Following last week's post, the very first question I was asked was "What about COBOL"?

In this week's post we will not only cover COBOL, but we'll show Pascal in a container and then look at how to handle one of my favourite languages, Perl in multiple ways culminating in a complete guide for a fully routable BBS.

COBOL

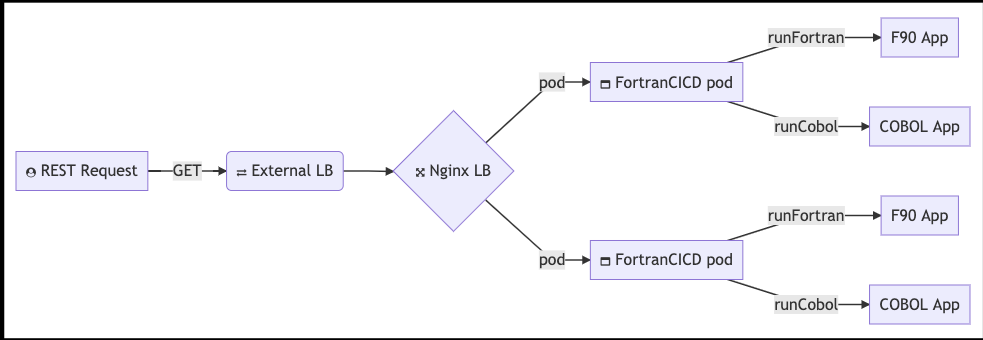

Handling COBOL is really no different than how we handed Fortran. We will want to just add the program and an endpoint:

First, we’ll need a helloworld COB program:

$ cat myFirstCob.cob

IDENTIFICATION DIVISION.

PROGRAM-ID. SAMPLE-01.

ENVIRONMENT DIVISION.

DATA DIVISION.

PROCEDURE DIVISION.

MAIN.

DISPLAY "My First COBOL" UPON CONSOLE.

STOP RUN.

Then update the Dockerfile to include a COBOL install. Since we are based on CentOS, it was just a bit of searching and testing to add the right packages:

RUN yum update -y

RUN yum install -y gcc-gfortran gdb make curl gmp-devel libtool libdb-devel.x86_64 ncurses-devel

# COBOL

RUN curl -O https://ftp.gnu.org/gnu/gnucobol/gnu-cobol-1.1.tar.gz

RUN tar -xzf gnu-cobol-1.1.tar.gz

RUN cd gnu-cobol-1.1 && ./configure && make && make install

# COBOL Compile

COPY myFirstCob.cob /fortran/

WORKDIR /fortran/

RUN echo "/usr/local/lib" >> /etc/ld.so.conf.d/gnu-cobol-1.1.conf

RUN ldconfig

RUN cobc -V

RUN cobc -x -free myFirstCob.cob

RUN ./myFirstCob

Since I was doing this additively, here is the full Dockerfile:

$ cat Dockerfile

#HelloWorld_Fortran

# start by building the basic container

FROM centos:latest

MAINTAINER Isaac Johnson <isaac.johnson@gmail.com>

RUN yum update -y

RUN yum install -y gcc-gfortran gdb make curl gmp-devel libtool libdb-devel.x86_64 ncurses-devel

# COBOL

RUN curl -O https://ftp.gnu.org/gnu/gnucobol/gnu-cobol-1.1.tar.gz

RUN tar -xzf gnu-cobol-1.1.tar.gz

RUN cd gnu-cobol-1.1 && ./configure && make && make install

# COBOL Compile

COPY myFirstCob.cob /fortran/

WORKDIR /fortran/

RUN echo "/usr/local/lib" >> /etc/ld.so.conf.d/gnu-cobol-1.1.conf

RUN ldconfig

RUN cobc -V

RUN cobc -x -free myFirstCob.cob

RUN ./myFirstCob

# build the hello world code

COPY Makefile run_fortran.sh HelloWorld.f90 HelloAgainInput.txt /fortran/

WORKDIR /fortran/

RUN make HelloWorld

# NodeJs

RUN curl -sL https://rpm.nodesource.com/setup_10.x | bash -

RUN yum install -y nodejs

COPY package.json package-lock.json server.js users.json ./

RUN npm install

# configure the container to run the hello world executable by default

# CMD ["./HelloWorld"]

ENTRYPOINT ["./run_fortran.sh"]

Having a program there, I just need to expose it with Express JS, so we add an endpoint in server.js:

app.get('/runcobol', function (req, res) {

exec("./myFirstCob", (error, stdout, stderr) => {

if (error) {

console.log(`error: ${error.message}`);

res.end( `error: ${error.message}` );

}

if (stderr) {

console.log(`stderr: ${stderr}`);

res.end( `error: ${stderr}` );

}

console.log(`stdout: ${stdout}`);

res.end( `${stdout}` );

});

})

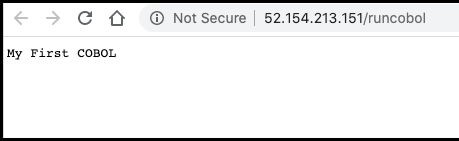

Once launched, it is easy to test:

Pascal

We need not show every example, but we could use FPC for Pascal to do similar.

I downloaded the tar from sourceforge: https://sourceforge.net/projects/freepascal/files/Linux/3.0.0/

Gzip and then transfer the tgz to the pod:

$ cd ~/Downloads && gzip fpc-3.0.0.x86_64-linux.tar

$ kubectl cp ./bin/fpc-3.0.0.x86_64-linux.tar.gz fortrancicd-67c498959f-bzss8:/fortran

Logging into the pod:

$ kubectl exec -it fortrancicd-67c498959f-bzss8 -- /bin/bash

[root@fortrancicd-67c498959f-bzss8 fortran]# tar -xzvf fpc-3.0.0.x86_64-linux.tar.gz

[root@fortrancicd-67c498959f-bzss8 fortran]# cd fpc-3.0.0.x86_64-linux

[root@fortrancicd-67c498959f-bzss8 fortran]# ./install.sh

Note, to make this non-interactive, i would need to re-write the install sh.

[root@fortrancicd-67c498959f-bzss8 fortran]# cat Hello.p

program Hello;

begin

writeln ('Hello, world.');

readln;

end.

Then compile it and run

[root@fortrancicd-67c498959f-bzss8 fortran]# fpc Hello.p

Free Pascal Compiler version 3.0.0 [2015/11/20] for x86_64

Copyright (c) 1993-2015 by Florian Klaempfl and others

Target OS: Linux for x86-64

Compiling Hello.p

Linking Hello

/usr/bin/ld: warning: link.res contains output sections; did you forget -T?

5 lines compiled, 0.0 sec

[root@fortrancicd-67c498959f-bzss8 fortran]# ./Hello

Hello, world.

Perl

We’re going to really dig in here. I made a brand new project. Arguably Perl started at a 3G language but it has a full TCP stack and a rather loyal fanbase that keeps it going.

Knowing there is a proper Docker container (perl) we can do a new project.

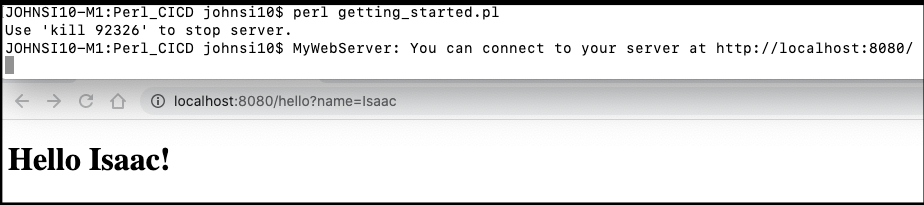

First, a basic HTTP app - there are a lot of ways to handle this. For now, we’ll use the HTTP::Server:Simple CPAN package

$ cat getting_started.pl

#!/usr/bin/perl

{

package MyWebServer;

use HTTP::Server::Simple::CGI;

use base qw(HTTP::Server::Simple::CGI);

my %dispatch = (

'/hello' => \&resp_hello,

# ...

);

sub handle_request {

my $self = shift;

my $cgi = shift;

my $path = $cgi->path_info();

my $handler = $dispatch{$path};

if (ref($handler) eq "CODE") {

print "HTTP/1.0 200 OK\r\n";

$handler->($cgi);

} else {

print "HTTP/1.0 404 Not found\r\n";

print $cgi->header,

$cgi->start_html('Not found'),

$cgi->h1('Not found'),

$cgi->end_html;

}

}

sub resp_hello {

my $cgi = shift; # CGI.pm object

return if !ref $cgi;

my $who = $cgi->param('name');

print $cgi->header,

$cgi->start_html("Hello"),

$cgi->h1("Hello $who!"),

$cgi->end_html;

}

}

# start the server on port 8080

my $pid = MyWebServer->new(8080)->background();

print "Use 'kill $pid' to stop server.\n";

Testing locally:

$ sudo cpan install HTTP::Server::Simple

$ perl getting_started.pl

http://localhost:8080/hello?name=Isaac

Our Dockerfile really just needs to install the one CPAN module we added and then copy our script over. Most perl packages have equivalent apt packages as well. So instead of CPAN, we could have used

sudo apt-get install -y libhttp-server-simple-perlHowever, using CPAN eliminates the dependency on Aptitude package manager.

Dockerfile:

$ cat Dockerfile

FROM perl:5.20

# install required modules

RUN export PERL_MM_USE_DEFAULT=1 && cpan install HTTP::Server::Simple

COPY . /usr/src/myapp

WORKDIR /usr/src/myapp

CMD [ "perl", "./getting_started.pl" ]

And similar to our last FORTRAN example, we set up an environment : VSECluster for k8s and a new CR. We use the Resource ID of the CR in the azure-pipelines and the name of our environment in the stages:

$ cat azure-pipelines.yaml:

# Deploy to Azure Kubernetes Service

# Build and push image to Azure Container Registry; Deploy to Azure Kubernetes Service

# https://docs.microsoft.com/azure/devops/pipelines/languages/docker

trigger:

- master

- develop

resources:

- repo: self

variables:

# Container registry service connection established during pipeline creation

dockerRegistryServiceConnection: '478f27cc-e412-40cf-9d7d-6787376f5998'

imageRepository: 'perlcicd'

containerRegistry: 'idjf90cr.azurecr.io'

dockerfilePath: '**/Dockerfile'

tag: '$(Build.BuildId)'

imagePullSecret: 'idjf90cr25108-auth'

# Agent VM image name

vmImageName: 'ubuntu-latest'

# Name of the new namespace being created to deploy the PR changes.

k8sNamespaceForPR: 'review-app-$(System.PullRequest.PullRequestId)'

stages:

- stage: Build

displayName: Build stage

jobs:

- job: Build

displayName: Build

pool:

vmImage: $(vmImageName)

steps:

- task: Docker@2

displayName: Build and push an image to container registry

inputs:

command: buildAndPush

repository: $(imageRepository)

dockerfile: $(dockerfilePath)

containerRegistry: $(dockerRegistryServiceConnection)

tags: |

$(tag)

- upload: manifests

artifact: manifests

- stage: Deploy

displayName: Deploy stage

dependsOn: Build

jobs:

- deployment: Deploy

condition: and(succeeded(), not(startsWith(variables['Build.SourceBranch'], 'refs/pull/')))

displayName: Deploy

pool:

vmImage: $(vmImageName)

environment: 'VSECluster.default'

strategy:

runOnce:

deploy:

steps:

- task: KubernetesManifest@0

displayName: Create imagePullSecret

inputs:

action: createSecret

secretName: $(imagePullSecret)

dockerRegistryEndpoint: $(dockerRegistryServiceConnection)

- task: KubernetesManifest@0

displayName: Deploy to Kubernetes cluster

inputs:

action: deploy

manifests: |

$(Pipeline.Workspace)/manifests/deployment.yml

$(Pipeline.Workspace)/manifests/service.yml

imagePullSecrets: |

$(imagePullSecret)

containers: |

$(containerRegistry)/$(imageRepository):$(tag)

- deployment: DeployPullRequest

displayName: Deploy Pull request

condition: and(succeeded(), startsWith(variables['Build.SourceBranch'], 'refs/pull/'))

pool:

vmImage: $(vmImageName)

environment: 'VSECluster.$(k8sNamespaceForPR)'

strategy:

runOnce:

deploy:

steps:

- reviewApp: default

- task: Kubernetes@1

displayName: 'Create a new namespace for the pull request'

inputs:

command: apply

useConfigurationFile: true

inline: '{ "kind": "Namespace", "apiVersion": "v1", "metadata": { "name": "$(k8sNamespaceForPR)" }}'

- task: KubernetesManifest@0

displayName: Create imagePullSecret

inputs:

action: createSecret

secretName: $(imagePullSecret)

namespace: $(k8sNamespaceForPR)

dockerRegistryEndpoint: $(dockerRegistryServiceConnection)

- task: KubernetesManifest@0

displayName: Deploy to the new namespace in the Kubernetes cluster

inputs:

action: deploy

namespace: $(k8sNamespaceForPR)

manifests: |

$(Pipeline.Workspace)/manifests/deployment.yml

$(Pipeline.Workspace)/manifests/service.yml

imagePullSecrets: |

$(imagePullSecret)

containers: |

$(containerRegistry)/$(imageRepository):$(tag)

- task: Kubernetes@1

name: get

displayName: 'Get services in the new namespace'

continueOnError: true

inputs:

command: get

namespace: $(k8sNamespaceForPR)

arguments: svc

outputFormat: jsonpath='http://{.items[0].status.loadBalancer.ingress[0].ip}:{.items[0].spec.ports[0].port}'

# Getting the IP of the deployed service and writing it to a variable for posing comment

- script: |

url="$(get.KubectlOutput)"

message="Your review app has been deployed"

if [ ! -z "$url" -a "$url" != "http://:" ]

then

message="${message} and is available at $url.<br><br>[Learn More](https://aka.ms/testwithreviewapps) about how to test and provide feedback for the app."

fi

echo "##vso[task.setvariable variable=GITHUB_COMMENT]$message"

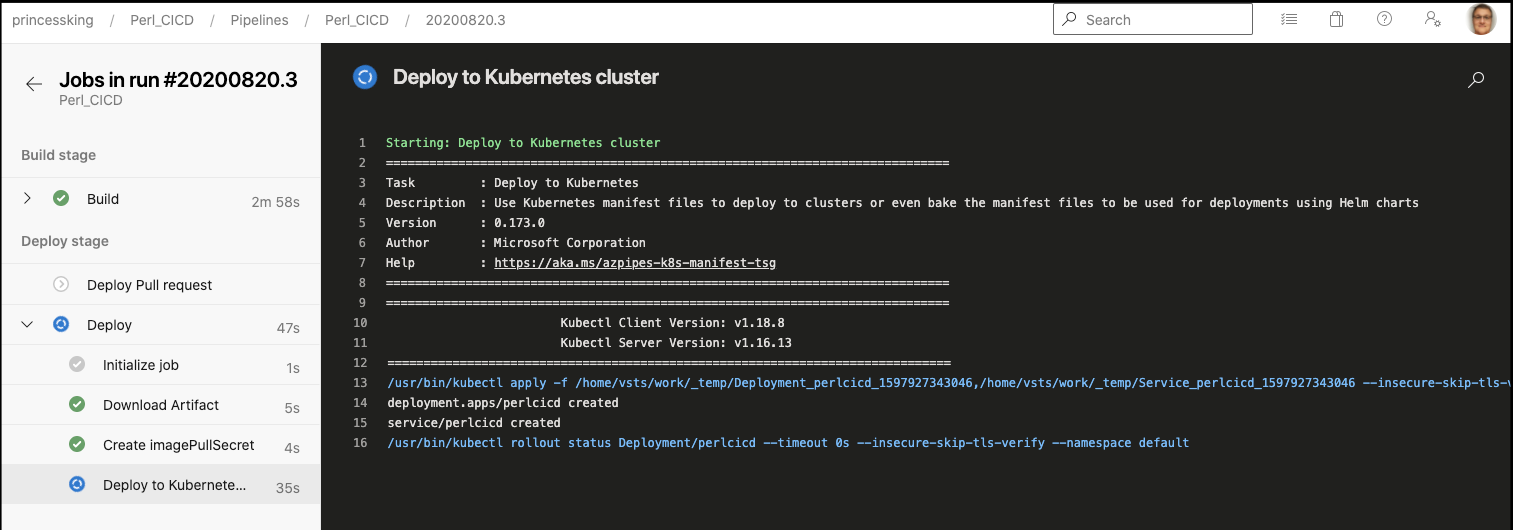

Deploying

Right away I see an issue. The POD keeps crashing.

JOHNSI10-M1:Downloads johnsi10$ kubectl get pods

NAME READY STATUS RESTARTS AGE

fortrancicd-67c498959f-bzss8 1/1 Running 0 9h

perlcicd-57dd7c85d4-9zbcj 0/1 CrashLoopBackOff 1 7s

JOHNSI10-M1:Downloads johnsi10$ kubectl logs perlcicd-57dd7c85d4-9zbcj

Use 'kill 9' to stop server.

We cannot login because the crashed state:

$ kubectl exec -it perlcicd-57dd7c85d4-9zbcj -- /bin/bash

error: unable to upgrade connection: container not found ("perlcicd")

A trick i learned some time ago is to just make your Dockerfile sleep so you can have time to interactively debug. Change the last line of Dockerfile:

# CMD [ "perl", "./getting_started.pl" ]

ENTRYPOINT [ "sh", "-c", "sleep 500000" ]

We push and CICD redeploys.

We can see it runs.. However it exits.. In the Docker world, this means it’s failed.

JOHNSI10-M1:Downloads johnsi10$ kubectl get pods

NAME READY STATUS RESTARTS AGE

fortrancicd-67c498959f-bzss8 1/1 Running 0 10h

perlcicd-7fc6c7d5d5-vt2v6 1/1 Running 0 23s

JOHNSI10-M1:Downloads johnsi10$ kubectl exec -it perlcicd-7fc6c7d5d5-vt2v6 -- /bin/bash

root@perlcicd-7fc6c7d5d5-vt2v6:/usr/src/myapp# perl getting_started.pl

Use 'kill 14' to stop server.

root@perlcicd-7fc6c7d5d5-vt2v6:/usr/src/myapp# MyWebServer: You can connect to your server at http://localhost:8080/

root@perlcicd-7fc6c7d5d5-vt2v6:/usr/src/myapp#

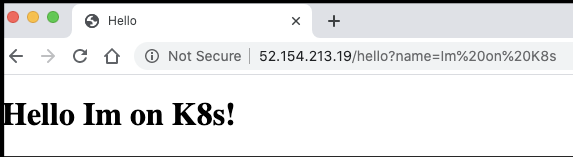

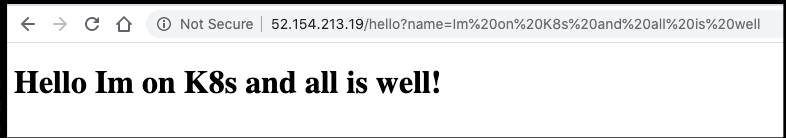

We can prove this is running now:

$ kubectl get svc perlcicd

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

perlcicd LoadBalancer 10.0.128.38 52.154.213.19 80:31695/TCP 22m

The problem is using the sample code, we let it background the app and return. Documentation of HTTP::Server::Simple shows that we should be using “run” instead.

Changing the line to my $pid = MyWebServer->new(8080)->run(); and then enabling perl again CMD [ "perl", "./getting_started.pl" ]

Now we can check:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

fortrancicd-67c498959f-bzss8 1/1 Running 0 10h

perlcicd-865d876db-q98ck 1/1 Running 0 23m

$ kubectl get svc perlcicd

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

perlcicd LoadBalancer 10.0.128.38 52.154.213.19 80:31695/TCP 56m

That is great for perl based servers. But what about CGI?

Perl CGI in Docker

We’ll move to a different branch (feature/cgi).

We can follow this guide that is similar (using Docker compose).

First, let’s change Deployment and Service to use port 80:

$ cat manifests/deployment.yml | grep 80

- containerPort: 80

$ cat manifests/service.yml | grep 80

port: 80

targetPort: 80

We need to add a basic index and vhosts files:

$ cat index.html

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<meta http-equiv="X-UA-Compatible" content="ie=edge">

<title>Document</title>

</head>

<body>

<h1>Hello World In html file</h1>

</body>

</html>

$ cat vhost.conf

server {

listen 80;

index index.php index.html;

root /var/www;

location ~ \.pl$ {

gzip off;

fastcgi_param SERVER_NAME \$http_host;

include /etc/nginx/fastcgi_params;

fastcgi_pass unix:/var/run/fcgiwrap.socket;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

}

error_log /var/log/nginx/web/error.log;

access_log /var/log/nginx/web/access.log;

}

A Perl script to run:

$ cat hello_world.pl

#!/usr/bin/perl -wT

print "Content-type: text/plain\n\n";

print "Hello World In CGI Perl " . time;

$ chmod 755 hello_world.pl

The a new Dockerfile to use the files:

$ cat Dockerfile

FROM nginx:1.10

RUN umask 0002

RUN apt-get clean && apt-get update && apt-get install -y nano spawn-fcgi fcgiwrap wget curl

RUN sed -i 's/www-data/nginx/g' /etc/init.d/fcgiwrap

RUN chown nginx:nginx /etc/init.d/fcgiwrap

ADD ./vhost.conf /etc/nginx/conf.d/default.conf

COPY . /var/www

RUN mkdir -p /var/log/nginx/web

WORKDIR /var/www

#ENTRYPOINT [ "sh", "-c", "sleep 500000" ]

CMD /etc/init.d/fcgiwrap start && nginx -g 'daemon off;'

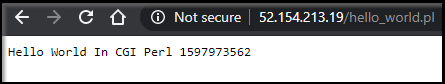

Let’s test:

$ kubectl get svc perlcicd

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

perlcicd LoadBalancer 10.0.128.38 52.154.213.19 80:31695/TCP 12h

Testing

YaBBS

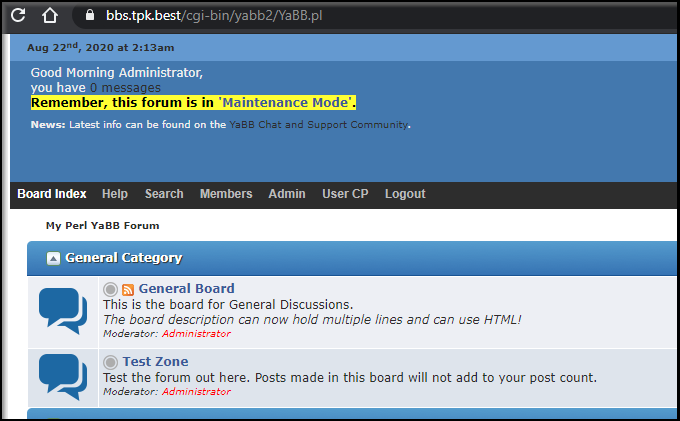

I downloaded YaBBS into ./bin of my repo then updated the Dockerfile: http://www.yabbforum.com/?page_id=32 . The tgz can be downloaded from http://www.yabbforum.com/downloads/release/YaBB_2.6.11.tar.gz

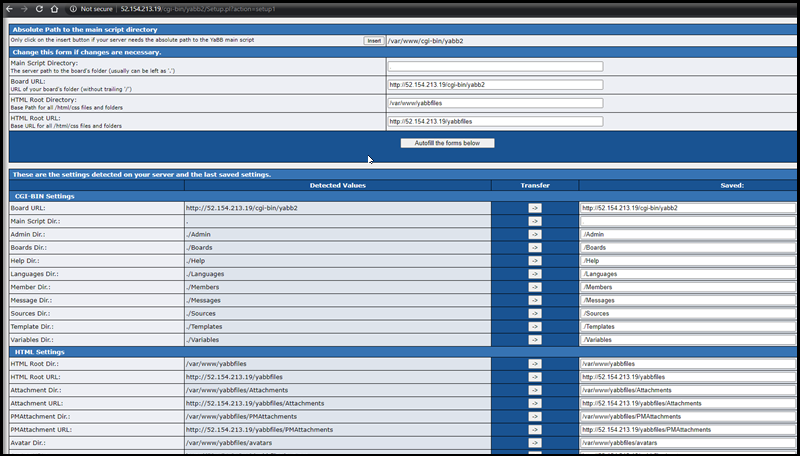

When we now go to the cgi-script setup page, we can setup the BBS:

http://52.154.213.19/cgi-bin/yabb2/Setup.pl

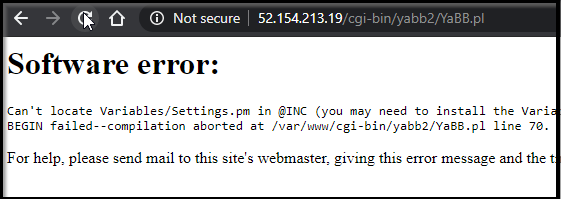

However, the lack of persistence is going to be an issue…

For instance, if i kill the pod we lose the forum.

$ kubectl delete pod perlcicd-5d6f8d8dc8-bmvkc

pod "perlcicd-5d6f8d8dc8-bmvkc" deleted

Actually this is where Persistent Volume Claims (PVC) come into play.

You will need to look up your provider if not using AKS (e.g "longhorn" or "local-storage" for k3s). You can use kubectl get sc to see the storage class (and which is default) in your cluster.

Here we just add a PVC to the Deployment.yaml

$ cat manifests/deployment.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: azure-managed-disk

spec:

accessModes:

- ReadWriteOnce

storageClassName: managed-premium

resources:

requests:

storage: 10Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: perlcicd

spec:

selector:

matchLabels:

app: perlcicd

replicas: 1

template:

metadata:

labels:

app: perlcicd

spec:

containers:

- name: perlcicd

image: idjf90cr.azurecr.io/perlcicd

ports:

- containerPort: 80

volumeMounts:

- mountPath: "/var/www"

name: volume

volumes:

- name: volume

persistentVolumeClaim:

claimName: azure-managed-disk

Additionally, we have a first time issue - our files are in /var/www. This means on first launch, the PVC will mount over our install location.

We can do a backup and restore. This is also useful if we had to move PVCs.

$ cat Dockerfile

FROM nginx:1.10

RUN umask 0002

RUN apt-get clean && apt-get update && apt-get install -y nano spawn-fcgi fcgiwrap wget curl

RUN sed -i 's/www-data/nginx/g' /etc/init.d/fcgiwrap

RUN chown nginx:nginx /etc/init.d/fcgiwrap

ADD ./vhost.conf /etc/nginx/conf.d/default.conf

COPY . /var/www

RUN mkdir -p /tmp/bbinstall

COPY ./bin/YaBB_2.6.11.tar.gz /tmp/bbinstall

WORKDIR /tmp/bbinstall

RUN umask 0002 && tar -xzf YaBB_2.6.11.tar.gz

RUN chown -R nginx:nginx YaBB_2.6.11

RUN find . -type f -name \*.pl -exec chmod 755 {} \;

RUN mv YaBB_2.6.11/cgi-bin /var/www

RUN mv YaBB_2.6.11/public_html/yabbfiles /var/www

RUN mkdir -p /var/log/nginx/web

WORKDIR /var/www

RUN mkdir -p /var/wwwbase

RUN cp -vnpr /var/www/* /var/wwwbase

#ENTRYPOINT [ "sh", "-c", "sleep 500000" ]

CMD cp -vnpr /var/wwwbase/* /var/www && /etc/init.d/fcgiwrap start && nginx -g 'daemon off;'

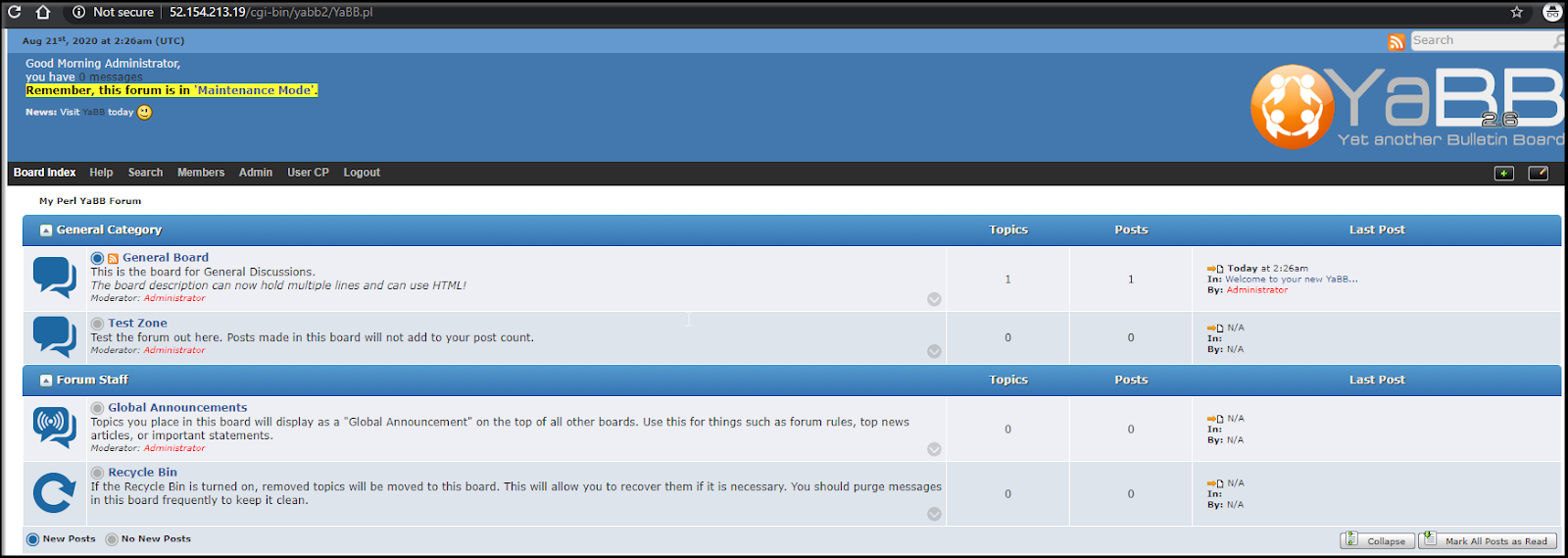

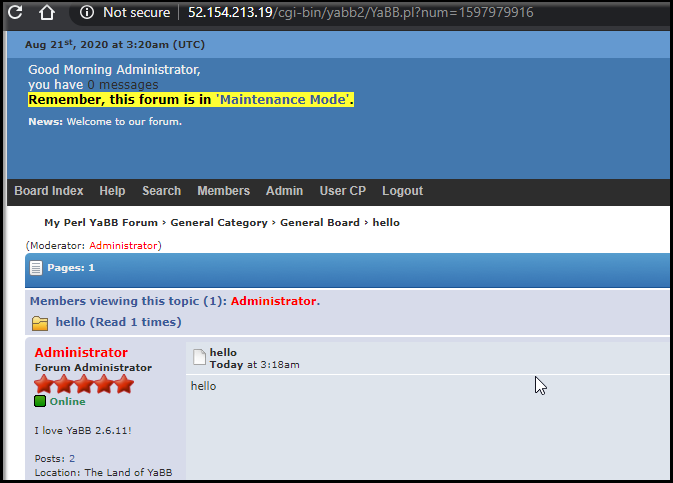

We can login and create a post, killing the pod and the post survived:

Set up TLS ingress:

Following this guide: https://docs.microsoft.com/en-us/azure/aks/ingress-tls

Create a namespace and add the helm chart repo

$ kubectl create ns ingress-basic

$ helm repo add stable https://kubernetes-charts.storage.googleapis.com/

"stable" has been added to your repositories

JOHNSI10-M1:ghost-blog johnsi10$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "appdynamics-charts" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "banzaicloud-stable" chart repository

fetch from s3: fetch object from s3: AccessDenied: Access Denied

status code: 403, request id: B1B6B0DC3949C560, host id: Zfff4Q6fz/qoFTVCsH2xQDryD/QDUJvBgvskXpF+XcwgC4pu2ETAK08sdpk4acYmEC3u9P+/Bc8=

...Unable to get an update from the "fbs3" chart repository (s3://idjhelmtest/helm):

plugin "bin/helms3" exited with error

...Successfully got an update from the "bitnami" chart repository

fetch from s3: fetch object from s3: AccessDenied: Access Denied

status code: 403, request id: F63D4A110C406561, host id: D55rlAPdHyU9l9K7apA+t79Xq8wcZbu4IcND3CLC7Ey38A2ucJaG2xcQf/vwoypT4CxAj/fSvPw=

...Unable to get an update from the "vnc" chart repository (s3://idjhelmtest/helm):

plugin "bin/helms3" exited with error

...Successfully got an update from the "stable" chart repository

...Successfully got an update from the "mlifedev" chart repository

fetch from s3: fetch object from s3: AccessDenied: Access Denied

status code: 403, request id: D8C4BF6EB6991A18, host id: 6nApBOhXdKMC4d86rtyn+KbjM7F0YdEy+fdYdscHrivi/zbH8jd4EF1+Ngb7BiWBs6Gfc0QgvPA=

...Unable to get an update from the "fbs3b" chart repository (s3://idjhelmtest/helm/):

plugin "bin/helms3" exited with error

Update Complete. ⎈ Happy Helming!⎈

Then install it

$ helm install nginx stable/nginx-ingress \

> --namespace ingress-basic \

> --set controller.replicaCount=2 \

> --set controller.nodeSelector."beta\.kubernetes\.io/os"=linux \

> --set defaultBackend.nodeSelector."beta\.kubernetes\.io/os"=linux

WARNING: This chart is deprecated

NAME: nginx

LAST DEPLOYED: Fri Aug 21 07:31:34 2020

NAMESPACE: ingress-basic

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

*******************************************************************************************************

* DEPRECATED, please use https://github.com/kubernetes/ingress-nginx/tree/master/charts/ingress-nginx *

*******************************************************************************************************

The nginx-ingress controller has been installed.

It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status by running 'kubectl --namespace ingress-basic get services -o wide -w nginx-nginx-ingress-controller'

An example Ingress that makes use of the controller:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: example

namespace: foo

spec:

rules:

- host: www.example.com

http:

paths:

- backend:

serviceName: exampleService

servicePort: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls

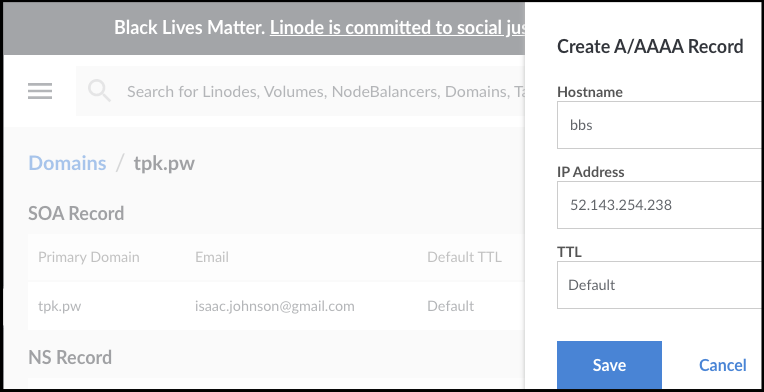

Let’s get the IPv4 and set an A/AAAA record;

$ kubectl get svc nginx-nginx-ingress-controller -n ingress-basic

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-nginx-ingress-controller LoadBalancer 10.0.239.164 52.143.254.238 80:32108/TCP,443:30089/TCP 3m57s

Note, i had to pivot. Try as i might, i could not route traffic from the ingress-basic namespace over to the default namespace to which i was deploying my app. I saw some pointers to using a service to point to another with an "external" attribute, but it didn't seem to work. Instead i relaunched the the nginx chart and controller into the same namespace as our deployment (default)

$ helm install nginx stable/nginx-ingress --namespace default --set controller.replicaCount=2 --set controller.nodeSelector."beta\.kubernetes\.io/os"=linux --set defaultBackend.nodeSelector."beta\.kubernetes\.io/os"=linux

WARNING: This chart is deprecated

NAME: nginx

LAST DEPLOYED: Fri Aug 21 20:38:56 2020

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

*******************************************************************************************************

* DEPRECATED, please use https://github.com/kubernetes/ingress-nginx/tree/master/charts/ingress-nginx *

*******************************************************************************************************

The nginx-ingress controller has been installed.

It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status by running 'kubectl --namespace default get services -o wide -w nginx-nginx-ingress-controller'

An example Ingress that makes use of the controller:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: example

namespace: foo

spec:

rules:

- host: www.example.com

http:

paths:

- backend:

serviceName: exampleService

servicePort: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls

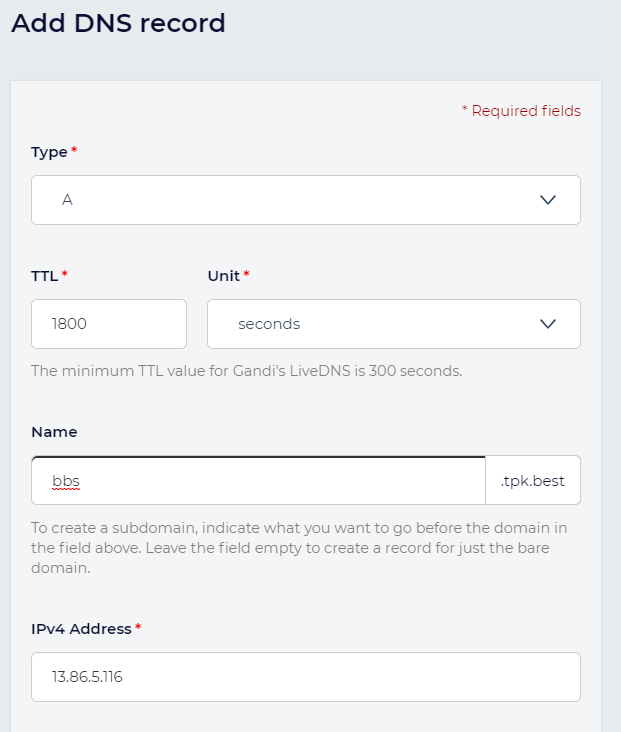

$ kubectl get svc nginx-nginx-ingress-controller

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-nginx-ingress-controller LoadBalancer 10.0.229.71 13.86.5.116 80:31597/TCP,443:31362/TCP 36s

I did need to login to the pod and fix the paths. This will update the PVC as well.

$ helm install nginx stable/nginx-ingress --namespace default --set controller.replicaCount=2 --set controller.nodeSelector."beta\.kubernetes\.io/os"=linux --set defaultBackend.nodeSelector."beta\.kubernetes\.io/os"=linux

WARNING: This chart is deprecated

NAME: nginx

LAST DEPLOYED: Fri Aug 21 20:38:56 2020

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

*******************************************************************************************************

* DEPRECATED, please use https://github.com/kubernetes/ingress-nginx/tree/master/charts/ingress-nginx *

*******************************************************************************************************

The nginx-ingress controller has been installed.

It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status by running 'kubectl --namespace default get services -o wide -w nginx-nginx-ingress-controller'

An example Ingress that makes use of the controller:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: example

namespace: foo

spec:

rules:

- host: www.example.com

http:

paths:

- backend:

serviceName: exampleService

servicePort: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls

$ kubectl get svc nginx-nginx-ingress-controller

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-nginx-ingress-controller LoadBalancer 10.0.229.71 13.86.5.116 80:31597/TCP,443:31362/TCP 36s

I also realized tpk.pw expired, so i used a different DNS i owned, tpk.best

Enable cert-manager on the default namespace

$ kubectl label namespace default cert-manager.io/disable-validation=true

namespace/default labeled

then install it

$ helm install cert-manager --namespace default --version v0.16.1 --set installCRDs=true --set nodeSelector."beta\.kubernetes\.io/os"=linux jetstack/cert-manager

NAME: cert-manager

LAST DEPLOYED: Fri Aug 21 20:47:43 2020

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

cert-manager has been deployed successfully!

In order to begin issuing certificates, you will need to set up a ClusterIssuer

or Issuer resource (for example, by creating a 'letsencrypt-staging' issuer).

More information on the different types of issuers and how to configure them

can be found in our documentation:

https://cert-manager.io/docs/configuration/

For information on how to configure cert-manager to automatically provision

Certificates for Ingress resources, take a look at the `ingress-shim`

documentation:

https://cert-manager.io/docs/usage/ingress/

Next we need to install the clusterissuer for LetsEncrypt. If testing, you can use the staging endpoint. Here I use the real production one.

$ cat ca_ci.yaml

apiVersion: cert-manager.io/v1alpha2

kind: ClusterIssuer

metadata:

name: letsencrypt

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: isaac.johnson@gmail.com

privateKeySecretRef:

name: letsencrypt

solvers:

- http01:

ingress:

class: nginx

podTemplate:

spec:

nodeSelector:

"kubernetes.io/os": linux

$ kubectl apply -f ca_ci.yaml

clusterissuer.cert-manager.io/letsencrypt created

The important part is next. Here we are going to apply an ingress with an annotation that will ask our cluster issuer to automate the process of getting a valid TLS certificate from LetsEncrypt and applying it. You will need to ensure the DNS entry specified is pointed at your external IP before applying.

$ cat ingress.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: perlcicd-ingress

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/rewrite-target: /$1

nginx.ingress.kubernetes.io/use-regex: "true"

cert-manager.io/cluster-issuer: letsencrypt

spec:

tls:

- hosts:

- bbs.tpk.best

secretName: tls-secret

rules:

- host: bbs.tpk.best

http:

paths:

- backend:

serviceName: perlcicd

servicePort: 80

path: /(.*)

$ kubectl apply -f ingress.yaml

ingress.networking.k8s.io/perlcicd-ingress configured

We can now check on the result. It will take a few minutes

$ kubectl get certificate --all-namespaces

NAMESPACE NAME READY SECRET AGE

default tls-secret True tls-secret 11m

One final step, the BBS code still has the static IP saved in its variables files. We will need to login and fix those

$ kubectl exec -it perlcicd-6d7d87c64-7hpch -- /bin/bash

root@perlcicd-6d7d87c64-7hpch:/var/www# vim ./cgi-bin/yabb2/Paths.pm

# i changed from http://<ip> to https://bbs.tpk.best

Summary

We looked at COBOL as a followup to FORTRAN and then explored Pascal. We’ve now laid out a reusable pattern for handling older languages that should be useful for anyone having to handle a classic 3rd Gen language.

We lastly took a look at a classic BBS system, YaBBS and setup a routable persistent endpoint. Not only does this use Kubernetes to host a classic linux based forum, but by adding TLS SSL to our Nginx ingress controller, we added a layer of security that wasn’t part of the former HTTP based offering.