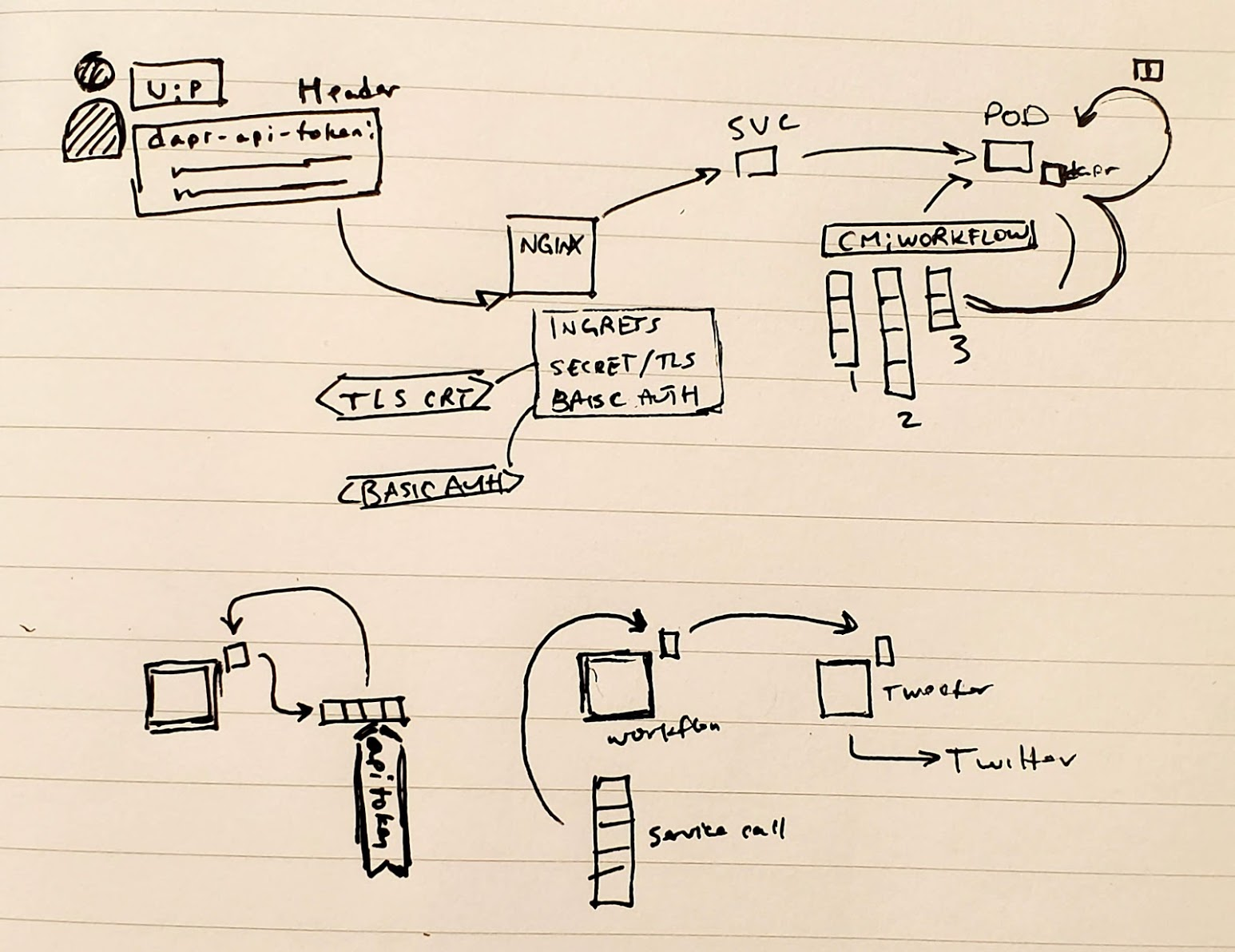

One of the challenges to exposing Dapr workflows, or any Kubernetes based flow really, is securing it. We generally want to avoid enabling business logic orchestration that can be invoked unauthenticated. Luckily there are solutions for this problem.

Today we'll explore using both Basic Auth and Dapr API tokens to restrict access to your rest based services and see how that is used in practice. We'll go over AKS as well as what can be done on-prem.

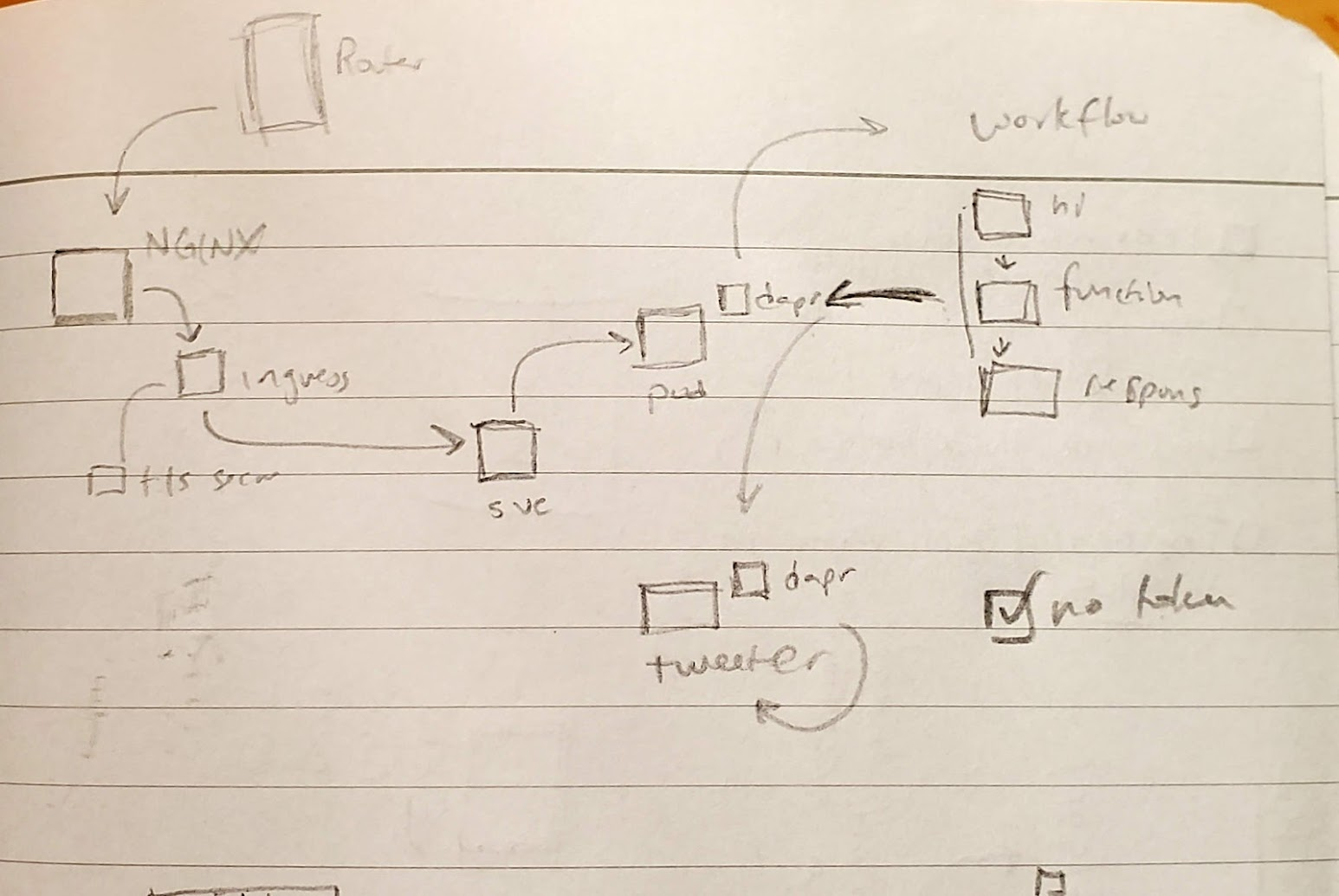

Our starting point

Let's look at what we built last time. We created a containerized Twitter API microservice ("Tweeter") that was invoked via Dapr by a Dapr Workflow (that implements Logic App flows). We then exposed that as a service that is tied to a Kubernetes Ingress and serviced by our Nginx Ingress controller. We used Cert Manager to get a free LE TLS.

Essentially we exposed a Dapr Workflow to call a Dapr service to Tweet for us. However, anyone could hit that public endpoint and send out tweets. Probably not something we would want to keep open.

AKS Setup

Let's start by creating AKS Cluster running 1.19.9. First, like normal, create a resource group to hold an AKS cluster.

$ az account set --subscription 70b42e6a-asdf-asdf-asfd-9f3995b1aca8

JOHNSI10-C02ZC3P6LVDQ:blog-dapr-workflow johnsi10$ az group create --name idjtestingressrg --location centralus

{

"id": "/subscriptions/70b42e6a-asdf-asdf-asdf-9f3995b1aca8/resourceGroups/idjtestingressrg",

"location": "centralus",

"managedBy": null,

"name": "idjtestingressrg",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

We will just re-use an existing SP (no need to recreate for a test AKS)

$ echo "re-use existing sp" && az ad sp create-for-rbac -n idjaks04sp --skip-assignment --output json > my_sp.json

re-use existing sp

Changing "idjaks04sp" to a valid URI of "http://idjaks04sp", which is the required format used for service principal names

Found an existing application instance of "4d3b5455-8ab1-4dc3-954b-39daed1cd954". We will patch it

The output includes credentials that you must protect. Be sure that you do not include these credentials in your code or check the credentials into your source control. For more information, see https://aka.ms/azadsp-cli

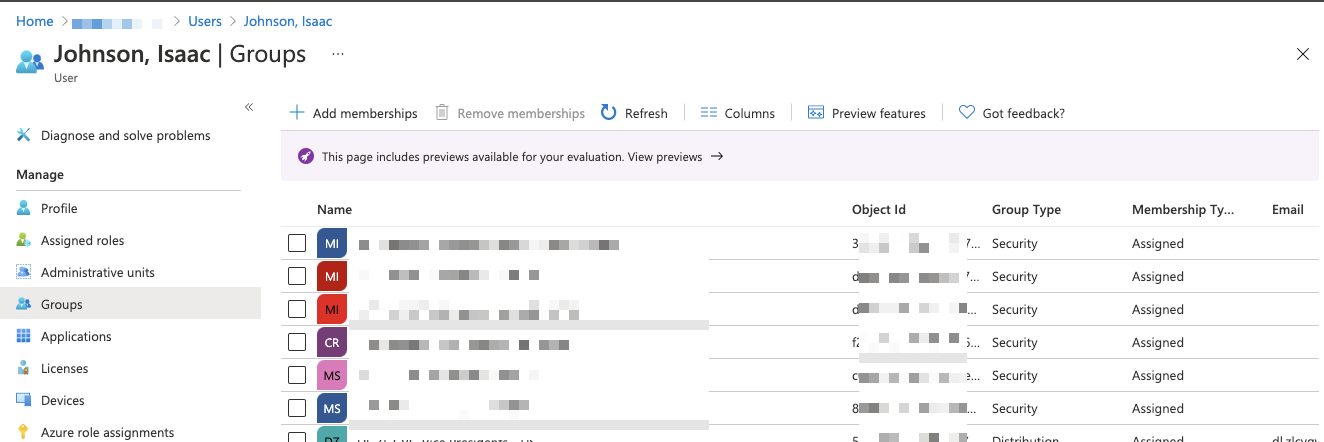

However, before we create the cluster, since this account is part of a proper AAD tenant, let's use some of the newer AAD support in AKS create. I'll look up my own user and find a group of which I'm a member in AAD

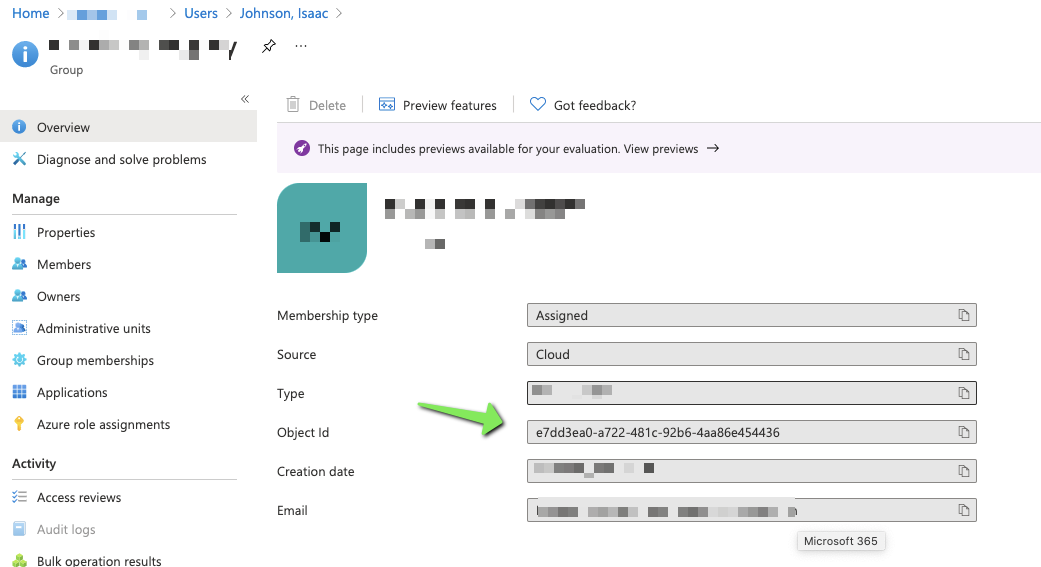

We need the Object ID of the DL that is our Management Group out of AAD

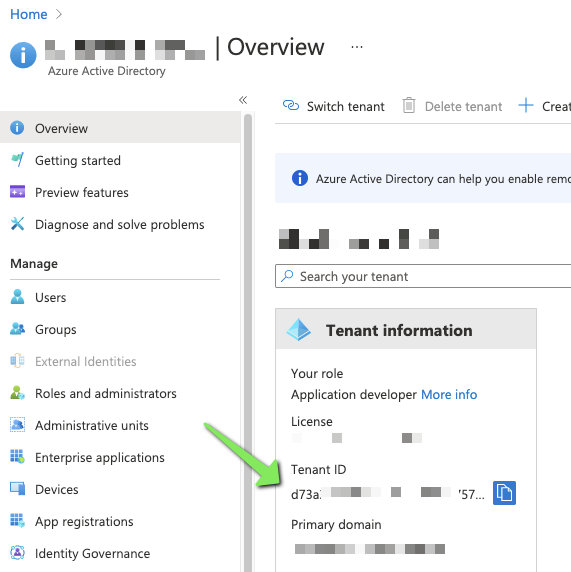

The other piece we need is the Tenant ID which is the overall container of this organizations Subscriptions

We can now set the Service Principal vars.

$ export SP_PASS=`cat my_sp.json | jq -r .password`

$ export SP_ID=`cat my_sp.json | jq -r .appId`

Creating the cluster

$ az aks create --resource-group idjtestingressrg --name idjaks04b --location centralus --node-count 3 --enable-cluster-autoscaler --min-count 2 --max-count 4 --generate-ssh-keys --network-plugin azure --network-policy azure --service-principal $SP_ID --client-secret $SP_PASS --enable-aad --aad-admin-group-object-ids e7dd3ea0-a722-481c-92b6-4aa86e454436 --aad-tenant-id d73123-1234-1234-1234-1234aedaeda8

- Running ..

As one command:

$ az account set --subscription 70b42e6a-asdf-asdf-asdf-9f3995b1aca8

$ az group create --name idjtestingressrg --location centralus && echo "re-use existing sp" && az ad sp create-for-rbac -n idjaks04sp --skip-assignment --output json > my_sp.json

$ export SP_ID=`cat my_sp.json | jq -r .appId`

$ az aks create --resource-group idjtestingressrg --name idjaks04b --location centralus --node-count 3 --enable-cluster-autoscaler --min-count 2 --max-count 4 --generate-ssh-keys --network-plugin azure --network-policy azure --service-principal $SP_ID --client-secret $SP_PASS --enable-aad --aad-admin-group-object-ids e7dd3ea0-a722-481c-92b6-4aa86e454436 --aad-tenant-id d73123-1234-1234-1234-1234aedaeda8

Creating the Ingress

The simplest path is to install an Nginx ingress controller into its own namespace:

$ kubectl create namespace ingress-basic

namespace/ingress-basic created

$ helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

$ helm install nginx-ingress ingress-nginx/ingress-nginx --namespace ingress-basic --set controller.replicaCount=2 --set controller.nodeSelector."beta\.kubernetes\.io/os"=linux --set defaultBackend.nodeSelector."beta\.kubernetes\.io/os"=linux --set controller.admissionWebhooks.patch.nodeSelector."beta\.kubernetes\.io/os"=linux

NAME: nginx-ingress

LAST DEPLOYED: Wed May 26 18:53:19 2021

NAMESPACE: ingress-basic

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status by running 'kubectl --namespace ingress-basic get services -o wide -w nginx-ingress-ingress-nginx-controller'

An example Ingress that makes use of the controller:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: example

namespace: foo

spec:

rules:

- host: www.example.com

http:

paths:

- backend:

serviceName: exampleService

servicePort: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls

$ kubectl get svc --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 12m

ingress-basic nginx-ingress-ingress-nginx-controller LoadBalancer 10.0.3.75 52.154.56.244 80:32200/TCP,443:32037/TCP 62s

ingress-basic nginx-ingress-ingress-nginx-controller-admission ClusterIP 10.0.37.196 <none> 443/TCP 62s

kube-system kube-dns ClusterIP 10.0.0.10 <none> 53/UDP,53/TCP 11m

kube-system metrics-server ClusterIP 10.0.20.248 <none> 443/TCP 11m

kube-system npm-metrics-cluster-service ClusterIP 10.0.30.57 <none> 9000/TCP 11m

However, because we are going to want to engage with our services running in 'default' directly, we will install the ingress controller in the default namespace. It's worth noting that as I tested, i discovered this after having created foo2 hence I created foo3 A record.

# if you already installed in basic namespace, remove it

$ helm delete nginx-ingress -n ingress-basic

$ helm install nginx-ingress ingress-nginx/ingress-nginx --set controller.replicaCount=1 --set controller.nodeSelector."beta\.kubernetes\.io/os"=linux --set defaultBackend.nodeSelector."beta\.kubernetes\.io/os"=linux --set controller.admissionWebhooks.patch.nodeSelector."beta\.kubernetes\.io/os"=linux

NAME: nginx-ingress

LAST DEPLOYED: Wed May 26 20:25:23 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status by running 'kubectl --namespace default get services -o wide -w nginx-ingress-ingress-nginx-controller'

An example Ingress that makes use of the controller:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: example

namespace: foo

spec:

rules:

- host: www.example.com

http:

paths:

- backend:

serviceName: exampleService

servicePort: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

..snip...

nginx-ingress-ingress-nginx-controller LoadBalancer 10.0.217.152 20.83.9.230 80:31772/TCP,443:31503/TCP 2m27s

nginx-ingress-ingress-nginx-controller-admission ClusterIP 10.0.115.233 <none> 443/TCP 2m27s

...snip...

To get the TLS setup we will need to add the Cert Manager for LE certs

First install the CRDs for a 1.19.x cluster

$ kubectl apply -f https://github.com/jetstack/cert-manager/releases/download/v1.3.1/cert-manager.crds.yaml

customresourcedefinition.apiextensions.k8s.io/certificaterequests.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/certificates.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/challenges.acme.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/clusterissuers.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/issuers.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/orders.acme.cert-manager.io configured

Now add the Jetstack Helm repo and update

$ helm repo add jetstack https://charts.jetstack.io

"jetstack" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "kedacore" chart repository

...Successfully got an update from the "ingress-nginx" chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "datawire" chart repository

Update Complete. ⎈Happy Helming!⎈

And then install

$ helm install \

> cert-manager jetstack/cert-manager \

> --namespace cert-manager \

> --create-namespace \

> --version v1.3.1

NAME: cert-manager

LAST DEPLOYED: Wed May 26 19:01:05 2021

NAMESPACE: cert-manager

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

cert-manager has been deployed successfully!

In order to begin issuing certificates, you will need to set up a ClusterIssuer

or Issuer resource (for example, by creating a 'letsencrypt-staging' issuer).

More information on the different types of issuers and how to configure them

can be found in our documentation:

https://cert-manager.io/docs/configuration/

For information on how to configure cert-manager to automatically provision

Certificates for Ingress resources, take a look at the `ingress-shim`

documentation:

https://cert-manager.io/docs/usage/ingress/

We can test with a self signed cert

$ cat <<EOF > test-resources.yaml

> apiVersion: v1

> kind: Namespace

> metadata:

> name: cert-manager-test

> ---

> apiVersion: cert-manager.io/v1

> kind: Issuer

> metadata:

> name: test-selfsigned

> namespace: cert-manager-test

> spec:

> selfSigned: {}

> ---

> apiVersion: cert-manager.io/v1

> kind: Certificate

> metadata:

> name: selfsigned-cert

> namespace: cert-manager-test

> spec:

> dnsNames:

> - example.com

> secretName: selfsigned-cert-tls

> issuerRef:

> name: test-selfsigned

> EOF

$ kubectl apply -f test-resources.yaml

namespace/cert-manager-test created

issuer.cert-manager.io/test-selfsigned created

certificate.cert-manager.io/selfsigned-cert created

This shows that Cert Manager is running

$ kubectl get certificate -n cert-manager-test

NAME READY SECRET AGE

selfsigned-cert True selfsigned-cert-tls 96s

We next need to create our LetsEncrypt Prod cluster Issuer

apiVersion: cert-manager.io/v1alpha2

kind: ClusterIssuer

metadata:

name: letsencrypt-prod

namespace: default

spec:

acme:

# The ACME server URL

server: https://acme-v02.api.letsencrypt.org/directory

# Email address used for ACME registration

email: isaac.johnson@gmail.com

# Name of a secret used to store the ACME account private key

privateKeySecretRef:

name: letsencrypt-prod

# Enable the HTTP-01 challenge provider

solvers:

- http01:

ingress:

class: nginx

and apply it

$ kubectl apply -f le-prod.yaml

clusterissuer.cert-manager.io/letsencrypt-prod created

At this point, I jumped over to Route53 and added DNS entries for foo2 and foo3.freshbrewed.science to point to my external IP. Once those A records were there, i came back to create the Cert request

apiVersion: cert-manager.io/v1alpha2

kind: Certificate

metadata:

name: foo2-freshbrewed.science

namespace: default

spec:

secretName: foo2.freshbrewed.science-cert

issuerRef:

name: letsencrypt-prod

kind: ClusterIssuer

commonName: foo2.freshbrewed.science

dnsNames:

- foo2.freshbrewed.science

acme:

config:

- http01:

ingressClass: nginx

domains:

- foo2.freshbrewed.science

$ kubectl apply -f foo2-fb-science-cert.yaml --validate=false

certificate.cert-manager.io/foo2-freshbrewed.science created

$ kubectl get certificate

NAME READY SECRET AGE

foo2-freshbrewed.science True foo2.freshbrewed.science-cert 28s

And our other cert is pretty much the same

apiVersion: cert-manager.io/v1alpha2

kind: Certificate

metadata:

name: foo3-freshbrewed.science

namespace: default

spec:

secretName: foo3.freshbrewed.science-cert

issuerRef:

name: letsencrypt-prod

kind: ClusterIssuer

commonName: foo3.freshbrewed.science

dnsNames:

- foo3.freshbrewed.science

acme:

config:

- http01:

ingressClass: nginx

domains:

- foo3.freshbrewed.scienceAnd apply it

$ kubectl apply -f foo3-fb-science-cert.yaml --validate=false

certificate.cert-manager.io/foo3-freshbrewed.science created

$ kubectl get cert

NAME READY SECRET AGE

foo2-freshbrewed.science True foo2.freshbrewed.science-cert 84m

foo3-freshbrewed.science False foo3.freshbrewed.science-cert 15s

$ kubectl get cert

NAME READY SECRET AGE

foo2-freshbrewed.science True foo2.freshbrewed.science-cert 85m

foo3-freshbrewed.science True foo3.freshbrewed.science-cert 47s

Dapr install

Since this is a brand new cluster, we also need to setup Dapr in it.

$ dapr init -k -n default

⌛ Making the jump to hyperspace...

ℹ️ Note: To install Dapr using Helm, see here: https://docs.dapr.io/getting-started/install-dapr-kubernetes/#install-with-helm-advanced

✅ Deploying the Dapr control plane to your cluster...

✅ Success! Dapr has been installed to namespace default. To verify, run `dapr status -k' in your terminal. To get started, go here: https://aka.ms/dapr-getting-started

They have a helm chart as well, but i generally use -k as shown above

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

dapr-dashboard-76cc799b78-68bdz 1/1 Running 0 20s

dapr-operator-5d45c778c4-d4bvq 1/1 Running 0 20s

dapr-placement-server-0 1/1 Running 0 20s

dapr-sentry-68776c58b5-wsbgn 1/1 Running 0 20s

dapr-sidecar-injector-66c878c8b-vqjqq 1/1 Running 0 20s

We will setup a few services right off, including Redis

Add the bitnami chart repo and update

$ helm repo add bitnami https://charts.bitnami.com/bitnami

"bitnami" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "kedacore" chart repository

...Successfully got an update from the "ingress-nginx" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "bitnami" chart repository

...Successfully got an update from the "datawire" chart repository

Update Complete. ⎈Happy Helming!⎈

Then install

$ helm install redis bitnami/redis

NAME: redis

LAST DEPLOYED: Wed May 26 19:14:31 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

** Please be patient while the chart is being deployed **

Redis(TM) can be accessed on the following DNS names from within your cluster:

redis-master.default.svc.cluster.local for read/write operations (port 6379)

redis-replicas.default.svc.cluster.local for read-only operations (port 6379)

To get your password run:

export REDIS_PASSWORD=$(kubectl get secret --namespace default redis -o jsonpath="{.data.redis-password}" | base64 --decode)

To connect to your Redis(TM) server:

1. Run a Redis(TM) pod that you can use as a client:

kubectl run --namespace default redis-client --restart='Never' --env REDIS_PASSWORD=$REDIS_PASSWORD --image docker.io/bitnami/redis:6.2.3-debian-10-r18 --command -- sleep infinity

Use the following command to attach to the pod:

kubectl exec --tty -i redis-client \

--namespace default -- bash

2. Connect using the Redis(TM) CLI:

redis-cli -h redis-master -a $REDIS_PASSWORD

redis-cli -h redis-replicas -a $REDIS_PASSWORD

To connect to your database from outside the cluster execute the following commands:

kubectl port-forward --namespace default svc/redis-master 6379:6379 &

redis-cli -h 127.0.0.1 -p 6379 -a $REDIS_PASSWORD

Now we can apply our Redis instance for Dapr. This is used by Pub/Sub services

$ cat redis.yaml

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: statestore

namespace: default

spec:

type: state.redis

version: v1

metadata:

- name: redisHost

value: redis-master.default.svc.cluster.local:6379

- name: redisPassword

secretKeyRef:

name: redis

key: redis-password

$ kubectl apply -f redis.yaml

component.dapr.io/statestore created

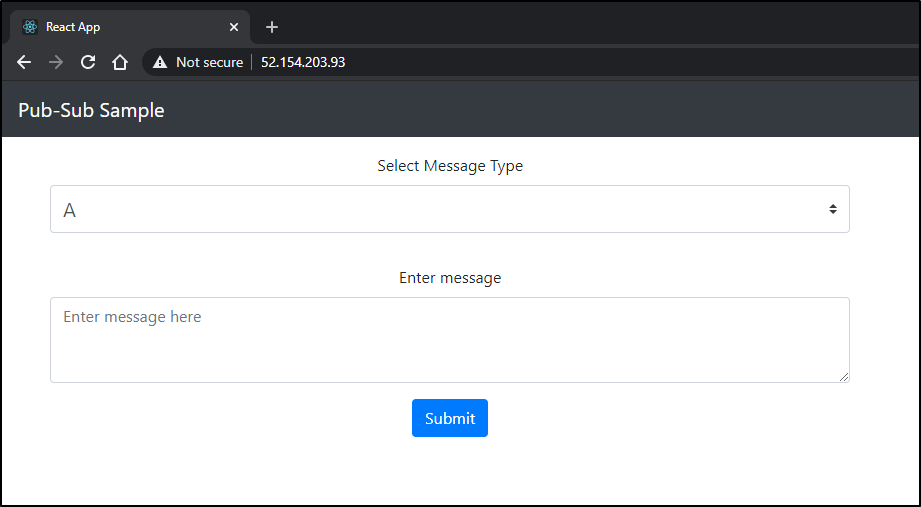

In fact, just so we have some services to use, let's fire up the quickstart on that

$ git clone https://github.com/dapr/quickstarts.git

Cloning into 'quickstarts'...

remote: Enumerating objects: 2480, done.

remote: Counting objects: 100% (182/182), done.

remote: Compressing objects: 100% (109/109), done.

remote: Total 2480 (delta 98), reused 133 (delta 66), pack-reused 2298

Receiving objects: 100% (2480/2480), 10.09 MiB | 7.64 MiB/s, done.

Resolving deltas: 100% (1462/1462), done.

$ cd quickstarts/pub-sub/deploy

$ kubectl apply -f .

deployment.apps/node-subscriber created

deployment.apps/python-subscriber created

service/react-form created

deployment.apps/react-form created

component.dapr.io/pubsub configured

And AKS generously gave us a nice Public IP for the React Form (probably not something we would want to keep public like that)

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dapr-api ClusterIP 10.0.169.89 <none> 80/TCP 5m12s

dapr-dashboard ClusterIP 10.0.116.59 <none> 8080/TCP 5m12s

dapr-placement-server ClusterIP None <none> 50005/TCP,8201/TCP 5m12s

dapr-sentry ClusterIP 10.0.24.217 <none> 80/TCP 5m12s

dapr-sidecar-injector ClusterIP 10.0.55.9 <none> 443/TCP 5m12s

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 35m

node-subscriber-dapr ClusterIP None <none> 80/TCP,50001/TCP,50002/TCP,9090/TCP 6s

python-subscriber-dapr ClusterIP None <none> 80/TCP,50001/TCP,50002/TCP,9090/TCP 6s

react-form LoadBalancer 10.0.247.142 52.154.203.93 80:31758/TCP 6s

react-form-dapr ClusterIP None <none> 80/TCP,50001/TCP,50002/TCP,9090/TCP 6s

redis-headless ClusterIP None <none> 6379/TCP 3m51s

redis-master ClusterIP 10.0.120.193 <none> 6379/TCP 3m51s

redis-replicas ClusterIP 10.0.223.203 <none> 6379/TCP 3m51s

I should point out that i first exposed the foo2 ingress:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-with-auth

annotations:

kubernetes.io/ingress.class: nginx

# type of authentication

nginx.ingress.kubernetes.io/auth-type: basic

# name of the secret that contains the user/password definitions

nginx.ingress.kubernetes.io/auth-secret: basic-auth

# message to display with an appropriate context why the authentication is required

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - foo'

spec:

rules:

- host: foo2.freshbrewed.science

http:

paths:

- path: /

backend:

serviceName: workflows-dapr

servicePort: 80

tls:

- hosts:

- foo2.freshbrewed.science

secretName: foo2.freshbrewed.science-cert

but then realized that i both used the wrong nginx label and namespace which is why class didnt line up

$ kubectl get ingress

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-with-auth <none> foo2.freshbrewed.science 52.154.56.244 80, 443 24m

Set up the Workflow System

We need to get a storage account

$ az storage account list -o table

AccessTier AllowBlobPublicAccess CreationTime EnableHttpsTrafficOnly Kind Location MinimumTlsVersion Name PrimaryLocation ProvisioningState ResourceGroup StatusOfPrimary SecondaryLocation StatusOfSecondary

------------ ----------------------- -------------------------------- ------------------------ ----------- ---------- ------------------- ------------------ ----------------- ------------------- --------------- ----------------- ------------------- -------------------

...snip...

Hot 2020-11-24T17:13:23.329093+00:00 True StorageV2 centralus idjdemoaksstorage centralus Succeeded idjdemoaks available eastus2 available

Hot 2020-11-24T18:06:05.110478+00:00 True StorageV2 centralus idjdemoaksstorage2 centralus Succeeded idjdemoaks available eastus2 available

Let's use an existing Demo storage account.

$ export STORAGE_ACCOUNT_NAME=idjdemoaksstorage

$ export STORAGE_ACCOUNT_RG=idjdemoaks

$ export STORAGE_ACCOUNT_KEY=`az storage account keys list -n $STORAGE_ACCOUNT_NAME -g $STORAGE_ACCOUNT_RG -o json | jq -r '.[] | .value' | head -n1 | tr -d '\n'`

Let's clone the Workflow example repo for our next steps

builder@DESKTOP-72D2D9T:~/Workspaces$ git clone https://github.com/dapr/workflows.git

Cloning into 'workflows'...

remote: Enumerating objects: 974, done.

remote: Counting objects: 100% (45/45), done.

remote: Compressing objects: 100% (28/28), done.

remote: Total 974 (delta 17), reused 22 (delta 12), pack-reused 929

Receiving objects: 100% (974/974), 124.11 MiB | 7.98 MiB/s, done.

Resolving deltas: 100% (438/438), done.

builder@DESKTOP-72D2D9T:~/Workspaces$ cd workflows/

builder@DESKTOP-72D2D9T:~/Workspaces/workflows$ kubectl create configmap workflows --from-file ./samples/workflow1.json

configmap/workflows created

We need the storage account secret for the workflow steps

$ kubectl create secret generic dapr-workflows --from-literal=accountName=$STORAGE_ACCOUNT_NAME --from-literal=accountKey=$STORAGE_ACCOUNT_KEY

secret/dapr-workflows created

Now deploy the workflow service

builder@DESKTOP-72D2D9T:~/Workspaces/workflows$ kubectl apply -f deploy/deploy.yaml

deployment.apps/dapr-workflows-host created

We can do a quick sanity check

$ kubectl port-forward deploy/dapr-workflows-host 3500:3500

Forwarding from 127.0.0.1:3500 -> 3500

Forwarding from [::1]:3500 -> 3500

Handling connection for 3500

Handling connection for 3500

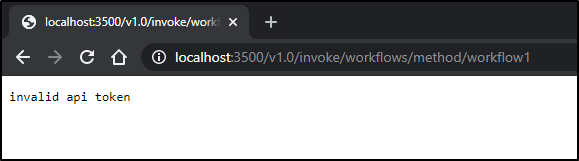

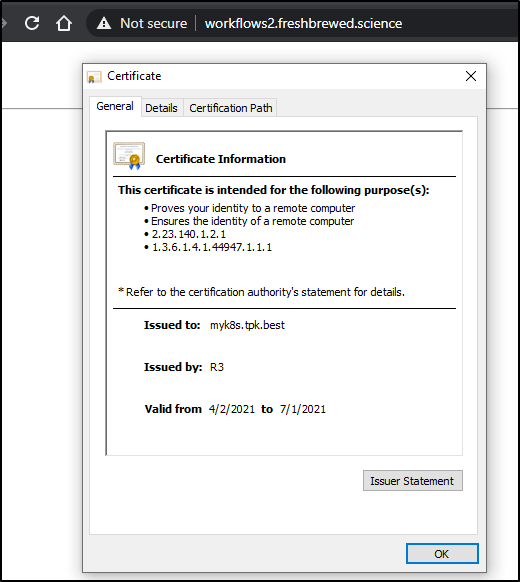

Quick Note: if you have enabled api-token support, then you'll get a window like this:

The api-token-secret annotation controls this behavior.

metadata:

annotations:

dapr.io/api-token-secret: dapr-api-token

dapr.io/app-id: workflows

dapr.io/app-port: "50003"

dapr.io/app-protocol: grpc

dapr.io/enabled: "true"

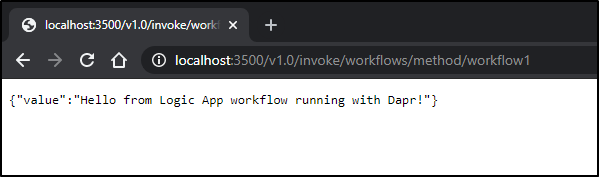

Otherwise, you should get a simple happy path with workflow1

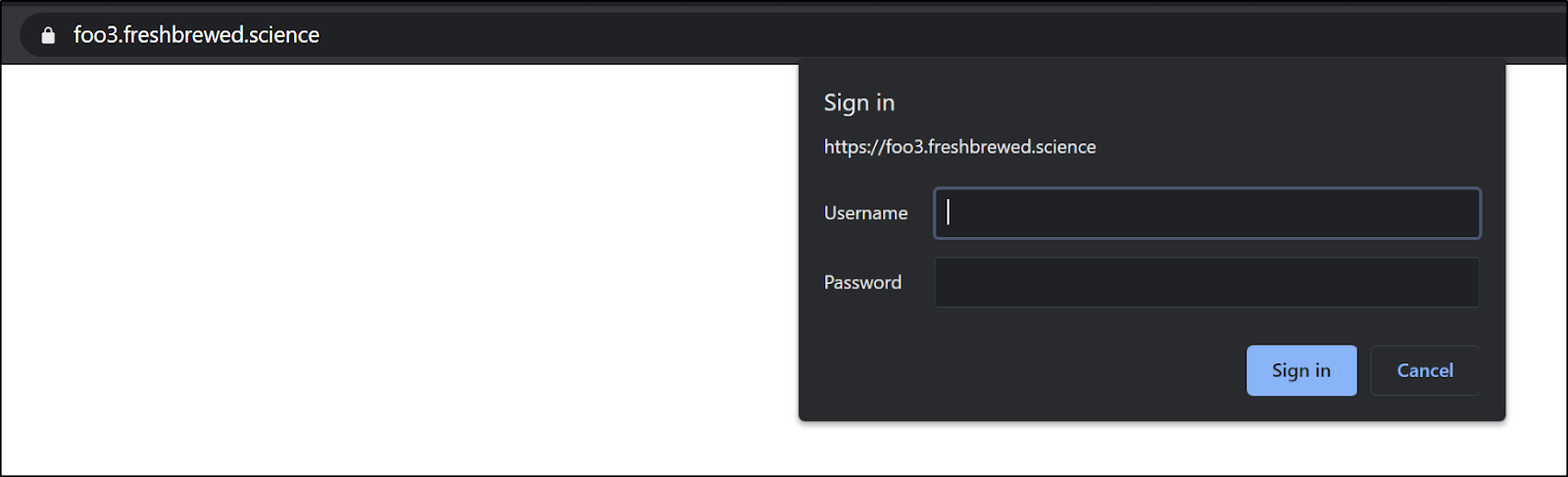

Setup basic auth in ingress

$ sudo apt install apache2-utils

$ htpasswd -c auth foo

$ kubectl create secret generic basic-auth --from-file=auth

Let's now use our Cert and DNS name to route TLS traffic into our cluster

$ cat ingress-with-auth-foo3.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-with-auth

annotations:

kubernetes.io/ingress.class: nginx

# type of authentication

nginx.ingress.kubernetes.io/auth-type: basic

# name of the secret that contains the user/password definitions

nginx.ingress.kubernetes.io/auth-secret: basic-auth

# message to display with an appropriate context why the authentication is required

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - foo'

spec:

rules:

- host: foo3.freshbrewed.science

http:

paths:

- path: /

backend:

serviceName: workflows-dapr

servicePort: 80

tls:

- hosts:

- foo3.freshbrewed.science

secretName: foo3.freshbrewed.science-cert

$ kubectl apply -f ingress-with-auth-foo3.yaml

Warning: networking.k8s.io/v1beta1 Ingress is deprecated in v1.19+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

ingress.networking.k8s.io/ingress-with-auth configured

It's these annotations that control enabling basic auth

nginx.ingress.kubernetes.io/auth-type: basic

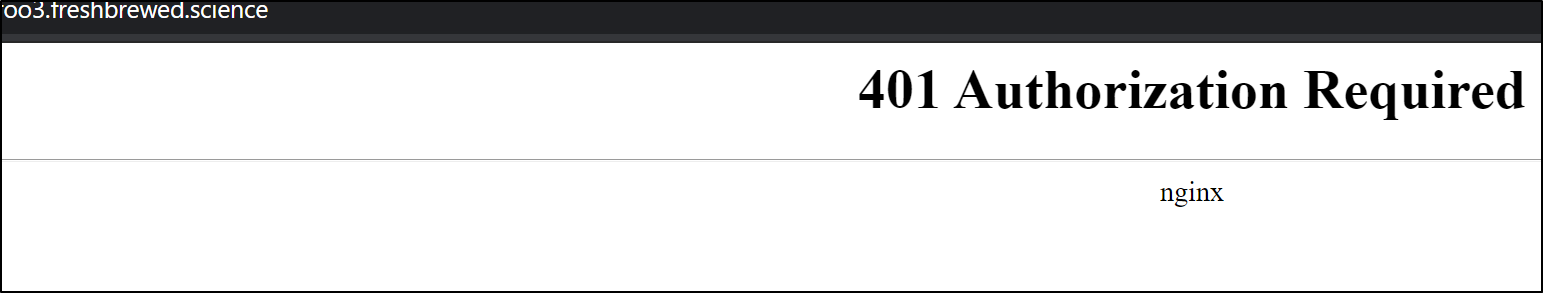

nginx.ingress.kubernetes.io/auth-secret: basic-authWith foo3 we can now see the password is prompted

And invalid entry will render

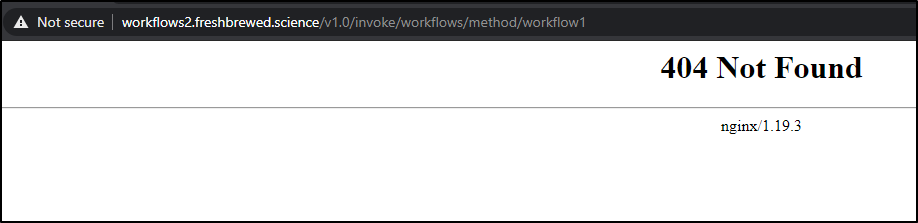

If we have the dapr-api-token disabled (not in the workflows annotation) we can expect a not found (since the workflows service only serves workflows on the v1.0 API URL)

Dapr API Token

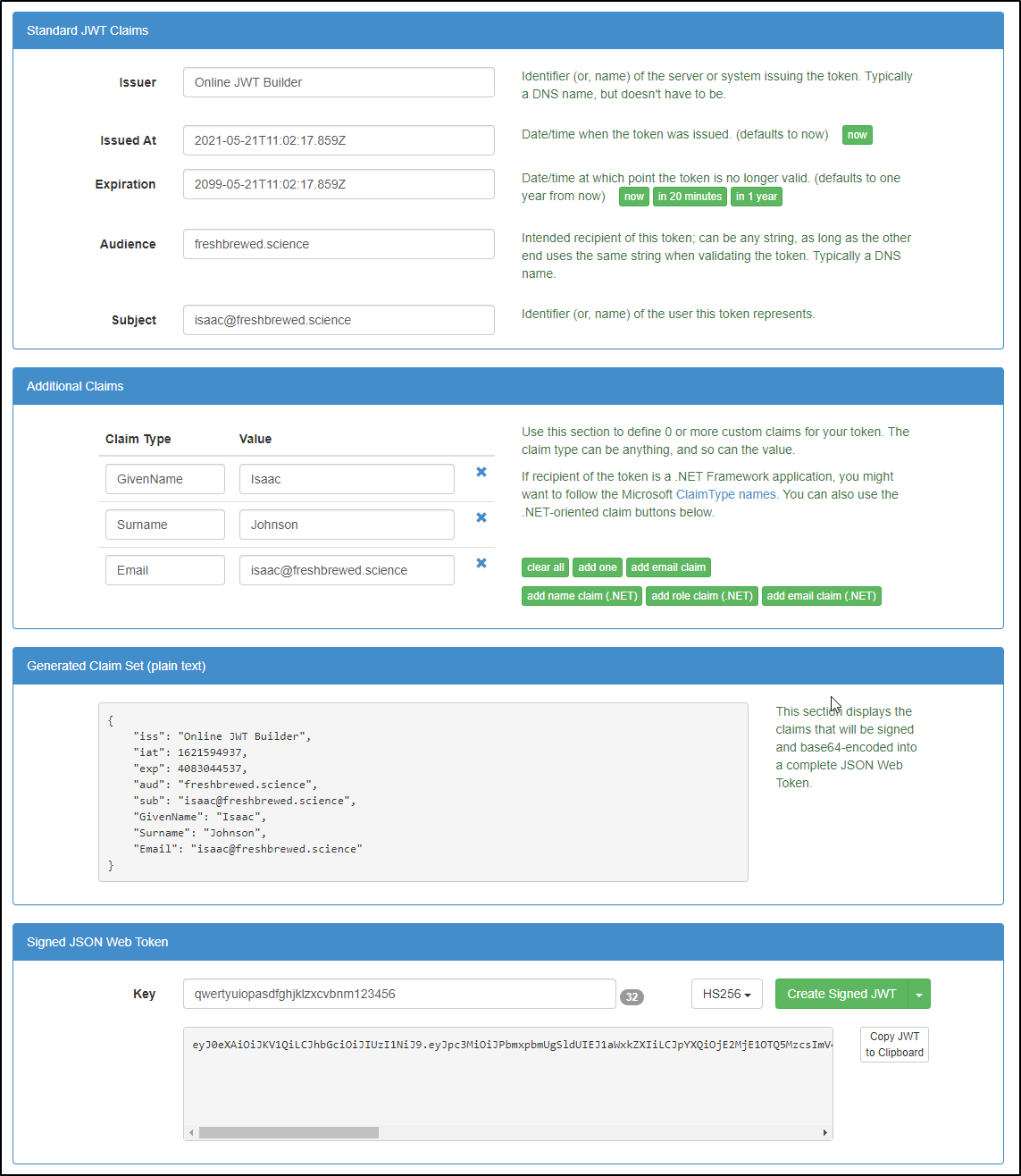

Our next step will be to add a token requirement following https://docs.dapr.io/operations/security/api-token/

The first step is to get a JWT token: http://jwtbuilder.jamiekurtz.com/

Next, let's create the API token in our namespace (in our case, default)

$ kubectl create secret generic dapr-api-token --from-literal=token=eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJpc3MiOiJPbmxpbmUgSldUIEJ1aWxkZXIiLCJpYXQiOjE2MjE1OTQ5MzcsImV4cCI6NDA4MzA0NDUzNywiYXVkIjoiZnJlc2hicmV3ZWQuc2NpZW5jZSIsInN1YiI6ImlzYWFjQGZyZXNoYnJld2VkLnNjaWVuY2UiLCJHaXZlbk5hbWUiOiJJc2FhYyIsIlN1cm5hbWUiOiJKb2huc29uIiwiRW1haWwiOiJpc2FhY0BmcmVzaGJyZXdlZC5zY2llbmNlIn0.86p_txK7TxapSrQx53IzgHMi3SPIifzZjpn_6ynOHec

secret/dapr-api-token created

Verify it created

$ kubectl get secrets dapr-api-token -o yaml

apiVersion: v1

data:

token: ZVhBaU9pSktWMVFpTENKaGJHY2lPaUpJVXpJMU5pSjkuZXlKcGMzTWlPaUpQYm14cGJtVWdTbGRVSUVKMWFXeGtaWElpTENKcFlYUWlPakUyTWpFMU9UUTVNemNzSW1WNGNDSTZOREE0TXpBME5EVXpOeXdpWVhWa0lqb2labkpsYzJoaWNtVjNaV1F1YzJOcFpXNWpaU0lzSW5OMVlpSTZJbWx6WVdGalFHWnlaWE5vWW5KbGQyVmtMbk5qYVdWdVkyVWlMQ0pIYVhabGJrNWhiV1VpT2lKSmMyRmhZeUlzSWxOMWNtNWhiV1VpT2lKS2IyaHVjMjl1SWl3aVJXMWhhV3dpT2lKcGMyRmhZMEJtY21WemFHSnlaWGRsWkM1elkybGxibU5sSW4wLjg2cF90eEs3VHhhcFNyUXg1M0l6Z0hNaTNTUElpZnpaanBuXzZ5bk9IZWM=

kind: Secret

metadata:

creationTimestamp: "2021-05-27T01:13:53Z"

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:data:

.: {}

f:token: {}

f:type: {}

manager: kubectl-create

operation: Update

time: "2021-05-27T01:13:53Z"

name: dapr-api-token

namespace: default

resourceVersion: "21872"

selfLink: /api/v1/namespaces/default/secrets/dapr-api-token

uid: 3eb5d068-ba90-4623-87d2-fc2d8312c034

We can always read it back from the secret

$ echo ZVhBaU9pSktWMVFpTENKaGJHY2lPaUpJVXpJMU5pSjkuZXlKcGMzTWlPaUpQYm14cGJtVWdTbGRVSUVKMWFXeGtaWElpTENKcFlYUWlPakUyTWpFMU9UUTVNemNzSW1WNGNDSTZOREE0TXpBME5EVXpOeXdpWVhWa0lqb2labkpsYzJoaWNtVjNaV1F1YzJOcFpXNWpaU0lzSW5OMVlpSTZJbWx6WVdGalFHWnlaWE5vWW5KbGQyVmtMbk5qYVdWdVkyVWlMQ0pIYVhabGJrNWhiV1VpT2lKSmMyRmhZeUlzSWxOMWNtNWhiV1VpT2lKS2IyaHVjMjl1SWl3aVJXMWhhV3dpT2lKcGMyRmhZMEJtY21WemFHSnlaWGRsWkM1elkybGxibU5sSW4wLjg2cF90eEs3VHhhcFNyUXg1M0l6Z0hNaTNTUElpZnpaanBuXzZ5bk9IZWM= | base64 --decode

eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJpc3MiOiJPbmxpbmUgSldUIEJ1aWxkZXIiLCJpYXQiOjE2MjE1OTQ5MzcsImV4cCI6NDA4MzA0NDUzNywiYXVkIjoiZnJlc2hicmV3ZWQuc2NpZW5jZSIsInN1YiI6ImlzYWFjQGZyZXNoYnJld2VkLnNjaWVuY2UiLCJHaXZlbk5hbWUiOiJJc2FhYyIsIlN1cm5hbWUiOiJKb2huc29uIiwiRW1haWwiOiJpc2FhY0BmcmVzaGJyZXdlZC5zY2llbmNlIn0.86p_txK7TxapSrQx53IzgHMi3SPIifzZjpn_6ynOHec

We then nearly need to add it to our workflow deployment to enable it.

$ kubectl get deployment dapr-workflows-host -o yaml > dwh.dep.yaml

$ kubectl get deployment dapr-workflows-host -o yaml > dwh.dep.yaml.old

$ vi dwh.dep.yaml

$ diff -c dwh.dep.yaml dwh.dep.yaml.old

*** dwh.dep.yaml 2021-05-27 01:28:25.367379100 -0500

--- dwh.dep.yaml.old 2021-05-27 01:27:55.637379100 -0500

***************

*** 160,166 ****

metadata:

annotations:

dapr.io/app-id: workflows

- dapr.io/api-token-secret: "dapr-api-token"

dapr.io/app-port: "50003"

dapr.io/app-protocol: grpc

dapr.io/enabled: "true"

--- 160,165 ----

$ kubectl apply -f dwh.dep.yaml

deployment.apps/dapr-workflows-host configured

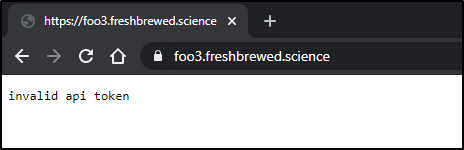

Now when we test our URL, once we pass basic auth, we get the error

Permutations

So let's look at some permutations. If you wish to NOT have basic auth, just adding the api token will suffice:

$ curl -X GET -H "Content-Type: application/xml" -H "Accept: application/json" -H "dapr-api-token: eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJpc3MiOiJPbmxpbmUgSldUIEJ1aWxkZXIiLCJpYXQiOjE2MjE1OTQ5MzcsImV4cCI6NDA4MzA0NDUzNywiYXVkIjoiZnJlc2hicmV3ZWQuc2NpZW5jZSIsInN1YiI6ImlzYWFjQGZyZXNoYnJld2VkLnNjaWVuY2UiLCJHaXZlbk5hbWUiOiJJc2FhYyIsIlN1cm5hbWUiOiJKb2huc29uIiwiRW1haWwiOiJpc2FhY0BmcmVzaGJyZXdlZC5zY2llbmNlIn0.86p_txK7TxapSrQx53IzgHMi3SPIifzZjpn_6ynOHec" https://foo3.freshbrewed.science/v1.0/invoke/workflows/method/workflow1

However, if you have basic auth enabled, that call will respond with:

<html>

<head><title>401 Authorization Required</title></head>

<body>

<center><h1>401 Authorization Required</h1></center>

<hr><center>nginx</center>

</body>

</html>

If you don't pass the token OR if the token is expired/invalid, you'll get the same error as our browser test gave us

$ curl -u foo:bar -X GET -H "Content-Type: application/xml" -H "Accept: application/json" -H "dapr-api-token: eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJpc3MiOiJPbmxpbmUgSldUIEJ1aWxkZXIiLCJpYXQiOjE2MjE1OTQ5MzcsImV4cCI6NDA4MzA0NDUzNywiYXVkIjoiZnJlc2hicmV3ZWQuc2NpZW5jZSIsInN1YiI6ImlzYWFjQGZyZXNoYnJld2VkLnNjaWVuY2UiLCJHaXZlbk5hbWUiOiJJc2FhYyIsIlN1cm5hbWUiOiJKb2huc29uIiwiRW1haWwiOiJpc2FhY0BmcmVzaGJyZXdlZC5zY2llbmNlIn0.86p_txK7TxapSrQx53IzgHMi3SPIifzZjpn_6ynOHec" https://foo3.freshbrewed.science/v1.0/invoke/workflows/method/workflow3

invalid api token

That said, if we do both correct, then we should get a passing test:

$ curl -u foo:bar -X GET -H "Content-Type: application/xml" -H "Accept: application/json" -H "dapr-api-token: eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJpc3MiOiJPbmxpbmUgSldUIEJ1aWxkZXIiLCJpYXQiOjE2MjE1OTQ5MzcsImV4cCI6NDA4MzA0NDUzNywiYXVkIjoiZnJlc2hicmV3ZWQuc2NpZW5jZSIsInN1YiI6ImlzYWFjQGZyZXNoYnJld2VkLnNjaWVuY2UiLCJHaXZlbk5hbWUiOiJJc2FhYyIsIlN1cm5hbWUiOiJKb2huc29uIiwiRW1haWwiOiJpc2FhY0BmcmVzaGJyZXdlZC5zY2llbmNlIn0.86p_txK7TxapSrQx53IzgHMi3SPIifzZjpn_6ynOHec" https://foo3.freshbrewed.science/v1.0/invoke/workflows/method/workflow1

{"value":"Hello from Logic App workflow running with Dapr!"}

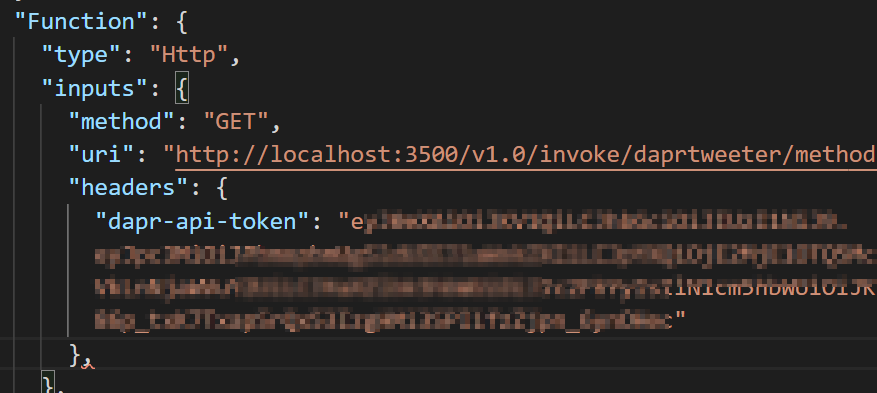

Workflows calling Workflows

We will setup the workflow3 with our Dapr app-id.

However, if we don't have it hardcoded in the get field, we can expect the callback to itself to fail (since the workflow endpoint requires it)

$ curl -u foo:bar -X GET -H "Content-Type: application/xml" -H "Accept: application/json" -H "dapr-api-token: eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJpc3MiOiJPbmxpbmUgSldUIEJ1aWxkZXIiLCJpYXQiOjE2MjE1OTQ5MzcsImV4cCI6NDA4MzA0NDUzNywiYXVkIjoiZnJlc2hicmV3ZWQuc2NpZW5jZSIsInN1YiI6ImlzYWFjQGZyZXNoYnJld2VkLnNjaWVuY2UiLCJHaXZlbk5hbWUiOiJJc2FhYyIsIlN1cm5hbWUiOiJKb2huc29uIiwiRW1haWwiOiJpc2FhY0BmcmVzaGJyZXdlZC5zY2llbmNlIn0.86p_txK7TxapSrQx53IzgHMi3SPIifzZjpn_6ynOHec" https://foo3.freshbrewed.science/v1.0/invoke/workflows/method/workflow3

{

"error": {

"code": "NoResponse",

"message": "The server did not received a response from an upstream server. Request tracking id '08585795250225827997145858794CU00'."

}

Using the correct Dapr ID we can now have a workflow call another workflow, with authentication:

$ cat workflow3.json

{

"definition": {

"$schema": "https://schema.management.azure.com/providers/Microsoft.Logic/schemas/2016-06-01/workflowdefinition.json#",

"actions": {

"Compose": {

"type": "compose",

"runAfter": {},

"inputs": { "body": "Hello from Logic App workflow running with Dapr!" }

},

"triggerflow": {

"inputs": {

"headers": {

"dapr-api-token": "eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJpc3MiOiJPbmxpbmUgSldUIEJ1aWxkZXIiLCJpYXQiOjE2MjE1OTQ5MzcsImV4cCI6NDA4MzA0NDUzNywiYXVkIjoiZnJlc2hicmV3ZWQuc2NpZW5jZSIsInN1YiI6ImlzYWFjQGZyZXNoYnJld2VkLnNjaWVuY2UiLCJHaXZlbk5hbWUiOiJJc2FhYyIsIlN1cm5hbWUiOiJKb2huc29uIiwiRW1haWwiOiJpc2FhY0BmcmVzaGJyZXdlZC5zY2llbmNlIn0.86p_txK7TxapSrQx53IzgHMi3SPIifzZjpn_6ynOHec"

},

"method": "GET",

"uri": "http://localhost:3500/v1.0/invoke/workflows/method/workflow1"

},

"runAfter": {

"Compose": [

"Succeeded"

]

},

"type": "Http"

},

"Response": {

"inputs": {

"body": {

"value": "@body('compose')"

},

"statusCode": 200

},

"runAfter": { "triggerflow": [ "Succeeded" ] },

"type": "Response"

}

},

"contentVersion": "1.0.0.0",

"outputs": {},

"parameters": {},

"triggers": {

"manual": {

"inputs": {

"schema": {}

},

"kind": "Http",

"type": "Request"

}

}

}

}

Above we see workflow 3 calls 1 with the token (becuase we use localhost:3500 and that localhost, the workflow service, requires a Dapr API Token)

We can apply this to the CM and rotate the pod to get the new workflow to take effect

$ kubectl delete cm workflows && kubectl create configmap workflows --from-file ./samples/workflow1.json --from-file ./samples/workflow2.json --from-file ./samples/workflow3.json

configmap "workflows" deleted

configmap/workflows created

$ kubectl get pods | grep workf

dapr-workflows-host-85d9d74777-8s6xh 2/2 Running 0 34m

$ kubectl delete pods dapr-workflows-host-85d9d74777-8s6xh

pod "dapr-workflows-host-85d9d74777-8s6xh" deleted

Now when we test, we can get a proper result

$ curl -u foo:bar -X GET -H "Content-Type: application/xml" -H "Accept: application/json" -H "dapr-api-token: eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJpc3MiOiJPbmxpbmUgSldUIEJ1aWxkZXIiLCJpYXQiOjE2MjE1OTQ5MzcsImV4cCI6NDA4MzA0NDUzNywiYXVkIjoiZnJlc2hicmV3ZWQuc2NpZW5jZSIsInN1YiI6ImlzYWFjQGZyZXNoYnJld2VkLnNjaWVuY2UiLCJHaXZlbk5hbWUiOiJJc2FhYyIsIlN1cm5hbWUiOiJKb2huc29uIiwiRW1haWwiOiJpc2FhY0BmcmVzaGJyZXdlZC5zY2llbmNlIn0.86p_txK7TxapSrQx53IzgHMi3SPIifzZjpn_6ynOHec" https://foo3.freshbrewed.science/v1.0/invoke/workflows/method/workflow3

{"value":"Hello from Logic App workflow running with Dapr!"}

This means we have finally implemented our model

Albeit we did not re-create the Tweeter Service. We merely had Workflow3 call Workflow1.

Using Dapr Tokens in externalized services

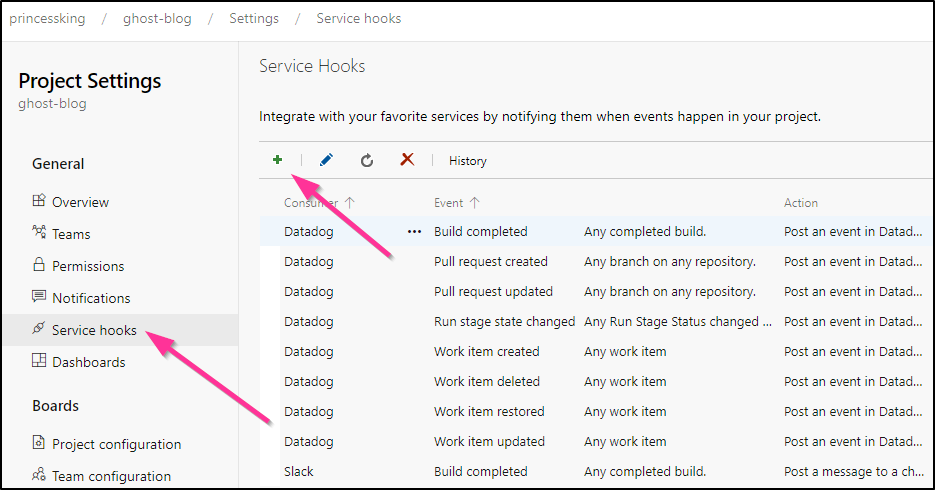

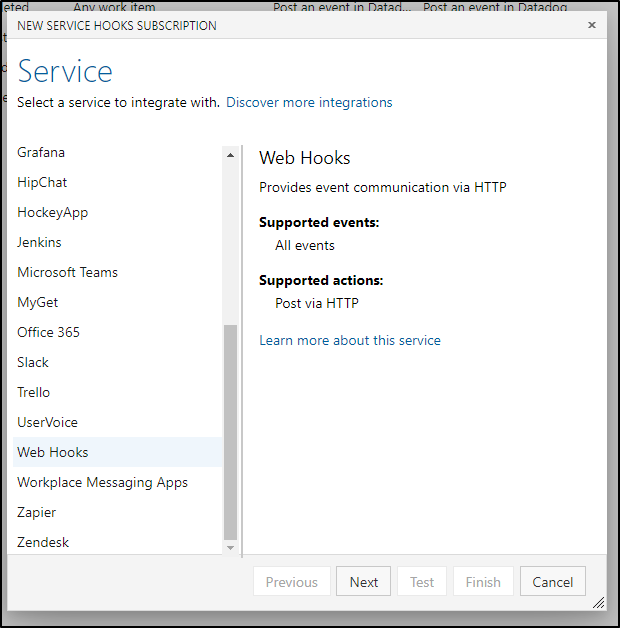

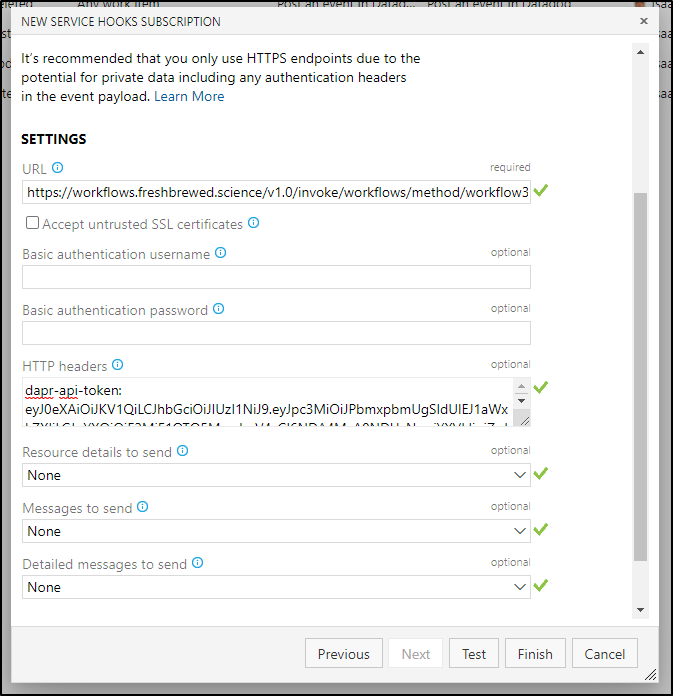

We'll go back to our AzDO and work on adding the service hook back to our project

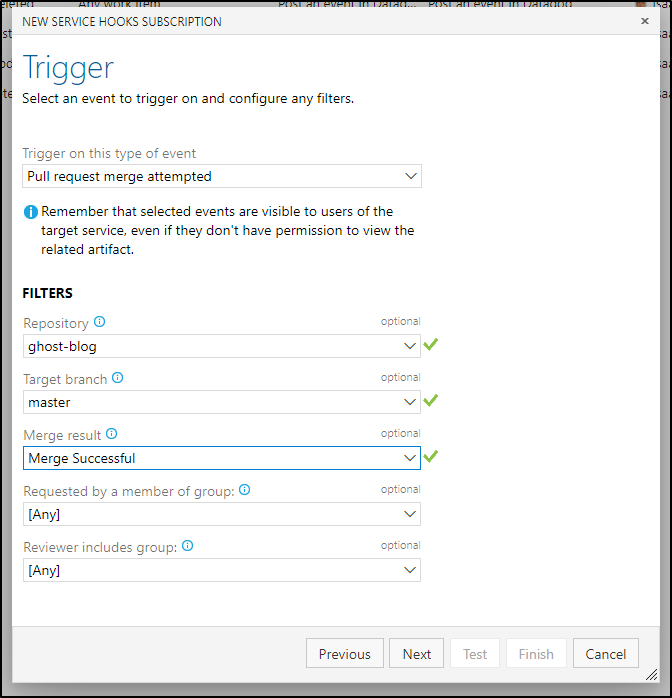

Using the standard webhook

We only want the webhook to fire when a successful merge happens on the blog.

This time we can use the token with the HTTP header 'dapr-api-token' when hitting https://workflows.freshbrewed.science/v1.0/invoke/workflows/method/workflow3

and you'll want this token to match what is in workflow3 json

as defined in the headers block

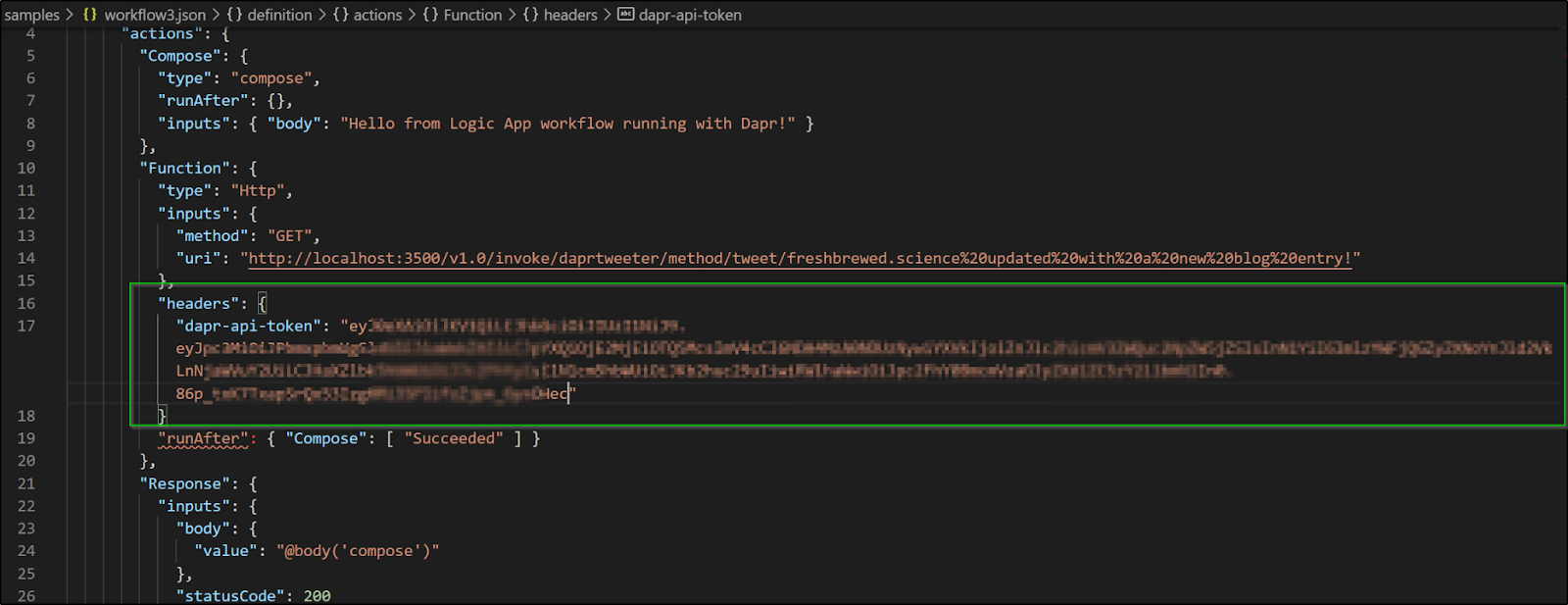

If we had the Dapr Tweeter App setup, it would look like

"HTTP": {

"inputs": {

"headers": {

"dapr-api-token": "eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJpc3MiOiJPbmxpbmUgSldUIEJ1aWxkZXIiLCJpYXQiOjE2MjE1OTQ5MzcsImV4cCI6NDA4MzA0NDUzNywiYXVkIjoiZnJlc2hicmV3ZWQuc2NpZW5jZSIsInN1YiI6ImlzYWFjQGZyZXNoYnJld2VkLnNjaWVuY2UiLCJHaXZlbk5hbWUiOiJJc2FhYyIsIlN1cm5hbWUiOiJKb2huc29uIiwiRW1haWwiOiJpc2FhY0BmcmVzaGJyZXdlZC5zY2llbmNlIn0.86p_txK7TxapSrQx53IzgHMi3SPIifzZjpn_6ynOHec"

},

"method": "GET",

"uri": "https://workflows.freshbrewed.science/v1.0/invoke/daprtweeter/method/tweet/freshbrewed.science%20updated%20with%20a%20new%20blog%20entry!"

},

"runAfter": {

"HTTP_Webhook": [

"Succeeded"

]

},

"type": "Http"

},

Cleanup

$ az aks list -o table

Name Location ResourceGroup KubernetesVersion ProvisioningState Fqdn

--------- ---------- ---------------- ------------------- ------------------- ------------------------------------------------------------------

idjaks04b centralus idjtestingressrg 1.19.9 Succeeded idjaks04b-idjtestingressrg-70b42e-bd9c7f78.hcp.centralus.azmk8s.io

$ az aks delete -n idjaks04b -g idjtestingressrg

Are you sure you want to perform this operation? (y/n): y

- Running ..

Trying to setup On-Prem cluster

I spent many days fighting to get my K3s to work. It's my belief the problem lies either in Nginx and the older version i have in my cluster which did not respect the basic auth annotations OR that there exists a typo in my Dapr App ID.

We know the model works as we see it working above. You can read below about things I tried or scroll to the end for Summary.

I tried to use different NGinx's with the hope a new Nginx would respect annotations or work better with the workflow model

# tweaking NGinx

# To get our ingress to be served by the new Nginx and not the old, we need to set our ingressClass to a different name..

$ helm upgrade nginx-ingress ingress-nginx/ingress-nginx --namespace ingress-basic --set controller.replicaCount=2 --set controller.nodeSelector."beta\.kubernetes\.io/os"=linux --set defaultBackend.nodeSelector."beta\.kubernetes\.io/os"=linux --set controller.admissionWebhooks.patch.nodeSelector."beta\.kubernetes\.io/os"=linux --set controller.admissionWebhooks.enabled=false --set controller.service.type=NodePort --set controller.service.externalIPs[0]=192.168.1.12 --set controller.ingressClass=nginxnew

As you see i used "nginxnew" as the classname

We can verify our Nginx deploy:

$ helm list -n ingress-basic

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

nginx-ingress ingress-basic 5 2021-05-24 21:39:44.2763018 -0500 CDT deployed ingress-nginx-3.31.0 0.46.0

And see the rendered values:

$ helm get values nginx-ingress -n ingress-basic

USER-SUPPLIED VALUES:

controller:

admissionWebhooks:

enabled: false

patch:

nodeSelector:

beta.kubernetes.io/os: linux

ingressClass: nginxnew

nodeSelector:

beta.kubernetes.io/os: linux

replicaCount: 2

service:

externalIPs:

- 192.168.1.12

type: NodePort

defaultBackend:

nodeSelector:

beta.kubernetes.io/os: linux

Compared to our former/existing Nginx

$ helm list | head -n1 && helm list | grep my-release

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

my-release default 2 2021-01-02 16:00:14.2231903 -0600 CST deployed nginx-ingress-0.7.1 1.9.1

$ helm get values my-release

USER-SUPPLIED VALUES:

controller:

defaultTLS:

secret: default/myk8s.tpk.best-cert

enableCustomResources: true

enableTLSPassthrough: true

logLevel: 3

nginxDebug: true

setAsDefaultIngress: true

useIngressClassOnly: false

watchNamespace: default

Now set it in the ingress definition:

$ cat ingress-with-auth-new.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-with-auth

annotations:

kubernetes.io/ingress.class: nginxnew

# type of authentication

nginx.ingress.kubernetes.io/auth-type: basic

# name of the secret that contains the user/password definitions

nginx.ingress.kubernetes.io/auth-secret: basic-auth

# message to display with an appropriate context why the authentication is required

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - foo'

spec:

rules:

- host: workflows2.freshbrewed.science

http:

paths:

- path: /

backend:

serviceName: dapr-workflows-svc

servicePort: 80

tls:

- hosts:

- workflows2.freshbrewed.science

secretName: workflows2.freshbrewed.science-cert

This annotation should force the proper handler

kubernetes.io/ingress.class: nginxnewApplying it

$ kubectl apply -f ingress-with-auth-new.yaml

$ kubectl get ingress

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

harbor-registry-harbor-ingress-notary nginx notary.freshbrewed.science 192.168.1.205 80, 443 18d

ingress-services <none> myk8s.tpk.pw,dapr-react.tpk.pw,myk8s.tpk.best + 1 more... 192.168.1.205 80, 443 52d

harbor-registry-harbor-ingress nginx harbor.freshbrewed.science 192.168.1.205 80, 443 18d

workflows-registry-workflows-ingress nginx workflows.freshbrewed.science 192.168.1.205 80, 443 3d20h

ingress-with-auth <none> workflows2.freshbrewed.science 192.168.1.12 80, 443 8h

We see that the new service got the IP for our new Nginx (192.168.1.12)

However, it's not crossing the namespace boundary to find our service (or our TLS secret)

let's try moving our new NGinx ingress into the default namespace to see our service

Omit "--namespace ingress-basic" from the invokation (and setAsDefaultIngress to false) and install to our default namespace

$ helm upgrade --install nginx-ingress ingress-nginx/ingress-nginx --set controller.replicaCount=1 --set controller.nodeSelector."beta\.kubernetes\.io/os"=linux --set defaultBackend.nodeSelector."beta\.kubernetes\.io/os"=linux --set controller.admissionWebhooks.patch.nodeSelector."beta\.kubernetes\.io/os"=linux --set controller.admissionWebhooks.enabled=false --set controller.service.type=NodePort --set controller.service.externalIPs[0]=192.168.1.12 --set controller.ingressClass=nginxnew --set controller.setAsDefaultIngress=false

Release "nginx-ingress" does not exist. Installing it now.

NAME: nginx-ingress

LAST DEPLOYED: Tue May 25 06:00:59 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

Get the application URL by running these commands:

export HTTP_NODE_PORT=$(kubectl --namespace default get services -o jsonpath="{.spec.ports[0].nodePort}" nginx-ingress-ingress-nginx-controller)

export HTTPS_NODE_PORT=$(kubectl --namespace default get services -o jsonpath="{.spec.ports[1].nodePort}" nginx-ingress-ingress-nginx-controller)

export NODE_IP=$(kubectl --namespace default get nodes -o jsonpath="{.items[0].status.addresses[1].address}")

echo "Visit http://$NODE_IP:$HTTP_NODE_PORT to access your application via HTTP."

echo "Visit https://$NODE_IP:$HTTPS_NODE_PORT to access your application via HTTPS."

An example Ingress that makes use of the controller:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginxnew

name: example

namespace: foo

spec:

rules:

- host: www.example.com

http:

paths:

- backend:

serviceName: exampleService

servicePort: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls

Now let's apply the ingress for workflows2.. this time we should have the secret (for basic-auth) and can view the service

$ kubectl apply -f ingress-with-auth-new.yaml

Warning: networking.k8s.io/v1beta1 Ingress is deprecated in v1.19+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

ingress.networking.k8s.io/ingress-with-auth created

getting the ingress

$ kubectl get ingress

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

harbor-registry-harbor-ingress-notary nginx notary.freshbrewed.science 192.168.1.205 80, 443 18d

ingress-services <none> myk8s.tpk.pw,dapr-react.tpk.pw,myk8s.tpk.best + 1 more... 192.168.1.205 80, 443 52d

harbor-registry-harbor-ingress nginx harbor.freshbrewed.science 192.168.1.205 80, 443 18d

workflows-registry-workflows-ingress nginx workflows.freshbrewed.science 192.168.1.205 80, 443 3d21h

ingress-with-auth <none> workflows2.freshbrewed.science 192.168.1.12 80, 443 61s

still getting old nginx.. lets try again

$ cat ingress-with-auth-new.yaml

#apiVersion: networking.k8s.io/v1beta1

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-with-auth

annotations:

#kubernetes.io/ingress.class: nginxnew

# type of authentication

nginx.ingress.kubernetes.io/auth-type: basic

# name of the secret that contains the user/password definitions

nginx.ingress.kubernetes.io/auth-secret: basic-auth

# message to display with an appropriate context why the authentication is required

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - foo'

spec:

ingressClassName: nginxnew

rules:

- host: workflows2.freshbrewed.science

http:

paths:

- path: /

backend:

serviceName: dapr-workflows-svc

servicePort: 80

tls:

- hosts:

- workflows2.freshbrewed.science

secretName: workflows2.freshbrewed.science-cert

$ kubectl apply -f ingress-with-auth-new.yaml

$ kubectl get ingress

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

harbor-registry-harbor-ingress-notary nginx notary.freshbrewed.science 192.168.1.205 80, 443 18d

ingress-services <none> myk8s.tpk.pw,dapr-react.tpk.pw,myk8s.tpk.best + 1 more... 192.168.1.205 80, 443 52d

harbor-registry-harbor-ingress nginx harbor.freshbrewed.science 192.168.1.205 80, 443 18d

workflows-registry-workflows-ingress nginx workflows.freshbrewed.science 192.168.1.205 80, 443 3d21h

ingress-with-auth nginxnew workflows2.freshbrewed.science 192.168.1.12 80, 443 3m58s

Even tho i set the class name, the other nginx is intercepting traffic!

$ kubectl logs my-release-nginx-ingress-988695c44-h4bs4 | grep workflows2 | tail -n10

10.42.1.161 [25/May/2021:11:06:51 +0000] TCP 200 156 7 0.001 "workflows2.freshbrewed.science"

10.42.1.161 [25/May/2021:11:08:10 +0000] TCP 200 2404 2128 79.115 "workflows2.freshbrewed.science"

10.42.1.161 [25/May/2021:11:08:47 +0000] TCP 200 156 7 0.002 "workflows2.freshbrewed.science"

10.42.1.161 [25/May/2021:11:10:56 +0000] TCP 200 3143 2917 128.749 "workflows2.freshbrewed.science"

10.42.1.161 [25/May/2021:11:18:15 +0000] TCP 200 2868 7 0.007 "workflows2.freshbrewed.science"

10.42.1.161 [25/May/2021:11:18:15 +0000] TCP 200 2868 7 0.008 "workflows2.freshbrewed.science"

10.42.1.161 [25/May/2021:11:18:17 +0000] TCP 200 156 7 0.002 "workflows2.freshbrewed.science"

10.42.1.161 [25/May/2021:11:18:27 +0000] TCP 200 2868 7 0.005 "workflows2.freshbrewed.science"

10.42.1.161 [25/May/2021:11:19:22 +0000] TCP 200 4667 1469 67.240 "workflows2.freshbrewed.science"

10.42.1.161 [25/May/2021:11:19:38 +0000] TCP 200 4667 1481 70.561 "workflows2.freshbrewed.science"

One more idea… there is an old, rather unused ingress services that catches *.. since the invalid cert points to that old domain.. perhaps this is 'catching' my ingresses

Let's remove that

$ kubectl get ingress ingress-services -o yaml > ingress.services.yaml

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

$ kubectl delete -f ingress.services.yaml

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

ingress.extensions "ingress-services" deleted

And now we should have just the known URLs (and classes)

$ kubectl get ingress

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

harbor-registry-harbor-ingress-notary nginx notary.freshbrewed.science 192.168.1.205 80, 443 18d

harbor-registry-harbor-ingress nginx harbor.freshbrewed.science 192.168.1.205 80, 443 18d

workflows-registry-workflows-ingress nginx workflows.freshbrewed.science 192.168.1.205 80, 443 3d21h

ingress-with-auth nginxnew workflows2.freshbrewed.science 192.168.1.12 80, 443 6m54s

.. still no luck.. lets rotate pods (foreshadowing: this will get worse, by the way)

builder@DESKTOP-JBA79RT:~/Workspaces/daprWorkflow$ kubectl get pods | grep nginx

svclb-my-release-nginx-ingress-cp4kw 0/2 Pending 0 5d9h

svclb-my-release-nginx-ingress-2vn8z 2/2 Running 0 5d9h

svclb-my-release-nginx-ingress-lx8hf 2/2 Running 0 5d9h

my-release-nginx-ingress-988695c44-h4bs4 1/1 Running 0 3d16h

nginx-ingress-ingress-nginx-controller-68cf8b69c-z88xd 1/1 Running 0 25m

builder@DESKTOP-JBA79RT:~/Workspaces/daprWorkflow$ kubectl delete pod nginx-ingress-ingress-nginx-controller-68cf8b69c-z88xd && kubectl delete pod my-release-nginx-ingress-988695c44-h4bs4 && kubectl delete pods -l app=svclb-my-release-nginx-ingress

pod "nginx-ingress-ingress-nginx-controller-68cf8b69c-z88xd" deleted

That not only didn't work, but moved everything to the same IP!

nginx-ingress-ingress-nginx-controller NodePort 10.43.251.142 192.168.1.12 80:32546/TCP,443:30338/TCP 30m

my-release-nginx-ingress LoadBalancer 10.43.214.113 192.168.1.12 80:32300/TCP,443:32020/TCP 142d

builder@DESKTOP-JBA79RT:~/Workspaces/daprWorkflow$ kubectl get ingress

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-with-auth nginxnew workflows2.freshbrewed.science 192.168.1.12 80, 443 13m

harbor-registry-harbor-ingress nginx harbor.freshbrewed.science 192.168.1.12 80, 443 18d

harbor-registry-harbor-ingress-notary nginx notary.freshbrewed.science 192.168.1.12 80, 443 18d

workflows-registry-workflows-ingress nginx workflows.freshbrewed.science 192.168.1.12 80, 443 3d21h

I might just have to upgrade Nginx , my main ingress, at this point

$ helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

..snip..

my-release default 2 2021-01-02 16:00:14.2231903 -0600 CST deployed nginx-ingress-0.7.1 1.9.1

nginx-ingress default 1 2021-05-25 06:00:59.0111771 -0500 CDT deployed ingress-nginx-3.31.0 0.46.0

I plan to continue to work on

Summary

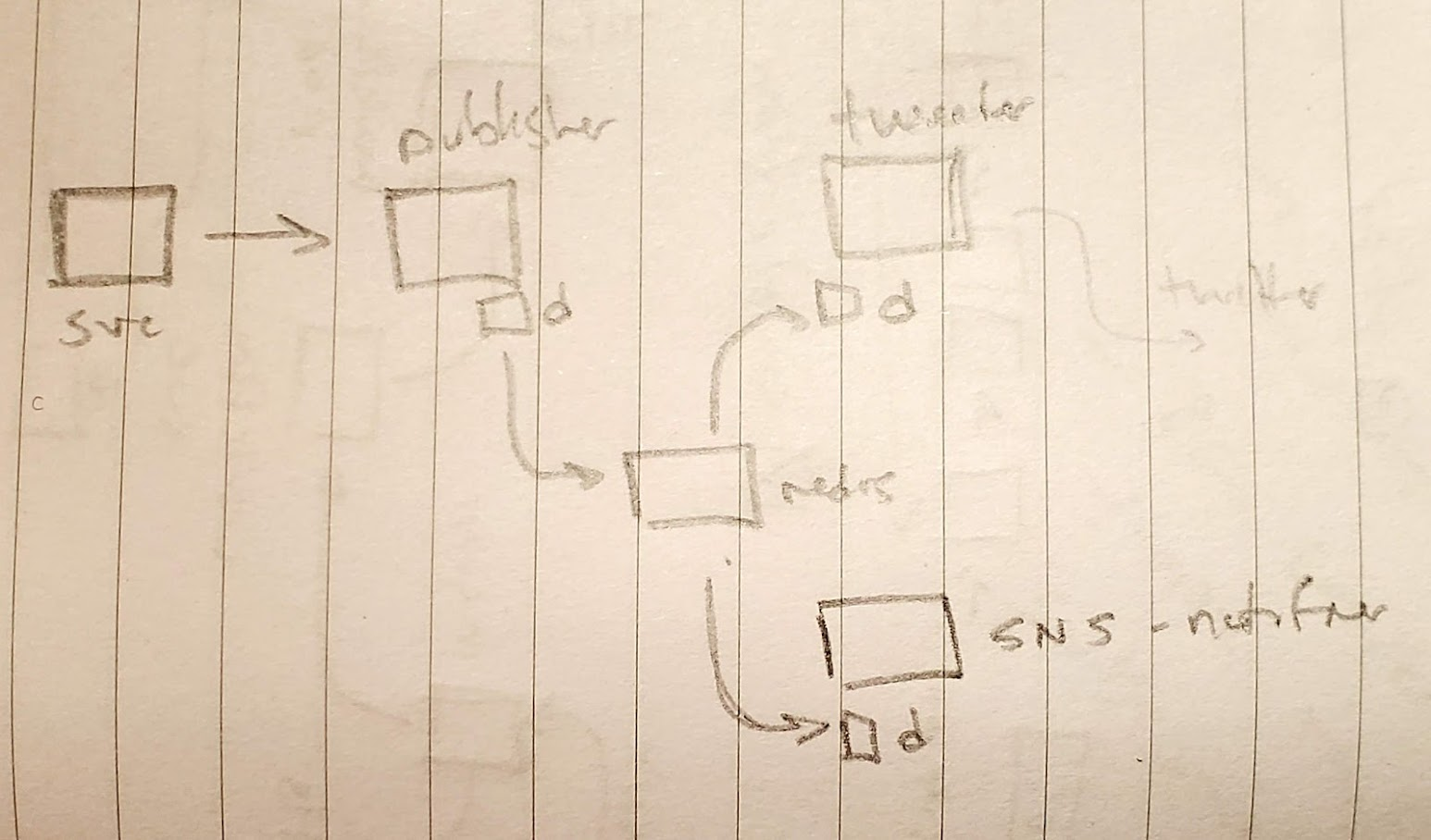

We found some issues implementing this locally, but that was mostly due to an older Nginx and only one ingress. We explored implementing workflow endpoints directly, behind a basic auth and lastly with both basic auth and dapr app id. We looked at implementing workflows that call services and workflows that call workflows.

To address our problem, we could have used external services like Amazon SQS. In fact any Binding that has an Input Binding could trigger our workflow. Instead of a binding, we could use a pub/sub model as well to trigger multiple notifiers

The idea is to show how we could expose our services for real world uses and to that end, with a properly supported Nginx controller, it's straightforward.