Knative is a platform to bring serverless workloads to Kubernetes. With Knative, one can create the equivalent of Azure or GCP Functions and Amazon Lambdas in K8s. More concretely, this allows one to create containerized microservices that can scale to zero meaning you can put far more active services in a Kubernetes cluster than one could traditionally. The framework also eliminates having many containerized services consuming cluster resources when not in use.

So let’s look into KNative on Azure. We’ll step through installing a new AKS cluster, setting up KNative with an example and then talk about exposing with SSL.

Setting up AKS and ACR.

First, let’s create a resource group and an ACR, in case we need it.

$ az account set --subscription 1fea80cf-bb25-4748-8e45-a0bc3775065f

$ az group create --name idjknaksrg --location centralus

{

"id": "/subscriptions/1fea80cf-bb25-4748-8e45-a0bc3775065f/resourceGroups/idjknaksrg",

"location": "centralus",

"managedBy": null,

"name": "idjknaksrg",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

$ az acr create --name idjknacr --resource-group idjknaksrg --sku Basic --admin-enabled true

{

"adminUserEnabled": true,

"creationDate": "2020-09-03T01:42:59.108419+00:00",

"dataEndpointEnabled": false,

"dataEndpointHostNames": [],

"encryption": {

"keyVaultProperties": null,

"status": "disabled"

},

"id": "/subscriptions/1fea80cf-bb25-4748-8e45-a0bc3775065f/resourceGroups/idjknaksrg/providers/Microsoft.ContainerRegistry/registries/idjknacr",

"identity": null,

"location": "centralus",

"loginServer": "idjknacr.azurecr.io",

"name": "idjknacr",

"networkRuleSet": null,

"policies": {

"quarantinePolicy": {

"status": "disabled"

},

"retentionPolicy": {

"days": 7,

"lastUpdatedTime": "2020-09-03T01:42:59.939651+00:00",

"status": "disabled"

},

"trustPolicy": {

"status": "disabled",

"type": "Notary"

}

},

"privateEndpointConnections": [],

"provisioningState": "Succeeded",

"resourceGroup": "idjknaksrg",

"sku": {

"name": "Basic",

"tier": "Basic"

},

"status": null,

"storageAccount": null,

"tags": {},

"type": "Microsoft.ContainerRegistry/registries"

}

Next, we’ll need a service principal for AKS and then we can create our cluster.

$ az ad sp create-for-rbac --skip-assignment > my_sp2.json

$ cat my_sp2.json | jq -r .appId

653c316e-db05-4033-bb46-dbf1453b3b34

$ export SP_PASS=`cat my_sp2.json | jq -r .password`

$ export SP_ID=`cat my_sp2.json | jq -r .appId`

$ az aks create --resource-group idjknaksrg --name idjknaks --location centralus --node-count 3 --enable-cluster-autoscaler --min-count 2 --max-count 4 --generate-ssh-keys --network-plugin azure --network-poli

cy azure --service-principal $SP_ID --client-secret $SP_PASS

- Running ..

Validation

$ rm ~/.kube/config && az aks get-credentials -n idjknaks -g idjknaksrg --admin

Merged "idjknaks-admin" as current context in /home/builder/.kube/config

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-23052013-vmss000000 Ready agent 14h v1.16.13

aks-nodepool1-23052013-vmss000001 Ready agent 14h v1.16.13

aks-nodepool1-23052013-vmss000002 Ready agent 14h v1.16.13

Installing KNative

We’ll need to install the Custom Resource Definitions and core components first.

$ kubectl apply --filename https://github.com/knative/serving/releases/download/v0.17.0/serving-crds.yaml

customresourcedefinition.apiextensions.k8s.io/certificates.networking.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/configurations.serving.knative.dev created

customresourcedefinition.apiextensions.k8s.io/ingresses.networking.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/metrics.autoscaling.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/podautoscalers.autoscaling.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/revisions.serving.knative.dev created

customresourcedefinition.apiextensions.k8s.io/routes.serving.knative.dev created

customresourcedefinition.apiextensions.k8s.io/serverlessservices.networking.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/services.serving.knative.dev created

customresourcedefinition.apiextensions.k8s.io/images.caching.internal.knative.dev created

$ kubectl apply --filename https://github.com/knative/serving/releases/download/v0.17.0/serving-core.yaml

customresourcedefinition.apiextensions.k8s.io/images.caching.internal.knative.dev unchanged

namespace/knative-serving created

serviceaccount/controller created

clusterrole.rbac.authorization.k8s.io/knative-serving-admin created

clusterrolebinding.rbac.authorization.k8s.io/knative-serving-controller-admin created

image.caching.internal.knative.dev/queue-proxy created

configmap/config-autoscaler created

configmap/config-defaults created

configmap/config-deployment created

configmap/config-domain created

configmap/config-features created

configmap/config-gc created

configmap/config-leader-election created

configmap/config-logging created

configmap/config-network created

configmap/config-observability created

configmap/config-tracing created

horizontalpodautoscaler.autoscaling/activator created

deployment.apps/activator created

service/activator-service created

deployment.apps/autoscaler created

service/autoscaler created

deployment.apps/controller created

service/controller created

deployment.apps/webhook created

service/webhook created

customresourcedefinition.apiextensions.k8s.io/certificates.networking.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/configurations.serving.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/ingresses.networking.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/metrics.autoscaling.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/podautoscalers.autoscaling.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/revisions.serving.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/routes.serving.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/serverlessservices.networking.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/services.serving.knative.dev unchanged

clusterrole.rbac.authorization.k8s.io/knative-serving-addressable-resolver created

clusterrole.rbac.authorization.k8s.io/knative-serving-namespaced-admin created

clusterrole.rbac.authorization.k8s.io/knative-serving-namespaced-edit created

clusterrole.rbac.authorization.k8s.io/knative-serving-namespaced-view created

clusterrole.rbac.authorization.k8s.io/knative-serving-core created

clusterrole.rbac.authorization.k8s.io/knative-serving-podspecable-binding created

validatingwebhookconfiguration.admissionregistration.k8s.io/config.webhook.serving.knative.dev created

mutatingwebhookconfiguration.admissionregistration.k8s.io/webhook.serving.knative.dev created

validatingwebhookconfiguration.admissionregistration.k8s.io/validation.webhook.serving.knative.dev created

secret/webhook-certs created

Service Mesh

We have a few choices of service mesh that work with Knative out of the box. I first tried with Istio but ran into troubles. So I switched to the lightweight Kourier load balancer.

# install kourier and get ext ip

$ kubectl apply --filename https://github.com/knative/net-kourier/releases/download/v0.17.0/kourier.yaml

namespace/kourier-system created

service/kourier created

deployment.apps/3scale-kourier-gateway created

deployment.apps/3scale-kourier-control created

clusterrole.rbac.authorization.k8s.io/3scale-kourier created

serviceaccount/3scale-kourier created

clusterrolebinding.rbac.authorization.k8s.io/3scale-kourier created

service/kourier-internal created

service/kourier-control created

configmap/kourier-bootstrap created

$ kubectl patch configmap/config-network \

> --namespace knative-serving \

> --type merge \

> --patch '{"data":{"ingress.class":"kourier.ingress.networking.knative.dev"}}'

configmap/config-network patched

$ kubectl --namespace kourier-system get service kourier

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kourier LoadBalancer 10.0.206.235 52.158.160.6 80:31584/TCP,443:31499/TCP 33s

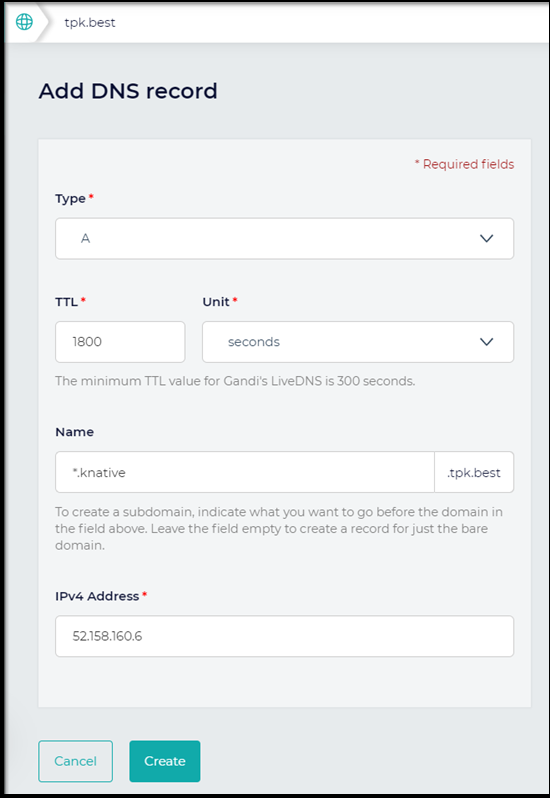

Create DNS:

Now that we have a DNS record (a wildcard one at that), we can apply it to the configmap in knative’s namespace.

$ kubectl patch configmap/config-domain \

> --namespace knative-serving \

> --type merge \

> --patch '{"data":{"knative.tpk.best":""}}'

configmap/config-domain patched

Verification

# see that knative pods are running

$ kubectl get pods --namespace knative-serving

NAME READY STATUS RESTARTS AGE

3scale-kourier-control-dfcbc46df-kf9rr 1/1 Running 0 16m

activator-68cbc9b5c7-xt5jr 1/1 Running 0 17m

autoscaler-5cf649dbb-m2p6d 1/1 Running 0 17m

controller-bc8d75cbc-2tls7 1/1 Running 0 17m

webhook-85758f4589-stxm6 1/1 Running 0 17m

Usage

Before we go further, we should get the knative CLI.

### download kn

$ wget https://storage.googleapis.com/knative-nightly/client/latest/kn-linux-amd64

--2020-09-02 22:20:58-- https://storage.googleapis.com/knative-nightly/client/latest/kn-linux-amd64

Resolving storage.googleapis.com (storage.googleapis.com)... 172.217.4.48, 172.217.5.16, 172.217.1.48, ...

Connecting to storage.googleapis.com (storage.googleapis.com)|172.217.4.48|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 49152920 (47M) [application/octet-stream]

Saving to: ‘kn-linux-amd64’

kn-linux-amd64 100%[==============================================>] 46.88M 18.3MB/s in 2.6s

2020-09-02 22:21:01 (18.3 MB/s) - ‘kn-linux-amd64’ saved [49152920/49152920]

$ chmod u+x kn-linux-amd64

$ mv kn-linux-amd64 kn

On WSL i’ll..

$ mv kn ~/.local/bin

Verification

$ ./kn --help

kn is the command line interface for managing Knative Serving and Eventing resources

Find more information about Knative at: https://knative.dev

Serving Commands:

service Manage Knative services

revision Manage service revisions

route List and describe service routes

Eventing Commands:

source Manage event sources

broker Manage message broker

trigger Manage event triggers

channel Manage event channels

Other Commands:

plugin Manage kn plugins

completion Output shell completion code

version Show the version of this client

Use "kn <command> --help" for more information about a given command.

Use "kn options" for a list of global command-line options (applies to all commands).

Installing a Knative App

Let’s now install a sample app. We could launch any containerized workload at this point.

# install sample app

$ ./kn service create helloworld-go --image gcr.io/knative-samples/helloworld-go --env TARGE

T="Go Sample v1"

Creating service 'helloworld-go' in namespace 'default':

0.115s The Configuration is still working to reflect the latest desired specification.

0.227s The Route is still working to reflect the latest desired specification.

0.278s Configuration "helloworld-go" is waiting for a Revision to become ready.

37.877s ...

38.083s Ingress has not yet been reconciled.

38.227s unsuccessfully observed a new generation

38.391s Ready to serve.

Service 'helloworld-go' created to latest revision 'helloworld-go-hrpsm-1' is available at URL:

http://helloworld-go.default.knative.tpk.best

Verification

$ kubectl get ksvc helloworld-go

NAME URL LATESTCREATED LATESTREADY READY REASON

helloworld-go http://helloworld-go.default.knative.tpk.best helloworld-go-hrpsm-1 helloworld-go-hrpsm-1 True

We can also use describe to get similar information

$ ./kn service describe helloworld-go

Name: helloworld-go

Namespace: default

Age: 3m

URL: http://helloworld-go.default.knative.tpk.best

Revisions:

100% @latest (helloworld-go-hrpsm-1) [1] (3m)

Image: gcr.io/knative-samples/helloworld-go (pinned to 5ea96b)

Conditions:

OK TYPE AGE REASON

++ Ready 2m

++ ConfigurationsReady 2m

++ RoutesReady 2m

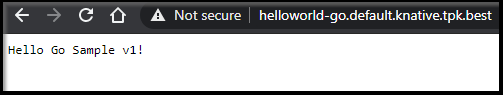

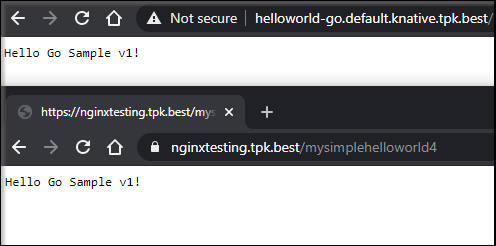

Viewing our Sample App:

Seeing how it scales:

Even though we just viewed the app, we can see it terminates the pod shortly thereafter when not active…

$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default helloworld-go-hrpsm-1-deployment-5bbdcbf48-5x4vs 1/2 Terminating 0 98s

knative-serving 3scale-kourier-control-dfcbc46df-kf9rr 1/1 Running 0 28m

knative-serving activator-68cbc9b5c7-xt5jr 1/1 Running 0 29m

knative-serving autoscaler-5cf649dbb-m2p6d 1/1 Running 0 29m

knative-serving controller-bc8d75cbc-2tls7 1/1 Running 0 29m

knative-serving webhook-85758f4589-stxm6 1/1 Running 0 29m

kourier-system 3scale-kourier-gateway-6579576fff-hgkz7 1/1 Running 0 28m

kube-system azure-cni-networkmonitor-7d852 1/1 Running 0 33m

kube-system azure-cni-networkmonitor-gwjmp 1/1 Running 0 33m

kube-system azure-cni-networkmonitor-h8z5s 1/1 Running 0 33m

kube-system azure-ip-masq-agent-8wfjc 1/1 Running 0 33m

kube-system azure-ip-masq-agent-wfz7g 1/1 Running 0 33m

kube-system azure-ip-masq-agent-xk2dz 1/1 Running 0 33m

kube-system azure-npm-bbm4m 1/1 Running 0 32m

kube-system azure-npm-crklh 1/1 Running 0 32m

kube-system azure-npm-kg4vq 1/1 Running 0 32m

kube-system coredns-869cb84759-wr9bn 1/1 Running 0 34m

kube-system coredns-869cb84759-zs4v7 1/1 Running 0 33m

kube-system coredns-autoscaler-5b867494f-bbttp 1/1 Running 0 34m

kube-system dashboard-metrics-scraper-566c858889-zcp2m 1/1 Running 0 34m

kube-system kube-proxy-ndvsr 1/1 Running 0 33m

kube-system kube-proxy-scbzh 1/1 Running 0 33m

kube-system kube-proxy-vrxxr 1/1 Running 0 33m

kube-system kubernetes-dashboard-7f7d6bbd7f-tn4gl 1/1 Running 0 34m

kube-system metrics-server-6cd7558856-vcmzv 1/1 Running 0 34m

kube-system tunnelfront-6664c65584-z9f7h 2/2 Running 0 34m

$ kubectl describe pod helloworld-go-hrpsm-1-deployment-5bbdcbf48-5x4vs

Error from server (NotFound): pods "helloworld-go-hrpsm-1-deployment-5bbdcbf48-5x4vs" not found

$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

knative-serving 3scale-kourier-control-dfcbc46df-kf9rr 1/1 Running 0 28m

knative-serving activator-68cbc9b5c7-xt5jr 1/1 Running 0 29m

knative-serving autoscaler-5cf649dbb-m2p6d 1/1 Running 0 29m

knative-serving controller-bc8d75cbc-2tls7 1/1 Running 0 29m

knative-serving webhook-85758f4589-stxm6 1/1 Running 0 29m

kourier-system 3scale-kourier-gateway-6579576fff-hgkz7 1/1 Running 0 28m

kube-system azure-cni-networkmonitor-7d852 1/1 Running 0 33m

kube-system azure-cni-networkmonitor-gwjmp 1/1 Running 0 33m

kube-system azure-cni-networkmonitor-h8z5s 1/1 Running 0 33m

kube-system azure-ip-masq-agent-8wfjc 1/1 Running 0 33m

kube-system azure-ip-masq-agent-wfz7g 1/1 Running 0 33m

kube-system azure-ip-masq-agent-xk2dz 1/1 Running 0 33m

kube-system azure-npm-bbm4m 1/1 Running 0 33m

kube-system azure-npm-crklh 1/1 Running 0 33m

kube-system azure-npm-kg4vq 1/1 Running 0 33m

kube-system coredns-869cb84759-wr9bn 1/1 Running 0 35m

kube-system coredns-869cb84759-zs4v7 1/1 Running 0 33m

kube-system coredns-autoscaler-5b867494f-bbttp 1/1 Running 0 35m

kube-system dashboard-metrics-scraper-566c858889-zcp2m 1/1 Running 0 35m

kube-system kube-proxy-ndvsr 1/1 Running 0 33m

kube-system kube-proxy-scbzh 1/1 Running 0 33m

kube-system kube-proxy-vrxxr 1/1 Running 0 33m

kube-system kubernetes-dashboard-7f7d6bbd7f-tn4gl 1/1 Running 0 35m

kube-system metrics-server-6cd7558856-vcmzv 1/1 Running 0 35m

kube-system tunnelfront-6664c65584-z9f7h 2/2 Running 0 34m

Another way to view the same thing:

We can fire another view of the webpage and see it scale and then terminate…

$ kubectl get pods -l app=helloworld-go-hrpsm-1

NAME READY STATUS RESTARTS AGE

helloworld-go-hrpsm-1-deployment-5bbdcbf48-7smwl 2/2 Running 0 84s

$ kubectl get pods -l app=helloworld-go-hrpsm-1

NAME READY STATUS RESTARTS AGE

helloworld-go-hrpsm-1-deployment-5bbdcbf48-7smwl 2/2 Terminating 0 109s

$ kubectl get pods -l app=helloworld-go-hrpsm-1

NAME READY STATUS RESTARTS AGE

helloworld-go-hrpsm-1-deployment-5bbdcbf48-7smwl 1/2 Terminating 0 2m17s

$ kubectl get pods -l app=helloworld-go-hrpsm-1

NAME READY STATUS RESTARTS AGE

helloworld-go-hrpsm-1-deployment-5bbdcbf48-7smwl 0/2 Terminating 0 2m25s

$ kubectl get pods -l app=helloworld-go-hrpsm-1

No resources found in default namespace.

SSL / HTTPS

Http is fine for a demo, but everything needs ssl nowadays. Most browsers vomit on HTTP so let’s setup SSL for our Knative services next.

We’ll start by following the guide we wrote in Kubernetes, SSL and Cert-Manager. First, ensure cert-manager isn’t already installed:

$ kubectl get pods --all-namespaces | grep cert

$

Next, ensure we are using helm 3. This is just an issue i tend to have dancing between Helm 2 (with tiller) and 3.

$ helm version

version.BuildInfo{Version:"v3.0.2", GitCommit:"19e47ee3283ae98139d98460de796c1be1e3975f", GitTreeState:"clean", GoVersion:"go1.13.5"}

Next we need to install the CRDs and charts. Ensure you use the right version of the helm chart. We are using Kubernetes version 1.16.13 so i’ll use the 0.16.0 version of the chart.. UPDATE: 0.16.0 did not work, use 1.0.0 now

$ helm repo add jetstack https://charts.jetstack.io

"jetstack" has been added to your repositories

$ kubectl apply --validate=false -f https://github.com/jetstack/cert-manager/releases/download/v1.0.0/cert-manager.crds.yaml

customresourcedefinition.apiextensions.k8s.io/certificaterequests.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/certificates.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/challenges.acme.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/clusterissuers.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/issuers.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/orders.acme.cert-manager.io configured

$ helm install cert-manager jetstack/cert-manager --namespace cert-manager --version v1.0.0 --set installCRDS=true

NAME: cert-manager

LAST DEPLOYED: Thu Sep 3 08:00:18 2020

NAMESPACE: cert-manager

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

cert-manager has been deployed successfully!

In order to begin issuing certificates, you will need to set up a ClusterIssuer

or Issuer resource (for example, by creating a 'letsencrypt-staging' issuer).

More information on the different types of issuers and how to configure them

can be found in our documentation:

https://cert-manager.io/docs/configuration/

For information on how to configure cert-manager to automatically provision

Certificates for Ingress resources, take a look at the `ingress-shim`

documentation:

https://cert-manager.io/docs/usage/ingress/

Verify it’s running:

$ kubectl get pods --namespace cert-manager

NAME READY STATUS RESTARTS AGE

cert-manager-69779b98cd-wcsqs 1/1 Running 0 2m53s

cert-manager-cainjector-7c4c4bbbb9-sswvw 1/1 Running 0 2m53s

cert-manager-webhook-6496b996cb-4rrzk 1/1 Running 0 2m53s

We will need to create cluster issuers for Staging and Prod LetsEncrypt (ACME)

$ cat issuer-staging.yaml

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-staging

spec:

acme:

# You must replace this email address with your own.

# Let's Encrypt will use this to contact you about expiring

# certificates, and issues related to your account.

email: isaac.johnson@gmail.com

server: https://acme-staging-v02.api.letsencrypt.org/directory

privateKeySecretRef:

# Secret resource used to store the account's private key.

name: letsencrypt-account-key

# Add a single challenge solver, HTTP01 using nginx

solvers:

- http01:

ingress:

class: nginx

$ cat issuer-prod.yaml

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-prod

spec:

acme:

# The ACME server URL

server: https://acme-v02.api.letsencrypt.org/directory

# Email address used for ACME registration

email: isaac.johnson@gmail.com

# Name of a secret used to store the ACME account private key

privateKeySecretRef:

name: letsencrypt-prod

# Enable the HTTP-01 challenge provider

solvers:

- http01:

ingress:

class: nginx

Before we start using them, let's setup Nginx to receive traffic.

$ helm repo add nginx-stable https://helm.nginx.com/stable

"nginx-stable" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "jetstack" chart repository

Update Complete. ⎈ Happy Helming!⎈

$ helm install my-release nginx-stable/nginx-ingress

NAME: my-release

LAST DEPLOYED: Thu Sep 3 07:42:46 2020

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The NGINX Ingress Controller has been installed.

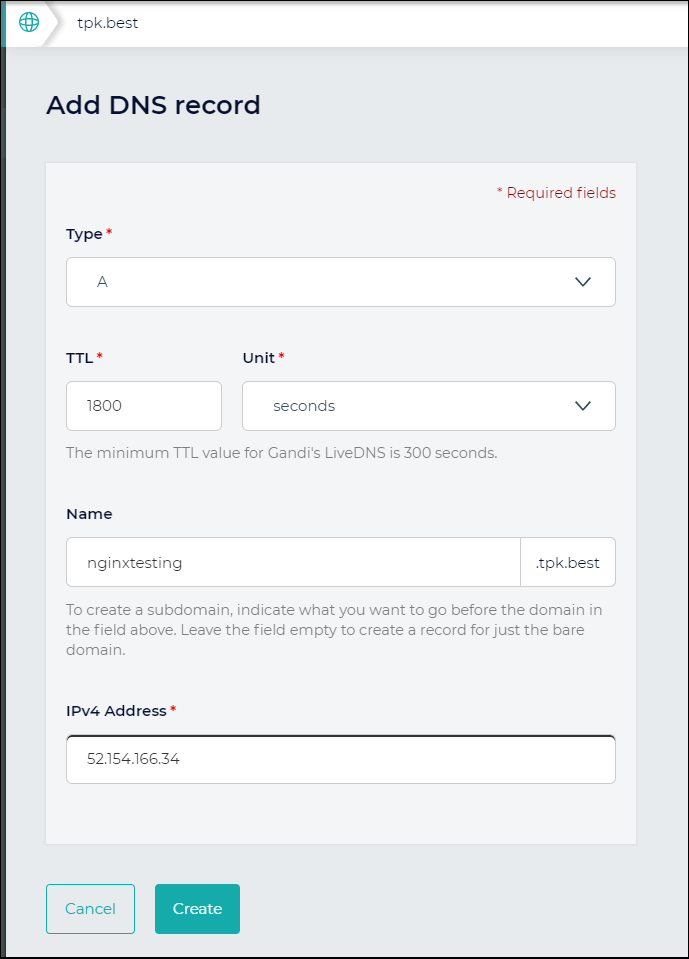

And we can setup a testing entry in DNS.

We’ll need the NGinx external IP:

$ kubectl describe svc -l app.kubernetes.io/name=my-release-nginx-ingress | grep Ingress

LoadBalancer Ingress: 52.154.166.34

Now we have an entry for nginxtesting.tpk.best. However, we still need to setup the LE SSL Certs.

$ kubectl apply -f issuer-staging.yaml --validate=false

clusterissuer.cert-manager.io/letsencrypt-staging created

$ kubectl apply -f issuer-prod.yaml --validate=false

clusterissuer.cert-manager.io/letsencrypt-prod created

Create a cert request and apply it:

$ cat nginxtesting-tpk-best.yaml

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: nginxtesting-tpk-best

namespace: default

spec:

secretName: nginxtesting.tpk.best-cert

issuerRef:

name: letsencrypt-prod

kind: ClusterIssuer

commonName: nginxtesting.tpk.best

dnsNames:

- nginxtesting.tpk.best

acme:

config:

- http01:

ingressClass: nginx

domains:

- nginxtesting.tpk.best

$ kubectl apply -f nginxtesting-tpk-best.yaml --validate=false

certificate.cert-manager.io/nginxtesting-tpk-best created

We can watch for it to change to True for READY. This means the cert-manager successfully got our Cert.

$ kubectl get certificate

NAME READY SECRET AGE

nginxtesting-tpk-best True nginxtesting.tpk.best-cert 16m

$ kubectl get certificate nginxtesting-tpk-best -o yaml

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"cert-manager.io/v1","kind":"Certificate","metadata":{"annotations":{},"name":"nginxtesting-tpk-best","namespace":"default"},"spec":{"acme":{"config":[{"domains":["nginxtesting.tpk.best"],"http01":{"ingressClass":"nginx"}}]},"commonName":"nginxtesting.tpk.best","dnsNames":["nginxtesting.tpk.best"],"issuerRef":{"kind":"ClusterIssuer","name":"letsencrypt-prod"},"secretName":"nginxtesting.tpk.best-cert"}}

creationTimestamp: "2020-09-03T12:53:04Z"

generation: 1

name: nginxtesting-tpk-best

namespace: default

resourceVersion: "296048"

selfLink: /apis/cert-manager.io/v1/namespaces/default/certificates/nginxtesting-tpk-best

uid: 6e6c0846-f468-42c8-99d4-4589ce0786a3

spec:

commonName: nginxtesting.tpk.best

dnsNames:

- nginxtesting.tpk.best

issuerRef:

kind: ClusterIssuer

name: letsencrypt-prod

secretName: nginxtesting.tpk.best-cert

status:

conditions:

- lastTransitionTime: "2020-09-03T13:06:29Z"

message: Certificate is up to date and has not expired

reason: Ready

status: "True"

type: Ready

notAfter: "2020-12-02T12:06:28Z"

notBefore: "2020-09-03T12:06:28Z"

renewalTime: "2020-11-02T12:06:28Z"

revision: 2

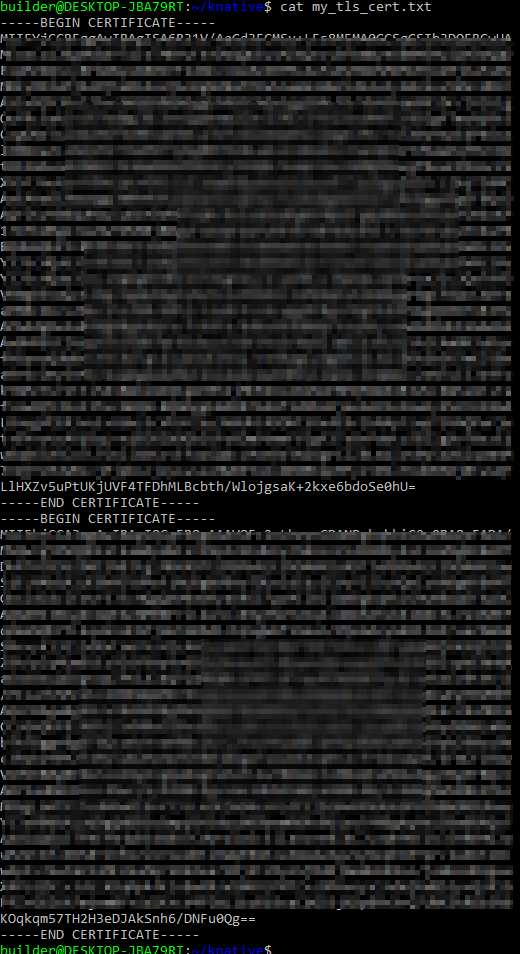

We now can download it locally:

$ kubectl get secret nginxtesting.tpk.best-cert -o yaml > my_certs.yamlNow we have the cert base64 encoded in my_certs.yaml. Just copy the tls to a file and base64 decode it

$ cp my_certs.yaml my_tls_cert_b64.txt

$ vi my_tls_cert_b64.txt

base64 -d my_tls_cert_b64.txt > my_tls_cert.txt

Same with key:

$ cp my_certs.yaml my_key_b64.txt

$ vi my_key_b64.txt

$ cat my_key_b64.txt | base64 --decode > my_key.txt

Now use those keys in the helm upgrade (i used vs code to remove new lines and comments from my keys). You’ll use the base64 values from the secret directly. Using “tr” to remove a newline means we can use just inline cat.

$ helm upgrade my-release nginx-stable/nginx-ingress --set controller.defaultTLS.cert=`cat my_tls_cert_b64.txt | tr -d '\n'` --set controller.defaultTLS.key=`cat my_key_b64.txt | tr -d '\n'`

Release "my-release" has been upgraded. Happy Helming!

NAME: my-release

LAST DEPLOYED: Thu Sep 3 08:29:59 2020

NAMESPACE: default

STATUS: deployed

REVISION: 3

TEST SUITE: None

NOTES:

The NGINX Ingress Controller has been installed.

We can now verify:

Exposing Knative with TLS

Using Kourier, we can expose the service with TLS if we have a wildcard certificate. This requires a different kind of challenge and one that would require adding more webhooks for Gandi (my provider).

That said, here is a guide if you have the wildcard certificate: https://lesv.dev/posts/using-kourier-with-knative/

If you use CIVO, another one of my favourite providers, they have an Okteto webhook to automate wildcard certificates: https://www.civo.com/learn/get-a-wildcard-certificate-with-cert-manager-and-civo-dns

Which would look like:

apiVersion: cert-manager.io/v1alpha2

kind: Certificate

metadata:

name: wildcard-certificate

spec:

dnsNames:

- '*.knative.tpk.best'

issuerRef:

kind: Issuer

name: civo

secretName: wildcard-tpk-best-tls

However, because we do have NGinx with a proper certificate, while we cannot trigger knative to spin a pod, we could connect with TLS if we know it’s running:

Create and apply an ingress rule.

Get the private service name:

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hello-node ClusterIP 10.0.155.131 <none> 80/TCP 27m

helloworld-go ExternalName <none> kourier-internal.kourier-system.svc.cluster.local <none> 11h

helloworld-go-hrpsm-1 ClusterIP 10.0.181.96 <none> 80/TCP 11h

helloworld-go-hrpsm-1-private ClusterIP 10.0.216.90 <none> 80/TCP,9090/TCP,9091/TCP,8022/TCP 11h

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 11h

my-release-nginx-ingress LoadBalancer 10.0.216.83 52.154.166.34 80:31983/TCP,443:31924/TCP 124m

Then make an Ingress YAML

$ cat hw-ingress2.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: helloworld-ingress

annotations:

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

tls:

- hosts:

- nginxtesting.tpk.best

secretName: nginxtesting.tpk.best-cert

rules:

- host: "nginxtesting.tpk.best"

http:

paths:

- path: /mysimplehelloworld4

backend:

serviceName: helloworld-go-hrpsm-1-private

servicePort: 80

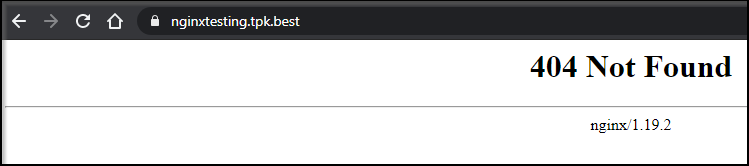

We can initially see nothing is there:

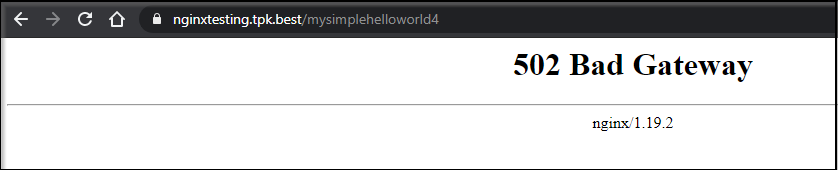

However if we use the Kourier ingress to spin a pod, then Nginx can find and resolve it:

You’ll note the lower image is TLS encrypted.

Summary

First, a few gotchas i encountered. NGinx can only serve content in the same namespace. So if you launch nginx into, for instance, an “ingress-basic” namespace, you can’t point to services in “default”. So be aware of the namespace delineations.

I also had challenges in figuring out why my ClusterIssuers kept failing. My old guide used the pre-release of 0.16.0 for Cert-Manager. I got all kinds of webhook issues until I removed everything and did v.1.0.0. Despite adding the “installCRDs” setting, it didn’t really install CRDs. So i still did the 1.0.0 CRDs manually (detailed above).

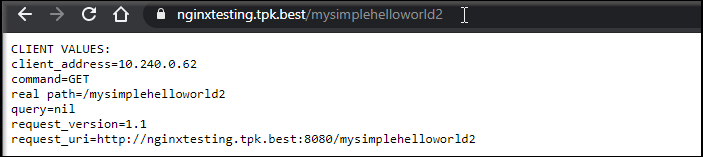

I also had to work through SSL and redirect syntax. A great little tiny deployment you can use when debugging is echo server:

kubectl create deployment hello-node --image=k8s.gcr.io/echoserver:1.4

kubectl expose deployment hello-node --type=ClusterIP --port 80 --target-port 8080

This can be handy for testing nginx.

$ cat hw-ingress2.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: helloworld-ingress

annotations:

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

tls:

- hosts:

- nginxtesting.tpk.best

secretName: nginxtesting.tpk.best-cert

rules:

- host: "nginxtesting.tpk.best"

http:

paths:0

- path: /mysimplehelloworld2

backend:

serviceName: hello-node

servicePort: 80

I really like the idea of leveraging Knative to expose self created services.

kn service create helloworld-go --image gcr.io/knative-samples/helloworld-go --env TARGET="Go Sample v1"Any image could be used in the --image field. E.g.

kn service create helloworld-go --image k8s.gcr.io/echoserver:1.4 -p 8080

The ability to have containers available but not always running really leverages the power of containers with the saving of serverless. I think having KNative in one's toolset is a great way to expose infrequently used services where maximizing cluster resources is important.