Published: Sep 6, 2024 by Isaac Johnson

Today, we’ll look at gcsfuse, crontabs with gsutil, and attempt to setup Minio. I will build out some working PV/PVCs for that as well as an Ansible Playbook for GCSFuse.

Lastly, we will cover Azure Storage with both File Stores and Blob containers using Blobfuse on Linux and crontabs for backups.

GCS Fuse

We’ll install gcsfuse on our Dockerhost, the Linux box we used for the Storage Transfer Service last time, just so we can do a nice side-by-side comparison.

I’ll get apt setup first

builder@builder-T100:~$ export GCSFUSE_REPO=gcsfuse-`lsb_release -c -s`

builder@builder-T100:~$ echo "deb https://packages.cloud.google.com/apt $GCSFUSE_REPO main" | sudo tee /etc/apt/sources.list.d/gcsfuse.list

deb https://packages.cloud.google.com/apt gcsfuse-jammy main

builder@builder-T100:~$ curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0Warning: apt-key is deprecated. Manage keyring files in trusted.gpg.d instead (see apt-key(8)).

100 1021 100 1021 0 0 3323 0 --:--:-- --:--:-- --:--:-- 3336

OK

builder@builder-T100:~$ sudo apt-get update

Hit:1 http://us.archive.ubuntu.com/ubuntu jammy InRelease

Hit:2 https://packages.microsoft.com/repos/azure-cli focal InRelease

Hit:3 https://download.docker.com/linux/ubuntu focal InRelease

Get:4 http://us.archive.ubuntu.com/ubuntu jammy-updates InRelease [128 kB]

Get:6 https://packages.cloud.google.com/apt gcsfuse-jammy InRelease [1,227 B]

Get:7 http://security.ubuntu.com/ubuntu jammy-security InRelease [129 kB]

Ign:5 https://packages.cloud.google.com/apt kubernetes-xenial InRelease

Hit:9 http://us.archive.ubuntu.com/ubuntu jammy-backports InRelease

Err:8 https://packages.cloud.google.com/apt kubernetes-xenial Release

404 Not Found [IP: 142.250.190.46 443]

Get:10 https://packages.cloud.google.com/apt gcsfuse-jammy/main amd64 Packages [25.6 kB]

Get:11 http://us.archive.ubuntu.com/ubuntu jammy-updates/main i386 Packages [690 kB]

Get:12 https://packages.cloud.google.com/apt gcsfuse-jammy/main all Packages [750 B]

Get:13 http://us.archive.ubuntu.com/ubuntu jammy-updates/main amd64 Packages [1,988 kB]

Get:14 http://us.archive.ubuntu.com/ubuntu jammy-updates/universe amd64 Packages [1,121 kB]

Get:15 http://us.archive.ubuntu.com/ubuntu jammy-updates/universe i386 Packages [730 kB]

Reading package lists... Done

E: The repository 'https://apt.kubernetes.io kubernetes-xenial Release' no longer has a Release file.

N: Updating from such a repository can't be done securely, and is therefore disabled by default.

N: See apt-secure(8) manpage for repository creation and user configuration details.

W: https://packages.cloud.google.com/apt/dists/gcsfuse-jammy/InRelease: Key is stored in legacy trusted.gpg keyring (/etc/apt/trusted.gpg), see the DEPRECATION section in apt-key(8) for details.

N: Skipping acquire of configured file 'main/binary-i386/Packages' as repository 'https://packages.cloud.google.com/apt gcsfuse-jammy InRelease' doesn't support architecture 'i386'

builder@builder-T100:~$ sudo apt-get install fuse gcsfuse

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following packages were automatically installed and are no longer required:

... snip ...

builder@builder-T100:~$ gcsfuse -v

gcsfuse version 2.4.0 (Go version go1.22.4)

Even though I authed for STS, let’s do it again to show the full process

builder@builder-T100:~$ gcloud auth application-default login

Go to the following link in your browser, and complete the sign-in prompts:

https://accounts.google.com/o/oauth2/auth?response_type=code&client_id=764086051850-6qr4p6gpi6hn506pt8ejuq83di341hur.apps.googleusercontent.com&redirect_uri=https%3A%2F%2Fsdk.cloud.google.com%2Fapplicationdefaultauthcode.html&scope=openid+https%3A%2F%2Fwww.googleapis.com%2Fauth%2Fuserinfo.email+https%3A%2F%2Fwww.googleapis.com%2Fauth%2Fcloud-platform+https%3A%2F%2Fwww.googleapis.com%2Fauth%2Fsqlservice.login&state=RJsqdB6hb3T9zQ5YdzZhGA5aPadA3V&prompt=consent&token_usage=remote&access_type=offline&code_challenge=gpNipAiHQArbPk9G6keE4yfFLYPFP_aCyaOd6XDsJq4&code_challenge_method=S256

Once finished, enter the verification code provided in your browser: 4/********************************************

Credentials saved to file: [/home/builder/.config/gcloud/application_default_credentials.json]

These credentials will be used by any library that requests Application Default Credentials (ADC).

Quota project "myanthosproject2" was added to ADC which can be used by Google client libraries for billing and quota. Note that some services may still bill the project owning the resource.

If you were doing this with Ansible or on a fleet of machines, of course you wouldn’t authenticate interactively as a named user. You can use a Service Account for this just as easily as long as it has the role roles/storage.objectUse

$ gcloud auth application-default login --client-id-file=serviceaccount.json

Instead of re-using a sample bucket, let’s just create a fresh one

builder@builder-T100:~$ gcloud storage buckets create gs://fbsgcsfusetest --project=myanthosproject2

Creating gs://fbsgcsfusetest/...

Next, I need a mount point

builder@builder-T100:~$ sudo mkdir /mnt/fbsgcsfusetest

builder@builder-T100:~$ sudo chown builder:builder /mnt/fbsgcsfusetest

The reason we want to use our user to mount is that is where gcloud can find the auth. If you try to mount with root (using sudo), you’ll get the error

... .Run: readFromProcess: sub-process: Error while mounting gcsfuse: mountWithArgs: failed to create storage handle using createStorageHandle: go storage client creation failed: while creating http endpoint: while fetching tokenSource: DefaultTokenSource: google: could not find default credentials. See https://cloud.google.com/docs/authentication/external/set-up-adc for more information

I can now mount the directory as builder (me)

builder@builder-T100:~$ gcsfuse fbsgcsfusetest /mnt/fbsgcsfusetest

{"timestamp":{"seconds":1725361703,"nanos":354874953},"severity":"INFO","message":"Start gcsfuse/2.4.0 (Go version go1.22.4) for app \"\" using mount point: /mnt/fbsgcsfusetest\n"}

{"timestamp":{"seconds":1725361703,"nanos":355051908},"severity":"INFO","message":"GCSFuse mount command flags: {\"AppName\":\"\",\"Foreground\":false,\"ConfigFile\":\"\",\"MountOptions\":{},\"DirMode\":493,\"FileMode\":420,\"Uid\":-1,\"Gid\":-1,\"ImplicitDirs\":false,\"OnlyDir\":\"\",\"RenameDirLimit\":0,\"IgnoreInterrupts\":true,\"CustomEndpoint\":null,\"BillingProject\":\"\",\"KeyFile\":\"\",\"TokenUrl\":\"\",\"ReuseTokenFromUrl\":true,\"EgressBandwidthLimitBytesPerSecond\":-1,\"OpRateLimitHz\":-1,\"SequentialReadSizeMb\":200,\"AnonymousAccess\":false,\"MaxRetrySleep\":30000000000,\"MaxRetryAttempts\":0,\"StatCacheCapacity\":20460,\"StatCacheTTL\":60000000000,\"TypeCacheTTL\":60000000000,\"KernelListCacheTtlSeconds\":0,\"HttpClientTimeout\":0,\"RetryMultiplier\":2,\"TempDir\":\"\",\"ClientProtocol\":\"http1\",\"MaxConnsPerHost\":0,\"MaxIdleConnsPerHost\":100,\"EnableNonexistentTypeCache\":false,\"StackdriverExportInterval\":0,\"PrometheusPort\":0,\"OtelCollectorAddress\":\"\",\"LogFile\":\"\",\"LogFormat\":\"json\",\"ExperimentalEnableJsonRead\":false,\"DebugFuse\":false,\"DebugGCS\":false,\"DebugInvariants\":false,\"DebugMutex\":false,\"ExperimentalMetadataPrefetchOnMount\":\"disabled\"}"}

{"timestamp":{"seconds":1725361703,"nanos":355158857},"severity":"INFO","message":"GCSFuse mount config flags: {\"CreateEmptyFile\":false,\"Severity\":\"INFO\",\"Format\":\"\",\"FilePath\":\"\",\"LogRotateConfig\":{\"MaxFileSizeMB\":512,\"BackupFileCount\":10,\"Compress\":true},\"MaxSizeMB\":-1,\"CacheFileForRangeRead\":false,\"EnableParallelDownloads\":false,\"ParallelDownloadsPerFile\":16,\"MaxParallelDownloads\":16,\"DownloadChunkSizeMB\":50,\"EnableCRC\":false,\"CacheDir\":\"\",\"TtlInSeconds\":-9223372036854775808,\"TypeCacheMaxSizeMB\":4,\"StatCacheMaxSizeMB\":-9223372036854775808,\"EnableEmptyManagedFolders\":false,\"GRPCConnPoolSize\":1,\"AnonymousAccess\":false,\"EnableHNS\":false,\"IgnoreInterrupts\":true,\"DisableParallelDirops\":false,\"KernelListCacheTtlSeconds\":0,\"MaxRetryAttempts\":0,\"PrometheusPort\":0}"}

{"timestamp":{"seconds":1725361703,"nanos":738800923},"severity":"INFO","message":"File system has been successfully mounted."}

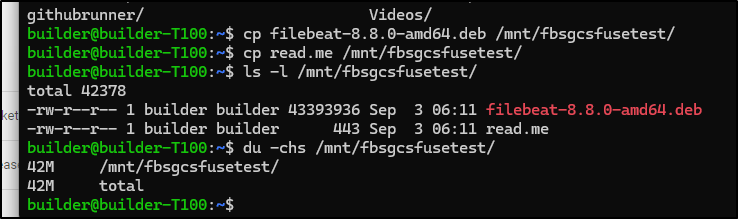

Here we can see using it in action

This does bring up a minor gotcha I often have to remind my fellow SREs - this is not like an NFS mount or a Windows Share. GCSFuse replicates behind the scenes to a bucket - the files still live right there in the mount.

So while this is a very easy way to mount a bucket and transfer files, this is not a way to save space.

GSUtil and cron

GSUtil is the sister program to gcloud in that we can use it to quickly and easily transfer files to and from buckets.

builder@DESKTOP-QADGF36:/var/log$ gsutil cp ~/fixRedis0.yaml gs://fbstestbucket123dd321b/fixRedis0.yaml

Copying file:///home/builder/fixRedis0.yaml [Content-Type=application/octet-stream]...

/ [1 files][ 829.0 B/ 829.0 B]

Operation completed over 1 objects/829.0 B.

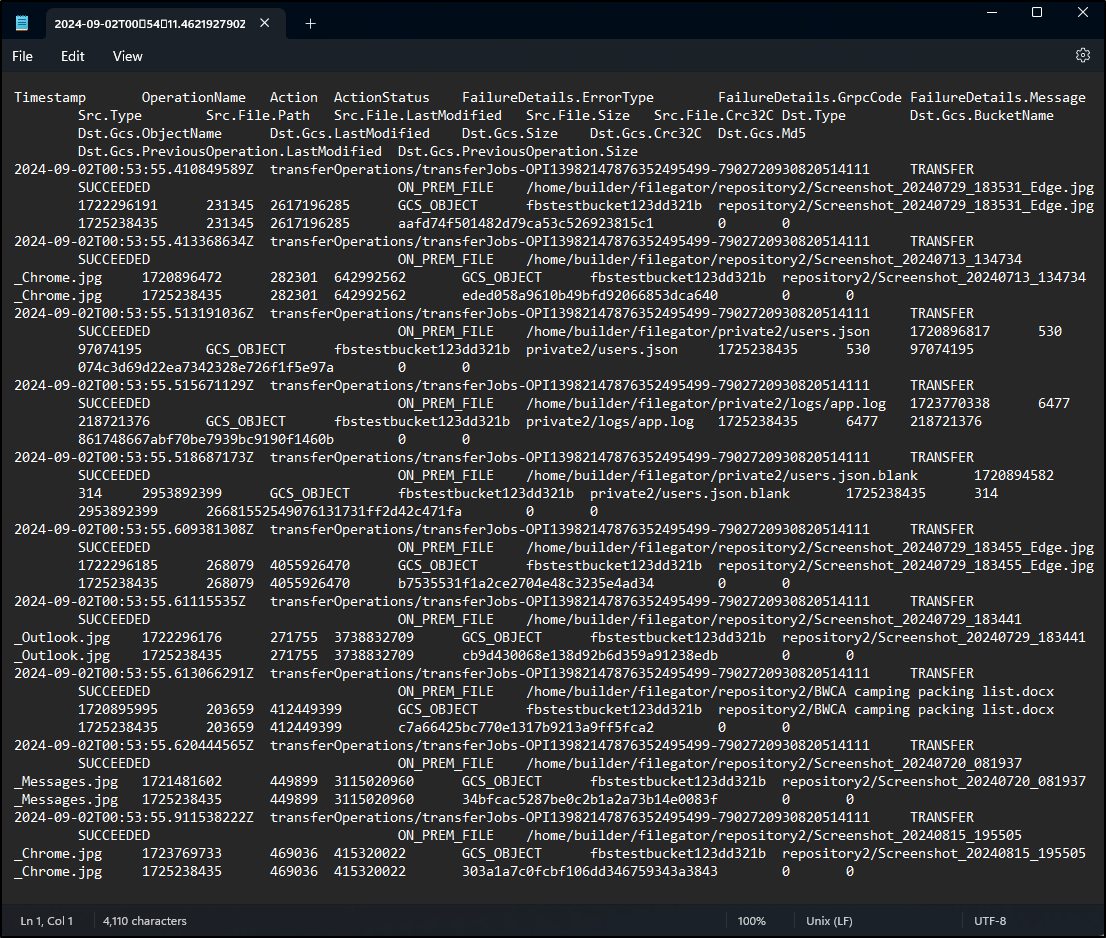

builder@DESKTOP-QADGF36:/var/log$ gsutil cp gs://fbstestbucket123dd321b/storage-transfer/logs/transferJobs/OPI13982147876352495499/transferOperations/transferJobs-OPI13982147876352495499-7902720930820514111/2024-09-02T00:54:11.462192790Z-d594662a-da7c-46ac-a444-01220ea31d37.log /mnt/c/Users/isaac/Documents/

Copying gs://fbstestbucket123dd321b/storage-transfer/logs/transferJobs/OPI13982147876352495499/transferOperations/transferJobs-OPI13982147876352495499-7902720930820514111/2024-09-02T00:54:11.462192790Z-d594662a-da7c-46ac-a444-01220ea31d37.log...

/ [1 files][ 4.0 KiB/ 4.0 KiB]

Operation completed over 1 objects/4.0 KiB.

So just thinking logically here, we can do what we did with AWS aws cp crontab

builder@DESKTOP-QADGF36:/var/log$ sudo crontab -l

# Edit this file to introduce tasks to be run by cron.

#

# Each task to run has to be defined through a single line

# indicating with different fields when the task will be run

# and what command to run for the task

#

# To define the time you can provide concrete values for

# minute (m), hour (h), day of month (dom), month (mon),

# and day of week (dow) or use '*' in these fields (for 'any').

#

# Notice that tasks will be started based on the cron's system

# daemon's notion of time and timezones.

#

# Output of the crontab jobs (including errors) is sent through

# email to the user the crontab file belongs to (unless redirected).

#

# For example, you can run a backup of all your user accounts

# at 5 a.m every week with:

# 0 5 * * 1 tar -zcf /var/backups/home.tgz /home/

#

# For more information see the manual pages of crontab(5) and cron(8)

#

# m h dom mon dow command

0 0 * * * find /var/log -type f -name \*.log -exec cp -f {} /mnt/s3-fbs-logs/$(date +%Y-%m-%d)/ \;

And instead do it as our user (builder) with GCP gsutil cp.

builder@DESKTOP-QADGF36:/var/log$ crontab -e

no crontab for builder - using an empty one

Select an editor. To change later, run 'select-editor'.

1. /bin/nano <---- easiest

2. /usr/bin/vim.basic

3. /usr/bin/vim.tiny

4. /bin/ed

Choose 1-4 [1]: 2

crontab: installing new crontab

builder@DESKTOP-QADGF36:/var/log$ crontab -l

# Edit this file to introduce tasks to be run by cron.

#

# Each task to run has to be defined through a single line

# indicating with different fields when the task will be run

# and what command to run for the task

#

# To define the time you can provide concrete values for

# minute (m), hour (h), day of month (dom), month (mon),

# and day of week (dow) or use '*' in these fields (for 'any').

#

# Notice that tasks will be started based on the cron's system

# daemon's notion of time and timezones.

#

# Output of the crontab jobs (including errors) is sent through

# email to the user the crontab file belongs to (unless redirected).

#

# For example, you can run a backup of all your user accounts

# at 5 a.m every week with:

# 0 5 * * 1 tar -zcf /var/backups/home.tgz /home/

#

# For more information see the manual pages of crontab(5) and cron(8)

#

# m h dom mon dow command

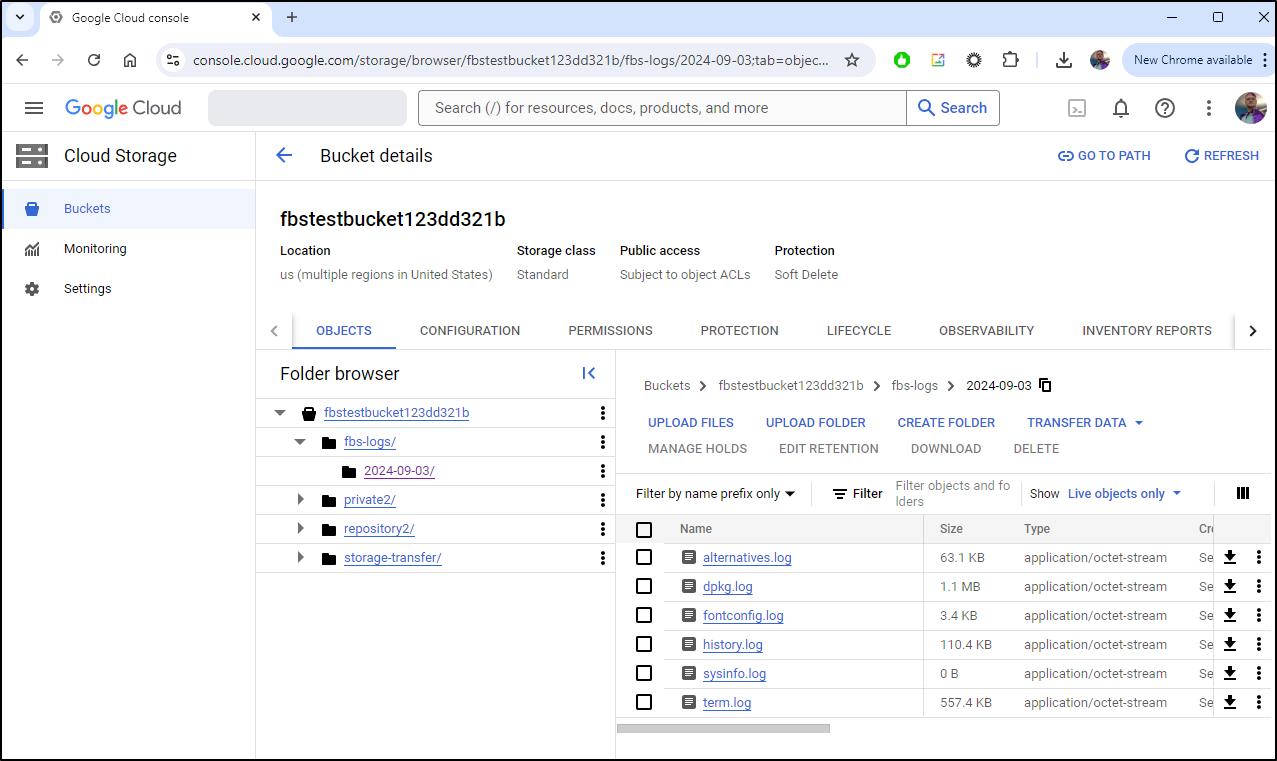

25 * * * * find /var/log -type f -name \*.log -exec gsutil cp {} gs://fbstestbucket123dd321b/fbs-logs/$(date +%Y-%m-%d)/ \;

I can now see the logs appear in the bucket

Of course, if you wanted to move files out (copy and remove), then you can use ‘mv’ instead, e.g find /var/log -type f -name \*.log -exec gsutil mv gs://fbstestbucket123dd321b/fbs-logs/$(date +%Y-%m-%d)/ \;

Cron is just a scheduler, so we can run the copy command interactively on demand just as easily

builder@DESKTOP-QADGF36:/var/log$ find /var/log -type f -name \*.log -exec gsutil cp {} gs://fbstestbucket12

3dd321b/fbs-logs/$(date +%Y-%m-%d)/ \;

find: ‘/var/log/ceph’: Permission denied

Copying file:///var/log/apt/term.log [Content-Type=application/octet-stream]...

/ [1 files][557.4 KiB/557.4 KiB]

Operation completed over 1 objects/557.4 KiB.

Copying file:///var/log/apt/history.log [Content-Type=application/octet-stream]...

/ [1 files][110.4 KiB/110.4 KiB]

Operation completed over 1 objects/110.4 KiB.

Copying file:///var/log/fontconfig.log [Content-Type=application/octet-stream]...

/ [1 files][ 3.4 KiB/ 3.4 KiB]

Operation completed over 1 objects/3.4 KiB.

find: ‘/var/log/private’: Permission denied

Copying file:///var/log/dpkg.log [Content-Type=application/octet-stream]...

/ [1 files][ 1.1 MiB/ 1.1 MiB]

Operation completed over 1 objects/1.1 MiB.

Copying file:///var/log/alternatives.log [Content-Type=application/octet-stream]...

/ [1 files][ 63.1 KiB/ 63.1 KiB]

Operation completed over 1 objects/63.1 KiB.

Copying file:///var/log/landscape/sysinfo.log [Content-Type=application/octet-stream]...

/ [1 files][ 0.0 B/ 0.0 B]

Operation completed over 1 objects.

CommandException: Error opening file "file:///var/log/ubuntu-advantage.log": [Errno 13] Permission denied: '/var/log/ubuntu-advantage.log'.

Minio (attempt)

spoiler: Ultimately I do not get Minio to work, but we do build out some interesting pieces and automations

Minio is a suite for mounting various storage backends and exposing them as if they were a local AWS S3 Edge mount. This enables you to use S3 Object storage utilities that think they are going to AWS, but they are really using your Minio instance.

To use Minio, we first need to install the operator.

For this part, I’ll be using my test cluster

builder@DESKTOP-QADGF36:~$ helm repo add minio-operator https://operator.min.io

"minio-operator" has been added to your repositories

builder@DESKTOP-QADGF36:~$ helm install minio-operator minio-operator/operator

NAME: minio-operator

LAST DEPLOYED: Tue Sep 3 06:49:45 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

For the deployment, I’ll want to find the latest tag in Dockerhub which as of this moment is minio/minio:RELEASE.2024-08-29T01-40-52Z

But before we do that, let’s get our SA with bucket admin so we can configure Minio. This Service Account would be the same we would use for logging in for gcsfuse

builder@DESKTOP-QADGF36:~$ gcloud iam service-accounts create myminio --project myanthosproject2

Created service account [myminio].

builder@DESKTOP-QADGF36:~$ gcloud projects add-iam-policy-binding myanthosproject2 --role roles/storage.objectAdmin --member serviceAccount:myminio@myanthosproject2.iam.gserviceaccount.com

Updated IAM policy for project [myanthosproject2].

bindings:

- members:

- serviceAccount:service-511842454269@gcp-sa-aiplatform.iam.gserviceaccount.com

role: roles/aiplatform.serviceAgent

- members:

... snip ...

etag: BwYhNbWrZKQ=

version: 1

builder@DESKTOP-QADGF36:~$ gcloud iam service-accounts keys create myminio.json --iam-account myminio@myanthosproject2.iam.gserviceaccount.com

created key [c0524871dc11a2beb9d876d021ecc8d9a745a193] of type [json] as [myminio.json] for [myminio@myanthosproject2.iam.gserviceaccount.com]

builder@DESKTOP-QADGF36:~$ ls -l myminio.json

-rw------- 1 builder builder 2356 Sep 3 06:53 myminio.json

I’ll want that base64 encoded value for our K8s secret

$ base64 -w 0 < myminio.json

ewogICJ0eXBlIjogInNlcnZpY2VfYWNjb3VudCIsCiAgInxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx....

Which I’ll use to create a secret

builder@DESKTOP-QADGF36:~$ cat myminio.secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: gcs-credentials

type: Opaque

data:

gcs-credentials.json: ewogICJ0eXBlIjogInNlcnZpY2VfYWxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx....

builder@DESKTOP-QADGF36:~$ kubectl apply -f ./myminio.secret.yaml

secret/gcs-credentials created

I’ll also create a new bucket for this

builder@DESKTOP-QADGF36:~$ gcloud storage buckets create gs://fbsminiobucket

Creating gs://fbsminiobucket/...

While there does exist a “gcs-fuse-csi-driver” which will work with GKE to mount a bucket, it requires WIF (Workload Identity Federation) so sadly it can only work in GKE.

I’m going to need to get a gcsfuse mount setup on at least one host for the next steps.

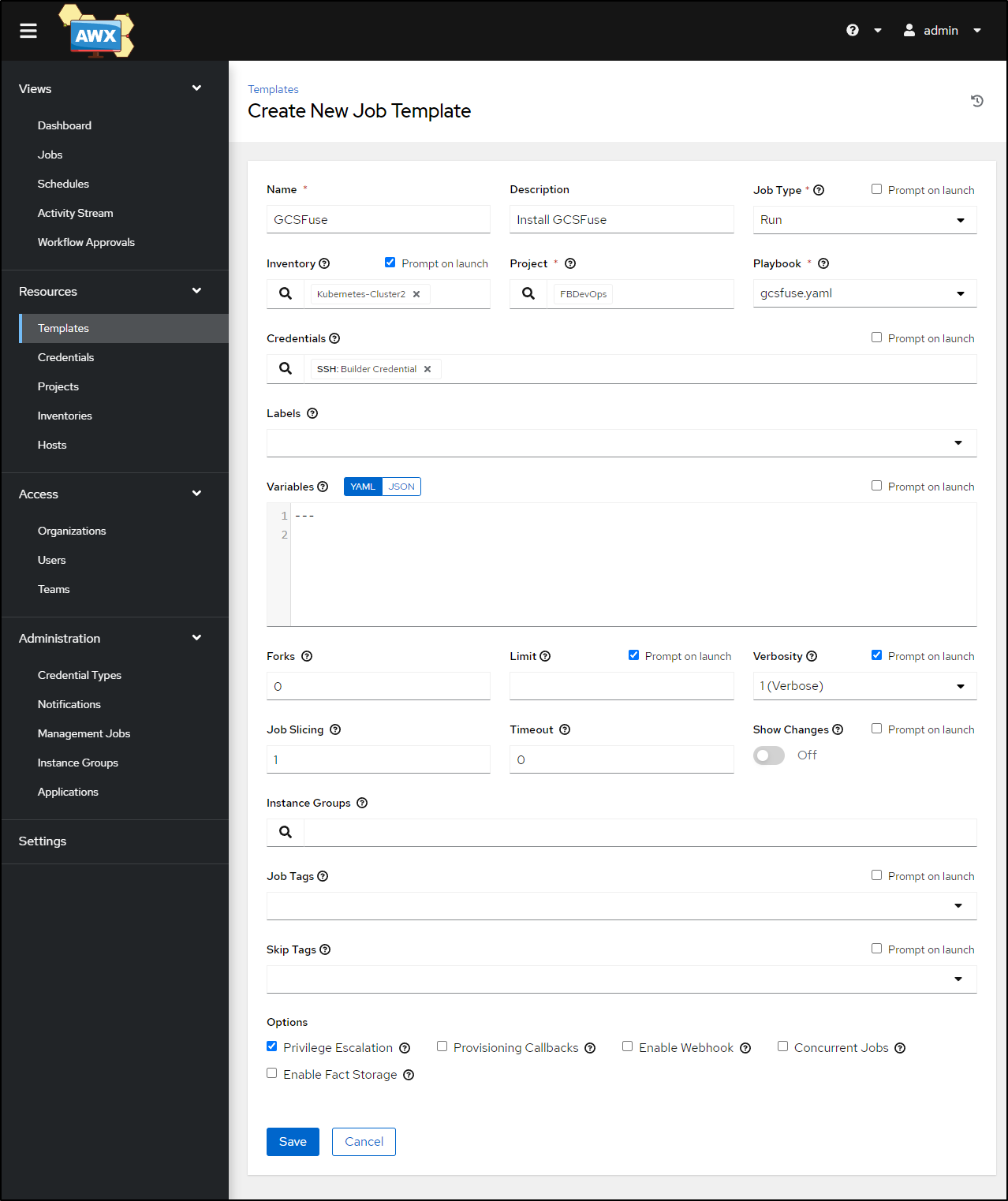

Since I hate toil, now is a good time to just write an ansible playbook and be done with it:

- name: Install GCSFuse

hosts: all

tasks:

- name: Add Google Prereqs

ansible.builtin.shell: |

DEBIAN_FRONTEND=noninteractive apt update -y

DEBIAN_FRONTEND=noninteractive apt install -y apt-transport-https ca-certificates gnupg curl

become: true

- name: Add Google Apt Repo and Key

ansible.builtin.shell: |

curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo gpg --dearmor -o /usr/share/keyrings/cloud.google.gpg

echo "deb [signed-by=/usr/share/keyrings/cloud.google.gpg] https://packages.cloud.google.com/apt cloud-sdk main" | sudo tee -a /etc/apt/sources.list.d/google-cloud-sdk.list

become: true

- name: Install gcloud cli

ansible.builtin.shell: |

DEBIAN_FRONTEND=noninteractive apt update -y

DEBIAN_FRONTEND=noninteractive apt install -y google-cloud-cli

become: true

- name: Add Google Pkgs Apt Repo and Key

ansible.builtin.shell: |

export GCSFUSE_REPO=gcsfuse-`lsb_release -c -s`

echo "deb https://packages.cloud.google.com/apt $GCSFUSE_REPO main" | sudo tee /etc/apt/sources.list.d/gcsfuse.list

curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

become: true

- name: Install gcsfuse

ansible.builtin.shell: |

DEBIAN_FRONTEND=noninteractive apt update -y

DEBIAN_FRONTEND=noninteractive apt install -y fuse gcsfuse

become: true

I’ll refresh (sync) the project then add the template in AWX

then run it

I already had an SA.json locally on the primary node for a prior writeup, so i was able to just make a directory

builder@anna-MacBookAir:~$sudo mkdir /mnt/fbsgcsfusetest

Add a line to the /etc/fstab

builder@anna-MacBookAir:~$ cat /etc/fstab | tail -n1

fbsgcsfusetest /mnt/fbsgcsfusetest gcsfuse rw,allow_other,file_mode=777,dir_mode=777,key_file=/home/builder/sa-storage-key.json

Then mount

builder@anna-MacBookAir:~$ sudo mount -a

Calling gcsfuse with arguments: --file-mode 777 --dir-mode 777 --key-file /home/builder/sa-storage-key.json -o rw -o allow_other fbsgcsfusetest /mnt/fbsgcsfusetest

{"timestamp":{"seconds":1725467892,"nanos":564293007},"severity":"INFO","message":"Start gcsfuse/2.4.0 (Go version go1.22.4) for app \"\" using mount point: /mnt/fbsgcsfusetest\n"}

{"timestamp":{"seconds":1725467892,"nanos":564493985},"severity":"INFO","message":"GCSFuse mount command flags: {\"AppName\":\"\",\"Foreground\":false,\"ConfigFile\":\"\",\"MountOptions\":{\"allow_other\":\"\",\"rw\":\"\"},\"DirMode\":511,\"FileMode\":511,\"Uid\":-1,\"Gid\":-1,\"ImplicitDirs\":false,\"OnlyDir\":\"\",\"RenameDirLimit\":0,\"IgnoreInterrupts\":true,\"CustomEndpoint\":null,\"BillingProject\":\"\",\"KeyFile\":\"/home/builder/sa-storage-key.json\",\"TokenUrl\":\"\",\"ReuseTokenFromUrl\":true,\"EgressBandwidthLimitBytesPerSecond\":-1,\"OpRateLimitHz\":-1,\"SequentialReadSizeMb\":200,\"AnonymousAccess\":false,\"MaxRetrySleep\":30000000000,\"MaxRetryAttempts\":0,\"StatCacheCapacity\":20460,\"StatCacheTTL\":60000000000,\"TypeCacheTTL\":60000000000,\"KernelListCacheTtlSeconds\":0,\"HttpClientTimeout\":0,\"RetryMultiplier\":2,\"TempDir\":\"\",\"ClientProtocol\":\"http1\",\"MaxConnsPerHost\":0,\"MaxIdleConnsPerHost\":100,\"EnableNonexistentTypeCache\":false,\"StackdriverExportInterval\":0,\"PrometheusPort\":0,\"OtelCollectorAddress\":\"\",\"LogFile\":\"\",\"LogFormat\":\"json\",\"ExperimentalEnableJsonRead\":false,\"DebugFuse\":false,\"DebugGCS\":false,\"DebugInvariants\":false,\"DebugMutex\":false,\"ExperimentalMetadataPrefetchOnMount\":\"disabled\"}"}

{"timestamp":{"seconds":1725467892,"nanos":564599190},"severity":"INFO","message":"GCSFuse mount config flags: {\"CreateEmptyFile\":false,\"Severity\":\"INFO\",\"Format\":\"\",\"FilePath\":\"\",\"LogRotateConfig\":{\"MaxFileSizeMB\":512,\"BackupFileCount\":10,\"Compress\":true},\"MaxSizeMB\":-1,\"CacheFileForRangeRead\":false,\"EnableParallelDownloads\":false,\"ParallelDownloadsPerFile\":16,\"MaxParallelDownloads\":16,\"DownloadChunkSizeMB\":50,\"EnableCRC\":false,\"CacheDir\":\"\",\"TtlInSeconds\":-9223372036854775808,\"TypeCacheMaxSizeMB\":4,\"StatCacheMaxSizeMB\":-9223372036854775808,\"EnableEmptyManagedFolders\":false,\"GRPCConnPoolSize\":1,\"AnonymousAccess\":false,\"EnableHNS\":false,\"IgnoreInterrupts\":true,\"DisableParallelDirops\":false,\"KernelListCacheTtlSeconds\":0,\"MaxRetryAttempts\":0,\"PrometheusPort\":0}"}

{"timestamp":{"seconds":1725467893,"nanos":97276214},"severity":"INFO","message":"File system has been successfully mounted."}

I could then see files

builder@anna-MacBookAir:~$ ls -ltr /mnt/fbsgcsfusetest/

total 42378

-rwxrwxrwx 1 root root 43393936 Sep 3 06:11 filebeat-8.8.0-amd64.deb

-rwxrwxrwx 1 root root 443 Sep 3 06:11 read.me

I’ll make a subdir for minio

builder@anna-MacBookAir:~$ mkdir /mnt/fbsgcsfusetest/minio

builder@anna-MacBookAir:~$ ls -ltra /mnt/fbsgcsfusetest/

total 42378

-rwxrwxrwx 1 root root 43393936 Sep 3 06:11 filebeat-8.8.0-amd64.deb

-rwxrwxrwx 1 root root 443 Sep 3 06:11 read.me

drwxrwxrwx 1 root root 0 Sep 4 11:43 minio

Even though we can see the name above has casing, for the next bit of work, we need to know what kubernetes thinks its name is, which is all lowercase

$ kubectl get nodes -o yaml | grep name:

k3s.io/hostname: builder-macbookpro2

kubernetes.io/hostname: builder-macbookpro2

name: builder-macbookpro2

k3s.io/hostname: anna-macbookair

kubernetes.io/hostname: anna-macbookair

name: anna-macbookair

k3s.io/hostname: isaac-macbookpro

kubernetes.io/hostname: isaac-macbookpro

name: isaac-macbookpro

I’ll use that to create a PVC and its PV for Kubernetes and apply the manifest

builder@DESKTOP-QADGF36:~$ cat ./minio.pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: gcs-local-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Gi

storageClassName: manual

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: gcs-local-pv

spec:

capacity:

storage: 100Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: manual

local:

path: /mnt/fbsgcsfusetest/minio

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- anna-macbookair

builder@DESKTOP-QADGF36:~$ kubectl apply -f ./minio.pvc.yaml

persistentvolumeclaim/gcs-local-pvc created

persistentvolume/gcs-local-pv created

I can see it’s bound

builder@DESKTOP-QADGF36:~$ kubectl get pvc gcs-local-pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

gcs-local-pvc Bound gcs-local-pv 100Gi RWX manual 46s

Fail fail fail

I fought to get minio up. I tried different charts, operators. Nothing was working. I let this blog post go on a bit long trying to get minio to come back and still errors and failed forwarding

builder@DESKTOP-QADGF36:~$ kubectl port-forward myminio-pool-0-1 9443:9443

Forwarding from 127.0.0.1:9443 -> 9443

Forwarding from [::1]:9443 -> 9443

Handling connection for 9443

Handling connection for 9443

E0905 15:29:31.709042 12377 portforward.go:409] an error occurred forwarding 9443 -> 9443: error forwarding port 9443 to pod df0eb565d3bfb4d59ec9172b587a39d5cbc3c04d7afa984eff59d6ea093ad95a, uid : failed to execute portforward in network namespace "/var/run/netns/cni-ffa19ff9-1f3d-d15a-bb0c-82275fa1bf64": failed to connect to localhost:9443 inside namespace "df0eb565d3bfb4d59ec9172b587a39d5cbc3c04d7afa984eff59d6ea093ad95a", IPv4: dial tcp4 127.0.0.1:9443: connect: connection refused IPv6 dial tcp6: address localhost: no suitable address found

error: lost connection to pod

But I knew we should be able to do something if not Minio.

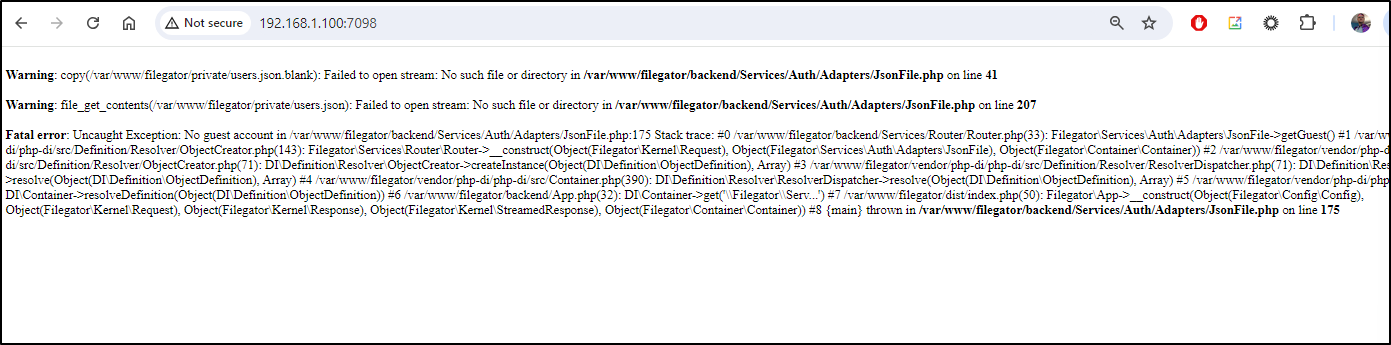

What about Filegator? We reviewed it in this post in July

I tried a direct mount but that failed….

Similarly, Kubernetes manual mounts with node affinity selectors failed.

I worked this several evenings before decided to punt to a future date. That said, there is still one more of the major clouds to explore…

Azure Storage

Even though we, for the most part, ruled out using Azure Storage let’s go ahead and show how we might use that as well.

I’ll create a new resource group to hold the account

builder@DESKTOP-QADGF36:~$ az account set --subscription "Pay-As-You-Go"

builder@DESKTOP-QADGF36:~$ az group create --name "fbsbackupsrg" --location eastus

{

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/fbsbackupsrg",

"location": "eastus",

"managedBy": null,

"name": "fbsbackupsrg",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

I’ll now make the cheapest storage I can, namely Standard_LRS in eastus.

builder@DESKTOP-QADGF36:~$ az storage account create --name fbsbackups -g fbsbackupsrg -l eastus --sku Standard_LRS

Command group 'az storage' is in preview and under development. Reference and support levels: https://aka.ms/CLI_refstatus

The behavior of this command has been altered by the following extension: storage-preview

{

"accessTier": "Hot",

"allowBlobPublicAccess": false,

"allowCrossTenantReplication": false,

"allowSharedKeyAccess": null,

"allowedCopyScope": null,

"azureFilesIdentityBasedAuthentication": null,

"blobRestoreStatus": null,

"creationTime": "2024-09-06T11:00:15.362893+00:00",

"customDomain": null,

"defaultToOAuthAuthentication": null,

"dnsEndpointType": null,

"enableHttpsTrafficOnly": true,

"enableNfsV3": null,

"encryption": {

"encryptionIdentity": null,

"keySource": "Microsoft.Storage",

"keyVaultProperties": null,

"requireInfrastructureEncryption": null,

"services": {

"blob": {

"enabled": true,

"keyType": "Account",

"lastEnabledTime": "2024-09-06T11:00:15.753519+00:00"

},

"file": {

"enabled": true,

"keyType": "Account",

"lastEnabledTime": "2024-09-06T11:00:15.753519+00:00"

},

"queue": null,

"table": null

}

},

"extendedLocation": null,

"failoverInProgress": null,

"geoReplicationStats": null,

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/fbsbackupsrg/providers/Microsoft.Storage/storageAccounts/fbsbackups",

"identity": null,

"immutableStorageWithVersioning": null,

"isHnsEnabled": null,

"isLocalUserEnabled": null,

"isSftpEnabled": null,

"keyCreationTime": {

"key1": "2024-09-06T11:00:15.503517+00:00",

"key2": "2024-09-06T11:00:15.503517+00:00"

},

"keyPolicy": null,

"kind": "StorageV2",

"largeFileSharesState": null,

"lastGeoFailoverTime": null,

"location": "eastus",

"minimumTlsVersion": "TLS1_0",

"name": "fbsbackups",

"networkRuleSet": {

"bypass": "AzureServices",

"defaultAction": "Allow",

"ipRules": [],

"resourceAccessRules": null,

"virtualNetworkRules": []

},

"primaryEndpoints": {

"blob": "https://fbsbackups.blob.core.windows.net/",

"dfs": "https://fbsbackups.dfs.core.windows.net/",

"file": "https://fbsbackups.file.core.windows.net/",

"internetEndpoints": null,

"microsoftEndpoints": null,

"queue": "https://fbsbackups.queue.core.windows.net/",

"table": "https://fbsbackups.table.core.windows.net/",

"web": "https://fbsbackups.z13.web.core.windows.net/"

},

"primaryLocation": "eastus",

"privateEndpointConnections": [],

"provisioningState": "Succeeded",

"publicNetworkAccess": null,

"resourceGroup": "fbsbackupsrg",

"routingPreference": null,

"sasPolicy": null,

"secondaryEndpoints": null,

"secondaryLocation": null,

"sku": {

"name": "Standard_LRS",

"tier": "Standard"

},

"statusOfPrimary": "available",

"statusOfSecondary": null,

"storageAccountSkuConversionStatus": null,

"tags": {},

"type": "Microsoft.Storage/storageAccounts"

}

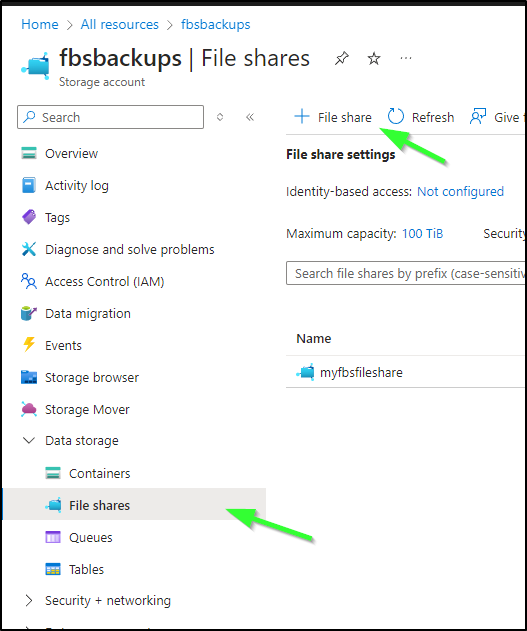

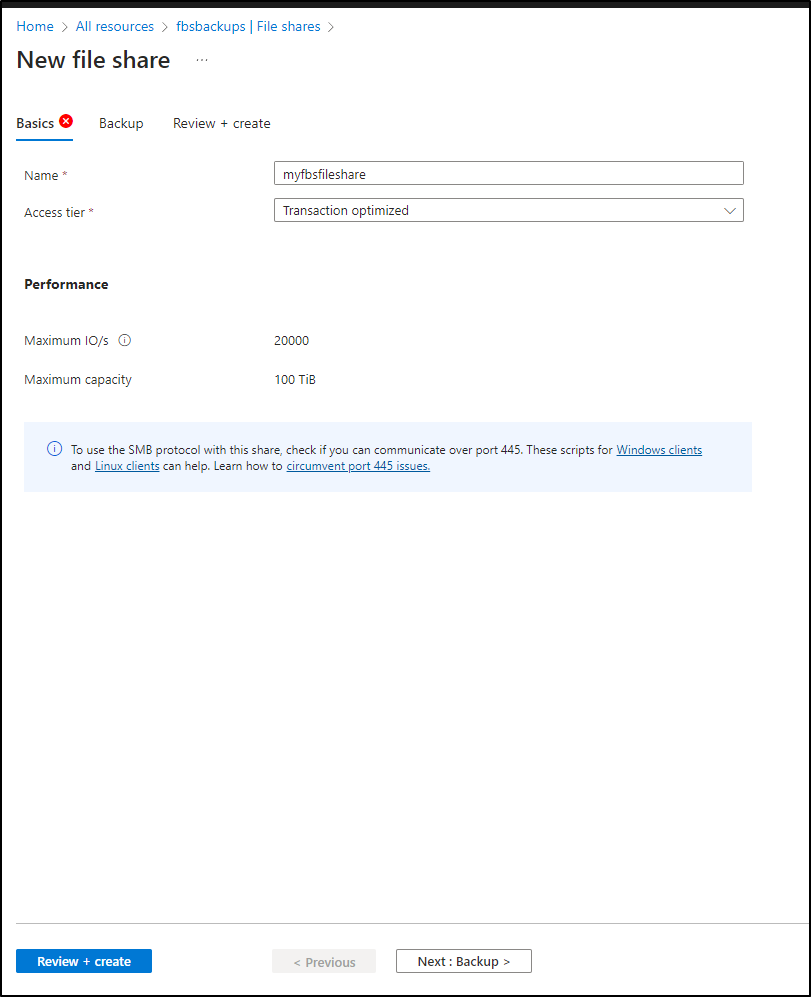

I’m going to use the Azure Portal to create a File Share next

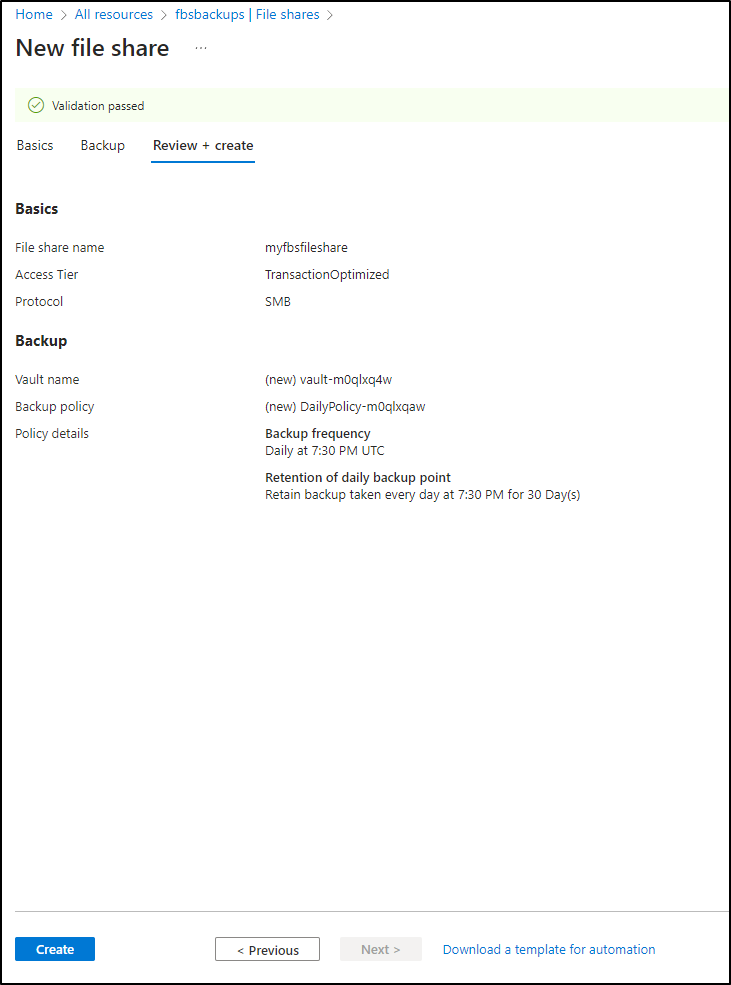

I’ll give it a name and I can select Hot, Cold or Transaction Optimized for access tier

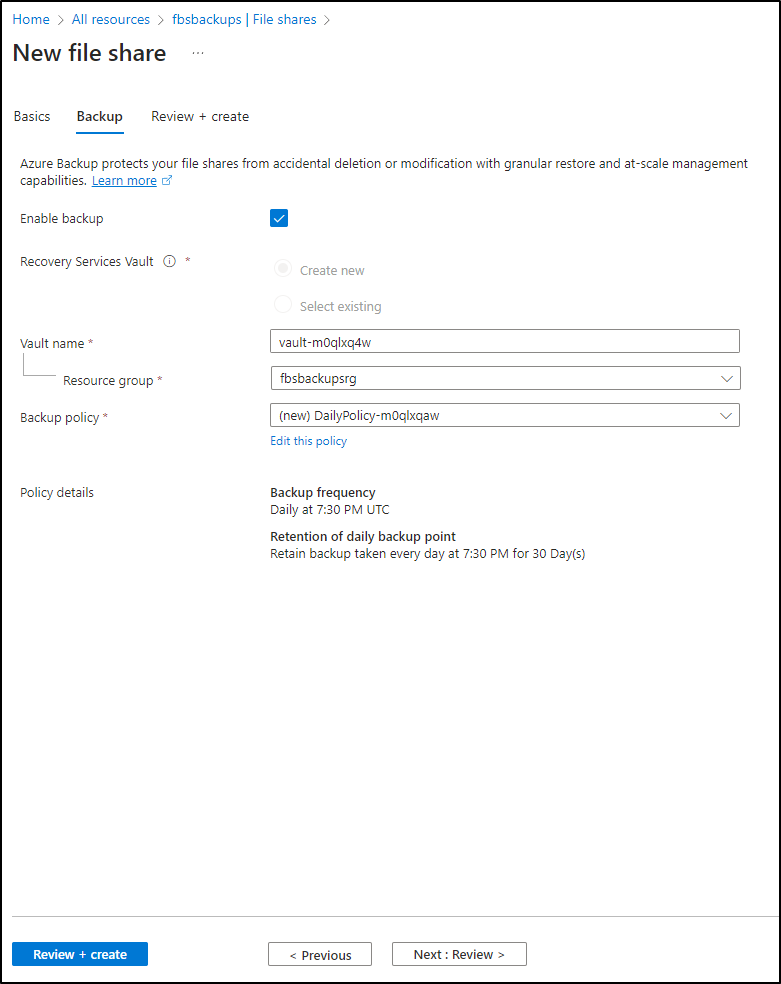

I’ll enable backups, which is the default setting, however because we are using LRS, Locally Redundant Storage, backups make much more sense

Finally, review and create

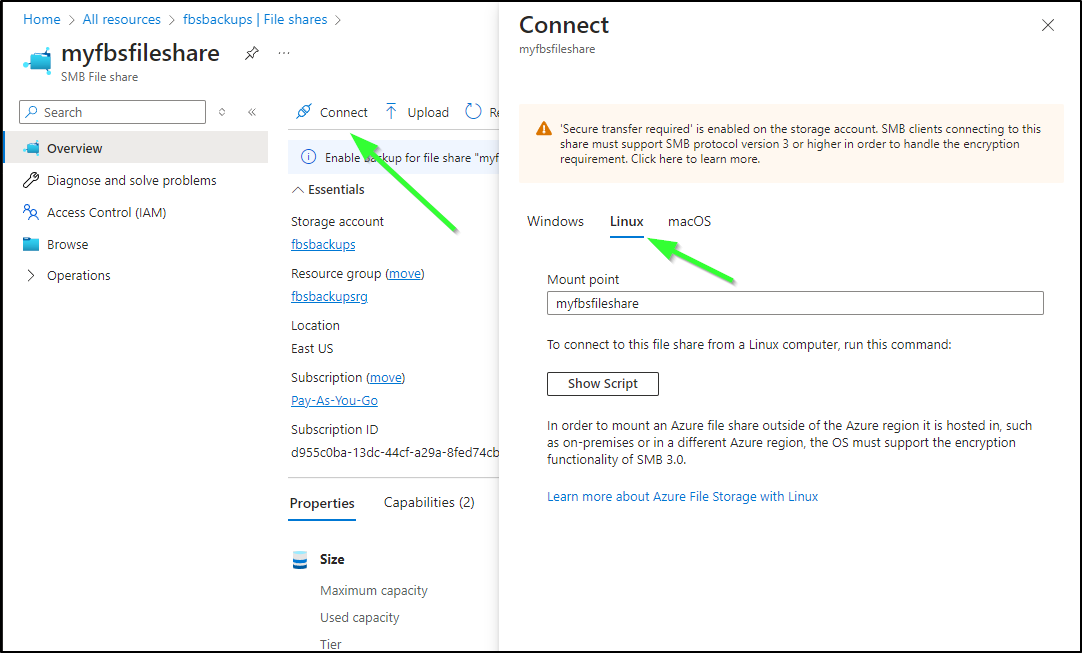

I can now select the share, click Connect, and go to Linux

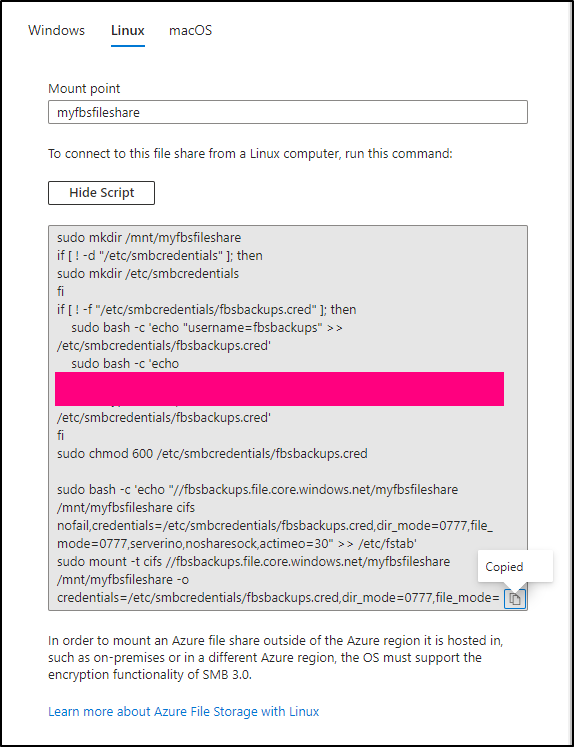

When you show the script it will show you a valid storage access key so that is something you’ll want to protect

I’ll copy that to my Dockerhost

builder@builder-T100:~$ vi create_azure_mount.sh

builder@builder-T100:~$ chmod 755 ./create_azure_mount.sh

builder@builder-T100:~$ cat ./create_azure_mount.sh | sed 's/password=.*"/password=************************/g'

sudo mkdir /mnt/myfbsfileshare

if [ ! -d "/etc/smbcredentials" ]; then

sudo mkdir /etc/smbcredentials

fi

if [ ! -f "/etc/smbcredentials/fbsbackups.cred" ]; then

sudo bash -c 'echo "username=fbsbackups" >> /etc/smbcredentials/fbsbackups.cred'

sudo bash -c 'echo "password=************************ >> /etc/smbcredentials/fbsbackups.cred'

fi

sudo chmod 600 /etc/smbcredentials/fbsbackups.cred

sudo bash -c 'echo "//fbsbackups.file.core.windows.net/myfbsfileshare /mnt/myfbsfileshare cifs nofail,credentials=/etc/smbcredentials/fbsbackups.cred,dir_mode=0777,file_mode=0777,serverino,nosharesock,actimeo=30" >> /etc/fstab'

sudo mount -t cifs //fbsbackups.file.core.windows.net/myfbsfileshare /mnt/myfbsfileshare -o credentials=/etc/smbcredentials/fbsbackups.cred,dir_mode=0777,file_mode=0777,serverino,nosharesock,actimeo=30

At first I got the error

builder@builder-T100:~$ sudo mount -t cifs //fbsbackups.file.core.windows.net/myfbsfileshare /mnt/myfbsfileshare -o credentials=/etc/smbcredentials/fbsbackups.cred,dir_mode=0777,file_mode=0777,serverino,nosharesock,actimeo=30

mount: /mnt/myfbsfileshare: mount(2) system call failed: No route to host.

I just needed cifs-utils for that

$ sudo apt-get install cifs-utils

However, I am again, just damn stuck trying to get it to use SMB 3.0 and connect

[575336.599670] CIFS: Attempting to mount //fbsbackups.file.core.windows.net/myfbsfileshare

[575347.070100] CIFS: VFS: Error connecting to socket. Aborting operation.

[575347.070122] CIFS: VFS: cifs_mount failed w/return code = -115

[575360.055529] CIFS: Attempting to mount //fbsbackups.file.core.windows.net/myfbsfileshare

[575370.621089] CIFS: VFS: Error connecting to socket. Aborting operation.

[575370.621107] CIFS: VFS: cifs_mount failed w/return code = -115

This actually reminded me of the last time I used (tried to use) Azure Storage for Longhorn. This is actually an ISP issue I have.

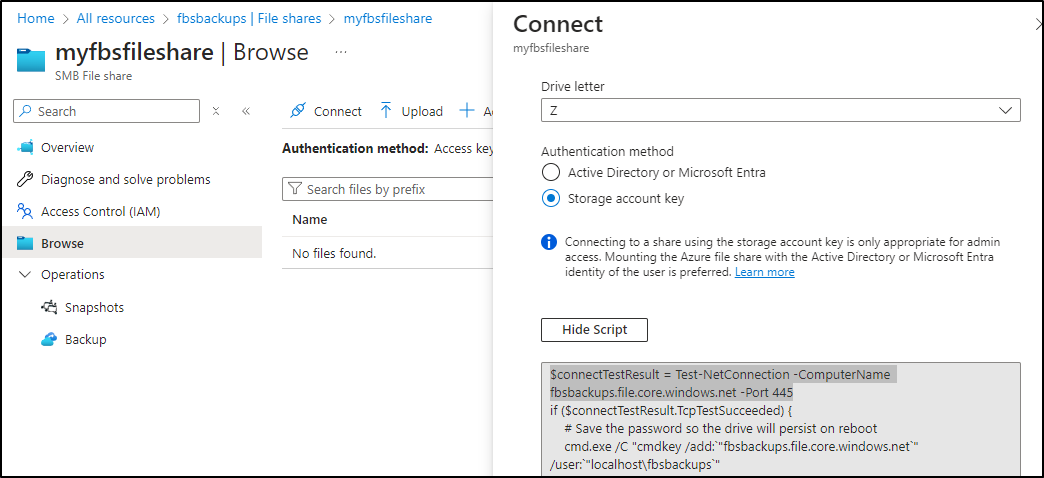

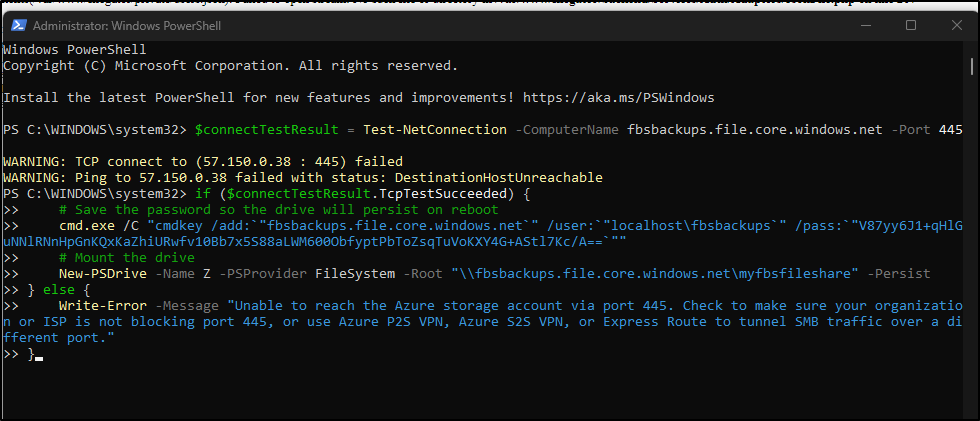

If I try the Windows mount in Powershell

This is confirmed when the script complains:

The recommendation from Microsoft is to follow this guide to create a P2S VPN. This, however, entails creating not only a built out virtual network, but a vnet-gateway which is an appliance that can really add up ($$).

Blob Fuse and Storage Containers

I’ll next pivot to Azure Storage containers with Blob Fuse.

I need the Microsoft Apt packages

$ sudo wget https://packages.microsoft.com/config/ubuntu/20.04/packages-microsoft-prod.deb

$ sudo dpkg -i packages-microsoft-prod.deb

$ sudo apt-get update

Then install blob-fuse (if you have not already)

builder@builder-T100:~$ sudo apt-get install blobfuse

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

blobfuse is already the newest version (1.4.5).

The following packages were automatically installed and are no longer required:

apg gnome-control-center-faces gnome-online-accounts libcolord-gtk1 libfreerdp-server2-2 libgnome-bg-4-1 libgsound0 libgssdp-1.2-0 libgupnp-1.2-1 libgupnp-av-1.0-3 libgupnp-dlna-2.0-4 libntfs-3g89 librygel-core-2.6-2 librygel-db-2.6-2

librygel-renderer-2.6-2 librygel-server-2.6-2 libvncserver1 mobile-broadband-provider-info network-manager-gnome python3-macaroonbakery python3-protobuf python3-pymacaroons python3-rfc3339 python3-tz rygel

Use 'sudo apt autoremove' to remove them.

0 upgraded, 0 newly installed, 0 to remove and 171 not upgraded.

I’ll want a ramdisk for large files (not required, but helpful)

builder@builder-T100:~$ sudo mkdir /mnt/ramdisk

builder@builder-T100:~$ sudo mount -t tmpfs -o size=16g tmpfs /mnt/ramdisk

builder@builder-T100:~$ sudo mkdir /mnt/ramdisk/blobfusetmp

builder@builder-T100:~$ sudo chown builder /mnt/ramdisk/blobfusetmp

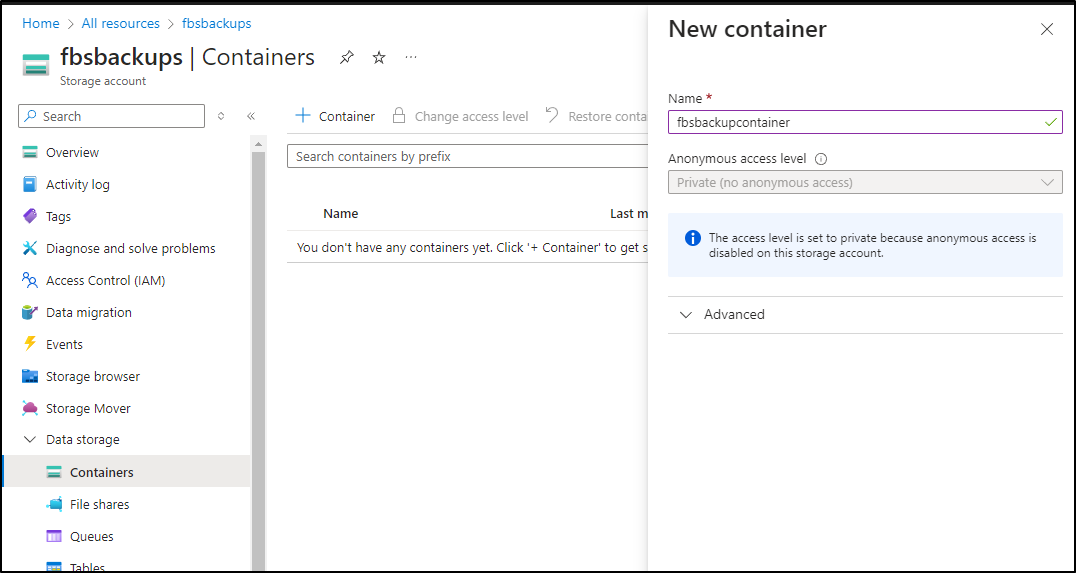

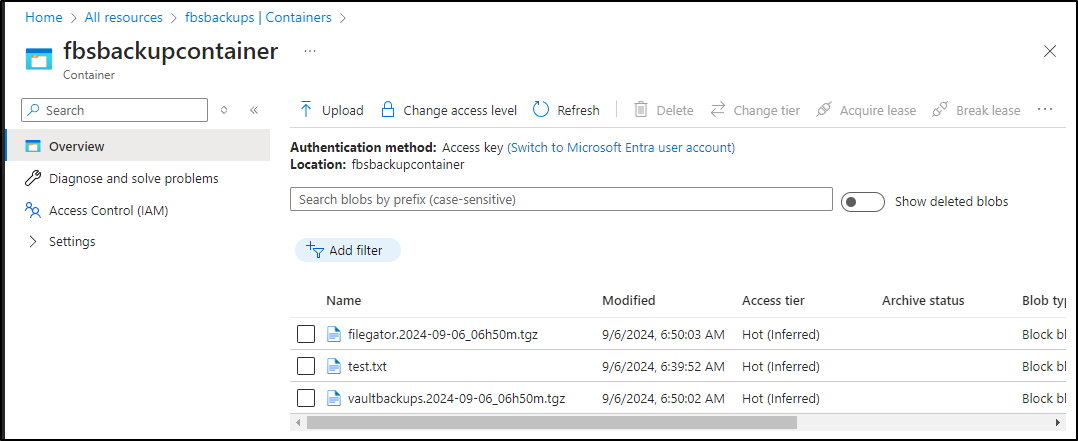

In the Azure Portal, I’ll make a container we can use (not a File Share)

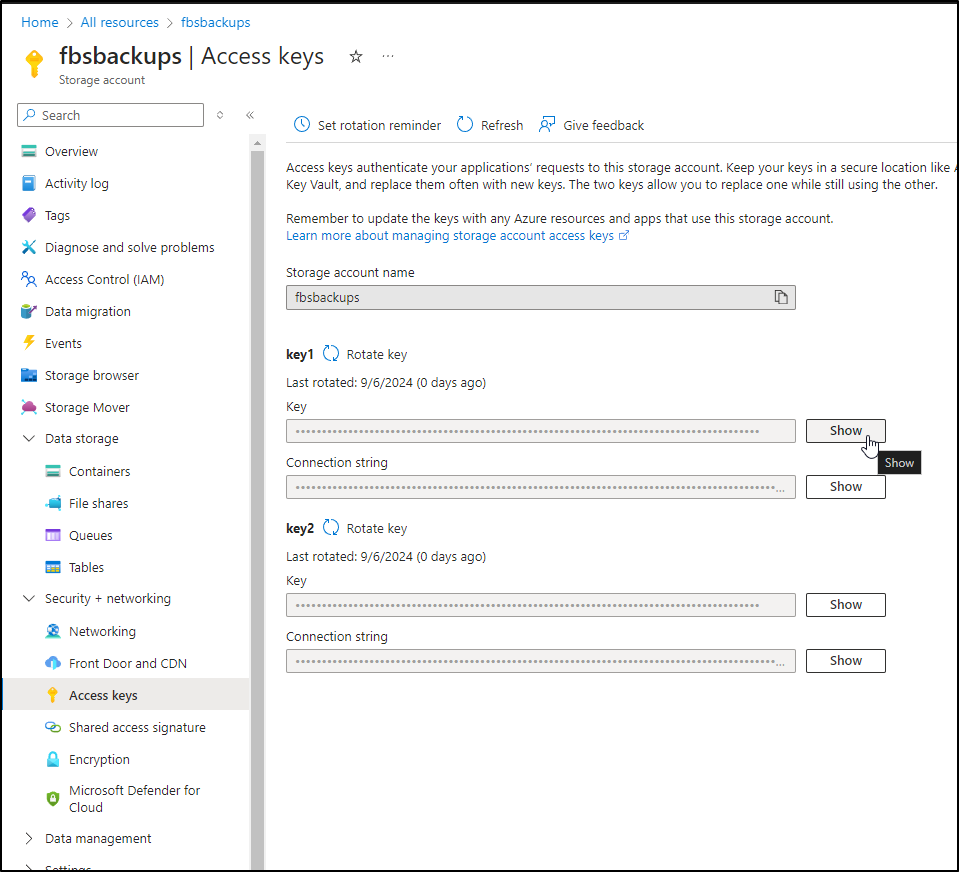

Using my storage account key

I’ll create a cfg file we can use

builder@builder-T100:~$ sudo vi /mnt/azure_fuse_connection.cfg

builder@builder-T100:~$ cat /mnt/azure_fuse_connection.cfg

accountName fbsbackups

accountKey Vxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx==

containerName fbsbackupcontainer

authType Key

Set the perms to 600

builder@builder-T100:~$ sudo chmod 600 /mnt/azure_fuse_connection.cfg

Now I can mount it

builder@builder-T100:~$ sudo blobfuse /mnt/myfbsfuseblob --tmp-path=/mnt/ramdisk/blobfusetmp --config-file=/mnt/azure_fuse_connection.cfg -o attr_timeout=240 -o entry_timeout=240 -o negative_timeout=120

builder@builder-T100:~$

Testing

I’ll create a file

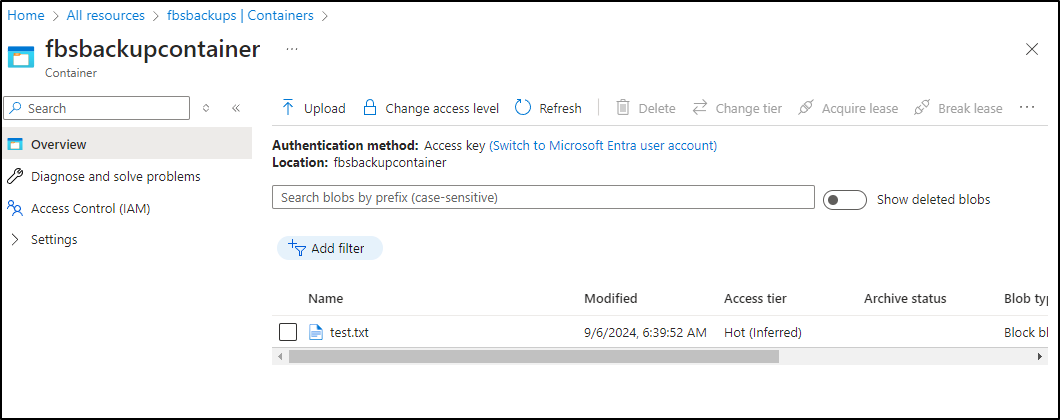

builder@builder-T100:~$ echo "This is a test" | sudo tee /mnt/myfbsfuseblob/test.txt

This is a test

And indeed I can see it is there

Usage

I can could create a cron, as i have with my NAS to backup Bitwarden and Filegator there

builder@builder-T100:~$ crontab -e

builder@builder-T100:~$ crontab -l

@reboot /sbin/swapoff -a

45 3 * * * tar -zcvf /mnt/filestation/vaultbackups.$(date '+\%Y-\%m-\%d_\%Hh\%Mm').tgz /home/builder/vaultwarden/data

16 6 * * * tar -zcvf /mnt/filestation/filegator.$(date '+\%Y-\%m-\%d_\%Hh\%Mm').tgz /home/builder/filegator/repository2

45 * * * * tar -zcvf /mnt/myfbsfuseblob/vaultbackups.$(date '+\%Y-\%m-\%d_\%Hh\%Mm').tgz /home/builder/vaultwarden/data

45 * * * * tar -zcvf /mnt/myfbsfuseblob/filegator.$(date '+\%Y-\%m-\%d_\%Hh\%Mm').tgz /home/builder/filegator/repository2

I actually had to pivot to root due to file permissions

builder@builder-T100:~$ sudo crontab -e

no crontab for root - using an empty one

Select an editor. To change later, run 'select-editor'.

1. /bin/nano <---- easiest

2. /usr/bin/vim.basic

3. /usr/bin/vim.tiny

4. /bin/ed

Choose 1-4 [1]: 2

crontab: installing new crontab

builder@builder-T100:~$ sudo crontab -l

# Edit this file to introduce tasks to be run by cron.

#

# Each task to run has to be defined through a single line

# indicating with different fields when the task will be run

# and what command to run for the task

#

# To define the time you can provide concrete values for

# minute (m), hour (h), day of month (dom), month (mon),

# and day of week (dow) or use '*' in these fields (for 'any').

#

# Notice that tasks will be started based on the cron's system

# daemon's notion of time and timezones.

#

# Output of the crontab jobs (including errors) is sent through

# email to the user the crontab file belongs to (unless redirected).

#

# For example, you can run a backup of all your user accounts

# at 5 a.m every week with:

# 0 5 * * 1 tar -zcf /var/backups/home.tgz /home/

#

# For more information see the manual pages of crontab(5) and cron(8)

#

# m h dom mon dow command

50 * * * * tar -zcvf /mnt/myfbsfuseblob/vaultbackups.$(date '+\%Y-\%m-\%d_\%Hh\%Mm').tgz /home/builder/vaultwarden/data

50 * * * * tar -zcvf /mnt/myfbsfuseblob/filegator.$(date '+\%Y-\%m-\%d_\%Hh\%Mm').tgz /home/builder/filegator/repository2

In a few minutes I could see them work

Since these files are so small, I think I will keep Azure as a weekly backup for now

# m h dom mon dow command

30 1 * * 3 tar -zcvf /mnt/myfbsfuseblob/vaultbackups.$(date '+\%Y-\%m-\%d_\%Hh\%Mm').tgz /home/builder/vaultwarden/data

30 1 * * 3 tar -zcvf /mnt/myfbsfuseblob/filegator.$(date '+\%Y-\%m-\%d_\%Hh\%Mm').tgz /home/builder/filegator/repository2

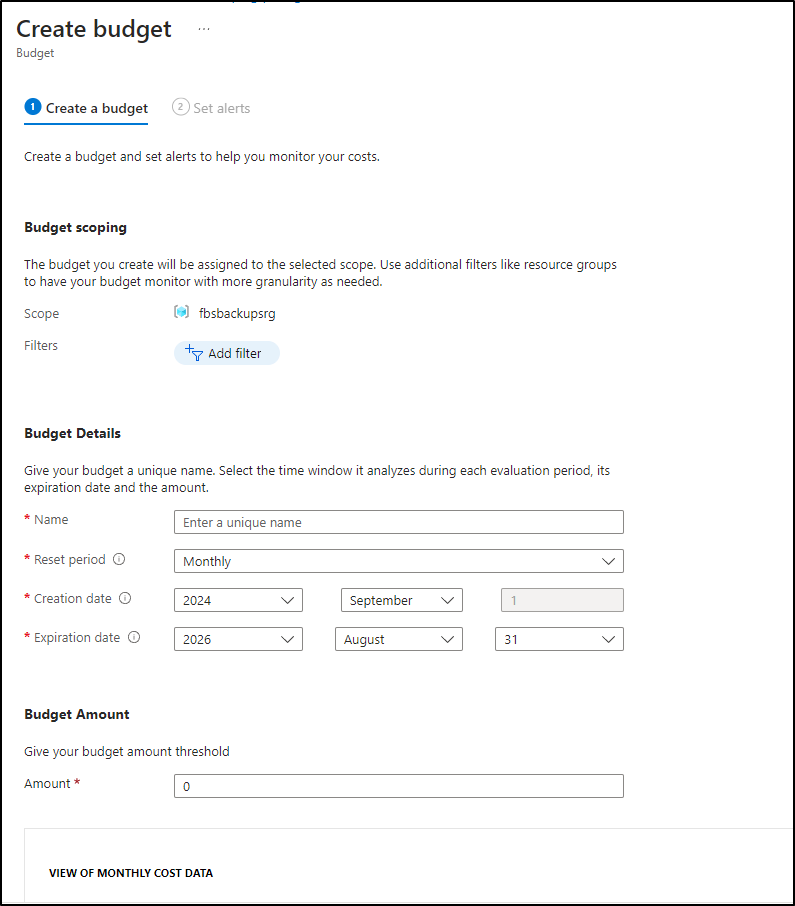

If I worried, I could create a Budget scoped to the RG

with alerts tied to PagerDuty (I have one for the subscription already)

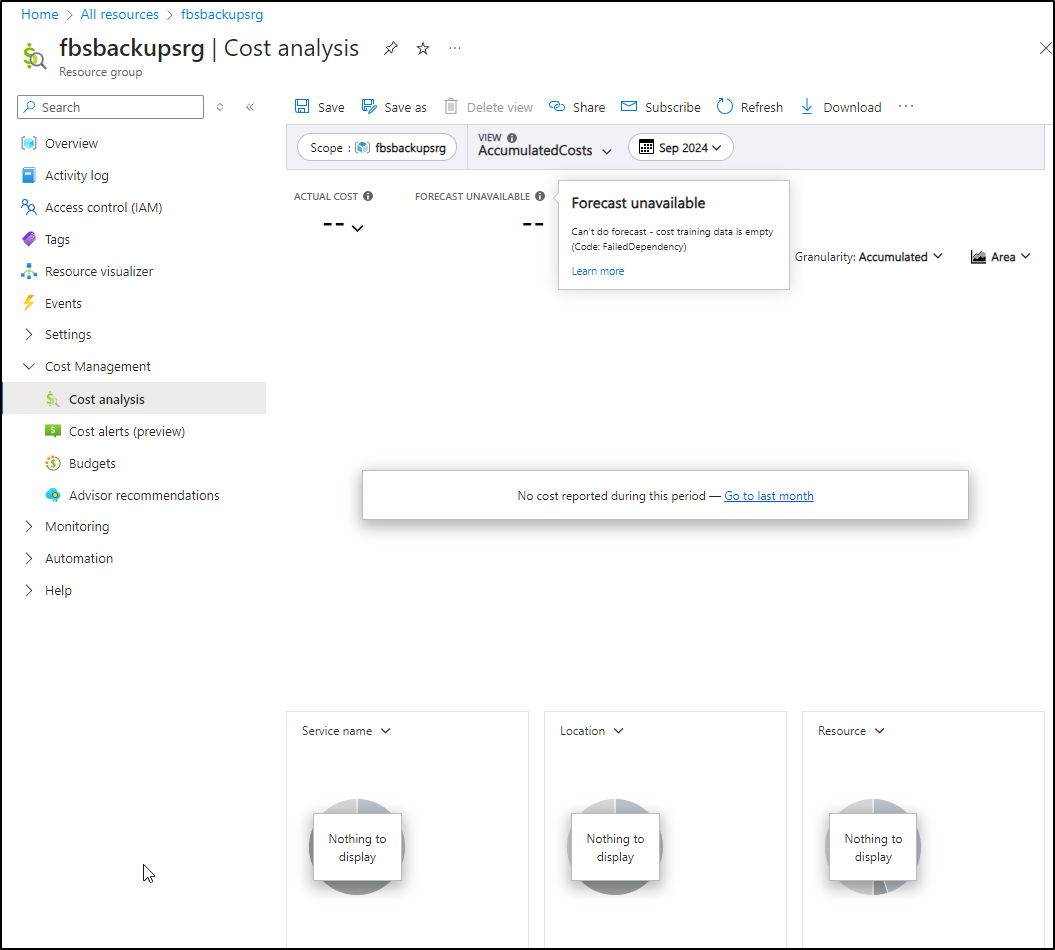

Or just view Costs on the RG periodically (no data yet)

Summary

We setup GSSFuse with mounts then pivoted to using gsutil with find in a crontab to backup files to a Google Bucket (as we did last time with AWS S3).

In my attempts to get Minio to work, which was a fail in the end, we did build out a proper Ansible playbook to setup GCSFuse on all my VMs as well as figure out the syntax for a manual Kubernetes PersitentVolume (PV) and PersistentVolumeClaim (PVC) that could use a gcs mount.

I actually delayed this blog post a day really fighting to make this work - from different releases of Minio, hosts, using pods, deployments, working on node affinity and nodeselectors. Nothing seemed to work.

I then decided we could at least get Azure File Storage to work then recalled the lessons from a couple years back that XFinity (as well as many other ISPs) block NFS ports and Azure is rather fixated on them. That said, we did get Azure Containers working with blobfuse and crontab for backups.

I recommend checking out some older posts on Rook vs Longhorn and GlusterFS using GSCFuse for more ways to mount cloud buckets with Kubernetes.