Published: Aug 31, 2022 by Isaac Johnson

Rook and Longhorn are two CNCF backed projects for providing storage to Kubernetes. Rook is a way to add storage via Ceph or NFS in a Kubernetes cluster. Longhorn similarly is a storage class provider but it focuses on providing distributed block storage replicated across a cluster.

We’ll try and setup both and see how they compare.

Rook : Attempt Numbero Uno

In this attempt, I’ll use a two-node cluster with SSD drives and one attached formatted USB drive mounted with NFS

Before we setup Rook, let’s check our cluster configuration

I can see in my cluster, presently, I just have a 500Gb SSD drive available

$ sudo fdisk -l | grep Disk | grep -v loop

Disk /dev/sda: 465.94 GiB, 500277790720 bytes, 977105060 sectors

Disk model: APPLE SSD SM512E

Disklabel type: gpt

Disk identifier: 35568E63-232C-4A36-97AB-F9022D0E462B

I add a thumb drive that was available

$ sudo fdisk -l | grep Disk | grep -v loop

Disk /dev/sda: 465.94 GiB, 500277790720 bytes, 977105060 sectors

Disk model: APPLE SSD SM512E

Disklabel type: gpt

Disk identifier: 35568E63-232C-4A36-97AB-F9022D0E462B

Disk /dev/sdc: 29.3 GiB, 31457280000 bytes, 61440000 sectors

Disk model: USB DISK 2.0

Disklabel type: dos

Disk identifier: 0x6e52f796

This is now ‘/dev/sdc’. However, it is not mounted

$ df -h | grep /dev | grep -v loop

udev 3.8G 0 3.8G 0% /dev

/dev/sda2 458G 27G 408G 7% /

tmpfs 3.9G 0 3.9G 0% /dev/shm

/dev/sda1 511M 5.3M 506M 2% /boot/efi

Format and mount

Wipe then format as ext4

$ sudo wipefs -a /dev/sdc

[sudo] password for builder:

/dev/sdc: 2 bytes were erased at offset 0x000001fe (dos): 55 aa

/dev/sdc: calling ioctl to re-read partition table: Success

# Create a partition

builder@anna-MacBookAir:~$ sudo fdisk /dev/sdc

Welcome to fdisk (util-linux 2.34).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

The old ext4 signature will be removed by a write command.

Device does not contain a recognized partition table.

Created a new DOS disklabel with disk identifier 0x0c172cd2.

Command (m for help): n

Partition type

p primary (0 primary, 0 extended, 4 free)

e extended (container for logical partitions)

Select (default p): p

Partition number (1-4, default 1): 1

First sector (2048-61439999, default 2048):

Last sector, +/-sectors or +/-size{K,M,G,T,P} (2048-61439999, default 61439999):

Created a new partition 1 of type 'Linux' and of size 29.3 GiB.

Command (m for help): p

Disk /dev/sdc: 29.3 GiB, 31457280000 bytes, 61440000 sectors

Disk model: USB DISK 2.0

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x0c172cd2

Device Boot Start End Sectors Size Id Type

/dev/sdc1 2048 61439999 61437952 29.3G 83 Linux

Command (m for help): w

The partition table has been altered.

Calling ioctl() to re-read partition table.

Syncing disks.

# this takes a while

~$ sudo mkfs.ext4 /dev/sdc1

mke2fs 1.45.5 (07-Jan-2020)

Creating filesystem with 7679744 4k blocks and 1921360 inodes

Filesystem UUID: f1c4e426-8e9a-4dde-88fa-412abd73b73b

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000

Allocating group tables: done

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

Now we can mount this so we could use with Rook

Install Rook with Helm

Let’s first install the Rook Operator with helm

$ helm install --create-namespace --namespace rook-ceph rook-ceph rook-release/rook-ceph

W0825 11:52:12.866453 4930 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

W0825 11:52:13.548116 4930 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

NAME: rook-ceph

LAST DEPLOYED: Thu Aug 25 11:52:12 2022

NAMESPACE: rook-ceph

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The Rook Operator has been installed. Check its status by running:

kubectl --namespace rook-ceph get pods -l "app=rook-ceph-operator"

Visit https://rook.io/docs/rook/latest for instructions on how to create and configure Rook clusters

Important Notes:

- You must customize the 'CephCluster' resource in the sample manifests for your cluster.

- Each CephCluster must be deployed to its own namespace, the samples use `rook-ceph` for the namespace.

- The sample manifests assume you also installed the rook-ceph operator in the `rook-ceph` namespace.

- The helm chart includes all the RBAC required to create a CephCluster CRD in the same namespace.

- Any disk devices you add to the cluster in the 'CephCluster' must be empty (no filesystem and no partitions).

$ helm list --all-namespaces

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

rook-ceph rook-ceph 1 2022-08-25 11:52:12.462213486 -0500 CDT deployed rook-ceph-v1.9.9 v1.9.9

traefik kube-system 1 2022-08-25 14:55:37.746738083 +0000 UTC deployed traefik-10.19.300 2.6.2

traefik-crd kube-system 1 2022-08-25 14:55:34.323550464 +0000 UTC deployed traefik-crd-10.19.300

Now install the Cluster helm chart

$ helm install --create-namespace --namespace rook-ceph rook-ceph-cluster --set operatorNamespace=rook-ceph rook-release/rook-ceph-cluster

NAME: rook-ceph-cluster

LAST DEPLOYED: Thu Aug 25 11:55:39 2022

NAMESPACE: rook-ceph

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The Ceph Cluster has been installed. Check its status by running:

kubectl --namespace rook-ceph get cephcluster

Visit https://rook.io/docs/rook/latest/CRDs/ceph-cluster-crd/ for more information about the Ceph CRD.

Important Notes:

- You can only deploy a single cluster per namespace

- If you wish to delete this cluster and start fresh, you will also have to wipe the OSD disks using `sfdisk`

The Rook setup starts and stops some container at initial startup

$ kubectl get pods -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-948ff69b5-v7gqm 1/1 Running 0 5m3s

csi-rbdplugin-lj2qx 0/2 ContainerCreating 0 20s

csi-rbdplugin-jkq8m 0/2 ContainerCreating 0 20s

csi-rbdplugin-provisioner-7bcc69755c-ws84s 0/5 ContainerCreating 0 20s

csi-rbdplugin-provisioner-7bcc69755c-6gpdw 0/5 ContainerCreating 0 20s

csi-cephfsplugin-nh984 0/2 ContainerCreating 0 20s

csi-cephfsplugin-4c6j8 0/2 ContainerCreating 0 20s

csi-cephfsplugin-provisioner-8556f8746-nz75b 0/5 ContainerCreating 0 20s

csi-cephfsplugin-provisioner-8556f8746-4sbk7 0/5 ContainerCreating 0 20s

rook-ceph-csi-detect-version-s85pg 1/1 Terminating 0 96s

$ kubectl get pods -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-948ff69b5-v7gqm 1/1 Running 0 5m34s

csi-cephfsplugin-4c6j8 2/2 Running 0 51s

csi-rbdplugin-jkq8m 2/2 Running 0 51s

csi-cephfsplugin-nh984 2/2 Running 0 51s

csi-cephfsplugin-provisioner-8556f8746-4sbk7 5/5 Running 0 51s

csi-rbdplugin-provisioner-7bcc69755c-ws84s 5/5 Running 0 51s

csi-rbdplugin-lj2qx 2/2 Running 0 51s

csi-cephfsplugin-provisioner-8556f8746-nz75b 5/5 Running 0 51s

csi-rbdplugin-provisioner-7bcc69755c-6gpdw 5/5 Running 0 51s

Now we can see the new StorageClass

$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path (default) rancher.io/local-path Delete WaitForFirstConsumer false 123m

ceph-bucket rook-ceph.ceph.rook.io/bucket Delete Immediate false 2m37s

ceph-filesystem rook-ceph.cephfs.csi.ceph.com Delete Immediate true 2m37s

ceph-block (default) rook-ceph.rbd.csi.ceph.com Delete Immediate true 2m37s

Testing

Let’s use this with MongoDB

I’ll first create a 5Gb PVC using the Rook ceph-block storage class

$ cat testingRook.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mongo-pvc

spec:

storageClassName: ceph-block

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

$ kubectl apply -f testingRook.yaml

persistentvolumeclaim/mongo-pvc created

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mongo-pvc Pending ceph-block 32s

This failed as I only had 2 nodes (requires 3)

Attemping pivot and redo with YAML

I’ll try and redo this using YAML

$ kubectl delete -f testingRook.yaml

persistentvolumeclaim "mongo-pvc" deleted

$ helm delete rook-ceph-cluster -n rook-ceph

release "rook-ceph-cluster" uninstalled

I’ll get the example code

builder@DESKTOP-QADGF36:~/Workspaces$ git clone --single-branch --branch master https://github.com/rook/rook.git

Cloning into 'rook'...

remote: Enumerating objects: 81890, done.

remote: Counting objects: 100% (278/278), done.

remote: Compressing objects: 100% (181/181), done.

remote: Total 81890 (delta 134), reused 207 (delta 95), pack-reused 81612

Receiving objects: 100% (81890/81890), 45.39 MiB | 27.43 MiB/s, done.

Resolving deltas: 100% (57204/57204), done.

Then attempt to use the “Test” cluster

builder@DESKTOP-QADGF36:~/Workspaces/rook/deploy/examples$ kubectl apply -f cluster-test.yaml

configmap/rook-config-override created

cephcluster.ceph.rook.io/my-cluster created

cephblockpool.ceph.rook.io/builtin-mgr created

And try and remove the former with helm

builder@DESKTOP-QADGF36:~/Workspaces/rook/deploy/examples$ helm delete rook-ceph -n rook-ceph

W0825 12:13:40.446278 6088 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

These resources were kept due to the resource policy:

[CustomResourceDefinition] cephblockpoolradosnamespaces.ceph.rook.io

[CustomResourceDefinition] cephblockpools.ceph.rook.io

[CustomResourceDefinition] cephbucketnotifications.ceph.rook.io

[CustomResourceDefinition] cephbuckettopics.ceph.rook.io

[CustomResourceDefinition] cephclients.ceph.rook.io

[CustomResourceDefinition] cephclusters.ceph.rook.io

[CustomResourceDefinition] cephfilesystemmirrors.ceph.rook.io

[CustomResourceDefinition] cephfilesystems.ceph.rook.io

[CustomResourceDefinition] cephfilesystemsubvolumegroups.ceph.rook.io

[CustomResourceDefinition] cephnfses.ceph.rook.io

[CustomResourceDefinition] cephobjectrealms.ceph.rook.io

[CustomResourceDefinition] cephobjectstores.ceph.rook.io

[CustomResourceDefinition] cephobjectstoreusers.ceph.rook.io

[CustomResourceDefinition] cephobjectzonegroups.ceph.rook.io

[CustomResourceDefinition] cephobjectzones.ceph.rook.io

[CustomResourceDefinition] cephrbdmirrors.ceph.rook.io

[CustomResourceDefinition] objectbucketclaims.objectbucket.io

[CustomResourceDefinition] objectbuckets.objectbucket.io

release "rook-ceph" uninstalled

Now do the CRDs and Common with YAMLs

builder@DESKTOP-QADGF36:~/Workspaces/rook/deploy/examples$ kubectl apply -f crds.yaml -f common.yaml

Warning: resource customresourcedefinitions/cephblockpoolradosnamespaces.ceph.rook.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/cephblockpoolradosnamespaces.ceph.rook.io configured

Warning: resource customresourcedefinitions/cephblockpools.ceph.rook.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/cephblockpools.ceph.rook.io configured

Warning: resource customresourcedefinitions/cephbucketnotifications.ceph.rook.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/cephbucketnotifications.ceph.rook.io configured

Warning: resource customresourcedefinitions/cephbuckettopics.ceph.rook.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/cephbuckettopics.ceph.rook.io configured

Warning: resource customresourcedefinitions/cephclients.ceph.rook.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/cephclients.ceph.rook.io configured

Warning: resource customresourcedefinitions/cephclusters.ceph.rook.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/cephclusters.ceph.rook.io configured

Warning: resource customresourcedefinitions/cephfilesystemmirrors.ceph.rook.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/cephfilesystemmirrors.ceph.rook.io configured

Warning: resource customresourcedefinitions/cephfilesystems.ceph.rook.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/cephfilesystems.ceph.rook.io configured

Warning: resource customresourcedefinitions/cephfilesystemsubvolumegroups.ceph.rook.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/cephfilesystemsubvolumegroups.ceph.rook.io configured

Warning: resource customresourcedefinitions/cephnfses.ceph.rook.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/cephnfses.ceph.rook.io configured

Warning: resource customresourcedefinitions/cephobjectrealms.ceph.rook.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/cephobjectrealms.ceph.rook.io configured

Warning: resource customresourcedefinitions/cephobjectstores.ceph.rook.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/cephobjectstores.ceph.rook.io configured

Warning: resource customresourcedefinitions/cephobjectstoreusers.ceph.rook.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/cephobjectstoreusers.ceph.rook.io configured

Warning: resource customresourcedefinitions/cephobjectzonegroups.ceph.rook.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/cephobjectzonegroups.ceph.rook.io configured

Warning: resource customresourcedefinitions/cephobjectzones.ceph.rook.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/cephobjectzones.ceph.rook.io configured

Warning: resource customresourcedefinitions/cephrbdmirrors.ceph.rook.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/cephrbdmirrors.ceph.rook.io configured

Warning: resource customresourcedefinitions/objectbucketclaims.objectbucket.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/objectbucketclaims.objectbucket.io configured

Warning: resource customresourcedefinitions/objectbuckets.objectbucket.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/objectbuckets.objectbucket.io configured

Warning: resource namespaces/rook-ceph is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

namespace/rook-ceph configured

clusterrole.rbac.authorization.k8s.io/cephfs-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/cephfs-external-provisioner-runner created

clusterrole.rbac.authorization.k8s.io/psp:rook created

clusterrole.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/rbd-external-provisioner-runner created

clusterrole.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

clusterrole.rbac.authorization.k8s.io/rook-ceph-global created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-system created

clusterrole.rbac.authorization.k8s.io/rook-ceph-object-bucket created

clusterrole.rbac.authorization.k8s.io/rook-ceph-osd created

clusterrole.rbac.authorization.k8s.io/rook-ceph-system created

clusterrolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-global created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-object-bucket created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-system created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-system-psp created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-cephfs-plugin-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-cephfs-provisioner-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-rbd-plugin-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-rbd-provisioner-sa-psp created

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/00-rook-privileged created

role.rbac.authorization.k8s.io/cephfs-external-provisioner-cfg created

role.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

role.rbac.authorization.k8s.io/rbd-external-provisioner-cfg created

role.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

role.rbac.authorization.k8s.io/rook-ceph-mgr created

role.rbac.authorization.k8s.io/rook-ceph-osd created

role.rbac.authorization.k8s.io/rook-ceph-purge-osd created

role.rbac.authorization.k8s.io/rook-ceph-rgw created

role.rbac.authorization.k8s.io/rook-ceph-system created

rolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin-role-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role-cfg created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-default-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-system created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-purge-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-purge-osd-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-rgw created

rolebinding.rbac.authorization.k8s.io/rook-ceph-rgw-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-system created

serviceaccount/rook-ceph-cmd-reporter created

serviceaccount/rook-ceph-mgr created

serviceaccount/rook-ceph-osd created

serviceaccount/rook-ceph-purge-osd created

serviceaccount/rook-ceph-rgw created

serviceaccount/rook-ceph-system created

serviceaccount/rook-csi-cephfs-plugin-sa created

serviceaccount/rook-csi-cephfs-provisioner-sa created

serviceaccount/rook-csi-rbd-plugin-sa created

serviceaccount/rook-csi-rbd-provisioner-sa created

Then the Operator

builder@DESKTOP-QADGF36:~/Workspaces/rook/deploy/examples$ kubectl apply -f operator.yaml

configmap/rook-ceph-operator-config created

deployment.apps/rook-ceph-operator created

We wait for the operator to come up

$ kubectl get pods -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-b5c96c99b-9qzjq 0/1 ContainerCreating 0 28s

$ kubectl get pods -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-b5c96c99b-9qzjq 1/1 Running 0 89s

Now let’s launch the test cluster

# delete the former

builder@DESKTOP-QADGF36:~/Workspaces/rook/deploy/examples$ kubectl delete -f cluster-test.yaml

configmap "rook-config-override" deleted

cephcluster.ceph.rook.io "my-cluster" deleted

cephblockpool.ceph.rook.io "builtin-mgr" deleted

I can see they are stuck

$ kubectl describe cephblockpool.ceph.rook.io/builtin-mgr -n rook-ceph | tail -n5

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ReconcileSucceeded 7m58s (x14 over 10m) rook-ceph-block-pool-controller successfully configured CephBlockPool "rook-ceph/builtin-mgr"

Normal ReconcileSucceeded 7s (x32 over 5m7s) rook-ceph-block-pool-controller successfully configured CephBlockPool "rook-ceph/builtin-mgr"

Add/Confirm LVM2, which is a pre-req for Rook, to both nodes

builder@anna-MacBookAir:~$ sudo apt-get install lvm2

[sudo] password for builder:

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages were automatically installed and are no longer required:

libfprint-2-tod1 libfwupdplugin1 libllvm10 libllvm11 shim

Use 'sudo apt autoremove' to remove them.

The following additional packages will be installed:

dmeventd libaio1 libdevmapper-event1.02.1 liblvm2cmd2.03 libreadline5 thin-provisioning-tools

The following NEW packages will be installed:

dmeventd libaio1 libdevmapper-event1.02.1 liblvm2cmd2.03 libreadline5 lvm2 thin-provisioning-tools

0 upgraded, 7 newly installed, 0 to remove and 1 not upgraded.

Need to get 2,255 kB of archives.

After this operation, 8,919 kB of additional disk space will be used.

Do you want to continue? [Y/n] y

...

Download rook

$ git clone --single-branch --branch release-1.9 https://github.com/rook/rook.git

Cloning into 'rook'...

remote: Enumerating objects: 80478, done.

remote: Counting objects: 100% (86/86), done.

remote: Compressing objects: 100% (75/75), done.

remote: Total 80478 (delta 13), reused 58 (delta 9), pack-reused 80392

Receiving objects: 100% (80478/80478), 44.82 MiB | 5.54 MiB/s, done.

Resolving deltas: 100% (56224/56224), done.

We now need the common

builder@DESKTOP-72D2D9T:~/Workspaces/rook/deploy/examples$ kubectl create -f common.yaml

namespace/rook-ceph created

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

role.rbac.authorization.k8s.io/cephfs-external-provisioner-cfg created

role.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

role.rbac.authorization.k8s.io/rbd-external-provisioner-cfg created

role.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

role.rbac.authorization.k8s.io/rook-ceph-mgr created

role.rbac.authorization.k8s.io/rook-ceph-osd created

role.rbac.authorization.k8s.io/rook-ceph-purge-osd created

role.rbac.authorization.k8s.io/rook-ceph-rgw created

role.rbac.authorization.k8s.io/rook-ceph-system created

rolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin-role-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role-cfg created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-default-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-system created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-purge-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-purge-osd-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-rgw created

rolebinding.rbac.authorization.k8s.io/rook-ceph-rgw-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-system created

serviceaccount/rook-ceph-cmd-reporter created

serviceaccount/rook-ceph-mgr created

serviceaccount/rook-ceph-osd created

serviceaccount/rook-ceph-purge-osd created

serviceaccount/rook-ceph-rgw created

serviceaccount/rook-ceph-system created

serviceaccount/rook-csi-cephfs-plugin-sa created

serviceaccount/rook-csi-cephfs-provisioner-sa created

serviceaccount/rook-csi-rbd-plugin-sa created

serviceaccount/rook-csi-rbd-provisioner-sa created

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "cephfs-csi-nodeplugin" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "cephfs-external-provisioner-runner" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "psp:rook" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rbd-csi-nodeplugin" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rbd-external-provisioner-runner" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-cluster-mgmt" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-global" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-mgr-cluster" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-mgr-system" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-object-bucket" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-osd" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-system" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "cephfs-csi-nodeplugin" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "cephfs-csi-provisioner-role" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rbd-csi-nodeplugin" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rbd-csi-provisioner-role" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-global" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-mgr-cluster" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-object-bucket" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-osd" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-system" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-system-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-csi-cephfs-plugin-sa-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-csi-cephfs-provisioner-sa-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-csi-rbd-plugin-sa-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-csi-rbd-provisioner-sa-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": podsecuritypolicies.policy "00-rook-privileged" already exists

I then tried the operator yet again

$ kubectl create -f operator.yaml

configmap/rook-ceph-operator-config created

deployment.apps/rook-ceph-operator created

But never did the Ceph cluster move onto new pods - that is, the cluster never came up

$ kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-5949bdbb59-clhwc 1/1 Running 0 21s

$ kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-5949bdbb59-dq6cj 1/1 Running 0 9h

Rook : Attempt Deux : Unformatted drive and YAML

I’ll summarize in saying that I tried, unsuccessfully, to remove the failed Rook-Ceph. The objects and finalizers created so many dependent locks I finally gave up and moved onto Longhorn.

Here I will use an unformatted thumb drive and similar to this guide use YAML files

Respin and try again

I respun the cluster fresh to try again

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

anna-macbookair Ready control-plane,master 7h57m v1.24.4+k3s1

builder-macbookpro2 Ready <none> 7h55m v1.24.4+k3s1

I double-checked on LVM on both the master and worker nodes

builder@anna-MacBookAir:~$ sudo apt-get install lvm2

[sudo] password for builder:

Reading package lists... Done

Building dependency tree

Reading state information... Done

lvm2 is already the newest version (2.03.07-1ubuntu1).

The following packages were automatically installed and are no longer required:

libfprint-2-tod1 libfwupdplugin1 libllvm10 libllvm11 shim

Use 'sudo apt autoremove' to remove them.

0 upgraded, 0 newly installed, 0 to remove and 5 not upgraded.

builder@builder-MacBookPro2:~$ sudo apt-get install lvm2

[sudo] password for builder:

Reading package lists... Done

Building dependency tree

Reading state information... Done

lvm2 is already the newest version (2.03.07-1ubuntu1).

The following packages were automatically installed and are no longer required:

libfprint-2-tod1 libllvm10

Use 'sudo apt autoremove' to remove them.

0 upgraded, 0 newly installed, 0 to remove and 6 not upgraded.

I popped in a USB Drive, however it already has a formatted partition

builder@anna-MacBookAir:~$ ls -ltra /dev | grep sd

brw-rw---- 1 root disk 8, 0 Aug 28 22:07 sda

brw-rw---- 1 root disk 8, 2 Aug 28 22:07 sda2

brw-rw---- 1 root disk 8, 1 Aug 28 22:07 sda1

brw-rw---- 1 root disk 8, 16 Aug 28 22:07 sdb

builder@anna-MacBookAir:~$ ls -ltra /dev | grep sd

brw-rw---- 1 root disk 8, 0 Aug 28 22:07 sda

brw-rw---- 1 root disk 8, 2 Aug 28 22:07 sda2

brw-rw---- 1 root disk 8, 1 Aug 28 22:07 sda1

brw-rw---- 1 root disk 8, 16 Aug 28 22:07 sdb

brw-rw---- 1 root disk 8, 32 Aug 29 06:14 sdc

brw-rw---- 1 root disk 8, 33 Aug 29 06:14 sdc1

I made sure it was unmounted and i commented out the fstab line that would use it with mount -a

builder@anna-MacBookAir:~$ cat /etc/fstab | tail -n1

# UUID=f1c4e426-8e9a-4dde-88fa-412abd73b73b /mnt/thumbdrive ext4 defaults,errors=remount-ro 0 1

builder@anna-MacBookAir:~$ sudo umount /mnt/thumbdrive

umount: /mnt/thumbdrive: not mounted.

Now I need to ditch the ext4 filesystem and make a primary partition

builder@anna-MacBookAir:~$ sudo fdisk /dev/sdc

Welcome to fdisk (util-linux 2.34).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Command (m for help): m

Help:

DOS (MBR)

a toggle a bootable flag

b edit nested BSD disklabel

c toggle the dos compatibility flag

Generic

d delete a partition

F list free unpartitioned space

l list known partition types

n add a new partition

p print the partition table

t change a partition type

v verify the partition table

i print information about a partition

Misc

m print this menu

u change display/entry units

x extra functionality (experts only)

Script

I load disk layout from sfdisk script file

O dump disk layout to sfdisk script file

Save & Exit

w write table to disk and exit

q quit without saving changes

Create a new label

g create a new empty GPT partition table

G create a new empty SGI (IRIX) partition table

o create a new empty DOS partition table

s create a new empty Sun partition table

Command (m for help): d

Selected partition 1

Partition 1 has been deleted.

Command (m for help): p

Disk /dev/sdc: 29.3 GiB, 31457280000 bytes, 61440000 sectors

Disk model: USB DISK 2.0

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x0c172cd2

Command (m for help): n

Partition type

p primary (0 primary, 0 extended, 4 free)

e extended (container for logical partitions)

Select (default p): p

Partition number (1-4, default 1):

First sector (2048-61439999, default 2048):

Last sector, +/-sectors or +/-size{K,M,G,T,P} (2048-61439999, default 61439999):

Created a new partition 1 of type 'Linux' and of size 29.3 GiB.

Command (m for help): t

Selected partition 1

Hex code (type L to list all codes): L

0 Empty 24 NEC DOS 81 Minix / old Lin bf Solaris

1 FAT12 27 Hidden NTFS Win 82 Linux swap / So c1 DRDOS/sec (FAT-

2 XENIX root 39 Plan 9 83 Linux c4 DRDOS/sec (FAT-

3 XENIX usr 3c PartitionMagic 84 OS/2 hidden or c6 DRDOS/sec (FAT-

4 FAT16 <32M 40 Venix 80286 85 Linux extended c7 Syrinx

5 Extended 41 PPC PReP Boot 86 NTFS volume set da Non-FS data

6 FAT16 42 SFS 87 NTFS volume set db CP/M / CTOS / .

7 HPFS/NTFS/exFAT 4d QNX4.x 88 Linux plaintext de Dell Utility

8 AIX 4e QNX4.x 2nd part 8e Linux LVM df BootIt

9 AIX bootable 4f QNX4.x 3rd part 93 Amoeba e1 DOS access

a OS/2 Boot Manag 50 OnTrack DM 94 Amoeba BBT e3 DOS R/O

b W95 FAT32 51 OnTrack DM6 Aux 9f BSD/OS e4 SpeedStor

c W95 FAT32 (LBA) 52 CP/M a0 IBM Thinkpad hi ea Rufus alignment

e W95 FAT16 (LBA) 53 OnTrack DM6 Aux a5 FreeBSD eb BeOS fs

f W95 Ext'd (LBA) 54 OnTrackDM6 a6 OpenBSD ee GPT

10 OPUS 55 EZ-Drive a7 NeXTSTEP ef EFI (FAT-12/16/

11 Hidden FAT12 56 Golden Bow a8 Darwin UFS f0 Linux/PA-RISC b

12 Compaq diagnost 5c Priam Edisk a9 NetBSD f1 SpeedStor

14 Hidden FAT16 <3 61 SpeedStor ab Darwin boot f4 SpeedStor

16 Hidden FAT16 63 GNU HURD or Sys af HFS / HFS+ f2 DOS secondary

17 Hidden HPFS/NTF 64 Novell Netware b7 BSDI fs fb VMware VMFS

18 AST SmartSleep 65 Novell Netware b8 BSDI swap fc VMware VMKCORE

1b Hidden W95 FAT3 70 DiskSecure Mult bb Boot Wizard hid fd Linux raid auto

1c Hidden W95 FAT3 75 PC/IX bc Acronis FAT32 L fe LANstep

1e Hidden W95 FAT1 80 Old Minix be Solaris boot ff BBT

Hex code (type L to list all codes): 60

Changed type of partition 'Linux' to 'unknown'.

Command (m for help): w

The partition table has been altered.

Calling ioctl() to re-read partition table.

Note, “60” is not valid. which makes it RAW. We can see all types here

Last time we tried with Helm, here we will use YAML

builder@DESKTOP-QADGF36:~$ git clone --single-branch --branch master https://github.com/rook/rook.git

Cloning into 'rook'...

remote: Enumerating objects: 81967, done.

remote: Counting objects: 100% (355/355), done.

remote: Compressing objects: 100% (244/244), done.

remote: Total 81967 (delta 170), reused 247 (delta 109), pack-reused 81612

Receiving objects: 100% (81967/81967), 45.44 MiB | 37.20 MiB/s, done.

Resolving deltas: 100% (57240/57240), done.

builder@DESKTOP-QADGF36:~$ cd rook/deploy/examples/

builder@DESKTOP-QADGF36:~/rook/deploy/examples$

Create PSP

builder@DESKTOP-QADGF36:~/rook/deploy/examples$ kubectl create -f psp.yaml

clusterrole.rbac.authorization.k8s.io/psp:rook created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-system-psp created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-cephfs-plugin-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-cephfs-provisioner-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-rbd-plugin-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-rbd-provisioner-sa-psp created

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/00-rook-privileged created

Error from server (NotFound): error when creating "psp.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "psp.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "psp.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "psp.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "psp.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "psp.yaml": namespaces "rook-ceph" not found

Create CRDs, common and Operator

builder@DESKTOP-QADGF36:~/rook/deploy/examples$ kubectl create -f crds.yaml -f common.yaml -f operator.yaml

customresourcedefinition.apiextensions.k8s.io/cephblockpoolradosnamespaces.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephblockpools.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephbucketnotifications.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephbuckettopics.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephclients.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephclusters.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystemmirrors.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystems.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystemsubvolumegroups.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephnfses.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectrealms.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstores.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstoreusers.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectzonegroups.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectzones.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephrbdmirrors.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/objectbucketclaims.objectbucket.io created

customresourcedefinition.apiextensions.k8s.io/objectbuckets.objectbucket.io created

namespace/rook-ceph created

clusterrole.rbac.authorization.k8s.io/cephfs-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/cephfs-external-provisioner-runner created

clusterrole.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/rbd-external-provisioner-runner created

clusterrole.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

clusterrole.rbac.authorization.k8s.io/rook-ceph-global created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-system created

clusterrole.rbac.authorization.k8s.io/rook-ceph-object-bucket created

clusterrole.rbac.authorization.k8s.io/rook-ceph-osd created

clusterrole.rbac.authorization.k8s.io/rook-ceph-system created

clusterrolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-global created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-object-bucket created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-system created

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

role.rbac.authorization.k8s.io/cephfs-external-provisioner-cfg created

role.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

role.rbac.authorization.k8s.io/rbd-external-provisioner-cfg created

role.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

role.rbac.authorization.k8s.io/rook-ceph-mgr created

role.rbac.authorization.k8s.io/rook-ceph-osd created

role.rbac.authorization.k8s.io/rook-ceph-purge-osd created

role.rbac.authorization.k8s.io/rook-ceph-rgw created

role.rbac.authorization.k8s.io/rook-ceph-system created

rolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin-role-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role-cfg created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-default-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-system created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-purge-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-purge-osd-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-rgw created

rolebinding.rbac.authorization.k8s.io/rook-ceph-rgw-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-system created

serviceaccount/rook-ceph-cmd-reporter created

serviceaccount/rook-ceph-mgr created

serviceaccount/rook-ceph-osd created

serviceaccount/rook-ceph-purge-osd created

serviceaccount/rook-ceph-rgw created

serviceaccount/rook-ceph-system created

serviceaccount/rook-csi-cephfs-plugin-sa created

serviceaccount/rook-csi-cephfs-provisioner-sa created

serviceaccount/rook-csi-rbd-plugin-sa created

serviceaccount/rook-csi-rbd-provisioner-sa created

configmap/rook-ceph-operator-config created

deployment.apps/rook-ceph-operator created

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "psp:rook" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-system-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-csi-cephfs-plugin-sa-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-csi-cephfs-provisioner-sa-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-csi-rbd-plugin-sa-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-csi-rbd-provisioner-sa-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": podsecuritypolicies.policy "00-rook-privileged" already exists

We can check on the Operator to see when it comes up

builder@DESKTOP-QADGF36:~/rook/deploy/examples$ kubectl get pods -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-b5c96c99b-fpslw 0/1 ContainerCreating 0 41s

builder@DESKTOP-QADGF36:~/rook/deploy/examples$ kubectl describe pod rook-ceph-operator-b5c96c99b-fpslw -n rook-ceph | tail -n 5

Normal Scheduled 84s default-scheduler Successfully assigned rook-ceph/rook-ceph-operator-b5c96c99b-fpslw to builder-macbookpro2

Normal Pulling 83s kubelet Pulling image "rook/ceph:master"

Normal Pulled 0s kubelet Successfully pulled image "rook/ceph:master" in 1m22.985243362s

Normal Created 0s kubelet Created container rook-ceph-operator

Normal Started 0s kubelet Started container rook-ceph-operator

builder@DESKTOP-QADGF36:~/rook/deploy/examples$ kubectl get pods -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-b5c96c99b-fpslw 1/1 Running 0 105s

Since we are on a basic 2 node cluster, we will use the Cluster-test.yaml to setup a Test Ceph Cluster

builder@DESKTOP-QADGF36:~/rook/deploy/examples$ kubectl apply -f cluster-test.yaml

configmap/rook-config-override created

cephcluster.ceph.rook.io/my-cluster created

cephblockpool.ceph.rook.io/builtin-mgr created

This time I see pods coming up

builder@DESKTOP-QADGF36:~/rook/deploy/examples$ kubectl get pods -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-b5c96c99b-fpslw 1/1 Running 0 3m25s

rook-ceph-mon-a-canary-868d956cbf-zfvnd 1/1 Terminating 0 28s

csi-rbdplugin-kp8pk 0/2 ContainerCreating 0 25s

csi-rbdplugin-provisioner-6c99988f59-rfrs4 0/5 ContainerCreating 0 25s

csi-rbdplugin-vg7zm 0/2 ContainerCreating 0 25s

csi-rbdplugin-provisioner-6c99988f59-2b5mv 0/5 ContainerCreating 0 25s

csi-cephfsplugin-bfvnv 0/2 ContainerCreating 0 25s

csi-cephfsplugin-provisioner-846bf56886-zhvqf 0/5 ContainerCreating 0 25s

csi-cephfsplugin-provisioner-846bf56886-d52ww 0/5 ContainerCreating 0 25s

csi-cephfsplugin-wftx4 0/2 ContainerCreating 0 25s

rook-ceph-mon-a-5cddf7f9fc-2r42z 0/1 Running 0 26s

Ceph Toolbox

Let’s add the Toolbox and see that it was rolled out

builder@DESKTOP-QADGF36:~/rook/deploy/examples$ kubectl create -f toolbox.yaml

deployment.apps/rook-ceph-tools created

$ kubectl -n rook-ceph rollout status deploy/rook-ceph-tools

deployment "rook-ceph-tools" successfully rolled out

I can use it to check the status

builder@DESKTOP-QADGF36:~/rook/deploy/examples$ kubectl -n rook-ceph exec -it deploy/rook-ceph-tools -- bash

bash-4.4$ ceph status

cluster:

id: caceb937-3244-4bff-a80a-841da091d170

health: HEALTH_WARN

Reduced data availability: 1 pg inactive

services:

mon: 1 daemons, quorum a (age 13m)

mgr: a(active, since 12m)

osd: 1 osds: 0 up, 1 in (since 12m)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs: 100.000% pgs unknown

1 unknown

bash-4.4$ ceph osd status

ID HOST USED AVAIL WR OPS WR DATA RD OPS RD DATA STATE

0 0 0 0 0 0 0 exists,new

We can check the Rook Dashboard as well

Get Ceph Dashboard password

$ kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echo

adsfasdfasdf

We can then port-forward to service

builder@DESKTOP-QADGF36:~/rook/deploy/examples$ kubectl port-forward -n rook-ceph svc/rook-ceph-mgr-dashboard 7000:7000

Forwarding from 127.0.0.1:7000 -> 7000

Forwarding from [::1]:7000 -> 7000

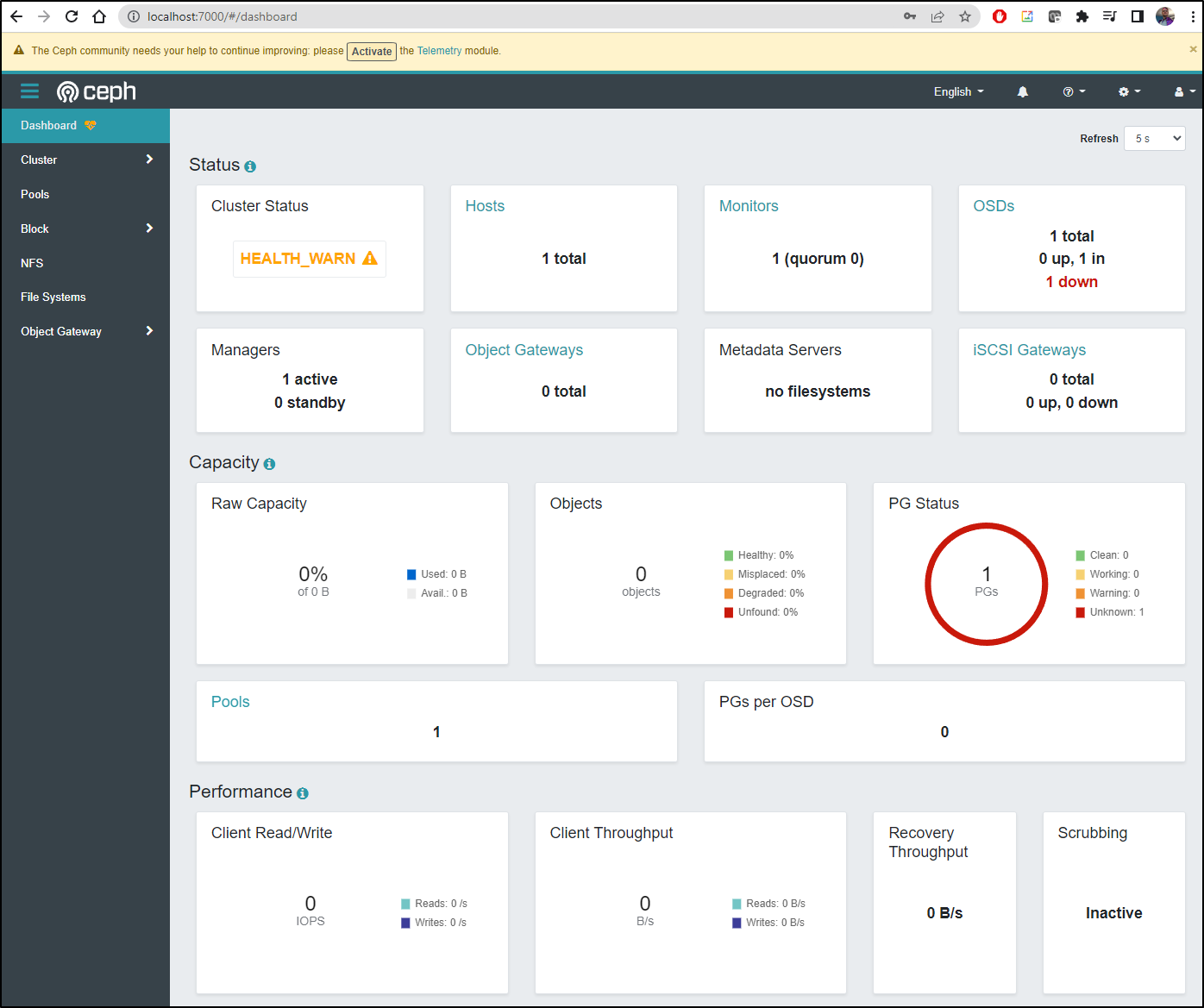

I see similar status information

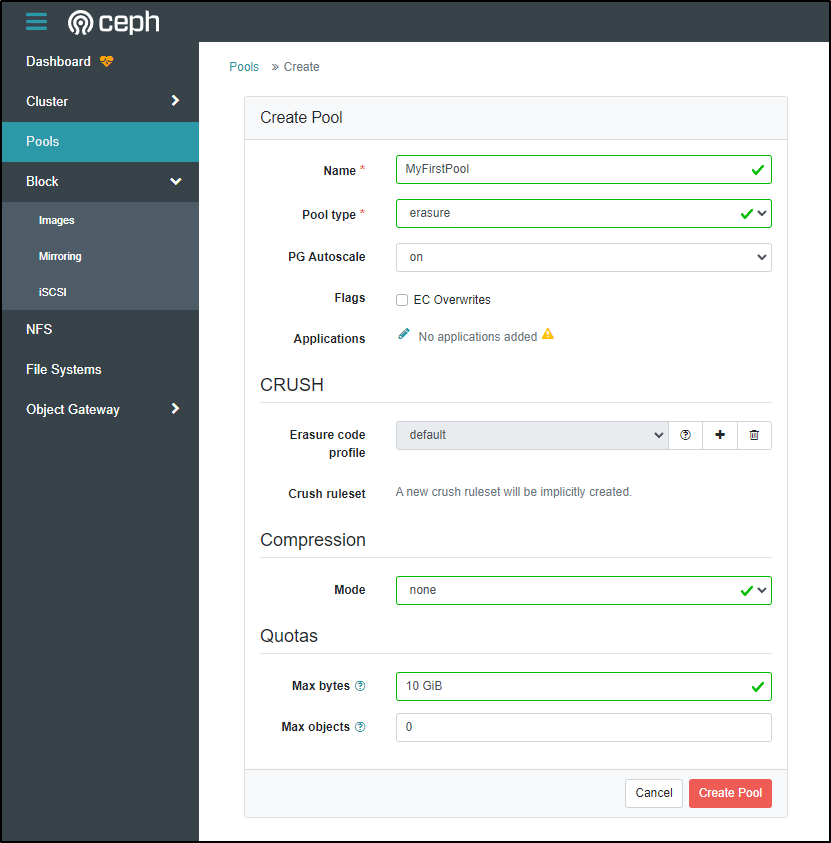

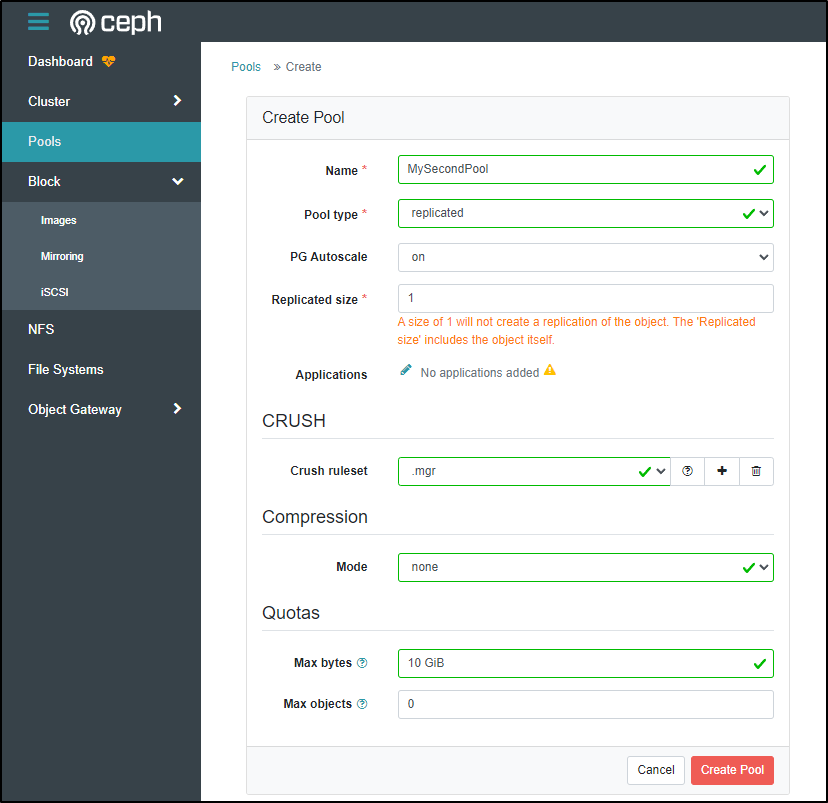

I’ll try and create a pool

And another

I do not see Storage Classes made available, however

The status has stayed in Warn

$ kubectl -n rook-ceph exec -it deploy/rook-ceph-tools -- ceph status

cluster:

id: caceb937-3244-4bff-a80a-841da091d170

health: HEALTH_WARN

Reduced data availability: 3 pgs inactive

services:

mon: 1 daemons, quorum a (age 24m)

mgr: a(active, since 22m)

osd: 1 osds: 0 up, 1 in (since 23m)

data:

pools: 3 pools, 3 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs: 100.000% pgs unknown

3 unknown

And the Operator complains of unclean pgs

$ kubectl -n rook-ceph logs -l app=rook-ceph-operator

2022-08-29 11:52:06.525404 I | clusterdisruption-controller: osd "rook-ceph-osd-0" is down but no node drain is detected

2022-08-29 11:52:07.219354 I | clusterdisruption-controller: osd is down in failure domain "anna-macbookair" and pgs are not active+clean. pg health: "cluster is not fully clean. PGs: [{StateName:unknown Count:3}]"

2022-08-29 11:52:25.261134 I | clusterdisruption-controller: osd "rook-ceph-osd-0" is down but no node drain is detected

2022-08-29 11:52:25.938538 I | clusterdisruption-controller: osd is down in failure domain "anna-macbookair" and pgs are not active+clean. pg health: "cluster is not fully clean. PGs: [{StateName:unknown Count:3}]"

2022-08-29 11:52:37.246992 I | clusterdisruption-controller: osd "rook-ceph-osd-0" is down but no node drain is detected

2022-08-29 11:52:38.056085 I | clusterdisruption-controller: osd is down in failure domain "anna-macbookair" and pgs are not active+clean. pg health: "cluster is not fully clean. PGs: [{StateName:unknown Count:3}]"

2022-08-29 11:52:55.973639 I | clusterdisruption-controller: osd "rook-ceph-osd-0" is down but no node drain is detected

2022-08-29 11:52:56.624652 I | clusterdisruption-controller: osd is down in failure domain "anna-macbookair" and pgs are not active+clean. pg health: "cluster is not fully clean. PGs: [{StateName:unknown Count:3}]"

2022-08-29 11:53:08.261183 I | clusterdisruption-controller: osd "rook-ceph-osd-0" is down but no node drain is detected

2022-08-29 11:53:08.929602 I | clusterdisruption-controller: osd is down in failure domain "anna-macbookair" and pgs are not active+clean. pg health: "cluster is not fully clean. PGs: [{StateName:unknown Count:3}]"

Rook : Attempt 3 : No thumb drive, fresh k3s

Add LVM, which is a pre-req for Rook, to both nodes

builder@anna-MacBookAir:~$ sudo apt-get install lvm2

[sudo] password for builder:

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages were automatically installed and are no longer required:

libfprint-2-tod1 libfwupdplugin1 libllvm10 libllvm11 shim

Use 'sudo apt autoremove' to remove them.

The following additional packages will be installed:

dmeventd libaio1 libdevmapper-event1.02.1 liblvm2cmd2.03 libreadline5 thin-provisioning-tools

The following NEW packages will be installed:

dmeventd libaio1 libdevmapper-event1.02.1 liblvm2cmd2.03 libreadline5 lvm2 thin-provisioning-tools

0 upgraded, 7 newly installed, 0 to remove and 1 not upgraded.

Need to get 2,255 kB of archives.

After this operation, 8,919 kB of additional disk space will be used.

Do you want to continue? [Y/n] y

...

Download rook

$ git clone --single-branch --branch release-1.9 https://github.com/rook/rook.git

Cloning into 'rook'...

remote: Enumerating objects: 80478, done.

remote: Counting objects: 100% (86/86), done.

remote: Compressing objects: 100% (75/75), done.

remote: Total 80478 (delta 13), reused 58 (delta 9), pack-reused 80392

Receiving objects: 100% (80478/80478), 44.82 MiB | 5.54 MiB/s, done.

Resolving deltas: 100% (56224/56224), done.

Create common

builder@DESKTOP-72D2D9T:~/Workspaces/rook/deploy/examples$ kubectl create -f common.yaml

namespace/rook-ceph created

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

role.rbac.authorization.k8s.io/cephfs-external-provisioner-cfg created

role.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

role.rbac.authorization.k8s.io/rbd-external-provisioner-cfg created

role.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

role.rbac.authorization.k8s.io/rook-ceph-mgr created

role.rbac.authorization.k8s.io/rook-ceph-osd created

role.rbac.authorization.k8s.io/rook-ceph-purge-osd created

role.rbac.authorization.k8s.io/rook-ceph-rgw created

role.rbac.authorization.k8s.io/rook-ceph-system created

rolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin-role-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role-cfg created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-default-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-system created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-purge-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-purge-osd-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-rgw created

rolebinding.rbac.authorization.k8s.io/rook-ceph-rgw-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-system created

serviceaccount/rook-ceph-cmd-reporter created

serviceaccount/rook-ceph-mgr created

serviceaccount/rook-ceph-osd created

serviceaccount/rook-ceph-purge-osd created

serviceaccount/rook-ceph-rgw created

serviceaccount/rook-ceph-system created

serviceaccount/rook-csi-cephfs-plugin-sa created

serviceaccount/rook-csi-cephfs-provisioner-sa created

serviceaccount/rook-csi-rbd-plugin-sa created

serviceaccount/rook-csi-rbd-provisioner-sa created

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "cephfs-csi-nodeplugin" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "cephfs-external-provisioner-runner" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "psp:rook" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rbd-csi-nodeplugin" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rbd-external-provisioner-runner" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-cluster-mgmt" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-global" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-mgr-cluster" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-mgr-system" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-object-bucket" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-osd" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-system" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "cephfs-csi-nodeplugin" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "cephfs-csi-provisioner-role" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rbd-csi-nodeplugin" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rbd-csi-provisioner-role" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-global" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-mgr-cluster" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-object-bucket" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-osd" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-system" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-system-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-csi-cephfs-plugin-sa-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-csi-cephfs-provisioner-sa-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-csi-rbd-plugin-sa-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-csi-rbd-provisioner-sa-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": podsecuritypolicies.policy "00-rook-privileged" already exists

Create the Operator

$ kubectl create -f operator.yaml

configmap/rook-ceph-operator-config created

deployment.apps/rook-ceph-operator created

I could see the Operator come up

$ kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-5949bdbb59-clhwc 1/1 Running 0 21s

# waiting overnight

$ kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-5949bdbb59-dq6cj 1/1 Running 0 9h

Really, no matter what i did, rook-ceph was in a trashed state

2022-08-27 02:08:03.115018 E | ceph-cluster-controller: failed to reconcile CephCluster "rook-ceph/rook-ceph". CephCluster "rook-ceph/rook-ceph" will not be deleted until all dependents are removed: CephBlockPool: [ceph-blockpool builtin-mgr], CephFilesystem: [ceph-filesystem], CephObjectStore: [ceph-objectstore]

2022-08-27 02:08:13.536370 I | ceph-cluster-controller: CephCluster "rook-ceph/rook-ceph" will not be deleted until all dependents are removed: CephBlockPool: [ceph-blockpool builtin-mgr], CephFilesystem: [ceph-filesystem], CephObjectStore: [ceph-objectstore]

2022-08-27 02:08:13.553691 E | ceph-cluster-controller: failed to reconcile CephCluster "rook-ceph/rook-ceph". CephCluster "rook-ceph/rook-ceph" will not be deleted until all dependents are removed: CephBlockPool: [ceph-blockpool builtin-mgr], CephFilesystem: [ceph-filesystem], CephObjectStore: [ceph-objectstore]

After I created the sc

$ kubectl apply -f storageclass-bucket-retain.yaml

Then I could try and create a PVC request

builder@DESKTOP-72D2D9T:~/Workspaces/jekyll-blog$ cat pvc-ceph.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mongo-ceph-pvc

spec:

storageClassName: rook-ceph-retain-bucket

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

builder@DESKTOP-72D2D9T:~/Workspaces/jekyll-blog$ kubectl apply -f pvc-ceph.yaml

persistentvolumeclaim/mongo-ceph-pvc created

builder@DESKTOP-72D2D9T:~/Workspaces/jekyll-blog$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mongo-ceph-pvc Pending rook-ceph-retain-bucket 6s

Clearly it didnt work (no storage)

Rook : Attempt 4 : Using AKS

At this point I just gave up trying to force Rook-Ceph onto my home cluster. I figured, perhaps using AKS with some mounted storage might work.

I created a based 3 node AKS cluster and logged in.

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-19679206-vmss000000 Ready agent 3m42s v1.23.8

aks-nodepool1-19679206-vmss000001 Ready agent 3m46s v1.23.8

aks-nodepool1-19679206-vmss000002 Ready agent 3m46s v1.23.8

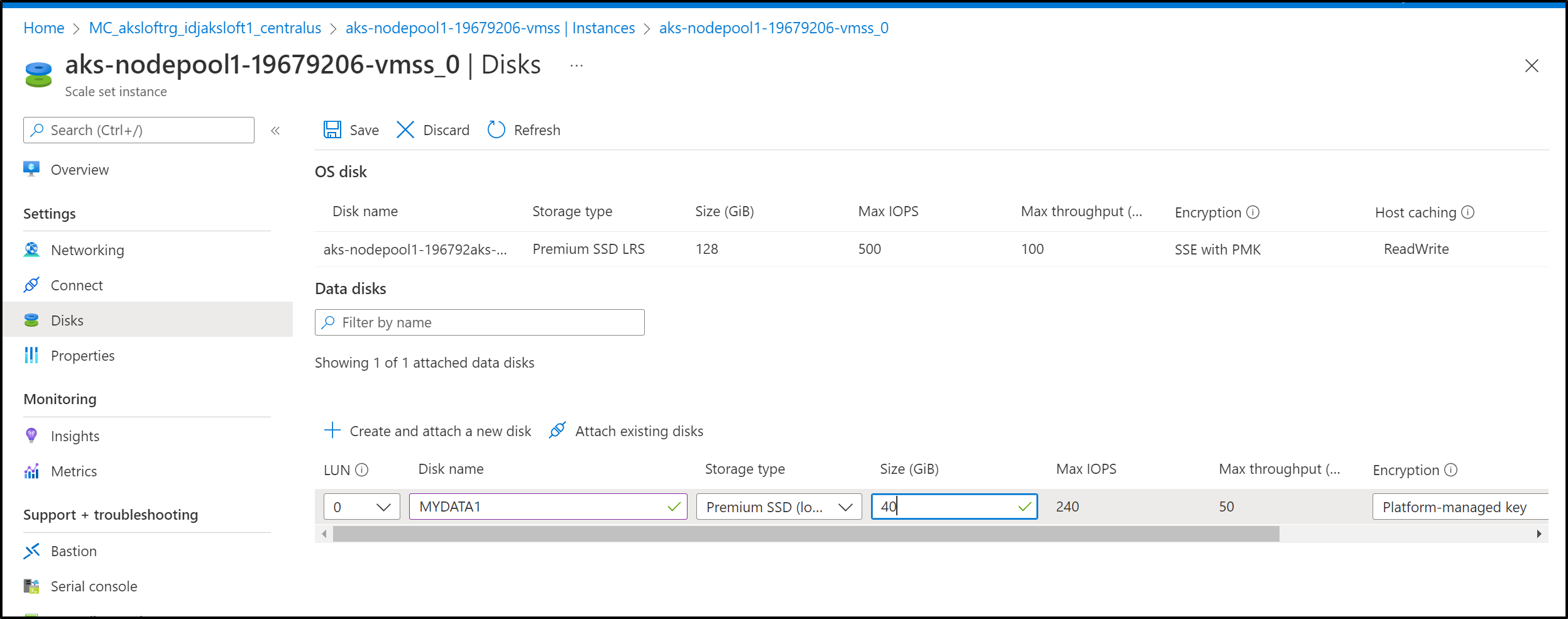

I then added disks (40Gb) to each node manually

Now I can install the Rook Operator

$ helm install --create-namespace --namespace rook-ceph rook-ceph rook-release/rook-ceph

W0829 20:17:58.933428 2813 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

W0829 20:18:11.490180 2813 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

NAME: rook-ceph

LAST DEPLOYED: Mon Aug 29 20:17:57 2022

NAMESPACE: rook-ceph

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The Rook Operator has been installed. Check its status by running:

kubectl --namespace rook-ceph get pods -l "app=rook-ceph-operator"

Visit https://rook.io/docs/rook/latest for instructions on how to create and configure Rook clusters

Important Notes:

- You must customize the 'CephCluster' resource in the sample manifests for your cluster.

- Each CephCluster must be deployed to its own namespace, the samples use `rook-ceph` for the namespace.

- The sample manifests assume you also installed the rook-ceph operator in the `rook-ceph` namespace.

- The helm chart includes all the RBAC required to create a CephCluster CRD in the same namespace.

- Any disk devices you add to the cluster in the 'CephCluster' must be empty (no filesystem and no partitions).

We can check the status of the Operator

$ helm list -n rook-ceph

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

rook-ceph rook-ceph 1 2022-08-29 20:17:57.6679055 -0500 CDT deployed rook-ceph-v1.9.10 v1.9.10

Now install the Rook Deployment

$ helm install --create-namespace --namespace rook-ceph rook-ceph-cluster --set operatorNamespace=rook-ceph rook-release/rook-ceph-cluster

NAME: rook-ceph-cluster

LAST DEPLOYED: Mon Aug 29 20:20:19 2022

NAMESPACE: rook-ceph

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The Ceph Cluster has been installed. Check its status by running:

kubectl --namespace rook-ceph get cephcluster

Visit https://rook.io/docs/rook/latest/CRDs/ceph-cluster-crd/ for more information about the Ceph CRD.

Important Notes:

- You can only deploy a single cluster per namespace

- If you wish to delete this cluster and start fresh, you will also have to wipe the OSD disks using `sfdisk`

Unlike before, I immediately saw new propegated Storage classes

$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

azurefile file.csi.azure.com Delete Immediate true 22m

azurefile-csi file.csi.azure.com Delete Immediate true 22m

azurefile-csi-premium file.csi.azure.com Delete Immediate true 22m

azurefile-premium file.csi.azure.com Delete Immediate true 22m

ceph-block (default) rook-ceph.rbd.csi.ceph.com Delete Immediate true 98s

ceph-bucket rook-ceph.ceph.rook.io/bucket Delete Immediate false 98s

ceph-filesystem rook-ceph.cephfs.csi.ceph.com Delete Immediate true 98s

default (default) disk.csi.azure.com Delete WaitForFirstConsumer true 22m

managed disk.csi.azure.com Delete WaitForFirstConsumer true 22m

managed-csi disk.csi.azure.com Delete WaitForFirstConsumer true 22m

managed-csi-premium disk.csi.azure.com Delete WaitForFirstConsumer true 22m

managed-premium disk.csi.azure.com Delete WaitForFirstConsumer true 22m

Testing

Let’s now add a PVC using th default ceph-block storage class

$ cat pvc-ceph.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mongo-ceph-pvc

spec:

storageClassName: ceph-block

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

$ kubectl apply -f pvc-ceph.yaml

persistentvolumeclaim/mongo-ceph-pvc created

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mongo-ceph-pvc Pending ceph-block 60s

Looking for the Rook Ceph Dashboard

builder@DESKTOP-72D2D9T:~/Workspaces/jekyll-blog$ kubectl get svc -n rook-ceph

No resources found in rook-ceph namespace.

builder@DESKTOP-72D2D9T:~/Workspaces/jekyll-blog$ kubectl get ingress --all-namespaces

No resources found

builder@DESKTOP-72D2D9T:~/Workspaces/jekyll-blog$ kubectl get svc --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 27m

gatekeeper-system gatekeeper-webhook-service ClusterIP 10.0.43.240 <none> 443/TCP 17m

kube-system azure-policy-webhook-service ClusterIP 10.0.194.119 <none> 443/TCP 17m

kube-system kube-dns ClusterIP 10.0.0.10 <none> 53/UDP,53/TCP 27m

kube-system metrics-server ClusterIP 10.0.234.196 <none> 443/TCP 27m

kube-system npm-metrics-cluster-service ClusterIP 10.0.149.126 <none> 9000/TCP 27m

I checked the helm values and saw the dashboard was set to enabled

However, none was created

$ kubectl get pods -n rook-ceph

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-9wdwt 2/2 Running 0 33m

csi-cephfsplugin-f4s2t 2/2 Running 0 31m

csi-cephfsplugin-j4l6h 2/2 Running 0 33m

csi-cephfsplugin-provisioner-5965769756-42zzh 0/5 Pending 0 33m

csi-cephfsplugin-provisioner-5965769756-mhzcv 0/5 Pending 0 33m

csi-cephfsplugin-v5hx6 2/2 Running 0 33m

csi-rbdplugin-2qtbn 2/2 Running 0 33m

csi-rbdplugin-82nqx 2/2 Running 0 33m

csi-rbdplugin-k8rtj 2/2 Running 0 33m

csi-rbdplugin-provisioner-7cb769bbb7-d98bq 5/5 Running 0 33m

csi-rbdplugin-provisioner-7cb769bbb7-r4zgb 0/5 Pending 0 33m

csi-rbdplugin-qxrqf 2/2 Running 0 31m

rook-ceph-mon-a-canary-5cff67c4fb-4jgs6 0/1 Pending 0 93s

rook-ceph-mon-b-canary-56f8f669cd-zv2c9 0/1 Pending 0 93s

rook-ceph-mon-c-canary-77958fc564-mlw9r 0/1 Pending 0 93s

rook-ceph-operator-56c65d67c5-lm4dq 1/1 Running 0 35m

After waiting a while and seeing no progress, I deleted the AKS cluster.

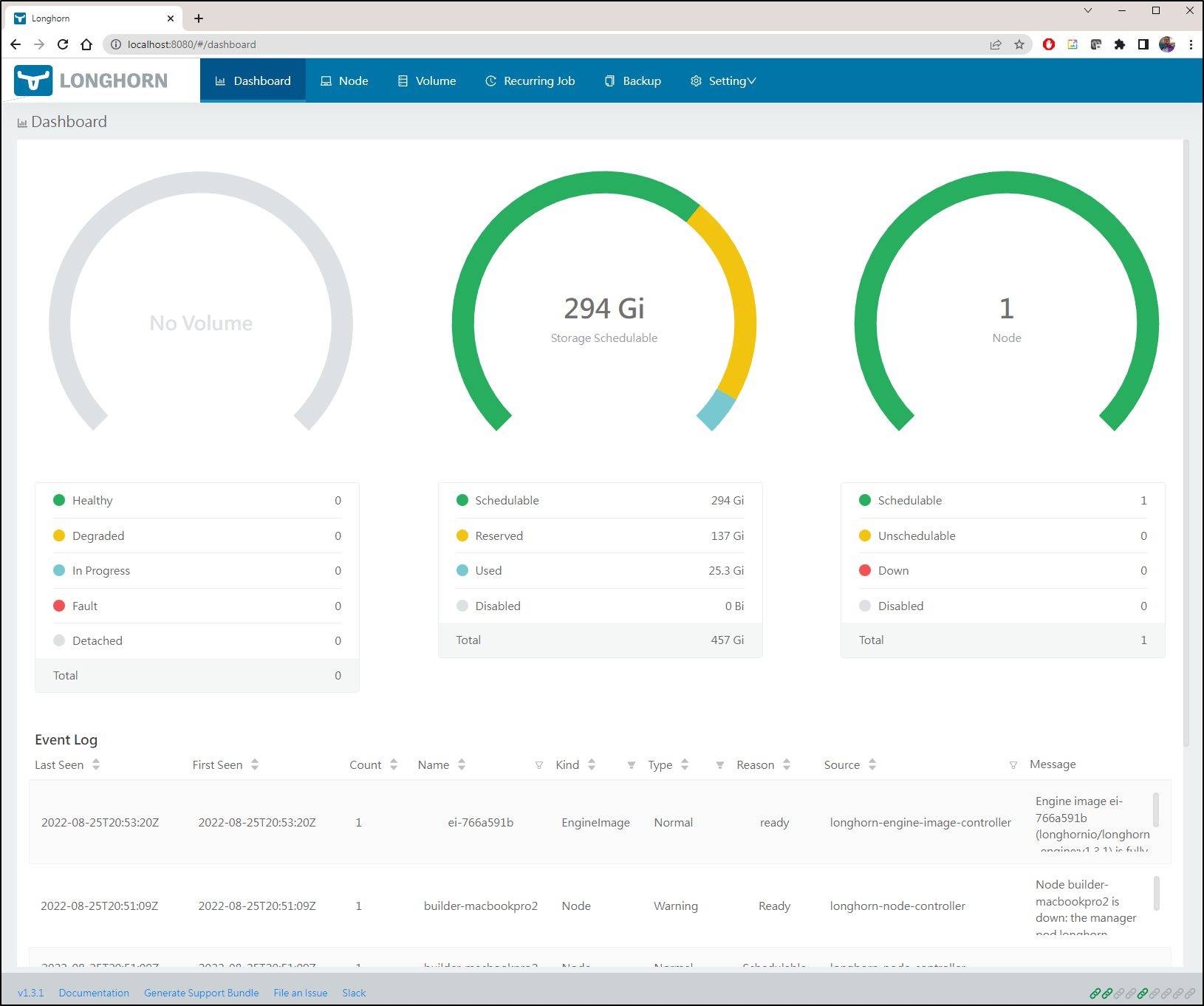

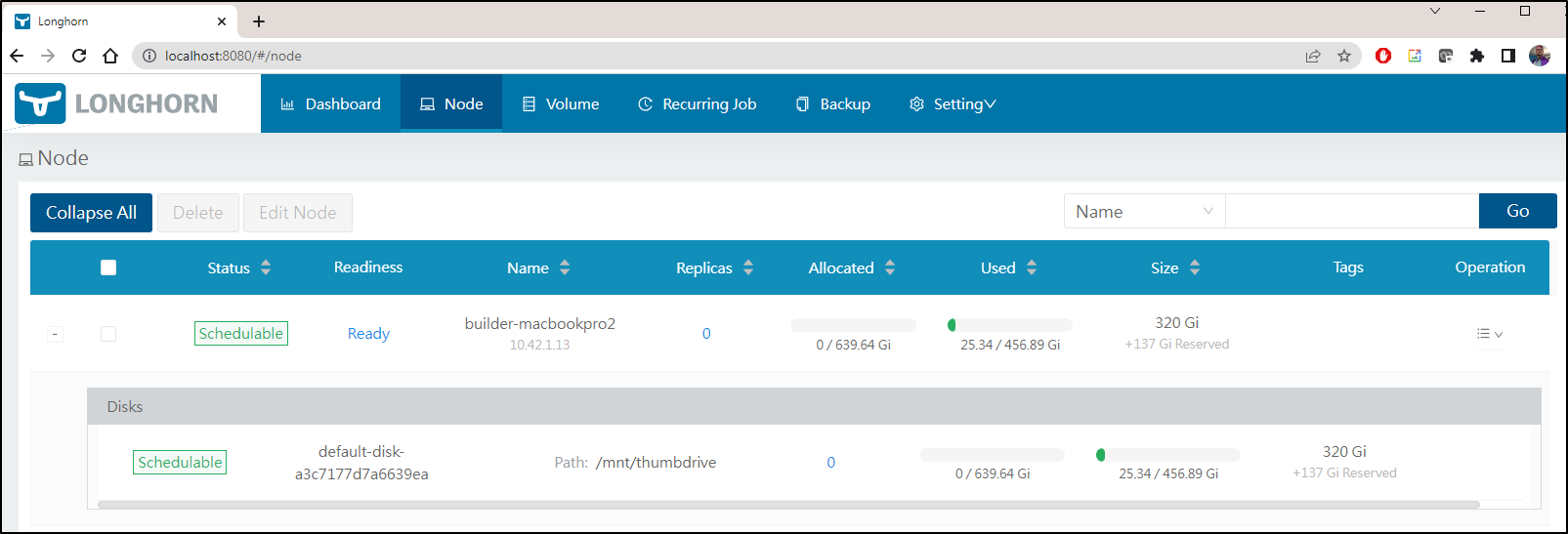

Rook : Attempt fünf : Using NFS instead of Ceph

I had one last idea for Rook - try the NFS implementation instead of Ceph.

I found yet-another guide here on using Rook NFS for PVCs. Perhaps the needs of Ceph just do not jive with my Laptops and Azure approach.

I respun this time with 1.23:

$ curl -sfL https://get.k3s.io | INSTALL_K3S_VERSION="v1.23.10+k3s1" K3S_KUBECONFIG_MODE="644" INSTALL_K3S_EXEC="--tls-san 73.242.50.46

" sh -

[INFO] Using v1.23.10+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.23.10+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.23.10+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s.service

[INFO] systemd: Enabling k3s unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s.service → /etc/systemd/system/k3s.service.

[INFO] systemd: Starting k3s

And after adding the worker node, i could pull the kubeconfig and see our new cluster

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

anna-macbookair Ready control-plane,master 2m27s v1.23.10+k3s1

builder-macbookpro2 Ready <none> 66s v1.23.10+k3s1

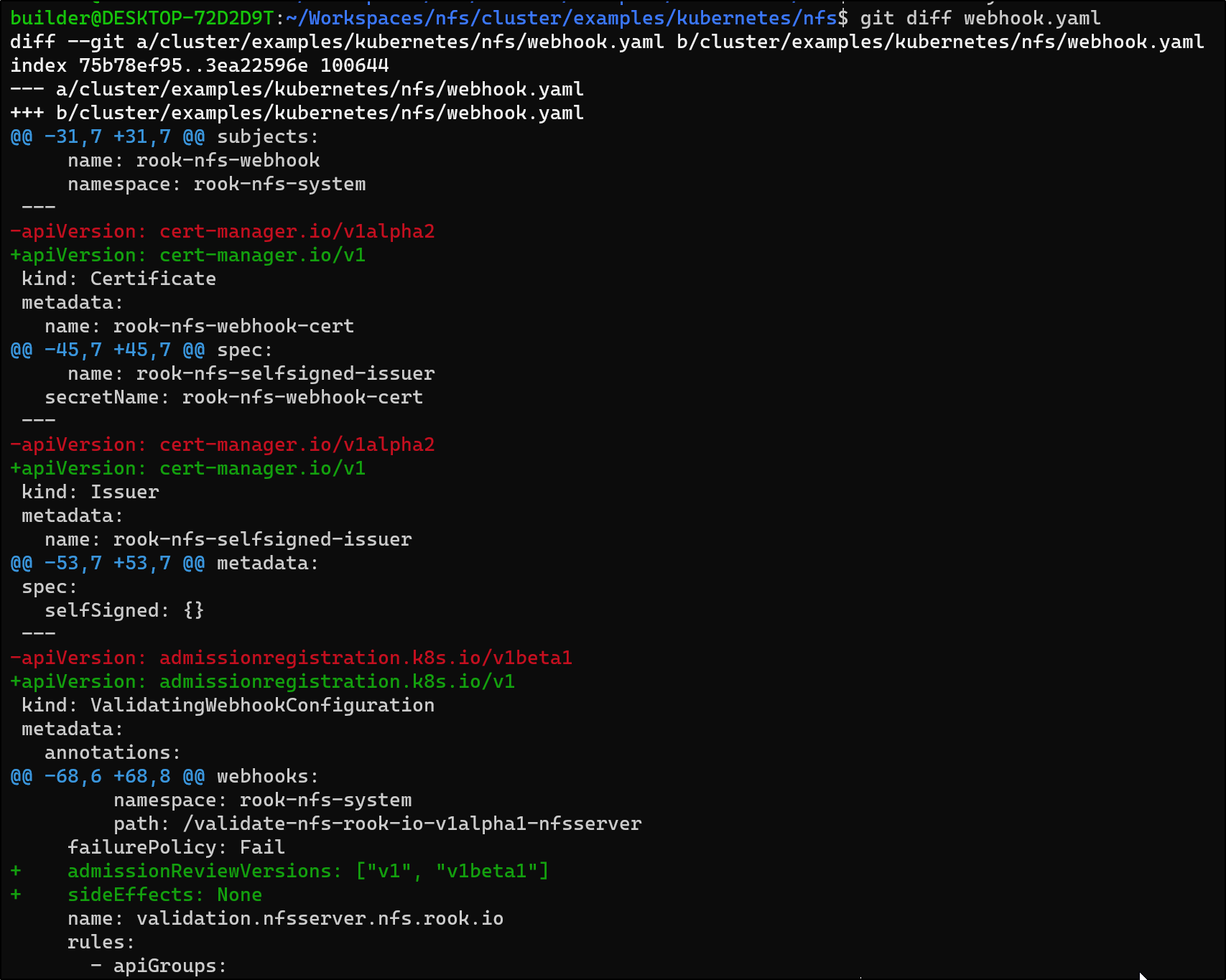

While deprecated and unsupported, we can try the last known good Rook NFS. We’ll largely be following this LKE guide sprinkled with some notes from notes from an unreleased version.

Get the Rook NFS Code

builder@DESKTOP-72D2D9T:~/Workspaces$ git clone --single-branch --branch v1.7.3 https://github.com/rook/nfs.git

Cloning into 'nfs'...

remote: Enumerating objects: 68742, done.

remote: Counting objects: 100% (17880/17880), done.

remote: Compressing objects: 100% (3047/3047), done.

remote: Total 68742 (delta 16619), reused 15044 (delta 14811), pack-reused 50862

Receiving objects: 100% (68742/68742), 34.16 MiB | 2.60 MiB/s, done.

Resolving deltas: 100% (48931/48931), done.

Note: switching to '99e2a518700c549e2d0855c00598ae560a4d002c'.

You are in 'detached HEAD' state. You can look around, make experimental

changes and commit them, and you can discard any commits you make in this

state without impacting any branches by switching back to a branch.

If you want to create a new branch to retain commits you create, you may

do so (now or later) by using -c with the switch command. Example:

git switch -c <new-branch-name>

Or undo this operation with:

git switch -

Turn off this advice by setting config variable advice.detachedHead to false

Now apply the CRDs and Operator

builder@DESKTOP-72D2D9T:~/Workspaces$ cd nfs/cluster/examples/kubernetes/nfs/

builder@DESKTOP-72D2D9T:~/Workspaces/nfs/cluster/examples/kubernetes/nfs$ kubectl create -f crds.yaml

customresourcedefinition.apiextensions.k8s.io/nfsservers.nfs.rook.io created

builder@DESKTOP-72D2D9T:~/Workspaces/nfs/cluster/examples/kubernetes/nfs$ kubectl create -f operator.yaml

namespace/rook-nfs-system created

serviceaccount/rook-nfs-operator created

clusterrolebinding.rbac.authorization.k8s.io/rook-nfs-operator created

clusterrole.rbac.authorization.k8s.io/rook-nfs-operator created

deployment.apps/rook-nfs-operator created

Check that the Operator is running

$ kubectl get pods -n rook-nfs-system

NAME READY STATUS RESTARTS AGE

rook-nfs-operator-556c5ddff7-dmfps 1/1 Running 0 81s

I need to add cert-manager

$ kubectl apply -f https://github.com/jetstack/cert-manager/releases/download/v1.8.0/cert-manager.yaml

namespace/cert-manager created

customresourcedefinition.apiextensions.k8s.io/certificaterequests.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/certificates.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/challenges.acme.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/clusterissuers.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/issuers.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/orders.acme.cert-manager.io created

serviceaccount/cert-manager-cainjector created

serviceaccount/cert-manager created

serviceaccount/cert-manager-webhook created

configmap/cert-manager-webhook created

clusterrole.rbac.authorization.k8s.io/cert-manager-cainjector created