Published: Sep 3, 2024 by Isaac Johnson

Recently I was asked about backups to the cloud using tools like s3fs, fuse, rsync and more.

Let’s first cover some real fundamentals on cloud storage and costs. The big three (AWS, Azure and GCP) all have some form of blob storage: AWS S3, Azure Storage Accounts and GCP Buckets.

With each of these we have the idea of “Hot”, “Cool” (many terms here) and “Cold” storage. Hot storage is always the fastest, most expensive and generally the default and Cold or Archive is always the cheapest, but has the highest cost to retrieve and at times has a cost implication for removing files early. Archive can take longer to fetch as well.

Let’s break down the costs of storage (per month in USD unless otherwise specified) for the major cloud providers.

AWS Storage Classes and Costs

Let’s look at AWS S3 (source)

| S3 Standard | S3 Intelligent-Tiering | S3 Express One Zone | S3 Standard-IA | S3 One Zone-IA | S3 Glacier Instant Retrieval | S3 Glacier Flexible Retrieval | S3 Glacier Deep Archive | |

|---|---|---|---|---|---|---|---|---|

| I’de call it | Hot | Hot | Hot | Hot | Hot | Cool | Cold | Cold |

| Use cases | General purpose storage for frequently accessed data | Automatic cost savings for data with unknown or changing access patterns | High performance storage for your most frequently accessed data | Infrequently accessed data that needs millisecond access | Re-creatable infrequently accessed data | Long-lived data that is accessed a few times per year with instant retrievals | Backup and archive data that is rarely accessed and low cost | Archive data that is very rarely accessed and very low cost |

| First byte latency | milliseconds | milliseconds | single-digit milliseconds | milliseconds | milliseconds | milliseconds | minutes or hours | hours |

| Availability Zones | ≥3 | ≥3 | 1 | ≥3 | 1 | ≥3 | ≥3 | ≥3 |

| Minimum storage duration charge | N/A | N/A | 1 hour | 30 days | 30 days | 90 days | 90 days | 180 days |

| Retrieval charge | N/A | N/A | N/A | per GB retrieved | per GB retrieved | per GB retrieved | per GB retrieved | per GB retrieved |

| Lifecycle transitions | Yes | Yes | No | Yes | Yes | Yes | Yes | Yes |

In all cases except for Express One Zone we can have a storage lifecycle rule to move things between classes.

A common policy would be use S3 Standard and if the object exists for 30d we move to Standard-IA and then at 90d we move to S3 Glacier. With the more nuanced classes, I might now use “S3 Intelligent-Tiering” then move to “S3 Standard-IA” before going to “S3 Glacier Flexible Retrieval”. If it’s something that has regulatory requirements for years (or it’s personal files like photos), I might then send to Deep Archive after 1 year.

Just to give an idea of cost basics, to store 100Gb in US East (Ohio) today in Standard storage would be US$2.30 but only US$0.10 for Deep Archive. However, if I pull that 100Gb in the month, the Deep Archive is back to $2.10

However, assuming I’m just storing out some archives, this can really add up. To store 50Tb in Deep Archive it would be US$51.36/month but if we just left it in S3-Standard that would add up to US$1,177.60/month

GCP

Lets now compare prices for what we just suggested in AWS. For 50TB in Standard storage in a GCP Bucket in us-centra1 today would be US$9999/month

A quick estimate in us-central1 as of today in USD, per month

| Data | Standard | Nearline | Coldline | Archive |

|---|---|---|---|---|

| 50Gb | 0.90 | 0.50 | 0.20 | 0.06 |

| 500Gb | 9.90 | 5.00 | 2.00 | 0.60 |

| 50Tb | 999 | 500 | 200 | 60 |

However, the retrieval cost in GCP are similar. If I stored 100Gb in Coldline but also pulled 100Gb, we would be looking at US$2.40. But if I stay in GCP then that would be $0.40 (meaning this could be handy for backups/restores in GCP).

Azure

Azure has the idea of Access Tiers (Hot, Cool, Cold and Archive) as well as Redundancy like LRS, ZRS, GRS, RA-GRS, GZRS and RA-GZRS. So for our Redundancy, it’s just about how much risk we would tolerate.

LRS is the cheapest, but least “safe” in that your data is locally redundant and stored three times within a single physical location in the primary region. This means a full data center outage or loss could impact you. Zone redundant storage copies it synchronously over three Availability Zones. Geo-Zone-redundant storage (GZRS) then adds three AZ and then asynchronously to a secondary region.

For backups, I tend to like GRS, or Geo-redundant storage which copies it three times in a physical location but then syncs asynchronously to a location in a secondary region that is hundreds of miles from that primary location.

For a GPv2 Storage Account (which I generally use) in US East today, in USD per month:

| Data | R | Hot | Cool | Cold | Archive |

|---|---|---|---|---|---|

| 50Gb | LRS | 1.04 | 0.76 | 0.18 | 0.05 |

| 50Gb | GRS | 1.30 | 1.67 | 0.40 | 0.15 |

| 50Gb | GZRS | 2.34 | 1.71 | 0.43 | 0.15 (RA-GRS) |

| 500Gb | LRS | 10.40 | 7.60 | 1.80 | 0.50 |

| 500Gb | GRS | 22.90 | 16.70 | 4.05 | 1.50 |

| 500Gb | GZRS | 23.40 | 17.10 | 4.25 | 1.50 (RA-GRS) |

| 50Tb | LRS | 1064.96 | 778.24 | 184.32 | 50.69 |

| 50Tb | GRS | 2344.96 | 1710.08 | 414.72 | 153.09 |

| 50Tb | GZRS | 2396.16 | 1751.04 | 435.20 | 153.09 (RA-GRS) |

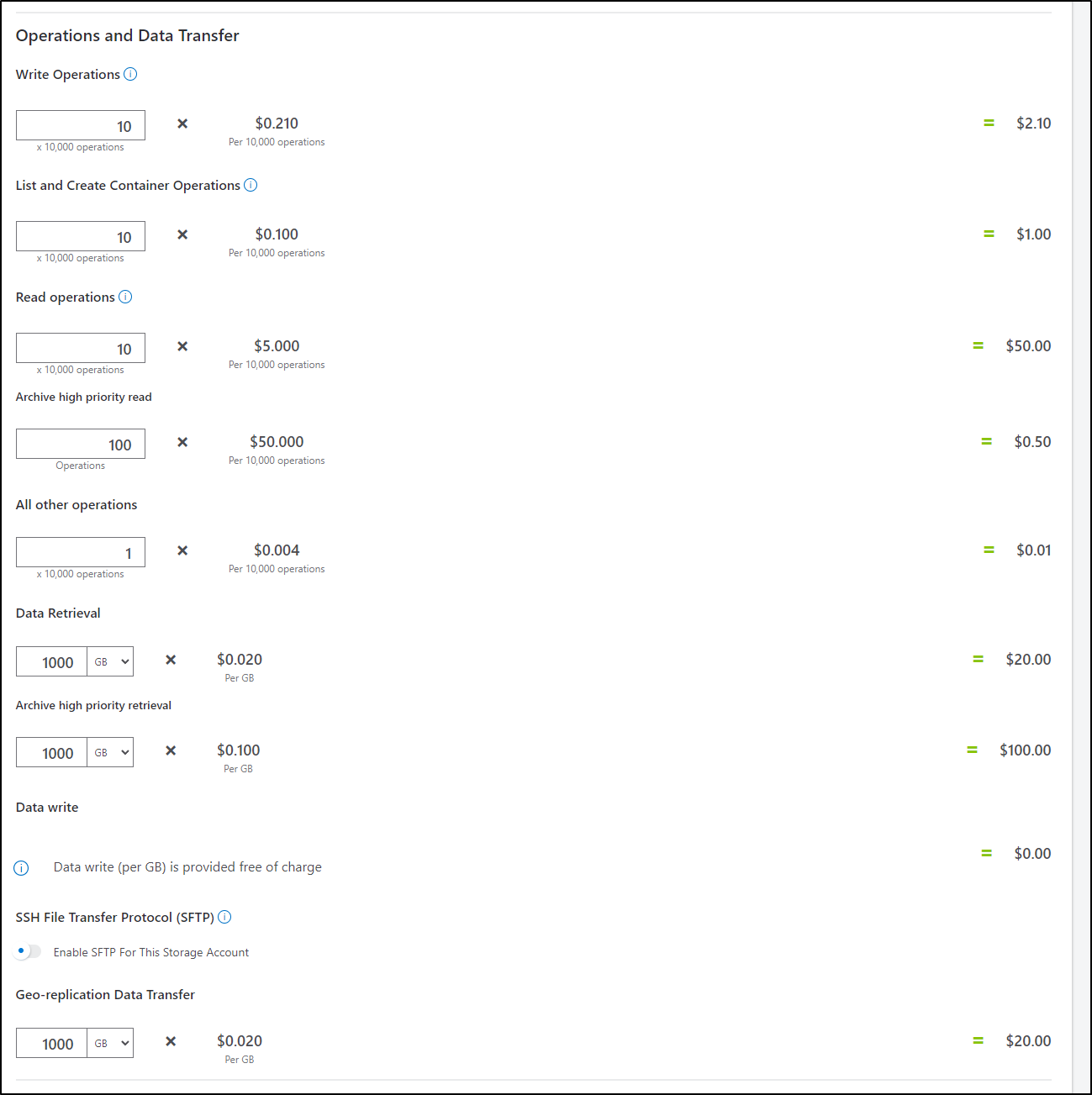

Azure has a lot of small other charges too that can add up

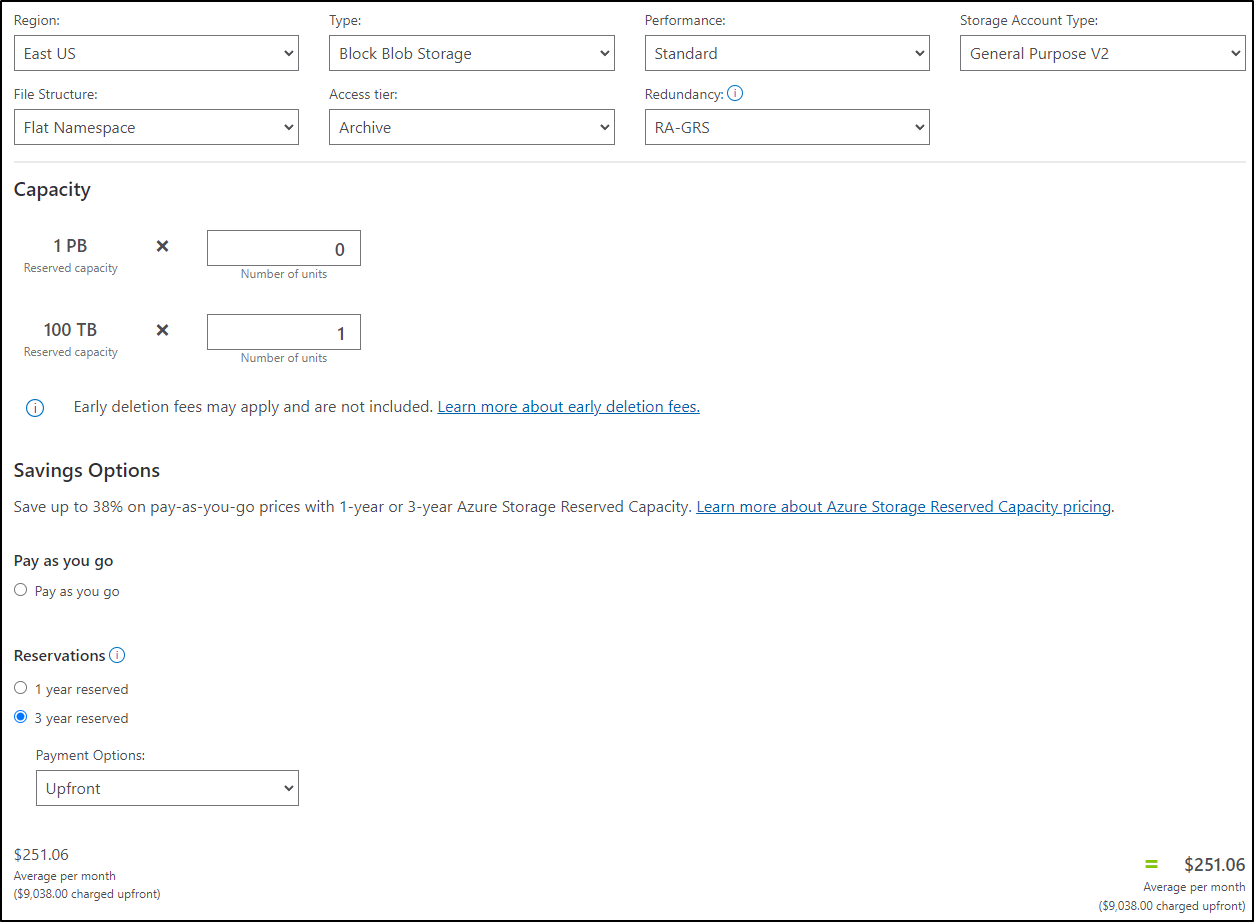

However, if we are looking at big storage, we can consider buying storage up front to just “stash” things.

Consider that I could get 100 Tb of storage for an average of US$251 a month if I wanted to pay 3y upfront ($9038 today) compare to $306/mo buying it per month.

This makes more sense when we get to Petabyte size units. For instance, paying for 1000Tb (1Pb) per month with RA-GRS would be US$3061 compared to $2445.50/mo by dropping $88038 up-front for a 3y commitment or $2664.92/mo by dropping $31,979 up front for a 1-year commitment.

Big Data, a comparison

Assuming I have to stash a full Petabyte (1000 Tb)

| Cloud | Type | Per month | Per month 1-y upfront | Per month 3-y upfront |

|---|---|---|---|---|

| Azure | Archive LRS | 1014 | 882 | 810 |

| AWS | Glacier Deep Archive | 1027 | — | — |

| GCP | Archive Storage | 1258 | — | — |

| Azure | GRS | 3061 | 2665 | 2446 |

Azure only looks good if you have a tolerance for risk as LRS “is the lowest-cost redundancy option and offers the least durability compared to other options. LRS protects your data against server rack and drive failures. However, if a disaster such as fire or flooding occurs within the data center, all replicas of a storage account using LRS might be lost or unrecoverable” (source: Azure docs)

Okay I’m now more confused.

While professors and software architects love to say “It Depends”, this can generally just aggravate a manager looking for some kind of straightforward answer.

The key decision paths here are how much data you need to stash and for how long. A lot of times we need to store “all database backups for 4 years” or some kind of DR that says we need “90 days of hot backups of our main database in case of a breach or disaster”.

Let’s just take those two questions…

Database Backups for 4 years

I typically see production databases between 500Gb and 50Tb - so let’s consider these two common sizes. with some different RPOs and RTOs.

My first RPO is just once a month (so for four years that is 1 in hot, 2 in cool, and 45 backups in archive). I’m just considering Full Backups to keep the math simple.

AWS

| Database Size | Copies | Storage Class | Cost a Month | Total for 90d |

|---|---|---|---|---|

| 500Gb | 1 | Standard | 11.50 | 11.50 |

| 500Gb | 2 | Glacier Flexible Retrieval | 3.62 | 7.24 |

| 500Gb | 45 | Glacier Deep Storage | 0.50 | 22.57 |

| Total | $41.31 |

GCP

| Database Size | Copies | Storage Class | Cost a Month | Total for 90d |

|---|---|---|---|---|

| 500Gb | 1 | Nearline | 5.00 | 5.00 |

| 500Gb | 2 | Coldline | 2.00 | 4.00 |

| 500Gb | 45 | Archive | 0.60 | 27.00 |

| Total | $36.00 |

Azure

| Database Size | Copies | Storage Class | Cost a Month | Total for 90d |

|---|---|---|---|---|

| 500Gb | 1 | Cool (ZRS) | 9.50 | 9.50 |

| 500Gb | 2 | Cold (ZRS) | 2.00 | 4.00 |

| 500Gb | 45 | Archive (GRS) | 1.50 | 67.28 |

| Total | $80.78 |

Note: if we had tolerance for loosing data - which we don’t on a Production database backup, Archive LRS would be 22.28/mo for a total of $35.78 for Azure

90 days of hot backups of our main database in case of a breach or disaster

Here we will assume daily backups for 90 days and we cannot afford slow access.

AWS

| Database Size | Copies | Storage Class | Cost a Month | Total |

|---|---|---|---|---|

| 500Gb | 93 | Standard-IA | 6.25 | 581.25 |

| 1Tb | 93 | Standard-IA | 12.50 | 1,1652.50 |

GCP

| Database Size | Copies | Storage Class | Cost a Month | Total |

|---|---|---|---|---|

| 500Gb | 93 | Nearline | 5.00 | 465.00 |

| 1Tb | 93 | Nearline | 10.00 | 930 |

Azure

| Database Size | Copies | Storage Class | Cost a Month | Total |

|---|---|---|---|---|

| 500Gb | 93 | Cool (ZRS) | 7.60 | 706.80 |

| 1Tb | 93 | Cool (ZRS) | 7.60 | 1,556.48 |

| 1Tb | 93 | Cool (ZRS) | 7.60 | 1,276 (1y) |

| 1Tb | 93 | Cool (ZRS) | 7.60 | 1,027 (3y) |

I don’t need to explore more - from a storage perspective, it seems that short of special discounts your c-suite might negotiate with Microsoft, Azure is eliminated from a costing perspective.

Let’s do some backups

I think we have laid out some arguments above for why we want to purse either AWS who, as the largest cloud, has some of the best prices around storage in S3 and GCP, which because they are Google and have a massive backend for the likes of YouTube, Google and their other properties, offer a good deal on storage.

S3Fuse for AWS

The most common path I tend to follow is to use a mount on a Linux box to just “expose” the cloud via the filesystem. Then the rest is all about my tooling for keeping one folder synced to another.

We start by installing with apt, though we can use many different package managers to install fuse.

$ sudo apt install s3fs

Reading package lists...

Building dependency tree

Reading state information... Done

s3fs is already the newest version (1.86-1).

The following packages were automatically installed and are no longer required:

aspnetcore-runtime-3.1 aspnetcore-targeting-pack-3.1 aspnetcore-targeting-pack-6.0 dotnet-apphost-pack-3.1 dotnet-apphost-pack-6.0 dotnet-targeting-pack-3.1

dotnet-targeting-pack-6.0 libappstream-glib8 libdbus-glib-1-2 libfwupdplugin1 liblttng-ust-ctl4 liblttng-ust0 libxmlb1 python3-crcmod

Use 'sudo apt autoremove' to remove them.

0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded.

2 not fully installed or removed.

After this operation, 0 B of additional disk space will be used.

Do you want to continue? [Y/n] Y

My next step is to set a Access Key and Secret into the a local secrets file. It should be “KEYID:KEYSECRET”

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ vi ${HOME}/.passwd-s3fs

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ chmod 600 ~/.passwd-s3fs

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ cat ~/.passwd-s3fs | sed 's/[^:]/*/g'

********************:****************************************

I can now mount it and list the contents

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ sudo s3fs fbs-logs /mnt/s3-fbs-logs -o passwd_file=/home/builder/.passwd-s3fs

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ sudo ls -ltra /mnt/s3-fbs-logs

total 5

drwx------ 1 root root 0 Dec 31 1969 .

drwxr-xr-x 8 root root 4096 Aug 30 08:02 ..

To test, I’ll just copy a local index file in using the AWS CLI then check the folder to see if it’s there.

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ aws s3 cp ./index.html s3://fbs-logs/

upload: ./index.html to s3://fbs-logs/index.html

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ sudo ls -ltra /mnt/s3-fbs-logs

total 13

drwx------ 1 root root 0 Dec 31 1969 .

drwxr-xr-x 8 root root 4096 Aug 30 08:02 ..

-rw-r----- 1 root root 7756 Aug 30 08:05 index.html

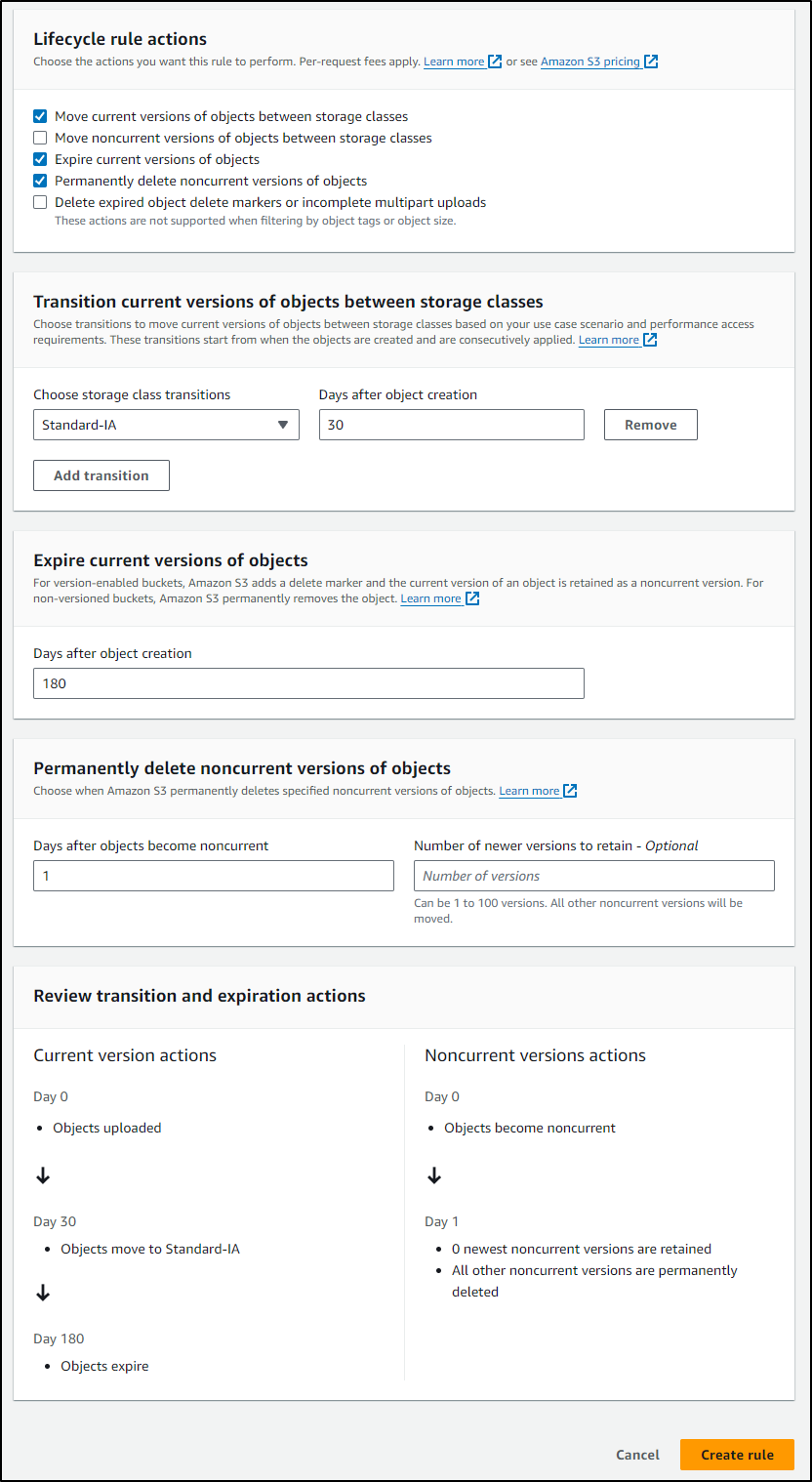

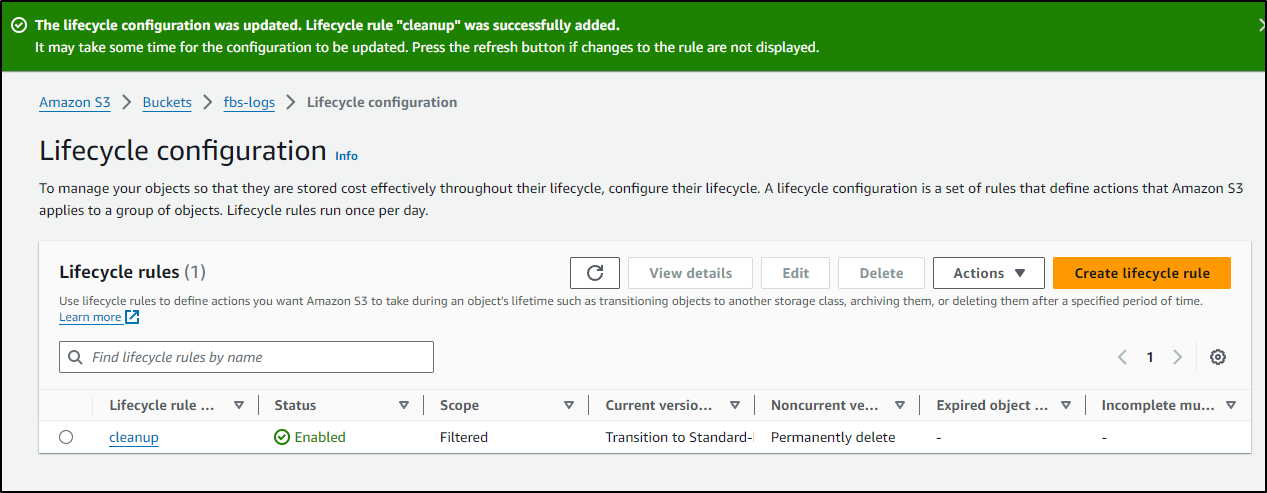

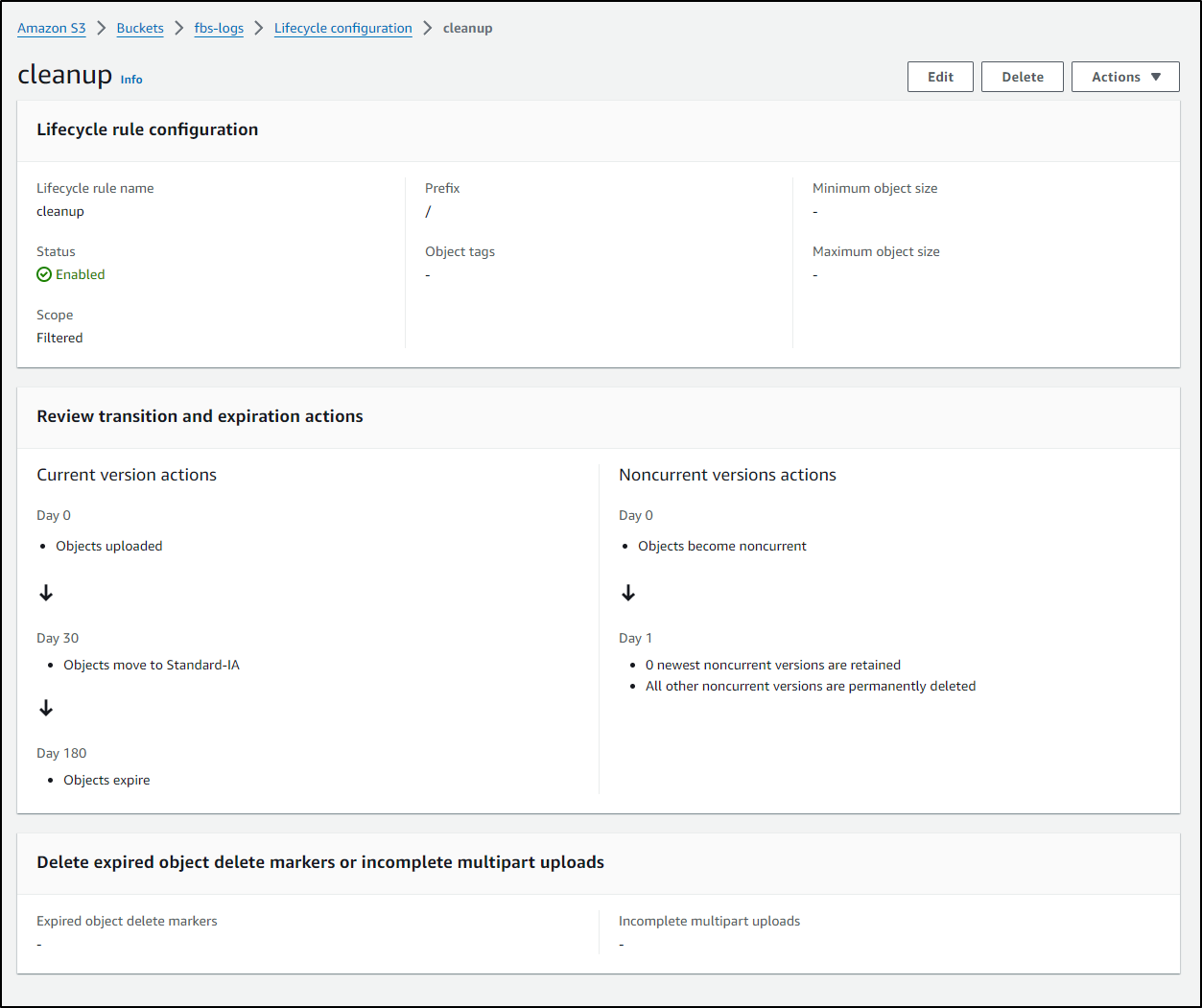

If I was then using this for the 180 backups example, I would set a lifecycle rule that moved into IA right after the minimum 30d (it wont let you use less). I would also set a rule to expire and remove expired after 180d

Once saved, I can see the rule on the logs bucket

If I click it, I can review it and/or edit/remove it.

One way I might sync files, using ‘/var/log’ as my example, would be to use RSync

builder@DESKTOP-QADGF36:/var/log$ sudo rsync -av --include='*.log' --exclude="*" /var/log/ /mnt/s3-fbs-logs/$(date +%Y-%m-%d)/

sending incremental file list

ubuntu-advantage.log

sent 215 bytes received 35 bytes 100.00 bytes/sec

total size is 1,220,324 speedup is 4,881.30

Here I’ll copy all my log files to a folder with the date. I could run it more than once a day and it would just clobber the destination files if different, but kindly skip those that are unchanged

builder@DESKTOP-QADGF36:/var/log$ sudo rsync -av --include='*.log' --exclude="*" /var/log/ /mnt/s3-fbs-logs/$(date +%Y-%m-%d)/

sending incremental file list

sent 172 bytes received 12 bytes 368.00 bytes/sec

total size is 1,220,324 speedup is 6,632.20

Howevever, I found it copied just the one file ignoring the other “.log” files

builder@DESKTOP-QADGF36:/var/log$ ls *.log

alternatives.log dpkg.log fontconfig.log ubuntu-advantage.log

builder@DESKTOP-QADGF36:/var/log$ sudo ls -ltra /mnt/s3-fbs-logs/2024-08-30/

total 2

drwx------ 1 root root 0 Dec 31 1969 ..

-rw------- 1 root root 0 Jul 21 2021 ubuntu-advantage.log

drwxrwxr-x 1 root syslog 0 Aug 30 07:57 .

I can use a simple find and cp command as well. This wouldn’t skip repeats, but I know it would work. Because there is a delay in the file “showing up” in the s3 mount, we see some warnings the first time, but not the second

builder@DESKTOP-QADGF36:/var/log$ sudo find /var/log -type f -name \*.log -exec cp -f {} /mnt/s3-fbs-logs/$(date +%Y-%m-%d)/ \;

cp: cannot fstat '/mnt/s3-fbs-logs/2024-08-30/fontconfig.log': No such file or directory

cp: cannot fstat '/mnt/s3-fbs-logs/2024-08-30/dpkg.log': No such file or directory

cp: cannot fstat '/mnt/s3-fbs-logs/2024-08-30/alternatives.log': No such file or directory

builder@DESKTOP-QADGF36:/var/log$ sudo find /var/log -type f -name \*.log -exec cp -f {} /mnt/s3-fbs-logs/$(date +%Y-%m-%d)/ \;

builder@DESKTOP-QADGF36:/var/log$

Now I see backups

builder@DESKTOP-QADGF36:/var/log$ sudo ls -ltra /mnt/s3-fbs-logs/2024-08-30/

total 1863

drwx------ 1 root root 0 Dec 31 1969 ..

-rw------- 1 root root 0 Jul 21 2021 ubuntu-advantage.log

drwxrwxr-x 1 root syslog 0 Aug 30 07:57 .

-rw-r--r-- 1 root root 0 Aug 30 08:26 sysinfo.log

-rw-r----- 1 root root 570778 Aug 30 08:26 term.log

-rw-r--r-- 1 root root 112999 Aug 30 08:26 history.log

-rw-r--r-- 1 root root 3494 Aug 30 08:26 fontconfig.log

-rw-r--r-- 1 root root 1152190 Aug 30 08:26 dpkg.log

-rw-r--r-- 1 root root 64640 Aug 30 08:26 alternatives.log

And because I pack the date, I can run this daily

builder@DESKTOP-QADGF36:/var/log$ sudo crontab -e

no crontab for root - using an empty one

crontab: installing new crontab

builder@DESKTOP-QADGF36:/var/log$ sudo crontab -l

# Edit this file to introduce tasks to be run by cron.

#

# Each task to run has to be defined through a single line

# indicating with different fields when the task will be run

# and what command to run for the task

#

# To define the time you can provide concrete values for

# minute (m), hour (h), day of month (dom), month (mon),

# and day of week (dow) or use '*' in these fields (for 'any').

#

# Notice that tasks will be started based on the cron's system

# daemon's notion of time and timezones.

#

# Output of the crontab jobs (including errors) is sent through

# email to the user the crontab file belongs to (unless redirected).

#

# For example, you can run a backup of all your user accounts

# at 5 a.m every week with:

# 0 5 * * 1 tar -zcf /var/backups/home.tgz /home/

#

# For more information see the manual pages of crontab(5) and cron(8)

#

# m h dom mon dow command

0 0 * * * find /var/log -type f -name \*.log -exec cp -f {} /mnt/s3-fbs-logs/$(date +%Y-%m-%d)/ \;

I estimate this might total 186Mb for 3months of data which doesnt even register as a value in AWS S3 storage (shows 0.00).

If you are doing this on a real server, you’ll want to move that bucket mount into /etc/fstab so you can withstand a reboot.

To do this, find your user id and group id

builder@DESKTOP-QADGF36:~$ id -u builder

1000

builder@DESKTOP-QADGF36:~$ id -g builder

1000

Have a mount point created

builder@DESKTOP-QADGF36:/var/log$ sudo mkdir /mnt/bucket2

Then add an entry for your bucket in fstab

builder@DESKTOP-QADGF36:/var/log$ sudo vi /etc/fstab

builder@DESKTOP-QADGF36:/var/log$ cat /etc/fstab

LABEL=cloudimg-rootfs / ext4 defaults 0 1

fbs-logs /mnt/bucket2 fuse.s3fs _netdev,allow_other,passwd_file=/home/builder/.passwd-s3fs,default_acl=public-read,uid=1000,gid=1000 0 0

Then mount and verify you can see files

builder@DESKTOP-QADGF36:/var/log$ sudo mount -a

builder@DESKTOP-QADGF36:/var/log$ ls /mnt/bucket2/

2024-08-30 index.html

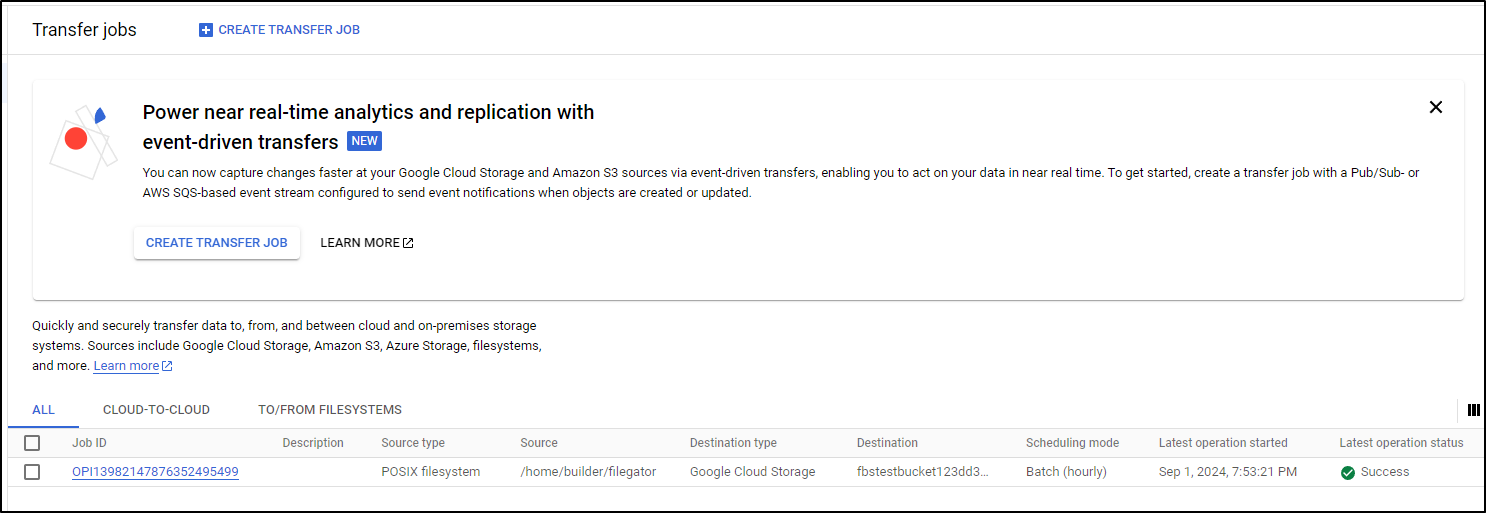

STS / DTS - GCP Storage Transfer jobs

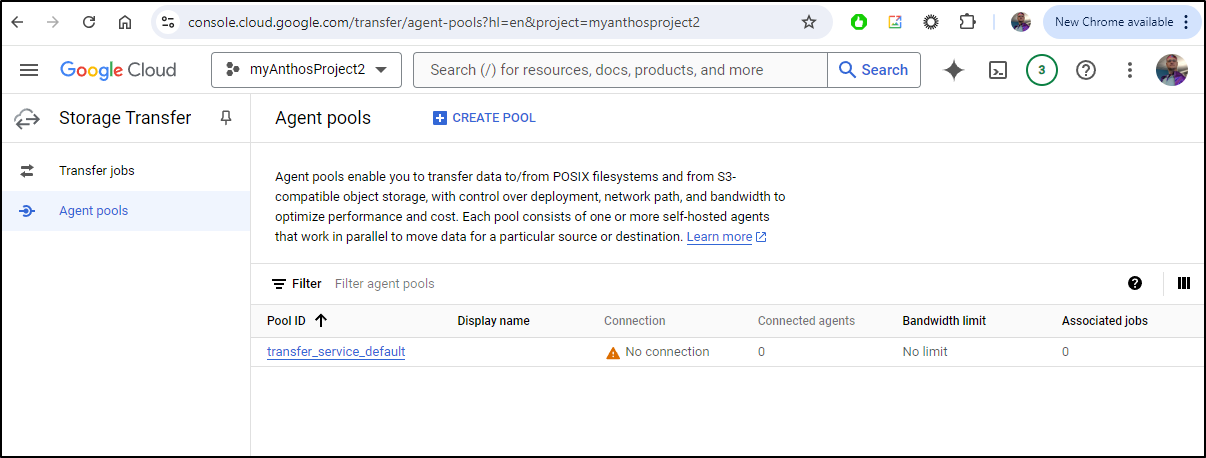

I’ve used the term Data Transfer Service and Storage Transfer Service back and forth for GCP Storage Transfer Jobs, but regardless of the acronym du jour, the idea of these jobs are to create an agent that syncs things on your behalf up to GCP.

I’ve used these to transfer nearly a petabyte before out of an alternate cloud over the course of time. They are idempotent, meaning they can ‘catch up’ if killed or time out.

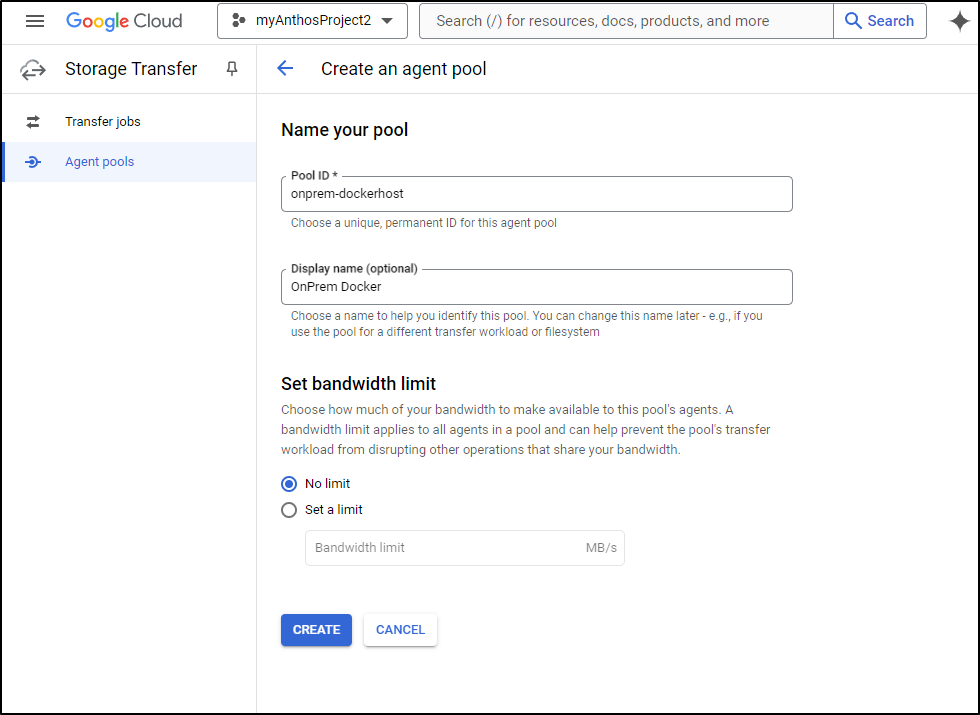

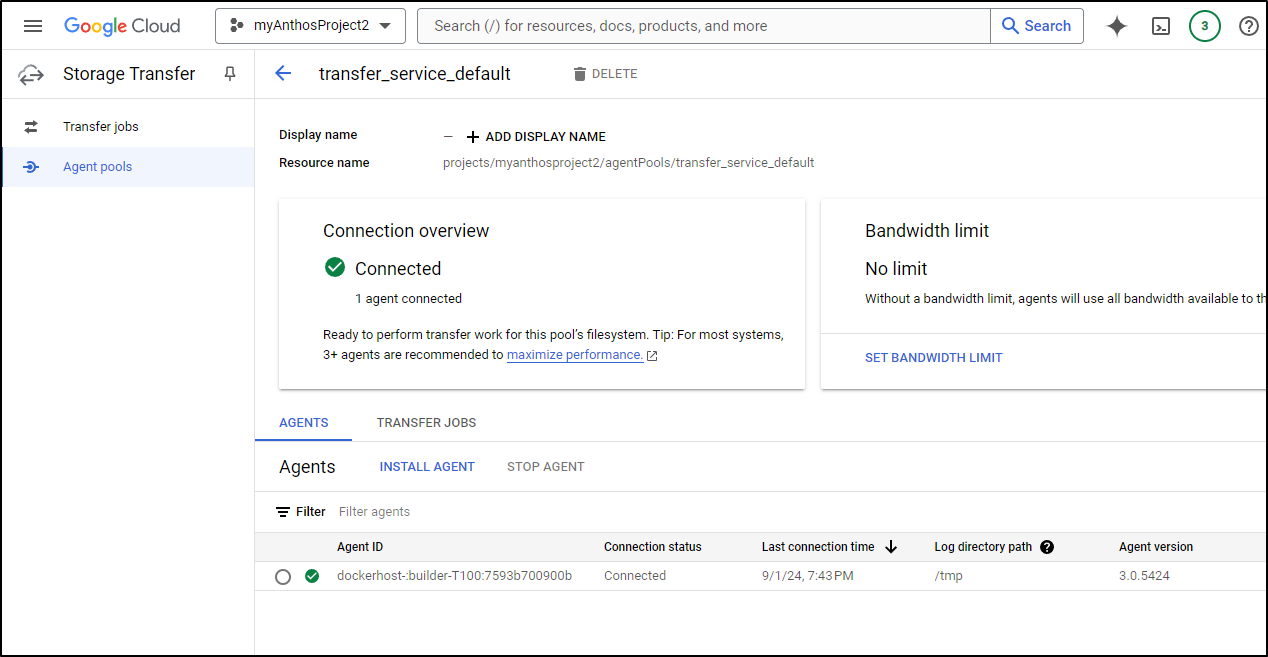

My first step is to create an on-prem transfer pool in by clicking “Create Pool” in the Storage Transfer agent pools area

We can optionally choose to set a limit on bandwidth. This is useful when you don’t want to affect daytime traffic on a production system.

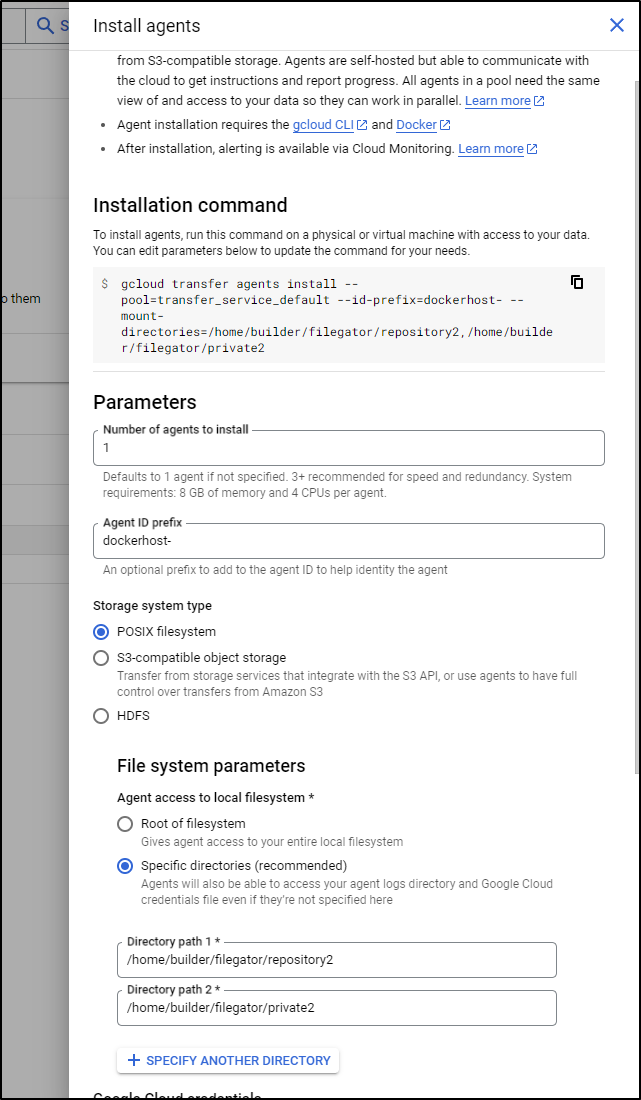

Let’s consider our dockerhost. For a simple example, I’ll look to just backup the Repository folder from Filegator

builder@builder-T100:~/filegator/repository2$ ls -ltra

total 2144

drwxrwxr-x 13 builder builder 4096 Jul 13 13:11 ..

-rw-r--r-- 1 www-data www-data 203659 Jul 13 13:39 'BWCA camping packing list.docx'

-rw-r--r-- 1 www-data www-data 282301 Jul 13 13:47 Screenshot_20240713_134734_Chrome.jpg

-rw-r--r-- 1 www-data www-data 449899 Jul 20 08:20 Screenshot_20240720_081937_Messages.jpg

-rw-r--r-- 1 www-data www-data 271755 Jul 29 18:36 Screenshot_20240729_183441_Outlook.jpg

-rw-r--r-- 1 www-data www-data 268079 Jul 29 18:36 Screenshot_20240729_183455_Edge.jpg

-rw-r--r-- 1 www-data www-data 231345 Jul 29 18:36 Screenshot_20240729_183531_Edge.jpg

-rw-r--r-- 1 www-data www-data 469036 Aug 15 19:55 Screenshot_20240815_195505_Chrome.jpg

drwxrwxrwx 2 builder builder 4096 Aug 15 19:55 .

builder@builder-T100:~/filegator/repository2$ pwd

/home/builder/filegator/repository2

I’ll also include the ‘private’ dir that houses user credentials

If I just try and run the command, we’ll be met the problem we haven’t added ‘gcloud’ to this host yet

builder@builder-T100:~$ gcloud transfer agents install --pool=transfer_service_default --id-prefix=dockerhost- --mount-directories=/home/builder/filegator/repository2,/home/builder/filegator/private2

Command 'gcloud' not found, but can be installed with:

sudo snap install google-cloud-cli # version 490.0.0, or

sudo snap install google-cloud-sdk # version 490.0.0

See 'snap info <snapname>' for additional versions.

To do that I’ll run:

curl -O https://dl.google.com/dl/cloudsdk/channels/rapid/downloads/google-cloud-cli-linux-x86_64.tar.gz

tar -xf google-cloud-cli-linux-x86_64.tar.gz

./google-cloud-sdk/install.sh

As such

builder@builder-T100:~$ curl -O https://dl.google.com/dl/cloudsdk/channels/rapid/downloads/google-cloud-cli-linux-x86_64.tar.gz

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 124M 100 124M 0 0 66.1M 0 0:00:01 0:00:01 --:--:-- 66.1M

builder@builder-T100:~$ tar -xf google-cloud-cli-linux-x86_64.tar.gz

builder@builder-T100:~$ ./google-cloud-sdk/install.sh

Welcome to the Google Cloud CLI!

To help improve the quality of this product, we collect anonymized usage data

and anonymized stacktraces when crashes are encountered; additional information

is available at <https://cloud.google.com/sdk/usage-statistics>. This data is

handled in accordance with our privacy policy

<https://cloud.google.com/terms/cloud-privacy-notice>. You may choose to opt in this

collection now (by choosing 'Y' at the below prompt), or at any time in the

future by running the following command:

gcloud config set disable_usage_reporting false

Do you want to help improve the Google Cloud CLI (y/N)? y

Your current Google Cloud CLI version is: 490.0.0

The latest available version is: 490.0.0

┌─────────────────────────────────────────────────────────────────────────────────────────────────────────────────┐

│ Components │

├───────────────┬──────────────────────────────────────────────────────┬──────────────────────────────┬───────────┤

│ Status │ Name │ ID │ Size │

├───────────────┼──────────────────────────────────────────────────────┼──────────────────────────────┼───────────┤

│ Not Installed │ App Engine Go Extensions │ app-engine-go │ 4.7 MiB │

│ Not Installed │ Appctl │ appctl │ 21.0 MiB │

│ Not Installed │ Artifact Registry Go Module Package Helper │ package-go-module │ < 1 MiB │

│ Not Installed │ Cloud Bigtable Command Line Tool │ cbt │ 18.4 MiB │

│ Not Installed │ Cloud Bigtable Emulator │ bigtable │ 7.3 MiB │

│ Not Installed │ Cloud Datastore Emulator │ cloud-datastore-emulator │ 36.2 MiB │

│ Not Installed │ Cloud Firestore Emulator │ cloud-firestore-emulator │ 46.3 MiB │

│ Not Installed │ Cloud Pub/Sub Emulator │ pubsub-emulator │ 63.7 MiB │

│ Not Installed │ Cloud Run Proxy │ cloud-run-proxy │ 13.3 MiB │

│ Not Installed │ Cloud SQL Proxy v2 │ cloud-sql-proxy │ 13.8 MiB │

│ Not Installed │ Cloud Spanner Emulator │ cloud-spanner-emulator │ 36.9 MiB │

│ Not Installed │ Cloud Spanner Migration Tool │ harbourbridge │ 20.9 MiB │

│ Not Installed │ Google Container Registry's Docker credential helper │ docker-credential-gcr │ 1.8 MiB │

│ Not Installed │ Kustomize │ kustomize │ 4.3 MiB │

│ Not Installed │ Log Streaming │ log-streaming │ 13.9 MiB │

│ Not Installed │ Minikube │ minikube │ 34.4 MiB │

│ Not Installed │ Nomos CLI │ nomos │ 30.3 MiB │

│ Not Installed │ On-Demand Scanning API extraction helper │ local-extract │ 24.6 MiB │

│ Not Installed │ Skaffold │ skaffold │ 24.2 MiB │

│ Not Installed │ Spanner migration tool │ spanner-migration-tool │ 24.5 MiB │

│ Not Installed │ Terraform Tools │ terraform-tools │ 66.1 MiB │

│ Not Installed │ anthos-auth │ anthos-auth │ 22.0 MiB │

│ Not Installed │ config-connector │ config-connector │ 91.1 MiB │

│ Not Installed │ enterprise-certificate-proxy │ enterprise-certificate-proxy │ 8.6 MiB │

│ Not Installed │ gcloud Alpha Commands │ alpha │ < 1 MiB │

│ Not Installed │ gcloud Beta Commands │ beta │ < 1 MiB │

│ Not Installed │ gcloud app Java Extensions │ app-engine-java │ 127.8 MiB │

│ Not Installed │ gcloud app Python Extensions │ app-engine-python │ 5.0 MiB │

│ Not Installed │ gcloud app Python Extensions (Extra Libraries) │ app-engine-python-extras │ < 1 MiB │

│ Not Installed │ gke-gcloud-auth-plugin │ gke-gcloud-auth-plugin │ 4.0 MiB │

│ Not Installed │ istioctl │ istioctl │ 24.0 MiB │

│ Not Installed │ kpt │ kpt │ 14.6 MiB │

│ Not Installed │ kubectl │ kubectl │ < 1 MiB │

│ Not Installed │ kubectl-oidc │ kubectl-oidc │ 22.0 MiB │

│ Not Installed │ pkg │ pkg │ │

│ Installed │ BigQuery Command Line Tool │ bq │ 1.7 MiB │

│ Installed │ Bundled Python 3.11 │ bundled-python3-unix │ 74.2 MiB │

│ Installed │ Cloud Storage Command Line Tool │ gsutil │ 11.3 MiB │

│ Installed │ Google Cloud CLI Core Libraries │ core │ 19.5 MiB │

│ Installed │ Google Cloud CRC32C Hash Tool │ gcloud-crc32c │ 1.3 MiB │

└───────────────┴──────────────────────────────────────────────────────┴──────────────────────────────┴───────────┘

To install or remove components at your current SDK version [490.0.0], run:

$ gcloud components install COMPONENT_ID

$ gcloud components remove COMPONENT_ID

To update your SDK installation to the latest version [490.0.0], run:

$ gcloud components update

Modify profile to update your $PATH and enable shell command completion?

Do you want to continue (Y/n)? Y

The Google Cloud SDK installer will now prompt you to update an rc file to bring the Google Cloud CLIs into your environment.

Enter a path to an rc file to update, or leave blank to use [/home/builder/.bashrc]:

Backing up [/home/builder/.bashrc] to [/home/builder/.bashrc.backup].

[/home/builder/.bashrc] has been updated.

==> Start a new shell for the changes to take effect.

For more information on how to get started, please visit:

https://cloud.google.com/sdk/docs/quickstarts

I want to add a few of those optional components as well

builder@builder-T100:~$ source ~/.bashrc

builder@builder-T100:~$ gcloud components install kustomize log-streaming terraform-tools docker-credential-gcr

Your current Google Cloud CLI version is: 490.0.0

Installing components from version: 490.0.0

┌───────────────────────────────────────────────────────────────────────────────────────────────┐

│ These components will be installed. │

├──────────────────────────────────────────────────────────────────────────┬─────────┬──────────┤

│ Name │ Version │ Size │

├──────────────────────────────────────────────────────────────────────────┼─────────┼──────────┤

│ Google Container Registry's Docker credential helper (Platform Specific) │ 1.5.0 │ 1.8 MiB │

│ Kustomize (Platform Specific) │ 4.4.0 │ 4.3 MiB │

│ Log Streaming (Platform Specific) │ 0.3.0 │ 13.9 MiB │

│ Terraform Tools (Platform Specific) │ 0.11.1 │ 66.1 MiB │

└──────────────────────────────────────────────────────────────────────────┴─────────┴──────────┘

For the latest full release notes, please visit:

https://cloud.google.com/sdk/release_notes

Once started, canceling this operation may leave your SDK installation in an inconsistent state.

Do you want to continue (Y/n)? Y

Performing in place update...

╔════════════════════════════════════════════════════════════╗

╠═ Downloading: Google Container Registry's Docker crede... ═╣

╠════════════════════════════════════════════════════════════╣

╠═ Downloading: Google Container Registry's Docker crede... ═╣

╠════════════════════════════════════════════════════════════╣

╠═ Downloading: Kustomize ═╣

╠════════════════════════════════════════════════════════════╣

╠═ Downloading: Kustomize (Platform Specific) ═╣

╠════════════════════════════════════════════════════════════╣

╠═ Downloading: Log Streaming ═╣

╠════════════════════════════════════════════════════════════╣

╠═ Downloading: Log Streaming (Platform Specific) ═╣

╠════════════════════════════════════════════════════════════╣

╠═ Downloading: Terraform Tools ═╣

╠════════════════════════════════════════════════════════════╣

╠═ Downloading: Terraform Tools (Platform Specific) ═╣

╠════════════════════════════════════════════════════════════╣

╠═ Installing: Google Container Registry's Docker creden... ═╣

╠════════════════════════════════════════════════════════════╣

╠═ Installing: Google Container Registry's Docker creden... ═╣

╠════════════════════════════════════════════════════════════╣

╠═ Installing: Kustomize ═╣

╠════════════════════════════════════════════════════════════╣

╠═ Installing: Kustomize (Platform Specific) ═╣

╠════════════════════════════════════════════════════════════╣

╠═ Installing: Log Streaming ═╣

╠════════════════════════════════════════════════════════════╣

╠═ Installing: Log Streaming (Platform Specific) ═╣

╠════════════════════════════════════════════════════════════╣

╠═ Installing: Terraform Tools ═╣

╠════════════════════════════════════════════════════════════╣

╠═ Installing: Terraform Tools (Platform Specific) ═╣

╚════════════════════════════════════════════════════════════╝

Performing post processing steps...⠶

I can now login and set my default project

builder@builder-T100:~$ gcloud auth login

Go to the following link in your browser, and complete the sign-in prompts:

https://accounts.google.com/o/oauth2/auth?response_type=code&client_id=32555940559.apps.googleusercontent.com&redirect_uri=https%3A%2F%2Fsdk.cloud.google.com%2Fauthcode.html&scope=openid+https%3A%2F%2Fwww.googleapis.com%2Fauth%2Fuserinfo.email+https%3A%2F%2Fwww.googleapis.com%2Fauth%2Fcloud-platform+https%3A%2F%2Fwww.googleapis.com%2Fauth%2Fappengine.admin+https%3A%2F%2Fwww.googleapis.com%2Fauth%2Fsqlservice.login+https%3A%2F%2Fwww.googleapis.com%2Fauth%2Fcompute+https%3A%2F%2Fwww.googleapis.com%2Fauth%2Faccounts.reauth&state=ZPw9l9vLlykrME8qAwD0NERyD3ZcIh&prompt=consent&token_usage=remote&access_type=offline&code_challenge=kKp9ZIM4qsEd8ktxQGgKVuE8_0rPvfkZj2kg_hIdlyU&code_challenge_method=S256

Once finished, enter the verification code provided in your browser: ******************************************

You are now logged in as [isaac.johnson@gmail.com].

Your current project is [None]. You can change this setting by running:

$ gcloud config set project PROJECT_ID

builder@builder-T100:~$ gcloud config set project myanthosproject2

Updated property [core/project].

I also needed to do the application-default login as well

builder@builder-T100:~$ gcloud auth application-default login

Go to the following link in your browser, and complete the sign-in prompts:

...snip...

Now I can install it

builder@builder-T100:~$ gcloud transfer agents install --pool=transfer_service_default --id-prefix=dockerhost- --mount-directories=/home/builder/filegator/repository2,/home/builder/filegator/private2

[1/3] Credentials found ✓

[2/3] Docker found ✓

Unable to find image 'gcr.io/cloud-ingest/tsop-agent:latest' locally

latest: Pulling from cloud-ingest/tsop-agent

c6a83fedfae6: Already exists

f565a99c3795: Pull complete

e79ecc92a63a: Pull complete

21e26252b179: Pull complete

bfa0723221fa: Pull complete

5a83ea39f40d: Pull complete

1b84d5badb15: Pull complete dbe9d0bfee51: Pull complete e8980c948446: Pull complete c37c5c6393da: Pull complete ae8b8f020400: Pull complete

bc7a3b5b6f07: Pull complete

d4ed31182a19: Pull complete

ff5b09c120b4: Pull complete

78e169bf3005: Pull complete

514d01812583: Pull complete

bad972a5c535: Pull complete

b74c782c7e5a: Pull complete

Digest: sha256:b0efca60fa4dfa659e36bb88c5097ccca1d91958f97b5ec98bd6d8b69918a228

Status: Downloaded newer image for gcr.io/cloud-ingest/tsop-agent:latest

7593b700900b80dde5f5f2dc0821f7684041c60adacd601fa2b8d531ac448a5b

[3/3] Agent installation complete! ✓

To confirm your agents are connected, go to the following link in your browser,

and check that agent status is 'Connected' (it can take a moment for the status

to update and may require a page refresh):

https://console.cloud.google.com/transfer/on-premises/agent-pools/pool/transfer_service_default/agents?project=myanthosproject2

If your agent does not appear in the pool, check its local logs by running

"docker container logs [container ID]". The container ID is the string of random

characters printed by step [2/3]. The container ID can also be found by running

"docker container list".

I can now see my agent in the pool

All we have done at this point is enabled the API, created a Transfer Agent pool and added an agent to the pool.

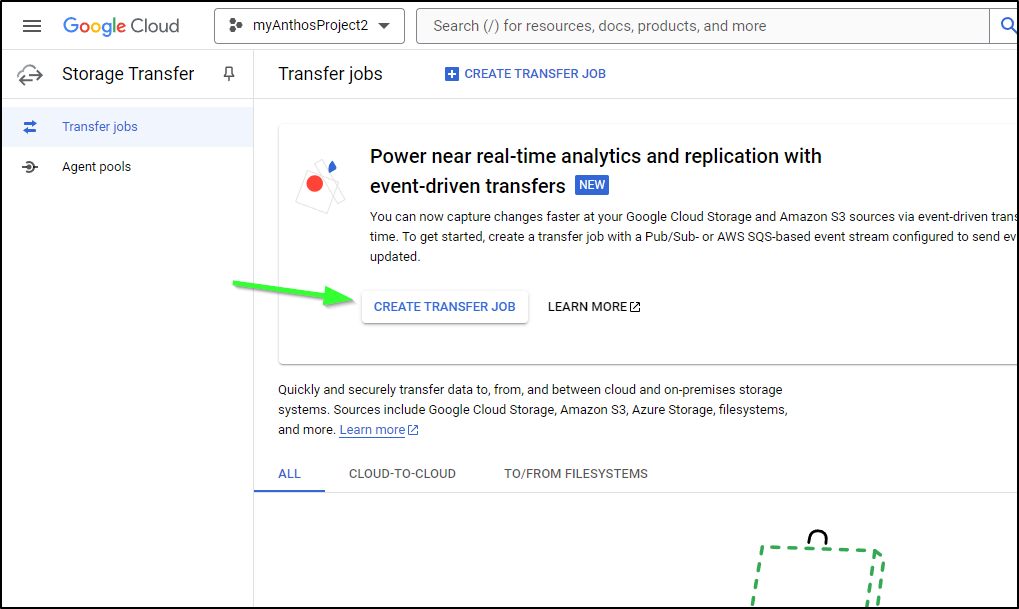

We now have to setup the Transfer Job which is what really defines the data pipeline

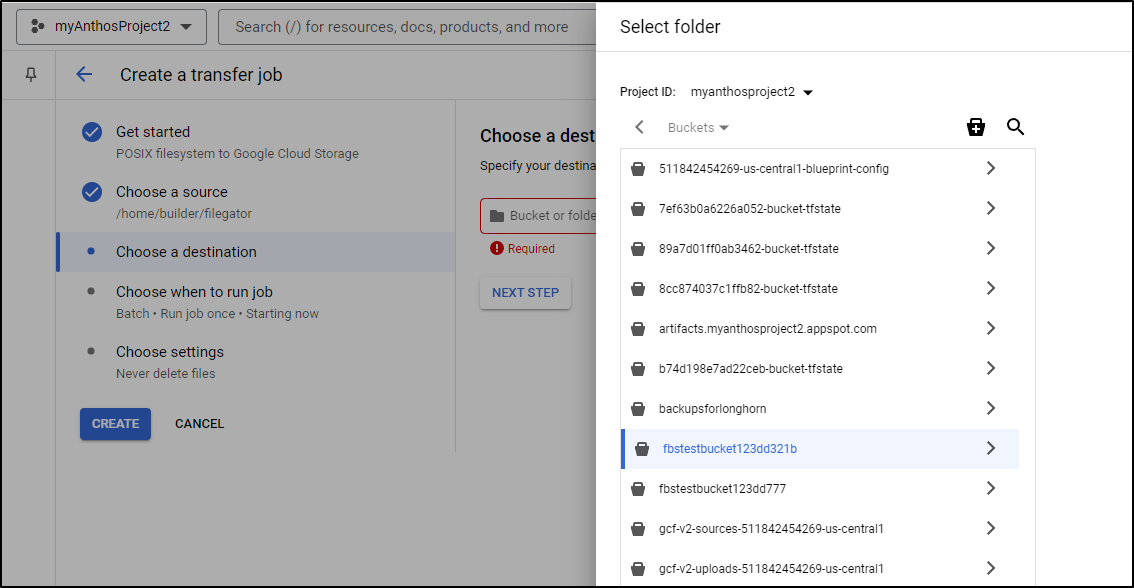

We click the “Create Transfer Job” under the Transfer Jobs page.

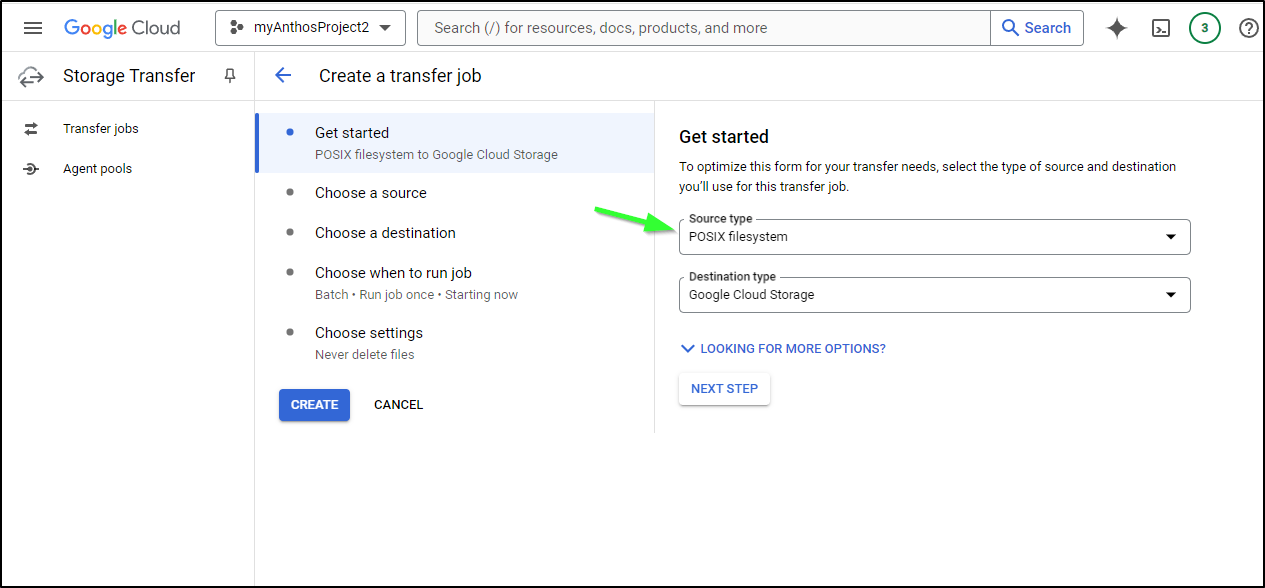

The first change we make is to change from “Google Cloud Storage” as the source type to “POSIX filesystem”

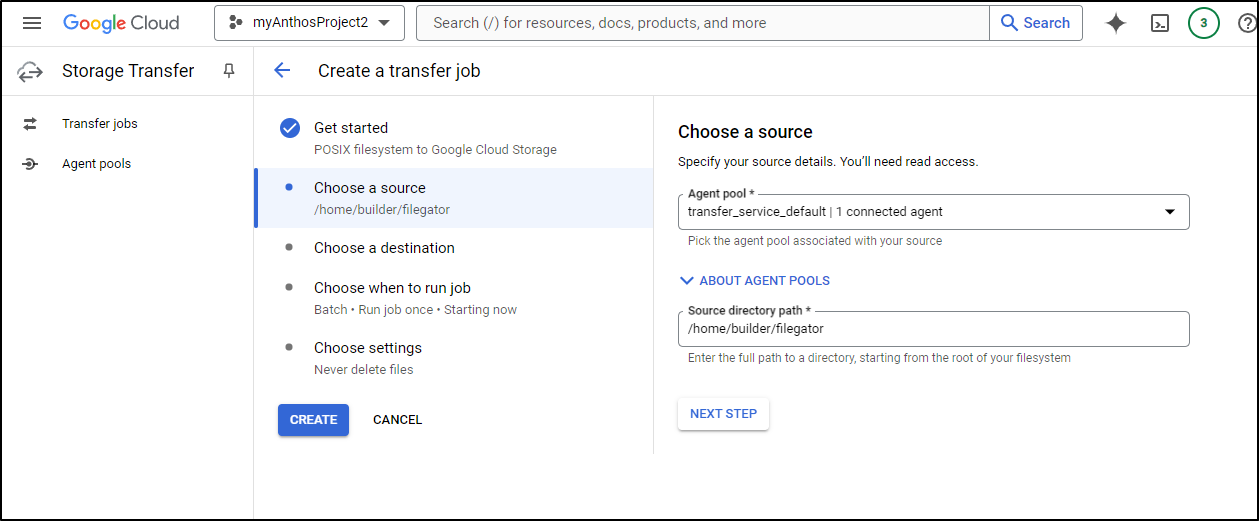

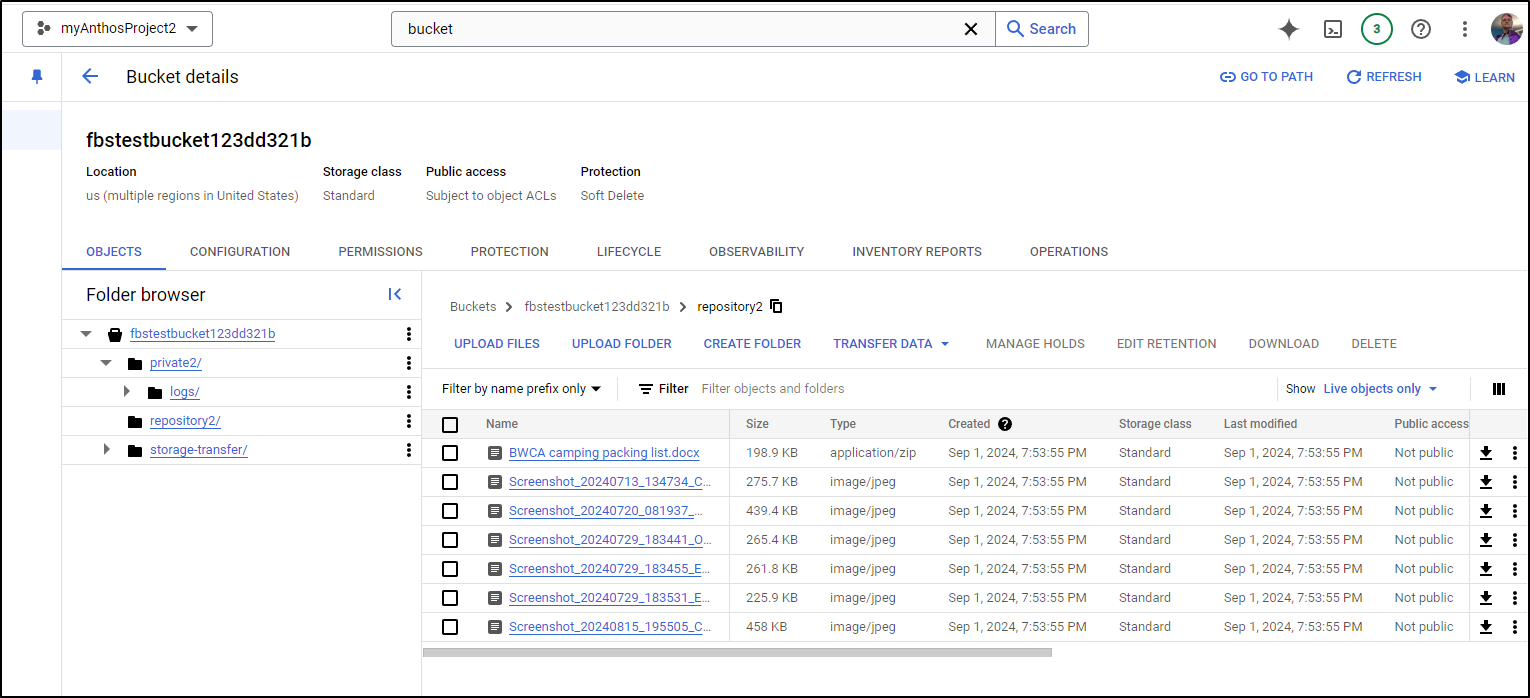

I’ll try and pull the root of both the private2 and repository2 subdirectories we mounted

I have a bunch of rather random buckets so I’ll just pick one that ends in 321b to use as our destination as this is just a test

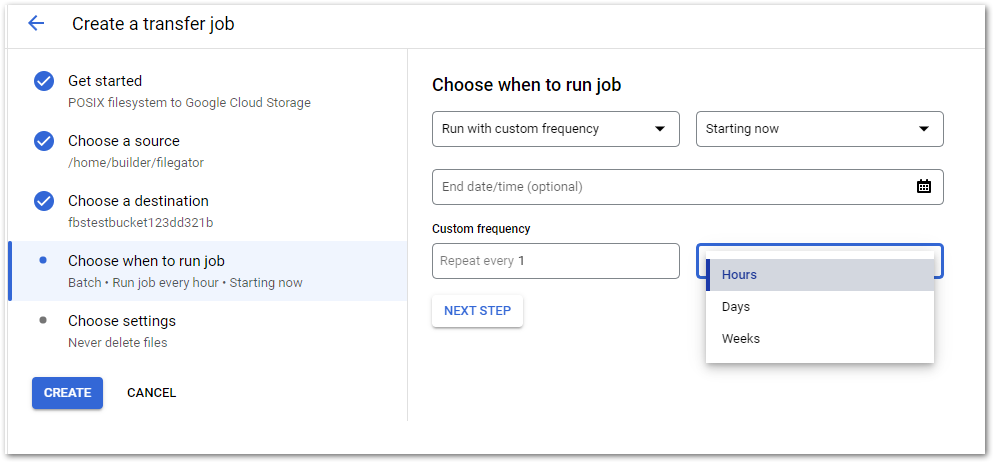

We now have some choices on how often to run. For things like database backups, that might be daily. We may want to run

Here, I’ll set it to be the most often which is hourly

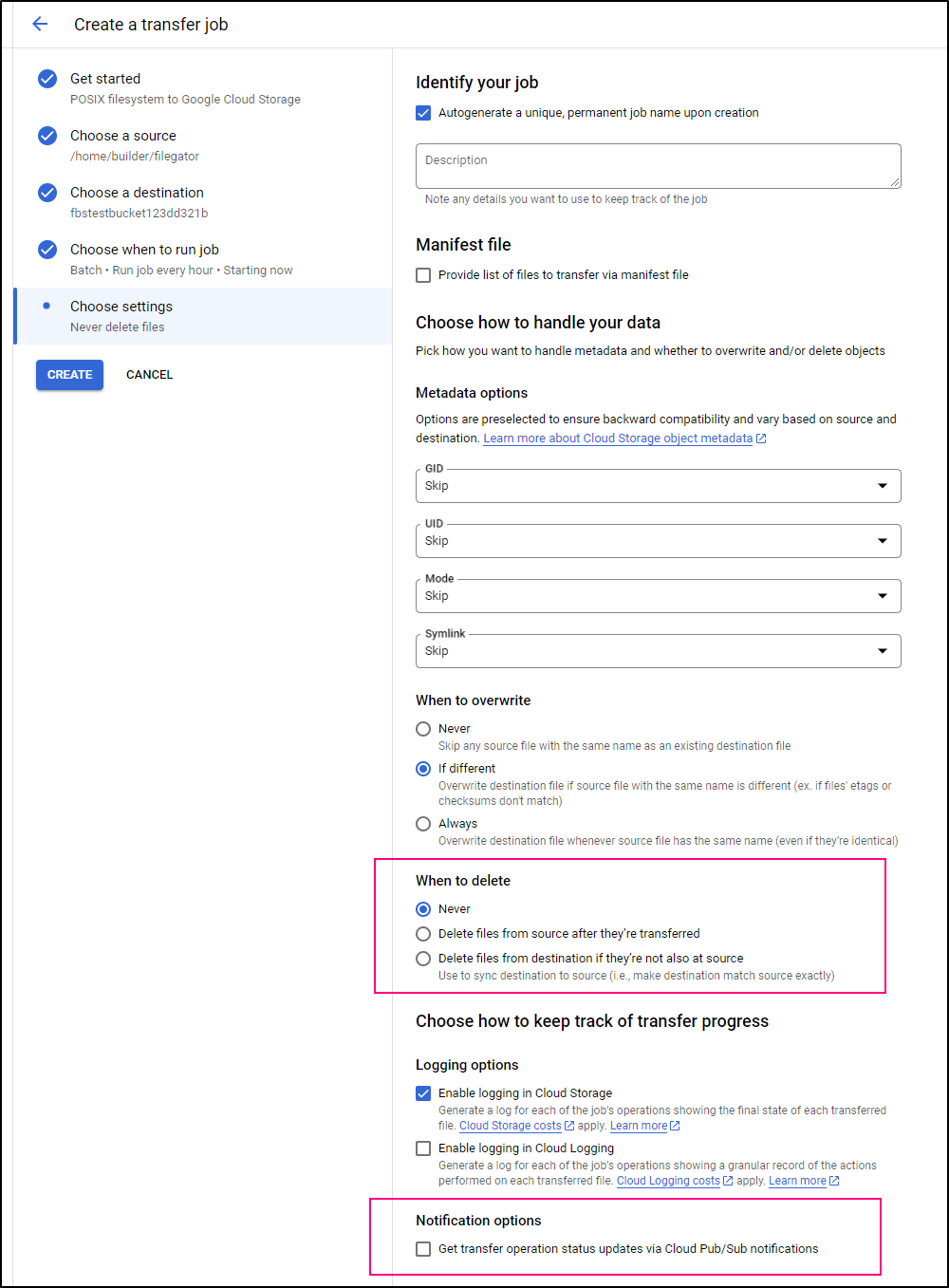

The next page has a couple of options I want to call out

the “When to delete” is handy for cloud migrations when you are trying to get everything from an S3 bucket, or from some on-prem archive up to “the cloud”. You can choose to delete when transferred.

The other is “Notifications”. There are no “email” notifications, but one can create a Pub/Sub then a simple CloudRun function to trigger emails/alerts. It’s a bit jenky, but it’s what I did in one professional situation where we had lots to transfer and I wanted alerts.

In my case, there were some bizarro s3 files with newlines in the name that needed some cajoling to get copied over.

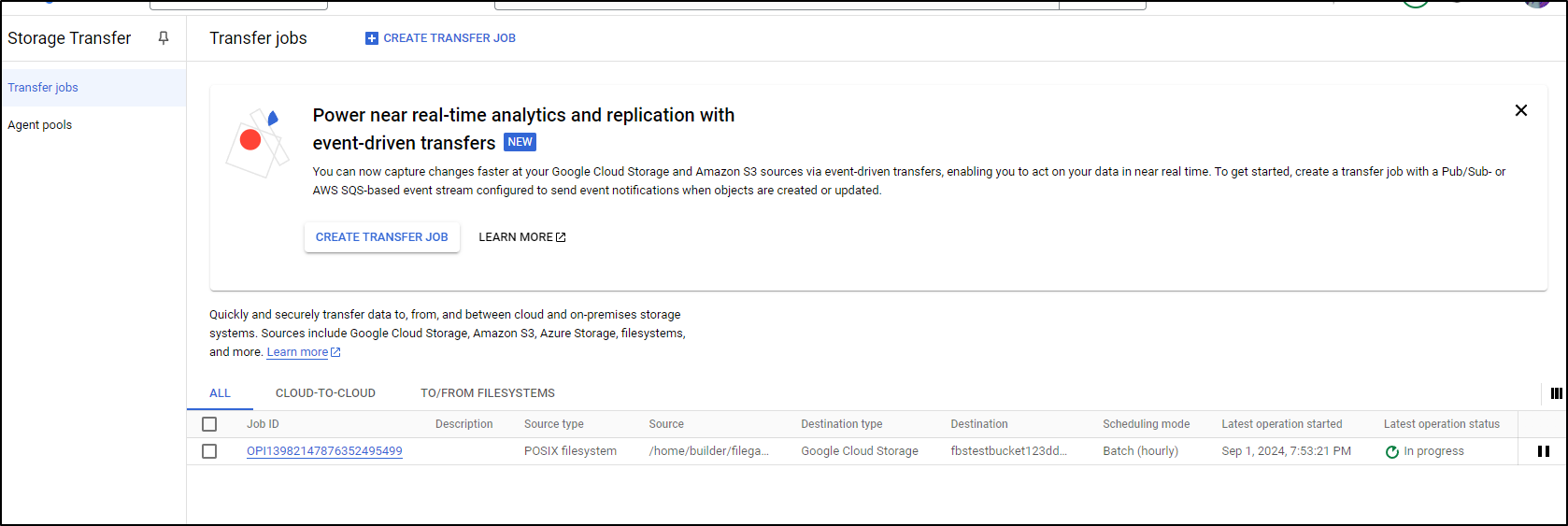

Once saved, I saw it go from “Queued” to “In Progress” in short order

wrapping with “Success”

I can browse to my bucket and see, indeed, both subfolders were copied

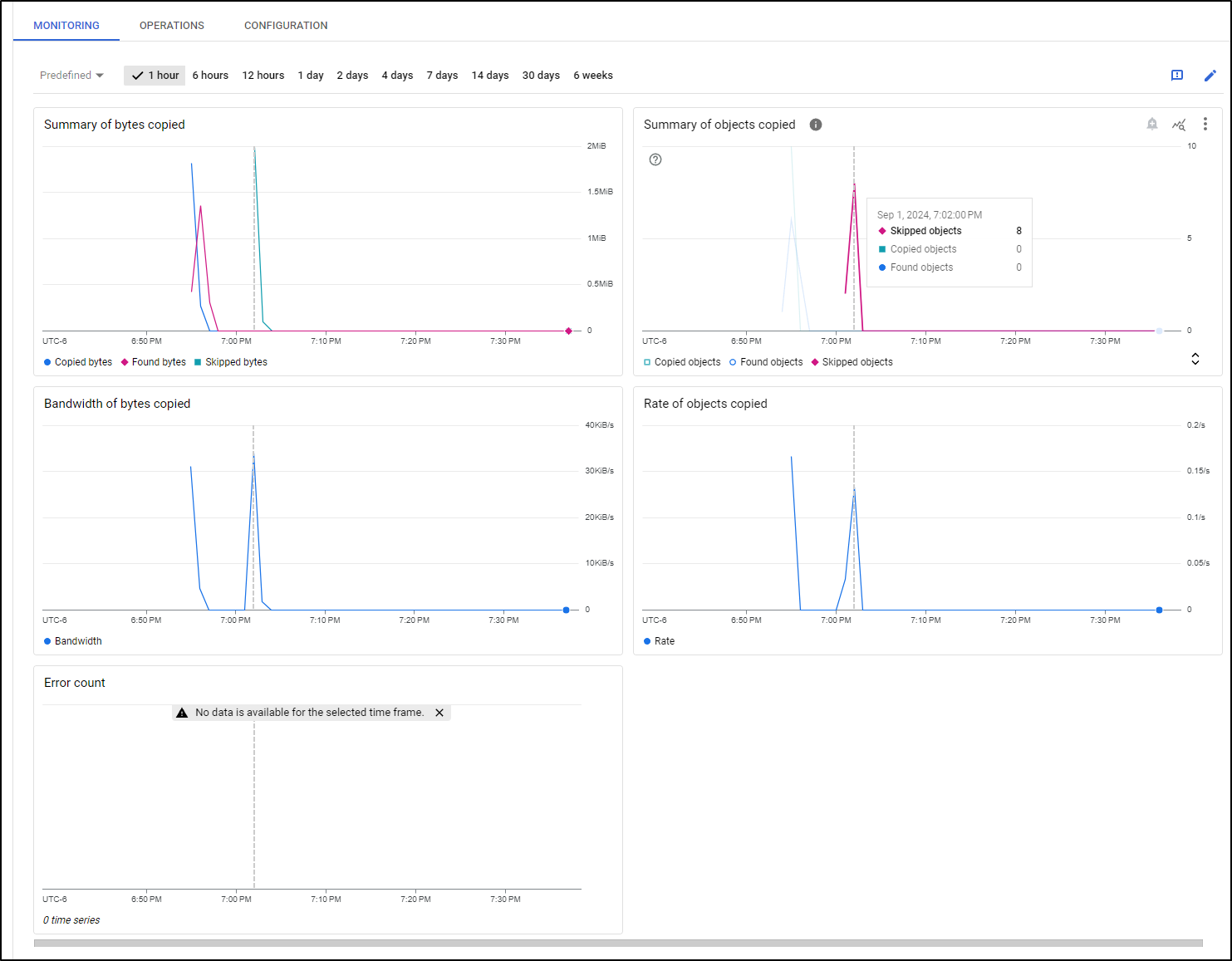

If I give it a few minutes, we can then see the monitoring graphs update with the details of what it transferred.

Here we can see at the next hour mark it skipped all the objects as they were copied already

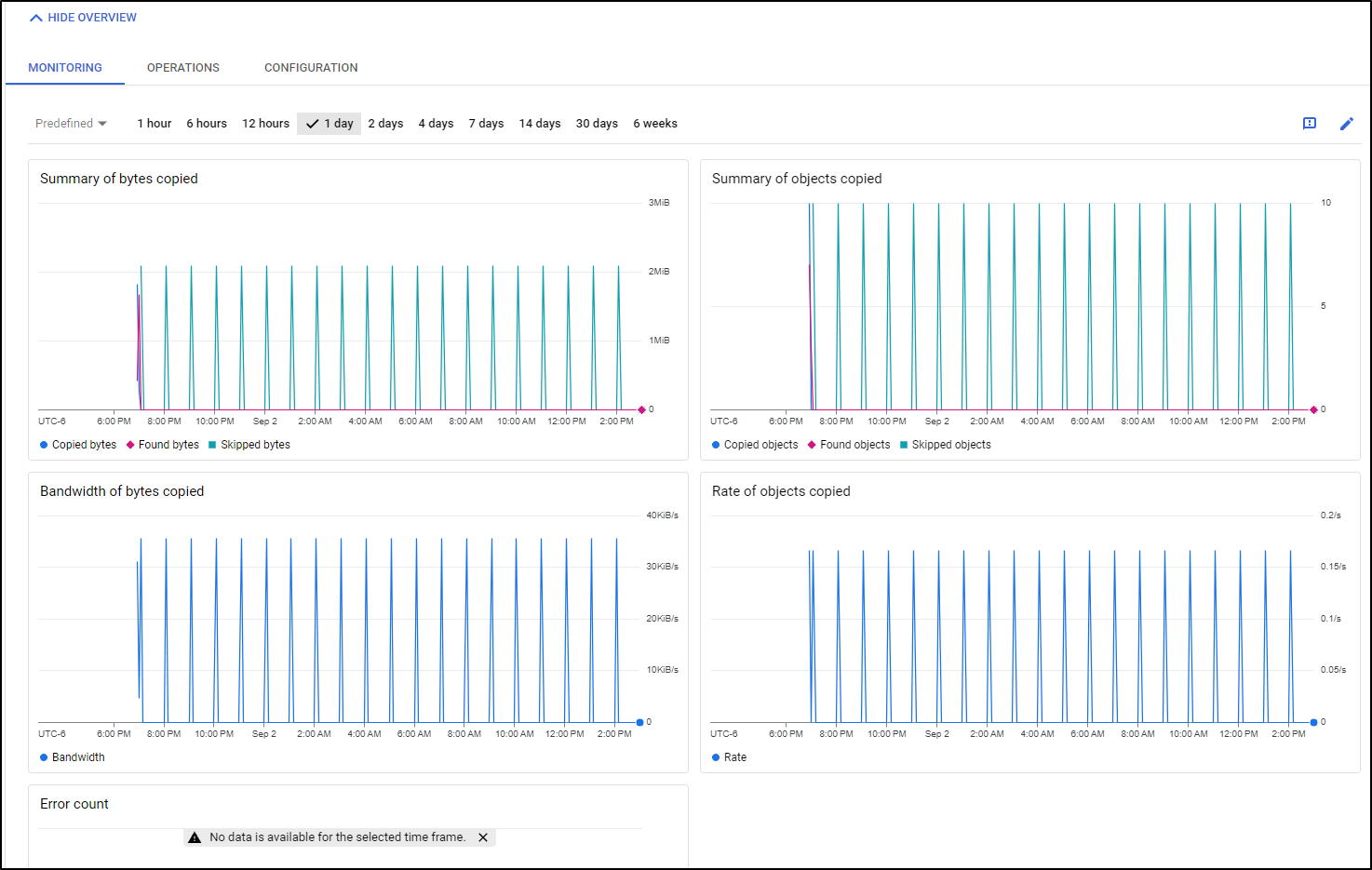

and a day later

Summary

Today we started by looking into Cloud Storage costs and what different storage classes mean for costs. We broke down the major three cloud vendors (Google, Microsoft Azure and Amazon AWS) to explore what makes sense for backups.

Then, to put into practice, we looked at AWS and Google covering at least three ways to sync from a local filesystem to Cloud storage. With s3fs we mounted a drive to an S3 bucket and looked at copying files with rsync as well as with crontab using find and cp.

We then looked at GCP and set up a Storage Transfer Job (Data Transfer Job) to sync paths on a schedule using a containerized transfer agent.

I have plans to show you some more in our next post, but this is a good start and hopefully you can find some good patterns to get you going.