Published: Sep 20, 2022 by Isaac Johnson

GlusterFS is a free, scalable and open-source network filesystem we can use to keep paths synchronized across systems. Today we’ll setup GlusterFS on an on-prem 3 node cluster and then use it in a variety of ways.

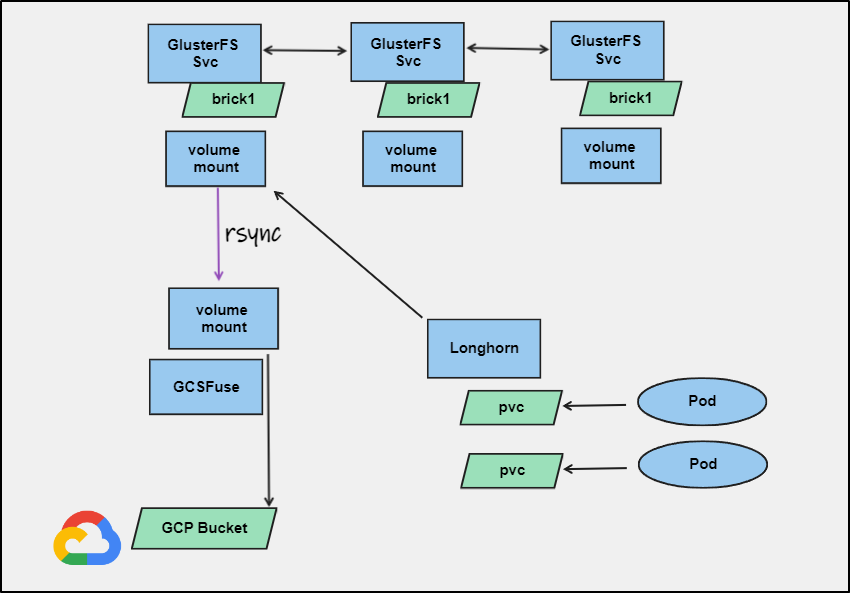

In addition, we’ll piggyback on last week’s blog to tie this into GCP Cloud Buckets for backups. We’ll see how GlusterFS and GCSFuse can tie nicely together to provide a cohesive durable storage solution.

GCFuse for GCP Buckets

To mount the directory for GCP Cloud Storage, we can fix the local permissions we ignored in our last post.

First, I want to determine my user ID and group ID

builder@anna-MacBookAir:~$ id -u builder

1000

builder@anna-MacBookAir:~$ cat /etc/group | grep ^builder

builder:x:1000:

Next, I’ll create a dir for mounting

$ sudo mkdir /mnt/gcpbucket2

Then lastly, assuming we still have saved our sa-storage-key.json (see last blog entry for how to get them ).

Now we can mount it

builder@anna-MacBookAir:~$ sudo mount -t gcsfuse -o gid=1000,uid=1000,allow_other,implicit_dirs,rw,noauto,file_mode=777,

dir_mode=777,key_file=/home/builder/sa-storage-key.json myk3spvcstore /mnt/gcpbucket2

Calling gcsfuse with arguments: --key-file /home/builder/sa-storage-key.json -o rw --gid 1000 --uid 1000 -o allow_other --implicit-dirs --file-mode 777 --dir-mode 777 myk3spvcstore /mnt/gcpbucket2

2022/09/17 13:34:32.380108 Start gcsfuse/0.41.6 (Go version go1.18.4) for app "" using mount point: /mnt/gcpbucket2

2022/09/17 13:34:32.393651 Opening GCS connection...

2022/09/17 13:34:33.415344 Mounting file system "myk3spvcstore"...

2022/09/17 13:34:33.430344 File system has been successfully mounted.

And I can test

builder@anna-MacBookAir:~$ ls -ltra /mnt/gcpbucket2

total 1

-rwxrwxrwx 1 builder builder 0 Sep 9 07:20 testing

-rwxrwxrwx 1 builder builder 51 Sep 9 12:02 longhorn-disk.cfg

drwxrwxrwx 1 builder builder 0 Sep 17 13:34 replicas

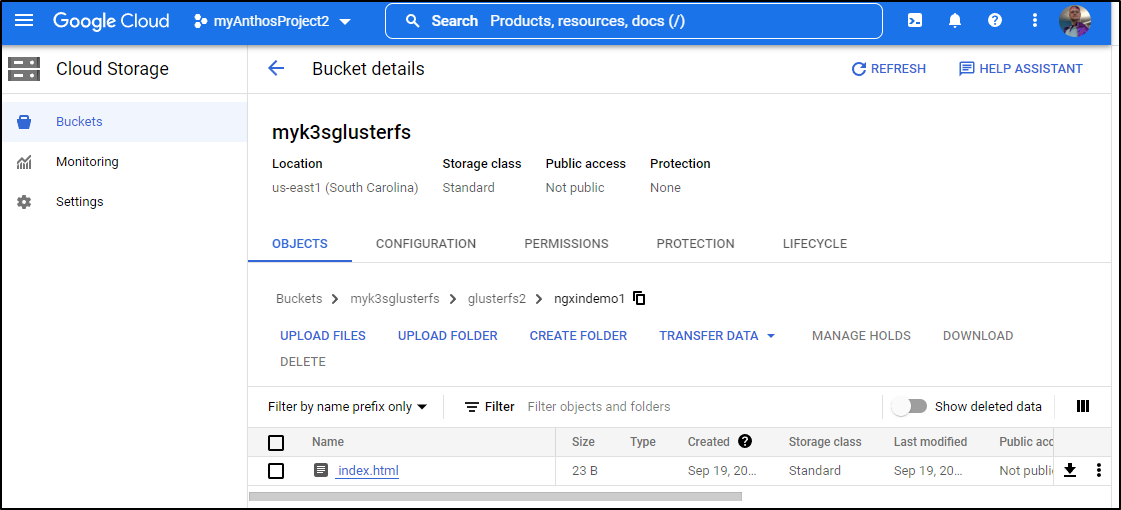

We’ve mounted the PVC storage from before. Now I should create a bucket for glusterfs

$ gcloud alpha storage buckets create gs://mkk3sglusterfs

Creating gs://mkk3sglusterfs/...

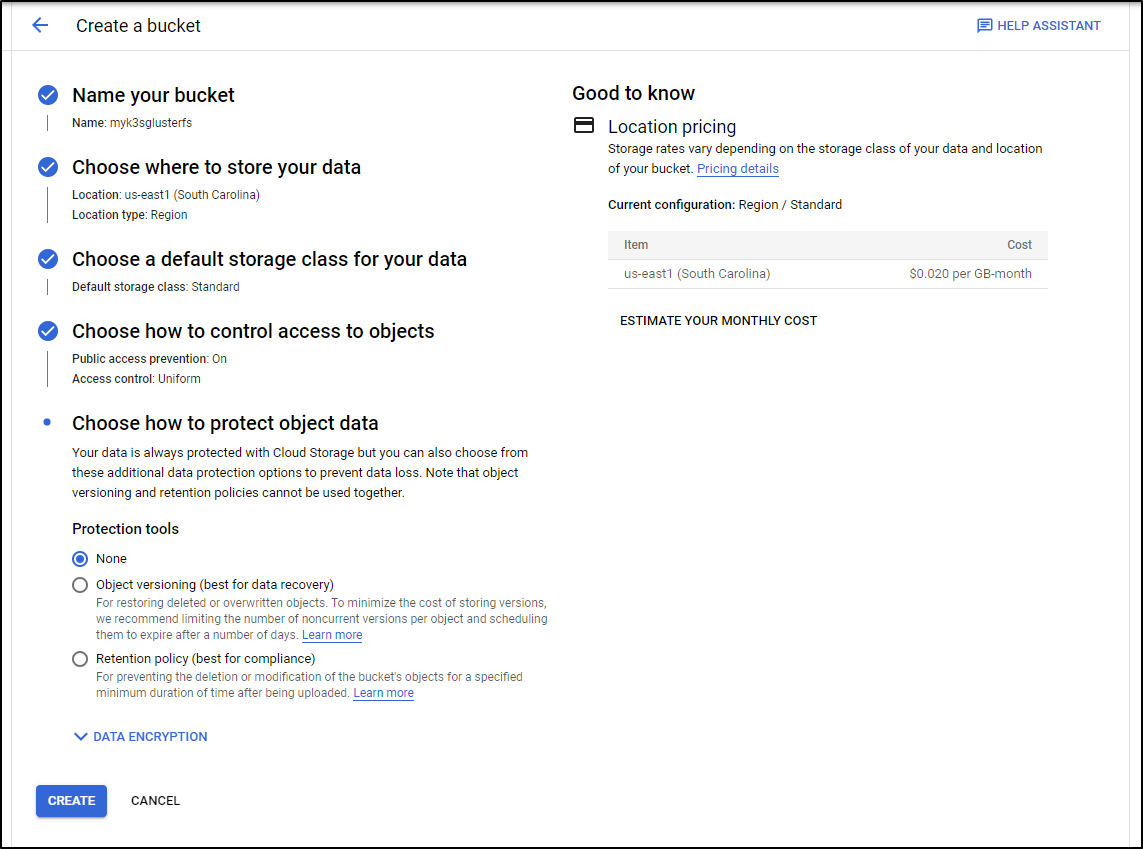

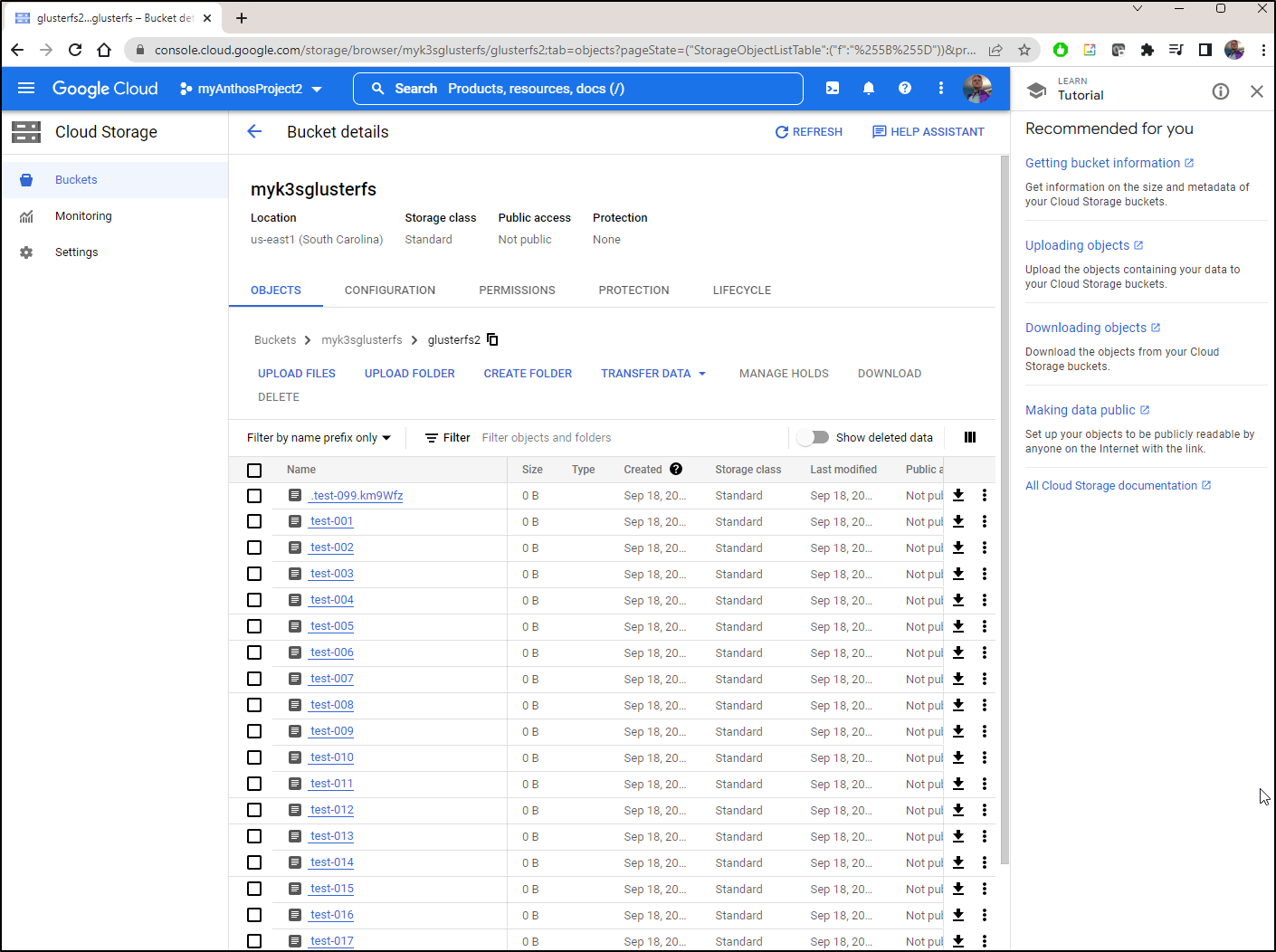

I can also create with the Cloud Console

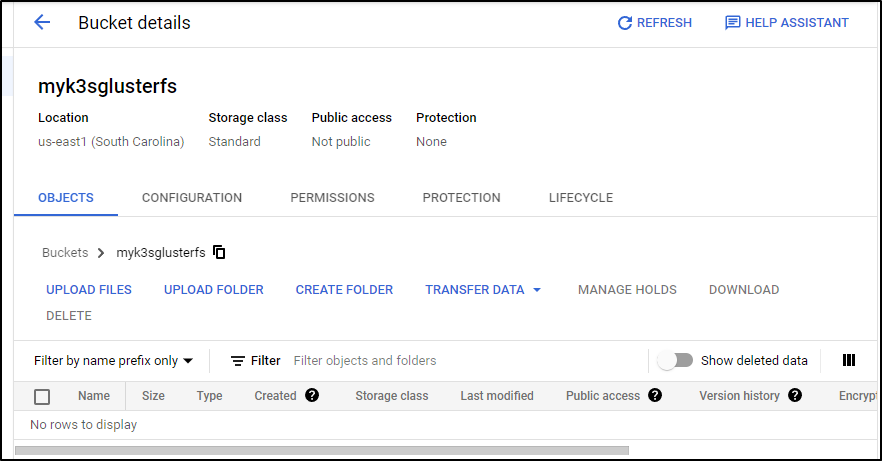

Which shows as

I’ll want to mount this locally to use with glusterfs

builder@anna-MacBookAir:~$ sudo mount -t gcsfuse -o gid=1000,uid=1000,allow_other,implicit_dirs,rw,noauto,file_mode=777,dir_mode=777,key_file=/home/builder/sa-storage-key.json myk3sglusterfs /mnt/glusterfs

Calling gcsfuse with arguments: --key-file /home/builder/sa-storage-key.json -o rw --gid 1000 --uid 1000 -o allow_other --implicit-dirs --file-mode 777 --dir-mode 777 myk3sglusterfs /mnt/glusterfs

2022/09/17 13:56:22.374727 Start gcsfuse/0.41.6 (Go version go1.18.4) for app "" using mount point: /mnt/glusterfs

2022/09/17 13:56:22.390939 Opening GCS connection...

2022/09/17 13:56:22.898022 Mounting file system "myk3sglusterfs"...

2022/09/17 13:56:22.902388 File system has been successfully mounted.

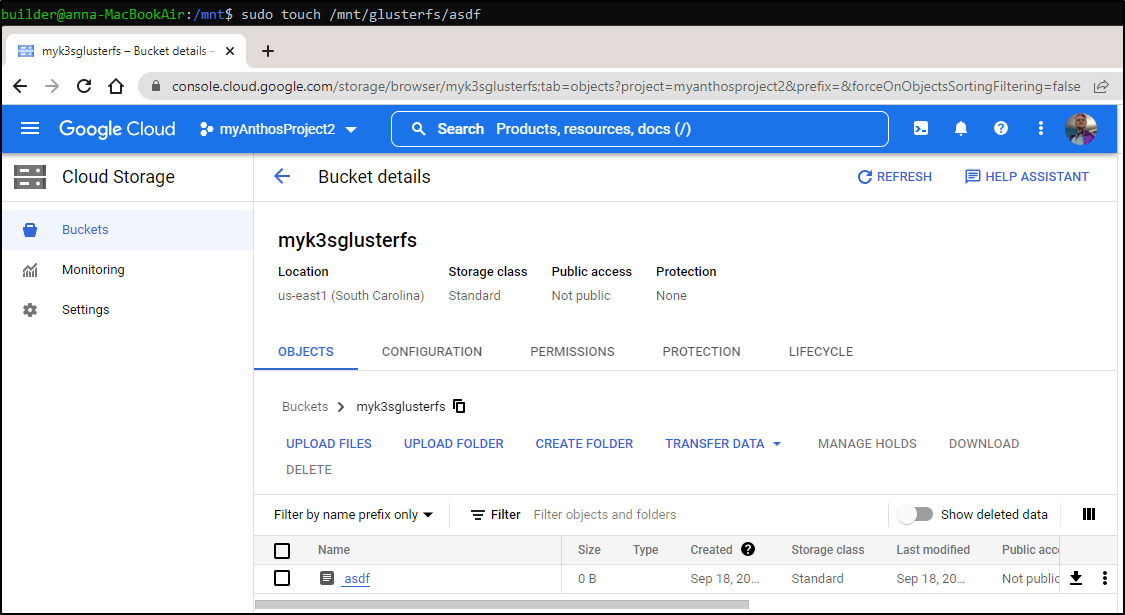

Verification

We can touch a file to see it get created in our GCP Storage Bucket

GlusterFS install

Now we can install GlusterFS

builder@anna-MacBookAir:~$ sudo apt install glusterfs-server

Preparing to unpack .../12-glusterfs-client_7.2-2build1_amd64.deb ...

Unpacking glusterfs-client (7.2-2build1) ...

Selecting previously unselected package glusterfs-server.

Preparing to unpack .../13-glusterfs-server_7.2-2build1_amd64.deb ...

Unpacking glusterfs-server (7.2-2build1) ...

Selecting previously unselected package ibverbs-providers:amd64.

Preparing to unpack .../14-ibverbs-providers_28.0-1ubuntu1_amd64.deb ...

Unpacking ibverbs-providers:amd64 (28.0-1ubuntu1) ...

Setting up libibverbs1:amd64 (28.0-1ubuntu1) ...

Setting up ibverbs-providers:amd64 (28.0-1ubuntu1) ...

Setting up attr (1:2.4.48-5) ...

Setting up libglusterfs0:amd64 (7.2-2build1) ...

Setting up xfsprogs (5.3.0-1ubuntu2) ...

update-initramfs: deferring update (trigger activated)

Setting up liburcu6:amd64 (0.11.1-2) ...

Setting up python3-prettytable (0.7.2-5) ...

Setting up libgfxdr0:amd64 (7.2-2build1) ...

Setting up librdmacm1:amd64 (28.0-1ubuntu1) ...

Setting up libgfrpc0:amd64 (7.2-2build1) ...

Setting up libgfchangelog0:amd64 (7.2-2build1) ...

Setting up libgfapi0:amd64 (7.2-2build1) ...

Setting up glusterfs-common (7.2-2build1) ...

Adding group `gluster' (GID 134) ...

Done.

/usr/lib/x86_64-linux-gnu/glusterfs/python/syncdaemon/syncdutils.py:705: SyntaxWarning: "is" with a literal. Did you mean "=="?

if dirpath is "/":

Setting up glusterfs-client (7.2-2build1) ...

Setting up glusterfs-server (7.2-2build1) ...

Processing triggers for man-db (2.9.1-1) ...

Processing triggers for libc-bin (2.31-0ubuntu9.9) ...

Processing triggers for initramfs-tools (0.136ubuntu6.7) ...

update-initramfs: Generating /boot/initrd.img-5.15.0-46-generic

Then we can start the GlusterFS service

builder@anna-MacBookAir:~$ sudo service glusterd start

builder@anna-MacBookAir:~$ sudo service glusterd status

● glusterd.service - GlusterFS, a clustered file-system server

Loaded: loaded (/lib/systemd/system/glusterd.service; disabled; vendor preset: enabled)

Active: active (running) since Sat 2022-09-17 14:01:57 CDT; 30s ago

Docs: man:glusterd(8)

Process: 835492 ExecStart=/usr/sbin/glusterd -p /var/run/glusterd.pid --log-level $LOG_LEVEL $GLUSTERD_OPTIONS (cod>

Main PID: 835494 (glusterd)

Tasks: 9 (limit: 9331)

Memory: 3.9M

CGroup: /system.slice/glusterd.service

└─835494 /usr/sbin/glusterd -p /var/run/glusterd.pid --log-level INFO

Sep 17 14:01:55 anna-MacBookAir systemd[1]: Starting GlusterFS, a clustered file-system server...

Sep 17 14:01:57 anna-MacBookAir systemd[1]: Started GlusterFS, a clustered file-system server.

we will want to enable the nodes to all talk to each other so we’ll add to iptables

builder@anna-MacBookAir:~$ sudo iptables -I INPUT -p all -s 192.168.1.159 -j ACCEPT

builder@anna-MacBookAir:~$ sudo iptables -I INPUT -p all -s 192.168.1.205 -j ACCEPT

Using Ansible

I realized; these steps would be prime to automate with Ansible.

Thus, I created a glusterfs playbook

- name: Install and Configure GlusterFS

hosts: all

tasks:

- name: Set IPTables entry (if Firewall enabled)

ansible.builtin.shell: |

iptables -I INPUT -p all -s 192.168.1.205 -j ACCEPT

iptables -I INPUT -p all -s 192.168.1.159 -j ACCEPT

iptables -I INPUT -p all -s 192.168.1.81 -j ACCEPT

become: true

ignore_errors: True

- name: Install glusterfs server

ansible.builtin.shell: |

apt update

apt install -y glusterfs-server

become: true

- name: GlusterFS server - Start and check status

ansible.builtin.shell: |

service glusterd start

sleep 5

service glusterd status

become: true

- name: Create First Brick

ansible.builtin.shell: |

mkdir -p /mnt/glusterfs/brick1/gv0

become: true

- name: Volume Create and Start on Primary

hosts: AnnaMacbook

tasks:

- name: GlusterFS Peer Probe

ansible.builtin.shell: |

gluster peer probe 192.168.1.81

gluster peer probe 192.168.1.159

gluster peer probe 192.168.1.205

become: true

ignore_errors: True

- name: GlusterFS Peer Status

ansible.builtin.shell: |

gluster peer status

become: true

- name: Create GV0 Volume

ansible.builtin.shell: |

gluster volume create gv0 replica 3 192.168.1.81:/mnt/glusterfs/brick1/gv0 192.168.1.159:/mnt/glusterfs/brick1/gv0 192.168.1.205:/mnt/glusterfs/brick1/gv0

become: true

- name: Start GV0 Volume

ansible.builtin.shell: |

gluster volume start gv0

gluster volume info

become: true

- name: Check Status of Volume

ansible.builtin.shell: |

gluster volume info

become: true

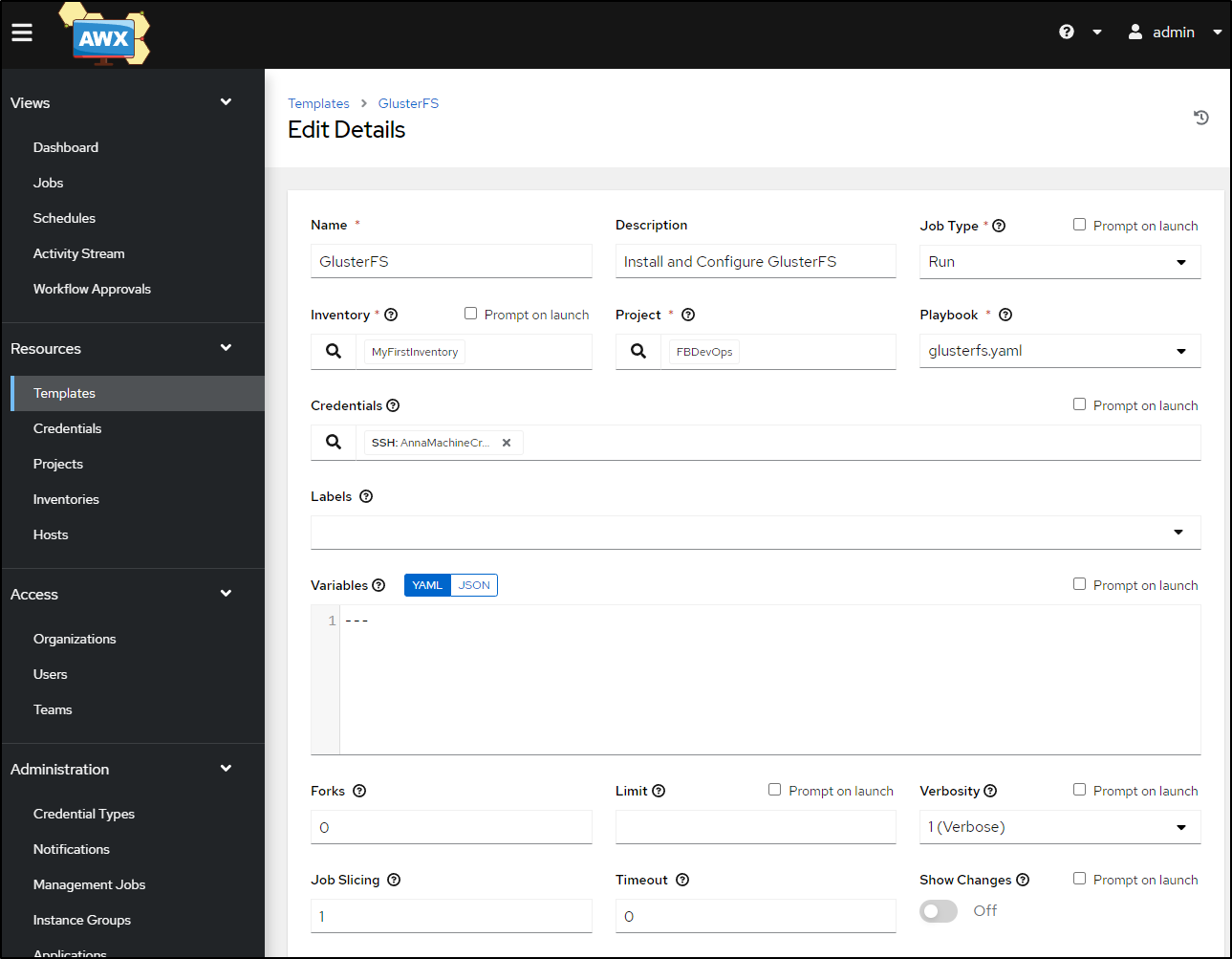

I then added as a new template to AWX

Debugging

In the end, using IP addresses just would not work.

builder@anna-MacBookAir:/mnt$ sudo gluster peer status

Number of Peers: 2

Hostname: 192.168.1.159

Uuid: bf5698c7-3bd4-4b5e-9951-1c7152ae34cf

State: Accepted peer request (Connected)

Hostname: 192.168.1.205

Uuid: ec7e5bd5-5ca9-496d-9ae9-66ef19df5c4c

State: Accepted peer request (Connected)

If I created and updated /etc/hosts files, then joined manually, I was good to go

isaac@isaac-MacBookPro:~$ sudo gluster peer status

Number of Peers: 2

Hostname: builder-MacBookPro2

Uuid: bf5698c7-3bd4-4b5e-9951-1c7152ae34cf

State: Peer in Cluster (Connected)

Other names:

builder-MacBookPro2

Hostname: anna-MacBookAir

Uuid: c21f126c-0bf9-408e-afc7-a9e1d91d6431

State: Peer in Cluster (Connected)

I tried a few variations, but the create command that worked:

builder@anna-MacBookAir:/mnt$ sudo gluster volume create gv0 replica 3 anna-MacBookAir:/mnt/glusterfs/brick1/gv0 isaac-MacBookPro:/mnt/glusterfs/brick1/gv0 builder-MacBookPro2:/mnt/glusterfs/brick1/gv0 force

volume create: gv0: success: please start the volume to access data

I could then start the volume

builder@anna-MacBookAir:/mnt$ sudo gluster volume start gv0

volume start: gv0: success

And get info, which showed the 3 replicas

builder@anna-MacBookAir:/mnt$ sudo gluster volume info

Volume Name: gv0

Type: Replicate

Volume ID: f7f5ff8d-d5e8-4e1b-ab2d-9af2a2ee8b91

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 3 = 3

Transport-type: tcp

Bricks:

Brick1: anna-MacBookAir:/mnt/glusterfs/brick1/gv0

Brick2: isaac-MacBookPro:/mnt/glusterfs/brick1/gv0

Brick3: builder-MacBookPro2:/mnt/glusterfs/brick1/gv0

Options Reconfigured:

transport.address-family: inet

storage.fips-mode-rchecksum: on

nfs.disable: on

performance.client-io-threads: off

Using

we can now mount it locally

builder@anna-MacBookAir:/mnt$ sudo mkdir /mnt/glusterfs2

builder@anna-MacBookAir:/mnt$ sudo mount -t glusterfs anna-MacBookAir:/gv0 /mnt/glusterfs2

Testing

Let’s touch 100 files in the mount

builder@anna-MacBookAir:/mnt$ for i in `seq -w 1 100`; do sudo touch /mnt/glusterfs2/test-$i; done

builder@anna-MacBookAir:/mnt$ sudo ls -ltra /mnt/glusterfs2 | tail -n10

-rw-r--r-- 1 root root 0 Sep 18 17:00 test-092

-rw-r--r-- 1 root root 0 Sep 18 17:00 test-093

-rw-r--r-- 1 root root 0 Sep 18 17:00 test-094

-rw-r--r-- 1 root root 0 Sep 18 17:00 test-095

-rw-r--r-- 1 root root 0 Sep 18 17:00 test-096

-rw-r--r-- 1 root root 0 Sep 18 17:00 test-097

-rw-r--r-- 1 root root 0 Sep 18 17:00 test-098

-rw-r--r-- 1 root root 0 Sep 18 17:00 test-099

drwxr-xr-x 3 root root 4096 Sep 18 17:00 .

-rw-r--r-- 1 root root 0 Sep 18 17:00 test-100

I can see on each host, that the 100 files were replicated

builder@anna-MacBookAir:/mnt$ sudo ls -ltra /mnt/glusterfs/brick1/gv0/ | tail -n5

-rw-r--r-- 2 root root 0 Sep 18 17:00 test-098

-rw-r--r-- 2 root root 0 Sep 18 17:00 test-099

drw------- 86 root root 4096 Sep 18 17:00 .glusterfs

drwxr-xr-x 3 root root 4096 Sep 18 17:00 .

-rw-r--r-- 2 root root 0 Sep 18 17:00 test-100

builder@anna-MacBookAir:/mnt$ sudo ls -ltra /mnt/glusterfs/brick1/gv0/ | wc -l

104

isaac@isaac-MacBookPro:~$ ls -ltra /mnt/glusterfs/brick1/gv0/ | tail -n10

-rw-r--r-- 2 root root 0 Sep 18 17:00 test-093

-rw-r--r-- 2 root root 0 Sep 18 17:00 test-094

-rw-r--r-- 2 root root 0 Sep 18 17:00 test-095

-rw-r--r-- 2 root root 0 Sep 18 17:00 test-096

-rw-r--r-- 2 root root 0 Sep 18 17:00 test-097

-rw-r--r-- 2 root root 0 Sep 18 17:00 test-098

-rw-r--r-- 2 root root 0 Sep 18 17:00 test-099

drw------- 86 root root 4096 Sep 18 17:00 .glusterfs

drwxr-xr-x 3 root root 4096 Sep 18 17:00 .

-rw-r--r-- 2 root root 0 Sep 18 17:00 test-100

isaac@isaac-MacBookPro:~$ ls -ltra /mnt/glusterfs/brick1/gv0/ | wc -l

104

builder@builder-MacBookPro2:~$ ls -ltra /mnt/glusterfs/brick1/gv0/ | wc -l

104

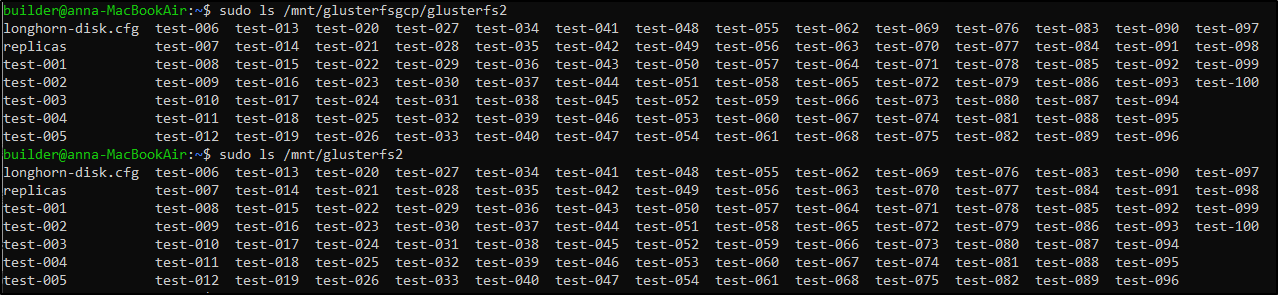

I’m now going to make a GCP mount

builder@anna-MacBookAir:/mnt$ sudo mkdir /mnt/glusterfsgcp

builder@anna-MacBookAir:/mnt$ sudo mount -t gcsfuse -o gid=1000,uid=1000,rw,noauto,file_mode=777,dir_mode=777,key_file=/home/builder/sa-storage-key.json myk3sglusterfs /mnt/glusterfsgcp

Calling gcsfuse with arguments: --dir-mode 777 --key-file /home/builder/sa-storage-key.json -o rw --gid 1000 --uid 1000 --file-mode 777 myk3sglusterfs /mnt/glusterfsgcp

2022/09/18 17:03:44.405349 Start gcsfuse/0.41.6 (Go version go1.18.4) for app "" using mount point: /mnt/glusterfsgcp

2022/09/18 17:03:44.419975 Opening GCS connection...

2022/09/18 17:03:44.866581 Mounting file system "myk3sglusterfs"...

2022/09/18 17:03:44.882374 File system has been successfully mounted.

I can then rsync the glusterfs distributed local storage out to GCP

builder@anna-MacBookAir:/mnt$ sudo rsync -r /mnt/glusterfs2 /mnt/glusterfsgcp

And after a bit, I can see it all replicated

Now I’ll schedule that to happen every hour

0 * * * * rsync -r /mnt/glusterfs2 /mnt/glusterfsgcp

Using crontab -e as root

builder@anna-MacBookAir:/mnt$ sudo crontab -e

no crontab for root - using an empty one

Select an editor. To change later, run 'select-editor'.

1. /bin/nano <---- easiest

2. /usr/bin/vim.tiny

3. /bin/ed

Choose 1-3 [1]: 2

crontab: installing new crontab

builder@anna-MacBookAir:/mnt$ sudo crontab -l

# Edit this file to introduce tasks to be run by cron.

#

# Each task to run has to be defined through a single line

# indicating with different fields when the task will be run

# and what command to run for the task

#

# To define the time you can provide concrete values for

# minute (m), hour (h), day of month (dom), month (mon),

# and day of week (dow) or use '*' in these fields (for 'any').

#

# Notice that tasks will be started based on the cron's system

# daemon's notion of time and timezones.

#

# Output of the crontab jobs (including errors) is sent through

# email to the user the crontab file belongs to (unless redirected).

#

# For example, you can run a backup of all your user accounts

# at 5 a.m every week with:

# 0 5 * * 1 tar -zcf /var/backups/home.tgz /home/

0 * * * * rsync -r /mnt/glusterfs2 /mnt/glusterfsgcp

#

# For more information see the manual pages of crontab(5) and cron(8)

#

# m h dom mon dow command

A quick note: The reason you cannot use the GCP Fuse mount for GlusterFS is that you cannot have the same path used by two mounts. Additionally, the data bricks path needs extended attributes, which my ext4 partitions do just fine but a fuse mount does not.

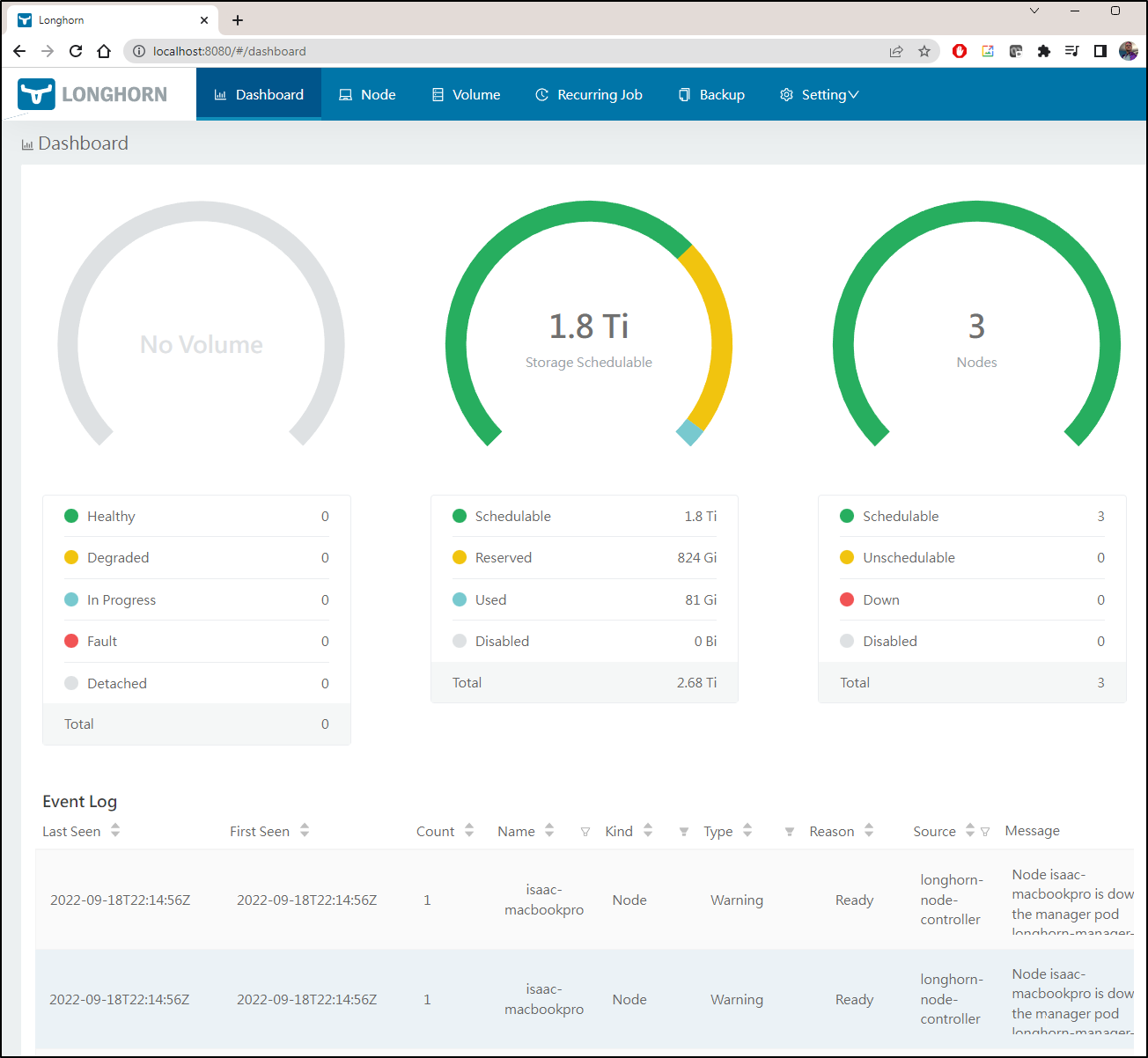

Adding Longhorn

Now that I have some replicated storage that is backed up every hour to GCP, I’ll use it as the basis for a longhorn local stack.

Note, the GlusterFS will be faster than the GCP mount, so I’ll focus on the gluster mount

$ helm install longhorn longhorn/longhorn --namespace longhorn-system --create-namespace --set defaultSettings.defaultDataPath="/mnt/glusterfs2"

WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /home/builder/.kube/config

WARNING: Kubernetes configuration file is world-readable. This is insecure. Location: /home/builder/.kube/config

W0918 17:13:32.013147 10463 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

W0918 17:13:32.268602 10463 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

NAME: longhorn

LAST DEPLOYED: Sun Sep 18 17:13:31 2022

NAMESPACE: longhorn-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Longhorn is now installed on the cluster!

Please wait a few minutes for other Longhorn components such as CSI deployments, Engine Images, and Instance Managers to be initialized.

Visit our documentation at https://longhorn.io/docs/

Like before, we’ll want a service to route traffic to the longhorn UI

builder@DESKTOP-QADGF36:~$ cat Workspaces/jekyll-blog/longhornsvc.yaml

apiVersion: v1

kind: Service

metadata:

name: longhorn-ingress-lb

namespace: longhorn-system

spec:

selector:

app: longhorn-ui

type: LoadBalancer

ports:

- name: http

protocol: TCP

port: 80

targetPort: http

builder@DESKTOP-QADGF36:~$ kubectl apply -f Workspaces/jekyll-blog/longhornsvc.yaml

service/longhorn-ingress-lb created

Then I’ll port-forward to longhorn

$ kubectl port-forward svc/longhorn-ingress-lb -n longhorn-system 8080:80

Forwarding from 127.0.0.1:8080 -> 8000

Forwarding from [::1]:8080 -> 8000

Handling connection for 8080

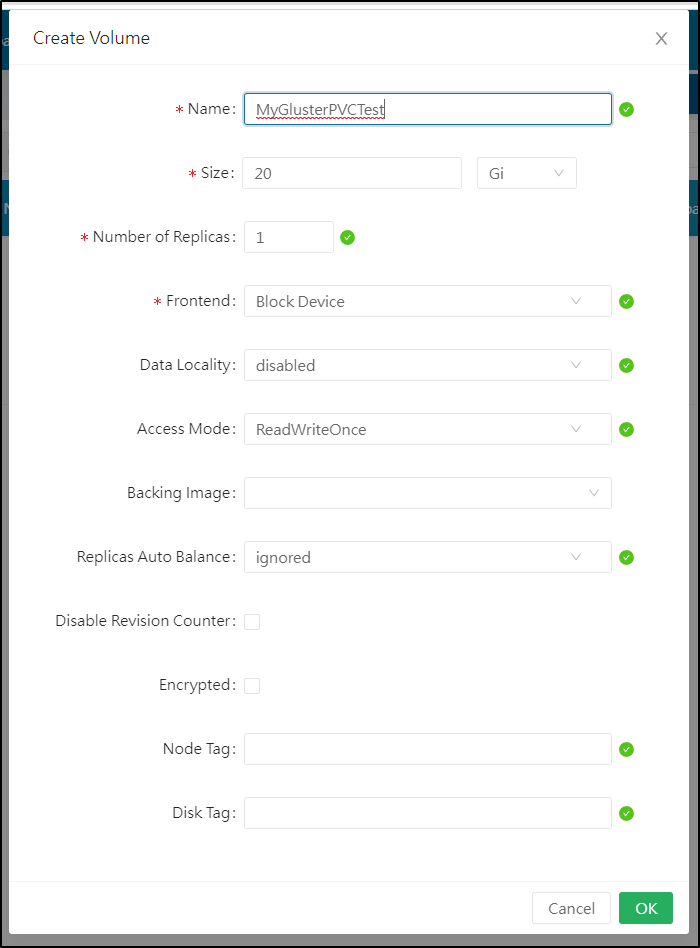

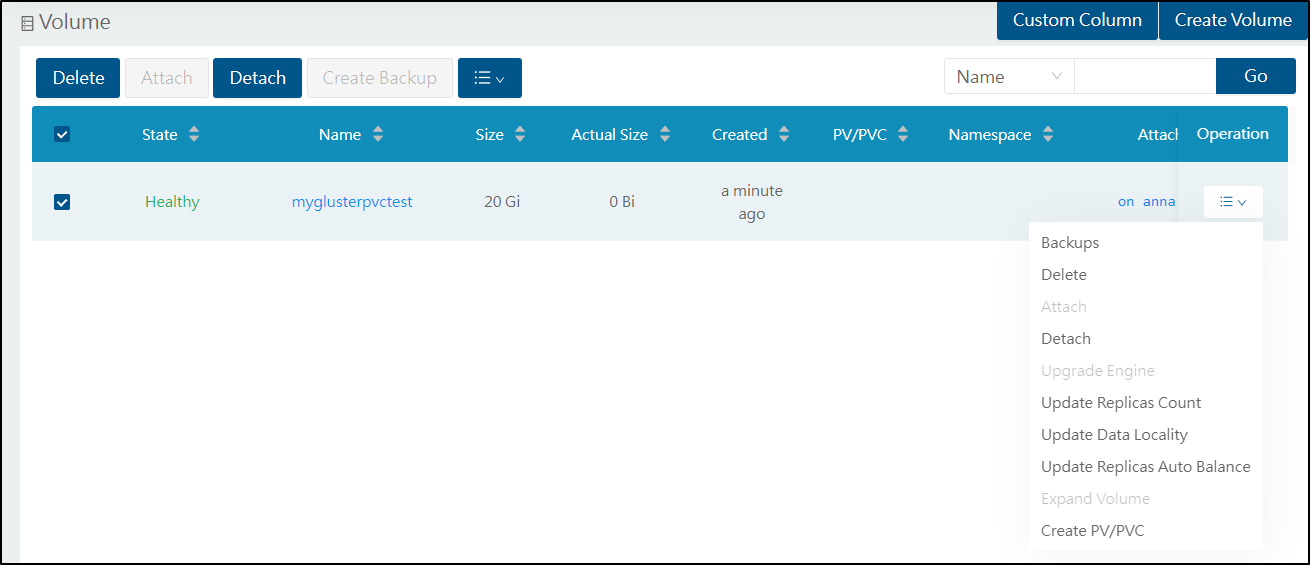

I’ll create a simple volume

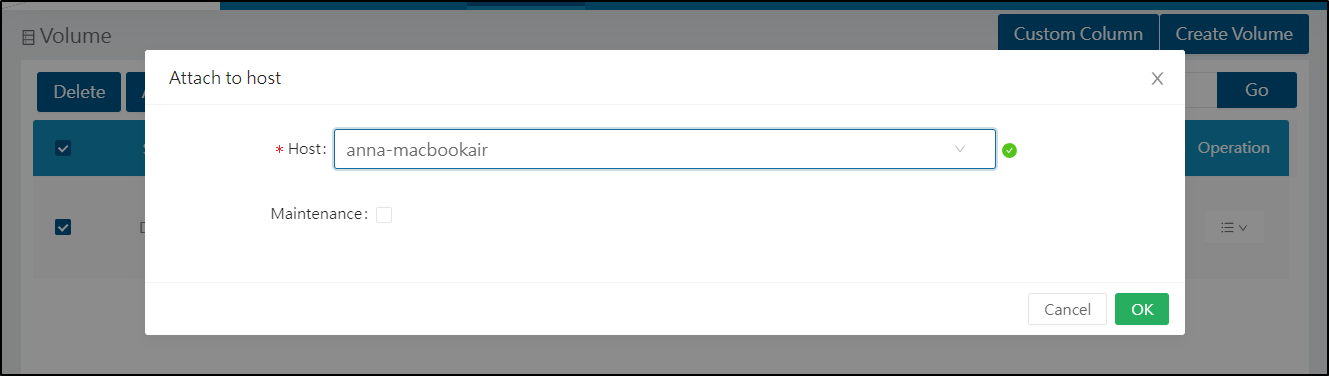

And I’ll attach it to a host

Which I can see marked healthy

GlusterFS Replication

I can see that the Volume has been replicated across the hosts

I’ll hop to a different worker node than the master to which I attached the volume and check the GlusterFS data brick directory

builder@builder-MacBookPro2:~$ ls -ltra /mnt/glusterfs/brick1/gv0/ | tail -n5

-rw-r--r-- 2 root root 0 Sep 18 17:00 test-100

drwxr-xr-x 2 root root 4096 Sep 18 17:14 replicas

drwxr-xr-x 4 root root 4096 Sep 18 17:14 .

drw------- 87 root root 4096 Sep 18 17:14 .glusterfs

-rw-r--r-- 2 root root 51 Sep 18 17:14 longhorn-disk.cfg

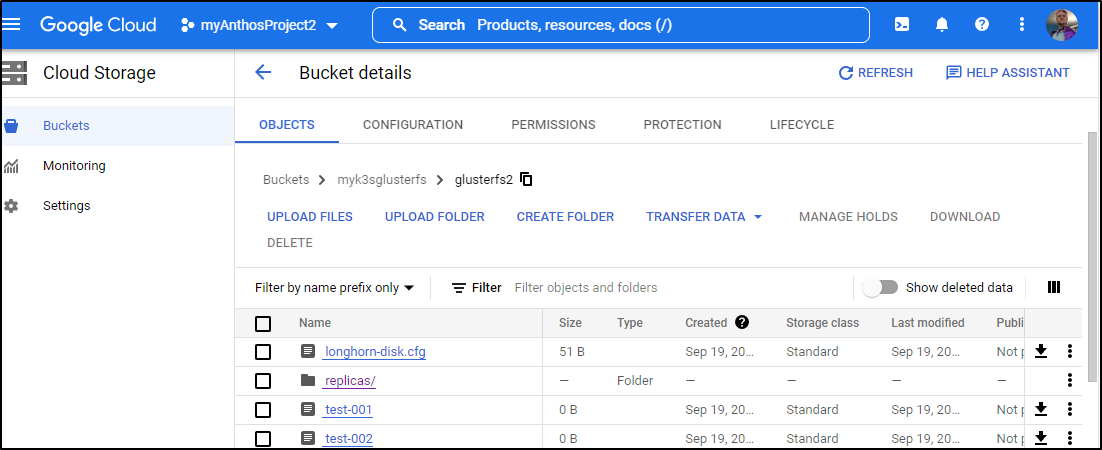

GCP Bucket Backup

As we created the crontab above, every hour it will sync to GCP.

I can verify by looking at the bucket mount

as well as GCP

Using with Pods

Let’s now use Longhorn for a PVC that will back a simple Hello World pod

$ cat glusterdemo.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: gluster-claim

spec:

storageClassName: longhorn

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Pod

metadata:

name: hello-openshift-pod

labels:

name: hello-openshift-pod

spec:

containers:

- name: hello-openshift-pod

image: openshift/hello-openshift

ports:

- name: web

containerPort: 80

volumeMounts:

- name: gluster-vol1

mountPath: /usr/share/nginx/html

readOnly: false

volumes:

- name: gluster-vol1

persistentVolumeClaim:

claimName: gluster-claim

$ kubectl apply -f glusterdemo.yml

persistentvolumeclaim/gluster-claim created

pod/hello-openshift-pod created

We can see it’s immediately bound

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

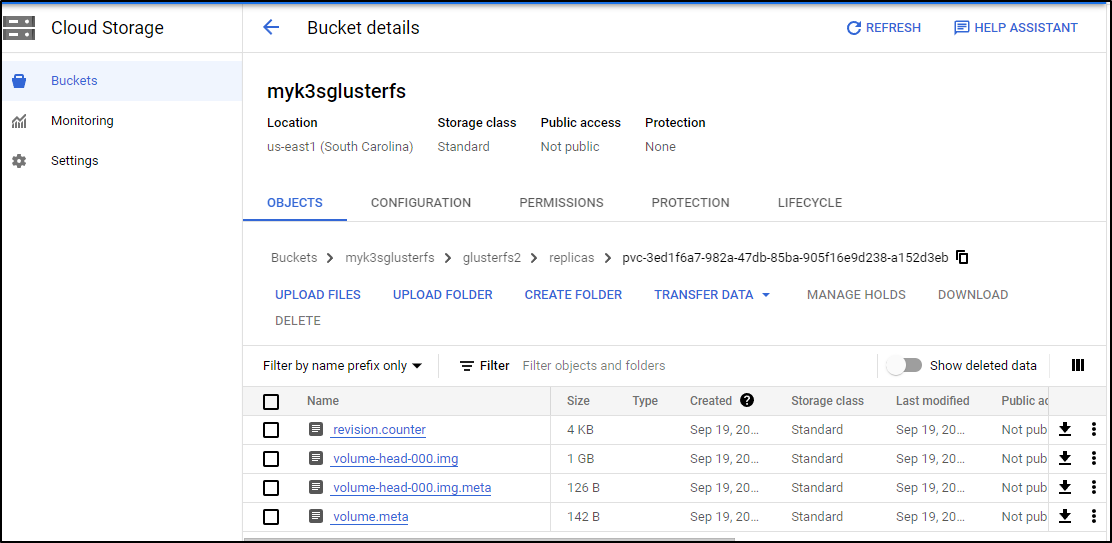

gluster-claim Bound pvc-3ed1f6a7-982a-47db-85ba-905f16e9d238 1Gi RWX longhorn 44s

I can also see it has been created in the GlusterFS space

builder@anna-MacBookAir:~$ sudo ls /mnt/glusterfs2/replicas/

pvc-3ed1f6a7-982a-47db-85ba-905f16e9d238-a152d3eb

As well as on other hosts

builder@builder-MacBookPro2:~$ ls -ltra /mnt/glusterfs2/replicas/

total 16

drwxr-xr-x 3 root root 4096 Sep 18 17:14 ..

drwx------ 2 root root 4096 Sep 18 17:18 myglusterpvctest-45c7b2b3

drwxr-xr-x 4 root root 4096 Sep 19 06:03 .

drwx------ 2 root root 4096 Sep 19 06:03 pvc-3ed1f6a7-982a-47db-85ba-905f16e9d238-ba406489

And after the next rsync, I can see it in GCP as well

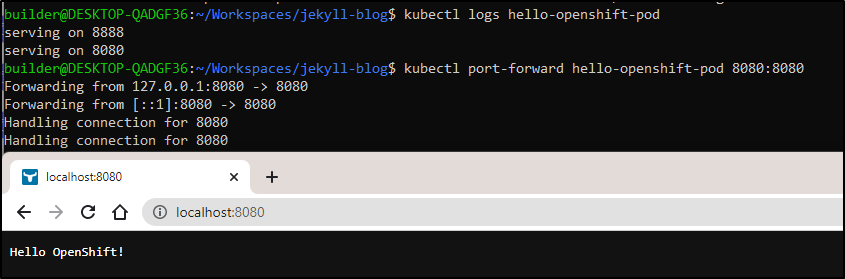

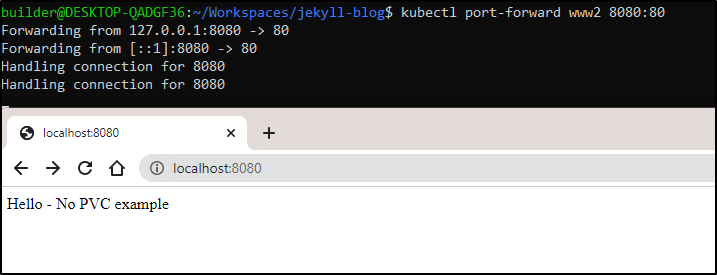

I can port-forward to the pod and see it is serving files

$ kubectl logs hello-openshift-pod

serving on 8888

serving on 8080

$ kubectl port-forward hello-openshift-pod 8080:8080

Forwarding from 127.0.0.1:8080 -> 8080

Forwarding from [::1]:8080 -> 8080

Handling connection for 8080

Handling connection for 8080

Sadly, despite docs to the contrary, I could not ssh into the pod

$ kubectl exec -it hello-openshift-pod /bin/sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

error: Internal error occurred: error executing command in container: failed to exec in container: failed to start exec "10b5f3db478c3068d4767b268ce8580b0b92e8819ffbbcef004b5e4b3c193945": OCI runtime exec failed: exec failed: unable to start container process: exec: "/bin/sh": stat /bin/sh: no such file or directory: unknown

$ kubectl exec -it hello-openshift-pod sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

error: Internal error occurred: error executing command in container: failed to exec in container: failed to start exec "35d20bc3ef6b85e9cbab9921a171fb5dca6e79a77c3be53beb75154a2950dfa7": OCI runtime exec failed: exec failed: unable to start container process: exec: "sh": executable file not found in $PATH: unknown

$ kubectl exec -it hello-openshift-pod bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

error: Internal error occurred: error executing command in container: failed to exec in container: failed to start exec "e584776d0c8de17d2d7bdae8de6a2c6db26c623a80680504956ce1e1affc1c39": OCI runtime exec failed: exec failed: unable to start container process: exec: "bash": executable file not found in $PATH: unknown

$ kubectl exec -it hello-openshift-pod zsh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

error: Internal error occurred: error executing command in container: failed to exec in container: failed to start exec "5c0d184278f6e3e4541b42b61269e8ceab931318318e3ecde241ae3586bd1098": OCI runtime exec failed: exec failed: unable to start container process: exec: "zsh": executable file not found in $PATH: unknown

I’ll use an NGinx example instead

$ cat glusterdemo2.yml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: http-claim

spec:

storageClassName: longhorn

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Pod

metadata:

name: www

labels:

name: www

spec:

containers:

- name: www

image: nginx:alpine

ports:

- containerPort: 80

name: www

volumeMounts:

- name: www-persistent-storage

mountPath: /usr/share/nginx/html

volumes:

- name: www-persistent-storage

persistentVolumeClaim:

claimName: http-claim

$ kubectl apply -f glusterdemo2.yml

persistentvolumeclaim/http-claim created

pod/www created

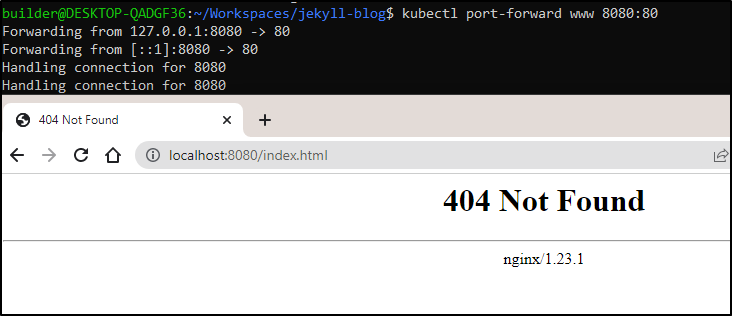

and a quick port-forward test

Now I’ll create a real file in the PVC

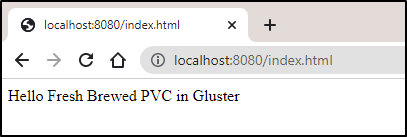

$ kubectl exec www -- sh -c 'echo "Hello Fresh Brewed PVC in Gluster" > /usr/share/nginx/html/index.html'

Then test

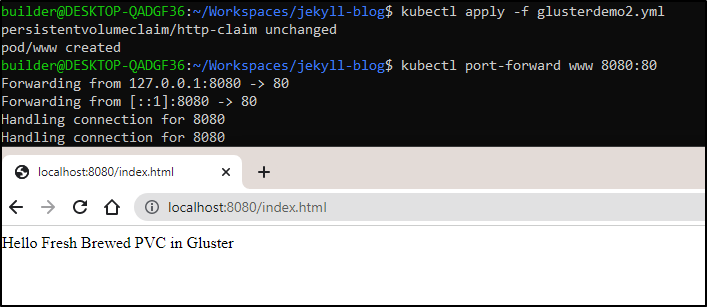

I can now delete the pod

$ kubectl delete pod && kubectl get pods

pod "www" deleted

NAME READY STATUS RESTARTS AGE

hello-openshift-pod 1/1 Running 0 23m

I can re-run the YAML to create the pod (but it will skip the already satisfied PVC)

$ cat glusterdemo2.yml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: http-claim

spec:

storageClassName: longhorn

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Pod

metadata:

name: www

labels:

name: www

spec:

containers:

- name: www

image: nginx:alpine

ports:

- containerPort: 80

name: www

volumeMounts:

- name: www-persistent-storage

mountPath: /usr/share/nginx/html

volumes:

- name: www-persistent-storage

persistentVolumeClaim:

claimName: http-claim

$ kubectl apply -f glusterdemo2.yml

persistentvolumeclaim/http-claim unchanged

pod/www created

And a test of port-forward shows, indeed, the data is stil there

Direct Mounts

We can skip longhorn all-together and use local files. Since GlusterFS replicates across systems, I could just use a hostmount knowing it will be on any host in my pool (since I added Gluster to all 3)

On one of the hosts, I’ll create a folder and file to user (here I used the primary node as root)

root@anna-MacBookAir:~# mkdir /mnt/glusterfs2/nginxdemo1

root@anna-MacBookAir:~# echo "Testing Local Path" > /mnt/glusterfs2/nginxdemo1/index.html

root@anna-MacBookAir:~# chmod 755 /mnt/glusterfs2/nginxdemo1

root@anna-MacBookAir:~# chmod 644 /mnt/glusterfs2/nginxdemo1/index.html

Then I can create a definition that will use it’s mounted path…

$ cat glusterdemo3.yml

apiVersion: v1

kind: Pod

metadata:

name: www2

labels:

name: www2

spec:

containers:

- name: www2

image: nginx:alpine

ports:

- containerPort: 80

name: www

volumeMounts:

- name: glusterfs-storage

mountPath: /usr/share/nginx/html

volumes:

- name: glusterfs-storage

hostPath:

path: /mnt/glusterfs2/ngxindemo1/index.html

$ kubectl apply -f glusterdemo3.yml

pod/www2 created

While this may work, we are trying to hostpath to the Mount against the brick as opposed to the brick itself (which is synced).

A more direct mapping:

$ cat glusterdemo3.yml

apiVersion: v1

kind: Pod

metadata:

name: www2

labels:

name: www2

spec:

containers:

- name: www2

image: nginx:alpine

ports:

- containerPort: 80

name: www

volumeMounts:

- name: glusterfs-storage

mountPath: /usr/share/nginx/html

volumes:

- name: glusterfs-storage

hostPath:

path: /mnt/glusterfs/brick1/gv0/ngxindemo1

$ kubectl apply -f glusterdemo3.yml

pod/www2 created

which we can see work

And as you would expect, Rsync to GCP takes care of the backups

Summary

The first thing we tackled was setting up GlusterFS. To do this, we created an ansible playbook but then found some issues with using only IPv4.

Once I modified the /etc/hosts files and re-ran with hostnames, I was able to complete the creation of the GlusterFS Peers and subsequent Volumes.

I then created a Longhorn installation that exposed the mountpoint as a backend for hosting volumes as well as satisfying persistent volume claims (PVC). We then tested this with two different web front-end pods. We tested the durability of the PVC by destroying a pod after manually updating a file and then recreating against the same PVC to see the file persisted.

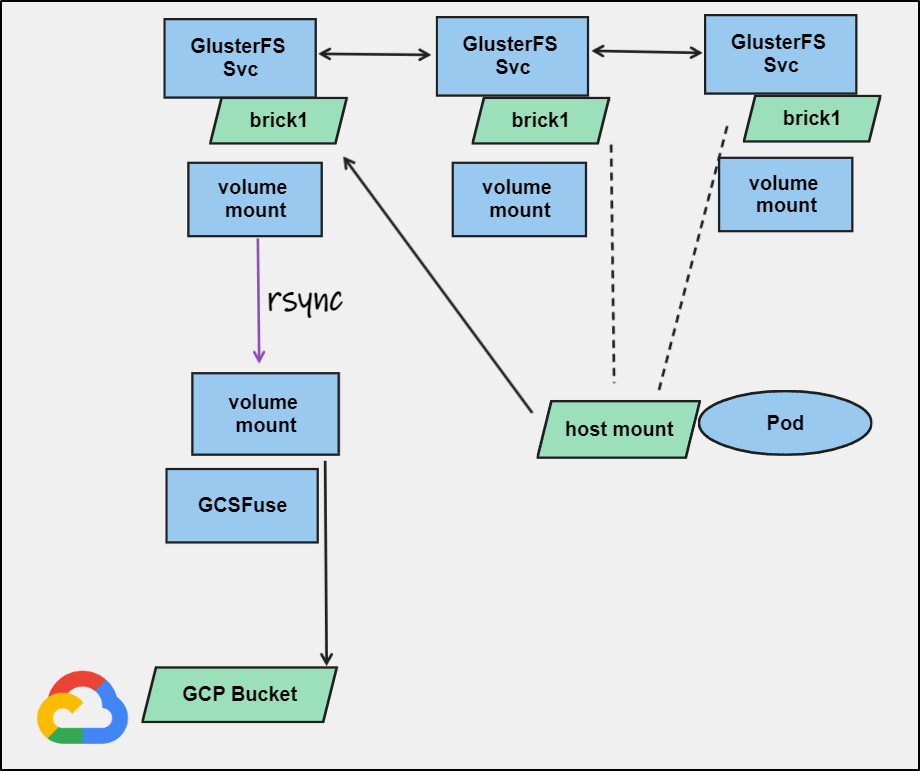

Essentially, we built out the following:

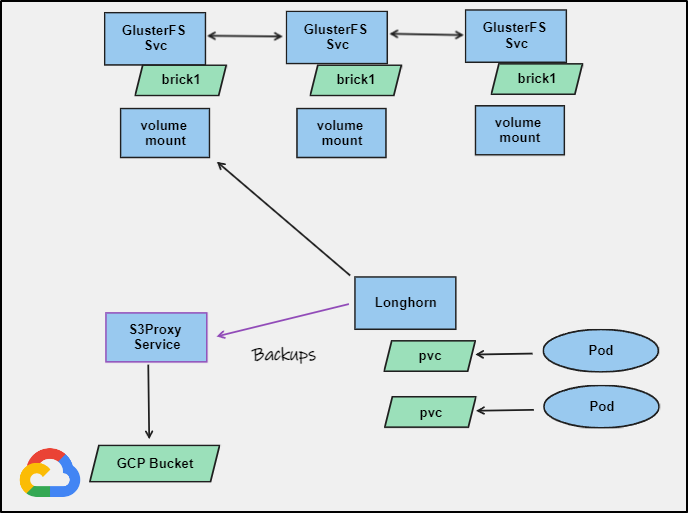

This certainly works to have a per-hour backup of our files. However, we could alternatively use the backup feature in Longhorn to push backups of our PVCs into GCP by way of the S3Proxy (which we covered in our last blog)

Our last test was using the GlusterFS data brick as a Pod’s Host mount to skip longhorn altogether. In this example I wouldn’t need a PVC. It’s essentially just exposing a path on the nodes which we happen to know is kept consistent by GlusterFS

Overall, GlusterFS is a pretty handy tool to keep paths in sync across hosts. Clearly, we could do similar by mounting an external NFS path or cloud storage as we’ve covered before. But in all of those scenarios, we then have an external dependance on other systems.

With GlusterFS we have a relatively air-gapped solution where the nodes just keep each other in sync. This could be also done hub-n-spoke style with a master path replicating to the workers using rsync - I do not want to discount that approach. But then you have to create a mapping and decide on timings.

I could easily see using GlusterFS, for instance, to sync common build tools across hosts that mount the builder agents (such as Github Runners or AzDO Agents). I could also see it serve a basic internal website or wiki that covers simple system metrics (where each host write stats to a hostname.html file).