Published: Sep 14, 2022 by Isaac Johnson

Today we’ll dig into some more ways we can leverage Cloud Storage to back our on-prem Kubernetes Persistent Volumes (and Persistent Volume Claims). I’ll cover GCP and more using a few different methods including Longhorn.io and S3Proxy.

GCFuse

Let’s first try to mount a bucket using Fuse.

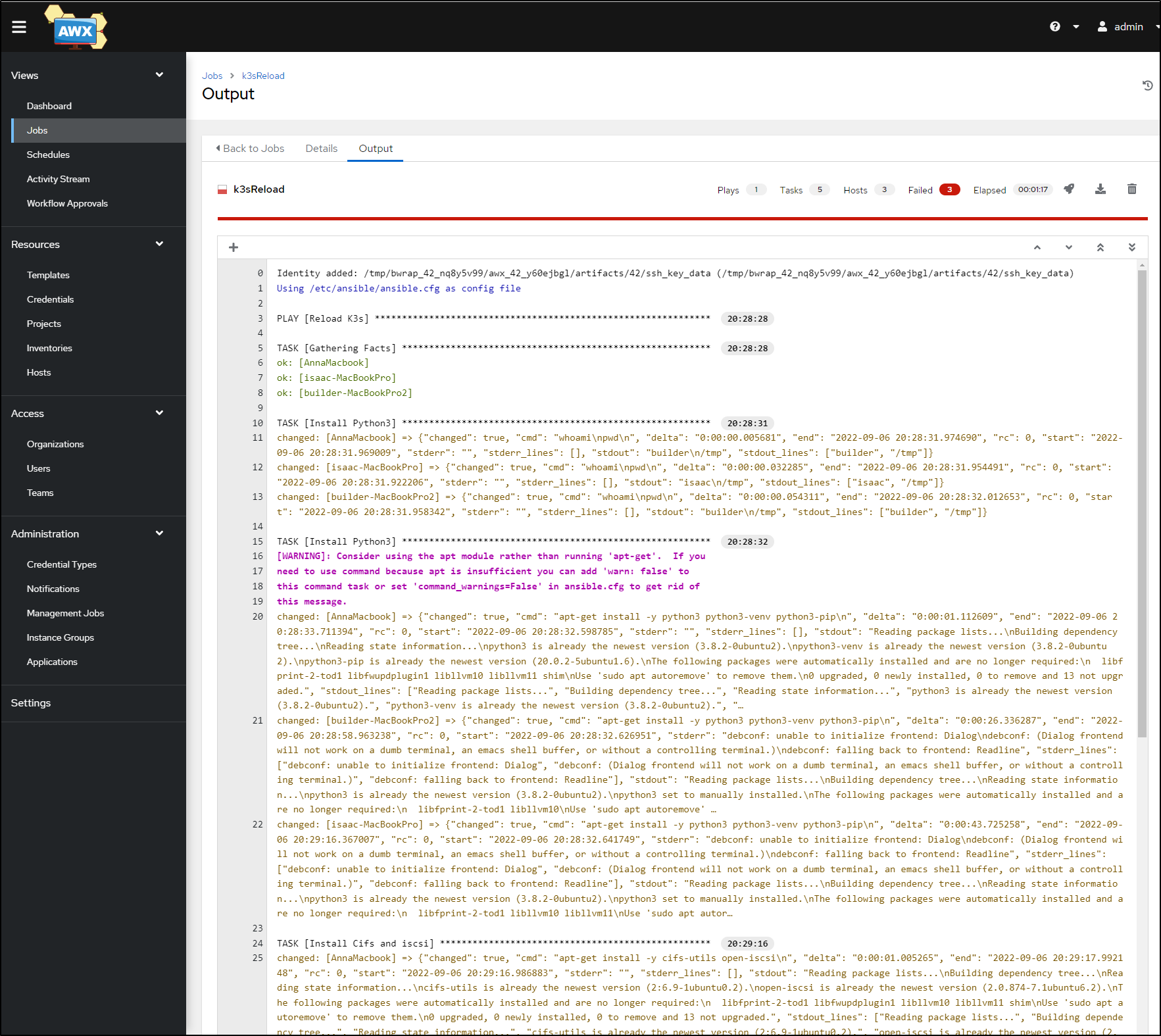

I’ve already started the work to build out a k3s reload and prep playbook

---

- name: Reload K3s

hosts: all

tasks:

- name: Install Python3

ansible.builtin.shell: |

whoami

pwd

args:

chdir: /tmp

- name: Install Python3

ansible.builtin.shell: |

apt-get install -y python3 python3-venv python3-pip

become: true

args:

chdir: /tmp

- name: Install Cifs and iscsi

ansible.builtin.shell: |

apt-get install -y cifs-utils open-iscsi

become: true

args:

chdir: /tmp

- name: Add AWS CLI

ansible.builtin.shell: |

apt-get install -y awscli s3fs

args:

chdir: /tmp

I’ll add a new section for gcfuse and run the playbook

- name: Install GCP Fuse

ansible.builtin.shell: |

export GCSFUSE_REPO=gcsfuse-`lsb_release -c -s`

rm -f /etc/apt/sources.list.d/gcsfuse.list || true

echo "deb http://packages.cloud.google.com/apt $GCSFUSE_REPO main" | sudo tee /etc/apt/sources.list.d/gcsfuse.list

curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

apt-get update

apt-get install -y gcsfuse

become: true

args:

chdir: /tmp

Create bucket and creds

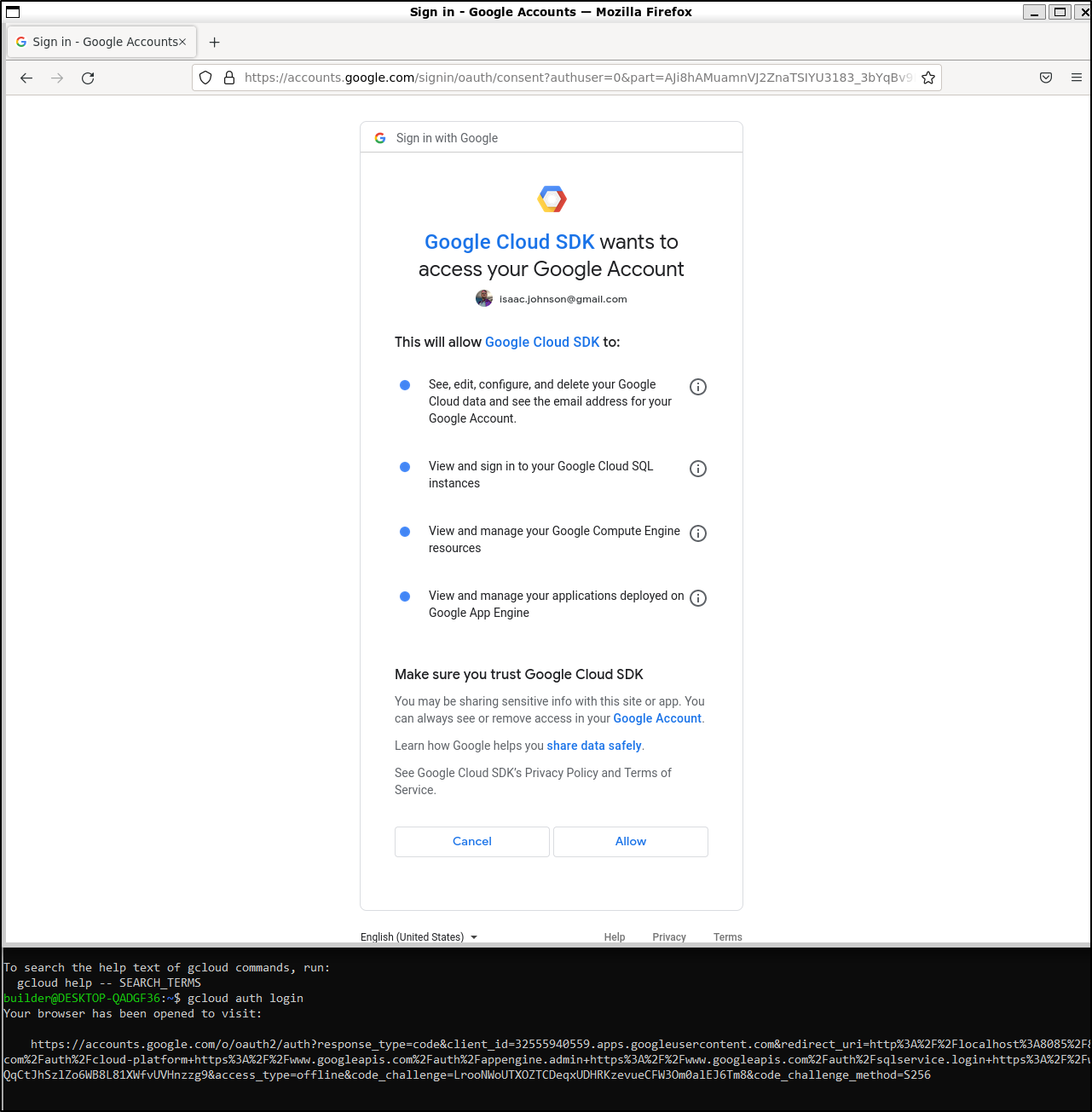

I’ll need to login to gcloud with the CLI.

I still get a kick out of the X11 based Firefox that launches due to Windows 11 supporting X

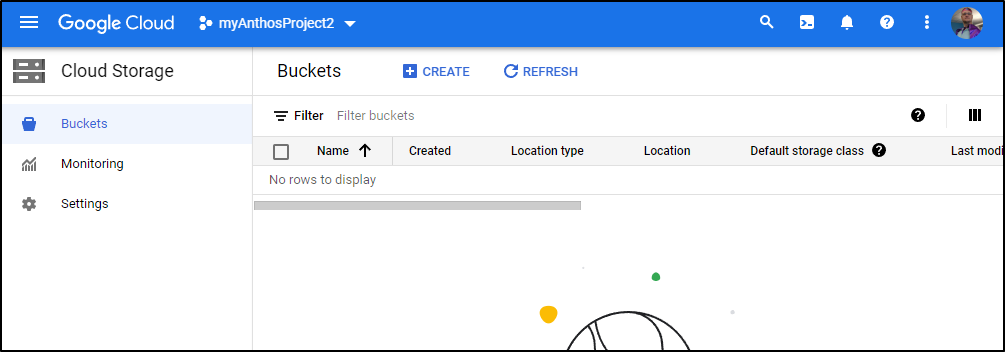

I can now see that I have no buckets

builder@DESKTOP-QADGF36:~$ export PROJECTID=myanthosproject2

builder@DESKTOP-QADGF36:~$ gcloud alpha storage ls --project=$PROJECTID

ERROR: (gcloud.alpha.storage.ls) One or more URLs matched no objects.

which matches what I see in the GCP cloud console

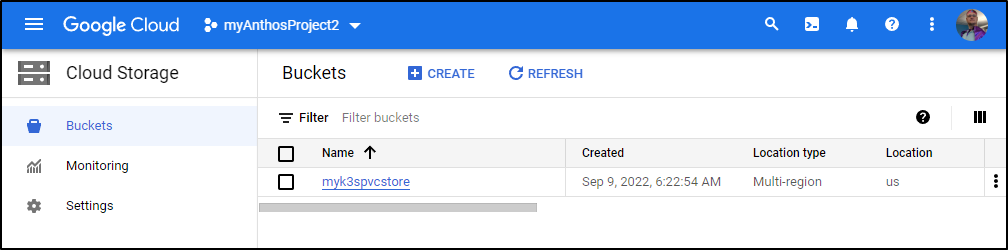

I can then create a bucket

$ gcloud alpha storage buckets create gs://myk3spvcstore --project=$PROJECTID

Creating gs://myk3spvcstore/...

$ gcloud alpha storage ls --project=$PROJECTID

gs://myk3spvcstore/

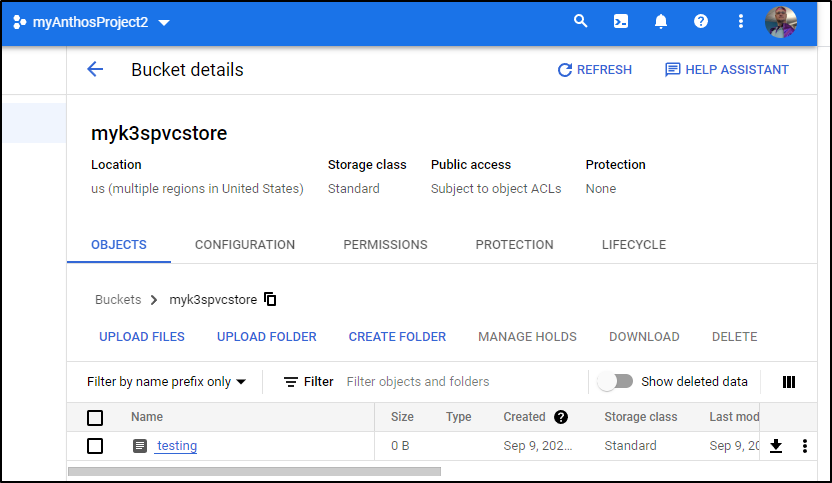

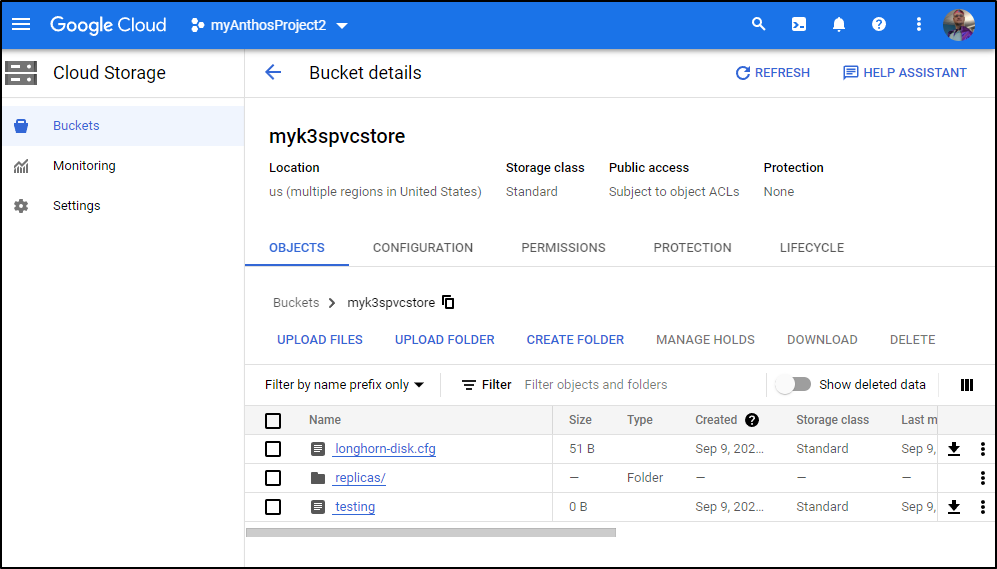

and see it listed in the Cloud Console (so I know I got the project correct)

Create SA and Keys

Now that we have a bucket, to use it we’ll need to create a service account we can use

$ gcloud iam service-accounts create gcpbucketsa --description "SA for Bucket Access" --display-name="GCP Bucket SA" --project=$PROJECTID

Created service account [gcpbucketsa].

Then grant it the storage admin role so it can interact with our buckets

$ gcloud projects add-iam-policy-binding $PROJECTID --member="serviceAccount:gcpbucketsa@$PROJECTID.iam.gserviceaccount.com" --role="roles/storage.admin"

Updated IAM policy for project [myanthosproject2].

bindings:

- members:

- serviceAccount:service-511842454269@gcp-sa-servicemesh.iam.gserviceaccount.com

role: roles/anthosservicemesh.serviceAgent

- members:

- serviceAccount:service-511842454269@gcp-sa-artifactregistry.iam.gserviceaccount.com

role: roles/artifactregistry.serviceAgent

- members:

- serviceAccount:service-511842454269@compute-system.iam.gserviceaccount.com

role: roles/compute.serviceAgent

- members:

- serviceAccount:service-511842454269@container-engine-robot.iam.gserviceaccount.com

role: roles/container.serviceAgent

- members:

- serviceAccount:service-511842454269@containerregistry.iam.gserviceaccount.com

role: roles/containerregistry.ServiceAgent

- members:

- serviceAccount:511842454269-compute@developer.gserviceaccount.com

- serviceAccount:511842454269@cloudservices.gserviceaccount.com

role: roles/editor

- members:

- serviceAccount:511842454269-compute@developer.gserviceaccount.com

role: roles/gkehub.connect

- members:

- serviceAccount:511842454269-compute@developer.gserviceaccount.com

- serviceAccount:service-511842454269@gcp-sa-gkehub.iam.gserviceaccount.com

role: roles/gkehub.serviceAgent

- members:

- serviceAccount:511842454269-compute@developer.gserviceaccount.com

role: roles/logging.logWriter

- members:

- serviceAccount:service-511842454269@gcp-sa-meshcontrolplane.iam.gserviceaccount.com

role: roles/meshcontrolplane.serviceAgent

- members:

- serviceAccount:service-511842454269@gcp-sa-meshdataplane.iam.gserviceaccount.com

role: roles/meshdataplane.serviceAgent

- members:

- serviceAccount:511842454269-compute@developer.gserviceaccount.com

role: roles/monitoring.metricWriter

- members:

- serviceAccount:service-511842454269@gcp-sa-multiclusteringress.iam.gserviceaccount.com

role: roles/multiclusteringress.serviceAgent

- members:

- serviceAccount:service-511842454269@gcp-sa-mcmetering.iam.gserviceaccount.com

role: roles/multiclustermetering.serviceAgent

- members:

- serviceAccount:service-511842454269@gcp-sa-mcsd.iam.gserviceaccount.com

role: roles/multiclusterservicediscovery.serviceAgent

- members:

- user:isaac.johnson@gmail.com

role: roles/owner

- members:

- serviceAccount:daprpubsub@myanthosproject2.iam.gserviceaccount.com

role: roles/pubsub.admin

- members:

- serviceAccount:service-511842454269@gcp-sa-pubsub.iam.gserviceaccount.com

role: roles/pubsub.serviceAgent

- members:

- serviceAccount:511842454269-compute@developer.gserviceaccount.com

role: roles/resourcemanager.projectIamAdmin

- members:

- serviceAccount:azure-pipelines-publisher@myanthosproject2.iam.gserviceaccount.com

- serviceAccount:gcpbucketsa@myanthosproject2.iam.gserviceaccount.com

role: roles/storage.admin

etag: BwXoPNtXGtE=

version: 1

Then we’ll need a key it can use for FUSE (and later s3Proxy)

$ gcloud iam service-accounts keys create ./sa-private-key.json --iam-account=gcpbucketsa@$PROJECTID.iam.gserviceaccount.com

created key [4c1asdfsadasdfsadfasdasasdf2c] of type [json] as [./sa-private-key.json] for [gcpbucketsa@myanthosproject2.iam.gserviceaccount.com]

We can see the details we will need in the file

$ cat sa-private-key.json | jq

{

"type": "service_account",

"project_id": "myanthosproject2",

"private_key_id": "4c1asdfsadasdfsadfasdasasdf2c",

"private_key": "-----BEGIN PRIVATE KEY-----\nMIIEstuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff stuff Q==\n-----END PRIVATE KEY-----\n",

"client_email": "gcpbucketsa@myanthosproject2.iam.gserviceaccount.com",

"client_id": "111234123412341234123412349",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://oauth2.googleapis.com/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_x509_cert_url": "https://www.googleapis.com/robot/v1/metadata/x509/gcpbucketsa%40myanthosproject2.iam.gserviceaccount.com"

}

Now we have a bit of an issue - I have a secret but my ansible playbooks are public. Clearly, I won’t save it in the playbook.

A quick solution is to base64 encode the file on my local workstation

$ cat sa-private-key.json | base64 -w 0

ewogICJ0eXBl........

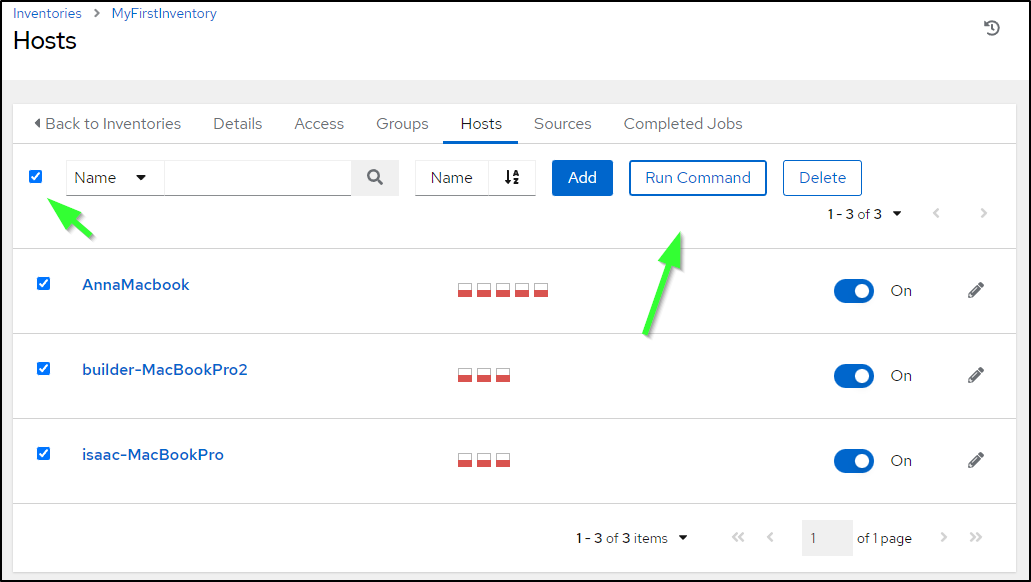

Under Hosts, we’ll check the box for all, then click “run command”

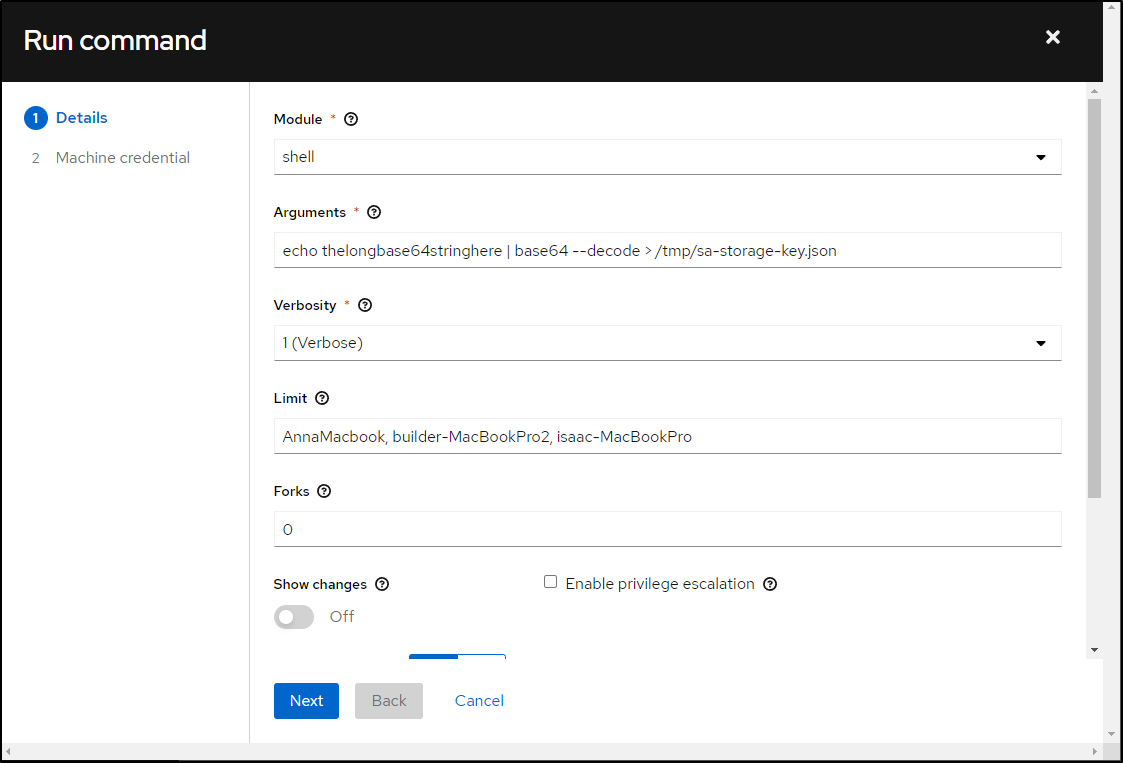

I’ll then paste in the base64 string from above (where you see “thelongbase64stringhere”)

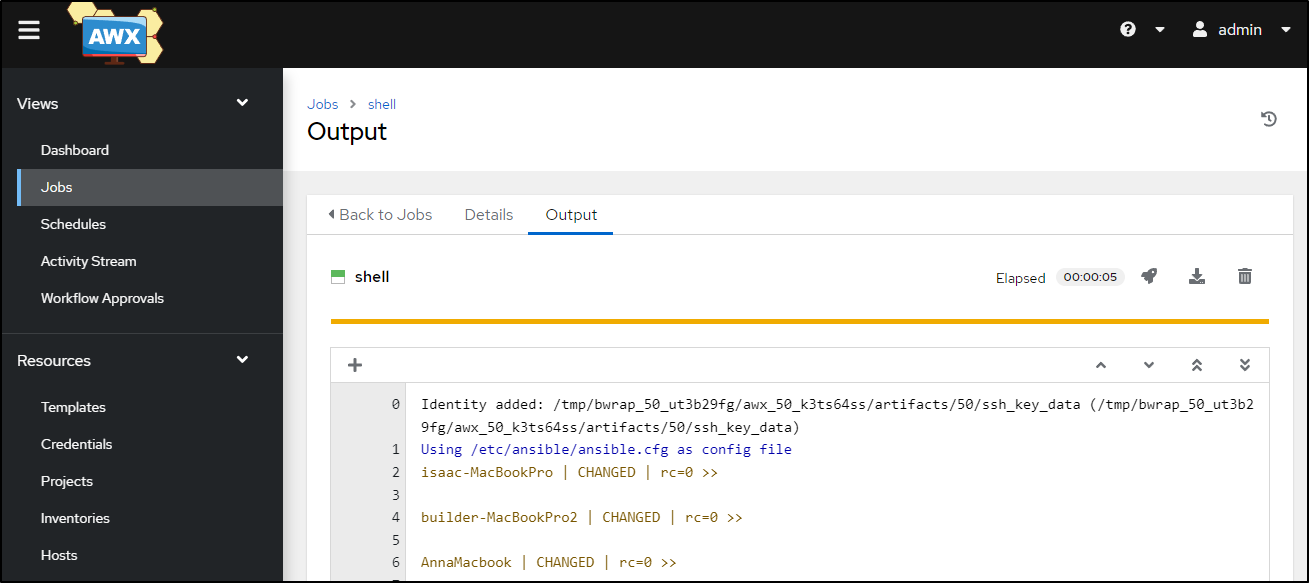

Then launch to see it decrypted onto the 3 hosts into the /tmp folder

I can check a host for sanity

$ ssh builder@192.168.1.81

Welcome to Ubuntu 20.04.4 LTS (GNU/Linux 5.15.0-46-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

12 updates can be applied immediately.

To see these additional updates run: apt list --upgradable

New release '22.04.1 LTS' available.

Run 'do-release-upgrade' to upgrade to it.

Your Hardware Enablement Stack (HWE) is supported until April 2025.

Last login: Fri Sep 9 07:03:50 2022 from 192.168.1.57

builder@anna-MacBookAir:~$ cat /tmp/sa-storage-key.json | jq | head -n3

{

"type": "service_account",

"project_id": "myanthosproject2",

I could automate the next steps, but I prefer to do fstab updates directly

builder@anna-MacBookAir:~$ cp /tmp/sa-storage-key.json /home/builder/

builder@anna-MacBookAir:~$ chmod 600 ~/sa-storage-key.json

builder@anna-MacBookAir:~$ sudo mkdir /mnt/gcpbucket1 && sudo chmod 777 /mnt/gcpbucket1

builder@anna-MacBookAir:~$ sudo vi /etc/fstab

builder@anna-MacBookAir:~$ cat /etc/fstab | tail -n1

myk3spvcstore /mnt/gcpbucket1 gcsfuse rw,noauto,user,key_file=/home/builder/sa-storage-key.json

builder@anna-MacBookAir:~$ sudo mount -a

mount error(115): Operation now in progress

Refer to the mount.cifs(8) manual page (e.g. man mount.cifs) and kernel log messages (dmesg)

dmesg provided

[725220.764420] kauditd_printk_skb: 1 callbacks suppressed

[725220.764424] audit: type=1400 audit(1662723333.575:68): apparmor="DENIED" operation="open" profile="snap.snap-store.ubuntu-software" name="/etc/PackageKit/Vendor.conf" pid=1107928 comm="snap-store" requested_mask="r" denied_mask="r" fsuid=1000 ouid=0

[725237.139454] audit: type=1400 audit(1662723349.946:69): apparmor="DENIED" operation="open" profile="snap.snap-store.ubuntu-software" name="/var/lib/snapd/hostfs/usr/share/gdm/greeter/applications/gnome-initial-setup.desktop" pid=1107928 comm="pool-org.gnome." requested_mask="r" denied_mask="r" fsuid=1000 ouid=0

[725237.191463] audit: type=1400 audit(1662723349.998:70): apparmor="DENIED" operation="open" profile="snap.snap-store.ubuntu-software" name="/var/lib/snapd/hostfs/usr/share/gdm/greeter/applications/gnome-initial-setup.desktop" pid=1107928 comm="pool-org.gnome." requested_mask="r" denied_mask="r" fsuid=1000 ouid=0

[725238.124161] audit: type=1326 audit(1662723350.934:71): auid=1000 uid=1000 gid=1000 ses=305 subj=? pid=1107928 comm="pool-org.gnome." exe="/snap/snap-store/558/usr/bin/snap-store" sig=0 arch=c000003e syscall=93 compat=0 ip=0x7f17c2b1d39b code=0x50000

[727245.148213] CIFS: Attempting to mount \\mac81azstoragecsi.file.core.windows.net\mycisstorage

[727255.471005] CIFS: VFS: Error connecting to socket. Aborting operation.

[727255.471022] CIFS: VFS: cifs_mount failed w/return code = -115

Ah, this is caused by my wunderbar ISP blocking 445 and me forgetting i left in my Azure storage mount. I’ll comment that out and try again

builder@anna-MacBookAir:~$ sudo vi /etc/fstab

builder@anna-MacBookAir:~$ sudo mount -a

builder@anna-MacBookAir:~$

Sadly, this did not work, no did any errors spew out.

Oddly, if I ran the same command directly, it mounted without error (could be “noauto” blocking it)

builder@anna-MacBookAir:~$ sudo mount -t gcsfuse -o rw,noauto,user,key_file=/home/builder/sa-storage-key.json myk3spvcstore /mnt/gcpbucket1

Calling gcsfuse with arguments: -o nosuid -o nodev --key-file /home/builder/sa-storage-key.json -o rw -o noexec myk3spvcstore /mnt/gcpbucket1

2022/09/09 07:20:21.638506 Start gcsfuse/0.41.6 (Go version go1.18.4) for app "" using mount point: /mnt/gcpbucket1

2022/09/09 07:20:21.667333 Opening GCS connection...

2022/09/09 07:20:22.080744 Mounting file system "myk3spvcstore"...

2022/09/09 07:20:22.092792 File system has been successfully mounted.

I could then touch a file

builder@anna-MacBookAir:~$ sudo touch /mnt/gcpbucket1/testing

and see it immediately reflected in the GCP Cloud Console

I must have typo’ed something because when I went to test on the next two hosts, it was fine

builder@DESKTOP-QADGF36:~/Workspaces/ansible-playbooks$ ssh builder@192.168.1.159

Welcome to Ubuntu 20.04.4 LTS (GNU/Linux 5.15.0-46-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

13 updates can be applied immediately.

To see these additional updates run: apt list --upgradable

New release '22.04.1 LTS' available.

Run 'do-release-upgrade' to upgrade to it.

Your Hardware Enablement Stack (HWE) is supported until April 2025.

Last login: Fri Sep 9 07:03:44 2022 from 192.168.1.57

builder@builder-MacBookPro2:~$ cp /tmp/sa-storage-key.json /home/builder/ && chmod 600 ~/sa-storage-key.json

builder@builder-MacBookPro2:~$ sudo mkdir /mnt/gcpbucket1

builder@builder-MacBookPro2:~$ sudo chmod 777 /mnt/gcpbucket1

builder@builder-MacBookPro2:~$ sudo vi /etc/fstab

builder@builder-MacBookPro2:~$ cat /etc/fstab | tail -n1

myk3spvcstore /mnt/gcpbucket1 gcsfuse rw,user,key_file=/home/builder/sa-storage-key.json

builder@builder-MacBookPro2:~$ sudo mount /mnt/gcpbucket1/

Calling gcsfuse with arguments: --key-file /home/builder/sa-storage-key.json -o rw -o noexec -o nosuid -o nodev myk3spvcstore /mnt/gcpbucket1

2022/09/09 07:26:25.162134 Start gcsfuse/0.41.6 (Go version go1.18.4) for app "" using mount point: /mnt/gcpbucket1

2022/09/09 07:26:25.180687 Opening GCS connection...

2022/09/09 07:26:25.650479 Mounting file system "myk3spvcstore"...

2022/09/09 07:26:25.662212 File system has been successfully mounted.

builder@builder-MacBookPro2:~$ sudo ls -l /mnt/gcpbucket1

total 0

-rw-r--r-- 1 root root 0 Sep 9 07:20 testing

and the other

isaac@isaac-MacBookPro:~$ sudo mount /mnt/gcpbucket1

Calling gcsfuse with arguments: -o noexec -o nosuid -o nodev --key-file /home/isaac/sa-storage-key.json -o rw myk3spvcstore /mnt/gcpbucket1

2022/09/09 07:31:18.296250 Start gcsfuse/0.41.6 (Go version go1.18.4) for app "" using mount point: /mnt/gcpbucket1

2022/09/09 07:31:18.312584 Opening GCS connection...

2022/09/09 07:31:18.747362 Mounting file system "myk3spvcstore"...

2022/09/09 07:31:18.755200 File system has been successfully mounted.

isaac@isaac-MacBookPro:~$ sudo ls -l /mnt/gcpbucket1

total 0

-rw-r--r-- 1 root root 0 Sep 9 07:20 testing

isaac@isaac-MacBookPro:~$

Longhorn

Now, as you might expect (and we covered in our last two blog entries), we can use Longhorn to expose this as a persistent volume class

$ helm install longhorn longhorn/longhorn --namespace longhorn-system --create-namespace --set defaultSettings.defaultDataPath="/mnt/gcpbucket1"

WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /home/builder/.kube/config

WARNING: Kubernetes configuration file is world-readable. This is insecure. Location: /home/builder/.kube/config

W0909 07:37:12.676674 30182 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

W0909 07:37:13.114282 30182 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

NAME: longhorn

LAST DEPLOYED: Fri Sep 9 07:37:11 2022

NAMESPACE: longhorn-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Longhorn is now installed on the cluster!

Please wait a few minutes for other Longhorn components such as CSI deployments, Engine Images, and Instance Managers to be initialized.

Visit our documentation at https://longhorn.io/docs/

Create a service (if you have not already) to forward traffic

$ cat longhornsvc.yaml

apiVersion: v1

kind: Service

metadata:

name: longhorn-ingress-lb

namespace: longhorn-system

spec:

selector:

app: longhorn-ui

type: LoadBalancer

ports:

- name: http

protocol: TCP

port: 80

targetPort: http

$ kubectl apply -f ./longhornsvc.yaml

service/longhorn-ingress-lb created

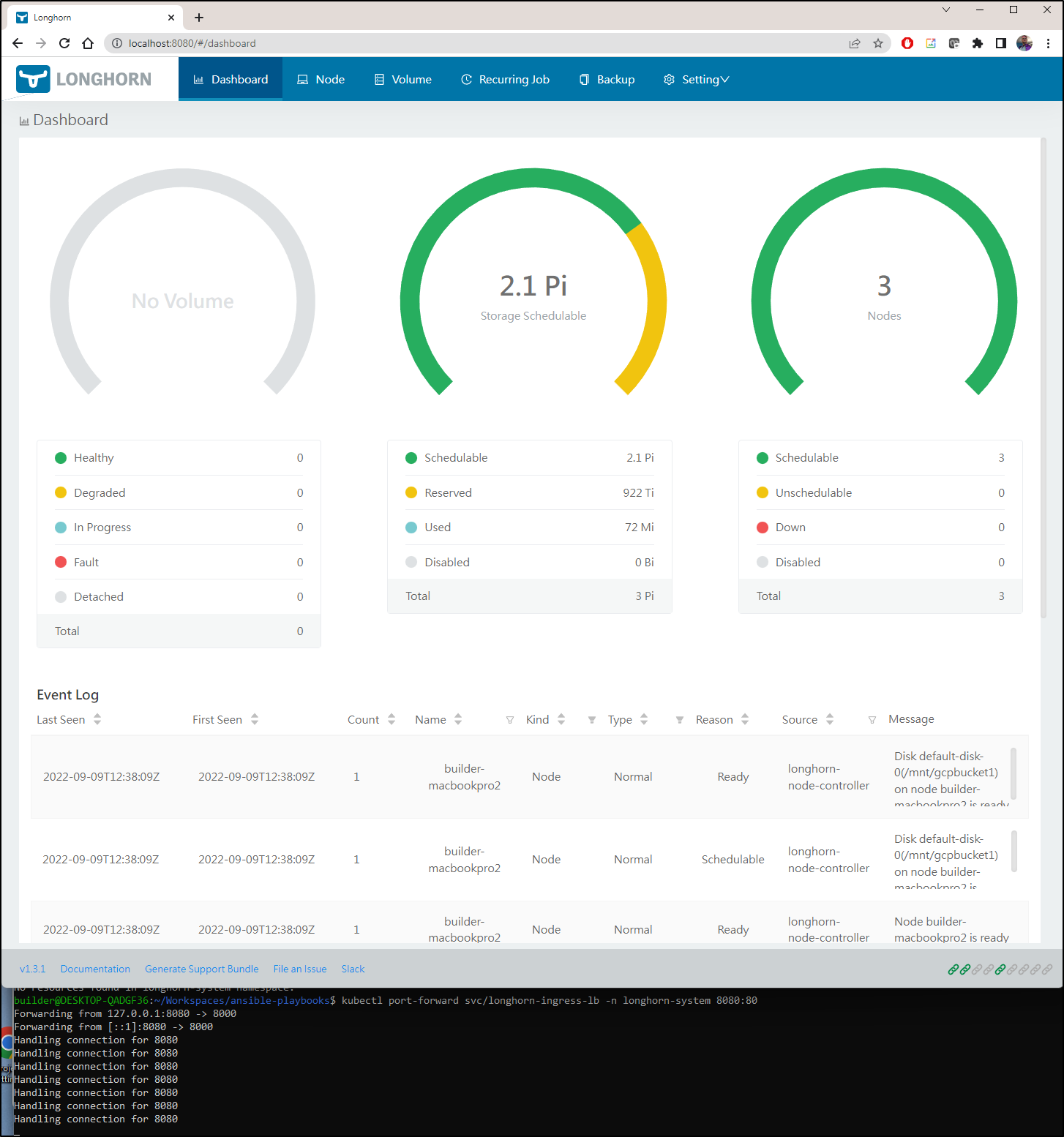

We can then port-forward to the service we created, and see 2.1Pi of storage we can use

$ kubectl port-forward svc/longhorn-ingress-lb -n longhorn-system 8080:80

We can also see longhorn as a storage class we can use with containers

$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path (default) rancher.io/local-path Delete WaitForFirstConsumer false 10d

rook-nfs-share1 nfs.rook.io/rook-nfs-provisioner Delete Immediate false 9d

s3-buckets aws-s3.io/bucket Delete Immediate false 8d

s3-existing-buckets aws-s3.io/bucket Delete Immediate false 8d

s3-existing-buckets2 aws-s3.io/bucket Delete Immediate false 8d

s3-existing-buckets3 aws-s3.io/bucket Delete Immediate false 3d

s3-existing-buckets4 aws-s3.io/bucket Delete Immediate false 3d

longhorn (default) driver.longhorn.io Delete Immediate true 3m35s

Testing

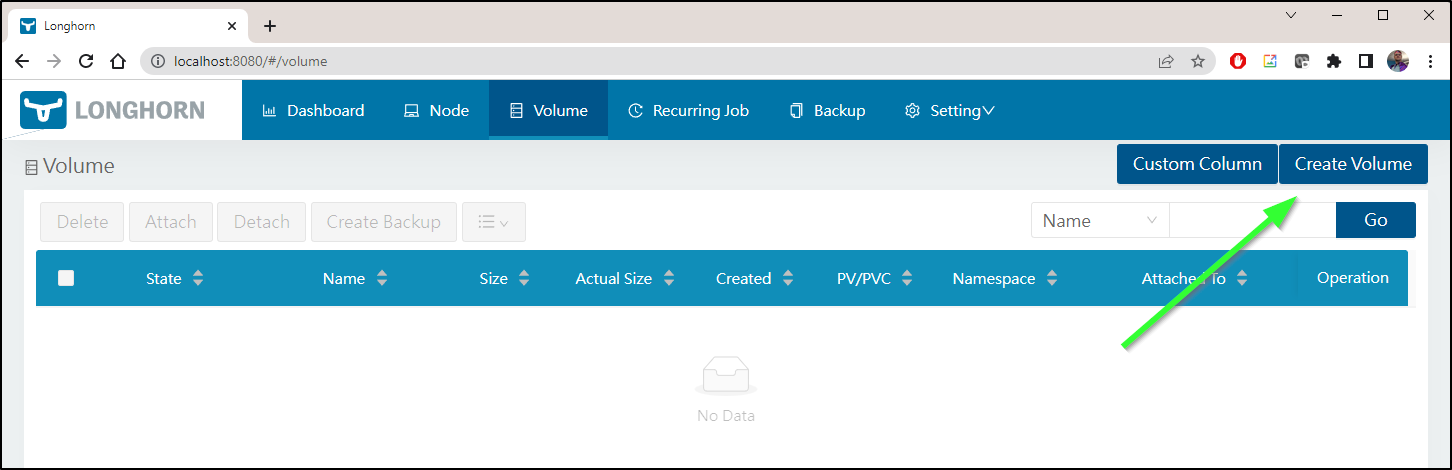

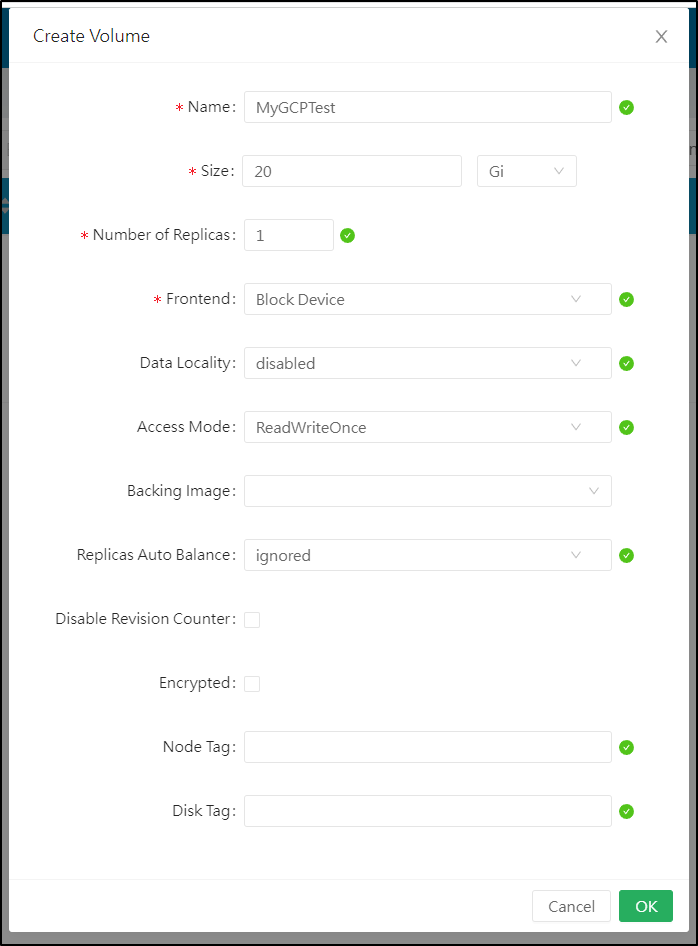

Under Volume, we can “create volume”

and give it a name and size. As it’s backend is GCP, we do not need to create replicas

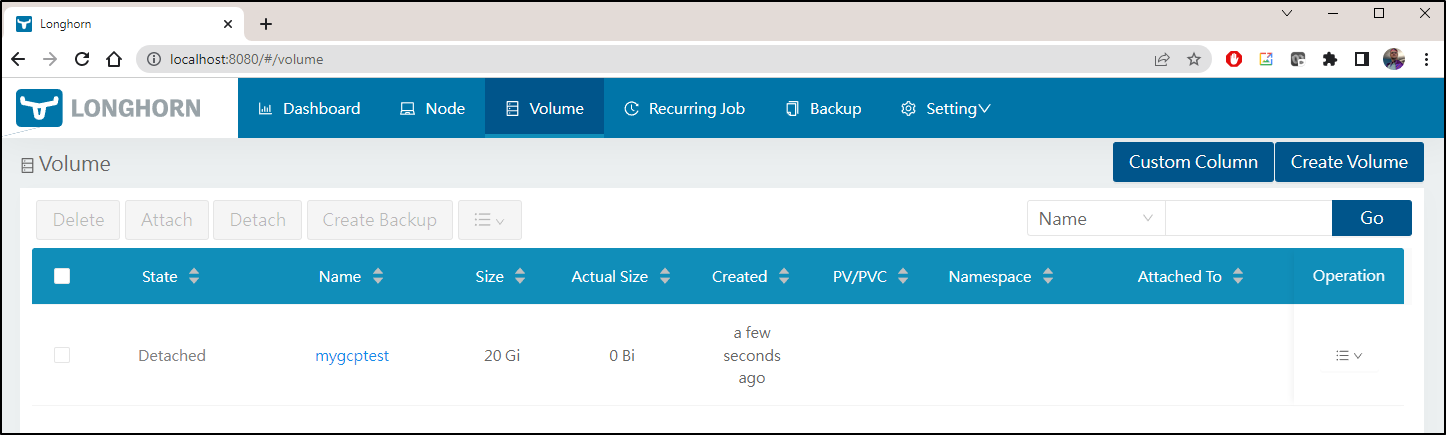

I can now see a detached volume listed in Longhorn

As well as see it reflected in GCP Cloud Console

S3Proxy

We can now use that same sa json file to fire up S3Proxy to create an internal S3 endpoint we can use by Longhorn for backups OR as an s3 complaint storage medium.

$ cat s3proxy.yaml

apiVersion: v1

kind: Namespace

metadata:

name: s3proxy

---

apiVersion: v1

kind: Secret

metadata:

name: sa-storage-key

namespace: s3proxy

data:

sa-storage-key.json: ewo****base 64 of the Google SA JSON*****n0K

---

apiVersion: v1

kind: Service

metadata:

name: s3proxy

namespace: s3proxy

spec:

selector:

app: s3proxy

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: s3proxy

namespace: s3proxy

spec:

replicas: 1

selector:

matchLabels:

app: s3proxy

template:

metadata:

labels:

app: s3proxy

spec:

volumes:

- name: sacred

secret:

secretName: sa-storage-key

containers:

- name: s3proxy

image: andrewgaul/s3proxy:latest

imagePullPolicy: Always

volumeMounts:

- mountPath: "/mnt/sacred"

name: sacred

readOnly: true

ports:

- containerPort: 80

env:

- name: JCLOUDS_PROVIDER

value: google-cloud-storage

- name: JCLOUDS_IDENTITY

value: gcpbucketsa@myanthosproject2.iam.gserviceaccount.com

- name: JCLOUDS_CREDENTIAL

value: /mnt/sacred/sa-storage-key.json

- name: S3PROXY_IDENTITY

value: mac81azstoragepremcsi

- name: S3PROXY_CREDENTIAL

value: S3l1wzS52sY4qbilPv8SrRKTTZEw1AbVljNnspxeaHvw8JpZdlW8A6og8ck5KF+6Wp2GYoF1i0Yn+AStjJJH4w==

Note: the S3Proxy Identity and Credential is rather arbitrary… just something we will use when connecting _to_ the S3Proxy service. Also, as we’ll see in a moment, we have to change some values to make this work

Then apply the above YAML

$ kubectl apply -f s3proxy.yaml

namespace/s3proxy unchanged

secret/sa-storage-key unchanged

service/s3proxy unchanged

deployment.apps/s3proxy configured

We can see that indeed the credential mounted as we would hope

$ kubectl exec -it s3proxy-6d7df87b6-mrx94 -n s3proxy -- /bin/bash

root@s3proxy-6d7df87b6-mrx94:/opt/s3proxy# ls /mnt/sacred/

..2022_09_10_10_56_09.236322181/ ..data/ sa-storage-key.json

root@s3proxy-6d7df87b6-mrx94:/opt/s3proxy# ls /mnt/sacred/sa-storage-key.json

/mnt/sacred/sa-storage-key.json

root@s3proxy-6d7df87b6-mrx94:/opt/s3proxy# cat /mnt/sacred/sa-storage-key.json

{

"type": "service_account",

"project_id": "myanthosproject2",

...

This will create a Proxy pod and service that we can use to direct s3 compatabile traffic to GCP Buckets

$ kubectl get pods -n s3proxy

NAME READY STATUS RESTARTS AGE

s3proxy-6d7df87b6-mrx94 1/1 Running 0 2d

$ kubectl get svc -n s3proxy

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

s3proxy ClusterIP 10.43.48.252 <none> 80/TCP 6d10h

To use this for Longhorn backups, we need to create a secret. To do this, we first need to base64 our secrets from the s3proxy definition above

$ echo S3l1wzS52sY4qbilPv8SrRKTTZEw1AbVljNnspxeaHvw8JpZdlW8A6og8ck5KF+6Wp2GYoF1i0Yn+AStjJJH4w== | tr -d '\n' | base64 -w 0 && echo

UzNsMXd6UzUyc1k0cWJpbFB2OFNyUktUVFpFdzFBYlZsak5uc3B4ZWFIdnc4SnBaZGxXOEE2b2c4Y2s1S0YrNldwMkdZb0YxaTBZbitBU3RqSkpINHc9PQ==

builder@DESKTOP-QADGF36:~/Workspaces/ansible-playbooks$ echo mac81azstoragepremcsi | tr -d '\n' | base64 -w 0 && echo

bWFjODFhenN0b3JhZ2VwcmVtY3Np

builder@DESKTOP-QADGF36:~/Workspaces/ansible-playbooks$ echo http://s3proxy.s3proxy.svc.cluster.local | tr -d '\n' | base64 -w 0 && echo

aHR0cDovL3MzcHJveHkuczNwcm94eS5zdmMuY2x1c3Rlci5sb2NhbA==

Now we can create and set the secret for Longhorn to use. Again, you see the base64 encoded values for ACCESS KEY ID and KEY match the above (and yes, even if your ACCESS_KEY looks like a b64 value, you b64 it again to make it a valid k8s secret)

$ cat longhornS3ProxySecret.yaml

apiVersion: v1

kind: Secret

metadata:

name: s3proxy-secret

namespace: longhorn-system

type: Opaque

data:

AWS_ACCESS_KEY_ID: bWFjODFhenN0b3JhZ2VwcmVtY3Np

AWS_SECRET_ACCESS_KEY: UzNsMXd6UzUyc1k0cWJpbFB2OFNyUktUVFpFdzFBYlZsak5uc3B4ZWFIdnc4SnBaZGxXOEE2b2c4Y2s1S0YrNldwMkdZb0YxaTBZbitBU3RqSkpINHc9PQ==

AWS_ENDPOINTS: aHR0cDovL3MzcHJveHkuczNwcm94eS5zdmMuY2x1c3Rlci5sb2NhbA== # http://s3proxy.s3proxy.svc.cluster.local

$ kubectl apply -f longhornS3ProxySecret.yaml

secret/s3proxy-secret created

However, we get errors

root@my-shell:/# aws s3 --endpoint-url http://s3proxy.s3proxy.svc.cluster.local ls

An error occurred () when calling the ListBuckets operation:

root@my-shell:/# aws s3 --endpoint-url http://10.43.48.252 ls s3://myk3spvcstore/

An error occurred () when calling the ListObjectsV2 operation:

root@my-shell:/# aws s3 --endpoint-url http://10.43.48.252 ls

An error occurred () when calling the ListBuckets operation:

root@my-shell:/# aws s3 --endpoint-url=http://s3proxy.s3proxy.svc.cluster.local ls s3://myk3spvcstore/

An error occurred () when calling the ListObjectsV2 operation:

The error we see from s3proxy matches what others have reported

</head>\n<body><h2>HTTP ERROR 400 chars /mnt/sacred/sa-storage-key.json doesn't contain % line [-----BEGIN ]</h2>\n<table>\n<tr><th>URI:</th><td>/myk3spvcstore</td></tr>\n<tr><th>STATUS:</t

After a lot of debugging and trial and error, I figured out the working syntax. It just wants the RSA key without any other decorations (but multi-line).

Here (with some masking) is my working s3proxy.yaml

apiVersion: v1

kind: Namespace

metadata:

name: s3proxy

---

apiVersion: v1

kind: Service

metadata:

name: s3proxy

namespace: s3proxy

spec:

selector:

app: s3proxy

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: s3proxy

namespace: s3proxy

spec:

replicas: 1

selector:

matchLabels:

app: s3proxy

template:

metadata:

labels:

app: s3proxy

spec:

volumes:

- name: sacred

secret:

secretName: sa-storage-key

containers:

- name: s3proxy

image: andrewgaul/s3proxy:latest

imagePullPolicy: Always

volumeMounts:

- mountPath: "/mnt/sacred"

name: sacred

readOnly: true

ports:

- containerPort: 80

env:

- name: JCLOUDS_PROVIDER

value: "google-cloud-storage"

- name: JCLOUDS_ENDPOINT

value: "https://storage.googleapis.com"

- name: JCLOUDS_IDENTITY

value: "gcpbucketsa@myanthosproject2.iam.gserviceaccount.com"

- name: JCLOUDS_CREDENTIAL

value: |

-----BEGIN PRIVATE KEY-----

MIIEvAIBADANBgkq************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

****************************************************************

*********************Q==

-----END PRIVATE KEY-----

- name: S3PROXY_IDENTITY

value: "mac81azstoragepremcsi"

- name: S3PROXY_CREDENTIAL

value: "S3l1wzS52sY4qbilPv8SrRKTTZEw1AbVljNnspxeaHvw8JpZdlW8A6og8ck5KF+6Wp2GYoF1i0Yn+AStjJJH4w=="

We can now test with an ubuntu pod

root@my-shell:/# aws s3 --endpoint-url=http://s3proxy.s3proxy.svc.cluster.local ls

1969-12-31 15:00:00 myk3spvcstore

I noticed “mb” (make bucket) did not work

root@my-shell:/# aws s3 --endpoint-url=http://s3proxy.s3proxy.svc.cluster.local mb s3://mybackups/

make_bucket failed: s3://mybackups/ An error occurred (BucketAlreadyOwnedByYou) when calling the CreateBucket operation: Your previous request to create the named bucket succeeded and you already own it. {BucketName=mybackups}

root@my-shell:/# aws s3 --endpoint-url=http://s3proxy.s3proxy.svc.cluster.local ls

1969-12-31 15:00:00 myk3spvcstore

root@my-shell:/# aws s3 --endpoint-url=http://s3proxy.s3proxy.svc.cluster.local mb s3://mybackups2/

make_bucket failed: s3://mybackups2/ An error occurred (BucketAlreadyOwnedByYou) when calling the CreateBucket operation: Your previous request to create the named bucket succeeded and you already own it. {BucketName=mybackups2}

root@my-shell:/# aws s3 --endpoint-url=http://s3proxy.s3proxy.svc.cluster.local ls

1969-12-31 15:00:00 myk3spvcstore

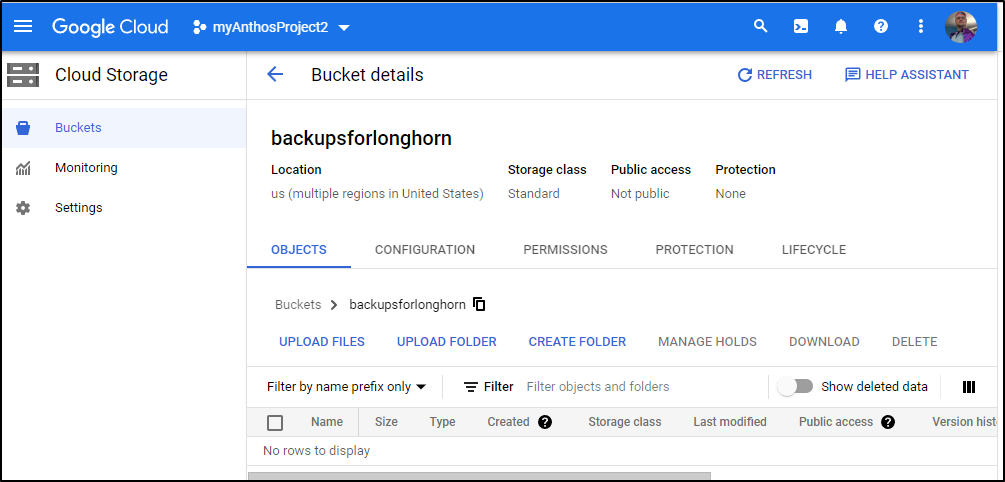

So I created one manualy in GCP Cloud Console

And then could see it with the CLI

root@my-shell:/# aws s3 --endpoint-url=http://s3proxy.s3proxy.svc.cluster.local ls

1969-12-31 15:00:00 backupsforlonghorn

1969-12-31 15:00:00 myk3spvcstore

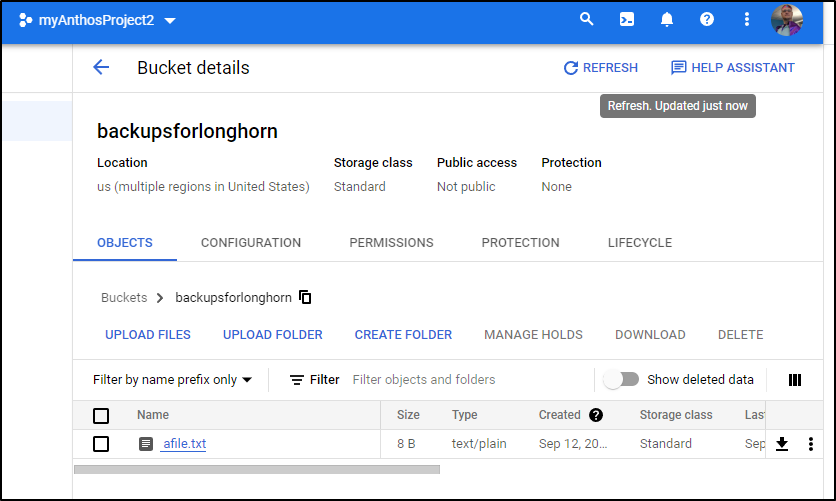

I did a quick test of creating and uploading a small file

root@my-shell:/# echo "testing" > afile.txt

root@my-shell:/# aws s3 --endpoint-url=http://s3proxy.s3proxy.svc.cluster.local cp ./afile.txt s3://backupsforlonghorn/

upload: ./afile.txt to s3://backupsforlonghorn/afile.txt

And could see it reflected in GCP Cloud Console Bucket Storage Browser

Backups in Longhorn

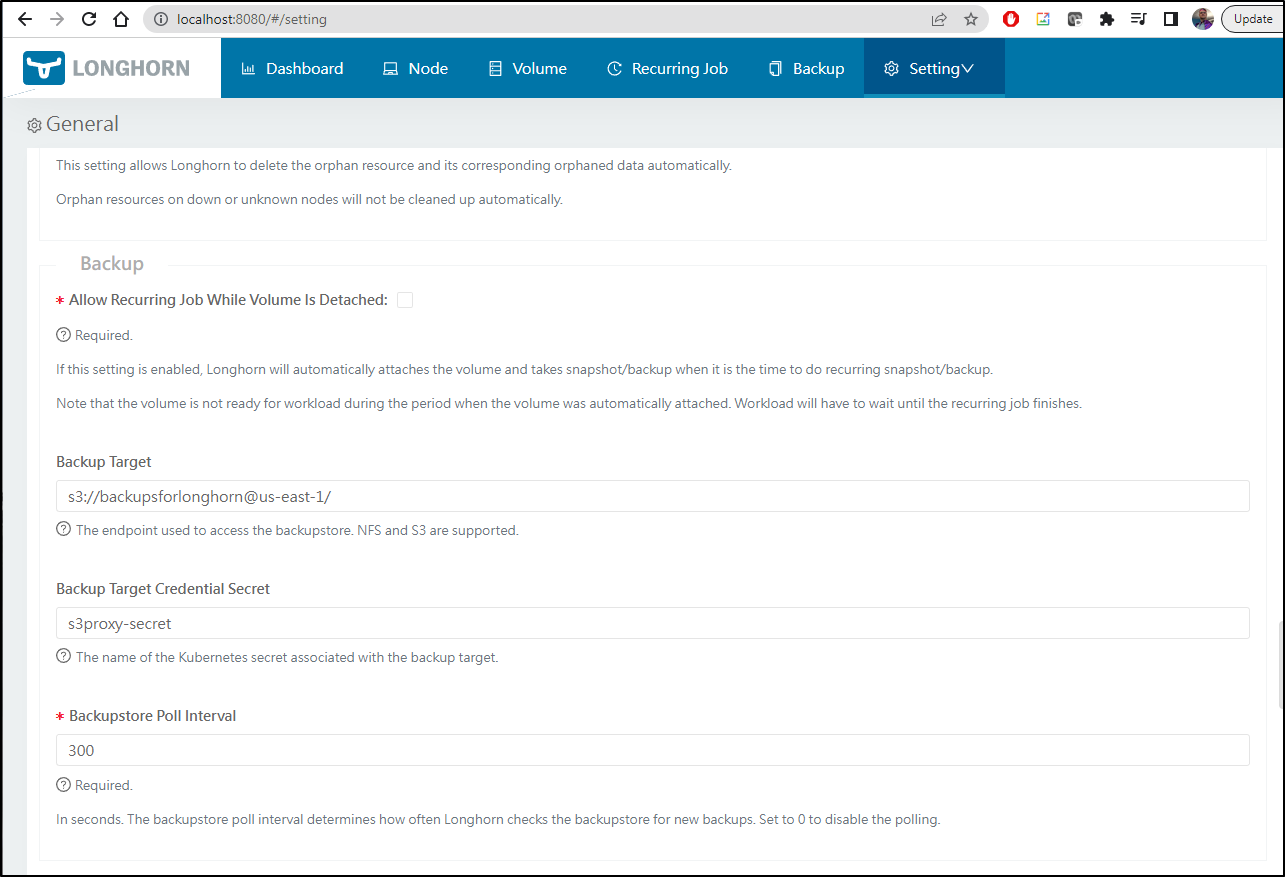

We can port-forward to the longhorn service

$ kubectl port-forward svc/longhorn-ingress-lb -n longhorn-system 8080:80

Forwarding from 127.0.0.1:8080 -> 8000

Forwarding from [::1]:8080 -> 8000

Handling connection for 8080

Handling connection for 8080

Then go to settings and find the backups section. Here we use the s3bucket and the secret we created above

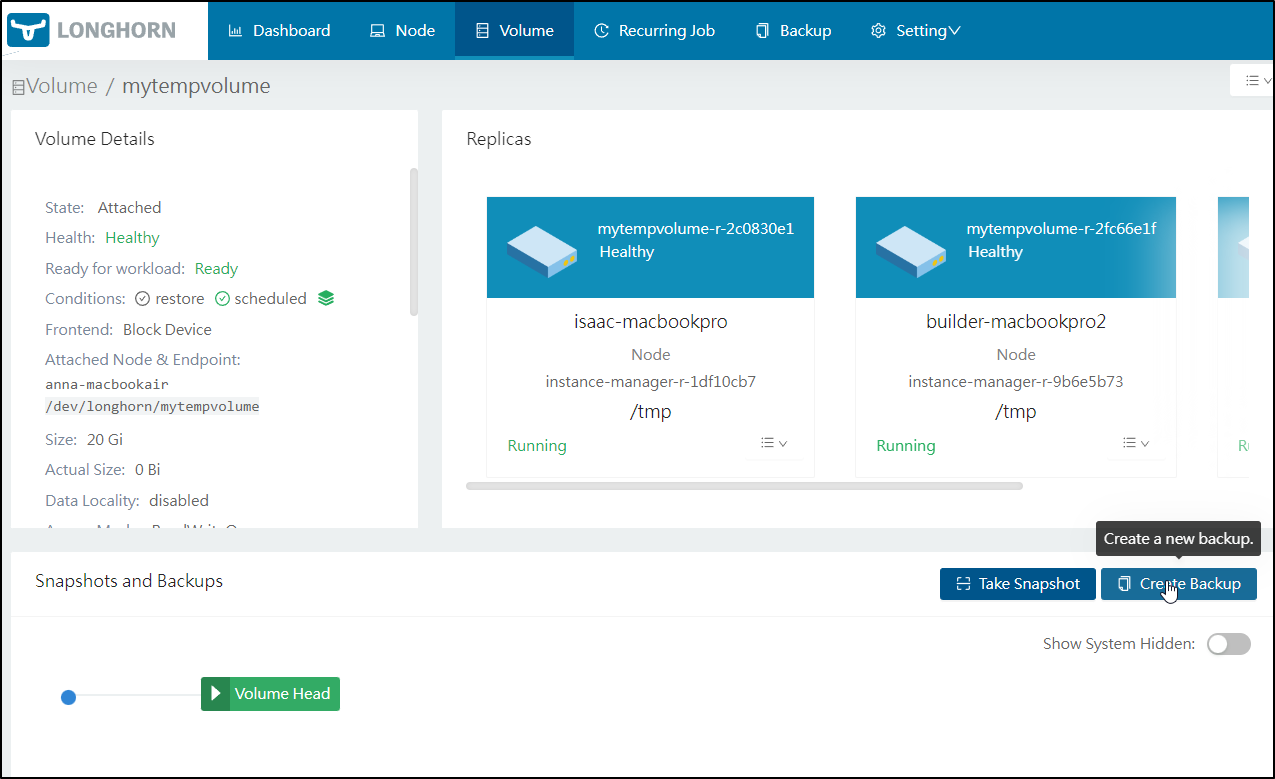

We can now Create a Backup of an existing volume using the s3proxy

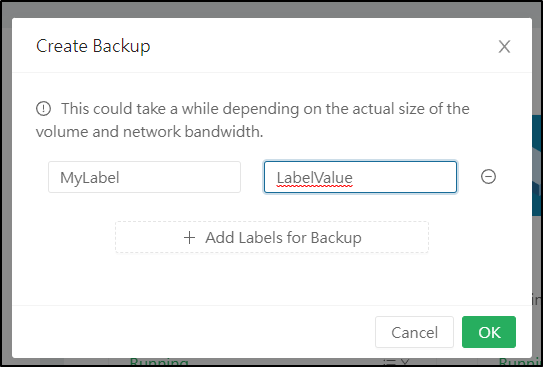

Add any Labels

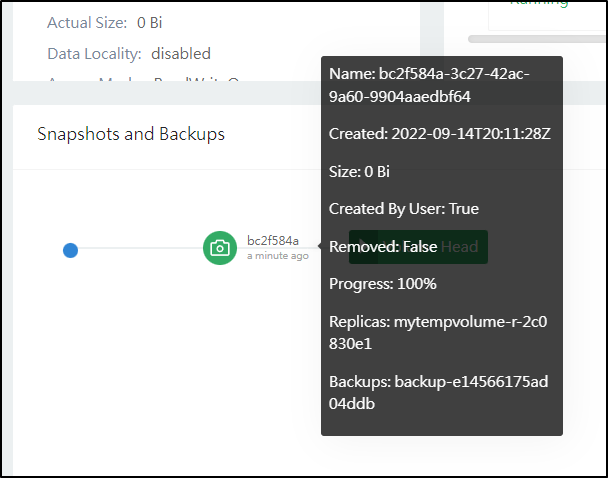

We can now see a backup

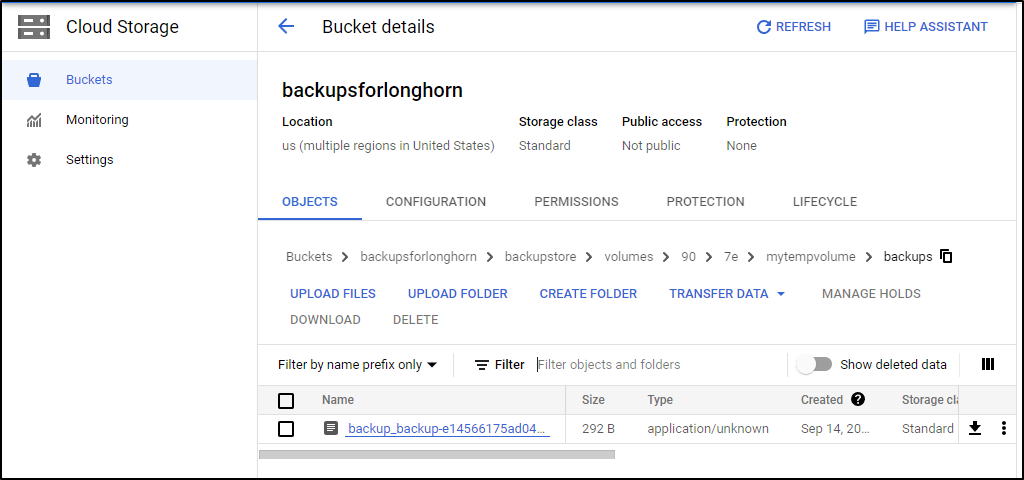

And I can now see my backup as routed from Longhorn through S3Proxy

Summmary

So we have done a few things here. We used GCFuse to mount a Google Cloud Storage bucket on a series of Kubernetes hosts, then used Longhorn.io to expose that mount as a Persistent volume class to our Kubernetes Cluster. Secondly, we used S3Proxy to expose another bucket as and S3 endpoint for Longhorn backups and successfully backed up a volume to Google Cloud Buckets.

The only struggle I found was figuring out the syntax of S3Proxy settings. They simply did not match the documentation and I could see others having similar errors.