Appscode Stash is slick k8s native suite to backup a variety of kubernetes objects including deployments and more. Let's dig in on AKS to see how we can use stash for a real application.

Creating a cluster to test:

By now this should be familiar.

First, login and create a resource group to contain our work:

$ az login

Note, we have launched a browser for you to login. For old experience with device code, use "az login --use-device-code"

You have logged in. Now let us find all the subscriptions to which you have access...

[

{

"cloudName": "AzureCloud",

"id": "8afad3d5-6093-4d1e-81e5-4f148a8c4621",

"isDefault": true,

"name": "My-Azure-Place",

"state": "Enabled",

"tenantId": "5cb73318-f7c7-4633-a7da-0e4b18115d29",

"user": {

"name": "Isaac.Johnson@cyberdyn.com",

"type": "user"

}

}

]

$ az group create --location eastus --name idjakstest01rg

{

"id": "/subscriptions/8afad3d5-6093-4d1e-81e5-4f148a8c4621/resourceGroups/idjakstest01rg",

"location": "eastus",

"managedBy": null,

"name": "idjakstest01rg",

"properties": {

"provisioningState": "Succeeded"

},

"tags": {

"Exception": "no",

"Owner": "missing",

"StopResources": "yes"

},

"type": null

}

Next, create the cluster:

$ az aks create --resource-group idjakstest01rg --name idj-aks-stash --kubernetes-version 1.12.6 --node-count 1 --enable-vmss --enable-cluster-autoscaler --min-count 1 --max-count 3 --generate-ssh-keys

The behavior of this command has been altered by the following extension: aks-preview

{

"aadProfile": null,

"addonProfiles": null,

"agentPoolProfiles": [

{

"count": 1,

"enableAutoScaling": true,

"maxCount": 3,

"maxPods": 110,

"minCount": 1,

"name": "nodepool1",

"osDiskSizeGb": 100,

"osType": "Linux",

"type": "VirtualMachineScaleSets",

"vmSize": "Standard_DS2_v2",

"vnetSubnetId": null

}

],

"apiServerAuthorizedIpRanges": null,

"dnsPrefix": "idj-aks-st-idjakstest01rg-fefd67",

"enableRbac": true,

"fqdn": "idj-aks-st-idjakstest01rg-fefd67-b5af97af.hcp.eastus.azmk8s.io",

"id": "/subscriptions/8afad3d5-6093-4d1e-81e5-4f148a8c4621/resourcegroups/idjakstest01rg/providers/Microsoft.ContainerService/managedClusters/idj-aks-stash",

"kubernetesVersion": "1.12.6",

"linuxProfile": {

"adminUsername": "azureuser",

"ssh": {

"publicKeys": [

{

"keyData": "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC8kZzEtk7J7Mvv4hJIE1jcQ0q6h41g5hUwPtOUPjNWPIKm4djmy4+C4+Gtsxxh5jUFooAbwl+DubFZogbU1Q5aLOGKSsD/K4XimTyOhr90DO47naCnaSS0Rg0XyZlvQsHKwcXGuGOleCMhB2gQ70QAK4X/N1dvGfqCDdKBbTORKQyz0WHWo7YGA6YAgtvzn1C5W0l7cT0AXgOfFEAGF31nqqTuRVBbBmosq1qhXJlVt+PO32MqmxZv44ZuCP1jWjyTz1rbQ1OLHCxP/+eDIlpOlkYop4XgwiHHMRn/rxHFTKOAxtFOccFw9KEnDM0j0M5FRBj5qU1BCa/6jhnu7LIz"

}

]

}

},

"location": "eastus",

"name": "idj-aks-stash",

"networkProfile": {

"dnsServiceIp": "10.0.0.10",

"dockerBridgeCidr": "172.17.0.1/16",

"networkPlugin": "kubenet",

"networkPolicy": null,

"podCidr": "10.244.0.0/16",

"serviceCidr": "10.0.0.0/16"

},

"nodeResourceGroup": "MC_idjakstest01rg_idj-aks-stash_eastus",

"provisioningState": "Succeeded",

"resourceGroup": "idjakstest01rg",

"servicePrincipalProfile": {

"clientId": "05ed2bfd-6340-4bae-85b4-f8e9a0ff5c76",

"secret": null

},

"tags": null,

"type": "Microsoft.ContainerService/ManagedClusters"

}

and setup up tiller (for helm)

$ az aks get-credentials --name idj-aks-stash --resource-group idjakstest01rg

Merged "idj-aks-stash" as current context in /Users/isaac.johnson/.kube/config

$ cat rbac-config.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

$ kubectl create -f rbac-config.yaml

serviceaccount/tiller created

clusterrolebinding.rbac.authorization.k8s.io/tiller created

$ helm init --service-account tiller --upgrade --history-max 200

$HELM_HOME has been configured at /Users/isaac.johnson/.helm.

Tiller (the Helm server-side component) has been upgraded to the current version.

Happy Helming!

Next, let's add Appscode helm repos and install Stash:

$ helm repo add appscode https://charts.appscode.com/stable/

"appscode" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Skip local chart repository

...Successfully got an update from the "ibm-repo" chart repository

...Successfully got an update from the "akomljen-charts" chart repository

...Successfully got an update from the "appscode" chart repository

...Successfully got an update from the "incubatorold" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈ Happy Helming!⎈

$ helm install appscode/stash --name stash-operator --namespace kube-system

NAME: stash-operator

LAST DEPLOYED: Tue Apr 30 21:22:47 2019

NAMESPACE: kube-system

STATUS: DEPLOYED

RESOURCES:

==> v1/ClusterRole

NAME AGE

stash-operator 2s

==> v1/ClusterRoleBinding

NAME AGE

stash-operator 2s

stash-operator-apiserver-auth-delegator 2s

==> v1/Deployment

NAME READY UP-TO-DATE AVAILABLE AGE

stash-operator 0/1 1 0 2s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

stash-operator-5f7bb8f4d5-rkm9j 0/2 ContainerCreating 0 2s

==> v1/RoleBinding

NAME AGE

stash-operator-apiserver-extension-server-authentication-reader 2s

==> v1/Secret

NAME TYPE DATA AGE

stash-operator-apiserver-cert Opaque 2 2s

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

stash-operator ClusterIP 10.0.76.9 <none> 443/TCP,56789/TCP 2s

==> v1/ServiceAccount

NAME SECRETS AGE

stash-operator 1 2s

==> v1beta1/APIService

NAME AGE

v1alpha1.admission.stash.appscode.com 2s

v1alpha1.repositories.stash.appscode.com 2s

NOTES:

To verify that Stash has started, run:

kubectl --namespace=kube-system get deployments -l "release=stash-operator, app=stash"

Lastly, verify it started:

$ kubectl --namespace=kube-system get deployments -l "release=stash-operator, app=stash"

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

stash-operator 1 1 1 1 102s

Using Stash

Let's install our favourite demo app, Sonarqube:

$ helm install stable/sonarqube

NAME: dusty-butterfly

LAST DEPLOYED: Tue Apr 30 22:07:52 2019

NAMESPACE: default

STATUS: DEPLOYED

RESOURCES:

==> v1/ConfigMap

NAME DATA AGE

dusty-butterfly-sonarqube-config 0 1s

dusty-butterfly-sonarqube-copy-plugins 1 1s

dusty-butterfly-sonarqube-install-plugins 1 1s

dusty-butterfly-sonarqube-tests 1 1s

==> v1/PersistentVolumeClaim

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

dusty-butterfly-postgresql Pending default 1s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

dusty-butterfly-postgresql-865f459b5-jhn82 0/1 Pending 0 0s

dusty-butterfly-sonarqube-756c789969-fh2bg 0/1 ContainerCreating 0 0s

==> v1/Secret

NAME TYPE DATA AGE

dusty-butterfly-postgresql Opaque 1 1s

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dusty-butterfly-postgresql ClusterIP 10.0.113.110 <none> 5432/TCP 0s

dusty-butterfly-sonarqube LoadBalancer 10.0.48.93 <pending> 9000:31335/TCP 0s

==> v1beta1/Deployment

NAME READY UP-TO-DATE AVAILABLE AGE

dusty-butterfly-postgresql 0/1 1 0 0s

dusty-butterfly-sonarqube 0/1 1 0 0s

NOTES:

1. Get the application URL by running these commands:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status of by running 'kubectl get svc -w dusty-butterfly-sonarqube'

export SERVICE_IP=$(kubectl get svc --namespace default dusty-butterfly-sonarqube -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

echo http://$SERVICE_IP:9000

Pro-tip: say you cleaned up a bunch of k8s resource groups and service principles and now everything is exploding - it could that AKS is trying to use an old service principle (not that it happened to me, of course). You can create a new service principal in Azure then apply it all the nodes in the cluster pretty easily:

$ az aks update-credentials --resource-group myResourceGroup idjakstest01rg --name idj-aks-stash --reset-service-principal --client-secret UDJuiejdJDUejDJJDuieUDJDJiJDJDJ+didjdjDi= --service-principal f1815adc-eeee-dddd-aaaa-ffffffffff

The SonarQube instance gets a public IP so we login and immediately change the admin password.

Then let’s follow some instructions and scan something:

fortran-cli isaac.johnson$ sonar-scanner -Dsonar.projectKey=SAMPLE -Dsonar.sources=F90 -Dsonar.host.url=http://168.61.36.201:9000 -Dsonar.login=4cdf844fe382f8d9003c28c92b36c4546b33d079

INFO: Scanner configuration file: /Users/isaac.johnson/Downloads/sonar-scanner-3.3.0.1492-macosx/conf/sonar-scanner.properties

INFO: Project root configuration file: NONE

INFO: SonarQube Scanner 3.3.0.1492

INFO: Java 1.8.0_121 Oracle Corporation (64-bit)

INFO: Mac OS X 10.14.4 x86_64

INFO: User cache: /Users/isaac.johnson/.sonar/cache

INFO: SonarQube server 7.7.0

INFO: Default locale: "en_US", source code encoding: "UTF-8" (analysis is platform dependent)

INFO: Load global settings

INFO: Load global settings (done) | time=176ms

INFO: Server id: 2090D6C0-AWpxX2_MBdGJAq0bH5y-

INFO: User cache: /Users/isaac.johnson/.sonar/cache

INFO: Load/download plugins

INFO: Load plugins index

INFO: Load plugins index (done) | time=85ms

INFO: Load/download plugins (done) | time=91ms

INFO: Process project properties

INFO: Project key: SAMPLE

INFO: Base dir: /Users/isaac.johnson/Workspaces/fortran-cli

INFO: Working dir: /Users/isaac.johnson/Workspaces/fortran-cli/.scannerwork

INFO: Load project settings for component key: 'SAMPLE'

INFO: Load project settings for component key: 'SAMPLE' (done) | time=98ms

INFO: Load project repositories

INFO: Load project repositories (done) | time=98ms

INFO: Load quality profiles

INFO: Load quality profiles (done) | time=101ms

INFO: Load active rules

INFO: Load active rules (done) | time=913ms

WARN: SCM provider autodetection failed. Please use "sonar.scm.provider" to define SCM of your project, or disable the SCM Sensor in the project settings.

INFO: Indexing files...

INFO: Project configuration:

INFO: 4 files indexed

INFO: Quality profile for f90: Sonar way

INFO: ------------- Run sensors on module SAMPLE

INFO: Load metrics repository

INFO: Load metrics repository (done) | time=71ms

INFO: Sensor fr.cnes.sonar.plugins.icode.check.ICodeSensor [icode]

WARN: Results file result.res has not been found and wont be processed.

INFO: Sensor fr.cnes.sonar.plugins.icode.check.ICodeSensor [icode] (done) | time=1ms

INFO: ------------- Run sensors on project

INFO: Sensor Zero Coverage Sensor

INFO: Sensor Zero Coverage Sensor (done) | time=1ms

INFO: No SCM system was detected. You can use the 'sonar.scm.provider' property to explicitly specify it.

INFO: Calculating CPD for 0 files

INFO: CPD calculation finished

INFO: Analysis report generated in 73ms, dir size=12 KB

INFO: Analysis report compressed in 15ms, zip size=3 KB

INFO: Analysis report uploaded in 106ms

INFO: ANALYSIS SUCCESSFUL, you can browse http://168.61.36.201:9000/dashboard?id=SAMPLE

INFO: Note that you will be able to access the updated dashboard once the server has processed the submitted analysis report

INFO: More about the report processing at http://168.61.36.201:9000/api/ce/task?id=AWpxavS8-5ofCQKUskvk

INFO: Analysis total time: 1.834 s

INFO: ------------------------------------------------------------------------

INFO: EXECUTION SUCCESS

INFO: ------------------------------------------------------------------------

INFO: Total time: 2.822s

INFO: Final Memory: 10M/198M

INFO: ------------------------------------------------------------------------

We can now see the project has successfully been indexed and is visible:

Applying Stash

Now let’s use stash to start to backup this application (we’ll be expanding on their offline guide).

We need some details first:

[1] the the pod namespace and name - verify the label

$ kubectl get pod -n default -l app=dusty-butterfly-postgresql

NAME READY STATUS RESTARTS AGE

dusty-butterfly-postgresql-865f459b5-jhn82 1/1 Running 0 4d9h

[2] let’s double check the containers database directory

$ kubectl exec -it dusty-butterfly-postgresql-865f459b5-jhn82 -- /bin/sh

# su postgres

$ psql

$ psql sonarDB

psql (9.6.2)

Type "help" for help.

sonarDB=# SHOW data_directory;

data_directory

---------------------------------

/var/lib/postgresql/data/pgdata

(1 row)

$ kubectl exec -n default dusty-butterfly-postgresql-865f459b5-jhn82 -- ls -R /var/lib/postgresql/data/pgdata | head -n10

/var/lib/postgresql/data/pgdata:

base

global

pg_clog

pg_commit_ts

pg_dynshmem

pg_hba.conf

pg_ident.conf

pg_logical

pg_multixact

Next step is to create a secret that stash can use to encrypt the backup data. Note, you need to put all these in the same namespace (default in my case):

$ echo -n 'MyBackupPassword' > RESTIC_PASSWORD

$ kubectl create secret generic -n default local-secret --from-file=./RESTIC_PASSWORD

secret/local-secret created

And verify it's there

$ kubectl get secret -n default local-secret -o yaml

apiVersion: v1

data:

RESTIC_PASSWORD: TXlCYWNrdXBQYXNzd29yZA==

kind: Secret

metadata:

creationTimestamp: "2019-05-05T12:52:25Z"

name: local-secret

namespace: default

resourceVersion: "496452"

selfLink: /api/v1/namespaces/default/secrets/local-secret

uid: a1b1839b-6f34-11e9-a14b-7af187f5df5f

type: Opaque

One more thing we are going to need, if you haven’t set one up already, is an nfs-server to receive the backups. This could be exposed in many ways, but for easy, let’s just spin a simple containerized instance.

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nfs-server

spec:

replicas: 1

selector:

matchLabels:

role: nfs-server

template:

metadata:

labels:

role: nfs-server

spec:

containers:

- name: nfs-server

image: gcr.io/google_containers/volume-nfs:0.8

ports:

- name: nfs

containerPort: 2049

- name: mountd

containerPort: 20048

- name: rpcbind

containerPort: 111

securityContext:

privileged: true

volumeMounts:

- mountPath: /exports

name: exports

volumes:

- name: exports

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

name: nfs-server

spec:

ports:

- name: nfs

port: 2049

- name: mountd

port: 20048

- name: rpcbind

port: 111

selector:

role: nfs-server

While we should be able to refer to this with nfs-server.svc.cluster.local, I found some issues as have others (mount.nfs: Failed to resolve server nfs-server.default.svc.cluster.local: Name or service not known) so i just pulled up the IP:

$ kubectl describe pod nfs-server-676897cb56-d9xv7 | grep IP

IP: 10.244.0.65

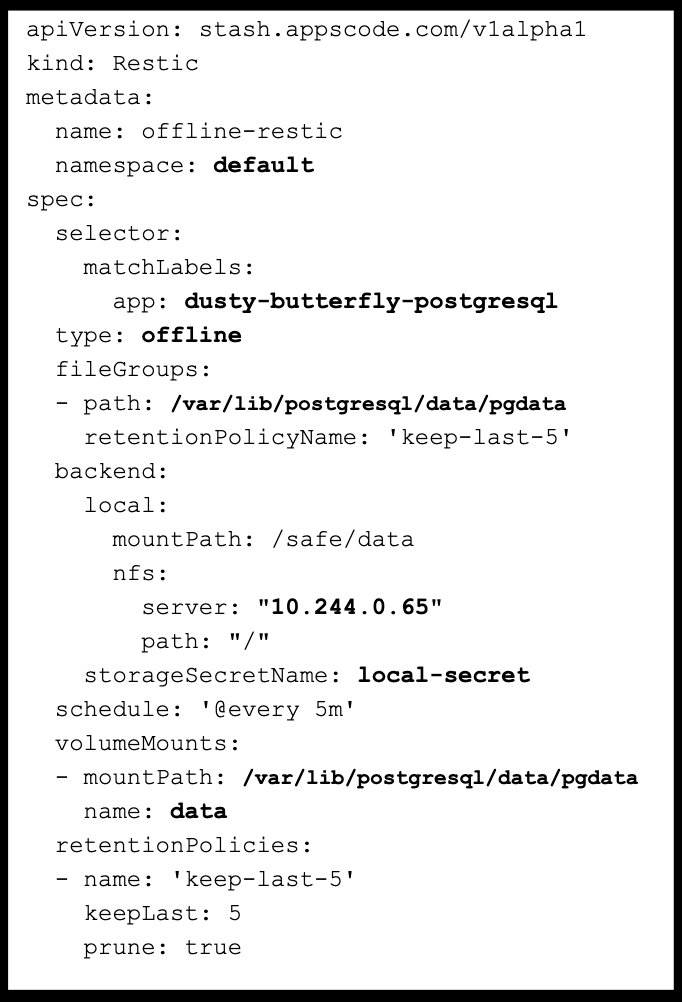

So now we know all the specifics we need for the restic file definition. The restic spec is a yaml object that stash uses to describe what it plans to backup in offline mode:

Note the areas we changed (file in markdown below image):

apiVersion: stash.appscode.com/v1alpha1

kind: Restic

metadata:

name: offline-restic

namespace: default

spec:

selector:

matchLabels:

app: dusty-butterfly-postgresql

type: offline

fileGroups:

- path: /var/lib/postgresql/data/pgdata

retentionPolicyName: 'keep-last-5'

backend:

local:

mountPath: /safe/data

nfs:

server: "10.244.0.65"

path: "/"

storageSecretName: local-secret

schedule: '@every 5m'

volumeMounts:

- mountPath: /var/lib/postgresql/data/pgdata

name: data

retentionPolicies:

- name: 'keep-last-5'

keepLast: 5

prune: true

Now apply it:

$ kubectl apply -f ./restic_offline_sonar.yaml

restic.stash.appscode.com/offline-restic created

The backups should start soon. Let’s check that the stash “init-container” has been injected:

kubectl get deployment -n default dusty-butterfly-postgresql -o yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "4"

old-replica: "1"

restic.appscode.com/last-applied-configuration: |

{"kind":"Restic","apiVersion":"stash.appscode.com/v1alpha1","metadata":{"name":"offline-restic","namespace":"default","selfLink":"/apis/stash.appscode.com/v1alpha1/namespaces/default/restics/offline-restic","uid":"a478b03e-6f35-11e9-a14b-7af187f5df5f","resourceVersion":"513231","generation":4,"creationTimestamp":"2019-05-05T12:59:39Z","annotations":{"kubectl.kubernetes.io/last-applied-configuration":"{\"apiVersion\":\"stash.appscode.com/v1alpha1\",\"kind\":\"Restic\",\"metadata\":{\"annotations\":{},\"name\":\"offline-restic\",\"namespace\":\"default\"},\"spec\":{\"backend\":{\"local\":{\"mountPath\":\"/safe/data\",\"nfs\":{\"path\":\"/\",\"server\":\"nfs-server.default.svc.cluster.local\"}},\"storageSecretName\":\"local-secret\"},\"fileGroups\":[{\"path\":\"/var/lib/postgresql/data/pgdata\",\"retentionPolicyName\":\"keep-last-5\"}],\"retentionPolicies\":[{\"keepLast\":5,\"name\":\"keep-last-5\",\"prune\":true}],\"schedule\":\"@every 5m\",\"selector\":{\"matchLabels\":{\"app\":\"dusty-butterfly-postgresql\"}},\"type\":\"offline\",\"volumeMounts\":[{\"mountPath\":\"/var/lib/postgresql/data/pgdata\",\"name\":\"data\"}]}}\n"}},"spec":{"selector":{"matchLabels":{"app":"dusty-butterfly-postgresql"}},"fileGroups":[{"path":"/var/lib/postgresql/data/pgdata","retentionPolicyName":"keep-last-5"}],"backend":{"storageSecretName":"local-secret","local":{"nfs":{"server":"nfs-server.default.svc.cluster.local","path":"/"},"mountPath":"/safe/data"}},"schedule":"@every 5m","volumeMounts":[{"name":"data","mountPath":"/var/lib/postgresql/data/pgdata"}],"resources":{},"retentionPolicies":[{"name":"keep-last-5","keepLast":5,"prune":true}],"type":"offline"}}

restic.appscode.com/tag: 0.8.3

creationTimestamp: "2019-05-01T03:07:53Z"

generation: 64

labels:

app: dusty-butterfly-postgresql

chart: postgresql-0.8.3

heritage: Tiller

release: dusty-butterfly

name: dusty-butterfly-postgresql

namespace: default

resourceVersion: "513248"

selfLink: /apis/extensions/v1beta1/namespaces/default/deployments/dusty-butterfly-postgresql

uid: 4f6afc14-6bbe-11e9-a14b-7af187f5df5f

spec:

progressDeadlineSeconds: 2147483647

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: dusty-butterfly-postgresql

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

annotations:

restic.appscode.com/resource-hash: "9340540511619766860"

creationTimestamp: null

labels:

app: dusty-butterfly-postgresql

spec:

containers:

- env:

- name: POSTGRES_USER

value: sonarUser

- name: PGUSER

value: sonarUser

- name: POSTGRES_DB

value: sonarDB

- name: POSTGRES_INITDB_ARGS

- name: PGDATA

value: /var/lib/postgresql/data/pgdata

- name: POSTGRES_PASSWORD

valueFrom:

secretKeyRef:

key: postgres-password

name: dusty-butterfly-postgresql

- name: POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

image: postgres:9.6.2

imagePullPolicy: IfNotPresent

livenessProbe:

exec:

command:

- sh

- -c

- exec pg_isready --host $POD_IP

failureThreshold: 6

initialDelaySeconds: 60

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

name: dusty-butterfly-postgresql

ports:

- containerPort: 5432

name: postgresql

protocol: TCP

readinessProbe:

exec:

command:

- sh

- -c

- exec pg_isready --host $POD_IP

failureThreshold: 3

initialDelaySeconds: 5

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 3

resources:

requests:

cpu: 100m

memory: 256Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/lib/postgresql/data/pgdata

name: data

subPath: postgresql-db

dnsPolicy: ClusterFirst

initContainers:

- args:

- backup

- --restic-name=offline-restic

- --workload-kind=Deployment

- --workload-name=dusty-butterfly-postgresql

- --docker-registry=appscode

- --image-tag=0.8.3

- --pushgateway-url=http://stash-operator.kube-system.svc:56789

- --enable-status-subresource=true

- --use-kubeapiserver-fqdn-for-aks=true

- --enable-analytics=true

- --logtostderr=true

- --alsologtostderr=false

- --v=3

- --stderrthreshold=0

- --enable-rbac=true

env:

- name: NODE_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: APPSCODE_ANALYTICS_CLIENT_ID

value: de2453edf3a39c712f20b4697845f2bb

image: appscode/stash:0.8.3

imagePullPolicy: IfNotPresent

name: stash

resources: {}

securityContext:

procMount: Default

runAsUser: 0

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /tmp

name: stash-scratchdir

- mountPath: /etc/stash

name: stash-podinfo

- mountPath: /var/lib/postgresql/data/pgdata

name: data

readOnly: true

- mountPath: /safe/data

name: stash-local

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- name: data

persistentVolumeClaim:

claimName: dusty-butterfly-postgresql

- emptyDir: {}

name: stash-scratchdir

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

name: stash-podinfo

- name: stash-local

nfs:

path: /

server: nfs-server.default.svc.cluster.local

status:

conditions:

- lastTransitionTime: "2019-05-01T03:07:53Z"

lastUpdateTime: "2019-05-01T03:07:53Z"

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

observedGeneration: 64

replicas: 1

unavailableReplicas: 1

updatedReplicas: 1

Next, let’s see if we’ve been creating backups:

$ kubectl get repository

NAME BACKUP-COUNT LAST-SUCCESSFUL-BACKUP AGE

deployment.dusty-butterfly-postgresql 16 4m 1h

Restoring

Backups aren't that useful if we can't use them. Let's explore how we can get our data back.

First, we need to create a persistent volume claim to dump a backup into:

Create restore-pvc.yaml :

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: stash-recovered

namespace: default

labels:

app: sonardb-restore

spec:

storageClassName: default

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Mi

$ kubectl apply -f restore-pvc.yaml

persistentvolumeclaim/stash-recovered created

Quick-tip: you can use “managed-premium” for storage class instead of default if you wish for faster ssd drives in AKS (for a cost, of course)

Then we can verify it:

$ kubectl get pvc -n default -l app=sonardb-restore

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

stash-recovered Bound pvc-e95c72e3-6f4f-11e9-a14b-7af187f5df5f 1Gi RWO default 17s

Next, we need to recreate a recovery Custom Resource Definition (CRD). This is where the restore action actually takes place:

$ cat ./recovery-it.yaml

apiVersion: stash.appscode.com/v1alpha1

kind: Recovery

metadata:

name: local-recovery

namespace: default

spec:

repository:

name: deployment.dusty-butterfly-postgresql

namespace: default

paths:

- /var/lib/postgresql/data/pgdata

recoveredVolumes:

- mountPath: /var/lib/postgresql/data/pgdata

persistentVolumeClaim:

claimName: stash-recovered

$ kubectl apply -f ./recovery-it.yaml

recovery.stash.appscode.com/local-recovery created

Check on the recovery:

$ kubectl get recovery -n default local-recovery

NAME REPOSITORY-NAMESPACE REPOSITORY-NAME SNAPSHOT PHASE AGE

local-recovery default deployment.dusty-butterfly-postgresql Running 16s

$ kubectl get recovery -n default local-recovery

NAME REPOSITORY-NAMESPACE REPOSITORY-NAME SNAPSHOT PHASE AGE

local-recovery default deployment.dusty-butterfly-postgresql Succeeded 2m

Now let’s verify the files:

$ cat ./verify-restore.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: sonar-restore

name: sonar-restore-test

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: sonar-restore-test

template:

metadata:

labels:

app: sonar-restore-test

name: busybox

spec:

containers:

- args:

- sleep

- "3600"

image: busybox

imagePullPolicy: IfNotPresent

name: busybox

volumeMounts:

- mountPath: /var/lib/postgresql/data/pgdata

name: source-data

restartPolicy: Always

volumes:

- name: source-data

persistentVolumeClaim:

claimName: stash-recovered

$ kubectl apply -f ./verify-restore.yaml

deployment.apps/sonar-restore-test created

Now just get the pod and check that the files are mounted:

$ kubectl get pods -n default -l app=sonar-restore-test

NAME READY STATUS RESTARTS AGE

sonar-restore-test-744498d8b8-rsfz6 1/1 Running 0 3m26s

$ kubectl exec -n default sonar-restore-test-744498d8b8-rsfz6 -- ls -R /var/lib/postgresql/data/pgdata | head -n10

/var/lib/postgresql/data/pgdata:

lost+found

postgresql-db

/var/lib/postgresql/data/pgdata/lost+found:

/var/lib/postgresql/data/pgdata/postgresql-db:

PG_VERSION

base

global

Clearly at this point, we could delete the sonar deployment and restic backup process (kubectl delete deployment dusty-butterfly-postgresql and kubectl delete restic offline-restic) then start the process of restoring with a full redeployment. However, a more common situation would be to load a new database with the data and point a sonar instance to it to verify it's what you were expecting.

In the case of postgres, we could just restore manually by copying the files locally and then to the pod (or a new Azure Database for Postgres database):

locally:

$ mkdir /tmp/backups

$ kubectl cp sonar-restore-test-744498d8b8-rsfz6:/var/lib/postgresql/data/pgdata /tmp/backups

$ du -csh /tmp/backups/

48M /tmp/backups/

48M total

$ ls /tmp/backups/

lost+found postgresql-db

Next steps:

Stash can do more than offline backups. You can backup an entire deployment at once as well (see guide: https://appscode.com/products/stash/0.8.3/guides/backup/).

Summary:

In this guide we dug into Appscode Stash and used it to create regular scheduled backups of a live containerized database. We then created a restore process and verified the contents. Lastly, we showed how we could take those files and move them around (such as copy locally).