Published: Oct 9, 2025 by Isaac Johnson

There are some that may think I have it out for tools like Jenkins and ArgoCD. Yes, I do have it out for Jenkins (while I don’t use Harness, I sure enjoyed their latest ad). But I have no such beef with ArgoCD.

In fact, three years ago I presented to OpenSource North on the topic “Three ways to GitOps” that focused on Flux and ArgoCD. I also wrote about it last back in 2022

The issue I always had with ArgoCD was it just worked on pre-baked manifests. Sometimes you could jerry rig helm, or use Kustomize, but it was really just designed to rectify YAML manifests and most teams I saw use it had pipelines that had to push updates to GIT just to get the CD to kick off.

ArgoCD 3.1

An InfoQ article in August thus caught my attention when it said not only has ArgoCD had some pretty major UI updates, it would now natively support OCI registries. This makes it much more interesting to me as by using OCI, we can bundle both our containers and their respective charts.

The article is well written with lots of big words. Very professional. I decided now is just a good time to bang on it and see what it does.

I think I’m just a simpleton that needs to try and put blocks in spaces

Installation

We should just need to create a namespace and apply the ArgoCD manifest

$ kubectl create namespace argocd

namespace/argocd created

$ kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

customresourcedefinition.apiextensions.k8s.io/applications.argoproj.io created

customresourcedefinition.apiextensions.k8s.io/applicationsets.argoproj.io created

customresourcedefinition.apiextensions.k8s.io/appprojects.argoproj.io created

serviceaccount/argocd-application-controller created

serviceaccount/argocd-applicationset-controller created

serviceaccount/argocd-dex-server created

serviceaccount/argocd-notifications-controller created

serviceaccount/argocd-redis created

serviceaccount/argocd-repo-server created

serviceaccount/argocd-server created

role.rbac.authorization.k8s.io/argocd-application-controller created

role.rbac.authorization.k8s.io/argocd-applicationset-controller created

role.rbac.authorization.k8s.io/argocd-dex-server created

role.rbac.authorization.k8s.io/argocd-notifications-controller created

role.rbac.authorization.k8s.io/argocd-redis created

role.rbac.authorization.k8s.io/argocd-server created

clusterrole.rbac.authorization.k8s.io/argocd-application-controller created

clusterrole.rbac.authorization.k8s.io/argocd-applicationset-controller created

clusterrole.rbac.authorization.k8s.io/argocd-server created

rolebinding.rbac.authorization.k8s.io/argocd-application-controller created

rolebinding.rbac.authorization.k8s.io/argocd-applicationset-controller created

rolebinding.rbac.authorization.k8s.io/argocd-dex-server created

rolebinding.rbac.authorization.k8s.io/argocd-notifications-controller created

rolebinding.rbac.authorization.k8s.io/argocd-redis created

rolebinding.rbac.authorization.k8s.io/argocd-server created

clusterrolebinding.rbac.authorization.k8s.io/argocd-application-controller created

clusterrolebinding.rbac.authorization.k8s.io/argocd-applicationset-controller created

clusterrolebinding.rbac.authorization.k8s.io/argocd-server created

configmap/argocd-cm created

configmap/argocd-cmd-params-cm created

configmap/argocd-gpg-keys-cm created

configmap/argocd-notifications-cm created

configmap/argocd-rbac-cm created

configmap/argocd-ssh-known-hosts-cm created

configmap/argocd-tls-certs-cm created

secret/argocd-notifications-secret created

secret/argocd-secret created

service/argocd-applicationset-controller created

service/argocd-dex-server created

service/argocd-metrics created

service/argocd-notifications-controller-metrics created

service/argocd-redis created

service/argocd-repo-server created

service/argocd-server createdhttps://argo-cd.readthedocs.io/en/stable/security_considerations/

service/argocd-server-metrics created

deployment.apps/argocd-applicationset-controller created

deployment.apps/argocd-dex-server created

deployment.apps/argocd-notifications-controller created

deployment.apps/argocd-redis created

deployment.apps/argocd-repo-server created

deployment.apps/argocd-server created

statefulset.apps/argocd-application-controller created

networkpolicy.networking.k8s.io/argocd-application-controller-network-policy created

networkpolicy.networking.k8s.io/argocd-applicationset-controller-network-policy created

networkpolicy.networking.k8s.io/argocd-dex-server-network-policy created

networkpolicy.networking.k8s.io/argocd-notifications-controller-network-policy created

networkpolicy.networking.k8s.io/argocd-redis-network-policy created

networkpolicy.networking.k8s.io/argocd-repo-server-network-policy created

networkpolicy.networking.k8s.io/argocd-server-network-policy created

By default, it creates a bunch of ClusterIP services and suggests you do the work for an LB or Ingress

$ kubectl get svc -n argocd

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

argocd-applicationset-controller ClusterIP 10.43.63.214 <none> 7000/TCP,8080/TCP 99s

argocd-dex-server ClusterIP 10.43.179.31 <none> 5556/TCP,5557/TCP,5558/TCP 99s

argocd-metrics ClusterIP 10.43.118.109 <none> 8082/TCP 99s

argocd-notifications-controller-metrics ClusterIP 10.43.46.252 <none> 9001/TCP 99s

argocd-redis ClusterIP 10.43.206.179 <none> 6379/TCP 99s

argocd-repo-server ClusterIP 10.43.195.240 <none> 8081/TCP,8084/TCP 99s

argocd-server ClusterIP 10.43.237.36 <none> 80/TCP,443/TCP 99s

argocd-server-metrics ClusterIP 10.43.31.206 <none> 8083/TCP 99s

I’m a fan, at least for testing, of using a nice NodePort service so I can hit any node to just view the app (instead of invoking a port-forward).

Let’s set that up now:

apiVersion: v1

kind: Service

metadata:

name: argocd-server-nodeport

namespace: argocd

labels:

app.kubernetes.io/component: server

app.kubernetes.io/name: argocd-server-nodeport

app.kubernetes.io/part-of: argocd

spec:

type: NodePort

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

selector:

app.kubernetes.io/name: argocd-server

ports:

- name: http

port: 80

targetPort: 8080

protocol: TCP

nodePort: 31080

- name: https

port: 443

targetPort: 8080

protocol: TCP

nodePort: 31443

I’ll apply it

$ kubectl apply -f ./argo-nodeport.yaml -n argocd

service/argocd-server-nodeport created

And now I can see it listed

$ kubectl get svc -n argocd

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

argocd-applicationset-controller ClusterIP 10.43.63.214 <none> 7000/TCP,8080/TCP 6m44s

argocd-dex-server ClusterIP 10.43.179.31 <none> 5556/TCP,5557/TCP,5558/TCP 6m44s

argocd-metrics ClusterIP 10.43.118.109 <none> 8082/TCP 6m44s

argocd-notifications-controller-metrics ClusterIP 10.43.46.252 <none> 9001/TCP 6m44s

argocd-redis ClusterIP 10.43.206.179 <none> 6379/TCP 6m44s

argocd-repo-server ClusterIP 10.43.195.240 <none> 8081/TCP,8084/TCP 6m44s

argocd-server ClusterIP 10.43.237.36 <none> 80/TCP,443/TCP 6m44s

argocd-server-metrics ClusterIP 10.43.31.206 <none> 8083/TCP 6m44s

argocd-server-nodeport NodePort 10.43.152.6 <none> 80:31080/TCP,443:31443/TCP 16s

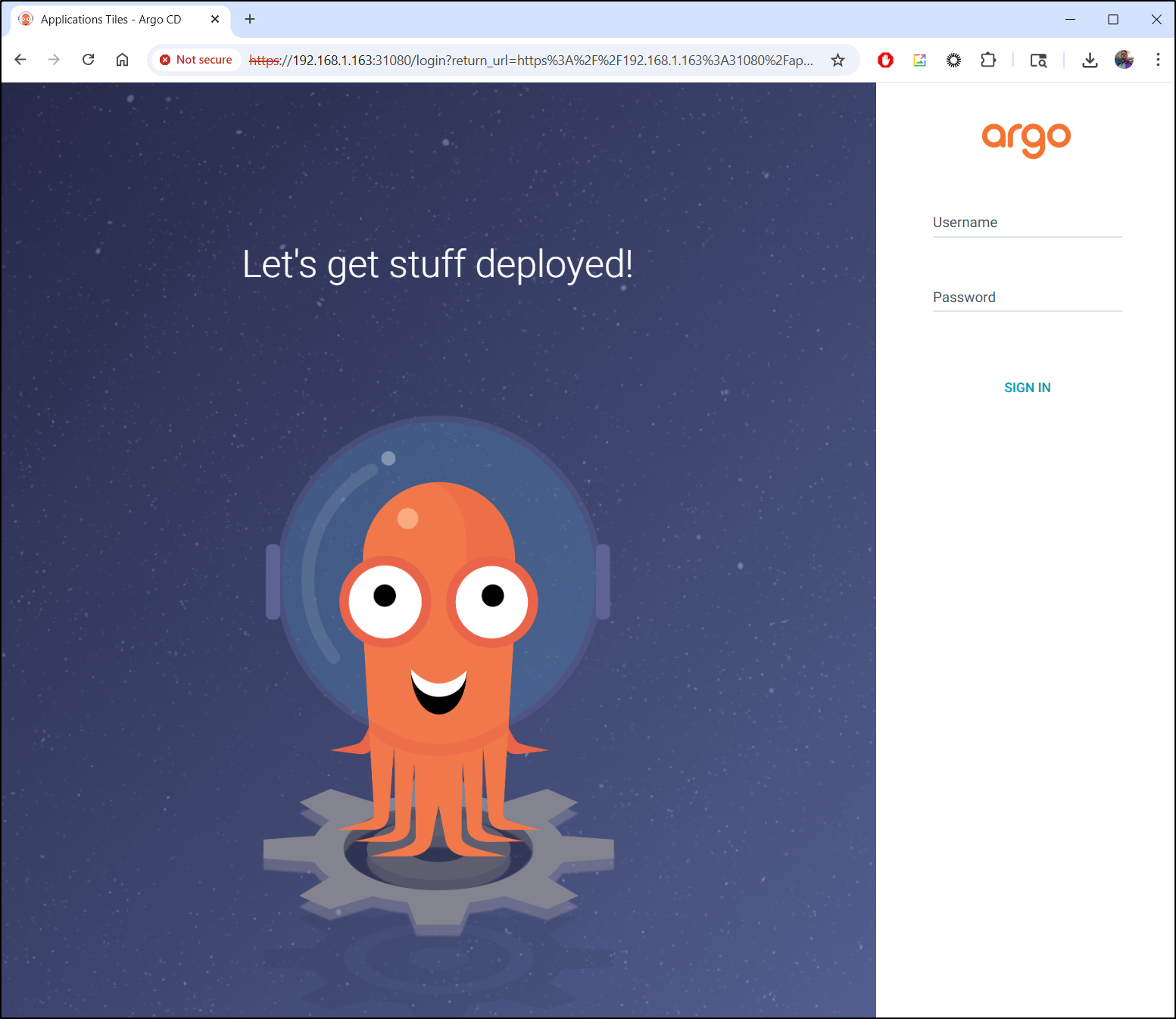

I can now hit the 80 (http) URL - I was surprised that even on 80 it serves a self-signed SSL connection (https)

We can get the initial admin user password from the Kubernetes secret:

$ kubectl get secrets -n argocd argocd-initial-admin-secret -o json | jq -r .data.password | base64 --decode

ASDFasdfASDFasdfASDF

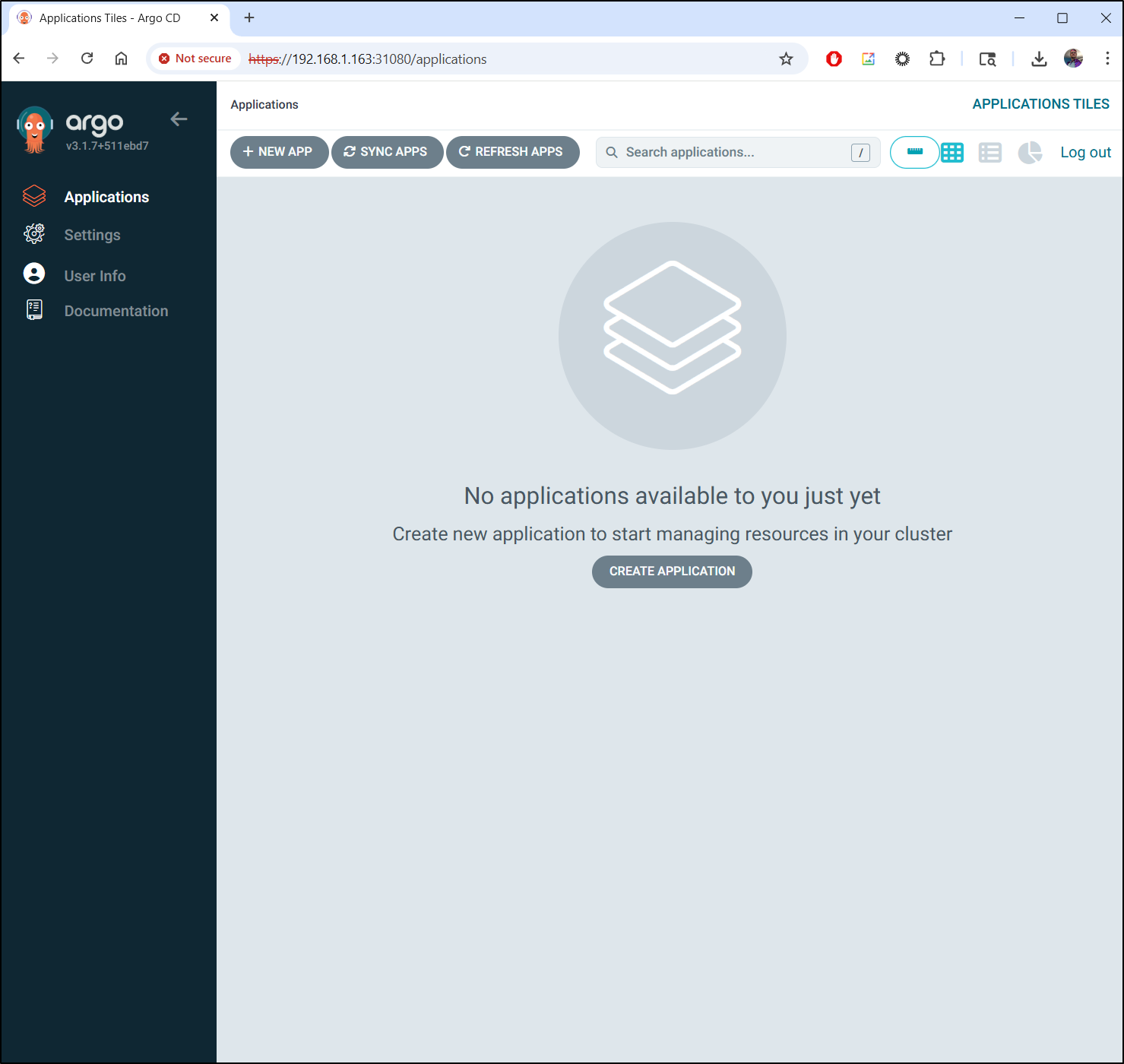

and login

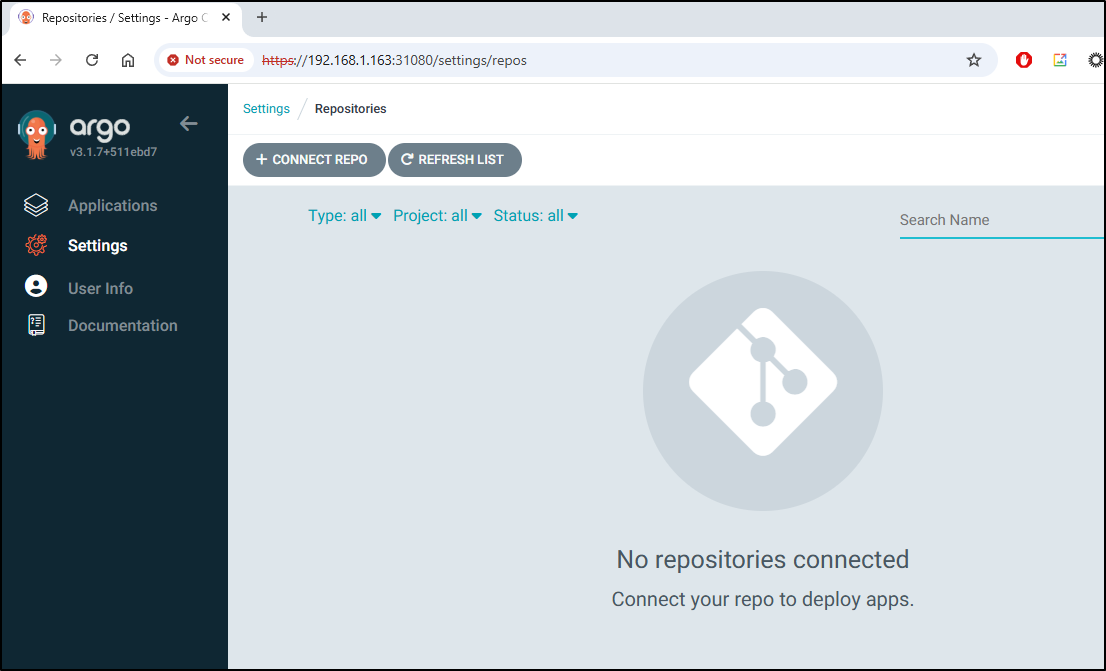

OCI Repos

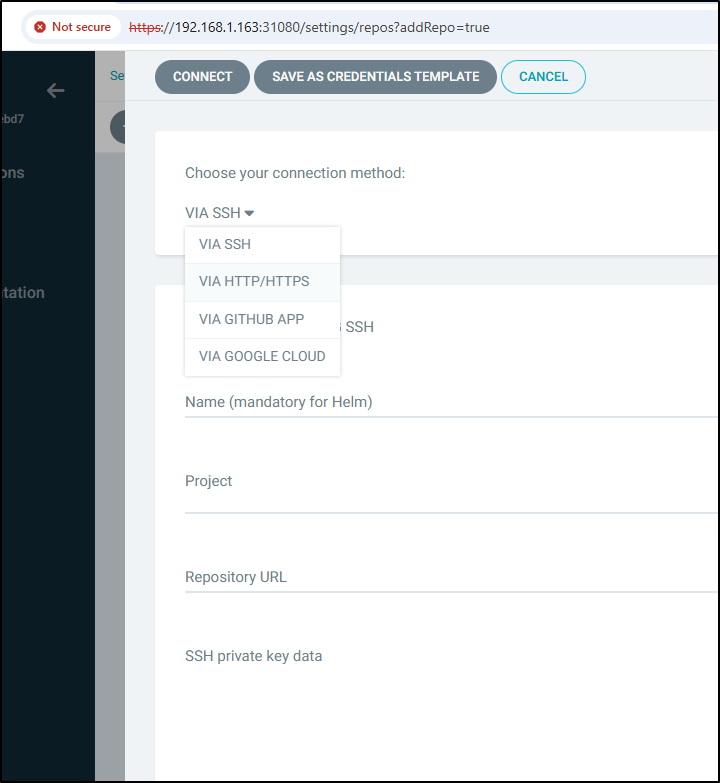

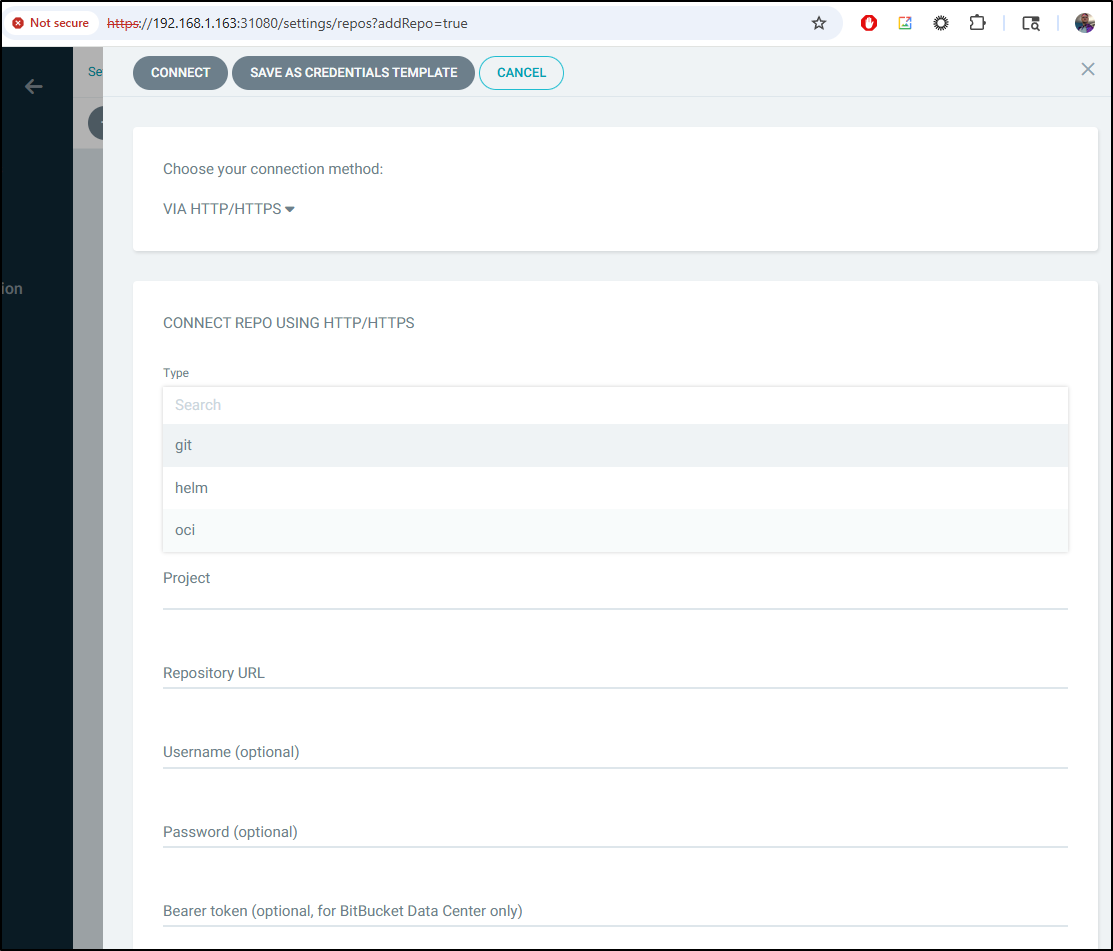

Let’s try adding an OCI repo. In Settings / Repositories, let’s “+ Connect Repo”. Don’t let the logo for GIT throw you…

I’ll change from SSH to HTTP/HTTPS

Now we can see type “OCI” in the list

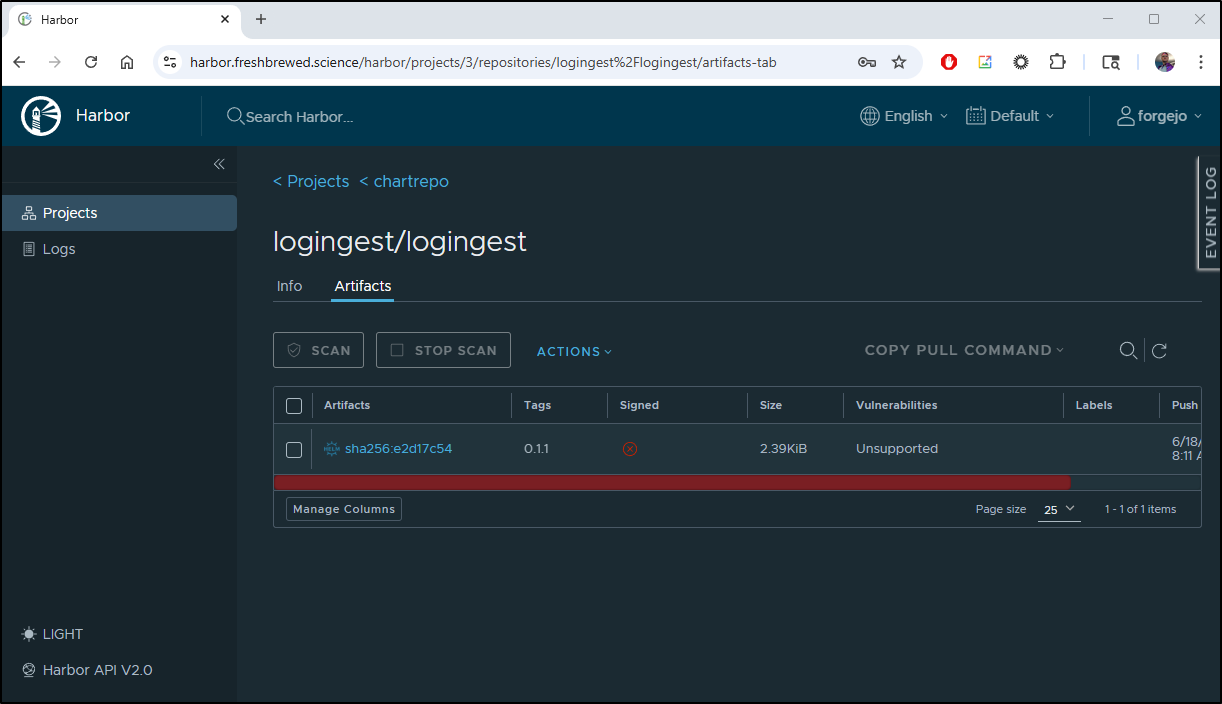

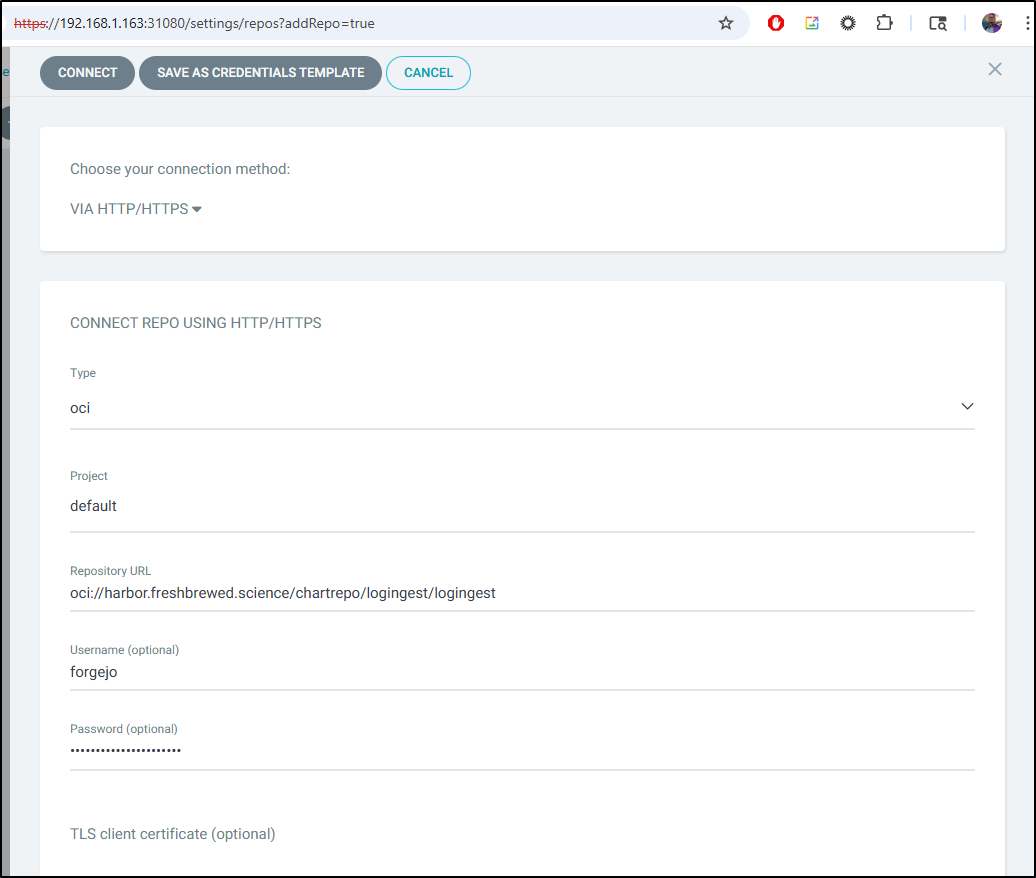

Let’s try and get my logingest chart to pull in

I will give it the OCI url oci://harbor.freshbrewed.science/chartrepo/logingest/logingest and pass a user and password

This would match what we might use with helm, e.g.

$ helm pull oci://harbor.freshbrewed.science/chartrepo/logingest/logingest --version 0.1.1

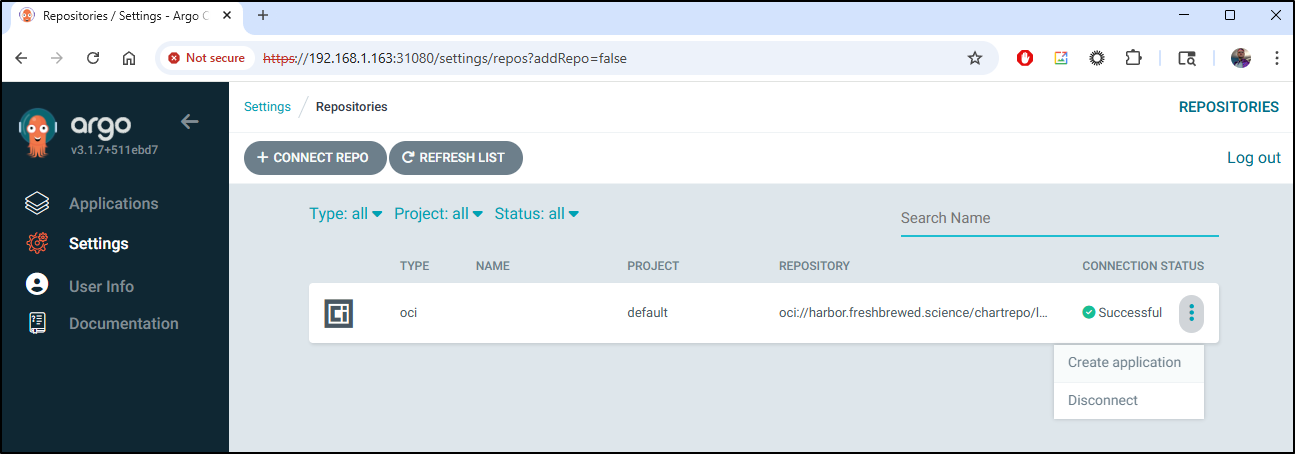

I can see it’s successful, from there I can use the menu to create an application

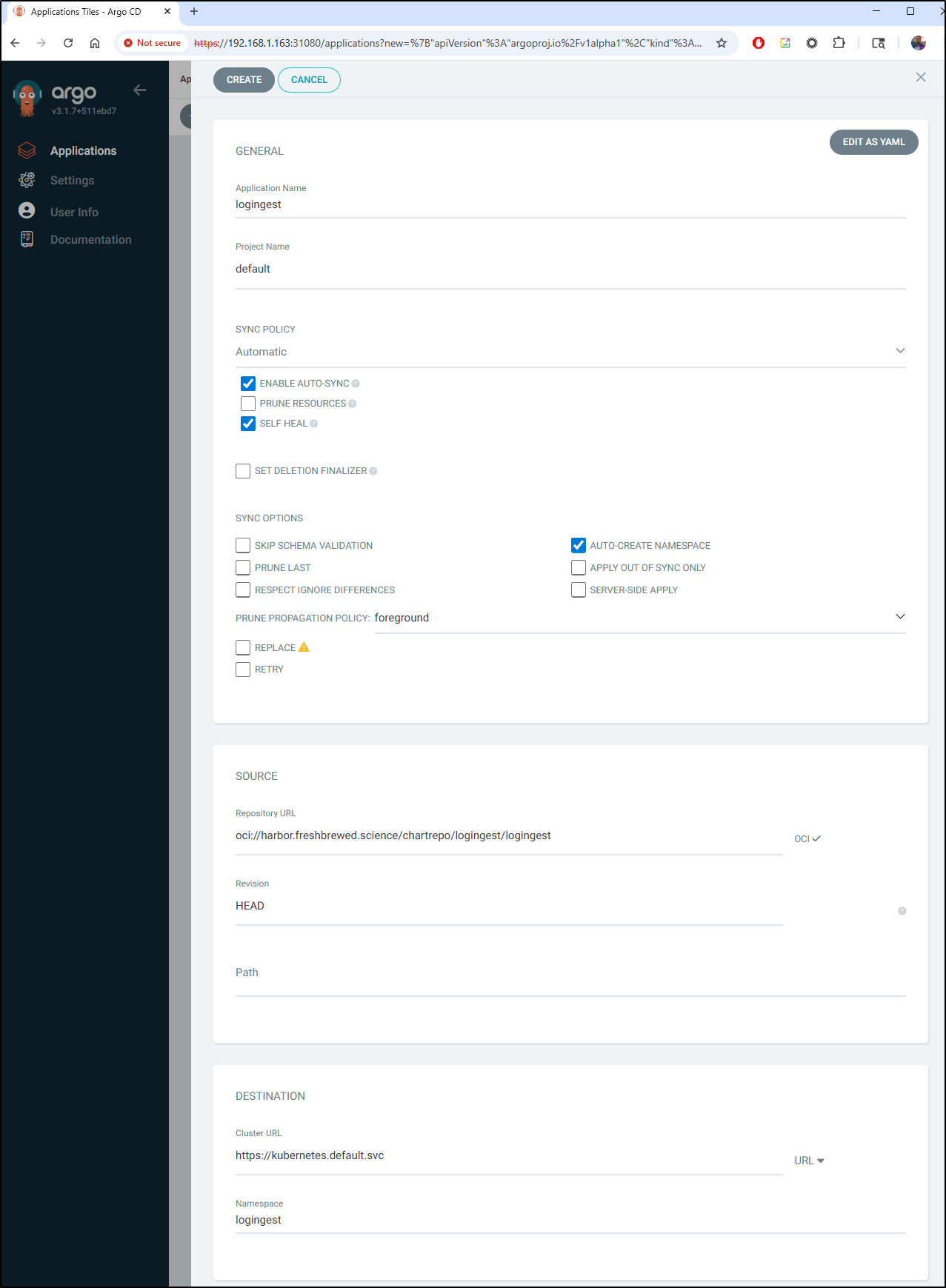

I filled in the details

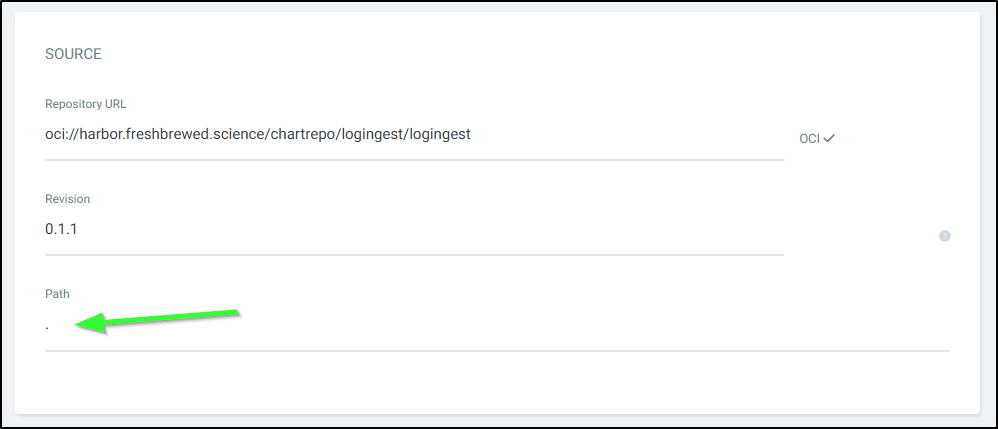

but then saw some errors about the path. It took me a few to figure out what they wanted. The “path” is just so that when it pulls the chart from the URL, if the chart was in, say a subfolder, you could reference that. In most cases, if your OCI is the chart, you can use “.”

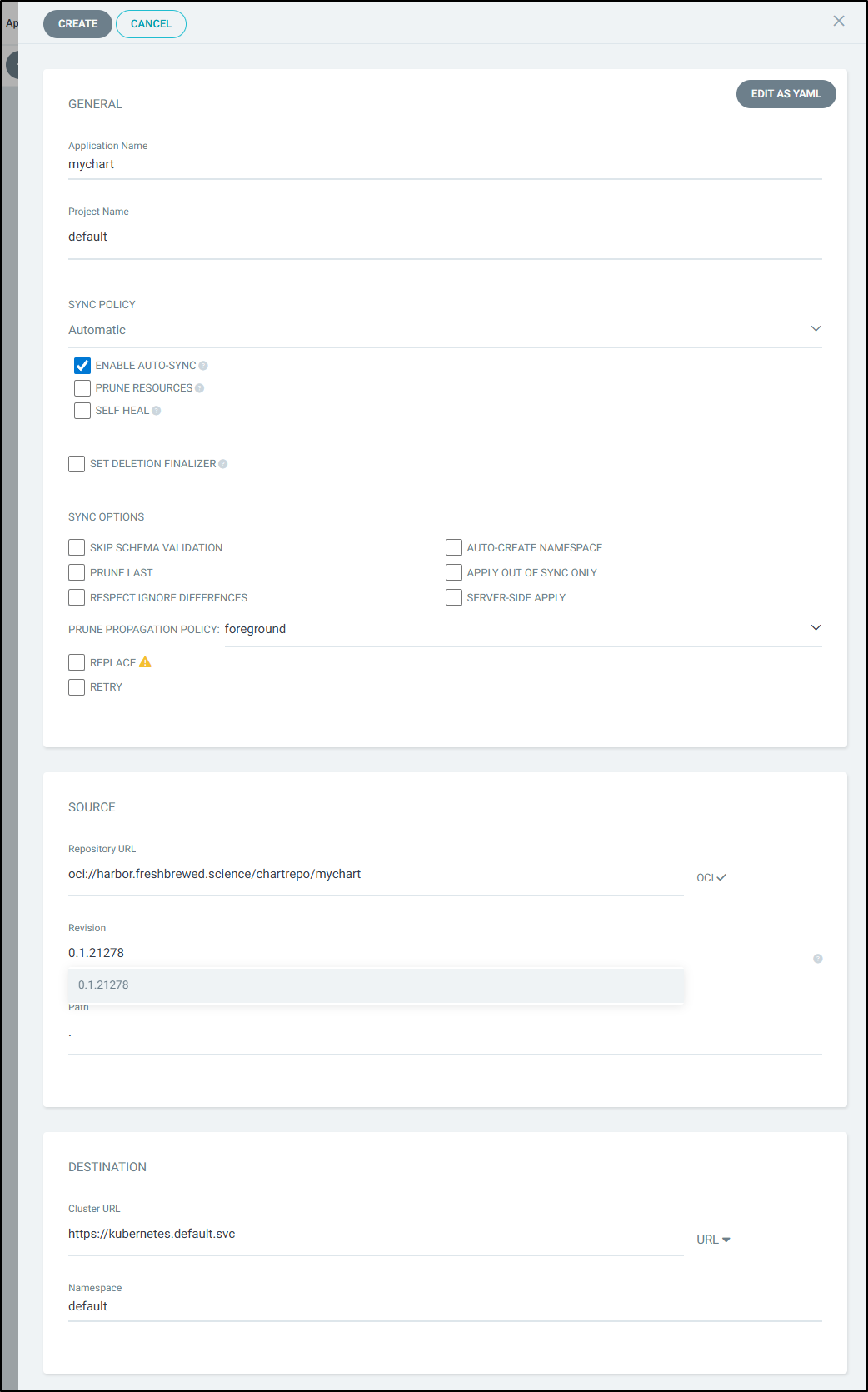

Unfortunately, that particular chart had a bug (misspelling) so I pivoted to know I know works, my pyk8service

I pivoted to a basic Nginx chart I have there

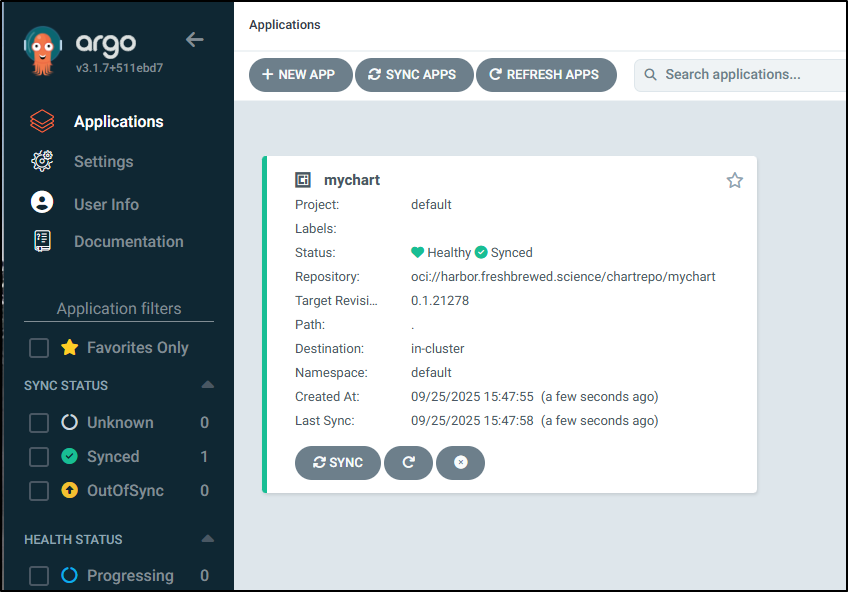

which worked dandy

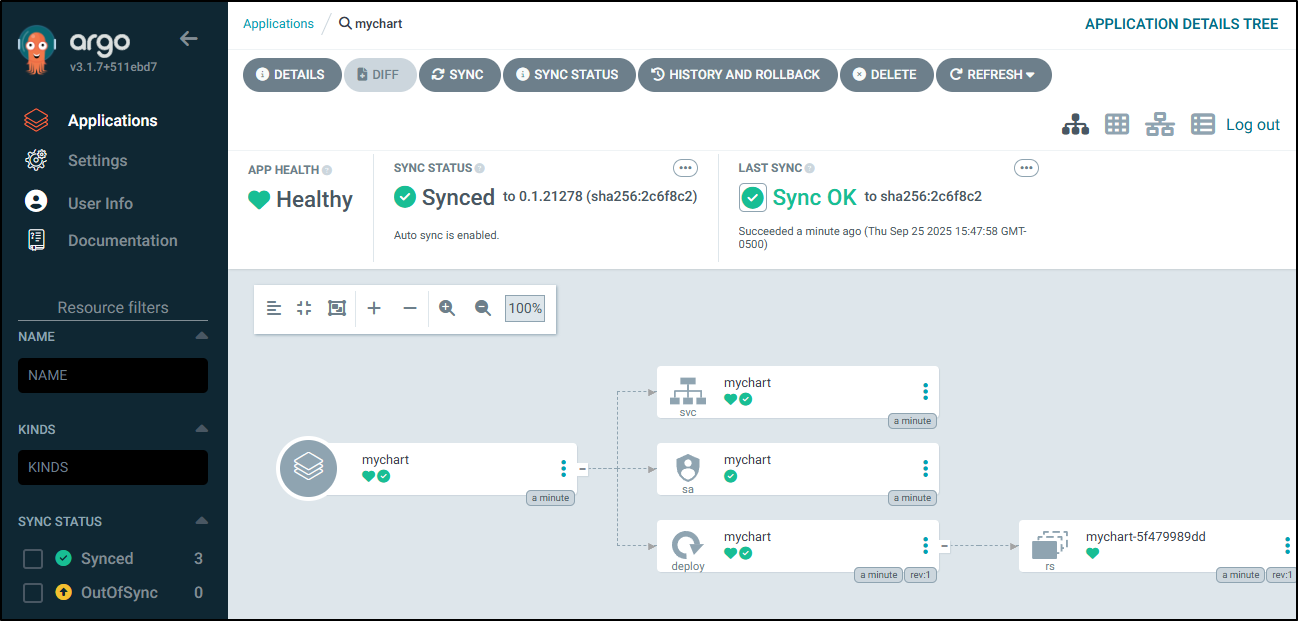

I can see it synced without issue

That said, like the others I tried, it does not like “HEAD” - so I needed to set my version each time.

I can view the objects it deployed

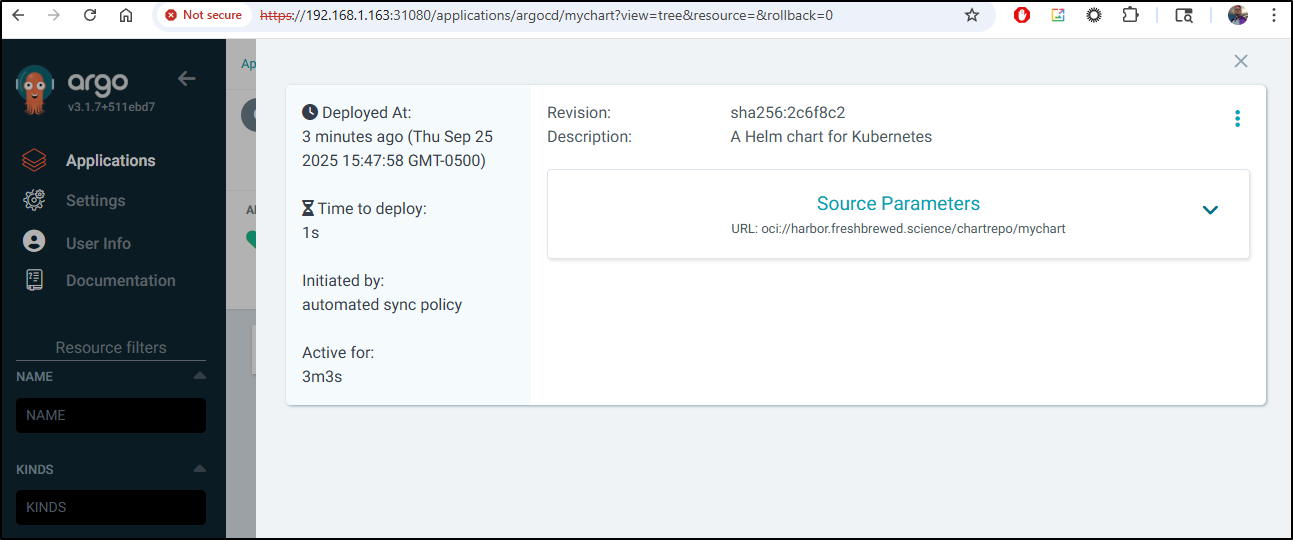

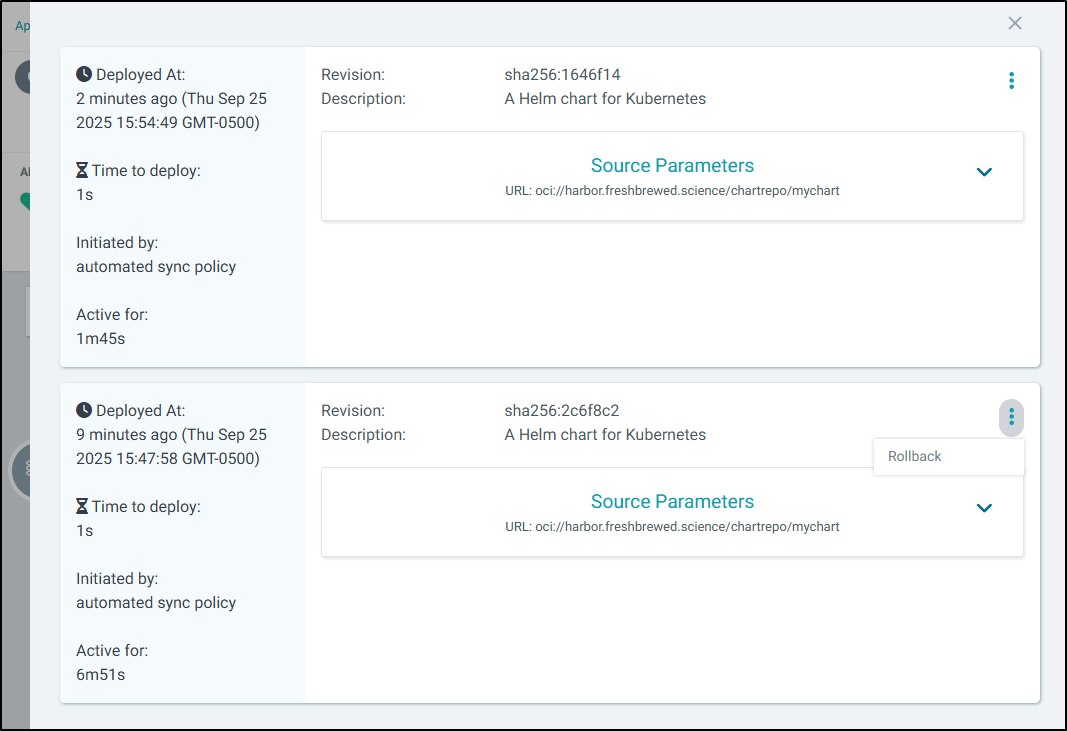

And I can always look at history to see when it deployed and of what revision

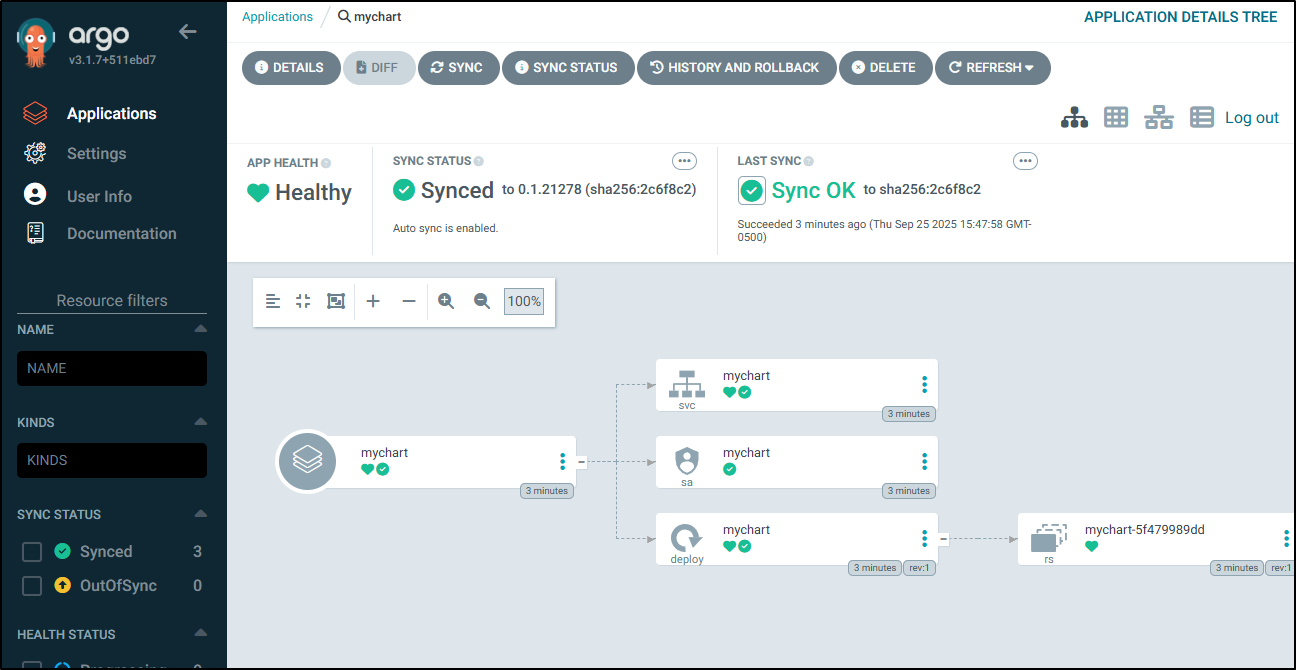

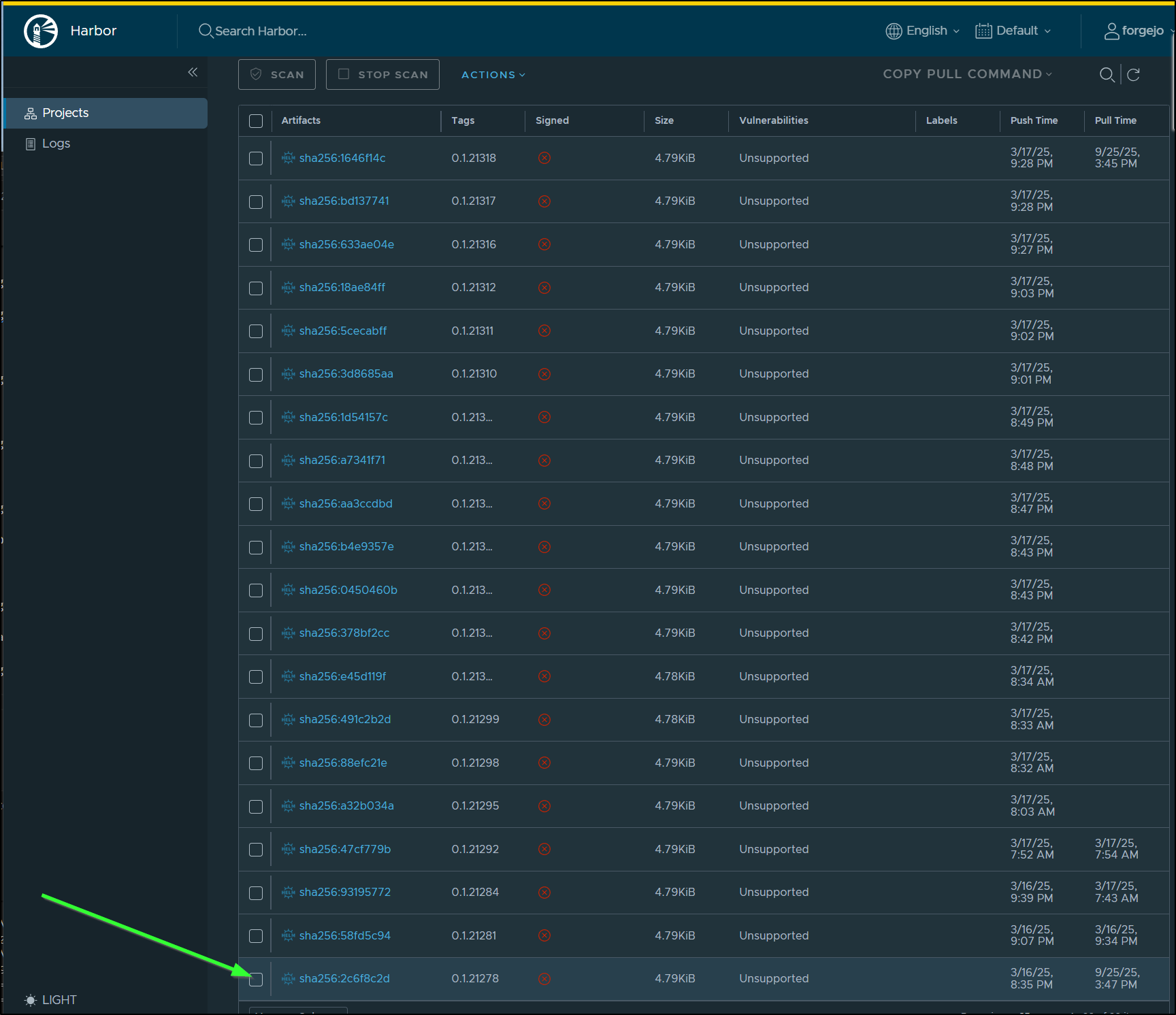

You can see above that it synced “to 0.1.21278 (sha256:2c6f8c2)”

Which matches the SHA in Harbor, but it’s not the latest

Switching it to the latest is pretty easy:

Had something gone wrong, I could always rollback in the UI

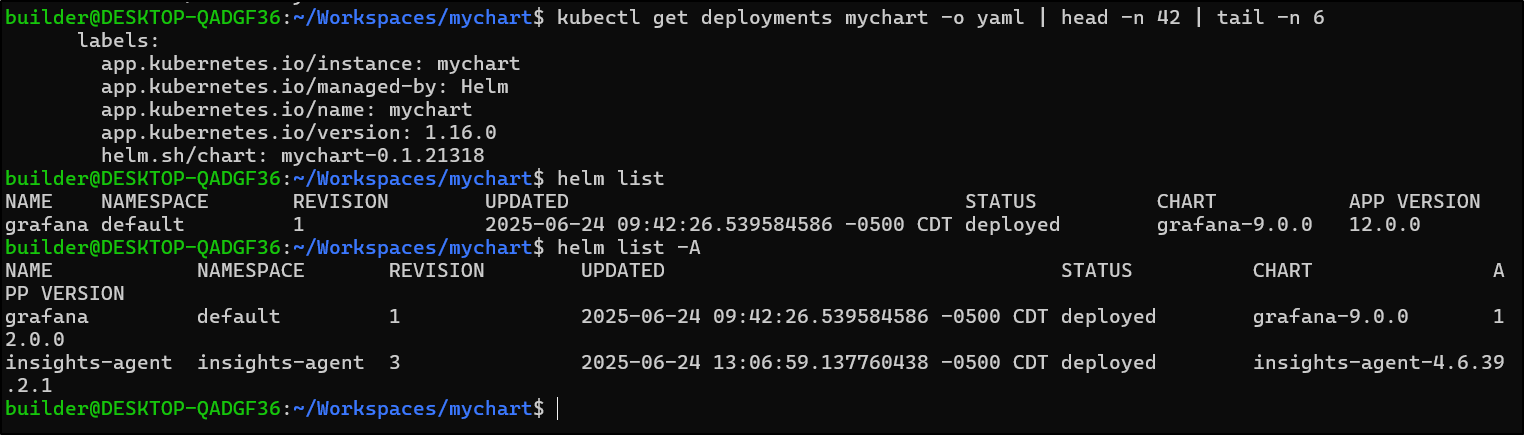

The version is also saved as an annotation in the deployment which is nice in case we are just looking at our cluster directly or via an APM

$ kubectl get deployments mychart -o yaml | head -n 42 | tail -n 6

labels:

app.kubernetes.io/instance: mychart

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: mychart

app.kubernetes.io/version: 1.16.0

helm.sh/chart: mychart-0.1.21318

However, unlike a traditional Helm chart, we will not see it with Helm directly

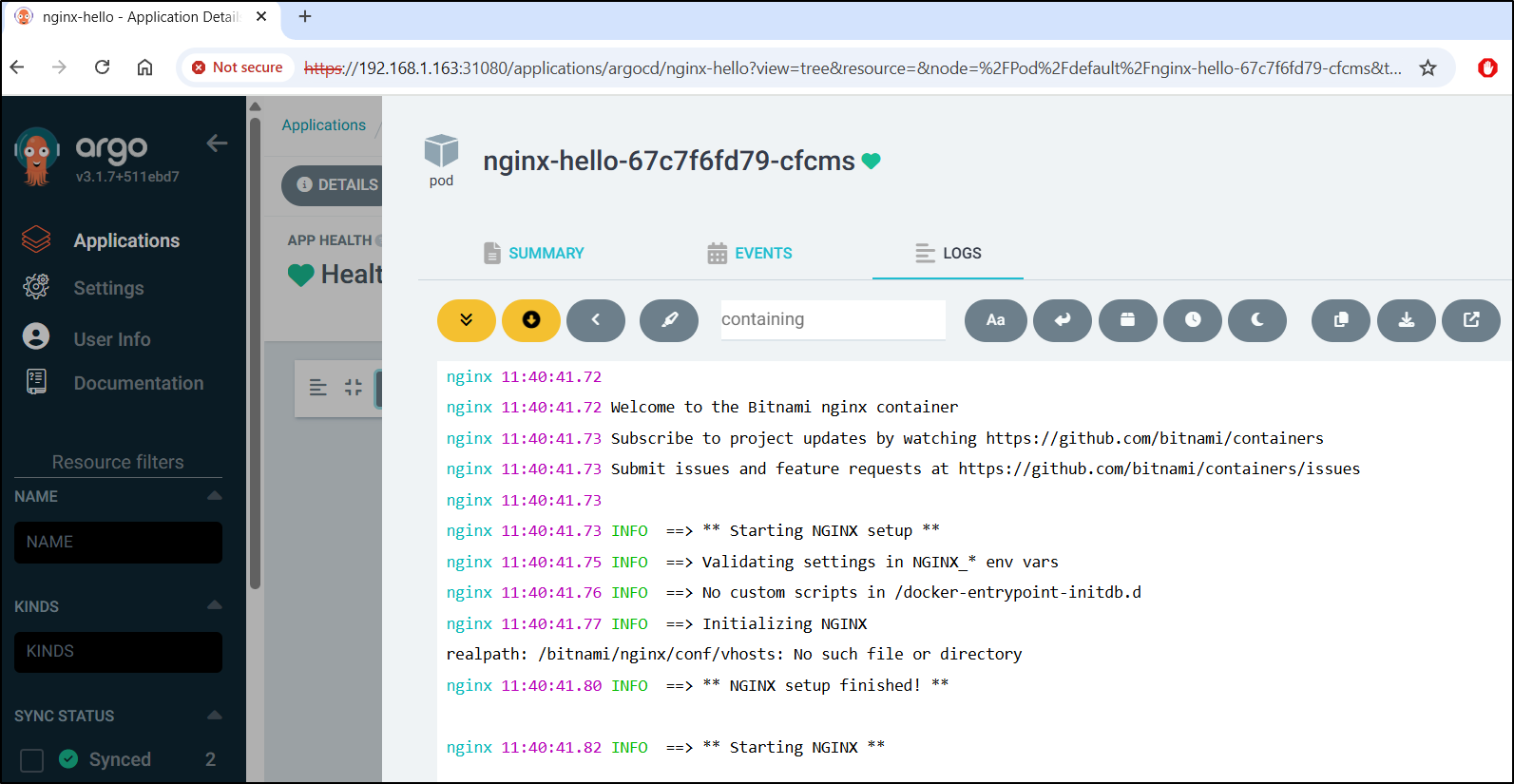

Logs

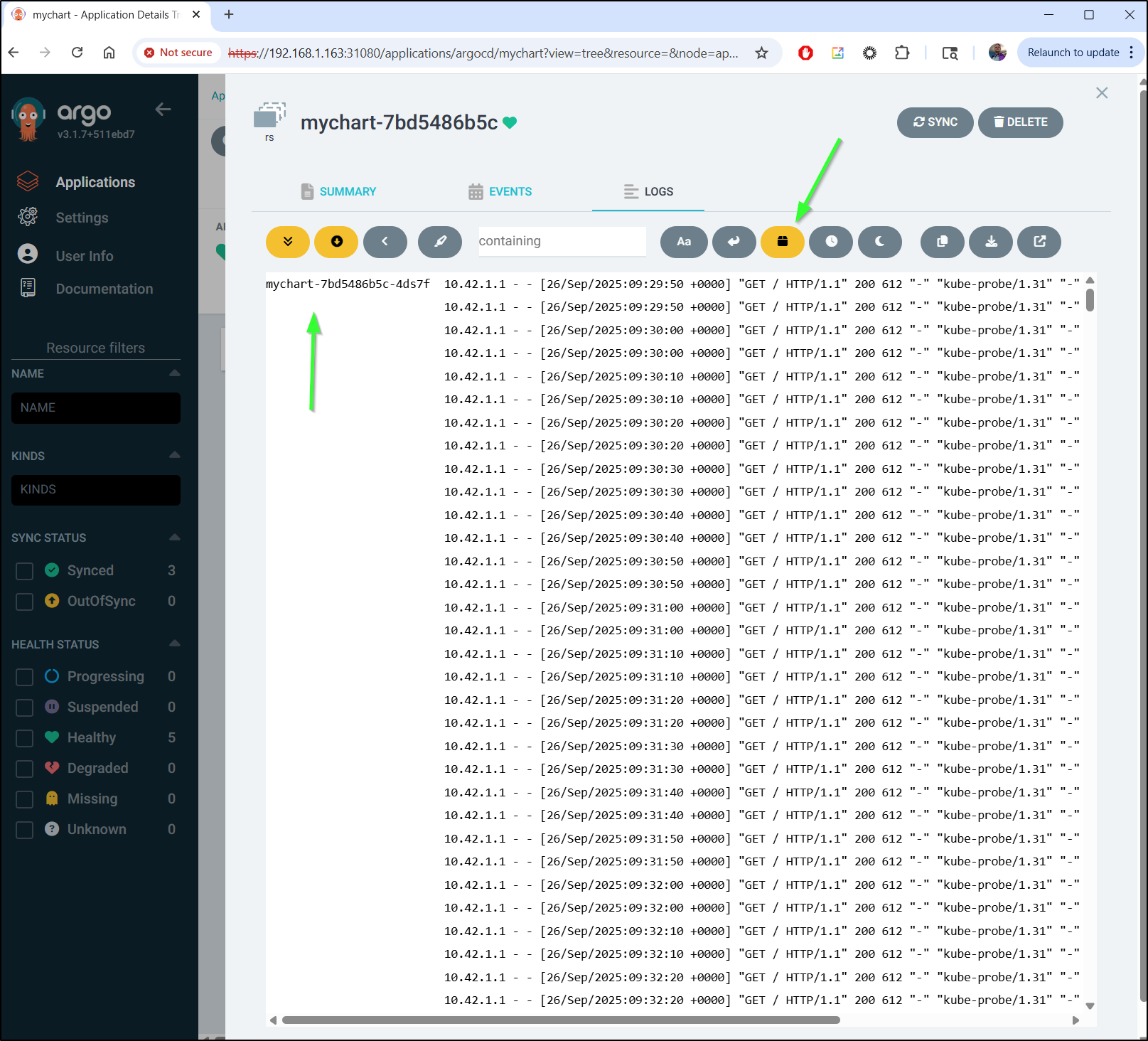

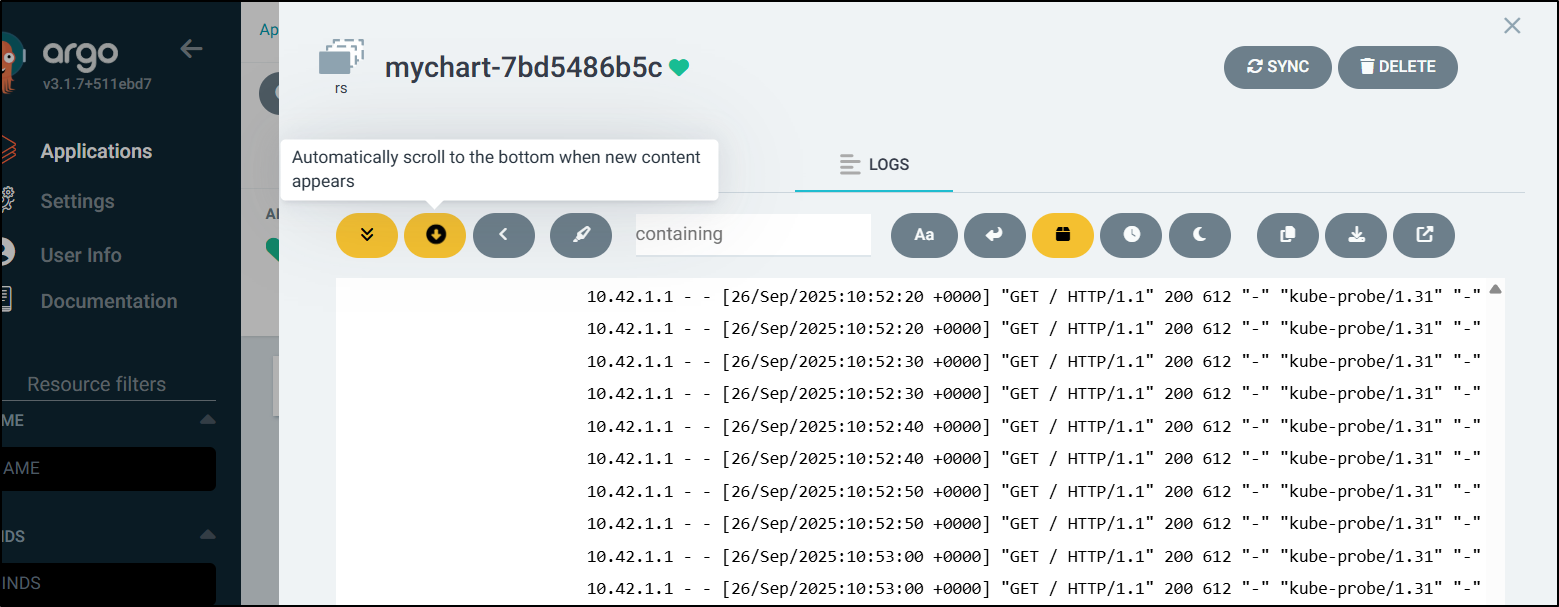

Something I only now discovered - ArgoCD can include the Pod names in the logs. This is quite useful when trying to correlate with other systems or see when a given pod crashed.

We can also use this page as a live tail, showing the latest logs as they appear.

This can be helpful in troubleshooting issues where you want to see which containers are picking up traffic, or catching debug output in a more controlled environment like staging or production.

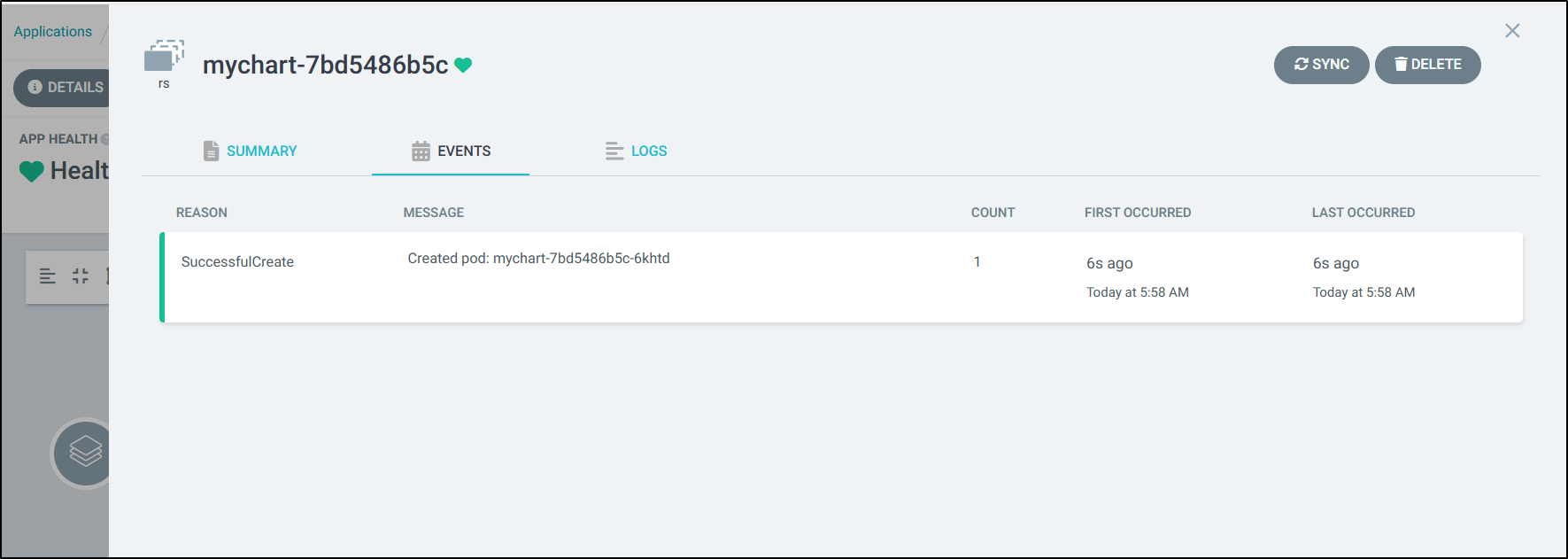

Events

We can use events to track things like a Replicaset restoring a terminated pod

Scaling

We can use the UI to easily scale out and in if we see a demand on service:

Projects and Kubernetes

We showed using the UI. Let’s move on to the objects we can create and modify within Kubernetes.

For instance, we can view our ArgoCD projects from the apiVersion argoproj.io/v1alpha1 and kind AppProject

$ kubectl get AppProject -A

NAMESPACE NAME AGE

argocd default 16h

The output of the YAML shows the namespaces and servers this project can use:

$ kubectl get AppProject default -n argocd -o yaml

apiVersion: argoproj.io/v1alpha1

kind: AppProject

metadata:

creationTimestamp: "2025-09-25T18:31:00Z"

generation: 1

name: default

namespace: argocd

resourceVersion: "5275222"

uid: 419f7071-d57d-40f8-a3ca-4063d62bec4a

spec:

clusterResourceWhitelist:

- group: '*'

kind: '*'

destinations:

- namespace: '*'

server: '*'

sourceRepos:

- '*'

status: {}

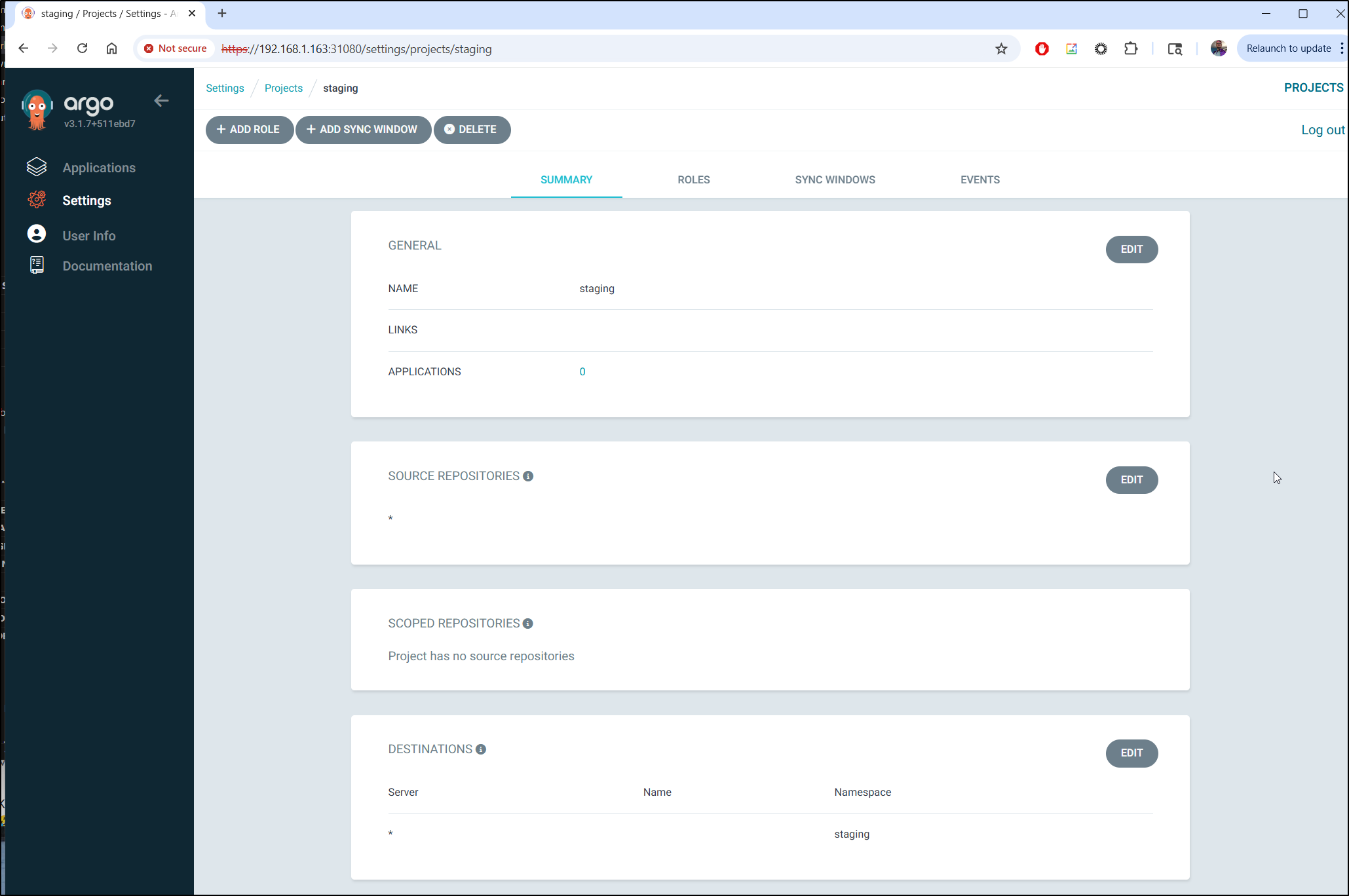

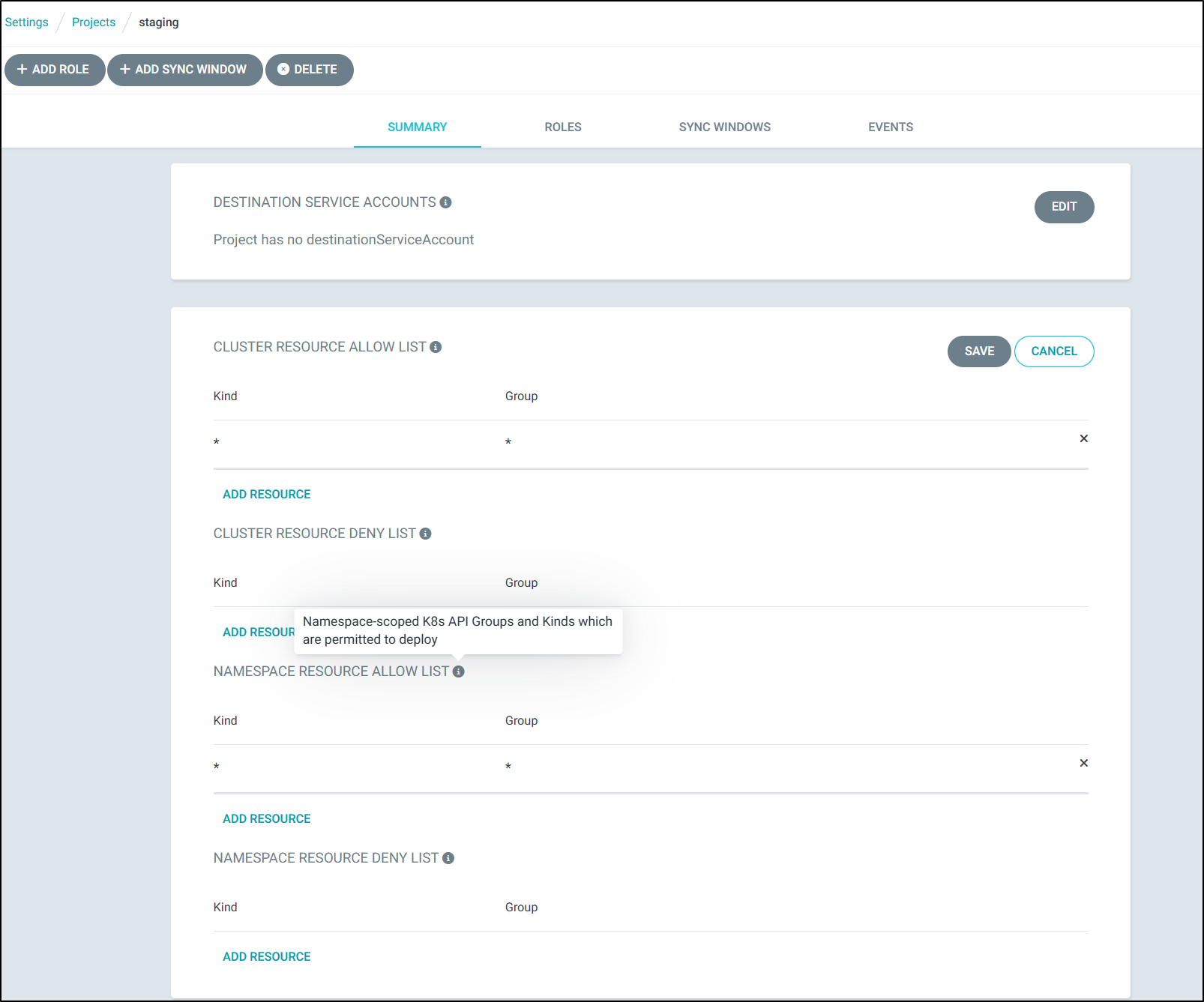

Let’s say we want a “Staging” project that would let us create and manage applications but they must always be in a “staging” namespace. I do not care about clusters and they come and go. I just want to limit our use of namespaces.

$ cat staging.prj.yaml

apiVersion: argoproj.io/v1alpha1

kind: AppProject

metadata:

name: staging

namespace: argocd

spec:

clusterResourceWhitelist:

- group: '*'

kind: '*'

destinations:

- namespace: 'staging'

server: '*'

sourceRepos:

- '*'

Once I apply it:

$ kubectl apply -f ./staging.prj.yaml

appproject.argoproj.io/staging created

I can see it light up with the destination namespace of “staging”

We can get pretty narrowly focused with projects even limiting the API Groups and Kinds

Only once can I recall seeing a place where I would use this - it was a product that would let developers launch devutil pods for debugging and only pods.

Personally, I’m more a fan of locking down namespaces and if it’s really important, use something like an Istio NetworkPolicy which comes with Istio (or in GKE, they call it Anthos Service Mesh). You can read more about NetworkPolicy usage here

Applications and Kubernetes

If you are thinking, “Can’t I also use YAML manifests for Applications”, then the answer is “Of course!”.

We can look at the application we did by hand:

$ kubectl get application -n argocd

NAME SYNC STATUS HEALTH STATUS

mychart Synced Healthy

The full manifest includes a lot of events, for example:

$ kubectl get application -n argocd -o yaml

apiVersion: v1

items:

- apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

creationTimestamp: "2025-09-25T20:47:55Z"

generation: 343

name: mychart

namespace: argocd

resourceVersion: "5316803"

uid: 71d0b1e5-d814-4015-8fd0-5ed404cb91ab

spec:

destination:

namespace: default

server: https://kubernetes.default.svc

project: default

source:

helm:

parameters:

- name: autoscaling.minReplicas

value: "2"

- name: replicaCount

value: "2"

path: .

repoURL: oci://harbor.freshbrewed.science/chartrepo/mychart

targetRevision: 0.1.21318

syncPolicy:

automated:

enabled: true

status:

controllerNamespace: argocd

health:

lastTransitionTime: "2025-09-26T11:03:39Z"

status: Healthy

history:

- deployStartedAt: "2025-09-25T20:47:57Z"

deployedAt: "2025-09-25T20:47:58Z"

id: 0

initiatedBy:

automated: true

revision: sha256:2c6f8c2d4eedda549717b9dc6986b1a28e4c86e087c689baba9b5103a4f1f390

source:

path: .

repoURL: oci://harbor.freshbrewed.science/chartrepo/mychart

targetRevision: 0.1.21278

- deployStartedAt: "2025-09-25T20:54:48Z"

deployedAt: "2025-09-25T20:54:49Z"

id: 1

initiatedBy:

automated: true

revision: sha256:1646f14c159bd8b7a1089d3a1c2687bc130aa50c0390a256c6ec0edfde1a8770

source:

path: .

repoURL: oci://harbor.freshbrewed.science/chartrepo/mychart

targetRevision: 0.1.21318

- deployStartedAt: "2025-09-26T11:00:01Z"

deployedAt: "2025-09-26T11:00:02Z"

id: 2

initiatedBy:

username: admin

revision: sha256:1646f14c159bd8b7a1089d3a1c2687bc130aa50c0390a256c6ec0edfde1a8770

source:

helm:

parameters:

- name: autoscaling.minReplicas

value: "10"

path: .

repoURL: oci://harbor.freshbrewed.science/chartrepo/mychart

targetRevision: 0.1.21318

- deployStartedAt: "2025-09-26T11:00:55Z"

deployedAt: "2025-09-26T11:00:56Z"

id: 3

initiatedBy:

username: admin

revision: sha256:1646f14c159bd8b7a1089d3a1c2687bc130aa50c0390a256c6ec0edfde1a8770

source:

helm:

parameters:

- name: autoscaling.minReplicas

value: "10"

path: .

repoURL: oci://harbor.freshbrewed.science/chartrepo/mychart

targetRevision: 0.1.21318

- deployStartedAt: "2025-09-26T11:01:43Z"

deployedAt: "2025-09-26T11:01:44Z"

id: 4

initiatedBy:

automated: true

revision: sha256:1646f14c159bd8b7a1089d3a1c2687bc130aa50c0390a256c6ec0edfde1a8770

source:

helm:

parameters:

- name: autoscaling.minReplicas

value: "10"

- name: replicaCount

value: "10"

path: .

repoURL: oci://harbor.freshbrewed.science/chartrepo/mychart

targetRevision: 0.1.21318

- deployStartedAt: "2025-09-26T11:03:08Z"

deployedAt: "2025-09-26T11:03:09Z"

id: 5

initiatedBy:

automated: true

revision: sha256:1646f14c159bd8b7a1089d3a1c2687bc130aa50c0390a256c6ec0edfde1a8770

source:

helm:

parameters:

- name: autoscaling.minReplicas

value: "10"

- name: replicaCount

value: "30"

path: .

repoURL: oci://harbor.freshbrewed.science/chartrepo/mychart

targetRevision: 0.1.21318

- deployStartedAt: "2025-09-26T11:03:47Z"

deployedAt: "2025-09-26T11:03:48Z"

id: 6

initiatedBy:

automated: true

revision: sha256:1646f14c159bd8b7a1089d3a1c2687bc130aa50c0390a256c6ec0edfde1a8770

source:

helm:

parameters:

- name: autoscaling.minReplicas

value: "2"

- name: replicaCount

value: "2"

path: .

repoURL: oci://harbor.freshbrewed.science/chartrepo/mychart

targetRevision: 0.1.21318

operationState:

finishedAt: "2025-09-26T11:03:48Z"

message: successfully synced (all tasks run)

operation:

initiatedBy:

automated: true

retry:

limit: 5

sync:

revision: sha256:1646f14c159bd8b7a1089d3a1c2687bc130aa50c0390a256c6ec0edfde1a8770

phase: Succeeded

startedAt: "2025-09-26T11:03:47Z"

syncResult:

resources:

- group: ""

hookPhase: Running

kind: ServiceAccount

message: serviceaccount/mychart unchanged

name: mychart

namespace: default

status: Synced

syncPhase: Sync

version: v1

- group: ""

hookPhase: Running

kind: Service

message: service/mychart unchanged

name: mychart

namespace: default

status: Synced

syncPhase: Sync

version: v1

- group: apps

hookPhase: Running

images:

- nginx:1.16.0

kind: Deployment

message: deployment.apps/mychart configured

name: mychart

namespace: default

status: Synced

syncPhase: Sync

version: v1

revision: sha256:1646f14c159bd8b7a1089d3a1c2687bc130aa50c0390a256c6ec0edfde1a8770

source:

helm:

parameters:

- name: autoscaling.minReplicas

value: "2"

- name: replicaCount

value: "2"

path: .

repoURL: oci://harbor.freshbrewed.science/chartrepo/mychart

targetRevision: 0.1.21318

reconciledAt: "2025-09-26T11:15:36Z"

resourceHealthSource: appTree

resources:

- kind: Service

name: mychart

namespace: default

status: Synced

version: v1

- kind: ServiceAccount

name: mychart

namespace: default

status: Synced

version: v1

- group: apps

kind: Deployment

name: mychart

namespace: default

status: Synced

version: v1

sourceHydrator: {}

sourceType: Helm

summary:

images:

- nginx:1.16.0

sync:

comparedTo:

destination:

namespace: default

server: https://kubernetes.default.svc

source:

helm:

parameters:

- name: autoscaling.minReplicas

value: "2"

- name: replicaCount

value: "2"

path: .

repoURL: oci://harbor.freshbrewed.science/chartrepo/mychart

targetRevision: 0.1.21318

revision: sha256:1646f14c159bd8b7a1089d3a1c2687bc130aa50c0390a256c6ec0edfde1a8770

status: Synced

kind: List

metadata:

resourceVersion: ""

This means we can use things like kubectl patch to update values like replicas or images.

Let’s try changing our OCI version which results in a Helm update to go back to the last release:

As you saw, we were able to go back and forth with:

$ kubectl -n argocd patch application mychart --type=merge -p '{"spec":{"source":{"targetRevision":"0.1.21318"}}}'

$ kubectl -n argocd patch application mychart --type=merge -p '{"spec":{"source":{"targetRevision":"0.1.21278"}}}'

We can also just create new Applications with YAML.

For instance, to fire off another mychart using the same OCI endpoint:

$ cat newapp.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: mychart2

namespace: argocd

spec:

destination:

namespace: default

server: https://kubernetes.default.svc

project: default

source:

repoURL: oci://harbor.freshbrewed.science/chartrepo/mychart

path: .

targetRevision: 0.1.21318

helm:

parameters:

- name: replicaCount

value: "7"

syncPolicy:

automated:

enabled: true

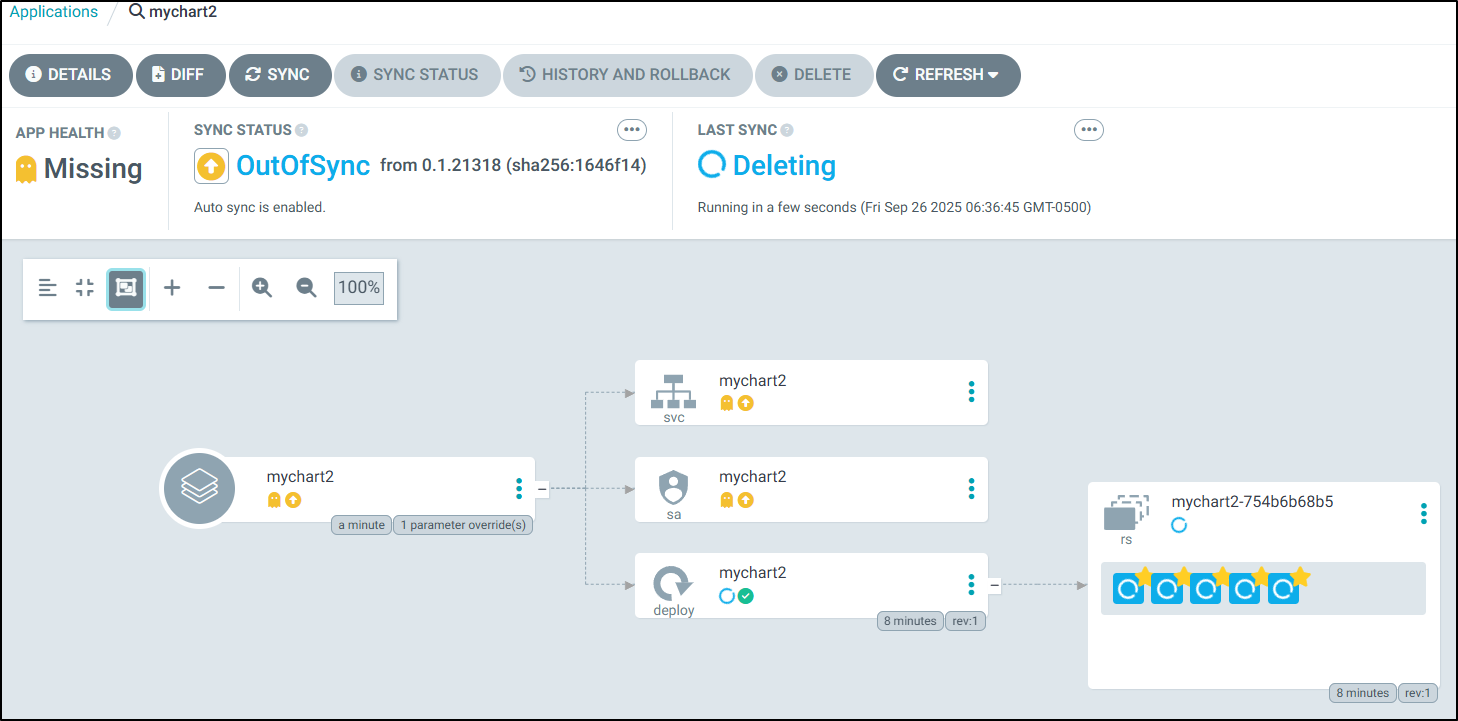

We can see it in action:

Now, a caveat - we can create this way, but destroy does not work this way.

For instance, if I remove this app:

$ kubectl delete -f ./newapp.yaml

application.argoproj.io "mychart2" deleted

$ kubectl get po | grep mychart

mychart-7bd5486b5c-b9bmw 1/1 Running 0 9m54s

mychart-7bd5486b5c-hnfmf 1/1 Running 0 9m55s

mychart2-754b6b68b5-bgnf4 1/1 Running 0 4m15s

mychart2-754b6b68b5-bxw4n 1/1 Running 0 4m15s

mychart2-754b6b68b5-jbs25 1/1 Running 0 4m15s

mychart2-754b6b68b5-pkkws 1/1 Running 0 4m15s

mychart2-754b6b68b5-qm4zg 1/1 Running 0 4m15s

mychart2-754b6b68b5-rhk4q 1/1 Running 0 4m15s

mychart2-754b6b68b5-tfdpv 1/1 Running 0 4m15s

We can see it leaves the workloads around (just removes the definition)

builder@DESKTOP-QADGF36:~/Workspaces/mychart$ kubectl get po | grep mychart

mychart-7bd5486b5c-b9bmw 1/1 Running 0 11m

mychart-7bd5486b5c-hnfmf 1/1 Running 0 11m

mychart2-754b6b68b5-bgnf4 1/1 Running 0 6m5s

mychart2-754b6b68b5-bxw4n 1/1 Running 0 6m5s

mychart2-754b6b68b5-jbs25 1/1 Running 0 6m5s

mychart2-754b6b68b5-pkkws 1/1 Running 0 6m5s

mychart2-754b6b68b5-qm4zg 1/1 Running 0 6m5s

mychart2-754b6b68b5-rhk4q 1/1 Running 0 6m5s

mychart2-754b6b68b5-tfdpv 1/1 Running 0 6m5s

builder@DESKTOP-QADGF36:~/Workspaces/mychart$ kubectl get applications -n argocd

NAME SYNC STATUS HEALTH STATUS

mychart Synced Healthy

builder@DESKTOP-QADGF36:~/Workspaces/mychart$ kubectl get po | grep mychart

mychart-7bd5486b5c-b9bmw 1/1 Running 0 12m

mychart-7bd5486b5c-hnfmf 1/1 Running 0 12m

mychart2-754b6b68b5-bgnf4 1/1 Running 0 7m

mychart2-754b6b68b5-bxw4n 1/1 Running 0 7m

mychart2-754b6b68b5-jbs25 1/1 Running 0 7m

mychart2-754b6b68b5-pkkws 1/1 Running 0 7m

mychart2-754b6b68b5-qm4zg 1/1 Running 0 7m

mychart2-754b6b68b5-rhk4q 1/1 Running 0 7m

mychart2-754b6b68b5-tfdpv 1/1 Running 0 7m

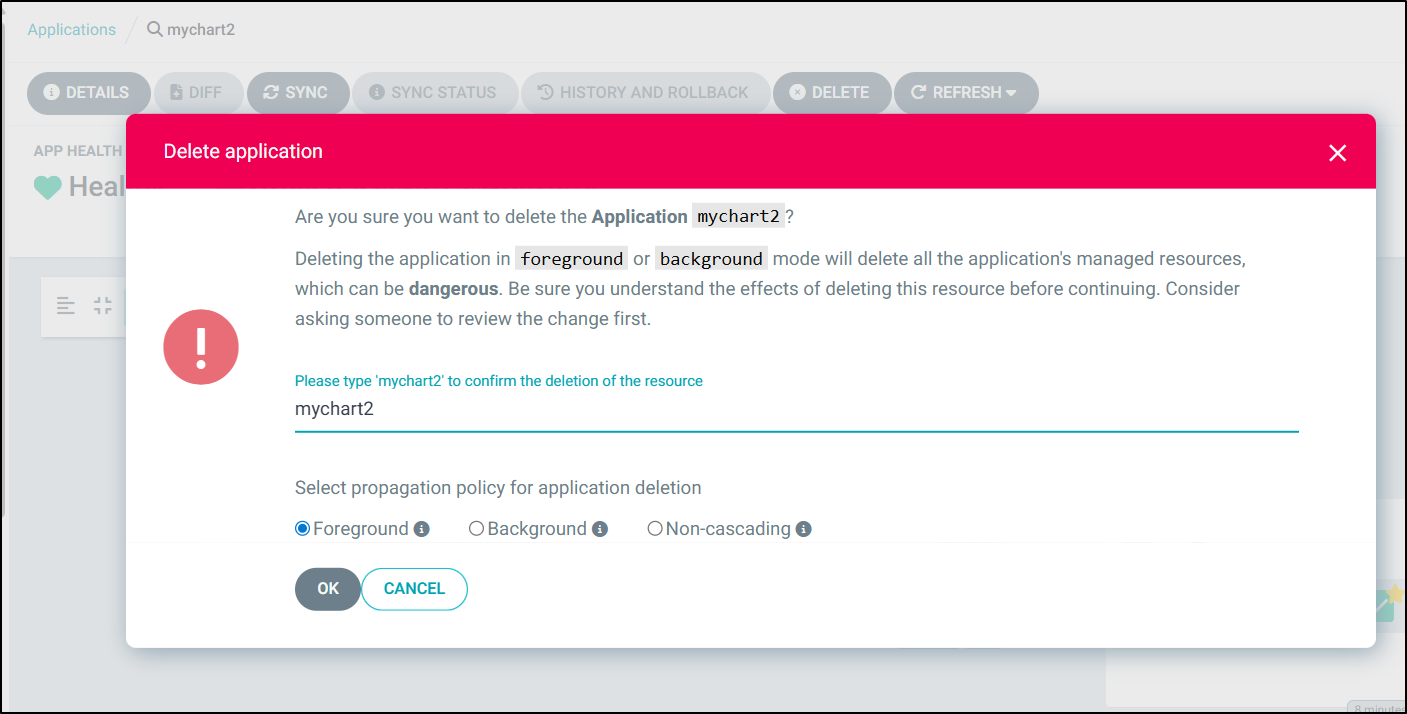

Now, if I use “delete” in the UI, which requires a confirmation:

It then starts to remove resources rapidly (barely had time to grab this screenshot)

and we can see they are gone now in K8s:

$ kubectl get po | grep mychart

mychart-7bd5486b5c-b9bmw 1/1 Running 0 13m

mychart-7bd5486b5c-hnfmf 1/1 Running 0 13m

mychart2-754b6b68b5-bgnf4 1/1 Running 0 7m42s

mychart2-754b6b68b5-bxw4n 1/1 Running 0 7m42s

mychart2-754b6b68b5-jbs25 1/1 Running 0 7m42s

mychart2-754b6b68b5-pkkws 1/1 Running 0 7m42s

mychart2-754b6b68b5-qm4zg 1/1 Running 0 7m42s

mychart2-754b6b68b5-rhk4q 1/1 Running 0 7m42s

mychart2-754b6b68b5-tfdpv 1/1 Running 0 7m42s

$ kubectl get po | grep mychart

mychart-7bd5486b5c-b9bmw 1/1 Running 0 14m

mychart-7bd5486b5c-hnfmf 1/1 Running 0 14m

Non-OCI charts

We showed OCI charts - how about the more common Helm repositories.

For instance, we could fire up an Nginx helm deploy

$ cat nginx.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: nginx-hello

namespace: argocd

spec:

project: default

source:

repoURL: https://charts.bitnami.com/bitnami

chart: nginx

targetRevision: "13.2.9"

helm:

values: |

replicaCount: 1

service:

type: ClusterIP

destination:

server: https://kubernetes.default.svc

namespace: default

syncPolicy:

automated:

prune: true

selfHeal: true

$ kubectl apply -f ./nginx.yaml

application.argoproj.io/nginx-hello created

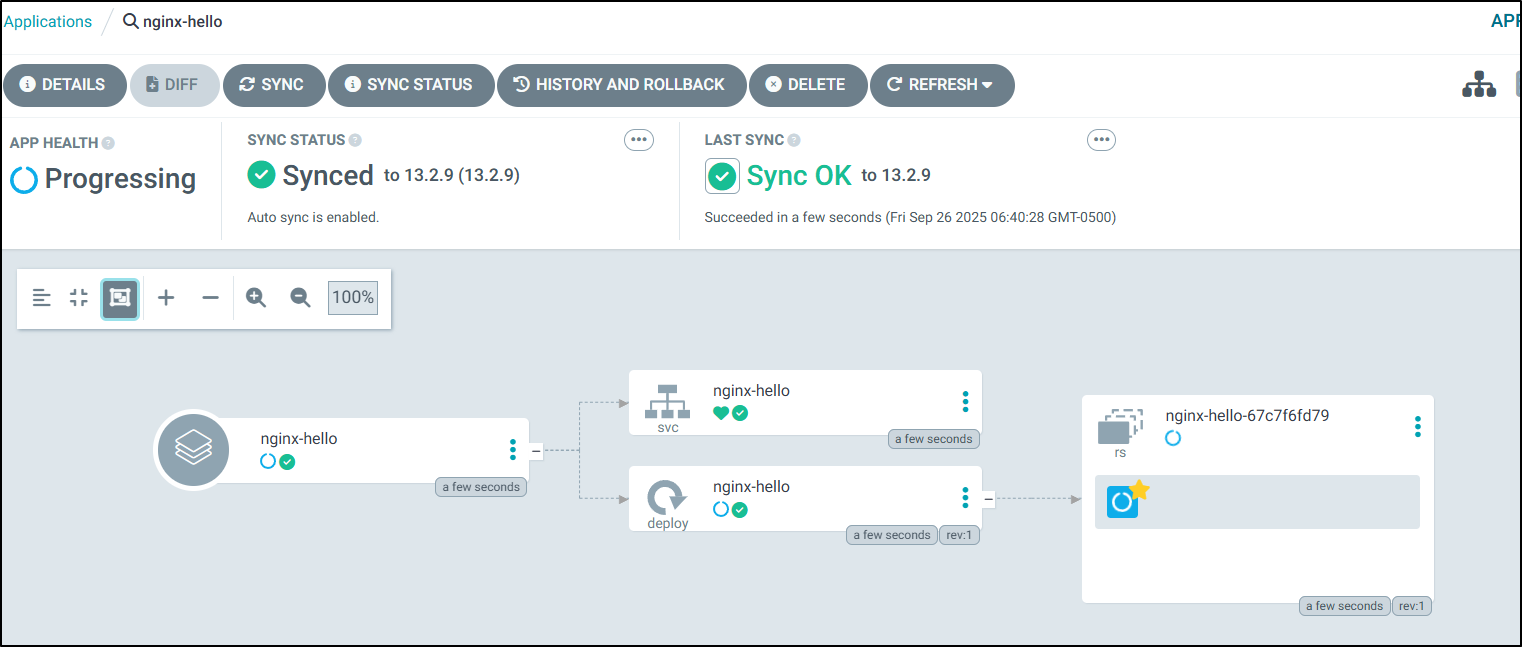

I see it create and start to sync

The reason that pod is taking a minute to come up is I do not have that container cached already (as I did with mychart)

We can see verify that by looking in Kubernetes to see it is creating the container:

$ kubectl get po

NAME READY STATUS RESTARTS AGE

grafana-85b4d9589c-chl4k 1/1 Running 0 11d

headless-vnc-5f7f6b8569-9slww 1/1 Running 2 (11d ago) 41d

mychart-7bd5486b5c-b9bmw 1/1 Running 0 17m

mychart-7bd5486b5c-hnfmf 1/1 Running 0 17m

nginx-hello-67c7f6fd79-cfcms 0/1 ContainerCreating 0 13s

But soon the pod is up and we can see logs

GIT

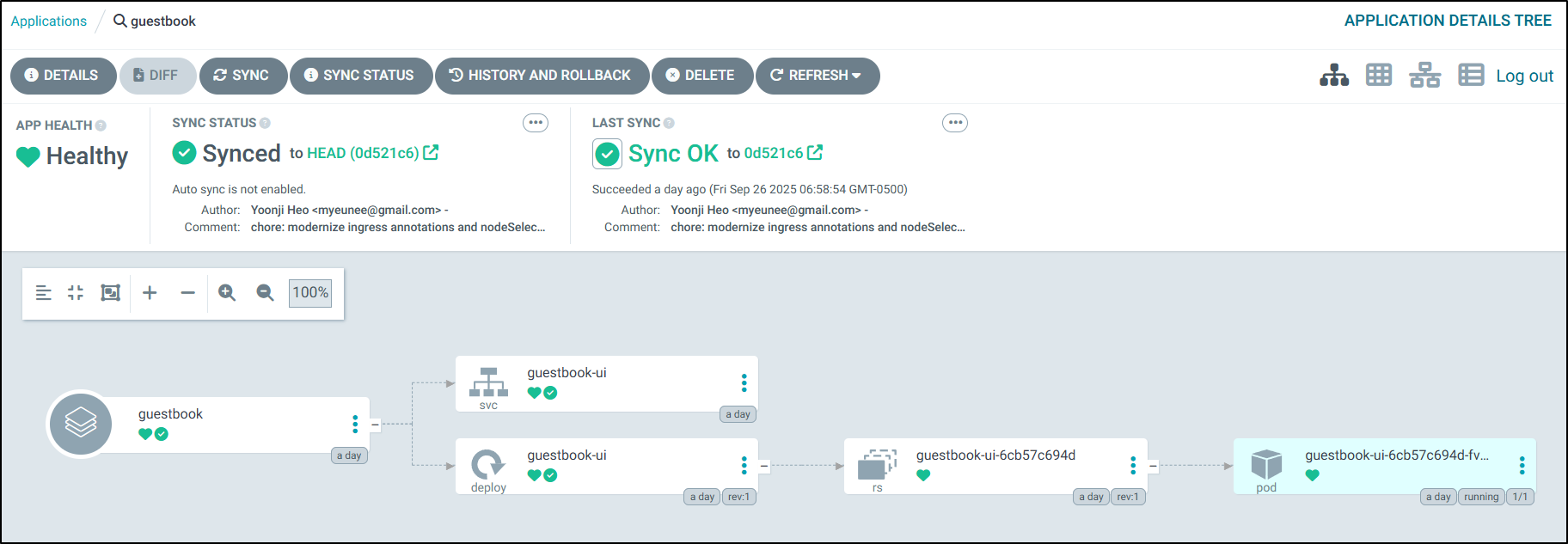

Of course, we can still do GIT based repos as we always have. Just for completeness, I can show an example using their guestbook app:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: guestbook

namespace: argocd

spec:

project: default

source:

repoURL: https://github.com/argoproj/argocd-example-apps.git

targetRevision: HEAD

path: guestbook

destination:

server: https://kubernetes.default.svc

namespace: guestbook

Which we can just apply to deploy

as you saw, this does highlight an order of precedence issue. We need to ensure the namespace exists before using it.

I have seen teams use GitOps like ArgoCD to standup all namespaces and shared SAs prior to launching apps in the past. One way is just to have a standard GIT repo with YAML manifests setup that we would use to “prep” all our K8s clusters at launch.

I do know of a team that once used Anthos Config Management (which is Google’s take on Flux) to do that, but I would worry about having competing GitOps systems on the same cluster.

I’ve also seen teams mix GitOps, like ArgoCD, with deployment pipelines - be then Github or Azure DevOps. This does work provided there is little overlap.

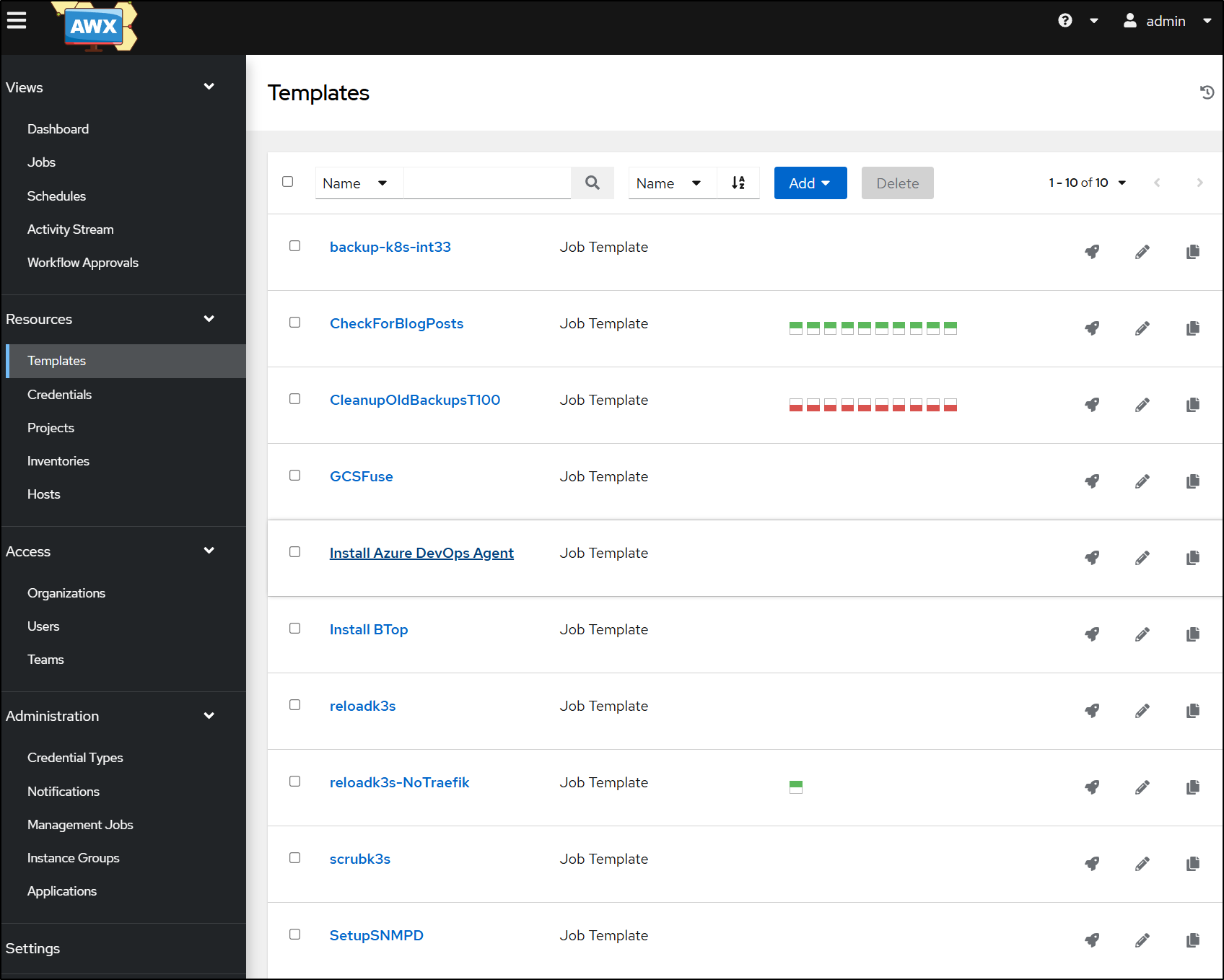

Personally, I use ArgoCD (and other GitOps) for the base layer of my cluster - things that should always be there. And when I don’t use GitOps for that, I use Ansible.

For instance, here is the YAML Ansible Playbook for Datadog: https://github.com/idjohnson/ansible-playbooks/blob/main/ddhelm.yaml

---

- name: Setup Datadog

hosts: all

tasks:

- name: Install Helm

ansible.builtin.shell: |

curl https://baltocdn.com/helm/signing.asc | gpg --dearmor | tee /usr/share/keyrings/helm.gpg > /dev/null

apt-get install apt-transport-https --yes

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/helm.gpg] https://baltocdn.com/helm/stable/debian/ all main" | tee /etc/apt/sources.list.d/helm-stable-debian.list

apt-get update

apt-get install helm

- name: Create DD Secret

ansible.builtin.shell: |

kubectl delete secret my-dd-apikey || true

kubectl create secret generic my-dd-apikey --from-literal=api-key=$(az keyvault secret show --vault-name idjhomelabakv --name ddapikey -o json | jq -r .value | tr -d '\n')

- name: Add DD Repo

ansible.builtin.shell: |

helm repo add datadog https://helm.datadoghq.com

helm repo update

become: true

args:

chdir: /tmp

- name: Create Initial DD Values Templates

ansible.builtin.shell: |

# Fetch APPKEY

export APPKEY=`az keyvault secret show --vault-name idjhomelabakv --name ddappkey -o json | jq -r .value | tr -d '\n'`

# Create Helm Values

cat >/tmp/ddvalues2.yaml <<EOF

agents:

rbac:

create: true

serviceAccountName: default

clusterAgent:

metricsProvider:

createReaderRbac: true

enabled: true

service:

port: 8443

type: ClusterIP

useDatadogMetrics: true

rbac:

create: true

serviceAccountName: default

replicas: 2

clusterChecksRunner:

enabled: true

rbac:

create: true

serviceAccountName: default

replicas: 2

datadog:

apiKeyExistingSecret: my-dd-apikey

apm:

enabled: true

port: 8126

portEnabled: true

appKey: ${APPKEY}

clusterName:

logs:

containerCollectAll: true

enabled: true

networkMonitoring:

enabled: true

orchestratorExplorer:

enabled: true

processAgent:

enabled: true

processCollection: true

tags: []

targetSystem: linux

EOF

export KUBECONFIG=/etc/rancher/k3s/k3s.yaml

helm install my-dd-release -f /tmp/ddvalues2.yaml datadog/datadog

args:

chdir: /tmp

I could put Datadog as a Helm install with Argo and update the APIKEY value in parameters.

I could also have a yaml manifest with the value set in a private GIT repository and use secrets to access it.

Either way, secrets become the thing to pay attention to with GitOps because, as a general rule of thumb, you never want to check secrets into code/GIT.

That said, as you saw with our YAML manifests we launched with kubectl directly, we can set some helm values when we invoke them (we did this with versions and replicaCounts). Passing in an APIKEY there for New Relic or Datadog would do the trick (then ArgoCD would be in charge of keeping it up to date).

Cluster to Cluster ingress

I had an idea - what if I want to expose this ArgoCD that runs on a disconnected cluster (test cluster) externally? How might I accomplish that.

Let’s start with an A Record

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.72.233.202 -n argocdtest

{

"ARecords": [

{

"ipv4Address": "75.72.233.202"

}

],

"TTL": 3600,

"etag": "bc658ce2-d068-4d45-a14e-e5996e4b214a",

"fqdn": "argocdtest.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/argocdtest",

"name": "argocdtest",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

Next, I’m going to create an endpoint that points off to the NodePort of the 2nd cluster so we can route via my external Nginx LB on the primary cluster

apiVersion: v1

kind: Endpoints

metadata:

name: argocdtest-external-ip

subsets:

- addresses:

- ip: 192.168.1.163

ports:

- name: argocdtestint

port: 31080

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: argocdtest-external-ip

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: argocdtest

port: 80

protocol: TCP

targetPort: 31080

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: argocdtest-external-ip

generation: 1

name: argocdtestingress

spec:

rules:

- host: argocdtest.tpk.pw

http:

paths:

- backend:

service:

name: argocdtest-external-ip

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- argocdtest.tpk.pw

secretName: argocdtest-tls

Then apply (quick note: don’t forget to swap kubectx back to primary cluster)

$ kubectl apply -f ./argotesting.yml

endpoints/argocdtest-external-ip created

service/argocdtest-external-ip created

Warning: annotation "kubernetes.io/ingress.class" is deprecated, please use 'spec.ingressClassName' instead

ingress.networking.k8s.io/argocdtestingress created

When I see it satisfied

$ kubectl get cert argocdtest-tls

NAME READY SECRET AGE

argocdtest-tls True argocdtest-tls 109s

I tried to connect but then my browser bogged down and i got “too many redirects”

Let me set a URL and disable TLS on 80 by editing the CM

$ kubectl edit cm argocd-cmd-params-cm -n argocd

configmap/argocd-cmd-params-cm edited

apiVersion: v1

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"ConfigMap","metadata":{"annotations":{},"labels":{"app.kubernetes.io/name":"argocd-cmd-params-cm","app.kubernetes.io/part-of":"argocd"},"name":"argocd-cmd-params-cm","namespace":"argocd"}}

creationTimestamp: "2025-09-25T18:29:24Z"

labels:

app.kubernetes.io/name: argocd-cmd-params-cm

app.kubernetes.io/part-of: argocd

name: argocd-cmd-params-cm

namespace: argocd

resourceVersion: "5274808"

uid: fb5d7c76-8a47-4788-b7f7-577477943542

data:

url: "https://argocdtest.tpk.pw"

server.insecure: "true" ~

Then restart ArgoCD to have it take effect

$ kubectl rollout restart deployment argocd-server -n argocd

deployment.apps/argocd-server restarted

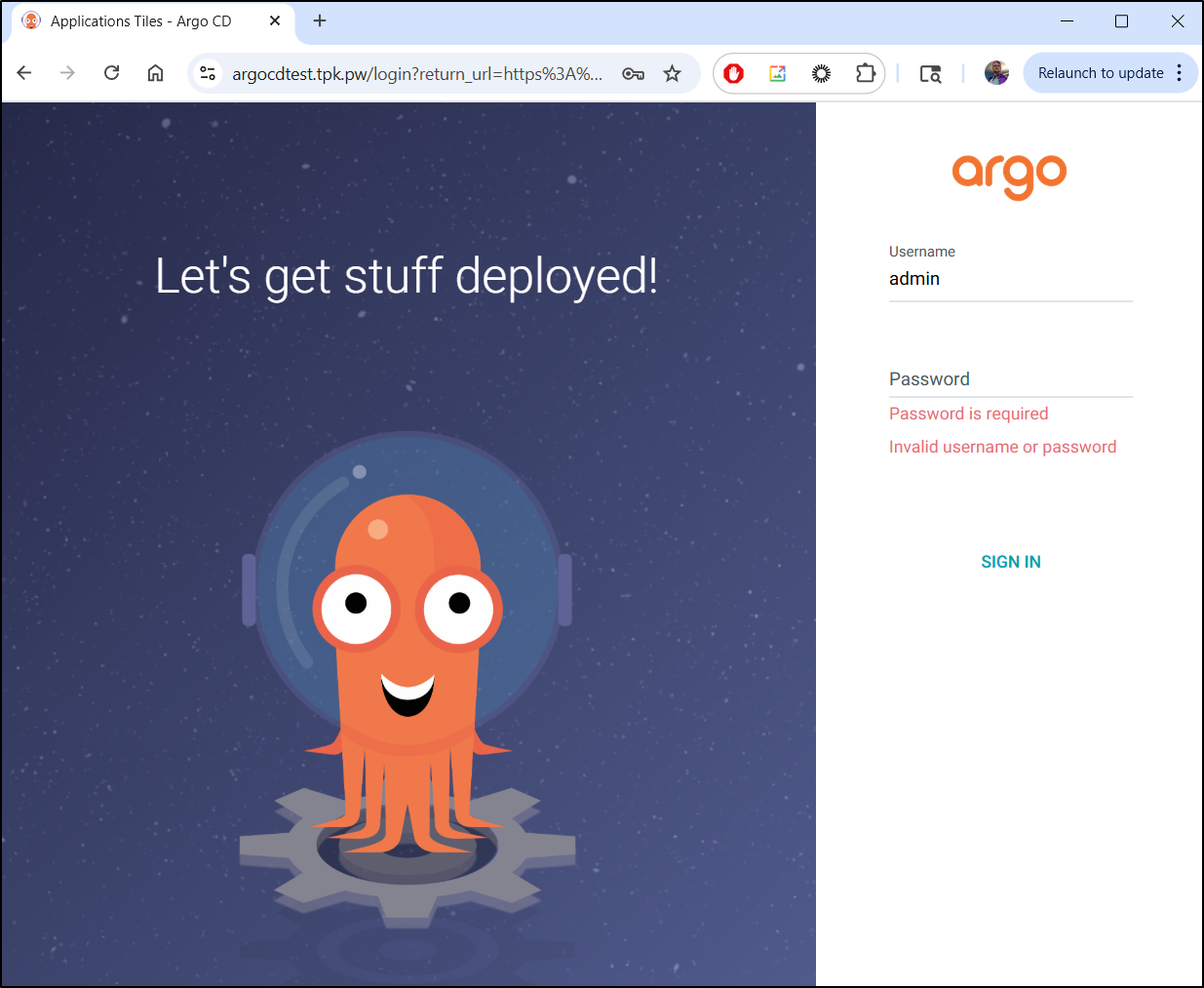

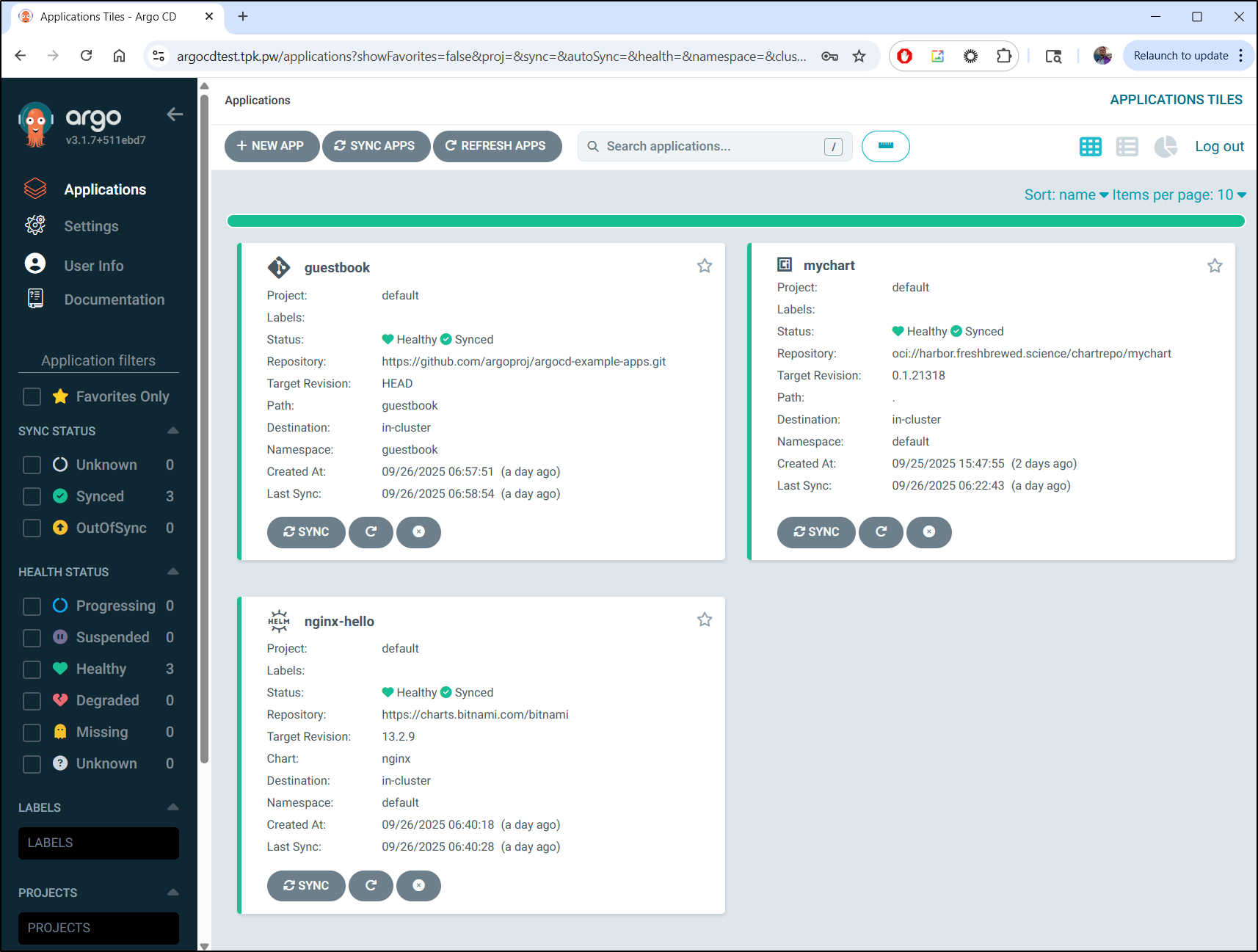

This time it worked

I can now sign-in and see the apps

CLI

I tried the windows CLI (https://github.com/argoproj/argo-cd/releases/latest/download/argocd-windows-amd64.exe) but it failed to launch.

Next, I tried homebrew in WSL

$ brew install argocd

==> Auto-updating Homebrew...

Adjust how often this is run with `$HOMEBREW_AUTO_UPDATE_SECS` or disable with

`$HOMEBREW_NO_AUTO_UPDATE=1`. Hide these hints with `$HOMEBREW_NO_ENV_HINTS=1` (see `man brew`).

==> Auto-updated Homebrew!

Updated 3 taps (loft-sh/tap, homebrew/core and homebrew/cask).

==> New Formulae

atomic_queue: C++14 lock-free queues

doge: Command-line DNS client

fastmcp: Fast, Pythonic way to build MCP servers and clients

jqp: TUI playground to experiment and play with jq

libcpucycles: Microlibrary for counting CPU cycles

libpq@17: Postgres C API library

lue-reader: Terminal eBook reader with text-to-speech and multi-format support

mcp-atlassian: MCP server for Atlassian tools (Confluence, Jira)

mcp-server-chart: MCP with 25+ @antvis charts for visualization, generation, and analysis

msedit: Simple text editor with clickable interface

nanobot: Build MCP Agents

ni: Selects the right Node package manager based on lockfiles

postgresql@18: Object-relational database system

privatebin-cli: CLI for creating and managing PrivateBin pastes

slack-mcp-server: Powerful MCP Slack Server with multiple transports and smart history fetch logic

swag: Automatically generate RESTful API documentation with Swagger 2.0 for Go

termsvg: Record, share and export your terminal as a animated SVG image

tfstate-lookup: Lookup resource attributes in tfstate

wuppiefuzz: Coverage-guided REST API fuzzer developed on top of LibAFL

zuban: Python language server and type checker, written in Rust

You have 103 outdated formulae installed.

argocd 2.3.3 is already installed but outdated (so it will be upgraded).

==> Fetching downloads for: argocd

==> Downloading https://ghcr.io/v2/homebrew/core/argocd/manifests/3.1.7

############################################################################################################### 100.0%

==> Fetching argocd

==> Downloading https://ghcr.io/v2/homebrew/core/argocd/blobs/sha256:ba38780a01b3f704fed43e48a4a6df1a99c81663132e200d0

############################################################################################################### 100.0%

==> Upgrading argocd

2.3.3 -> 3.1.7

==> Pouring argocd--3.1.7.x86_64_linux.bottle.tar.gz

🍺 /home/linuxbrew/.linuxbrew/Cellar/argocd/3.1.7: 10 files, 221.6MB

==> Running `brew cleanup argocd`...

Disable this behaviour by setting `HOMEBREW_NO_INSTALL_CLEANUP=1`.

Hide these hints with `HOMEBREW_NO_ENV_HINTS=1` (see `man brew`).

Removing: /home/linuxbrew/.linuxbrew/Cellar/argocd/2.3.3... (8 files, 112.9MB)

==> No outdated dependents to upgrade!

==> Caveats

Bash completion has been installed to:

/home/linuxbrew/.linuxbrew/etc/bash_completion.d

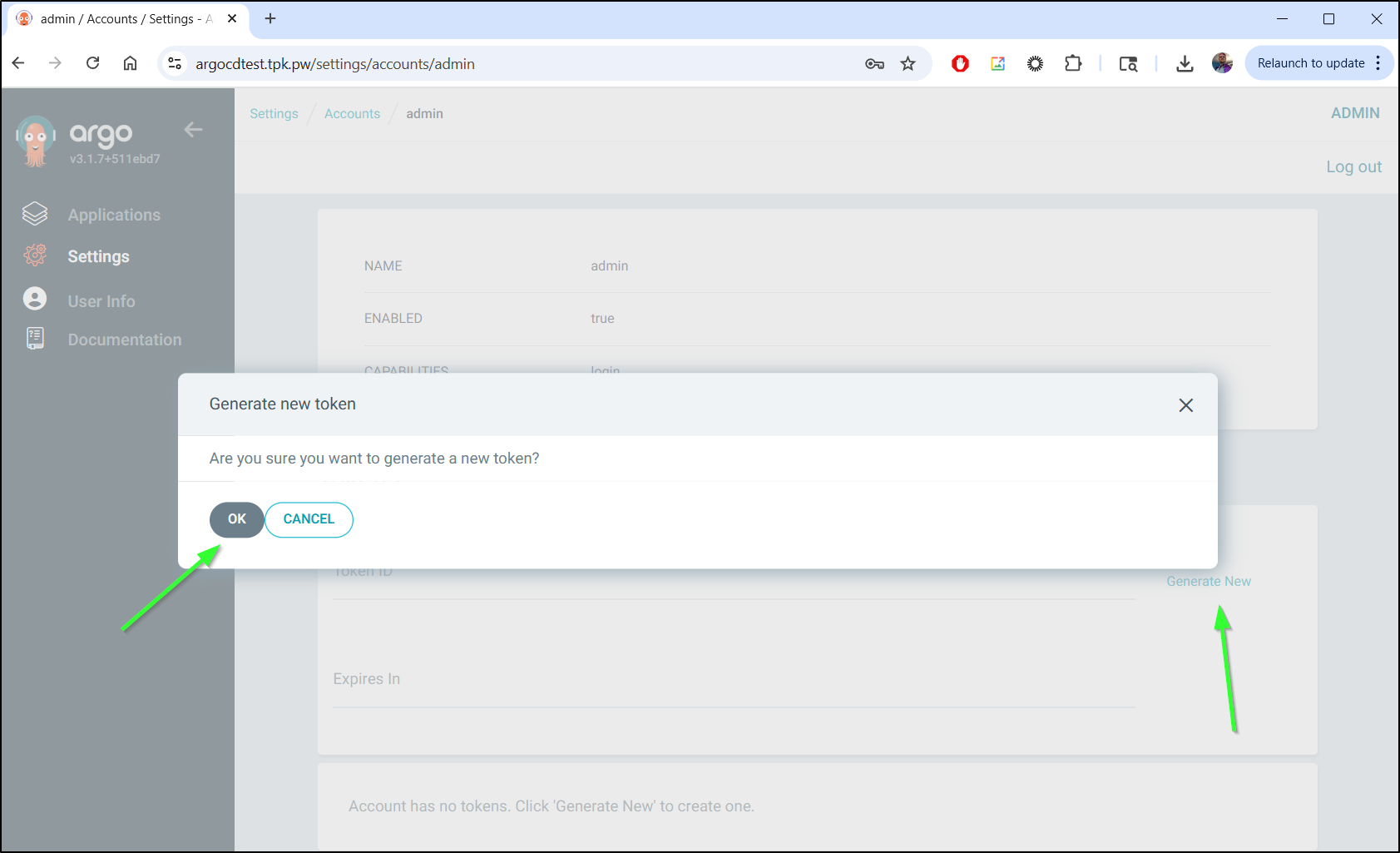

To use the CLI, we need a token.

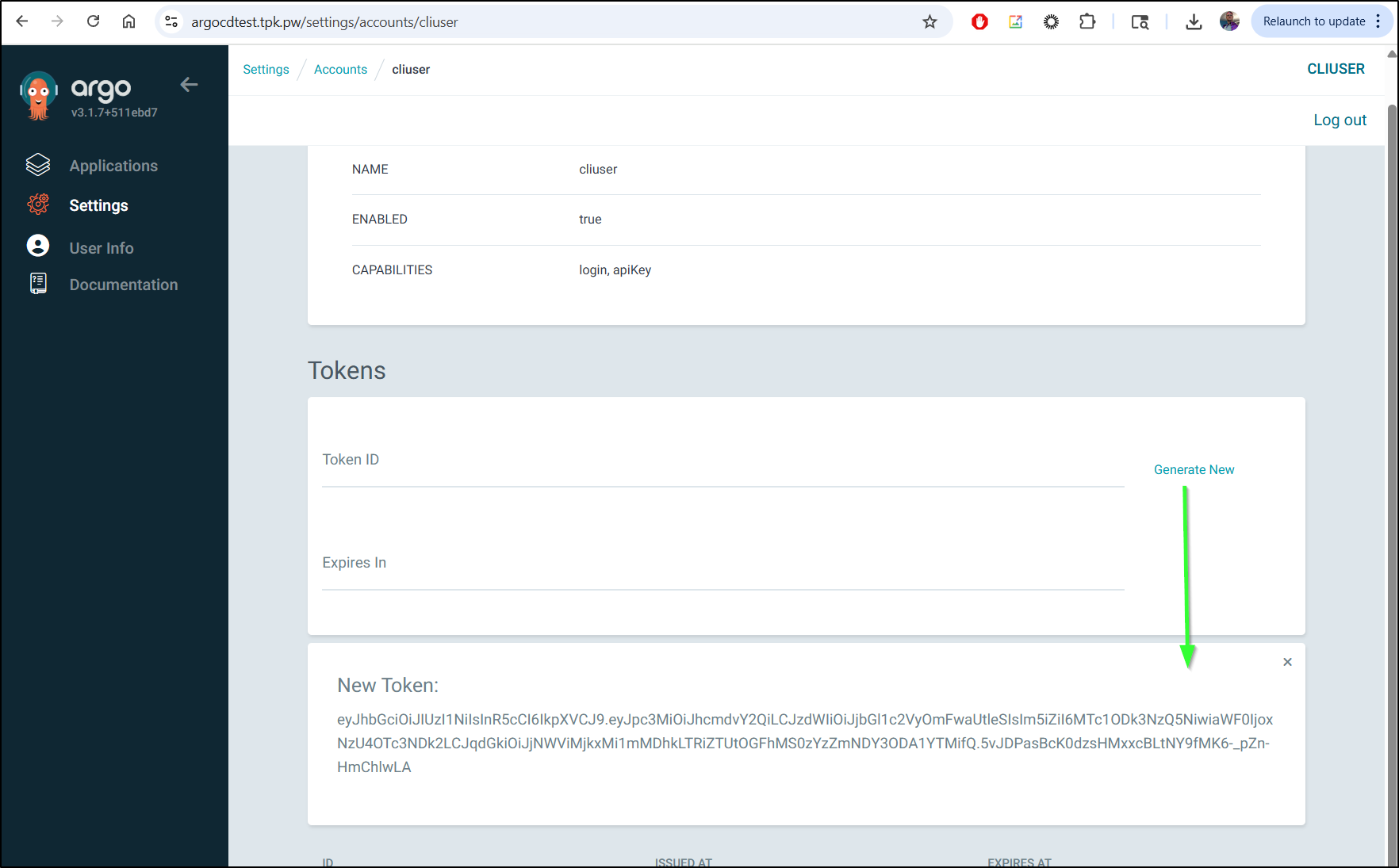

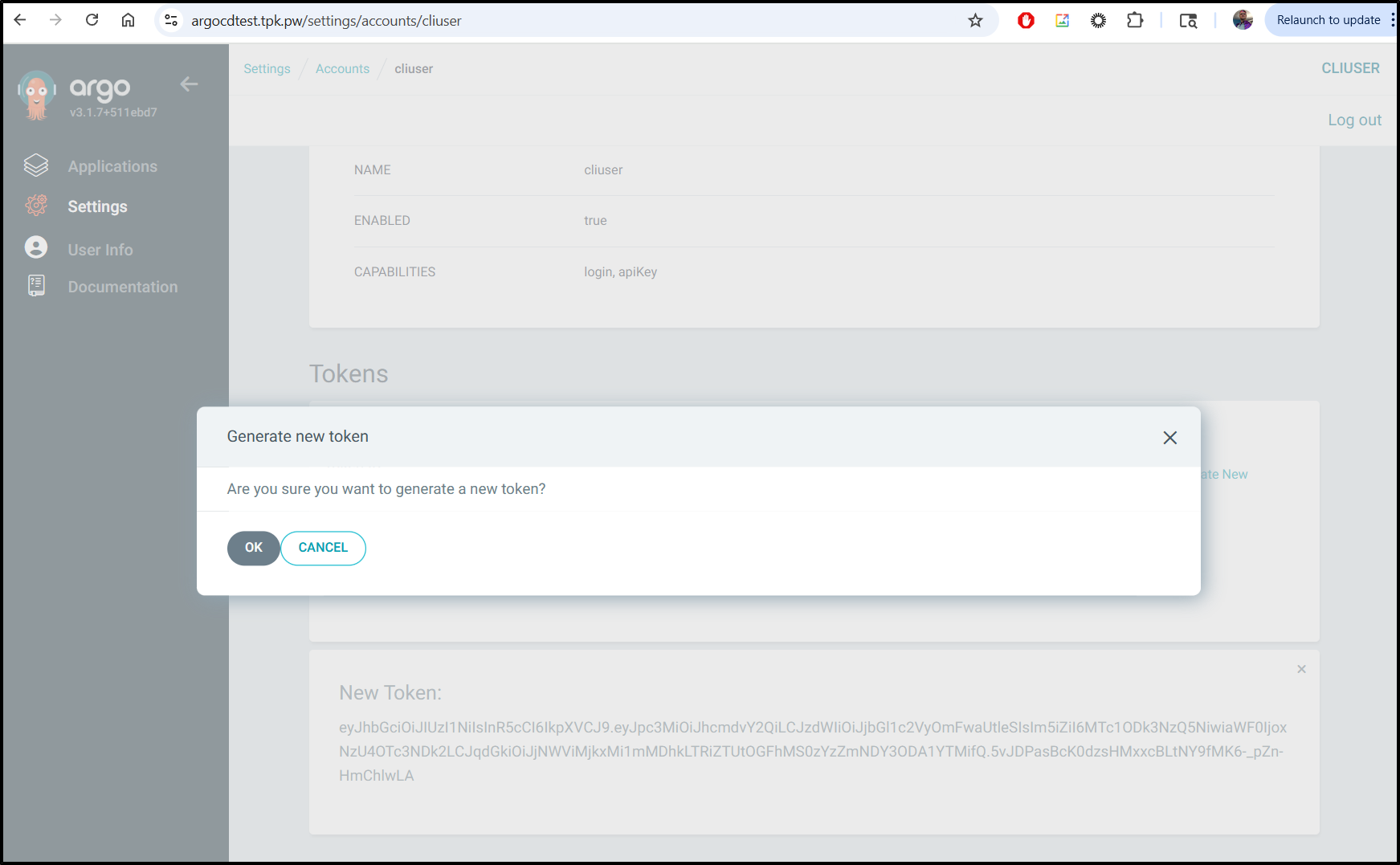

I can fetch that from the Accounts page by clicking generate new

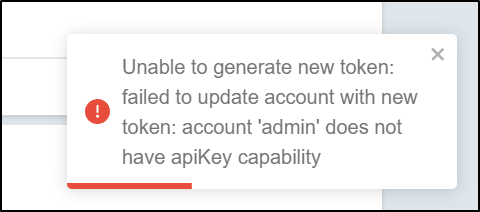

However, it would seem “admin” cannot get an auth token

I think the following flow is odd, but to create a new user, we have to update the primary CM as we did for URLs.

add an “accounts.ACCOUNTNAME”, eg. for a new “cliuser” user

apiVersion: v1

data:

server.insecure: "true"

url: https://argocdtest.tpk.pw

accounts.cliuser: apiKey, login

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"ConfigMap","metadata":{"annotations":{},"labels":{"app.kubernetes.io/name":"argocd-cmd-params-cm","app.kubernetes.io/part-of":"argocd"},"name":"argocd-cmd-params-cm","namespace":"argocd"}}

creationTimestamp: "2025-09-25T18:29:24Z"

labels:

app.kubernetes.io/name: argocd-cmd-params-cm

app.kubernetes.io/part-of: argocd

name: argocd-cmd-params-cm

namespace: argocd

resourceVersion: "5378546"

uid: fb5d7c76-8a47-4788-b7f7-577477943542

The bounce ArgoCD to have it take effect

$ kubectl rollout restart deployment argocd-server -n argocd

deployment.apps/argocd-server restarted

That failed to work. Moreover, no flavor of login seemed to like my URL.

I was able to use the NodePort, however:

$ argocd login --insecure 192.168.1.163:31080

WARNING: server is not configured with TLS. Proceed (y/n)? y

Username: admin

Password:

'admin:login' logged in successfully

Context '192.168.1.163:31080' updated

But the update password command failed

$ argocd account update-password --account cliuser --current-password asdfsdfsadf --new-password 'asdfasdfsdf'

{"level":"fatal","msg":"rpc error: code = NotFound desc = account 'cliuser' does not exist","time":"2025-09-27T07:46:21-05:00"}

which is confirmed with account list

$ argocd account list

NAME ENABLED CAPABILITIES

admin true login

I think I used the wrong CM.. let’s use the primary “argocd-cm” this time

$ kubectl edit cm argocd-cm -n argocd

configmap/argocd-cm edited

and add the block

apiVersion: v1

data:

accounts.cliuser: login, apiKey

... the rest ...

A quick bounce

$ kubectl rollout restart deployment argocd-server -n argocd

deployment.apps/argocd-server restarted

NOW I see the user

$ argocd account list

NAME ENABLED CAPABILITIES

admin true login

cliuser true login, apiKey

This time it worked

But the public URL failed here too

$ argocd login argocdtest.tpk.pw --auth-token eyJhbGciOiJIUzI1NiIsInR5

cCI6IkpXVCJ9.eyJpc3MiOiJhcmdvY2QiLCJzdWIiOiJjbGl1c2VyOmFwaUtleSIsIm5iZiI6MTc1ODk3NzQ5NiwiaWF0IjoxNzU4OTc3NDk2LCJqdGkiO

iJjNWViMjkxMi1mMDhkLTRiZTUtOGFhMS0zYzZmNDY3ODA1YTMifQ.5vJDPasBcK0dzsHMxxcBLtNY9fMK6-_pZn-HmChlwLA

{"level":"fatal","msg":"context deadline exceeded","time":"2025-09-27T07:54:12-05:00"}

Also, providing the auth token didn’t seem to take for the login command

$ argocd login --insecure 192.168.1.163:31080 --auth-token eyJhbGciOiJ

IUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJhcmdvY2QiLCJzdWIiOiJjbGl1c2VyOmFwaUtleSIsIm5iZiI6MTc1ODk3NzQ5NiwiaWF0IjoxNzU4OTc3

NDk2LCJqdGkiOiJjNWViMjkxMi1mMDhkLTRiZTUtOGFhMS0zYzZmNDY3ODA1YTMifQ.5vJDPasBcK0dzsHMxxcBLtNY9fMK6-_pZn-HmChlwLA

WARNING: server is not configured with TLS. Proceed (y/n)? y

Username: cliuser

Password:

But it does seem to work for just invoking commands - so perhaps the idea is to just have it for commands (and do not use it for login)

$ argocd account list --insecure 192.168.1.163:31080 --auth-token eyJh

bGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJhcmdvY2QiLCJzdWIiOiJjbGl1c2VyOmFwaUtleSIsIm5iZiI6MTc1ODk3NzQ5NiwiaWF0IjoxN

zU4OTc3NDk2LCJqdGkiOiJjNWViMjkxMi1mMDhkLTRiZTUtOGFhMS0zYzZmNDY3ODA1YTMifQ.5vJDPasBcK0dzsHMxxcBLtNY9fMK6-_pZn-HmChlwLA

NAME ENABLED CAPABILITIES

cliuser true login, apiKey

By default, the user has no rights so it cannot view apps

$ argocd app list --insecure 192.168.1.163:31080 --auth-token eyJhbGci

OiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJhcmdvY2QiLCJzdWIiOiJjbGl1c2VyOmFwaUtleSIsIm5iZiI6MTc1ODk3NzQ5NiwiaWF0IjoxNzU4O

Tc3NDk2LCJqdGkiOiJjNWViMjkxMi1mMDhkLTRiZTUtOGFhMS0zYzZmNDY3ODA1YTMifQ.5vJDPasBcK0dzsHMxxcBLtNY9fMK6-_pZn-HmChlwLA

NAME CLUSTER NAMESPACE PROJECT STATUS HEALTH SYNCPOLICY CONDITIONS REPO PATH TARGET

I can edit the RBAC cm

$ kubectl edit cm argocd-rbac-cm -n argocd

configmap/argocd-rbac-cm edited

And add a datablock (or update if exists) to set the rights for the cliuser

apiVersion: v1

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"ConfigMap","metadata":{"annotations":{},"labels":{"app.kubernetes.io/name":"argocd-rbac-cm","app.kubernetes.io/part-of":"argocd"},"name":"argocd-rbac-cm","namespace":"argocd"}}

creationTimestamp: "2025-09-25T18:29:24Z"

labels:

app.kubernetes.io/name: argocd-rbac-cm

app.kubernetes.io/part-of: argocd

name: argocd-rbac-cm

namespace: argocd

resourceVersion: "5274811"

uid: f61eb6df-9d12-4227-bbdb-0595e5ae8e03

data:

policy.csv: |

g, cliuser, role:admin

Then bounce to take effect

$ kubectl rollout restart deployment argocd-server -n argocd

deployment.apps/argocd-server restarted

Now our user can see and manages apps

$ argocd app list --insecure 192.168.1.163:31080 --auth-token eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJhcmdvY2QiLCJzdWIiOiJjbGl1c2VyOmFwaUtleSIsIm5iZiI6MTc1ODk3NzQ5NiwiaWF0IjoxNzU4OTc3NDk2LCJqdGkiOiJjNWViMjkxMi1mMDhkLTRiZTUtOGFhMS0zYzZmNDY3ODA1YTMifQ.5vJDPasBcK0dzsHMxxcBLtNY9fMK6-_pZn-HmChlwLA

NAME CLUSTER NAMESPACE PROJECT STATUS HEALTH SYNCPOLICY CONDITIONS REPO PATH TARGET

argocd/guestbook https://kubernetes.default.svc guestbook default Synced Healthy Manual <none> https://github.com/argoproj/argocd-example-apps.git guestbook HEAD

argocd/mychart https://kubernetes.default.svc default default Synced Healthy Auto <none> oci://harbor.freshbrewed.science/chartrepo/mychart . 0.1.21318

argocd/nginx-hello https://kubernetes.default.svc default default Synced Healthy Auto-Prune <none> https://charts.bitnami.com/bitnami 13.2.9

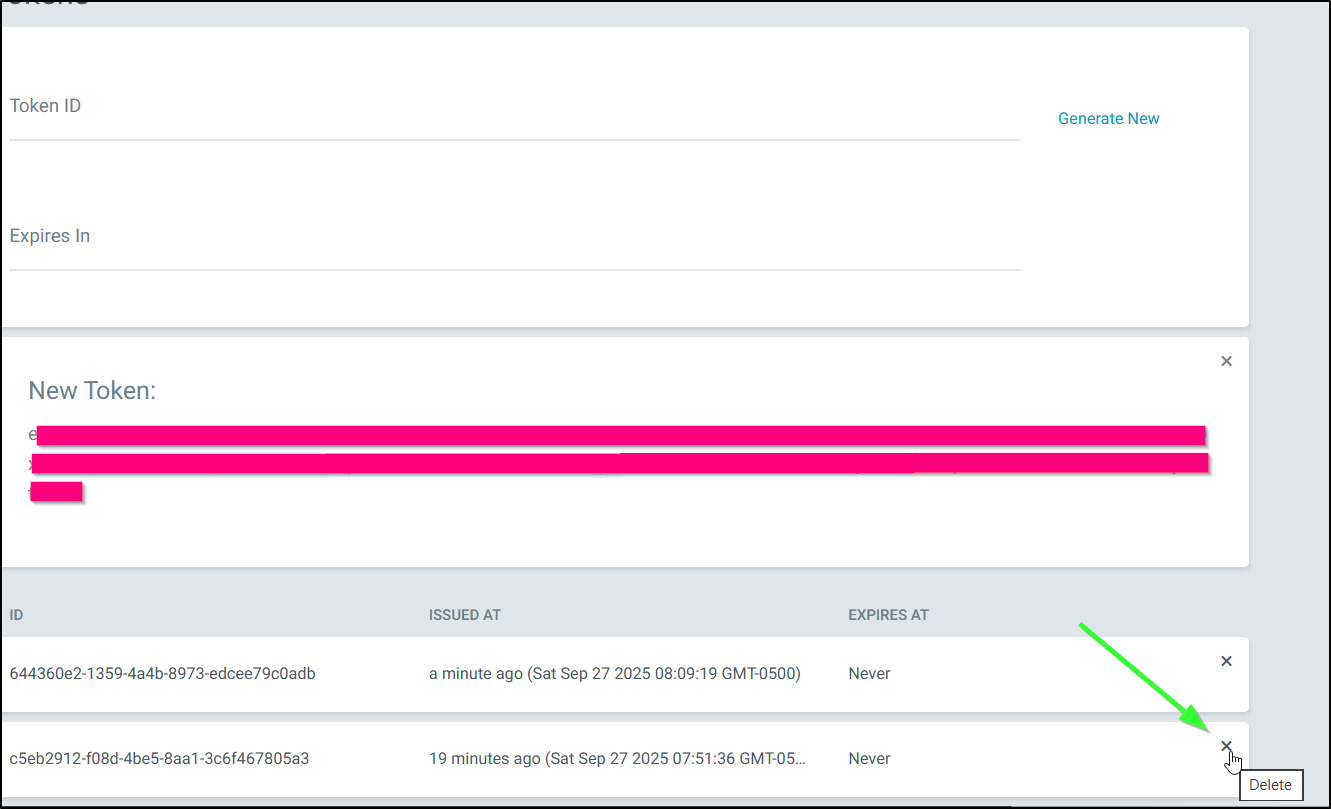

I thought that at this point, I could rotate my token and be good

But i still can use the old token

$ argocd app list --insecure 192.168.1.163:31080 --auth-token eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJhcmdvY2QiLCJzdWIiOiJjbGl1c2VyOmFwaUtleSIsIm5iZiI6MTc1ODk3NzQ5NiwiaWF0IjoxNzU4OTc3NDk2LCJqdGkiOiJjNWViMjkxMi1mMDhkLTRiZTUtOGFhMS0zYzZmNDY3ODA1YTMifQ.5vJDPasBcK0dzsHMxxcBLtNY9fMK6-_pZn-HmChlwLA

NAME CLUSTER NAMESPACE PROJECT STATUS HEALTH SYNCPOLICY CONDITIONS REPO PATH TARGET

argocd/guestbook https://kubernetes.default.svc guestbook default Synced Healthy Manual <none> https://github.com/argoproj/argocd-example-apps.git guestbook HEAD

argocd/mychart https://kubernetes.default.svc default default Synced Healthy Auto <none> oci://harbor.freshbrewed.science/chartrepo/mychart . 0.1.21318

argocd/nginx-hello https://kubernetes.default.svc default default Synced Healthy Auto-Prune <none> https://charts.bitnami.com/bitnami 13.2.9

We need to delete the old token (near the bottom) to make it expire

Now I can verify the old token is no longer functional

$ argocd app list --insecure 192.168.1.163:31080 --auth-token eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJhcmdvY2QiLCJzdWIiOiJjbGl1c2VyOmFwaUtleSIsIm5iZiI6MTc1ODk3NzQ5NiwiaWF0IjoxNzU4OTc3NDk2LCJqdGkiOiJjNWViMjkxMi1mMDhkLTRiZTUtOGFhMS0zYzZmNDY3ODA1YTMifQ.5vJDPasBcK0dzsHMxxcBLtNY9fMK6-_pZn-HmChlwLA

{"level":"fatal","msg":"rpc error: code = Unauthenticated desc = invalid session: account cliuser does not have token with id c5eb2912-f08d-4be5-8aa1-3c6f467805a3","time":"2025-09-27T08:11:27-05:00"}

Summary

We covered a lot of ground today with ArgoCD. We explored the OCI repositories.

I didn’t show the YAML for it, but this is what an OCI repo with password looks like to create:

$ kubectl get secret -n argocd repo-1010768722 -o yaml

apiVersion: v1

data:

password: VGhpcyBJcyBOb3QgVGhlIFBhc3N3b3JkLCBidXQgdGhhbmtzIGZvciBjaGVja2luZyE=

project: ZGVmYXVsdA==

type: b2Np

url: b2NpOi8vaGFyYm9yLmZyZXNoYnJld2VkLnNjaWVuY2UvY2hhcnRyZXBvL215Y2hhcnQ=

username: Zm9yZ2Vqbw==

kind: Secret

metadata:

annotations:

managed-by: argocd.argoproj.io

creationTimestamp: "2025-09-25T20:47:00Z"

labels:

argocd.argoproj.io/secret-type: repository

name: repo-1010768722

namespace: argocd

resourceVersion: "5280757"

uid: 06cc2c8c-1aec-4ccd-bf82-6084ae57f5d7

type: Opaque

We looked at the CLI and user management.

I spoke a bit about patterns with GitOps, namely that you still need to bootstrap your cluster with some things, like ArgoCD itself and namespaces. I also compared with what I do with AWX (ansible).

ArgoCD does serve a purpose. I do like the OCI support. But I’m not sure I would really use it as my primary driver as, at least to me, it adds a lot of unnecessary abstractions.

In otherwords, I might apply a SumoLogic or New Relic helm chart to monitor my cluster, and it has some settings. For me, I would much prefer to just do a helm upgrade to get it to the latest version knowing it would take the same initially set values. I don’t want to browse a set of parameters in an application block.

That said, ArgoCD is great at showing me the big picture of an application. Seeing how things map out is nice