Published: Jan 25, 2022 by Isaac Johnson

ArgoCD is a nearly 5 year old CNCF supported Open-Source suite for applying GitOps using Kubernetes. Its goal is to make a straightforward deployable system that can monitor code (GIT and Helm) for changes and automatically apply them (CD) to clusters. Put more simply, “Argo CD is a declarative, GitOps continuous delivery tool for Kubernetes”.

Today we’ll get started by setting up ArgoCD on an on-prem cluster, securing ingress, then setting up GitOps with a sample app, a local app and forking a project to show how to trigger errors and correct them.

Setup

There are simplified steps on their site which just use YAML (ie. kubectl apply -f https://github.com/argoproj/argo-cd/blob/master/manifests/install.yaml).

However, I like Arthur Koziel’s blog that detailed how to do it with Helm instead. We’ll use this as a basis (with some tweaks).

Create a chart yaml and values yaml

$ mkdir -p charts/argo-cd

$ cat charts/argo-cd/Chart.yaml

apiVersion: v2

name: argo-cd

version: 1.0.0

dependencies:

- name: argo-cd

version: 2.11.0

repository: https://argoproj.github.io/argo-helm

$ cat charts/argo-cd/values.yaml

argo-cd:

installCRDs: false

global:

image:

tag: v1.8.1

dex:

enabled: false

server:

extraArgs:

- --insecure

config:

repositories: |

- type: helm

name: stable

url: https://charts.helm.sh/stable

- type: helm

name: argo-cd

url: https://argoproj.github.io/argo-helm

We’ll need to update some deps next, so add the argo repo (if not already)

$ helm repo add argo-cd https://argoproj.github.io/argo-helm

"argo-cd" already exists with the same configuration, skipping

$ helm dep update charts/argo-cd/

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "cribl" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 1 charts

Downloading argo-cd from repo https://argoproj.github.io/argo-helm

Deleting outdated charts

$ git init

Initialized empty Git repository in /home/builder/Workspaces/argoCD2/.git/

$ git checkout -b main

Switched to a new branch 'main'

Now we can deploy:

$ helm install argo-cd charts/argo-cd/

W0121 17:05:39.242910 7594 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition

W0121 17:05:39.776781 7594 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition

W0121 17:05:40.573821 7594 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition

W0121 17:05:42.947895 7594 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition

W0121 17:05:43.156558 7594 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition

NAME: argo-cd

LAST DEPLOYED: Fri Jan 21 17:05:46 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

Since I don’t like those warnings, I did some looking and found the latest versions of the charts take care of that for newer K8s versions.

I updated the chart accordingly:

We can use the updated versions:

$ cat charts/argo-cd/Chart.yaml

apiVersion: v2

name: argo-cd

version: 1.0.1

dependencies:

- name: argo-cd

version: 2.14.1

repository: https://argoproj.github.io/argo-helm

$ cat charts/argo-cd/values.yaml

argo-cd:

installCRDs: false

global:

image:

tag: v1.8.4

dex:

enabled: false

server:

extraArgs:

- --insecure

config:

repositories: |

- type: helm

name: stable

url: https://charts.helm.sh/stable

- type: helm

name: argo-cd

url: https://argoproj.github.io/argo-helm

then update and upgrade:

$ helm dep update charts/argo-cd/

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "cribl" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 1 charts

Downloading argo-cd from repo https://argoproj.github.io/argo-helm

Deleting outdated charts

$ helm upgrade --install argo-cd charts/argo-cd/

Release "argo-cd" has been upgraded. Happy Helming!

NAME: argo-cd

LAST DEPLOYED: Sat Jan 22 10:05:19 2022

NAMESPACE: default

STATUS: deployed

REVISION: 2

TEST SUITE: None

We should now be able to get the admin password.

For Argo up to 1.8 it was the pod name;

$ kubectl get pods -l app.kubernetes.io/name=argocd-server -o name -o json | jq -r '.items[] | .metadata.name'

argo-cd-argocd-server-564ddc97c4-9bd7c

# another way

$ kubectl get pods -l app.kubernetes.io/name=argocd-server -o name | cut -d'/' -f 2

argo-cd-argocd-server-564ddc97c4-9bd7c

For ArgoCD 1.8 and beyond it is a secret in K8s:

$ kubectl get secrets argocd-secret -o json | jq -r '.data."admin.password"' | base64 --decode && echo

$2a$10$ifBzX9dla.eu2SNwHd/U8OGCE56H.3iSk/3wvjNdzcdOnC/nNT.7O

The problem is that its a bcrypted password.

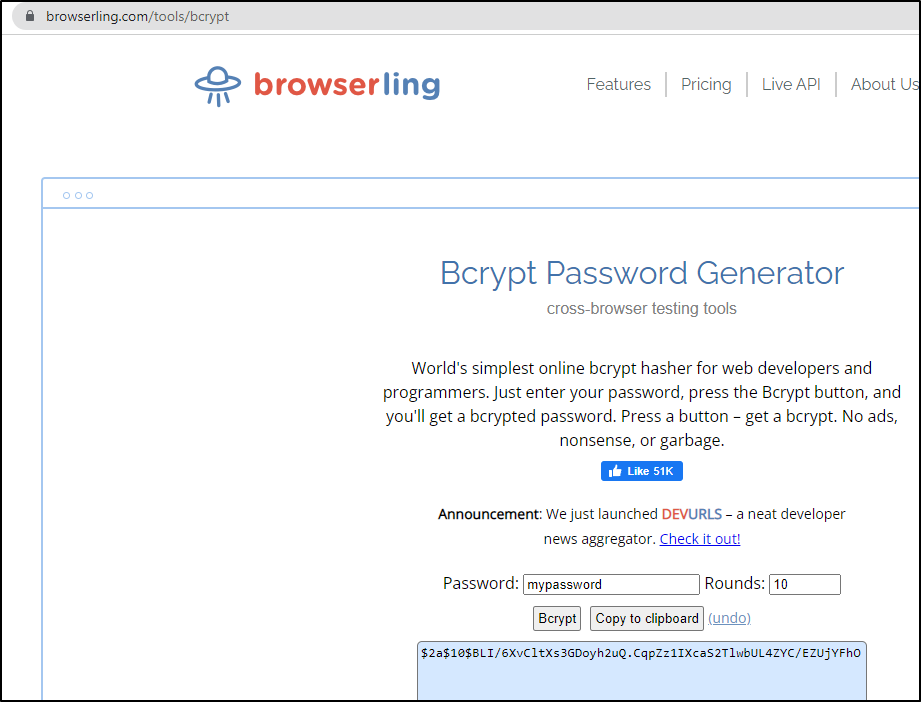

The most straightforward way to set the password to something you can recall is to use a site like: https://www.browserling.com/tools/bcrypt to create a new password:

Then patch that to k8s right away:

$ kubectl patch secret argocd-secret -p '{"stringData": { "admin.passwordMtime": "'$(date +%FT%T%Z)'", "admin.password": "$2a$10$BLI/6XvCltXs3GDoyh2uQ.CqpZz1IXcaS2TlwbUL4ZYC/EZUjYFhO"}}'

secret/argocd-secret patched

then rotate the pod to have it pick up the fresh secret:

$ kubectl delete pod `kubectl get pods -l app.kubernetes.io/name=argocd-server -o name | cut -d'/' -f 2 | tr -d '\n'`

pod "argo-cd-argocd-server-564ddc97c4-mvvq8" deleted

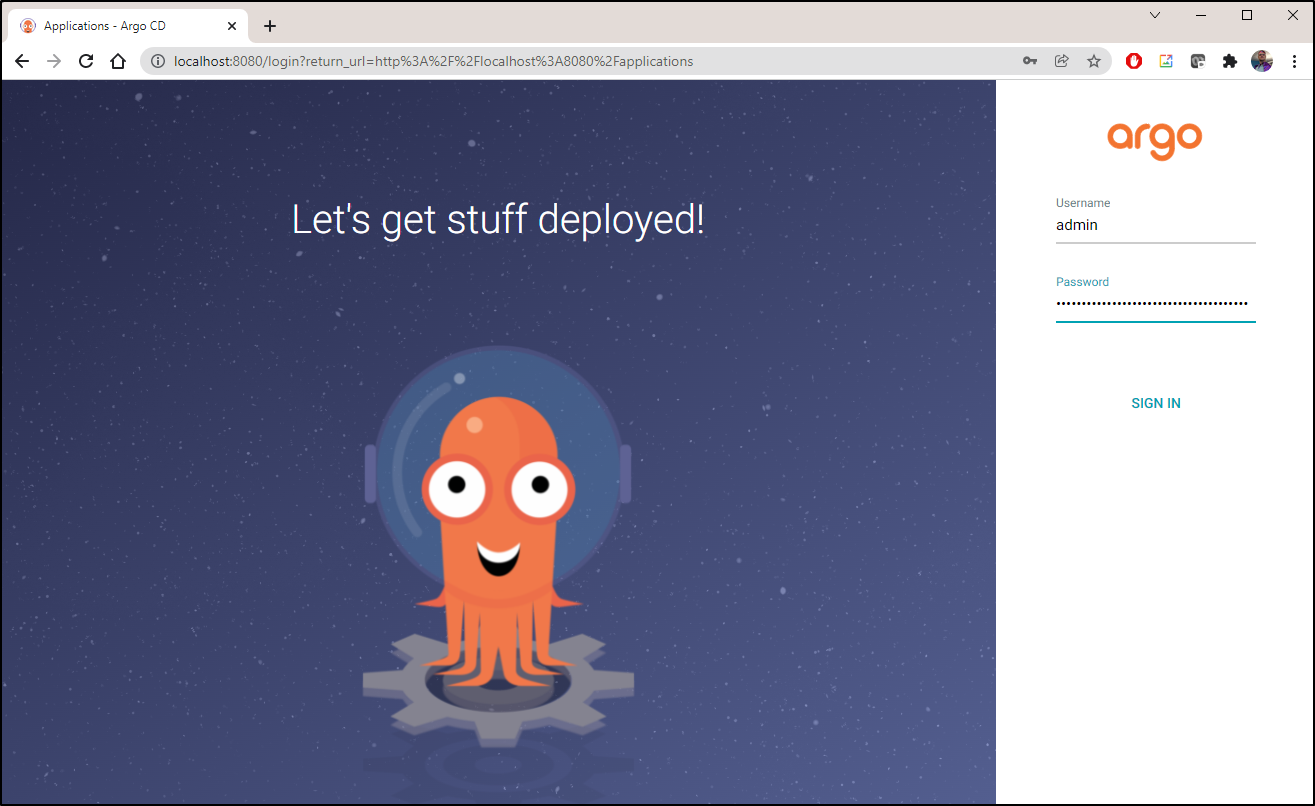

port-forward to the ArgoCD server to login:

$ kubectl port-forward svc/argo-cd-argocd-server 8080:443

Forwarding from 127.0.0.1:8080 -> 8080

Forwarding from [::1]:8080 -> 8080

Handling connection for 8080

Handling connection for 8080

I use the admin/mypassword login I setup (having patched the secret and rotated the pod)

Setting up proper Ingress

Before I go further, I’m going to expose the service with a proper DNS as anything worth doing is worth doing right.

DNS updates

First, I’ll fetch my HostedZone ID:

$ aws route53 list-hosted-zones-by-name | jq -r '.HostedZones[] | select(.Name == "freshbrewed.science.") | .Id' | cut -d'/' -f 3

Z39E8QFU0F9PZP

then create a JSON with my Ingress IP:

$ cat r53-argocd.json

{

"Comment": "CREATE argocd fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "argocd.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "73.242.50.46"

}

]

}

}

]

}

Lastly, I can set the Route53 A Record:

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-argocd.json

# or as a one-liner

$ aws route53 change-resource-record-sets --hosted-zone-id `aws route53 list-hosted-zones-by-name | jq -r '.HostedZones[] | select(.Name == "freshbrewed.science.") | .Id' | cut -d'/' -f 3 | tr -d '\n'` --change-batch file://r53-argocd.json

{

"ChangeInfo": {

"Id": "/change/C0109767AN5XND1AZU6M",

"Status": "PENDING",

"SubmittedAt": "2022-01-22T20:22:06.496Z",

"Comment": "CREATE argocd fb.s A record "

}

}

Basic Auth and Ingress

I’ll want to expose with basic auth:

# if you need it

$ sudo apt install apache2-utils

$ htpasswd -c auth builder

New password:

Re-type new password:

Adding password for user builder

$ kubectl create secret generic argocd-basic-auth --from-file=auth

secret/argocd-basic-auth created

Now we can create the Ingress with Basic Auth:

$ cat argo-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/auth-realm: Authentication Required - ArgoCD

nginx.ingress.kubernetes.io/auth-secret: argocd-basic-auth

nginx.ingress.kubernetes.io/auth-type: basic

kubernetes.io/tls-acme: "true"

kubernetes.io/ingress.class: nginx

cert-manager.io/cluster-issuer: "letsencrypt-prod"

name: argocd-with-auth

namespace: default

spec:

tls:

- hosts:

- argocd.freshbrewed.science

secretName: argocd-tls

rules:

- host: argocd.freshbrewed.science

http:

paths:

- backend:

service:

name: argo-cd-argocd-server

port:

number: 80

path: /

pathType: ImplementationSpecific

checking on Cert acquisition:

$ kubectl get cert

NAME READY SECRET AGE

...

argocd-tls False argocd-tls 3s

$ kubectl get cert | grep argo

argocd-tls True argocd-tls 32s

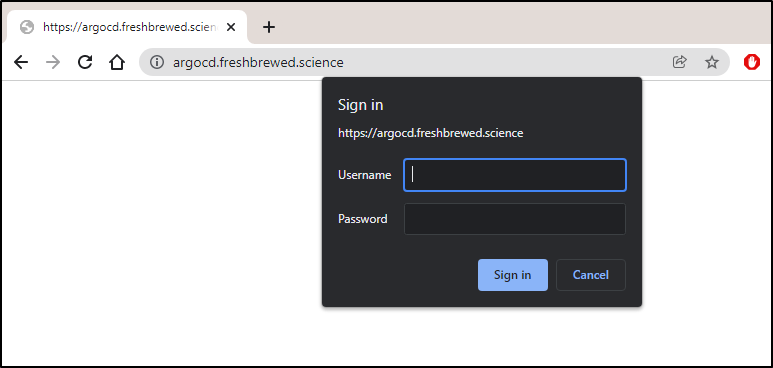

Now in logging in I am prompted:

and while I did have to type in the basic pass a few times on redirects, I was able to auth then login:

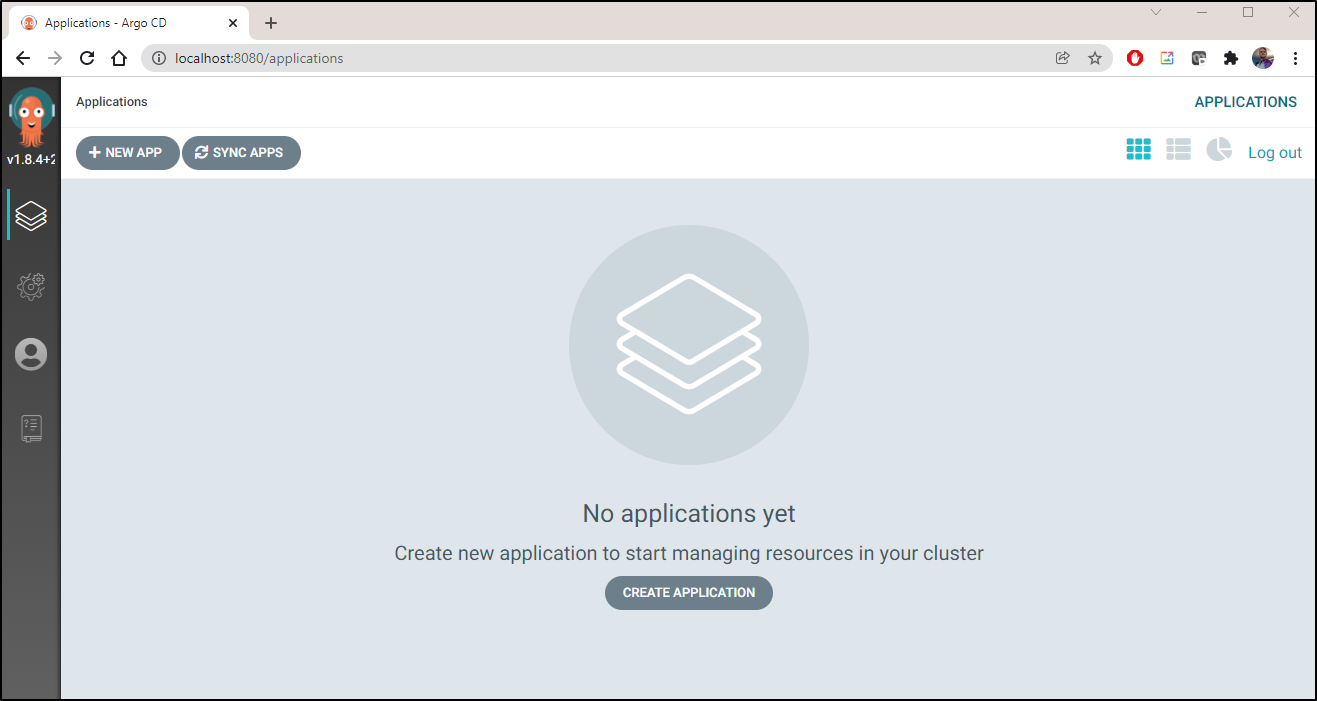

Example Sample App

Let’s fire the Argo Sample app next.

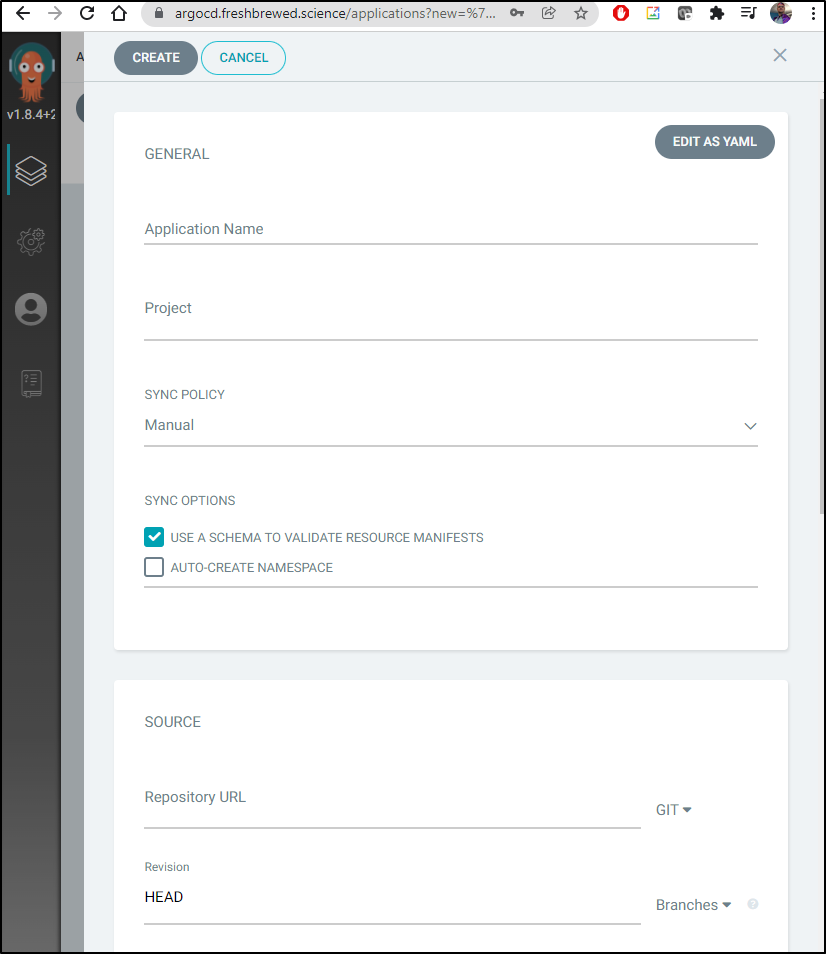

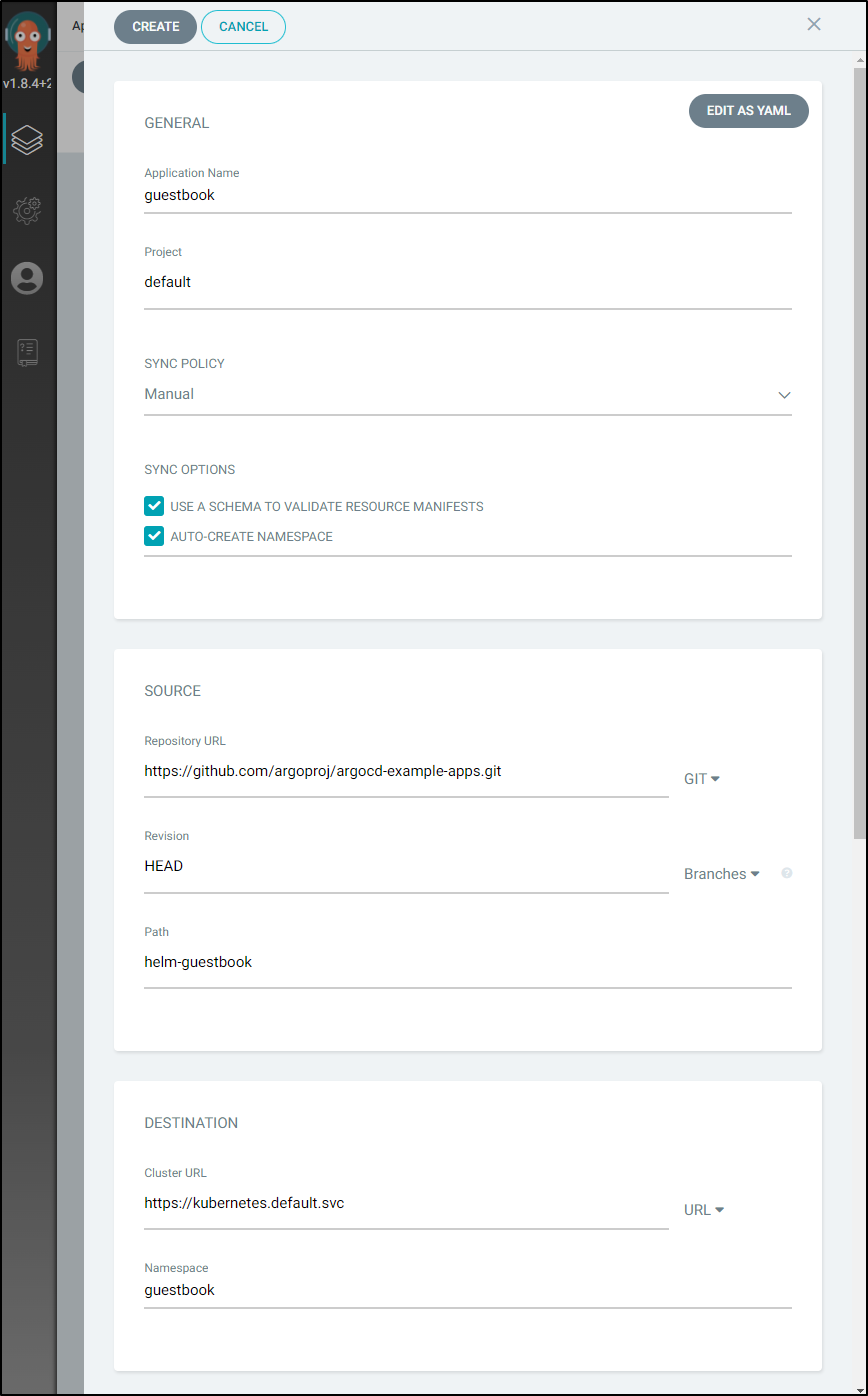

Click the Create Application button:

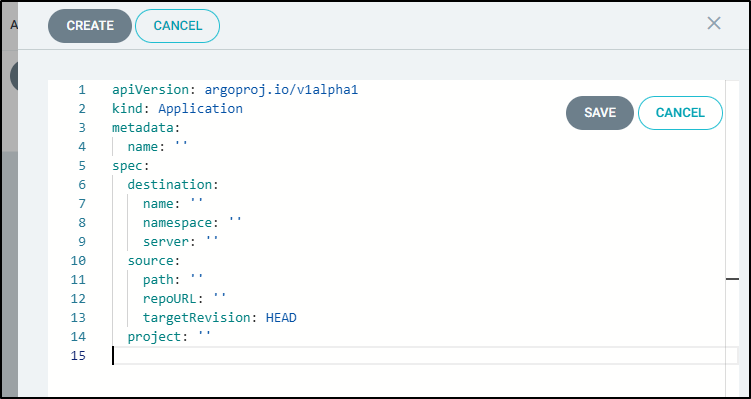

Click Edit as YAML in the upper right to switch to the YAML view:

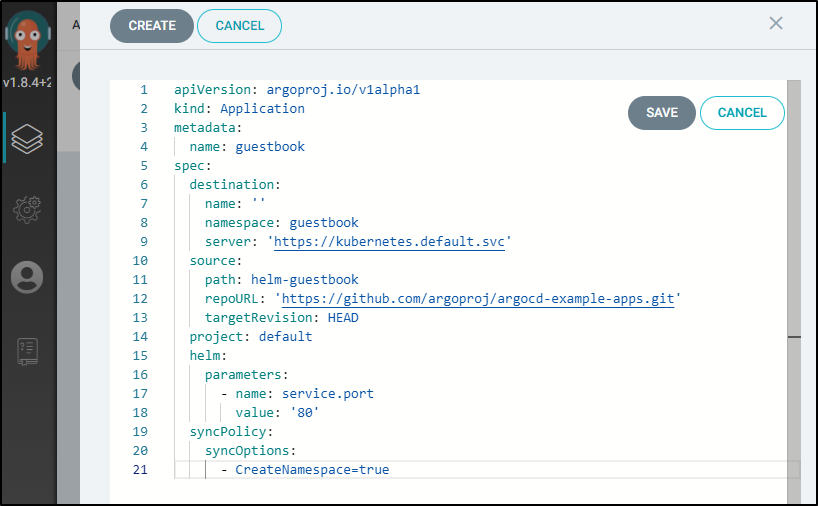

Use the following YAML:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: guestbook

spec:

destination:

name: ''

namespace: guestbook

server: 'https://kubernetes.default.svc'

source:

path: helm-guestbook

repoURL: 'https://github.com/argoproj/argocd-example-apps.git'

targetRevision: HEAD

project: default

helm:

parameters:

- name: service.port

value: '80'

syncPolicy:

syncOptions:

- CreateNamespace=true

Then click “save”

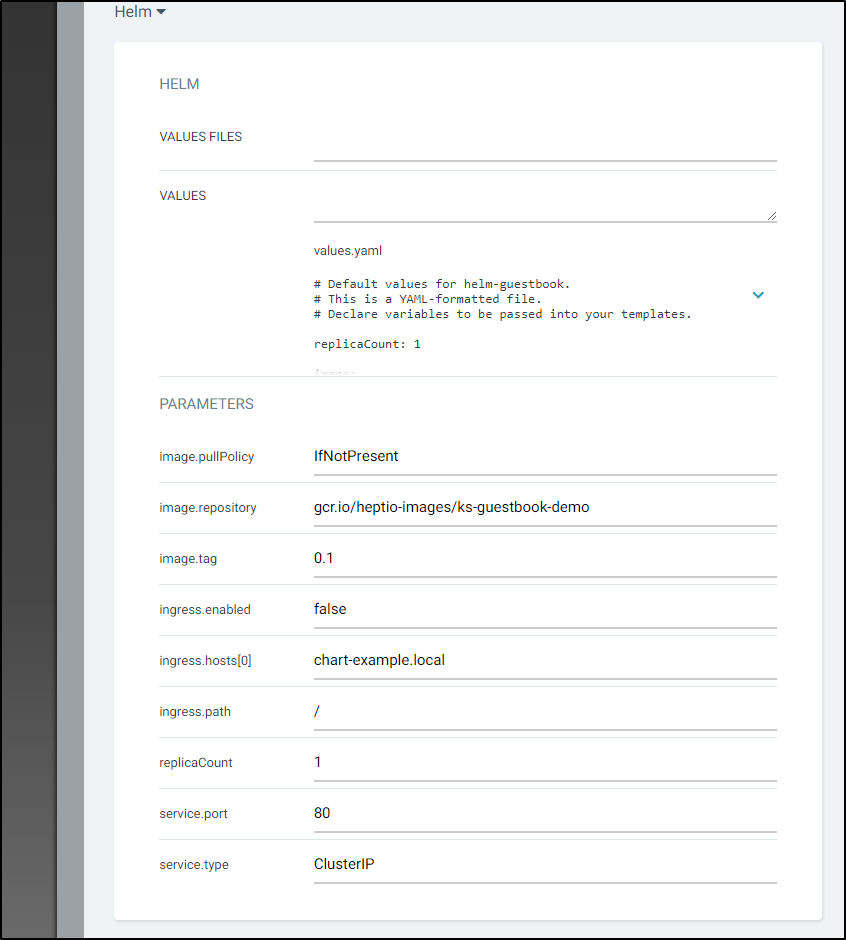

You can page through the Graphical UI settings:

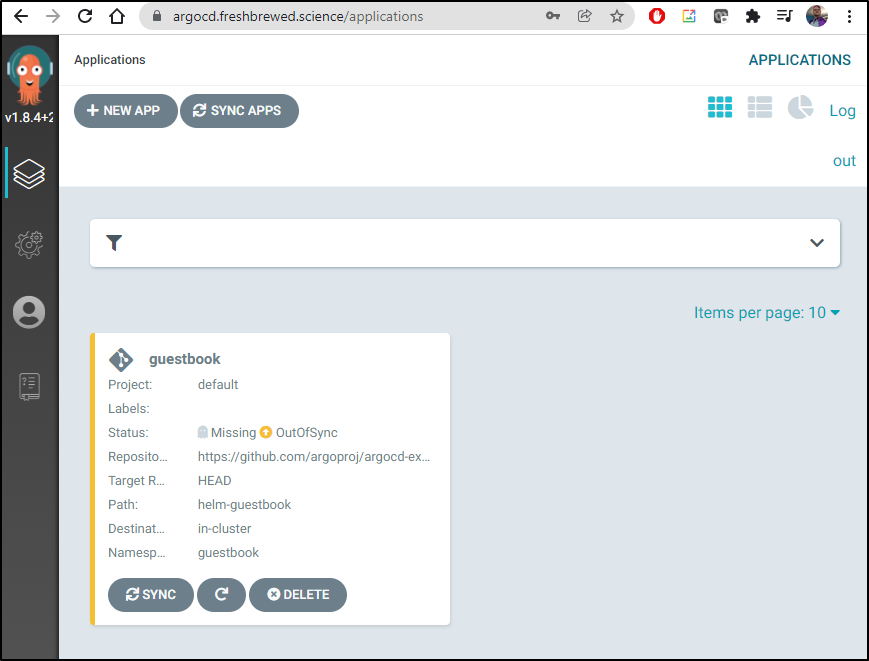

Then click Create to create it:

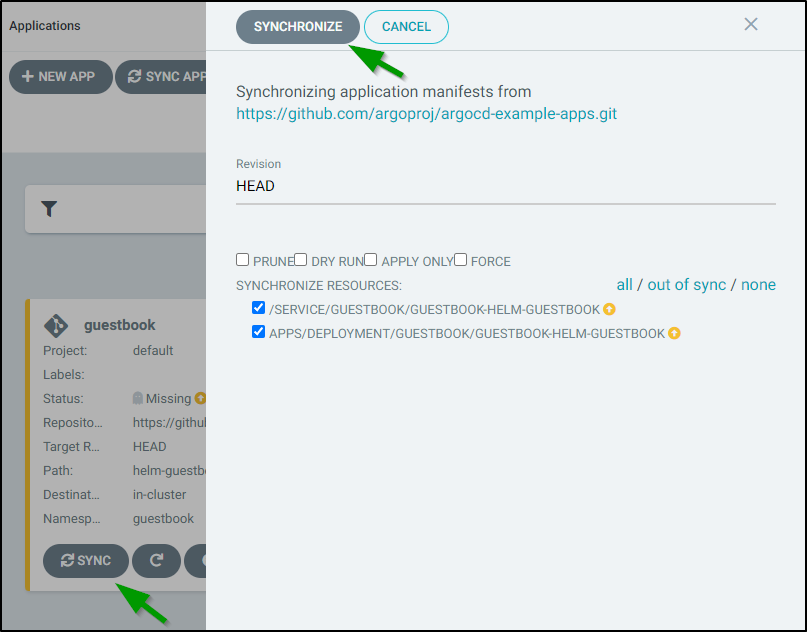

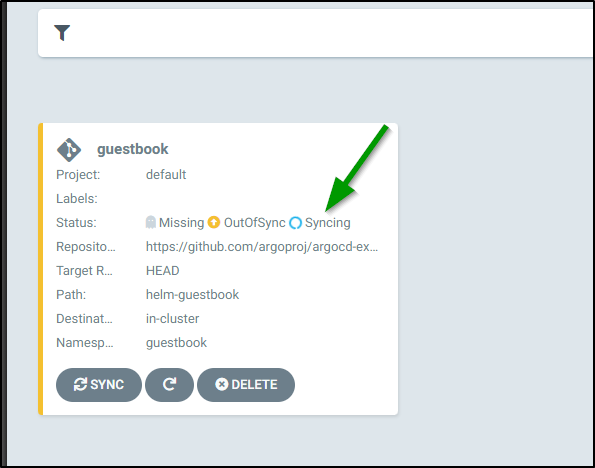

Next, we click on “Sync” to bring up the sync window then “Synchronize” to sync it.

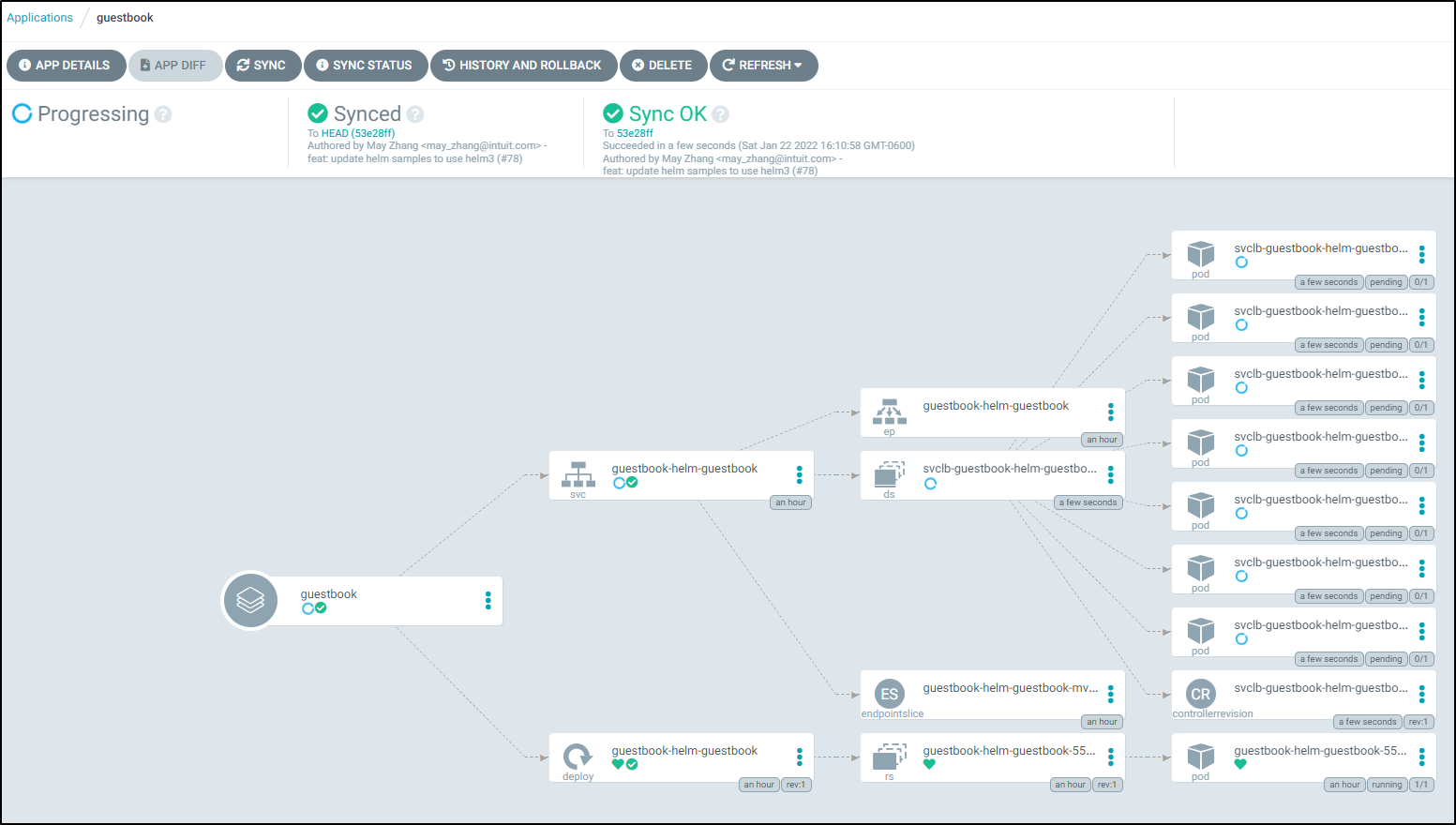

and we get a visual indicator that it is indeed syncing

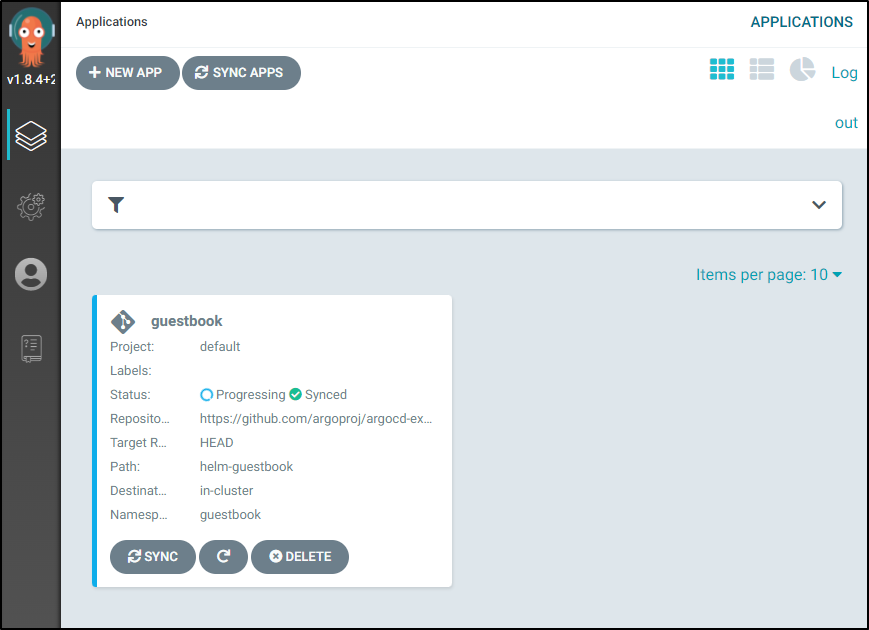

and when done syncing

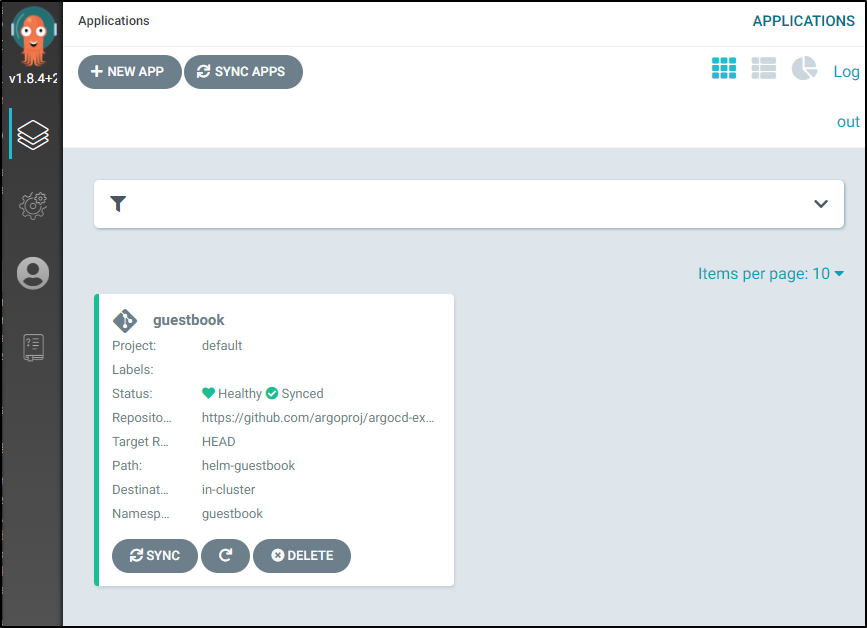

lastly when deployed:

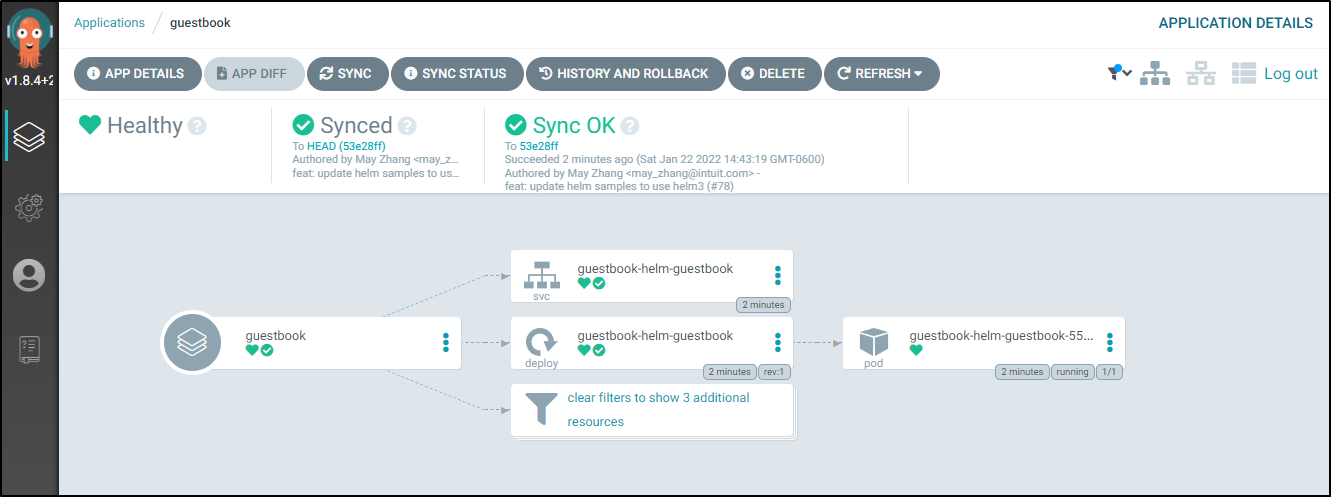

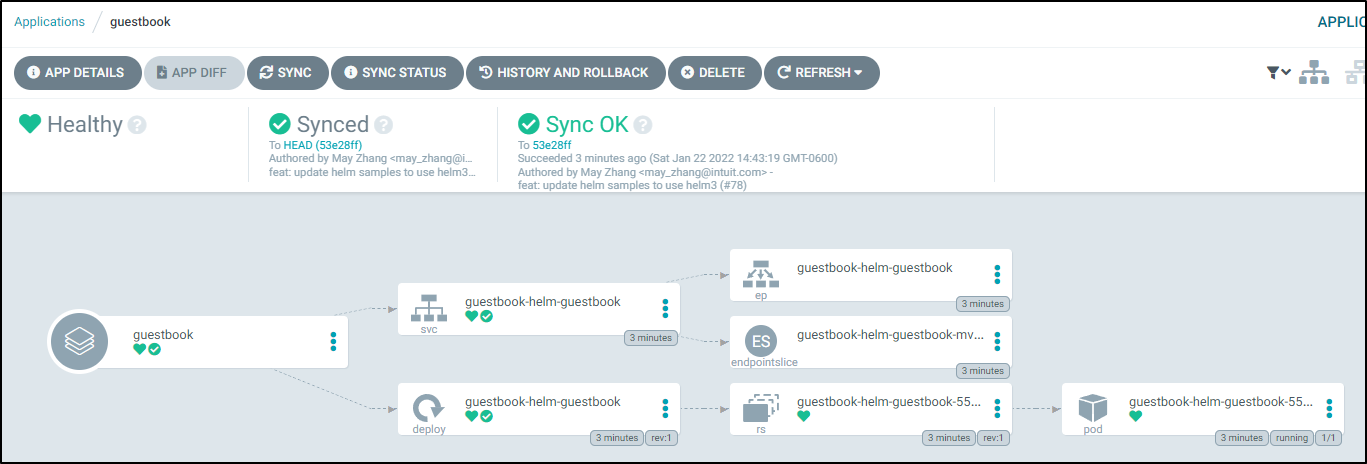

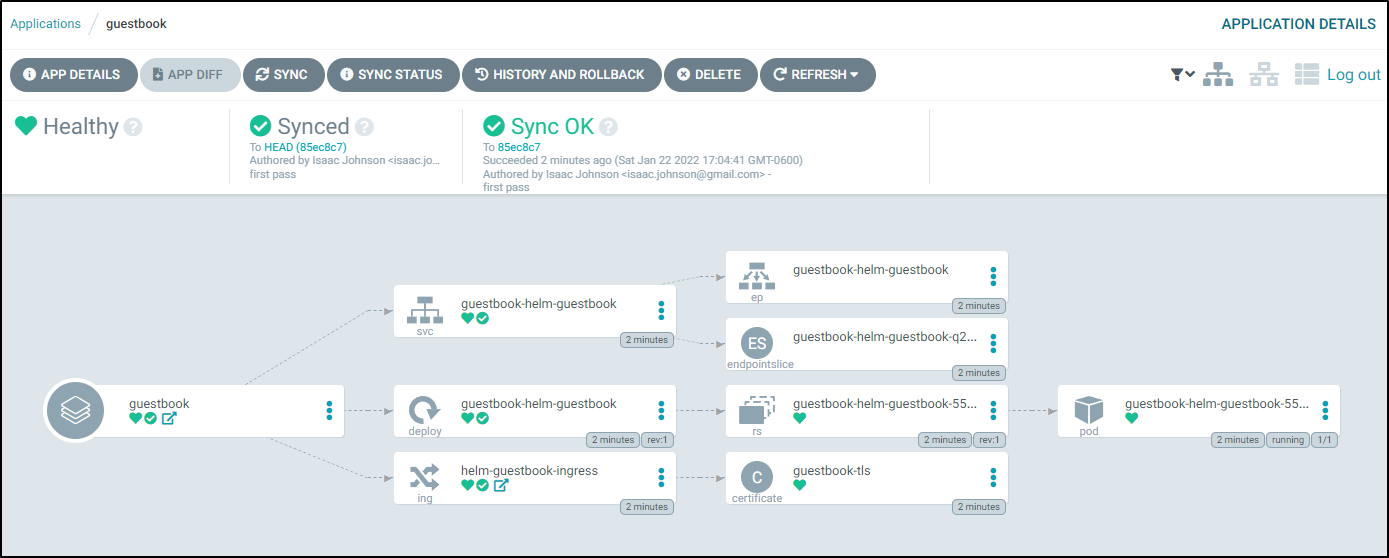

If we click on the Guestbook Application we can see the Application tree

and with filters clear:

This lines up with what we see in the cluster:

$ kubectl get svc -n guestbook

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

guestbook-helm-guestbook ClusterIP 10.43.3.36 <none> 80/TCP 4m27s

$ kubectl get ingress -n guestbook

No resources found in guestbook namespace.

$ kubectl get pods -n guestbook

NAME READY STATUS RESTARTS AGE

guestbook-helm-guestbook-55d9465ff4-br4t2 1/1 Running 0 4m40s

$ kubectl get rs -n guestbook

NAME DESIRED CURRENT READY AGE

guestbook-helm-guestbook-55d9465ff4 1 1 1 4m56s

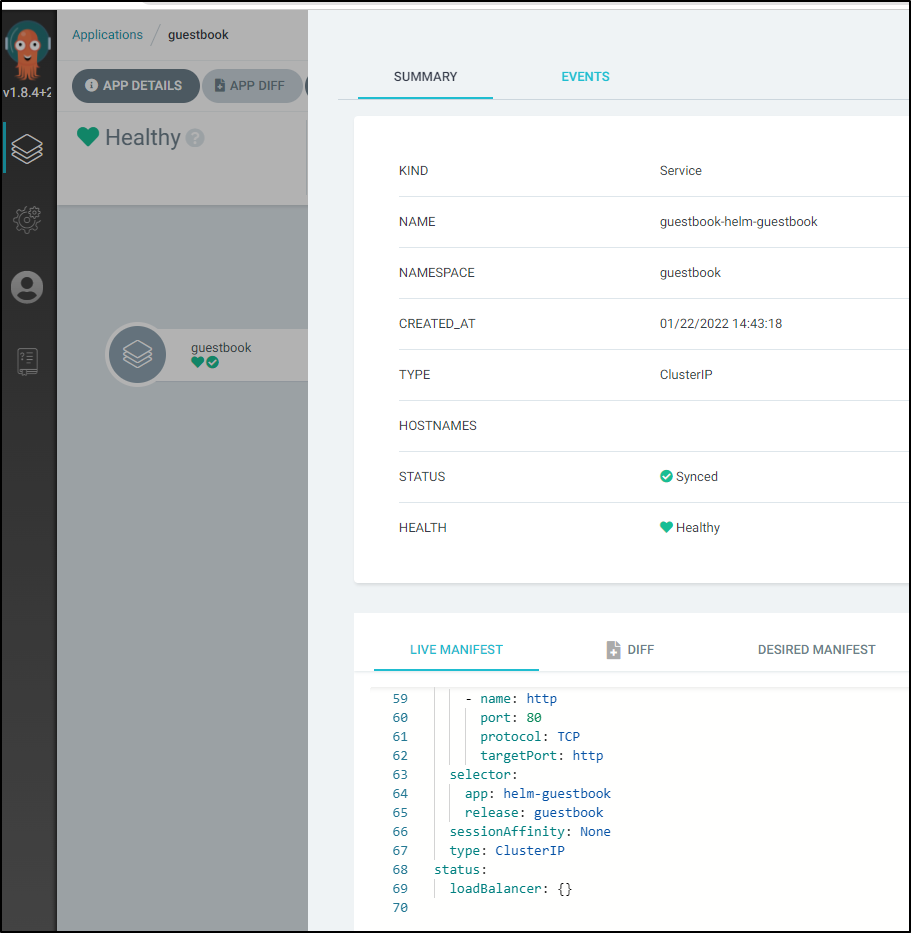

If I look at the service in ArgoCD and scroll down on the manifest I can see it runs on port 80

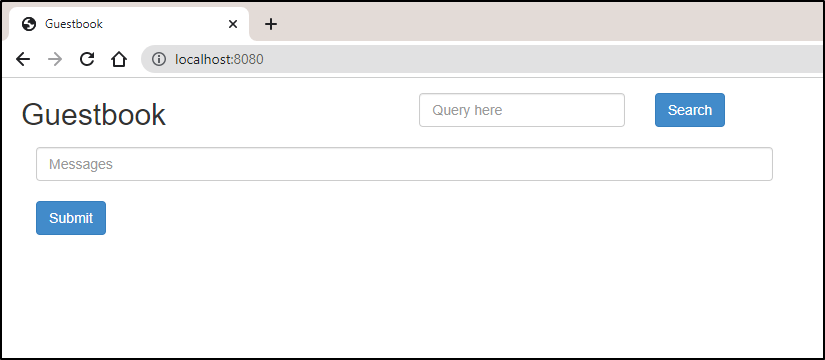

Which means I can port forward and try it out:

$ kubectl port-forward -n guestbook svc/guestbook-helm-guestbook 8080:80

Forwarding from 127.0.0.1:8080 -> 80

Forwarding from [::1]:8080 -> 80

Handling connection for 8080

$ kubectl logs guestbook-helm-guestbook-55d9465ff4-br4t2 -n guestbook | tail -n30

10.42.6.1 - - [22/Jan/2022:20:50:59 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:50:59 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:51:09 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:51:09 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:51:19 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:51:19 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:51:29 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:51:29 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:51:39 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:51:39 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:51:49 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:51:49 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

127.0.0.1 - - [22/Jan/2022:20:51:51 +0000] "GET /guestbook.php?cmd=set&key=messages&value=Hi%20there HTTP/1.1" 200 1387 "http://localhost:8080/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36"

127.0.0.1 - - [22/Jan/2022:20:51:51 +0000] "GET /guestbook.php?cmd=get&key=messages HTTP/1.1" 200 1386 "http://localhost:8080/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36"

127.0.0.1 - - [22/Jan/2022:20:51:55 +0000] "GET /guestbook.php?cmd=search&query=undefined HTTP/1.1" 200 229 "http://localhost:8080/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36"

127.0.0.1 - - [22/Jan/2022:20:51:59 +0000] "GET /guestbook.php?cmd=search&query=Hi%20there HTTP/1.1" 200 228 "http://localhost:8080/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36"

10.42.6.1 - - [22/Jan/2022:20:51:59 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:51:59 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

127.0.0.1 - - [22/Jan/2022:20:52:01 +0000] "GET /guestbook.php?cmd=set&key=messages&value= HTTP/1.1" 200 1386 "http://localhost:8080/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36"

127.0.0.1 - - [22/Jan/2022:20:52:01 +0000] "GET /guestbook.php?cmd=get&key=messages HTTP/1.1" 200 1386 "http://localhost:8080/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36"

127.0.0.1 - - [22/Jan/2022:20:52:02 +0000] "GET /guestbook.php?cmd=set&key=messages&value=asdf HTTP/1.1" 200 1386 "http://localhost:8080/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36"

127.0.0.1 - - [22/Jan/2022:20:52:02 +0000] "GET /guestbook.php?cmd=get&key=messages HTTP/1.1" 200 1386 "http://localhost:8080/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36"

10.42.6.1 - - [22/Jan/2022:20:52:09 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:52:09 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:52:19 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:52:19 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:52:29 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:52:29 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:52:39 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

10.42.6.1 - - [22/Jan/2022:20:52:39 +0000] "GET / HTTP/1.1" 200 1871 "-" "kube-probe/1.21"

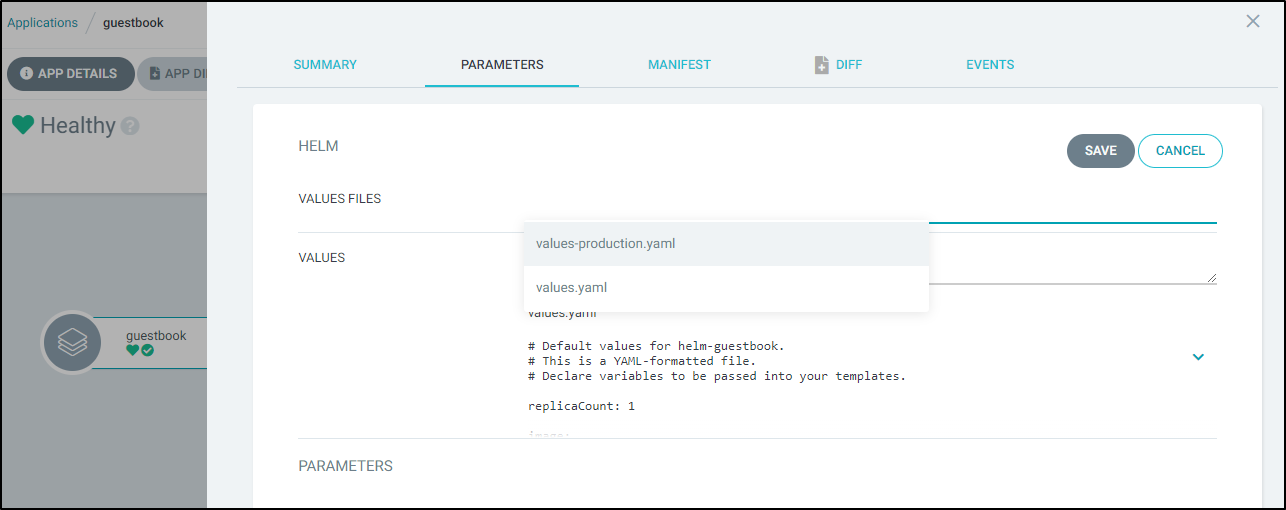

This is great; it deployed with default values as we can see in the repo we specified. But what if we want to now ‘promote’ it to production Values?

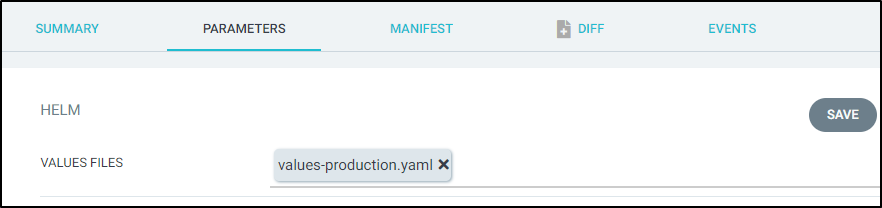

We can go to parameters in ArgoCD and select the production values YAML:

Then click save

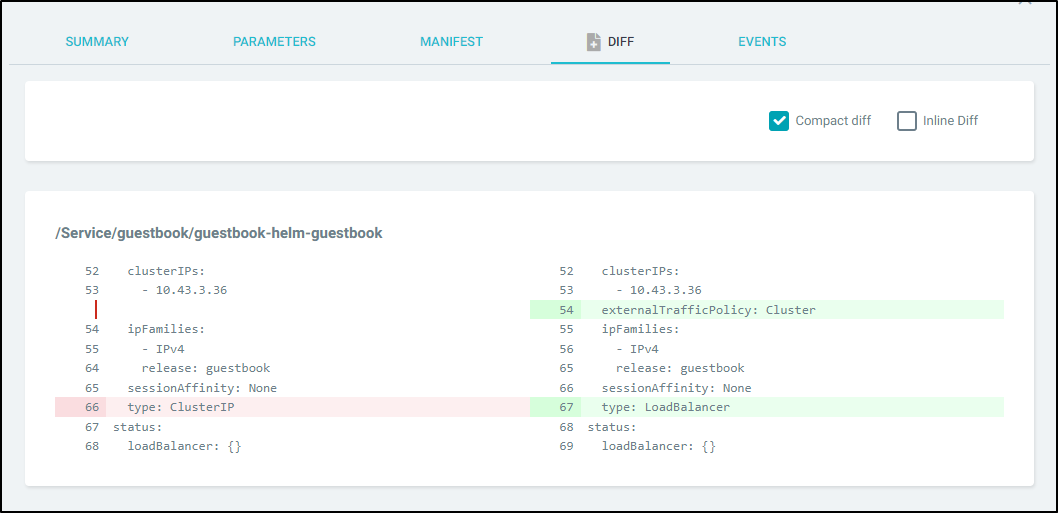

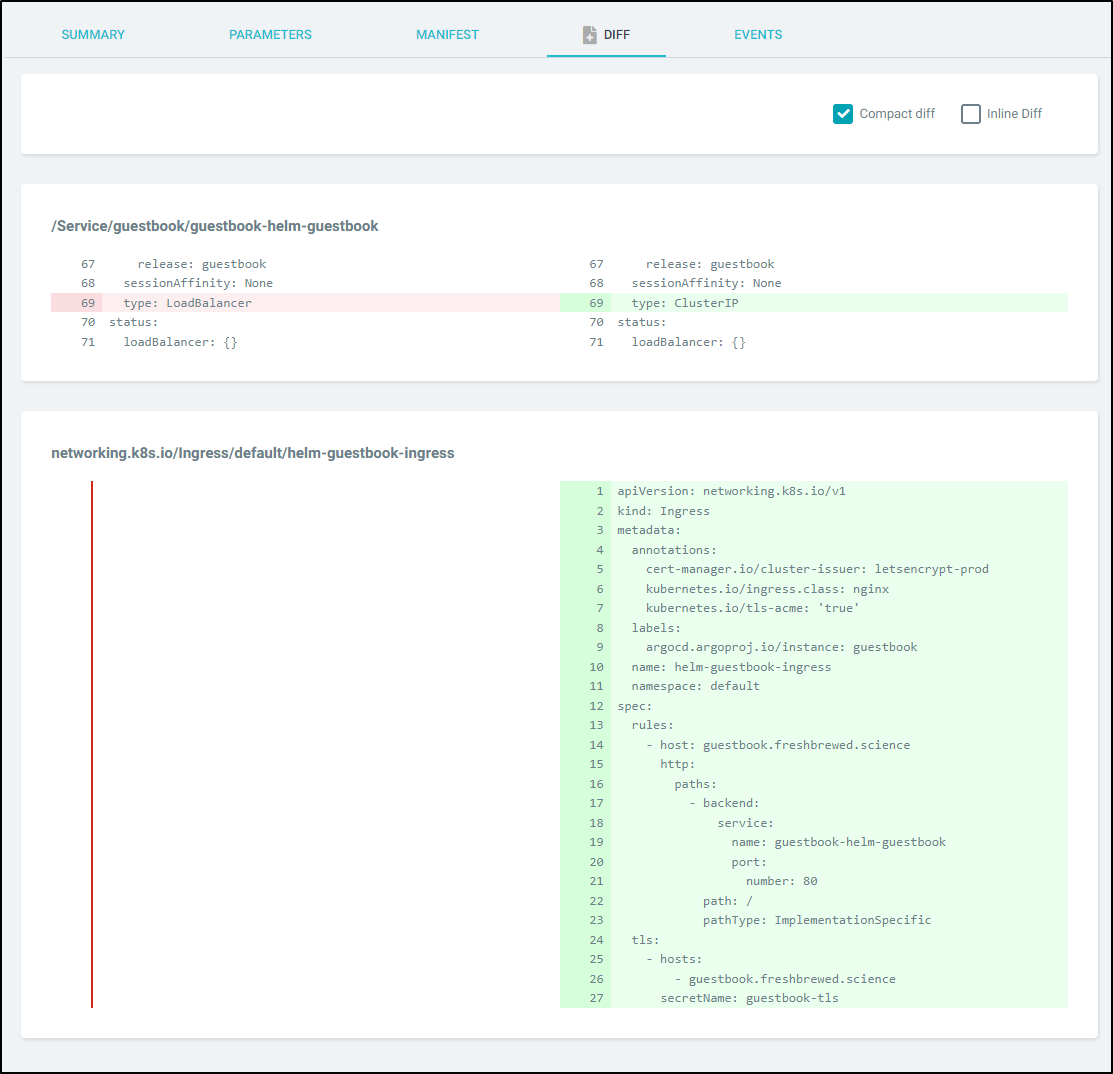

After we click save, the diff will show us (inline, side-by-side or compact) what will change:

And the Manifest shows the new YAML

project: default

source:

repoURL: 'https://github.com/argoproj/argocd-example-apps.git'

path: helm-guestbook

targetRevision: HEAD

helm:

valueFiles:

- values-production.yaml

destination:

server: 'https://kubernetes.default.svc'

namespace: guestbook

syncPolicy:

syncOptions:

- CreateNamespace=true

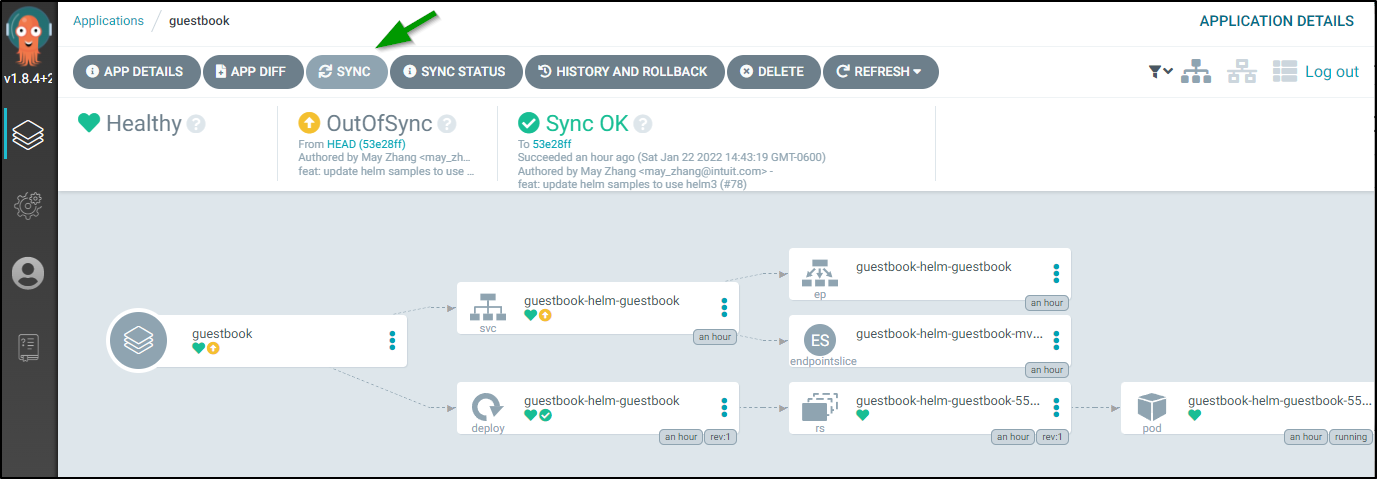

As before, we need to sync. We can Sync from the Application pane:

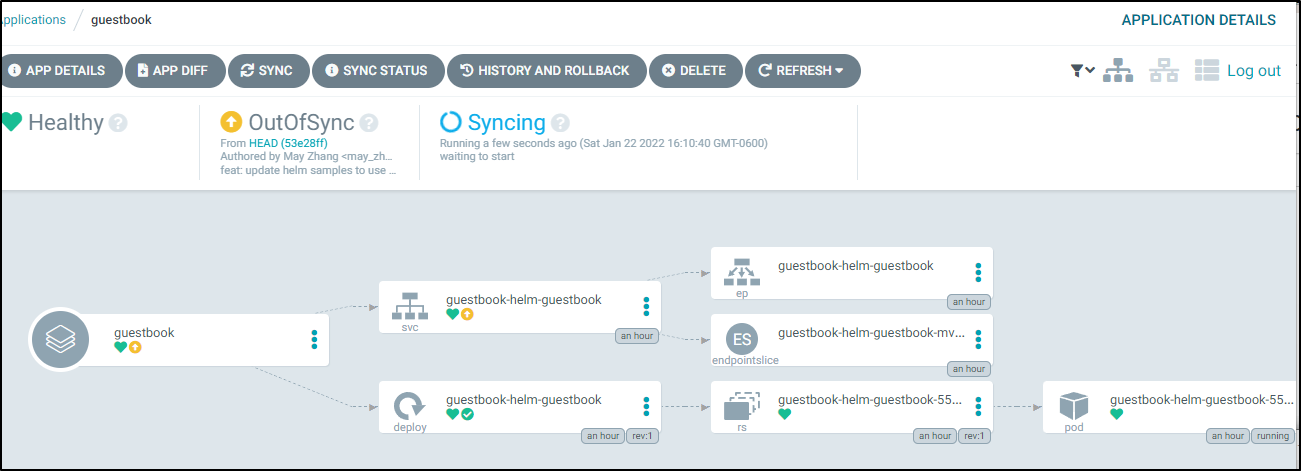

This will show us syncing

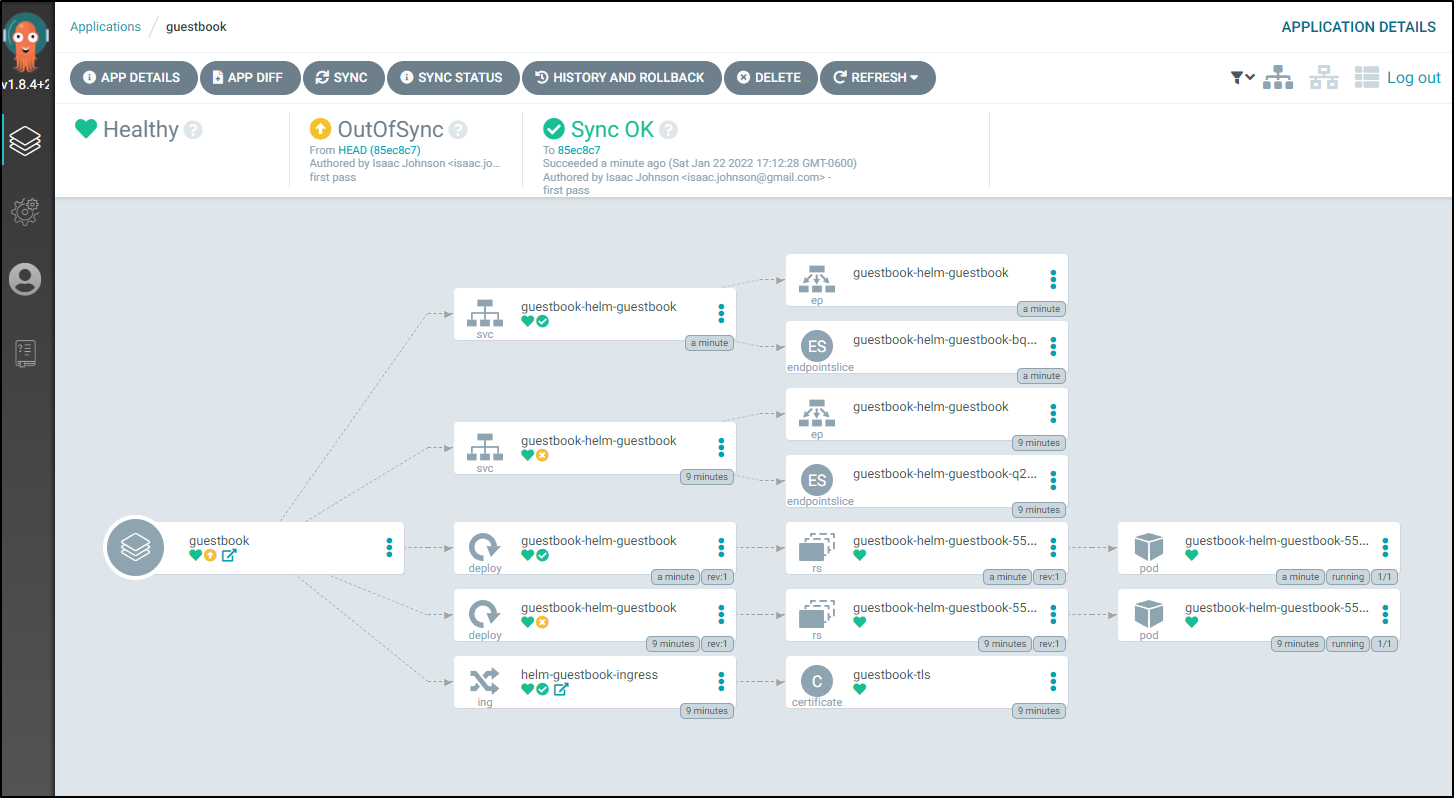

then automatically expand the deployment diagram when ready:

which reflects in the namespace in kubernetes:

$ kubectl get pods -n guestbook

NAME READY STATUS RESTARTS AGE

guestbook-helm-guestbook-55d9465ff4-br4t2 1/1 Running 0 88m

svclb-guestbook-helm-guestbook-bgqj8 0/1 Pending 0 66s

svclb-guestbook-helm-guestbook-rsbpv 0/1 Pending 0 66s

svclb-guestbook-helm-guestbook-mzgb6 0/1 Pending 0 66s

svclb-guestbook-helm-guestbook-z7vcx 0/1 Pending 0 66s

svclb-guestbook-helm-guestbook-zqs99 0/1 Pending 0 66s

svclb-guestbook-helm-guestbook-4w2gm 0/1 Pending 0 66s

svclb-guestbook-helm-guestbook-thkjk 0/1 Pending 0 66s

Oh, but we have an issue. This is wanting a cluster LB and my k3s is not really going to want to give a port on every node. those will likely stay pending until timeout.

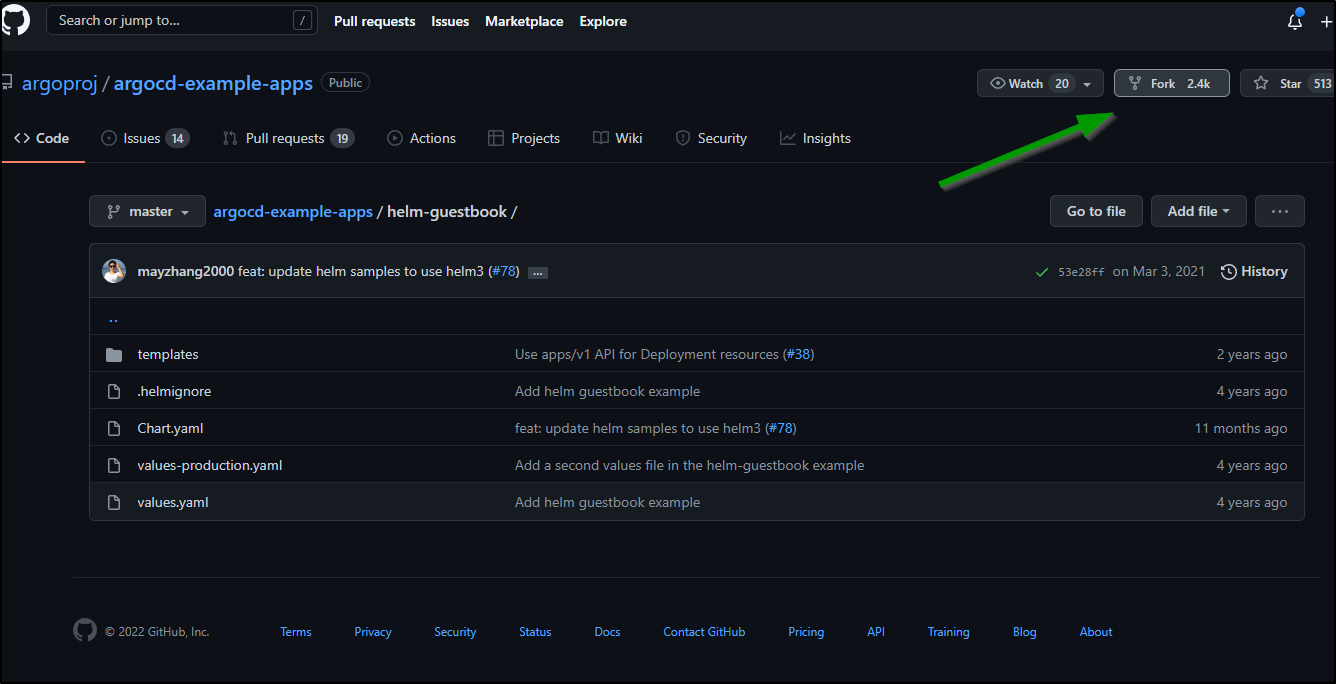

We could hand override values, we could manipulate the cluster or we could change this chart ourselves. Let’s do the latter:

I’ll fork it off to my own namespace in github:

If I change the Manifest YAML to use my repo, we can see the results in the diff

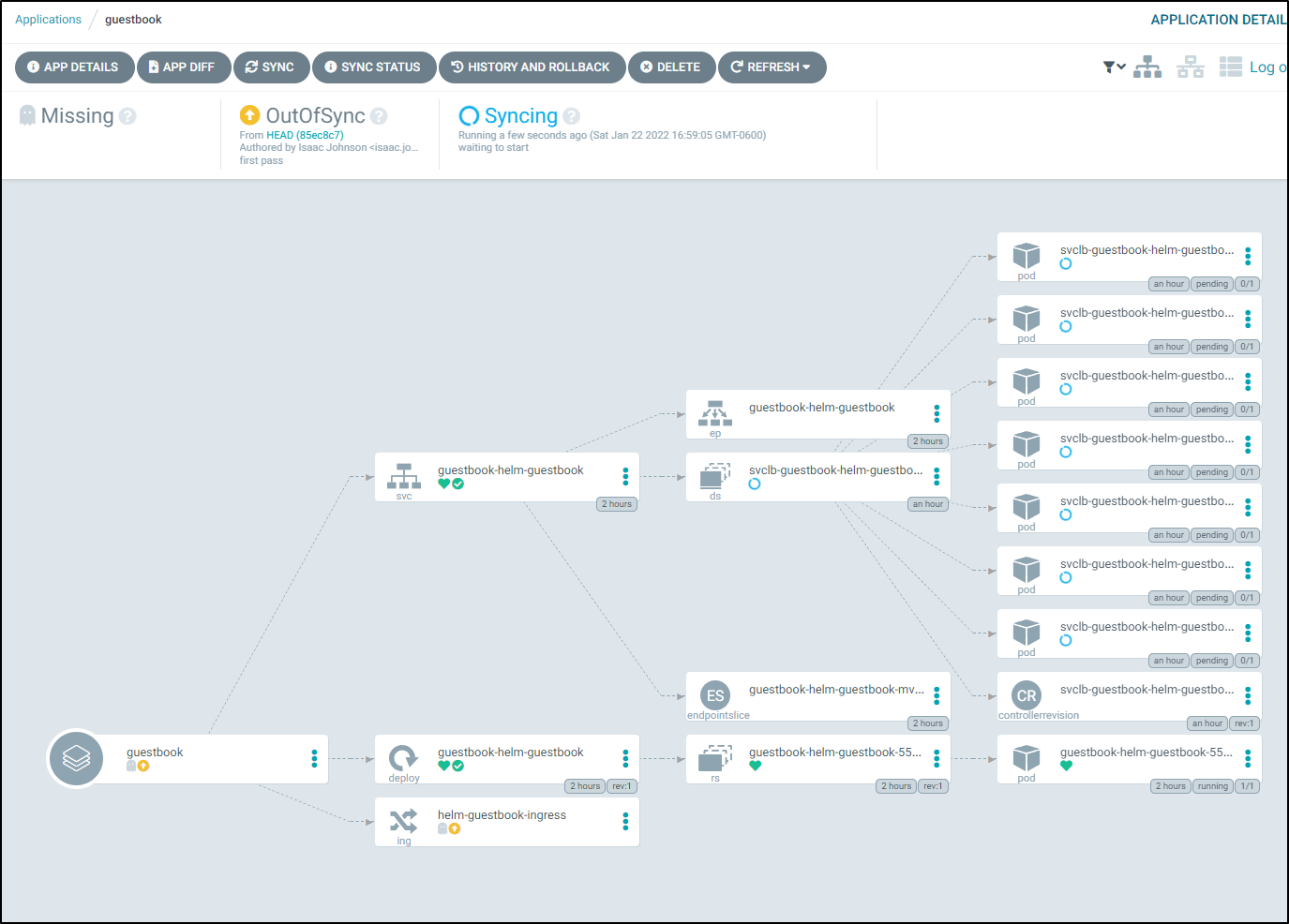

and when applied, it should update properly:

However, it just would not remove the svclb pods. Nor would manually removing.

I ended up using the “delete” to remove the whole app and readded it with this YAML:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: guestbook

spec:

destination:

name: ''

namespace: guestbook

server: 'https://kubernetes.default.svc'

source:

path: helm-guestbook

repoURL: 'https://github.com/idjohnson/argocd-example-apps.git'

targetRevision: HEAD

helm:

valueFiles:

- values-production.yaml

project: default

helm:

parameters:

- name: service.port

value: '80'

syncPolicy:

syncOptions:

- CreateNamespace=true

Now we can see production values that enable a proper TLS ingress:

# Default values for helm-guestbook.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

replicaCount: 1

image:

repository: gcr.io/heptio-images/ks-guestbook-demo

tag: 0.1

pullPolicy: IfNotPresent

service:

type: ClusterIP

port: 80

ingress:

enabled: true

annotations: {}

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

path: /

hosts:

- guestbook.freshbrewed.science

tls:

secretName: guestbook-tls

resources: {}

# We usually recommend not to specify default resources and to leave this as a conscious

# choice for the user. This also increases chances charts run on environments with little

# resources, such as Minikube. If you do want to specify resources, uncomment the following

# lines, adjust them as necessary, and remove the curly braces after 'resources:'.

# limits:

# cpu: 100m

# memory: 128Mi

# requests:

# cpu: 100m

# memory: 128Mi

nodeSelector: {}

tolerations: []

affinity: {}

I should point out a future optimization will be to update the ingress.yaml to handle annotations properly.

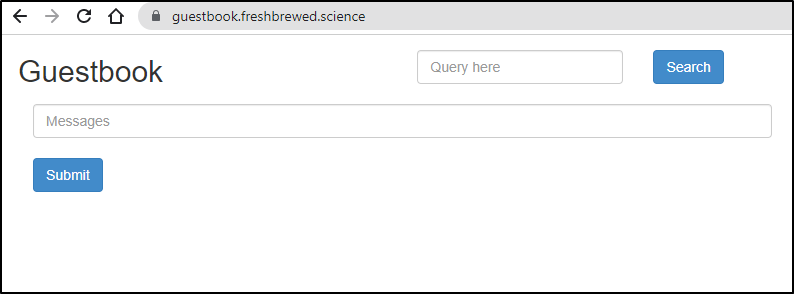

And the Application diagram as deployed with Production values:

And if we update our App to use the “default” namespace then the ingress actually routes:

validation:

and yes, I did add a route53 entry for this url

Private Repo

Let’s create a Private local git repo on a nearby Linux box (though a pi server would work just as well).

If you haven’t already, copy your public SSH key over that you plan to use:

$ cat ~/.ssh/id_rsa.pub

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCztCsq2pg/AFf8t6d6LobwssgaXLVNnzn4G0e7M13J9t2o41deZjLQaRTLYyqGhmwp114GpJnac08F4Ln87CbIu2jbQCC2y89bE4k2a9226VJDbZhidPyvEiEyquUpKvvJ9QwUYeQlz3rYLQ3f8gDvO4iFKNla2s8E1gpYv6VxN7e7OX+FJmJ4dY2ydPxQ6RoxOLxWx6IDk9ysDK8MoSIUoD9nvD/PqlWBZLXBqqlO6OadGku3q2naNvafDM5F2p4ixCsJV5fQxPWxqZy0CbyXzs1easL4lQHjK2NwqN8AK6pC1ywItDc1fUpJZEEPOJQLShI+dqqqjUptwJUPq87h

# on destination

builder@builder-HP-EliteBook-745-G5:~$ cat ~/.ssh/authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCztCsq2pg/AFf8t6d6LobwssgaXLVNnzn4G0e7M13J9t2o41deZjLQaRTLYyqGhmwp114GpJnac08F4Ln87CbIu2jbQCC2y89bE4k2a9226VJDbZhidPyvEiEyquUpKvvJ9QwUYeQlz3rYLQ3f8gDvO4iFKNla2s8E1gpYv6VxN7e7OX+FJmJ4dY2ydPxQ6RoxOLxWx6IDk9ysDK8MoSIUoD9nvD/PqlWBZLXBqqlO6OadGku3q2naNvafDM5F2p4ixCsJV5fQxPWxqZy0CbyXzs1easL4lQHjK2NwqN8AK6pC1ywItDc1fUpJZEEPOJQLShI+dqqqjUptwJUPq87h

Create a repo on destination Linux box:

$ ssh builder@192.168.1.32

Welcome to Ubuntu 20.04.3 LTS (GNU/Linux 5.11.0-38-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

54 updates can be applied immediately.

To see these additional updates run: apt list --upgradable

Your Hardware Enablement Stack (HWE) is supported until April 2025.

*** System restart required ***

Last login: Tue Jan 18 06:32:43 2022 from 192.168.1.160

builder@builder-HP-EliteBook-745-G5:~$ mkdir gitRepo

builder@builder-HP-EliteBook-745-G5:~$ cd gitRepo/

builder@builder-HP-EliteBook-745-G5:~/gitRepo$ pwd

/home/builder/gitRepo

builder@builder-HP-EliteBook-745-G5:~/gitRepo$ git init --bare

Initialized empty Git repository in /home/builder/gitRepo/

builder@builder-HP-EliteBook-745-G5:~/gitRepo$ exit

logout

Connection to 192.168.1.32 closed.

Test locally, create a main branch:

builder@DESKTOP-QADGF36:~/Workspaces$ git clone ssh://builder@192.168.1.32:/home/builder/gitRepo/

Cloning into 'gitRepo'...

warning: You appear to have cloned an empty repository.

builder@DESKTOP-QADGF36:~/Workspaces$ cd gitRepo/

builder@DESKTOP-QADGF36:~/Workspaces/gitRepo$ echo "# First" > README.md

builder@DESKTOP-QADGF36:~/Workspaces/gitRepo$ git checkout -b main

Switched to a new branch 'main'

builder@DESKTOP-QADGF36:~/Workspaces/gitRepo$ git add README.md

builder@DESKTOP-QADGF36:~/Workspaces/gitRepo$ git commit -m "init"

[main (root-commit) 6569031] init

1 file changed, 1 insertion(+)

create mode 100644 README.md

builder@DESKTOP-QADGF36:~/Workspaces/gitRepo$ git push

fatal: The current branch main has no upstream branch.

To push the current branch and set the remote as upstream, use

git push --set-upstream origin main

builder@DESKTOP-QADGF36:~/Workspaces/gitRepo$ darf

git push --set-upstream origin main [enter/↑/↓/ctrl+c]

Enumerating objects: 3, done.

Counting objects: 100% (3/3), done.

Writing objects: 100% (3/3), 219 bytes | 219.00 KiB/s, done.

Total 3 (delta 0), reused 0 (delta 0)

To ssh://192.168.1.32:/home/builder/gitRepo/

* [new branch] main -> main

Branch 'main' set up to track remote branch 'main' from 'origin'.

(note: I just alias darf to thef— since i’m somewhat vulgarity adverse)

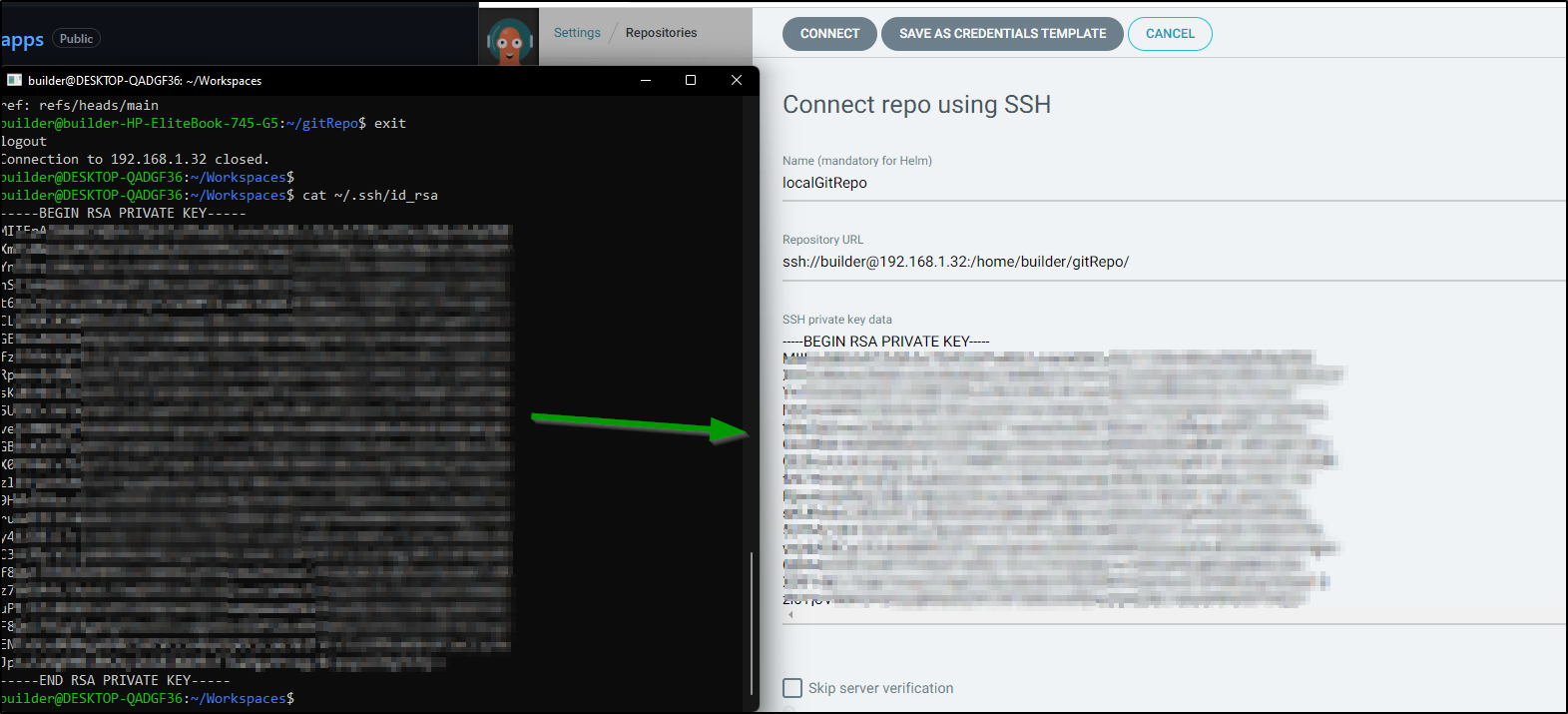

Next, in ArgoCD, go to Repositories (gear icon) and “+ connect repo using ssh”

Quick note: since I use proper inclusive naming, we have to fix the ‘bare’ default branch of ‘master’.

$ ssh builder@192.168.1.32

builder@builder-HP-EliteBook-745-G5:~$ cd gitRepo/

builder@builder-HP-EliteBook-745-G5:~/gitRepo$ cat HEAD

ref: refs/heads/master

builder@builder-HP-EliteBook-745-G5:~/gitRepo$ vi HEAD

builder@builder-HP-EliteBook-745-G5:~/gitRepo$ cat HEAD

ref: refs/heads/main

builder@builder-HP-EliteBook-745-G5:~/gitRepo$ exit

logout

Connection to 192.168.1.32 closed.

otherwise you’ll see errors when cloning (and adding in ArgoCD):

$ git clone ssh://builder@192.168.1.32:/home/builder/gitRepo/ testing123

Cloning into 'testing123'...

remote: Enumerating objects: 3, done.

remote: Counting objects: 100% (3/3), done.

remote: Total 3 (delta 0), reused 0 (delta 0)

Receiving objects: 100% (3/3), done.

warning: remote HEAD refers to nonexistent ref, unable to checkout.

Next copy over your private key (~/.ssh/id_rsa) file:

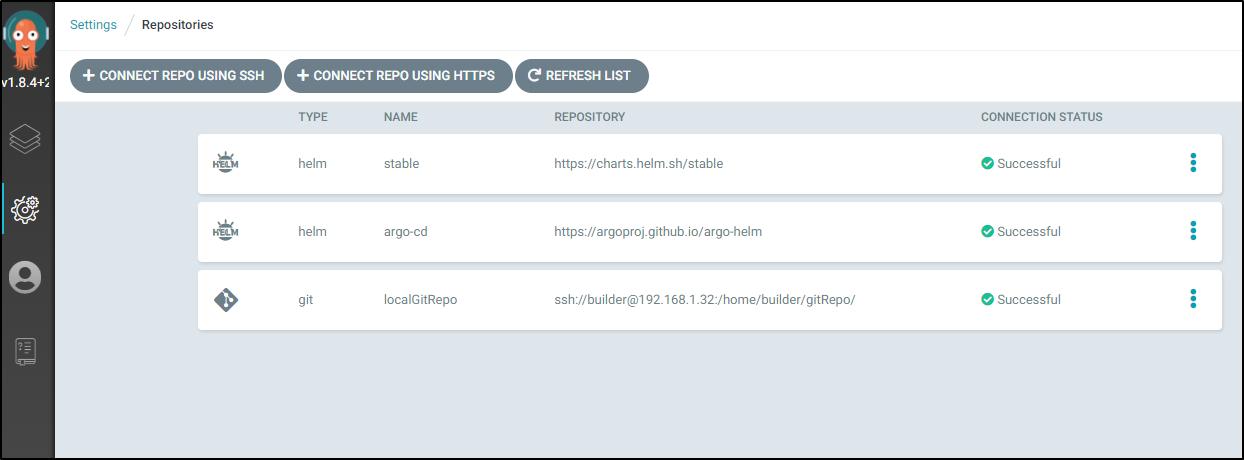

Press Connect and you should add the local GIT repo:

Sample chart

Let’s add a simple sample chart. By default it will be a straightforward Nginx web app.

$ git clone ssh://builder@192.168.1.32:/home/builder/gitRepo/

$ cd gitRepo

$ mkdir helm

$ cd helm

$ helm create sampleChart

Creating sampleChart

$ cat sampleChart/Chart.yaml | head -n3

apiVersion: v2 1

name: sampleChart0

description: A Helm chart for Kubernetes

$ cd ..

$ git add helm/

builder@DESKTOP-QADGF36:~/Workspaces/gitRepo$ git commit -m "add a sample helm chart"

[main d7fd5c3] add a sample helm chart

11 files changed, 405 insertions(+)

create mode 100644 helm/sampleChart/.helmignore

create mode 100644 helm/sampleChart/Chart.yaml

create mode 100644 helm/sampleChart/templates/NOTES.txtChart$ cd ..

create mode 100644 helm/sampleChart/templates/_helpers.tplleChart/Chart.yaml

create mode 100644 helm/sampleChart/templates/deployment.yaml

create mode 100644 helm/sampleChart/templates/hpa.yaml

create mode 100644 helm/sampleChart/templates/ingress.yaml

create mode 100644 helm/sampleChart/templates/service.yaml

create mode 100644 helm/sampleChart/templates/serviceaccount.yaml

create mode 100644 helm/sampleChart/templates/tests/test-connection.yaml

create mode 100644 helm/sampleChart/values.yamles that can be packaged into versioned archives

$ git push

Enumerating objects: 18, done.

Counting objects: 100% (18/18), done.ties or functions for the chart developer. They're included as

Delta compression using up to 16 threadsinject those utilities and functions into the rendering

Compressing objects: 100% (15/15), done. any templates and therefore cannot be deployed.

Writing objects: 100% (17/17), 5.63 KiB | 5.63 MiB/s, done.

Total 17 (delta 0), reused 0 (delta 0)

To ssh://192.168.1.32:/home/builder/gitRepo/mber should be incremented each time you make changes

6569031..d7fd5c3 main -> main including the app version.

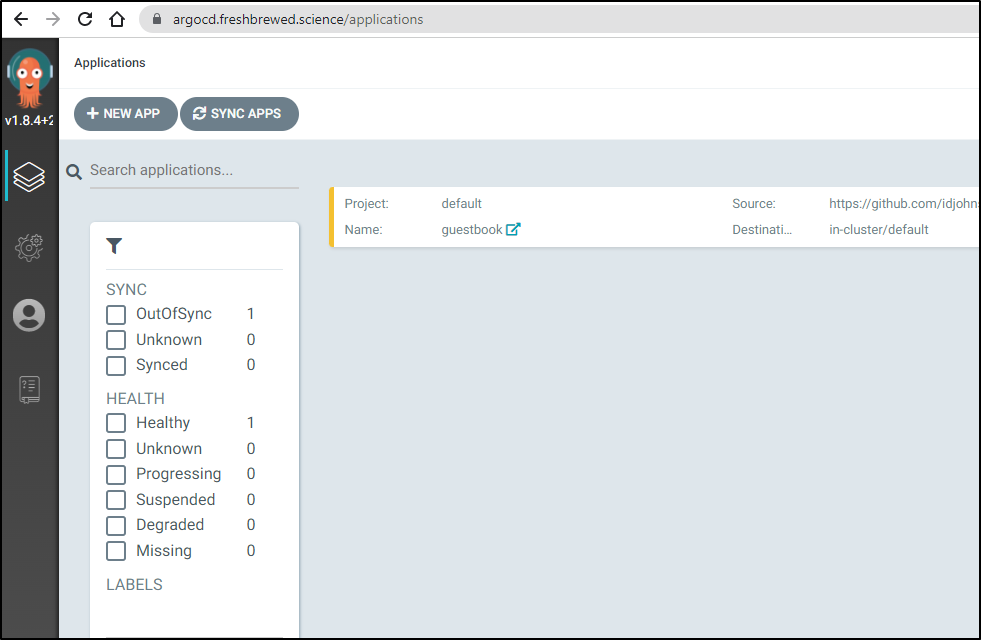

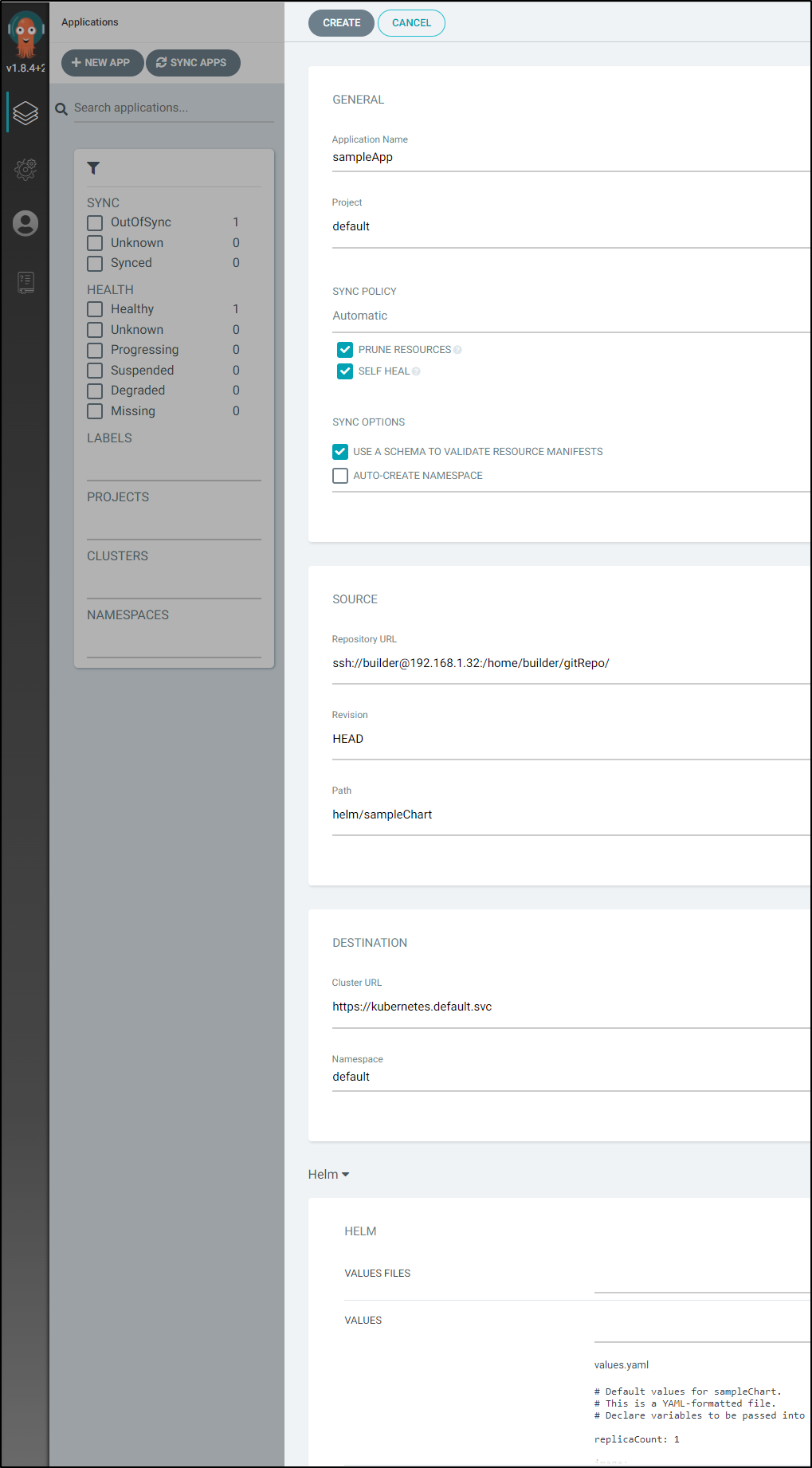

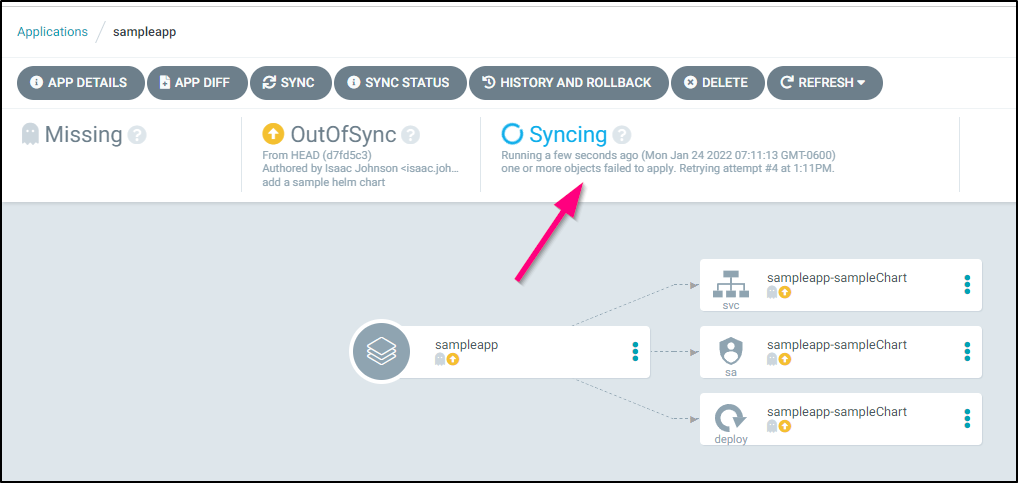

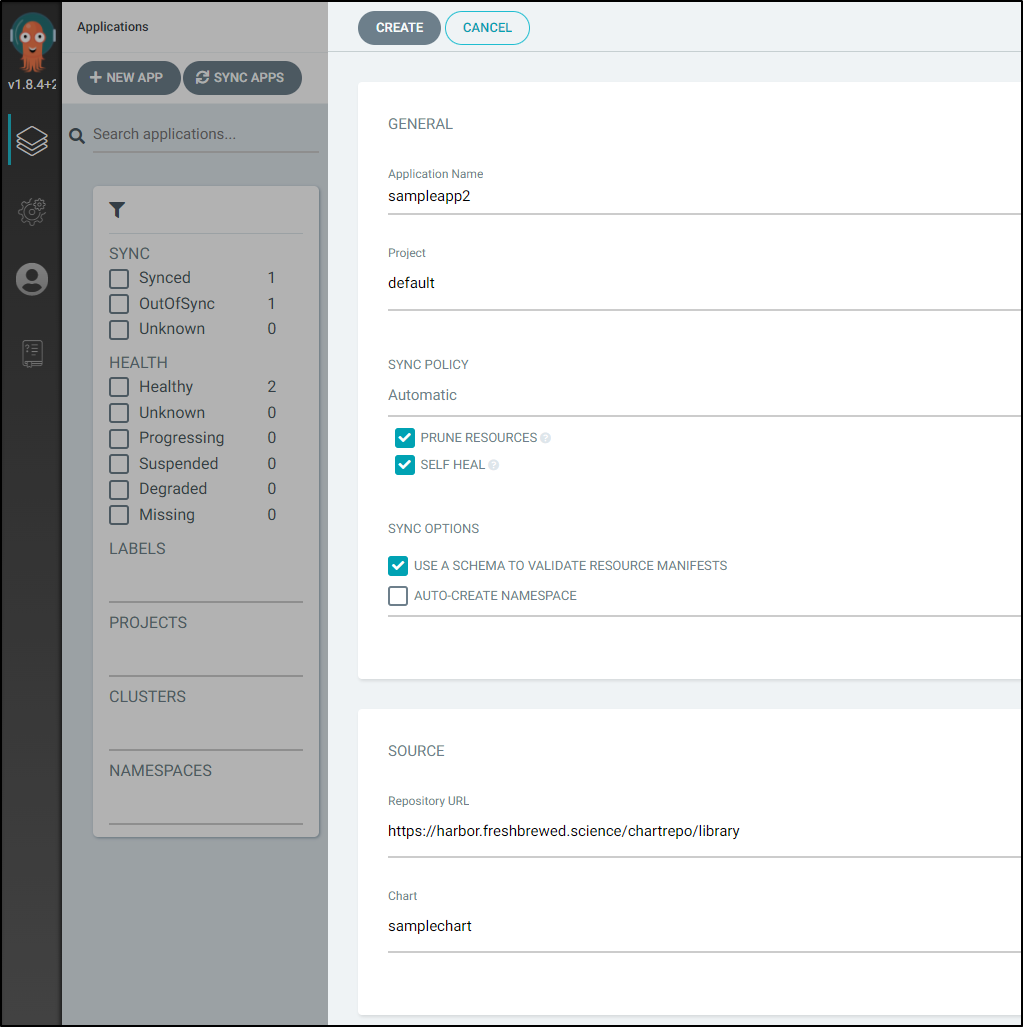

In Argo, Let’s “+ New App” in the Applications section

Next, we will set a name, project, pick our Repo and path.

Note: I’ve set the Sync Policy this time to “Automatic” with Prune and Self-Heal.

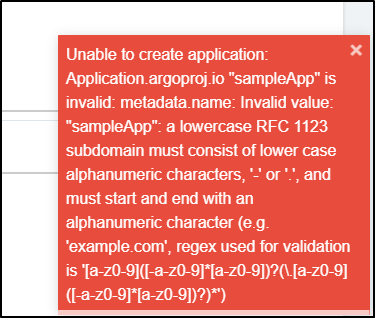

Then I press create and Argo accurately flags that app names need to be lowercase

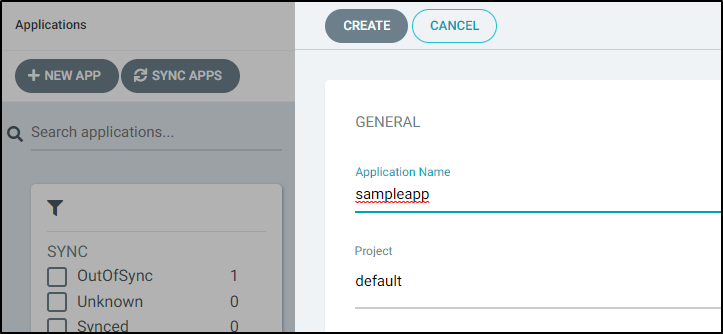

I correct and try again,

this time it works

You’ll notice that it’s already syncing. Because we set automatic, I did not need to synchronize after adding as we did earlier.

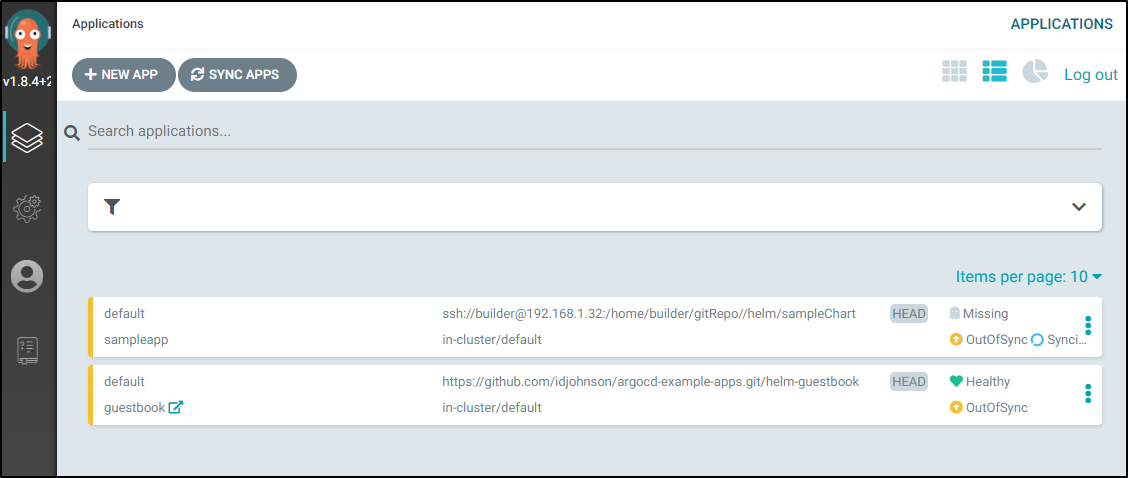

Watching the syncing, we see some issues…

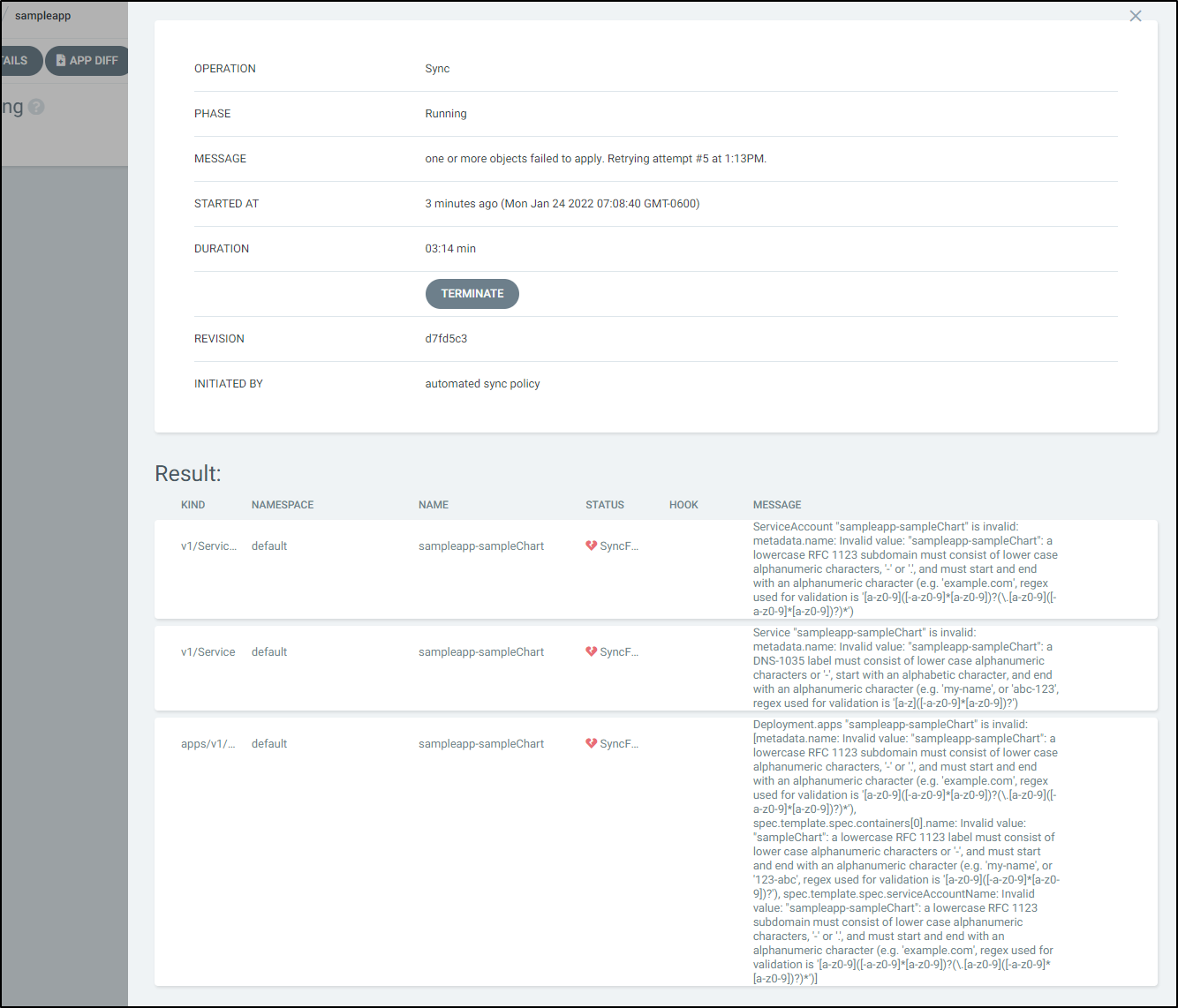

If we click on the large blue text “Syncing” we get the issues report:

clearly my chart is broken due to my habit of camel casing names. Let’s fix that.

builder@DESKTOP-QADGF36:~/Workspaces/gitRepo/helm/sampleChart$ find . -type f -exec sed -i 's/sampleChart/samplechart/g' {} \; -print

./.helmignore

./templates/NOTES.txt

./templates/hpa.yaml

./templates/serviceaccount.yaml

./templates/_helpers.tpl

./templates/tests/test-connection.yaml

./templates/service.yaml

./templates/ingress.yaml

./templates/deployment.yaml

./values.yaml

./Chart.yaml

builder@DESKTOP-QADGF36:~/Workspaces/gitRepo/helm/sampleChart$ git status

On branch main

Your branch is up to date with 'origin/main'.

Changes not staged for commit:

(use "git add <file>..." to update what will be committed)

(use "git restore <file>..." to discard changes in working directory)

modified: Chart.yaml

modified: templates/NOTES.txt

modified: templates/_helpers.tpl

modified: templates/deployment.yaml

modified: templates/hpa.yaml

modified: templates/ingress.yaml

modified: templates/service.yaml

modified: templates/serviceaccount.yaml

modified: templates/tests/test-connection.yaml

modified: values.yaml

no changes added to commit (use "git add" and/or "git commit -a")

builder@DESKTOP-QADGF36:~/Workspaces/gitRepo/helm/sampleChart$ git add -A

builder@DESKTOP-QADGF36:~/Workspaces/gitRepo/helm/sampleChart$ git commit -m "switch casing"

[main 1d80e71] switch casing

10 files changed, 34 insertions(+), 34 deletions(-)

builder@DESKTOP-QADGF36:~/Workspaces/gitRepo/helm/sampleChart$ git push

Enumerating objects: 31, done.

Counting objects: 100% (31/31), done.

Delta compression using up to 16 threads

Compressing objects: 100% (14/14), done.

Writing objects: 100% (16/16), 1.34 KiB | 1.34 MiB/s, done.

Total 16 (delta 10), reused 0 (delta 0)

To ssh://192.168.1.32:/home/builder/gitRepo/

d7fd5c3..1d80e71 main -> main

Within moments we see the chart updates and is fixed:

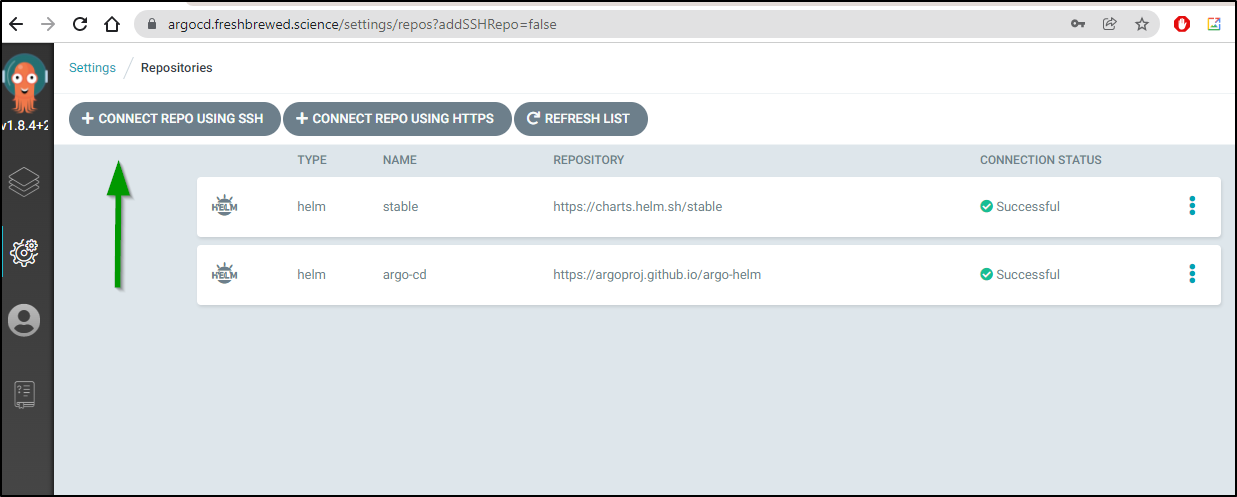

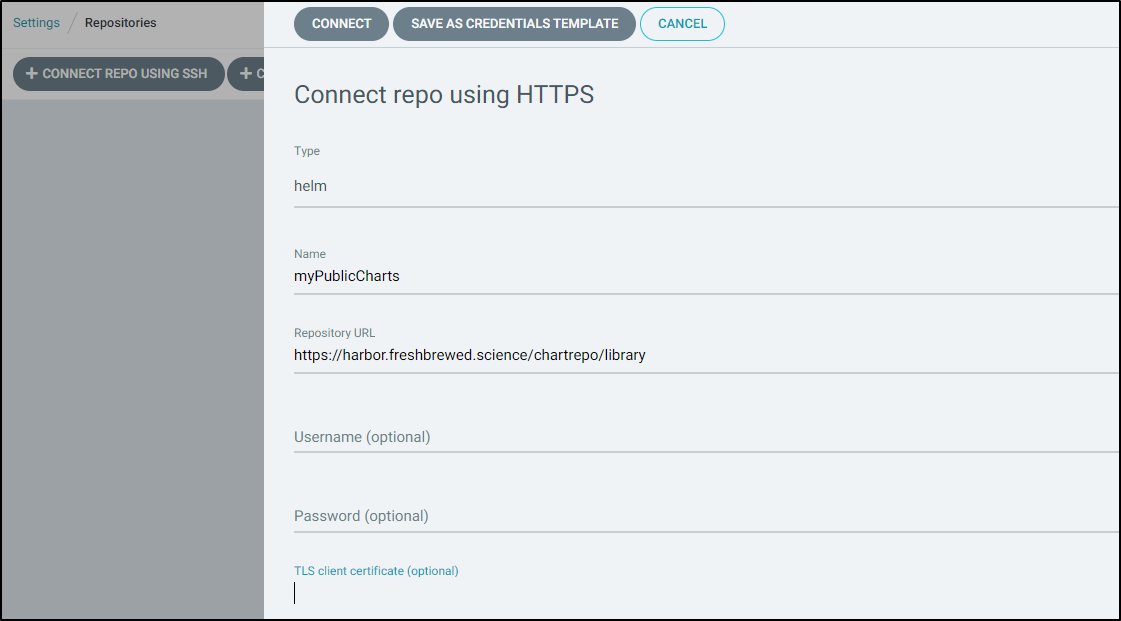

We demonstrated private repos with local GIT via SSH. However, you can add any accessible HTTPS repo as well:

We can also consume both private and public Helm charts directly:

https://harbor.freshbrewed.science/harbor/projects/1/helm-charts/samplechart/versions/0.1.1

and using Helm repos is very similar to GIT repos

CLI

For some activities, like adding another cluster, we will need the ArgoCD command line interface.

Installing:

$ sudo curl -sSL -o /usr/local/bin/argocd https://github.com/a

rgoproj/argo-cd/releases/latest/download/argocd-linux-amd64

[sudo] password for builder:

$ sudo chmod 755 /usr/local/bin/argocd

$ argocd version

argocd: v2.2.3+987f665

BuildDate: 2022-01-18T17:53:49Z

GitCommit: 987f6659b88e656a8f6f8feef87f4dd467d53c44

GitTreeState: clean

GoVersion: go1.16.11

Compiler: gc

Platform: linux/amd64

FATA[0000] Argo CD server address unspecified

The easiest way to login to a k8s instance is to just port-forward to the server and use another window to login:

$ kubectl port-forward argo-cd-argocd-server-564ddc97c4-j69fk 8080:8080

Forwarding from 127.0.0.1:8080 -> 8080

Forwarding from [::1]:8080 -> 8080

Handling connection for 8080

# another bash window

$ argocd login localhost:8080

WARNING: server is not configured with TLS. Proceed (y/n)? y

Username: admin

Password:

'admin' logged in successfully

Context 'localhost:8080' updated

$ argocd cluster add idjaks02-admin

WARNING: This will create a service account `argocd-manager` on the cluster referenced by context `idjaks02-admin` with full cluster level admin privileges. Do you want to continue [y/N]? y

INFO[0001] ServiceAccount "argocd-manager" created in namespace "kube-system"

INFO[0001] ClusterRole "argocd-manager-role" created

INFO[0001] ClusterRoleBinding "argocd-manager-role-binding" created

Cluster 'https://idjaks02-idjaks02rg-d4c024-2712c2e1.hcp.centralus.azmk8s.io:443' added

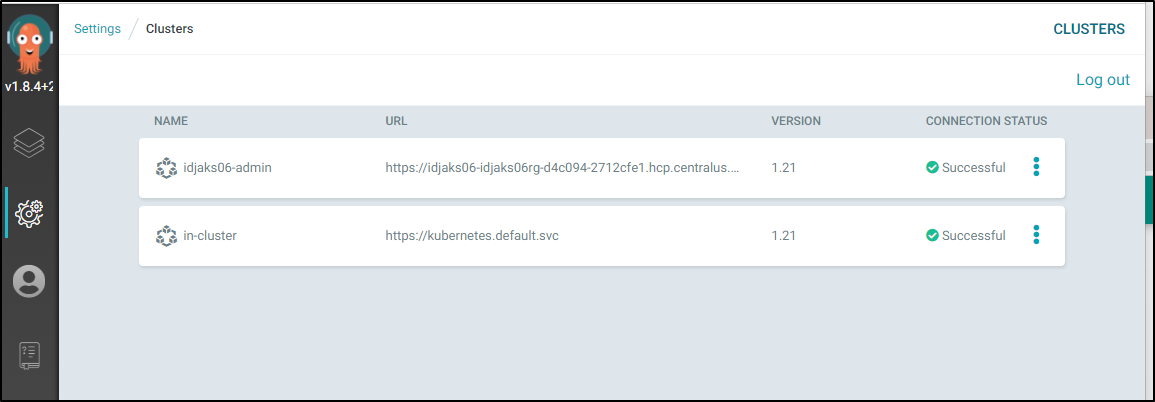

And now we can see it in the Clusters page:

We can also keep it simple and use the Server pod itself to orchestrate this flow rather easily:

# create a kubeconfig dir

$ kubectl cp ~/.kube/config `kubectl get pod -l app.kubernetes.io/name=argocd-server -o json | jq -r '.items[] | .metadata.name' | tr -d '\n'`:/home/argocd/.kube

# copy my current kubeconfig over to the pod

$ kubectl cp ~/.kube/config `kubectl get pod -l app.kubernetes.io/name=argocd-server -o json | jq -r '.items[] | .metadata.name' | tr -d '\n'`:/home/argocd/.kube

# login to argocd locally then add the cluster.

$ kubectl exec `kubectl get pod -l app.kubernetes.io/name=argocd-server -o json | jq -r '.items[] | .metadata.name' | tr -d '\n'` -- bash -c "yes | argocd login --username admin --password myadminpassword localhost:8080 && argocd cluster add idjaks06-admin"

WARNING: server is not configured with TLS. Proceed (y/n)? 'admin' logged in successfully

Context 'localhost:8080' updated

time="2022-01-24T23:18:07Z" level=info msg="ServiceAccount \"argocd-manager\" already exists in namespace \"kube-system\""

time="2022-01-24T23:18:07Z" level=info msg="ClusterRole \"argocd-manager-role\" updated"

time="2022-01-24T23:18:07Z" level=info msg="ClusterRoleBinding \"argocd-manager-role-binding\" updated"

Cluster 'https://idjaks06-idjaks06rg-d4c094-2712cfe1.hcp.centralus.azmk8s.io:443' added

Summary

ArgoCD does a great job of managing automated deployments to Kubernetes. It’s easy to tie any git or helm repo up to automatically deploy charts (or manually deploy if that is one’s desire). It gets even wilder when you tie it to something like Crossplane.io. You can see Viktor Farcic’s YouYube (DOT) demo of that here.

There is no commercial offering of ArgoCD. It came out in 2017. ArgoCD is maintained by dedicated team with support from the Cloud Native Computing Foundation. Presently there is not an Enterprise offering nor dedicated support, however for the last 2 years it has been publicly backed by Intuit and IBM (RedHat).

I’m thrilled with ArgoCD so far. In the past I’ve limited GitOps to using Azure Arc but that requires a persistent Azure subscription. I will likely check out its direct competition, fluxcd (which is also a CNCF incubated project that was initially developed by WeaveWorks). Both suites will allow one to implement GitOps quite well. ArgoCD has a few more features we will explore in future posts such as Workflows and Rollouts.