Published: May 8, 2025 by Isaac Johnson

Today we are going to revisit a couple of Open-Source suites - namely Vikunja and Glance. Both of which I use, albeit Vikunja nearly daily.

We’ll also look at Smokeping, a simple Open-source Network monitor that is easy to fire up in Docker or Kubernetes. I’ll cover both as well as how to add one’s own sites to it. Lastly, I’ll wrap by showing how Smokeping fits in with my existing Monitoring strategies.

Vikunja

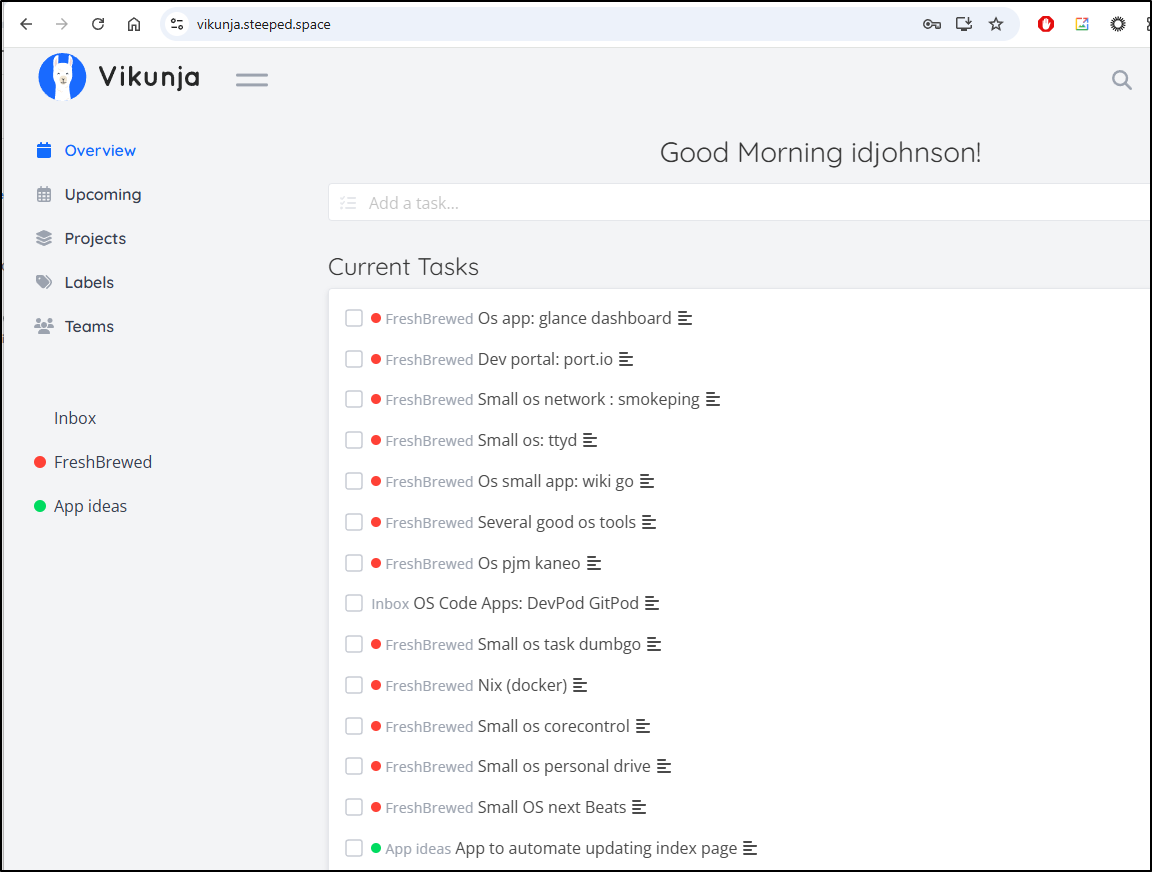

I wanted to post a follow-up on Vikunja Task Management from my February writeup.

I’ve been using Vikunja now, almost exclusively, for tracking app ideas and blog posts for the last 3 months.

I have a board, but I don’t use it too much, but it is there for tracking status

Most of the time I fire it up on my phone and the mobile interface works great.

I won’t re-write the same article, but I do recommend it if looking for a task management tool - especially if looking for non-US based ones.

Glance

I came across this XDA post about Glance while eating breakfast this morning.

It looks like a really solid landing page that is easy to self-host. As it looked familiar, I searched back and found indeed, I did cover this in June of 2024 and still have it running.

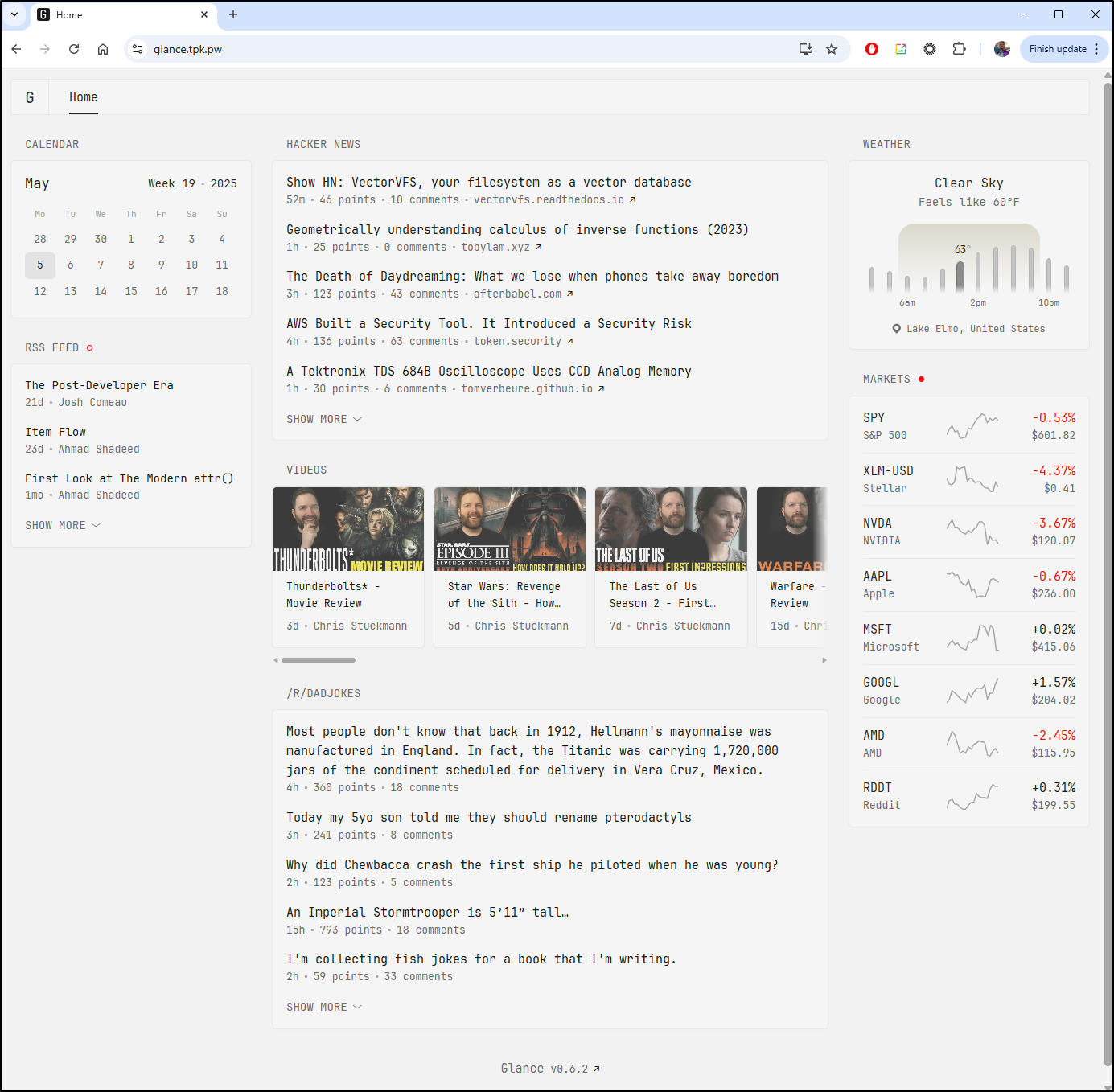

However, there have been plenty of updates since, so let’s upgrade my 0.6.2 version to the latest 0.7.13 and see what is new.

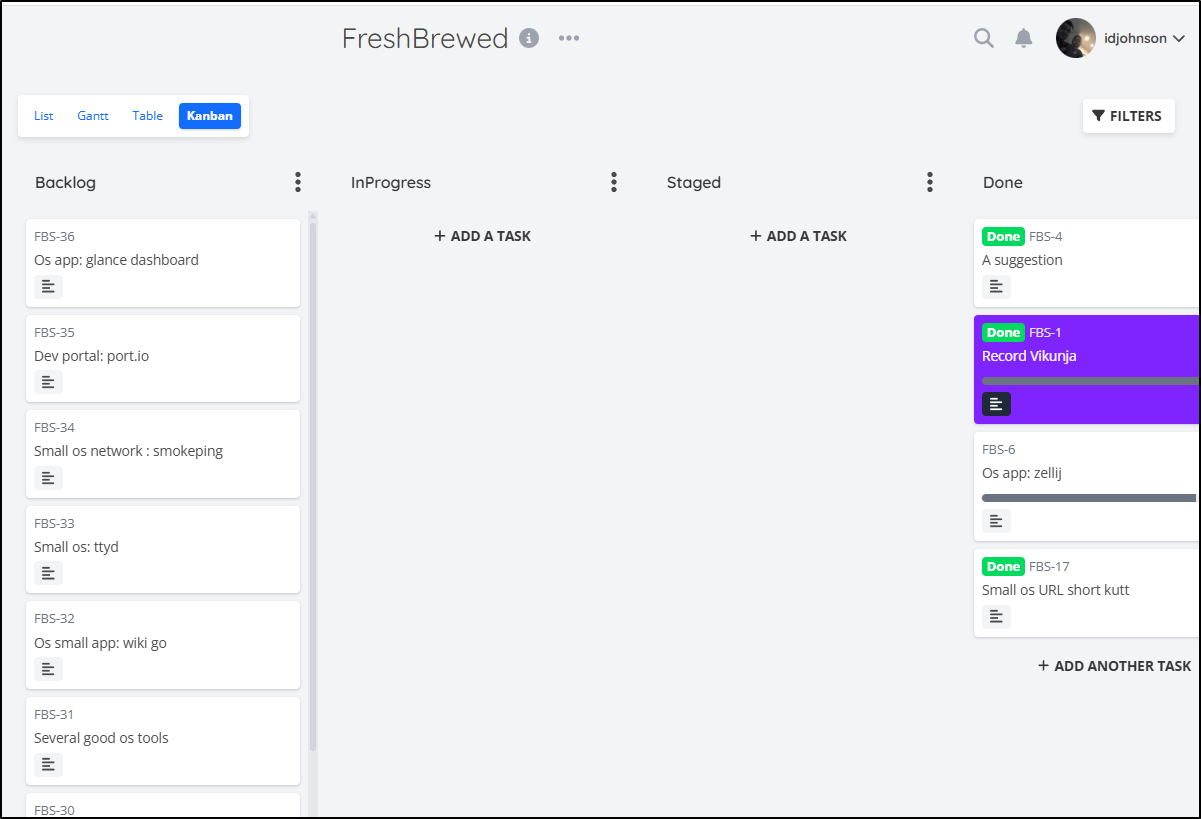

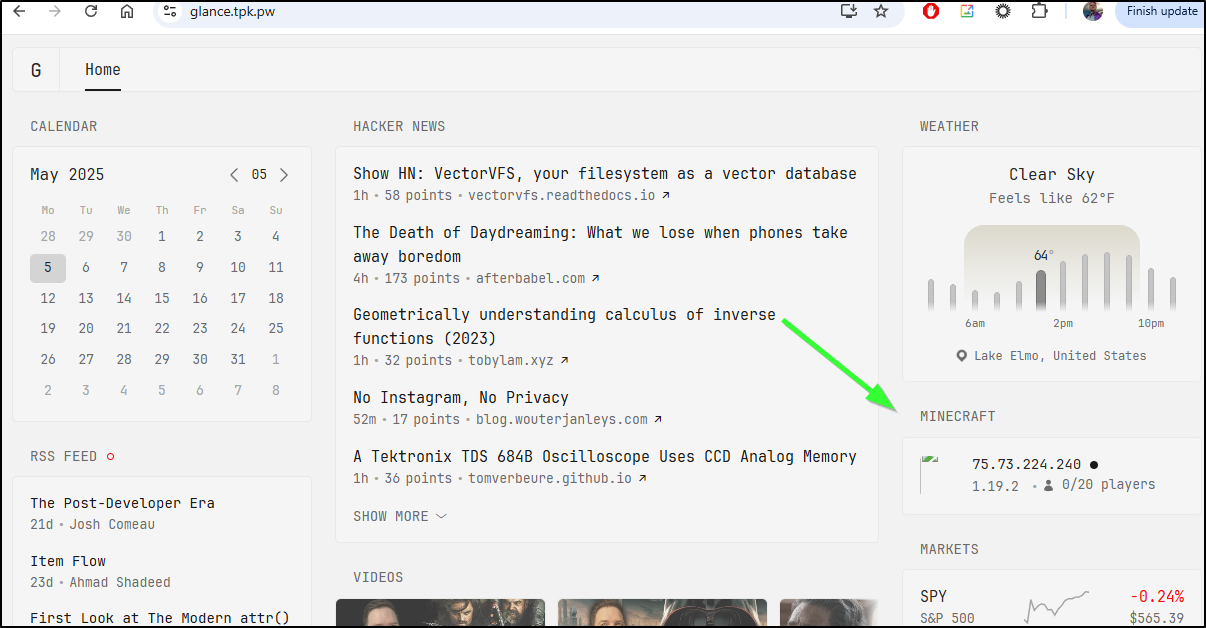

At this moment, Glance 0.6.2 looks like:

The Readme still lacks Kubernetes so we can just update the existing deployment.

It is still there nearly a full year later

$ kubectl get deployments -A | grep -i glance

default glance-deployment 1/1 1 1 347d

Since I never specified a tag in the deployment

$ kubectl get deployment glance-deployment -o yaml | grep -C 2 image:

spec:

containers:

- image: glanceapp/glance

imagePullPolicy: Always

name: glance

I should be able to just rotate the pod to “upgrade”

$ kubectl get po -l app=glance

NAME READY STATUS RESTARTS AGE

glance-deployment-5547d948ff-w5xg6 1/1 Running 0 177d

$ kubectl delete po -l app=glance

pod "glance-deployment-5547d948ff-w5xg6" deleted

$ kubectl get po -l app=glance

NAME READY STATUS RESTARTS AGE

glance-deployment-5547d948ff-rjwj5 0/1 Error 0 5s

$ kubectl get po -l app=glance

NAME READY STATUS RESTARTS AGE

glance-deployment-5547d948ff-rjwj5 0/1 CrashLoopBackOff 1 (3s ago) 7s

Uh-oh, looks like we have an issue

$ kubectl logs glance-deployment-5547d948ff-rjwj5

parsing config: reading main YAML file: open /app/config/glance.yml: no such file or directory

I’m guessing that is because the original volume mount was just /app/glance.yaml

volumeMounts:

- name: config-volume

mountPath: /app/glance.yml

subPath: glance.yml

I did a quick inline edit to fix:

$ kubectl edit deployment glance-deployment

deployment.apps/glance-deployment edited

$ kubectl get deployment glance-deployment -o yaml | grep -C 2 glance.yml

deployment.kubernetes.io/revision: "4"

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"name":"glance-deployment","namespace":"default"},"spec":{"replicas":1,"selector":{"matchLabels":{"app":"glance"}},"template":{"metadata":{"labels":{"app":"glance"}},"spec":{"containers":[{"image":"glanceapp/glance","name":"glance","ports":[{"containerPort":8080}],"volumeMounts":[{"mountPath":"/app/glance.yml","name":"config-volume","subPath":"glance.yml"}]}],"volumes":[{"configMap":{"name":"glanceconfig"},"name":"config-volume"}]}}}}

creationTimestamp: "2024-05-23T00:31:41Z"

generation: 4

--

terminationMessagePolicy: File

volumeMounts:

- mountPath: /app/config/glance.yml

name: config-volume

subPath: glance.yml

dnsPolicy: ClusterFirst

restartPolicy: Always

And it came right up

$ kubectl get po -l app=glance

NAME READY STATUS RESTARTS AGE

glance-deployment-5fdc699b86-nm42f 1/1 Running 0 6s

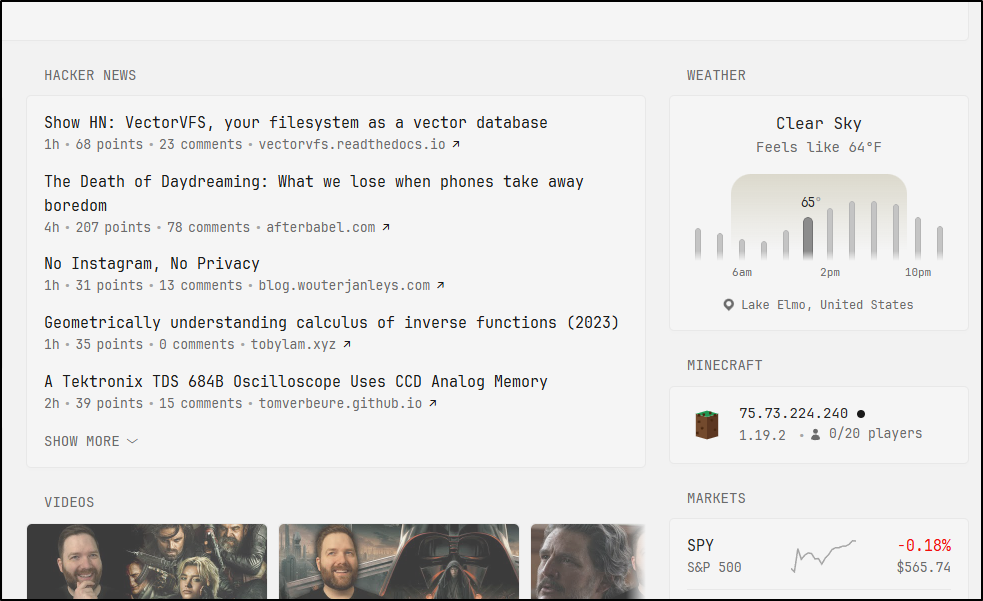

That said, I don’t see much difference

That said, there are a lot of updates in the release notes including the availability of community widgets.

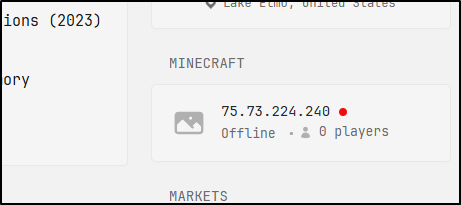

I had to try it. I added a Minecraft Server Monitor for my FTB server

I noticed the icon URL in the JSON return payload was empty

Offline works fine (if I change ports)

I created a better SVG with Copilot that sort of looks like a Minecraft dirt block.

<svg xmlns="http://www.w3.org/2000/svg" viewBox="0 0 20 20" style="width:32px; height:32px;">

<!-- Right side face (darker) -->

<polygon points="17,3 17,17 10,19 10,5" fill="#5C3721"/>

<!-- Left side face (medium) -->

<polygon points="3,3 3,17 10,19 10,5" fill="#734A2D"/>

<!-- Top face (lightest) -->

<polygon points="3,3 10,5 17,3 10,1" fill="#229954"/>

<!-- Texture dots (darker spots) -->

<circle cx="5" cy="4" r="0.8" fill="#4A2810"/>

<circle cx="12" cy="2.5" r="0.8" fill="#4A2810"/>

<circle cx="8" cy="3.5" r="0.8" fill="#4A2810"/>

<circle cx="15" cy="4" r="0.8" fill="#4A2810"/>

<!-- Side texture dots -->

<circle cx="5" cy="8" r="0.8" fill="#4A2810"/>

<circle cx="7" cy="12" r="0.8" fill="#4A2810"/>

<circle cx="4" cy="15" r="0.8" fill="#4A2810"/>

<circle cx="13" cy="7" r="0.8" fill="#4A2810"/>

<circle cx="15" cy="13" r="0.8" fill="#4A2810"/>

</svg>

You are welcome to use the SVG from the Online block below. e.g.

- type: custom-api

title: Minecraft

url: https://api.mcstatus.io/v2/status/java/75.73.224.240:12345

cache: 30s

template: |

<div style="display:flex; align-items:center; gap:12px;">

<div style="width:40px; height:40px; flex-shrink:0; border-radius:4px; display:flex; justify-content:center; align-items:center; overflow:hidden;">

{{ if .JSON.Bool "online" }}

<svg xmlns="http://www.w3.org/2000/svg" viewBox="0 0 20 20" style="width:32px; height:32px;">

<!-- Right side face (darker) -->

<polygon points="17,3 17,17 10,19 10,5" fill="#5C3721"/>

<!-- Left side face (medium) -->

<polygon points="3,3 3,17 10,19 10,5" fill="#734A2D"/>

<!-- Top face (lightest) -->

<polygon points="3,3 10,5 17,3 10,1" fill="#229954"/>

<!-- Texture dots (darker spots) -->

<circle cx="5" cy="4" r="0.8" fill="#4A2810"/>

<circle cx="12" cy="2.5" r="0.8" fill="#4A2810"/>

<circle cx="8" cy="3.5" r="0.8" fill="#4A2810"/>

<circle cx="15" cy="4" r="0.8" fill="#4A2810"/>

<!-- Side texture dots -->

<circle cx="5" cy="8" r="0.8" fill="#4A2810"/>

<circle cx="7" cy="12" r="0.8" fill="#4A2810"/>

<circle cx="4" cy="15" r="0.8" fill="#4A2810"/>

<circle cx="13" cy="7" r="0.8" fill="#4A2810"/>

<circle cx="15" cy="13" r="0.8" fill="#4A2810"/>

</svg>

{{ else }}

<svg xmlns="http://www.w3.org/2000/svg" viewBox="0 0 20 20" fill="currentColor" style="width:32px; height:32px; opacity:0.5;">

<path fill-rule="evenodd" d="M1 5.25A2.25 2.25 0 0 1 3.25 3h13.5A2.25 2.25 0 0 1 19 5.25v9.5A2.25 2.25 0 0 1 16.75 17H3.25A2.25 2.25 0 0 1 1 14.75v-9.5Zm1.5 5.81v3.69c0 .414.336.75.75.75h13.5a.75.75 0 0 0 .75-.75v-2.69l-2.22-2.219a.75.75 0 0 0-1.06 0l-1.91 1.909.47.47a.75.75 0 1 1-1.06 1.06L6.53 8.091a.75.75 0 0 0-1.06 0l-2.97 2.97ZM12 7a1 1 0 1 1-2 0 1 1 0 0 1 2 0Z" clip-rule="evenodd" />

</svg>

{{ end }}

</div>

... snip ...

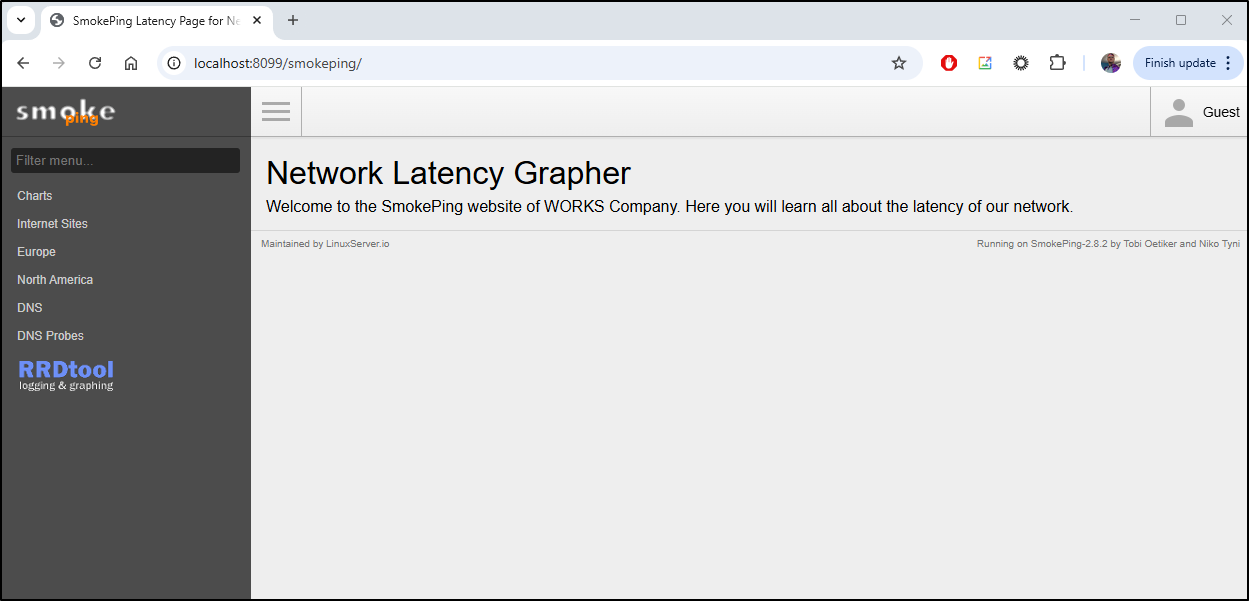

Smokeping

Recently I saw an article on Marius Hosting about Smokeping.

Smokeping from Linuxserver group

Let’s start with a basic docker compose run:

$ mkdir /tmp/smokeconfig

$ mkdir /tmp/smokedata

$ !v

vi docker-compose.yaml

$ cat ./docker-compose.yaml

---

services:

smokeping:

image: lscr.io/linuxserver/smokeping:latest

container_name: smokeping

environment:

- PUID=1000

- PGID=1000

- TZ=Etc/UTC

- SHARED_SECRET=password #optional

volumes:

- /tmp/smokeconfig:/config

- /tmp/smokedata:/data

ports:

- 8099:80

restart: unless-stopped

Now we can compose up

$ docker compose up

[+] Running 9/9

✔ smokeping Pulled 12.9s

✔ 88f52c619a3d Pull complete 1.6s

✔ e1cde46db0e1 Pull complete 1.6s

✔ c32d061e9a23 Pull complete 1.7s

✔ 1bf67c2a6cde Pull complete 1.7s

✔ 2cf302d3aa0f Pull complete 2.5s

✔ 28623f5332b1 Pull complete 2.6s

✔ 73125ea7fbc2 Pull complete 10.8s

✔ c1347cfb876d Pull complete 10.8s

[+] Running 2/2

✔ Network jekyll-blog_default Created 0.1s

✔ Container smokeping Created 0.3s

Attaching to smokeping

smokeping | [migrations] started

smokeping | [migrations] no migrations found

smokeping | ───────────────────────────────────────

smokeping |

smokeping | ██╗ ███████╗██╗ ██████╗

smokeping | ██║ ██╔════╝██║██╔═══██╗

smokeping | ██║ ███████╗██║██║ ██║

smokeping | ██║ ╚════██║██║██║ ██║

smokeping | ███████╗███████║██║╚██████╔╝

smokeping | ╚══════╝╚══════╝╚═╝ ╚═════╝

smokeping |

smokeping | Brought to you by linuxserver.io

smokeping | ───────────────────────────────────────

smokeping |

smokeping | To support LSIO projects visit:

smokeping | https://www.linuxserver.io/donate/

smokeping |

smokeping | ───────────────────────────────────────

smokeping | GID/UID

smokeping | ───────────────────────────────────────

smokeping |

smokeping | User UID: 1000

smokeping | User GID: 1000

smokeping | ───────────────────────────────────────

smokeping | Linuxserver.io version: 2.8.2-r3-ls130

smokeping | Build-date: 2025-04-22T20:40:37+00:00

smokeping | ───────────────────────────────────────

smokeping |

smokeping | 'Alerts' -> '/config/Alerts'

smokeping | 'Database' -> '/config/Database'

smokeping | 'General' -> '/config/General'

smokeping | 'Presentation' -> '/config/Presentation'

smokeping | 'Probes' -> '/config/Probes'

smokeping | 'Slaves' -> '/config/Slaves'

smokeping | 'Targets' -> '/config/Targets'

smokeping | 'pathnames' -> '/config/pathnames'

smokeping | 'ssmtp.conf' -> '/config/ssmtp.conf'

smokeping | [custom-init] No custom files found, skipping...

smokeping | AH00558: httpd: Could not reliably determine the server's fully qualified domain name, using 172.23.0.2. Set the 'ServerName' directive globally to suppress this message

smokeping | Connection to localhost (::1) 80 port [tcp/http] succeeded!

smokeping | [ls.io-init] done.

smokeping | WARNING: Hostname 'ipv6.google.com' does currently not resolve to an IPv4 address

smokeping | ### parsing dig output...OK

smokeping | Smokeping version 2.008002 successfully launched.

smokeping | Entering multiprocess mode.

smokeping | ### assuming you are using an tcpping copy reporting in milliseconds

smokeping | Child process 274 started for probe FPing6.

smokeping | No targets defined for probe TCPPing, skipping.

smokeping | FPing6: probing 1 targets with step 300 s and offset 255 s.

smokeping | Child process 275 started for probe DNS.

smokeping | DNS: probing 9 targets with step 300 s and offset 295 s.

smokeping | Child process 276 started for probe FPing.

smokeping | All probe processes started successfully.

smokeping | FPing: probing 25 targets with step 300 s and offset 298 s.

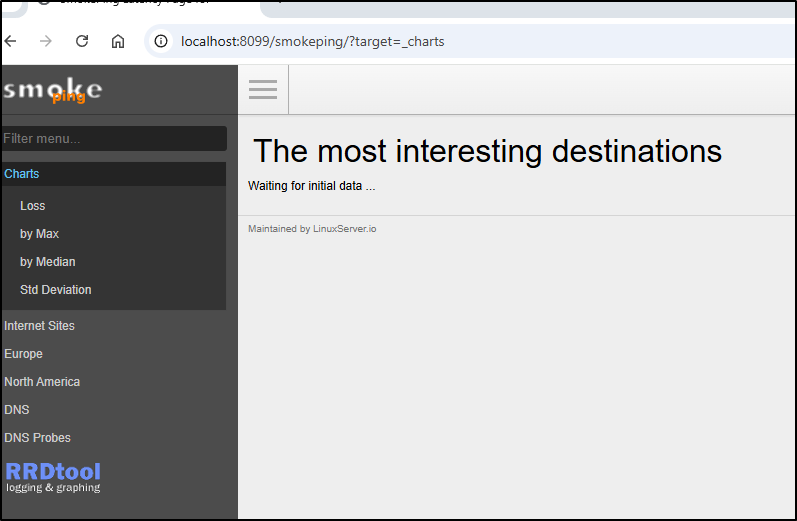

I can now see Smokeping on port 8099 (nothing magic there, I just wanted a local available port)

However, I did not see any data load

And all the internet sites remained flat

Docker host

Let’s try on my dedicated Docker host

builder@builder-T100:~$ mkdir smokeping

builder@builder-T100:~$ mkdir smokeping/data

builder@builder-T100:~$ mkdir smokeping/config

# just to make sure it isnt container user access issues

builder@builder-T100:~$ chmod 777 smokeping/data

builder@builder-T100:~$ chmod 777 smokeping/config

# run

builder@builder-T100:~$ docker run -d \

--name=smokeping \

-e PUID=1000 \

-e PGID=1000 \

-e TZ=Etc/UTC \

-p 8099:80 \

-v /home/builder/smokeping/config:/config \

-v /home/builder/smokeping/data:/data \

--restart unless-stopped \

lscr.io/linuxserver/smokeping:latest

Unable to find image 'lscr.io/linuxserver/smokeping:latest' locally

latest: Pulling from linuxserver/smokeping

88f52c619a3d: Pull complete

e1cde46db0e1: Pull complete

c32d061e9a23: Pull complete

1bf67c2a6cde: Pull complete

2cf302d3aa0f: Pull complete

28623f5332b1: Pull complete

73125ea7fbc2: Pull complete

c1347cfb876d: Pull complete

Digest: sha256:55d87021ff7a66575029d324d6cb5116d37ee7815637a29282b894a8336201a6

Status: Downloaded newer image for lscr.io/linuxserver/smokeping:latest

f3989c434dbd80bf8c67c68c54d4fb9a61ce0b5c72f44ed1f996ccb3d0eeaa86

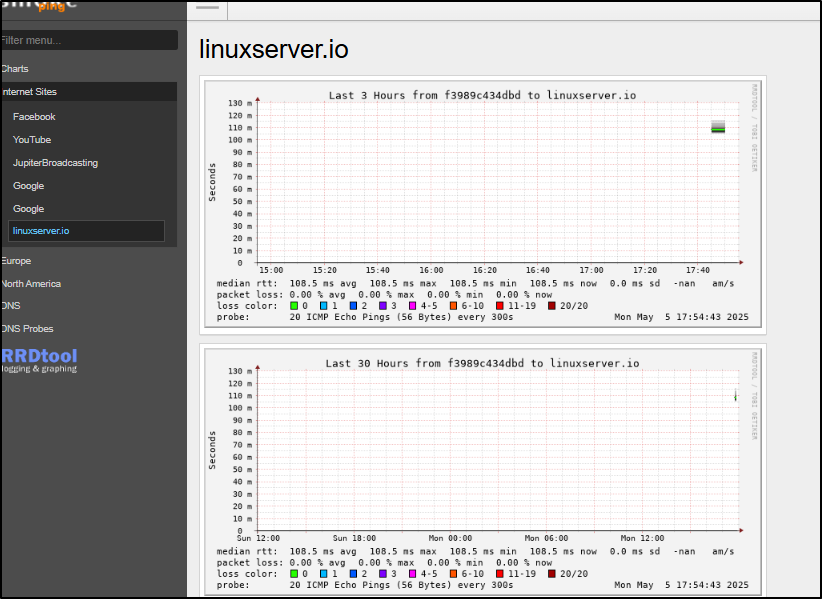

I can see graphs now but no data.

The logs look fine:

builder@builder-T100:~$ docker logs smokeping

[migrations] started

[migrations] no migrations found

───────────────────────────────────────

██╗ ███████╗██╗ ██████╗

██║ ██╔════╝██║██╔═══██╗

██║ ███████╗██║██║ ██║

██║ ╚════██║██║██║ ██║

███████╗███████║██║╚██████╔╝

╚══════╝╚══════╝╚═╝ ╚═════╝

Brought to you by linuxserver.io

───────────────────────────────────────

To support LSIO projects visit:

https://www.linuxserver.io/donate/

───────────────────────────────────────

GID/UID

───────────────────────────────────────

User UID: 1000

User GID: 1000

───────────────────────────────────────

Linuxserver.io version: 2.8.2-r3-ls130

Build-date: 2025-04-22T20:40:37+00:00

───────────────────────────────────────

'Alerts' -> '/config/Alerts'

'Database' -> '/config/Database'

'General' -> '/config/General'

'Presentation' -> '/config/Presentation'

'Probes' -> '/config/Probes'

'Slaves' -> '/config/Slaves'

'Targets' -> '/config/Targets'

'pathnames' -> '/config/pathnames'

'ssmtp.conf' -> '/config/ssmtp.conf'

[custom-init] No custom files found, skipping...

AH00558: httpd: Could not reliably determine the server's fully qualified domain name, using 172.17.0.15. Set the 'ServerName' directive globally to suppress this message

Connection to localhost (127.0.0.1) 80 port [tcp/http] succeeded!

[ls.io-init] done.

WARNING: Hostname 'ipv6.google.com' does currently not resolve to an IPv4 address

### parsing dig output...OK

### assuming you are using an fping copy reporting in milliseconds

Smokeping version 2.008002 successfully launched.

Entering multiprocess mode.

No targets defined for probe TCPPing, skipping.

### assuming you are using an tcpping copy reporting in milliseconds

Child process 272 started for probe DNS.

DNS: probing 9 targets with step 300 s and offset 185 s.

Child process 273 started for probe FPing6.

FPing6: probing 1 targets with step 300 s and offset 150 s.

Child process 274 started for probe FPing.

All probe processes started successfully.

FPing: probing 25 targets with step 300 s and offset 58 s.

And I can see data in the mounted volumes

builder@builder-T100:~$ ls -ltra smokeping/data/

total 28

drwxrwxr-x 4 builder builder 4096 May 5 12:41 ..

drwxr-xr-x 2 builder builder 4096 May 5 12:44 DNS

drwxr-xr-x 2 builder builder 4096 May 5 12:44 USA

drwxr-xr-x 5 builder builder 4096 May 5 12:44 Europe

drwxr-xr-x 2 builder builder 4096 May 5 12:44 DNSProbes

drwxrwxrwx 7 builder builder 4096 May 5 12:44 .

drwxr-xr-x 2 builder builder 4096 May 5 12:44 InternetSites

builder@builder-T100:~$ ls -ltra smokeping/config/

total 72

drwxrwxr-x 4 builder builder 4096 May 5 12:41 ..

-rw-r--r-- 1 builder builder 177 May 5 12:44 Alerts

-rw-r--r-- 1 builder builder 237 May 5 12:44 Database

-rw-r--r-- 1 builder builder 486 May 5 12:44 General

-rw-r--r-- 1 builder builder 875 May 5 12:44 Presentation

-rw-r--r-- 1 builder builder 251 May 5 12:44 Probes

-rw-r--r-- 1 builder builder 147 May 5 12:44 Slaves

-rw-r--r-- 1 builder builder 3390 May 5 12:44 Targets

-rw-r--r-- 1 builder builder 188 May 5 12:44 pathnames

-rw-r--r-- 1 builder builder 613 May 5 12:44 ssmtp.conf

drwxr-xr-x 2 builder builder 4096 May 5 12:44 site-confs

-rw-r--r-- 1 builder builder 17460 May 5 12:44 httpd.conf

-rwxr-x--- 1 builder builder 57 May 5 12:44 smokeping_secrets

drwxrwxrwx 3 builder builder 4096 May 5 12:44 .

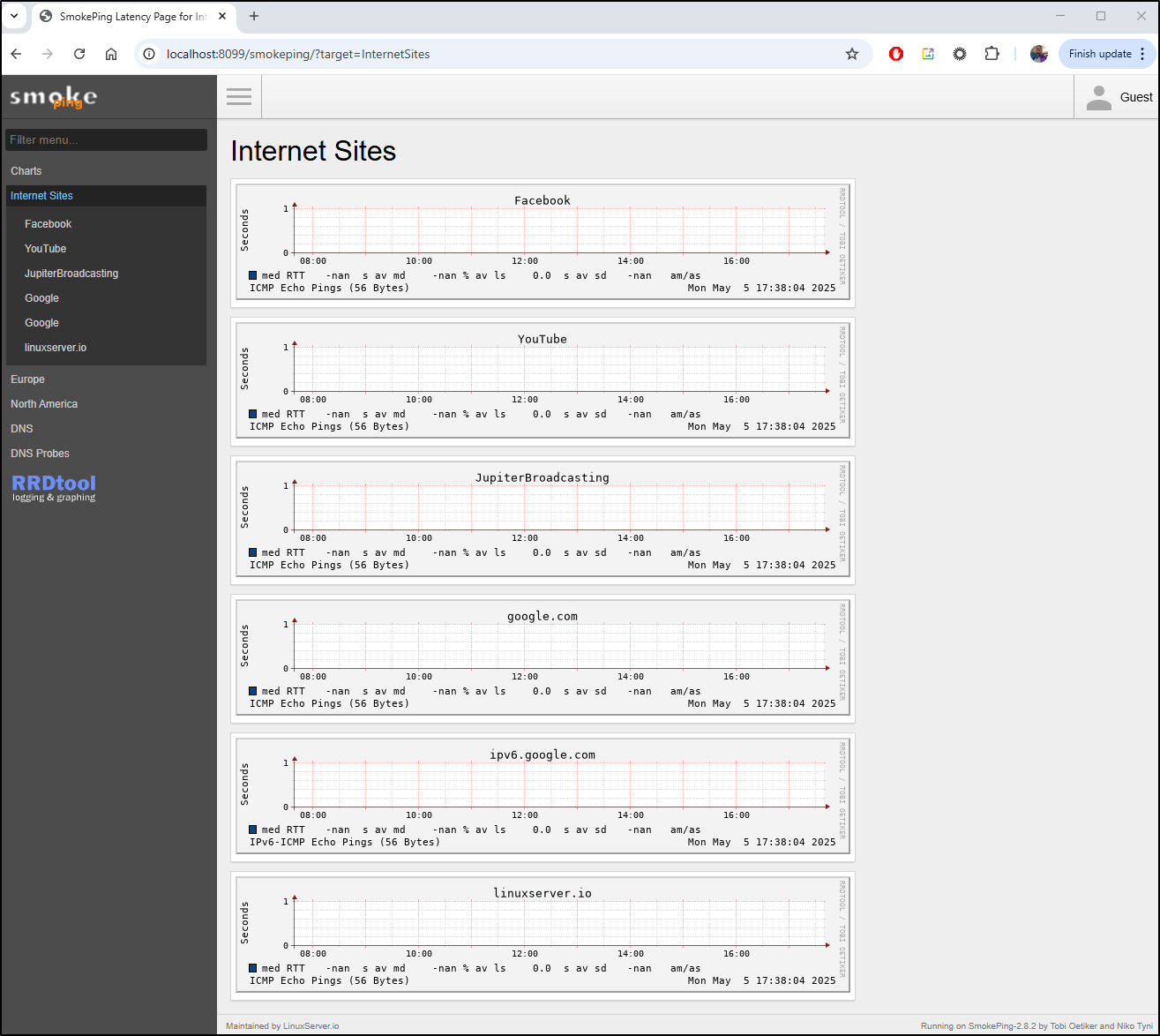

That was when I realized that I just lacked patience.

Coming back about 15 minutes later I could see graphs starting

Kubernetes

I’ll try one more way and that is with Kubernetes

Converting this to a manifest:

$ cat ./smokeping.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: smokeping-config

spec:

accessModes:

- ReadWriteOnce

storageClassName: local-path

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: smokeping-data

spec:

accessModes:

- ReadWriteOnce

storageClassName: local-path

resources:

requests:

storage: 5Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: smokeping

labels:

app: smokeping

spec:

replicas: 1

selector:

matchLabels:

app: smokeping

template:

metadata:

labels:

app: smokeping

spec:

containers:

- name: smokeping

image: lscr.io/linuxserver/smokeping:latest

env:

- name: PUID

value: "1000"

- name: PGID

value: "1000"

- name: TZ

value: "America/Chicago"

ports:

- containerPort: 80

name: http

volumeMounts:

- name: config

mountPath: /config

- name: data

mountPath: /data

resources:

requests:

memory: "256Mi"

cpu: "100m"

limits:

memory: "512Mi"

cpu: "500m"

volumes:

- name: config

persistentVolumeClaim:

claimName: smokeping-config

- name: data

persistentVolumeClaim:

claimName: smokeping-data

---

apiVersion: v1

kind: Service

metadata:

name: smokeping

spec:

selector:

app: smokeping

ports:

- port: 80

targetPort: 80

name: http

type: ClusterIP

Then apply

$ kubectl apply -f ./smokeping.yaml

persistentvolumeclaim/smokeping-config created

persistentvolumeclaim/smokeping-data created

deployment.apps/smokeping created

service/smokeping created

I can see the pod is up and running

$ kubectl logs smokeping-594bc499b8-nchx4

[migrations] started

[migrations] no migrations found

───────────────────────────────────────

██╗ ███████╗██╗ ██████╗

██║ ██╔════╝██║██╔═══██╗

██║ ███████╗██║██║ ██║

██║ ╚════██║██║██║ ██║

███████╗███████║██║╚██████╔╝

╚══════╝╚══════╝╚═╝ ╚═════╝

Brought to you by linuxserver.io

───────────────────────────────────────

To support LSIO projects visit:

https://www.linuxserver.io/donate/

───────────────────────────────────────

GID/UID

───────────────────────────────────────

User UID: 1000

User GID: 1000

───────────────────────────────────────

Linuxserver.io version: 2.8.2-r3-ls130

Build-date: 2025-04-22T20:40:37+00:00

───────────────────────────────────────

'Alerts' -> '/config/Alerts'

'Database' -> '/config/Database'

'General' -> '/config/General'

'Presentation' -> '/config/Presentation'

'Probes' -> '/config/Probes'

'Slaves' -> '/config/Slaves'

'Targets' -> '/config/Targets'

'pathnames' -> '/config/pathnames'

'ssmtp.conf' -> '/config/ssmtp.conf'

[custom-init] No custom files found, skipping...

AH00558: httpd: Could not reliably determine the server's fully qualified domain name, using 10.42.1.119. Set the 'ServerName' directive globally to suppress this message

Connection to localhost (::1) 80 port [tcp/http] succeeded!

[ls.io-init] done.

WARNING: Hostname 'ipv6.google.com' does currently not resolve to an IPv4 address

### parsing dig output...OK

### assuming you are using an tcpping copy reporting in milliseconds

Smokeping version 2.008002 successfully launched.

Entering multiprocess mode.

Child process 275 started for probe FPing6.

No targets defined for probe TCPPing, skipping.

FPing6: probing 1 targets with step 300 s and offset 77 s.

Child process 276 started for probe FPing.

FPing: probing 25 targets with step 300 s and offset 129 s.

Child process 277 started for probe DNS.

All probe processes started successfully.

DNS: probing 9 targets with step 300 s and offset 252 s.

I’ll want to check this remotely so let’s add an ingress with an A Record

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n smokeping

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "ddd74614-d3a2-4e7c-9716-0869aa23d27c",

"fqdn": "smokeping.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/smokeping",

"name": "smokeping",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

I can then add the block to the Kubernetes YAML manifest

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.org/websocket-services: smokeping

name: smokepingingress

spec:

rules:

- host: smokeping.tpk.pw

http:

paths:

- backend:

service:

name: smokeping

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- smokeping.tpk.pw

secretName: smokeping-tls

and apply

$ kubectl apply -f ./smokeping.yaml

persistentvolumeclaim/smokeping-config unchanged

persistentvolumeclaim/smokeping-data unchanged

deployment.apps/smokeping unchanged

service/smokeping unchanged

ingress.networking.k8s.io/smokepingingress created

Once I saw the cert was ready

$ kubectl get cert smokeping-tls

NAME READY SECRET AGE

smokeping-tls True smokeping-tls 96s

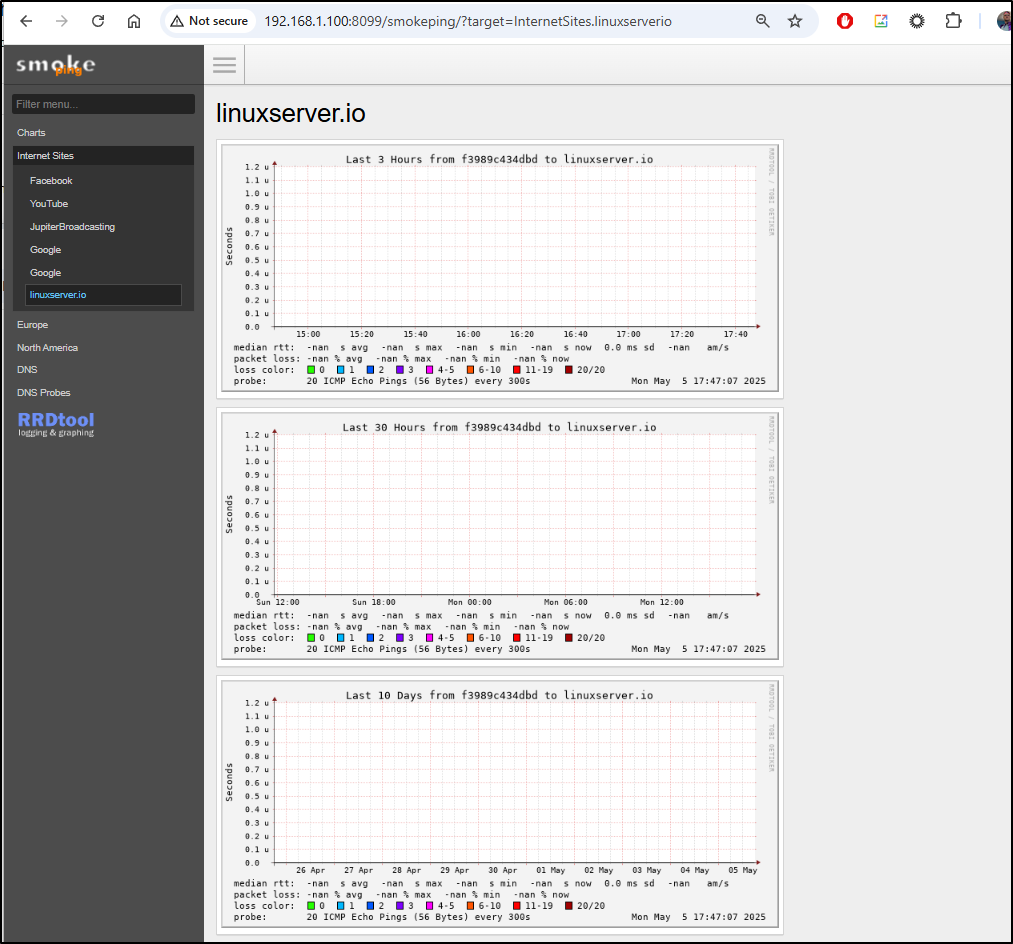

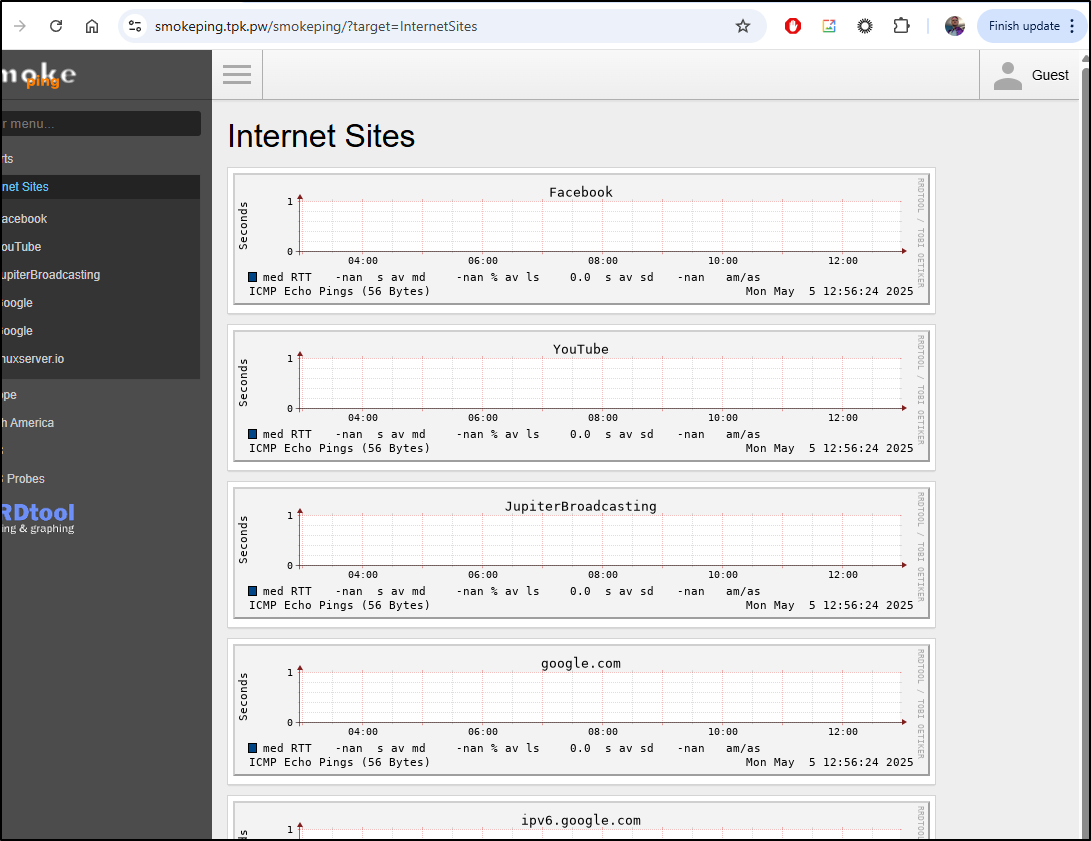

I could test the URL, smokeping.tpk.pw

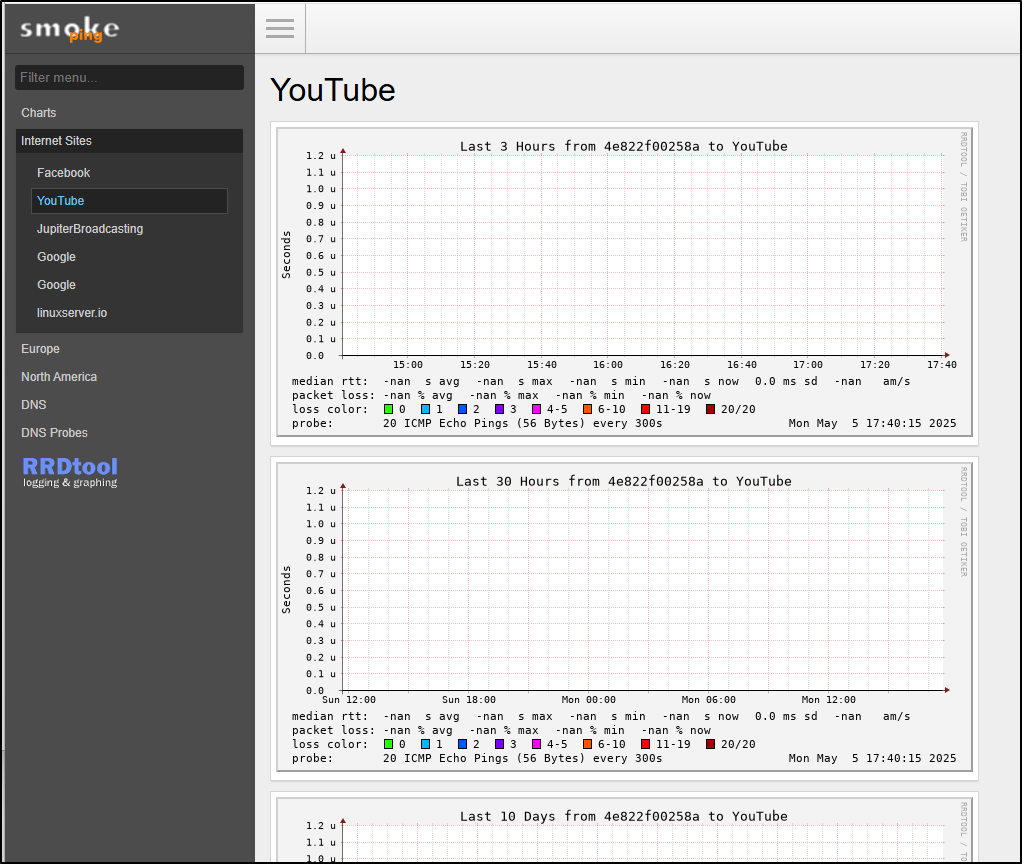

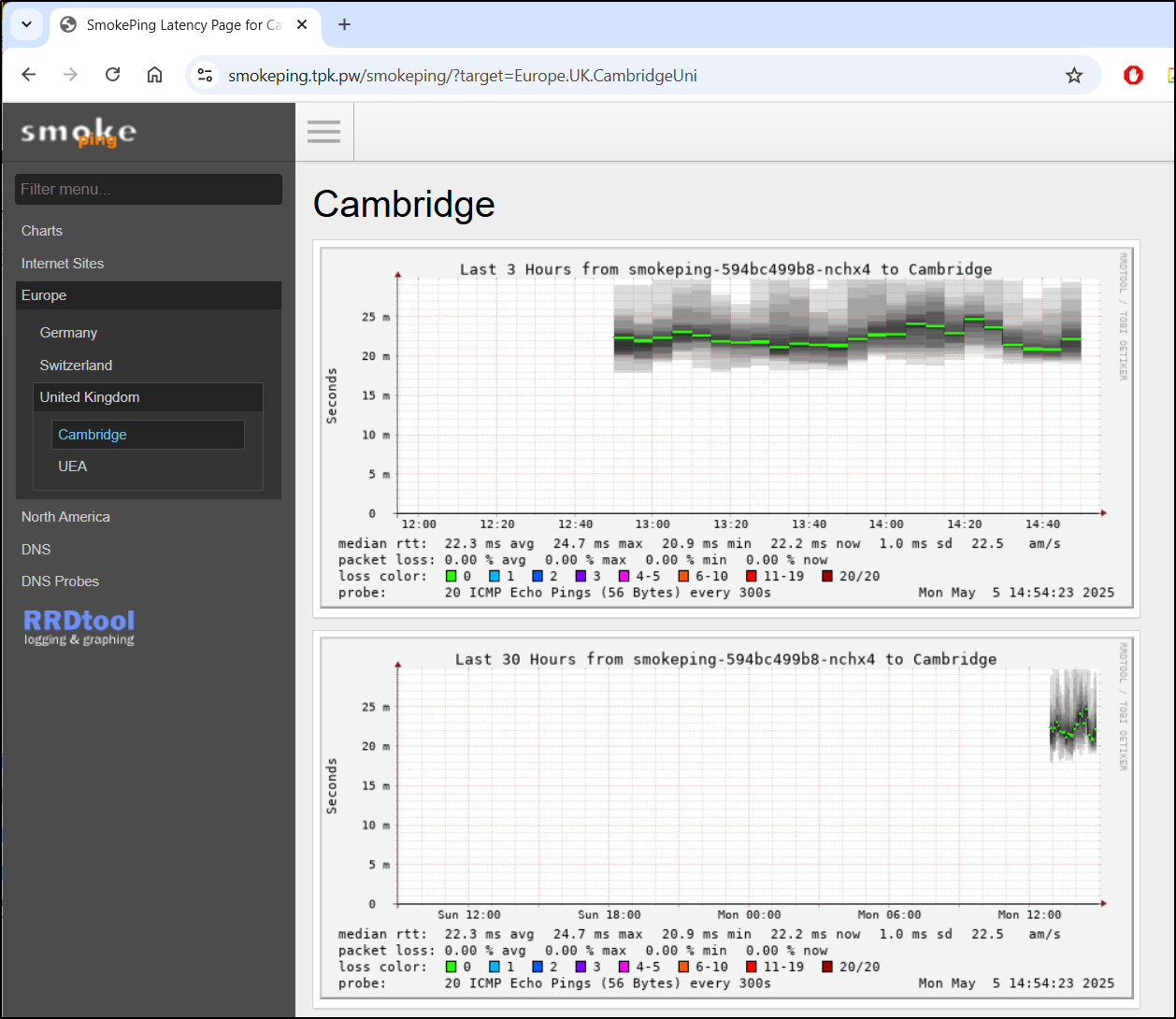

It didn’t take long to see some good data in the graphs, such as Pings to the UK

Adding sites

We could recompile to add sites to the Conf Target file

Or just get a shell to the container and modify them as they live on the PVC

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl get po | grep smoke

smokeping-594bc499b8-nchx4 1/1 Running 0 126m

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl exec -it smokeping-594bc499b8-nchx4 -- /bin/bash

root@smokeping-594bc499b8-nchx4:/# pwd

/

root@smokeping-594bc499b8-nchx4:/# ls

app build_version config defaults docker-mods home lib media opt proc run srv tmp var

bin command data dev etc init lsiopy mnt package root sbin sys usr

root@smokeping-594bc499b8-nchx4:/# cd /config

root@smokeping-594bc499b8-nchx4:/config# cat Targets

*** Targets ***

probe = FPing

menu = Top

title = Network Latency Grapher

remark = Welcome to the SmokePing website of WORKS Company. \

Here you will learn all about the latency of our network.

+ InternetSites

menu = Internet Sites

title = Internet Sites

++ Facebook

menu = Facebook

title = Facebook

host = facebook.com

Here I’ll add one of the Targets

root@smokeping-594bc499b8-nchx4:/config# ls -ltra

total 72

drwxr-xr-x 1 root root 4096 May 5 12:51 ..

-rw-r--r-- 1 abc users 177 May 5 12:51 Alerts

-rw-r--r-- 1 abc users 237 May 5 12:51 Database

-rw-r--r-- 1 abc users 486 May 5 12:51 General

-rw-r--r-- 1 abc users 875 May 5 12:51 Presentation

-rw-r--r-- 1 abc users 251 May 5 12:51 Probes

-rw-r--r-- 1 abc users 147 May 5 12:51 Slaves

-rw-r--r-- 1 abc users 3390 May 5 12:51 Targets

-rw-r--r-- 1 abc users 188 May 5 12:51 pathnames

-rw-r--r-- 1 abc users 613 May 5 12:51 ssmtp.conf

drwxr-xr-x 2 abc users 4096 May 5 12:51 site-confs

-rw-r--r-- 1 abc users 17460 May 5 12:51 httpd.conf

-rwxr-x--- 1 abc users 57 May 5 12:51 smokeping_secrets

drwxrwxrwx 3 abc users 4096 May 5 12:51 .

root@smokeping-594bc499b8-nchx4:/config# vi Targets

Then add my lines

+ InternetSites

menu = Internet Sites

title = Internet Sites

++ Facebook

menu = Facebook

title = Facebook

host = facebook.com

++ Youtube

menu = YouTube

title = YouTube

host = youtube.com

++ JupiterBroadcasting

menu = JupiterBroadcasting

title = JupiterBroadcasting

host = jupiterbroadcasting.com

++ GoogleSearch

menu = Google

title = google.com

host = google.com

++ GoogleSearchIpv6

menu = Google

probe = FPing6

title = ipv6.google.com

host = ipv6.google.com

++ linuxserverio

menu = linuxserver.io

title = linuxserver.io

host = linuxserver.io

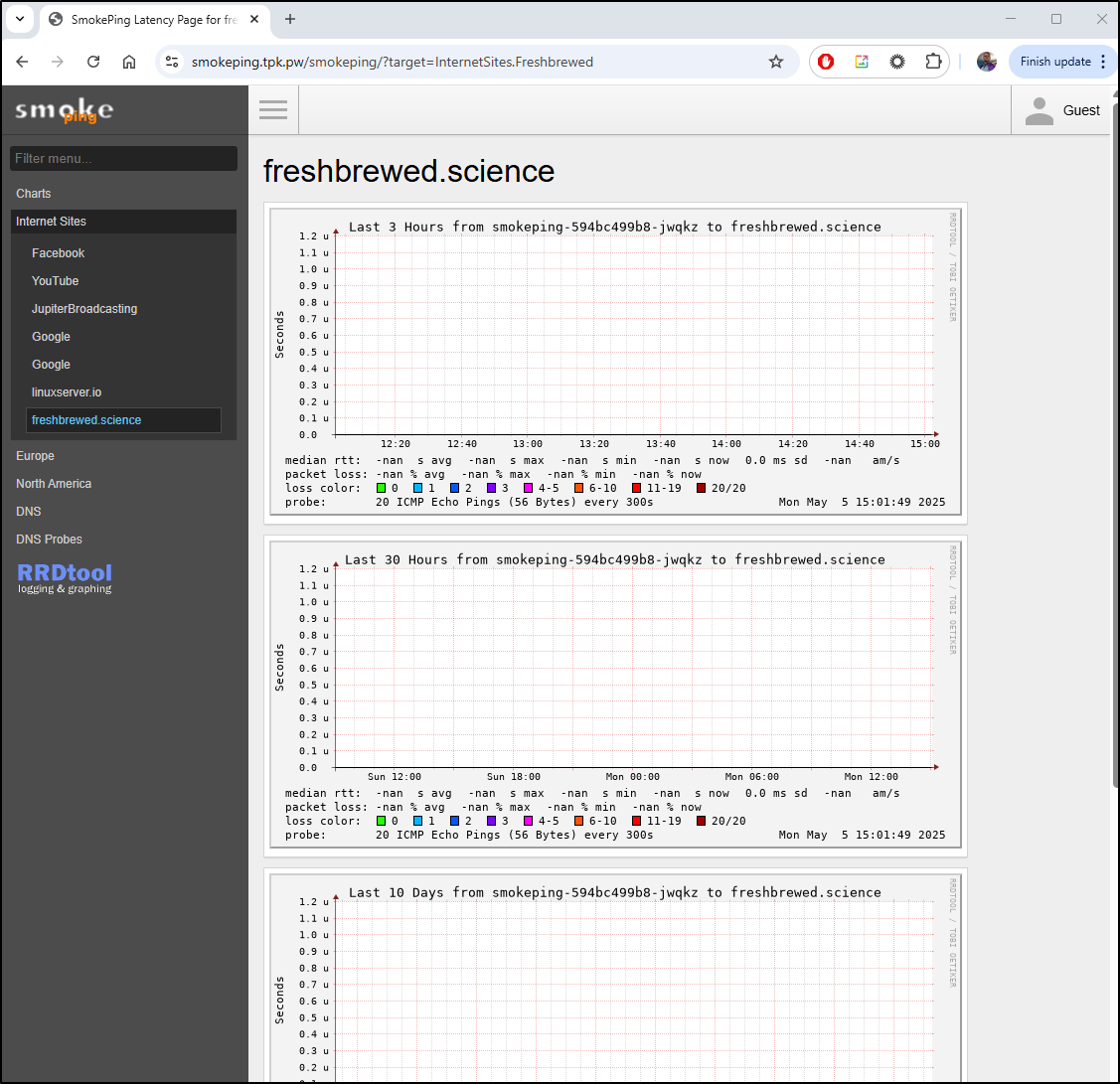

++ Freshbrewed

menu = freshbrewed.science

title = freshbrewed.science

host = freshbrewed.science

+ Europe

As it’s a cgi, I’ll rotate the pod to ensure it takes effect

$ kubectl delete po smokeping-594bc499b8-nchx4

pod "smokeping-594bc499b8-nchx4" deleted

$ kubectl get po | grep smoke

smokeping-594bc499b8-jwqkz 1/1 Running 0 18s

I can now see my own site listed in the Internet sites

Where I could really see this handy is adding a new section for all of ones exposed sites and monitoring so you can see if there is any latency at night.

Other ways to monitor

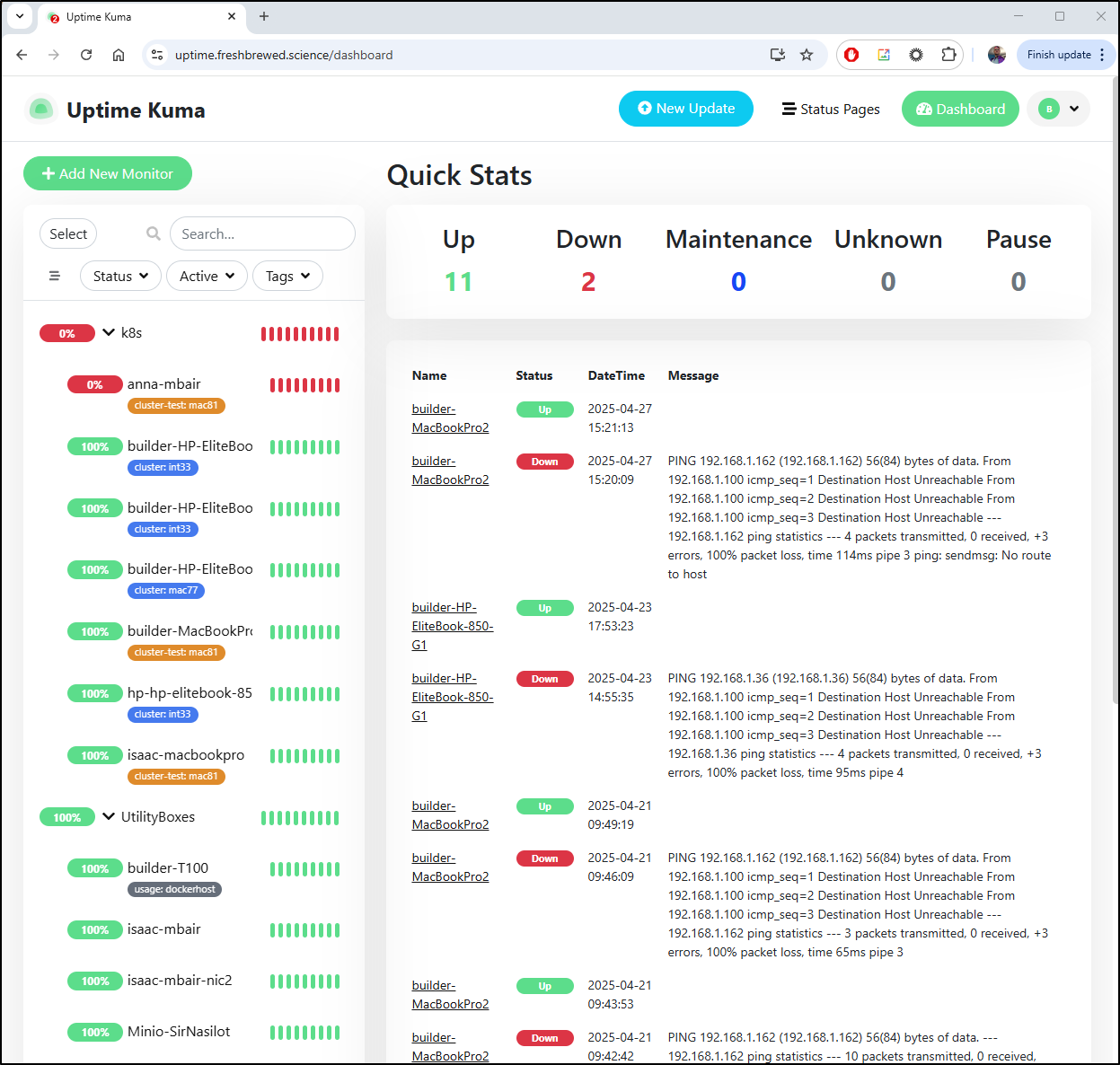

I would still use Uptime Kuma to monitor and send alerts:

But it would be nice to have a simple external app, perhaps in Cloud Run, just chugging along and showing when I’m down (or if I had a network blip at night).

One of my issues with Uptime Kuma is that it really just monitors in my network.

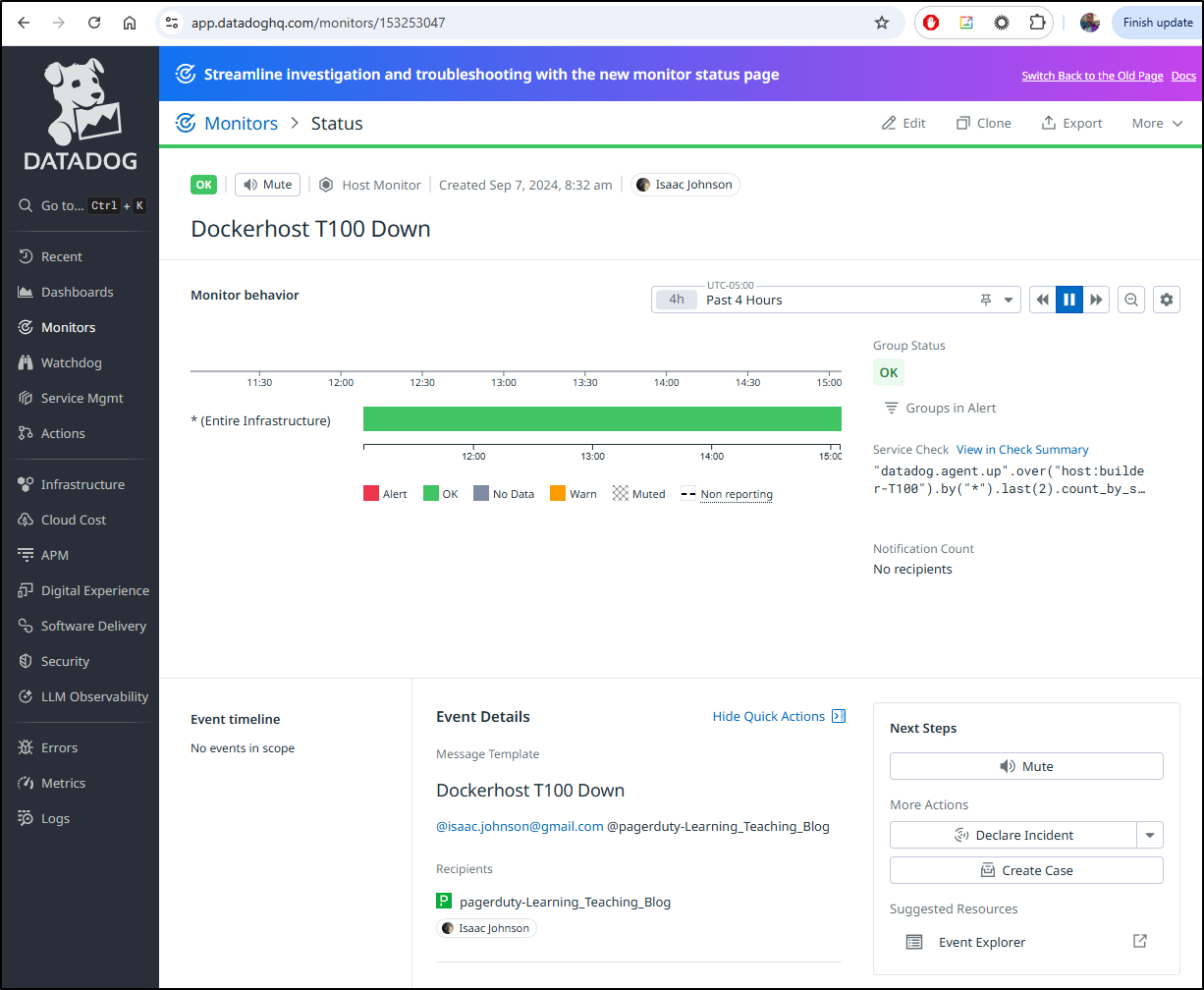

For an “external check”, today I rely on Datadog to alert when a host monitor goes down

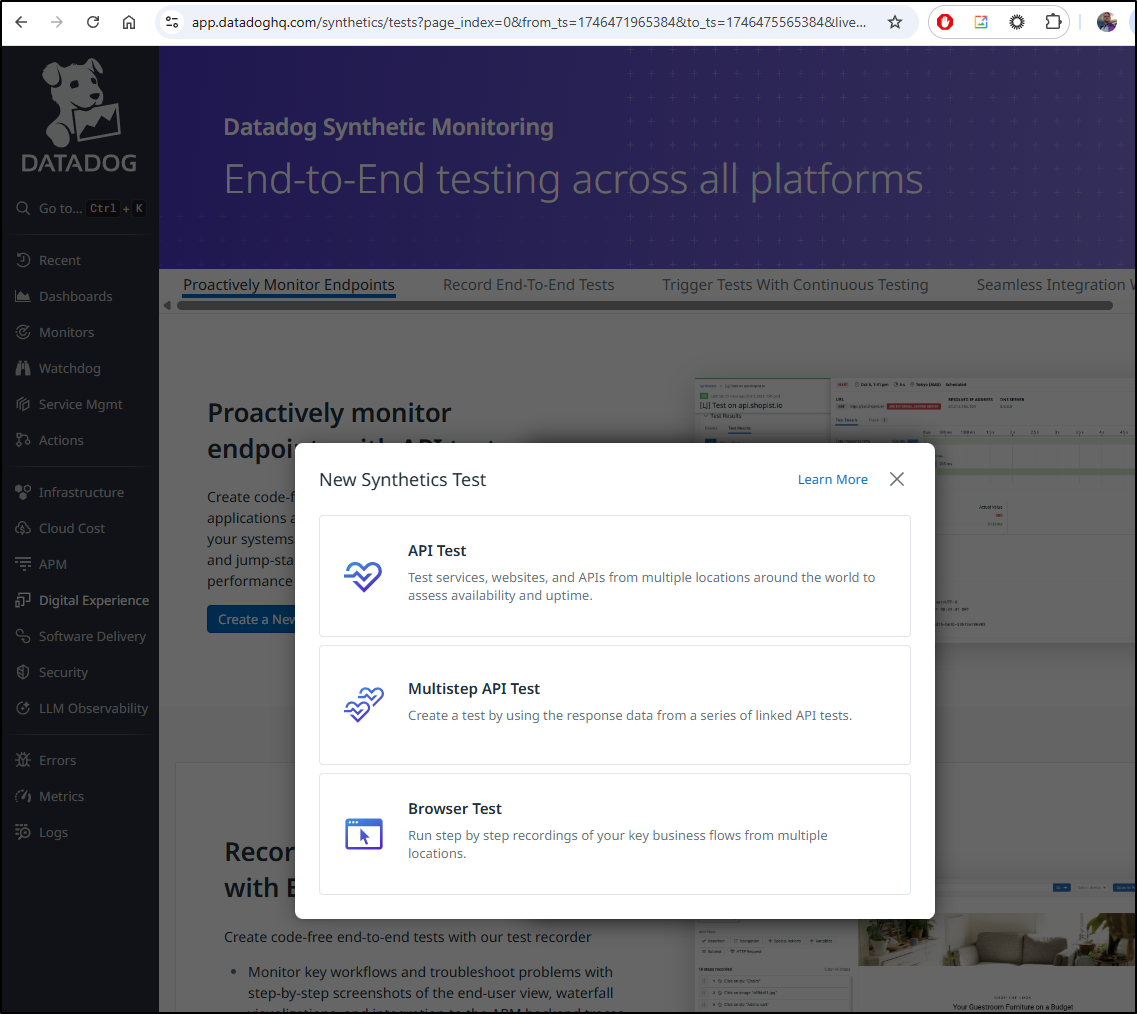

And if I wanted something more complicated or not requiring an Agent, I could use Synthetic Monitoring to get the job done

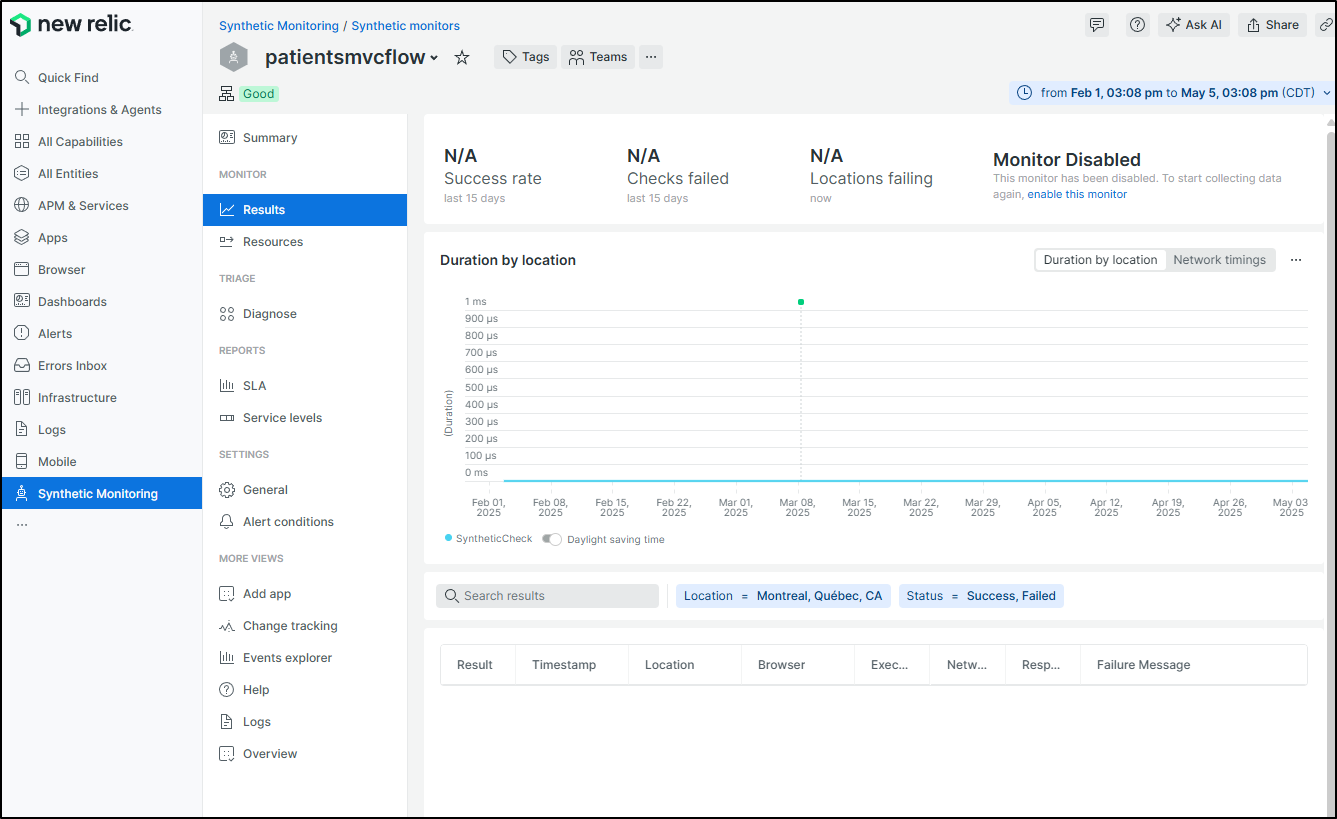

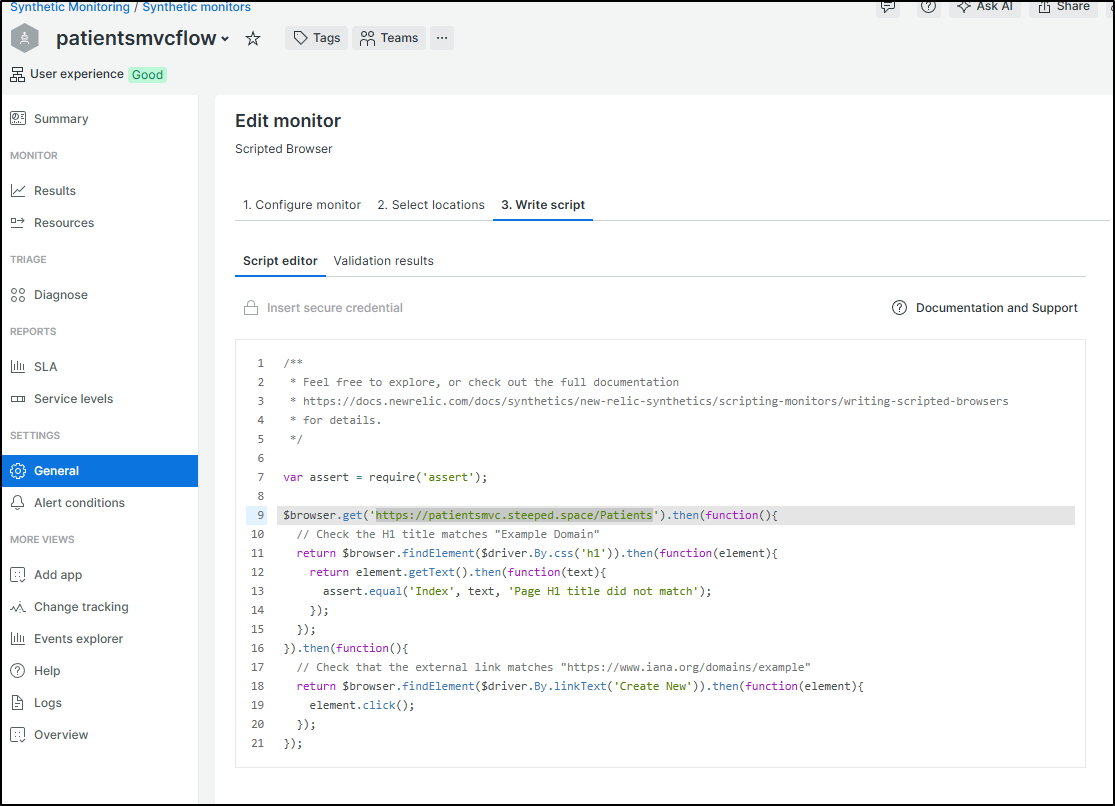

I use Synthetic Monitoring in New Relic as well which is pretty easy to configure

With New Relic, you simply write some Selenium to get the job done (e.g. testing if https://patientsmvc.steeped.icu/Patients responds with a payload including an H1 tag):

Summary

Today we revisited two Open-Source tools, Vikunja and Glance. I covered how I use Vikunja as my primary task manager and find it quite useful in tracking blog ideas, though I rarely use the other features. We upgraded Glance and checked out the Minecraft server plugin (as way to see how to use Community Plugins).

I then moved on to cover Smokeping, a simple, but very handy Open-source containerized tool for monitoring endpoints over time. I showed how to deploy it to Kubernetes and then update it to add new sites (my manually updating the config in the PVC). Lastly, I showed a few other ways I monitor systems including Uptime Kuma, Datadog and New Relic.

You’re welcome to see the hosted versions of Glance and Smokeping: