Published: Jun 4, 2024 by Isaac Johnson

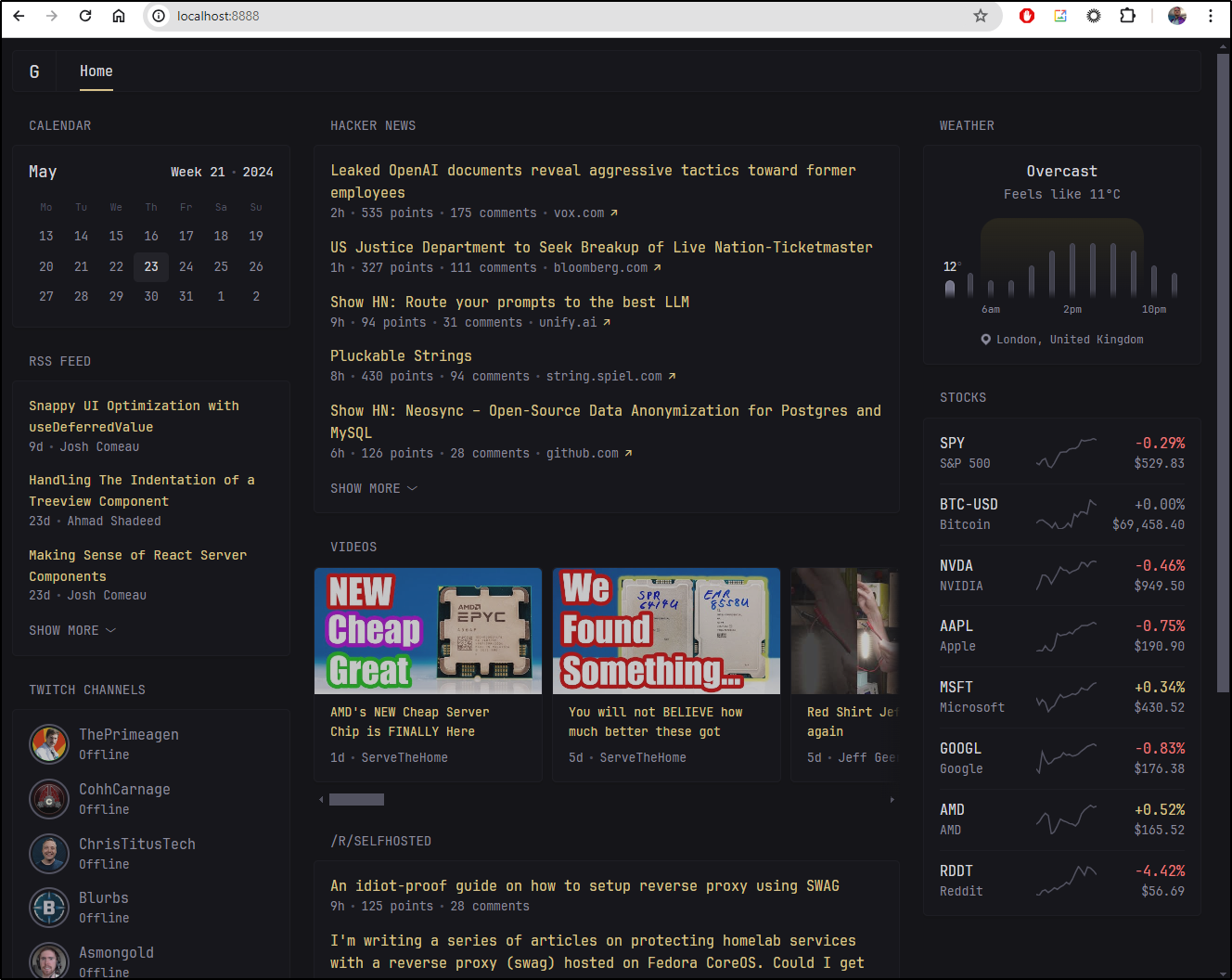

I had two bookmarked apps I wanted to check out that originally were mentioned on MariusHosting. The first is a landing page that is YAML driven called Glance. Glance is easy to install and configure - probably the best I’ve tried thus far.

The other app I wanted to check out is TimeTagger which can be hosted in Docker or Kubernetes and is used to just keep a log of time spent on things.

Let’s start with Glance.

Glance

I came across Glance from a MariusHosting post.

The Github page points out we can just launch with docker using

docker run -d -p 8080:8080 \

-v ./glance.yml:/app/glance.yml \

-v /etc/timezone:/etc/timezone:ro \

-v /etc/localtime:/etc/localtime:ro \

glanceapp/glance

A little bit buried in the docs is an example glance.yml file: https://github.com/glanceapp/glance/blob/main/docs/configuration.md#preconfigured-page

pages:

- name: Home

columns:

- size: small

widgets:

- type: calendar

- type: rss

limit: 10

collapse-after: 3

cache: 3h

feeds:

- url: https://ciechanow.ski/atom.xml

- url: https://www.joshwcomeau.com/rss.xml

title: Josh Comeau

- url: https://samwho.dev/rss.xml

- url: https://awesomekling.github.io/feed.xml

- url: https://ishadeed.com/feed.xml

title: Ahmad Shadeed

- type: twitch-channels

channels:

- theprimeagen

- cohhcarnage

- christitustech

- blurbs

- asmongold

- jembawls

- size: full

widgets:

- type: hacker-news

- type: videos

channels:

- UCR-DXc1voovS8nhAvccRZhg # Jeff Geerling

- UCv6J_jJa8GJqFwQNgNrMuww # ServeTheHome

- UCOk-gHyjcWZNj3Br4oxwh0A # Techno Tim

- type: reddit

subreddit: selfhosted

- size: small

widgets:

- type: weather

location: London, United Kingdom

- type: stocks

stocks:

- symbol: SPY

name: S&P 500

- symbol: BTC-USD

name: Bitcoin

- symbol: NVDA

name: NVIDIA

- symbol: AAPL

name: Apple

- symbol: MSFT

name: Microsoft

- symbol: GOOGL

name: Google

- symbol: AMD

name: AMD

- symbol: RDDT

name: Reddit

Since I like to run with Kubernetes, I’ll convert this over to a manifest with a deployment and service

apiVersion: v1

kind: ConfigMap

metadata:

name: glanceconfig

data:

glance.yml: |

pages:

- name: Home

columns:

- size: small

widgets:

- type: calendar

- type: rss

limit: 10

collapse-after: 3

cache: 3h

feeds:

- url: https://ciechanow.ski/atom.xml

- url: https://www.joshwcomeau.com/rss.xml

title: Josh Comeau

- url: https://samwho.dev/rss.xml

- url: https://awesomekling.github.io/feed.xml

- url: https://ishadeed.com/feed.xml

title: Ahmad Shadeed

- type: twitch-channels

channels:

- theprimeagen

- cohhcarnage

- christitustech

- blurbs

- asmongold

- jembawls

- size: full

widgets:

- type: hacker-news

- type: videos

channels:

- UCR-DXc1voovS8nhAvccRZhg # Jeff Geerling

- UCv6J_jJa8GJqFwQNgNrMuww # ServeTheHome

- UCOk-gHyjcWZNj3Br4oxwh0A # Techno Tim

- type: reddit

subreddit: selfhosted

- size: small

widgets:

- type: weather

location: London, United Kingdom

- type: stocks

stocks:

- symbol: SPY

name: S&P 500

- symbol: BTC-USD

name: Bitcoin

- symbol: NVDA

name: NVIDIA

- symbol: AAPL

name: Apple

- symbol: MSFT

name: Microsoft

- symbol: GOOGL

name: Google

- symbol: AMD

name: AMD

- symbol: RDDT

name: Reddit

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: glance-deployment

spec:

replicas: 1

selector:

matchLabels:

app: glance

template:

metadata:

labels:

app: glance

spec:

containers:

- name: glance

image: glanceapp/glance

ports:

- containerPort: 8080

volumeMounts:

- name: config-volume

mountPath: /app/glance.yml

subPath: glance.yml

volumes:

- name: config-volume

configMap:

name: glanceconfig

---

apiVersion: v1

kind: Service

metadata:

name: glance-service

spec:

type: ClusterIP

selector:

app: glance

ports:

- protocol: TCP

port: 80

targetPort: 8080

Which I applied

$ kubectl apply -f ./manifest.yml

Warning: resource configmaps/glanceconfig is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

configmap/glanceconfig configured

deployment.apps/glance-deployment created

service/glance-service created

Seems it doesn’t like that CM

$ kubectl get pods -l app=glance

NAME READY STATUS RESTARTS AGE

glance-deployment-5547d948ff-69h6q 1/1 Running 0 46s

I can now port-forward to test

$ kubectl port-forward svc/glance-service 8888:80

Forwarding from 127.0.0.1:8888 -> 8080

Forwarding from [::1]:8888 -> 8080

Handling connection for 8888

Handling connection for 8888

One thing I realized is that when I change the Configmap, it doesn’t live update. This is because we mount as a volume on the pod. What that means is that if you update your glance.yml, you need to also bounce the pod to make it take effect

builder@LuiGi:~/Workspaces/glance$ kubectl apply -f ./manifest.yml

configmap/glanceconfig configured

deployment.apps/glance-deployment unchanged

service/glance-service unchanged

builder@LuiGi:~/Workspaces/glance$ kubectl port-forward svc/glance-service 8888:80

Forwarding from 127.0.0.1:8888 -> 8080

Forwarding from [::1]:8888 -> 8080

Handling connection for 8888

Handling connection for 8888

^Cbuilder@LuiGi:~/Workspaces/glance$ kubectl get pods -l app=glance

NAME READY STATUS RESTARTS AGE

glance-deployment-5547d948ff-69h6q 1/1 Running 0 11m

builder@LuiGi:~/Workspaces/glance$ kubectl delete pod glance-deployment-5547d948ff-69h6q

pod "glance-deployment-5547d948ff-69h6q" deleted

or a bit easier

builder@LuiGi:~/Workspaces/glance$ kubectl apply -f ./manifest.yml

configmap/glanceconfig configured

deployment.apps/glance-deployment unchanged

service/glance-service unchanged

builder@LuiGi:~/Workspaces/glance$ kubectl delete pod -l app=glance

pod "glance-deployment-5547d948ff-s8cqk" deleted

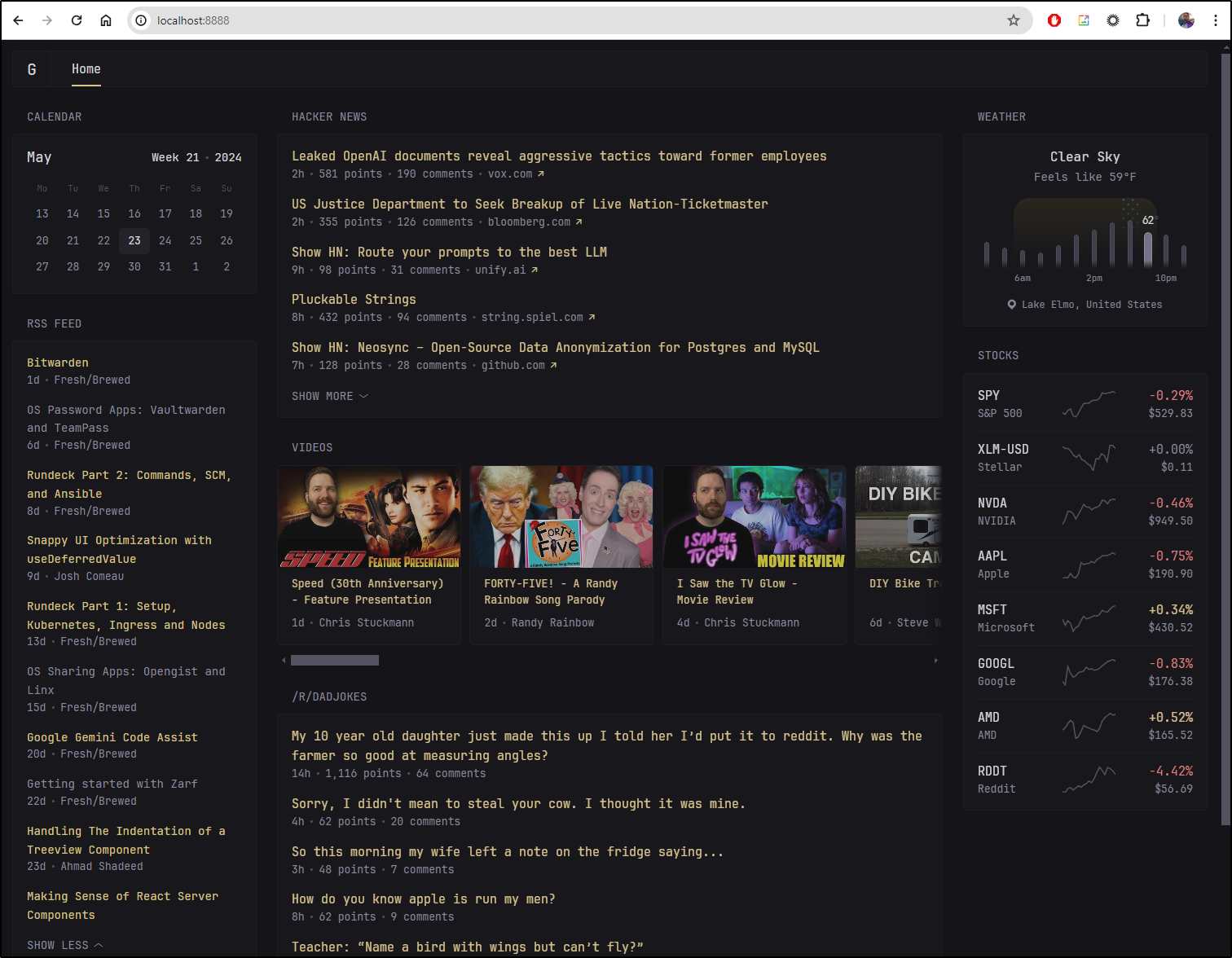

Once I got it configured to my tastes

I figured I might as well expose it, perhaps I can use it as a landing page. At the very least, I can keep an eye on my RSS feed

I need to make an A Record

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n glance

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "2c7054da-e087-4fa9-95f1-3b8da2b08d20",

"fqdn": "glance.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/glance",

"name": "glance",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

Then I can create an ingress to that same service

builder@LuiGi:~/Workspaces/glance$ cat glance.tpk.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

name: glance-ingress

spec:

rules:

- host: glance.tpk.pw

http:

paths:

- backend:

service:

name: glance-service

port:

number: 80

path: /

pathType: Prefix

tls:

- hosts:

- glance.tpk.pw

secretName: glance-tls

builder@LuiGi:~/Workspaces/glance$ kubectl apply -f ./glance.tpk.yaml

ingress.networking.k8s.io/glance-ingress created

Once I see the cert is satisified

builder@LuiGi:~/Workspaces/glance$ kubectl get cert glance-tls

NAME READY SECRET AGE

glance-tls False glance-tls 38s

builder@LuiGi:~/Workspaces/glance$ kubectl get cert glance-tls

NAME READY SECRET AGE

glance-tls False glance-tls 63s

glance-tls False glance-tls 78s

builder@LuiGi:~/Workspaces/glance$ kubectl get cert glance-tls

NAME READY SECRET AGE

glance-tls True glance-tls 84s

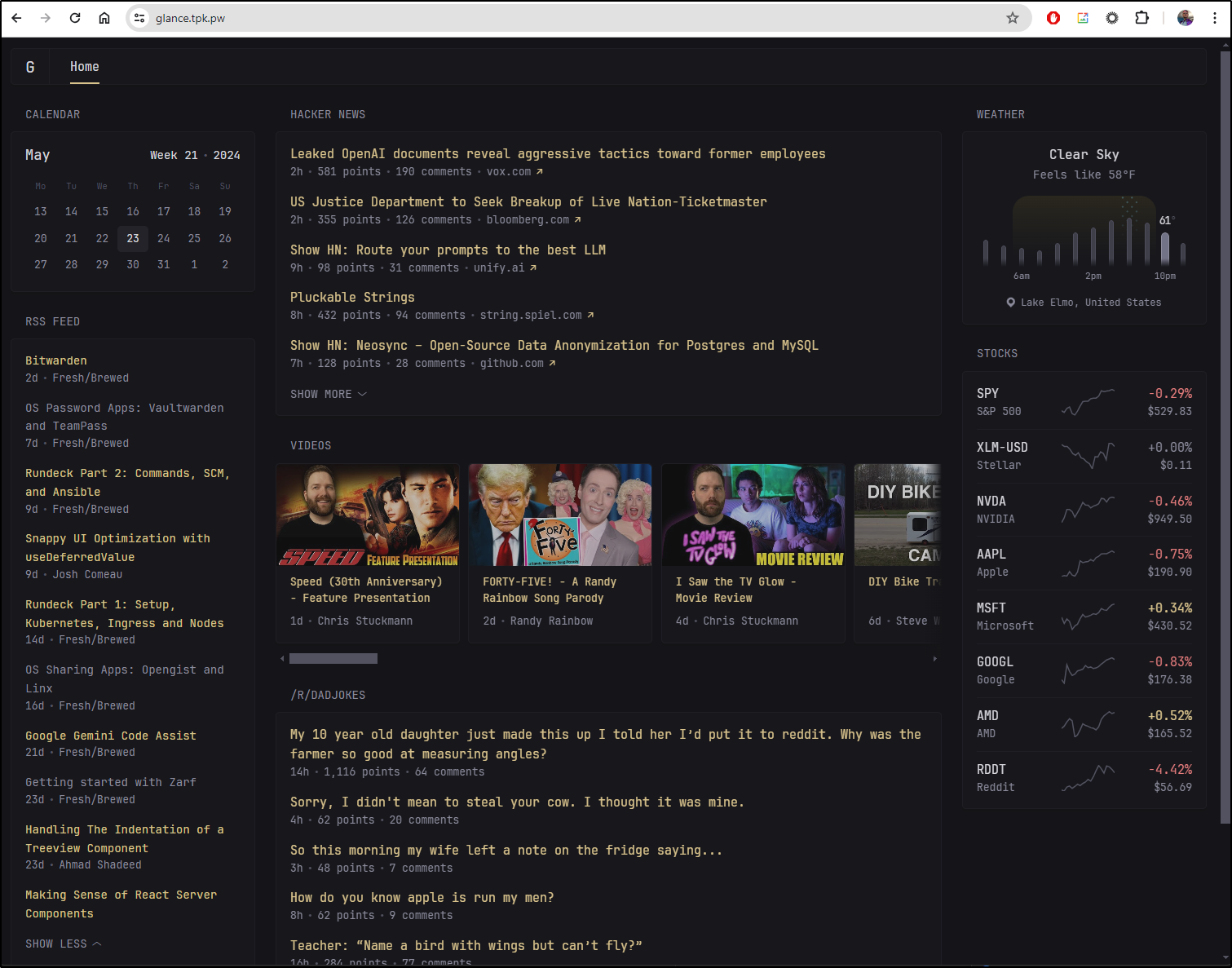

I can test https://glance.tpk.pw/

It’s really quite easy to update. Here we can see me changing the theme and making it live:

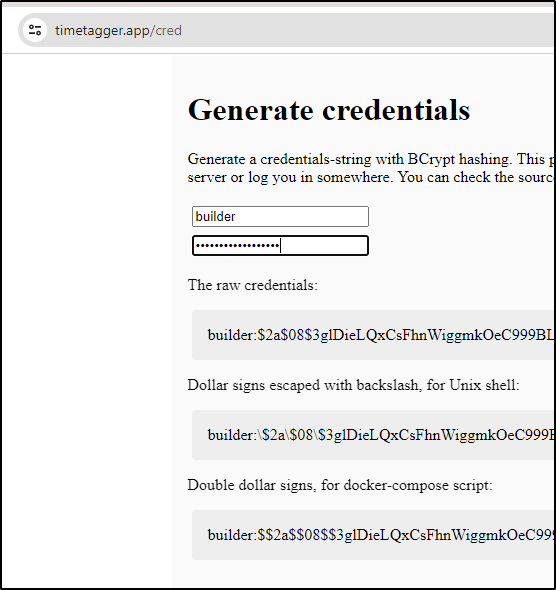

Time Tagger

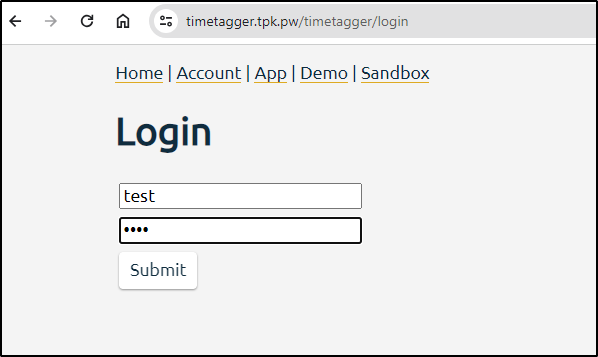

Before I install, I’ll need to create a BCrypt password. Luckily the author made it easy by creating a utility page we can use

The same Docker compose shows the quick way to launch in Docker

version: "3"

services:

timetagger:

image: ghcr.io/almarklein/timetagger

ports:

- "80:80"

volumes:

- ./_timetagger:/root/_timetagger

environment:

- TIMETAGGER_BIND=0.0.0.0:80

- TIMETAGGER_DATADIR=/root/_timetagger

- TIMETAGGER_LOG_LEVEL=info

- TIMETAGGER_CREDENTIALS=test:$$2a$$08$$zMsjPEGdXHzsu0N/felcbuWrffsH4.4ocDWY5oijsZ0cbwSiLNA8. # test:test

I’ll turn this into a Kuberntes YAML manifest and launch it

$ cat timetagger.manifest.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: timetagger-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: timetagger-deployment

spec:

replicas: 1

selector:

matchLabels:

app: timetagger

template:

metadata:

labels:

app: timetagger

spec:

containers:

- name: timetagger

image: ghcr.io/almarklein/timetagger

ports:

- containerPort: 80

env:

- name: TIMETAGGER_BIND

value: "0.0.0.0:80"

- name: TIMETAGGER_DATADIR

value: "/root/_timetagger"

- name: TIMETAGGER_LOG_LEVEL

value: "info"

- name: TIMETAGGER_CREDENTIALS

value: "test:$$2a$$08$$zMsjPEGdXHzsu0N/felcbuWrffsH4.4ocDWY5oijsZ0cbwSiLNA8."

volumeMounts:

- name: timetagger-volume

mountPath: /root/_timetagger

volumes:

- name: timetagger-volume

persistentVolumeClaim:

claimName: timetagger-pvc

---

apiVersion: v1

kind: Service

metadata:

name: timetagger-service

spec:

selector:

app: timetagger

ports:

- protocol: TCP

port: 80

targetPort: 80

$ kubectl apply -f ./timetagger.manifest.yaml

persistentvolumeclaim/timetagger-pvc created

deployment.apps/timetagger-deployment created

service/timetagger-service created

I can see it’s running

$ kubectl get pods -l app=timetagger

NAME READY STATUS RESTARTS AGE

timetagger-deployment-75c5df6d65-bhht8 1/1 Running 0 65s

I’ll skip to creating the Ingress now as well

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n timetagger

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "a72c0b63-971a-4a1b-a808-98fb5e374592",

"fqdn": "timetagger.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/timetagger",

"name": "timetagger",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

Then, as before, fire off the Ingress

$ cat timetagger.tpk.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

name: timetagger-ingress

spec:

rules:

- host: timetagger.tpk.pw

http:

paths:

- backend:

service:

name: timetagger-service

port:

number: 80

path: /

pathType: Prefix

tls:

- hosts:

- timetagger.tpk.pw

secretName: timetagger-tls

$ kubectl apply -f ./timetagger.tpk.yaml

ingress.networking.k8s.io/timetagger-ingress created

Once the cert was ready, I could test

builder@LuiGi:~/Workspaces/glance$ kubectl get cert timetagger-tls

NAME READY SECRET AGE

timetagger-tls False timetagger-tls 53s

builder@LuiGi:~/Workspaces/glance$ kubectl get cert timetagger-tls

NAME READY SECRET AGE

timetagger-tls True timetagger-tls 2m7s

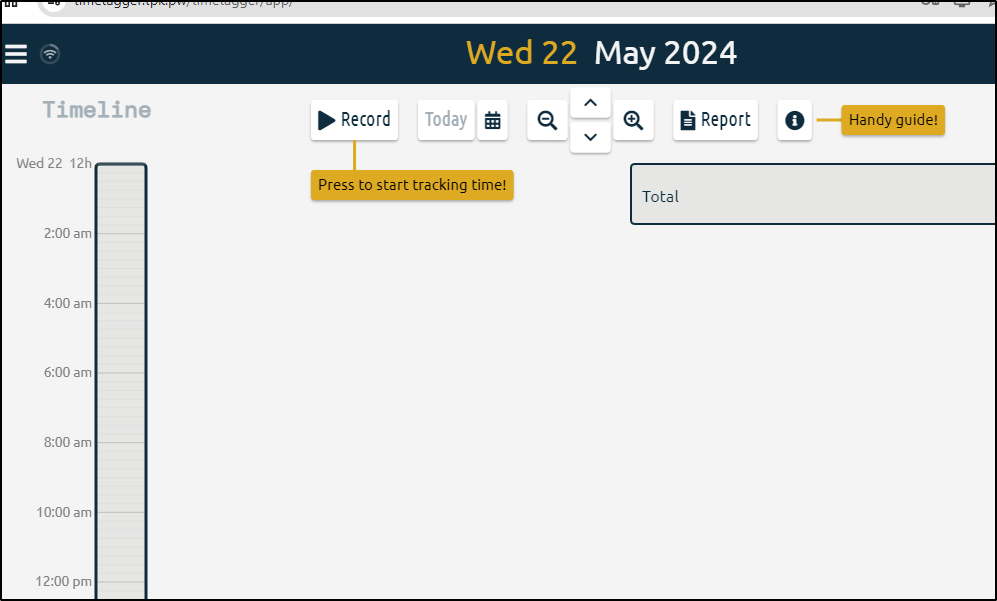

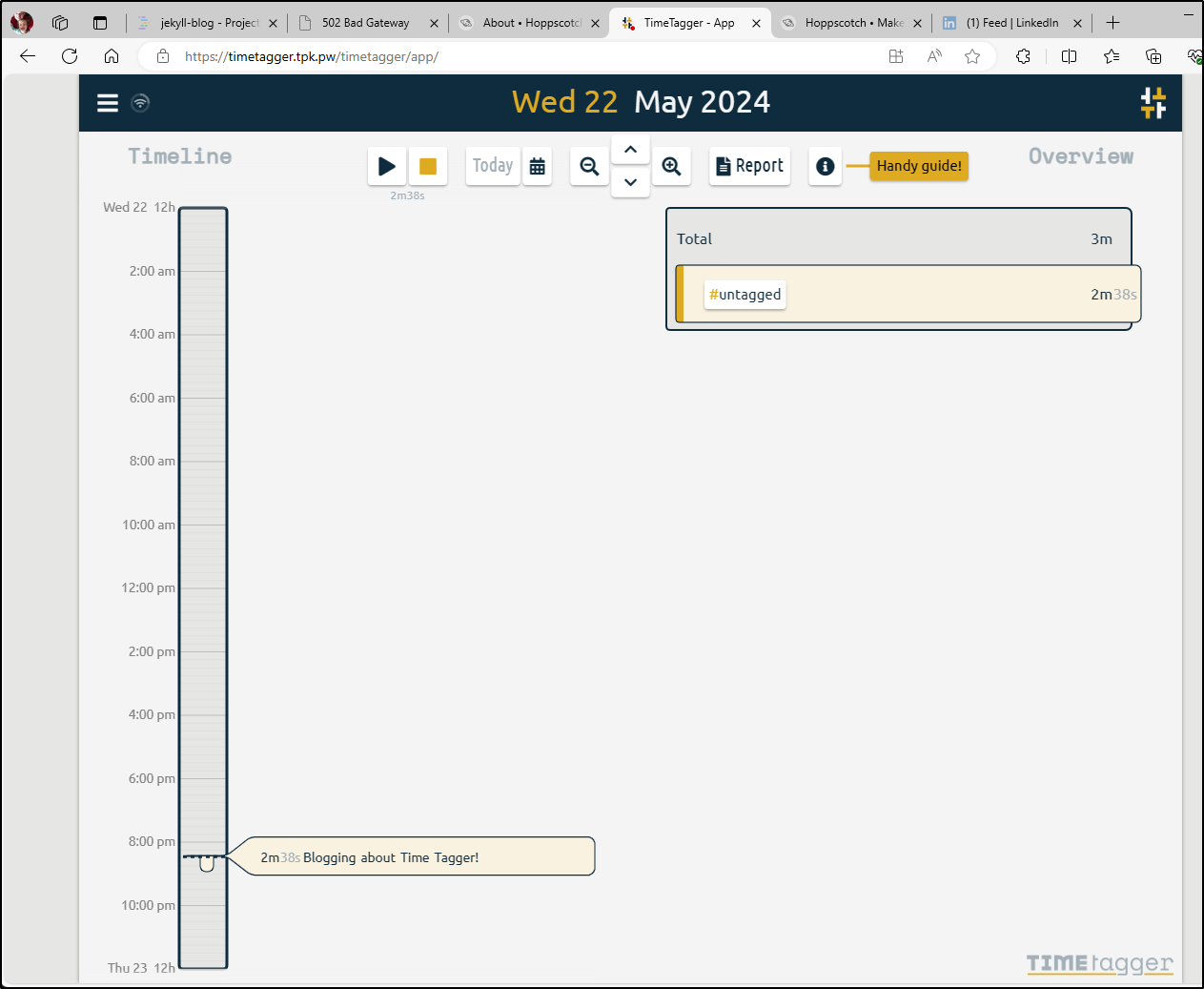

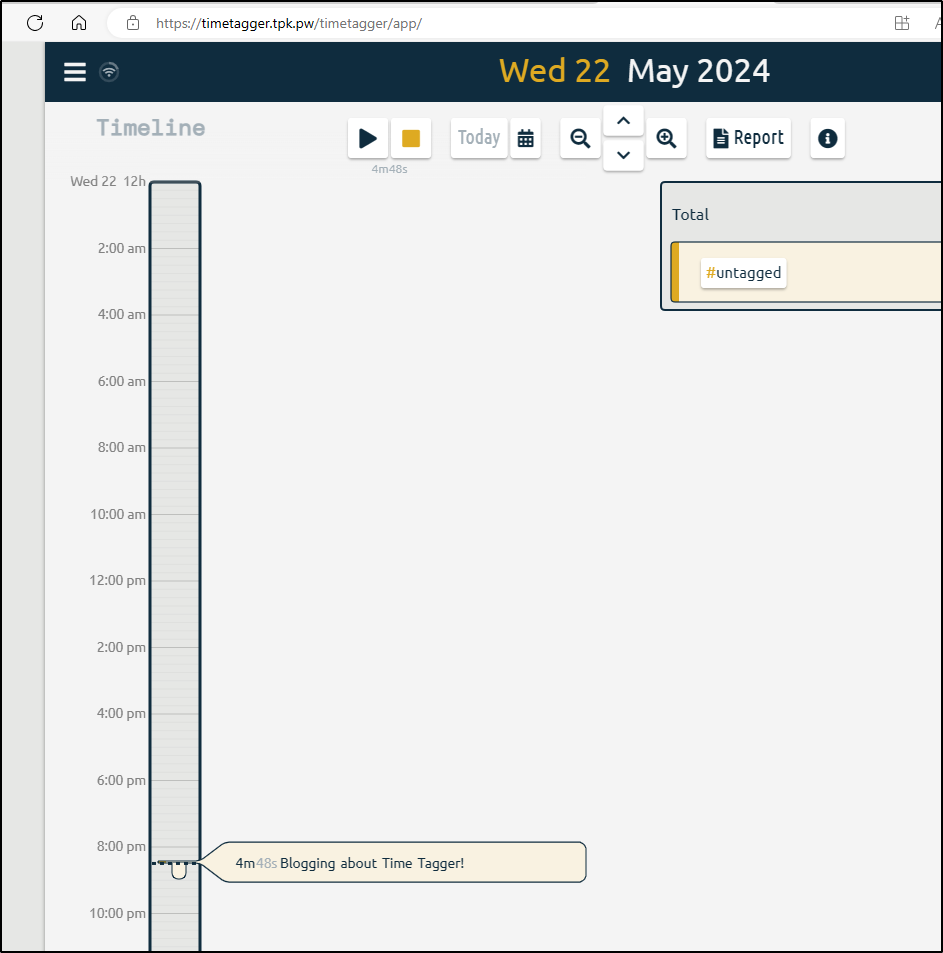

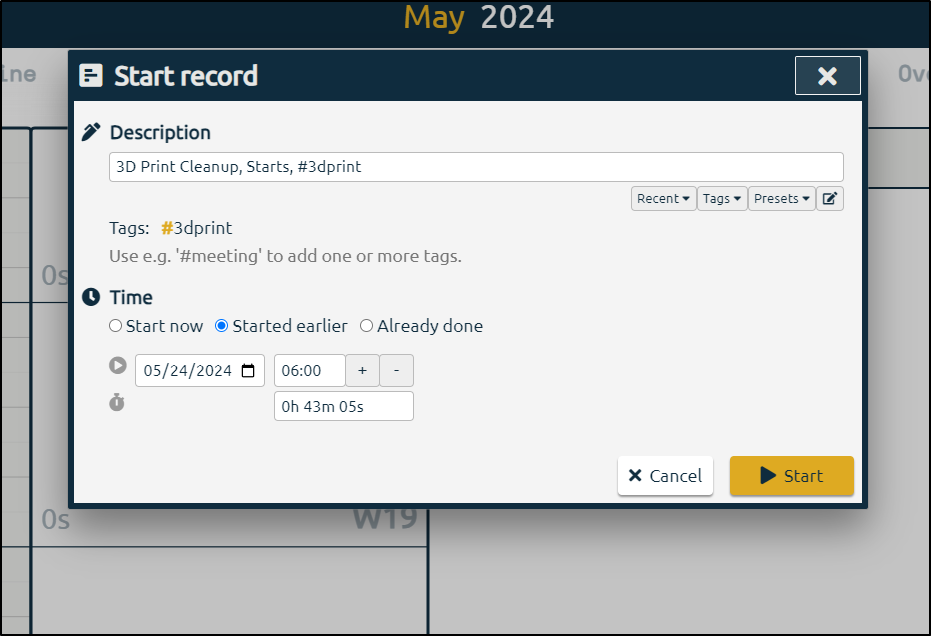

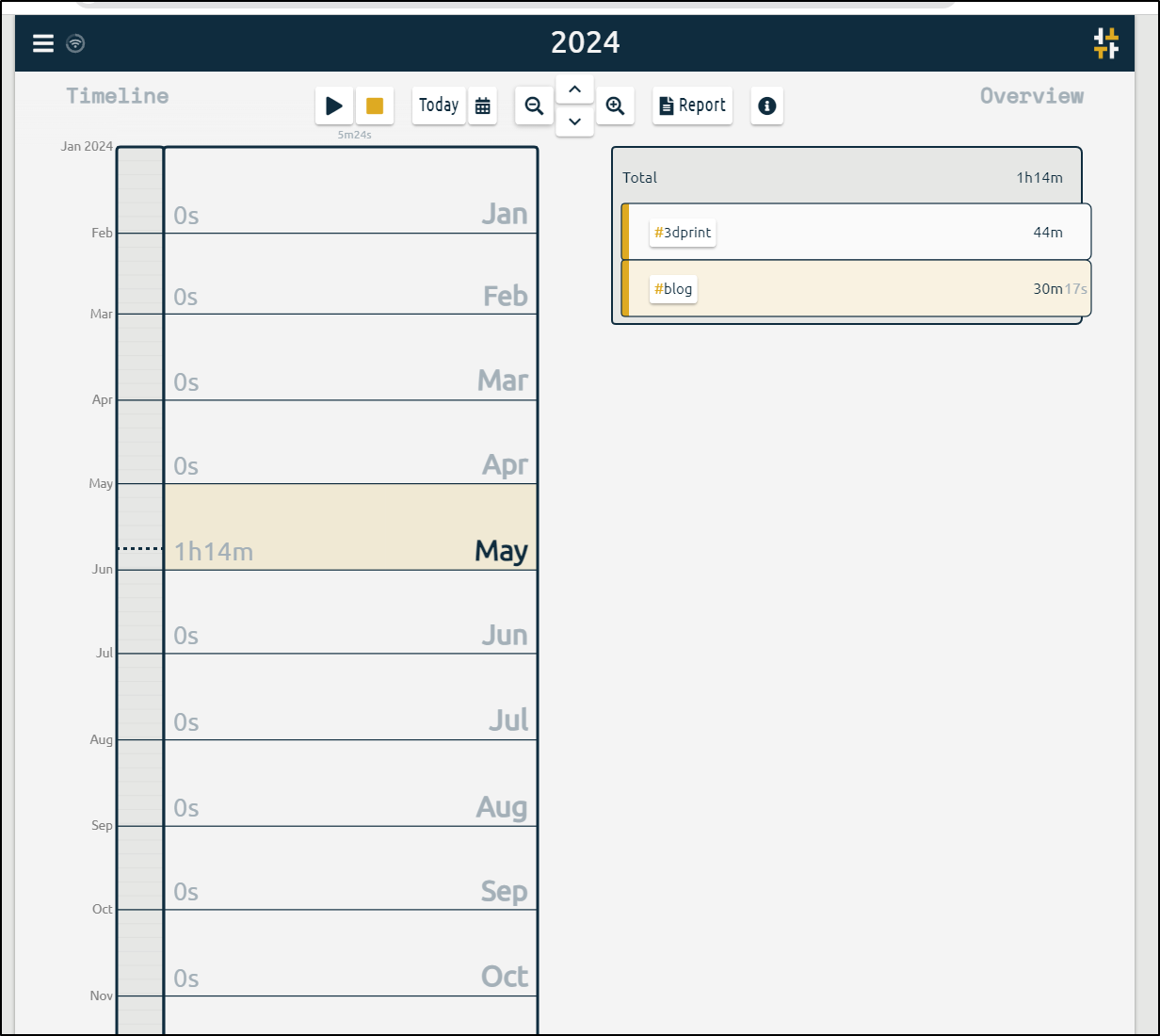

I can then press record to start tracking time

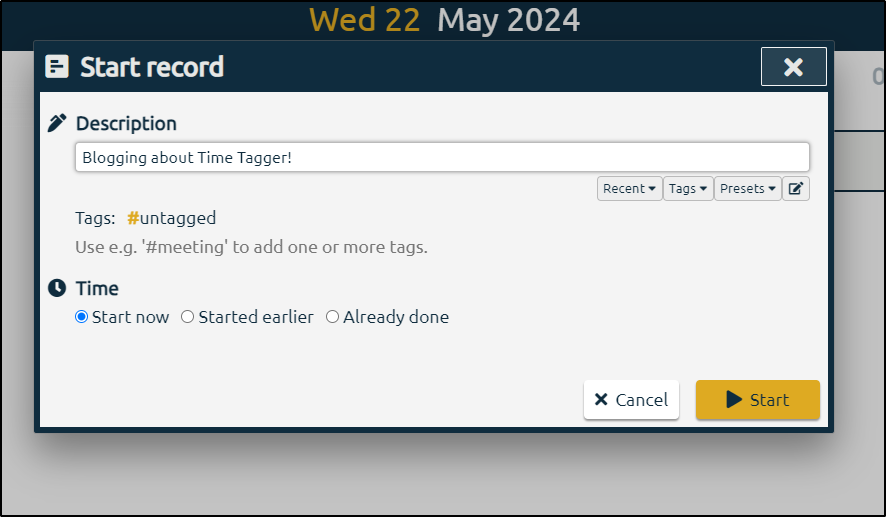

I’ll give it a note

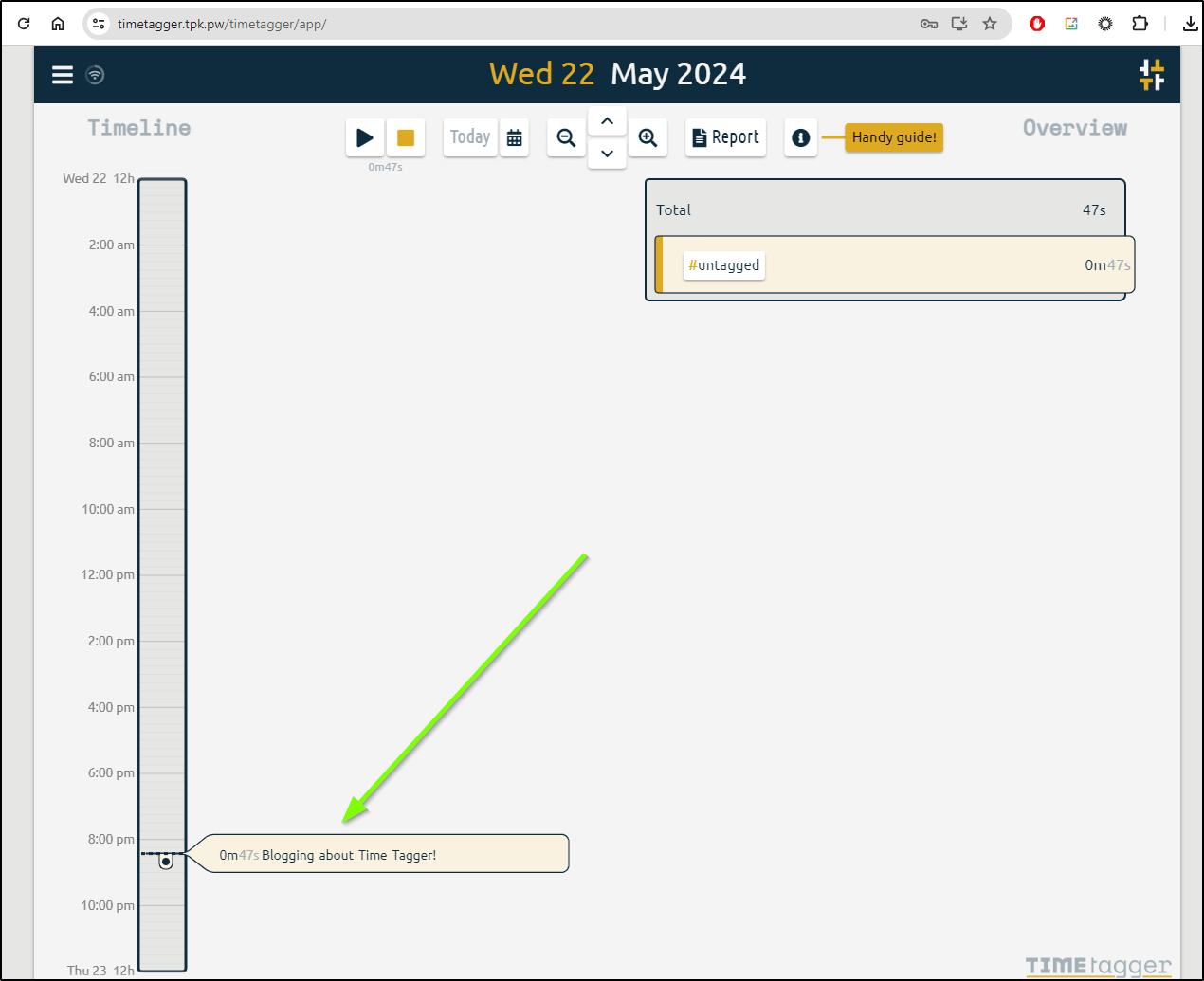

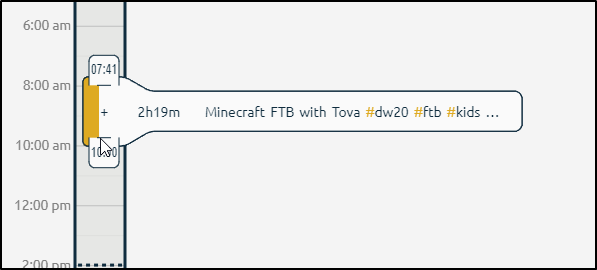

I can see it tracking my time

What is pretty nice is that the time is not Javascript or browser dependent.

I was able to login to a different browser and see it maintained the time tag

How fault tolerant is it? Does it use the PVC?

Let’s rotate the pod and check

builder@LuiGi:~/Workspaces/glance$ kubectl get pods -l app=timetagger

NAME READY STATUS RESTARTS AGE

timetagger-deployment-75c5df6d65-bhht8 1/1 Running 0 11m

builder@LuiGi:~/Workspaces/glance$ kubectl delete pods -l app=timetagger

pod "timetagger-deployment-75c5df6d65-bhht8" deleted

builder@LuiGi:~/Workspaces/glance$ kubectl get pods -l app=timetagger

NAME READY STATUS RESTARTS AGE

timetagger-deployment-75c5df6d65-6k2qf 1/1 Running 0 8s

It still is recording!

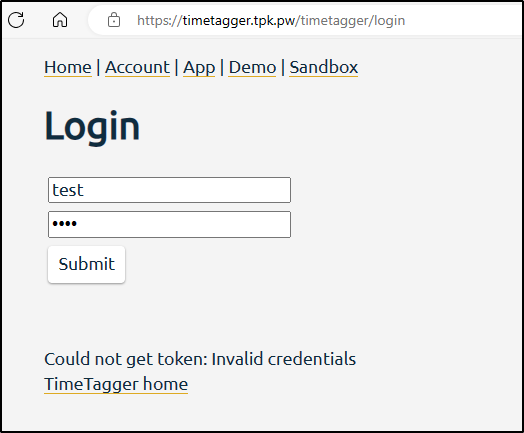

I’ll try another radical change and switch users. I moved from a test user to builder with a different password

$ kubectl apply -f ./timetagger.manifest.yaml

persistentvolumeclaim/timetagger-pvc unchanged

deployment.apps/timetagger-deployment configured

service/timetagger-service unchanged

Test no longer works

Forgetting to stop

I started it and then planned to stop that Blogging activity that night. I forgot.

Coming back two days later, that entry seems to have disappeared

I’ll try adding some time today. So today I spent my first 45m cleaning some 3d prints, restarting a project and just general 3d Printing right-brain work

I noticed that if I start a new activity, the old one is done. Perhaps that makes sense, tracking simultaneous work might be a challenge. That said, it’s good to know the behavior.

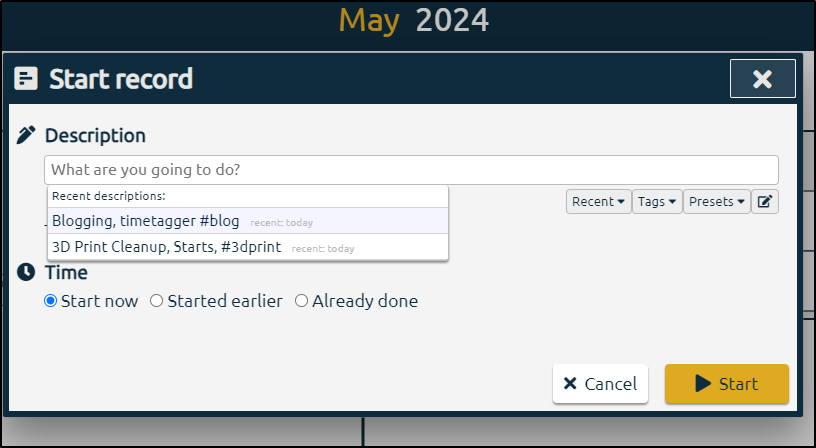

I can always restart a task by looking at recent entries. It doesn’t create a duplicate, just adds more time (continues the clock)

One can also grab a start or stop time in the page and click-hold-drag to move it to a new start/stop time

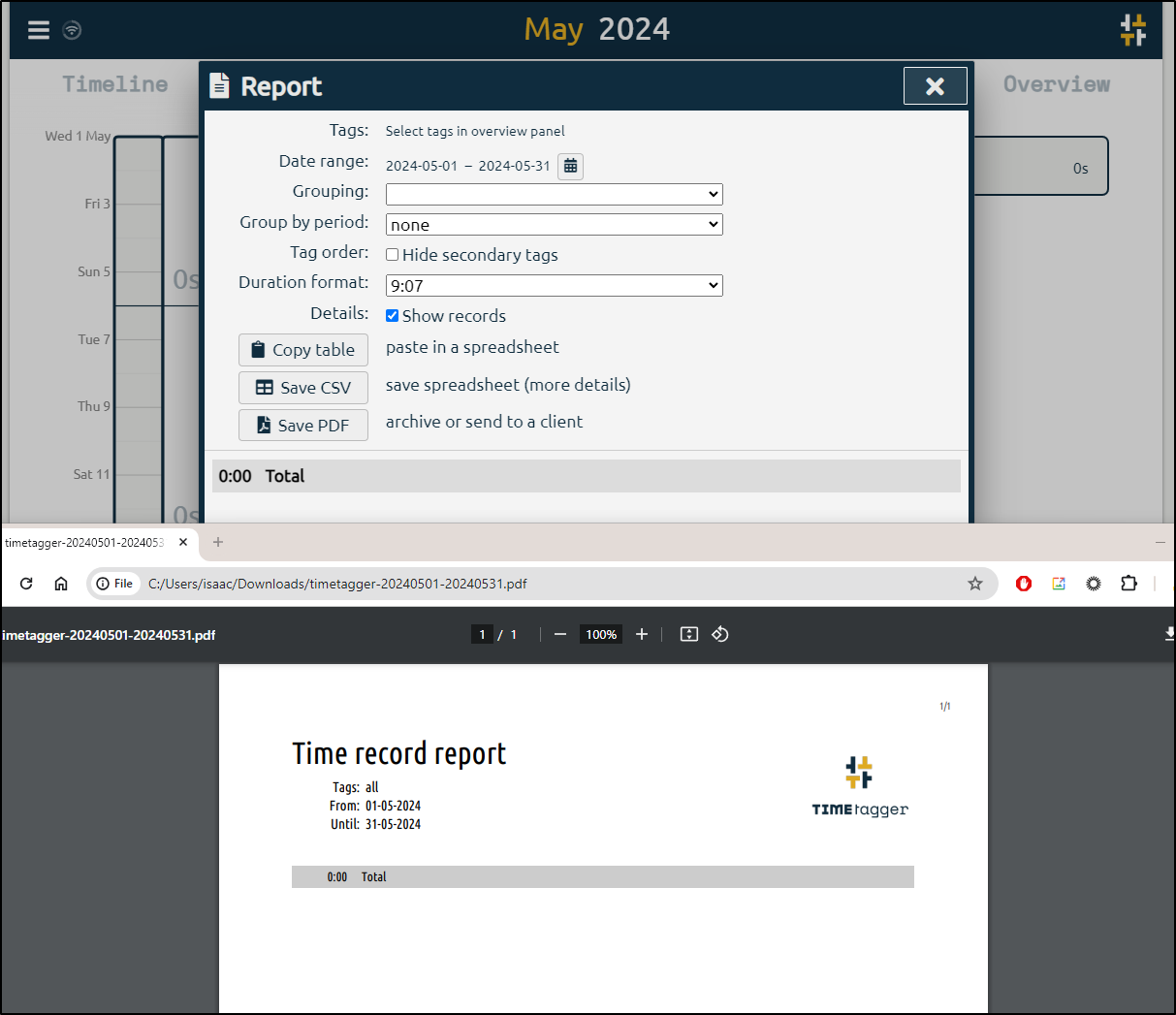

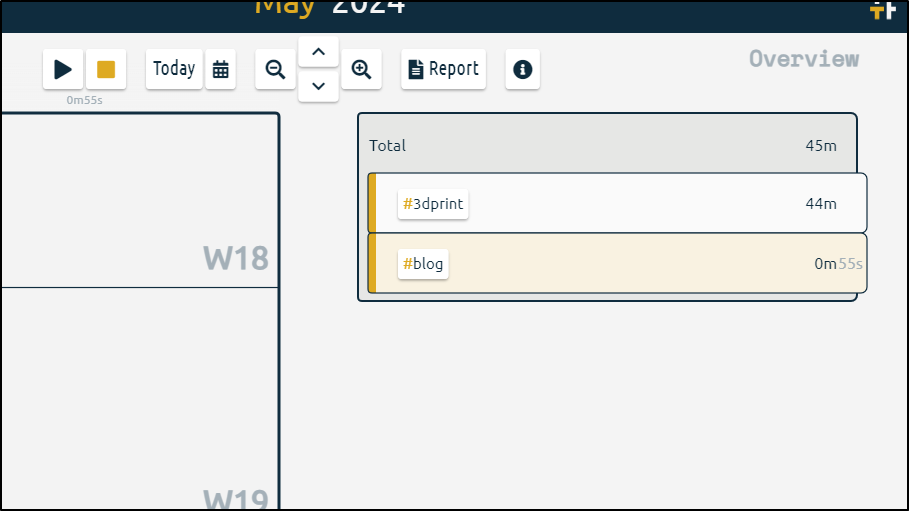

Reporting

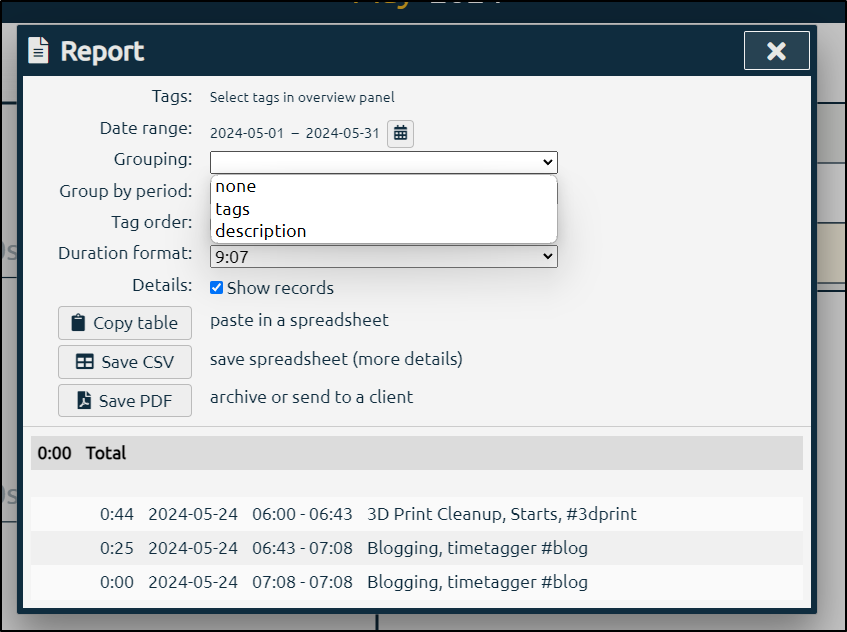

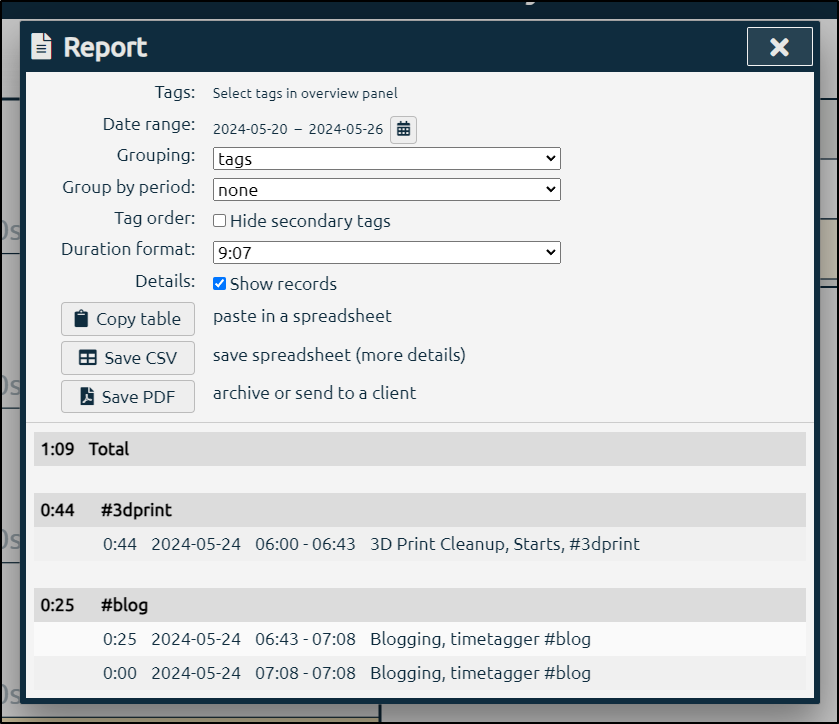

Clicking “Report” will show recent entries and allow us to group by tags or description

For instance, a report of this week grouped by tag

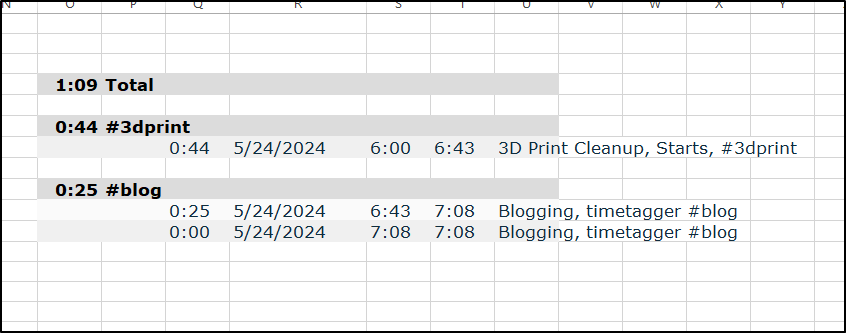

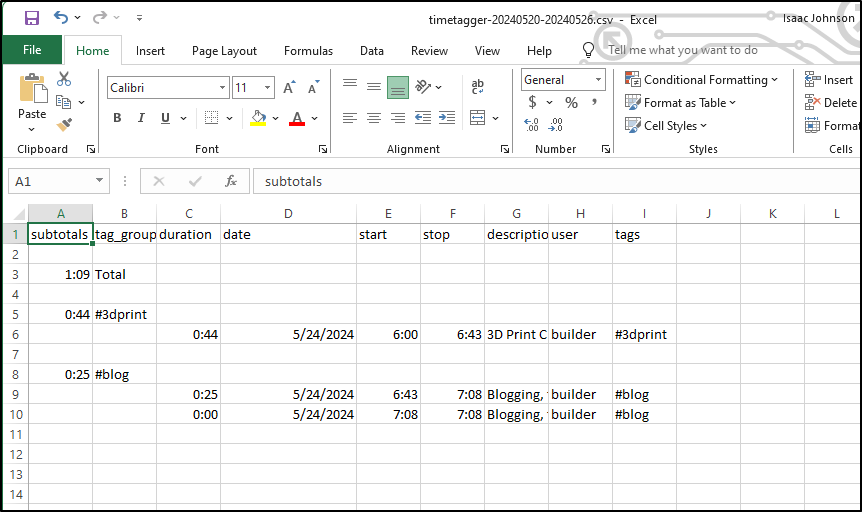

If I copy table, this is what ends up in the clipboard:

1:09 Total

0:44 #3dprint

0:44 2024-05-24 06:00 06:43 3D Print Cleanup, Starts, #3dprint

0:25 #blog

0:25 2024-05-24 06:43 07:08 Blogging, timetagger #blog

0:00 2024-05-24 07:08 07:08 Blogging, timetagger #blog

which pastes just fine into Excel

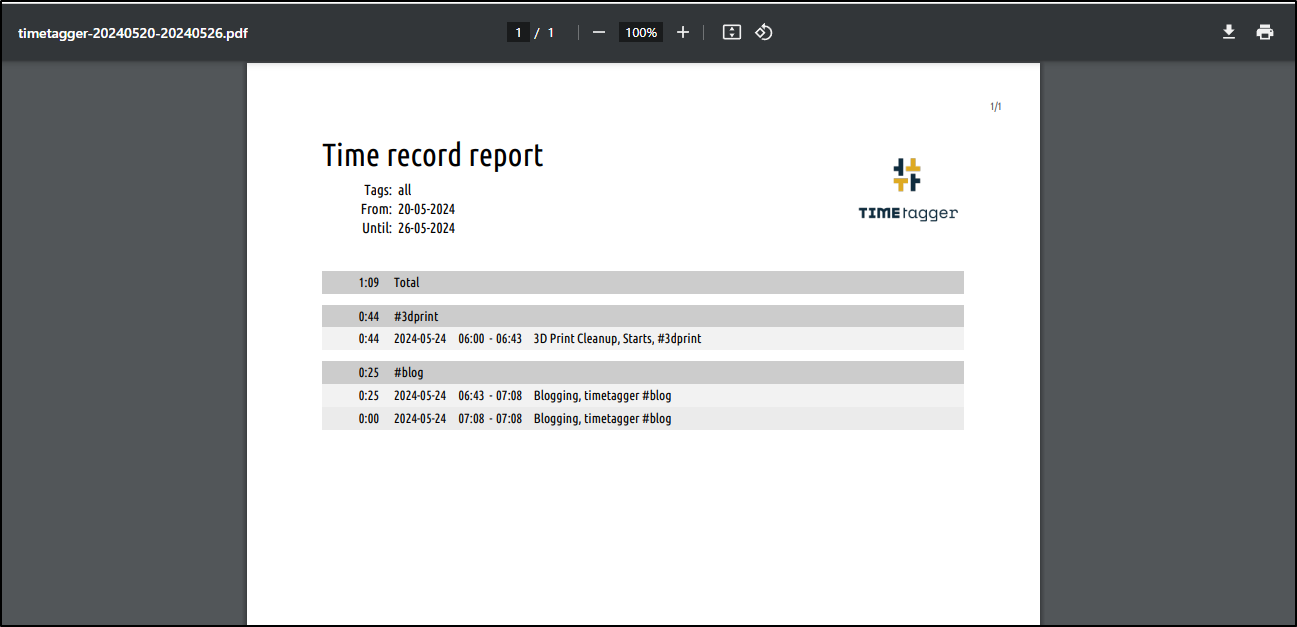

The PDF has a nice breakdown by time

And, of course, the CSV imports into Excel jsut fine as well

We can use the zoom buttons to zoom in and out with ease

A little bit of usage:

Backups

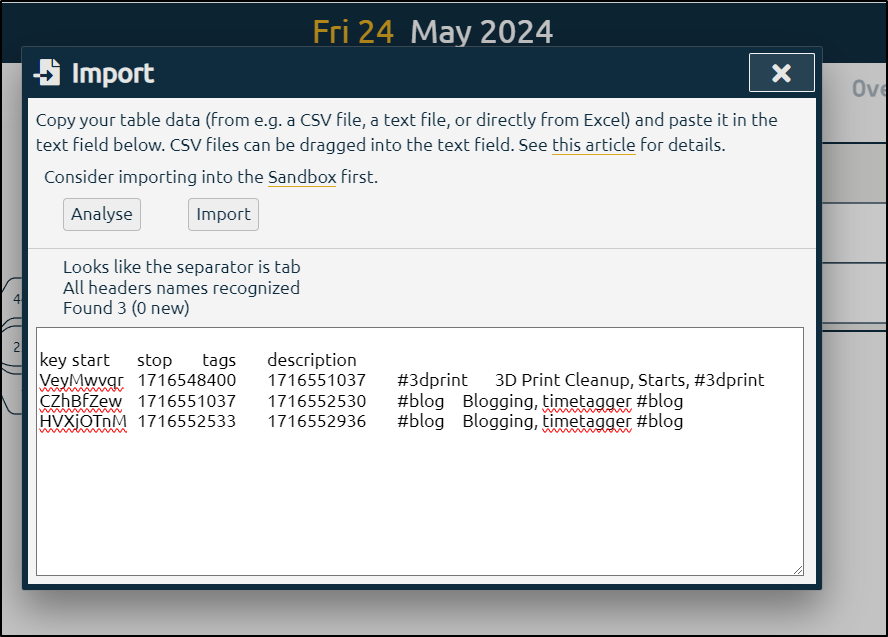

We can copy our data out, however it is just to the clipboard

I thought the import was nice in that it automatically eliminates existing duplicate entries

Summary

I hope you found at least one of these apps to be of use. I enjoyed Glance as a very easy to launch and configure landing page. It was easy to turn it into a Kubernetes app using a configmap for the config YAML.

Timetagger is a very fast little app. I’m surprised how feature rich it is. I plan to try and use it for a few side projects. I only wish I could have multiple users (or identities).