Published: May 6, 2025 by Isaac Johnson

When I recently looked at DevPod I noticed it was from Loft.sh, which I had covered a few years ago. It seems that over time they’ve lined up their products around more exacting channels (moving vClusters to vcluster.com).

As their offerings are open-source, I wanted to dig back in and see what is new with vClusters, how well do they work, and have they made any big improvements.

Today we’ll set up vClusters by both the CLI and the platform UI. We’ll look at connectivity via Windows and Linux and some of the features in the vCluster platform.

Company

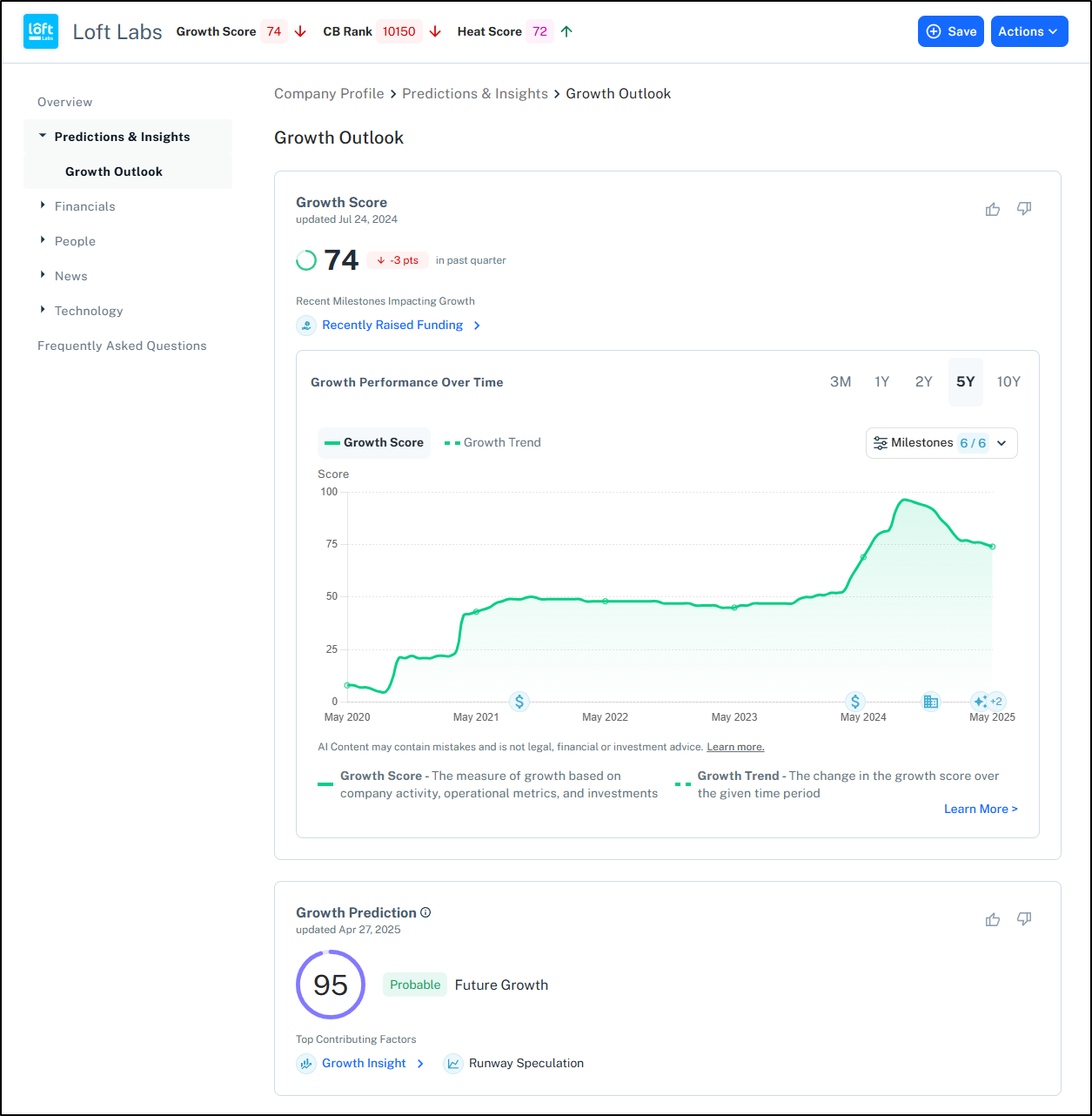

Three years ago, when I last took a look at vClusters by Loft.sh, I noted that they were in seed funding and at around 11-50 employees. It seems that has not changed.

However, according to Crunchbase, they are very likely positioned to get acquired at this stage

That said, the Founders, Lukas Gentele and Fabian Kramm are still three and in the same roles (CEO and CTO, respectively).

vCluster

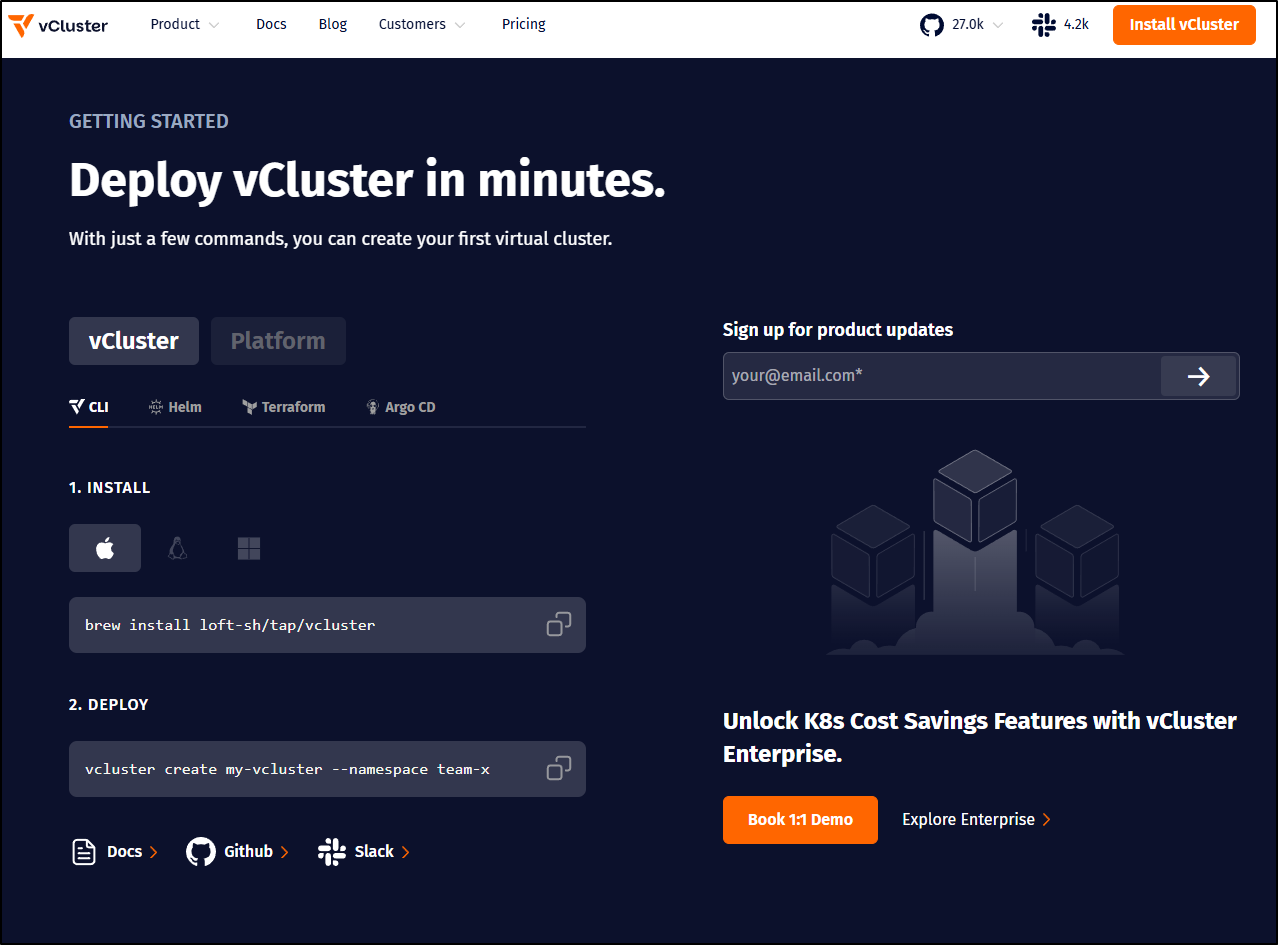

We can head over to vcluster.com to get started. The install steps have far more options now, including homebrew, helm, Terraform/OpenTofu and ArgoCD

Homebrew

I’ll start with homebrew since I always prefer to brew install things when that option is available.

I first need to brew install loft-sh/tap/vcluster

$ brew install loft-sh/tap/vcluster

==> Auto-updating Homebrew...

Adjust how often this is run with HOMEBREW_AUTO_UPDATE_SECS or disable with

HOMEBREW_NO_AUTO_UPDATE. Hide these hints with HOMEBREW_NO_ENV_HINTS (see `man brew`).

Warning: loft-sh/tap/vcluster 0.23.2 is already installed and up-to-date.

To reinstall 0.23.2, run:

brew reinstall vcluster

$ vcluster --version

vcluster version 0.23.2

I can now create a virtual cluster for “team-sre”

$ vcluster create sre-vcluster --namespace team-sre

07:20:56 warn There is a newer version of vcluster: v0.24.1. Run `vcluster upgrade` to upgrade to the newest version.

07:20:57 info Creating namespace team-sre

07:20:58 info Create vcluster sre-vcluster...

07:20:58 info execute command: helm upgrade sre-vcluster /tmp/vcluster-0.23.2.tgz-389014886 --create-namespace --kubeconfig /tmp/3761679172 --namespace team-sre --install --repository-config='' --values /tmp/1342473163

07:21:06 done Successfully created virtual cluster sre-vcluster in namespace team-sre

07:21:33 info Waiting for vcluster to come up...

07:21:33 info vcluster is waiting, because vcluster pod sre-vcluster-0 has status: Init:0/3

07:21:43 info vcluster is waiting, because vcluster pod sre-vcluster-0 has status: Init:0/3

07:21:54 info vcluster is waiting, because vcluster pod sre-vcluster-0 has status: Init:0/3

07:22:05 info vcluster is waiting, because vcluster pod sre-vcluster-0 has status: Init:0/3

07:22:16 info vcluster is waiting, because vcluster pod sre-vcluster-0 has status: Init:1/3

07:22:27 info vcluster is waiting, because vcluster pod sre-vcluster-0 has status: Init:1/3

07:22:37 info vcluster is waiting, because vcluster pod sre-vcluster-0 has status: Init:1/3

07:22:48 info vcluster is waiting, because vcluster pod sre-vcluster-0 has status: Init:2/3

07:22:59 info vcluster is waiting, because vcluster pod sre-vcluster-0 has status: Init:2/3

07:23:53 done vCluster is up and running

07:23:53 info Starting background proxy container...

07:24:07 done Switched active kube context to vcluster_sre-vcluster_team-sre_mac77

- Use `vcluster disconnect` to return to your previous kube context

- Use `kubectl get namespaces` to access the vcluster

When I use kubectx, I can now see a new context has been added and set to default

$ kubectx

docker-desktop

ext33

ext77

ext81

int33

mac77

mac81

vcluster_sre-vcluster_team-sre_mac77

However, I found this didn’t actually work as it assumed a cluster was hosted locally on 127.0.0.1

(note: later I realized you have to “connect” and see that is connected. In my case WSL just was not connecting)

$ kubectl get nodes

E0502 07:25:24.014484 69926 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://127.0.0.1:11491/api?timeout=32s\": dial tcp 127.0.0.1:11491: connect: connection refused"

E0502 07:25:24.016236 69926 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://127.0.0.1:11491/api?timeout=32s\": dial tcp 127.0.0.1:11491: connect: connection refused"

E0502 07:25:24.017679 69926 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://127.0.0.1:11491/api?timeout=32s\": dial tcp 127.0.0.1:11491: connect: connection refused"

E0502 07:25:24.019073 69926 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://127.0.0.1:11491/api?timeout=32s\": dial tcp 127.0.0.1:11491: connect: connection refused"

E0502 07:25:24.020414 69926 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://127.0.0.1:11491/api?timeout=32s\": dial tcp 127.0.0.1:11491: connect: connection refused"

The connection to the server 127.0.0.1:11491 was refused - did you specify the right host or port?

$ kubectl get namespaces

E0502 07:26:02.358694 69965 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://127.0.0.1:11491/api?timeout=32s\": dial tcp 127.0.0.1:11491: connect: connection refused"

E0502 07:26:02.360356 69965 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://127.0.0.1:11491/api?timeout=32s\": dial tcp 127.0.0.1:11491: connect: connection refused"

E0502 07:26:02.361863 69965 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://127.0.0.1:11491/api?timeout=32s\": dial tcp 127.0.0.1:11491: connect: connection refused"

E0502 07:26:02.363553 69965 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://127.0.0.1:11491/api?timeout=32s\": dial tcp 127.0.0.1:11491: connect: connection refused"

E0502 07:26:02.365006 69965 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://127.0.0.1:11491/api?timeout=32s\": dial tcp 127.0.0.1:11491: connect: connection refused"

The connection to the server 127.0.0.1:11491 was refused - did you specify the right host or port?

From the kubeconfig:

server: https://127.0.0.1:11491

name: vcluster_sre-vcluster_team-sre_mac77

If I head back to the mac77 cluster, I can see the “sre-vcluster” was created with servics

$ kubectx mac77

Switched to context "mac77".

$ kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 28d

kube-system kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 28d

kube-system metrics-server ClusterIP 10.43.51.82 <none> 443/TCP 28d

team-sre kube-dns-x-kube-system-x-sre-vcluster ClusterIP 10.43.166.221 <none> 53/UDP,53/TCP,9153/TCP 3m50s

team-sre sre-vcluster ClusterIP 10.43.130.46 <none> 443/TCP,10250/TCP 6m34s

team-sre sre-vcluster-headless ClusterIP None <none> 443/TCP 6m34s

team-sre sre-vcluster-node-isaac-macbookpro ClusterIP 10.43.211.16 <none> 10250/TCP 3m48s

I tried using that server and port just to see:

server: https://192.168.1.78:10250

name: vcluster_sre-vcluster_team-sre_mac77

But that failed to work

$ kubectl --insecure-skip-tls-verify get nodes

E0502 07:30:19.938304 70214 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: the server could not find the requested resource"

E0502 07:30:19.939745 70214 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: the server could not find the requested resource"

E0502 07:30:19.941189 70214 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: the server could not find the requested resource"

E0502 07:30:19.942530 70214 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: the server could not find the requested resource"

E0502 07:30:19.943855 70214 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: the server could not find the requested resource"

Error from server (NotFound): the server could not find the requested resource

I can switch back to my mac77 cluster and see it was created

$ kubectx mac77

Switched to context "mac77".

$ vcluster list

NAME | NAMESPACE | STATUS | VERSION | CONNECTED | AGE

---------------+-----------+---------+---------+-----------+---------

sre-vcluster | team-sre | Running | 0.23.2 | | 10m57s

But trying to connect seems to fail each time

$ vcluster connect --local-port 11491 sre-vcluster

07:32:35 warn There is a newer version of vcluster: v0.24.1. Run `vcluster upgrade` to upgrade to the newest version.

07:32:37 done vCluster is up and running

07:32:37 info Stopping background proxy...

07:32:37 info Starting background proxy container...

07:32:37 done Switched active kube context to vcluster_sre-vcluster_team-sre_mac77

- Use `vcluster disconnect` to return to your previous kube context

- Use `kubectl get namespaces` to access the vcluster

$ kubectl get nodes

E0502 07:32:44.041902 70383 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://127.0.0.1:11491/api?timeout=32s\": dial tcp 127.0.0.1:11491: connect: connection refused"

E0502 07:32:44.043404 70383 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://127.0.0.1:11491/api?timeout=32s\": dial tcp 127.0.0.1:11491: connect: connection refused"

E0502 07:32:44.044838 70383 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://127.0.0.1:11491/api?timeout=32s\": dial tcp 127.0.0.1:11491: connect: connection refused"

E0502 07:32:44.046221 70383 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://127.0.0.1:11491/api?timeout=32s\": dial tcp 127.0.0.1:11491: connect: connection refused"

E0502 07:32:44.047561 70383 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://127.0.0.1:11491/api?timeout=32s\": dial tcp 127.0.0.1:11491: connect: connection refused"

The connection to the server 127.0.0.1:11491 was refused - did you specify the right host or port?

Note: as I said before, moving to my primary windows host worked with the same steps, so I think this has something to do with my WSL

Helm

Let’s try the helm approach

I’ll reset back to the test cluster

$ kubectx mac77

Switched to context "mac77".

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-macbookpro2 Ready <none> 28d v1.31.4+k3s1

isaac-macbookair Ready control-plane,master 28d v1.31.4+k3s1

isaac-macbookpro Ready <none> 28d v1.31.4+k3s1

Now let’s use Helm to install another team

$ helm upgrade --install my-vcluster vcluster \

rts.loft.sh \

--namespace team-o> --repo https://charts.loft.sh \

> --namespace team-ops \

> --repository-config='' \

> --create-namespace

Release "my-vcluster" does not exist. Installing it now.

NAME: my-vcluster

LAST DEPLOYED: Fri May 2 13:36:45 2025

NAMESPACE: team-ops

STATUS: deployed

REVISION: 1

TEST SUITE: None

I can now see it listed

$ vcluster list

NAME | NAMESPACE | STATUS | VERSION | CONNECTED | AGE

---------------+-----------+----------+---------+-----------+----------

my-vcluster | team-ops | Init:0/3 | 0.24.1 | | 18s

sre-vcluster | team-sre | Running | 0.23.2 | | 6h16m2s

Windows

I’ll try and download a release from Github Releases.

(Note: I did try to follow their steps for using Powershell at first, but the IWR just was not finding the right release so I decided to KISS and just download from Github directly)

I can now try using it to list vClusters

PS C:\Users\isaac> kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-macbookpro2 Ready <none> 28d v1.31.4+k3s1

isaac-macbookair Ready control-plane,master 28d v1.31.4+k3s1

isaac-macbookpro Ready <none> 28d v1.31.4+k3s1

PS C:\Users\isaac> C:\Users\isaac\Downloads\vcluster-windows-amd64.exe list

NAME | NAMESPACE | STATUS | VERSION | CONNECTED | AGE

---------------+-----------+---------+---------+-----------+----------

my-vcluster | team-ops | Running | 0.24.1 | | 13m16s

sre-vcluster | team-sre | Running | 0.23.2 | | 6h29m0s

This worked to connect a local vCluster

PS C:\Users\isaac> C:\Users\isaac\Downloads\vcluster-windows-amd64.exe connect sre-vcluster

13:51:27 warn There is a newer version of vcluster: v0.24.1. Run `vcluster upgrade` to upgrade to the newest version.

13:51:28 done vCluster is up and running

13:51:29 info Stopping background proxy...

13:51:29 info Starting background proxy container...

13:51:30 done Switched active kube context to vcluster_sre-vcluster_team-sre_mac77

- Use `vcluster disconnect` to return to your previous kube context

- Use `kubectl get namespaces` to access the vcluster

PS C:\Users\isaac> kubectl get namespaces

NAME STATUS AGE

default Active 6h28m

kube-node-lease Active 6h28m

kube-public Active 6h28m

kube-system Active 6h28m

I confirmed it does show as 127.0.0.1 in the config

server: https://127.0.0.1:11189

name: vcluster_sre-vcluster_team-sre_mac77

This time, however, we can clearly see we are connected

PS C:\Users\isaac\.kube> C:\Users\isaac\Downloads\vcluster-windows-amd64.exe list

NAME | NAMESPACE | STATUS | VERSION | CONNECTED | AGE

---------------+-----------+---------+---------+-----------+-----------

my-vcluster | team-ops | Running | 0.24.1 | | 16m11s

sre-vcluster | team-sre | Running | 0.23.2 | True | 6h31m55s

13:53:02 info Run `vcluster disconnect` to switch back to the parent context

We can see that if we make a namespace, it shows up in the vCluster

PS C:\Users\isaac\.kube> kubectl create ns fakens

namespace/fakens created

PS C:\Users\isaac\.kube> kubectl get nodes

NAME STATUS ROLES AGE VERSION

isaac-macbookpro Ready <none> 6h32m v1.31.4

PS C:\Users\isaac\.kube> kubectl get ns

NAME STATUS AGE

default Active 6h32m

fakens Active 2m20s

kube-node-lease Active 6h32m

kube-public Active 6h32m

kube-system Active 6h32m

But it only exists in the vCluster so if we head back to the hosting cluster, we only see our vCluster namespaces, but also see all the nodes

PS C:\Users\isaac\.kube> kubectl get ns

NAME STATUS AGE

default Active 28d

kube-node-lease Active 28d

kube-public Active 28d

kube-system Active 28d

team-ops Active 18m

team-sre Active 6h34m

PS C:\Users\isaac\.kube> kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-macbookpro2 Ready <none> 28d v1.31.4+k3s1

isaac-macbookair Ready control-plane,master 28d v1.31.4+k3s1

isaac-macbookpro Ready <none> 28d v1.31.4+k3s1

Platform

Let’s add the vCluster Platform via Helm

$ helm upgrade vcluster-platform vcluster-platform --install \

--repo https://charts.loft.sh/ \

--namespace vcluster-platform \

--create-namespace

I can then see the services created

$ kubectl get svc -n vcluster-platform

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

loft ClusterIP 10.43.142.75 <none> 80/TCP,443/TCP 46s

loft-apiservice ClusterIP 10.43.199.69 <none> 443/TCP 46s

loft-apiservice-agent ClusterIP 10.43.154.222 <none> 443/TCP 46s

loft-ingress-wakeup-agent ClusterIP 10.43.204.162 <none> 9090/TCP 46s

loft-webhook-agent ClusterIP 10.43.26.102 <none> 443/TCP 46s

I can fire off a kubectl port-forward to the main vCluster service

PS C:\Users\isaac\Workspaces> kubectl port-forward svc/loft 8088:80 -n vcluster-platform

Forwarding from 127.0.0.1:8088 -> 8080

Forwarding from [::1]:8088 -> 8080

Handling connection for 8088

Handling connection for 8088

Handling connection for 8088

Handling connection for 8088

Handling connection for 8088

Handling connection for 8088

We can fetch our user/password from the helm chart

$ helm get values --all vcluster-platform -n vcluster-platform

COMPUTED VALUES:

additionalCA: ""

admin:

create: true

password: my-password

username: admin

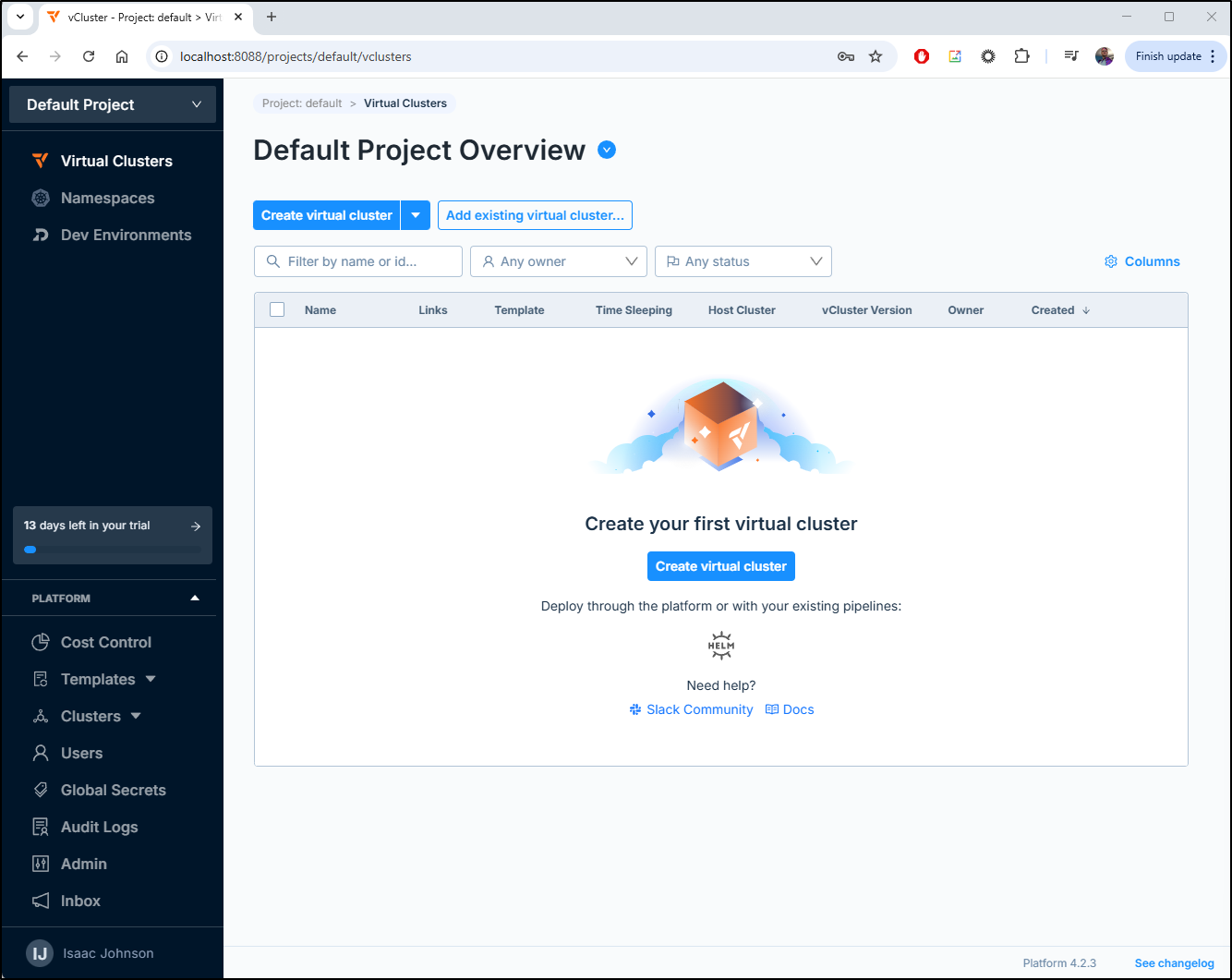

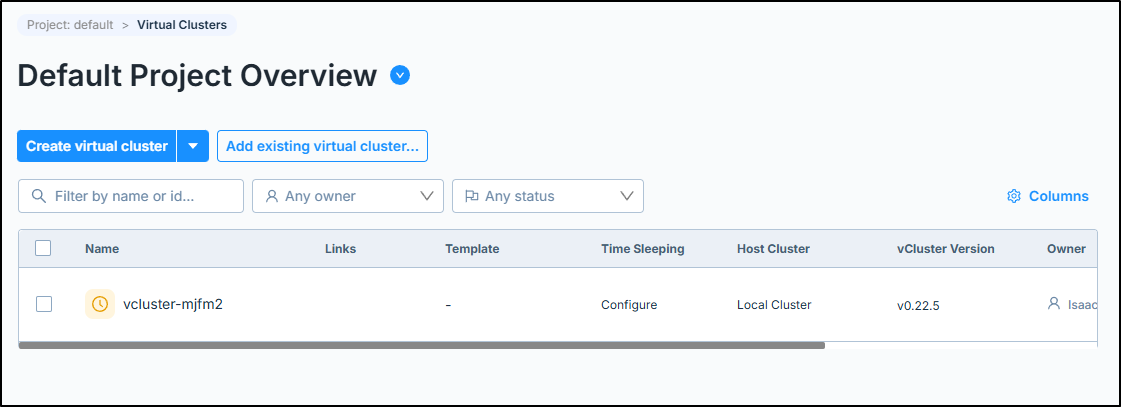

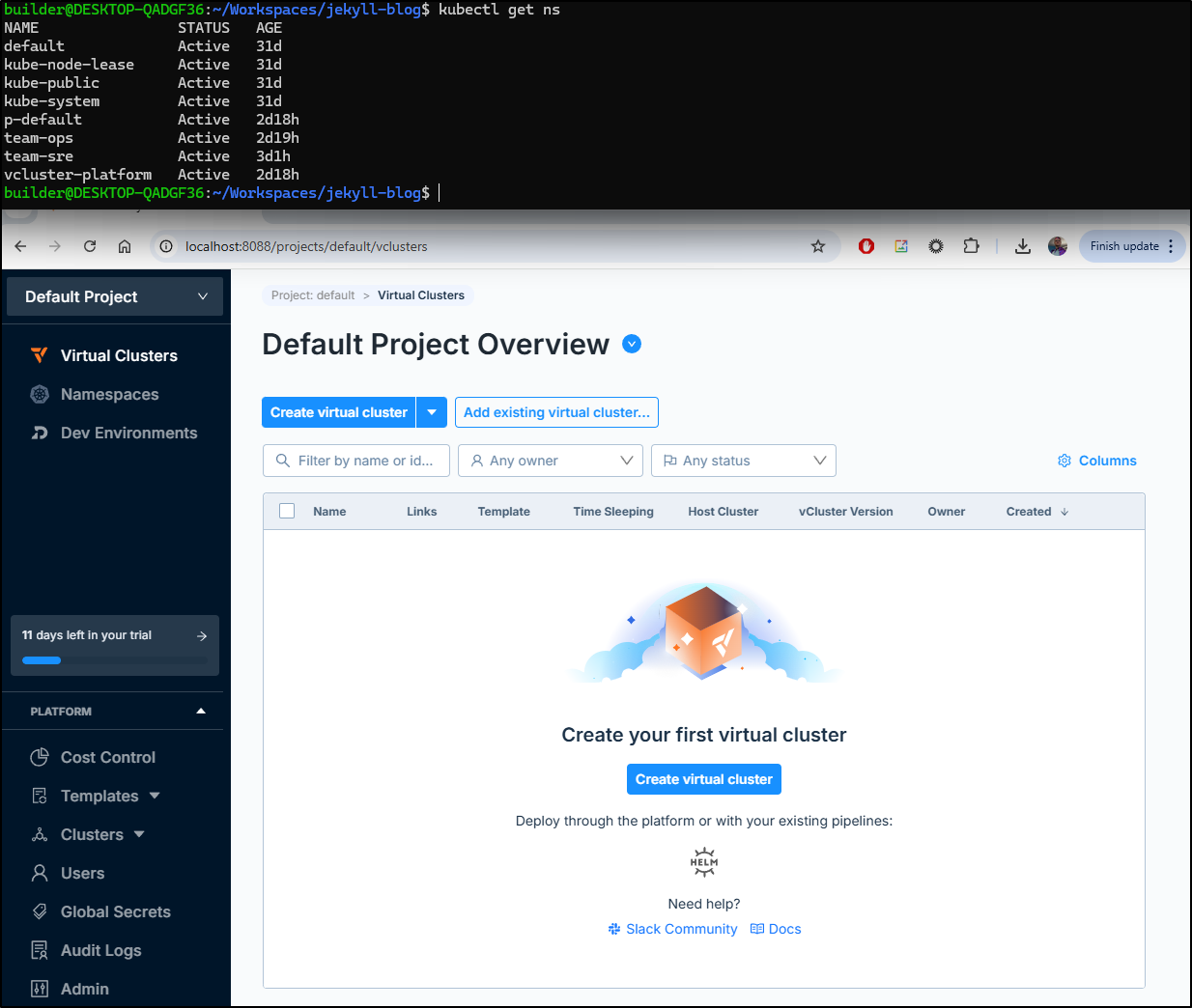

I can now see my clusters, which for this platform shows none

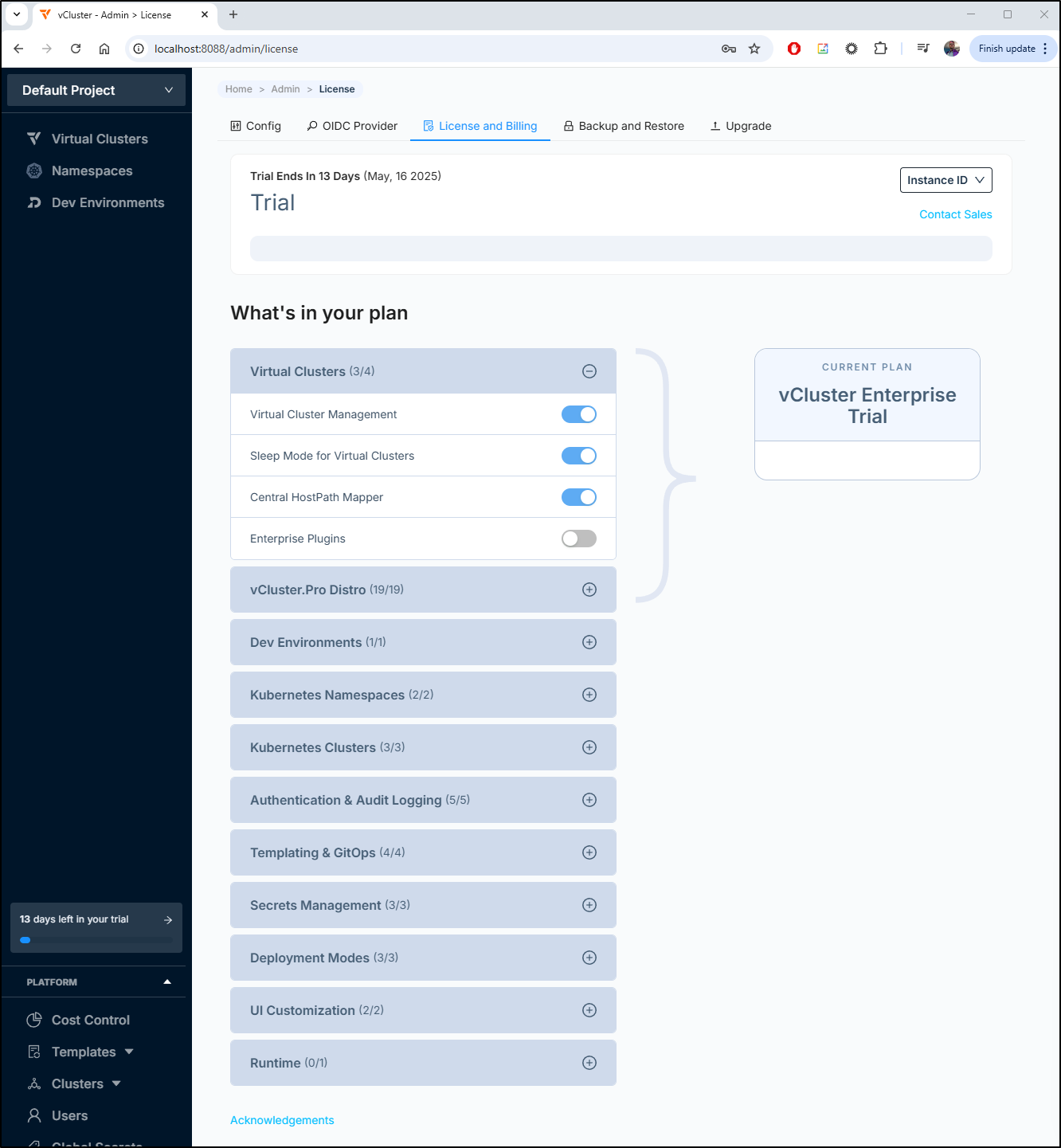

I was a bit worked about the note “13 days left in your trial”, only to see it opts in to “vCluster Enterprise Trial” by default

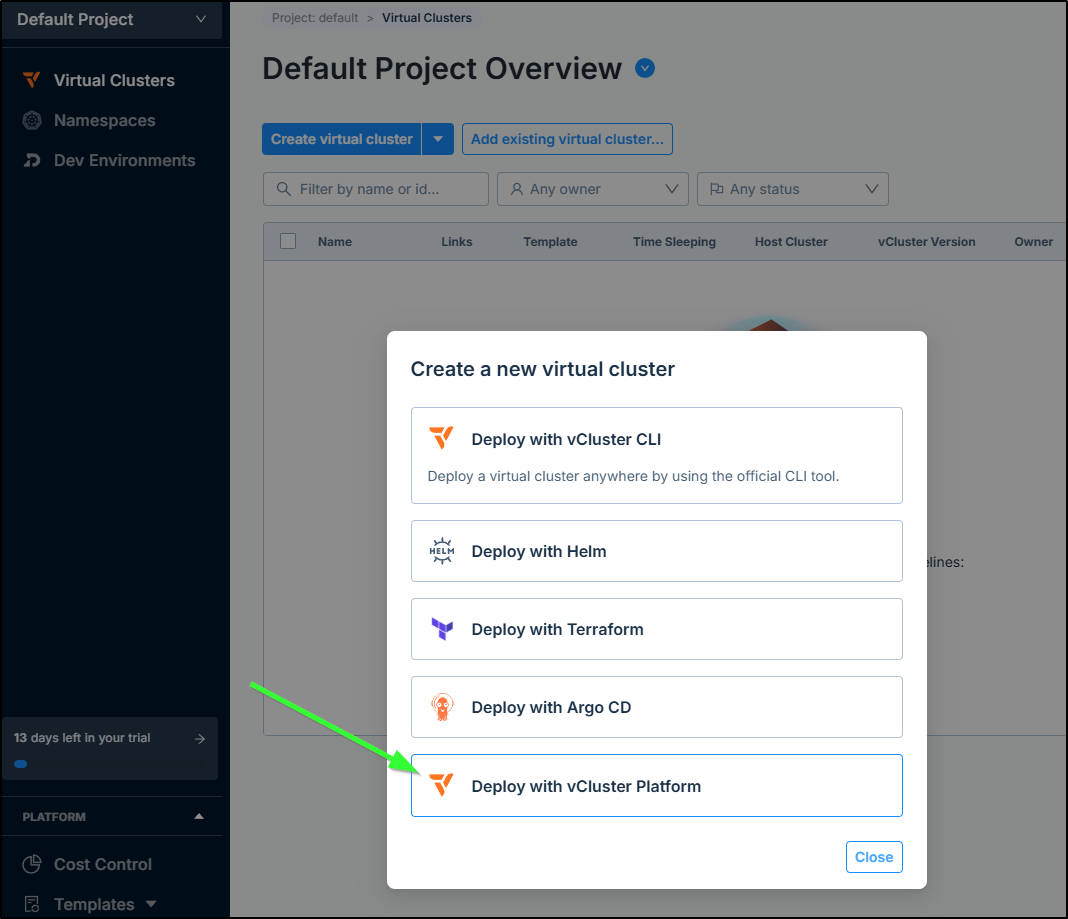

I’ll create a new cluster with Platform

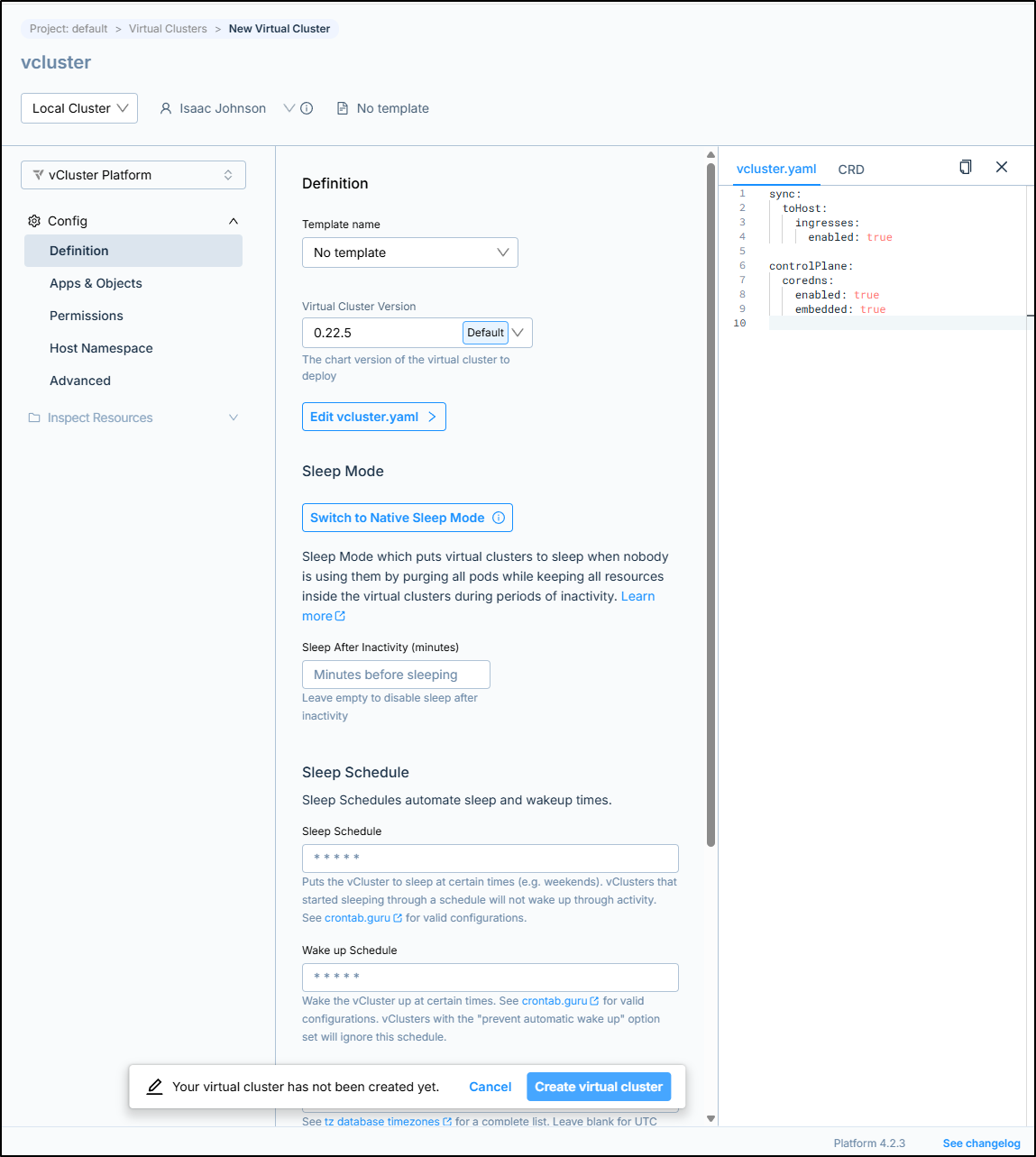

I’ll now use default settings for my vCluster

This starts the create process

soon it was created

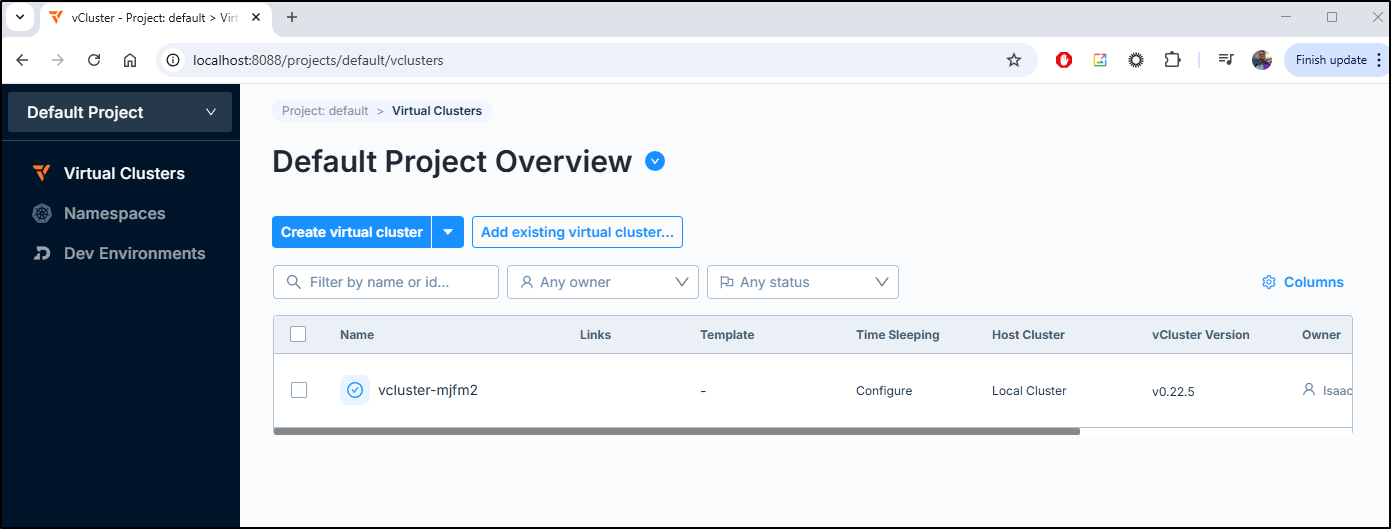

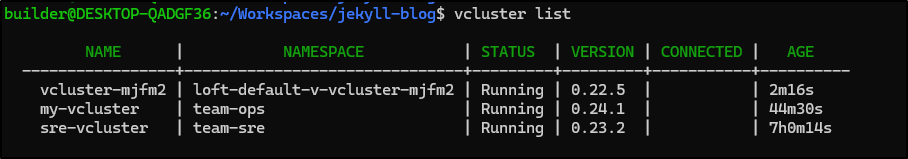

I want to point out that we can see this with the vCluster CLI as well!

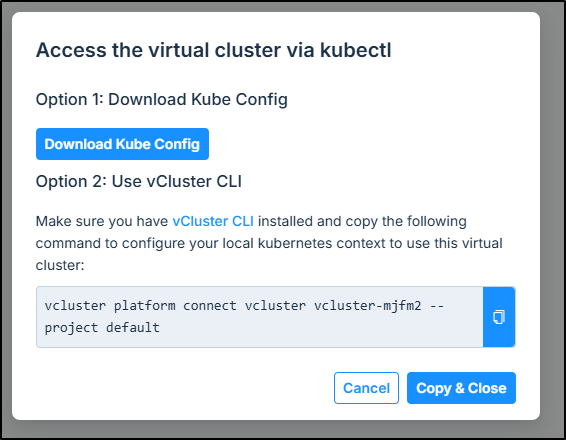

However, in the UI, we can not only see the vcluster command but also download a kube config

Trying that did not work

PS C:\Users\isaac> kubectl get nodes --kubeconfig C:\Users\isaac\Downloads\kubeconfig.yaml

Unable to connect to the server: http: server gave HTTP response to HTTPS client

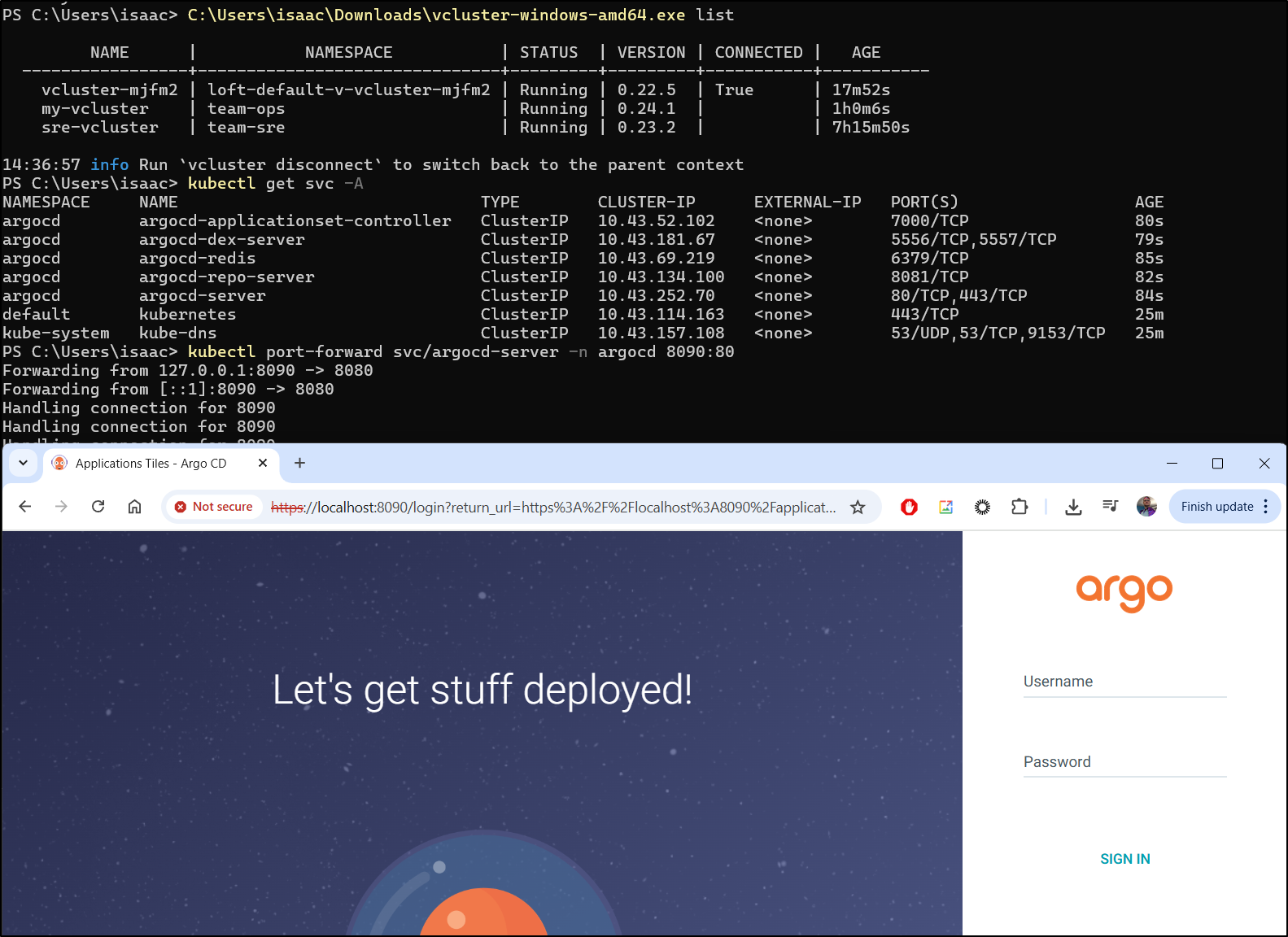

However, using the binary to connect did

PS C:\Users\isaac> C:\Users\isaac\Downloads\vcluster-windows-amd64.exe list

NAME | NAMESPACE | STATUS | VERSION | CONNECTED | AGE

-----------------+-------------------------------+---------+---------+-----------+-----------

vcluster-mjfm2 | loft-default-v-vcluster-mjfm2 | Running | 0.22.5 | | 16m43s

my-vcluster | team-ops | Running | 0.24.1 | | 58m57s

sre-vcluster | team-sre | Running | 0.23.2 | | 7h14m41s

PS C:\Users\isaac> C:\Users\isaac\Downloads\vcluster-windows-amd64.exe connect vcluster-mjfm2

14:35:56 warn There is a newer version of vcluster: v0.24.1. Run `vcluster upgrade` to upgrade to the newest version.

14:35:58 done vCluster is up and running

14:35:58 info Starting background proxy container...

14:35:59 done Switched active kube context to vcluster_vcluster-mjfm2_loft-default-v-vcluster-mjfm2_mac77

- Use `vcluster disconnect` to return to your previous kube context

- Use `kubectl get namespaces` to access the vcluster

I can see I’m connected

PS C:\Users\isaac> C:\Users\isaac\Downloads\vcluster-windows-amd64.exe list

NAME | NAMESPACE | STATUS | VERSION | CONNECTED | AGE

-----------------+-------------------------------+---------+---------+-----------+-----------

vcluster-mjfm2 | loft-default-v-vcluster-mjfm2 | Running | 0.22.5 | True | 17m52s

my-vcluster | team-ops | Running | 0.24.1 | | 1h0m6s

sre-vcluster | team-sre | Running | 0.23.2 | | 7h15m50s

14:36:57 info Run `vcluster disconnect` to switch back to the parent context

and view things like namespaces

PS C:\Users\isaac> kubectl get nodes

No resources found

PS C:\Users\isaac> kubectl get ns

NAME STATUS AGE

default Active 16m

kube-node-lease Active 16m

kube-public Active 16m

kube-system Active 16m

DevPod Integration?

I saw a “Dev Environments” section that got me excited that we could fire off DevPods in here, but it seems more like just a static page to suggest we use DevPod

I really thought I would see, at the least, a “DevPod” app install that we could fire off from Apps, but no such luck

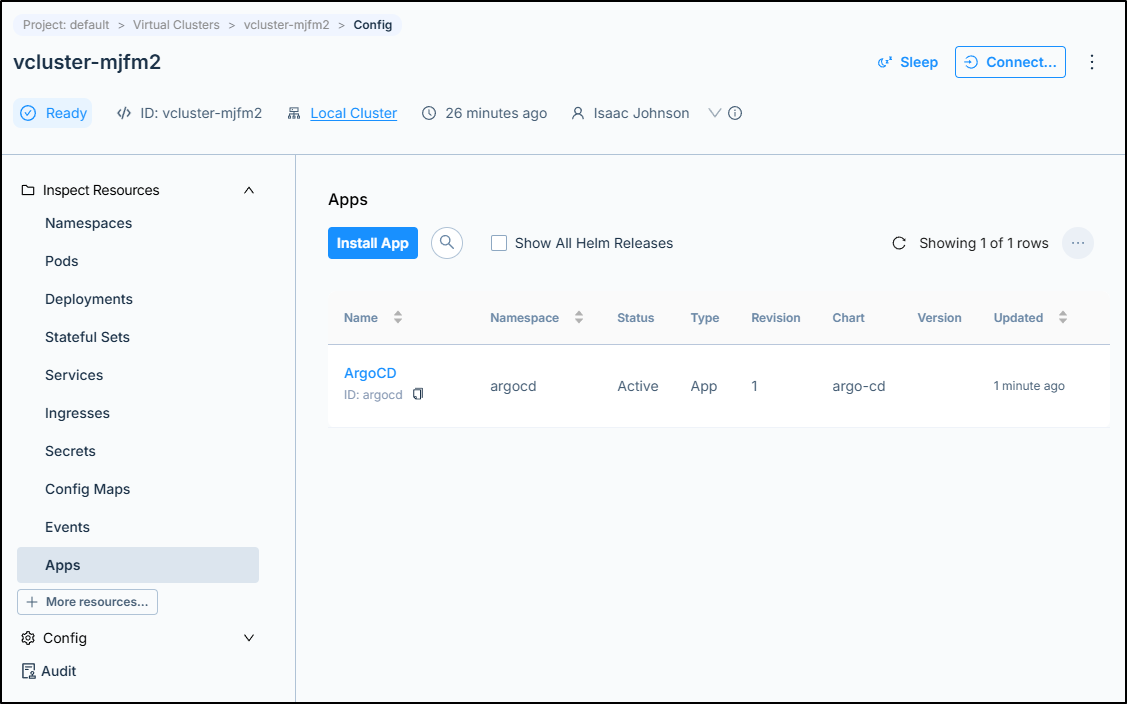

Apps

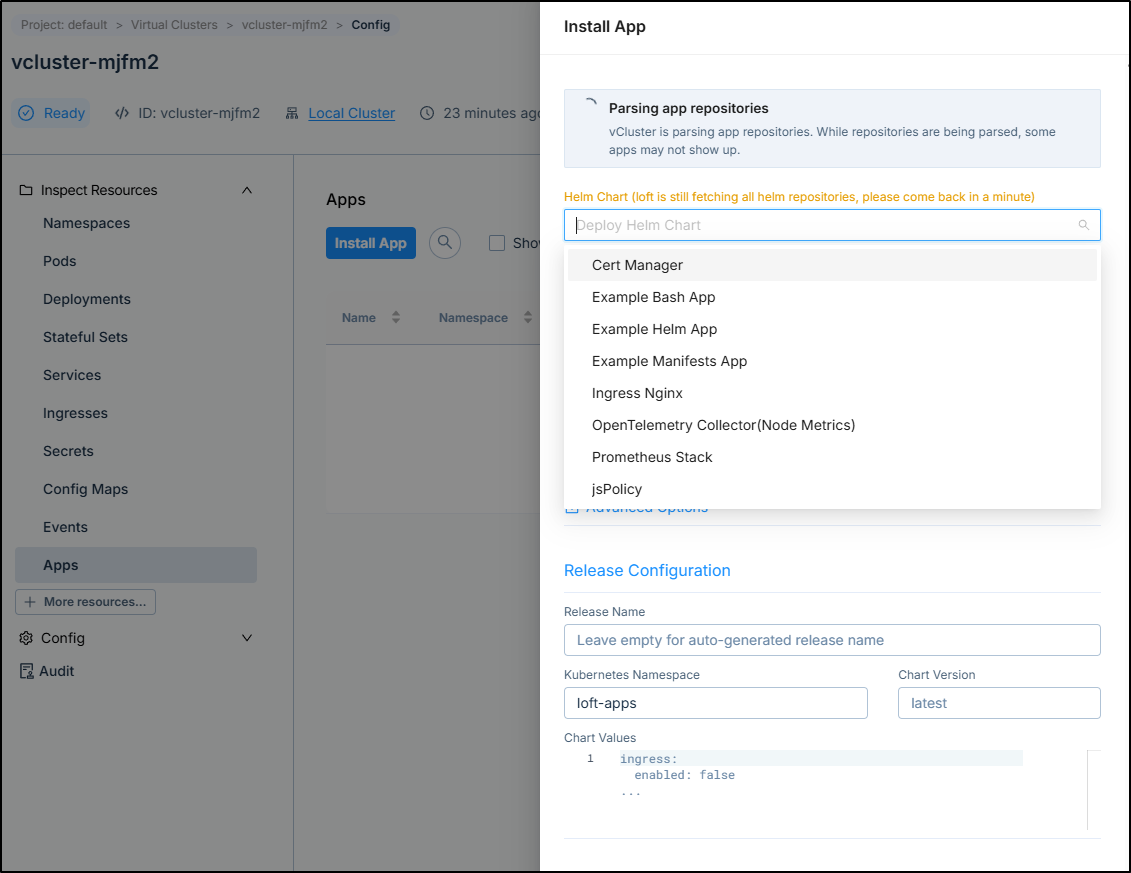

We can fire apps into our vCluster using the platform UI if desired.

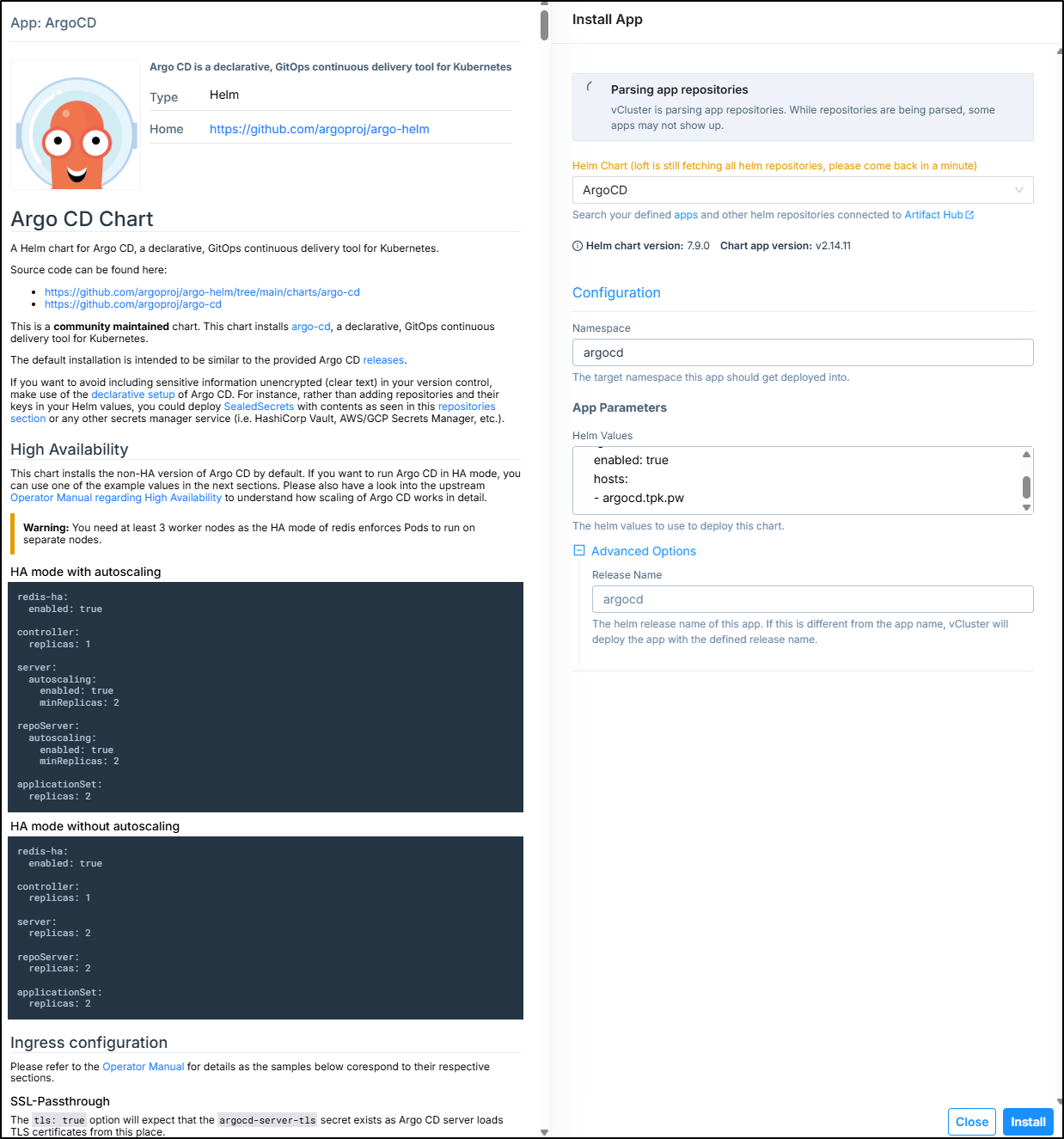

For instance, if we wished to do GitOps via ArgoCD, we could use the Argo CD chart.

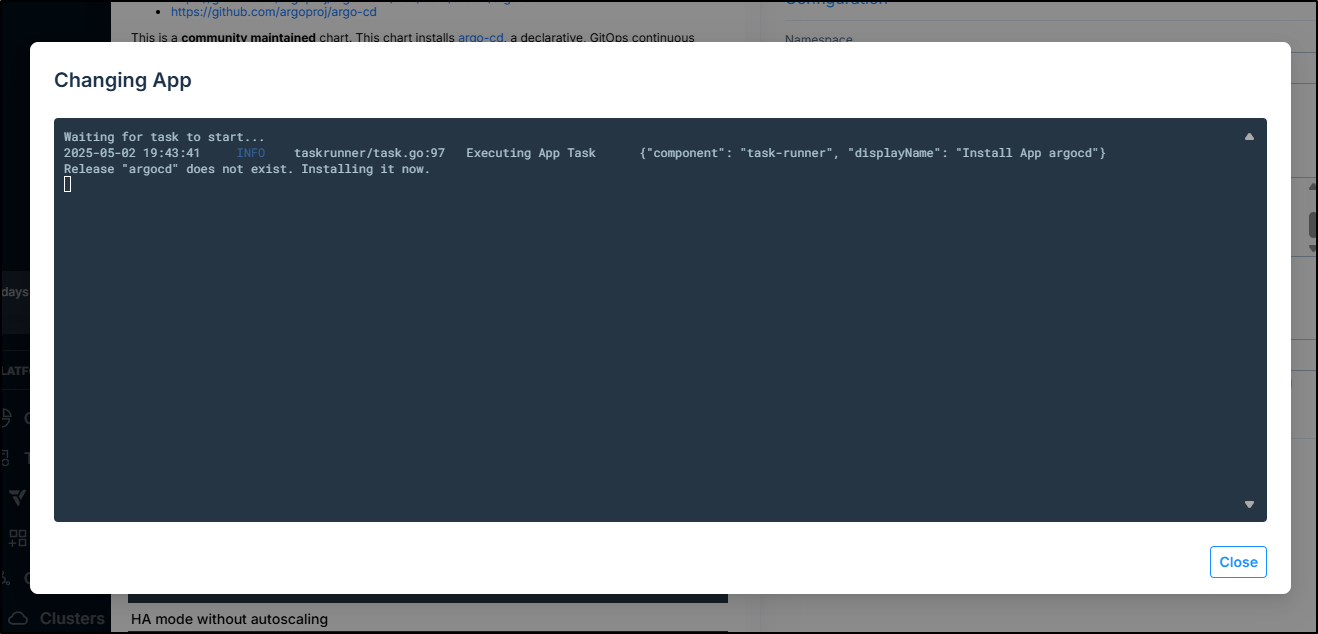

Clicking install pops up a window where we can see it install

Once done, I can see it is listed in the “Apps” section of my vCluster

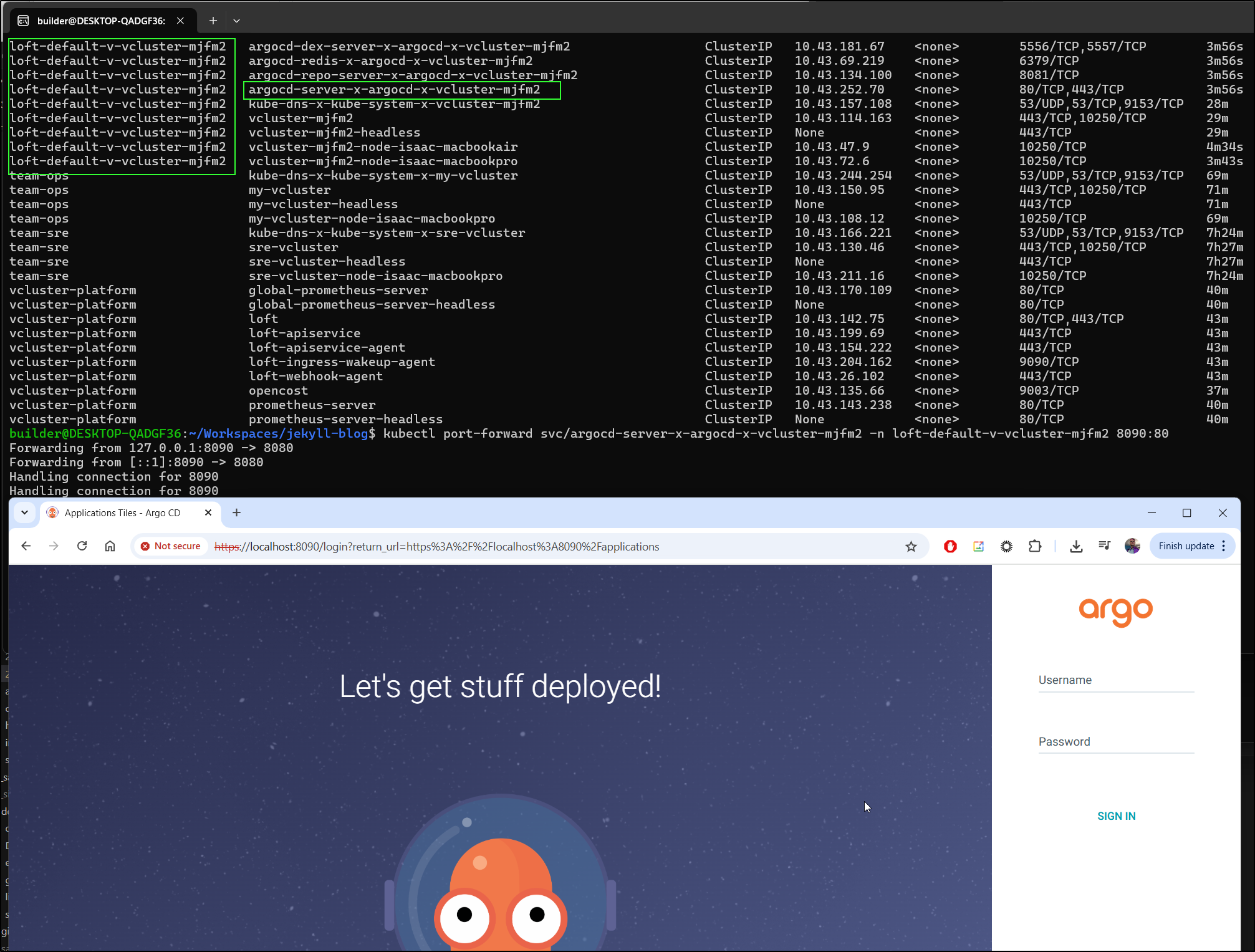

and as you can see, since I’m connected to my vCluster, I can port-forward to the service to access ArgoCD

I can also reach my services from the hosting cluster as the services are exposed in the vCluster namespace.

For instance, I can port-forward to that same ArgoCD by way of the primary mac77 kube context:

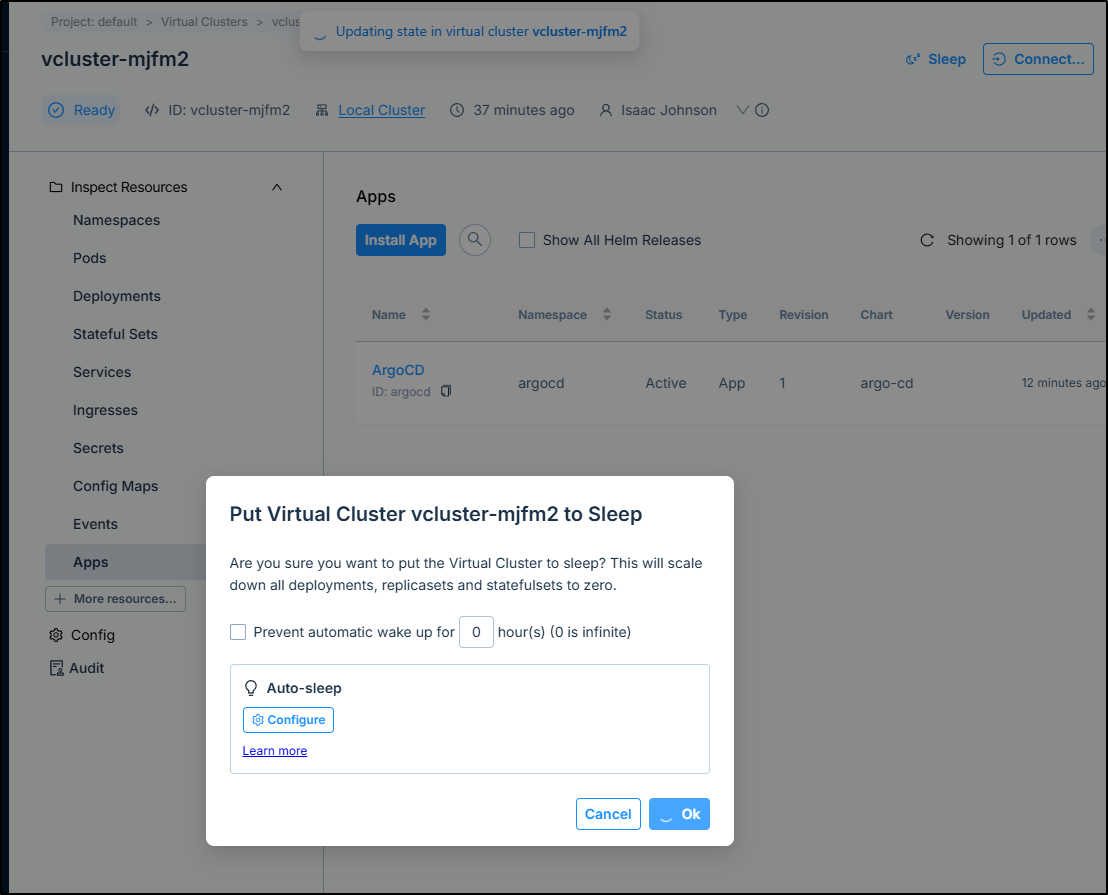

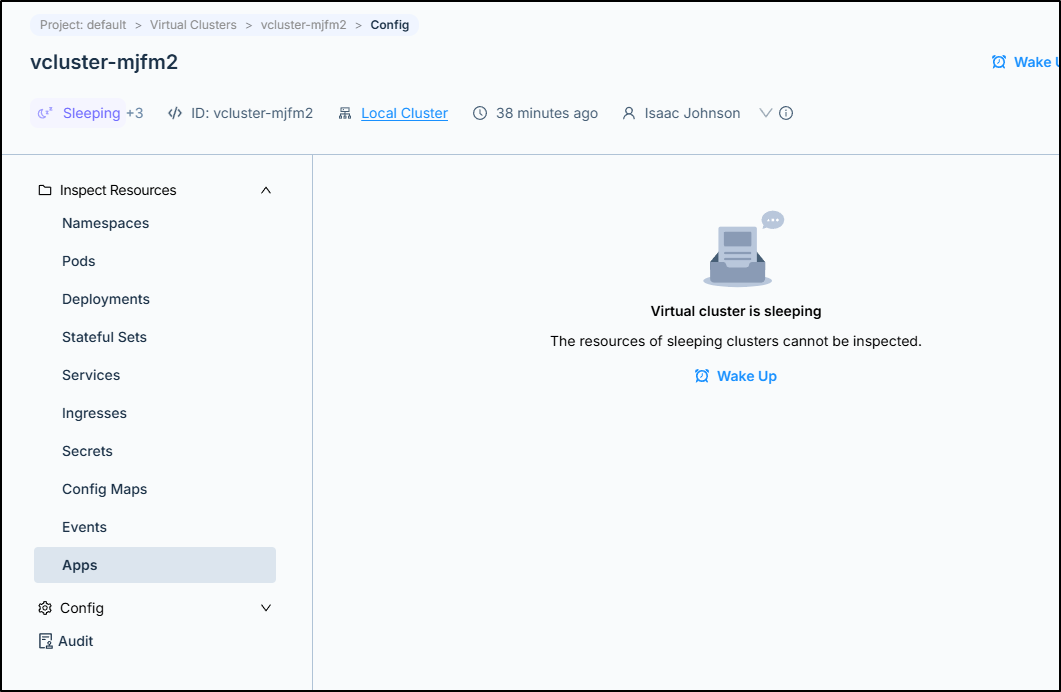

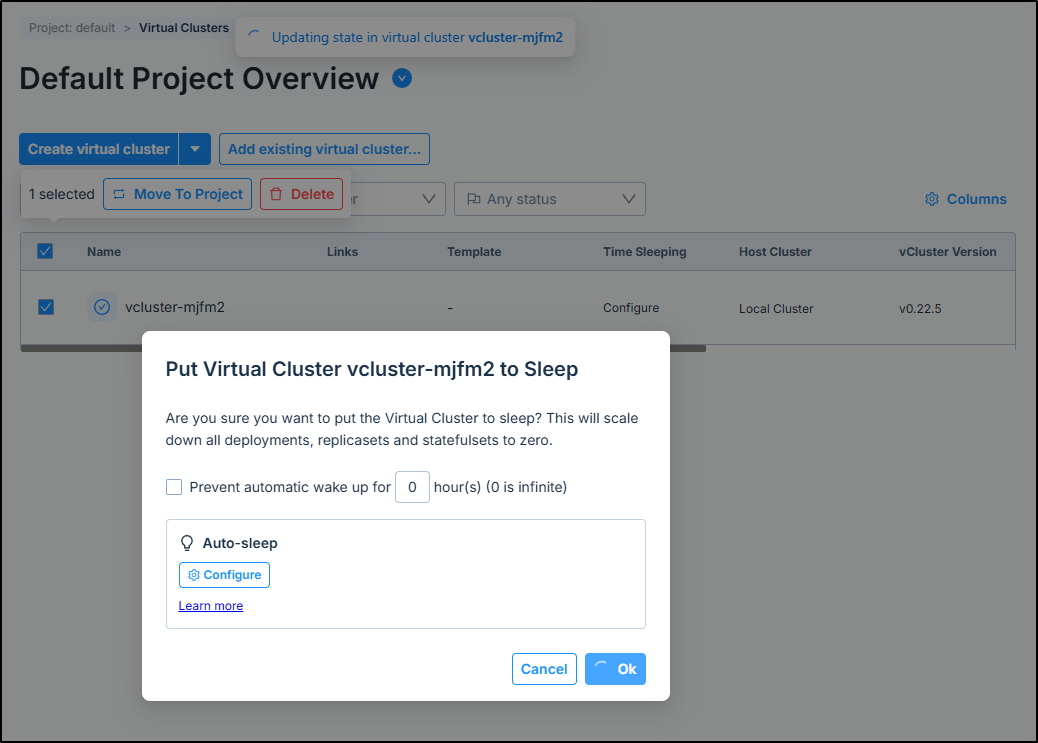

Sleep

One of the features that is new to me is “sleep” that lets us just put the whole cluster to bed and scale it to zero when not in use:

We can now see the vCluster is taking a nap:

And more importantly, we can see the pods went from:

$ kubectl get po -n loft-default-v-vcluster-mjfm2

NAME READY STATUS RESTARTS AGE

argocd-application-controller-0-x-argocd-x-vcluster-mjfm2 1/1 Running 0 11m

argocd-applicationset-controller-b9f789649-5hsfj-x-a-b03ec82d91 1/1 Running 0 11m

argocd-dex-server-58b585f5b9-t9x5g-x-argocd-x-vcluster-mjfm2 1/1 Running 0 11m

argocd-notifications-controller-f66d57bcf-fldwd-x-ar-2214f8b04e 1/1 Running 0 11m

argocd-redis-9b66c678-cbhnl-x-argocd-x-vcluster-mjfm2 1/1 Running 0 11m

argocd-repo-server-79fbf7c5c8-8pnmn-x-argocd-x-vcluster-mjfm2 1/1 Running 0 11m

argocd-server-79b96f9f4f-ldv6l-x-argocd-x-vcluster-mjfm2 1/1 Running 0 11m

vcluster-mjfm2-0 1/1 Running 0 36m

to

$ kubectl get po -n loft-default-v-vcluster-mjfm2

No resources found in loft-default-v-vcluster-mjfm2 namespace.

This is a great way to turn on-and-off vCluster for test projects/temporary environments.

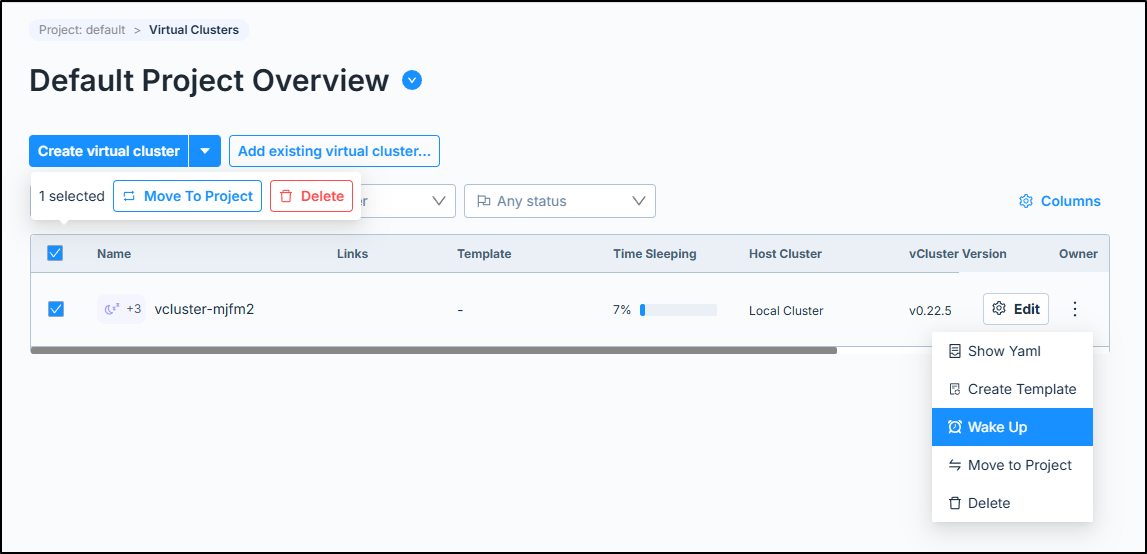

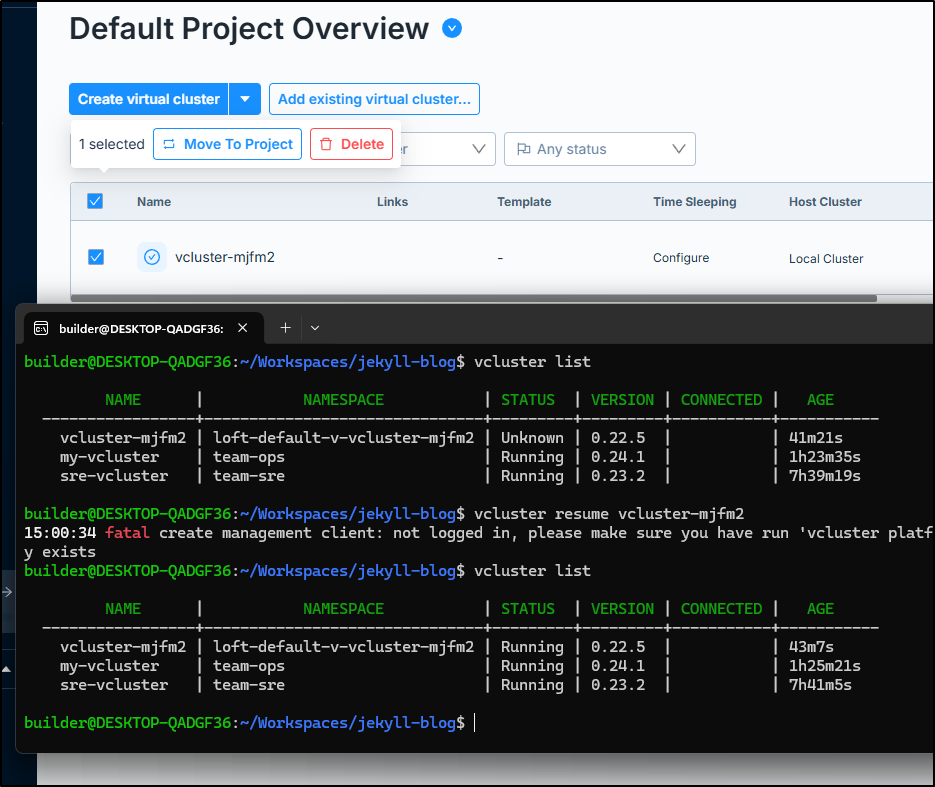

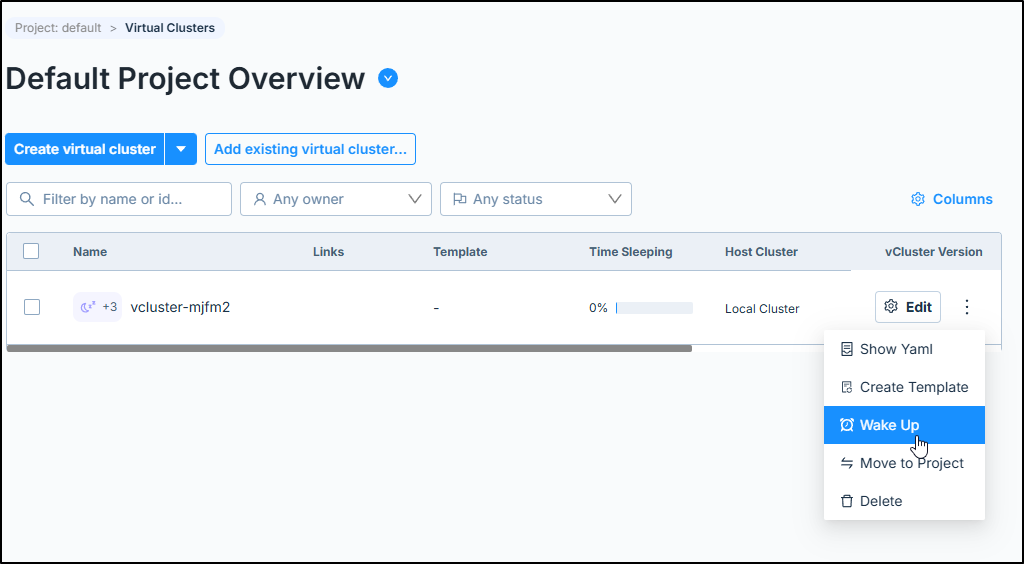

We can use the Platform manager to “Wake up”

In the vcluster CLI, the state will show “Unknown”.

Since I’m not authenticated as a Platform Admin, I cannot just “resume” a “sleeping” cluster this way, not without logging in

$ vcluster list

NAME | NAMESPACE | STATUS | VERSION | CONNECTED | AGE

-----------------+-------------------------------+---------+---------+-----------+-----------

vcluster-mjfm2 | loft-default-v-vcluster-mjfm2 | Unknown | 0.22.5 | | 41m21s

my-vcluster | team-ops | Running | 0.24.1 | | 1h23m35s

sre-vcluster | team-sre | Running | 0.23.2 | | 7h39m19s

$ vcluster resume vcluster-mjfm2

15:00:34 fatal create management client: not logged in, please make sure you have run 'vcluster platform start' to create one or 'vcluster login [vcluster-pro-url]' if one already exists

I noticed that the “Age” is from create, not resumed, nor discounting any “sleep” time

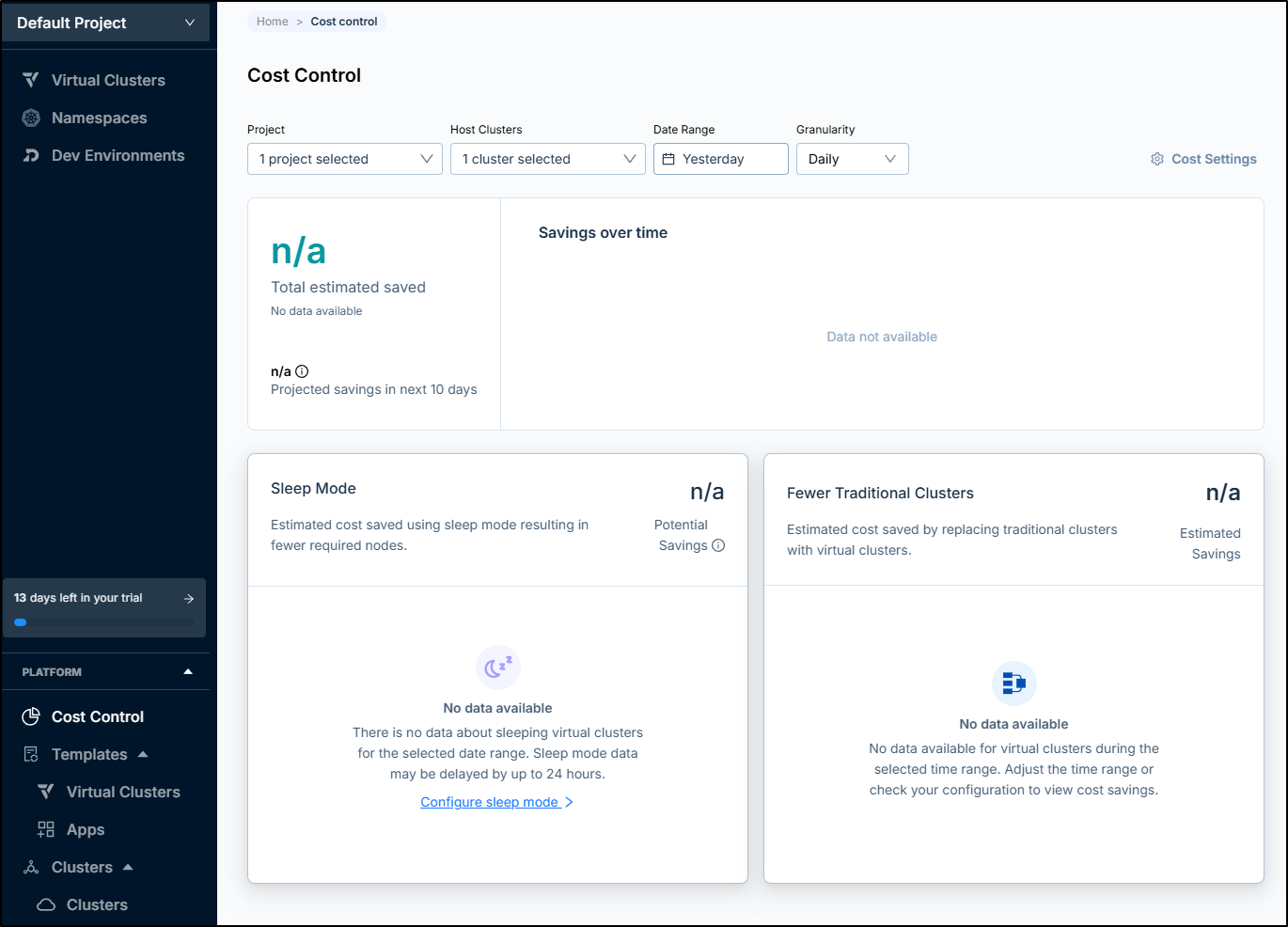

Cost control

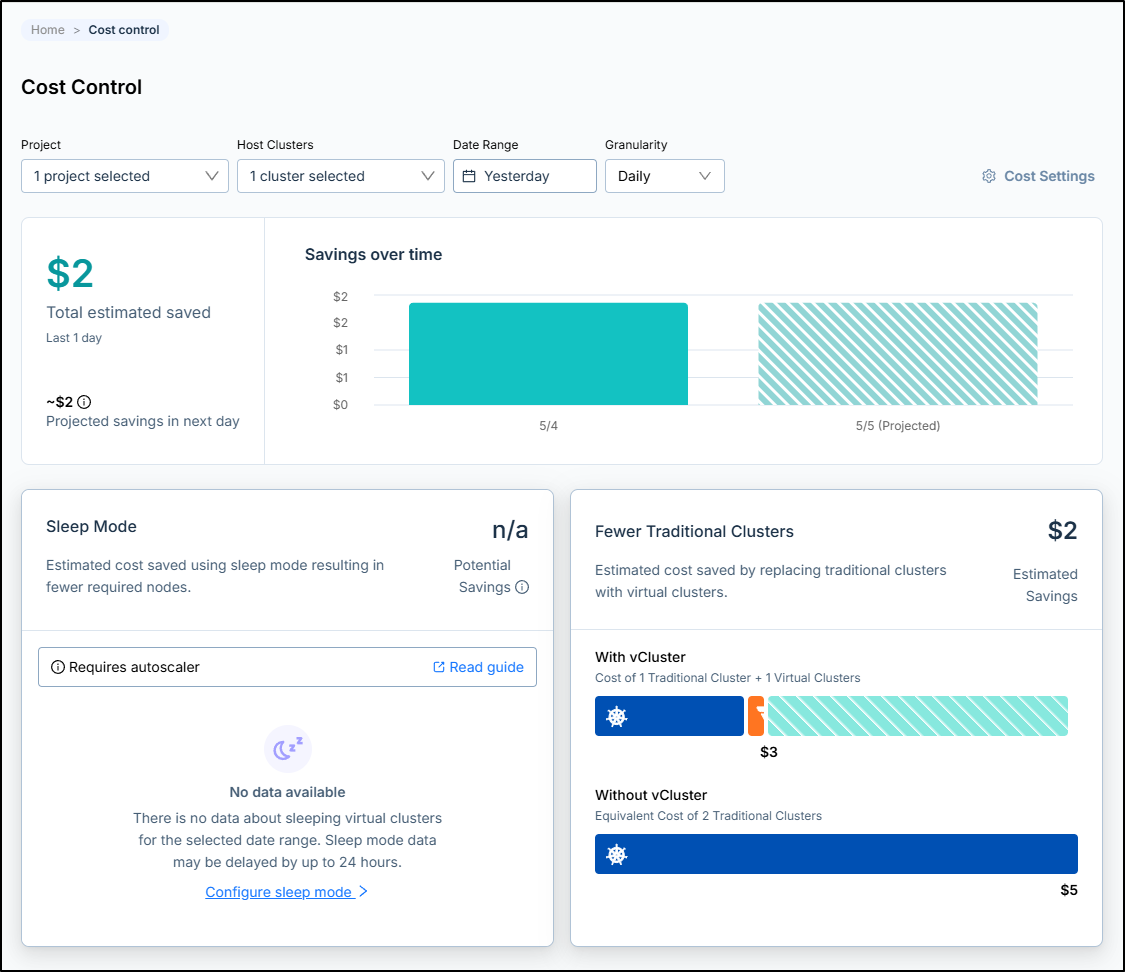

We can view costs via “Cost Control”

I gave it a couple days to view data and it suggested I saved $2 by using a vCluster

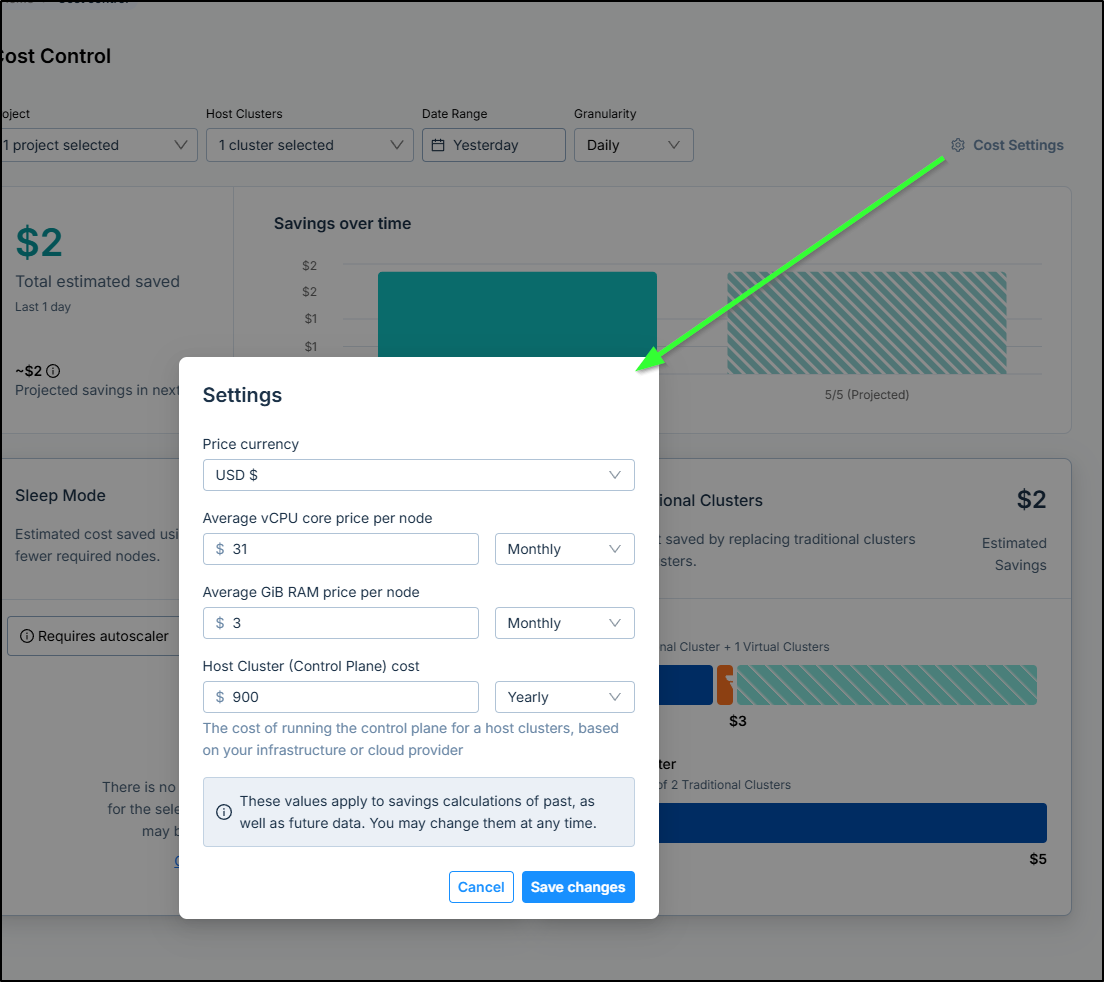

To really use Cost Savings accurately, however, one needs to go the gear icon by “Cost Settings” and set your usual spend because Kubernetes costs can vary wildly by implementation

Glboal Secrets

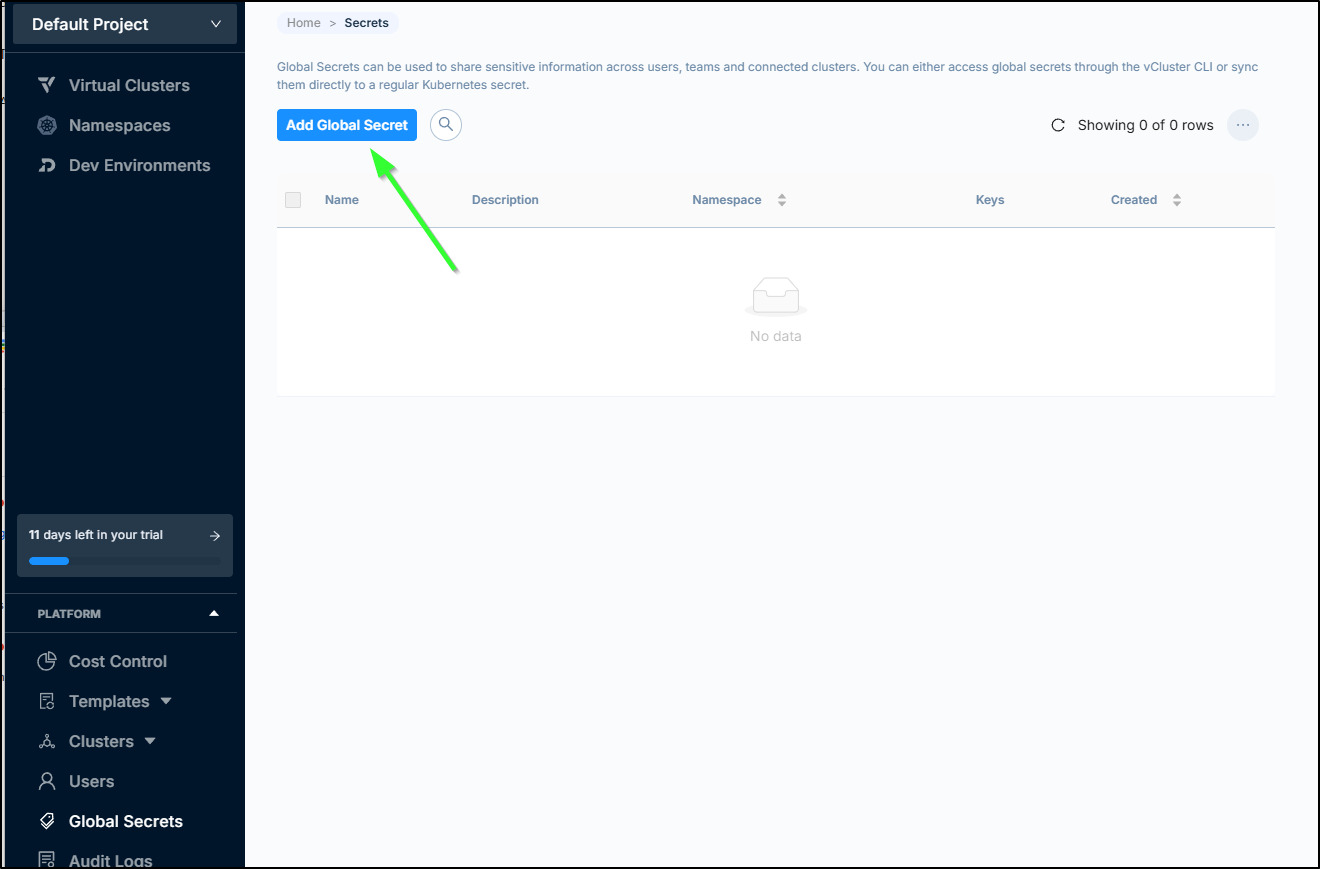

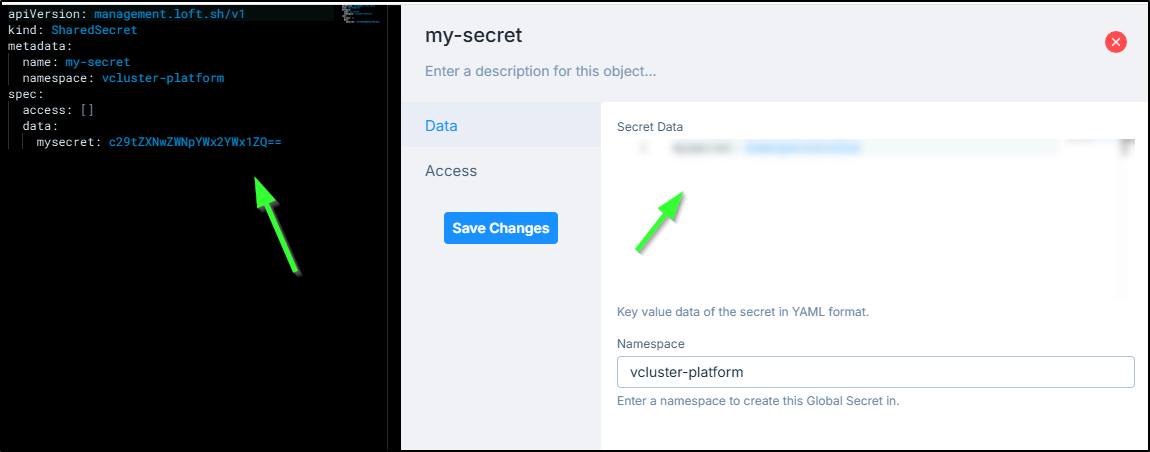

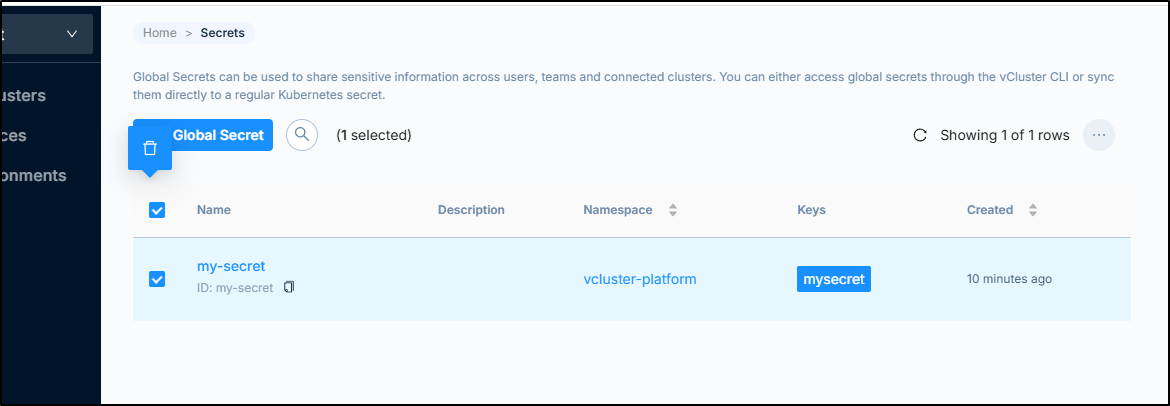

To help solve secrets management, vClusters offers a “Global Secrets” option that can let us sync a managed secret though the CLI or a K8s secret.

We can go to “Global Secrets” to “Add Global Secret”

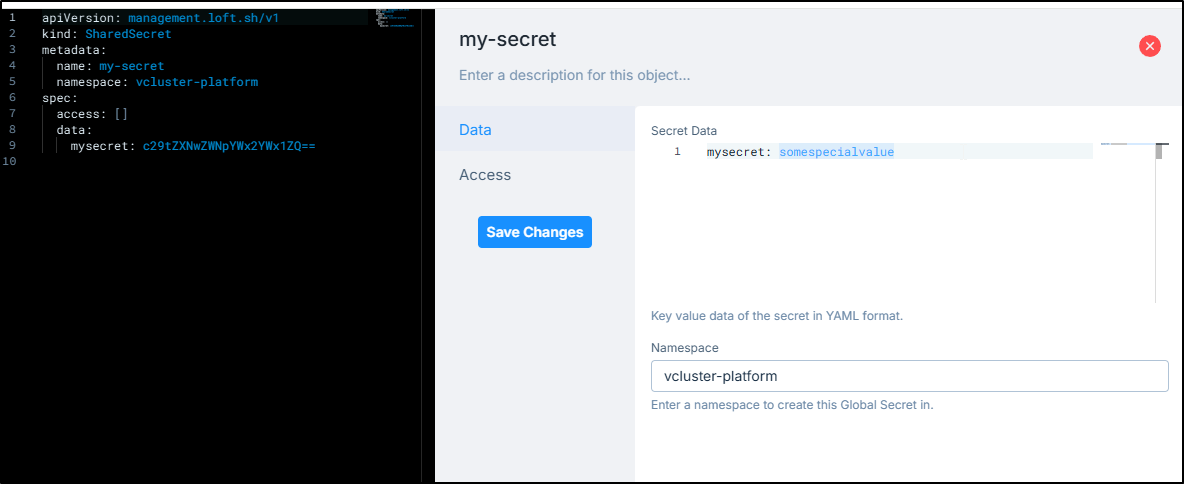

As I type the value, I can see on the left it’s BASE64 encoding it

I was somewhat amused by the fact that when you move off of edit mode in the UI, it does a gaussian blur effect on the value (that isn’t me), but then leaves the base64 just as it is so I guess it masks it from people that don’t know how to b64 decode?

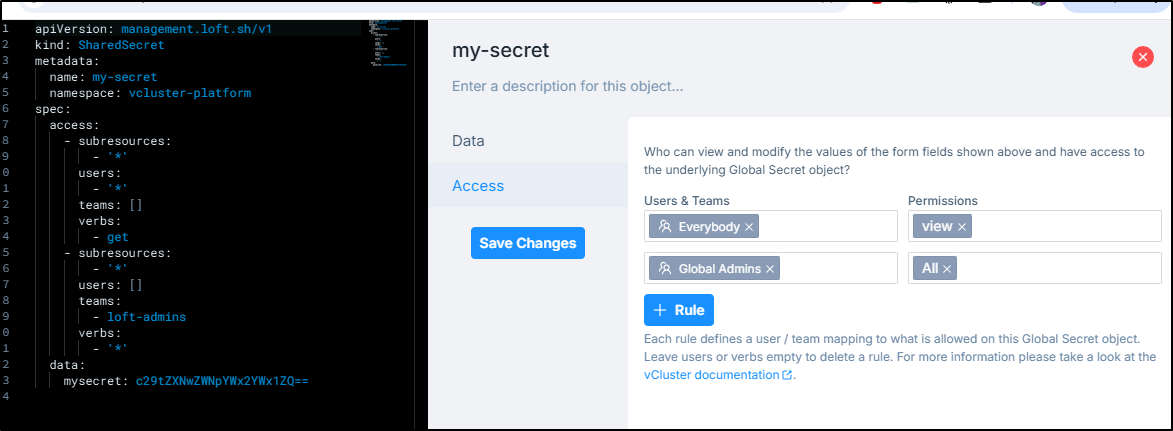

I can also set some permissions here which are reflected in the spec.access area:

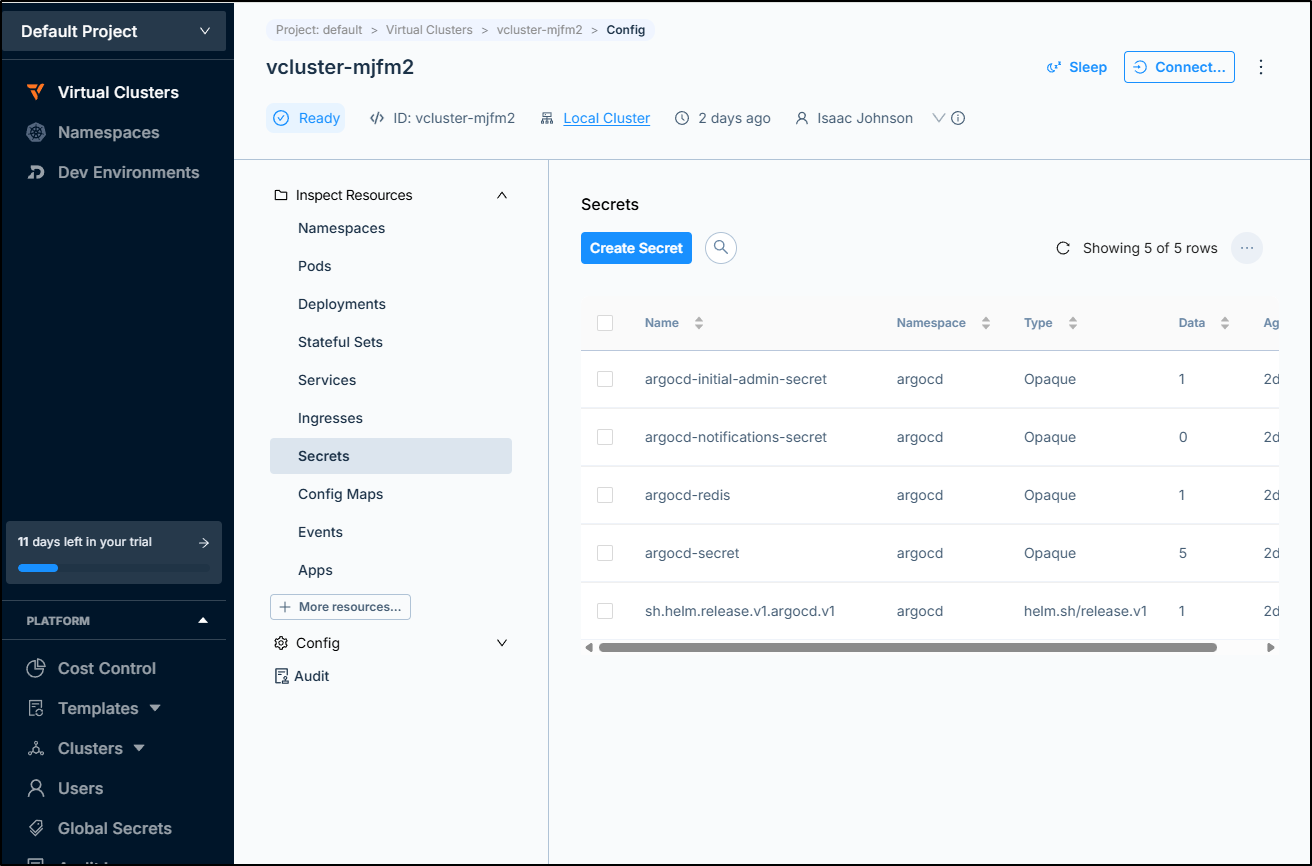

I saved it but when I went to check my vCluster, I did not see it propagated there

PS C:\Users\isaac\.kube> C:\Users\isaac\Downloads\vcluster-windows-amd64.exe disconnect

08:08:29 info Successfully disconnected and switched back to the original context: mac77

PS C:\Users\isaac\.kube> C:\Users\isaac\Downloads\vcluster-windows-amd64.exe connect vcluster-mjfm2

08:08:36 warn There is a newer version of vcluster: v0.24.1. Run `vcluster upgrade` to upgrade to the newest version.

08:08:38 done vCluster is up and running

08:08:38 info Starting background proxy container...

08:08:39 done Switched active kube context to vcluster_vcluster-mjfm2_loft-default-v-vcluster-mjfm2_mac77

- Use `vcluster disconnect` to return to your previous kube context

- Use `kubectl get namespaces` to access the vcluster

PS C:\Users\isaac\.kube> kubectl get ns

NAME STATUS AGE

argocd Active 2d17h

default Active 2d17h

kube-node-lease Active 2d17h

kube-public Active 2d17h

kube-system Active 2d17h

PS C:\Users\isaac\.kube> kubectl get secret -A

NAMESPACE NAME TYPE DATA AGE

argocd argocd-initial-admin-secret Opaque 1 2d17h

argocd argocd-notifications-secret Opaque 0 2d17h

argocd argocd-redis Opaque 1 2d17h

argocd argocd-secret Opaque 5 2d17h

argocd sh.helm.release.v1.argocd.v1 helm.sh/release.v1 1 2d17h

Nor via the UI in vCluster

I also went to the hosting cluster to check if its in the ‘vcluster-platform’ namespace

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl get secrets -n vcluster-platform

NAME TYPE DATA AGE

loft-agent-connection Opaque 2 2d18h

loft-api-service-cert kubernetes.io/tls 3 2d18h

loft-apiservice-agent-cert kubernetes.io/tls 3 2d18h

loft-cert kubernetes.io/tls 3 2d18h

loft-ingress-wakeup-agent-server-cert kubernetes.io/tls 3 2d18h

loft-manager-config Opaque 1 2d18h

loft-network-control-key Opaque 3 2d18h

loft-router-domain Opaque 1 2d18h

loft-server-cert kubernetes.io/tls 3 2d18h

loft-user-secret-admin Opaque 1 2d18h

loft-webhook-agent-cert kubernetes.io/tls 3 2d18h

sh.helm.release.v1.global-prometheus.v1 helm.sh/release.v1 1 2d18h

sh.helm.release.v1.global-prometheus.v2 helm.sh/release.v1 1 2d18h

sh.helm.release.v1.opencost.v1 helm.sh/release.v1 1 2d18h

sh.helm.release.v1.prometheus.v1 helm.sh/release.v1 1 2d18h

sh.helm.release.v1.vcluster-platform.v1 helm.sh/release.v1 1 2d18h

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl get secrets -n vcluster-platform -o yaml | grep -i my-secret

However, that was when I realized they make their own Custom Resource (SharedSecret):

$ kubectl get SharedSecret -A

NAMESPACE NAME CREATED AT

vcluster-platform my-secret 2025-05-05T13:06:01Z

$ kubectl get SharedSecret my-secret -n vcluster-platform -o json | jq -r .spec.data.mysecret | base64 --decode && echo

somespecialvalue

Though that type does not exist in the vCluster

PS C:\Users\isaac\.kube> kubectl get sharedsecret -A

error: the server doesn't have a resource type "sharedsecret"

Nor is there a sync option (just delete) from the “Shared Secret” menu

I decided I would try one more thing - the old “turn it off and on again”

I disconnected from the vCluster in windows

PS C:\Users\isaac\.kube> C:\Users\isaac\Downloads\vcluster-windows-amd64.exe disconnect

08:16:58 info Successfully disconnected and switched back to the original context: mac77

Then put it to sleep

then wake up

I reconnected, but still no go

PS C:\Users\isaac\.kube> C:\Users\isaac\Downloads\vcluster-windows-amd64.exe connect vcluster-mjfm2

08:22:19 warn There is a newer version of vcluster: v0.24.1. Run `vcluster upgrade` to upgrade to the newest version.

08:22:21 done vCluster is up and running

08:22:21 info Stopping background proxy...

08:22:22 info Starting background proxy container...

08:22:23 done Switched active kube context to vcluster_vcluster-mjfm2_loft-default-v-vcluster-mjfm2_mac77

- Use `vcluster disconnect` to return to your previous kube context

- Use `kubectl get namespaces` to access the vcluster

PS C:\Users\isaac\.kube> kubectl get sharedsecret -A

error: the server doesn't have a resource type "sharedsecret"

PS C:\Users\isaac\.kube> kubectl get secret -A

NAMESPACE NAME TYPE DATA AGE

argocd argocd-initial-admin-secret Opaque 1 2d17h

argocd argocd-notifications-secret Opaque 0 2d17h

argocd argocd-redis Opaque 1 2d17h

argocd argocd-secret Opaque 5 2d17h

argocd sh.helm.release.v1.argocd.v1 helm.sh/release.v1 1 2d17h

I have to believe, then, that “Shared Secrets” must just be a local Platform UI feature for basic secret sharing in the platform but not created vClusters.

If it could sync to namespaces in vClusters, I could see myself using it. Otherwise, I’m not really sure what the point is. I would far prefer to just use a secret store like AKV or GSM which versions it’s secrets.

Other features/odd UI elements

So there is an Inbox, but nothing you can do there and no idea how it could be used

Perhaps it’s just a placehold for Loft.sh company announcements?

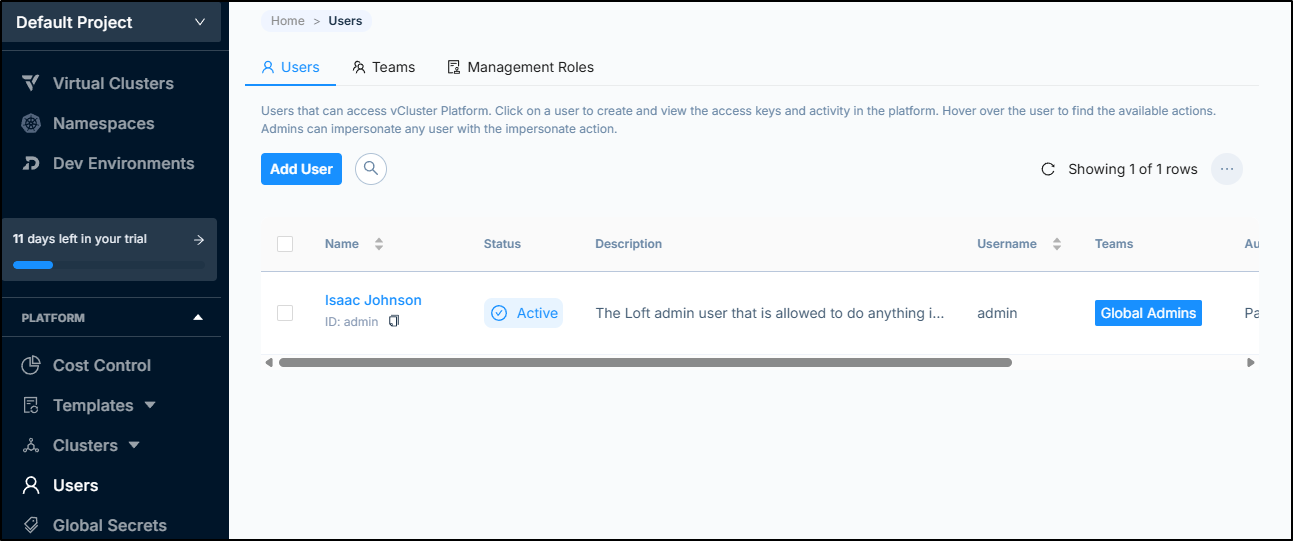

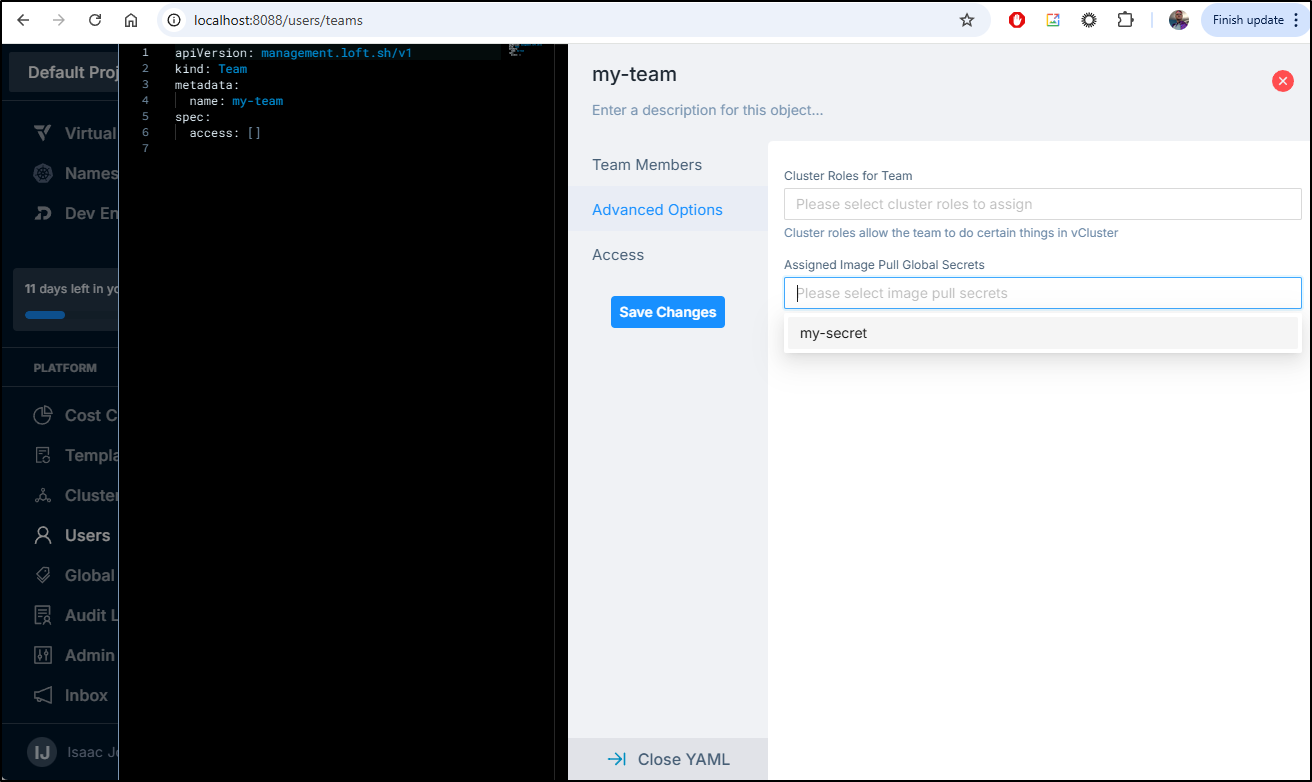

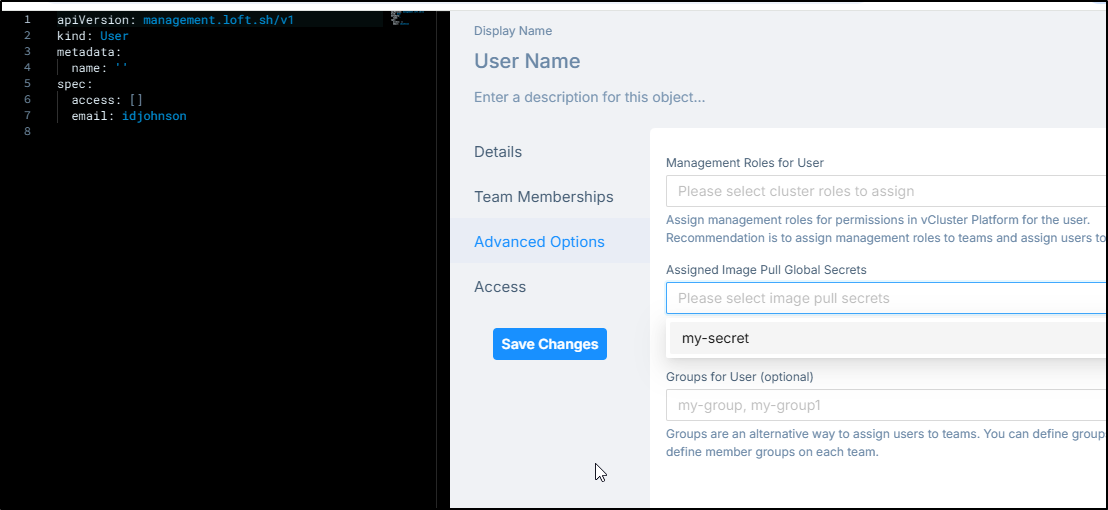

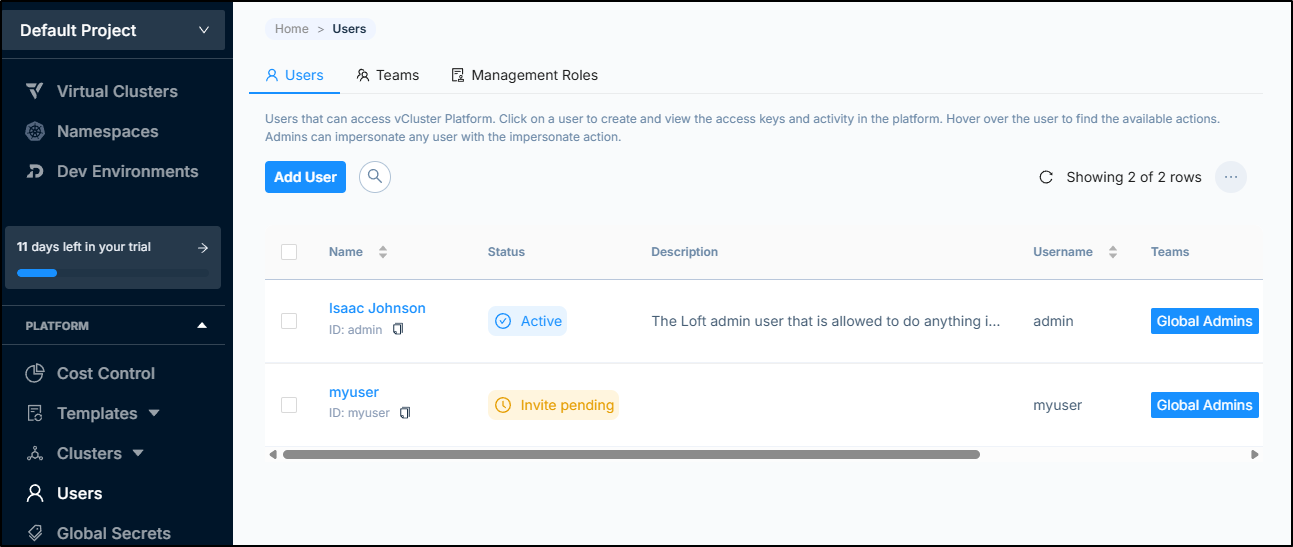

There is user and team management.

For instance, you can create a team and let it pull from a shared image pull secret (perhaps that is the usage for “Global Secrets”?)

There is also a “Namespaces” placeholder with little to no information shown

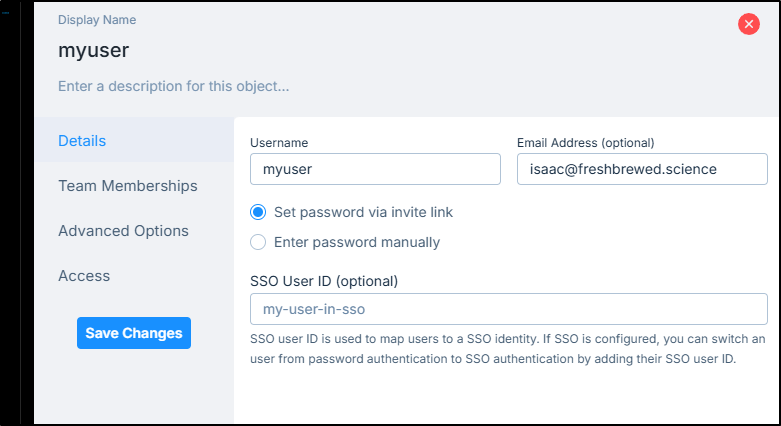

User Creation

I was curious what “set password via invite link” might entail, so I created a user

(I had to change to gmail), but then it went to “Invite Pending”.

I don’t recall setting up an SMTP server so I’m curious if something is going to get sent somehow.

I checked - there is no mail server settings in the helm chart

$ helm get values --all -n vcluster-platform vcluster-platform

COMPUTED VALUES:

additionalCA: ""

admin:

create: true

password: my-password

username: admin

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- preference:

matchExpressions:

- key: eks.amazonaws.com/capacityType

operator: NotIn

values:

- SPOT

- key: kubernetes.azure.com/scalesetpriority

operator: NotIn

values:

- spot

- key: cloud.google.com/gke-provisioning

operator: NotIn

values:

- spot

weight: 1

agentOnly: false

agentValues: {}

apiservice:

create: true

audit:

enableSideCar: false

image: library/alpine:3.13.1

persistence:

enabled: false

size: 10Gi

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

runAsNonRoot: true

runAsUser: 1000

certIssuer:

create: false

email: ""

httpResolver:

enabled: true

ingressClass: nginx

name: lets-encrypt-http-issuer

resolvers: []

secretName: loft-letsencrypt-credentials

server: https://acme-v02.api.letsencrypt.org/directory

config:

audit:

enabled: true

env: {}

envValueFrom: {}

hostAliases: []

ingress:

enabled: false

host: loft.mydomain.tld

ingressClass: nginx

name: loft-ingress

path: /

tls:

enabled: true

secret: loft-tls

insecureSkipVerify: false

livenessProbe:

enabled: true

logging:

encoding: console

level: info

persistence:

enabled: false

size: 30Gi

podDisruptionBudget:

create: true

minAvailable: 1

podSecurityContext: {}

product: vcluster-pro

readinessProbe:

enabled: true

replicaCount: 1

resources:

limits:

cpu: "2"

memory: 4Gi

requests:

cpu: 200m

memory: 256Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

enabled: true

privileged: false

runAsNonRoot: true

service:

type: ClusterIP

serviceAccount:

annotations: {}

clusterRole: cluster-admin

create: true

imagePullSecrets: []

name: loft

serviceMonitor:

enabled: false

interval: 60s

jobLabel: loft

labels: {}

path: /metrics

scrapeTimeout: 30s

targetPort: 8080

tls:

crtKey: tls.crt

enabled: false

keyKey: tls.key

secret: loft-tls

token: ""

url: ""

volumeMounts: []

volumes: []

webhook:

create: true

I can see it was created in the logs

$ kubectl logs loft-6d88556b5d-9pmvt -n vcluster-platform | grep -i myuser

2025-05-05 13:31:07 INFO user-controller user/controller.go:90 Updated user access because it was out of sync {"component": "loft", "object": {"name":"myuser"}, "reconcileID": "8aca97df-0632-4994-92d1-21b9713ed985"}

2025-05-05 13:31:07 INFO clienthelper/clienthelper.go:347 created object {"component": "loft", "kind": "", "name": "loft-user-password-myuser-nr2sz"}

Nothing came through email (I gave it a few hours).

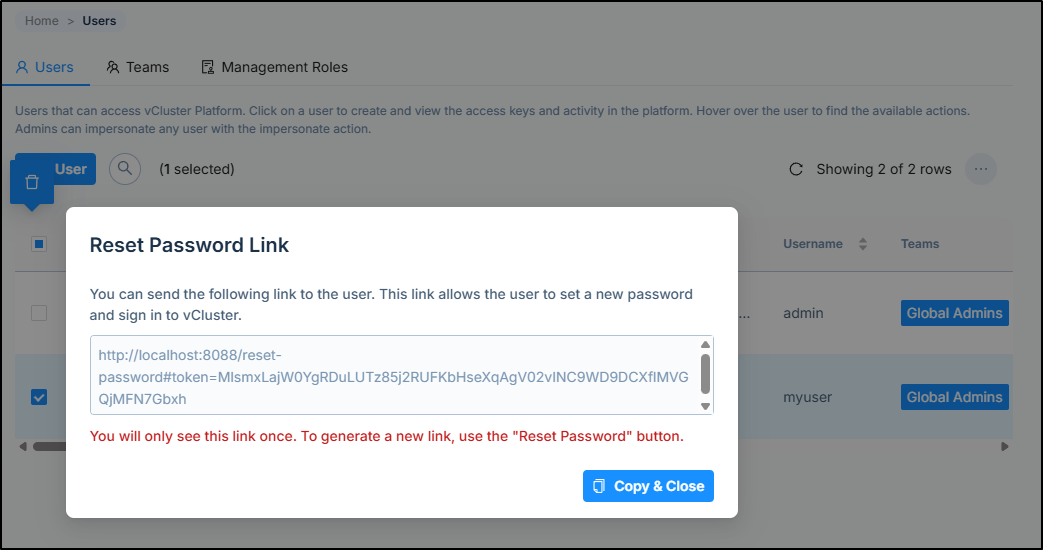

I can, however, reset the user’s password and vCluster Platform will provide a link I could send them

Cleanup

If we want to be done with vClusters, we can just delete our vCluster namespaces and the Loft Platform one.

PS C:\Users\isaac\Workspaces> kubectl get ns

NAME STATUS AGE

default Active 31d

kube-node-lease Active 31d

kube-public Active 31d

kube-system Active 31d

loft-default-v-vcluster-mjfm2 Active 2d18h

p-default Active 2d18h

team-ops Active 2d19h

team-sre Active 3d1h

vcluster-platform Active 2d18h

I’ll do it a bit more delicately and first disconnect any existing sessions

PS C:\Users\isaac\.kube> C:\Users\isaac\Downloads\vcluster-windows-amd64.exe disconnect

08:38:13 info Successfully disconnected and switched back to the original context: mac77

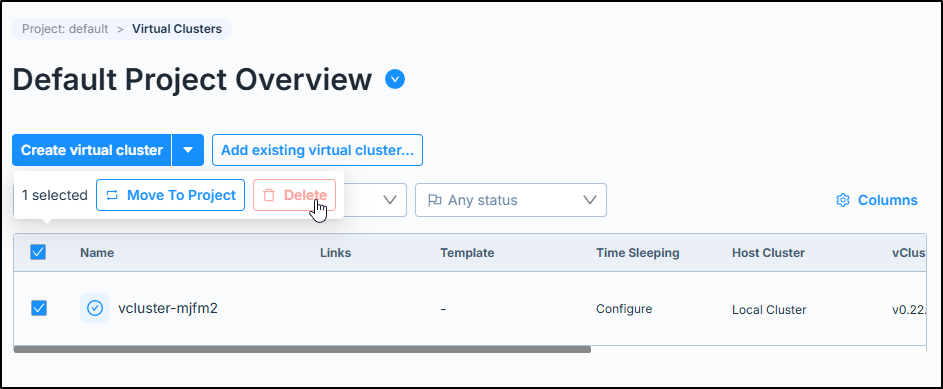

Then delete from the platform UI

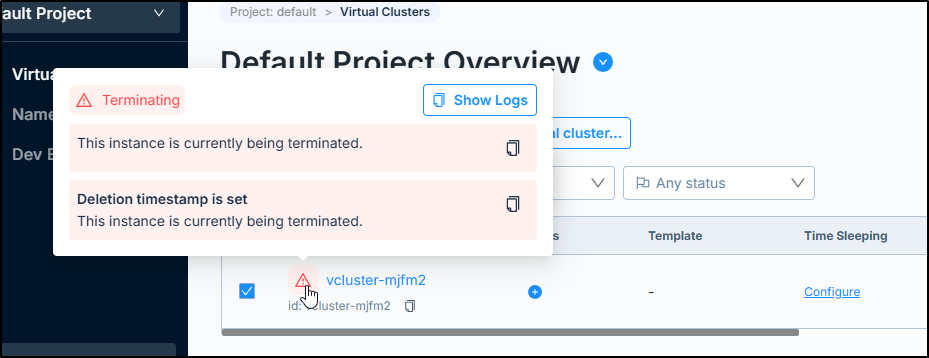

I can see it’s now being terminated

Soon, in the hosting cluster, I saw the namespace show “Terminating”

$ kubectl get ns

NAME STATUS AGE

default Active 31d

kube-node-lease Active 31d

kube-public Active 31d

kube-system Active 31d

loft-default-v-vcluster-mjfm2 Terminating 2d18h

p-default Active 2d18h

team-ops Active 2d19h

team-sre Active 3d1h

vcluster-platform Active 2d18h

In a couple of minutes it was gone:

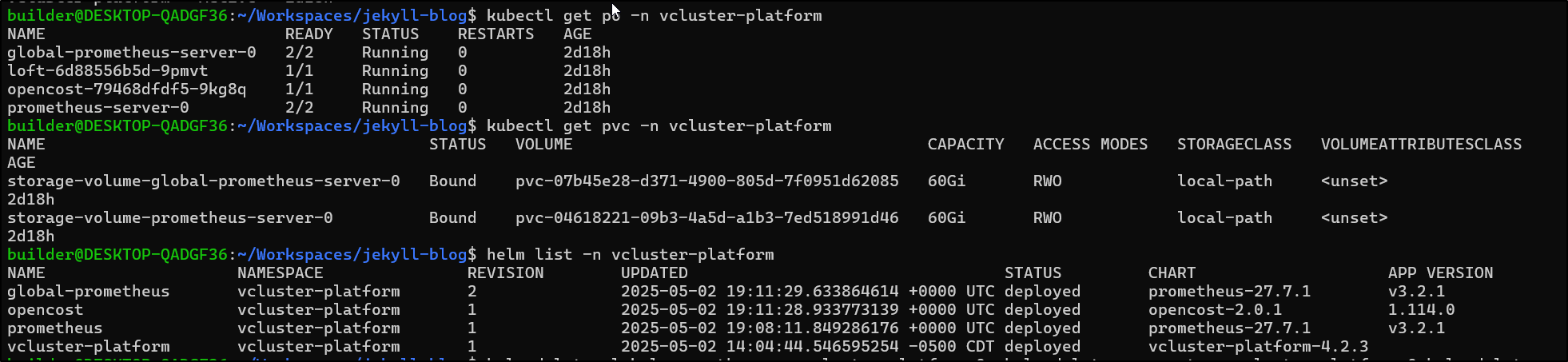

I could now delete the platform with helm delete global-prometheus -n vcluster-platform & helm delete opencost -n vcluster-platform & helm delete prometheus -n vcluster-platform & helm delete vcluster-platform -n vcluster-platform

However, since it’s just consuming some local-path disk, I’ll leave it be for now so I can see what happens in 11d when the “trial” license expires.

While those PVCs are provisioned for 60Gbi, they are barely using any space at the moment. Just to be certain, I hopped on the node and checked:

builder@isaac-MacBookPro:~$ sudo du -chs /var/lib/rancher/k3s/storage/pvc-04618221-09b3-4a5d-a1b3-7ed518991d46_vcluster-platform_storage-volume-prometheus-server

-0

23M /var/lib/rancher/k3s/storage/pvc-04618221-09b3-4a5d-a1b3-7ed518991d46_vcluster-platform_storage-volume-prometheus-server-0

23M total

Summary

Today we used a mix of helm and brew (and just downloading the windows binary) to setup vClusters. We found their is a nice “Platform” app that can help us manage and configure, however, the CLI works just as well.

We saw some features that are either not finished, or hidden behind a sales paywall (like namespaces) and what looks like future plans with a Devpod placeholder in the Platform UI.

When I revisit that post from 2022, I can see they moved from “Spaces” to “DevPod” and the UI is much nicer than before. I can also say the setup process was very clean.

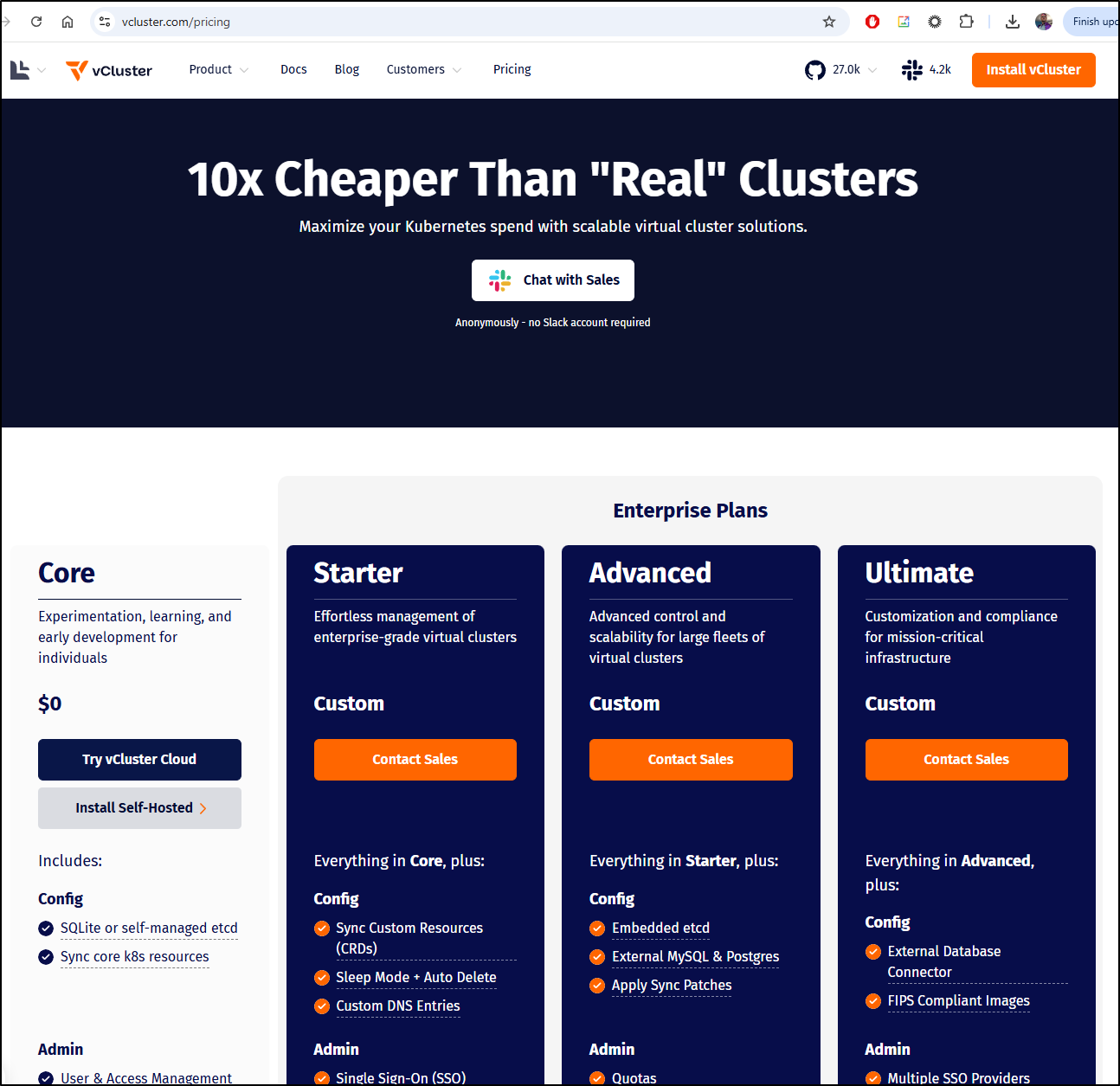

I’ll be interested to see what happens when the default “Enterprise Trial” expires. I also tend to shy away from any solution whose prices are all “talk to us”

I would have expected at this point the product should have at least some straight-forward pricing at the Starter level.