Published: Mar 25, 2025 by Isaac Johnson

Recently it was asked of me to explain how one might control many Azure DevOps pipelines at once. The goal, of course, to make a more robust deployment gate then emails and right click approve.

The fact is, email driven systems are inherently fraught with issues - they really should be reserved for out-of-band flows and release processes necessarily disconnected from process gates.

To expand on that a bit, think of systems where I need to only deploy a pipeline when an external customer has cleared a hurdle, or a regulatory statute has defined a time-bound gate but it’s hard to define (only on weekends during hours of less than 10% usage and with proven 2-week notice).

But more often than not, our releases should flow automatically at a schedule based on codified gating conditions (Wednesdays at 5p CT, fortnightly on Thursdays).

But let’s explore all this next with the Meet app (Fika). I’ll cover conversion from Classic UI to YAML, usage of Azure Environments as well as Gate Configuration. We’ll look at how we can automate tagging (two ways) to GIT as well as tagging our Container Registry repository artifacts with a tag after validation.

Let’s dig in!

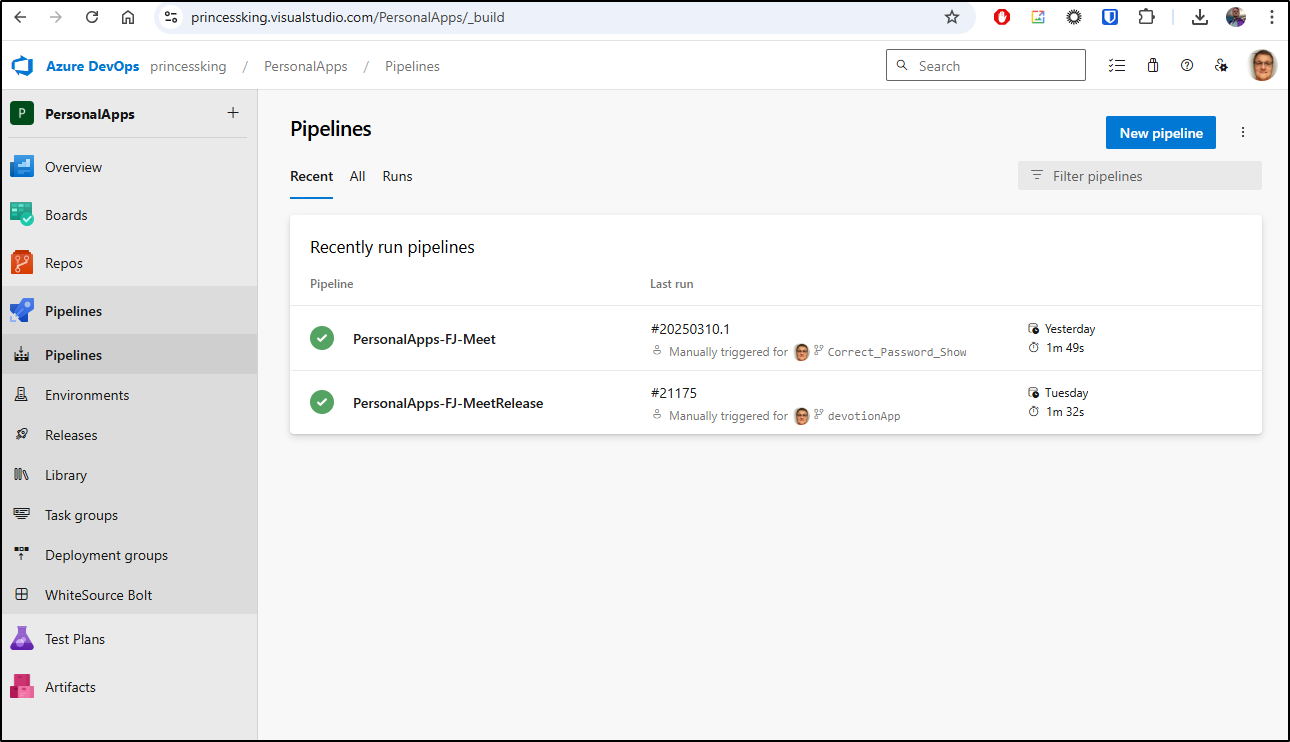

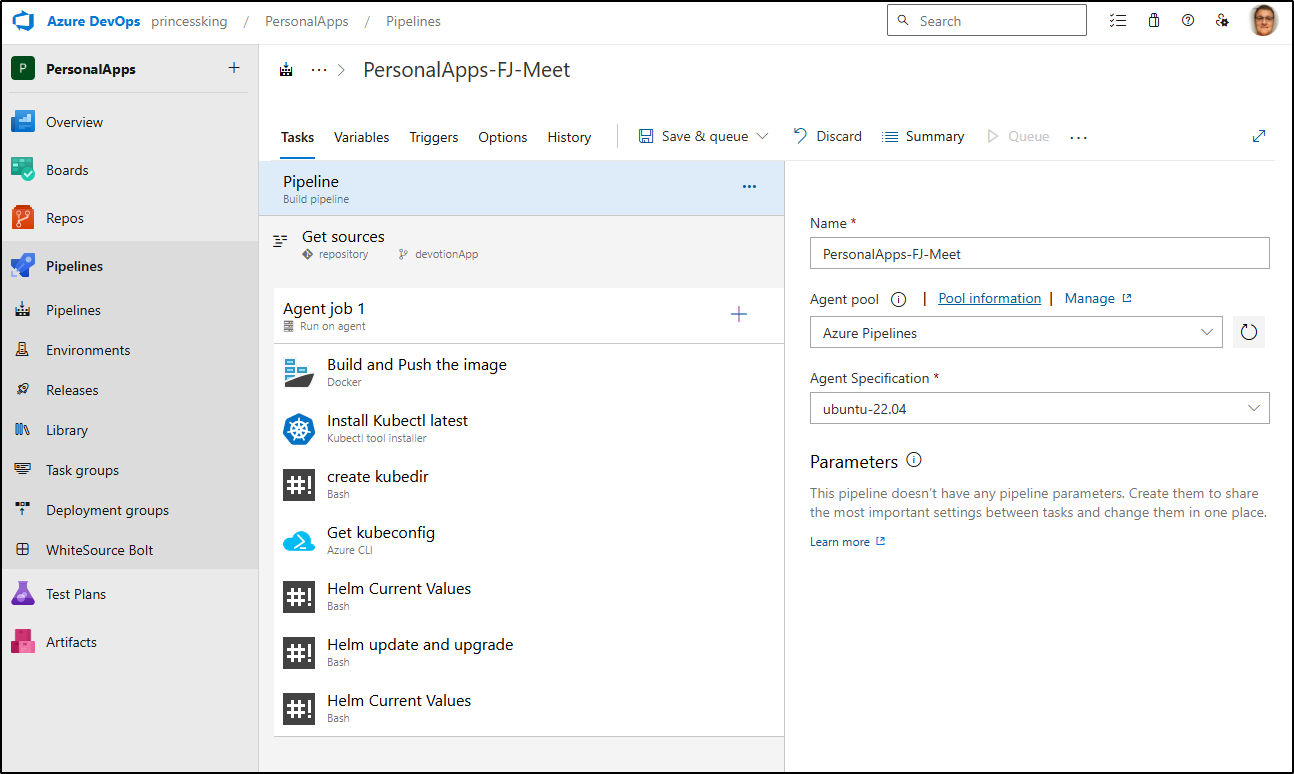

The pipelines today

Here we have a build pipeline and a release pipeline, albeit both as YAML azure pipelines

How can I connect them in such a way as to not require two separate pipelines, two histories, and two ways that could diverge?

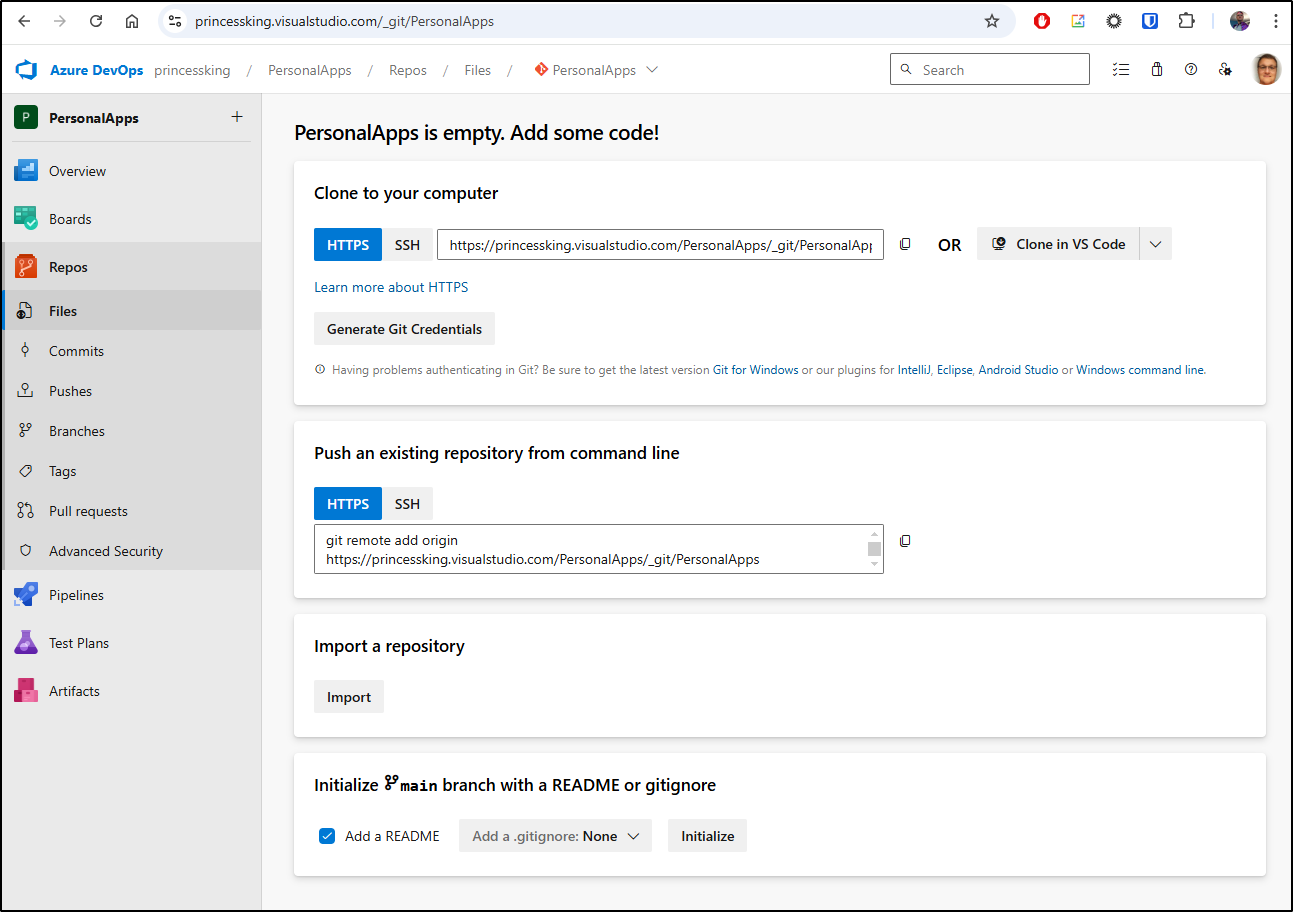

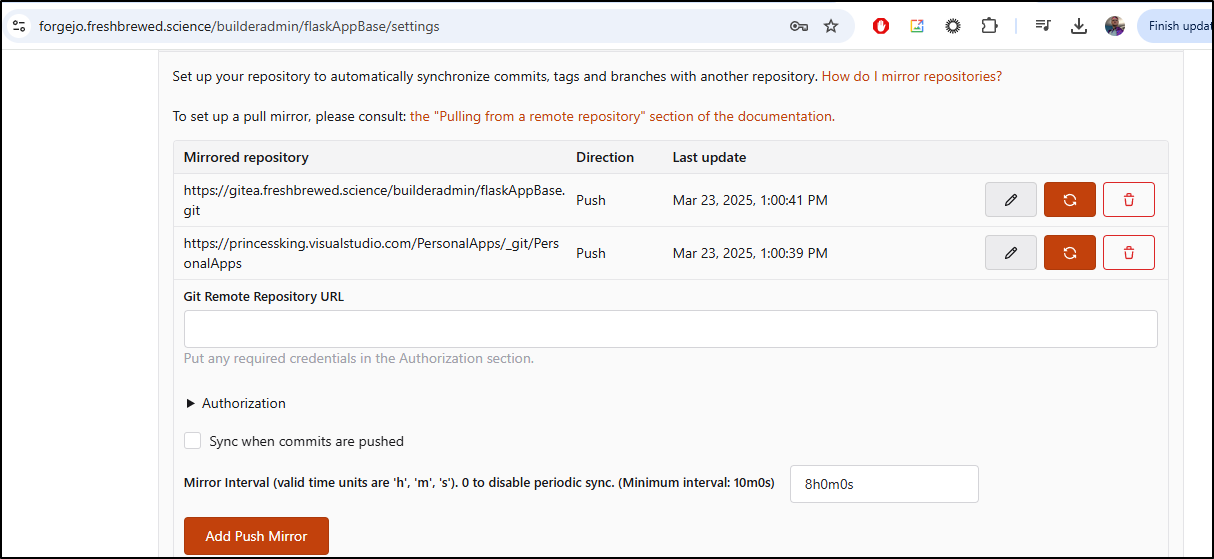

I think my first step is to switch to Azure Repos or Github so I can switch off of the Classic pipeline (a consequence of me using Forgejo)

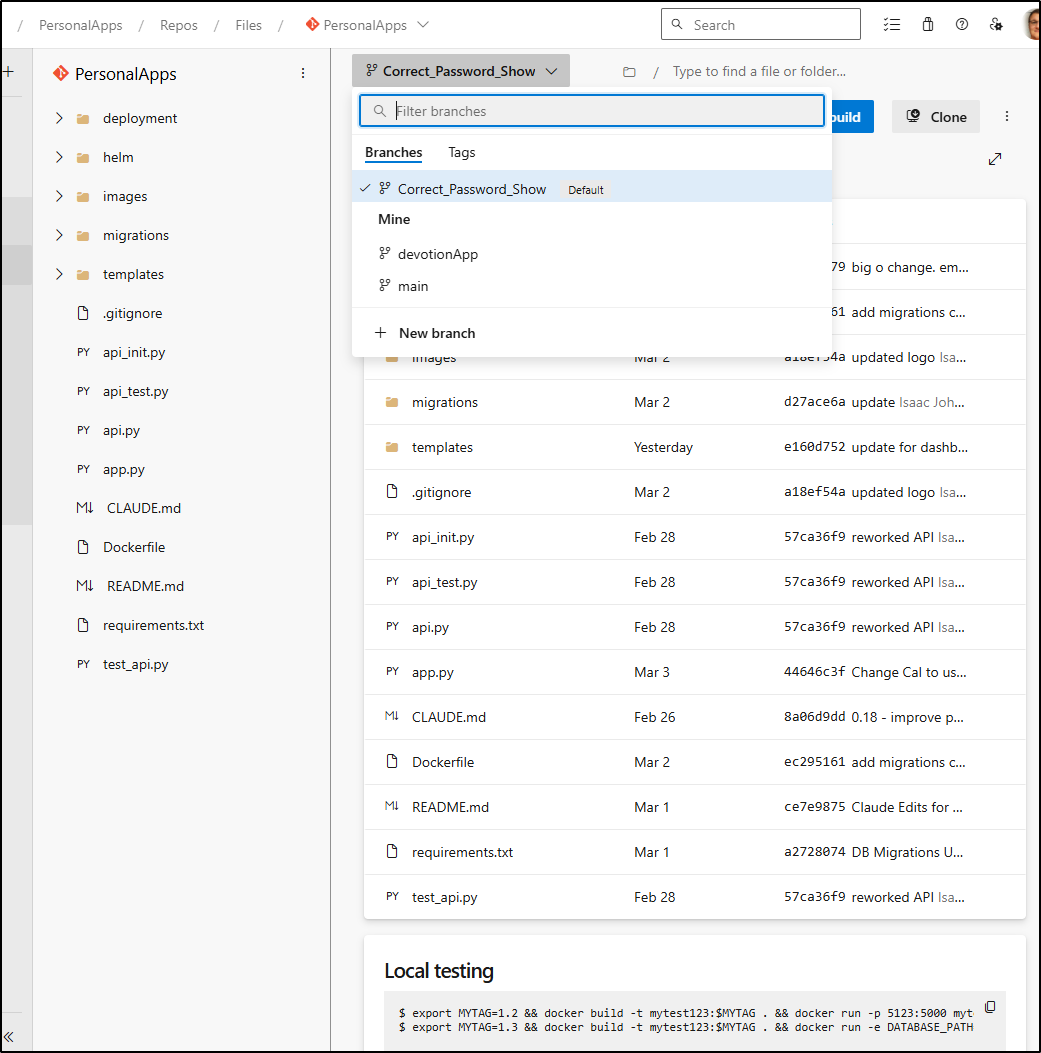

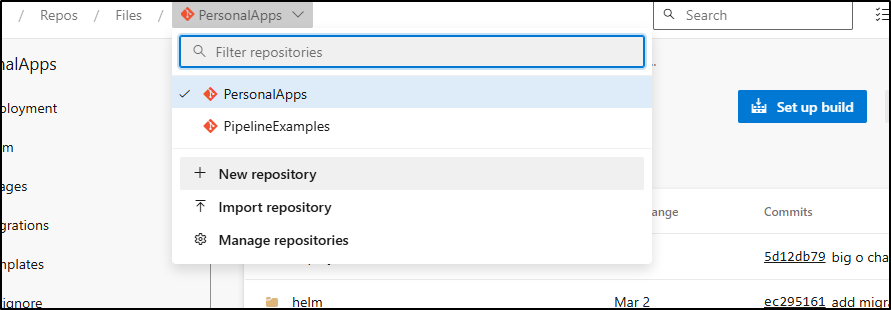

I’ll create an Azure Repo

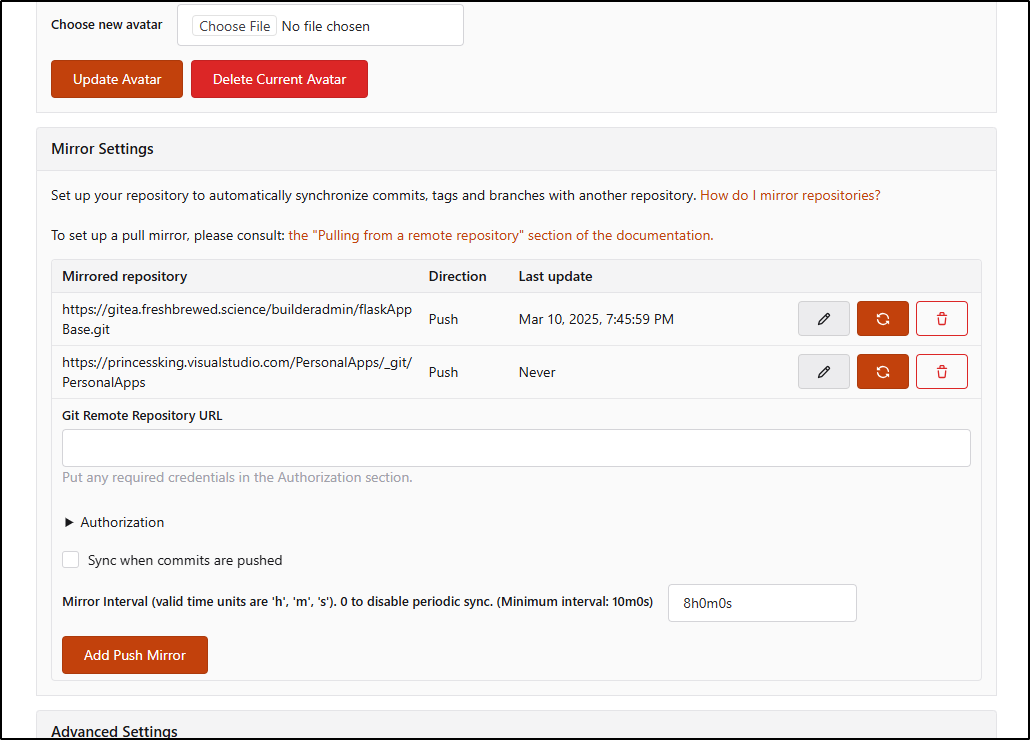

I can add a Push Mirror to Forgejo

Once pushed I can see the repo contents including all the branches

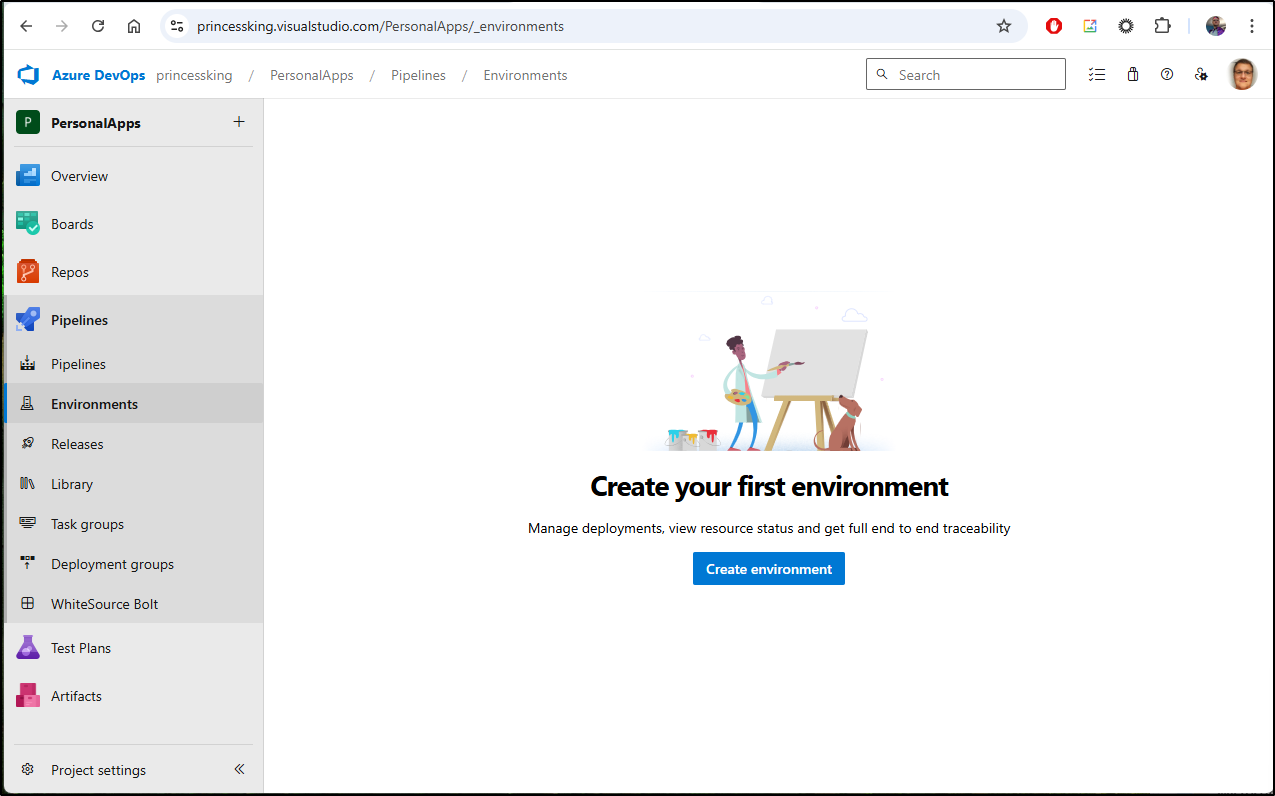

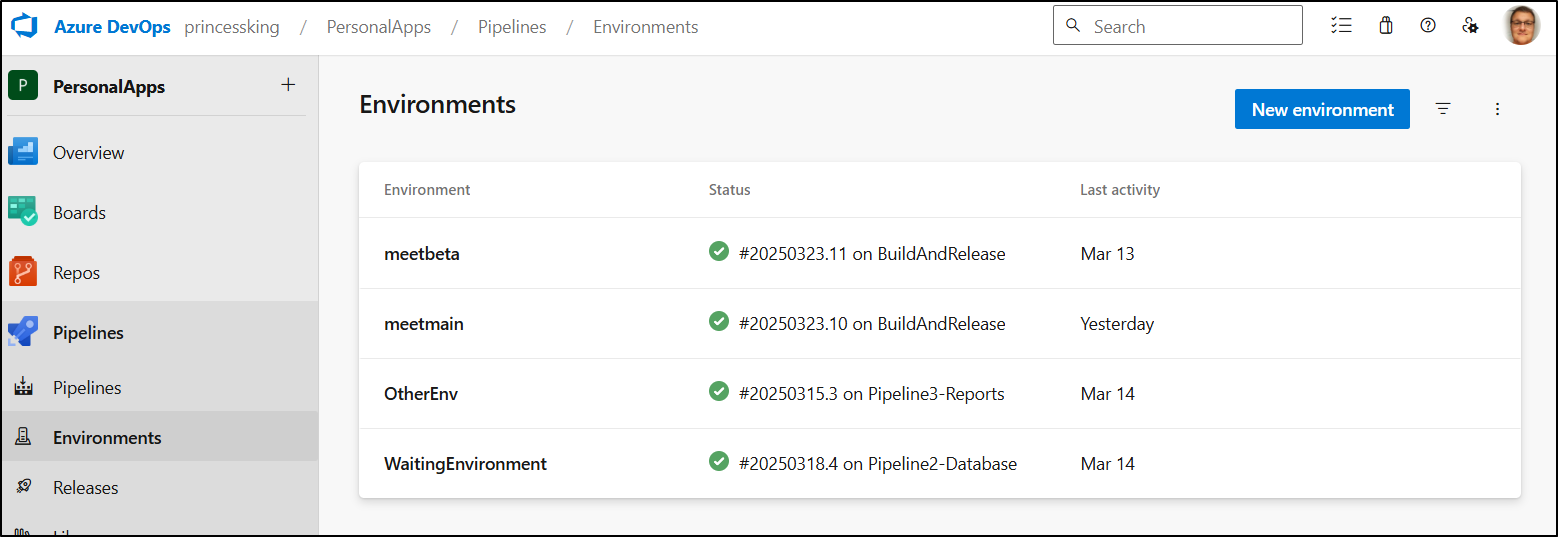

Environments

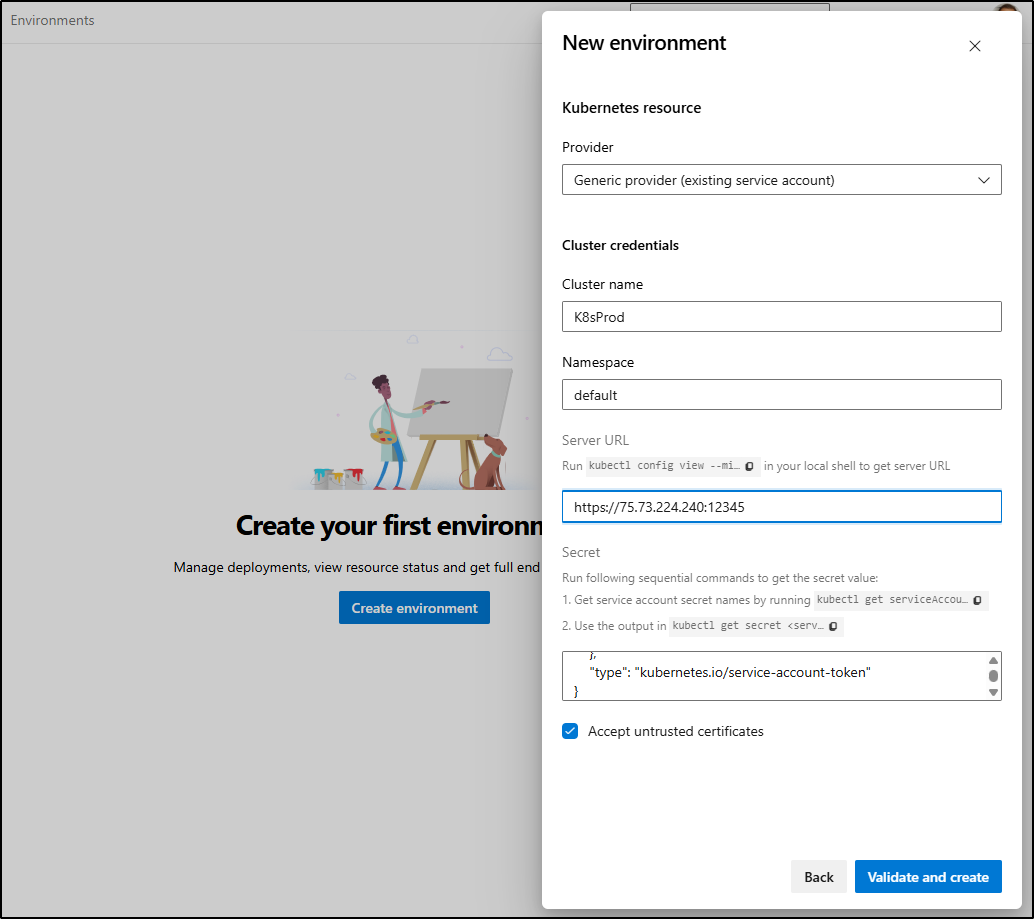

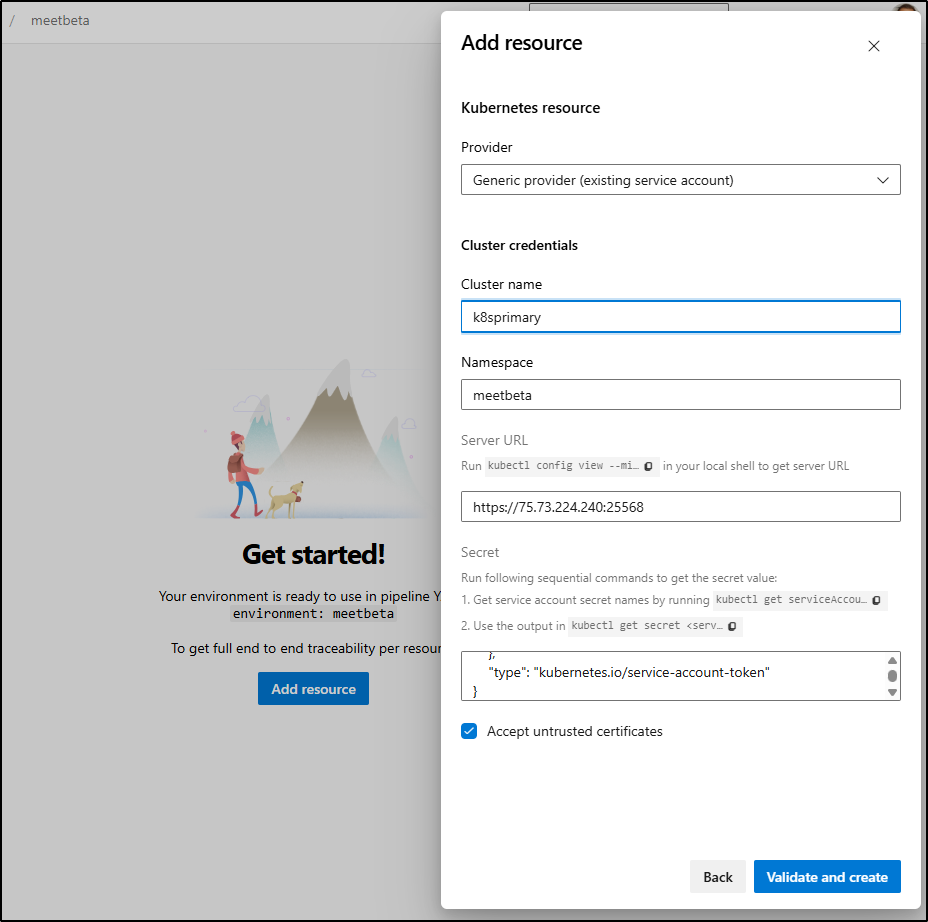

I’ll create an environment in Pipelines

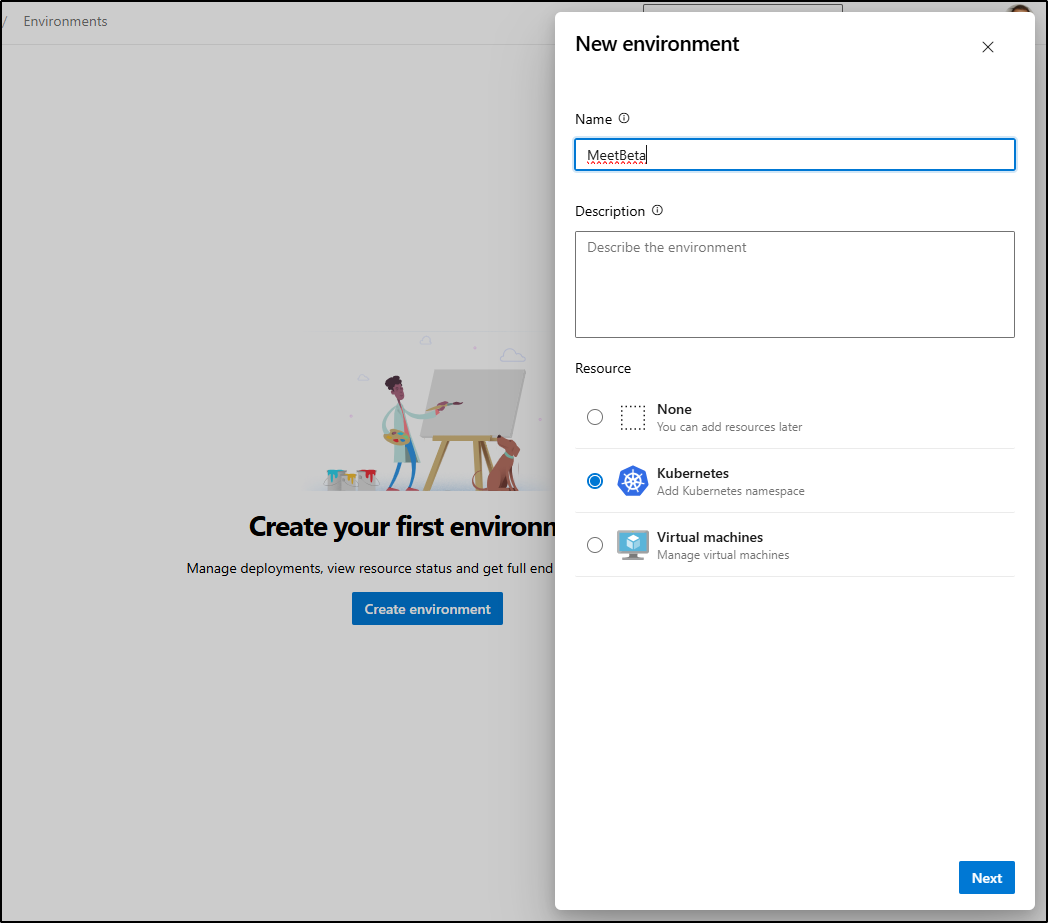

I’ll make an Environment for Meet Beta

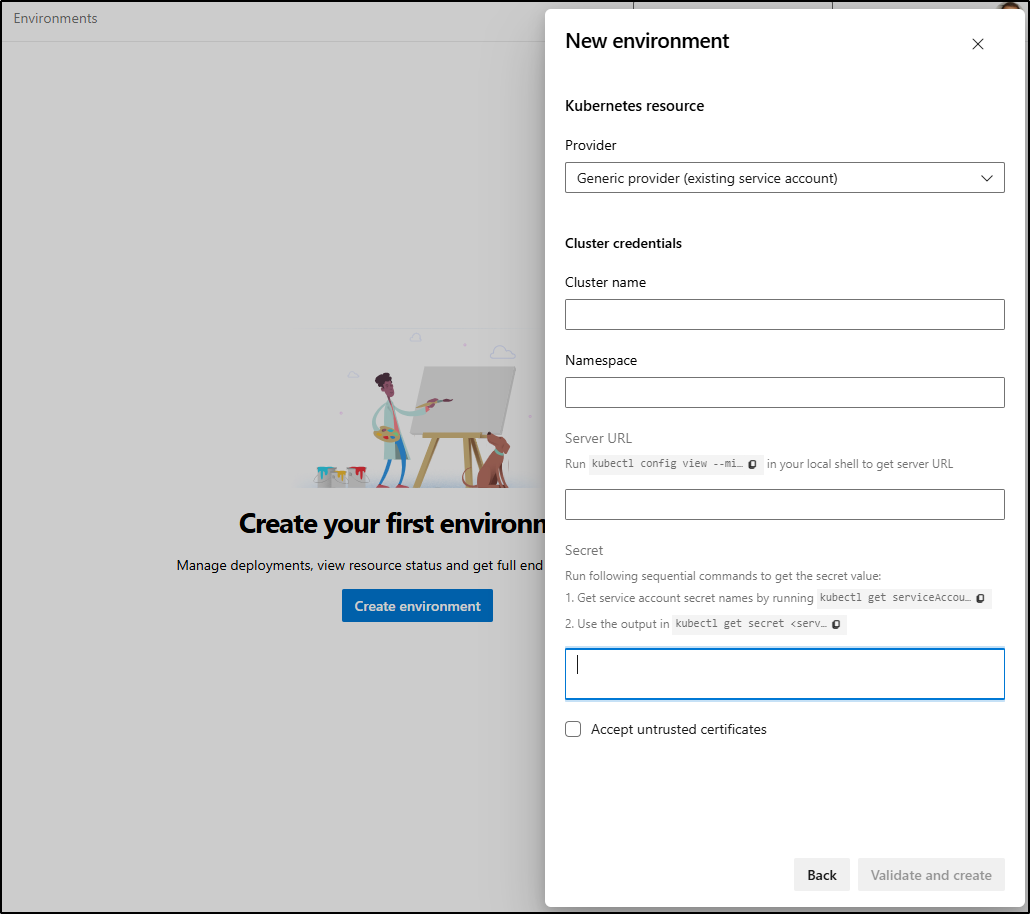

I’ll want to use a Generic k8s entry

Then I’ll create an SA and secret JSON

builder@LuiGi:~/Workspaces/jekyll-blog$ kubectl create serviceaccount meetdeploy

serviceaccount/meetdeploy created

builder@LuiGi:~/Workspaces/jekyll-blog$ vi sa.secret.json

builder@LuiGi:~/Workspaces/jekyll-blog$ cat ./sa.secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: meetdeploy

annotations:

kubernetes.io/service-account.name: meetdeploy

type: kubernetes.io/service-account-token

builder@LuiGi:~/Workspaces/jekyll-blog$ kubectl apply -f ./sa.secret.yaml

secret/meetdeploy created

I can then bind it to a clusterrole

builder@LuiGi:~/Workspaces/jekyll-blog$ cat ca.svc.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

name: meetdeploy-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: meetdeploy

namespace: default

builder@LuiGi:~/Workspaces/jekyll-blog$ kubectl apply -f ./ca.svc.yaml

clusterrolebinding.rbac.authorization.k8s.io/meetdeploy-admin created

Then I can use the secret in JSON format in the environment

I found it was better to scope to a namespace, so I redid this but scoped to a new “meetbeta” namespace

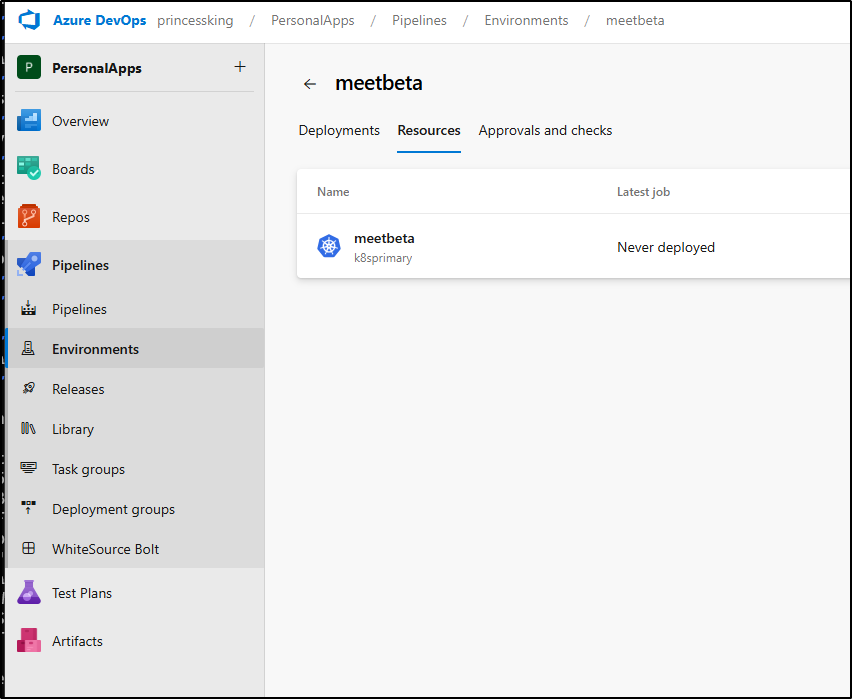

I can see it created without issue

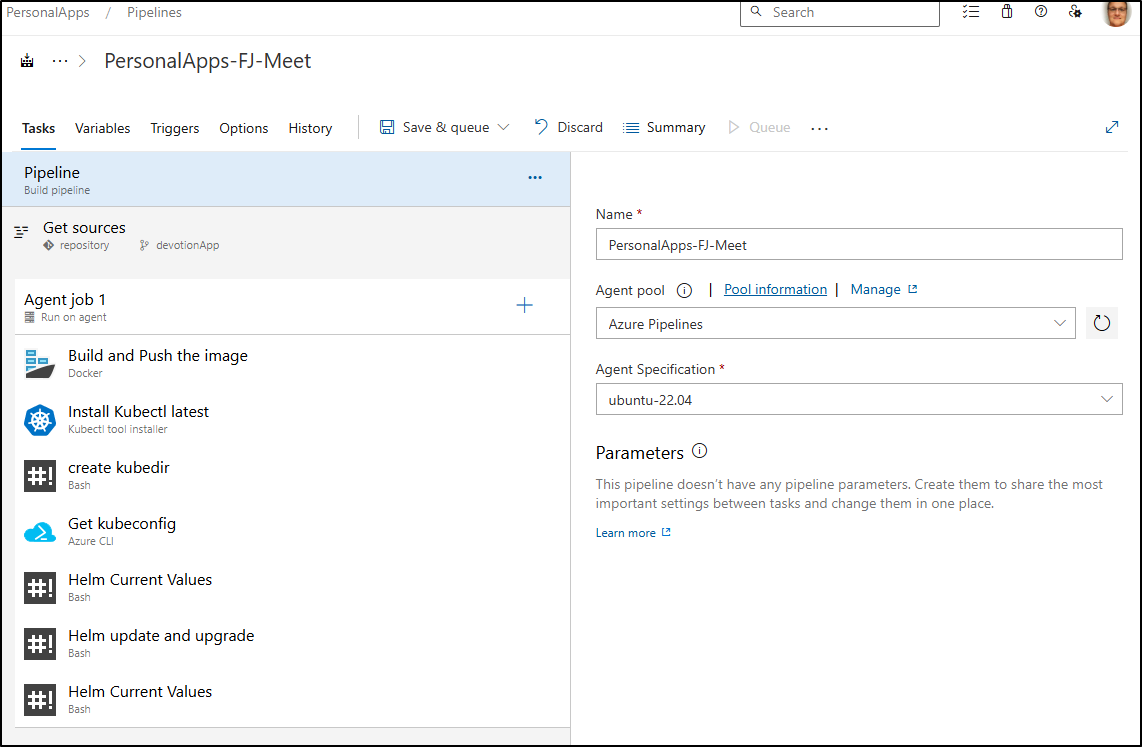

Moving from Classic to YAML Pipelines

My next step is to convert “Classic” UI pipeline, which we had to use because we weren’t in an acceptable GIT provider for Azure DevOps to use YAML (namely bitbucket, Github, and Azure Repos).

The truth is I really have two paths I can go down:

- I create a new “build” repo that will consume from Forgejo (or Repos sync) and be the real home of my build files

- I create the “azure-pipelines.yaml” in the Forgejo, it syncs, then I can tell AzDO about it.

If I do the latter, Azure DevOps will really want to edit and save in Azure Repos because it has no idea that is a sync-only repo.

If I do the former, I have a disconnect between the source files and build files.

I think I’ll try the former first as it keeps the source of truth in Forgejo and I will be able to rethink Github Actions if I go that route in the future.

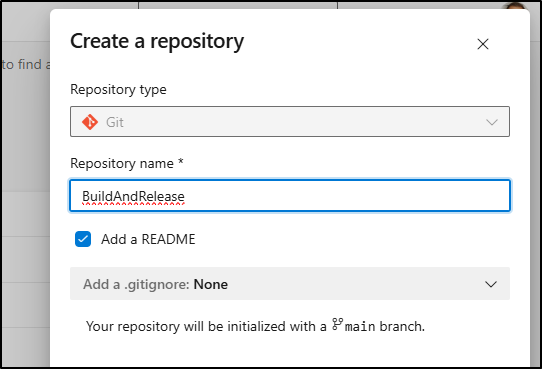

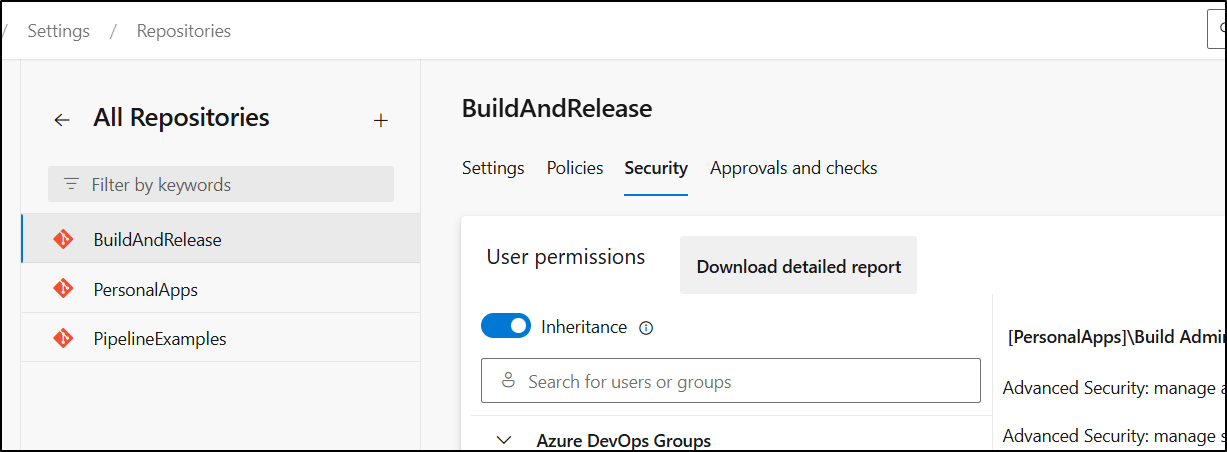

I’ll create a new repo

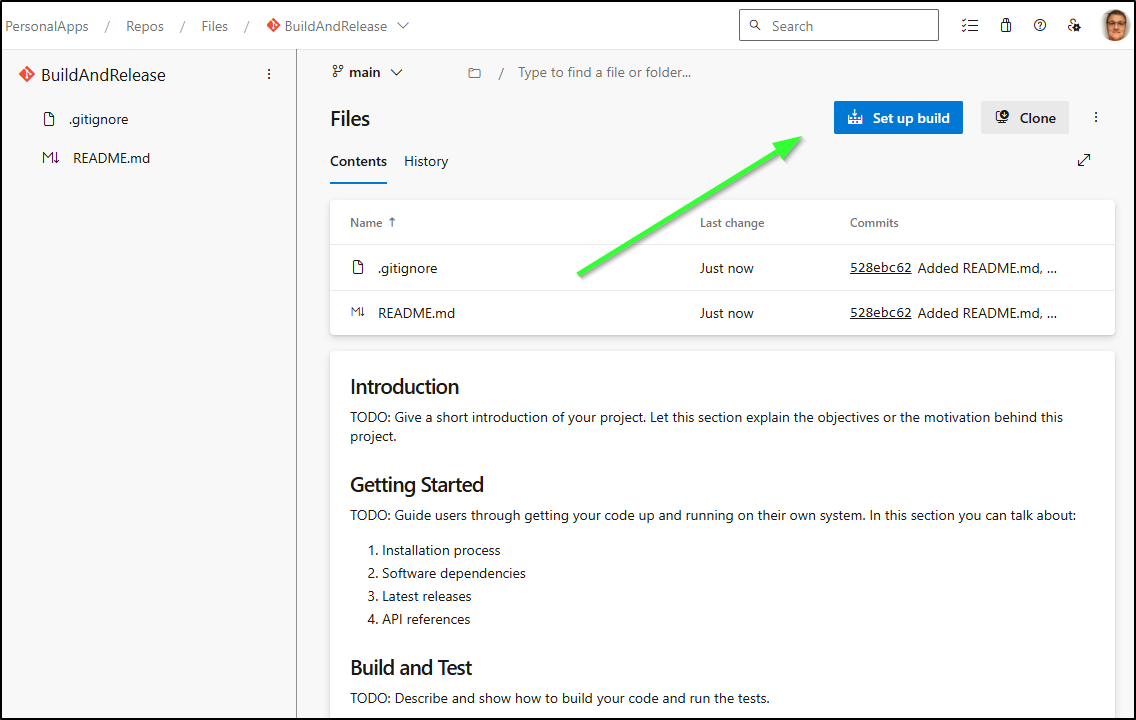

I’ll call it “BuildAndRelease” because I could have a few different project build and release pipelines here beyond just Fika/Meet

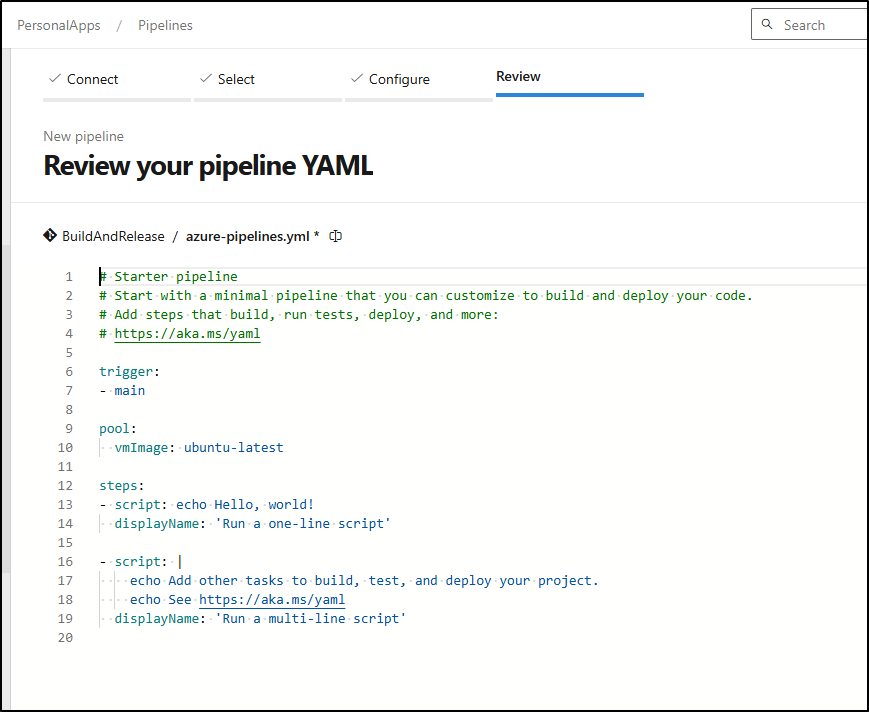

I can now “Set up build”

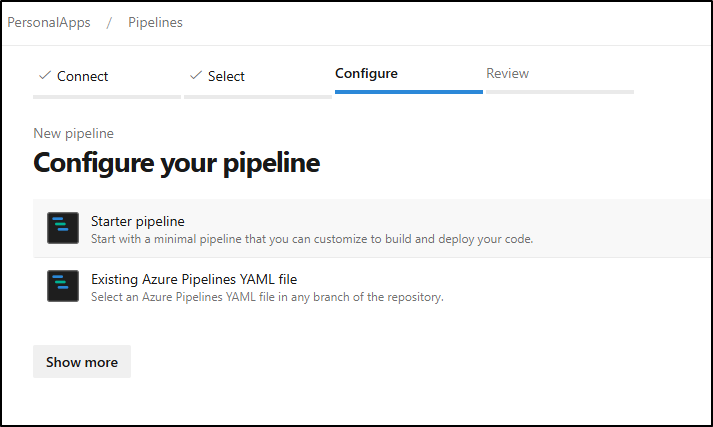

Choose “Starter pipeline”

We get a basic Hello World pipeline to start

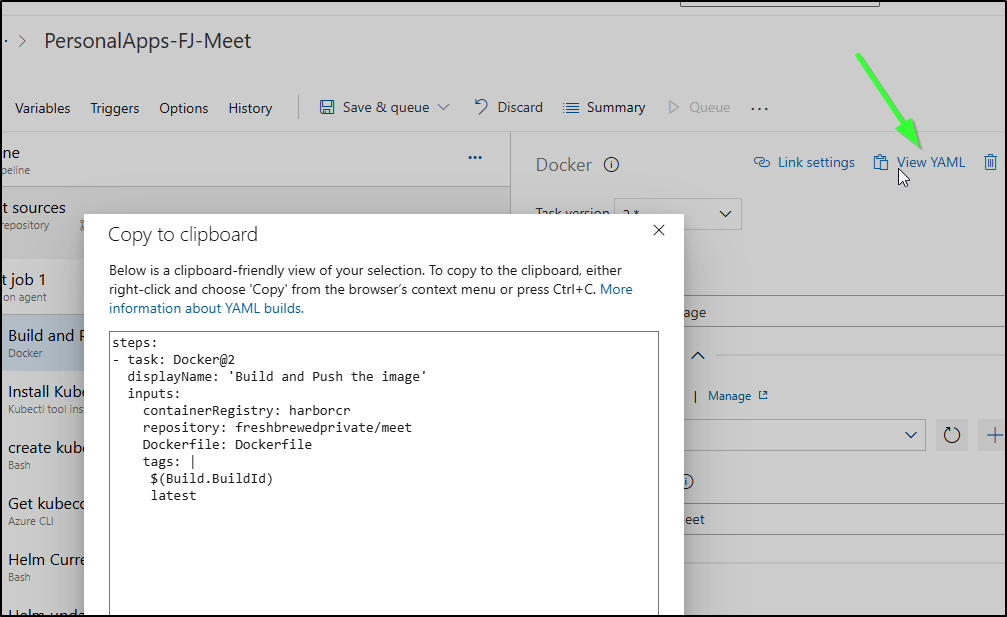

Next, I’ll look at the Classic pipeline where I can “view YAML” to see the YAML steps

After trimming some whitespace and redundant “steps:”, I now have a YAML pipeline that matches the former Classic

trigger:

- main

pool:

vmImage: ubuntu-latest

steps:

- task: Docker@2

displayName: 'Build and Push the image'

inputs:

containerRegistry: harborcr

repository: freshbrewedprivate/meet

Dockerfile: Dockerfile

tags: |

$(Build.BuildId)

latest

- task: KubectlInstaller@0

displayName: 'Install Kubectl latest'

- bash: |

#!/bin/bash

set -x

mkdir ~/.kube

which kubectl || true

which helm || true

displayName: 'create kubedir'

- task: AzureCLI@2

displayName: 'Get kubeconfig'

inputs:

azureSubscription: myAZConn

scriptType: bash

scriptLocation: inlineScript

inlineScript: 'az keyvault secret show --vault-name idjakv --name ext33-int | jq -r .value > ~/.kube/config'

- bash: |

#!/bin/bash

set -x

kubectl get nodes

helm list -A

echo "===1===="

helm get values meetbeta -n default | tee $(Build.StagingDirectory)/meetbeta.values.yaml

displayName: 'Helm Current Values'

- bash: |

#!/bin/bash

set -x

kubectl get nodes

helm list -A

echo "== update ===="

helm get values meetbeta -n default | tee $(Build.StagingDirectory)/meetbeta.values.yaml

sed -i 's/tag: .*/tag: $(Build.BuildID)/g' $(Build.StagingDirectory)/meetbeta.values.yaml

cat $(Build.StagingDirectory)/meetbeta.values.yaml

echo "== upgrade===="

helm upgrade -f $(Build.StagingDirectory)/meetbeta.values.yaml meetbeta ./helm/devotion/

displayName: 'Helm update and upgrade'

- bash: |

#!/bin/bash

set -x

kubectl get nodes

helm list -A

echo "===1===="

helm get values meetbeta -n default | tee $(Build.StagingDirectory)/meetbeta.values.yaml

displayName: 'Helm Current Values'

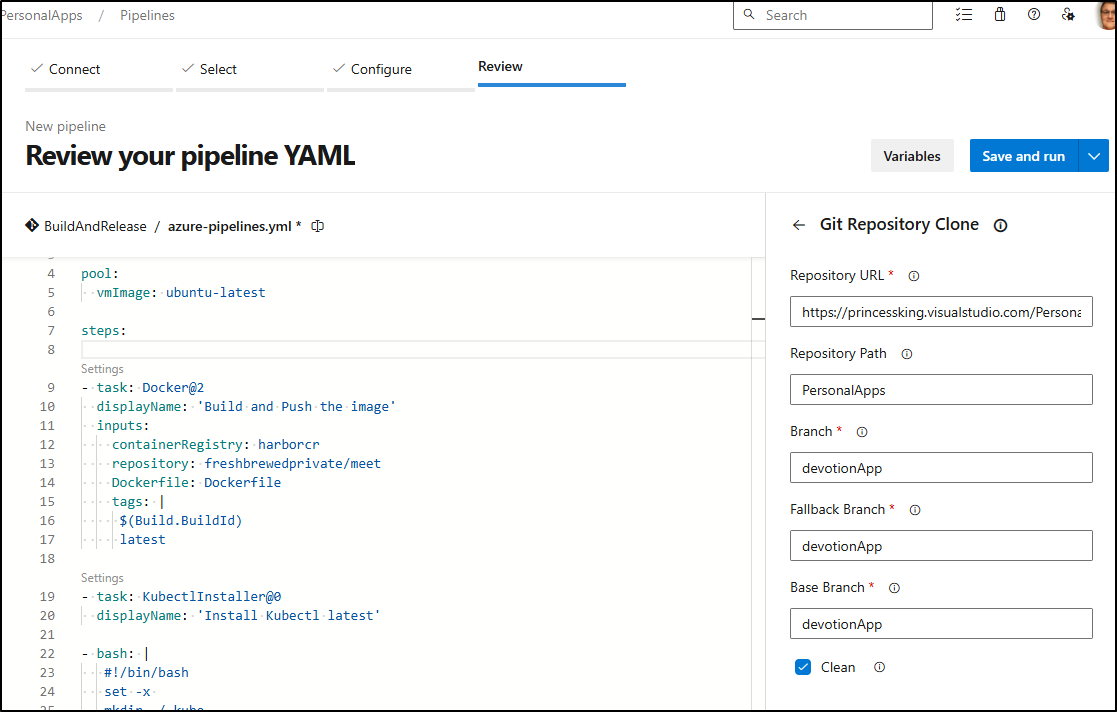

I’ll want to add a step to clone the repo

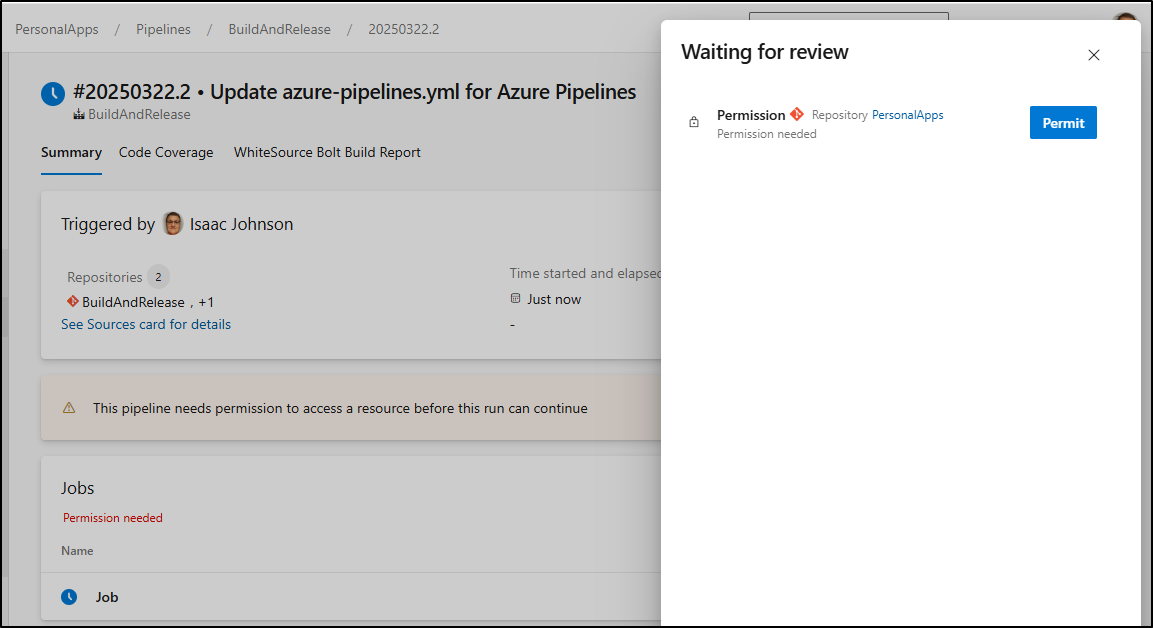

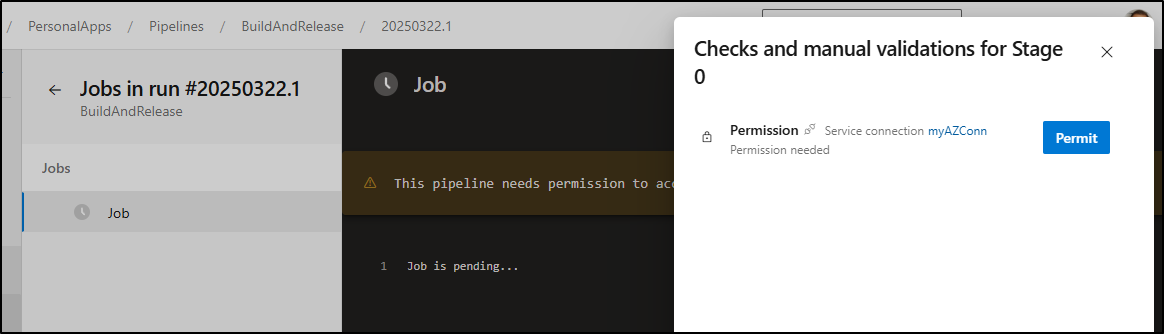

Note: I did a run with that and later found that is for Windows build agents only, so I changed to using “resources.repositories” as you see below. It does require permission to be granted:

Back to the YAML, I’ll also need to add “PersonalApps” to the path for Docker build and the helm upgrade

trigger:

- main

pool:

vmImage: ubuntu-latest

resources:

repositories:

- repository: PersonalApps # identifier for use in pipeline

type: git # type of repository (GitHub, Git, Bitbucket)

name: PersonalApps/PersonalApps # project/repository

ref: devotionApp # ref name to checkout (optional)

steps:

- checkout: self # checkout the current repository

- checkout: PersonalApps # checkout the repository defined in resources

path: PersonalApps # path where to checkout the repository

- task: Bash@3

inputs:

targetType: 'inline'

script: |

set -x

export

pwd

ls -ltra

ls -ltra $AGENT_BUILDDIRECTORY

ls -ltra $(Agent.BuildDirectory)/PersonalApps

ls -ltra $AGENT_BUILDDIRECTORY/PersonalApps

- task: Docker@2

displayName: 'Build and Push the image'

inputs:

containerRegistry: 'harborcr'

repository: 'freshbrewedprivate/meet'

command: 'buildAndPush'

Dockerfile: '$(Agent.BuildDirectory)/PersonalApps/Dockerfile'

buildContext: '$(Agent.BuildDirectory)/PersonalApps'

tags: |

$(Build.BuildId)

latest

- task: KubectlInstaller@0

displayName: 'Install Kubectl latest'

- bash: |

#!/bin/bash

set -x

mkdir ~/.kube

which kubectl || true

which helm || true

displayName: 'create kubedir'

- task: AzureCLI@2

displayName: 'Get kubeconfig'

inputs:

azureSubscription: myAZConn

scriptType: bash

scriptLocation: inlineScript

inlineScript: 'az keyvault secret show --vault-name idjakv --name ext33-int | jq -r .value > ~/.kube/config'

- bash: |

#!/bin/bash

set -x

kubectl get nodes

helm list -A

echo "===1===="

helm get values meetbeta -n default | tee $(Build.StagingDirectory)/meetbeta.values.yaml

displayName: 'Helm Current Values'

- bash: |

#!/bin/bash

set -x

kubectl get nodes

helm list -A

echo "== update ===="

helm get values meetbeta -n default | tee $(Build.StagingDirectory)/meetbeta.values.yaml

sed -i 's/tag: .*/tag: $(Build.BuildID)/g' $(Build.StagingDirectory)/meetbeta.values.yaml

cat $(Build.StagingDirectory)/meetbeta.values.yaml

echo "== upgrade===="

helm upgrade -f $(Build.StagingDirectory)/meetbeta.values.yaml meetbeta $(Agent.BuildDirectory)/PersonalApps/helm/devotion/

displayName: 'Helm update and upgrade'

- bash: |

#!/bin/bash

set -x

kubectl get nodes

helm list -A

echo "===1===="

helm get values meetbeta -n default | tee $(Build.StagingDirectory)/meetbeta.values.yaml

displayName: 'Helm Current Values'

I needed permit usage of the SA

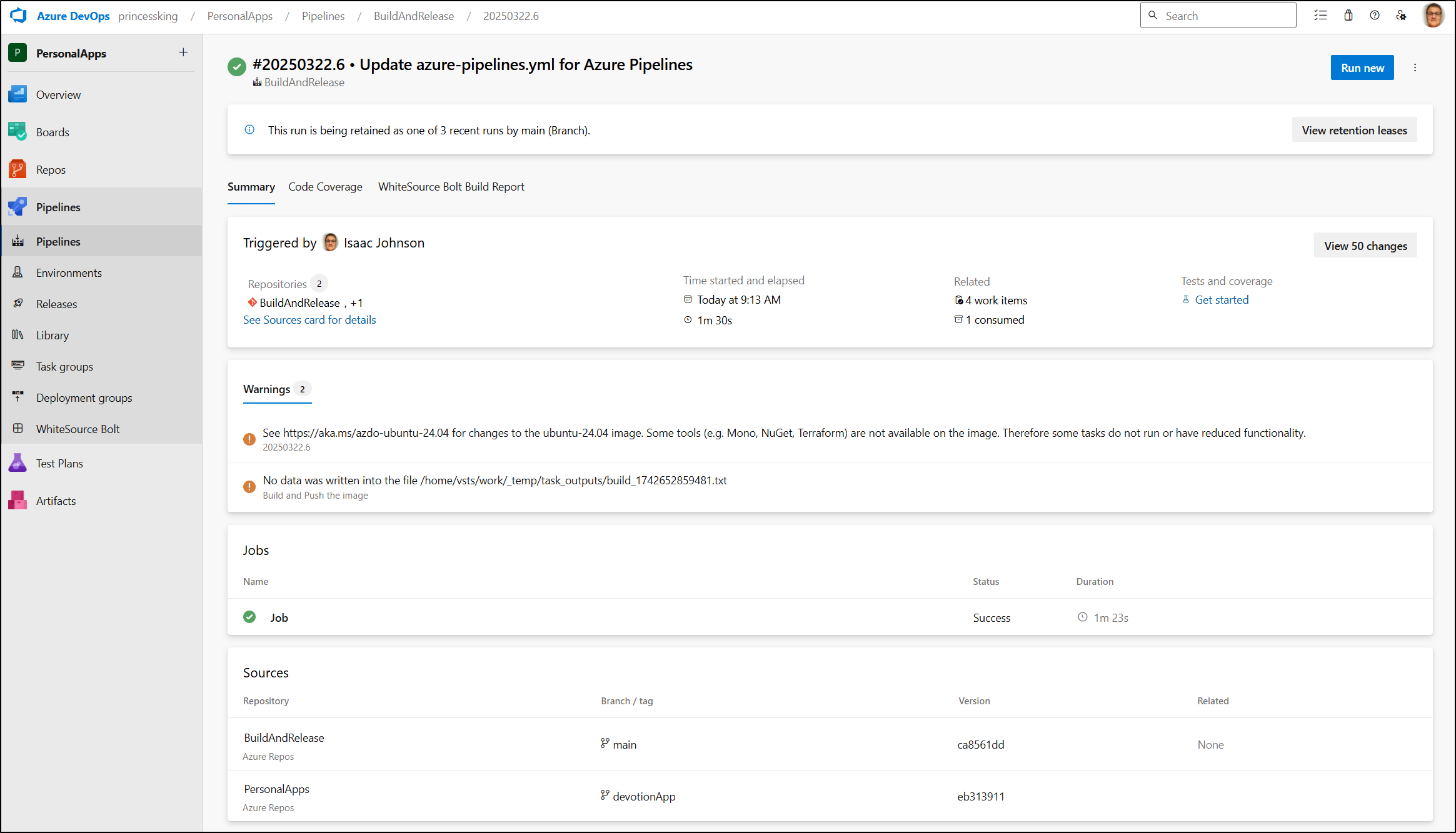

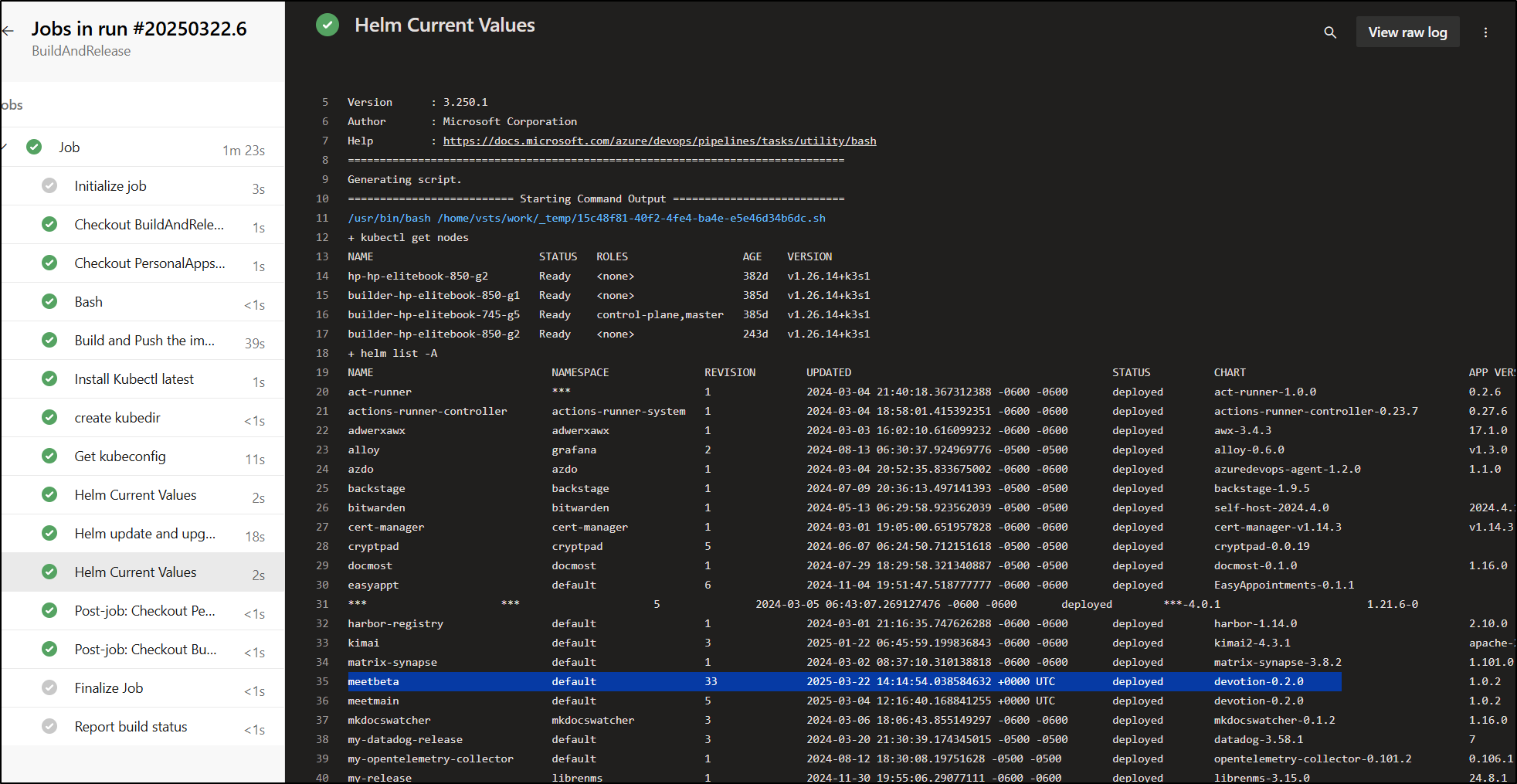

We can see it fetched both this “BuildAndRelease” repo as well as the “PersonalApps” one.

And it did succeed in building a new release and pushing to the Beta app

Now this worked, however we are only triggering on “main” of this repo, namely the “BuildAndRelease”.

As we can see in the documentation, we just need to trigger with “trigger”.

Adding a trigger

Our first activity is to add a trigger:

pool:

vmImage: ubuntu-latest

resources:

repositories:

- repository: PersonalApps # identifier for use in pipeline

type: git # type of repository (GitHub, Git, Bitbucket)

name: PersonalApps/PersonalApps # project/repository

ref: devotionApp # ref name to checkout (optional)

trigger:

branches:

include:

- devotionApp

Note: The trigger only works with Azure Repos (not external nor Github).

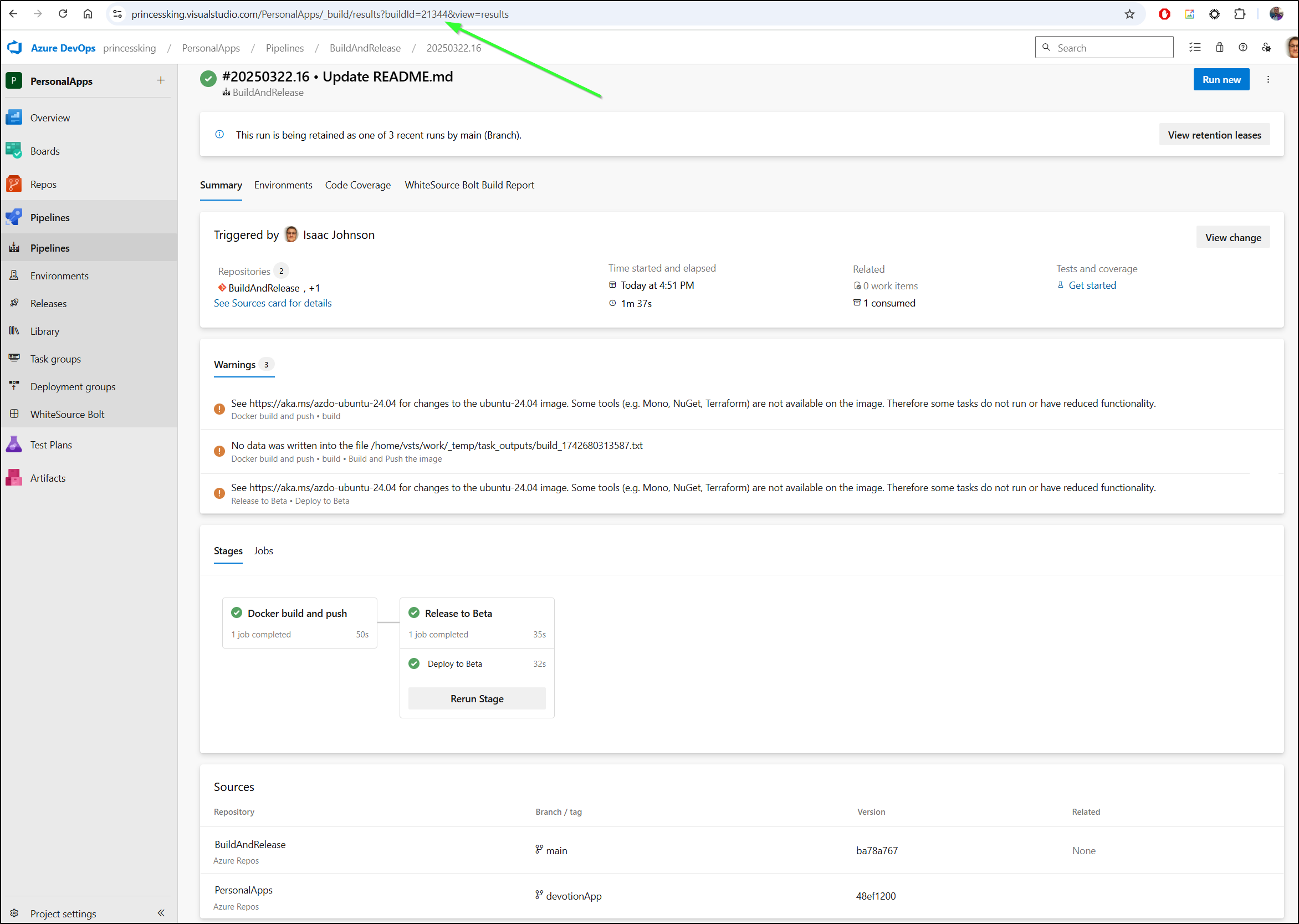

Here we can see it working with a small README change in Forgejo then triggering our build and release pipeline:

Using the Gate

Recall that earlier we created the “meetbeta” Environment to enable deployments:

To use it, we can use a multi-stage pipeline that can fetch our Repo (for the chart) and update just the image tag.

pool:

vmImage: ubuntu-latest

resources:

repositories:

- repository: PersonalApps # identifier for use in pipeline

type: git # type of repository (GitHub, Git, Bitbucket)

name: PersonalApps/PersonalApps # project/repository

ref: devotionApp # ref name to checkout (optional)

trigger:

branches:

include:

- devotionApp

stages:

- stage: build

displayName: "Docker build and push"

jobs:

- job: build

steps:

- checkout: self # checkout the current repository

- checkout: PersonalApps # checkout the repository defined in resources

path: PersonalApps # path where to checkout the repository

- task: Docker@2

displayName: 'Build and Push the image'

inputs:

containerRegistry: 'harborcr'

repository: 'freshbrewedprivate/meet'

command: 'buildAndPush'

Dockerfile: '$(Agent.BuildDirectory)/PersonalApps/Dockerfile'

buildContext: '$(Agent.BuildDirectory)/PersonalApps'

tags: |

$(Build.BuildId)

latest

- stage: releasebeta

displayName: "Release to Beta"

dependsOn: build

jobs:

- deployment: deploybeta

displayName: "Deploy to Beta"

environment:

name: 'meetbeta.meetbeta'

strategy:

runOnce:

deploy:

steps:

- checkout: PersonalApps # checkout the repository defined in resources

path: PersonalApps # path where to checkout the repository

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetbeta-meetbeta-1741916980775'

namespace: 'default'

command: 'upgrade'

chartType: 'FilePath'

chartPath: '$(Agent.BuildDirectory)/PersonalApps/helm/devotion'

releaseName: 'meetbeta'

overrideValues: 'image.tag=$(Build.BuildID)'

arguments: '--reuse-values'

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetbeta-meetbeta-1741916980775'

namespace: 'default'

command: 'get'

arguments: 'values meetbeta'

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetbeta-meetbeta-1741916980775'

namespace: 'default'

command: 'ls'

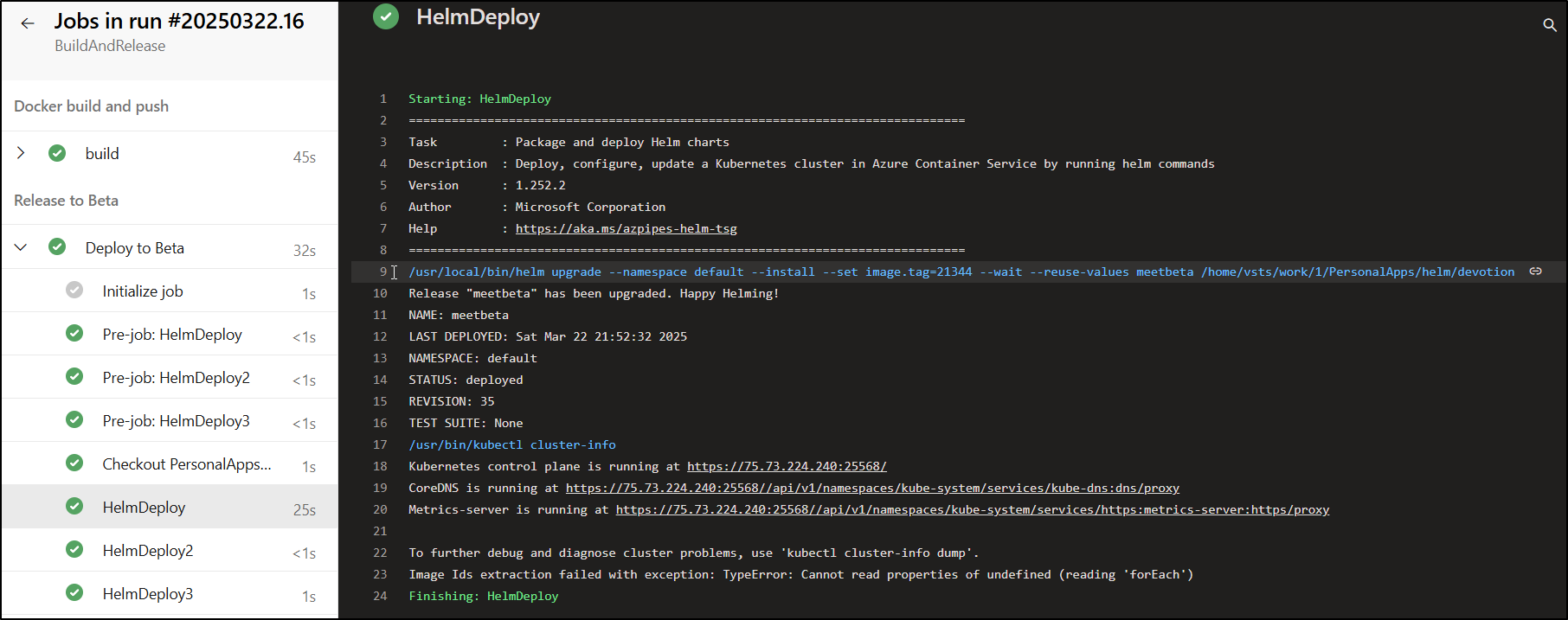

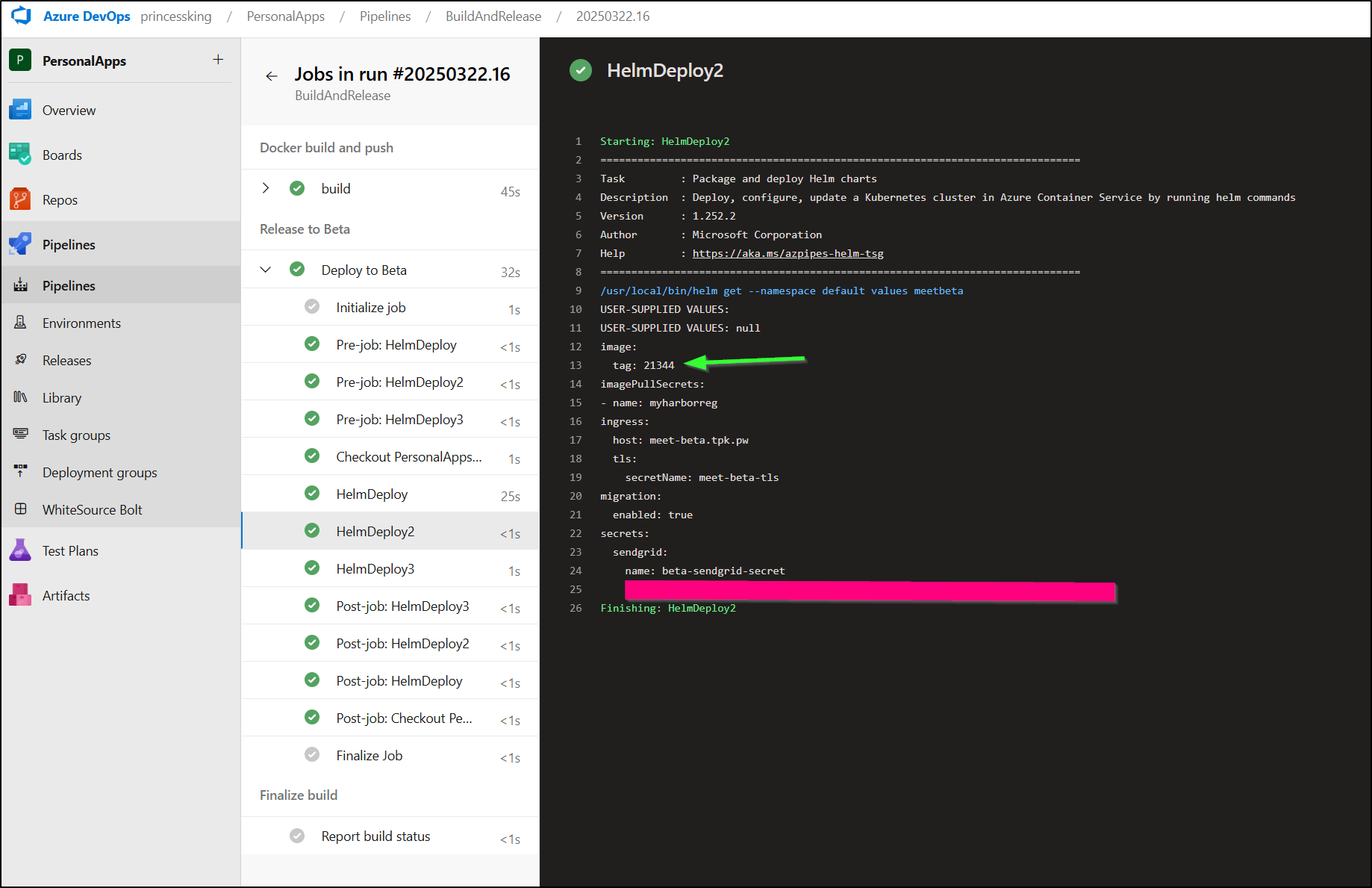

I can see it deployed. Noting the build for the README.md update is “21344”

I can see the updated value now reflected after the upgrade command:

and also in the fetched post-upgrade values:

Release to Production

Now that we have the Release to Beta (Staging) completed, let’s focus on Production next

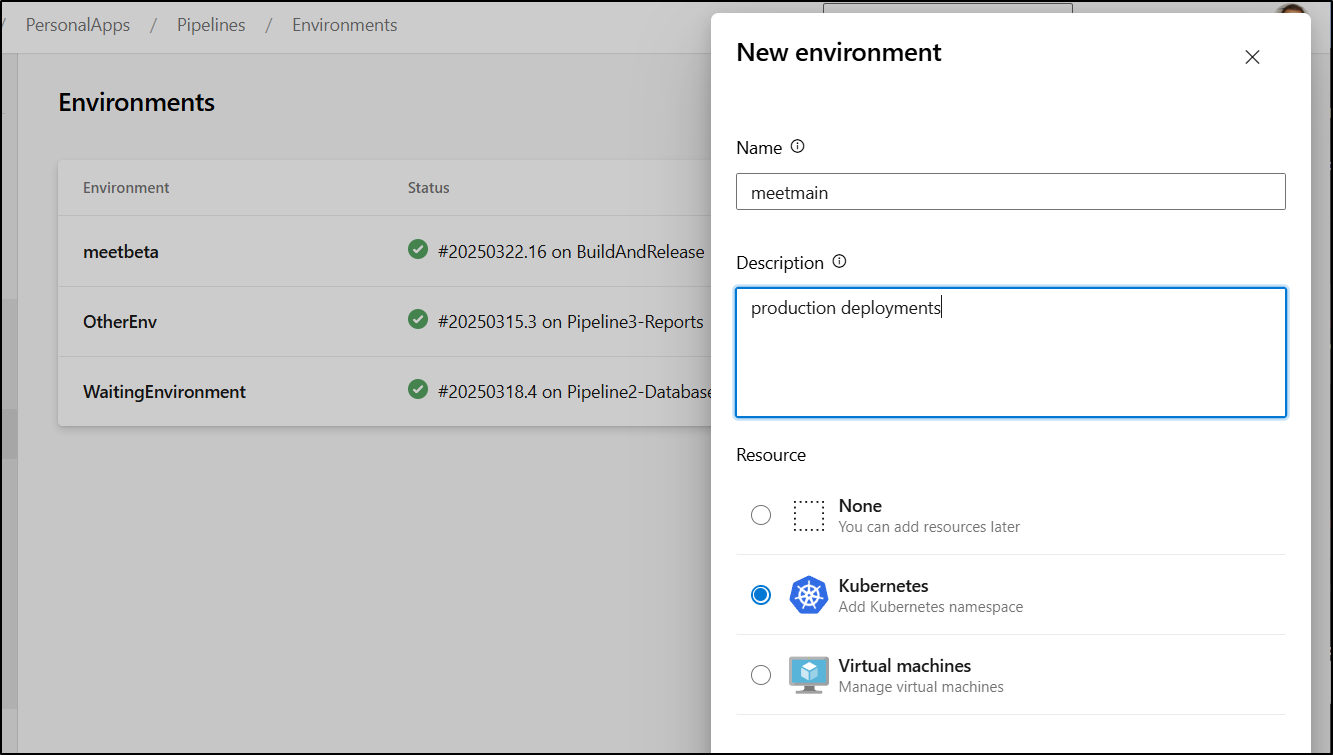

I’ll create a “Production” environment

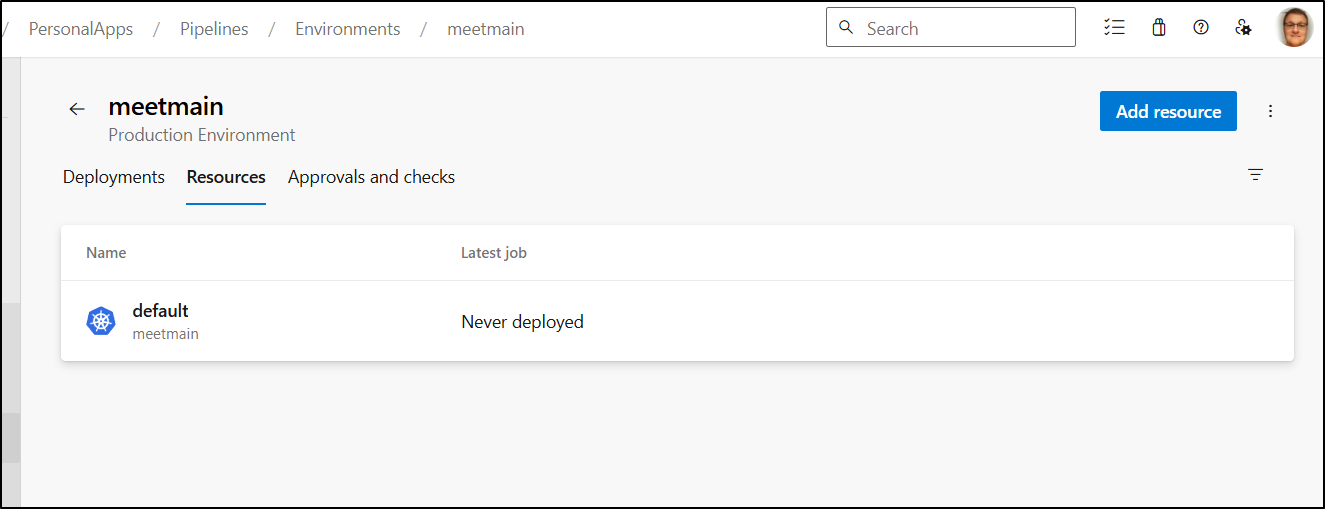

I can now create the “meetmain” entry.

In most situations, this would be a completely different cluster or namespace. For me, in this case, they are collocated. However, I wanted to write this to cover the more normal case of separate clusters for Stage and Production.

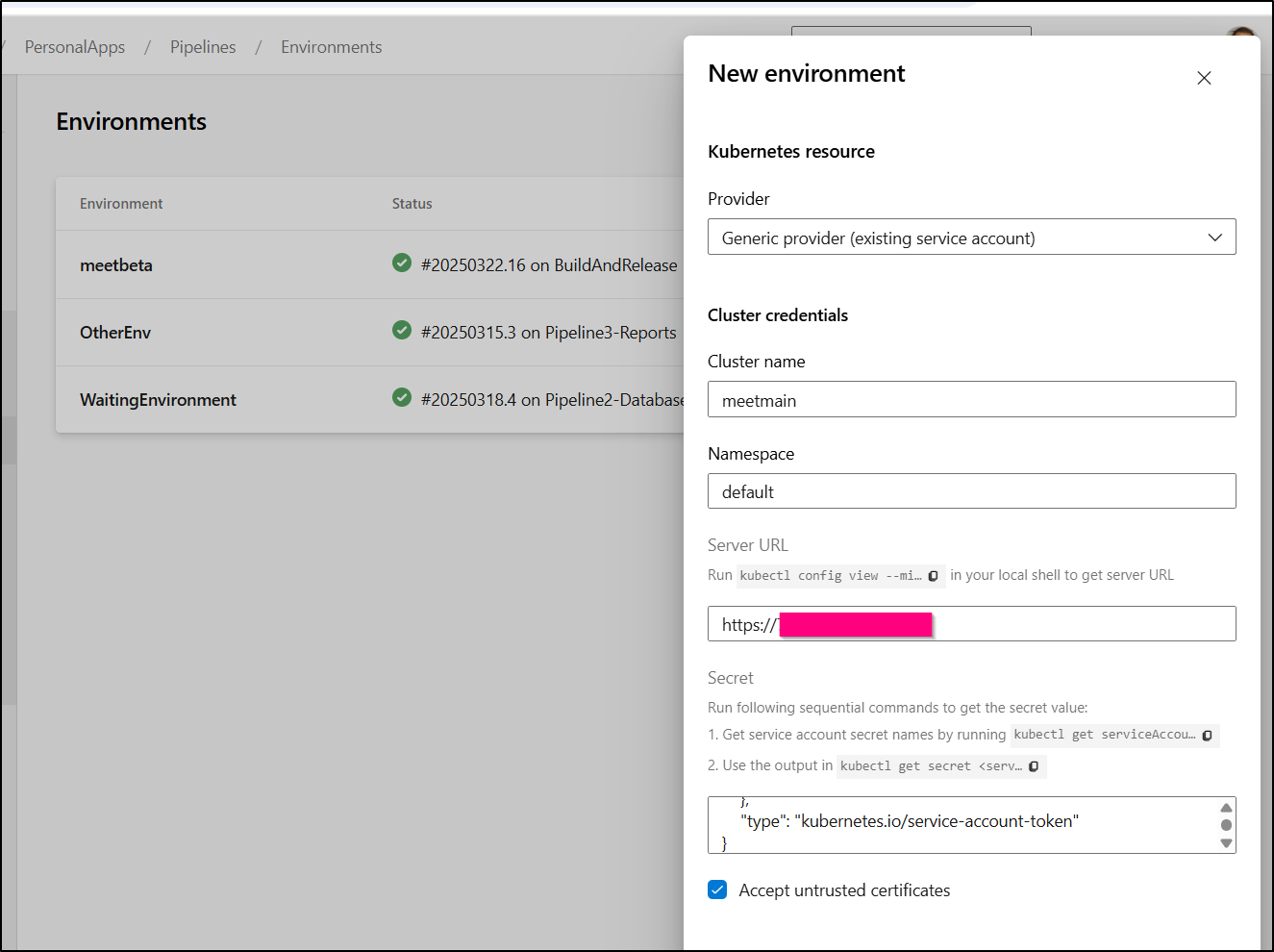

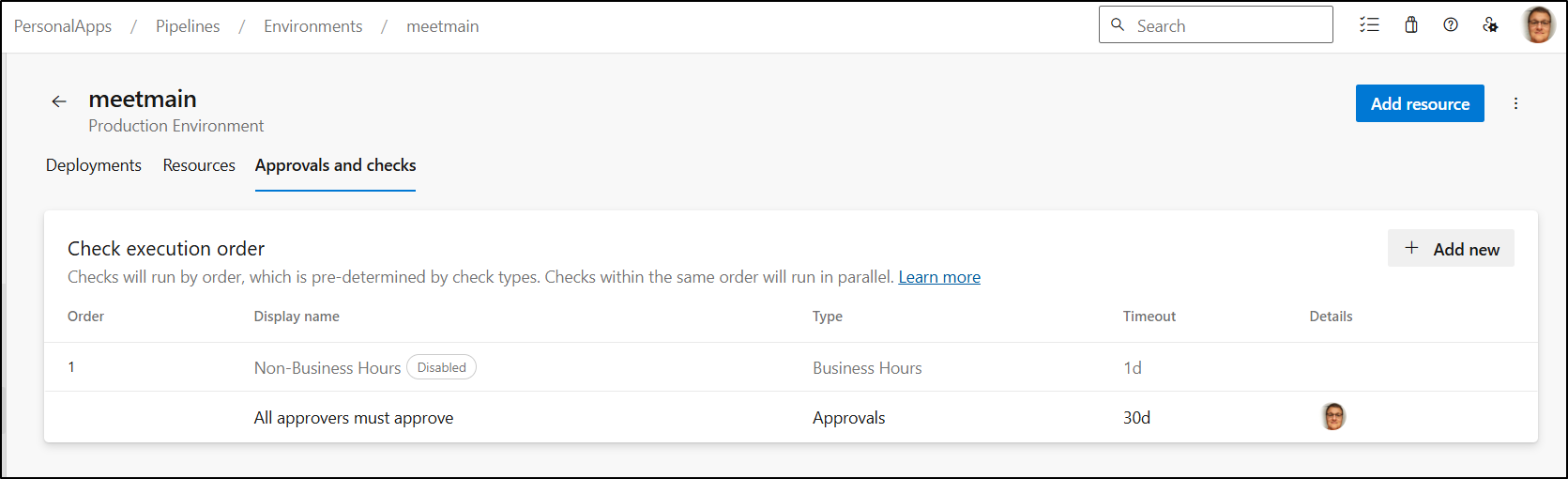

Besides now having a “meetmain” environment with a “meetmain” Kubernetes cluster entry

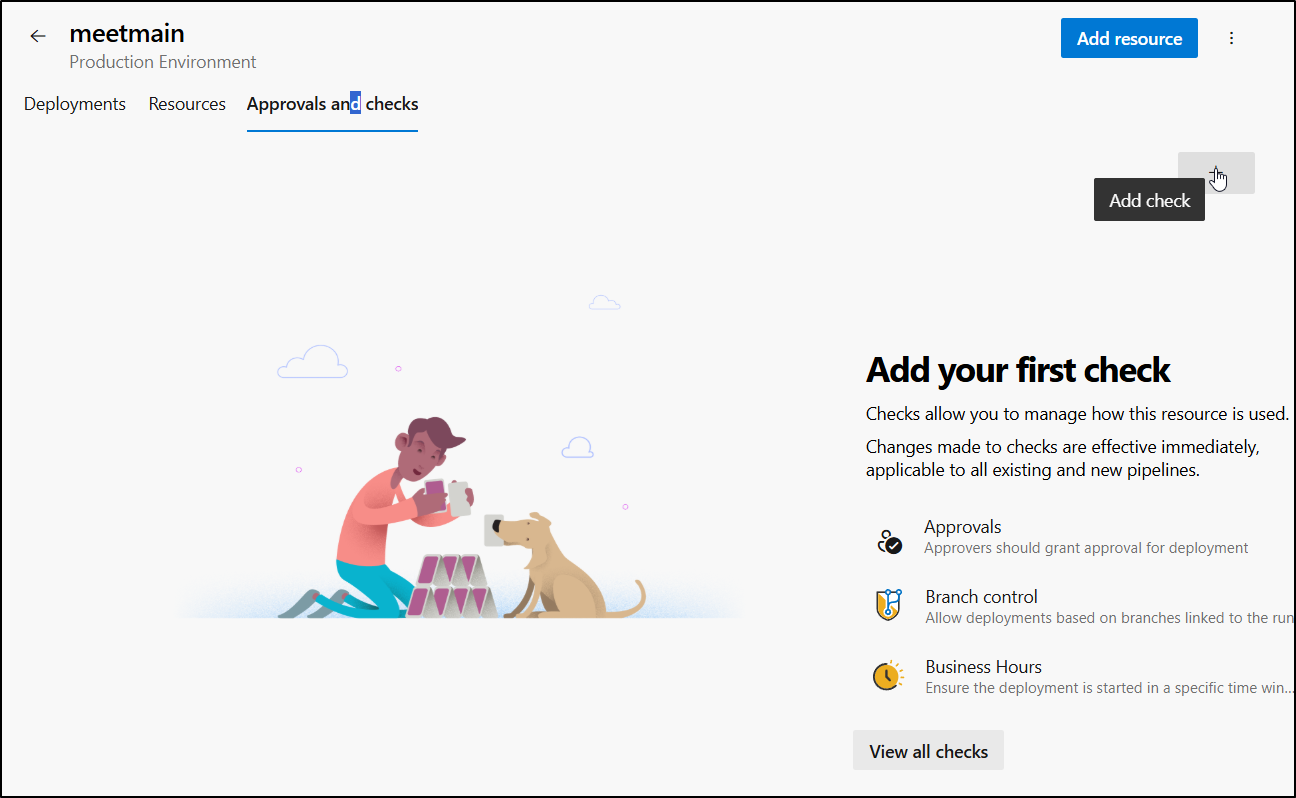

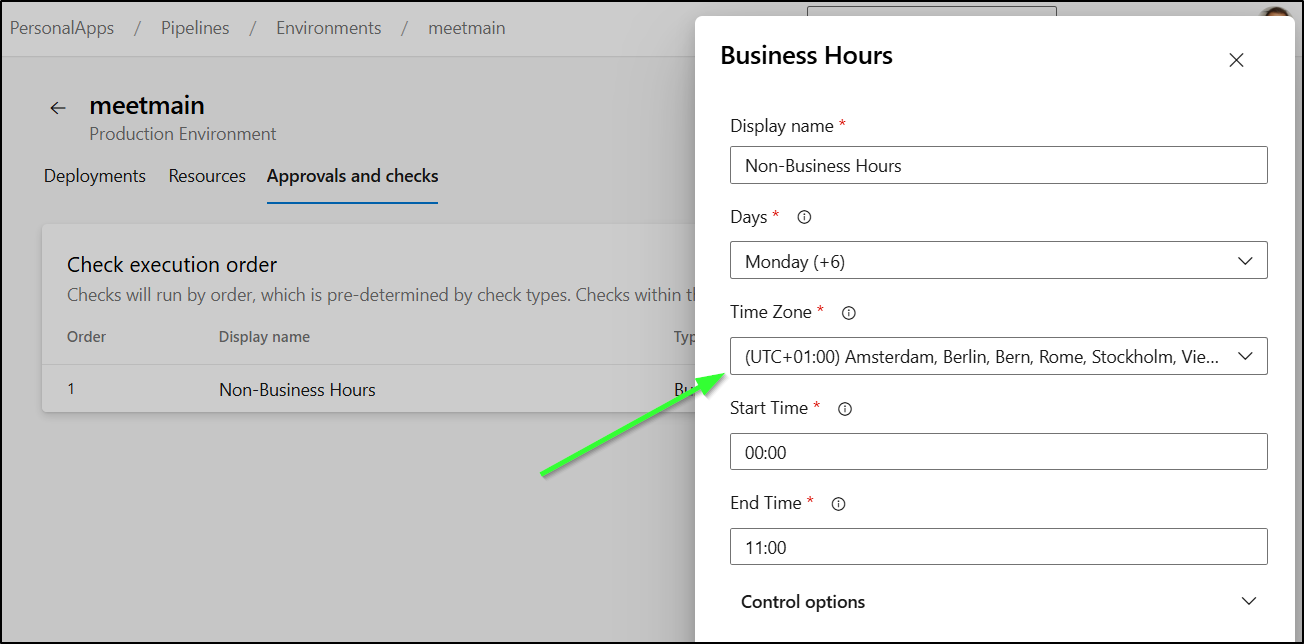

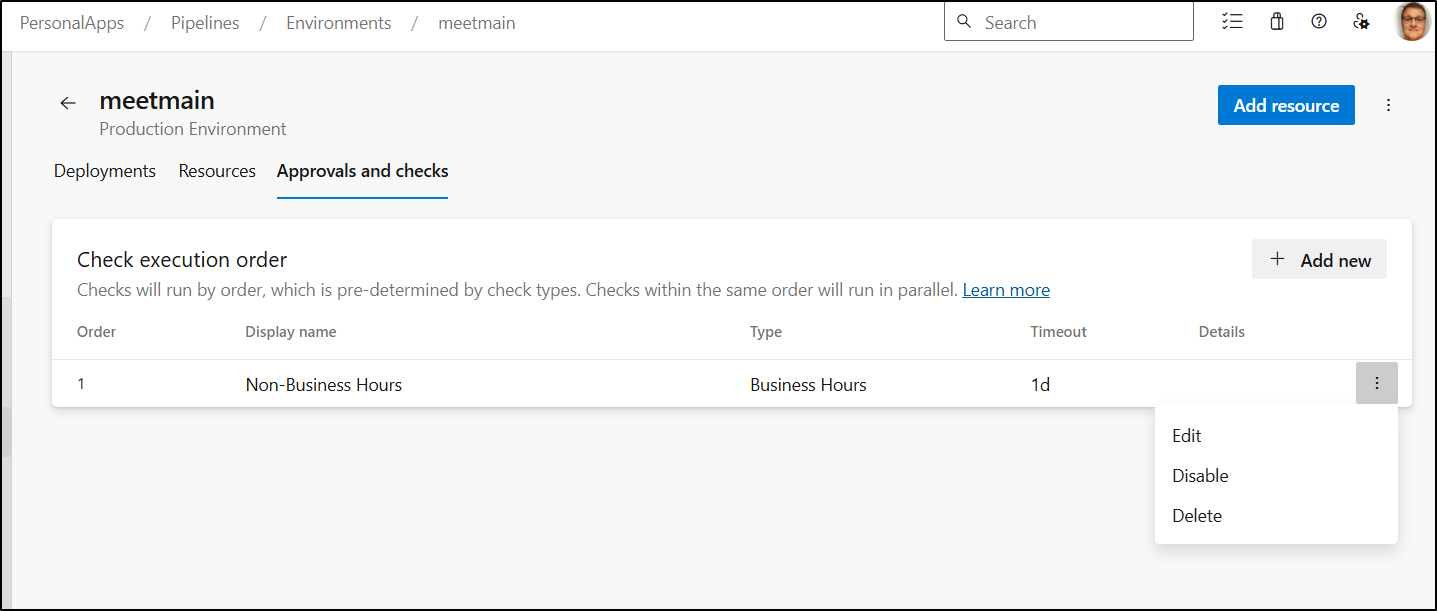

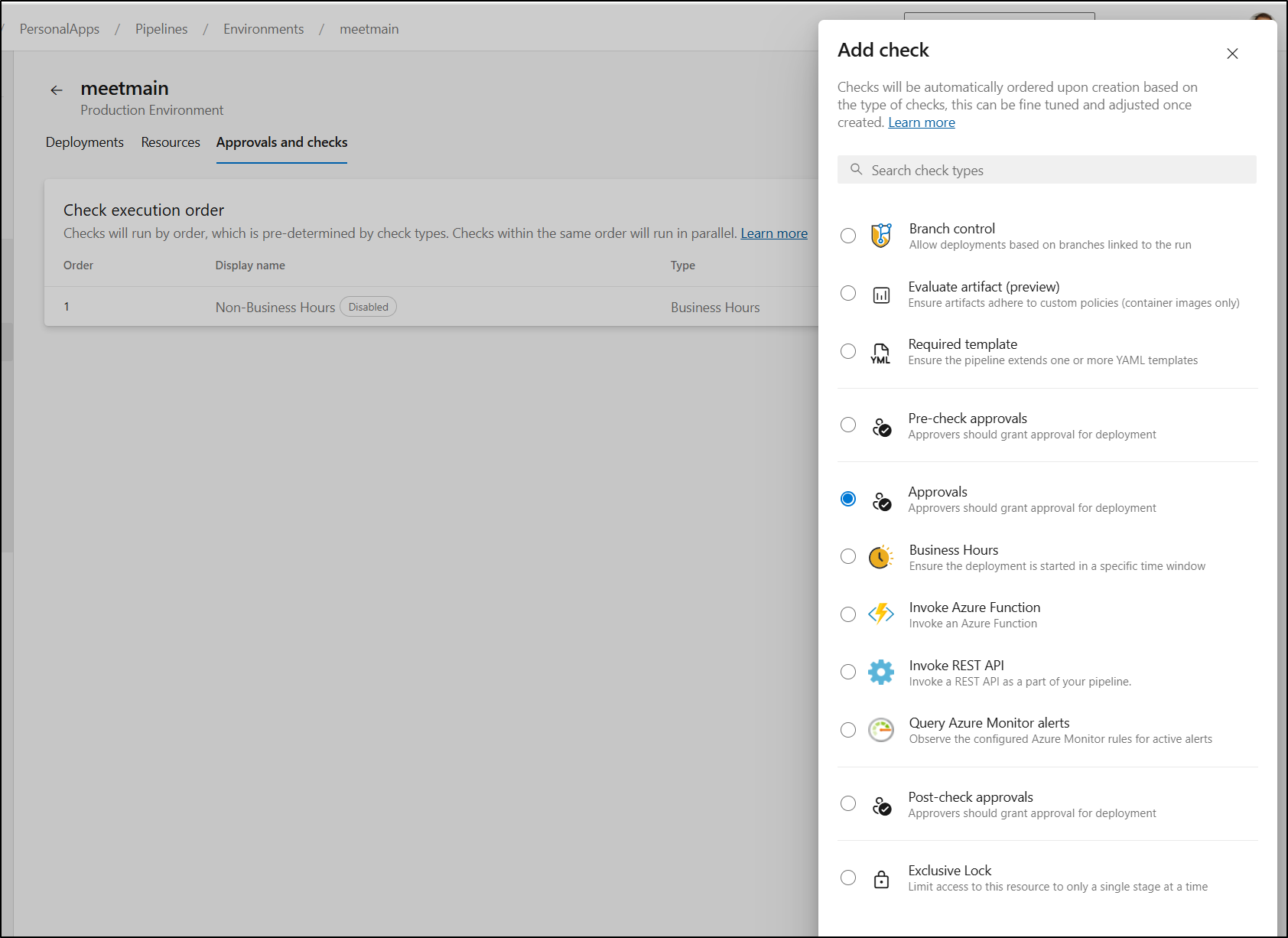

I’m going to want to go to “Approvals and Checks” to control releases to Production

The first check I’ll add is to limit when this can release.

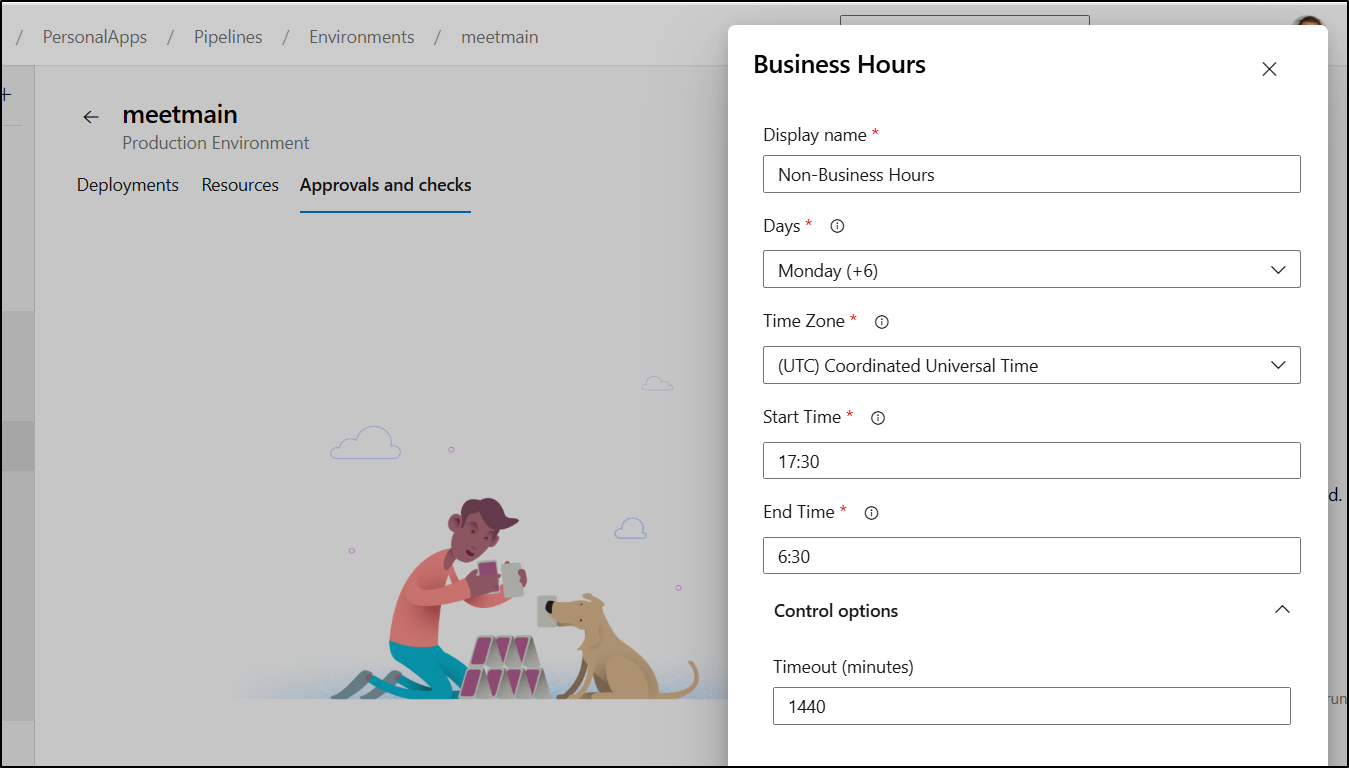

I’ve set it to M-Sun (7 days a week) between 5:30PM and 6:30AM every day as allowable times to deploy.

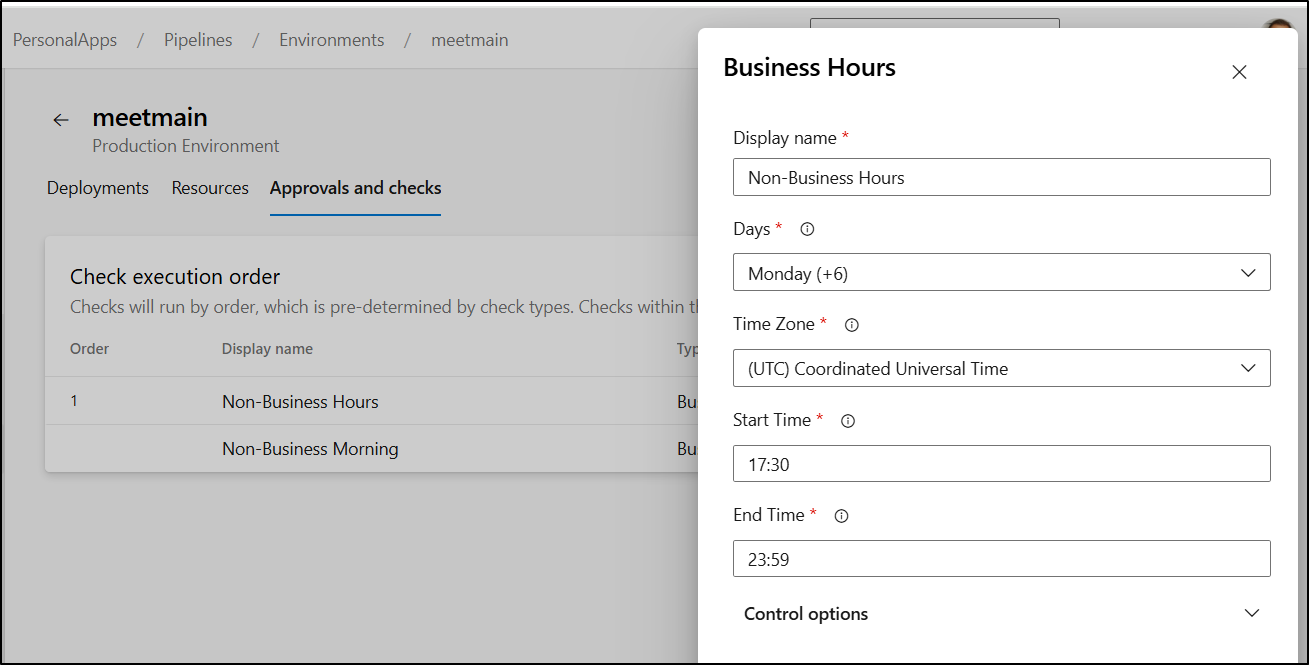

This actually caused a bit of a dilemma as it seems to have troubles crossing a day boundary.

I first made two rules (for night and mornings):

But then realized I could solve my issue by going back to UTC or near UTC to cover the 6pm to 5am opening I wanted:

I’ll start by testing this now at 6:30pm CDT and seeing if it works.

For starters, I’ll just check the helm list (also, leaving in the meetbeta connection as I found AzDO creates the named entry after first usage).

- stage: releasemain

displayName: "Release to Main"

dependsOn: releasebeta

jobs:

- deployment: deploymain

displayName: "Deploy to Main"

environment:

name: 'meetmain.default'

strategy:

runOnce:

deploy:

steps:

- checkout: PersonalApps # checkout the repository defined in resources

path: PersonalApps # path where to checkout the repository

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetbeta-meetbeta-1741916980775'

namespace: 'default'

command: 'get'

arguments: 'values meetmain'

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetbeta-meetbeta-1741916980775'

namespace: 'default'

command: 'ls'

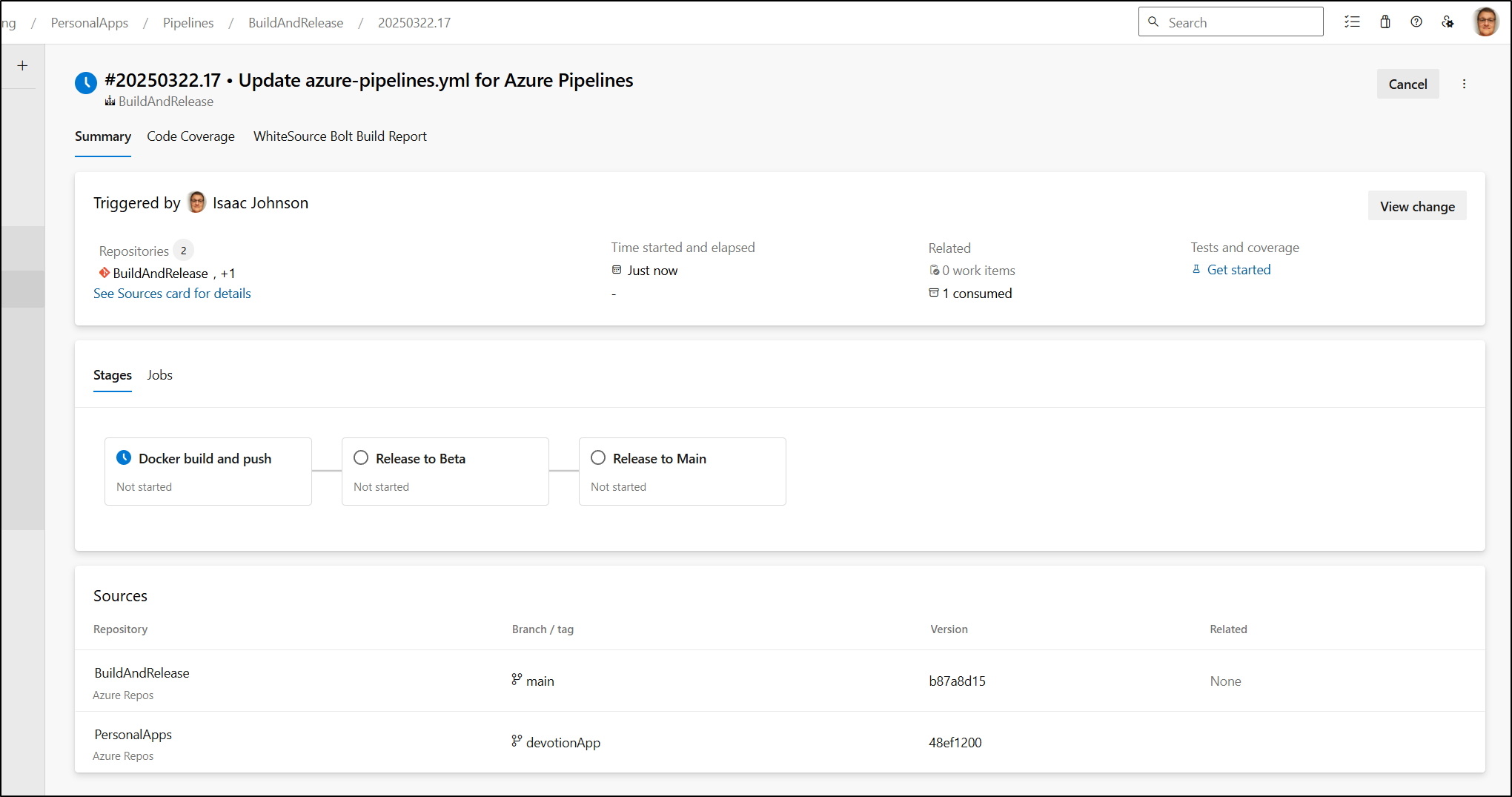

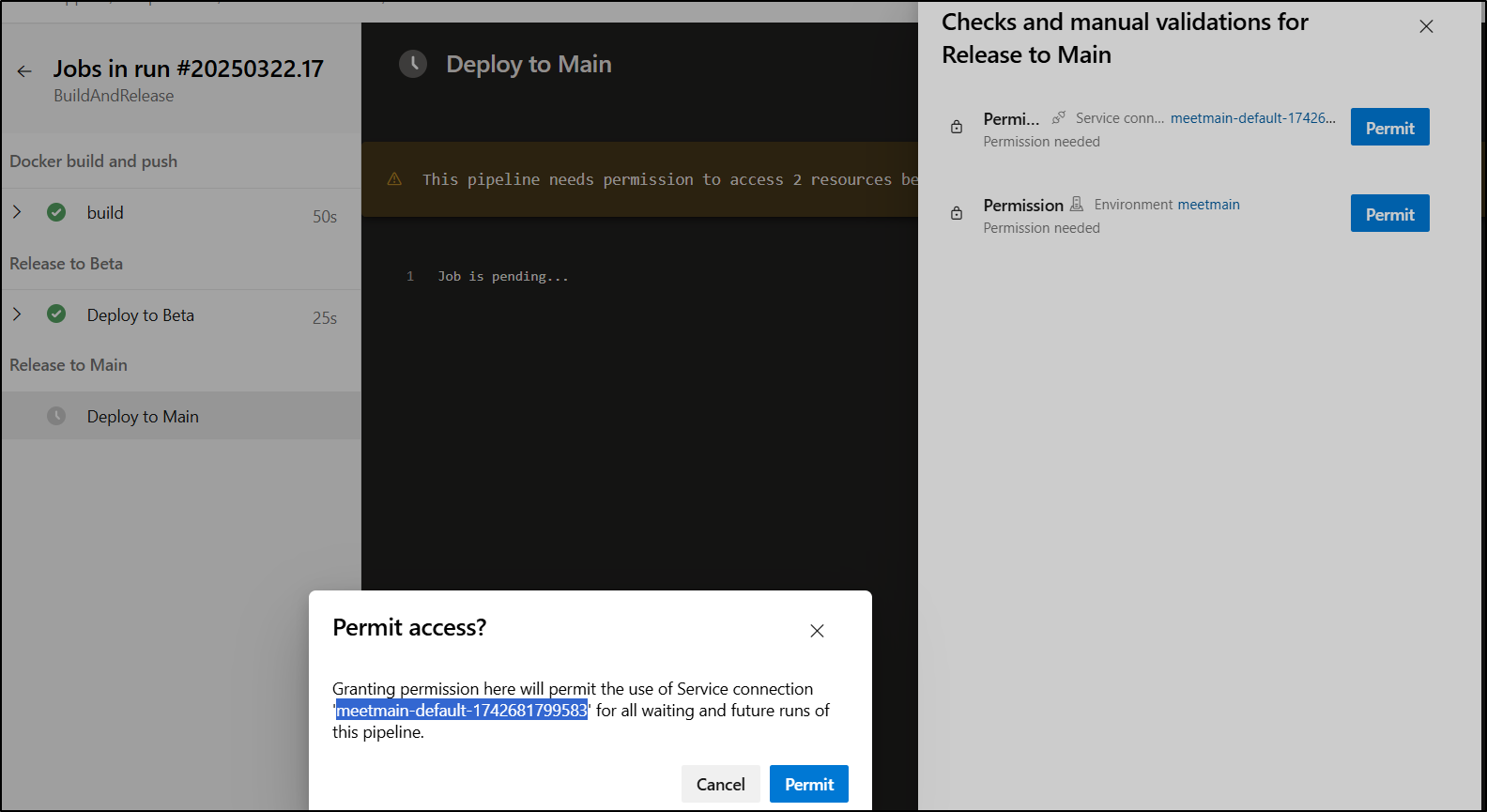

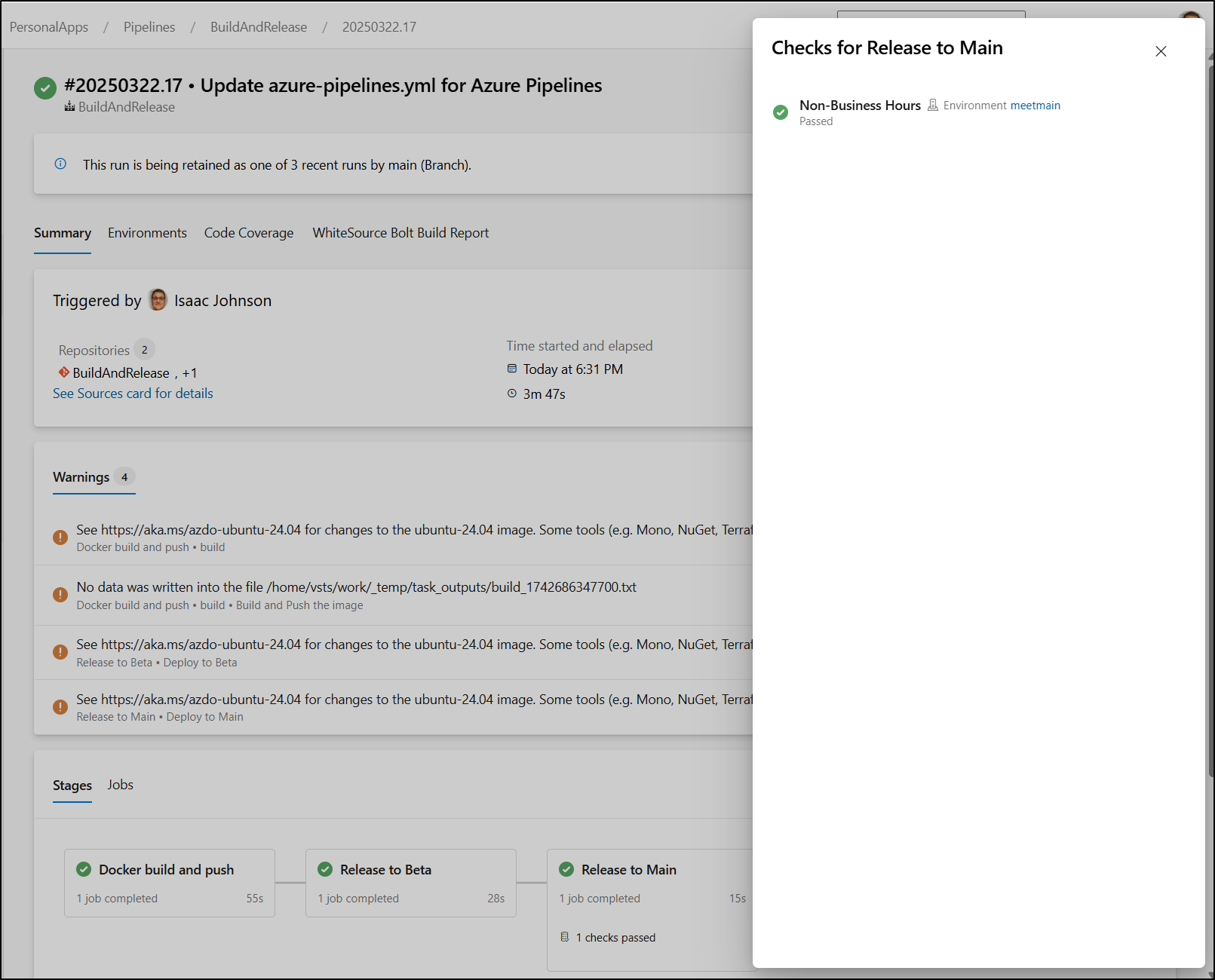

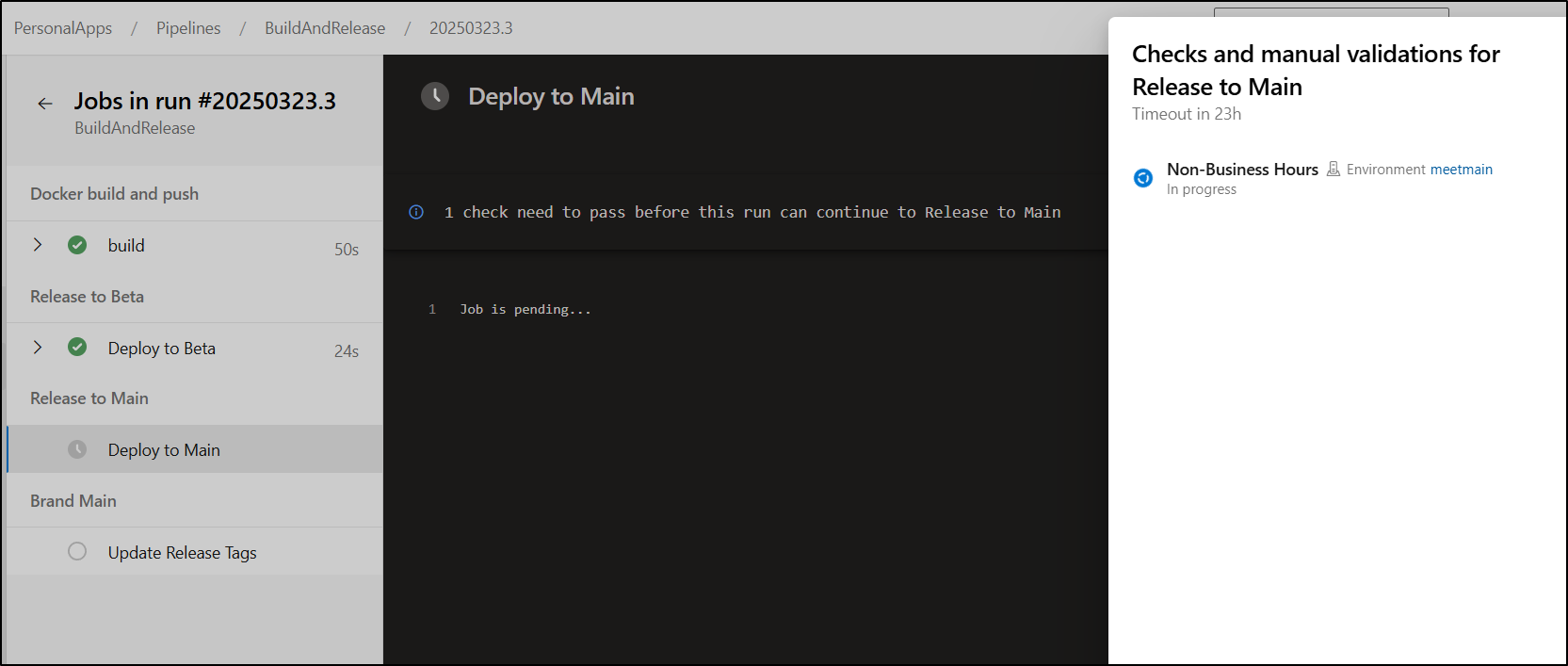

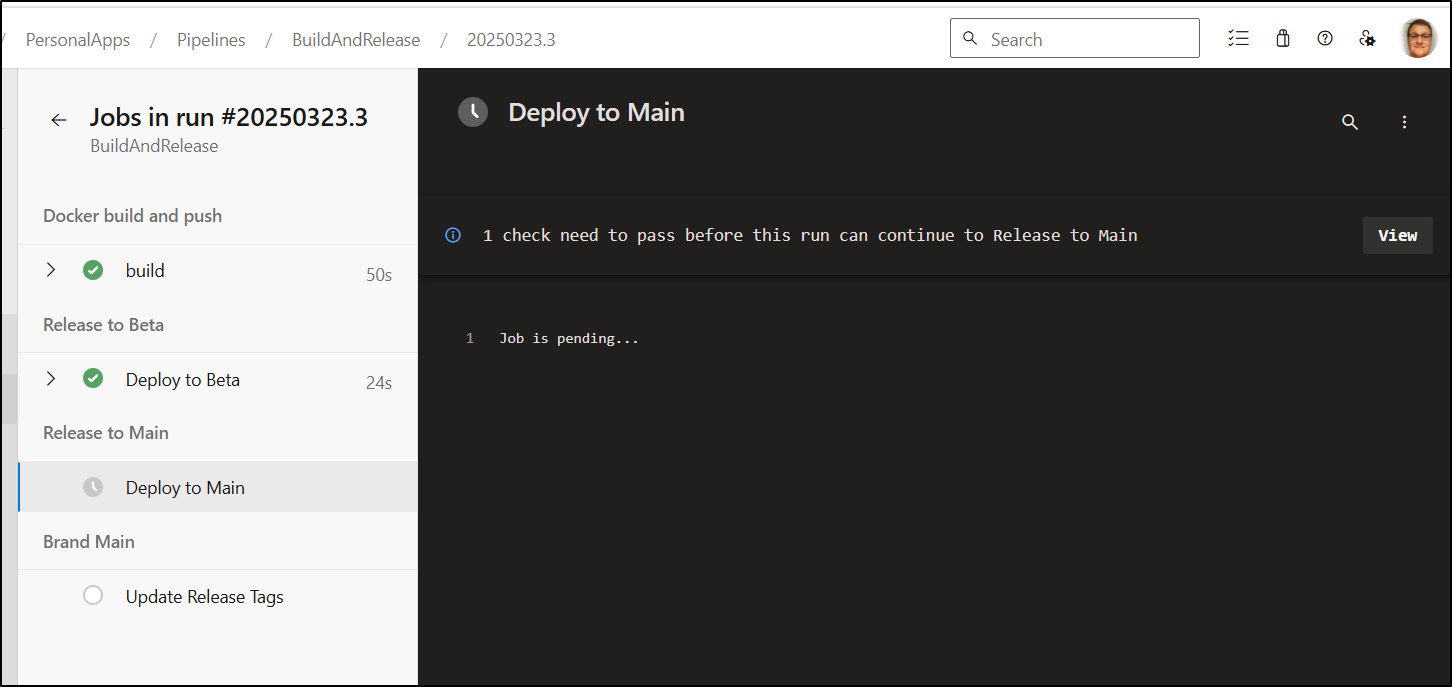

I can now see three stages:

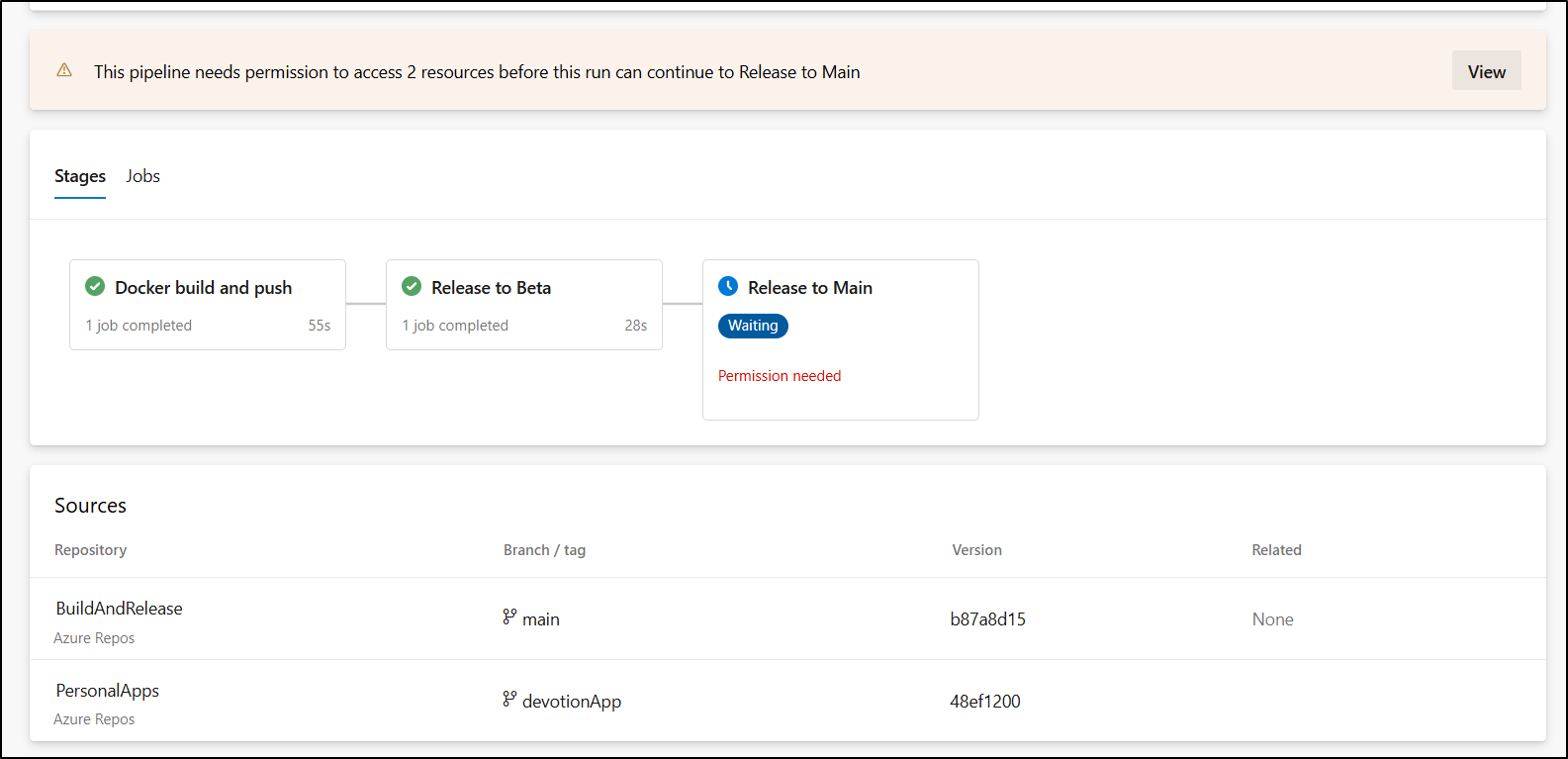

As before we need to approve the stage

I can see it creates the service connection (meetmain-default-1742681799583) when I click Permit

We see the Non-Business Hours validation passed

Tagging

If I plan to use this with my release pipeline, I would want to apply a tag

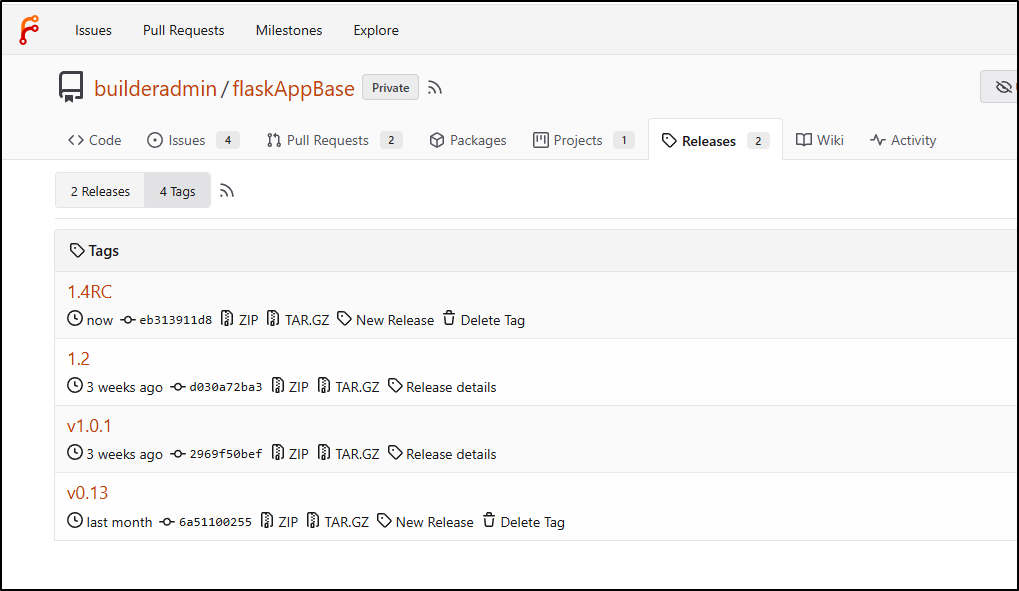

I could create a tag by hand with a REST call to Forgejo.

Quick Note on Basic Auth. This is just “username:password” base64 encoded. For instance, “username:password” would turn into dXNlcm5hbWU6cGFzc3dvcmQ=

curl -X 'POST' \

'https://forgejo.freshbrewed.science/api/v1/repos/builderadmin/flaskAppBase/tags' \

-H 'accept: application/json' \

-H 'authorization: Basic asdfasdfasdf' \

-H 'Content-Type: application/json' \

-d '{

"message": "My draft 1.5",

"tag_name": "1.4RC",

"target": "eb313911d8671dafd9411c0220dea63486d04cd2"

}'

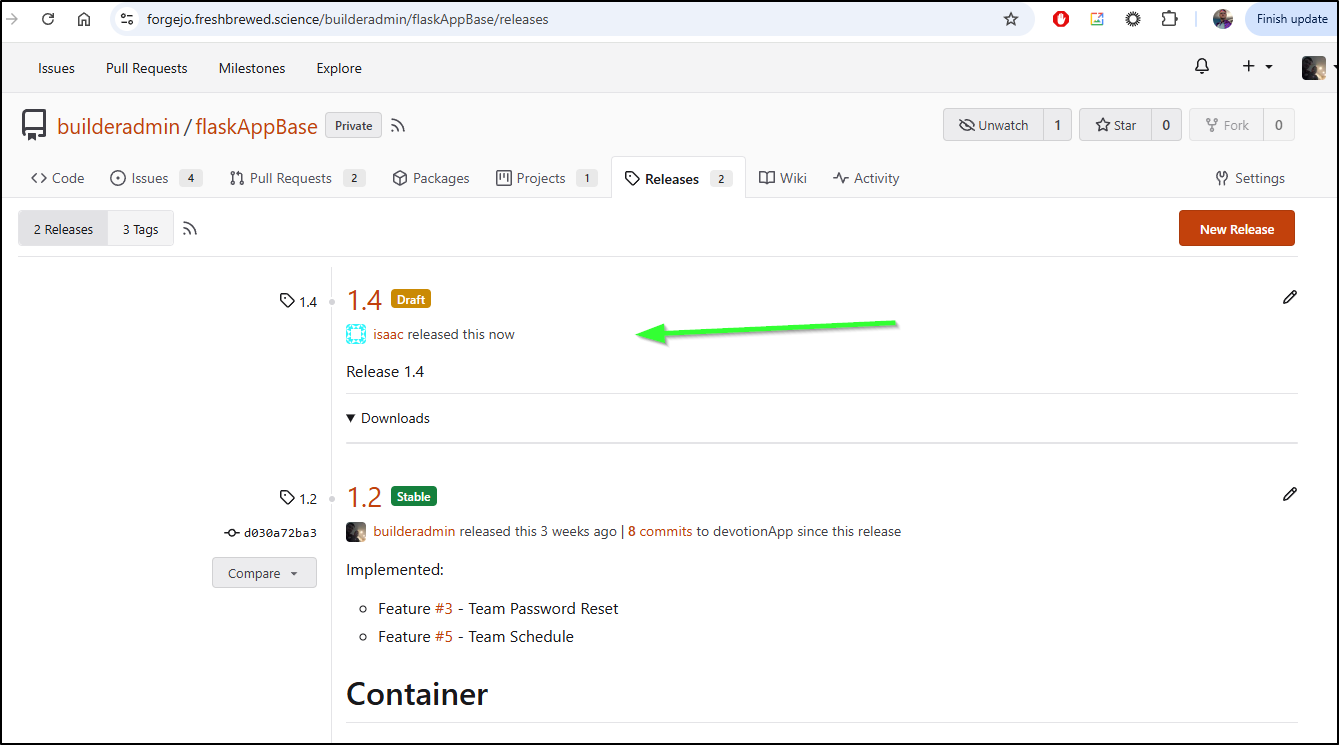

Or I could even create a new Release

curl -X 'POST' \

'https://forgejo.freshbrewed.science/api/v1/repos/builderadmin/flaskAppBase/releases' \

-H 'accept: application/json' \

-H 'authorization: Basic asdfasdfasdfasdfasdf' \

-H 'Content-Type: application/json' \

-d '{

"body": "Release 1.4",

"draft": true,

"name": "1.4",

"prerelease": false,

"tag_name": "1.4",

"target_commitish": "48ef1200c6bb94e400b28fa33f5a264d31fcd0ce"

}'

On tagging

We really have a few ways to tag a remote repo.

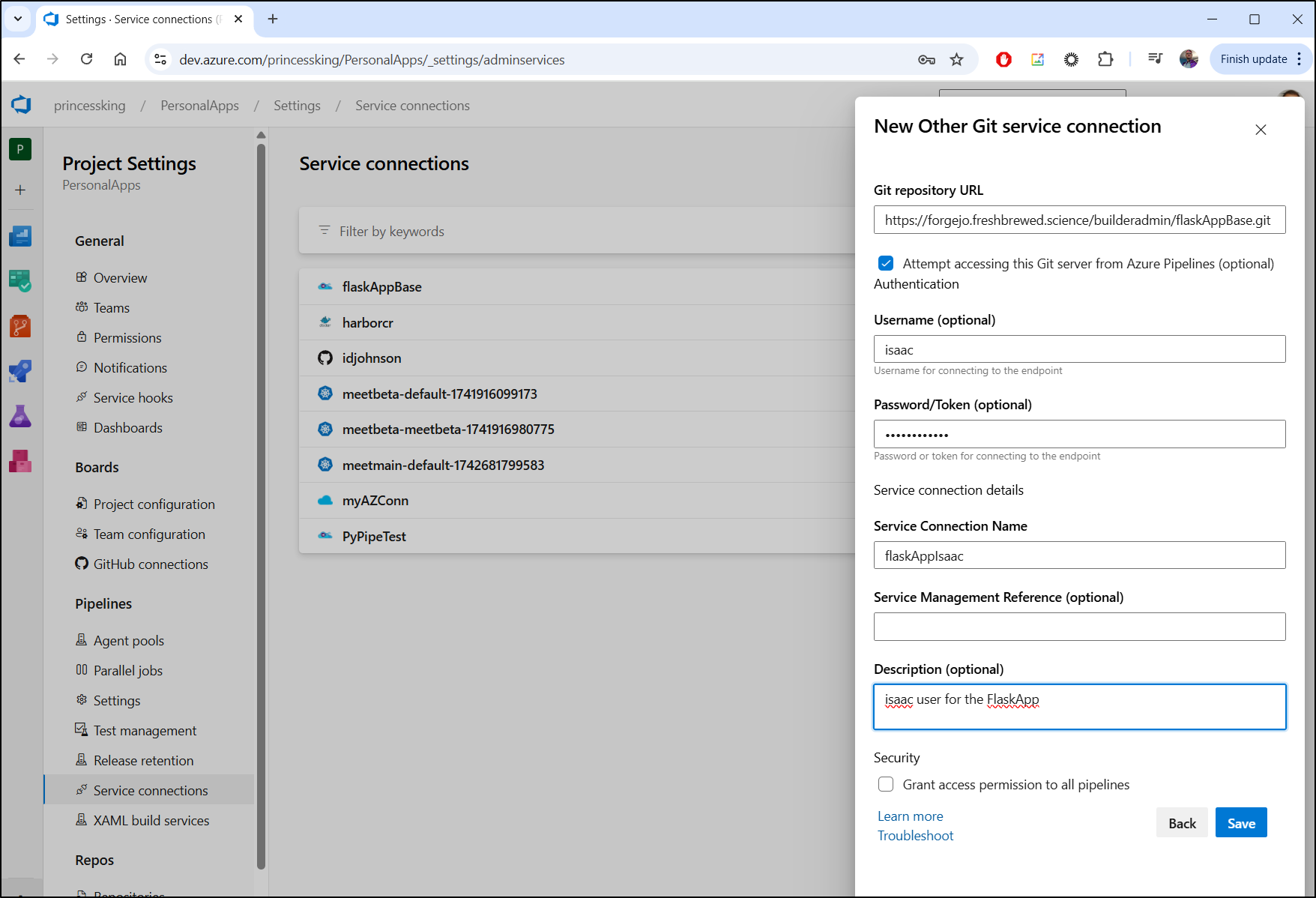

We could use a Service Connection with a user that has write access

Then clone the repo and CD in to tag and push

jobs:

- job: UpdateTags

displayName: "Update Release Tags"

steps:

- checkout: self # checkout the current repository

- checkout: PersonalApps # checkout the repository defined in resources

path: PersonalApps # path where to checkout the repository

- task: Bash@3

displayName: "Get Next Release Tag"

inputs:

targetType: 'inline'

script: 'curl --silent -X ''GET'' ''https://harbor.freshbrewed.science/api/v2.0/projects/freshbrewedprivate/repositories/meet/artifacts?page=1&page_size=100&with_tag=true&with_label=true&with_scan_overview=false&with_signature=false&with_immutable_status=false&with_accessory=false'' -H ''accept: application/json'' -H ''X-Accept-Vulnerabilities: application/vnd.security.vulnerability.report; version=1.1, application/vnd.scanner.adapter.vuln.report.harbor+json; version=1.0'' -H "authorization: Basic $(MYHARBORCRED)" | jq -r ''.[].tags[].name'' | sort -r |grep ''^1\.'' | head -n1 | awk -F. ''{$NF = $NF + 1;} 1'' OFS=. | tr -d ''\n'' | tee $(Build.ArtifactStagingDirectory)/RELVAL'

- task: Bash@3

displayName: "Show New Tag"

inputs:

targetType: 'inline'

script: 'cat $(Build.ArtifactStagingDirectory)/RELVAL'

- task: Bash@3

displayName: "Add tag to Forgejo"

inputs:

targetType: 'inline'

script: |

cd $(Agent.BuildDirectory)/PersonalApps/

git tag -a `cat $(Build.ArtifactStagingDirectory)/RELVAL` -m "Create Tag From Build"

git push origin `cat $(Build.ArtifactStagingDirectory)/RELVAL`

or use a REST call, allthough I would need to pass the SHA or branch

- task: Bash@3

displayName: "Update Tag on Forgejo"

inputs:

targetType: 'inline'

script: |

# Create JSON file using the release value

echo "{\"tag_name\": \"`cat $(Build.ArtifactStagingDirectory)/RELVAL`RC\", \"message\": \"my draft tag\", \target\": \"devotionApp\""}" > $(Build.ArtifactStagingDirectory)/fjtag.json

# Use the JSON file in curl command

curl -X 'POST' \

'https://forgejo.freshbrewed.science/api/v1/repos/builderadmin/flaskAppBase/tags' \

-H 'accept: application/json' \

-H 'authorization: Basic $(FORGEJOAUTH)' \

-H 'Content-Type: application/json' \

--data @$(Build.ArtifactStagingDirectory)/fjtag.json

Before I actually deploy to main, let’s attempt to create a “1.4” release using these new steps.

I’ve put them all into a “Brand” stage that follows release

pool:

vmImage: ubuntu-latest

resources:

repositories:

- repository: PersonalApps # identifier for use in pipeline

type: git # type of repository (GitHub, Git, Bitbucket)

name: PersonalApps/PersonalApps # project/repository

ref: devotionApp # ref name to checkout (optional)

trigger:

branches:

include:

- devotionApp

stages:

- stage: build

displayName: "Docker build and push"

jobs:

- job: build

steps:

- checkout: self # checkout the current repository

- checkout: PersonalApps # checkout the repository defined in resources

path: PersonalApps # path where to checkout the repository

- task: Docker@2

displayName: 'Build and Push the image'

inputs:

containerRegistry: 'harborcr'

repository: 'freshbrewedprivate/meet'

command: 'buildAndPush'

Dockerfile: '$(Agent.BuildDirectory)/PersonalApps/Dockerfile'

buildContext: '$(Agent.BuildDirectory)/PersonalApps'

tags: |

$(Build.BuildId)

latest

- stage: releasebeta

displayName: "Release to Beta"

dependsOn: build

jobs:

- deployment: deploybeta

displayName: "Deploy to Beta"

environment:

name: 'meetbeta.meetbeta'

strategy:

runOnce:

deploy:

steps:

- checkout: PersonalApps # checkout the repository defined in resources

path: PersonalApps # path where to checkout the repository

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetbeta-meetbeta-1741916980775'

namespace: 'default'

command: 'upgrade'

chartType: 'FilePath'

chartPath: '$(Agent.BuildDirectory)/PersonalApps/helm/devotion'

releaseName: 'meetbeta'

overrideValues: 'image.tag=$(Build.BuildID)'

arguments: '--reuse-values'

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetbeta-meetbeta-1741916980775'

namespace: 'default'

command: 'get'

arguments: 'values meetbeta'

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetbeta-meetbeta-1741916980775'

namespace: 'default'

command: 'ls'

- stage: releasemain

displayName: "Release to Main"

dependsOn: releasebeta

jobs:

- deployment: deploymain

displayName: "Deploy to Main"

environment:

name: 'meetmain.default'

strategy:

runOnce:

deploy:

steps:

- checkout: PersonalApps # checkout the repository defined in resources

path: PersonalApps # path where to checkout the repository

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetmain-default-1742681799583'

namespace: 'default'

command: 'get'

arguments: 'values meetmain'

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetmain-default-1742681799583'

namespace: 'default'

command: 'ls'

- stage: brandmain

displayName: "Brand Main"

dependsOn: releasemain

jobs:

- job: UpdateTags

displayName: "Update Release Tags"

steps:

- checkout: self # checkout the current repository

- checkout: PersonalApps # checkout the repository defined in resources

path: PersonalApps # path where to checkout the repository

- task: Bash@3

displayName: "Get Next Release Tag"

inputs:

targetType: 'inline'

script: |

curl --silent -X 'GET' \

'https://harbor.freshbrewed.science/api/v2.0/projects/freshbrewedprivate/repositories/meet/artifacts?page=1&page_size=100&with_tag=true&with_label=true&with_scan_overview=false&with_signature=false&with_immutable_status=false&with_accessory=false' \

-H 'accept: application/json' \

-H 'X-Accept-Vulnerabilities: application/vnd.security.vulnerability.report; version=1.1, application/vnd.scanner.adapter.vuln.report.harbor+json; version=1.0' \

-H "authorization: Basic $(MYHARBORCRED)" | jq -r ''.[].tags[].name'' | sort -r |grep ''^1\.'' | head -n1 | awk -F. ''{$NF = $NF + 1;} 1'' OFS=. | tr -d ''\n'' | tee $(Build.ArtifactStagingDirectory)/RELVAL'

- task: Bash@3

displayName: "Show New Tag"

inputs:

targetType: 'inline'

script: 'cat $(Build.ArtifactStagingDirectory)/RELVAL'

- task: Bash@3

displayName: "Add tag to Forgejo"

inputs:

targetType: 'inline'

script: |

cd $(Agent.BuildDirectory)/PersonalApps/

git tag -a `cat $(Build.ArtifactStagingDirectory)/RELVAL` -m "Create Tag From Build"

git push origin `cat $(Build.ArtifactStagingDirectory)/RELVAL`

- task: Bash@3

displayName: "Update Tag on Harbor"

inputs:

targetType: 'inline'

script: |

# Create JSON file using the release value

echo "{\"name\": \"`cat $(Build.ArtifactStagingDirectory)/RELVAL`\", \"immutable\": true}" > $(Build.ArtifactStagingDirectory)/tag.json

# Use the JSON file in curl command

curl -X 'POST' \

'https://harbor.freshbrewed.science/api/v2.0/projects/freshbrewedprivate/repositories/meet/artifacts/$(Build.BuildId)/tags' \

-H 'accept: application/json' \

-H 'authorization: Basic $(MYHARBORCRED)' \

-H 'Content-Type: application/json' \

--data @$(Build.ArtifactStagingDirectory)/tag.json

- task: Bash@3

displayName: "Update Tag on Forgejo"

inputs:

targetType: 'inline'

script: |

# Create JSON file using the release value

echo "{\"tag_name\": \"`cat $(Build.ArtifactStagingDirectory)/RELVAL`RC\", \"message\": \"my draft tag\", \target\": \"devotionApp\""}" > $(Build.ArtifactStagingDirectory)/fjtag.json

# Use the JSON file in curl command

curl -X 'POST' \

'https://forgejo.freshbrewed.science/api/v1/repos/builderadmin/flaskAppBase/tags' \

-H 'accept: application/json' \

-H 'authorization: Basic $(FORGEJOAUTH)' \

-H 'Content-Type: application/json' \

--data @$(Build.ArtifactStagingDirectory)/fjtag.json

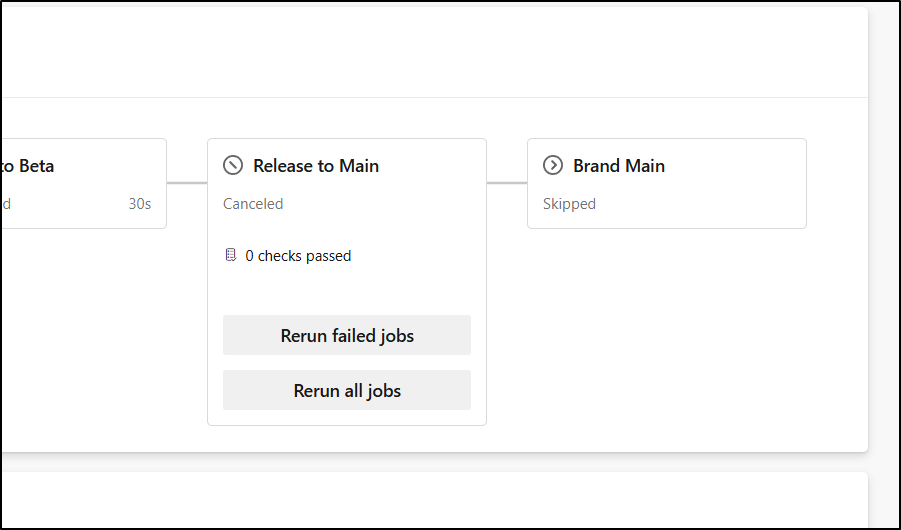

I tested, but it was already 8:30am so I caught in my gate

I can disable the check

and see it is now disabled

Though, it does not unblock the current run

Though I can cancel then “re-run failed jobs” to retrigger

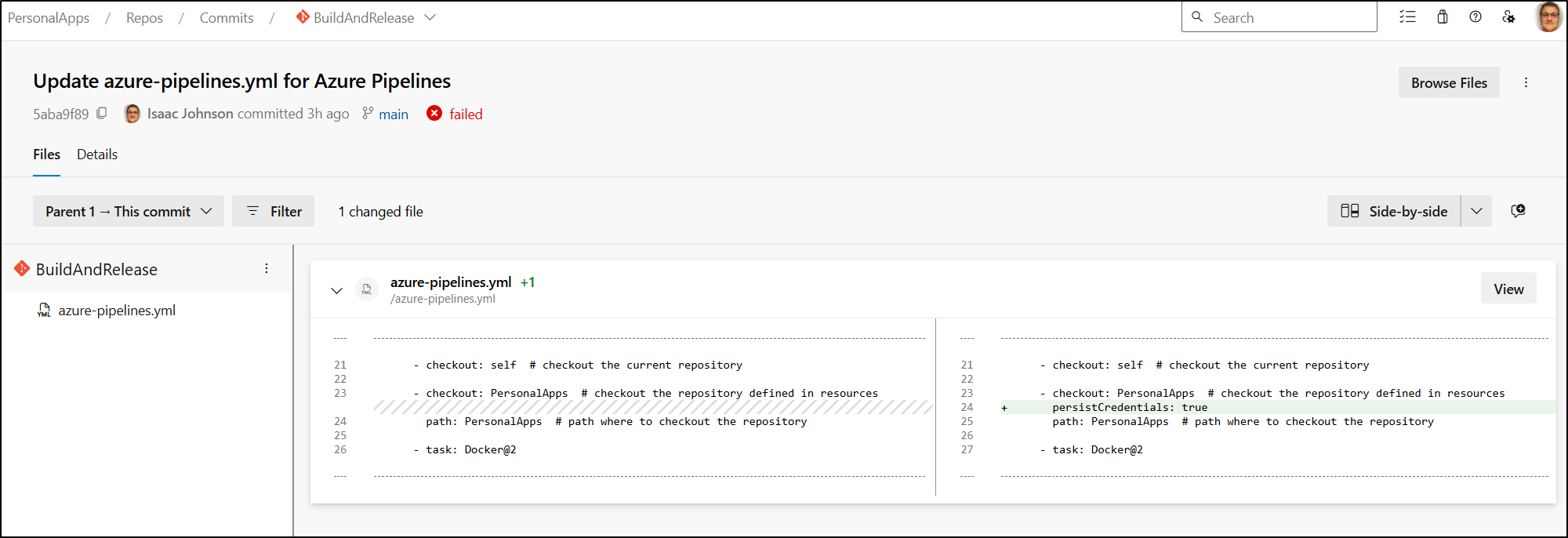

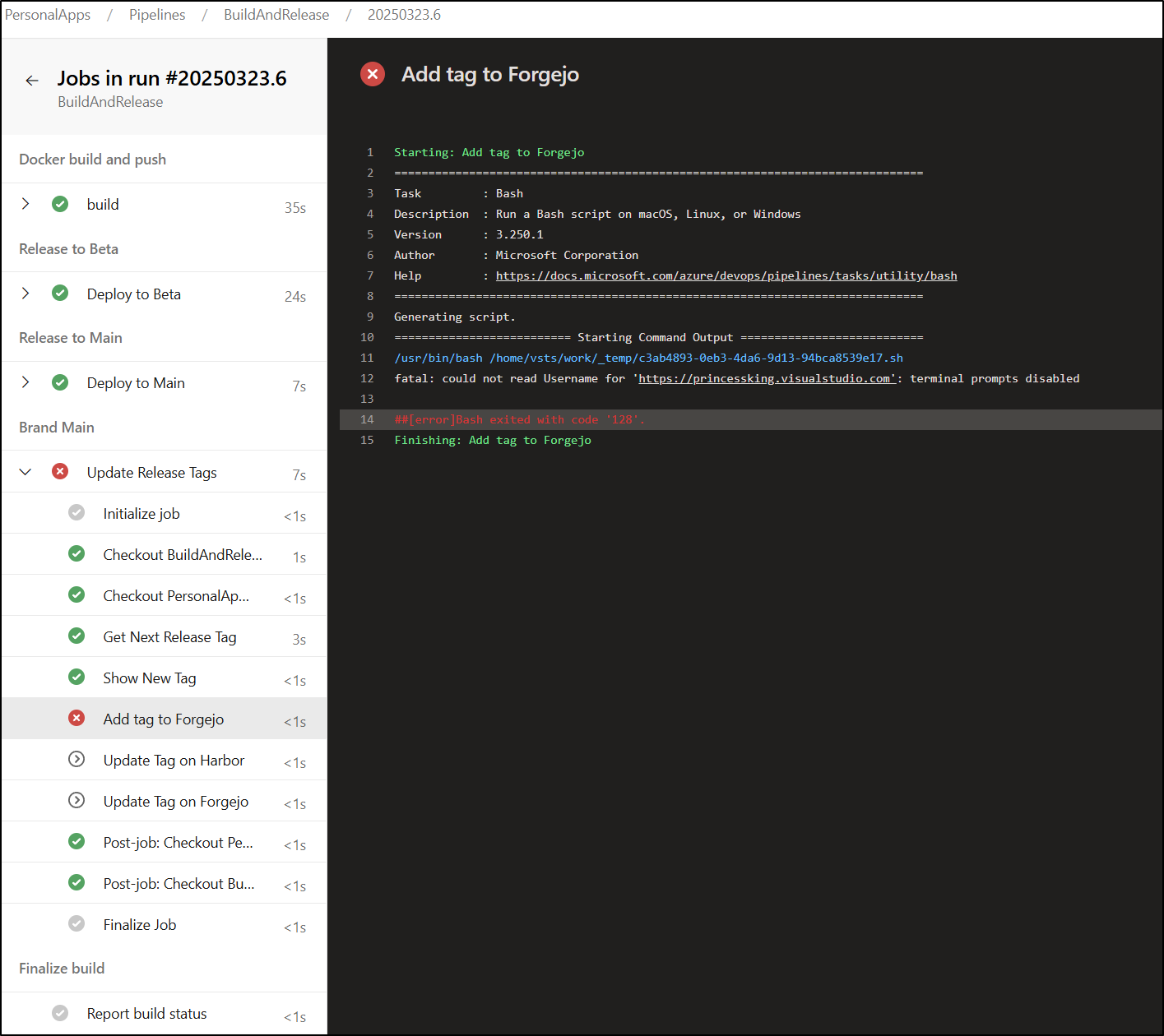

Unfortunately, even when I persist the credentials and set the GIT username and email

It fails to see the correct repo and attempts to push to AzDO instead of Forgejo

Unfortunately, I cannot use the other Git in a Checkout step.

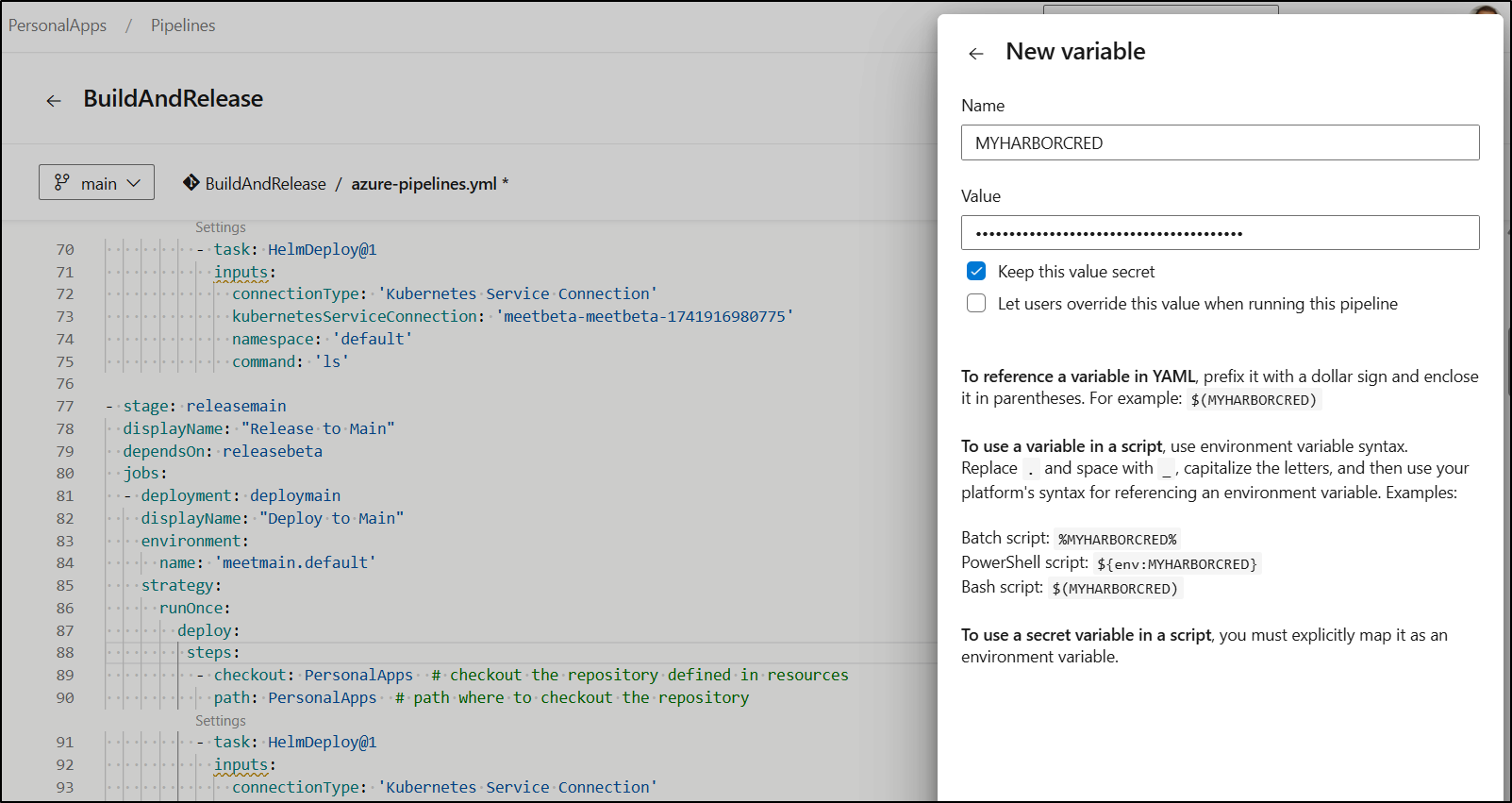

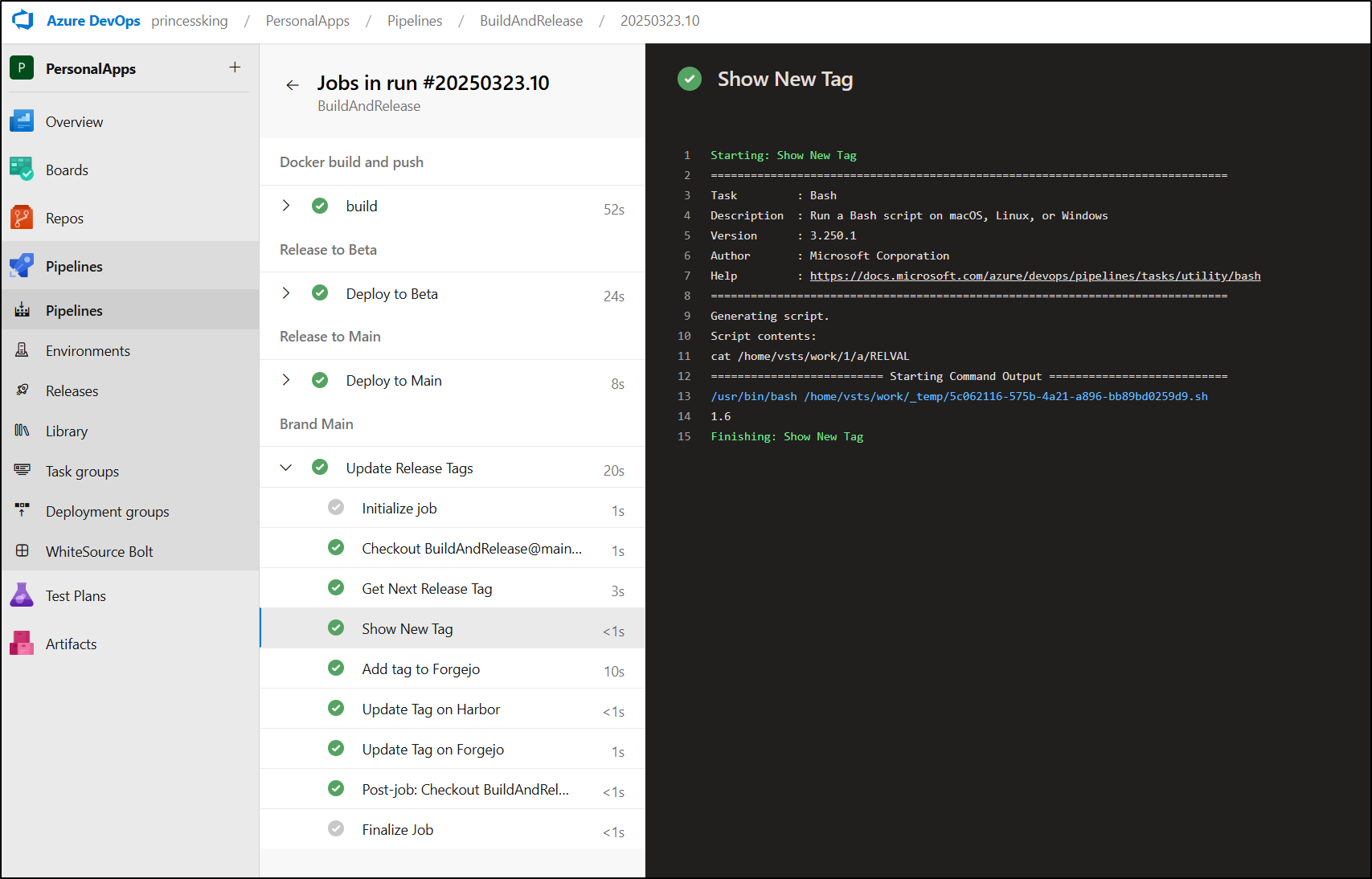

That said, I did solve the issue by using GIT credentials as a secret variable and doing a local clone

pool:

vmImage: ubuntu-latest

resources:

repositories:

- repository: PersonalApps # identifier for use in pipeline

type: git # type of repository (GitHub, Git, Bitbucket)

name: PersonalApps/PersonalApps # project/repository

ref: devotionApp # ref name to checkout (optional)

trigger:

branches:

include:

- devotionApp

stages:

- stage: build

displayName: "Docker build and push"

jobs:

- job: build

steps:

- checkout: self # checkout the current repository

- checkout: PersonalApps # checkout the repository defined in resources

persistCredentials: true

path: PersonalApps # path where to checkout the repository

- task: Docker@2

displayName: 'Build and Push the image'

inputs:

containerRegistry: 'harborcr'

repository: 'freshbrewedprivate/meet'

command: 'buildAndPush'

Dockerfile: '$(Agent.BuildDirectory)/PersonalApps/Dockerfile'

buildContext: '$(Agent.BuildDirectory)/PersonalApps'

tags: |

$(Build.BuildId)

latest

- stage: releasebeta

displayName: "Release to Beta"

dependsOn: build

jobs:

- deployment: deploybeta

displayName: "Deploy to Beta"

environment:

name: 'meetbeta.meetbeta'

strategy:

runOnce:

deploy:

steps:

- checkout: PersonalApps # checkout the repository defined in resources

path: PersonalApps # path where to checkout the repository

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetbeta-meetbeta-1741916980775'

namespace: 'default'

command: 'upgrade'

chartType: 'FilePath'

chartPath: '$(Agent.BuildDirectory)/PersonalApps/helm/devotion'

releaseName: 'meetbeta'

overrideValues: 'image.tag=$(Build.BuildID)'

arguments: '--reuse-values'

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetbeta-meetbeta-1741916980775'

namespace: 'default'

command: 'get'

arguments: 'values meetbeta'

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetbeta-meetbeta-1741916980775'

namespace: 'default'

command: 'ls'

- stage: releasemain

displayName: "Release to Main"

dependsOn: releasebeta

jobs:

- deployment: deploymain

displayName: "Deploy to Main"

environment:

name: 'meetmain.default'

strategy:

runOnce:

deploy:

steps:

- checkout: PersonalApps # checkout the repository defined in resources

path: PersonalApps # path where to checkout the repository

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetmain-default-1742681799583'

namespace: 'default'

command: 'get'

arguments: 'values meetmain'

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetmain-default-1742681799583'

namespace: 'default'

command: 'ls'

- stage: brandmain

displayName: "Brand Main"

dependsOn: releasemain

jobs:

- job: UpdateTags

displayName: "Update Release Tags"

steps:

- checkout: self # checkout the current repository

- task: Bash@3

displayName: "Get Next Release Tag"

inputs:

targetType: 'inline'

script: |

curl --silent -X 'GET' \

'https://harbor.freshbrewed.science/api/v2.0/projects/freshbrewedprivate/repositories/meet/artifacts?page=1&page_size=100&with_tag=true&with_label=true&with_scan_overview=false&with_signature=false&with_immutable_status=false&with_accessory=false' \

-H 'accept: application/json' \

-H 'X-Accept-Vulnerabilities: application/vnd.security.vulnerability.report; version=1.1, application/vnd.scanner.adapter.vuln.report.harbor+json; version=1.0' \

-H "authorization: Basic $(MYHARBORCRED)" | jq -r '.[].tags[].name' | sort -r |grep '^1\.' | head -n1 | awk -F. '{$NF = $NF + 1;} 1' OFS=. | tr -d '\n' | tee $(Build.ArtifactStagingDirectory)/RELVAL

- task: Bash@3

displayName: "Show New Tag"

inputs:

targetType: 'inline'

script: 'cat $(Build.ArtifactStagingDirectory)/RELVAL'

- task: Bash@3

displayName: "Add tag to Forgejo"

inputs:

targetType: 'inline'

script: |

cd $(Agent.BuildDirectory)

pwd

git clone 'https://$(FJGIT)@forgejo.freshbrewed.science/builderadmin/flaskAppBase.git' flaskAppFJ

cd flaskAppFJ

git checkout devotionApp

git remote show origin

git config --global user.email "isaac@freshbrewed.science"

git config --global user.name "Isaac Johnson"

git tag -a `cat $(Build.ArtifactStagingDirectory)/RELVAL` -m "Create Tag From Build"

git push origin `cat $(Build.ArtifactStagingDirectory)/RELVAL`

- task: Bash@3

displayName: "Update Tag on Harbor"

inputs:

targetType: 'inline'

script: |

# Create JSON file using the release value

echo "{\"name\": \"`cat $(Build.ArtifactStagingDirectory)/RELVAL`\", \"immutable\": true}" > $(Build.ArtifactStagingDirectory)/tag.json

# Use the JSON file in curl command

curl -X 'POST' \

'https://harbor.freshbrewed.science/api/v2.0/projects/freshbrewedprivate/repositories/meet/artifacts/$(Build.BuildId)/tags' \

-H 'accept: application/json' \

-H 'authorization: Basic $(MYHARBORCRED)' \

-H 'Content-Type: application/json' \

--data @$(Build.ArtifactStagingDirectory)/tag.json

- task: Bash@3

displayName: "Update Tag on Forgejo"

inputs:

targetType: 'inline'

script: |

# Create JSON file using the release value

echo "{\"tag_name\": \"`cat $(Build.ArtifactStagingDirectory)/RELVAL`RC\", \"message\": \"my draft tag\", \"target\": \"devotionApp\"}" > $(Build.ArtifactStagingDirectory)/fjtag.json

# Use the JSON file in curl command

curl -X 'POST' \

'https://forgejo.freshbrewed.science/api/v1/repos/builderadmin/flaskAppBase/tags' \

-H 'accept: application/json' \

-H 'authorization: Basic $(FORGEJOAUTH)' \

-H 'Content-Type: application/json' \

--data @$(Build.ArtifactStagingDirectory)/fjtag.json

Here we can see it handling tag “1.6”

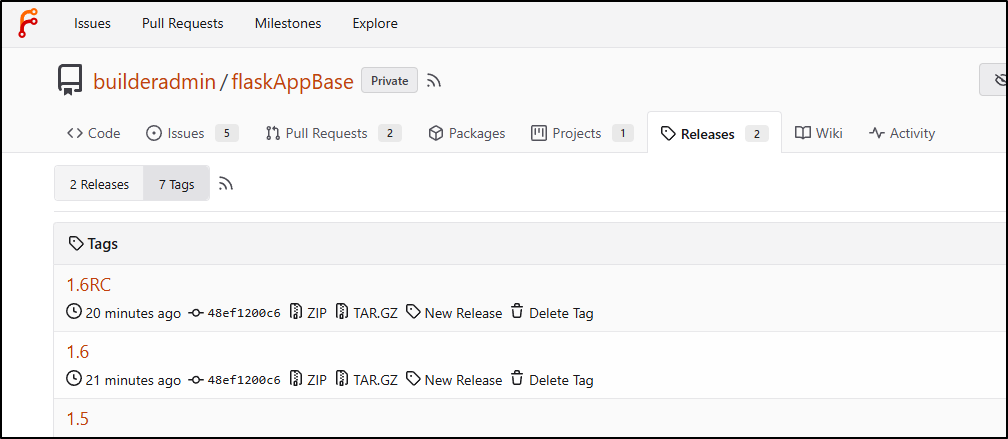

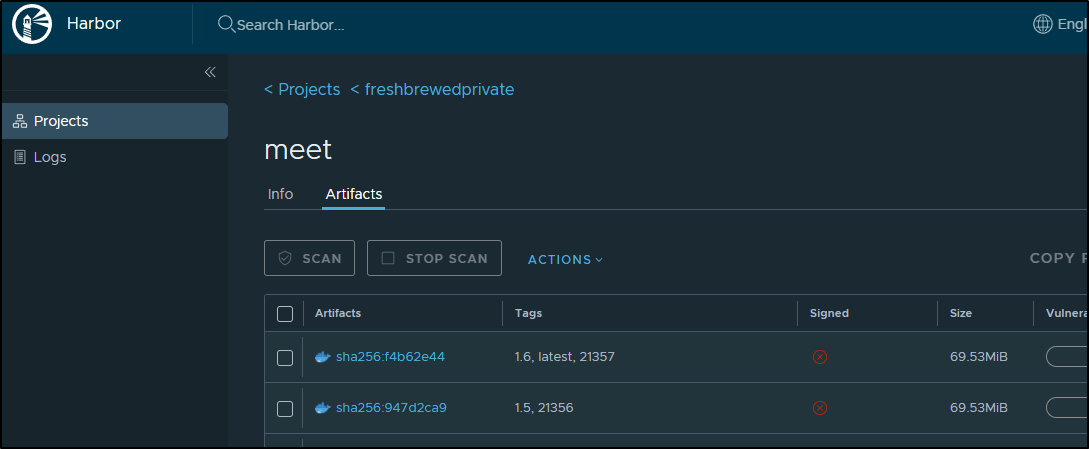

We see the “1.6” tag applied in source (both ways, the “RC” came from the REST API)

And Harbor is updated as well with a container image tag

Final setup

Now, I don’t want to release every time. I would rather gate on an approval for when there are real features.

Also, it would seem to me that doing the official tag before release is more proper. If I were to release, say “1.7” and it had problems, then I would create a “1.7.1” to fix.

That means I want some say in whether enough content is there to create a release.

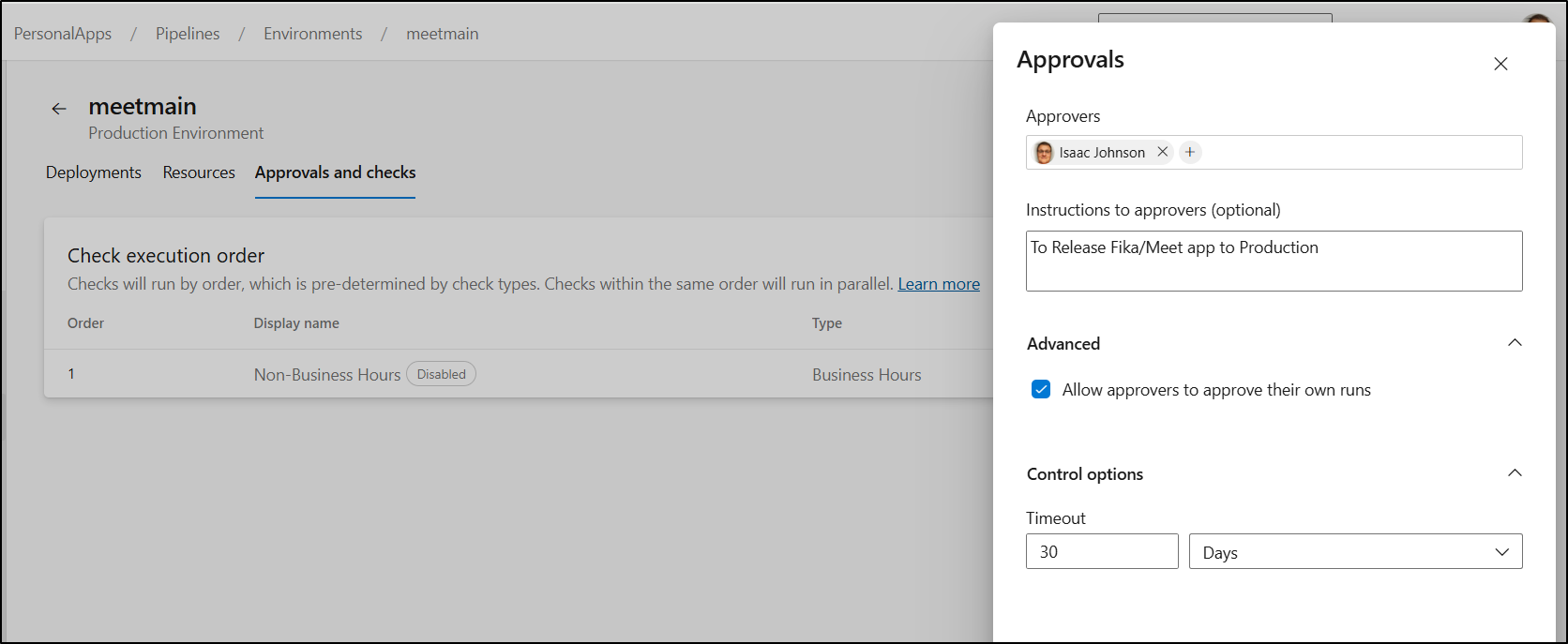

An “Approvals” check is a good option for this kind of gate. We can add that to our “meetmain” Environment

I’ll set myself as approval (allowing self-approval) and give it a 30d timeout

I debated re-enabling the “Non-Business Hours” check. The consequence here is that if I really wanted to push out midday I would be blocking myself. I decided that for now, for this app, it doesn’t make sense to enable

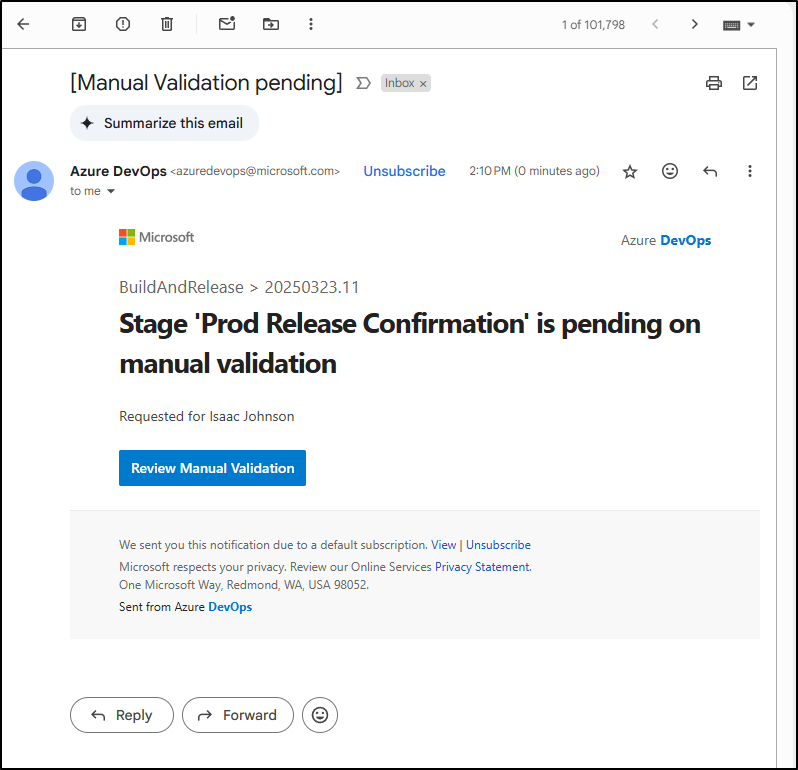

So that we can pause on the branding, I could either pretend it is a deployment stage so I can use the environment or just use the ManualValidation stage:

- stage: gaterelease

dependsOn: releasebeta

displayName: "Prod Release Confirmation"

jobs:

- job: waitForValidation

displayName: Wait for external validation

pool: server

timeoutInMinutes: 10080 # job times out in 7 days

steps:

- task: ManualValidation@0

inputs:

notifyUsers: 'isaac.johnson@gmail.com'

instructions: 'Release new Fika/Meet?'

onTimeout: 'reject'

I chose the latter for now.

pool:

vmImage: ubuntu-latest

resources:

repositories:

- repository: PersonalApps # identifier for use in pipeline

type: git # type of repository (GitHub, Git, Bitbucket)

name: PersonalApps/PersonalApps # project/repository

ref: devotionApp # ref name to checkout (optional)

trigger:

branches:

include:

- devotionApp

stages:

- stage: build

displayName: "Docker build and push"

jobs:

- job: build

steps:

- checkout: self # checkout the current repository

- checkout: PersonalApps # checkout the repository defined in resources

persistCredentials: true

path: PersonalApps # path where to checkout the repository

- task: Docker@2

displayName: 'Build and Push the image'

inputs:

containerRegistry: 'harborcr'

repository: 'freshbrewedprivate/meet'

command: 'buildAndPush'

Dockerfile: '$(Agent.BuildDirectory)/PersonalApps/Dockerfile'

buildContext: '$(Agent.BuildDirectory)/PersonalApps'

tags: |

$(Build.BuildId)

latest

- stage: releasebeta

displayName: "Release to Beta"

dependsOn: build

jobs:

- deployment: deploybeta

displayName: "Deploy to Beta"

environment:

name: 'meetbeta.meetbeta'

strategy:

runOnce:

deploy:

steps:

- checkout: PersonalApps # checkout the repository defined in resources

path: PersonalApps # path where to checkout the repository

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetbeta-meetbeta-1741916980775'

namespace: 'default'

command: 'upgrade'

chartType: 'FilePath'

chartPath: '$(Agent.BuildDirectory)/PersonalApps/helm/devotion'

releaseName: 'meetbeta'

overrideValues: 'image.tag=$(Build.BuildID)'

arguments: '--reuse-values'

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetbeta-meetbeta-1741916980775'

namespace: 'default'

command: 'get'

arguments: 'values meetbeta'

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetbeta-meetbeta-1741916980775'

namespace: 'default'

command: 'ls'

- stage: gaterelease

dependsOn: releasebeta

displayName: "Prod Release Confirmation"

jobs:

- job: waitForValidation

displayName: Wait for external validation

pool: server

timeoutInMinutes: 10080 # job times out in 7 days

steps:

- task: ManualValidation@0

inputs:

notifyUsers: 'isaac.johnson@gmail.com'

instructions: 'Release new Fika/Meet?'

onTimeout: 'reject'

- stage: brandmain

displayName: "Brand Main"

dependsOn: gaterelease

jobs:

- job: UpdateTags

displayName: "Update Release Tags"

steps:

- checkout: self # checkout the current repository

- task: Bash@3

displayName: "Get Next Release Tag"

inputs:

targetType: 'inline'

script: |

curl --silent -X 'GET' \

'https://harbor.freshbrewed.science/api/v2.0/projects/freshbrewedprivate/repositories/meet/artifacts?page=1&page_size=100&with_tag=true&with_label=true&with_scan_overview=false&with_signature=false&with_immutable_status=false&with_accessory=false' \

-H 'accept: application/json' \

-H 'X-Accept-Vulnerabilities: application/vnd.security.vulnerability.report; version=1.1, application/vnd.scanner.adapter.vuln.report.harbor+json; version=1.0' \

-H "authorization: Basic $(MYHARBORCRED)" | jq -r '.[].tags[].name' | sort -r |grep '^1\.' | head -n1 | awk -F. '{$NF = $NF + 1;} 1' OFS=. | tr -d '\n' | tee $(Build.ArtifactStagingDirectory)/RELVAL

- task: Bash@3

displayName: "Show New Tag"

inputs:

targetType: 'inline'

script: 'cat $(Build.ArtifactStagingDirectory)/RELVAL'

- task: Bash@3

displayName: "Add tag to Forgejo"

inputs:

targetType: 'inline'

script: |

cd $(Agent.BuildDirectory)

pwd

git clone 'https://$(FJGIT)@forgejo.freshbrewed.science/builderadmin/flaskAppBase.git' flaskAppFJ

cd flaskAppFJ

git checkout devotionApp

git remote show origin

git config --global user.email "isaac@freshbrewed.science"

git config --global user.name "Isaac Johnson"

git tag -a `cat $(Build.ArtifactStagingDirectory)/RELVAL` -m "Create Tag From Build"

git push origin `cat $(Build.ArtifactStagingDirectory)/RELVAL`

- task: Bash@3

displayName: "Update Tag on Harbor"

inputs:

targetType: 'inline'

script: |

# Create JSON file using the release value

echo "{\"name\": \"`cat $(Build.ArtifactStagingDirectory)/RELVAL`\", \"immutable\": true}" > $(Build.ArtifactStagingDirectory)/tag.json

# Use the JSON file in curl command

curl -X 'POST' \

'https://harbor.freshbrewed.science/api/v2.0/projects/freshbrewedprivate/repositories/meet/artifacts/$(Build.BuildId)/tags' \

-H 'accept: application/json' \

-H 'authorization: Basic $(MYHARBORCRED)' \

-H 'Content-Type: application/json' \

--data @$(Build.ArtifactStagingDirectory)/tag.json

- task: Bash@3

displayName: "Update Tag on Forgejo"

inputs:

targetType: 'inline'

script: |

# Create JSON file using the release value

echo "{\"tag_name\": \"`cat $(Build.ArtifactStagingDirectory)/RELVAL`RC\", \"message\": \"my draft tag\", \"target\": \"devotionApp\"}" > $(Build.ArtifactStagingDirectory)/fjtag.json

# Use the JSON file in curl command

curl -X 'POST' \

'https://forgejo.freshbrewed.science/api/v1/repos/builderadmin/flaskAppBase/tags' \

-H 'accept: application/json' \

-H 'authorization: Basic $(FORGEJOAUTH)' \

-H 'Content-Type: application/json' \

--data @$(Build.ArtifactStagingDirectory)/fjtag.json

- stage: releasemain

displayName: "Release to Main"

dependsOn: brandmain

jobs:

- deployment: deploymain

displayName: "Deploy to Main"

environment:

name: 'meetmain.default'

strategy:

runOnce:

deploy:

steps:

- checkout: PersonalApps # checkout the repository defined in resources

path: PersonalApps # path where to checkout the repository

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetmain-default-1742681799583'

namespace: 'default'

command: 'upgrade'

chartType: 'FilePath'

chartPath: '$(Agent.BuildDirectory)/PersonalApps/helm/devotion'

releaseName: 'meetmain'

overrideValues: 'image.tag=$(Build.BuildID)'

arguments: '--reuse-values'

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetmain-default-1742681799583'

namespace: 'default'

command: 'get'

arguments: 'values meetmain'

- task: HelmDeploy@1

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceConnection: 'meetmain-default-1742681799583'

namespace: 'default'

command: 'ls'

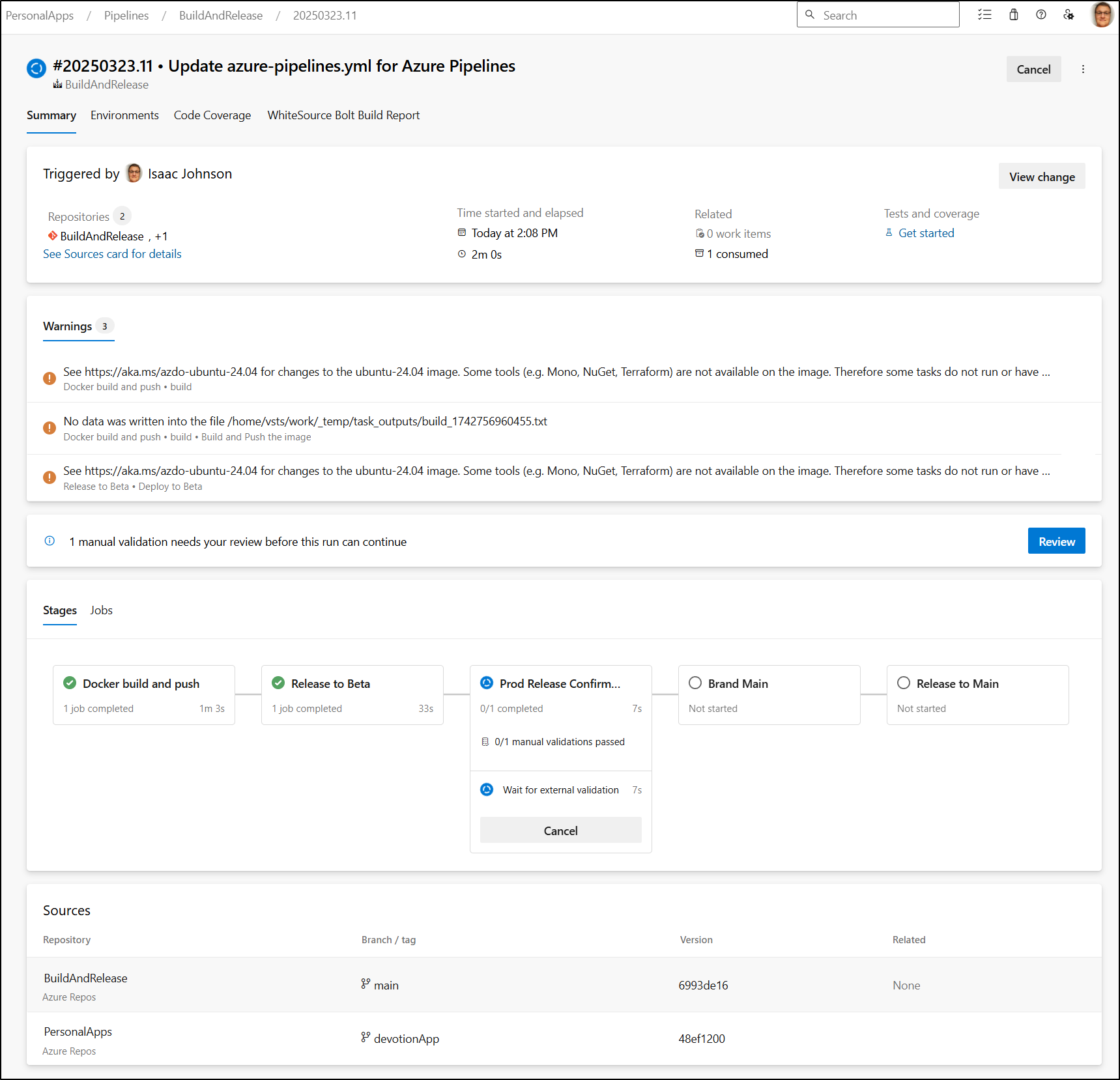

We can see it deploys to beta but stops short of branding and releasing to main.

And I have a gate now waiting on confirmation

Summary

In following up on our first Azure DevOps Gating post, I wanted to implement what I described on a real release pipeline.

This meant moving first from the Classic UI pipeline to YAML. As my Git repo was not in Github, Bitbucket or Azure Repos, I needed to get clever on how I went about this. I could have just moved from Forgejo to Github or Azure Repos, but to preserve the root of this, I merely set Azure Repos to be an upstream sync clone of my Forgejo.

Once that was done, I had another big choice to make: Do I create the YAML pipeline in Forgejo and let it sync to Azure Repos (and then get used in Pipelines)? Or do I abstract the “Build and Release” pipeline to its “own thing”. I chose the latter as I felt it kept the Azure Repo a pure sync copy.

While not entirely necessary, I decided to leverage Azure Pipelines “Environments” to create Kubernetes service connections that could be used with helm. I didn’t mention this earlier, but part of the reason why was because I just didn’t see a lot of good examples out there of Kubernetes Azure Pipelines Environments that used Helm (they all seemed to show demos using YAML manifests instead).

Lastly, we covered multi-stage release with “beta” (Staging) and “main” (Production). I covered multiple ways to push a tag to GIT (Forgejo) and my container registry (Harbor). I showed example code of pushing a GIT Release, but for now, I want to do those manually as I’m building out some steps and docs in the step. I might cover that in a future post.

I hope you found something useful above. It was pretty fun to write this up over the last week.