Published: Mar 18, 2025 by Isaac Johnson

Recently it was put upon me to come up with a variety of approaches to handle Azure DevOps gating on a myriad of related Azure Pipelines. There are a lot of ways to handle this situation.

Today I’ll walk through using Environment gates with the Azure CLI for automated releases. I’ll cover the “REST” Gate used in Environments and some of its limitations (With a full working example).

Lastly, I’ll walk through using a Ticket, like a Release ticket in Azure Work Items (albeit JIRA could be used just as well). Here we’ll find tickets of a type and parse details. I’ll also touch on how to search a CR like Harbor for tags that match a Release pattern and tag (which we would do upon completion).

I do not build the entire WIA approach - if that is desired, I can do a follow-up to tie the last couple bits and bobs, but I think it’s more than enough to walk through the idea and provide the JQuery examples one would need.

Let’s dig in!

Azure DevOps setup

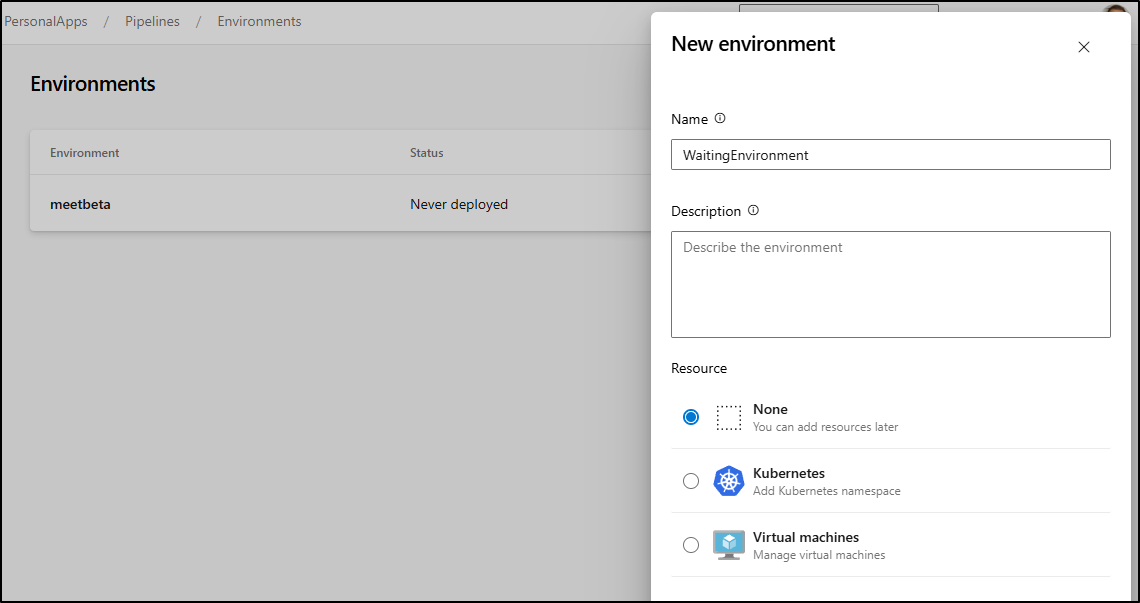

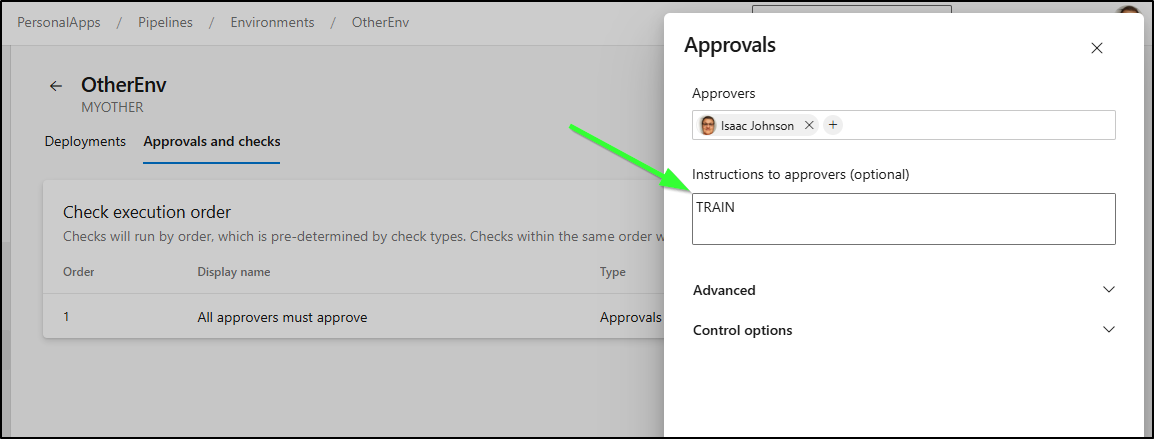

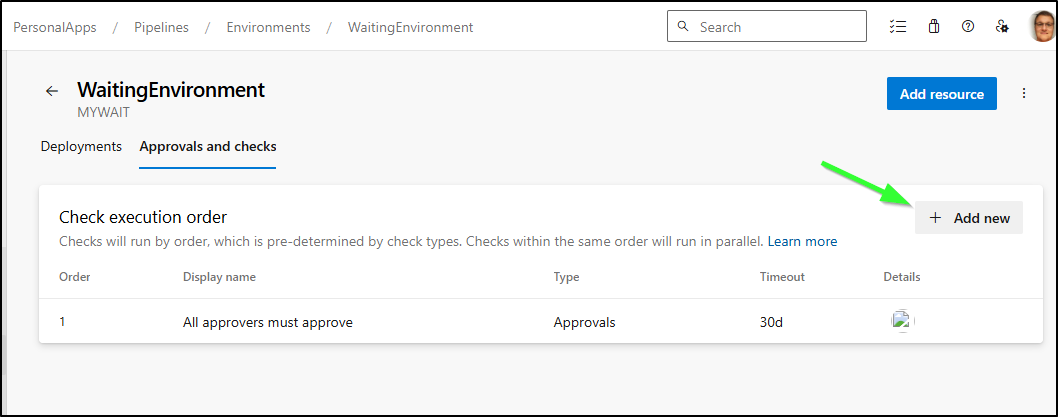

First, we need an environment we can use to gate pipelines.

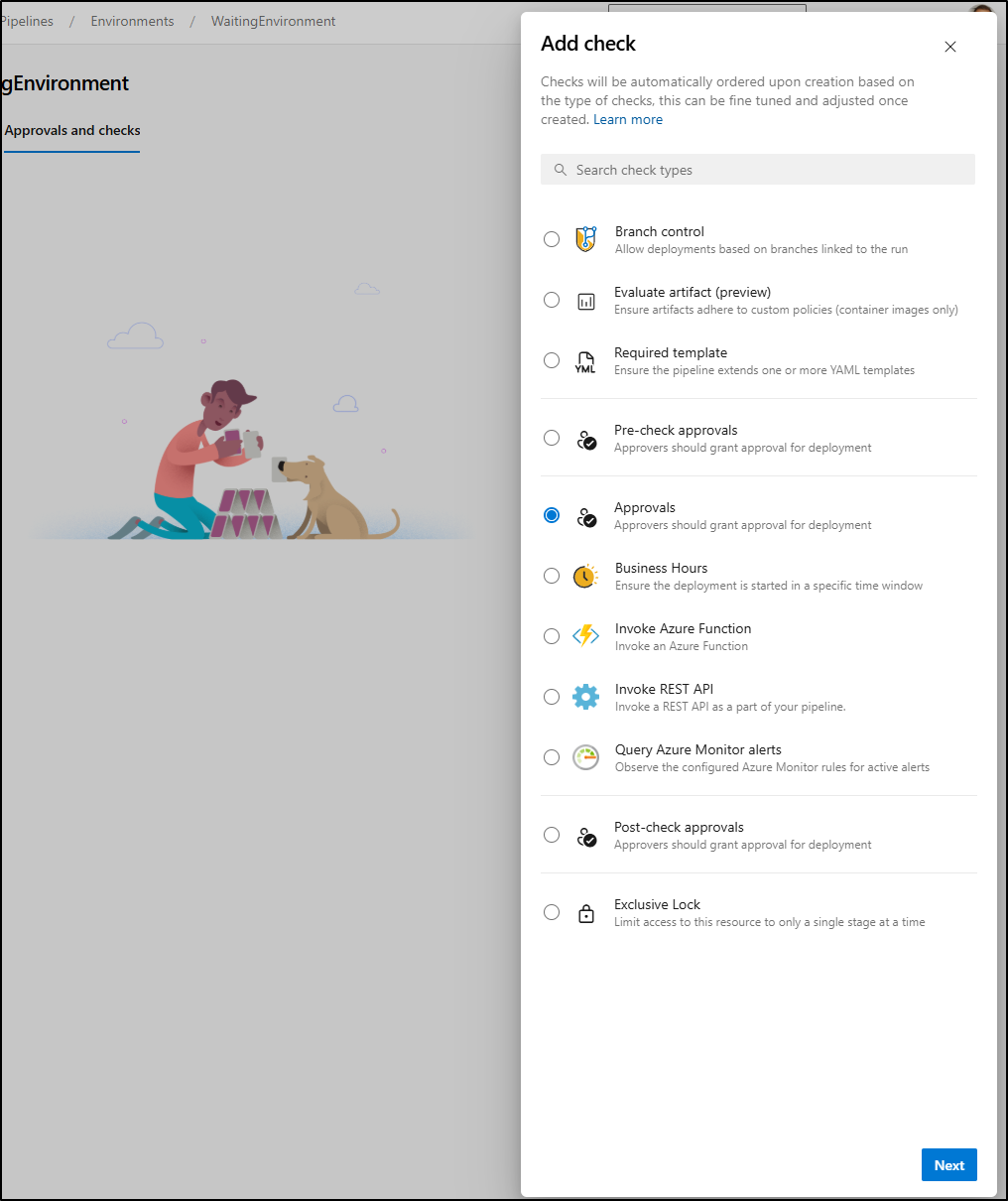

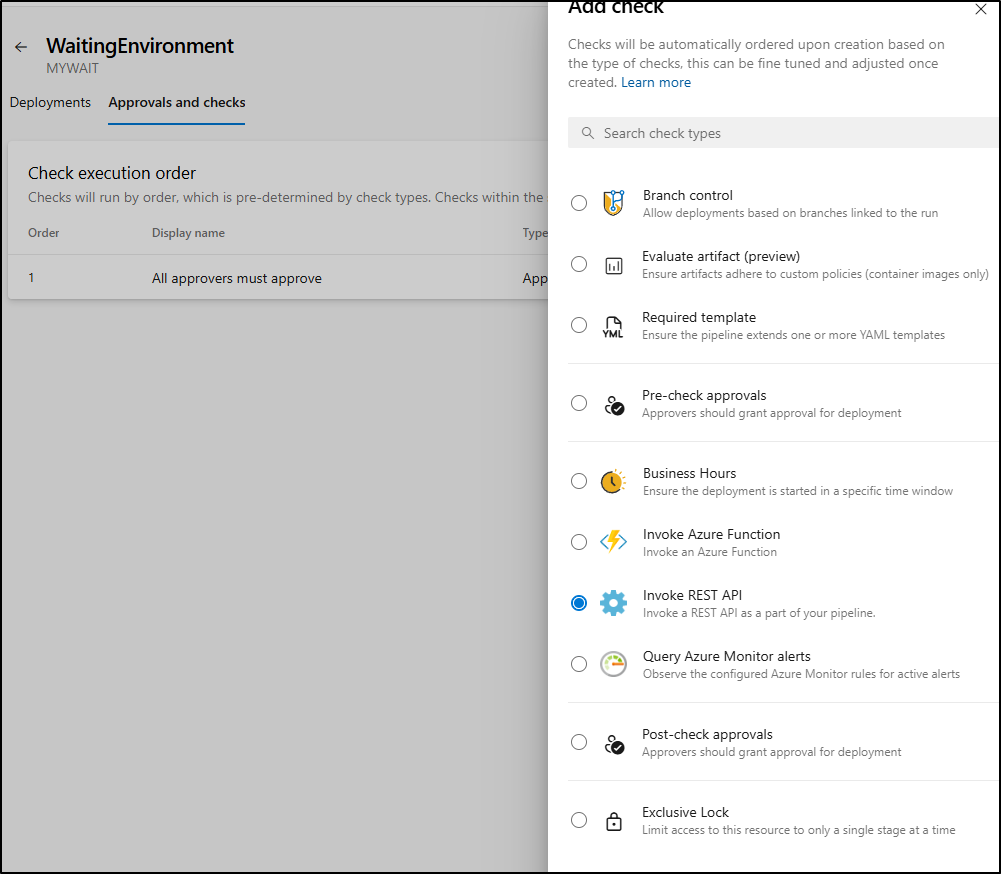

Next, I want to gate here, so I’ll start with something simple like Approval checks

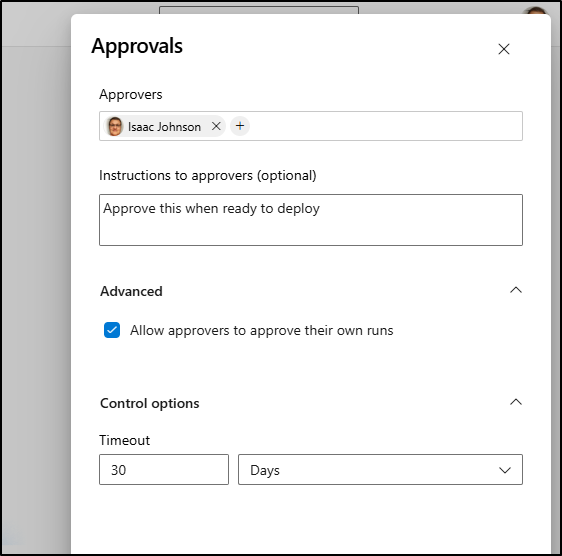

We can name groups and users as well as timeouts such as expiring this run after 30 days

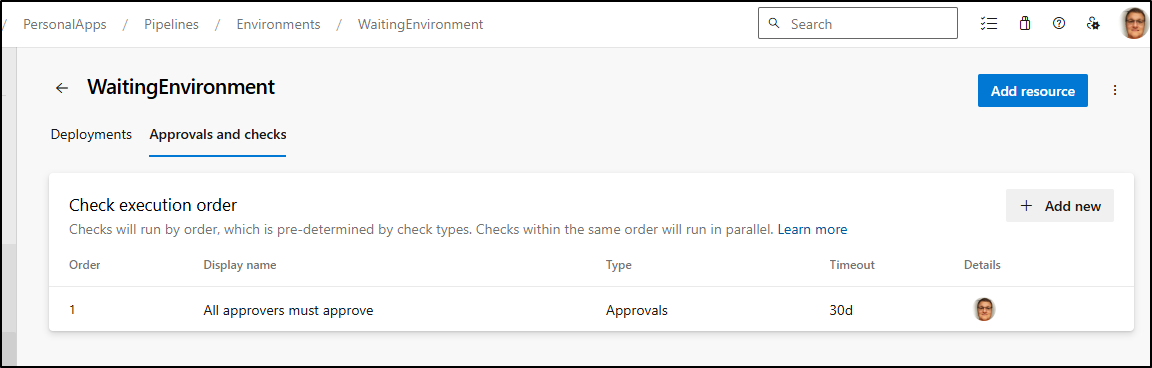

Can now see we have a gate with an approver and a timeout:

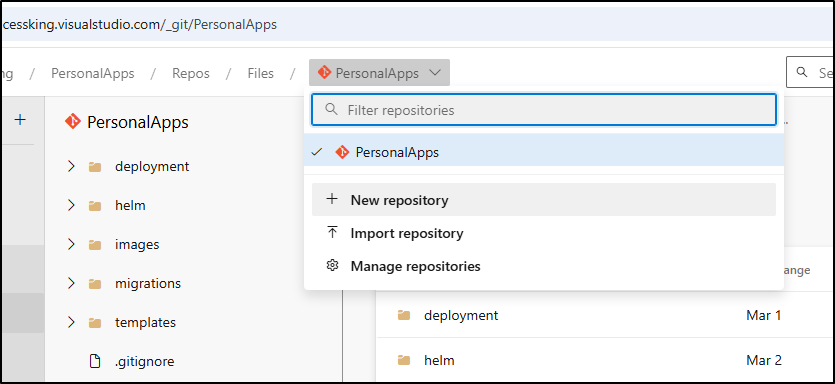

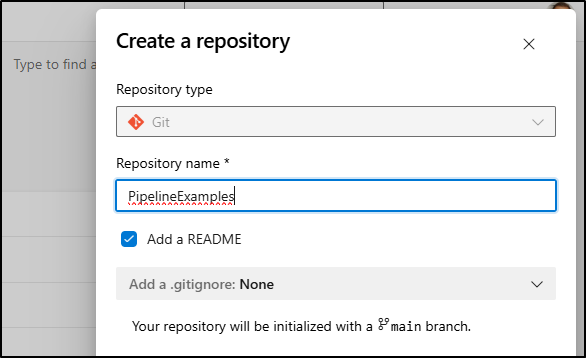

Now let’s build out some pipelines. I’m going to create a new repo to hold these

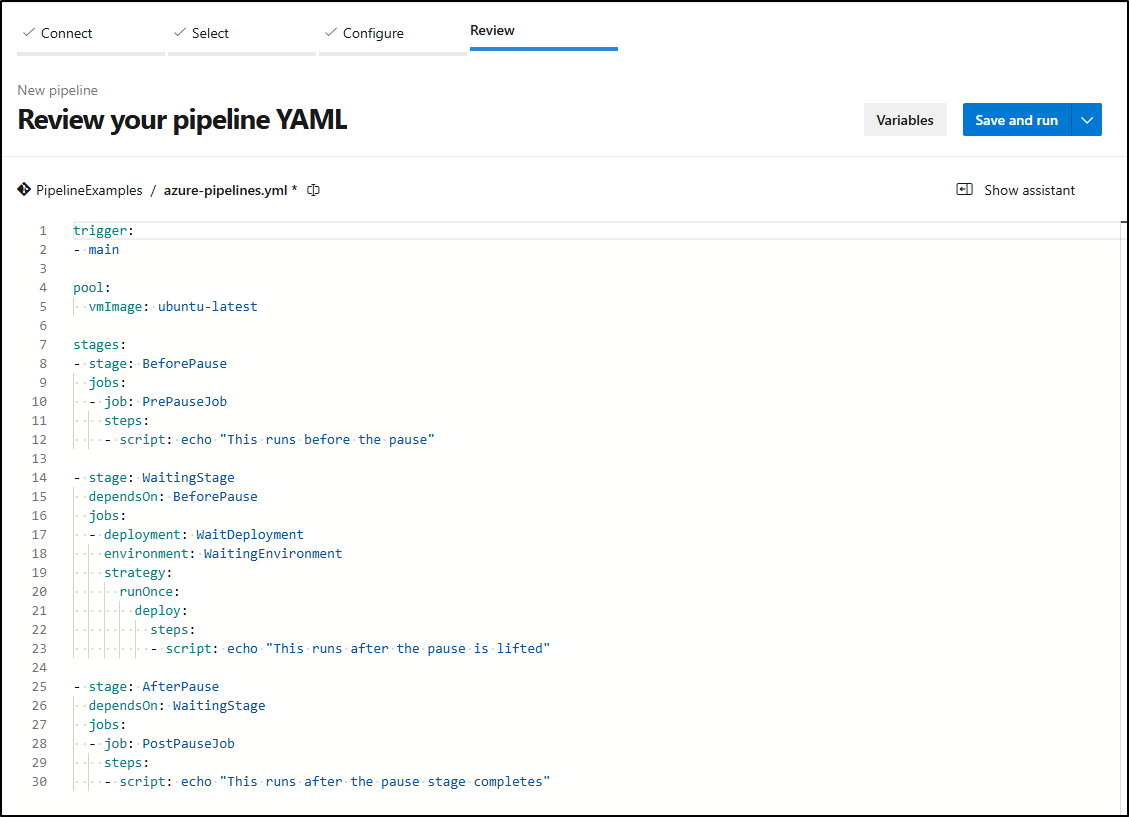

I can then “Setup build” and create a pipeline

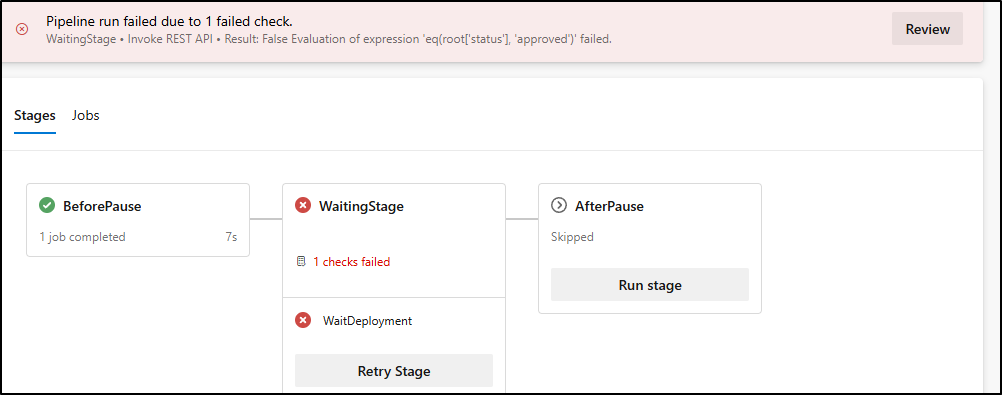

The key parts are in the YAML here:

stages:

- stage: BeforePause

jobs:

- job: PrePauseJob

steps:

- script: echo "This runs before the pause"

- stage: WaitingStage

dependsOn: BeforePause

jobs:

- deployment: WaitDeployment

environment: WaitingEnvironment

strategy:

runOnce:

deploy:

steps:

- script: echo "This runs after the pause is lifted"

- stage: AfterPause

dependsOn: WaitingStage

jobs:

- job: PostPauseJob

steps:

- script: echo "This runs after the pause stage completes"

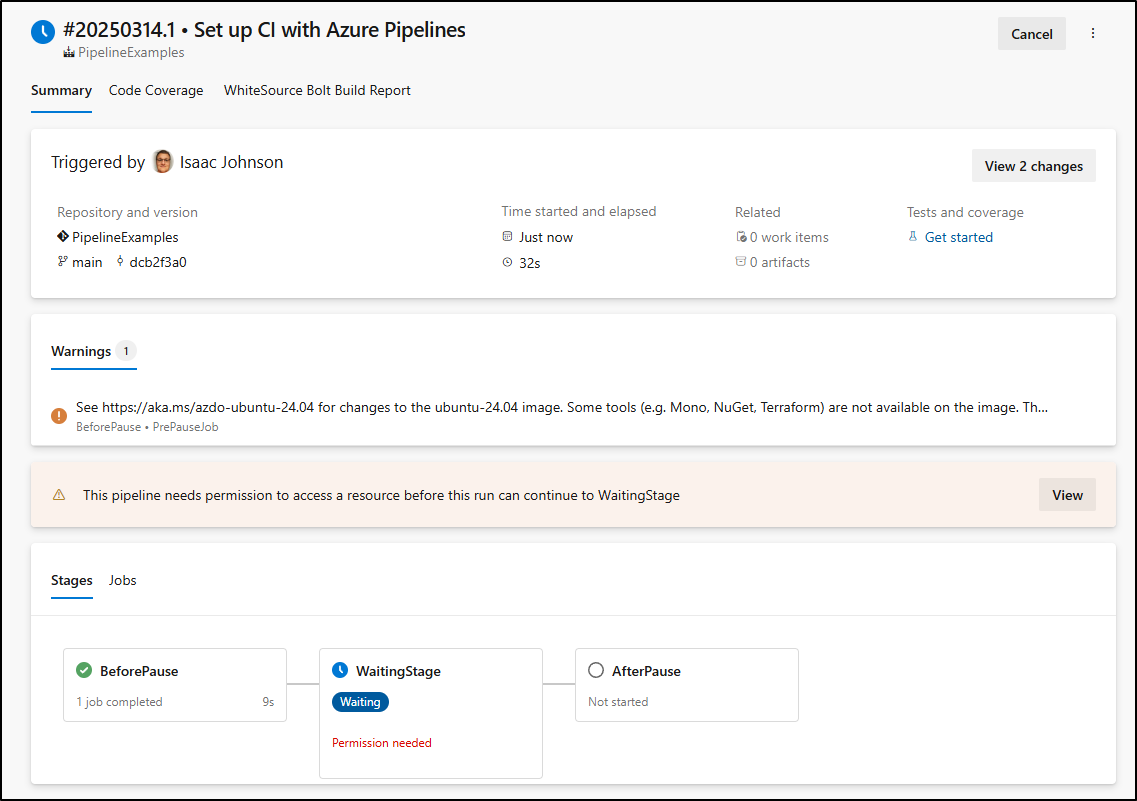

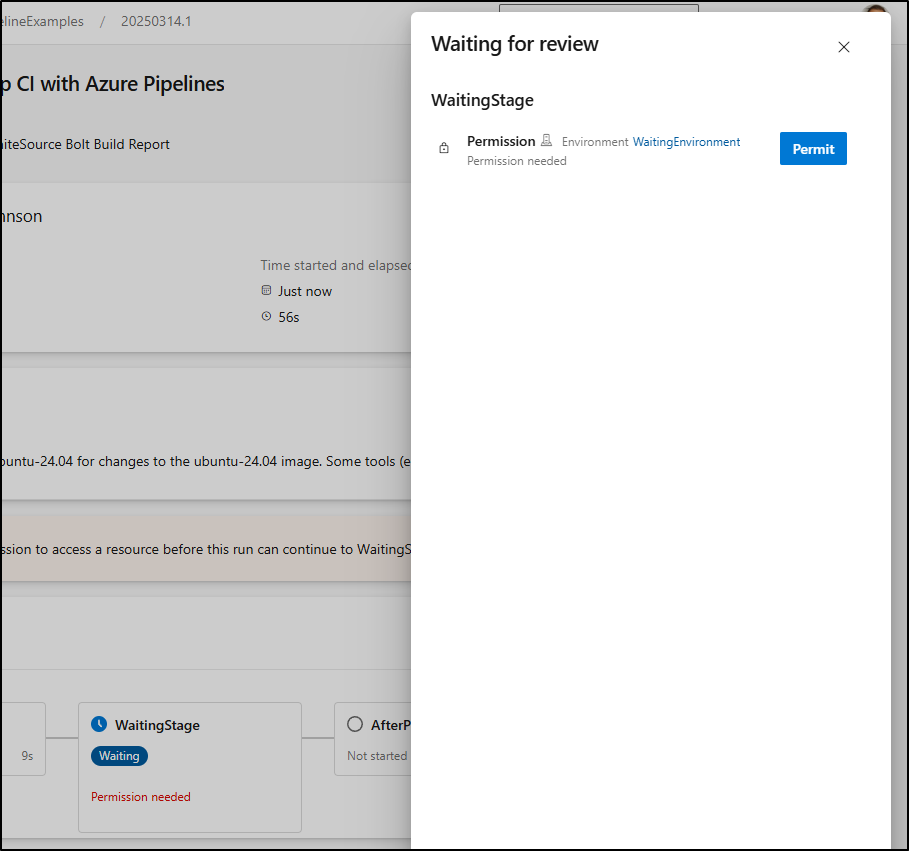

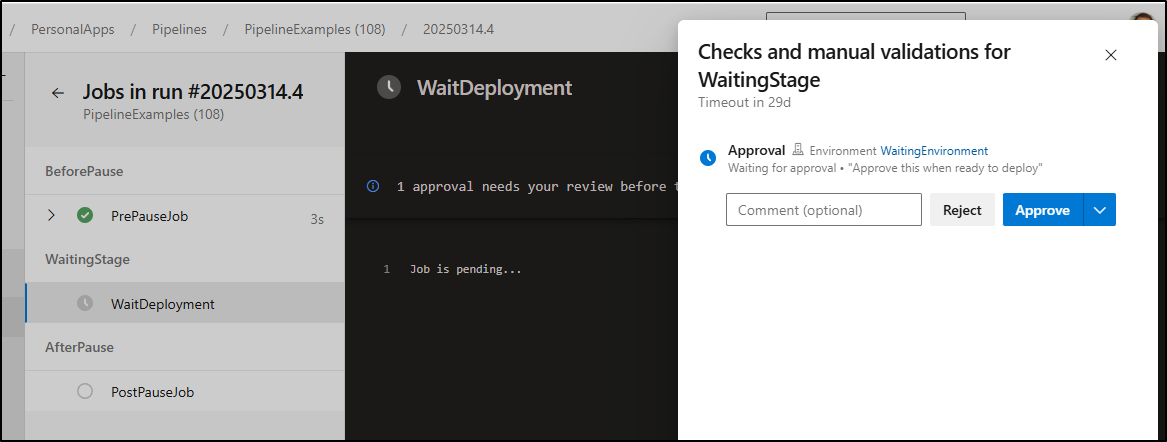

When saved we can see it needs permission to a resource first:

Even when I do permit

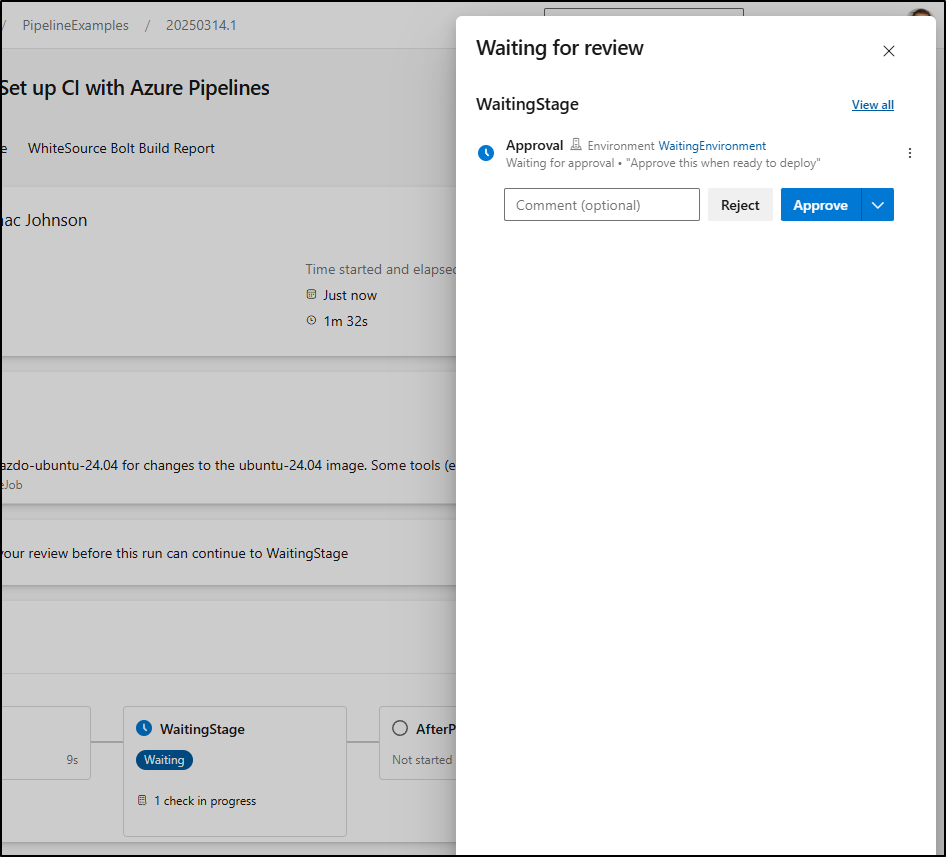

I still need to approve

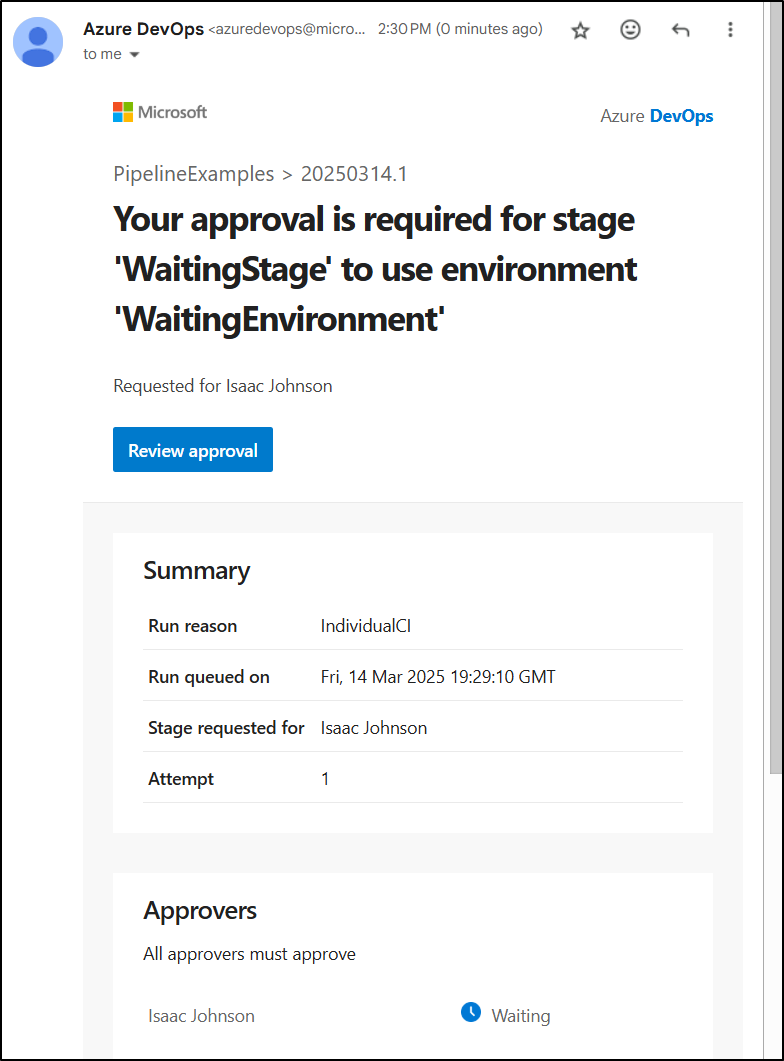

(and I also get emails for gate approvals)

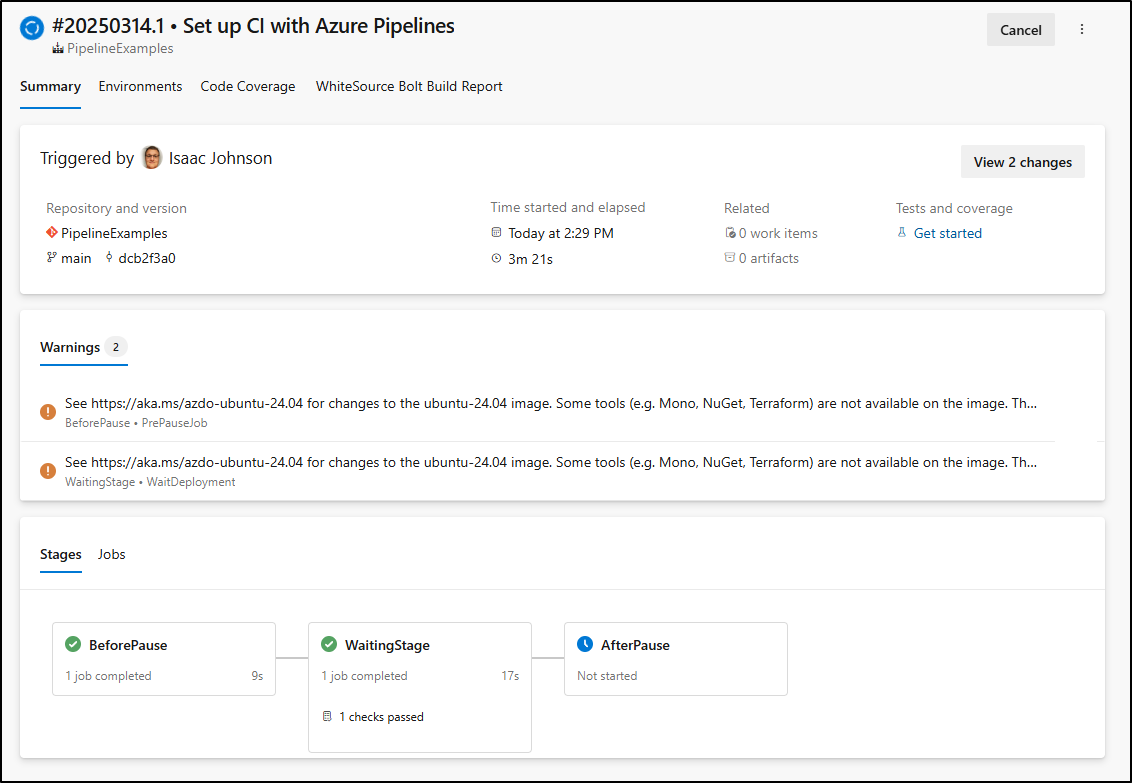

When approved it will move on

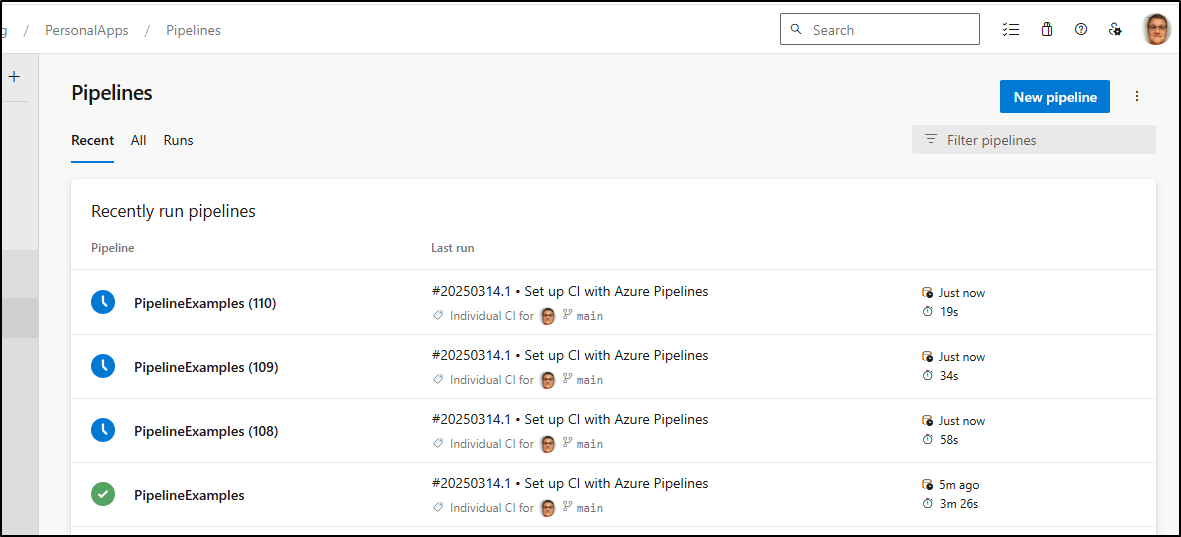

I added three more, same as the first

The thing is, if we had to try and release all of these at the same time, it might be a challenge.

Imagine having many pipelines that would need to be “released” through the same gate at the same time.

Approval scripting

Despite a few AI’s believing there is a “runs/checks” API endpoint for Azure DevOps, from their documentation, I assure there is not.

That said, we can create a bash script that will find all approval gates and approve them on our behalf

#!/bin/bash

# Configuration

ORG="princessking"

PROJECT="PersonalApps"

PAT="asdfsadfasfsadfasdf"

# Base64 encode the PAT

AUTH_HEADER="Authorization: Basic $(echo -n ":$PAT" | base64 -w 0)"

# Approval

echo "Get approvals"

APPROVALS_QUERY_URL="https://dev.azure.com/$ORG/$PROJECT/_apis/pipelines/approvals?state=pending&api-version=7.1"

APPROVALS_QUERY_RESP=$(curl -s -H "$AUTH_HEADER" "$APPROVALS_QUERY_URL")

APROVAL_IDS=$(echo "$APPROVALS_QUERY_RESP" | jq -r '.value[] | .id')

for APPROVAL_ID in $APROVAL_IDS; do

# get this Approval instance

echo "Getting details for Approval $APPROVAL_ID"

APPROVAL_URL="https://dev.azure.com/$ORG/$PROJECT/_apis/pipelines/approvals/$APPROVAL_ID?expand=steps&api-version=7.1"

APPROVAL_RESP=$(curl -s -H "$AUTH_HEADER" "$APPROVAL_URL")

#echo $APPROVAL_RESP

echo "Approving"

sed -i "s/approvalId\": \".*/approvalId\": \"$APPROVAL_ID\",/g" approval.json

curl -s -H "$AUTH_HEADER" --header "Content-Type: application/json" -X PATCH "https://dev.azure.com/$ORG/$PROJECT/_apis/pipelines/approvals?api-version=7.1" -d @approval.json

done

exit

In action

Let’s fire that off for all the queued gates

Approval of some Gates

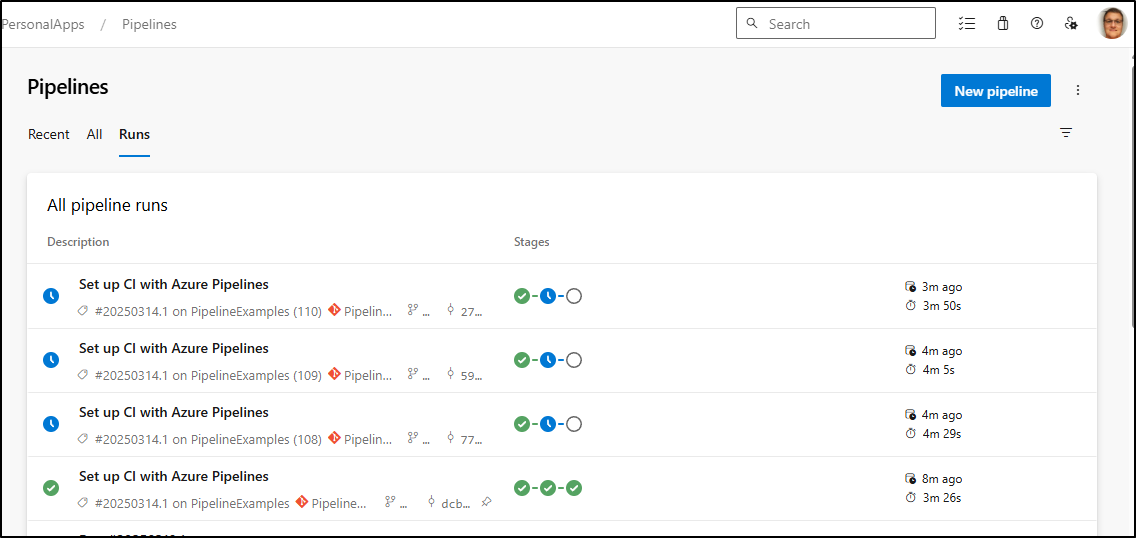

We cannot query the Gate name in the Azure DevOps REST API, but we can check the instructions.

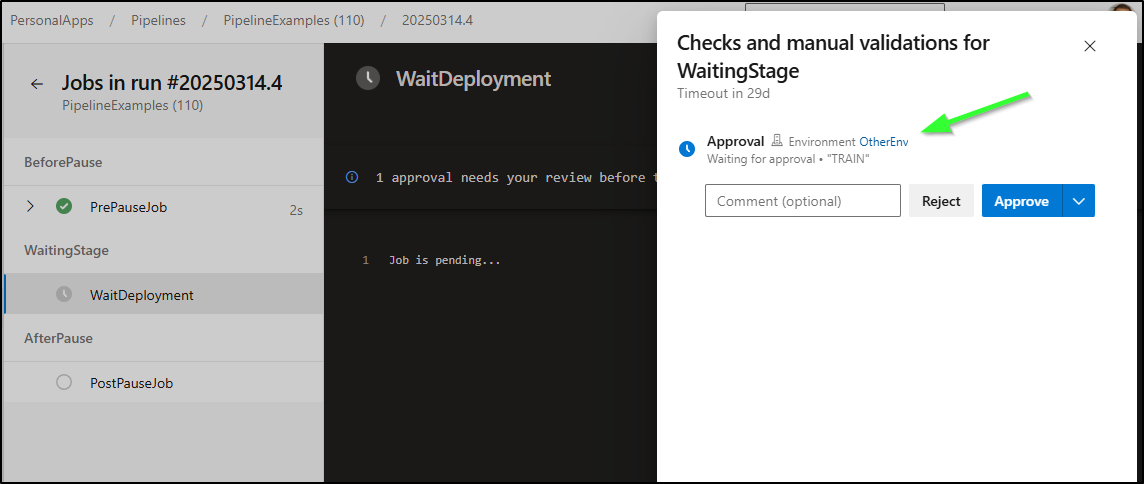

Say I made one environment dedicated to training environments. I could give the “instructions” of “TRAIN” as way to denote this enviroment:

For this activity, I now have a “WaitingEnvironment” and “OtherEnv” that is denoted as “TRAIN”

I now have all 4 pipelines queued up. Most are set to the “WaitingEnvironment”

However, Pipeline (110) is slated for “OtherEnv”

- stage: WaitingStage

dependsOn: BeforePause

jobs:

- deployment: WaitDeployment

environment: OtherEnv

strategy:

runOnce:

deploy:

steps:

- script: echo "This runs after the pause is lifted"

My updated Approval bash checks for the “TRAIN” signifier and only approves those

#!/bin/bash

set +x

# Configuration

ORG="princessking"

PROJECT="PersonalApps"

ENVIRONMENT_NAME="WaitingStage"

PAT="asdfasdfsadfasdfasdfasdasdf"

# Base64 encode the PAT

AUTH_HEADER="Authorization: Basic $(echo -n ":$PAT" | base64 -w 0)"

# Approval

echo "Get approvals"

APPROVALS_QUERY_URL="https://dev.azure.com/$ORG/$PROJECT/_apis/pipelines/approvals?state=pending&api-version=7.1"

APPROVALS_QUERY_RESP=$(curl -s -H "$AUTH_HEADER" "$APPROVALS_QUERY_URL")

APROVAL_IDS=$(echo "$APPROVALS_QUERY_RESP" | jq -r '.value[] | .id')

for APPROVAL_ID in $APROVAL_IDS; do

# get this Approval instance

echo "Getting details for Approval $APPROVAL_ID"

APPROVAL_URL="https://dev.azure.com/$ORG/$PROJECT/_apis/pipelines/approvals/$APPROVAL_ID?expand=steps&api-version=7.1"

APPROVAL_RESP=$(curl -s -H "$AUTH_HEADER" "$APPROVAL_URL")

#echo $APPROVAL_RESP

#echo ""

#echo ""

INSTRUCTIONS=$(echo "$APPROVAL_RESP" | jq -r '.value[] | .instructions')

if [[ "$INSTRUCTIONS" == "*TRAIN*" ]]; then

#echo $APPROVAL_RESP

echo "Approving"

sed -i "s/approvalId\": \".*/approvalId\": \"$APPROVAL_ID\",/g" approval.json

curl -s -H "$AUTH_HEADER" --header "Content-Type: application/json" -X PATCH "https://dev.azure.com/$ORG/$PROJECT/_apis/pipelines/approvals?api-version=7.1" -d @approval.json

fi

done

exit

Here we can see in action:

And as you can imagine, the converse works as well

Review of Environment Gates with user approvals and scripts

If we want to gate many pipelines that match a particular environment, we can have a multitude of pipelines queued at an Environment approval gate.

By using “instructions” we can queue off of field we can read from the REST API in order to selectively approve those gates at a time.

REST API

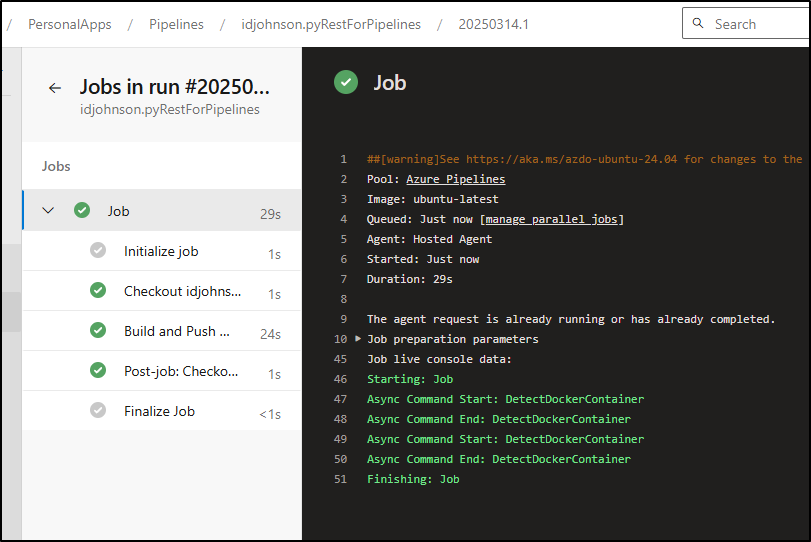

Before we start, let’s just create a quick containerized python app we can use for responses

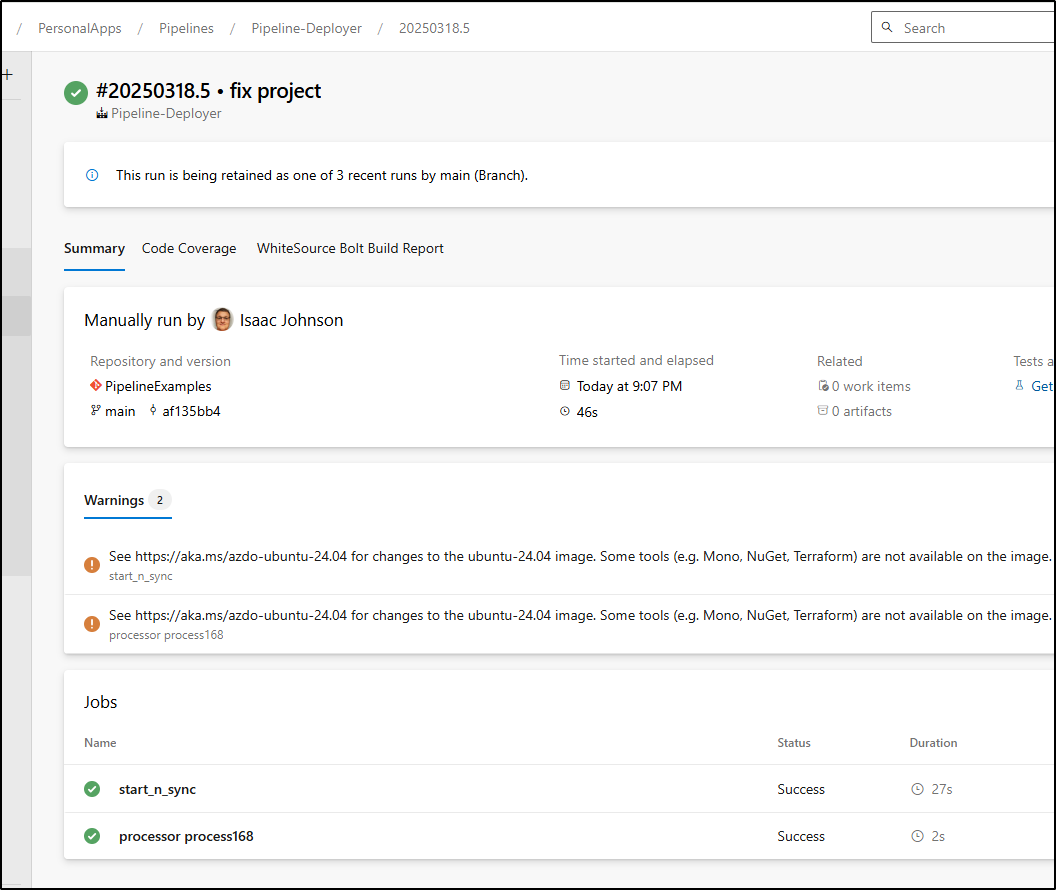

Here you can see my first push of a basic python Flask app that can do this work.

It’s kind of irritated with me for using a Public Github repo in a private project, but I should still be able to create the YAML pipeline that can build and push to my HarborCR

trigger:

- main

pool:

vmImage: ubuntu-latest

steps:

- task: Docker@2

displayName: 'Build and Push the image'

inputs:

containerRegistry: harborcr

repository: freshbrewedprivate/pyRestForPipelines

Dockerfile: Dockerfile

tags: |

$(Build.BuildId)

latest

I can see the build worked

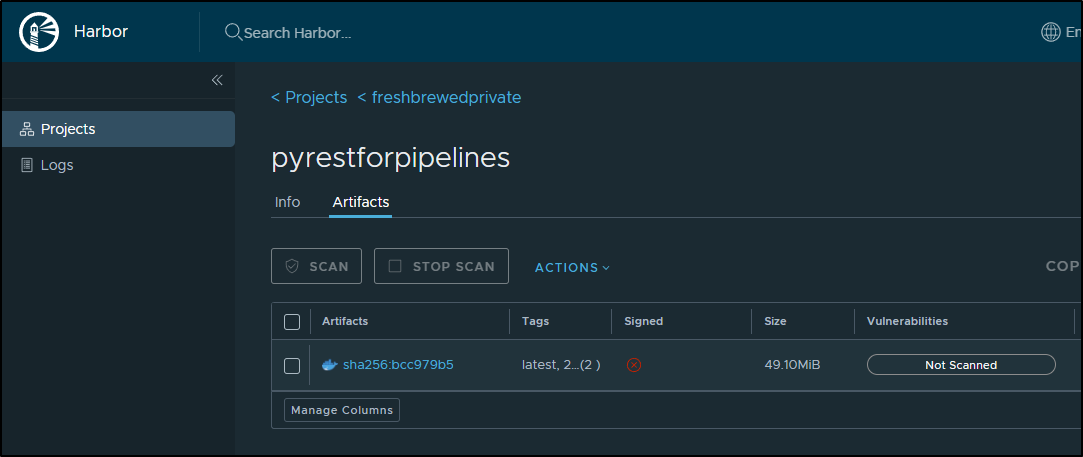

and an image is now in my CR

My next step is to fire a deployment of a deployment and service YAML

apiVersion: apps/v1

kind: Deployment

metadata:

name: pyrestforpipelines

labels:

app: pyrestforpipelines

spec:

replicas: 1

selector:

matchLabels:

app: pyrestforpipelines

template:

metadata:

labels:

app: pyrestforpipelines

spec:

containers:

- name: pyrestforpipelines

image: harbor.freshbrewed.science/freshbrewedprivate/pyrestforpipelines:latest

ports:

- containerPort: 5000

readinessProbe:

httpGet:

path: /approved

port: 5000

initialDelaySeconds: 5

periodSeconds: 10

imagePullSecrets:

- name: myharborreg

---

apiVersion: v1

kind: Service

metadata:

name: pyrestforpipelines-service

spec:

selector:

app: pyrestforpipelines

ports:

- protocol: TCP

port: 80

targetPort: 5000

type: ClusterIP

Fired off

$ kubectl apply -f ./deployment.yaml

deployment.apps/pyrestforpipelines created

service/pyrestforpipelines-service created

And I can see it working

$ kubectl get po | grep pyrest

pyrestforpipelines-7c9cc7bc47-24n5x 1/1 Running 0 43s

Let’s test

$ kubectl port-forward svc/pyrestforpipelines-service 8080:80

Forwarding from 127.0.0.1:8080 -> 5000

Forwarding from [::1]:8080 -> 5000

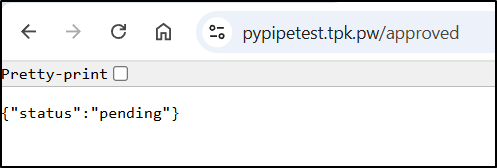

So we get the status

$ curl -X GET http://localhost:8080/approved

{"status":"pending"}

I can flip the status

$ curl -X POST -H 'Content-Type: application/json' "http://localhost:808

0/approved" --data '{"status":"approved"}'

{"status":"approved"}

$ curl -X GET http://localhost:8080/approved

{"status":"approved"}

$ curl -X POST -H 'Content-Type: application/json' "http://localhost:8080/approved" --data '{"status":"pending"}'

{"status":"pending"}

$ curl -X GET http://localhost:8080/approved

{"status":"pending"}

I’ll want an ingress so AzDO can reach it

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n pypipetest

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "5d7e7f2b-cd07-4c28-ae53-ef1ac7b534da",

"fqdn": "pypipetest.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/pypipetest",

"name": "pypipetest",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

I can then add an Ingress block to my deployment.yaml

$ cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: pyrestforpipelines

labels:

app: pyrestforpipelines

spec:

replicas: 1

selector:

matchLabels:

app: pyrestforpipelines

template:

metadata:

labels:

app: pyrestforpipelines

spec:

containers:

- name: pyrestforpipelines

image: harbor.freshbrewed.science/freshbrewedprivate/pyrestforpipelines:21219

ports:

- containerPort: 5000

readinessProbe:

httpGet:

path: /approved

port: 5000

initialDelaySeconds: 5

periodSeconds: 10

imagePullSecrets:

- name: myharborreg

---

apiVersion: v1

kind: Service

metadata:

name: pyrestforpipelines-service

spec:

selector:

app: pyrestforpipelines

ports:

- protocol: TCP

port: 80

targetPort: 5000

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/tls-acme: "true"

name: pypipetest-ingress

spec:

ingressClassName: nginx

rules:

- host: pypipetest.tpk.pw

http:

paths:

- backend:

service:

name: pyrestforpipelines-service

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- pypipetest.tpk.pw

secretName: pypipetest-tls

When we see the Cert is satisified:

$ kubectl get cert pypipetest-tls

NAME READY SECRET AGE

pypipetest-tls True pypipetest-tls 87s

which works

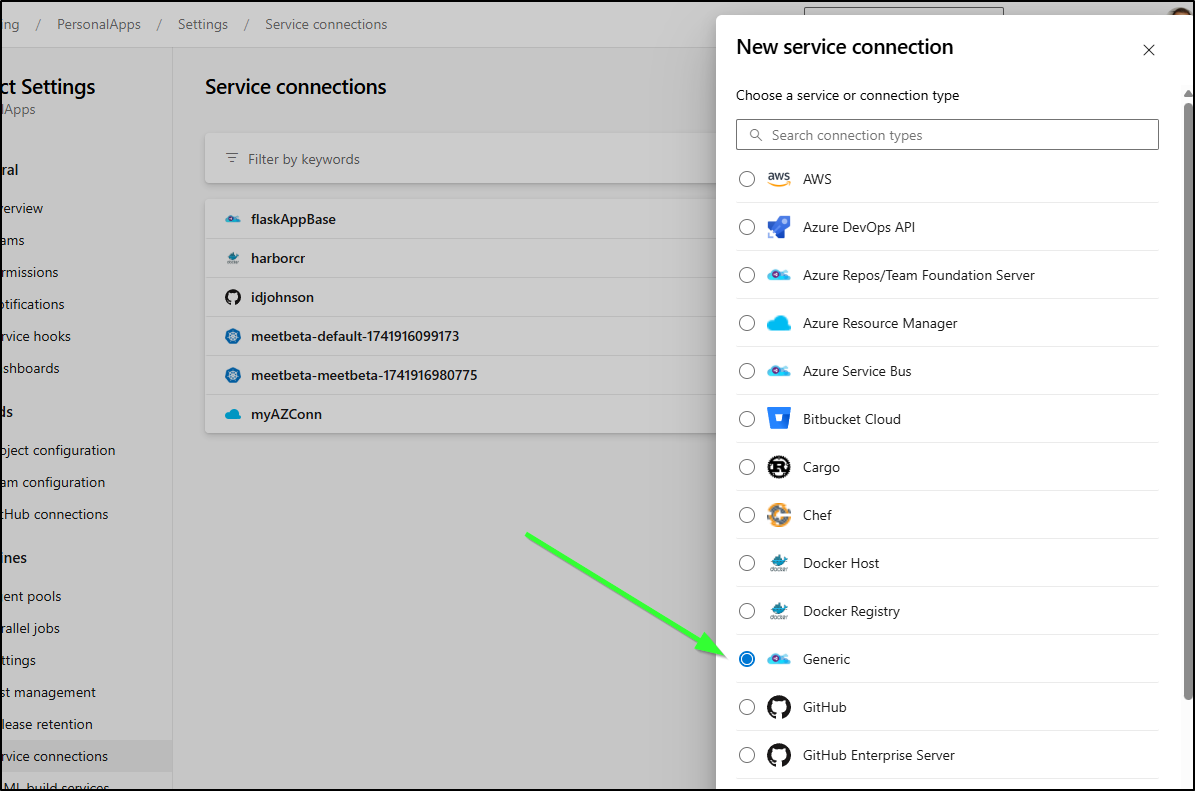

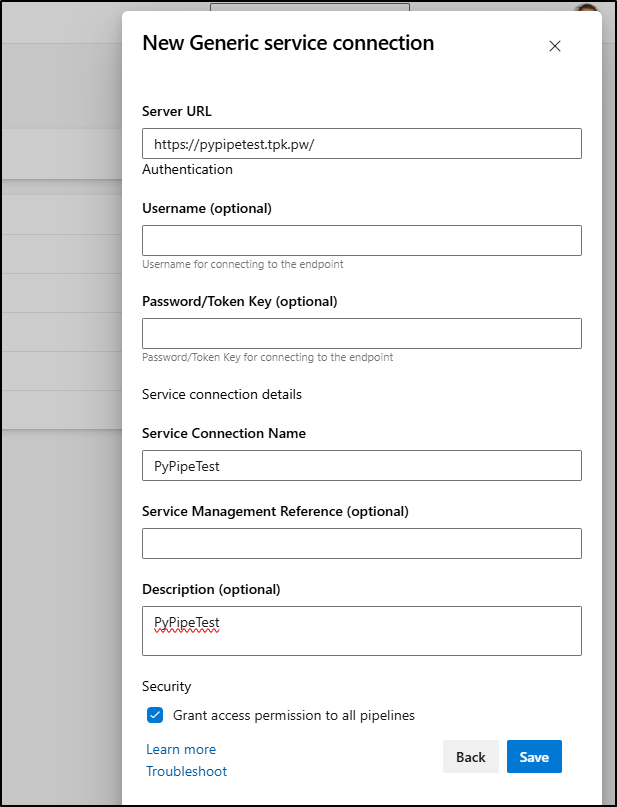

First, before we get a gate going, we need to add a “Generic” service connection:

Since I’m not using basic auth, I can just make a very simple one for this base URL

Now, let’s now go to the gate used by 3 of the pipelines and add a new Gate

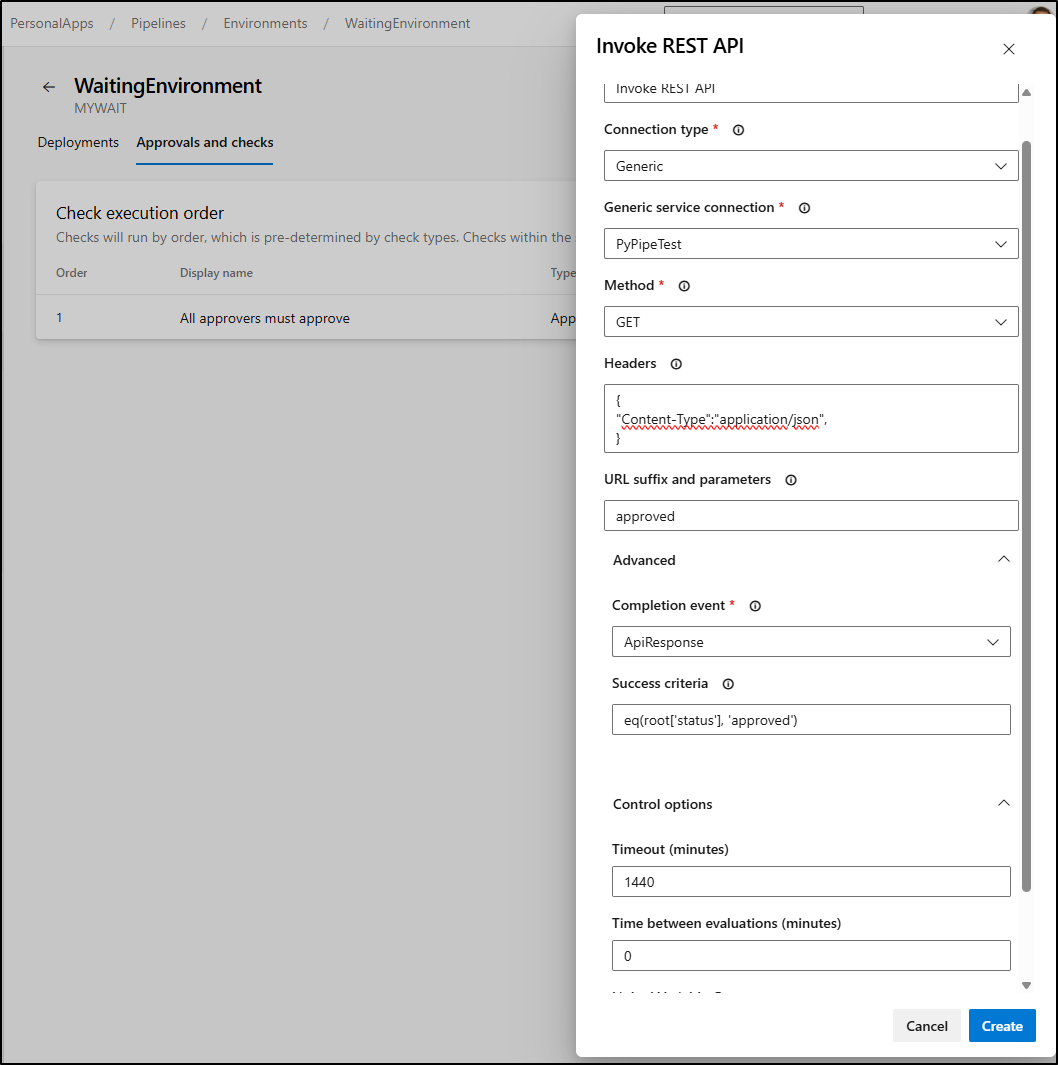

I’ll now add a REST check

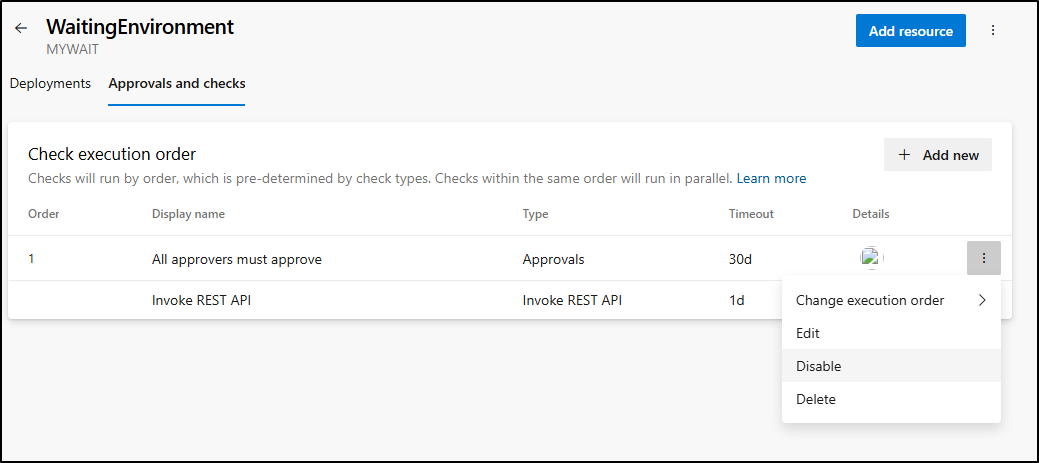

Based on my model, I’ll want to do an ApiResponse check against eq(root['status'], 'approved')

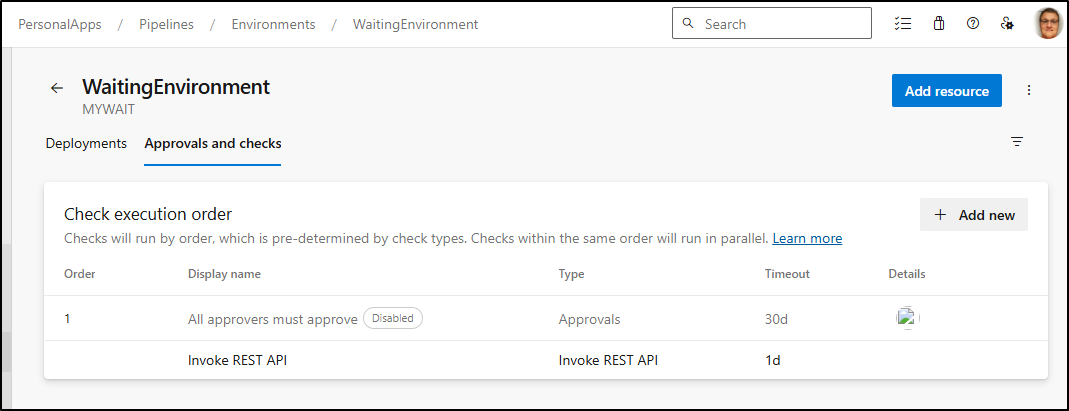

Then I can disable the email/user approvals

So now we should see a check totally based on REST

Let’s give it a try:

Seems it tries once and dies.

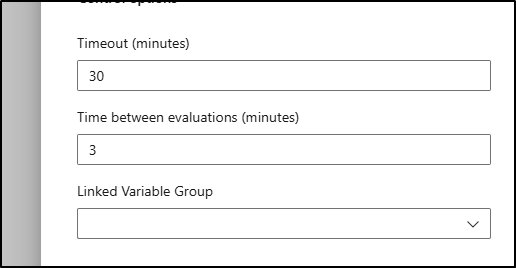

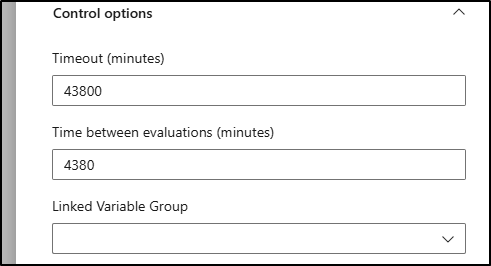

We must keep our max retries to 10. So if I want a max time of 30 minutes, the the minimum frequency is 3

So for context, if we have an environment that would queue for a month (43800 minutes), then logically we can only set the check time to 73 hours at the minimum

Here you can see a gate I set to 10 minutes maximum with retries every minute and how i “approved” with the REST API

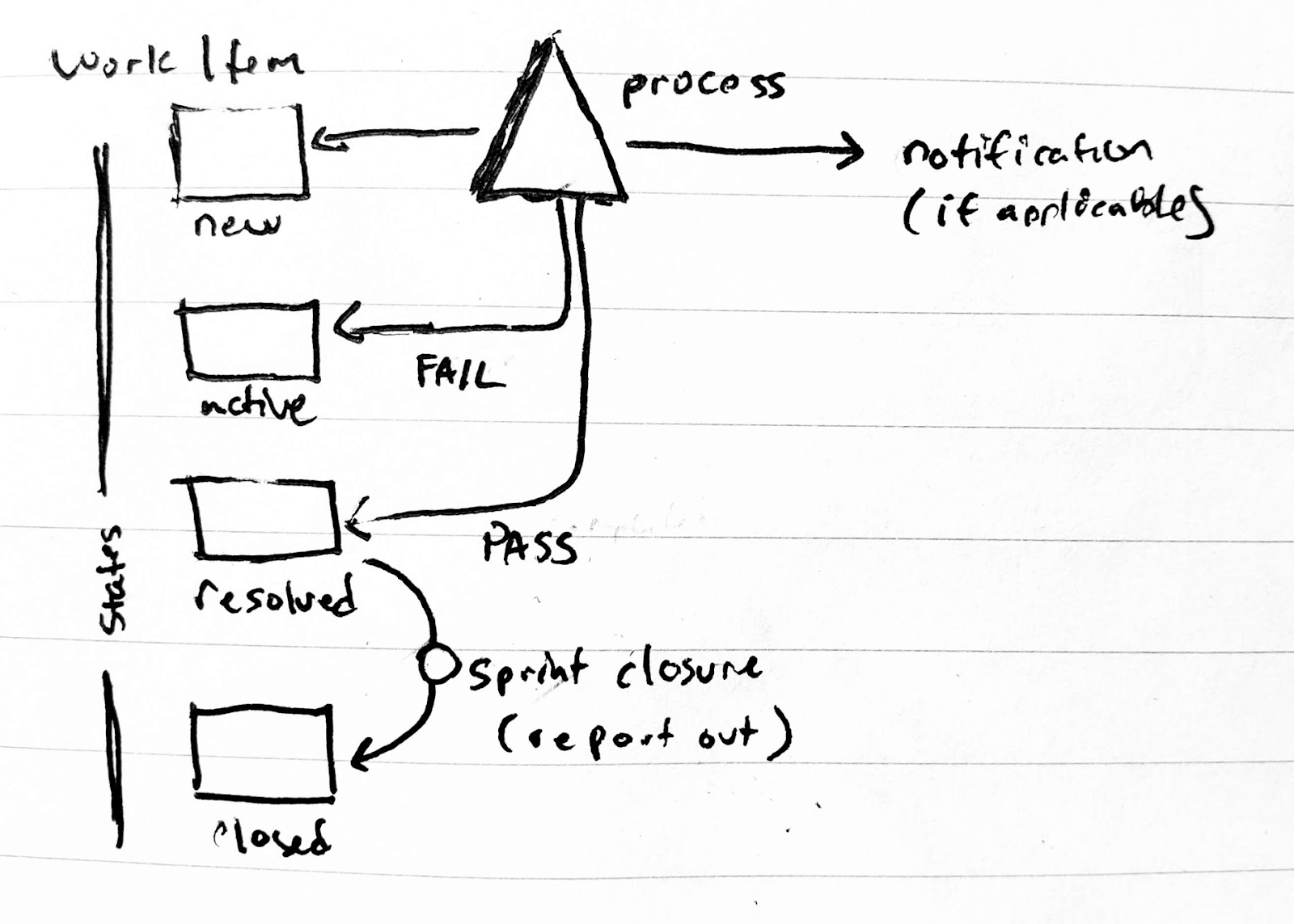

Work Item driven

One of my favourite topics is Work Item Automation. I’ll just have to admit that I tend to push this anywhere I work that has Azure DevOps.

I covered this topic in July of 2021 in “Work Item Automations in Azure DevOps”.

I also covered this in my OSN 2021 speaker series here.

The idea is we use a Work Item (ticket) to drive automations that can resolve the ticket.

Let’s show how that could work for releases.

Ticket Type

We could key off of summary fields or description, true. If I was forced to use a ticketing system like JIRA owned by another org and had no power to change process templates, I might have to do just that.

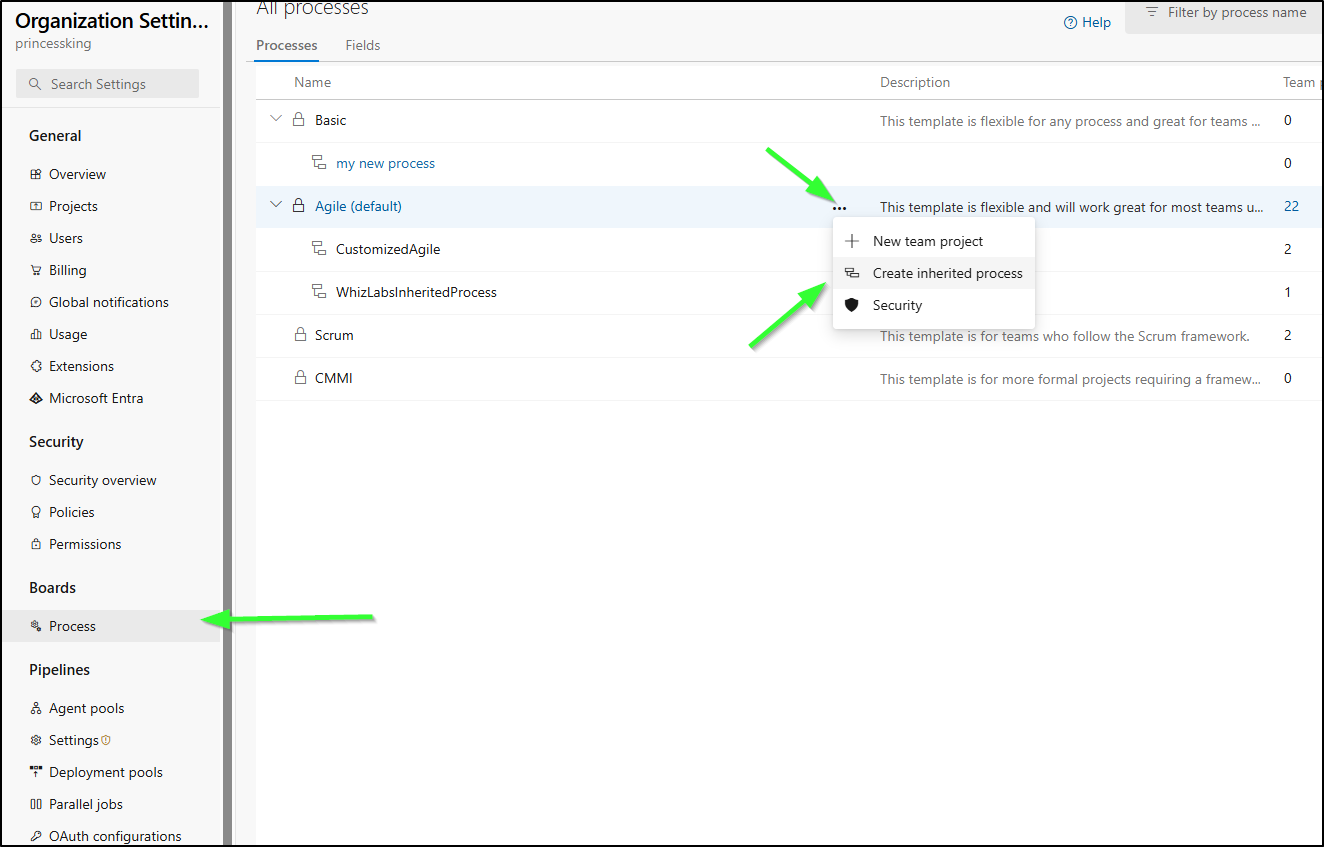

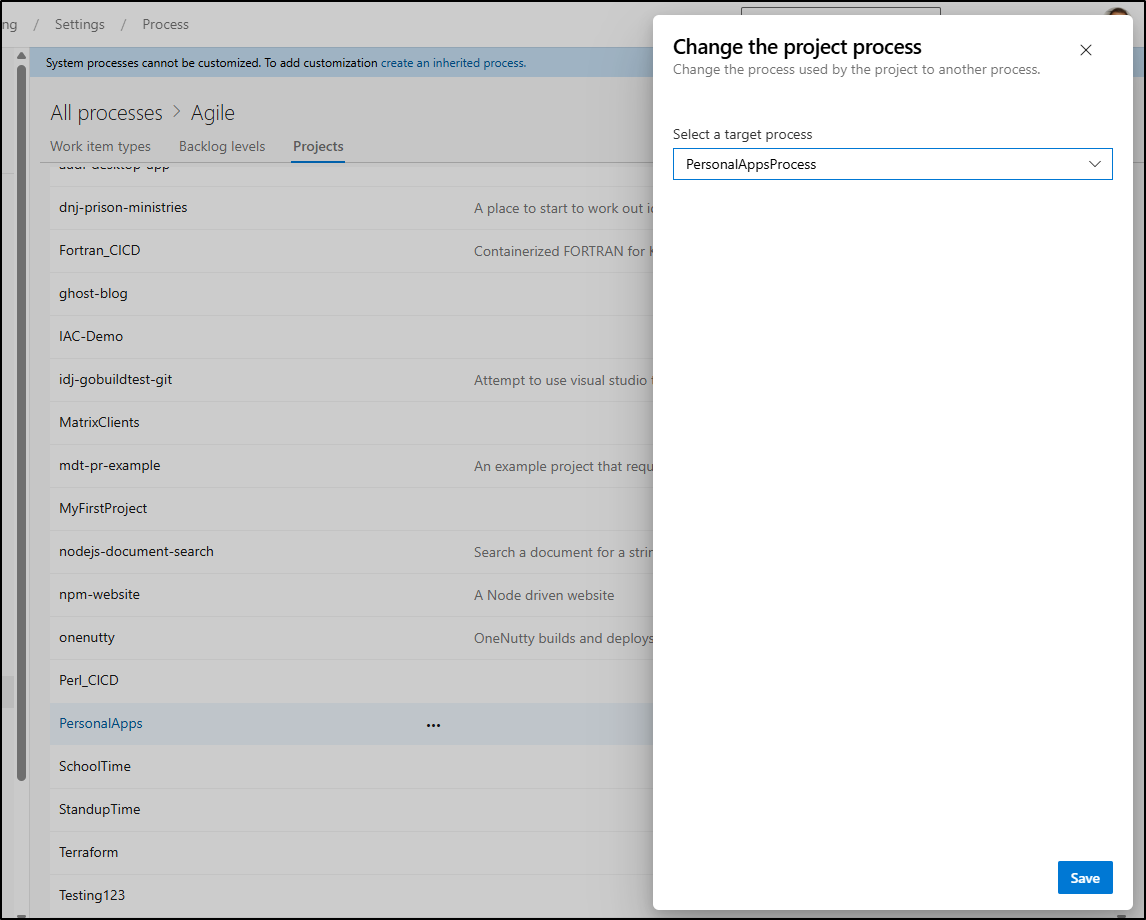

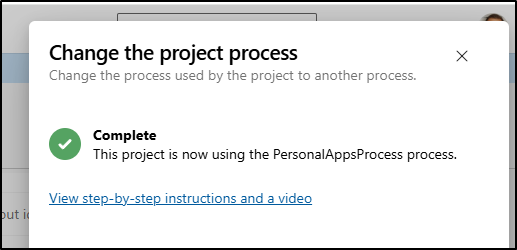

But here we can go ahead and make a “Release” type. I’ll fork from the Agile Process

I’ll give it a name and description

I could add a release type with a picklist

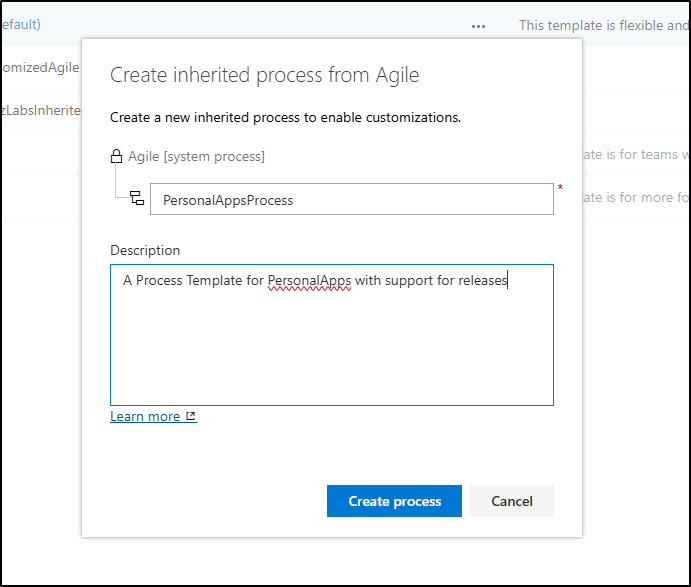

This is a bit of a double-edged sword. A picklist prevents mistakes from non-technical people. They cannot type “Staging” instead of “Stage” or “TRN” instead of “Train”. but then if we want to add/remove environments, we are on deck to update our picklist values.

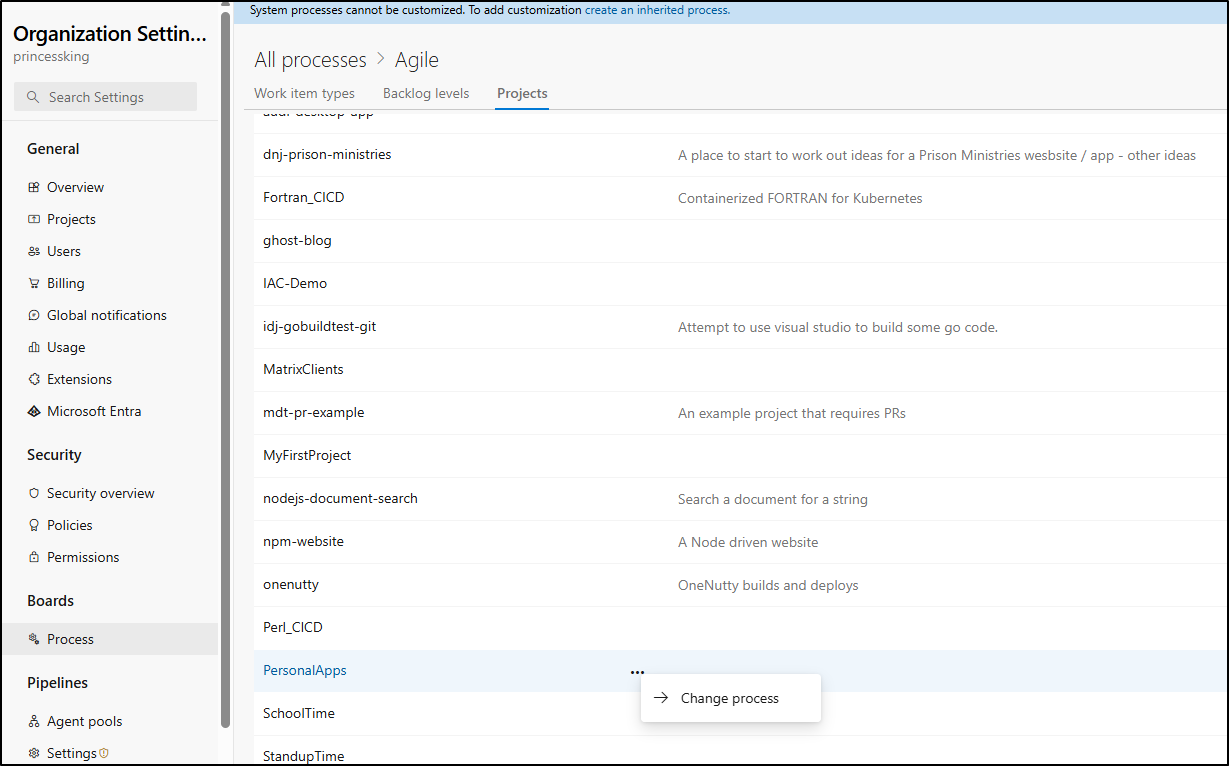

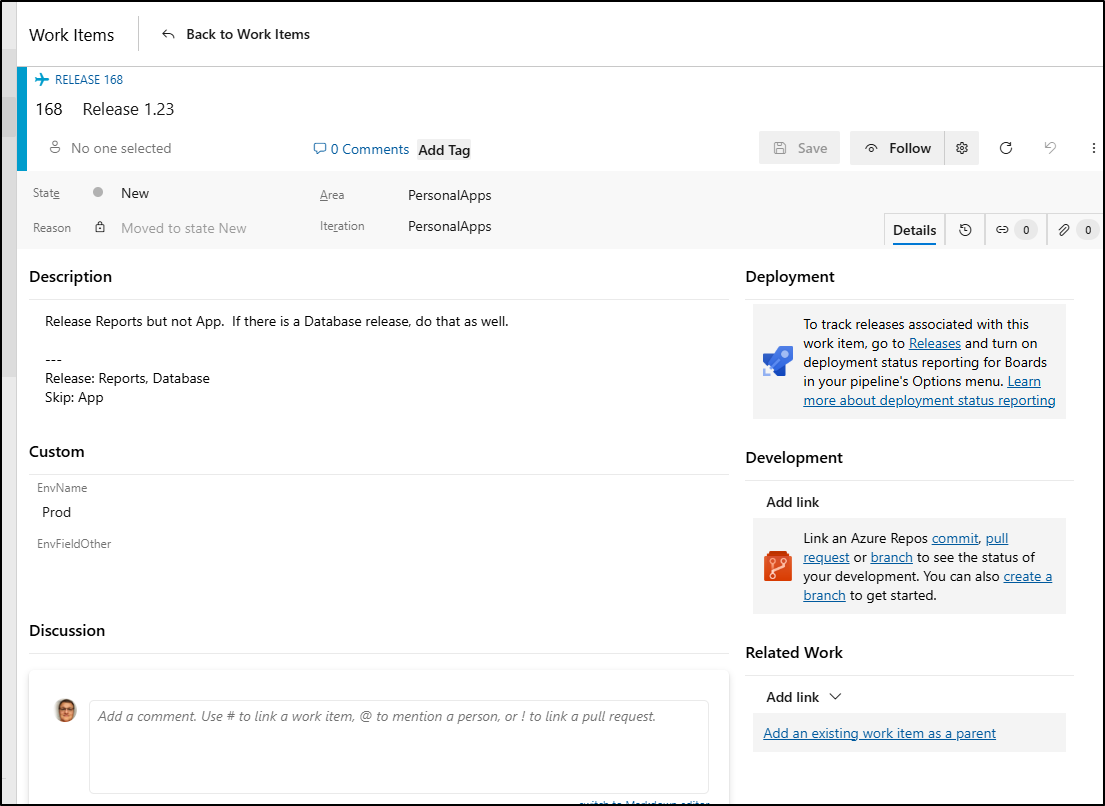

Just to illustrate the “how”, I’ll also add a string field of “EnvFieldOther” (note: in a few moments I’ll realize I should have called this “Release” not “Releases” and fix)

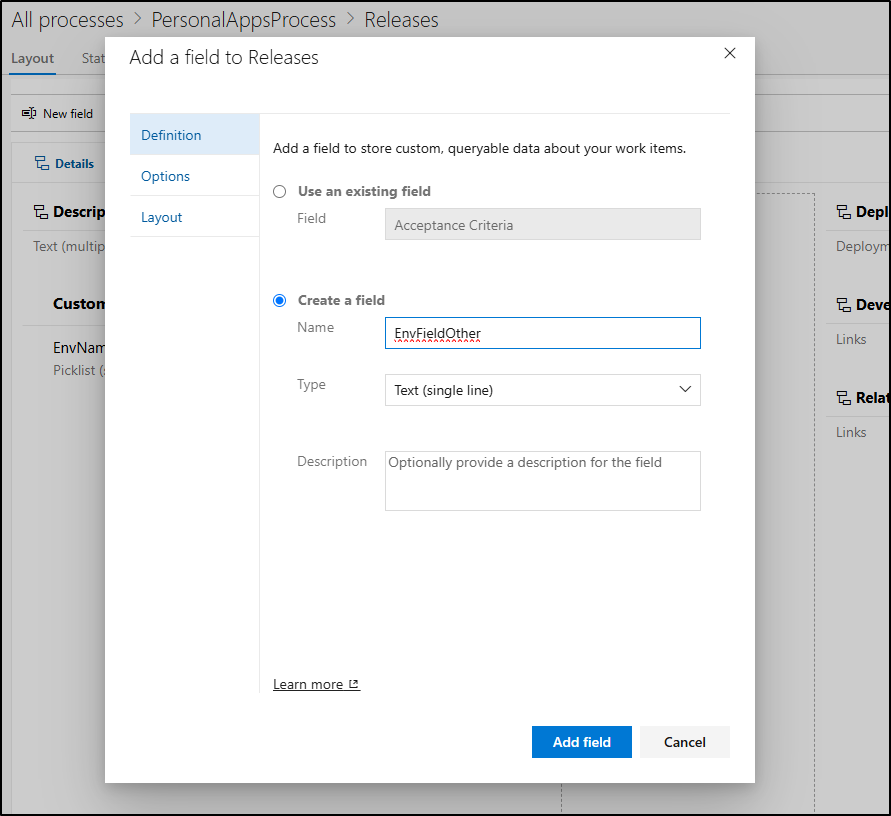

How to change processes is a bit of an odd flow. You find the existing process, go to Projects and from there you can “Change Process”

There I can change which process to use

As we had no conflicts, it’s complete. It can get a bit more tricky if our old flow and new flow had mutually exclusive states or dropped fields

I realized I goofed and called it “Releases” instead of “Release”. You cannot rename types, but i can delete and recreate in the process template.

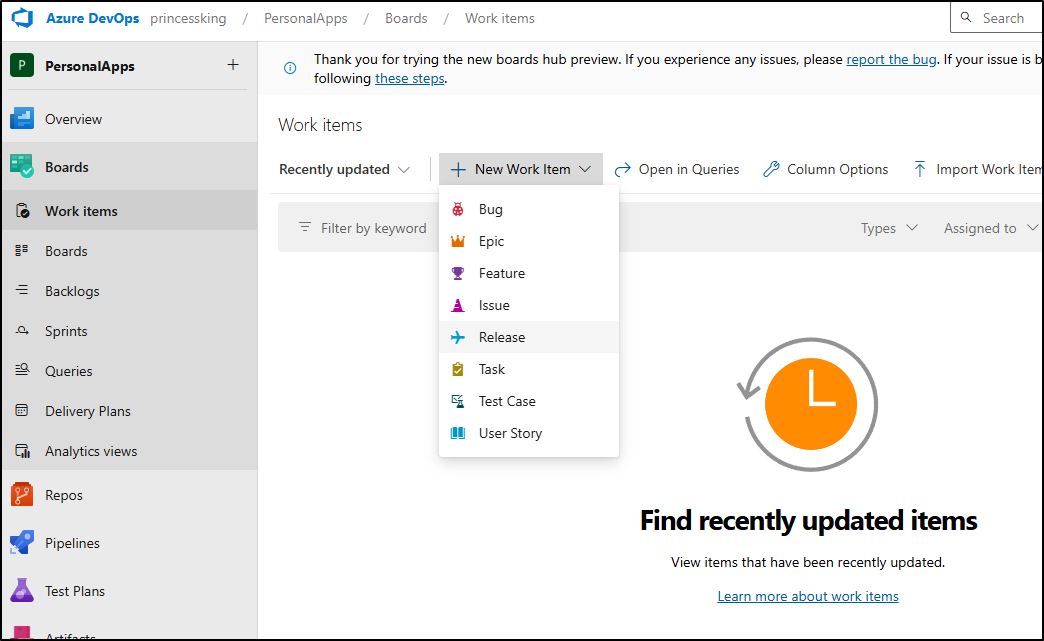

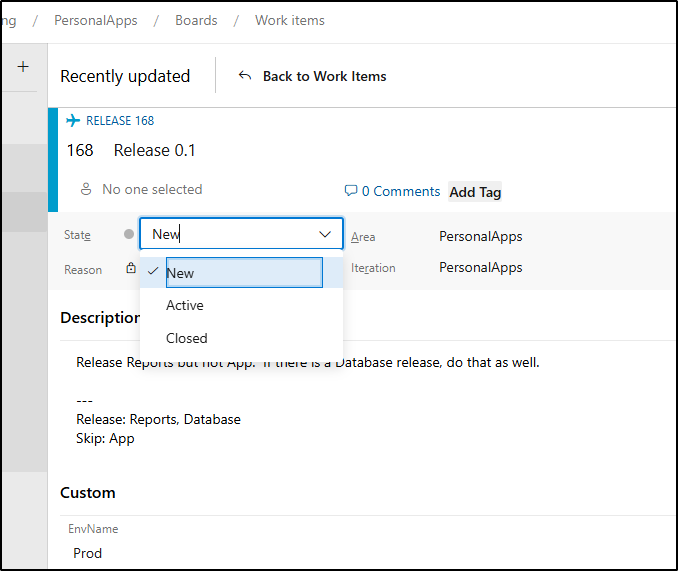

I can now create a “Release”

So let’s experiment and say we want to release “1.23” of the Reports and Database, but skip the “App” as it has a major feature that won’t make it out yet.

Its destination is Prod.

Setting up our sample pipelines

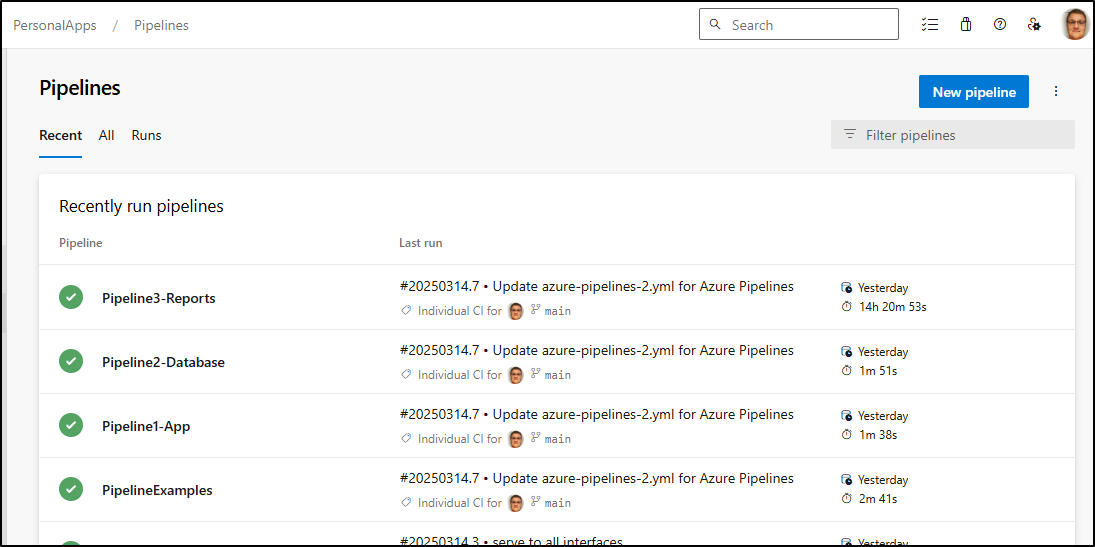

Here I’ll rename our existing Pipelines to pretend they are the Apps

Now, the destinations really don’t need a Pipeline-per-env. That would be a bit excessive.

However, let’s assume we have contained environments - that is, not publicly accessible endpoints.

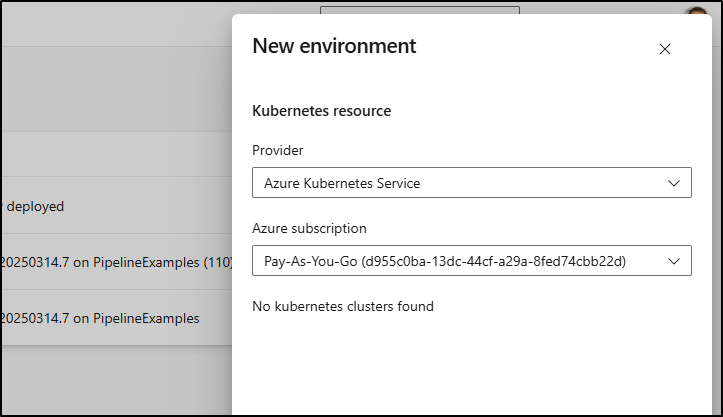

If we are thinking Kubernetes, we could use AKS as it ties in natively without needing to expose the control plane

However, in my case, I have two on-prem clusters and I would prefer to just deploy to them.

You can shoe-horn Azure DevOps agents into Kubernetes or Docker. The truth is, it’s a very hand created process and not one of the Microsoft supported paths.

That said, there is nothing wrong with having an agent on a VM or Linux host to do the work. It’s also a lot easier to update and control access points.

That will be the way we go here.

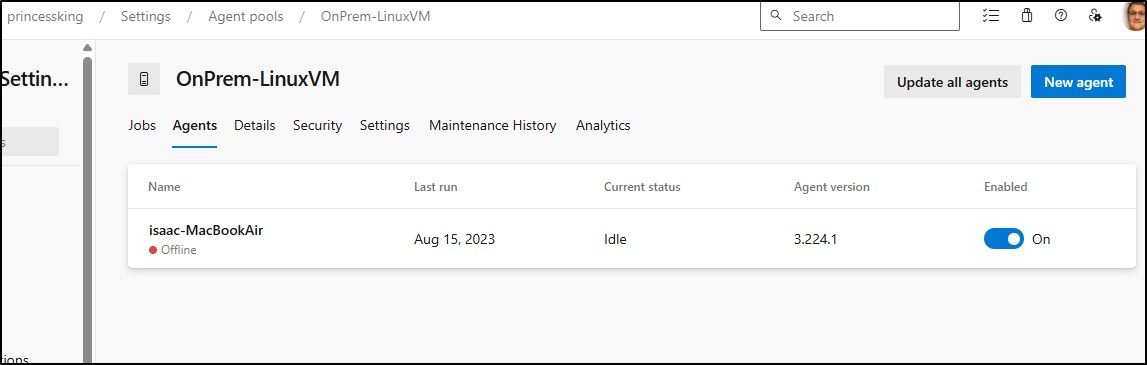

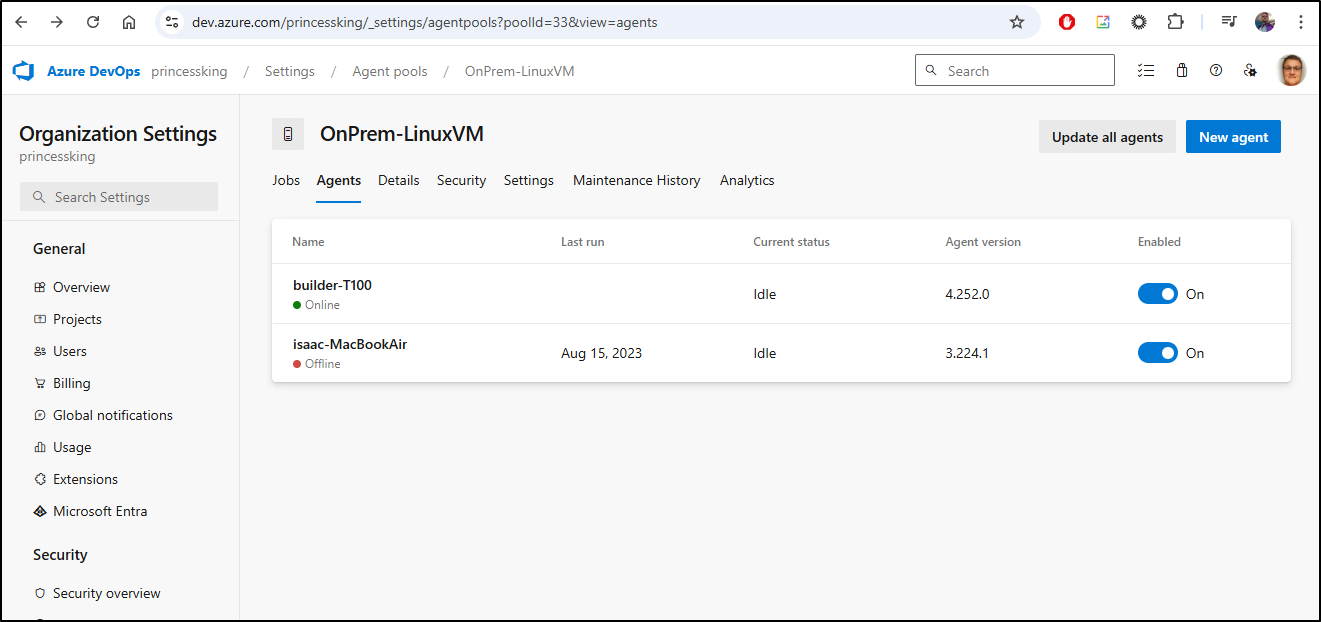

I already had a pool slated for this and some Perl containers.

However, they have been offline for a while.

I’m sure you can guess what I’ll do next - why do something manually when you have Ansible to use?

AzDo Agent Playbook

The way I generally work is to build a shell script that can do the job.

I’ll make a first pass at a BASH script that could install

#!/bin/bash

# Check if required arguments are provided

if [ $# -ne 3 ]; then

echo "Usage: $0 <azure_devops_org> <poolname> <pat_token>"

echo "Example: $0 myorganization mypoolname pat_token_value"

exit 1

fi

AZURE_ORG="$1"

POOL_NAME="$2"

PAT_TOKEN="$3"

AGENT_NAME=$(hostname)

MYUSERNAME=$(whoami)

# Create and navigate to agent directory

mkdir -p ./myagent

cd ./myagent

wget https://vstsagentpackage.azureedge.net/agent/4.252.0/vsts-agent-linux-x64-4.252.0.tar.gz

# Extract the agent package

tar zxvf ./vsts-agent-linux-x64-4.252.0.tar.gz

# Configure the agent

./config.sh --unattended \

--url "https://dev.azure.com/${AZURE_ORG}" \

--auth pat \

--token "${PAT_TOKEN}" \

--pool "${POOL_NAME}" \

--agent "${AGENT_NAME}" \

--acceptTeeEula \

--work ./_work

sudo ./svc.sh install $MYUSERNAME

sudo ./svc.sh start

rm ./myagent/vsts-agent-linux-x64-4.252.0.tar.gz

Then a playbook to copy and use it

- name: Install Azure DevOps

hosts: all

tasks:

- name: Transfer the script

copy: src=azdoadd.sh dest=/tmp mode=0755

- name: Run Installer

ansible.builtin.shell: |

/tmp/azdoadd.sh

args:

chdir: /home/builder

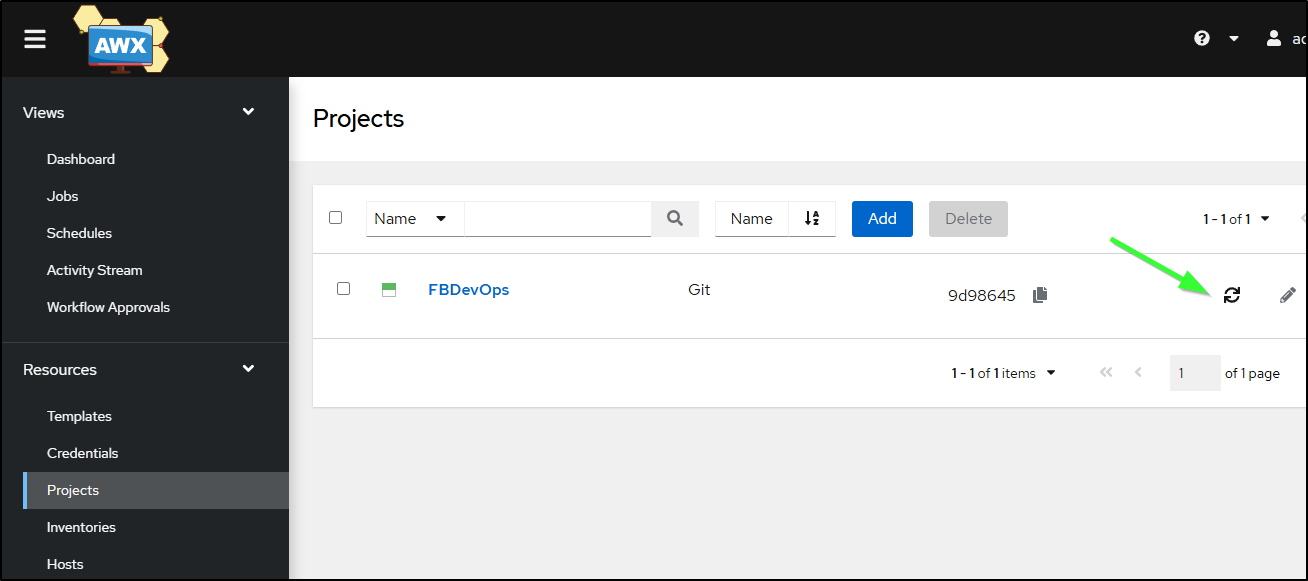

Which I pushed to main

I’ll sync latest

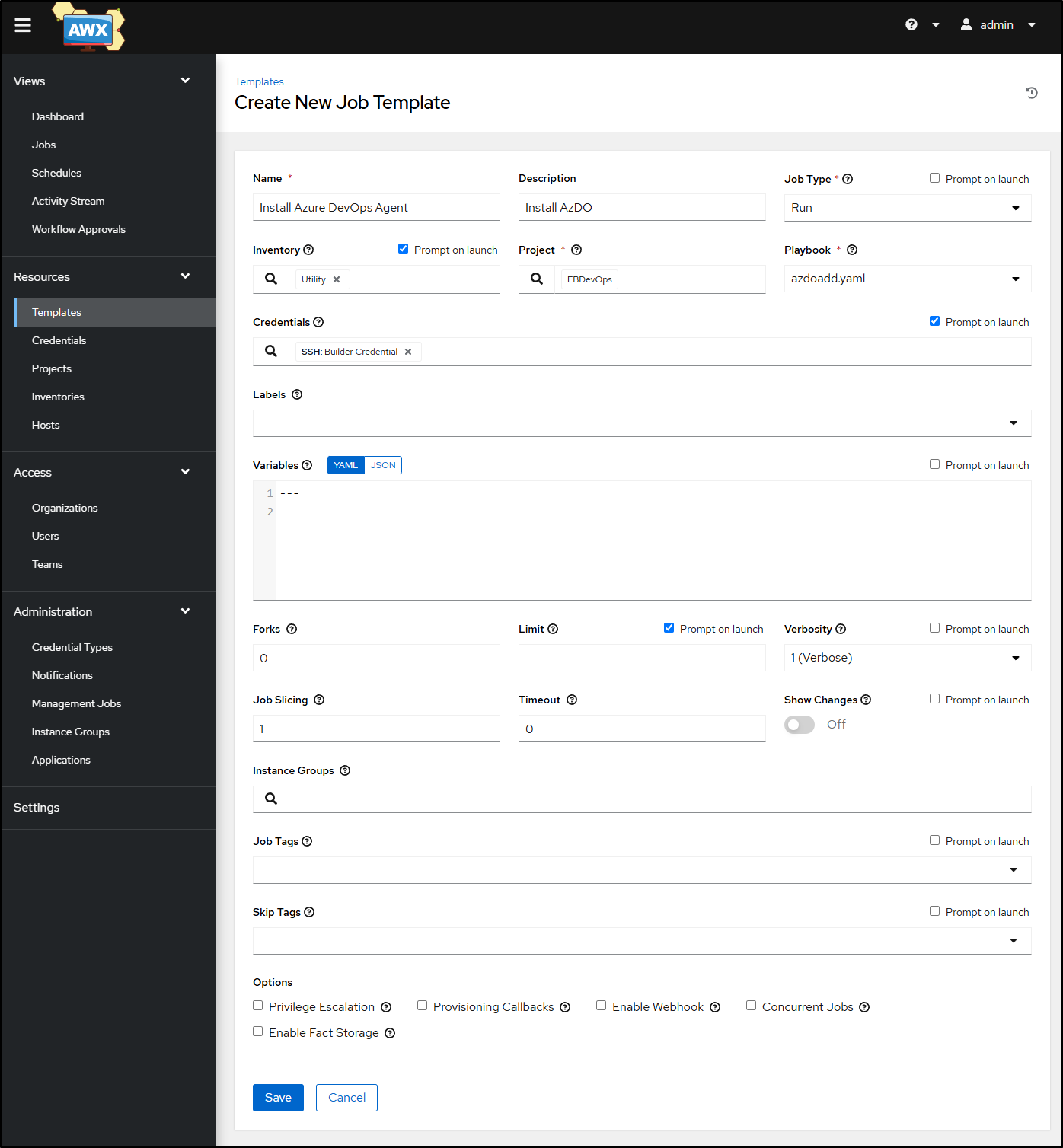

I’ll add the playbook, but because I want most of the operations to be peformed as “builder”, not root, I’ll not check the “Privilege Escelation” box

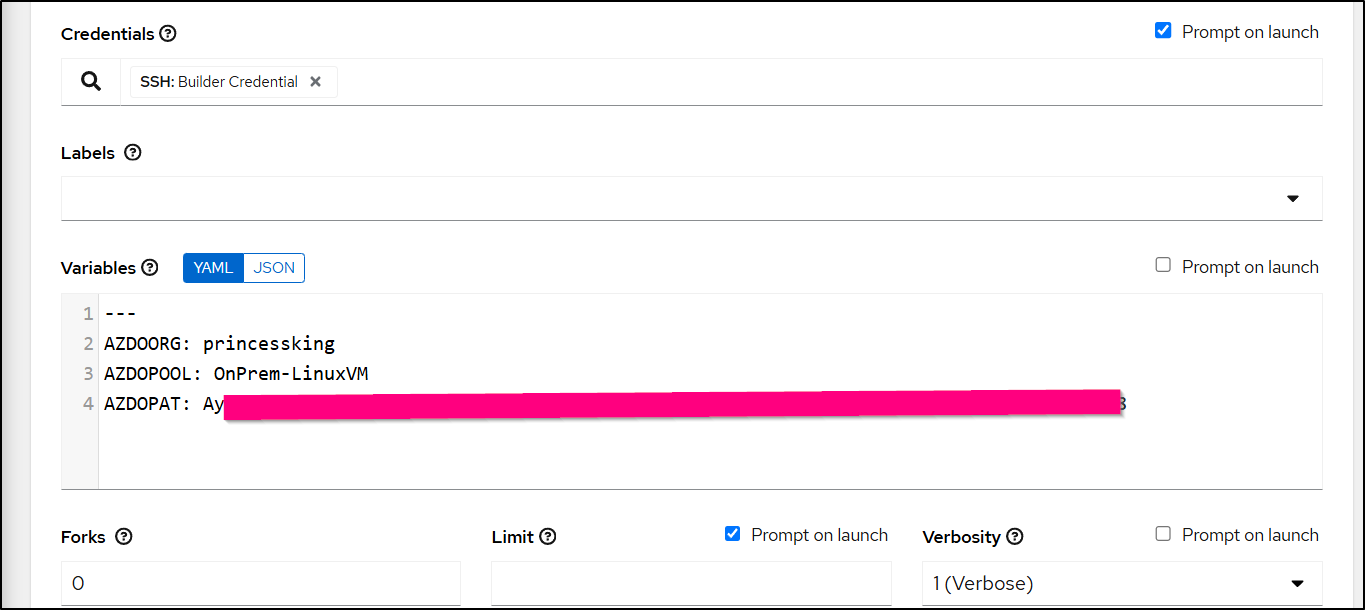

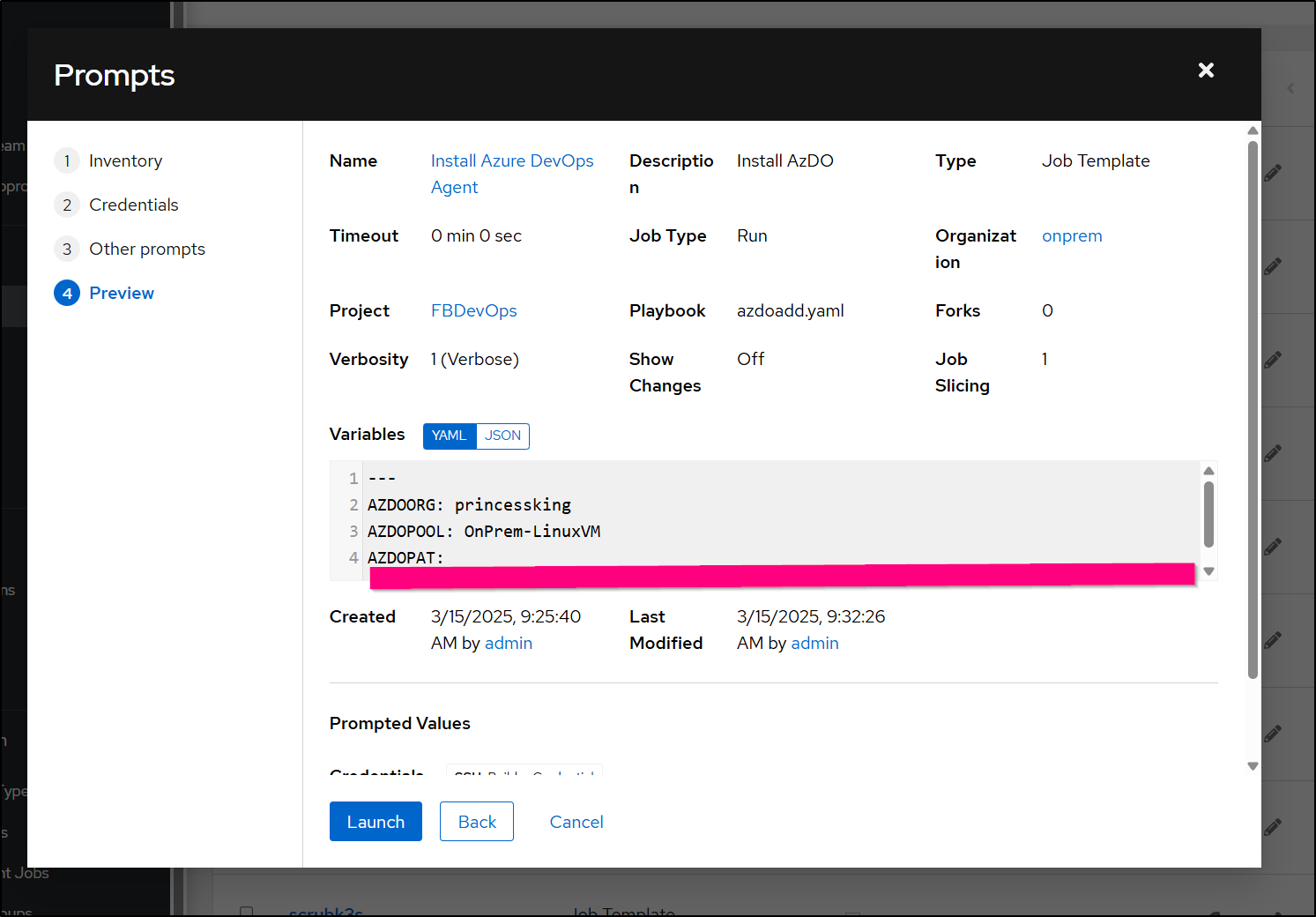

I’ll want to set the variables that are expected in the Variables block:

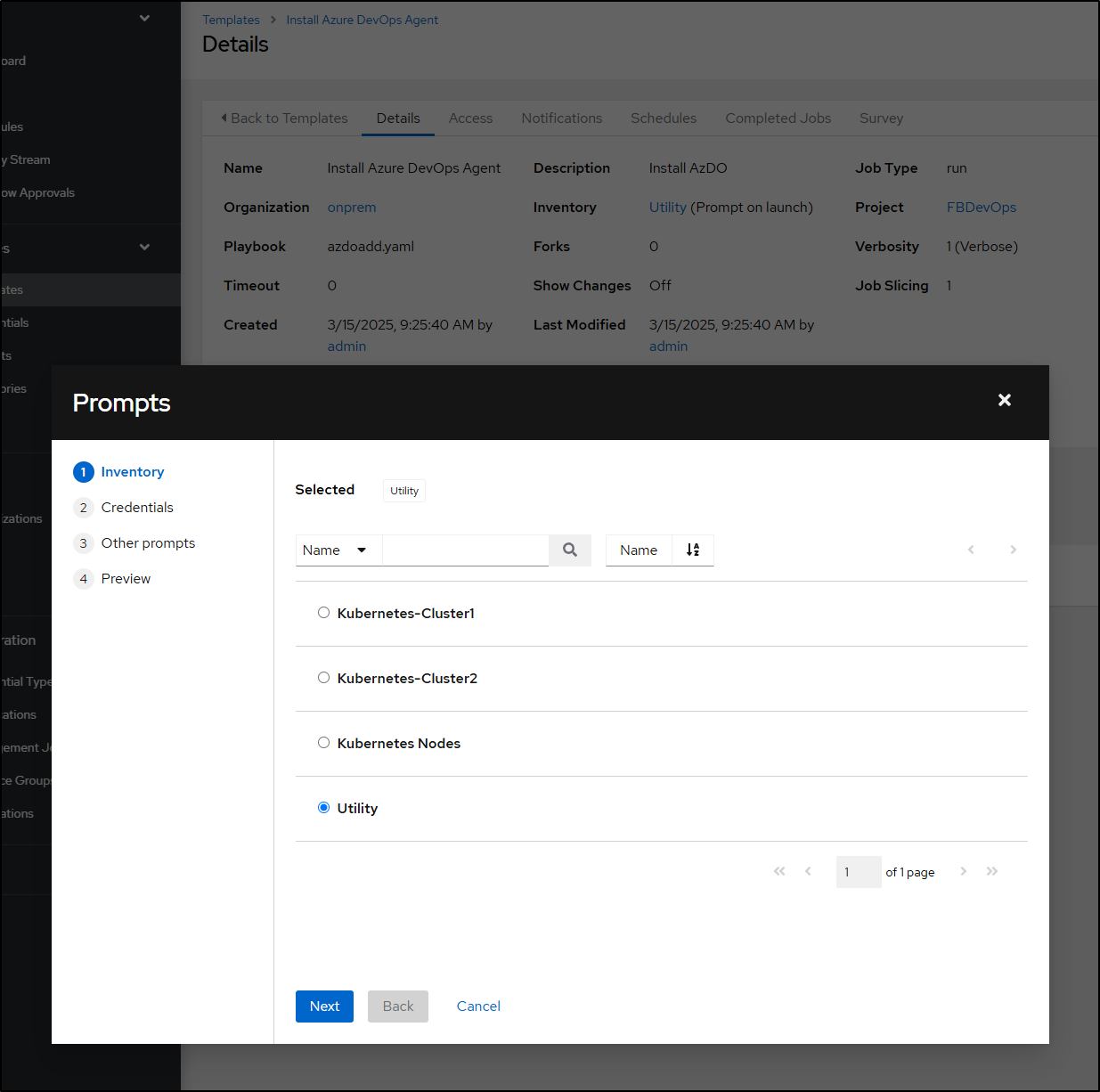

Now when I launch from Templates, I can confirm I want to use my “Utility” inventory

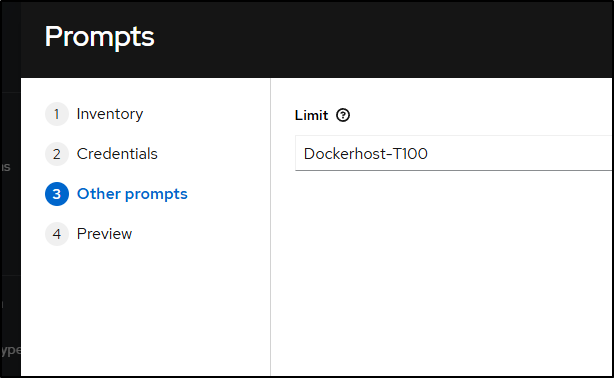

But I’ll limit it to the Dockerhost-T100

I’m now ready to launch

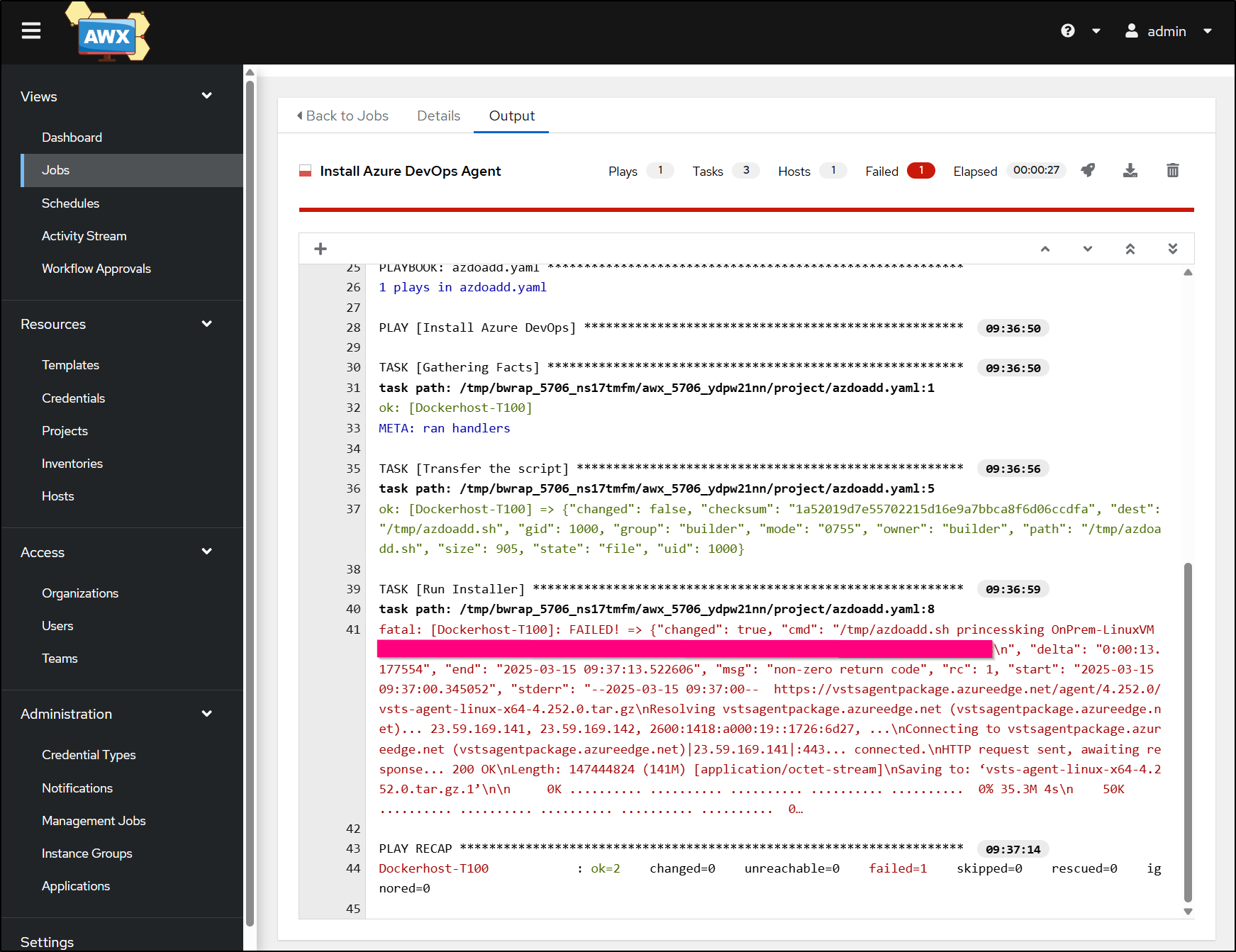

Seems to have an issue, but nothing in Stderr

It seems okay, i see it expanded into the right directory

builder@builder-T100:~/myagent$ ls -ltra

total 288092

-rwxrwxr-x 1 builder builder 2014 Feb 12 00:31 run.sh

-rw-rw-r-- 1 builder builder 2753 Feb 12 00:31 run-docker.sh

-rw-rw-r-- 1 builder builder 3170 Feb 12 00:31 reauth.sh

-rw-rw-r-- 1 builder builder 9465 Feb 12 00:31 license.html

-rwxrwxr-x 1 builder builder 726 Feb 12 00:31 env.sh

-rwxrwxr-x 1 builder builder 3173 Feb 12 00:31 config.sh

drwxrwxr-x 7 builder builder 4096 Feb 12 00:32 externals

drwxrwxr-x 26 builder builder 20480 Feb 12 00:41 bin

drwxrwxr-x 5 builder builder 4096 Feb 12 00:43 .

-rw-rw-r-- 1 builder builder 147444824 Feb 12 02:55 vsts-agent-linux-x64-4.252.0.tar.gz.1

-rw-rw-r-- 1 builder builder 147444824 Feb 12 02:55 vsts-agent-linux-x64-4.252.0.tar.gz

drwxr-xr-x 81 builder builder 4096 Mar 15 09:34 ..

-rw-rw-r-- 1 builder builder 17 Mar 15 09:34 .env

-rw------- 1 builder builder 1709 Mar 15 09:34 .credentials_rsaparams

-rw-rw-r-- 1 builder builder 272 Mar 15 09:34 .credentials

-rw-rw-r-- 1 builder builder 208 Mar 15 09:34 .agent

-rwxr-xr-x 1 builder builder 4621 Mar 15 09:34 svc.sh

-rwxr-xr-x 1 builder builder 697 Mar 15 09:34 runsvc.sh

-rw-r--r-- 1 builder builder 66 Mar 15 09:34 .service

-rw-rw-r-- 1 builder builder 99 Mar 15 09:37 .path

drwxrwxr-x 2 builder builder 4096 Mar 15 09:37 _diag

The status shows it is indeed running

builder@builder-T100:~/myagent$ sudo ./svc.sh status

/etc/systemd/system/vsts.agent.princessking.OnPrem\x2dLinuxVM.builder\x2dT100.service

● vsts.agent.princessking.OnPrem\x2dLinuxVM.builder\x2dT100.service - Azure Pipelines Agent (princessking.OnPrem-LinuxVM.builder-T100)

Loaded: loaded (/etc/systemd/system/vsts.agent.princessking.OnPrem\x2dLinuxVM.builder\x2dT100.service; enabled; vendor preset: enabled)

Active: active (running) since Sat 2025-03-15 09:34:17 CDT; 6min ago

Main PID: 1630339 (runsvc.sh)

Tasks: 31 (limit: 9092)

Memory: 160.7M

CPU: 9.060s

CGroup: /system.slice/vsts.agent.princessking.OnPrem\x2dLinuxVM.builder\x2dT100.service

├─1630339 /bin/bash /home/builder/myagent/runsvc.sh

├─1630493 ./externals/node20_1/bin/node ./bin/AgentService.js

└─1630749 /home/builder/myagent/bin/Agent.Listener run --startuptype service

Mar 15 09:34:17 builder-T100 systemd[1]: Started Azure Pipelines Agent (princessking.OnPrem-LinuxVM.builder-T100).

Mar 15 09:34:17 builder-T100 runsvc.sh[1630339]: .path=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/l…/snap/bin

Mar 15 09:34:17 builder-T100 runsvc.sh[1630363]: v20.17.0

Mar 15 09:34:18 builder-T100 runsvc.sh[1630493]: Starting Agent listener with startup type: service

Mar 15 09:34:18 builder-T100 runsvc.sh[1630493]: Started listener process

Mar 15 09:34:18 builder-T100 runsvc.sh[1630493]: Started running service

Mar 15 09:34:21 builder-T100 runsvc.sh[1630493]: Scanning for tool capabilities.

Mar 15 09:34:21 builder-T100 runsvc.sh[1630493]: Connecting to the server.

Mar 15 09:34:23 builder-T100 runsvc.sh[1630493]: 2025-03-15 14:34:23Z: Listening for Jobs

Hint: Some lines were ellipsized, use -l to show in full.

Refreshing my pool shows it’s there - must just be a wayward return code

Kubectx and access points

There are few ways we could think on this - namely, one AzDO agent per environment that is authed to see a Kubernetes cluster.

But again, I’m all about minimizing agents and systems to maintain. Let’s use Kubectx to be able to dance between clusters

builder@builder-T100:~/myagent$ sudo snap install kubectx --classic

kubectx 0.9.5 from Marcos Alano (mhalano) installed

I often have a script to prep my kubeconfig each time

az keyvault secret show --vault-name idjakv --name k3sremoteconfigb64tgz --subscription Pay-As-You-Go | jq -r .value > /tmp/k3s.tgz.b64

cat /tmp/k3s.tgz.b64 | base64 --decode > /tmp/k3s.tgz

cd /tmp

tar -xzvf /tmp/k3s.tgz

chown builder:builder home/builder/.kube/config

mv home/builder/.kube/config ~/.kube/config

I can now use Kubectx to see my clusters

builder@builder-T100:/tmp$ kubectx

docker-desktop

ext33

ext77

ext81

int33

mac77

mac81

I can test and see it works

builder@builder-T100:/tmp$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-hp-elitebook-745-g5 Ready control-plane,master 378d v1.26.14+k3s1

hp-hp-elitebook-850-g2 Ready <none> 375d v1.26.14+k3s1

builder-hp-elitebook-850-g1 Ready <none> 378d v1.26.14+k3s1

builder-hp-elitebook-850-g2 Ready <none> 236d v1.26.14+k3s1

Helm samples

I want to pretend to have a Helm Chart for this. I can use helm create for that

builder@LuiGi:~/Workspaces/PipelineExamples$ helm create mychart

Creating mychart

builder@LuiGi:~/Workspaces/PipelineExamples$ tree ./mychart/

./mychart/

├── Chart.yaml

├── charts

├── templates

│ ├── NOTES.txt

│ ├── _helpers.tpl

│ ├── deployment.yaml

│ ├── hpa.yaml

│ ├── ingress.yaml

│ ├── service.yaml

│ ├── serviceaccount.yaml

│ └── tests

│ └── test-connection.yaml

└── values.yaml

4 directories, 10 files

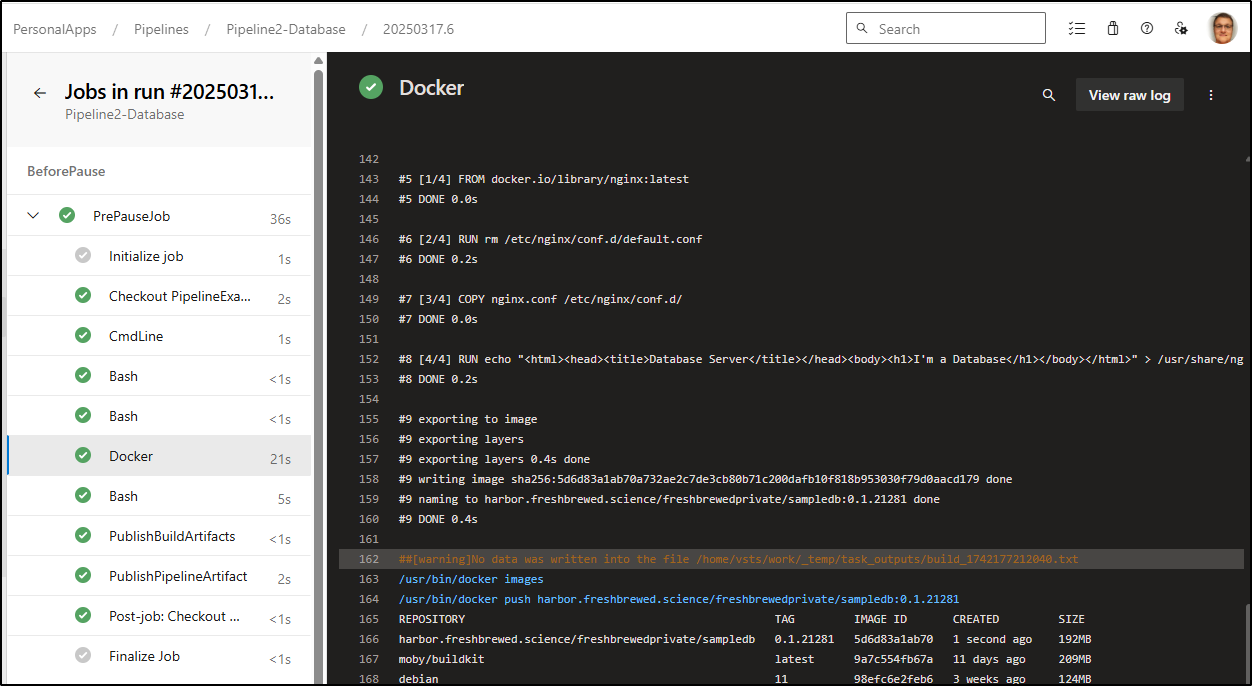

I built out an Azure Pipeline update for the Database pipeline to build an NGinx container with a database message and push both the Helm chart and Container to my CR

stages:

- stage: BeforePause

jobs:

- job: PrePauseJob

steps:

- script: echo "This runs before the pause"

- task: Bash@3

inputs:

targetType: 'inline'

script: |

echo "Test Database File" > SampleFile.txt

tar -czvf $(Build.ArtifactStagingDirectory)/Samplefile-$(Build.BuildId).tgz ./SampleFile.txt

- task: Bash@3

inputs:

targetType: 'inline'

script: |

sed -i 's/XXXX/Database/g' Dockerfile

- task: Docker@2

inputs:

containerRegistry: 'harborcr'

repository: 'freshbrewedprivate/sampledb'

command: 'buildAndPush'

Dockerfile: 'Dockerfile'

tags: '0.1.$(build.buildid)'

- task: Bash@3

inputs:

targetType: 'inline'

script: |

set -x

sed -i 's/version: .*/version: 0.1.$(build.buildid)/g' mychart/Chart.yaml

helm package mychart

#helm plugin install https://github.com/chartmuseum/helm-push

helm registry login -u $(harboruser) -p $(harborpass) harbor.freshbrewed.science

helm push mychart-0.1.$(build.buildid).tgz oci://harbor.freshbrewed.science/chartrepo

- task: PublishBuildArtifacts@1

inputs:

PathtoPublish: '$(Build.ArtifactStagingDirectory)'

ArtifactName: 'drop'

publishLocation: 'Container'

StoreAsTar: true

- task: PublishPipelineArtifact@1

inputs:

targetPath: '$(Build.ArtifactStagingDirectory)'

artifact: 'drop2'

publishLocation: 'pipeline'

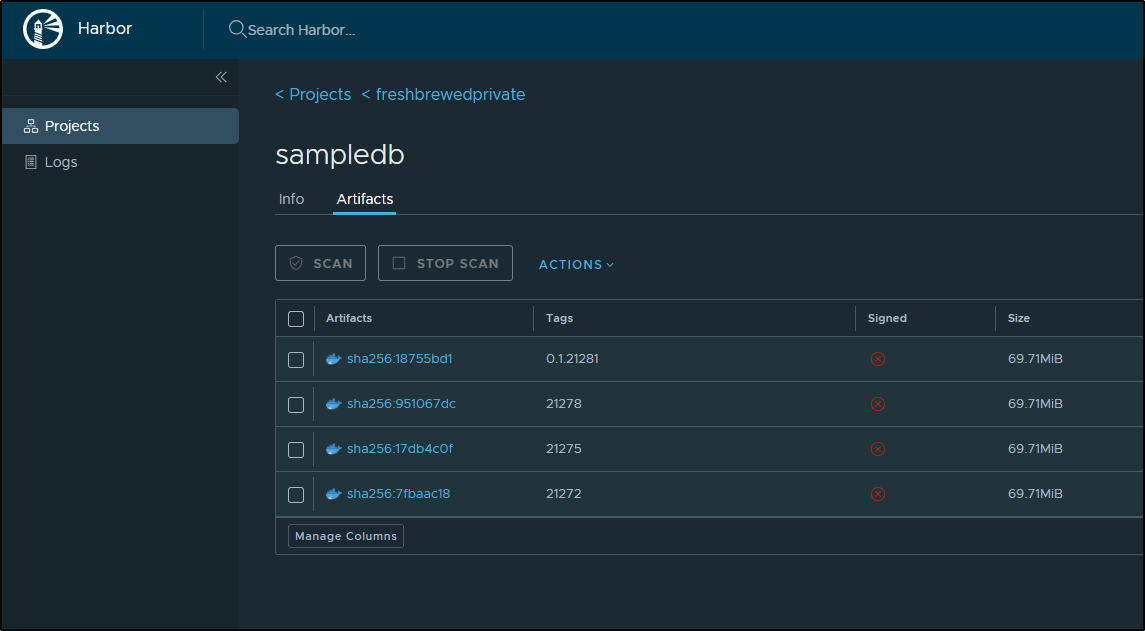

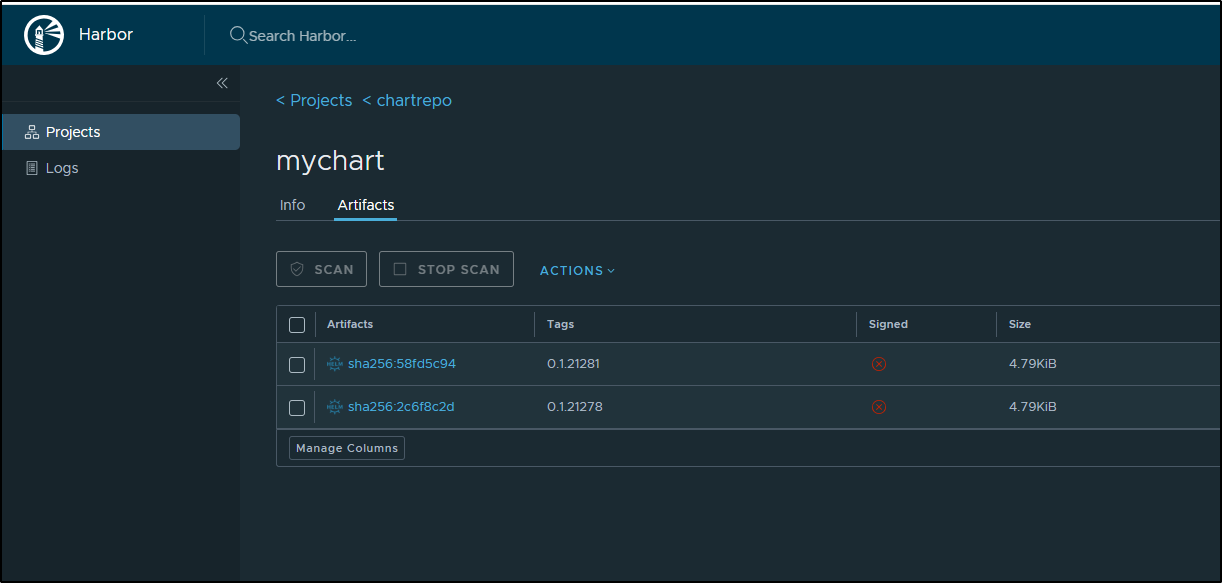

As you can see it built and push the docker container and chart

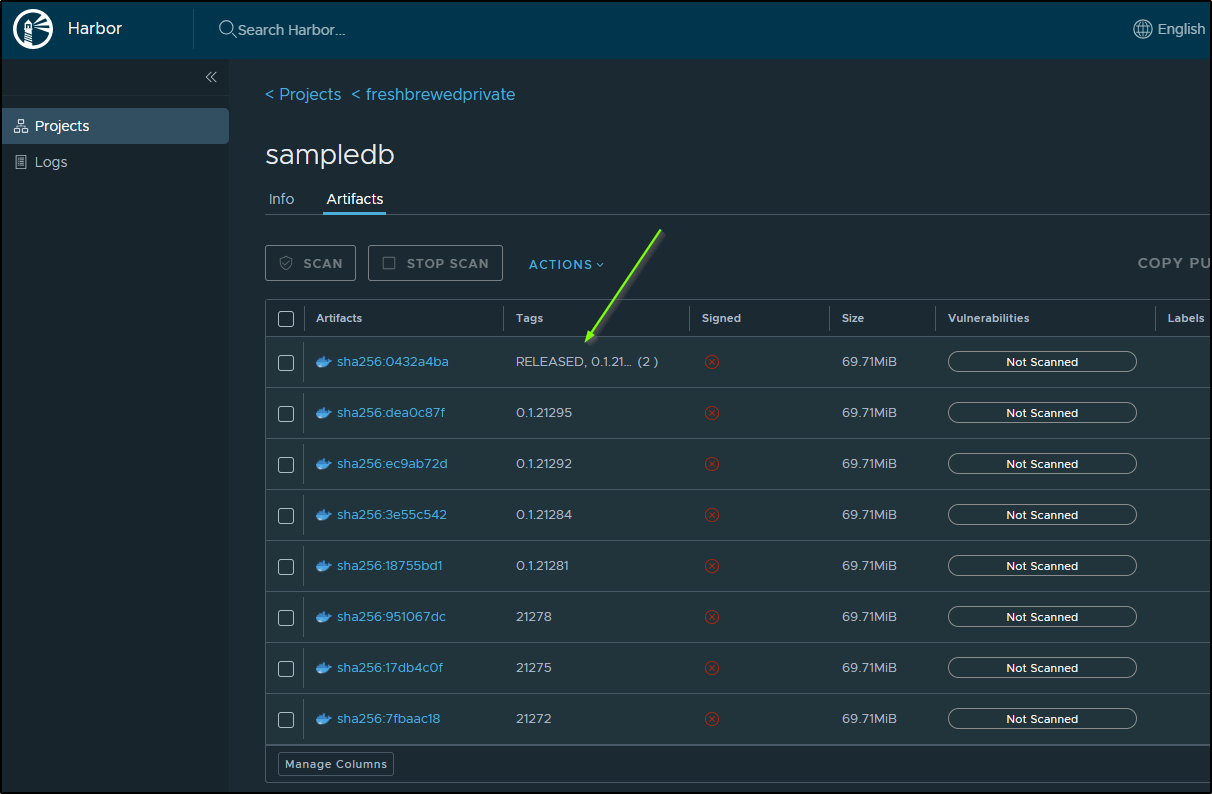

We can see we have a container that matches our build tag

And a chart pushed matching the tag as well

I could now use this in a deployment

$ helm pull oci://harbor.freshbrewed.science/chartrepo/mychart --version 0.1.21281

Pulled: harbor.freshbrewed.science/chartrepo/mychart:0.1.21281

Digest: sha256:58fd5c941ae6c8459966c61957574b847689bed8b84589cb4ba45184f512fece

I could also use it direct

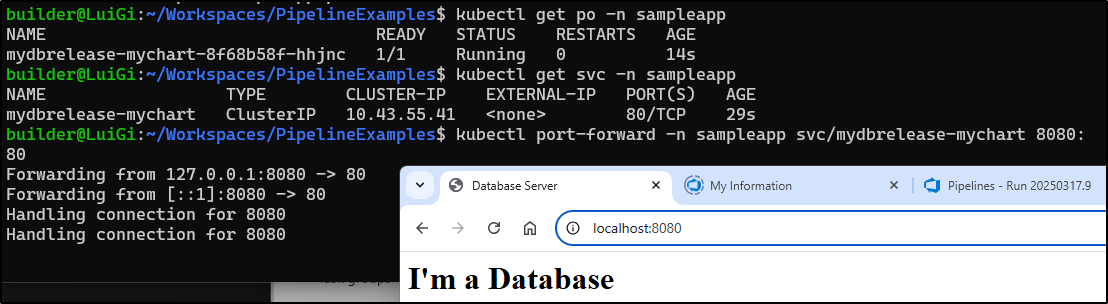

$ helm install mydbrelease oci://harbor.freshbrewed.science/chartrepo/mychart --version 0.1.21281

For instance, I created a namespace, then pulled in the HarborCR secret followed by installing the OCI chart with the image and tag set (as well as that harbor reg secret)

builder@LuiGi:~/Workspaces/PipelineExamples$ kubectl get secrets myharborreg -o yaml > myharborreg.yaml

builder@LuiGi:~/Workspaces/PipelineExamples$ vi myharborreg.yaml

builder@LuiGi:~/Workspaces/PipelineExamples$ !v

vi myharborreg.yaml

builder@LuiGi:~/Workspaces/PipelineExamples$ helm install mydbrelease --namespace sampleapp --set image.repository=harbor.freshbrewed.science/freshbrewedprivate/sampledb --set image.tag=0.1.21281 --set "imagePullSecrets[0].name=myharborreg" oci://harbo

r.freshbrewed.science/chartrepo/mychart --version 0.1.21281

Pulled: harbor.freshbrewed.science/chartrepo/mychart:0.1.21281

Digest: sha256:58fd5c941ae6c8459966c61957574b847689bed8b84589cb4ba45184f512fece

NAME: mydbrelease

LAST DEPLOYED: Sun Mar 16 21:34:17 2025

NAMESPACE: sampleapp

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace sampleapp -l "app.kubernetes.io/name=mychart,app.kubernetes.io/instance=mydbrelease" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace sampleapp $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace sampleapp port-forward $POD_NAME 8080:$CONTAINER_PORT

There seems to be an issue with my nginx conf

$ kubectl logs mydbrelease-mychart-774d5f76c7-hqmrg -n sampleapp

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: /etc/nginx/conf.d/default.conf is not a file or does not exist

/docker-entrypoint.sh: Sourcing /docker-entrypoint.d/15-local-resolvers.envsh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2025/03/17 02:34:41 [emerg] 1#1: "worker_processes" directive is not allowed here in /etc/nginx/conf.d/nginx.conf:1

nginx: [emerg] "worker_processes" directive is not allowed here in /etc/nginx/conf.d/nginx.conf:1

As I debug the issue, the pattern of delete and retry is pretty handy. This is only made possible as I have been pushing both the charts and builds with the same build ID to my Container Registry

builder@LuiGi:~/Workspaces/PipelineExamples$ !2106

helm delete mydbrelease -n sampleapp

release "mydbrelease" uninstalled

builder@LuiGi:~/Workspaces/PipelineExamples$ helm install mydbrelease --namespace sampleapp --set image.repository=harbor.freshbrewed.science/freshbrewedprivate/sampledb --set image.tag=0.1.21284 --set "imagePullSecrets[0].name=myharborreg" oci://harbo

r.freshbrewed.science/chartrepo/mychart --version 0.1.21284

Pulled: harbor.freshbrewed.science/chartrepo/mychart:0.1.21284

Digest: sha256:93195772075a129bd1c928e80a9cbe661015a36532ec6425c486e74dcdcf5bb1

NAME: mydbrelease

LAST DEPLOYED: Mon Mar 17 07:43:46 2025

NAMESPACE: sampleapp

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace sampleapp -l "app.kubernetes.io/name=mychart,app.kubernetes.io/instance=mydbrelease" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace sampleapp $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace sampleapp port-forward $POD_NAME 8080:$CONTAINER_PORT

With some tweaking I got the Database splash page to launch

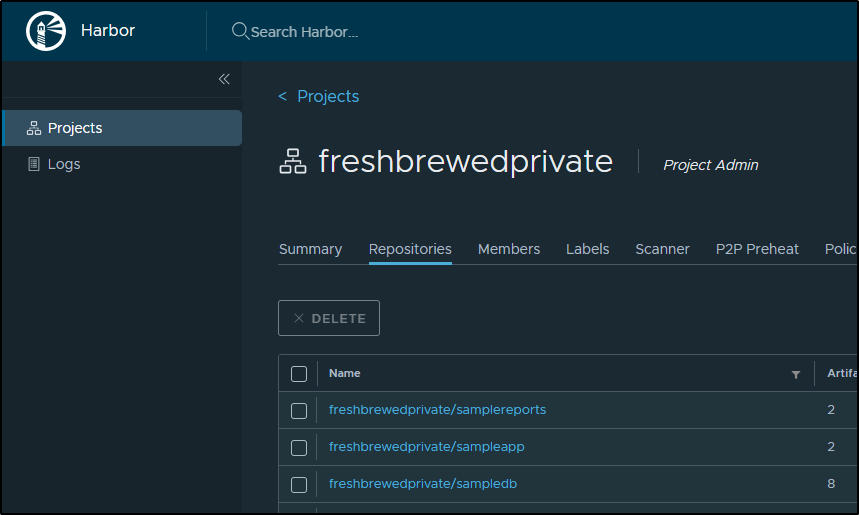

Soon I had all the pipelines updates so we can look at a multi-microsevice deployment structure:

The idea is now to create a system that would track the latest passing builds of these and look to deploy to an environment based on criteria such as a Work Item.

That is, what we do not want to happen is to require the Program administrator or Deploy Night Engineer to have to manually approve/progress individual pipelines.

Ideally, we would find the latest validated pipeline on a lineage (in our case 0.1.x).

The reason you want to pay attention to major/minors and not just “latest” is that we may have some bug fixes for former releases in the pipeline and we may also have a future release in the works that could get errantly picked up.

We could fetch from the CR assuming only validated images were pushed

builder@LuiGi:~/Workspaces/PipelineExamples$ curl --silent -X 'GET' 'https://harbor.freshbrewed.science/api/v2.0/projects/freshbrewedprivate/repositories/samplereports/artifacts?page=1&page_size=10&with_tag=true&with_label=true&with_scan_overview=false&with_signature=false&with_immutable_status=false&with_accessory=false' -H 'accept: application/json' -H 'X-Accept-Vulnerabilities: application/vnd.security.vulnerability.report; version=1.1, application/vnd.scanner.adapter.vuln.report.harbor+json; version=1.0' -H "authorization: Basic $MYHARBORCRED" | jq -r '.[].tags[].name' | sort -r | grep ^0\.1\. | head -n1

0.1.21300

builder@LuiGi:~/Workspaces/PipelineExamples$ curl --silent -X 'GET' 'https://harbor.freshbrewed.science/api/v2.0/projects/freshbrewedprivate/repositories/sampleapp/artifacts?page=1&page_size=10&with_tag=true&with_label=true&with_scan_overview=false&with_signature=false&with_immutable_status=false&with_accessory=false' -H 'accept: application/json' -H 'X-Accept-Vulnerabilities: application/vnd.security.vulnerability.report; version=1.1, application/vnd.scanner.adapter.vuln.report.harbor+json; version=1.0'

-H "authorization: Basic $MYHARBORCRED" | jq -r '.[].tags[].name' | sort -r | grep ^0\.1\. | head -n1

0.1.21298

builder@LuiGi:~/Workspaces/PipelineExamples$ curl --silent -X 'GET' 'https://harbor.freshbrewed.science/api/v2.0/projects/freshbrewedprivate/repositories/sampledb/artifacts?page=1&page_size=10&with_tag=true&with_label=true&with_scan_overview=false&with_signature=false&with_immutable_status=false&with_accessory=false' -H 'accept: application/json' -H 'X-Accept-Vulnerabilities: application/vnd.security.vulnerability.report; version=1.1, application/vnd.scanner.adapter.vuln.report.harbor+json; version=1.0'

-H "authorization: Basic $MYHARBORCRED" | jq -r '.[].tags[].name' | sort -r | grep ^0\.1\. | head -n1

0.1.21299

After deploying, I can of course push a “deployed” tag

curl -X 'POST' \

'https://harbor.freshbrewed.science/api/v2.0/projects/freshbrewedprivate/repositories/sampledb/artifacts/0.1.21299/tags' \

-H 'accept: application/json' \

-H "authorization: Basic $MYHARBORCRED$" \

-H 'Content-Type: application/json' \

-H 'X-Harbor-CSRF-Token: lY70OhGkPpyqCEULEQmN93l+ksRvHspHqB8/n3pST0apfsLSM2y+lqu8PrEm5aISLoMKwOX6bQzn4uDGGXk4+Q==' \

-d '{

"name": "RELEASED",

"push_time": "2025-03-18T00:55:39.023Z",

"pull_time": "2025-03-18T00:55:39.023Z",

"immutable": true

}'

As we can see, it is now tagged

I can also pull them with JQ

$ jq -r '.[] | "Image ID: \(.id)\nPush Time: \(.push_time)\nTags: \([.tags[].name] | join(", "))\n---"' t.o

Image ID: 391

Push Time: 2025-03-17T13:33:04.457Z

Tags: RELEASED, 0.1.21299

---

Image ID: 386

Push Time: 2025-03-17T13:02:55.765Z

Tags: 0.1.21295

---

Image ID: 383

Push Time: 2025-03-17T12:52:06.580Z

Tags: 0.1.21292

---

Image ID: 381

Push Time: 2025-03-17T02:39:30.464Z

Tags: 0.1.21284

---

Image ID: 379

Push Time: 2025-03-17T02:07:01.580Z

Tags: 0.1.21281

---

Image ID: 377

Push Time: 2025-03-17T01:35:50.531Z

Tags: 21278

---

Image ID: 376

Push Time: 2025-03-17T01:27:19.580Z

Tags: 21275

---

Image ID: 375

Push Time: 2025-03-17T00:48:41.249Z

Tags: 21272

---

So to get the next step would be to fetch work items that are ready to deploy. Right now I just have three states of New, Active and Closed. Obviously, we can make more states like Tested and Approved. However, for the sake of the demo, let us assume “Active” is approved and ready to go:

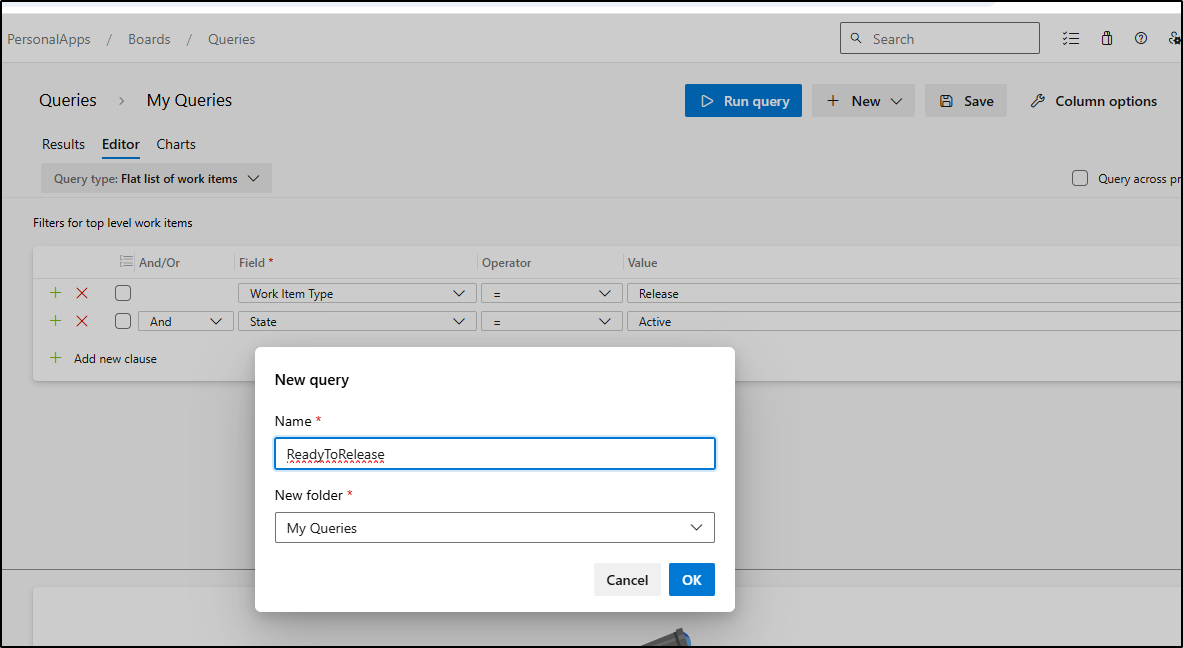

I’ll create and save a Work Item Query to find Release tickets that are now active

As saved, I can now see the WIQ URL

https://princessking.visualstudio.com/PersonalApps/_queries/query-edit/724048fd-2869-4995-8bb4-3bea1212c5ca/

I then created a deployer pipeline that could fetch results

schedules:

- cron: "*/15 * * * *"

displayName: 15m WI Check

branches:

include:

- wia-adduser

always: true

pool:

vmImage: ubuntu-latest

variables:

- name: Org_Name

value: 'princessking'

- name: affector

value: isaac.johnson@gmail.com

- name: ThisPipelineID

value: 112

stages:

- stage: parse

jobs:

- job: parse_work_item

variables:

job_supportUserWIQueryID: 724048fd-2869-4995-8bb4-3bea1212c5ca

displayName: start_n_sync

continueOnError: false

steps:

- task: AzureCLI@2

displayName: 'Azure CLI - wiq AddSPToAzure'

inputs:

azureSubscription: 'myAZConn'

scriptType: 'bash'

scriptLocation: 'inlineScript'

inlineScript: 'az boards query --organization https://dev.azure.com/$(Org_Name)/ --id $(job_supportUserWIQueryID) -o json | jq ''.[] | .id'' | tr ''\n'' '','' > ids.txt'

env:

AZURE_DEVOPS_EXT_PAT: $(AzureDevOpsAutomationPAT)

- task: AzureCLI@2

displayName: 'Azure CLI - Pipeline Semaphore'

inputs:

azureSubscription: 'myAZConn'

scriptType: bash

scriptLocation: inlineScript

inlineScript: 'az pipelines build list --project PersonalApps --definition-ids $(ThisPipelineID) --org https://dev.azure.com/$(Org_Name)/ -o table > $(Build.StagingDirectory)/pipelinestate.txt'

env:

AZURE_DEVOPS_EXT_PAT: $(AzureDevOpsAutomationPAT)

- bash: |

#!/bin/bash

set +x

# take comma sep list and set a var (remove trailing comma if there)

echo "##vso[task.setvariable variable=WISTOPROCESS]"`cat ids.txt | sed 's/,$//'` > t.o

set -x

cat t.o

displayName: 'Set WISTOPROCESS'

- bash: |

set -x

export

set +x

export IFS=","

read -a strarr <<< "$(WISTOPROCESS)"

# Print each value of the array by using the loop

export tval="{"

for val in "${strarr[@]}";

do

export tval="${tval}'process$val':{'wi':'$val'}, "

done

set -x

echo "... task.setvariable variable=mywis;isOutput=true]$tval" | sed 's/..$/}/'

set +x

if [[ "$(WISTOPROCESS)" == "" ]]; then

echo "##vso[task.setvariable variable=mywis;isOutput=true]{}" > ./t.o

else

echo "##vso[task.setvariable variable=mywis;isOutput=true]$tval" | sed 's/..$/}/' > ./t.o

fi

# regardless of above, if we detect another queued "notStarted" or "inProgress" job, just die.. dont double process

# this way if an existing job is taking a while, we just bail out on subsequent builds (gracefully)

export tVarNS="`cat $(Build.StagingDirectory)/pipelinestate.txt | grep -v $(Build.BuildID) | grep notStarted | head -n1 | tr -d '\n'`"

export tVarIP="`cat $(Build.StagingDirectory)/pipelinestate.txt | grep -v $(Build.BuildID) | grep inProgress | head -n1 | tr -d '\n'`"

if [[ "$tVarNS" == "" ]]; then

echo "No one else is NotStarted"

else

echo "##vso[task.setvariable variable=mywis;isOutput=true]{}" > ./t.o

fi

if [[ "$tVarIP" == "" ]]; then

echo "No one else is InProgress"

else

echo "##vso[task.setvariable variable=mywis;isOutput=true]{}" > ./t.o

fi

set -x

cat ./t.o

name: mtrx

displayName: 'create mywis var'

- bash: |

set -x

export

displayName: 'debug'

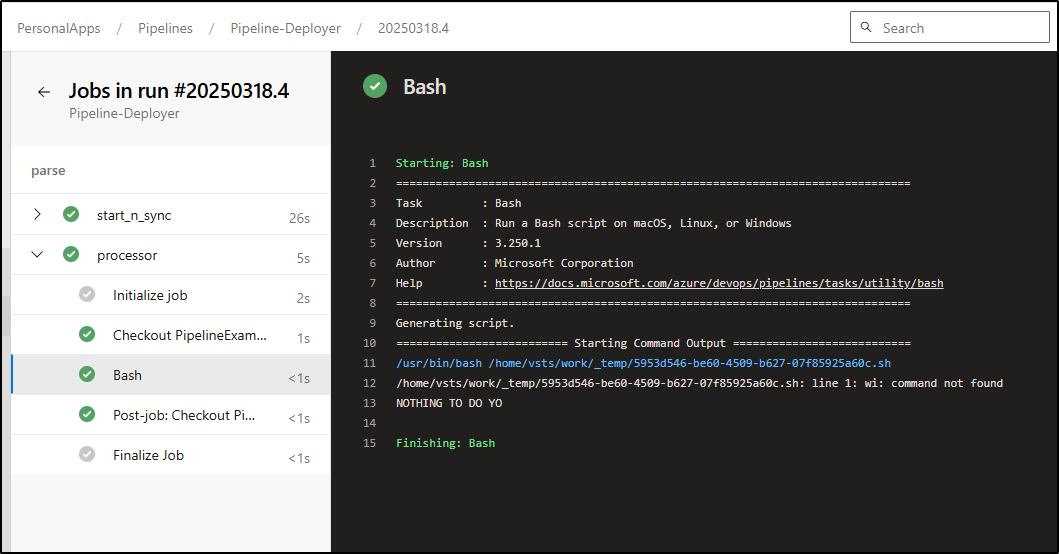

- job: processor

dependsOn: parse_work_item

strategy:

matrix: $[ dependencies.parse_work_item.outputs['mtrx.mywis']]

steps:

- bash: |

if [ "$(wi)" == "" ]; then

echo "NOTHING TO DO YO"

echo "##vso[task.setvariable variable=skipall;]yes"

exit

else

echo "PROCESS THIS WI $(wi)"

echo "##vso[task.setvariable variable=skipall;]no"

fi

echo "my item: $(wi)"

When the Release is “New”, we see no results to process

But if we move to active, then I see it show in the results

My next steps would be to parse out the “To Release” items:

$ az boards work-item show --id 168 --organization https://dev.azure.com/princessking/ > x.o

$ cat x.o | jq -r '.fields."System.Description"' | sed 's/.*Release: //' | sed 's/ <.div.*//'

Reports, Database

I could then find the Release to use just based on the summary

$ cat x.o | jq -r '.fields."System.Title"'

Release 0.1

At this point I think I’ll wait to detail out the rest as it depends on my full model.

That is, I could decide that I want the Release number as a field in the Azure Work Item and pull from there. Or I could pack it into the YAML block and parse it out from there. I could see value in POSIX matching like “0.1.*” or “0.1.234” to be explicit.

The other part to contend with is failures. What if I do pull the releases for Reports and Database and one fails. Do I stop in my tracks? Should I mark one as failure. Certainly this would be a condition worth kicking off alerts and emails.

Hopefully, though, you get the general idea of a Work Item driven flow.

Additionally, while I did this using Azure Work Items, you can use JIRA just as well and I have an article on that.

Summary

Today I covered a few options for controlling flows of Azure Pipelines through Azure DevOps.

We looked at using the Environment gates with both Email Approvals including automating by way of the Az CLI. This wasn’t ideal as it still tied to the user requested for review and it’s a bit tricky to parse the gate name out.

We looked at using a REST API for gating. We did this by building a quick REST App just to turn on and off gates. However, there is a limitation here - Azure DevOps has a fixed maximum (since 2023) of 10 retries then it goes or doesn’t go. So that means if you want it to be paused for 10 days, it can check once a day.

While I didn’t cover it, there is a dirty trick, of course, to just used a bunch of back to back gates. That is, if I wanted it to check once a day for 30 days max, I could chain up 3 gates using the same REST API (once a day, for 10 days, 3 times in a row). It would work, albeit seem kind of excessive.

Lastly, we looked at Work Item Automations. This is a topic I covered in 2021 in “Work Item Automations in Azure DevOps” and an OSN 2021 speaker series here. I put more notes of the Matrix style build setup above. If people want a full end-to-end build out I can do that. However, I did show how we can:

- search and pull containers matching a pattern from the Harbor CR REST API, finding the latest of a release lineage

- tag containers on release

- Parse a work item query for matching tickets

- Pull the requisite fields from a Work Item to trigger releases.