Published: Dec 12, 2024 by Isaac Johnson

About eight months ago Redis (the company) decided to change their license model drastically and ditch AGPL after 7.4. This caused a lot of strong feelings about the SSPL and whether developers could still trust Redis (the company).

In our first writeup about the forks in April 2024 I spoke about the reaction of the community at the time and how almost immediately some big forks kicked off including Valkey and Redict. Microsoft didn’t fork Redis, rather they released a Redis-compliant very light .NET based potential drop-in replacement called Garnet.

Time has passed since March 2024 and the most interesting things that have happened since a bunch of companies decided to ditch OSS was that some actually changed their minds. At the end of August, Elastic changed their license for Elasticsearch and Kibana back to AGPL. We could parse some things about the change, but overall, it’s a good thing. Also, in that time IBM stepped up to buy Hashicorp (which still my close any day now) and I personally have heard rumors that license changes might come with that.

Redict

So where did our forks go? I really don’t see many commits to Redict. It seems to have forked, changed to LGPL and just stayed there, save for updates of the Alpine base image . I mean, it’s a point in time archive I guess. And I respect his decision to keep it off Github but that does limit one’s audience.

Garnet

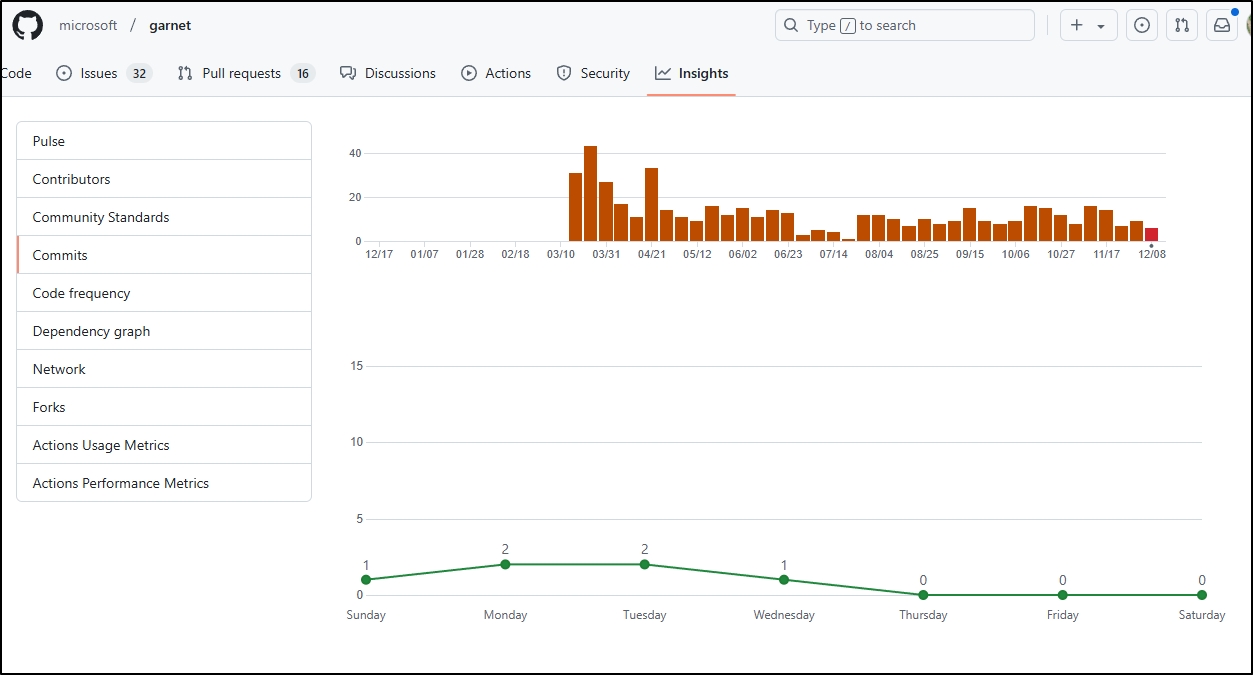

Microsoft still supports and highlights Garnet from their Research Project page. From Github Insights we can see it has kept up with regular updates.

And it’s had 46 releases in that time, the latest as of this writing is v1.0.46 and is just 2 weeks old.

Valkey

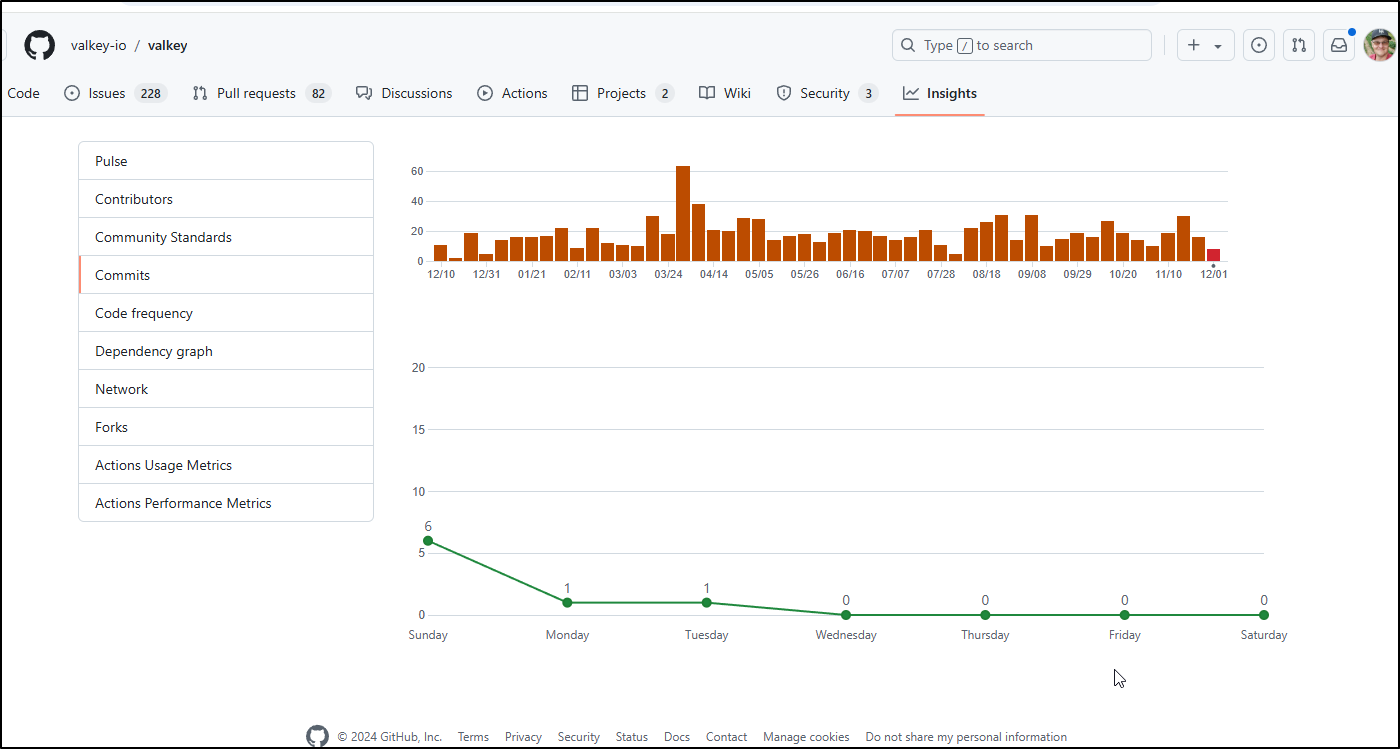

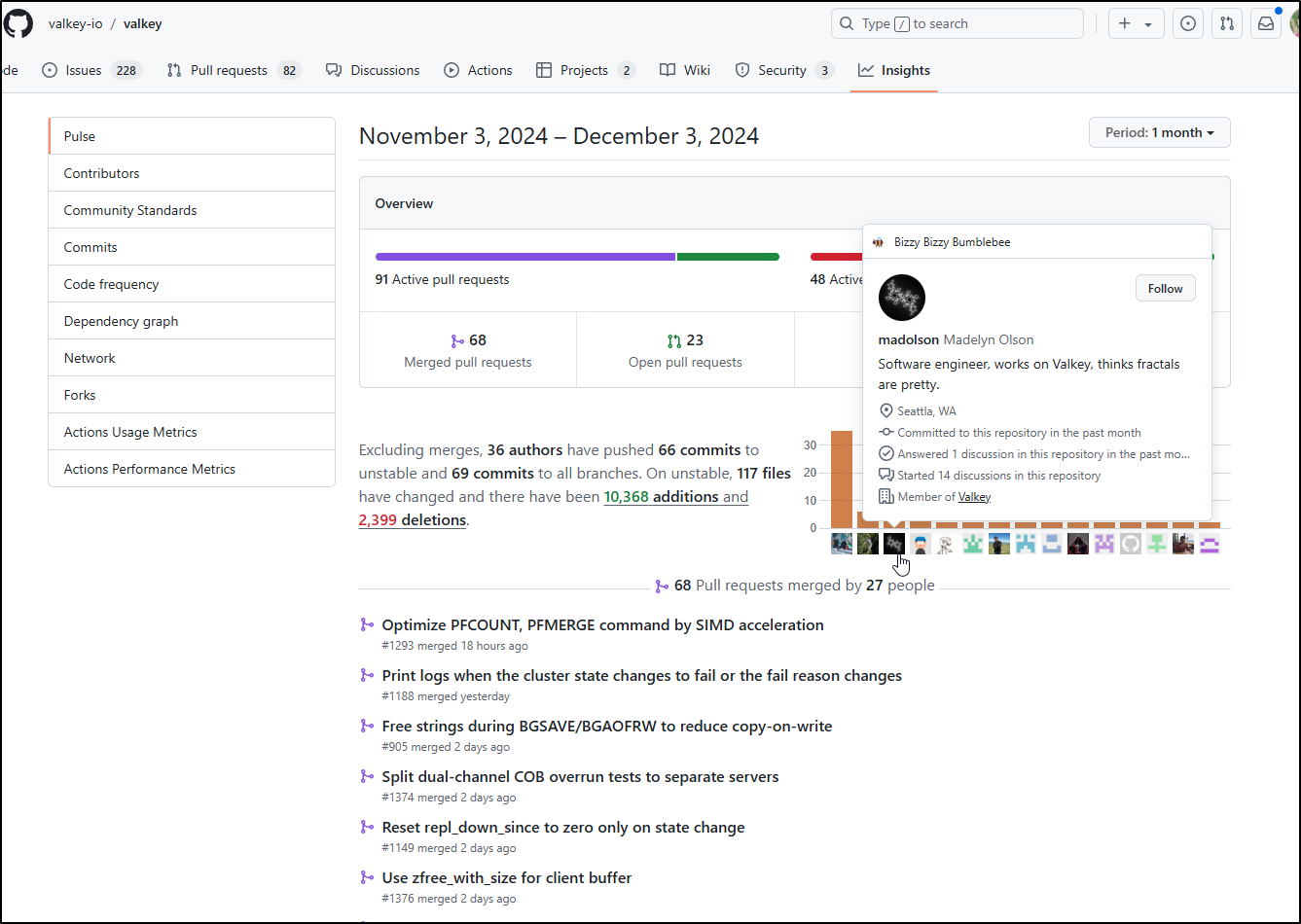

Valkey is alive and well. We can see a constant stream of commits

With a large portion of the commits from the folks who kicked this off including Madelyn Olson

Installation

Last time I had to hack the Redis chart to make it work but today they actually have a published working native helm chart.

We can use the Bitnami package for Valkey to install with helm.

$ helm install my-valkey oci://registry-1.docker.io/bitnamicharts/valkey

Pulled: registry-1.docker.io/bitnamicharts/valkey:2.1.1

Digest: sha256:f01034c1f66402e9308100d28a764317d666b0d908466480477c272f2767fe8d

NAME: my-valkey

LAST DEPLOYED: Tue Dec 3 07:34:05 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: valkey

CHART VERSION: 2.1.1

APP VERSION: 8.0.1

** Please be patient while the chart is being deployed **

Valkey can be accessed on the following DNS names from within your cluster:

my-valkey-primary.default.svc.cluster.local for read/write operations (port 6379)

my-valkey-replicas.default.svc.cluster.local for read-only operations (port 6379)

To get your password run:

export VALKEY_PASSWORD=$(kubectl get secret --namespace default my-valkey -o jsonpath="{.data.valkey-password}" | base64 -d)

To connect to your Valkey server:

1. Run a Valkey pod that you can use as a client:

kubectl run --namespace default valkey-client --restart='Never' --env VALKEY_PASSWORD=$VALKEY_PASSWORD --image docker.io/bitnami/valkey:8.0.1-debian-12-r3 --command -- sleep infinity

Use the following command to attach to the pod:

kubectl exec --tty -i valkey-client \

--namespace default -- bash

2. Connect using the Valkey CLI:

REDISCLI_AUTH="$VALKEY_PASSWORD" valkey-cli -h my-valkey-primary

REDISCLI_AUTH="$VALKEY_PASSWORD" valkey-cli -h my-valkey-replicas

To connect to your database from outside the cluster execute the following commands:

kubectl port-forward --namespace default svc/my-valkey-primary 6379:6379 &

REDISCLI_AUTH="$VALKEY_PASSWORD" valkey-cli -h 127.0.0.1 -p 6379

WARNING: There are "resources" sections in the chart not set. Using "resourcesPreset" is not recommended for production. For production installations, please set the following values according to your workload needs:

- replica.resources

- primary.resources

+info https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/

Let’s say you want to create a password and use an existing secret.

The docs are a bit sparse on examples

We can make a file with the password

$ cat valkey-pass

mygreatpassword

Then create a generic secret

$ kubectl create secret generic valkey-password-secret --from-file=valkey-pass

secret/valkey-password-secret created

The use it with the helm install

$ helm install my-valkey-pass oci://registry-1.docker.io/bitnamicharts/valkey --set auth.enabled=true --set auth.existingSecret=valkey-password-secret --set auth.existingSecretKey=valkey-pass

Pulled: registry-1.docker.io/bitnamicharts/valkey:2.1.1

Digest: sha256:f01034c1f66402e9308100d28a764317d666b0d908466480477c272f2767fe8d

NAME: my-valkey-pass

LAST DEPLOYED: Tue Dec 3 07:46:15 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: valkey

CHART VERSION: 2.1.1

APP VERSION: 8.0.1

** Please be patient while the chart is being deployed **

Valkey can be accessed on the following DNS names from within your cluster:

my-valkey-pass-primary.default.svc.cluster.local for read/write operations (port 6379)

my-valkey-pass-replicas.default.svc.cluster.local for read-only operations (port 6379)

To get your password run:

export VALKEY_PASSWORD=$(kubectl get secret --namespace default valkey-password-secret -o jsonpath="{.data.valkey-password}" | base64 -d)

To connect to your Valkey server:

1. Run a Valkey pod that you can use as a client:

kubectl run --namespace default valkey-client --restart='Never' --env VALKEY_PASSWORD=$VALKEY_PASSWORD --image docker.io/bitnami/valkey:8.0.1-debian-12-r3 --command -- sleep infinity

Use the following command to attach to the pod:

kubectl exec --tty -i valkey-client \

--namespace default -- bash

2. Connect using the Valkey CLI:

REDISCLI_AUTH="$VALKEY_PASSWORD" valkey-cli -h my-valkey-pass-primary

REDISCLI_AUTH="$VALKEY_PASSWORD" valkey-cli -h my-valkey-pass-replicas

To connect to your database from outside the cluster execute the following commands:

kubectl port-forward --namespace default svc/my-valkey-pass-primary 6379:6379 &

REDISCLI_AUTH="$VALKEY_PASSWORD" valkey-cli -h 127.0.0.1 -p 6379

WARNING: There are "resources" sections in the chart not set. Using "resourcesPreset" is not recommended for production. For production installations, please set the following values according to your workload needs:

- replica.resources

- primary.resources

+info https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/

I waited a long time for create. I assumed a cluster issue.

But later I checked the COntainerConfigError and noted it was looking for a password key of ‘valkey-password’

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m23s default-scheduler Successfully assigned default/my-valkey-pass-primary-0 to isaac-macbookpro

Warning Failed 37s (x12 over 2m55s) kubelet Error: couldn't find key valkey-password in Secret default/valkey-password-secret

Normal Pulled 22s (x13 over 2m55s) kubelet Container image "docker.io/bitnami/valkey:8.0.1-debian-12-r3" already present on machine

Which does not make sense since I told it, I’m certain, that the key would be ‘valkey-pass’:

$ helm get values my-valkey-pass

USER-SUPPLIED VALUES:

auth:

enabled: true

existingSecret: valkey-password-secret

existingSecretKey: valkey-pass

Even though it should use what I told it, I’ll recreate the secret to use ‘valkey-password’

$ mv valkey-pass valkey-password

$ kubectl delete secret valkey-password-secret

secret "valkey-password-secret" deleted

$ kubectl create secret generic valkey-password-secret --from-file=valkey-password

secret/valkey-password-secret created

Then rotate the pods

$ kubectl delete pod my-valkey-pass-primary-0 & kubectl delete pod my-valkey-pass-replicas-0 &

[1] 88080

[2] 88081

Testing

I’ll fire up a client pod that does have an ENV var set to the password (though not required). I’ll use this for both Valkey instances

$ kubectl run --namespace default valkey-client --restart='Never' --env VALKEY_PASSWORD=mygreatpassword --image docker.io/bitnami/valkey:8.0.1-debian-12-r3 --command -- sleep infinity

pod/valkey-client created

First, on the one we did not set an existing password on, we can see that Auth is enabled by default as an anonymous put fails

$ kubectl exec --tty -i valkey-client -- /bin/bash

I have no name!@valkey-client:/$ valkey-cli -h my-valkey-primary

my-valkey-primary:6379> set asdf 1234

(error) NOAUTH Authentication required.

Let’s quick fetch that

$ kubectl get secret --namespace default my-valkey -o jsonpath="{.data.valkey-password}" | base64 -d

B8ZHpp9OP6

And try again

I have no name!@valkey-client:/$ REDISCLI_AUTH="B8ZHpp9OP6" valkey-cli -h my-valkey-primary

my-valkey-primary:6379> set asdf 1234

OK

my-valkey-primary:6379> get asdf

"1234"

That clearly worked. How about the one for which we set the auth?

Testing with Auth from file

For some reason, it just does not like that ‘existing secret’ approach:

$ kubectl exec --tty -i valkey-client -- /bin/bash

I have no name!@valkey-client:/$ REDISCLI_AUTH="$VALKEY_PASSWORD" valkey-cli -h my-valkey-pass-primary

AUTH failed: WRONGPASS invalid username-password pair or user is disabled.

my-valkey-pass-primary:6379> echo $VALKEY_PASSWORD

(error) NOAUTH Authentication required.

my-valkey-pass-primary:6379> quit

I have no name!@valkey-client:/$ echo $VALKEY_PASSWORD

mygreatpassword

I have no name!@valkey-client:/$ REDISCLI_AUTH="mygreatpassword" valkey-cli -h my-valkey-pass-primary

AUTH failed: WRONGPASS invalid username-password pair or user is disabled.

Yet this is clearly what the value is

$ kubectl get secret --namespace default valkey-password-secret -o jsonpath="{.data.valkey-password}" | base64 -d

mygreatpassword

No Pass

Some apps - especially older ones - assume a Redis without a password. We can override the default behaviour to disable auth

$ helm install my-valkey-nopass oci://registry-1.docker.io/bitnamicharts/valkey --set auth.enabled=false --set usePassword=false

Pulled: registry-1.docker.io/bitnamicharts/valkey:2.1.1

Digest: sha256:f01034c1f66402e9308100d28a764317d666b0d908466480477c272f2767fe8d

NAME: my-valkey-nopass

LAST DEPLOYED: Tue Dec 3 07:55:06 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: valkey

CHART VERSION: 2.1.1

APP VERSION: 8.0.1

** Please be patient while the chart is being deployed **

Valkey can be accessed on the following DNS names from within your cluster:

my-valkey-nopass-primary.default.svc.cluster.local for read/write operations (port 6379)

my-valkey-nopass-replicas.default.svc.cluster.local for read-only operations (port 6379)

To connect to your Valkey server:

1. Run a Valkey pod that you can use as a client:

kubectl run --namespace default valkey-client --restart='Never' --image docker.io/bitnami/valkey:8.0.1-debian-12-r3 --command -- sleep infinity

Use the following command to attach to the pod:

kubectl exec --tty -i valkey-client \

--namespace default -- bash

2. Connect using the Valkey CLI:

valkey-cli -h my-valkey-nopass-primary

valkey-cli -h my-valkey-nopass-replicas

To connect to your database from outside the cluster execute the following commands:

kubectl port-forward --namespace default svc/my-valkey-nopass-primary 6379:6379 &

valkey-cli -h 127.0.0.1 -p 6379

WARNING: There are "resources" sections in the chart not set. Using "resourcesPreset" is not recommended for production. For production installations, please set the following values according to your workload needs:

- replica.resources

- primary.resources

+info https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/

I can then test using a CLI without a password

$ kubectl exec --tty -i valkey-client -- /bin/bash

I have no name!@valkey-client:/$ valkey-cli -h my-valkey-nopass-primary

my-valkey-nopass-primary:6379> get asdf

(nil)

my-valkey-nopass-primary:6379> set asdf 1234

OK

my-valkey-nopass-primary:6379> get adsf

(nil)

my-valkey-nopass-primary:6379> get asdf

"1234"

my-valkey-nopass-primary:6379> exit

I have no name!@valkey-client:/$ exit

Using Valkey

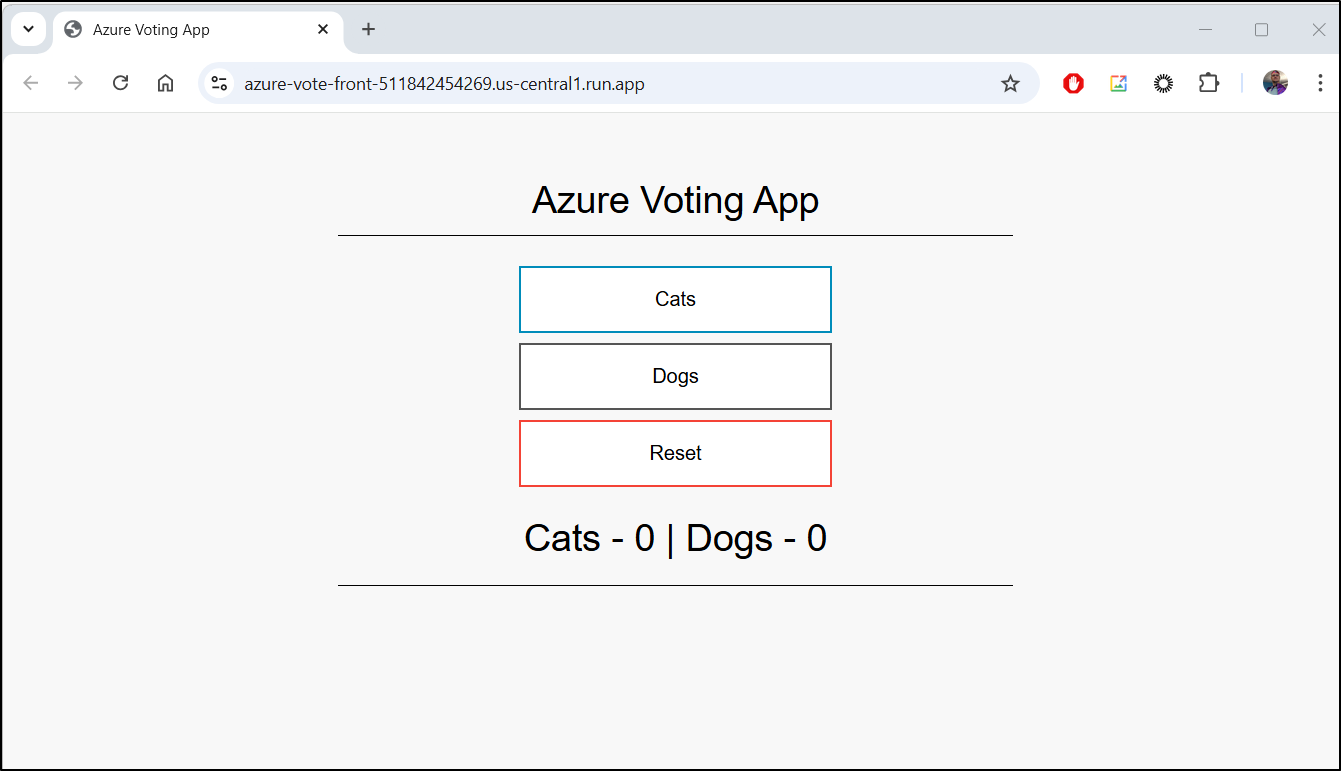

One simple app I like to use to test redis deployments is the Azure Vote App which is just a simple voting webapp that uses a key store to save votes.

The usual deployment looks like

apiVersion: apps/v1

kind: Deployment

metadata:

name: azure-vote-back

spec:

replicas: 1

selector:

matchLabels:

app: azure-vote-back

template:

metadata:

labels:

app: azure-vote-back

spec:

nodeSelector:

"kubernetes.io/os": linux

containers:

- name: azure-vote-back

image: mcr.microsoft.com/oss/bitnami/redis:6.0.8

env:

- name: ALLOW_EMPTY_PASSWORD

value: "yes"

ports:

- containerPort: 6379

name: redis

---

apiVersion: v1

kind: Service

metadata:

name: azure-vote-back

spec:

ports:

- port: 6379

selector:

app: azure-vote-back

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: azure-vote-front

spec:

replicas: 1

selector:

matchLabels:

app: azure-vote-front

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

minReadySeconds: 5

template:

metadata:

labels:

app: azure-vote-front

spec:

nodeSelector:

"kubernetes.io/os": linux

containers:

- name: azure-vote-front

image: mcr.microsoft.com/azuredocs/azure-vote-front:v1

ports:

- containerPort: 80

resources:

requests:

cpu: 250m

limits:

cpu: 500m

env:

- name: REDIS

value: "azure-vote-back"

---

apiVersion: v1

kind: Service

metadata:

name: azure-vote-front

spec:

type: LoadBalancer

ports:

- port: 80

selector:

app: azure-vote-front

So to test my password-less Redis, I’ll launch this but notice the “REDIS” key is set to the service fronting my NOPASS instance of Valkey

$ cat azavalkey.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: azure-vote-front

spec:

replicas: 1

selector:

matchLabels:

app: azure-vote-front

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

minReadySeconds: 5

template:

metadata:

labels:

app: azure-vote-front

spec:

nodeSelector:

"kubernetes.io/os": linux

containers:

- name: azure-vote-front

image: mcr.microsoft.com/azuredocs/azure-vote-front:v1

ports:

- containerPort: 80

resources:

requests:

cpu: 250m

limits:

cpu: 500m

env:

- name: REDIS

value: "my-valkey-nopass-headless"

---

apiVersion: v1

kind: Service

metadata:

name: azure-vote-front

spec:

type: ClusterIP

ports:

- port: 80

selector:

app: azure-vote-front

I’ll create

$ kubectl apply -f ./azavalkey.yaml

deployment.apps/azure-vote-front created

service/azure-vote-front created

I saw a pull error

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m16s default-scheduler Successfully assigned default/azure-vote-front-59bffc5859-wh2tr to isaac-macbookpro

Normal Pulling 44s (x3 over 2m20s) kubelet Pulling image "mcr.microsoft.com/azuredocs/azure-vote-front:v1"

Warning Failed 34s (x3 over 116s) kubelet Failed to pull image "mcr.microsoft.com/azuredocs/azure-vote-front:v1": rpc error: code = NotFound desc = failed to pull and unpack image "mcr.microsoft.com/azuredocs/azure-vote-front:v1": failed to resolve reference "mcr.microsoft.com/azuredocs/azure-vote-front:v1": mcr.microsoft.com/azuredocs/azure-vote-front:v1: not found

Warning Failed 34s (x3 over 116s) kubelet Error: ErrImagePull

Normal BackOff 2s (x4 over 106s) kubelet Back-off pulling image "mcr.microsoft.com/azuredocs/azure-vote-front:v1"

Warning Failed 2s (x4 over 106s) kubelet Error: ImagePullBackOff

Seems the old ACR is down

$ docker pull mcr.microsoft.com/azuredocs/azure-vote-front:v1

Error response from daemon: manifest for mcr.microsoft.com/azuredocs/azure-vote-front:v1 not found: manifest unknown: manifest tagged by "v1" is not found

$ docker pull mcr.microsoft.com/azuredocs/azure-vote-front:v2

Error response from daemon: manifest for mcr.microsoft.com/azuredocs/azure-vote-front:v2 not found: manifest unknown: manifest tagged by "v2" is not found

I decided to snag the top entry in Dockerhub as it’s likely just a copy From https://hub.docker.com/r/neilpeterson/azure-vote-front/tags

- name: azure-vote-front

image: neilpeterson/azure-vote-front:v1 # mcr.microsoft.com/azuredocs/azure-vote-front:v1

Then applied

$ kubectl apply -f ./azavalkey.yaml

deployment.apps/azure-vote-front configured

service/azure-vote-front unchanged

Looks like my first issue from the logs is I must be using a RO replica

mapped 1237056 bytes (1208 KB) for 16 cores

*** Operational MODE: preforking ***

2024-12-04 12:38:25,304 INFO success: nginx entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2024-12-04 12:38:25,304 INFO success: uwsgi entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

Traceback (most recent call last):

File "./main.py", line 31, in <module>

r.set(button1,0)

File "/usr/local/lib/python3.6/site-packages/redis/client.py", line 1171, in set

return self.execute_command('SET', *pieces)

File "/usr/local/lib/python3.6/site-packages/redis/client.py", line 668, in execute_command

return self.parse_response(connection, command_name, **options)

File "/usr/local/lib/python3.6/site-packages/redis/client.py", line 680, in parse_response

response = connection.read_response()

File "/usr/local/lib/python3.6/site-packages/redis/connection.py", line 629, in read_response

raise response

redis.exceptions.ReadOnlyError: You can't write against a read only replica.

unable to load app 0 (mountpoint='') (callable not found or import error)

*** no app loaded. going in full dynamic mode ***

*** uWSGI is running in multiple interpreter mode ***

I changed the REDIS env var from headless to ‘my-valkey-nopass-primary’ and tried again

$ kubectl apply -f ./azavalkey.yaml

deployment.apps/azure-vote-front configured

service/azure-vote-front unchanged

I can now test

$ kubectl port-forward svc/azure-vote-front 8088:80

Forwarding from 127.0.0.1:8088 -> 80

Forwarding from [::1]:8088 -> 80

Handling connection for 8088

Handling connection for 8088

Moreover, we can use the Valkey CLI to see the values set in Valkey cluster

$ kubectl exec --tty -i valkey-client -- /bin/bash

I have no name!@valkey-client:/$ valkey-cli -h my-valkey-nopass-primary

my-valkey-nopass-primary:6379> keys *

1) "Dogs"

2) "Cats"

3) "asdf"

my-valkey-nopass-primary:6379> get Docs

(nil)

my-valkey-nopass-primary:6379> get Dogs

"5"

my-valkey-nopass-primary:6379> get Cats

"5"

As well as the replicas

my-valkey-nopass-primary:6379> quit

I have no name!@valkey-client:/$ valkey-cli -h my-valkey-nopass-headless

my-valkey-nopass-headless:6379> get Dogs

"5"

my-valkey-nopass-headless:6379> get Cats

"5"

Valkey in the Cloud

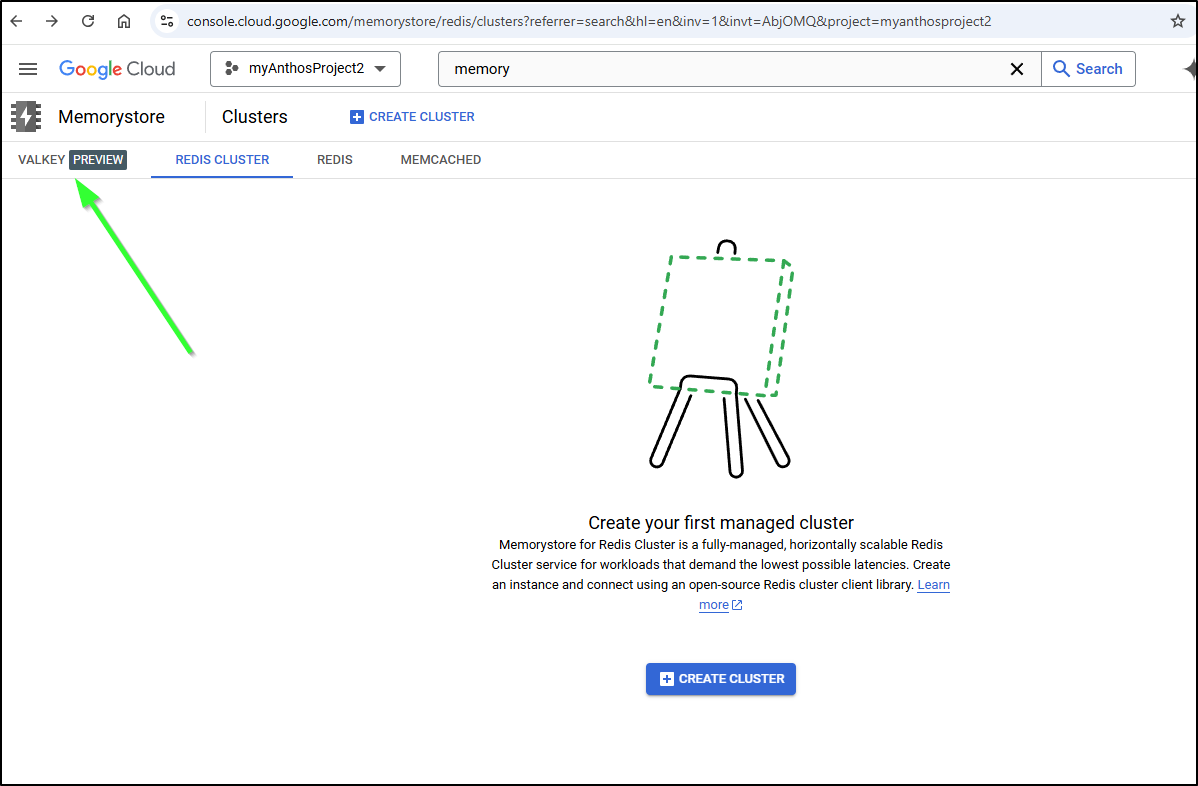

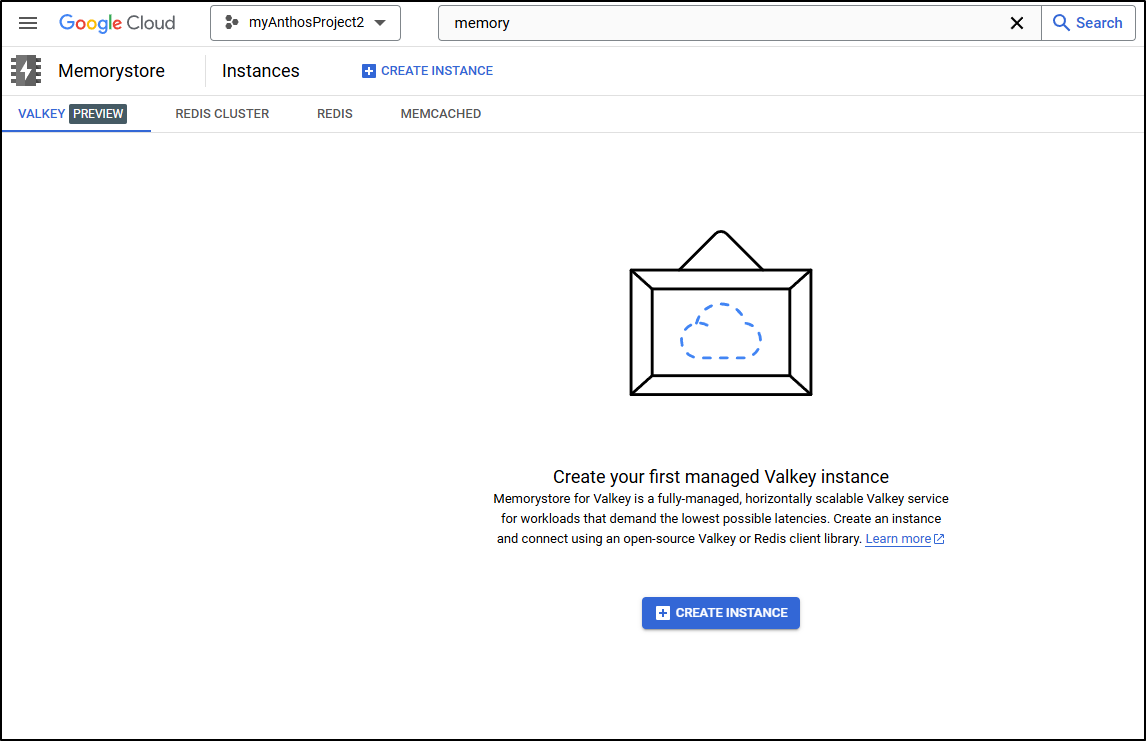

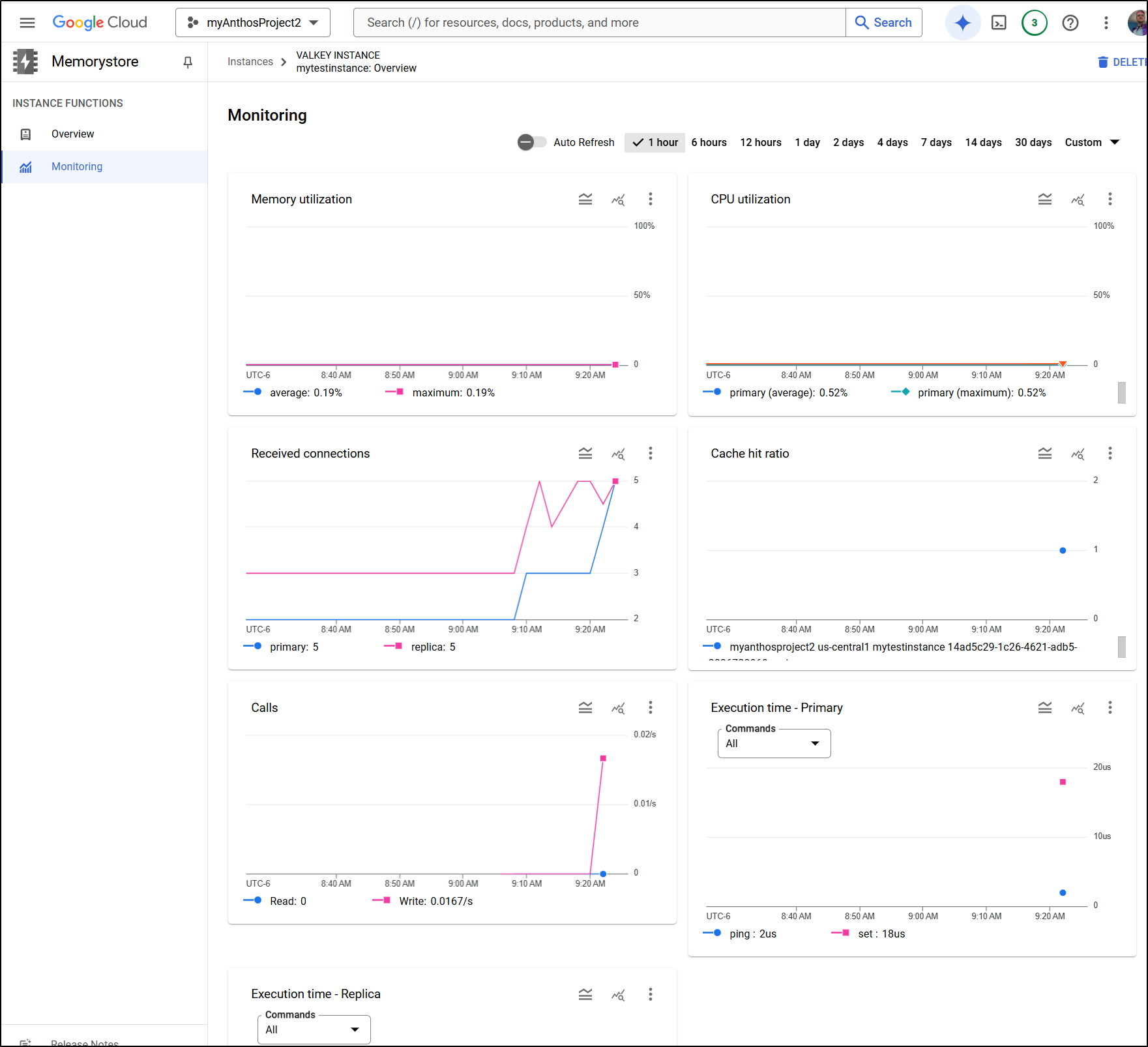

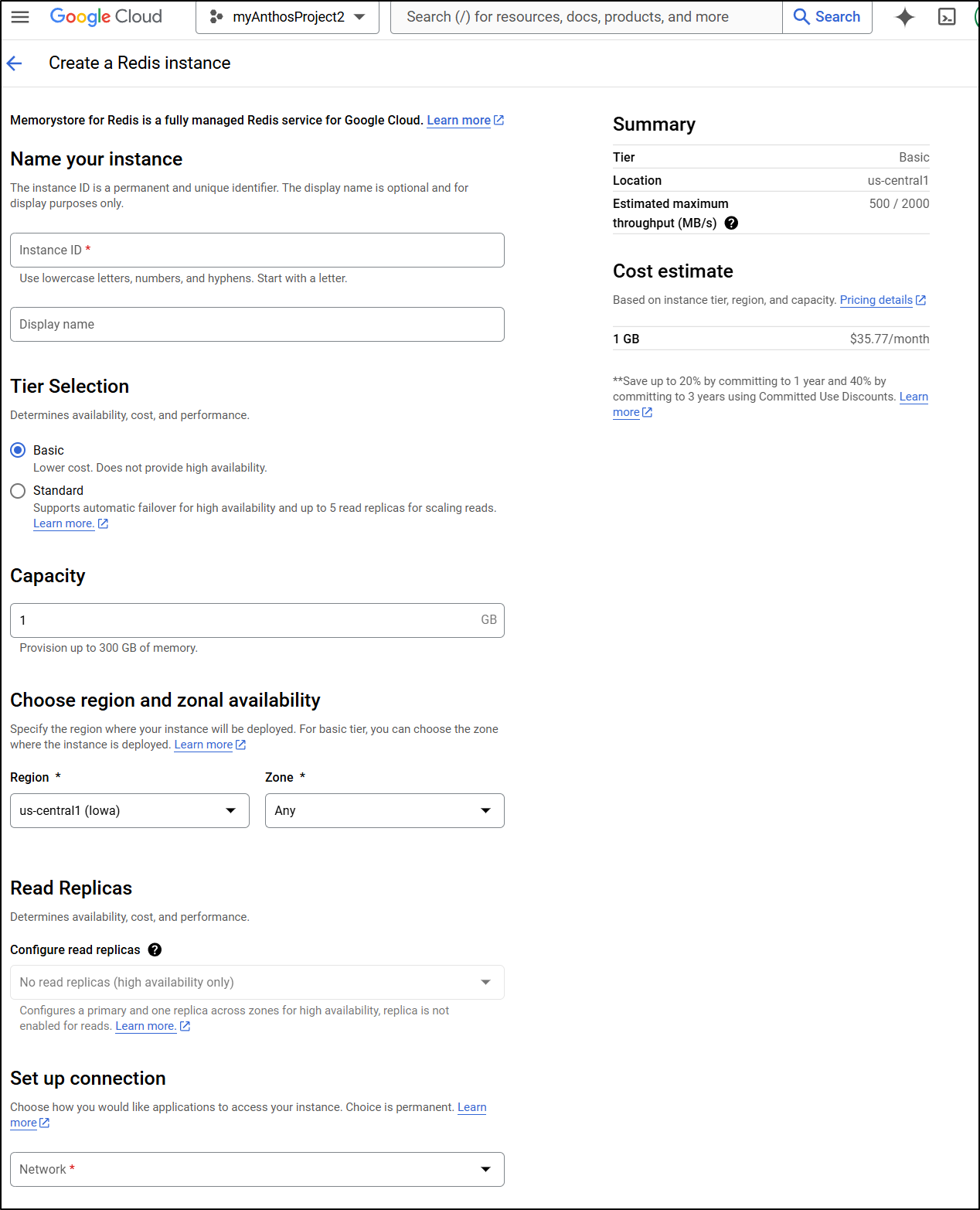

Something I noticed just within the last month was “Valkey” showing up in my GCP Memorystore UI.

For work, I need to be in CloudSQL and Memorystore on a regular basis and suddenly this Preview option just appeared

To use it, I need to enable the API

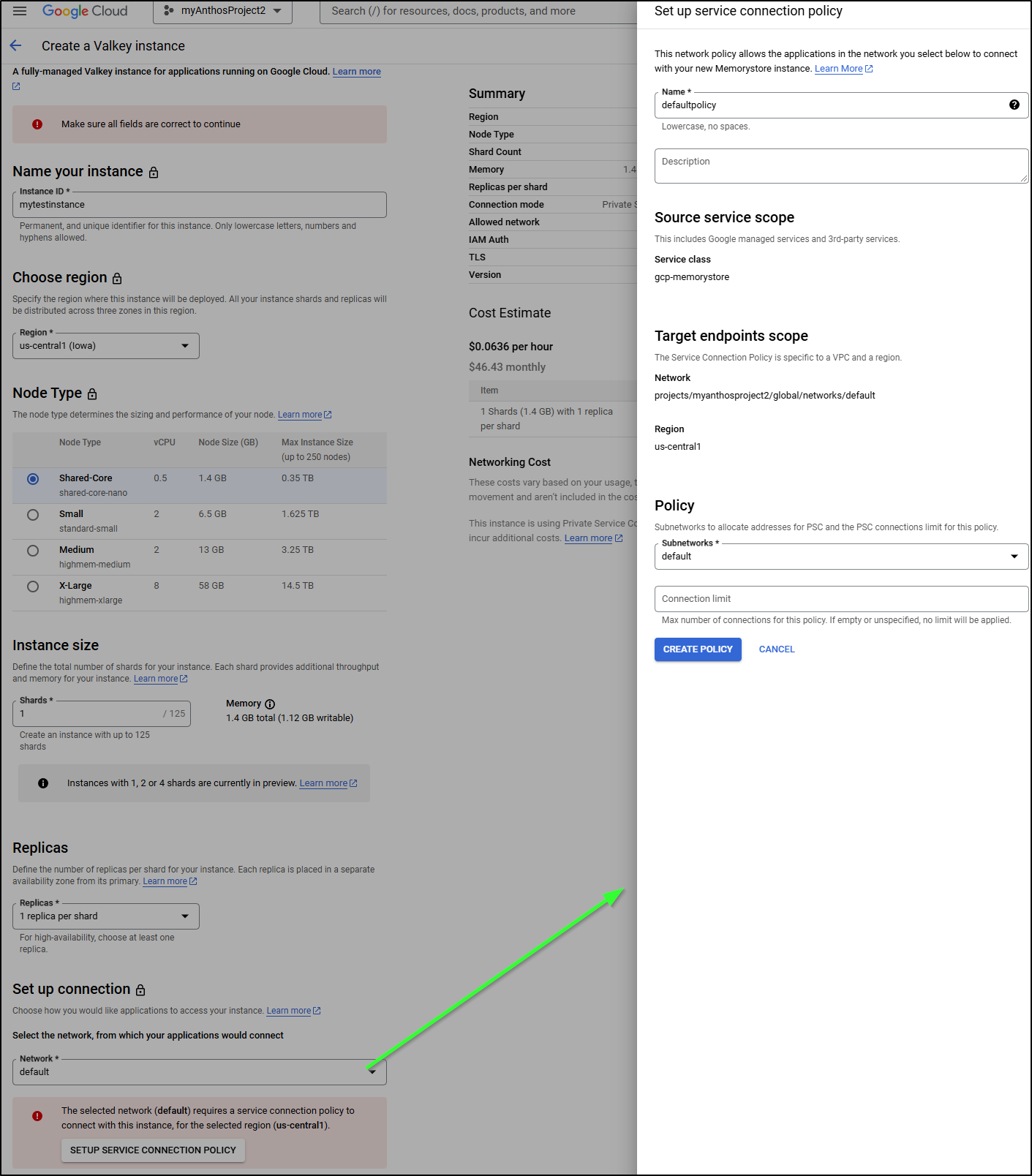

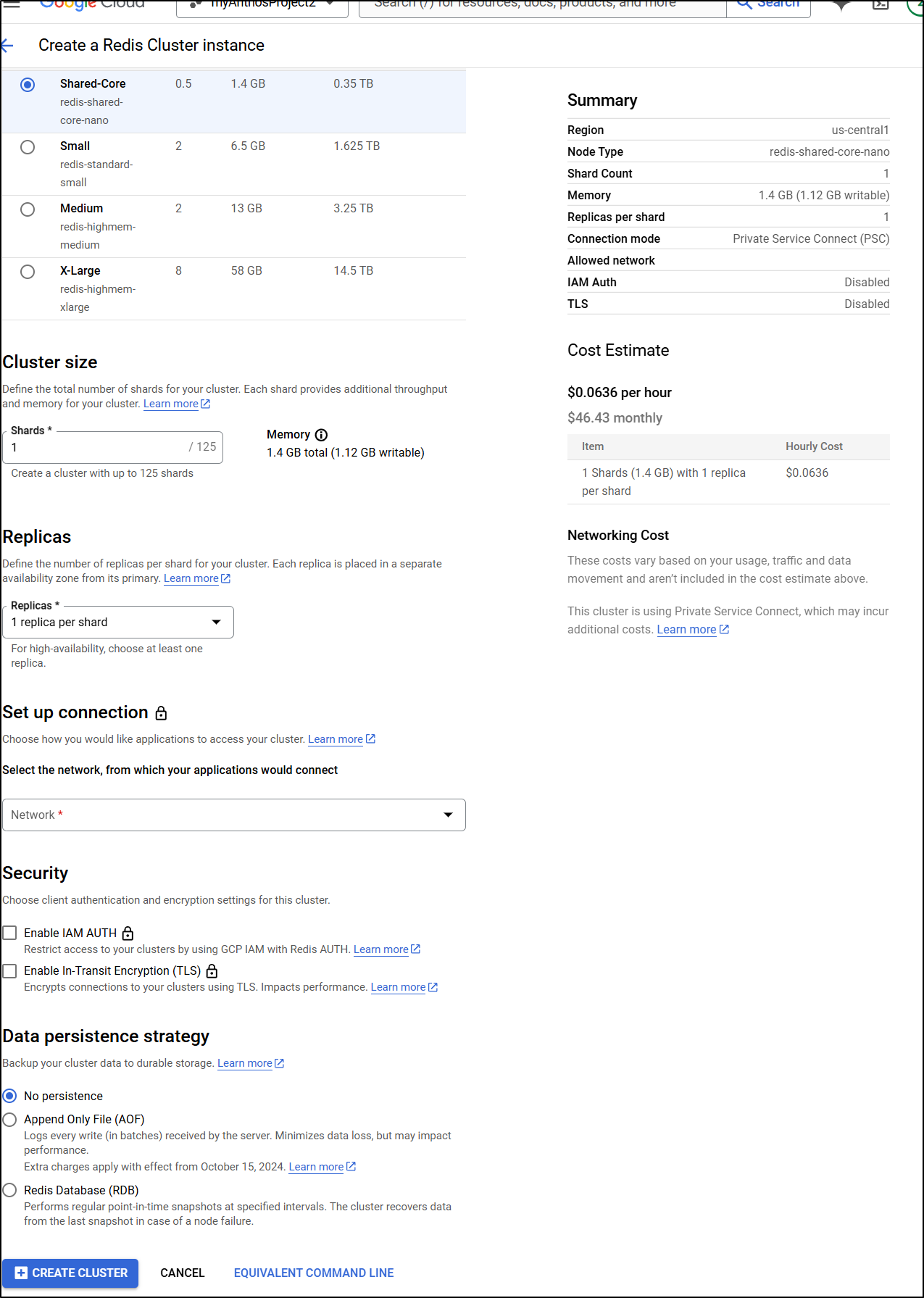

I can now create a Valkey instance in the UI

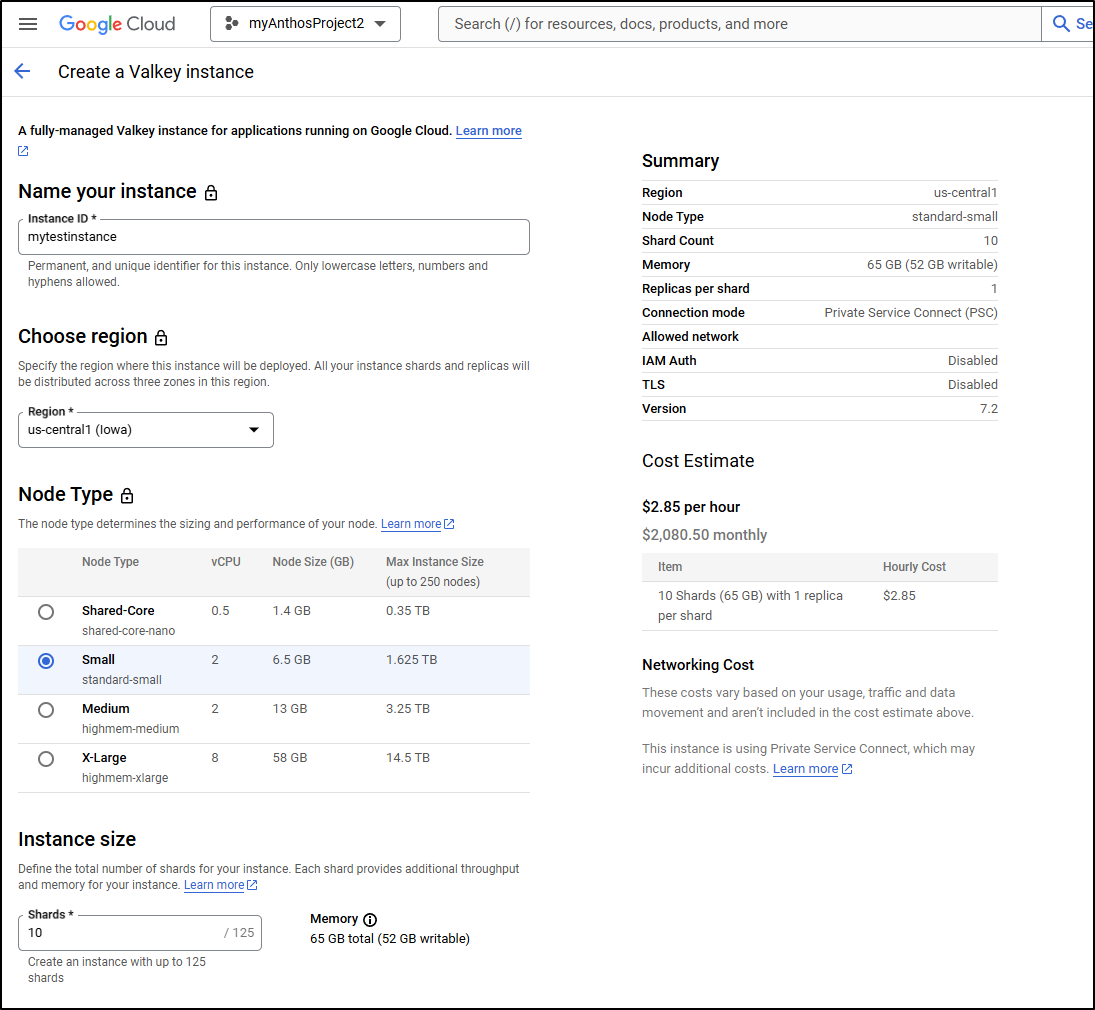

The pricing is essentially for the infrastructure. So here we can see a 2 vCPU instance that can stretch up to 1.625Tb would run me US$2.85/hour (or 2080/month) with 10 shards

The smallest I can go is 1 shared on the Shared-Core instance which would be US$0.0636 or US$46.43 a month

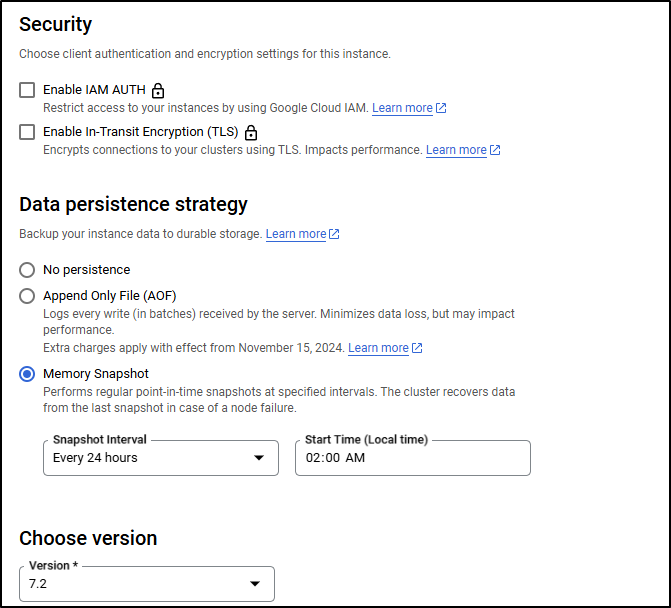

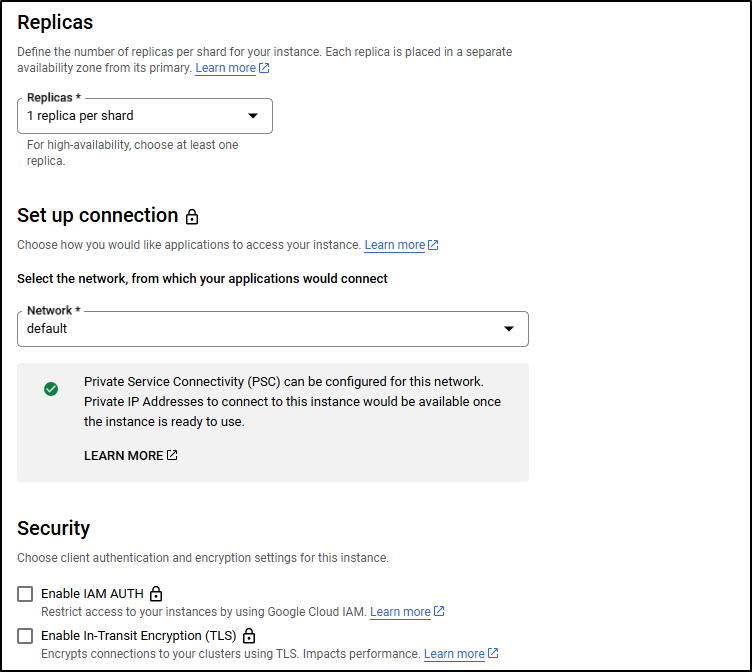

For security we can use IAM Auth or enable TLS. For DR we can use none, let it save aside the AOF files or let it snapshot the memory on a regular basis. Lastly, at the time of writing, we can use versions 7.2 or 8.0

Unlike some other options, I can only really make this internally facing. I am required to setup a connection policy to an existing GCP Network

Which confirms PSC is enabled

Note: the equivalent command line for all this is:

$ gcloud alpha memorystore instances create mytestinstance --project=myanthosproject2 --location=us-central1 --psc-auto-connections="network=projects/myanthosproject2/global/networks/default,projectId=myanthosproject2" --shard-count=1 --node-type=shared-core-nano --replica-count=1 --engine-version=VALKEY_7_2 --persistence-config-mode=rdb --rdb-config-snapshot-period=twenty-four-hours --rdb-config-snapshot-start-time=2024-12-05T08:00:00.000Z

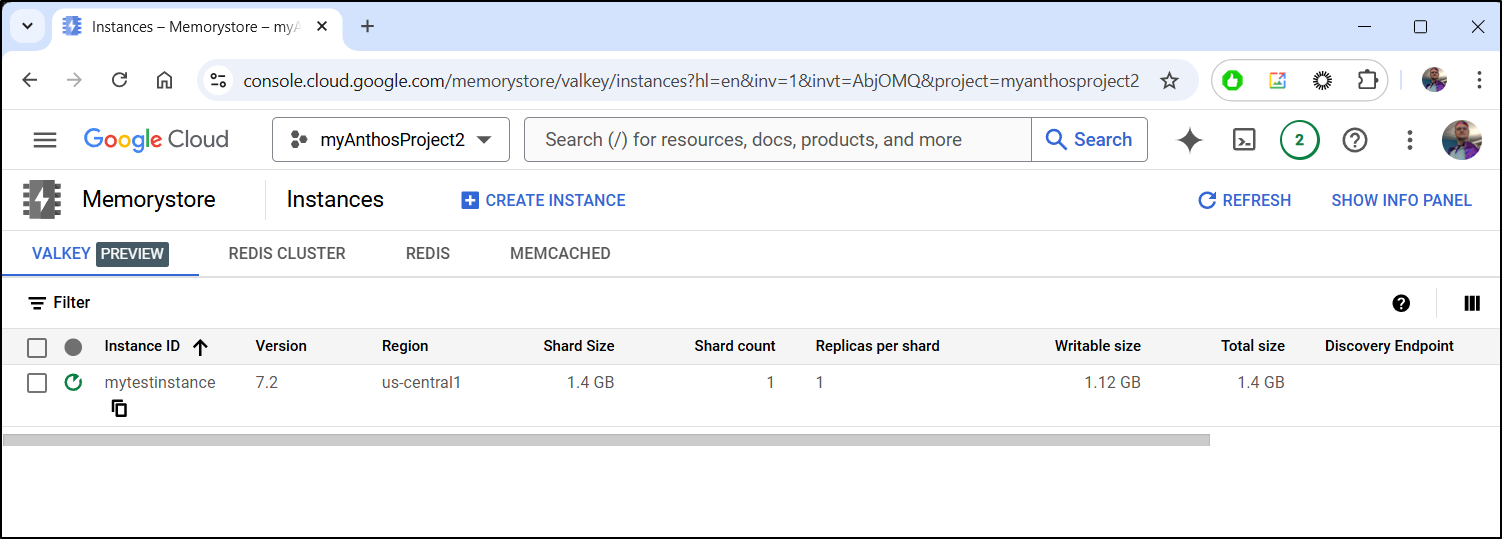

Once I click create I can see it spinning up

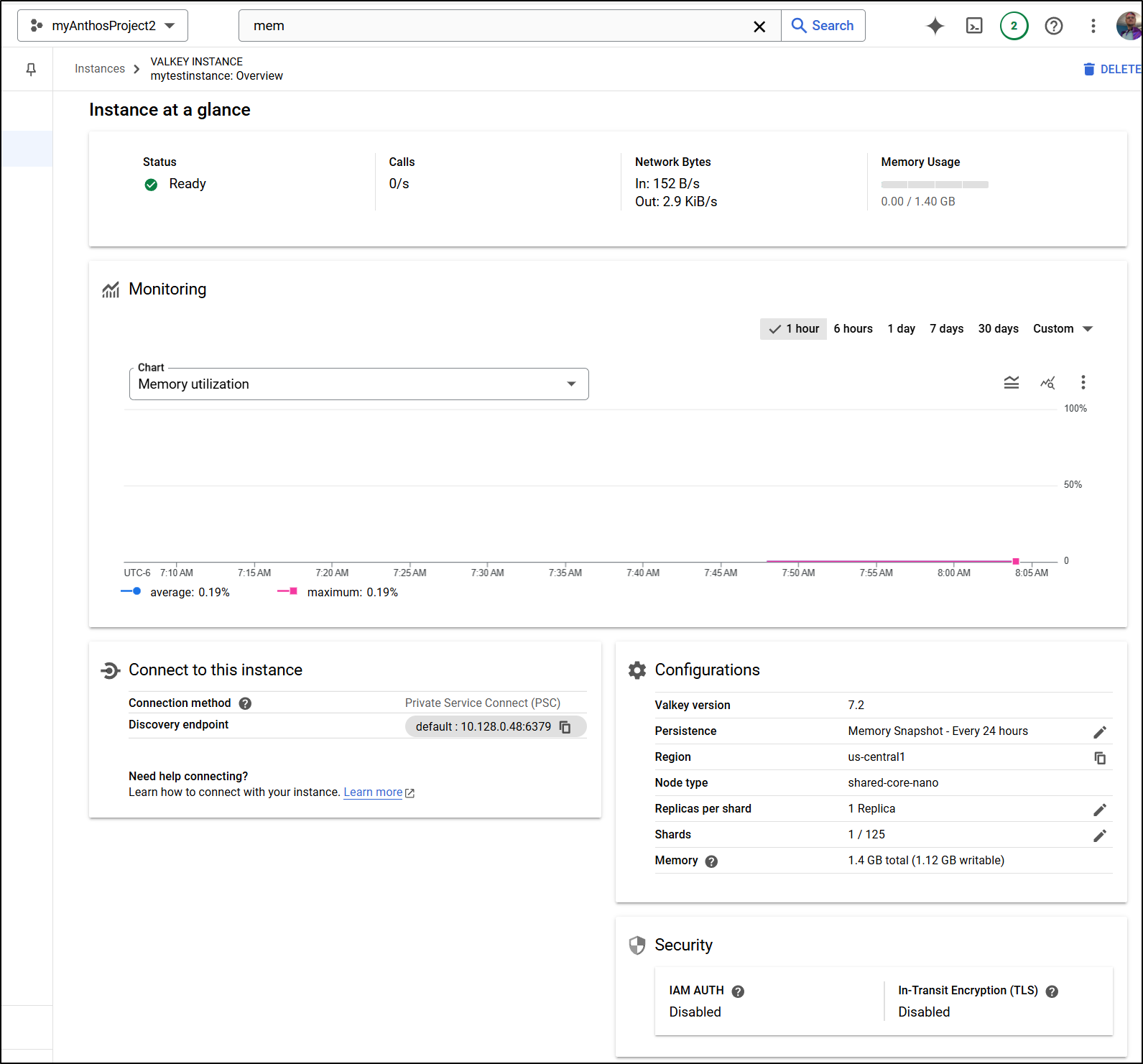

Once launched, I can see details including the private IP address and port (defautl 6379)

Testing

To use a cloud function, I’ll need this vote container in GAR/GCR. I could use Dockerhub as well

$ docker tag harbor.freshbrewed.science/freshbrewedprivate/azure-vote-front:v1 gcr.io/myanthosproject2/azure-vote-front:v1

$ docker push gcr.io/myanthosproject2/azure-vote-front:v1

The push refers to repository [gcr.io/myanthosproject2/azure-vote-front]

051efe652c4f: Pushed

dcd9a3bb3b8e: Pushed

475e51c5e84e: Pushed

0889a339caa2: Pushed

bba5e66e1bc9: Pushed

31b3b1f0ab7b: Pushed

66ddad2c15b4: Pushed

357d65e53b78: Pushed

40dc546a568a: Pushed

b61bb40af46f: Pushed 461de7fb06ff: Pushed

394c0a98982c: Pushed 433a67f63093: Pushed 5babbba9a986: Pushed 87e2b99e95df: Pushed

3c0d8f1e556d: Pushed

df08e2c3d6fe: Pushed

9a9ce7dcd474: Pushed

2548e7db2a94: Pushed

325b9d6f2920: Pushed

815acdffadff: Pushed

97108d083e01: Pushed

5616a6292c16: Pushed

f3ed6cb59ab0: Pushed

654f45ecb7e3: Pushed

2c40c66f7667: Pushed

v1: digest: sha256:cb790955fbe7d15450d5f9a254b9b748d0fdcc31364b17efbef0e7b1e1ff6407 size: 5754

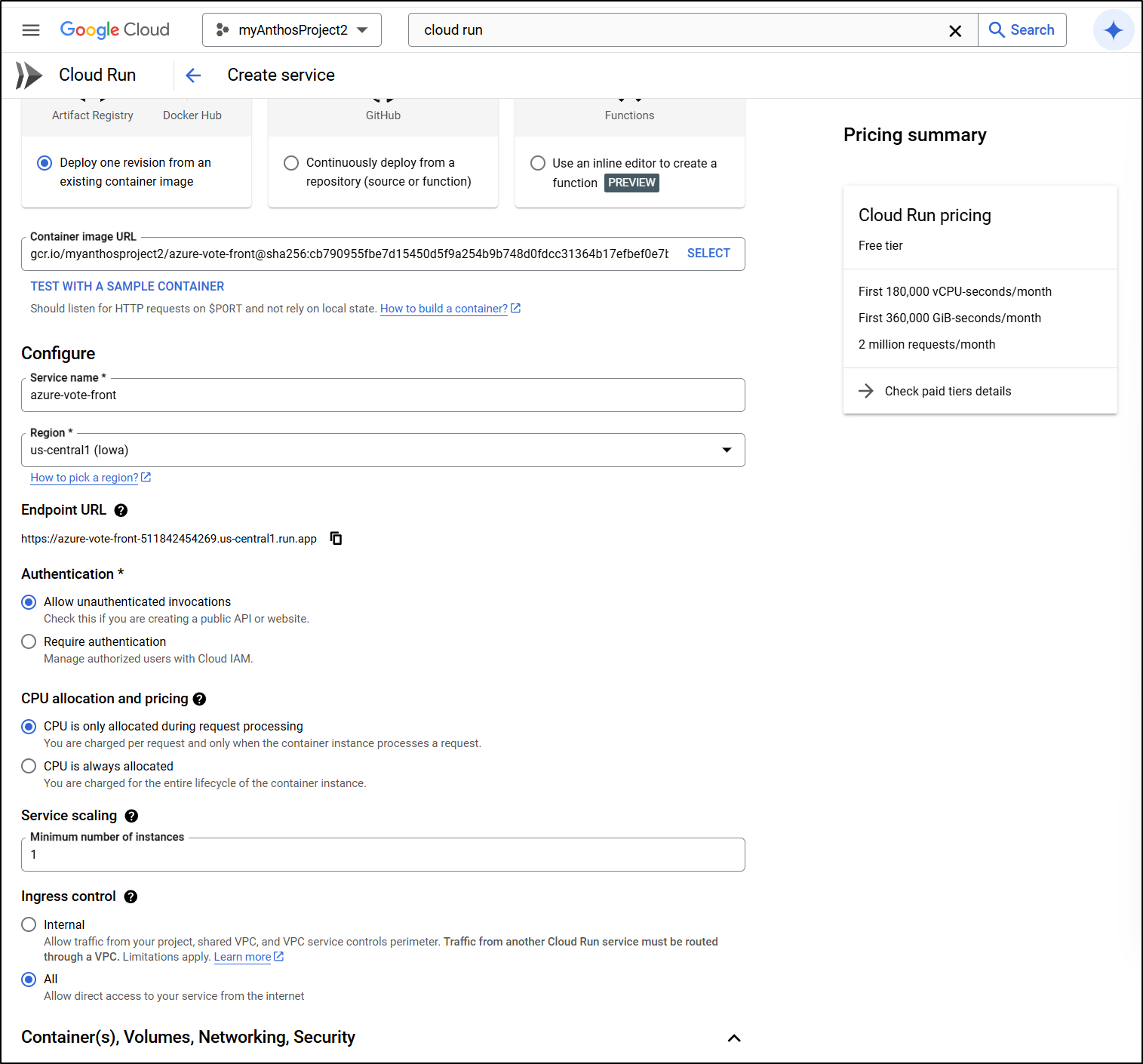

I can now create a Cloud Run function that is un-authed and public that will have an endpoint I can use

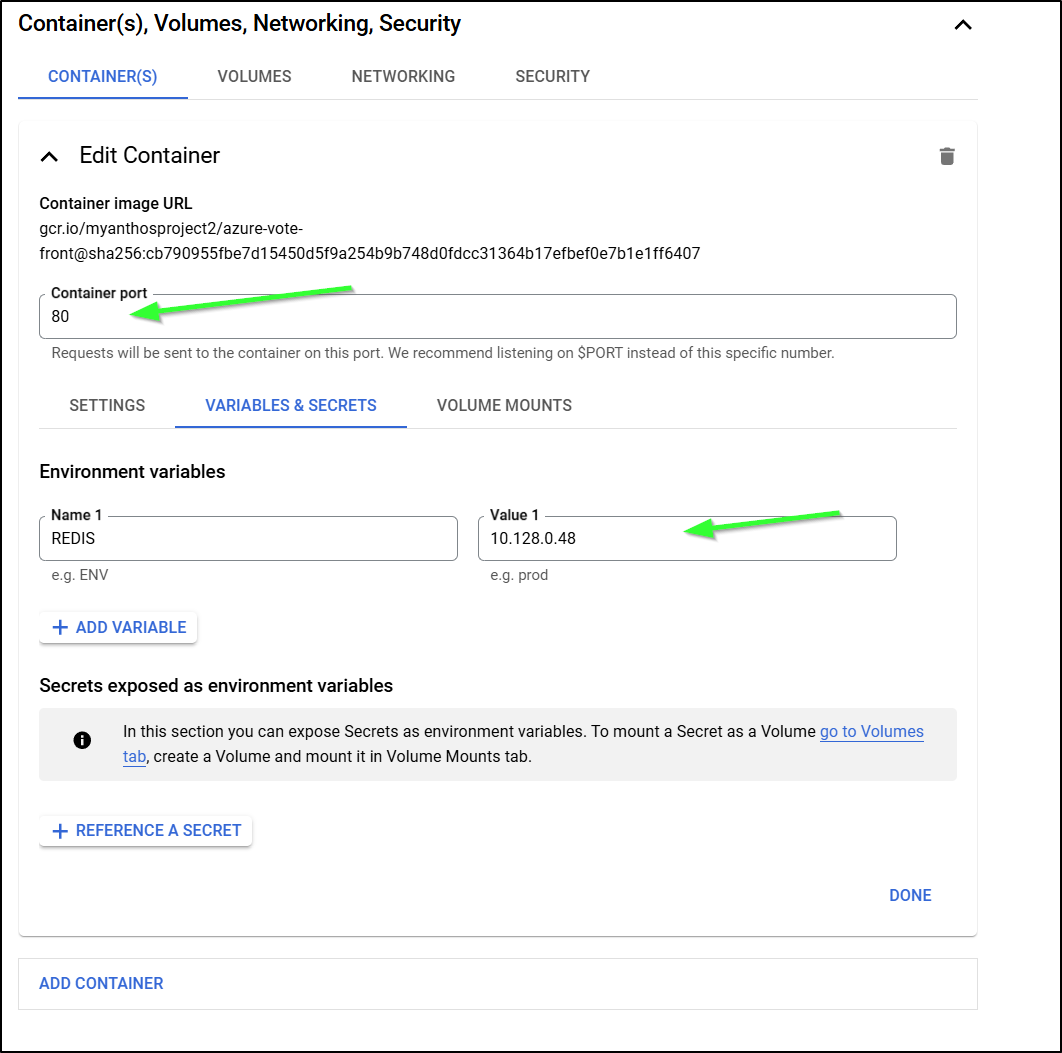

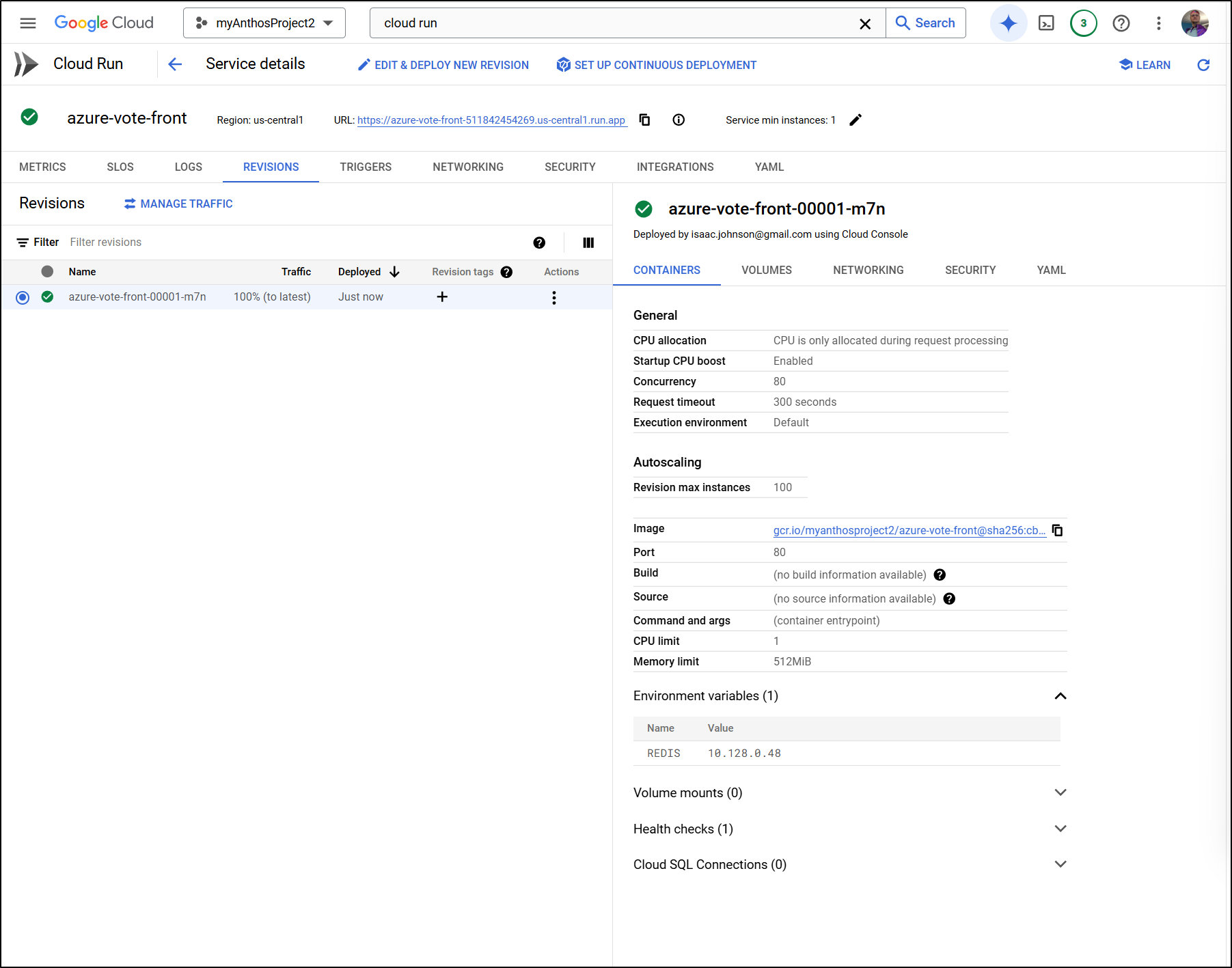

I’ll set the Valkey instance in the Environment variables and set the container port

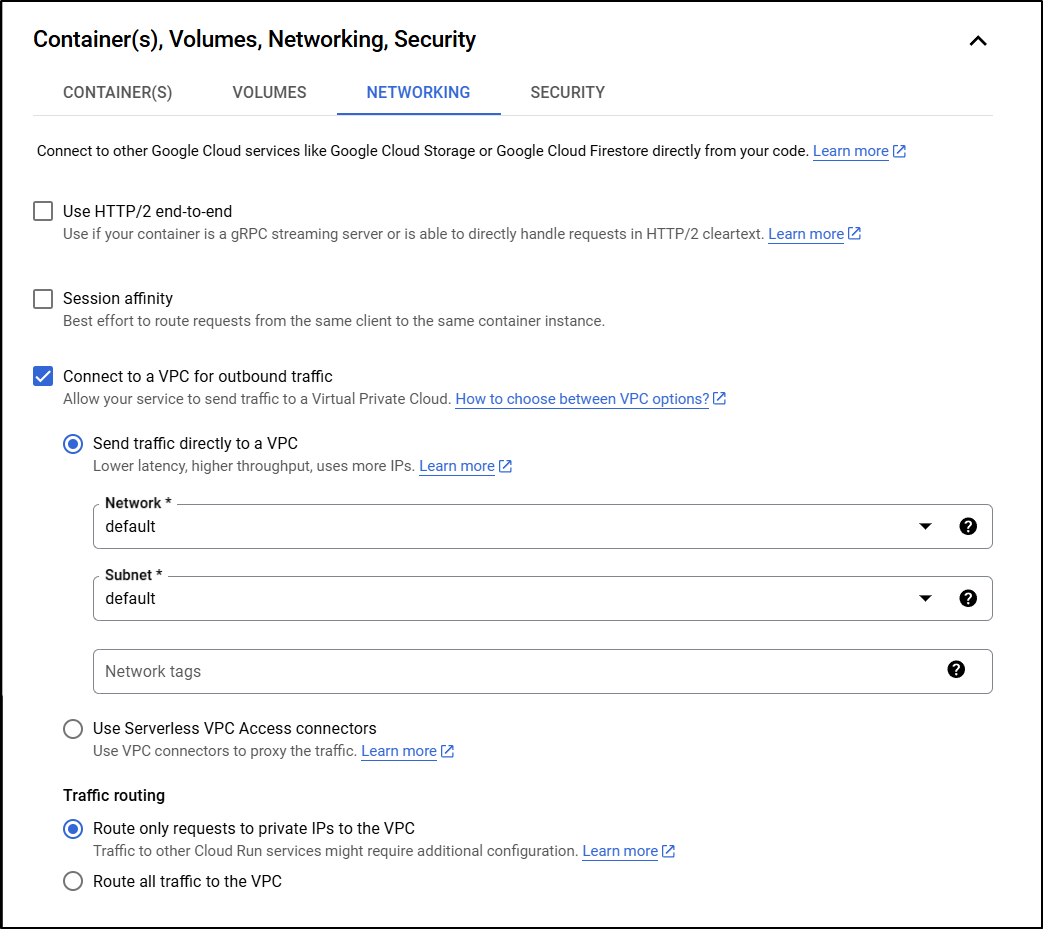

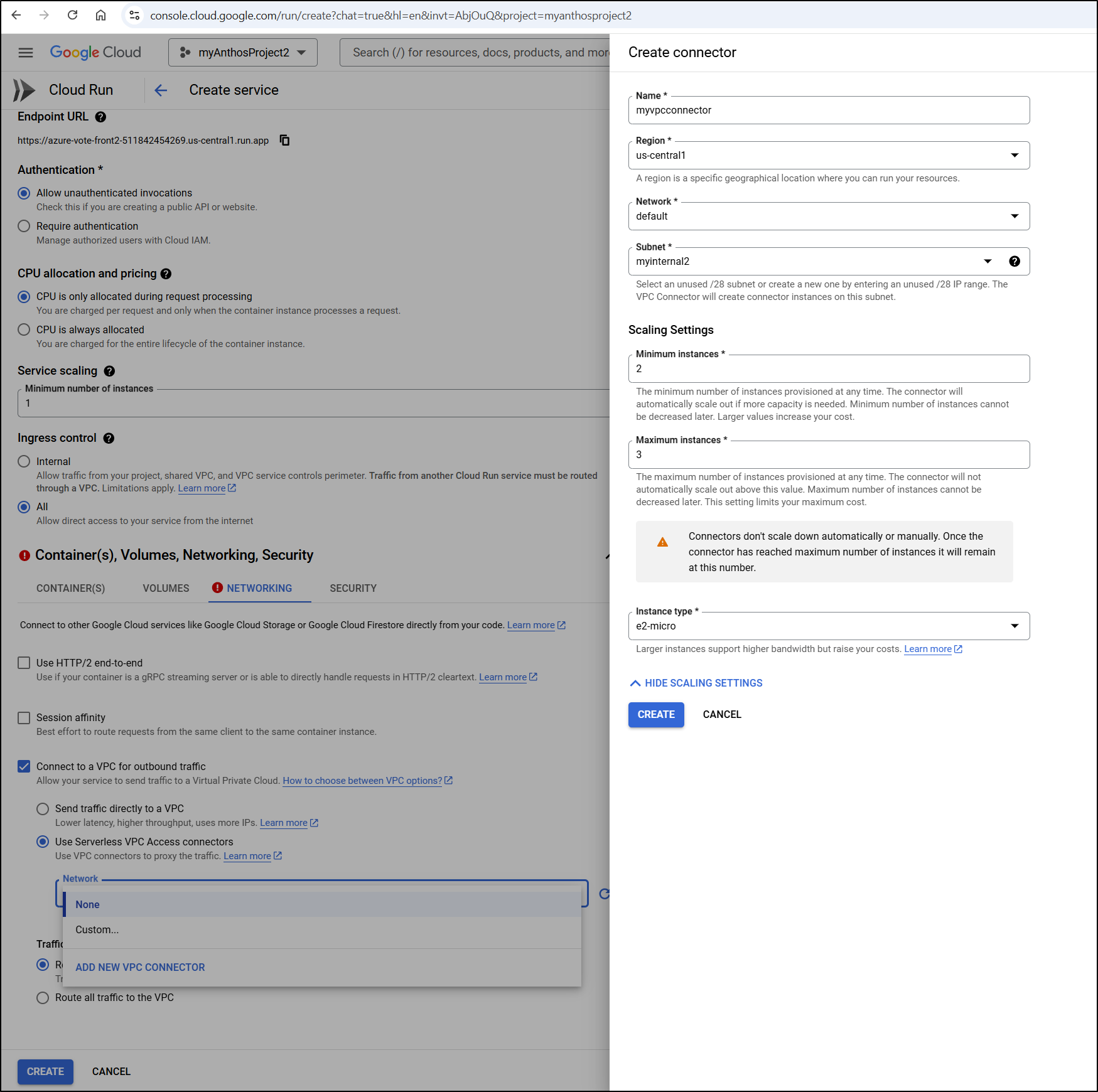

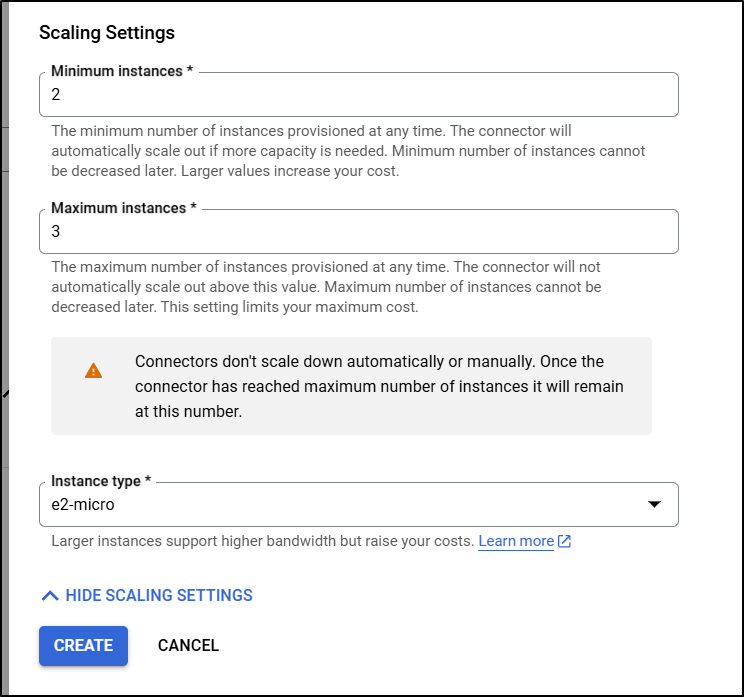

And since I need to reach the private IP, I have to either use VPC access connectors or just direct through the VPC.

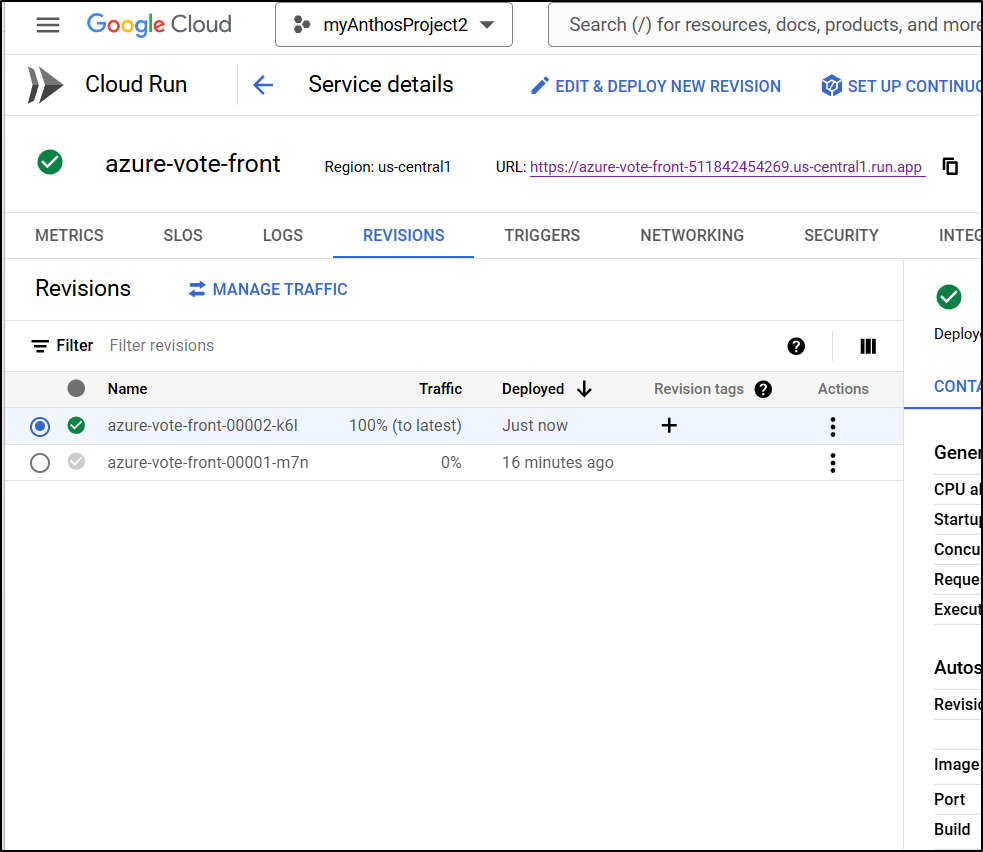

I click create and I can see it creating

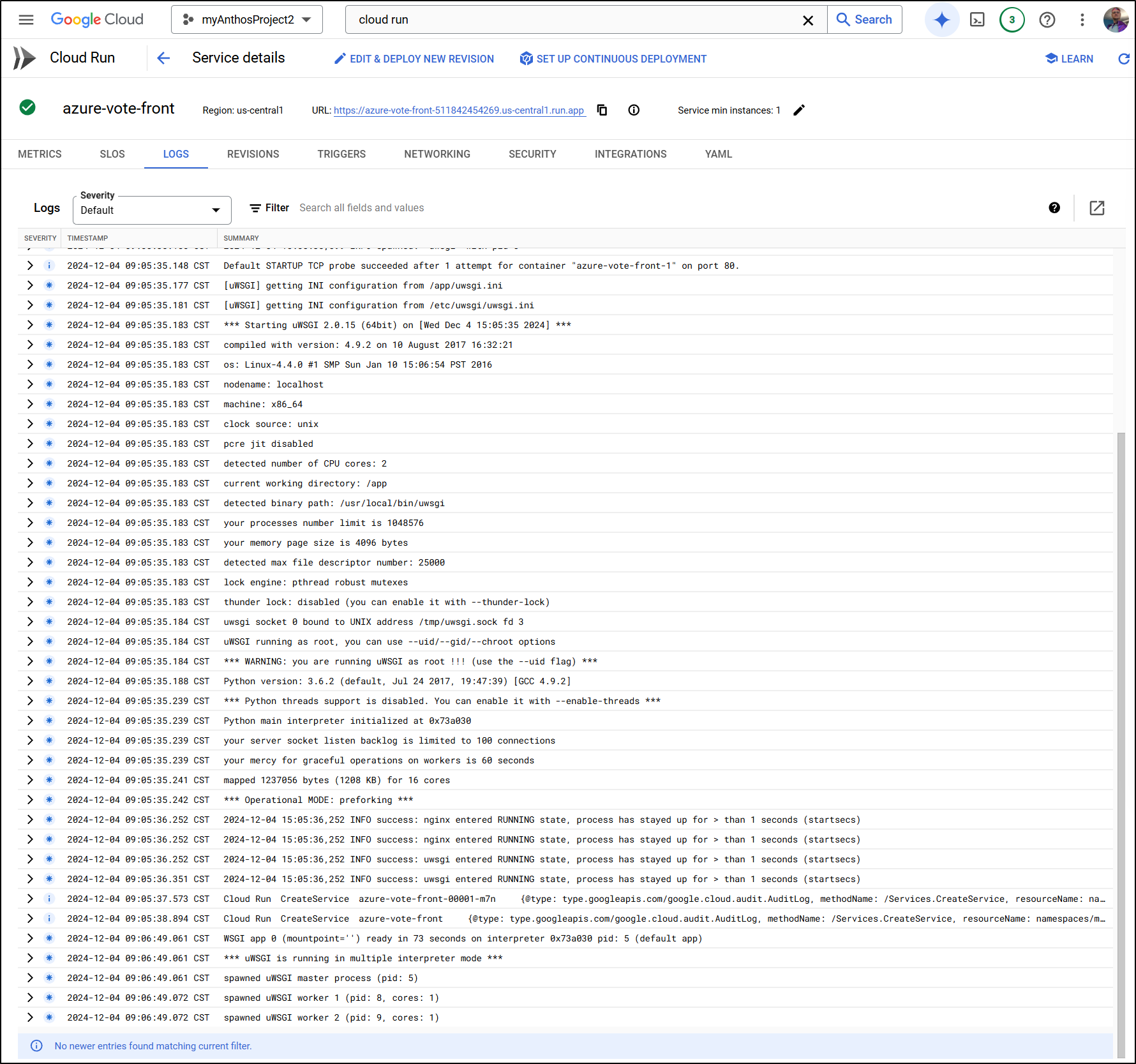

The logs suggest we are good to go

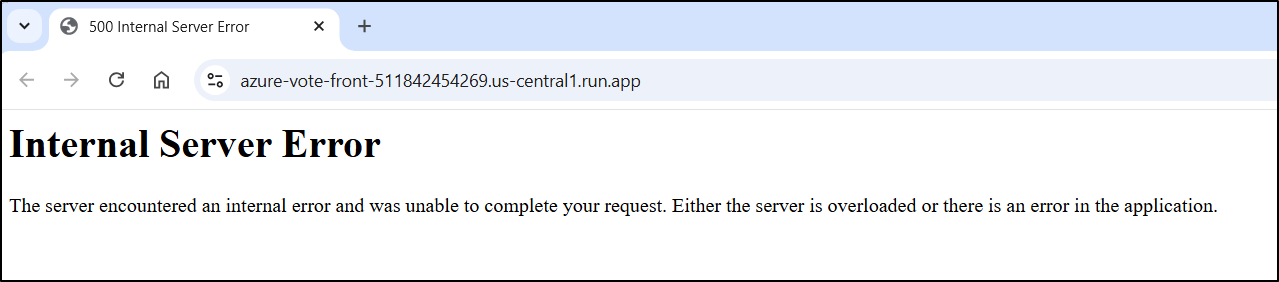

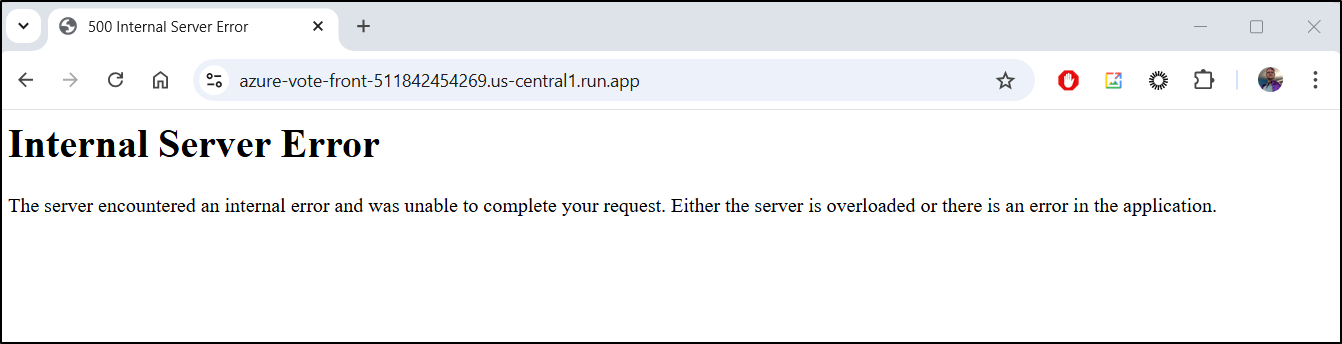

I keep getting 500 errors

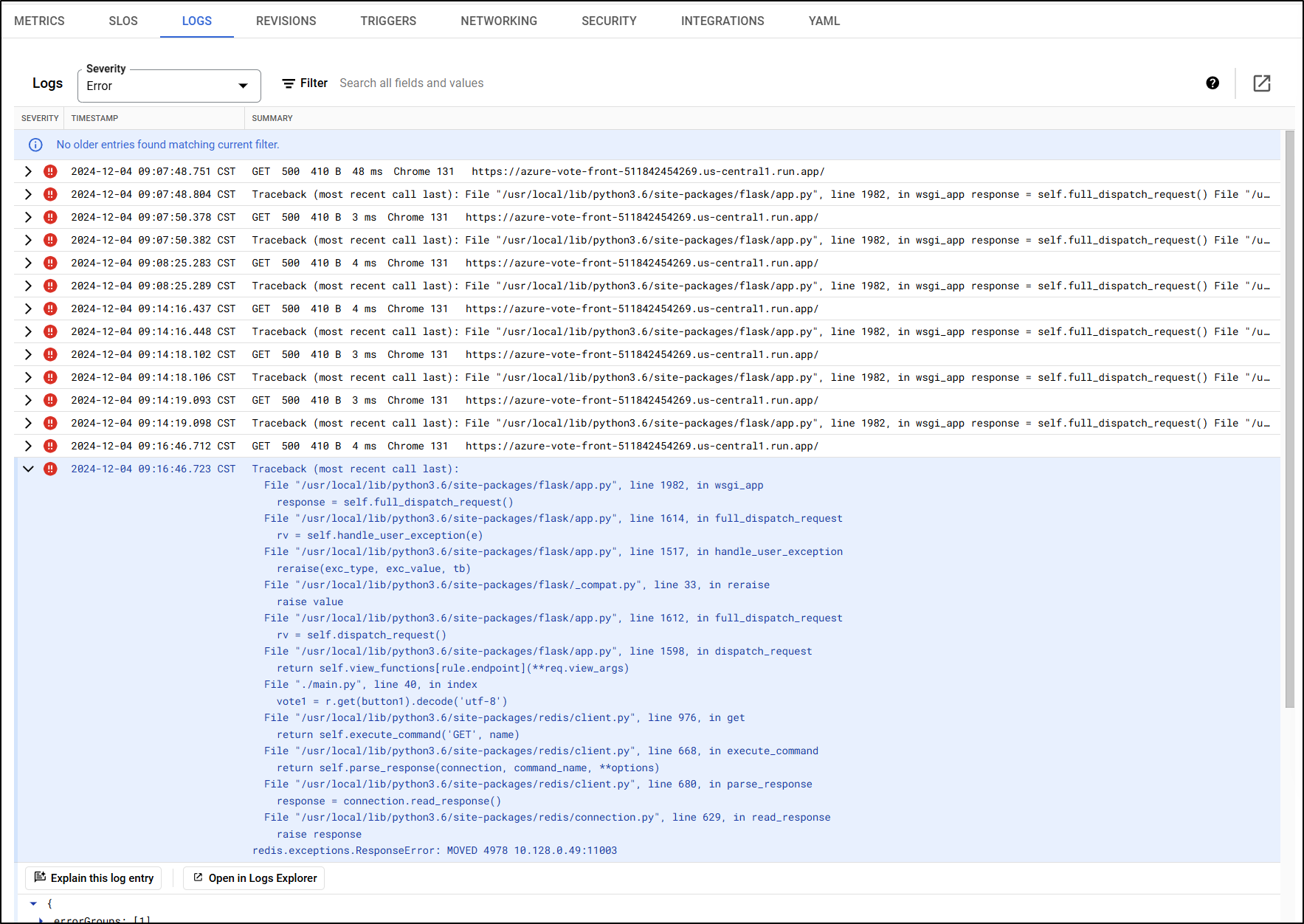

And the logs keep showing a “redis.exceptions.ResponseError: Moved 4978 0.128.0.49:11003”

I’m going to try updating this to send all traffic to the VPC to see if that helps

That worked!

I can now see my GCP Serverless function running the voting app

However, a Vote does make it crash

This too shows a similar error

Meanwhile I can see it is calling Valkey

I could move to using connectors next, but then i have to hunt them down to remove and since unlike Azure, I cannot just go to a Resource Group and delete, I’ll stop here.

Honestly, I have the same gripe with AWS. To network in a way that makes sense, I have to pay for VMs to run as gateways and for a simple blogger like myself, throwing away $30-40/mo just to have my network work the way I want isn’t something I’m willing to do.

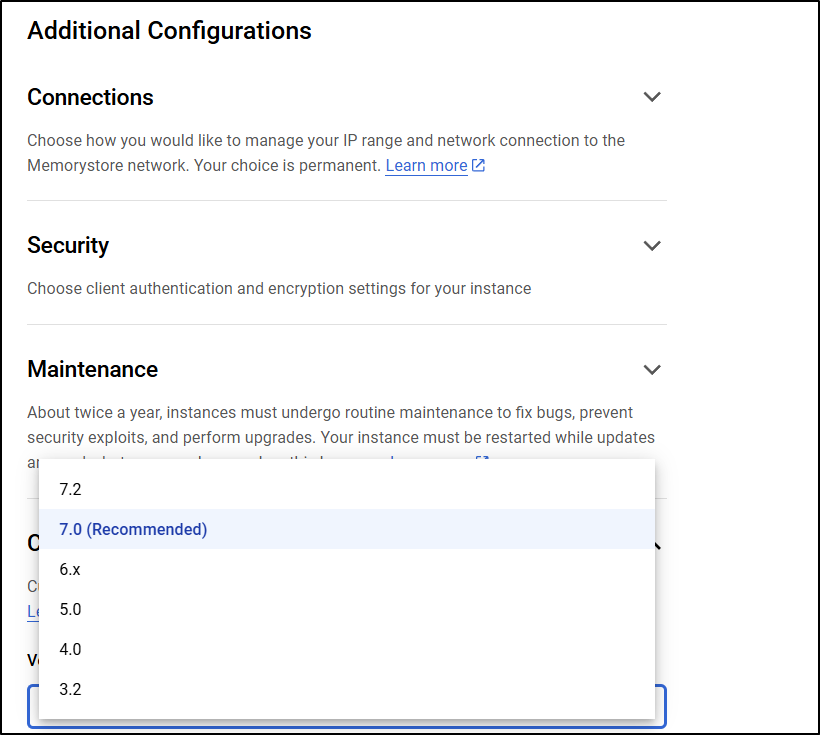

Compared to Redis in GCP

For comparison, the cheapest Redis instance in my GCP is $35.77/month - this is for 1Gb (not the Valkey 1.4Gb) and has no replicas at all

However, I can pick from a few more versions:

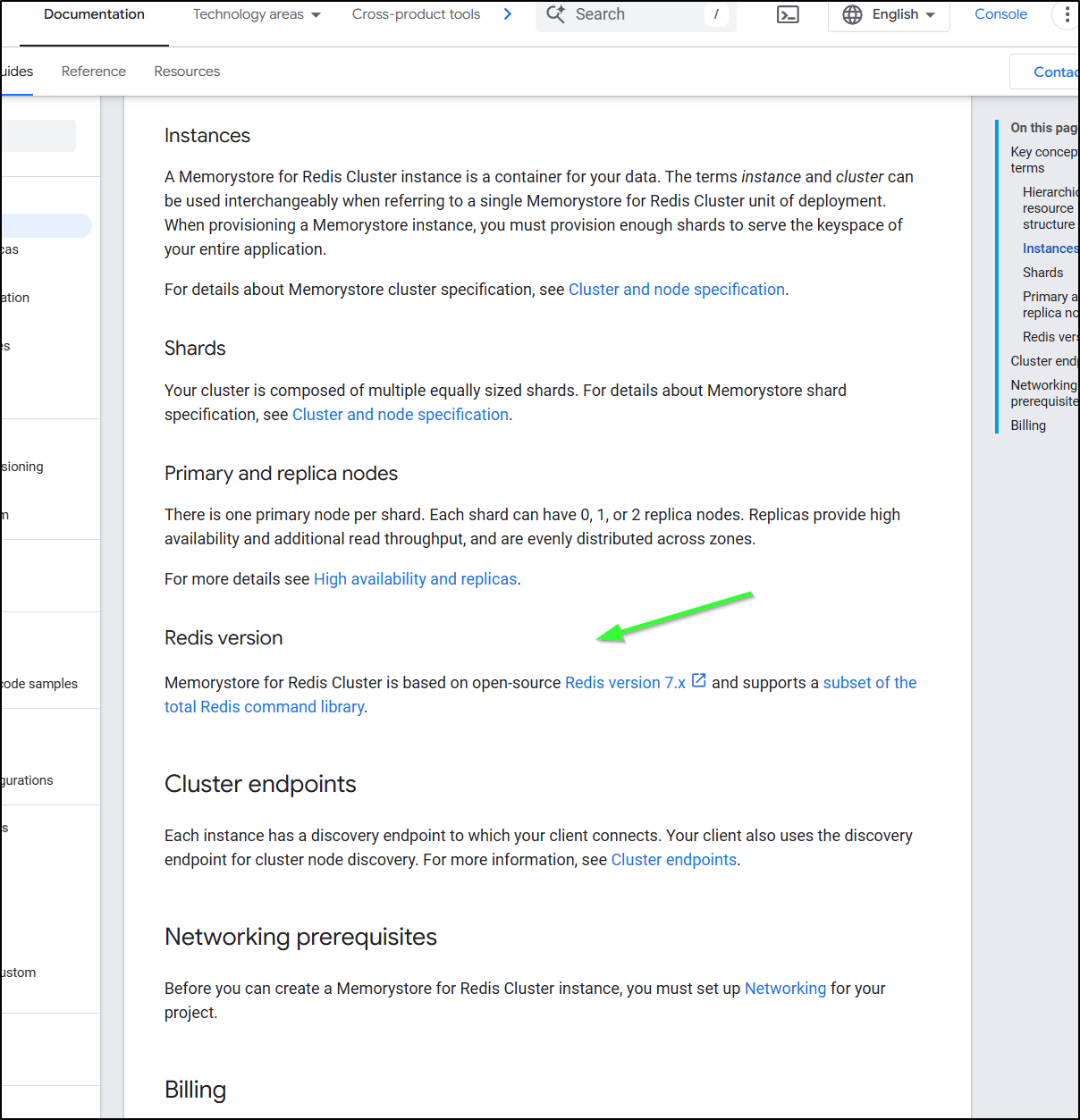

I should point out, there is a Redis Cluster option that lines up to the Valkey cluster but you’ll notice nowhere on the page does it show the version

The reason, I believe, is if you look at the Docs, we can see it’s pinned to the last OS version of 7.x

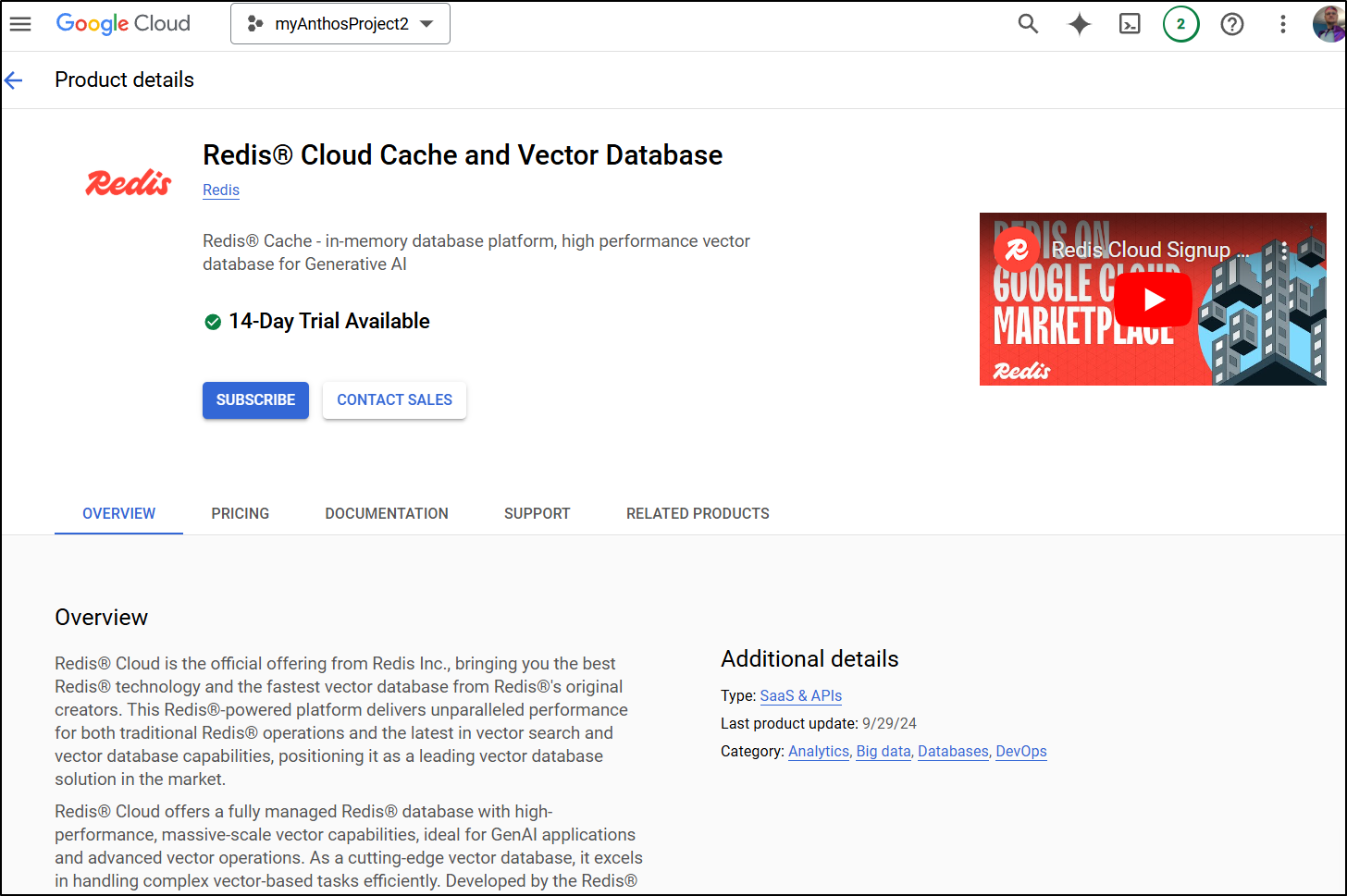

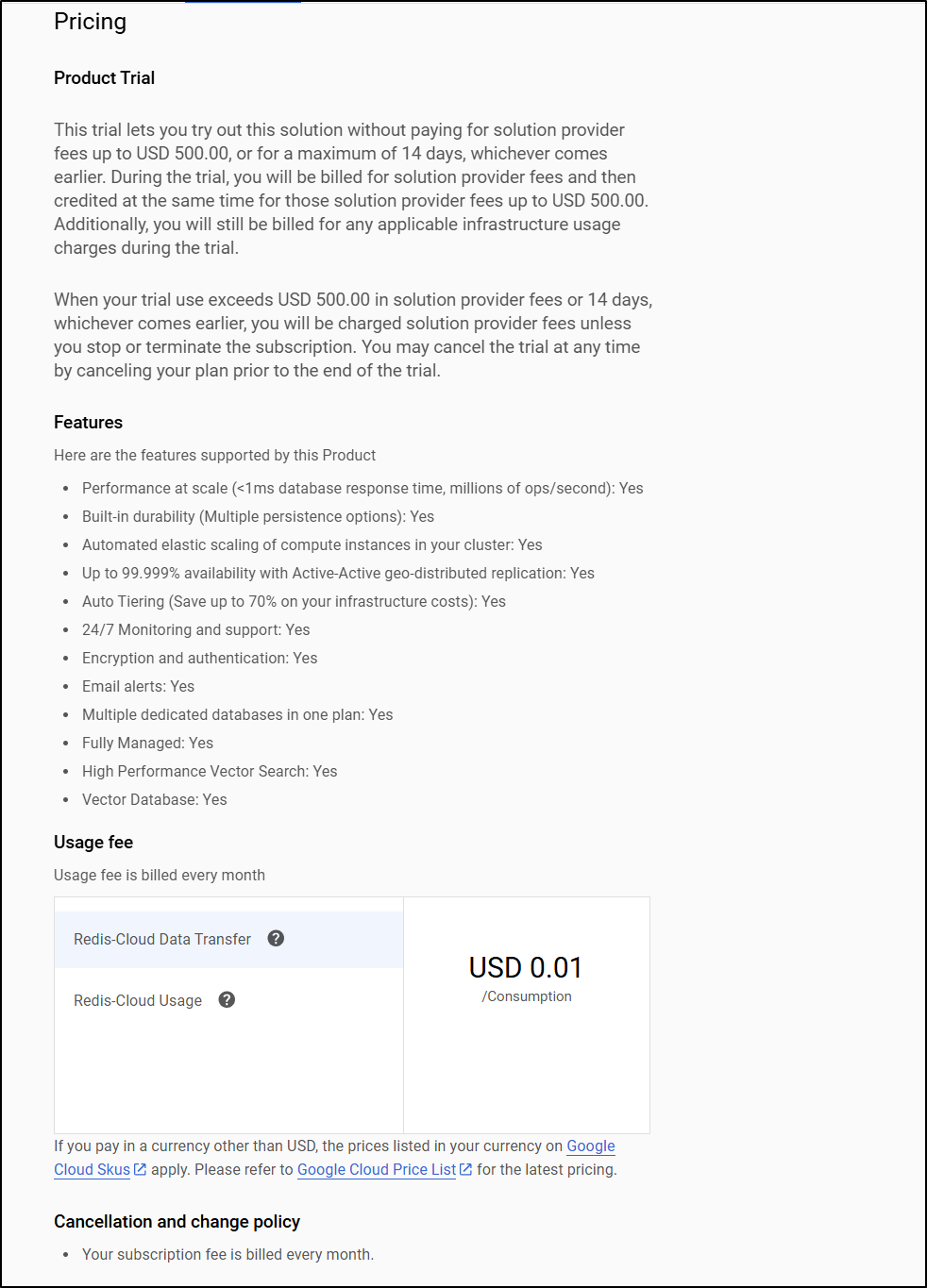

You can also always get “Redis Cloud” for mystery pricing

”$ per consumption” whatever that means

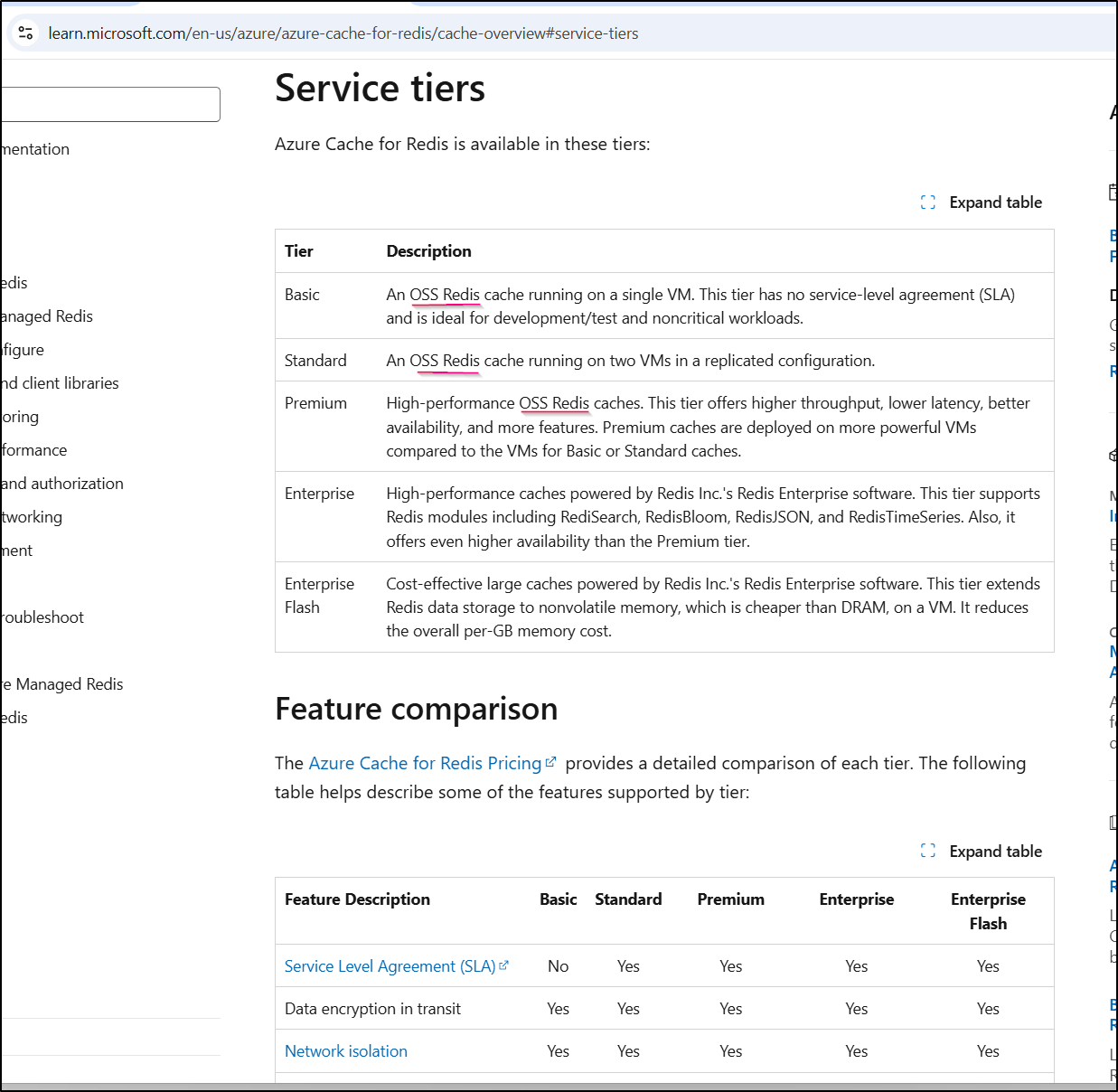

Redis/Valkey in Azure

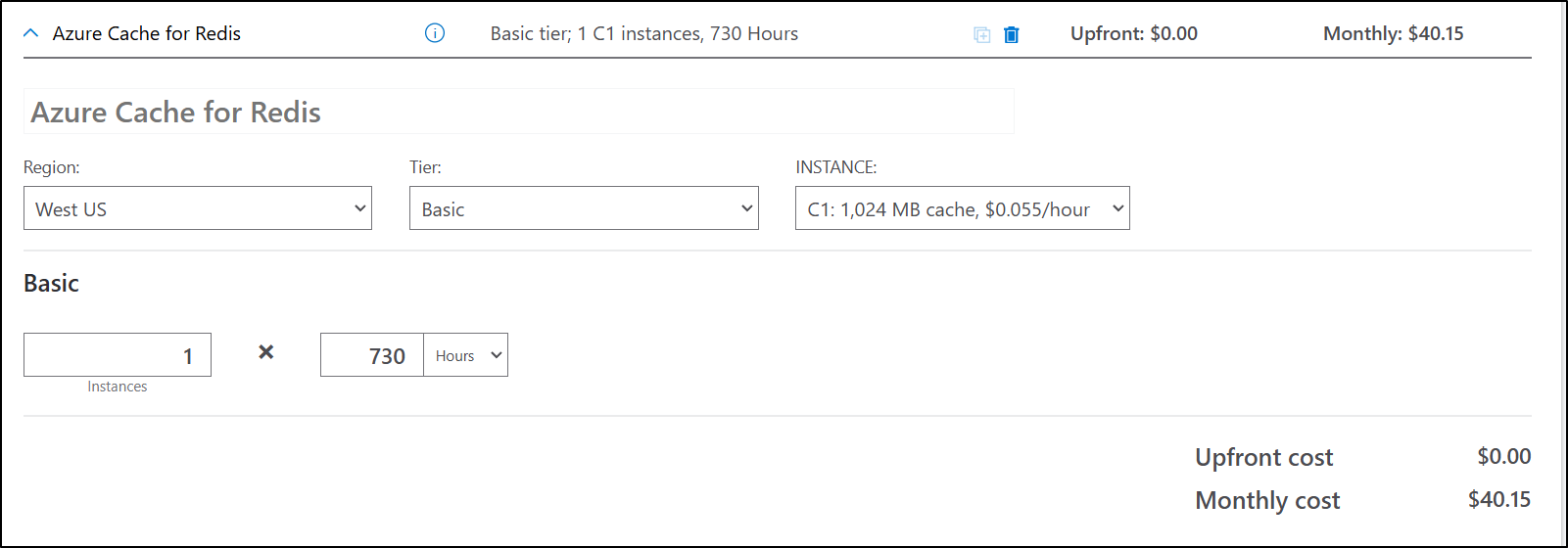

For years now, Azure has had “Azure Cache for Redis”.

In the docs I now see it’s clear that for Basic through Premium, its “OSS Redis” meaning 7.x

Pricing-wise this lines up. It’s about US$40/month for a non-HA basic instance

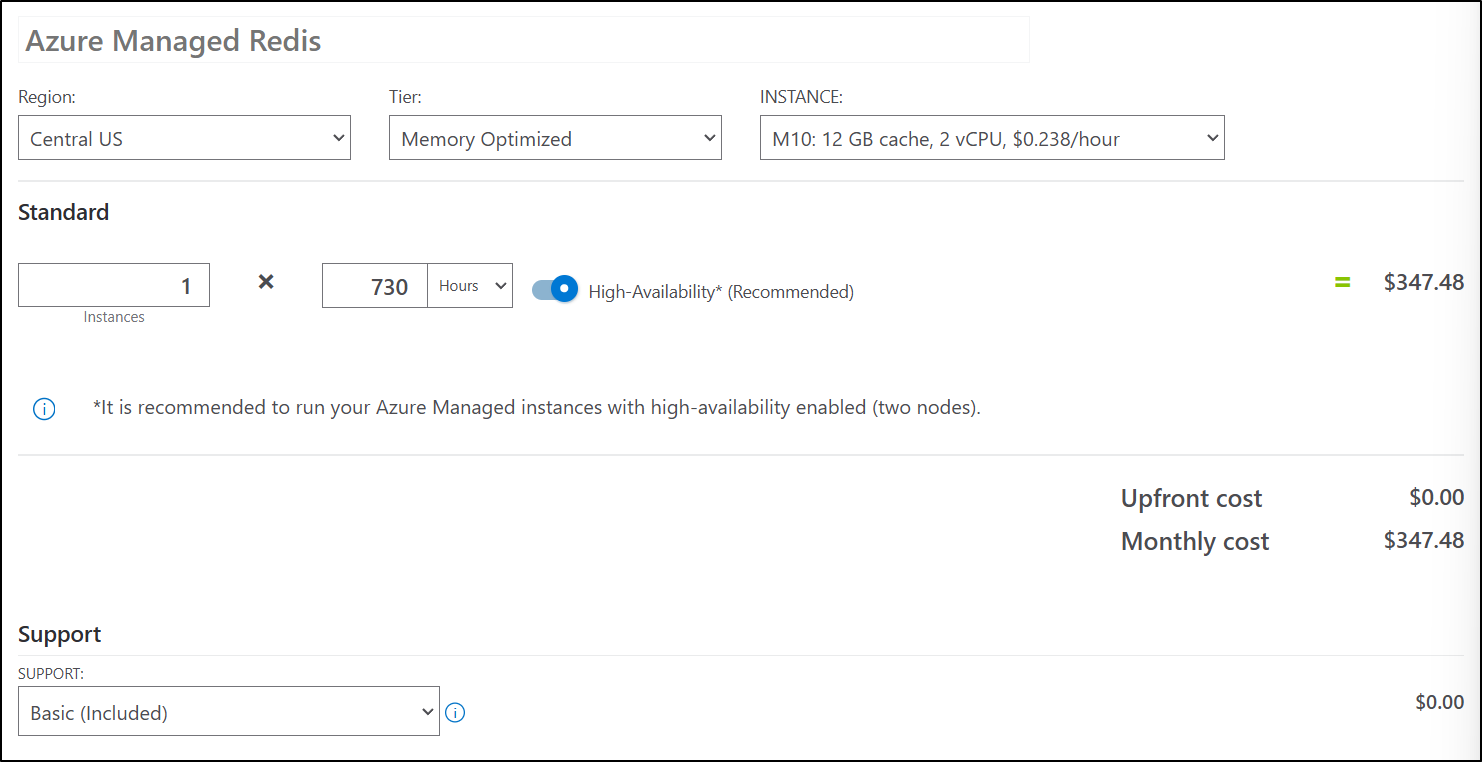

However, the cheapest SaaS Cluster option is 12Gbs and US$347 a month

Redis/Valkey in AWS

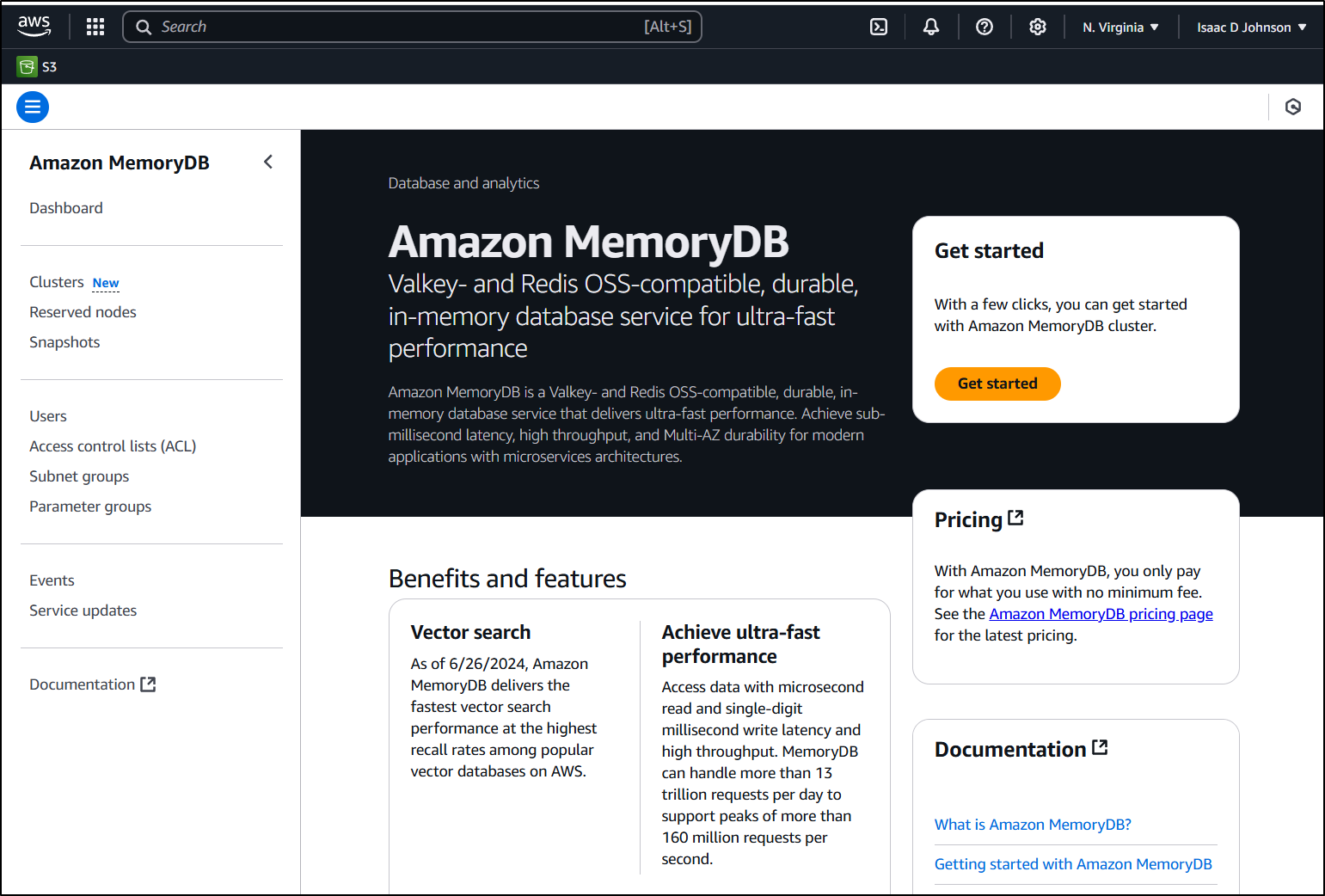

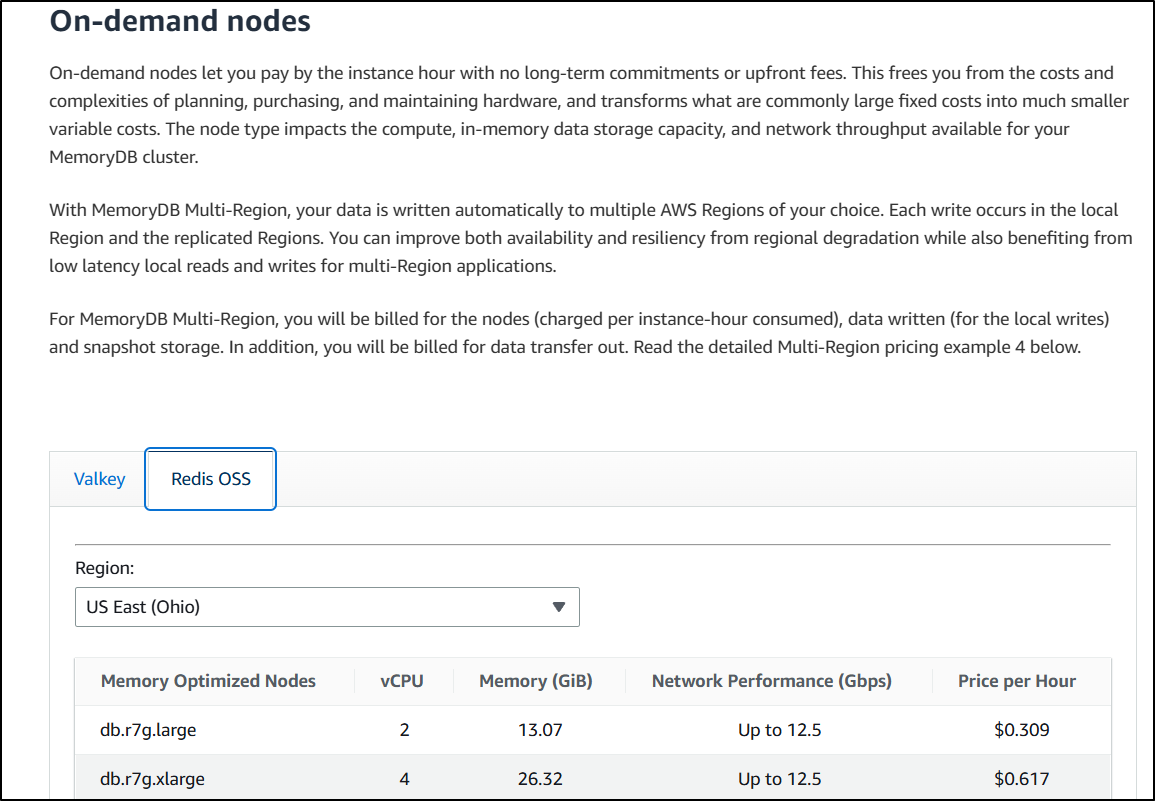

Amazon MemoryDB is only Valkey and Redis-OSS now. It’s telling that “Valkey” gets first billing in the offering text

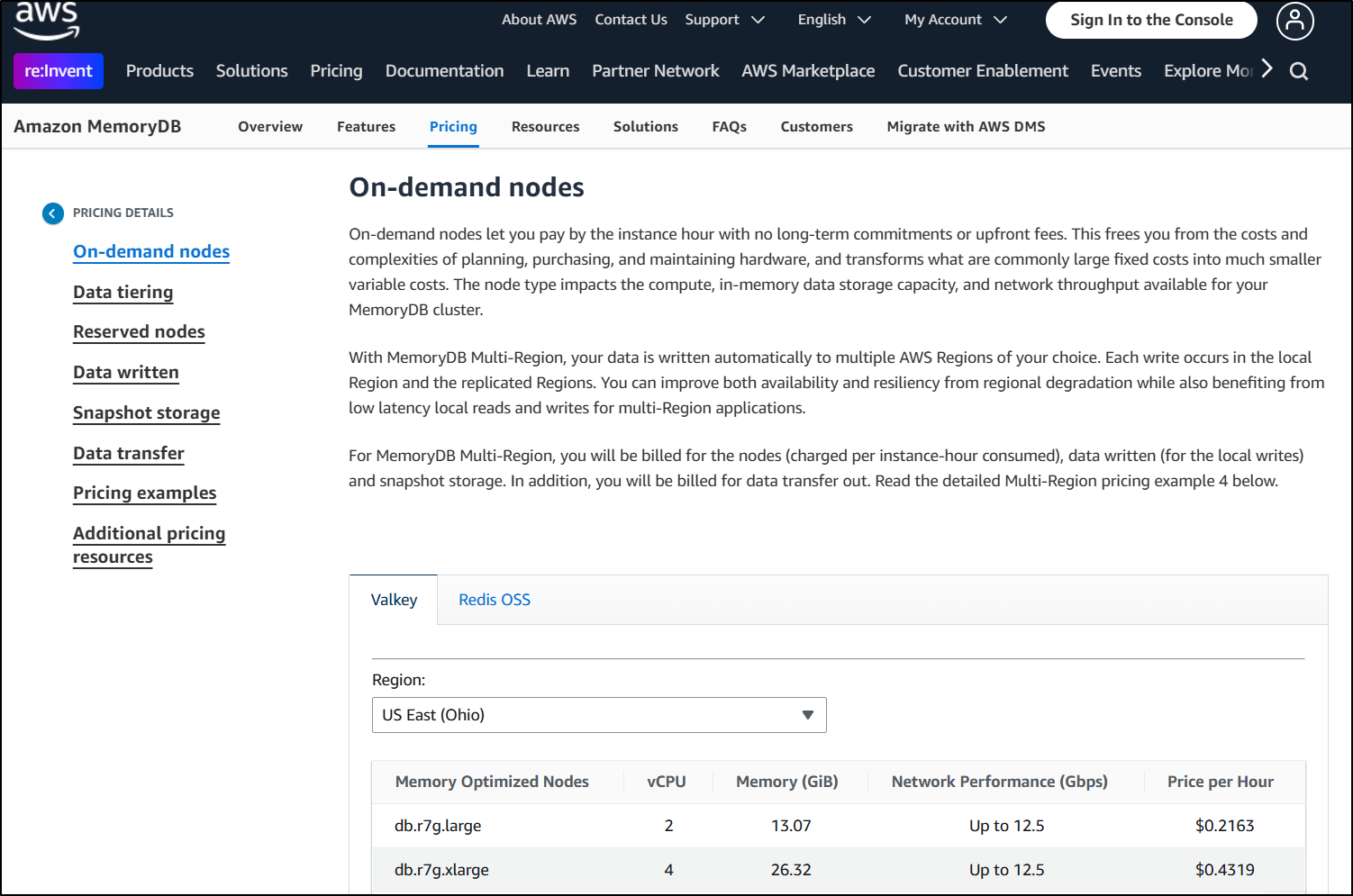

Here it’s easy to see the pricing difference. Our Valkey is US$0.2163 an hour (about $160/mo)

To the Redis OSS at $0.309/hour (about $230 a month)

For a non-cluster option - just a Redis or Valkey instance, we need to look at ElastiCache.

Here I need not even look at the Pricing Calculator as they are pushing Valkey with a discounted US$6/mo. That’s cheaper than Venti PSL at Starbucks.

Testing Valkey on AWS

Let’s give this a test as well.

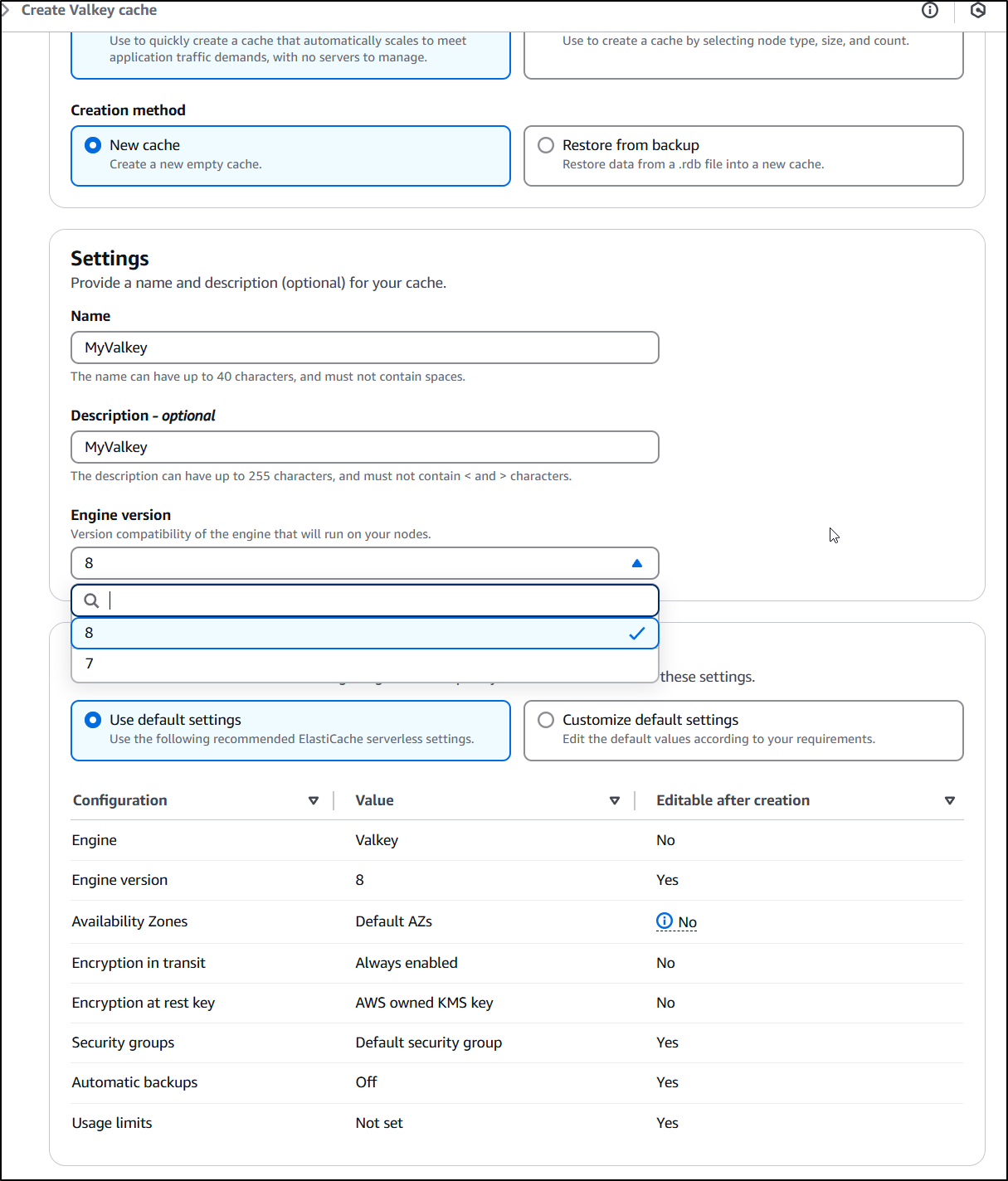

I’ll use the default ‘serverless’ model that “scales to meet application traffic” and we can pick between release 7 and 8

Ah, here I’m blocked - because I need a VPC and because the way AWS has jacked me around for pricing on gateways, I won’t make a VPC anymore

Aiven

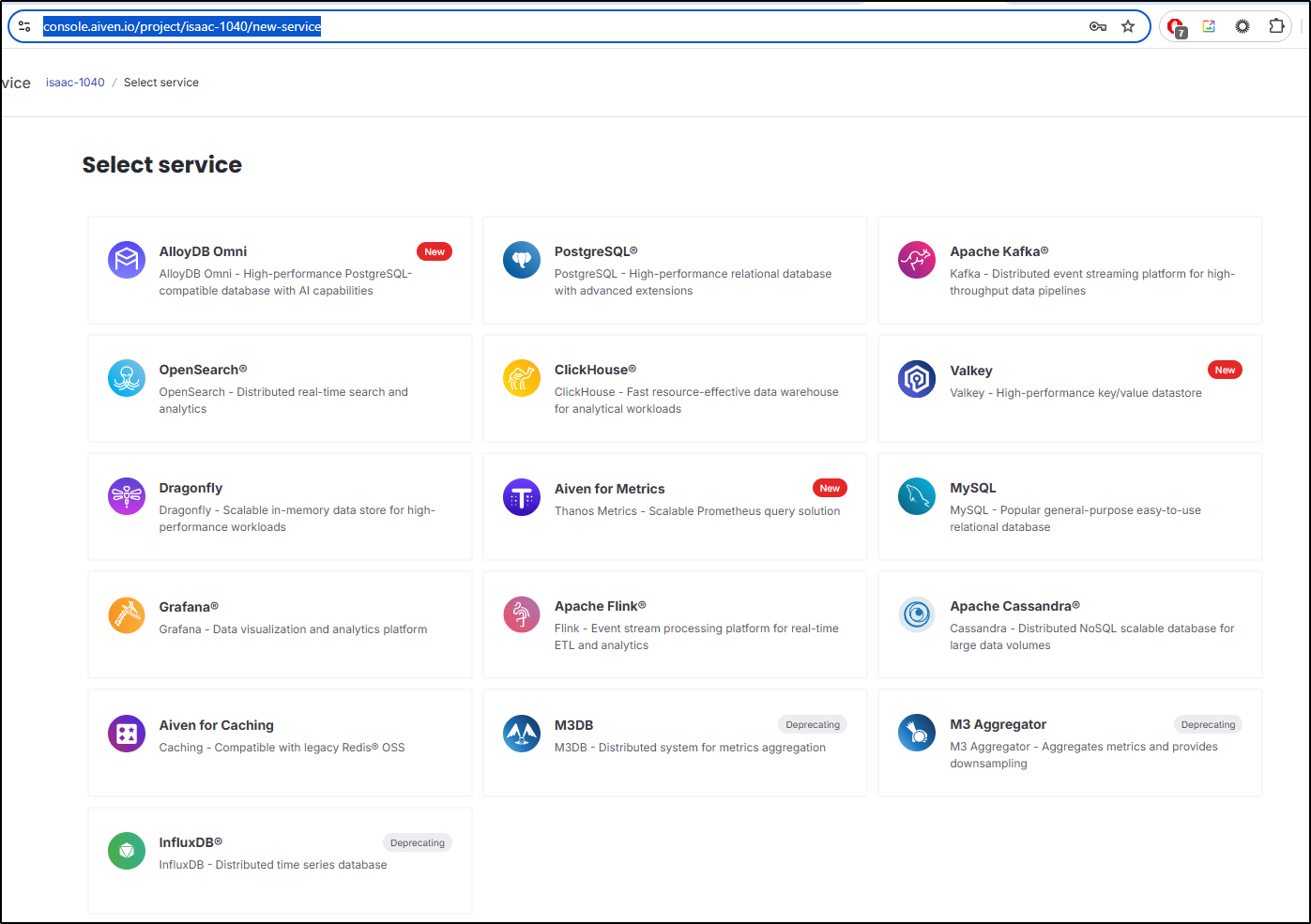

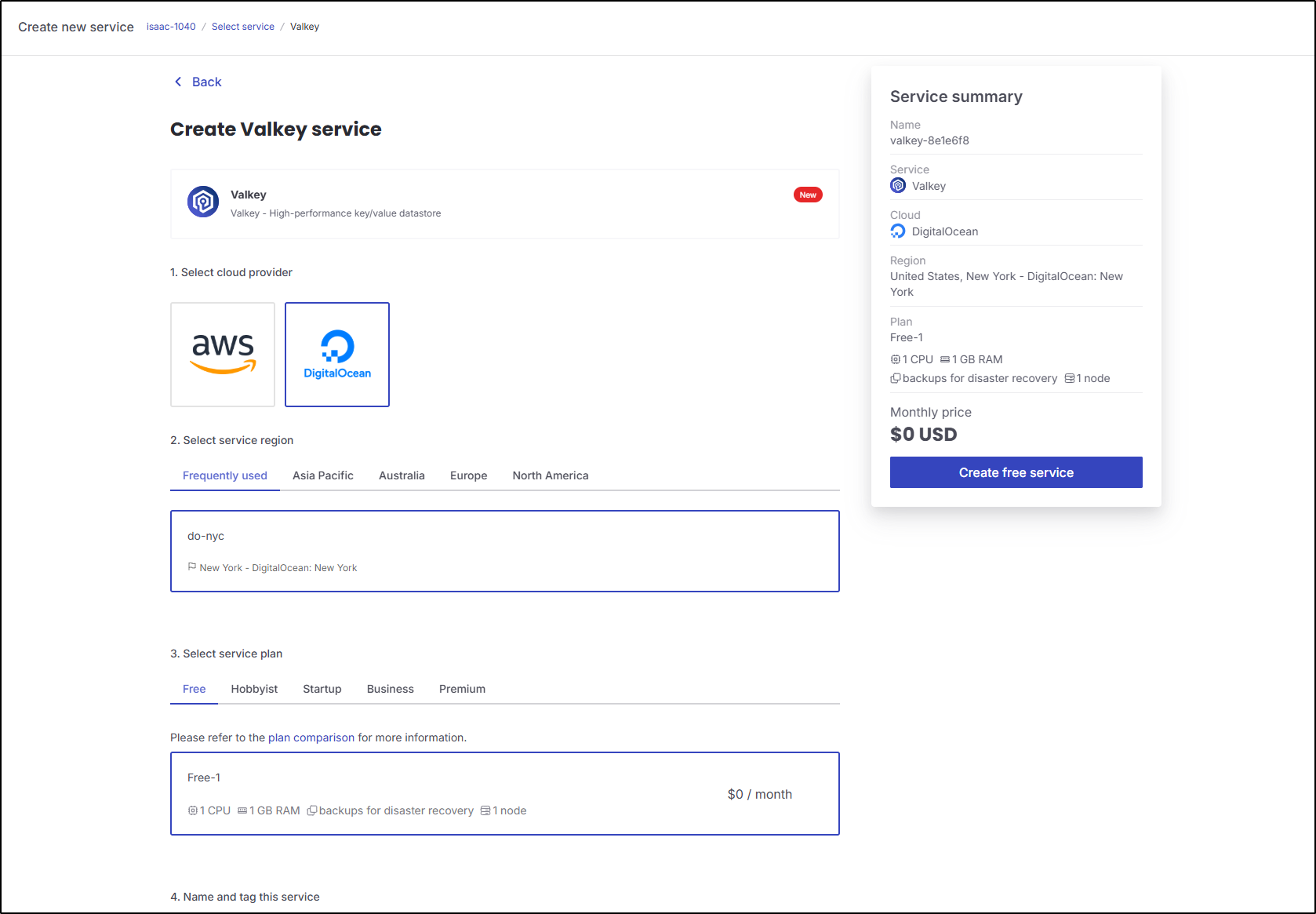

I don’t mention them often enough, but one of my favourite Cloud Providers for OS solutions is Aiven.io.

I went to check there and see they now have a Valkey option

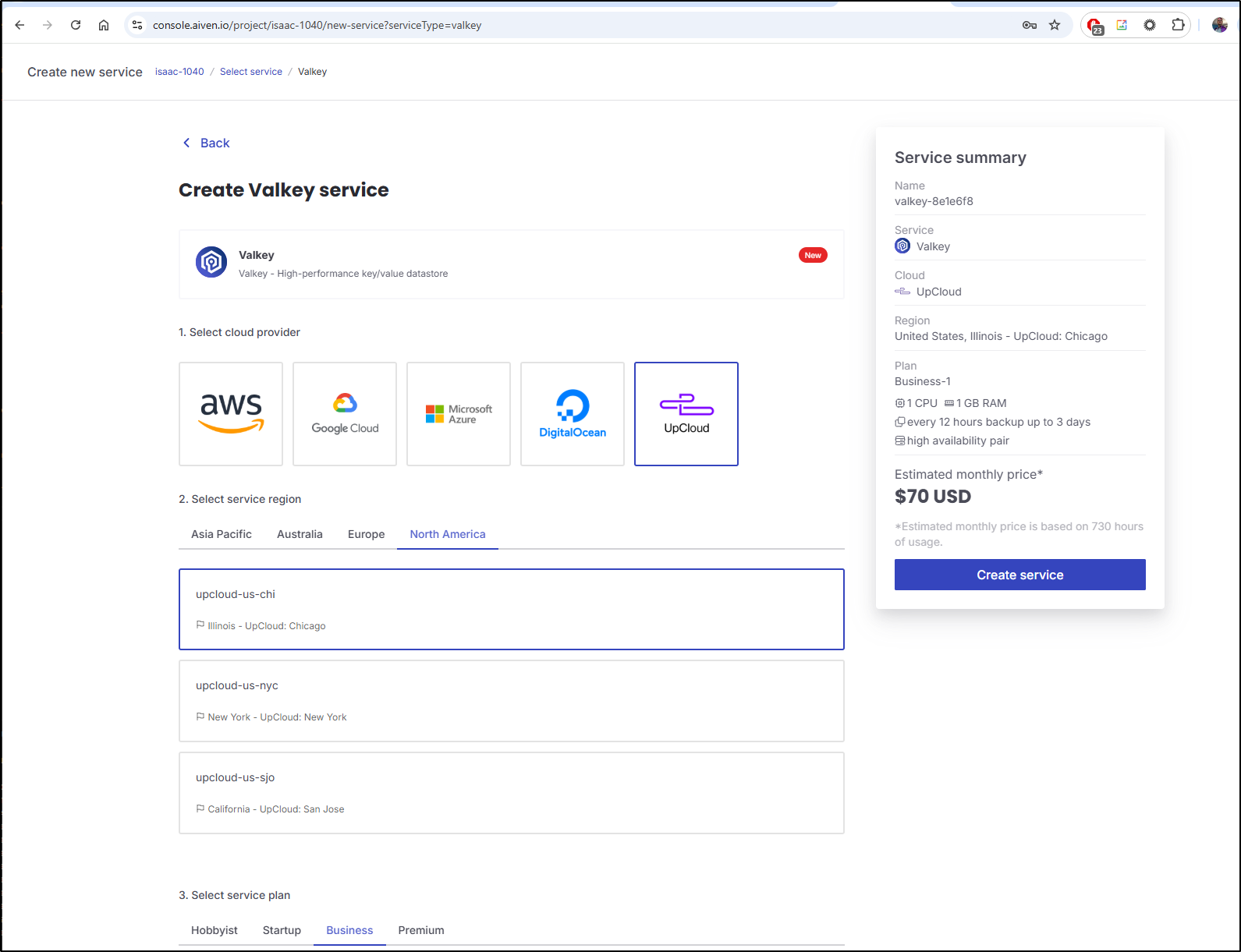

The cheapest business plan level is DO and Upcloud for $70

However, in the free tier they do have an option on AWS and DO

Unfortunately I cannot disable password based on Advanced Options so I cannot just use this as a drop-in on the Azure vote app

I waited for it to come up

And if I use TLS and pass AUTH with --user and --pass then it works

I have no name!@valkey-client:/$ valkey-cli -h valkey-8e1e6f8-isaac-1040.f.aivencloud.com -p 11997 --tls --user default --pass

AVNS_Zg5doQnvBmkNXw45qpH

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

valkey-8e1e6f8-isaac-1040.f.aivencloud.com:11997> set asdf 1234

OK

valkey-8e1e6f8-isaac-1040.f.aivencloud.com:11997> get asdf

"1234"

I realized I didn’t really need to pass a user of “default”.

I have no name!@valkey-client:/$ valkey-cli -h valkey-8e1e6f8-isaac-1040.f.aivencloud.com -p 11997 --tls --pass AVNS_Zg5doQnvB

mkNXw45qpH

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

valkey-8e1e6f8-isaac-1040.f.aivencloud.com:11997> get asdf

"1234"

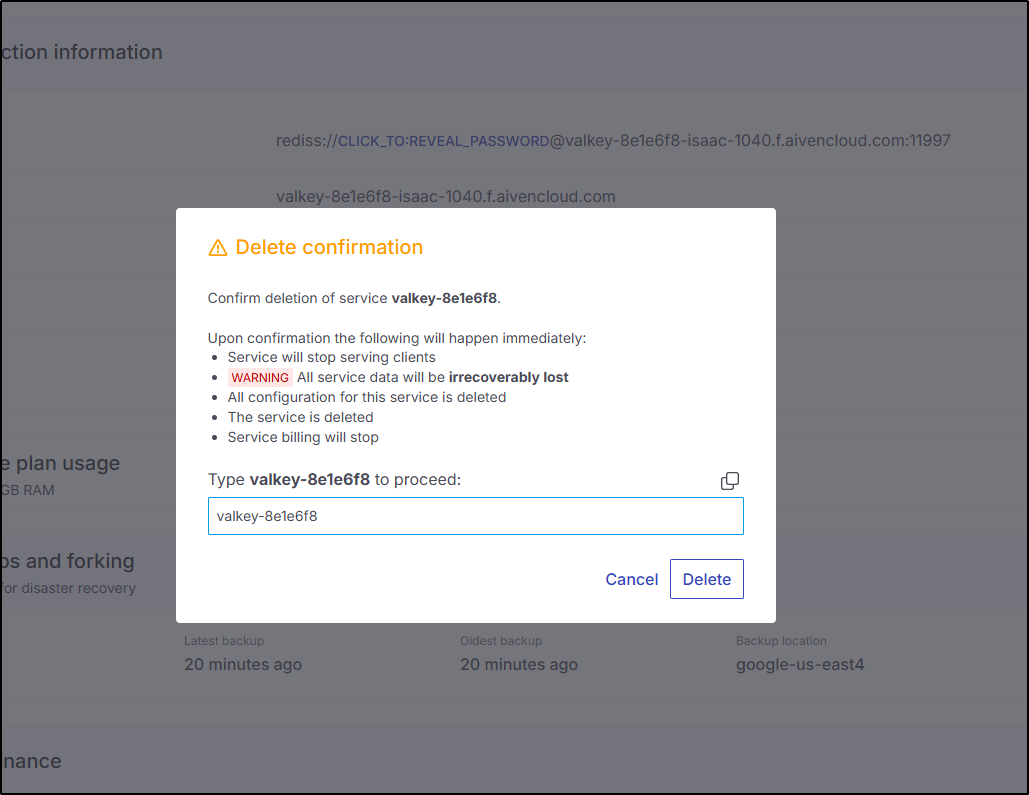

I’ll now delete it just to be a good user

I thought Aiven had Redis and consulting my old blog entry, indeed I did set it up with Redis on Aiven in 2022.

Indeed, their product page just shows Valkey now.

Summary

Today we revisited the Redis forks after nearly nine months and found that Garnet and Valkey are very much alive and well. We setup Valkey in Kubernetes and tried with and without passwords. The passwordless version was specifically so we could test an older containerized voting app, the Azure Vote App with it.

Other than some nuances on docs with existing password, Valkey installation is now quite smooth. Moreover, in reviewing our options in the clouds, it was clear most of the clouds, at least for Redis, have stopped at the last OSS version of 7.4. The versions 8.x and beyond were, for the most part, all Valkey. This does not bode well for Redis (the company).

As a privately held company, it’s hard to say how they are fairing. I have to see what is free for me to see in pitchbook which suggests no new investments and flat employee growth. Glassdoor reviews are hard to parse as so many recent 5 star ones are glowing recommendations from “anonymous employees” that sound suspiciously similar.

I have no bent against Redis or Hashicorp or other companies. Many good people work there. I just don’t like the practice of take what others contributed and now saying “it’s all mine, pay me” - to me, it is just a general feeling that it is a bit like stealing. But therein lies the beauty of OSS. The license protects the versions up until the fork. So that means things like Valkey can shoot off and companies can just choose to stop taking in new updates if they don’t want to deal with the BSL or SSPL.