Published: Oct 17, 2024 by Isaac Johnson

We spoke of Immich last in 2023. I used it often up until my cluster had a severe outage. But even in my article in 2023, I noted things were actively updating.

Let’s look at this Photo and Video hosting self-hosted platform. It’s Open-Source and easy to setup and now appears to have a native Android and iOS app.

Immich in Kubernetes

Spoiler: I do end up having some troubles trying to use my own PostgreSQL with the required vectors add on below. If following along, perhaps use the bundled PSQL in the chart

Unlike before, today we now have an official helm chart with documentation here

I’ll do a helm repo add and helm repo update

$ helm repo add immich https://immich-app.github.io/immich-charts

"immich" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Unable to get an update from the "freshbrewed" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Unable to get an update from the "myharbor" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Successfully got an update from the "confluentinc" chart repository

...Successfully got an update from the "nfs" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "zabbix-community" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "bitwarden" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Successfully got an update from the "opencost" chart repository

...Successfully got an update from the "opencost-charts" chart repository

...Successfully got an update from the "backstage" chart repository

...Successfully got an update from the "longhorn" chart repository

...Successfully got an update from the "castai-helm" chart repository

...Successfully got an update from the "minio-operator" chart repository

...Successfully got an update from the "kiwigrid" chart repository

...Successfully got an update from the "openproject" chart repository

...Successfully got an update from the "spacelift" chart repository

...Successfully got an update from the "open-telemetry" chart repository

...Successfully got an update from the "elastic" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "signoz" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "ananace-charts" chart repository

...Successfully got an update from the "grafana" chart repository

...Successfully got an update from the "minio" chart repository

...Successfully got an update from the "immich" chart repository

...Successfully got an update from the "makeplane" chart repository

...Successfully got an update from the "portainer" chart repository

...Successfully got an update from the "kube-state-metrics" chart repository

...Successfully got an update from the "ngrok" chart repository

...Successfully got an update from the "adwerx" chart repository

...Successfully got an update from the "openfunction" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "btungut" chart repository

...Successfully got an update from the "gitea-charts" chart repository

...Successfully got an update from the "zipkin" chart repository

...Successfully got an update from the "rhcharts" chart repository

...Successfully got an update from the "ingress-nginx" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "jfelten" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Unable to get an update from the "epsagon" chart repository (https://helm.epsagon.com):

Get "https://helm.epsagon.com/index.yaml": dial tcp: lookup helm.epsagon.com on 10.255.255.254:53: server misbehaving

...Successfully got an update from the "skm" chart repository

...Successfully got an update from the "akomljen-charts" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "openzipkin" chart repository

...Successfully got an update from the "rook-release" chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "prometheus-community" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

I will then want to create a values.yaml from the base. However, I see some different between that linked common and the latest version. And then this doesn’t really match the top values file

I’m going to err with the topmost file

Before we dig in there, we will need a Redis or Valkey instance, a PVC, and a PostgreSQL host as well.

PVC

This is probably the easiest to sort out. A quick manifest should do it

$ cat immich.pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: immich-vol

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: local-path

volumeMode: Filesystem

$ kubectl apply -f ./immich.pvc.yaml

persistentvolumeclaim/immich-vol created

I’ll note that in the values file (we’ll also review the whole file before installing later):

immich:

metrics:

# Enabling this will create the service monitors needed to monitor immich with the prometheus operator

enabled: false

persistence:

# Main data store for all photos shared between different components.

library:

# Automatically creating the library volume is not supported by this chart

# You have to specify an existing PVC to use

existingClaim: immich-vol

Valkey

I do have a valkey test instance from some time ago, but it was an early instance.

Let’s install a current instance with the official Bitnami chart, albeit I need to disable auth

$ helm install my-release oci://registry-1.docker.io/bitnamicharts/valkey --set auth.enabled=false

Pulled: registry-1.docker.io/bitnamicharts/valkey:1.0.2

Digest: sha256:7f042e99e7af8209e237ba75451334de641bdd906880a285a485b24ba78f9a3c

NAME: my-release

LAST DEPLOYED: Sun Oct 6 07:29:17 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: valkey

CHART VERSION: 1.0.2

APP VERSION: 8.0.1

** Please be patient while the chart is being deployed **

Valkey can be accessed on the following DNS names from within your cluster:

my-release-valkey-master.default.svc.cluster.local for read/write operations (port 6379)

my-release-valkey-replicas.default.svc.cluster.local for read-only operations (port 6379)

To connect to your Valkey server:

1. Run a Valkey pod that you can use as a client:

kubectl run --namespace default valkey-client --restart='Never' --image docker.io/bitnami/valkey:8.0.1-debian-12-r0 --command -- sleep infinity

Use the following command to attach to the pod:

kubectl exec --tty -i valkey-client \

--namespace default -- bash

2. Connect using the Valkey CLI:

valkey-cli -h my-release-valkey-master

valkey-cli -h my-release-valkey-replicas

To connect to your database from outside the cluster execute the following commands:

kubectl port-forward --namespace default svc/my-release-valkey-master 6379:6379 &

valkey-cli -h 127.0.0.1 -p 6379

WARNING: There are "resources" sections in the chart not set. Using "resourcesPreset" is not recommended for production. For production installations, please set the following values according to your workload needs:

- replica.resources

- master.resources

+info https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/

I can use the service

$ kubectl get svc | grep my-release

my-release-valkey-headless ClusterIP None <none>

6379/TCP 3m28s

my-release-valkey-replicas ClusterIP 10.43.212.95 <none>

6379/TCP 3m28s

my-release-valkey-master ClusterIP 10.43.82.30 <none>

6379/TCP 3m28s

Which I’ll use in my values

env:

REDIS_HOSTNAME: 'my-release-valkey-master.default.svc.cluster.local'

I could spin up postgresql in docker, but in this case I will use an instance running on a host already

builder@isaac-MacBookAir:~$ sudo su - postgres

postgres@isaac-MacBookAir:~$ psql

psql (14.13 (Ubuntu 14.13-0ubuntu0.22.04.1))

Type "help" for help.

postgres=# \list

List of databases

Name | Owner | Encoding | Collate | Ctype | Access privileges

------------+----------+----------+-------------+-------------+---------------------------

patientsdb | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =Tc/postgres +

| | | | | postgres=CTc/postgres +

| | | | | patientsuser=CTc/postgres

postgres | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 |

template0 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres +

| | | | | postgres=CTc/postgres

template1 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres +

| | | | | postgres=CTc/postgres

(4 rows)

postgres=#

I’ll then create the user and database

postgres=# create user immichuser with password 'immichp@ssw0rd';

CREATE ROLE

postgres=# create database immichdb;

CREATE DATABASE ^

postgres=# grant all privileges on database immichdb to immichuser;

GRANT

This rendered a final values file:

$ cat immich.values.yaml

## This chart relies on the common library chart from bjw-s

## You can find it at https://github.com/bjw-s/helm-charts/tree/main/charts/library/common

## Refer there for more detail about the supported values

# These entries are shared between all the Immich components

env:

REDIS_HOSTNAME: 'my-release-valkey-master.default.svc.cluster.local'

DB_HOSTNAME: "192.168.1.78"

DB_USERNAME: "immichuser"

DB_DATABASE_NAME: "immichdb"

# -- You should provide your own secret outside of this helm-chart and use `postgresql.global.postgresql.auth.existingSecret` to provide credentials to the postgresql instance

DB_PASSWORD: "immichp@ssw0rd"

IMMICH_MACHINE_LEARNING_URL: '{{ printf "http://%s-machine-learning:3003" .Release.Name }}'

image:

tag: v1.117.0

immich:

metrics:

# Enabling this will create the service monitors needed to monitor immich with the prometheus operator

enabled: false

persistence:

# Main data store for all photos shared between different components.

library:

# Automatically creating the library volume is not supported by this chart

# You have to specify an existing PVC to use

existingClaim: immich-vol

# configuration is immich-config.json converted to yaml

# ref: https://immich.app/docs/install/config-file/

#

configuration: {}

# trash:

# enabled: false

# days: 30

# storageTemplate:

# enabled: true

# template: "{{y}}/{{y}}-{{MM}}-{{dd}}/{{filename}}"

# Dependencies

postgresql:

enabled: false

image:

repository: tensorchord/pgvecto-rs

tag: pg14-v0.2.0

global:

postgresql:

auth:

username: immich

database: immich

password: immich

primary:

containerSecurityContext:

readOnlyRootFilesystem: false

initdb:

scripts:

create-extensions.sql: |

CREATE EXTENSION cube;

CREATE EXTENSION earthdistance;

CREATE EXTENSION vectors;

redis:

enabled: false

architecture: standalone

auth:

enabled: false

# Immich components

server:

enabled: true

image:

repository: ghcr.io/immich-app/immich-server

pullPolicy: IfNotPresent

ingress:

main:

enabled: true

annotations:

# proxy-body-size is set to 0 to remove the body limit on file uploads

nginx.ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/proxy-body-size: "0"

nginx.org/client-max-body-size: "0"

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

hosts:

- host: photos.freshbrewed.science

paths:

- path: "/"

tls:

- hosts:

- photos.freshbrewed.science

secretName: immich-tls

machine-learning:

enabled: true

image:

repository: ghcr.io/immich-app/immich-machine-learning

pullPolicy: IfNotPresent

env:

TRANSFORMERS_CACHE: /cache

persistence:

cache:

enabled: true

size: 10Gi

# Optional: Set this to pvc to avoid downloading the ML models every start.

type: emptyDir

accessMode: ReadWriteMany

storageClass: local-path

Let’s see if this all comes together

$ helm install --create-namespace --namespace immich immich immich/immich -f immich.values.yaml

W1006 07:55:38.025218 2703 warnings.go:70] annotation "kubernetes.io/ingress.class" is deprecated, please use 'spec.ingressClassName' instead

NAME: immich

LAST DEPLOYED: Sun Oct 6 07:55:36 2024

NAMESPACE: immich

STATUS: deployed

REVISION: 1

TEST SUITE: None

After 12m, i didnt see it come up

$ kubectl get pods -n immich

NAME READY STATUS RESTARTS AGE

immich-server-564db8f6c5-w5rdk 0/1 Pending 0 16s

immich-machine-learning-d946964f8-d8mk7 0/1 ContainerCreating 0 16s

$ kubectl get pods -n immich

NAME READY STATUS RESTARTS AGE

immich-server-564db8f6c5-w5rdk 0/1 Pending 0 12m

immich-machine-learning-d946964f8-d8mk7 1/1 Running 0 12m

Seems it’s waiting for the PVC

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 13m default-scheduler 0/3 nodes are available: persistentvolumeclaim "immich-vol" not found. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling..

Warning FailedScheduling 2m36s (x2 over 7m36s) default-scheduler 0/3 nodes are available: persistentvolumeclaim "immich-vol" not found. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling..

Did I not create it?

$ kubectl get pvc -n immich

No resources found in immich namespace.

I must have neglected to add the namespace. Let’s fix that

$ kubectl apply -f immich.pvc.yaml -n immich

persistentvolumeclaim/immich-vol created

$ kubectl get pvc -n immich

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

immich-vol Pending local-path 7s

I’ll rotate the pod to force it try again

$ kubectl delete pod immich-server-564db8f6c5-w5rdk -n immich

pod "immich-server-564db8f6c5-w5rdk" deleted

$ kubectl get pods -n immich

NAME READY STATUS RESTARTS AGE

immich-machine-learning-d946964f8-d8mk7 1/1 Running 0 15m

immich-server-564db8f6c5-z2sl2 0/1 Pending 0 14s

$ kubectl describe pod immich-server-564db8f6c5-z2sl2 -n immich | tail -n 7

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none>

$ kubectl describe pod immich-server-564db8f6c5-z2sl2 -n immich | tail -n 7

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 1s default-scheduler Successfully assigned immich/immich-server-564db8f6c5-z2sl2 to isaac-macbookpro

Perhaps Github rate limits, but the image pull is certainly taking some time

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 5m10s default-scheduler Successfully assigned immich/immich-server-564db8f6c5-z2sl2 to isaac-macbookpro

Normal Pulling 5m3s kubelet Pulling image "ghcr.io/immich-app/immich-server:v1.117.0"

Once it pulled, I saw that it could not resolve the KubeDNS entry for the Valkey instance

Error: getaddrinfo ENOTFOUND my-release-valkey-master.default.svc.cluster.local

at GetAddrInfoReqWrap.onlookup [as oncomplete] (node:dns:109:26) {

errno: -3008,

code: 'ENOTFOUND',

syscall: 'getaddrinfo',

hostname: 'my-release-valkey-master.default.svc.cluster.local'

}

I realized a big mistake (which explains the PVC). I left my kubectx on the wrong cluster. Doh!

I removed the install and switched to back to the primary cluster

$ helm delete -n immich immich

release "immich" uninstalled

$ kubectx int33

Switched to context "int33".

I then created a namespace and the PVC and verified the Valkey service was in the default namespace

$ kubectl create ns immich

namespace/immich created

$ kubectl apply -f ./immich.pvc.yaml -n immich

persistentvolumeclaim/immich-vol created

$ kubectl get svc -A | grep my | grep master

valkeytest my-redis-master ClusterIP 10.43.147.68 <none> 6379/TCP

184d

default my-release-valkey-master ClusterIP 10.43.82.30 <none> 6379/TCP

51m

Let’s try that again

$ helm install --create-namespace --namespace immich immich immich/immich -f immich.values.yaml

NAME: immich

LAST DEPLOYED: Sun Oct 6 08:22:43 2024

NAMESPACE: immich

STATUS: deployed

REVISION: 1

TEST SUITE: None

It seems they desire a PostgreSQL extension to be installed

$ kubectl get pods -n immich

NAME READY STATUS RESTARTS AGE

immich-machine-learning-84cd94d44-8kmw6 1/1 Running 0 9m45s

immich-server-7fbc5764bc-xgtqp 0/1 Running 4 (47s ago) 2m11s

$ kubectl logs immich-server-7fbc5764bc-xgtqp -n immich

Detected CPU Cores: 4

Starting api worker

Starting microservices worker

[Nest] 7 - 10/06/2024, 1:32:39 PM LOG [Microservices:EventRepository] Initialized websocket server

Error: The pgvecto.rs extension is not available in this Postgres instance.

If using a container image, ensure the image has the extension installed.

at /usr/src/app/dist/services/database.service.js:72:23

at process.processTicksAndRejections (node:internal/process/task_queues:95:5)

at async /usr/src/app/dist/repositories/database.repository.js:199:23

microservices worker error: Error: The pgvecto.rs extension is not available in this Postgres instance.

If using a container image, ensure the image has the extension installed.

microservices worker exited with code 1

https://github.com/tensorchord/pgvecto.rs/releases/tag/v0.3.0

Add vectors-pg14

$ sudo apt install vectors-pg14

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

vectors-pg14 is already the newest version (0.3.0).

The following packages were automatically installed and are no longer required:

app-install-data-partner bsdmainutils g++-9 gcc-10-base gir1.2-clutter-1.0 gir1.2-clutter-gst-3.0 gir1.2-cogl-1.0 gir1.2-coglpango-1.0 gir1.2-gnomebluetooth-1.0 gir1.2-gtkclutter-1.0

gnome-getting-started-docs gnome-screenshot ippusbxd libamtk-5-0 libamtk-5-common libaom0 libasn1-8-heimdal libboost-date-time1.71.0 libboost-filesystem1.71.0 libboost-iostreams1.71.0

libboost-locale1.71.0 libboost-thread1.71.0 libbrlapi0.7 libcamel-1.2-62 libcbor0.6 libcdio18 libcmis-0.5-5v5 libcodec2-0.9 libdns-export1109 libdvdnav4 libdvdread7

libedataserver-1.2-24 libedataserverui-1.2-2 libextutils-pkgconfig-perl libfprint-2-tod1 libfuse2 libfwupdplugin1 libgdk-pixbuf-xlib-2.0-0 libgdk-pixbuf2.0-0 libgssapi3-heimdal

libgupnp-1.2-0 libhandy-0.0-0 libhcrypto4-heimdal libheimbase1-heimdal libheimntlm0-heimdal libhogweed5 libhx509-5-heimdal libicu66 libidn11 libigdgmm11 libisl22 libjson-c4

libjuh-java libjurt-java libkrb5-26-heimdal libldap-2.4-2 liblibreoffice-java libllvm10 libllvm12 libmozjs-68-0 libmpdec2 libneon27-gnutls libnettle7 libntfs-3g883 liborcus-0.15-0

libperl5.30 libpgm-5.2-0 libphonenumber7 libpoppler97 libprotobuf17 libpython3.8 libpython3.8-minimal libpython3.8-stdlib libqpdf26 libraw19 libreoffice-style-tango libridl-java

libroken18-heimdal libsane libsnmp35 libssl1.1 libstdc++-9-dev libtepl-4-0 libtracker-control-2.0-0 libtracker-miner-2.0-0 libtracker-sparql-2.0-0 libunoloader-java libvpx6 libwebp6

libwind0-heimdal libwmf0.2-7 libx264-155 libx265-179 libxmlb1 linux-hwe-5.15-headers-5.15.0-100 ltrace lz4 ncal perl-modules-5.30 pkg-config popularity-contest python3-entrypoints

python3-requests-unixsocket python3-simplejson python3.8 python3.8-minimal syslinux syslinux-common syslinux-legacy ure-java vino xul-ext-ubufox

Use 'sudo apt autoremove' to remove them.

0 upgraded, 0 newly installed, 0 to remove and 84 not upgraded.

Ensure they are enabled

builder@isaac-MacBookAir:~/vectors$ !27

sudo su - postgres

postgres@isaac-MacBookAir:~$ psql

psql (14.13 (Ubuntu 14.13-0ubuntu0.22.04.1))

Type "help" for help.

postgres=# ALTER SYSTEM SET shared_preload_libraries = "vectors.so";

ALTER SYSTEM

postgres=# ALTER SYSTEM SET search_path TO "$user", public, vectors;

ALTER SYSTEM

postgres=# \q

postgres=# CREATE EXTENSION IF NOT EXISTS vectors;

CREATE EXTENSION

postgres=# exit

Restart PostgreSQL

$ sudo systemctl restart postgresql.service

However, no matter what I did, it seemed to fail firing up the vectors extension

$ kubectl logs immich-server-7fbc5764bc-nvjm2 -n immich

Detected CPU Cores: 4

Starting api worker

Starting microservices worker

[Nest] 7 - 10/06/2024, 4:00:19 PM LOG [Microservices:EventRepository] Initialized websocket server

[Nest] 7 - 10/06/2024, 4:00:19 PM FATAL [Microservices:DatabaseService] Failed to activate pgvecto.rs extension.

Please ensure the Postgres instance has pgvecto.rs installed.

If the Postgres instance already has pgvecto.rs installed, Immich may not have the necessary permissions to activate it.

In this case, please run 'CREATE EXTENSION IF NOT EXISTS vectors' manually as a superuser.

See https://immich.app/docs/guides/database-queries for how to query the database.

Alternatively, if your Postgres instance has pgvector, you may use this instead by setting the environment variable 'DB_VECTOR_EXTENSION=pgvector'.

Note that switching between the two extensions after a successful startup is not supported.

The exception is if your version of Immich prior to upgrading was 1.90.2 or earlier.

In this case, you may set either extension now, but you will not be able to switch to the other extension following a successful startup.

QueryFailedError: permission denied to create extension "vectors"

at PostgresQueryRunner.query (/usr/src/app/node_modules/typeorm/driver/postgres/PostgresQueryRunner.js:219:19)

at process.processTicksAndRejections (node:internal/process/task_queues:95:5)

at async DataSource.query (/usr/src/app/node_modules/typeorm/data-source/DataSource.js:350:20)

at async DatabaseRepository.createExtension (/usr/src/app/dist/repositories/database.repository.js:70:9)

at async DatabaseService.createExtension (/usr/src/app/dist/services/database.service.js:119:13)

at async /usr/src/app/dist/services/database.service.js:81:17

at async /usr/src/app/dist/repositories/database.repository.js:199:23 {

query: 'CREATE EXTENSION IF NOT EXISTS vectors',

parameters: undefined,

driverError: error: permission denied to create extension "vectors"

at /usr/src/app/node_modules/pg/lib/client.js:535:17

at process.processTicksAndRejections (node:internal/process/task_queues:95:5)

at async PostgresQueryRunner.query (/usr/src/app/node_modules/typeorm/driver/postgres/PostgresQueryRunner.js:184:25)

at async DataSource.query (/usr/src/app/node_modules/typeorm/data-source/DataSource.js:350:20)

at async DatabaseRepository.createExtension (/usr/src/app/dist/repositories/database.repository.js:70:9)

at async DatabaseService.createExtension (/usr/src/app/dist/services/database.service.js:119:13)

at async /usr/src/app/dist/services/database.service.js:81:17

at async /usr/src/app/dist/repositories/database.repository.js:199:23 {

length: 164,

severity: 'ERROR',

code: '42501',

detail: undefined,

hint: 'Must be superuser to create this extension.',

position: undefined,

internalPosition: undefined,

internalQuery: undefined,

where: undefined,

schema: undefined,

table: undefined,

column: undefined,

dataType: undefined,

constraint: undefined,

file: 'extension.c',

line: '899',

routine: 'execute_extension_script'

},

length: 164,

severity: 'ERROR',

code: '42501',

detail: undefined,

hint: 'Must be superuser to create this extension.',

position: undefined,

internalPosition: undefined,

internalQuery: undefined,

where: undefined,

schema: undefined,

table: undefined,

column: undefined,

dataType: undefined,

constraint: undefined,

file: 'extension.c',

line: '899',

routine: 'execute_extension_script'

}

microservices worker error: QueryFailedError: permission denied to create extension "vectors"

microservices worker exited with code 1

I decided to pivot to the bundled PostgreSQL which isn’t ideal, but would match the release

First, remove the existing run

$ helm delete immich -n immich

release "immich" uninstalled

I’ll then change the settings back to using the bundled psql

$ cat immich.values.yaml

## This chart relies on the common library chart from bjw-s

## You can find it at https://github.com/bjw-s/helm-charts/tree/main/charts/library/common

## Refer there for more detail about the supported values

# These entries are shared between all the Immich components

env:

REDIS_HOSTNAME: 'my-release-valkey-master.default.svc.cluster.local'

DB_HOSTNAME: "{{ .Release.Name }}-postgresql"

DB_USERNAME: "{{ .Values.postgresql.global.postgresql.auth.username }}"

DB_DATABASE_NAME: "{{ .Values.postgresql.global.postgresql.auth.database }}"

DB_PASSWORD: "{{ .Values.postgresql.global.postgresql.auth.password }}"

IMMICH_MACHINE_LEARNING_URL: '{{ printf "http://%s-machine-learning:3003" .Release.Name }}'

image:

tag: v1.117.0

immich:

metrics:

# Enabling this will create the service monitors needed to monitor immich with the prometheus operator

enabled: false

persistence:

# Main data store for all photos shared between different components.

library:

# Automatically creating the library volume is not supported by this chart

# You have to specify an existing PVC to use

existingClaim: immich-vol

# configuration is immich-config.json converted to yaml

# ref: https://immich.app/docs/install/config-file/

#

configuration: {}

# trash:

# enabled: false

# days: 30

# storageTemplate:

# enabled: true

# template: "{{y}}/{{y}}-{{MM}}-{{dd}}/{{filename}}"

# Dependencies

postgresql:

enabled: true

image:

repository: tensorchord/pgvecto-rs

tag: pg14-v0.2.0

global:

postgresql:

auth:

username: immich

database: immich

password: immich

primary:

containerSecurityContext:

readOnlyRootFilesystem: false

initdb:

scripts:

create-extensions.sql: |

CREATE EXTENSION cube;

CREATE EXTENSION earthdistance;

CREATE EXTENSION vectors;

redis:

enabled: false

architecture: standalone

auth:

enabled: false

# Immich components

server:

enabled: true

image:

repository: ghcr.io/immich-app/immich-server

pullPolicy: IfNotPresent

ingress:

main:

enabled: true

annotations:

# proxy-body-size is set to 0 to remove the body limit on file uploads

nginx.ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/proxy-body-size: "0"

nginx.org/client-max-body-size: "0"

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

hosts:

- host: photos.freshbrewed.science

paths:

- path: "/"

tls:

- hosts:

- photos.freshbrewed.science

secretName: immich-tls

machine-learning:

enabled: true

image:

repository: ghcr.io/immich-app/immich-machine-learning

pullPolicy: IfNotPresent

env:

TRANSFORMERS_CACHE: /cache

persistence:

cache:

enabled: true

size: 10Gi

# Optional: Set this to pvc to avoid downloading the ML models every start.

type: emptyDir

accessMode: ReadWriteMany

storageClass: local-path

Then install

$ helm install --create-namespace --namespace immich immich immich/immich -f immich.values.yaml

NAME: immich

LAST DEPLOYED: Sun Oct 6 11:05:30 2024

NAMESPACE: immich

STATUS: deployed

REVISION: 1

TEST SUITE: None

I can see the vector-enabled PostgreSQL DB pod now and the server startup is looking better

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl get pods -n immich

NAME READY STATUS RESTARTS AGE

immich-machine-learning-85d8459897-mrkcj 1/1 Running 0 110s

immich-postgresql-0 1/1 Running 0 110s

immich-server-5c6d9479c8-jmr2x 0/1 Running 1 (74s ago) 110s

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl logs immich-server-5c6d9479c8-jmr2x -n immich

Detected CPU Cores: 4

Starting api worker

Starting microservices worker

[Nest] 7 - 10/06/2024, 4:06:14 PM LOG [Microservices:EventRepository] Initialized websocket server

[Nest] 17 - 10/06/2024, 4:06:15 PM LOG [Api:EventRepository] Initialized websocket server

[Nest] 7 - 10/06/2024, 4:06:23 PM LOG [Microservices:MapRepository] Initializing metadata repository

Hmm.. i’ve been more than generous with time.. but it’s still not live

$ kubectl get pods -n immich

NAME READY STATUS RESTARTS AGE

immich-machine-learning-85d8459897-mrkcj 1/1 Running 0 89m

immich-postgresql-0 1/1 Running 0 89m

immich-server-5c6d9479c8-jmr2x 0/1 Running 18 (4m6s ago) 89m

It just seems to refush on the health check

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning Unhealthy 4m45s (x511 over 89m) kubelet Startup probe failed: Get "http://10.42.3.81:3001/api/server/ping": dial tcp 10.42.3.81:3001: connect: connection refused

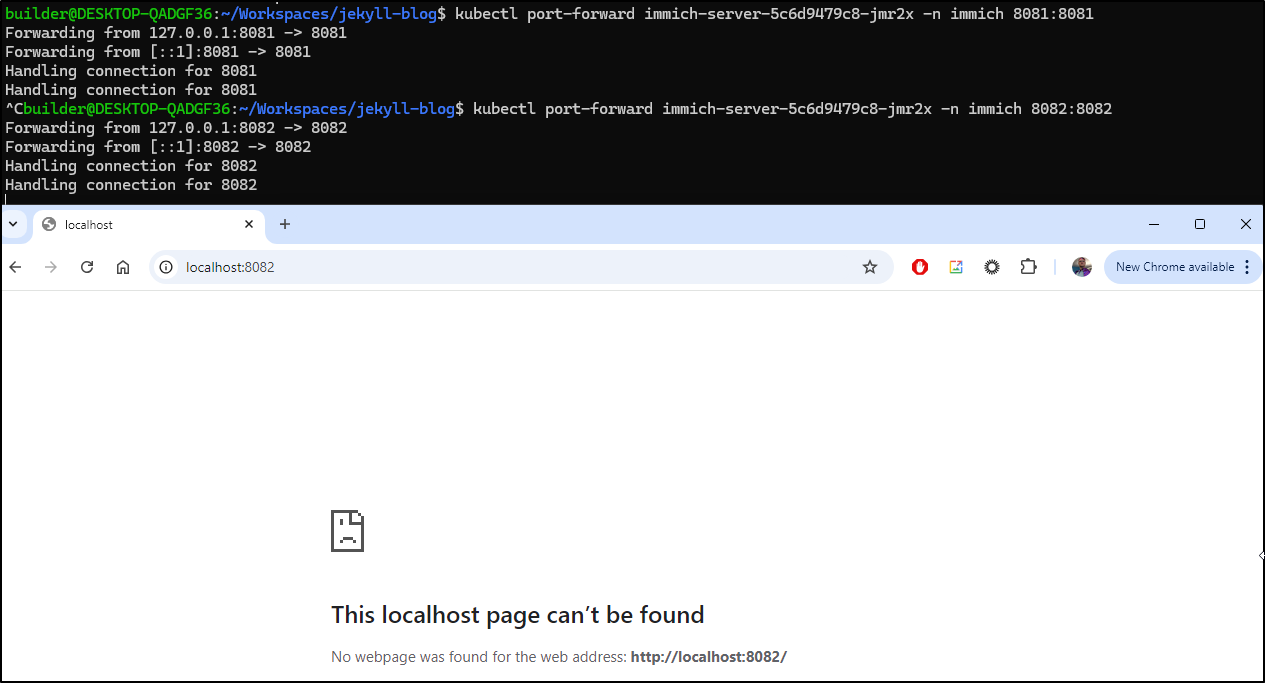

I did some digging on the pod. After adding lsof I see it’s serving up on 8081 and 8082, but not that API endpoint

root@immich-server-5c6d9479c8-jmr2x:/usr/src/app# lsof -i -P -n | grep LISTEN

immich 7 root 24u IPv6 774671140 0t0 TCP *:8082 (LISTEN)

immich-ap 17 root 19u IPv6 774669017 0t0 TCP *:8081 (LISTEN)

root@immich-server-5c6d9479c8-jmr2x:/usr/src/app# wget http://localhost:3001/api/server/ping

--2024-10-06 17:40:20-- http://localhost:3001/api/server/ping

Resolving localhost (localhost)... ::1, 127.0.0.1

Connecting to localhost (localhost)|::1|:3001... failed: Connection refused.

Connecting to localhost (localhost)|127.0.0.1|:3001... failed: Connection refused.

root@immich-server-5c6d9479c8-jmr2x:/usr/src/app# wget http://localhost:8081/api/server/ping

--2024-10-06 17:40:26-- http://localhost:8081/api/server/ping

Resolving localhost (localhost)... ::1, 127.0.0.1

Connecting to localhost (localhost)|::1|:8081... connected.

HTTP request sent, awaiting response... 404 Not Found

2024-10-06 17:40:26 ERROR 404: Not Found.

root@immich-server-5c6d9479c8-jmr2x:/usr/src/app# wget http://localhost:8082/api/server/ping

--2024-10-06 17:40:32-- http://localhost:8082/api/server/ping

Resolving localhost (localhost)... ::1, 127.0.0.1

Connecting to localhost (localhost)|::1|:8082... connected.

HTTP request sent, awaiting response... 404 Not Found

2024-10-06 17:40:32 ERROR 404: Not Found.

root@immich-server-5c6d9479c8-jmr2x:/usr/src/app# command terminated with exit code 137

However, none of those seemed to serve the app

Rotating the pod gave me nothing

$ kubectl logs immich-server-5c6d9479c8-hcmtx -n immich

Detected CPU Cores: 4

Starting api worker

Starting microservices worker

[Nest] 7 - 10/06/2024, 5:44:58 PM LOG [Microservices:EventRepository] Initialized websocket server

[Nest] 7 - 10/06/2024, 5:44:58 PM LOG [Microservices:MapRepository] Initializing metadata repository

[Nest] 17 - 10/06/2024, 5:44:58 PM LOG [Api:EventRepository] Initialized websocket server

Docker

Let’s pivot to Docker

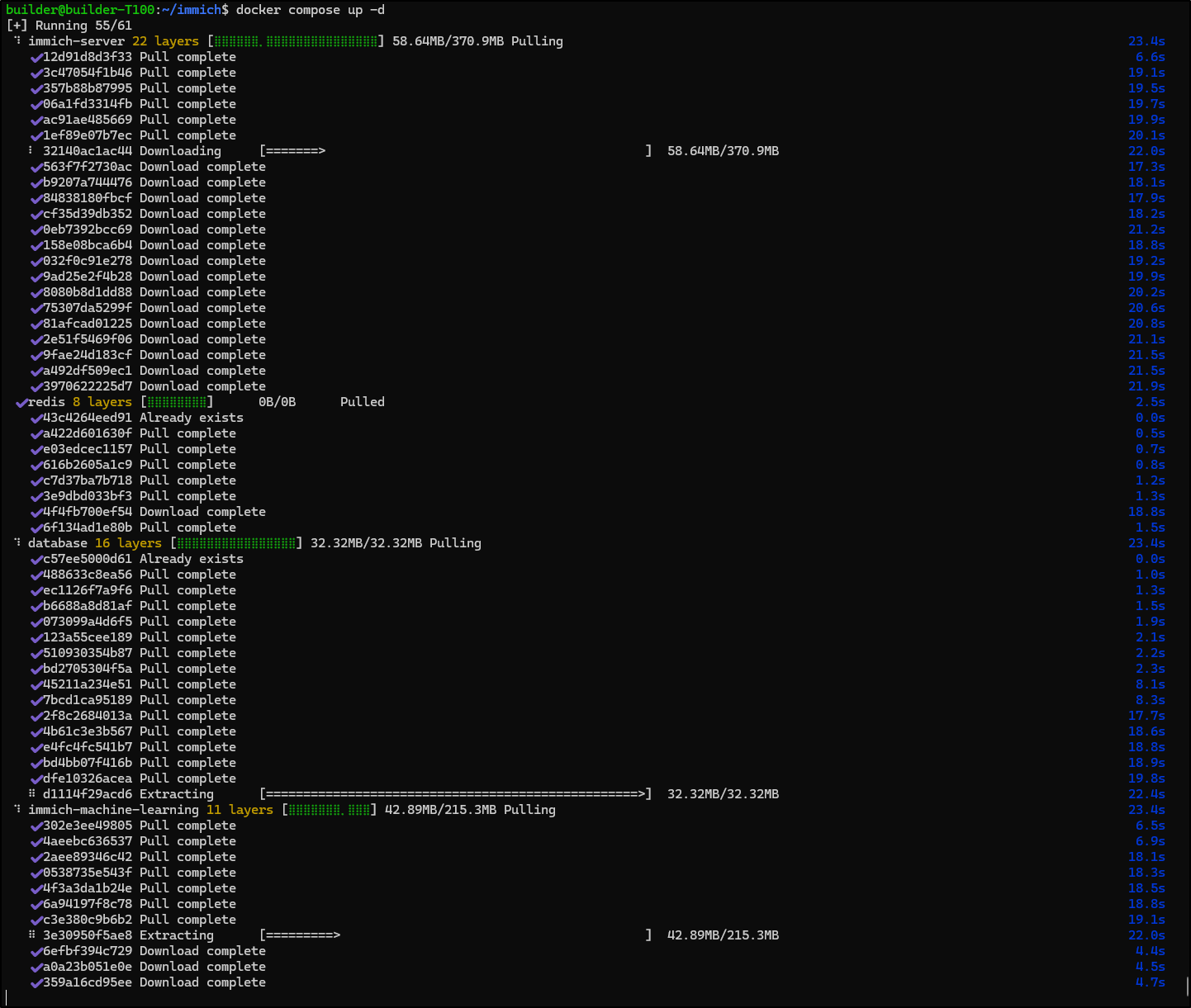

I’ll pull down the .env file and docker-compose.yml files

$ wget -O docker-compose.yml https://github.com/immich-app/immich/releases/latest/download/docker-compose.yml

$ wget -O .env https://github.com/immich-app/immich/releases/latest/download/example.env

I can modify those, but really I just need to make a folder for uploads and PostgreSQL data

$ mkdir ./postgres

$ mkdir ./library

Now I can launch it…. maybe

builder@builder-T100:~/immich$ docker compose up -d

validating /home/builder/immich/docker-compose.yml: services.database.healthcheck Additional property start_interval is not allowed

Perhaps that is for a newer docker instance. I removed the line about “start_interval” and tried again

Eventually it came up

[+] Building 0.0s (0/0)

[+] Running 6/6

✔ Network immich_default Created 0.4s

✔ Volume "immich_model-cache" Created 0.0s

✔ Container immich_postgres Started 2.6s

✔ Container immich_machine_learning Started 2.5s

✔ Container immich_redis Started 2.6s

✔ Container immich_server Started 3.9s

builder@builder-T100:~/immich$

And while this does run

I can tell it’s pushing my Dockerhost to the edge and will likely crash so I stopped it

builder@builder-T100:~/immich$ docker compose down

[+] Running 5/5

✔ Container immich_machine_learning Removed 1.7s

✔ Container immich_server Removed 1.7s

✔ Container immich_redis Removed 0.9s

✔ Container immich_postgres Removed 1.9s

✔ Network immich_default Removed

On my spare host that runs PostgreSQL, i figured that perhaps it would serve well as a Docker Host for this. I followed the steps here to install the latest Docker binaries.

Then a quick test it was working

builder@isaac-MacBookAir:~$ sudo docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

c1ec31eb5944: Pull complete

Digest: sha256:91fb4b041da273d5a3273b6d587d62d518300a6ad268b28628f74997b93171b2

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

Then just make the folders and fire up docker compose again

builder@isaac-MacBookAir:~$ cd immich/

builder@isaac-MacBookAir:~/immich$ mkdir postgres

builder@isaac-MacBookAir:~/immich$ mkdir library

builder@isaac-MacBookAir:~/immich$ wget -O docker-compose.yml https://github.com/immich-app/immich/releases/latest/download/docker-compose.yml

--2024-10-06 13:04:04-- https://github.com/immich-app/immich/releases/latest/download/docker-compose.yml

Resolving github.com (github.com)... 140.82.112.4

Connecting to github.com (github.com)|140.82.112.4|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://github.com/immich-app/immich/releases/download/v1.117.0/docker-compose.yml [following]

--2024-10-06 13:04:04-- https://github.com/immich-app/immich/releases/download/v1.117.0/docker-compose.yml

Reusing existing connection to github.com:443.

HTTP request sent, awaiting response... 302 Found

Location: https://objects.githubusercontent.com/github-production-release-asset-2e65be/455229168/1a38fc33-3a65-4c48-888f-36c5a00cf6fd?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=releaseassetproduction%2F20241006%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20241006T180404Z&X-Amz-Expires=300&X-Amz-Signature=431c82aaa93e03a63ad434b56da6903133444552a975aa2bf86e230ecb157790&X-Amz-SignedHeaders=host&response-content-disposition=attachment%3B%20filename%3Ddocker-compose.yml&response-content-type=application%2Foctet-stream [following]

--2024-10-06 13:04:04-- https://objects.githubusercontent.com/github-production-release-asset-2e65be/455229168/1a38fc33-3a65-4c48-888f-36c5a00cf6fd?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=releaseassetproduction%2F20241006%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20241006T180404Z&X-Amz-Expires=300&X-Amz-Signature=431c82aaa93e03a63ad434b56da6903133444552a975aa2bf86e230ecb157790&X-Amz-SignedHeaders=host&response-content-disposition=attachment%3B%20filename%3Ddocker-compose.yml&response-content-type=application%2Foctet-stream

Resolving objects.githubusercontent.com (objects.githubusercontent.com)... 185.199.109.133, 185.199.110.133, 185.199.111.133, ...

Connecting to objects.githubusercontent.com (objects.githubusercontent.com)|185.199.109.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 3202 (3.1K) [application/octet-stream]

Saving to: ‘docker-compose.yml’

docker-compose.yml 100%[==========================================================================================================================================================>] 3.13K --.-KB/s in 0s

2024-10-06 13:04:04 (8.37 MB/s) - ‘docker-compose.yml’ saved [3202/3202]

builder@isaac-MacBookAir:~/immich$ wget -O .env https://github.com/immich-app/immich/releases/latest/download/example.env

--2024-10-06 13:04:09-- https://github.com/immich-app/immich/releases/latest/download/example.env

Resolving github.com (github.com)... 140.82.112.4

Connecting to github.com (github.com)|140.82.112.4|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://github.com/immich-app/immich/releases/download/v1.117.0/example.env [following]

--2024-10-06 13:04:10-- https://github.com/immich-app/immich/releases/download/v1.117.0/example.env

Reusing existing connection to github.com:443.

HTTP request sent, awaiting response... 302 Found

Location: https://objects.githubusercontent.com/github-production-release-asset-2e65be/455229168/c33408f1-fdb8-477c-a394-29538aee0ec6?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=releaseassetproduction%2F20241006%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20241006T180410Z&X-Amz-Expires=300&X-Amz-Signature=9ead5c07b449622cb35d0c36e98498ca5efa552e709bbd95de080a27f122132b&X-Amz-SignedHeaders=host&response-content-disposition=attachment%3B%20filename%3Dexample.env&response-content-type=application%2Foctet-stream [following]

--2024-10-06 13:04:10-- https://objects.githubusercontent.com/github-production-release-asset-2e65be/455229168/c33408f1-fdb8-477c-a394-29538aee0ec6?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=releaseassetproduction%2F20241006%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20241006T180410Z&X-Amz-Expires=300&X-Amz-Signature=9ead5c07b449622cb35d0c36e98498ca5efa552e709bbd95de080a27f122132b&X-Amz-SignedHeaders=host&response-content-disposition=attachment%3B%20filename%3Dexample.env&response-content-type=application%2Foctet-stream

Resolving objects.githubusercontent.com (objects.githubusercontent.com)... 185.199.111.133, 185.199.108.133, 185.199.109.133, ...

Connecting to objects.githubusercontent.com (objects.githubusercontent.com)|185.199.111.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 933 [application/octet-stream]

Saving to: ‘.env’

.env 100%[==========================================================================================================================================================>] 933 --.-KB/s in 0s

2024-10-06 13:04:10 (11.1 MB/s) - ‘.env’ saved [933/933]

builder@isaac-MacBookAir:~/immich$ docker compose up -d

permission denied while trying to connect to the Docker daemon socket at unix:///var/run/docker.sock: Get "http://%2Fvar%2Frun%2Fdocker.sock/v1.47/containers/json?all=1&filters=%7B%22label%22%3A%7B%22com.docker.compose.config-hash%22%3Atrue%2C%22com.docker.compose.project%3Dimmich%22%3Atrue%7D%7D": dial unix /var/run/docker.sock: connect: permission denied

builder@isaac-MacBookAir:~/immich$ sudo docker compose up -d

[+] Running 33/61

⠴ immich-server [⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀] Pulling 9.5s

⠙ 12d91d8d3f33 Waiting 8.1s

⠙ 3c47054f1b46 Waiting 8.1s

⠙ 357b88b87995 Waiting 8.1s

⠙ 06a1fd3314fb Waiting

Which we can see is now running

builder@isaac-MacBookAir:~/immich$ sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3eff417bebb9 ghcr.io/immich-app/immich-server:release "tini -- /bin/bash s…" About a minute ago Up About a minute (healthy) 0.0.0.0:2283->3001/tcp, [::]:2283->3001/tcp immich_server

aa7439ea8c3d redis:6.2-alpine "docker-entrypoint.s…" About a minute ago Up About a minute (healthy) 6379/tcp immich_redis

db105b3c7857 tensorchord/pgvecto-rs:pg14-v0.2.0 "docker-entrypoint.s…" About a minute ago Up About a minute (healthy) 5432/tcp immich_postgres

5302ff1fdb37 ghcr.io/immich-app/immich-machine-learning:release "tini -- ./start.sh" About a minute ago Up About a minute (healthy) immich_machine_learning

This seemed to work

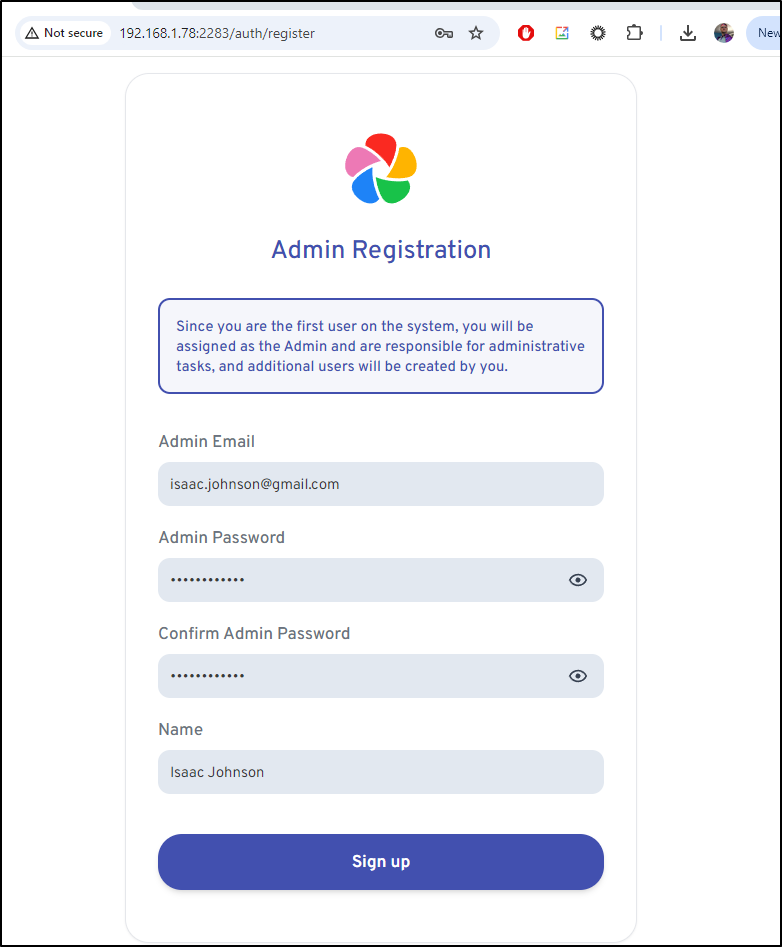

I created an admin user

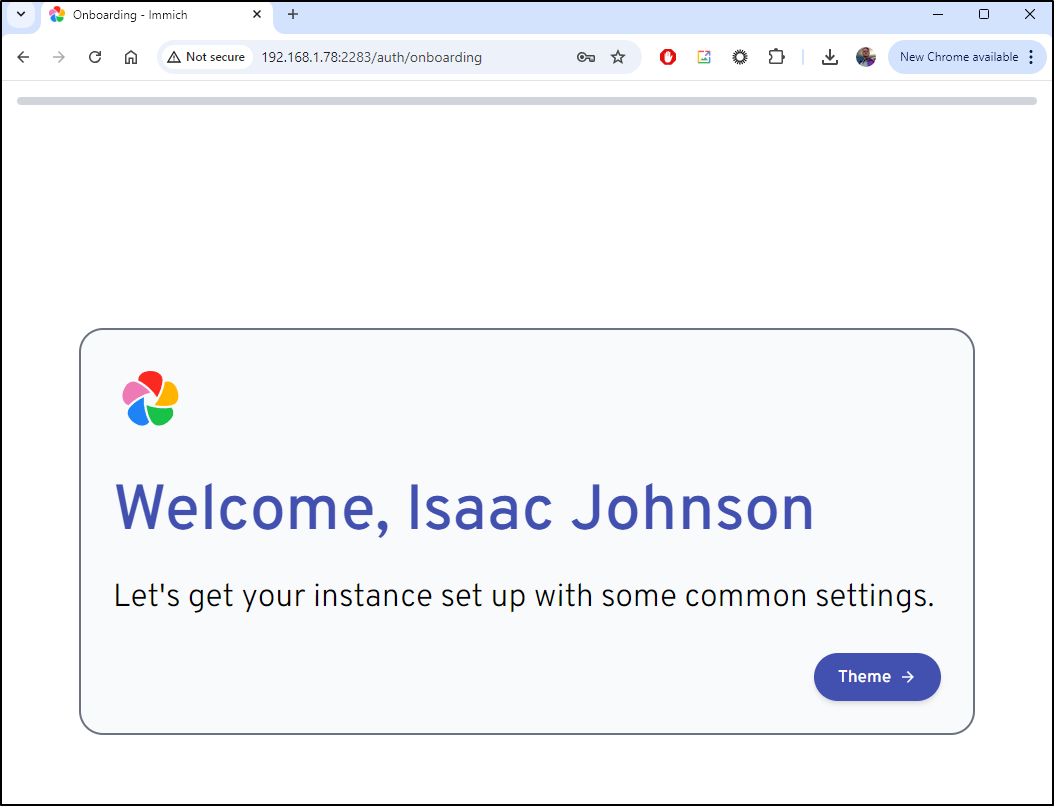

Upon login, I get some setup wizard

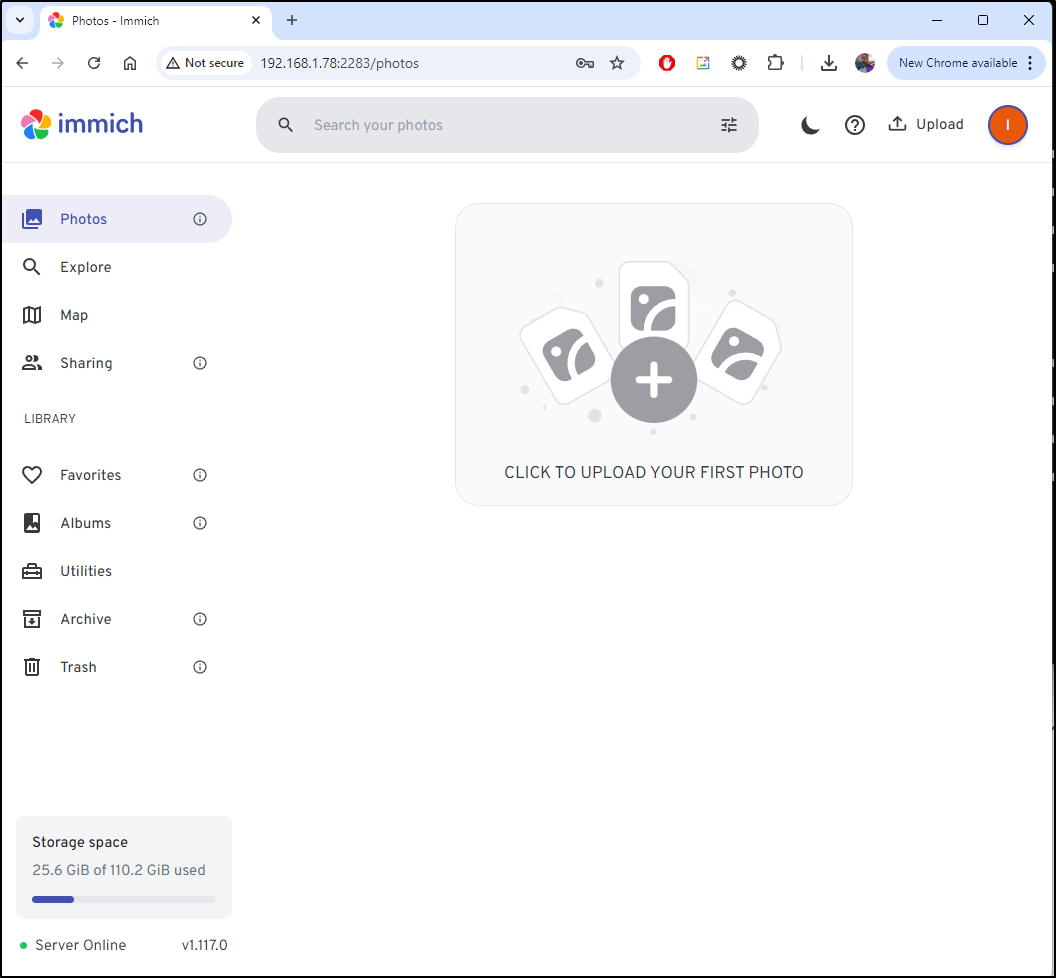

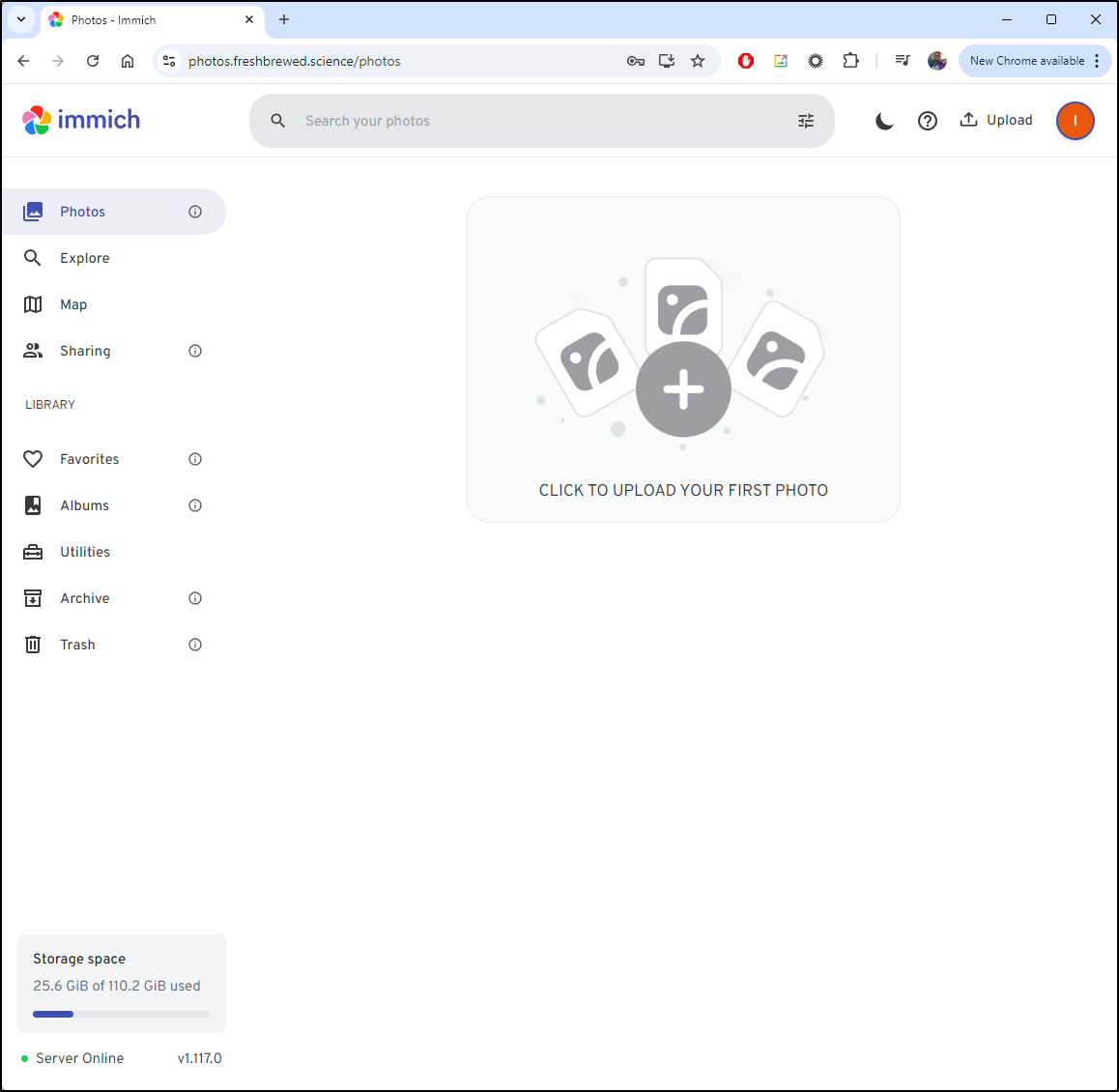

Once completed, I see the main page, albeit much newer and more spiffy than my last install

Let’s now route traffic through Kubernetes on to that host

I’ll create an external ip, service and ingress

$ cat immich.docker.ingress.yml

apiVersion: v1

kind: Service

metadata:

name: photos-external-ip

spec:

clusterIP: None

internalTrafficPolicy: Cluster

ports:

- name: photosp

port: 2283

protocol: TCP

targetPort: 2283

sessionAffinity: None

type: ClusterIP

---

apiVersion: v1

kind: Endpoints

metadata:

name: photos-external-ip

subsets:

- addresses:

- ip: 192.168.1.78

ports:

- name: photosp

port: 2283

protocol: TCP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: photos-external-ip

labels:

app.kubernetes.io/instance: photosingress

name: photosingress

spec:

rules:

- host: photos.freshbrewed.science

http:

paths:

- backend:

service:

name: photos-external-ip

port:

number: 2283

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- photos.freshbrewed.science

secretName: photos-tls

Then apply it

$ kubectl apply -f ./immich.docker.ingress.yml

service/photos-external-ip created

endpoints/photos-external-ip created

ingress.networking.k8s.io/photosingress created

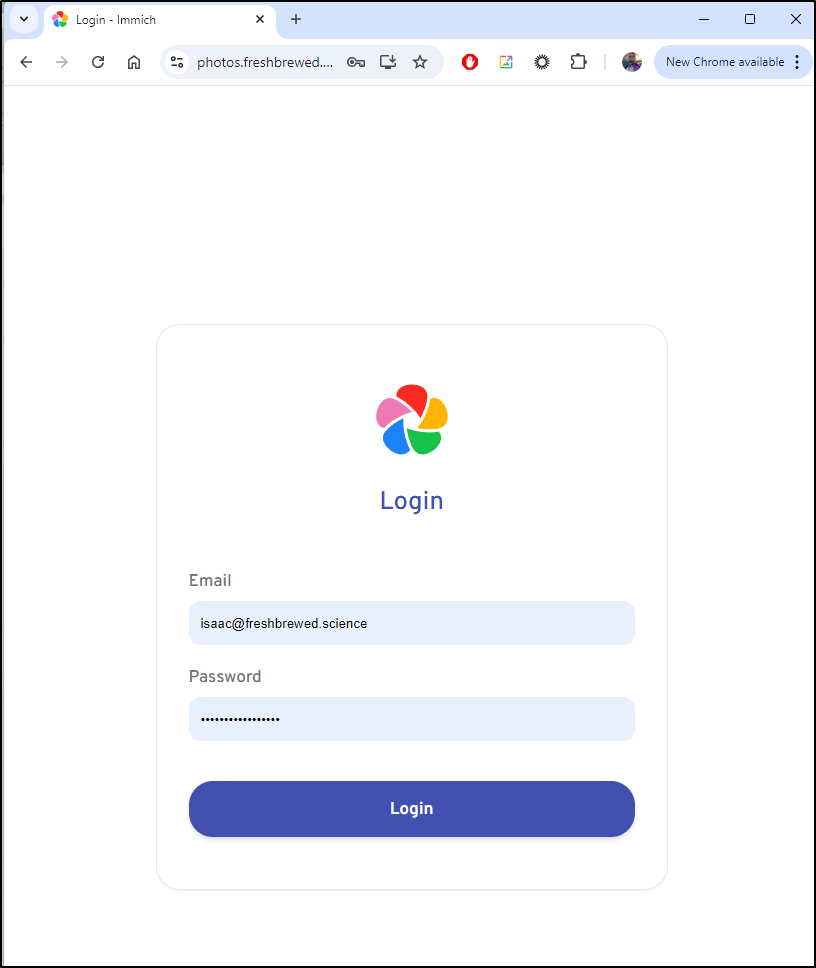

That kicked in immediately

And I could log right in

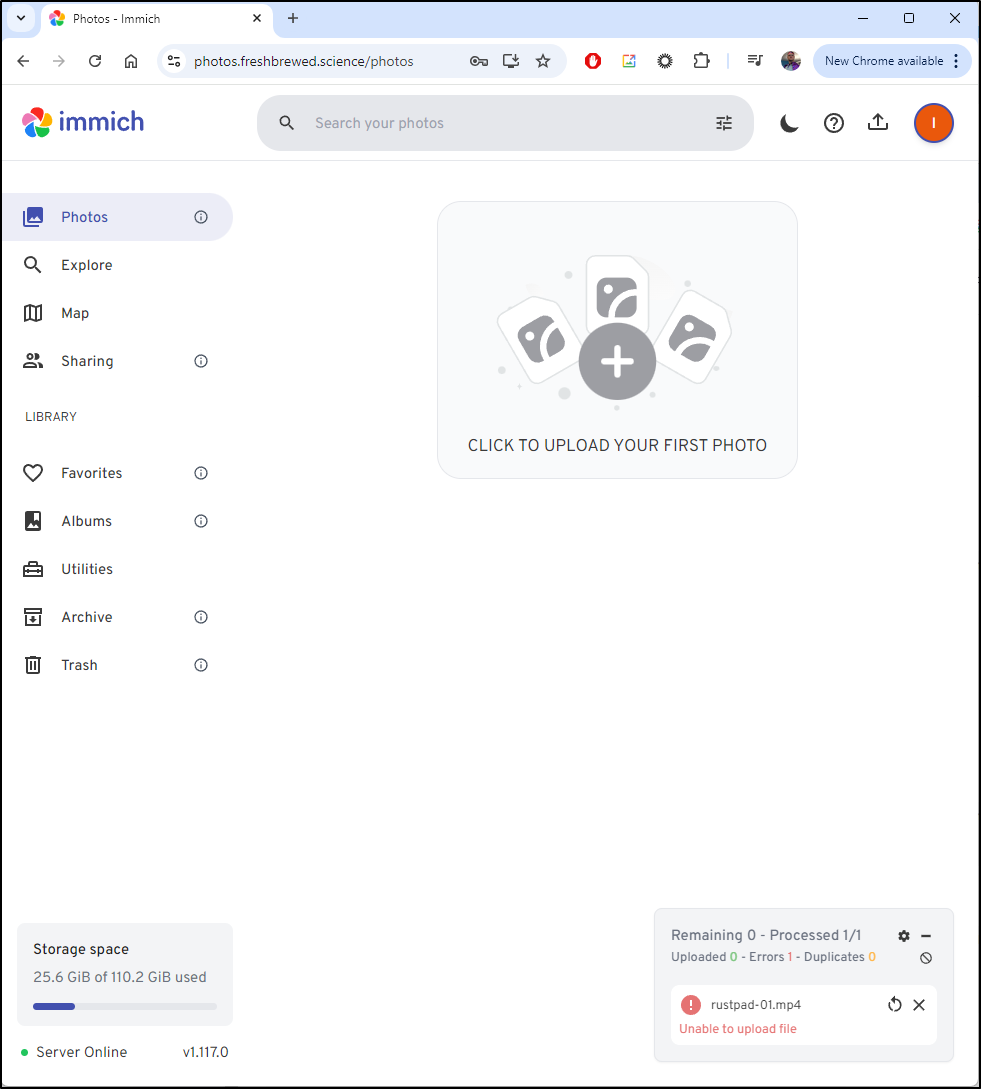

My first attempt to upload, however, failed

However, I soon fixed the larger file uploads with some proxy timeout and body size annotations in the ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/proxy-body-size: "0"

nginx.org/client-max-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

nginx.org/websocket-services: photos-external-ip

labels:

app.kubernetes.io/instance: photosingress

name: photosingress

spec:

rules:

- host: photos.freshbrewed.science

http:

paths:

- backend:

service:

name: photos-external-ip

port:

number: 2283

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- photos.freshbrewed.science

secretName: photos-tls

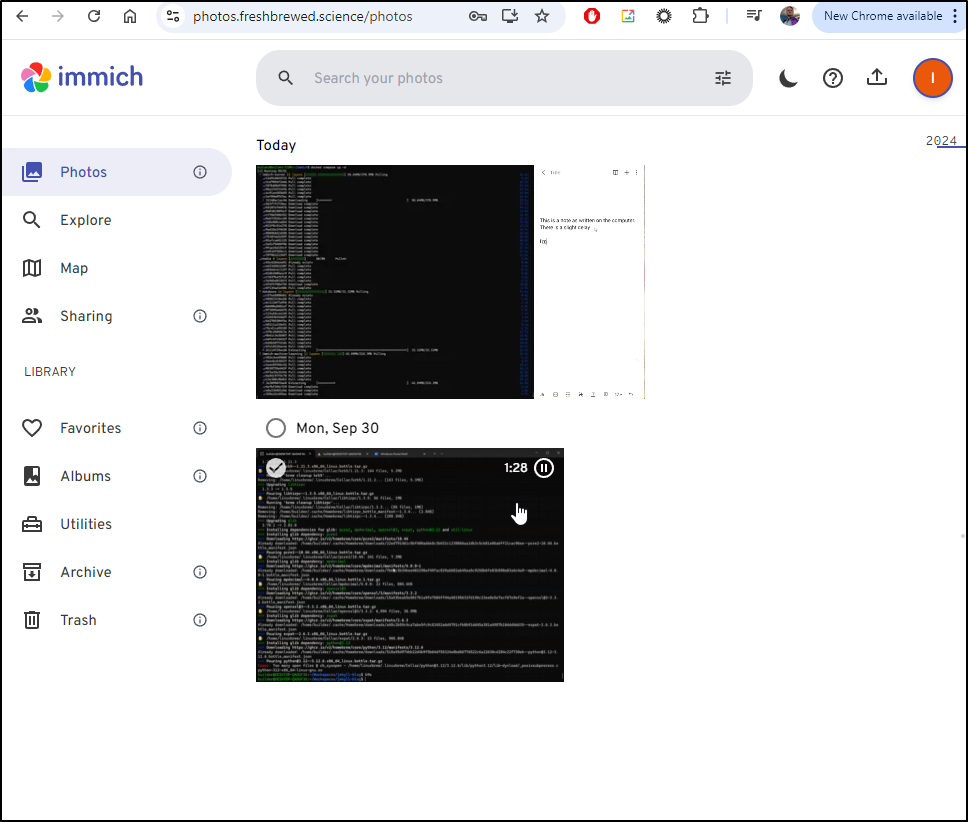

Immich covers a lot of the same grounds as Google Photos.

For instance, we can use it to upload a video to share to others.

Mobile App

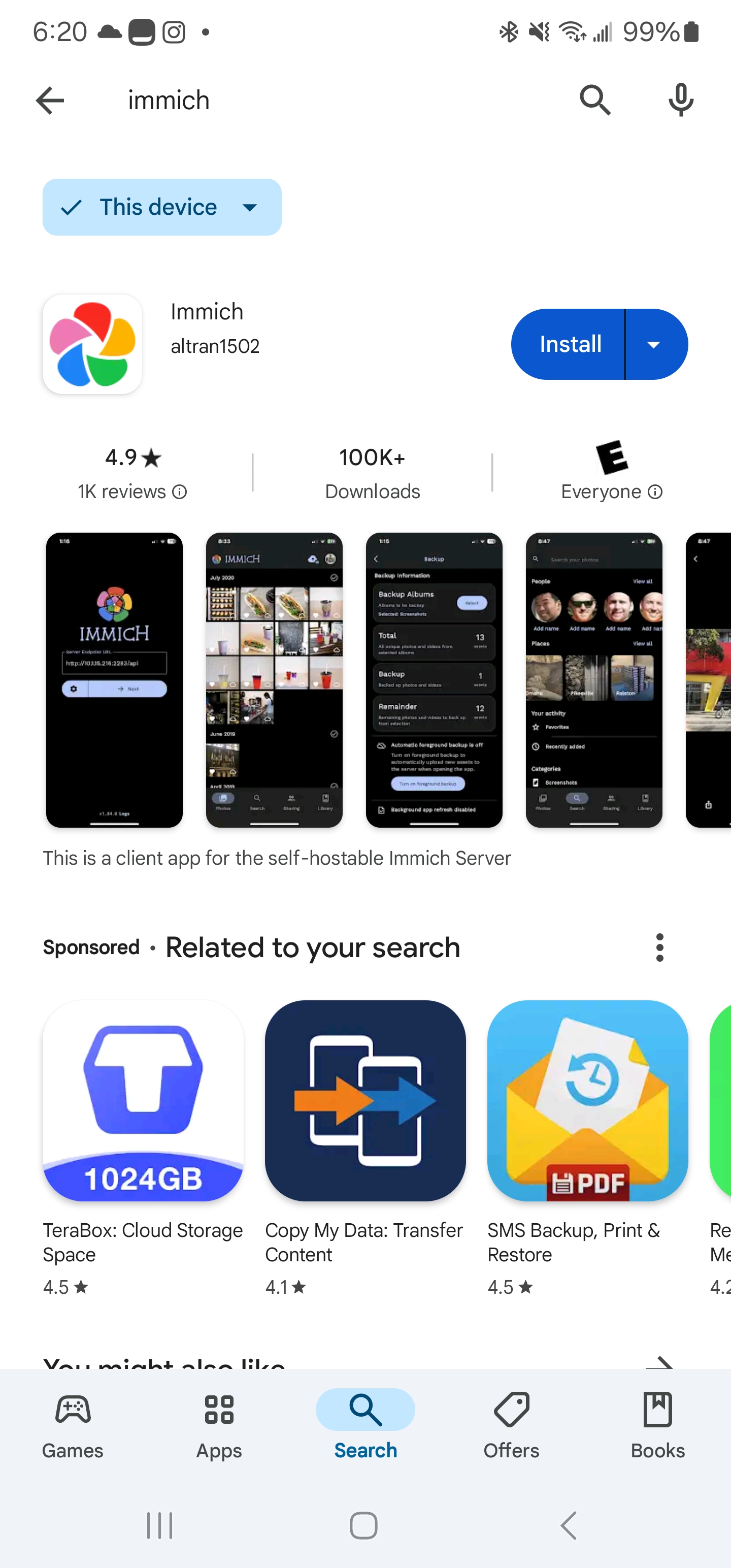

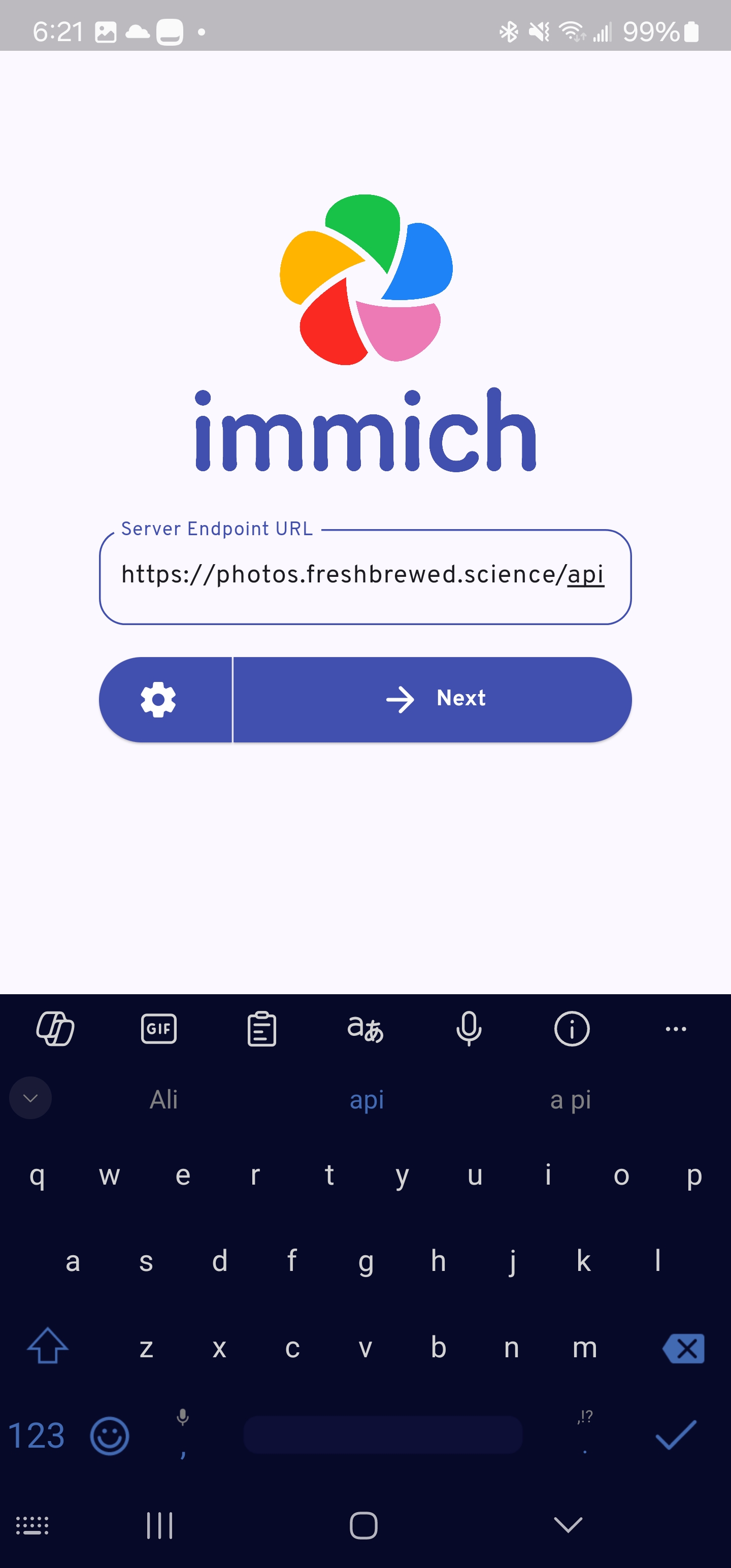

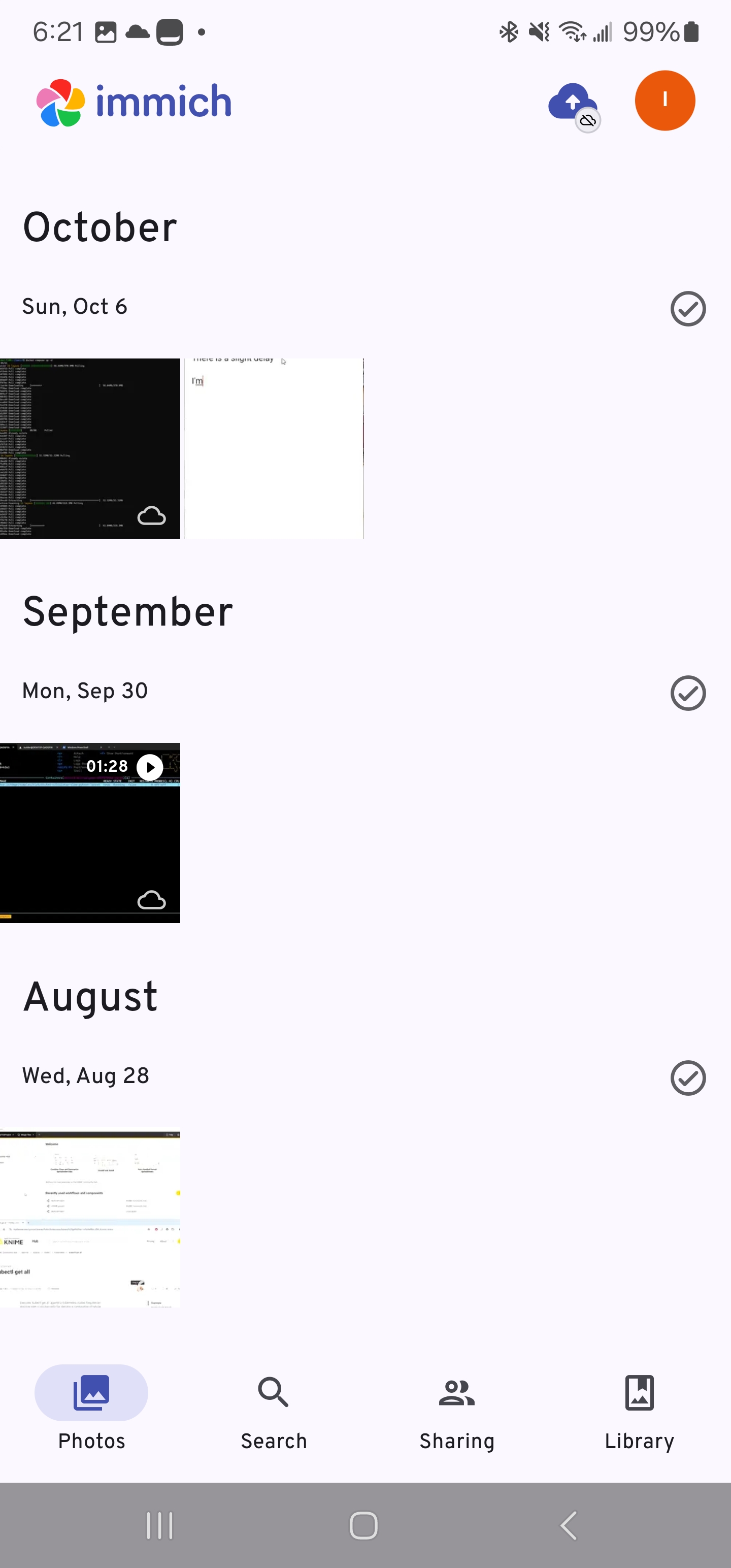

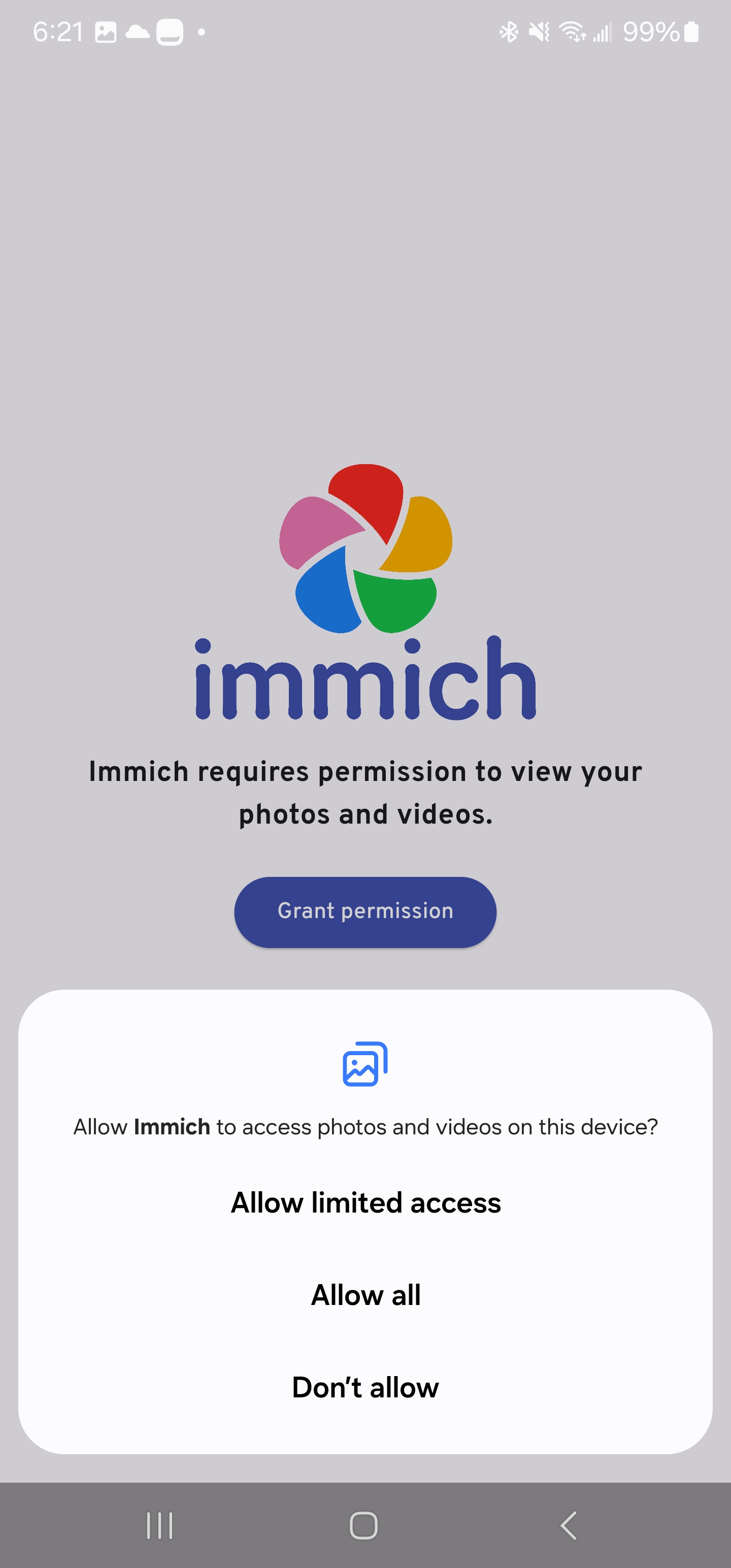

Something new this time is a mobile App for Android and iOS.

I can download and install the app from the Google Play store

When launched, I need to enter the Immich URL with “/api” at the end

For me, it would be https://photos.freshbrewed.science/api

I can then login

And see the photos and videos thus far

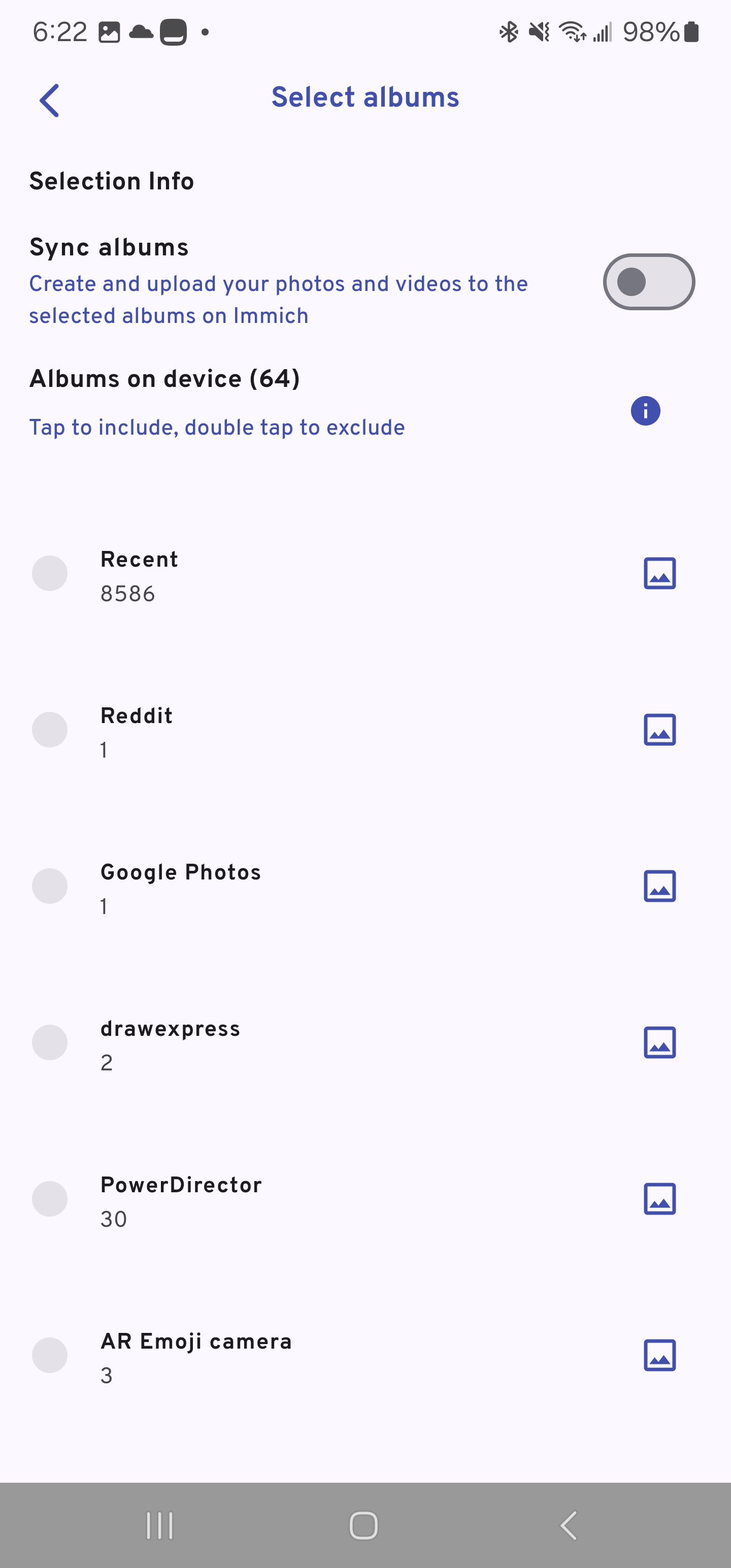

One needs to grant permissions if you want to sync or upload

However, the app just lets me sync an album as a whole - not individual images or videos

We can use the app to sync our albums, but really not just select items

That said, it is easy to just use the browser to login and upload individual items (honestly, how all these photos circled back to the blog)

Summary

Immich is really a great suite which now includes several self-hosted options and mobile apps. It could just be my cluster having troubles with the chart, or my PostgreSQL - you may have better luck following their Kubernetes steps. That said, today we tried to self-host in Kubernetes, then pivoted to Docker Compose - first on my shared Docker Host, then moving to a more dedicated physical host. I then exposed it via TLS using Kubernetes and an external endpoint.

We wrapped by giving the new Android App a spin and comparing it to the features of just using the web app.