Published: Nov 9, 2023 by Isaac Johnson

Immich is a fully open-source project for “Self-Hosted” “Photos and Videos” that supports web and mobile devices. It was created (and maintained) by Alex Tran. In fact, I think it’s so good, I bought him a coffee.

You can host it many ways, from just a Linux install through to Docker, Docker Compose and Kubernetes. Since I’m fundamentally a K8s guy, I went that route.

Let’s dig in! (And if you want to see an example of the end, here is a link of art ideas for this post as self-hosted in Immich)

Installing

Let’s clone the repo

builder@LuiGi17:~/Workspaces$ git clone https://github.com/immich-app/immich-charts.git

Cloning into 'immich-charts'...

remote: Enumerating objects: 546, done.

remote: Counting objects: 100% (546/546), done.

remote: Compressing objects: 100% (189/189), done.

remote: Total 546 (delta 294), reused 518 (delta 272), pack-reused 0

Receiving objects: 100% (546/546), 112.84 KiB | 1.08 MiB/s, done.

Resolving deltas: 100% (294/294), done.

I’ll then create a namespaces

builder@LuiGi17:~/Workspaces/immich-charts$ kubectl create ns immich

namespace/immich created

Oddly, this chart doesn’t automatically create a PVC, so we’ll need to do that.

builder@LuiGi17:~/Workspaces/immich-charts$ cat immich.pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: immich-vol

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

storageClassName: local-path

volumeMode: Filesystem

builder@LuiGi17:~/Workspaces/immich-charts$ kubectl apply -f immich.pvc.yaml

persistentvolumeclaim/immich-vol created

Now we are able to install via helm

builder@LuiGi17:~/Workspaces/immich-charts$ helm install immichtest -n immich ./charts/immich/ --set immich.persistence.library.existingClaim=immich-vol

NAME: immichtest

LAST DEPLOYED: Sun Oct 29 19:49:41 2023

NAMESPACE: immich

STATUS: deployed

REVISION: 1

TEST SUITE: None

My one goof there was to create the PVC in the default namespace:

builder@LuiGi17:~/Workspaces/jekyll-blog$ kubectl get pvc -n immich

No resources found in immich namespace.

builder@LuiGi17:~/Workspaces/jekyll-blog$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

immich-vol Pending local-path 11m

which was blocking the pods from coming up

$ kubectl get pods -n immich

NAME READY STATUS RESTARTS AGE

immichtest-server-6dfc4cff-2q4df 0/1 Pending 0 9m24s

immichtest-machine-learning-6578fb7f8b-8cmkg 0/1 Pending 0 9m24s

immichtest-microservices-5fd4c5445c-qpg76 0/1 Pending 0 9m24s

immichtest-proxy-c97965d58-nsbdn 1/1 Running 0 9m24s

immichtest-web-58d6d6478-dwwfs 1/1 Running 0 9m24s

I went back and created it in the namespace

builder@LuiGi17:~/Workspaces/immich-charts$ kubectl apply -f immich.pvc.yaml -n immich

persistentvolumeclaim/immich-vol created

builder@LuiGi17:~/Workspaces/immich-charts$ kubectl get pvc -n immich

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

immich-vol Pending local-path 8s

builder@LuiGi17:~/Workspaces/immich-charts$ kubectl get pvc -n immich

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

immich-vol Bound pvc-bb1639a8-a84a-4f61-9b3e-1160549c5fa2 5Gi RWO local-path 11s

Now I can see the pods coming up

$ kubectl get pods -n immich

NAME READY STATUS RESTARTS AGE

immichtest-proxy-c97965d58-nsbdn 1/1 Running 0 10m

immichtest-web-58d6d6478-dwwfs 1/1 Running 0 10m

immichtest-server-6dfc4cff-2q4df 0/1 ContainerCreating 0 10m

immichtest-microservices-5fd4c5445c-qpg76 0/1 ContainerCreating 0 10m

immichtest-machine-learning-6578fb7f8b-8cmkg 0/1 ContainerCreating 0 10m

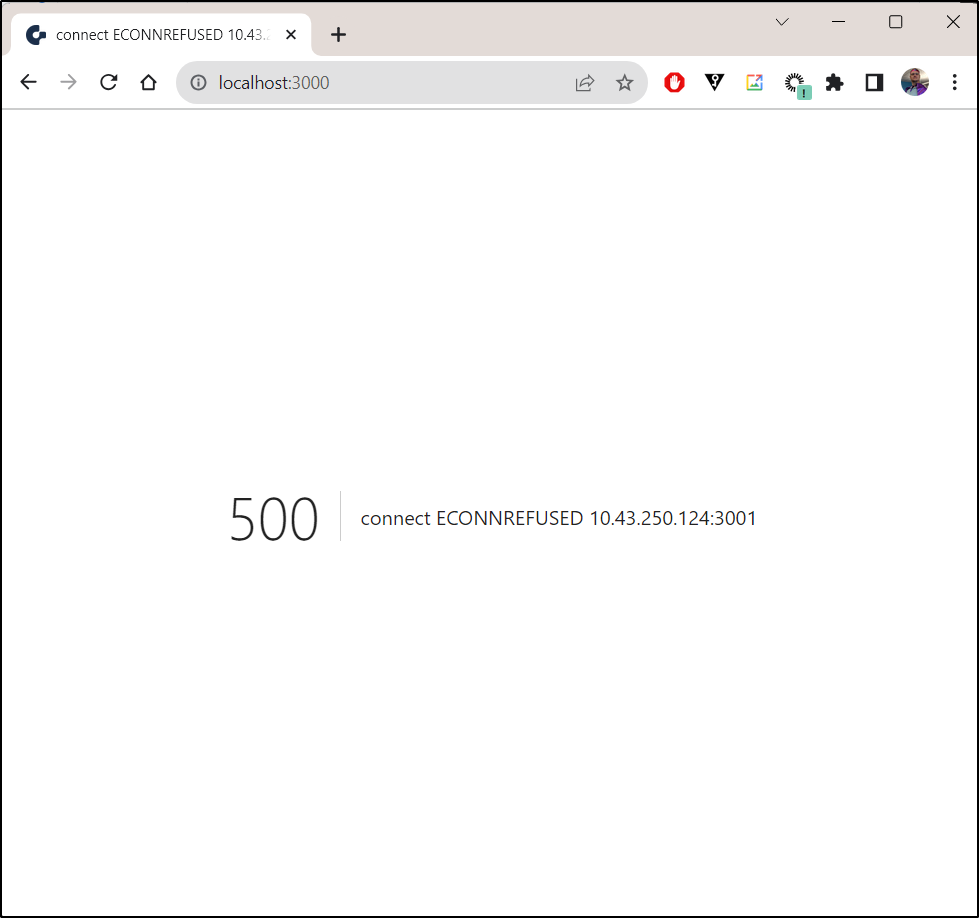

I tried port-forwarding but it didn’t work

$ kubectl port-forward svc/immichtest-web -n immich 3000:3000

Forwarding from 127.0.0.1:3000 -> 3000

Forwarding from [::1]:3000 -> 3000

Handling connection for 3000

Handling connection for 3000

Handling connection for 3000

Handling connection for 3000

The errors from the microservices pod suggest it needs a Redis instance

Error: getaddrinfo ENOTFOUND immichtest-redis-master

at GetAddrInfoReqWrap.onlookup [as oncomplete] (node:dns:108:26) {

errno: -3008,

code: 'ENOTFOUND',

syscall: 'getaddrinfo',

hostname: 'immichtest-redis-master'

}

I’ll enable the redis section in the chart with a set

$ helm upgrade immichtest -n immich ./charts/immich/ --set immich.persistence.library.existingClaim=immich-vol --set redis.enabled=true

Release "immichtest" has been upgraded. Happy Helming!

NAME: immichtest

LAST DEPLOYED: Sun Oct 29 20:09:16 2023

NAMESPACE: immich

STATUS: deployed

REVISION: 2

TEST SUITE: None

Which showed we also need PostgreSQL instance

builder@LuiGi17:~/Workspaces/immich-charts$ kubectl logs immichtest-server-6dfc4cff-2q4df -n immich

[Nest] 6 - 10/30/2023, 1:11:07 AM LOG [NestFactory] Starting Nest application...

[Nest] 6 - 10/30/2023, 1:11:07 AM LOG [InstanceLoader] TypeOrmModule dependencies initialized +74ms

[Nest] 6 - 10/30/2023, 1:11:07 AM LOG [InstanceLoader] BullModule dependencies initialized +0ms

[Nest] 6 - 10/30/2023, 1:11:07 AM LOG [InstanceLoader] ConfigHostModule dependencies initialized +2ms

[Nest] 6 - 10/30/2023, 1:11:07 AM LOG [InstanceLoader] DiscoveryModule dependencies initialized +0ms

[Nest] 6 - 10/30/2023, 1:11:07 AM LOG [InstanceLoader] ConfigModule dependencies initialized +7ms

[Nest] 6 - 10/30/2023, 1:11:07 AM LOG [InstanceLoader] ScheduleModule dependencies initialized +0ms

[Nest] 6 - 10/30/2023, 1:11:07 AM LOG [InstanceLoader] BullModule dependencies initialized +0ms

[Nest] 6 - 10/30/2023, 1:11:07 AM LOG [InstanceLoader] BullModule dependencies initialized +1ms

[Nest] 6 - 10/30/2023, 1:11:07 AM ERROR [TypeOrmModule] Unable to connect to the database. Retrying (1)...

Error: getaddrinfo ENOTFOUND immichtest-postgresql

at GetAddrInfoReqWrap.onlookup [as oncomplete] (node:dns:108:26)

[Nest] 6 - 10/30/2023, 1:11:10 AM ERROR [TypeOrmModule] Unable to connect to the database. Retrying (2)...

Error: getaddrinfo ENOTFOUND immichtest-postgresql

at GetAddrInfoReqWrap.onlookup [as oncomplete] (node:dns:108:26)

[Nest] 6 - 10/30/2023, 1:11:13 AM ERROR [TypeOrmModule] Unable to connect to the database. Retrying (3)...

Error: getaddrinfo ENOTFOUND immichtest-postgresql

at GetAddrInfoReqWrap.onlookup [as oncomplete] (node:dns:108:26)

Since I’m using the upgrade command, even if PSQL isn’t present, I need to provide a password to use

$ helm upgrade immichtest -n immich ./charts/immich/ --set immich.persistence.library.existingClaim=immich-vol --set redis.enabled=true --set postgresql.enabled=true --set global.postgresql.auth.postgresPassword=DBPassword123

Release "immichtest" has been upgraded. Happy Helming!

NAME: immichtest

LAST DEPLOYED: Sun Oct 29 20:13:49 2023

NAMESPACE: immich

STATUS: deployed

REVISION: 3

TEST SUITE: None

We can rotate the pods to get them to start

builder@LuiGi17:~/Workspaces/immich-charts$ kubectl delete pod immichtest-microservices-5fd4c5445c-qpg76 -n immich

pod "immichtest-microservices-5fd4c5445c-qpg76" deleted

builder@LuiGi17:~/Workspaces/immich-charts$ kubectl delete pod immichtest-server-6dfc4cff-2q4df -n immich

pod "immichtest-server-6dfc4cff-2q4df" deleted

builder@LuiGi17:~/Workspaces/immich-charts$

builder@LuiGi17:~/Workspaces/immich-charts$ kubectl get pods -n immich

NAME READY STATUS RESTARTS AGE

immichtest-proxy-c97965d58-nsbdn 1/1 Running 0 27m

immichtest-web-58d6d6478-dwwfs 1/1 Running 0 27m

immichtest-machine-learning-6578fb7f8b-8cmkg 1/1 Running 1 (13m ago) 27m

immichtest-redis-master-0 1/1 Running 0 3m13s

immichtest-postgresql-0 1/1 Running 0 3m20s

immichtest-microservices-5fd4c5445c-wpsml 1/1 Running 0 68s

immichtest-server-6dfc4cff-jb6bg 1/1 Running 0 34s

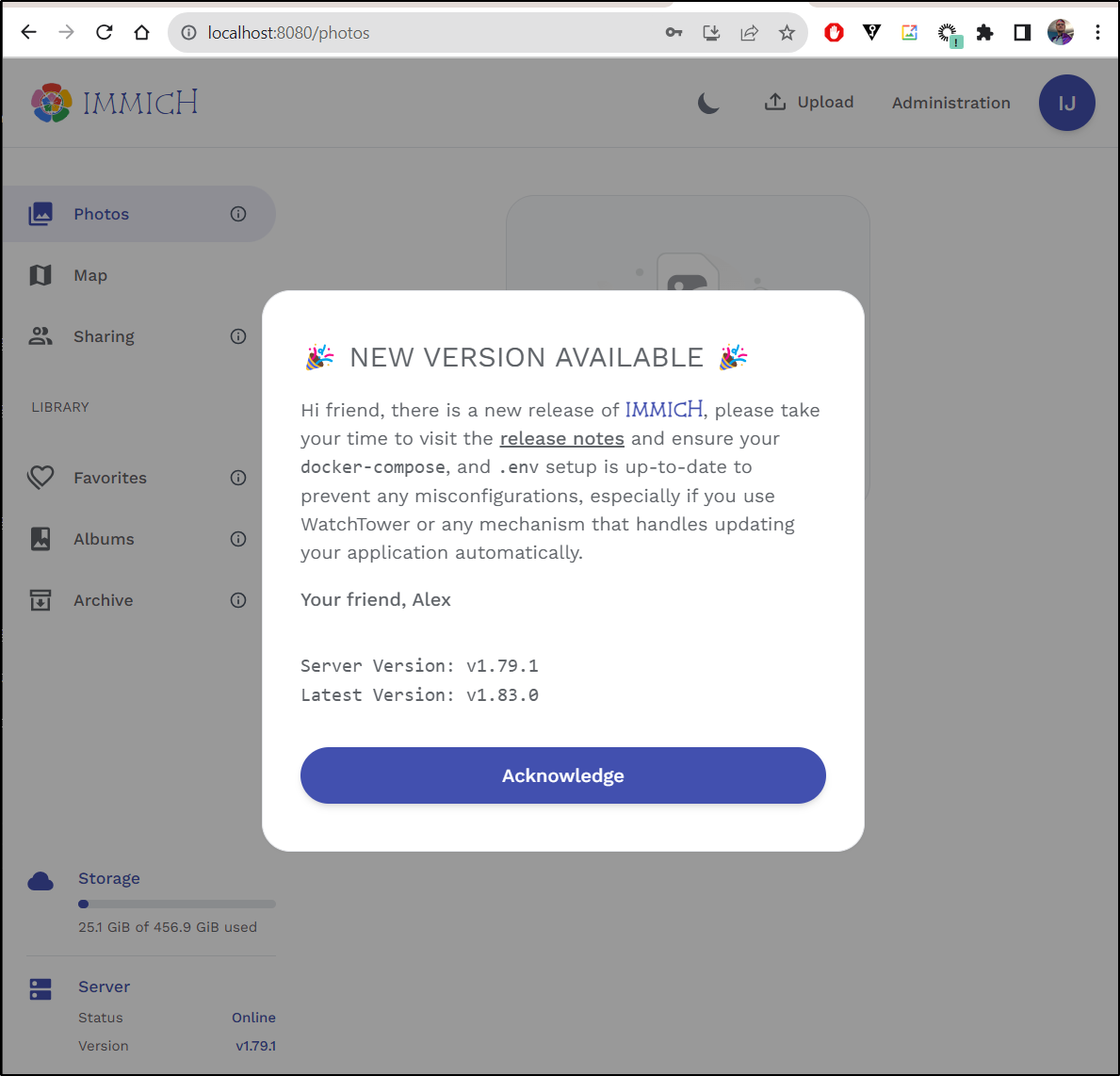

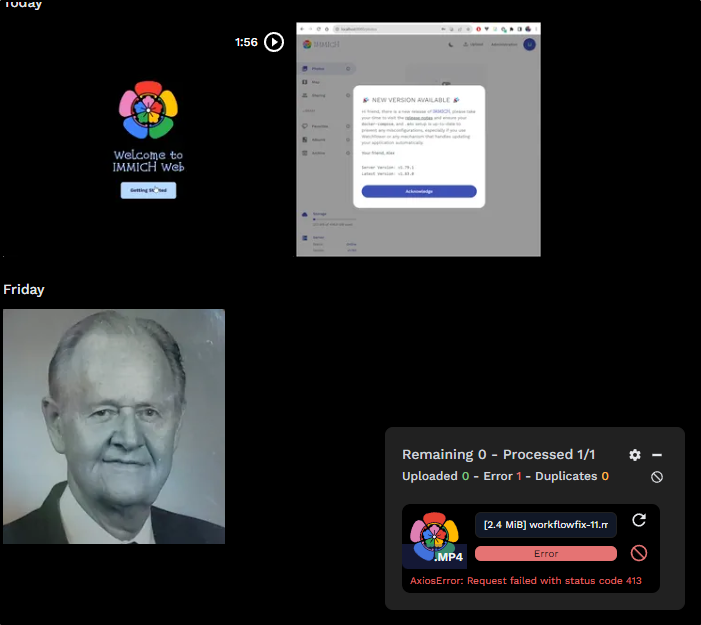

Now port-forwarding gives me a “Getting Started” page

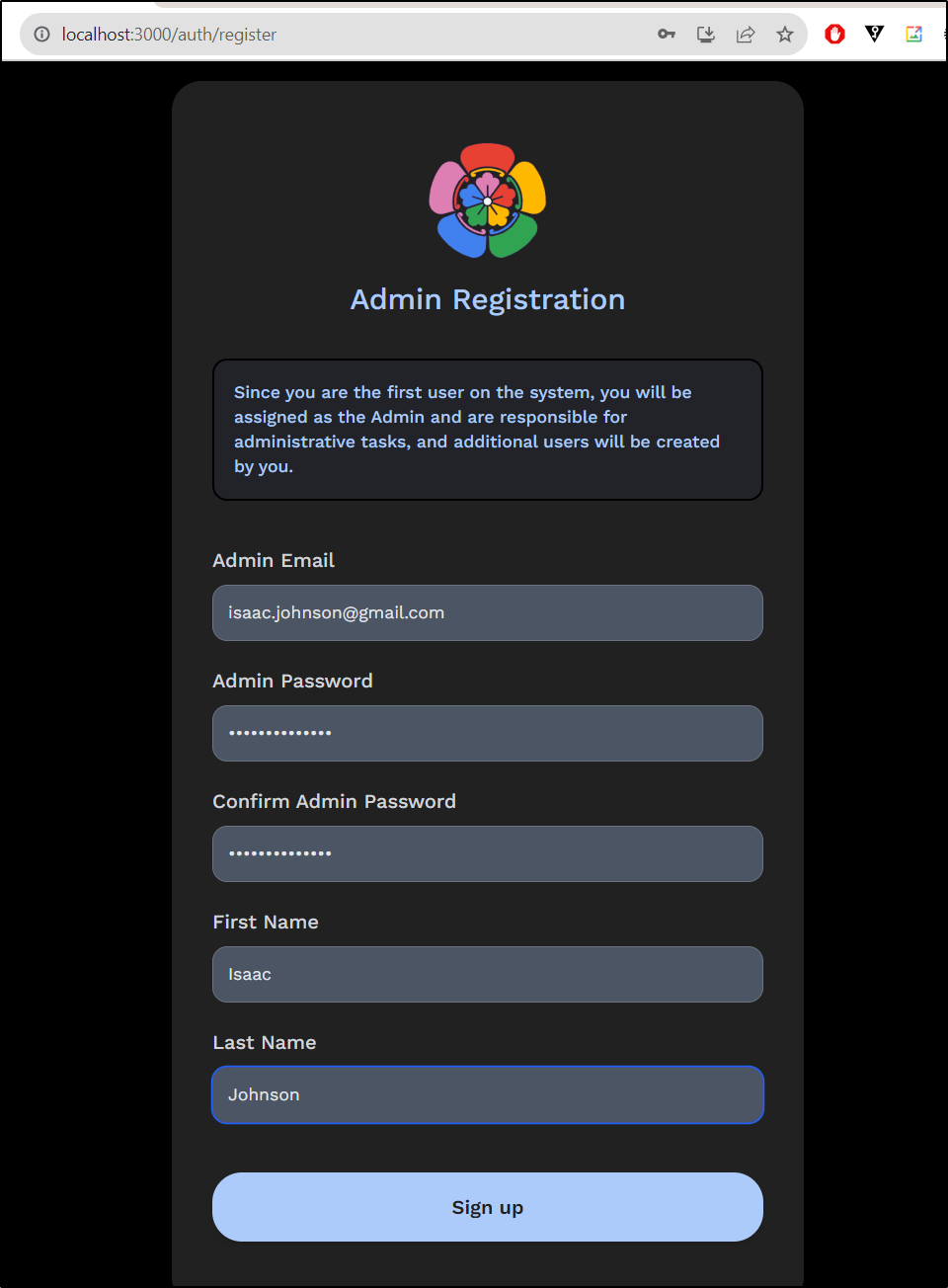

I’ll fill in the signup page

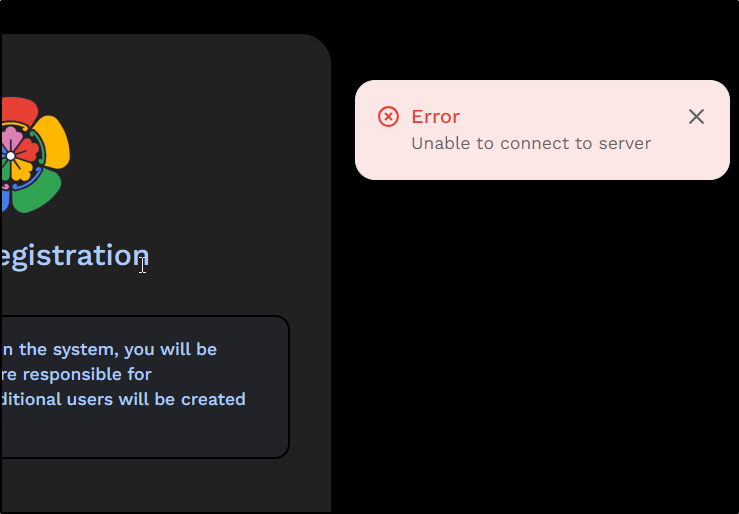

But it dies here

I realized I needed to use the Proxy service instead

$ kubectl port-forward svc/immichtest-proxy -n immich 8080:8080

Forwarding from 127.0.0.1:8080 -> 8080

Forwarding from [::1]:8080 -> 8080

Handling connection for 8080

Handling connection for 8080

Which let me login

We can now test it out

Production cluster

This seems like an app I’ll want to explore more. I’ll go ahead and launch it in my production cluster

I’ll create an R53 entry

builder@LuiGi17:~/Workspaces/jekyll-blog$ cat r53-photos.json

{

"Comment": "CREATE photos fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "photos.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "75.73.224.240"

}

]

}

}

]

}

builder@LuiGi17:~/Workspaces/jekyll-blog$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-photos.json

{

"ChangeInfo": {

"Id": "/change/C08093172IQ4HZR0BE8TC",

"Status": "PENDING",

"SubmittedAt": "2023-10-30T02:35:14.686Z",

"Comment": "CREATE photos fb.s A record "

}

}

I’ll then want the charts downloaded, but also saved for later in Harbor.

builder@DESKTOP-QADGF36:~/Workspaces/immich-charts/charts$ helm package immich

Error: found in Chart.yaml, but missing in charts/ directory: common, postgresql, redis

builder@DESKTOP-QADGF36:~/Workspaces/immich-charts/charts$ helm dependency build immich/

Getting updates for unmanaged Helm repositories...

...Successfully got an update from the "https://bjw-s.github.io/helm-charts" chart repository

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "btungut" chart repository

...Successfully got an update from the "jfelten" chart repository

...Successfully got an update from the "ngrok" chart repository

...Successfully got an update from the "confluentinc" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "kube-state-metrics" chart repository

...Successfully got an update from the "zabbix-community" chart repository

...Successfully got an update from the "rhcharts" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "adwerx" chart repository

...Unable to get an update from the "freshbrewed" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Unable to get an update from the "myharbor" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Successfully got an update from the "portainer" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Successfully got an update from the "nfs" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "longhorn" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "opencost" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "akomljen-charts" chart repository

...Successfully got an update from the "kiwigrid" chart repository

...Successfully got an update from the "castai-helm" chart repository

...Successfully got an update from the "sumologic" chart repository

...Unable to get an update from the "epsagon" chart repository (https://helm.epsagon.com):

Get "https://helm.epsagon.com/index.yaml": dial tcp: lookup helm.epsagon.com on 172.22.64.1:53: no such host

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "gitea-charts" chart repository

...Successfully got an update from the "openzipkin" chart repository

...Successfully got an update from the "rook-release" chart repository

...Successfully got an update from the "elastic" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "open-telemetry" chart repository

...Successfully got an update from the "signoz" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "grafana" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "prometheus-community" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 3 charts

Downloading common from repo https://bjw-s.github.io/helm-charts

Downloading postgresql from repo https://charts.bitnami.com/bitnami

Downloading redis from repo https://charts.bitnami.com/bitnami

Deleting outdated charts

builder@DESKTOP-QADGF36:~/Workspaces/immich-charts/charts$ helm package immich

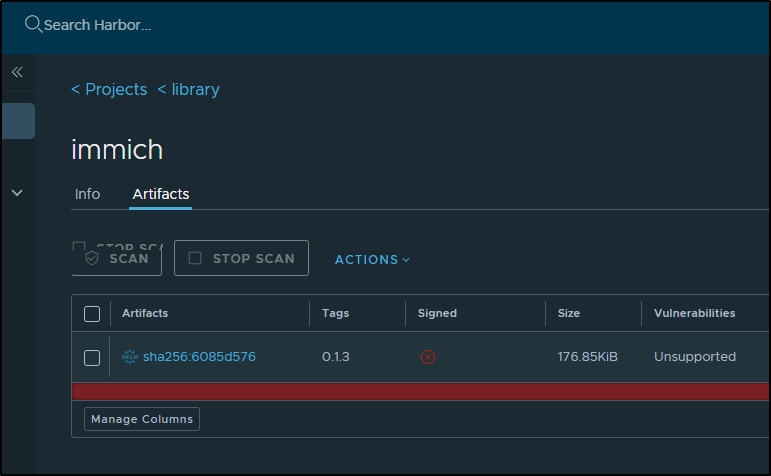

Successfully packaged chart and saved it to: /home/builder/Workspaces/immich-charts/charts/immich-0.1.3.tgz

builder@DESKTOP-QADGF36:~/Workspaces/immich-charts/charts$ helm push immich-0.1.3.tgz oci://harbor.freshbrewed.science/l

ibrary/

Pushed: harbor.freshbrewed.science/library/immich:0.1.3

Digest: sha256:6085d5763fefac3ea18706a3fd1be3f5ffeea40f3a2672271b91a536eefde429

I can now see the chart as an OCI artifact in Harbor. Not as ideal as a proper Chart Repo, but we’ll come back to that later.

As before, I’ll create my namespace

$ kubectl create ns immich

namespace/immich created

I’ll create the PVC, this time using NFS as my storage class

builder@DESKTOP-QADGF36:~/Workspaces/immich-charts/charts$ cat immich-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

annotations:

volume.beta.kubernetes.io/storage-provisioner: fuseim.pri/ifs

volume.kubernetes.io/storage-provisioner: fuseim.pri/ifs

labels:

app.kubernetes.io/instance: immichprod

name: immichpvc

namespace: immich

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: managed-nfs-storage

volumeMode: Filesystem

builder@DESKTOP-QADGF36:~/Workspaces/immich-charts/charts$ kubectl apply -f immich-pvc.yaml

persistentvolumeclaim/immichpvc created

Now there is a section in the values for letting Helm set the ingress

proxy:

enabled: true

image:

repository: ghcr.io/immich-app/immich-proxy

pullPolicy: IfNotPresent

persistence:

library:

enabled: false

ingress:

main:

enabled: false

annotations:

# proxy-body-size is set to 0 to remove the body limit on file uploads

nginx.ingress.kubernetes.io/proxy-body-size: "0"

hosts:

- host: immich.local

paths:

- path: "/"

tls: []

But I was seeing a lot of threads on stackoverflow on struggles folks had.

I’ll KISS and create the ingress myself after the service is up and running.

$ helm install immichprod -n immich ./immich/ --set immich.persistence.library.existingClaim=immichpvc --set redis.enabled=true --set postgresql.enabled=true --set global.postgresql.auth.postgresPassword=DBPassword123

NAME: immichprod

LAST DEPLOYED: Mon Oct 30 06:51:00 2023

NAMESPACE: immich

STATUS: deployed

REVISION: 1

TEST SUITE: None

builder@DESKTOP-QADGF36:~/Workspaces/immich-charts/charts$

I saw the pods up and running

$ kubectl get pods -n immich

NAME READY STATUS RESTARTS AGE

immichprod-proxy-7cbc7bf69f-4nfpw 1/1 Running 0 3m29s

immichprod-web-59759c645f-9klw4 1/1 Running 0 3m29s

immichprod-postgresql-0 1/1 Running 0 3m28s

immichprod-redis-master-0 1/1 Running 0 3m28s

immichprod-microservices-6b6bd985cc-9jn5z 1/1 Running 0 3m28s

immichprod-server-649fcc4855-k7d78 1/1 Running 0 3m29s

immichprod-machine-learning-598bf9bbfd-g8c2q 1/1 Running 0 3m28s

Lastly, I can marry that Proxy service with the Ingress

$ cat immich.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

labels:

app.kubernetes.io/instance: immichprod

name: immich

namespace: immich

spec:

rules:

- host: photos.freshbrewed.science

http:

paths:

- backend:

service:

name: immichprod-proxy

port:

number: 8080

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- photos.freshbrewed.science

secretName: immich-tls

$ kubectl apply -f immich.ingress.yaml

ingress.networking.k8s.io/immich created

In a bit over a minute, cert-manager got us a TLS cert

builder@DESKTOP-QADGF36:~/Workspaces/immich-charts/charts$ kubectl get cert -n immich

NAME READY SECRET AGE

immich-tls False immich-tls 90s

builder@DESKTOP-QADGF36:~/Workspaces/immich-charts/charts$ kubectl get cert -n immich

NAME READY SECRET AGE

immich-tls True immich-tls 99s

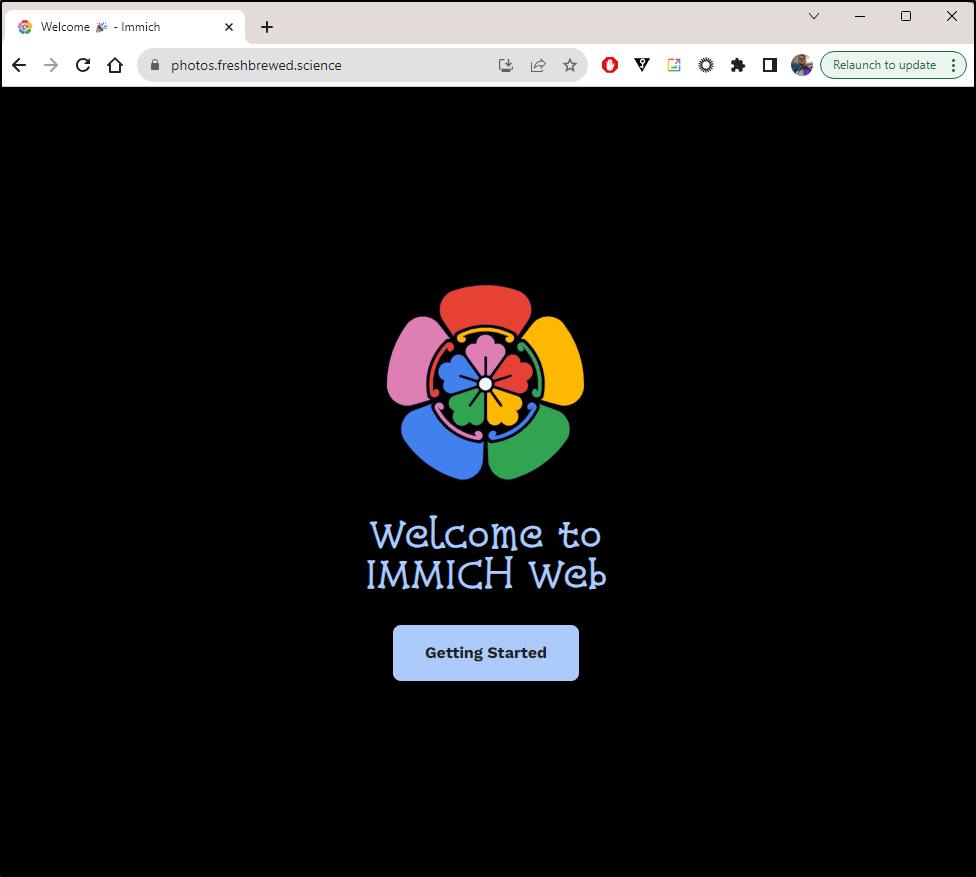

I was then able to sign-in with proper certs

We can now set it up and even share an album

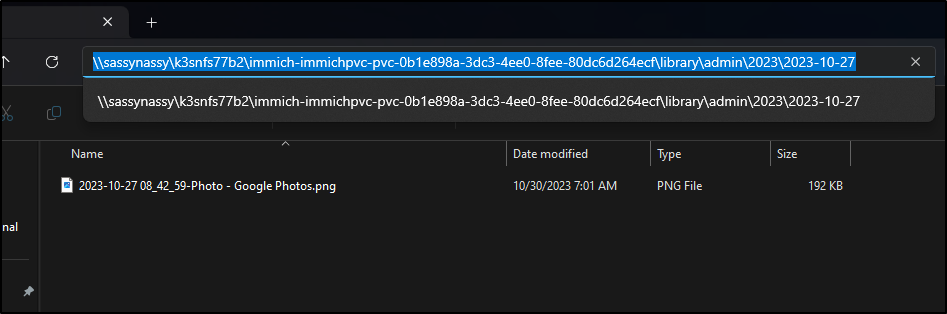

One nice feature about using the NFS provisioner is that my images are safe on the NAS

I can look up my PVC

$ kubectl get pvc immichpvc -n immich -o yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"PersistentVolumeClaim","metadata":{"annotations":{"volume.beta.kubernetes.io/storage-provisioner":"fuseim.pri/ifs","volume.kubernetes.io/storage-provisioner":"fuseim.pri/ifs"},"labels":{"app.kubernetes.io/instance":"immichprod"},"name":"immichpvc","namespace":"immich"},"spec":{"accessModes":["ReadWriteOnce"],"resources":{"requests":{"storage":"10Gi"}},"storageClassName":"managed-nfs-storage","volumeMode":"Filesystem"}}

pv.kubernetes.io/bind-completed: "yes"

pv.kubernetes.io/bound-by-controller: "yes"

volume.beta.kubernetes.io/storage-provisioner: fuseim.pri/ifs

volume.kubernetes.io/storage-provisioner: fuseim.pri/ifs

creationTimestamp: "2023-10-30T11:36:54Z"

finalizers:

- kubernetes.io/pvc-protection

labels:

app.kubernetes.io/instance: immichprod

name: immichpvc

namespace: immich

resourceVersion: "255565294"

uid: 0b1e898a-3dc3-4ee0-8fee-80dc6d264ecf

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: managed-nfs-storage

volumeMode: Filesystem

volumeName: pvc-0b1e898a-3dc3-4ee0-8fee-80dc6d264ecf

status:

accessModes:

- ReadWriteOnce

capacity:

storage: 10Gi

phase: Bound

To see the volumename above is volumeName: pvc-0b1e898a-3dc3-4ee0-8fee-80dc6d264ecf

From that, I can see the full path in the Volume description:

$ kubectl describe persistentvolume pvc-0b1e898a-3dc3-4ee0-8fee-80dc6d264ecf -n immich

Name: pvc-0b1e898a-3dc3-4ee0-8fee-80dc6d264ecf

Labels: <none>

Annotations: pv.kubernetes.io/provisioned-by: fuseim.pri/ifs

Finalizers: [kubernetes.io/pv-protection]

StorageClass: managed-nfs-storage

Status: Bound

Claim: immich/immichpvc

Reclaim Policy: Delete

Access Modes: RWO

VolumeMode: Filesystem

Capacity: 10Gi

Node Affinity: <none>

Message:

Source:

Type: NFS (an NFS mount that lasts the lifetime of a pod)

Server: 192.168.1.129

Path: /volume1/k3snfs77b2/immich-immichpvc-pvc-0b1e898a-3dc3-4ee0-8fee-80dc6d264ecf

ReadOnly: false

Events: <none>

Which if I go to 192.168.1.129 (sassynassy), I can see the files:

In testing, I found most videos failed.

However, we can see part of the reason is that Typesense was not enabled.

$ kubectl get pods -n immich

NAME READY STATUS RESTARTS AGE

immichprod-proxy-7cbc7bf69f-4nfpw 1/1 Running 0 33m

immichprod-web-59759c645f-9klw4 1/1 Running 0 33m

immichprod-postgresql-0 1/1 Running 0 33m

immichprod-redis-master-0 1/1 Running 0 33m

immichprod-microservices-6b6bd985cc-9jn5z 1/1 Running 0 33m

immichprod-server-649fcc4855-k7d78 1/1 Running 0 33m

immichprod-machine-learning-598bf9bbfd-g8c2q 1/1 Running 0 33m

We can fix that now

immichprod -n immich ./immich/ --set immich.persistence.library.existingClaim=immichpvc --set redis.enabled=true --set postgresql.enabled=true --set global.postgresql.auth.postgresPassword=DBPassword123

Which didn’t work because it needs some storage (which oddly is not enabled by default)

$ kubectl logs immichprod-typesense-75cd6d8dbb-rqt8p -n immich

I20231030 12:27:08.123667 1 typesense_server_utils.cpp:357] Starting Typesense 0.24.0

I20231030 12:27:08.123689 1 typesense_server_utils.cpp:360] Typesense is using jemalloc.

E20231030 12:27:08.123869 1 typesense_server_utils.cpp:378] Typesense failed to start. Data directory /tsdata does not exist.

Running now with persistence enabled, a storage class set and a more reasonable size (3Gi instead of 1Gi):

$ helm upgrade immichprod -n immich ./immich/ --set immich.persistence.library.existingClaim=immichpvc --set redis.enabled=true --set postgresql.enabled=true --set typesense.enabled=true --set typesense.persistence.tsdata.enabled=true --set typesense.persistence.tsdata.size=3Gi --set typesense.persistence.tsdata.storageClass=managed-nfs-storage --set global.postgre

sql.auth.postgresPassword=DBPassword123

Release "immichprod" has been upgraded. Happy Helming!

NAME: immichprod

LAST DEPLOYED: Mon Oct 30 07:29:19 2023

NAMESPACE: immich

STATUS: deployed

REVISION: 3

TEST SUITE: None

The pods now come up

$ kubectl get pods -n immich

NAME READY STATUS RESTARTS AGE

immichprod-postgresql-0 1/1 Running 0 39m

immichprod-redis-master-0 1/1 Running 0 39m

immichprod-proxy-764698ffb4-qwsbn 1/1 Running 0 4m22s

immichprod-machine-learning-75d84dd96f-stqlf 1/1 Running 0 4m23s

immichprod-microservices-5b969c856d-8zct7 1/1 Running 0 3m53s

immichprod-web-dd4b87954-g2n56 1/1 Running 0 3m52s

immichprod-typesense-55bf4bd8d6-kt596 1/1 Running 0 60s

immichprod-server-c6698b848-pf4fv 1/1 Running 3 (51s ago) 3m53s

But I still see errors

I see the errors are a permission denied error

[Nest] 7 - 10/30/2023, 12:29:37 PM LOG [ImmichServer] Immich Server is listening on http://[::1]:3001 [v1.79.1] [PRODUCTION]

[Nest] 7 - 10/30/2023, 12:31:37 PM ERROR [ExceptionsHandler] EACCES: permission denied, access 'upload/library/admin/2023/2023-10-30/immich-08.mp4'

That made sense since my NFS won’t let me re-upload the exact same file. I fought some errors and didn’t see logs that resulted.

However, Chrome has gotten pretty bad on me lately. I switched to Firefox and uploaded an MP4 (granted to a port-forward) without issue

I did get the same error via NGinx. This makes me think I might need that nginx.ingress.kubernetes.io/proxy-body-size: "0" I could see the in values.yaml

I added the three ways Nginx looks for a proxy body size of zero (which is unlimited) and recreated the ingress:

$ kubectl delete -f immich.ingress.yaml

ingress.networking.k8s.io "immich" deleted

$ cat immich.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.org/client-max-body-size: "0"

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

labels:

app.kubernetes.io/instance: immichprod

name: immich

namespace: immich

spec:

rules:

- host: photos.freshbrewed.science

http:

paths:

- backend:

service:

name: immichprod-proxy

port:

number: 8080

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- photos.freshbrewed.science

secretName: immich-tls

$ kubectl apply -f immich.ingress.yaml

ingress.networking.k8s.io/immich createdcat

That fixed it!

Let’s do one last test:

To make this full circle, let’s take that video above and upload it to immich and share it with a link.

Summary

We setup Immich first in a test Kubernetes cluster then in my production cluster. We setup TLS Ingress with NGinx and worked through some proxy body and missing services (like Typesense). I showed the value of using the NFS provisioner and lastly we did a couple full demos with shared albums.

Until I can figure out how to embed videos, I will continue to use S3 for my backend store. However, that is getting more and more expensive so I may start to consider moving some content, like longer form videos to Immich and YouTube. I have some issues with how YouTube monetizes and deals with creators so it’s not my first choice, but it is effectively free video sharing (for now).

If you like Immich, consider buying him a coffee (not me). It’s important to help support great OS tools like this.