Published: Jan 6, 2024 by Isaac Johnson

When we left off with Part 4 we had mostly automated the CI/CD flow for building and storing the container and charts. However, we punted on finishing the functionality of the app.

Today, let’s tackle changing how we store the YAML ingress/disabled. I also want to build out the templates repo and setup examples for Traefik, Nginx and Istio. Lastly, if I have time, I want to figure out a non-CR based helm repository endpoint for storing and sharing charts.

Update to store ingress files

As of last time, we launched the app and a basic Nginx “disabled” page:

builder@DESKTOP-QADGF36:~/Workspaces/pyK8sService$ helm list -n disabledtest

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

mytest disabledtest 5 2023-12-25 23:20:43.932018982 -0600 CST deployed pyk8sservice-0.1.0 1.16.0

builder@DESKTOP-QADGF36:~/Workspaces/pyK8sService$ kubectl get pods -n disabledtest

NAME READY STATUS RESTARTS AGE

mytest-pyk8sservice-7d8798768f-9vgv8 1/1 Running 0 12h

mytest-pyk8sservice-app-74cc77666b-w8fpn 1/1 Running 0 11h

builder@DESKTOP-QADGF36:~/Workspaces/pyK8sService$ kubectl get svc -n disabledtest

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mytest-pyk8sservice ClusterIP 10.43.179.105 <none> 80/TCP 12h

mytest-pyk8sservice-app ClusterIP 10.43.206.218 <none> 5000/TCP 12h

From the app deployment chart we can see that we mount the pvc to /config.

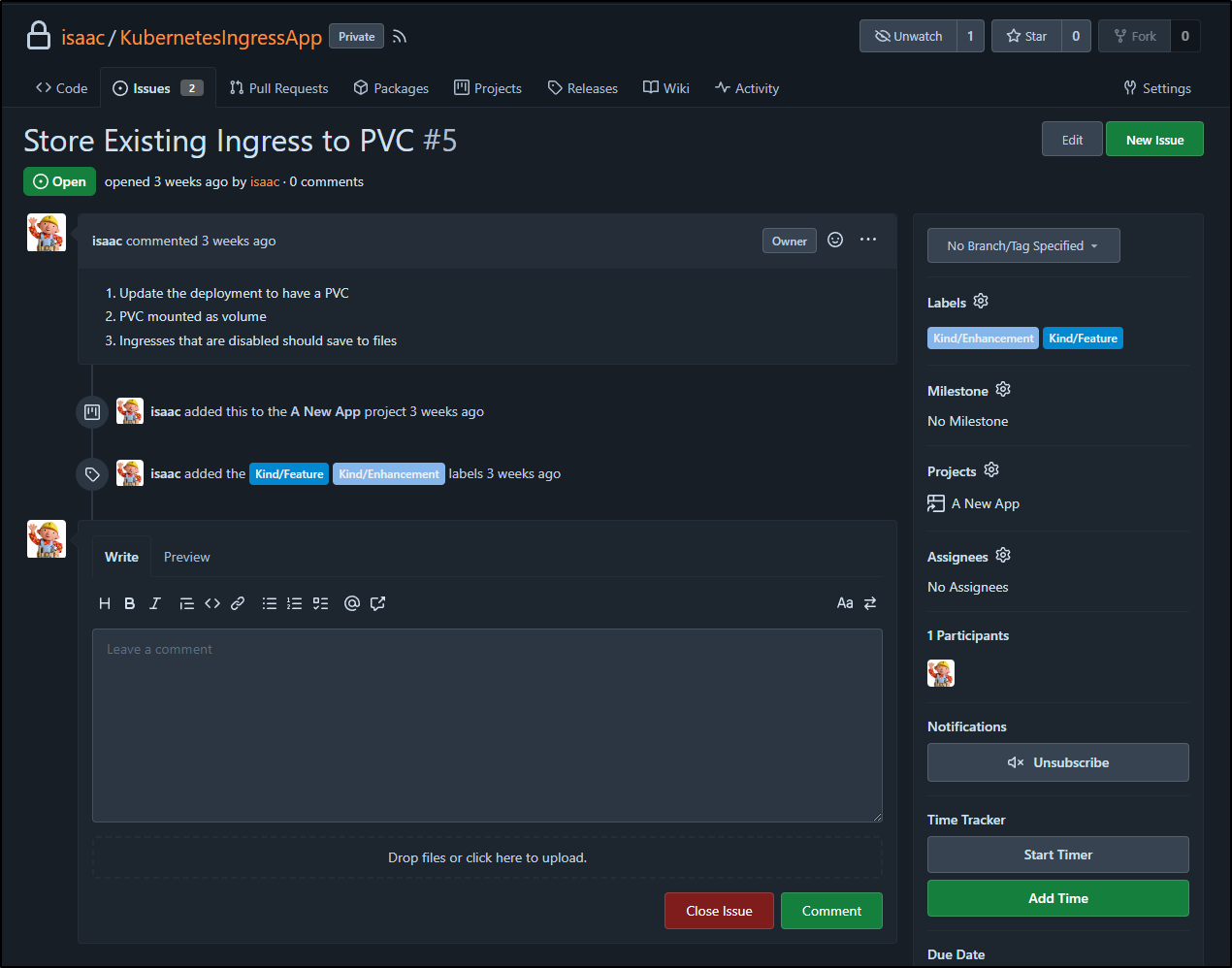

The first change I want to make is to store the existing Ingress into that mount. We originally wrote this up as Feature #5

I’ll now create a branch for it (feature-5-store-pvc) and make some initial changes

I’ll test by building locally

builder@DESKTOP-QADGF36:~/Workspaces/pyK8sService$ docker build -t harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:test0501 .

[+] Building 13.9s (10/10) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 193B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/python:3.8-slim 2.0s

=> [1/5] FROM docker.io/library/python:3.8-slim@sha256:d1cba0f8754d097bd333b8f3d4c655f37c2ede9042d1 1.5s

=> => resolve docker.io/library/python:3.8-slim@sha256:d1cba0f8754d097bd333b8f3d4c655f37c2ede9042d1 0.0s

=> => sha256:8ce3f2b601ccac03ff1858022363c325355bafba224123a4563dade58bc8e70f 3.51MB / 3.51MB 0.2s

=> => sha256:7f4f85c41831fbd0f3274211b4400f992d8e7a77938d86a5d24bc7af2e8df503 13.75MB / 13.75MB 0.5s

=> => sha256:61e3cec0af2bf2f5a7e75b87fb4daeeef94d7054b09c461a9da8604f805a1806 244B / 244B 0.2s

=> => sha256:d1cba0f8754d097bd333b8f3d4c655f37c2ede9042d1e7db69561d9eae2eebfa 1.86kB / 1.86kB 0.0s

=> => sha256:514ad38605babc4749a1dfb47a800a9ad30e97795ea3c52390a8cdd8a0d5952c 1.37kB / 1.37kB 0.0s

=> => sha256:067655fb1c0910227ec88b6bc6bc18d6697f82e80ab04a2ce00f36879df9b36e 6.97kB / 6.97kB 0.0s

=> => sha256:8244879b209cbcd120ac0c4a2bd01d70ee60cc770924a99c1948b6698ea4d2af 3.13MB / 3.13MB 0.4s

=> => extracting sha256:8ce3f2b601ccac03ff1858022363c325355bafba224123a4563dade58bc8e70f 0.3s

=> => extracting sha256:7f4f85c41831fbd0f3274211b4400f992d8e7a77938d86a5d24bc7af2e8df503 0.5s

=> => extracting sha256:61e3cec0af2bf2f5a7e75b87fb4daeeef94d7054b09c461a9da8604f805a1806 0.0s

=> => extracting sha256:8244879b209cbcd120ac0c4a2bd01d70ee60cc770924a99c1948b6698ea4d2af 0.2s

=> [internal] load build context 0.2s

=> => transferring context: 153.31kB 0.1s

=> [2/5] WORKDIR /app 0.1s

=> [3/5] COPY requirements.txt . 0.0s

=> [4/5] RUN pip install --no-cache-dir -r requirements.txt 9.0s

=> [5/5] COPY . . 0.1s

=> exporting to image 0.4s

=> => exporting layers 0.4s

=> => writing image sha256:ad61fb8404c926e0748514b81aaf985f96cf8513d39fde4799e81f8224e45117 0.0s

=> => naming to harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:test0501 0.0s

Then push

builder@DESKTOP-QADGF36:~/Workspaces/pyK8sService$ docker push harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:test0501

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc]

577ac6d2efff: Pushed

63699480bf04: Pushed

095f4ccf99ba: Pushed

f96fd631fd42: Pushed

148dc688e60f: Layer already exists

009aa92f6140: Layer already exists

ad7efa606e4e: Layer already exists

384858ccd7ef: Layer already exists

7292cf786aa8: Layer already exists

test0501: digest: sha256:1c77454910f2b4ebc3ab201b9c19b2e17d6983b184ec444e3bb2f31540f3a4b0 size: 2203

I then changed the deployment manually to set the image: and added an imagePullSecrets: section to the deployment to test

spec:

containers:

- image: harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:test0501

imagePullPolicy: Always

livenessProbe:

failureThreshold: 3

httpGet:

path: /

port: http

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: pyk8sservice

ports:

- containerPort: 5000

name: http

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /

port: http

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources: {}

securityContext: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /config

name: config

dnsPolicy: ClusterFirst

imagePullSecrets:

- name: myharborreg

Actually, I came around and opted to try to use this chart to create an ingress I could use. I ended up messing up my local deploy, so i removed/deleted then re-installed:

$ cat myvalues.yaml

appservice:

port: 5000

persistence:

storageClass: local-path

rbac:

create: true

appimage:

repository: harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc

pullPolicy: Always

tag: "test0501"

appimagePullSecrets:

- name: myharborreg

ingress:

enabled: true

className: "traefik"

annotations:

kubernetes.io/ingress.class: traefik

# kubernetes.io/tls-acme: "true"

hosts:

- host: pytestapp.local

paths:

- path: /

pathType: ImplementationSpecific

tls:

- secretName: chart-example-tls

hosts:

- chart-example.local

$ helm install mytest -n disabledtest -f myvalues.yaml ./pyK8sService/

NAME: mytest

LAST DEPLOYED: Wed Dec 27 12:02:35 2023

NAMESPACE: disabledtest

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

https://pytestapp.local/

I can now see the pods running

$ kubectl get pods -n disabledtest

NAME READY STATUS RESTARTS AGE

mytest-pyk8sservice-app-5f67b78b94-rdtmg 1/1 Running 0 49s

mytest-pyk8sservice-7d8798768f-9pwcb 1/1 Running 0 49s

with an Ingress I can use for testing

$ kubectl get ingress -n disabledtest

NAME CLASS HOSTS ADDRESS PORTS AGE

mytest-pyk8sservice traefik pytestapp.local 192.168.1.13,192.168.1.159,192.168.1.206 80, 443 5m40s

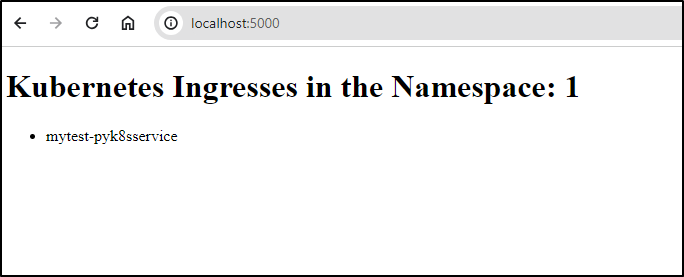

We can now test

$ kubectl port-forward svc/mytest-pyk8sservice-app -n disabledtest 5000:5000

Forwarding from 127.0.0.1:5000 -> 5000

Forwarding from [::1]:5000 -> 5000

Handling connection for 5000

Handling connection for 5000

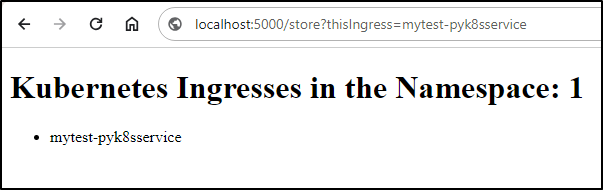

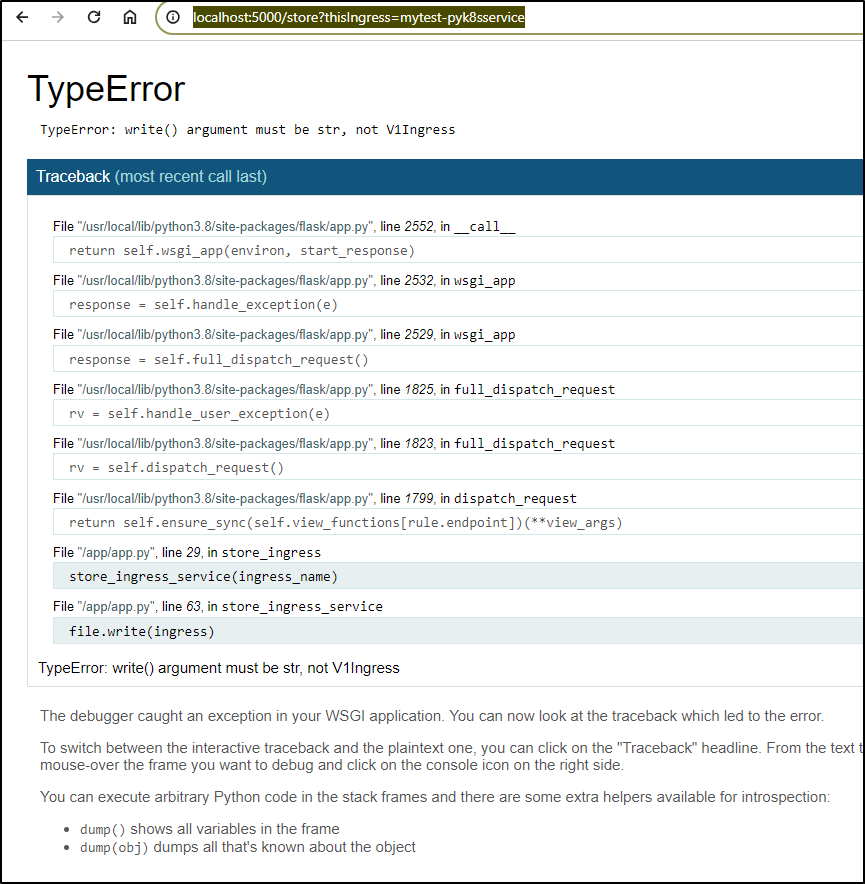

I then typed in a URL i hoped would work: http://localhost:5000/store?thisIngress=mytest-pyk8sservice

And boom, what one gets when they forget to cast to string

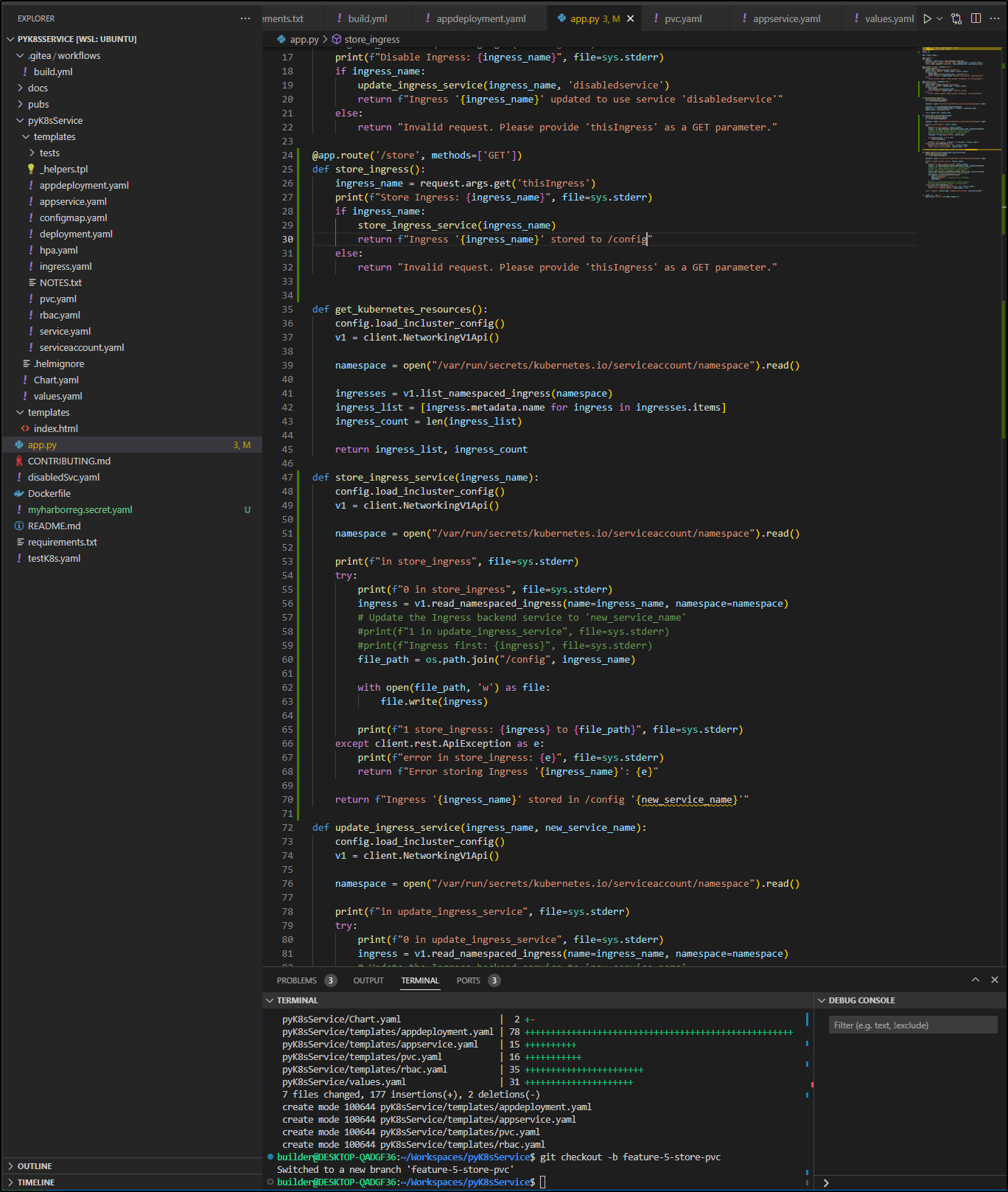

It took a bit of tweaking, but I landed on the following app.py code:

from flask import Flask, render_template, request

from kubernetes import client, config

import sys

import os

import yaml

app = Flask(__name__)

@app.route('/')

def index():

ingresses, ingress_count = get_kubernetes_resources()

print(f"Number of Ingress resources found: {ingress_count}", file=sys.stderr)

return render_template('index.html', resources=ingresses, count=ingress_count)

@app.route('/disable', methods=['GET'])

def disable_ingress():

ingress_name = request.args.get('thisIngress')

print(f"Disable Ingress: {ingress_name}", file=sys.stderr)

if ingress_name:

update_ingress_service(ingress_name, 'disabledservice')

return f"Ingress '{ingress_name}' updated to use service 'disabledservice'"

else:

return "Invalid request. Please provide 'thisIngress' as a GET parameter."

@app.route('/store', methods=['GET'])

def store_ingress():

ingress_name = request.args.get('thisIngress')

print(f"Store Ingress: {ingress_name}", file=sys.stderr)

if ingress_name:

store_ingress_service(ingress_name)

return f"Ingress '{ingress_name}' stored to /config"

else:

return "Invalid request. Please provide 'thisIngress' as a GET parameter."

def get_kubernetes_resources():

config.load_incluster_config()

v1 = client.NetworkingV1Api()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

ingresses = v1.list_namespaced_ingress(namespace)

ingress_list = [ingress.metadata.name for ingress in ingresses.items]

ingress_count = len(ingress_list)

return ingress_list, ingress_count

def store_ingress_service(ingress_name):

config.load_incluster_config()

v1 = client.NetworkingV1Api()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

print(f"in store_ingress", file=sys.stderr)

try:

print(f"0 in store_ingress", file=sys.stderr)

ingress = v1.read_namespaced_ingress(name=ingress_name, namespace=namespace)

# Update the Ingress backend service to 'new_service_name'

#print(f"1 in update_ingress_service", file=sys.stderr)

#print(f"Ingress first: {ingress}", file=sys.stderr)

#file_path = os.path.join("/config", ingress_name, ".yaml")

file_path = f"/config/{ingress_name}.yaml"

ingress_dict = ingress.to_dict()

ingress_yaml = yaml.dump(ingress_dict)

with open(file_path, 'w') as file:

file.write(ingress_yaml)

print(f"1 store_ingress: {ingress} to {file_path}", file=sys.stderr)

except client.rest.ApiException as e:

print(f"error in store_ingress: {e}", file=sys.stderr)

return f"Error storing Ingress '{ingress_name}': {e}"

return f"Ingress '{ingress_name}' stored in /config '{ingress_name}'"

def update_ingress_service(ingress_name, new_service_name):

config.load_incluster_config()

v1 = client.NetworkingV1Api()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

print(f"in update_ingress_service", file=sys.stderr)

try:

print(f"0 in update_ingress_service", file=sys.stderr)

ingress = v1.read_namespaced_ingress(name=ingress_name, namespace=namespace)

# Update the Ingress backend service to 'new_service_name'

#print(f"1 in update_ingress_service", file=sys.stderr)

#print(f"Ingress first: {ingress}", file=sys.stderr)

ingress.spec.rules[0].http.paths[0].backend.service.name = new_service_name

#print(f"2 in update_ingress_service", file=sys.stderr)

api_response = v1.patch_namespaced_ingress(

name='rundeckingress',

namespace='default', # replace with your namespace

body=ingress

)

#print(f"3 in update_ingress_service", file=sys.stderr)

#print(f"Ingress second: {ingress}", file=sys.stderr)

except client.rest.ApiException as e:

print(f"error in update_ingress_service: {e}", file=sys.stderr)

return f"Error updating Ingress '{ingress_name}': {e}"

return f"Ingress '{ingress_name}' updated to use service '{new_service_name}'"

if __name__ == '__main__':

app.run(host='0.0.0.0', port=5000, debug=True)

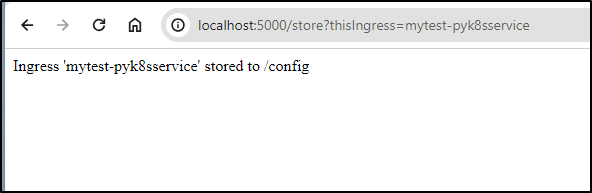

Which when tested

$ kubectl port-forward svc/mytest-pyk8sservice-app -n disabledtest 5000:5000

Forwarding from 127.0.0.1:5000 -> 5000

Forwarding from [::1]:5000 -> 5000

Handling connection for 5000

Handling connection for 5000

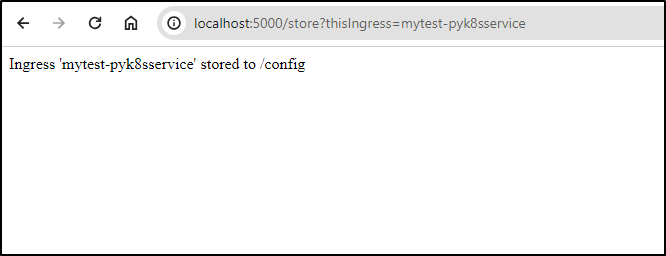

Said it saved the file:

We can actually verify that by cat’ing out the file

$ kubectl exec mytest-pyk8sservice-app-6cdd6d756f-bfnfr -n disabledtest cat '/config/mytest-pyk8sservice.yaml'

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

api_version: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: traefik

meta.helm.sh/release-name: mytest

meta.helm.sh/release-namespace: disabledtest

creation_timestamp: 2023-12-27 18:02:36+00:00

deletion_grace_period_seconds: null

deletion_timestamp: null

finalizers: null

generate_name: null

generation: 1

labels:

app.kubernetes.io/instance: mytest

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: pyk8sservice

app.kubernetes.io/version: 1.16.0

helm.sh/chart: pyk8sservice-0.2.0

managed_fields:

- api_version: networking.k8s.io/v1

fields_type: FieldsV1

fields_v1:

f:metadata:

f:annotations:

.: {}

f:kubernetes.io/ingress.class: {}

f:meta.helm.sh/release-name: {}

f:meta.helm.sh/release-namespace: {}

f:labels:

.: {}

f:app.kubernetes.io/instance: {}

f:app.kubernetes.io/managed-by: {}

f:app.kubernetes.io/name: {}

f:app.kubernetes.io/version: {}

f:helm.sh/chart: {}

f:spec:

f:ingressClassName: {}

f:rules: {}

f:tls: {}

manager: helm

operation: Update

subresource: null

time: 2023-12-27 18:02:36+00:00

- api_version: networking.k8s.io/v1

fields_type: FieldsV1

fields_v1:

f:status:

f:loadBalancer:

f:ingress: {}

manager: traefik

operation: Update

subresource: status

time: 2023-12-27 18:02:36+00:00

name: mytest-pyk8sservice

namespace: disabledtest

owner_references: null

resource_version: '8438650'

self_link: null

uid: 56eed306-e43c-409b-9d17-b07f6a270c0d

spec:

default_backend: null

ingress_class_name: traefik

rules:

- host: pytestapp.local

http:

paths:

- backend:

resource: null

service:

name: mytest-pyk8sservice

port:

name: null

number: 80

path: /

path_type: ImplementationSpecific

tls:

- hosts:

- chart-example.local

secret_name: chart-example-tls

status:

load_balancer:

ingress:

- hostname: null

ip: 192.168.1.13

ports: null

- hostname: null

ip: 192.168.1.159

ports: null

- hostname: null

ip: 192.168.1.206

ports: null

And prove it’s in the PVC by deleting the pod and doing it again:

$ kubectl exec mytest-pyk8sservice-app-6cdd6d756f-nwf6q -n disabledtest -- bash -c 'ls -l /

config/'

total 8

-rw-r--r-- 1 root root 2342 Dec 27 18:31 mytest-pyk8sservice

-rw-r--r-- 1 root root 2342 Dec 27 18:36 mytest-pyk8sservice.yaml

note: the file without a .yaml was from an earlier test

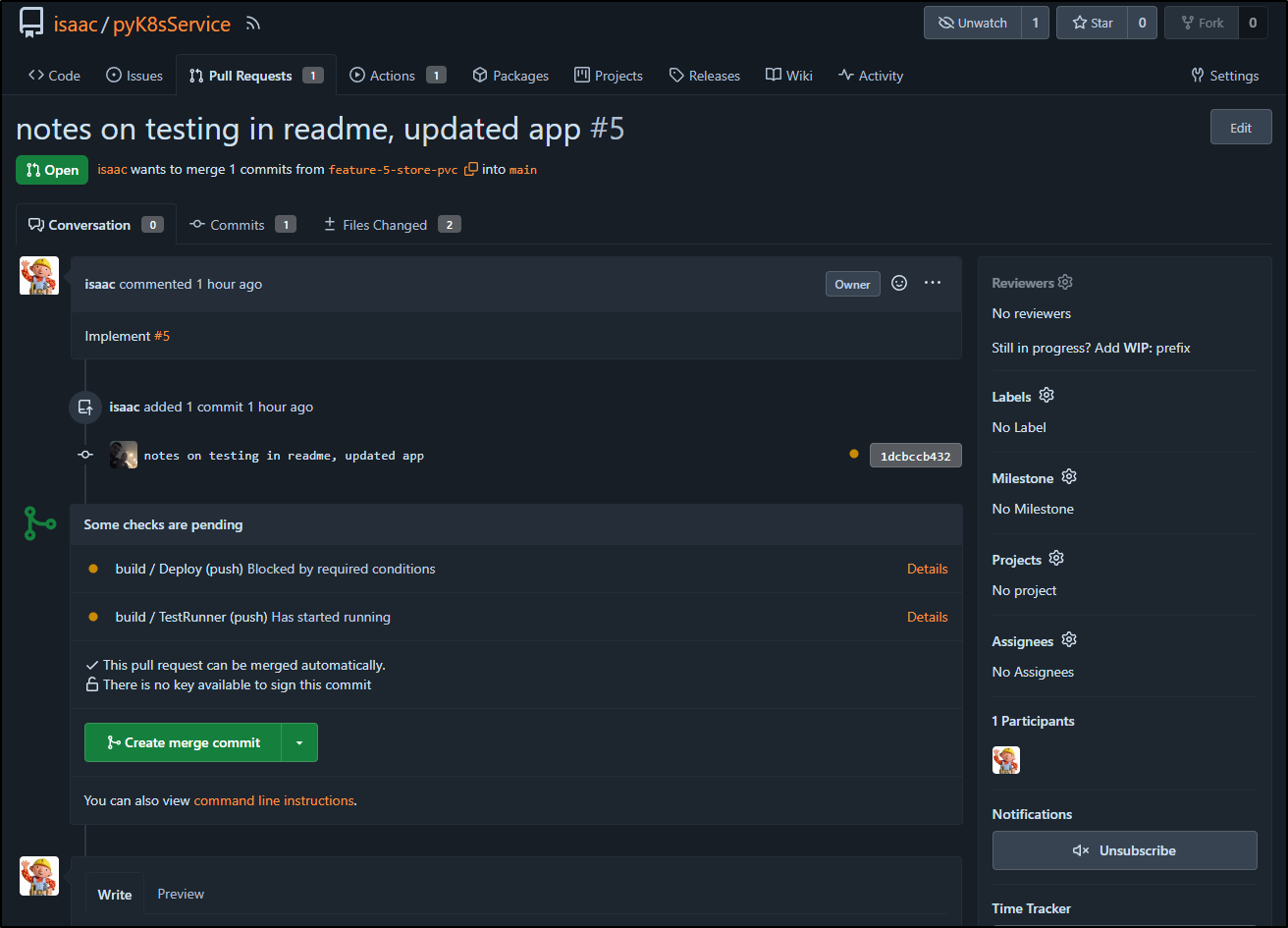

I’m happy enough with this incremental progress to push the changes back to GIT

I then created a PR #5

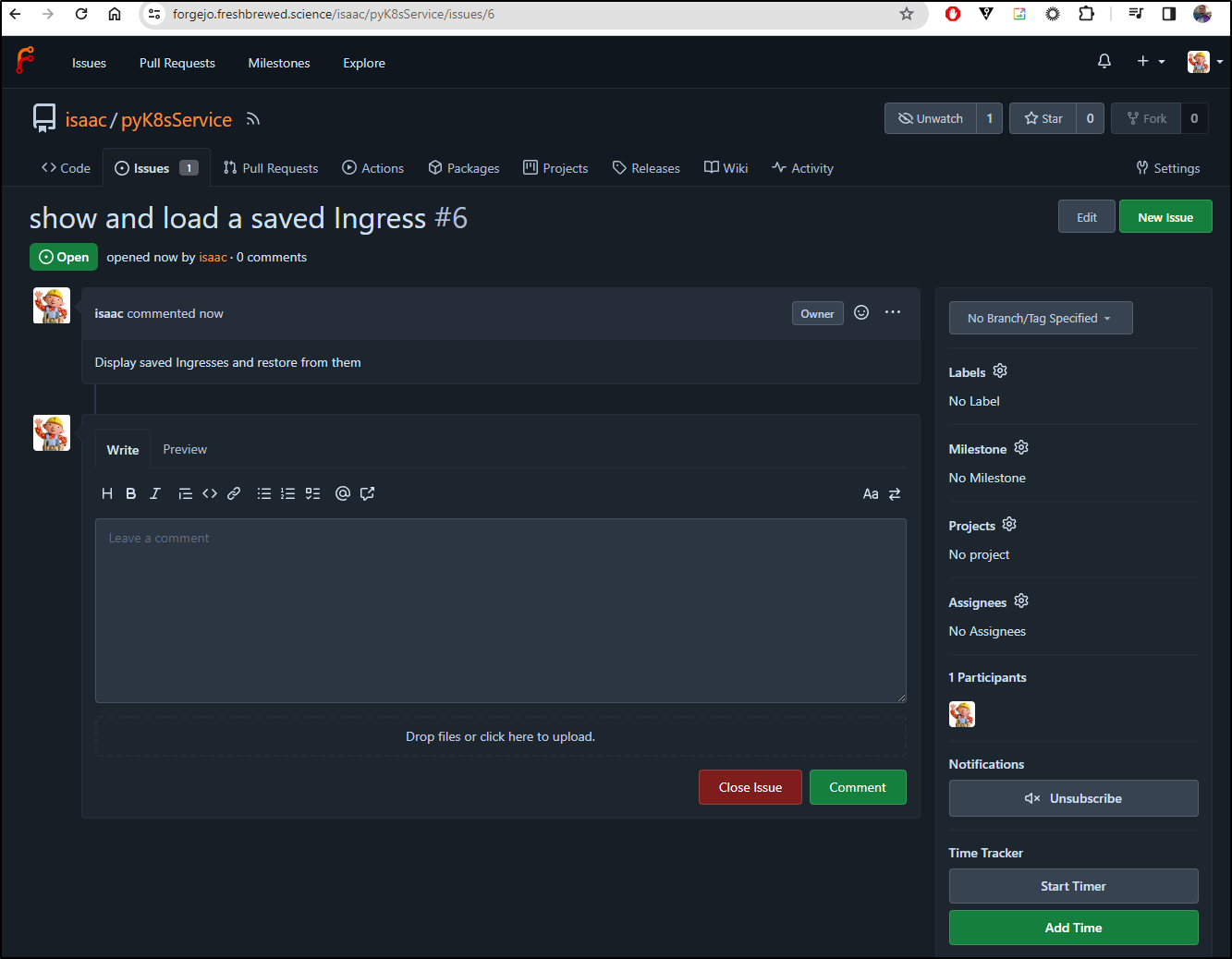

Now that we have Ingress objects set aside, how hard would it be to restore from them?

Let’s write a Ticket for that:

I created a new branch and then wrote routines to list and restore from file

@app.route('/saved', methods=['GET'])

def showsaved_ingress():

filenames = os.listdir('/config')

ingress_count = len(filenames)

print(f"1 show saved ingresses in /config: {ingress_count} total", file=sys.stderr)

return render_template('restore.html', resources=filenames, count=ingress_count)

@app.route('/restore', methods=['GET'])

def restore_ingress():

ingress_name = request.args.get('thisIngress')

print(f"Restore Ingress: {ingress_name}", file=sys.stderr)

if ingress_name:

restore_ingress_service(ingress_name)

return f"Ingress '{ingress_name}' restored from /config"

else:

return "Invalid request. Please provide 'thisIngress' as a GET parameter."

#==================================================================

def restore_ingress_service(ingress_name):

config.load_incluster_config()

v1 = client.NetworkingV1Api()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

print(f"in store_ingress", file=sys.stderr)

v1 = client.NetworkingV1Api()

print(f"0 in restore ingress", file=sys.stderr)

# Remove IFF exists

try:

v1.delete_namespaced_ingress(name=ingress_name, namespace=namespace)

except client.exceptions.ApiException as e:

if e.status != 404: # Ignore error if the ingress does not exist

raise

# Now try and restore it

print(f"1 in restore ingress", file=sys.stderr)

try:

file_path = f"/config/{ingress_name}.yaml"

with open(file_path, 'r') as file:

ingress_dict = yaml.safe_load(file)

# Convert Dict back to an Ingress Object

ingress = client.V1beta1Ingress(**ingress_dict)

# ingress_name = ingress.metadata.name

v1.create_namespaced_ingress(namespace=namespace, body=ingress)

except client.rest.ApiException as e:

print(f"error in restore: {e}", file=sys.stderr)

return f"Error restoring Ingress '{ingress_name}': {e}"

return f"Ingress '{ingress_name}' restored from /config '{ingress_name}'"

I did the usual local build and push:

builder@DESKTOP-QADGF36:~/Workspaces/pyK8sService$ docker push harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:test0601 .

"docker push" requires exactly 1 argument.

See 'docker push --help'.

Usage: docker push [OPTIONS] NAME[:TAG]

Push an image or a repository to a registry

builder@DESKTOP-QADGF36:~/Workspaces/pyK8sService$ docker push harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:test0601

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc]

8fe78eea7bfd: Pushed

63699480bf04: Layer already exists

095f4ccf99ba: Layer already exists

f96fd631fd42: Layer already exists

148dc688e60f: Layer already exists

009aa92f6140: Layer already exists

ad7efa606e4e: Layer already exists

384858ccd7ef: Layer already exists

7292cf786aa8: Layer already exists

test0601: digest: sha256:ea38d03be384df9f481b35a64c113f3b8a3e375c5bff3ec8e4e7c7c853782093 size: 2203

a helm upgrade to use that image

$ helm upgrade mytest -n disabledtest -f myvalues.yaml ./pyK8sService/

Release "mytest" has been upgraded. Happy Helming!

NAME: mytest

LAST DEPLOYED: Wed Dec 27 14:41:18 2023

NAMESPACE: disabledtest

STATUS: deployed

REVISION: 8

NOTES:

1. Get the application URL by running these commands:

https://pytestapp.local/

Then a port-forward to test

builder@DESKTOP-QADGF36:~/Workspaces/pyK8sService$ kubectl port-forward svc/mytest-pyk8sservice-app -n disabledtest 5000:5000

Forwarding from 127.0.0.1:5000 -> 5000

Forwarding from [::1]:5000 -> 5000

Handling connection for 5000

Handling connection for 5000

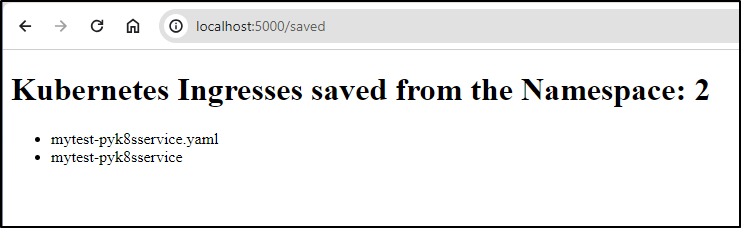

It would seem listing works just dandy

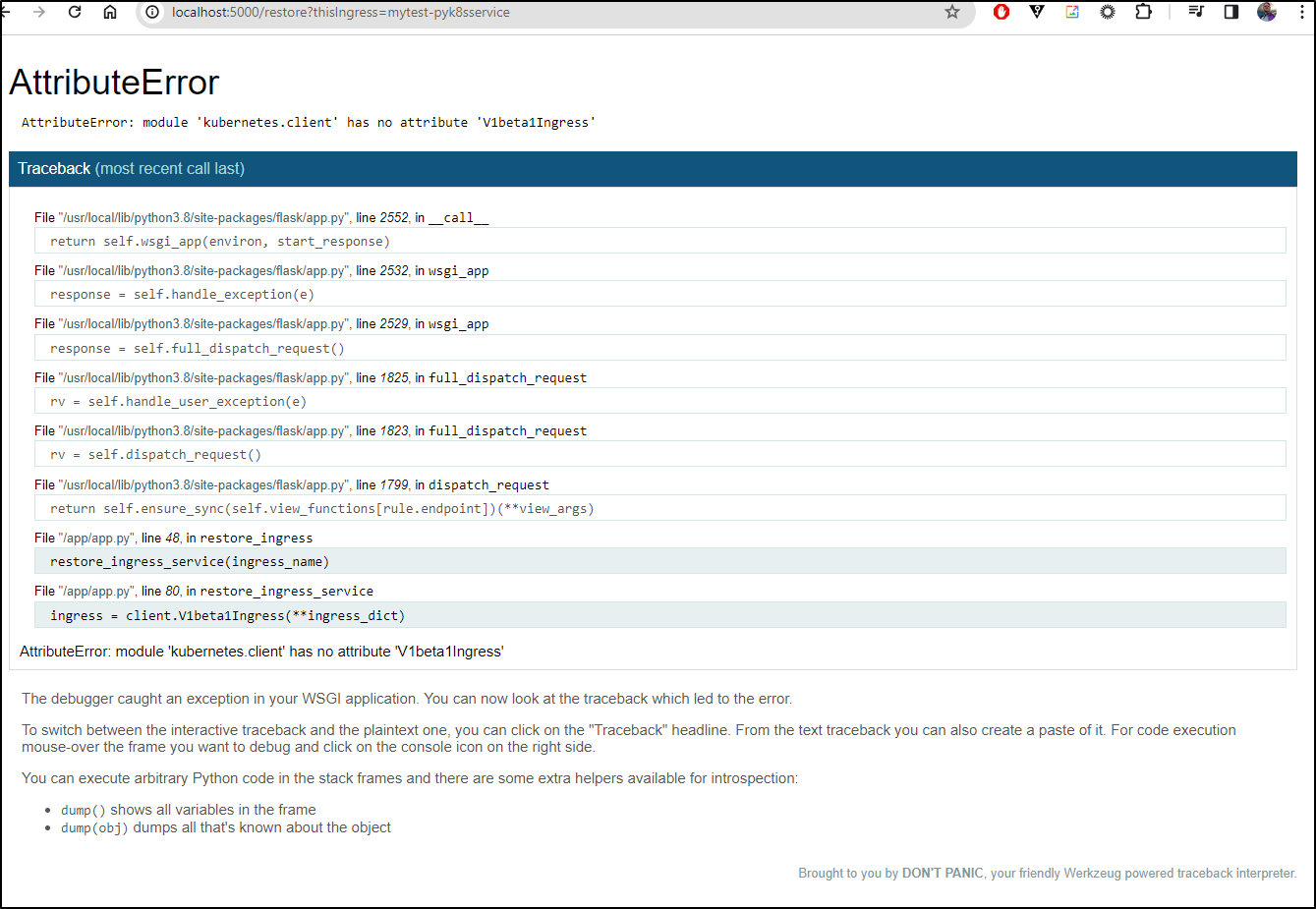

Though my restore fell down

I realized that I needed to switch to just “V1Ingress” in the class

try:

file_path = f"/config/{ingress_name}.yaml"

with open(file_path, 'r') as file:

ingress_dict = yaml.safe_load(file)

# Convert Dict back to an Ingress Object

ingress = client.V1Ingress(**ingress_dict)

# ingress_name = ingress.metadata.name

v1.create_namespaced_ingress(namespace=namespace, body=ingress)

Now a build and push and test works

However, I don’t actually see it created

builder@DESKTOP-QADGF36:~/Workspaces/pyK8sService$ kubectl get ingress -n disabledtest

No resources found in disabledtest namespace.

Logs show us something is amiss

$ kubectl logs mytest-pyk8sservice-app-b587b498b-nlwhc -n disabledtest

* Serving Flask app 'app'

* Debug mode: on

WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:5000

* Running on http://10.42.0.64:5000

Press CTRL+C to quit

* Restarting with stat

* Debugger is active!

* Debugger PIN: 127-226-652

Number of Ingress resources found: 1

10.42.0.1 - - [27/Dec/2023 20:50:59] "GET / HTTP/1.1" 200 -

Number of Ingress resources found: 1

Number of Ingress resources found: 1

10.42.0.1 - - [27/Dec/2023 20:51:07] "GET / HTTP/1.1" 200 -

10.42.0.1 - - [27/Dec/2023 20:51:07] "GET / HTTP/1.1" 200 -

Restore Ingress: mytest-pyk8sservice

in store_ingress

0 in restore ingress

1 in restore ingress

error in restore: (422)

Reason: Unprocessable Entity

HTTP response headers: HTTPHeaderDict({'Audit-Id': '7d07b45d-7c9c-40eb-934a-c715fb37533a', 'Cache-Control': 'no-cache, private', 'Content-Type': 'application/json', 'Warning': '299 - "unknown field \\"metadata.creation_timestamp\\"", 299 - "unknown field \\"metadata.deletion_grace_period_seconds\\"", 299 - "unknown field \\"metadata.deletion_timestamp\\"", 299 - "unknown field \\"metadata.generate_name\\"", 299 - "unknown field \\"metadata.managed_fields\\"", 299 - "unknown field \\"metadata.owner_references\\"", 299 - "unknown field \\"metadata.resource_version\\"", 299 - "unknown field \\"metadata.self_link\\"", 299 - "unknown field \\"spec.default_backend\\"", 299 - "unknown field \\"spec.ingress_class_name\\"", 299 - "unknown field \\"spec.rules[0].http.paths[0].path_type\\"", 299 - "unknown field \\"spec.tls[0].secret_name\\"", 299 - "unknown field \\"status.load_balancer\\""', 'X-Kubernetes-Pf-Flowschema-Uid': '9d2994dc-4137-4742-91f2-ef281ef01ff2', 'X-Kubernetes-Pf-Prioritylevel-Uid': '70d13cea-624c-4356-af78-6b13c6d82bba', 'Date': 'Wed, 27 Dec 2023 20:51:15 GMT', 'Content-Length': '471'})

HTTP response body: {"kind":"Status","apiVersion":"v1","metadata":{},"status":"Failure","message":"Ingress.extensions \"mytest-pyk8sservice\" is invalid: spec.rules[0].http.paths[0].pathType: Required value: pathType must be specified","reason":"Invalid","details":{"name":"mytest-pyk8sservice","group":"extensions","kind":"Ingress","causes":[{"reason":"FieldValueRequired","message":"Required value: pathType must be specified","field":"spec.rules[0].http.paths[0].pathType"}]},"code":422}

127.0.0.1 - - [27/Dec/2023 20:51:15] "GET /restore?thisIngress=mytest-pyk8sservice HTTP/1.1" 200 -

Number of Ingress resources found: 0

Number of Ingress resources found: 0

10.42.0.1 - - [27/Dec/2023 20:51:17] "GET / HTTP/1.1" 200 -

10.42.0.1 - - [27/Dec/2023 20:51:17] "GET / HTTP/1.1" 200 -

Number of Ingress resources found: 0

10.42.0.1 - - [27/Dec/2023 20:51:27] "GET / HTTP/1.1" 200 -

Number of Ingress resources found: 0

10.42.0.1 - - [27/Dec/2023 20:51:27] "GET / HTTP/1.1" 200 -

Number of Ingress resources found: 0

10.42.0.1 - - [27/Dec/2023 20:51:37] "GET / HTTP/1.1" 200 -

I used a lot of debug and found it just was vommitting on the parsed YAML. I decided to pivot to the utils apply method instead.

From

def restore_ingress_service(ingress_name):

config.load_incluster_config()

v1 = client.NetworkingV1Api()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

print(f"in restore ingress", file=sys.stderr)

v1 = client.NetworkingV1Api()

print(f"0 in restore ingress", file=sys.stderr)

# Remove IFF exists

try:

v1.delete_namespaced_ingress(name=ingress_name, namespace=namespace)

except client.exceptions.ApiException as e:

if e.status != 404: # Ignore error if the ingress does not exist

raise

# Now try and restore it

print(f"1 in restore ingress", file=sys.stderr)

try:

file_path = f"/config/{ingress_name}.yaml"

print(f"1a Restore Ingress: {file_path}", file=sys.stderr)

with open(file_path, 'r') as file:

ingress_dict = yaml.safe_load(file)

print(f"1b Restore Ingress: {ingress_dict}", file=sys.stderr)

# Convert Dict back to an Ingress Object

ingress = client.V1Ingress(**ingress_dict)

print(f"1c Restore Ingress: {ingress}", file=sys.stderr)

# ingress_name = ingress.metadata.name

v1.create_namespaced_ingress(namespace=namespace, body=ingress)

print(f"1d Restored Ingress: {ingress_name}", file=sys.stderr)

except client.rest.ApiException as e:

print(f"error in restore: {e}", file=sys.stderr)

return f"Error restoring Ingress '{ingress_name}': {e}"

print(f"2 Restored Ingress: {ingress_name}", file=sys.stderr)

return f"Ingress '{ingress_name}' restored from /config '{ingress_name}'"

to:

from kubernetes import client, config, utils

#... snip ...

def restore_ingress_service(ingress_name):

config.load_incluster_config()

apiClient = client.ApiClient()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

print(f"in restore ingress", file=sys.stderr)

v1 = client.NetworkingV1Api()

print(f"0 in restore ingress", file=sys.stderr)

# Remove IFF exists

try:

v1.delete_namespaced_ingress(name=ingress_name, namespace=namespace)

except client.exceptions.ApiException as e:

if e.status != 404: # Ignore error if the ingress does not exist

raise

# Now try and restore it

print(f"1 in restore ingress", file=sys.stderr)

try:

file_path = f"/config/{ingress_name}.yaml"

print(f"1a Restore Ingress: {file_path}", file=sys.stderr)

# Apply the manifest

utils.create_from_yaml(apiClient, file_path)

print(f"1d Restored Ingress: {ingress_name}", file=sys.stderr)

except client.rest.ApiException as e:

print(f"error in restore: {e}", file=sys.stderr)

return f"Error restoring Ingress '{ingress_name}': {e}"

print(f"2 Restored Ingress: {ingress_name}", file=sys.stderr)

return f"Ingress '{ingress_name}' restored from /config '{ingress_name}'"

Actually, this ran into another issue - the V1 api was storing the YAML with “api_version” not “apiVersion”.

$ kubectl exec `kubectl get pods -l app.kubernetes.io/instance=mytest-app -n disabledtest -o=jsonpath='{.items[0].metadata.name}'` -n disabledtest cat '/config/mytest-pyk8sservice.yaml'

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

api_version: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: traefik

meta.helm.sh/release-name: mytest

meta.helm.sh/release-namespace: disabledtest

creation_timestamp: 2023-12-28 21:39:08+00:00

... snip ...

I fought and fought this. I finally sucommed to just using a post-save file replace in the store routine:

def store_ingress_service(ingress_name):

config.load_incluster_config()

v1 = client.NetworkingV1Api()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

print(f"in store_ingress", file=sys.stderr)

try:

print(f"0 in store_ingress", file=sys.stderr)

ingress = v1.read_namespaced_ingress(name=ingress_name, namespace=namespace)

# Update the Ingress backend service to 'new_service_name'

#print(f"1 in update_ingress_service", file=sys.stderr)

#print(f"Ingress first: {ingress}", file=sys.stderr)

#file_path = os.path.join("/config", ingress_name, ".yaml")

file_path = f"/config/{ingress_name}.yaml"

ingress_dict = ingress.to_dict()

ingress_yaml = yaml.dump(ingress_dict)

with open(file_path, 'w') as file:

file.write(ingress_yaml)

# seems to really want to use "api_version". Force it to use "apiVersion"!

with open(file_path, 'r') as file:

content = file.read()

content = content.replace('api_version', 'apiVersion', 1)

with open(file_path, 'w') as file:

file.write(content)

print(f"1 store_ingress: {ingress} to {file_path}", file=sys.stderr)

except client.rest.ApiException as e:

print(f"error in store_ingress: {e}", file=sys.stderr)

return f"Error storing Ingress '{ingress_name}': {e}"

return f"Ingress '{ingress_name}' stored in /config '{ingress_name}'"

Now the store routine (http://localhost:5000/store?thisIngress=mytest-pyk8sservice) saves it properly:

$ kubectl exec `kubectl get pods -l app.kubernetes.io/instance=mytest-app -n disabledtest -o=jsonpath='{.items[0].metadata.name}'` -n disabledtest cat '/config/mytest-pyk8sservice.yaml' | head -n10

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: traefik

meta.helm.sh/release-name: mytest

meta.helm.sh/release-namespace: disabledtest

creation_timestamp: 2023-12-28 21:39:08+00:00

deletion_grace_period_seconds: null

deletion_timestamp: null

I had to fix the update ingress service routine as it had some hardcoded values

def update_ingress_service(ingress_name, new_service_name):

config.load_incluster_config()

v1 = client.NetworkingV1Api()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

print(f"in update_ingress_service", file=sys.stderr)

try:

print(f"0 in update_ingress_service", file=sys.stderr)

ingress = v1.read_namespaced_ingress(name=ingress_name, namespace=namespace)

# Update the Ingress backend service to 'new_service_name'

#print(f"1 in update_ingress_service", file=sys.stderr)

#print(f"Ingress first: {ingress}", file=sys.stderr)

ingress.spec.rules[0].http.paths[0].backend.service.name = new_service_name

#print(f"2 in update_ingress_service", file=sys.stderr)

api_response = v1.patch_namespaced_ingress(

name=ingress_name,

namespace=namespace, # replace with your namespace

body=ingress

)

#print(f"3 in update_ingress_service", file=sys.stderr)

#print(f"Ingress second: {ingress}", file=sys.stderr)

except client.rest.ApiException as e:

print(f"error in update_ingress_service: {e}", file=sys.stderr)

return f"Error updating Ingress '{ingress_name}': {e}"

return f"Ingress '{ingress_name}' updated to use service '{new_service_name}'"

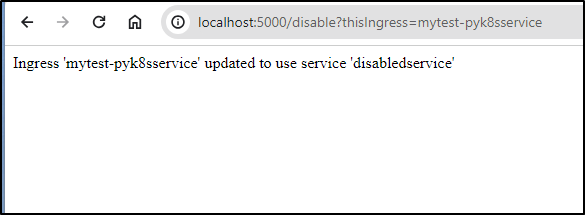

But now it works to disable:

And I can see it disabled it’s own ingress

$ kubectl get ingress mytest-pyk8sservice -n disabledtest -o yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: traefik

meta.helm.sh/release-name: mytest

meta.helm.sh/release-namespace: disabledtest

creationTimestamp: "2023-12-28T21:39:08Z"

generation: 2

labels:

app.kubernetes.io/instance: mytest

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: pyk8sservice

app.kubernetes.io/version: 1.16.0

helm.sh/chart: pyk8sservice-0.2.0

name: mytest-pyk8sservice

namespace: disabledtest

resourceVersion: "9126079"

uid: cba226d5-2cc9-4161-972f-79367486876e

spec:

ingressClassName: traefik

rules:

- host: pytestapp.local

http:

paths:

- backend:

service:

name: disabledservice

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- chart-example.local

secretName: chart-example-tls

status:

loadBalancer:

ingress:

- ip: 192.168.1.13

- ip: 192.168.1.159

- ip: 192.168.1.206

To be honest, I spent hours fighting to get it to case things right

from flask import Flask, render_template, request

from kubernetes import client, config, utils

import sys

import os

import yaml

app = Flask(__name__)

@app.route('/')

def index():

ingresses, ingress_count = get_kubernetes_resources()

print(f"Number of Ingress resources found: {ingress_count}", file=sys.stderr)

return render_template('index.html', resources=ingresses, count=ingress_count)

@app.route('/disable', methods=['GET'])

def disable_ingress():

ingress_name = request.args.get('thisIngress')

print(f"Disable Ingress: {ingress_name}", file=sys.stderr)

if ingress_name:

update_ingress_service(ingress_name, 'disabledservice')

return f"Ingress '{ingress_name}' updated to use service 'disabledservice'"

else:

return "Invalid request. Please provide 'thisIngress' as a GET parameter."

@app.route('/store', methods=['GET'])

def store_ingress():

ingress_name = request.args.get('thisIngress')

print(f"Store Ingress: {ingress_name}", file=sys.stderr)

if ingress_name:

store_ingress_service(ingress_name)

return f"Ingress '{ingress_name}' stored to /config"

else:

return "Invalid request. Please provide 'thisIngress' as a GET parameter."

@app.route('/saved', methods=['GET'])

def showsaved_ingress():

filenames = os.listdir('/config')

ingress_count = len(filenames)

print(f"1 show saved ingresses in /config: {ingress_count} total", file=sys.stderr)

return render_template('restore.html', resources=filenames, count=ingress_count)

@app.route('/restore', methods=['GET'])

def restore_ingress():

ingress_name = request.args.get('thisIngress')

print(f"Restore Ingress: {ingress_name}", file=sys.stderr)

if ingress_name:

restore_ingress_service(ingress_name)

return f"Ingress '{ingress_name}' restored from /config"

else:

return "Invalid request. Please provide 'thisIngress' as a GET parameter."

#==================================================================

def snake_to_camel(word):

"""Convert a snake case string to camel case without capitalizing the first word."""

components = word.split('_')

return components[0] + ''.join(x.capitalize() for x in components[1:])

def restore_ingress_service(ingress_name):

config.load_incluster_config()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

print(f"in restore ingress", file=sys.stderr)

v1 = client.NetworkingV1Api()

print(f"0 in restore ingress", file=sys.stderr)

# Remove IFF exists

try:

v1.delete_namespaced_ingress(name=ingress_name, namespace=namespace)

except client.exceptions.ApiException as e:

if e.status != 404: # Ignore error if the ingress does not exist

raise

# Now try and restore it

print(f"1 in restore ingress", file=sys.stderr)

try:

file_path = f"/config/{ingress_name}.yaml"

print(f"1a Restore Ingress: {file_path}", file=sys.stderr)

# Apply the manifest

utils.create_from_yaml(client.ApiClient(), yaml_file=file_path, verbose=True, namespace=namespace)

print(f"1d Restored Ingress: {ingress_name}", file=sys.stderr)

except client.rest.ApiException as e:

print(f"error in restore: {e}", file=sys.stderr)

return f"Error restoring Ingress '{ingress_name}': {e}"

print(f"2 Restored Ingress: {ingress_name}", file=sys.stderr)

return f"Ingress '{ingress_name}' restored from /config '{ingress_name}'"

def get_kubernetes_resources():

config.load_incluster_config()

v1 = client.NetworkingV1Api()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

ingresses = v1.list_namespaced_ingress(namespace)

ingress_list = [ingress.metadata.name for ingress in ingresses.items]

ingress_count = len(ingress_list)

return ingress_list, ingress_count

def store_ingress_service(ingress_name):

config.load_incluster_config()

v1 = client.NetworkingV1Api()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

print(f"in store_ingress", file=sys.stderr)

try:

print(f"0 in store_ingress", file=sys.stderr)

ingress = v1.read_namespaced_ingress(name=ingress_name, namespace=namespace)

# Update the Ingress backend service to 'new_service_name'

#print(f"1 in update_ingress_service", file=sys.stderr)

#print(f"Ingress first: {ingress}", file=sys.stderr)

#file_path = os.path.join("/config", ingress_name, ".yaml")

file_path = f"/config/{ingress_name}.yaml"

ingress_dict = ingress.to_dict()

ingress_yaml = yaml.dump(ingress_dict)

with open(file_path, 'w') as file:

file.write(ingress_yaml)

# seems to really want to use "api_version". Force it to use "apiVersion"!

with open(file_path, 'r') as file:

lines = file.readlines()

for i in range(len(lines)):

words = lines[i].split(' ')

words = [snake_to_camel(word) if '_' in word else word for word in words]

lines[i] = ' '.join(words)

content = ''.join(lines)

with open(file_path, 'w') as file:

file.write(content)

print(f"1 store_ingress: {ingress} to {file_path}", file=sys.stderr)

except client.rest.ApiException as e:

print(f"error in store_ingress: {e}", file=sys.stderr)

return f"Error storing Ingress '{ingress_name}': {e}"

return f"Ingress '{ingress_name}' stored in /config '{ingress_name}'"

def update_ingress_service(ingress_name, new_service_name):

config.load_incluster_config()

v1 = client.NetworkingV1Api()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

print(f"in update_ingress_service", file=sys.stderr)

try:

print(f"0 in update_ingress_service", file=sys.stderr)

ingress = v1.read_namespaced_ingress(name=ingress_name, namespace=namespace)

# Update the Ingress backend service to 'new_service_name'

#print(f"1 in update_ingress_service", file=sys.stderr)

#print(f"Ingress first: {ingress}", file=sys.stderr)

ingress.spec.rules[0].http.paths[0].backend.service.name = new_service_name

#print(f"2 in update_ingress_service", file=sys.stderr)

api_response = v1.patch_namespaced_ingress(

name=ingress_name,

namespace=namespace, # replace with your namespace

body=ingress

)

#print(f"3 in update_ingress_service", file=sys.stderr)

#print(f"Ingress second: {ingress}", file=sys.stderr)

except client.rest.ApiException as e:

print(f"error in update_ingress_service: {e}", file=sys.stderr)

return f"Error updating Ingress '{ingress_name}': {e}"

return f"Ingress '{ingress_name}' updated to use service '{new_service_name}'"

if __name__ == '__main__':

app.run(host='0.0.0.0', port=5000, debug=True)

Only to get stopped by

kubernetes.utils.create_from_yaml.FailToCreateError: Error from server (Internal Server Error): {"kind":"Status","apiVersion":"v1","metadata":{},"status":"Failure","message":"resourceVersion should not be set on objects to be created","code":500}

I did end up fighting for a few more hours on snake casing vs camel casing before I pivoted to just using kubectl directly

I updated the Dockerfile to pull in kubectl

FROM python:3.8-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# Install necessary packages for adding apt repositories

RUN apt-get update && \

apt-get install -y apt-transport-https ca-certificates curl gnupg lsb-release

# Add Kubernetes apt repository

RUN echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | tee -a /etc/apt/sources.list.d/kubernetes.list

# Add Kubernetes apt repository GPG key

RUN curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add -

# Update package list

RUN apt-get update

# Install kubectl

RUN apt-get install -y kubectl

COPY . .

CMD ["python", "app.py"]

Then the app needed to be changed. This is after quite a lot of cleaning:

from flask import Flask, render_template, request

from kubernetes import client, config, utils

import sys

import os

import subprocess

app = Flask(__name__)

@app.route('/')

def index():

ingresses, ingress_count = get_kubernetes_resources()

print(f"Number of Ingress resources found: {ingress_count}", file=sys.stderr)

return render_template('index.html', resources=ingresses, count=ingress_count)

@app.route('/disable', methods=['GET'])

def disable_ingress():

ingress_name = request.args.get('thisIngress')

print(f"Disable Ingress: {ingress_name}", file=sys.stderr)

if ingress_name:

update_ingress_service(ingress_name, 'disabledservice')

return f"Ingress '{ingress_name}' updated to use service 'disabledservice'"

else:

return "Invalid request. Please provide 'thisIngress' as a GET parameter."

@app.route('/store', methods=['GET'])

def store_ingress():

ingress_name = request.args.get('thisIngress')

print(f"Store Ingress: {ingress_name}", file=sys.stderr)

if ingress_name:

newstore_ingress_service(ingress_name)

return f"Ingress '{ingress_name}' stored to /config"

else:

return "Invalid request. Please provide 'thisIngress' as a GET parameter."

@app.route('/saved', methods=['GET'])

def showsaved_ingress():

filenames = os.listdir('/config')

ingress_count = len(filenames)

print(f"1 show saved ingresses in /config: {ingress_count} total", file=sys.stderr)

return render_template('restore.html', resources=filenames, count=ingress_count)

@app.route('/restore', methods=['GET'])

def restore_ingress():

ingress_name = request.args.get('thisIngress')

print(f"Restore Ingress: {ingress_name}", file=sys.stderr)

if ingress_name:

newrestore_ingress_service(ingress_name)

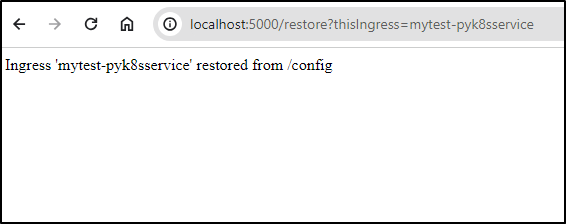

return f"Ingress '{ingress_name}' restored from /config"

else:

return "Invalid request. Please provide 'thisIngress' as a GET parameter."

#==================================================================

def newrestore_ingress_service(ingress_name):

config.load_incluster_config()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

v1 = client.NetworkingV1Api()

print(f"0 in restore ingress", file=sys.stderr)

# Remove IFF exists

try:

v1.delete_namespaced_ingress(name=ingress_name, namespace=namespace)

except client.exceptions.ApiException as e:

if e.status != 404: # Ignore error if the ingress does not exist

raise

file_path = f"/config/{ingress_name}.yaml"

cmd = f"kubectl apply --validate='false' -f {file_path}"

# Execute the command

subprocess.run(cmd, shell=True, check=True)

return f"Ingress '{ingress_name}' restored from /config '{ingress_name}'"

def get_kubernetes_resources():

config.load_incluster_config()

v1 = client.NetworkingV1Api()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

ingresses = v1.list_namespaced_ingress(namespace)

ingress_list = [ingress.metadata.name for ingress in ingresses.items]

ingress_count = len(ingress_list)

return ingress_list, ingress_count

def newstore_ingress_service(ingress_name):

config.load_incluster_config()

v1 = client.NetworkingV1Api()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

file_path = f"/config/{ingress_name}.yaml"

cmd = f"kubectl get ingress {ingress_name} -n {namespace} -o yaml > {file_path}"

# Execute the command

subprocess.run(cmd, shell=True, check=True)

return f"Ingress '{ingress_name}' stored in /config '{ingress_name}'"

def update_ingress_service(ingress_name, new_service_name):

config.load_incluster_config()

v1 = client.NetworkingV1Api()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

print(f"in update_ingress_service", file=sys.stderr)

try:

print(f"0 in update_ingress_service", file=sys.stderr)

ingress = v1.read_namespaced_ingress(name=ingress_name, namespace=namespace)

# Update the Ingress backend service to 'new_service_name'

#print(f"1 in update_ingress_service", file=sys.stderr)

#print(f"Ingress first: {ingress}", file=sys.stderr)

ingress.spec.rules[0].http.paths[0].backend.service.name = new_service_name

#print(f"2 in update_ingress_service", file=sys.stderr)

api_response = v1.patch_namespaced_ingress(

name=ingress_name,

namespace=namespace, # replace with your namespace

body=ingress

)

#print(f"3 in update_ingress_service", file=sys.stderr)

#print(f"Ingress second: {ingress}", file=sys.stderr)

except client.rest.ApiException as e:

print(f"error in update_ingress_service: {e}", file=sys.stderr)

return f"Error updating Ingress '{ingress_name}': {e}"

return f"Ingress '{ingress_name}' updated to use service '{new_service_name}'"

if __name__ == '__main__':

app.run(host='0.0.0.0', port=5000, debug=True)

Lastly, I wanted, at the least, a start to actions from the index page so I wouldn’t need to keep typing in the URLs manually

$ cat ./templates/index.html

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Kubernetes Ingresses</title>

<style>

.styled-table {

border-collapse: collapse;

margin: 25px 0;

font-size: 0.9em;

font-family: sans-serif;

min-width: 400px;

box-shadow: 0 0 20px rgba(0, 0, 0, 0.15);

}

.styled-table thead tr {

background-color: #491ac9;

color: #ffffff;

text-align: left;

}

.styled-table th,

.styled-table td {

padding: 12px 15px;

}

.styled-table tbody tr {

border-bottom: 1px solid #dddddd;

}

.styled-table tbody tr:nth-of-type(even) {

background-color: #dadada;

}

.styled-table tbody tr:last-of-type {

border-bottom: 2px solid #491ac9;

}

.styled-table tbody tr.active-row {

font-weight: bold;

color: #491ac9;

}

caption {

font-weight: bold;

font-size: 24px;

text-align: left;

color: #333;

}

</style>

</head>

<body>

<table class="styled-table">

<caption>Kubernetes Ingresses in the Namespace: 8</caption>

<thead>

<tr>

<th>Ingress</th>

<th>Actions</th>

</tr>

</thead>

<tbody>

</tbody>

</table>

</body>

</html>

Here we can see it in action with a full end to end demo:

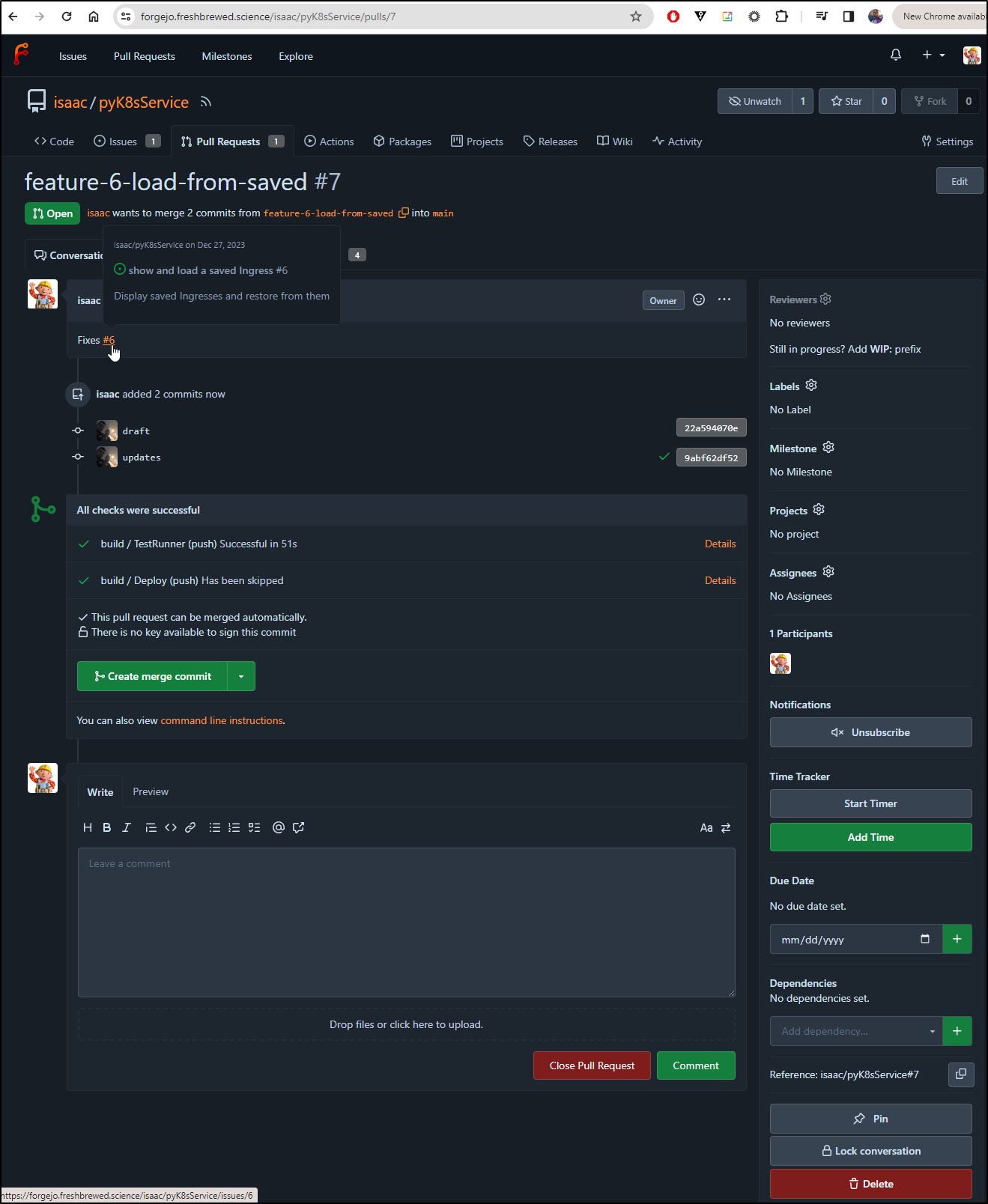

I created PR 7 and we can see it’s associated to the issue (#6)

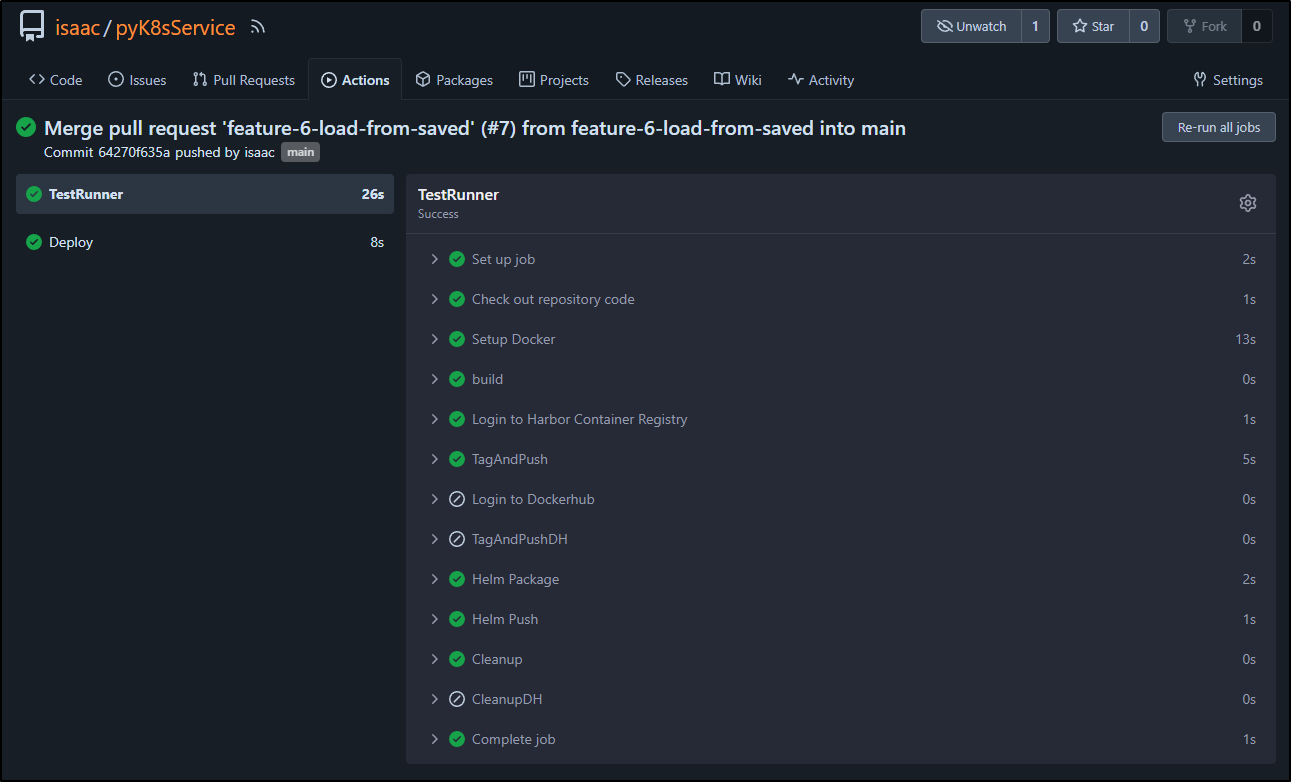

On merge, it built and pushed to Harbor

I want this pushed to Dockerhub so others can enjoy it, thus I made a release.0.0.3 tag which kicked a build. You can access that image here in Dockerhub.

Summary

Today we changed the app to store our Ingress definitions into a mounted Persistent Volume Claim (PVC) for permanent storage. In doing so, I demonstrated how to use imagePullSecrets for local builds of the container. This was sorted by PR 5. We moved onto Feature #6 for restoring from backups. This led down a rabbit hole of fighting with the Kubernetes python library which wanted to endlessly reformat the YAML to snake_case from camelCase. After enough trying, I moved to just using a local kubectl in the Dockerfile and this wrapped with PR 7 which implemented Issue #6 on restore. The code at this point is tagged release.0.03

At this point we have a functional app, in the basic sense. Our next goals will be to tackle Password login, some styling changes and using the real Nginx disabled service name. We’ll cover all that next week, stay tuned!