Published: Dec 30, 2023 by Isaac Johnson

So far, in Part 1 we covered ideation and initial project creation. Part 2 was about the first app where we tried NodeJS and Python and stuck with Python. Last week, we put out Part 3 where we tackled CICD, Pipelines in Forgejo and a first working Minimum Viable Product (MVP).

This week, we will take the next steps to create releases and documentation. We’ll build our Container and push to Harbor CR and to Dockerhub on release. Pivoting from YAML manifests, we’ll build out Helm charts.

Branching

You’ve seen me pushing to main for the most part. There is a philosophy out there of only using main and not doing PRs. That is, keep the primary live and if there are problems, they’ll be rolled back by others.

I see a lot of issues there. PR’s allow checks before merging. My doing proper PR branches with code checks, we can limit bad changes and bring the overall quality of the main branch up. Additionally, when more than one developer is involved, PRs allow us to do code reviews and lastly indicate auto Issue closing when done.

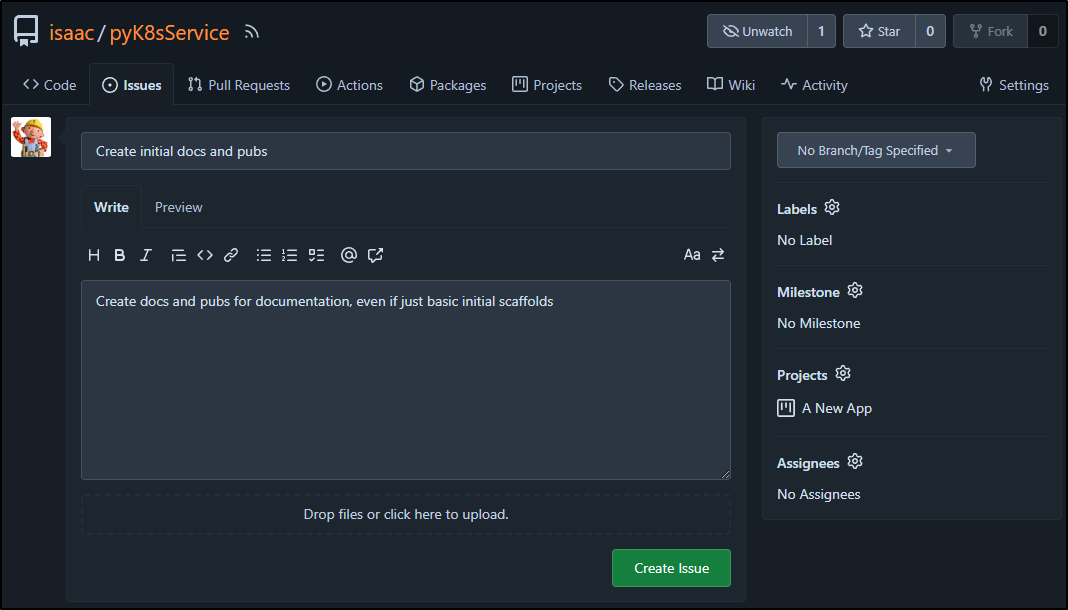

To show what I mean, let’s create an issue:

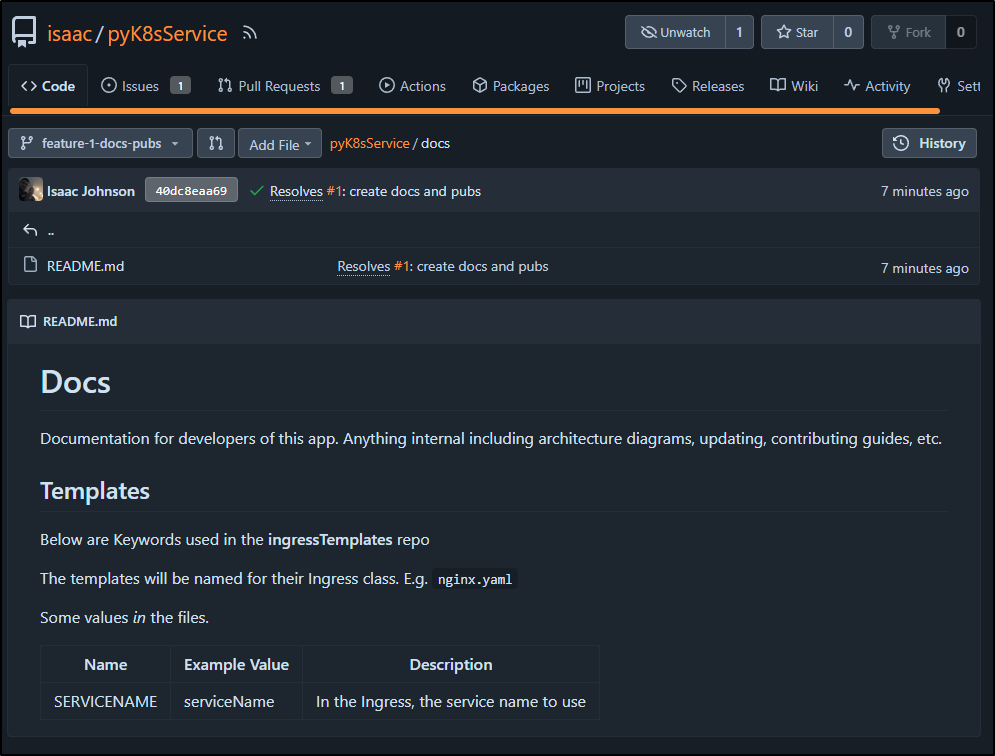

I’ll create some initial docs

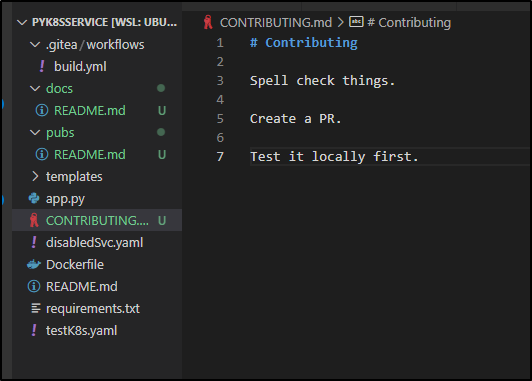

Next, I’ll create a comment and push the branch

builder@DESKTOP-QADGF36:~/Workspaces/pyK8sService$ git add -A

builder@DESKTOP-QADGF36:~/Workspaces/pyK8sService$ git commit -m "Resolves #1: create docs and pubs"

[feature-1-docs-pubs 40dc8ea] Resolves #1: create docs and pubs

3 files changed, 33 insertions(+)

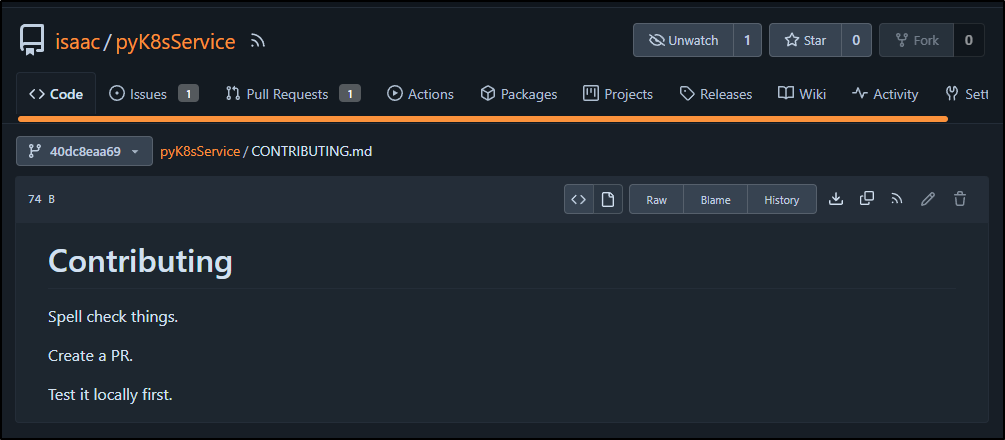

create mode 100644 CONTRIBUTING.md

create mode 100644 docs/README.md

create mode 100644 pubs/README.md

builder@DESKTOP-QADGF36:~/Workspaces/pyK8sService$ git push

fatal: The current branch feature-1-docs-pubs has no upstream branch.

To push the current branch and set the remote as upstream, use

git push --set-upstream origin feature-1-docs-pubs

builder@DESKTOP-QADGF36:~/Workspaces/pyK8sService$ git push --set-upstream origin feature-1-docs-pubs

Enumerating objects: 8, done.

Counting objects: 100% (8/8), done.

Delta compression using up to 16 threads

Compressing objects: 100% (5/5), done.

Writing objects: 100% (7/7), 983 bytes | 983.00 KiB/s, done.

Total 7 (delta 1), reused 0 (delta 0)

remote:

remote: Create a new pull request for 'feature-1-docs-pubs':

remote: https://forgejo.freshbrewed.science/isaac/pyK8sService/compare/main...feature-1-docs-pubs

remote:

remote: . Processing 1 references

remote: Processed 1 references in total

To https://forgejo.freshbrewed.science/isaac/pyK8sService.git

* [new branch] feature-1-docs-pubs -> feature-1-docs-pubs

Branch 'feature-1-docs-pubs' set up to track remote branch 'feature-1-docs-pubs' from 'origin'.

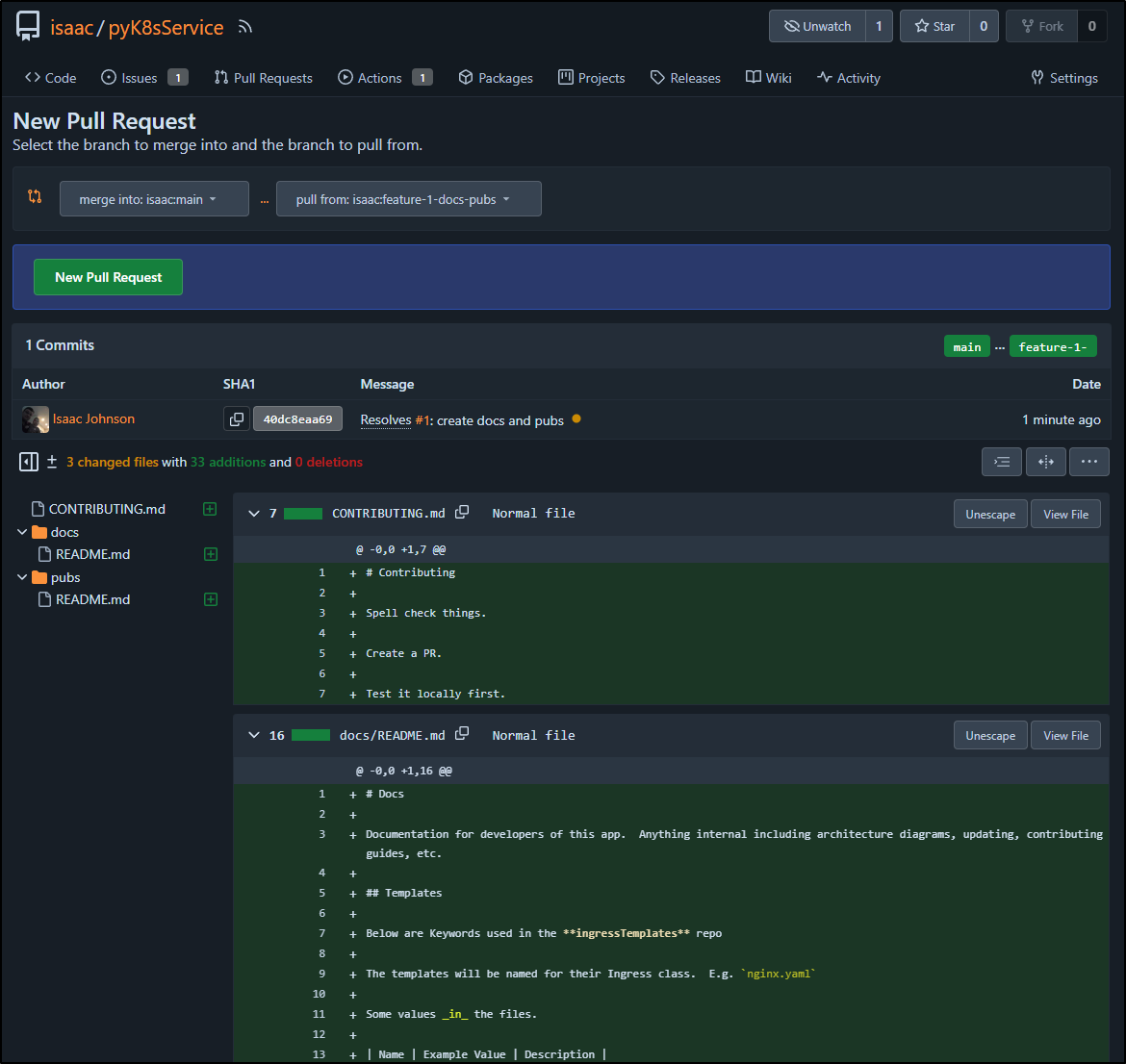

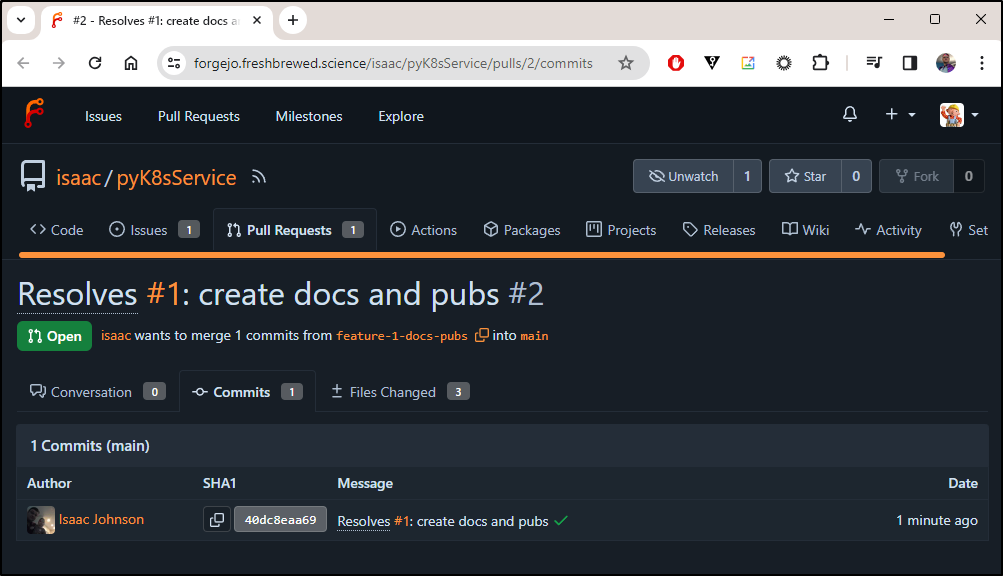

The link lets me create a PR

Let’s talk about what happens next.

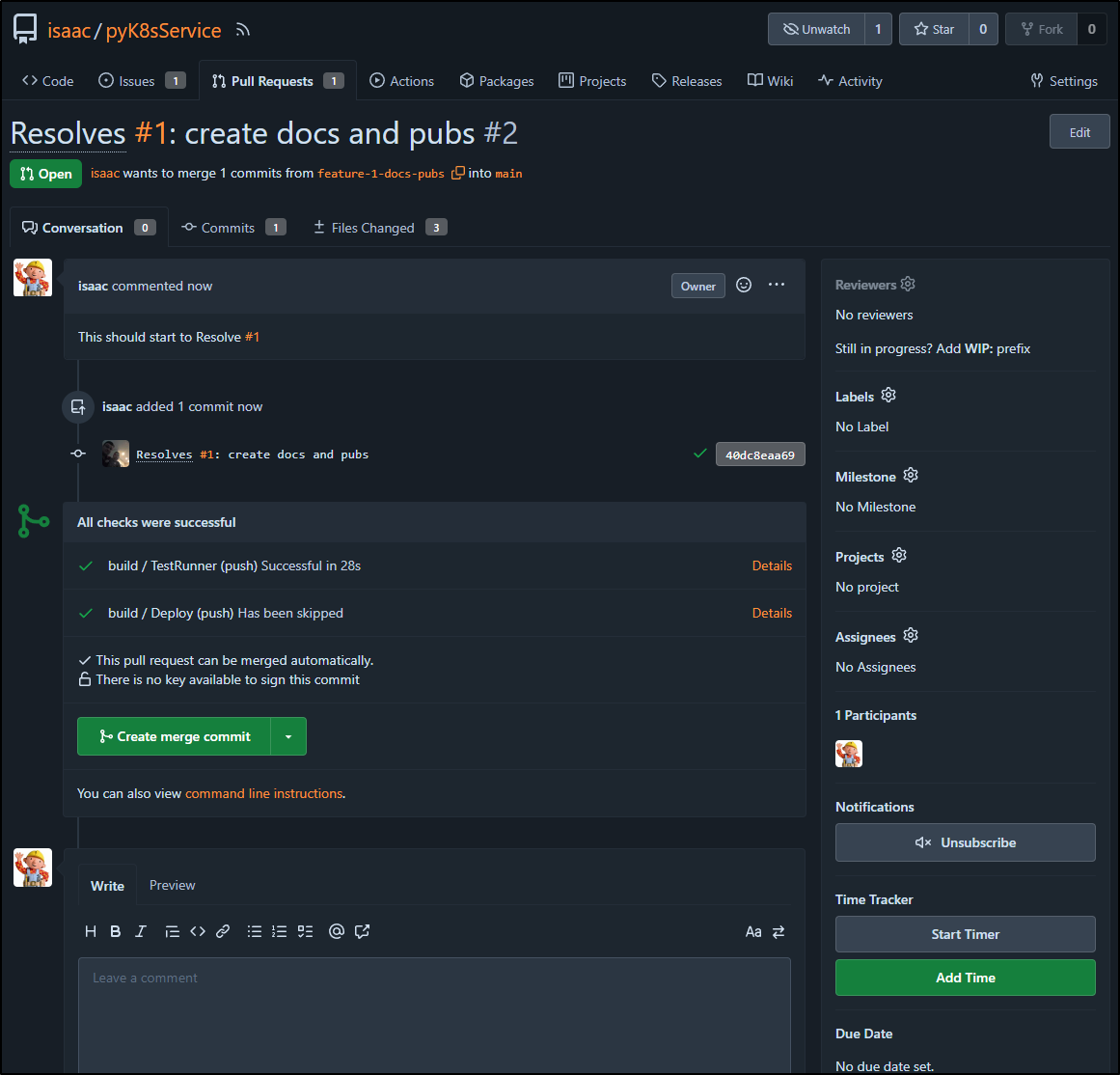

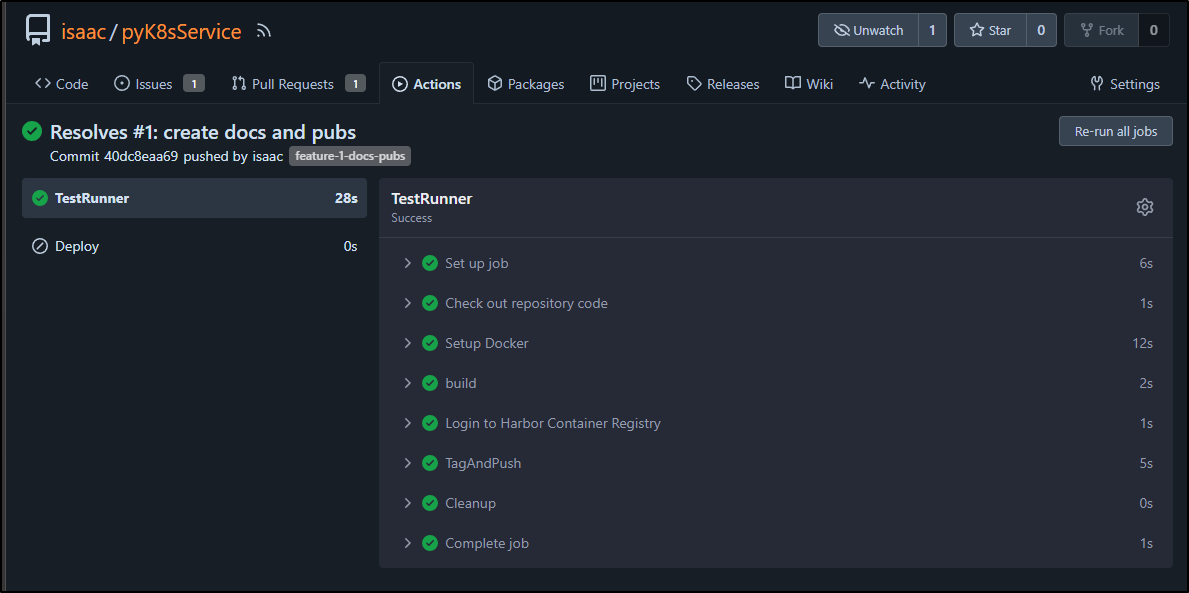

We can see our PR checks happened:

On the issue in the repo, we can see an associated commit

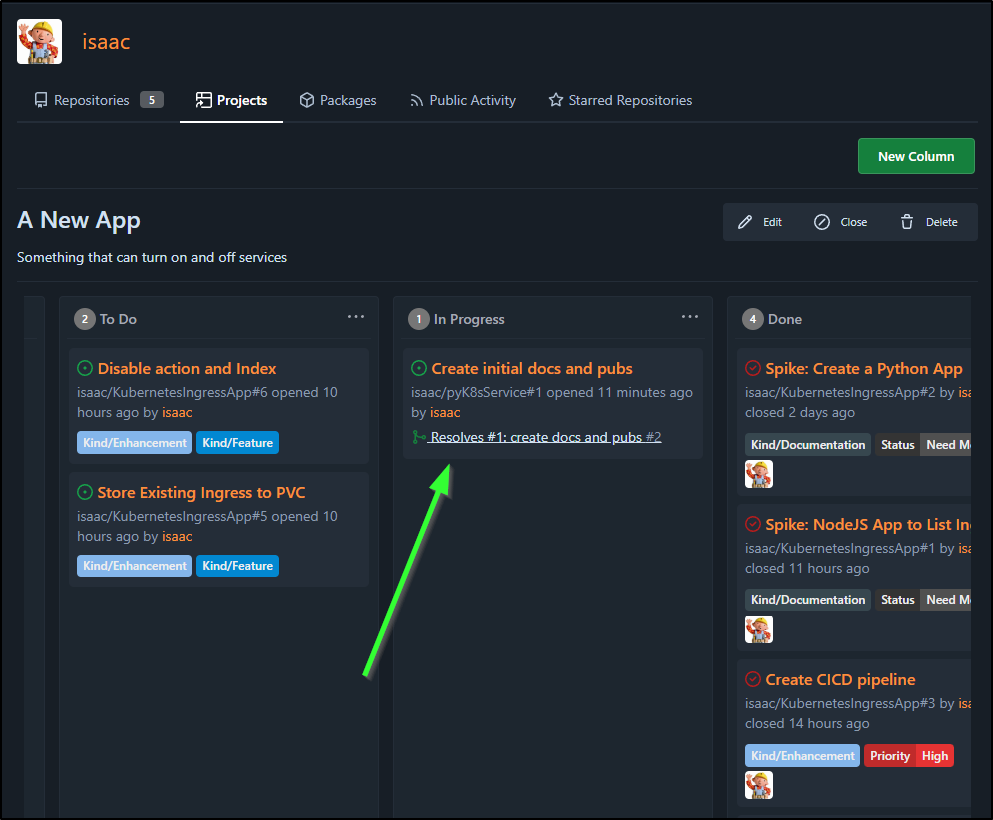

Which I can also see associated when I look at the overall project plan

Recall as well, that because this is not on main, we skip deploys. So only the build and push happened

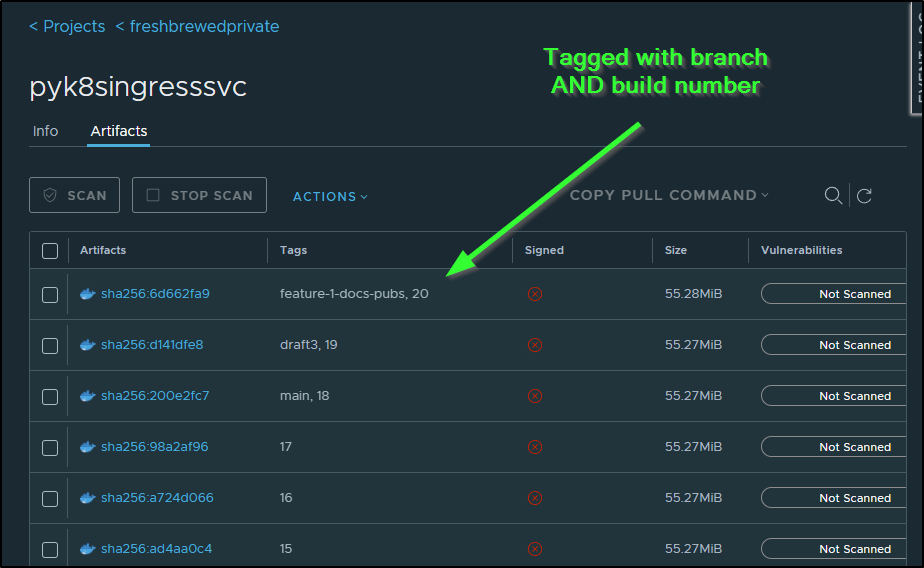

I can see that by branch tag as well now in Harbor. Long term, I’ll want some kind of cleanup on these artifacts. But at 55Mb, I can be a bit wasteful

Since I’m acting as my own reviewer, I can check the rendered Markdown in the branch

or PR

Let’s watch how this might play out from the perspective of reviewer, with a merge and deploy. (note: i did have to roll a quick hotfix then build at the end)

Dockerhub

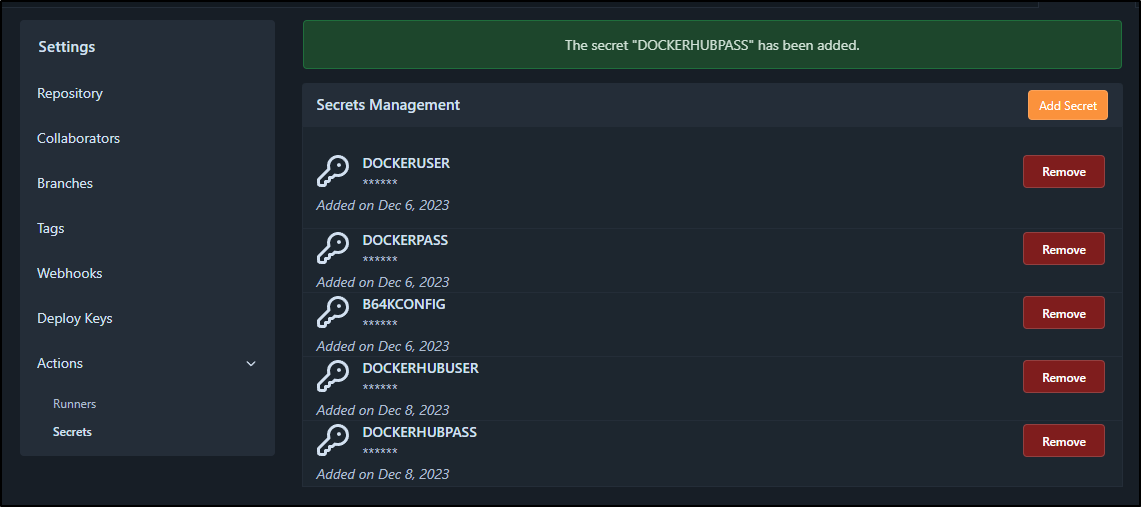

I’ll add my Dockerhub creds to our repo secrets

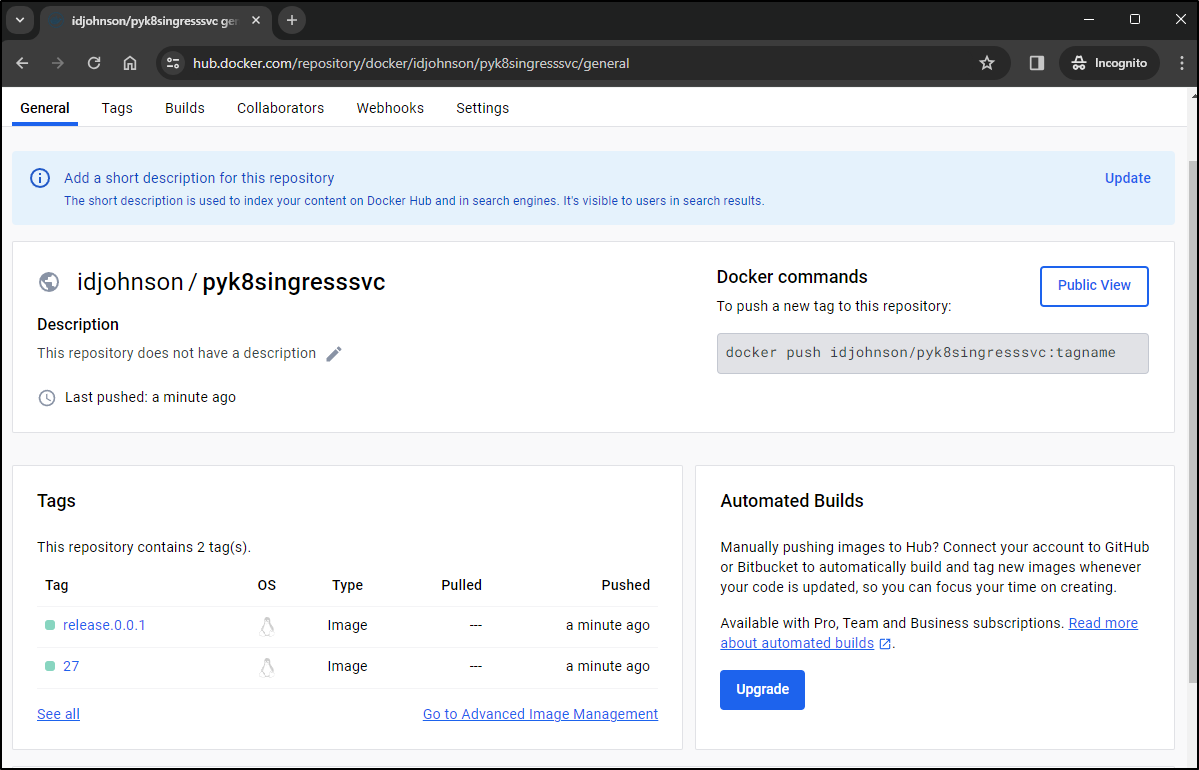

Then in the build steps, I can check for the ref type to be “tag” and push on tagging to DH

- name: build

run: |

docker build -t pyk8singresssvc:$GITHUB_RUN_NUMBER .

- name: Login to Harbor Container Registry

uses: docker/login-action@v2

with:

registry: harbor.freshbrewed.science

username: ${{ secrets.DOCKERUSER }}

password: ${{ secrets.DOCKERPASS }}

- name: TagAndPush

run: |

# if running as non-root, add sudo

echo hi

docker tag pyk8singresssvc:$GITHUB_RUN_NUMBER harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:$GITHUB_RUN_NUMBER

docker tag pyk8singresssvc:$GITHUB_RUN_NUMBER harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:$GITHUB_REF_NAME

docker push harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:$GITHUB_RUN_NUMBER

docker push harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:$GITHUB_REF_NAME

- name: Login to Dockerhub

if: ${{ github.ref_type == 'tag' }}

run: |

echo ${{ secrets.DOCKERHUBPASS }} | docker login --username ${{ secrets.DOCKERHUBUSER }} --password-stdin

- name: TagAndPushDH

if: ${{ github.ref_type == 'tag' }}

run: |

# if running as non-root, add sudo

docker tag pyk8singresssvc:$GITHUB_RUN_NUMBER idjohnson/pyk8singresssvc:$GITHUB_RUN_NUMBER

docker tag pyk8singresssvc:$GITHUB_RUN_NUMBER idjohnson/pyk8singresssvc:$GITHUB_REF_NAME

docker push idjohnson/pyk8singresssvc:$GITHUB_RUN_NUMBER

docker push idjohnson/pyk8singresssvc:$GITHUB_REF_NAME

- name: Cleanup

run: |

docker image rm pyk8singresssvc:$GITHUB_RUN_NUMBER

docker image rm harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:$GITHUB_RUN_NUMBER

docker image rm harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:$GITHUB_REF_NAME

- name: CleanupDH

if: ${{ github.ref_type == 'tag' }}

run: |

docker image rm idjohnson/pyk8singresssvc:$GITHUB_RUN_NUMBER

docker image rm idjohnson/pyk8singresssvc:$GITHUB_REF_NAME

I did try earlier to just use ‘docker login’ action as we did with Harbor, but it doesn’t seem to work presently with dockerhub or forgejo actions at v2 (tried to reuse harborcr), so I reverted to the inline docker login.

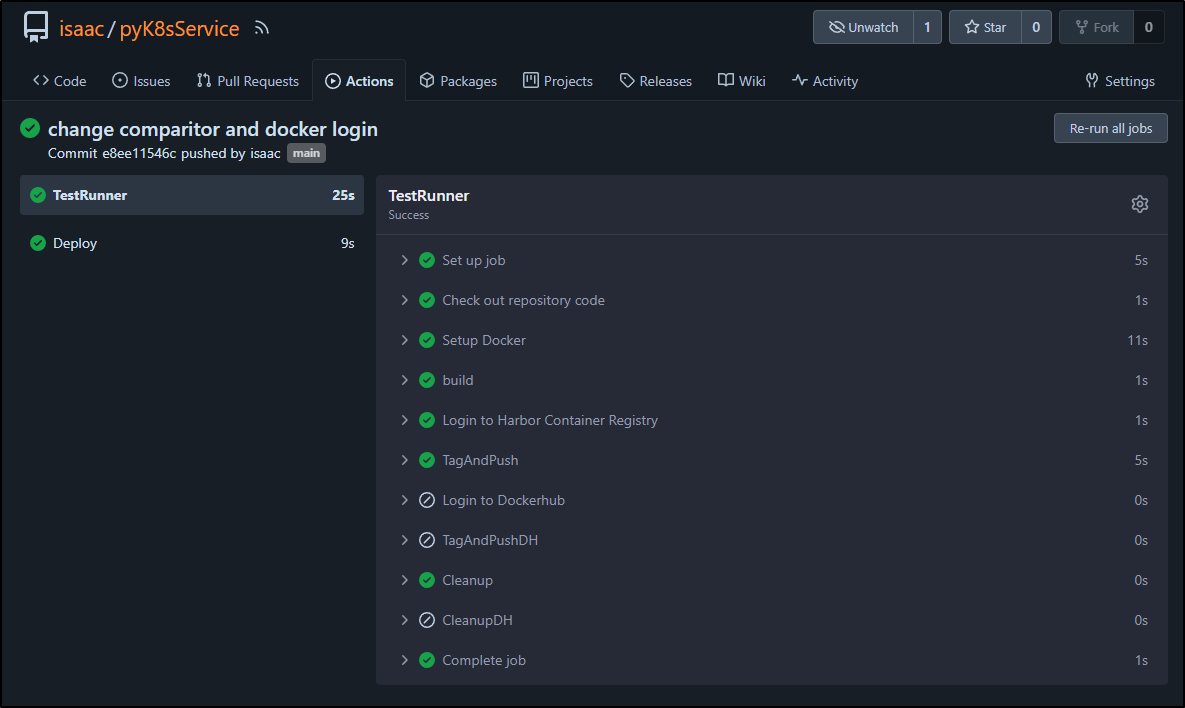

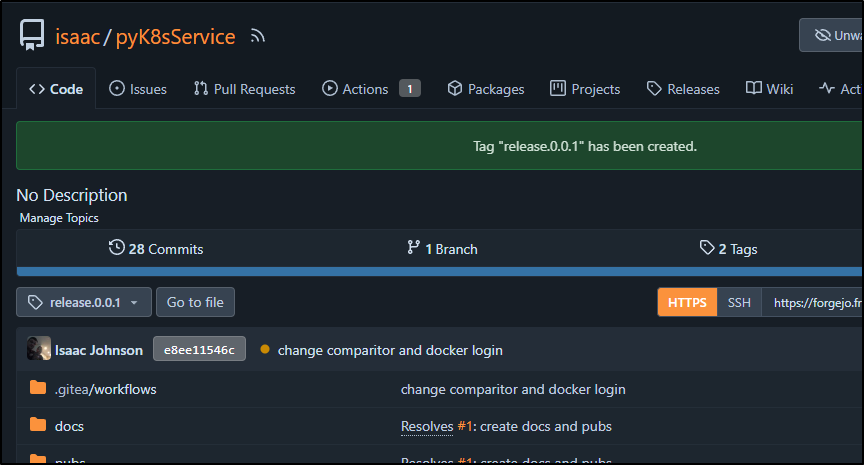

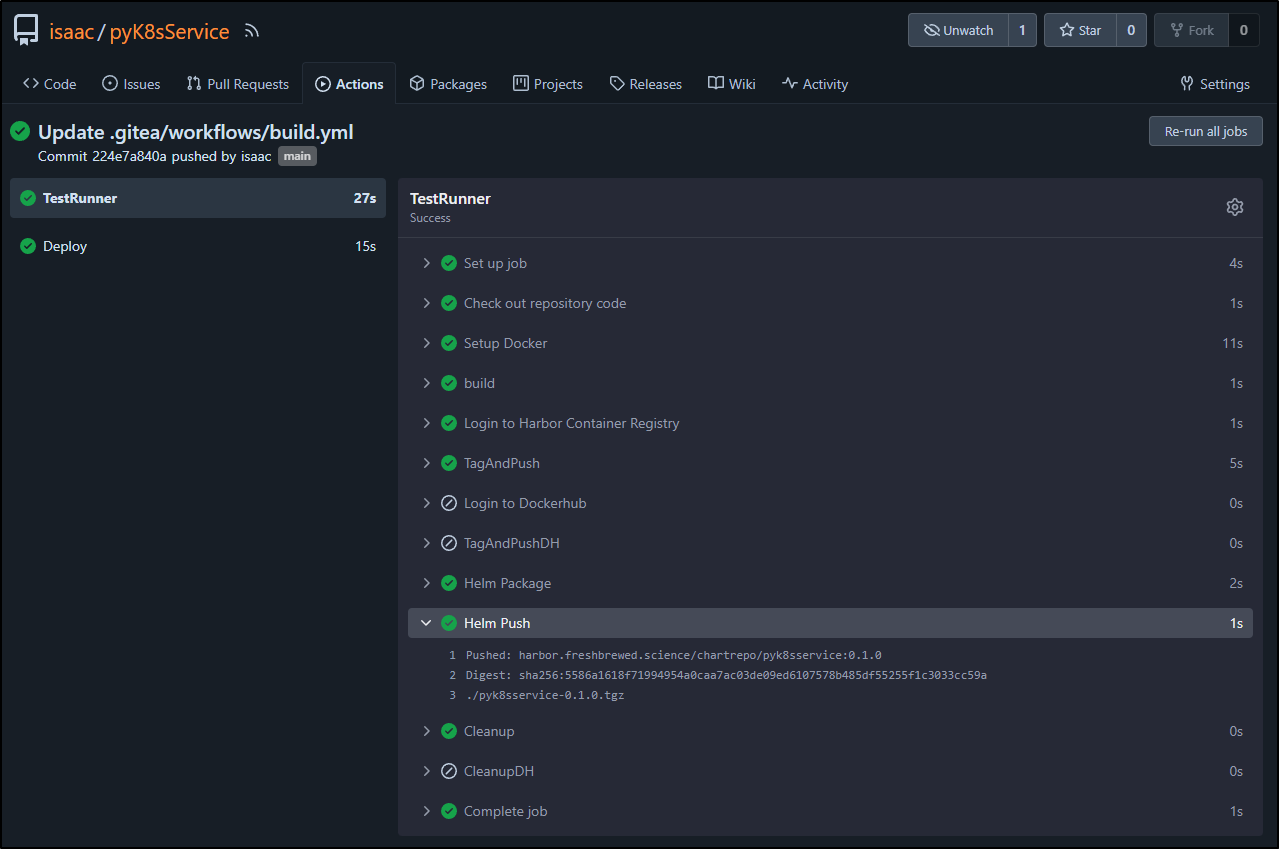

We can see the conditionals were respected at the step level:

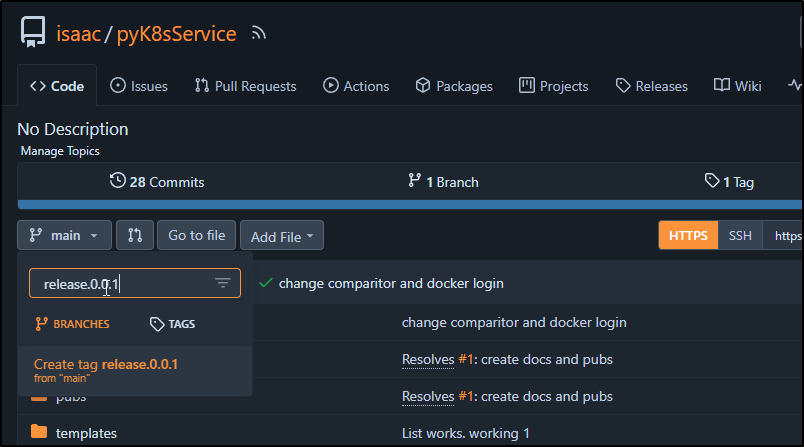

I’ll now try testing the flow by creating Release 0.0.1

Which makes the tag

I can see those steps now queue and execute

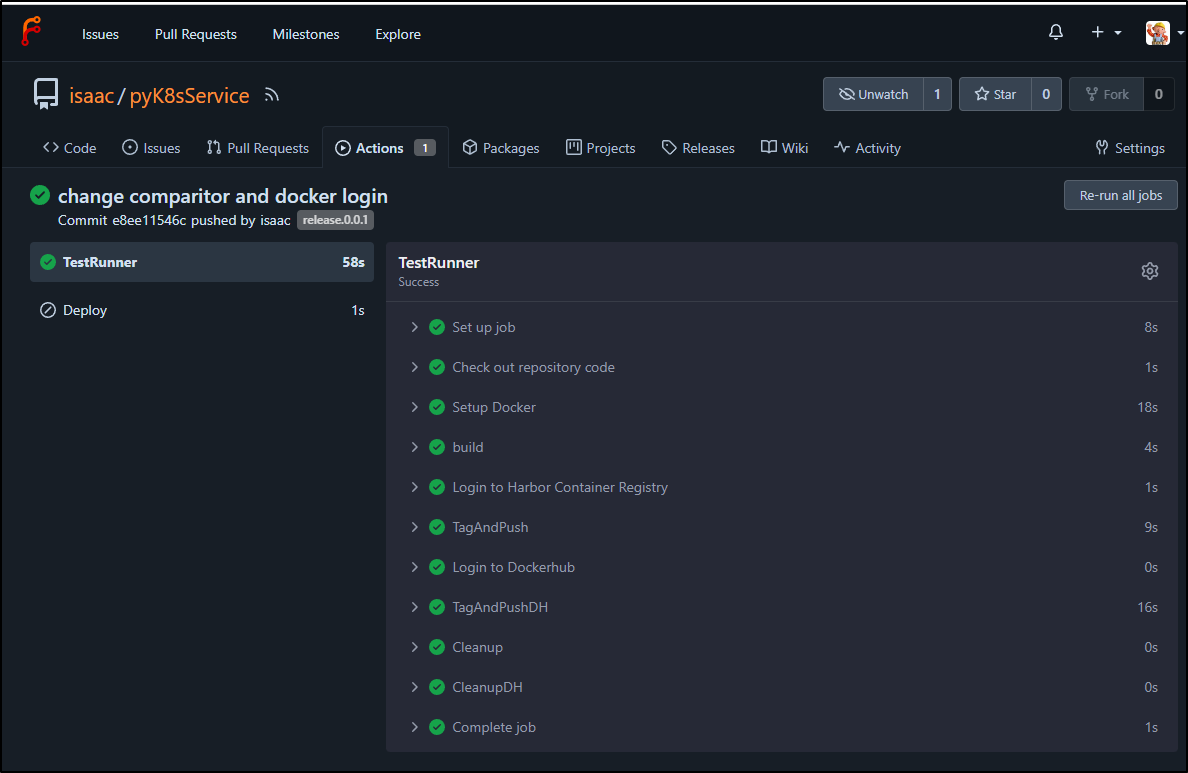

and more importantly, I can see the container was built and pushed to Dockerhub here

The other part of the equation is the Helm chart.

I’ll use helm create to create a chart then add the configmap.

$ helm create pyK8sService

Creating pyK8sService

Once modified, I created a Namespace and tested

$ kubectl create ns disabledtest

namespace/disabledtest created

$ helm install -name mytest -n disabledtest ./pyK8sService/

NAME: mytest

LAST DEPLOYED: Mon Dec 25 21:31:53 2023

NAMESPACE: disabledtest

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace disabledtest -l "app.kubernetes.io/name=pyk8sservice,app.kubernetes.io/instance=mytest" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace disabledtest $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace disabledtest port-forward $POD_NAME 8080:$CONTAINER_PORT

It looked like it installed the service and pods

builder@LuiGi17:~/Workspaces/pyK8sService$ kubectl get svc -n disabledtest

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mytest-pyk8sservice ClusterIP 10.43.219.225 <none> 80/TCP 88s

builder@LuiGi17:~/Workspaces/pyK8sService$ kubectl get pods -n disabledtest

NAME READY STATUS RESTARTS AGE

mytest-pyk8sservice-7d8798768f-gdp4b 1/1 Running 0 96s

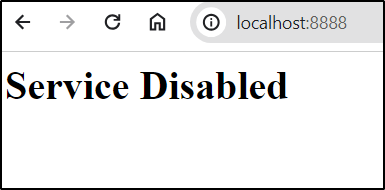

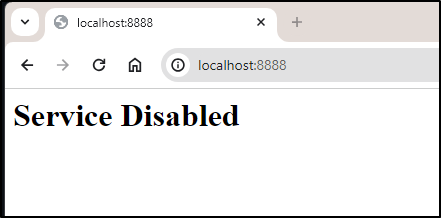

If I port-forward

$ kubectl port-forward svc/mytest-pyk8sservice -n disabledtest 8888:80

Forwarding from 127.0.0.1:8888 -> 80

Forwarding from [::1]:8888 -> 80

It’s working.

Note: I wont try and paste the full contents of the Helm Charts. As I go over changes and updates below, feel free to browse the version we arrive at here in the repo

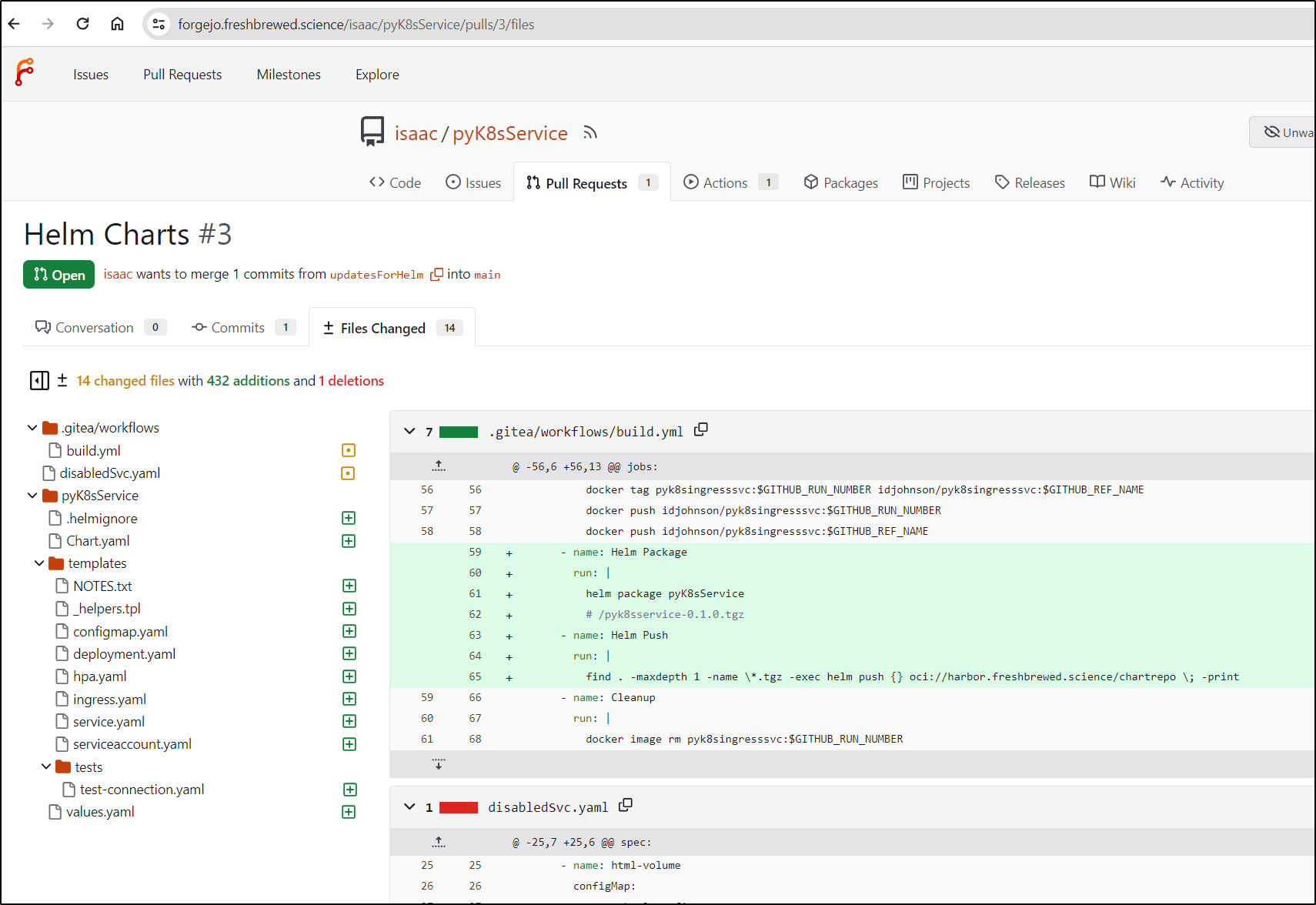

I created a new PR for the change

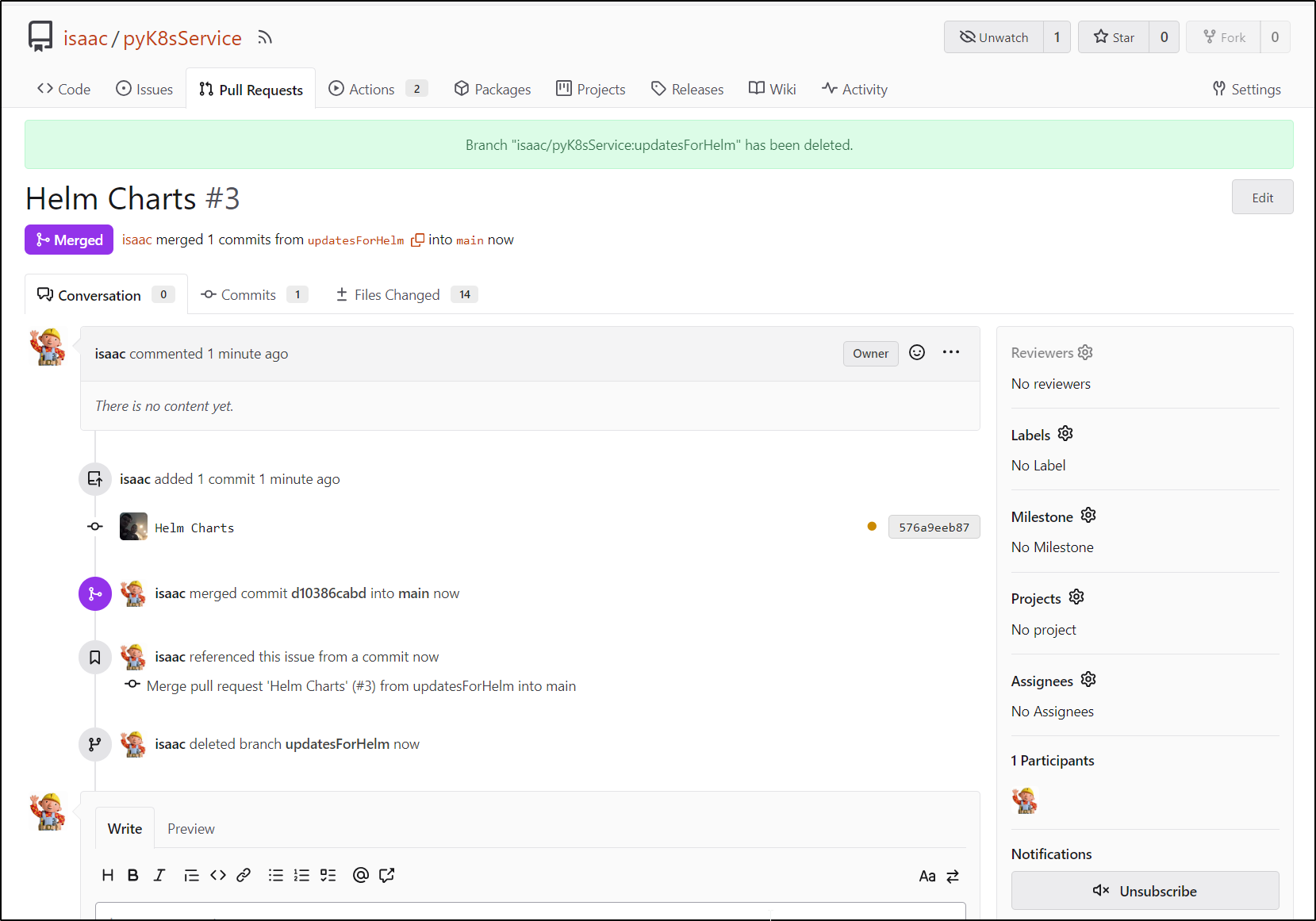

I reviewed, merged then deleted the branch in PR3

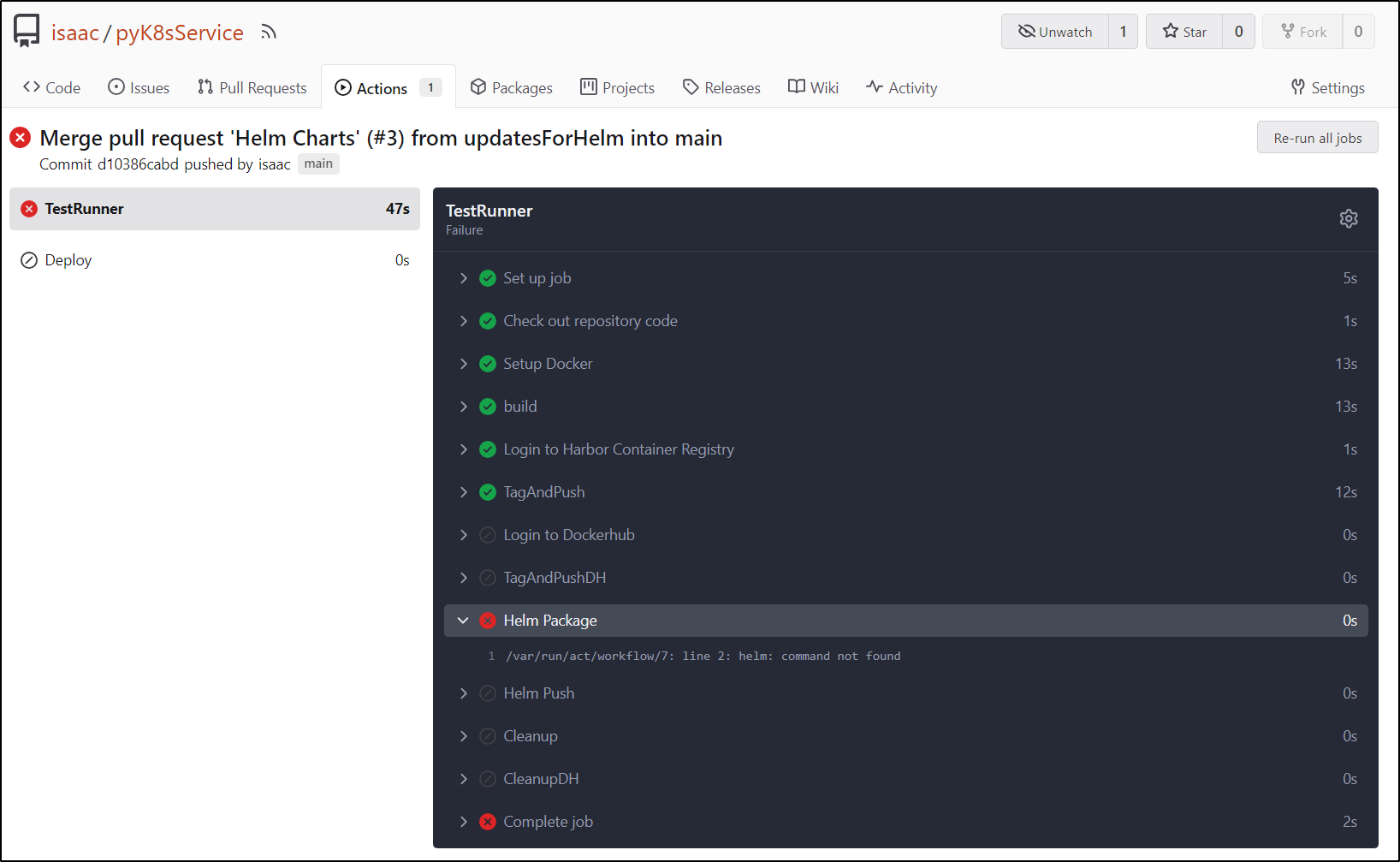

For some reason my runners were stopped. I likely need a check for that. However, i just restarted them and all was well

uptime-kuma

builder@builder-T100:~$ docker ps -a | grep buildert100

84c578380db9 gitea/act_runner:nightly "/sbin/tini -- /opt/…" 2 weeks ago Exited (143) 9 days ago

buildert100gf2

c1f96fc0c41d gitea/act_runner:nightly "/sbin/tini -- /opt/…" 3 weeks ago Exited (143) 9 days ago

buildert100cb

5abdc928e11d gitea/act_runner:nightly "/sbin/tini -- /opt/…" 4 weeks ago Exited (143) 9 days ago

buildert100gf

builder@builder-T100:~$ docker start 84c578380db9

84c578380db9

builder@builder-T100:~$ docker start c1f96fc0c41d

c1f96fc0c41d

builder@builder-T100:~$ docker start 5abdc928e11d

5abdc928e11d

Seems I’m missing helm

I’ll just add a step to install helm on the fly:

- name: Helm Package

run: |

# install helm

curl https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash

helm package pyK8sService

# /pyk8sservice-0.1.0.tgz

- name: Helm Push

run: |

find . -maxdepth 1 -name \*.tgz -exec helm push {} oci://harbor.freshbrewed.science/chartrepo \; -print

It appears to have worked

We can now easily install using the remote chart

$ kubectl delete ns disabledtest

namespace "disabledtest" deleted

$ helm install mytest --namespace disabledtest --create-namespace oci://harbor.freshbrewed.science/chartrepo/pyk8sservice --version 0.1.0

Pulled: harbor.freshbrewed.science/chartrepo/pyk8sservice:0.1.0

Digest: sha256:5586a1618f71994954a0caa7ac03de09ed6107578b485df55255f1c3033cc59a

NAME: mytest

LAST DEPLOYED: Tue Dec 26 19:23:43 2023

NAMESPACE: disabledtest

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace disabledtest -l "app.kubernetes.io/name=pyk8sservice,app.kubernetes.io/instance=mytest" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace disabledtest $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace disabledtest port-forward $POD_NAME 8080:$CONTAINER_PORT

I can do a test

$ kubectl port-forward svc/mytest-pyk8sservice -n disabledtest 8888:80

Forwarding from 127.0.0.1:8888 -> 80

Forwarding from [::1]:8888 -> 80

Handling connection for 8888

Handling connection for 8888

My next step is to add the app container.

We can see in PR4 that means adding an app deployment with a PVC

$ cat pyK8sService/templates/appdeployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ include "pyK8sService.fullname" . }}-app

labels:

{{- include "pyK8sService.labels" . | nindent 4 }}-app

spec:

{{- if not .Values.autoscaling.enabled }}

replicas: {{ .Values.replicaCount }}

{{- end }}

selector:

matchLabels:

{{- include "pyK8sService.selectorLabels" . | nindent 6 }}-app

template:

metadata:

{{- with .Values.podAnnotations }}

annotations:

{{- toYaml . | nindent 8 }}

{{- end }}

labels:

{{- include "pyK8sService.selectorLabels" . | nindent 8 }}-app

spec:

{{- with .Values.appimagePullSecrets }}

imagePullSecrets:

{{- toYaml . | nindent 8 }}

{{- end }}

serviceAccountName: {{ include "pyK8sService.serviceAccountName" . }}

securityContext:

{{- toYaml .Values.podSecurityContext | nindent 8 }}

containers:

- name: {{ .Chart.Name }}

securityContext:

{{- toYaml .Values.securityContext | nindent 12 }}

image: "{{ .Values.appimage.repository }}:{{ .Values.appimage.tag | default .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.appimage.pullPolicy }}

ports:

- name: http

containerPort: {{ .Values.appservice.port }}

protocol: TCP

livenessProbe:

httpGet:

path: /

port: http

readinessProbe:

httpGet:

path: /

port: http

volumeMounts:

- mountPath: /config

name: config

{{- if .Values.persistence.additionalMounts }}

{{- .Values.persistence.additionalMounts | toYaml | nindent 12 }}

{{- end }}

resources:

{{- toYaml .Values.resources | nindent 12 }}

volumes:

- name: config

{{- if .Values.persistence.enabled }}

persistentVolumeClaim:

claimName: {{ .Values.persistence.existingClaim | default (include "pyK8sService.fullname" .) }}

{{- else }}

emptyDir: { }

{{- end }}

{{- if .Values.persistence.additionalVolumes }}

{{- .Values.persistence.additionalVolumes | toYaml | nindent 8}}

{{- end }}

{{- with .Values.nodeSelector }}

nodeSelector:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.affinity }}

affinity:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.tolerations }}

tolerations:

{{- toYaml . | nindent 8 }}

{{- end }}

The App service

apiVersion: v1

kind: Service

metadata:

name: {{ include "pyK8sService.fullname" . }}-app

labels:

{{- include "pyK8sService.labels" . | nindent 4 }}-app

spec:

type: {{ .Values.appservice.type }}

ports:

- port: {{ .Values.appservice.port }}

targetPort: http

protocol: TCP

name: http

selector:

{{- include "pyK8sService.selectorLabels" . | nindent 4 }}-app

and lastly, the PVC yaml

{{- if and .Values.persistence.enabled (not .Values.persistence.existingClaim) }}

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: {{ include "pyK8sService.fullname" . }}

labels:

{{- include "pyK8sService.labels" . | nindent 4 }}

spec:

storageClassName: {{ .Values.persistence.storageClass }}

accessModes:

- {{ .Values.persistence.accessMode | quote }}

resources:

requests:

storage: {{ .Values.persistence.size | quote }}

{{- end }}

Lastly, I added to our values.yaml

############################

appimage:

repository: idjohnson/pyk8singresssvc

pullPolicy: Always

# Overrides the image tag whose default is the chart appVersion.

tag: "release.0.0.1"

appimagePullSecrets: []

appservice:

type: ClusterIP

port: 3000

persistence:

storageClass: ""

existingClaim: ""

enabled: true

accessMode: ReadWriteOnce

size: 500Mi

# if you need any additional volumes, you can define them here

additionalVolumes: []

# if you need any additional volume mounts, you can define them here

additionalMounts: []

#############################

Note: tip of the hat to this blog on the PVC template

I slated it to use the released version on Dockerhub so, by default, anyone could use the chart.

Testing locally

$ helm install mytest -n disabledtest ./pyK8sService/

NAME: mytest

LAST DEPLOYED: Tue Dec 26 19:50:00 2023

NAMESPACE: disabledtest

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace disabledtest -l "app.kubernetes.io/name=pyk8sservice,app.kubernetes.io/instance=mytest" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace disabledtest $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace disabledtest port-forward $POD_NAME 8080:$CONTAINER_PORT

I can see both services up

$ kubectl get svc -n disabledtest

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mytest-pyk8sservice ClusterIP 10.43.179.105 <none> 80/TCP 7s

mytest-pyk8sservice-app ClusterIP 10.43.206.218 <none> 3000/TCP 7s

Then the pods launch

$ kubectl get pods -n disabledtest

NAME READY STATUS RESTARTS AGE

mytest-pyk8sservice-7d8798768f-9vgv8 1/1 Running 0 20s

mytest-pyk8sservice-app-76c8f69c68-pw65q 0/1 Running 0 20s

$ kubectl get pods -n disabledtest

NAME READY STATUS RESTARTS AGE

mytest-pyk8sservice-7d8798768f-9vgv8 1/1 Running 0 6m1s

mytest-pyk8sservice-app-76c8f69c68-pw65q 0/1 CrashLoopBackOff 6 (97s ago) 6m1s

Oops… i see my app is on 5000, not 3000

app.run(host='0.0.0.0', port=5000, debug=True)

The PVC is blocking a quick upgrade to test

$ helm upgrade mytest --set appservice.port=5000 -n disabledtest ./pyK8sService/

Error: UPGRADE FAILED: cannot patch "mytest-pyk8sservice" with kind PersistentVolumeClaim: PersistentVolumeClaim "mytest-pyk8sservice" is invalid: spec: Forbidden: spec is immutable after creation except resources.requests for bound claims

core.PersistentVolumeClaimSpec{

... // 2 identical fields

Resources: {Requests: {s"storage": {i: {...}, s: "500Mi", Format: "BinarySI"}}},

VolumeName: "pvc-bf50af03-ffb8-4d45-a0f3-f87321bdef65",

- StorageClassName: &"local-path",

+ StorageClassName: nil,

VolumeMode: &"Filesystem",

DataSource: nil,

DataSourceRef: nil,

}

But I can work around it, knowing that it was assigned the default “local-path”

$ helm upgrade mytest --set appservice.port=5000 --set persistence.storageClass="local-path" -n disabledtest ./pyK8sService/

Release "mytest" has been upgraded. Happy Helming!

NAME: mytest

LAST DEPLOYED: Tue Dec 26 20:00:05 2023

NAMESPACE: disabledtest

STATUS: deployed

REVISION: 3

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace disabledtest -l "app.kubernetes.io/name=pyk8sservice,app.kubernetes.io/instance=mytest" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace disabledtest $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace disabledtest port-forward $POD_NAME 8080:$CONTAINER_PORT

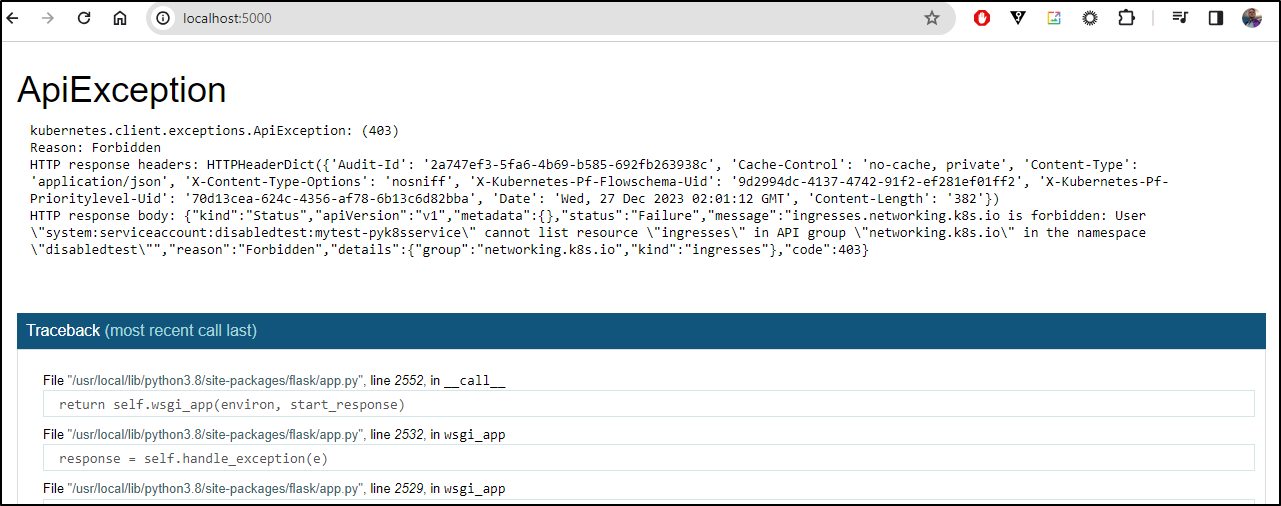

I certainly got further this time:

$ kubectl port-forward mytest-pyk8sservice-app-74cc77666b-7w98l -n disabledtest 5000:5000

Forwarding from 127.0.0.1:5000 -> 5000

Forwarding from [::1]:5000 -> 5000

Handling connection for 5000

Handling connection for 5000

Seems we have some RBAC needs from the error

kubernetes.client.exceptions.ApiException: (403)

Reason: Forbidden

HTTP response headers: HTTPHeaderDict({'Audit-Id': '2a747ef3-5fa6-4b69-b585-692fb263938c', 'Cache-Control': 'no-cache, private', 'Content-Type': 'application/json', 'X-Content-Type-Options': 'nosniff', 'X-Kubernetes-Pf-Flowschema-Uid': '9d2994dc-4137-4742-91f2-ef281ef01ff2', 'X-Kubernetes-Pf-Prioritylevel-Uid': '70d13cea-624c-4356-af78-6b13c6d82bba', 'Date': 'Wed, 27 Dec 2023 02:01:12 GMT', 'Content-Length': '382'})

HTTP response body: {"kind":"Status","apiVersion":"v1","metadata":{},"status":"Failure","message":"ingresses.networking.k8s.io is forbidden: User \"system:serviceaccount:disabledtest:mytest-pyk8sservice\" cannot list resource \"ingresses\" in API group \"networking.k8s.io\" in the namespace \"disabledtest\"","reason":"Forbidden","details":{"group":"networking.k8s.io","kind":"ingresses"},"code":403}

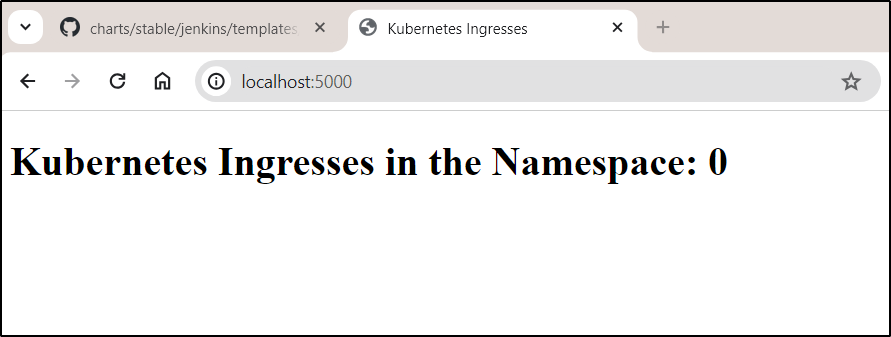

Once corrected:

I could port-forward

$ kubectl port-forward mytest-pyk8sservice-app-74cc77666b-w8fpn -n disabledtest 5000:5000

Forwarding from 127.0.0.1:5000 -> 5000

Forwarding from [::1]:5000 -> 5000

Handling connection for 5000

Handling connection for 5000

and see it work

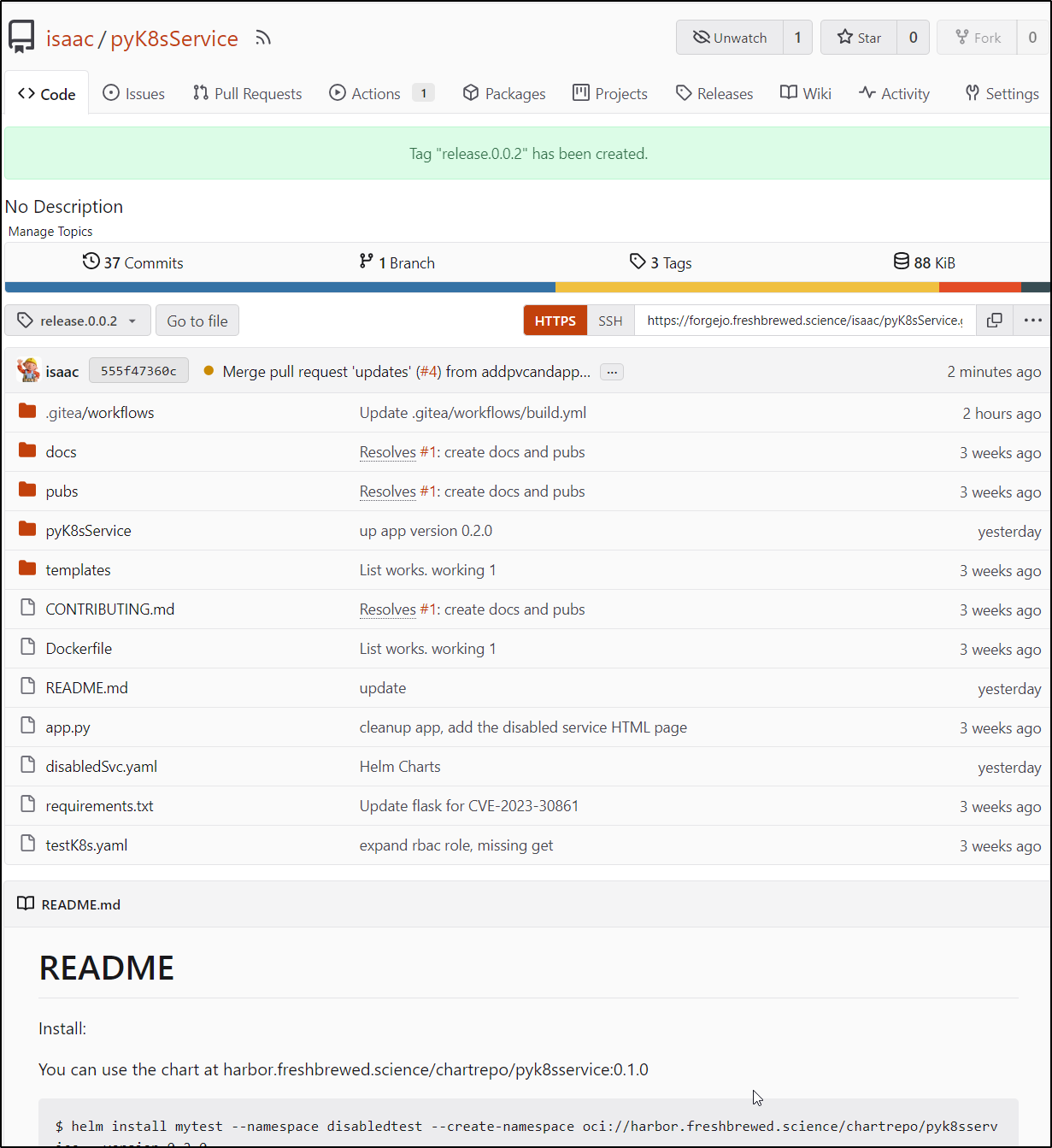

So then then I wrapped by creating a new tag release.0.0.2

Summary

Today we started in by looking at Branching and PRs to do a more properly controlled development flow with Pull Requests and reviews. We covered building and pushing our app container to Dockerhub on release tag. A larger chunk of time was spent building and testing Helm charts that could deploy two services and backing ReplicaSets to serve the disabledpage (Nginx) and our switcher (App) and in testing, we took care of (initial) RBAC challenges.

For next time, we will look at updating the app. I want to change how we store the YAML ingress/disabled. I also want to build out the templates repo and setup examples for Traefik, Nginx and Istio. Lastly, if I have time, I want to figure out a non-CR based helm repository endpoint for storing and sharing charts.