Published: Dec 23, 2023 by Isaac Johnson

Today we have a lot on our plate. We’ll want to setup CICD pipelines in Forgejo. The goal is to build the containers on git push but also figure out a way to tag on release and probably push those to shared places like Dockerhub.

I’ll want to solve the non-functional ‘disable action’ and since this is moving along well, let’s get DR sorted by replicating to at least one or two other Git repository systems.

CICD

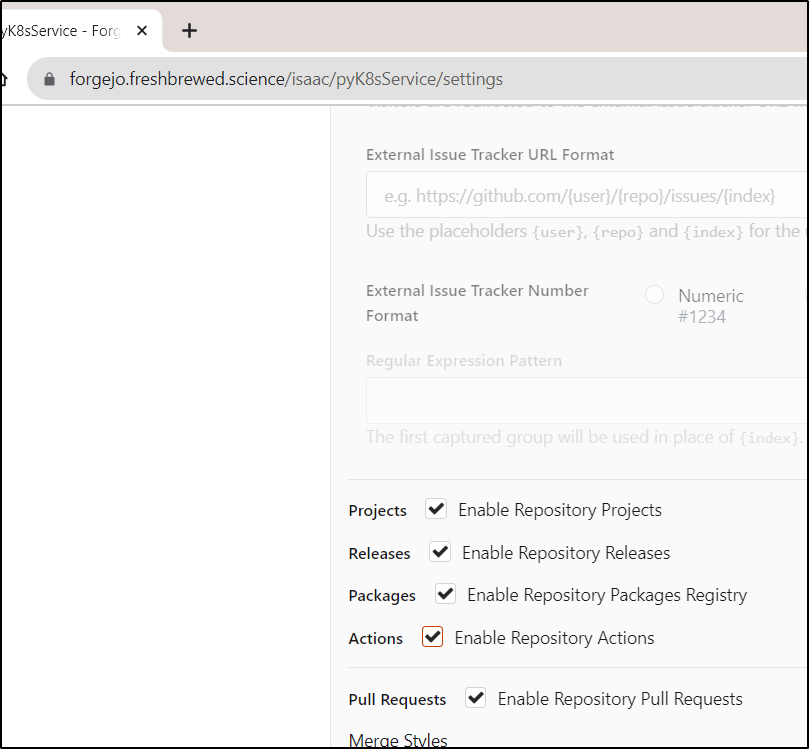

I need to enable Actions first

Next, I’ll create a build file in .gitea\workflows\build.yaml

name: build

run-name: $ building test

on: [push]

jobs:

TestRunner:

steps:

- name: Check out repository code

uses: actions/checkout@v3

- name: Setup Docker

run: |

# if running as non-root, add sudo

echo "Hello"

apt-get update && \

apt-get install -y \

ca-certificates \

curl \

gnupg \

sudo \

lsb-release

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu focal stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

apt-get update

apt install -y docker-ce-cli

- name: build

run: |

docker build -t testbuild .

- name: Test Docker

run: |

# if running as non-root, add sudo

echo hi

docker ps

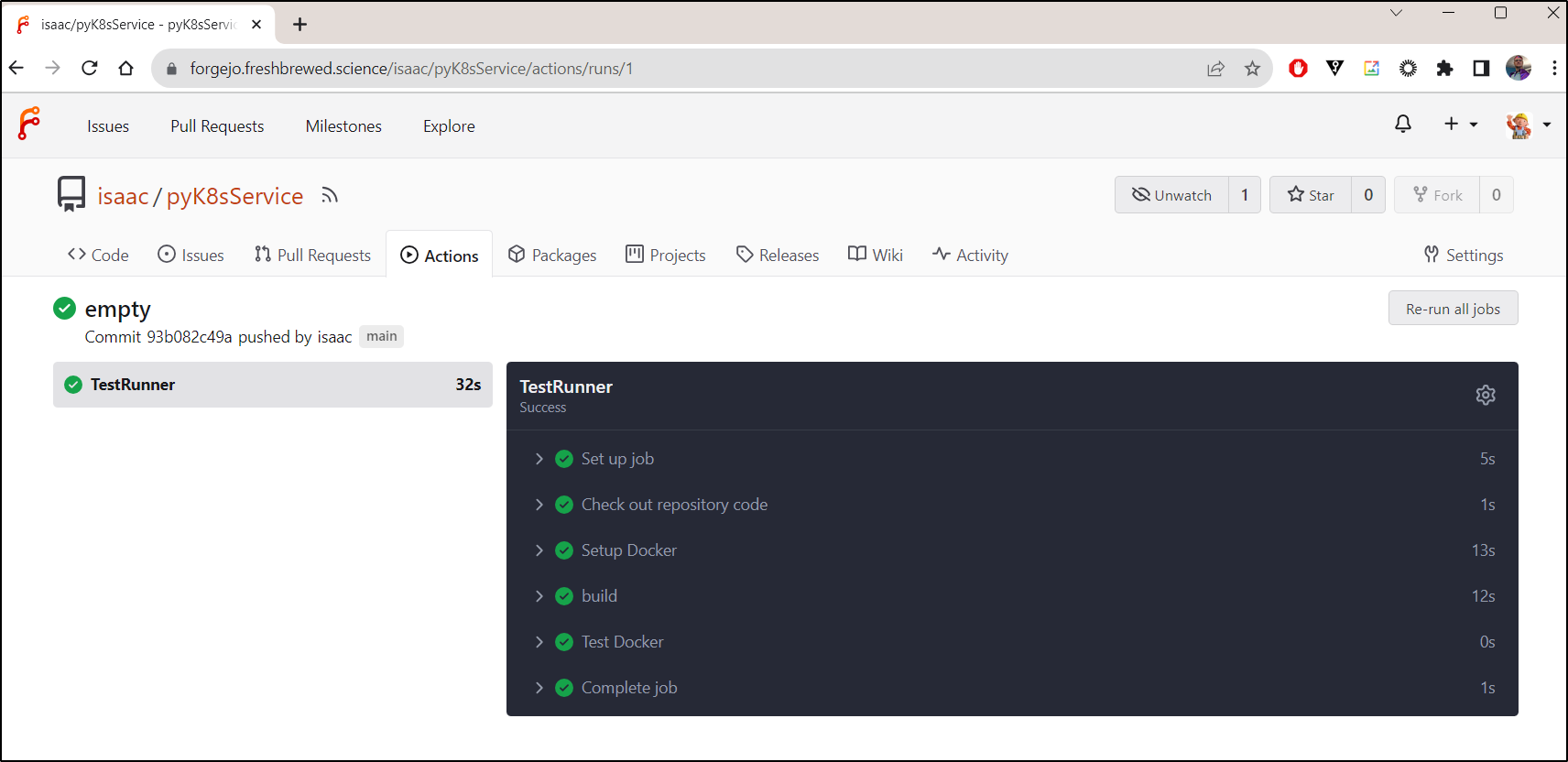

I’ll push to build

and I can see it built the container

Taking a pause for DR

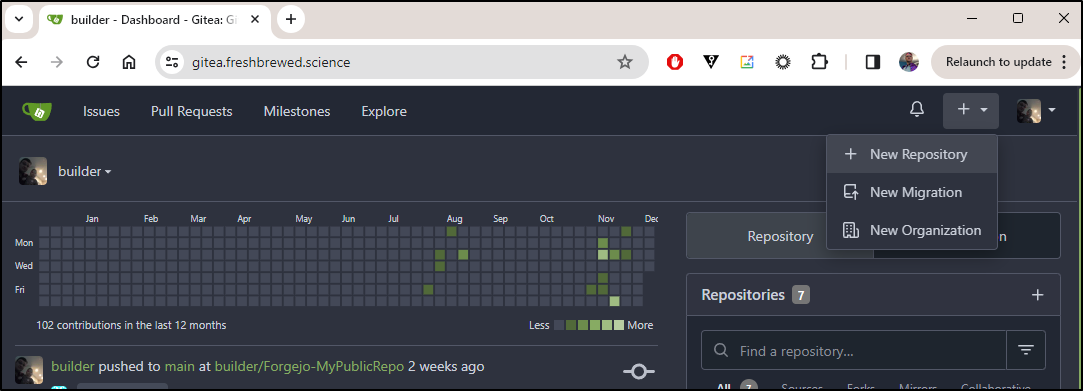

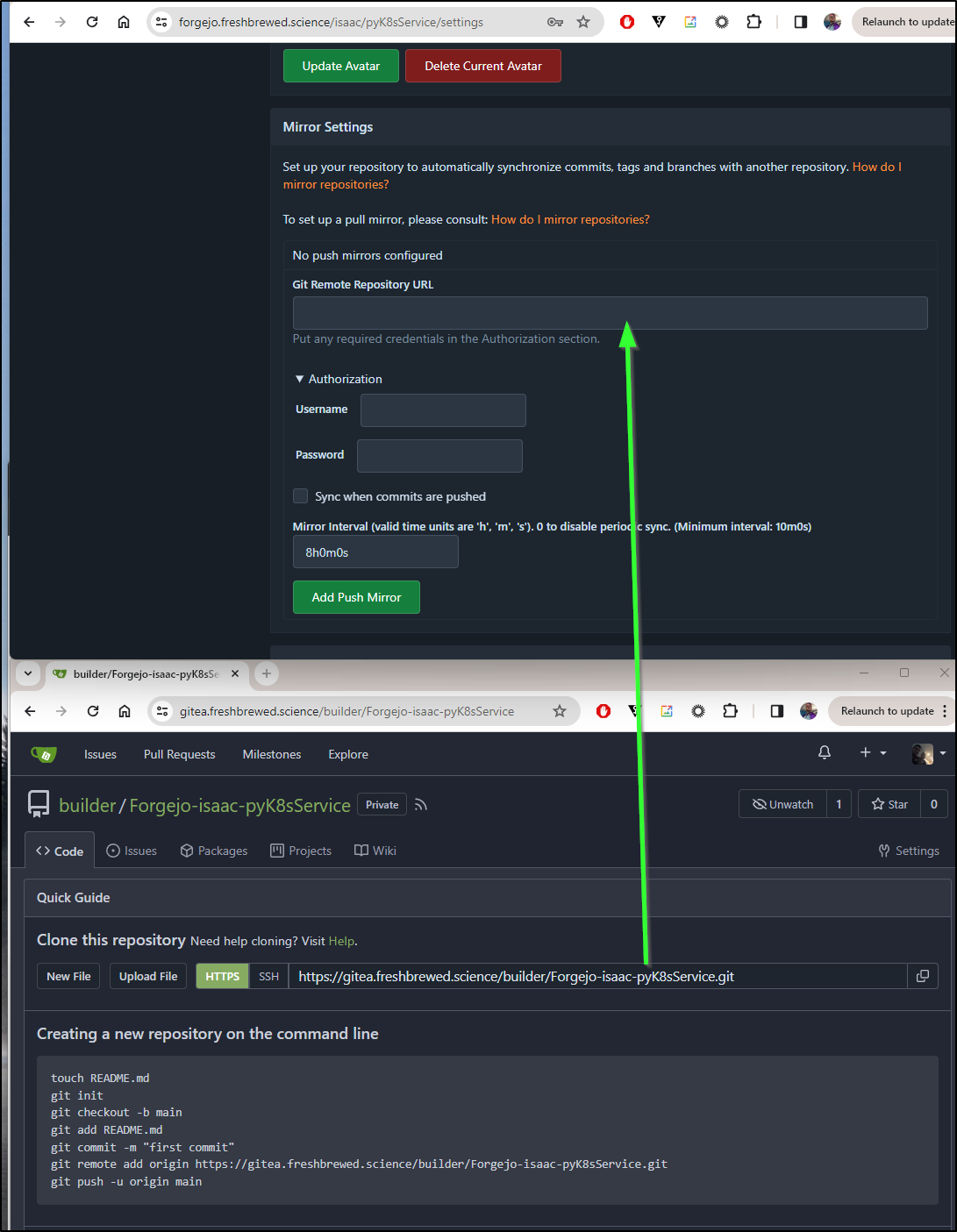

There is one more bit of housekeeping I want to do before I go any farther - create a clone in Gitea.

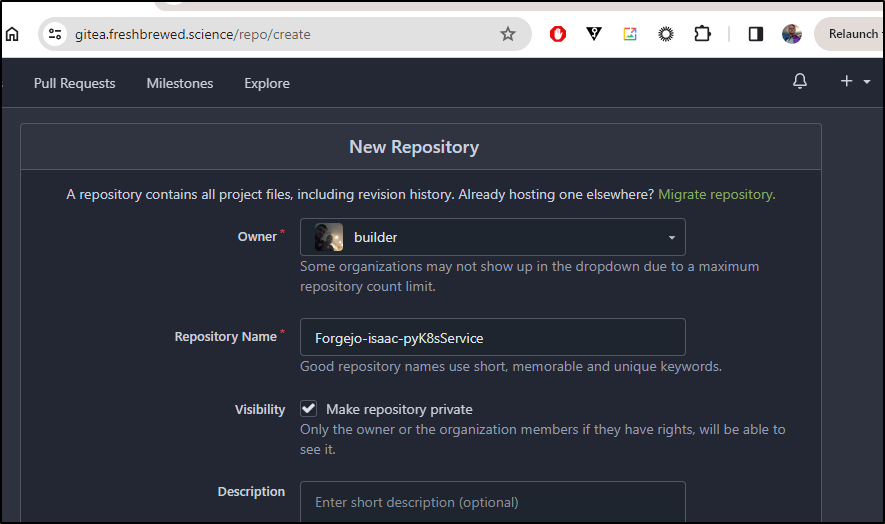

I’ll create a new repo

Since this is just for receiving the replicated bits, I’ll leave it set to private

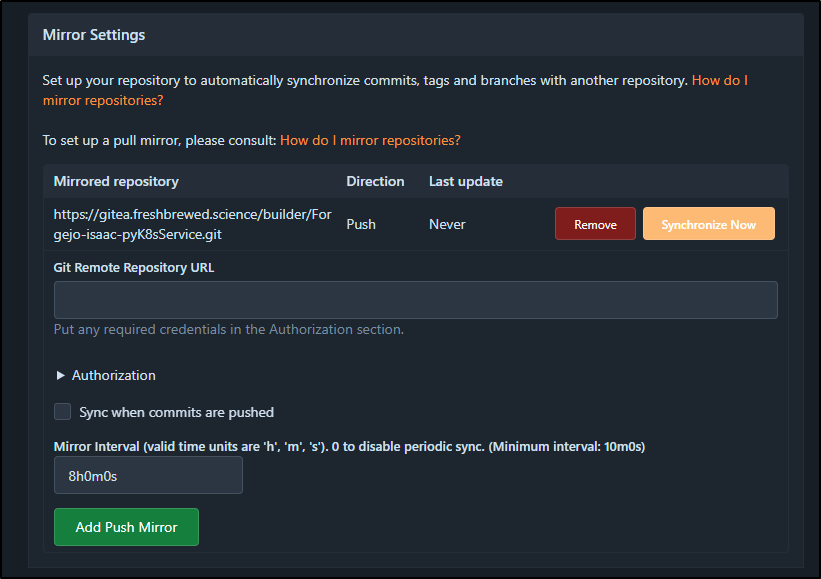

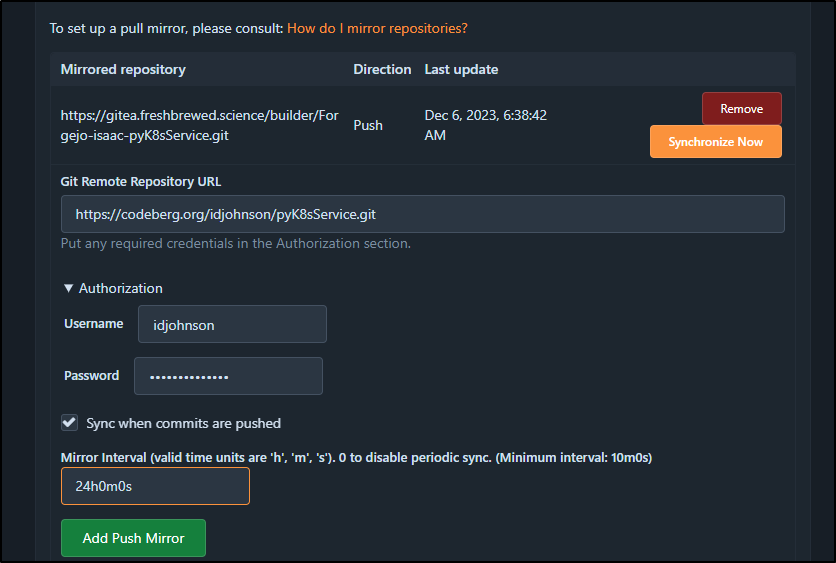

Next, I’ll use that clone URL in the Mirror setting of pyK8sService

Once added, I’ll click Synchronize now

One thing I will not do that I did in my prior demos is to enable Actions in Gitea. I really just want containers built and pushed by the primary. This replica is just for DR

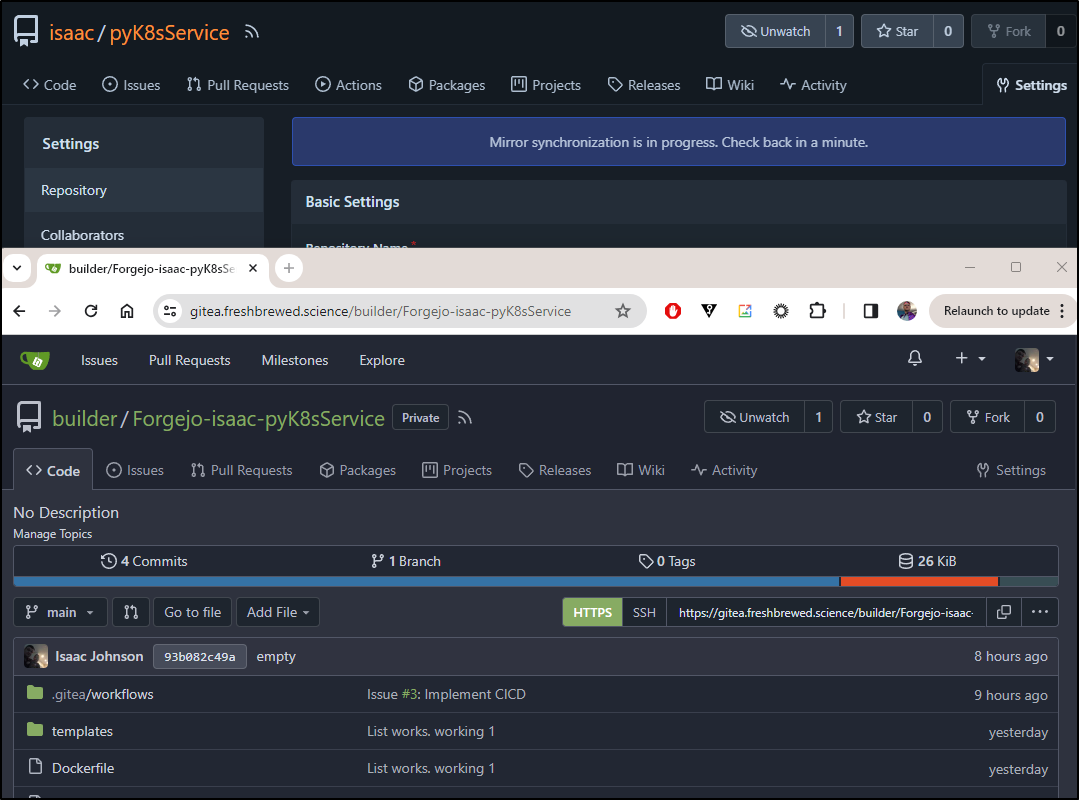

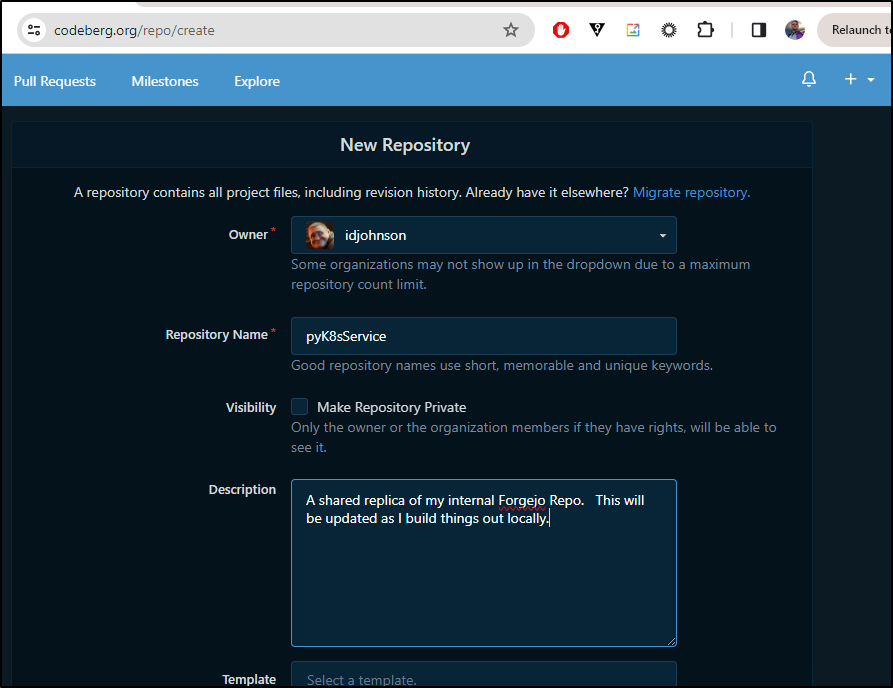

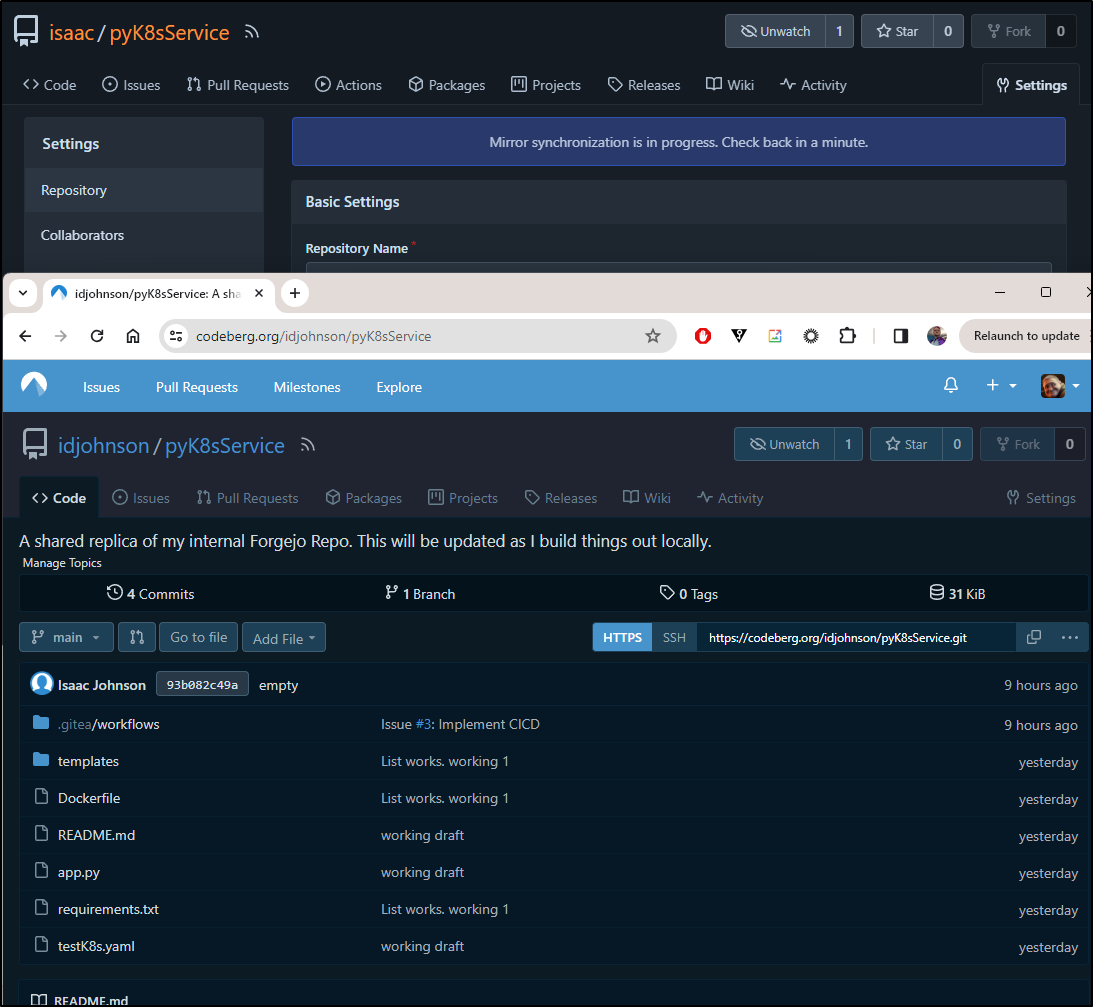

I’m also going to get proper with my DR (as Gitea and Forgejo are both in the same cluster) and sync to Codeberg as well

I’ll set this one to daily

Lastly, I’ll kick a sync off manually as well to verify creds and get the first bits over

Back to CICD

Now that I can rest easier knowing that my GIT repo is replicated, I’ll push on.

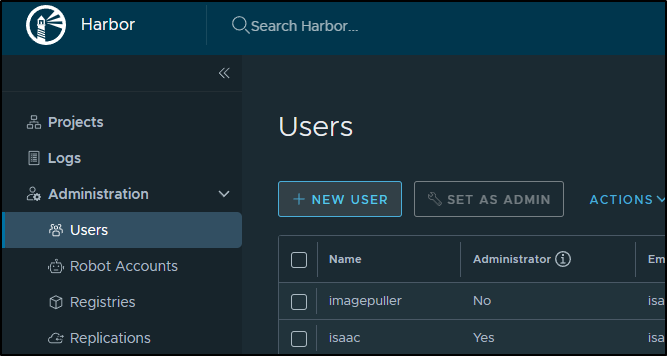

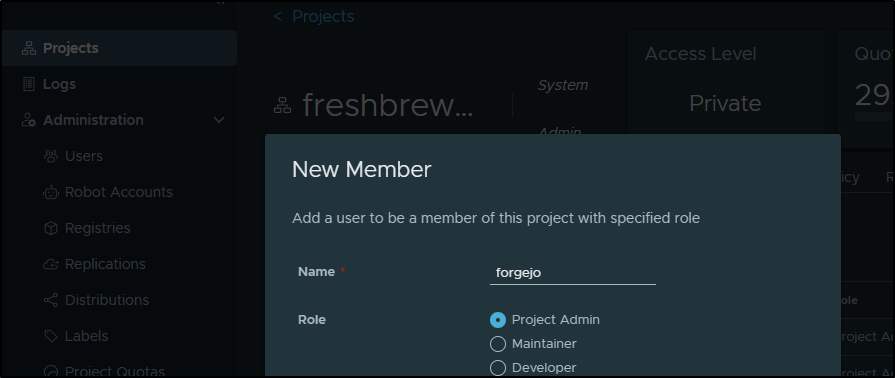

I would like to better control output to Harbor. To do so, I’ll want to create a user just for Forgejo in HarborCR.

Under users, I’ll click “+ New User”

Because it is not a project admin, I’ll need to give it developer or admin perms to the private registry

And the library if I end up using the Chart repo

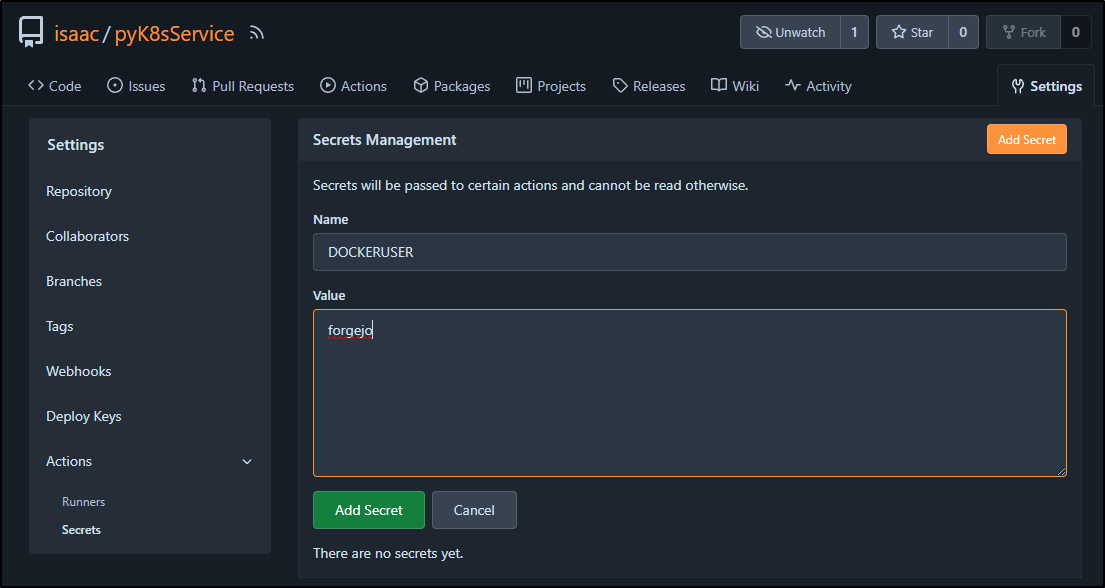

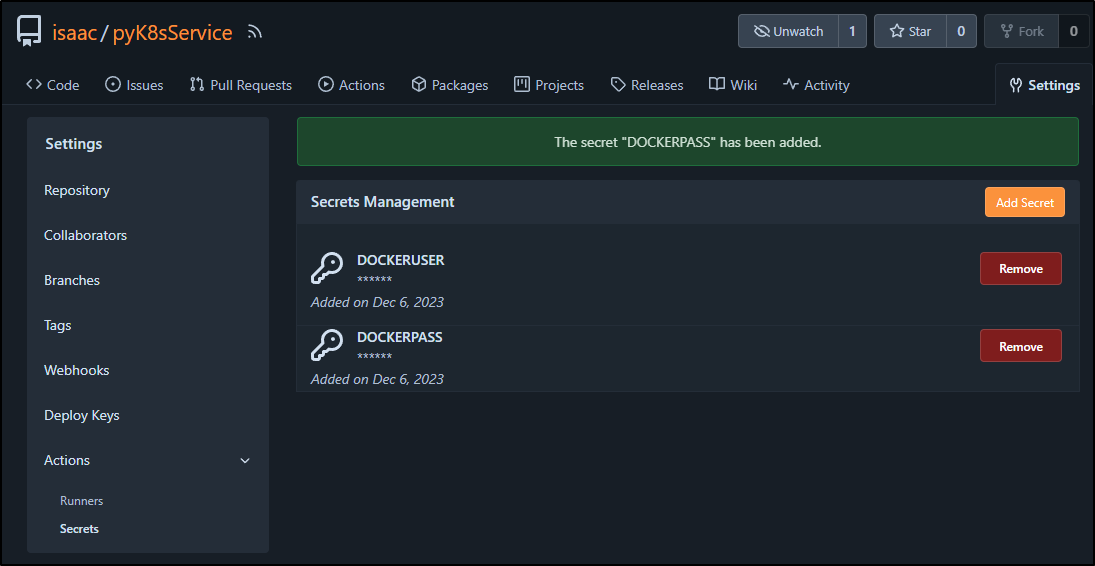

back in Forgejo, I’ll want to create a DOCKERUSER and DOCKERPASS secret

I can now use these in my pipelines

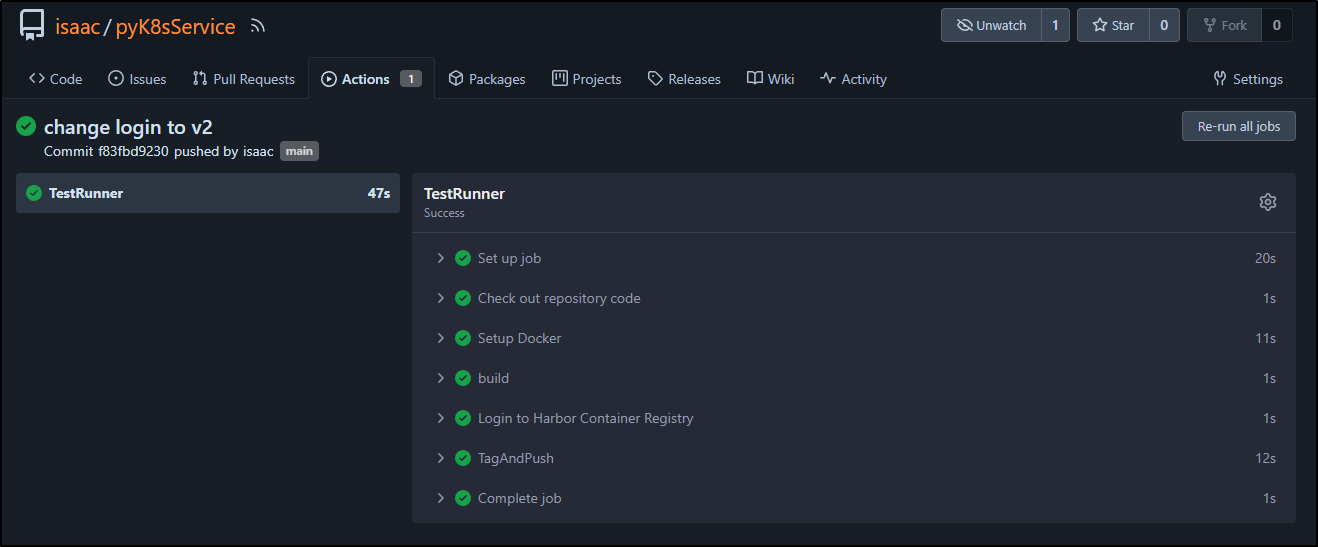

The latest docker-login action caused me troubles initially, but when I moved back to docker/login-action@v2 I was fine:

This should build our image and then push to the CR using the credential we created

name: build

run-name: $ building test

on: [push]

jobs:

TestRunner:

steps:

- name: Check out repository code

uses: actions/checkout@v3

- name: Setup Docker

run: |

# if running as non-root, add sudo

echo "Hello"

export

apt-get update && \

apt-get install -y \

ca-certificates \

curl \

gnupg \

sudo \

lsb-release

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu focal stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

apt-get update

apt install -y docker-ce-cli

- name: build

run: |

docker build -t pyk8singresssvc:$GITHUB_RUN_NUMBER .

- name: Login to Harbor Container Registry

uses: docker/login-action@v2

with:

registry: harbor.freshbrewed.science

username: $

password: $

- name: TagAndPush

run: |

# if running as non-root, add sudo

echo hi

docker tag pyk8singresssvc:$GITHUB_RUN_NUMBER harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:$GITHUB_RUN_NUMBER

docker push harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:$GITHUB_RUN_NUMBER

Which built

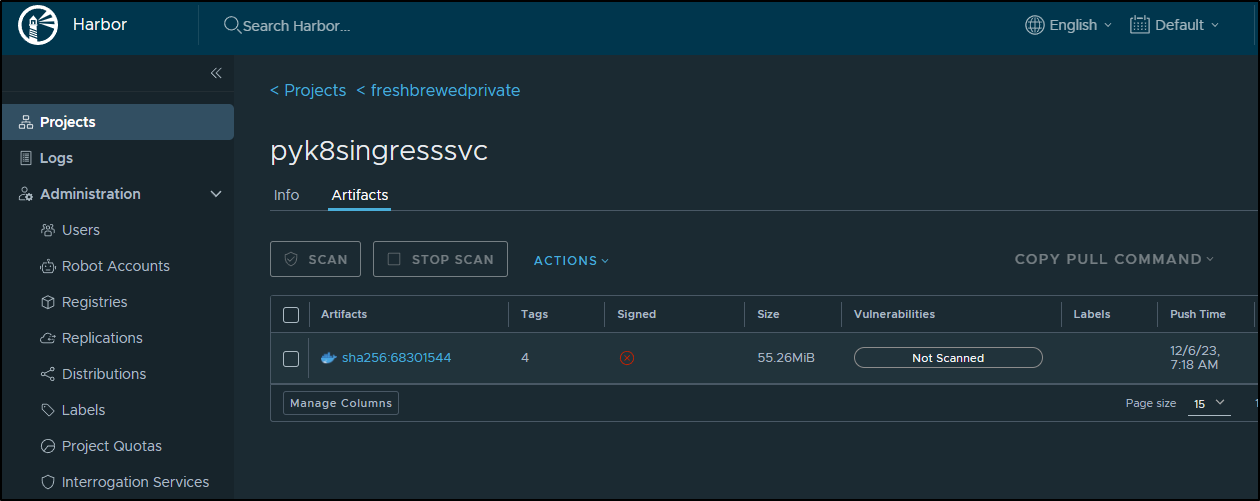

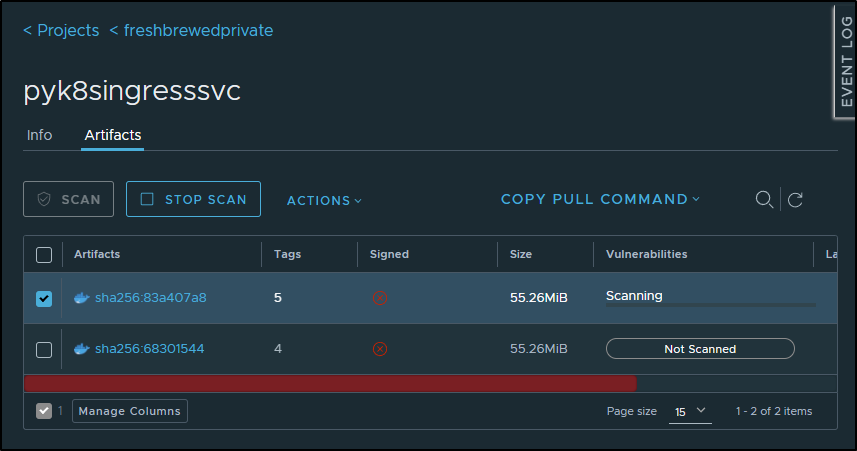

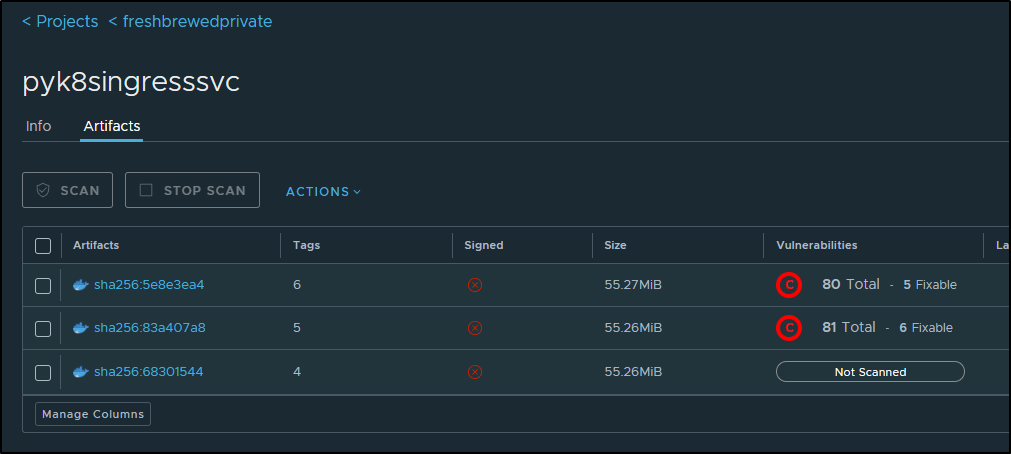

And I can now see

One issue we better solve now is space consumed on the docker host. Because our runner is using a docker shim to the docker host, I’ll end up creating a whole lot of images locally consuming disk unneccessarily.

Since both images use the same SHA, to save disk, I’ll need to remove both when the build completes.

Testing interactivley:

builder@builder-T100:~$ docker image rm pyk8singresssvc:4

Untagged: pyk8singresssvc:4

builder@builder-T100:~$ docker image rm harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:4

Untagged: harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:4

Untagged: harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc@sha256:68301544b517ac213f50fbcabdb30163f2d089da7f4a475106efc34414144667

Deleted: sha256:74b856960bb496f983a7995aa815b041e4975f2f12fa99b69faaca1337405e21

I’ll just add a cleanup task

$ cat .gitea/workflows/build.yml

name: build

run-name: $ building test

on: [push]

jobs:

TestRunner:

steps:

- name: Check out repository code

uses: actions/checkout@v3

- name: Setup Docker

run: |

# if running as non-root, add sudo

echo "Hello"

export

apt-get update && \

apt-get install -y \

ca-certificates \

curl \

gnupg \

sudo \

lsb-release

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu focal stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

apt-get update

apt install -y docker-ce-cli

- name: build

run: |

docker build -t pyk8singresssvc:$GITHUB_RUN_NUMBER .

- name: Login to Harbor Container Registry

uses: docker/login-action@v2

with:

registry: harbor.freshbrewed.science

username: $

password: $

- name: TagAndPush

run: |

# if running as non-root, add sudo

echo hi

docker tag pyk8singresssvc:$GITHUB_RUN_NUMBER harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:$GITHUB_RUN_NUMBER

docker push harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:$GITHUB_RUN_NUMBER

- name: Cleanup

run: |

docker image rm pyk8singresssvc:$GITHUB_RUN_NUMBER

docker image rm harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:$GITHUB_RUN_NUMBER

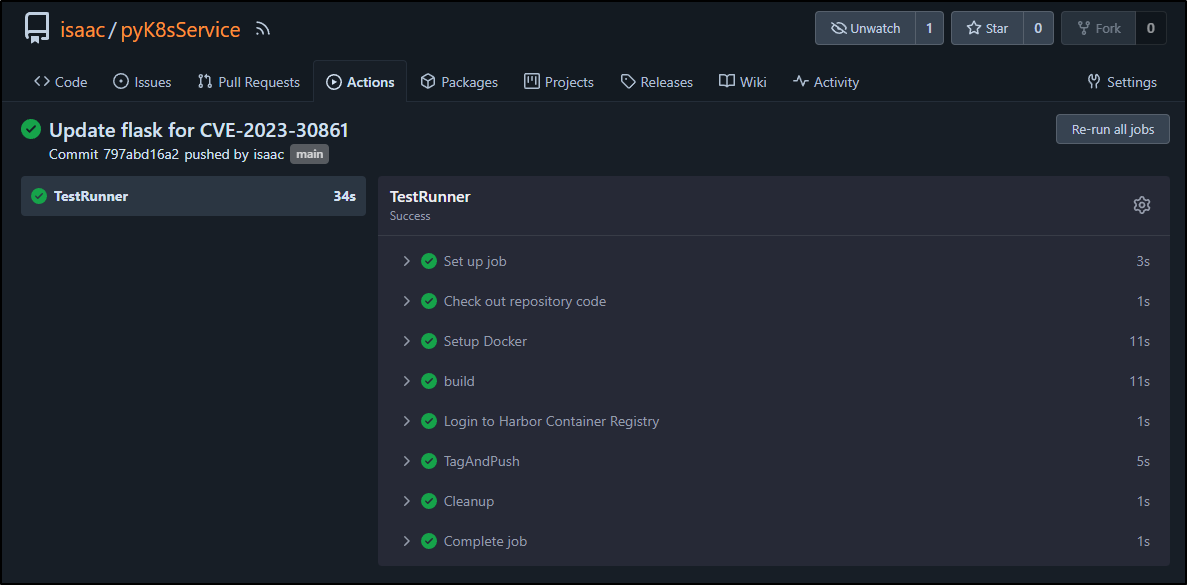

We can see that in action:

At this point, in a basic way, we have CI covered

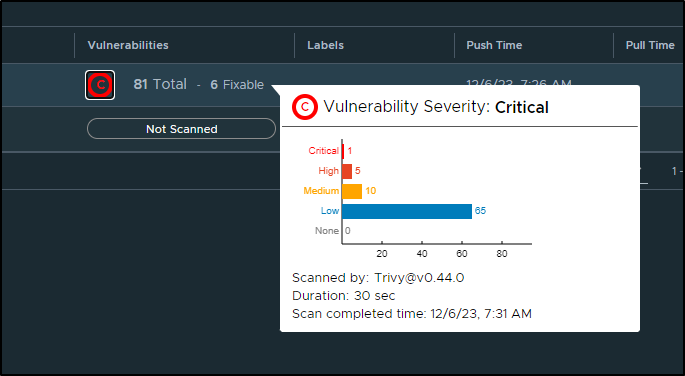

I’ll do a quick scan in Harbor to check for any big CVEs I’ll want to handle

Which found at least 6 for me to fix

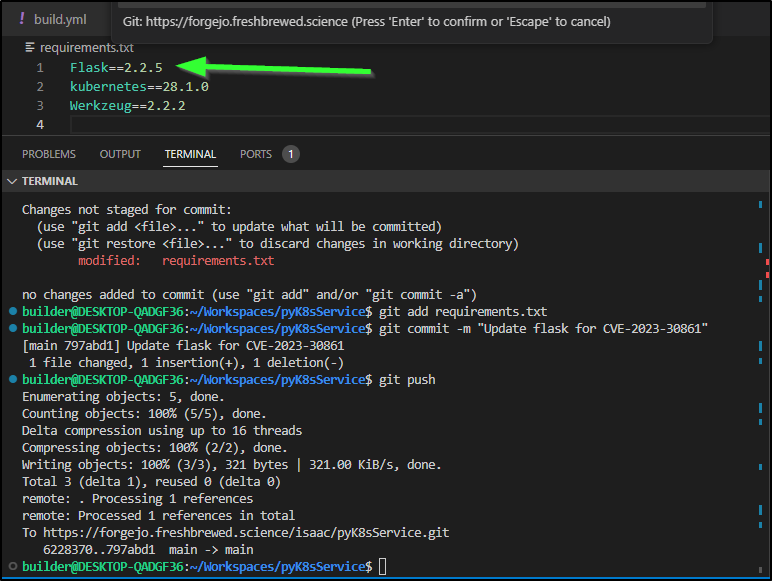

The ones that are easy to correct seem like low hanging fruit. For instance, a CVE around the Flask version

Just means I’ll update the flask version and rebuild

which built

and I can now rescan and see one less issue on the new tag for build 6

I’ll now add my base64’ed kubeconfig to my repo secrets. Since this is operating in my network, I can actually use the internal config with private IP addresses

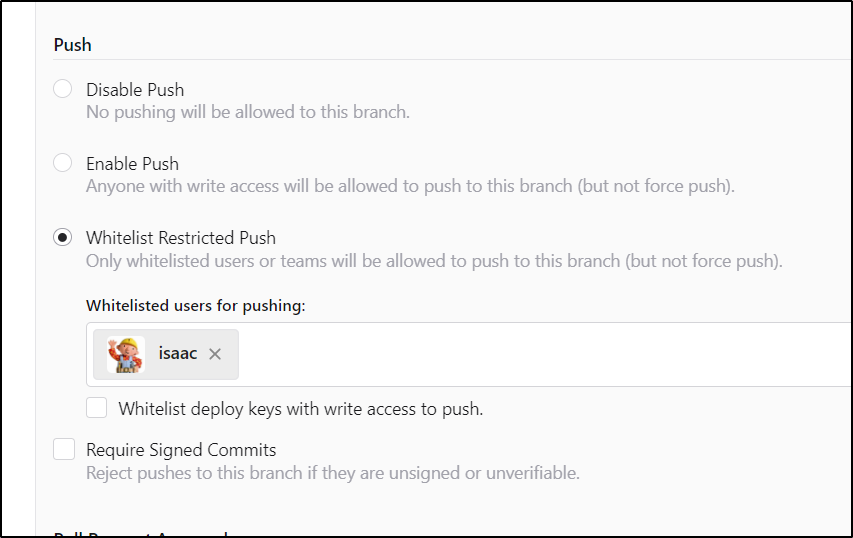

I should mention that now that I’m putting something like a kubeconfig in secrets, this main branch is going to get some protections

Now, I can add a Deploy step that uses the Kubeconfig to deploy

name: build

run-name: $ building test

on: [push]

jobs:

TestRunner:

steps:

- name: Check out repository code

uses: actions/checkout@v3

- name: Setup Docker

run: |

# if running as non-root, add sudo

echo "Hello"

export

apt-get update && \

apt-get install -y \

ca-certificates \

curl \

gnupg \

sudo \

lsb-release

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu focal stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

apt-get update

apt install -y docker-ce-cli

- name: build

run: |

docker build -t pyk8singresssvc:$GITHUB_RUN_NUMBER .

- name: Login to Harbor Container Registry

uses: docker/login-action@v2

with:

registry: harbor.freshbrewed.science

username: $

password: $

- name: TagAndPush

run: |

# if running as non-root, add sudo

echo hi

docker tag pyk8singresssvc:$GITHUB_RUN_NUMBER harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:$GITHUB_RUN_NUMBER

docker push harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:$GITHUB_RUN_NUMBER

- name: Cleanup

run: |

docker image rm pyk8singresssvc:$GITHUB_RUN_NUMBER

docker image rm harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:$GITHUB_RUN_NUMBER

Deploy:

needs: TestRunner

steps:

- name: Check out repository code

uses: actions/checkout@v3

- name: GetKubectl

run: |

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

chmod 755 ./kubectl

- name: createKConfig

run: |

echo $ | base64 --decode > ./kconfig

- name: update build

run: |

cat testK8s.yaml | sed "s/image: .*/image: harbor.freshbrewed.science\/freshbrewedprivate\/pyk8singresssvc:$GITHUB_RUN_NUMBER/g" > ./runK8s.yaml

cat ./runK8s.yaml

./kubectl apply -f ./runK8s.yaml --kubeconfig=./kconfig

which built and applied

I can also check the cluster to see the latest image was applied

builder@LuiGi17:~/Workspaces/k8sChecker2/my-app$ kubectl get pods -l app=list-services

NAME READY STATUS RESTARTS AGE

list-services-deployment-5f7559db96-c7vxn 1/1 Running 0 3m3s

builder@LuiGi17:~/Workspaces/k8sChecker2/my-app$ kubectl get pods -l app=list-services -o yaml | gr

ep image:

- image: harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:11

image: harbor.freshbrewed.science/freshbrewedprivate/pyk8singresssvc:11

Disabled Service

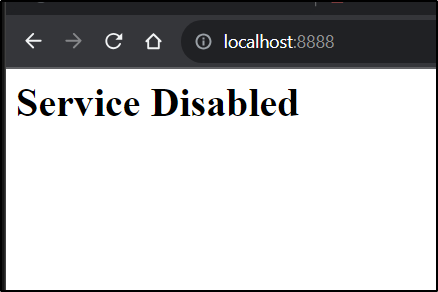

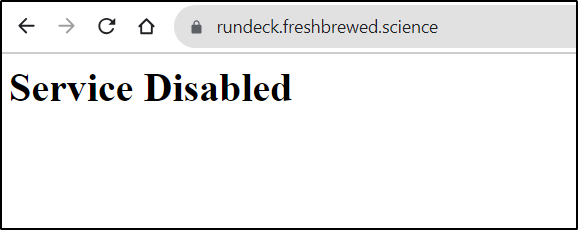

I want some basic splash page for a disabled service. It should at the very least respond with ‘Service Disabled’

Something like this:

$ cat disabledSvc.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: disabled-nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: disablednginx

template:

metadata:

labels:

app: disablednginx

spec:

containers:

- name: disablednginx

image: nginx:1.14.2

ports:

- containerPort: 80

volumeMounts:

- name: html-volume

mountPath: /usr/share/nginx/html

volumes:

- name: html-volume

configMap:

name: html-configmap

---

apiVersion: v1

kind: ConfigMap

metadata:

name: html-configmap

data:

index.html: |

<html>

<body>

<h1>Service Disabled</h1>

</body>

</html>

---

apiVersion: v1

kind: Service

metadata:

name: disabledservice

spec:

selector:

app: disablednginx

ports:

- protocol: TCP

port: 80

targetPort: 80

I’ll add it to the build step:

- name: update build

run: |

cat testK8s.yaml | sed "s/image: .*/image: harbor.freshbrewed.science\/freshbrewedprivate\/pyk8singresssvc:$GITHUB_RUN_NUMBER/g" > ./runK8s.yaml

cat ./runK8s.yaml

./kubectl apply -f ./disabledSvc.yaml --kubeconfig=./kconfig

./kubectl apply -f ./runK8s.yaml --kubeconfig=./kconfig

I can then test with a port-forward

$ kubectl port-forward disabled-nginx-deployment-b49d6cc4c-v65f8 8888:80

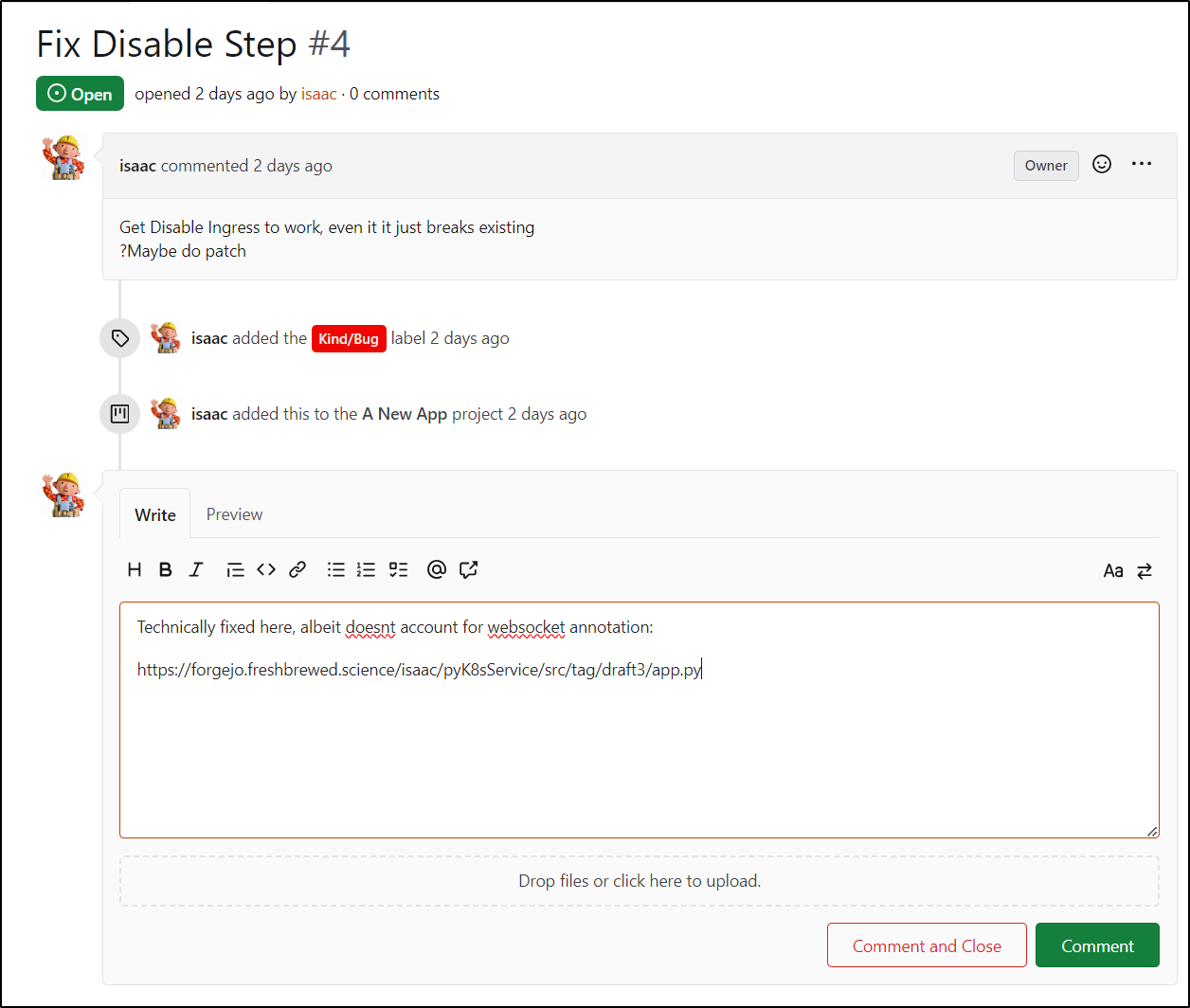

I did manage to get the disable service working by patching the service name:

def update_ingress_service(ingress_name, new_service_name):

config.load_incluster_config()

v1 = client.NetworkingV1Api()

namespace = open("/var/run/secrets/kubernetes.io/serviceaccount/namespace").read()

print(f"in update_ingress_service", file=sys.stderr)

try:

print(f"0 in update_ingress_service", file=sys.stderr)

ingress = v1.read_namespaced_ingress(name=ingress_name, namespace=namespace)

# Update the Ingress backend service to 'new_service_name'

#print(f"1 in update_ingress_service", file=sys.stderr)

#print(f"Ingress first: {ingress}", file=sys.stderr)

ingress.spec.rules[0].http.paths[0].backend.service.name = new_service_name

#print(f"2 in update_ingress_service", file=sys.stderr)

api_response = v1.patch_namespaced_ingress(

name='rundeckingress',

namespace='default', # replace with your namespace

body=ingress

)

#print(f"3 in update_ingress_service", file=sys.stderr)

#print(f"Ingress second: {ingress}", file=sys.stderr)

except client.rest.ApiException as e:

print(f"error in update_ingress_service: {e}", file=sys.stderr)

return f"Error updating Ingress '{ingress_name}': {e}"

return f"Ingress '{ingress_name}' updated to use service '{new_service_name}'"

Which we can see here:

$ kubectl get ingress rundeckingress -o yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

field.cattle.io/publicEndpoints: "null"

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"networking.k8s.io/v1","kind":"Ingress","metadata":{"annotations":{"cert-manager.io/cluster-issuer":"letsencrypt-prod","kubernetes.io/ingress.class":"nginx","kubernetes.io/tls-acme":"true","nginx.ingress.kubernetes.io/proxy-read-timeout":"3600","nginx.ingress.kubernetes.io/proxy-send-timeout":"3600","nginx.org/websocket-services":"rundeck-external-ip"},"labels":{"app.kubernetes.io/instance":"rundeckingress"},"name":"rundeckingress","namespace":"default"},"spec":{"rules":[{"host":"rundeck.freshbrewed.science","http":{"paths":[{"backend":{"service":{"name":"rundeck-external-ip","port":{"number":80}}},"path":"/","pathType":"ImplementationSpecific"}]}}],"tls":[{"hosts":["rundeck.freshbrewed.science"],"secretName":"rundeck-tls"}]}}

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: rundeck-external-ip

creationTimestamp: "2023-10-25T00:40:40Z"

generation: 2

labels:

app.kubernetes.io/instance: rundeckingress

name: rundeckingress

namespace: default

resourceVersion: "281502944"

uid: dc7dd8ec-5d7d-496b-b7b5-ab496e140a11

spec:

rules:

- host: rundeck.freshbrewed.science

http:

paths:

- backend:

service:

name: disabledservice

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- rundeck.freshbrewed.science

secretName: rundeck-tls

status:

loadBalancer: {}

It almost worked. If I remove the websocket annotation (did by hand - just added ‘xxx’ so i could stub it out), then it works:

$ kubectl get ingress rundeckingress -o yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

field.cattle.io/publicEndpoints: '[{"addresses":["192.168.1.215","192.168.1.36","192.168.1.57","192.168.1.78"],"port":443,"protocol":"HTTPS","serviceName":"default:disabledservice","ingressName":"default:rundeckingress","hostname":"rundeck.freshbrewed.science","path":"/","allNodes":false}]'

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"networking.k8s.io/v1","kind":"Ingress","metadata":{"annotations":{"cert-manager.io/cluster-issuer":"letsencrypt-prod","kubernetes.io/ingress.class":"nginx","kubernetes.io/tls-acme":"true","nginx.ingress.kubernetes.io/proxy-read-timeout":"3600","nginx.ingress.kubernetes.io/proxy-send-timeout":"3600","nginx.org/websocket-services":"rundeck-external-ip"},"labels":{"app.kubernetes.io/instance":"rundeckingress"},"name":"rundeckingress","namespace":"default"},"spec":{"rules":[{"host":"rundeck.freshbrewed.science","http":{"paths":[{"backend":{"service":{"name":"rundeck-external-ip","port":{"number":80}}},"path":"/","pathType":"ImplementationSpecific"}]}}],"tls":[{"hosts":["rundeck.freshbrewed.science"],"secretName":"rundeck-tls"}]}}

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-xxx-services: rundeck-external-ip

creationTimestamp: "2023-10-25T00:40:40Z"

generation: 5

labels:

app.kubernetes.io/instance: rundeckingress

name: rundeckingress

namespace: default

resourceVersion: "282160853"

uid: dc7dd8ec-5d7d-496b-b7b5-ab496e140a11

spec:

rules:

- host: rundeck.freshbrewed.science

http:

paths:

- backend:

service:

name: disabledservice

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- rundeck.freshbrewed.science

secretName: rundeck-tls

status:

loadBalancer:

ingress:

- ip: 192.168.1.215

- ip: 192.168.1.36

- ip: 192.168.1.57

- ip: 192.168.1.78

and if I set it back, again, by hand, it works again

builder@LuiGi17:~/Workspaces/pyK8sService$ kubectl edit ingress rundeckingress

ingress.networking.k8s.io/rundeckingress edited

builder@LuiGi17:~/Workspaces/pyK8sService$ kubectl get ingress rundeckingress -o yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

field.cattle.io/publicEndpoints: '[{"addresses":["192.168.1.215","192.168.1.36","192.168.1.57","192.168.1.78"],"port":443,"protocol":"HTTPS","serviceName":"default:rundeck-external-ip","ingressName":"default:rundeckingress","hostname":"rundeck.freshbrewed.science","path":"/","allNodes":false}]'

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"networking.k8s.io/v1","kind":"Ingress","metadata":{"annotations":{"cert-manager.io/cluster-issuer":"letsencrypt-prod","kubernetes.io/ingress.class":"nginx","kubernetes.io/tls-acme":"true","nginx.ingress.kubernetes.io/proxy-read-timeout":"3600","nginx.ingress.kubernetes.io/proxy-send-timeout":"3600","nginx.org/websocket-services":"rundeck-external-ip"},"labels":{"app.kubernetes.io/instance":"rundeckingress"},"name":"rundeckingress","namespace":"default"},"spec":{"rules":[{"host":"rundeck.freshbrewed.science","http":{"paths":[{"backend":{"service":{"name":"rundeck-external-ip","port":{"number":80}}},"path":"/","pathType":"ImplementationSpecific"}]}}],"tls":[{"hosts":["rundeck.freshbrewed.science"],"secretName":"rundeck-tls"}]}}

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: rundeck-external-ip

creationTimestamp: "2023-10-25T00:40:40Z"

generation: 6

labels:

app.kubernetes.io/instance: rundeckingress

name: rundeckingress

namespace: default

resourceVersion: "282162195"

uid: dc7dd8ec-5d7d-496b-b7b5-ab496e140a11

spec:

rules:

- host: rundeck.freshbrewed.science

http:

paths:

- backend:

service:

name: rundeck-external-ip

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- rundeck.freshbrewed.science

secretName: rundeck-tls

status:

loadBalancer:

ingress:

- ip: 192.168.1.215

- ip: 192.168.1.36

- ip: 192.168.1.57

- ip: 192.168.1.78

So herein lies a problem. Ingresses vary.

For instance, how can I handle something like harbor? Here is just the spec block

spec:

ingressClassName: nginx

rules:

- host: harbor.freshbrewed.science

http:

paths:

- backend:

service:

name: harbor-registry2-core

port:

number: 80

path: /api/

pathType: Prefix

- backend:

service:

name: harbor-registry2-core

port:

number: 80

path: /service/

pathType: Prefix

- backend:

service:

name: harbor-registry2-core

port:

number: 80

path: /v2/

pathType: Prefix

- backend:

service:

name: harbor-registry2-core

port:

number: 80

path: /chartrepo/

pathType: Prefix

- backend:

service:

name: harbor-registry2-core

port:

number: 80

path: /c/

pathType: Prefix

- backend:

service:

name: harbor-registry2-portal

port:

number: 80

path: /

pathType: Prefix

tls:

- hosts:

- harbor.freshbrewed.science

secretName: harbor.freshbrewed.science-cert

Pivotting

It has me thinking, perhaps I’m going about this wrong. Perhaps what is really needed is more simple. I’m thinking through the flow if I had to do by hand. I would very likely:

- save the current ingress YAML somewhere so i could restore it verbatim

- I would capture the host (URL) being served

- I would then delete the current ingress yaml, then create a new one with some boilerplate for that $hostname being sent to my disabled service

My “undo”, again, if doing by hand, would be:

- fetch the ingress I saved and have it handy

- delete the current ‘disabled’ ingress

- relaunch with

kubectl apply -fthe former

So perhaps I should pivot now… To automate this, I could:

- save the current ingress yaml to a bucket/store account.. a pvc should work

- have a canned boilerplate ingress template ready

- a CM would be good - we can feed this with some common options

- this way those using VirtualService with Istio/ASM or those useing Traefik can just use the boiler plate

- if there are other Ingress providers, they’ll see the common pattern and add them in (the power of Open Source!)

This means adding a CM, boiler plates, docs and a PVC.

Story Time

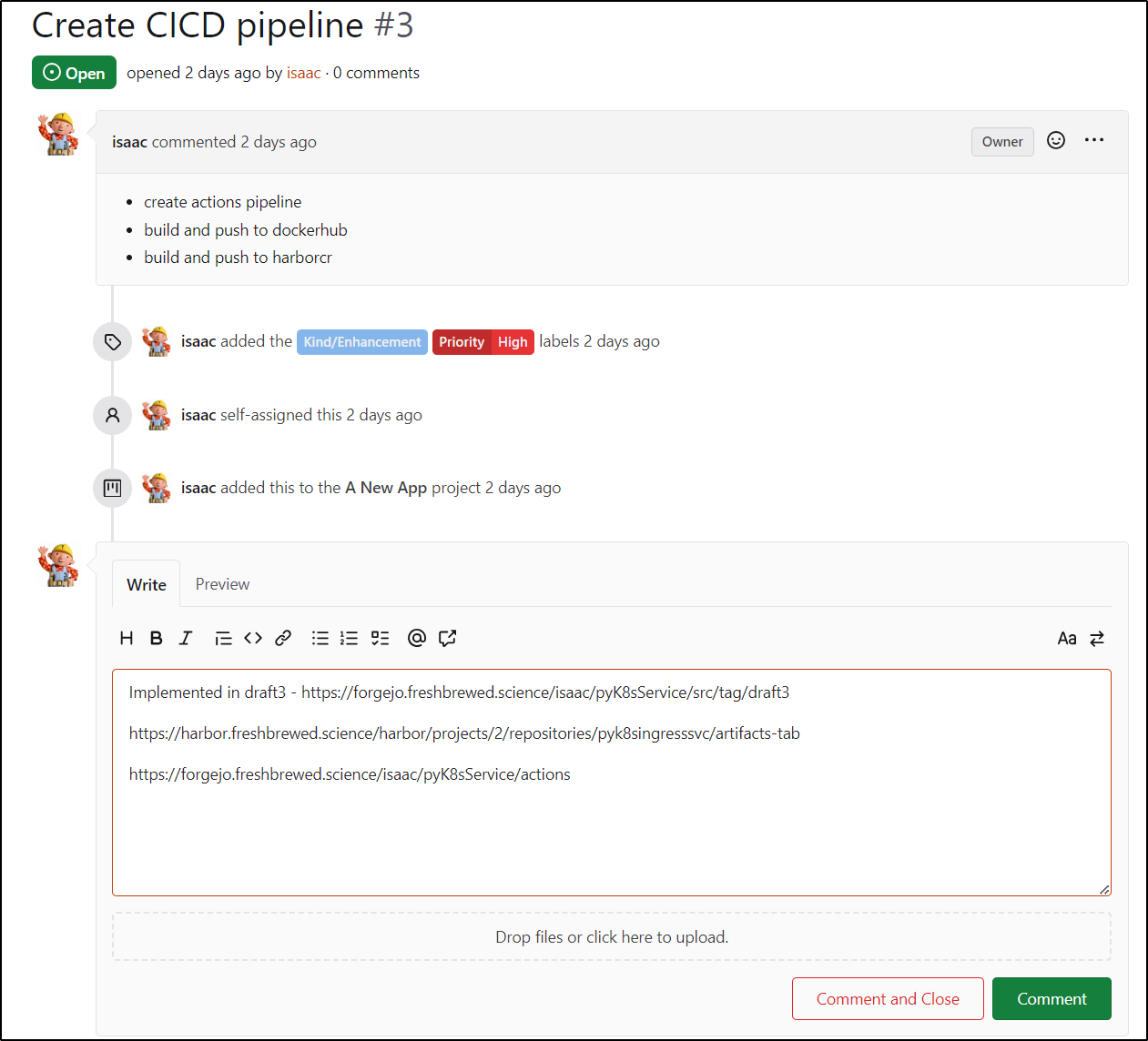

First, the CICD pipeline was created and we successfully pushed to Harbor CR, so I can close ticket 3

I’m going to close Issue 4 as well, though we have some new work to do

I created a couple issues to cover our next steps:

- Save disabled ingress to a PVC

- Create PVC and mount as volume

- Show index that lets us flip

- Use templates to deploy (in CM)

Summary

We covered a lot: Creating the CICD pipelines, fixing the app to properly change ingress services name, and building out a basic “Disabled Service” deployment. We setup DR to Gitea and Codeberg so we can rest assured our repo’s contents are secured.

As far as next steps, realizing that this wouldn’t scale to other ingress classes, it made sense to change to using a template that can be deployed to a ConfigMap. Next time, we’ll create a volume to store the disabled ingresses and lastly setup a nice web UI picker to turn on and off ingresses in a more straightforward manner.

We did punt on getting proper release tagging (save for adding branch). I’ll want to tackle dockerhub and releases in the next sprint.

Addendum

I really liked the AI art this time. You’re welcome to download and use however you like: