Published: Jul 20, 2023 by Isaac Johnson

I wanted to circle back on Configure8 now that they announced cost management features. I had a bit of inside scoop there but was under NDA. I’m guessing some of you might have wondered why I encouraged folks to setup the OpenCost tagging in AWS in the last post.

Today we’ll look at AWS Cost Management (including a follow-up to last time when I attempted to lower my bill). We’ll dig into some specifics and wrap by checking out a few integrations we haven’t tried to date; Azure DevOps and SonarSource. To make that extra fun, we’ll set that up on some Public AzDO projects, Fortran_CICD and Perl_CICD.

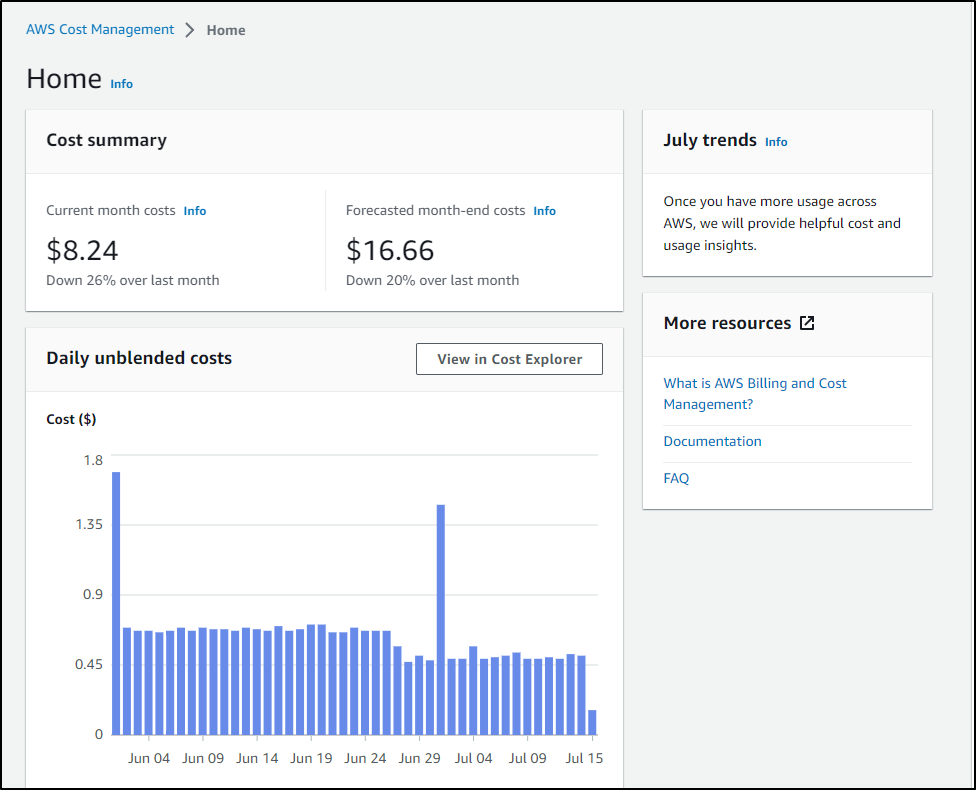

AWS Savings

I mentioned using Configure8 to find some unused EC2s in the last post. It’s been a while, I figured I would share the good news that it did, in fact, bring my bills down

Pricing Details in Environments

As you recall, in the last post we covered setting up costing, though I really did not say way. I was a bit under embargo until release, but now Configure8 has Cost details!

So, had you done similar, you should be able to follow along. Don’t worry if you haven’t, just go half way down on the last post to “Gathering Costs”.

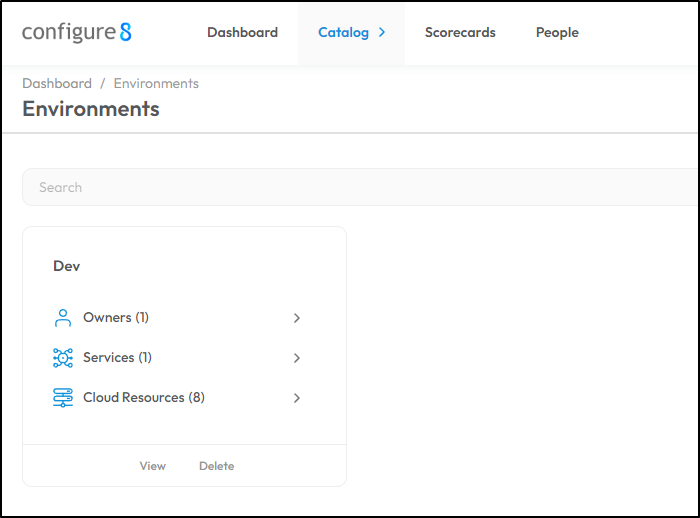

We can now look at an environment

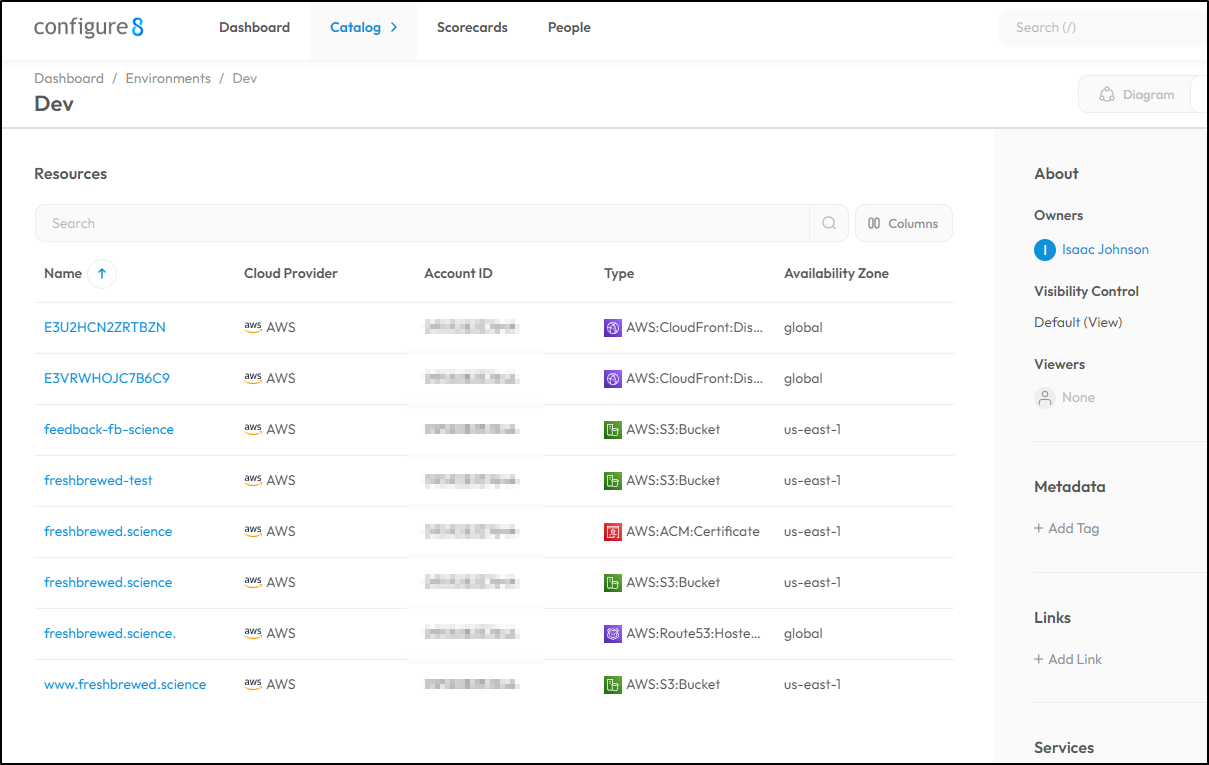

And pick either “View” or “Cloud Resources”. I’ll now see the Cloud Resources that make up my Dev environment

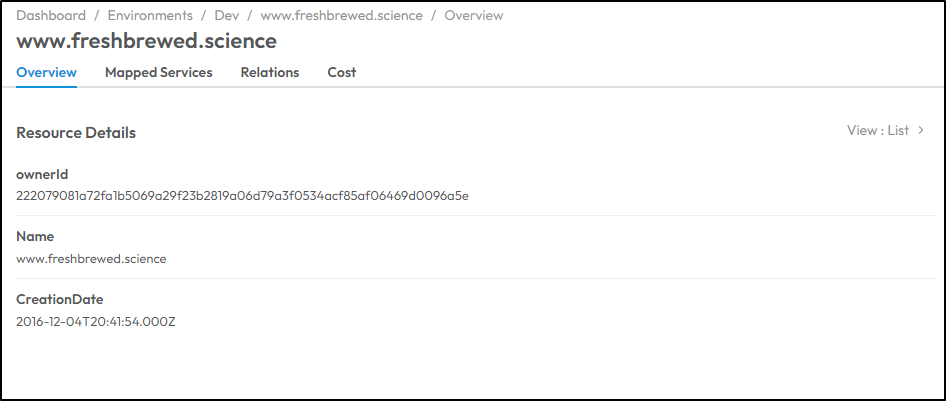

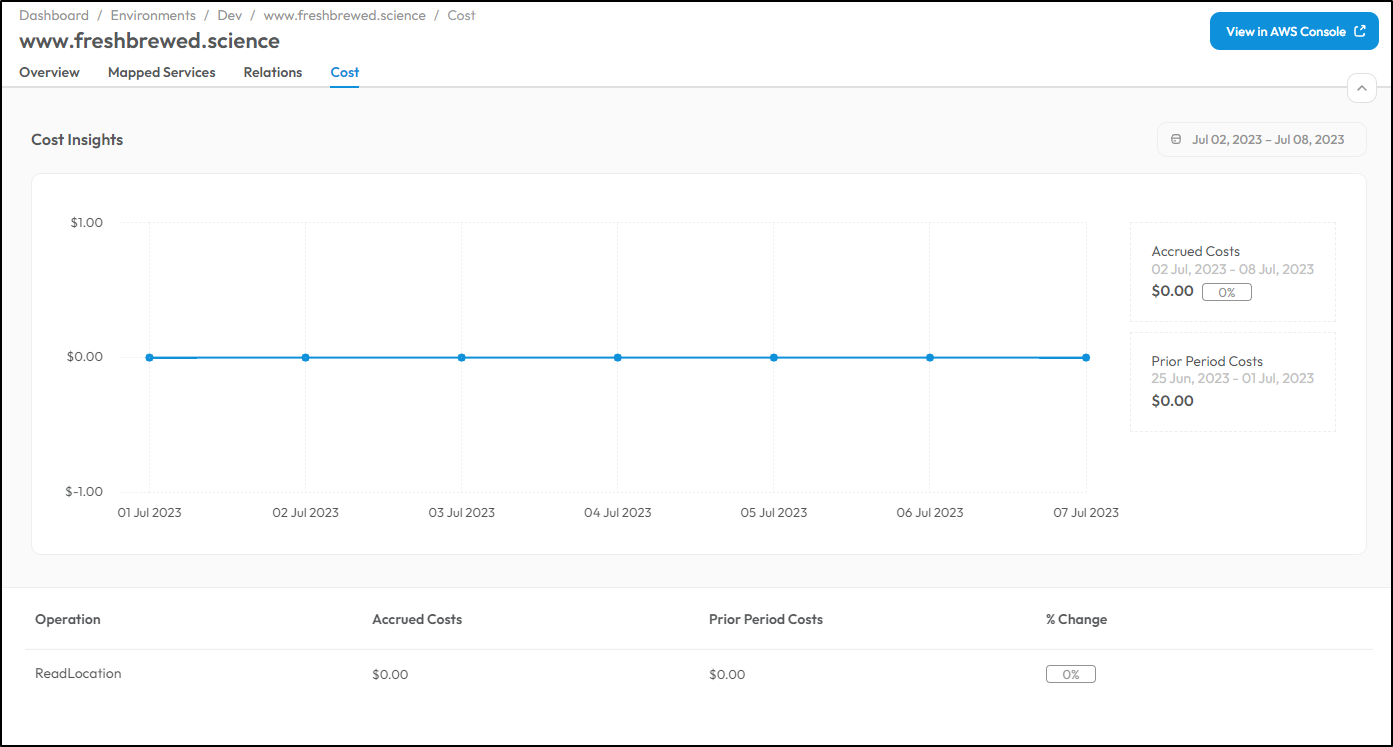

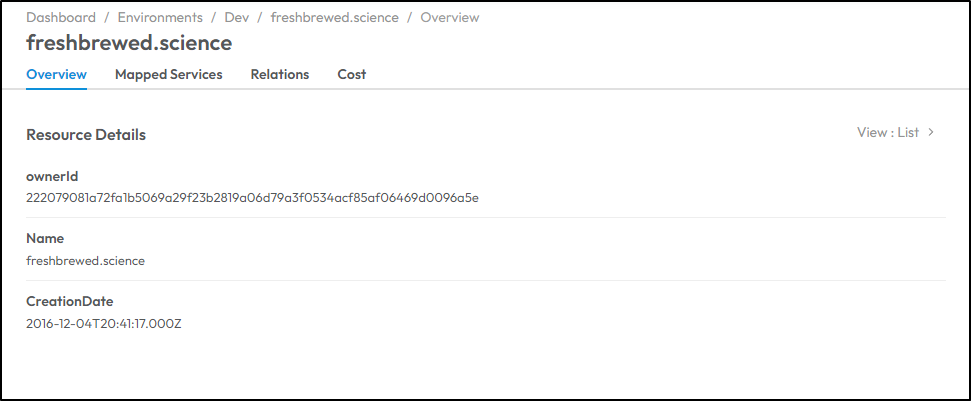

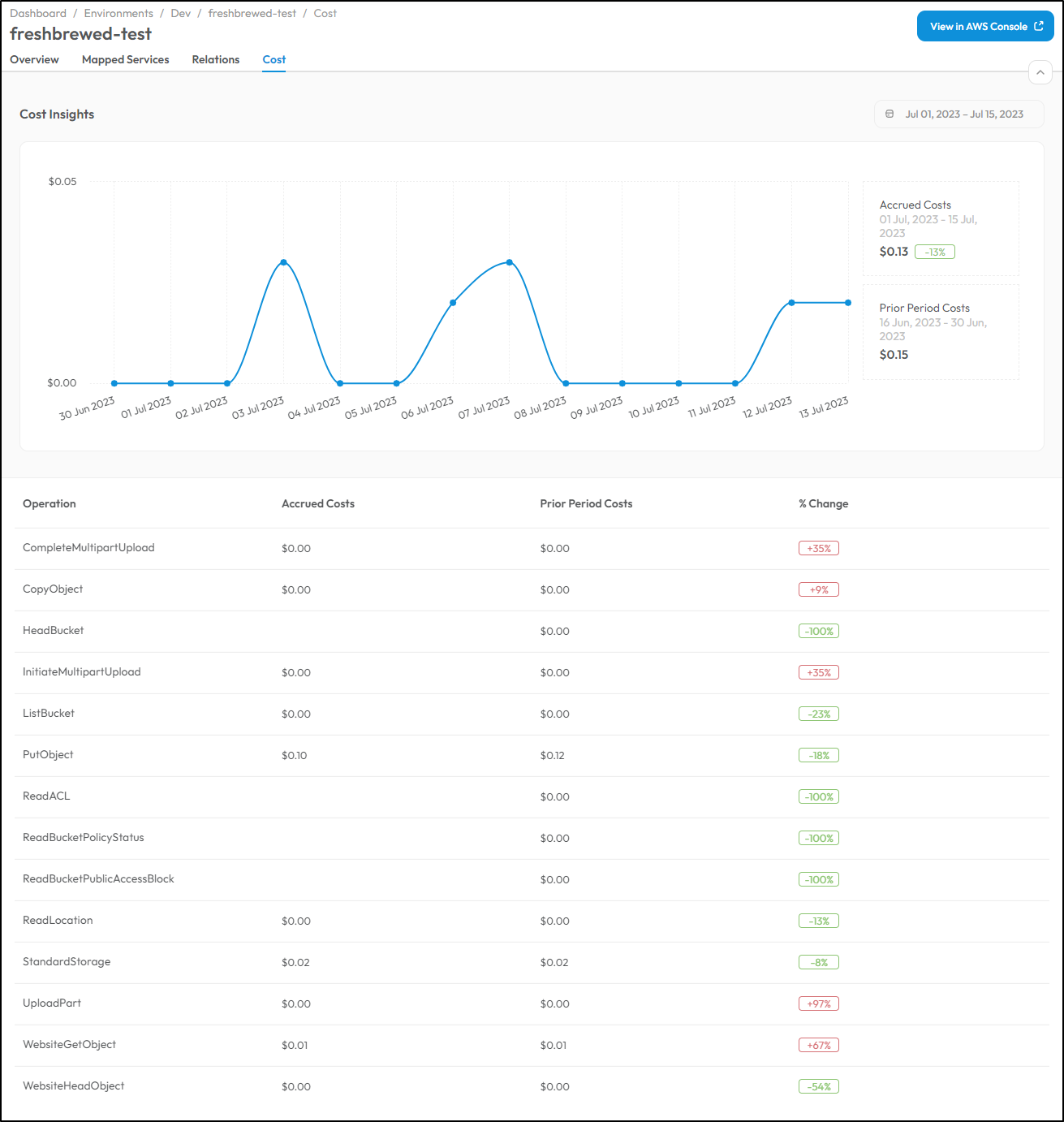

I’ll pick one I don’t use very much, the old “www.freshbrewed.science” bucket

As you can see, there is now a “Cost” tab on the right. Clicking that shows me costing details

Not to interesting, other that to note it has been zero. Perhaps I should remove this then? We’ve identified something monitored, but clearly unused.

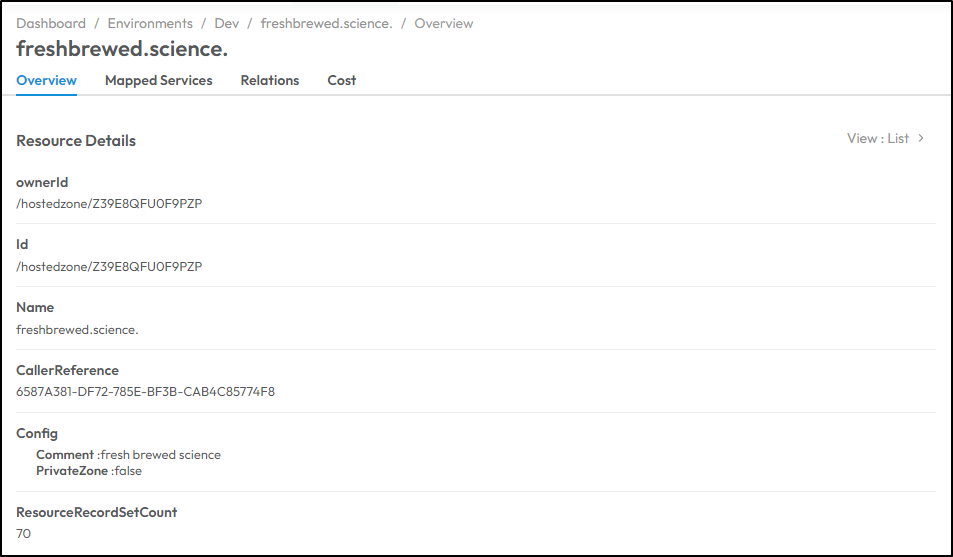

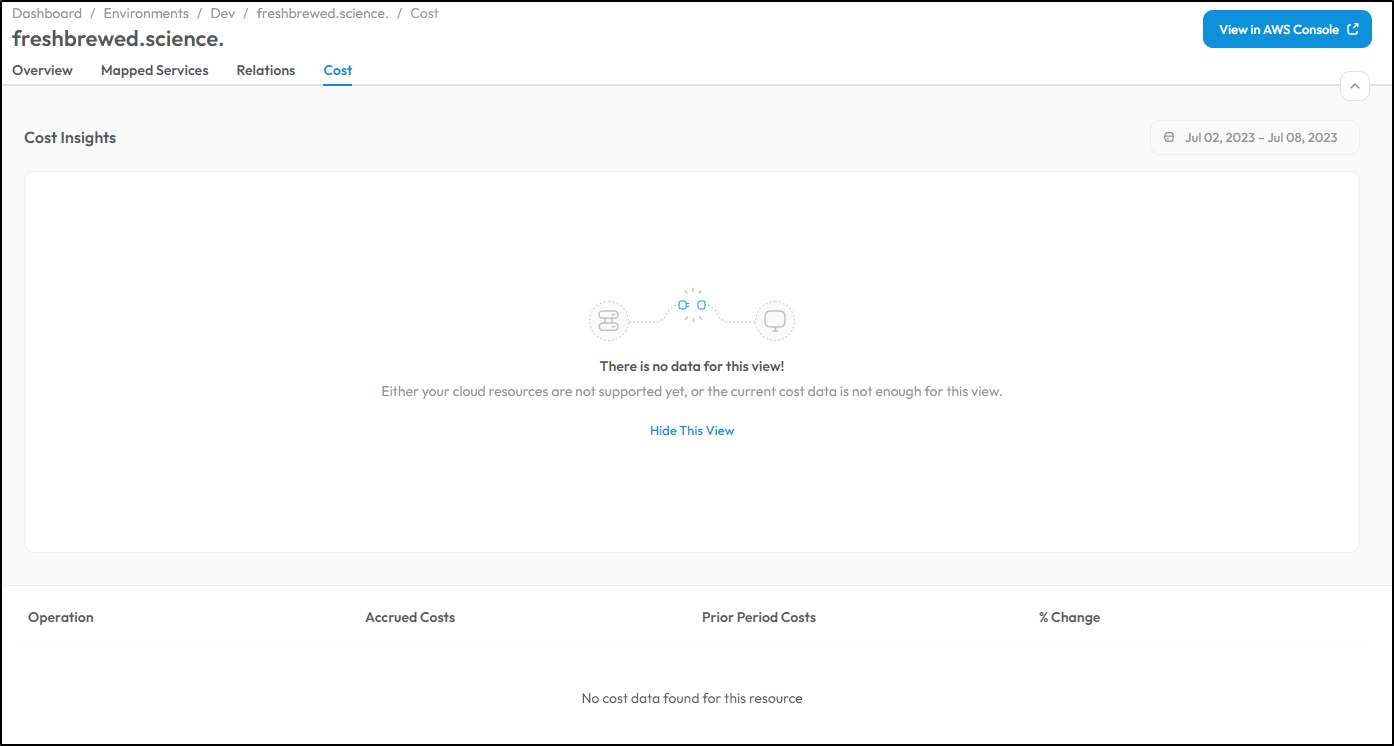

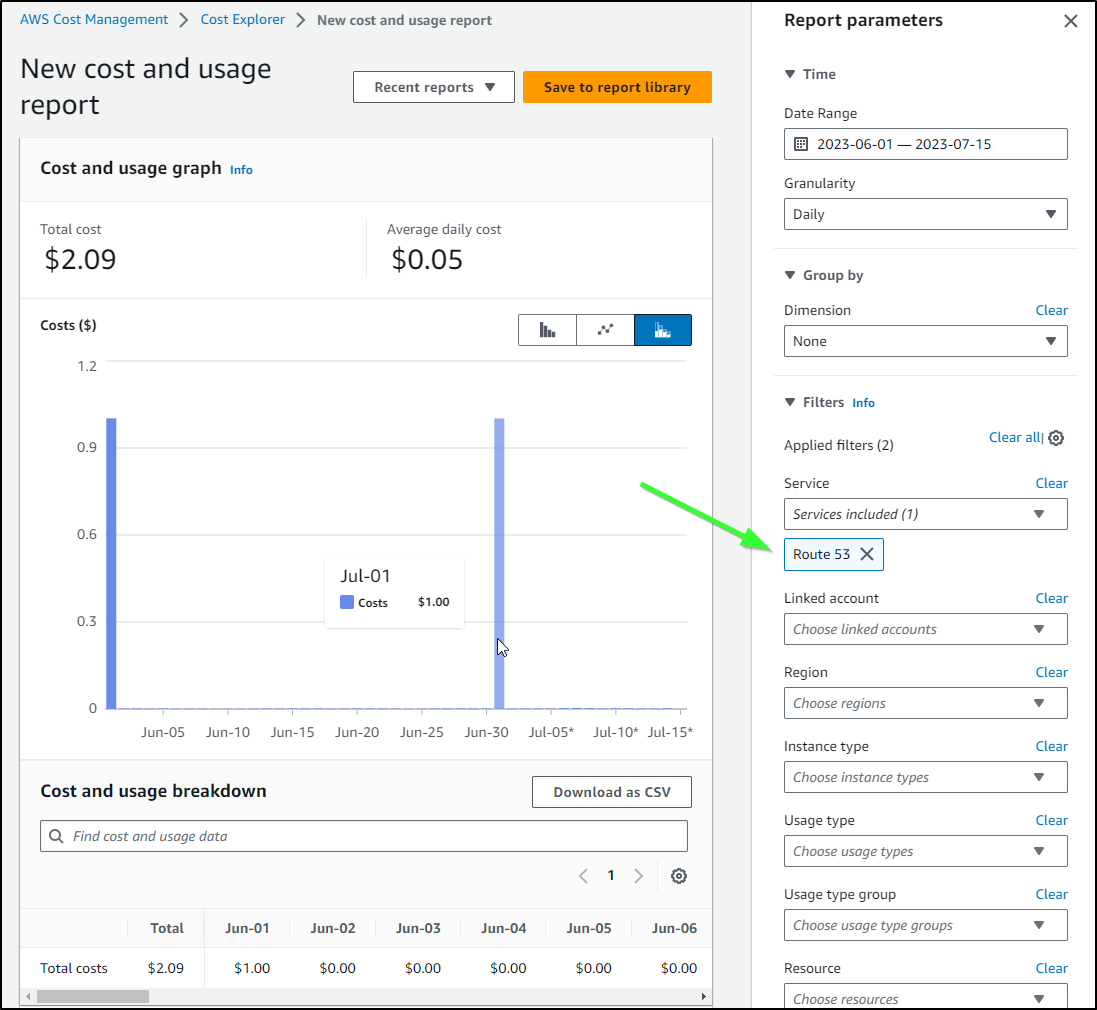

Some things, like my Route53 Hosted Zone

do no expose costing details.

NOTE: I got word this is being fixed this week so likely you will see details by the time you read this

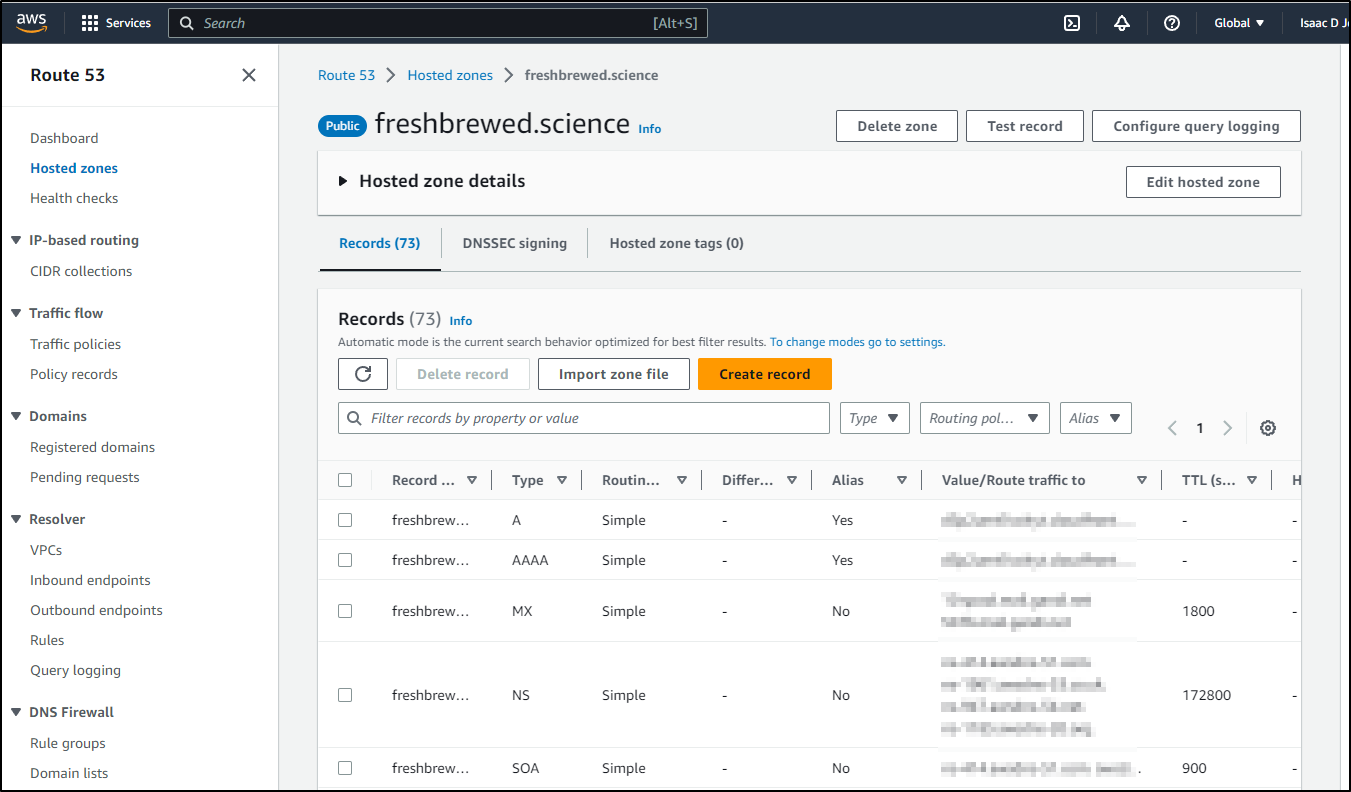

Clicking the “View in AWS Console” brings me to the HZ

And from there I can jump to the AWS Cost Management console to see it has cost me a buck a month

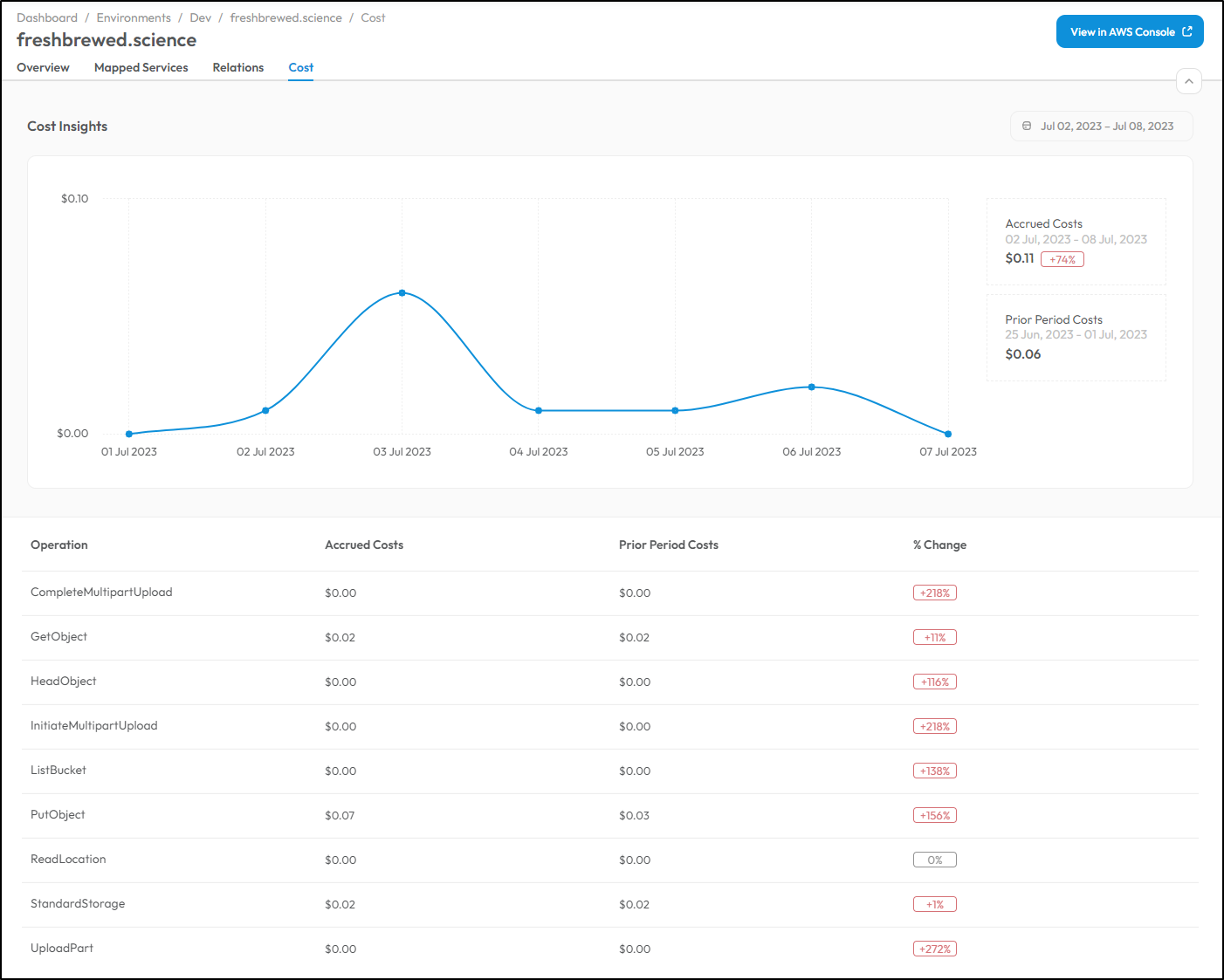

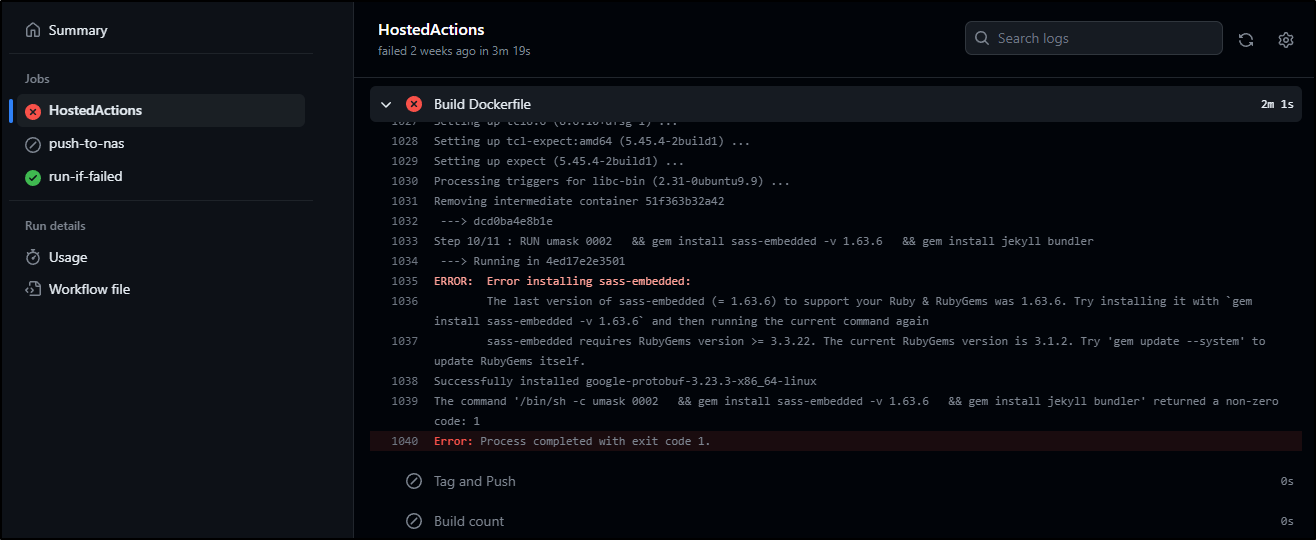

Let’s go to a resource with a bit more usage. We can look at the primary FB bucket

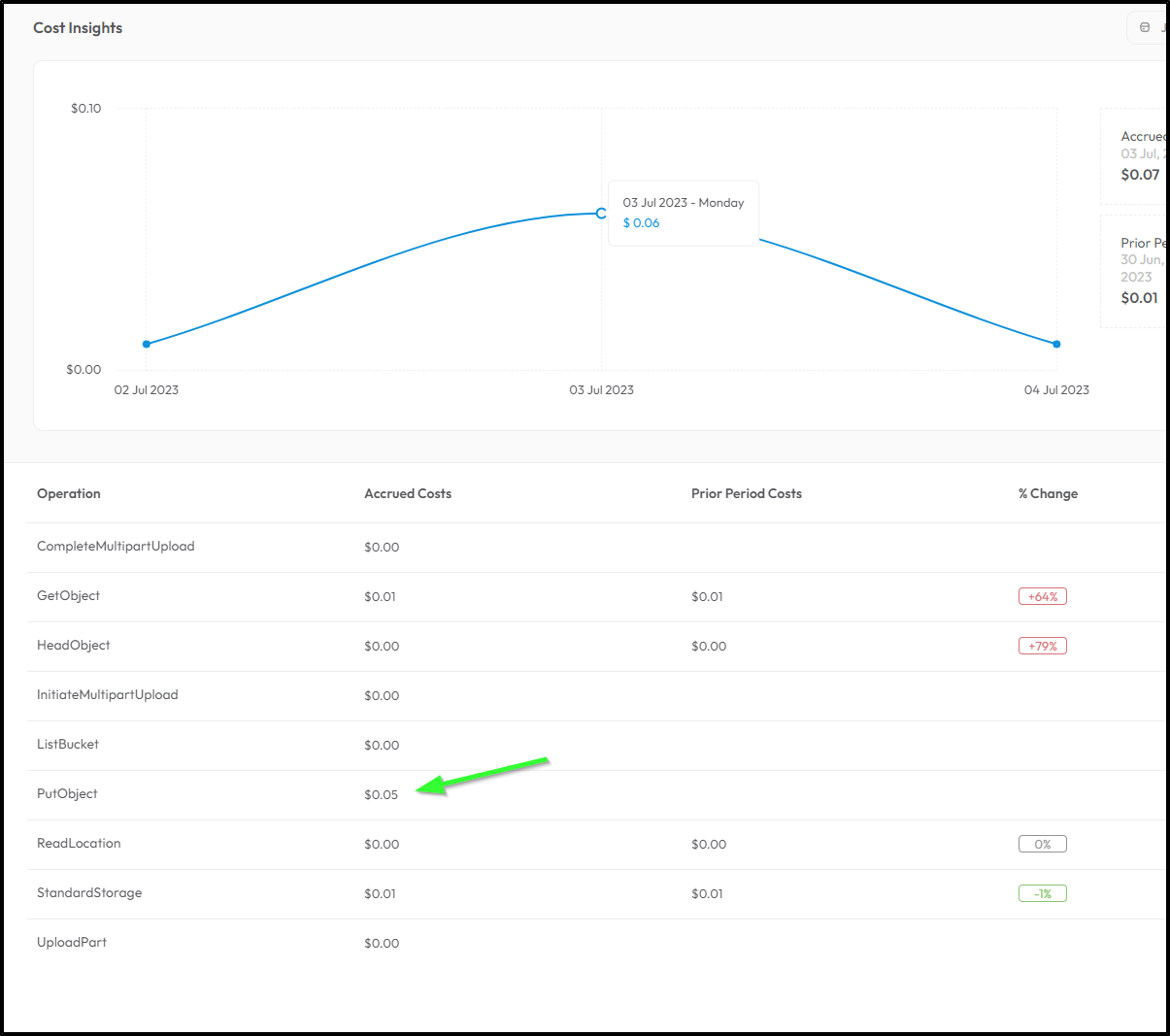

Here we can see a bit more costs. Clearly, I had spike around July 4th

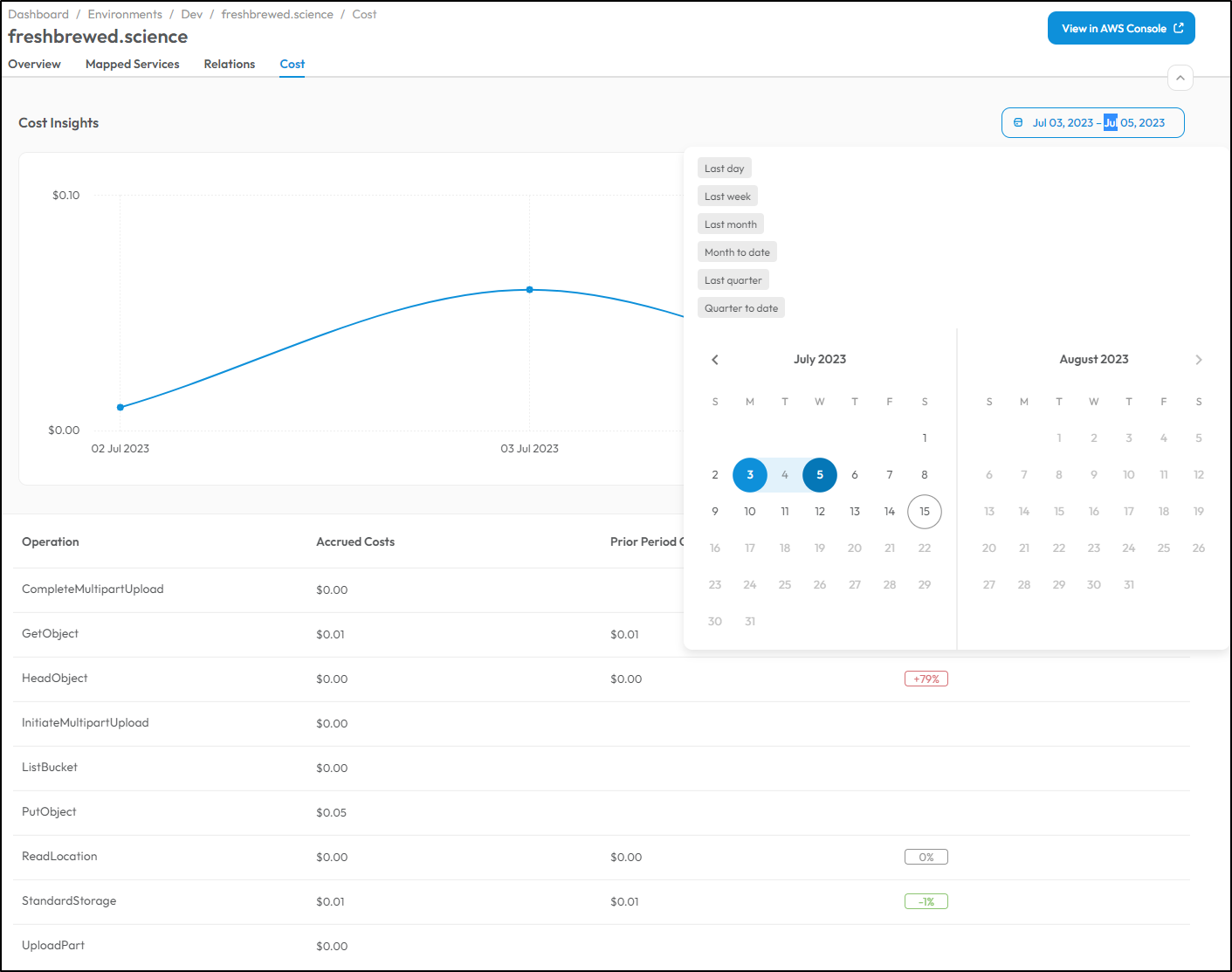

Let’s use the date picker to zoom in on that range

It looks to be the Put Object

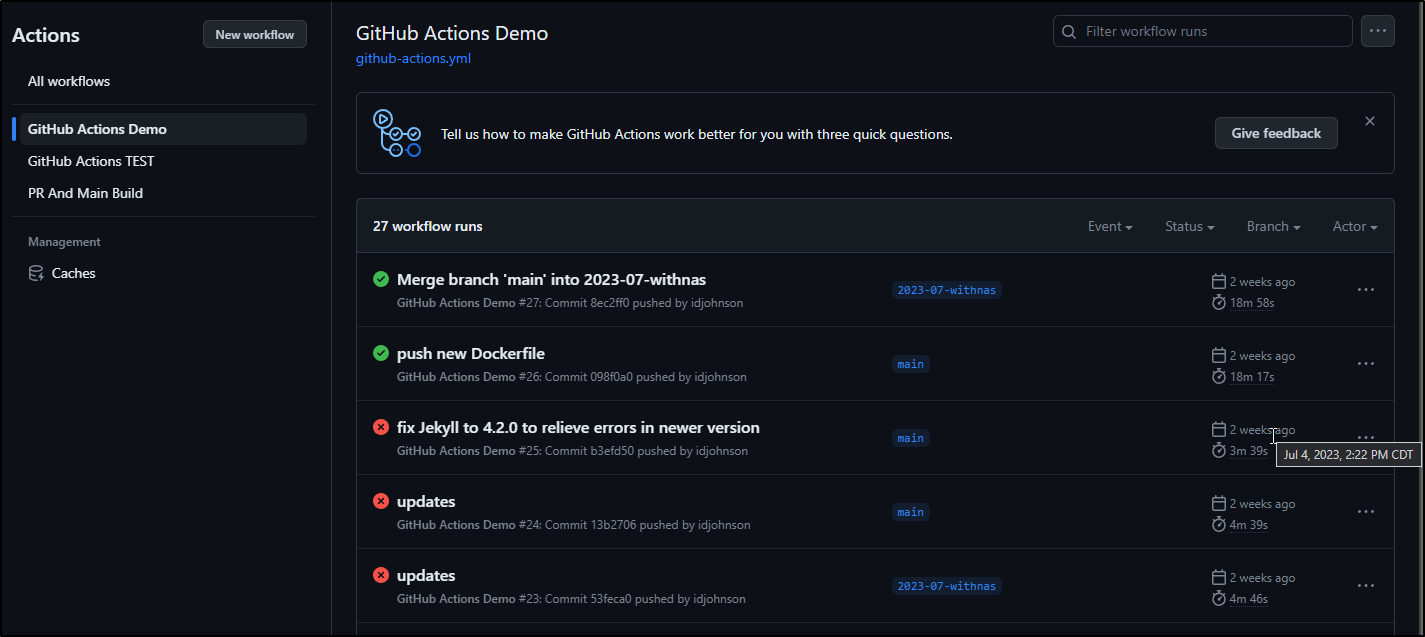

Going to my Service page, I see two items of interest - the Github deploy that day (PR204 to post Synology NAS: DSM, Central Management, and a Container Registry). This had a sizable 1:29 video in the deliverable.

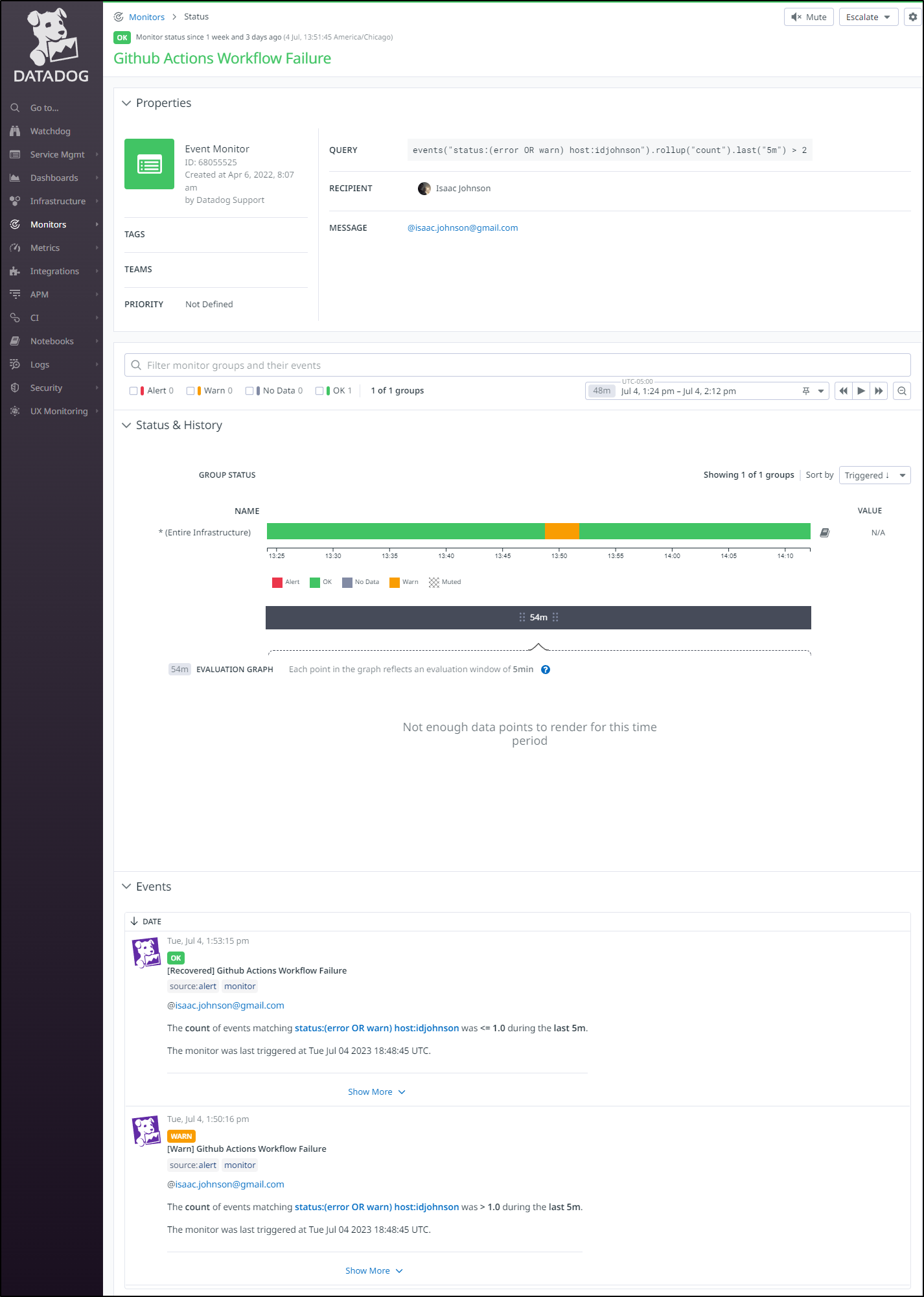

Datadog, on that same day, alerted me to a pipeline failure

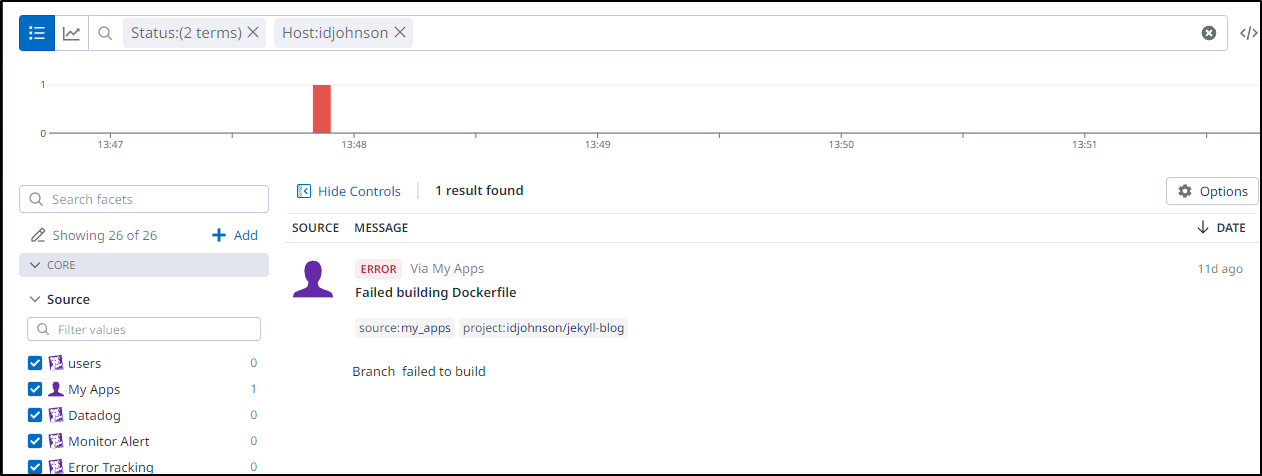

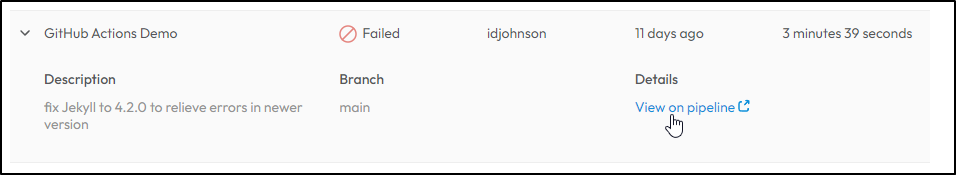

This failed a Dockerfile build

Since I know I do not build Docker to post to the blog, it reminded me that I was also updating my backend agent pool image to use with the NAS Registry and that had failures

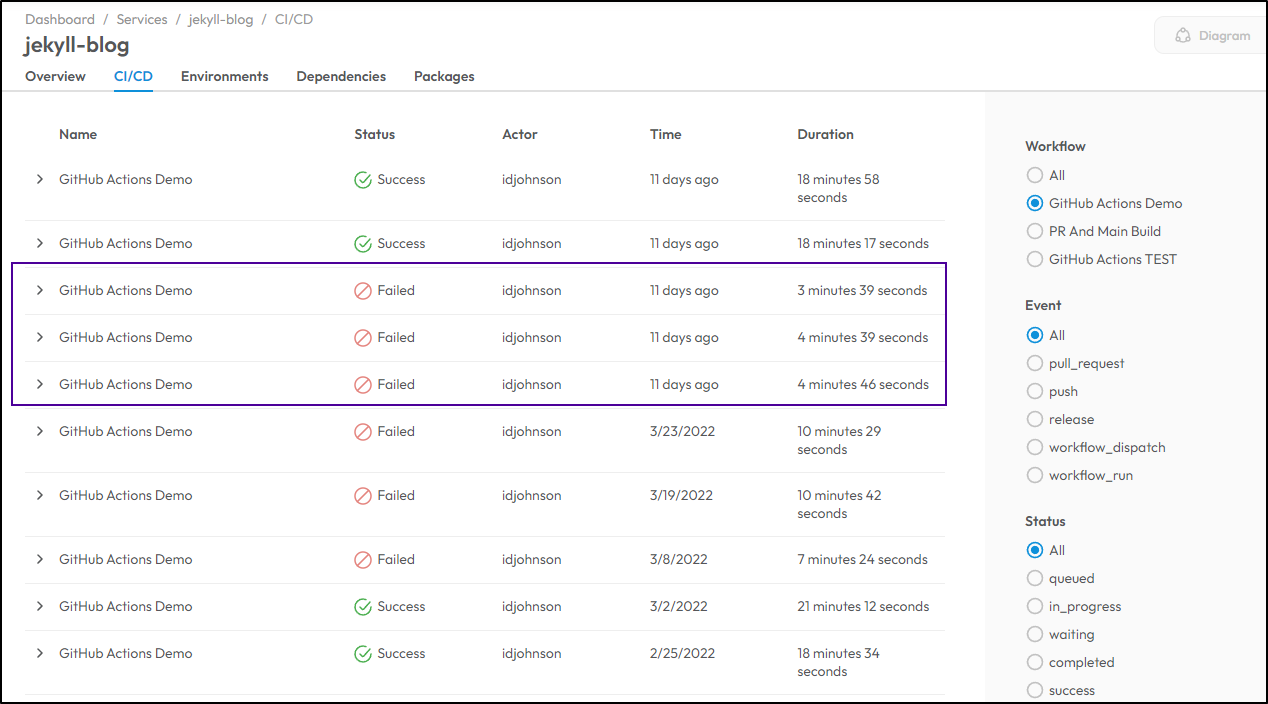

I didn’t even need to go to Github for that as I can easily see it in my CI/CD page in Configure8

I can “View on pipeline” to see more

Not that interesting, per se, but it lets me easily see why (Ruby module issue)

More Cost details

We can see my costs are doubled since every post goes to a staging site for review. I can view a Quarter of data to see the spikes in my “test” bucket

I dug a bit more, The real costs here are Usage requests (Requests-Tier1).

What is odd is Requests-Tier1 really should be PUT, POST and LIST. The GET (read) is Tier2.

Yet clearly I have huge usage bills on Tier1 POST/PUT/LIST

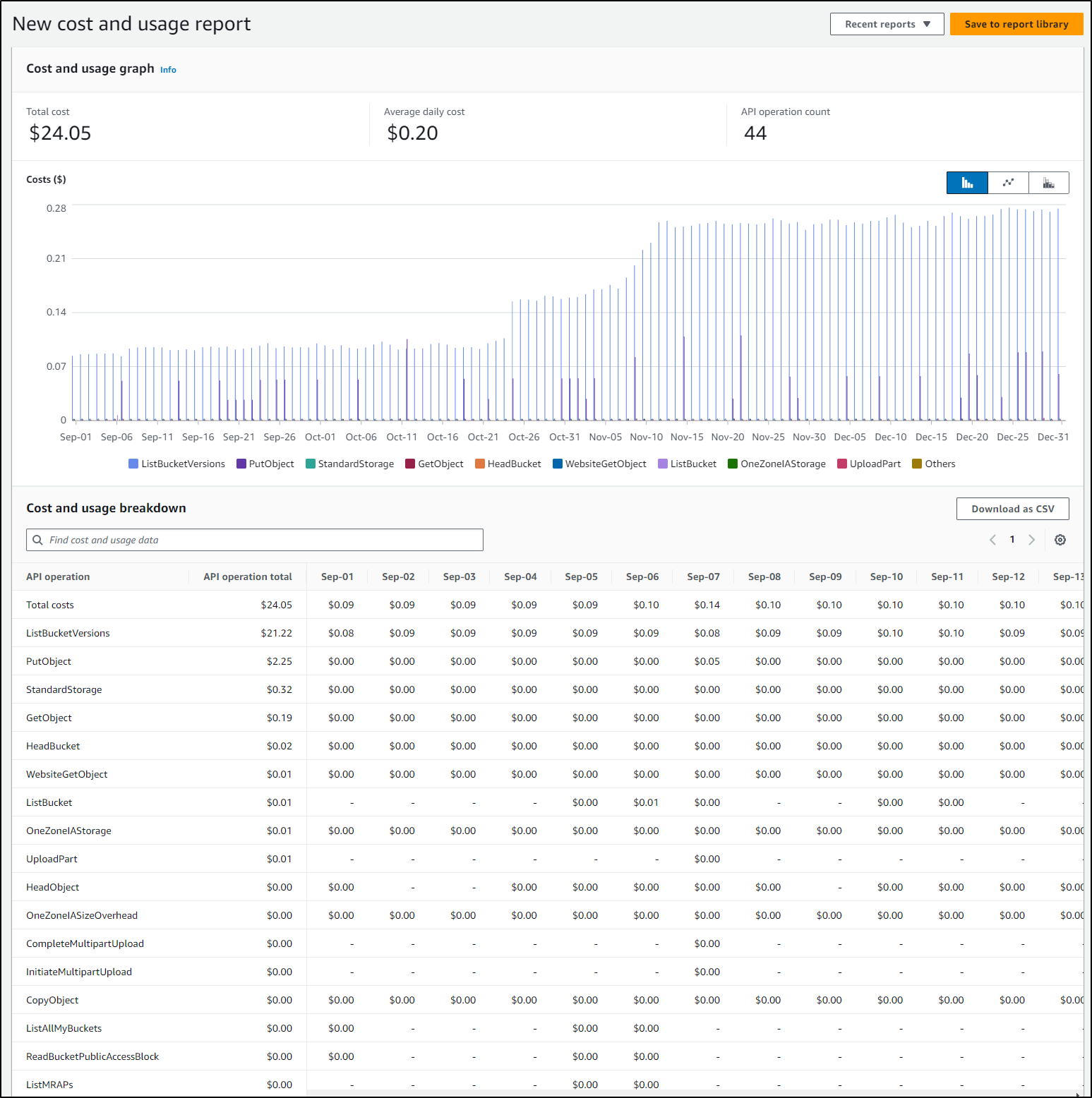

Around Oct 26th, something spiked in October on ListBucketVersions and never went down.

The only blog post around that time was on k3s rebuilds.

Honestly, I’m a bit stumped on this one. I suspect there is a Fuse item somewhere in my network and using S3 for storage and I have lost track which.

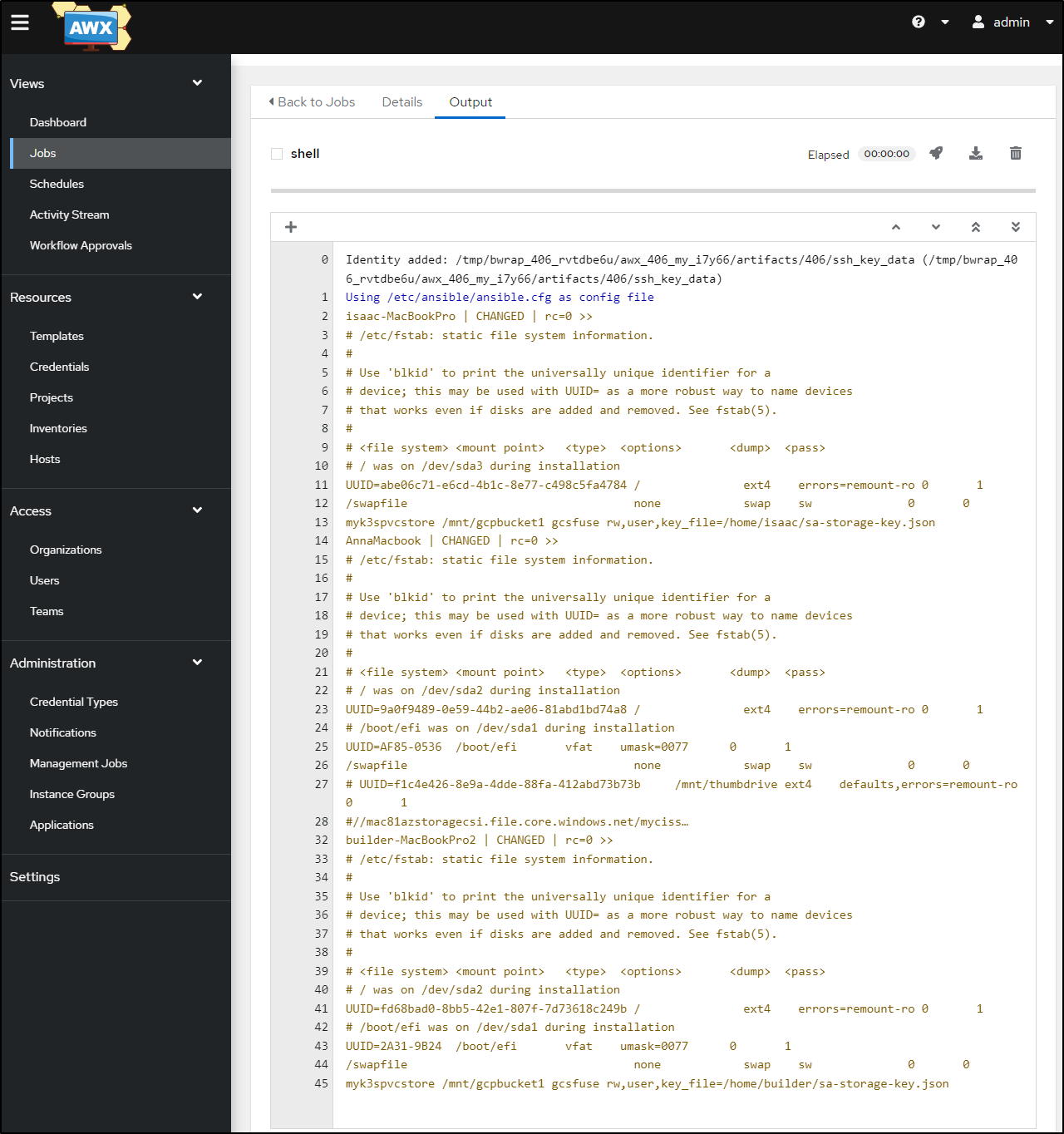

I found at least one host using GDP for fuse data

myk3spvcstore /mnt/gcpbucket1 gcsfuse rw,noauto,user,key_file=/home/builder/sa-storage-key.json

myk3spvcstore /mnt/gcptest2 gcsfuse rw,noauto,user,key_file=/home/builder/sa-storage-key.json

builder@anna-MacBookAir:~

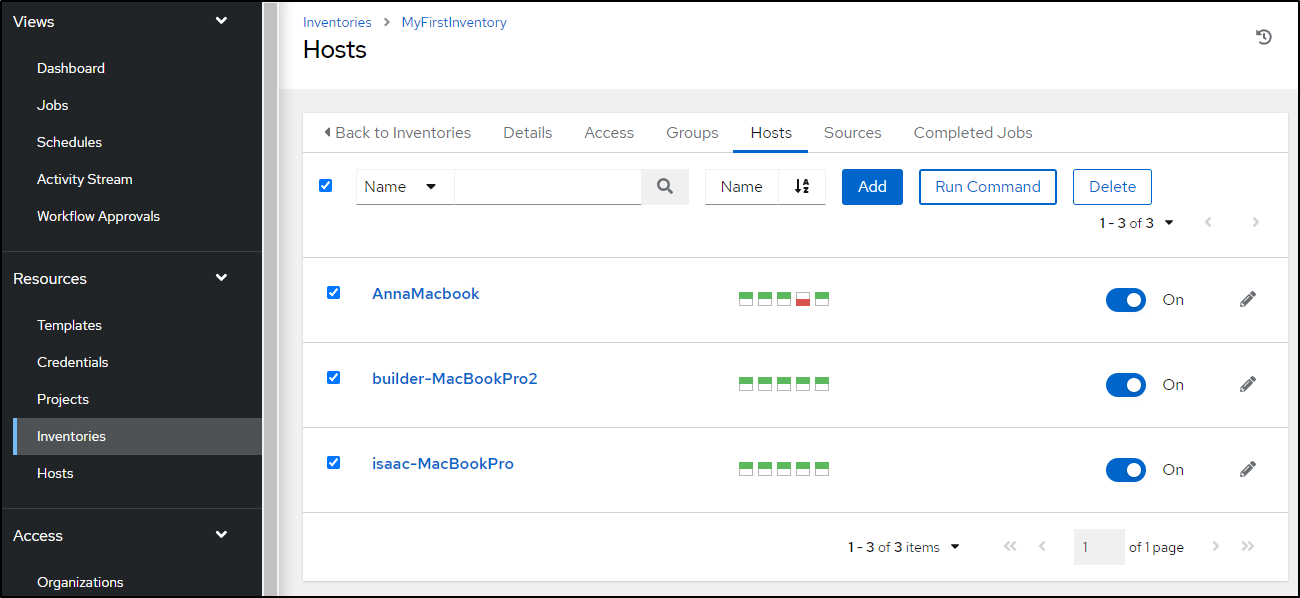

I can at least use AWX to check one cluster. Picking all the hosts in the inventory

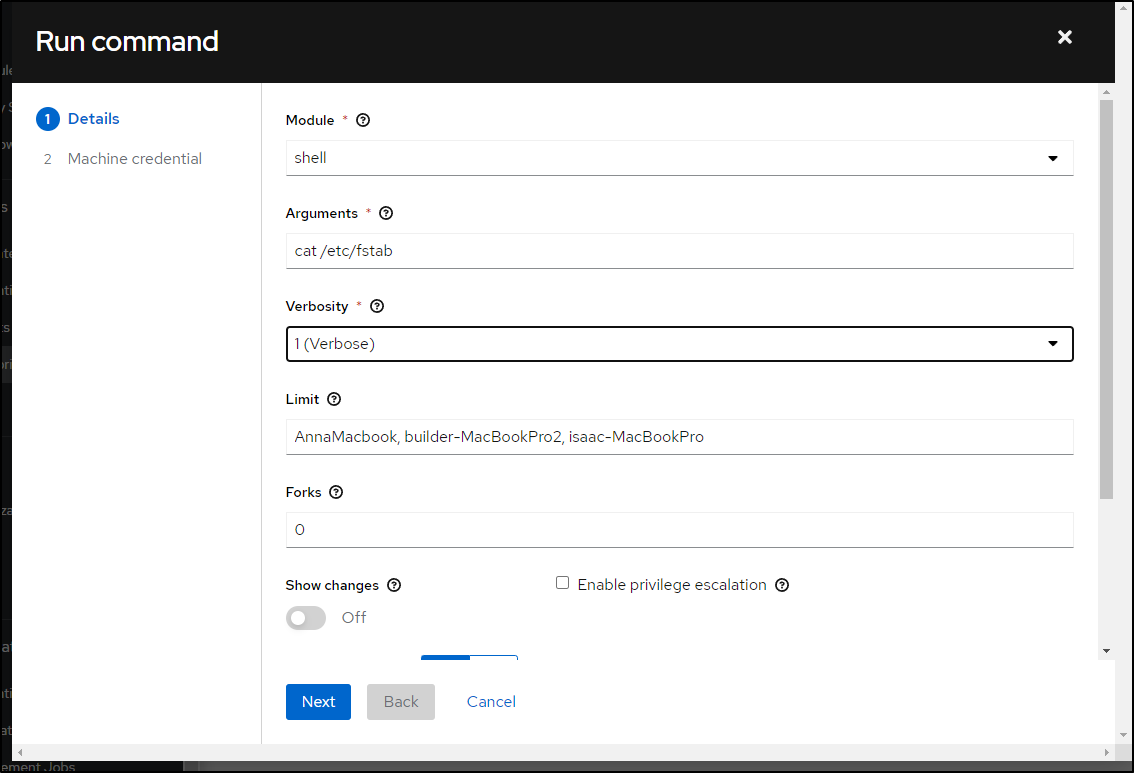

I can cat /etc/fstab to see what is there

And I see they are all pretty much GCP based

The only other thought I had might be my Cribl LogStream. But it was powered down and checking lately, it really only has local destinations

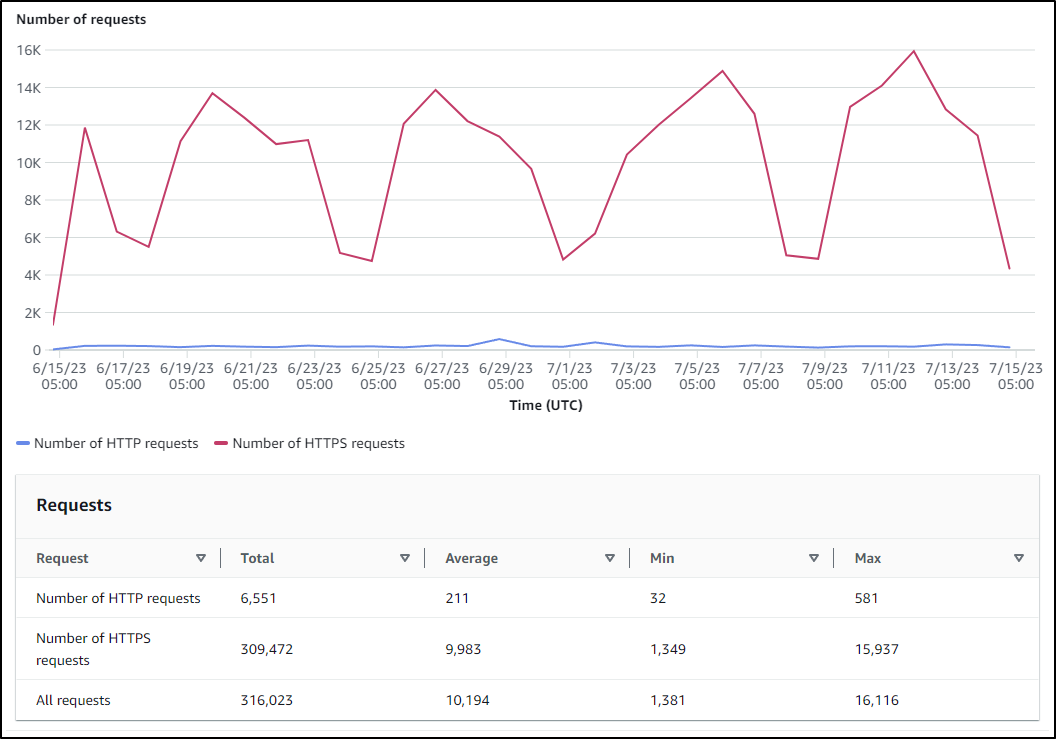

My other theory (and one I like since it strokes my ego) is that the blog is popular enough that I just get that much traffic. It is probably not the case, but it is fun to think we are at 1/3 million requests a month with spikes that tie to regular weeks (drops off on weekends)

Azure DevOps and Sonar

Enough pining over costs. Let’s look at some new Integrations.

We can add a Service that has Azure Repos, DevOps and SonarCloud scans.

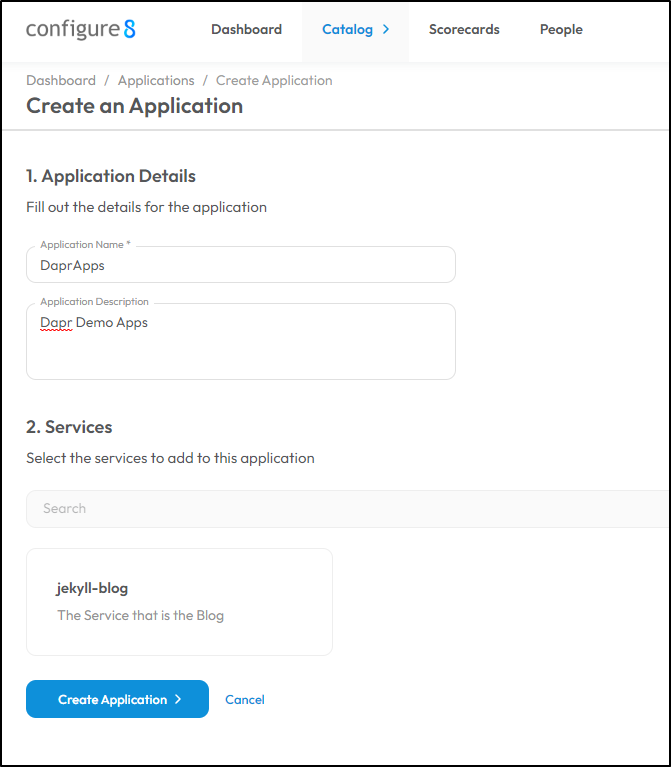

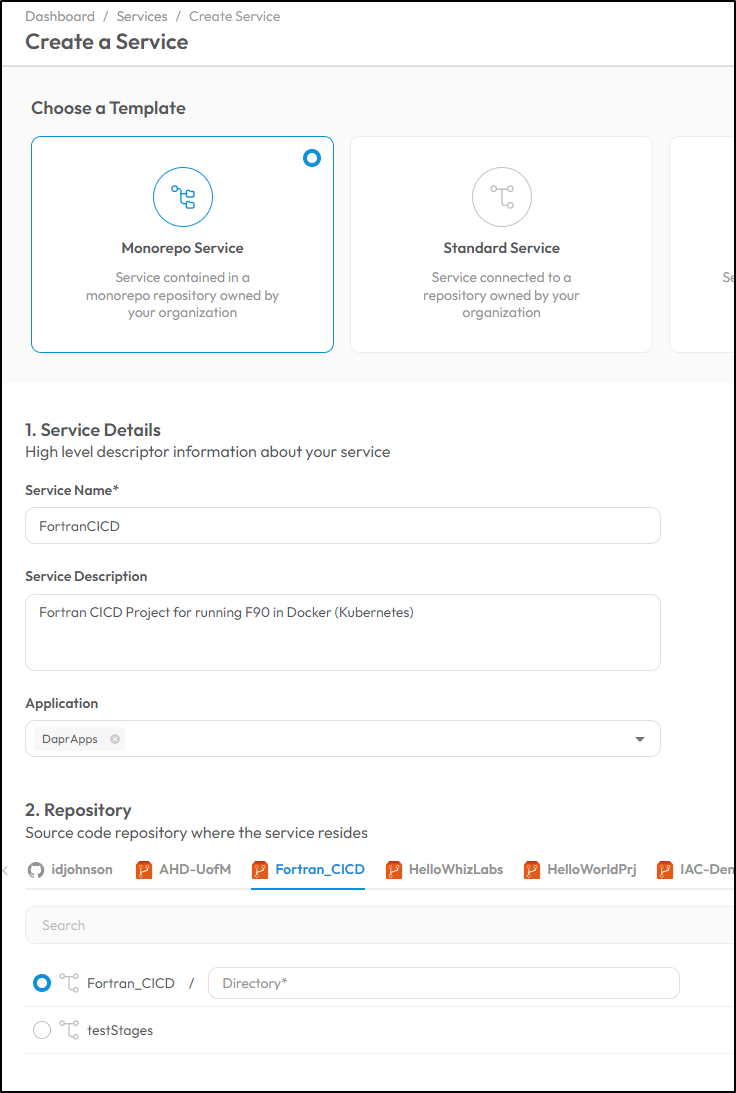

I want to put these into a larger concept of a Dapr Apps Application. So I’ll create that first

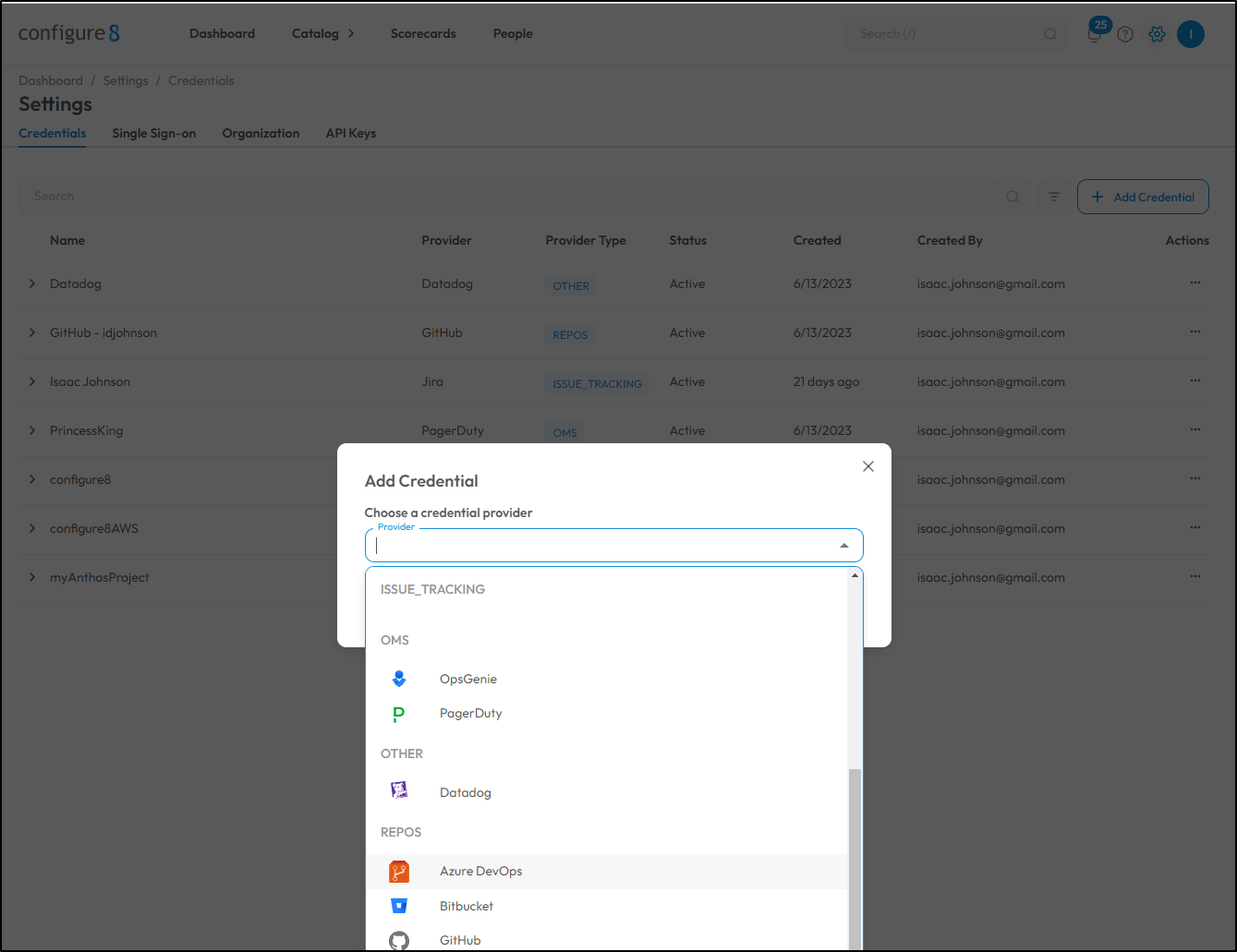

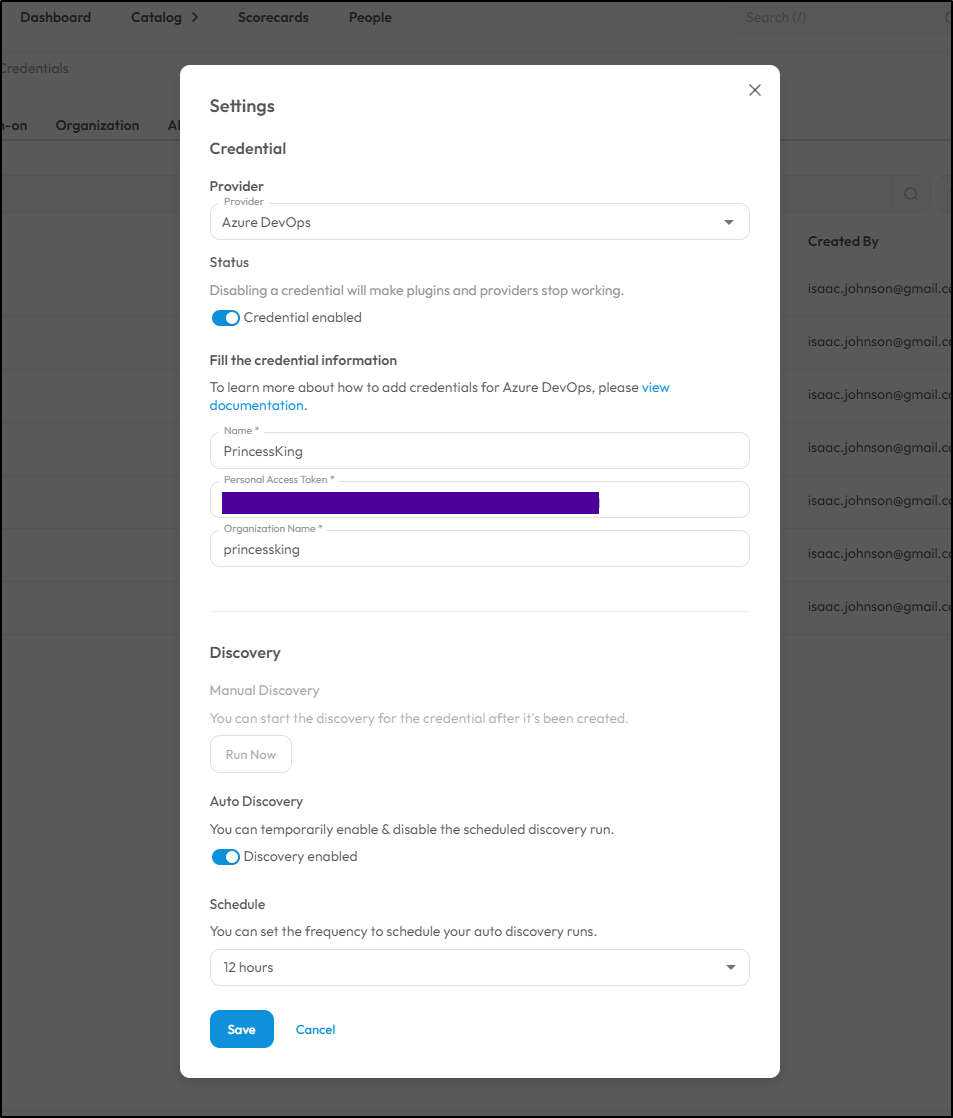

Next, before we add the service, I’ll add Azure Repos by credential

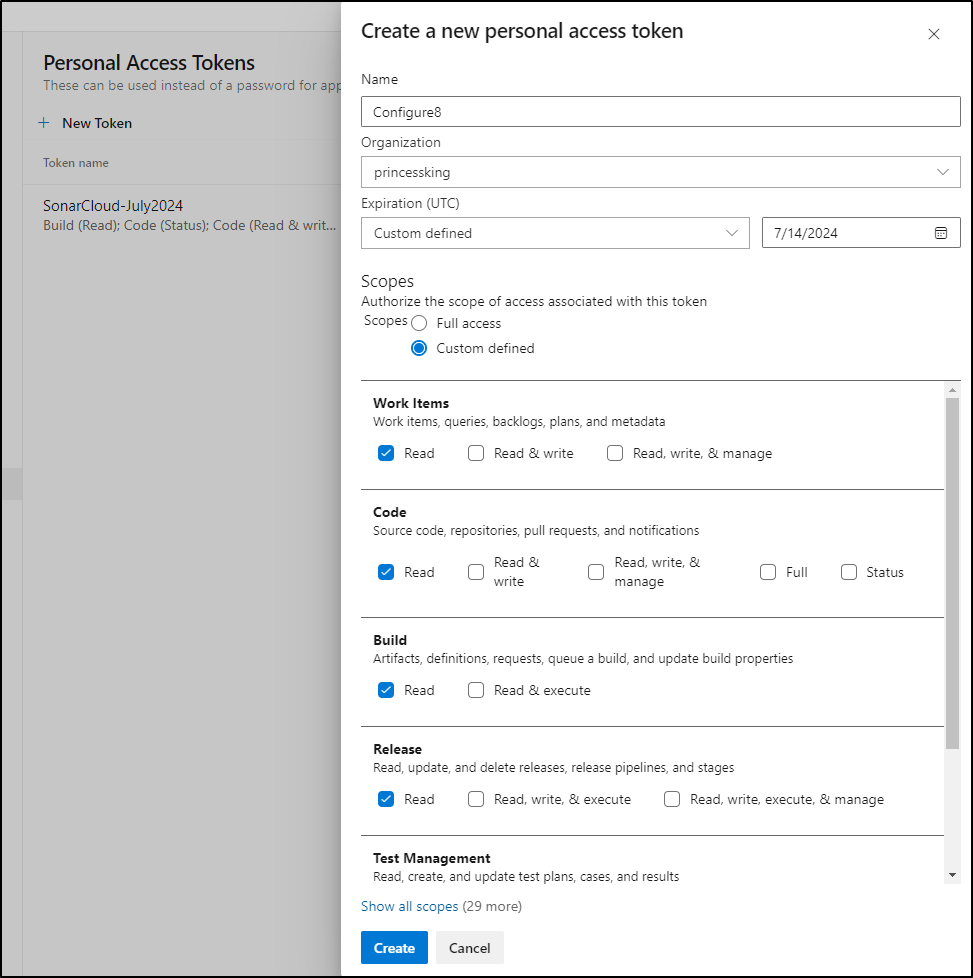

I’ll want to use a new PAT with Read scopes on Work Items, Code, Build and Release

which I’ll use in the provider

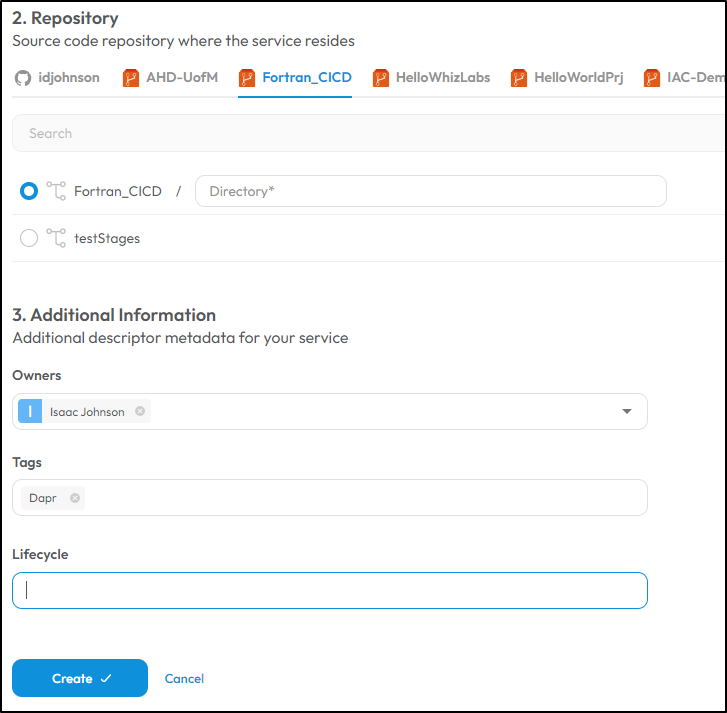

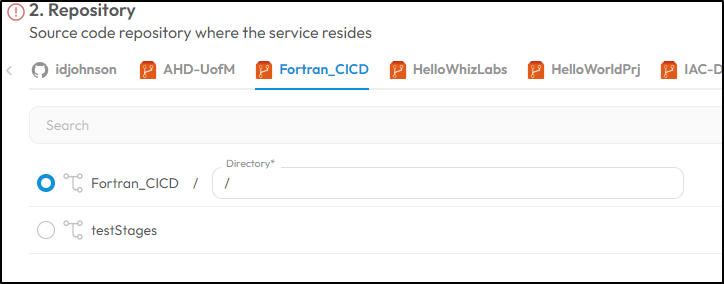

I’ll now add a service and pick the Application and Azure Repo

I’ll update the Tags and Owners then click Create

I wanted a Subdirectory, but this project has none. I’ll try with a root “/”

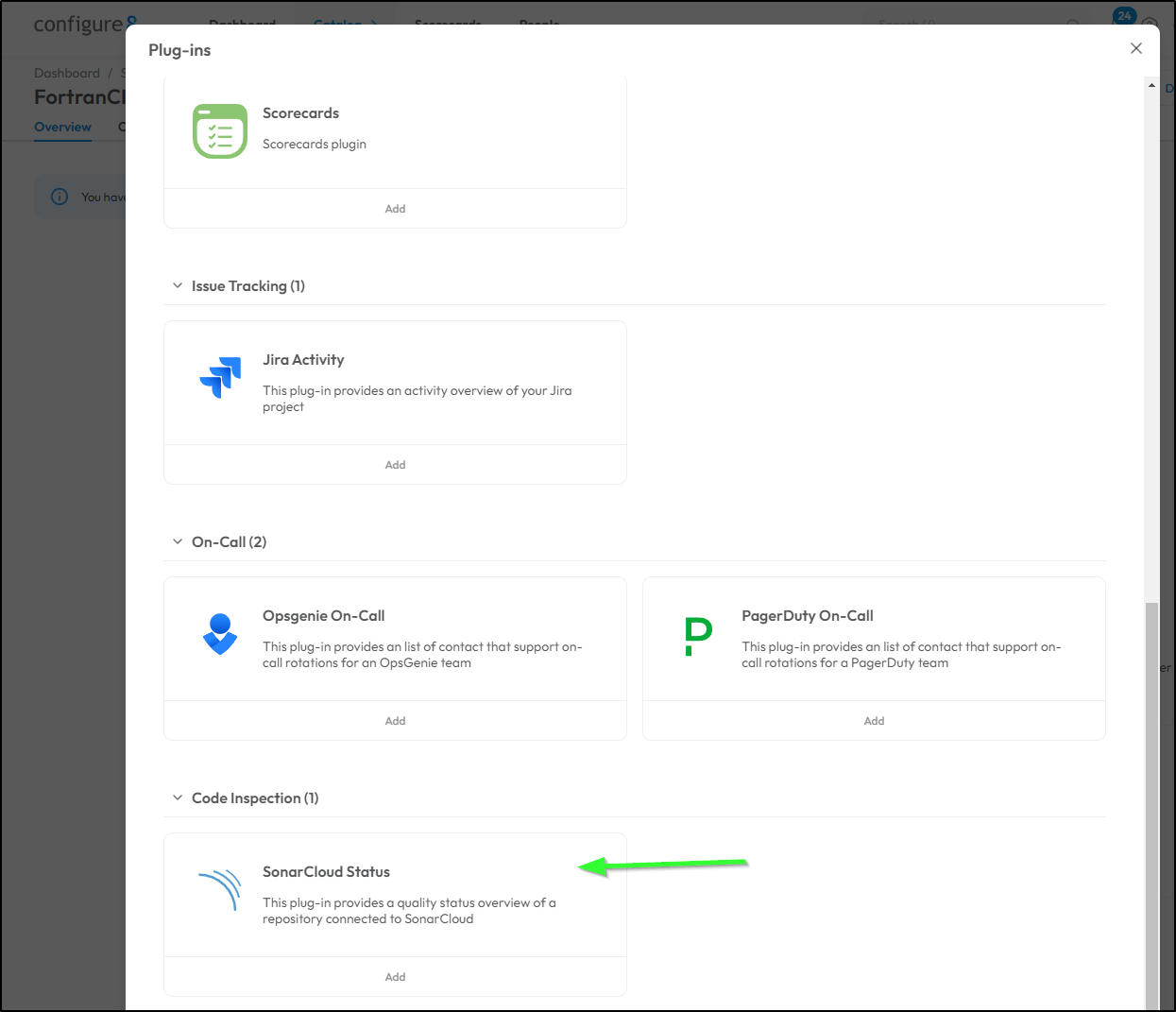

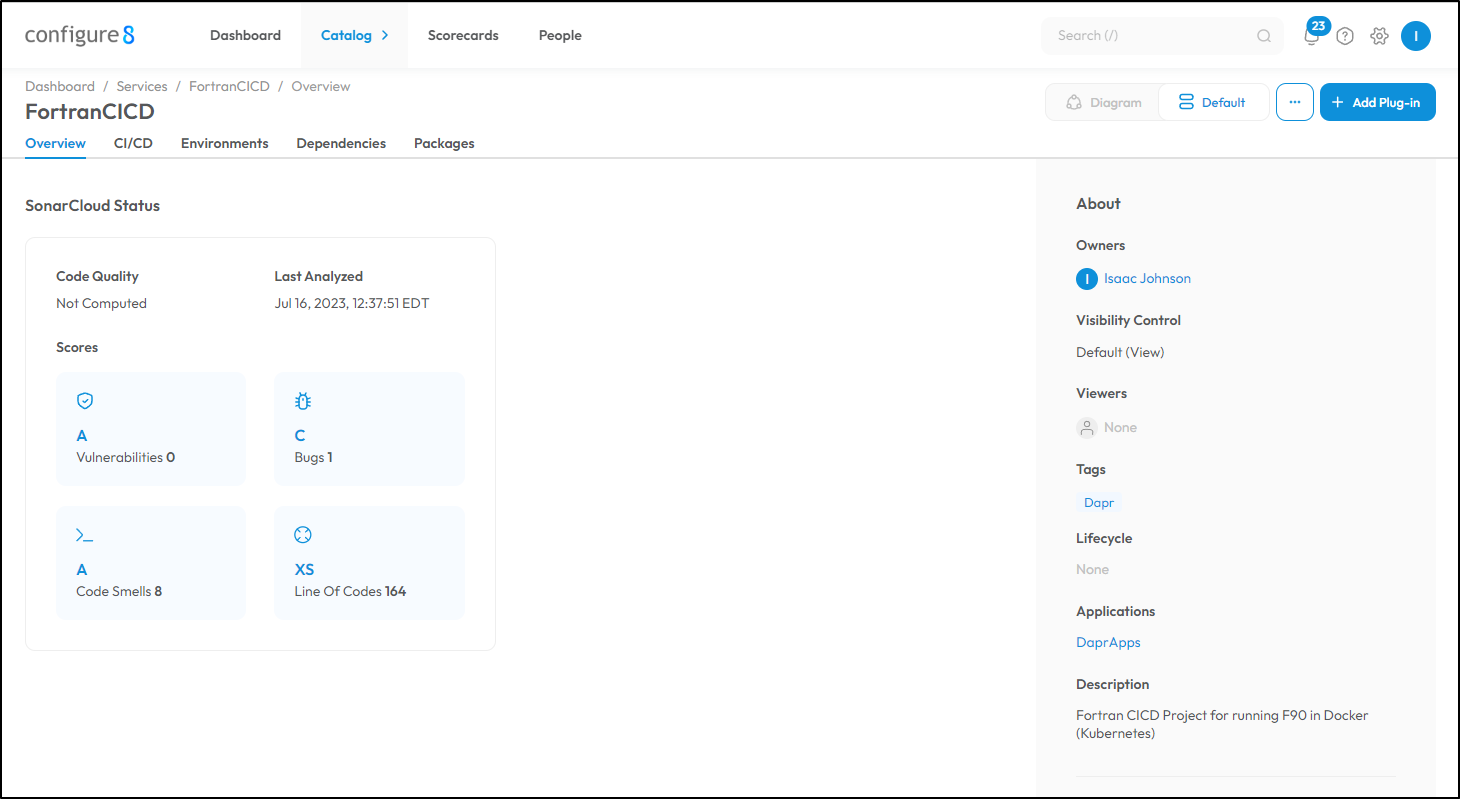

I’ll now add SonarCloud Status

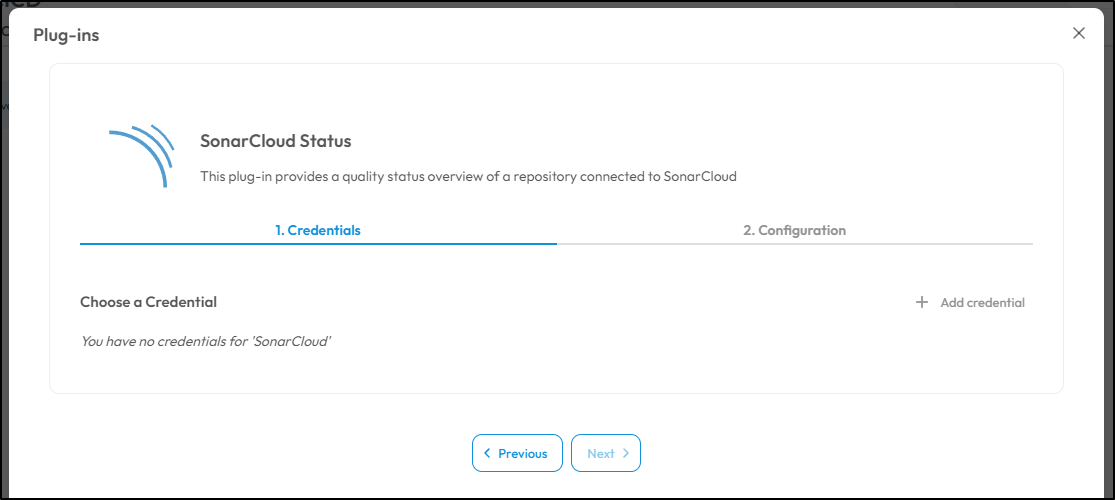

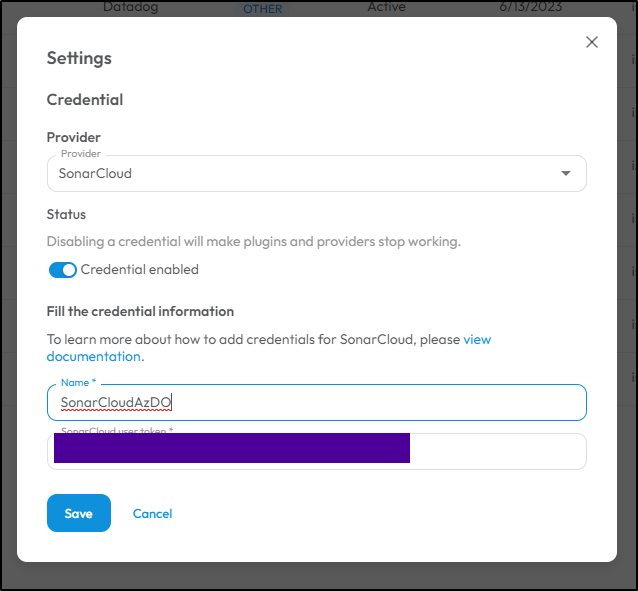

To use SonarCloud, I’ll need to add a credential

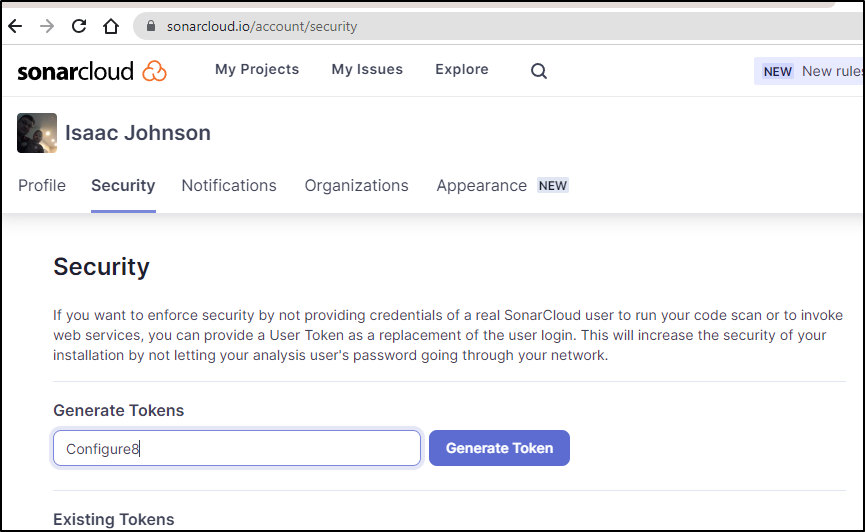

I can generate that from the Security section in SonarCloud

Then add in Configure8

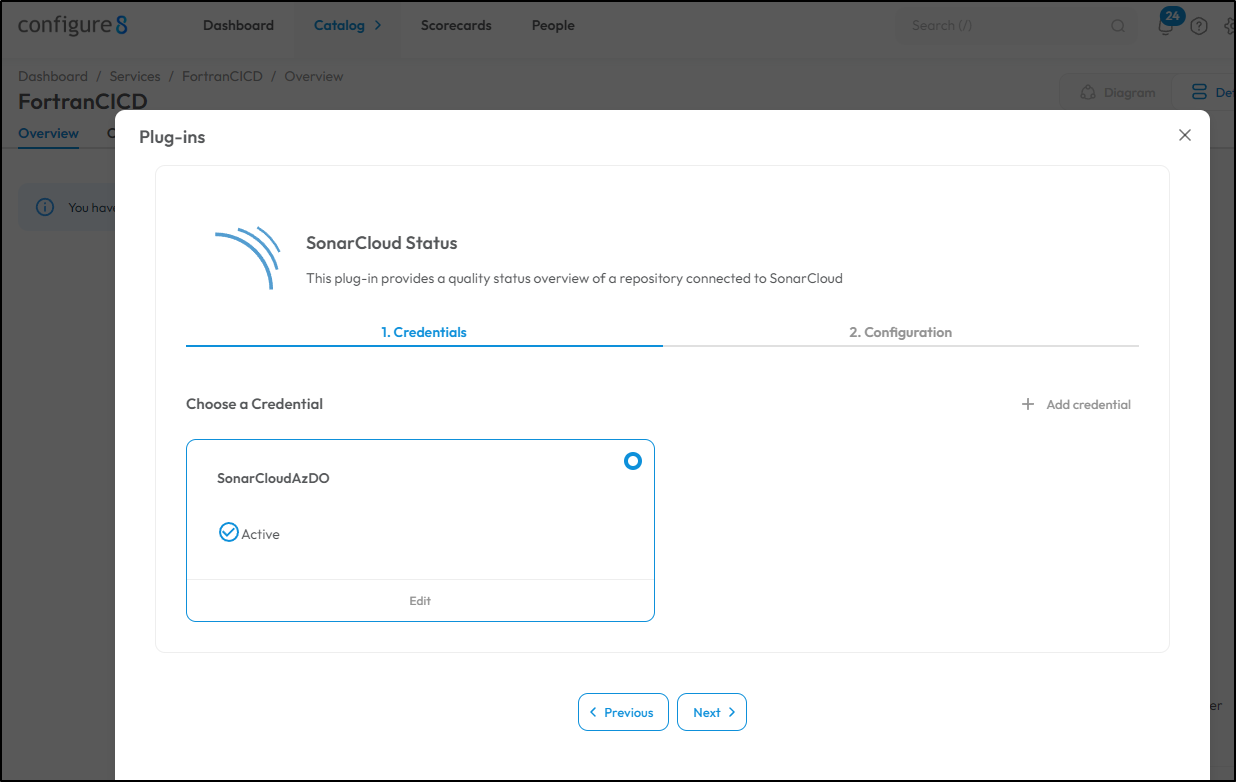

Unfortunately, that popped me out of the Add Integration flow so I went back to the FortranCICD project and selected to add SonarCloud. This time I can see the credential i added

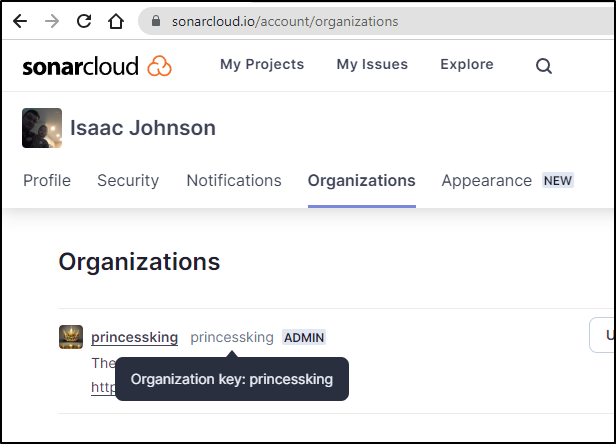

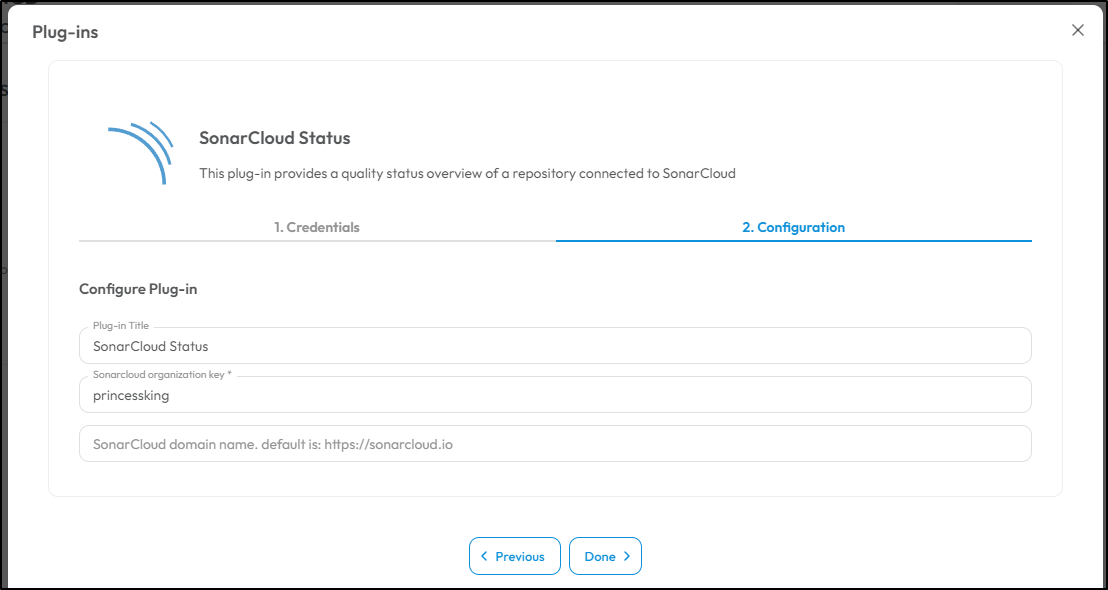

I can see the Organization Key in SonarCloud

And use in Configure8

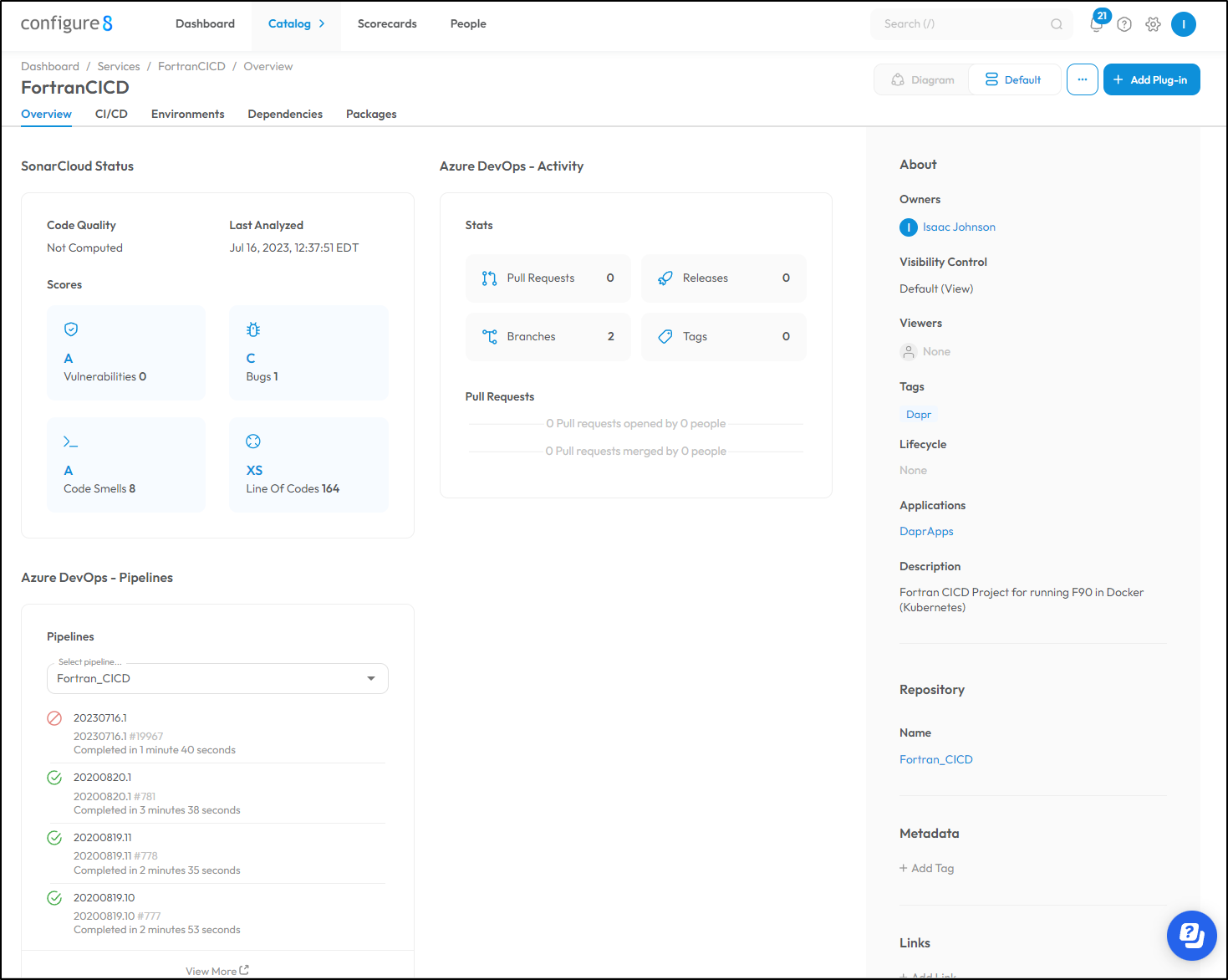

I can now see the latest results in my Configure8 Dashboard

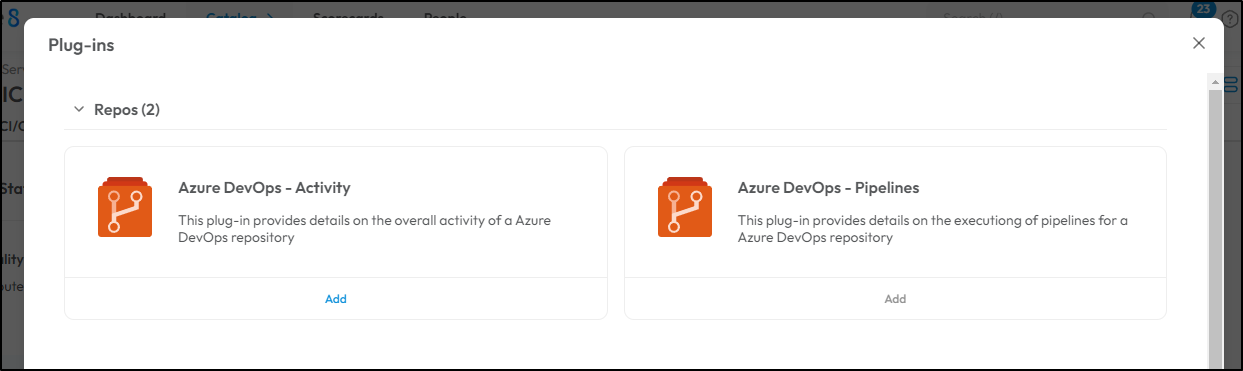

Next I’ll add Activity and Pipelines from Azure DevOps

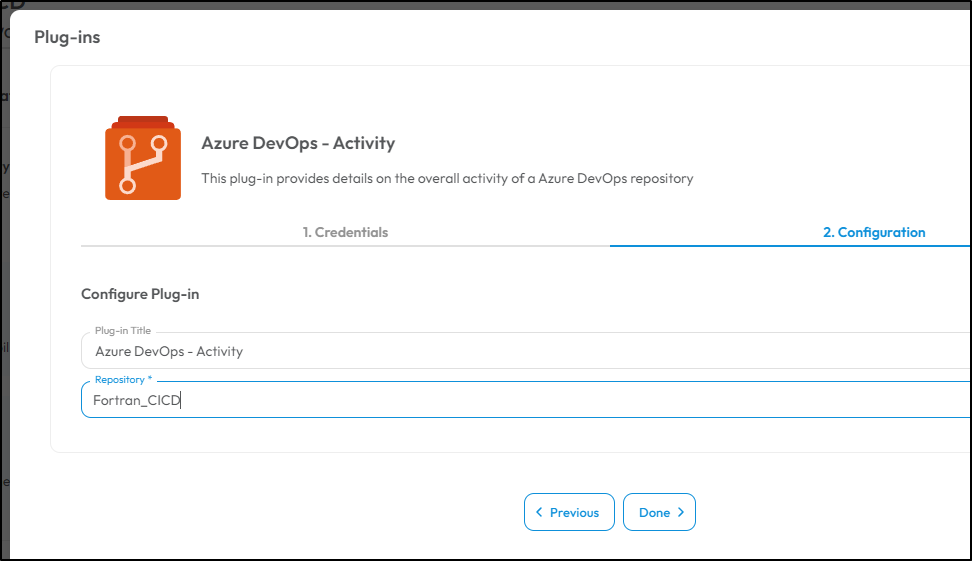

I’ll add activity

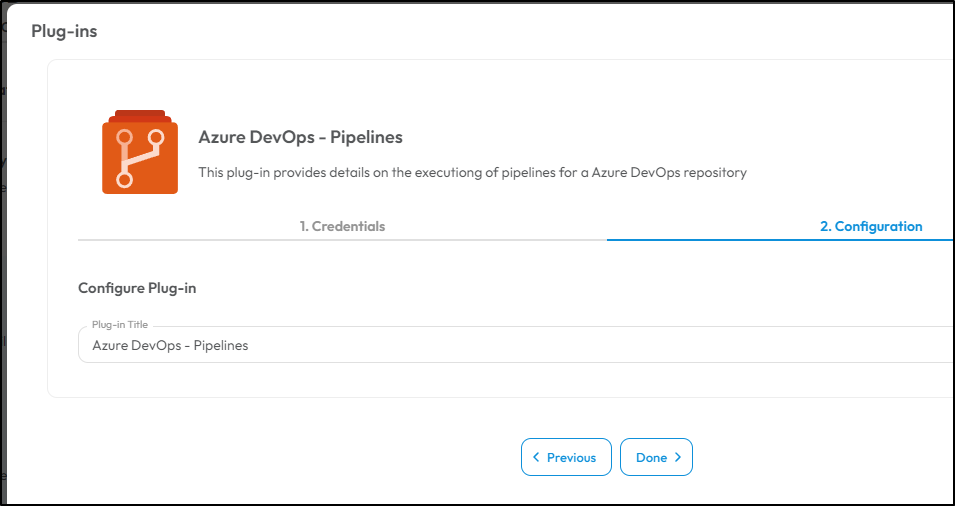

and Pipelines

We now have a Dashboard for an Azure DevOps based service that includes SonarCloud status, Azure DevOps Repos activity as well as Pipelines activity.

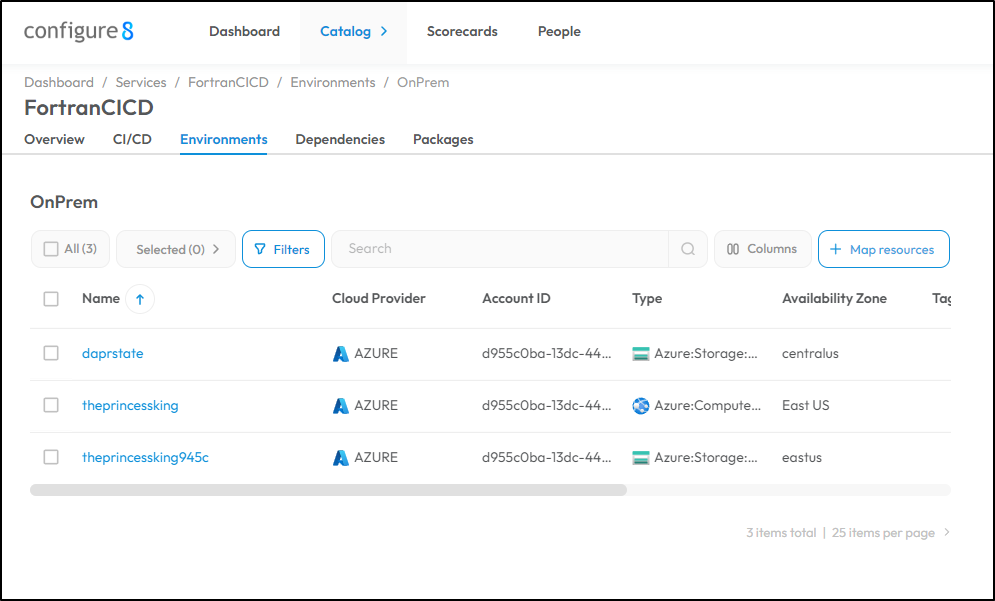

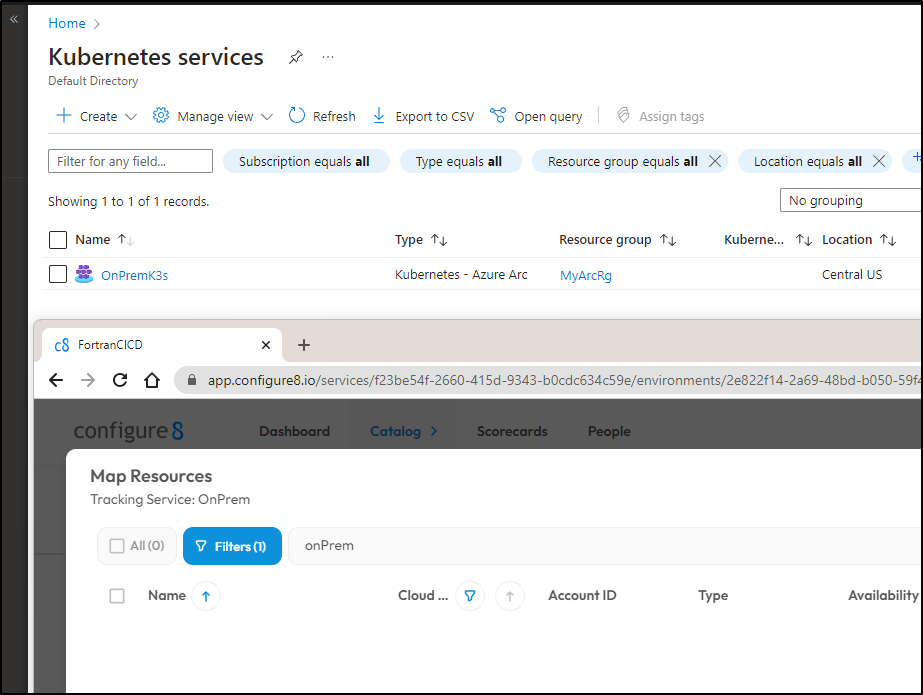

I’ll link to a new Environment to point to some Azure Resources

I had the thought that perhaps I could map an environment via Azure Arc for Kubernetes which allows me to onboard an on-prem K8s into Azure. But unfortunately I didn’t see that in the list

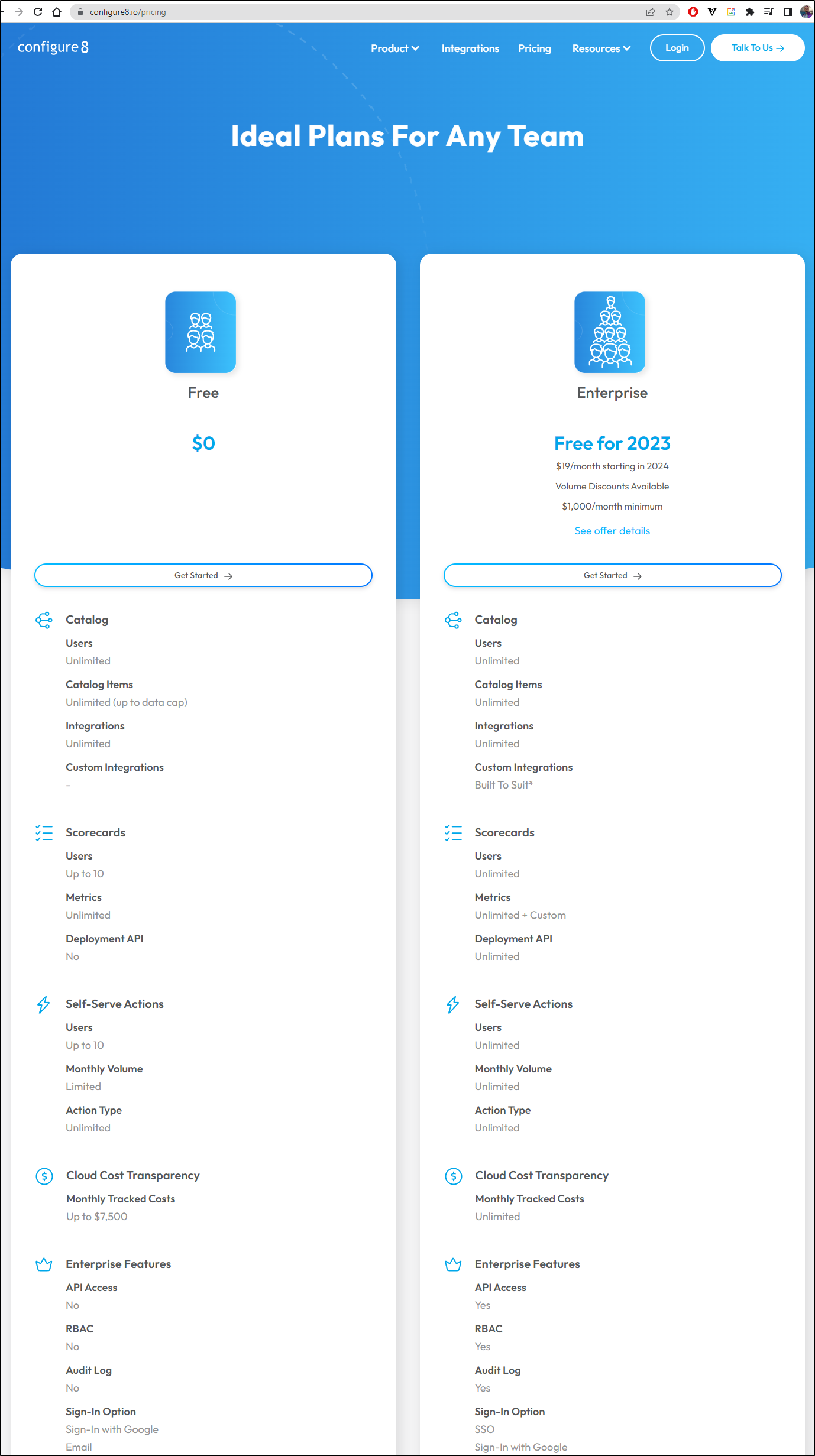

Pricing for Configure 8

I don’t want to be pushy, or sound like a shill, but they are basically giving this away through 2023 so take it!

Summary

Today we looked at Configure8’s new Cost Monitoring abilities, specifically in AWS. I pivoted to try and find where some AWS S3 costs came from without success. We then wanted to look at SonarSource and Azure DevOps integrations so we onboarded two public AzDO projects, Fortran_CICD and Perl_CICD. Lastly, I gently nudged everyone to signup while Enterprise is free (for 2023).