Published: Jun 28, 2022 by Isaac Johnson

Kaniko is an Open Source Kubernetes based build tool that came out of Google Container Tools. It’s under the Apache 2.0 license and is up to release v.1.8.1 as of this writing (the oldest being 0.1.0 which shows this project started prior to May 2018).

While it’s not the first build-a-container-in-a-container tool, it is the first of which I’ve seen that does not rely on the Docker-in-Docker method which often leverages a behind-the-scenes Docker daemon. Instead, the executor extracts the file system from the base image the executes the commands and lastly snapshots in userspace.

Today we’ll walk through a helloworld tutorial while at the same time we’ll setup ArgoCD and properly supported Fuse based NFS storage classes in our new cluster. I’ll also take a moment to setup Sonarqube that can be used with our DockerWithTests repo

Setup

First, we need to create a volume claim. We’ll be using the example files in the GCT repo here. However, I’ll be using NFS instead of local-storage. If you wish to use local-storage, you can also create the volume via yaml

$ cat volume-claim.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: dockerfile-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 8Gi

storageClassName: nfs

$ kubectl apply -f volume-claim.yaml

persistentvolumeclaim/dockerfile-claim created

$ kubectl get pvc dockerfile-claim

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

dockerfile-claim Bound pvc-c77ccf96-5aea-4e3f-bde3-0ecdde265ac9 8Gi RWO nfs 2m19s

I wanted to test the files, so I created a pod to use a PVC

$ cat volume-claim.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: dockerfile2-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 8Gi

storageClassName: nfs

volumeMode: Filesystem

$ kubectl apply -f volume-claim.yaml

persistentvolumeclaim/dockerfile2-claim created

$ cat pod-test.yaml

apiVersion: v1

kind: Pod

metadata:

name: kaniko-tgest

spec:

containers:

- name: ubuntu

image: ubuntu:latest

command:

- "sleep"

- "604800"

volumeMounts:

- name: dockerfile-storage

mountPath: /workspace

restartPolicy: Never

volumes:

- name: dockerfile-storage

persistentVolumeClaim:

claimName: dockerfile2-claim

$ kubectl apply -f pod-test.yaml

pod/kaniko-tgest created

$ kubectl exec -it kaniko-tgest -- /bin/bash

root@kaniko-tgest:/# ls /workspace

root@kaniko-tgest:/# cd /workspace

root@kaniko-tgest:/workspace# touch testing-kaniko

Interestingly my NFS provisioner really was using local-path. So as a result, the file showed up on a path on the master node

builder@anna-MacBookAir:~$ sudo ls /var/lib/rancher/k3s/storage/pvc-ce1165bd-4c45-4ee3-a641-2438e50c1139_default_data-nfs-server-provisioner-1655037797-0/pvc-31a6f985-a4c7-41f6-8cd1-8a86f0c9f15a

testing-kaniko

Fixing NFS

First, I want to ensure NFS works on the cluster nodes. To do so, I’ll run a test locally with an existing share

builder@anna-MacBookAir:~$ sudo mount -t nfs 192.168.1.129:/volume1/k3snfs /tmp/nfscheck/

builder@anna-MacBookAir:~$ ls /tmp/nfscheck/

default-beospvc6-pvc-099fe2f3-2d63-4df5-ba65-4c7f3eba099e default-redis-data-bitnami-harbor-redis-master-0-pvc-73a7e833-90fb-41ab-b42c-7a1e7fd5aad3

default-beospvc-pvc-f36c2986-ab0b-4978-adb6-710d4698e170 default-redis-data-redis-master-0-pvc-bdf57f20-661c-4982-aebd-a1bb30b44830

default-data-redis-ha-1605552203-server-0-pvc-35be9319-4b0b-429e-82f6-6fbf3afab721 default-redis-data-redis-slave-0-pvc-651cdaa3-d321-45a3-adf3-62224c341fba

default-data-redis-ha-1605552203-server-1-pvc-17c79f00-ac73-454f-a664-e02de9158bd5 default-redis-data-redis-slave-1-pvc-3c569803-3275-443d-9b65-be028ce4481f

default-data-redis-ha-1605552203-server-2-pvc-728cf90d-b725-44b9-8a2d-73ddae84abfa k3s-backup-master-20211206.tgz

default-fedorawsiso-pvc-cad0ce95-9af3-4cb4-959d-d8b944de47ce '#recycle'

default-mongo-release-mongodb-pvc-ecb4cc4f-153e-4eff-a5e7-5972b48e6f37 test

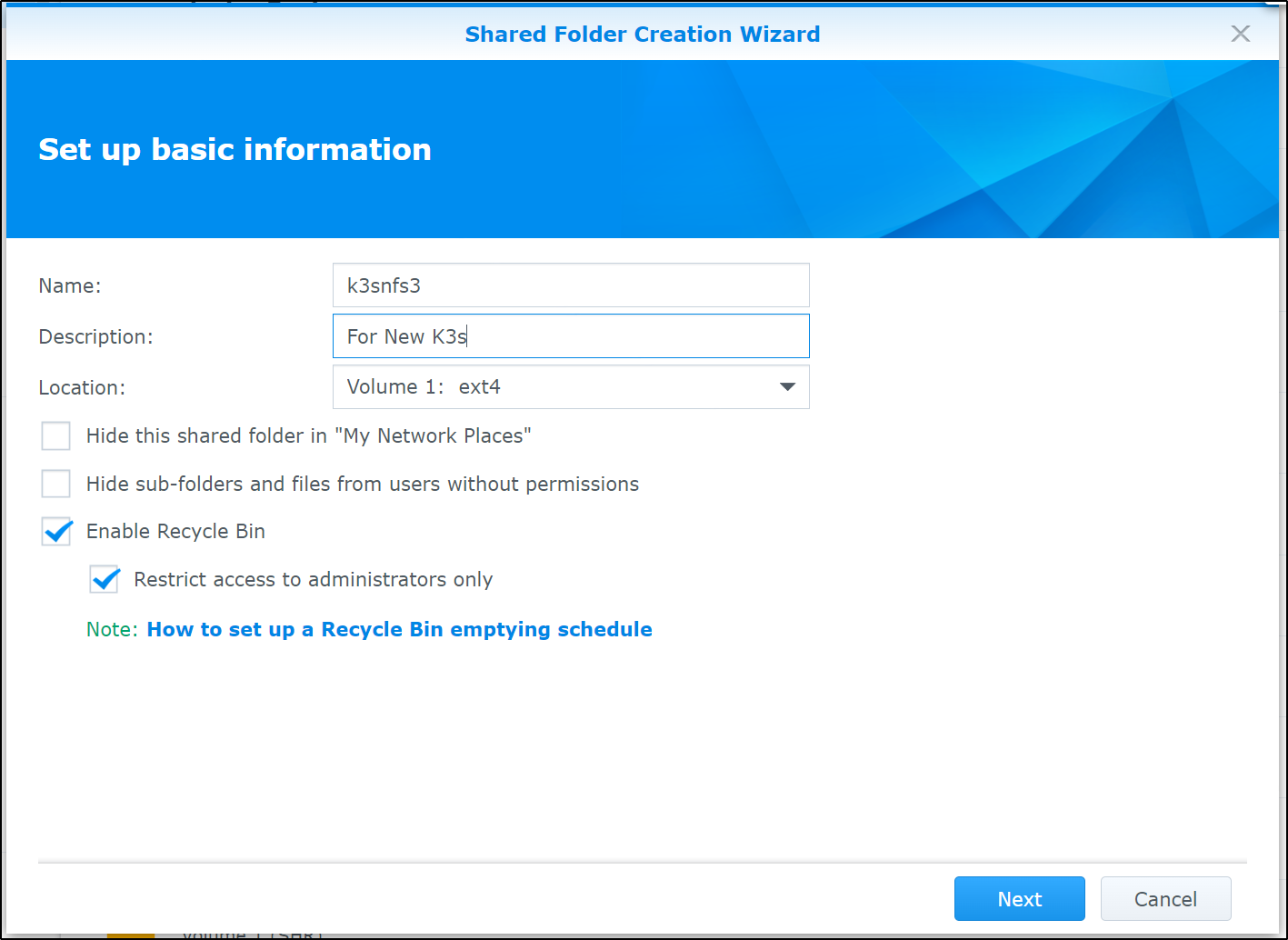

Create a Share

Next, I’ll create a new share on my Synology NAS.

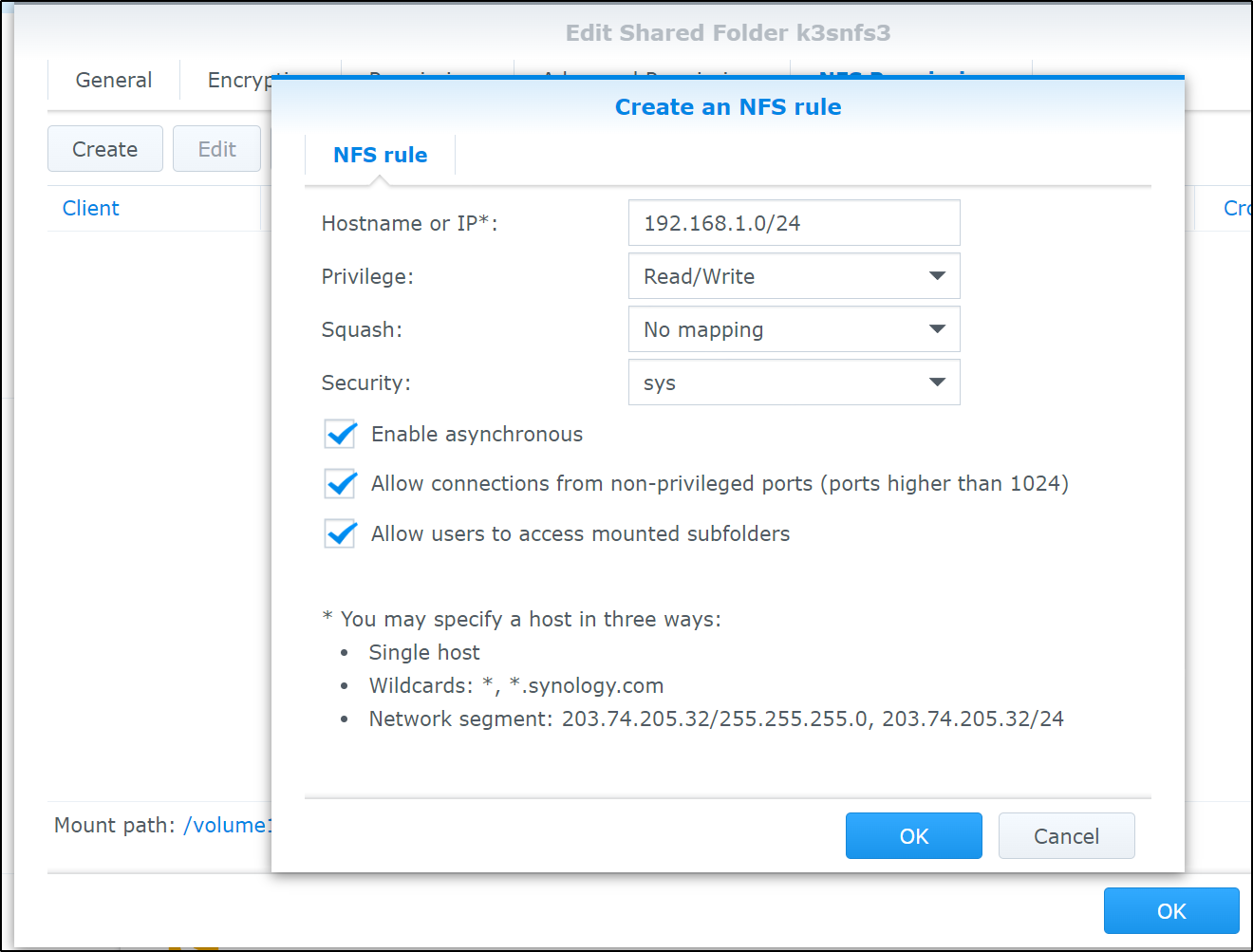

We can enable NFS sharing next

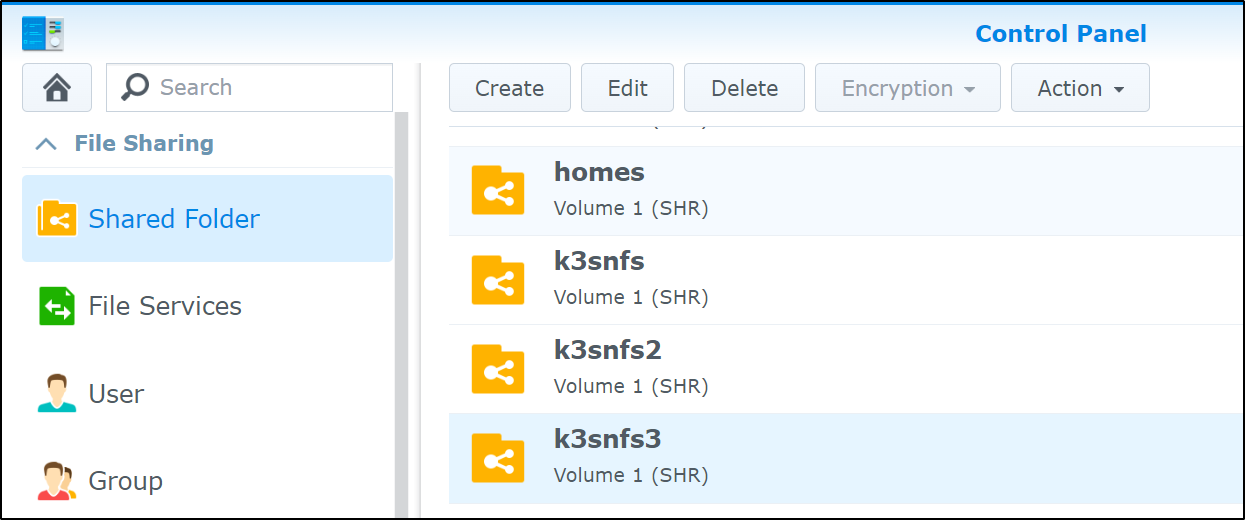

When done, we see the share in the list of shares on the NAS

Now I’ll add the pre-reqs for NFS (via FUSE)

$ cat k3s-prenfs.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"

allowVolumeExpansion: "true"

reclaimPolicy: "Delete"

allowVolumeExpansion: true

$ kubectl apply -f k3s-prenfs.yaml

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

storageclass.storage.k8s.io/managed-nfs-storage created

Then we can launch it

$ cat k3s-nfs.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: gcr.io/k8s-staging-sig-storage/nfs-subdir-external-provisioner:v4.0.0

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.1.129

- name: NFS_PATH

value: /volume1/k3snfs3

volumes:

- name: nfs-client-root

nfs:

server: 192.168.1.129

path: /volume1/k3snfs3

$ kubectl apply -f k3s-nfs.yaml

deployment.apps/nfs-client-provisioner created

Note: I used to use quay.io/external_storage/nfs-client-provisioner:latest but now because selfLink was depricated in 1.20 and beyond, we need to use the image gcr.io/k8s-staging-sig-storage/nfs-subdir-external-provisioner:v4.0.0

When done, we can see the Storage Classes and note that is now listed

$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs (default) cluster.local/nfs-server-provisioner-1655037797 Delete Immediate true 14d

local-path (default) rancher.io/local-path Delete WaitForFirstConsumer false 21d

managed-nfs-storage fuseim.pri/ifs Delete Immediate true 119s

The defaults are a bit out of whack so some simple patches will sort that out

$ kubectl patch storageclass local-path -p '{"metadata":{"annotations":{"storageclass.kubernetes.io/is-default-class":"false"}}}' && kubectl patch storageclass nfs -p '{"metadata":{"annotations":{"storageclass.kubernetes.io/is-default-class":"false"}}}' && kubectl patch storageclass managed-nfs-storage -p '{"metadata":{"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

storageclass.storage.k8s.io/local-path patched

storageclass.storage.k8s.io/nfs patched

storageclass.storage.k8s.io/managed-nfs-storage patched

$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path rancher.io/local-path Delete WaitForFirstConsumer false 21d

nfs cluster.local/nfs-server-provisioner-1655037797 Delete Immediate true 14d

managed-nfs-storage (default) fuseim.pri/ifs Delete Immediate true 9m6s

Before moving on to using the proper NFS shares, I’m going to delete the last tests

$ kubectl delete -f pod-test.yaml

pod "kaniko-tgest" deleted

$ kubectl delete -f volume-claim.yaml

persistentvolumeclaim "dockerfile2-claim" deleted

Now we can create a Volume Claim to use the new provisioner

$ cat volume-claim.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: dockerfile3-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 8Gi

storageClassName: managed-nfs-storage

volumeMode: Filesystem

$ kubectl apply -f volume-claim.yaml

persistentvolumeclaim/dockerfile3-claim created

Let’s fire a pod to use it

$ cat pod-test.yaml

apiVersion: v1

kind: Pod

metadata:

name: kaniko-tgest

spec:

containers:

- name: ubuntu

image: ubuntu:latest

command:

- "sleep"

- "604800"

volumeMounts:

- name: dockerfile-storage

mountPath: /workspace

restartPolicy: Never

volumes:

- name: dockerfile-storage

persistentVolumeClaim:

claimName: dockerfile3-claim

$ kubectl apply -f pod-test.yaml

pod/kaniko-tgest created

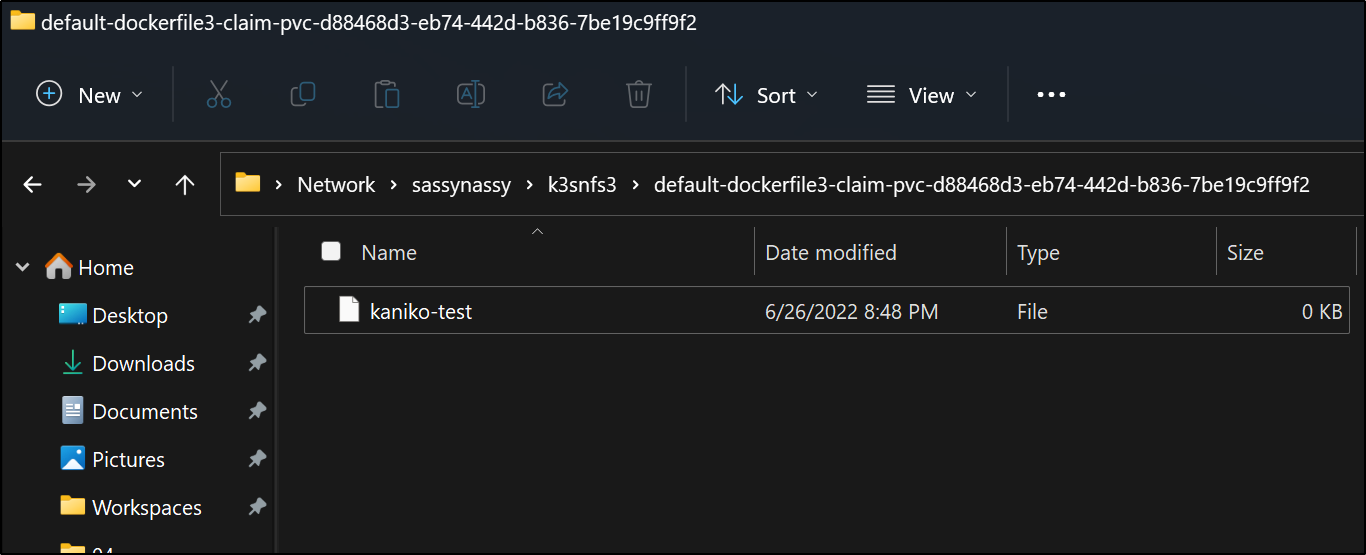

And then test it

$ kubectl exec -it kaniko-tgest -- /bin/bash

root@kaniko-tgest:/# cd /workspace/

root@kaniko-tgest:/workspace# touch kaniko-test

root@kaniko-tgest:/workspace# ls -l

total 0

-rw-r--r-- 1 root root 0 Jun 27 01:48 kaniko-test

root@kaniko-tgest:/workspace# exit

exit

We can see it created a file

Kaniko

Let’s first create the PVC for Kaniko. In their writeup, they suggested making a volume, then a claim. However, with NFS as our provisioner, we will automatically get a volume created when seeking a PVC

$ cat volume-claim.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: dockerfile-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 8Gi

storageClassName: managed-nfs-storage

volumeMode: Filesystem

Now create the claim

$ kubectl apply -f volume-claim.yaml

persistentvolumeclaim/dockerfile-claim created

$ kubectl get pvc | grep docker

dockerfile3-claim Bound pvc-d88468d3-eb74-442d-b836-7be19c9ff9f2 8Gi RWO managed-nfs-storage 14m

dockerfile-claim Bound pvc-848781c6-8a0f-44ee-89f5-beebc4bf55b5 8Gi RWO managed-nfs-storage 14s

I’ll follow that by creating a dockerfile in the PVC. For fun, I’ll do this via windows accessing the NFS directly

I’ll use my Harbor registry cred already set to use my private Harbor CR. If you want to use dockerhub, you can create the secret using kubectl create secret docker-registry regcred --docker-server=<your-registry-server> --docker-username=<your-name> --docker-password=<your-pword> --docker-email=<your-email>

First update the Kaniko pod

$ git diff pod.yaml

diff --git a/examples/pod.yaml b/examples/pod.yaml

index 27f40a4b..92952d50 100644

--- a/examples/pod.yaml

+++ b/examples/pod.yaml

@@ -8,7 +8,7 @@ spec:

image: gcr.io/kaniko-project/executor:latest

args: ["--dockerfile=/workspace/dockerfile",

"--context=dir://workspace",

- "--destination=<user-name>/<repo>"] # replace with your dockerhub account

+ "--destination=rbor.freshbrewed.science/freshbrewedprivate"] # replace with your dockerhub account

volumeMounts:

- name: kaniko-secret

mountPath: /kaniko/.docker

@@ -18,7 +18,7 @@ spec:

volumes:

- name: kaniko-secret

secret:

- secretName: regcred

+ secretName: myharborreg

items:

- key: .dockerconfigjson

path: config.json

Then apply the pod

$ cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: kaniko

spec:

containers:

- name: kaniko

image: gcr.io/kaniko-project/executor:latest

args: ["--dockerfile=/workspace/dockerfile",

"--context=dir://workspace",

"--destination=harbor.freshbrewed.science/freshbrewedprivate/kanikotest"] # replace with your dockerhub account

volumeMounts:

- name: kaniko-secret

mountPath: /kaniko/.docker

- name: dockerfile-storage

mountPath: /workspace

restartPolicy: Never

volumes:

- name: kaniko-secret

secret:

secretName: myharborreg

items:

- key: .dockerconfigjson

path: config.json

- name: dockerfile-storage

persistentVolumeClaim:

claimName: dockerfile-claim

$ kubectl apply -f pod.yaml

pod/kaniko created

I realized the harborreg I was using only had “Image Pull” privs when checking the kaniko logs

$ kubectl logs kaniko

error checking push permissions -- make sure you entered the correct tag name, and that you are authenticated correctly, and try again: checking push permission for "harbor.freshbrewed.science/freshbrewedprivate/kanikotest": POST https://harbor.freshbrewed.science/v2/freshbrewedprivate/kanikotest/blobs/uploads/: UNAUTHORIZED: unauthorized to access repository: freshbrewedprivate/kanikotest, action: push: unauthorized to access repository: freshbrewedprivate/kanikotest, action: push

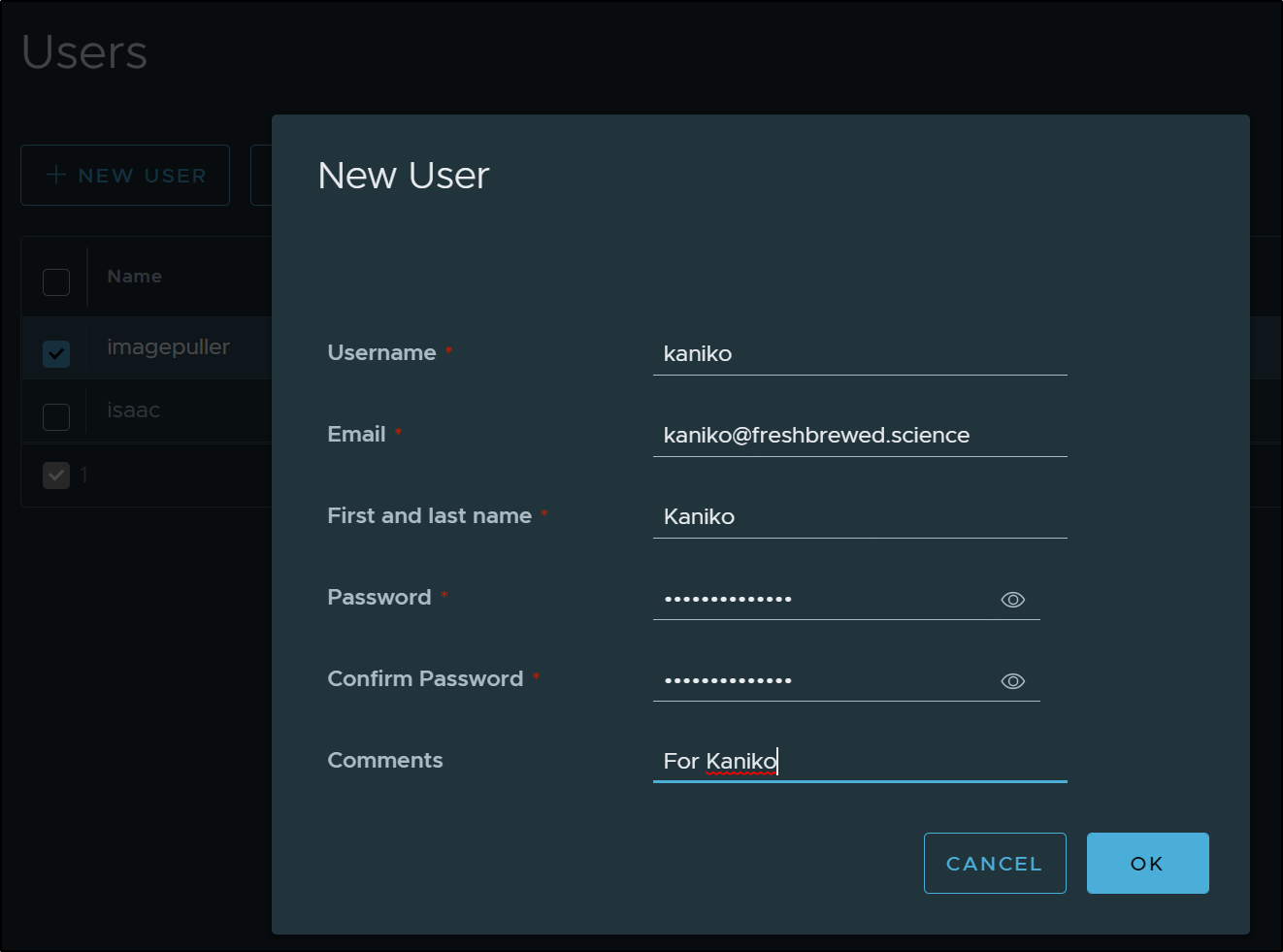

I’ll go ahead and create a new user “kaniko”

Create a Kaniko registry cred

$ kubectl create secret docker-registry kanikoharborcred --docker-server=harbor.freshbrewed.science --docker-username=kaniko --docker-pas

sword=************--docker-email=kaniko@freshbrewed.science

secret/kanikoharborcred created

Then we can use it

$ cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: kaniko

spec:

containers:

- name: kaniko

image: gcr.io/kaniko-project/executor:latest

args: ["--dockerfile=/workspace/dockerfile",

"--context=dir://workspace",

"--destination=harbor.freshbrewed.science/freshbrewedprivate/kanikotest"] # replace with your dockerhub account

volumeMounts:

- name: kaniko-secret

mountPath: /kaniko/.docker

- name: dockerfile-storage

mountPath: /workspace

restartPolicy: Never

volumes:

- name: kaniko-secret

secret:

secretName: kanikoharborcred

items:

- key: .dockerconfigjson

path: config.json

- name: dockerfile-storage

persistentVolumeClaim:

claimName: dockerfile-claim

$ kubectl delete -f pod.yaml

pod "kaniko" deleted

$ kubectl apply -f pod.yaml

pod/kaniko created

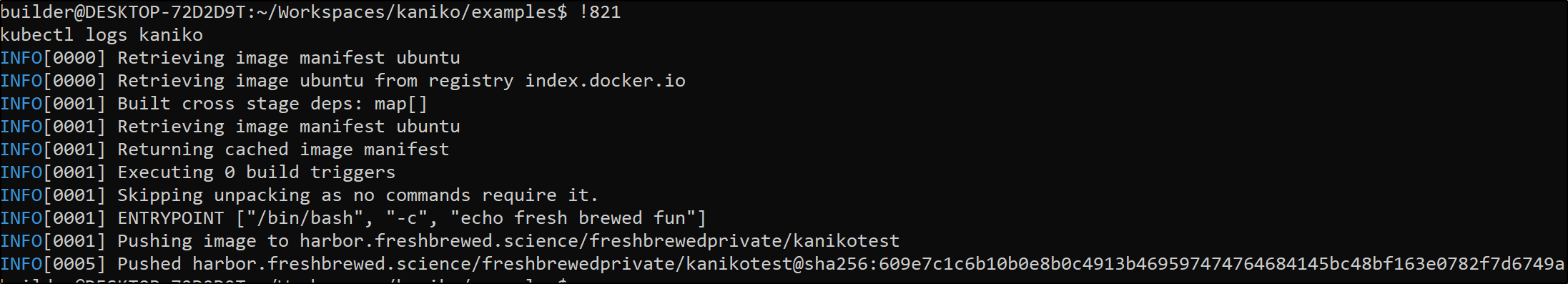

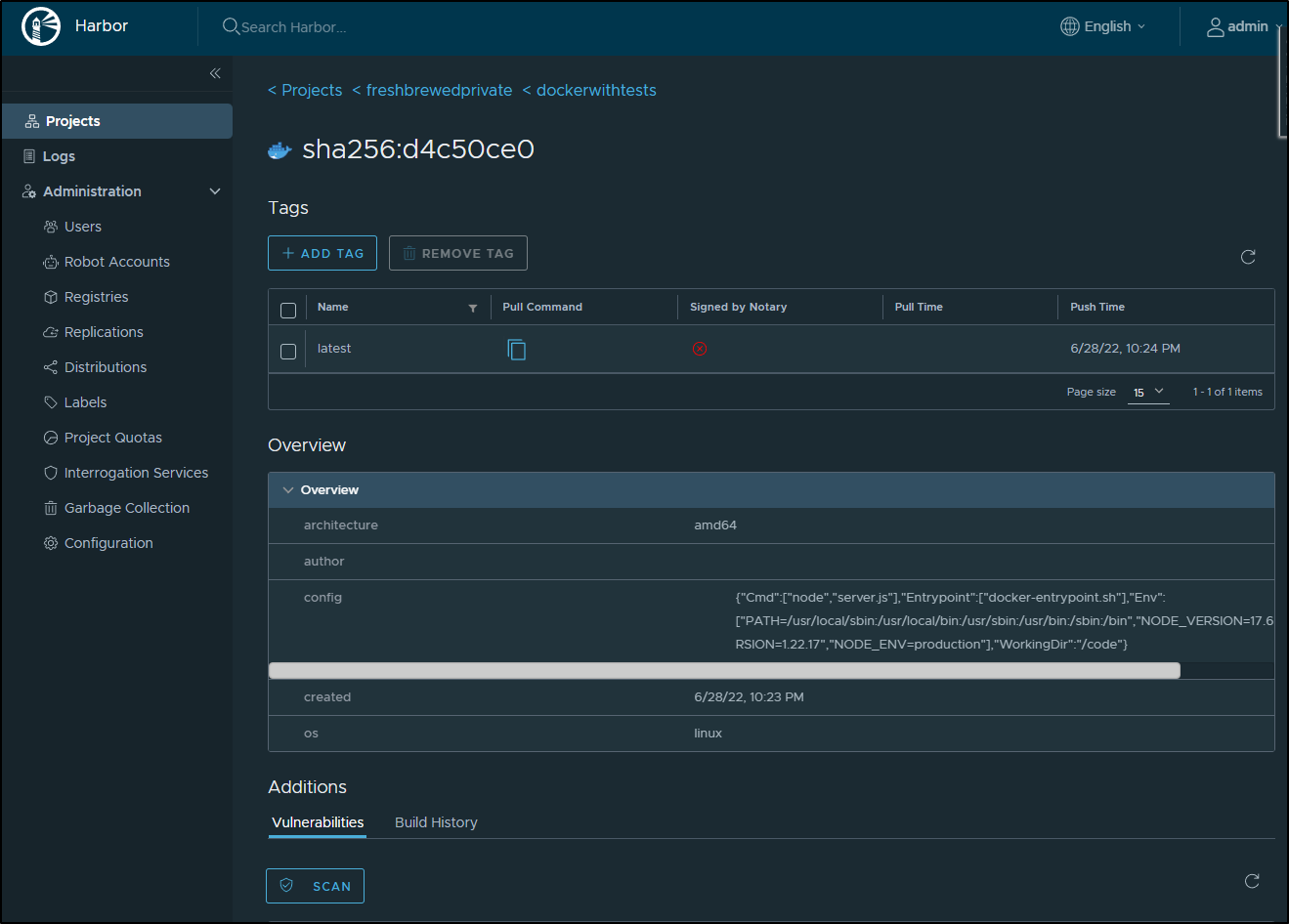

We can see the repository in Harbor was created

You can pull the image to test

kubectl logs kaniko

INFO[0000] Retrieving image manifest ubuntu

INFO[0000] Retrieving image ubuntu from registry index.docker.io

INFO[0001] Built cross stage deps: map[]

INFO[0001] Retrieving image manifest ubuntu

INFO[0001] Returning cached image manifest

INFO[0001] Executing 0 build triggers

INFO[0001] Skipping unpacking as no commands require it.

INFO[0001] ENTRYPOINT ["/bin/bash", "-c", "echo fresh brewed fun"]

INFO[0001] Pushing image to harbor.freshbrewed.science/freshbrewedprivate/kanikotest

INFO[0005] Pushed harbor.freshbrewed.science/freshbrewedprivate/kanikotest@sha256:609e7c1c6b10b0e8b0c4913b469597474764684145bc48bf163e0782f7d6749a

$ docker pull harbor.freshbrewed.science/freshbrewedprivate/kanikotest

Using default tag: latest

latest: Pulling from freshbrewedprivate/kanikotest

405f018f9d1d: Pull complete

Digest: sha256:609e7c1c6b10b0e8b0c4913b469597474764684145bc48bf163e0782f7d6749a

Status: Downloaded newer image for harbor.freshbrewed.science/freshbrewedprivate/kanikotest:latest

harbor.freshbrewed.science/freshbrewedprivate/kanikotest:latest

$ docker run -it harbor.freshbrewed.science/freshbrewedprivate/kanikotest:latest

fresh brewed fun

Using Kaniko

First, I need Argo CD to show how this could work.

Before I go further, I’ll add self-hosted Argo CD back into the mix. We could use https://www.koncrete.dev/ to do this, which you can skip this whole Argo CD install section if you want to use the free Koncrete.dev hosted option. In my case, I just want to add a working current (as of 5d ago) ArgoCD installation.

Setting up Argo CD

I’ll follow a similar path as I did with the Argo CD : Part 1 write up (albeit with newer versions)

builder@DESKTOP-QADGF36:~/Workspaces/argotest$ cat charts/argo-cd/Chart.yaml

apiVersion: v2

name: argo-cd

version: 1.0.1

dependencies:

- name: argo-cd

version: 4.9.7

repository: https://argoproj.github.io/argo-helm

$ cat charts/argo-cd/values.yaml

argo-cd:

installCRDs: false

global:

image:

tag: v2.4.2

dex:

enabled: false

server:

extraArgs:

- --insecure

config:

repositories: |

- type: helm

name: stable

url: https://charts.helm.sh/stable

- type: helm

name: argo-cd

url: https://argoproj.github.io/argo-helm

$ helm dep update charts/argo-cd/

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "epsagon" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "myharbor" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 1 charts

Downloading argo-cd from repo https://argoproj.github.io/argo-helm

Deleting outdated charts

Then install

$ helm upgrade --install argo-cd charts/argo-cd/

Release "argo-cd" does not exist. Installing it now.

NAME: argo-cd

LAST DEPLOYED: Mon Jun 27 05:58:58 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

We check the pod and get the default login credential

$ kubectl get pods -l app.kubernetes.io/name=argocd-server -o name -o json | jq -r '.items[] | .metadata.name'

argo-cd-argocd-server-7fff4b76b7-w5pmw

$ kubectl get secrets argocd-secret -o json | jq -r '.data."admin.password"' | base64 --decode && echo

$2a$10$Qo4TCjNtQzzoNM7pD.hd0OiUo4Ug.jlzen/YkKuDk2I9nbHUKVi52

This is useless as it’s bcrypted. So as before, I’ll create a fresh password with this page then use it to patch the secret and rotate the pod

$ kubectl patch secret argocd-secret -p '{"stringData": { "admin.passwordMtime": "'$(date +%FT%T%Z)'", "admin.password": "$2a$10$ifBzX9dla.eu2SNwHd/U8OGCE56H.3iSk/3wvjNdzcdOnC/nNT.7O"}}'

secret/argocd-secret patched

$ kubectl delete pod `kubectl get pods -l app.kubernetes.io/name=argocd-server -o name | cut -d'/' -f 2 | tr -d '\n'`

pod "argo-cd-argocd-server-7fff4b76b7-w5pmw" deleted

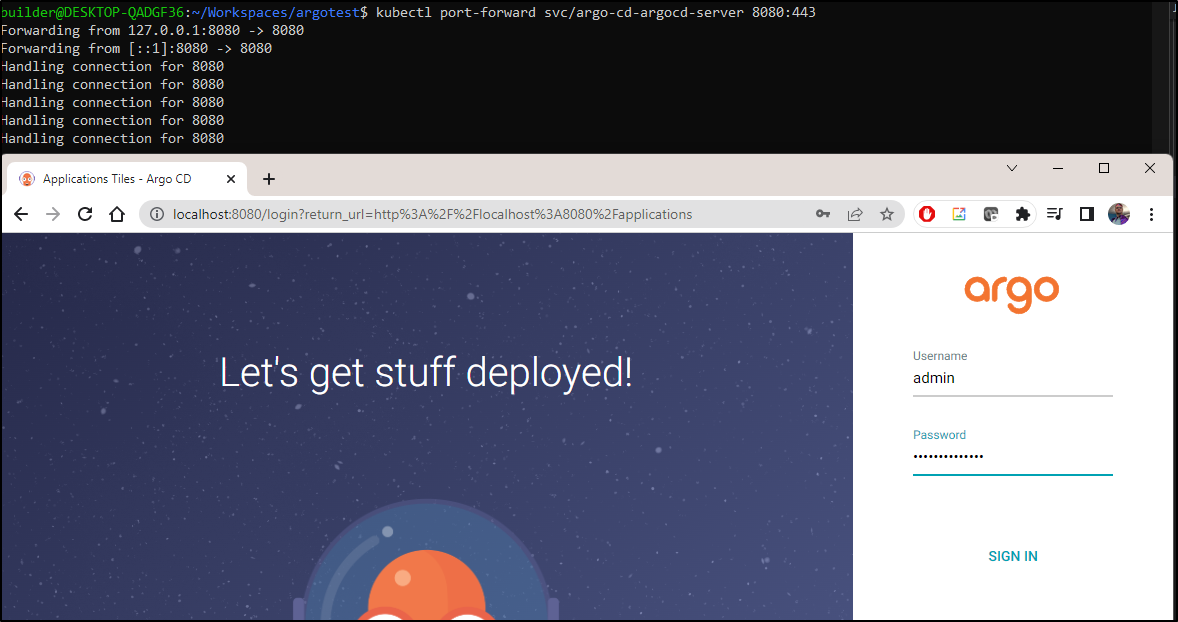

Now we can port-forward and login with “admin” and our password

$ kubectl port-forward svc/argo-cd-argocd-server 8080:443

Forwarding from 127.0.0.1:8080 -> 8080

Forwarding from [::1]:8080 -> 8080

Clearly I’ll want a proper ingress. My new ingress requires a classname so we’ll update the Ingress.yaml from before

$ cat argo-ingress2.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/tls-acme: "true"

ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-buffer-size: 32k

nginx.ingress.kubernetes.io/proxy-buffers-number: 8 32k

nginx.ingress.kubernetes.io/proxy-read-timeout: "43200"

nginx.ingress.kubernetes.io/proxy-send-timeout: "43200"

name: argocd-ingress

namespace: default

spec:

ingressClassName: nginx

rules:

- host: argocd.freshbrewed.science

http:

paths:

- backend:

service:

name: argo-cd-argocd-server

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- argocd.freshbrewed.science

secretName: argocd-tls

$ kubectl apply -f argo-ingress2.yaml

ingress.networking.k8s.io/argocd-ingress created

We’ll watch for the cert to get applied

$ kubectl get cert

NAME READY SECRET AGE

azurevote-tls True azurevote-tls 15d

harbor-fb-science True harbor.freshbrewed.science-cert 14d

notary-fb-science True notary.freshbrewed.science-cert 14d

harbor.freshbrewed.science-cert True harbor.freshbrewed.science-cert 14d

notary.freshbrewed.science-cert True notary.freshbrewed.science-cert 14d

argocd-tls False argocd-tls 68s

$ kubectl get cert

NAME READY SECRET AGE

azurevote-tls True azurevote-tls 15d

harbor-fb-science True harbor.freshbrewed.science-cert 14d

notary-fb-science True notary.freshbrewed.science-cert 14d

harbor.freshbrewed.science-cert True harbor.freshbrewed.science-cert 14d

notary.freshbrewed.science-cert True notary.freshbrewed.science-cert 14d

argocd-tls True argocd-tls 116s

Then the ingress

$ kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

azurevote-ingress nginx azurevote.freshbrewed.science 192.168.1.159 80, 443 15d

harbor-registry-ingress nginx harbor.freshbrewed.science 192.168.1.159 80, 443 14d

harbor-registry-ingress-notary nginx notary.freshbrewed.science 192.168.1.159 80, 443 14d

argocd-ingress nginx argocd.freshbrewed.science 192.168.1.159 80, 443 2m21s

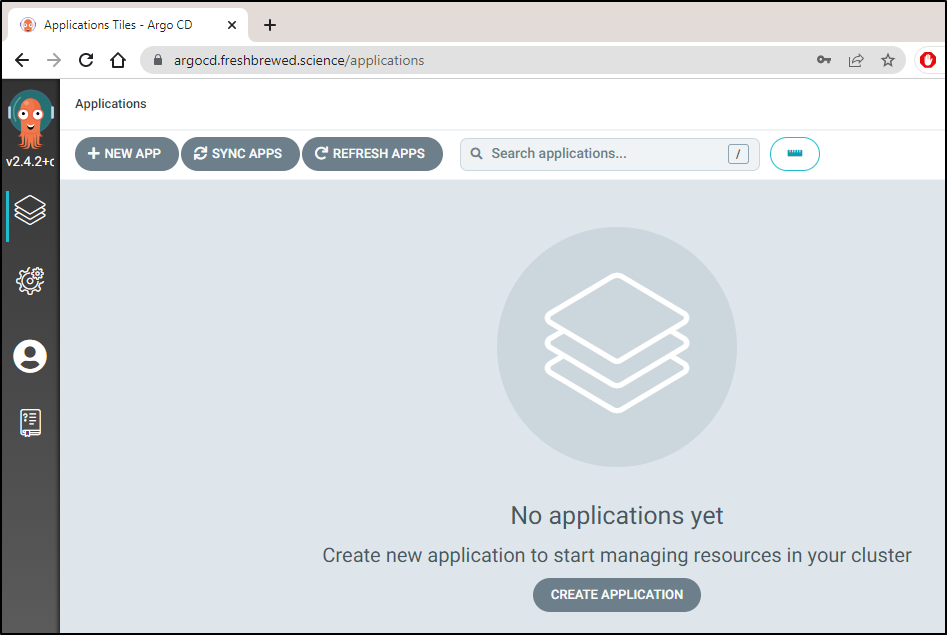

And now we have ArgoCD running again

Sonarqube

One more item of housekeeping, I’ll need to add Sonarqube back

$ helm repo add sonarqube https://SonarSource.github.io/helm-chart-sonarqube

"sonarqube" already exists with the same configuration, skipping

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "epsagon" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "myharbor" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

$ helm upgrade --install sonarqube sonarqube/sonarqube

Release "sonarqube" does not exist. Installing it now.

NAME: sonarqube

LAST DEPLOYED: Mon Jun 27 06:56:32 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app=sonarqube,release=sonarqube" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl port-forward $POD_NAME 8080:9000 -n default

and

$ cat sonarqubeIngress.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/tls-acme: "true"

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

name: sonarqube-ingress

namespace: default

spec:

ingressClassName: nginx

tls:

- hosts:

- sonarqube.freshbrewed.science

secretName: sonarqube-tls

rules:

- host: sonarqube.freshbrewed.science

http:

paths:

- backend:

service:

name: sonarqube-sonarqube

port:

number: 9000

path: /

pathType: ImplementationSpecific

We’ll check the cert is created

$ kubectl get cert

NAME READY SECRET AGE

azurevote-tls True azurevote-tls 15d

harbor-fb-science True harbor.freshbrewed.science-cert 14d

notary-fb-science True notary.freshbrewed.science-cert 14d

harbor.freshbrewed.science-cert True harbor.freshbrewed.science-cert 14d

notary.freshbrewed.science-cert True notary.freshbrewed.science-cert 14d

argocd-tls True argocd-tls 50m

sonarqube-tls True sonarqube-tls 108s

and the ingress as well

$ kubectl get ingress sonarqube-ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

sonarqube-ingress nginx sonarqube.freshbrewed.science 192.168.1.159 80, 443 2m16s

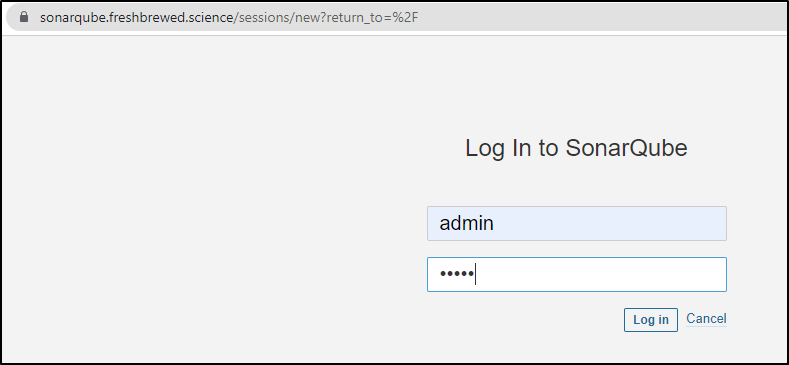

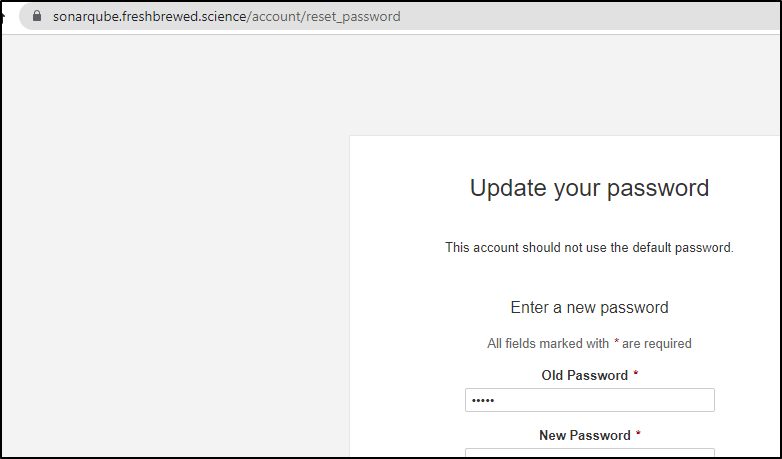

We need to login and change the password as by default, the login/pass is both “admin”

and it prompts a password change

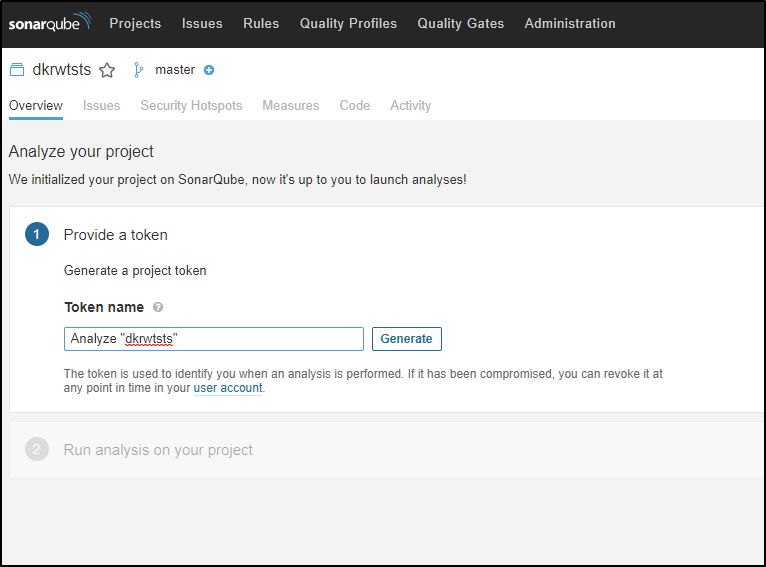

Because I plan to update the Dockerwithtests to publish results during my Kaniko builds, I’ll geta new token.

The old invokation (and expired token) looked as such in the Dockerfile

+RUN apt update && apt install -y jq

+RUN curl --silent -u 93846bed357b0cad92e6550dbb40203601451103: https://sonarqube.freshbrewed.science/api/project_analyses/search?project=dkrwtsts | jq -r '.analyses[].events[] | select(.category=="QUALITY_GATE") | .name' > results

+RUN grep -q "Passed" output; exit $?

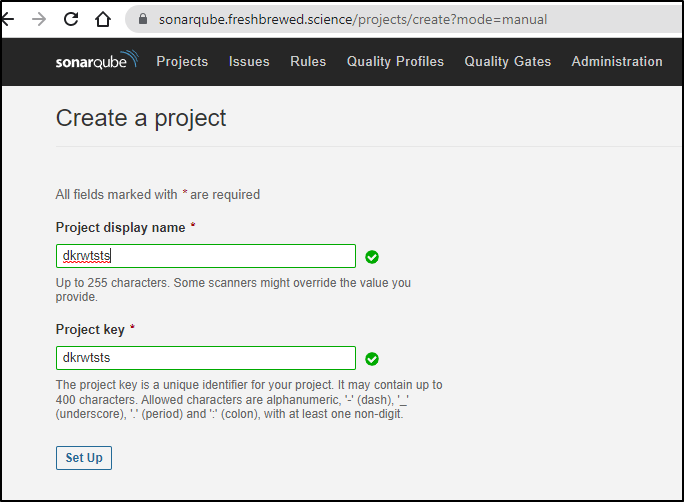

I’ll create the project in Sonarqube

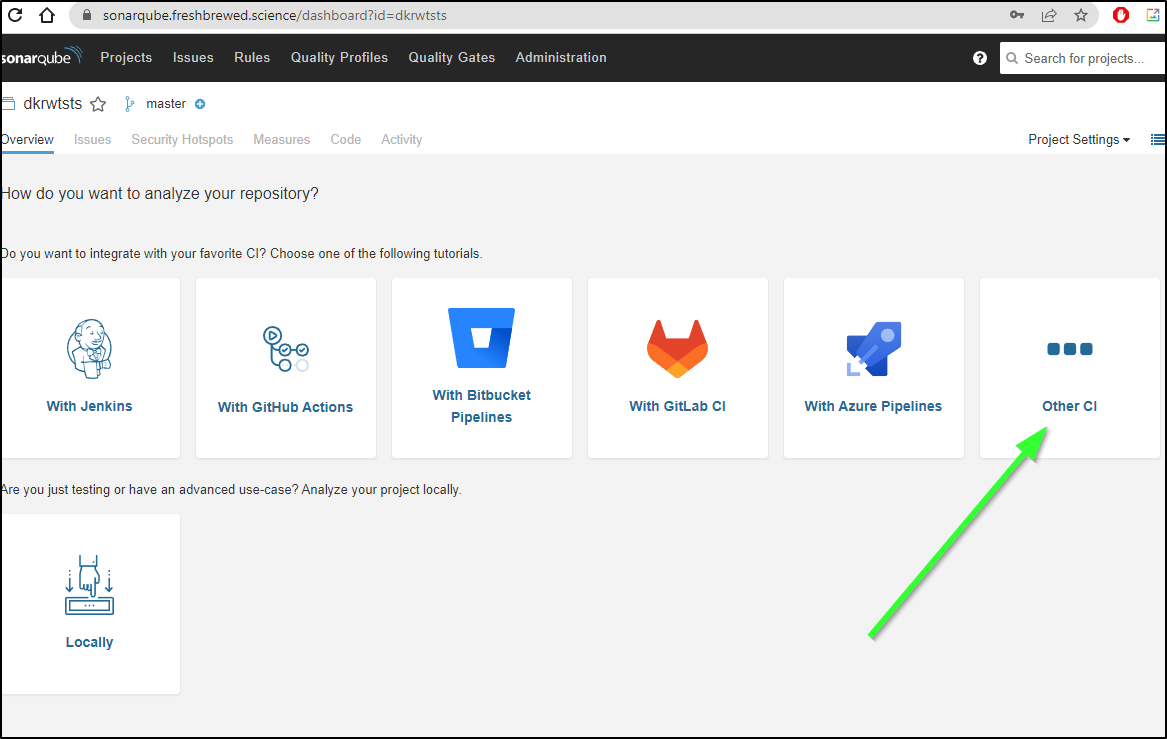

To create a token, I’ll choose “Other CI”

Then “Generate” to create the token

This creates the upload token “sqp_72d6f8cbe95fd000004d2d7465625f587cd39558”

I’ll add this to my Dockerfile:

$ cat Dockerfile

FROM node:17.6.0 as base

WORKDIR /code

COPY package.json package.json

COPY package-lock.json package-lock.json

FROM base as test

RUN curl -s -L https://binaries.sonarsource.com/Distribution/sonar-scanner-cli/sonar-scanner-cli-4.7.0.2747-linux.zip -o sonarscanner.zip \

&& unzip -qq sonarscanner.zip \

&& rm -rf sonarscanner.zip \

&& mv sonar-scanner-4.7.0.2747-linux sonar-scanner

COPY sonar-scanner.properties sonar-scanner/conf/sonar-scanner.properties

ENV SONAR_RUNNER_HOME=sonar-scanner

ENV PATH $PATH:sonar-scanner/bin

# RUN sed -i 's/use_embedded_jre=true/use_embedded_jre=false/g' sonar-scanner/bin/sonar-scanner

RUN npm ci

COPY . .

COPY .git .git

RUN npm run test2

RUN sonar-scanner -Dsonar.settings=sonar-scanner/conf/sonar-scanner.properties

RUN apt update && apt install -y jq

RUN curl --silent -u sqp_72d6f8cbe95fd000004d2d7465625f587cd39558: https://sonarqube.freshbrewed.science/api/project_analyses/search?project=dkrwtsts | jq -r '.analyses[].events[] | select(.category=="QUALITY_GATE") | .name' > results

RUN grep -q "Passed" output; exit $?

FROM base as prod

ENV NODE_ENV=production

RUN npm ci --production

COPY . .

CMD [ "node", "server.js" ]

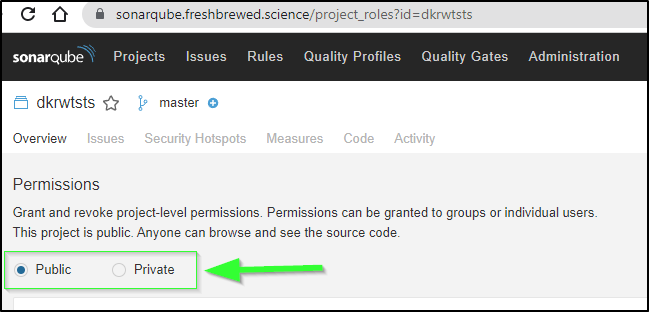

Since this is for learning code, I’m leaving the project Public. In a real-world scenario, you likely would want to keep your Sonar project private (as it fundamentally exposes code in analysis)

I’ll push the current changes to the sonarscans branch

$ git status

On branch sonarscans

Your branch is ahead of 'origin/sonarscans' by 1 commit.

(use "git push" to publish your local commits)

Changes to be committed:

(use "git restore --staged <file>..." to unstage)

modified: Dockerfile

new file: calculator.js

new file: index.js

modified: package-lock.json

modified: package.json

new file: tests/calc.js

$ git commit -m "Working Copy"

[sonarscans 7b6a4b1] Working Copy

6 files changed, 3020 insertions(+), 94 deletions(-)

create mode 100644 calculator.js

create mode 100644 index.js

create mode 100644 tests/calc.js

$ git push

Enumerating objects: 22, done.

Counting objects: 100% (22/22), done.

Delta compression using up to 16 threads

Compressing objects: 100% (15/15), done.

Writing objects: 100% (15/15), 65.62 KiB | 10.94 MiB/s, done.

Total 15 (delta 5), reused 0 (delta 0)

remote: Resolving deltas: 100% (5/5), completed with 3 local objects.

To https://github.com/idjohnson/dockerWithTests2.git

d79ce4e..7b6a4b1 sonarscans -> sonarscans

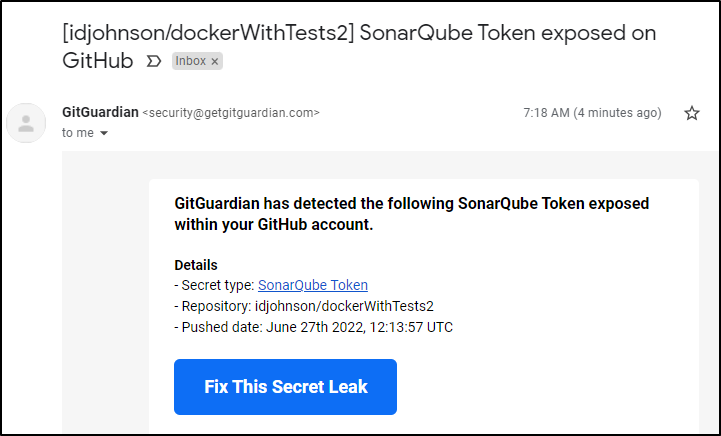

Which is exposed in github here

I might add that leaving in your Sonartoken makes Github mad. You’ll likely get an alert from GitGuardian

To use Kaniko, first with a Hello World example, we can set the Dockerfile into a Configmap and mount it as a volume (as opposed to a PVC)

This makes the k8s/deployment.yaml

kind: Namespace

apiVersion: v1

metadata:

name: test

labels:

name: test

---

apiVersion: v1

kind: ConfigMap

metadata:

name: docker-test-cm

data:

Dockerfile: |

FROM ubuntu:latest

CMD [ "sleep", "64000" ]

---

apiVersion: v1

kind: Pod

metadata:

name: kaniko

spec:

containers:

- name: kaniko

image: gcr.io/kaniko-project/executor:latest

args: ["--dockerfile=/workspace/dockerfile",

"--context=dir://workspace",

"--destination=harbor.freshbrewed.science/freshbrewedprivate/dockerwithtests"]

volumeMounts:

- name: kaniko-secret

mountPath: /kaniko/.docker

- name: dockerfile-storage

mountPath: /workspace

restartPolicy: Never

volumes:

- name: kaniko-secret

secret:

secretName: kanikoharborcred

items:

- key: .dockerconfigjson

path: config.json

- name: dockerfile-storage

configMap:

name: docker-test-cm

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

namespace: test

spec:

selector:

matchLabels:

run: my-nginx

replicas: 3

template:

metadata:

labels:

run: my-nginx

spec:

imagePullSecrets:

- name: regcred

containers:

- name: my-nginx

image: nginx

imagePullPolicy: Always

ports:

- containerPort: 8000

---

apiVersion: v1

kind: Service

metadata:

name: nginx-run-svc

namespace: test

spec:

type: LoadBalancer

ports:

- port: 80

targetPort: 8000

protocol: TCP

name: http

selector:

run: my-nginx

and the k8s/kustomization.yaml

resources:

- deployment.yaml

images:

- name: nginx

newName: harbor.freshbrewed.science/freshbrewedprivate/dockerwithtests

newTag: latest

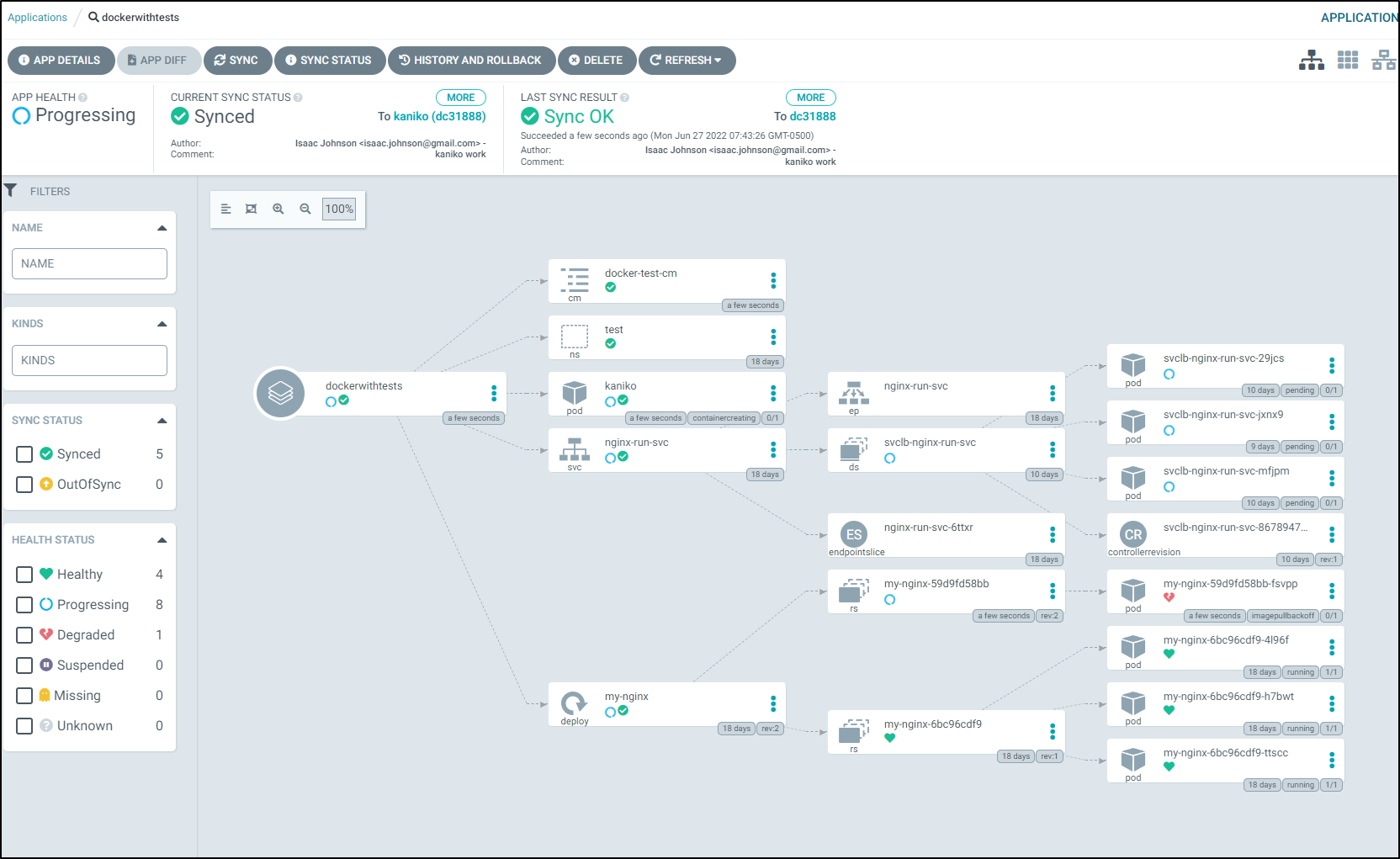

Which we can see here

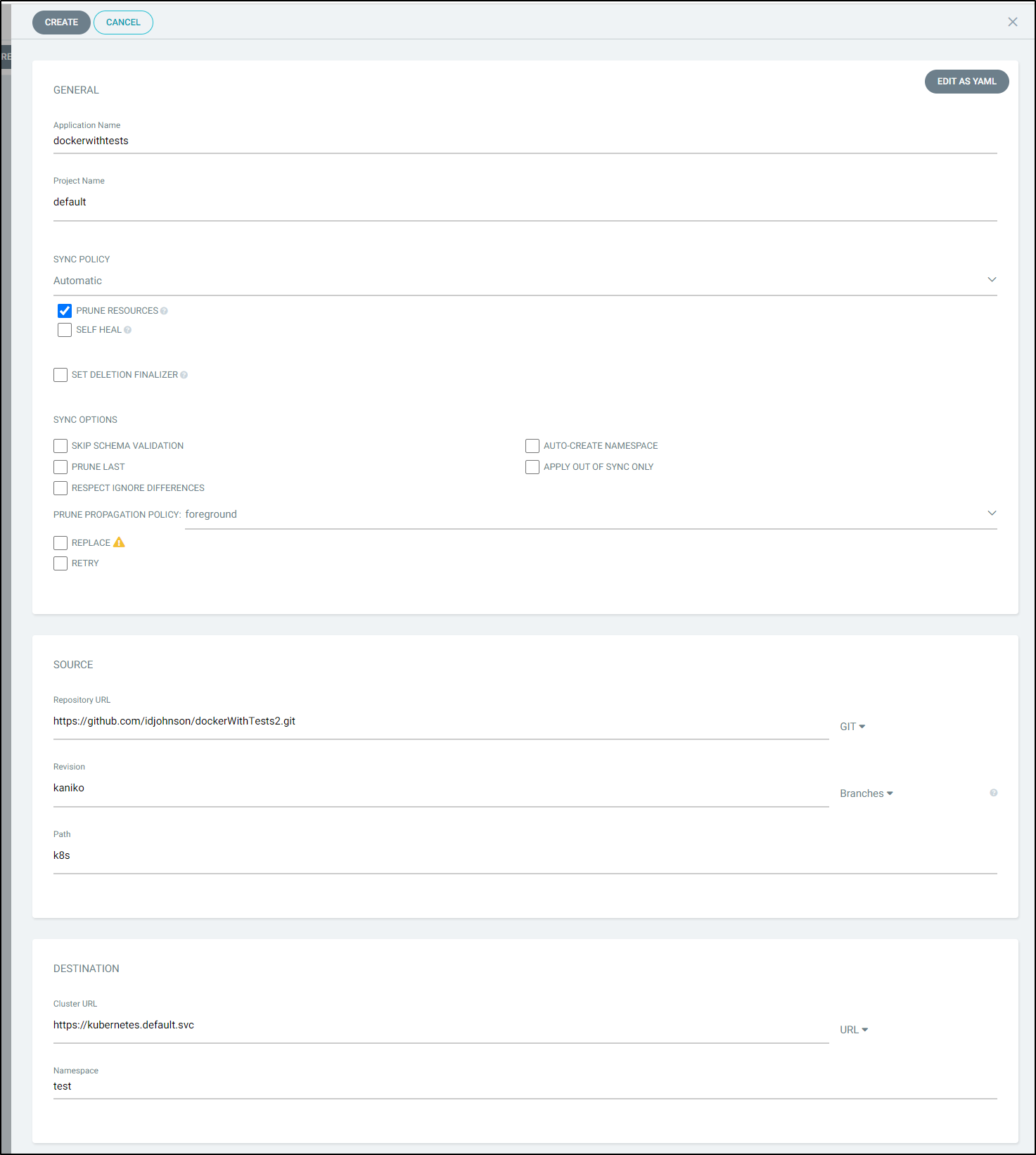

I’ll add the app to ArgoCD

And immediatly see things start to get created

I’ll have to solve some registry creds

$ kubectl get secret kanikoharborcred -o yaml > kanikoharborcred.yaml && sed -i 's/namespace: default/namespace: test/g' kanikoharborcred.yaml && kubectl apply -f kanikoharborcred.yaml

secret/kanikoharborcred created

which will unblock the Kaniko pod

$ kubectl describe pod kaniko -n test | tail -n 10

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 4m34s default-scheduler Successfully assigned test/kaniko to builder-macbookpro2

Warning FailedMount 2m32s kubelet Unable to attach or mount volumes: unmounted volumes=[kaniko-secret], unattached volumes=[dockerfile-storage kube-api-access-v7dx5 kaniko-secret]: timed out waiting for the condition

Warning FailedMount 2m27s (x9 over 4m35s) kubelet MountVolume.SetUp failed for volume "kaniko-secret" : secret "kanikoharborcred" not found

Normal Pulling 24s kubelet Pulling image "gcr.io/kaniko-project/executor:latest"

Normal Pulled 14s kubelet Successfully pulled image "gcr.io/kaniko-project/executor:latest" in 10.478949347s

Normal Created 13s kubelet Created container kaniko

Normal Started 13s kubelet Started container kaniko

I can then see that it created and pushed the container

builder@DESKTOP-QADGF36:~/Workspaces$ kubectl logs kaniko -n test

INFO[0000] Retrieving image manifest ubuntu:latest

INFO[0000] Retrieving image ubuntu:latest from registry index.docker.io

INFO[0001] Built cross stage deps: map[]

INFO[0001] Retrieving image manifest ubuntu:latest

INFO[0001] Returning cached image manifest

INFO[0001] Executing 0 build triggers

INFO[0001] Skipping unpacking as no commands require it.

INFO[0001] CMD [ "sleep", "64000" ]

INFO[0001] Pushing image to harbor.freshbrewed.science/freshbrewedprivate/dockerwithtests

I’ll then quickly add the regcred for harbor in the test namespace to solve the errors in the pull

$ kubectl describe pod my-nginx-59d9fd58bb-fsvpp -n test | tail -n 9

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 8m59s default-scheduler Successfully assigned test/my-nginx-59d9fd58bb-fsvpp to builder-macbookpro2

Normal Pulling 7m26s (x4 over 8m59s) kubelet Pulling image "harbor.freshbrewed.science/freshbrewedprivate/dockerwithtests:latest"

Warning Failed 7m26s (x4 over 8m59s) kubelet Failed to pull image "harbor.freshbrewed.science/freshbrewedprivate/dockerwithtests:latest": rpc error: code = Unknown desc = failed to pull and unpack image "harbor.freshbrewed.science/freshbrewedprivate/dockerwithtests:latest": failed to resolve reference "harbor.freshbrewed.science/freshbrewedprivate/dockerwithtests:latest": pulling from host harbor.freshbrewed.science failed with status code [manifests latest]: 401 Unauthorized

Warning Failed 7m26s (x4 over 8m59s) kubelet Error: ErrImagePull

Warning Failed 7m15s (x6 over 8m58s) kubelet Error: ImagePullBackOff

Normal BackOff 3m46s (x21 over 8m58s) kubelet Back-off pulling image "harbor.freshbrewed.science/freshbrewedprivate/dockerwithtests:latest"

A quick fix

$ kubectl get secrets myharborreg -o yaml > myharborreg.yaml && sed -i 's/name: myharborreg/name: regcred/' myharborreg.yaml && sed -i 's/namespace: default/namespace: test/' myharborreg.yaml && kubectl apply -f myharborreg.yaml

secret/regcred created

And we can see the default Ubuntu container now running in the test namespace:

$ kubectl get pods -n test

NAME READY STATUS RESTARTS AGE

my-nginx-6bc96cdf9-4l96f 1/1 Running 0 18d

my-nginx-6bc96cdf9-ttscc 1/1 Running 0 18d

my-nginx-6bc96cdf9-h7bwt 1/1 Running 0 18d

svclb-nginx-run-svc-jxnx9 0/1 Pending 0 9d

svclb-nginx-run-svc-29jcs 0/1 Pending 0 9d

svclb-nginx-run-svc-mfjpm 0/1 Pending 0 9d

kaniko 0/1 Completed 0 3m29s

my-nginx-59d9fd58bb-fsvpp 0/1 ErrImagePull 0 11m

$ kubectl delete pod my-nginx-59d9fd58bb-fsvpp -n test

pod "my-nginx-59d9fd58bb-fsvpp" deleted

$ kubectl get pods -n test

NAME READY STATUS RESTARTS AGE

my-nginx-6bc96cdf9-4l96f 1/1 Running 0 18d

my-nginx-6bc96cdf9-h7bwt 1/1 Running 0 18d

svclb-nginx-run-svc-jxnx9 0/1 Pending 0 9d

svclb-nginx-run-svc-29jcs 0/1 Pending 0 9d

svclb-nginx-run-svc-mfjpm 0/1 Pending 0 9d

kaniko 0/1 Completed 0 3m45s

my-nginx-59d9fd58bb-tjkb2 1/1 Running 0 7s

my-nginx-59d9fd58bb-gnhbm 0/1 ContainerCreating 0 1s

my-nginx-6bc96cdf9-ttscc 0/1 Terminating 0 18d

We can verify with logs and interactive login

$ kubectl logs my-nginx-59d9fd58bb-tjkb2 -n test

$ kubectl exec -it my-nginx-59d9fd58bb-tjkb2 -n test -- /bin/bash

root@my-nginx-59d9fd58bb-tjkb2:/# cat /etc/lsb-release

DISTRIB_ID=Ubuntu

DISTRIB_RELEASE=22.04

DISTRIB_CODENAME=jammy

DISTRIB_DESCRIPTION="Ubuntu 22.04 LTS"

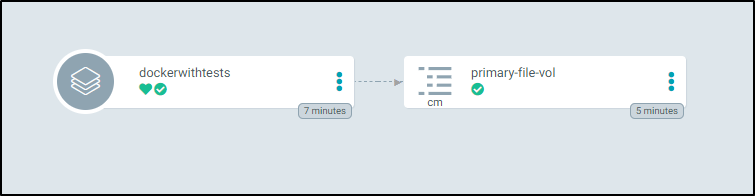

This can get complicated using Kaniko. The fact that it uses a mapped volume that must be pre-populated.

I could use the kustomization for a CM generator. In fact I did in order to create the cm.yaml. However, it seemed to stop the ArgoCD deploy at this step.

configMapGenerator:

- name: primary-file-vol

files:

- Dockerfile

- package.json

- server.js

generatorOptions:

disableNameSuffixHash: true

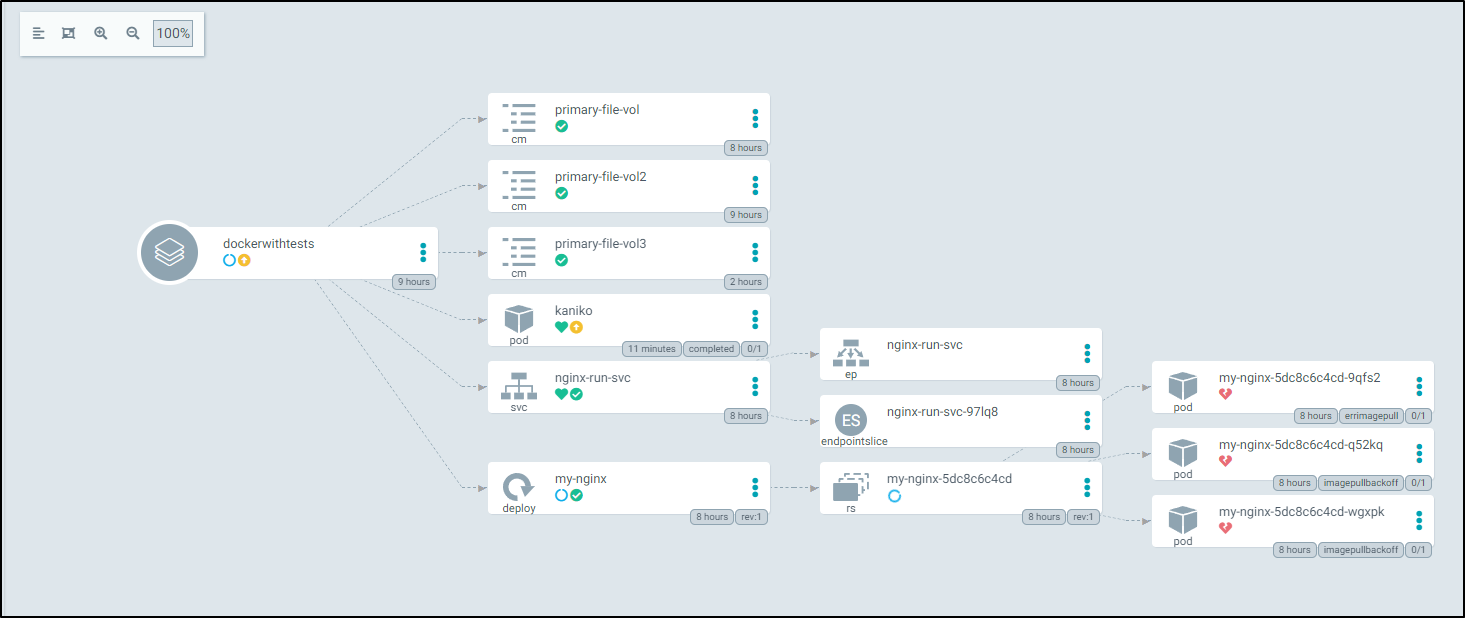

I realized soon after that the formating of the kustomization indicates all the files in a deployment. I didn’t see many working examples of multiple Kustomizations.

Here, for instance, I create two ConfigMaps populated with local files as well as modify image references to Nginx.

$ cat kustomization.yaml

commonLabels:

app: hello

resources:

- deployment.yaml

images:

- name: nginx

newName: harbor.freshbrewed.science/freshbrewedprivate/dockerwithtests

newTag: latest

configMapGenerator:

- name: primary-file-vol2

files:

- Dockerfile

- package.json

- server.js

- name: primary-file-vol3

files:

- package-lock.json

generatorOptions:

disableNameSuffixHash: true

The other note is that when using Kustomizations, if you want to load more than one file, you need to specify them all in the resources. For instance:

$ cat kustomization.yaml

commonLabels:

app: hello

resources:

- deployment.yaml

- service.yaml

- secrets.yaml

...etc

I struggled to get the DnD part of Kaniko to actually pull in the files. However, I found a slick cheat. I could use Github to pull them in (since this was a public repo). This saved me the hassle of having to setup working local copy commands

apiVersion: v1

data:

Dockerfile: |

FROM node:17.6.0 as base

WORKDIR /code

RUN git clone https://github.com/idjohnson/dockerWithTests2.git && cd dockerWithTests2 && git checkout kaniko && cp package.json .. && cp package-lock.json .. && cp server.js .. && cd ..

ENV NODE_ENV=production

RUN npm ci --production

CMD [ "node", "server.js" ]

kind: ConfigMap

name: primary-file-vol2

When tested, Kaniko pulled the base docker image, cloned the repo (same as what I was building), then properly packaged and sent back up to Harbor.

$ kubectl logs kaniko -n test

INFO[0000] Resolved base name node:17.6.0 to base

INFO[0000] Retrieving image manifest node:17.6.0

INFO[0000] Retrieving image node:17.6.0 from registry index.docker.io

INFO[0001] Built cross stage deps: map[]

INFO[0001] Retrieving image manifest node:17.6.0

INFO[0001] Returning cached image manifest

INFO[0001] Executing 0 build triggers

INFO[0001] Unpacking rootfs as cmd RUN git clone https://github.com/idjohnson/dockerWithTests2.git && cd dockerWithTests2 && git checkout kaniko && cp package.json .. && cp package-lock.json .. && cp server.js .. && cd .. requires it.

INFO[0038] WORKDIR /code

INFO[0038] cmd: workdir

INFO[0038] Changed working directory to /code

INFO[0038] Creating directory /code

INFO[0038] Taking snapshot of files...

INFO[0038] RUN git clone https://github.com/idjohnson/dockerWithTests2.git && cd dockerWithTests2 && git checkout kaniko && cp package.json .. && cp package-lock.json .. && cp server.js .. && cd ..

INFO[0038] Taking snapshot of full filesystem...

INFO[0056] cmd: /bin/sh

INFO[0056] args: [-c git clone https://github.com/idjohnson/dockerWithTests2.git && cd dockerWithTests2 && git checkout kaniko && cp package.json .. && cp package-lock.json .. && cp server.js .. && cd ..]

INFO[0056] Running: [/bin/sh -c git clone https://github.com/idjohnson/dockerWithTests2.git && cd dockerWithTests2 && git checkout kaniko && cp package.json .. && cp package-lock.json .. && cp server.js .. && cd ..]

Cloning into 'dockerWithTests2'...

Switched to a new branch 'kaniko'

Branch 'kaniko' set up to track remote branch 'kaniko' from 'origin'.

INFO[0057] Taking snapshot of full filesystem...

INFO[0059] ENV NODE_ENV=production

INFO[0059] RUN npm ci --production

INFO[0059] cmd: /bin/sh

INFO[0059] args: [-c npm ci --production]

INFO[0059] Running: [/bin/sh -c npm ci --production]

npm WARN old lockfile

npm WARN old lockfile The package-lock.json file was created with an old version of npm,

npm WARN old lockfile so supplemental metadata must be fetched from the registry.

npm WARN old lockfile

npm WARN old lockfile This is a one-time fix-up, please be patient...

npm WARN old lockfile

added 231 packages, and audited 232 packages in 23s

27 packages are looking for funding

run `npm fund` for details

3 high severity vulnerabilities

To address all issues, run:

npm audit fix

Run `npm audit` for details.

npm notice

npm notice New minor version of npm available! 8.5.1 -> 8.13.1

npm notice Changelog: <https://github.com/npm/cli/releases/tag/v8.13.1>

npm notice Run `npm install -g npm@8.13.1` to update!

npm notice

INFO[0082] Taking snapshot of full filesystem...

INFO[0091] CMD [ "node", "server.js" ]

INFO[0091] Pushing image to harbor.freshbrewed.science/freshbrewedprivate/dockerwithtests

INFO[0171] Pushed harbor.freshbrewed.science/freshbrewedprivate/dockerwithtests@sha256:d4c50ce0d44fb1e3101c4ffb6cf067dc356a8b34b6ec9ac7ae00361ad8bb2658

And in ArgoCD

Summary

We tackled some local cluster housekeeping and properly set up a fuse based NFS storage class to satisfy our PVCs. We also setup ArgoCD for GitOps from our DockerWithTests public repo and Sonarqube to gather test results.

We used an NFS volume claim and a series of methods to build the container. We paired Kaniko with ArgoCD to create a really solid turn-key GitOps solution.

Our next plans will be to tighten up the Dockerfile, add test coverage and handle any secrets.

Overall, I found Kaniko to be quite fast. However, it did fail on proper file copy commands requiring me to fall back to the git clone method.