Published: Jun 21, 2022 by Isaac Johnson

In our last post we covered getting K3s setup and basic Ingress setup. We used Nginx for our ingress provider and MetalLB for our Load Balancer. Next, we covered getting our Storage Class setup for NFS to our on-prem NAS. Lastly, we setup Harbor and verified TLS ingress works just fine.

Today, we’ll cover migrating additional nodes, setting up our Observability suite (Datadog), Github Runners, Dapr, Crossplane.io and Loft.

Adding a worker node

We can add a worker node by first fetching the token

builder@anna-MacBookAir:~$ sudo cat /var/lib/rancher/k3s/server/node-token

[sudo] password for builder:

K10a9e665bb0e1fe827b71f76d09ade426153bd0ba99d5d9df39a76ac4f90b584ef::server:3246003cde679880017e7a14a63fa05d

K3s moves fast, so we also need to be certain of the Kubernetes version

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

anna-macbookair Ready control-plane,master 7d18h v1.23.6+k3s1

As we can see from the old cluster, I already pealed one node off the stack to move over, one of the older macbook pros

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

anna-macbookair NotReady <none> 421d v1.23.3+k3s1

builder-macbookpro2 NotReady <none> 161d v1.23.3+k3s1

hp-hp-elitebook-850-g2 Ready <none> 248d v1.23.3+k3s1

isaac-macbookair Ready control-plane,master 533d v1.23.3+k3s1

builder-hp-elitebook-850-g1 Ready <none> 228d v1.23.3+k3s1

builder-hp-elitebook-850-g2 Ready <none> 223d v1.23.3+k3s1

isaac-macbookpro Ready <none> 533d v1.23.3+k3s1

I can quick fetch the IP

$ kubectl describe node builder-macbookpro2 | grep IP:

InternalIP: 192.168.1.159

And now I’ll remote in with SSH to move it. I can see I already stopped the former agent

pi@raspberrypi:~ $ ssh builder@192.168.1.159

The authenticity of host '192.168.1.159 (192.168.1.159)' can't be established.

ECDSA key fingerprint is 91:8b:bd:b8:ae:00:e8:ae:29:d1:ff:9d:f0:47:49:c9.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.1.159' (ECDSA) to the list of known hosts.

builder@192.168.1.159's password:

Welcome to Ubuntu 20.04.4 LTS (GNU/Linux 5.13.0-40-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

8 updates can be applied immediately.

To see these additional updates run: apt list --upgradable

Your Hardware Enablement Stack (HWE) is supported until April 2025.

*** System restart required ***

Last login: Sun Jun 5 16:59:32 2022 from 192.168.1.160

builder@builder-MacBookPro2:~$ ps -ef | grep k3s

builder 689439 689418 0 09:02 pts/0 00:00:00 grep --color=auto k3s

builder@builder-MacBookPro2:~$ ps -ef | grep agent

builder 1180 1099 0 May12 ? 00:00:15 /usr/bin/ssh-agent /usr/bin/im-launch env GNOME_SHELL_SESSION_MODE=ubuntu /usr/bin/gnome-session --systemd --session=ubuntu

builder 689442 689418 0 09:02 pts/0 00:00:00 grep --color=auto agent

To be safe, I’ll double check nfs-common is there (for our storage class)

builder@builder-MacBookPro2:~$ sudo apt install nfs-common

[sudo] password for builder:

Reading package lists... Done

Building dependency tree

Reading state information... Done

nfs-common is already the newest version (1:1.3.4-2.5ubuntu3.4).

The following packages were automatically installed and are no longer required:

libfprint-2-tod1 libllvm10

Use 'sudo apt autoremove' to remove them.

0 upgraded, 0 newly installed, 0 to remove and 7 not upgraded.

Now I just need to download and launch the agent

$ curl -sfL https://get.k3s.io | INSTALL_K3S_VERSION=v1.23.6+k3s1 K3S_URL=https://192.168.1.81:6443 K3S_TOKEN=K10a9e665bb0e1fe827b71f76d09ade426153bd0ba99d5d9df39a76ac4f90b584ef::server:3246003cde679880017e7a14a63fa05d sh -

That command should be obvious, but the key parts are to match our master node’s k3s version with the “INSTALL_K3S_VERSION” variable, set the internal IP address of the master in K3S_URL (external if we were joining a node from the internet) and then the token we already fetched for K3S_TOKEN.

builder@builder-MacBookPro2:~$ curl -sfL https://get.k3s.io | INSTALL_K3S_VERSION=v1.23.6+k3s1 K3S_URL=https://192.168.1.81:6443 K3S_TOKEN=K10a9e665bb0e1fe827b71f76d09ade426153bd0ba99d5d9df39a76ac4f90b584ef::server:3246003cde679880017e7a14a63fa05d sh -

[sudo] password for builder:

[INFO] Using v1.23.6+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.23.6+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.23.6+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Skipping /usr/local/bin/kubectl symlink to k3s, already exists

[INFO] Skipping /usr/local/bin/crictl symlink to k3s, already exists

[INFO] Skipping /usr/local/bin/ctr symlink to k3s, already exists

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

[INFO] systemd: Starting k3s-agent

I’ll also want to start via Systemctl

builder@builder-MacBookPro2:~$ systemctl enable --now k3s-agent

==== AUTHENTICATING FOR org.freedesktop.systemd1.manage-unit-files ===

Authentication is required to manage system service or unit files.

Authenticating as: builder,,, (builder)

Password:

==== AUTHENTICATION COMPLETE ===

==== AUTHENTICATING FOR org.freedesktop.systemd1.reload-daemon ===

Authentication is required to reload the systemd state.

Authenticating as: builder,,, (builder)

Password:

==== AUTHENTICATION COMPLETE ===

==== AUTHENTICATING FOR org.freedesktop.systemd1.manage-units ===

Authentication is required to start 'k3s-agent.service'.

Authenticating as: builder,,, (builder)

Password:

==== AUTHENTICATION COMPLETE ===

And in a few moments, I can see the node has joined

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

anna-macbookair Ready control-plane,master 7d18h v1.23.6+k3s1

builder-macbookpro2 Ready <none> 75s v1.23.6+k3s1

Datadog

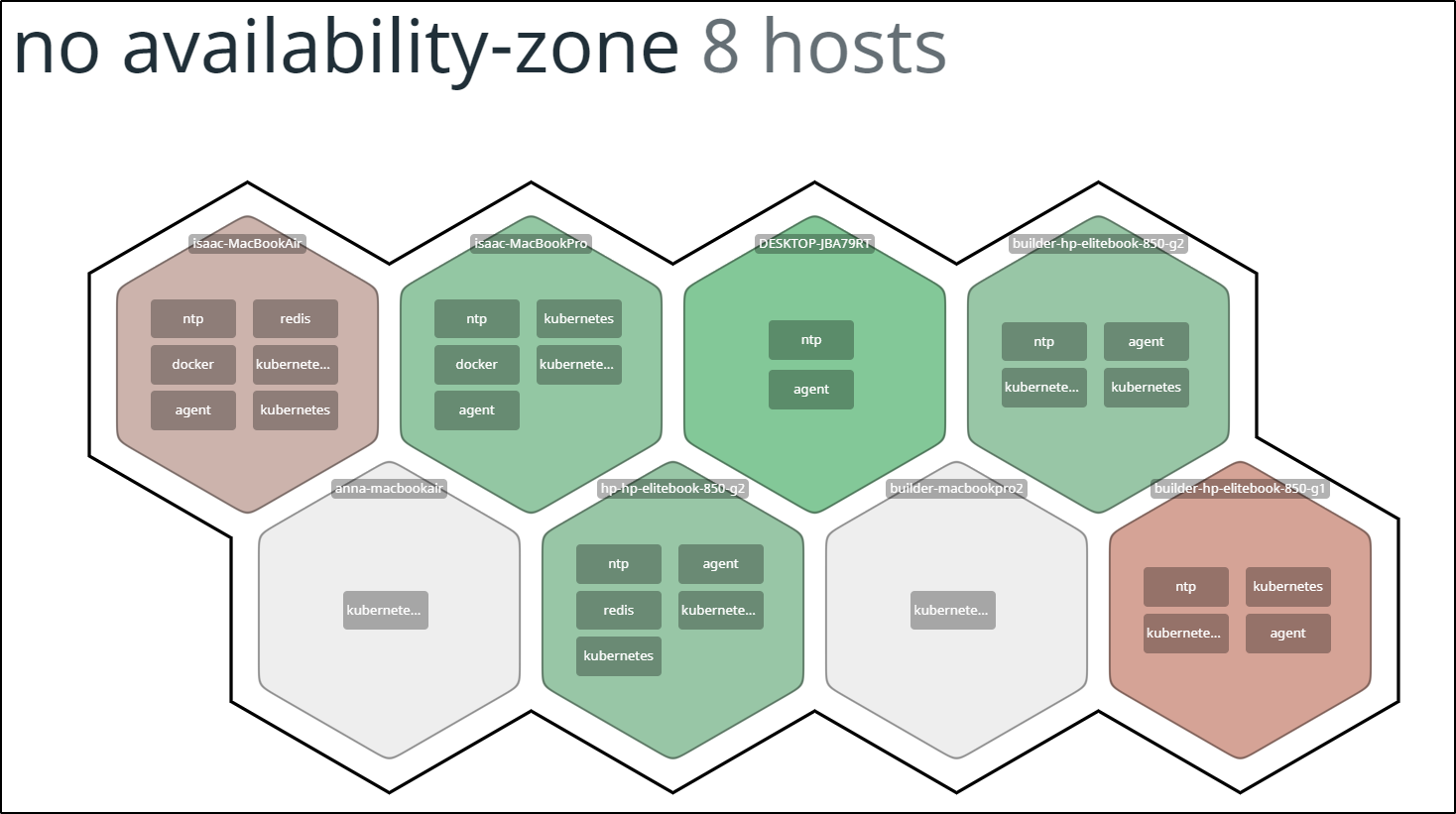

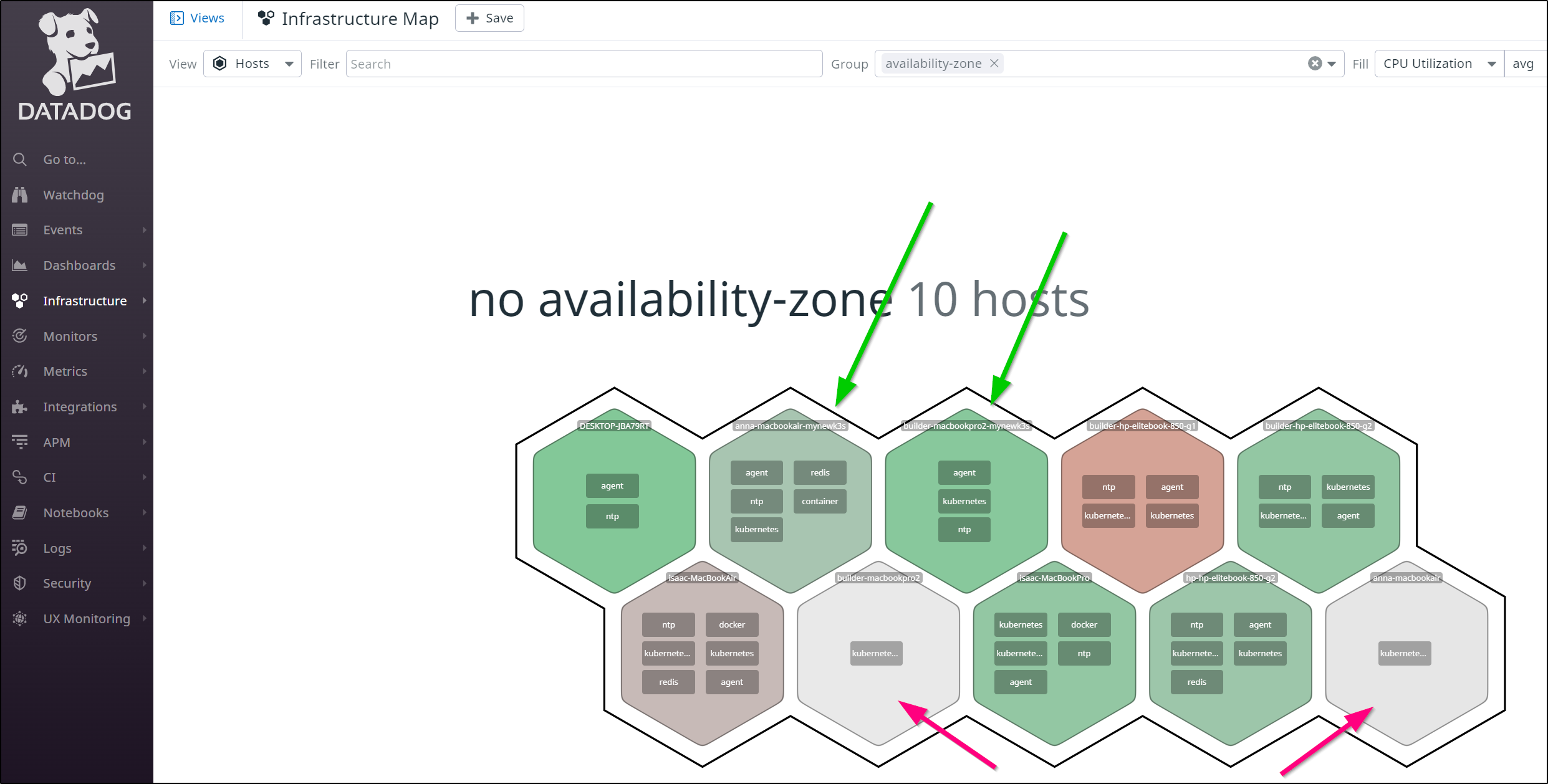

I can see Datadog has noticed the old nodes have been dropped from the former cluster:

I’ll want to use the DD Helm chart.

$ helm repo add datadog https://helm.datadoghq.com

"datadog" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "metallb" chart repository

...Successfully got an update from the "cribl" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "jenkins" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "bitnami" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈Happy Helming!⎈

I needed to fetch my existing API key secret and save it into my new cluster

builder@DESKTOP-72D2D9T:~/Workspaces/jekyll-blog$ kubectl get secret dd-secret -o yaml > dd-secret.yaml

builder@DESKTOP-72D2D9T:~/Workspaces/jekyll-blog$ vi dd-secret.yaml

builder@DESKTOP-72D2D9T:~/Workspaces/jekyll-blog$ cp ~/.kube/my-NEWmac-k3s-external ~/.kube/config

builder@DESKTOP-72D2D9T:~/Workspaces/jekyll-blog$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

anna-macbookair Ready control-plane,master 9d v1.23.6+k3s1

builder-macbookpro2 Ready <none> 33h v1.23.6+k3s1

builder@DESKTOP-72D2D9T:~/Workspaces/jekyll-blog$ kubectl apply -f ./dd-secret.yaml

secret/dd-secret created

I then put together a Helm chart values that pretty much turned on every bell and whistle

$ cat datadog.release.values.yml

targetSystem: "linux"

datadog:

# apiKey: <DATADOG_API_KEY>

# appKey: <DATADOG_APP_KEY>

# If not using secrets, then use apiKey and appKey instead

apiKeyExistingSecret: dd-secret

clusterName: mynewk3s

tags: []

orchestratorExplorer:

enabled: true

appKey: 51bbf169c11305711e4944b9e74cd918838efbb2

apm:

enabled: true

port: 8126

portEnabled: true

logs:

containerCollectAll: true

enabled: true

networkMonitoring:

enabled: true

processAgent:

enabled: true

processCollection: true

clusterAgent:

replicas: 2

rbac:

create: true

serviceAccountName: default

metricsProvider:

enabled: true

createReaderRbac: true

useDatadogMetrics: true

service:

type: ClusterIP

port: 8443

agents:

rbac:

create: true

serviceAccountName: default

clusterChecksRunner:

enabled: true

rbac:

create: true

serviceAccountName: default

replicas: 2

Then install

$ helm install my-dd-release -f datadog.release.values.yml datadog/datadog

W0614 18:22:36.197929 25203 warnings.go:70] spec.template.metadata.annotations[container.seccomp.security.alpha.kubernetes.io/system-probe]: deprecated since v1.19, non-functional in v1.25+; use the "seccompProfile" field instead

NAME: my-dd-release

LAST DEPLOYED: Tue Jun 14 18:22:19 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Datadog agents are spinning up on each node in your cluster. After a few

minutes, you should see your agents starting in your event stream:

https://app.datadoghq.com/event/explorer

You disabled creation of Secret containing API key, therefore it is expected

that you create Secret named 'dd-secret' which includes a key called 'api-key' containing the API key.

The Datadog Agent is listening on port 8126 for APM service.

#################################################################

#### WARNING: Deprecation notice ####

#################################################################

The option `datadog.apm.enabled` is deprecated, please use `datadog.apm.portEnabled` to enable TCP communication to the trace-agent.

The option `datadog.apm.socketEnabled` is enabled by default and can be used to rely on unix socket or name-pipe communication.

###################################################################################

#### WARNING: Cluster-Agent should be deployed in high availability mode ####

###################################################################################

The Cluster-Agent should be in high availability mode because the following features

are enabled:

* Admission Controller

* External Metrics Provider

To run in high availability mode, our recommandation is to update the chart

configuration with:

* set `clusterAgent.replicas` value to `2` replicas .

* set `clusterAgent.createPodDisruptionBudget` to `true`.

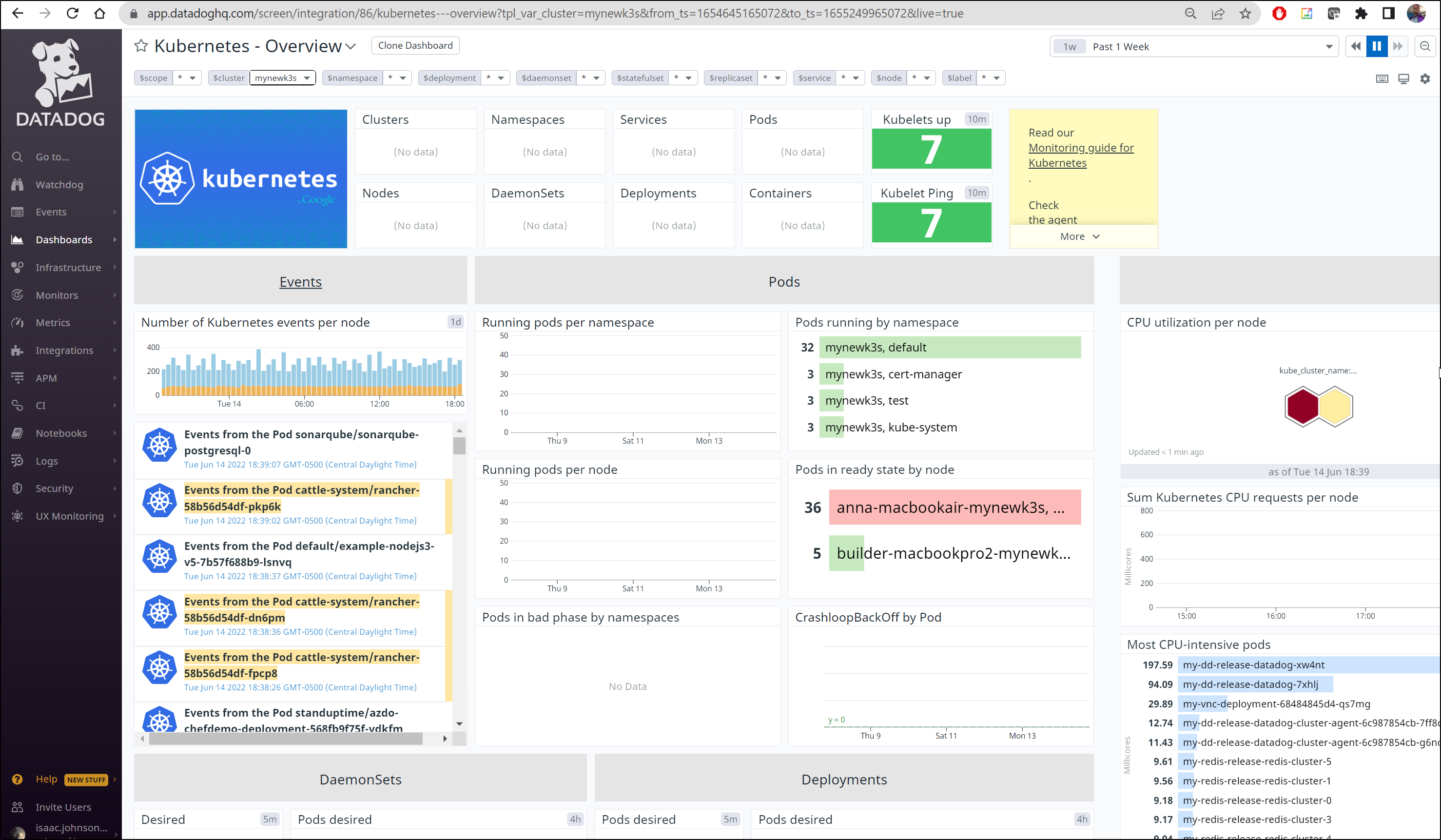

Soon I started to see our hosts in my Infrastructure panel

and we can see it is properly monitored in the Kubernetes Dashboard

GH Actions Runners

First, we will need to add the Helm repo if we haven’t already

$ helm repo add actions-runner-controller https://actions-runner-controller.github.io/actions-runner-controller

"actions-runner-controller" already exists with the same configuration, skipping

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "metallb" chart repository

...Successfully got an update from the "cribl" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "jenkins" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "bitnami" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈Happy Helming!⎈

I can create a new GH token to use (see our last guide), but instead, i’ll copy out the token i was using.

On the old cluster:

$ kubectl get secrets -n actions-runner-system | head -n3

NAME TYPE DATA AGE

default-token-q68c8 kubernetes.io/service-account-token 3 198d

controller-manager Opaque 1 198d

$ kubectl get secret -n actions-runner-system controller-manager -o yaml > cm.ars.secret.yaml

I also need to fetch a DD and AWS secret my nodes use:

builder@DESKTOP-72D2D9T:~/Workspaces/jekyll-blog/ghRunnerImage$ kubectl get secret awsjekyll -o yaml

apiVersion: v1

data:

PASSWORD: asdfasdfasdfasdfasdfasdfasdf==

USER_NAME: asdfasdfasdfasdfasdf=

kind: Secret

metadata:

annotations:

creationTimestamp: "2021-12-03T20:09:56Z"

name: awsjekyll

namespace: default

resourceVersion: "126580363"

uid: 3c7c19f2-ee5d-484e-9bd2-668be16ab5fc

type: Opaque

builder@DESKTOP-72D2D9T:~/Workspaces/jekyll-blog/ghRunnerImage$ kubectl get secret ddjekyll -o yaml

apiVersion: v1

data:

DDAPIKEY: asdfasdfasdasdfasdfsadfasdf=

kind: Secret

metadata:

creationTimestamp: "2021-12-09T00:02:17Z"

name: ddjekyll

namespace: default

resourceVersion: "131766209"

uid: c13dfead-3cde-41db-aa1d-79d9dd669fad

type: Opaque

$ kubectl get secret ddjekyll -o yaml > dd.key.yaml

$ kubectl get secret awsjekyll -o yaml > aws.key.yaml

Now on the new cluster:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

anna-macbookair Ready control-plane,master 11d v1.23.6+k3s1

builder-macbookpro2 Ready <none> 3d10h v1.23.6+k3s1

$ kubectl create ns actions-runner-system

namespace/actions-runner-system created

$ kubectl apply -f cm.ars.secret.yaml -n actions-runner-system

secret/controller-manager created

$ kubectl apply -f dd.key.yaml

secret/ddjekyll created

$ kubectl apply -f aws.key.yaml

secret/awsjekyll created

Now we can install the Actions Runner Controller

$ helm upgrade --install --namespace actions-runner-system --wait actions-runner-controller actions-runner-controller/actions-runner-controller --set authSecret.name=controller-manager

Release "actions-runner-controller" does not exist. Installing it now.

NAME: actions-runner-controller

LAST DEPLOYED: Thu Jun 16 19:56:04 2022

NAMESPACE: actions-runner-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace actions-runner-system -l "app.kubernetes.io/name=actions-runner-controller,app.kubernetes.io/instance=actions-runner-controller" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace actions-runner-system $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace actions-runner-system port-forward $POD_NAME 8080:$CONTAINER_PORT

I am going to want to build a fresh image. Yes, i could pull the TGZ from backup to push the last known built. But I think it would be better to build fresh

$ cat Dockerfile

FROM summerwind/actions-runner:latest

RUN sudo apt update -y \

&& umask 0002 \

&& sudo apt install -y ca-certificates curl apt-transport-https lsb-release gnupg

# Install MS Key

RUN curl -sL https://packages.microsoft.com/keys/microsoft.asc | gpg --dearmor | sudo tee /etc/apt/trusted.gpg.d/microsoft.gpg > /dev/null

# Add MS Apt repo

RUN umask 0002 && echo "deb [arch=amd64] https://packages.microsoft.com/repos/azure-cli/ focal main" | sudo tee /etc/apt/sources.list.d/azure-cli.list

# Install Azure CLI

RUN sudo apt update -y \

&& umask 0002 \

&& sudo apt install -y azure-cli awscli ruby-full

RUN sudo chown runner /usr/local/bin

RUN sudo chmod 777 /var/lib/gems/2.7.0

RUN sudo chown runner /var/lib/gems/2.7.0

# Install Expect and SSHPass

RUN sudo apt update -y \

&& umask 0002 \

&& sudo apt install -y sshpass expect

# save time per build

RUN umask 0002 \

&& gem install jekyll bundler

RUN sudo rm -rf /var/lib/apt/lists/*

#harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.13

I’ll build that locally then push

$ docker build -t harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.13 .

[+] Building 610.2s (16/16) FINISHED

=> [internal] load build definition from Dockerfile 0.1s

=> => transferring dockerfile: 1.06kB 0.0s

=> [internal] load .dockerignore 0.1s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/summerwind/actions-runner:latest 16.0s

=> [auth] summerwind/actions-runner:pull token for registry-1.docker.io 0.0s

=> [ 1/11] FROM docker.io/summerwind/actions-runner:latest@sha256:f83396c4ac90086d8c3d25f41212d9ef73736b6ba112c0851df61ce3a9651617 77.3s

=> => resolve docker.io/summerwind/actions-runner:latest@sha256:f83396c4ac90086d8c3d25f41212d9ef73736b6ba112c0851df61ce3a9651617 0.0s

=> => sha256:f83396c4ac90086d8c3d25f41212d9ef73736b6ba112c0851df61ce3a9651617 743B / 743B 0.0s

=> => sha256:d7bfe07ed8476565a440c2113cc64d7c0409dba8ef761fb3ec019d7e6b5952df 28.57MB / 28.57MB 22.8s

=> => sha256:4f4fb700ef54461cfa02571ae0db9a0dc1e0cdb5577484a6d75e68dc38e8acc1 32B / 32B 0.6s

=> => sha256:d48872a271e977faf7b80d5fc0721b1856a38323f19900e15670b1539f02a3ee 2.20kB / 2.20kB 0.0s

=> => sha256:872f30b04217e3400237a455184f93041eac7a4c1c566d4631b016e3d1118619 8.64kB / 8.64kB 0.0s

=> => sha256:950cd68d33668db6e6eb7d82d8e7f6cf1e011e897577145cd5283ff1ce227d38 156.62MB / 156.62MB 55.3s

=> => sha256:e2df5ad5697424aba35b0f7334c7ada541cba3bcd4d7b1eca6177b42bf495d5d 31.79kB / 31.79kB 1.1s

=> => sha256:bb706cc364161c7a1edd0997e22b31c1a538592860b53bc3257f1d2e0d9e71ac 14.22MB / 14.22MB 8.3s

=> => sha256:2241dd8bb07288c5f38b04830e38441b2a10daed369bcc07371f67a86fd669a9 146.04MB / 146.04MB 51.4s

=> => sha256:b3ff8120f0cf25d31810272b5026af616a7b790a9894bd7195abd3cb169126b0 141B / 141B 24.0s

=> => extracting sha256:d7bfe07ed8476565a440c2113cc64d7c0409dba8ef761fb3ec019d7e6b5952df 4.3s

=> => sha256:e6e70f4531187af92531a2964464900def63a11db7bd3069c7f6c1c2553de043 3.71kB / 3.71kB 24.4s

=> => sha256:25d02c262a4e3cb4364616187cf847d0f8bdddfc55e52dae812a1186cb80e716 213B / 213B 24.8s

=> => extracting sha256:4f4fb700ef54461cfa02571ae0db9a0dc1e0cdb5577484a6d75e68dc38e8acc1 0.1s

=> => extracting sha256:950cd68d33668db6e6eb7d82d8e7f6cf1e011e897577145cd5283ff1ce227d38 10.0s

=> => extracting sha256:e2df5ad5697424aba35b0f7334c7ada541cba3bcd4d7b1eca6177b42bf495d5d 0.0s

=> => extracting sha256:bb706cc364161c7a1edd0997e22b31c1a538592860b53bc3257f1d2e0d9e71ac 0.7s

=> => extracting sha256:2241dd8bb07288c5f38b04830e38441b2a10daed369bcc07371f67a86fd669a9 8.3s

=> => extracting sha256:b3ff8120f0cf25d31810272b5026af616a7b790a9894bd7195abd3cb169126b0 0.0s

=> => extracting sha256:e6e70f4531187af92531a2964464900def63a11db7bd3069c7f6c1c2553de043 0.0s

=> => extracting sha256:25d02c262a4e3cb4364616187cf847d0f8bdddfc55e52dae812a1186cb80e716 0.0s

=> [ 2/11] RUN sudo apt update -y && umask 0002 && sudo apt install -y ca-certificates curl apt-transport-https lsb-release gnupg 28.8s

=> [ 3/11] RUN curl -sL https://packages.microsoft.com/keys/microsoft.asc | gpg --dearmor | sudo tee /etc/apt/trusted.gpg.d/microsoft.gpg > /dev/null 1.8s

=> [ 4/11] RUN umask 0002 && echo "deb [arch=amd64] https://packages.microsoft.com/repos/azure-cli/ focal main" | sudo tee /etc/apt/sources.list.d/azure-cli.list 1.1s

=> [ 5/11] RUN sudo apt update -y && umask 0002 && sudo apt install -y azure-cli awscli ruby-full 139.6s

=> [ 6/11] RUN sudo chown runner /usr/local/bin 1.1s

=> [ 7/11] RUN sudo chmod 777 /var/lib/gems/2.7.0 1.2s

=> [ 8/11] RUN sudo chown runner /var/lib/gems/2.7.0 1.1s

=> [ 9/11] RUN sudo apt update -y && umask 0002 && sudo apt install -y sshpass expect 12.7s

=> [10/11] RUN umask 0002 && gem install jekyll bundler 308.6s

=> [11/11] RUN sudo rm -rf /var/lib/apt/lists/* 1.0s

=> exporting to image 19.4s

=> => exporting layers 19.3s

=> => writing image sha256:df143cc715b4ebc3b63edf8653ece5bad7aa14120666e2f0f1c6c1cd827afd32 0.0s

=> => naming to harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.13 0.0s

Since I rebuilt this Harbor, and not necessarily with the same credentials, I’ll need to relogin (as Docker cache’s our creds).

$ docker login harbor.freshbrewed.science

Authenticating with existing credentials...

Stored credentials invalid or expired

Username (isaac): isaac

Password:

Login Succeeded

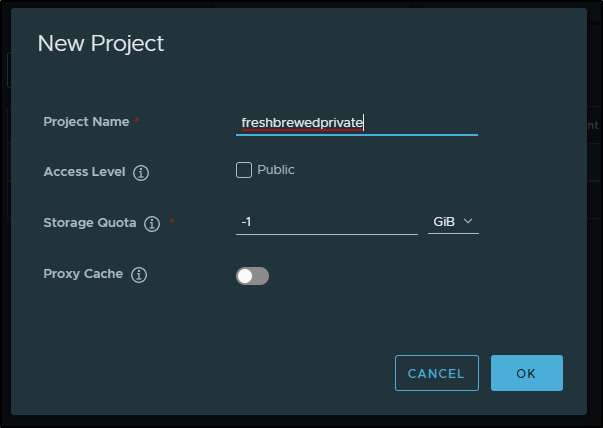

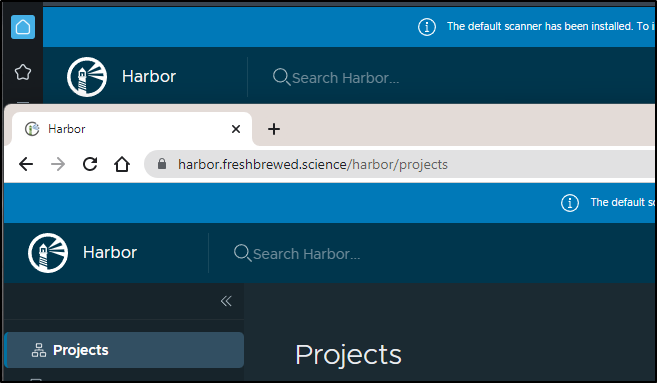

I need to recreate the Private registry project in Harbor before I can push (or else we would see the error “unauthorized: project freshbrewedprivate not found: project freshbrewedprivate not found”)

The first error I saw was a note about entity too large

$ docker push harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.13

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/myghrunner]

b8e25a20d2eb: Pushed

7ec94da33254: Pushing [==================================================>] 211.9MB/211.9MB

2ba26b1ba48d: Pushing [==================================================>] 7.665MB

c5e499e69756: Pushing 3.072kB

991f5d1d1df6: Pushing 3.072kB

d56e855828af: Waiting

3674ed7036c5: Waiting

8d4930403abc: Waiting

94b4423c9024: Waiting

d0ed446f74e8: Waiting

42b9506c2ecc: Waiting

e44b7f70f7b4: Waiting

567c7c8f0210: Waiting

cc92a348ad7b: Waiting

20dbbcc55f09: Waiting

58d066333799: Waiting

4d5ea7b64e1e: Waiting

5f70bf18a086: Waiting

af7ed92504ae: Waiting

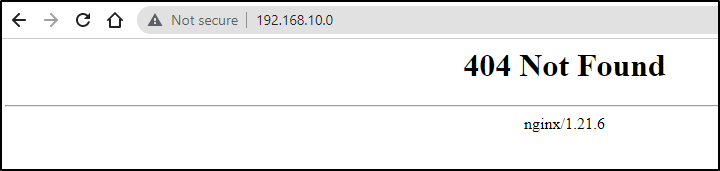

error parsing HTTP 413 response body: invalid character '<' looking for beginning of value: "<html>\r\n<head><title>413 Request Entity Too Large</title></head>\r\n<body>\r\n<center><h1>413 Request Entity Too Large</h1></center>\r\n<hr><center>nginx/1.21.6</center>\r\n</body>\r\n</html>\r\n"

We need to increase our size limits in the Harbor Ingress.

Namely, the current ingress:

$ kubectl get ingress harbor-registry-ingress -o yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-production

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

meta.helm.sh/release-name: harbor-registry

meta.helm.sh/release-namespace: default

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

creationTimestamp: "2022-06-13T00:35:26Z"

generation: 2

needs to change to have proxy-read-timeout, proxy-send-timeout and the new nginx.org annotations.

$ kubectl get ingress harbor-registry-ingress -o yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-production

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

meta.helm.sh/release-name: harbor-registry

meta.helm.sh/release-namespace: default

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "600"

nginx.org/proxy-read-timeout: "600"

creationTimestamp: "2022-06-13T00:35:26Z"

generation: 2

And I can quick patch them in

$ kubectl apply -f harbor-registry-ingress.yml

Warning: resource ingresses/harbor-registry-ingress is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

ingress.networking.k8s.io/harbor-registry-ingress configured

which worked

$ docker push harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.13

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/myghrunner]

b8e25a20d2eb: Layer already exists

7ec94da33254: Pushed

2ba26b1ba48d: Pushed

c5e499e69756: Layer already exists

991f5d1d1df6: Layer already exists

d56e855828af: Pushed

3674ed7036c5: Pushed

8d4930403abc: Pushed

94b4423c9024: Pushed

d0ed446f74e8: Pushed

42b9506c2ecc: Pushed

e44b7f70f7b4: Pushed

567c7c8f0210: Pushed

cc92a348ad7b: Pushed

20dbbcc55f09: Pushed

58d066333799: Pushed

4d5ea7b64e1e: Pushed

5f70bf18a086: Pushed

af7ed92504ae: Pushed

1.1.13: digest: sha256:f387ab12a8ee1bb2cab5b129443e0c2684403d2f7a6dfcfeac87ad1e94df9517 size: 4295

A note for Static Route issues

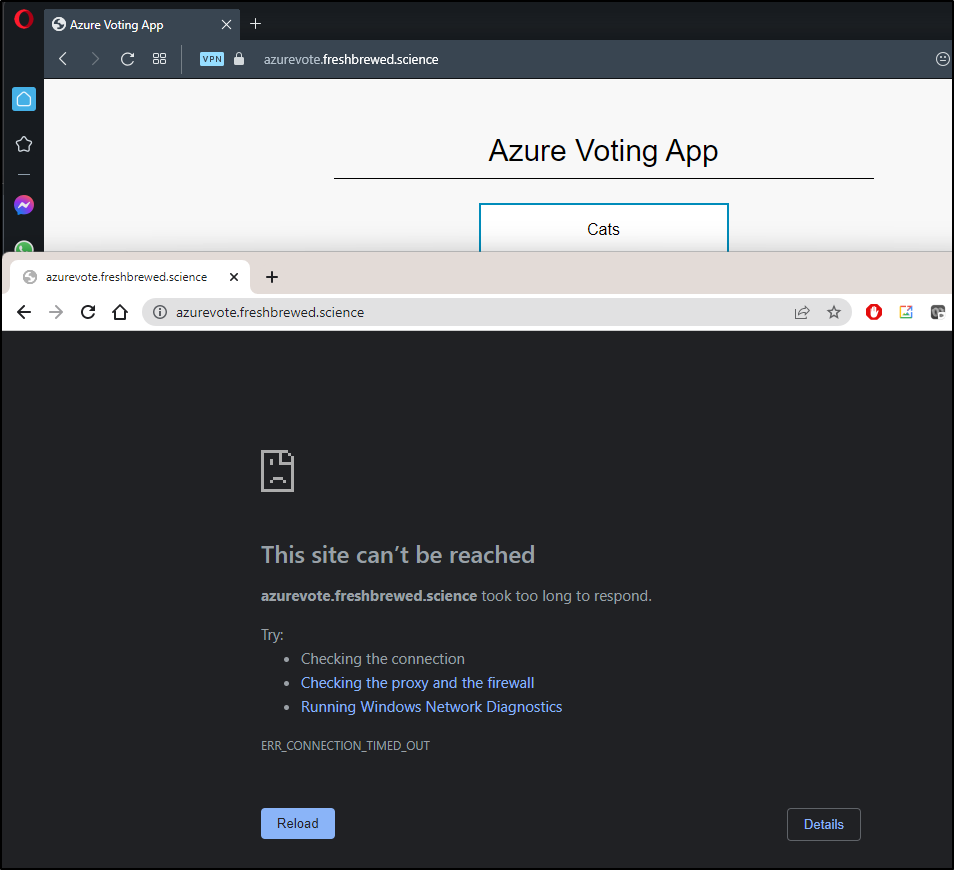

I had the strangest behavior. Since I set a static route in the route to use the Master host as the gateway for 192.168.10.0, it worked just dandy externally. And if I enable my VPN to route traffic from Europe, again, I have no issues.

But inside my network 192.168.0.10 would resolve but i would get timeouts for harbor.freshbrewed.science (and any other DNS name trying to route to 443 on my router).

This just really confused me. Why would my router treat traffic internally (to its external IP) different?

I decided to try and hand patch my hosts

WSL

$ cat /etc/hosts | grep harbor

192.168.0.10 harbor.freshbrewed.science

Windows

C:\Windows\System32\drivers\etc>type hosts

# Copyright (c) 1993-2009 Microsoft Corp.

#

# This is a sample HOSTS file used by Microsoft TCP/IP for Windows.

#

# This file contains the mappings of IP addresses to host names. Each

# entry should be kept on an individual line. The IP address should

# be placed in the first column followed by the corresponding host name.

# The IP address and the host name should be separated by at least one

# space.

#

# Additionally, comments (such as these) may be inserted on individual

# lines or following the machine name denoted by a '#' symbol.

#

# For example:

#

# 102.54.94.97 rhino.acme.com # source server

# 38.25.63.10 x.acme.com # x client host

# localhost name resolution is handled within DNS itself.

# 127.0.0.1 localhost

# ::1 localhost

# Added by Docker Desktop

192.168.10.0 harbor.freshbrewed.science

192.168.1.160 host.docker.internal

192.168.1.160 gateway.docker.internal

# To allow the same kube context to work on the host and the container:

127.0.0.1 kubernetes.docker.internal

# End of section

I might have to address this tech debt later

switching

Even though I could fix the hosts on windows and mac, my runners would never be able to hit DNS hosts breaking k3s.

It seems my ASUS routers simply will not satisfy static routes outside its subnet and since changing my router subnet settings would reset everything, i saw little choice, at least in my case, then to go back to klipper

on master host, upgrade to latest, don’t disable servicelb (klipper)

Uninstall Metallb

builder@DESKTOP-QADGF36:~$ helm delete metallb

W0617 09:02:23.079922 608 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

W0617 09:02:23.080375 608 warnings.go:70] policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

release "metallb" uninstalled

builder@anna-MacBookAir:~$ curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC="server --disable traefik" sh

[sudo] password for builder:

[INFO] Finding release for channel stable

[INFO] Using v1.23.6+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.23.6+k3s1/sha256sum-amd64.txt

[INFO] Skipping binary downloaded, installed k3s matches hash

[INFO] Skipping installation of SELinux RPM

[INFO] Skipping /usr/local/bin/kubectl symlink to k3s, already exists

[INFO] Skipping /usr/local/bin/crictl symlink to k3s, already exists

[INFO] Skipping /usr/local/bin/ctr symlink to k3s, already exists

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s.service

[INFO] systemd: Enabling k3s unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s.service → /etc/systemd/system/k3s.service.

[INFO] systemd: Starting k3s

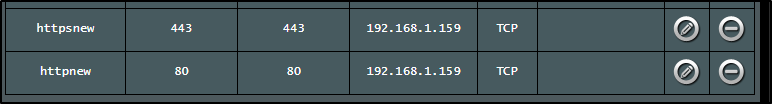

I checked and right away internal IPs were used

$ kubectl get svc | tail -n2

nginx-ingress-release-nginx-ingress LoadBalancer 10.43.209.58 192.168.1.159 80:30272/TCP,443:30558/TCP 8d

azure-vote-front LoadBalancer 10.43.251.119 192.168.1.81 80:31761/TCP 6d12h

I reset my routers Virtual Server entry to use it

and was good again

Look - i get it. Klipper is old. The audience is clearly different than Metallb (Klipper docs show using nano to edit files - that says a lot)

But I can’t seem to fix my home router and replacing it just to use the “better” LB isn’t viable right now.

The fact is I like TLS, I Like DNS, I like externally facing services - that means the bulk of what I standup will be via NGinx and Ingress which requires DNS resolution.

One issue I had often faced before was that my NGinx Ingress controller was only serving traffic from the default namespace. Let’s see if that was cleared up

Testing Ingress

Last time I launched a quick NGinx deployment into a “test” namespaces

$ kubectl get deployments -n test

NAME READY UP-TO-DATE AVAILABLE AGE

my-nginx 3/3 3 3 8d

Let’s try and route a “test” ingress.

$ cat r53-test.json

{

"Comment": "CREATE test fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "test.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "73.242.50.46"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-test.json

Since the last NGinx service was of type LoadBalancer, let’s create a quick ClusterIP based one

$ cat t.yaml

apiVersion: v1

kind: Service

metadata:

name: test-cip-svc

spec:

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: htto

port: 80

protocol: TCP

targetPort: 80

selector:

run: my-nginx

sessionAffinity: None

type: ClusterIP

$ kubectl apply -f t.yaml -n test

service/test-cip-svc created

Lastly, I’ll create an Ingress definition, in the test namespace, that points to the service definition

$ cat t2.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

name: test-ingress-nginx

namespace: test

spec:

ingressClassName: nginx

rules:

- host: test.freshbrewed.science

http:

paths:

- backend:

service:

name: test-cip-svc

port:

number: 80

path: /

pathType: Prefix

tls:

- hosts:

- test.freshbrewed.science

secretName: test1.freshbrewed.science-cert

$ kubectl apply -f t2.yaml -n test

ingress.networking.k8s.io/test-ingress-nginx configured

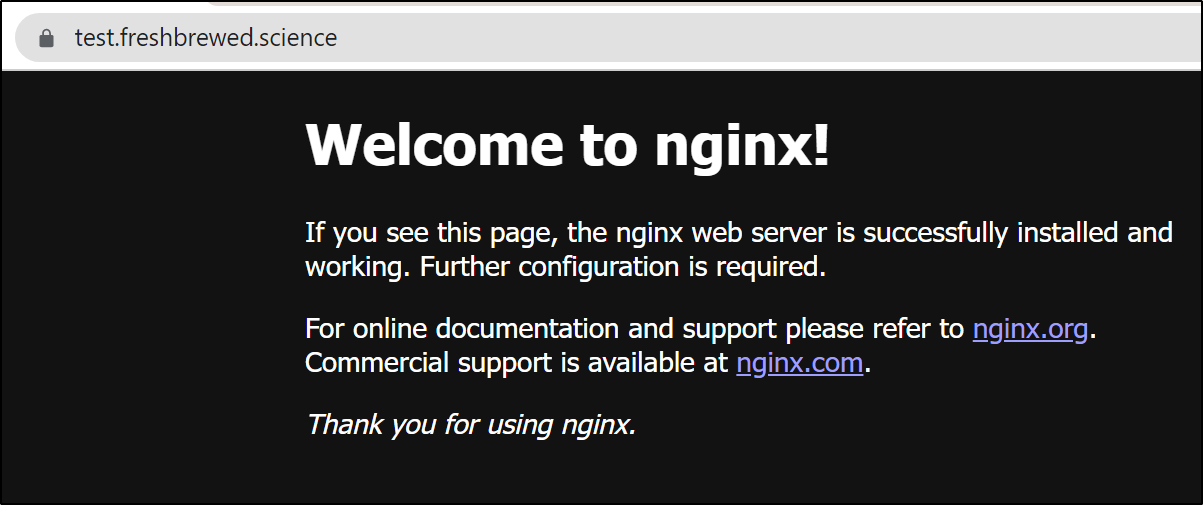

after the Cert is pulled, we can check and see traffic is routed

GH Runner (continued)

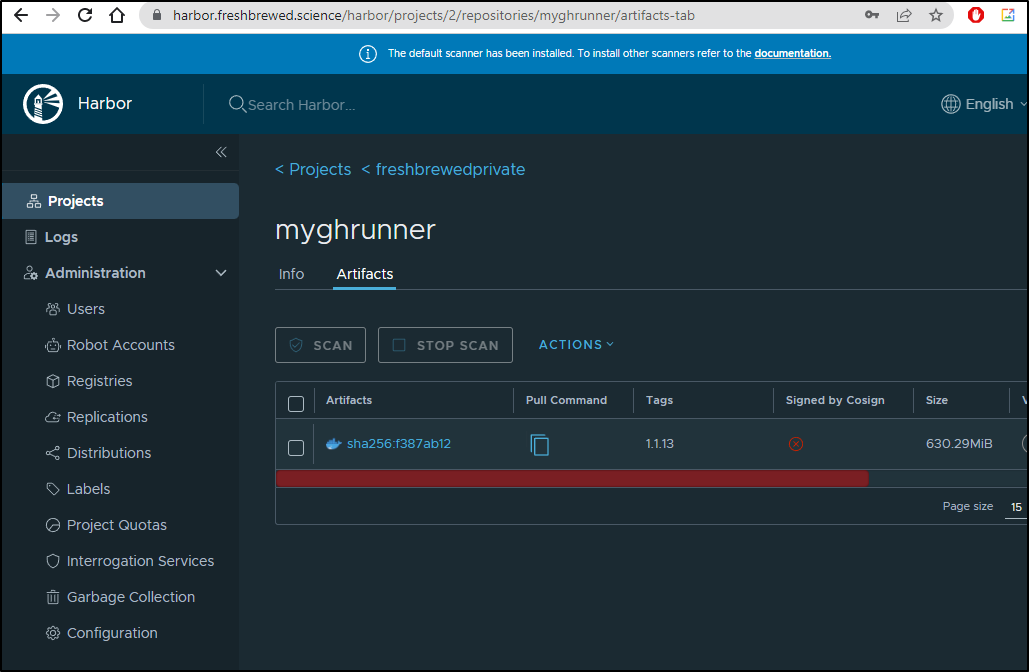

We can see that the image is now in the local repo

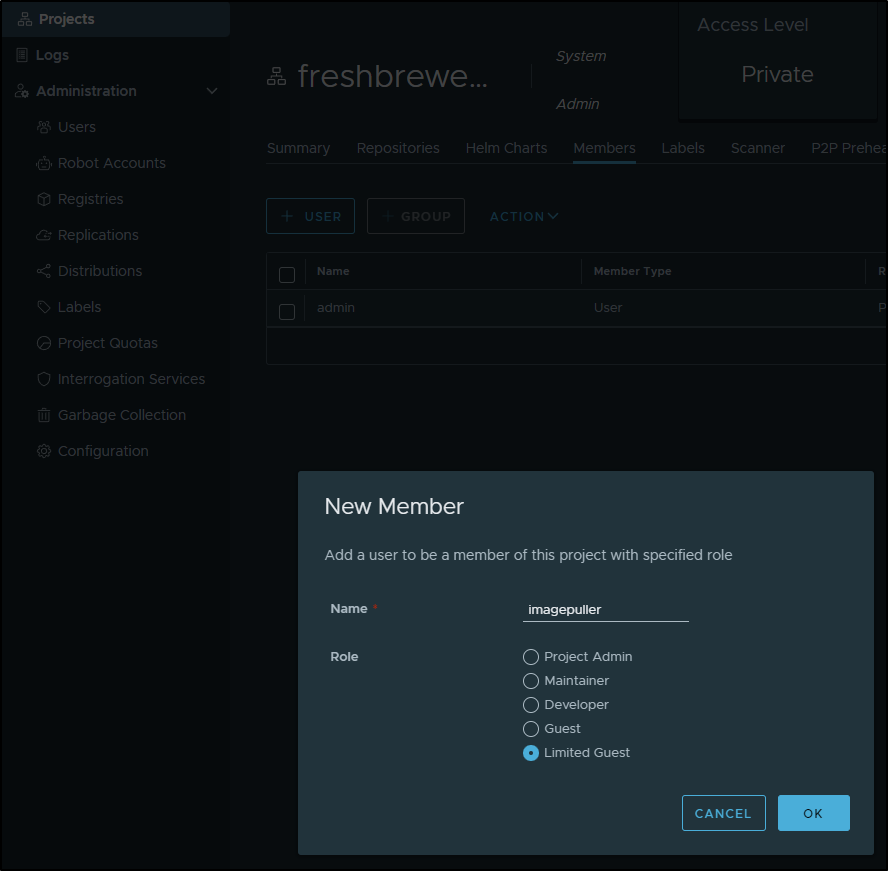

I’ll need to create a docker pull secret. This time I’ll be more nuanced in my user config.

First, I’ll create an ImagePuller user

We can check the docs on roles to be sure, but the role we will want is “Limited Guest”. I’ll go to the private project and add that user.

Then create the secret (dont forget to escape special characters in the password)

$ kubectl create secret docker-registry myharborreg --docker-server=harbor.freshbrewed.science --docker-username=imagepuller --docker-password=adsfasdfasdfsadf --docker-email=isaac.johnson@gmail.com

secret/myharborreg created

Since we launched the actions runner to use our GH token, that auth should be sorted. The image pull credential (myharborreg) is now sorted. We have set the required AWS and Datadog secrets. That means we should have all the pieces to launch our private runner pool.

$ cat newRunnerDeployment.yml

apiVersion: actions.summerwind.dev/v1alpha1

kind: RunnerDeployment

metadata:

name: my-jekyllrunner-deployment

namespace: default

spec:

replicas: 1

selector: null

template:

metadata: {}

spec:

dockerEnabled: true

dockerdContainerResources: {}

env:

- name: AWS_DEFAULT_REGION

value: us-east-1

- name: AWS_ACCESS_KEY_ID

valueFrom:

secretKeyRef:

key: USER_NAME

name: awsjekyll

- name: AWS_SECRET_ACCESS_KEY

valueFrom:

secretKeyRef:

key: PASSWORD

name: awsjekyll

- name: DATADOG_API_KEY

valueFrom:

secretKeyRef:

key: DDAPIKEY

name: ddjekyll

image: harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.13

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: myharborreg

#- name: alicloud

labels:

- my-jekyllrunner-deployment

repository: idjohnson/jekyll-blog

resources: {}

And apply

$ kubectl apply -f newRunnerDeployment.yml

runnerdeployment.actions.summerwind.dev/my-jekyllrunner-deployment created

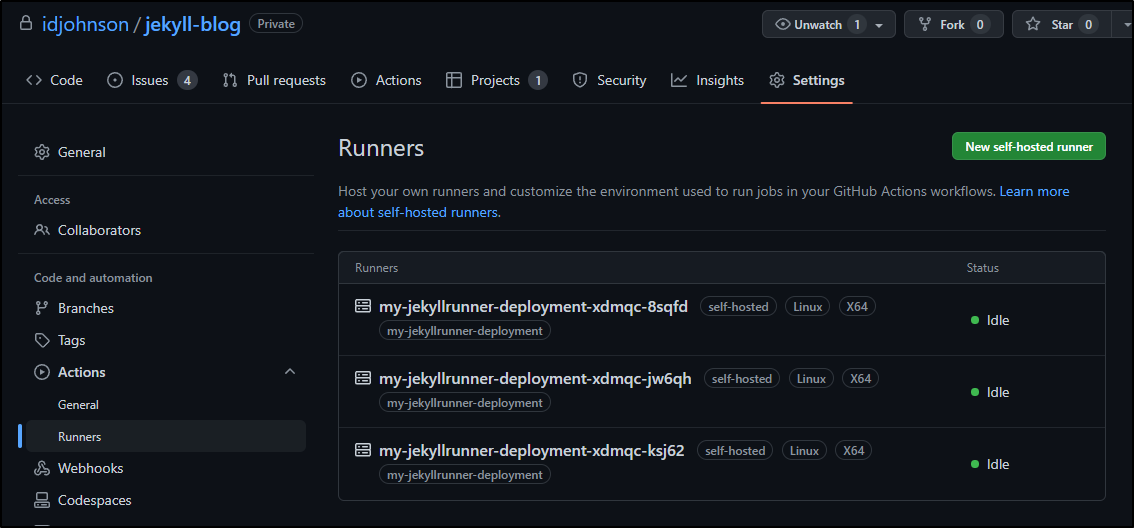

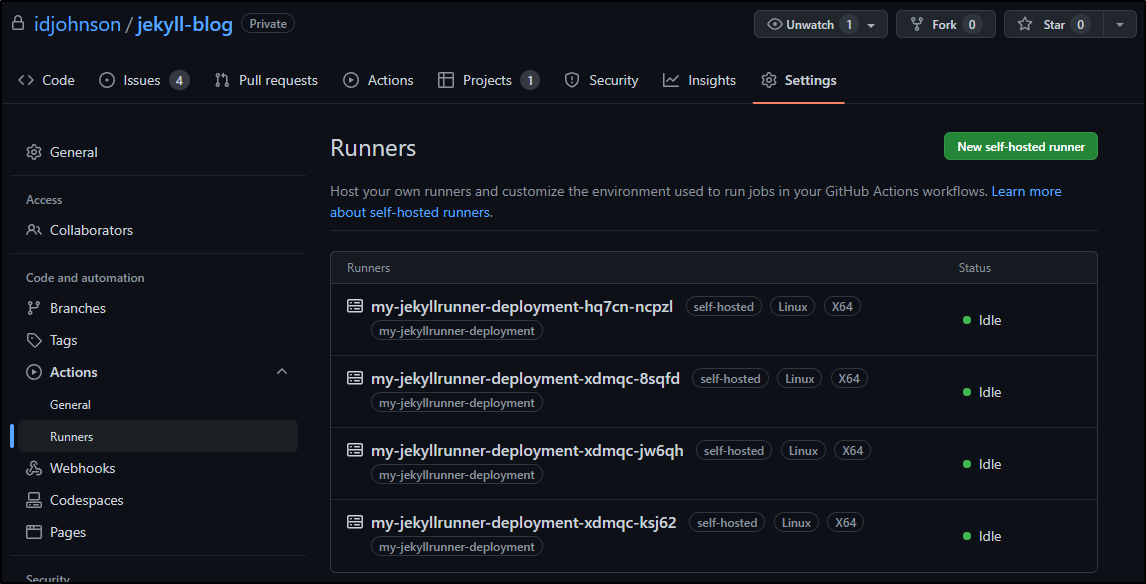

Because the old cluster isn’t dead yet, I can see 3 active runners there presently

However, our new runner is coming up

$ kubectl get pods -l runner-deployment-name=my-jekyllrunner-deployment

NAME READY STATUS RESTARTS AGE

my-jekyllrunner-deployment-hq7cn-ncpzl 0/2 ContainerCreating 0 3m10s

$ kubectl get pods -l runner-deployment-name=my-jekyllrunner-deployment

NAME READY STATUS RESTARTS AGE

my-jekyllrunner-deployment-hq7cn-ncpzl 2/2 Running 0 3m42s

And in a few moments, I see it on the Runners page

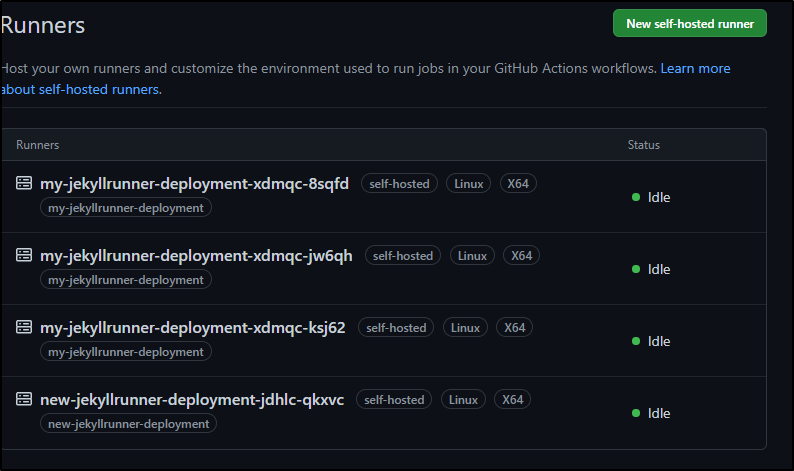

I decided that since I’m doing this all fresh, a new Runner Name might be nice.

$ diff newRunnerDeployment.yml newRunnerDeployment.yml.bak

4c4

< name: new-jekyllrunner-deployment

---

> name: my-jekyllrunner-deployment

38c38

< - new-jekyllrunner-deployment

---

> - my-jekyllrunner-deployment

$ kubectl delete -f newRunnerDeployment.yml.bak

runnerdeployment.actions.summerwind.dev "my-jekyllrunner-deployment" deleted

$ kubectl apply -f newRunnerDeployment.yml

runnerdeployment.actions.summerwind.dev/new-jekyllrunner-deployment created

This goes much faster as that container is already present on the hosts

$ kubectl get pods -l runner-deployment-name=new-jekyllrunner-deployment

NAME READY STATUS RESTARTS AGE

new-jekyllrunner-deployment-jdhlc-qkxvc 2/2 Running 0 41s

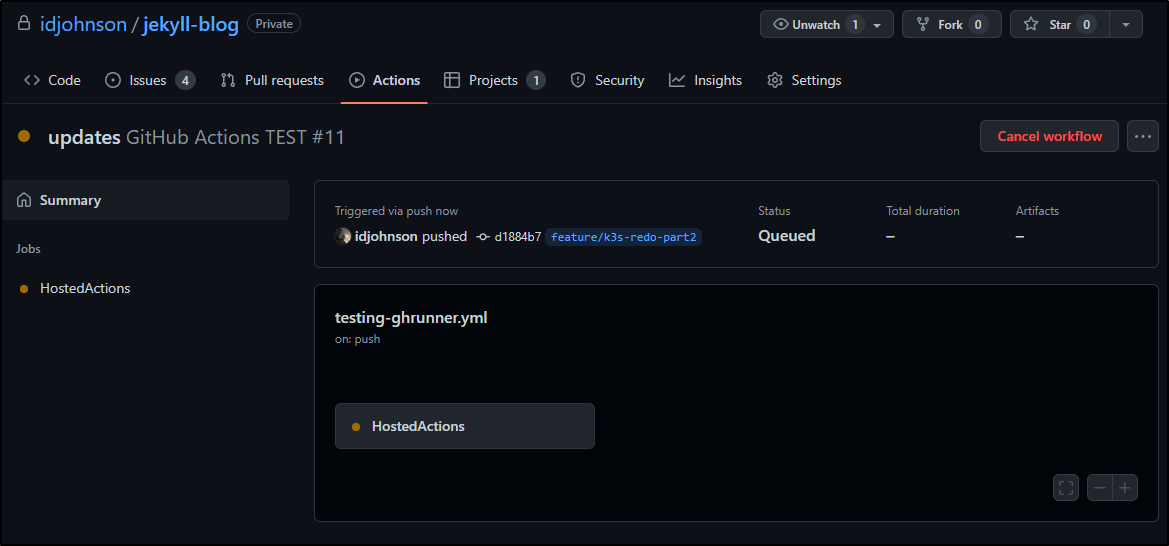

Validation

I will update my GH actions later, but a quick test is to change the GH Runner Image package workflow which will trigger an invokation on push

$ cat .github/workflows/testing-ghrunner.yml

name: GitHub Actions TEST

on:

push:

paths:

- "**/testing-ghrunner.yml"

jobs:

HostedActions:

runs-on: self-hosted

steps:

- name: Check out repository code

uses: actions/checkout@v2

- name: Pull From Tag

run: |

set -x

export BUILDIMGTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g'`"

export FINALBUILDTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^#//g'`"

docker images

echo $CR_PAT | docker login harbor.freshbrewed.science -u $CR_USER --password-stdin

docker pull $FINALBUILDTAG

env: # Or as an environment variable

CR_PAT: $

CR_USER: $

- name: SFTP Locally

run: |

set -x

export BUILDIMGTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g'`"

export FILEOUT="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g' | sed 's/:/-/g'`".tgz

export FINALBUILDTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^#//g'`"

docker save $FINALBUILDTAG | gzip > $FILEOUT

mkdir ~/.ssh || true

ssh-keyscan sassynassy >> ~/.ssh/known_hosts

sshpass -e sftp -oBatchMode=no -b - builder@sassynassy << !

cd dockerBackup

put $FILEOUT

bye

!

env: # Or as an environment variable

SSHPASS: $

# change to force a test

Moving more nodes

At this point, we can start to move over more of the old cluster’s nodes

I’ll take you through just one:

$ ssh hp@192.168.1.57

The authenticity of host '192.168.1.57 (192.168.1.57)' can't be established.

ECDSA key fingerprint is SHA256:4dCxYuYp4SSYM0HyP52xHxDZri3a8Kh8DAv/ka8cI+U.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.1.57' (ECDSA) to the list of known hosts.

hp@192.168.1.57's password:

Welcome to Ubuntu 20.04.3 LTS (GNU/Linux 5.4.0-109-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

122 updates can be applied immediately.

To see these additional updates run: apt list --upgradable

*** System restart required ***

Last login: Sat Oct 30 16:34:15 2021 from 192.168.1.160

As I will with all nodes, I’ll make sure NFS common is on there

hp@hp-HP-EliteBook-850-G2:~$ sudo apt install nfs-common

[sudo] password for hp:

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages were automatically installed and are no longer required:

linux-generic linux-headers-generic

Use 'sudo apt autoremove' to remove them.

The following additional packages will be installed:

keyutils libevent-2.1-7 libnfsidmap2 libtirpc-common libtirpc3 rpcbind

Suggested packages:

open-iscsi watchdog

The following NEW packages will be installed:

keyutils libevent-2.1-7 libnfsidmap2 libtirpc-common libtirpc3 nfs-common rpcbind

0 upgraded, 7 newly installed, 0 to remove and 123 not upgraded.

Need to get 542 kB of archives.

After this operation, 1,922 kB of additional disk space will be used.

Do you want to continue? [Y/n] Y

Get:1 http://us.archive.ubuntu.com/ubuntu focal/main amd64 libtirpc-common all 1.2.5-1 [7,632 B]

Get:2 http://us.archive.ubuntu.com/ubuntu focal/main amd64 libtirpc3 amd64 1.2.5-1 [77.2 kB]

Get:3 http://us.archive.ubuntu.com/ubuntu focal/main amd64 rpcbind amd64 1.2.5-8 [42.8 kB]

Get:4 http://us.archive.ubuntu.com/ubuntu focal-updates/main amd64 keyutils amd64 1.6-6ubuntu1.1 [44.8 kB]

Get:5 http://us.archive.ubuntu.com/ubuntu focal/main amd64 libevent-2.1-7 amd64 2.1.11-stable-1 [138 kB]

Get:6 http://us.archive.ubuntu.com/ubuntu focal/main amd64 libnfsidmap2 amd64 0.25-5.1ubuntu1 [27.9 kB]

Get:7 http://us.archive.ubuntu.com/ubuntu focal-updates/main amd64 nfs-common amd64 1:1.3.4-2.5ubuntu3.4 [204 kB]

Fetched 542 kB in 0s (1,104 kB/s)

Selecting previously unselected package libtirpc-common.

(Reading database ... 212280 files and directories currently installed.)

Preparing to unpack .../0-libtirpc-common_1.2.5-1_all.deb ...

Unpacking libtirpc-common (1.2.5-1) ...

Selecting previously unselected package libtirpc3:amd64.

Preparing to unpack .../1-libtirpc3_1.2.5-1_amd64.deb ...

Unpacking libtirpc3:amd64 (1.2.5-1) ...

Selecting previously unselected package rpcbind.

Preparing to unpack .../2-rpcbind_1.2.5-8_amd64.deb ...

Unpacking rpcbind (1.2.5-8) ...

Selecting previously unselected package keyutils.

Preparing to unpack .../3-keyutils_1.6-6ubuntu1.1_amd64.deb ...

Unpacking keyutils (1.6-6ubuntu1.1) ...

Selecting previously unselected package libevent-2.1-7:amd64.

Preparing to unpack .../4-libevent-2.1-7_2.1.11-stable-1_amd64.deb ...

Unpacking libevent-2.1-7:amd64 (2.1.11-stable-1) ...

Selecting previously unselected package libnfsidmap2:amd64.

Preparing to unpack .../5-libnfsidmap2_0.25-5.1ubuntu1_amd64.deb ...

Unpacking libnfsidmap2:amd64 (0.25-5.1ubuntu1) ...

Selecting previously unselected package nfs-common.

Preparing to unpack .../6-nfs-common_1%3a1.3.4-2.5ubuntu3.4_amd64.deb ...

Unpacking nfs-common (1:1.3.4-2.5ubuntu3.4) ...

Setting up libtirpc-common (1.2.5-1) ...

Setting up libevent-2.1-7:amd64 (2.1.11-stable-1) ...

Setting up keyutils (1.6-6ubuntu1.1) ...

Setting up libnfsidmap2:amd64 (0.25-5.1ubuntu1) ...

Setting up libtirpc3:amd64 (1.2.5-1) ...

Setting up rpcbind (1.2.5-8) ...

Created symlink /etc/systemd/system/multi-user.target.wants/rpcbind.service → /lib/systemd/system/rpcbind.service.

Created symlink /etc/systemd/system/sockets.target.wants/rpcbind.socket → /lib/systemd/system/rpcbind.socket.

Setting up nfs-common (1:1.3.4-2.5ubuntu3.4) ...

Creating config file /etc/idmapd.conf with new version

Adding system user `statd' (UID 128) ...

Adding new user `statd' (UID 128) with group `nogroup' ...

Not creating home directory `/var/lib/nfs'.

Created symlink /etc/systemd/system/multi-user.target.wants/nfs-client.target → /lib/systemd/system/nfs-client.target.

Created symlink /etc/systemd/system/remote-fs.target.wants/nfs-client.target → /lib/systemd/system/nfs-client.target.

nfs-utils.service is a disabled or a static unit, not starting it.

Processing triggers for systemd (245.4-4ubuntu3.15) ...

Processing triggers for man-db (2.9.1-1) ...

Processing triggers for libc-bin (2.31-0ubuntu9.7) ...

I’ll stop the current running agent

hp@hp-HP-EliteBook-850-G2:~$ sudo //usr/local/bin/k3s-killall.sh

[sudo] password for hp:

+ [ -s /etc/systemd/system/k3s-agent.service ]

+ basename /etc/systemd/system/k3s-agent.service

+ systemctl stop k3s-agent.service

+ [ -x /etc/init.d/k3s* ]

+ killtree 3167 3707 4090 4581 4617 5185 5632 6922 9079 322683 478408 987833 988908 989742 1130770 1165788 1166882 1784138 2684089 2791000 2857269 2857446 3248392 3248674 3248807

+ kill -9 3167 3187 3224 3261 3297 3338 3380 3422 3463 3707 3728 4869 5008 5161 4090 4129 4166 4240 4581 4619 4741 4617 4651 4824 4901 5185 5228 5295 5388 5632 5656 5741 6922 6944 13240 13272 15809 17696 420896 420897 421097 421098 1070047 1070048 9079 9111 9256 322683 322706 322785 478408 478434 478467 478480 478835 478842 478500 478624 478632 987833 987860 988465 988496 988497 988498 988499 988908 988934 990020 989742 989765 990628 990814 990815 990816 990817 990818 990819 1019202 1020157 1130770 1130791 1131187 1131217 1785197 1785198 1785199 1785200 1165788 1165810 1173343 1166882 1166904 1174828 1174877 1784138 1784169 1784370 1784568 1784580 2684089 2684117 2685244 2685298 2791000 2791025 2791073 2791088 2791477 3104583 2791113 2791325 2791335 2857269 2857317 2857601 2857446 2857479 2857896 3248392 3248431 3249532 3249700 3249724 3248674 1782394 1782441 3248731 3249543 3248807 3248829 3249692 3249723 3249760

+ do_unmount_and_remove /run/k3s

+ set +x

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/ffc7fc1471c2ccffc3b1ed34970f2a6bfbca1a5770870b70e7c48be1fa5b95e0/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/f866c6b61f4948aa068b5c4bb4df4ff6ec263448a7f3ce9e513354df3942b51b/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/f7f159c481a00411a60f71f038076583b2c1f377f2cedd80dfe6d1119235f5c6/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/f63939b78b580892d216ef0d8a3c8b2fc86d7ae2d114d0e1eda8f9660df66cbe/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/f4bc5980fb8a79a6f0919be3664b16db87dff5bdad53b8dd274306be74decfef/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/eca9c73903bf4267cc365f378473ec0c352d2d84215bd7e637611420d22abe19/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/eae7bafe3fbd53a51f497575c3e45766bd97eb8a4b5596bea0aeb446025bffb0/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/e3ccb7dfc3bcc368b37fc78a3000480821ce67407ed2aee44b3f1cd03f7e9225/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/d9e964a8b480d8c451e03c6f05ba5f9ce733620d5c0a6bb57e14c9bda9e23ca2/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/d99ea989f2ac0c2e89163c60165852a00c14b53106d39f2eb729978fd0827475/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/d60045a3e5f62684e255a72caab419dd440c7c20e29ca0d95d30887faefc777e/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/d5fd01941bfa012061d3a4588373f649f01222922e60b6a247589e9c06ba25ff/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/d336f8b1ac353a11d79022871bf89ef0ac134ef83740f52b529c07b2a7c07315/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/cebcbf387d7c8e28cedf89ae7d799376bacfb0e23fb9c1a43c24e8b622665dfc/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/cca95452575508070f2d37cf7f84494901a28495f20b6be539e5ab6093956e40/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/c7426a2d6af3f02540c187076dcd37bbe3608996cce0f49afcc47741201b796b/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/c5abe4aceaa1710ec767f21a30ffcd6fbcf913b6433dca8620846a7b0cb98b2c/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/c3cadebd58ea9e827d9c110022787db7e7c10eaf33f911a61e254728b27e0345/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/c3285d78a5ee51b746b9c9a5725e463aa4403445ee793e033b6b44ccf3eb5b5b/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/c2e2b2f96a234a412619eb7f4cf9e40424bb028821c16b378e5c176a5a2a3b3f/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/c1ef277980db46d45ac00418c8f274fd8f6f0db6c7cb259152b134cc0ad7bc98/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/bf22cc4b6579944f7d8d9d0a5f0fd4951077d177a57d5ef8be37939ec6d277e6/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/b9abdd929d5e31e1283cc4cca19273a275c6eb78e625083fb5e0644e4f7cf930/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/b500779e25ffd6512619ea996d02bbe6ca1d695601ddf69470e8d030b677462d/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/b4e1ff5523e78aec14f1f80560c68c9fa58e42fd73e71e5cf5fb086265fe508c/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/b440d30c287fa376b1dfa5d58cbe53bd073c498697647b97cac5370f8865309f/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/b0c1e6261ad64048a2f07b2f58bc22f461597d6d2c14e0e73dcb6be24e51ed6b/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/b02358707de92844f8077db4483f1d439185c6d85d6403aa86f0c242262cf2e4/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/b00571af8af97fbe4848b2d195835ff418e38b1eec87d9785f7f0dc84f3f2b14/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/aedb2019c318d388ef6559fd9e0d2b2a550441dbbd04c5ae7f0189f6703c0f99/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/adaf5ce8ade0e1c4ce15ad07f59afd4383dd95d04a6f907d0a11e81c601388d8/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/ac0a4499d313d63cb5cc2f993a259728ac1f1a13a6875ca9c0c5e0364981648b/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/aa0f265571cabe037448122198c216253bf4fdf9a4e750f52b74e5b0b60f7385/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/a76350a00672fdad5e93d8bfe47a75bec9172778d0489ce76388dc90ef88564f/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/a510d2b60a57d94d264c42bf7762e4dba52c768f01248ba9c22d70b75a72917c/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/a23fb7e1102ab174f293ce7b619c324055d551642de1eb844b802d1ae20ae873/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/9b78baf58105417bb7307546a94b7bf4d17a54fae5d1ea9f1d9a8b245d69b351/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/976b6a4ace4cb3b824811d69cf3fd2d95dffc60bbb397d7ca6bec920c71d42ca/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/95e10c44d62d286fa943d143514af04e2b06df3d9f558ee9585ae96b4468c96e/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/8d98182ceaaaee70a9e7bb4ca660e63daf1e2061eb0eabbda3f491a744d4338e/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/8c6fc4e980b3e707e91ce1ae1b44c37ac26dcb730f37cca638da264b41f189ac/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/8886647afe4a874b87d288ca7876f370bb245c93bbaf7f74e7bafe5f12624a35/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/7d23351fe619f896418e6248de644d95be61a6683fe551f4918a37d8265ccf8a/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/7ce447032ec07f8db6a16590b6b0a99eaf50e96fbd8c67a8665c91c0ace65283/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/7acef0986cb4c1ae7d01f79f29b24c5172159e88c43e40f78546afe2835e9e70/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/7704b184efbe9e2156d43d08bc09ad7f91d1fdc158f011d77285bfe3deba00cc/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/756e98f55a5753bfc9856c22be93d43b3ff6a206a6acfab6dfbefe4c108b25bd/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/6b54cd6332662c46d296b1535e86ff4dfd76aea35b1de2a5cfc8a7e94d983063/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/6881057e010383535457204de85f5aa9f44ccee83a14627a8ef8c5f032fee335/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/5dabe008aba819fe9244773feb91bc662c60592ca5148f7c54486aced09114e5/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/589612ffd5c5213f3c855db443d2f0bac6eed8691df5d2fb6c72f255057b60b5/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/5254b9017e329b423350558069e51c923113151cdf0623ba4c49be598c6732b7/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/4af34eb7145e4da93c163f53019f16e0facf4daee681f39c0d73d41350cdb711/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/4abb4b6062abcf5528e0cdef841c09aca42ab7a97a6350de6b5b3af4806a797b/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/494d943a37719d3ce4a98880507e26bd1a66f7af4341edcbb65488cd1f85e338/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/4305da821d8954d8596038b7a63018900c94319aeefe60e7b181a87606db5547/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/3ce5971c203b439911c6d1e25b043316a8f8ebb8b8f38c28f6eb7507852369f3/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/3a1dc3b1525ced786de1edede9c2e8f6a8e2a56d3458127caf117e60375060aa/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/383dde2890ec1293e98a7679c05a06c72e153c1c2e8da480414651a15513bc77/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/30d846bab538be4f010ac795fc004b36c9492f0bedcfd8c7c5ee8e6e708175cf/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/29221b5d55cb0a50731363b5a95d1ed1d91bed77ec4cc9d54bc30ba4721d7994/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/24b48955b382eb4d23937550ef13f43b65cb0ccc5b773d8af5ae7b620353eba9/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/20d3076c7b1ba47484b5e0e7b0a9b797fd14a203c0adbe2b3a8d866cff090f55/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/19130d54595851f48efef28c4612c2e3ee217d82ac7bfa5a0f90ea62ffbe2a3a/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/169ba6ef058bc973951d8cfb6e3a871f1c6e58dbc49e8e1a37511e199c610555/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/1050414a75be606d94bf37773764f2b124860797638fb63922cefd4485a19c86/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/081532e61ac8fd96af0f28323db3d2113f90853f82b985ae3b5f94094f8c30ac/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/07dc8eee8b9ac9d8599ba6a8b4b61f641c59cc2925389b4c8b81ad26242ca2ce/rootfs

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/ffc7fc1471c2ccffc3b1ed34970f2a6bfbca1a5770870b70e7c48be1fa5b95e0/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/eca9c73903bf4267cc365f378473ec0c352d2d84215bd7e637611420d22abe19/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/e3ccb7dfc3bcc368b37fc78a3000480821ce67407ed2aee44b3f1cd03f7e9225/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/d60045a3e5f62684e255a72caab419dd440c7c20e29ca0d95d30887faefc777e/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/d5fd01941bfa012061d3a4588373f649f01222922e60b6a247589e9c06ba25ff/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/d336f8b1ac353a11d79022871bf89ef0ac134ef83740f52b529c07b2a7c07315/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/cca95452575508070f2d37cf7f84494901a28495f20b6be539e5ab6093956e40/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/c5abe4aceaa1710ec767f21a30ffcd6fbcf913b6433dca8620846a7b0cb98b2c/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/b4e1ff5523e78aec14f1f80560c68c9fa58e42fd73e71e5cf5fb086265fe508c/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/b0c1e6261ad64048a2f07b2f58bc22f461597d6d2c14e0e73dcb6be24e51ed6b/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/b02358707de92844f8077db4483f1d439185c6d85d6403aa86f0c242262cf2e4/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/adaf5ce8ade0e1c4ce15ad07f59afd4383dd95d04a6f907d0a11e81c601388d8/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/ac0a4499d313d63cb5cc2f993a259728ac1f1a13a6875ca9c0c5e0364981648b/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/aa0f265571cabe037448122198c216253bf4fdf9a4e750f52b74e5b0b60f7385/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/976b6a4ace4cb3b824811d69cf3fd2d95dffc60bbb397d7ca6bec920c71d42ca/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/95e10c44d62d286fa943d143514af04e2b06df3d9f558ee9585ae96b4468c96e/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/8886647afe4a874b87d288ca7876f370bb245c93bbaf7f74e7bafe5f12624a35/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/7d23351fe619f896418e6248de644d95be61a6683fe551f4918a37d8265ccf8a/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/7704b184efbe9e2156d43d08bc09ad7f91d1fdc158f011d77285bfe3deba00cc/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/756e98f55a5753bfc9856c22be93d43b3ff6a206a6acfab6dfbefe4c108b25bd/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/5dabe008aba819fe9244773feb91bc662c60592ca5148f7c54486aced09114e5/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/589612ffd5c5213f3c855db443d2f0bac6eed8691df5d2fb6c72f255057b60b5/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/3a1dc3b1525ced786de1edede9c2e8f6a8e2a56d3458127caf117e60375060aa/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/1050414a75be606d94bf37773764f2b124860797638fb63922cefd4485a19c86/shm

sh -c 'umount "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/07dc8eee8b9ac9d8599ba6a8b4b61f641c59cc2925389b4c8b81ad26242ca2ce/shm

+ do_unmount_and_remove /var/lib/rancher/k3s

+ set +x

+ do_unmount_and_remove /var/lib/kubelet/pods

+ set +x

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/ec0fb262-26bd-4c1d-a57e-9f3867069a8e/volumes/kubernetes.io~secret/credentials

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/ec0fb262-26bd-4c1d-a57e-9f3867069a8e/volumes/kubernetes.io~projected/kube-api-access-58t2g

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/ce45061e-beb9-4106-b0b7-aa70869f7078/volumes/kubernetes.io~projected/kube-api-access-gcmvk

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/bc2d82ee-b879-401b-be52-72cf6d0a7a30/volumes/kubernetes.io~projected/kube-api-access-kdsg6

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/b9ab38cf-141e-426c-83e7-8960e021d646/volume-subpaths/installinfo/cluster-agent/0

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/b9ab38cf-141e-426c-83e7-8960e021d646/volumes/kubernetes.io~projected/kube-api-access-9fdr7

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/9e74372d-3b88-4618-a31a-6b271ac37a02/volumes/kubernetes.io~projected/kube-api-access-tdrkf

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/8da68bd5-59e4-4780-a1ba-033779a11e99/volume-subpaths/portal-config/portal/0

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/8c2e1489-0375-4933-ba78-3e58d7e23e8b/volume-subpaths/sysprobe-config/system-probe/4

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/8c2e1489-0375-4933-ba78-3e58d7e23e8b/volume-subpaths/sysprobe-config/process-agent/9

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/8c2e1489-0375-4933-ba78-3e58d7e23e8b/volume-subpaths/sysprobe-config/init-config/4

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/8c2e1489-0375-4933-ba78-3e58d7e23e8b/volume-subpaths/sysprobe-config/agent/7

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/8c2e1489-0375-4933-ba78-3e58d7e23e8b/volume-subpaths/installinfo/agent/0

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/8c2e1489-0375-4933-ba78-3e58d7e23e8b/volumes/kubernetes.io~projected/kube-api-access-tw4wh

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/88505b02-a299-490c-84c0-b047e17807f1/volumes/kubernetes.io~empty-dir/shm-volume

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/873431b3-67bb-4c4e-8b0a-f16a91f049d7/volumes/kubernetes.io~projected/kube-api-access-9dxl9

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/85f737eb-1576-4930-94d9-4bbd73baf23b/volumes/kubernetes.io~projected/kube-api-access-hswr8

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/69f6095b-8418-41c5-baf3-98e855e63212/volume-subpaths/sonarqube/sonarqube/2

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/69f6095b-8418-41c5-baf3-98e855e63212/volume-subpaths/sonarqube/sonarqube/1

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/69f6095b-8418-41c5-baf3-98e855e63212/volume-subpaths/sonarqube/sonarqube/0

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/69f6095b-8418-41c5-baf3-98e855e63212/volume-subpaths/sonarqube/inject-prometheus-exporter/0

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/69f6095b-8418-41c5-baf3-98e855e63212/volume-subpaths/prometheus-config/sonarqube/4

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/69f6095b-8418-41c5-baf3-98e855e63212/volume-subpaths/prometheus-ce-config/sonarqube/5

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/69f6095b-8418-41c5-baf3-98e855e63212/volumes/kubernetes.io~projected/kube-api-access-tsvmm

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/61078392-2f69-48a6-b5b0-d44da6fd658d/volumes/kubernetes.io~projected/kube-api-access-wz74v

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/54310b14-3e23-47c9-82e0-742aba6f0260/volumes/kubernetes.io~projected/kube-api-access-mhbhg

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/522214b2-064d-46f0-abeb-bb825375b8d4/volumes/kubernetes.io~projected/kube-api-access-grz9z

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/4d06a0c3-a05f-416b-b693-52a0fe08a398/volumes/kubernetes.io~secret/kube-aad-proxy-tls

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/4d06a0c3-a05f-416b-b693-52a0fe08a398/volumes/kubernetes.io~projected/kube-api-access-5fzm8

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/39c05ea0-db93-4f6f-b3e3-dcddfe5408e2/volumes/kubernetes.io~projected/kube-api-access-m4h45

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/31fdcc58-a021-4c51-a396-909f4d60d557/volumes/kubernetes.io~projected/kube-api-access-4g9jz

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/17cbc947-895d-48f3-a02c-262f6158c29b/volumes/kubernetes.io~projected/kube-api-access-p9zxr

sh -c 'umount "$0" && rm -rf "$0"' /var/lib/kubelet/pods/0ead65f7-772b-4530-9738-83fa81eb5fdb/volumes/kubernetes.io~projected/kube-api-access-xzq4l

+ do_unmount_and_remove /var/lib/kubelet/plugins

+ set +x

+ do_unmount_and_remove /run/netns/cni-

+ set +x

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-fdb63637-6f86-f725-a40e-347e361dde99

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-f7b395a3-e372-9374-b621-a14ba17c5adf

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-f700fa00-8242-8bff-9d6a-d95ce93a2292

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-d999ed41-ee4c-1310-0690-20db473607eb

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-d661f5fc-136c-922b-c20a-0f3490ed9063

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-d55b74d4-f13d-0f39-d7e3-d2a85685aa74

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-cb70d5d2-c19b-c2bc-a567-bdbca17169df

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-c98c1c6d-65f6-3b13-70ff-1a57833702ee

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-baa81cb9-b5b0-8a1c-bc28-4de7cf401f44

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-968c86d1-eb2c-b660-b799-77c685d4a80b

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-76947fb8-a7be-fb5a-f8fa-097b20d92f8b

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-70eb69af-a35d-57ef-d9f5-051e254ccb9a

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-697c36d5-c163-627c-1802-01922539dd93

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-674ee367-f910-9866-5778-892e4f80fe21

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-63303dd6-6c20-a5f8-b5e1-3d7c61a1d5b3

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-575f4d64-9e60-b1ce-2b4c-6c18a6957059

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-4e916f3d-5fd2-c7b4-fc57-a460325d8f36

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-482df260-7228-8e1f-fa92-e548dbc24051

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-46c026ba-7cb5-72e7-e9ed-c9df7ee1bab5

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-31ca3780-18d8-ae00-9c6f-78f987e37d17

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-2ce596a4-6cf1-f18b-1eee-e2bf917fb8da

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-26bacf14-9bd1-4361-e9cf-fd7d70bfb7bb

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-0e44aec8-3e44-90ab-9a99-4b80c46d81f5

sh -c 'umount "$0" && rm -rf "$0"' /run/netns/cni-06d2f652-b237-e99e-1834-4ea3602a0d86

+ ip netns show

+ + grep cni-

xargs -r -t -n 1 ip netns delete

+ grep master cni0

+ ip link show

+ read ignore iface ignore

+ iface=veth219730cd

+ [ -z veth219730cd ]

+ ip link delete veth219730cd

+ read ignore iface ignore

+ iface=veth895290ab

+ [ -z veth895290ab ]

+ ip link delete veth895290ab

+ read ignore iface ignore

+ iface=vethe9081f8e

+ [ -z vethe9081f8e ]

+ ip link delete vethe9081f8e

+ read ignore iface ignore

+ iface=vethc71264d0

+ [ -z vethc71264d0 ]

+ ip link delete vethc71264d0

Cannot find device "vethc71264d0"

+ read ignore iface ignore

+ ip link delete cni0

+ ip link delete flannel.1

+ ip link delete flannel-v6.1

Cannot find device "flannel-v6.1"

+ rm -rf /var/lib/cni/

+ iptables-save

+ grep -v CNI-

+ iptables-restore

+ grep -v KUBE-

hp@hp-HP-EliteBook-850-G2:~$ sudo systemctl stop k3s

Failed to stop k3s.service: Unit k3s.service not loaded.

hp@hp-HP-EliteBook-850-G2:~$ sudo service k3s-agent stop

Now we can add the new (it’s always good to double check our cluster version as we will likely upgrade over time)

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-macbookpro2 Ready <none> 4d22h v1.23.6+k3s1

anna-macbookair Ready control-plane,master 12d v1.23.6+k3s1

Now install and enable via systemctl

$ curl -sfL https://get.k3s.io | INSTALL_K3S_VERSION=v1.23.6+k3s1 K3S_URL=https://192.168.1.81:6443 K3S_TOKEN=K10a9e665bb0e1fe827b71f76d09ade426153bd0ba99d5d9df39a76ac4f90b584ef::server:3246003cde679880017e7a14a63fa05d sh -

[sudo] password for hp:

[INFO] Using v1.23.6+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.23.6+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.23.6+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Skipping /usr/local/bin/kubectl symlink to k3s, already exists

[INFO] Skipping /usr/local/bin/crictl symlink to k3s, already exists

[INFO] Skipping /usr/local/bin/ctr symlink to k3s, already exists

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

[INFO] systemd: Starting k3s-agent

hp@hp-HP-EliteBook-850-G2:~$ sudo systemctl enable --now k3s-agent

And back on the laptop, I can see the new worker node has been added

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-macbookpro2 Ready <none> 4d22h v1.23.6+k3s1

anna-macbookair Ready control-plane,master 12d v1.23.6+k3s1

hp-hp-elitebook-850-g2 Ready <none> 73s v1.23.6+k3s1

Dapr

First, Dapr.io moves fast. It’s a good idea to update your local CLI before installing the operator in Kubernetes.

Here I can check my current version

builder@DESKTOP-QADGF36:~$ dapr -v

CLI version: 1.5.1

Runtime version: n/a

Then Update it to the latest

builder@DESKTOP-QADGF36:~$ wget -q https://raw.githubusercontent.com/dapr/cli/master/install/install.sh -O - | /bin/bash

Getting the latest Dapr CLI...

Your system is linux_amd64

Dapr CLI is detected:

CLI version: 1.5.1

Runtime version: n/a

Reinstalling Dapr CLI - /usr/local/bin/dapr...

Installing v1.7.1 Dapr CLI...

Downloading https://github.com/dapr/cli/releases/download/v1.7.1/dapr_linux_amd64.tar.gz ...

[sudo] password for builder:

dapr installed into /usr/local/bin successfully.

CLI version: 1.7.1

Runtime version: n/a

To get started with Dapr, please visit https://docs.dapr.io/getting-started/

builder@DESKTOP-QADGF36:~$ dapr -v

CLI version: 1.7.1

Runtime version: n/a

Installing it is as easy as dapr init -k

We can now see it is installed

$ dapr init -k

⌛ Making the jump to hyperspace...

ℹ️ Note: To install Dapr using Helm, see here: https://docs.dapr.io/getting-started/install-dapr-kubernetes/#install-with-helm-advanced

✅ Deploying the Dapr control plane to your cluster...

✅ Success! Dapr has been installed to namespace dapr-system. To verify, run `dapr status -k' in your terminal. To get started, go here: https://aka.ms/dapr-getting-started

$ dapr status -k

NAME NAMESPACE HEALTHY STATUS REPLICAS VERSION AGE CREATED

dapr-placement-server dapr-system True Running 1 1.7.4 49s 2022-06-21 11:58.38

dapr-dashboard dapr-system True Running 1 0.10.0 50s 2022-06-21 11:58.37

dapr-sidecar-injector dapr-system True Running 1 1.7.4 50s 2022-06-21 11:58.37

dapr-operator dapr-system True Running 1 1.7.4 51s 2022-06-21 11:58.36

dapr-sentry dapr-system True Running 1 1.7.4 51s 2022-06-21 11:58.36

Loft

Loft likes to install using regular bash

curl -s -L "https://github.com/loft-sh/loft/releases/latest" | sed -nE 's!.*"([^"]*loft-linux-amd64)".*!https://github.com\1!p' | xargs -n 1 curl -L -o loft && chmod +x loft;

sudo mv loft /usr/local/bin;

we can check the version

builder@DESKTOP-QADGF36:~$ loft -v

loft version 2.2.0

We’ll now install with the start command and using our own TLD (if you use loft start with no domain, you’ll need to login with --insecure later)

$ loft start --host=loft.freshbrewed.science

[info] Welcome to Loft!

[info] This installer will help you configure and deploy Loft.

? Enter your email address to create the login for your admin user isaac.johnson@gmail.com

[info] Executing command: helm upgrade loft loft --install --reuse-values --create-namespace --repository-config='' --kube-context default --namespace loft --repo https://charts.loft.sh/ --set admin.email=isaac.johnson@gmail.com --set admin.password=4dbfa47f-cb7f-43ba-8526-45f5587f8b73 --set ingress.enabled=true --set ingress.host=loft.freshbrewed.science --reuse-values

[done] √ Loft has been deployed to your cluster!

[done] √ Loft pod successfully started

? Unable to reach Loft at https://loft.freshbrewed.science. Do you want to start port-forwarding instead?

No, please re-run the DNS check

########################## LOGIN ############################

Username: admin

Password: 4dbfa47f-cb7f-43ba-8526-45f5587f8b73 # Change via UI or via: loft reset password

Login via UI: https://loft.freshbrewed.science

Login via CLI: loft login --insecure https://loft.freshbrewed.science

!!! You must accept the untrusted certificate in your browser !!!

Follow this guide to add a valid certificate: https://loft.sh/docs/administration/ssl

#################################################################

Loft was successfully installed and can now be reached at: https://loft.freshbrewed.science

Thanks for using Loft!

I noticed Ingress wasn’t picked up again. Following this doc I then set Nginx as my default class

$ kubectl get ingressclass

NAME CONTROLLER PARAMETERS AGE

nginx nginx.org/ingress-controller <none> 12d

$ kubectl get ingressclass nginx -o yaml > ingress.class.yaml

$ kubectl get ingressclass nginx -o yaml > ingress.class.yaml.bak

$ vi ingress.class.yaml

$ diff ingress.class.yaml ingress.class.yaml.bak

5d4

< ingressclass.kubernetes.io/is-default-class: "true"

$ kubectl apply -f ingress.class.yaml

Warning: resource ingressclasses/nginx is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

ingressclass.networking.k8s.io/nginx configured

But presently, like others noted I was still seeing issues with it getting picked up.

My other (minor) issue was my A record had been created pointing at another IPv4. I had to manually update route53 as a result. Once corrected (and a sufficient TTL had passed), I could resolve loft.freshbrewed.sicence and proceed (re-run DNS check).

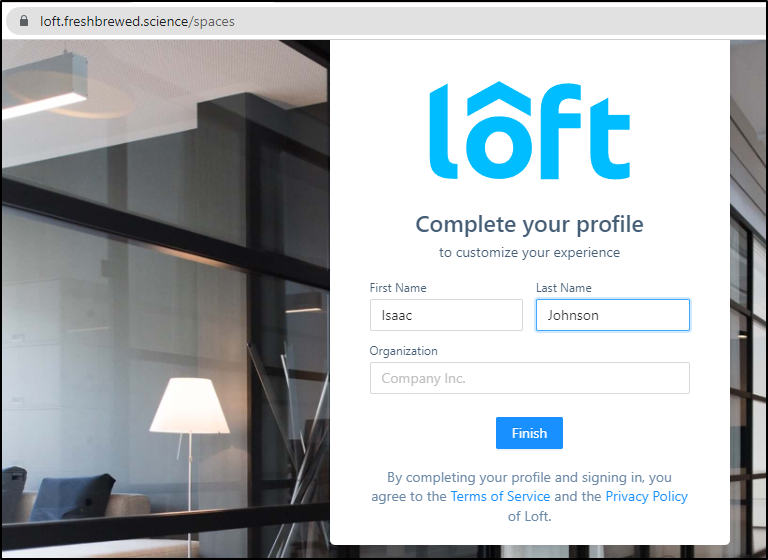

Loft Setup

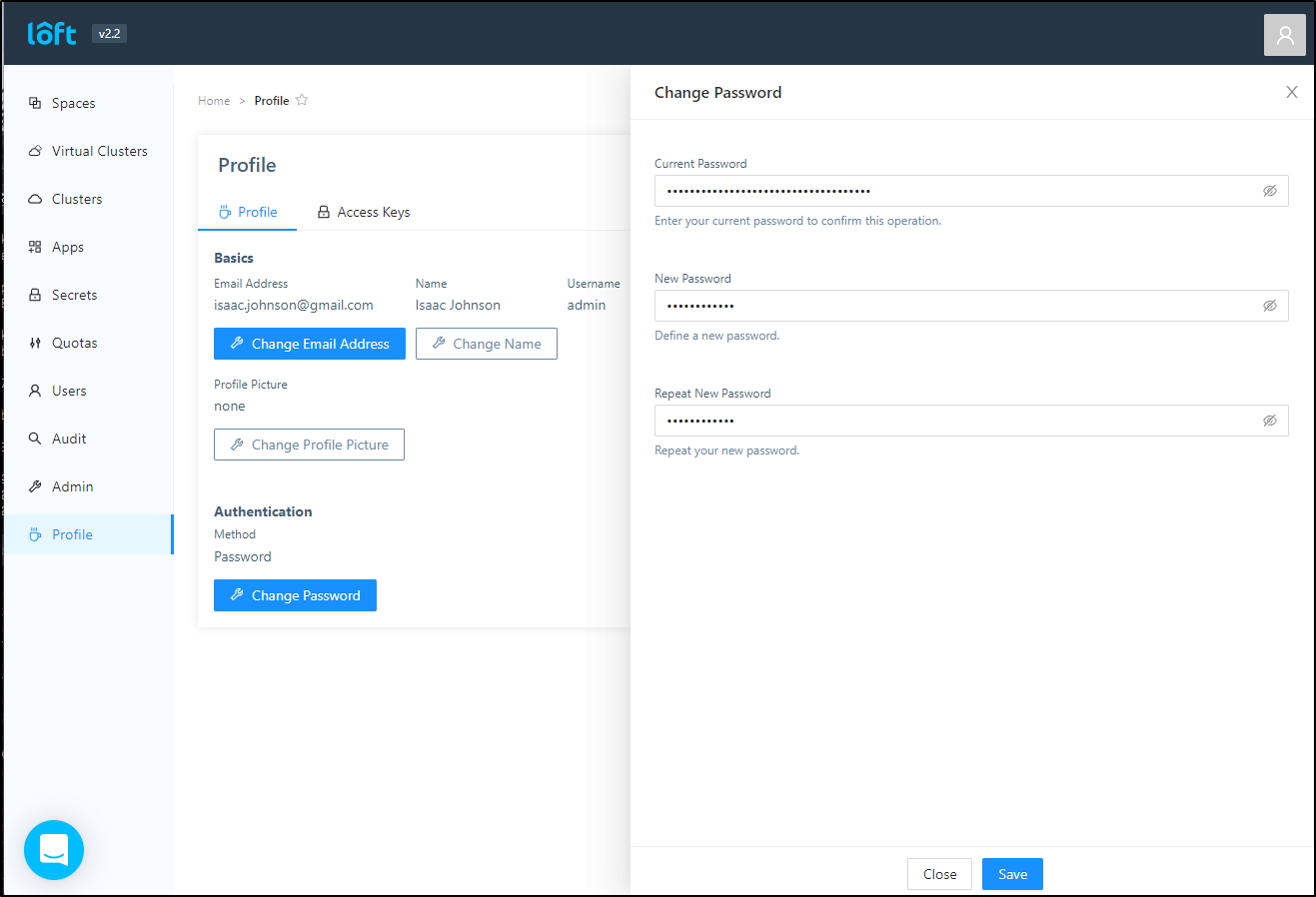

Since I’m doing this again, I’ll want to use that default password (the GUID in my invokation) and then login and change the password

then change it in Profile

Crossplane.io

We can take care of setting up the Crossplane namespace presently by installing the latest Crossplane helm chart

builder@DESKTOP-QADGF36:~$ helm repo add crossplane-stable https://charts.crossplane.io/stable

"crossplane-stable" has been added to your repositories

builder@DESKTOP-QADGF36:~$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "myharbor" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "epsagon" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "incubator" chart repository