Published: Dec 1, 2021 by Isaac Johnson

Github Actions allow us to run Workflows to automate our work. The Actions model is very similar to Azure DevOps Pipelines in that we have Workflows (Pipelines) with Runners (Agents) that execute Jobs (Builds). Github Actions are triggered by events (most often merges or Pull Requests).

We are able to use a provided runner that has some limitations at the free tier. However, we are also able to leverage our own private runners on a variety of hosts. The steps to setup a self-hosted runner are easy to follow and found in Github settings under Actions / Runners.

The more interesting option in self hosting, at least to me, is how to leverage our existing Kubernetes clusters to be a workhorse for our Github Actions.

How easy it is to use existing available containerized agents, how can we create our own runner containers and lastly how can we easily scale (horizontally) our Runners?

We’ll cover the setup on a new and existing cluster, launching a single and HPA’ed instances and lastly how to build and leverage private agent containers.

Setup

In this first example we will start with a blank slate and setup a fresh Azure Kubernetes Service instance tip-to-toe.

Create a Resource Group

$ az group create --location centralus --name testaksrg02

{

"id": "/subscriptions/d4c094eb-e397-46d6-bcb2-fd877504a619/resourceGroups/testaksrg02",

"location": "centralus",

"managedBy": null,

"name": "testaksrg02",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

If you have a Service Principal to use, you can use the SP ID and Secret for the identity of this AKS

$ export SP_ID=`cat ~/sp_id | tr -d '\n'`

$ export SP_PASS=`cat ~/sp_pass | tr -d '\n'`

Now create the AKS using the SP above

$ az aks create -g testaksrg02 -n testaks02 -l centralus --node-count 2 --generate-ssh-keys --network-plugin azure --network-policy azure --service-principal $SP_ID --client-secret $SP_PASS

The behavior of this command has been altered by the following extension: aks-preview

{

"aadProfile": null,

"addonProfiles": null,

"agentPoolProfiles": [

{

"availabilityZones": null,

"count": 2,

"creationData": null,

"enableAutoScaling": null,

"enableEncryptionAtHost": false,

"enableFips": false,

"enableNodePublicIp": false,

"enableUltraSsd": false,

"gpuInstanceProfile": null,

"kubeletConfig": null,

"kubeletDiskType": "OS",

"linuxOsConfig": null,

"maxCount": null,

"maxPods": 30,

"minCount": null,

"mode": "System",

"name": "nodepool1",

"nodeImageVersion": "AKSUbuntu-1804gen2containerd-2021.10.30",

"nodeLabels": null,

"nodePublicIpPrefixId": null,

"nodeTaints": null,

"orchestratorVersion": "1.20.9",

"osDiskSizeGb": 128,

"osDiskType": "Managed",

"osSku": "Ubuntu",

"osType": "Linux",

"podSubnetId": null,

"powerState": {

"code": "Running"

},

"provisioningState": "Succeeded",

"proximityPlacementGroupId": null,

"scaleDownMode": null,

"scaleSetEvictionPolicy": null,

"scaleSetPriority": null,

"spotMaxPrice": null,

"tags": null,

"type": "VirtualMachineScaleSets",

"upgradeSettings": null,

"vmSize": "Standard_DS2_v2",

"vnetSubnetId": null,

"workloadRuntime": "OCIContainer"

}

],

"apiServerAccessProfile": null,

"autoScalerProfile": null,

"autoUpgradeProfile": null,

"azurePortalFqdn": "testaks02-testaksrg02-d4c094-704d8fbc.portal.hcp.centralus.azmk8s.io",

...

snip

...

"type": "Microsoft.ContainerService/ManagedClusters",

"windowsProfile": {

"adminPassword": null,

"adminUsername": "azureuser",

"enableCsiProxy": true,

"licenseType": null

}

}

Verification

Login

$ (rm -f ~/.kube/config || true) && az aks get-credentials -g testaksrg02 -n testaks02 --admin

The behavior of this command has been altered by the following extension: aks-preview

Merged "testaks02-admin" as current context in /home/builder/.kube/config

Verify access by listing the active nodes

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-65902513-vmss000000 Ready agent 2m53s v1.20.9

aks-nodepool1-65902513-vmss000001 Ready agent 3m4s v1.20.9

Installing a Github Runner

First add and update the helm repo

$ helm repo add actions-runner-controller https://actions-runner-controller.github.io/actions-runner-controller

"actions-runner-controller" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "azuredisk-csi-driver" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "azure-samples" chart repository

Update Complete. ⎈Happy Helming!⎈

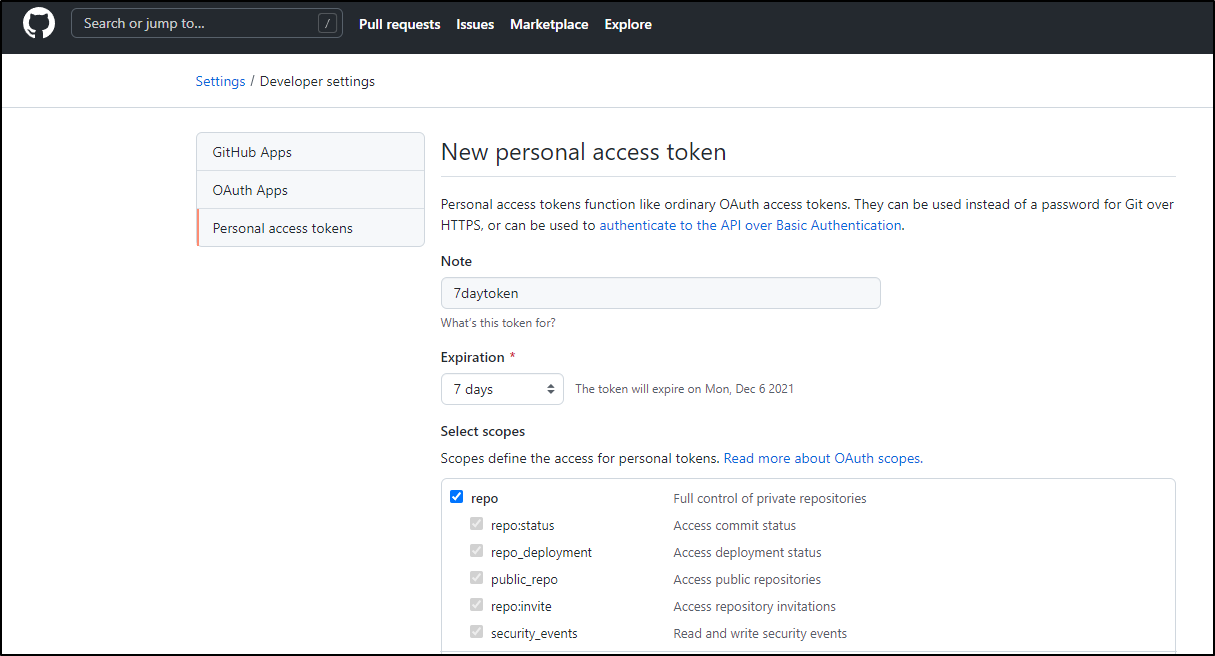

Next we need a PAT for the GH runner to use.

From the docs

Required Scopes for Repository Runners

- repo (Full control)

Required Scopes for Organization Runners

- repo (Full control)

- admin:org (Full control)

- admin:public_key (read:public_key)

- admin:repo_hook (read:repo_hook)

- admin:org_hook (Full control)

- notifications (Full control)

- workflow (Full control)

Required Scopes for Enterprise Runners

- admin:enterprise (manage_runners:enterprise)

We will just need the first. Since this is for a demo, i’ll set a short window (as i’ll expire this token when done)

We’ll save that token ghp_8w6F7MHEmgV6A5SKDHe8djdjejdGeNC aside for now.

We need to then create a secret in our cluster to use this.

$ kubectl create ns actions-runner-system

namespace/actions-runner-system created

$ kubectl create secret generic controller-manager -n actions-runner-system --from-literal=github_token=ghp_8w6F7MHEmgV6A5SKDHe8djdjejdGeNC

secret/controller-manager created

While controller-manager is the default name for the secret, i’ll call it out explicitly in my helm invokation just to show where you might change it if you used a different secret name:

$ helm upgrade --install --namespace actions-runner-system --wait actions-runner-controller actions-runner-controller/actions-runner-controller --set authSecret.name=controller-manager

Release "actions-runner-controller" does not exist. Installing it now.

Error: unable to build kubernetes objects from release manifest: [unable to recognize "": no matches for kind "Certificate" in version "cert-manager.io/v1", unable to recognize "": no matches for kind "Issuer" in version "cert-manager.io/v1"]

Ah… we are required to have a cert-manager installed.

$ helm repo add jetstack https://charts.jetstack.io

"jetstack" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "azuredisk-csi-driver" chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

Update Complete. ⎈Happy Helming!⎈

$ helm install cert-manager jetstack/cert-manager --namespace cert-m

anager --create-namespace --version v1.6.1 --set installCRDs=true

NAME: cert-manager

LAST DEPLOYED: Mon Nov 29 13:15:15 2021

NAMESPACE: cert-manager

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

cert-manager v1.6.1 has been deployed successfully!

In order to begin issuing certificates, you will need to set up a ClusterIssuer

or Issuer resource (for example, by creating a 'letsencrypt-staging' issuer).

More information on the different types of issuers and how to configure them

can be found in our documentation:

https://cert-manager.io/docs/configuration/

For information on how to configure cert-manager to automatically provision

Certificates for Ingress resources, take a look at the `ingress-shim`

documentation:

https://cert-manager.io/docs/usage/ingress/

For our issuer, we need an Nginx Ingress controller as well

helm upgrade --install ingress-nginx ingress-nginx \

--repo https://kubernetes.github.io/ingress-nginx \

--namespace ingress-nginx --create-namespace

Release "ingress-nginx" does not exist. Installing it now.

NAME: ingress-nginx

LAST DEPLOYED: Mon Nov 29 13:18:20 2021

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status by running 'kubectl --namespace ingress-nginx get services -o wide -w ingress-nginx-controller'

An example Ingress that makes use of the controller:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example

namespace: foo

spec:

ingressClassName: nginx

rules:

- host: www.example.com

http:

paths:

- backend:

service:

name: exampleService

port:

number: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls

Now add the LE issuer (this is not actually needed)

builder@US-5CG92715D0:~/Workspaces/DevOps$ cat prod-issuer.yaml

# prod-issuer.yml

apiVersion: cert-manager.io/v1

kind: Issuer

metadata:

# different name

name: letsencrypt-prod

namespace: default

spec:

acme:

# now pointing to Let's Encrypt production API

server: https://acme-v02.api.letsencrypt.org/directory

email: isaac.johnson@gmail.com

privateKeySecretRef:

# storing key material for the ACME account in dedicated secret

name: account-key-prod

solvers:

- http01:

ingress:

class: nginx

builder@US-5CG92715D0:~/Workspaces/DevOps$ kubectl apply -f prod-issuer.yaml

issuer.cert-manager.io/letsencrypt-prod created

Now let’s try again:

$ helm upgrade --install --namespace actions-runner-system --wait actions-runner-controller actions-runner-controller/actions-runner-controller --set authSecret.name=controller-manager

Release "actions-runner-controller" does not exist. Installing it now.

NAME: actions-runner-controller

LAST DEPLOYED: Mon Nov 29 13:20:47 2021

NAMESPACE: actions-runner-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace actions-runner-system -l "app.kubernetes.io/name=actions-runner-controller,app.kubernetes.io/instance=actions-runner-controller" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace actions-runner-system $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace actions-runner-system port-forward $POD_NAME 8080:$CONTAINER_PORT

Runner Object

Now we can define a runner object in YAML and launch it

$ cat runner.yaml

# runner.yaml

apiVersion: actions.summerwind.dev/v1alpha1

kind: Runner

metadata:

name: idjohnson-gh-runner

spec:

repository: idjohnson/idjohnson.github.io

env: []

$ kubectl apply -f runner.yaml

runner.actions.summerwind.dev/idjohnson-gh-runner created

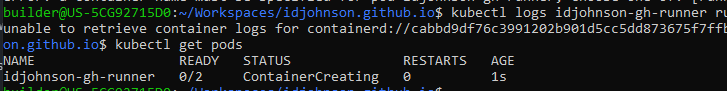

We can see the status:

$ kubectl describe runner

Name: idjohnson-gh-runner

Namespace: default

Labels: <none>

Annotations: <none>

API Version: actions.summerwind.dev/v1alpha1

Kind: Runner

Metadata:

Creation Timestamp: 2021-11-29T19:24:07Z

Finalizers:

runner.actions.summerwind.dev

Generation: 1

Managed Fields:

API Version: actions.summerwind.dev/v1alpha1

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.:

f:kubectl.kubernetes.io/last-applied-configuration:

f:spec:

.:

f:env:

f:repository:

Manager: kubectl-client-side-apply

Operation: Update

Time: 2021-11-29T19:24:07Z

API Version: actions.summerwind.dev/v1alpha1

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:finalizers:

f:status:

.:

f:lastRegistrationCheckTime:

f:registration:

.:

f:expiresAt:

f:repository:

f:token:

Manager: manager

Operation: Update

Time: 2021-11-29T19:24:09Z

Resource Version: 3924

UID: e797fee5-49bb-46d2-a25e-09840983a67a

Spec:

Dockerd Container Resources:

Image:

Repository: idjohnson/idjohnson.github.io

Resources:

Status:

Last Registration Check Time: 2021-11-29T19:24:09Z

Registration:

Expires At: 2021-11-29T20:24:09Z

Repository: idjohnson/idjohnson.github.io

Token: ABTDTVPXKMHC576SGH335QLBUU3OS

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal RegistrationTokenUpdated 63s runner-controller Successfully update registration token

Normal PodCreated 63s runner-controller Created pod 'idjohnson-gh-runner'

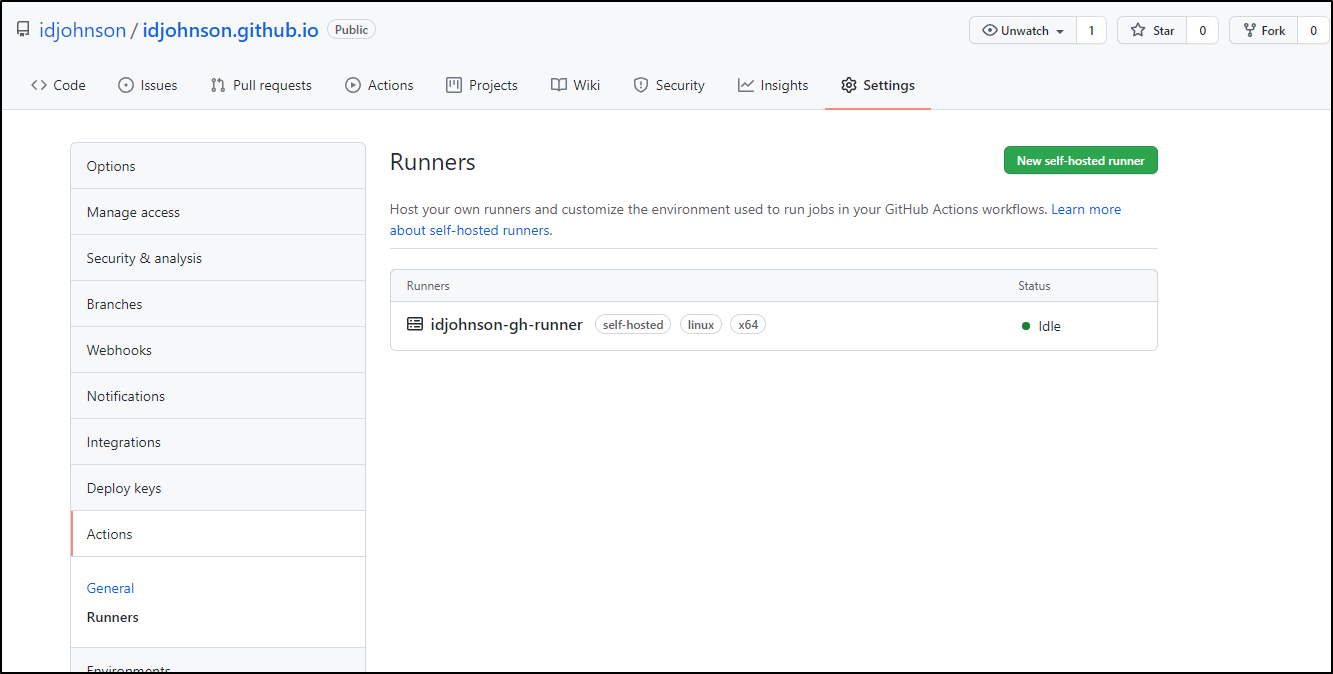

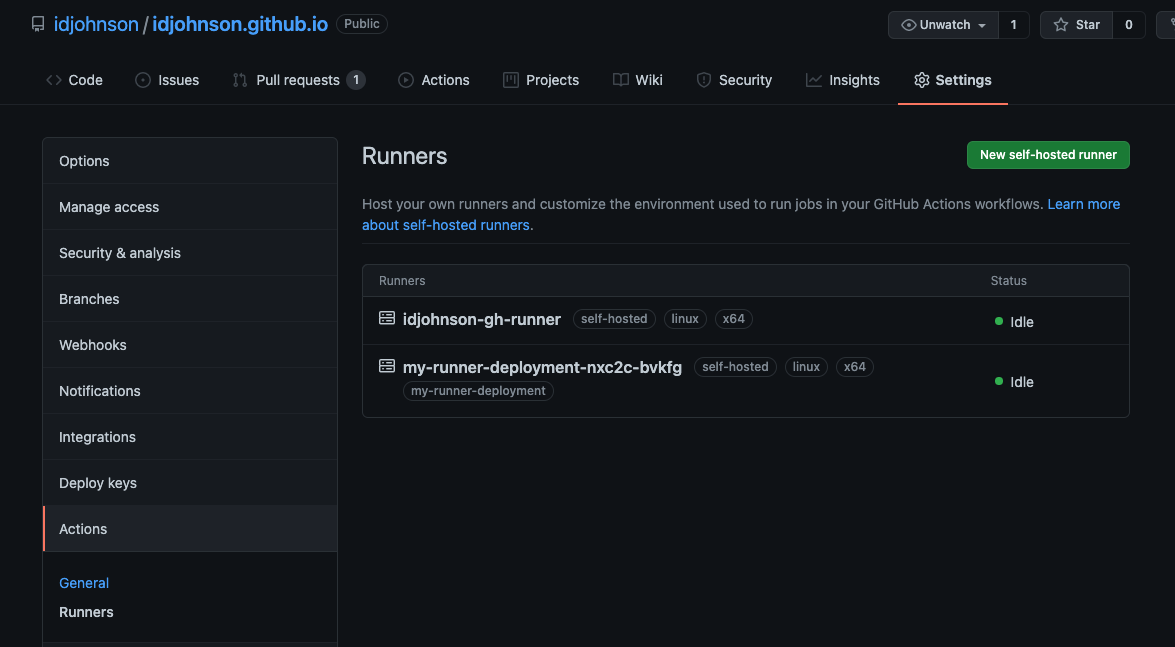

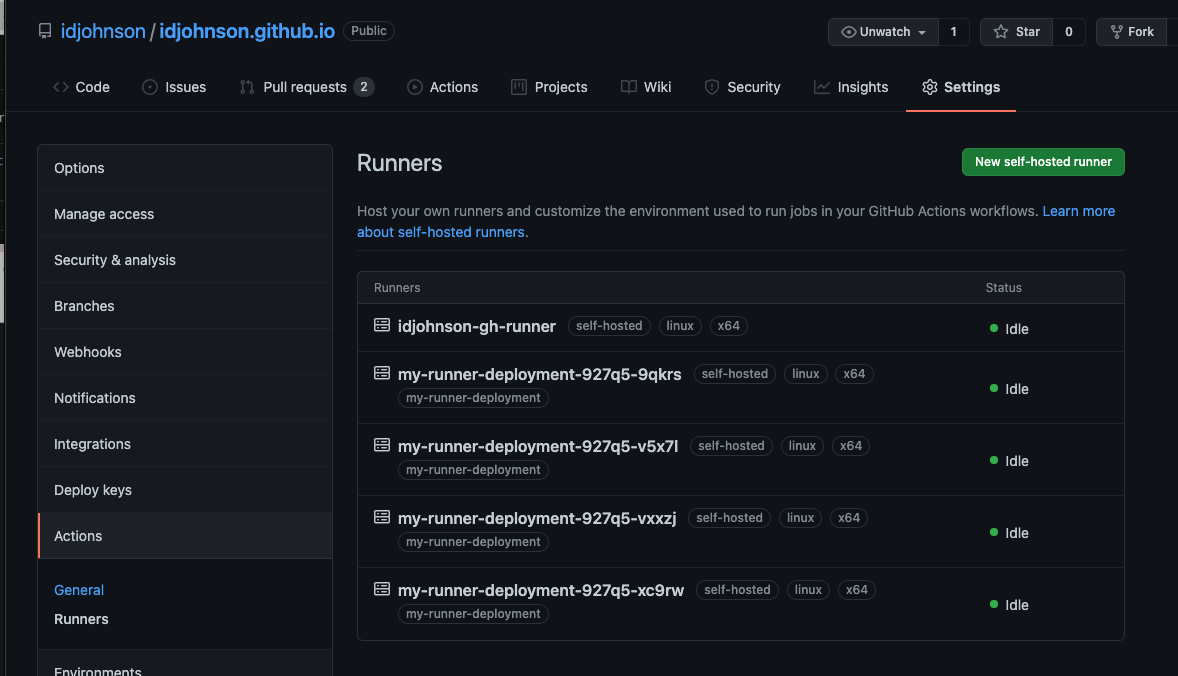

Lastly, if we go to Github, we can see it under Actions:

Github Workflow

Now let’s make an easy GH workflow to show it works

$ mkdir .github

$ mkdir .github/workflows

$ vi .github/workflows/github-actions-demo.yml

$ cat .github/workflows/github-actions-demo.yml

name: GitHub Actions Demo

on: [push]

jobs:

Explore-GitHub-Actions:

runs-on: self-hosted

steps:

- run: echo "🎉 The job was automatically triggered by a $ event."

- run: echo "🐧 This job is now running on a $ server hosted by GitHub!"

- run: echo "🔎 The name of your branch is $ and your repository is $."

- name: Check out repository code

uses: actions/checkout@v2

- run: echo "💡 The $ repository has been cloned to the runner."

- run: echo "🖥️ The workflow is now ready to test your code on the runner."

- name: List files in the repository

run: |

ls $

- run: echo "🍏 This job's status is $."

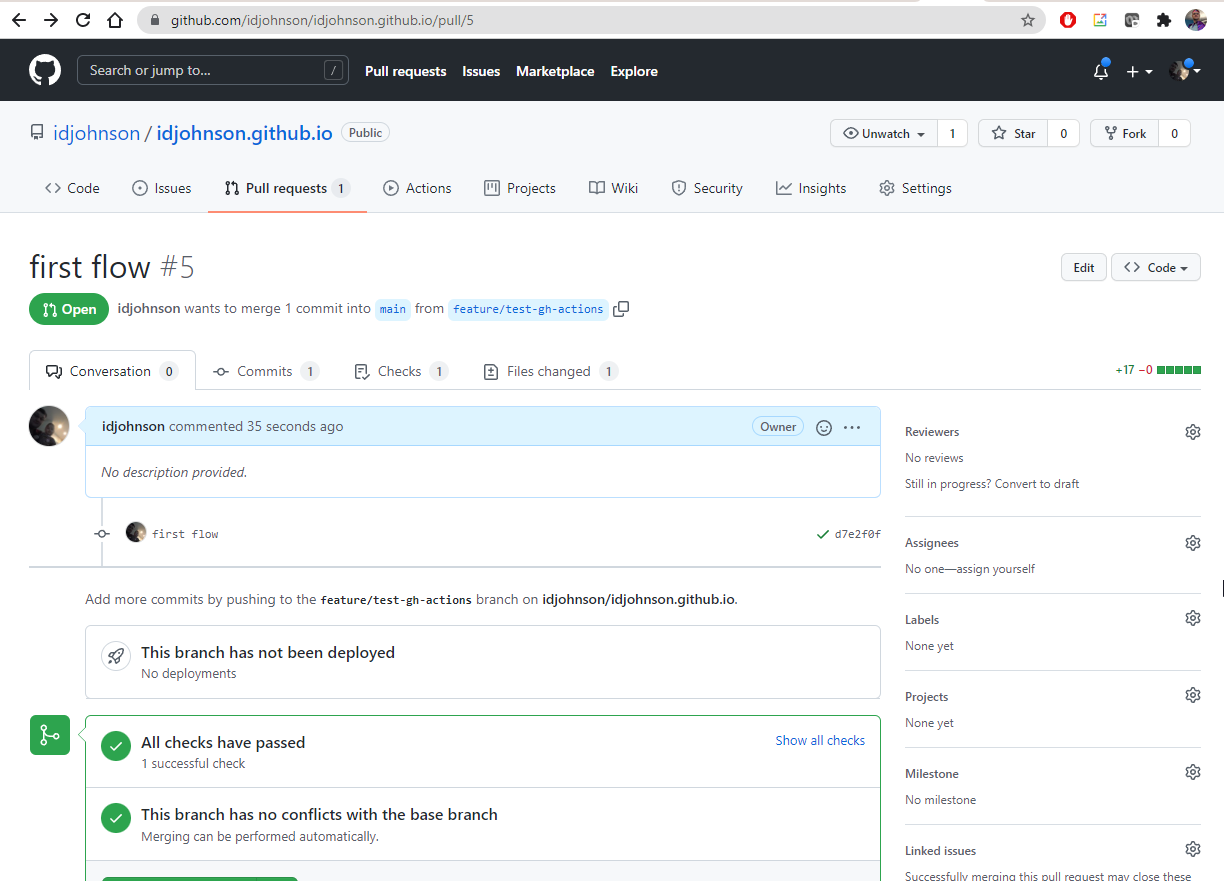

Then we can add it

$ git checkout -b feature/test-gh-actions

Switched to a new branch 'feature/test-gh-actions'

$ git add .github/

$ git commit -m "first flow"

[feature/test-gh-actions d7e2f0f] first flow

1 file changed, 17 insertions(+)

create mode 100644 .github/workflows/github-actions-demo.yml

$ git push

fatal: The current branch feature/test-gh-actions has no upstream branch.

To push the current branch and set the remote as upstream, use

git push --set-upstream origin feature/test-gh-actions

$ darf

git push --set-upstream origin feature/test-gh-actions [enter/↑/↓/ctrl+c]

Enumerating objects: 6, done.

Counting objects: 100% (6/6), done.

Delta compression using up to 8 threads

Compressing objects: 100% (4/4), done.

Writing objects: 100% (5/5), 779 bytes | 779.00 KiB/s, done.

Total 5 (delta 1), reused 0 (delta 0)

remote: Resolving deltas: 100% (1/1), completed with 1 local object.

remote:

remote: Create a pull request for 'feature/test-gh-actions' on GitHub by visiting:

remote: https://github.com/idjohnson/idjohnson.github.io/pull/new/feature/test-gh-actions

remote:

To https://github.com/idjohnson/idjohnson.github.io.git

* [new branch] feature/test-gh-actions -> feature/test-gh-actions

Branch 'feature/test-gh-actions' set up to track remote branch 'feature/test-gh-actions' from 'origin'.

I then created a Pull Request with the link above:

which is enough to trigger the action!

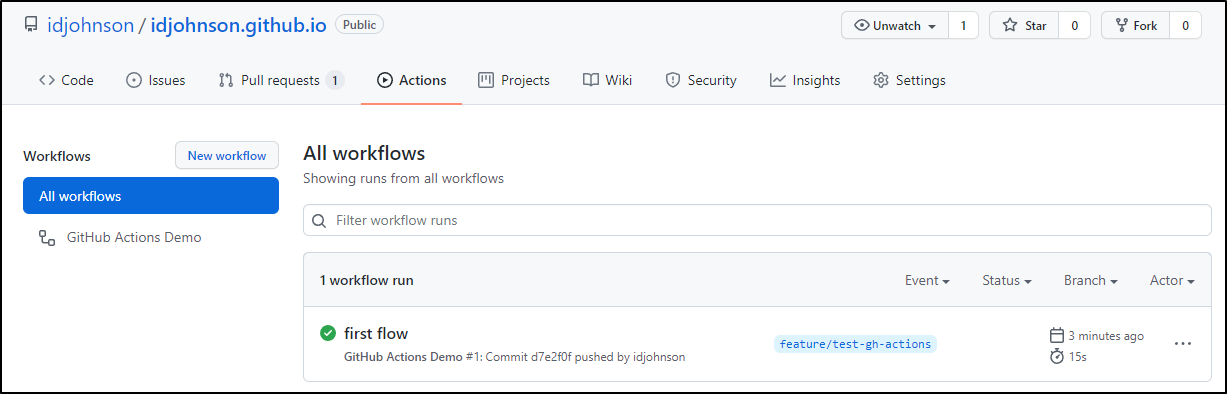

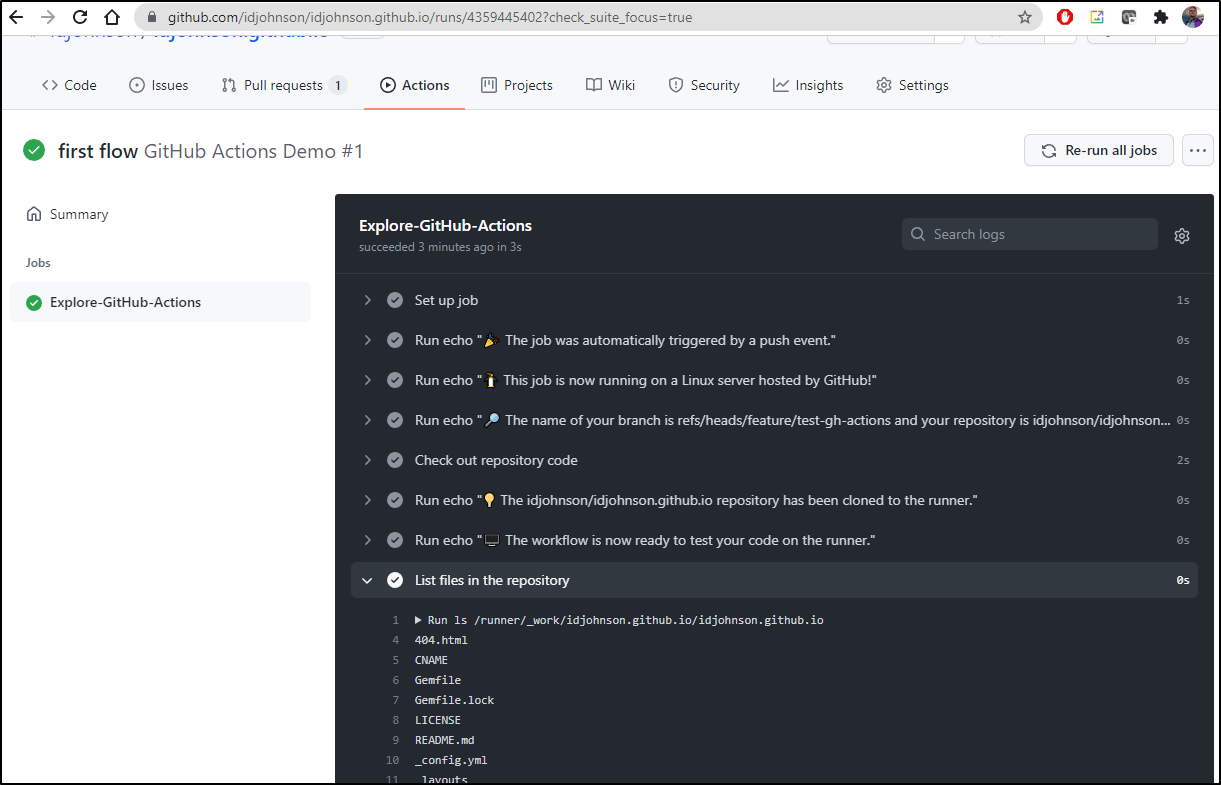

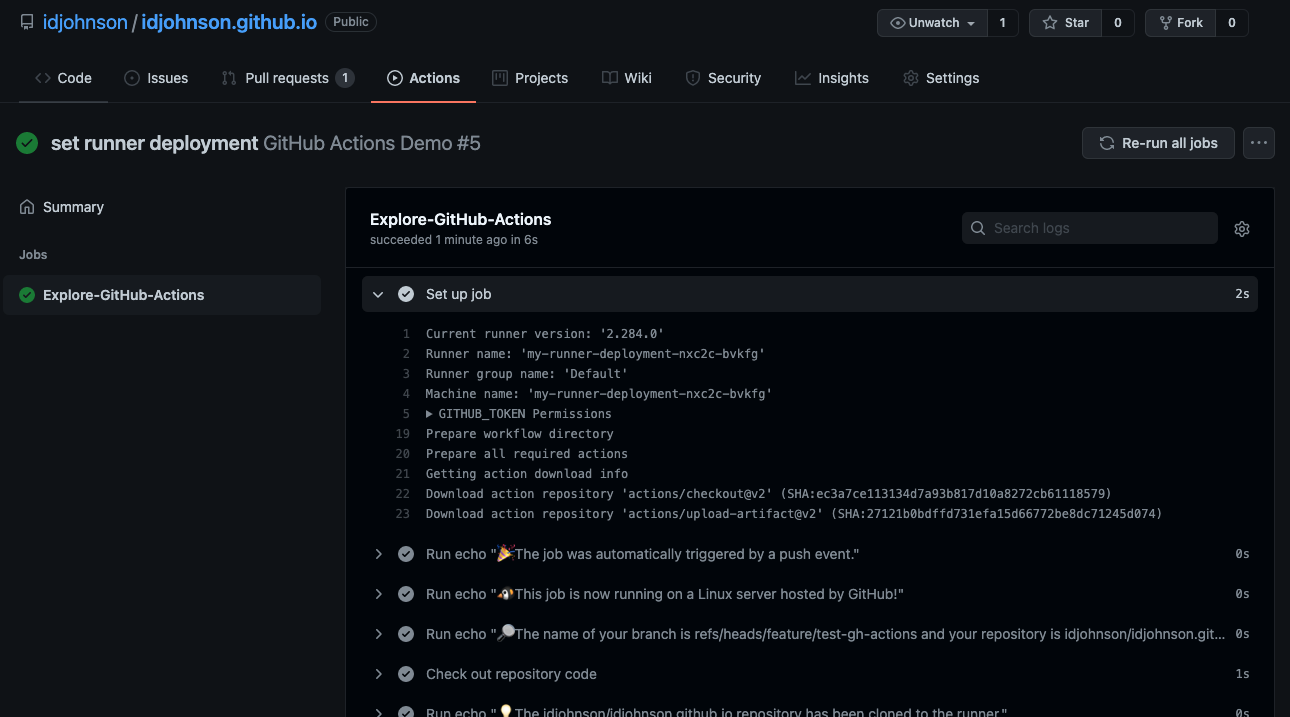

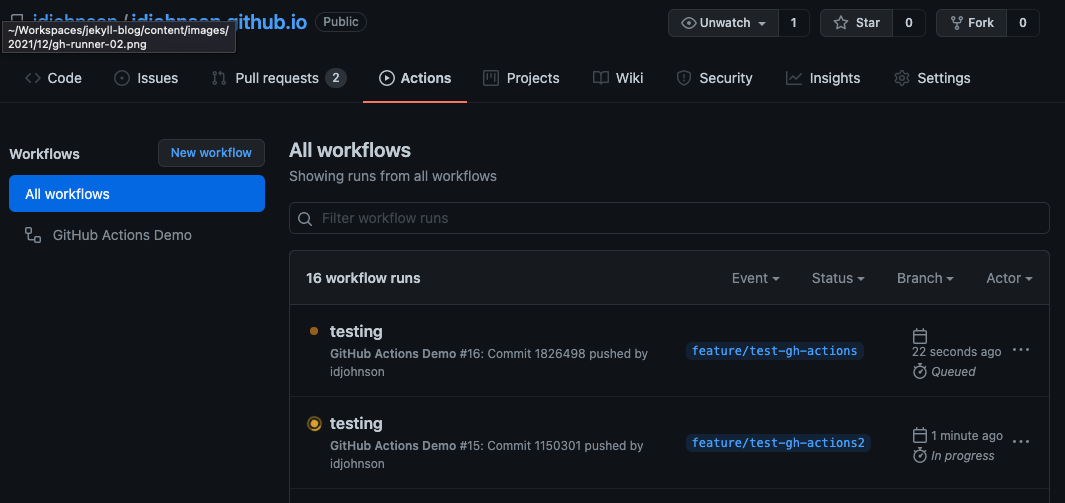

We can see Results now in the Actions section of our Repo:

And we can explore the results of the runner:

If we look at the first step, we see it was scheduled on our own private runner, not some default provided agent

In this default configuration, each invokation of our action creates a fresh GH runner:

We can hop on the container in the pod, but note that nothing is kept locally when the build is completed

$ kubectl exec -it idjohnson-gh-runner runner -- /bin/bash

Defaulted container "runner" out of: runner, docker

runner@idjohnson-gh-runner:/$ cd /runner/_work

runner@idjohnson-gh-runner:/runner/_work$ ls

runner@idjohnson-gh-runner:/runner/_work$

Let’s try a step to touch a file outside of work as well as use uname -a to see if we are running IN the runner container or the docker container.

$ git diff

diff --git a/.github/workflows/github-actions-demo.yml b/.github/workflows/github-actions-demo.yml

index 953a721..aef4e73 100644

--- a/.github/workflows/github-actions-demo.yml

+++ b/.github/workflows/github-actions-demo.yml

@@ -14,4 +14,8 @@ jobs:

- name: List files in the repository

run: |

ls $

+ - name: List files in the repository

+ run: |

+ uname -a

+ touch /tmp/asdf

- run: echo "🍏 This job's status is $."

$ git add .github/workflows/

$ git commit -m "testing a new step"

[feature/test-gh-actions 4b311b0] testing a new step

1 file changed, 4 insertions(+)

$ git push

Enumerating objects: 9, done.

Counting objects: 100% (9/9), done.

Delta compression using up to 8 threads

Compressing objects: 100% (4/4), done.

Writing objects: 100% (5/5), 448 bytes | 448.00 KiB/s, done.

Total 5 (delta 2), reused 0 (delta 0)

remote: Resolving deltas: 100% (2/2), completed with 2 local objects.

To https://github.com/idjohnson/idjohnson.github.io.git

d7e2f0f..4b311b0 feature/test-gh-actions -> feature/test-gh-actions

We can see the step ran in the GH action:

but it must be using a DinD image or some other isolated system as the /tmp/asdf file was not saved and nothing persists in work (later we’ll see indeed we are using the runner container).

$ kubectl exec -it idjohnson-gh-runner runner -- /bin/bash

Defaulted container "runner" out of: runner, docker

runner@idjohnson-gh-runner:/$ ls /tmp

clr-debug-pipe-86-1009061-in clr-debug-pipe-86-1009061-out dotnet-diagnostic-86-1009061-socket tmpc_dfmpyt

runner@idjohnson-gh-runner:/$ uname -a

Linux idjohnson-gh-runner 5.4.0-1062-azure #65~18.04.1-Ubuntu SMP Tue Oct 12 11:26:28 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux

# just make sure i can touch a file

runner@idjohnson-gh-runner:/$ touch /tmp/asdfasdf

runner@idjohnson-gh-runner:/$ ls /tmp

asdfasdf clr-debug-pipe-86-1009061-in clr-debug-pipe-86-1009061-out dotnet-diagnostic-86-1009061-socket tmpc_dfmpyt

runner@idjohnson-gh-runner:/$ ls _work

ls: cannot access '_work': No such file or directory

runner@idjohnson-gh-runner:/$ ls /runner/_work/

runner@idjohnson-gh-runner:/$

The uname with timestamp lines up so we did use this pod.

A lot of this behavior may stem from the fact we set it to be ephemeral:

RUNNER_EPHEMERAL: true

On certificates

It seems the Helm installer needs to generate a certificate, but not a publicly signed one:

$ kubectl get certificates actions-runner-controller-serving-cert -n actions-runner-system -o yaml

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

annotations:

meta.helm.sh/release-name: actions-runner-controller

meta.helm.sh/release-namespace: actions-runner-system

creationTimestamp: "2021-11-29T19:20:42Z"

generation: 1

labels:

app.kubernetes.io/managed-by: Helm

name: actions-runner-controller-serving-cert

namespace: actions-runner-system

resourceVersion: "3346"

uid: 340a19e5-e07b-4c19-adb4-5f8dd841edbd

spec:

dnsNames:

- actions-runner-controller-webhook.actions-runner-system.svc

- actions-runner-controller-webhook.actions-runner-system.svc.cluster.local

issuerRef:

kind: Issuer

name: actions-runner-controller-selfsigned-issuer

secretName: actions-runner-controller-serving-cert

status:

conditions:

- lastTransitionTime: "2021-11-29T19:20:43Z"

message: Certificate is up to date and has not expired

observedGeneration: 1

reason: Ready

status: "True"

type: Ready

notAfter: "2022-02-27T19:20:43Z"

notBefore: "2021-11-29T19:20:43Z"

renewalTime: "2022-01-28T19:20:43Z"

revision: 1

By default we are running the repository summerwind/actions-runner-controller with runner image summerwind/actions-runner:latest

However, we can update our helm chart to use our own if we wish to modify it via image.repository and image.actionsRunnerRepositoryAndTag (and imagePullSecrets if required)

The Docker-in-Docker sidecar comes from the image.dindSidecarRepositoryAndTag setting and is by default docker:dind

Which we can verify:

$ kubectl describe pod idjohnson-gh-runner | grep Image:

Image: summerwind/actions-runner:latest

Image: docker:dind

If we remove the cluster:

$ az aks delete -n testaks02 -g testaksrg02

Are you sure you want to perform this operation? (y/n): y

We can see the runner was removed from Github:

Note: I re-added with a different cluster and token, but the same name. This restored the runner and my actions just fine.

Adding tools to the runner

Say we want to expand what the Runner container can do. Or perhaps we wish to control the runner image, for safety and security, by building it and storing it locally in our own container registry.

Below I expand the Private GH Runner Dockerfile to add the Azure CLI

$ cat Dockerfile

FROM summerwind/actions-runner:latest

RUN sudo apt update -y \

&& umask 0002 \

&& sudo apt install -y ca-certificates curl apt-transport-https lsb-release gnupg

# Install MS Key

RUN curl -sL https://packages.microsoft.com/keys/microsoft.asc | gpg --dearmor | sudo tee /etc/apt/trusted.gpg.d/microsoft.gpg > /dev/null

# Add MS Apt repo

RUN umask 0002 && echo "deb [arch=amd64] https://packages.microsoft.com/repos/azure-cli/ focal main" | sudo tee /etc/apt/sources.list.d/azure-cli.list

# Install Azure CLI

RUN sudo apt update -y \

&& umask 0002 \

&& sudo apt install -y azure-cli

RUN sudo rm -rf /var/lib/apt/lists/*

I can then build it with a tag of ‘myrunner’:

$ docker build -t myrunner .

Sending build context to Docker daemon 2.56kB

Step 1/6 : FROM summerwind/actions-runner:latest

---> 811e8827de51

Step 2/6 : RUN sudo apt update -y && umask 0002 && sudo apt install -y ca-certificates curl apt-transport-https lsb-release gnupg

---> Using cache

---> 685bb558ce7a

Step 3/6 : RUN curl -sL https://packages.microsoft.com/keys/microsoft.asc | gpg --dearmor | sudo tee /etc/apt/trusted.gpg.d/microsoft.gpg > /dev/null

---> Using cache

---> a5955c58c74d

Step 4/6 : RUN umask 0002 && echo "deb [arch=amd64] https://packages.microsoft.com/repos/azure-cli/ focal main" | sudo tee /etc/apt/sources.list.d/azure-cli.list

---> Running in f1c23b246b36

deb [arch=amd64] https://packages.microsoft.com/repos/azure-cli/ focal main

Removing intermediate container f1c23b246b36

---> 78be389253d4

Step 5/6 : RUN sudo apt update -y && umask 0002 && sudo apt install -y azure-cli

---> Running in 715d67b45fd7

WARNING: apt does not have a stable CLI interface. Use with caution in scripts.

Hit:1 http://security.ubuntu.com/ubuntu focal-security InRelease

Get:2 https://packages.microsoft.com/repos/azure-cli focal InRelease [10.4 kB]

Hit:3 http://ppa.launchpad.net/git-core/ppa/ubuntu focal InRelease

Hit:4 http://archive.ubuntu.com/ubuntu focal InRelease

Hit:5 http://archive.ubuntu.com/ubuntu focal-updates InRelease

Get:6 https://packages.microsoft.com/repos/azure-cli focal/main amd64 Packages [7589 B]

Hit:7 http://archive.ubuntu.com/ubuntu focal-backports InRelease

Fetched 18.0 kB in 1s (17.9 kB/s)

Reading package lists...

Building dependency tree...

Reading state information...

10 packages can be upgraded. Run 'apt list --upgradable' to see them.

WARNING: apt does not have a stable CLI interface. Use with caution in scripts.

Reading package lists...

Building dependency tree...

Reading state information...

The following NEW packages will be installed:

azure-cli

0 upgraded, 1 newly installed, 0 to remove and 10 not upgraded.

Need to get 67.5 MB of archives.

After this operation, 1056 MB of additional disk space will be used.

Get:1 https://packages.microsoft.com/repos/azure-cli focal/main amd64 azure-cli all 2.30.0-1~focal [67.5 MB]

debconf: delaying package configuration, since apt-utils is not installed

Fetched 67.5 MB in 7s (9865 kB/s)

Selecting previously unselected package azure-cli.

(Reading database ... 19200 files and directories currently installed.)

Preparing to unpack .../azure-cli_2.30.0-1~focal_all.deb ...

Unpacking azure-cli (2.30.0-1~focal) ...

Setting up azure-cli (2.30.0-1~focal) ...

Removing intermediate container 715d67b45fd7

---> ef271c40444e

Step 6/6 : RUN sudo rm -rf /var/lib/apt/lists/*

---> Running in 0a29bc1f45bc

Removing intermediate container 0a29bc1f45bc

---> 2dd337569832

Successfully built 2dd337569832

Successfully tagged myrunner:latest

We can verify that the CLI was properly installed by doing a quick local test (using the SHA from the second to last line above that matches the built image)

$ docker run --rm 2dd337569832 az version

{

"azure-cli": "2.30.0",

"azure-cli-core": "2.30.0",

"azure-cli-telemetry": "1.0.6",

"extensions": {}

}

I can now tag and push to my Harbor Registry:

$ docker tag myrunner:latest harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.0.0

$ docker push harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.0.0

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/myghrunner]

5132f0c8f95c: Pushed

802c4fb218a3: Pushed

19c53885ab06: Pushed

540c2e008748: Pushed

d1a880742d49: Pushed

27673375a57a: Pushed

f8dcc7bda31e: Pushed

c8e3e9fe1918: Pushed

42fc571eebac: Pushed

3848ade27b67: Pushed

b3ca2680764a: Pushed

2e902537dac1: Pushed

9f54eef41275: Pushed

1.0.0: digest: sha256:a34e14e861368225fdff9a62e14bef90c46682e578f59847c7d4823a1727136a size: 3045

Verification

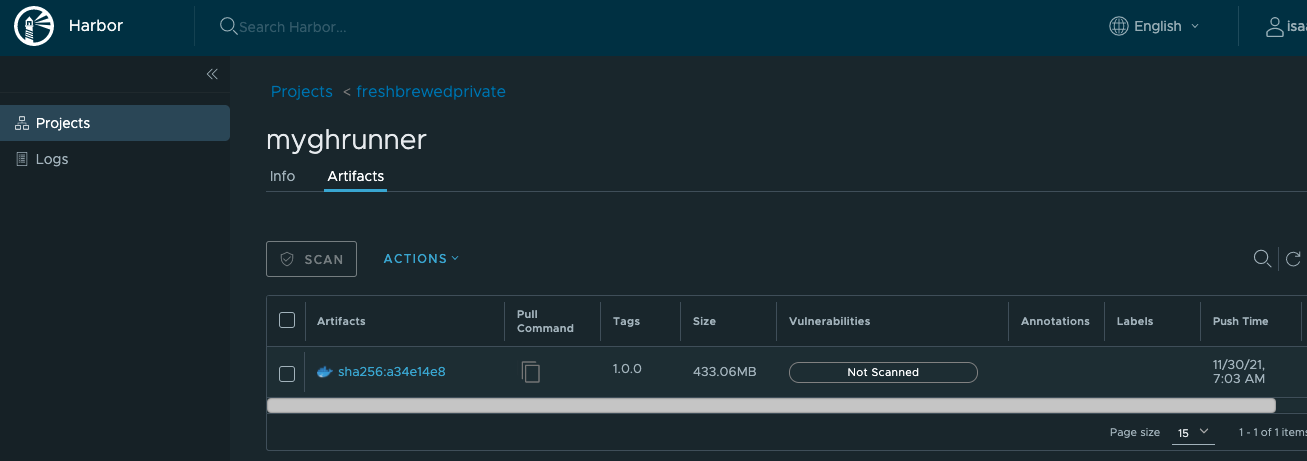

We can see it listed in our Private repo

Then we can now use this in our helm deploy (note the imagePullsecrets secret is by name):

$ helm upgrade --install --namespace actions-runner-system --wait actions-runner-controller actions-runner-controller/actions-runner-controller --set authSecret.name=controller-manager --set image.actionsRunnerRepositoryAndTag=harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.0.0 --set imagePullSecrets[0].name=myharborreg

Release "actions-runner-controller" has been upgraded. Happy Helming!

NAME: actions-runner-controller

LAST DEPLOYED: Tue Nov 30 07:18:10 2021

NAMESPACE: actions-runner-system

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace actions-runner-system -l "app.kubernetes.io/name=actions-runner-controller,app.kubernetes.io/instance=actions-runner-controller" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace actions-runner-system $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace actions-runner-system port-forward $POD_NAME 8080:$CONTAINER_PORT

We see the actions-runner updated

$ kubectl get pods --all-namespaces | grep runner

default idjohnson-gh-runner 2/2 Running 0 10h

actions-runner-system actions-runner-controller-7bc9cf6475-zpzzk 2/2 Running 0 2m46s

And we can see it running:

$ kubectl get pods | grep '[Ni][Ad][Mj]'

NAME READY STATUS RESTARTS AGE

idjohnson-gh-runner 2/2 Running 0 9m17s

Using the Custom Private Agent

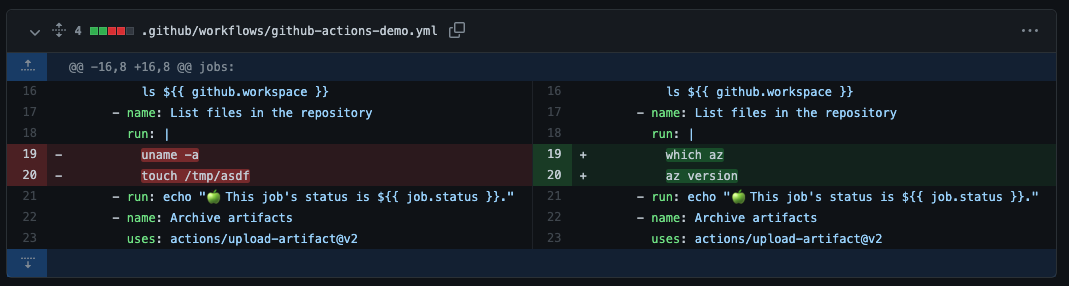

I updated the GH actions workflow

and this is where I should have seen it run…

However, the way the action runner works is the action controller will use imagePullSecrets but it does NOT pass them into the Runner. The Runner definition doesn’t even include a place to put secrets.

Pod listing:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

idjohnson-gh-runner 1/2 ErrImagePull 0 3s

And from describing the pod

$ kubectl describe pod idjohnson-gh-runner

Name: idjohnson-gh-runner

...snip...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 10s default-scheduler Successfully assigned default/idjohnson-gh-runner to anna-macbookair

Normal Pulling 10s kubelet Pulling image "harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.0.0"

Warning Failed 10s kubelet Failed to pull image "harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.0.0": rpc error: code = Unknown desc = failed to pull and unpack image "harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.0.0": failed to resolve reference "harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.0.0": pulling from host harbor.freshbrewed.science failed with status code [manifests 1.0.0]: 401 Unauthorized

Warning Failed 10s kubelet Error: ErrImagePull

Normal Pulled 10s kubelet Container image "docker:dind" already present on machine

Normal Created 10s kubelet Created container docker

Normal Started 10s kubelet Started container docker

Normal BackOff 8s (x2 over 9s) kubelet Back-off pulling image "harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.0.0"

Warning Failed 8s (x2 over 9s) kubelet Error: ImagePullBackOff

I pulled the chart locally and we can see it just passes the images to use as parameters to the containerized action runner:

$ cat templates/deployment.yaml | head -n50 | tail -n10

- "--enable-leader-election"

{{- end }}

{{- if .Values.leaderElectionId }}

- "--leader-election-id={{ .Values.leaderElectionId }}"

{{- end }}

- "--sync-period={{ .Values.syncPeriod }}"

- "--docker-image={{ .Values.image.dindSidecarRepositoryAndTag }}"

- "--runner-image={{ .Values.image.actionsRunnerRepositoryAndTag }}"

{{- if .Values.dockerRegistryMirror }}

- "--docker-registry-mirror={{ .Values.dockerRegistryMirror }}"

I can use a RunnerDeployment object (outside of helm) to create the image: https://github.com/actions-runner-controller/actions-runner-controller/issues/637

However, as you can see the code, it does not include a field for imagepullsecrets: https://github.com/actions-runner-controller/actions-runner-controller/blob/master/controllers/runnerset_controller.go, just the image, it may pose issues. In the end i moved my image to a public repository endpoint and tried again.

$ kubectl get pods | grep '[Ni][Ad][Mj]'

NAME READY STATUS RESTARTS AGE

idjohnson-gh-runner 2/2 Running 0 19m

RunnerDeployment

In one of those situations of “I would rather know”, I then did create a RunnerDeployment object with an imagePullSecret.

I did not want a situation where a private runner was required to be on a public image repository:

$ cat testingPrivate.yaml

apiVersion: actions.summerwind.dev/v1alpha1

kind: RunnerDeployment

metadata:

name: my-runner-deployment

spec:

replicas: 1

template:

spec:

repository: idjohnson/idjohnson.github.io

image: harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.0.0

imagePullSecrets:

- name: myharborreg

dockerEnabled: true

labels:

- my-runner-deployment

Applied:

$ kubectl apply -f testingPrivate.yaml --validate=false

runnerdeployment.actions.summerwind.dev/my-runner-deployment created

We can see it did launch a runner:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

idjohnson-gh-runner 2/2 Running 0 62m

my-runner-deployment-nxc2c-bvkfg 2/2 Running 0 34m

I see it added, albeit with the pod name, to my Private Runner pool

We can see the deployment label of “my-runner-deployment” was applied so if we update our GH workflow to use the label, we can restrict the runs to just that agent:

$ cat .github/workflows/github-actions-demo.yml

name: GitHub Actions Demo

on: [push]

jobs:

Explore-GitHub-Actions:

runs-on: my-runner-deployment

steps:

- run: echo "🎉 The job was automatically triggered by a $ event."

- run: echo "🐧 This job is now running on a $ server hosted by GitHub!"

- run: echo "🔎 The name of your branch is $ and your repository is $."

- name: Check out repository code

uses: actions/checkout@v2

- run: echo "💡 The $ repository has been cloned to the runner."

- run: echo "🖥️ The workflow is now ready to test your code on the runner."

- name: List files in the repository

run: |

ls $

- name: List files in the repository

run: |

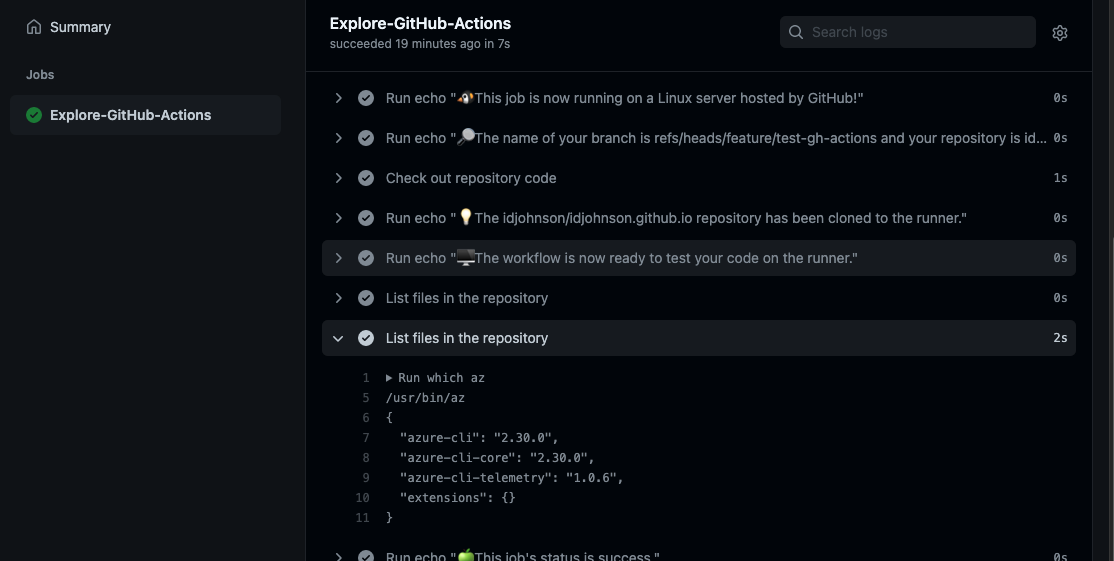

which az

az version

- run: echo "🍏 This job's status is $."

- name: Archive artifacts

uses: actions/upload-artifact@v2

with:

name: dist-with-markdown

path: |

**/*.md

And pushing the workflow showed it ran on the agent:

Also, just as a sanity check, i’ll verify it pulled from my Private registry:

$ kubectl describe pod my-runner-deployment-nxc2c-bvkfg | grep Image

Image: harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.0.0

Image ID: harbor.freshbrewed.science/freshbrewedprivate/myghrunner@sha256:a34e14e861368225fdff9a62e14bef90c46682e578f59847c7d4823a1727136a

Image: docker:dind

Image ID: docker.io/library/docker@sha256:79d0c6e997920e71e96571ef434defcca1364d693d5b937232decf1ee7524a9b

HPAs: Horizontal Pod Autoscalers

I hoped the straightforward basic HPA would scale up for me:

$ cat testingPrivate2.yaml

apiVersion: actions.summerwind.dev/v1alpha1

kind: RunnerDeployment

metadata:

name: my-runner-deployment

spec:

template:

spec:

repository: idjohnson/idjohnson.github.io

image: harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.0.0

imagePullSecrets:

- name: myharborreg

dockerEnabled: true

labels:

- my-runner-deployment

---

apiVersion: actions.summerwind.dev/v1alpha1

kind: HorizontalRunnerAutoscaler

metadata:

name: my-runner-deployment-autoscaler

spec:

# Runners in the targeted RunnerDeployment won't be scaled down for 5 minutes instead of the default 10 minutes now

scaleDownDelaySecondsAfterScaleOut: 300

scaleTargetRef:

name: my-runner-deployment

minReplicas: 1

maxReplicas: 5

metrics:

- type: PercentageRunnersBusy

scaleUpThreshold: '0.75'

scaleDownThreshold: '0.25'

scaleUpFactor: '2'

scaleDownFactor: '0.5'

After about 8 minutes the HPA kicked in

$ kubectl get HorizontalRunnerAutoscaler my-runner-deployment-autoscaler -o yaml

apiVersion: actions.summerwind.dev/v1alpha1

kind: HorizontalRunnerAutoscaler

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"actions.summerwind.dev/v1alpha1","kind":"HorizontalRunnerAutoscaler","metadata":{"annotations":{},"name":"my-runner-deployment-autoscaler","namespace":"default"},"spec":{"maxReplicas":5,"metrics":[{"scaleDownFactor":"0.5","scaleDownThreshold":"0.25","scaleUpFactor":"2","scaleUpThreshold":"0.75","type":"PercentageRunnersBusy"}],"minReplicas":1,"scaleDownDelaySecondsAfterScaleOut":300,"scaleTargetRef":{"name":"my-runner-deployment"}}}

creationTimestamp: "2021-11-30T16:42:37Z"

generation: 1

name: my-runner-deployment-autoscaler

namespace: default

resourceVersion: "123931037"

uid: 9548550c-a6f0-42c3-a2c3-a2168cf5df6d

spec:

maxReplicas: 5

metrics:

- scaleDownFactor: "0.5"

scaleDownThreshold: "0.25"

scaleUpFactor: "2"

scaleUpThreshold: "0.75"

type: PercentageRunnersBusy

minReplicas: 1

scaleDownDelaySecondsAfterScaleOut: 300

scaleTargetRef:

name: my-runner-deployment

status:

cacheEntries:

- expirationTime: "2021-11-30T17:11:25Z"

key: desiredReplicas

value: 2

desiredReplicas: 2

lastSuccessfulScaleOutTime: "2021-11-30T17:01:35Z"

and I saw both agents:

$ kubectl get pods | grep my-runner

my-runner-deployment-927q5-9qkrs 2/2 Running 0 9m5s

my-runner-deployment-927q5-v5x7l 2/2 Running 0 52s

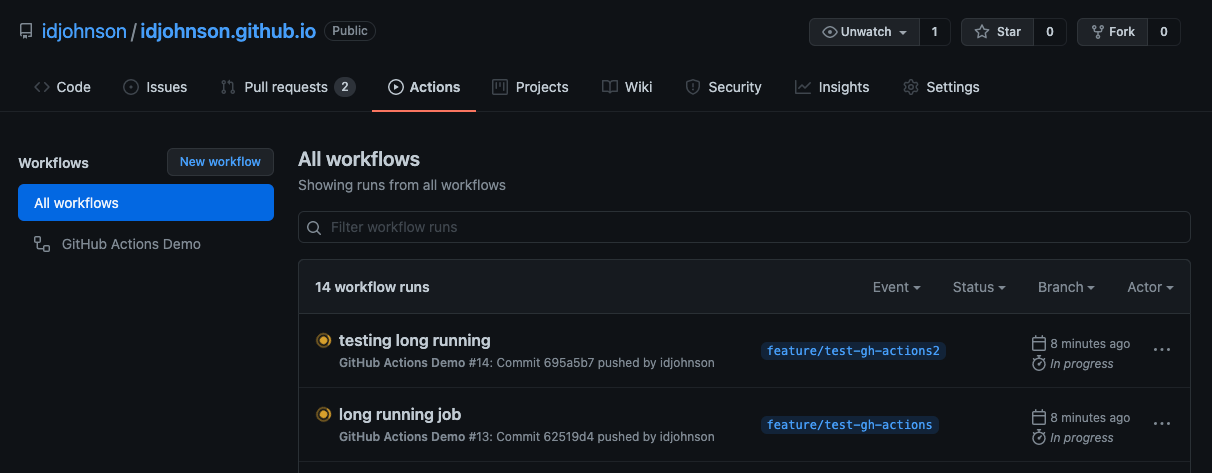

As well as seeing them listed in the Github actioning queued jobs

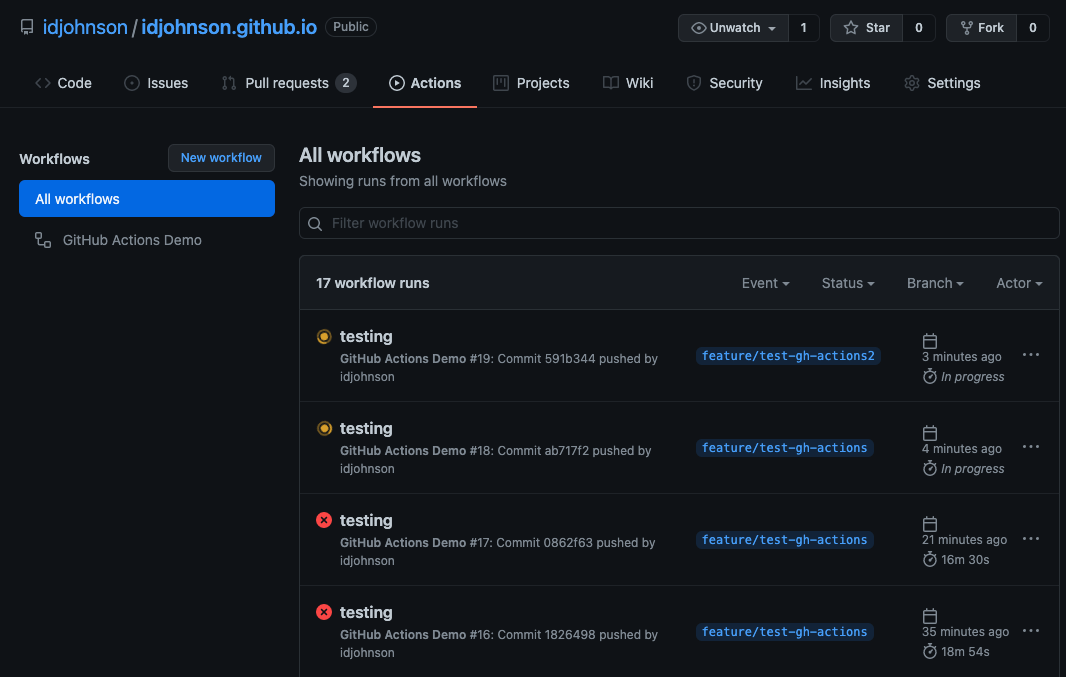

Given a long enough time, I saw up to 4 runners spin up:

$ kubectl get pods | grep runner

idjohnson-gh-runner 2/2 Running 0 3h18m

my-runner-deployment-927q5-9qkrs 2/2 Running 0 42m

my-runner-deployment-927q5-v5x7l 2/2 Running 0 34m

my-runner-deployment-927q5-xc9rw 2/2 Running 0 16m

my-runner-deployment-927q5-vxxzj 2/2 Running 0 15m

This also afforded me the opportunity to verify that indeed the runner container was acting as the gh action runner:

$ kubectl exec -it my-runner-deployment-927q5-v5x7l -- /bin/bash

Defaulted container "runner" out of: runner, docker

runner@my-runner-deployment-927q5-v5x7l:/$ ls /runner/_work/

_PipelineMapping _actions _temp idjohnson.github.io

runner@my-runner-deployment-927q5-v5x7l:/$ ls /runner/_work/idjohnson.github.io/

idjohnson.github.io

runner@my-runner-deployment-927q5-v5x7l:/$ ls /runner/_work/idjohnson.github.io/idjohnson.github.io/

404.html CNAME Gemfile Gemfile.lock LICENSE README.md _config.yml _layouts _posts about.markdown assets docs index.markdown

$ ps -ef | grep sleep

runner 241 240 0 17:01 ? 00:00:00 sleep 6000

runner 586 556 0 17:38 pts/0 00:00:00 grep --color=auto sleep

And it verified one more behavior; that our action workflows can run over an hour (a feature that catches us in the provided Azure Agents in AzDO):

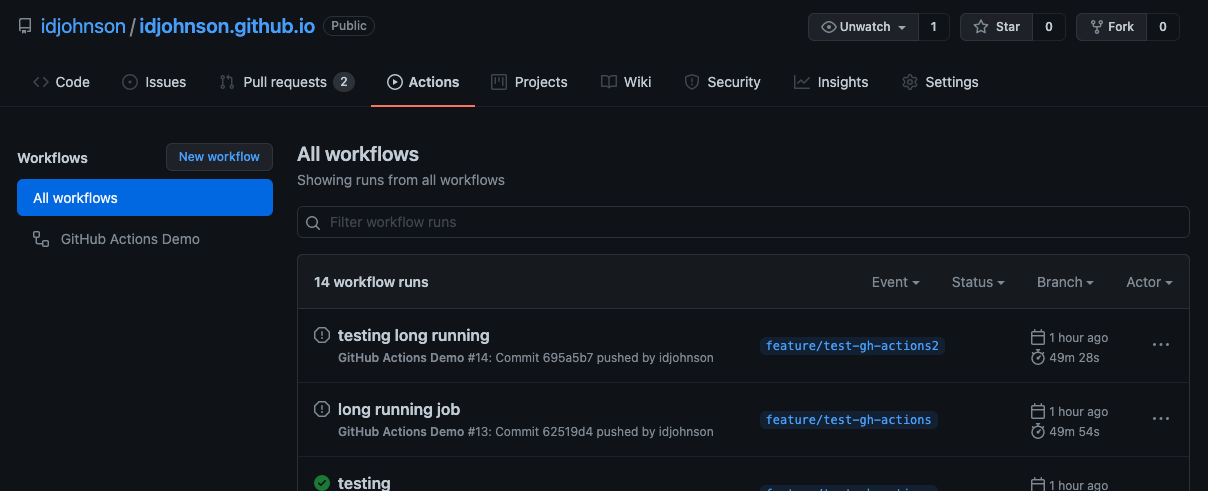

Cancelling runs

If we cancel the runs:

As expected, those agents will self-terminate

$ kubectl get pods | grep runner

idjohnson-gh-runner 2/2 Running 0 3h25m

my-runner-deployment-927q5-xc9rw 2/2 Running 0 23m

my-runner-deployment-927q5-vxxzj 2/2 Running 0 22m

my-runner-deployment-927q5-v5x7l 1/2 Terminating 0 41m

my-runner-deployment-927q5-9qkrs 0/2 Terminating 0 49m

But because we have a cooldown before scaledown, fresh workers are spun up to replace them:

$ kubectl get pods | grep my-runner

my-runner-deployment-927q5-xc9rw 2/2 Running 0 23m

my-runner-deployment-927q5-vxxzj 2/2 Running 0 22m

my-runner-deployment-927q5-9qkrs 2/2 Running 0 48s

my-runner-deployment-927q5-v5x7l 2/2 Running 0 44s

Fun with HPA Scalers

Let’s try one more simple HPA case.. trigger from queued jobs (webhook)

$ cat testingPrivate3.yaml

apiVersion: actions.summerwind.dev/v1alpha1

kind: RunnerDeployment

metadata:

name: my-runner-deployment

spec:

template:

spec:

repository: idjohnson/idjohnson.github.io

image: harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.0.0

imagePullSecrets:

- name: myharborreg

dockerEnabled: true

labels:

- my-runner-deployment

---

apiVersion: actions.summerwind.dev/v1alpha1

kind: HorizontalRunnerAutoscaler

metadata:

name: my-runner-deployment-autoscaler

spec:

scaleTargetRef:

name: my-runner-deployment

scaleUpTriggers:

- githubEvent: {}

duration: "1m"

$ kubectl apply -f testingPrivate3.yaml --validate=false

runnerdeployment.actions.summerwind.dev/my-runner-deployment created

horizontalrunnerautoscaler.actions.summerwind.dev/my-runner-deployment-autoscaler created

$ kubectl get pods | grep my-runner

my-runner-deployment-q248s-s9st5 2/2 Running 0 82s

Our initial change immediately builds and the next queues:

However, it never did scale up another.

Initially it complained about a missing minReplicas value:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal RunnerAutoscalingFailure 37s (x18 over 7m10s) horizontalrunnerautoscaler-controller horizontalrunnerautoscaler default/my-runner-deployment-autoscaler is missing minReplicas

I corrected:

$ testingPrivate3.yaml

apiVersion: actions.summerwind.dev/v1alpha1

kind: RunnerDeployment

metadata:

name: my-runner-deployment

spec:

template:

spec:

repository: idjohnson/idjohnson.github.io

image: harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.0.0

imagePullSecrets:

- name: myharborreg

dockerEnabled: true

labels:

- my-runner-deployment

---

apiVersion: actions.summerwind.dev/v1alpha1

kind: HorizontalRunnerAutoscaler

metadata:

name: my-runner-deployment-autoscaler

spec:

scaleTargetRef:

name: my-runner-deployment

minReplicas: 1

maxReplicas: 15

scaleUpTriggers:

- githubEvent: {}

duration: "1m"

But still it didnt scale up:

$ kubectl get pods | grep runner

idjohnson-gh-runner 2/2 Running 0 4h1m

my-runner-deployment-44zhh-5vf5f 2/2 Running 0 15m

$ kubectl get horizontalrunnerautoscaler my-runner-deployment-autoscaler

NAME MIN MAX DESIRED SCHEDULE

my-runner-deployment-autoscaler 1 15 1

While the “scaleUpTriggers” section on its own failed me, the metrics on events section did work:

switching:

$ kubectl delete -f testingPrivate3.yaml

runnerdeployment.actions.summerwind.dev "my-runner-deployment" deleted

horizontalrunnerautoscaler.actions.summerwind.dev "my-runner-deployment-autoscaler" deleted

$ kubectl apply -f testingPrivate3.yaml --validate=false

runnerdeployment.actions.summerwind.dev/my-runner-deployment created

horizontalrunnerautoscaler.actions.summerwind.dev/my-runner-deployment-autoscaler created

$ kubectl get HorizontalRunnerAutoscaler

NAME MIN MAX DESIRED SCHEDULE

my-runner-deployment-autoscaler 1 15 2

I immediately see it scale up

$ kubectl get pods | grep runner

idjohnson-gh-runner 2/2 Running 0 4h8m

my-runner-deployment-msjdw-psn4j 2/2 Running 0 2m46s

my-runner-deployment-wkvr8-6lzjd 2/2 Running 0 16s

my-runner-deployment-wkvr8-c9cc5 2/2 Running 0 15s

The HPA can get rather fancy. There are quite a few ways to use the HPA.

For instance, say you wanted to pre-warm the agent pool for afternoon work.

apiVersion: actions.summerwind.dev/v1alpha1

kind: RunnerDeployment

metadata:

name: my-runner-deployment

spec:

template:

spec:

repository: idjohnson/idjohnson.github.io

image: harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.0.0

imagePullSecrets:

- name: myharborreg

dockerEnabled: true

labels:

- my-runner-deployment

---

apiVersion: actions.summerwind.dev/v1alpha1

kind: HorizontalRunnerAutoscaler

metadata:

name: my-runner-deployment-autoscaler

spec:

scaleTargetRef:

name: my-runner-deployment

minReplicas: 1

maxReplicas: 15

metrics:

- type: TotalNumberOfQueuedAndInProgressWorkflowRuns

repositoryNames:

- idjohnson/idjohnson.github.io

# Pre-warm for afternoon spike

scheduledOverrides:

- startTime: "2021-11-30T15:15:00-06:00"

endTime: "2021-11-30T17:30:00-06:00"

recurrenceRule:

frequency: Daily

untilTime: "2022-05-01T00:00:00-06:00"

minReplicas: 10

Above I set a regular minimum number to be 1 and max as 15. However, once 3:15pm CST hits, the next event would scale up to 10 minimum.

When launched shows just the 1 agent is desired:

$ kubectl get horizontalrunnerautoscaler

NAME MIN MAX DESIRED SCHEDULE

my-runner-deployment-autoscaler 1 15 1 min=10 time=2021-11-30 21:15:00 +0000 UTC

But then when i push a commit in the afternoon window, the cluster scales up:

$ date

Tue Nov 30 15:19:21 CST 2021

$ kubectl get horizontalrunnerautoscaler

NAME MIN MAX DESIRED SCHEDULE

my-runner-deployment-autoscaler 1 15 10 min=1 time=2021-11-30 23:30:00 +0000 UTC

$ kubectl get pods | grep my-runner

my-runner-deployment-njn2k-njs5z 2/2 Running 0 72s

my-runner-deployment-njn2k-ptkhc 0/2 ContainerCreating 0 68s

my-runner-deployment-njn2k-jjtsn 2/2 Running 0 70s

my-runner-deployment-njn2k-9wmnl 2/2 Running 0 71s

my-runner-deployment-njn2k-fqfdm 2/2 Running 0 67s

my-runner-deployment-njn2k-zwz9t 2/2 Running 0 69s

my-runner-deployment-njn2k-tdn9j 2/2 Running 0 67s

my-runner-deployment-njn2k-tr8cf 2/2 Running 0 66s

my-runner-deployment-njn2k-r8pzc 0/2 ContainerCreating 0 54s

my-runner-deployment-njn2k-5s7d8 2/2 Running 0 9s

For more dig into the README with all the examples.

Footprint

We already showed that the image itself is decently large in our Container Registry:

However, i had concerns over the memory and CPU footprint of the runners themselves.

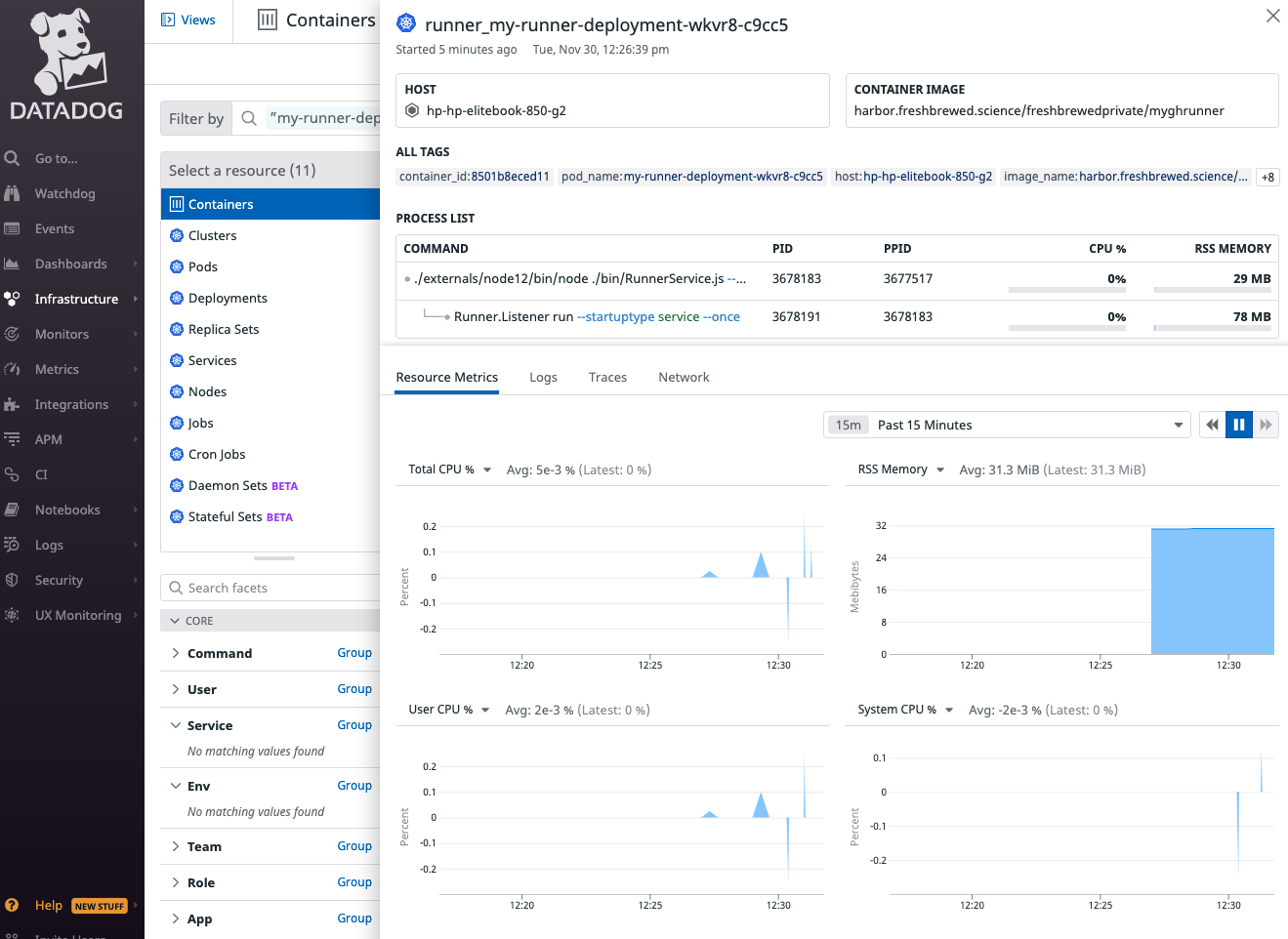

I was able to verify the container was really not consuming that much of CPU or memory:

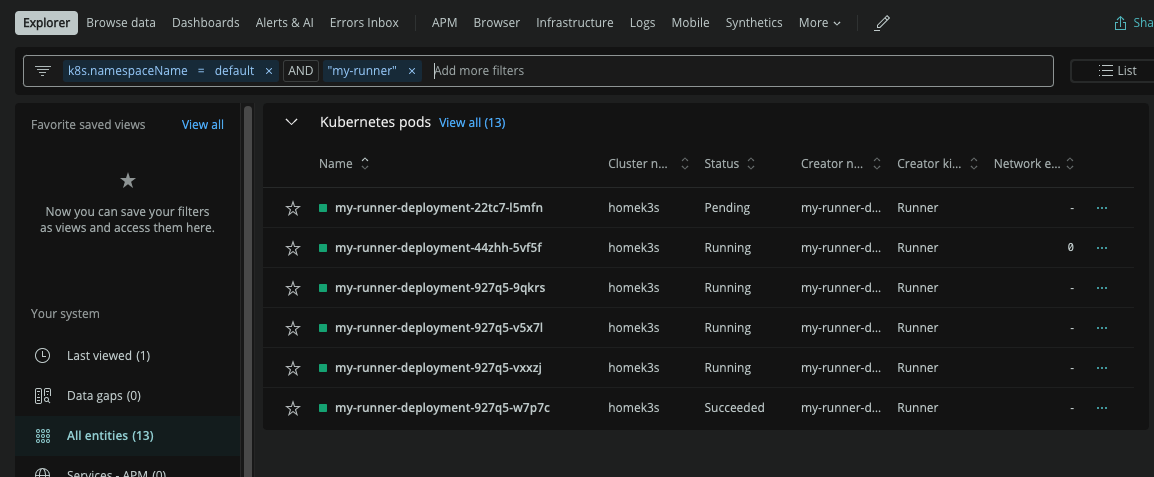

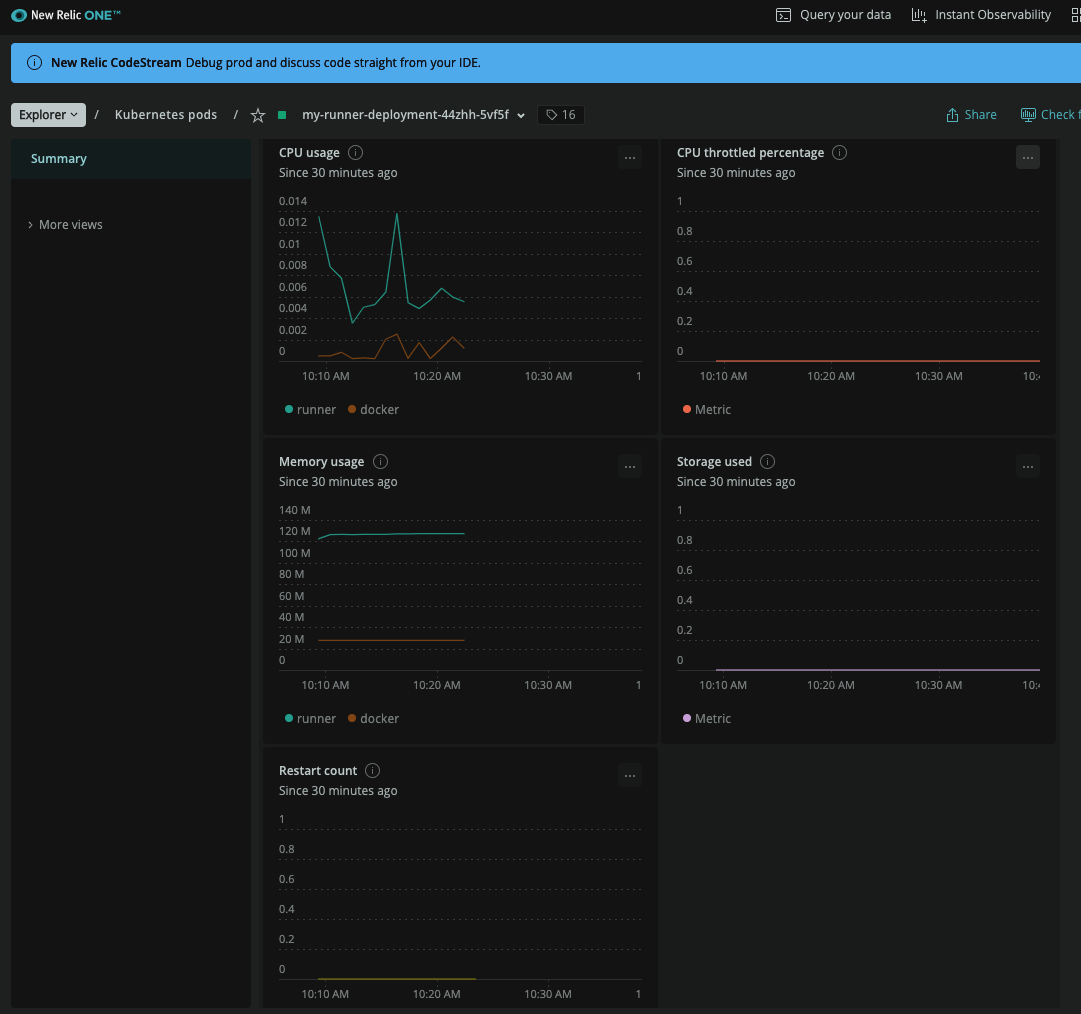

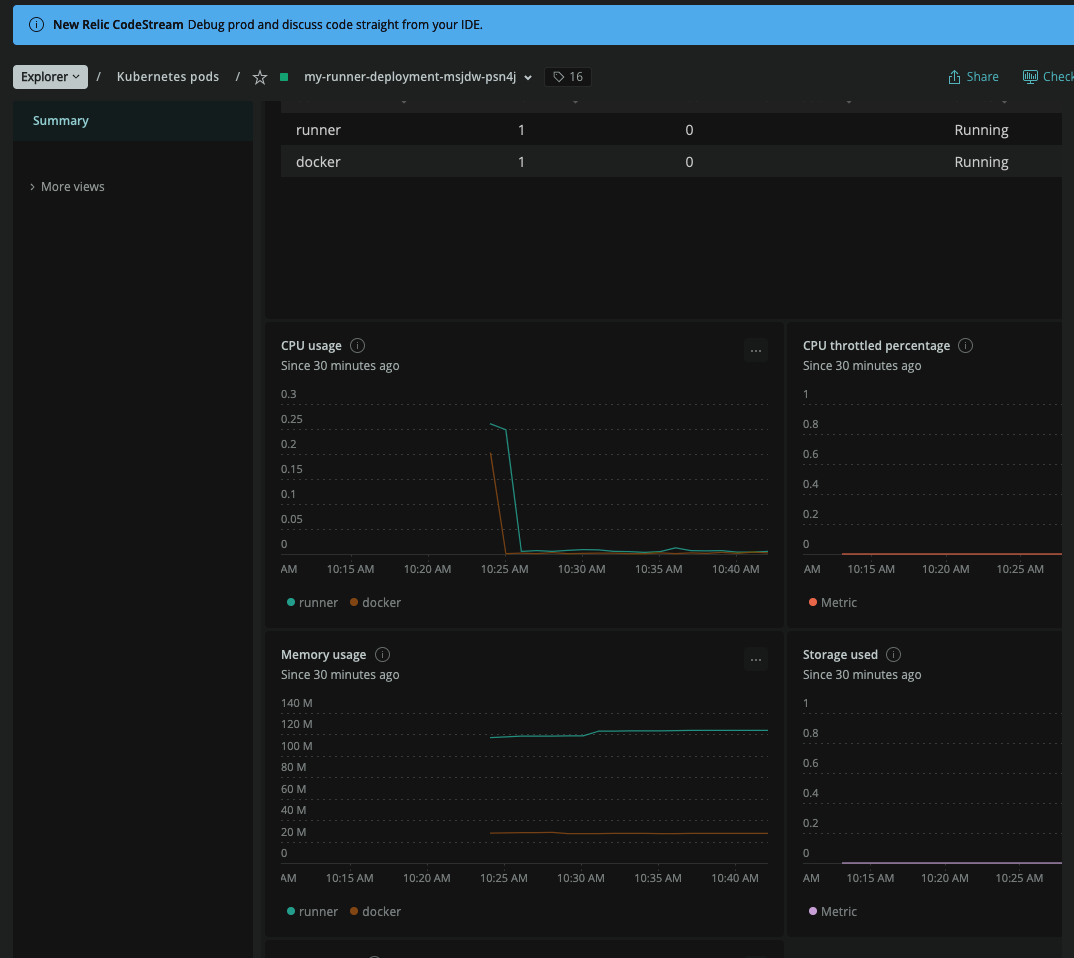

However, Datadog failed to capture all the pods.. I used NewRelic and found the worker agents:

And specifically the memory used by a worker (120mb)

this includes some spikes on CPU at the start, but because the command is just a sleep, it trails off on CPU usage rather quickly

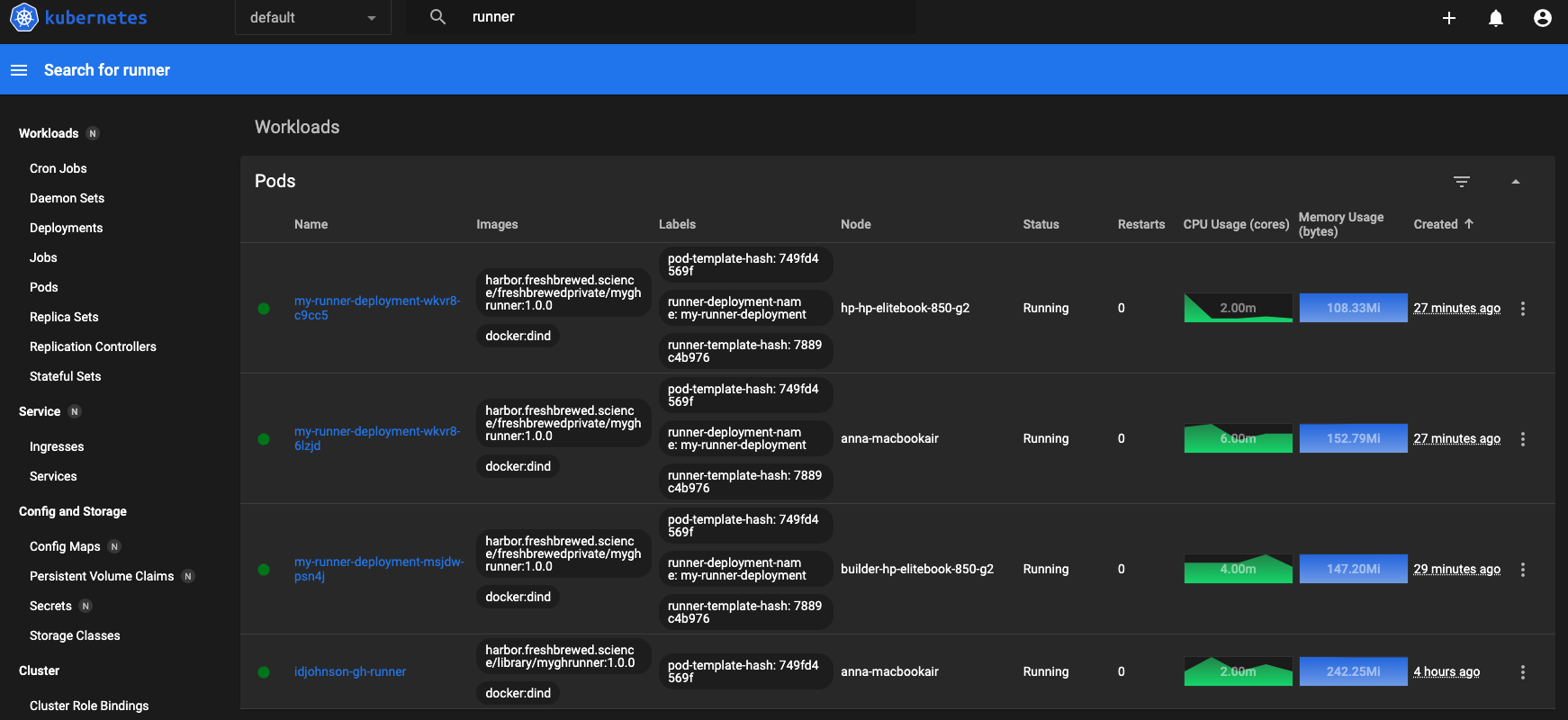

I launched and checked the K8s dashboard and saw that k8s smartly spread out the pods on different hosts:

Summary

We covered setting up a fresh AKS cluster as a Github Actions Runner host. We launched a single instance Runner and Horizontally scaled RunnerDeployment. We looked at how to build, host and leverage private agent containers. Finally, we examined their size and impact to the cluster.

Github Actions are a good start to pipelining and there is some value to using a comprehensive system. That is, if you are hosting your GIT repositories in Github already, why hook them up to some other external suite like Azure DevOps or Jenkins. I might argue the same holds true for Azure Repos in Azure DevOps and Gitlab with Gitlab runners.

The trend here is that source control and pipelining (and to a lesser extent ticketing) are becoming facets of comprehensive suites. This makes a lot of sense. If we consider Atlassian JIRA on it’s own, it’s a fine (actually exceedingly well made) Ticketing and Work Item management suite - but when you pair it with Stash and Crucible (I’ve rather lost track of all their product names du jour), it’s a compelling suite. If you hold Azure Repos in isolation, it’s a rather underwhelming, but functional source code suite. However, paired with Work Items in Azure Boards and a rich set of Pull Request rules, Azure DevOps becomes a very compelling suite.

| Parent | Brand | Code | Ticketing | Pipelines | Agents |

|---|---|---|---|---|---|

| Microsoft | Github | Repositories | Issues / Boards | Actions | Runners |

| Microsoft | Azure DevOps | Repos | Work Items / Boards | Pipelines | Agents |

| Gitlab | Gitlab | Repository | Issues | Pipelines | Runners |

| Atlassian | Atlassian | Bitbucket (was Stash) | JIRA | Bamboo | Agents |

So what do we make of Github’s offering? How does this play into the fact Microsoft owns two very mature and very well loved suites; Azure DevOps (of the TFS lineage) and Github (of the Github Enterprise lineage)?

I would argue that trends show Microsoft is putting a considerable amount of effort into Github especially around Actions and Runners. In Azure Devops they have really only been focusing on Work Items and Test Plan Management as of late.

This would lead me to believe a merging of products is coming (likely under the Github brand).

I plan to explore Github Actions and how they tie into secrets and issues next.

More Info

You can read more from the Github page for the Action Runner: https://github.com/actions-runner-controller/actions-runner-controller