Published: Feb 15, 2022 by Isaac Johnson

I’m guilty of saying “Junkins” in meetings. For years, I’ve had a not-so-hidden disdain for Jenkins. It stemmed from being in a team that touted itself as “Jenkineers” back at a prior as well as from the unctuous consternations of jenkins diehards whenever given a forum.

That said, it is arguably the oldest and still post popular CICD tool making top CICD lists year after year. Whether I like the toolset or not, it has a dedicated and vocal following.

Moreover, it is often the first tool new SRE/DevOps engineers learn. In some fashion, it has been in active use with every employer of mine for the last decade and, if for no other reason than to level set, it deserves a look.

A Personal History

I asked myself what was life like before Hudson? At the start, it was Makefiles and Bash scripts. Later, this gave way to homegrown solutions like Perl based scripting. In the early 200s we moved to CruiseControl and CruiseControl.NET. around the time Hudson was starting to make its rounds, we were looking at commercial tooling like Build Forge and Visual Build. But by 2010 Hudson was the ‘hotness’ and teams were adopting it all over.

In January of 2011, following legal threats from Oracle. Andrew Bayer published the blog entry that would forever fork Jenkins from Hudson and make a clean break. Around that time Cloudbees was formed and took over the care and feeding that had been done by Sun prior.

Getting Started

The fastest way to get started with jenkins is to just download the jenkins war file and start it:

builder@DESKTOP-QADGF36:~$ java -jar /mnt/c/Users/isaac/Downloads/jenkins.war

Running from: /mnt/c/Users/isaac/Downloads/jenkins.war

webroot: $user.home/.jenkins

2022-02-15 13:06:20.837+0000 [id=1] INFO org.eclipse.jetty.util.log.Log#initialized: Logging initialized @461ms to org.eclipse.jetty.util.log.JavaUtilLog

2022-02-15 13:06:20.891+0000 [id=1] INFO winstone.Logger#logInternal: Beginning extraction from war file

2022-02-15 13:06:25.418+0000 [id=1] WARNING o.e.j.s.handler.ContextHandler#setContextPath: Empty contextPath

2022-02-15 13:06:25.456+0000 [id=1] INFO org.eclipse.jetty.server.Server#doStart: jetty-9.4.43.v20210629; built: 2021-06-30T11:07:22.254Z; git: 526006ecfa3af7f1a27ef3a288e2bef7ea9dd7e8; jvm 1.8.0_312-8u312-b07-0ubuntu1~20.04-b07

2022-02-15 13:06:25.616+0000 [id=1] INFO o.e.j.w.StandardDescriptorProcessor#visitServlet: NO JSP Support for /, did not find org.eclipse.jetty.jsp.JettyJspServlet

2022-02-15 13:06:25.645+0000 [id=1] INFO o.e.j.s.s.DefaultSessionIdManager#doStart: DefaultSessionIdManager workerName=node0

2022-02-15 13:06:25.645+0000 [id=1] INFO o.e.j.s.s.DefaultSessionIdManager#doStart: No SessionScavenger set, using defaults

2022-02-15 13:06:25.646+0000 [id=1] INFO o.e.j.server.session.HouseKeeper#startScavenging: node0 Scavenging every 600000ms

...

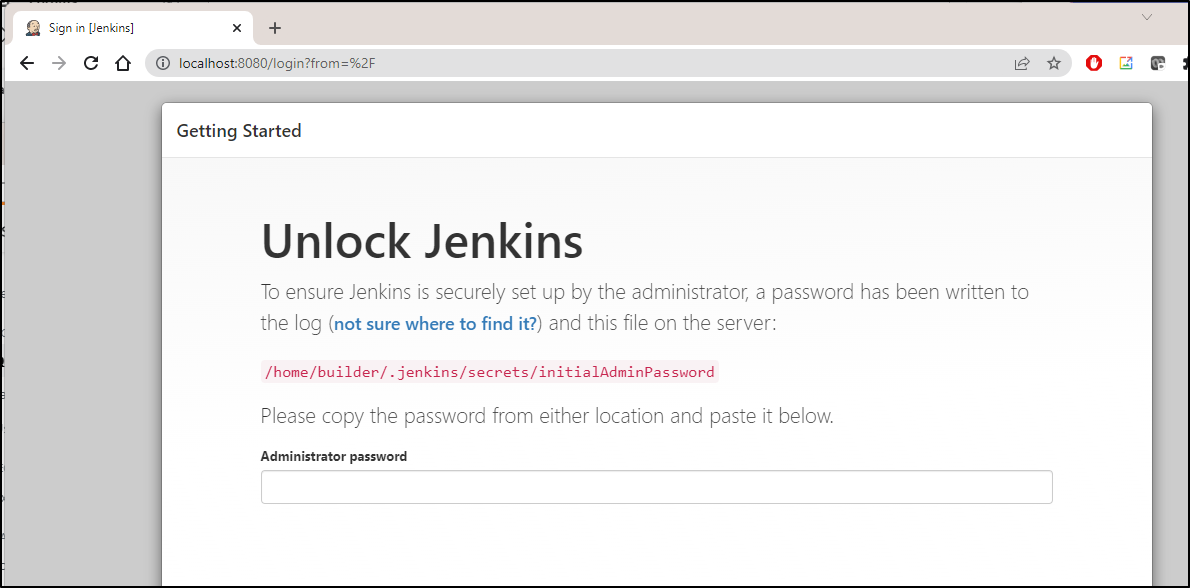

We can fetch the initial password:

$ cat /home/builder/.jenkins/secrets/initialAdminPassword

9a50c4d3462641c5a97b69f20516d9de

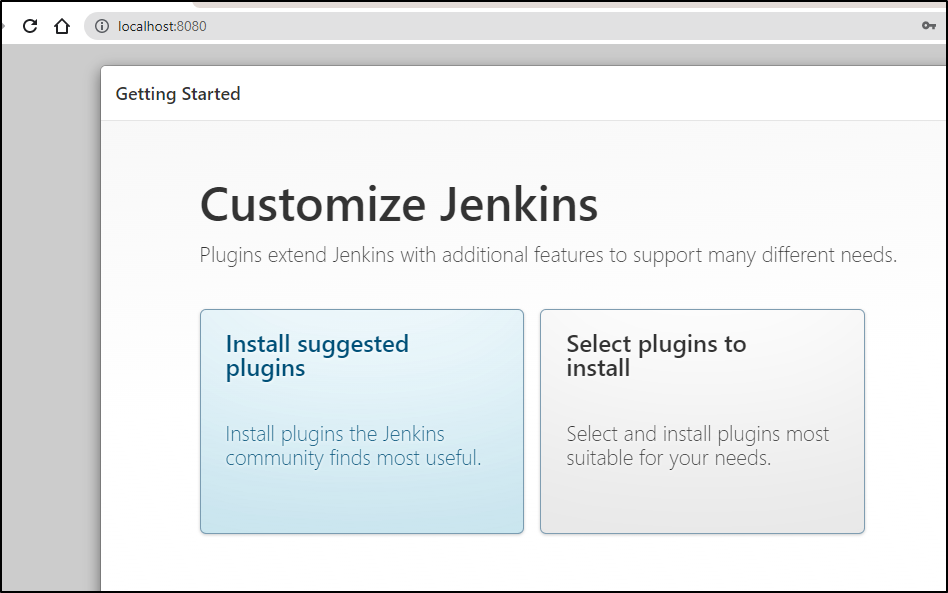

Then login and install a default set of plugins

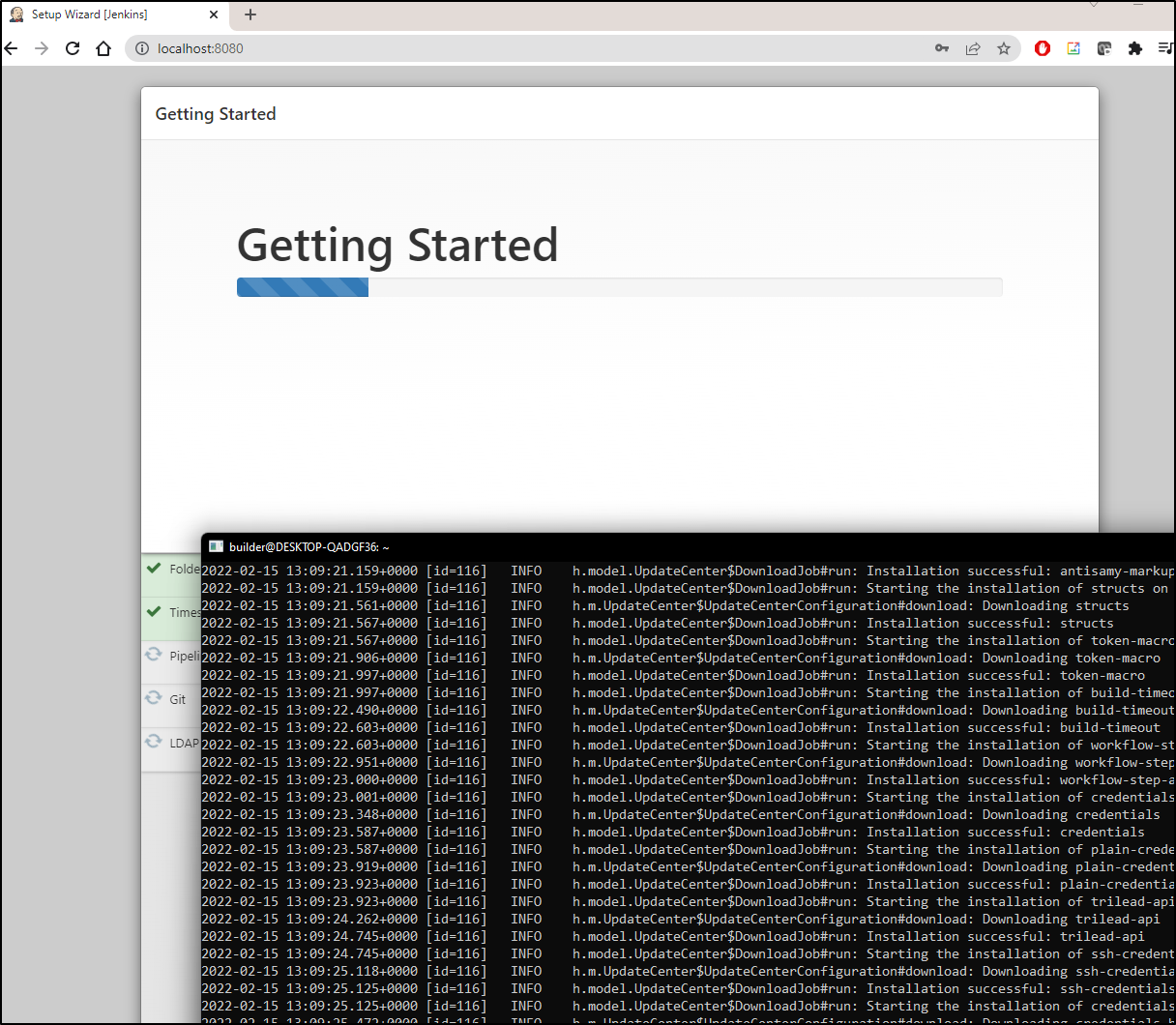

As it installs them with the “Getting Started” message, we can see fire up a log of downloads in the shell running the server.

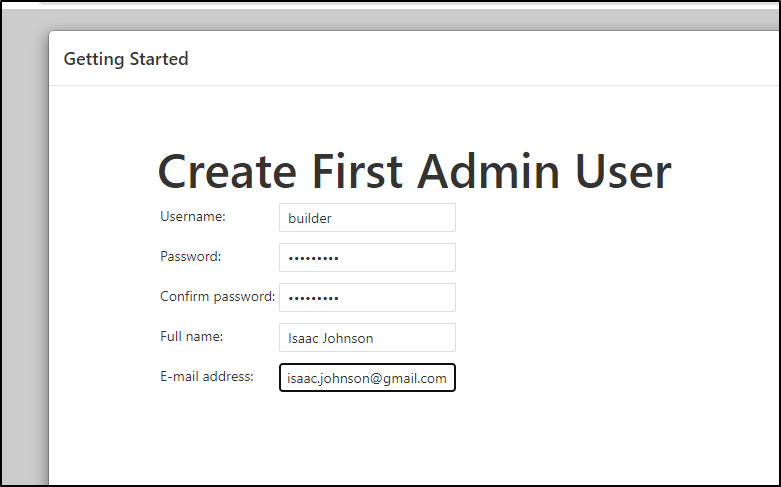

When done, set your admin user

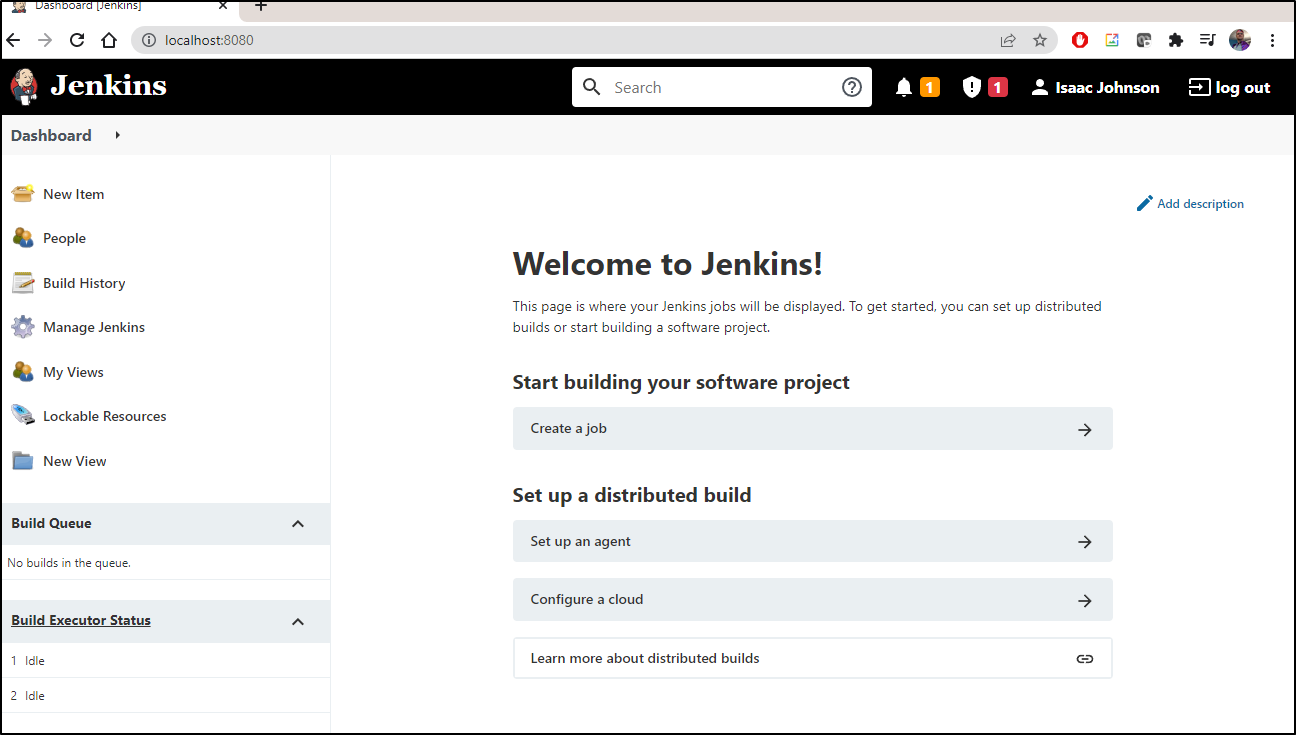

Leave the default Jenkins URL (localhost:8080) and “Save and Finish”, then click “Start Using Jenkins”.

This will give you a perfectly usable test Jenkins. If your goal is to learn some plugins or test groovy pipelines, this would work just dandy.

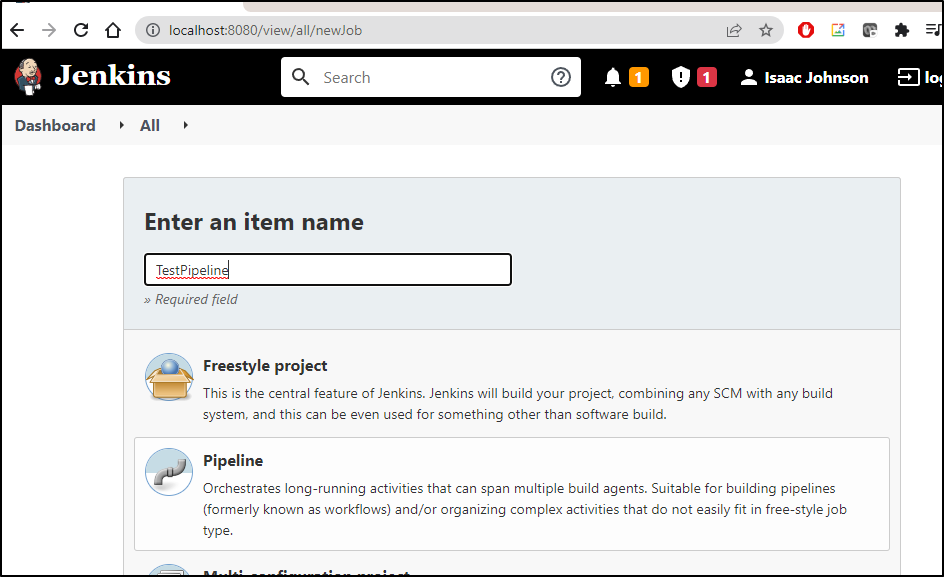

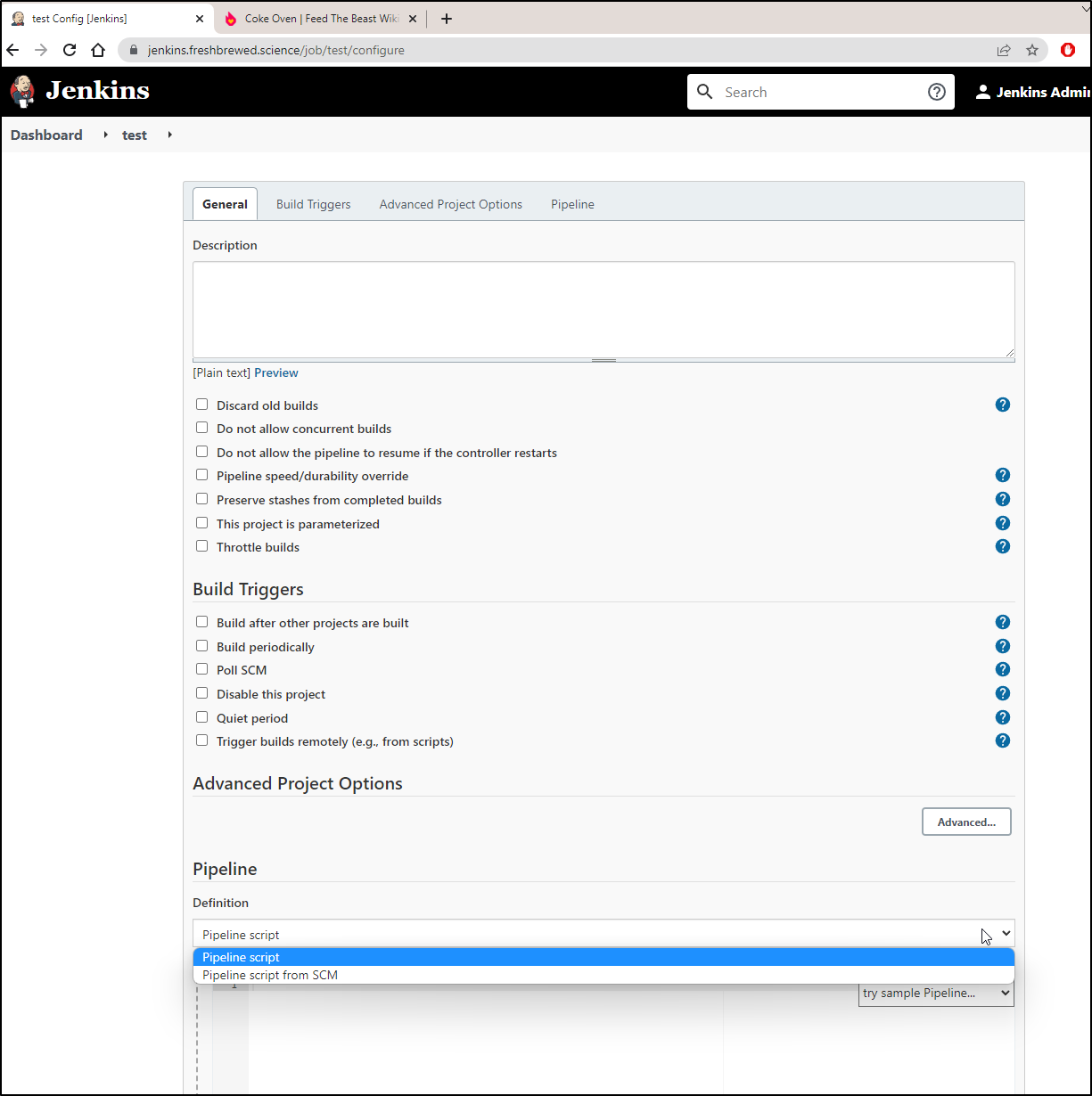

For instance, to create a quick hello world groovy pipeline, create new pipeline and choose Pipeline for the project type:

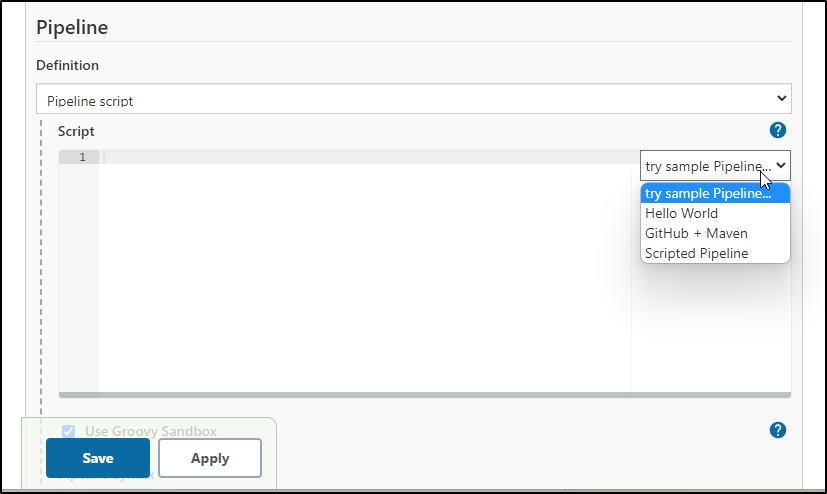

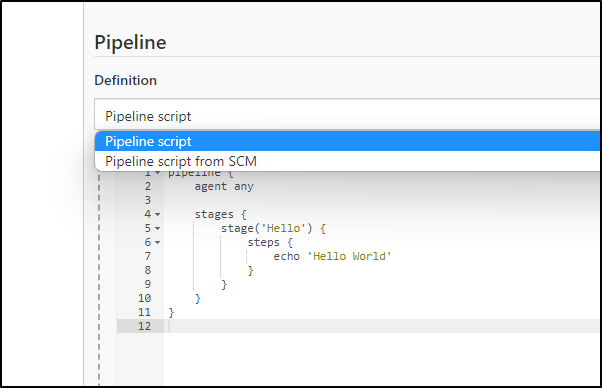

and we can pick a sample pipeline from the pick list:

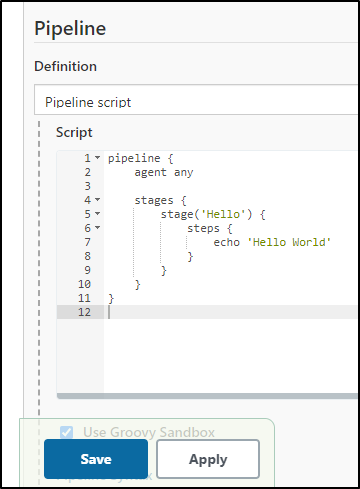

pipeline {

agent any

stages {

stage('Hello') {

steps {

echo 'Hello World'

}

}

}

}

which we can save and apply

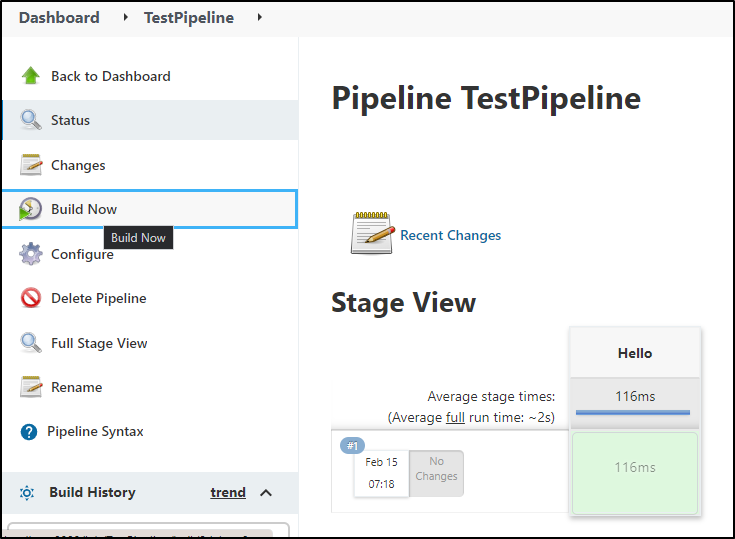

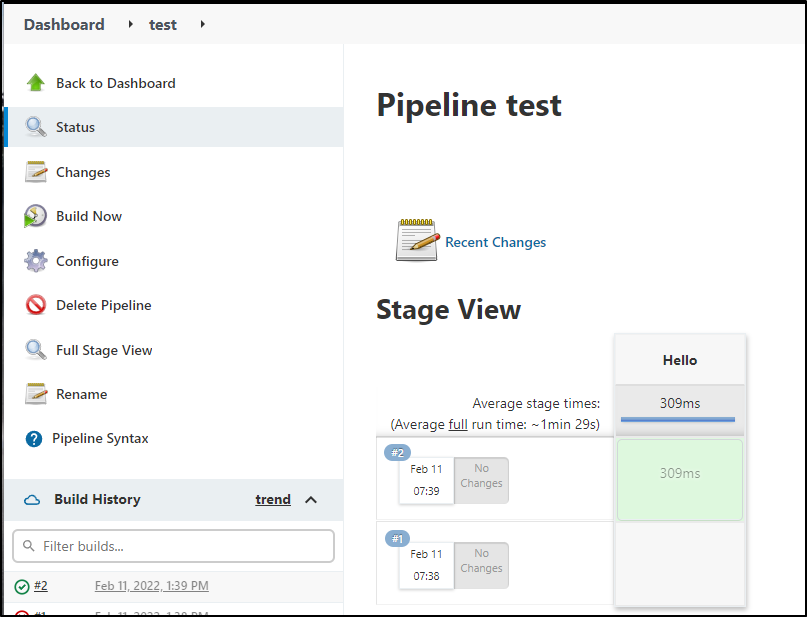

then click “Build Now” to invoke

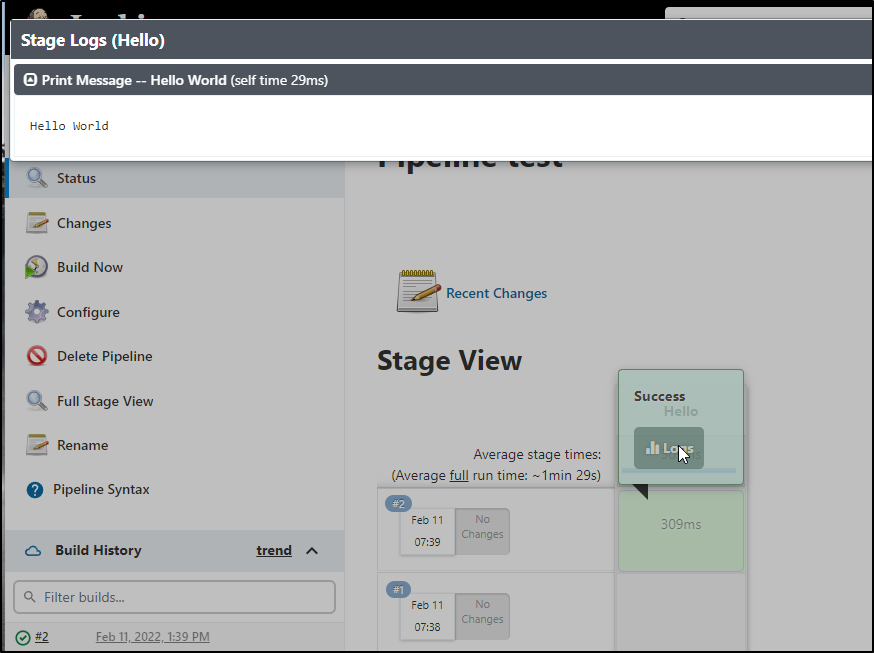

and lastly, you can click the logs icon inline to view the logs on the same results page

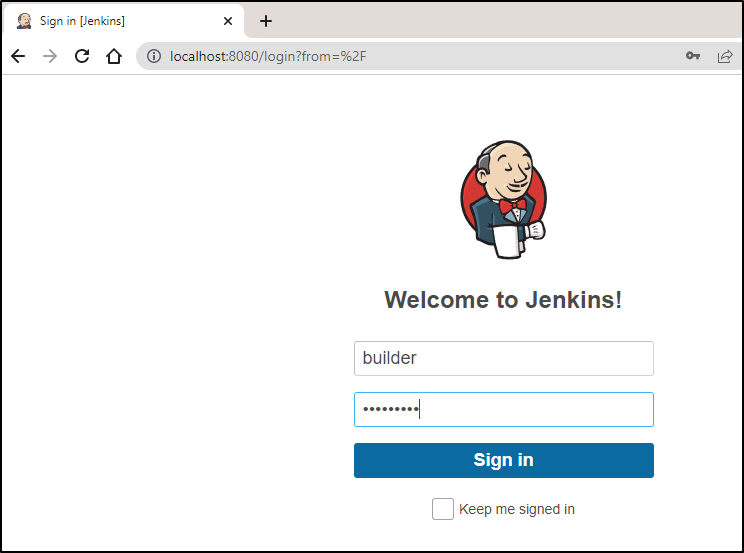

And if we have to kill the java process, we can always relaunch and relogin (this time with the user we created)

$ java -jar /mnt/c/Users/isaac/Downloads/jenkins.war

Running from: /mnt/c/Users/isaac/Downloads/jenkins.war

webroot: $user.home/.jenkins

2022-02-15 13:13:35.095+0000 [id=1] INFO org.eclipse.jetty.util.log.Log#initialized: Logging initialized @303ms to org.eclipse.jetty.util.log.JavaUtilLog

2022-02-15 13:13:35.142+0000 [id=1] INFO winstone.Logger#logInternal: Beginning extraction from war file

2022-02-15 13:13:35.164+0000 [id=1] WARNING o.e.j.s.handler.ContextHandler#setContextPath: Empty contextPath

2022-02-15 13:13:35.203+0000 [id=1] INFO org.eclipse.jetty.server.Server#doStart: jetty-9.4.43.v20210629; built: 2021-06-30T11:07:22.254Z; git: 526006ecfa3af7f1a27ef3a288e2bef7ea9dd7e8; jvm 1.8.0_312-8u312-b07-0ubuntu1~20.04-b07

2022-02-15 13:13:35.358+0000 [id=1] INFO o.e.j.w.StandardDescriptorProcessor#visitServlet: NO JSP Support for /, did not find org.eclipse.jetty.jsp.JettyJspServlet

2022-02-15 13:13:35.384+0000 [id=1] INFO o.e.j.s.s.DefaultSessionIdManager#doStart: DefaultSessionIdManager workerName=node0

2022-02-15 13:13:35.384+0000 [id=1] INFO o.e.j.s.s.DefaultSessionIdManager#doStart: No SessionScavenger set, using defaults

2022-02-15 13:13:35.385+0000 [id=1] INFO o.e.j.server.session.HouseKeeper#startScavenging: node0 Scavenging every 660000ms

2022-02-15 13:13:35.645+0000 [id=1] INFO hudson.WebAppMain#contextInitialized: Jenkins home directory: /home/builder/.jenkins found at: $user.home/.jenkins

2022-02-15 13:13:35.704+0000 [id=1] INFO o.e.j.s.handler.ContextHandler#doStart: Started w.@3fa247d1{Jenkins v2.319.3,/,file:///home/builder/.jenkins/war/,AVAILABLE}{/home/builder/.jenkins/war}

2022-02-15 13:13:35.716+0000 [id=1] INFO o.e.j.server.AbstractConnector#doStart: Started ServerConnector@32e6e9c3{HTTP/1.1, (http/1.1)}{0.0.0.0:8080}

2022-02-15 13:13:35.716+0000 [id=1] INFO org.eclipse.jetty.server.Server#doStart: Started @924ms

2022-02-15 13:13:35.717+0000 [id=33] INFO winstone.Logger#logInternal: Winstone Servlet Engine running: controlPort=disabled

2022-02-15 13:13:36.509+0000 [id=40] INFO jenkins.InitReactorRunner$1#onAttained: Started initialization

2022-02-15 13:13:36.639+0000 [id=62] INFO jenkins.InitReactorRunner$1#onAttained: Listed all plugins

2022-02-15 13:13:39.601+0000 [id=45] INFO jenkins.InitReactorRunner$1#onAttained: Prepared all plugins

2022-02-15 13:13:39.609+0000 [id=44] INFO jenkins.InitReactorRunner$1#onAttained: Started all plugins

2022-02-15 13:13:39.615+0000 [id=57] INFO jenkins.InitReactorRunner$1#onAttained: Augmented all extensions

2022-02-15 13:13:40.300+0000 [id=45] INFO jenkins.InitReactorRunner$1#onAttained: System config loaded

2022-02-15 13:13:40.300+0000 [id=58] INFO jenkins.InitReactorRunner$1#onAttained: System config adapted

2022-02-15 13:13:40.301+0000 [id=55] INFO jenkins.InitReactorRunner$1#onAttained: Loaded all jobs

2022-02-15 13:13:40.301+0000 [id=62] INFO jenkins.InitReactorRunner$1#onAttained: Configuration for all jobs updated

2022-02-15 13:13:40.314+0000 [id=93] INFO hudson.model.AsyncPeriodicWork#lambda$doRun$1: Started Download metadata

2022-02-15 13:13:40.324+0000 [id=93] INFO hudson.model.AsyncPeriodicWork#lambda$doRun$1: Finished Download metadata. 9 ms

2022-02-15 13:13:40.363+0000 [id=53] INFO jenkins.InitReactorRunner$1#onAttained: Completed initialization

2022-02-15 13:13:40.378+0000 [id=30] INFO hudson.WebAppMain$3#run: Jenkins is fully up and running

Running Jenkins as a war/jar based java app has been around since around 2005. It was developed by Sun Microsystems, after all.

A more interesting path might be to use Kubernetes to host a Jenkins master.

Containerized Jenkins

To run Jenkins in Kubernetes, we first need to add the stable helm chart

$ helm repo add jenkins https://charts.jenkins.io

"jenkins" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "cribl" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "jenkins" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

Then we can install with Helm

$ helm install jenkinsrelease jenkins/jenkins --set controller.adminPassword=MyAdminPassword1234

Once installed, we can fetch the initial password from the container (if we didnt set it directly already)

$ kubectl exec --namespace default -it svc/jenkinsrelease -c jenkins -- /bin/cat /run/secrets/chart-admin-password

Before we setup ingress, we can directly access with port-forward

$ kubectl --namespace default port-forward svc/jenkinsrelease 8080:8080

Forwarding from 127.0.0.1:8080 -> 8080

Forwarding from [::1]:8080 -> 8080

Handling connection for 8080

Handling connection for 8080

Handling connection for 8080

Setup Ingress

This, by now, should be a pattern you see throughout my various blogs.

We first create a NS entry in our DNS provider

$ cat r53-jenkins.json

{

"Comment": "CREATE jenkins fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "jenkins.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "73.242.50.46"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-jenkins.json

{

"ChangeInfo": {

"Id": "/change/C0568972QALICKZPK4G0",

"Status": "PENDING",

"SubmittedAt": "2022-02-07T03:00:37.512Z",

"Comment": "CREATE jenkins fb.s A record "

}

}

$ nslookup jenkins.freshbrewed.science

Server: 172.31.48.1

Address: 172.31.48.1#53

Non-authoritative answer:

Name: jenkins.freshbrewed.science

Address: 73.242.50.46

We then want to set a password file

$ sudo apt install apache2-utils

[sudo] password for builder:

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following additional packages will be installed:

libapr1 libaprutil1

The following NEW packages will be installed:

apache2-utils libapr1 libaprutil1

0 upgraded, 3 newly installed, 0 to remove and 45 not upgraded.

Need to get 260 kB of archives.

After this operation, 970 kB of additional disk space will be used.

Do you want to continue? [Y/n] Y

Get:1 http://archive.ubuntu.com/ubuntu focal/main amd64 libapr1 amd64 1.6.5-1ubuntu1 [91.4 kB]

Get:2 http://archive.ubuntu.com/ubuntu focal/main amd64 libaprutil1 amd64 1.6.1-4ubuntu2 [84.7 kB]

Get:3 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 apache2-utils amd64 2.4.41-4ubuntu3.9 [84.3 kB]

Fetched 260 kB in 2s (167 kB/s)

Selecting previously unselected package libapr1:amd64.

(Reading database ... 131977 files and directories currently installed.)

Preparing to unpack .../libapr1_1.6.5-1ubuntu1_amd64.deb ...

Unpacking libapr1:amd64 (1.6.5-1ubuntu1) ...

Selecting previously unselected package libaprutil1:amd64.

Preparing to unpack .../libaprutil1_1.6.1-4ubuntu2_amd64.deb ...

Unpacking libaprutil1:amd64 (1.6.1-4ubuntu2) ...

Selecting previously unselected package apache2-utils.

Preparing to unpack .../apache2-utils_2.4.41-4ubuntu3.9_amd64.deb ...

Unpacking apache2-utils (2.4.41-4ubuntu3.9) ...

Setting up libapr1:amd64 (1.6.5-1ubuntu1) ...

Setting up libaprutil1:amd64 (1.6.1-4ubuntu2) ...

Setting up apache2-utils (2.4.41-4ubuntu3.9) ...

Processing triggers for man-db (2.9.1-1) ...

Processing triggers for libc-bin (2.31-0ubuntu9.2) ...

/sbin/ldconfig.real: /usr/lib/wsl/lib/libcuda.so.1 is not a symbolic link

$ htpasswd -c auth2 builder

New password:

Re-type new password:

Adding password for user builder

$ kubectl create secret generic jenkins-basic-auth --from-file=auth2

secret/jenkins-basic-auth created

Then reference that in the ingress

$ cat jenkinsIngress.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/auth-realm: Authentication Required - Jenkins

nginx.ingress.kubernetes.io/auth-secret: jenkins-basic-auth

nginx.ingress.kubernetes.io/auth-type: basic

kubernetes.io/tls-acme: "true"

kubernetes.io/ingress.class: nginx

cert-manager.io/cluster-issuer: "letsencrypt-prod"

name: jenkins-with-auth

namespace: default

spec:

tls:

- hosts:

- jenkins.freshbrewed.science

secretName: jenkins-tls

rules:

- host: jenkins.freshbrewed.science

http:

paths:

- backend:

service:

name: jenkinsrelease

port:

number: 8080

path: /

pathType: ImplementationSpecific

I did find some nuances with auth and not specifying the ingress class. In the end, this was the Ingress definition that worked with TLS and auth

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-production

ingress.kubernetes.io/proxy-body-size: 2048m

ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/auth-realm: Authentication Required - jenkins

nginx.ingress.kubernetes.io/auth-secret: argocd-basic-auth

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/proxy-body-size: 2048m

nginx.ingress.kubernetes.io/proxy-read-timeout: "900"

nginx.ingress.kubernetes.io/proxy-send-timeout: "900"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: 2048m

labels:

app: jenkins

release: jenkinsrelease

name: jenkins-with-auth

spec:

ingressClassName: nginx

rules:

- host: jenkins.freshbrewed.science

http:

paths:

- backend:

service:

name: jenkinsrelease

port:

number: 8080

path: /

pathType: Prefix

tls:

- hosts:

- jenkins.freshbrewed.science

secretName: jenkins-tls

apply it

$ kubectl apply -f jenkinsIngress.yml

ingress.networking.k8s.io/jenkins-with-auth created

Adding another Kubernetes endpoint

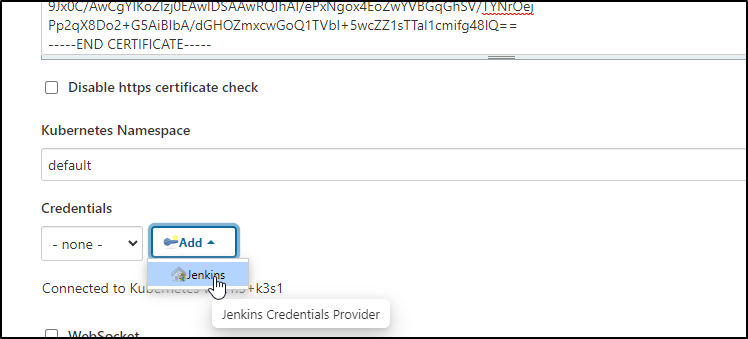

If we wanted to add more Kubernetes endpoints other than the built-in, we could follow the docs on the plug.

Find the cert authority:

$ cat ~/.kube/config | grep certificate-authority-data | head -n1 | sed 's/^.*-data: //' | base64 -d

-----BEGIN CERTIFICATE-----

MIIBdzCCAR2gAwIBAgIBADAKBggqhkjOPQQDAjAjMSEwHwYDVQQDDBhrM3Mtc2Vy

dmVyLWNhasdfasdasdfasdfasdfasdfasdfasdfasdfasZwYVBGqGhSV/TYNrOej

dmVyLWNhasdfasdasdfasdfasdfasdfasdfasdfasdfasZwYVBGqGhSV/TYNrOej

dmVyLWNhasdfasdasdfasdfasdfasdfasdfasdfasdfasZwYVBGqGhSV/TYNrOej

dmVyLWNhasdfasdasdfasdfasdfasdfasdfasdfasdfasZwYVBGqGhSV/TYNrOej

asdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdf==

-----END CERTIFICATE-----

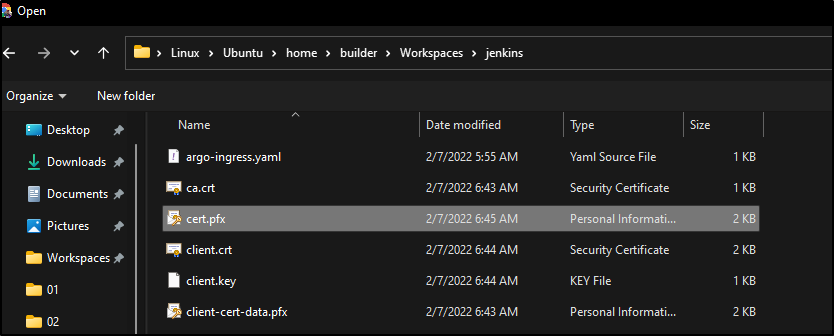

Build out our cert and key files:

builder@DESKTOP-QADGF36:~/Workspaces/jenkins$ cat ~/.kube/config | grep certificate-authority-data | head -n1 | sed 's/^.*-data: //' | base64 -d > ca.crt

builder@DESKTOP-QADGF36:~/Workspaces/jenkins$ cat ~/.kube/config | grep client-key-data | head -n1 | sed 's/^.*-data: //' | base64 -d > client.key

builder@DESKTOP-QADGF36:~/Workspaces/jenkins$ cat ~/.kube/config | grep client-certificate-data | head -n1 | sed 's/^.*-data: //' | base64 -d > client.crt

builder@DESKTOP-QADGF36:~/Workspaces/jenkins$ openssl pkcs12 -export -out cert.pfx -inkey client.key -in client.crt -certfile ca.crt

Enter Export Password:

Verifying - Enter Export Password:

(use a passphrase you will remember)

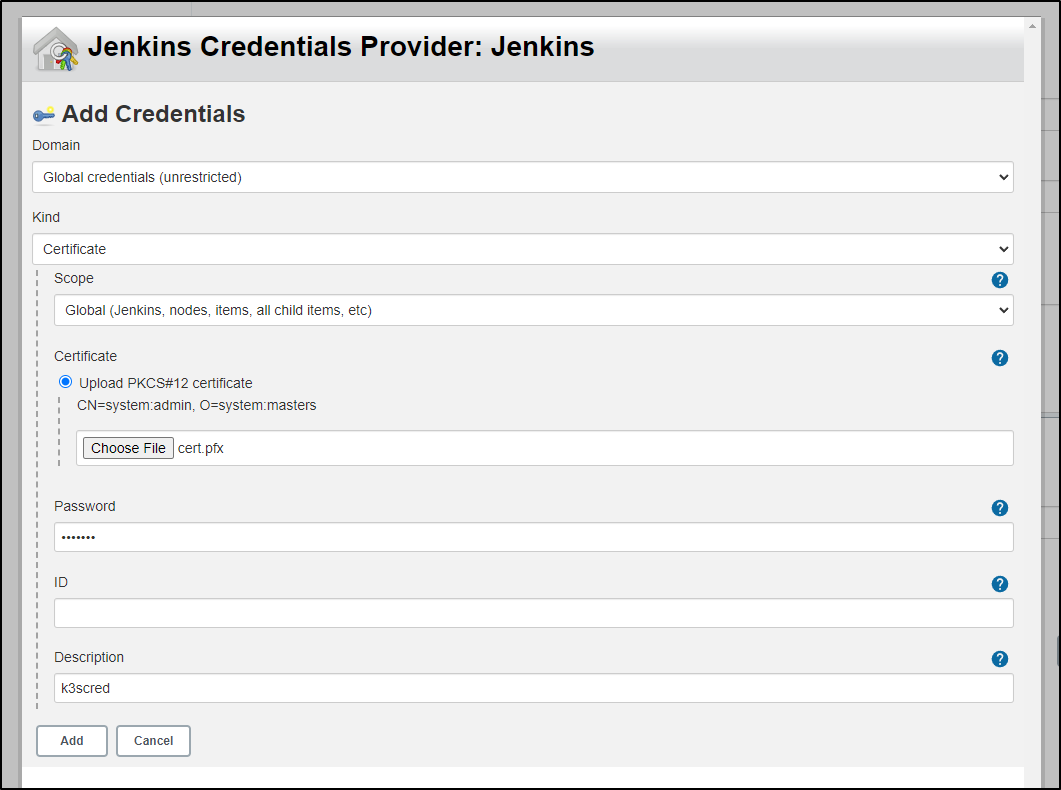

Pick the pfx we just created

it will probably warn that the password is wrong. click change and use the password you used when you encrypted the pfx. now you can click “add” to save

This should work, however I did not need more k8s clusters so I stopped here.

Adding Email

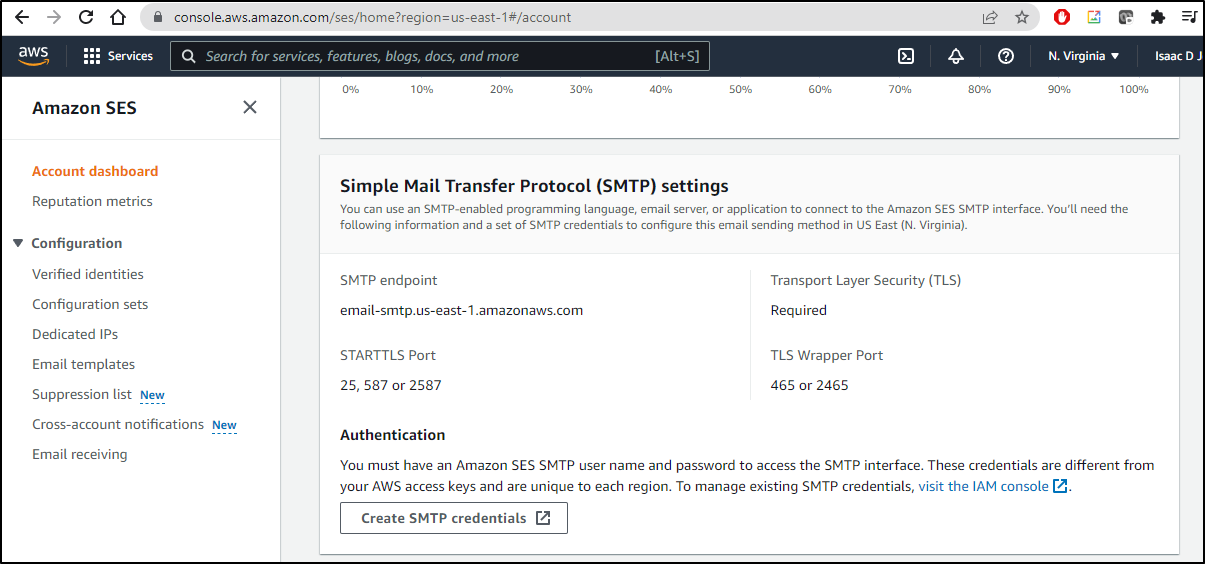

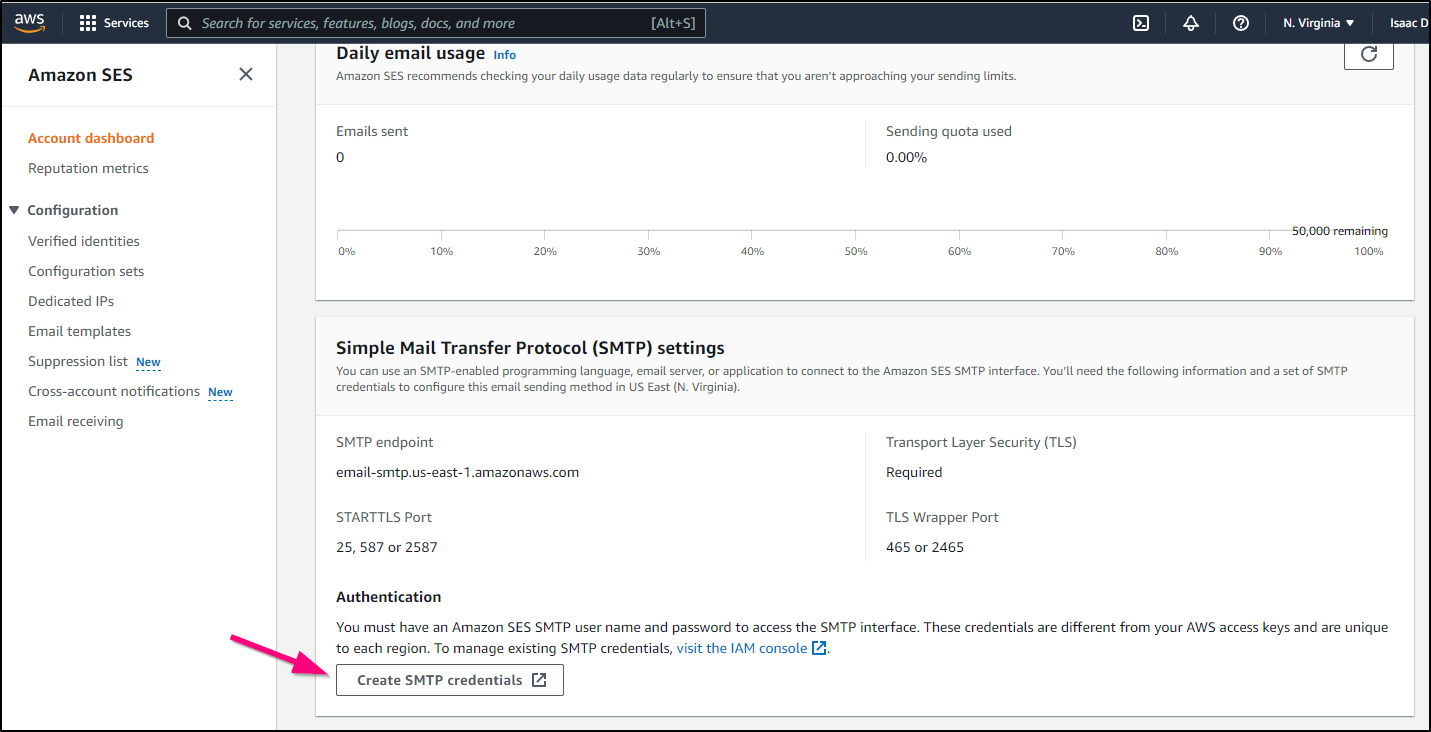

We can use SES as a proper SMTP email provider for our Jenkins instance.

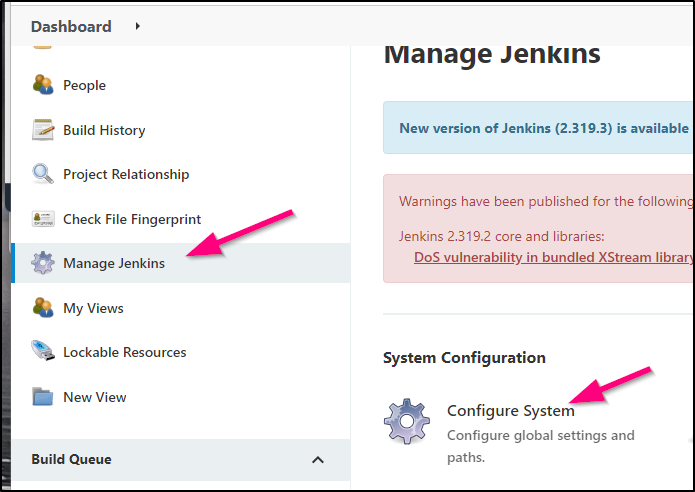

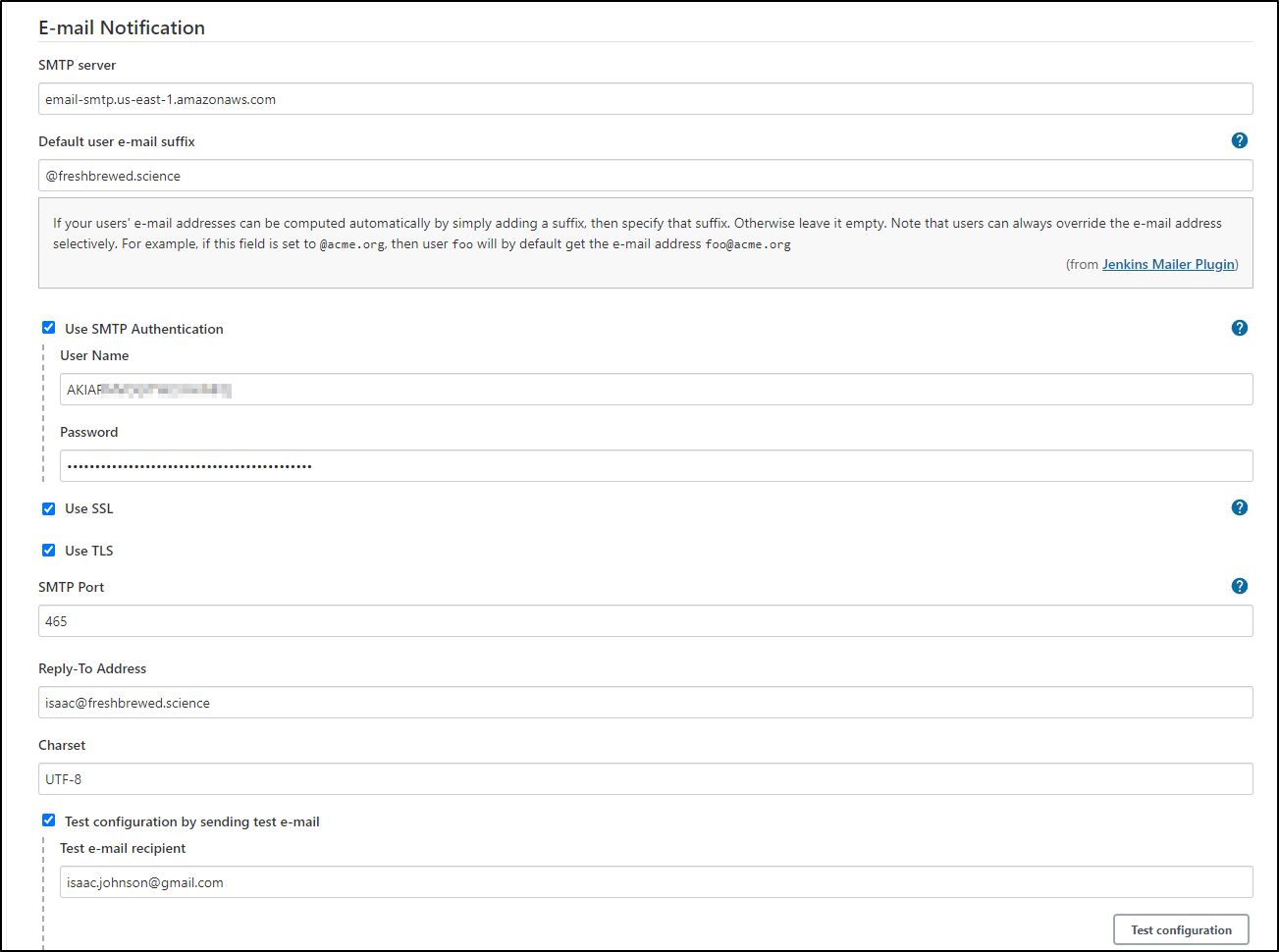

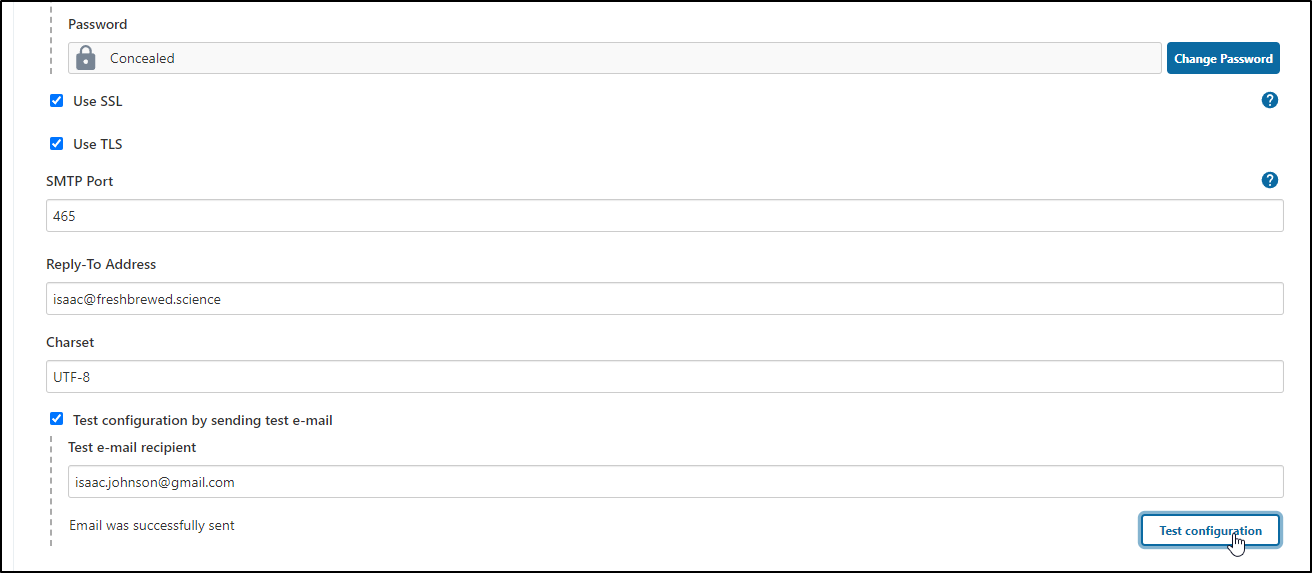

Then under Manage Jenkins/Configure System

We can create the SMTP credentials

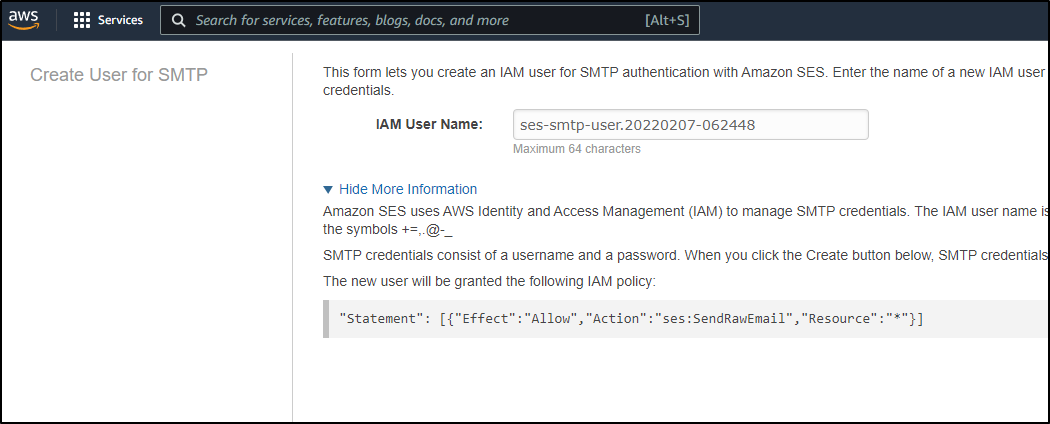

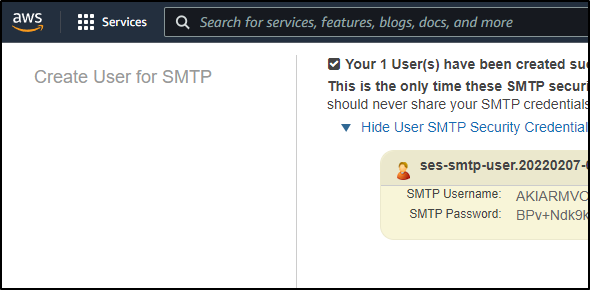

Create the user

Which will create narrowly scoped IAM credentials

Back in the Email Notification we can set the SMTP Auth

then click “Test Configuration”

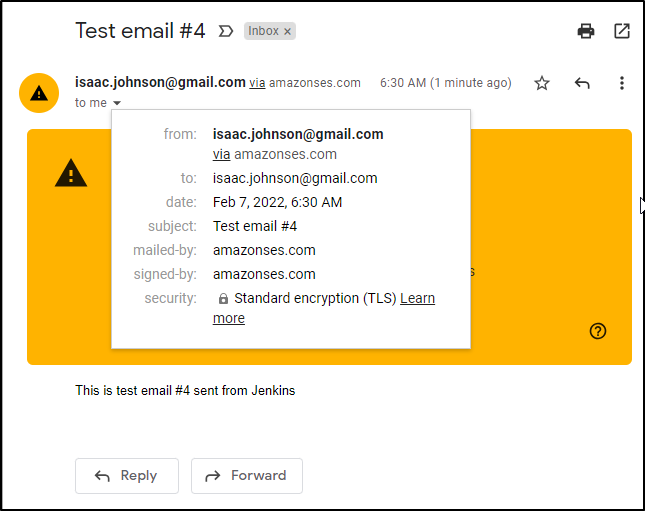

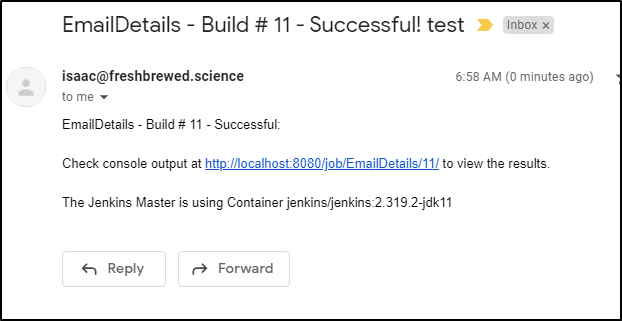

Which should send an email

Once I set the “From” to match SES (which uses freshbrewed.science), then the mail was shown as valid

Note: it is worth saving these IAM creds as we will need them later when we use the Editable Email Notification Plugin

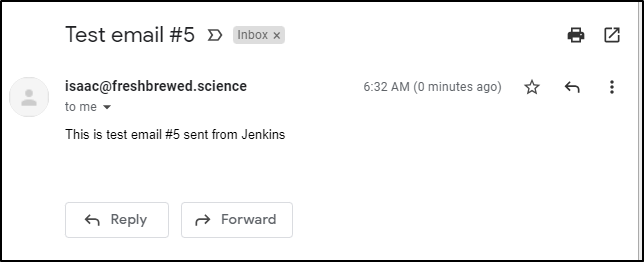

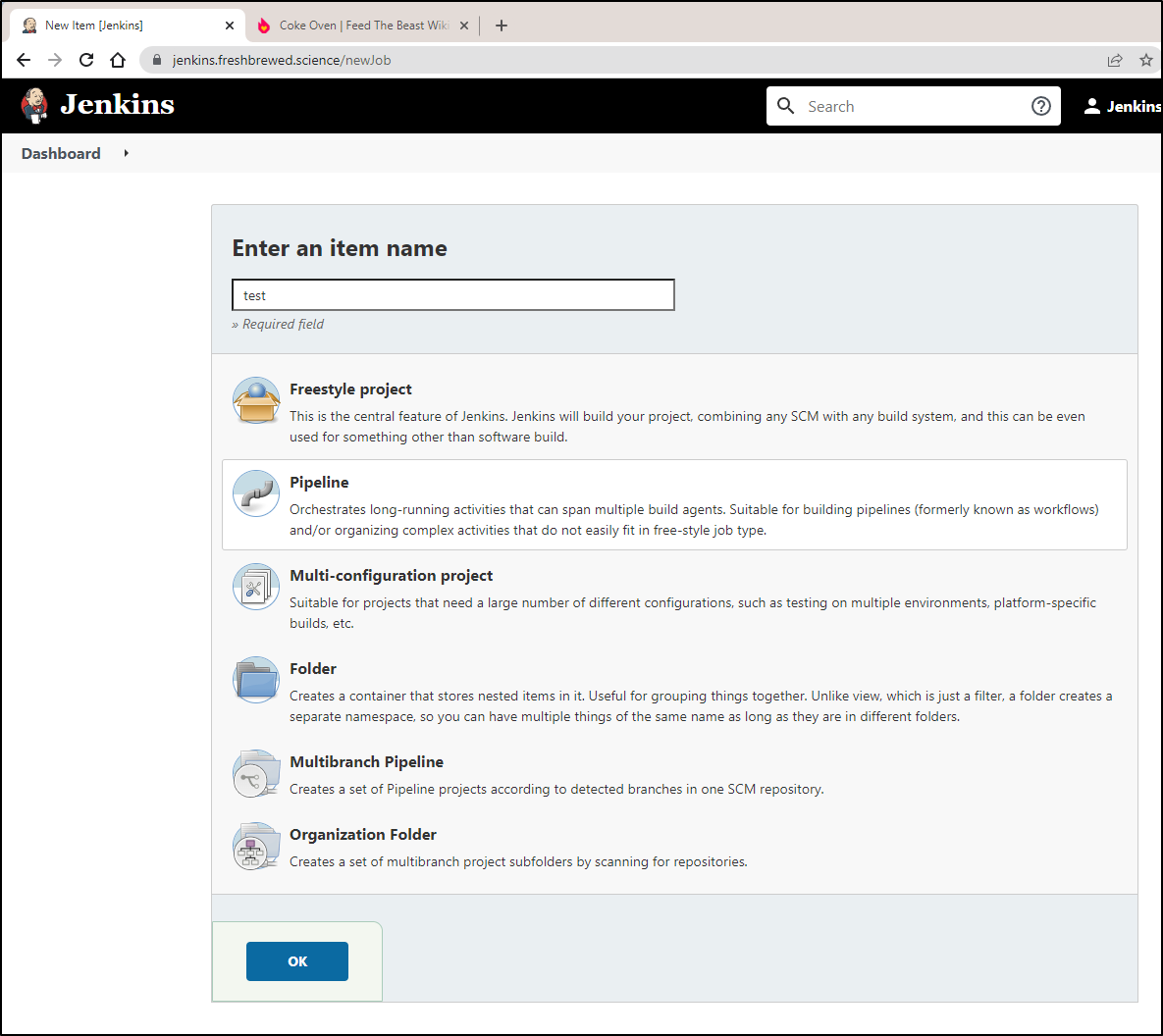

Create a basic Groovy build

Create a new Pipeline style build

Next, we’ll want to define a pipeline script

This can be a simple hello world:

pipeline {

agent any

stages {

stage('Hello') {

steps {

echo 'Hello World'

}

}

}

}

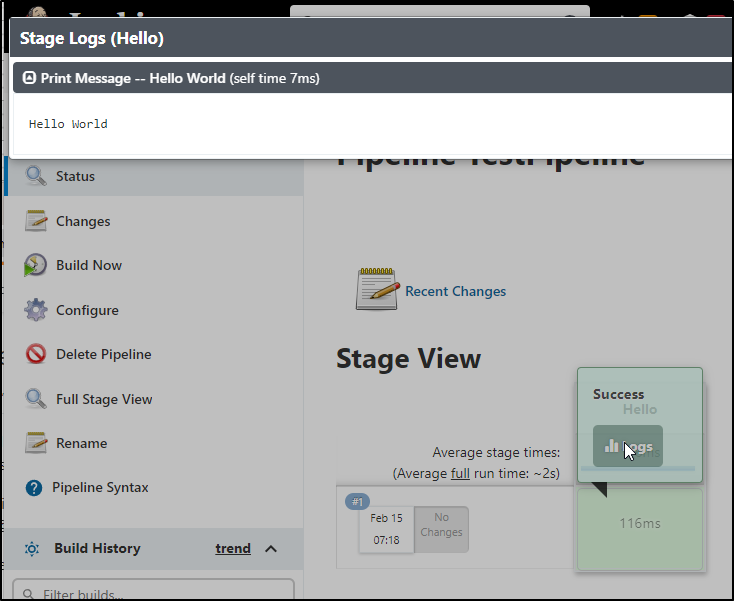

Save and run:

and we can view the logs from the results page:

Now let’s add that same file to SCM (GIT).

We will create a branch on the local SSH GIT:

$ cd testing123/

$ git remote show origin

* remote origin

Fetch URL: ssh://builder@192.168.1.32:/home/builder/gitRepo/

Push URL: ssh://builder@192.168.1.32:/home/builder/gitRepo/

...

$ git pull

remote: Enumerating objects: 47, done.

remote: Counting objects: 100% (47/47), done.

remote: Compressing objects: 100% (40/40), done.

remote: Total 46 (delta 17), reused 0 (delta 0)

Unpacking objects: 100% (46/46), 7.70 KiB | 1.28 MiB/s, done.

From ssh://192.168.1.32:/home/builder/gitRepo

6569031..c717101 main -> origin/main

Updating 6569031..c717101

Fast-forward

helm/sampleChart/.helmignore | 23 +++++++++++++++++++++++

helm/sampleChart/Chart.yaml | 24 ++++++++++++++++++++++++

helm/sampleChart/templates/NOTES.txt | 22 ++++++++++++++++++++++

helm/sampleChart/templates/_helpers.tpl | 62 ++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

helm/sampleChart/templates/deployment.yaml | 61 +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

helm/sampleChart/templates/hpa.yaml | 28 ++++++++++++++++++++++++++++

helm/sampleChart/templates/ingress.yaml | 61 +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

helm/sampleChart/templates/service.yaml | 15 +++++++++++++++

helm/sampleChart/templates/serviceaccount.yaml | 12 ++++++++++++

helm/sampleChart/templates/tests/test-connection.yaml | 15 +++++++++++++++

helm/sampleChart/values.yaml | 82 ++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

11 files changed, 405 insertions(+)

create mode 100644 helm/sampleChart/.helmignore

create mode 100644 helm/sampleChart/Chart.yaml

create mode 100644 helm/sampleChart/templates/NOTES.txt

create mode 100644 helm/sampleChart/templates/_helpers.tpl

create mode 100644 helm/sampleChart/templates/deployment.yaml

create mode 100644 helm/sampleChart/templates/hpa.yaml

create mode 100644 helm/sampleChart/templates/ingress.yaml

create mode 100644 helm/sampleChart/templates/service.yaml

create mode 100644 helm/sampleChart/templates/serviceaccount.yaml

create mode 100644 helm/sampleChart/templates/tests/test-connection.yaml

create mode 100644 helm/sampleChart/values.yaml

# create new Jenkins branch

$ git checkout -b jenkinsBuild

Switched to a new branch 'jenkinsBuild'

We need to add a “Jenkinsfile” file:

$ vi Jenkinsfile

$ cat Jenkinsfile

pipeline {

agent any

stages {

stage('Hello') {

steps {

echo 'Hello World'

}

}

}

}

$ git add Jenkinsfile

$ git commit -m "Hello World"

[jenkinsBuild fbb3b3b] Hello World

1 file changed, 11 insertions(+)

create mode 100644 Jenkinsfile

Now push

$ git push

fatal: The current branch jenkinsBuild has no upstream branch.

To push the current branch and set the remote as upstream, use

git push --set-upstream origin jenkinsBuild

$ darf

git push --set-upstream origin jenkinsBuild [enter/↑/↓/ctrl+c]

Enumerating objects: 4, done.

Counting objects: 100% (4/4), done.

Delta compression using up to 16 threads

Compressing objects: 100% (3/3), done.

Writing objects: 100% (3/3), 392 bytes | 392.00 KiB/s, done.

Total 3 (delta 0), reused 0 (delta 0)

To ssh://192.168.1.32:/home/builder/gitRepo/

* [new branch] jenkinsBuild -> jenkinsBuild

Branch 'jenkinsBuild' set up to track remote branch 'jenkinsBuild' from 'origin'.

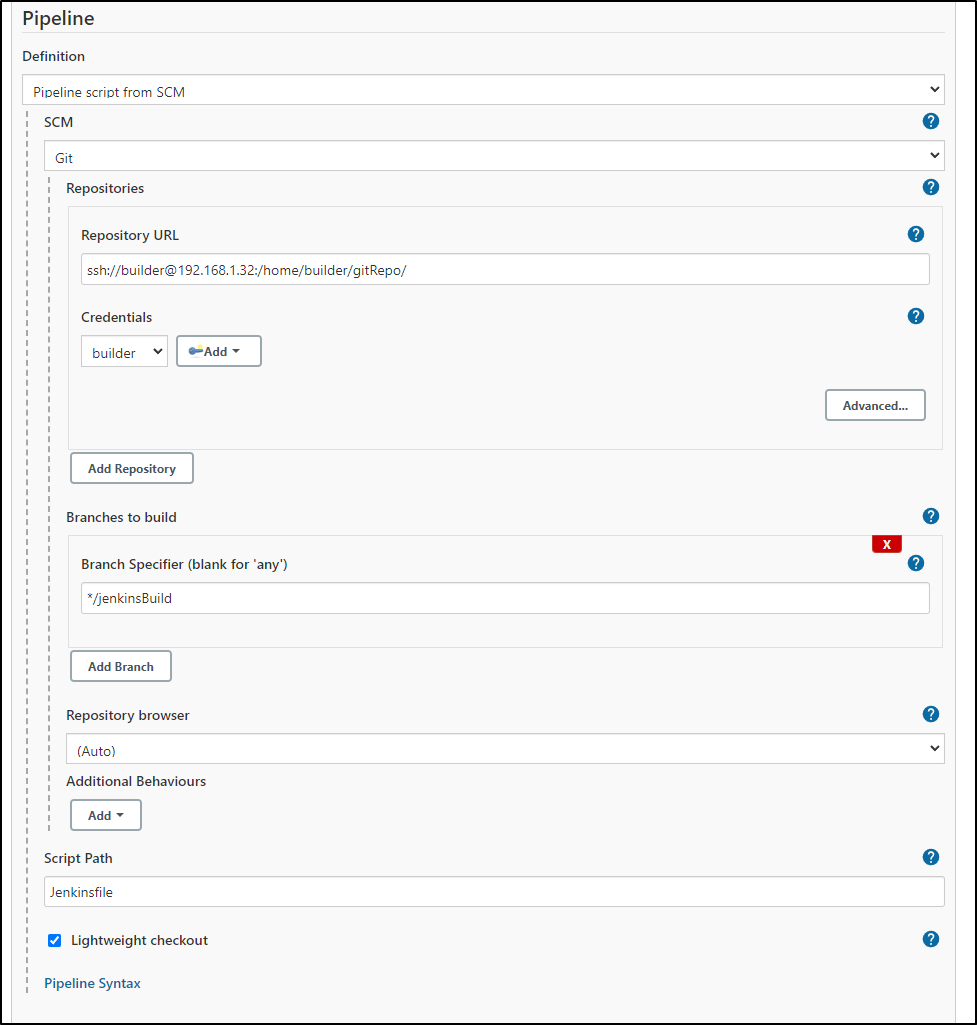

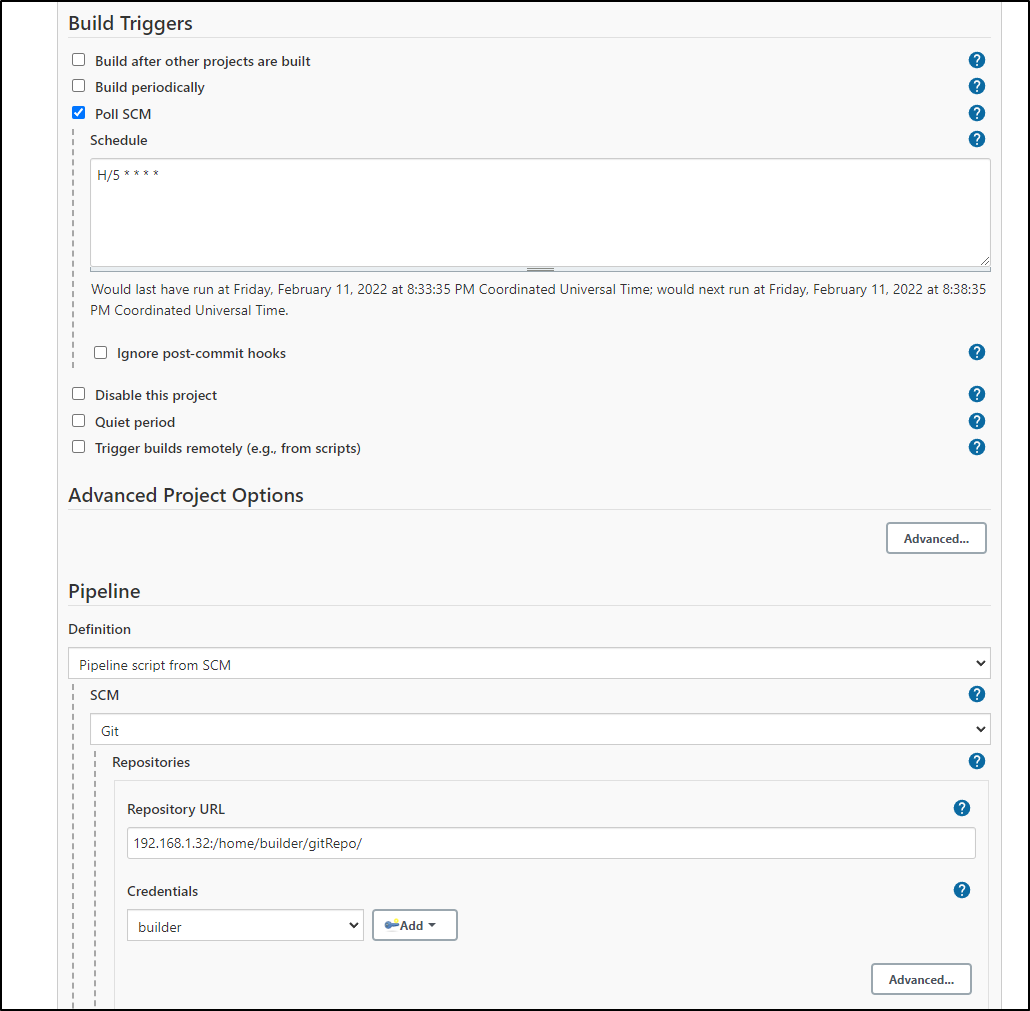

In our Jenkins configuration, change to “Pipeline script from SCM”

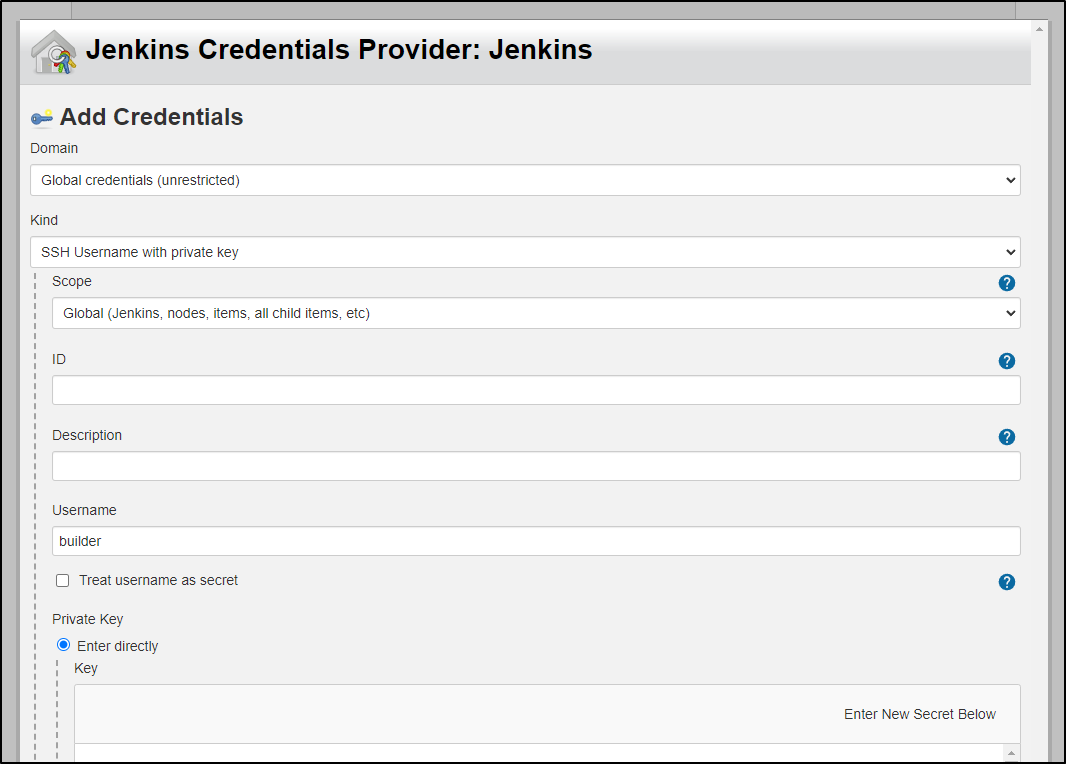

We can add our GIT SSH credentials inline here:

This makes our new Pipeline configuration page look as such:

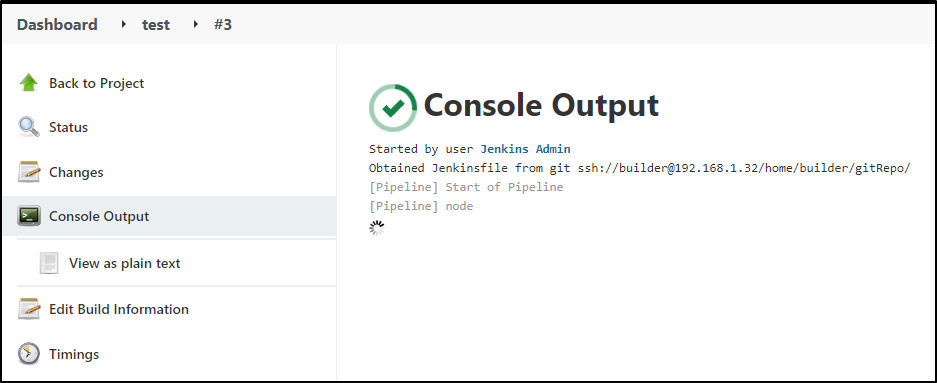

Now we can build manually and see it kicks a build use the local repo

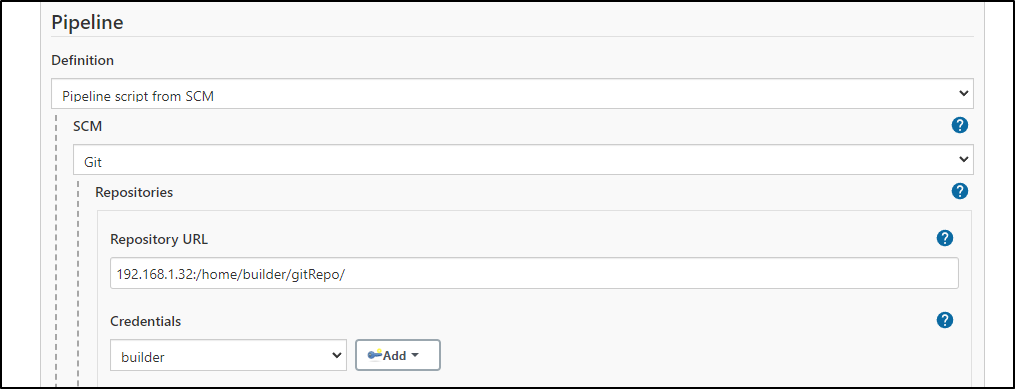

Note: if you get an error about host key authorization, you can tweak the syntax or use new keys. For me, I changed the GIT format which solved it:

To enable CI, we can set Jenkins to poll our GIT repo every 5 minutes

It should only build if there are changes on the branch.

Integration of Helm

Let’s say we want to do some Helm work.

What does not work

One challenge with the default “cloud” pod is that it has no helm, curl or wget

[Pipeline] sh

+ which curl

[Pipeline] }

...

+ helm list

/home/jenkins/agent/workspace/test2@tmp/durable-39475986/script.sh: 1: helm: not found

...

[Pipeline] sh

+ wget https://get.helm.sh/helm-v3.8.0-linux-amd64.tar.gz

/home/jenkins/agent/workspace/test2@tmp/durable-facefb61/script.sh: 1: wget: not found

apt needs sudo

[Pipeline] sh

+ apt update

WARNING: apt does not have a stable CLI interface. Use with caution in scripts.

Reading package lists...

E: List directory /var/lib/apt/lists/partial is missing. - Acquire (13: Permission denied)

and we have no sudo/sudoers

[Pipeline] sh

+ sudo apt install -y wget

/home/jenkins/agent/workspace/test2@tmp/durable-a27d3dbb/script.sh: 1: sudo: not found

[Pipeline] }

Using plugins to try and download files….

crashed on memory:

[Pipeline] End of Pipeline

java.lang.OutOfMemoryError: Java heap space

at java.base/java.util.Arrays.copyOf(Unknown Source)

at java.base/java.io.ByteArrayOutputStream.grow(Unknown Source)

at java.base/java.io.ByteArrayOutputStream.ensureCapacity(Unknown Source)

at java.base/java.io.ByteArrayOutputStream.write(Unknown Source)

Workarounds

One quick and easy solution is to just add the binary to git.

I would not recommend it for the long term, but helm is small and this gets us to our next steps.

$ ls -ltrah bin/

total 14M

-rw-r--r-- 1 builder builder 13M Jan 24 10:30 helm-v3.8.0-linux-amd64.tar.gz

drwxr-xr-x 2 builder builder 4.0K Feb 14 06:41 .

drwxr-xr-x 5 builder builder 4.0K Feb 14 07:09 ..

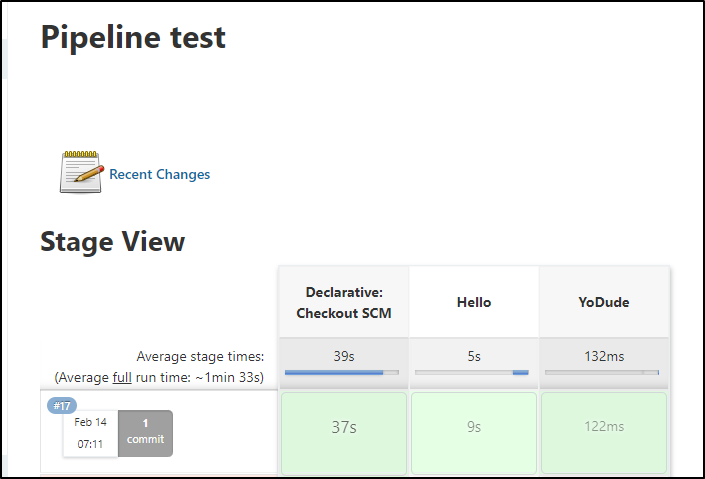

We can also do multiple stages in our Pipeline YAML:

$ cat Jenkinsfile

pipeline {

agent any

stages {

stage('Hello') {

steps {

sh "which curl || true"

sh "cd ./bin && tar -xzvf *.gz"

sh "$WORKSPACE/bin/linux-amd64/helm list"

echo 'Hello World'

}

}

stage('YoDude') {

steps {

echo 'Hello Dude'

}

}

}

}

And we can see we do not need extra auth

[Pipeline] sh

+ /home/jenkins/agent/workspace/test/bin/linux-amd64/helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

argo-cd default 1 2022-01-22 11:50:52.2846044 -0600 -0600 deployed argo-cd-1.0.1

azure-vote-1608995981 default 1 2020-12-26 09:19:42.3568184 -0600 -0600 deployed azure-vote-0.1.1

dapr default 3 2021-12-03 14:11:44.294724493 -0600 -0600 deployed dapr-1.5.0 1.5.0

datadogrelease default 10 2021-12-09 07:54:54.780959189 -0600 -0600 deployed datadog-2.27.5 7

docker-registry default 1 2021-01-01 16:53:12.375859 -0600 -0600 deployed docker-registry-1.9.6 2.7.1

harbor-registry default 1 2021-05-06 07:57:55.4126017 -0500 -0500 deployed harbor-1.6.1 2.2.1

jenkinsrelease default 1 2022-02-06 20:35:42.036675 -0600 -0600 deployed jenkins-3.11.3 2.319.2

kubewatch default 9 2021-06-10 08:35:11.802945 -0500 -0500 deployed kubewatch-3.2.6 0.1.0

mongo-x86-release default 3 2021-01-02 23:39:56.0582053 -0600 -0600 deployed mongodb-10.3.3 4.4.3

my-release default 3 2021-05-29 21:30:58.8689736 -0500 -0500 deployed ingress-nginx-3.31.0 0.46.0

redis default 1 2021-04-02 08:40:08.0504813 -0500 -0500 deployed redis-12.10.0 6.0.12

rundeckrelease default 1 2021-12-19 16:23:42.714181058 -0600 -0600 deployed rundeck-0.3.6 3.2.7

Installing a Sample Chart with Helm

The next step is to install something. Initially I used ‘–generate-name’, however this has two issues. The first is that it will use the folder for the name (sampleChart) which has upper case which breaks RFC rules. The second would be it would be hard to find what release was created to later remove. A bit more elegant is to use the Jenkins tag which fits RFC rules on releases and is unique to this run.

Note: we are using a basic Nginx sample chart from a prior guide. But you can use helm create sampleChart to affect the same (see docs.

)

$ cat Jenkinsfile

pipeline {

agent any

stages {

stage('Hello') {

steps {

echo 'Hello World'

sh "which curl || true"

sh "cd ./bin && tar -xzvf *.gz"

sh "$WORKSPACE/bin/linux-amd64/helm list"

sh "cd $WORKSPACE/helm && $WORKSPACE/bin/linux-amd64/helm install $BUILD_TAG ./sampleChart"

sh "$WORKSPACE/bin/linux-amd64/helm list"

}

}

stage('YoDude') {

steps {

echo 'Hello Dude'

}

}

}

}

We can see from the logs it installed just fine:

+ cd /home/jenkins/agent/workspace/test/helm

+ /home/jenkins/agent/workspace/test/bin/linux-amd64/helm install jenkins-test-19 ./sampleChart

NAME: jenkins-test-19

LAST DEPLOYED: Mon Feb 14 13:26:46 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=samplechart,app.kubernetes.io/instance=jenkins-test-19" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace default $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace default port-forward $POD_NAME 8080:$CONTAINER_PORT

[Pipeline] sh

+ /home/jenkins/agent/workspace/test/bin/linux-amd64/helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

argo-cd default 1 2022-01-22 11:50:52.2846044 -0600 -0600 deployed argo-cd-1.0.1

azure-vote-1608995981 default 1 2020-12-26 09:19:42.3568184 -0600 -0600 deployed azure-vote-0.1.1

dapr default 3 2021-12-03 14:11:44.294724493 -0600 -0600 deployed dapr-1.5.0 1.5.0

datadogrelease default 10 2021-12-09 07:54:54.780959189 -0600 -0600 deployed datadog-2.27.5 7

docker-registry default 1 2021-01-01 16:53:12.375859 -0600 -0600 deployed docker-registry-1.9.6 2.7.1

harbor-registry default 1 2021-05-06 07:57:55.4126017 -0500 -0500 deployed harbor-1.6.1 2.2.1

jenkins-test-19 default 1 2022-02-14 13:26:46.151337869 +0000 UTC deployed samplechart-0.1.1 1.16.0

jenkinsrelease default 1 2022-02-06 20:35:42.036675 -0600 -0600 deployed jenkins-3.11.3 2.319.2

kubewatch default 9 2021-06-10 08:35:11.802945 -0500 -0500 deployed kubewatch-3.2.6 0.1.0

mongo-x86-release default 3 2021-01-02 23:39:56.0582053 -0600 -0600 deployed mongodb-10.3.3 4.4.3

my-release default 3 2021-05-29 21:30:58.8689736 -0500 -0500 deployed ingress-nginx-3.31.0 0.46.0

redis default 1 2021-04-02 08:40:08.0504813 -0500 -0500 deployed redis-12.10.0 6.0.12

rundeckrelease default 1 2021-12-19 16:23:42.714181058 -0600 -0600 deployed rundeck-0.3.6 3.2.7

After the build, I see it running in the cluster just fine

$ helm list | grep jenkins

jenkins-test-19 default 1 2022-02-14 13:26:46.151337869 +0000 UTC deployed samplechart-0.1.1 1.16.0

jenkinsrelease default 1 2022-02-06 20:35:42.036675 -0600 CST deployed jenkins-3.11.3 2.319.2

We can install and remove in the same file

$ cat Jenkinsfile

pipeline {

agent any

stages {

stage('Hello') {

steps {

echo 'Hello World'

sh "which kubectl || true"

sh "cd ./bin && tar -xzvf *.gz"

sh "$WORKSPACE/bin/linux-amd64/helm list"

sh "cd $WORKSPACE/helm && $WORKSPACE/bin/linux-amd64/helm install $BUILD_TAG ./sampleChart"

sh "$WORKSPACE/bin/linux-amd64/helm list"

sh "$WORKSPACE/bin/linux-amd64/helm delete $BUILD_TAG"

}

}

stage('YoDude') {

steps {

echo 'Hello Dude'

sh "ls -ltra ./bin"

}

}

}

}

Output:

[Pipeline] sh

+ cd /home/jenkins/agent/workspace/test/helm

+ /home/jenkins/agent/workspace/test/bin/linux-amd64/helm install jenkins-test-20 ./sampleChart

NAME: jenkins-test-20

LAST DEPLOYED: Mon Feb 14 13:32:44 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=samplechart,app.kubernetes.io/instance=jenkins-test-20" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace default $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace default port-forward $POD_NAME 8080:$CONTAINER_PORT

[Pipeline] sh

+ /home/jenkins/agent/workspace/test/bin/linux-amd64/helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

argo-cd default 1 2022-01-22 11:50:52.2846044 -0600 -0600 deployed argo-cd-1.0.1

azure-vote-1608995981 default 1 2020-12-26 09:19:42.3568184 -0600 -0600 deployed azure-vote-0.1.1

dapr default 3 2021-12-03 14:11:44.294724493 -0600 -0600 deployed dapr-1.5.0 1.5.0

datadogrelease default 10 2021-12-09 07:54:54.780959189 -0600 -0600 deployed datadog-2.27.5 7

docker-registry default 1 2021-01-01 16:53:12.375859 -0600 -0600 deployed docker-registry-1.9.6 2.7.1

harbor-registry default 1 2021-05-06 07:57:55.4126017 -0500 -0500 deployed harbor-1.6.1 2.2.1

jenkins-test-19 default 1 2022-02-14 13:26:46.151337869 +0000 UTC deployed samplechart-0.1.1 1.16.0

jenkins-test-20 default 1 2022-02-14 13:32:44.393016282 +0000 UTC deployed samplechart-0.1.1 1.16.0

jenkinsrelease default 1 2022-02-06 20:35:42.036675 -0600 -0600 deployed jenkins-3.11.3 2.319.2

kubewatch default 9 2021-06-10 08:35:11.802945 -0500 -0500 deployed kubewatch-3.2.6 0.1.0

mongo-x86-release default 3 2021-01-02 23:39:56.0582053 -0600 -0600 deployed mongodb-10.3.3 4.4.3

my-release default 3 2021-05-29 21:30:58.8689736 -0500 -0500 deployed ingress-nginx-3.31.0 0.46.0

redis default 1 2021-04-02 08:40:08.0504813 -0500 -0500 deployed redis-12.10.0 6.0.12

rundeckrelease default 1 2021-12-19 16:23:42.714181058 -0600 -0600 deployed rundeck-0.3.6 3.2.7

[Pipeline] sh

+ /home/jenkins/agent/workspace/test/bin/linux-amd64/helm delete jenkins-test-20

release "jenkins-test-20" uninstalled

[Pipeline] }

I improved it a bit to install into a namespace. I found more Anthos garbage left in the cluster that needed cleansing first

$ kubectl delete ValidatingWebhookConfiguration gatekeeper-validating-webhook-configuration

validatingwebhookconfiguration.admissionregistration.k8s.io "gatekeeper-validating-webhook-configuration" deleted

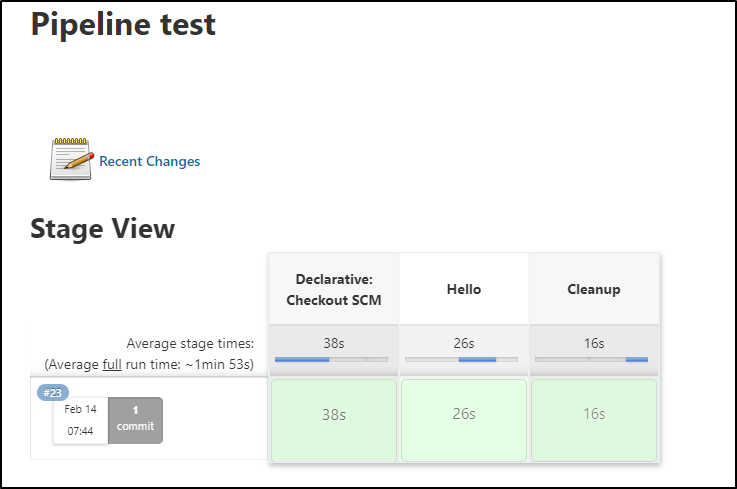

Since stages execute on the same workspace, I could use the extracted helm binary to do the cleanup in a subsequent stage

$ cat Jenkinsfile

pipeline {

agent any

stages {

stage('Hello') {

steps {

echo 'Hello World'

sh "which kubectl || true"

sh "cd ./bin && tar -xzvf *.gz"

sh "$WORKSPACE/bin/linux-amd64/helm list"

sh "cd $WORKSPACE/helm && $WORKSPACE/bin/linux-amd64/helm install -n $BUILD_TAG $BUILD_TAG ./sampleChart --create-namespace"

}

}

stage('Cleanup') {

steps {

echo 'Hello Dude'

sh "$WORKSPACE/bin/linux-amd64/helm list -n $BUILD_TAG"

sh "$WORKSPACE/bin/linux-amd64/helm delete $BUILD_TAG -n $BUILD_TAG"

}

}

}

}

Here we see the results of the multi-stage run

Output:

[Pipeline] sh

+ cd /home/jenkins/agent/workspace/test/helm

+ /home/jenkins/agent/workspace/test/bin/linux-amd64/helm install -n jenkins-test-23 jenkins-test-23 ./sampleChart --create-namespace

I0214 13:46:13.819086 219 request.go:665] Waited for 1.084184802s due to client-side throttling, not priority and fairness, request: GET:https://10.43.0.1:443/apis/external.metrics.k8s.io/v1beta1?timeout=32s

NAME: jenkins-test-23

LAST DEPLOYED: Mon Feb 14 13:46:24 2022

NAMESPACE: jenkins-test-23

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace jenkins-test-23 -l "app.kubernetes.io/name=samplechart,app.kubernetes.io/instance=jenkins-test-23" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace jenkins-test-23 $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace jenkins-test-23 port-forward $POD_NAME 8080:$CONTAINER_PORT

[Pipeline] }

[Pipeline] // stage

[Pipeline] stage

[Pipeline] { (Cleanup)

[Pipeline] echo

Hello Dude

[Pipeline] sh

+ /home/jenkins/agent/workspace/test/bin/linux-amd64/helm list -n jenkins-test-23

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

jenkins-test-23 jenkins-test-23 1 2022-02-14 13:46:24.208637521 +0000 UTC deployed samplechart-0.1.1 1.16.0

[Pipeline] sh

+ /home/jenkins/agent/workspace/test/bin/linux-amd64/helm delete jenkins-test-23 -n jenkins-test-23

release "jenkins-test-23" uninstalled

We could keep expanding on Jenkinsfile builds. But what if we wanted to trigger Argo (as we covered last week)

Jenkins to Argo

We may wish to use Jenkins to orchestrate containerized workflows in Argo Workflows.

We can add a Workflows chart to our helm repo:

builder@DESKTOP-QADGF36:~/Workspaces/testing123/helm/sampleChart$ cat templates/argoworkflow.yaml

apiVersion: argoproj.io/v1alpha1

kind: Workflow

metadata:

name: {{ include "samplechart.fullname" . }}

labels:

workflows.argoproj.io/container-runtime-executor: pns

spec:

entrypoint: dind-sidecar-example

volumes:

- name: my-secret-vol

secret:

secretName: argo-harbor-secret

templates:

- name: dind-sidecar-example

inputs:

artifacts:

- name: buildit

path: /tmp/buildit.sh

raw:

data: |

cat Dockerfile

docker build -t hello .

echo $HARBORPASS | docker login --username $HARBORUSER --password-stdin https://harbor.freshbrewed.science/freshbrewedprivate

echo 'going to tag.'

docker tag hello:latest harbor.freshbrewed.science/freshbrewedprivate/hello-argo:latest

echo 'going to push.'

docker push harbor.freshbrewed.science/freshbrewedprivate/hello-argo:latest

- name: dockerfile

path: /tmp/Dockerfile

raw:

data: |

FROM alpine

CMD ["echo","Hello Argo. Let us CICD entirely in Argo"]

container:

image: docker:19.03.13

command: [sh, -c]

args: ["until docker ps; do sleep 3; done; echo 'hello' && cd /tmp && chmod u+x buildit.sh && ./buildit.sh"]

env:

- name: DOCKER_HOST

value: 127.0.0.1

- name: HARBORPASS

valueFrom:

secretKeyRef:

name: argo-harbor-secret

key: harborpassword

- name: HARBORUSER

valueFrom:

secretKeyRef:

name: argo-harbor-secret

key: harborusername

volumeMounts:

- name: my-secret-vol

mountPath: "/secret/mountpath"

sidecars:

- name: dind

image: docker:19.03.13-dind

env:

- name: DOCKER_TLS_CERTDIR

value: ""

securityContext:

privileged: true

mirrorVolumeMounts: true

Granted, due to my setup, I needed to install into the default workspace (where the harbor secret lives and where my Argo monitors for Workflow objects).

From the Jenkins log I can see the chart was created:

[Pipeline] sh

+ cd /home/jenkins/agent/workspace/test/helm

+ /home/jenkins/agent/workspace/test/bin/linux-amd64/helm install jenkins-test-25 ./sampleChart

NAME: jenkins-test-25

LAST DEPLOYED: Mon Feb 14 14:09:06 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=samplechart,app.kubernetes.io/instance=jenkins-test-25" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace default $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace default port-forward $POD_NAME 8080:$CONTAINER_PORT

[Pipeline] }

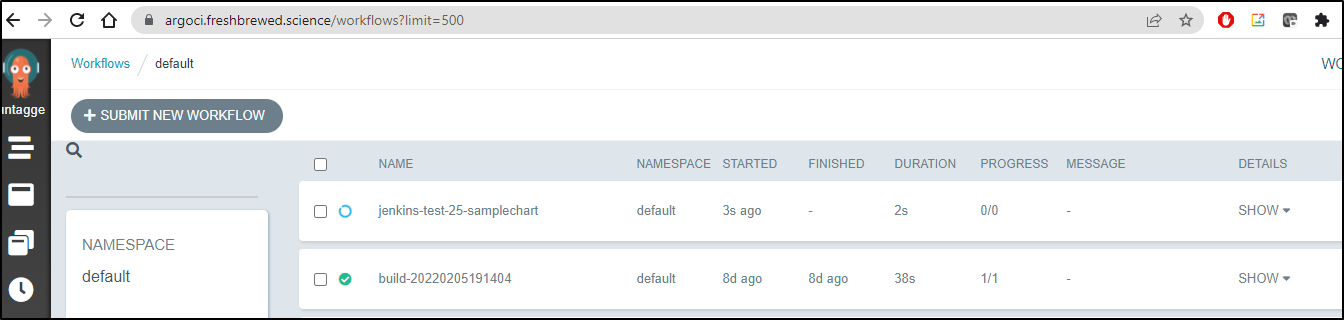

This then created the Workflow instance

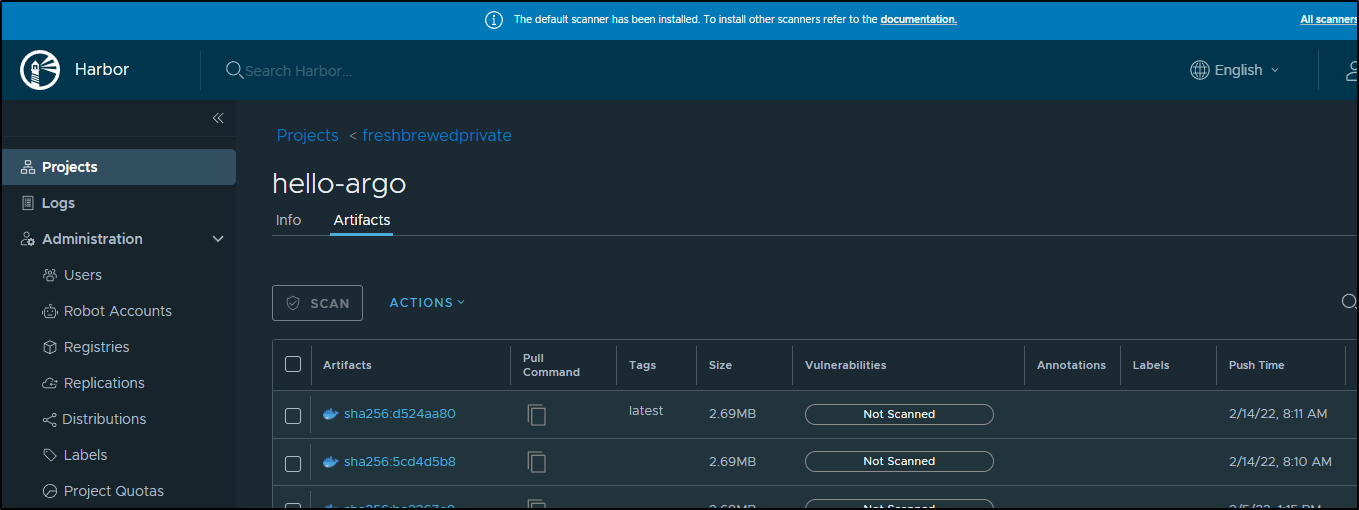

Which then generated a container image

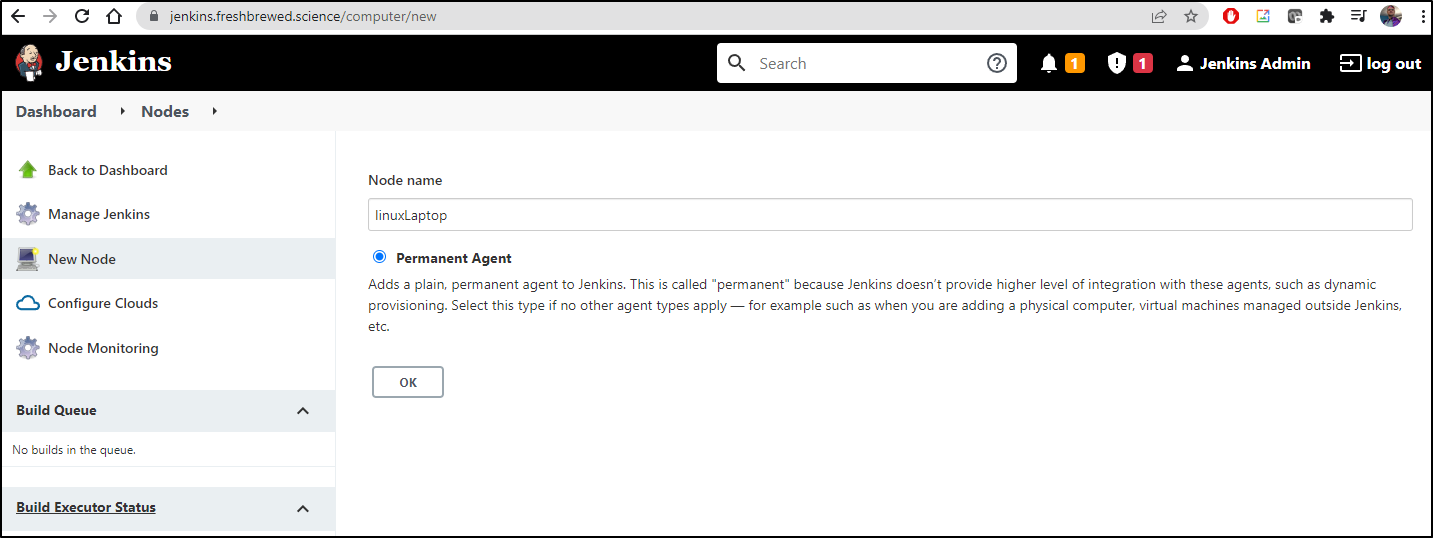

Adding a Jenkins Persistant Node

We can also add a non-kubernetes based agents just as easily

Add a New Node

Give it a name and set the label. The label is what we will use in subsequent jobs to use this node.

On the VM, install Java if it is not there already

$ sudo apt install openjdk-11-jre-headless

[sudo] password for builder:

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages were automatically installed and are no longer required:

libfprint-2-tod1 libllvm10

Use 'sudo apt autoremove' to remove them.

The following additional packages will be installed:

ca-certificates-java java-common

Suggested packages:

default-jre fonts-dejavu-extra fonts-ipafont-gothic fonts-ipafont-mincho fonts-wqy-microhei | fonts-wqy-zenhei

The following NEW packages will be installed:

ca-certificates-java java-common openjdk-11-jre-headless

0 upgraded, 3 newly installed, 0 to remove and 76 not upgraded.

Need to get 37.3 MB of archives.

After this operation, 171 MB of additional disk space will be used.

Do you want to continue? [Y/n]

...

Next, I tried to get the JNLP and Agent jar via the ingress…

builder@builder-HP-EliteBook-745-G5:~$ wget https://builder:MyPassword1234@jenkins.freshbrewed.science/jnlpJars/agent.jar

--2022-02-14 10:17:34-- https://builder:*password*@jenkins.freshbrewed.science/jnlpJars/agent.jar

Resolving jenkins.freshbrewed.science (jenkins.freshbrewed.science)... 73.242.50.46

Connecting to jenkins.freshbrewed.science (jenkins.freshbrewed.science)|73.242.50.46|:443... connected.

HTTP request sent, awaiting response... 401 Unauthorized

Authentication selected: Basic realm="Authentication Required - jenkins"

Reusing existing connection to jenkins.freshbrewed.science:443.

HTTP request sent, awaiting response... 200 OK

Length: 1522173 (1.5M) [application/java-archive]

Saving to: ‘agent.jar’

agent.jar 100%[===========================================================================================>] 1.45M --.-KB/s in 0.1s

2022-02-14 10:17:35 (10.0 MB/s) - ‘agent.jar’ saved [1522173/1522173]

However, I had some struggles getting through basic auth and TLS as I had setup with my ingress. The easiest work around (and likely not something I would do in production), was to port-forward to 8080 and 50000 so I could directly address the JenkinsRelease pod:

$ kubectl port-forward jenkinsrelease-0 8080:8080 & kubectl port-forward jenkinsrelease-0 50000:50000 &

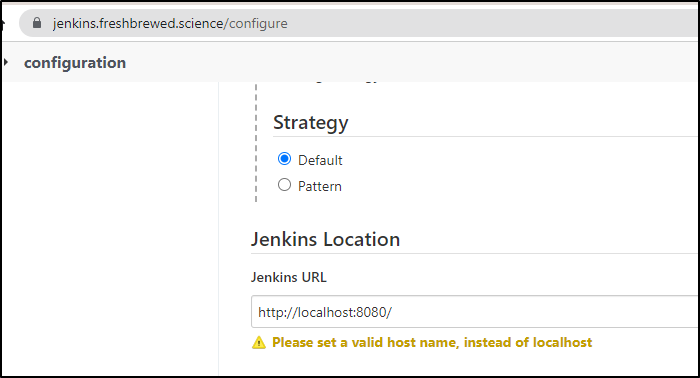

You’ll need to change the Jenkins URL (which is packed into the JNLP file) for the moment:

you can change back after the jnlp download and agent is connected

I was then able to download the JNLP jar and use it to start an agent:

$ wget http://localhost:8080/jnlpJars/agent.jar --no-check-certificate

--2022-02-15 06:09:45-- http://localhost:8080/jnlpJars/agent.jar

Resolving localhost (localhost)... 127.0.0.1

Connecting to localhost (localhost)|127.0.0.1|:8080... connected.

HTTP request sent, awaiting response... Handling connection for 8080

200 OK

Length: 1522173 (1.5M) [application/java-archive]

Saving to: ‘agent.jar’

agent.jar 100%[===========================================================================================>] 1.45M 8.47MB/s in 0.2s

2022-02-15 06:09:45 (8.47 MB/s) - ‘agent.jar’ saved [1522173/1522173]

$ echo ce123412341234123412341234123412341234123421241231231239c > secret-file && java -jar agent.jar -jnlpUrl http://localhost:8080/computer/linuxLaptop/jenkins-agent.jnlp -secret @secret-file -workDir "/tmp"

Feb 15, 2022 6:13:36 AM org.jenkinsci.remoting.engine.WorkDirManager initializeWorkDir

INFO: Using /tmp/remoting as a remoting work directory

Feb 15, 2022 6:13:36 AM org.jenkinsci.remoting.engine.WorkDirManager setupLogging

INFO: Both error and output logs will be printed to /tmp/remoting

Handling connection for 8080

Feb 15, 2022 6:13:36 AM hudson.remoting.jnlp.Main createEngine

INFO: Setting up agent: linuxLaptop

Feb 15, 2022 6:13:36 AM hudson.remoting.jnlp.Main$CuiListener <init>

INFO: Jenkins agent is running in headless mode.

Feb 15, 2022 6:13:36 AM hudson.remoting.Engine startEngine

INFO: Using Remoting version: 4.11.2

Feb 15, 2022 6:13:36 AM org.jenkinsci.remoting.engine.WorkDirManager initializeWorkDir

INFO: Using /tmp/remoting as a remoting work directory

Feb 15, 2022 6:13:36 AM hudson.remoting.jnlp.Main$CuiListener status

INFO: Locating server among [http://localhost:8080/]

Handling connection for 8080

Feb 15, 2022 6:13:36 AM org.jenkinsci.remoting.engine.JnlpAgentEndpointResolver resolve

INFO: Remoting server accepts the following protocols: [JNLP4-connect, Ping]

Handling connection for 50000

Feb 15, 2022 6:13:36 AM hudson.remoting.jnlp.Main$CuiListener status

INFO: Agent discovery successful

Agent address: localhost

Agent port: 50000

Identity: f0:6c:ab:2c:75:e2:73:98:b9:c2:12:1b:7e:3f:de:68

Feb 15, 2022 6:13:36 AM hudson.remoting.jnlp.Main$CuiListener status

INFO: Handshaking

Feb 15, 2022 6:13:36 AM hudson.remoting.jnlp.Main$CuiListener status

INFO: Connecting to localhost:50000

Handling connection for 50000

Feb 15, 2022 6:13:36 AM hudson.remoting.jnlp.Main$CuiListener status

INFO: Trying protocol: JNLP4-connect

Feb 15, 2022 6:13:36 AM org.jenkinsci.remoting.protocol.impl.BIONetworkLayer$Reader run

INFO: Waiting for ProtocolStack to start.

Feb 15, 2022 6:13:37 AM hudson.remoting.jnlp.Main$CuiListener status

INFO: Remote identity confirmed: f0:6c:ab:2c:75:e2:73:98:b9:c2:12:1b:7e:3f:de:68

Feb 15, 2022 6:13:37 AM hudson.remoting.jnlp.Main$CuiListener status

INFO: Connected

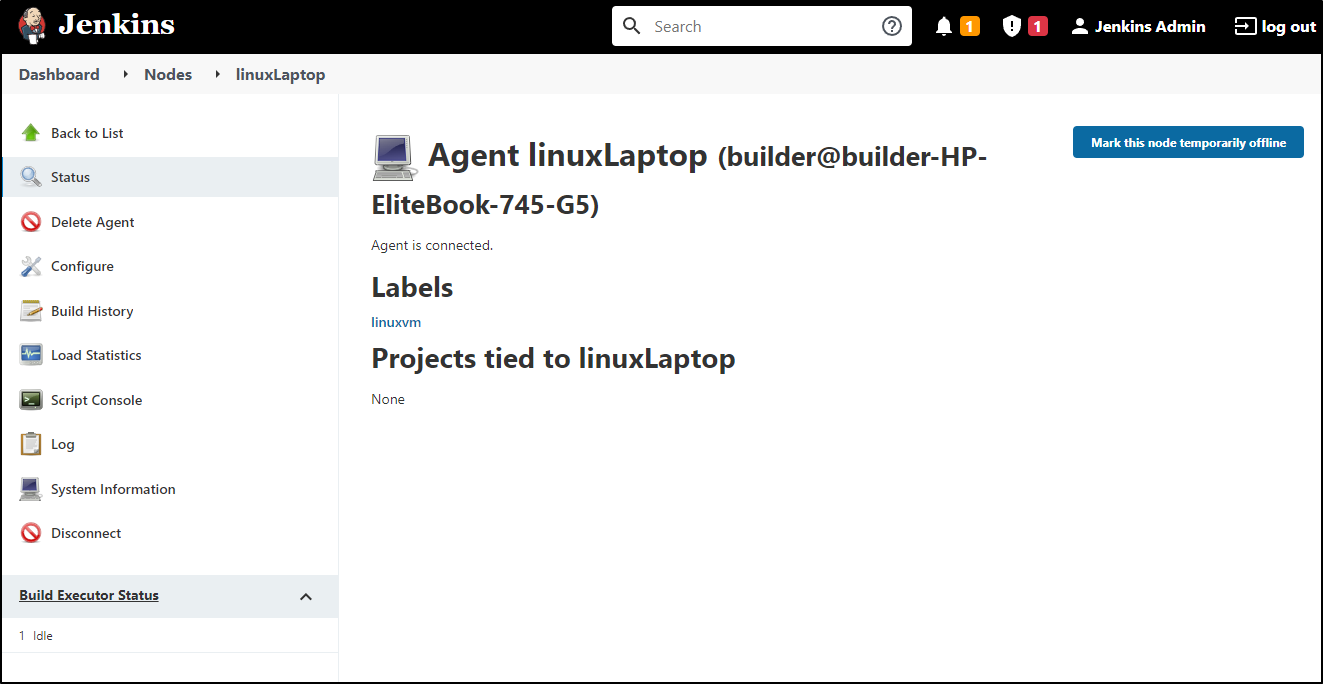

Here we can see the agent is now live

Validation

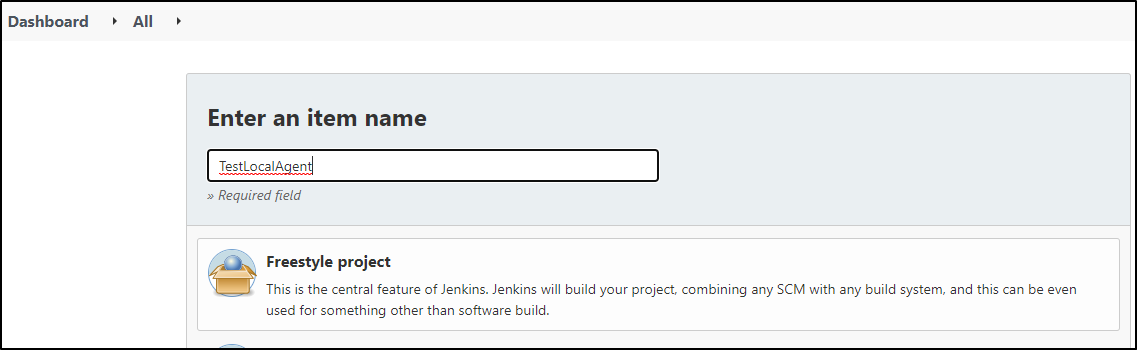

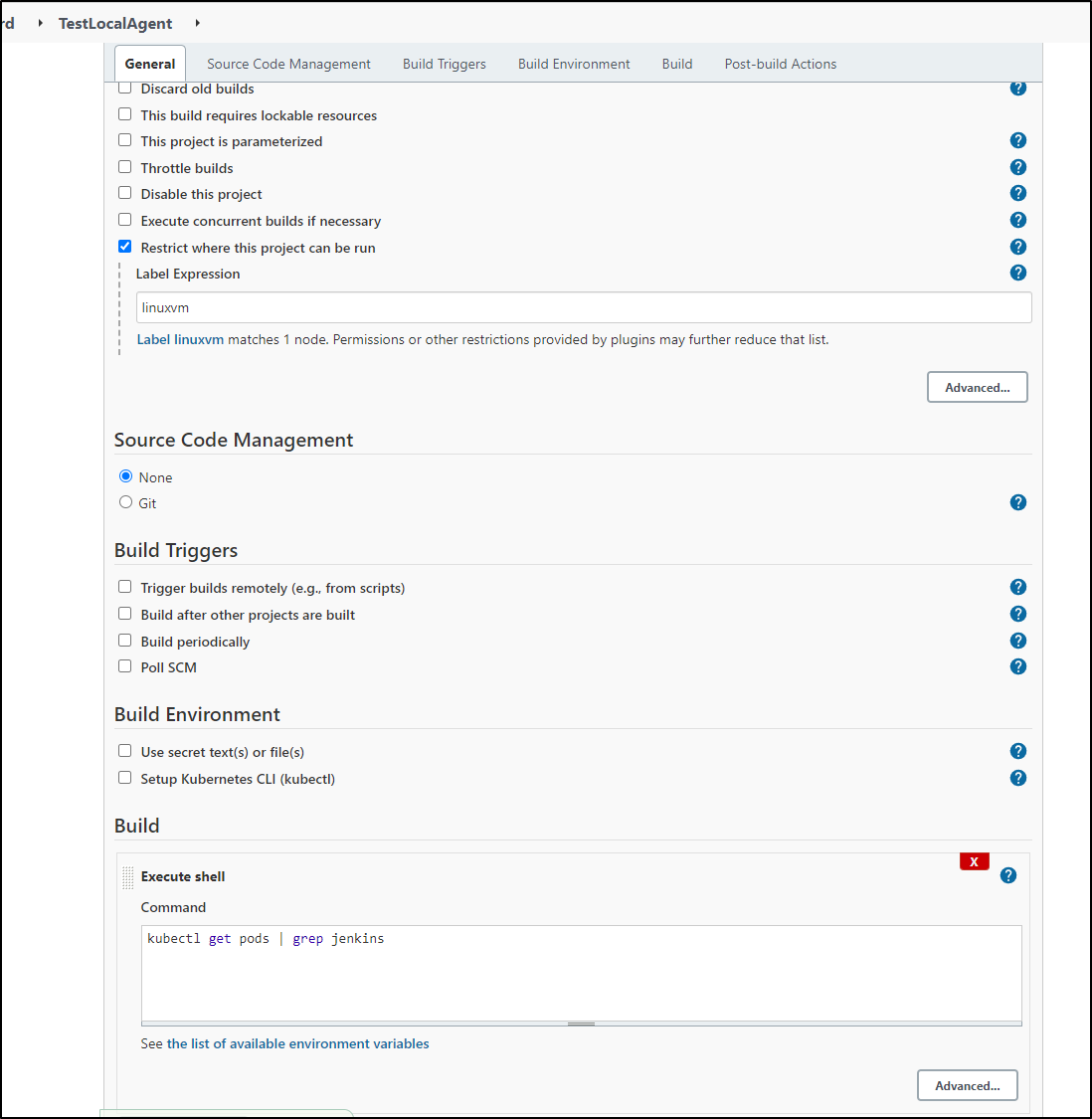

For a simple test, we can use a freestyle project

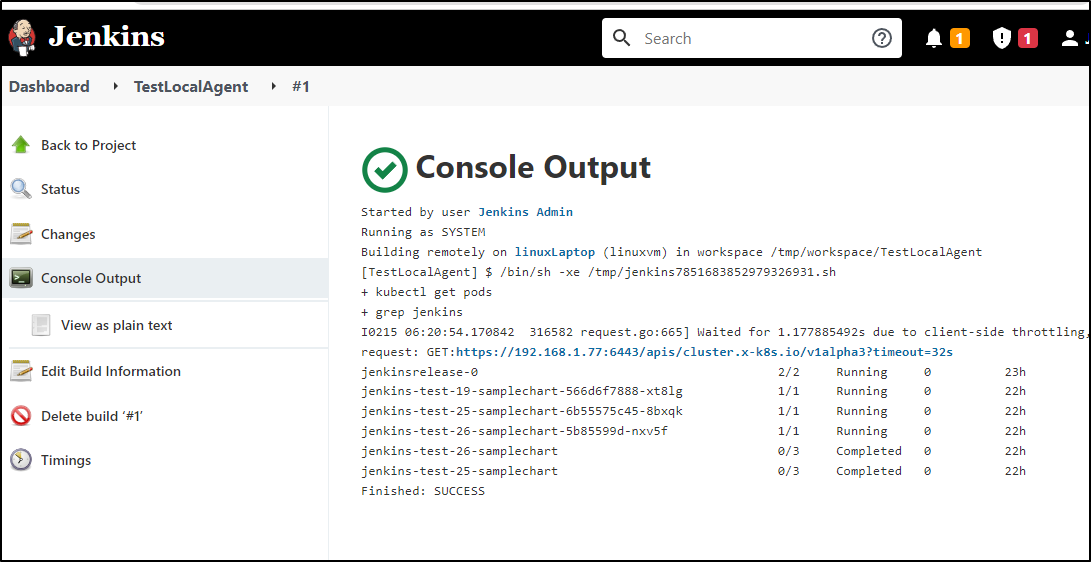

We can use any binary on the host. Here we will try showing the pods via the same kubectl that is executing port-forwards

and we can see it used the builder user auth to get pods

Triggering Other jobs

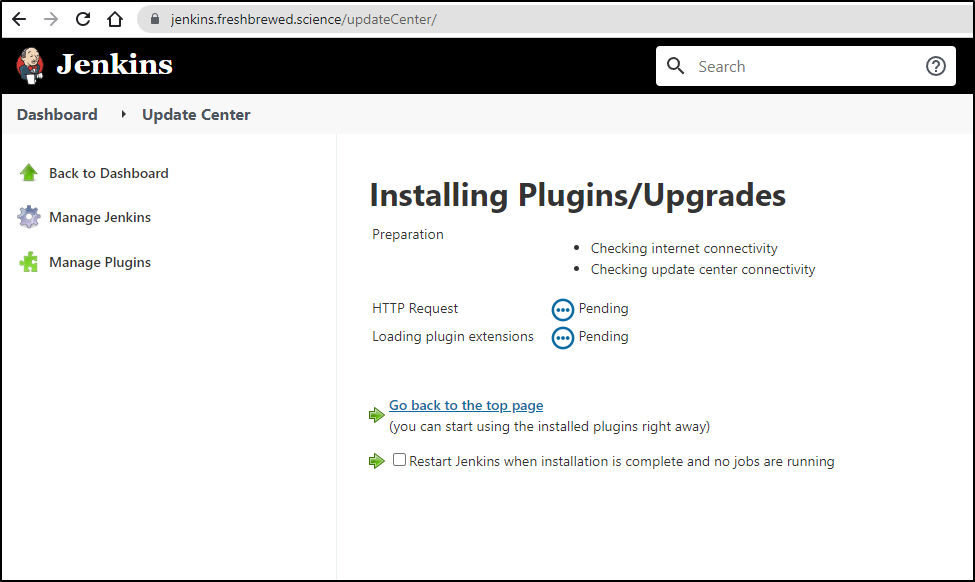

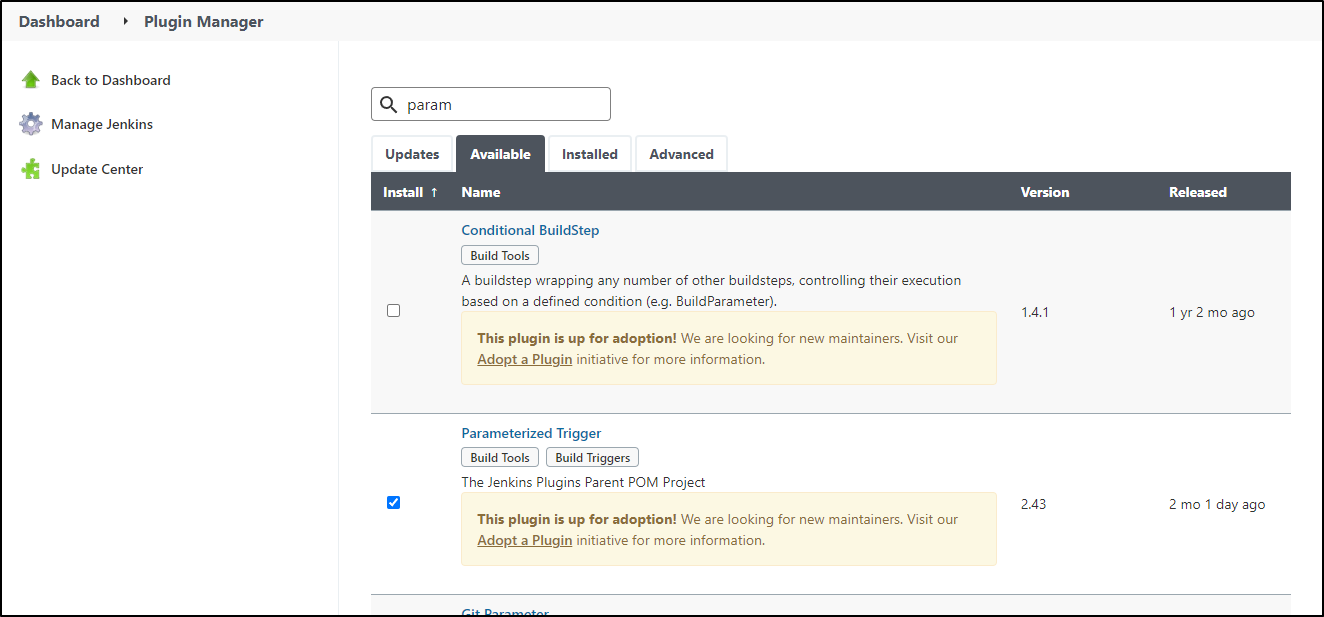

Let’s add a Parameterized Trigger plugin

We want to pass some details via the email notification plugin. There are more elegant approaches (e.g. use SMTP directly or engages with SES using curl).

But let’s KISS and just use a plugin and the built-in email step.

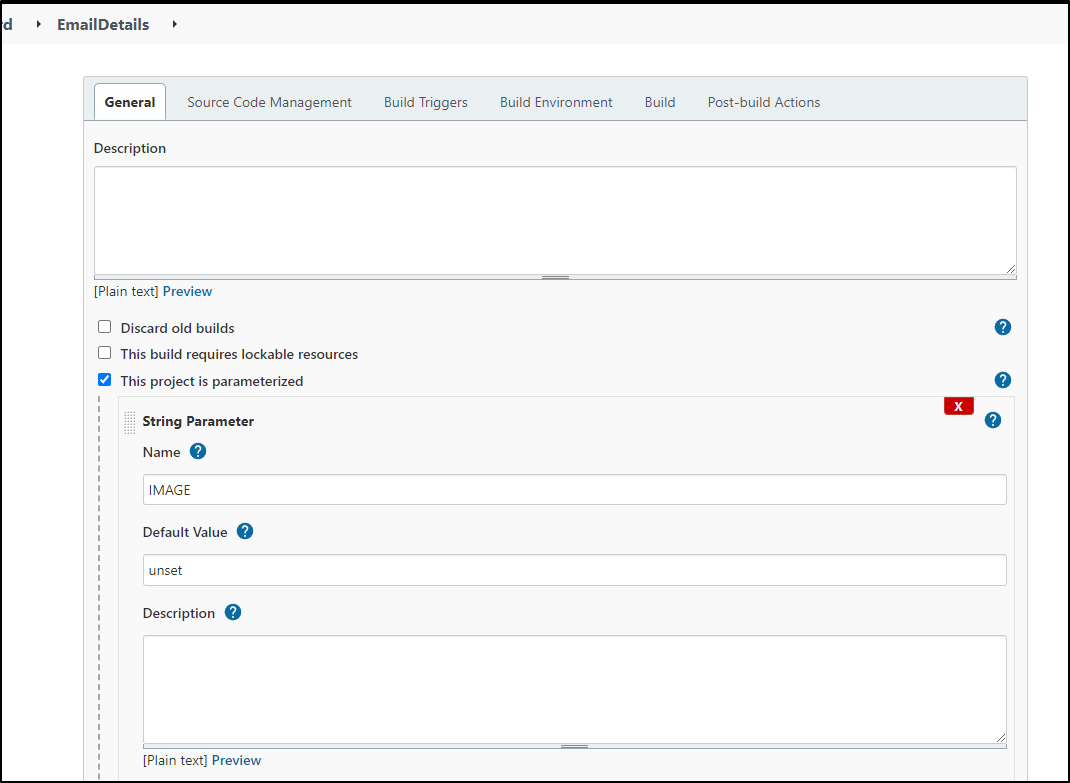

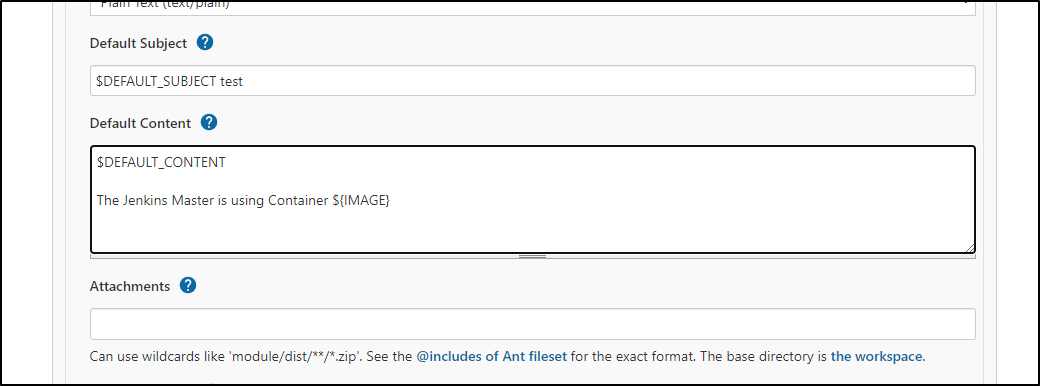

I’ll create a quick freestyle email job with a parameter

You will want to configure the email settings the Jenkins Configuration page (which if you saved the SES password details, you can just re-used the same Email settings).

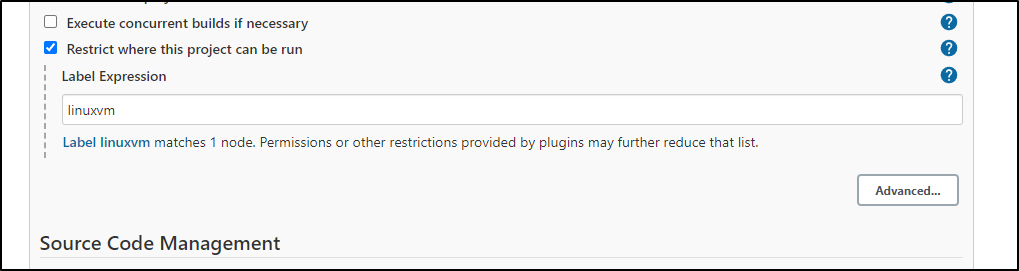

We will restrict to the same linux host

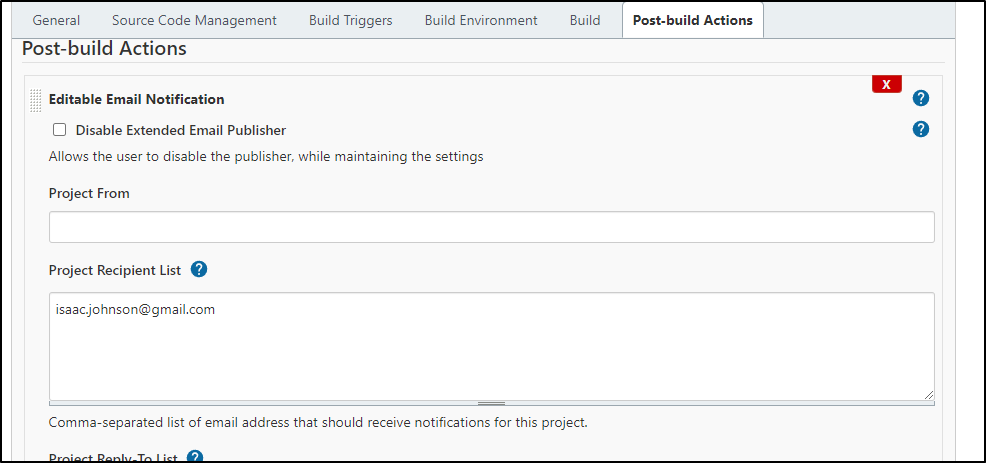

We’ll set a default senders

We want to put the paramater into the content

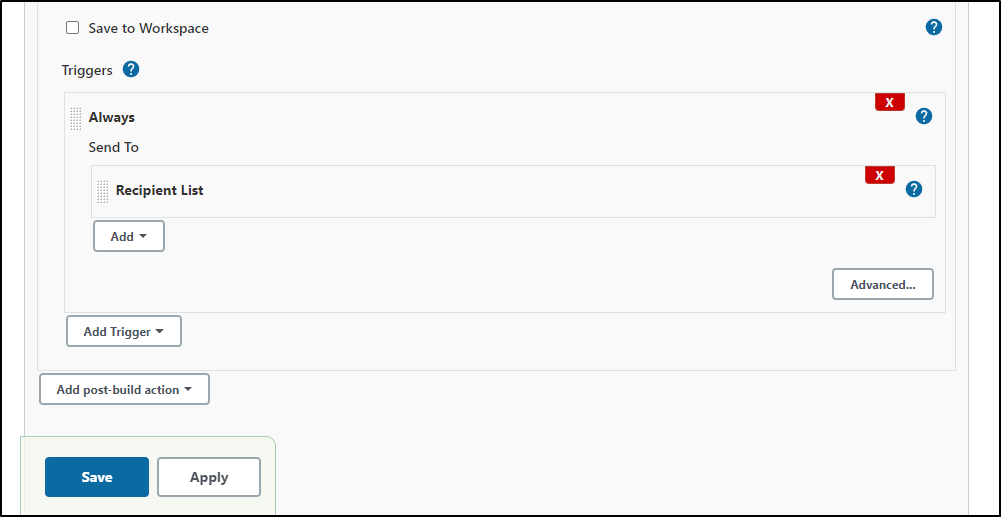

Make sure to change the trigger to be always (by defualt is set to only on fail)

After ensuring I set the specifics on the Editable Email Notification plugin correcting in Manage Jenkins, I gave a test

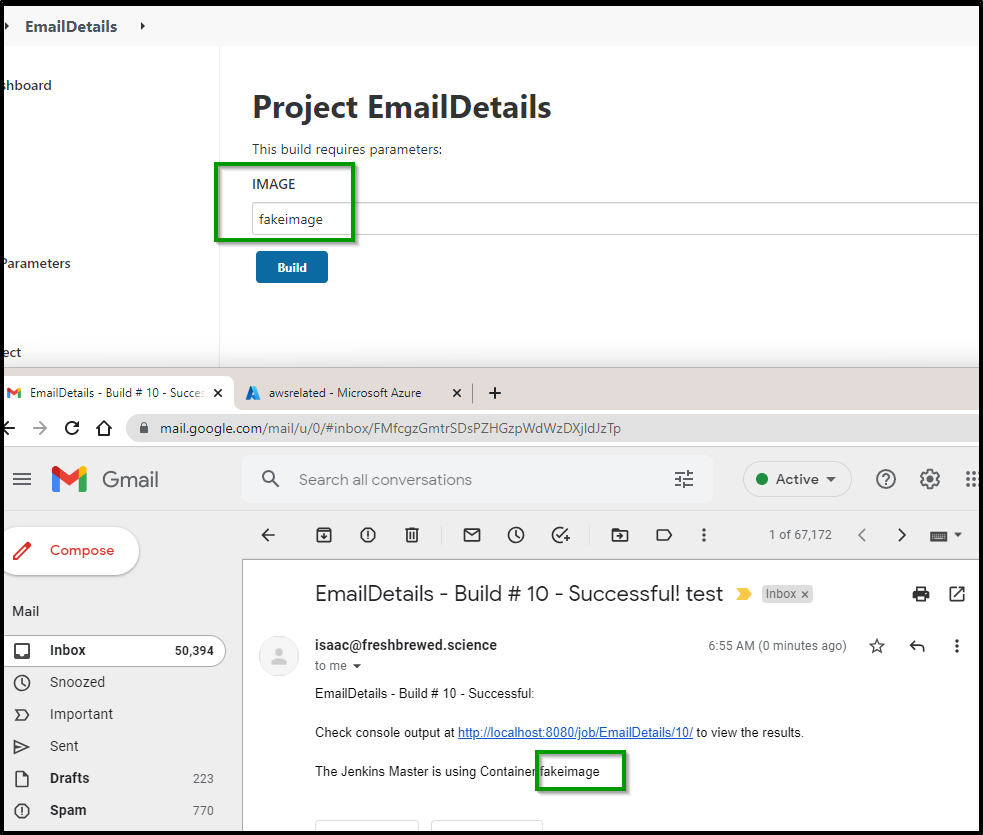

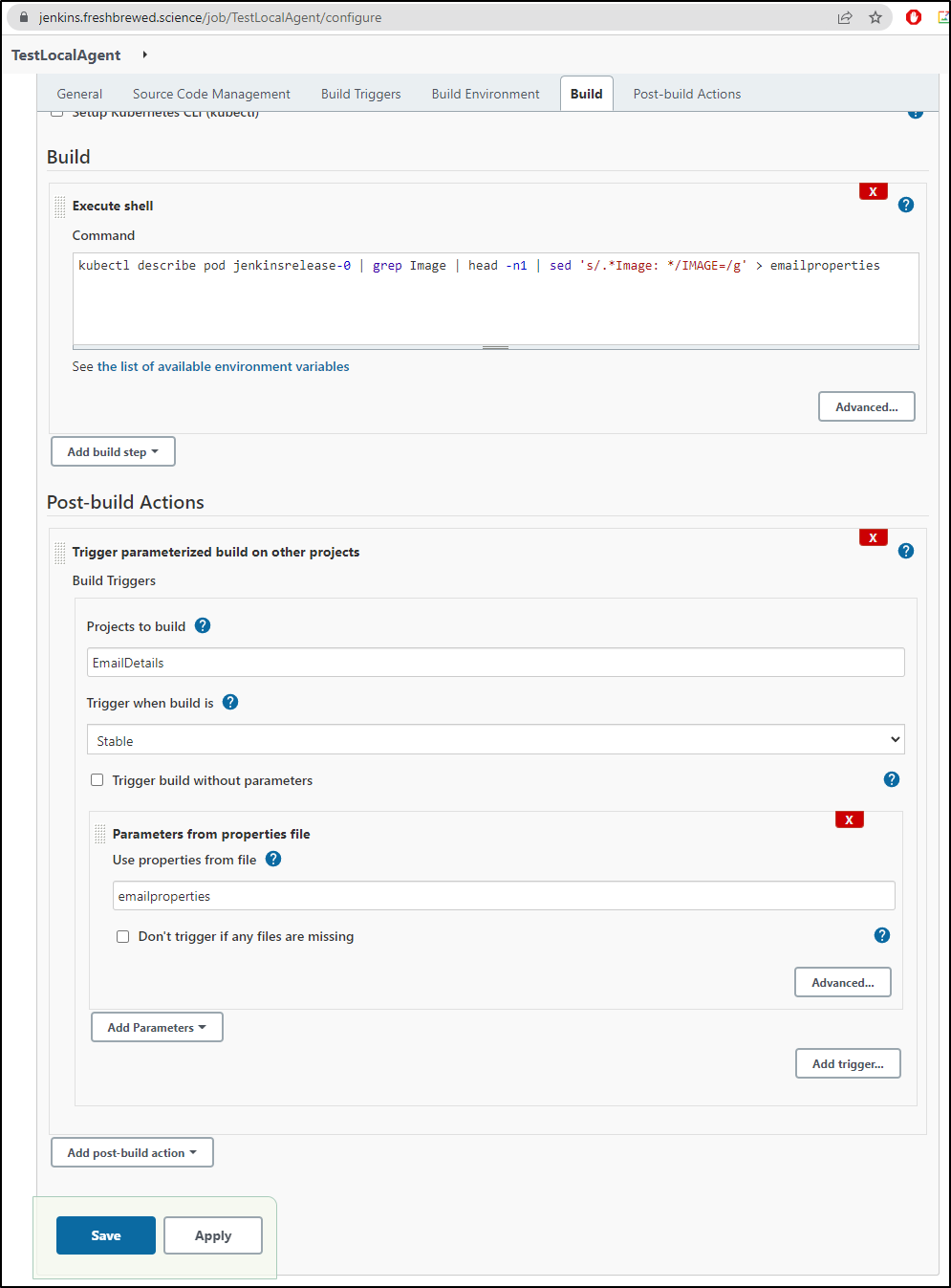

Back in the TestLocalAgent job with which we started, we can add a post build step to use that email Job

And we now see the actual Jenkins server image shown

Summary

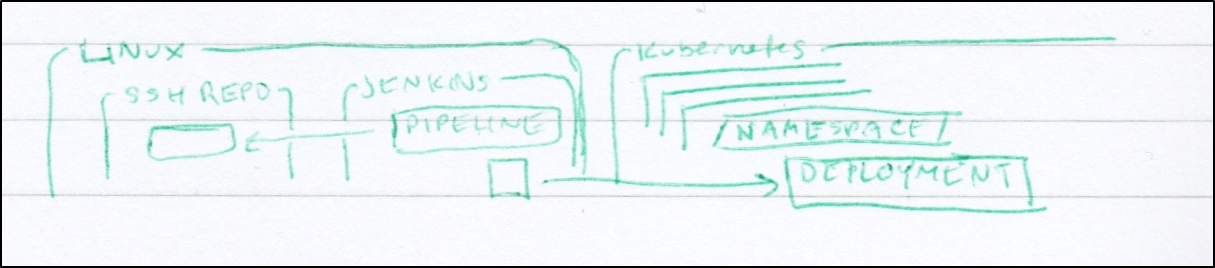

We setup Jenkins on a Linux VM (WSL on Windows 11). Then we used the stable Helm chart to launch both the Kubernetes master and agents into our cluster. We tried both Freestyle and Pipeline jobs and experimented with parameterized builds and email notifications.

Jenkins is a perfectly fine basic CI/CD tool. Many changes over the years have made for a multitude of methods one can use to create pipelines.

There are challenges to a tool with such wide adoption and long life. The advantage is also the disadvantage: there are many many ways to implement Pipelines. Trying to find good examples of Kubernetes pod design, for instance, proved challenging. I could find plenty of docker and script syntax, but not much by way of POD labels and how to best sideload the JNLP.

I had many more examples to show, however the Jenkins master would get hung and stop creating containerized agents for no good reason and I would need to bounce the master to get it back online. The settings would come back, which was good, but it was clearly temperamental.

As I mentioned last week, Jenkins can provide a SaaS-less option for private CI/CD:

And to that end, it solves a need. Back in July I covered how one could run Jenkinsfile builds in AzDO which would allow teams to migrate to a SaaS offering later in a more seamless method.

The question that one must ask is: Does this provide some kind of value over SaaS offerings like Azure Devops, Github or Gitlab, all of whom have very solid free and scalable supported CICD solutions? And for on-prem needs, there are commercial offerings like Circle CI, Atlassian CI/CD and now Hashi Waypoint which offer a bit more support.

That said, I highly doubt Jenkins will ever go away entirely. CVS still exists. Companies still need COBOL developers from time to time. Hudson had some dark hours in 2011 after Oracle acquired Sun but the Jenkins Fork saved it. How many CI/CD tools still have conferences that are nearly 18 years old?