Published: Feb 8, 2022 by Isaac Johnson

Argo Workflows is the workflow system from Argo Project that can be used to run containerized parallel workloads in Kubernetes.

In this post, we will explore setting up Argo Workflows with YAML and as an App within Argo CD. We’ll explore some basic workflows, then look at invoking more complicated examples including using, building and pushing docker containers.

Lastly, we’ll look at a full CICD workflow leveraging GIT pre-commit hooks, Argo CD, and Argo Workflows that build and promote to a private Container Registry.

History

Argo, as a Workflow system, has been around since 2017. It was developed by Applatix which was acquired by Intuit in 2018. A couple years later in 2020, Argo was adopted into the CNCF.

We often think of its sibling, ArgoCD when we talk about “Argo” but Argo Workflows is a distinct project and actually predates the ArgoCD project (which wasn’t announced until 2018).

Model

The idea behind workflows is to run parallel jobs in Kubernetes via CRDs. This Workflow model is a multi-step object that defines a series of tasks and lives entirely with the Kubernetes system.

Both Argo projects (Workflows and CD) include GUIs that can show status, logs and flow as well as allow invokation via a graphical wizard. There is also an Argo CLI, however most operations can be done entirely with YAML and kubectl.

Setup (Quickstart)

From the quickstart we can see the manifest to install:

kubectl create ns argo

kubectl apply -n argo -f https://raw.githubusercontent.com/argoproj/argo-workflows/master/manifests/quick-start-postgres.yaml

We can port-forward and test it;

$ kubectl port-forward -n argo argo-server-79bf45b5b7-jgh45 2746:2746

Forwarding from 127.0.0.1:2746 -> 2746

Forwarding from [::1]:2746 -> 2746

Handling connection for 2746

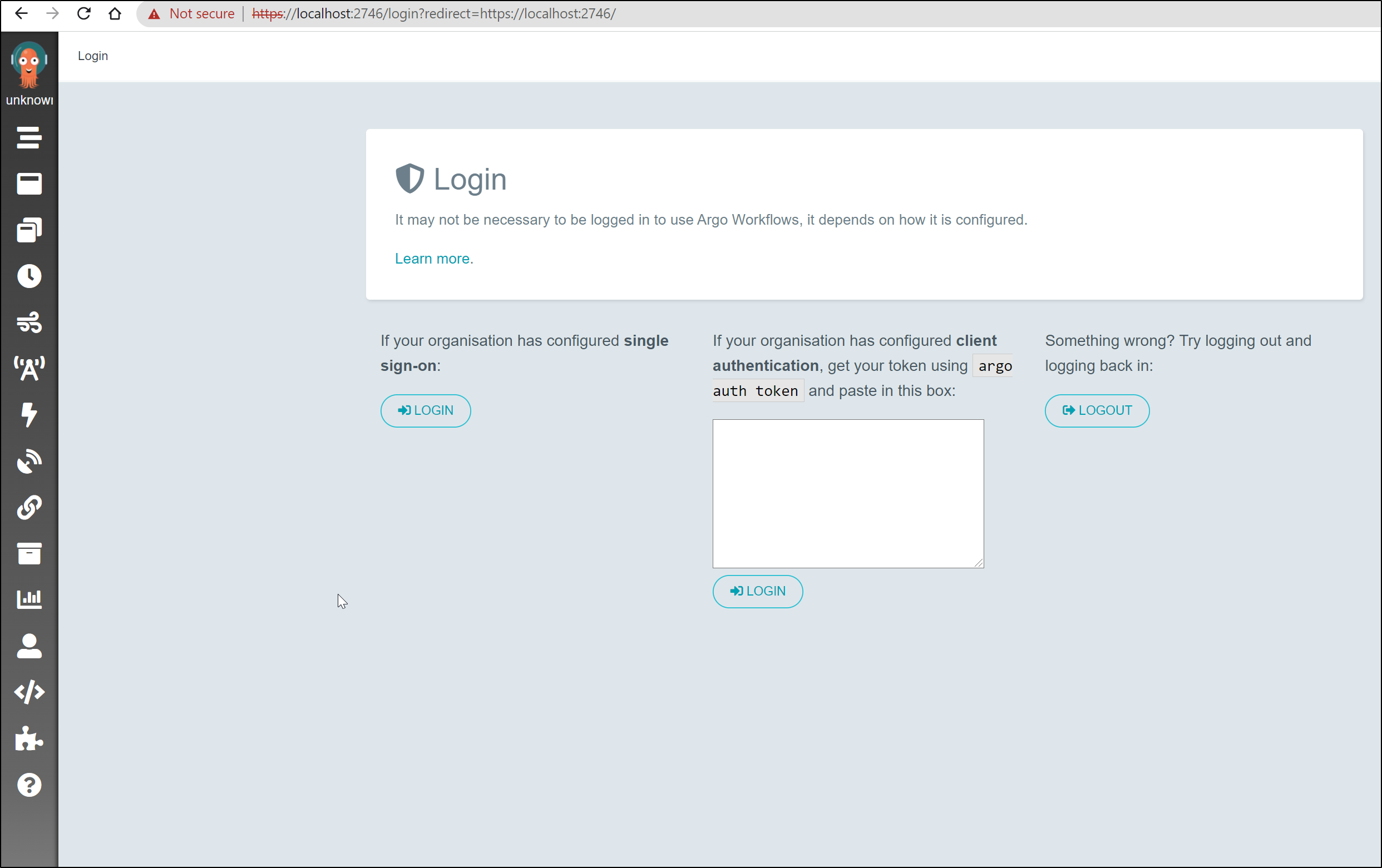

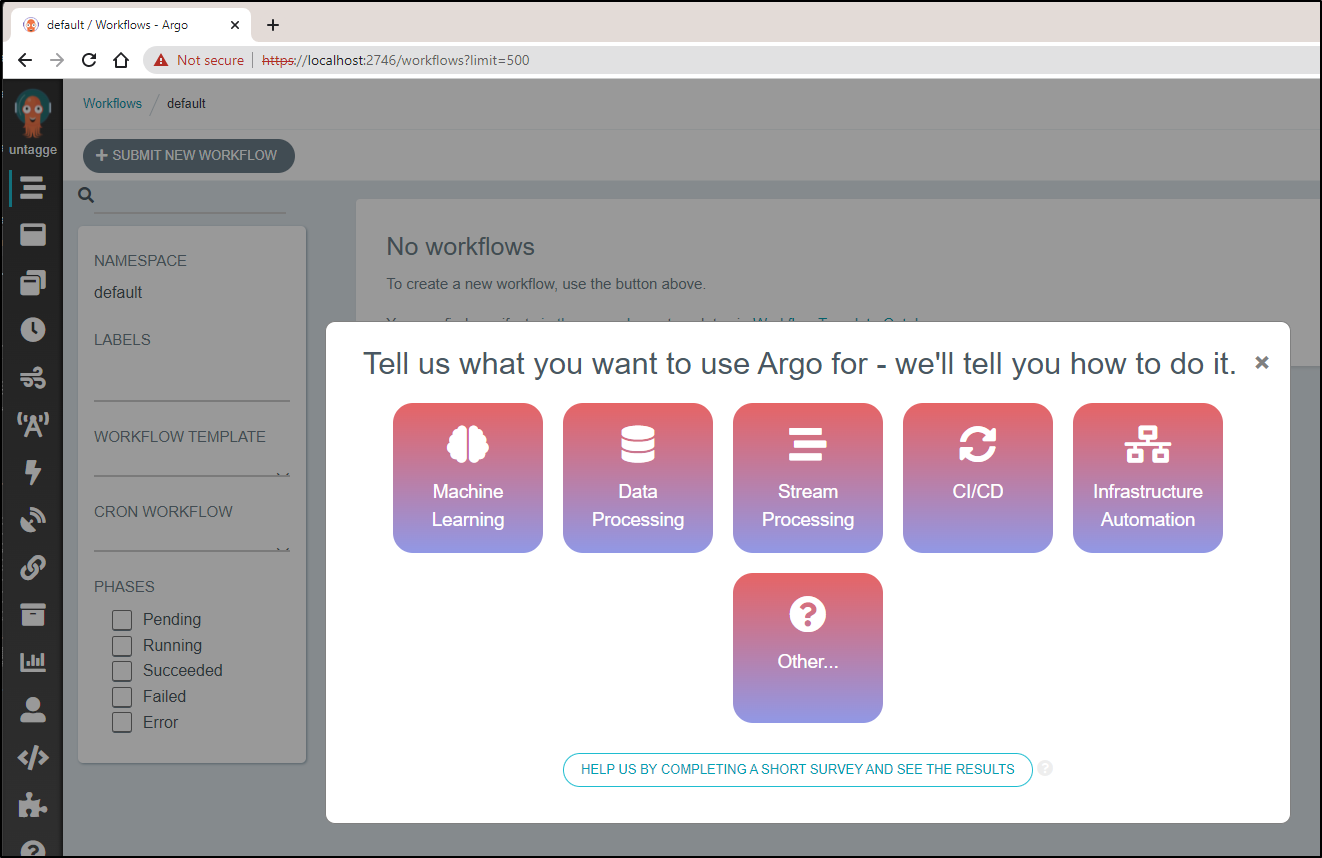

Now login locally: https://localhost:2746/login?redirect=https://localhost:2746/

Testing

To try it out, we can deploy a basic hello world CI app via YAML and the Argo CLI.

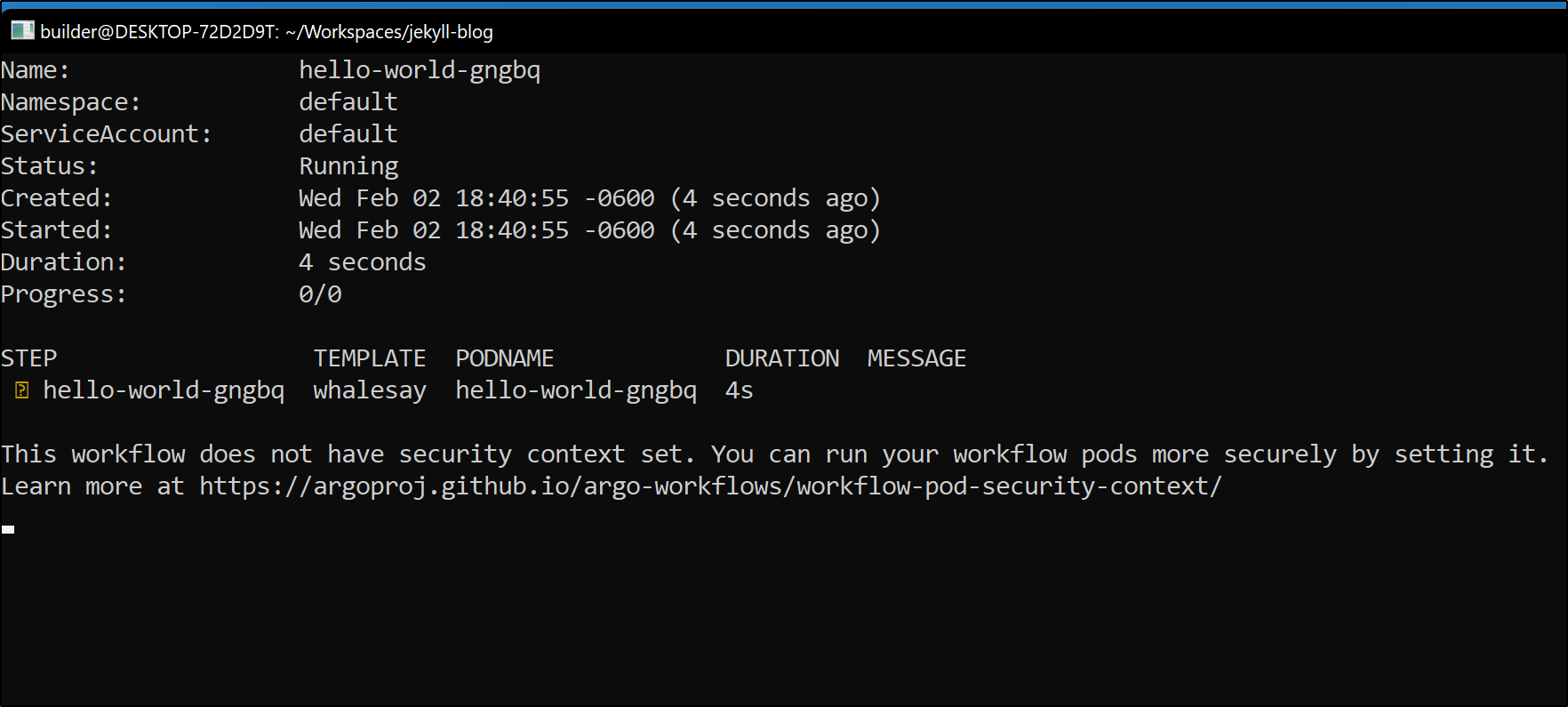

$ argo submit --watch https://raw.githubusercontent.com/argoproj/argo-workflows/master/examples/hello-world.yaml

While it invokes, we’ll see status update in our console window:

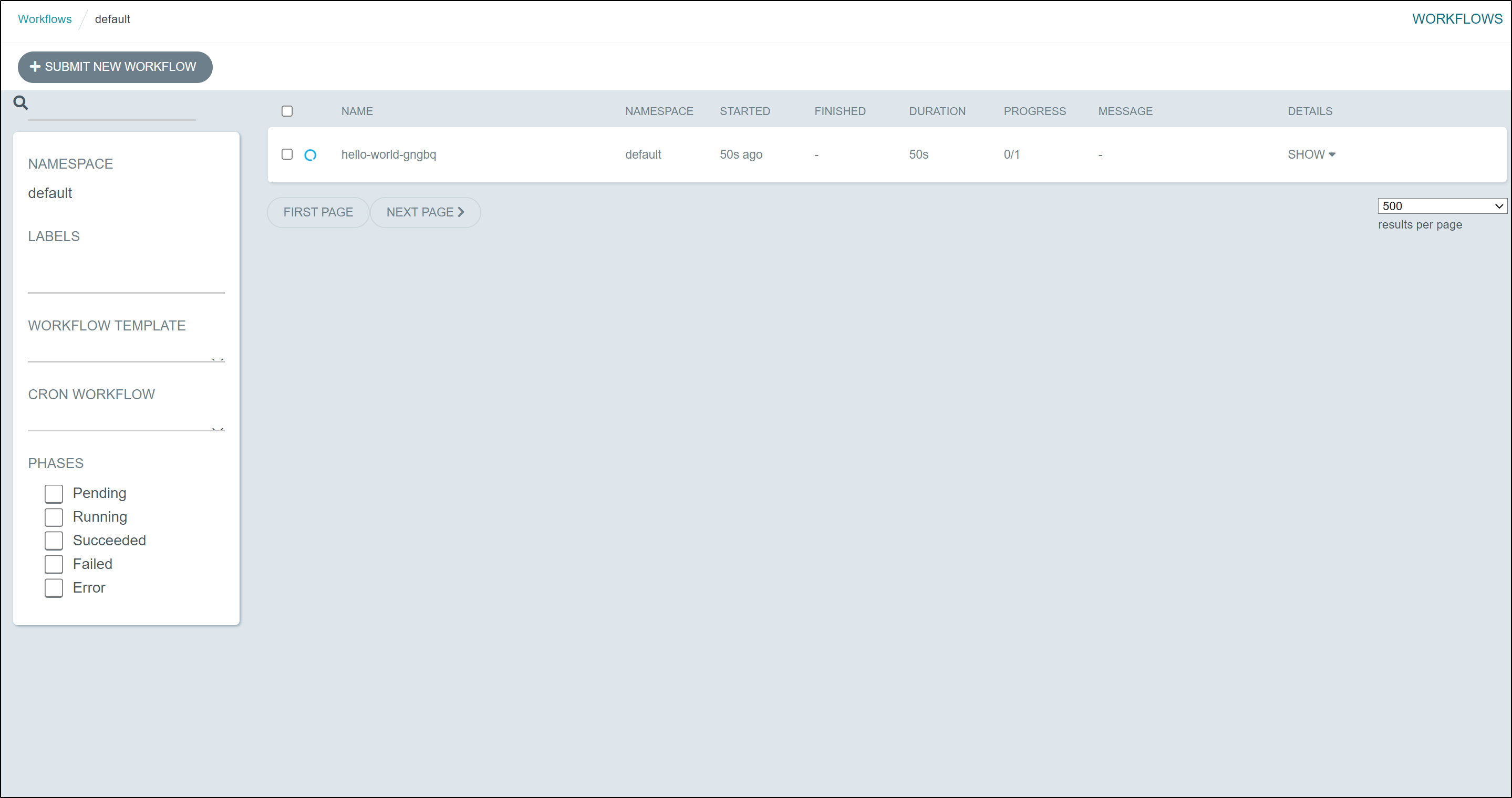

We can also see it in the UI:

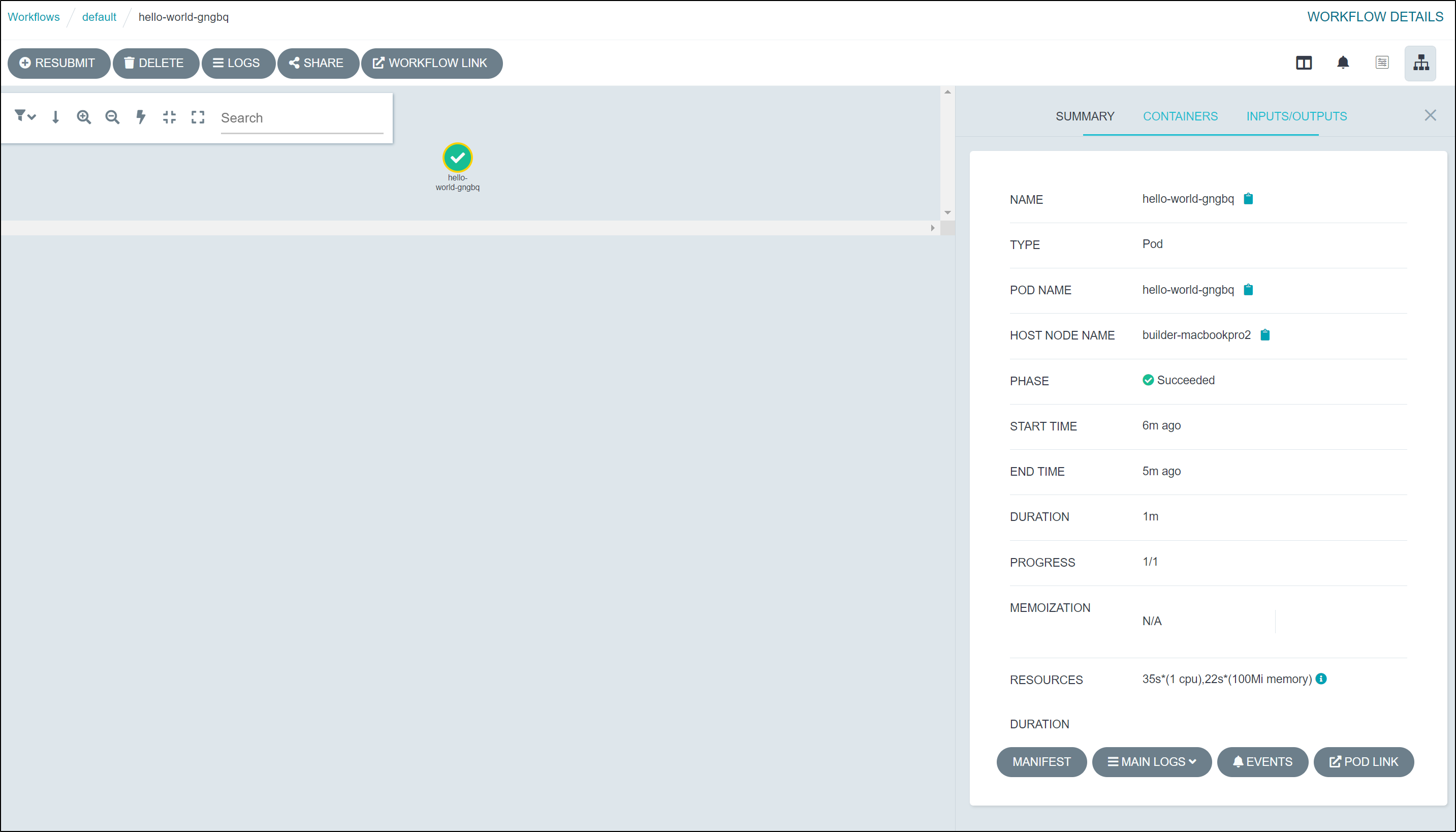

When we pick the workflow in the UI we can see results

This includes Init, Wait and Main logs

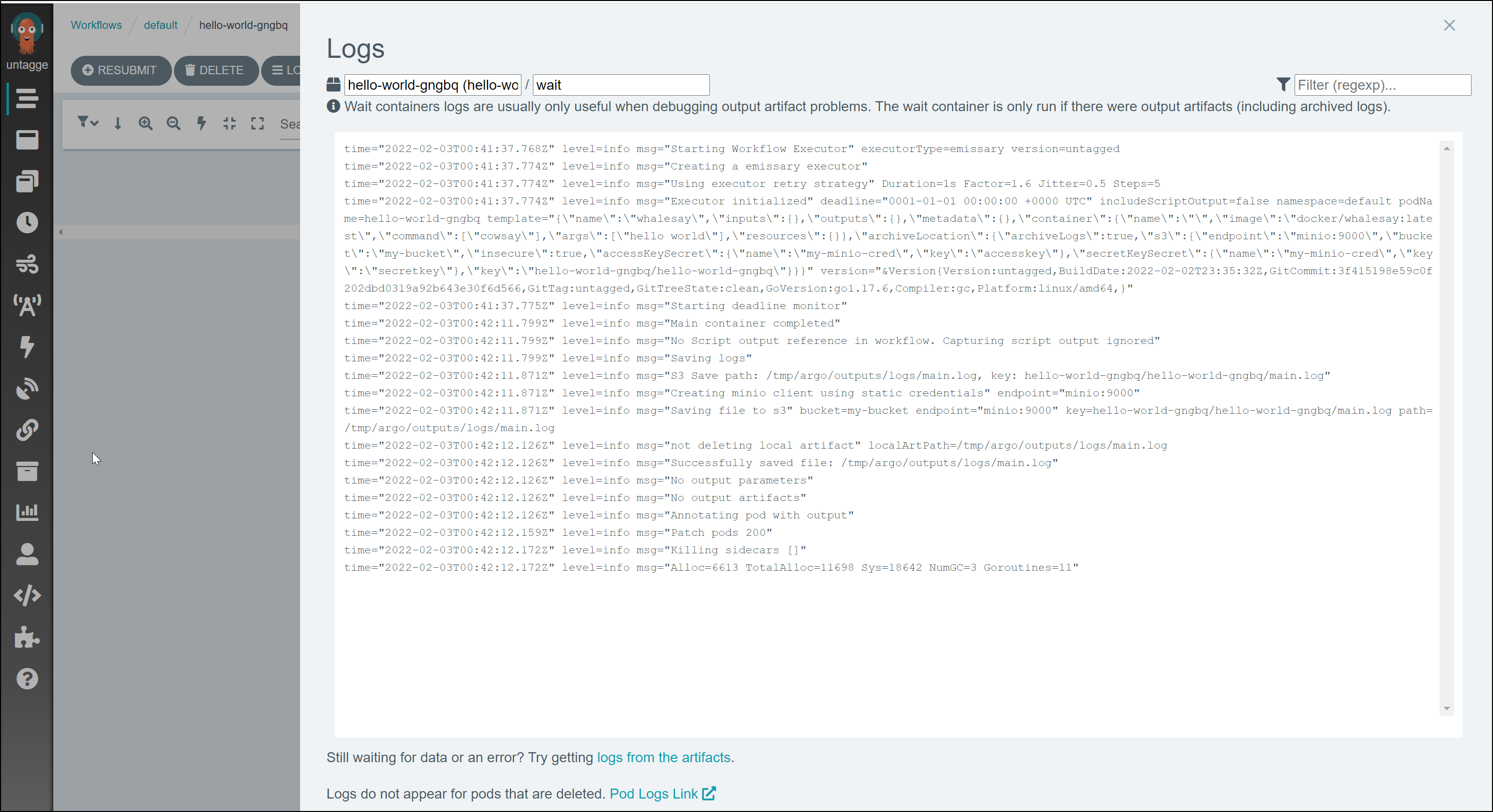

For example, the wait log

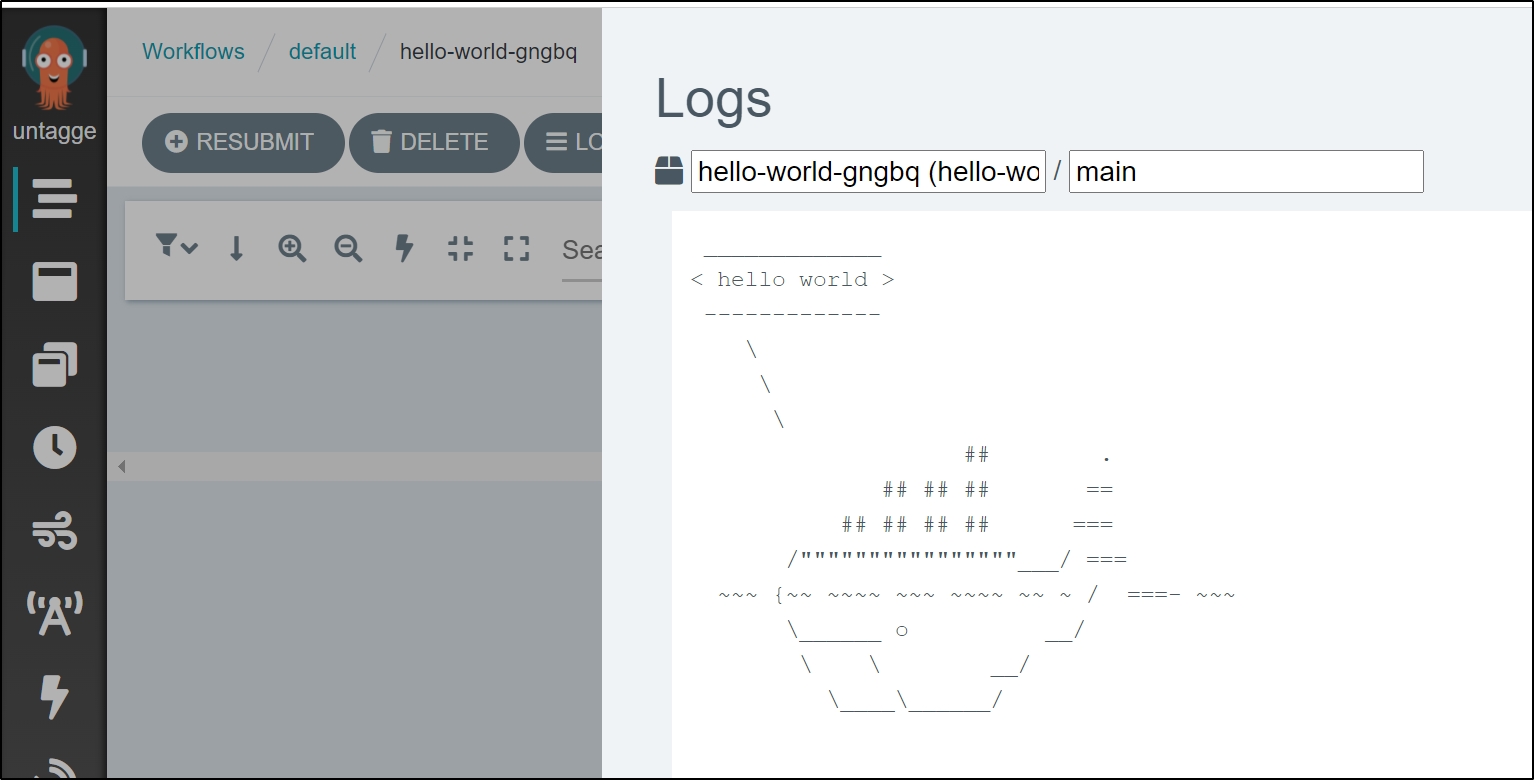

and main log

We can also use the CLI for the logs, such as the latest main log

$ argo logs @latest

hello-world-gngbq: time="2022-02-03T00:42:11.335Z" level=info msg="capturing logs" argo=true

hello-world-gngbq: _____________

hello-world-gngbq: < hello world >

hello-world-gngbq: -------------

hello-world-gngbq: \

hello-world-gngbq: \

hello-world-gngbq: \

hello-world-gngbq: ## .

hello-world-gngbq: ## ## ## ==

hello-world-gngbq: ## ## ## ## ===

hello-world-gngbq: /""""""""""""""""___/ ===

hello-world-gngbq: ~~~ {~~ ~~~~ ~~~ ~~~~ ~~ ~ / ===- ~~~

hello-world-gngbq: \______ o __/

hello-world-gngbq: \ \ __/

hello-world-gngbq: \____\______/

There is nothing wrong with this port-forward approach. In fact, you could use ArgoCI running in the namespace and accessible via Port-forward just fine.

However, I often want to access things remotely. There are ways to use the Argo CD we already setup as well, albeit it with a little pre-work.

Setup (using Argo CD)

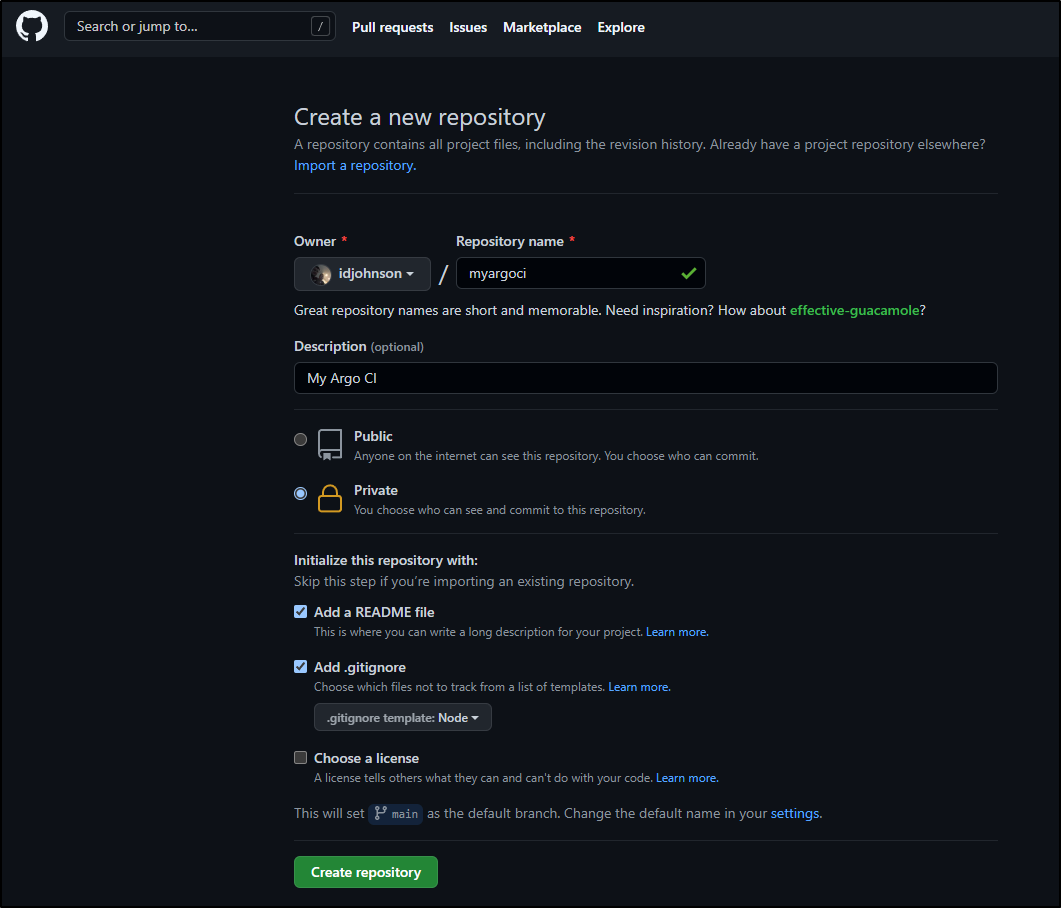

First, let’s create a repo to start this work.

We now want to put together a manifests folder:

builder@DESKTOP-QADGF36:~/Workspaces$ git clone https://github.com/idjohnson/myargoci.git

Cloning into 'myargoci'...

remote: Enumerating objects: 4, done.

remote: Counting objects: 100% (4/4), done.

remote: Compressing objects: 100% (3/3), done.

remote: Total 4 (delta 0), reused 0 (delta 0), pack-reused 0

Unpacking objects: 100% (4/4), 1.39 KiB | 1.39 MiB/s, done.

builder@DESKTOP-QADGF36:~/Workspaces$ cd myargoci/

builder@DESKTOP-QADGF36:~/Workspaces/myargoci$ mkdir manifests

builder@DESKTOP-QADGF36:~/Workspaces/myargoci$ cd manifests/

builder@DESKTOP-QADGF36:~/Workspaces/myargoci/manifests$ wget https://raw.githubusercontent.com/argoproj/argo-workflows/master/manifests/quick-start-postgres.yaml

--2022-02-01 06:23:37-- https://raw.githubusercontent.com/argoproj/argo-workflows/master/manifests/quick-start-postgres.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.111.133, 185.199.108.133, 185.199.109.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.111.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 20407 (20K) [text/plain]

Saving to: ‘quick-start-postgres.yaml’

quick-start-postgres.yaml 100%[==================================================================================================================================================================>] 19.93K --.-KB/s in 0.002s

2022-02-01 06:23:37 (7.79 MB/s) - ‘quick-start-postgres.yaml’ saved [20407/20407]

I want an admin account to do activities as well:

builder@DESKTOP-QADGF36:~/Workspaces/myargoci/manifests$ vi adminaccount.yaml

builder@DESKTOP-QADGF36:~/Workspaces/myargoci/manifests$ cat adminaccount.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: argoci-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: argoci-admin

namespace: kube-system

Now I’ll go ahead and add them and push them to Github:

builder@DESKTOP-QADGF36:~/Workspaces/myargoci$ git branch

* main

builder@DESKTOP-QADGF36:~/Workspaces/myargoci$ git add manifests/

builder@DESKTOP-QADGF36:~/Workspaces/myargoci$ git commit -m "argo workflow postgres manifest and admin user"

[main a4d1072] argo workflow postgres manifest and admin user

2 files changed, 1073 insertions(+)

create mode 100644 manifests/adminaccount.yaml

create mode 100644 manifests/quick-start-postgres.yaml

builder@DESKTOP-QADGF36:~/Workspaces/myargoci$ git push

Enumerating objects: 6, done.

Counting objects: 100% (6/6), done.

Delta compression using up to 16 threads

Compressing objects: 100% (5/5), done.

Writing objects: 100% (5/5), 3.73 KiB | 3.73 MiB/s, done.

Total 5 (delta 0), reused 0 (delta 0)

To https://github.com/idjohnson/myargoci.git

1fa5abf..a4d1072 main -> main

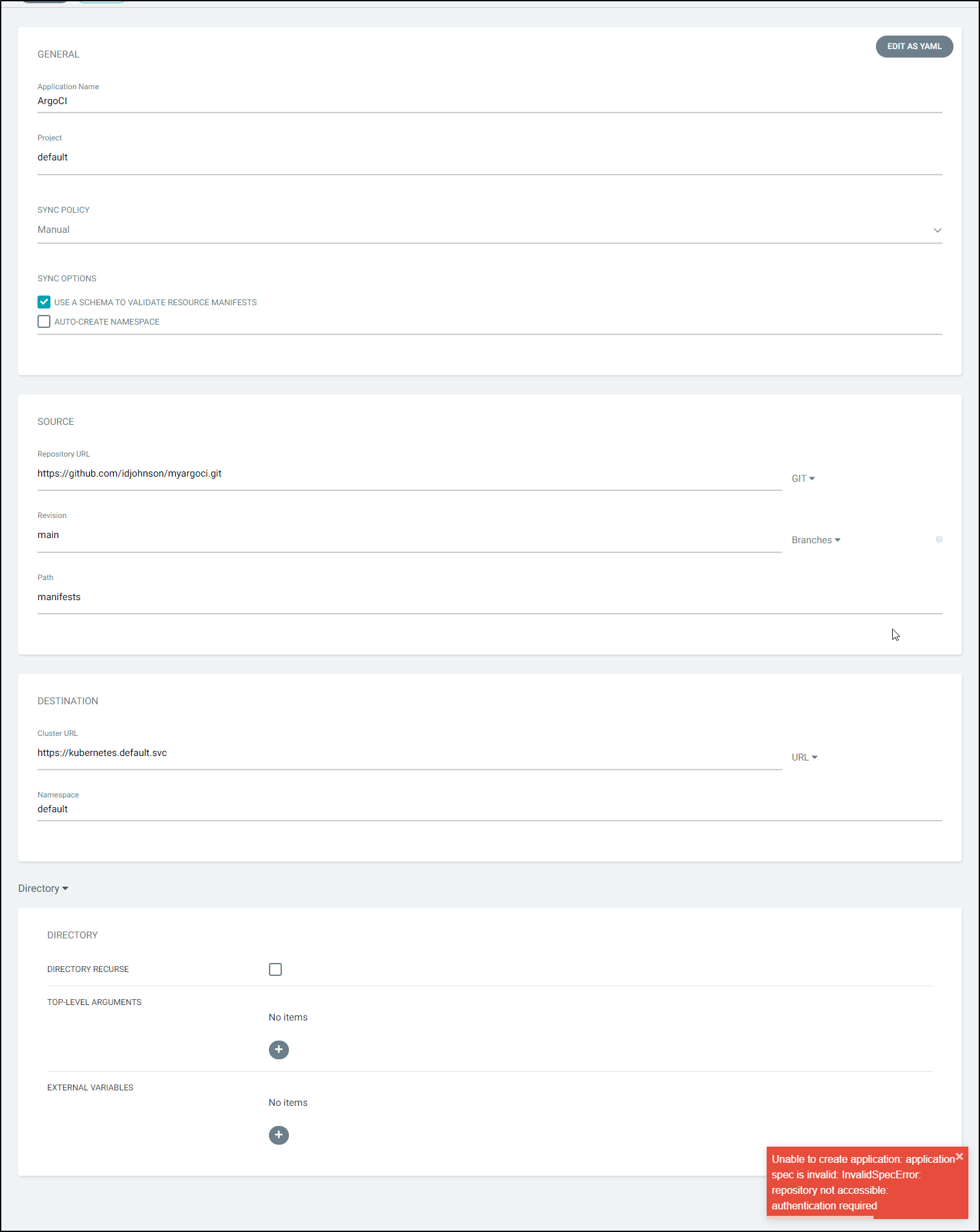

Now if we try and add it to ArgoCD right now, we would get an error as this repo requires auth:

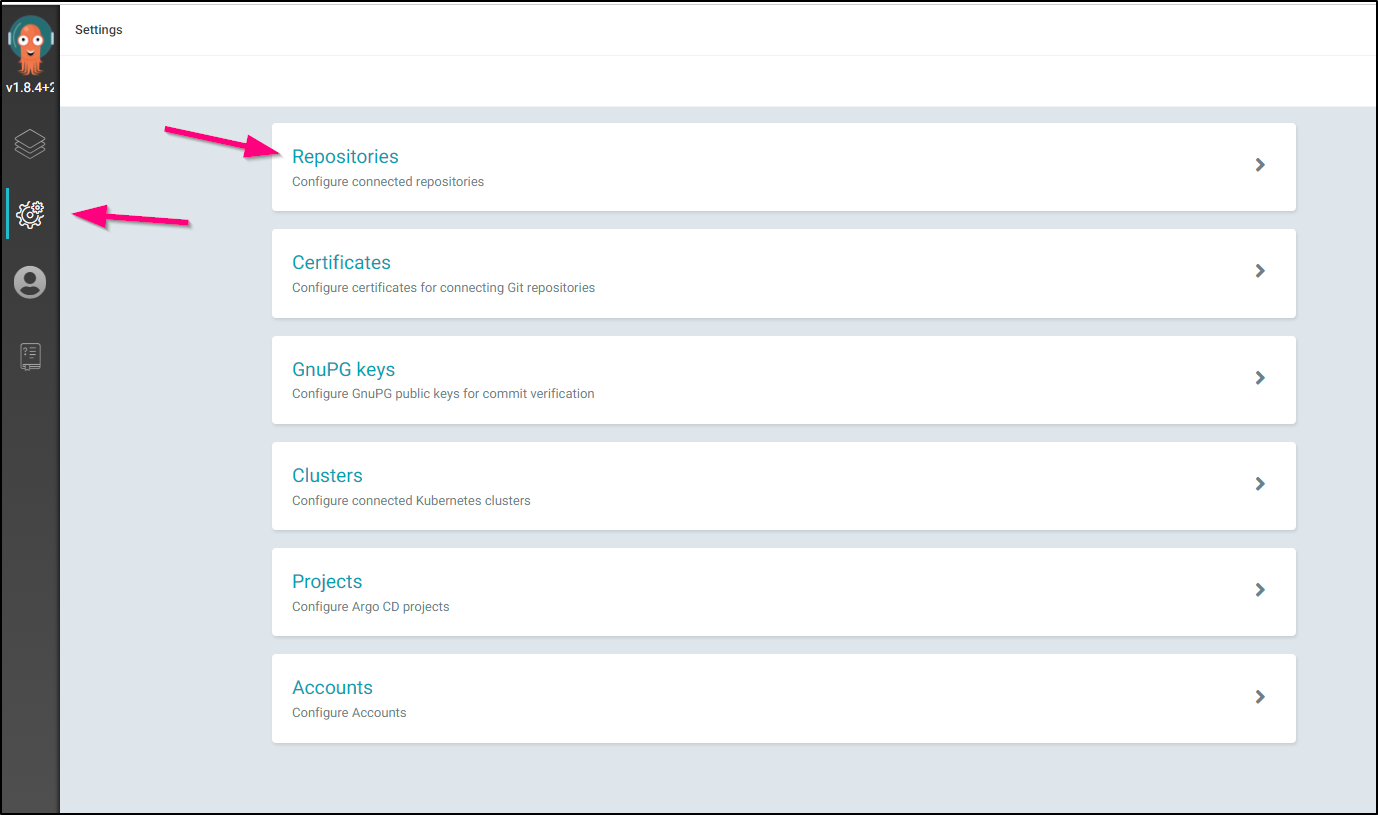

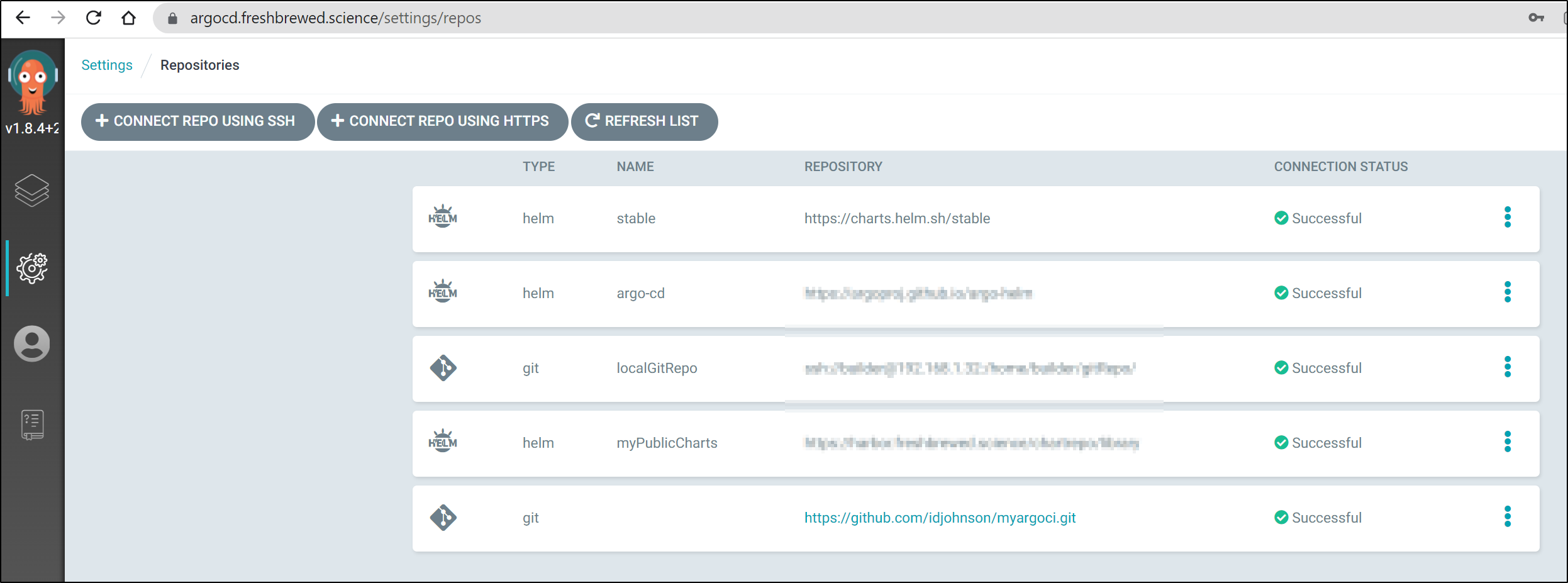

We will want to go to Settings and Repositories:

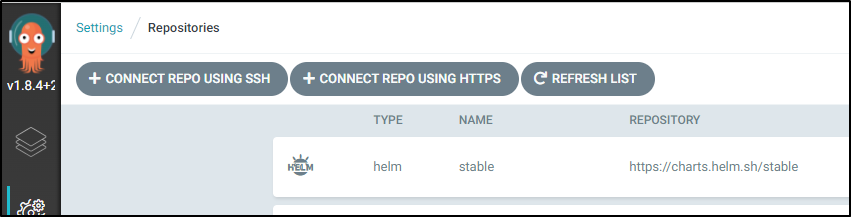

Click “+ Connect Repo using HTTPS”:

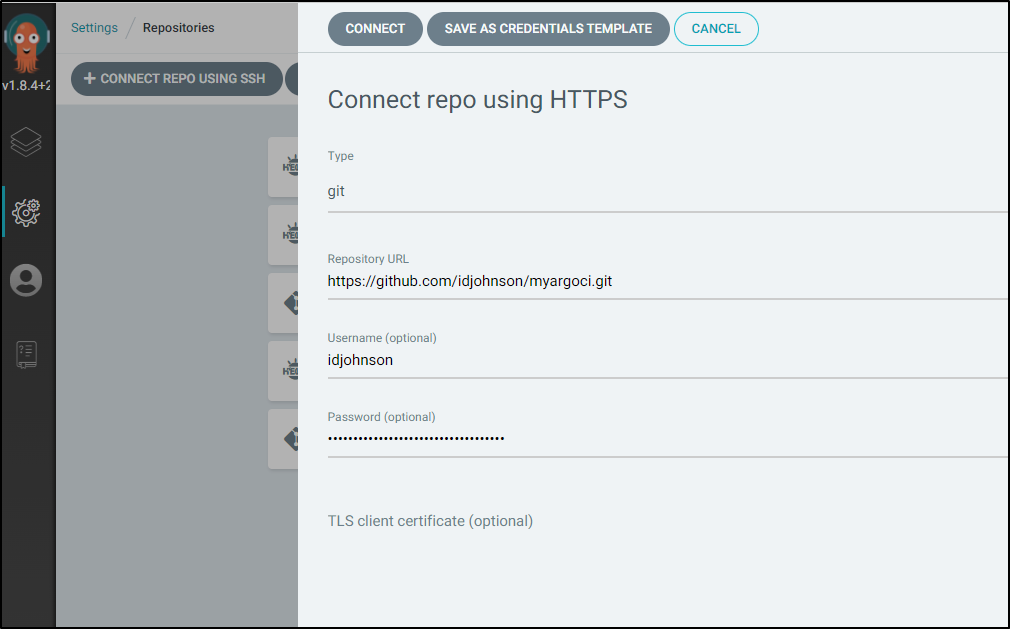

Enter your connection details. Whether you use Github, Gitlab, Azure Repos or any other Git hosting service, it just needs the URL, user and password. If you have self-signed SSL certs, you can skip TLS verification (skip server verification) at the bottom.

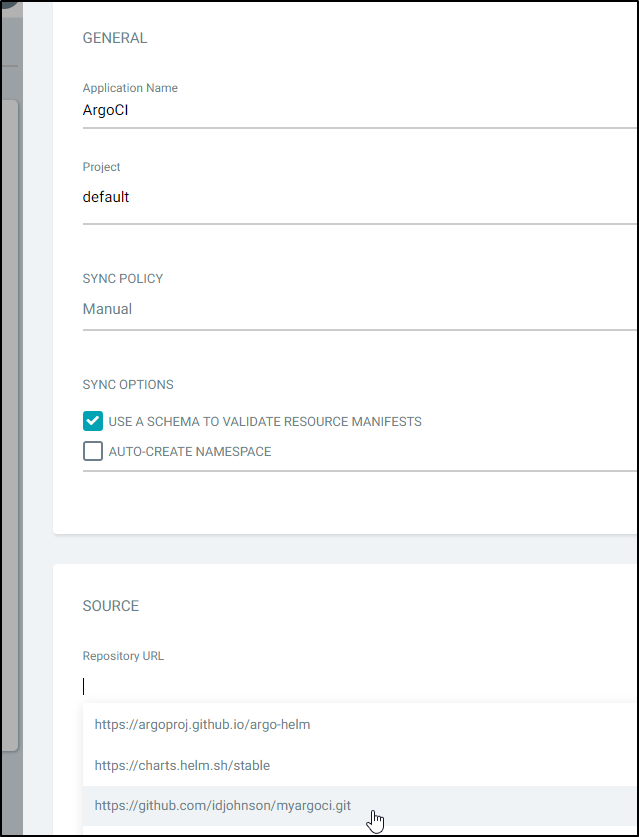

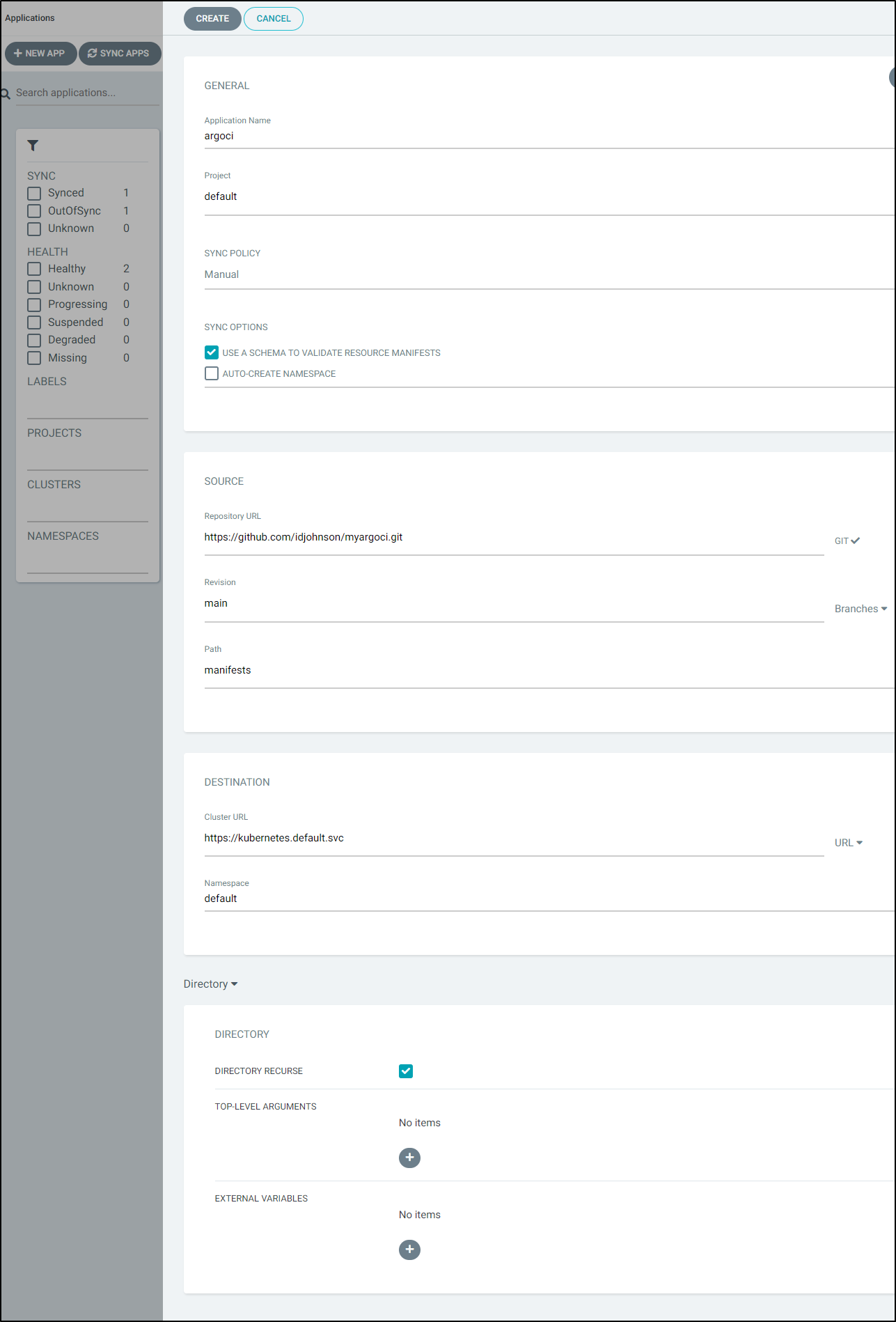

Now when we go to add the App in ArgoCD, we can pick the authenticated URL from the list.

Go ahead and create it

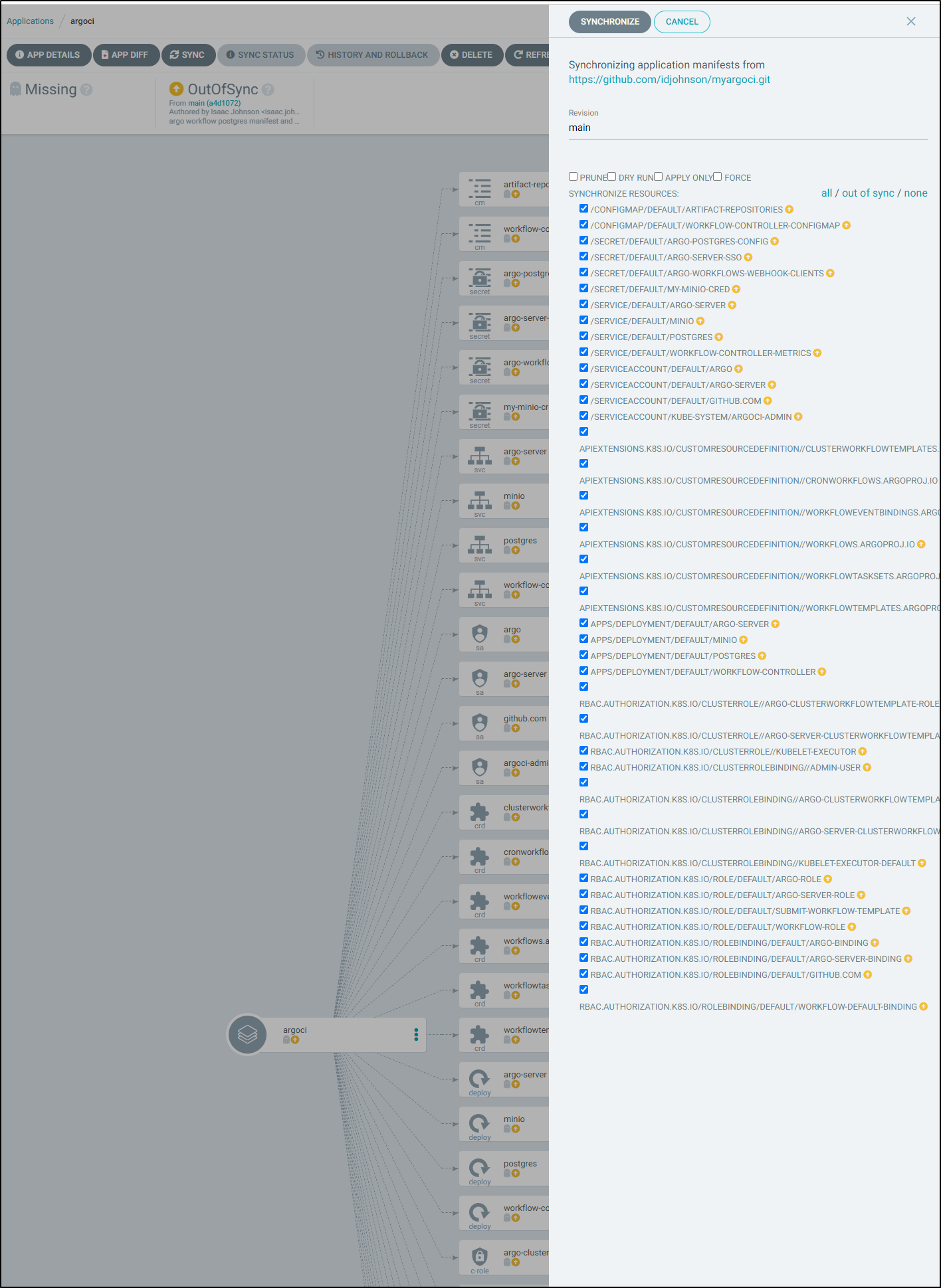

We will need to sync (as we set to manual)

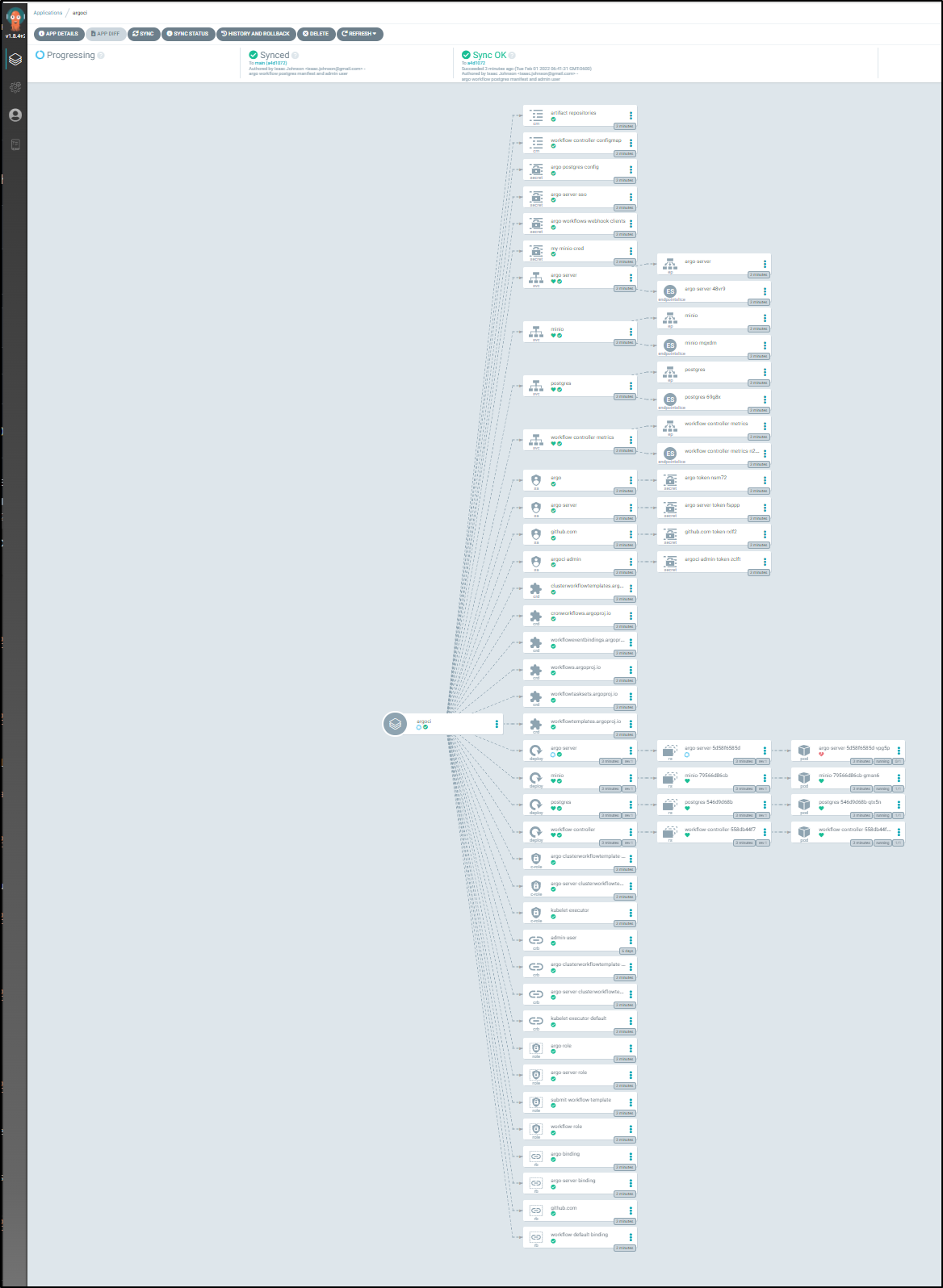

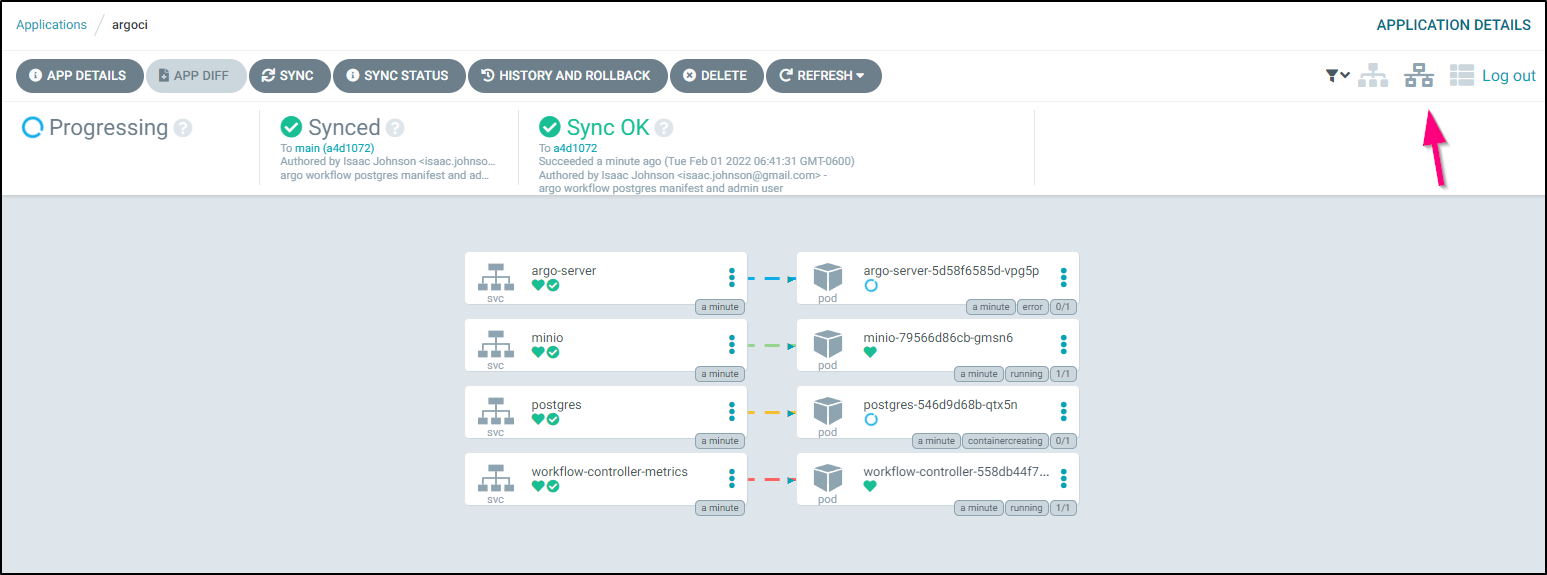

Once deployed we can see quite a lot of things were deployed

We can use the Network view to see the deployment a bit easier

Validation

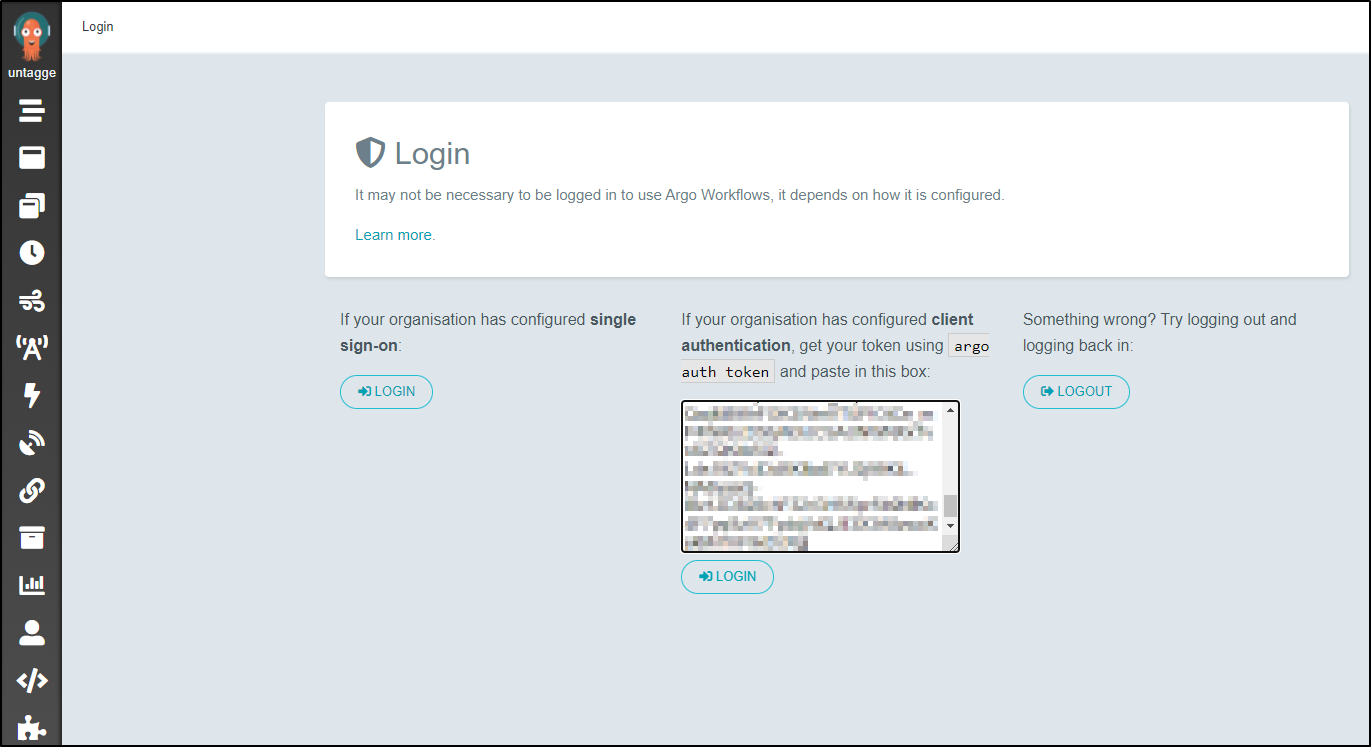

Next, we need the access token. We can review the steps, but essentially we need the base64 decoded token from the admin user we created.

$ kubectl get sa argoci-admin -n kube-system

NAME SECRETS AGE

argoci-admin 1 8m30s

$ SECRET=`kubectl get sa argoci-admin -n kube-system -o=jsonpath='{.secrets[0].name}'`

$ ARGO_TOKEN="Bearer `kubectl get secret -n kube-system $SECRET -o=jsonpath='{.data.token}' | base64 --decode`"

$ echo $ARGO_TOKEN

Bearer YXBpVmVyc2lvbjogYXJnb3Byb2ouaW8vdjFhbHBoYTEKa2luZDogV29ya2Zsb3cKbWV0YWRhdGE6CiAgZ2VuZXJhdGVOYW1lOiBidWlsZC1kaW5kLQogIGxhYmVsczoKICAgIHdvcmtmbG93cy5hcmdvcHJvai5pby9jb250YWluZXItcnVudGltZS1leGVjdXRvcjogcG5zCnNwZWM6CiAgZW50cnlwb2ludDogZGluZC1zaWRlY2FyLWV4YW1wbGUKICB2b2x1bWVzOgogIC0gbmFtZTogbXktc2VjcmV0LXZvbAogICAgc2VjcmV0OgogICAgICBzZWNyZXROYW1lOiBhcmdvLWhhcmJvci1zZWNyZXQKICB0ZW1wbGF0ZXM6CiAgLSBuYW1lOiBkaW5kLXNpZGVjYXItZXhhbXBsZQogICAgaW5wdXRzOgogICAgICBhcnRpZmFjdHM6CiAgICAgIC0gbmFtZTogYnVpbGRpdAogICAgICAgIHBhdGg6IC90bXAvYnVpbGRpdC5zaAogICAgICAgIHJhdzoKICAgICAgICAgIGRhdGE6IHwKICAgICAgICAgICAgY2F0Isdfasdfasdfasdfasfdasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfg6IC90bXAvRG9ja2VyZmlsZQogICAgICAgIHJhdzoKICAgICAgICAgIGRhdGE6IHwKICAgICAgICAgICAgRlJPTSBhbHBpbmUKICAgICAgICAgICAgQ01EIFsiZWNobyIsIkhlbGxvIEFyZ28iXQogICAgY29udGFpbmVyOgogICAgICBpbWFnZTogZG9ja2VyOjE5LjAzLjEzCiAgICAgIGNvbW1hbmQ6IFtzaCwgLWNdCiAgICAgIGFyZ3M6IFsidW50aWwgZG9ja2VyIHBzOyBkbyBzbGVlcCAzOyBkb2

Let’s first test with port-forwarding

$ kubectl port-forward svc/argo-server 2746:2746

Forwarding from 127.0.0.1:2746 -> 2746

Forwarding from [::1]:2746 -> 2746

Handling connection for 2746

Handling connection for 2746

You might be logged in by default. If so, and you want to test the token, you can logout from the user screen

And you can paste in that token (again.. it is the sa token with the word “Bearer” at the start).

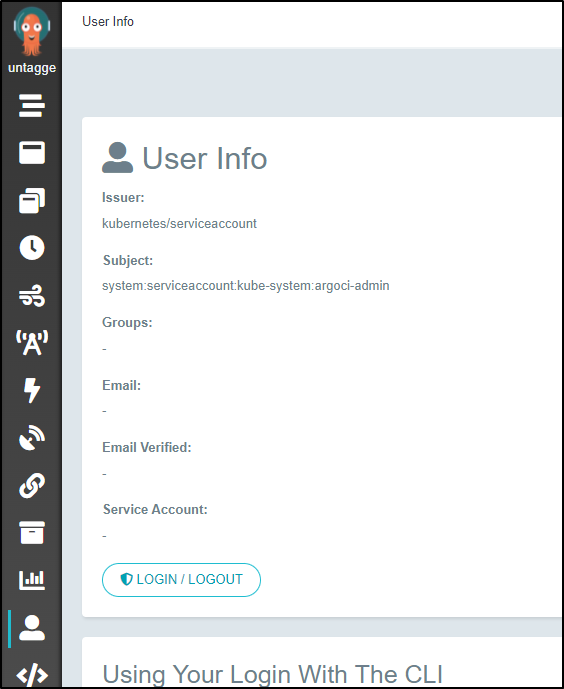

and we can see that user listed now when logged in

Ingress

Set up a R53

$ cat r53-argoci.json

{

"Comment": "CREATE argocd fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "argoci.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "73.242.50.46"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id `aws route53 list-hosted-zones-by-name | jq -r '.HostedZones[] | select(.Name == "freshbrewed.science.") | .Id' | cut -d'/' -f 3 | tr -d '\n'` --change-batch file://r53-argoci.json

{

"ChangeInfo": {

"Id": "/change/C0580280HE9C9OO07A87",

"Status": "PENDING",

"SubmittedAt": "2022-02-01T21:20:31.768Z",

"Comment": "CREATE argocd fb.s A record "

}

}

With the Route53 set, we can create our Ingress with TLS

$ cat argoci-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

nginx.ingress.kubernetes.io/ssl-passthrough: "true"

#nginx.ingress.kubernetes.io/force-ssl-redirect: "true"

kubernetes.io/tls-acme: "true"

kubernetes.io/ingress.class: nginx

cert-manager.io/cluster-issuer: "letsencrypt-prod"

name: argoci-with-auth

namespace: default

spec:

tls:

- hosts:

- argoci.freshbrewed.science

secretName: argoci-tls

rules:

- host: argoci.freshbrewed.science

http:

paths:

- backend:

service:

name: argo-server

port:

number: 2746

path: /

pathType: Prefix

$ kubectl apply -f argoci-ingress.yaml

ingress.networking.k8s.io/argoci-with-auth created

note: in experimenting, i found, at least for my nginx, it needed me to do kubectl delete -f ingressfile before i would apply it again. the apply did not seem to change values

Now we can see it.

Validation

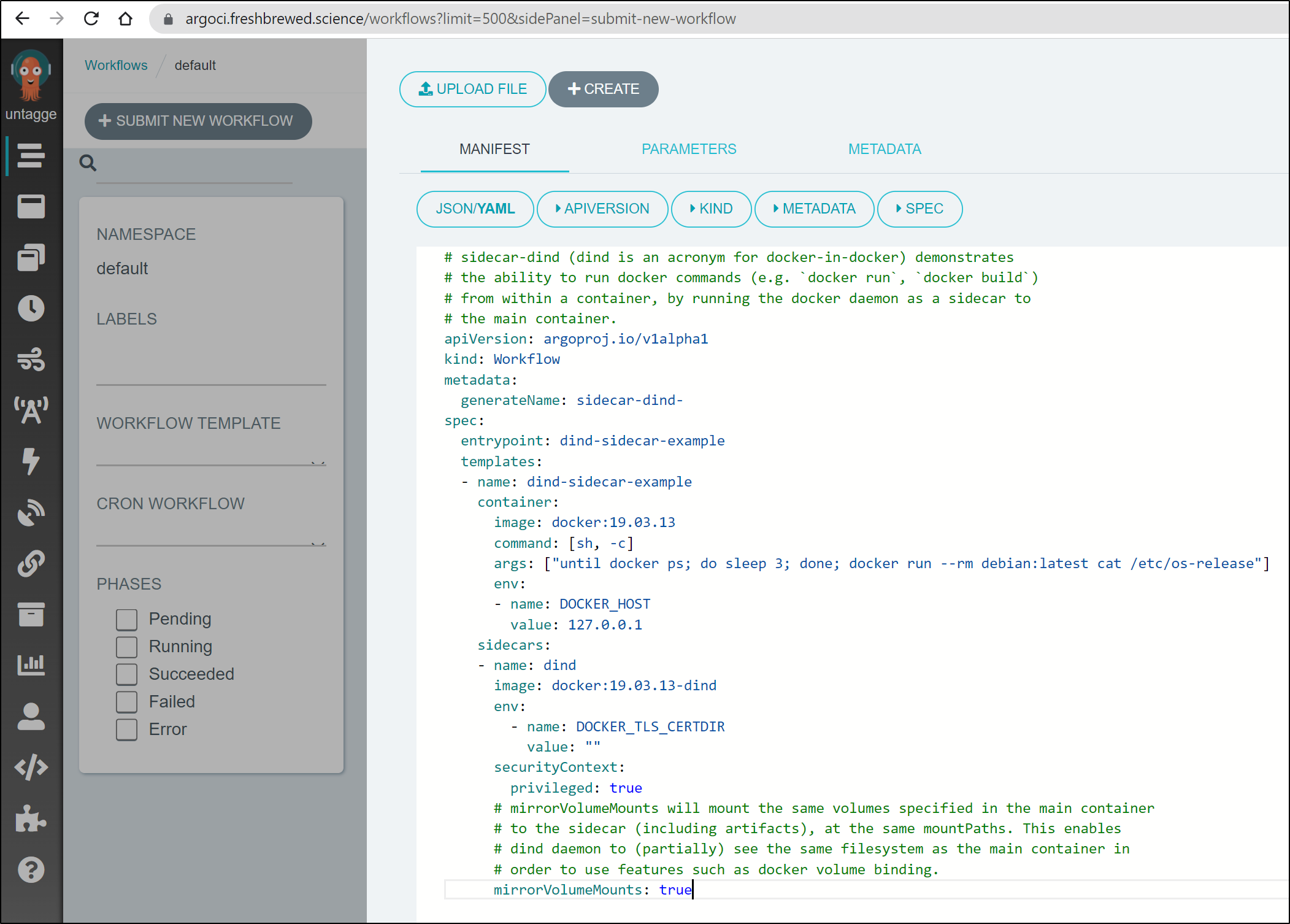

This time, let’s try running a Docker-in-Docker (DinD) Job.

The first time, I attempted using the UI

But I soon realized when it failed, that they now need an annotation:

labels:

workflows.argoproj.io/container-runtime-executor: pns

That made it look as such:

$ cat ./sidecar-dind.yaml

# sidecar-dind (dind is an acronym for docker-in-docker) demonstrates

# the ability to run docker commands (e.g. `docker run`, `docker build`)

# from within a container, by running the docker daemon as a sidecar to

# the main container.

apiVersion: argoproj.io/v1alpha1

kind: Workflow

metadata:

generateName: sidecar-dind-

labels:

workflows.argoproj.io/container-runtime-executor: pns

spec:

entrypoint: dind-sidecar-example

templates:

- name: dind-sidecar-example

container:

image: docker:19.03.13

command: [sh, -c]

args: ["until docker ps; do sleep 3; done; docker run --rm debian:latest cat /etc/os-release"]

env:

- name: DOCKER_HOST

value: 127.0.0.1

sidecars:

- name: dind

image: docker:19.03.13-dind

env:

- name: DOCKER_TLS_CERTDIR

value: ""

securityContext:

privileged: true

# mirrorVolumeMounts will mount the same volumes specified in the main container

# to the sidecar (including artifacts), at the same mountPaths. This enables

# dind daemon to (partially) see the same filesystem as the main container in

# order to use features such as docker volume binding.

mirrorVolumeMounts: true

If we have not already, install the argo CL (see release page for latest release and steps)

$ curl -sLO https://github.com/argoproj/argo-workflows/releases/download/v3.1.3/argo-linux-amd64.gz

$ rm argo-linux-amd64.gz

$ curl -sLO https://github.com/argoproj/argo-workflows/releases/download/v3.2.8/argo-linux-amd64.gz

$ gunzip argo-linux-amd64.gz

$ chmod +x argo-linux-amd64

$ mv ./argo-linux-amd64 /usr/local/bin/argo

mv: cannot move './argo-linux-amd64' to '/usr/local/bin/argo': Permission denied

$ sudo mv ./argo-linux-amd64 /usr/local/bin/argo

[sudo] password for builder:

We can submit this on the command line:

$ argo submit --watch ./sidecar-dind.yaml

This workflow does not have security context set. You can run your workflow pods more securely by setting it.

Learn more at https://argoproj.github.io/argo-workflows/workflow-pod-security-context/

Name: sidecar-dind-q6v5j

Namespace: default

ServiceAccount: default

Status: Succeeded

Conditions:

PodRunning False

Completed True

Created: Wed Feb 02 20:15:56 -0600 (1 minute ago)

Started: Wed Feb 02 20:15:56 -0600 (1 minute ago)

Finished: Wed Feb 02 20:17:09 -0600 (now)

Duration: 1 minute 13 seconds

Progress: 1/1

ResourcesDuration: 2m2s*(1 cpu),1m40s*(100Mi memory)

STEP TEMPLATE PODNAME DURATION MESSAGE

✔ sidecar-dind-q6v5j dind-sidecar-example sidecar-dind-q6v5j 1m

This workflow does not have security context set. You can run your workflow pods more securely by setting it.

Learn more at https://argoproj.github.io/argo-workflows/workflow-pod-security-context/

We can also fetch the logs from the command line:

$ argo logs @latest

sidecar-dind-q6v5j: Cannot connect to the Docker daemon at tcp://127.0.0.1:2375. Is the docker daemon running?

sidecar-dind-q6v5j: Cannot connect to the Docker daemon at tcp://127.0.0.1:2375. Is the docker daemon running?

sidecar-dind-q6v5j: Cannot connect to the Docker daemon at tcp://127.0.0.1:2375. Is the docker daemon running?

sidecar-dind-q6v5j: Cannot connect to the Docker daemon at tcp://127.0.0.1:2375. Is the docker daemon running?

sidecar-dind-q6v5j: CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

sidecar-dind-q6v5j: Unable to find image 'debian:latest' locally

sidecar-dind-q6v5j: latest: Pulling from library/debian

sidecar-dind-q6v5j: 0c6b8ff8c37e: Pulling fs layer

sidecar-dind-q6v5j: 0c6b8ff8c37e: Verifying Checksum

sidecar-dind-q6v5j: 0c6b8ff8c37e: Download complete

sidecar-dind-q6v5j: 0c6b8ff8c37e: Pull complete

sidecar-dind-q6v5j: Digest: sha256:fb45fd4e25abe55a656ca69a7bef70e62099b8bb42a279a5e0ea4ae1ab410e0d

sidecar-dind-q6v5j: Status: Downloaded newer image for debian:latest

sidecar-dind-q6v5j: PRETTY_NAME="Debian GNU/Linux 11 (bullseye)"

sidecar-dind-q6v5j: NAME="Debian GNU/Linux"

sidecar-dind-q6v5j: VERSION_ID="11"

sidecar-dind-q6v5j: VERSION="11 (bullseye)"

sidecar-dind-q6v5j: VERSION_CODENAME=bullseye

sidecar-dind-q6v5j: ID=debian

sidecar-dind-q6v5j: HOME_URL="https://www.debian.org/"

sidecar-dind-q6v5j: SUPPORT_URL="https://www.debian.org/support"

sidecar-dind-q6v5j: BUG_REPORT_URL="https://bugs.debian.org/"

Next Steps

We showed we can pull containers, but can we build a Dockerfile in DinD?

Indeed, with a little update to our yaml:

apiVersion: argoproj.io/v1alpha1

kind: Workflow

metadata:

generateName: sidecar-dind-

labels:

workflows.argoproj.io/container-runtime-executor: pns

spec:

entrypoint: dind-sidecar-example

templates:

- name: dind-sidecar-example

container:

image: docker:19.03.13

command: [sh, -c]

args: ["until docker ps; do sleep 3; done; echo 'hello' && cd /tmp && echo 'FROM alpine' > Dockerfile && echo 'CMD [\"echo\",\"Hello Argo\"]' >> Dockerfile && cat Dockerfile && docker build -t hello ."]

env:

- name: DOCKER_HOST

value: 127.0.0.1

sidecars:

- name: dind

image: docker:19.03.13-dind

env:

- name: DOCKER_TLS_CERTDIR

value: ""

securityContext:

privileged: true

# mirrorVolumeMounts will mount the same volumes specified in the main container

# to the sidecar (including artifacts), at the same mountPaths. This enables

# dind daemon to (partially) see the same filesystem as the main container in

# order to use features such as docker volume binding.

mirrorVolumeMounts: true

It works to build and push

$ argo submit --watch ./sidecar-dnd.yaml

$ argo logs @latest

sidecar-dind-n5ptj: Cannot connect to the Docker daemon at tcp://127.0.0.1:2375. Is the docker daemon running?

sidecar-dind-n5ptj: CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

sidecar-dind-n5ptj: hello

sidecar-dind-n5ptj: FROM alpine

sidecar-dind-n5ptj: CMD ["echo","Hello Argo"]

sidecar-dind-n5ptj: Sending build context to Docker daemon 2.048kB

sidecar-dind-n5ptj: Step 1/2 : FROM alpine

sidecar-dind-n5ptj: latest: Pulling from library/alpine

sidecar-dind-n5ptj: 59bf1c3509f3: Pulling fs layer

sidecar-dind-n5ptj: 59bf1c3509f3: Verifying Checksum

sidecar-dind-n5ptj: 59bf1c3509f3: Download complete

sidecar-dind-n5ptj: 59bf1c3509f3: Pull complete

sidecar-dind-n5ptj: Digest: sha256:21a3deaa0d32a8057914f36584b5288d2e5ecc984380bc0118285c70fa8c9300

sidecar-dind-n5ptj: Status: Downloaded newer image for alpine:latest

sidecar-dind-n5ptj: ---> c059bfaa849c

sidecar-dind-n5ptj: Step 2/2 : CMD ["echo","Hello Argo"]

sidecar-dind-n5ptj: ---> Running in f9273e55574f

sidecar-dind-n5ptj: Removing intermediate container f9273e55574f

sidecar-dind-n5ptj: ---> 29a0b45e3051

sidecar-dind-n5ptj: Successfully built 29a0b45e3051

sidecar-dind-n5ptj: Successfully tagged hello:latest

sidecar-dind-n5ptj: Hello Argo

when run:

$ argo submit --watch ./sidecar-dnd.yaml

Name: sidecar-dind-dj4fd

Namespace: default

ServiceAccount: default

Status: Succeeded

Conditions:

PodRunning False

Completed True

Created: Fri Feb 04 17:08:14 -0600 (2 seconds ago)

Started: Fri Feb 04 17:08:14 -0600 (2 seconds ago)

Finished: Fri Feb 04 17:08:34 -0600 (17 seconds from now)

Duration: 20 seconds

Progress: 1/1

ResourcesDuration: 21s*(1 cpu),17s*(100Mi memory)

STEP TEMPLATE PODNAME DURATION MESSAGE

✔ sidecar-dind-dj4fd dind-sidecar-example sidecar-dind-dj4fd 10s

This workflow does not have security context set. You can run your workflow pods more securely by setting it.

Learn more at https://argoproj.github.io/argo-workflows/workflow-pod-security-context/

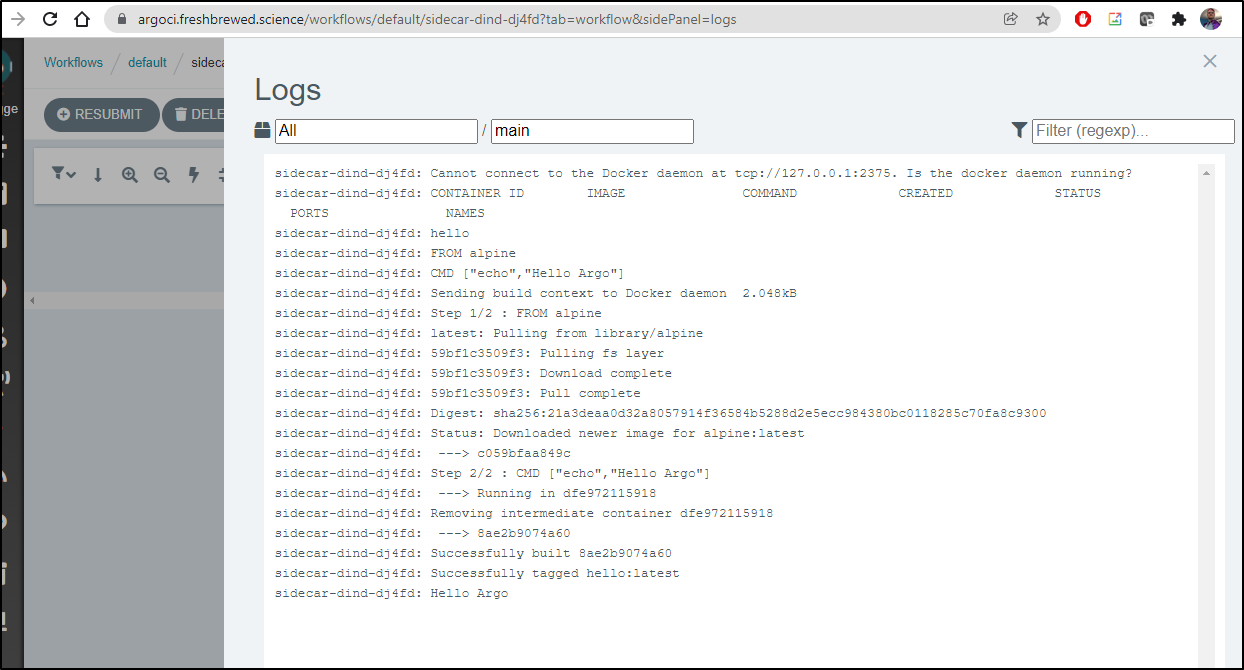

The logs

$ argo logs @latest

sidecar-dind-dj4fd: Cannot connect to the Docker daemon at tcp://127.0.0.1:2375. Is the docker daemon running?

sidecar-dind-dj4fd: CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

sidecar-dind-dj4fd: hello

sidecar-dind-dj4fd: FROM alpine

sidecar-dind-dj4fd: CMD ["echo","Hello Argo"]

sidecar-dind-dj4fd: Sending build context to Docker daemon 2.048kB

sidecar-dind-dj4fd: Step 1/2 : FROM alpine

sidecar-dind-dj4fd: latest: Pulling from library/alpine

sidecar-dind-dj4fd: 59bf1c3509f3: Pulling fs layer

sidecar-dind-dj4fd: 59bf1c3509f3: Download complete

sidecar-dind-dj4fd: 59bf1c3509f3: Pull complete

sidecar-dind-dj4fd: Digest: sha256:21a3deaa0d32a8057914f36584b5288d2e5ecc984380bc0118285c70fa8c9300

sidecar-dind-dj4fd: Status: Downloaded newer image for alpine:latest

sidecar-dind-dj4fd: ---> c059bfaa849c

sidecar-dind-dj4fd: Step 2/2 : CMD ["echo","Hello Argo"]

sidecar-dind-dj4fd: ---> Running in dfe972115918

sidecar-dind-dj4fd: Removing intermediate container dfe972115918

sidecar-dind-dj4fd: ---> 8ae2b9074a60

sidecar-dind-dj4fd: Successfully built 8ae2b9074a60

sidecar-dind-dj4fd: Successfully tagged hello:latest

sidecar-dind-dj4fd: Hello Argo

And of course, we can see the same logs in the UI

Can we take this a step further and build and push docker images.

Here I’ll reset the demo password for use in the flow and try it:

apiVersion: argoproj.io/v1alpha1

kind: Workflow

metadata:

generateName: sidecar-dind-

labels:

workflows.argoproj.io/container-runtime-executor: pns

spec:

entrypoint: dind-sidecar-example

templates:

- name: dind-sidecar-example

container:

image: docker:19.03.13

command: [sh, -c]

args: ["until docker ps; do sleep 3; done; echo 'hello' && cd /tmp && echo 'FROM alpine' > Dockerfile && echo 'CMD [\"echo\",\"Hello Argo\"]' >> Dockerfile && cat Dockerfile && docker build -t hello . && echo Testing1234 | docker login --username chrdemo --password-stdin https://harbor.freshbrewed.science/freshbrewedprivate && echo 'going to tag' && docker tag hello:latest harbor.freshbrewed.science/freshbrewedprivate/hello-argo:latest && echo 'going to push' && docker push harbor.freshbrewed.science/freshbrewedprivate/hello-argo:latest"]

env:

- name: DOCKER_HOST

value: 127.0.0.1

sidecars:

- name: dind

image: docker:19.03.13-dind

env:

- name: DOCKER_TLS_CERTDIR

value: ""

securityContext:

privileged: true

# mirrorVolumeMounts will mount the same volumes specified in the main container

# to the sidecar (including artifacts), at the same mountPaths. This enables

# dind daemon to (partially) see the same filesystem as the main container in

# order to use features such as docker volume binding.

mirrorVolumeMounts: true

The steps, if we remove the && are:

until docker ps; do sleep 3; done; echo 'hello'

cd /tmp

echo 'FROM alpine' > Dockerfile

echo 'CMD [\"echo\",\"Hello Argo\"]' >> Dockerfile

cat Dockerfile

docker build -t hello .

echo Testing1234 | docker login --username chrdemo --password-stdin https://harbor.freshbrewed.science/freshbrewedprivate

echo 'going to tag'

docker tag hello:latest harbor.freshbrewed.science/freshbrewedprivate/hello-argo:latest

echo 'going to push'

docker push harbor.freshbrewed.science/freshbrewedprivate/hello-argo:latest

We can see when run this works:

Name: sidecar-dind-lptgf

Namespace: default

ServiceAccount: default

Status: Succeeded

Conditions:

PodRunning False

Completed True

Created: Fri Feb 04 17:52:57 -0600 (51 seconds ago)

Started: Fri Feb 04 17:52:57 -0600 (51 seconds ago)

Finished: Fri Feb 04 17:54:06 -0600 (17 seconds from now)

Duration: 1 minute 9 seconds

Progress: 1/1

ResourcesDuration: 1m17s*(1 cpu),1m6s*(100Mi memory)

STEP TEMPLATE PODNAME DURATION MESSAGE

✔ sidecar-dind-lptgf dind-sidecar-example sidecar-dind-lptgf 1m

This workflow does not have security context set. You can run your workflow pods more securely by setting it.

Learn more at https://argoproj.github.io/argo-workflows/workflow-pod-security-context/

Logs:

$ argo logs @latest

sidecar-dind-lptgf: Cannot connect to the Docker daemon at tcp://127.0.0.1:2375. Is the docker daemon running?

sidecar-dind-lptgf: CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

sidecar-dind-lptgf: hello

sidecar-dind-lptgf: FROM alpine

sidecar-dind-lptgf: CMD ["echo","Hello Argo"]

sidecar-dind-lptgf: Sending build context to Docker daemon 2.048kB

sidecar-dind-lptgf: Step 1/2 : FROM alpine

sidecar-dind-lptgf: latest: Pulling from library/alpine

sidecar-dind-lptgf: 59bf1c3509f3: Pulling fs layer

sidecar-dind-lptgf: 59bf1c3509f3: Download complete

sidecar-dind-lptgf: 59bf1c3509f3: Pull complete

sidecar-dind-lptgf: Digest: sha256:21a3deaa0d32a8057914f36584b5288d2e5ecc984380bc0118285c70fa8c9300

sidecar-dind-lptgf: Status: Downloaded newer image for alpine:latest

sidecar-dind-lptgf: ---> c059bfaa849c

sidecar-dind-lptgf: Step 2/2 : CMD ["echo","Hello Argo"]

sidecar-dind-lptgf: ---> Running in 45d793756aed

sidecar-dind-lptgf: Removing intermediate container 45d793756aed

sidecar-dind-lptgf: ---> 397a6d70ecae

sidecar-dind-lptgf: Successfully built 397a6d70ecae

sidecar-dind-lptgf: Successfully tagged hello:latest

sidecar-dind-lptgf: WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

sidecar-dind-lptgf: Configure a credential helper to remove this warning. See

sidecar-dind-lptgf: https://docs.docker.com/engine/reference/commandline/login/#credentials-store

sidecar-dind-lptgf:

sidecar-dind-lptgf: Login Succeeded

sidecar-dind-lptgf: going to tag

sidecar-dind-lptgf: going to push

sidecar-dind-lptgf: The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/hello-argo]

sidecar-dind-lptgf: 8d3ac3489996: Preparing

sidecar-dind-lptgf: 8d3ac3489996: Pushed

sidecar-dind-lptgf: latest: digest: sha256:ebdc1b52363811844e946ad2775ff93e8c9d88c5b0c70407855054c6e368b5bd size: 528

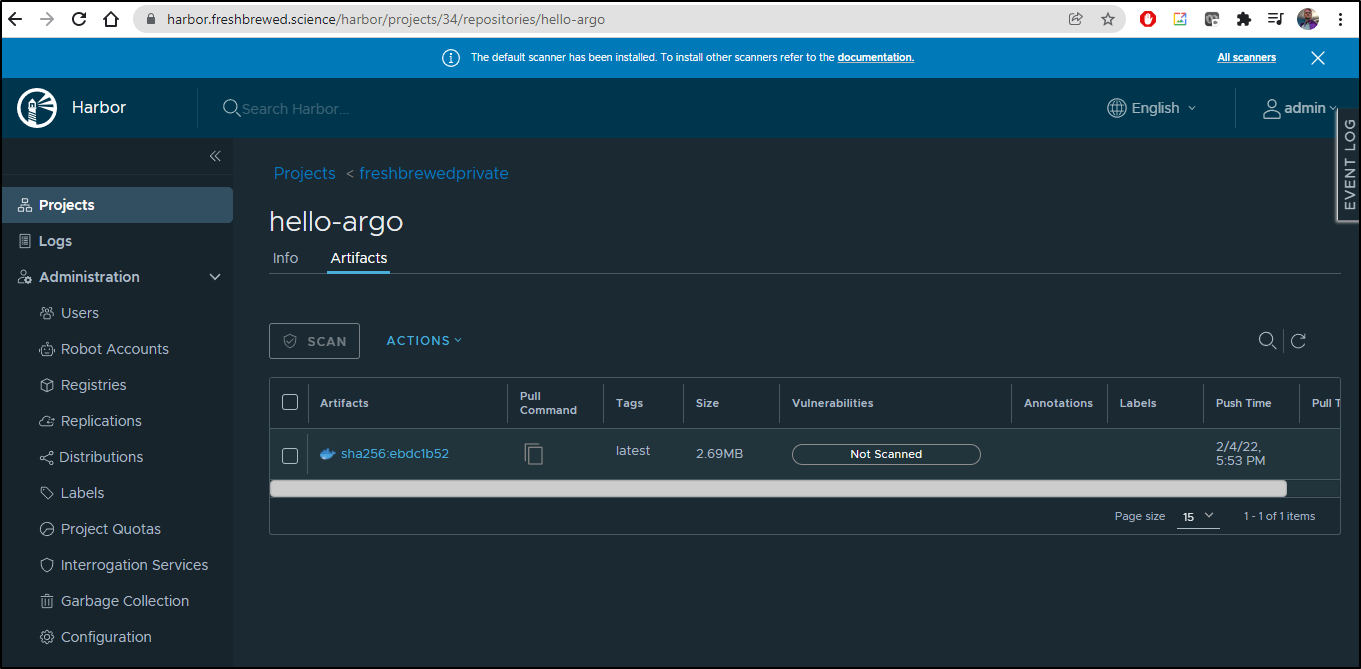

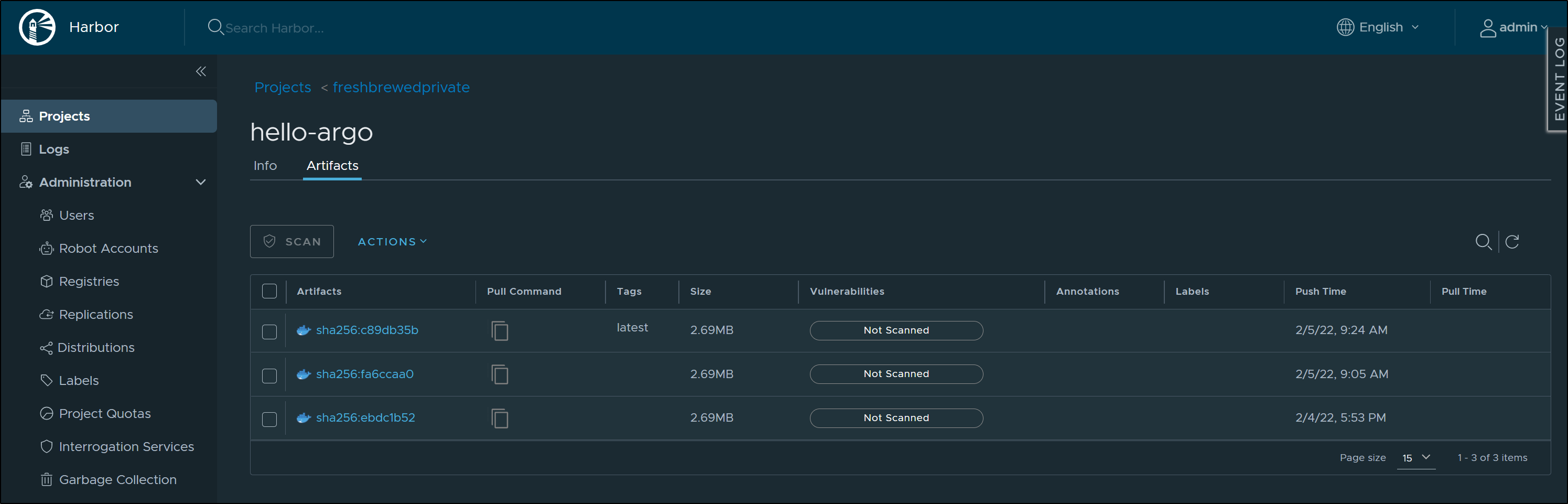

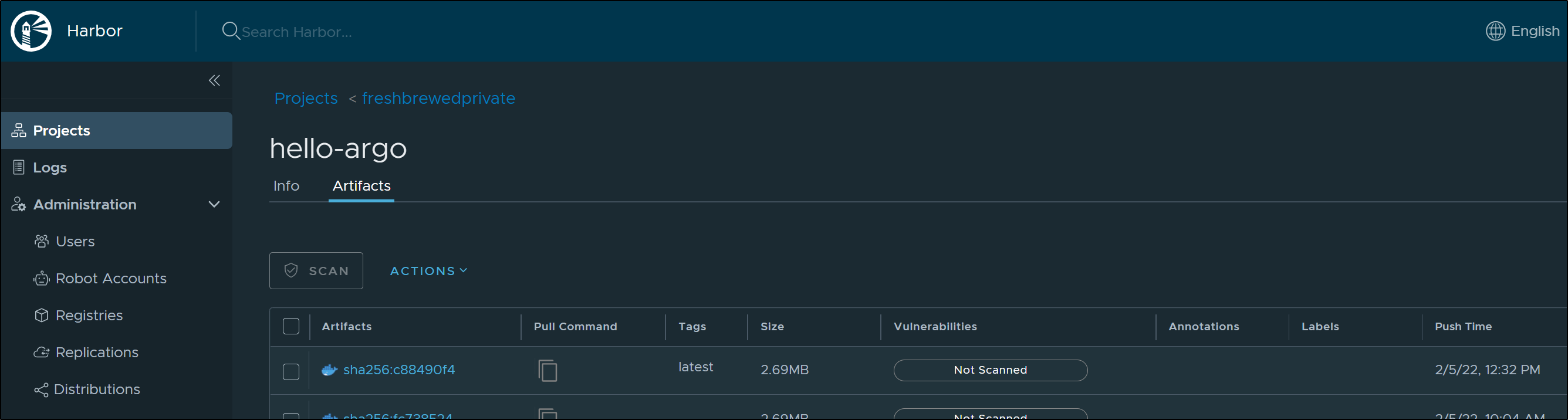

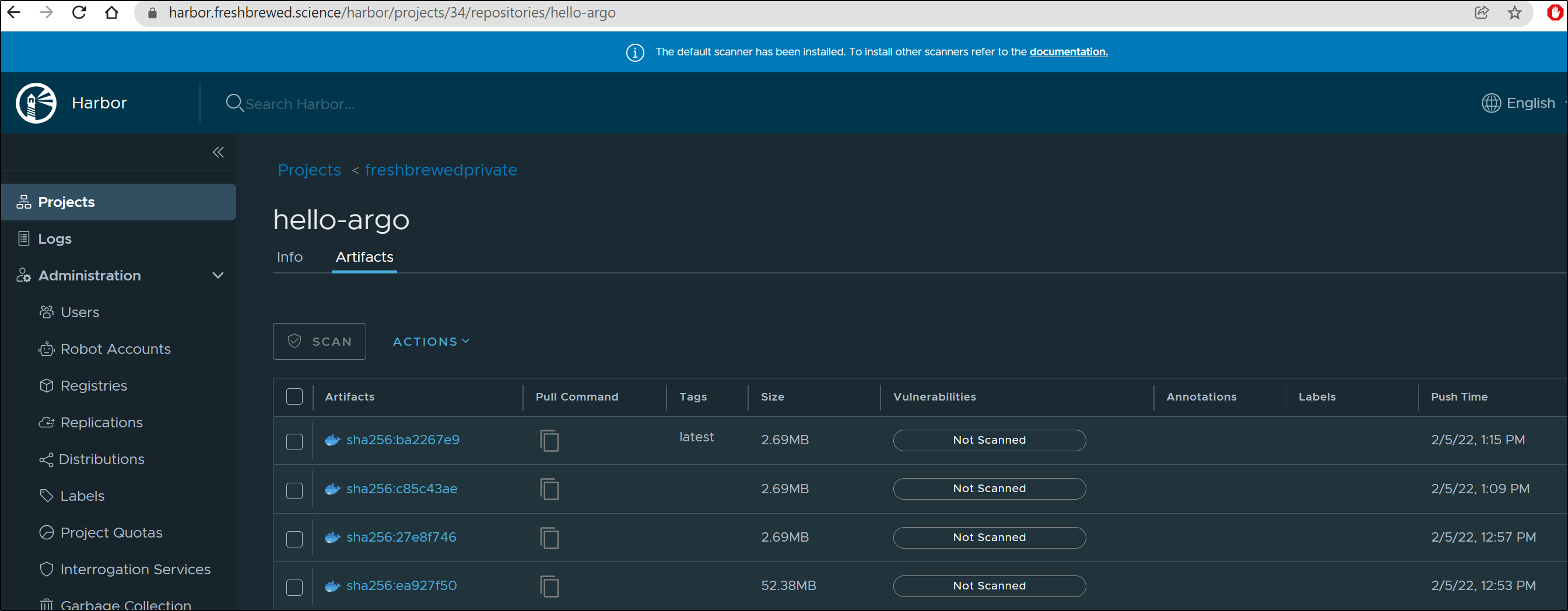

And lastly, confirmation from our private repo:

We can make it a bit more readable by moving the build file and Dockerfile into data blocks that will be mounted into the file system:

apiVersion: argoproj.io/v1alpha1

kind: Workflow

metadata:

generateName: build-dind-

labels:

workflows.argoproj.io/container-runtime-executor: pns

spec:

entrypoint: dind-sidecar-example

templates:

- name: dind-sidecar-example

inputs:

artifacts:

- name: buildit

path: /tmp/buildit.sh

raw:

data: |

cat Dockerfile

docker build -t hello .

echo Testing1234 | docker login --username chrdemo --password-stdin https://harbor.freshbrewed.science/freshbrewedprivate

echo 'going to tag'

docker tag hello:latest harbor.freshbrewed.science/freshbrewedprivate/hello-argo:latest

echo 'going to push'

docker push harbor.freshbrewed.science/freshbrewedprivate/hello-argo:latest

- name: dockerfile

path: /tmp/Dockerfile

raw:

data: |

FROM alpine

CMD ["echo","Hello Argo"]

container:

image: docker:19.03.13

command: [sh, -c]

args: ["until docker ps; do sleep 3; done; echo 'hello' && cd /tmp && chmod u+x buildit.sh && ./buildit.sh"]

env:

- name: DOCKER_HOST

value: 127.0.0.1

sidecars:

- name: dind

image: docker:19.03.13-dind

env:

- name: DOCKER_TLS_CERTDIR

value: ""

securityContext:

privileged: true

# mirrorVolumeMounts will mount the same volumes specified in the main container

# to the sidecar (including artifacts), at the same mountPaths. This enables

# dind daemon to (partially) see the same filesystem as the main container in

# order to use features such as docker volume binding.

mirrorVolumeMounts: true

Secrets to Workflows

Since we will want to check in the files and it is generally poor form to check in plain text passwords, let’s move the Harbor password to a k8s secret:

$ kubectl create secret generic argo-harbor-secret --from-literal=harborpassword=Testing1234 --from-literal=harborusername=chrdemo

secret/argo-harbor-secret created

We can now mount the secret as a volume or variable:

$ cat ./argoDocker.yml

apiVersion: argoproj.io/v1alpha1

kind: Workflow

metadata:

generateName: build-dind-

labels:

workflows.argoproj.io/container-runtime-executor: pns

spec:

entrypoint: dind-sidecar-example

volumes:

- name: my-secret-vol

secret:

secretName: argo-harbor-secret

templates:

- name: dind-sidecar-example

inputs:

artifacts:

- name: buildit

path: /tmp/buildit.sh

raw:

data: |

cat Dockerfile

docker build -t hello .

echo $HARBORPASS | docker login --username $HARBORUSER --password-stdin https://harbor.freshbrewed.science/freshbrewedprivate

echo 'going to tag'

docker tag hello:latest harbor.freshbrewed.science/freshbrewedprivate/hello-argo:latest

echo 'going to push'

docker push harbor.freshbrewed.science/freshbrewedprivate/hello-argo:latest

- name: dockerfile

path: /tmp/Dockerfile

raw:

data: |

FROM alpine

CMD ["echo","Hello Argo"]

container:

image: docker:19.03.13

command: [sh, -c]

args: ["until docker ps; do sleep 3; done; echo 'hello' && cd /tmp && chmod u+x buildit.sh && ./buildit.sh"]

env:

- name: DOCKER_HOST

value: 127.0.0.1

- name: HARBORPASS

valueFrom:

secretKeyRef:

name: argo-harbor-secret

key: harborpassword

- name: HARBORUSER

valueFrom:

secretKeyRef:

name: argo-harbor-secret

key: harborusername

volumeMounts:

- name: my-secret-vol

mountPath: "/secret/mountpath"

sidecars:

- name: dind

image: docker:19.03.13-dind

env:

- name: DOCKER_TLS_CERTDIR

value: ""

securityContext:

privileged: true

mirrorVolumeMounts: true

$ argo submit --watch ./argoDocker.yml

Created: Sat Feb 05 09:05:21 -0600 (32 seconds ago)

Name: build-dind-hxhsr

Namespace: default

ServiceAccount: default

Status: Succeeded

Conditions:

PodRunning False

Completed True

Created: Sat Feb 05 09:24:29 -0600 (34 seconds ago)

Started: Sat Feb 05 09:24:29 -0600 (34 seconds ago)

Finished: Sat Feb 05 09:25:04 -0600 (now)

Duration: 35 seconds

Progress: 1/1

ResourcesDuration: 44s*(1 cpu),37s*(100Mi memory)

STEP TEMPLATE PODNAME DURATION MESSAGE

✔ build-dind-hxhsr dind-sidecar-example build-dind-hxhsr 27s

This workflow does not have security context set. You can run your workflow pods more securely by setting it.

Learn more at https://argoproj.github.io/argo-workflows/workflow-pod-security-context/

and we can review the logs

$ argo logs @latest

build-dind-hxhsr: Cannot connect to the Docker daemon at tcp://127.0.0.1:2375. Is the docker daemon running?

build-dind-hxhsr: CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

build-dind-hxhsr: hello

build-dind-hxhsr: FROM alpine

build-dind-hxhsr: CMD ["echo","Hello Argo"]

build-dind-hxhsr: Sending build context to Docker daemon 3.072kB

build-dind-hxhsr: Step 1/2 : FROM alpine

build-dind-hxhsr: latest: Pulling from library/alpine

build-dind-hxhsr: 59bf1c3509f3: Pulling fs layer

build-dind-hxhsr: 59bf1c3509f3: Download complete

build-dind-hxhsr: 59bf1c3509f3: Pull complete

build-dind-hxhsr: Digest: sha256:21a3deaa0d32a8057914f36584b5288d2e5ecc984380bc0118285c70fa8c9300

build-dind-hxhsr: Status: Downloaded newer image for alpine:latest

build-dind-hxhsr: ---> c059bfaa849c

build-dind-hxhsr: Step 2/2 : CMD ["echo","Hello Argo"]

build-dind-hxhsr: ---> Running in 8cf5187418d4

build-dind-hxhsr: Removing intermediate container 8cf5187418d4

build-dind-hxhsr: ---> 247e7dfdfe32

build-dind-hxhsr: Successfully built 247e7dfdfe32

build-dind-hxhsr: Successfully tagged hello:latest

build-dind-hxhsr: WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

build-dind-hxhsr: Configure a credential helper to remove this warning. See

build-dind-hxhsr: https://docs.docker.com/engine/reference/commandline/login/#credentials-store

build-dind-hxhsr:

build-dind-hxhsr: Login Succeeded

build-dind-hxhsr: going to tag

build-dind-hxhsr: going to push

build-dind-hxhsr: The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/hello-argo]

build-dind-hxhsr: 8d3ac3489996: Preparing

build-dind-hxhsr: 8d3ac3489996: Layer already exists

build-dind-hxhsr: latest: digest: sha256:c89db35b58f32b45289100a981add587b3c92ffefca8b10743e134acf842c3d6 size: 528

Lastly, confirm we see a fresh image in our Container Registry:

Rotating the password was simple. I just changed it in Harbor then set it again in the namespace. The next flow picked it up without issue:

$ kubectl delete secret argo-harbor-secret && kubectl create secret generic argo-harbor-secret --from-literal=harborpassword=somenewpassword --from-literal=harborusername=chrdemo

secret "argo-harbor-secret" deleted

secret/argo-harbor-secret created

Continuous Integration

We now have a Workflow that builds and pushes docker images. However, presently, it requires us to submit the flow to make it happen.

To enable CI, Let’s update our argoci repo with the same steps as YAML

$ git checkout -b develop

Switched to a new branch 'develop'

$ git add cicd/

$ git commit -m "create CICD files in new develop branch"

[develop 68a9dcd] create CICD files in new develop branch:

1 file changed, 62 insertions(+)

create mode 100644 cicd/argoDocker.yml

$ git push --set-upstream origin develop

Username for 'https://github.com': idjohnson

Password for 'https://idjohnson@github.com':

Enumerating objects: 5, done.

Counting objects: 100% (5/5), done.

Delta compression using up to 4 threads

Compressing objects: 100% (3/3), done.

Writing objects: 100% (4/4), 1.10 KiB | 1.10 MiB/s, done.

Total 4 (delta 0), reused 0 (delta 0)

remote:

remote: Create a pull request for 'develop' on GitHub by visiting:

remote: https://github.com/idjohnson/myargoci/pull/new/develop

remote:

To https://github.com/idjohnson/myargoci.git

* [new branch] develop -> develop

Branch 'develop' set up to track remote branch 'develop' from 'origin'.

Having added the Repo with authentication to our Repositories

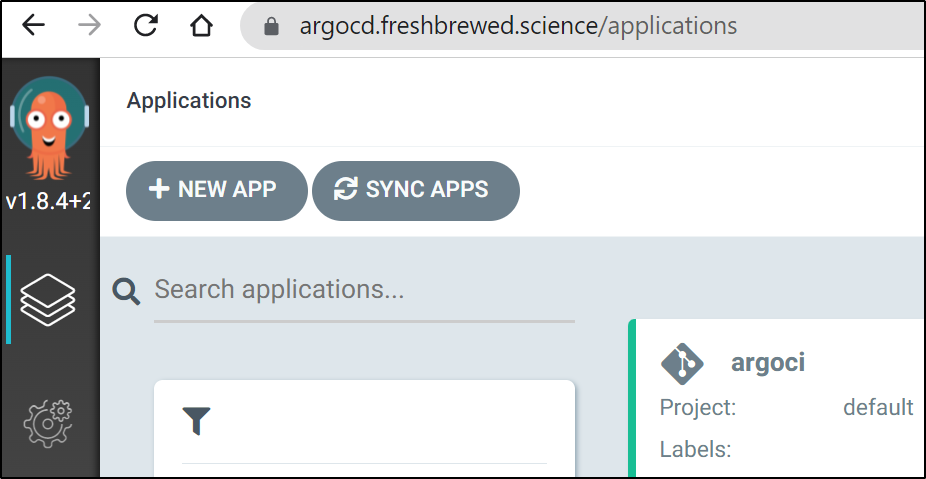

Then we can go to the Applications menu to create a new App

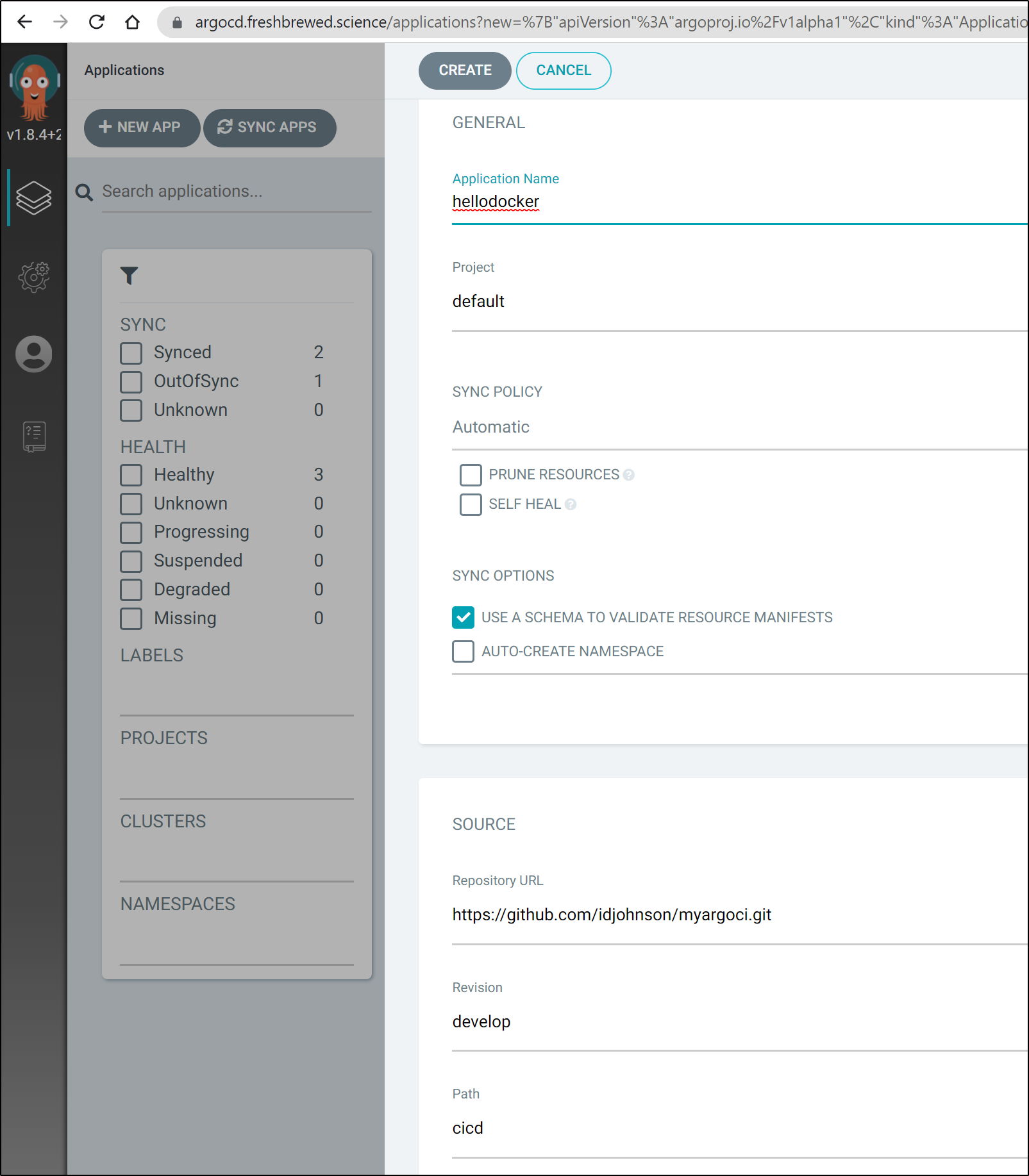

Now we can use our GIT repo and the folder/branch we created

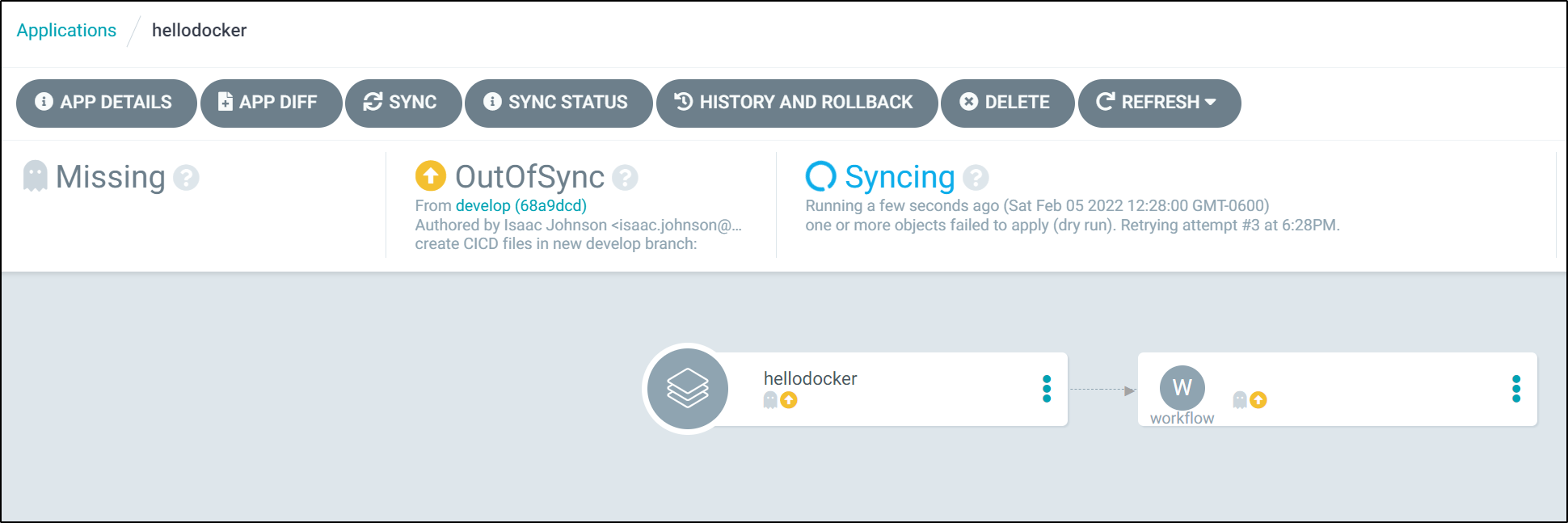

when created, we can see it is invoking an Argo Workflow

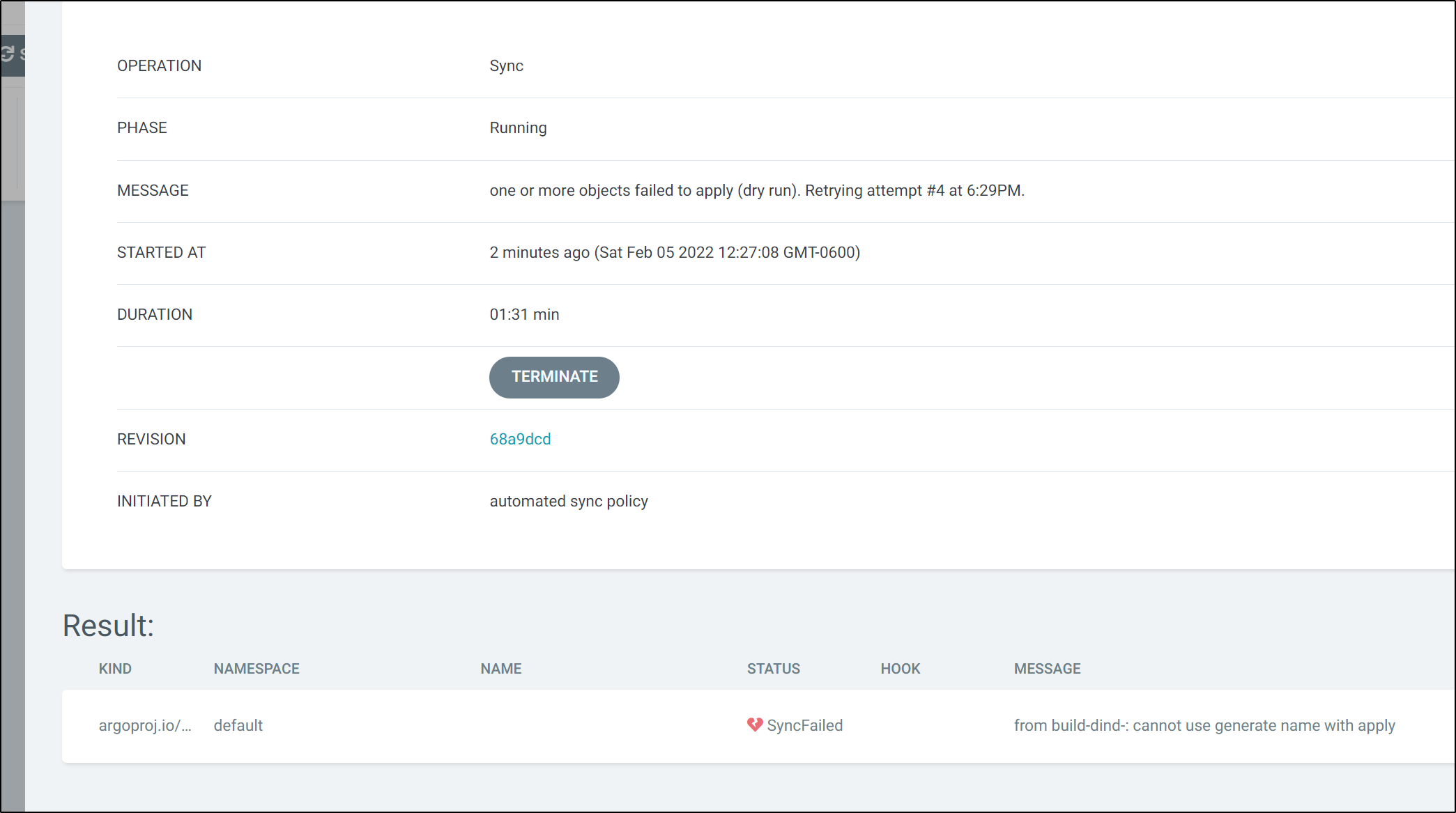

It does not like generate name when invoked this way

which i can see locally as well:

$ kubectl apply -f argoDocker.yml

error: from build-dind-: cannot use generate name with apply

If I change to a fixed name:

$ git diff -c

diff --git a/cicd/argoDocker.yml b/cicd/argoDocker.yml

index 9ec0781..cc8bff8 100644

--- a/cicd/argoDocker.yml

+++ b/cicd/argoDocker.yml

@@ -1,7 +1,7 @@

apiVersion: argoproj.io/v1alpha1

kind: Workflow

metadata:

- generateName: build-dind-

+ name: build-hellodocker

labels:

workflows.argoproj.io/container-runtime-executor: pns

then it works

$ kubectl apply -f argoDocker.yml

workflow.argoproj.io/build-hellodocker created

To test

$ kubectl apply -f argoDocker.yml

workflow.argoproj.io/build-hellodocker created

$ git add argoDocker.yml

$ git commit -m "fix name"

[develop bb6e339] fix name

1 file changed, 2 insertions(+), 2 deletions(-)

$ git push

Username for 'https://github.com': idjohnson

Password for 'https://idjohnson@github.com':

Enumerating objects: 7, done.

Counting objects: 100% (7/7), done.

Delta compression using up to 4 threads

Compressing objects: 100% (3/3), done.

Writing objects: 100% (4/4), 370 bytes | 370.00 KiB/s, done.

Total 4 (delta 2), reused 0 (delta 0)

remote: Resolving deltas: 100% (2/2), completed with 2 local objects.

To https://github.com/idjohnson/myargoci.git

68a9dcd..bb6e339 develop -> develop

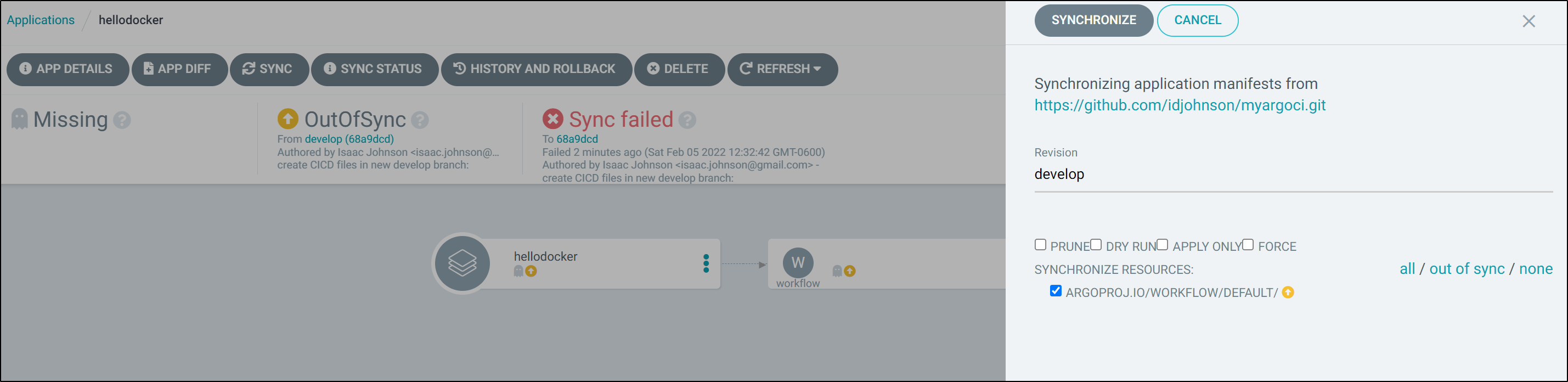

We can then force a sync (as the “Application” is presently in a failed state)

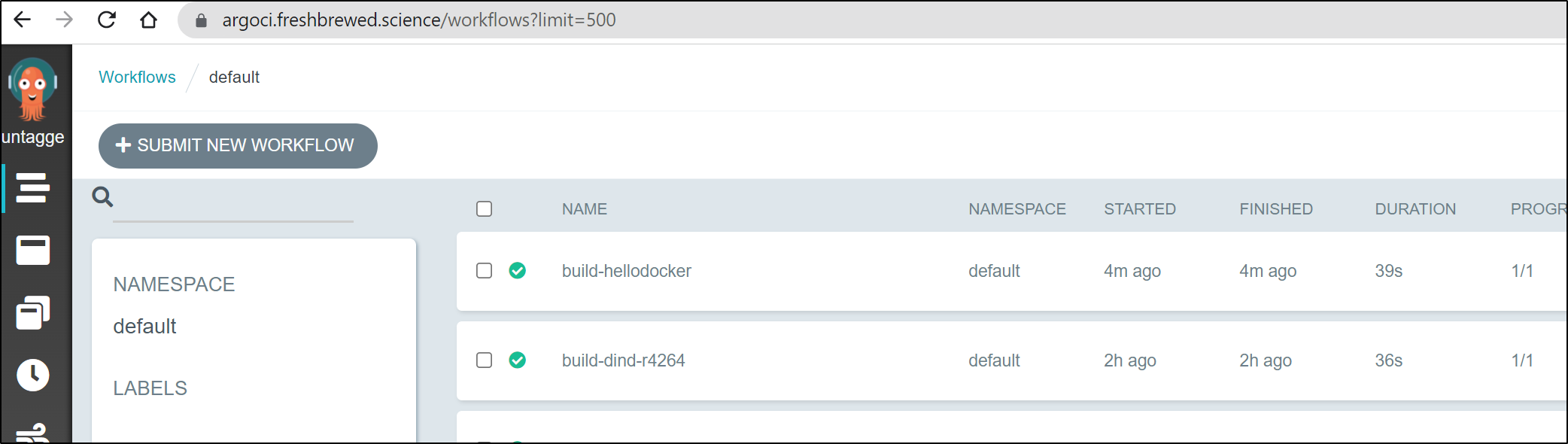

And now we see it is successful

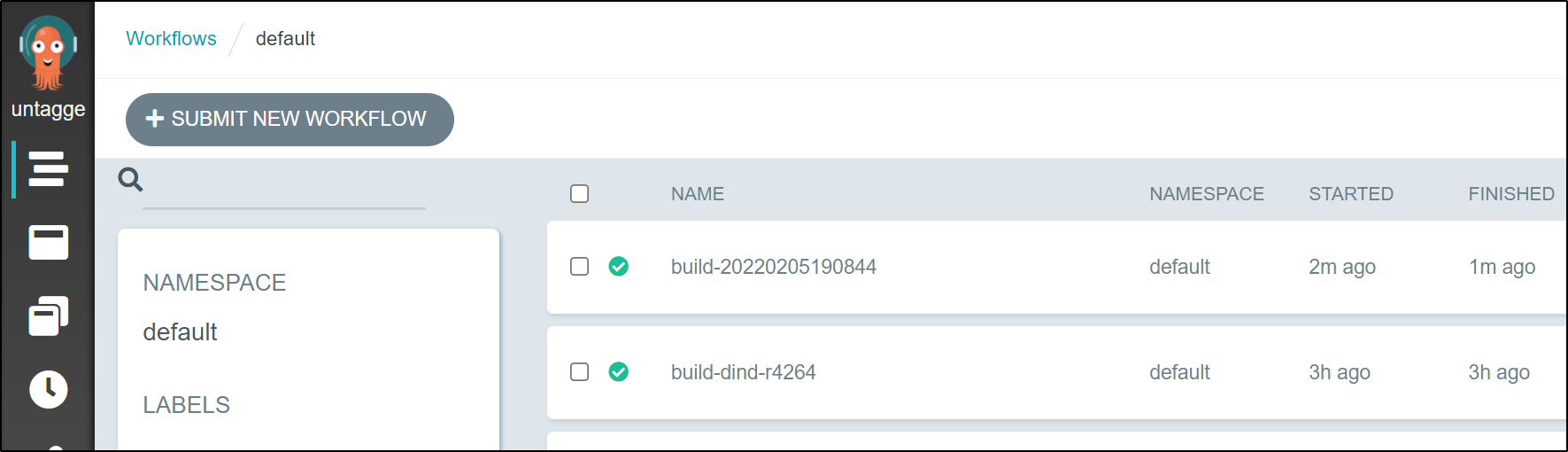

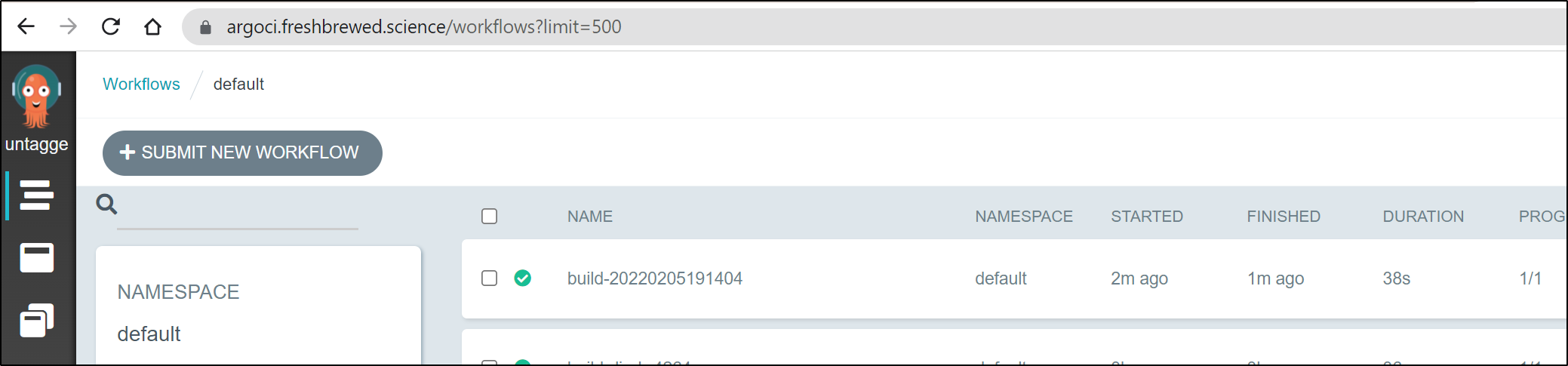

and we can see it properly triggered a new flow in Argo Workflows

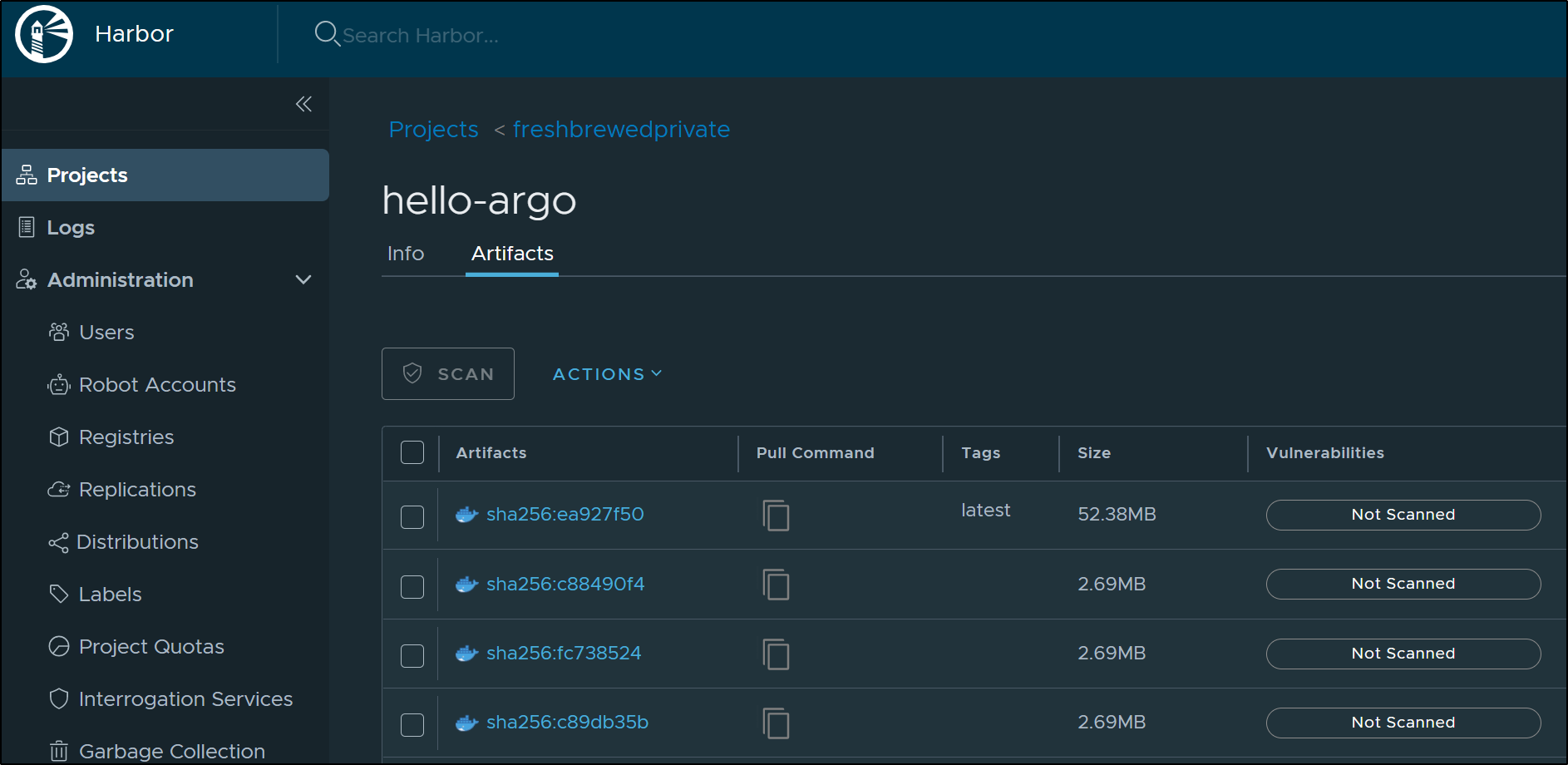

lastly, we see the fresh image in our CR:

Testing

Let’s update the base to be different, this way we can see a larger docker image reflected in Harbor

builder@DESKTOP-72D2D9T:~/Workspaces/myargoci/cicd$ vi argoDocker.yml

builder@DESKTOP-72D2D9T:~/Workspaces/myargoci/cicd$ git diff

diff --git a/cicd/argoDocker.yml b/cicd/argoDocker.yml

index cc8bff8..3081346 100644

--- a/cicd/argoDocker.yml

+++ b/cicd/argoDocker.yml

@@ -29,7 +29,7 @@ spec:

path: /tmp/Dockerfile

raw:

data: |

- FROM alpine

+ FROM debian

CMD ["echo","Hello Argo"]

container:

image: docker:19.03.13

builder@DESKTOP-72D2D9T:~/Workspaces/myargoci/cicd$ git add argoDocker.yml

builder@DESKTOP-72D2D9T:~/Workspaces/myargoci/cicd$ git commit -m "A new Base should show a larger image"

[develop 0b48193] A new Base should show a larger image

1 file changed, 1 insertion(+), 1 deletion(-)

builder@DESKTOP-72D2D9T:~/Workspaces/myargoci/cicd$ git push

Enumerating objects: 7, done.

Counting objects: 100% (7/7), done.

Delta compression using up to 4 threads

Compressing objects: 100% (3/3), done.

Writing objects: 100% (4/4), 367 bytes | 367.00 KiB/s, done.

Total 4 (delta 2), reused 0 (delta 0)

remote: Resolving deltas: 100% (2/2), completed with 2 local objects.

To https://github.com/idjohnson/myargoci.git

bb6e339..0b48193 develop -> develop

Sadly, this did not auto trigger. Nor did it redeploy. I needed to change the name of the Workflow directly to get a fresh run.

However, it did work, and we can see the larger image

Automating name updates

We can automate updating the name with a pre-commit hook

builder@DESKTOP-72D2D9T:~/Workspaces/myargoci/cicd$ cat ../.git/hooks/pre-commit

#!/bin/sh

# Contents of .git/hooks/pre-commit

# Replace workflow name with one that has timestamp

git diff --cached --name-status | egrep -i "^(A|M).*\.(yml)$" | while read a b; do

cat $b | sed "s/name: build-.*/name: build-$(date -u "+%Y%m%d%H%M%S")/" > tmp

mv tmp $b

git add $b

done

builder@DESKTOP-72D2D9T:~/Workspaces/myargoci/cicd$ chmod 755 ../.git/hooks/pre-commit

builder@DESKTOP-72D2D9T:~/Workspaces/myargoci/cicd$ git add ../.git/hooks/pre-commit

builder@DESKTOP-72D2D9T:~/Workspaces/myargoci/cicd$ git commit -m "Add Pre-Commit hook"

[develop aafcf3f] Add Pre-Commit hook

1 file changed, 2 insertions(+), 2 deletions(-)

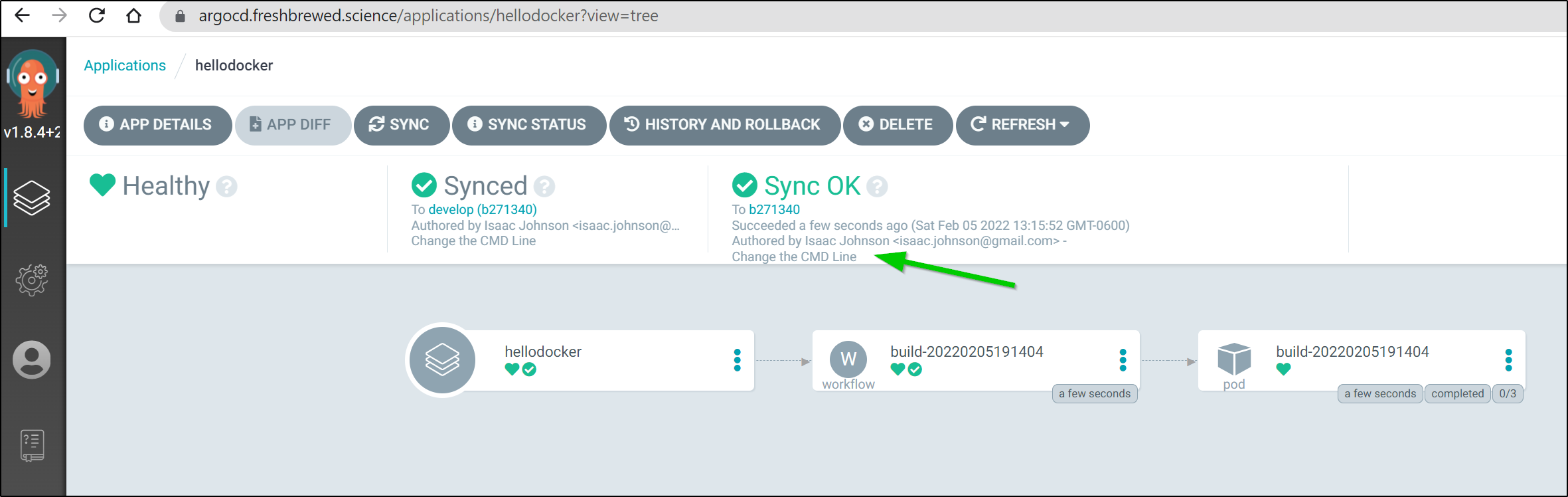

This now updates ArgoCD automatically and we can see the resulting flow created:

End-to-End one more time.

So let’s see all of it in action one more time

builder@DESKTOP-72D2D9T:~/Workspaces/myargoci$ vi cicd/argoDocker.yml

builder@DESKTOP-72D2D9T:~/Workspaces/myargoci$ git diff

diff --git a/cicd/argoDocker.yml b/cicd/argoDocker.yml

index 393289e..c09e992 100644

--- a/cicd/argoDocker.yml

+++ b/cicd/argoDocker.yml

@@ -30,7 +30,7 @@ spec:

raw:

data: |

FROM alpine

- CMD ["echo","Hello Argo.."]

+ CMD ["echo","Hello Argo. Let us CICD entirely in Argo"]

container:

image: docker:19.03.13

command: [sh, -c]

builder@DESKTOP-72D2D9T:~/Workspaces/myargoci$ git add cicd/argoDocker.yml

builder@DESKTOP-72D2D9T:~/Workspaces/myargoci$ git commit -m "Change the CMD Line"

[develop b271340] Change the CMD Line

1 file changed, 2 insertions(+), 2 deletions(-)

builder@DESKTOP-72D2D9T:~/Workspaces/myargoci$ git push

Enumerating objects: 7, done.

Counting objects: 100% (7/7), done.

Delta compression using up to 4 threads

Compressing objects: 100% (3/3), done.

Writing objects: 100% (4/4), 390 bytes | 390.00 KiB/s, done.

Total 4 (delta 2), reused 0 (delta 0)

remote: Resolving deltas: 100% (2/2), completed with 2 local objects.

To https://github.com/idjohnson/myargoci.git

aafcf3f..b271340 develop -> develop

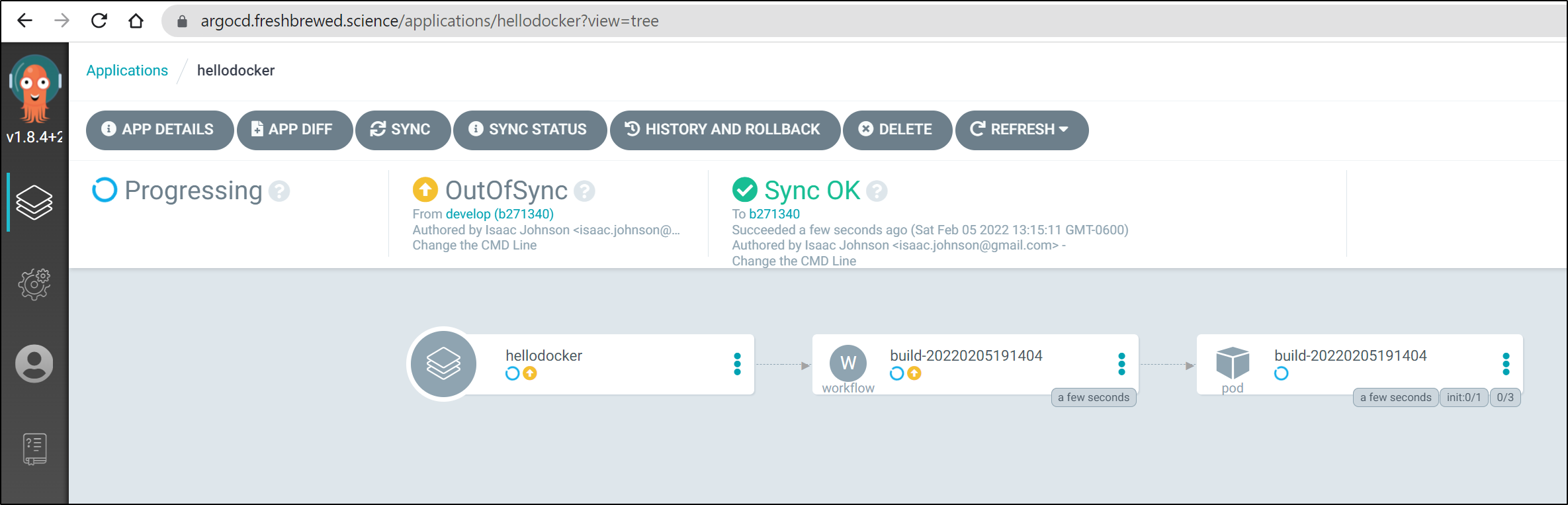

We see ArgoCD update

Updated:

This then spurned a fresh build (because I used Prune in ArgoCD, the last flow was removed).

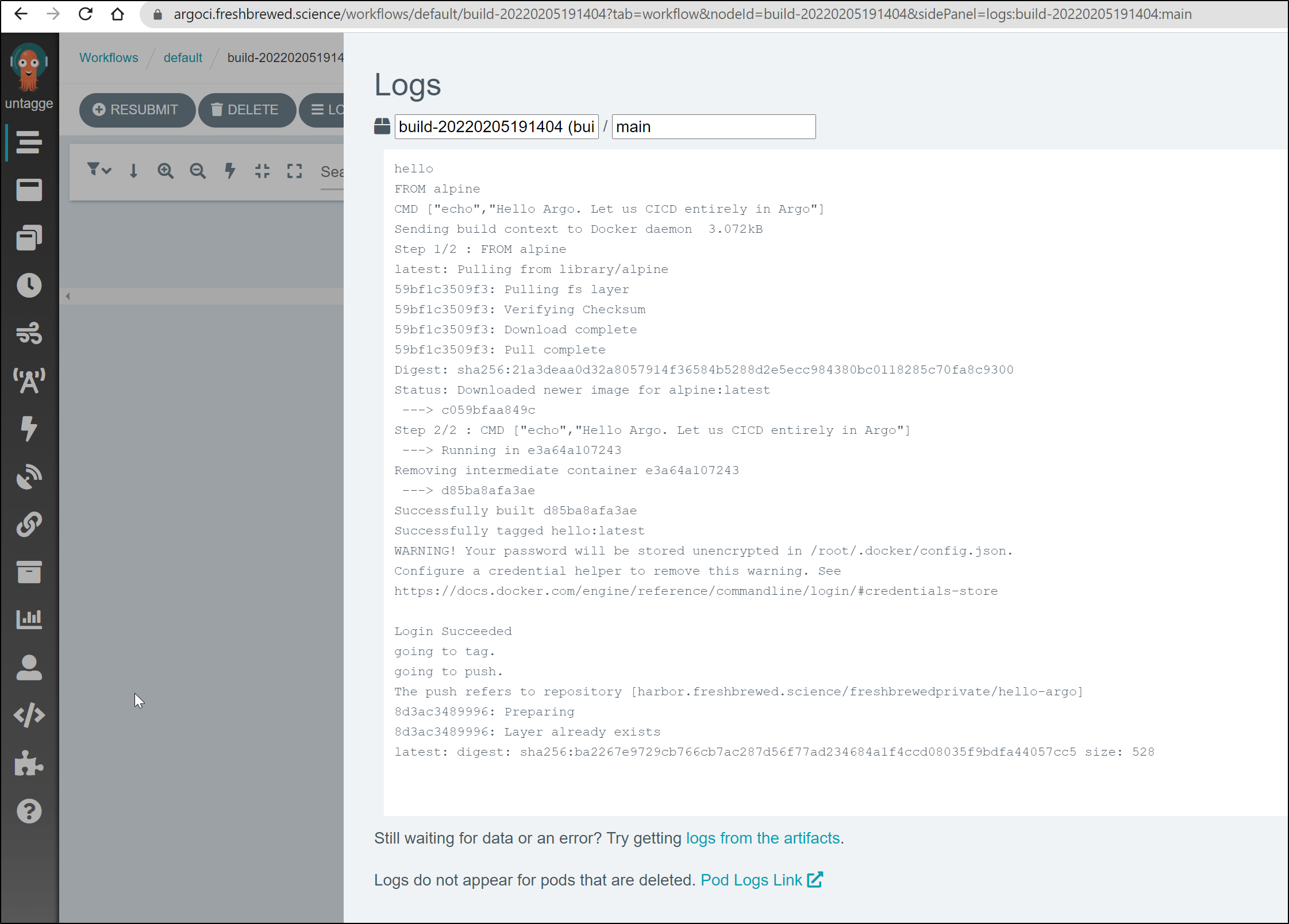

We can see logs of the job in ArgoCI

We can see the same on the command line:

builder@DESKTOP-72D2D9T:~/Workspaces/myargoci/cicd$ argo logs @latest

build-20220205191404: Cannot connect to the Docker daemon at tcp://127.0.0.1:2375. Is the docker daemon running?

build-20220205191404: Cannot connect to the Docker daemon at tcp://127.0.0.1:2375. Is the docker daemon running?

build-20220205191404: CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

build-20220205191404: hello

build-20220205191404: FROM alpine

build-20220205191404: CMD ["echo","Hello Argo. Let us CICD entirely in Argo"]

build-20220205191404: Sending build context to Docker daemon 3.072kB

build-20220205191404: Step 1/2 : FROM alpine

build-20220205191404: latest: Pulling from library/alpine

build-20220205191404: 59bf1c3509f3: Pulling fs layer

build-20220205191404: 59bf1c3509f3: Verifying Checksum

build-20220205191404: 59bf1c3509f3: Download complete

build-20220205191404: 59bf1c3509f3: Pull complete

build-20220205191404: Digest: sha256:21a3deaa0d32a8057914f36584b5288d2e5ecc984380bc0118285c70fa8c9300

build-20220205191404: Status: Downloaded newer image for alpine:latest

build-20220205191404: ---> c059bfaa849c

build-20220205191404: Step 2/2 : CMD ["echo","Hello Argo. Let us CICD entirely in Argo"]

build-20220205191404: ---> Running in e3a64a107243

build-20220205191404: Removing intermediate container e3a64a107243

build-20220205191404: ---> d85ba8afa3ae

build-20220205191404: Successfully built d85ba8afa3ae

build-20220205191404: Successfully tagged hello:latest

build-20220205191404: WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

build-20220205191404: Configure a credential helper to remove this warning. See

build-20220205191404: https://docs.docker.com/engine/reference/commandline/login/#credentials-store

build-20220205191404:

build-20220205191404: Login Succeeded

build-20220205191404: going to tag.

build-20220205191404: going to push.

build-20220205191404: The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/hello-argo]

build-20220205191404: 8d3ac3489996: Preparing

build-20220205191404: 8d3ac3489996: Layer already exists

build-20220205191404: latest: digest: sha256:ba2267e9729cb766cb7ac287d56f77ad234684a1f4ccd08035f9bdfa44057cc5 size: 528

builder@DESKTOP-72D2D9T:~/Workspaces/myargoci/cicd$

Lastly, we see the Image updated in Harbor, our container registry

Summary

In this post we installed Argo Workflows using the provided YAML. We also installed by forking into our own private Github repo to use Argo CD. We learned how to login and engage with Argo using the CLI and UI. We exposed it with basic HTTP Auth and TLS (as we had with Argo CD). We also showed how to use an admin token to get elevated permissions in Argo Workflows.

We tried several hello world basic flows before diving into a Docker-in-Docker (DinD) example that went from showing a docker sidecar was running to building and pushing freshly created container images to a private Container Registry.

This means that, with the exception of the GIT repo, we have a full CICD solution self-contained within a cluster (even Harbor runs in that registry).

There are webhooks coming in Argo Workflows (but they are in beta and still slated for a future release). The usage of Argo CD to trigger CI has a logical next step: A fully contained CICD Deployment model that does not need any other outside tool:

- git commit code

- the code is compiled and built within a container

- the container is pushed to a CR (with an immutable tag)

- the charts (that reference the tag) are also pushed via Argo CD (and can be satisfied when the container appears).

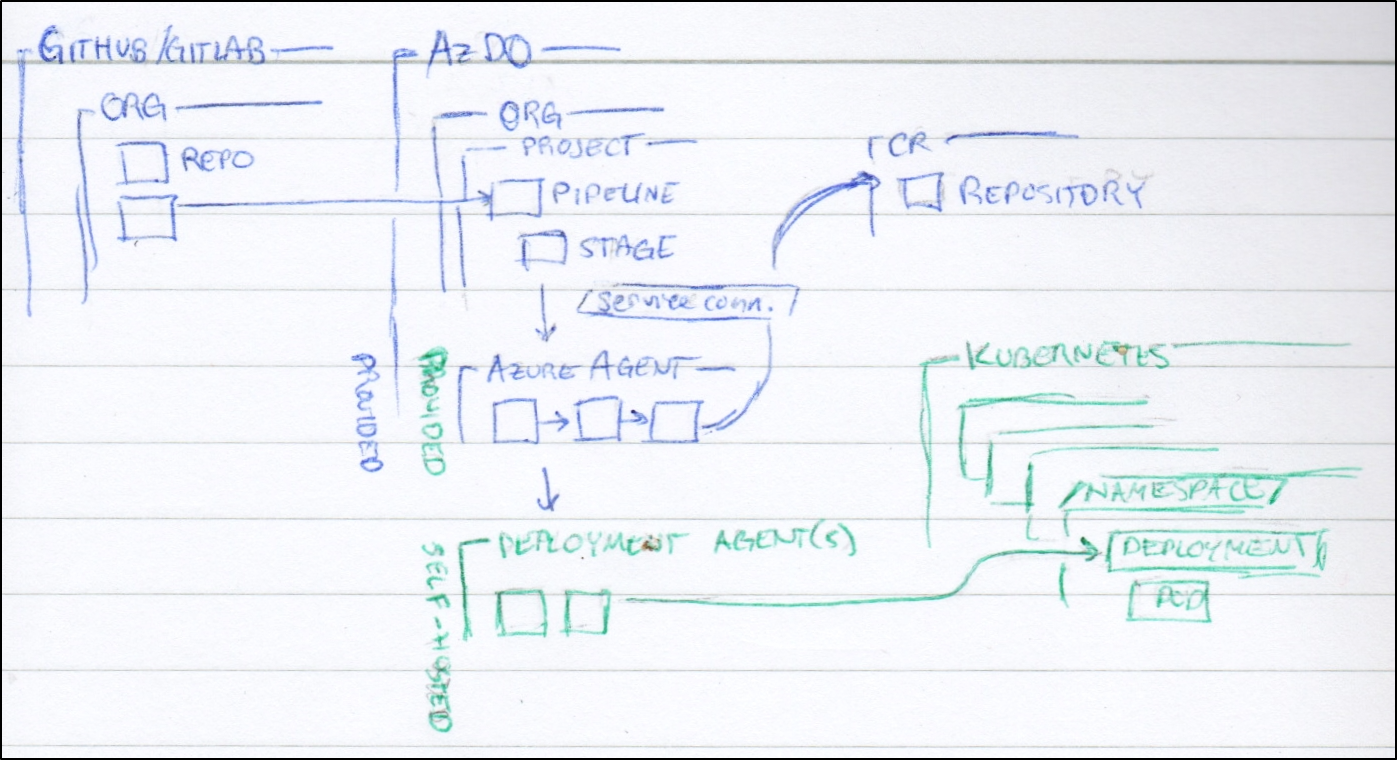

Consider our traditional build and deploy model that uses many SaaS tools (in blue) to deploy to our infrastructure (green):

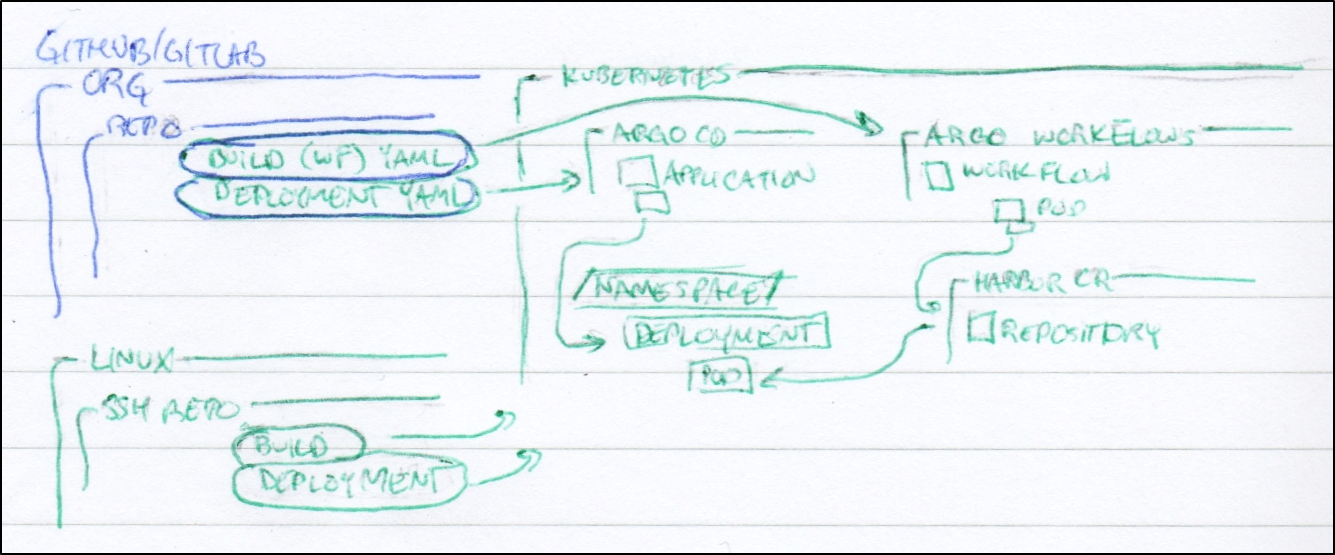

Using ArgoCD with Argo Workflows could enable us to have a proper CICD that does not need an external “build” tool - we need not Jenkins, Github Actions nor AzDO if we can build and deploy all within the cluster using Argo.

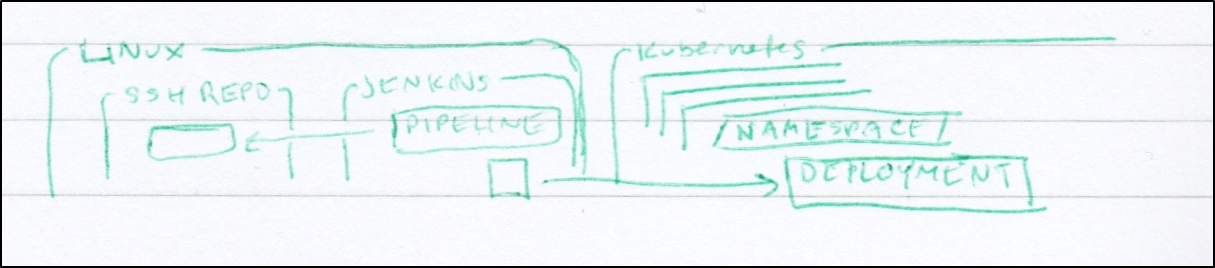

And, despite my general disdain for Jenkins, for a true air-gap model, one can use private Linux SSH repos with a self-hosted Jenkins for true CICD independence.

Going forward, I am hoping to see the Argo Workflow model improve a bit more before I would consider moving off of Github actions. For instance, I would want to create CMs or Secrets on the fly because the need to keep everything inside a single Workflow CRD can make things a bit cumbersome. Additionally, I would want to be able to source in files or trigger external systems.

That said, I do plan to come back and do more work. This model allows us to build a full on-prem non-sass Open Source CICD system that can be isolated and self-contained. If we used a GIT host internally (as I did with the ArgoCD writeup), we would need no external access yet still have turnkey DevOps workflow automations. This could be highly desirable in high-security environments that require air-gapped or heavily firewalled compute environments.