Published: May 21, 2020 by Isaac Johnson

Azure Arc for Kuberentes has finally gone out to public preview. Like Arc for Servers, Arc for Kubernetes let’s us add our external kubernetes clusters, be them on-prem or in other clouds into Azure to be managed with Policies, RBAC and GitOps deployment.

What I hope to discover is:

- What kind of clusters will it support? Old versions/new versions of k8s? ARM?

- Can i use Azure Pipelines Kubernetes Tasks with these?

Creating an CIVO K8s cluster

First, let’s get the CIVO client. We need to install Ruby if we don’t have it already:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ sudo apt update && sudo apt install ruby-full

[sudo] password for builder:

Hit:1 http://archive.ubuntu.com/ubuntu bionic InRelease

Get:2 http://security.ubuntu.com/ubuntu bionic-security InRelease [88.7 kB]

Get:3 https://packages.microsoft.com/repos/azure-cli bionic InRelease [3965 B]

Get:4 https://packages.microsoft.com/ubuntu/18.04/prod bionic InRelease [4003 B]

Get:5 http://archive.ubuntu.com/ubuntu bionic-updates InRelease [88.7 kB]

Get:6 https://packages.microsoft.com/repos/azure-cli bionic/main amd64 Packages [9314 B]

Get:7 https://packages.microsoft.com/ubuntu/18.04/prod bionic/main amd64 Packages [112 kB]

Get:8 http://archive.ubuntu.com/ubuntu bionic-backports InRelease [74.6 kB]

Get:9 http://security.ubuntu.com/ubuntu bionic-security/main amd64 Packages [717 kB]

Get:10 http://archive.ubuntu.com/ubuntu bionic-updates/main amd64 Packages [947 kB]

Get:11 http://security.ubuntu.com/ubuntu bionic-security/main Translation-en [227 kB]

Get:12 http://security.ubuntu.com/ubuntu bionic-security/restricted amd64 Packages [41.9 kB]

Get:13 http://archive.ubuntu.com/ubuntu bionic-updates/main Translation-en [322 kB]

Get:14 http://security.ubuntu.com/ubuntu bionic-security/restricted Translation-en [10.5 kB]

Get:15 http://archive.ubuntu.com/ubuntu bionic-updates/restricted amd64 Packages [54.9 kB]

Get:16 http://security.ubuntu.com/ubuntu bionic-security/universe amd64 Packages [665 kB]

Get:17 http://archive.ubuntu.com/ubuntu bionic-updates/restricted Translation-en [13.7 kB]

Get:18 http://archive.ubuntu.com/ubuntu bionic-updates/universe amd64 Packages [1075 kB]

Get:19 http://security.ubuntu.com/ubuntu bionic-security/universe Translation-en [221 kB]

Get:20 http://security.ubuntu.com/ubuntu bionic-security/multiverse amd64 Packages [7596 B]

Get:21 http://archive.ubuntu.com/ubuntu bionic-updates/universe Translation-en [334 kB]

Get:22 http://security.ubuntu.com/ubuntu bionic-security/multiverse Translation-en [2824 B]

Get:23 http://archive.ubuntu.com/ubuntu bionic-updates/multiverse amd64 Packages [15.7 kB]

Get:24 http://archive.ubuntu.com/ubuntu bionic-updates/multiverse Translation-en [6384 B]

Get:25 http://archive.ubuntu.com/ubuntu bionic-backports/main amd64 Packages [7516 B]

Get:26 http://archive.ubuntu.com/ubuntu bionic-backports/main Translation-en [4764 B]

Get:27 http://archive.ubuntu.com/ubuntu bionic-backports/universe amd64 Packages [7484 B]

Get:28 http://archive.ubuntu.com/ubuntu bionic-backports/universe Translation-en [4436 B]

Fetched 5068 kB in 3s (1856 kB/s)

Reading package lists... Done

Building dependency tree

Reading state information... Done

69 packages can be upgraded. Run 'apt list --upgradable' to see them.

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following additional packages will be installed:

fonts-lato javascript-common libgmp-dev libgmpxx4ldbl libjs-jquery libruby2.5 rake ri ruby ruby-dev ruby-did-you-mean ruby-minitest ruby-net-telnet ruby-power-assert ruby-test-unit

ruby2.5 ruby2.5-dev ruby2.5-doc rubygems-integration

Suggested packages:

apache2 | lighttpd | httpd gmp-doc libgmp10-doc libmpfr-dev bundler

The following NEW packages will be installed:

fonts-lato javascript-common libgmp-dev libgmpxx4ldbl libjs-jquery libruby2.5 rake ri ruby ruby-dev ruby-did-you-mean ruby-full ruby-minitest ruby-net-telnet ruby-power-assert

ruby-test-unit ruby2.5 ruby2.5-dev ruby2.5-doc rubygems-integration

0 upgraded, 20 newly installed, 0 to remove and 69 not upgraded.

Need to get 8208 kB/8366 kB of archives.

After this operation, 48.1 MB of additional disk space will be used.

Do you want to continue? [Y/n] Y

Get:1 http://archive.ubuntu.com/ubuntu bionic/main amd64 fonts-lato all 2.0-2 [2698 kB]

Get:2 http://archive.ubuntu.com/ubuntu bionic/main amd64 libgmpxx4ldbl amd64 2:6.1.2+dfsg-2 [8964 B]

Get:3 http://archive.ubuntu.com/ubuntu bionic/main amd64 libgmp-dev amd64 2:6.1.2+dfsg-2 [316 kB]

Get:4 http://archive.ubuntu.com/ubuntu bionic/main amd64 rubygems-integration all 1.11 [4994 B]

Get:5 http://archive.ubuntu.com/ubuntu bionic-updates/main amd64 ruby2.5 amd64 2.5.1-1ubuntu1.6 [48.6 kB]

Get:6 http://archive.ubuntu.com/ubuntu bionic/main amd64 ruby amd64 1:2.5.1 [5712 B]

Get:7 http://archive.ubuntu.com/ubuntu bionic-updates/main amd64 rake all 12.3.1-1ubuntu0.1 [44.9 kB]

Get:8 http://archive.ubuntu.com/ubuntu bionic/main amd64 ruby-did-you-mean all 1.2.0-2 [9700 B]

Get:9 http://archive.ubuntu.com/ubuntu bionic/main amd64 ruby-minitest all 5.10.3-1 [38.6 kB]

Get:10 http://archive.ubuntu.com/ubuntu bionic/main amd64 ruby-net-telnet all 0.1.1-2 [12.6 kB]

Get:11 http://archive.ubuntu.com/ubuntu bionic/main amd64 ruby-power-assert all 0.3.0-1 [7952 B]

Get:12 http://archive.ubuntu.com/ubuntu bionic/main amd64 ruby-test-unit all 3.2.5-1 [61.1 kB]

Get:13 http://archive.ubuntu.com/ubuntu bionic-updates/main amd64 libruby2.5 amd64 2.5.1-1ubuntu1.6 [3069 kB]

Get:14 http://archive.ubuntu.com/ubuntu bionic-updates/main amd64 ruby2.5-doc all 2.5.1-1ubuntu1.6 [1806 kB]

Get:15 http://archive.ubuntu.com/ubuntu bionic/universe amd64 ri all 1:2.5.1 [4496 B]

Get:16 http://archive.ubuntu.com/ubuntu bionic-updates/main amd64 ruby2.5-dev amd64 2.5.1-1ubuntu1.6 [63.7 kB]

Get:17 http://archive.ubuntu.com/ubuntu bionic/main amd64 ruby-dev amd64 1:2.5.1 [4604 B]

Get:18 http://archive.ubuntu.com/ubuntu bionic/universe amd64 ruby-full all 1:2.5.1 [2716 B]

Fetched 8208 kB in 3s (2550 kB/s)

Selecting previously unselected package fonts-lato.

(Reading database ... 78441 files and directories currently installed.)

Preparing to unpack .../00-fonts-lato_2.0-2_all.deb .................................................................................................................................]

Unpacking fonts-lato (2.0-2) ........................................................................................................................................................]

Selecting previously unselected package javascript-common............................................................................................................................]

Preparing to unpack .../01-javascript-common_11_all.deb .............................................................................................................................]

Unpacking javascript-common (11) ...

Selecting previously unselected package libgmpxx4ldbl:amd64..........................................................................................................................]

Preparing to unpack .../02-libgmpxx4ldbl_2%3a6.1.2+dfsg-2_amd64.deb .................................................................................................................]

Unpacking libgmpxx4ldbl:amd64 (2:6.1.2+dfsg-2) ......................................................................................................................................]

Selecting previously unselected package libgmp-dev:amd64.............................................................................................................................]

Preparing to unpack .../03-libgmp-dev_2%3a6.1.2+dfsg-2_amd64.deb ...

Unpacking libgmp-dev:amd64 (2:6.1.2+dfsg-2) .........................................................................................................................................]

Selecting previously unselected package libjs-jquery.

Preparing to unpack .../04-libjs-jquery_3.2.1-1_all.deb .............................................................................................................................]

Unpacking libjs-jquery (3.2.1-1) ....................................................................................................................................................]

Selecting previously unselected package rubygems-integration.

Preparing to unpack .../05-rubygems-integration_1.11_all.deb ........................................................................................................................]

Unpacking rubygems-integration (1.11) ...

Selecting previously unselected package ruby2.5......................................................................................................................................]

Preparing to unpack .../06-ruby2.5_2.5.1-1ubuntu1.6_amd64.deb ...

Unpacking ruby2.5 (2.5.1-1ubuntu1.6) ................................................................................................................................................]

Selecting previously unselected package ruby.

Preparing to unpack .../07-ruby_1%3a2.5.1_amd64.deb .................................................................................................................................]

Unpacking ruby (1:2.5.1) ...

Selecting previously unselected package rake.

Preparing to unpack .../08-rake_12.3.1-1ubuntu0.1_all.deb ...........................................................................................................................]

Unpacking rake (12.3.1-1ubuntu0.1) ...

Selecting previously unselected package ruby-did-you-mean............................................................................................................................]

Preparing to unpack .../09-ruby-did-you-mean_1.2.0-2_all.deb ........................................................................................................................]

Unpacking ruby-did-you-mean (1.2.0-2) ...

Selecting previously unselected package ruby-minitest................................................................................................................................]

Preparing to unpack .../10-ruby-minitest_5.10.3-1_all.deb ...........................................................................................................................]

Unpacking ruby-minitest (5.10.3-1) ......................................................................................................................................................]

Selecting previously unselected package ruby-net-telnet.

Preparing to unpack .../11-ruby-net-telnet_0.1.1-2_all.deb ...

Unpacking ruby-net-telnet (0.1.1-2) ...

Selecting previously unselected package ruby-power-assert.

Preparing to unpack .../12-ruby-power-assert_0.3.0-1_all.deb ...

Unpacking ruby-power-assert (0.3.0-1) ...

Selecting previously unselected package ruby-test-unit.

Preparing to unpack .../13-ruby-test-unit_3.2.5-1_all.deb ...

Unpacking ruby-test-unit (3.2.5-1) ...

Selecting previously unselected package libruby2.5:amd64.

Preparing to unpack .../14-libruby2.5_2.5.1-1ubuntu1.6_amd64.deb ...

Unpacking libruby2.5:amd64 (2.5.1-1ubuntu1.6) .........................................................................................................................................]

Selecting previously unselected package ruby2.5-doc.

Preparing to unpack .../15-ruby2.5-doc_2.5.1-1ubuntu1.6_all.deb ...

Unpacking ruby2.5-doc (2.5.1-1ubuntu1.6) ...

Selecting previously unselected package ri.

Preparing to unpack .../16-ri_1%3a2.5.1_all.deb ...

Unpacking ri (1:2.5.1) ...

Selecting previously unselected package ruby2.5-dev:amd64.

Preparing to unpack .../17-ruby2.5-dev_2.5.1-1ubuntu1.6_amd64.deb ...

Unpacking ruby2.5-dev:amd64 (2.5.1-1ubuntu1.6) ...

Selecting previously unselected package ruby-dev:amd64.

Preparing to unpack .../18-ruby-dev_1%3a2.5.1_amd64.deb ...

Unpacking ruby-dev:amd64 (1:2.5.1) ...

Selecting previously unselected package ruby-full.

Preparing to unpack .../19-ruby-full_1%3a2.5.1_all.deb ...

Unpacking ruby-full (1:2.5.1) ...

Setting up libjs-jquery (3.2.1-1) ...

Setting up fonts-lato (2.0-2) ...

Setting up ruby-did-you-mean (1.2.0-2) ...

Setting up ruby-net-telnet (0.1.1-2) ...

Setting up rubygems-integration (1.11) ...

Setting up ruby2.5-doc (2.5.1-1ubuntu1.6) ...

Setting up javascript-common (11) ...

Setting up libgmpxx4ldbl:amd64 (2:6.1.2+dfsg-2) ...

Setting up ruby-minitest (5.10.3-1) ...

Setting up ruby-power-assert (0.3.0-1) ...

Setting up libgmp-dev:amd64 (2:6.1.2+dfsg-2) ...

Setting up ruby2.5 (2.5.1-1ubuntu1.6) ...

Setting up ri (1:2.5.1) ...

Setting up ruby (1:2.5.1) ...

Setting up ruby-test-unit (3.2.5-1) ...

Setting up rake (12.3.1-1ubuntu0.1) ...

Setting up libruby2.5:amd64 (2.5.1-1ubuntu1.6) ...

Setting up ruby2.5-dev:amd64 (2.5.1-1ubuntu1.6) ...

Setting up ruby-dev:amd64 (1:2.5.1) ...

Setting up ruby-full (1:2.5.1) ...

Processing triggers for man-db (2.8.3-2ubuntu0.1) ...

Processing triggers for libc-bin (2.27-3ubuntu1) ...

Next let’s install the CIVO client:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ sudo gem install civo_cli

Fetching: unicode-display_width-1.7.0.gem (100%).......................................................................................................................................]

Successfully installed unicode-display_width-1.7.0

Fetching: terminal-table-1.8.0.gem (100%)

Successfully installed terminal-table-1.8.0

Fetching: thor-1.0.1.gem (100%)

Successfully installed thor-1.0.1

Fetching: colorize-0.8.1.gem (100%)

Successfully installed colorize-0.8.1

Fetching: bundler-2.1.4.gem (100%)

Successfully installed bundler-2.1.4

Fetching: mime-types-data-3.2020.0512.gem (100%)

Successfully installed mime-types-data-3.2020.0512

Fetching: mime-types-3.3.1.gem (100%)

Successfully installed mime-types-3.3.1

Fetching: multi_json-1.14.1.gem (100%)

Successfully installed multi_json-1.14.1

Fetching: safe_yaml-1.0.5.gem (100%)

Successfully installed safe_yaml-1.0.5

Fetching: crack-0.4.3.gem (100%)

Successfully installed crack-0.4.3

Fetching: multipart-post-2.1.1.gem (100%)

Successfully installed multipart-post-2.1.1

Fetching: faraday-1.0.1.gem (100%)

Successfully installed faraday-1.0.1

Fetching: concurrent-ruby-1.1.6.gem (100%)

Successfully installed concurrent-ruby-1.1.6

Fetching: i18n-1.8.2.gem (100%)

HEADS UP! i18n 1.1 changed fallbacks to exclude default locale.

But that may break your application.

If you are upgrading your Rails application from an older version of Rails:

Please check your Rails app for 'config.i18n.fallbacks = true'.

If you're using I18n (>= 1.1.0) and Rails (< 5.2.2), this should be

'config.i18n.fallbacks = [I18n.default_locale]'.

If not, fallbacks will be broken in your app by I18n 1.1.x.

If you are starting a NEW Rails application, you can ignore this notice.

For more info see:

https://github.com/svenfuchs/i18n/releases/tag/v1.1.0

Successfully installed i18n-1.8.2

Fetching: thread_safe-0.3.6.gem (100%)

Successfully installed thread_safe-0.3.6

Fetching: tzinfo-1.2.7.gem (100%)

Successfully installed tzinfo-1.2.7

Fetching: zeitwerk-2.3.0.gem (100%)

Successfully installed zeitwerk-2.3.0

Fetching: activesupport-6.0.3.1.gem (100%)

Successfully installed activesupport-6.0.3.1

Fetching: flexirest-1.9.15.gem (100%)

Successfully installed flexirest-1.9.15

Fetching: parslet-1.8.2.gem (100%)

Successfully installed parslet-1.8.2

Fetching: toml-0.2.0.gem (100%)

Successfully installed toml-0.2.0

Fetching: highline-2.0.3.gem (100%)

Successfully installed highline-2.0.3

Fetching: commander-4.5.2.gem (100%)

Successfully installed commander-4.5.2

Fetching: civo-1.3.3.gem (100%)

Successfully installed civo-1.3.3

Fetching: civo_cli-0.5.9.gem (100%)

Successfully installed civo_cli-0.5.9

Parsing documentation for unicode-display_width-1.7.0

Installing ri documentation for unicode-display_width-1.7.0

Parsing documentation for terminal-table-1.8.0

Installing ri documentation for terminal-table-1.8.0

Parsing documentation for thor-1.0.1

Installing ri documentation for thor-1.0.1

Parsing documentation for colorize-0.8.1

Installing ri documentation for colorize-0.8.1

Parsing documentation for bundler-2.1.4

Installing ri documentation for bundler-2.1.4

Parsing documentation for mime-types-data-3.2020.0512

Installing ri documentation for mime-types-data-3.2020.0512

Parsing documentation for mime-types-3.3.1

Installing ri documentation for mime-types-3.3.1

Parsing documentation for multi_json-1.14.1

Installing ri documentation for multi_json-1.14.1

Parsing documentation for safe_yaml-1.0.5

Installing ri documentation for safe_yaml-1.0.5

Parsing documentation for crack-0.4.3

Installing ri documentation for crack-0.4.3

Parsing documentation for multipart-post-2.1.1

Installing ri documentation for multipart-post-2.1.1

Parsing documentation for faraday-1.0.1

Installing ri documentation for faraday-1.0.1

Parsing documentation for concurrent-ruby-1.1.6

Installing ri documentation for concurrent-ruby-1.1.6

Parsing documentation for i18n-1.8.2

Installing ri documentation for i18n-1.8.2

Parsing documentation for thread_safe-0.3.6

Installing ri documentation for thread_safe-0.3.6

Parsing documentation for tzinfo-1.2.7

Installing ri documentation for tzinfo-1.2.7

Parsing documentation for zeitwerk-2.3.0

Installing ri documentation for zeitwerk-2.3.0

Parsing documentation for activesupport-6.0.3.1

Installing ri documentation for activesupport-6.0.3.1

Parsing documentation for flexirest-1.9.15

Installing ri documentation for flexirest-1.9.15

Parsing documentation for parslet-1.8.2

Installing ri documentation for parslet-1.8.2

Parsing documentation for toml-0.2.0

Installing ri documentation for toml-0.2.0

Parsing documentation for highline-2.0.3

Installing ri documentation for highline-2.0.3

Parsing documentation for commander-4.5.2

Installing ri documentation for commander-4.5.2

Parsing documentation for civo-1.3.3

Installing ri documentation for civo-1.3.3

Parsing documentation for civo_cli-0.5.9

Installing ri documentation for civo_cli-0.5.9

Done installing documentation for unicode-display_width, terminal-table, thor, colorize, bundler, mime-types-data, mime-types, multi_json, safe_yaml, crack, multipart-post, faraday, concurrent-ruby, i18n, thread_safe, tzinfo, zeitwerk, activesupport, flexirest, parslet, toml, highline, commander, civo, civo_cli after 21 seconds

25 gems installed

Verification that it’s installed:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ civo help

Commands:

/var/lib/gems/2.5.0/gems/thor-1.0.1/lib/thor/shell/basic.rb:399: warning: Insecure world writable dir /mnt/c in PATH, mode 040777

civo apikey # manage API keys stored in the client

civo applications # list and add marketplace applications to Kubernetes clusters. Alias: apps, addons, marketplace, k8s-apps, k3s-apps

civo blueprint # manage blueprints

civo domain # manage DNS domains

civo domainrecord # manage domain name DNS records for a domain

civo firewall # manage firewalls

civo help [COMMAND] # Describe available commands or one specific command

civo instance # manage instances

civo kubernetes # manage Kubernetes. Aliases: k8s, k3s

civo loadbalancer # manage load balancers

civo network # manage networks

civo quota # view the quota for the active account

civo region # manage regions

civo size # manage sizes

civo snapshot # manage snapshots

civo sshkey # manage uploaded SSH keys

civo template # manage templates

civo update # update to the latest Civo CLI

civo version # show the version of Civo CLI used

civo volume # manage volumes

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ civo version

You are running the current v0.5.9 of Civo CLI

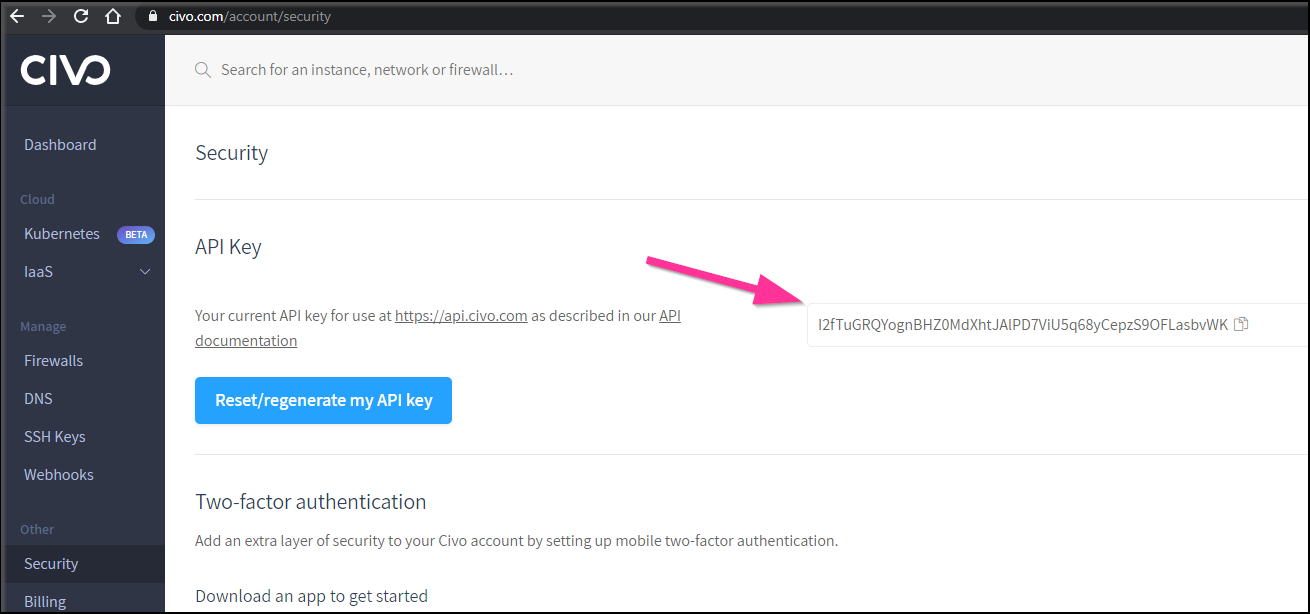

Next we need to login to CIVOand get/set our API key. It’s now shown under Other/Security:

I have since reset it, but as you see, right now it’s listed as: I2fTuGRQYognBHZ0MdXhtJAlPD7ViU5q68yCepzS9OFLasbvWK

We now need to save that in the client:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ civo apikey add K8s I2fTuGRQYognBHZ0MdXhtJAlPD7ViU5q68yCepzS9OFLasbvWK

Saved the API Key I2fTuGRQYognBHZ0MdXhtJAlPD7ViU5q68yCepzS9OFLasbvWK as K8s

The current API Key is now K8s

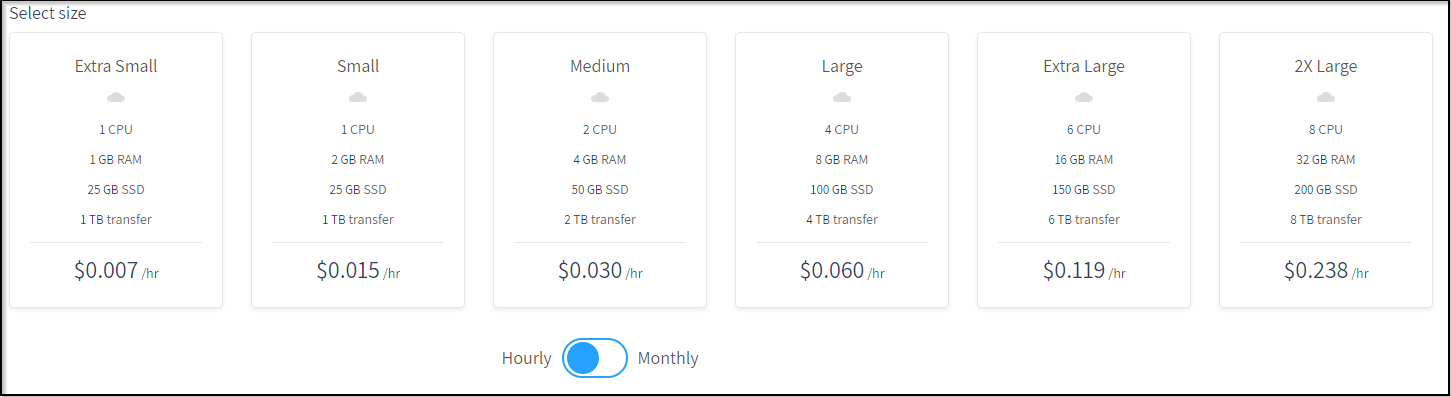

We’ll need to refresh our memory on CIVO sizes before moving on:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ civo sizes

+------------+----------------------------------------------------+-----+----------+-----------+

| Name | Description | CPU | RAM (MB) | Disk (GB) |

+------------+----------------------------------------------------+-----+----------+-----------+

| g2.xsmall | Extra Small - 1GB RAM, 1 CPU Core, 25GB SSD Disk | 1 | 1024 | 25 |

| g2.small | Small - 2GB RAM, 1 CPU Core, 25GB SSD Disk | 1 | 2048 | 25 |

| g2.medium | Medium - 4GB RAM, 2 CPU Cores, 50GB SSD Disk | 2 | 4096 | 50 |

| g2.large | Large - 8GB RAM, 4 CPU Cores, 100GB SSD Disk | 4 | 8192 | 100 |

| g2.xlarge | Extra Large - 16GB RAM, 6 CPU Core, 150GB SSD Disk | 6 | 16386 | 150 |

| g2.2xlarge | 2X Large - 32GB RAM, 8 CPU Core, 200GB SSD Disk | 8 | 32768 | 200 |

+------------+----------------------------------------------------+-----+----------+-----------+

And the costs haven’t changed since the last time we blogged:

Lastly we’ll pick a version. Since our last post, they’ve added 1.17.2 in preview:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ civo kubernetes versions

+-------------+-------------+---------+

| Version | Type | Default |

+-------------+-------------+---------+

| 1.17.2+k3s1 | development | |

| 1.0.0 | stable | <===== |

| 0.10.2 | deprecated | |

| 0.10.0 | deprecated | |

| 0.9.1 | deprecated | |

| 0.8.1 | legacy | |

+-------------+-------------+---------+

And like before, we can review applications we can install at the same time:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ civo applications list

+----------------------+-------------+--------------+-----------------+--------------+

| Name | Version | Category | Plans | Dependencies |

+----------------------+-------------+--------------+-----------------+--------------+

| cert-manager | v0.11.0 | architecture | Not applicable | Helm |

| Helm | 2.14.3 | management | Not applicable | |

| Jenkins | 2.190.1 | ci_cd | 5GB, 10GB, 20GB | Longhorn |

| KubeDB | v0.12.0 | database | Not applicable | Longhorn |

| Kubeless | 1.0.5 | architecture | Not applicable | |

| kubernetes-dashboard | v2.0.0-rc7 | management | Not applicable | |

| Linkerd | 2.5.0 | architecture | Not applicable | |

| Longhorn | 0.7.0 | storage | Not applicable | |

| Maesh | Latest | architecture | Not applicable | Helm |

| MariaDB | 10.4.7 | database | 5GB, 10GB, 20GB | Longhorn |

| metrics-server | (default) | architecture | Not applicable | |

| MinIO | 2019-08-29 | storage | 5GB, 10GB, 20GB | Longhorn |

| MongoDB | 4.2.0 | database | 5GB, 10GB, 20GB | Longhorn |

| OpenFaaS | 0.18.0 | architecture | Not applicable | Helm |

| Portainer | beta | management | Not applicable | |

| PostgreSQL | 11.5 | database | 5GB, 10GB, 20GB | Longhorn |

| prometheus-operator | 0.35.0 | monitoring | Not applicable | |

| Rancher | v2.3.0 | management | Not applicable | |

| Redis | 3.2 | database | Not applicable | |

| Selenium | 3.141.59-r1 | ci_cd | Not applicable | |

| Traefik | (default) | architecture | Not applicable | |

+----------------------+-------------+--------------+-----------------+--------------+

I notice since February they dropped Klum but added Portainer and upgraded prometheus to 0.35.0 (from 0.34.0).

The last step is to create our cluster:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ civo kubernetes create MyNextCluster --size g2.medium --applications=Kubernetes-dashboard --nodes=2 --wait=true --save

Building new Kubernetes cluster MyNextCluster: Done

Created Kubernetes cluster MyNextCluster in 02 min 01 sec

KUBECONFIG=~/.kube/config:/tmp/import_kubeconfig20200520-3031-r5ix5y kubectl config view --flatten

/var/lib/gems/2.5.0/gems/civo_cli-0.5.9/lib/kubernetes.rb:350: warning: Insecure world writable dir /mnt/c in PATH, mode 040777

Saved config to ~/.kube/config

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

kube-node-8727 Ready <none> 22s v1.16.3-k3s.2

kube-master-e748 Ready master 33s v1.16.3-k3s.2

Azure Setup

First, login to Azure..

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az login

To sign in, use a web browser to open the page https://microsoft.com/devicelogin and enter the code FF64MDUEP to authenticate.

…

Create a Resource Group we’ll use to contain our clusters:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az group create --name myArcClusters --location centralus

{

"id": "/subscriptions/70b42e6a-asdf-asdf-asdf-9f3995b1aca8/resourceGroups/myArcClusters",

"location": "centralus",

"managedBy": null,

"name": "myArcClusters",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

Next, let’s create a Service Principal for Arc

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az ad sp create-for-RBAC --skip-assignment --name "https://azure-arc-for-k8s-onboarding"

{

"appId": "9f7e213f-3b03-411e-b874-ab272e46de41",

"displayName": "azure-arc-for-k8s-onboarding",

"name": "https://azure-arc-for-k8s-onboarding",

"password": "4545458-aaee-3344-4321-12345678ad",

"tenant": "d73a39db-6eda-495d-8000-7579f56d68b7"

}

Give it access to our subscription:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az role assignment create --role 34e09817-6cbe-4d01-b1a2-e0eac5743d41 --a

ssignee 9f7e213f-3b03-411e-b874-ab272e46de41 --scope /subscriptions/70b42e6a-asdf-asdf-asdf-9f3995b1aca8

{

"canDelegate": null,

"id": "/subscriptions/70b42e6a-asdf-asdf-asdf-9f3995b1aca8/providers/Microsoft.Authorization/roleAssignments/c2cc591e-adca-4f91-af83-bdcea56a56f2",

"name": "c2cc591e-adca-4f91-af83-bdcea56a56f2",

"principalId": "e3349b46-3444-4f4a-b9f8-c36a8cf0bf38",

"principalType": "ServicePrincipal",

"roleDefinitionId": "/subscriptions/70b42e6a-asdf-asdf-asdf-9f3995b1aca8/providers/Microsoft.Authorization/roleDefinitions/34e09817-6cbe-4d01-b1a2-e0eac5743d41",

"scope": "/subscriptions/70b42e6a-asdf-asdf-asdf-9f3995b1aca8",

"type": "Microsoft.Authorization/roleAssignments"

}

Next we need to login:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az login --service-principal -u 9f7e213f-3b03-411e-b874-ab272e46de41 -p 4545458-aaee-3344-4321-12345678ad --tenant d73a39db-6eda-495d-8000-7579f56d68b7

[

{

"cloudName": "AzureCloud",

"homeTenantId": "d73a39db-6eda-495d-8000-7579f56d68b7",

"id": "70b42e6a-asdf-asdf-asdf-9f3995b1aca8",

"isDefault": true,

"managedByTenants": [],

"name": "Visual Studio Enterprise Subscription",

"state": "Enabled",

"tenantId": "d73a39db-6eda-495d-8000-7579f56d68b7",

"user": {

"name": "9f7e213f-3b03-411e-b874-ab272e46de41",

"type": "servicePrincipal"

}

}

]

If your Azure CLI is out of date, you may get an error:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az connectedk8s connect -n myCIVOCluster -g myArcClusters

az: 'connectedk8s' is not in the 'az' command group. See 'az --help'. If the command is from an extension, please make sure the corresponding extension is installed. To learn more about extensions, please visit https://docs.microsoft.com/en-us/cli/azure/azure-cli-extensions-overview

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az version

This command is in preview. It may be changed/removed in a future release.

{

"azure-cli": "2.3.1",

"azure-cli-command-modules-nspkg": "2.0.3",

"azure-cli-core": "2.3.1",

"azure-cli-nspkg": "3.0.4",

"azure-cli-telemetry": "1.0.4",

"extensions": {

"azure-devops": "0.18.0"

}

}

Updating is easy:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ sudo apt-get update && sudo apt-get install azure-cli

[sudo] password for builder:

Get:1 http://security.ubuntu.com/ubuntu bionic-security InRelease [88.7 kB]

Hit:2 http://archive.ubuntu.com/ubuntu bionic InRelease

Hit:3 https://packages.microsoft.com/repos/azure-cli bionic InRelease

Hit:4 https://packages.microsoft.com/ubuntu/18.04/prod bionic InRelease

Get:5 http://archive.ubuntu.com/ubuntu bionic-updates InRelease [88.7 kB]

Get:6 http://archive.ubuntu.com/ubuntu bionic-backports InRelease [74.6 kB]

Get:7 http://archive.ubuntu.com/ubuntu bionic-updates/universe Translation-en [334 kB]

Fetched 586 kB in 1s (396 kB/s)

Reading package lists... Done

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages will be upgraded:

azure-cli

1 upgraded, 0 newly installed, 0 to remove and 68 not upgraded.

Need to get 47.7 MB of archives.

After this operation, 23.5 MB of additional disk space will be used.

Get:1 https://packages.microsoft.com/repos/azure-cli bionic/main amd64 azure-cli all 2.6.0-1~bionic [47.7 MB]

Fetched 47.7 MB in 1s (32.0 MB/s)

(Reading database ... 94395 files and directories currently installed.)

Preparing to unpack .../azure-cli_2.6.0-1~bionic_all.deb ...

Unpacking azure-cli (2.6.0-1~bionic) over (2.3.1-1~bionic) ...

Setting up azure-cli (2.6.0-1~bionic) ...

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az version

{

"azure-cli": "2.6.0",

"azure-cli-command-modules-nspkg": "2.0.3",

"azure-cli-core": "2.6.0",

"azure-cli-nspkg": "3.0.4",

"azure-cli-telemetry": "1.0.4",

"extensions": {

"azure-devops": "0.18.0"

}

}

We need to register two providers.. Note, for me, i had permission issues so i had to elevate the role of the SP in my Subscription to get it done:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az provider register --namespace Microsoft.Kubernetes

Registering is still on-going. You can monitor using 'az provider show -n Microsoft.Kubernetes'

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az provider register --namespace Microsoft.KubernetesConfiguration

Registering is still on-going. You can monitor using 'az provider show -n Microsoft.KubernetesConfiguration'

Verification

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az provider show -n Microsoft.Kubernetes -o table

Namespace RegistrationPolicy RegistrationState

-------------------- -------------------- -------------------

Microsoft.Kubernetes RegistrationRequired Registered

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az provider show -n Microsoft.KubernetesConfiguration -o table

Namespace RegistrationPolicy RegistrationState

--------------------------------- -------------------- -------------------

Microsoft.KubernetesConfiguration RegistrationRequired Registered

Install and update to the latest version of the extensions:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az extension add --name connectedk8s

The installed extension 'connectedk8s' is in preview.

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az extension add --name k8sconfiguration

The installed extension 'k8sconfiguration' is in preview.

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az extension update --name connectedk8s

No updates available for 'connectedk8s'. Use --debug for more information.

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az extension update --name k8sconfiguration

No updates available for 'k8sconfiguration'. Use --debug for more information.

Onboarding to Arc

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az connectedk8s connect -n myCIVOCluster -g myArcClusters

Command group 'connectedk8s' is in preview. It may be changed/removed in a future release.

Ensure that you have the latest helm version installed before proceeding.

This operation might take a while...

Connected cluster resource creation is supported only in the following locations: eastus, westeurope. Use the --location flag to specify one of these locations.

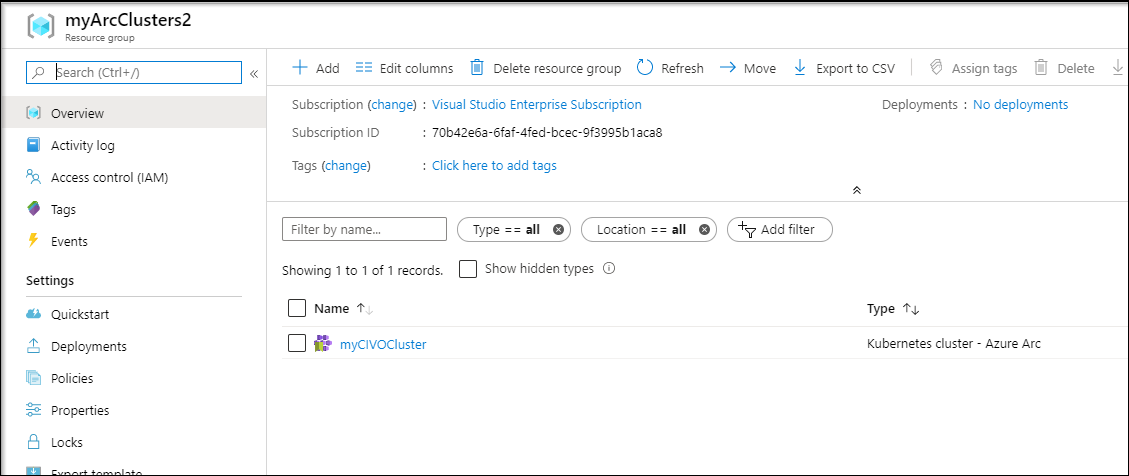

That would have been good to know.. Lets create a new Group in eastus and do it again…

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az group create --name myArcClusters2 --location eastus

{

"id": "/subscriptions/70b42e6a-asdf-asdf-asdf-9f3995b1aca8/resourceGroups/myArcClusters2",

"location": "eastus",

"managedBy": null,

"name": "myArcClusters2",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

Let’s add it!

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az connectedk8s connect -n myCIVOCluster -g myArcClusters2

Command group 'connectedk8s' is in preview. It may be changed/removed in a future release.

Ensure that you have the latest helm version installed before proceeding.

This operation might take a while...

{- Finished ..

"aadProfile": {

"clientAppId": "",

"serverAppId": "",

"tenantId": ""

},

"agentPublicKeyCertificate": "MIICCgKCAgEAsux97vWSl97JnRSsED6UyXq7TtIOBSR/eSy8fGAfGHL1wUCYQQeYI+8bgqSN0n7fvIELalrQ8efvCLdNE3C8ocBC/0RpfjRrIpdg7LPXrCCAA1hvR2PymNMWSyAJcPjyCPu5jbKkRSj4XSSLk1sVgVeJpWXjl4ZKZluwVEGD1EWA3Xwd43fwI8gYNKu55qpyO/knUJngoMV3jwnE0UJuEMzbVyoeKWNQJJe4g0vflA5j4cvU7QYBW6XRGGKiFYgWq1053kzvVuucZIl0QPt9hZU8NbbMnU/b8NPLIue4S/w7cZobpYf46CV2ZzySZ0OIe+kcZ7T8v0yw+i+77C76OcDwDaojov+DBEMLJ7AJtoQ/dwf9JebVO92ZuWFtHbdT3M3222xF2R1KWGVXxmOnqEtB3D+zsNpcSp8abKOH8du9l07Jts1ss2j24Lqf/QFVDrBHUTxidvLENFSCh4qQ2npfJq/v/ndLUv4qPXyRmbNLiFn5/+4shhSQBPBj+GopzMdkZn6Zoh4uPGX77svBS5jmBjyDYj0OIJobt/cfPwQzn9iV3/t2ED7IHauT5WGrGPeqFyF/43YAkanPOQUWDaWy2YLHyrR12Hh2KHLfVq/9k9VvwlcJ0Md96QOhqicBqGDZRR9YDoQjVkOP1Gb11AStvvMmeumWfeCqkVsBy9ECAwEAAQ==",

"agentVersion": null,

"id": "/subscriptions/70b42e6a-asdf-asdf-asdf-9f3995b1aca8/resourceGroups/myArcClusters2/providers/Microsoft.Kubernetes/connectedClusters/myCIVOCluster",

"identity": {

"principalId": "23947e5a-0b1b-45fc-a265-7cad37625959",

"tenantId": "d73a39db-6eda-495d-8000-7579f56d68b7",

"type": "SystemAssigned"

},

"kubernetesVersion": null,

"location": "eastus",

"name": "myCIVOCluster",

"provisioningState": "Succeeded",

"resourceGroup": "myArcClusters2",

"tags": null,

"totalNodeCount": null,

"type": "Microsoft.Kubernetes/connectedClusters"

}

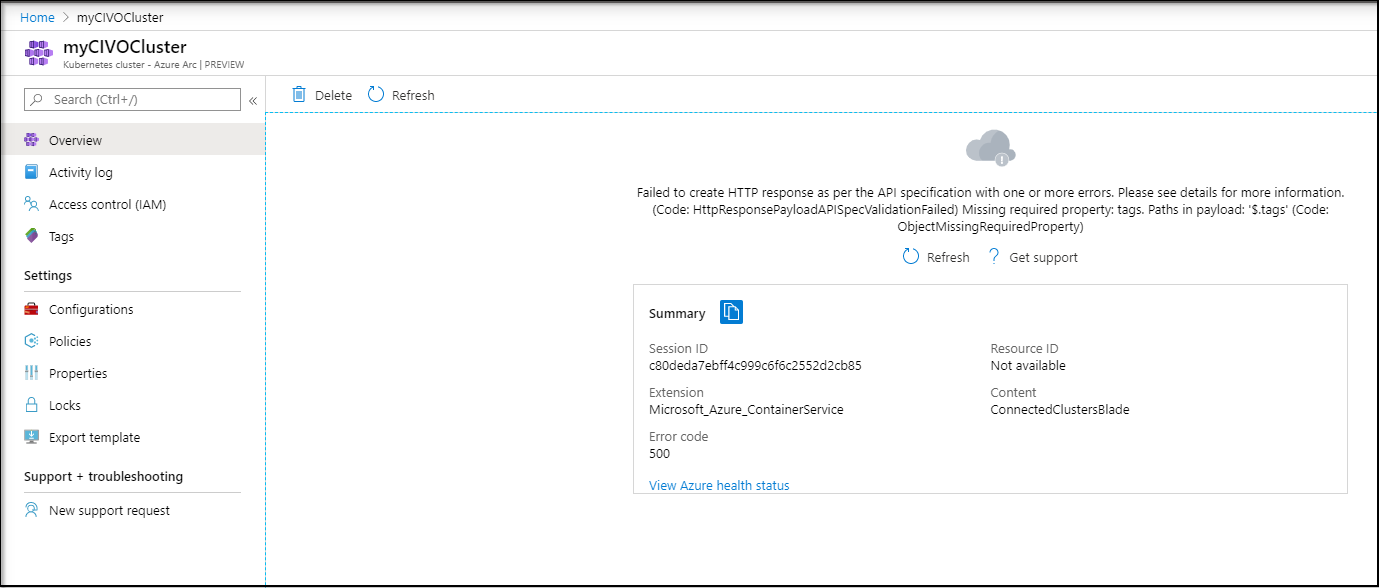

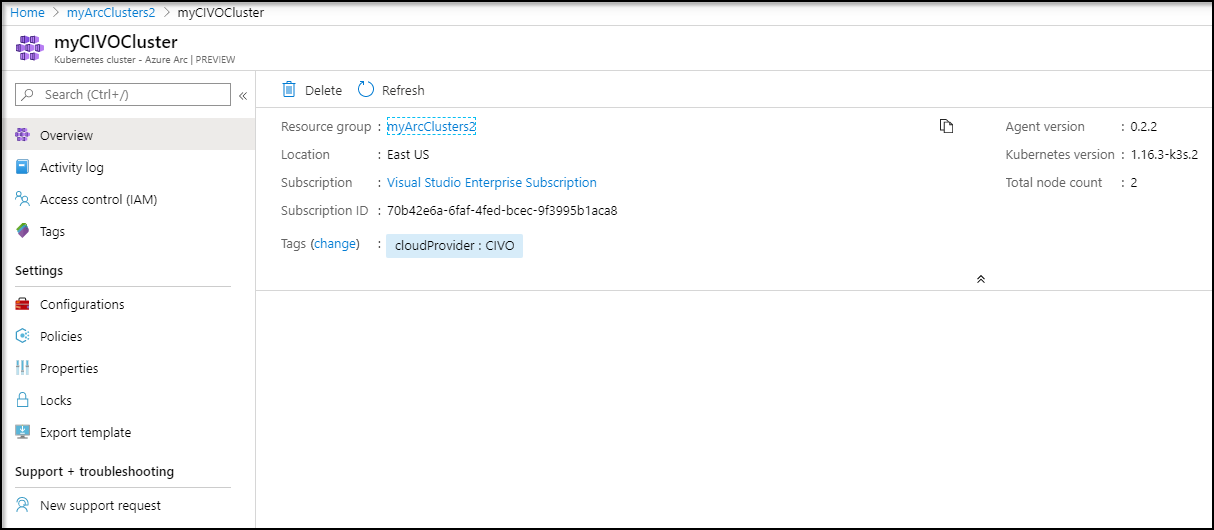

The details are missing until we add in at least one tag in Tags.

We can see the pods are running fine:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ kubectl get pods -n azure-arc

NAME READY STATUS RESTARTS AGE

flux-logs-agent-6989466ff6-f7znc 2/2 Running 0 20m

metrics-agent-bb65d876-gh7bd 2/2 Running 0 20m

clusteridentityoperator-5649f66cf8-spngt 3/3 Running 0 20m

config-agent-dcf745b57-lsnj7 3/3 Running 0 20m

controller-manager-98947d4f6-lg2hw 3/3 Running 0 20m

resource-sync-agent-75f6885587-d76mf 3/3 Running 0 20m

cluster-metadata-operator-5959f77f6d-7hqtf 2/2 Running 0 20m

Pods look good

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ kubectl get deployments -n azure-arc

NAME READY UP-TO-DATE AVAILABLE AGE

flux-logs-agent 1/1 1 1 30m

metrics-agent 1/1 1 1 30m

clusteridentityoperator 1/1 1 1 30m

config-agent 1/1 1 1 30m

controller-manager 1/1 1 1 30m

resource-sync-agent 1/1 1 1 30m

cluster-metadata-operator 1/1 1 1 30m

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

azure-arc default 1 2020-05-20 08:45:13.2639004 -0500 CDT deployed azure-arc-k8sagents-0.2.2 1.0

Let’s stop here, and tear down this cluster.

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ civo kubernetes list

+--------------------------------------+---------------+---------+-----------+---------+--------+

| ID | Name | # Nodes | Size | Version | Status |

+--------------------------------------+---------------+---------+-----------+---------+--------+

| 88f155ad-08e2-4935-8f2a-788deebaa451 | MyNextCluster | 2 | g2.medium | 1.0.0 | ACTIVE |

+--------------------------------------+---------------+---------+-----------+---------+--------+

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ civo kubernetes remove 88f155ad-08e2-4935-8f2a-788deebaa451

Removing Kubernetes cluster MyNextCluster

*update* the problem was not having Tags. For some reason, Arc for K8s needs at least 1 tag to function properly. Once i set a tag (i used cloudProvider = CIVO) then it loaded just fine

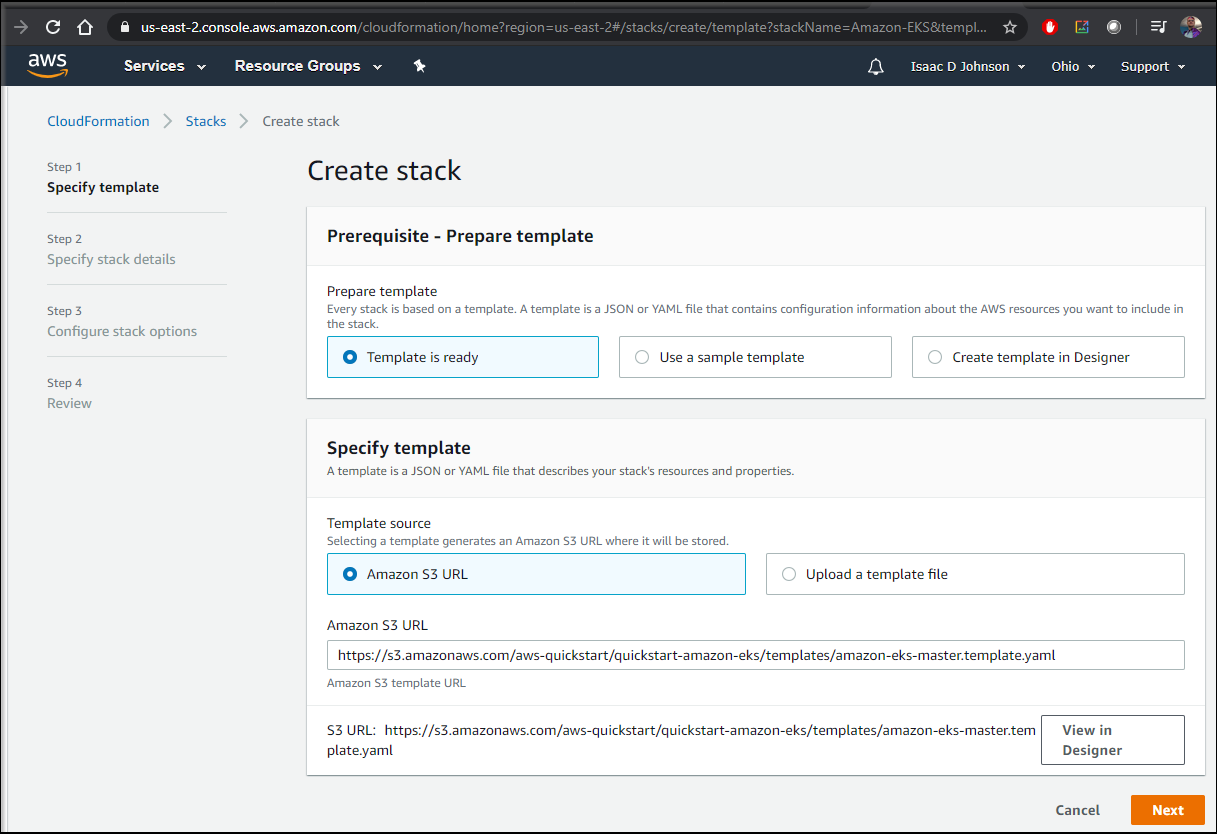

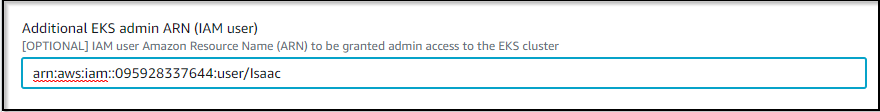

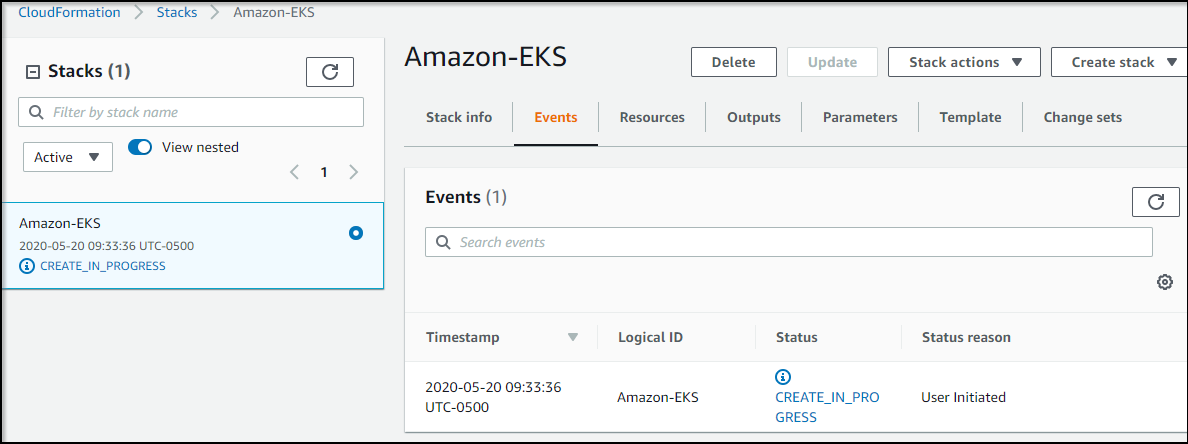

AWS EKS

Let’s keep it simple and use a Quickstart guide to launch EKS into a new VPC: https://aws.amazon.com/quickstart/architecture/amazon-eks/

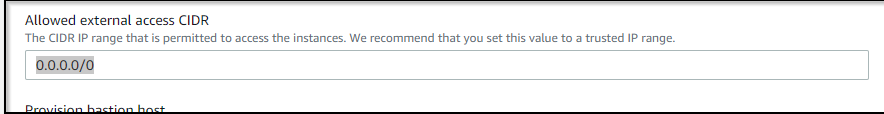

I did set the Allow external access to 0.0.0.0/0:

And set EKS public access endpoint to Enabled

We can now see the EKS cluster via the CLI:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ aws eks list-clusters --region us-east-2

{

"clusters": [

"EKS-YVA1LL5Y"

]

}

We can now download the kubeconfig:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ aws --region us-east-2 eks update-kubeconfig --name EKS-YVA1LL5Y

Added new context arn:aws:eks:us-east-2:01234512345:cluster/EKS-YVA1LL5Y to /home/builder/.kube/config

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 73m

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-10-0-0-74.us-east-2.compute.internal Ready <none> 58m v1.15.10-eks-bac369

ip-10-0-61-98.us-east-2.compute.internal Ready <none> 58m v1.15.10-eks-bac369

ip-10-0-82-16.us-east-2.compute.internal Ready <none> 58m v1.15.10-eks-bac369

Onboarding to Azure Arc

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az connectedk8s connect -n myEKSCluster -g myArcClusters2

Command group 'connectedk8s' is in preview. It may be changed/removed in a future release.

Ensure that you have the latest helm version installed before proceeding.

This operation might take a while...

{- Finished ..

"aadProfile": {

"clientAppId": "",

"serverAppId": "",

"tenantId": ""

},

"agentPublicKeyCertificate": "MIICCgKCAgEAlIaZLZ0eHd1zwSlXflD8L5tQcH8MAF/t3tHOewN4+6EsVW6wtwHL8rup+1i/1jbnArKHFrMCPpok+JCkxXvTgWsD5VSnZxDg/dkkACHw1I2RTq+9Q7E4SwT9QxreuxTejyLI/0w+kCnNwWlAvS6I4J/M709Bvz6KhxzFCWsX5fgRCoIO5zE0wjlxGG0s4c4f1hmHz3Mb4utPaX4gk6+Bo5MZs5EVNbhuHKdasDvJWe1itrtKVKQh7LfWICb1M34Kmo+TPhADKcoV4Ltaxb3VHnHLZpStGfcFI5SqTppn5dULOcGGob2wG8Mlw2INy17QUsyZ728qIKrBaNaJXgeQmZ93xUh7H0IBjV7VXuCOScsDBP7Cx5KZnXT7uWPRLjWCzYlOodCNeCMnBAabEGPECbUq3juvkmrbYiktjn6ZnWm32bDEYqiobqyFz3XMZVkK1zTnrVY0Bguc+n3RHXQeKKHZLyg84Cm1p83uadKFB7RZdCmBw5a8rS4PJEF+bpgPgS5FEcuNa5qp35BaWKTPifiDx9vo8uIADtEwSqpsrxqDnTf2gm8ORoww3vNfTO9WdiAzpBMDToJQamauVzGLB/HFO7pfrvjzRTdeKjamv8lzI/IyStr6Wf2QHAZVF95XfmmZ0iCHmkVHRwbQE4IABUeyZPzB6VKJD3yC9K8q4EsCAwEAAQ==",

"agentVersion": null,

"id": "/subscriptions/70b42e6a-asdf-asdf-asdf-9f3995b1aca8/resourceGroups/myArcClusters2/providers/Microsoft.Kubernetes/connectedClusters/myEKSCluster",

"identity": {

"principalId": "e5c729a1-ef9c-4839-89cd-a850536a400a",

"tenantId": "d73a39db-6eda-495d-8000-7579f56d68b7",

"type": "SystemAssigned"

},

"kubernetesVersion": null,

"location": "eastus",

"name": "myEKSCluster",

"provisioningState": "Succeeded",

"resourceGroup": "myArcClusters2",

"tags": null,

"totalNodeCount": null,

"type": "Microsoft.Kubernetes/connectedClusters"

}

Linode LKE

Since mylast blog entry, LKE CLI now can create clusters.

First, let’s get a list of types:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ linode-cli linodes types

┌──────────────────┬──────────────────────────────────┬───────────┬─────────┬────────┬───────┬─────────────┬──────────┬────────┬─────────┬──────┐

│ id │ label │ class │ disk │ memory │ vcpus │ network_out │ transfer │ hourly │ monthly │ gpus │

├──────────────────┼──────────────────────────────────┼───────────┼─────────┼────────┼───────┼─────────────┼──────────┼────────┼─────────┼──────┤

│ g6-nanode-1 │ Nanode 1GB │ nanode │ 25600 │ 1024 │ 1 │ 1000 │ 1000 │ 0.0075 │ 5.0 │ 0 │

│ g6-standard-1 │ Linode 2GB │ standard │ 51200 │ 2048 │ 1 │ 2000 │ 2000 │ 0.015 │ 10.0 │ 0 │

│ g6-standard-2 │ Linode 4GB │ standard │ 81920 │ 4096 │ 2 │ 4000 │ 4000 │ 0.03 │ 20.0 │ 0 │

│ g6-standard-4 │ Linode 8GB │ standard │ 163840 │ 8192 │ 4 │ 5000 │ 5000 │ 0.06 │ 40.0 │ 0 │

│ g6-standard-6 │ Linode 16GB │ standard │ 327680 │ 16384 │ 6 │ 6000 │ 8000 │ 0.12 │ 80.0 │ 0 │

│ g6-standard-8 │ Linode 32GB │ standard │ 655360 │ 32768 │ 8 │ 7000 │ 16000 │ 0.24 │ 160.0 │ 0 │

│ g6-standard-16 │ Linode 64GB │ standard │ 1310720 │ 65536 │ 16 │ 9000 │ 20000 │ 0.48 │ 320.0 │ 0 │

│ g6-standard-20 │ Linode 96GB │ standard │ 1966080 │ 98304 │ 20 │ 10000 │ 20000 │ 0.72 │ 480.0 │ 0 │

│ g6-standard-24 │ Linode 128GB │ standard │ 2621440 │ 131072 │ 24 │ 11000 │ 20000 │ 0.96 │ 640.0 │ 0 │

│ g6-standard-32 │ Linode 192GB │ standard │ 3932160 │ 196608 │ 32 │ 12000 │ 20000 │ 1.44 │ 960.0 │ 0 │

│ g6-highmem-1 │ Linode 24GB │ highmem │ 20480 │ 24576 │ 1 │ 5000 │ 5000 │ 0.09 │ 60.0 │ 0 │

│ g6-highmem-2 │ Linode 48GB │ highmem │ 40960 │ 49152 │ 2 │ 6000 │ 6000 │ 0.18 │ 120.0 │ 0 │

│ g6-highmem-4 │ Linode 90GB │ highmem │ 92160 │ 92160 │ 4 │ 7000 │ 7000 │ 0.36 │ 240.0 │ 0 │

│ g6-highmem-8 │ Linode 150GB │ highmem │ 204800 │ 153600 │ 8 │ 8000 │ 8000 │ 0.72 │ 480.0 │ 0 │

│ g6-highmem-16 │ Linode 300GB │ highmem │ 348160 │ 307200 │ 16 │ 9000 │ 9000 │ 1.44 │ 960.0 │ 0 │

│ g6-dedicated-2 │ Dedicated 4GB │ dedicated │ 81920 │ 4096 │ 2 │ 4000 │ 4000 │ 0.045 │ 30.0 │ 0 │

│ g6-dedicated-4 │ Dedicated 8GB │ dedicated │ 163840 │ 8192 │ 4 │ 5000 │ 5000 │ 0.09 │ 60.0 │ 0 │

│ g6-dedicated-8 │ Dedicated 16GB │ dedicated │ 327680 │ 16384 │ 8 │ 6000 │ 6000 │ 0.18 │ 120.0 │ 0 │

│ g6-dedicated-16 │ Dedicated 32GB │ dedicated │ 655360 │ 32768 │ 16 │ 7000 │ 7000 │ 0.36 │ 240.0 │ 0 │

│ g6-dedicated-32 │ Dedicated 64GB │ dedicated │ 1310720 │ 65536 │ 32 │ 8000 │ 8000 │ 0.72 │ 480.0 │ 0 │

│ g6-dedicated-48 │ Dedicated 96GB │ dedicated │ 1966080 │ 98304 │ 48 │ 9000 │ 9000 │ 1.08 │ 720.0 │ 0 │

│ g1-gpu-rtx6000-1 │ Dedicated 32GB + RTX6000 GPU x1 │ gpu │ 655360 │ 32768 │ 8 │ 10000 │ 16000 │ 1.5 │ 1000.0 │ 1 │

│ g1-gpu-rtx6000-2 │ Dedicated 64GB + RTX6000 GPU x2 │ gpu │ 1310720 │ 65536 │ 16 │ 10000 │ 20000 │ 3.0 │ 2000.0 │ 2 │

│ g1-gpu-rtx6000-3 │ Dedicated 96GB + RTX6000 GPU x3 │ gpu │ 1966080 │ 98304 │ 20 │ 10000 │ 20000 │ 4.5 │ 3000.0 │ 3 │

│ g1-gpu-rtx6000-4 │ Dedicated 128GB + RTX6000 GPU x4 │ gpu │ 2621440 │ 131072 │ 24 │ 10000 │ 20000 │ 6.0 │ 4000.0 │ 4 │

└──────────────────┴──────────────────────────────────┴───────────┴─────────┴────────┴───────┴─────────────┴──────────┴────────┴─────────┴──────┘

Next, let’s create a cluster:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ linode-cli lke cluster-create --label mycluster --k8s_version 1.15 --node_pools.type g6-standard-2 --node_pools.count 2

┌──────┬───────────┬────────────┐

│ id │ label │ region │

├──────┼───────────┼────────────┤

│ 5193 │ mycluster │ us-central │

└──────┴───────────┴────────────┘

The Kubeconfig view is still screwy so we can use our last hack to get it:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ linode-cli lke kubeconfig-view 5193 > t.o && cat t.o | tail -n2 | head -n1 | sed 's/.\{8\}$//' | sed 's/^.\{8\}//' | base64 --decode > ~/.kube/config

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

lke5193-6544-5ec54610dcac NotReady <none> 2s v1.15.6

lke5193-6544-5ec54610e234 NotReady <none> 9s v1.15.6

Onboarding to Azure Arc

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ az connectedk8s connect -n myLKECluster -g myArcClusters2

Command group 'connectedk8s' is in preview. It may be changed/removed in a future release.

Ensure that you have the latest helm version installed before proceeding.

This operation might take a while...

{- Finished ..

"aadProfile": {

"clientAppId": "",

"serverAppId": "",

"tenantId": ""

},

"agentPublicKeyCertificate": "MIICCgKCAgEAqjk+8jskhpDa+O6cDqL37tad130qQCRBcCPimQ5wExwKPE0+BLrprfDGdXfAEbH9sQXTl6yWiXLiJkrV8PP/D+Qhkze4WINTY0pSu5yRT9x0MO/bu1E7ygcXjxuVvd7fJV0JF5fisWMaB/maY3FtmpoX1zOd0Sbz/yKg3pTrZE8R7QLDuNmKEkbHj5/ZmeZAmGYBUguKpqtnNM7++OE5BKjtHcI5H5A8fEV5xUJEA1+F7TTtrFTrFZdMPUR5QqQ+WQzsN3hrDakW51+SU5IkkppmzORHCehAn9TG/36mD7C8k05h/JKPzyU0UWlLb1yi7wOZqh9FksmEdqix6XcAMQCIp3CzdJc5M8KXa9rUNPmHf+KvPaqQtuZYPUiErMWHMiSqK/arZEj7N4NAToPjIjT1oPHB7g01yQFCmHcgs5gYt7H3b0ZSxWQJ78aZaYVc3HskegmYwL2qsg/P6uOIoS6+SqlPcb4U/ER+J+vKPLm71sOydDG2uMU02JiBJqrOqoxFRFtbrksOcL0zsFe3axjTrEJTz18NIhOJ98Af0en/q5r/h6zG7SR+xp/VkIk81UzYlCKPTMF01lbm1nKtrIdmYchp/ggC21bWqClhmOMPDw0eoiINvYWGoOfsugm0ceEbpxkKEv/avir+Z+krVnOrb/enVP5qli6leZRgSTsCAwEAAQ==",

"agentVersion": null,

"id": "/subscriptions/70b42e6a-asdf-asdf-asdf-9f3995b1aca8/resourceGroups/myArcClusters2/providers/Microsoft.Kubernetes/connectedClusters/myLKECluster",

"identity": {

"principalId": "0f31b18c-743c-4697-9aa2-b1d3585a401c",

"tenantId": "d73a39db-6eda-495d-8000-7579f56d68b7",

"type": "SystemAssigned"

},

"kubernetesVersion": null,

"location": "eastus",

"name": "myLKECluster",

"provisioningState": "Succeeded",

"resourceGroup": "myArcClusters2",

"tags": null,

"totalNodeCount": null,

"type": "Microsoft.Kubernetes/connectedClusters"

}

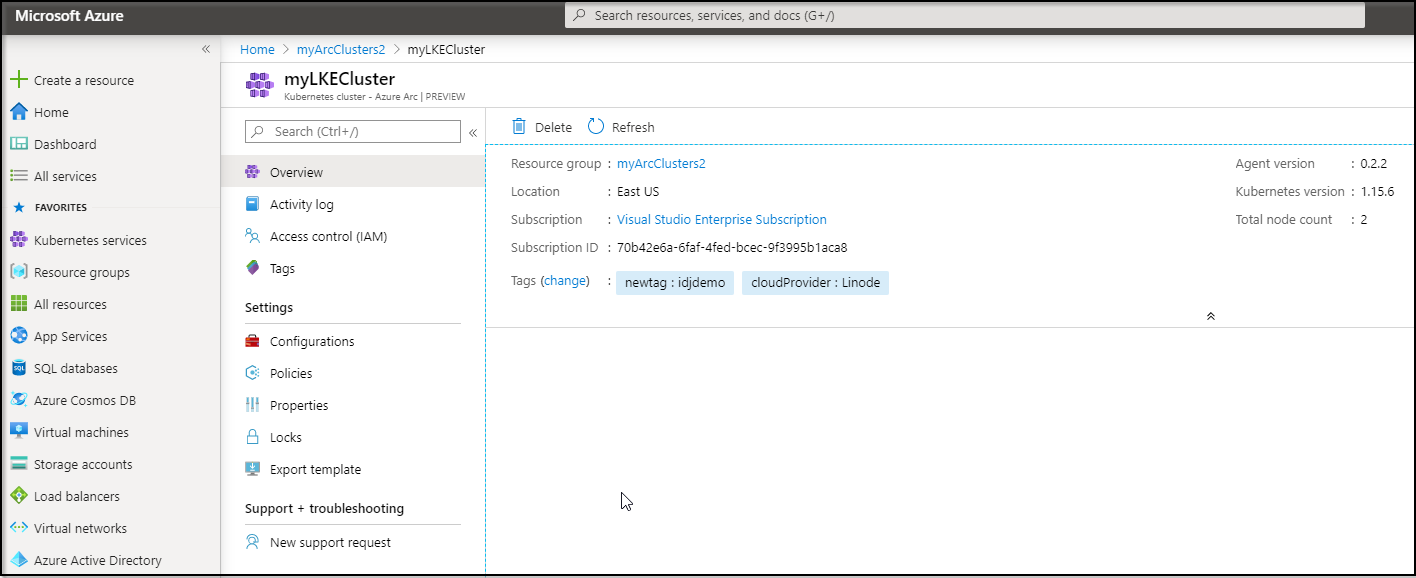

Once I set a tag in the Azure Portal:

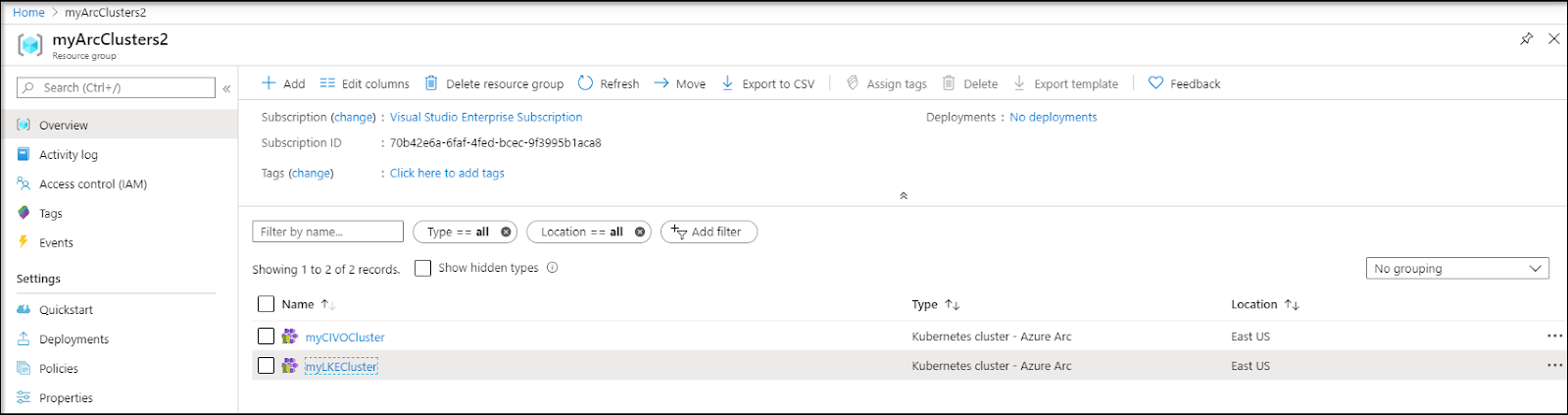

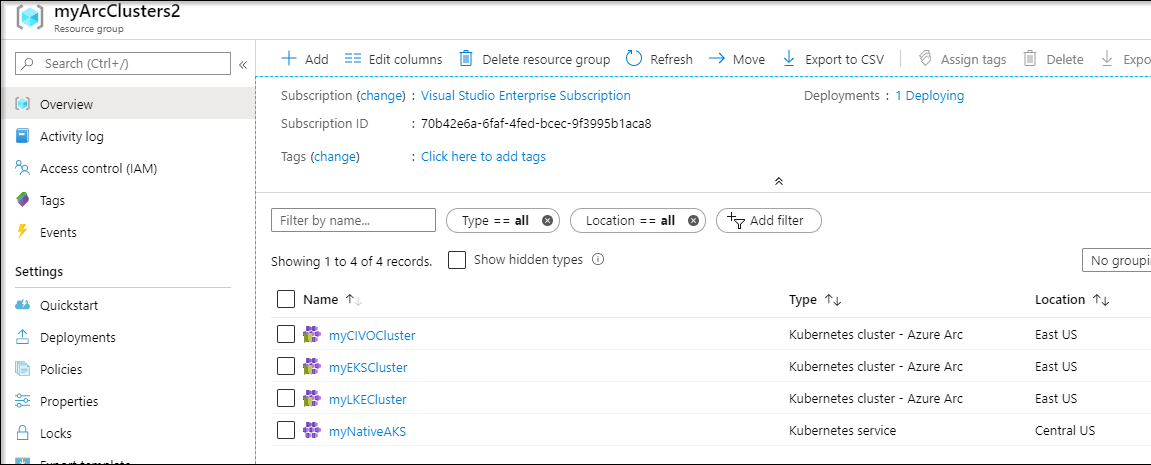

We can now see Kubernetes clusters hosted in two other different Cloud providers now in my Azure Resource Group:

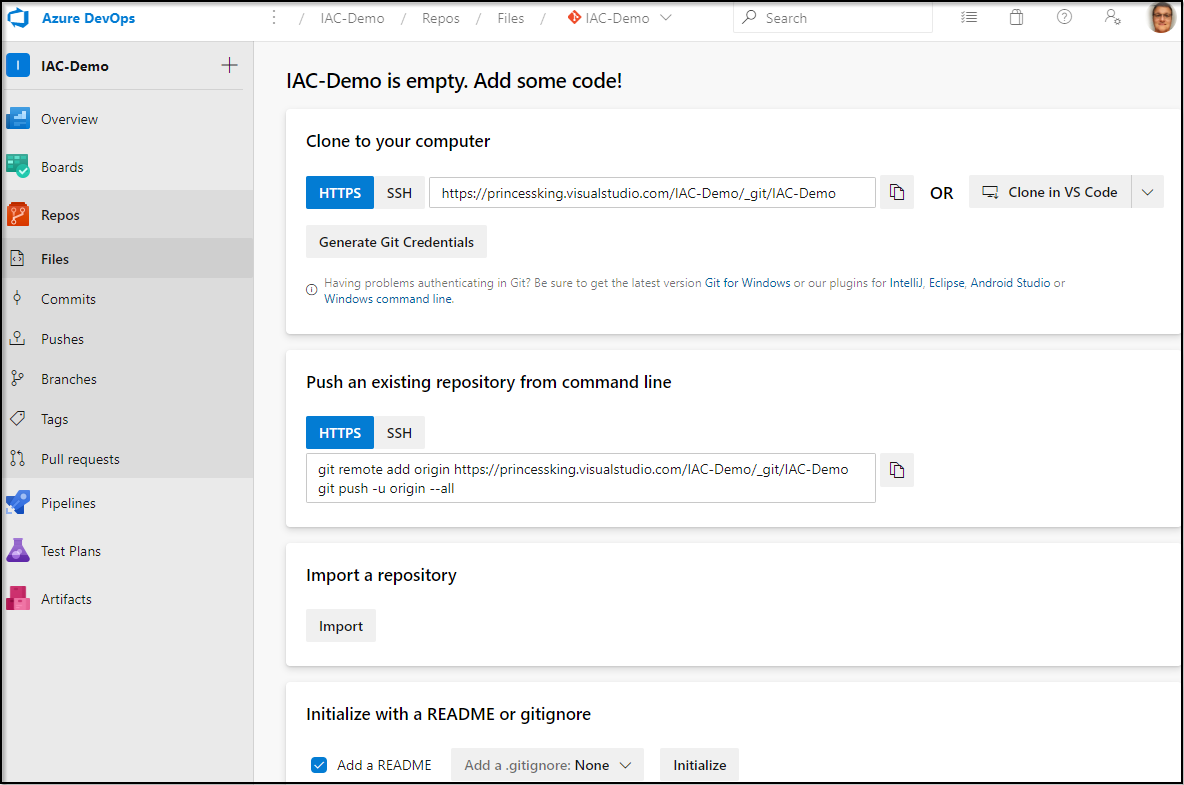

GitOps

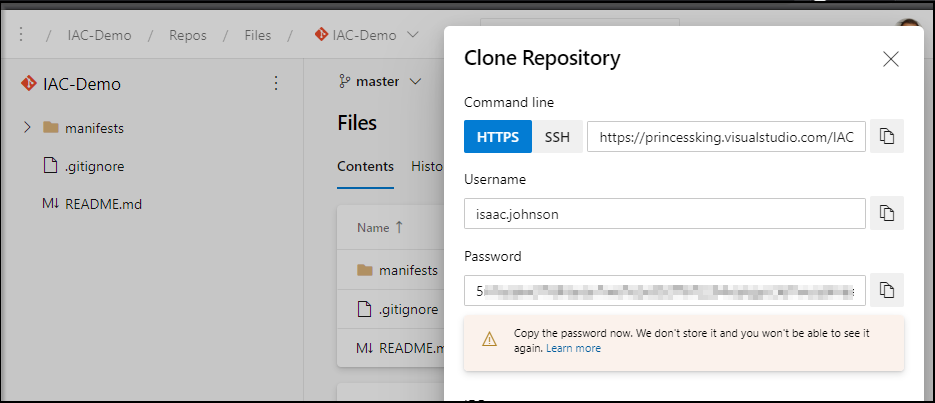

First, we need a repo to store our YAML/Helm charts. We can create one in Azure Repos: https://princessking.visualstudio.com/_git/IAC-Demo

I’ll then initialize it with a README.md and Create new GIT Credentials:

We’ll clone the repo then add a directory for manifests.I could create a simple app, but i’ll just borrow from Azure’s Hello World Voting app and put that YAML in my manifests folder.

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo$ mkdir manifests

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo$ cd manifests/

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo/manifests$ curl -O https://raw.githubusercontent.com/Azure-Samples/azure-voting-app-redis/master/azure-vote-all-in-one-redis.yaml

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 100 1405 100 1405 0 0 9493 0 --:--:-- --:--:-- --:--:-- 9493

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo/manifests$ cat azure-vote-all-in-one-redis.yaml | tail -n5

type: LoadBalancer

ports:

- port: 80

selector:

app: azure-vote-front

We can now launch a configuration that would deploy to the default namespace the YAMLs in manifests.. Now note - i have NOT yet pushed the manifest folder so there is nothing to deploy:

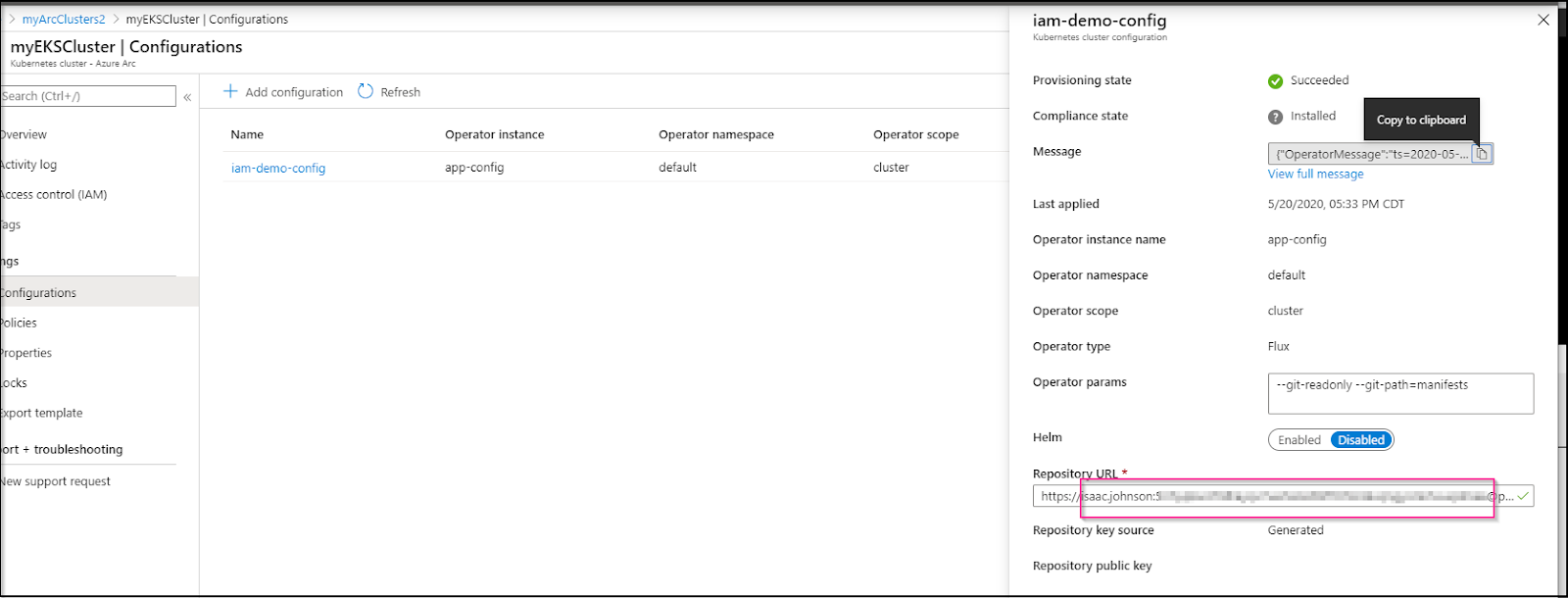

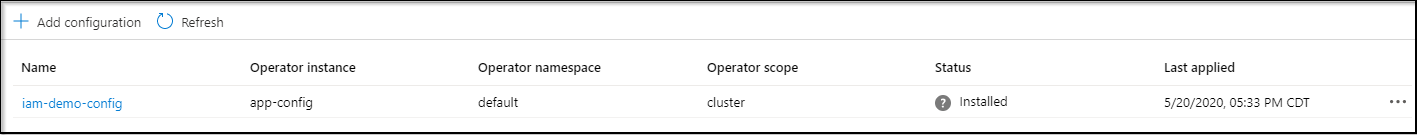

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo/manifests$ az k8sconfiguration create --name iam-demo-config --cluster-name myEKSCluster --resource-group myArcClusters2 --operator-instance-name app-config --operator-namespace default --repository-url https://princessking.visualstudio.com/IAC-Demo/_git/IAC-Demo --cluster-type connectedClusters --scope cluster --operator-params="--git-readonly --git-path=manifests"

Command group 'k8sconfiguration' is in preview. It may be changed/removed in a future release.

{

"complianceStatus": {

"complianceState": "Pending",

"lastConfigApplied": "0001-01-01T00:00:00",

"message": "{\"OperatorMessage\":null,\"ClusterState\":null}",

"messageLevel": "3"

},

"enableHelmOperator": "False",

"helmOperatorProperties": null,

"id": "/subscriptions/70b42e6a-asdf-asdf-asdf-9f3995b1aca8/resourceGroups/myArcClusters2/providers/Microsoft.Kubernetes/connectedClusters/myEKSCluster/providers/Microsoft.KubernetesConfiguration/sourceControlConfigurations/iam-demo-config",

"name": "iam-demo-config",

"operatorInstanceName": "app-config",

"operatorNamespace": "default",

"operatorParams": "--git-readonly --git-path=manifests",

"operatorScope": "cluster",

"operatorType": "Flux",

"provisioningState": "Succeeded",

"repositoryPublicKey": "",

"repositoryUrl": "https://princessking.visualstudio.com/IAC-Demo/_git/IAC-Demo",

"resourceGroup": "myArcClusters2",

"type": "Microsoft.KubernetesConfiguration/sourceControlConfigurations"

}

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo/manifests$

And we see a deployment launched in EKS

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ kubectl get deployments --all-namespaces

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

azure-arc cluster-metadata-operator 1/1 1 1 50m

azure-arc clusteridentityoperator 1/1 1 1 50m

azure-arc config-agent 1/1 1 1 50m

azure-arc controller-manager 1/1 1 1 50m

azure-arc flux-logs-agent 1/1 1 1 50m

azure-arc metrics-agent 1/1 1 1 50m

azure-arc resource-sync-agent 1/1 1 1 50m

default app-config 0/1 1 0 4s

default memcached 0/1 1 0 4s

kube-system coredns 2/2 2 2 125m

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ kubectl describe deployment app-config

Name: app-config

Namespace: default

CreationTimestamp: Wed, 20 May 2020 12:12:10 -0500

Labels: <none>

Annotations: deployment.kubernetes.io/revision: 1

Selector: instanceName=app-config,name=flux

Replicas: 1 desired | 1 updated | 1 total | 1 available | 0 unavailable

StrategyType: Recreate

MinReadySeconds: 0

Pod Template:

Labels: instanceName=app-config

name=flux

Annotations: prometheus.io/port: 3031

Service Account: app-config

Containers:

flux:

Image: docker.io/fluxcd/flux:1.18.0

Port: 3030/TCP

Host Port: 0/TCP

Args:

--k8s-secret-name=app-config-git-deploy

--memcached-service=

--ssh-keygen-dir=/var/fluxd/keygen

--git-url=https://princessking.visualstudio.com/IAC-Demo/_git/IAC-Demo

--git-branch=master

--git-path=manifests

--git-label=flux

--git-user=Flux

--git-readonly

--connect=ws://flux-logs-agent.azure-arc/default/iam-demo-config

--listen-metrics=:3031

Requests:

cpu: 50m

memory: 64Mi

Liveness: http-get http://:3030/api/flux/v6/identity.pub delay=5s timeout=5s period=10s #success=1 #failure=3

Readiness: http-get http://:3030/api/flux/v6/identity.pub delay=5s timeout=5s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/etc/fluxd/ssh from git-key (ro)

/var/fluxd/keygen from git-keygen (rw)

Volumes:

git-key:

Type: Secret (a volume populated by a Secret)

SecretName: app-config-git-deploy

Optional: false

git-keygen:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium: Memory

SizeLimit: <unset>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: app-config-768cc4fc89 (1/1 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 18s deployment-controller Scaled up replica set app-config-768cc4fc89 to 1

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ kubectl get pods

NAME READY STATUS RESTARTS AGE

app-config-768cc4fc89-f5n66 1/1 Running 0 45s

memcached-86869f57fd-r2kgj 1/1 Running 0 45s

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ kubectl describe app-config-768cc4fc89-f5n66

error: the server doesn't have a resource type "app-config-768cc4fc89-f5n66"

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ kubectl describe pod app-config-768cc4fc89-f5n66

Name: app-config-768cc4fc89-f5n66

Namespace: default

Priority: 0

Node: ip-10-0-82-16.us-east-2.compute.internal/10.0.82.16

Start Time: Wed, 20 May 2020 12:12:10 -0500

Labels: instanceName=app-config

name=flux

pod-template-hash=768cc4fc89

Annotations: kubernetes.io/psp: eks.privileged

prometheus.io/port: 3031

Status: Running

IP: 10.0.84.119

Controlled By: ReplicaSet/app-config-768cc4fc89

Containers:

flux:

Container ID: docker://d638ec677da94ca4d4c32fcd5ab9e35b390d33fa433b350976152f744f105244

Image: docker.io/fluxcd/flux:1.18.0

Image ID: docker-pullable://fluxcd/flux@sha256:8fcf24dccd7774b87a33d87e42fa0d9233b5c11481c8414fe93a8bdc870b4f5b

Port: 3030/TCP

Host Port: 0/TCP

Args:

--k8s-secret-name=app-config-git-deploy

--memcached-service=

--ssh-keygen-dir=/var/fluxd/keygen

--git-url=https://princessking.visualstudio.com/IAC-Demo/_git/IAC-Demo

--git-branch=master

--git-path=manifests

--git-label=flux

--git-user=Flux

--git-readonly

--connect=ws://flux-logs-agent.azure-arc/default/iam-demo-config

--listen-metrics=:3031

State: Running

Started: Wed, 20 May 2020 12:12:18 -0500

Ready: True

Restart Count: 0

Requests:

cpu: 50m

memory: 64Mi

Liveness: http-get http://:3030/api/flux/v6/identity.pub delay=5s timeout=5s period=10s #success=1 #failure=3

Readiness: http-get http://:3030/api/flux/v6/identity.pub delay=5s timeout=5s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/etc/fluxd/ssh from git-key (ro)

/var/fluxd/keygen from git-keygen (rw)

/var/run/secrets/kubernetes.io/serviceaccount from app-config-token-rpzr5 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

git-key:

Type: Secret (a volume populated by a Secret)

SecretName: app-config-git-deploy

Optional: false

git-keygen:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium: Memory

SizeLimit: <unset>

app-config-token-rpzr5:

Type: Secret (a volume populated by a Secret)

SecretName: app-config-token-rpzr5

Optional: false

QoS Class: Burstable

Node-Selectors: beta.kubernetes.io/os=linux

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 63s default-scheduler Successfully assigned default/app-config-768cc4fc89-f5n66 to ip-10-0-82-16.us-east-2.compute.internal

Normal Pulling 62s kubelet, ip-10-0-82-16.us-east-2.compute.internal Pulling image "docker.io/fluxcd/flux:1.18.0"

Normal Pulled 55s kubelet, ip-10-0-82-16.us-east-2.compute.internal Successfully pulled image "docker.io/fluxcd/flux:1.18.0"

Normal Created 55s kubelet, ip-10-0-82-16.us-east-2.compute.internal Created container flux

Normal Started 55s kubelet, ip-10-0-82-16.us-east-2.compute.internal Started container flux

Let’s push it:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo$ git add manifests/

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo$ git status

On branch master

Your branch is up to date with 'origin/master'.

Changes to be committed:

(use "git reset HEAD <file>..." to unstage)

new file: manifests/azure-vote-all-in-one-redis.yaml

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo$ git commit -m "push changes for a redis app"

[master f9f49cc] push changes for a redis app

1 file changed, 78 insertions(+)

create mode 100644 manifests/azure-vote-all-in-one-redis.yaml

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo$ git push

Counting objects: 4, done.

Delta compression using up to 8 threads.

Compressing objects: 100% (4/4), done.

Writing objects: 100% (4/4), 815 bytes | 815.00 KiB/s, done.

Total 4 (delta 0), reused 0 (delta 0)

remote: Analyzing objects... (4/4) (41 ms)

remote: Storing packfile... done (143 ms)

remote: Storing index... done (51 ms)

remote: We noticed you're using an older version of Git. For the best experience, upgrade to a newer version.

To https://princessking.visualstudio.com/IAC-Demo/_git/IAC-Demo

d11c1e8..f9f49cc master -> master

However i did not see it change. That’s when i realized i needed to pack the credentials into the URL:

Once saved, I saw it start to update:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ kubectl get pods

NAME READY STATUS RESTARTS AGE

app-config-8c4b5fd6f-jj995 1/1 Running 0 13s

azure-vote-back-5775d78ff5-s2mh9 0/1 ContainerCreating 0 4s

azure-vote-front-559d85d4f7-jhb84 0/1 ContainerCreating 0 4s

memcached-86869f57fd-r2kgj 1/1 Running 0 20m

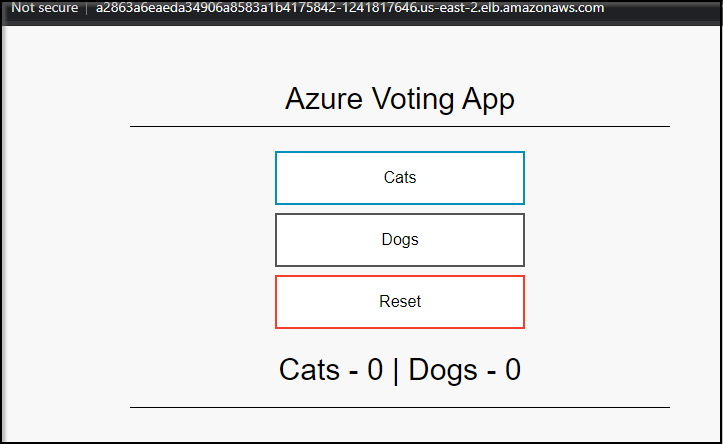

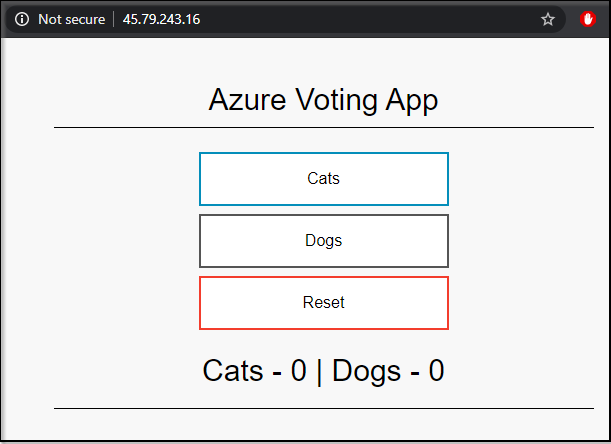

And we can see it launched:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

azure-vote-back ClusterIP 172.20.46.228 <none> 6379/TCP 6m55s

azure-vote-front LoadBalancer 172.20.208.101 a2863a6eaeda34906a8583a1b4175842-1241817646.us-east-2.elb.amazonaws.com 80:30262/TCP 6m55s

kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 153m

memcached ClusterIP 172.20.171.75 <none> 11211/TCP 27m

Let’s then launch the same app on LKE:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo$ az k8sconfiguration create --name iam-demo-config --cluster-name myLKECluster --resource-group myArcClusters2 --operator-instance-name app-config --operator-namespace default --repository-url https://isaac.johnson: *********************************** @princessking.visualstudio.com/IAC-Demo/_git/IAC-Demo --cluster-type connectedClusters --scope cluster --operator-params="--git-readonly --git-path=manifests"

Command group 'k8sconfiguration' is in preview. It may be changed/removed in a future release.

{

"complianceStatus": {

"complianceState": "Pending",

"lastConfigApplied": "0001-01-01T00:00:00",

"message": "{\"OperatorMessage\":null,\"ClusterState\":null}",

"messageLevel": "3"

},

"enableHelmOperator": "False",

"helmOperatorProperties": null,

"id": "/subscriptions/70b42e6a-asdf-asdf-asdf-9f3995b1aca8/resourceGroups/myArcClusters2/providers/Microsoft.Kubernetes/connectedClusters/myLKECluster/providers/Microsoft.KubernetesConfiguration/sourceControlConfigurations/iam-demo-config",

"name": "iam-demo-config",

"operatorInstanceName": "app-config",

"operatorNamespace": "default",

"operatorParams": "--git-readonly --git-path=manifests",

"operatorScope": "cluster",

"operatorType": "Flux",

"provisioningState": "Succeeded",

"repositoryPublicKey": "",

"repositoryUrl": "https://isaac.johnson:abcdef12345abcdef12345abcdef12345abcdef12345@princessking.visualstudio.com/IAC-Demo/_git/IAC-Demo",

"resourceGroup": "myArcClusters2",

"type": "Microsoft.KubernetesConfiguration/sourceControlConfigurations"

}

Verification:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo$ !1675

linode-cli lke kubeconfig-view 5193 > t.o && cat t.o | tail -n2 | head -n1 | sed 's/.\{8\}$//' | sed 's/^.\{8\}//' | base64 --decode > ~/.kube/config

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

azure-vote-back ClusterIP 10.128.43.37 <none> 6379/TCP 2m

azure-vote-front LoadBalancer 10.128.85.35 45.79.243.16 80:32408/TCP 2m

kubernetes ClusterIP 10.128.0.1 <none> 443/TCP 160m

memcached ClusterIP 10.128.82.70 <none> 11211/TCP 2m24s

Updating

Let’s now change the values:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo/manifests$ git diff

diff --git a/manifests/azure-vote-all-in-one-redis.yaml b/manifests/azure-vote-all-in-one-redis.yaml

index 80a8edb..d64cfce 100644

--- a/manifests/azure-vote-all-in-one-redis.yaml

+++ b/manifests/azure-vote-all-in-one-redis.yaml

@@ -20,6 +20,9 @@ spec:

ports:

- containerPort: 6379

name: redis

+ env:

+ - name: VOTE1VALUE

+ value: "Elephants"

---

apiVersion: v1

kind: Service

@@ -65,6 +68,8 @@ spec:

env:

- name: REDIS

value: "azure-vote-back"

+ - name: VOTE1VALUE

+ value: "Elephants"

---

apiVersion: v1

kind: Service

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo/manifests$ git add azure-vote-all-in-one-redis.yaml

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo/manifests$ git commit -m "Change the Cats option to Elephants"

[master 46cdb15] Change the Cats option to Elephants

1 file changed, 5 insertions(+)

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo/manifests$ git push

Counting objects: 4, done.

Delta compression using up to 8 threads.

Compressing objects: 100% (4/4), done.

Writing objects: 100% (4/4), 430 bytes | 430.00 KiB/s, done.

Total 4 (delta 2), reused 0 (delta 0)

remote: Analyzing objects... (4/4) (169 ms)

remote: Storing packfile... done (175 ms)

remote: Storing index... done (204 ms)

remote: We noticed you're using an older version of Git. For the best experience, upgrade to a newer version.

To https://princessking.visualstudio.com/IAC-Demo/_git/IAC-Demo

f9f49cc..46cdb15 master -> master

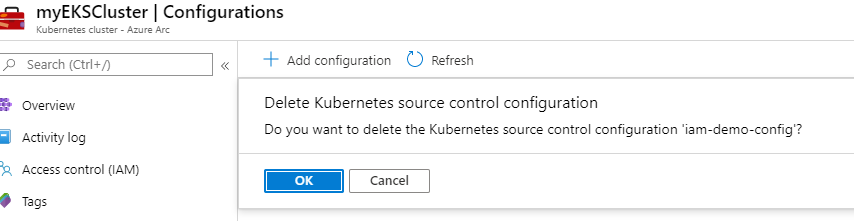

Deleting

I watched for a while, but deleting the configuration did not remove the former configurations

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo$ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

app-config 1/1 1 1 52m

azure-vote-back 1/1 1 1 32m

azure-vote-front 1/1 1 1 32m

memcached 1/1 1 1 52m

What happens when we relaunch?

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo$ az k8sconfiguration create --name iam-demo-config --cluster-name myEKSCluster --resource-group myArcClusters2 --operator-instance-name app-config --operator-namespace default --repository-url https://isaac.johnson:abcdef12345abcdef12345abcdef12345abcdef12345@princessking.visualstudio.com/IAC-Demo/_git/IAC-Demo --cluster-type connectedClusters --scope cluster --operator-params="--git-path=manifests"

Command group 'k8sconfiguration' is in preview. It may be changed/removed in a future release.

{

"complianceStatus": {

"complianceState": "Pending",

"lastConfigApplied": "0001-01-01T00:00:00",

"message": "{\"OperatorMessage\":null,\"ClusterState\":null}",

"messageLevel": "3"

},

"enableHelmOperator": "False",

"helmOperatorProperties": null,

"id": "/subscriptions/70b42e6a-asdf-asdf-asdf-9f3995b1aca8/resourceGroups/myArcClusters2/providers/Microsoft.Kubernetes/connectedClusters/myEKSCluster/providers/Microsoft.KubernetesConfiguration/sourceControlConfigurations/iam-demo-config",

"name": "iam-demo-config",

"operatorInstanceName": "app-config",

"operatorNamespace": "default",

"operatorParams": "--git-readonly --git-path=manifests",

"operatorScope": "cluster",

"operatorType": "Flux",

"provisioningState": "Succeeded",

"repositoryPublicKey": "",

"repositoryUrl": "https://isaac.johnson:abcdef12345abcdef12345abcdef12345abcdef12345@princessking.visualstudio.com/IAC-Demo/_git/IAC-Demo",

"resourceGroup": "myArcClusters2",

"type": "Microsoft.KubernetesConfiguration/sourceControlConfigurations"

}

Within moments, i did see the former deployment get removed

Every 2.0s: kubectl get deployments DESKTOP-JBA79RT: Wed May 20 13:08:36 2020

NAME READY UP-TO-DATE AVAILABLE AGE

azure-vote-back 1/1 1 1 36m

azure-vote-front 1/1 1 1 36m

I noticed an error in the portal

{"OperatorMessage":"unable to add the configuration with configId {iam-demo-config} due to error: {error while adding the CRD configuration: error {object is being deleted: gitconfigs.clusterconfig.azure.com \"iam-demo-config\" already exists}}","ClusterState":"Failed","LastGitCommitInformation":"","ErrorsInTheLastSynced":""}

very 2.0s: kubectl get deployments DESKTOP-JBA79RT: Wed May 20 13:09:36 2020

NAME READY UP-TO-DATE AVAILABLE AGE

app-config 1/1 1 1 31s

azure-vote-back 1/1 1 1 37m

azure-vote-front 1/1 1 1 37m

memcached 1/1 1 1 31s

It seemed that while it did clean up old deployments, it did not relaunch and got hung up on the –readonly flag.

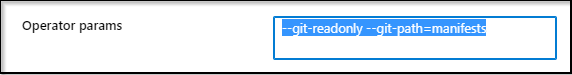

No matter what, it keeps that flag in there. I recreated in new namespaces, and ensured i just set –operator-params=”–git-path=manifests” but then it shows in Azure “–git-readonly –git-path=manifests

I tried namepaces, deleting deployments, updating the token for git and applying it.. Nothing seemed to relaunch. This is in preview so I would expect these issues to get sorted out.

Slow… eventually it came back:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

azure-arc cluster-metadata-operator-57fc656db4-zdf5p 2/2 Running 0 157m

azure-arc clusteridentityoperator-7598664f64-5cvk2 3/3 Running 0 157m

azure-arc config-agent-798648fbd5-g74gb 3/3 Running 0 157m

azure-arc controller-manager-64985bcb6b-7kwl9 3/3 Running 0 157m

azure-arc flux-logs-agent-6f8fdb7d58-4hbmc 2/2 Running 0 157m

azure-arc metrics-agent-5f55ccddfd-pmcc8 2/2 Running 0 157m

azure-arc resource-sync-agent-95c997779-rnpf8 3/3 Running 0 157m

default app-config-55c7974448-rwdkb 1/1 Running 0 15m

default azure-vote-back-567c66c8d8-5xqp8 1/1 Running 0 30s

default azure-vote-front-fd7d8d4d7-wvhnx 1/1 Running 0 30s

default memcached-86869f57fd-t7g5k 1/1 Running 0 49m

Indeed i scan verify i passed in the SHOWHOSTS var:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo$ kubectl describe pod azure-vote-front-fd7d8d4d7-wvhnx

Name: azure-vote-front-fd7d8d4d7-wvhnx

Namespace: default

Priority: 0

Node: ip-10-0-61-98.us-east-2.compute.internal/10.0.61.98

Start Time: Wed, 20 May 2020 13:58:23 -0500

Labels: app=azure-vote-front

pod-template-hash=fd7d8d4d7

Annotations: kubernetes.io/psp: eks.privileged

Status: Running

IP: 10.0.52.147

Controlled By: ReplicaSet/azure-vote-front-fd7d8d4d7

Containers:

azure-vote-front:

Container ID: docker://41ddabfcab73e3e4d9c1e8e0d78aac5427cdf91df3d8fc56167b3e55e3fe71a1

Image: microsoft/azure-vote-front:v1

Image ID: docker-pullable://microsoft/azure-vote-front@sha256:9ace3ce43db1505091c11d15edce7b520cfb598d38402be254a3024146920859

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Wed, 20 May 2020 13:58:24 -0500

Ready: True

Restart Count: 0

Limits:

cpu: 500m

Requests:

cpu: 250m

Environment:

REDIS: azure-vote-back

SHOWHOST: true

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-7c4vq (ro)

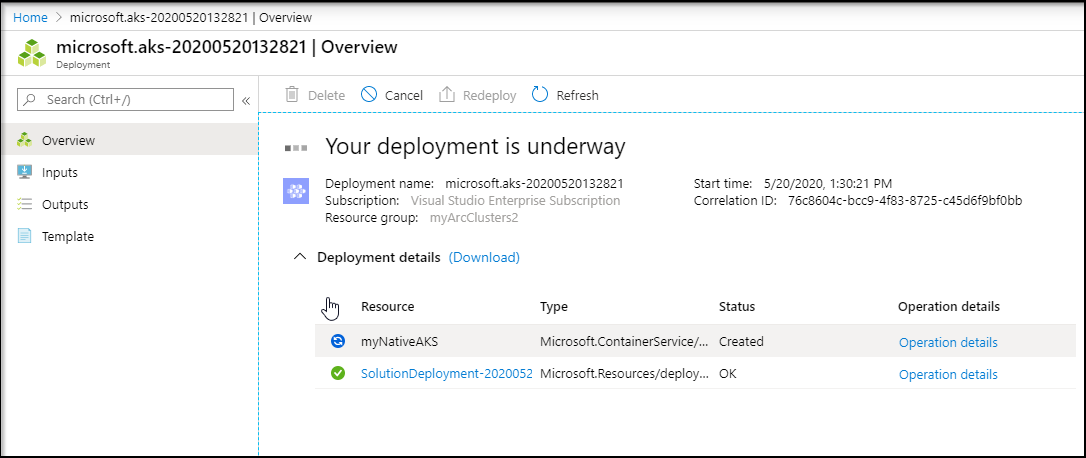

Let’s make an AKS cluster in the same RG:

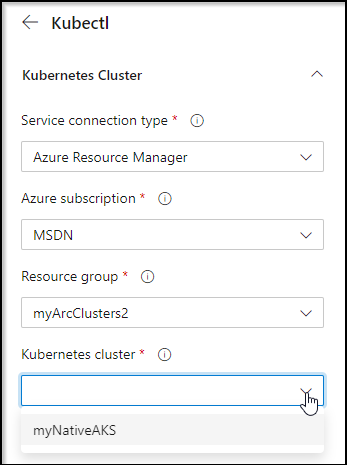

I then created a new YAML pipeline and verified that indeed, i cannot see Arc clusters listed via ARM connections:

One last check. I added another helloworld yaml (different app).

version: v2

spec:

replicas: 1

selector:

matchLabels:

app: helloworld

version: v2

template:

metadata:

labels:

app: helloworld

version: v2

spec:

containers:

- name: helloworld

image: docker.io/istio/examples-helloworld-v2

resources:

requests:

cpu: "100m"

imagePullPolicy: IfNotPresent #Always

ports:

- containerPort: 5000

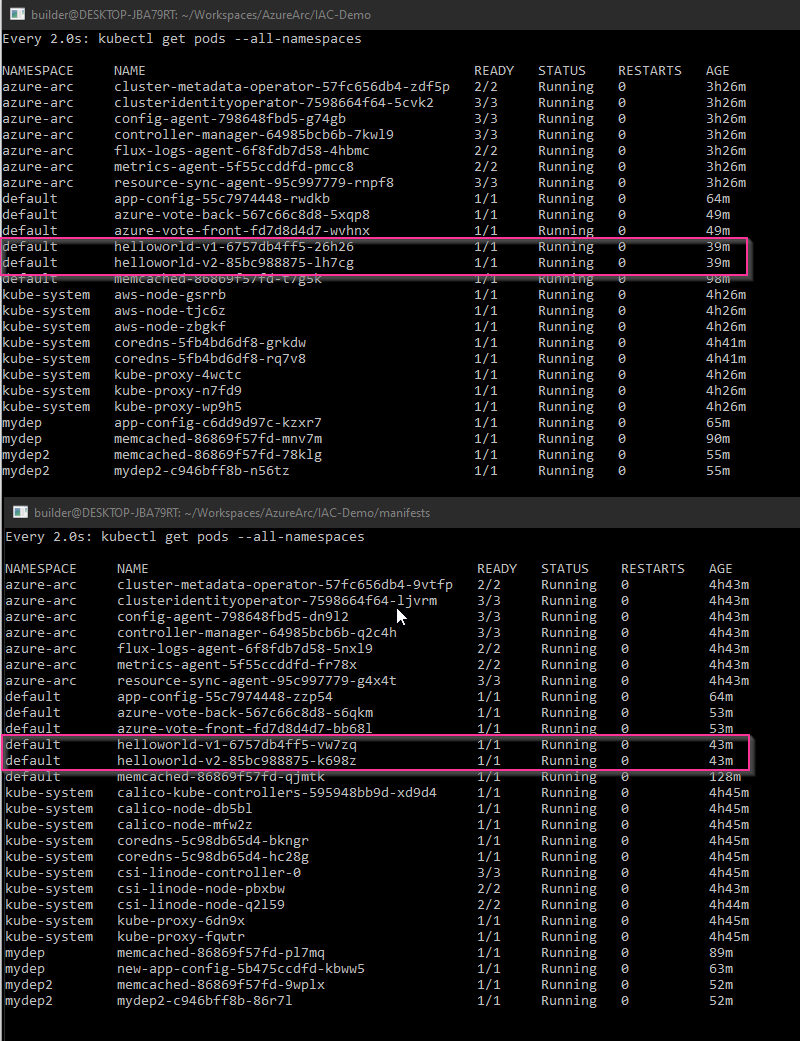

I saw that indeed it was deployed, albeit to the first configuration we had applied

EKS :

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo/manifests$ kubectl get pods | grep hello

helloworld-v1-6757db4ff5-26h26 1/1 Running 0 23m

helloworld-v2-85bc988875-lh7cg 1/1 Running 0 23m

LKE :

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo/manifests$ kubectl get pods --all-namespaces | grep hello

default helloworld-v1-6757db4ff5-vw7zq 1/1 Running 0 27m

default helloworld-v2-85bc988875-k698z 1/1 Running 0 27m

I then removed it:

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo$ git commit -m "remove helloworld"

[master 2c12de4] remove helloworld

1 file changed, 1 insertion(+), 1 deletion(-)

rename manifests/helloworld.yaml => helloworld.md (98%)

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo$ git push

Counting objects: 4, done.

Delta compression using up to 8 threads.

Compressing objects: 100% (4/4), done.

Writing objects: 100% (4/4), 704 bytes | 704.00 KiB/s, done.

Total 4 (delta 1), reused 0 (delta 0)

remote: Analyzing objects... (4/4) (189 ms)

remote: Storing packfile... done (252 ms)

remote: Storing index... done (92 ms)

remote: We noticed you're using an older version of Git. For the best experience, upgrade to a newer version.

To https://princessking.visualstudio.com/IAC-Demo/_git/IAC-Demo

5b88894..2c12de4 master -> master

However, it was not removed from the EKS or LKE clusters.

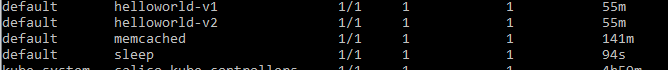

I did one more test. I added sleepdeployment to ensure content was still getting pushed. Within about 4 minutes on both clusters, i could see the Sleep deployment, but importantly, the helloworld deployments still existed.

So it’s clearly additive (ie. kubectl create) and not deleting anything.

Cleanup

LKE

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo/manifests$ linode-cli lke clusters-list

┌──────┬───────────┬────────────┐

│ id │ label │ region │

├──────┼───────────┼────────────┤

│ 5193 │ mycluster │ us-central │

└──────┴───────────┴────────────┘

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo/manifests$ linode-cli lke cluster-delete 5193

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo/manifests$ linode-cli lke clusters-list

┌────┬───────┬────────┐

│ id │ label │ region │

└────┴───────┴────────┘

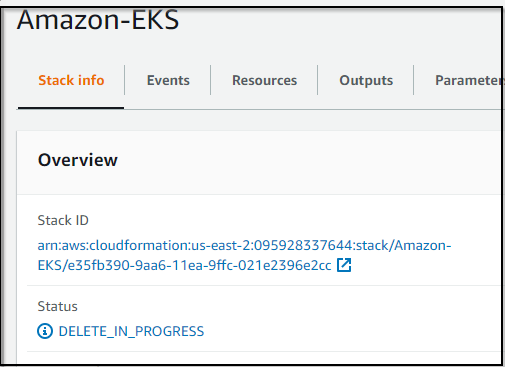

EKS

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo/manifests$ aws cloudformation list-stacks --region us-east-2 | grep Amazon-EKS

"StackId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS-EKSStack-SDZCUE9193SC-BastionStack-75ZZA7D9Y8VZ/5df70170-9aad-11ea-aa5c-02582149edd0",

"StackName": "Amazon-EKS-EKSStack-SDZCUE9193SC-BastionStack-75ZZA7D9Y8VZ",

"ParentId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS-EKSStack-SDZCUE9193SC/7ab4d9f0-9aa7-11ea-81b8-064e3beebd9c",

"RootId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS/e35fb390-9aa6-11ea-9ffc-021e2396e2cc",

"StackId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS-EKSStack-SDZCUE9193SC-NodeGroupStack-14OM4SDFRQ1HZ/5dd48550-9aad-11ea-856e-063c30684966",

"StackName": "Amazon-EKS-EKSStack-SDZCUE9193SC-NodeGroupStack-14OM4SDFRQ1HZ",

"ParentId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS-EKSStack-SDZCUE9193SC/7ab4d9f0-9aa7-11ea-81b8-064e3beebd9c",

"RootId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS/e35fb390-9aa6-11ea-9ffc-021e2396e2cc",

"StackId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS-EKSStack-SDZCUE9193SC-EKSControlPlane-RLECTP00VA5Z/4c73eb70-9aa8-11ea-bc6c-026789fff09e",

"StackName": "Amazon-EKS-EKSStack-SDZCUE9193SC-EKSControlPlane-RLECTP00VA5Z",

"ParentId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS-EKSStack-SDZCUE9193SC/7ab4d9f0-9aa7-11ea-81b8-064e3beebd9c",

"RootId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS/e35fb390-9aa6-11ea-9ffc-021e2396e2cc",

"StackId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS-EKSStack-SDZCUE9193SC-FunctionStack-J0MM85F3VKX3/ec211b30-9aa7-11ea-9fe1-0696b95a38c4",

"StackName": "Amazon-EKS-EKSStack-SDZCUE9193SC-FunctionStack-J0MM85F3VKX3",

"ParentId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS-EKSStack-SDZCUE9193SC/7ab4d9f0-9aa7-11ea-81b8-064e3beebd9c",

"RootId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS/e35fb390-9aa6-11ea-9ffc-021e2396e2cc",

"StackId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS-EKSStack-SDZCUE9193SC-IamStack-144D49GJXUSOB/8bb803d0-9aa7-11ea-a843-0a9fc5c4303c",

"StackName": "Amazon-EKS-EKSStack-SDZCUE9193SC-IamStack-144D49GJXUSOB",

"ParentId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS-EKSStack-SDZCUE9193SC/7ab4d9f0-9aa7-11ea-81b8-064e3beebd9c",

"RootId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS/e35fb390-9aa6-11ea-9ffc-021e2396e2cc",

"StackId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS-EKSStack-SDZCUE9193SC/7ab4d9f0-9aa7-11ea-81b8-064e3beebd9c",

"StackName": "Amazon-EKS-EKSStack-SDZCUE9193SC",

"ParentId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS/e35fb390-9aa6-11ea-9ffc-021e2396e2cc",

"RootId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS/e35fb390-9aa6-11ea-9ffc-021e2396e2cc",

"StackId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS-VPCStack-1LUZPCD2BF1J/e78197e0-9aa6-11ea-9392-062398501aa6",

"StackName": "Amazon-EKS-VPCStack-1LUZPCD2BF1J",

"ParentId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS/e35fb390-9aa6-11ea-9ffc-021e2396e2cc",

"RootId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS/e35fb390-9aa6-11ea-9ffc-021e2396e2cc",

"StackId": "arn:aws:cloudformation:us-east-2:01234512345:stack/Amazon-EKS/e35fb390-9aa6-11ea-9ffc-021e2396e2cc",

"StackName": "Amazon-EKS",

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo/manifests$ aws cloudformation delete-stack --stack-name "Amazon-EKS" --region us-east-2

builder@DESKTOP-JBA79RT:~/Workspaces/AzureArc/IAC-Demo/manifests$ aws cloudformation list-stacks --region us-east-2 | grep StackStatus

"StackStatus": "DELETE_IN_PROGRESS",

"StackStatus": "DELETE_IN_PROGRESS",

"StackStatus": "UPDATE_COMPLETE",

"StackStatus": "CREATE_COMPLETE",

"StackStatus": "CREATE_COMPLETE",

"StackStatus": "DELETE_IN_PROGRESS",

"StackStatus": "CREATE_COMPLETE",

"StackStatus": "DELETE_IN_PROGRESS",

"StackStatus": "DELETE_COMPLETE",

"StackStatus": "DELETE_COMPLETE",

"StackStatus": "DELETE_COMPLETE",

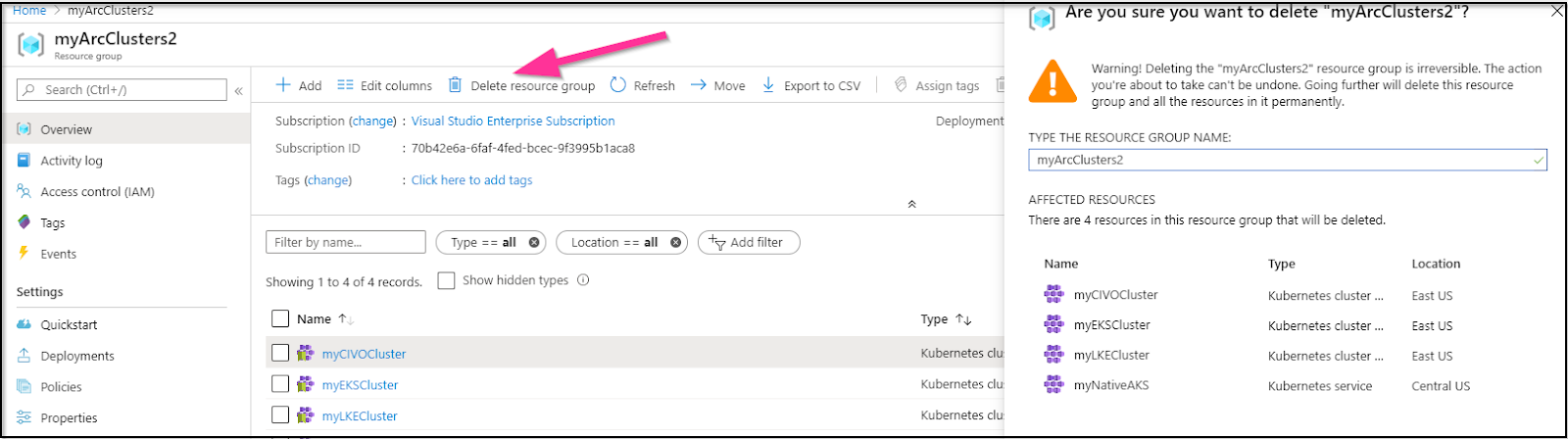

Cleaning up the Azure RG:

Summary

Azure Arc for Kubernetes is a really solid offering from Microsoft to manage all your Kubernetes Clusters’ configurations in one place. It is in public preview so there are some issues I experienced - namely handling bad YAML and no method to remove deployments (Add and Update, but not Deprecation).

We did not test Helm support but one can enable Helm chart deployments as well.