We've dug into a few kubernetes launch guides, but in reality, once launched, they aren't of much use without a DevOps pipeline to deliver content. Let's focus on taking a Digital Ocean kubernetes cluster and connecting it with an Azure DevOps (VSTS) instance to automate delivery of containerized content.

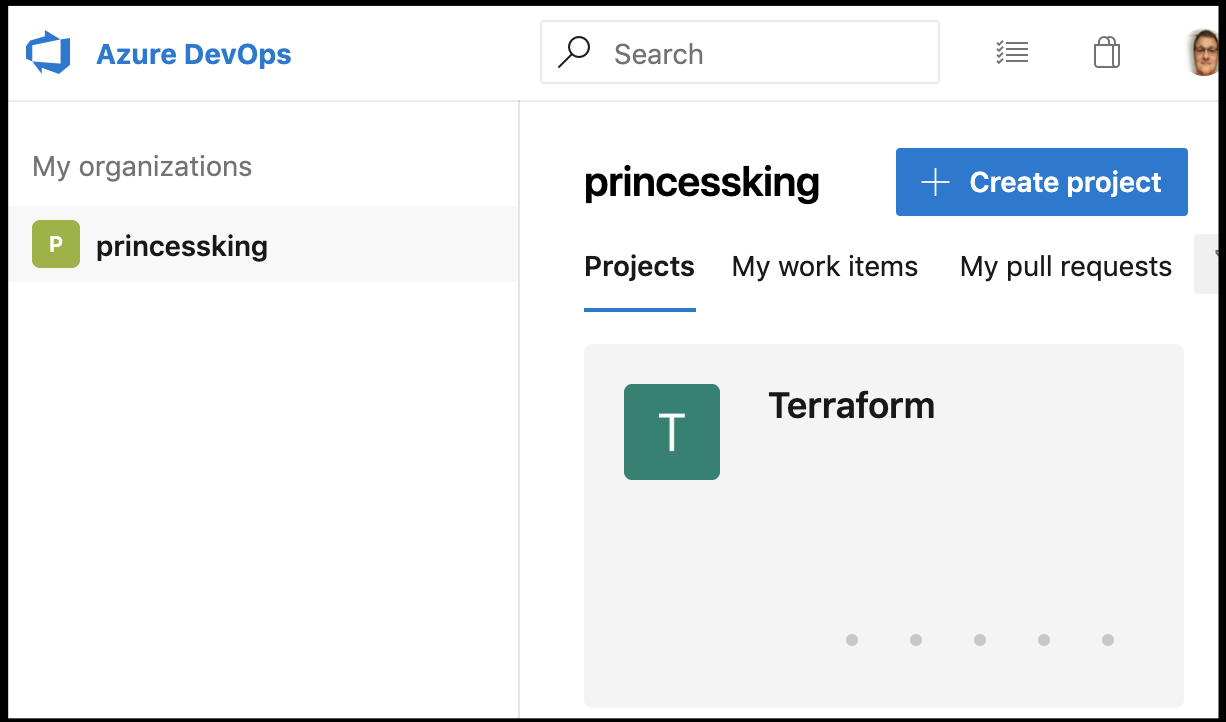

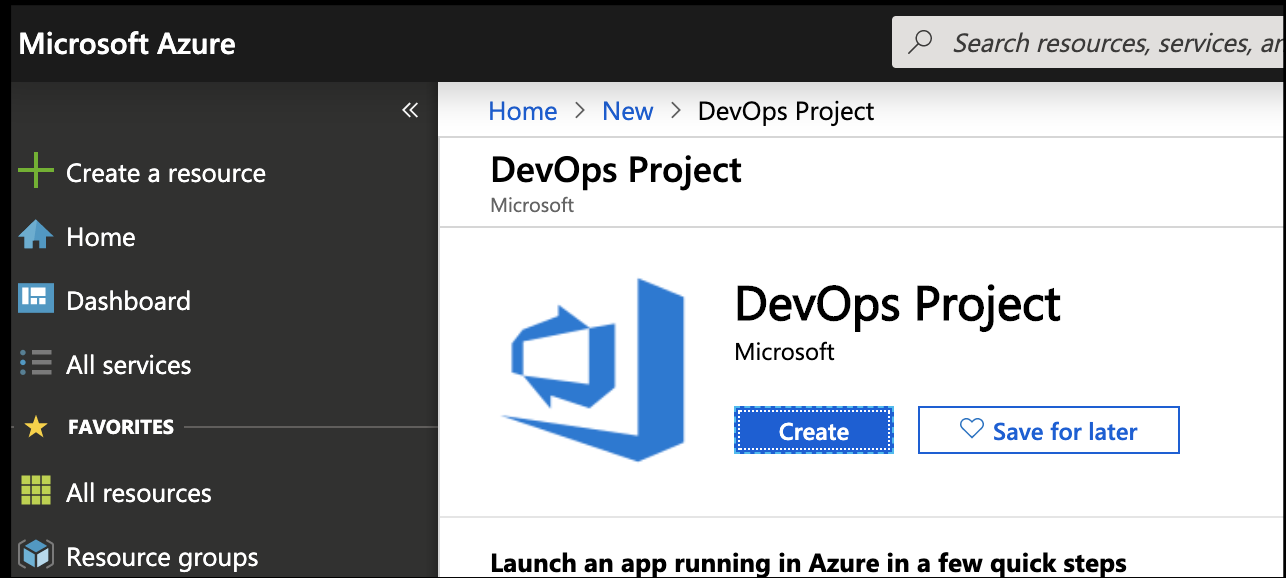

Set up AzDO

Create a project in AzDO:

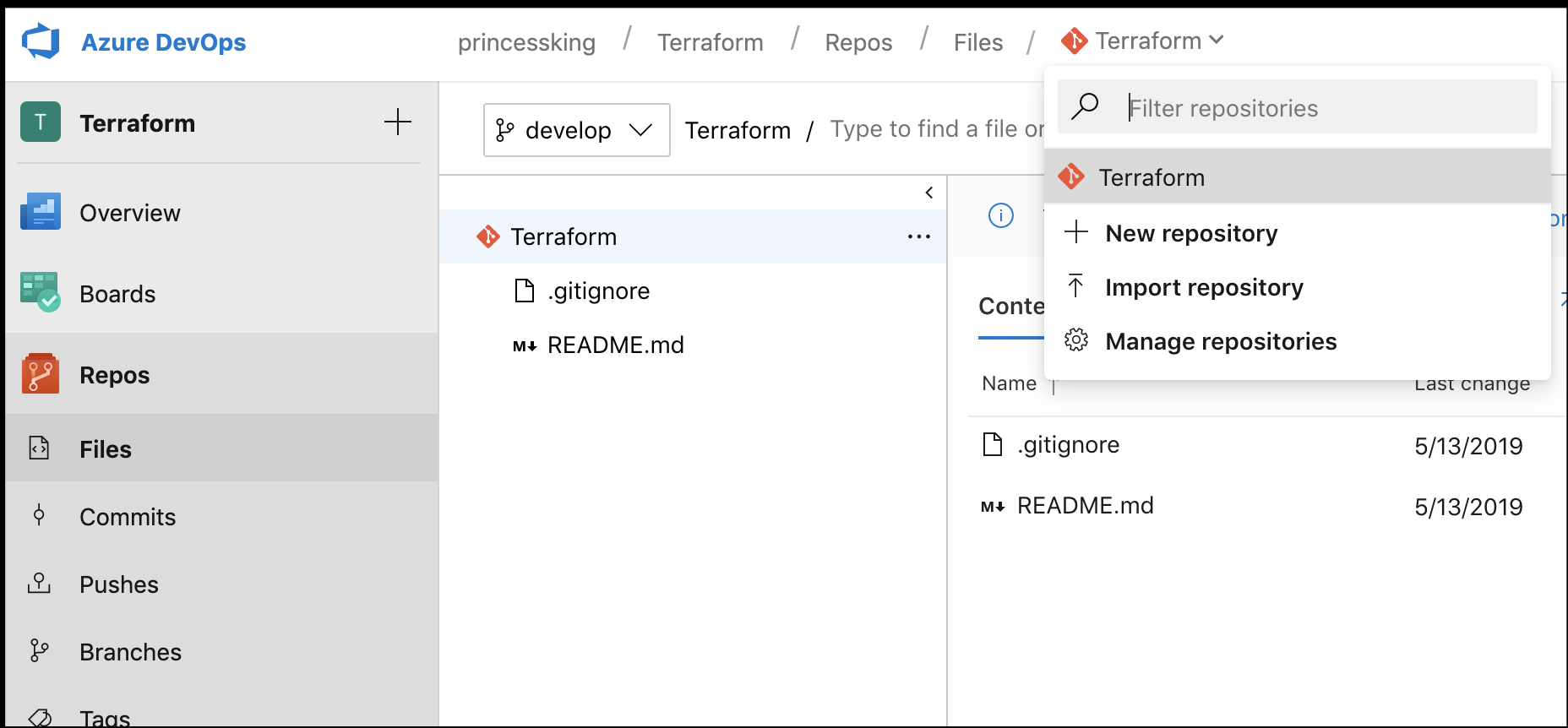

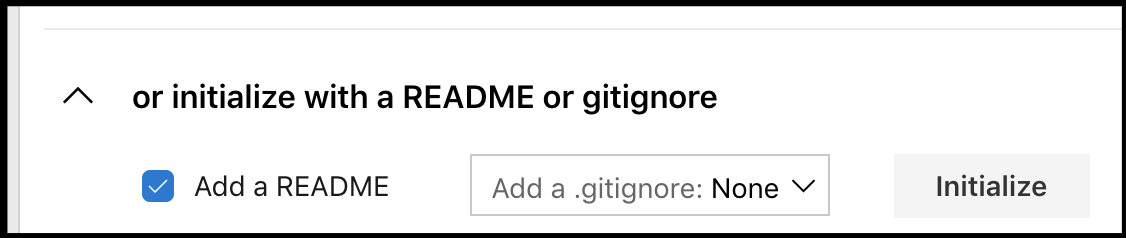

Next we'll create a new repo (Terraform) and initialize it:

Next let's clone it and TF init inside:

$ git clone https://princessking.visualstudio.com/Terraform/_git/Terraform

Cloning into 'Terraform'...

remote: Azure Repos

remote: Found 17 objects to send. (194 ms)

Unpacking objects: 100% (17/17), done.

$ cd Terraform

$ terraform init

Terraform initialized in an empty directory!

The directory has no Terraform configuration files. You may begin working

with Terraform immediately by creating Terraform configuration files.

Let's create a develop branch and push our work

$ git checkout -b develop

Switched to a new branch 'develop'

AHD-MBP13-048:Terraform isaac.johnson$ vi README.md

AHD-MBP13-048:Terraform isaac.johnson$ git add README.md

AHD-MBP13-048:Terraform isaac.johnson$ git commit -m "basic readme in develop branch"

[develop 913ff0c] basic readme in develop branch

1 file changed, 15 insertions(+), 20 deletions(-)

rewrite README.md (77%)

$ git push

fatal: The current branch develop has no upstream branch.

To push the current branch and set the remote as upstream, use

git push --set-upstream origin develop

$ darf

git push --set-upstream origin develop [enter/↑/↓/ctrl+c]

Enumerating objects: 5, done.

Counting objects: 100% (5/5), done.

Delta compression using up to 4 threads

Compressing objects: 100% (3/3), done.

Writing objects: 100% (3/3), 582 bytes | 582.00 KiB/s, done.

Total 3 (delta 0), reused 0 (delta 0)

remote: Analyzing objects... (3/3) (18 ms)

remote: Storing packfile... done (169 ms)

remote: Storing index... done (78 ms)

To https://princessking.visualstudio.com/Terraform/_git/Terraform

* [new branch] develop -> develop

Branch 'develop' set up to track remote branch 'develop' from 'origin'.

note: i remap thefuck to darf since i'm not all that profane in real life.. great utility. If you want to do the same remapping, just add the following to your ~/.bash_profile:

eval $(thefuck --alias darf)

Work Items

Next I'’ll want to create a Work Item to track my work.

Saving it creates WI 46.

I’ll next want a user story in this Iteration (Sprint) to tackle the pipeline to at least match what we made in our last blog post:

Next, I need to modify the Repo a bit.

Branch Defaults and Policies

First, set develop as my default branch. I used to abscond the gitflow model but in recent years i became a convert.

Next, we’ll want to set branch policies on develop and master.

Normally i would not allow self-approval, but i’m doing this on my own for now.

Now we can create a branch and add the main.tf from our last blog post:

$ git checkout -b feature/47-initial-DO-TF-creation

Switched to a new branch 'feature/47-initial-DO-TF-creation'

$ ls

README.md

$ mkdir terraform

$ cd terraform/

$ ls

$ mkdir do_k8s

$ cd do_k8s/

$ ls

$ vi main.tf

$ git status

On branch feature/47-initial-DO-TF-creation

Untracked files:

(use "git add <file>..." to include in what will be committed)

../

nothing added to commit but untracked files present (use "git add" to track)

$ cd ../..

$ ls

README.md terraform

$ git add -A

$ git status

On branch feature/47-initial-DO-TF-creation

Changes to be committed:

(use "git reset HEAD <file>..." to unstage)

new file: terraform/do_k8s/main.tf

$ git commit -m "tf from our last blog post"

[feature/47-initial-DO-TF-creation 52ad65b] tf from our last blog post

1 file changed, 34 insertions(+)

create mode 100644 terraform/do_k8s/main.tf

AHD-MBP13-048:Terraform isaac.johnson$ git push

fatal: The current branch feature/47-initial-DO-TF-creation has no upstream branch.

To push the current branch and set the remote as upstream, use

git push --set-upstream origin feature/47-initial-DO-TF-creation

$ darf

git push --set-upstream origin feature/47-initial-DO-TF-creation [enter/↑/↓/ctrl+c]

Enumerating objects: 6, done.

Counting objects: 100% (6/6), done.

Delta compression using up to 4 threads

Compressing objects: 100% (3/3), done.

Writing objects: 100% (5/5), 813 bytes | 813.00 KiB/s, done.

Total 5 (delta 0), reused 0 (delta 0)

remote: Analyzing objects... (5/5) (14 ms)

remote: Storing packfile... done (192 ms)

remote: Storing index... done (77 ms)

To https://princessking.visualstudio.com/Terraform/_git/Terraform

* [new branch] feature/47-initial-DO-TF-creation -> feature/47-initial-DO-TF-creation

Branch 'feature/47-initial-DO-TF-creation' set up to track remote branch 'feature/47-initial-DO-TF-creation' from 'origin'.

So now that we can see we have code, we can setup the build:

Quick pause - we need some storage accounts first - namely one of terraform state files. Hop into the azure portal and create one (or do it with the az CLI if you prefer):

Create a blob container once your storage account is created:

Lets also create an Azure Key Vault (AKV):

And create a secret to store our do-token (we’ll use this for a manage var group later)

Back to AzDO, we next need to create a service connection.

In our Library, Managed Secrets, Create a new one for tf-tokens. There you can associate to the KV then pick our token:

Now we can go back and create Pipeline variables that match our storage account from earlier:

Creating the pipeline:

Summary

At this point we have most of what we need:

- Our token stored in Key Vault and tied to VSTS via a Managed Variable list (Under Pipelines/Library).

- We've created some Work Items to track our work

- We've create a GIT Repo to store our Terraform and created the basic branch structure and policies

- We've creates the proper Azure Service connection should we need any other objects from our Subscription.

In Part 2...

In our next post we'll dive into the pipeline definition itself and testing it. Microsoft would like you to use YAML definitions but I still find them woefully inadequate to create and tend to create them with the graphical wizard instead.

Once we've created the pipeline we'll dig into re-usable blocks of steps called "Task Groups" we can use to make multiple transforms of pipelines: