A challenge that has come up for me a few times recently is to monitor kubernetes events. In several situations I've had the need to trigger things based on changes in a Kubernetes namespace. Luckily for us, Dapr.io has a component just for his purpose; bindings.kuberentes.

We will explore using that as an input event trigger then compare it with Kubewatch, a helm installable system to do much the same thing. Lastly, we'll tie one of them into Azure DevOps webhook triggers, a relatively recent feature for triggering Azure DevOps Pipelines from external events.

Dapr.io Event Binding

First, let's apply the k8s binding

$ cat k8s_event_binding.yaml

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: kubeevents

namespace: default

spec:

type: bindings.kubernetes

version: v1

metadata:

- name: namespace

value: default

- name: resyncPeriodInSec

value: "5"

$ kubectl apply -f k8s_event_binding.yaml

and the role and rolebinding

$ cat role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: event-reader

namespace: default

rules:

- apiGroups:

- ""

resources:

- events

verbs:

- get

- watch

- list

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: event-reader-rb

namespace: default

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: event-reader

subjects:

- kind: ServiceAccount

name: default

namespace: default

$ kubectl apply -f role.yaml

NodeJS Consumer

Next we need to create the NodeJS app. We will base our app.js on the code in the dapr docs (https://docs.dapr.io/developing-applications/building-blocks/bindings/howto-triggers/)

$ cat app.js

const express = require('express')

const bodyParser = require('body-parser')

const app = express()

app.use(bodyParser.json())

const port = 8080

app.post('/kubeevents', (req, res) => {

console.log(req.body)

res.status(200).send()

})

app.listen(port, () => console.log(`k8s event consumer app listening on port ${port}!`))

The only difference being the port (8080 instead of 3000)

Verify we can test

$ npm install --save express

$ npm install --save body-parser

$ node app.js

Now build the dockerfile

$ cat Dockerfile

FROM node:14

# Create app directory

WORKDIR /usr/src/app

# Install app dependencies

# A wildcard is used to ensure both package.json AND package-lock.json are copied

# where available (npm@5+)

COPY package*.json ./

RUN npm install

# If you are building your code for production

# RUN npm ci --only=production

# Bundle app source

COPY . .

EXPOSE 8080

CMD [ "node", "app.js" ]

$ docker build -f Dockerfile -t nodeeventwatcher .

Now that it's built, we need to tag and push the image locally

$ docker tag nodeeventwatcher:latest harbor.freshbrewed.science/freshbrewedprivate/nodeeventwatcher:latest

$ docker push harbor.freshbrewed.science/freshbrewedprivate/nodeeventwatcher:latest

Testing

Next, install by applying the deployment.yaml

$ cat kubeevents.dep.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nodeeventwatcher

name: nodeeventwatcher-deployment

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: nodeeventwatcher

template:

metadata:

annotations:

dapr.io/app-id: nodeeventwatcher

dapr.io/app-port: "8080"

dapr.io/config: appconfig

dapr.io/enabled: "true"

labels:

app: nodeeventwatcher

spec:

containers:

- env:

- name: PORT

value: "8080"

image: harbor.freshbrewed.science/freshbrewedprivate/nodeeventwatcher:latest

imagePullPolicy: Always

name: nodeeventwatcher

ports:

- containerPort: 8080

protocol: TCP

imagePullSecrets:

- name: myharborreg

$ kubectl apply -f kubeevents.dep.yaml

We can now see we are getting k8s events sent to the pod

$ kubectl logs nodeeventwatcher-deployment-6dddc4858c-m66n5 nodeeventwatcher | tail -n 63

{

event: 'update',

oldVal: {

metadata: {

name: 'nodeeventwatcher-deployment-6dddc4858c-m66n5.1685ddb34e199dff',

namespace: 'default',

selfLink: '/api/v1/namespaces/default/events/nodeeventwatcher-deployment-6dddc4858c-m66n5.1685ddb34e199dff',

uid: 'ef7d197a-764c-46cb-a962-98d986ee154d',

resourceVersion: '42943418',

creationTimestamp: '2021-06-06T02:36:42Z',

managedFields: [Array]

},

involvedObject: {

kind: 'Pod',

namespace: 'default',

name: 'nodeeventwatcher-deployment-6dddc4858c-m66n5',

uid: '255c0dc6-80de-4253-9304-b20e28199693',

apiVersion: 'v1',

resourceVersion: '42943220',

fieldPath: 'spec.containers{nodeeventwatcher}'

},

reason: 'Created',

message: 'Created container nodeeventwatcher',

source: { component: 'kubelet', host: 'isaac-macbookair' },

firstTimestamp: '2021-06-06T02:36:42Z',

lastTimestamp: '2021-06-06T02:36:42Z',

count: 1,

type: 'Normal',

eventTime: null,

reportingComponent: '',

reportingInstance: ''

},

newVal: {

metadata: {

name: 'nodeeventwatcher-deployment-6dddc4858c-m66n5.1685ddb34e199dff',

namespace: 'default',

selfLink: '/api/v1/namespaces/default/events/nodeeventwatcher-deployment-6dddc4858c-m66n5.1685ddb34e199dff',

uid: 'ef7d197a-764c-46cb-a962-98d986ee154d',

resourceVersion: '42943418',

creationTimestamp: '2021-06-06T02:36:42Z',

managedFields: [Array]

},

involvedObject: {

kind: 'Pod',

namespace: 'default',

name: 'nodeeventwatcher-deployment-6dddc4858c-m66n5',

uid: '255c0dc6-80de-4253-9304-b20e28199693',

apiVersion: 'v1',

resourceVersion: '42943220',

fieldPath: 'spec.containers{nodeeventwatcher}'

},

reason: 'Created',

message: 'Created container nodeeventwatcher',

source: { component: 'kubelet', host: 'isaac-macbookair' },

firstTimestamp: '2021-06-06T02:36:42Z',

lastTimestamp: '2021-06-06T02:36:42Z',

count: 1,

type: 'Normal',

eventTime: null,

reportingComponent: '',

reportingInstance: ''

}

}

We can also trim to just event messages…

$ kubectl logs nodeeventwatcher-deployment-6dddc4858c-m66n5 nodeeventwatcher | grep message | tail -n5

message: 'Started container nodeeventwatcher',

message: 'Created container daprd',

message: 'Created container daprd',

message: 'Container image "docker.io/daprio/daprd:1.1.1" already present on machine',

message: 'Container image "docker.io/daprio/daprd:1.1.1" already present on machine',

However, one disadvantage is this does not seem to trigger on CM and Secrets updates. This is rather key for my needs and since I cannot customize (best i can see from the docs)

Kubewatch

To get other events, we could use something like kubewatch.

Install

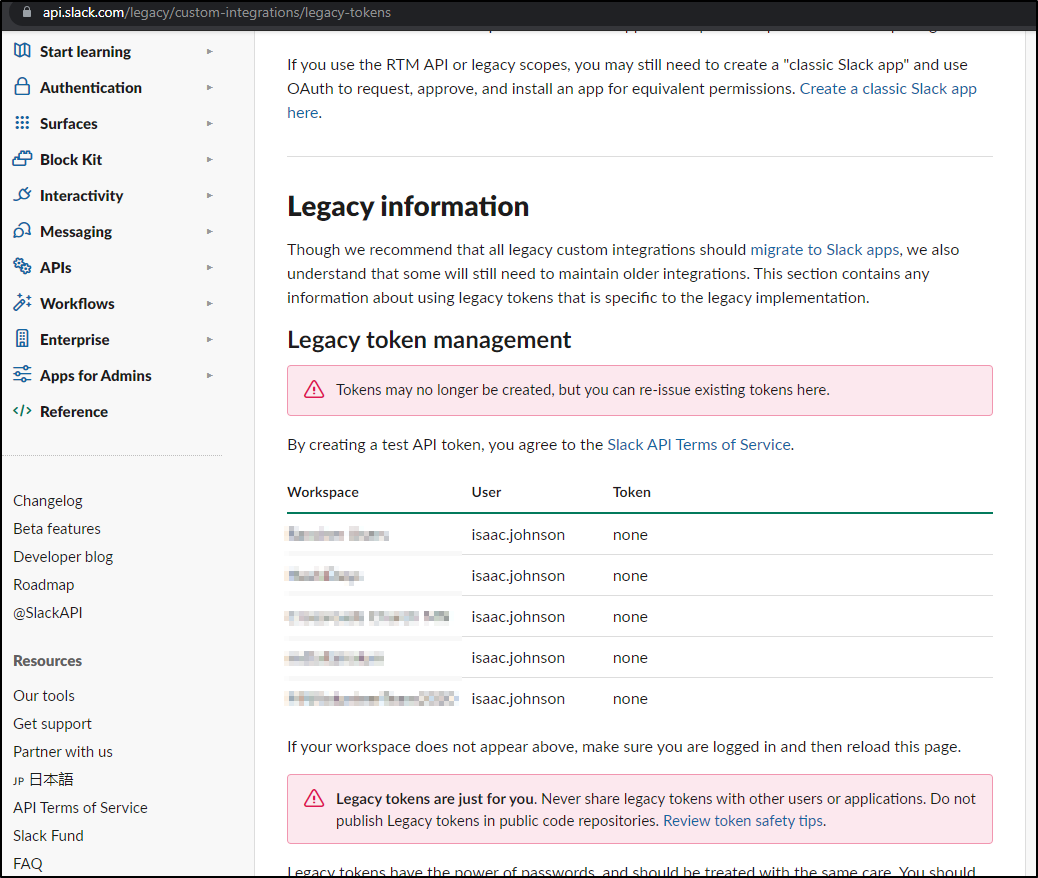

First install we can just specify a couple things to monitor with our slack legacy API token

helm install kubewatch bitnami/kubewatch --set='rbac.create=true,slack.channel=#random,slack.token=xoxp-555555555555-555555555555-555555555555-555555555555555555555555555555555555,resourcesToWatch.pod=true,resourcesToWatch.daemonset=true'we can also stop and update the values via a file as well.

$ cat kubewatch_values.yaml

rbac:

create: true

resourcesToWatch:

deployment: true

replicationcontroller: false

replicaset: false

daemonset: false

services: true

pod: true

job: true

node: false

clusterrole: true

serviceaccount: true

persistentvolume: false

namespace: false

secret: true

configmap: true

ingress: true

slack:

channel: '#random'

token: 'xoxp-555555555555-555555555555-555555555555-555555555555555555555555555555555555'

$ helm upgrade kubewatch bitnami/kubewatch --values=kubewatch_values.yaml

Release "kubewatch" has been upgraded. Happy Helming!

NAME: kubewatch

LAST DEPLOYED: Sun May 30 16:51:47 2021

NAMESPACE: default

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

** Please be patient while the chart is being deployed **

To verify that kubewatch has started, run:

kubectl get deploy -w --namespace default kubewatch

We also need to ensure we have the ability to watch these events. A simple CRB that grants Kubewatch cluster admin would suffice

$ cat kubewatch_clusteradmin.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubewatch-clusteradmin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubewatch

namespace: default

$ kubectl apply -f kubewatch_clusteradmin.yaml

clusterrolebinding.rbac.authorization.k8s.io/kubewatch-clusteradmin created

We can check posting to slack..

time="2021-06-06T22:04:10Z" level=info msg="Processing update to configmap: default/ingress-controller-leader-nginx" pkg=kubewatch-configmap

2021/06/06 22:04:10 token_revoked

time="2021-06-06T22:04:10Z" level=info msg="Processing update to configmap: default/datadog-leader-election" pkg=kubewatch-configmap

2021/06/06 22:04:10 token_revoked

time="2021-06-06T22:04:11Z" level=info msg="Processing update to configmap: default/operator.dapr.io" pkg=kubewatch-configmap

time="2021-06-06T22:04:11Z" level=info msg="Processing update to configmap: kube-system/cert-manager-cainjector-leader-election-core" pkg=kubewatch-configmap

time="2021-06-06T22:04:11Z" level=info msg="Processing update to configmap: kube-system/cert-manager-cainjector-leader-election" pkg=kubewatch-configmap

2021/06/06 22:04:11 token_revoked

2021/06/06 22:04:11 token_revoked

2021/06/06 22:04:12 token_revoked

seems our token was revoked (due to disuse) and Slack doesn't let us make more… (boo!)

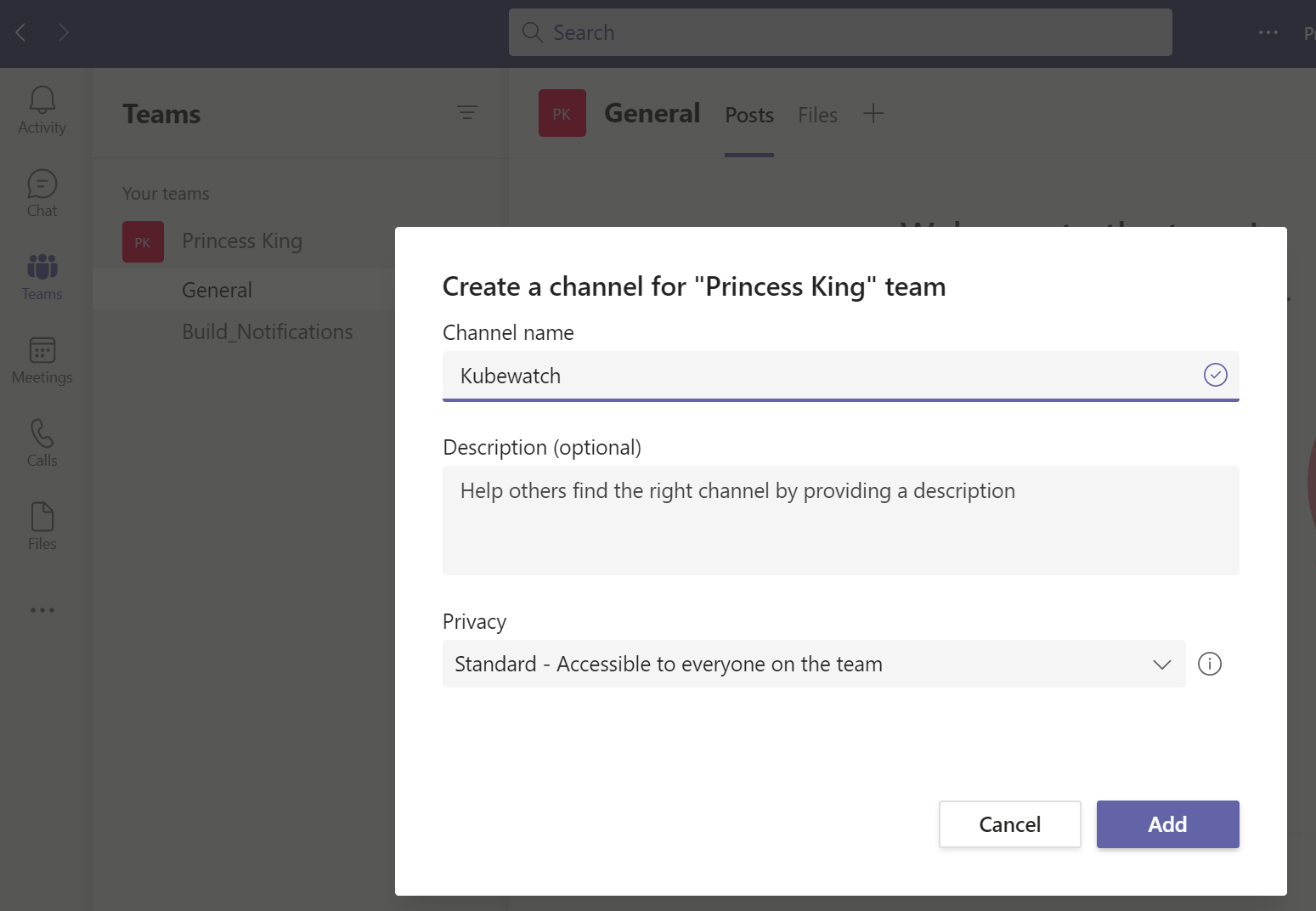

Using Microsoft Teams

Since Slack isn't going to work for us, let's use a Teams channel instead.

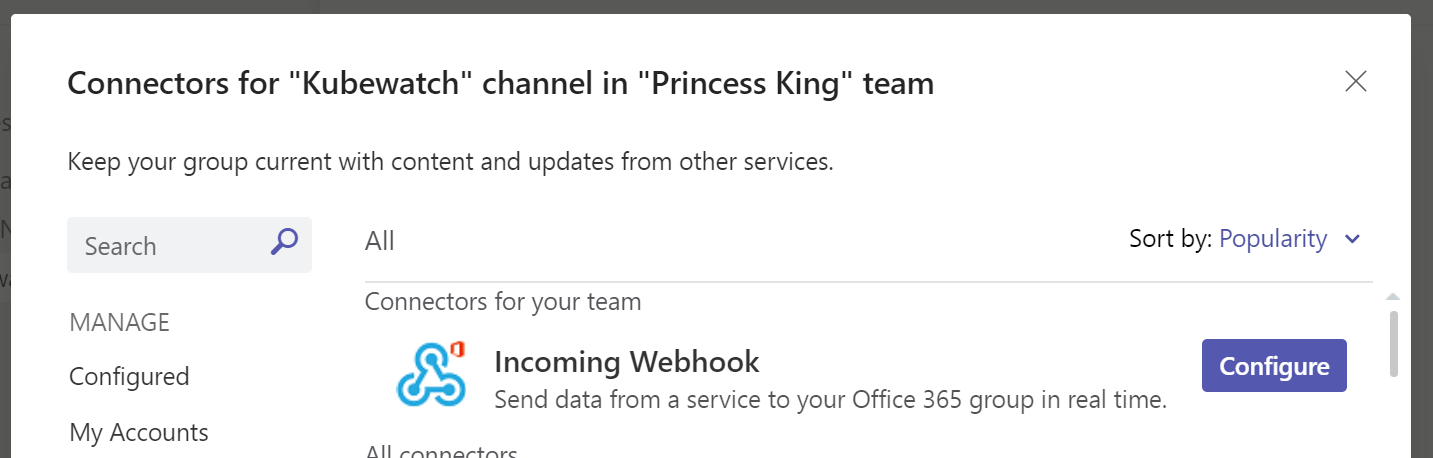

Create a new channel for events

Once created, right click on the channel and select connectors. Then choose webhook

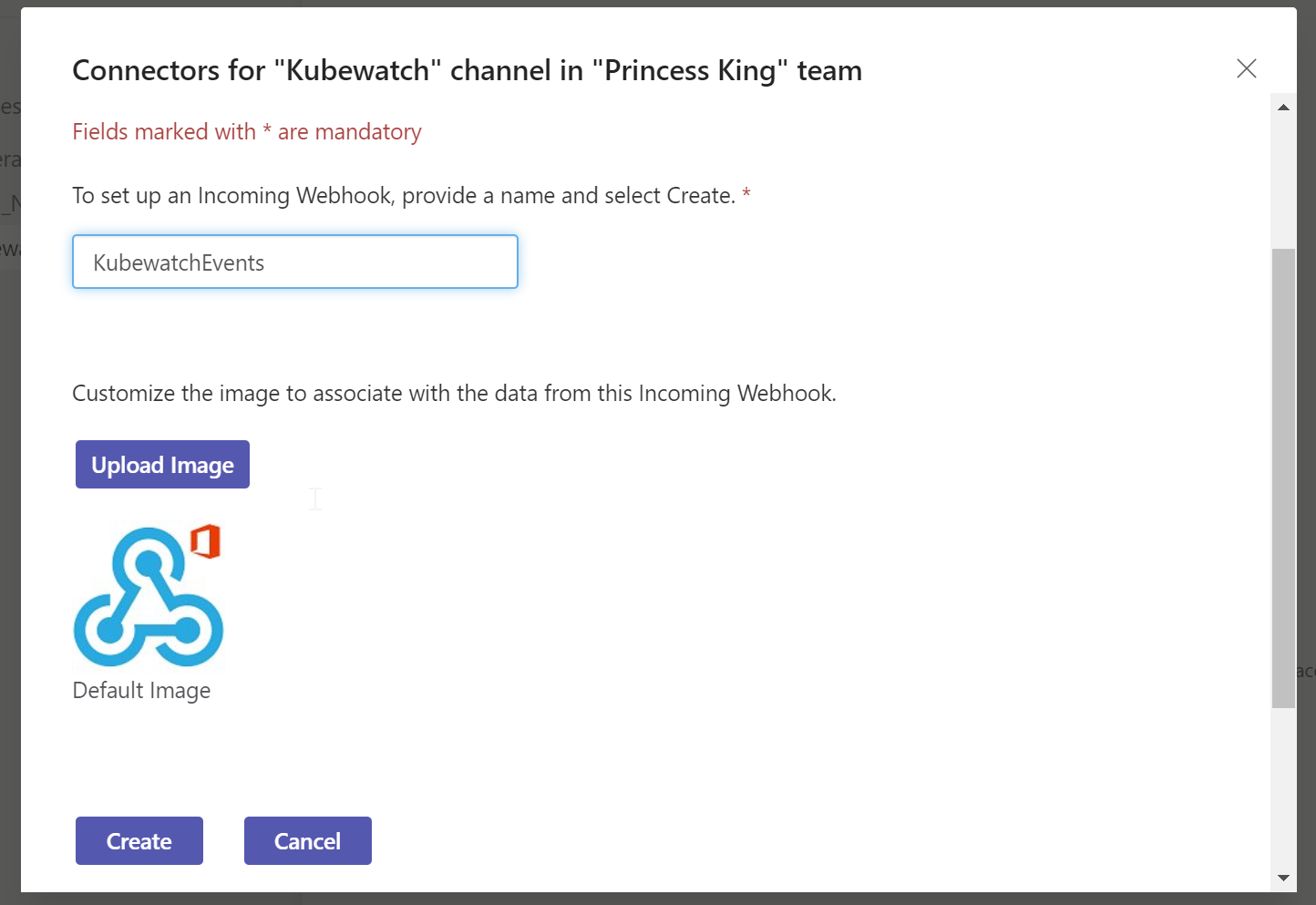

we can just do basic events so we'll call it KubewatchEvents

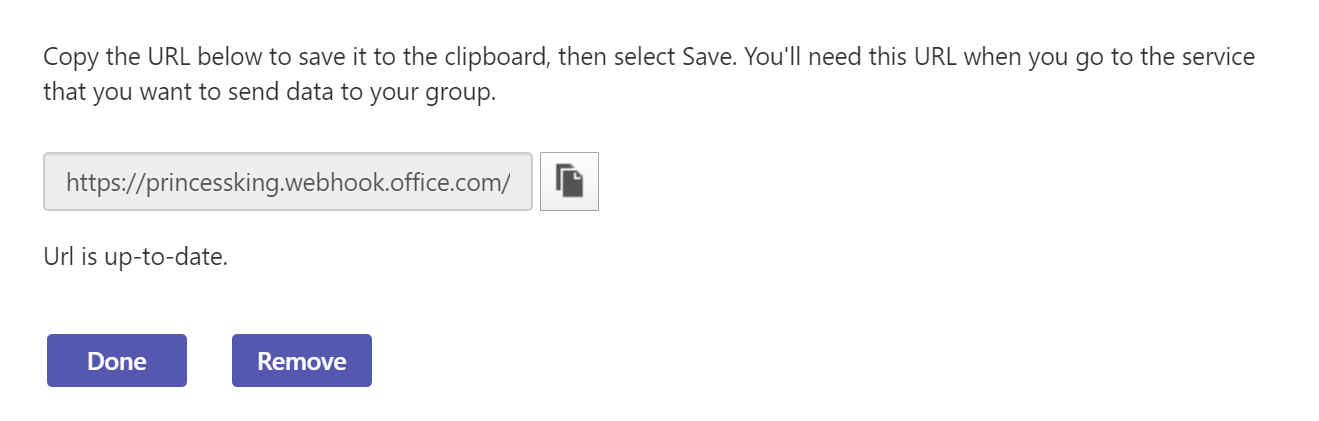

Then we can retrieve the webhook URL after clicking create

e.g.

https://princessking.webhook.office.com/webhookb2/0119e157-eb03-4d10-b097-fea3bfa2115c@6f503690-f043-4e2a-bba1-106faaa1c397/IncomingWebhook/23585651-24cd-4b09-95f4-ea7a721eec5cWe can now insert this into our configmap directly (later we'll update the helm chart, but this is a quick test)

builder@DESKTOP-72D2D9T:~/Workspaces/daprk8sevents$ kubectl get cm kubewatch-config -o yaml > kwconfig.yaml

builder@DESKTOP-72D2D9T:~/Workspaces/daprk8sevents$ kubectl get cm kubewatch-config -o yaml > kwconfig.yaml.bak

builder@DESKTOP-72D2D9T:~/Workspaces/daprk8sevents$ vi kwconfig.yaml

builder@DESKTOP-72D2D9T:~/Workspaces/daprk8sevents$ diff kwconfig.yaml kwconfig.yaml.bak

5,6c5,8

< msteams:

< webhookurl: https://princessking.webhook.office.com/webhookb2/0119e157-eb03-4d10-b097-fea3bfa2115c@6f503690-f043-4e2a-bba1-106faaa1c397/IncomingWebhook/23585651-24cd-4b09-95f4-ea7a721eec5c

---

> slack:

> channel: '#random'

> enabled: true

> token: xoxp-555555555555-555555555555-555555555555-555555555555555555555555555555555555

$ kubectl apply -f kwconfig.yaml

Warning: resource configmaps/kubewatch-config is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

configmap/kubewatch-config configured

To make it take effect, rotate the pod

$ kubectl get pods | grep kubewatch

kubewatch-6f895cd797-glngx 1/1 Running 0 29m

$ kubectl delete pod kubewatch-6f895cd797-glngx

pod "kubewatch-6f895cd797-glngx" deleted

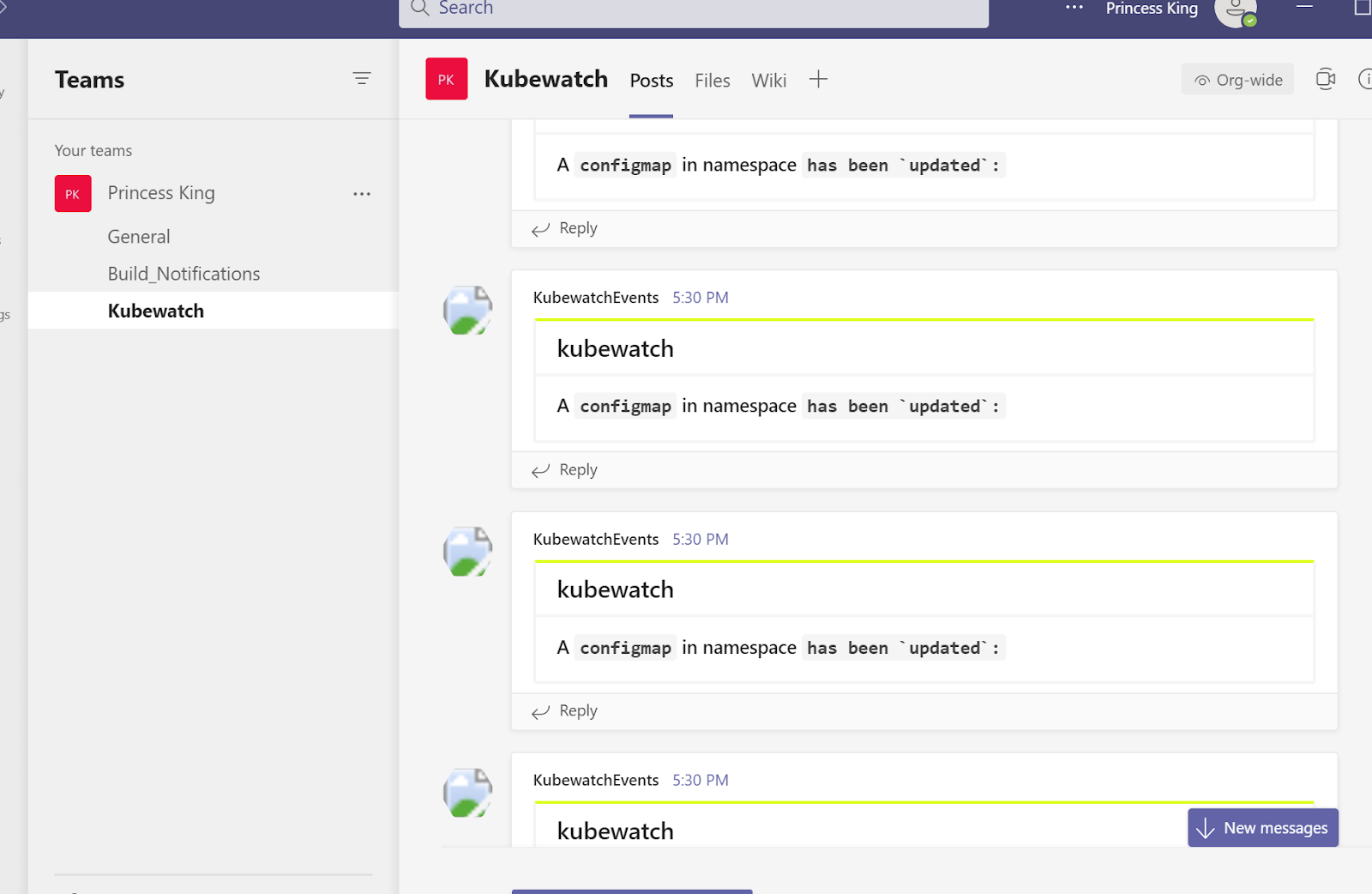

Immediately our channel started to get spammed

Looking at the logs we can see it's our configmaps that is the issue.

time="2021-06-06T22:32:23Z" level=info msg="Processing update to configmap: kube-system/cert-manager-cainjector-leader-election" pkg=kubewatch-configmap

2021/06/06 22:32:23 Message successfully sent to MS Teams

2021/06/06 22:32:23 Message successfully sent to MS Teams

time="2021-06-06T22:32:23Z" level=info msg="Processing update to configmap: default/operator.dapr.io" pkg=kubewatch-configmap

2021/06/06 22:32:24 Message successfully sent to MS Teams

time="2021-06-06T22:32:24Z" level=info msg="Processing update to configmap: kube-system/cert-manager-cainjector-leader-election-core" pkg=kubewatch-configmap

2021/06/06 22:32:24 Message successfully sent to MS Teams

time="2021-06-06T22:32:24Z" level=info msg="Processing update to configmap: kube-system/cert-manager-controller" pkg=kubewatch-configmap

time="2021-06-06T22:32:25Z" level=info msg="Processing update to configmap: default/datadog-leader-election" pkg=kubewatch-configmap

2021/06/06 22:32:25 Message successfully sent to MS Teams

time="2021-06-06T22:32:25Z" level=info msg="Processing update to configmap: kube-system/cert-manager-cainjector-leader-election" pkg=kubewatch-configmap

2021/06/06 22:32:25 Message successfully sent to MS Teams

2021/06/06 22:32:25 Message successfully sent to MS Teams

time="2021-06-06T22:32:25Z" level=info msg="Processing update to configmap: default/operator.dapr.io" pkg=kubewatch-configmap

2021/06/06 22:32:26 Message successfully sent to MS Teams

time="2021-06-06T22:32:26Z" level=info msg="Processing update to configmap: kube-system/cert-manager-cainjector-leader-election-core" pkg=kubewatch-configmap

2021/06/06 22:32:26 Message successfully sent to MS Teams

time="2021-06-06T22:32:27Z" level=info msg="Processing update to configmap: kube-system/cert-manager-cainjector-leader-election" pkg=kubewatch-configmap

2021/06/06 22:32:27 Message successfully sent to MS Teams

time="2021-06-06T22:32:27Z" level=info msg="Processing update to configmap: default/operator.dapr.io" pkg=kubewatch-configmap

2021/06/06 22:32:28 Message successfully sent to MS Teams

time="2021-06-06T22:32:28Z" level=info msg="Processing update to configmap: kube-system/cert-manager-cainjector-leader-election-core" pkg=kubewatch-configmap

time="2021-06-06T22:32:28Z" level=info msg="Processing update to configmap: default/ingress-controller-leader-nginx" pkg=kubewatch-configmap

2021/06/06 22:32:28 Message successfully sent to MS Teams

time="2021-06-06T22:32:28Z" level=info msg="Processing update to configmap: default/datadog-leader-election" pkg=kubewatch-configmap

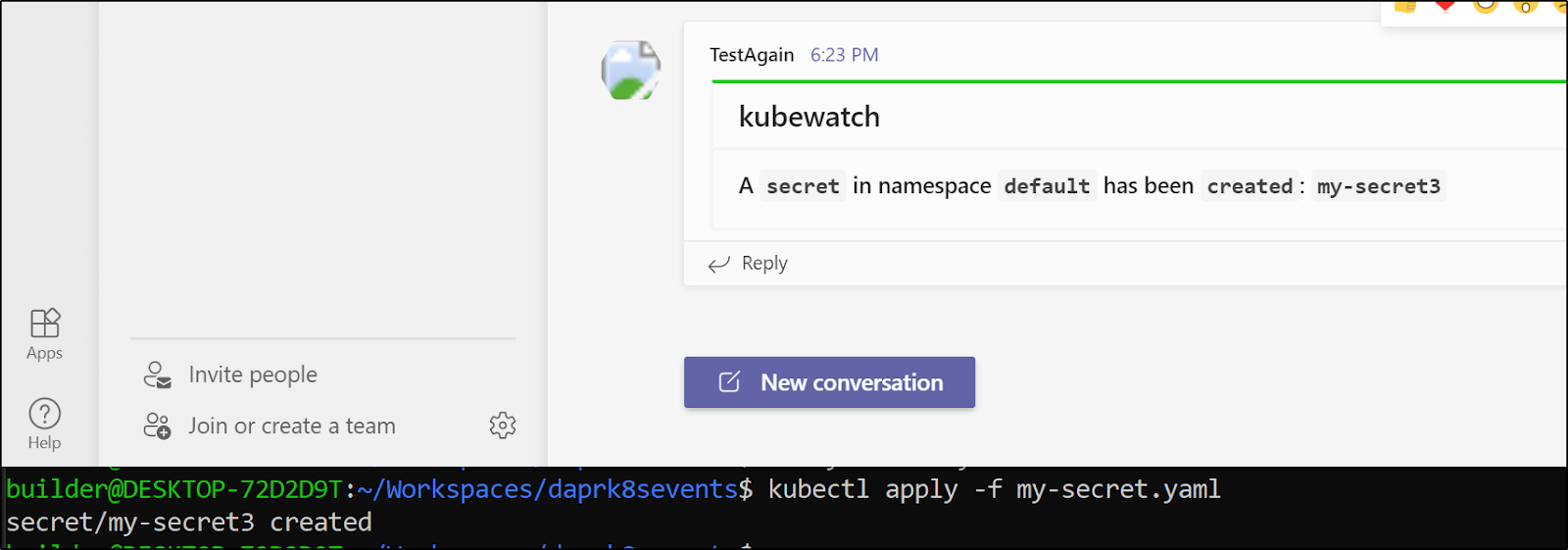

Once configured properly, we can see changes reflected in Teams when we update secrets. As you can see, i had to recreate my webhook (as "TestAgain") since Teams (properly) stopped accepting posts after a bit of nonstop spam

Triggering AzDO Pipelines with webhooks

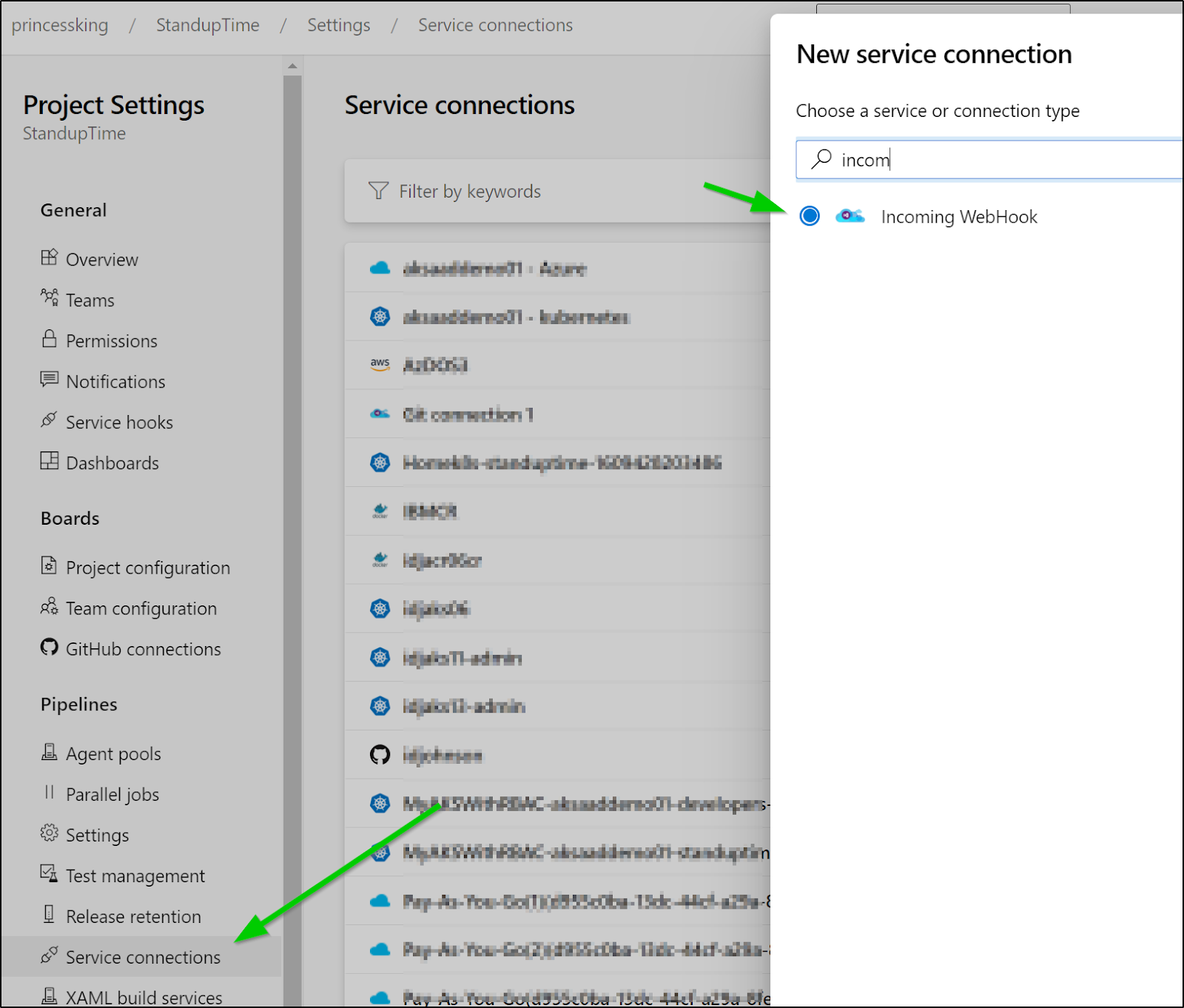

Since Sprint 172, we have had the ability to trigger a pipeline on a webhook.

Creating a Webhook

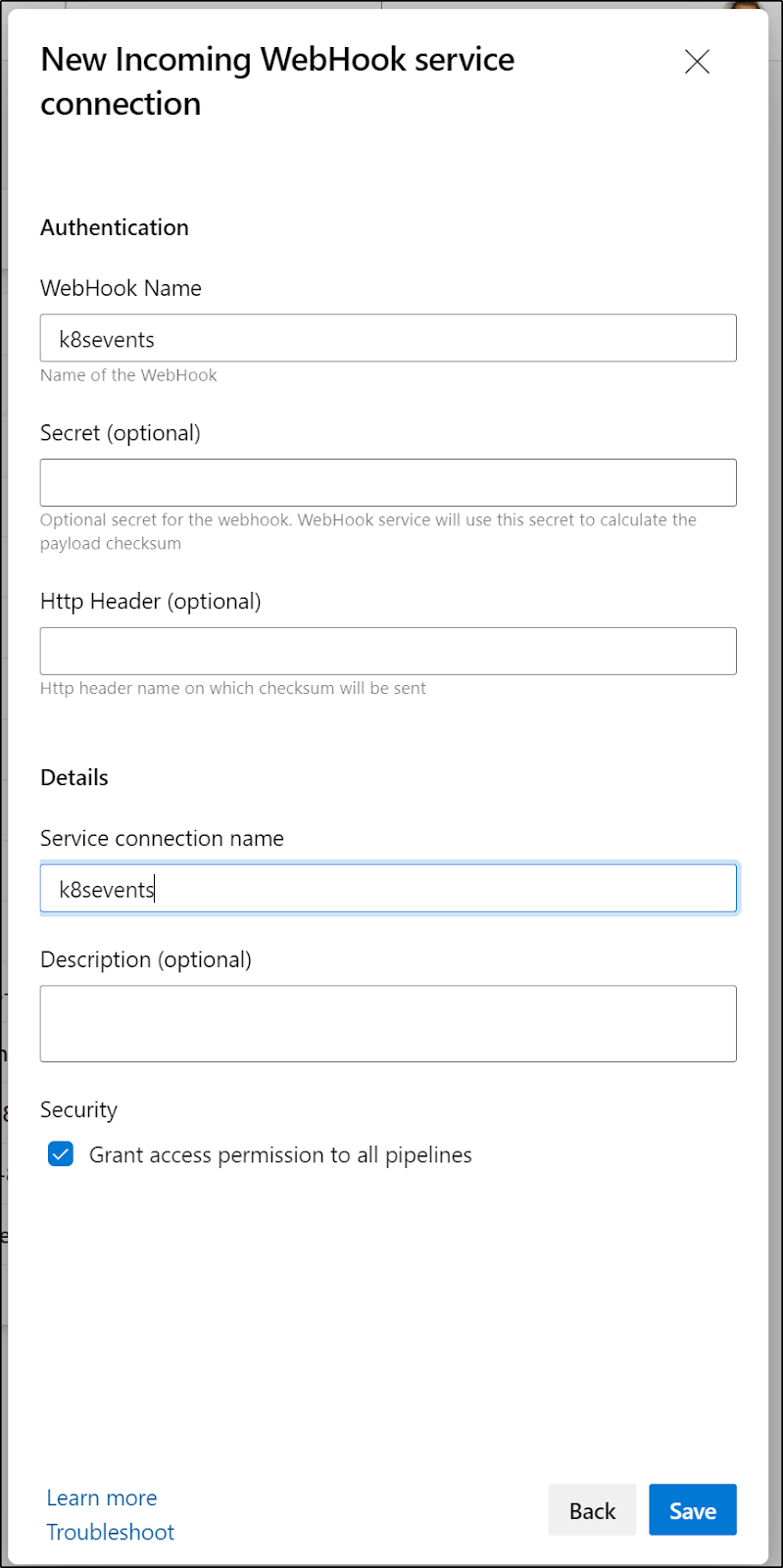

First, let's create an incoming webhook trigger under Service Connections

Then fill in the details. Optionally you can secure it with a secret. For what we are doing with Kubewatch, the secret won't work.

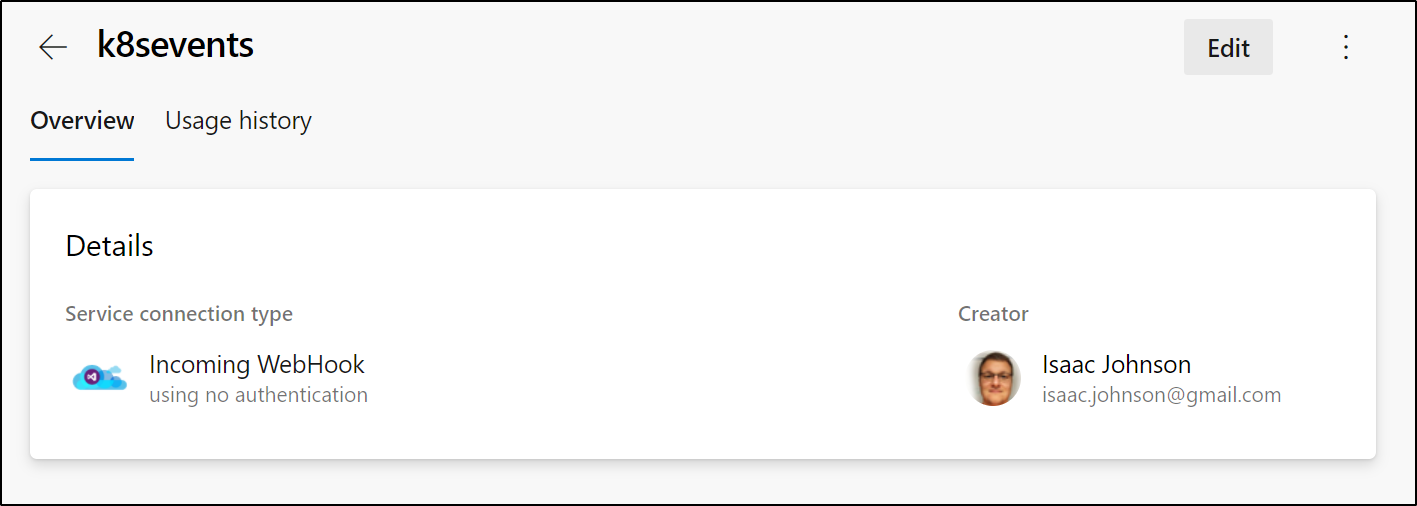

Save and we should have a webhook trigger created

Next we can make a basic job that can trigger on changes:

$ cat azure-pipelines.yaml

# Azure DevOps pipeline.yaml

trigger:

- main

resources:

webhooks:

- webhook: k8sevents

connection: k8sevents

pool:

vmImage: ubuntu-latest

steps:

- script: echo Hello, world!

displayName: 'Run a one-line script'

- script: |

echo Add other tasks to build, test, and deploy your project.

echo See https://aka.ms/yaml

displayName: 'Run a multi-line script'Update the Kubewatch chart with values that will update both MS Teams and our new webhook. Note: it seems Kubewatch assumes Slack is to be updated so when NOT using slack, you have to explicitly set enabled to false in the configmap

$ cat kubewatch_values.yaml

rbac:

create: true

resourcesToWatch:

deployment: false

replicationcontroller: false

replicaset: false

daemonset: false

services: false

pod: false

job: false

node: false

clusterrole: false

serviceaccount: false

persistentvolume: false

namespace: false

secret: true

configmap: false

ingress: false

msteams:

enabled: true

webhookurl: 'https://princessking.webhook.office.com/webhookb2/0119e157-eb03-4d10-b097-fea3bfa2115c@6f503690-f043-4e2a-bba1-106faaa1c397/IncomingWebhook/23585651-24cd-4b09-95f4-ea7a721eec5c'

slack:

enabled: false

webhook:

enabled: true

url: 'https://dev.azure.com/princessking/_apis/public/distributedtask/webhooks/k8sevents?api-version=6.0-preview'

$ helm upgrade kubewatch bitnami/kubewatch --values=kubewatch_values.yaml

Release "kubewatch" has been upgraded. Happy Helming!

NAME: kubewatch

LAST DEPLOYED: Sun May 30 18:54:02 2021

NAMESPACE: default

STATUS: deployed

REVISION: 7

TEST SUITE: None

NOTES:

** Please be patient while the chart is being deployed **

To verify that kubewatch has started, run:

kubectl get deploy -w --namespace default kubewatch

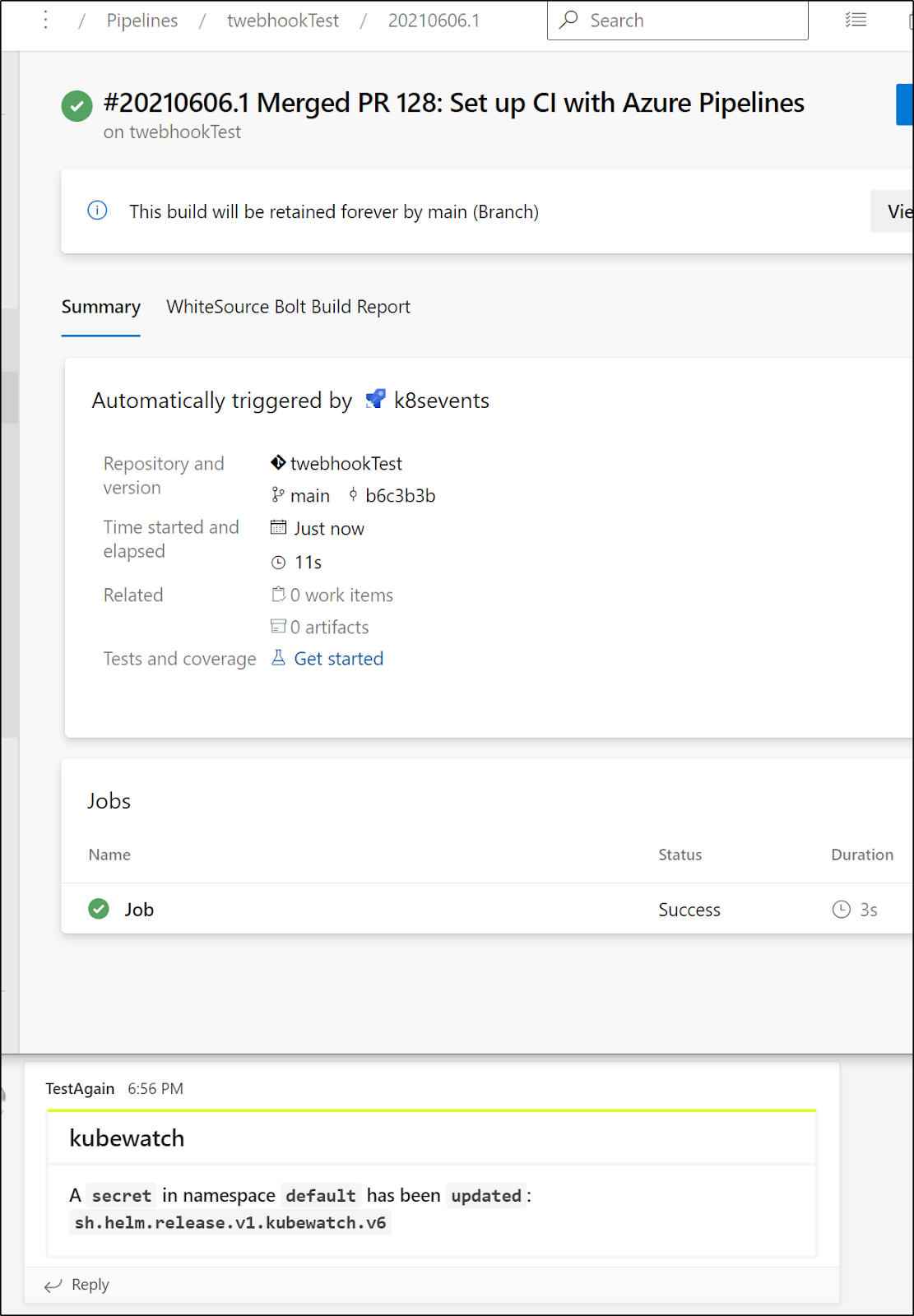

Now when we update the secret we see that both Teams was notified and the AzDO job kicked off

$ kubectl apply -f my-secret.yaml

secret/my-secret4 created

Checking the logs of the pod, we see it indeed triggered Teams notifications and the AzDO webhook

$ kubectl logs kubewatch-5d466cffc8-fncpl | tail -n 5

time="2021-06-06T23:56:13Z" level=info msg="Processing add to secret: default/sh.helm.release.v1.kubewatch.v2" pkg=kubewatch-secret

time="2021-06-06T23:56:13Z" level=info msg="Processing add to secret: default/sh.helm.release.v1.kubewatch.v4" pkg=kubewatch-secret

time="2021-06-06T23:56:13Z" level=info msg="Kubewatch controller synced and ready" pkg=kubewatch-secret

time="2021-06-06T23:57:09Z" level=info msg="Processing add to secret: default/my-secret4" pkg=kubewatch-secret

2021/06/06 23:57:09 Message successfully sent to https://dev.azure.com/princessking/_apis/public/distributedtask/webhooks/k8sevents?api-version=6.0-preview at 2021-06-06 23:57:09.74699227 +0000 UTC m=+56.432415102

And we can see both the job was invoked and Teams was notified

What to do next

This could be useful if we wished to monitor a namespace for secret updates and then trigger, for instance, an Azure DevOps pipeline that would consume them and push them into a permanent store like AKV or Hashi Vault.

One does need to be careful, however, as some things, like configmaps change quite often (with leader election updates, for instance)

If we want to secure the endpoint, we have to set a secret and header field that would contain the secret. you can see some details in this GH post: https://github.com/MicrosoftDocs/azure-devops-docs/issues/8913

For instance, saying this was your POST data do the webhook (fields we can parse from the resouce in our AzDO job)

$ jq -n --arg waitMinutes 10 --argjson targets "${targets_json:-[]}" '{ waitMinutes: $waitMinutes, targets: $targets }'

{

"waitMinutes": "10",

"targets": []

}

then we could encrypt it with the secret (e.g. mysupersecret1234)

$ export postdata=`jq -n --arg waitMinutes 10 --argjson targets "${targets_json:-[]}" '{ waitMinutes: $waitMinutes, targets: $targets }'`

$ export hash_value=$( echo -n "$post_data" | openssl sha1 -hmac "mysupersecret1234")

$ echo $hash_value

(stdin)= c2901c9b22bd1c391afa744738bcf0b62e679d4e

this would make it

out=$( curl -v -X POST -d"$post_data" -H "Content-type: application/json" -H "$azdo_header_field: $hash_value" "$azdo_url" )so if our header was, just for instance, MYCRAZYHEADER then we would have a header as

-H "Content-type: application/json" -H "MYCRAZYHEADER: (stdin)= c2901c9b22bd1c391afa744738bcf0b62e679d4e"

# or

-H "Content-type: application/json" -H "MYCRAZYHEADER: c2901c9b22bd1c391afa744738bcf0b62e679d4e"which would line up with the encrypted post data.

This is a bit much for the basic helm chart of our kubewatcher app. But good to know if writing something more customized.

Summary

We explored using the kubernetes events component and how to trigger a Dapr nodejs App with event messages. We then explored using Kubewatch to do similar and trigger Slack, Teams and lastly Azure DevOps webhook triggers.

These systems could be used to watch kubernetes for changes, say dynamically created secrets or secrets created in a namespace by another team, then sync those secrets into a more permanent system of record like Hashi Vault or AKV.