In our prior guides we covered creating and monitoring clusters, but how do we handle service mesh? That is, a service for discovering and routing services. There are many options for “service mesh” including Istio, Consul, and the Netflix OSS Stack (Eureka, Zuul and Hysterix).

There are many places on the web already that can talk big picture with fancy slides, but you come here for the ‘doing’ not the ‘talking’. So let’s dig in and see how these actually stack up.

Create a DevOps Project

Before we start doing anything, we need to get a repo to store our stuff. Since we will be doing CI to deploy, let’s just start in AzDO.

$ az extension add --name azure-devops

The installed extension 'azure-devops' is in preview.

While we can do many things with the az CLI, creating the initial org and PAT are still a process done through the Azure portal.

And lastly we can have it create a k8s service and CD pipeline automatically

Click "Done" and it will start to create all the resources - this includes a new DevOps instance, Repo, Pipeline, Cluster and Container Registry! This may take a few minutes.

When done we should have:

If we click the Code button above, we are taken to the repo where we can clone the code:

$ git clone https://ijohnson-thinkahead.visualstudio.com/idjSpringBootApp/_git/idjSpringBootApp

Cloning into 'idjSpringBootApp'...

remote: Azure Repos

remote: Found 46 objects to send. (25 ms)

Unpacking objects: 100% (46/46), done.

AHD-MBP13-048:Workspaces isaac.johnson$ cd idjSpringBootApp/

AHD-MBP13-048:idjSpringBootApp isaac.johnson$

Getting JDK8

Let's install JDK8 so we can test the app. We're using a Mac so we'll brew install it. The main issue is Oracle has revisioned JDKs non stop so we are up to something like 12 now so we have to do a bit of changes to install:

$ brew tap caskroom/versions

Updating Homebrew...

==> Auto-updated Homebrew!

Updated 2 taps (homebrew/core and homebrew/cask).

==> Updated Formulae

minetest

==> Tapping caskroom/versions

Cloning into '/usr/local/Homebrew/Library/Taps/caskroom/homebrew-versions'...

remote: Enumerating objects: 231, done.

…

then we can install:

$ brew cask install java8

==> Caveats

Installing java8 means you have AGREED to the license at

https://www.oracle.com/technetwork/java/javase/terms/license/javase-license.html

==> Satisfying dependencies

…

Next, install maven:

$ brew install maven

==> Downloading https://www.apache.org/dyn/closer.cgi?path=maven/maven-3/3.6.0/binaries/apache-maven-3.6.0-

==> Downloading from http://mirrors.koehn.com/apache/maven/maven-3/3.6.0/binaries/apache-maven-3.6.0-bin.ta

###################################

…

With Java8 installed and Maven, let's verify we can build:

$ mvn clean package

[INFO] Scanning for projects...

[INFO]

[INFO] -------------------------< com.microsoft:ROOT >-------------------------

[INFO] Building Sample Spring App 1.0

[INFO] --------------------------------[ war ]---------------------------------

[INFO]

[INFO] --- maven-clean-plugin:2.6.1:clean (default-clean) @ ROOT ---

[INFO] Deleting /Users/isaac.johnson/Workspaces/idjSpringBootApp/Application/target

[INFO]

…

Downloaded from central: https://repo.maven.apache.org/maven2/asm/asm-analysis/3.2/asm-analysis-3.2.jar (18 kB at 30 kB/s)

Downloaded from central: https://repo.maven.apache.org/maven2/commons-io/commons-io/1.3.2/commons-io-1.3.2.jar (88 kB at 146 kB/s)

Downloaded from central: https://repo.maven.apache.org/maven2/asm/asm-util/3.2/asm-util-3.2.jar (37 kB at 58 kB/s)

Downloaded from central: https://repo.maven.apache.org/maven2/com/google/guava/guava/18.0/guava-18.0.jar (2.3 MB at 2.9 MB/s)

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 10.689 s

[INFO] Finished at: 2019-04-13T12:23:00-05:00

[INFO] ------------------------------------------------------------------------

Let’s check on our deployment… the “Helm Upgrade” step in the release pipeline will give us some details:

We can see the External IP listed right there, so if we check our browser:

We can see it's clearly working:

But what if we want to go directly to the pod to check it out? We can get our AKS Name and Resource Group from the portal then just get the kubectl credentials and look up running pods:

$ az aks get-credentials --name idjSpringBootApp --resource-group idjSpringBootApp-rg

Merged "idjSpringBootApp" as current context in /Users/isaac.johnson/.kube/config

$ kubectl get pods --all-namespaces | grep sampleapp

dev6f9f sampleapp-659d9cdfc8-s8ltd 1/1 Running 0 12m

dev6f9f sampleapp-659d9cdfc8-v7zc2 1/1 Running 0 12m

Then port-forward to view the pod directly via localhost:

$ kubectl port-forward sampleapp-659d9cdfc8-s8ltd --namespace dev6f9f 8080:8080

Forwarding from 127.0.0.1:8080 -> 8080

Forwarding from [::1]:8080 -> 8080

And if we want to directly login and see what is going on:

$ kubectl exec -it sampleapp-659d9cdfc8-s8ltd --namespace dev6f9f -- /bin/sh

# cd logs

# tail -f localhost_access_log.2019-04-13.txt

10.244.1.1 - - [13/Apr/2019:17:46:19 +0000] "GET / HTTP/1.1" 200 2884

10.244.1.1 - - [13/Apr/2019:17:46:22 +0000] "GET / HTTP/1.1" 200 2884

10.244.1.1 - - [13/Apr/2019:17:46:29 +0000] "GET / HTTP/1.1" 200 2884

10.244.1.1 - - [13/Apr/2019:17:46:32 +0000] "GET / HTTP/1.1" 200 2884

We can verify we are on the host by just doing a test for a nonexistant page:

Being that this is load-balanced under a 2 pod replica-set, i would not expect every refresh to show up in the log. but roughly half should.

Verifying CICD works

Let’s make a minor change to the page and see that it is autodeployed.

First, let’s see what the last build was:

$ az pipelines build list -o table

ID Number Status Result Definition ID Definition Name Source Branch Queued Time Reason

---- ---------- --------- --------- --------------- --------------------- --------------- -------------------------- -----------

6 20190413.1 completed succeeded 5 idjSpringBootApp - CI master 2019-04-13 08:51:34.747383 userCreated

Next lets just add the Java 8 note:

$ sed -i.bak 's/Your Java Spring app/You Java8 Spring app/g' src/main/webapp/index.html

$ git diff

Before we add, if we do a quick git diff, we can see some files that need to be ignored.

$ git status

On branch master

Your branch is up to date with 'origin/master'.

Changes not staged for commit:

(use "git add <file>..." to update what will be committed)

(use "git checkout -- <file>..." to discard changes in working directory)

modified: src/main/webapp/index.html

Untracked files:

(use "git add <file>..." to include in what will be committed)

bin/

src/main/webapp/index.html.bak

target/

Pro-tip: You likely will want to create a gitignore file at the root of your repo (.gitignore).

You can find a decent one here: https://gitignore.io/api/vim,java,macos,maven,windows,visualstudio,visualstudiocode

Now just add the modified html file, commit it and push

$ git add -A && git commit -m "change java 8 line and trigger build" && git push

Now we should see the CI (and CD) has been triggered:

az pipelines build list -o table

ID Number Status Result Definition ID Definition Name Source Branch Queued Time Reason

---- ---------- ---------- --------- --------------- --------------------- --------------- -------------------------- -----------

7 20190413.2 inProgress 5 idjSpringBootApp - CI master 2019-04-13 15:54:49.170701 batchedCI

6 20190413.1 completed succeeded 5 idjSpringBootApp - CI master 2019-04-13 08:51:34.747383 userCreated

We can port forward the latest container pretty easily if we know the namespace:

$ kubectl port-forward $(kubectl get pod -l app=sampleapp -n dev6f9f -o=jsonpath='{.items[0].metadata.name}') --namespace dev6f9f 8080:8080

Adding Istio

Download the latest

$ cd ~ && mkdir istio_install

$ cd istio_install/

$ export ISTIO_VERSION=1.1.2

$ curl -sL "https://github.com/istio/istio/releases/download/$ISTIO_VERSION/istio-$ISTIO_VERSION-osx.tar.gz" | tar xz

Copy the client library and local istoctl program

AHD-MBP13-048:istio_install isaac.johnson$ cd istio-1.1.2/

AHD-MBP13-048:istio-1.1.2 isaac.johnson$ ls

LICENSE README.md bin install istio.VERSION samples tools

AHD-MBP13-048:istio-1.1.2 isaac.johnson$ chmod +x ./bin/istioctl

AHD-MBP13-048:istio-1.1.2 isaac.johnson$ sudo mv ./bin/istioctl /usr/local/bin/istioctl

Password:

Pro-tip: from the guide, we can follow the steps to add bash completions for istoctl. However, I did this but found it generally made using istoctl more problematic (when i wanted to tab complete a path to a local yaml, it redirected as a command).

# Generate the bash completion file and source it in your current shell

mkdir -p ~/completions && istioctl collateral --bash -o ~/completions

source ~/completions/istioctl.bash

# Source the bash completion file in your .bashrc so that the command-line completions

# are permanently available in your shell

echo "source ~/completions/istioctl.bash" >> ~/.bashrc

We’ll assume you’ve already installed helm but if not, you can follow this guide here: https://helm.sh/docs/using_helm/

Set up tiller if missing (likely is):

$ helm init

First, we need to install "istio-init":

$ helm install install/kubernetes/helm/istio-init --name istio-init --namespace istio-system

NAME: istio-init

LAST DEPLOYED: Sat Apr 13 16:20:07 2019

NAMESPACE: istio-system

STATUS: DEPLOYED

...

Then install Istio (this can take a bit)

$ helm install install/kubernetes/helm/istio --name istio --namespace istio-system

NAME: istio

LAST DEPLOYED: Sat Apr 13 16:21:22 2019

NAMESPACE: istio-system

STATUS: DEPLOYED

RESOURCES:

==> v1/ClusterRole

NAME AGE

istio-citadel-istio-system 31s

istio-galley-istio-system 31s

istio-ingressgateway-istio-system 31s

istio-mixer-istio-system 31s

istio-pilot-istio-system 31s

istio-reader 31s

istio-sidecar-injector-istio-system 31s

prometheus-istio-system 31s

==> v1/ClusterRoleBinding

NAME AGE

istio-citadel-istio-system 31s

istio-galley-admin-role-binding-istio-system 31s

istio-ingressgateway-istio-system 31s

istio-mixer-admin-role-binding-istio-system 31s

istio-multi 31s

istio-pilot-istio-system 31s

istio-sidecar-injector-admin-role-binding-istio-system 31s

prometheus-istio-system 31s

==> v1/ConfigMap

NAME DATA AGE

istio 2 31s

istio-galley-configuration 1 31s

istio-security-custom-resources 2 31s

istio-sidecar-injector 1 31s

prometheus 1 31s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

istio-citadel-5bbc997554-bzgc8 1/1 Running 0 30s

istio-galley-64f64687c8-7j6s4 0/1 ContainerCreating 0 31s

istio-ingressgateway-5f577bbbcd-x954m 0/1 Running 0 31s

istio-pilot-78f7d6645f-5rx78 1/2 Running 0 30s

istio-policy-5fd9989f74-wnfvq 2/2 Running 0 30s

istio-sidecar-injector-549585c8d9-jx64h 1/1 Running 0 30s

istio-telemetry-5b47cf5b9b-7j2qd 2/2 Running 0 30s

prometheus-8647cf4bc7-rgq8w 0/1 Init:0/1 0 30s

==> v1/Role

NAME AGE

istio-ingressgateway-sds 31s

==> v1/RoleBinding

NAME AGE

istio-ingressgateway-sds 31s

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

istio-citadel ClusterIP 10.0.180.48 <none> 8060/TCP,15014/TCP 31s

istio-galley ClusterIP 10.0.185.215 <none> 443/TCP,15014/TCP,9901/TCP 31s

istio-ingressgateway LoadBalancer 10.0.200.183 <pending> 80:31380/TCP,443:31390/TCP,31400:31400/TCP,15029:31541/TCP,15030:30284/TCP,15031:30932/TCP,15032:32705/TCP,15443:31289/TCP,15020:31342/TCP 31s

istio-pilot ClusterIP 10.0.203.190 <none> 15010/TCP,15011/TCP,8080/TCP,15014/TCP 31s

istio-policy ClusterIP 10.0.137.162 <none> 9091/TCP,15004/TCP,15014/TCP 31s

istio-sidecar-injector ClusterIP 10.0.1.204 <none> 443/TCP 31s

istio-telemetry ClusterIP 10.0.92.214 <none> 9091/TCP,15004/TCP,15014/TCP,42422/TCP 31s

prometheus ClusterIP 10.0.46.21 <none> 9090/TCP 31s

==> v1/ServiceAccount

NAME SECRETS AGE

istio-citadel-service-account 1 31s

istio-galley-service-account 1 31s

istio-ingressgateway-service-account 1 31s

istio-mixer-service-account 1 31s

istio-multi 1 31s

istio-pilot-service-account 1 31s

istio-security-post-install-account 1 31s

istio-sidecar-injector-service-account 1 31s

prometheus 1 31s

==> v1alpha2/attributemanifest

NAME AGE

istioproxy 30s

kubernetes 30s

==> v1alpha2/handler

NAME AGE

kubernetesenv 30s

prometheus 30s

==> v1alpha2/kubernetes

NAME AGE

attributes 30s

==> v1alpha2/metric

NAME AGE

requestcount 30s

requestduration 30s

requestsize 30s

responsesize 30s

tcpbytereceived 30s

tcpbytesent 30s

tcpconnectionsclosed 30s

tcpconnectionsopened 30s

==> v1alpha2/rule

NAME AGE

kubeattrgenrulerule 30s

promhttp 30s

promtcp 30s

promtcpconnectionclosed 30s

promtcpconnectionopen 30s

tcpkubeattrgenrulerule 30s

==> v1alpha3/DestinationRule

NAME AGE

istio-policy 30s

istio-telemetry 30s

==> v1beta1/ClusterRole

NAME AGE

istio-security-post-install-istio-system 31s

==> v1beta1/ClusterRoleBinding

NAME AGE

istio-security-post-install-role-binding-istio-system 31s

==> v1beta1/Deployment

NAME READY UP-TO-DATE AVAILABLE AGE

istio-citadel 1/1 1 1 30s

istio-galley 0/1 1 0 31s

istio-ingressgateway 0/1 1 0 31s

istio-pilot 0/1 1 0 31s

istio-policy 1/1 1 1 31s

istio-sidecar-injector 1/1 1 1 30s

istio-telemetry 1/1 1 1 31s

prometheus 0/1 1 0 31s

==> v1beta1/MutatingWebhookConfiguration

NAME AGE

istio-sidecar-injector 30s

==> v1beta1/PodDisruptionBudget

NAME MIN AVAILABLE MAX UNAVAILABLE ALLOWED DISRUPTIONS AGE

istio-galley 1 N/A 0 31s

istio-ingressgateway 1 N/A 0 31s

istio-pilot 1 N/A 0 31s

istio-policy 1 N/A 0 31s

istio-telemetry 1 N/A 0 31s

==> v2beta1/HorizontalPodAutoscaler

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

istio-ingressgateway Deployment/istio-ingressgateway <unknown>/80% 1 5 1 30s

istio-pilot Deployment/istio-pilot <unknown>/80% 1 5 1 30s

istio-policy Deployment/istio-policy <unknown>/80% 1 5 1 30s

istio-telemetry Deployment/istio-telemetry <unknown>/80% 1 5 1 30s

NOTES:

Thank you for installing istio.

Your release is named istio.

To get started running application with Istio, execute the following steps:

1. Label namespace that application object will be deployed to by the following command (take default namespace as an example)

$ kubectl label namespace default istio-injection=enabled

$ kubectl get namespace -L istio-injection

2. Deploy your applications

$ kubectl apply -f <your-application>.yaml

For more information on running Istio, visit:

https://istio.io/

So let’s apply the istio label to the dev namespace and verify:

$ kubectl label namespace dev6f9f istio-injection=enabled

namespace/dev6f9f labeled

AHD-MBP13-048:istio-1.1.2 isaac.johnson$ kubectl get namespace -L istio-injection

NAME STATUS AGE ISTIO-INJECTION

default Active 7h37m

dev6f9f Active 7h33m enabled

istio-system Active 14m

kube-public Active 7h37m

kube-system Active 7h37m

If we want, we can check out what prometheus is tracking already:

$ kubectl -n istio-system port-forward $(kubectl -n istio-system get pod -l app=prometheus -o jsonpath='{.items[0].metadata.name}') 9090:9090

Forwarding from 127.0.0.1:9090 -> 9090

Forwarding from [::1]:9090 -> 9090

Handling connection for 9090

And we’ll see entries for our Springboot app already:

But to instrument for better metrics collection, we can add micrometer by adding a dependency to micrometer-registry-prometheus.

<!-- Micrometer Prometheus registry -->

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-prometheus</artifactId>

</dependency>

Just add to the dependencies in the top level pom.xml:

Pro-tip: if you need to look up the latest version of a thing, you can search apache repo:

Let's push the change and watch for our pod to recycle:

$ git add pom.xml

$ git commit -m "add io.micrometer for prometheus"

[master 25bdeb8] add io.micrometer for prometheus

1 file changed, 7 insertions(+), 1 deletion(-)

AHD-MBP13-048:Application isaac.johnson$ git push

Enumerating objects: 7, done.

Counting objects: 100% (7/7), done.

Delta compression using up to 4 threads

Compressing objects: 100% (4/4), done.

Writing objects: 100% (4/4), 543 bytes | 543.00 KiB/s, done.

Total 4 (delta 2), reused 0 (delta 0)

remote: Analyzing objects... (4/4) (7 ms)

remote: Storing packfile... done (254 ms)

remote: Storing index... done (79 ms)

To https://ijohnson-thinkahead.visualstudio.com/idjSpringBootApp/_git/idjSpringBootApp

02131d0..25bdeb8 master -> master

Watching for deployment (though you could also watch the release pipeline)

$ kubectl get pods --all-namespaces | grep sampleapp

dev6f9f sampleapp-654c4f4689-9ls65 1/1 Running 0 64m

dev6f9f sampleapp-654c4f4689-f7lgg 1/1 Running 0 65m

dev6f9f sampleapp-654c974b8d-c7tjm 1/2 Running 0 19s

$ kubectl get pods --all-namespaces | grep sampleapp

dev6f9f sampleapp-654c4f4689-9ls65 1/1 Terminating 0 64m

dev6f9f sampleapp-654c4f4689-f7lgg 1/1 Running 0 65m

dev6f9f sampleapp-654c974b8d-622dw 0/2 Pending 0 0s

dev6f9f sampleapp-654c974b8d-c7tjm 2/2 Running 0 21s

$ kubectl get pods --all-namespaces | grep sampleapp

dev6f9f sampleapp-654c4f4689-9ls65 1/1 Terminating 0 64m

dev6f9f sampleapp-654c4f4689-f7lgg 1/1 Running 0 65m

dev6f9f sampleapp-654c974b8d-622dw 0/2 Init:0/1 0 2s

dev6f9f sampleapp-654c974b8d-c7tjm 2/2 Running 0 23s

Now let’s fire up Prometheus:

kubectl -n istio-system port-forward $(kubectl -n istio-system get pod -l app=prometheus -o jsonpath='{.items[0].metadata.name}') 9090:9090

Forwarding from 127.0.0.1:9090 -> 9090

Forwarding from [::1]:9090 -> 9090

But istio’s real power is routing.

Istio Routing

Let’s test with a voting app. Before i dig in, most of the follow steps come from a great Microsoft article here. I don't want to replicate it all, but they have a great walk through with diagrams as well.

Lets clone the repo, create the namespace and set it to have automatic istio sidecar injection.

$ git clone https://github.com/Azure-Samples/aks-voting-app.git

Cloning into 'aks-voting-app'...

remote: Enumerating objects: 163, done.

remote: Total 163 (delta 0), reused 0 (delta 0), pack-reused 163

Receiving objects: 100% (163/163), 30.66 KiB | 784.00 KiB/s, done.

Resolving deltas: 100% (82/82), done.

$ cd aks-voting-app/

$ cd scenarios/intelligent-routing-with-istio/

$ kubectl create namespace voting

namespace/voting created

$ kubectl label namespace voting istio-injection=enabled

namespace/voting labeled

Next we can launch the voting app:

$ kubectl apply -f kubernetes/step-1-create-voting-app.yaml --namespace voting

deployment.apps/voting-storage-1-0 created

service/voting-storage created

deployment.apps/voting-analytics-1-0 created

service/voting-analytics created

deployment.apps/voting-app-1-0 created

service/voting-app created

And create the istio gateway. Frankly, this is where the magic happens:

$ istioctl create -f istio/step-1-create-voting-app-gateway.yaml --namespace voting

Command "create" is deprecated, Use `kubectl create` instead (see https://kubernetes.io/docs/tasks/tools/install-kubectl)

Created config virtual-service/voting/voting-app at revision 66082

Created config gateway/voting/voting-app-gateway at revision 66083

Once launched, we can get the IP of the Istio Ingress Gateway

$ kubectl get service istio-ingressgateway --namespace istio-system -o jsonpath='{.status.loadBalancer.ingress[0].ip}'

40.121.141.103

Next let's update the analytics engine so we can see multiple versions of the app.

$ kubectl apply -f kubernetes/step-2-update-voting-analytics-to-1.1.yaml --namespace voting

deployment.apps/voting-analytics-1-1 created

This shows we have different engines available:

$ kubectl get pods -n voting

NAME READY STATUS RESTARTS AGE

voting-analytics-1-0-57c7fccb44-lmjr8 2/2 Running 0 17m

voting-analytics-1-1-75f7559f78-lbqc4 2/2 Running 0 5m28s

voting-app-1-0-956756fd-m25q7 2/2 Running 0 17m

voting-app-1-0-956756fd-v22t2 2/2 Running 0 17m

voting-app-1-0-956756fd-vrxpt 2/2 Running 0 17m

voting-storage-1-0-5d8fcc89c4-txrdj 2/2 Running 0 17m

and refreshing will give us different results depending on which voting-analytics we get:

As you see above, it's the same container, but hitting different voting-analytics pods.

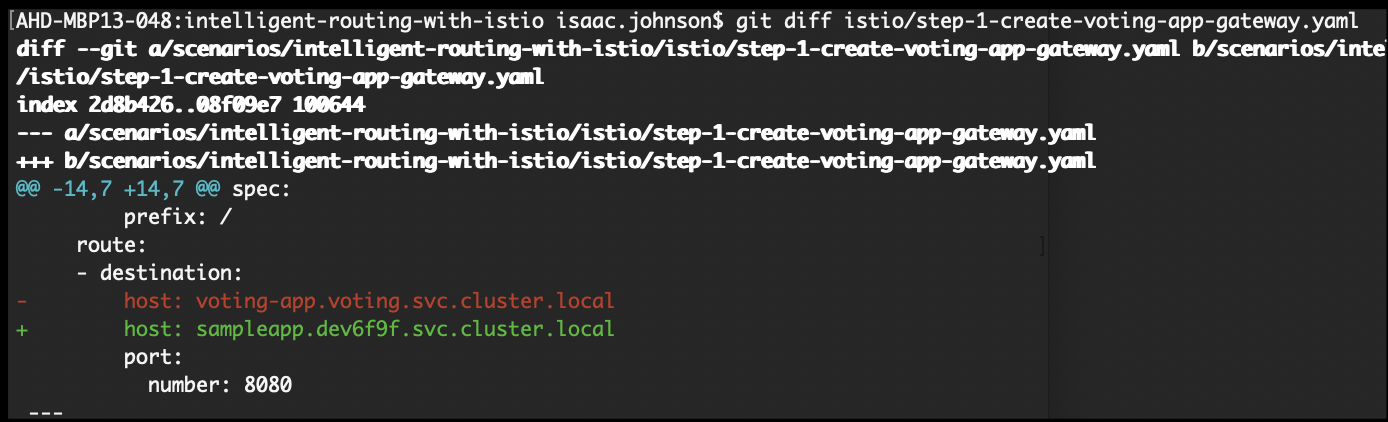

So what happens when we switch from voting app to sample app? Did we indeed get automatic Istio routing to our sample app?

# voting-app-virtualservice.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: voting-app

spec:

hosts:

- "*"

gateways:

- voting-app-gateway

http:

- match:

- uri:

prefix: /

route:

- destination:

host: sampleapp.dev6f9f.svc.cluster.local

port:

number: 8080

```

$ istioctl replace -f istio/step-1-create-voting-app-gateway.yaml --namespace voting

Command "replace" is deprecated, Use `kubectl apply` instead (see https://kubernetes.io/docs/tasks/tools/install-kubectl)

Updated config virtual-service/voting/voting-app to revision 167095

Updated config gateway/voting/voting-app-gateway to revision 66083

Refreshing the page now routes us to our HelloWorld app showing Istio routing indeed is working:

Nothing has changes with the original ARM deployed chart, however. Our HelloWorld app is still loadbalanced and available via the original external IP.

$ kubectl get service --namespace dev6f9f

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

sampleapp LoadBalancer 10.0.50.200 13.82.213.133 8080:32144/TCP 28h

tiller-deploy ClusterIP 10.0.187.192 <none> 44134/TCP 32h

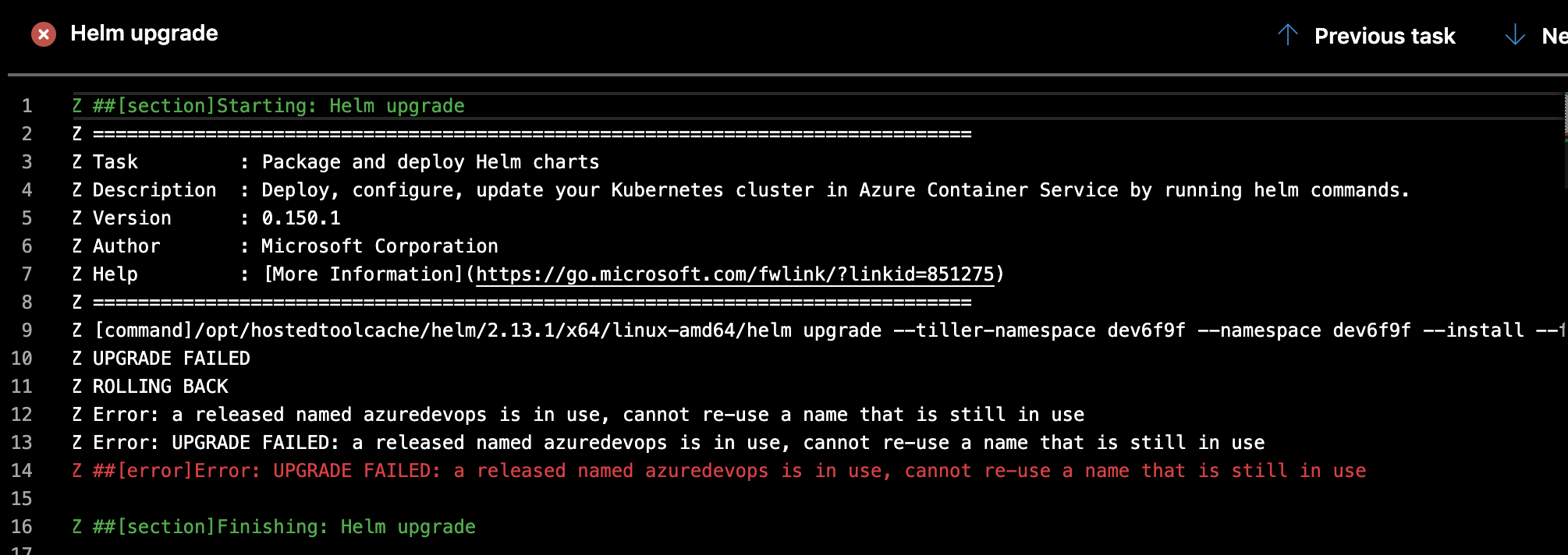

The only outstanding issue we will have to contend with is that in our messing about in the dev namespace and convinced kubernetes that the namespace is in use and blocks further CD deployments:

While we won't solve it here, the proper action would be to disable istio injection on the namespace, delete the pods there and do a kubectl delete with purge prior to our next helm deployment.

Summary

In this post we have covered creating an AKS cluster, launching a SpringBoot app via Azure DevOps that built a repo, CI and CD pipeline and related charts. We installed Istio and touched on Prometheus which can monitor our applications and nodes. We applied Istio side-car injection and verified it worked, both with a voting app and by retrofitting our existing HelloWorld Java SpringBoot app.