Published: Feb 12, 2026 by Isaac Johnson

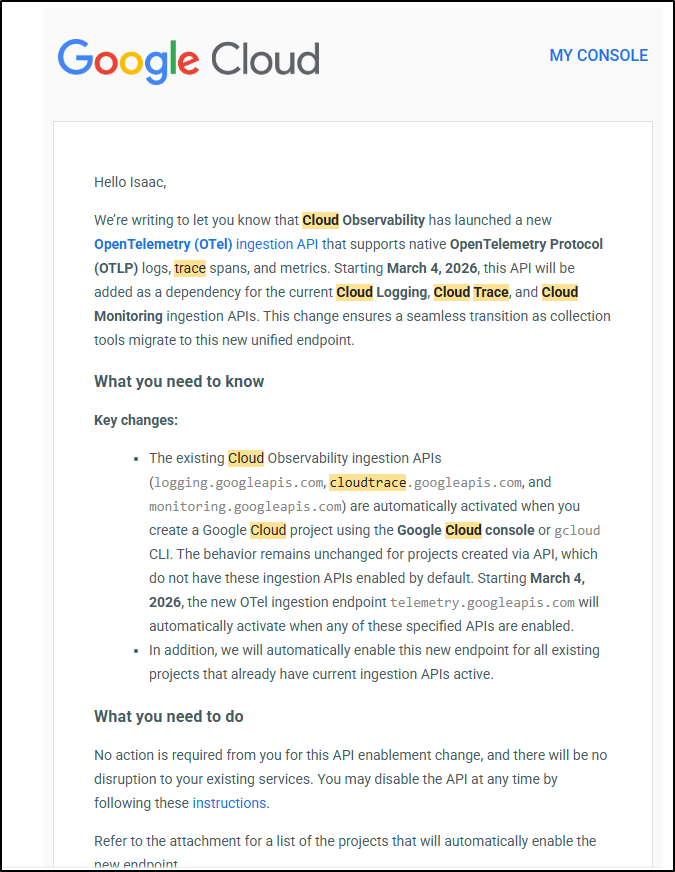

Google is now starting to roll out native OpenTelemetry Protocol (OTLP) endpoints for logs, spans and traces. From a recent email, it will be the preferred unified endpoint starting in early March.

Those that know me know I love OTel. It’s because of that love I often lean towards New Relic for my SaaS just because they have a simple to use globally available OTel endpoint (that just requires an API key header).

So how does Google do it? The email was light on details - auth is always the unique factor and most of the ‘setup guides’ assumed stacks living in GCP.

So let’s attack this from a few angles. I’m going to take a simple RESTful Python App I developed just for fun recently, GotifyMe and update for monitoring. I’ll instrument it in the following ways:

- GCP OpenTelemetry - setup using the GCP libraries in Python

- configure proper SA, review usage

- add metrics and logs to the traces as well (and note the updates)

- Native Open Telemetry to a containerized Otel Forwarder

- Look at Zipkin (service) and New Relic

- Setup the Zipkin GCP forwarder to send traces to GCP via standard Otel

Lastly, I’ll break it down with some comparisons and discuss the pros/cons of each approach.

GCP Native Setup

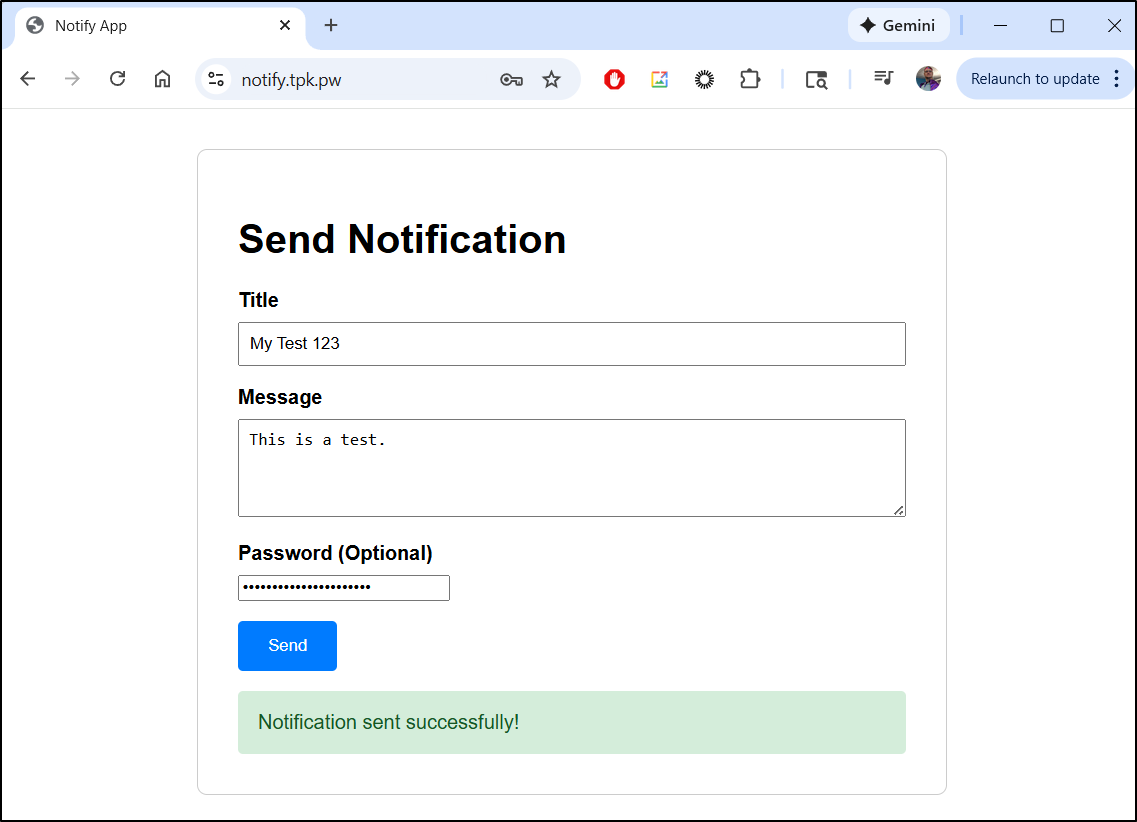

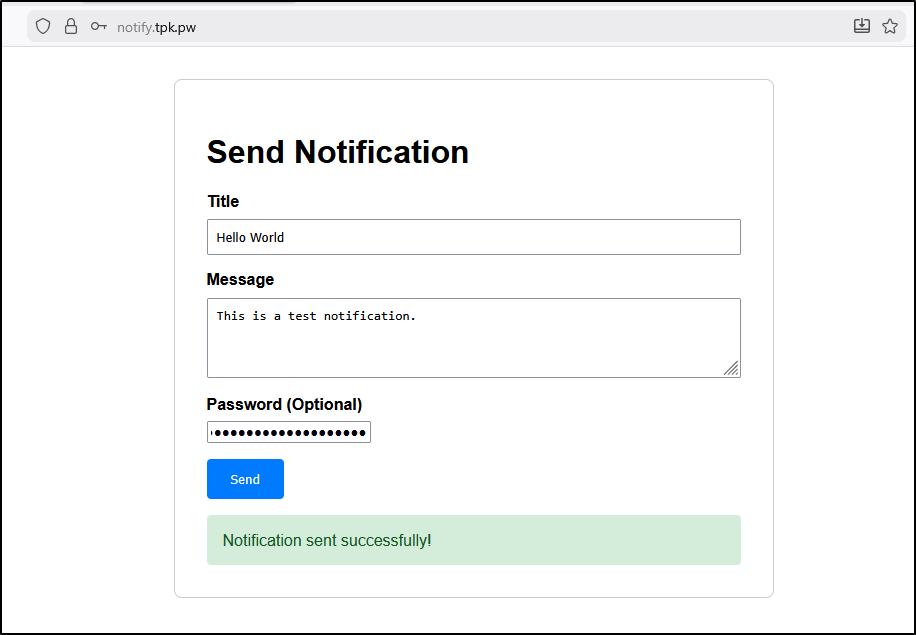

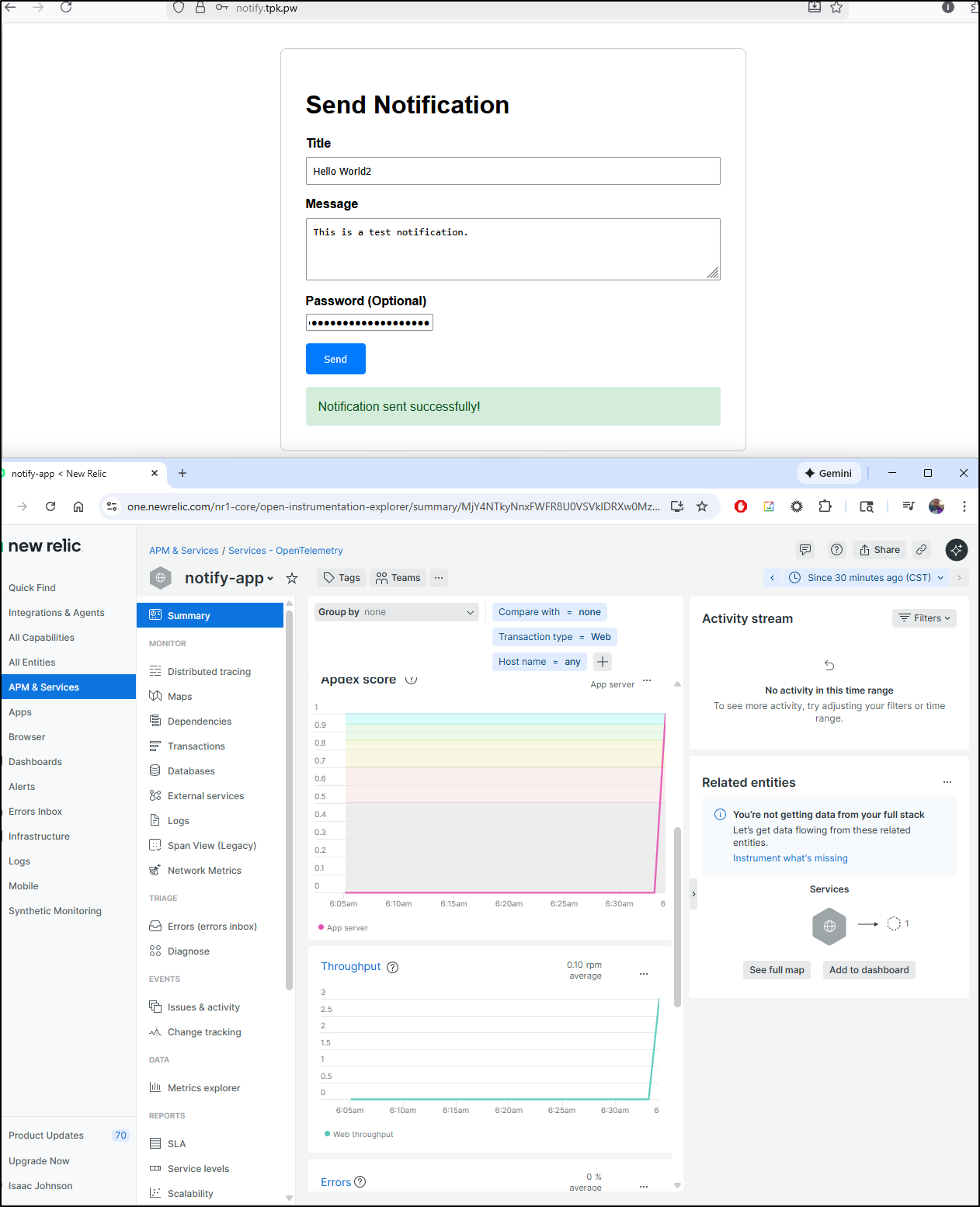

So we might have something with which to experiment, I created a basic Python app GotifyMe.

It is a simple app that notifies my gotify server with both a RESTFul backend and web interface.

It has a helm chart for installing into Kubernetes.

Here is a simple deployed instance at notify.tpk.pw:

GCP Cloud Trace

I saw this email come from GCP about the new OTel library

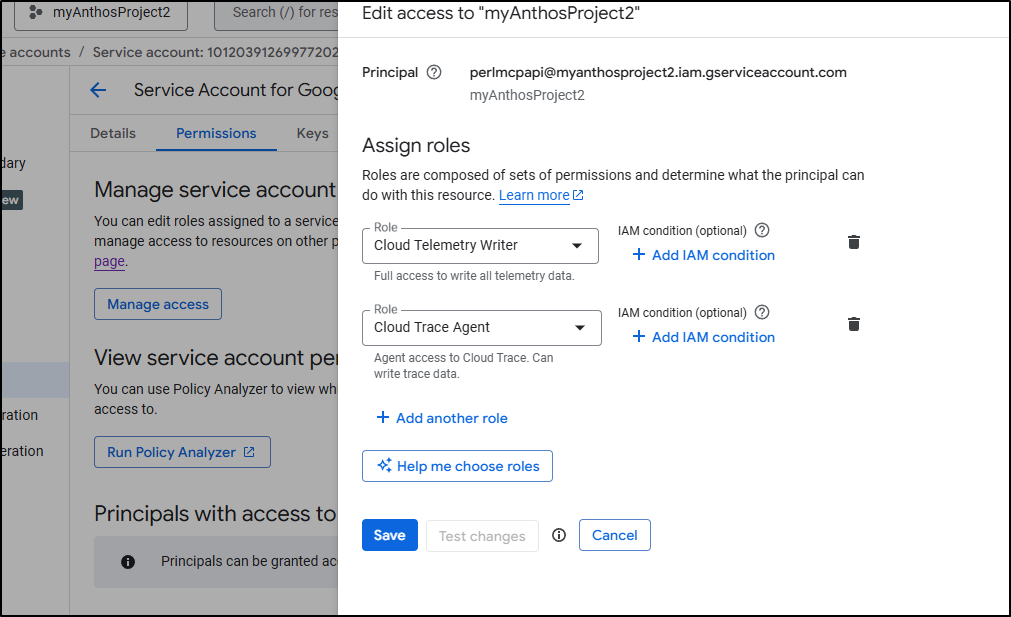

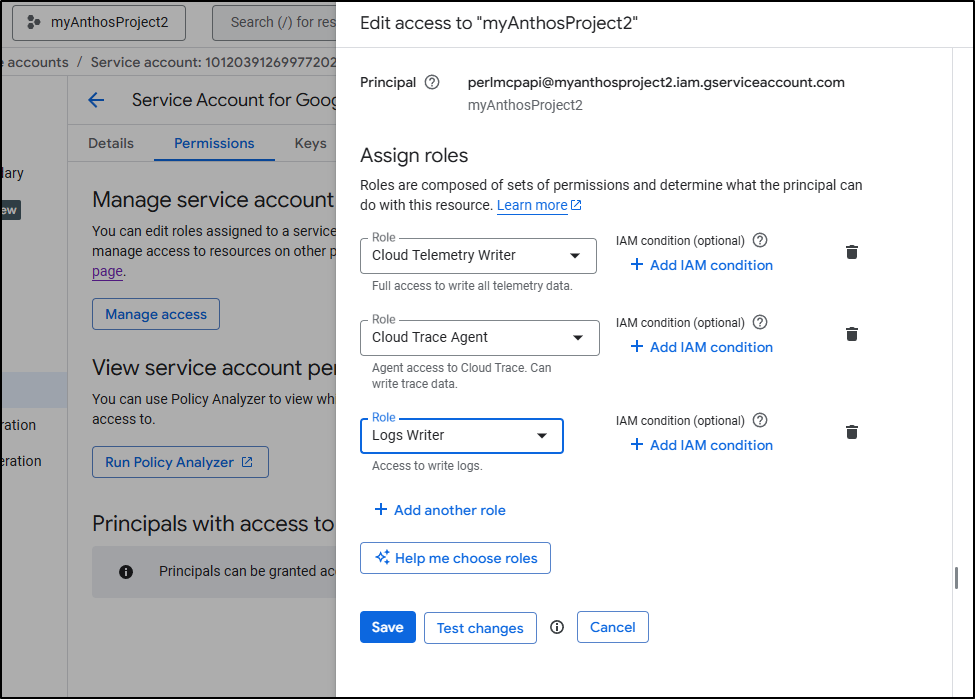

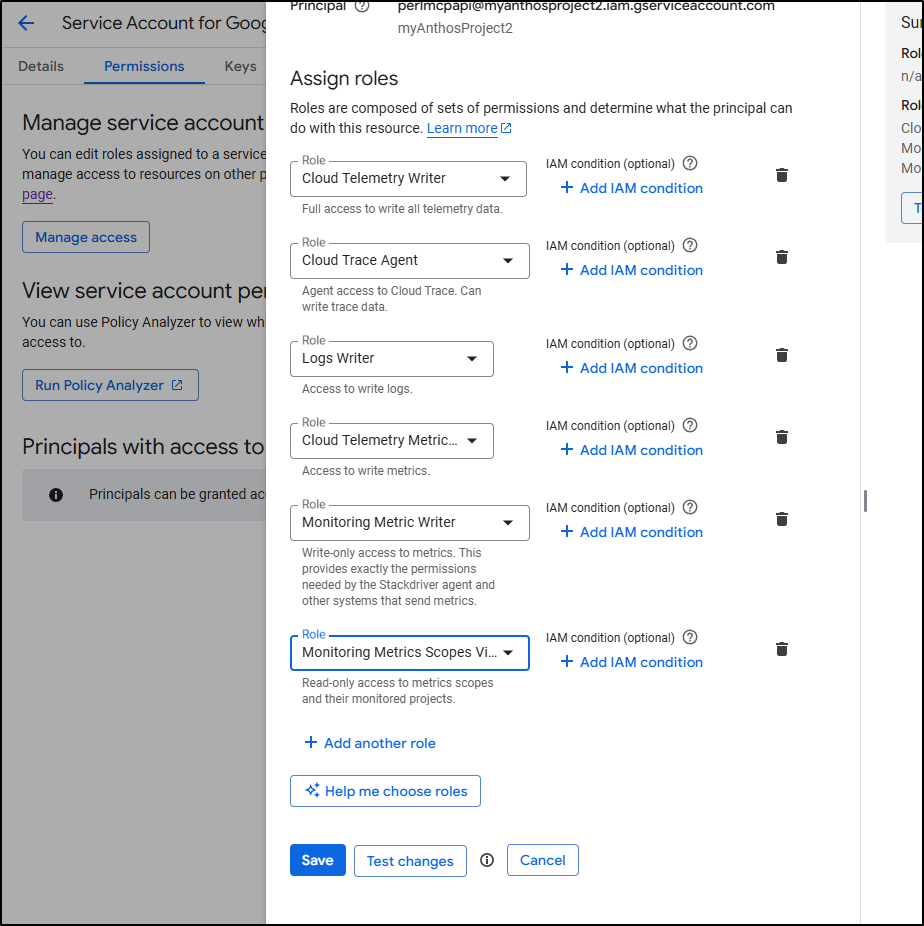

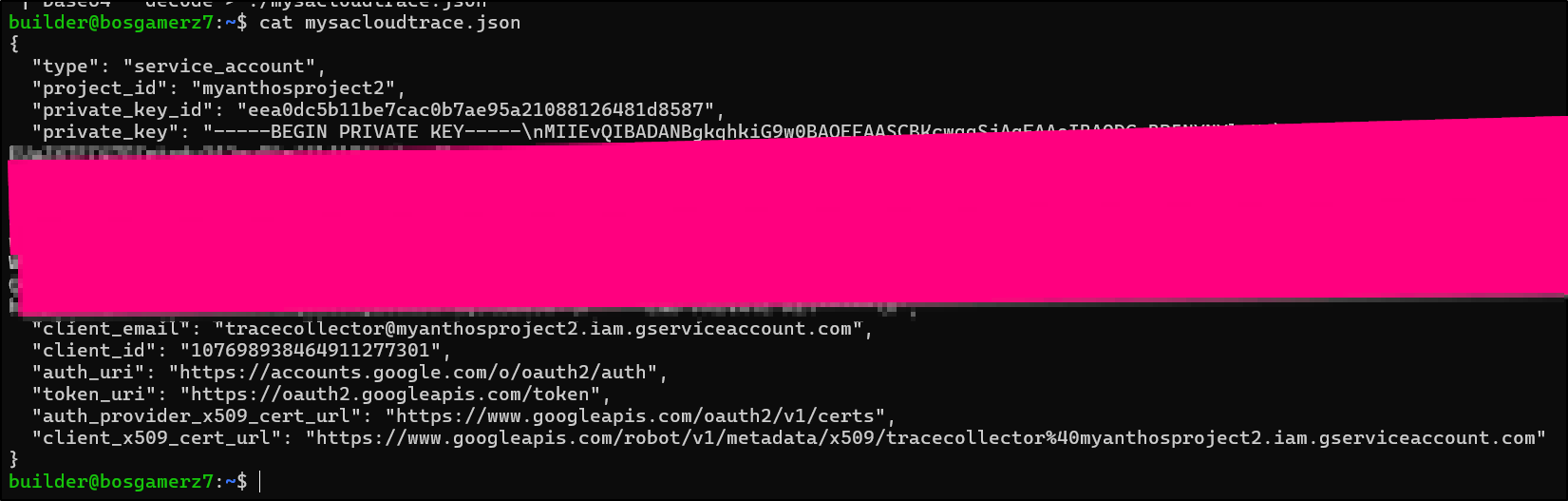

Now to use it externally from GCP, I need a Service Account that has at least the “Cloud Trace Agent” Roles permission (I also added “Cloud Telemetry Writer”).

I worked with Gemini and a bit of Copilot to work through the issues and could eventually get it to work (see PR1)

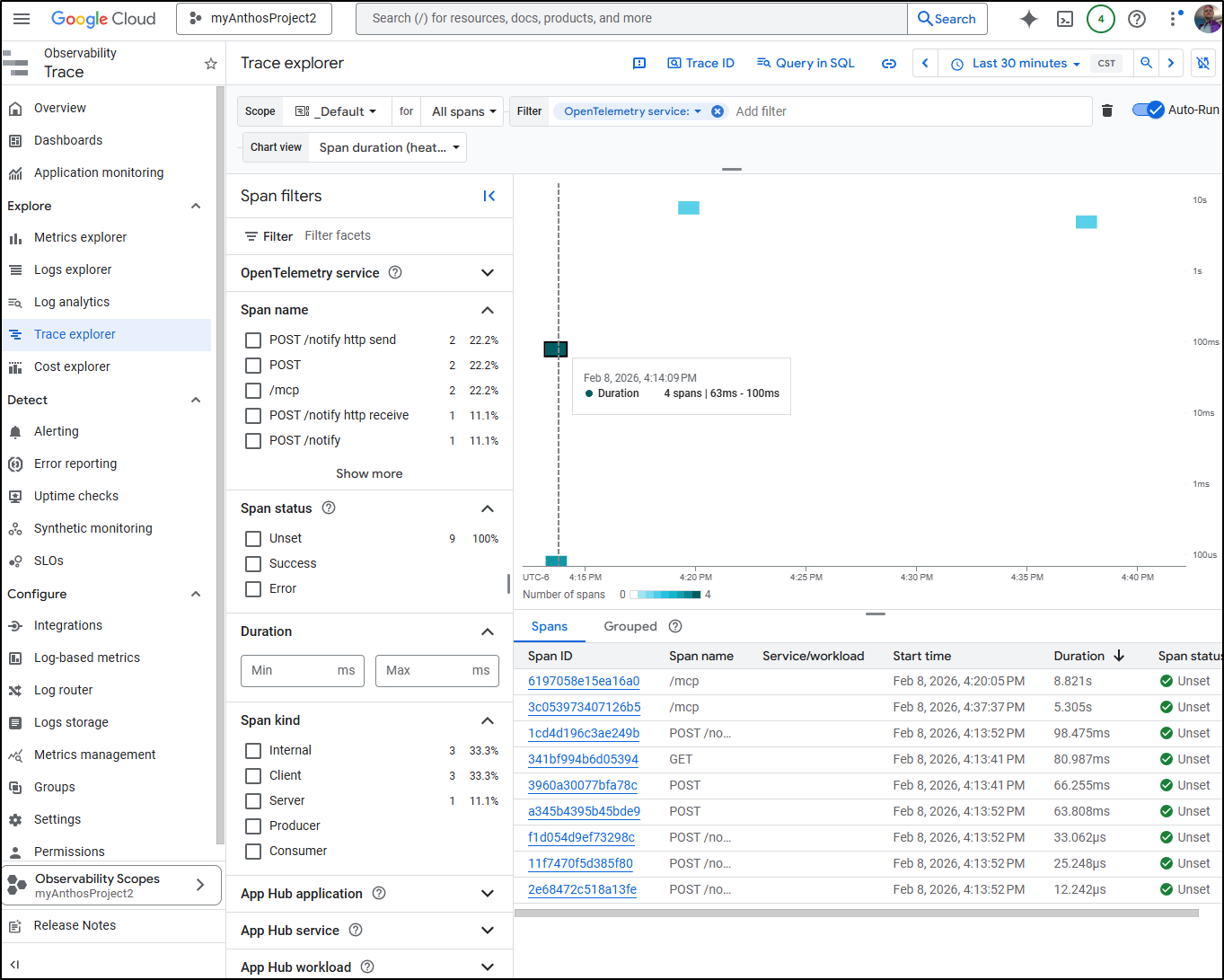

I can now get trace data in GCP.

I fire it up

(venv) builder@DESKTOP-QADGF36:~/Workspaces/GotifyMe$ uvicorn app.main:app --host 0.0.0.0 --port 8002

DEBUG:app.main:Env: GOOGLE_APPLICATION_CREDENTIALS=mysa.json

DEBUG:app.main:Env: GOOGLE_CLOUD_PROJECT=myanthosproject2

DEBUG:app.main:Env: ENABLE_OTEL=true

DEBUG:app.main:Env: GOTIFY_ENDPOINT=https://gotify.tpk.pw

DEBUG:google.auth._default:Checking 'mysa.json' for explicit credentials as part of auth process...

DEBUG:app.main:GCP Auth: GOOGLE_APPLICATION_CREDENTIALS is set to: mysa.json

DEBUG:app.main:GCP Auth: Project ID being used: myanthosproject2 (Detected: myanthosproject2, Env: myanthosproject2)

DEBUG:app.main:GCP Auth: Service Account Email: perlmcpapi@myanthosproject2.iam.gserviceaccount.com

DEBUG:urllib3.connectionpool:Starting new HTTPS connection (1): oauth2.googleapis.com:443

DEBUG:urllib3.connectionpool:https://oauth2.googleapis.com:443 "POST /token HTTP/1.1" 200 None

DEBUG:app.main:GCP Auth: Credentials valid: True

DEBUG:app.main:GCP Auth: Credentials expired: False

DEBUG:app.main:GCP Auth: Scopes: ['https://www.googleapis.com/auth/cloud-platform']

DEBUG:app.main:OTEL Resource Attributes: {'telemetry.sdk.language': 'python', 'telemetry.sdk.name': 'opentelemetry', 'telemetry.sdk.version': '1.39.1', 'service.name': 'gotifyme', 'service.namespace': 'gotifyme-ns', 'gcp.project_id': 'myanthosproject2'}

INFO:app.main:OpenTelemetry configured successfully for Google Cloud Trace (Project: myanthosproject2)

INFO: Started server process [49008]

INFO: Waiting for application startup.

INFO:app.main:Starting up... Setting up Gotify Client

DEBUG:urllib3.connectionpool:Starting new HTTPS connection (1): gotify.tpk.pw:443

DEBUG:urllib3.connectionpool:https://gotify.tpk.pw:443 "GET /application HTTP/1.1" 200 428

INFO:app.gotify_client:Authenticated using Client Token.

INFO:app.gotify_client:Found existing app 'FastAPI_Notify_App'. using token: AL.Hh...

DEBUG:urllib3.connectionpool:Starting new HTTPS connection (1): gotify.tpk.pw:443

DEBUG:urllib3.connectionpool:https://gotify.tpk.pw:443 "POST /message HTTP/1.1" 200 133

INFO:app.main:Startup notification sent.

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:8002 (Press CTRL+C to quit)

DEBUG:urllib3.connectionpool:Starting new HTTPS connection (1): gotify.tpk.pw:443

DEBUG:urllib3.connectionpool:https://gotify.tpk.pw:443 "POST /message HTTP/1.1" 200 135

INFO: 127.0.0.1:34750 - "POST /notify HTTP/1.1" 200 OK

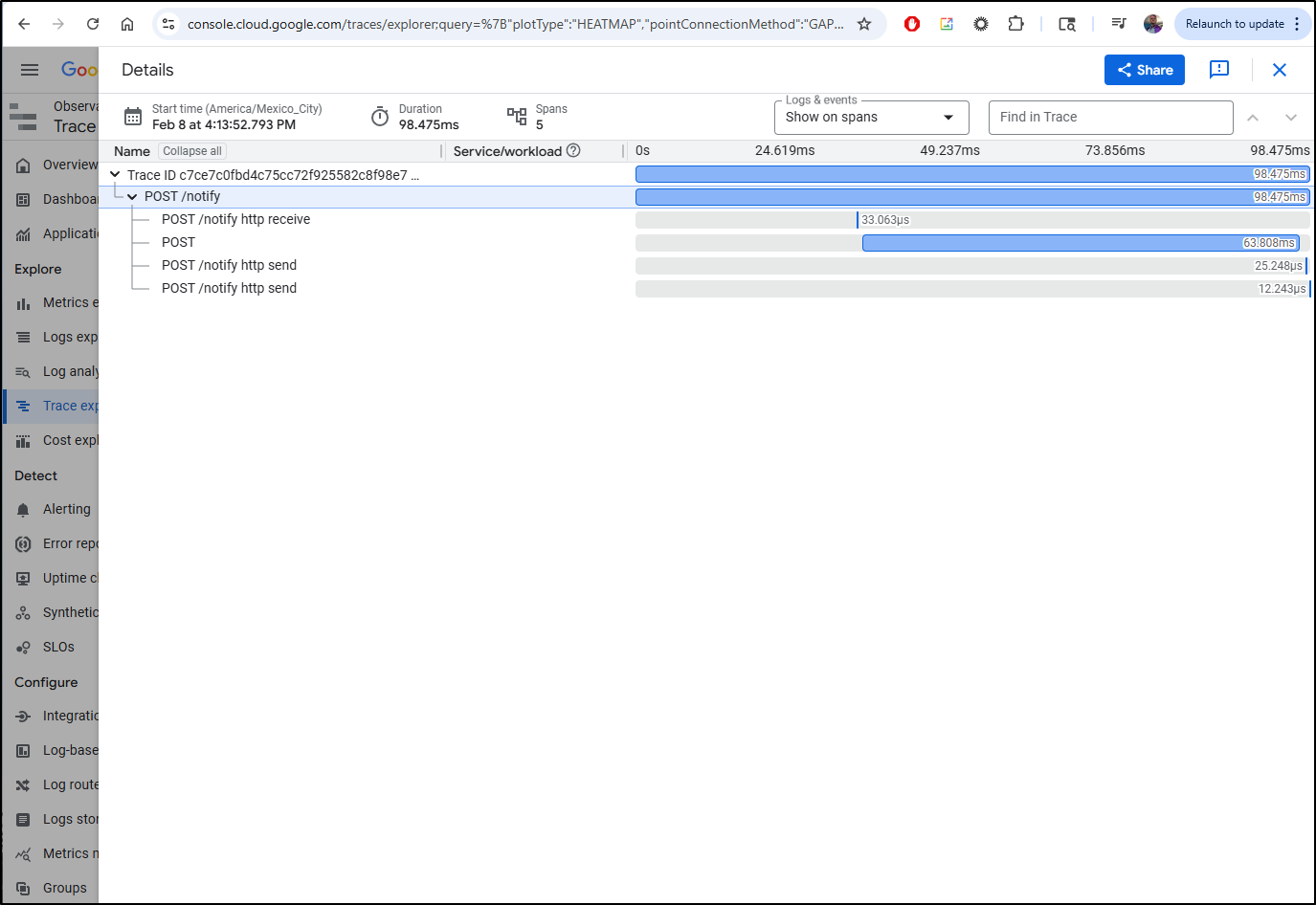

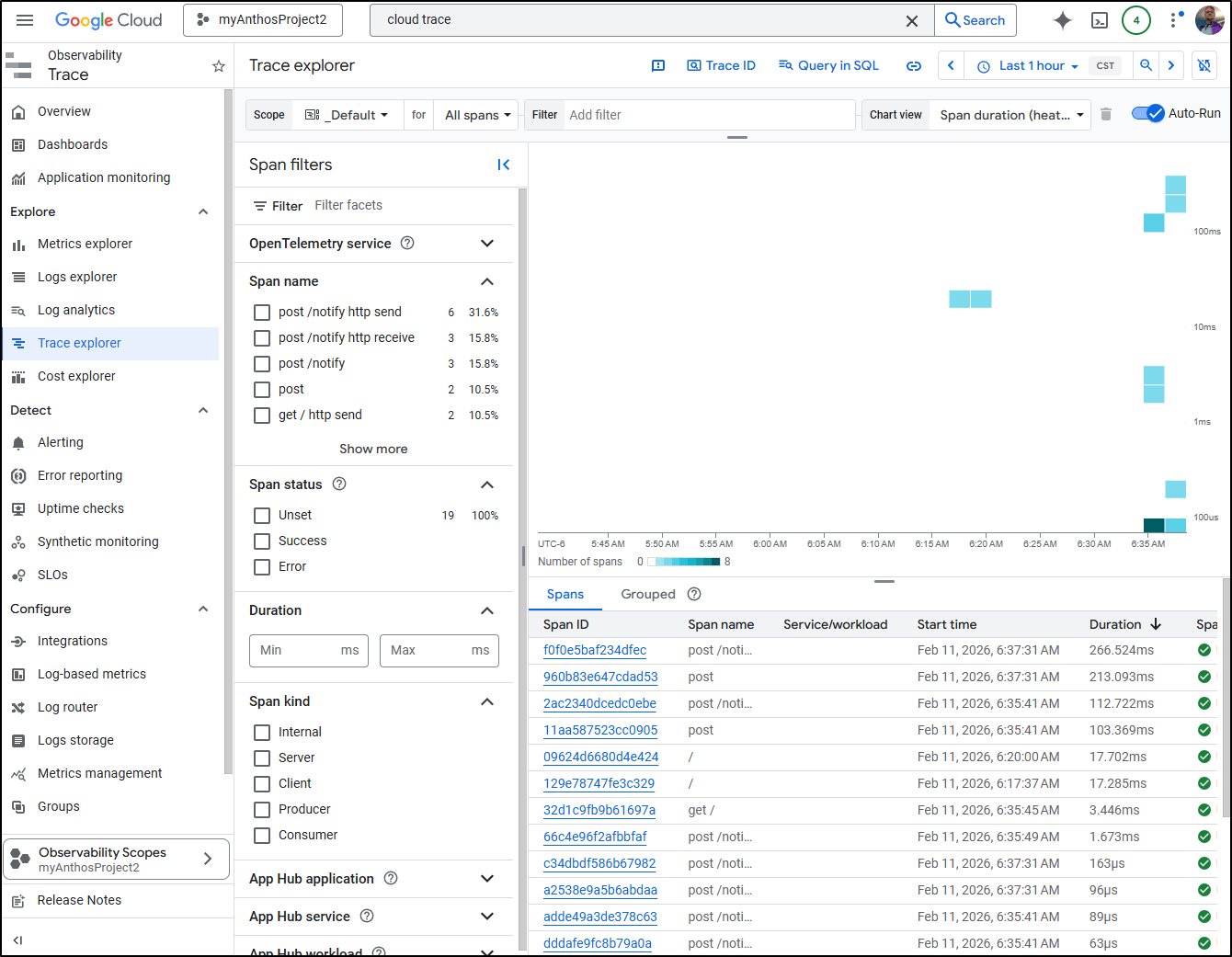

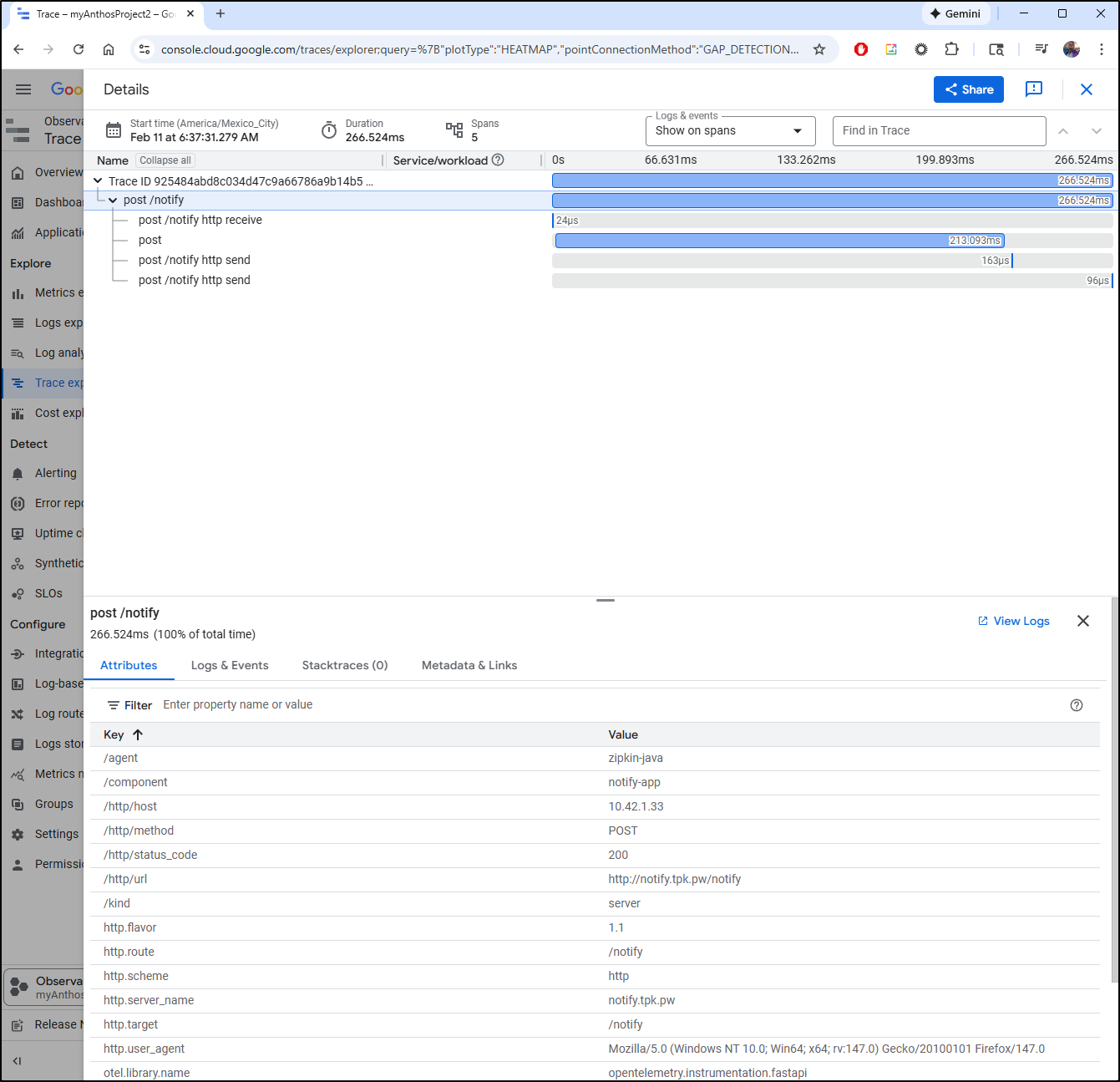

In testing, I can see spans now in GCP

And look at traces:

Adding Metrics and logs

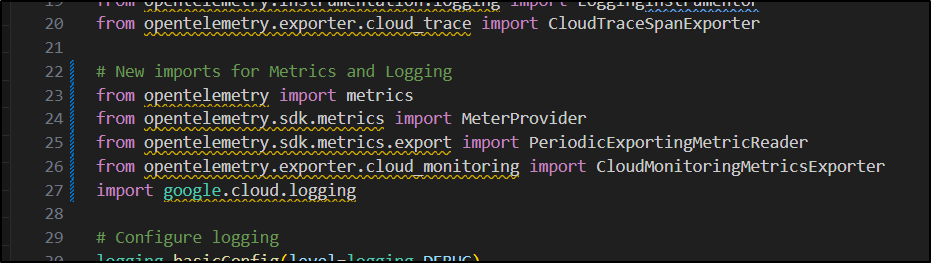

I then had Gemini CLI add Metrics and Logging

✦ I have successfully extended the GotifyMe application to send metrics and logs to Google Cloud Platform.

Summary of changes:

1. Dependencies: Updated requirements.txt to include opentelemetry-exporter-gcp-monitoring and google-cloud-logging.

2. Instrumentation:

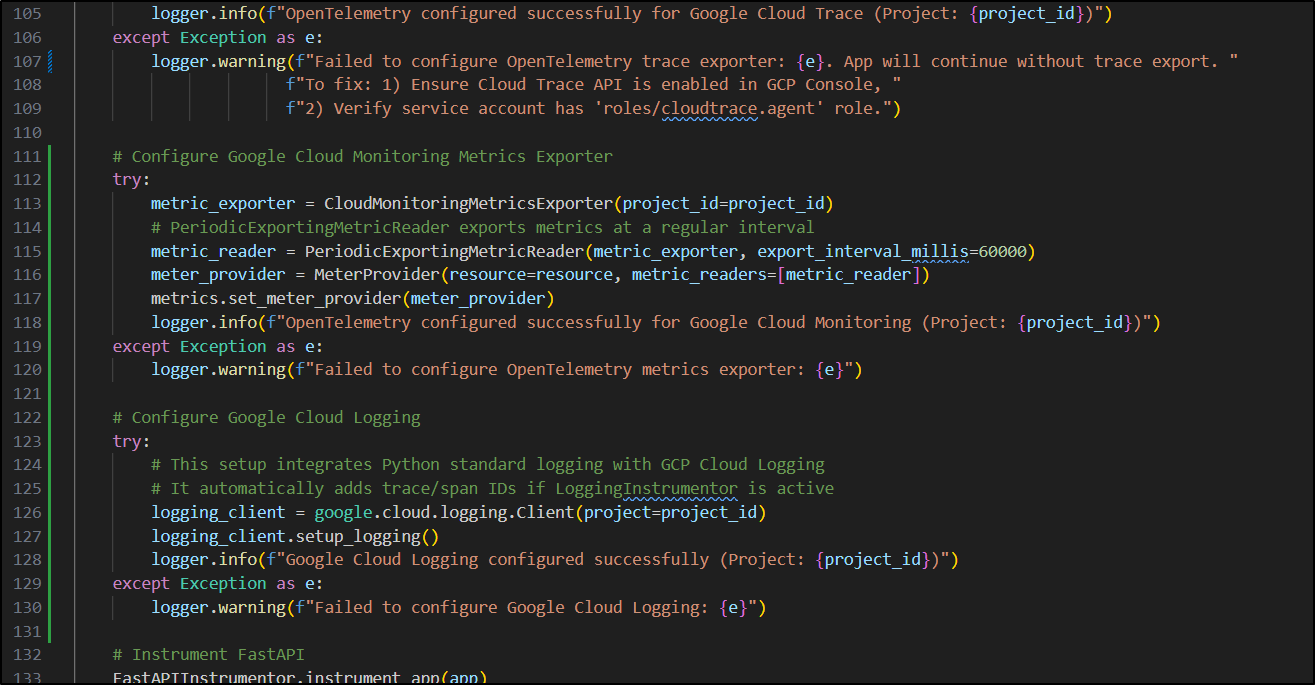

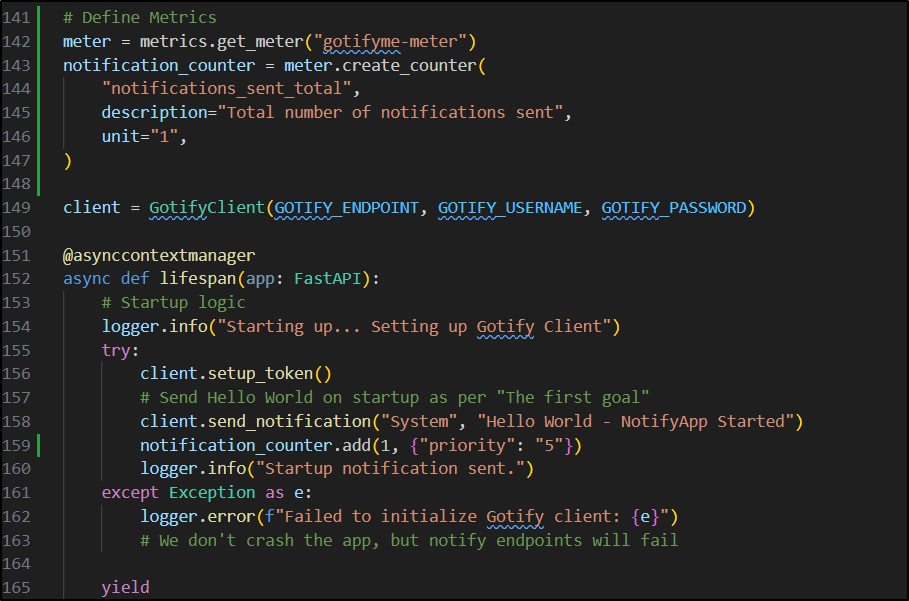

* Metrics: Integrated CloudMonitoringMetricsExporter in app/main.py. Added a custom counter notifications_sent_total that tracks the number of notifications sent, labeled by priority.

* Logging: Integrated google-cloud-logging to automatically export Python logs to Cloud Logging. Application logs now include OpenTelemetry trace and span IDs for seamless correlation in

the GCP Console.

3. Application Logic: Updated the /notify endpoint and the startup lifespan to increment the notification counter and provide descriptive log messages.

4. Documentation: Updated INSTRUMENTATION.md to reflect the new metrics and logs features.

You can now monitor notification counts in Google Cloud Monitoring and view correlated logs and traces in the Google Cloud Observability suite.

Key here is to add in the Metrics exporter and the Google Cloud logging import - today, I believe, Metrics and Traces (Spans) use the OTel endpoint, but logs might still be using native GCP packages.

Gemini CLI detected I like logging (good catch) and added proper try catch blocks (which later helped me sort out Service Account permissions)

Before we can use a metric (custom), we need to create it. In this case we are creating a “notifications_sent_total” metric

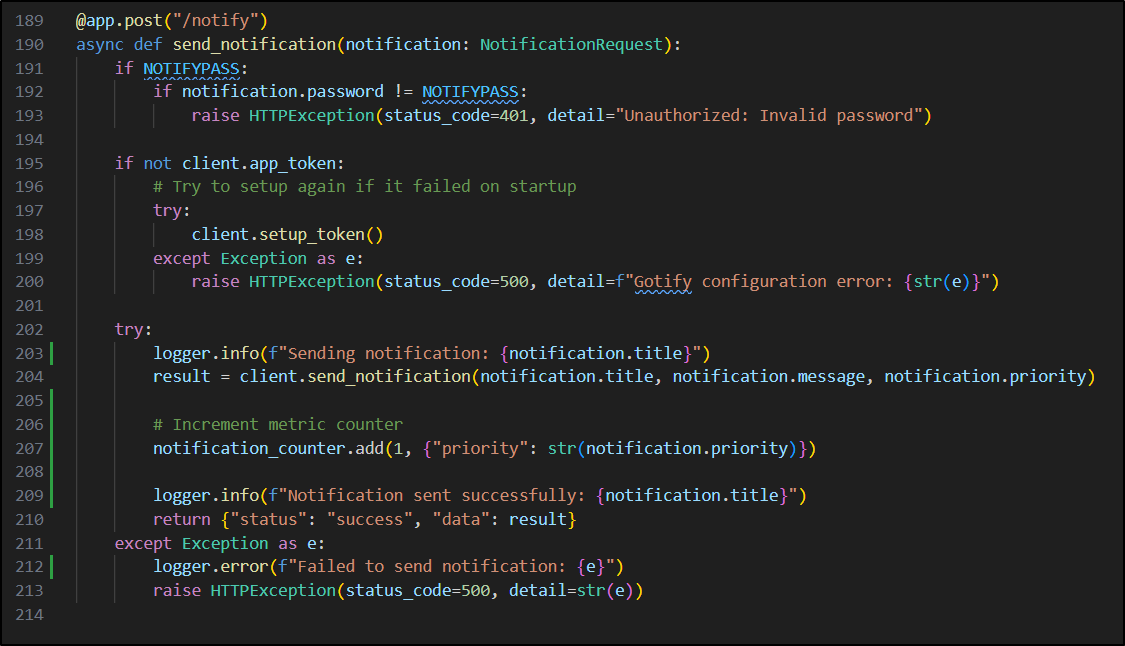

Lastly, when a message is posted, we want to send not only a metric (notification_counter) but also a log (send_notification)

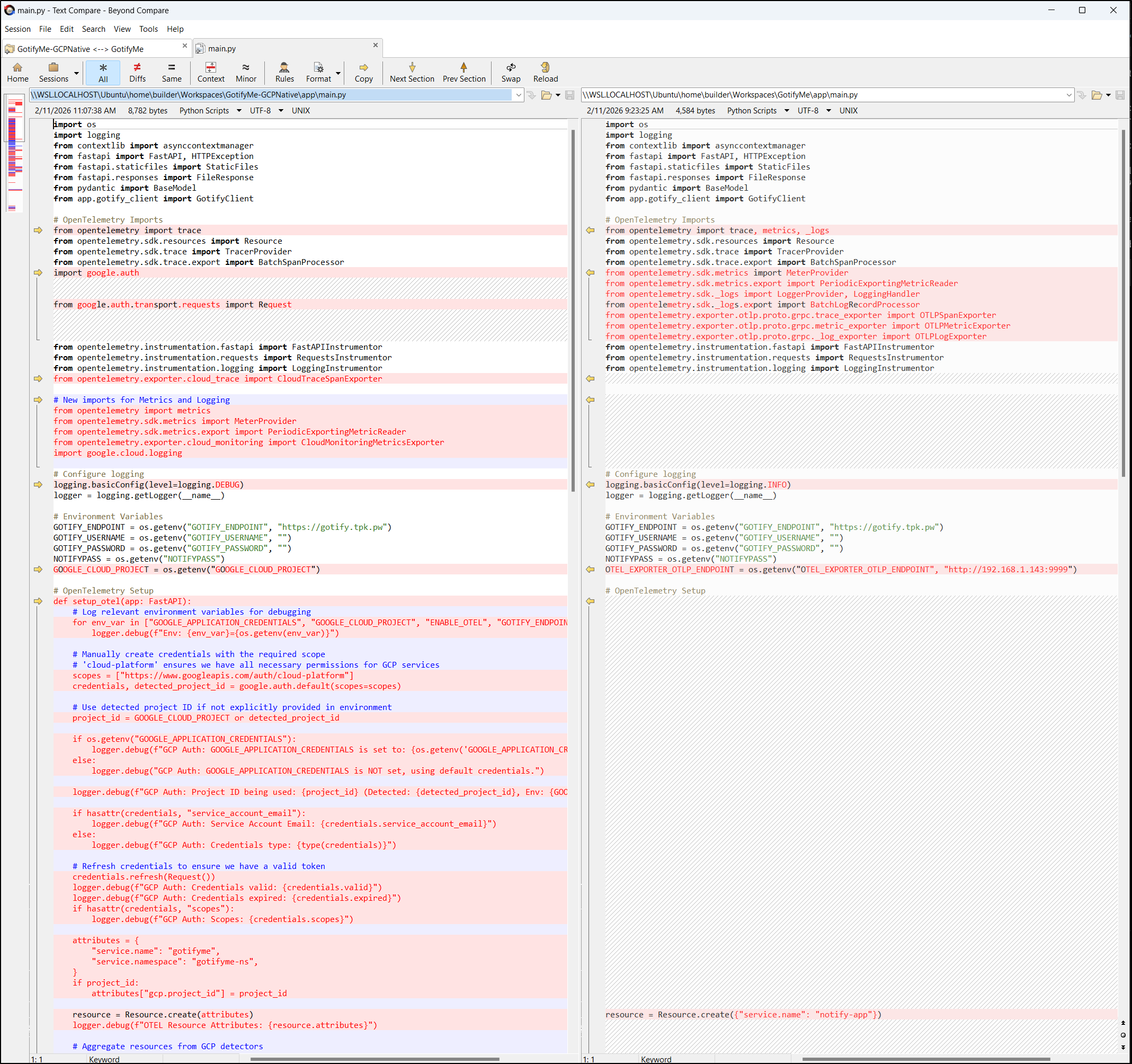

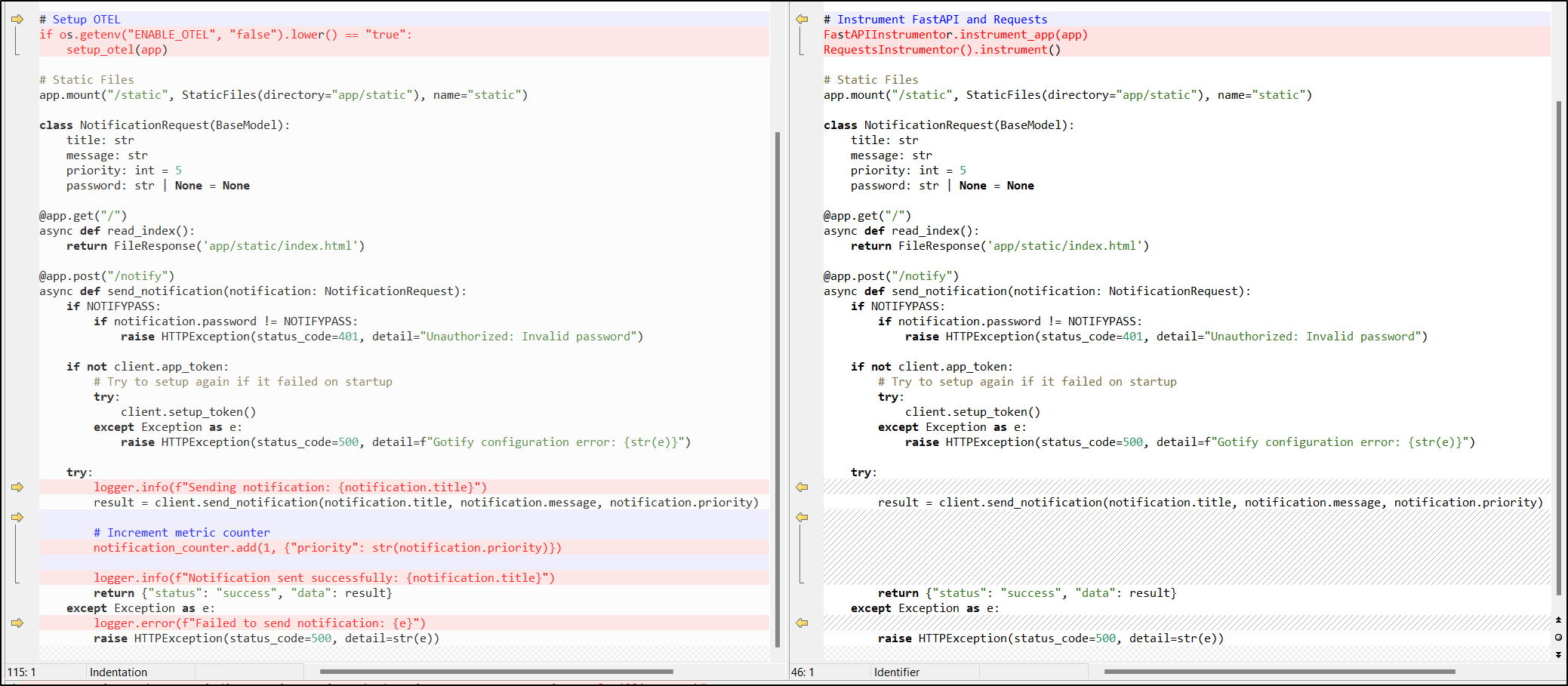

We can see the differences that were added in PR2

That said, on first launch, I realized I missed something with permissions:

INFO:app.gotify_client:Found existing app 'FastAPI_Notify_App'. using token: AL.Hh...

Failed to submit 1 logs.

Traceback (most recent call last):

File "/home/builder/Workspaces/poznote/mcp-server/venv/lib/python3.11/site-packages/google/cloud/logging_v2/handlers/transports/background_thread.py", line 122, in _safely_commit_batch

batch.commit()

File "/home/builder/Workspaces/poznote/mcp-server/venv/lib/python3.11/site-packages/google/cloud/logging_v2/logger.py", line 507, in commit

client.logging_api.write_entries(

File "/home/builder/Workspaces/poznote/mcp-server/venv/lib/python3.11/site-packages/google/cloud/logging_v2/_gapic.py", line 167, in write_entries

self._gapic_api.write_log_entries(request=request)

File "/home/builder/Workspaces/poznote/mcp-server/venv/lib/python3.11/site-packages/google/cloud/logging_v2/services/logging_service_v2/client.py", line 1010, in write_log_entries

response = rpc(

^^^^

File "/home/builder/Workspaces/poznote/mcp-server/venv/lib/python3.11/site-packages/google/api_core/gapic_v1/method.py", line 131, in __call__

return wrapped_func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/builder/Workspaces/poznote/mcp-server/venv/lib/python3.11/site-packages/google/api_core/retry/retry_unary.py", line 294, in retry_wrapped_func

return retry_target(

^^^^^^^^^^^^^

File "/home/builder/Workspaces/poznote/mcp-server/venv/lib/python3.11/site-packages/google/api_core/retry/retry_unary.py", line 156, in retry_target

next_sleep = _retry_error_helper(

^^^^^^^^^^^^^^^^^^^^

File "/home/builder/Workspaces/poznote/mcp-server/venv/lib/python3.11/site-packages/google/api_core/retry/retry_base.py", line 214, in _retry_error_helper

raise final_exc from source_exc

File "/home/builder/Workspaces/poznote/mcp-server/venv/lib/python3.11/site-packages/google/api_core/retry/retry_unary.py", line 147, in retry_target

result = target()

^^^^^^^^

File "/home/builder/Workspaces/poznote/mcp-server/venv/lib/python3.11/site-packages/google/api_core/timeout.py", line 130, in func_with_timeout

return func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "/home/builder/Workspaces/poznote/mcp-server/venv/lib/python3.11/site-packages/google/api_core/grpc_helpers.py", line 77, in error_remapped_callable

raise exceptions.from_grpc_error(exc) from exc

google.api_core.exceptions.PermissionDenied: 403 Permission 'logging.logEntries.create' denied on resource (or it may not exist). [reason: "IAM_PERMISSION_DENIED"

domain: "iam.googleapis.com"

metadata {

key: "permission"

value: "logging.logEntries.create"

}

, type_url: "type.googleapis.com/google.logging.v2.WriteLogEntriesPartialErrors"

I would need to add the “Logs Writer” permission to the SA

The other error we may see relates to metrics:

google.api_core.exceptions.PermissionDenied: 403 Permission monitoring.metricDescriptors.create denied (or the resource may not exist).

ERROR:opentelemetry.exporter.cloud_monitoring:Failed to create metric descriptor labels {

This is due to a “Permission monitoring.metricDescriptors.create denied (or the resource may not exist)” error with our our SA.

To be honest, I wasn’t sure if it was “Cloud Telemetry Metrics Writer” or just “Monitoring Metric Writer” that was required so I added both (some searching suggests the latter is correct). I also added a Metrics viewer (beta) role as well

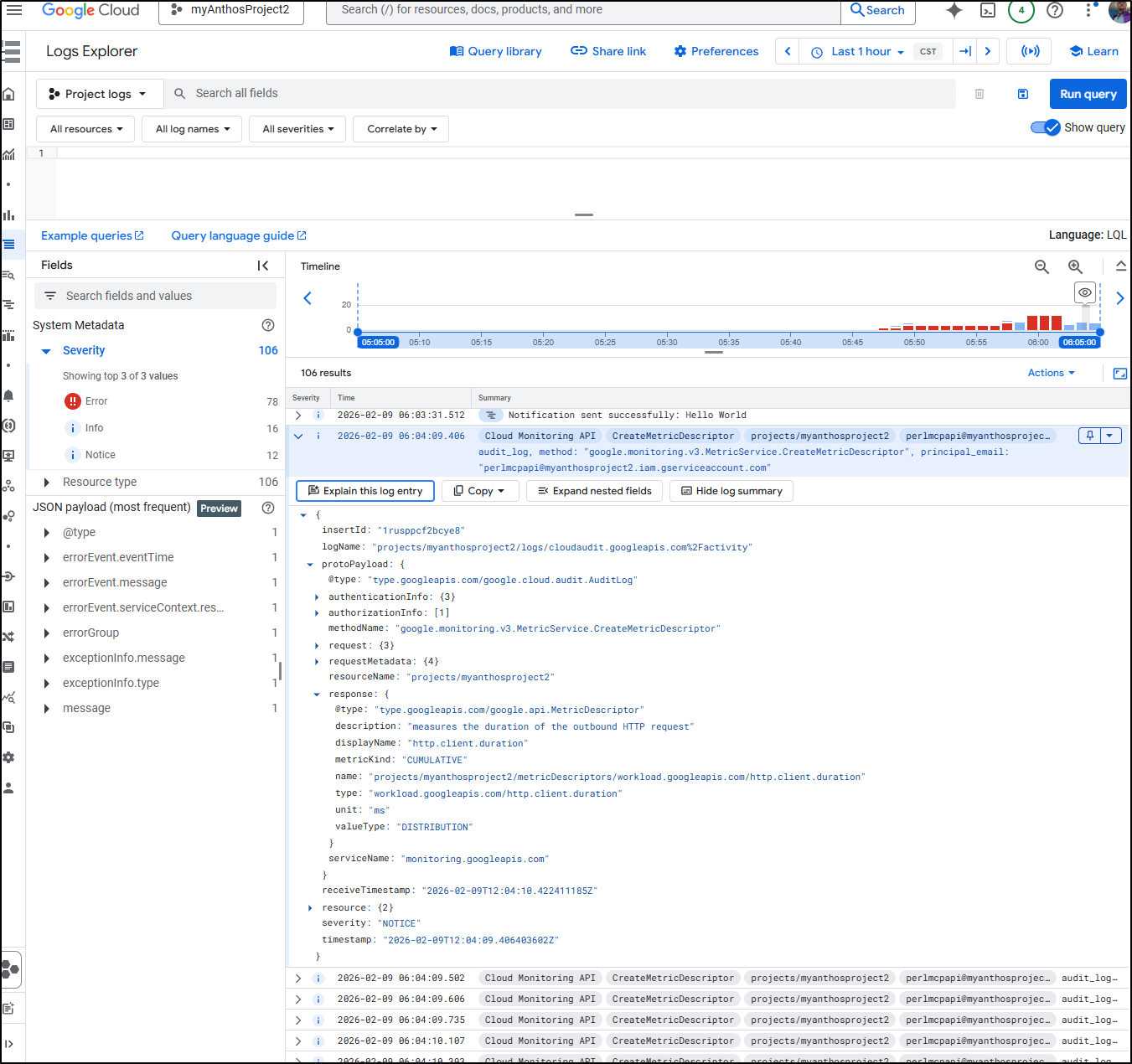

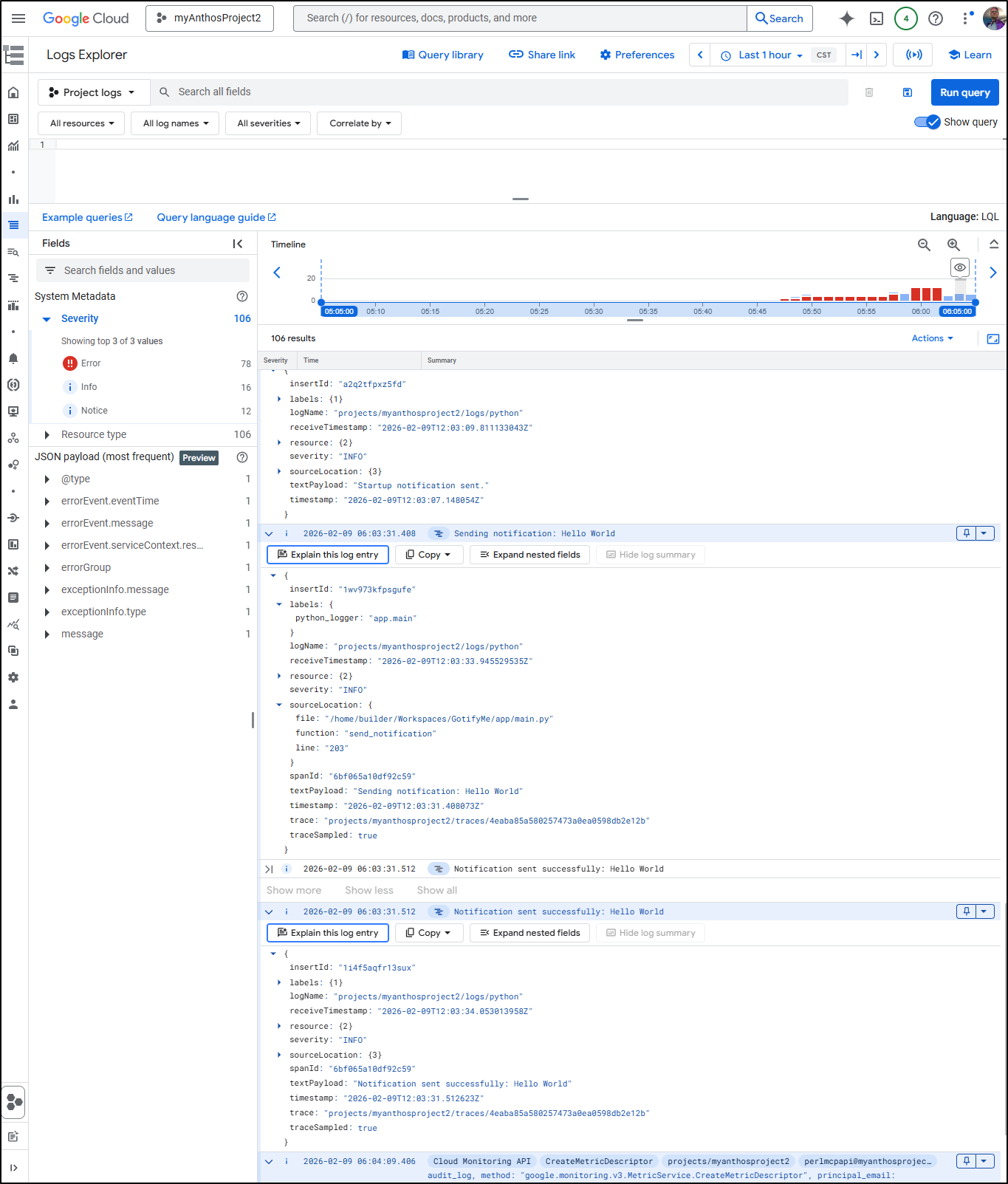

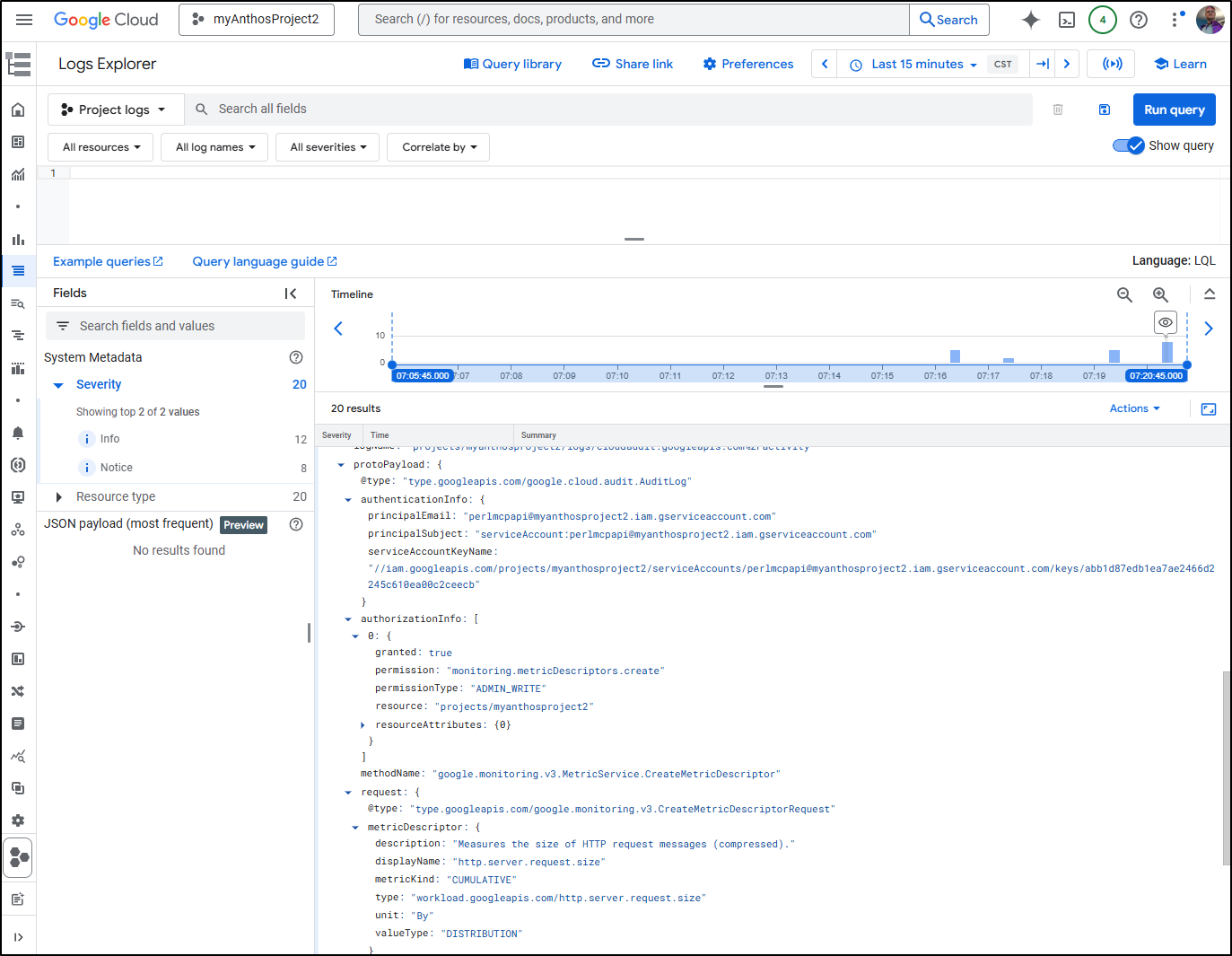

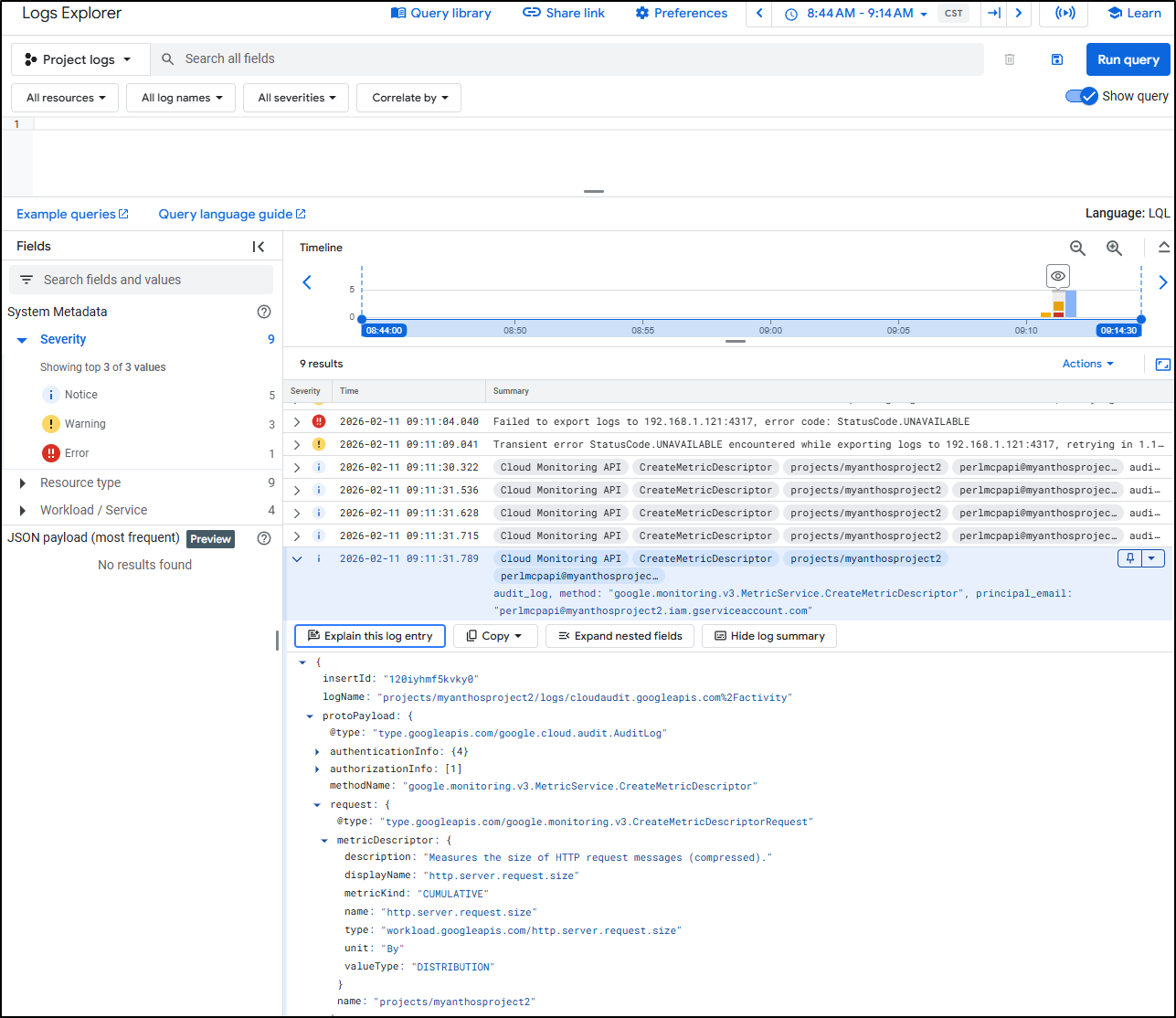

I can now see logs being written (about adding metrics)

But more importantly, I can see payloads showing what was sent

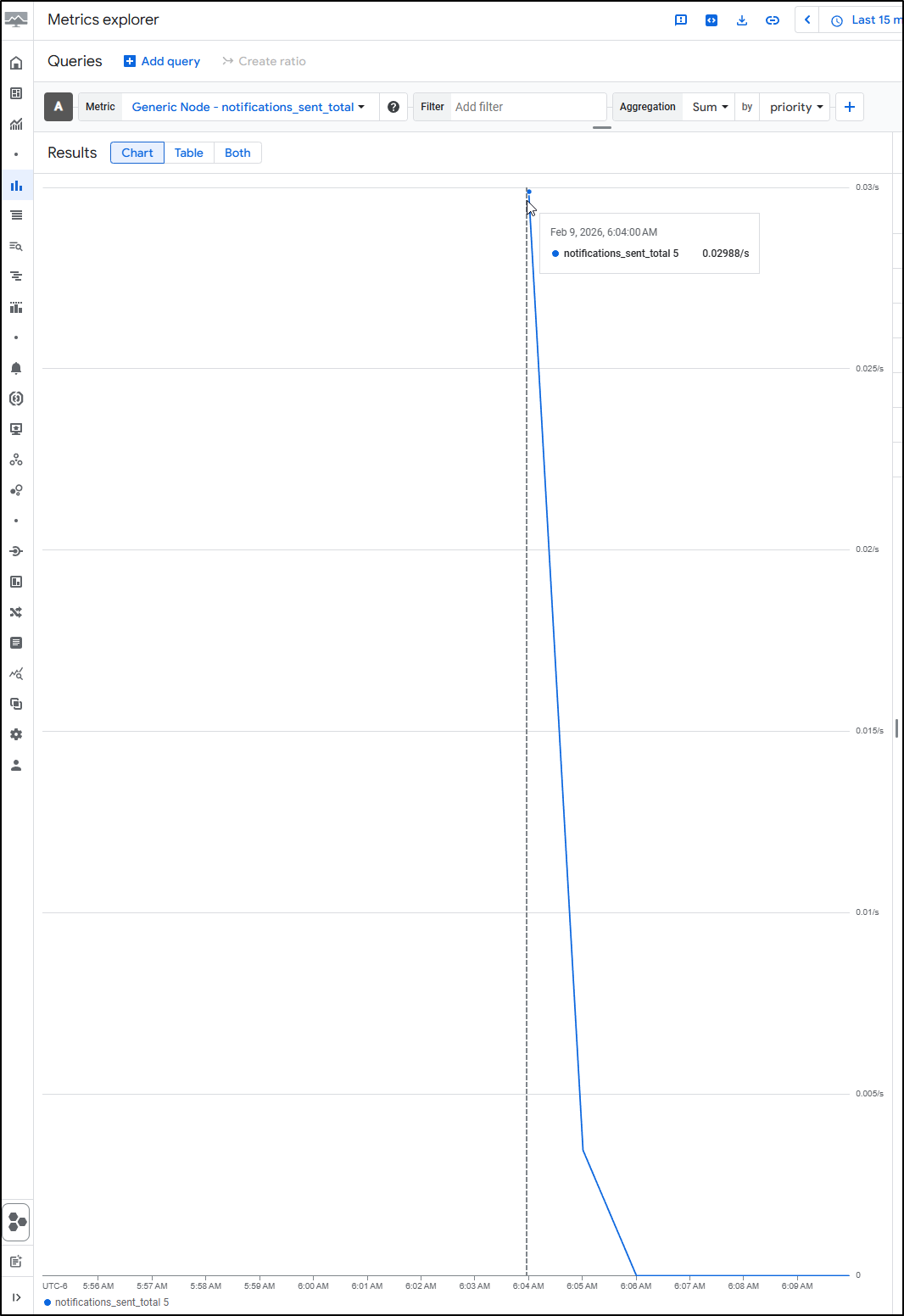

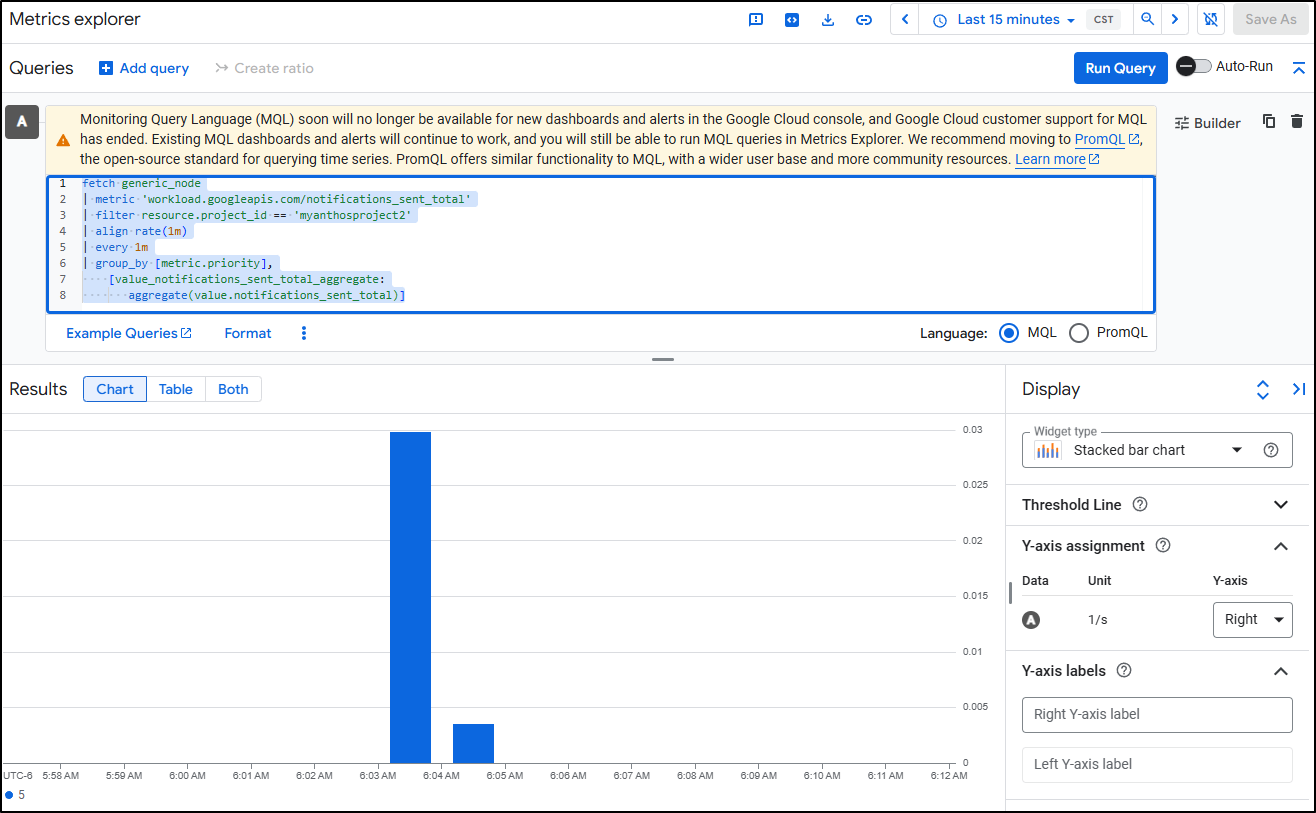

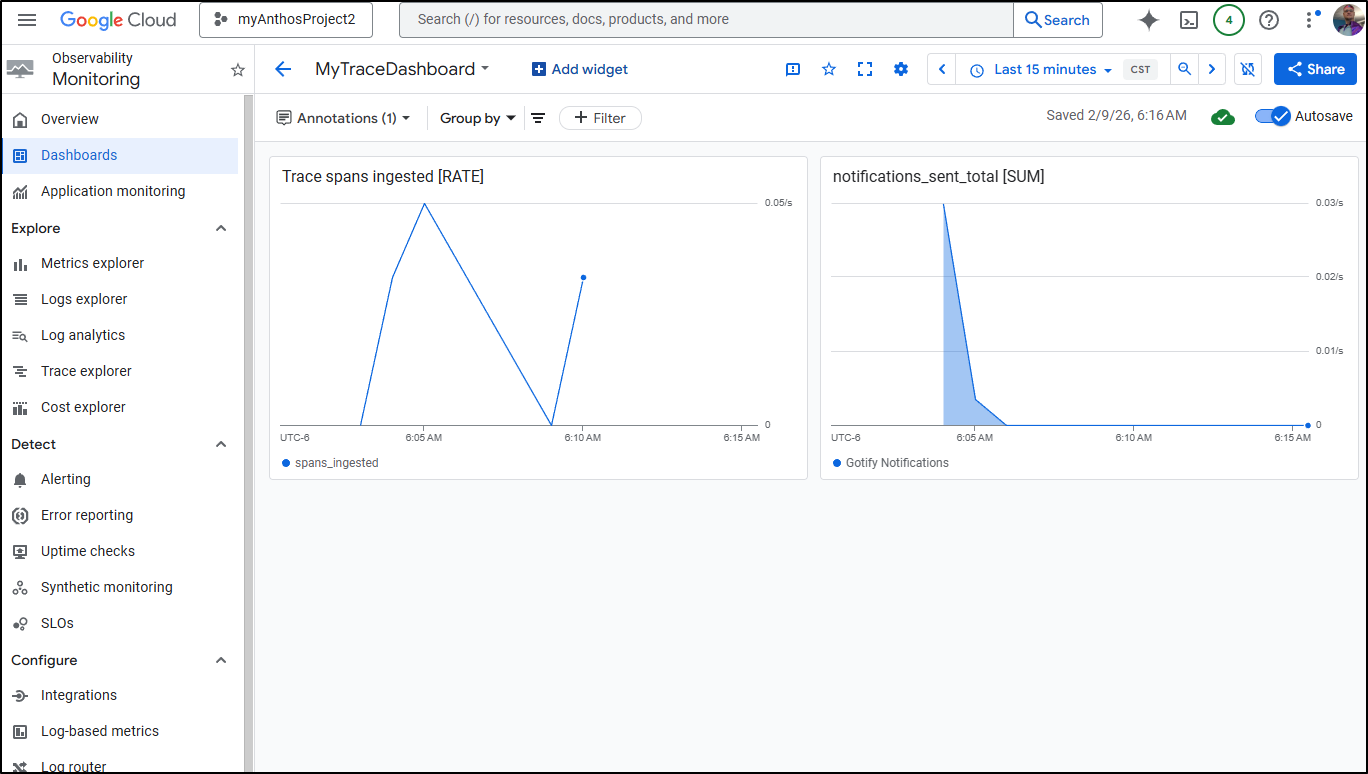

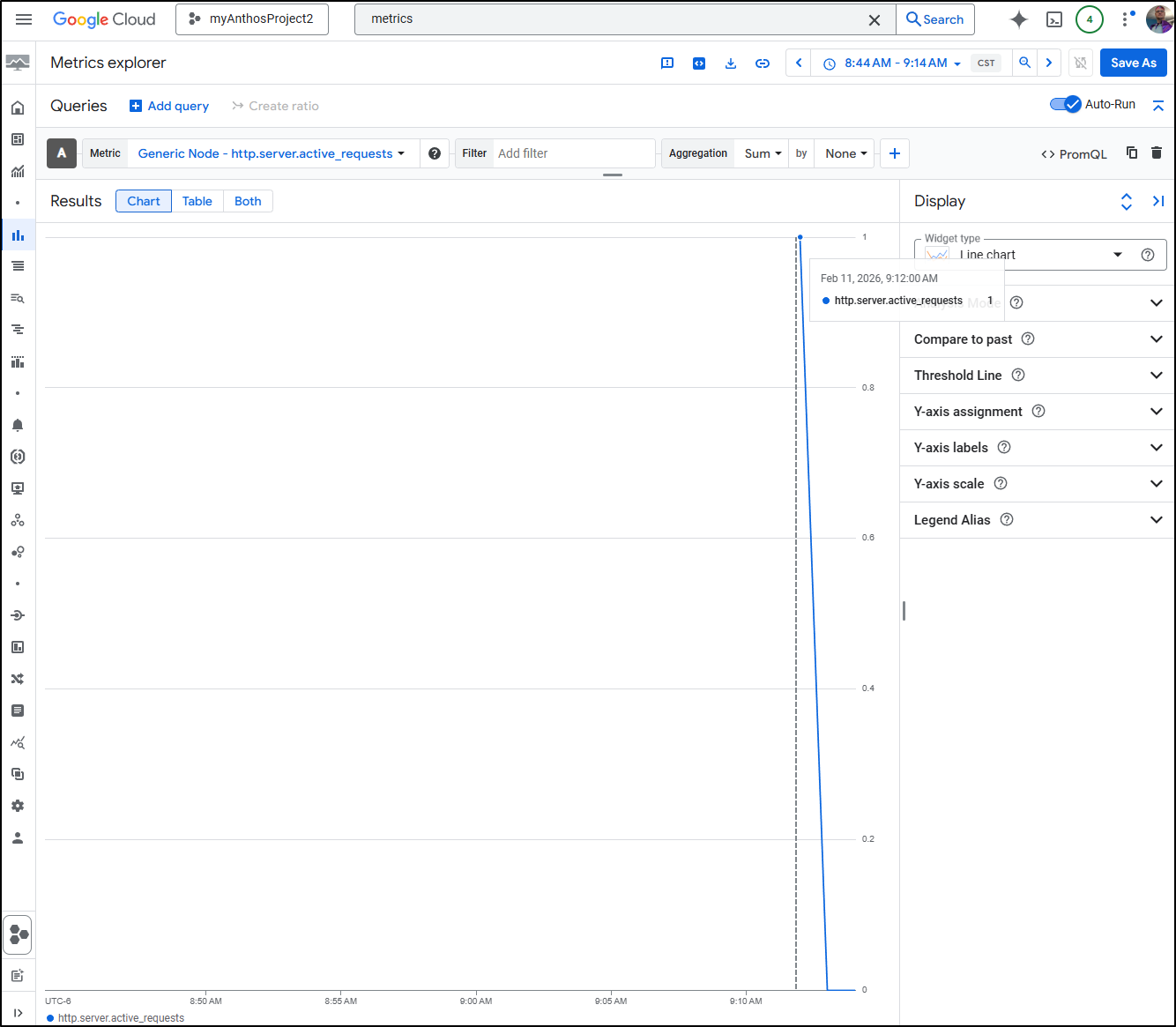

We can also see the “Notifications Sent Total” metric being gathered in GCP Metrics viewer

For reference, the MQL for that is

fetch generic_node

| metric 'workload.googleapis.com/notifications_sent_total'

| filter resource.project_id == 'myanthosproject2'

| align rate(1m)

| every 1m

| group_by [metric.priority],

[value_notifications_sent_total_aggregate:

aggregate(value.notifications_sent_total)]

And PromQL is

sum by (“priority”)(rate({“name”=”workload.googleapis.com/notifications_sent_total”,”monitored_resource”=”generic_node”,”project_id”=”myanthosproject2”}[${__interval}]))

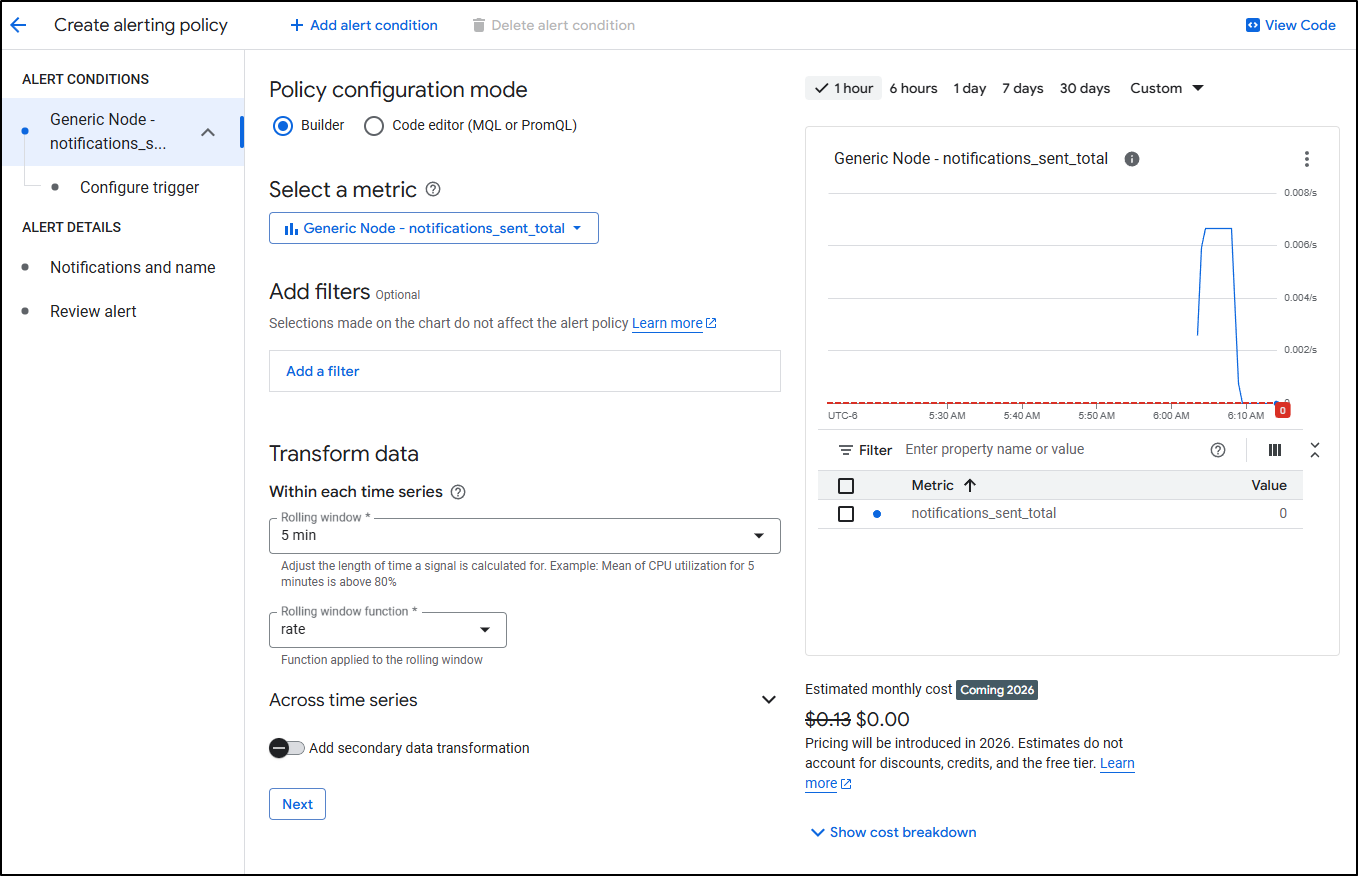

Of course, one of the key reasons one may do this is to create an alert based on the metric. Perhaps this might be to alert it if gets excessively high or goes too long with no messages.

or add it to a dashboard as a Metrics Widget.

Helm Updates

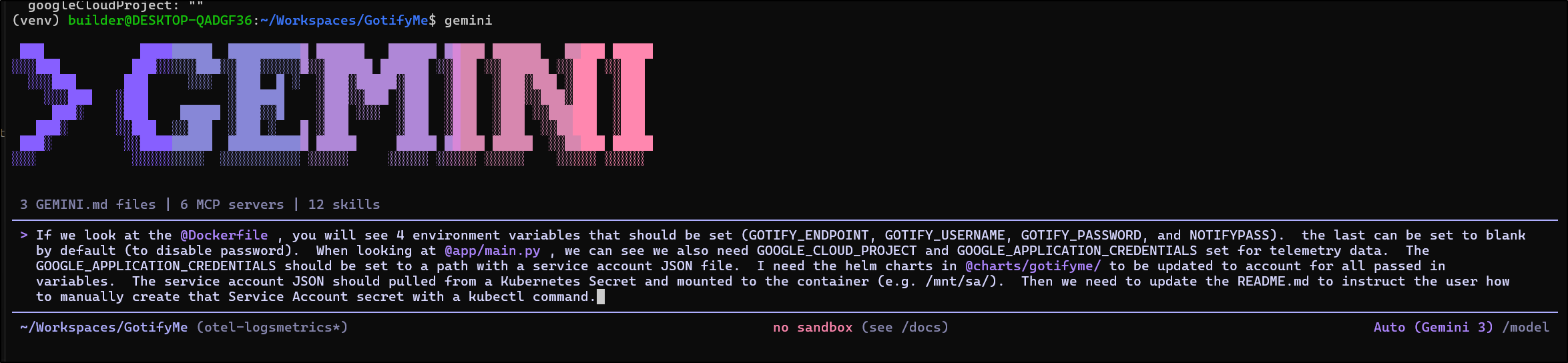

I debated updating it all myself, but I also get the exact syntax of creating a manual secret a bit off, so I asked Gemini CLI to do it. However, I was very exacting in my ask:

I’ll create the SA JSON

$ kubectl create secret generic gotifyme-sa --from-file=mysa.json=/home/builder/Workspaces/GotifyMe/mysa.json

secret/gotifyme-sa created

I then updated the values for the image and settings

$ cat myvalues.yaml

autoscaling:

enabled: true

maxReplicas: 2

minReplicas: 1

targetCPUUtilizationPercentage: 80

gotify:

endpoint: https://gotify.tpk.pw

password: xxxxxxxxxxx

username: sampleapp

notifyPass: xxxxxxxxxxxxxx

otel:

enabled: true

googleCloudProject: "myanthosproject2"

googleApplicationCredentialsSecret: "gotifyme-sa"

googleApplicationCredentialsFile: "mysa.json"

image:

pullPolicy: IfNotPresent

repository: harbor.freshbrewed.science/library/notifyapp

tag: gcpotel1

ingress:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

className: nginx

enabled: true

hosts:

- host: notify.tpk.pw

paths:

- path: /

pathType: ImplementationSpecific

tls:

- hosts:

- notify.tpk.pw

secretName: notify-tls

replicaCount: 1

resources:

limits:

cpu: 250m

memory: 256Mi

requests:

cpu: 100m

memory: 128Mi

I can now upgrade it

$ helm upgrade notifyapp -f ./myvalues.yaml ./charts/gotifyme

Release "notifyapp" has been upgraded. Happy Helming!

NAME: notifyapp

LAST DEPLOYED: Mon Feb 9 07:16:14 2026

NAMESPACE: default

STATUS: deployed

REVISION: 5

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

https://notify.tpk.pw/

I can see get logs from the container

$ kubectl logs notifyapp-gotifyme-657ddf7c4f-lq6bt

/usr/local/lib/python3.10/site-packages/google/api_core/_python_version_support.py:275: FutureWarning: You are using a Python version (3.10.19) which Google will stop supporting in new releases of google.api_core once it reaches its end of life (2026-10-04). Please upgrade to the latest Python version, or at least Python 3.11, to continue receiving updates for google.api_core past that date.

warnings.warn(message, FutureWarning)

/usr/local/lib/python3.10/site-packages/google/api_core/_python_version_support.py:275: FutureWarning: You are using a Python version (3.10.19) which Google will stop supporting in new releases of google.cloud.trace_v2 once it reaches its end of life (2026-10-04). Please upgrade to the latest Python version, or at least Python 3.11, to continue receiving updates for google.cloud.trace_v2 past that date.

warnings.warn(message, FutureWarning)

/usr/local/lib/python3.10/site-packages/google/api_core/_python_version_support.py:275: FutureWarning: You are using a Python version (3.10.19) which Google will stop supporting in new releases of google.cloud.monitoring_v3 once it reaches its end of life (2026-10-04). Please upgrade to the latest Python version, or at least Python 3.11, to continue receiving updates for google.cloud.monitoring_v3 past that date.

warnings.warn(message, FutureWarning)

/usr/local/lib/python3.10/site-packages/google/api_core/_python_version_support.py:275: FutureWarning: You are using a Python version (3.10.19) which Google will stop supporting in new releases of google.cloud.appengine_logging_v1 once it reaches its end of life (2026-10-04). Please upgrade to the latest Python version, or at least Python 3.11, to continue receiving updates for google.cloud.appengine_logging_v1 past that date.

warnings.warn(message, FutureWarning)

DEBUG:app.main:Env: GOOGLE_APPLICATION_CREDENTIALS=/mnt/sa/mysa.json

DEBUG:app.main:Env: GOOGLE_CLOUD_PROJECT=myanthosproject2

DEBUG:app.main:Env: ENABLE_OTEL=true

DEBUG:app.main:Env: GOTIFY_ENDPOINT=https://gotify.tpk.pw

DEBUG:google.auth._default:Checking '/mnt/sa/mysa.json' for explicit credentials as part of auth process...

DEBUG:app.main:GCP Auth: GOOGLE_APPLICATION_CREDENTIALS is set to: /mnt/sa/mysa.json

DEBUG:app.main:GCP Auth: Project ID being used: myanthosproject2 (Detected: myanthosproject2, Env: myanthosproject2)

DEBUG:app.main:GCP Auth: Service Account Email: perlmcpapi@myanthosproject2.iam.gserviceaccount.com

DEBUG:urllib3.connectionpool:Starting new HTTPS connection (1): oauth2.googleapis.com:443

DEBUG:urllib3.connectionpool:https://oauth2.googleapis.com:443 "POST /token HTTP/1.1" 200 None

DEBUG:app.main:GCP Auth: Credentials valid: True

DEBUG:app.main:GCP Auth: Credentials expired: False

DEBUG:app.main:GCP Auth: Scopes: ['https://www.googleapis.com/auth/cloud-platform']

DEBUG:app.main:OTEL Resource Attributes: {'telemetry.sdk.language': 'python', 'telemetry.sdk.name': 'opentelemetry', 'telemetry.sdk.version': '1.39.1', 'service.name': 'gotifyme', 'service.namespace': 'gotifyme-ns', 'gcp.project_id': 'myanthosproject2'}

INFO:app.main:OpenTelemetry configured successfully for Google Cloud Trace (Project: myanthosproject2)

INFO:app.main:OpenTelemetry configured successfully for Google Cloud Monitoring (Project: myanthosproject2)

DEBUG:urllib3.connectionpool:Starting new HTTP connection (1): metadata.google.internal.:80

DEBUG:urllib3.connectionpool:Starting new HTTP connection (1): metadata.google.internal.:80

DEBUG:urllib3.connectionpool:Starting new HTTP connection (1): metadata.google.internal.:80

DEBUG:urllib3.connectionpool:Starting new HTTP connection (1): metadata.google.internal.:80

INFO:app.main:Google Cloud Logging configured successfully (Project: myanthosproject2)

INFO: Started server process [1]

INFO: Waiting for application startup.

INFO:app.main:Starting up... Setting up Gotify Client

INFO:app.gotify_client:Authenticated using Client Token.

INFO:app.gotify_client:Found existing app 'FastAPI_Notify_App'. using token: AL.Hh...

INFO:app.main:Startup notification sent.

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:80 (Press CTRL+C to quit)

I can now test

and see evidence in the logs

Otel Standard Setup

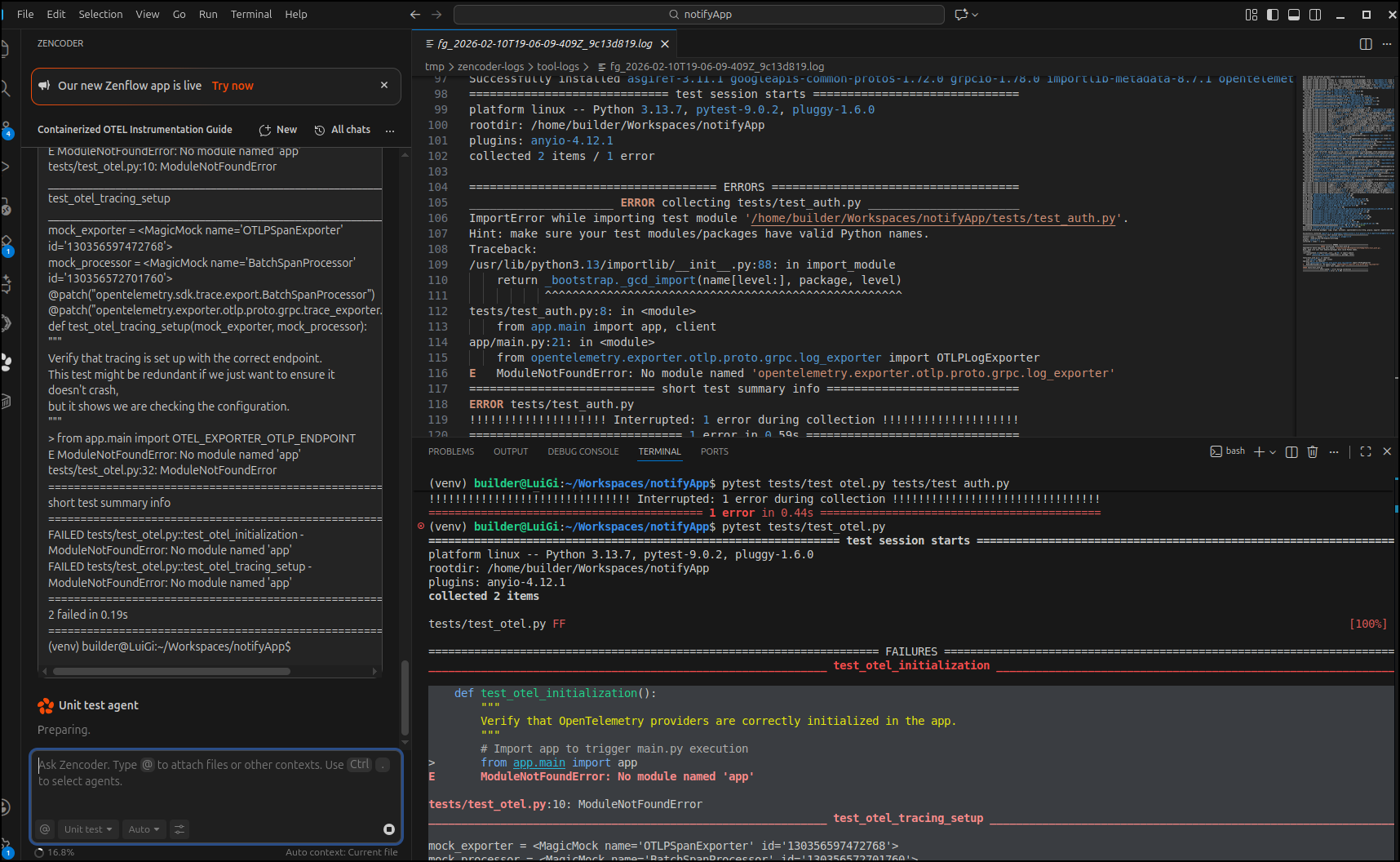

I have not used Verdant AI in a while, let’s give it a crack at building out a pure Otel solution in our Python app with a Test-first approach (using Unit Test Agent)

It got close, but then got into a loop as you saw at the end.

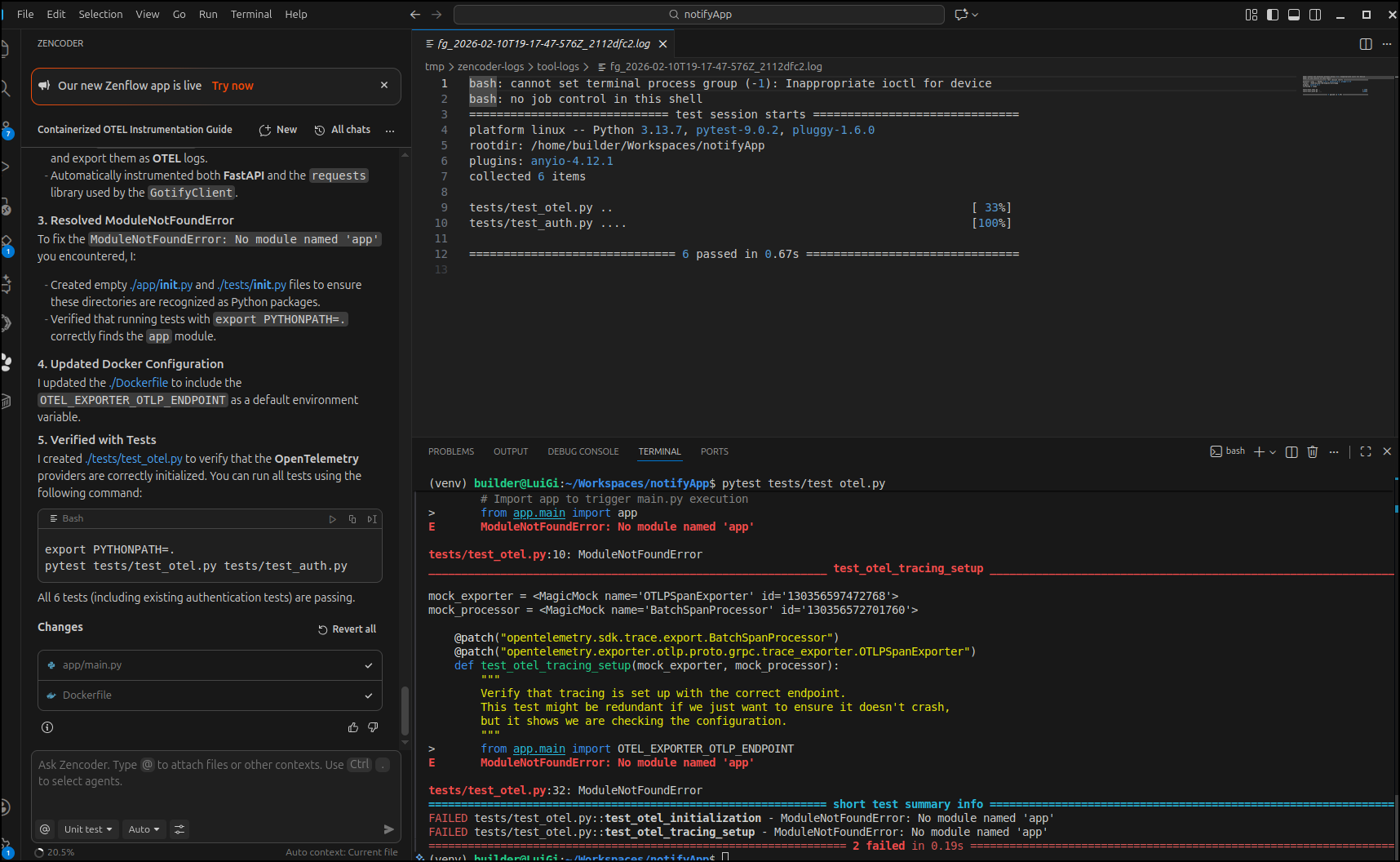

I then asked it to fix that specific error after manually running tests in a virtual env

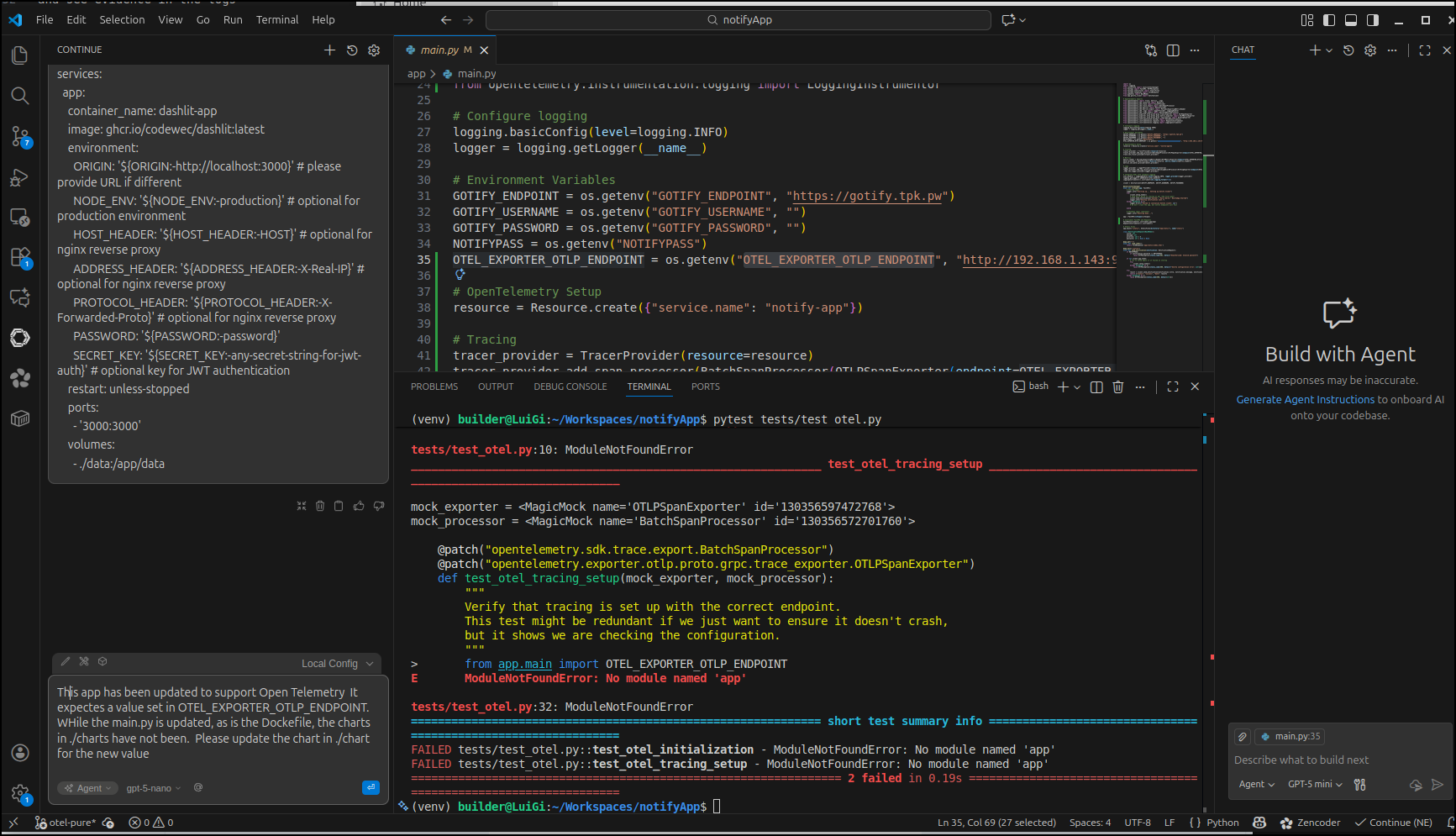

That did fix it (not in the way I expected), then wrapped by touching up the Dockerfile

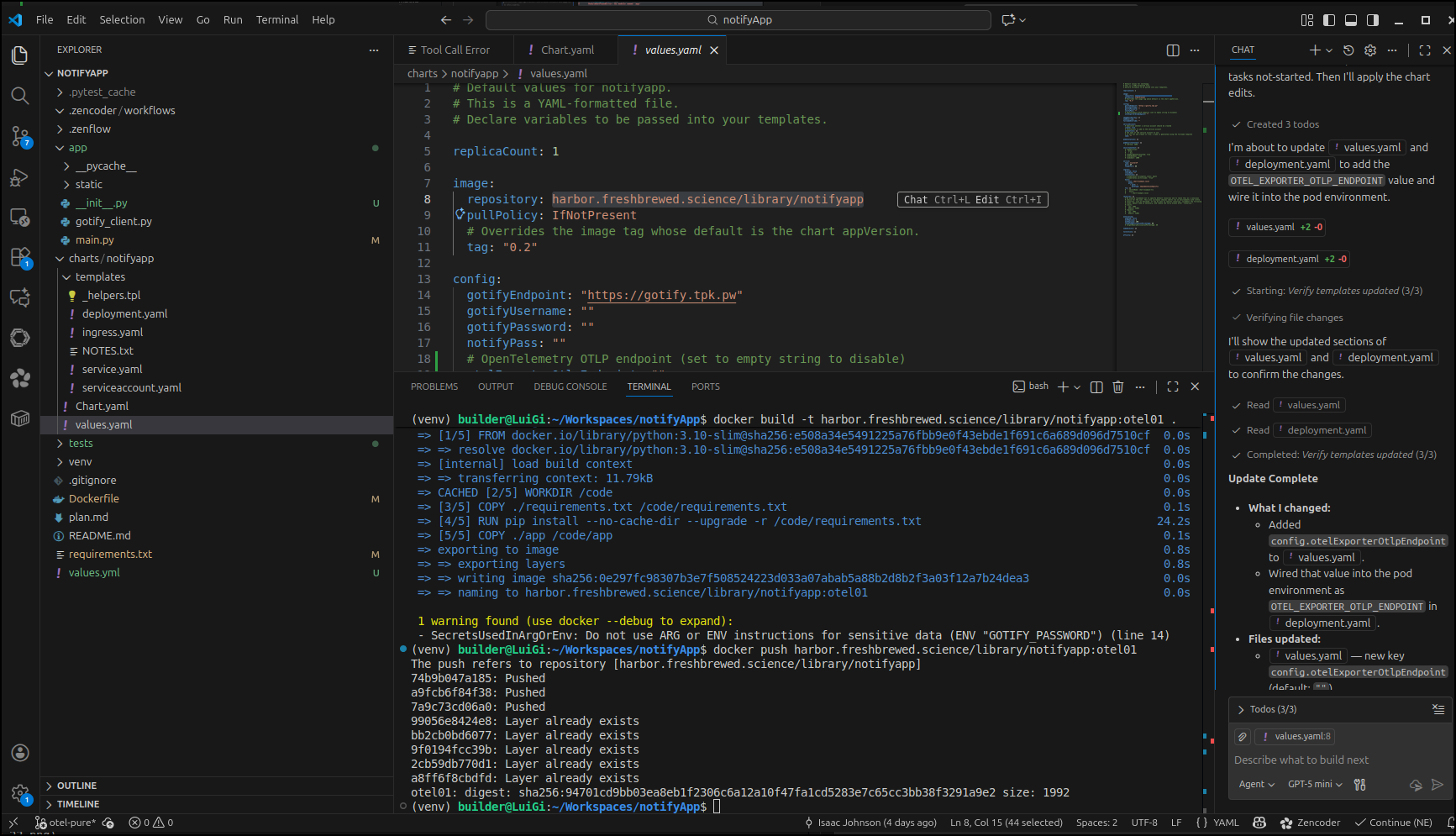

To update the Charts, let’s pivot to GPT 5 as hosted via Azure AI Foundry

I also used GPT 5 with Copilot free tier

I did a docker build and push so we can have an OTEL instrumented image to try

(venv) builder@LuiGi:~/Workspaces/notifyApp$ docker build -t harbor.freshbrewed.science/library/notifyapp:otel01 .

[+] Building 26.3s (11/11) FINISHED docker:default

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 489B 0.0s

=> [internal] load metadata for docker.io/library/python:3.10-slim 0.7s

=> [auth] library/python:pull token for registry-1.docker.io 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [1/5] FROM docker.io/library/python:3.10-slim@sha256:e508a34e5491225a76fbb9e0f43ebde1f691c6a689d096d7510cf 0.0s

=> => resolve docker.io/library/python:3.10-slim@sha256:e508a34e5491225a76fbb9e0f43ebde1f691c6a689d096d7510cf 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 11.79kB 0.0s

=> CACHED [2/5] WORKDIR /code 0.0s

=> [3/5] COPY ./requirements.txt /code/requirements.txt 0.1s

=> [4/5] RUN pip install --no-cache-dir --upgrade -r /code/requirements.txt 24.2s

=> [5/5] COPY ./app /code/app 0.1s

=> exporting to image 0.8s

=> => exporting layers 0.8s

=> => writing image sha256:0e297fc98307b3e7f508524223d033a07abab5a88b2d8b2f3a03f12a7b24dea3 0.0s

=> => naming to harbor.freshbrewed.science/library/notifyapp:otel01 0.0s

1 warning found (use docker --debug to expand):

- SecretsUsedInArgOrEnv: Do not use ARG or ENV instructions for sensitive data (ENV "GOTIFY_PASSWORD") (line 14)

(venv) builder@LuiGi:~/Workspaces/notifyApp$ docker push harbor.freshbrewed.science/library/notifyapp:otel01

The push refers to repository [harbor.freshbrewed.science/library/notifyapp]

74b9b047a185: Pushed

a9fcb6f84f38: Pushed

7a9c73cd06a0: Pushed

99056e8424e8: Layer already exists

bb2cb0bd6077: Layer already exists

9f0194fcc39b: Layer already exists

2cb59db770d1: Layer already exists

a8ff6f8cbdfd: Layer already exists

otel01: digest: sha256:94701cd9bb03ea8eb1f2306c6a12a10f47fa1cd5283e7c65cc3bb38f3291a9e2 size: 1992

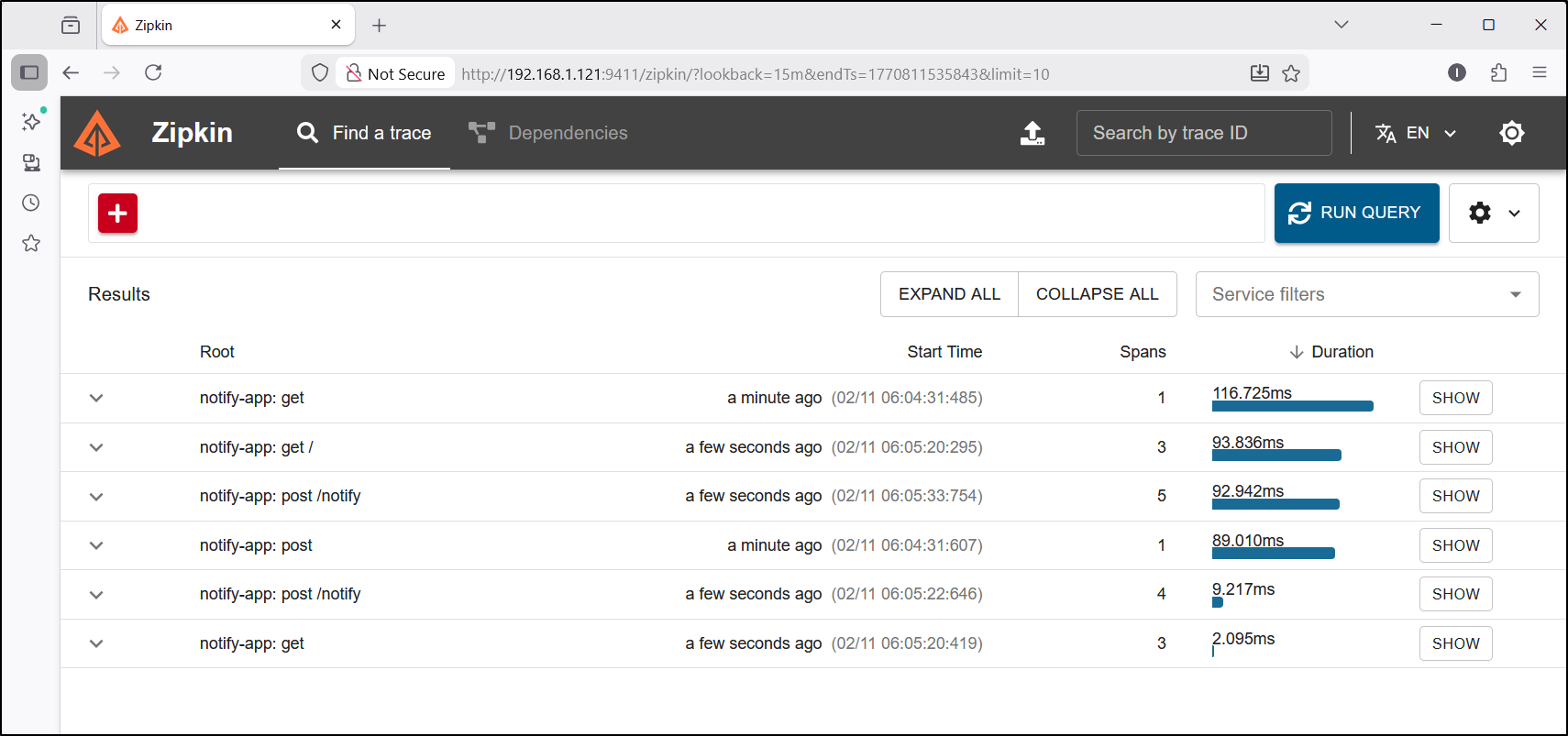

I have a Linux box running with an Open Telemetry collector already routing to both a local Zipkin and New Relic.

Let’s first test with that host:

builder@DESKTOP-QADGF36:~/Workspaces/notifyapp$ vi myvalues.yaml

builder@DESKTOP-QADGF36:~/Workspaces/notifyapp$ cat myvalues.yaml | grep otel

otelExporterOtlpEndpoint: "192.168.1.121:4317"

tag: otel01

otel:

Then use the updated values in a helm upgrade to make them live

builder@DESKTOP-QADGF36:~/Workspaces/notifyapp$ helm upgrade notifyapp -f ./myvalues.yaml ./charts/notifyapp/

Release "notifyapp" has been upgraded. Happy Helming!

NAME: notifyapp

LAST DEPLOYED: Wed Feb 11 05:57:34 2026

NAMESPACE: default

STATUS: deployed

REVISION: 7

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

https://notify.tpk.pw/

I can now test those updates

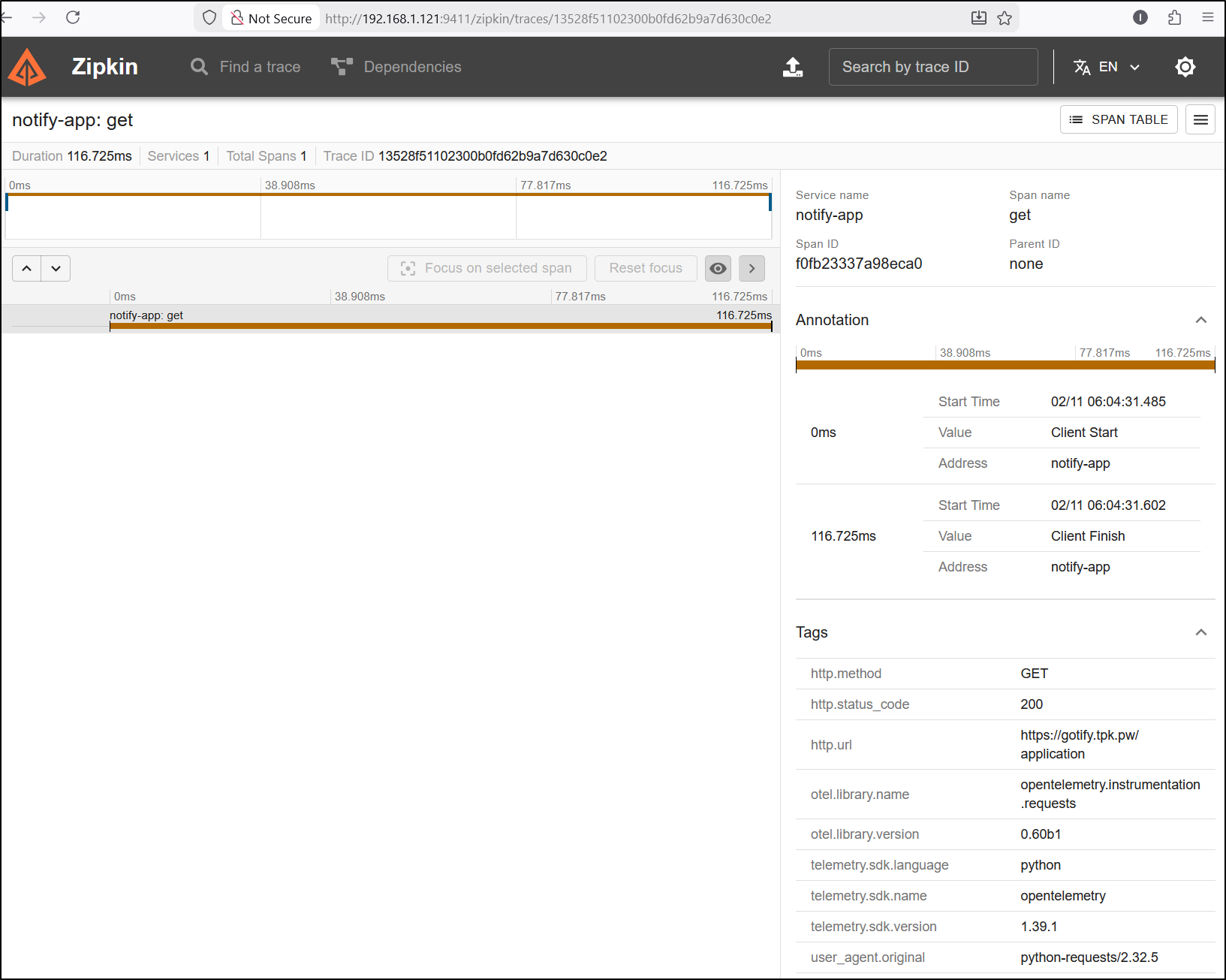

We can see local traces in Zipkin

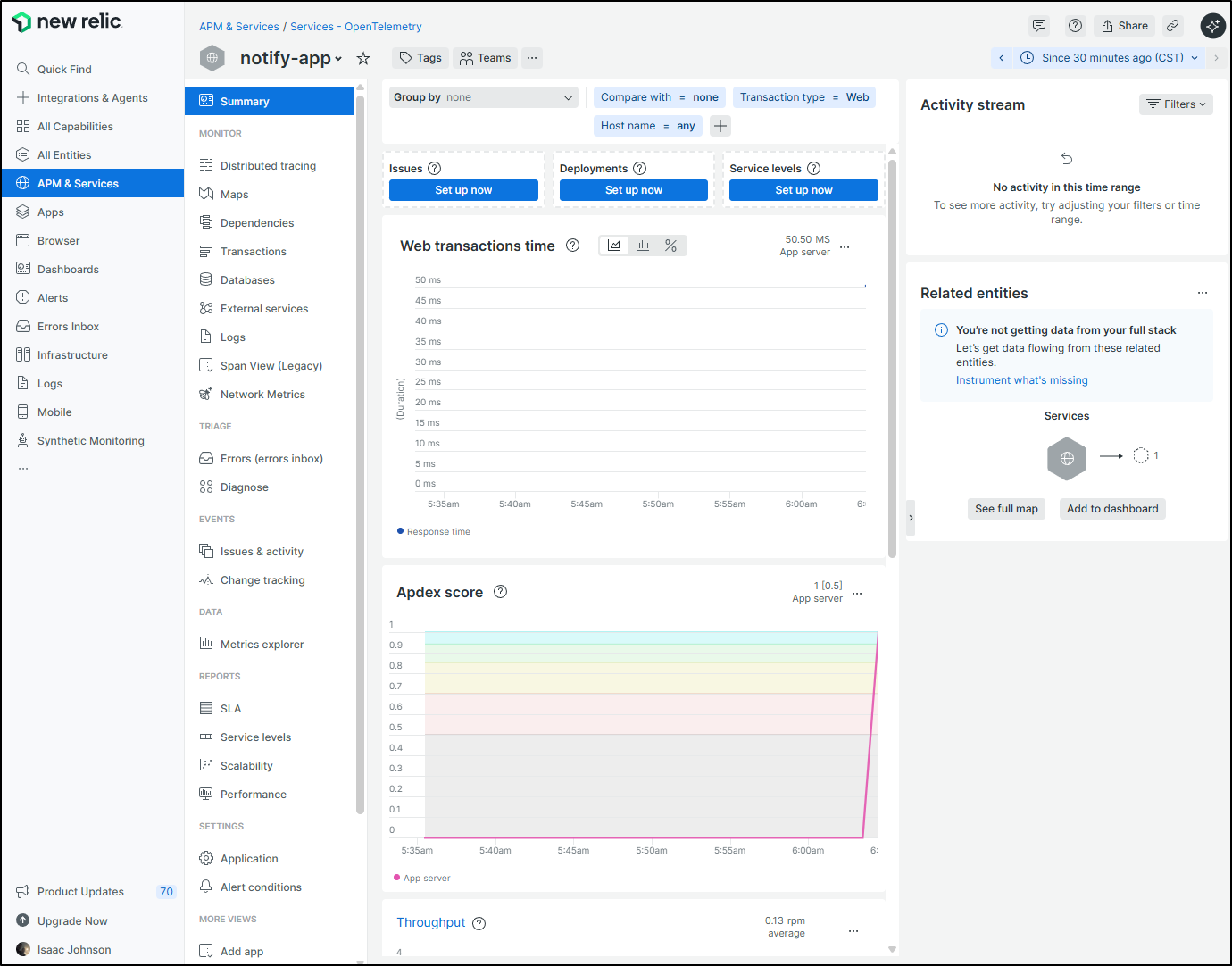

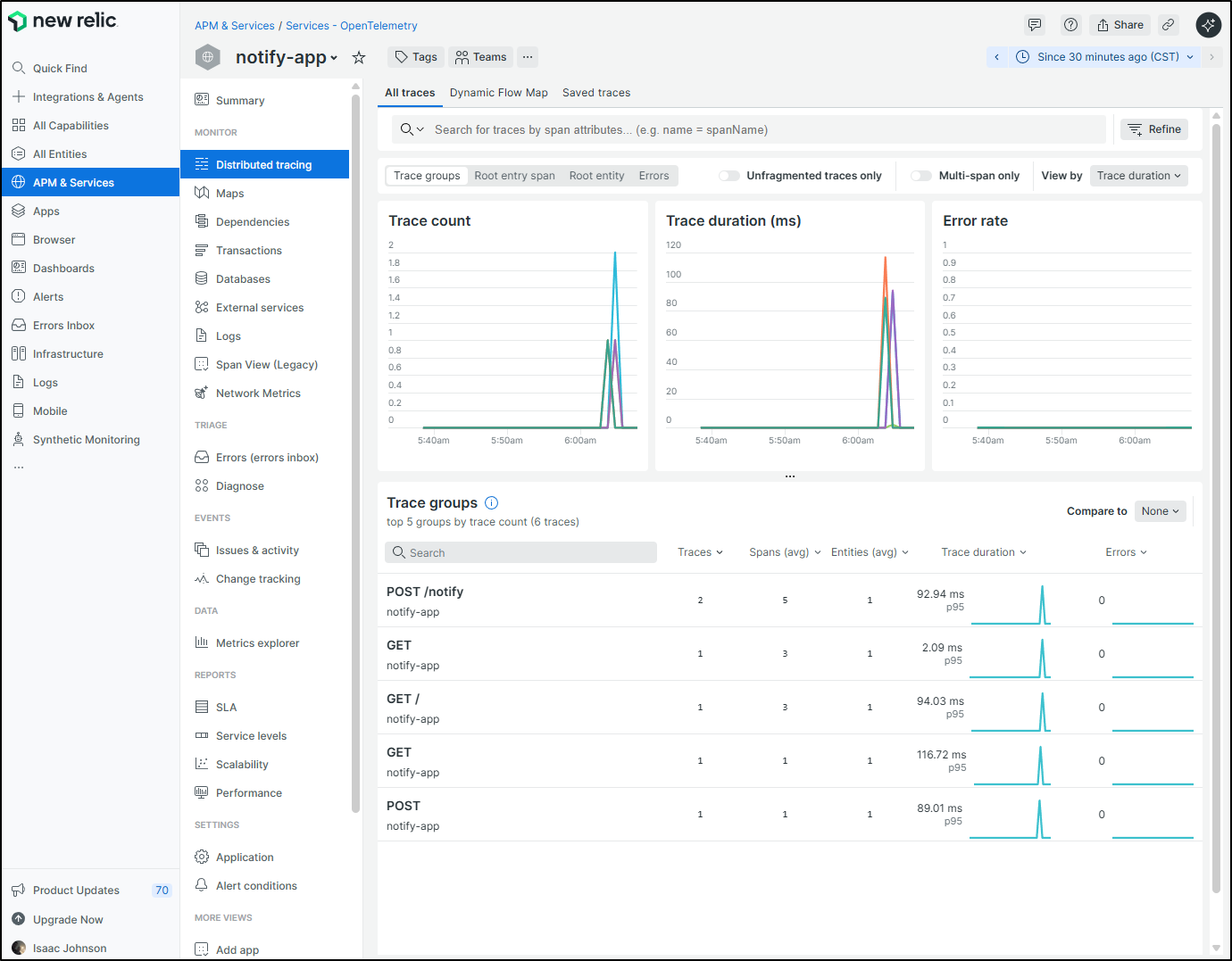

But also New Relic

which has much the same data (but a bit nicer looking)

Using Zipkin forwarder

One of the easiest ways to just get our traces over is to use a Zipkin forwarder

I need a GCP SA that has “Cloud Trace Agent” role enabled

I can set that as a CM in my Kubernetes cluster

$ kubectl create configmap gcptracecred --from-file=/mnt/c/Users/isaac/Downloads/myanthosproject2-eea0dc5b11be.json

configmap/gcptracecred created

In my former writeup we showed using as the main Zipkin thus having a ClusterIP service that listens on 9411

$ cat zipkin-gcp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: zipkin

labels:

app: zipkin

spec:

replicas: 1

selector:

matchLabels:

app: zipkin

template:

metadata:

labels:

app: zipkin

spec:

containers:

- name: zipkin

image: openzipkin/zipkin-gcp

env:

- name: STORAGE_TYPE

value: stackdriver

- name: GOOGLE_APPLICATION_CREDENTIALS

value: /zipkin/.gcp/myanthosproject2-eea0dc5b11be.json

- name: STACKDRIVER_PROJECT_ID

value: myanthosproject2

volumeMounts:

- name: gcp-volume

mountPath: /zipkin/.gcp

ports:

- containerPort: 9411

volumes:

- name: gcp-volume

configMap:

name: gcptracecred

---

kind: Service

apiVersion: v1

metadata:

name: zipkin

labels:

app: zipkin

spec:

selector:

app: zipkin

ports:

- protocol: TCP

port: 9411

targetPort: 9411

type: ClusterIP

But there would be two issues with this:

- I already have an in-cluster Zipkin service I use for various things that has claimed port 9411

- I would still need to sort out external ingress as in this test, my Open Telemetry collector runs outside of Kubernetes on a different Linux host

This means using NodePort makes far more sense:

$ cat zipkin-gcp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: zipkin

labels:

app: zipkin

spec:

replicas: 1

selector:

matchLabels:

app: zipkin

template:

metadata:

labels:

app: zipkin

spec:

containers:

- name: zipkin

image: openzipkin/zipkin-gcp

env:

- name: STORAGE_TYPE

value: stackdriver

- name: GOOGLE_APPLICATION_CREDENTIALS

value: /zipkin/.gcp/myanthosproject2-eea0dc5b11be.json

- name: STACKDRIVER_PROJECT_ID

value: myanthosproject2

volumeMounts:

- name: gcp-volume

mountPath: /zipkin/.gcp

ports:

- containerPort: 9411

volumes:

- name: gcp-volume

configMap:

name: gcptracecred

---

apiVersion: v1

kind: Service

metadata:

name: zipkinnp

spec:

ports:

- nodePort: 31633

port: 9411

protocol: TCP

targetPort: 9411

selector:

app: zipkin

type: NodePort

There is nothing special about 31633, it’s just an open high numbered port in the cluster.

I can now deploy and check that it is running

$ kubectl apply -f ./zipkin-gcp.yaml

deployment.apps/zipkin created

service/zipkinnp created

$ kubectl get po -l app=zipkin

NAME READY STATUS RESTARTS AGE

zipkin-5bcc757f7-gqhnl 1/1 Running 0 10s

$ kubectl get svc zipkinnp

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

zipkinnp NodePort 10.43.113.125 <none> 9411:31633/TCP 34s

I can use any node in the cluster, but I prefer to use the Node that the pod is using to minimize traffic

$ kubectl describe po zipkin-5bcc757f7-gqhnl | grep -i Node:

Node: builder-hp-elitebook-850-g1/192.168.1.34

back on the Linux VM running Otel, I can just swap up the Zipkin endpoint for the Node and NodePort

exporters:

debug:

verbosity: ${LOG_EXPORTER_LOG_VERBOSITY}

otlphttp:

endpoint: https://otlp.nr-data.net

headers:

api-key: ${NEW_RELIC_API_KEY}

zipkin:

#endpoint: http://192.168.1.121:9411/api/v2/spans

endpoint: http://192.168.1.34:31633/api/v2/spans

Then just restart the Otel collector with Docker compose to get the new config to take effect

builder@bosgamerz7:~/otel$ vi otel-config.yaml

builder@bosgamerz7:~/otel$ docker compose down

WARN[0000] The "OTELCOL_ARGS" variable is not set. Defaulting to a blank string.

WARN[0000] /home/builder/otel/docker-compose.yml: the attribute `version` is obsolete, it will be ignored, please remove it to avoid potential confusion

[+] Running 2/2

✔ Container otel-otel-collector-1 Removed 2.1s

✔ Network otel_default Removed 0.2s

builder@bosgamerz7:~/otel$ docker compose up -d

WARN[0000] The "OTELCOL_ARGS" variable is not set. Defaulting to a blank string.

WARN[0000] /home/builder/otel/docker-compose.yml: the attribute `version` is obsolete, it will be ignored, please remove it to avoid potential confusion

[+] Running 2/2

✔ Network otel_default Created 0.0s

✔ Container otel-otel-collector-1 Started 0.3s

builder@bosgamerz7:~/otel$

builder@bosgamerz7:~/otel$ docker ps | grep otel

6b1dcd51649c otel/opentelemetry-collector-contrib:0.121.0 "/otelcol-contrib --…" 10 seconds ago Up 9 seconds 0.0.0.0:4317-4318->4317-4318/tcp, [::]:4317-4318->4317-4318/tcp, 0.0.0.0:8006->8006/tcp, [::]:8006->8006/tcp, 0.0.0.0:13133->13133/tcp, [::]:13133->13133/tcp, 0.0.0.0:8006->8006/udp, [::]:8006->8006/udp, 55678-55679/tcp otel-otel-collector-1

A quick test shows New Relic is listening

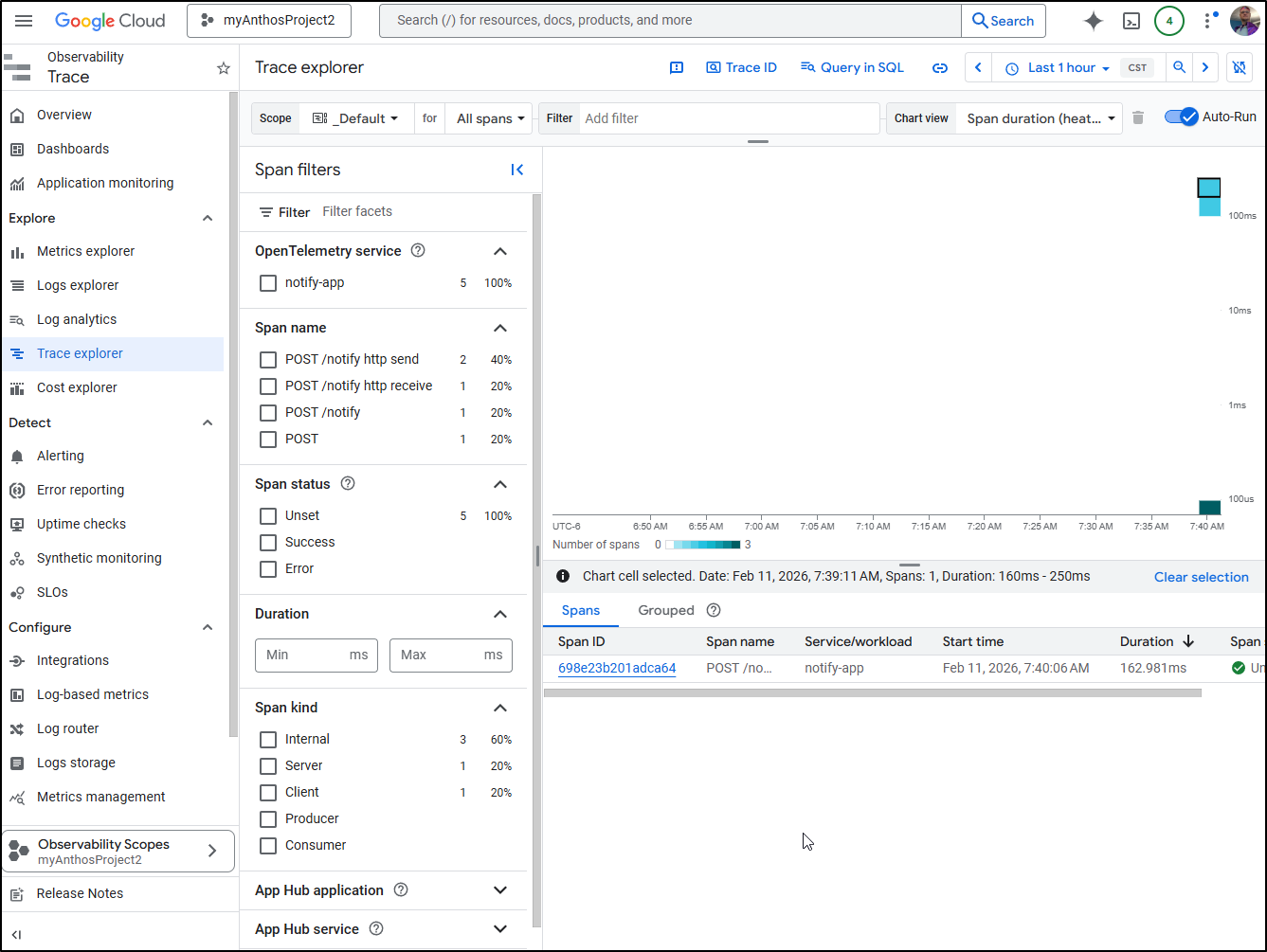

I can see the traces in GCP as well

which much the same detail

Trying the Otel Contrib

There are some docs on GCP about their OTel collector, but it’s really focused on running in GCP and misses some of the SA steps.

Thus we can look to the standard “opentelemetry-collector-contrib” which supports GCP Exporting

The OOTB config should cover us:

receivers:

otlp:

protocols:

grpc:

http:

exporters:

googlecloud:

log:

default_log_name: opentelemetry.io/collector-exported-log

processors:

memory_limiter:

check_interval: 1s

limit_percentage: 65

spike_limit_percentage: 20

resourcedetection:

detectors: [gcp]

timeout: 10s

service:

pipelines:

traces:

receivers: [otlp]

processors: [memory_limiter]

exporters: [googlecloud]

metrics:

receivers: [otlp]

processors: [memory_limiter]

exporters: [googlecloud]

logs:

receivers: [otlp]

processors: [memory_limiter]

exporters: [googlecloud]

A standard deploy (assuming that SA we had is in ~/service-account-key.json) would look like

docker run \

--volume ~/service-account-key.json:/etc/otelcol-contrib/key.json \

--volume $(pwd)/config.yaml:/etc/otelcol-contrib/config.yaml \

--env GOOGLE_APPLICATION_CREDENTIALS=/etc/otelcol-contrib/key.json \

--expose 4317 \

--expose 55681 \

--rm \

otel/opentelemetry-collector-contrib

However, I’m using 4317 for my existing collector, so I’ll want to stop that one first, then start

builder@bosgamerz7:~/otel-gcp$ docker ps | grep 4317

6b1dcd51649c otel/opentelemetry-collector-contrib:0.121.0 "/otelcol-contrib --…" 20 minutes ago Up 20 minutes 0.0.0.0:4317-4318->4317-4318/tcp, [::]:4317-4318->4317-4318/tcp, 0.0.0.0:8006->8006/tcp, [::]:8006->8006/tcp, 0.0.0.0:13133->13133/tcp, [::]:13133->13133/tcp, 0.0.0.0:8006->8006/udp, [::]:8006->8006/udp, 55678-55679/tcp otel-otel-collector-1

builder@bosgamerz7:~/otel-gcp$ docker stop otel-otel-collector-1

otel-otel-collector-1

$ docker run --volume $HOME/service-account-key.json:/etc/otelcol-contrib/key.json --volume $(pwd)/config.yaml:/etc/otelcol-contrib/config.yaml --env GOOGLE_APPLICATION_CREDENTIALS=/etc/otelcol-contrib/key.json --expose 4317 --expose 55681 --rm otel/opentelemetry-collector-contrib

2026-02-11T12:59:11.957Z info service@v0.132.0/service.go:187 Setting up own telemetry... {"resource": {"service.instance.id": "c164c51c-9626-4af1-9010-519828d329f3", "service.name": "otelcol-contrib", "service.version": "0.132.0"}}

2026-02-11T12:59:11.957Z info memorylimiter@v0.132.0/memorylimiter.go:146 Using percentage memory limiter {"resource": {"service.instance.id": "c164c51c-9626-4af1-9010-519828d329f3", "service.name": "otelcol-contrib", "service.version": "0.132.0"}, "otelcol.component.kind": "processor", "total_memory_mib": 28901, "limit_percentage": 65, "spike_limit_percentage": 20}

2026-02-11T12:59:11.957Z info memorylimiter@v0.132.0/memorylimiter.go:71 Memory limiter configured {"resource": {"service.instance.id": "c164c51c-9626-4af1-9010-519828d329f3", "service.name": "otelcol-contrib", "service.version": "0.132.0"}, "otelcol.component.kind": "processor", "limit_mib": 18786, "spike_limit_mib": 5780, "check_interval": 1}

2026-02-11T12:59:11.959Z info service@v0.132.0/service.go:249 Starting otelcol-contrib... {"resource": {"service.instance.id": "c164c51c-9626-4af1-9010-519828d329f3", "service.name": "otelcol-contrib", "service.version": "0.132.0"}, "Version": "0.132.0", "NumCPU": 16}

2026-02-11T12:59:11.959Z info extensions/extensions.go:41 Starting extensions... {"resource": {"service.instance.id": "c164c51c-9626-4af1-9010-519828d329f3", "service.name": "otelcol-contrib", "service.version": "0.132.0"}}

2026-02-11T12:59:11.960Z info otlpreceiver@v0.132.0/otlp.go:117 Starting GRPC server {"resource": {"service.instance.id": "c164c51c-9626-4af1-9010-519828d329f3", "service.name": "otelcol-contrib", "service.version": "0.132.0"}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "localhost:4317"}

2026-02-11T12:59:11.961Z info otlpreceiver@v0.132.0/otlp.go:175 Starting HTTP server {"resource": {"service.instance.id": "c164c51c-9626-4af1-9010-519828d329f3", "service.name": "otelcol-contrib", "service.version": "0.132.0"}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "localhost:4318"}

2026-02-11T12:59:11.962Z info service@v0.132.0/service.go:272 Everything is ready. Begin running and processing data. {"resource": {"service.instance.id": "c164c51c-9626-4af1-9010-519828d329f3", "service.name": "otelcol-contrib", "service.version": "0.132.0"}}

I fought this for a while:

- I tried just using port forwarding syntax (

-p 4317:4317) - I tried using the tag mentioned in the current Prometheus docs which is “0.140.0”/

But no matter what I did:

$ docker run --volume $HOME/service-account-key.json:/etc/otelcol-contrib/key.json --volume $(pwd)/config.yaml:/etc/otelcol-contrib/config.yaml --env GOOGLE_APPLICATION_CREDENTIALS=/etc/otelcol-contrib/key.json --expose 4317 --expose 55681 --rm otel/opentelemetry-collector-contrib

# Use GCP image

$ docker run --volume $HOME/service-account-key.json:/etc/otelcol-contrib/key.json --volume $(pwd)/config.yaml:/etc/otelcol-contrib/config.yaml --env GOOGLE_APPLICATION_CREDENTIALS=/etc/otelcol-contrib/key.json -p 4317:4317 -p 55681:55681 -d otel/opentelemetry-collector-contrib:0.140.0

# Include http

$ docker run --volume $HOME/service-account-key.json:/etc/otelcol-contrib/key.json --volume $(pwd)/config.yaml:/etc/otelcol-contrib/config.yaml --env GOOGLE_APPLICATION_CREDENTIALS=/etc/otelcol-contrib/key.json -p 4317:4317 -p 4318:4318 -p 55681:55681 -d otel/opentelemetry-collector-contrib:0.140.0

The result was the same; I saw logs showing running but not listening

$ docker logs upbeat_blackburn

2026-02-11T13:14:27.143Z info memorylimiter@v0.140.0/memorylimiter.go:146 Using percentage memory limiter {"resource": {"service.instance.id": "70b24811-3826-42ba-a22f-203fefc6e80e", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "otelcol.component.kind": "processor", "total_memory_mib": 28901, "limit_percentage": 65, "spike_limit_percentage": 20}

2026-02-11T13:14:27.143Z info memorylimiter@v0.140.0/memorylimiter.go:71 Memory limiter configured {"resource": {"service.instance.id": "70b24811-3826-42ba-a22f-203fefc6e80e", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "otelcol.component.kind": "processor", "limit_mib": 18786, "spike_limit_mib": 5780, "check_interval": 1}

2026-02-11T13:14:27.144Z info service@v0.140.0/service.go:224 Starting otelcol-contrib... {"resource": {"service.instance.id": "70b24811-3826-42ba-a22f-203fefc6e80e", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "Version": "0.140.1", "NumCPU": 16}

2026-02-11T13:14:27.144Z info extensions/extensions.go:40 Starting extensions... {"resource": {"service.instance.id": "70b24811-3826-42ba-a22f-203fefc6e80e", "service.name": "otelcol-contrib", "service.version": "0.140.1"}}

2026-02-11T13:14:27.145Z info otlpreceiver@v0.140.0/otlp.go:120 Starting GRPC server {"resource": {"service.instance.id": "70b24811-3826-42ba-a22f-203fefc6e80e", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "127.0.0.1:4317"}

2026-02-11T13:14:27.145Z info otlpreceiver@v0.140.0/otlp.go:178 Starting HTTP server {"resource": {"service.instance.id": "70b24811-3826-42ba-a22f-203fefc6e80e", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "127.0.0.1:4318"}

2026-02-11T13:14:27.145Z info service@v0.140.0/service.go:247 Everything is ready. Begin running and processing data. {"resource": {"service.instance.id": "70b24811-3826-42ba-a22f-203fefc6e80e", "service.name": "otelcol-contrib", "service.version": "0.140.1"}}

and my Notify app was showing rejected connections

$ kubectl logs notifyapp-7667cfb94c-69z4n | tail -n5

WARNING:opentelemetry.exporter.otlp.proto.grpc.exporter:Transient error StatusCode.UNAVAILABLE encountered while exporting metrics to 192.168.1.121:4317, retrying in 0.98s.

ERROR:opentelemetry.exporter.otlp.proto.grpc.exporter:Failed to export metrics to 192.168.1.121:4317, error code: StatusCode.UNAVAILABLE

WARNING:opentelemetry.exporter.otlp.proto.grpc.exporter:Transient error StatusCode.UNAVAILABLE encountered while exporting logs to 192.168.1.121:4317, retrying in 1.08s.

ERROR:opentelemetry.exporter.otlp.proto.grpc.exporter:Failed to export logs to 192.168.1.121:4317, error code: StatusCode.UNAVAILABLE

WARNING:opentelemetry.exporter.otlp.proto.grpc.exporter:Transient error StatusCode.UNAVAILABLE encountered while exporting logs to 192.168.1.121:4317, retrying in 0.83s.

I tested locally with some sample spans and they too were rejected - So I think something is amiss with the otel contrib container as it just won’t “hear” me

builder@bosgamerz7:~/otel$ grpcurl -plaintext -d @ 192.168.1.121:4317 opentelemetry.collector.trace.v1.TraceService/Export < ../otel/test_span_grpc.json

Failed to dial target host "192.168.1.121:4317": connection error: desc = "transport: error while dialing: dial tcp 192.168.1.121:4317: connect: connection refused"

builder@bosgamerz7:~/otel$ grpcurl -plaintext -d @ 192.168.1.121:4317 opentelemetry.collector.trace.v1.TraceService/Export < ../otel/test_span_grpc.json

Failed to dial target host "192.168.1.121:4317": connection error: desc = "transport: error while dialing: dial tcp 192.168.1.121:4317: connect: connection refused"

builder@bosgamerz7:~/otel$ grpcurl -plaintext -d @ 192.168.1.121:4318 opentelemetry.collector.trace.v1.TraceService/Export < ../otel/test_span_grpc.json

Failed to dial target host "192.168.1.121:4318": connection error: desc = "transport: error while dialing: dial tcp 192.168.1.121:4318: connect: connection refused"

builder@bosgamerz7:~/otel$ grpcurl -plaintext -d @ 192.168.1.121:4317 opentelemetry.collector.trace.v1.TraceService/Export < ../otel/test_span_grpc.json

Failed to dial target host "192.168.1.121:4317": connection error: desc = "transport: error while dialing: dial tcp 192.168.1.121:4317: connect: connection refused"

builder@bosgamerz7:~/otel$ grpcurl -plaintext -d @ localhost:4317 traces/Export < test_span_grpc.json

Failed to dial target host "localhost:4317": context deadline exceeded

builder@bosgamerz7:~/otel$ curl -i http://127.0.0.1:4318/v1/traces -X POST -H "Content-Type: application/json" -d @test_span.json

curl: (56) Recv failure: Connection reset by peer

builder@bosgamerz7:~/otel$ curl -i http://127.0.0.1:4318/v1/traces -X POST -H "Content-Type: application/json" -d @test_span.json

curl: (56) Recv failure: Connection reset by peer

I even tried swapping to a batch processor similar to the way my current Otel container is setup

$ cat config.yaml

receivers:

otlp:

protocols:

grpc:

http:

exporters:

googlecloud:

log:

default_log_name: opentelemetry.io/collector-exported-log

processors:

batch:

# Will convert all monotonic, cumulative sums to monotonic, delta sums

cumulativetodelta:

transform:

trace_statements:

- truncate_all(span.attributes, 4095)

- truncate_all(resource.attributes, 4095)

log_statements:

- truncate_all(log.attributes, 4095)

- truncate_all(resource.attributes, 4095)

metric_statements:

- truncate_all(datapoint.attributes, 4095)

- truncate_all(resource.attributes, 4095)

service:

pipelines:

traces:

receivers: [otlp]

processors: [transform, batch]

exporters: [googlecloud]

metrics:

receivers: [otlp]

processors: [transform, batch]

exporters: [googlecloud]

logs:

receivers: [otlp]

processors: [transform, batch]

exporters: [googlecloud]

Nothing seems to get it to “listen”.

I had tried firing it through Claude via Copilot free, no ideas.

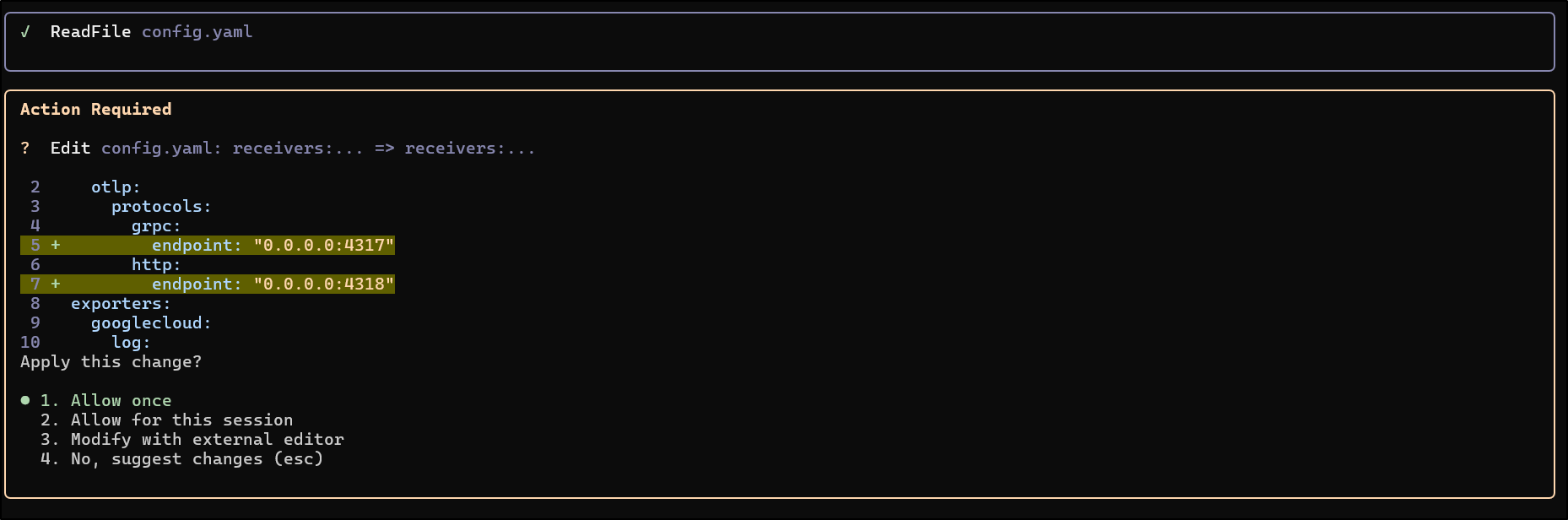

The old adage, “If at first you don’t succeed, try try then stop. no sense in making a damn fool of yourself”, might better be put “If at first you don’t succeed, try, try, try, then dammit, call up Gemini CLI!”

It suggested the “0.0.0.0” syntax in the endpoint configuration (which is triggering some Deja Vu)

The new config

$ cat config.yaml

receivers:

otlp:

protocols:

grpc:

endpoint: "0.0.0.0:4317"

http:

endpoint: "0.0.0.0:4318"

exporters:

googlecloud:

log:

default_log_name: opentelemetry.io/collector-exported-log

processors:

batch:

# Will convert all monotonic, cumulative sums to monotonic, delta sums

cumulativetodelta:

transform:

trace_statements:

- truncate_all(span.attributes, 4095)

- truncate_all(resource.attributes, 4095)

log_statements:

- truncate_all(log.attributes, 4095)

- truncate_all(resource.attributes, 4095)

metric_statements:

- truncate_all(datapoint.attributes, 4095)

- truncate_all(resource.attributes, 4095)

service:

pipelines:

traces:

receivers: [otlp]

processors: [transform, batch]

exporters: [googlecloud]

metrics:

receivers: [otlp]

processors: [transform, batch]

exporters: [googlecloud]

logs:

receivers: [otlp]

processors: [transform, batch]

exporters: [googlecloud]

Seemed to work to send traces now

If I check the logs, however, we see there are some issues:

$ docker logs --follow jovial_neumann

2026-02-11T13:38:00.821Z info service@v0.140.0/service.go:224 Starting otelcol-contrib... {"resource": {"service.instance.id": "7dc47037-8767-4169-8942-73c35fc1eee0", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "Version": "0.140.1", "NumCPU": 16}

2026-02-11T13:38:00.821Z info extensions/extensions.go:40 Starting extensions... {"resource": {"service.instance.id": "7dc47037-8767-4169-8942-73c35fc1eee0", "service.name": "otelcol-contrib", "service.version": "0.140.1"}}

2026-02-11T13:38:00.822Z info otlpreceiver@v0.140.0/otlp.go:120 Starting GRPC server {"resource": {"service.instance.id": "7dc47037-8767-4169-8942-73c35fc1eee0", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "[::]:4317"}

2026-02-11T13:38:00.822Z info otlpreceiver@v0.140.0/otlp.go:178 Starting HTTP server {"resource": {"service.instance.id": "7dc47037-8767-4169-8942-73c35fc1eee0", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "otelcol.component.id": "otlp", "otelcol.component.kind": "receiver", "endpoint": "[::]:4318"}

2026-02-11T13:38:00.823Z info service@v0.140.0/service.go:247 Everything is ready. Begin running and processing data. {"resource": {"service.instance.id": "7dc47037-8767-4169-8942-73c35fc1eee0", "service.name": "otelcol-contrib", "service.version": "0.140.1"}}

2026/02/11 13:39:20 failed to export to Google Cloud Trace: rpc error: code = InvalidArgument desc = A valid, non-zero start time is required.

2026-02-11T13:39:20.986Z error internal/queue_sender.go:49 Exporting failed. Dropping data. {"resource": {"service.instance.id": "7dc47037-8767-4169-8942-73c35fc1eee0", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "otelcol.component.id": "googlecloud", "otelcol.component.kind": "exporter", "otelcol.signal": "traces", "error": "rpc error: code = InvalidArgument desc = A valid, non-zero start time is required.", "dropped_items": 1}

go.opentelemetry.io/collector/exporter/exporterhelper/internal.NewQueueSender.func1

go.opentelemetry.io/collector/exporter/exporterhelper@v0.140.0/internal/queue_sender.go:49

go.opentelemetry.io/collector/exporter/exporterhelper/internal/queuebatch.(*disabledBatcher[...]).Consume

go.opentelemetry.io/collector/exporter/exporterhelper@v0.140.0/internal/queuebatch/disabled_batcher.go:23

go.opentelemetry.io/collector/exporter/exporterhelper/internal/queue.(*asyncQueue[...]).Start.func1

go.opentelemetry.io/collector/exporter/exporterhelper@v0.140.0/internal/queue/async_queue.go:49

2026-02-11T13:39:28.822Z error internal/queue_sender.go:49 Exporting failed. Dropping data. {"resource": {"service.instance.id": "7dc47037-8767-4169-8942-73c35fc1eee0", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "otelcol.component.id": "googlecloud", "otelcol.component.kind": "exporter", "otelcol.signal": "logs", "error": "rpc error: code = PermissionDenied desc = Permission 'logging.logEntries.create' denied on resource (or it may not exist).\nerror details: name = ErrorInfo reason = IAM_PERMISSION_DENIED domain = iam.googleapis.com metadata = map[permission:logging.logEntries.create]\nerror details: name = Unknown desc = log_entry_errors:{key:0 value:{code:7 message:\"Permission 'logging.logEntries.create' denied on resource (or it may not exist).\"}} log_entry_errors:{key:1 value:{code:7 message:\"Permission 'logging.logEntries.create' denied on resource (or it may not exist).\"}}", "dropped_items": 2}

go.opentelemetry.io/collector/exporter/exporterhelper/internal.NewQueueSender.func1

go.opentelemetry.io/collector/exporter/exporterhelper@v0.140.0/internal/queue_sender.go:49

go.opentelemetry.io/collector/exporter/exporterhelper/internal/queuebatch.(*disabledBatcher[...]).Consume

go.opentelemetry.io/collector/exporter/exporterhelper@v0.140.0/internal/queuebatch/disabled_batcher.go:23

go.opentelemetry.io/collector/exporter/exporterhelper/internal/queue.(*asyncQueue[...]).Start.func1

go.opentelemetry.io/collector/exporter/exporterhelper@v0.140.0/internal/queue/async_queue.go:49

2026-02-11T13:39:33.559Z error internal/queue_sender.go:49 Exporting failed. Dropping data. {"resource": {"service.instance.id": "7dc47037-8767-4169-8942-73c35fc1eee0", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "otelcol.component.id": "googlecloud", "otelcol.component.kind": "exporter", "otelcol.signal": "logs", "error": "rpc error: code = PermissionDenied desc = Permission 'logging.logEntries.create' denied on resource (or it may not exist).\nerror details: name = ErrorInfo reason = IAM_PERMISSION_DENIED domain = iam.googleapis.com metadata = map[permission:logging.logEntries.create]\nerror details: name = Unknown desc = log_entry_errors:{key:0 value:{code:7 message:\"Permission 'logging.logEntries.create' denied on resource (or it may not exist).\"}}", "dropped_items": 1}

go.opentelemetry.io/collector/exporter/exporterhelper/internal.NewQueueSender.func1

go.opentelemetry.io/collector/exporter/exporterhelper@v0.140.0/internal/queue_sender.go:49

go.opentelemetry.io/collector/exporter/exporterhelper/internal/queuebatch.(*disabledBatcher[...]).Consume

go.opentelemetry.io/collector/exporter/exporterhelper@v0.140.0/internal/queuebatch/disabled_batcher.go:23

go.opentelemetry.io/collector/exporter/exporterhelper/internal/queue.(*asyncQueue[...]).Start.func1

go.opentelemetry.io/collector/exporter/exporterhelper@v0.140.0/internal/queue/async_queue.go:49

2026-02-11T13:40:09.443Z error internal/queue_sender.go:49 Exporting failed. Dropping data. {"resource": {"service.instance.id": "7dc47037-8767-4169-8942-73c35fc1eee0", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "otelcol.component.id": "googlecloud", "otelcol.component.kind": "exporter", "otelcol.signal": "metrics", "error": "failed to export metrics to projects/myanthosproject2: rpc error: code = PermissionDenied desc = Permission monitoring.timeSeries.create denied (or the resource may not exist).", "dropped_items": 6}

go.opentelemetry.io/collector/exporter/exporterhelper/internal.NewQueueSender.func1

go.opentelemetry.io/collector/exporter/exporterhelper@v0.140.0/internal/queue_sender.go:49

go.opentelemetry.io/collector/exporter/exporterhelper/internal/queuebatch.(*disabledBatcher[...]).Consume

go.opentelemetry.io/collector/exporter/exporterhelper@v0.140.0/internal/queuebatch/disabled_batcher.go:23

go.opentelemetry.io/collector/exporter/exporterhelper/internal/queue.(*asyncQueue[...]).Start.func1

go.opentelemetry.io/collector/exporter/exporterhelper@v0.140.0/internal/queue/async_queue.go:49

2026-02-11T13:40:09.450Z error collector@v0.54.0/metrics.go:772 Unable to send metric descriptor. {"resource": {"service.instance.id": "7dc47037-8767-4169-8942-73c35fc1eee0", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "otelcol.component.id": "googlecloud", "otelcol.component.kind": "exporter", "otelcol.signal": "metrics", "error": "rpc error: code = PermissionDenied desc = Permission monitoring.metricDescriptors.create denied (or the resource may not exist).", "metric_descriptor": "name:\"http.client.duration\" type:\"workload.googleapis.com/http.client.duration\" labels:{key:\"instrumentation_source\"} labels:{key:\"instrumentation_version\"} labels:{key:\"service_name\"} labels:{key:\"http_method\"} labels:{key:\"http_scheme\"} labels:{key:\"http_host\"} labels:{key:\"net_peer_name\"} labels:{key:\"http_status_code\"} labels:{key:\"http_flavor\"} metric_kind:CUMULATIVE value_type:DISTRIBUTION unit:\"ms\" description:\"measures the duration of the outbound HTTP request\" display_name:\"http.client.duration\""}

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector.(*MetricsExporter).exportMetricDescriptor

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector@v0.54.0/metrics.go:772

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector.(*MetricsExporter).exportMetricDescriptorRunner

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector@v0.54.0/metrics.go:738

2026-02-11T13:40:09.533Z error collector@v0.54.0/metrics.go:772 Unable to send metric descriptor. {"resource": {"service.instance.id": "7dc47037-8767-4169-8942-73c35fc1eee0", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "otelcol.component.id": "googlecloud", "otelcol.component.kind": "exporter", "otelcol.signal": "metrics", "error": "rpc error: code = Unavailable desc = The service is currently unavailable.", "metric_descriptor": "name:\"http.server.active_requests\" type:\"workload.googleapis.com/http.server.active_requests\" labels:{key:\"instrumentation_source\"} labels:{key:\"instrumentation_version\"} labels:{key:\"service_name\"} labels:{key:\"http_scheme\"} labels:{key:\"http_host\"} labels:{key:\"http_flavor\"} labels:{key:\"http_method\"} labels:{key:\"http_server_name\"} metric_kind:GAUGE value_type:INT64 unit:\"{request}\" description:\"Number of active HTTP server requests.\" display_name:\"http.server.active_requests\""}

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector.(*MetricsExporter).exportMetricDescriptor

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector@v0.54.0/metrics.go:772

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector.(*MetricsExporter).exportMetricDescriptorRunner

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector@v0.54.0/metrics.go:738

2026-02-11T13:40:09.762Z error collector@v0.54.0/metrics.go:772 Unable to send metric descriptor. {"resource": {"service.instance.id": "7dc47037-8767-4169-8942-73c35fc1eee0", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "otelcol.component.id": "googlecloud", "otelcol.component.kind": "exporter", "otelcol.signal": "metrics", "error": "rpc error: code = PermissionDenied desc = Permission monitoring.metricDescriptors.create denied (or the resource may not exist).", "metric_descriptor": "name:\"http.server.duration\" type:\"workload.googleapis.com/http.server.duration\" labels:{key:\"service_name\"} labels:{key:\"instrumentation_source\"} labels:{key:\"instrumentation_version\"} labels:{key:\"http_scheme\"} labels:{key:\"http_host\"} labels:{key:\"net_host_port\"} labels:{key:\"http_flavor\"} labels:{key:\"http_method\"} labels:{key:\"http_server_name\"} labels:{key:\"http_status_code\"} labels:{key:\"http_target\"} metric_kind:CUMULATIVE value_type:DISTRIBUTION unit:\"ms\" description:\"Measures the duration of inbound HTTP requests.\" display_name:\"http.server.duration\""}

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector.(*MetricsExporter).exportMetricDescriptor

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector@v0.54.0/metrics.go:772

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector.(*MetricsExporter).exportMetricDescriptorRunner

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector@v0.54.0/metrics.go:738

2026-02-11T13:40:09.962Z error collector@v0.54.0/metrics.go:772 Unable to send metric descriptor. {"resource": {"service.instance.id": "7dc47037-8767-4169-8942-73c35fc1eee0", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "otelcol.component.id": "googlecloud", "otelcol.component.kind": "exporter", "otelcol.signal": "metrics", "error": "rpc error: code = PermissionDenied desc = Permission monitoring.metricDescriptors.create denied (or the resource may not exist).", "metric_descriptor": "name:\"http.server.response.size\" type:\"workload.googleapis.com/http.server.response.size\" labels:{key:\"instrumentation_source\"} labels:{key:\"instrumentation_version\"} labels:{key:\"service_name\"} labels:{key:\"http_scheme\"} labels:{key:\"http_host\"} labels:{key:\"net_host_port\"} labels:{key:\"http_flavor\"} labels:{key:\"http_method\"} labels:{key:\"http_server_name\"} labels:{key:\"http_status_code\"} labels:{key:\"http_target\"} metric_kind:CUMULATIVE value_type:DISTRIBUTION unit:\"By\" description:\"measures the size of HTTP response messages (compressed).\" display_name:\"http.server.response.size\""}

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector.(*MetricsExporter).exportMetricDescriptor

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector@v0.54.0/metrics.go:772

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector.(*MetricsExporter).exportMetricDescriptorRunner

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector@v0.54.0/metrics.go:738

2026-02-11T13:40:10.162Z error collector@v0.54.0/metrics.go:772 Unable to send metric descriptor. {"resource": {"service.instance.id": "7dc47037-8767-4169-8942-73c35fc1eee0", "service.name": "otelcol-contrib", "service.version": "0.140.1"}, "otelcol.component.id": "googlecloud", "otelcol.component.kind": "exporter", "otelcol.signal": "metrics", "error": "rpc error: code = PermissionDenied desc = Permission monitoring.metricDescriptors.create denied (or the resource may not exist).", "metric_descriptor": "name:\"http.server.request.size\" type:\"workload.googleapis.com/http.server.request.size\" labels:{key:\"instrumentation_source\"} labels:{key:\"instrumentation_version\"} labels:{key:\"service_name\"} labels:{key:\"http_scheme\"} labels:{key:\"http_host\"} labels:{key:\"net_host_port\"} labels:{key:\"http_flavor\"} labels:{key:\"http_method\"} labels:{key:\"http_server_name\"} labels:{key:\"http_status_code\"} labels:{key:\"http_target\"} metric_kind:CUMULATIVE value_type:DISTRIBUTION unit:\"By\" description:\"Measures the size of HTTP request messages (compressed).\" display_name:\"http.server.request.size\""}

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector.(*MetricsExporter).exportMetricDescriptor

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector@v0.54.0/metrics.go:772

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector.(*MetricsExporter).exportMetricDescriptorRunner

github.com/GoogleCloudPlatform/opentelemetry-operations-go/exporter/collector@v0.54.0/metrics.go:738

Namely, if I want to route all the Otel things, metrics, traces and logs, then I need to grant that SA more permission (or use an SA with more permissions).

Now, if you recall, we created a secret for our Gotify app when we added native GCP Otel instrumentation libraries:

$ kubectl create secret generic gotifyme-sa --from-file=mysa.json=/home/builder/Workspaces/GotifyMe/mysa.json

secret/gotifyme-sa created

I should be able to just swap that SA in the OTEL launch

builder@bosgamerz7:~/otel-gcp$ cat ~/mysa.json | grep client_email

"client_email": "perlmcpapi@myanthosproject2.iam.gserviceaccount.com",

builder@bosgamerz7:~/otel-gcp$ docker run --volume $HOME/mysa.json:/etc/otelcol-contrib/key.json --volume $(pwd)/config.yaml:/etc/otelcol-contrib/config.yaml --env GOOGLE_APPLICATION_CREDENTIALS=/etc/otelcol-contrib/key.json -p 4317:4317 -p 4318:4318 -p 55681:55681 -d otel/opentelemetry-collector-contrib

f9be7f07017d191dab31a7393afe14915a0ba1ed2699ddb28233a8c3719e5475

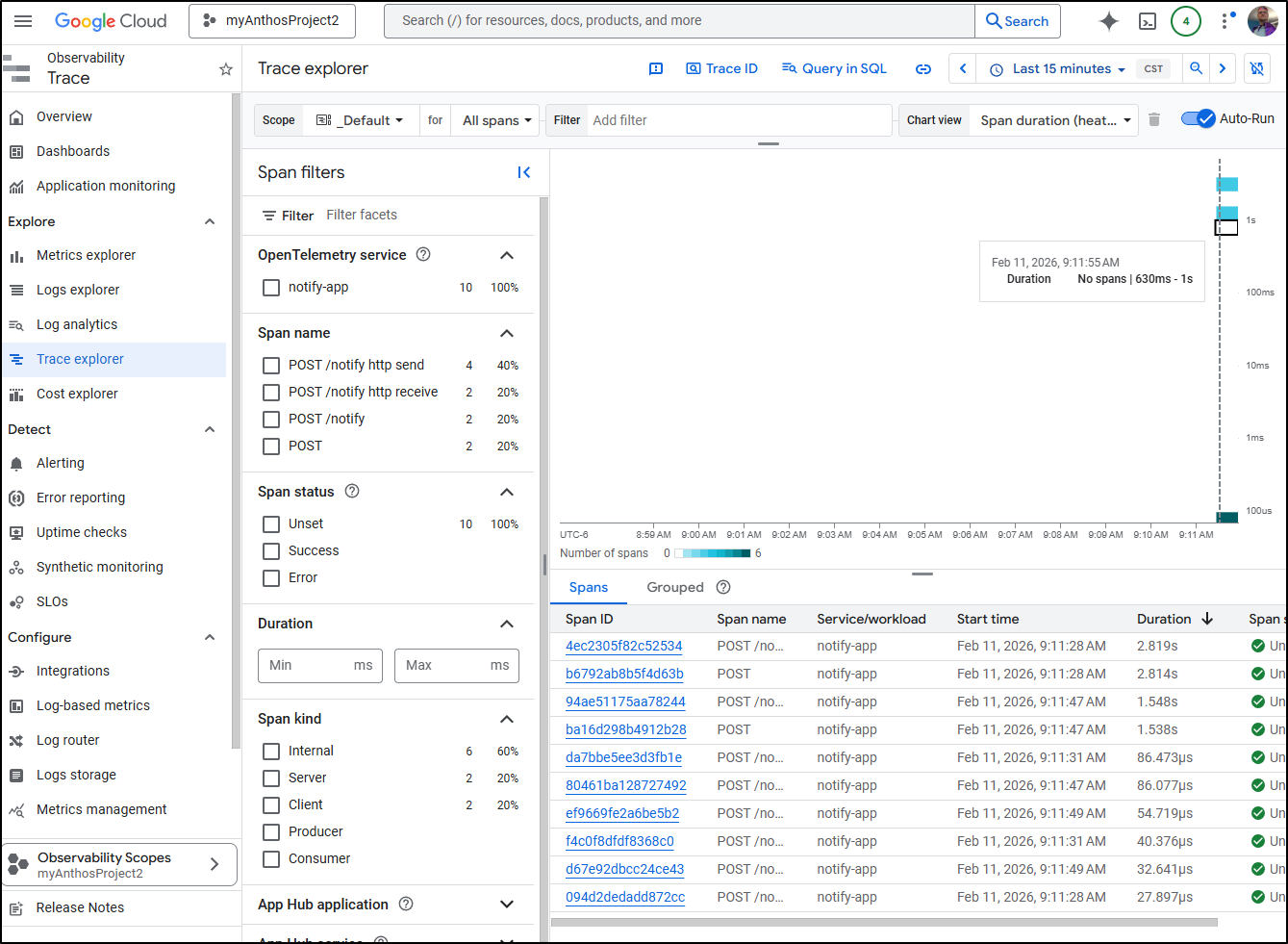

Now when testing the notify app, I can see fresh spans

The first time they came by way of App -> Otel -> ZipkinGCP -> CloudTrace.

This time, they are using App -> OtelGCP -> CloudTrace.

Additionally, I can see logs entered (this one about making a metric)

as well as Metrics

I pushed this to the Public GotifyMe repo and made a PR so you can review the code changes: PR3

GCP Native vs OTEL Standard

We looked at Google’s New(er) OpenTelemetry endpoints and tested by instrumenting some Python code.

Let’s compare a bit between the “GCP Native OTel” vs “Otel” (which we redirected) in the code

The first thing you will notice is for “GCP Native”, we need to pull in libraries for Google Auth and Cloud Trace. For the “OTel Native”, we pull in a lot of OTel SDK Libraries.

We cannot fault the verbosity of the GCP Native on the auth block as if we look at my code, there is a lot of verbose debug (which I used to sort out my permissions).

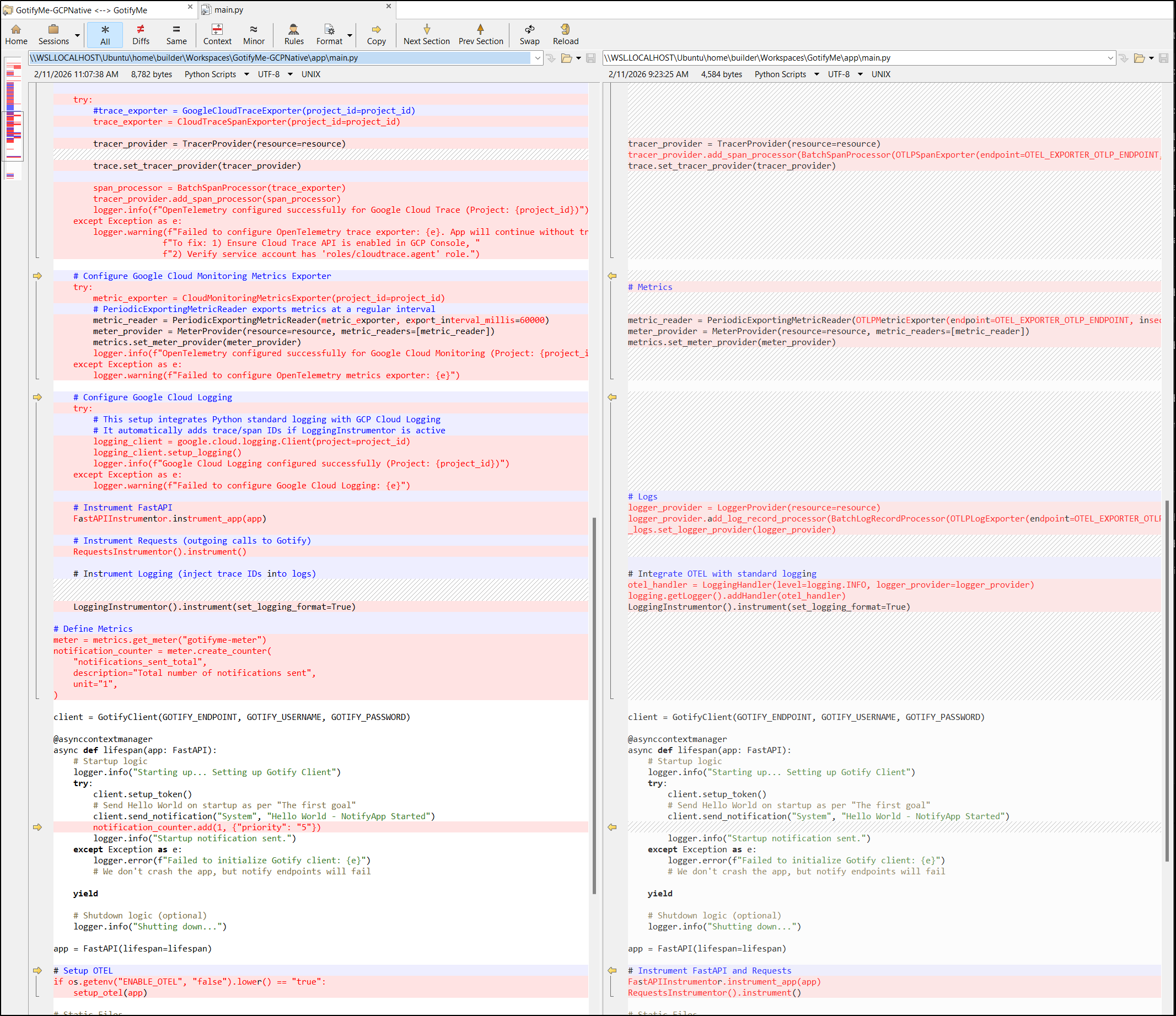

Let’s now look at the differences in the core of the app:

We can see how with the GCP endpoint, we send, for instance, metrics to the metric_exporter tied to the project

metric_exporter = CloudMonitoringMetricsExporter(project_id=project_id)

metric_reader = PeriodicExportingMetricReader(metric_exporter, export_interval_millis=60000)

versus the native OTEL which just goes to an endpoint

metric_reader = PeriodicExportingMetricReader(OTLPMetricExporter(endpoint=OTEL_EXPORTER_OTLP_ENDPOINT, insecure=True))

Lastly, we can compare the setup steps. Note that in the “GCP Native” I added more logging and metrics (e.g. a custom metric for counter)

Summary

We looked at setting up instrumentation in Python using the GCP Libraries with Google auth. This did involve sorting out some SA permissions but then was easy to direct our traces and metrics up to GCP.

We then looked at setting up native Open Telemetry monitors using a forwarder (namely the Otel Contrib container which can run in Docker and Kubernetes). We also looked at the Zipkin GCP trace forwarder which can tie into the standard Otel collector (and this would work fine with Grafana Alloy as well, though we didn’t demo it). This allows us to have a bit more control locally (like sending just Zipkin formatted traces), or a more full deployment with an Otel Contrib.

At the end of the day, I think one needs to compare trade-offs:

- If we use Native GCP, we are tied to a specific vendor, but I can abstract the auth to a set SA

- and i can used managed identities and avoid the SA JSON by using infrastructure in GCP like GKE and CloudRun

- If we use Native Open Telemetry, we have more control on our destinations (including routing to effectively /dev/null but not including an exporter - useful for noisy services).

- We can also leverage the Zipkin GCP forwarder to just do traces which is a nice option

I don’t think, at this time, I would switch to using the GCP Native endpoints just because the auth layer is cumbersome. Additionally, from an SRE / Ops perspective, I’m far more inclined to have a managed OTel forwarder separate from the development stack so I do not need to expose any Service Account credentials. Additionally, while I didn’t dive into it here, there are quite a lot of processors that one can leverage to redact data or transform logs and metrics.

That said, I’ll keep an eye out for any updates Google may roll out on this service and likely review it again in the future.