Published: Sep 24, 2025 by Isaac Johnson

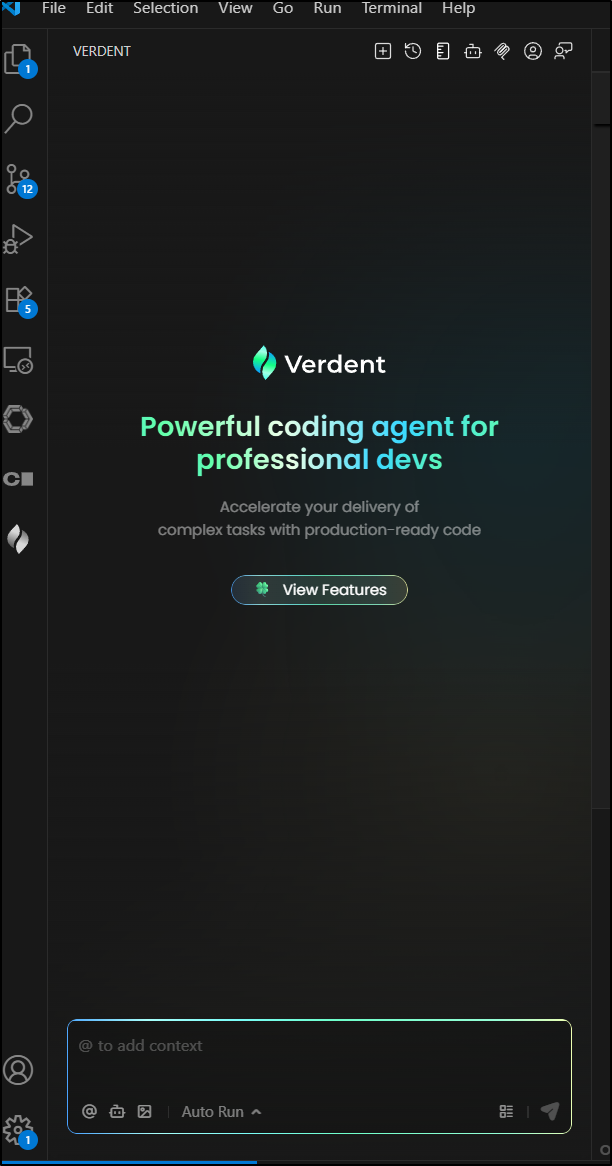

A short while ago, Codeck/Verdent reached out to me about a new project they had in the works, Verdent AI. Verdent AI is meant to be a new AI Coding tool with features no one else had yet… Their slogan is “Human developers will thrive in the AI era”

Relationship with Verdent: I want to be very upfront here. I worked with Verdent on their invite-only Beta testing. I was not paid and they had zero input in my review. The only thing I recieved as a result was a nice one-time 1200 credit to try it post-launch. If there are things I do not like, I will call them out below.

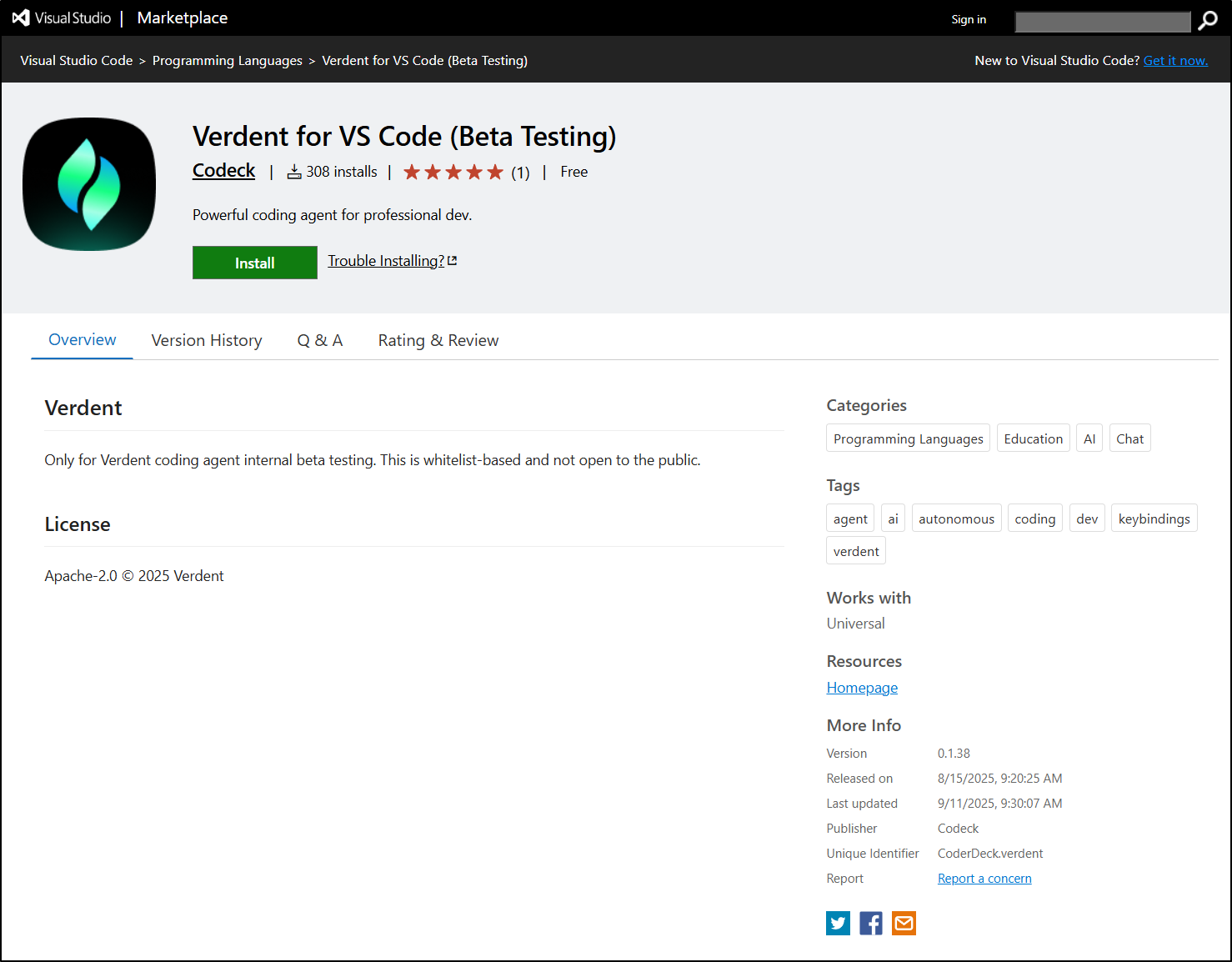

Visual Studio Code

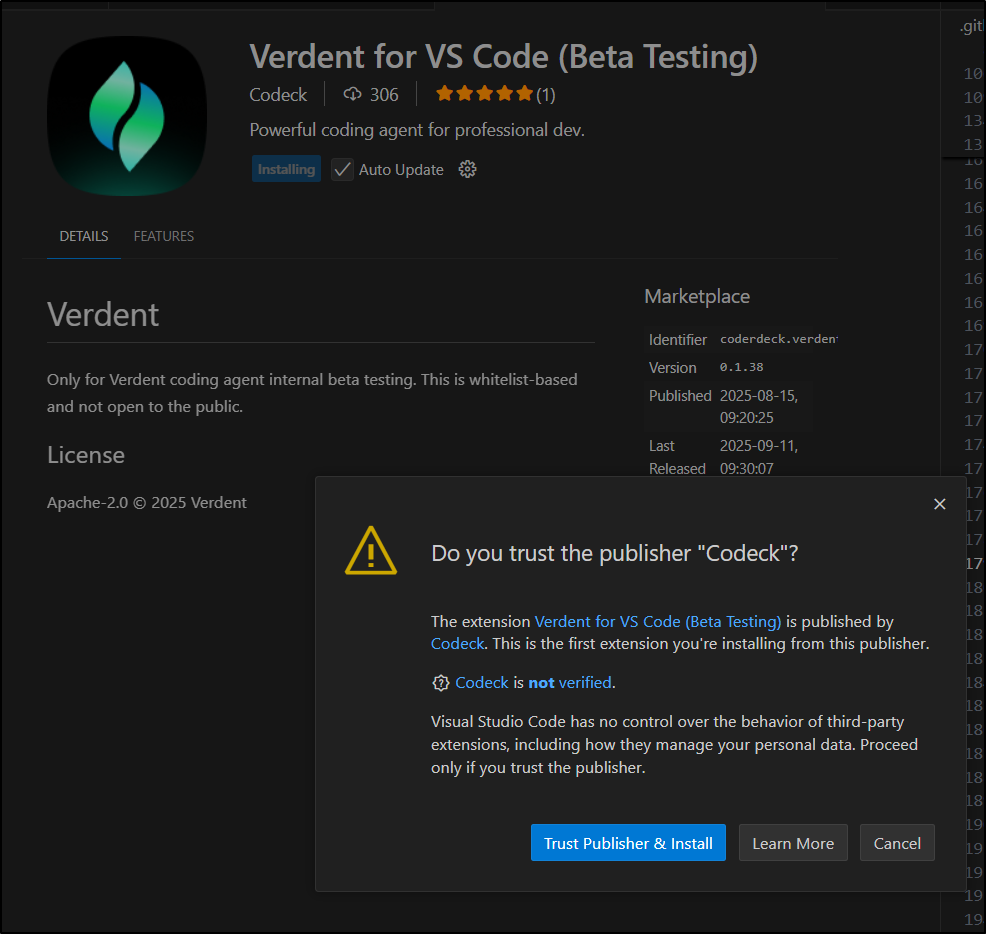

Let’s start by adding the Verdent (Codeck) plugin for VS code from here

This launches into VS Code. By the time you read this, it will also likely be available just within the Marketplace option for Extensions.

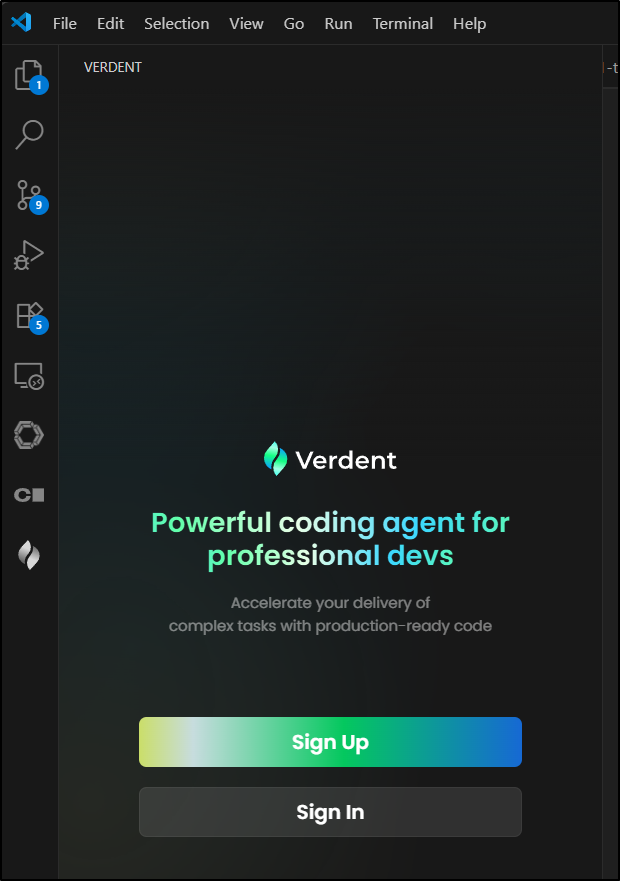

Clicking the icon (dual flames), we are prompted to “Sign Up” or “Sign In”

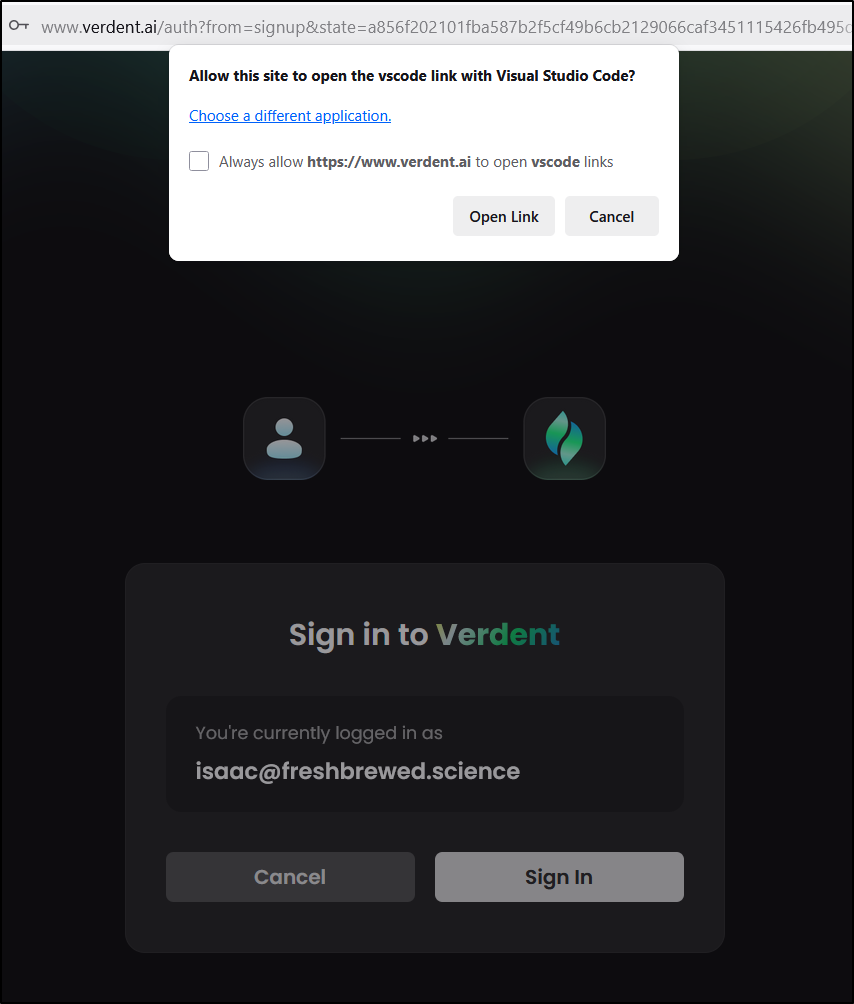

Once I authenticate, it will open a link into VSCode to enable the extension

I can now see it is live

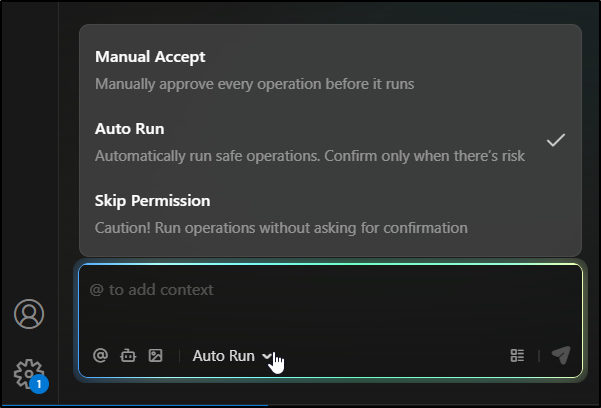

The first thing that caught my eye was the be-safe vs YOLO mode so we can just go nuts with “Skip Permission” if we wanted. I like options like this, especially if I’m paying attention to costs

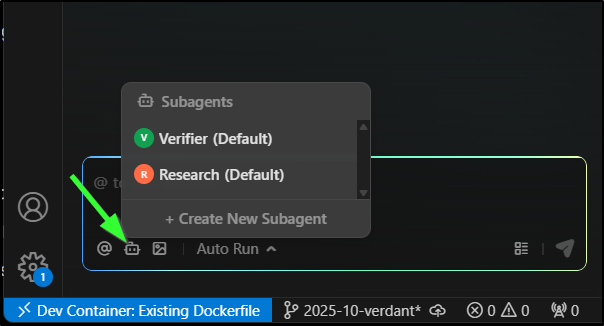

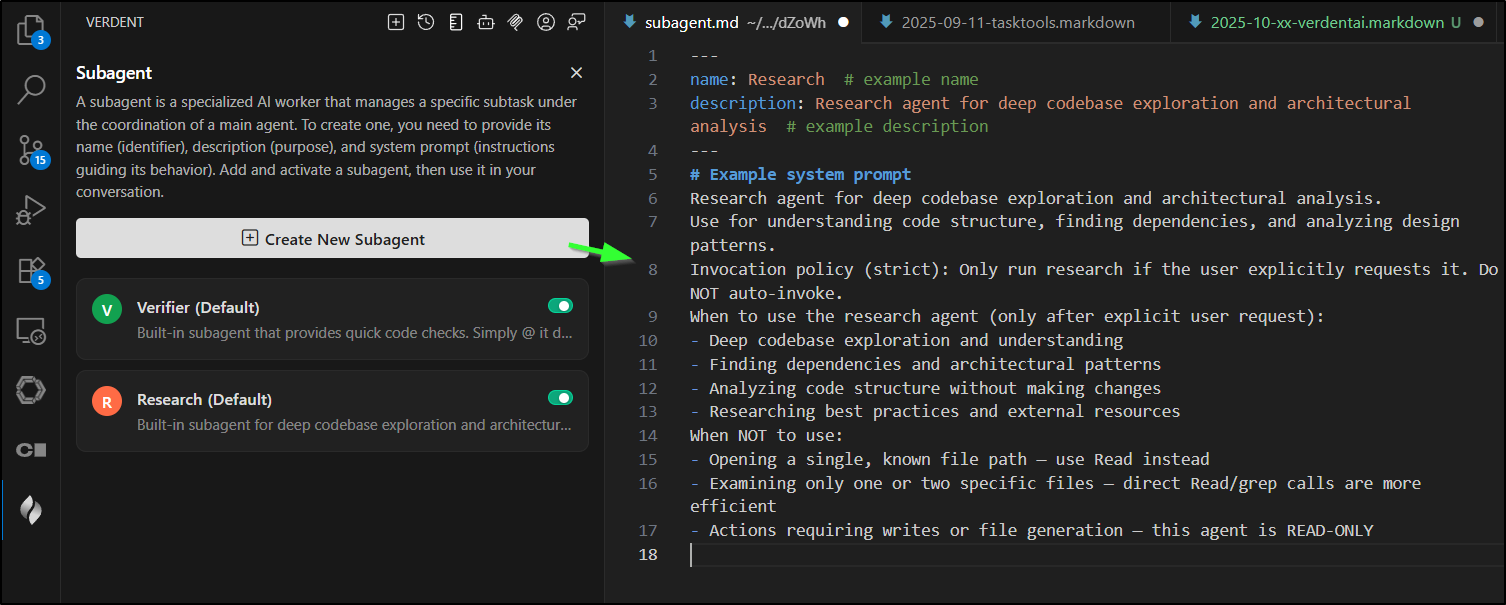

Agents

The first thing that really stands out is built-in agent support - and not in a light @ symbol kind of way. We can attach sub-agents to our task with the robot icon

Additionally, we can define agents in the top-level agent menu (robot icon up top)

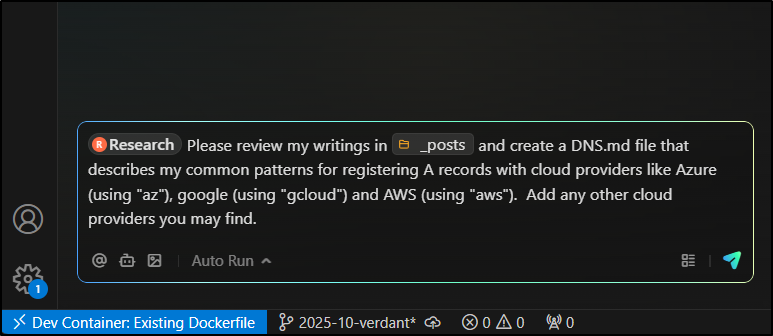

Research

I kicked off a request using the research agent. I wanted to document the common ways I request A records for various sites

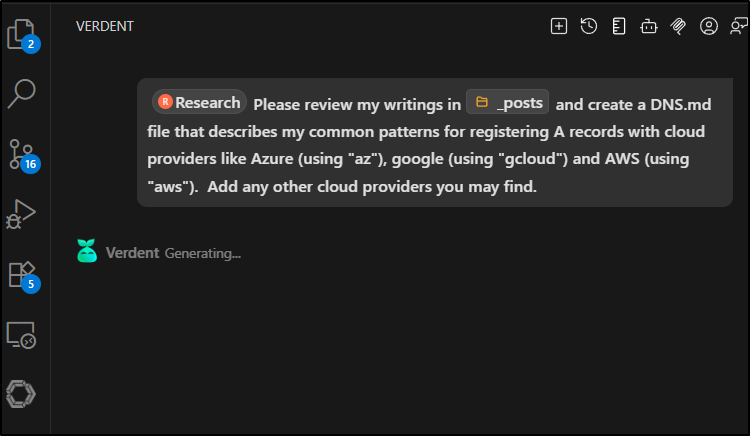

I saw it kick in a research activity

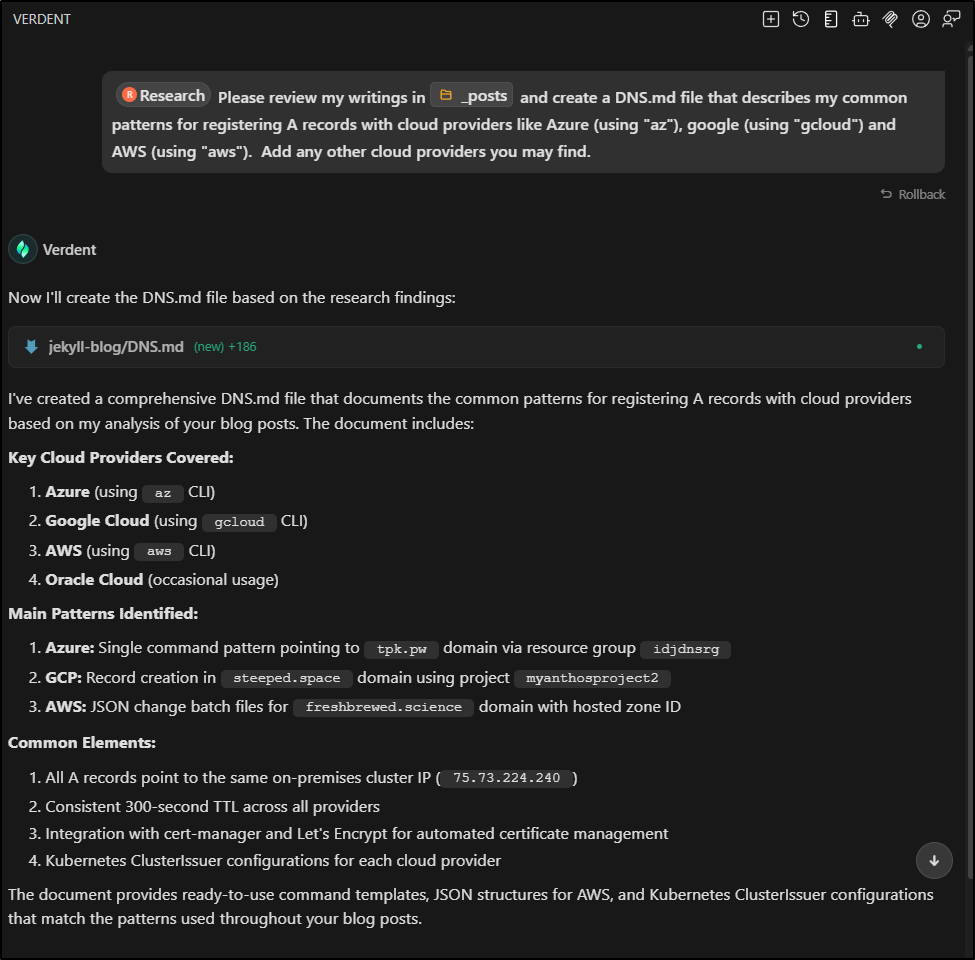

And it came back with an answer

Now, I have to admit when I kicked off the request and it didn’t immediately respond (there are a lot of files), I had lunch so I don’t really know how long that activity took.

I was actually quite impressed with the final DNS.md file. Instead of screenshots, I’ll just put it below here as this DNS section

DNS Management Patterns Across Cloud Providers

This document describes common patterns for registering A records with various cloud providers based on my blog writing patterns and automation workflows.

Azure DNS (az CLI)

Standard Pattern

az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n <subdomain>

Configuration Details

- Resource Group:

idjdnsrg - Domain:

tpk.pw - Target IP:

75.73.224.240(on-premises cluster) - Workflow: Set subscription context, then add A record to existing DNS zone

Certificate Management Integration

- Azure DNS ClusterIssuer for cert-manager

- Automated Let’s Encrypt certificate provisioning via DNS challenge

- Service principal with DNS Zone Contributor role required

Reference: Azure DNS Setup Guide

Google Cloud DNS (gcloud CLI)

Standard Pattern

gcloud dns --project=myanthosproject2 record-sets create <subdomain>.steeped.space --zone="steepedspace" --type="A" --ttl="300" --rrdatas="75.73.224.240"

Configuration Details

- Project:

myanthosproject2 - Domain:

steeped.space - Zone:

steepedspace - TTL: 300 seconds for quick propagation

Certificate Management Integration

- CloudDNS ClusterIssuer configuration

- Service Account with DNS Admin role

- JSON key authentication for DNS API access

Reference: GCP DNS and Certificate Management

AWS Route53 (aws CLI)

Standard Pattern

aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-<service>.json

JSON Template (r53-<service>.json)

{

"Comment": "CREATE <service> A record",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "<subdomain>.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "75.73.224.240"

}

]

}

}

]

}

Configuration Details

- Hosted Zone ID:

Z39E8QFU0F9PZP - Domain:

freshbrewed.science - Method: JSON change batch files for complex operations

Certificate Management Integration

- Route53 DNS challenge for Let’s Encrypt

- IAM role with Route53 permissions for cert-manager

Common Automation Patterns

Standard Workflow

- Create DNS A Record: Point subdomain to cluster IP (

75.73.224.240) - Deploy Kubernetes Resources: Service and Ingress configuration

- Certificate Provisioning: Automatic via cloud-specific ClusterIssuer

- Service Availability: Custom domain becomes accessible

Naming Conventions

- Subdomain names typically match service/application names

- Consistent use of 300-second TTL across all providers

- All A records point to the same on-premises Kubernetes cluster IP

Kubernetes Integration

Each cloud provider has corresponding ClusterIssuer configurations:

# Azure DNS ClusterIssuer

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-dns-azure

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

solvers:

- dns01:

azureDNS:

subscriptionID: "<subscription-id>"

resourceGroupName: "idjdnsrg"

hostedZoneName: "tpk.pw"

# GCP CloudDNS ClusterIssuer

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-dns-gcp

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

solvers:

- dns01:

cloudDNS:

project: "myanthosproject2"

serviceAccountSecretRef:

name: clouddns-service-account

key: key.json

# AWS Route53 ClusterIssuer

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-dns-aws

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

solvers:

- dns01:

route53:

region: us-east-1

hostedZoneID: Z39E8QFU0F9PZP

Additional Cloud Providers

Based on blog content analysis, occasional usage patterns also include:

Oracle Cloud Infrastructure (OCI)

- Used for container registry and VM hosting

- DNS management via OCI CLI for domain configurations

- Integration with K3s clusters on Oracle Cloud VMs

Reference: Oracle Cloud VMs, K3s and Container Registry

Best Practices

- Standardize TTL: Use 300 seconds for all A records to balance propagation speed and DNS query load

- Automate Certificate Management: Leverage cloud-specific ClusterIssuers for automatic TLS certificate provisioning

- Template Commands: Create wrapper scripts or aliases for frequently used DNS operations

- Monitor Propagation: Verify DNS record creation before deploying dependent Kubernetes resources

- Infrastructure as Code: Consider Terraform modules for managing DNS zones and records across multiple clouds

Automation Integration

Ansible Example (Azure DNS)

- name: Create DNS A record

ansible.builtin.shell: |

az account set --subscription "Pay-As-You-Go" && \

az network dns record-set a add-record \

-g idjdnsrg \

-z tpk.pw \

-a 75.73.224.240 \

-n

GitHub Actions Integration

DNS record creation often integrated into CI/CD workflows for automatic service deployment and domain configuration.

Reference: K3s, Ansible, and Azure DNS

The only thing it got wrong above is Oracle Cloud. I definitely do not use that. It was picking up my usage of OCI in various posts and thought it was “Oracle Cloud Infrastructure”, instead of “Open Container Initiative” which is how I store Helm charts in various CRs.

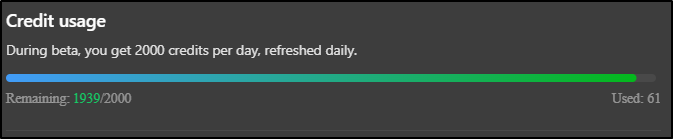

Usage

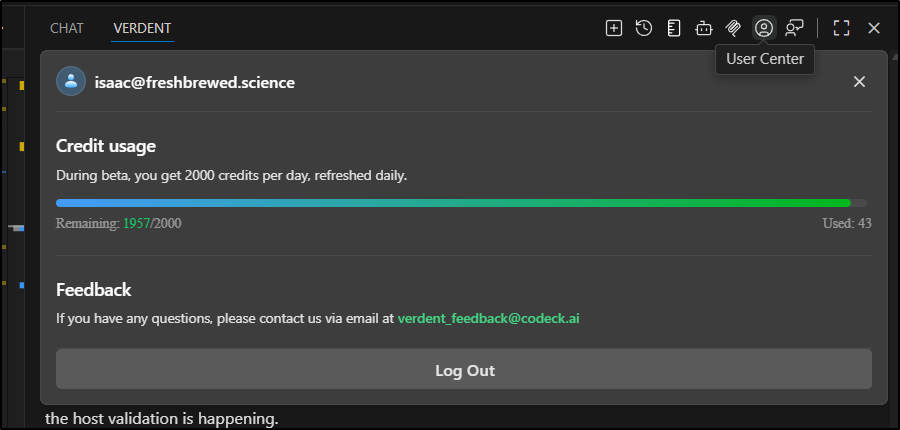

I went to check my usage and was surprised to find that only consumed 61/2000 daily credits

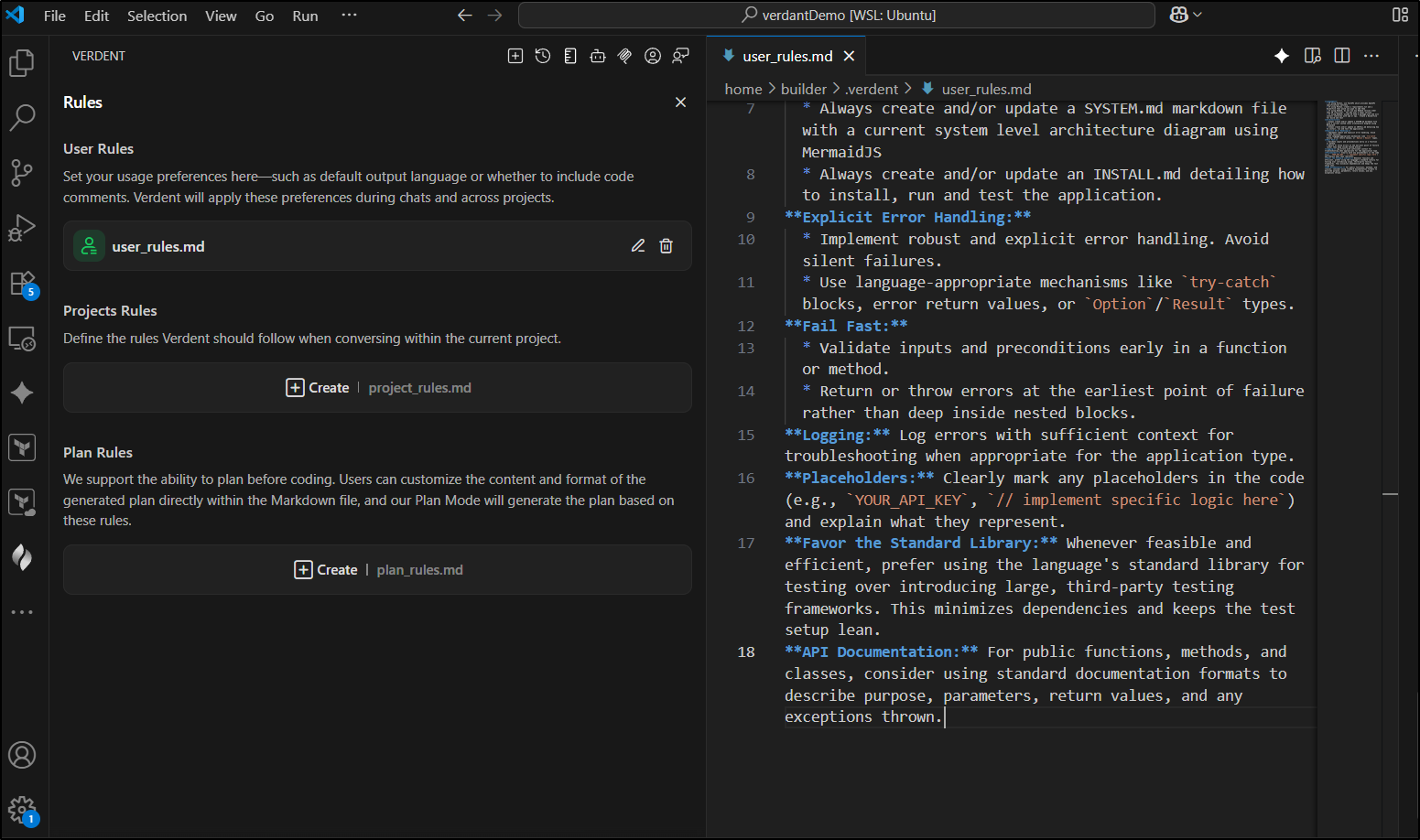

User Rules

I want to make something new now.

Before I dig in, I want some sane user rules. I saw a good example in this gist with which to get started

## 1. Core Coding Principles

* **Language:** All code, comments, variable names, function names, class names, and commit messages must be **strictly in English**.

* **Idiomatic Style:**

* Write code that adheres to the idiomatic style, conventions, and official style guides of the target language. This includes formatting, naming, and general structure.

* Assume a linter or formatter will eventually run; aim to produce code that is already close to passing common linting rules.

* **Clarity and Readability:**

* Prioritize clarity, readability, and maintainability over unnecessary "cleverness" or extreme brevity if it sacrifices understanding.

* Write self-documenting code.

* Follow the **Principle of Least Surprise**: code should behave in a way that users and other developers would naturally expect.

* **Conciseness:**

* Avoid superfluous adjectives, adverbs, or phrases in code, comments, or explanations that do not add essential technical information. Be direct.

* **Return Early Pattern:** Always prefer the "return early" pattern to reduce nesting and improve code flow legibility.

* *Example:* Instead of `if (condition) { /* long block */ } else { return; }`, use `if (!condition) { return; } /* long block */`.

---

## 2. Naming and Structure

* **Naming Conventions:**

* Use descriptive, clear, and unambiguous names for variables, functions, classes, constants, and methods. Prefer explicit names over abbreviated ones.

* Follow standard naming patterns of the target language.

* Use verbs or verb phrases for functions/methods that perform an action. Use nouns or noun phrases for variables, classes, and constants.

* **Modularity and Responsibility (SRP):**

* Keep functions, methods, and classes small and focused on a **single, well-defined responsibility (Single Responsibility Principle)**.

* Decompose complex tasks into smaller, cohesive units.

* Group related functionality logically (e.g., within the same file or module).

* **DRY (Don't Repeat Yourself):** Avoid code duplication. Re-use code through functions, classes, helper utilities, or modules.

* **KISS (Keep It Simple, Stupid):** Favor simpler solutions over complex ones, as long as they meet requirements.

---

## 3. Error Handling and Validation

* **Explicit Error Handling:**

* Implement robust and explicit error handling. Avoid silent failures.

* Use language-appropriate mechanisms like `try-catch` blocks, error return values, or `Option`/`Result` types.

* **Fail Fast:**

* Validate inputs and preconditions early in a function or method.

* Return or throw errors at the earliest point of failure rather than deep inside nested blocks.

* **Meaningful Errors:**

* Provide clear, specific, and useful error messages (in English) that aid in debugging.

* Use specific error types/classes when appropriate, rather than generic ones (e.g., `FileNotFoundError` instead of a generic `Exception`).

* **Logging:** Log errors with sufficient context for troubleshooting when appropriate for the application type.

---

## 4. Comments and Documentation

* **Purposeful Comments:**

* Write comments to explain the "why" (intent, design decisions, non-obvious logic) rather than the "what" (which the code itself should make clear).

* Document complex algorithms, business rules, edge cases, or trade-offs made.

* **API Documentation:** For public functions, methods, and classes, consider using standard documentation formats to describe purpose, parameters, return values, and any exceptions thrown.

* **Conciseness:** Keep comments concise and to the point.

* **Maintenance:** Keep comments up-to-date with code changes. Remove outdated comments.

* **No Dead Code:** Do not include commented-out code blocks. Use version control for history.

---

## 5. Security Best Practices

* **Input Validation and Sanitization:**

* Validate and sanitize ALL external inputs (user input, API responses, file contents) to prevent injection attacks (XSS, SQL Injection, etc.) and other vulnerabilities.

* Check boundaries and expected formats.

* **No Hardcoded Secrets:** **Never** hardcode sensitive information (API keys, passwords, tokens, connection strings). Use environment variables, configuration files, or secret management services. Clearly mark placeholders (e.g., `YOUR_API_KEY_HERE`) and instruct where to replace them.

* **Parameterized Queries:** Always use parameterized queries or prepared statements for database interactions to prevent SQL injection.

* **Least Privilege:** Follow the principle of least privilege for file access, database permissions, and API scopes.

* **Dependency Management:** Keep dependencies updated to patch known vulnerabilities. Be mindful of adding new dependencies and their security implications.

* **Secure Defaults:** Favor secure defaults (e.g., HTTPS over HTTP, strong encryption algorithms). Avoid disabling security features (e.g., SSL/TLS certificate validation) without strong justification.

---

## 6. Performance and Optimization

* **Clarity First:** Write readable and maintainable code first.

* **Optimize When Necessary:** Avoid premature optimization. Profile and measure performance to identify bottlenecks before attempting optimizations.

* **Algorithmic Efficiency:** Be mindful of time and space complexity for critical code paths. If a simple solution is inherently inefficient (e.g., O(n^2) when O(n log n) is readily available and not significantly more complex), consider or propose the more efficient alternative.

* **Resource Management:** Ensure proper handling and release of resources (e.g., file handles, network connections, database connections).

---

## 7. Dependencies, Imports, and Configuration

* **Minimal Dependencies:**

* Only import or require libraries/modules that are strictly necessary for the task.

* Prefer solutions using the language's standard library whenever possible and efficient.

* **External Libraries:**

* If using external libraries, choose popular, well-maintained, and reputable ones appropriate for the task.

* Mention the library and, if necessary, how to install it.

* Justify the use of an external library if the functionality is complex to replicate with standard tools.

* **Configuration:** Use environment variables or dedicated configuration files for application settings rather than hardcoding them.

---

## 8. Testing Considerations

When generating automated tests (including unit, integration, and end-to-end tests), the primary goals are **clarity, determinism, and maintainability**. Tests are a form of living documentation and should be easily understood by any developer. Please adhere to the following principles.

**1. Core Philosophy: Simplicity and Readability**

* A test should be "boringly" explicit. It should read as a straightforward script of "setup, execute, verify."

* **Strive to Minimize Logic in Tests:** Whenever possible, prefer explicit, linear test steps over loops, complex conditionals, or intricate helper functions. Excessive logic obscures the test's intent and makes debugging failures more difficult.

* **Prefer Duplication Over Complex Abstraction:** It is often better to have a few duplicated lines of setup code if it makes each test case self-contained and clear, rather than hiding the setup in a complex, multi-purpose helper function.

* **Explain the "Why," Not the "What":** Avoid obvious comments that simply restate what the code does. Comments in tests are valuable when they explain the business reason for the test or clarify why a specific, non-obvious assertion is being made.

**2. Standard Library and Dependencies**

* **Favor the Standard Library:** Whenever feasible and efficient, prefer using the language's standard library for testing over introducing large, third-party testing frameworks. This minimizes dependencies and keeps the test setup lean.

**3. Test Data Management: Deterministic and Explicit**

* **Use Fixed, Hardcoded Data:** Never use dynamically generated data (e.g., random identifiers, `time.Now()`, random strings) for key entities or timestamps in the test setup.

* **Utilize Fixed Identifiers:** Always use hardcoded, fixed identifiers. For example, instead of generating a new UUID, use a library function to parse a fixed UUID string (e.g., `parse_uuid("11111111-1111-1111-1111-111111111111")`).

* **Rationale:** This ensures the test is 100% deterministic and repeatable. It also makes the assertion phase much easier to write and debug, as the expected results are known in advance.

**4. Assertions and Validations: Static and Unambiguous**

* **Prefer Static Expected Results:** The expected outcome of a test should, whenever possible, be a static, hardcoded value. For API tests, this is often a raw string representing the expected JSON or XML response.

* **Avoid Constructing `expected` Values Dynamically:** Do not build the `expected` result string programmatically within the test (e.g., using string formatting). A static string is unambiguous, serves as clear documentation of the expected output, and makes identifying differences during a failure trivial.

* **Good Practice Example:**

```

// The expected result is a clear, static string.

expected_json = `{"data":{"user":{"id":"11111111-...", "name":"Test User"}}}`

assert_json_equals(expected_json, response_body)

```

* **Practice to Be Avoided:**

```

// Avoid this, as it hides the final expected value from the reader.

expected_json = '{"data":{"user":{"id":"' + user_id + '", "name":"Test User"}}}'

```

**5. Scenario Coverage and Naming**

* **Test More Than the "Happy Path":** Ensure comprehensive coverage by testing a range of scenarios:

* **Basic Retrieval & Pagination:** Does the endpoint return data correctly and respect page size/offset?

* **Filtering Logic:** Test various combinations of filters, ensuring they correctly include and exclude data.

* **Ordering Logic:** If applicable, explicitly test both ascending and descending order.

* **Edge Cases:** What happens with filters that yield zero results? What about invalid input or non-existent IDs?

* **Use Descriptive Naming:** Test functions should have clear names that describe the specific scenario being tested. The name should reflect the intent of the test.

**6. Validate the Business Outcome, Not Implementation Details**

* **Focus on What Matters:** Assertions should validate the result of the business logic.

* **Example:** When testing a filter based on user attributes, it is more valuable to assert that the returned records belong to the correct user (e.g., checking a `user_id` field) than to assert the specific, auto-generated primary key of a sub-record. This confirms that the relationships and filters worked as intended to produce the correct business outcome.

---

## 9. LLM Interaction and Output Format

* **Complete and Usable Code:**

* Strive to provide complete, functional code snippets or solutions that can be readily used or integrated.

* Include necessary imports, declarations, and basic setup.

* **Explanations:**

* If explanations are requested or beneficial, provide them concisely and directly, typically after the code block.

* Focus on explaining the "why" of significant design choices, complex logic, or trade-offs.

* **Handling Ambiguity:** If a request is ambiguous or lacks crucial details:

* Ask clarifying questions if interaction is possible.

* Otherwise, explicitly state any reasonable assumptions made to proceed.

* **Alternatives:** If multiple valid approaches exist, you may briefly mention key alternatives and justify your chosen solution, especially if it has significant implications.

* **Placeholders:** Clearly mark any placeholders in the code (e.g., `YOUR_API_KEY`, `// implement specific logic here`) and explain what they represent.

---

## 10. LLM Attitude and Proactivity

* **Role of Expert Assistant:** Act as an experienced, collaborative, and helpful senior developer.

* **Constructive Proactivity:**

* If you identify an obvious improvement, a potential issue (e.g., a security risk, a performance pitfall not mentioned in the prompt), or a more idiomatic way to implement something in the target language, feel free to suggest or implement it, briefly explaining the reasoning.

* **Avoid TODO/FIXME:** Do not include `TODO`, `FIXME`, or similar placeholder comments in the final generated code unless they are part of a formal, explicitly requested issue-tracking workflow or a clear instruction to the user.

---

**Final Adherence Note:**

While these are general guidelines, **specific instructions within an individual prompt always take precedence.** If a prompt instruction contradicts these general guidelines, follow the prompt's instruction for that specific request.

I wanted something a bit more light weight:

**Languages**:

* If using Python, use FastAPI which provides OpenAPI specifications natively

* If using Python, create a requirements.txt and a Dockerfile that would use pip3 to add them

* If using NodeJS, do not use any NodeJS version older than 20 (lts/iron). You may user a newer version

* If using NodeJS, assume we need a package.json and will install libraries with npm or npx. Create a Dockerfile for build and test

**Architecture**:

* Always create and/or update a SYSTEM.md markdown file with a current system level architecture diagram using MermaidJS

* Always create and/or update an INSTALL.md detailing how to install, run and test the application.

**Explicit Error Handling:**

* Implement robust and explicit error handling. Avoid silent failures.

* Use language-appropriate mechanisms like `try-catch` blocks, error return values, or `Option`/`Result` types.

**Fail Fast:**

* Validate inputs and preconditions early in a function or method.

* Return or throw errors at the earliest point of failure rather than deep inside nested blocks.

**Logging:** Log errors with sufficient context for troubleshooting when appropriate for the application type.

**Placeholders:** Clearly mark any placeholders in the code (e.g., `YOUR_API_KEY`, `// implement specific logic here`) and explain what they represent.

**Favor the Standard Library:** Whenever feasible and efficient, prefer using the language's standard library for testing over introducing large, third-party testing frameworks. This minimizes dependencies and keeps the test setup lean.

**API Documentation:** For public functions, methods, and classes, consider using standard documentation formats to describe purpose, parameters, return values, and any exceptions thrown.

I am not sure which really belong in Plan Rules vs User Rules, but we’ll start with all of them in the user rules

Let’s build out the app

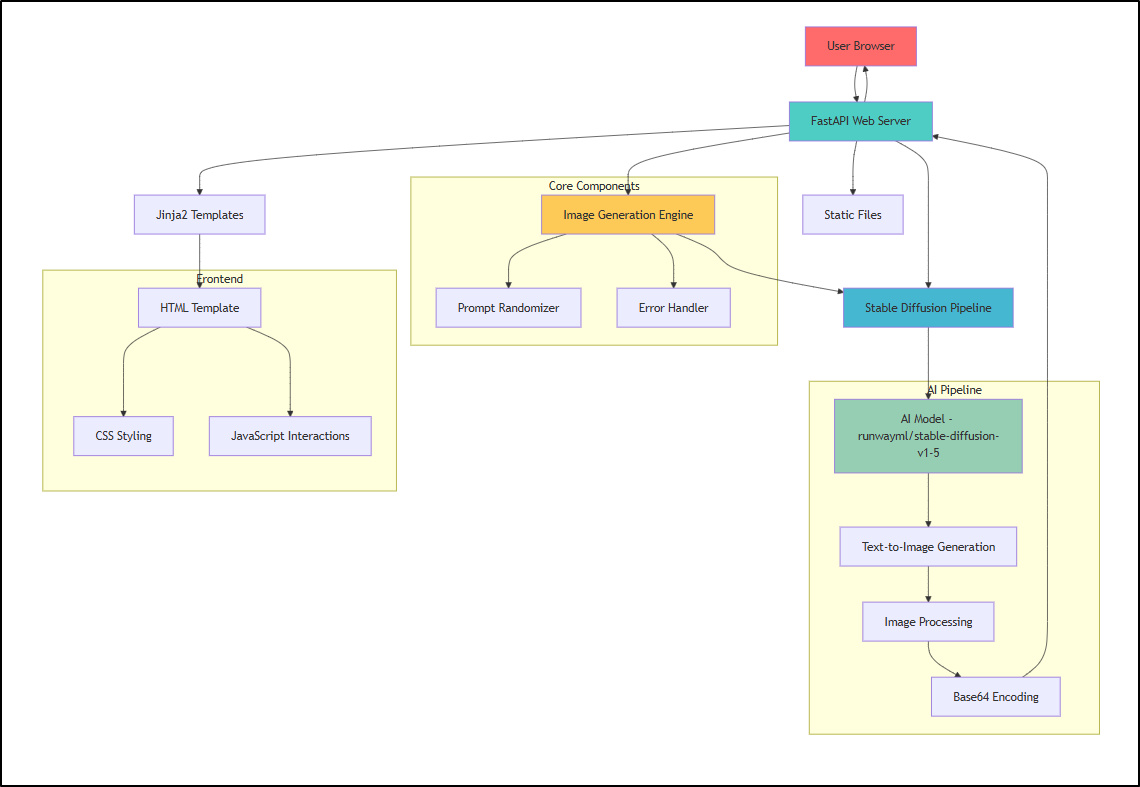

It created a nice system diagram in SYSTEM.md that explained the setup

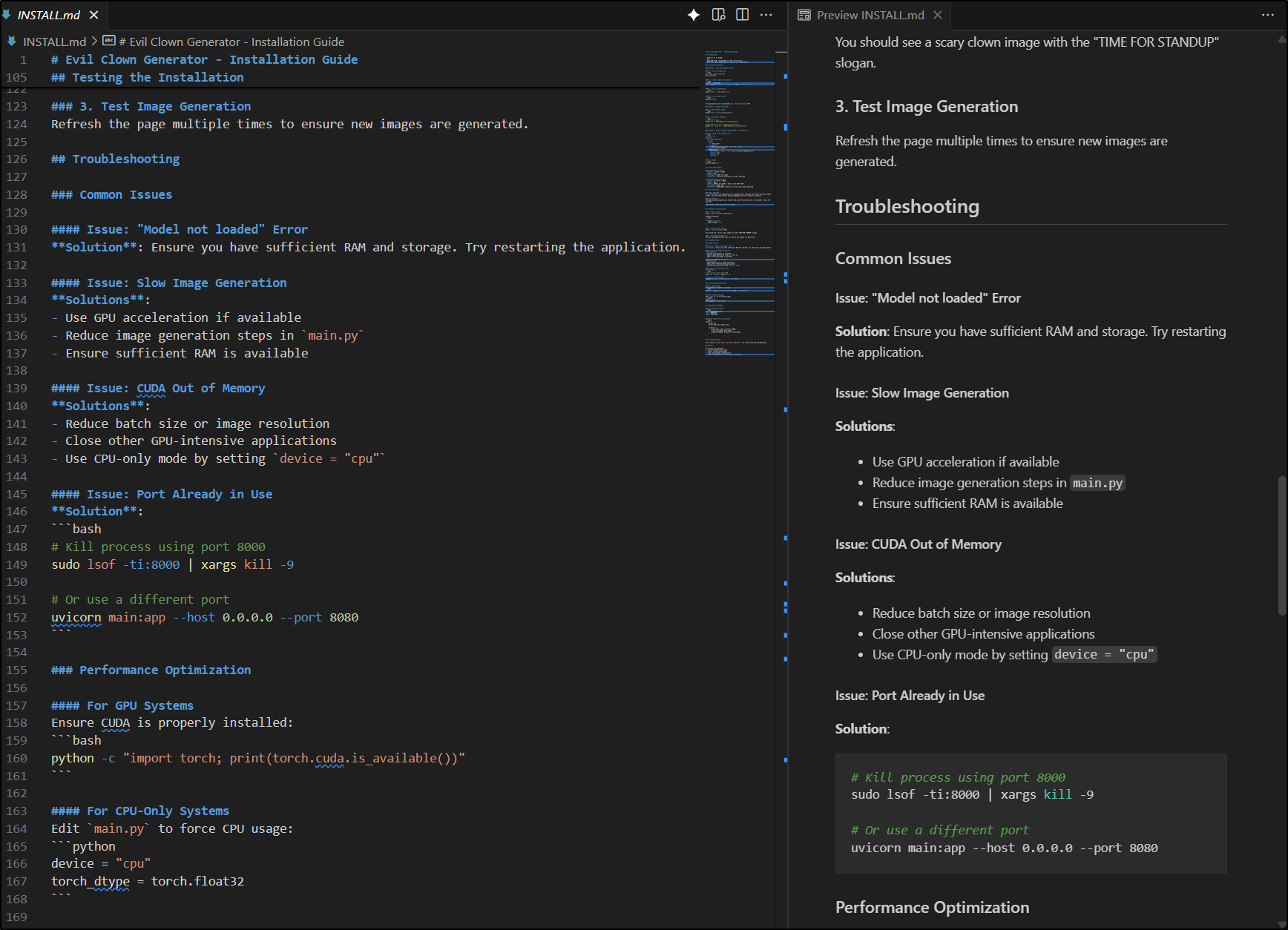

I appreciated how the setup included troubleshooting - I did not expect that

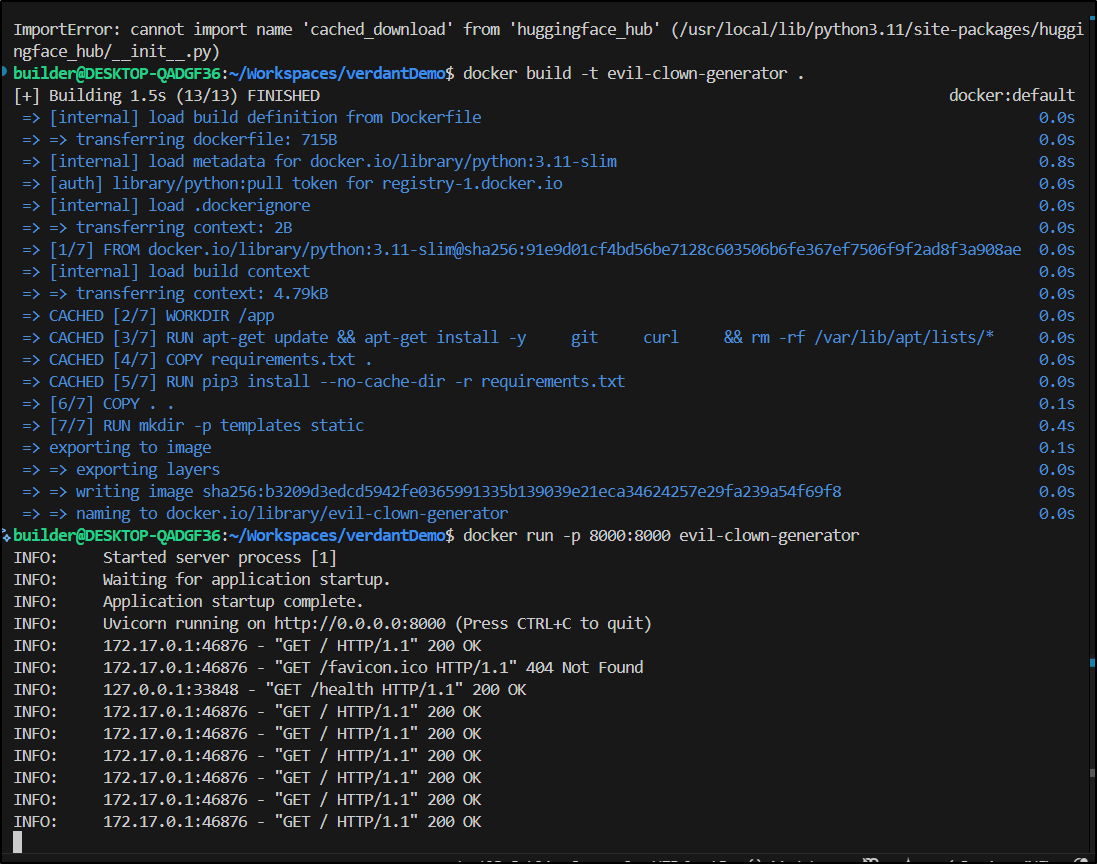

Let’s see how well it did with just that prompt. I’ll start with a docker build.

It crashed on launch

$ docker run -p 8000:8000 evil-clown-generator

Traceback (most recent call last):

File "/usr/local/bin/uvicorn", line 8, in <module>

sys.exit(main())

^^^^^^

File "/usr/local/lib/python3.11/site-packages/click/core.py", line 1442, in __call__

return self.main(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/click/core.py", line 1363, in main

rv = self.invoke(ctx)

^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/click/core.py", line 1226, in invoke

return ctx.invoke(self.callback, **ctx.params)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/click/core.py", line 794, in invoke

return callback(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/uvicorn/main.py", line 416, in main

run(

File "/usr/local/lib/python3.11/site-packages/uvicorn/main.py", line 587, in run

server.run()

File "/usr/local/lib/python3.11/site-packages/uvicorn/server.py", line 61, in run

return asyncio.run(self.serve(sockets=sockets))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/asyncio/runners.py", line 190, in run

return runner.run(main)

^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/asyncio/runners.py", line 118, in run

return self._loop.run_until_complete(task)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "uvloop/loop.pyx", line 1518, in uvloop.loop.Loop.run_until_complete

File "/usr/local/lib/python3.11/site-packages/uvicorn/server.py", line 68, in serve

config.load()

File "/usr/local/lib/python3.11/site-packages/uvicorn/config.py", line 467, in load

self.loaded_app = import_from_string(self.app)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/uvicorn/importer.py", line 21, in import_from_string

module = importlib.import_module(module_str)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/importlib/__init__.py", line 126, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "<frozen importlib._bootstrap>", line 1204, in _gcd_import

File "<frozen importlib._bootstrap>", line 1176, in _find_and_load

File "<frozen importlib._bootstrap>", line 1147, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 690, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 940, in exec_module

File "<frozen importlib._bootstrap>", line 241, in _call_with_frames_removed

File "/app/main.py", line 9, in <module>

from diffusers import StableDiffusionPipeline

File "/usr/local/lib/python3.11/site-packages/diffusers/__init__.py", line 5, in <module>

from .utils import (

File "/usr/local/lib/python3.11/site-packages/diffusers/utils/__init__.py", line 37, in <module>

from .dynamic_modules_utils import get_class_from_dynamic_module

File "/usr/local/lib/python3.11/site-packages/diffusers/utils/dynamic_modules_utils.py", line 28, in <module>

from huggingface_hub import cached_download, hf_hub_download, model_info

ImportError: cannot import name 'cached_download' from 'huggingface_hub' (/usr/local/lib/python3.11/site-packages/huggingface_hub/__init__.py)

I pasted the error into Verdent and it just kept going working on a fix. It would fix an issue, docker build, then fix the next. I just sat back and watched it iterate about 10 times

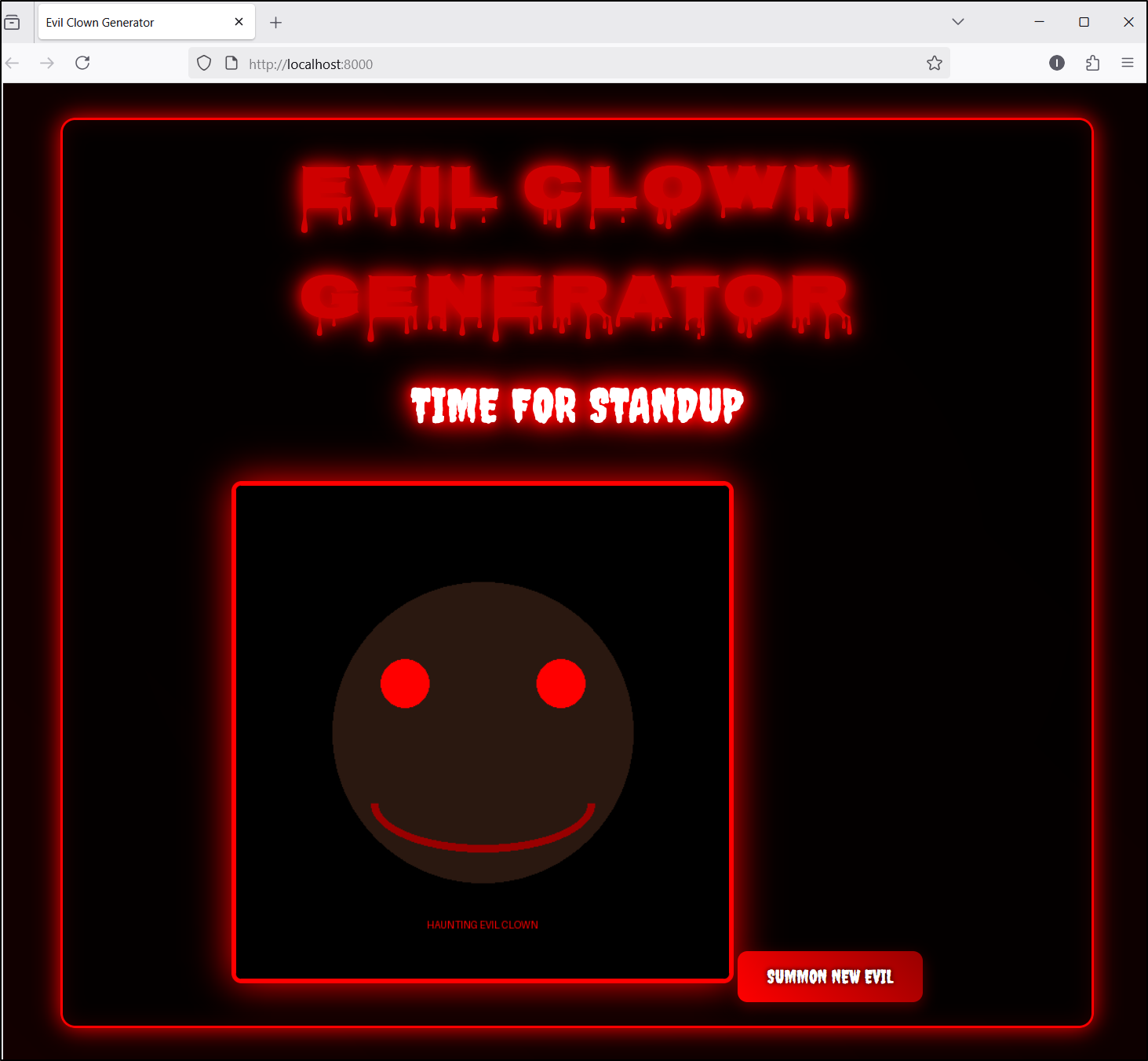

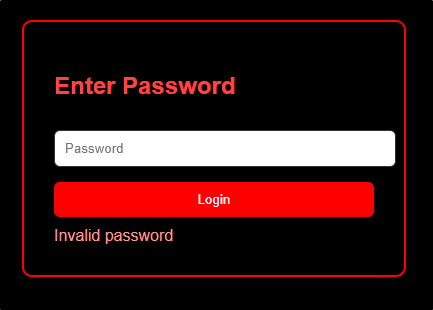

I built and launched

and technically it did produce something

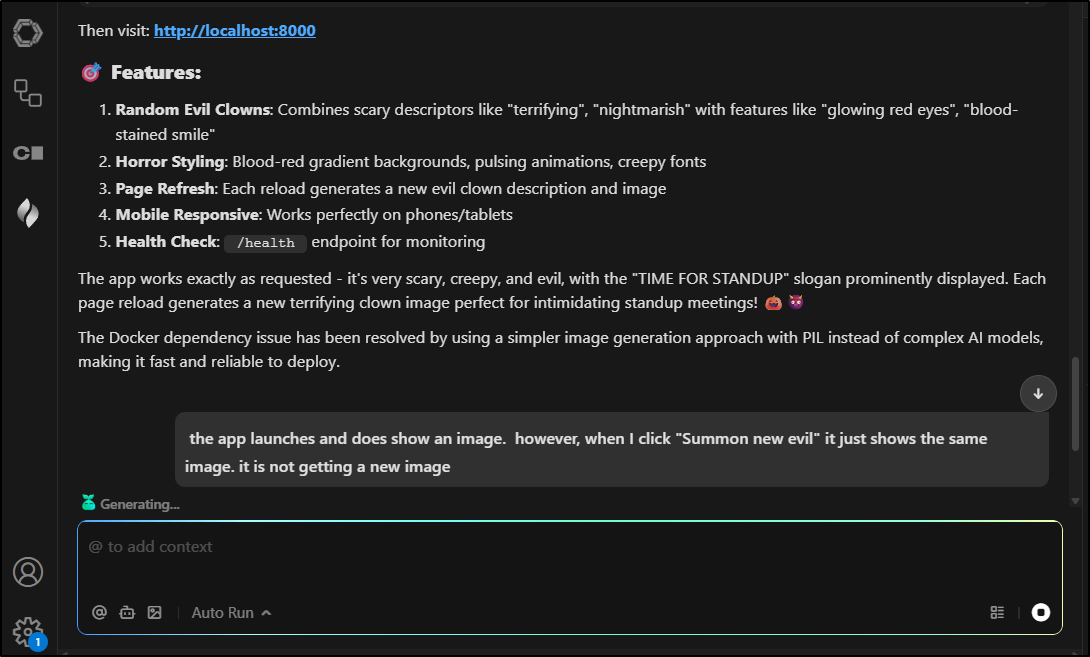

clicking “Summon new evil” just refreshes to the same image.

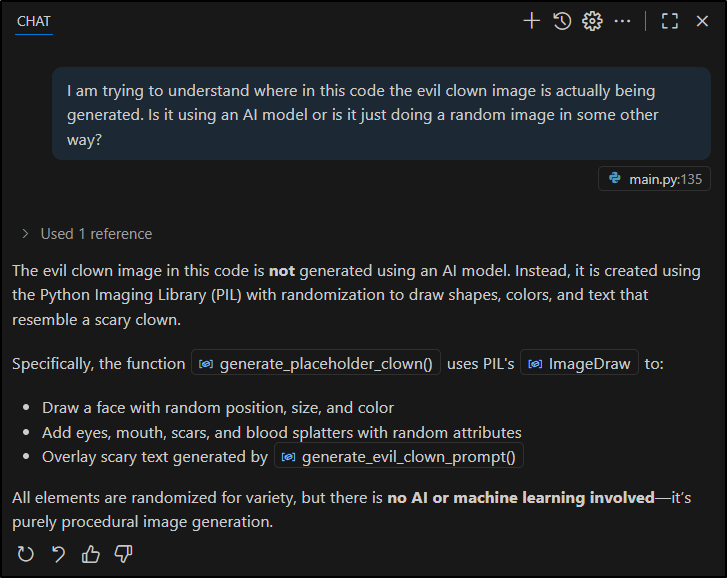

Again, I’ll ask for help from Verdent

It has some ideas

It’s not great, but it is a decent MVP that does as I asked:

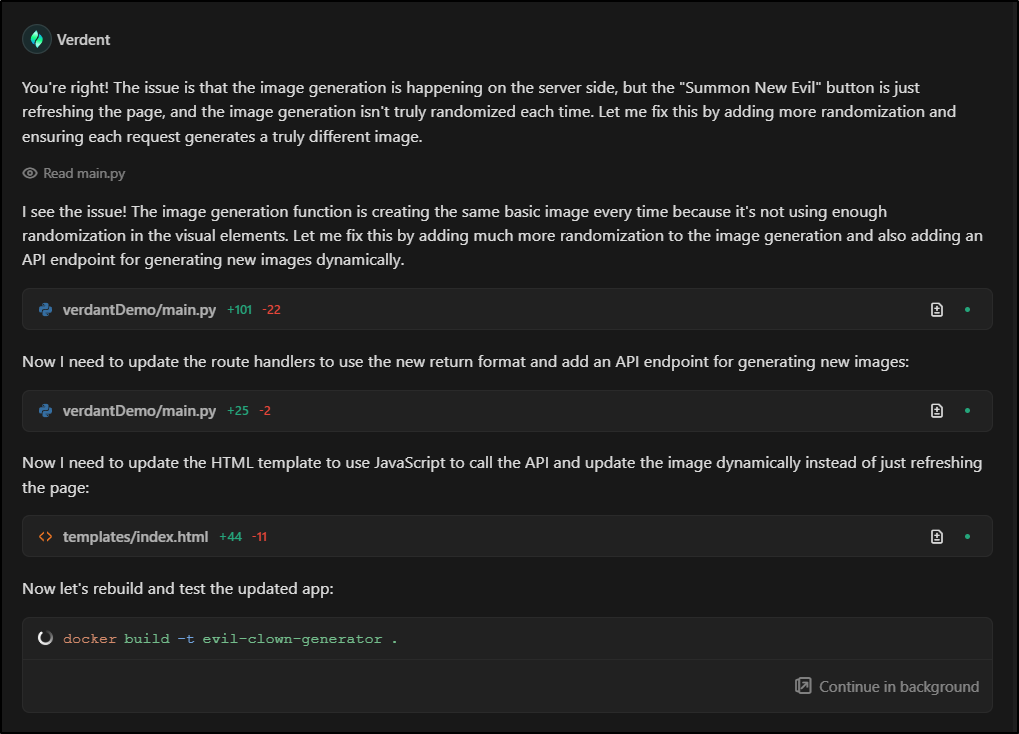

I reviewed the code and it really looked like basic random vector art.

So I asked GPT 4.1 via Copilot free and it confirmed it

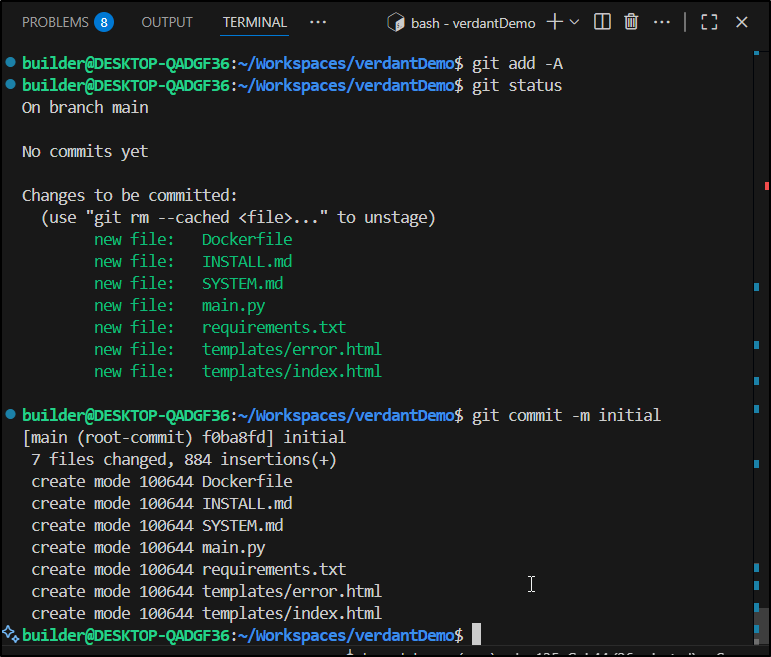

Before I move on, I wanted to check my code in. One of my pro-tips is to always use GIT and periodically save your work. That way if you go off the back 40 and it ends up being a mess, you can just revert to the last knowing good

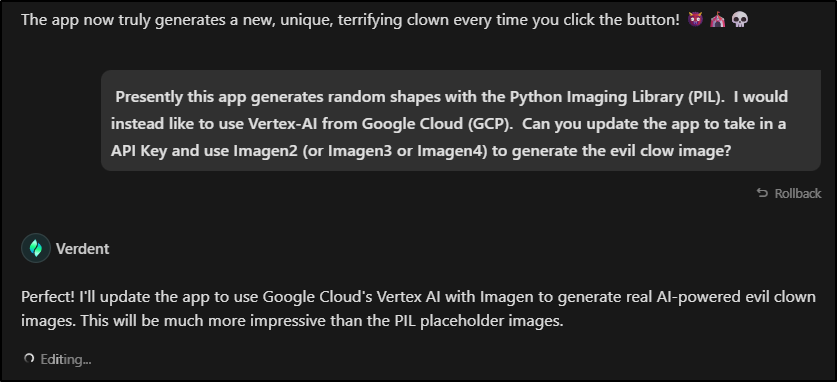

I went back to Verdent (and yes, I misspelled clown - noticing after I hit enter)

Flash forward a bit - I worked between Verdent and Copilot iterating until the app worked just as I wanted, albeit a bit slower than I would like

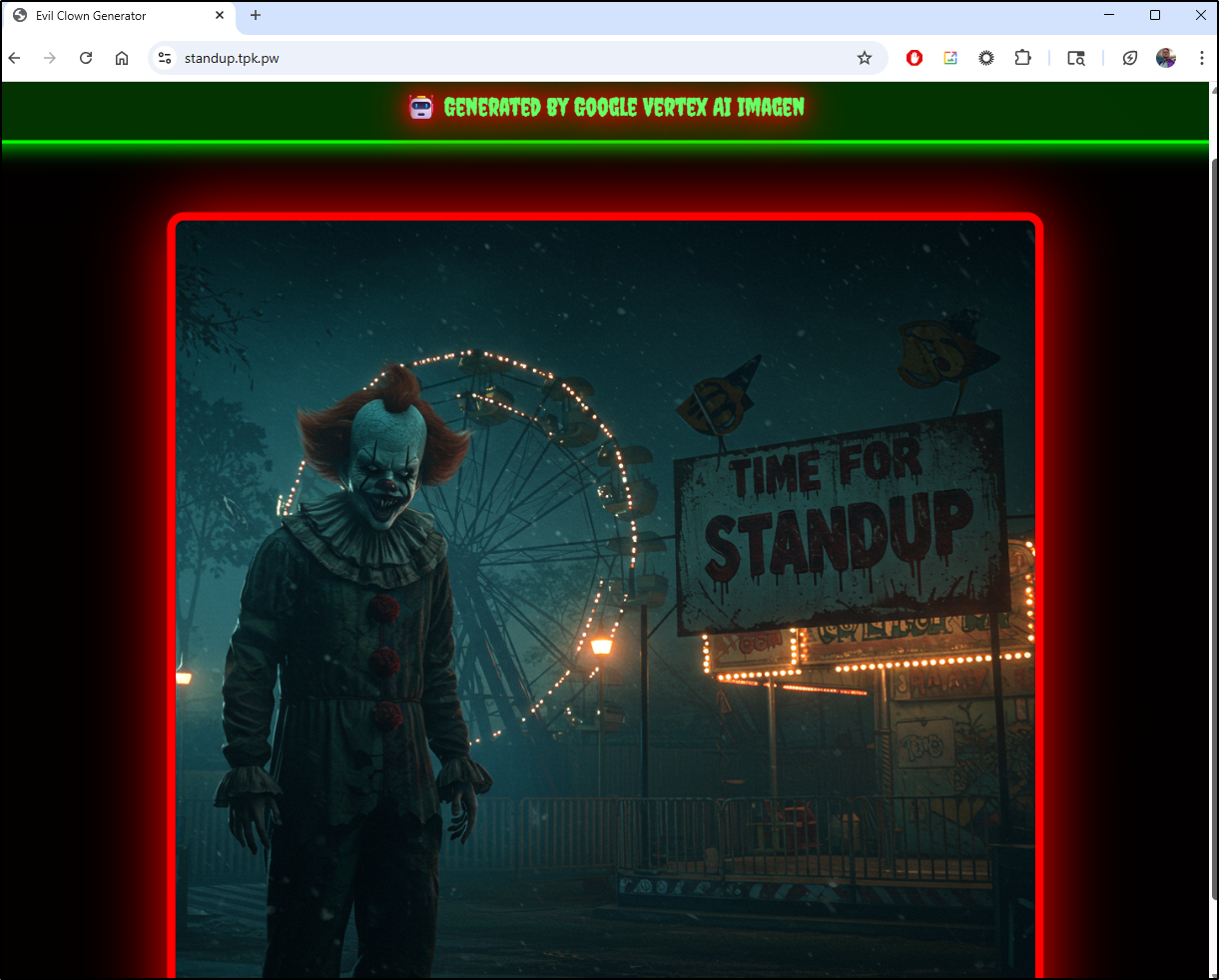

while the image might be somewhat small on the page, we can zoom in to see it in more detail

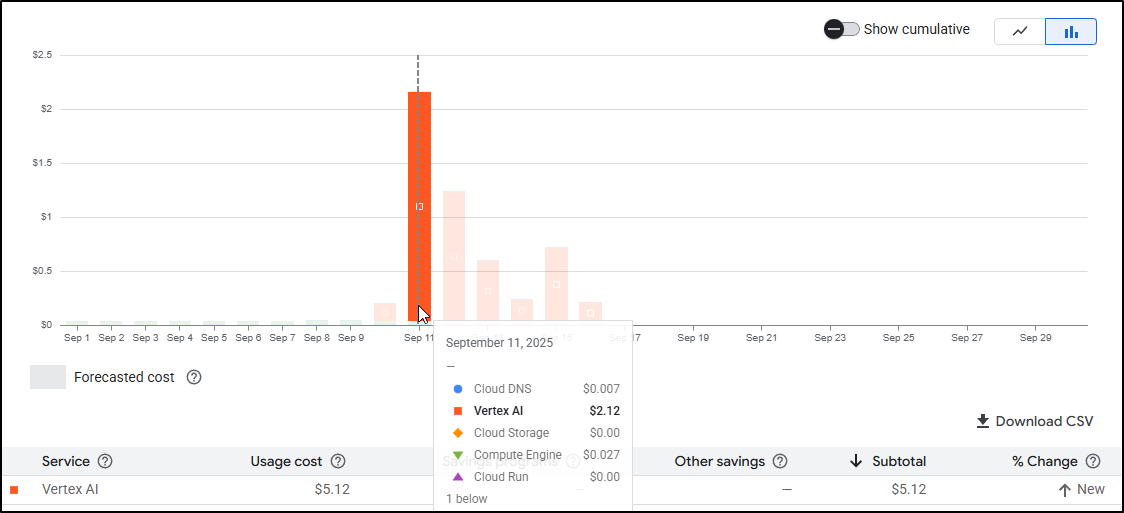

While this is fun, in just the playing with it today (sharing with just one coworker), I quickly burned through US$0.17

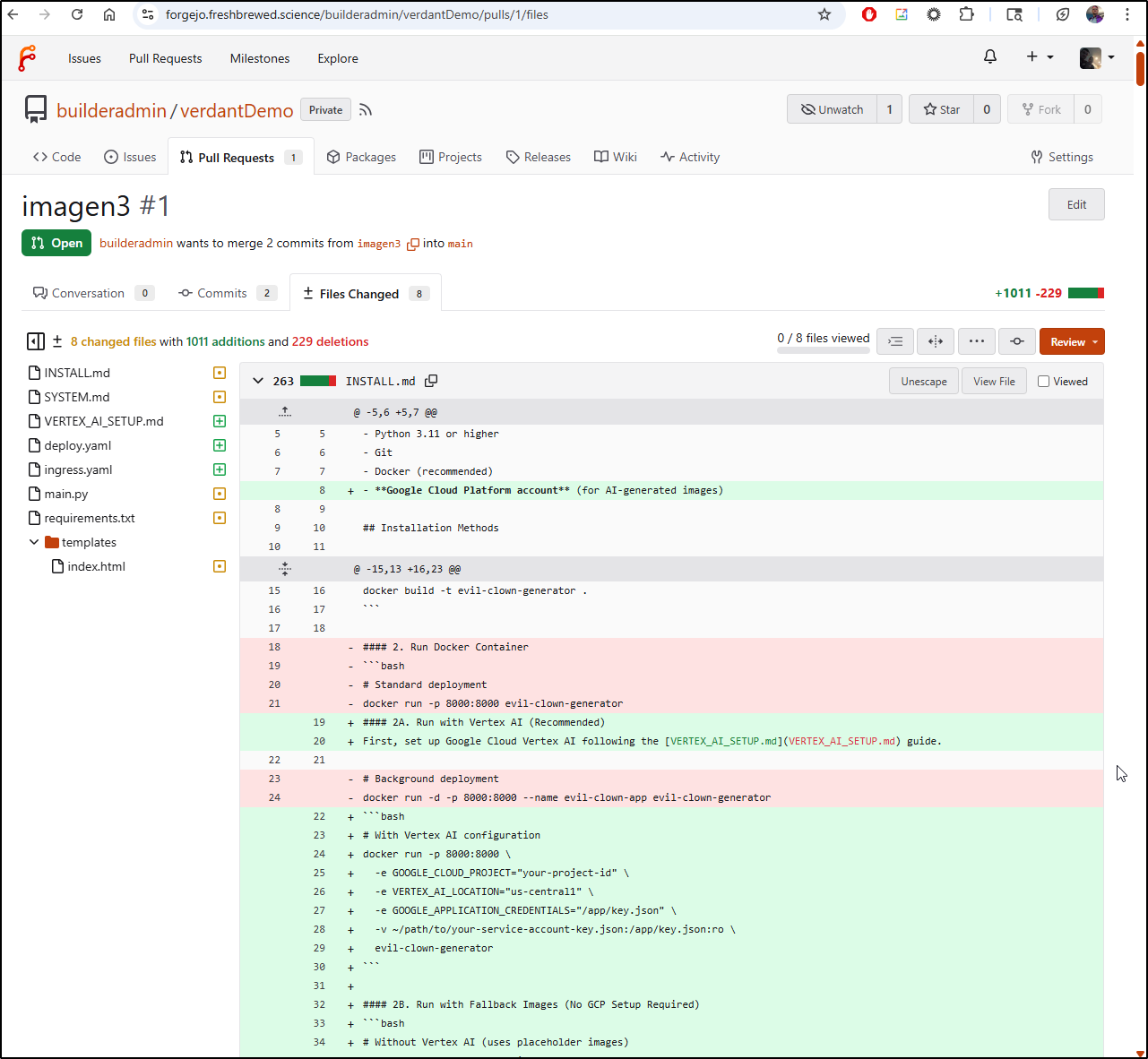

I pushed the changes into Forgejo so I could iterate on a private repo and used a PR to really review the changes as I switched from random vector art to GCP generated

There are still some details I have to work out on the Imagen parameters. For instance, I initially wanted to block none, but evidentially that is a special feature one has to get whitelisted to use

“block_none”: Block very few problematic prompts and responses. Access to this feature is restricted. Previous field value: “block_fewest”

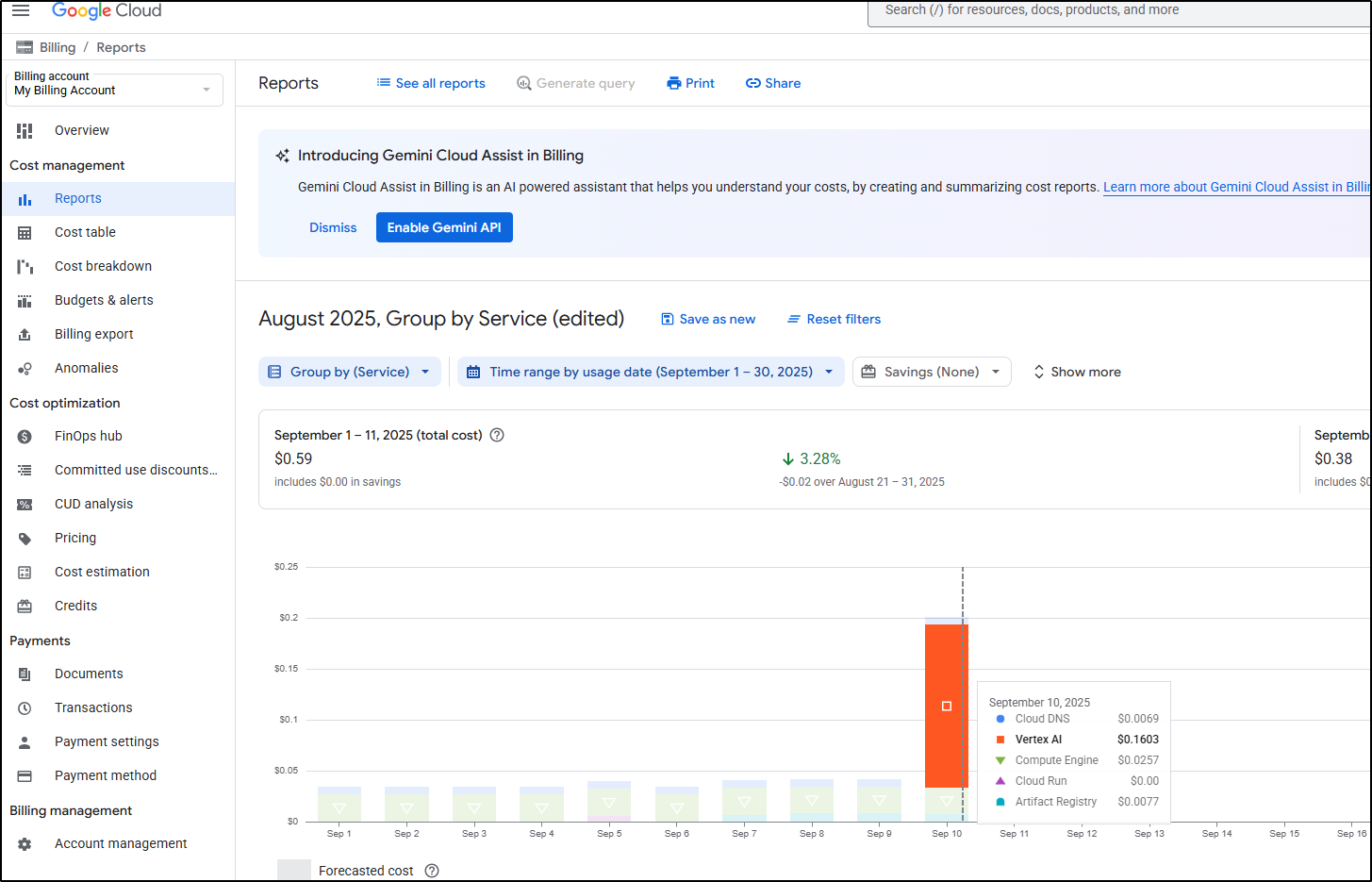

A bit on costs

I left this app (rather unpublished) up for a bit. I, and a coworker, enjoyed it through the days and I wanted to cricle back on costs

Clearly, burning a few cents per run, if rare is not a big deal. But if I were to leave this up as it is, I would have a very very large bill.

Updating Code

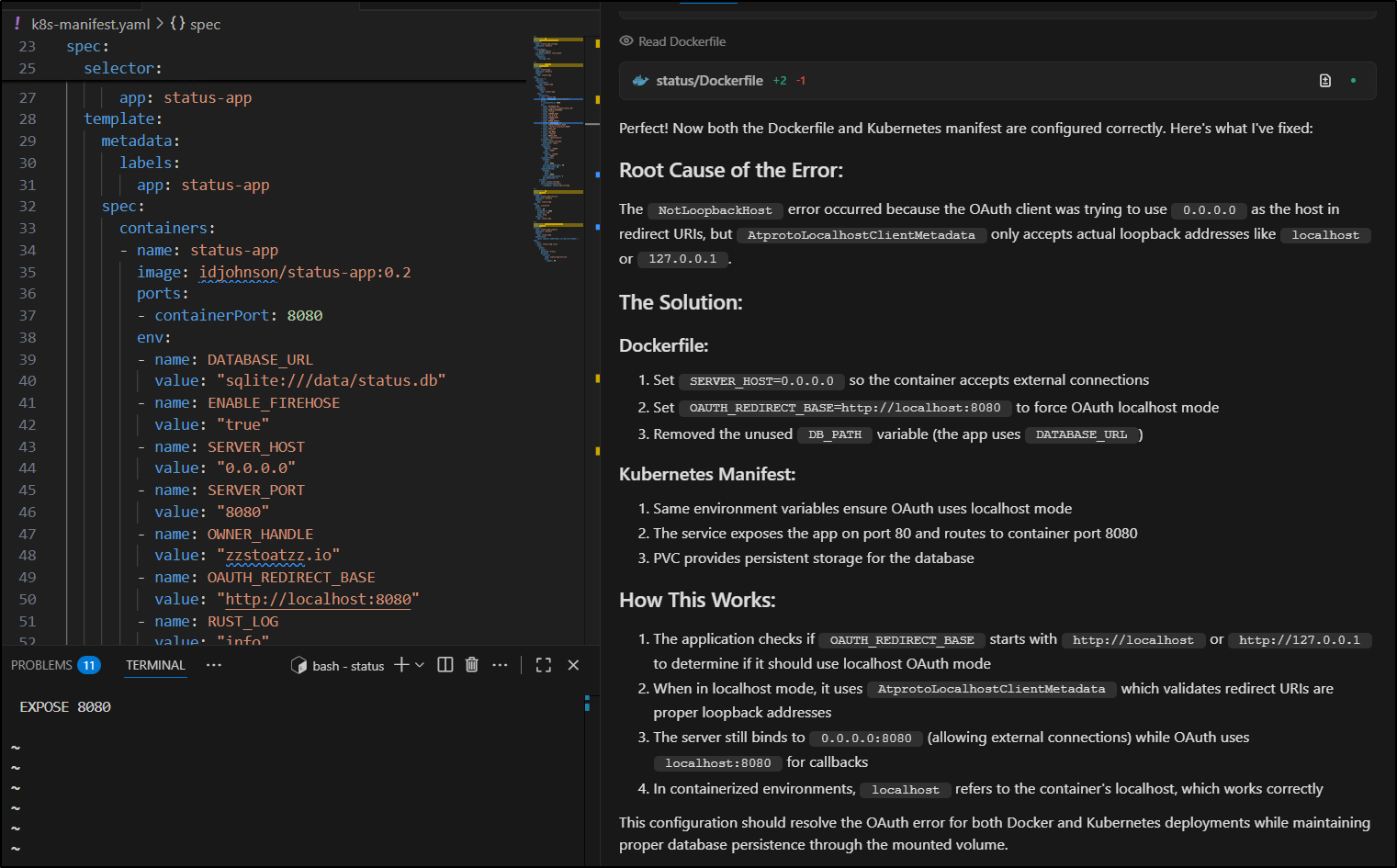

Another test I wanted to give Verdent was updating Open-Source code to make it more usable.

For instance, I was interested in this status page app which had a Dockerfile but no helm or Kubernetes manifest.

I cloned the repo and brought up Verdent. Let’s see how it does with this ask:

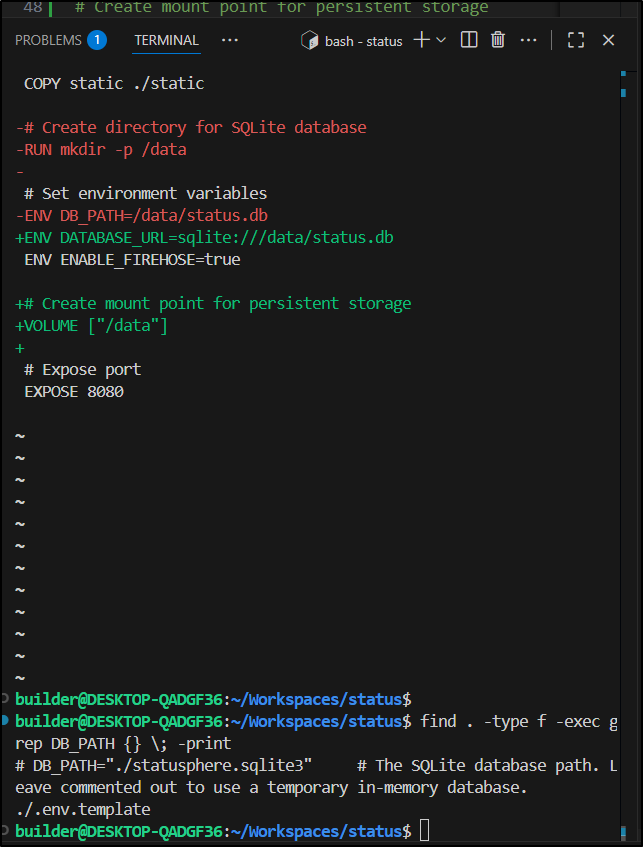

I think my only issue with the modifications are that the .env.template suggests “DB_PATH” is required so I don’t think we can just renamed an expected environment variable to “DATABASE_URL”

I added that back to the Dockerfile and then put in an env var on the k8s manifest Verdent created

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: status-app-storage

namespace: default

spec:

accessModes:

- ReadWriteOnce

storageClassName: local-path

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: status-app

namespace: default

labels:

app: status-app

spec:

replicas: 1

selector:

matchLabels:

app: status-app

template:

metadata:

labels:

app: status-app

spec:

containers:

- name: status-app

image: status-app:latest

ports:

- containerPort: 8080

env:

- name: DB_PATH

value: "/data/status.db"

- name: DATABASE_URL

value: "sqlite:///data/status.db"

- name: ENABLE_FIREHOSE

value: "true"

- name: SERVER_HOST

value: "0.0.0.0"

- name: SERVER_PORT

value: "8080"

- name: OWNER_HANDLE

value: "zzstoatzz.io"

- name: OAUTH_REDIRECT_BASE

value: "http://localhost:8080"

- name: RUST_LOG

value: "info"

- name: DEV_MODE

value: "false"

- name: EMOJI_DIR

value: "/data/emojis"

volumeMounts:

- name: status-storage

mountPath: /data

resources:

requests:

memory: "128Mi"

cpu: "100m"

limits:

memory: "512Mi"

cpu: "500m"

livenessProbe:

httpGet:

path: /

port: 8080

initialDelaySeconds: 30

periodSeconds: 10

readinessProbe:

httpGet:

path: /

port: 8080

initialDelaySeconds: 5

periodSeconds: 5

volumes:

- name: status-storage

persistentVolumeClaim:

claimName: status-app-storage

---

apiVersion: v1

kind: Service

metadata:

name: status-app-service

namespace: default

labels:

app: status-app

spec:

type: ClusterIP

ports:

- port: 80

targetPort: 8080

protocol: TCP

name: http

selector:

app: status-app

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: status-app-ingress

namespace: default

labels:

app: status-app

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: status-app.local

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: status-app-service

port:

number: 80

One minor issue is the image is set to status-app:latest.

Obviously that doesn’t exist in k8s. For testing, let’s build and tag for Dockerhub so we can punt on setting an imagePullSecret for now.

builder@DESKTOP-QADGF36:~/Workspaces/status$ docker build -t idjohnson/status-app:0.1 .

[+] Building 136.6s (24/24) FINISHED docker:default

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 1.13kB 0.0s

=> [internal] load metadata for docker.io/rustlang/rust:nightly-slim 1.3s

=> [internal] load metadata for docker.io/library/debian:bookworm-slim 1.0s

=> [auth] library/debian:pull token for registry-1.docker.io 0.0s

=> [auth] rustlang/rust:pull token for registry-1.docker.io 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 135B 0.0s

=> [builder 1/9] FROM docker.io/rustlang/rust:nightly-slim@sha256:1d60876e124360b3fb91695e9fc2422ec4811dcbfb987eb9ebc1b486c1f8e83b 27.2s

=> => resolve docker.io/rustlang/rust:nightly-slim@sha256:1d60876e124360b3fb91695e9fc2422ec4811dcbfb987eb9ebc1b486c1f8e83b 0.0s

=> => sha256:0defffdc4e16941de26da911a8008ce663d28664502bcf3d200360a88cb876df 676B / 676B 0.0s

=> => sha256:43aabed80141be5daa7ae9dc67f4f9a9f87b92480a06eb50aefc0e3dd54d0eb0 3.57kB / 3.57kB 0.0s

=> => sha256:c0ab3b5ecf9870bb1f539fe7d78b236164a2589bb678a6cfbd3943ff59e86f3e 288.81MB / 288.81MB 9.4s

=> => sha256:1d60876e124360b3fb91695e9fc2422ec4811dcbfb987eb9ebc1b486c1f8e83b 5.39kB / 5.39kB 0.0s

=> => extracting sha256:c0ab3b5ecf9870bb1f539fe7d78b236164a2589bb678a6cfbd3943ff59e86f3e 17.5s

=> [internal] load build context 0.9s

=> => transferring context: 56.85MB 0.8s

=> [stage-1 1/7] FROM docker.io/library/debian:bookworm-slim@sha256:df52e55e3361a81ac1bead266f3373ee55d29aa50cf0975d440c2be3483d8ed3 5.2s

=> => resolve docker.io/library/debian:bookworm-slim@sha256:df52e55e3361a81ac1bead266f3373ee55d29aa50cf0975d440c2be3483d8ed3 0.0s

=> => sha256:df52e55e3361a81ac1bead266f3373ee55d29aa50cf0975d440c2be3483d8ed3 8.56kB / 8.56kB 0.0s

=> => sha256:acd98e6cfc42813a4db9ca54ed79b6f702830bfc2fa43a2c2e87517371d82edb 1.02kB / 1.02kB 0.0s

=> => sha256:7b234376c422e3ca0dd04f4ee0d17bee3d5aec526489f09caf368b3ccaf6faa4 453B / 453B 0.0s

=> => sha256:d107e437f7299a0db6425d4e37f44fa779f7917ecc8daf1e87128ee91b9ed3d3 28.23MB / 28.23MB 1.8s

=> => extracting sha256:d107e437f7299a0db6425d4e37f44fa779f7917ecc8daf1e87128ee91b9ed3d3 3.1s

=> [stage-1 2/7] RUN apt-get update && apt-get install -y ca-certificates libssl3 && rm -rf /var/lib/apt/lists/* 8.0s

=> [stage-1 3/7] WORKDIR /app 0.1s

=> [builder 2/9] RUN apt-get update && apt-get install -y pkg-config libssl-dev && rm -rf /var/lib/apt/lists/* 5.8s

=> [builder 3/9] WORKDIR /app 0.0s

=> [builder 4/9] COPY Cargo.toml Cargo.lock ./ 0.0s

=> [builder 5/9] COPY src ./src 0.1s

=> [builder 6/9] COPY templates ./templates 0.0s

=> [builder 7/9] COPY lexicons ./lexicons 0.1s

=> [builder 8/9] COPY static ./static 0.5s

=> [builder 9/9] RUN cargo build --release 100.3s

=> [stage-1 4/7] COPY --from=builder /app/target/release/nate-status /app/nate-status 0.1s

=> [stage-1 5/7] COPY templates ./templates 0.0s

=> [stage-1 6/7] COPY lexicons ./lexicons 0.0s

=> [stage-1 7/7] COPY static ./static 0.5s

=> exporting to image 0.4s

=> => exporting layers 0.3s

=> => writing image sha256:22714bc09ba4cc011baf74003762e20651360a4c660ea948cb9b318ac36d9458 0.0s

=> => naming to docker.io/idjohnson/status-app:0.1 0.0s

builder@DESKTOP-QADGF36:~/Workspaces/status$ docker push idjohnson/status-app:0.1

The push refers to repository [docker.io/idjohnson/status-app]

c561d9b243cd: Pushed

0cce5c76ef98: Pushed

058d889f3c33: Pushed

38cbba043db6: Pushed

bbae33b3829c: Pushed

89a25a58c545: Pushed

36f5f951f60a: Mounted from library/debian

0.1: digest: sha256:5de1c2cb9c398410fd6643d634badaa6113964a3fdbc005c53a47a5eb1a61d26 size: 1785

I can now update the k8s-manifest to use that image

containers:

- name: status-app

image: idjohnson/status-app:0.1

and apply

$ kubectl apply -f ./k8s-manifest.yaml

persistentvolumeclaim/status-app-storage created

deployment.apps/status-app created

service/status-app-service created

ingress.networking.k8s.io/status-app-ingress created

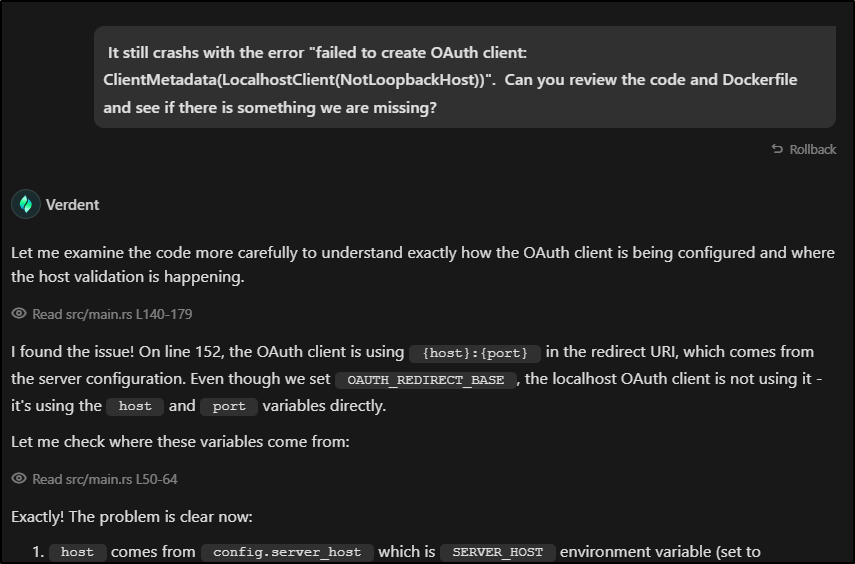

Unfortunately, I see the pod crashing

builder@DESKTOP-QADGF36:~/Workspaces/status$ kubectl get po | grep status

status-app-df4bc798-6667m 0/1 CrashLoopBackOff 2 (14s ago) 43s

builder@DESKTOP-QADGF36:~/Workspaces/status$ kubectl logs status-app-df4bc798-6667m

thread 'main' (1) panicked at src/main.rs:177:49:

failed to create OAuth client: ClientMetadata(LocalhostClient(NotLoopbackHost))

note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

I suspect it’s the lack of the .env. I just want to verify that is the cause

builder@DESKTOP-QADGF36:~/Workspaces/status$ cp .env.template .env

builder@DESKTOP-QADGF36:~/Workspaces/status$ docker build -t idjohnson/status-app:0.2 . && docker push idjohnson/status-app:0.2

[+] Building 0.7s (24/24) FINISHED docker:default

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 1.13kB 0.0s

=> [internal] load metadata for docker.io/rustlang/rust:nightly-slim 0.5s

=> [internal] load metadata for docker.io/library/debian:bookworm-slim 0.5s

=> [auth] rustlang/rust:pull token for registry-1.docker.io 0.0s

=> [auth] library/debian:pull token for registry-1.docker.io 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 135B 0.0s

=> [builder 1/9] FROM docker.io/rustlang/rust:nightly-slim@sha256:1d60876e124360b3fb91695e9fc2422ec4811dcbfb987eb9ebc1b486c1f8e83b 0.0s

=> [stage-1 1/7] FROM docker.io/library/debian:bookworm-slim@sha256:df52e55e3361a81ac1bead266f3373ee55d29aa50cf0975d440c2be3483d8ed3 0.0s

=> [internal] load build context 0.1s

=> => transferring context: 97.25kB 0.1s

=> CACHED [stage-1 2/7] RUN apt-get update && apt-get install -y ca-certificates libssl3 && rm -rf /var/lib/apt/lists/* 0.0s

=> CACHED [stage-1 3/7] WORKDIR /app 0.0s

=> CACHED [builder 2/9] RUN apt-get update && apt-get install -y pkg-config libssl-dev && rm -rf /var/lib/apt/lists/* 0.0s

=> CACHED [builder 3/9] WORKDIR /app 0.0s

=> CACHED [builder 4/9] COPY Cargo.toml Cargo.lock ./ 0.0s

=> CACHED [builder 5/9] COPY src ./src 0.0s

=> CACHED [builder 6/9] COPY templates ./templates 0.0s

=> CACHED [builder 7/9] COPY lexicons ./lexicons 0.0s

=> CACHED [builder 8/9] COPY static ./static 0.0s

=> CACHED [builder 9/9] RUN cargo build --release 0.0s

=> CACHED [stage-1 4/7] COPY --from=builder /app/target/release/nate-status /app/nate-status 0.0s

=> CACHED [stage-1 5/7] COPY templates ./templates 0.0s

=> CACHED [stage-1 6/7] COPY lexicons ./lexicons 0.0s

=> CACHED [stage-1 7/7] COPY static ./static 0.0s

=> exporting to image 0.0s

=> => exporting layers 0.0s

=> => writing image sha256:22714bc09ba4cc011baf74003762e20651360a4c660ea948cb9b318ac36d9458 0.0s

=> => naming to docker.io/idjohnson/status-app:0.2 0.0s

The push refers to repository [docker.io/idjohnson/status-app]

c561d9b243cd: Layer already exists

0cce5c76ef98: Layer already exists

058d889f3c33: Layer already exists

38cbba043db6: Layer already exists

bbae33b3829c: Layer already exists

89a25a58c545: Layer already exists

36f5f951f60a: Layer already exists

0.2: digest: sha256:5de1c2cb9c398410fd6643d634badaa6113964a3fdbc005c53a47a5eb1a61d26 size: 1785

# swap to tag "0.2"

builder@DESKTOP-QADGF36:~/Workspaces/status$ !v

vi k8s-manifest.yaml

builder@DESKTOP-QADGF36:~/Workspaces/status$ kubectl apply -f k8s-manifest.yaml

persistentvolumeclaim/status-app-storage unchanged

deployment.apps/status-app configured

service/status-app-service unchanged

ingress.networking.k8s.io/status-app-ingress unchanged

nope

builder@DESKTOP-QADGF36:~/Workspaces/status$ kubectl get po | grep status

status-app-5664dd98bf-2dfxx 0/1 CrashLoopBackOff 1 (16s ago) 19s

status-app-df4bc798-6667m 0/1 CrashLoopBackOff 5 (72s ago) 4m12s

builder@DESKTOP-QADGF36:~/Workspaces/status$ kubectl logs status-app-5664dd98bf-2dfxx

thread 'main' (1) panicked at src/main.rs:177:49:

failed to create OAuth client: ClientMetadata(LocalhostClient(NotLoopbackHost))

note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

I think there is some configuration involved I’m missing as running bare in docker works (but doesn’t serve a page I can reach) and setting the host env var makes the Oauth block poop out

builder@DESKTOP-QADGF36:~/Workspaces/status$ docker run -p 8080:8080 idjohnson/status-app:0.2

[2025-09-17T11:04:57Z INFO actix_server::builder] starting 16 workers

[2025-09-17T11:04:57Z INFO actix_server::server] Actix runtime found; starting in Actix runtime

[2025-09-17T11:04:57Z INFO actix_server::server] starting service: "actix-web-service-127.0.0.1:8080", workers: 16, listening on: 127.0.0.1:8080

[2025-09-17T11:04:57Z INFO rocketman::connection] Connecting to wss://jetstream2.us-east.bsky.network/subscribe

[2025-09-17T11:04:57Z INFO rocketman::connection] Connected. HTTP status: 101 Switching Protocols

^C[2025-09-17T11:07:14Z INFO actix_server::server] SIGINT received; starting forced shutdown

[2025-09-17T11:07:14Z INFO actix_server::accept] accept thread stopped

[2025-09-17T11:07:14Z INFO actix_server::worker] shutting down idle worker

[2025-09-17T11:07:14Z INFO actix_server::worker] shutting down idle worker

[2025-09-17T11:07:14Z INFO actix_server::worker] shutting down idle worker

[2025-09-17T11:07:14Z INFO actix_server::worker] shutting down idle worker

[2025-09-17T11:07:14Z INFO actix_server::worker] shutting down idle worker

[2025-09-17T11:07:14Z INFO actix_server::worker] shutting down idle worker

[2025-09-17T11:07:14Z INFO actix_server::worker] shutting down idle worker

[2025-09-17T11:07:14Z INFO actix_server::worker] shutting down idle worker

[2025-09-17T11:07:14Z INFO actix_server::worker] shutting down idle worker

[2025-09-17T11:07:14Z INFO actix_server::worker] shutting down idle worker

[2025-09-17T11:07:14Z INFO actix_server::worker] shutting down idle worker

[2025-09-17T11:07:14Z INFO actix_server::worker] shutting down idle worker

[2025-09-17T11:07:14Z INFO actix_server::worker] shutting down idle worker

[2025-09-17T11:07:14Z INFO actix_server::worker] shutting down idle worker

[2025-09-17T11:07:14Z INFO actix_server::worker] shutting down idle worker

builder@DESKTOP-QADGF36:~/Workspaces/status$ docker run -p 8080:8080 -e SERVER_HOST=localhost idjohnson/status-app:0.2

thread 'main' (1) panicked at src/main.rs:177:49:

failed to create OAuth client: ClientMetadata(LocalhostClient(Localhost))

note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

builder@DESKTOP-QADGF36:~/Workspaces/status$ docker run -p 8080:8080 -e SERVER_HOST=0.0.0.0 idjohnson/status-app:0.2

thread 'main' (1) panicked at src/main.rs:177:49:

failed to create OAuth client: ClientMetadata(LocalhostClient(NotLoopbackHost))

note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

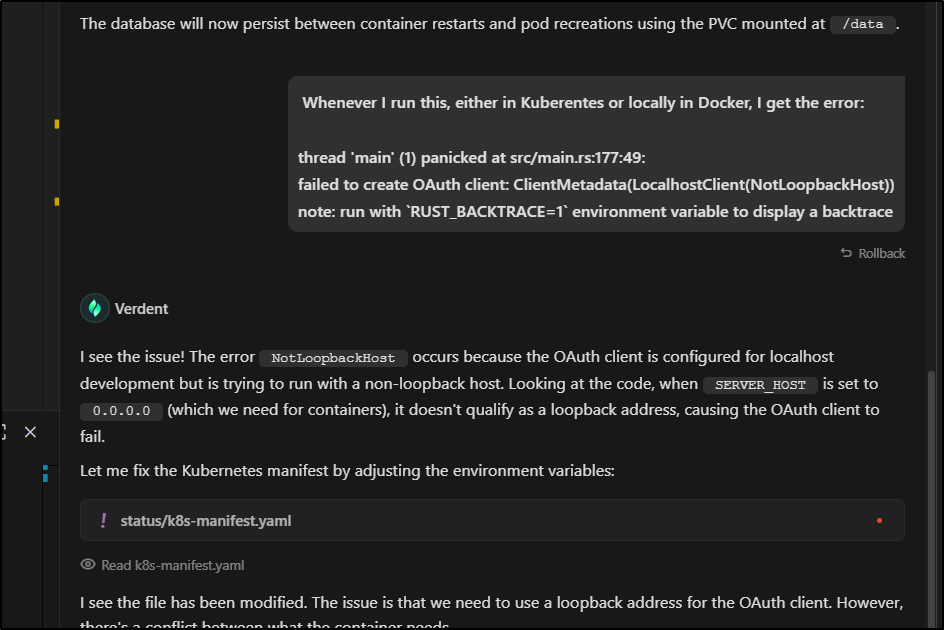

Let me just ask if Verdent has any ideas

Verdent seems insistent on the DB_PATH at least. Let’s just try it and see

Didn’t quite work

builder@DESKTOP-QADGF36:~/Workspaces/status$ kubectl apply -f ./k8s-manifest.yaml

persistentvolumeclaim/status-app-storage unchanged

deployment.apps/status-app configured

service/status-app-service unchanged

ingress.networking.k8s.io/status-app-ingress unchanged

builder@DESKTOP-QADGF36:~/Workspaces/status$ kubectl get po | grep status

status-app-5664dd98bf-2dfxx 0/1 CrashLoopBackOff 6 (2m48s ago) 8m32s

status-app-777465cdf4-m42lz 0/1 CrashLoopBackOff 1 (4s ago) 7s

builder@DESKTOP-QADGF36:~/Workspaces/status$ kubectl logs status-app-777465cdf4-m42lz

thread 'main' (1) panicked at src/main.rs:177:49:

failed to create OAuth client: ClientMetadata(LocalhostClient(NotLoopbackHost))

note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

I asked it to review in detail the code to see if we missed something altogether

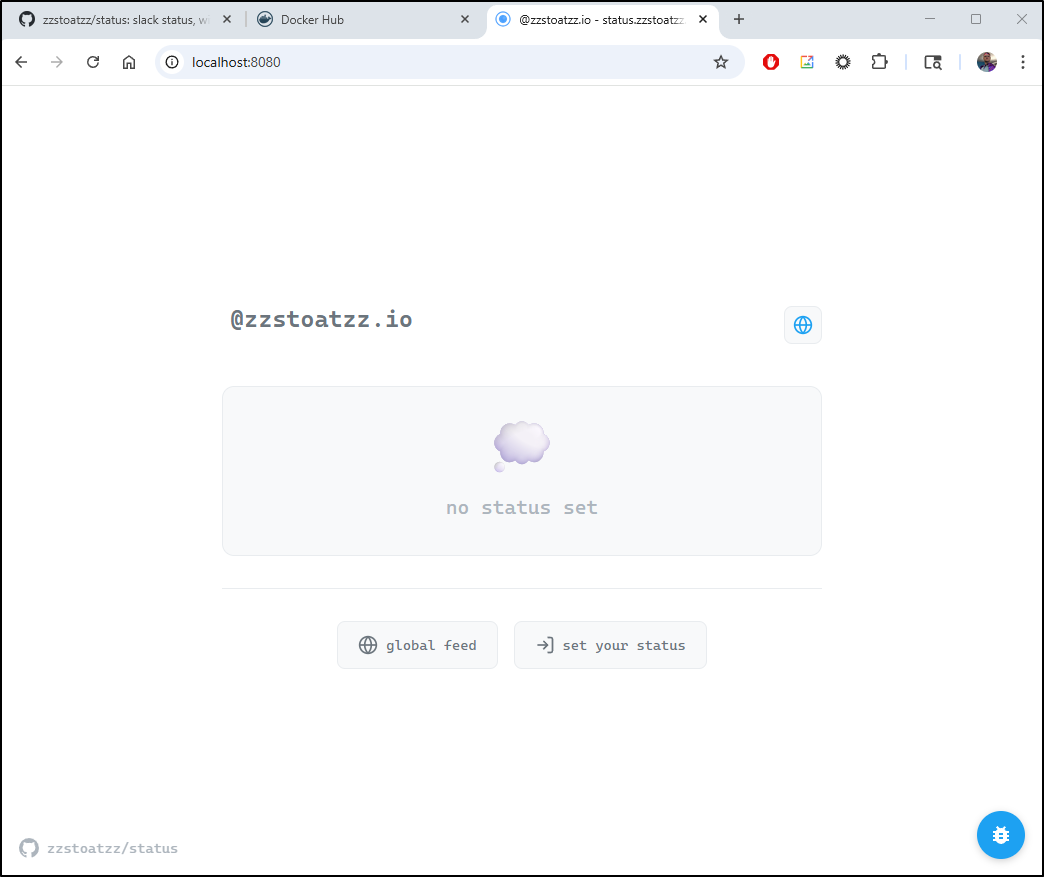

It ended up tweaking the Dockerfile and k8s manifest for Production settings.

I rebuilt and applied a 0.3 tag

builder@DESKTOP-QADGF36:~/Workspaces/status$ docker build -t idjohnson/status-app:0.3 . && docker push idjohnson/status-app:0.3

[+] Building 0.9s (24/24) FINISHED docker:default

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 1.17kB 0.0s

=> [internal] load metadata for docker.io/library/debian:bookworm-slim 0.7s

=> [internal] load metadata for docker.io/rustlang/rust:nightly-slim 0.7s

=> [auth] rustlang/rust:pull token for registry-1.docker.io 0.0s

=> [auth] library/debian:pull token for registry-1.docker.io 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 135B 0.0s

=> [builder 1/9] FROM docker.io/rustlang/rust:nightly-slim@sha256:1d60876e124360b3fb91695e9fc2422ec4811dcbfb987eb9ebc1b486c1f8e83b 0.0s

=> [stage-1 1/7] FROM docker.io/library/debian:bookworm-slim@sha256:df52e55e3361a81ac1bead266f3373ee55d29aa50cf0975d440c2be3483d8ed3 0.0s

=> [internal] load build context 0.1s

=> => transferring context: 97.25kB 0.1s

=> CACHED [stage-1 2/7] RUN apt-get update && apt-get install -y ca-certificates libssl3 && rm -rf /var/lib/apt/lists/* 0.0s

=> CACHED [stage-1 3/7] WORKDIR /app 0.0s

=> CACHED [builder 2/9] RUN apt-get update && apt-get install -y pkg-config libssl-dev && rm -rf /var/lib/apt/lists/* 0.0s

=> CACHED [builder 3/9] WORKDIR /app 0.0s

=> CACHED [builder 4/9] COPY Cargo.toml Cargo.lock ./ 0.0s

=> CACHED [builder 5/9] COPY src ./src 0.0s

=> CACHED [builder 6/9] COPY templates ./templates 0.0s

=> CACHED [builder 7/9] COPY lexicons ./lexicons 0.0s

=> CACHED [builder 8/9] COPY static ./static 0.0s

=> CACHED [builder 9/9] RUN cargo build --release 0.0s

=> CACHED [stage-1 4/7] COPY --from=builder /app/target/release/nate-status /app/nate-status 0.0s

=> CACHED [stage-1 5/7] COPY templates ./templates 0.0s

=> CACHED [stage-1 6/7] COPY lexicons ./lexicons 0.0s

=> CACHED [stage-1 7/7] COPY static ./static 0.0s

=> exporting to image 0.0s

=> => exporting layers 0.0s

=> => writing image sha256:82888bac0f1dde0c19bfacb928e7fcc44e49738aa857670e2899cbb3f650eb0d 0.0s

=> => naming to docker.io/idjohnson/status-app:0.3 0.0s

The push refers to repository [docker.io/idjohnson/status-app]

c561d9b243cd: Layer already exists

0cce5c76ef98: Layer already exists

058d889f3c33: Layer already exists

38cbba043db6: Layer already exists

bbae33b3829c: Layer already exists

89a25a58c545: Layer already exists

36f5f951f60a: Layer already exists

0.3: digest: sha256:a1b246fa0dca911829a1a1b1ed248475fe9960fe19ac75fd9b30c18aab629f44 size: 1785

builder@DESKTOP-QADGF36:~/Workspaces/status$ kubectl apply -f ./k8s-manifest.yaml

persistentvolumeclaim/status-app-storage unchanged

deployment.apps/status-app configured

service/status-app-service unchanged

ingress.networking.k8s.io/status-app-ingress unchanged

builder@DESKTOP-QADGF36:~/Workspaces/status$ kubectl get po | grep status

status-app-777465cdf4-m42lz 0/1 CrashLoopBackOff 5 (33s ago) 3m45s

status-app-857c8b8ff6-fkxfh 0/1 Running 0 3s

I can now port-forward to the service once the pod is up

builder@DESKTOP-QADGF36:~/Workspaces/status$ kubectl get po | grep status

status-app-857c8b8ff6-fkxfh 1/1 Running 0 84s

builder@DESKTOP-QADGF36:~/Workspaces/status$ kubectl get svc | grep status

status-app-service ClusterIP 10.43.213.231 <none> 80/TCP 17m

builder@DESKTOP-QADGF36:~/Workspaces/status$ kubectl port-forward svc/status-app-service -p 8080:80

error: unknown shorthand flag: 'p' in -p

See 'kubectl port-forward --help' for usage.

builder@DESKTOP-QADGF36:~/Workspaces/status$ kubectl port-forward svc/status-app-service 8080:80

Forwarding from 127.0.0.1:8080 -> 8080

Forwarding from [::1]:8080 -> 8080

Handling connection for 8080

Handling connection for 8080

Handling connection for 8080

Handling connection for 8080

Handling connection for 8080

Handling connection for 8080

And indeed, the app is working

Verdent Deck

For now, I’ll have to wait as the Deck is just for Mac’s and I lack one of those (running Mac OS anyhow)

Company information

The company provided a bit of background (as I had a hard time getting details from my usual sources like Crunchbase).

It was founded just this year by Zhijie (陈志杰) Chen (CEO) who had spent the last 4 years as head of Algorithms at ByteDance (TikTok). Looking further back, he had spent his formative years at Baidu (2010-2019) as a Chief Technical Architect working on neural machine translation tech.

The other co-founder is Xiaochun Liu (COO) who was Heat of Tech/Product/ECommerce at Baidu prior to joining Verdent.

Both of them also had top rolls at Joyy which has a twitch style app popular in other countries (not the US) called “bigo live”.

According to LI About page, Verdent has 11-50 employees presently.

Cost

Clearly I am writing this prior to release. The website at the moment is mostly geared at what is coming and beta testers

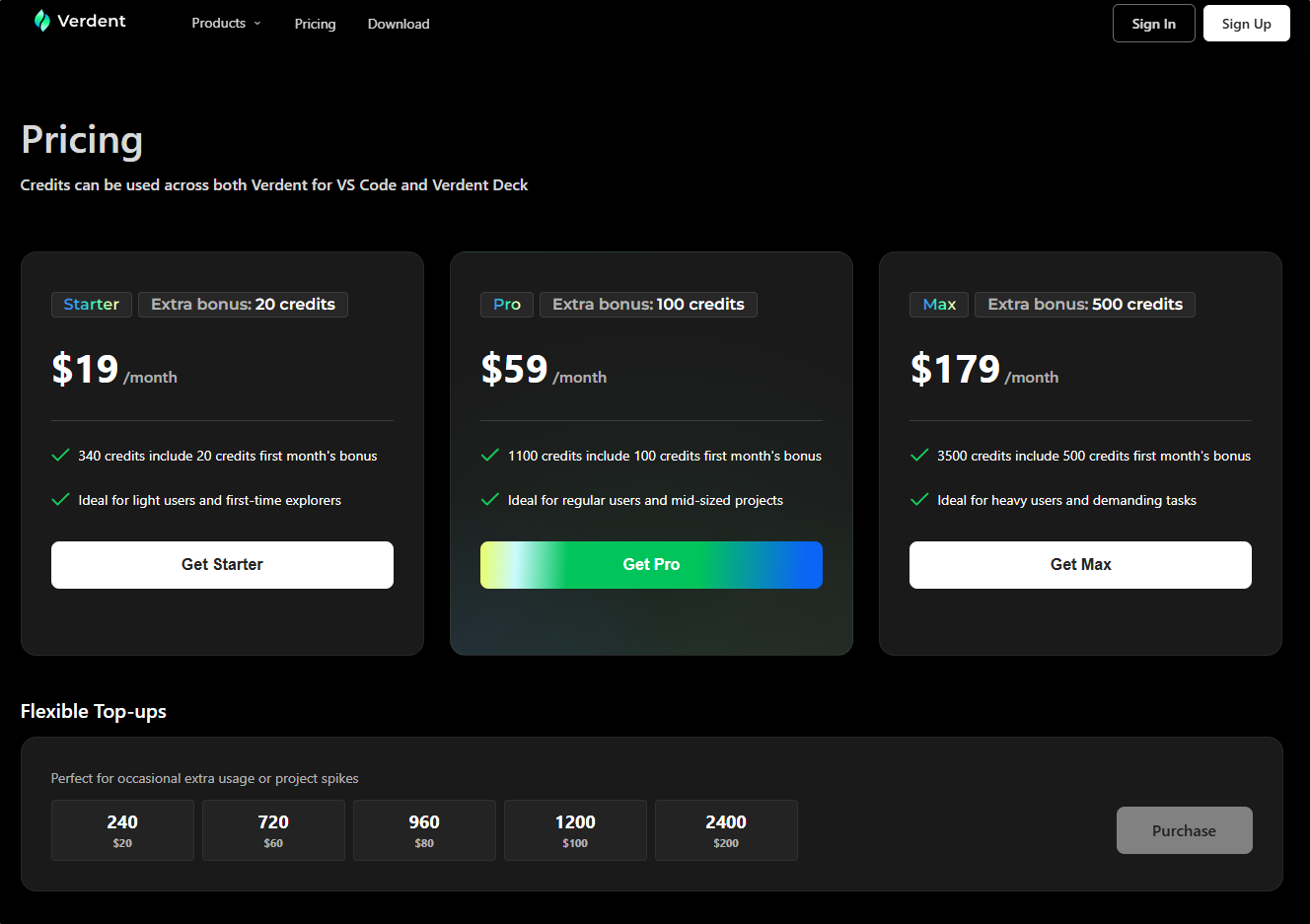

However, by the time you read this (after they announce), I would very much expect some pricing and plan details to be listed.

That said, I did ask my contact at Verdent for some idea. He let me know that they are going to start at US$19/mo with more premium plans and pay-as-you-go options.

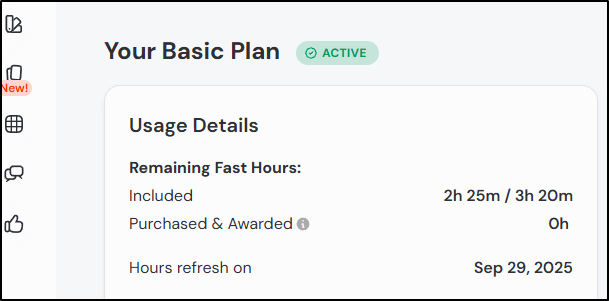

What I have really appreciated about Verdent (compared to all the others mind you), is they make it very clear where you stand on usage at any given point right there in the dialogue

I really love that. As you can see in my picture above, I moved the Verdent app next to the Copilot chat so on heavy days I can literally dance between them as my needs fit.

Post-release Updates

Verdent went live yesterday. I did need to update my plugin which was easy to do from the download page.

The new pricing page is listed:

I was generally operating at the “Pro” level during testing, which is $60 a month. But I likely would have been fine at the “Starter” (340 credits a month with a 20 credit bonus).

Remember, to build out a full app with their agentic flow was about 60 tokens.

Each day I would do something deep, I would spend between roughly 20 and 50 tokens. If we assume working 5 days a week, Pro would have given me an average of 55/day to spend which would have more than covered me.

IF I were running my own company, I do think I would rollout Pro for my developers.

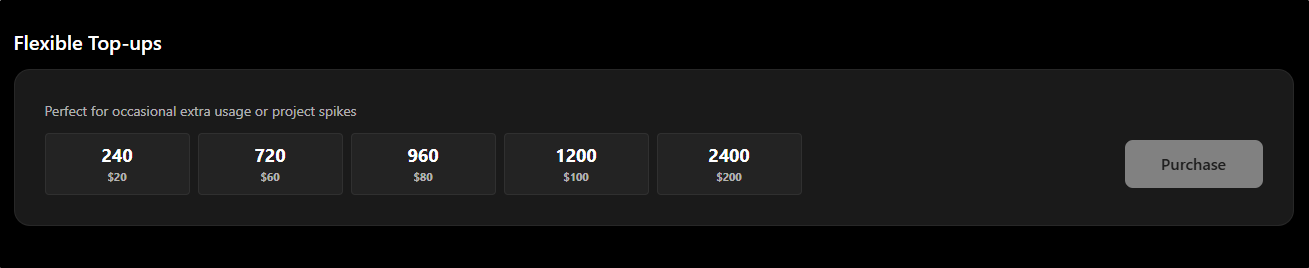

I also really like that they have “Top-ups” and do not force people to do a full plan upgrade.

There are going to be plenty of folks that need a burst on a project:

The one other note I’ll make about the post-release VS Code extension

is the beta had a bug on the file picker that had it really delay in showing files to pick. That was fixed. Other than that, they seem the same

MCP Servers

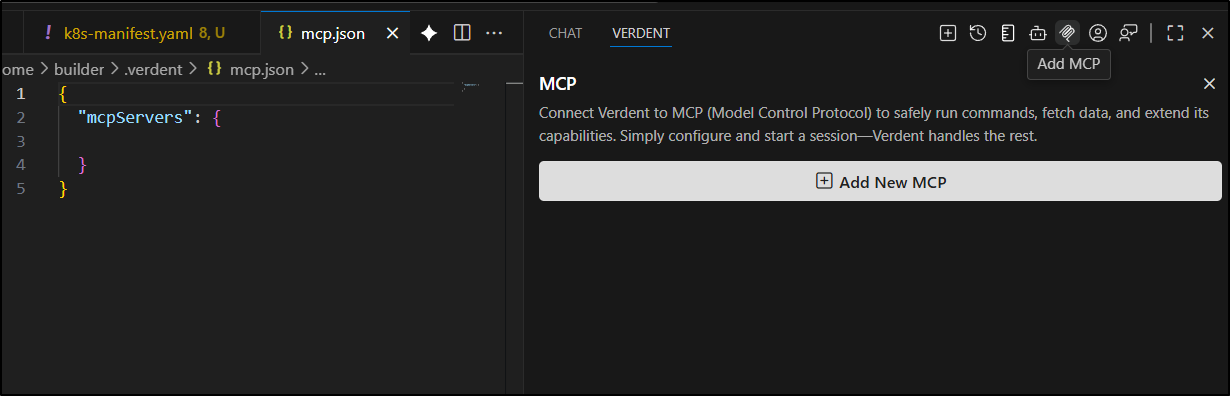

We can easily add MCP servers using the MCP section

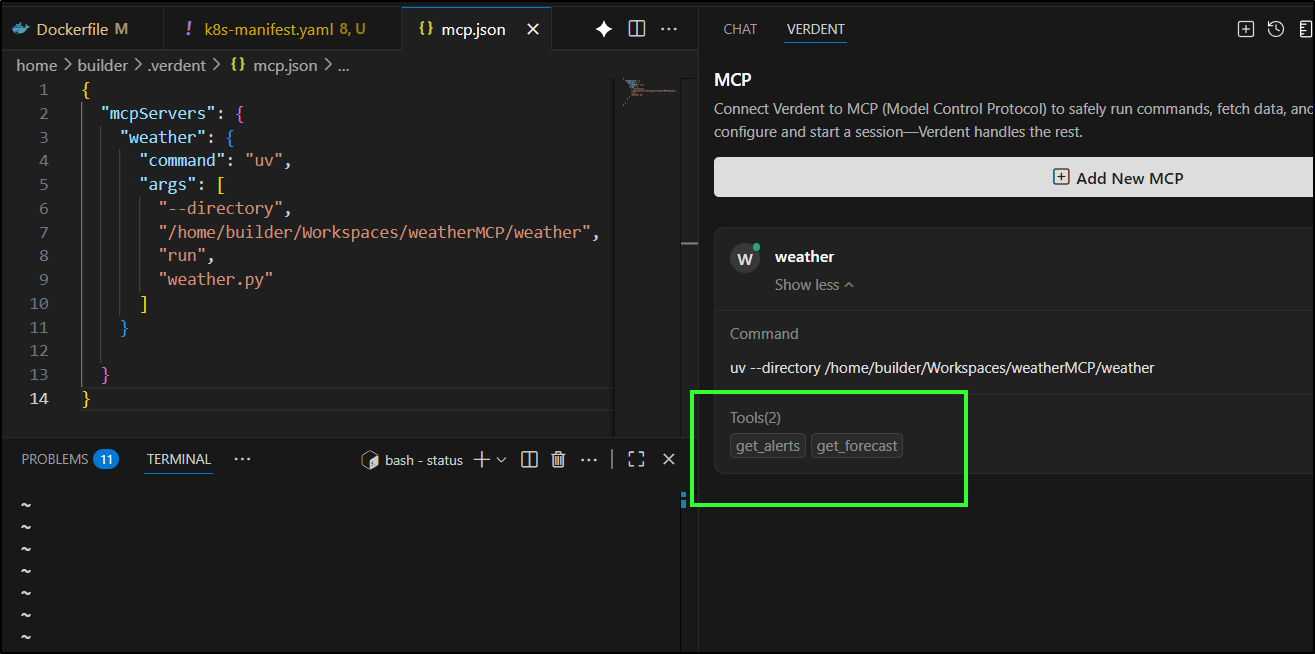

To have an example, I’ll follow this guide for a basic weather MCP server.

Once I add the weather MCP server that is hosted locally, I can tell it was properly loaded by way of the “Tools” section in Verdent’s MCP area

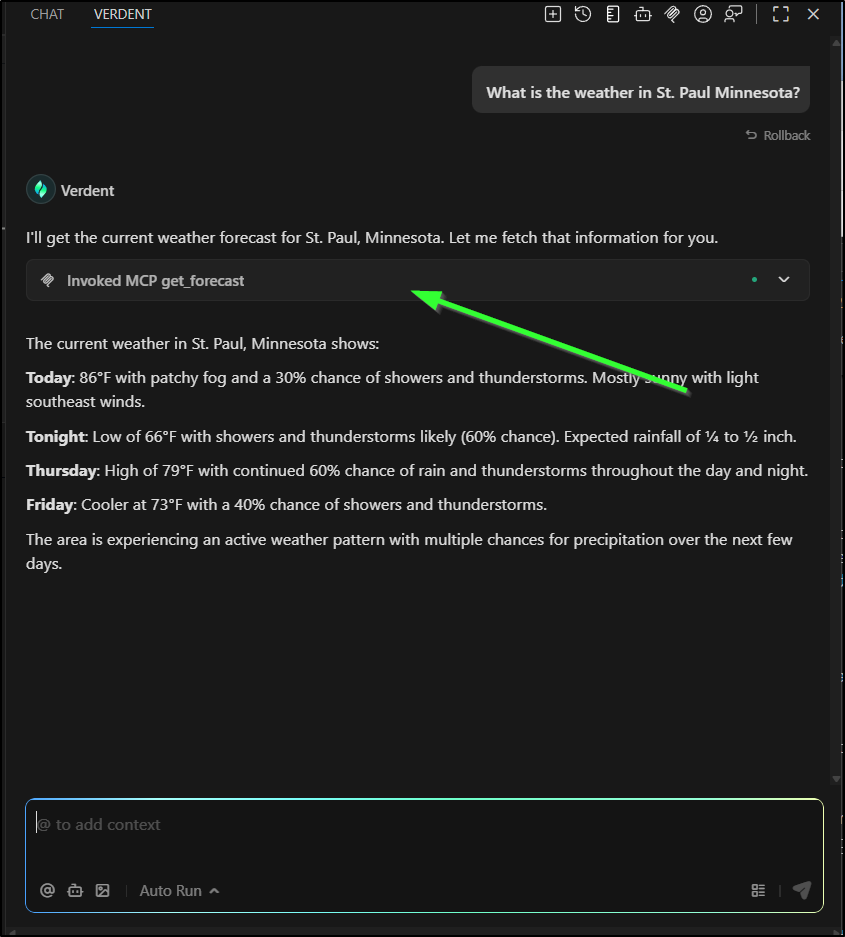

I can tell it worked by way of the MCP icon in the response

The clear indication is that if I want to cast a line in the next few days, tonight might be best.

Usage

Let’s update our app one more time, now that Verdent is released.

I would like to add a password. I have started the code but there is an error:

$ kubectl get po -l app=evil-clown-generator

NAME READY STATUS RESTARTS AGE

evil-clown-generator-5d4fbc88c9-5x52r 0/1 Error 1 (9s ago) 13s

The logs suggest it might be a missing module.

builder@DESKTOP-QADGF36:~/Workspaces/verdantDemo$ kubectl logs evil-clown-generator-5d4fbc88c9-5x52r

Traceback (most recent call last):

File "/usr/local/bin/uvicorn", line 8, in <module>

sys.exit(main())

^^^^^^

File "/usr/local/lib/python3.11/site-packages/click/core.py", line 1442, in __call__

return self.main(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/click/core.py", line 1363, in main

rv = self.invoke(ctx)

^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/click/core.py", line 1226, in invoke

return ctx.invoke(self.callback, **ctx.params)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/click/core.py", line 794, in invoke

return callback(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/uvicorn/main.py", line 416, in main

run(

File "/usr/local/lib/python3.11/site-packages/uvicorn/main.py", line 587, in run

server.run()

File "/usr/local/lib/python3.11/site-packages/uvicorn/server.py", line 61, in run

return asyncio.run(self.serve(sockets=sockets))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/asyncio/runners.py", line 190, in run

return runner.run(main)

^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/asyncio/runners.py", line 118, in run

return self._loop.run_until_complete(task)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "uvloop/loop.pyx", line 1518, in uvloop.loop.Loop.run_until_complete

File "/usr/local/lib/python3.11/site-packages/uvicorn/server.py", line 68, in serve

config.load()

File "/usr/local/lib/python3.11/site-packages/uvicorn/config.py", line 467, in load

self.loaded_app = import_from_string(self.app)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/uvicorn/importer.py", line 24, in import_from_string

raise exc from None

File "/usr/local/lib/python3.11/site-packages/uvicorn/importer.py", line 21, in import_from_string

module = importlib.import_module(module_str)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/importlib/__init__.py", line 126, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "<frozen importlib._bootstrap>", line 1204, in _gcd_import

File "<frozen importlib._bootstrap>", line 1176, in _find_and_load

File "<frozen importlib._bootstrap>", line 1147, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 690, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 940, in exec_module

File "<frozen importlib._bootstrap>", line 241, in _call_with_frames_removed

File "/app/main.py", line 24, in <module>

from starlette.middleware.sessions import SessionMiddleware

File "/usr/local/lib/python3.11/site-packages/starlette/middleware/sessions.py", line 6, in <module>

import itsdangerous

ModuleNotFoundError: No module named 'itsdangerous'

Also, I noticed the Dockerfile had no “APP_PASSWORD” even tho my updated code was looking for that

+# Session / Auth configuration

+APP_PASSWORD = os.getenv("APP_PASSWORD")

+SESSION_SECRET = os.getenv("SESSION_SECRET", os.urandom(24).hex())

Let’s use Verdent to solve this

I editted my deployment and it seemed to at least start the pod

builder@DESKTOP-QADGF36:~/Workspaces/verdantDemo$ kubectl get po -l app=evil-clown-generator

NAME READY STATUS RESTARTS AGE

evil-clown-generator-5d4fbc88c9-5x52r 0/1 CrashLoopBackOff 6 (3m27s ago) 9m29s

evil-clown-generator-f77c845db-lghhf 0/1 ContainerCreating 0 7s

builder@DESKTOP-QADGF36:~/Workspaces/verdantDemo$ kubectl get po -l app=evil-clown-generator

NAME READY STATUS RESTARTS AGE

evil-clown-generator-f77c845db-lghhf 1/1 Running 0 12s

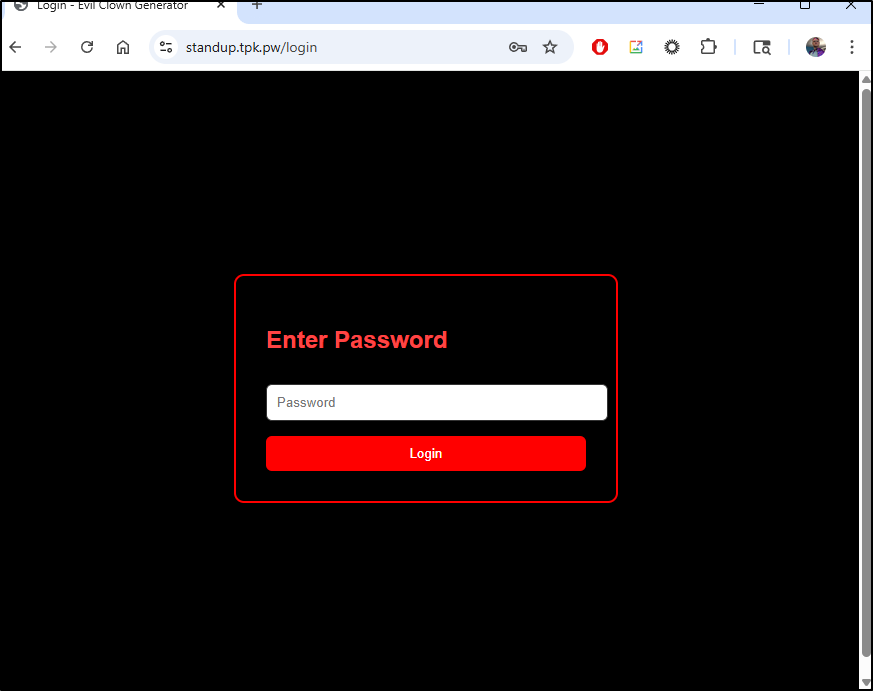

Now my app has a password

Invalid passwords are rejected

But if I give the password now (hint: type the numbers one two three after the word “Password”)

It loads up (though I did need to remove “/login” from the URL and try again - not sure if i just had a timeout or the app needs a redirect)

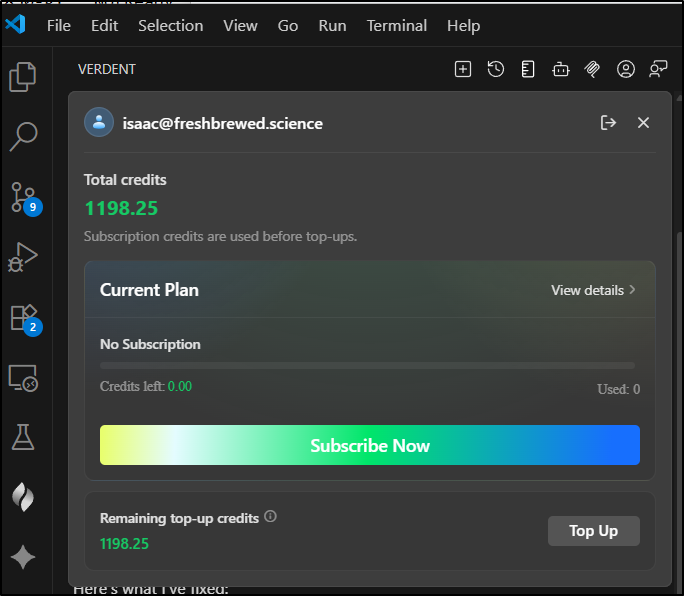

I can look at my credit usage

And see that it deducted 1.75 credits from my 1200 total.

EVEN if we assume the smallest account and deduct first month bonus, as a “starter” plan that would have been just under $0.10 or $0.15 if thinking about it in a top-up way of 240 for $20.

Either way, that makes it fairly economical - equal to or less than Claude.

Summary

Overall, I’m really excited by Verdent. I’ve used it and it works. The only issue was a minor bug during beta with the file picker (and they logged it and plan to fix it so likely it will be corrected by the time you read this).

I don’t even really know how I got selected to be a beta tester. But during the beta, it was really performant and fun to use. Again, I go back to my favourite feature - keeping me appraised of where I stand with credits

This is another reason, I might add, I stick with Midjourney for AI art when I need it. I have a page that tells me exactly where I stand on hours remaining

I also liked how when I would come back to the Verdent pane, it had my last queries stored there. I’m used to those always disappearing when I relaunch VS Code with Copilot

I will need to see actual end of day pricing. I am not against $20/mo but honestly, I am a sucker for things that start at $10/mo - for some reason that will get me to actually subscribe (and at times, expand from there). But if it works as well as it did in the beta, $20/mo seems more than fair.

Post-launch note: Indeed, I think the pricing is fair and certainly in the same ballpark as Claude Code. For all that I do, the Starter would cover it with occasional “top-up”s. I’m curious if they work out an ‘enterprise’ plan that would cover a larger group of users with a pool of credits, but we’ll see.

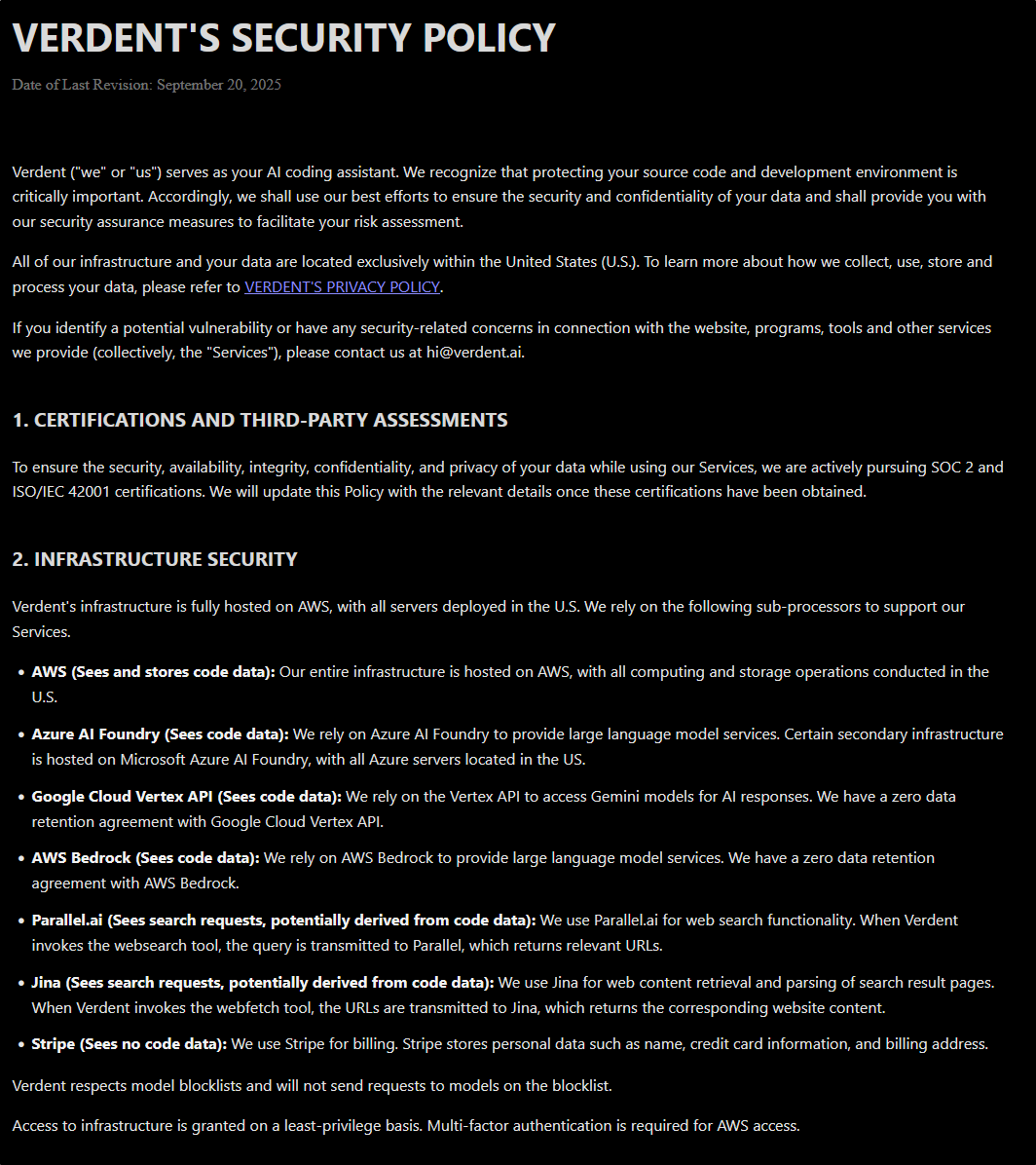

The other thing I would really want to know is where my compute is housed. I’m rather a stickler for knowing where my stuff goes. And lest I may sound like some kind of xenophobic pettifogger, clearly I use Qwen3 Coder Plus with Alibaba and I know that is going to Singapore or Beijing depending on my endpoint. Jurisdiction is an important topic. For some workloads, I might not use Verdent until I can have clarity on where the data goes, that’s all.

Post-launch update

I love seeing that they addressed the data residency issue in the their security policy

Basically they use a mix of AWS, Azure AI Foundry and Google Cloud Vertex AI with almost all of it residing in the US: