Published: Sep 23, 2025 by Isaac Johnson

Here is the setup: Let’s say you have some data in a PostgreSQL database that would sure be nice to be able to query/use/leverage with AI tools.

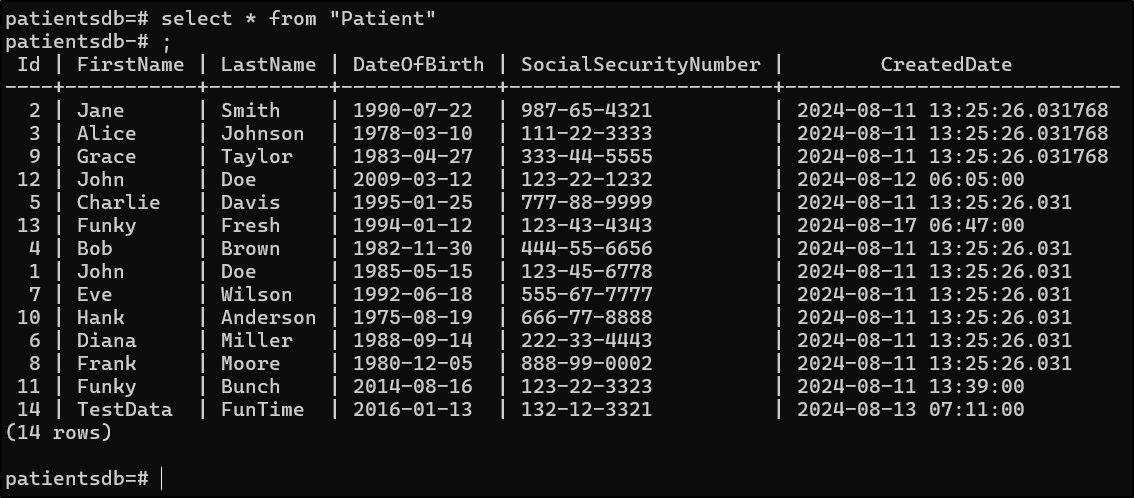

The above example is from a simple Patients .NET MVC app I setup in August last year.

Today I want to explore using MCP (Model Context Protocol) servers with our Agentic AI tooling like Copilot and Gemini CLI.

There are two similar tools, pgmcp, written in Go and pg-mcp-server written in python. The both work a little different so let’s dig in and see if we can figure out how to use them with a local on-prem database (the one shown above).

Note: A quick spoiler. In the start of this article I have some challenges getting Gemini CLI to work. I cover this in a section near the end “Gemini CLI revisit (and fixes)” that solves the proper invokations.

pgmcp

The first PostgreSQL MCP server we will try is this go-based one “pgmcp”.

Let’s first build the server code. It needs at least go 1.23+. I had 1.19 in my chain

$ go build -o pgmcp-server ./server

go: errors parsing go.mod:

/home/builder/Workspaces/pgmcp/go.mod:3: invalid go version '1.23.0': must match format 1.23

/home/builder/Workspaces/pgmcp/go.mod:5: unknown directive: toolchain

$ go version

go version go1.19.13 linux/amd64

I used homebrew to upgrade

$ brew install go@1.23

==> Auto-updating Homebrew...

At least for me, I needed to update my path to make it take effect

If you need to have go@1.23 first in your PATH, run:

echo 'export PATH="/home/linuxbrew/.linuxbrew/opt/go@1.23/bin:$PATH"' >> ~/.profile

I can now build the server

$ go build -o pgmcp-server ./server

go: downloading github.com/openai/openai-go/v2 v2.4.3

go: downloading github.com/jackc/pgx/v5 v5.6.0

go: downloading github.com/modelcontextprotocol/go-sdk v0.5.0

go: downloading github.com/rs/zerolog v1.33.0

go: downloading github.com/mattn/go-isatty v0.0.19

go: downloading golang.org/x/sys v0.29.0

go: downloading github.com/jackc/puddle/v2 v2.2.1

go: downloading golang.org/x/text v0.21.0

go: downloading golang.org/x/crypto v0.32.0

go: downloading github.com/jackc/pgpassfile v1.0.0

go: downloading github.com/jackc/pgservicefile v0.0.0-20221227161230-091c0ba34f0a

go: downloading github.com/yosida95/uritemplate/v3 v3.0.2

go: downloading github.com/google/jsonschema-go v0.2.3

go: downloading golang.org/x/sync v0.10.0

go: downloading github.com/tidwall/gjson v1.14.4

go: downloading github.com/tidwall/sjson v1.2.5

go: downloading github.com/tidwall/pretty v1.2.1

go: downloading github.com/tidwall/match v1.1.1

and client

$ go build -o pgmcp-client ./client

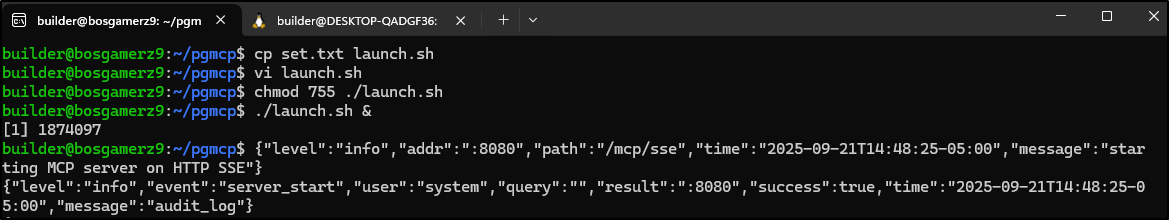

Now we can run the server

builder@DESKTOP-QADGF36:~/Workspaces/pgmcp$ export OPENAI_API_KEY="sk-proj-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

# set DB connection and start server

builder@DESKTOP-QADGF36:~/Workspaces/pgmcp$ export DATABASE_URL="postgres://patientsuser:asdfasdfasdf@192.168.1.77:5432/patientsdb"

builder@DESKTOP-QADGF36:~/Workspaces/pgmcp$ ./pgmcp-server

{"level":"info","addr":":8080","path":"/mcp/sse","time":"2025-09-20T19:11:55-05:00","message":"starting MCP server on HTTP SSE"}

{"level":"info","event":"server_start","user":"system","query":"","result":":8080","success":true,"time":"2025-09-20T19:11:55-05:00","message":"audit_log"}

Let’s test:

builder@DESKTOP-QADGF36:~/Workspaces/pgmcp$ ./pgmcp-client -ask "How many patients are over 40 years old?"

{

"has_more": false,

"note": "model=gpt-4o-mini (streamed 1 pages)",

"rows": [

{

"count": 6

}

],

"sql": "SELECT COUNT(*) FROM public.\"Patient\" WHERE age(current_date, \"DateOfBirth\") \u003e interval '40 years'"

}

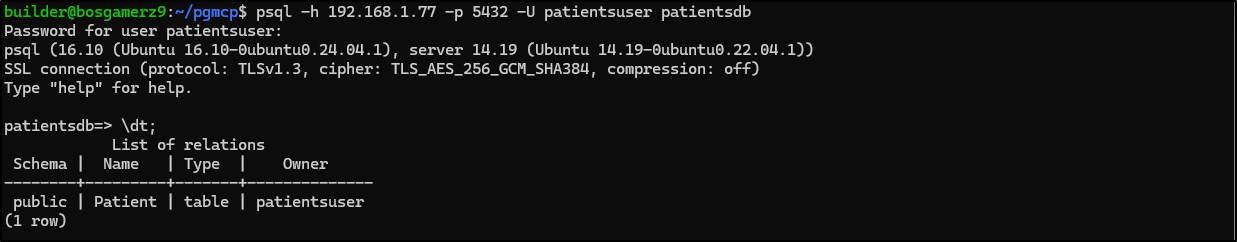

Checking our data

patientsdb=# select * from "Patient"

patientsdb-# ;

Id | FirstName | LastName | DateOfBirth | SocialSecurityNumber | CreatedDate

----+-----------+----------+-------------+----------------------+----------------------------

2 | Jane | Smith | 1990-07-22 | 987-65-4321 | 2024-08-11 13:25:26.031768

3 | Alice | Johnson | 1978-03-10 | 111-22-3333 | 2024-08-11 13:25:26.031768

9 | Grace | Taylor | 1983-04-27 | 333-44-5555 | 2024-08-11 13:25:26.031768

12 | John | Doe | 2009-03-12 | 123-22-1232 | 2024-08-12 06:05:00

5 | Charlie | Davis | 1995-01-25 | 777-88-9999 | 2024-08-11 13:25:26.031

13 | Funky | Fresh | 1994-01-12 | 123-43-4343 | 2024-08-17 06:47:00

4 | Bob | Brown | 1982-11-30 | 444-55-6656 | 2024-08-11 13:25:26.031

1 | John | Doe | 1985-05-15 | 123-45-6778 | 2024-08-11 13:25:26.031

7 | Eve | Wilson | 1992-06-18 | 555-67-7777 | 2024-08-11 13:25:26.031

10 | Hank | Anderson | 1975-08-19 | 666-77-8888 | 2024-08-11 13:25:26.031

6 | Diana | Miller | 1988-09-14 | 222-33-4443 | 2024-08-11 13:25:26.031

8 | Frank | Moore | 1980-12-05 | 888-99-0002 | 2024-08-11 13:25:26.031

11 | Funky | Bunch | 2014-08-16 | 123-22-3323 | 2024-08-11 13:39:00

14 | TestData | FunTime | 2016-01-13 | 132-12-3321 | 2024-08-13 07:11:00

(14 rows)

Indeed, that would be 6 patients over 40

Interestingly, when I asked for those with names start A-F, unless it was treating them exclusively, it seems off

$ ./pgmcp-client -ask "How many patients last names start with A-F?"

{

"has_more": false,

"note": "model=gpt-4o-mini (streamed 1 pages)",

"rows": [

{

"count": 6

}

],

"sql": "SELECT COUNT(*) FROM public.\"Patient\" WHERE \"LastName\" BETWEEN 'A' AND 'F'"

}

coming back to ask for the names gave me 8 (though in fairness, I do have Jone Doe in the database twice)

$ ./pgmcp-client -ask "How many patients last names start with A-F and what are their names?"

{

"has_more": false,

"note": "model=gpt-4o-mini (streamed 1 pages)",

"rows": [

{

"PatientCount": 8,

"PatientNames": [

"John Doe",

"Charlie Davis",

"Funky Fresh",

"Bob Brown",

"John Doe",

"Hank Anderson",

"Funky Bunch",

"TestData FunTime"

]

}

],

"sql": "SELECT COUNT(*) AS \"PatientCount\", ARRAY_AGG(\"FirstName\" || ' ' || \"LastName\") AS \"PatientNames\"\nFROM public.\"Patient\"\nWHERE \"LastName\" \u003e= 'A' AND \"LastName\" \u003c 'G'"

}

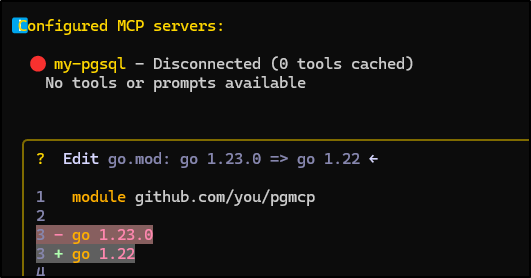

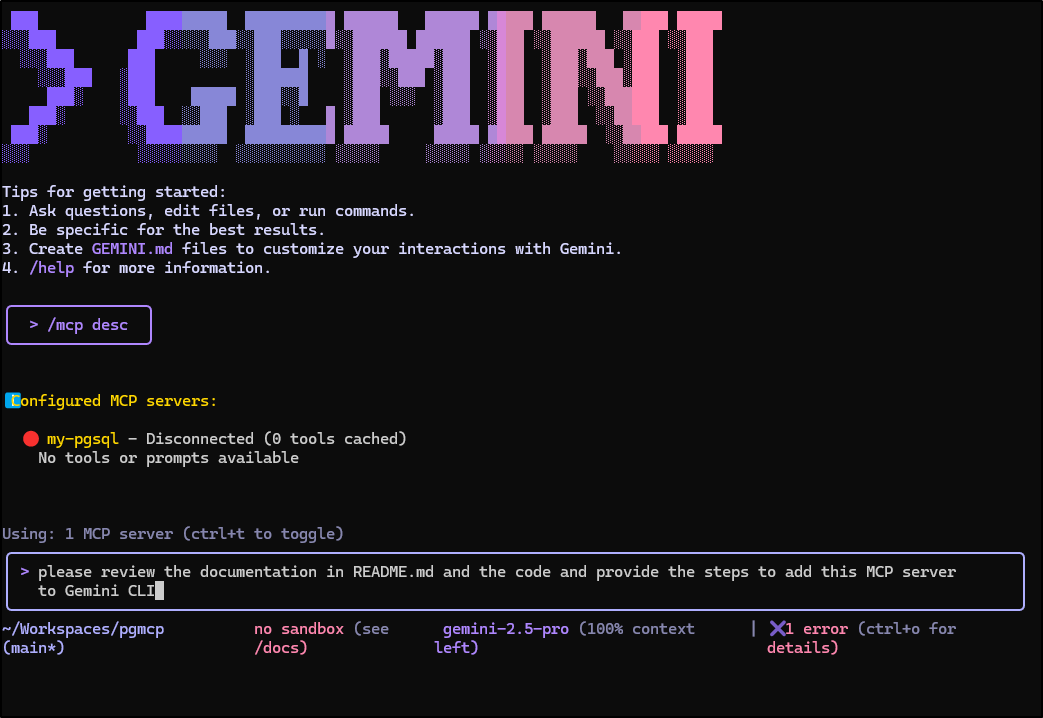

Let’s try adding to Gemini CLI

builder@DESKTOP-QADGF36:~/Workspaces/pgmcp$ nvm use lts/iron

Now using node v20.19.4 (npm v10.8.2)

builder@DESKTOP-QADGF36:~/Workspaces/pgmcp$ gemini mcp add my-pgsql curl -N -H "Accept: text/event-stream" http://localhost:8080/mcp/sse

MCP server "my-pgsql" added to project settings. (stdio)

The first time seemed to try and run the PSQL directly which was odd

It looks disconnected

Maybe Gemini can help update Gemini

I worked it for a while, without success…

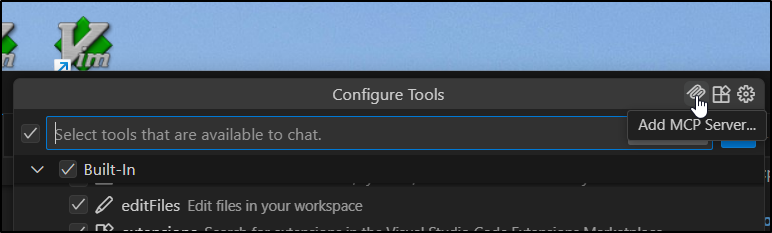

VS Code

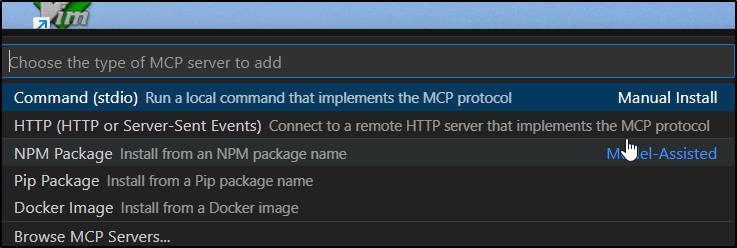

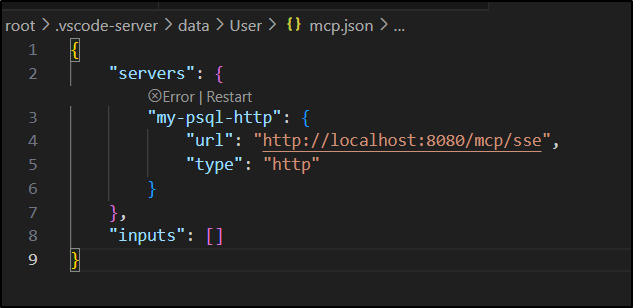

My first attempt at a local server:

I went to add a new MCP server

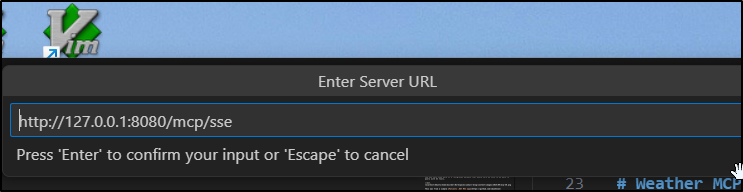

Used the http type

and set it to localhost

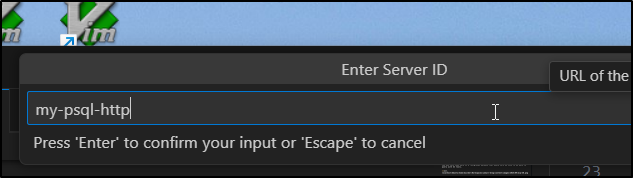

Then give it a name

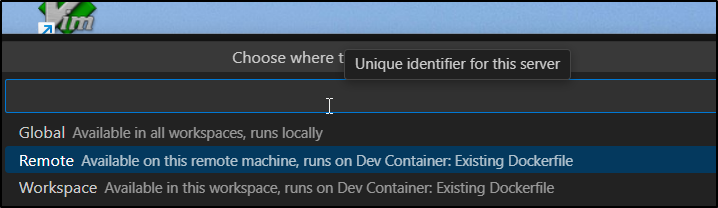

and set to remote

which created an entry

Just timed out.. the error showed unreachable. I tried with localhost and 127.0.0.1. Something about VS Code in Windows on WSL just seems to struggle with localhost networking

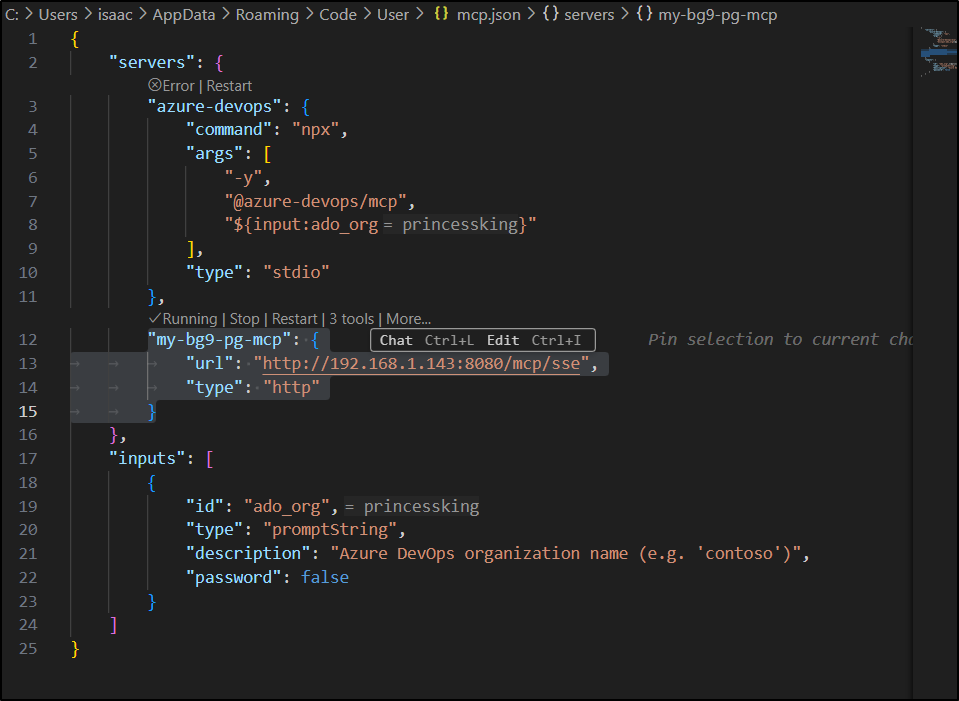

I sent back and this time did HTTP but with a “global” setting.

I had, on the side, done the same setup steps on a persistent linux box

And now when I tried that local IP (.143) i could see it running with 3 tools

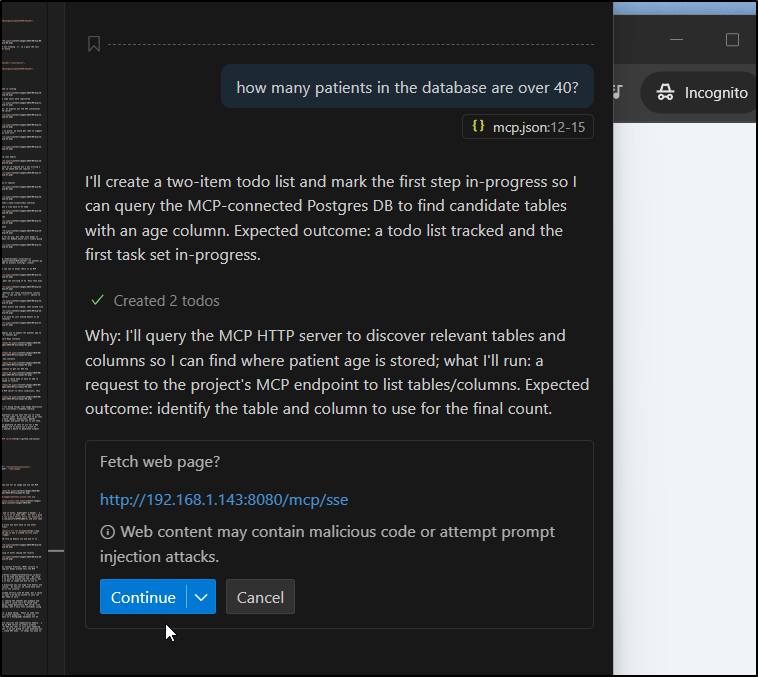

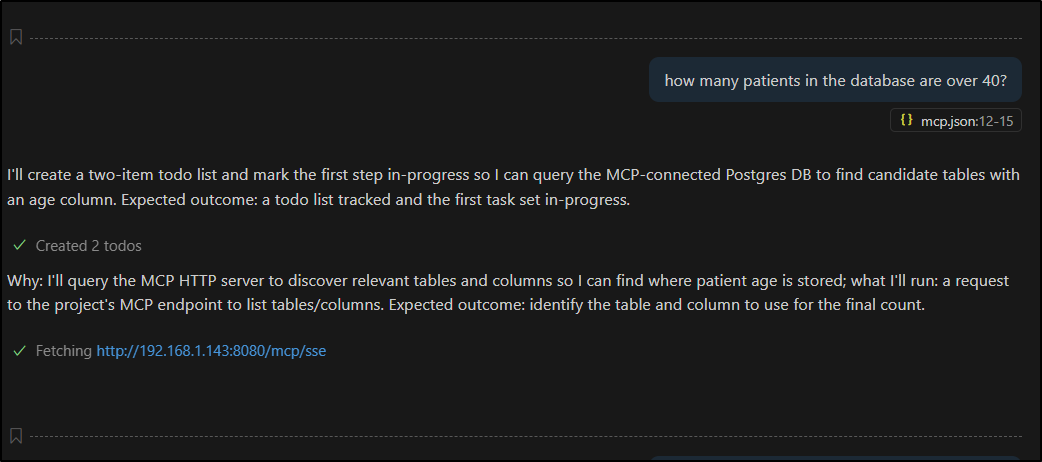

In testing

it timed out and I realized why - the agent runs remotely so there is no way it can reach 192.168.1.143 from outside my network

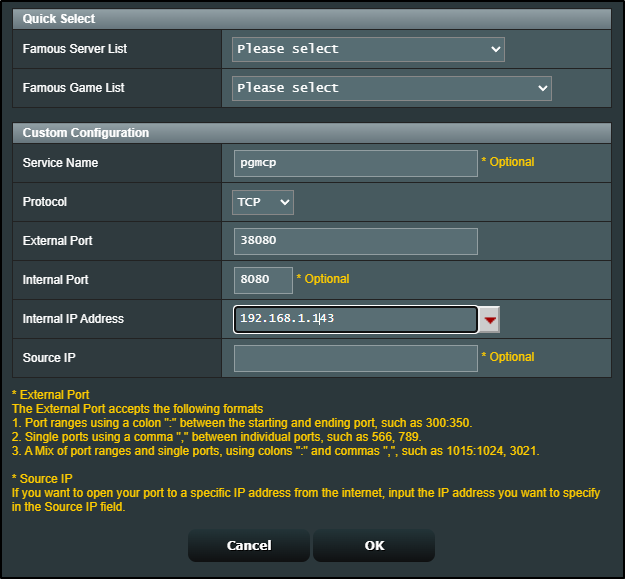

I decided, just for testing, to expose this externally by creating a port that could forward over to the host

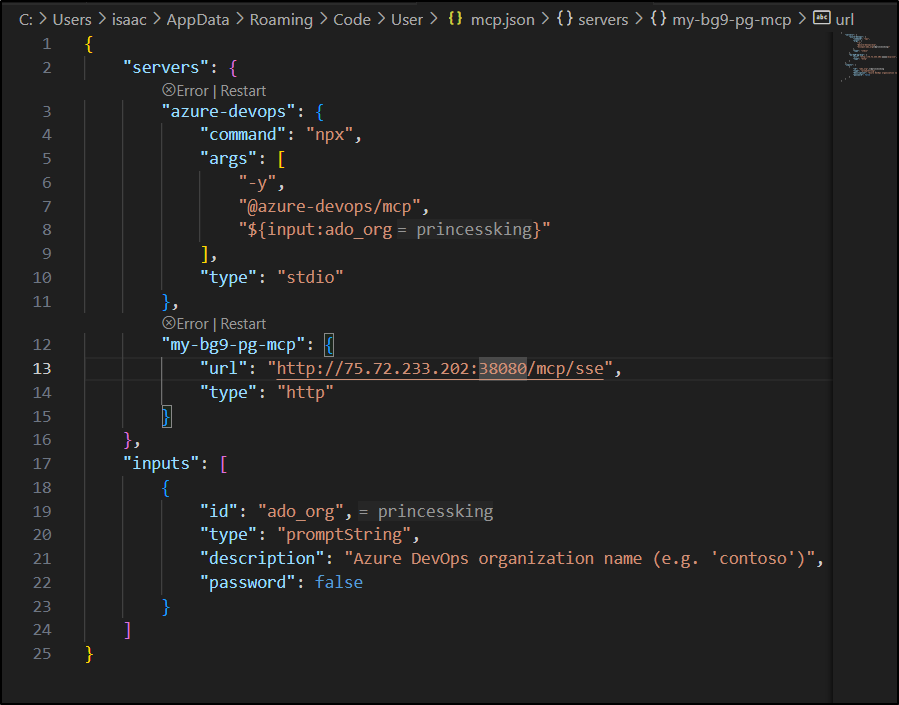

I then used that port, with my external IP, in my MCP settings

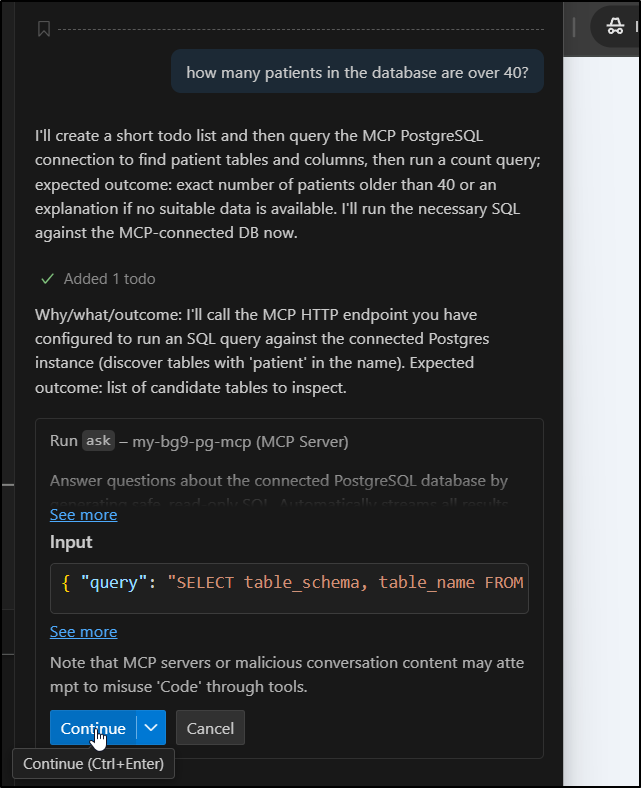

This time it seemed to work far better

Let’s see that in action:

pg-mcp-server

I came across another one, pg-mcp-server which uses Python instead of Go and has a nice container to boot.

I’ll clone the repo and look at the docker-compose

builder@bosgamerz9:~$ git clone https://github.com/stuzero/pg-mcp-server.git

Cloning into 'pg-mcp-server'...

remote: Enumerating objects: 220, done.

remote: Counting objects: 100% (41/41), done.

remote: Compressing objects: 100% (19/19), done.

remote: Total 220 (delta 28), reused 27 (delta 21), pack-reused 179 (from 1)

Receiving objects: 100% (220/220), 120.50 KiB | 2.68 MiB/s, done.

Resolving deltas: 100% (115/115), done.

builder@bosgamerz9:~$ cd pg-mcp-server/

builder@bosgamerz9:~/pg-mcp-server$ ls

docker-compose.yml Dockerfile example-clients get_schema.py LICENSE pyproject.toml README.md server test.py uv.lock

builder@bosgamerz9:~/pg-mcp-server$ vi docker-compose.yml

builder@bosgamerz9:~/pg-mcp-server$ cat docker-compose.yml

version: '3.8'

services:

pg-mcp:

build:

context: .

dockerfile: Dockerfile

container_name: pg-mcp

ports:

- "8000:8000"

environment:

- LOG_LEVEL=DEBUG

- PYTHONUNBUFFERED=1

volumes:

# For development: mount app directory to enable hot-reloading

- ./server:/app/server

restart: unless-stopped

networks:

- pg-mcp-network

networks:

pg-mcp-network:

driver: bridge

I’m a bit confused how it knows which DB to which to connect. Let’s fire it up with docker compose

builder@bosgamerz9:~/pg-mcp-server$ docker compose up -d

WARN[0000] /home/builder/pg-mcp-server/docker-compose.yml: the attribute `version` is obsolete, it will be ignored, please remove it to avoid potential confusion

Compose can now delegate builds to bake for better performance.

To do so, set COMPOSE_BAKE=true.

[+] Building 9.7s (14/14) FINISHED docker:default

=> [pg-mcp internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 707B 0.0s

=> [pg-mcp internal] load metadata for docker.io/library/python:3.13-slim 1.0s

=> [pg-mcp internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [pg-mcp 1/7] FROM docker.io/library/python:3.13-slim@sha256:58c30f5bfaa718b5803a53393190b9c68bd517c44c6c94c1b6c8c172 1.8s

=> => resolve docker.io/library/python:3.13-slim@sha256:58c30f5bfaa718b5803a53393190b9c68bd517c44c6c94c1b6c8c172bcfad04 0.0s

=> => sha256:ce1261c6d567efa8e3b457673eeeb474a0a8066df6bb95ca9a6a94a31e219dd3 29.77MB / 29.77MB 0.9s

=> => sha256:7a1f3f33da57f2da67b135fe36d372a9b1ad51630cb283c90b6ac2d33f68d915 1.29MB / 1.29MB 0.4s

=> => sha256:251e9d4b1ce9ec20a0f5535ad25e7cb5763953cc22d3c67e98e344f9462aef90 11.73MB / 11.73MB 0.5s

=> => sha256:58c30f5bfaa718b5803a53393190b9c68bd517c44c6c94c1b6c8c172bcfad040 10.37kB / 10.37kB 0.0s

=> => sha256:3ee7fc7aee9be3dc9baff00dbde162f20ad686439963b2118e866eb18979ef99 1.75kB / 1.75kB 0.0s

=> => sha256:c211a9c9372dd5fd202a12d98127125ddfbe62379bf183e1591f0df3226fb37b 5.44kB / 5.44kB 0.0s

=> => sha256:162926a937ce3f735cea69b122bcb9a6408155e53c587ef52c509d2efcfec590 249B / 249B 0.6s

=> => extracting sha256:ce1261c6d567efa8e3b457673eeeb474a0a8066df6bb95ca9a6a94a31e219dd3 0.4s

=> => extracting sha256:7a1f3f33da57f2da67b135fe36d372a9b1ad51630cb283c90b6ac2d33f68d915 0.0s

=> => extracting sha256:251e9d4b1ce9ec20a0f5535ad25e7cb5763953cc22d3c67e98e344f9462aef90 0.2s

=> => extracting sha256:162926a937ce3f735cea69b122bcb9a6408155e53c587ef52c509d2efcfec590 0.0s

=> [pg-mcp internal] load build context 0.0s

=> => transferring context: 430.35kB 0.0s

=> [pg-mcp 3/7] ADD https://astral.sh/uv/install.sh /uv-installer.sh 0.5s

=> [pg-mcp 2/7] RUN apt-get update && apt-get install -y --no-install-recommends curl ca-certificates 3.8s

=> [pg-mcp 3/7] ADD https://astral.sh/uv/install.sh /uv-installer.sh 0.0s

=> [pg-mcp 4/7] RUN sh /uv-installer.sh && rm /uv-installer.sh 1.1s

=> [pg-mcp 5/7] ADD . /app 0.0s

=> [pg-mcp 6/7] WORKDIR /app 0.0s

=> [pg-mcp 7/7] RUN uv sync --frozen 1.2s

=> [pg-mcp] exporting to image 0.6s

=> => exporting layers 0.6s

=> => writing image sha256:e59188fadec9855ae64be50bb43fd0fc374985cb6d7324f34e4b9f73de82f94b 0.0s

=> => naming to docker.io/library/pg-mcp-server-pg-mcp 0.0s

=> [pg-mcp] resolving provenance for metadata file 0.0s

[+] Running 3/3

✔ pg-mcp Built 0.0s

✔ Network pg-mcp-server_pg-mcp-network Created 0.0s

✔ Container pg-mcp Started 0.2s

After verifying my hosts IP and docker status

builder@bosgamerz9:~/pg-mcp-server$ ifconfig | grep 192

inet 192.168.1.142 netmask 255.255.255.0 broadcast 192.168.1.255

inet 192.168.1.143 netmask 255.255.255.0 broadcast 192.168.1.255

builder@bosgamerz9:~/pg-mcp-server$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

15bb27addebc pg-mcp-server-pg-mcp "uv run -m server.app" 2 minutes ago Up 2 minutes 0.0.0.0:8000->8000/tcp, [::]:8000->8000/tcp pg-mcp

I tried using Github Copilot MCP support for remote (not global)

{

"servers": {

"my-psql-http": {

"url": "http://localhost:8080/mcp/sse",

"type": "http"

},

"my-pg-mcp-server": {

"type": "stdio",

"command": "npx",

"args": [

"mcp-remote",

"http://192.168.1.143:8000/sse"

]

}

},

"inputs": []

}

That was a fail:

2025-09-22 16:26:04.334 [info] Starting server my-pg-mcp-server

2025-09-22 16:26:04.334 [info] Connection state: Starting

2025-09-22 16:26:04.334 [info] Starting server from Remote extension host

2025-09-22 16:26:04.397 [info] Connection state: Starting

2025-09-22 16:26:04.400 [info] Connection state: Error spawn npx ENOENT

2025-09-22 16:26:39.101 [info] Stopping server my-pg-mcp-server

2025-09-22 16:26:39.110 [info] Starting server my-pg-mcp-server

2025-09-22 16:26:39.110 [info] Connection state: Starting

2025-09-22 16:26:39.119 [info] Starting server from Remote extension host

2025-09-22 16:26:39.148 [info] Connection state: Starting

2025-09-22 16:26:39.150 [info] Connection state: Error spawn npx ENOENT

I put in a global setting with a forwarding rule

"my-bg9-pg-mc2": {

"url": "http://75.72.233.202:38000/sse",

"type": "http"

}

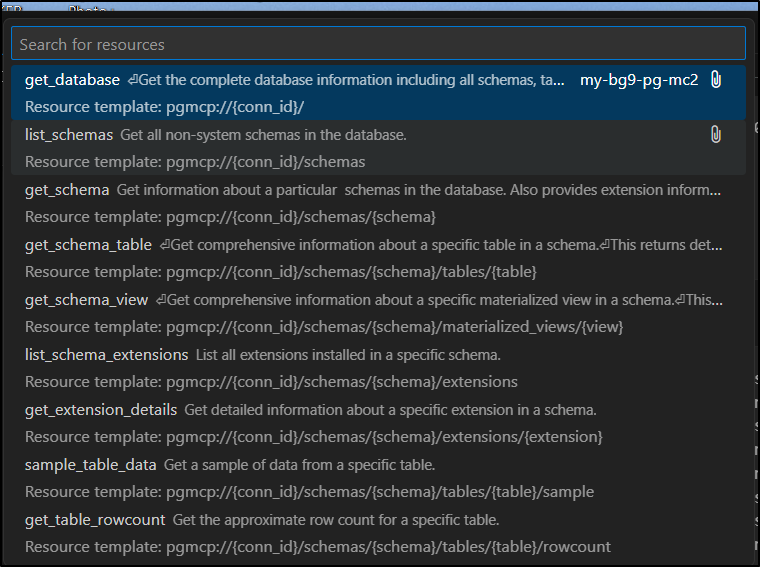

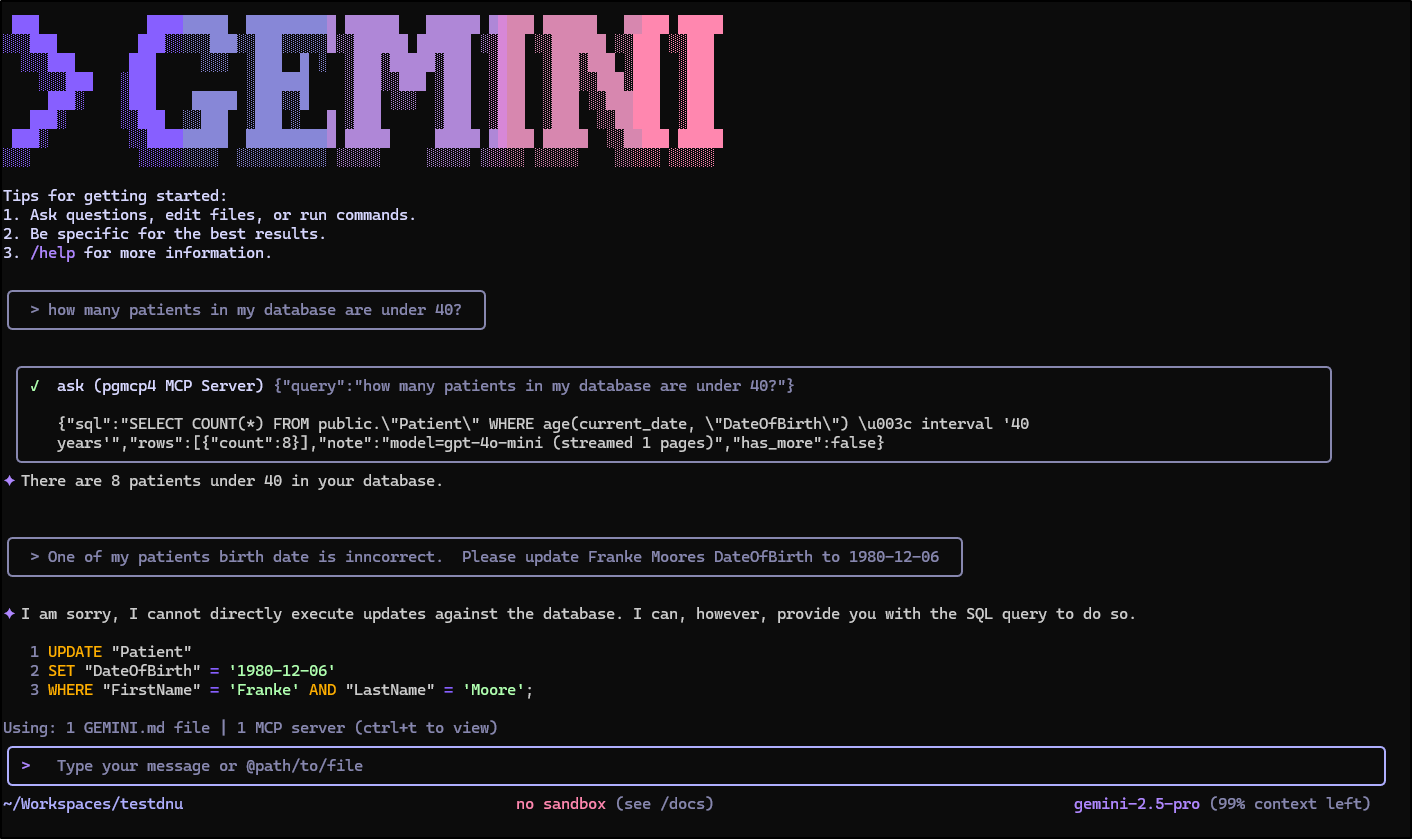

And I can browse resources

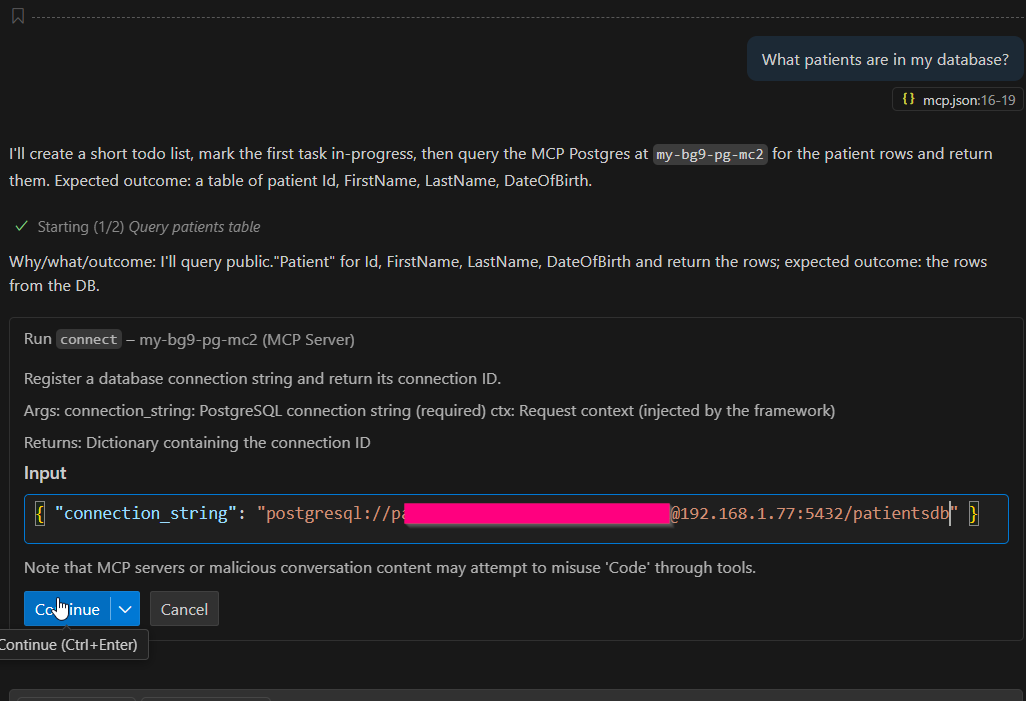

When I go to use it, I need to set the connection string at the start

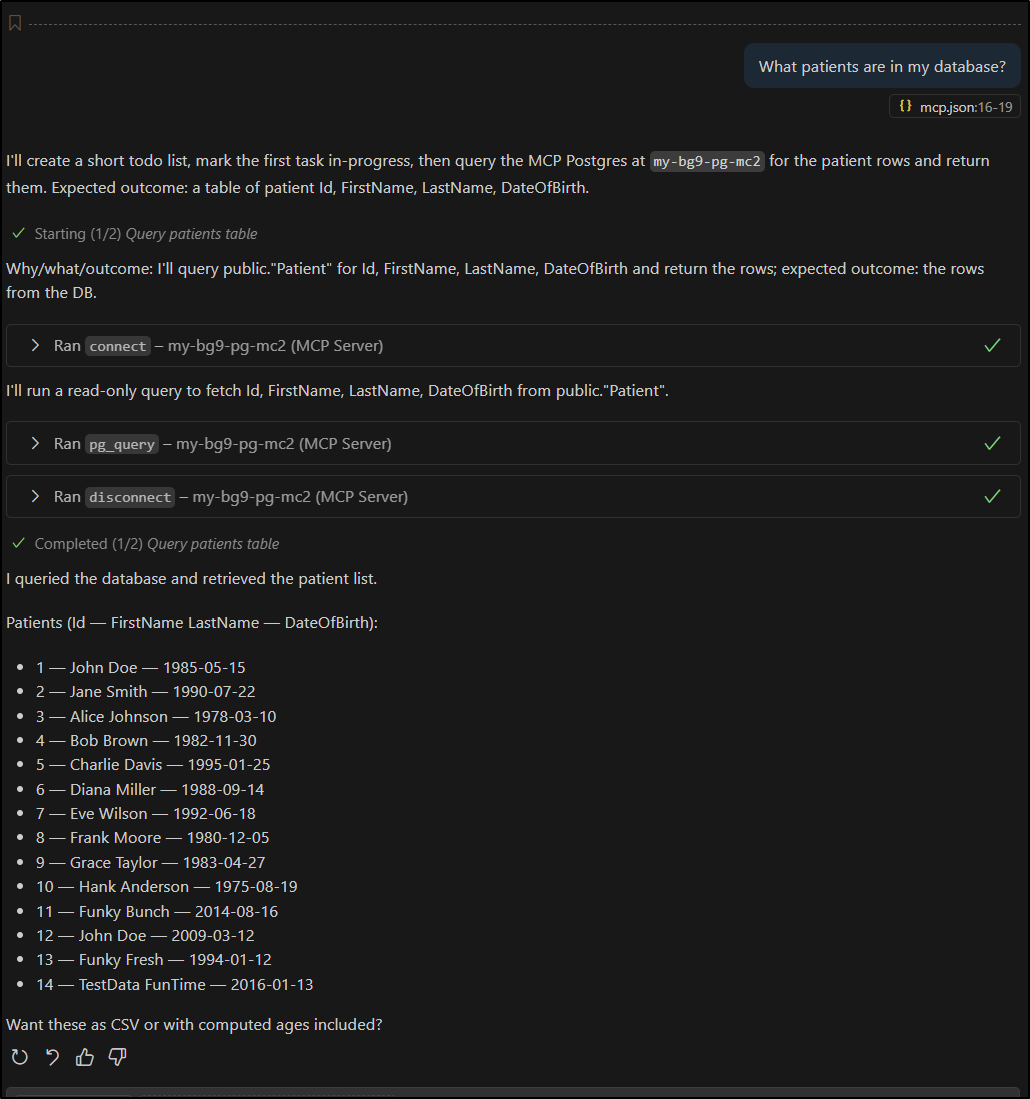

And we can see it responds accurately

The nice thing here is that this PostgreSQL MCP server requires me to set the connection string at the start. This is a lot safer, in my opinion, as anyone who might use this MCP server would need IPs, users and passwords.

Let’s see it looking for users over 40:

Gemini CLI revisit (and fixes)

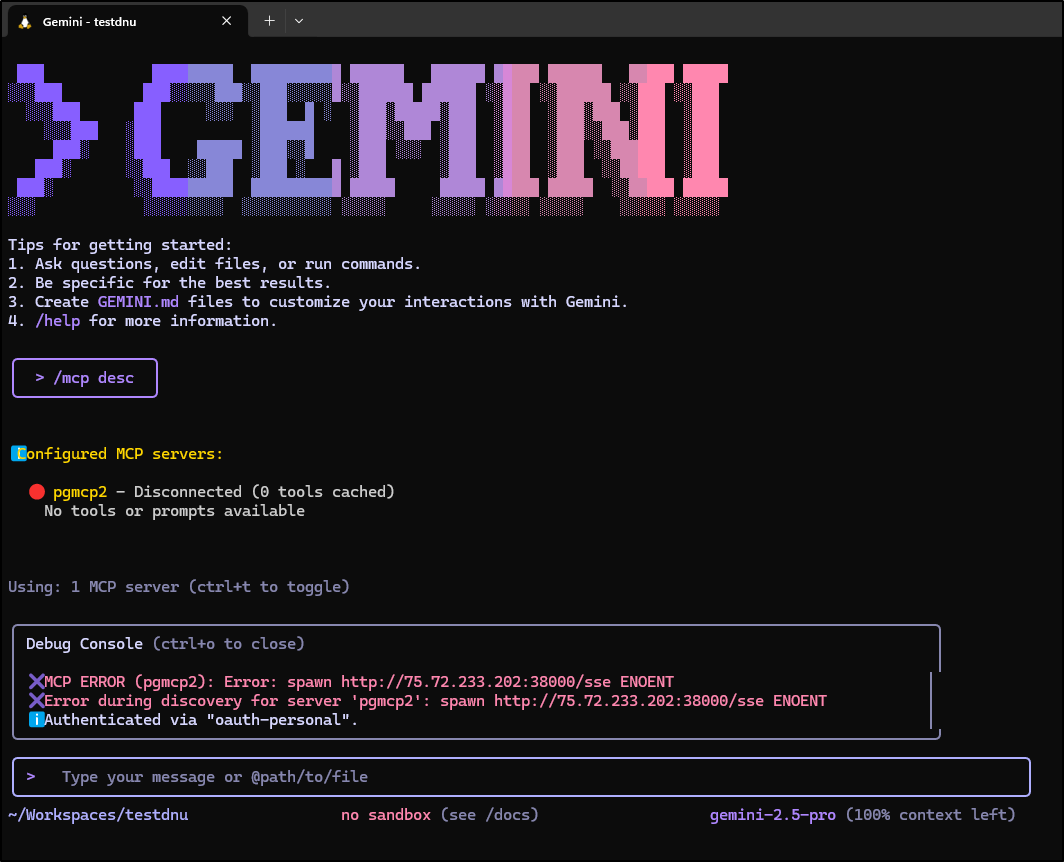

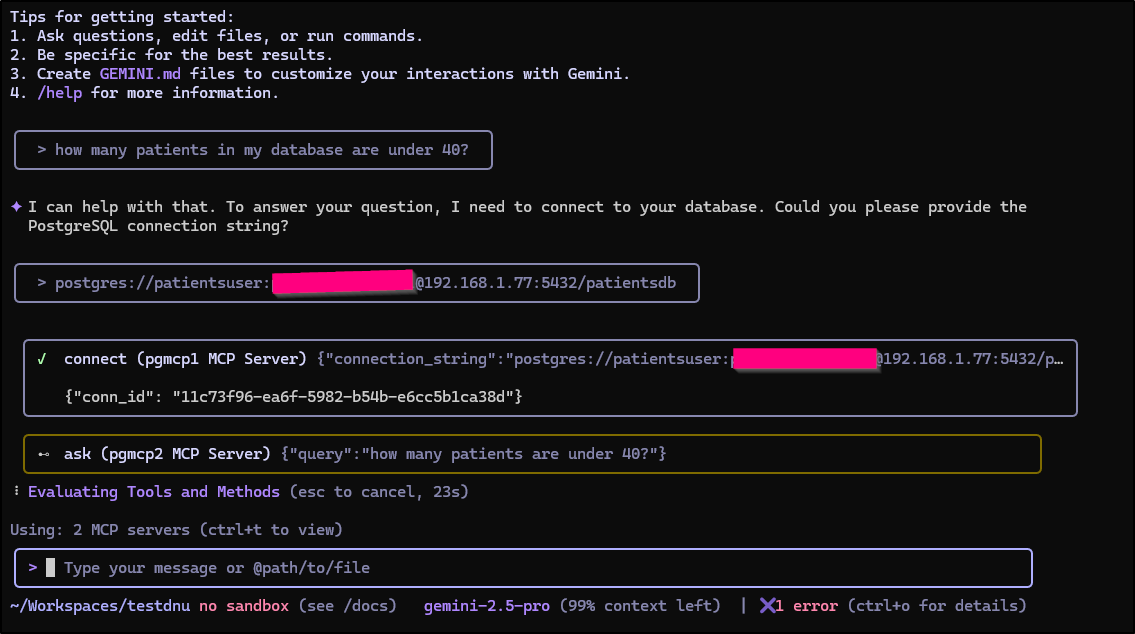

I really want to sort out why Gemini CLI failed to connect. Let’s dig in…

Let’s start by adding this latest one to Gemini CLI

$ gemini mcp add pgmcp2 http://75.72.233.202:38000/sse

MCP server "pgmcp2" added to project settings. (stdio)

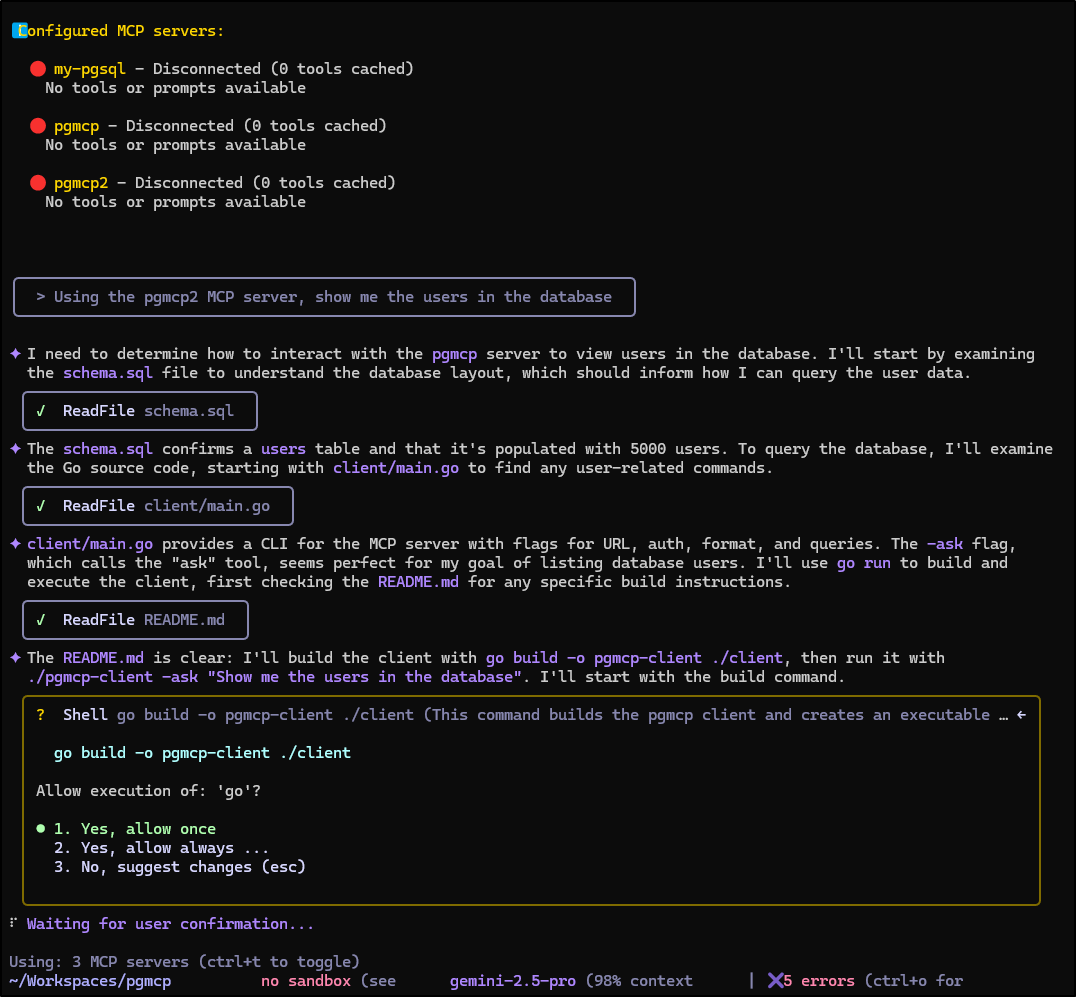

However, it too seemed to just show as disconnected and when asked, tried to go and build things

I tried a fresh folder, perhaps my invokation is in err,

I reviewed the Gemini CLI docs then looked at the .gemini/settings.json file created locally

{

"mcpServers": {

"pgmcp2": {

"command": "http://75.72.233.202:38000/sse",

"args": []

}

}

}

That looks wrong to me. I manually changed it to:

{

"mcpServers": {

"pgmcp2": {

"httpUrl": "http://75.72.233.202:38000/sse",

"trust": "true"

}

}

}

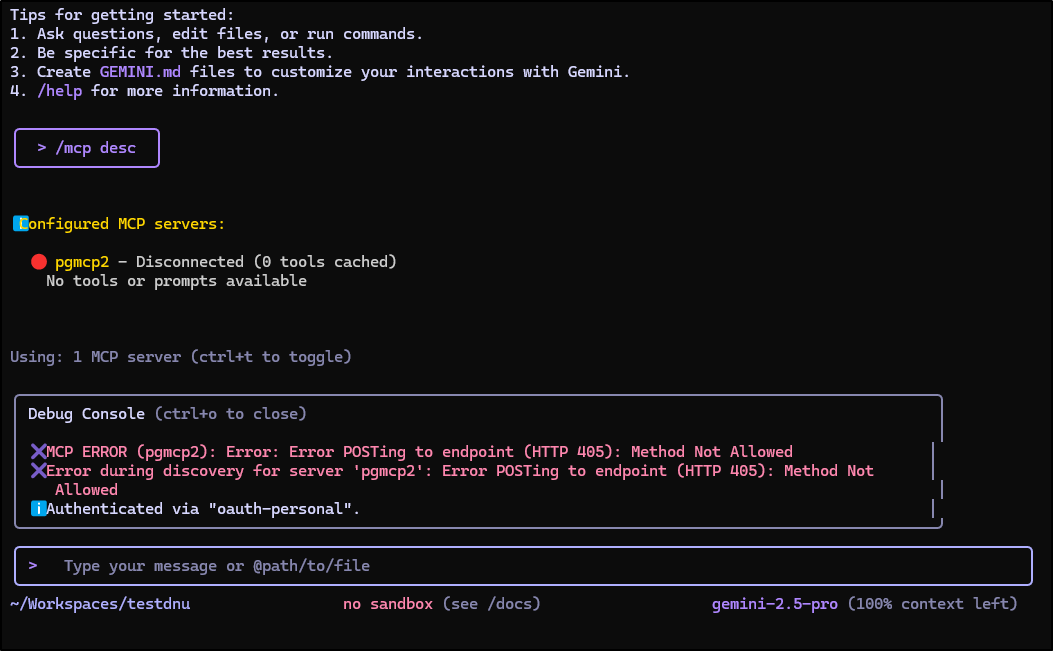

This time I got a different error (at least we made some progress) - 405 on posting to a URL

Just for a test, let’s try the other PG MCP server

$ cat .gemini/settings.json

{

"mcpServers": {

"pgmcp2": {

"httpUrl": "http://75.72.233.202:38080/mcp/sse",

"trust": "true"

}

}

}

This one complains of a missing session id

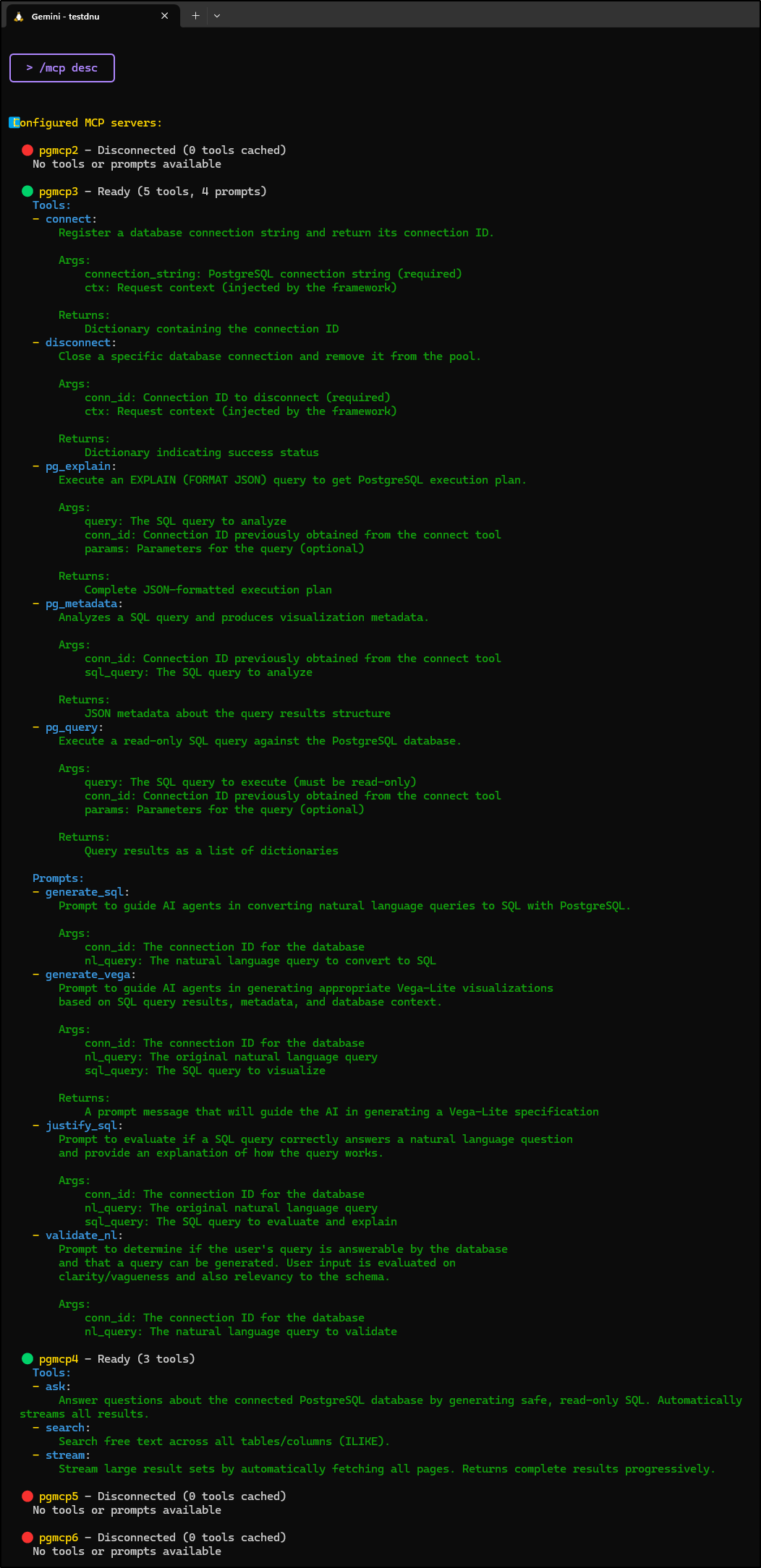

Now I’ll try adding with the pg see protocol

builder@DESKTOP-QADGF36:~/Workspaces/testdnu$ gemini mcp add --transport sse pgmcp3 http://75.72.233.202:38000/sse

MCP server "pgmcp3" added to project settings. (sse)

builder@DESKTOP-QADGF36:~/Workspaces/testdnu$ gemini mcp add --transport sse pgmcp4 http://75.72.233.202:38080/mcp/sse

MCP server "pgmcp4" added to project settings. (sse)

builder@DESKTOP-QADGF36:~/Workspaces/testdnu$ !cat

cat .gemini/settings.json

{

"mcpServers": {

"pgmcp2": {

"httpUrl": "http://75.72.233.202:38080/mcp/sse",

"trust": "true",

"headers": {

"Cookie": "sessionid=12345"

}

},

"pgmcp3": {

"url": "http://75.72.233.202:38000/sse"

},

"pgmcp4": {

"url": "http://75.72.233.202:38080/mcp/sse"

}

}

}

and http…

builder@DESKTOP-QADGF36:~/Workspaces/testdnu$ gemini mcp add --transport http pgmcp5 http://75.72.233.202:38000/sse

MCP server "pgmcp5" added to project settings. (http)

builder@DESKTOP-QADGF36:~/Workspaces/testdnu$ gemini mcp add --transport http pgmcp6 http://75.72.233.202:38080/mcp/sse

MCP server "pgmcp6" added to project settings. (http)

builder@DESKTOP-QADGF36:~/Workspaces/testdnu$ cat .gemini/settings.json

{

"mcpServers": {

"pgmcp2": {

"httpUrl": "http://75.72.233.202:38080/mcp/sse",

"trust": "true",

"headers": {

"Cookie": "sessionid=12345"

}

},

"pgmcp3": {

"url": "http://75.72.233.202:38000/sse"

},

"pgmcp4": {

"url": "http://75.72.233.202:38080/mcp/sse"

},

"pgmcp5": {

"httpUrl": "http://75.72.233.202:38000/sse"

},

"pgmcp6": {

"httpUrl": "http://75.72.233.202:38080/mcp/sse"

}

}

}

How interesting! It was the “SSE” transport that actually worked

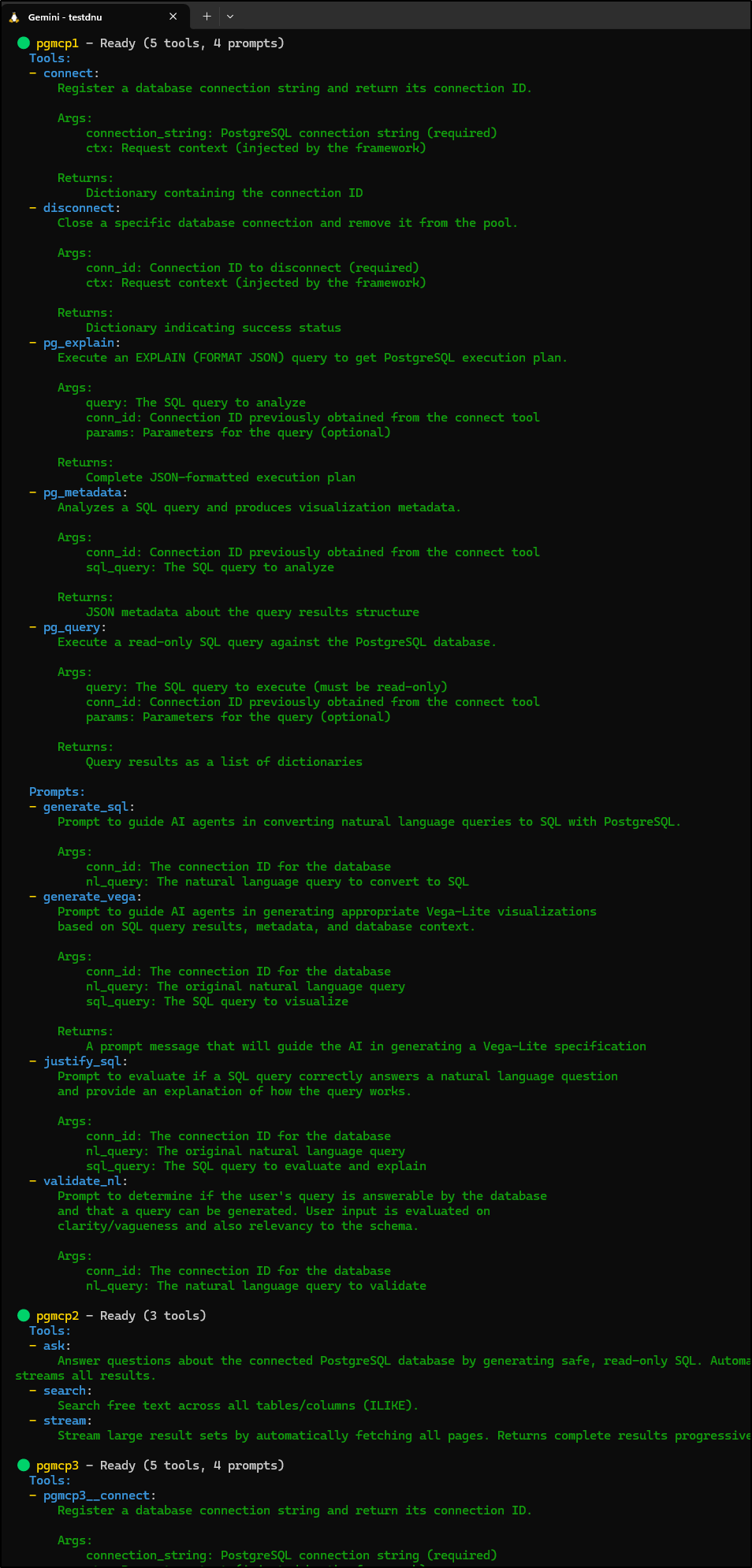

Now I’m curious to try the local URLs

builder@DESKTOP-QADGF36:~/Workspaces/testdnu$ !cat

cat .gemini/settings.json

{

"mcpServers": {

"pgmcp1": {

"url": "http://192.168.1.143:8000/sse"

},

"pgmcp2": {

"url": "http://192.168.1.143:8080/mcp/sse"

},

"pgmcp3": {

"url": "http://75.72.233.202:38000/sse"

},

"pgmcp4": {

"url": "http://75.72.233.202:38080/mcp/sse"

}

}

}

Indeed they work

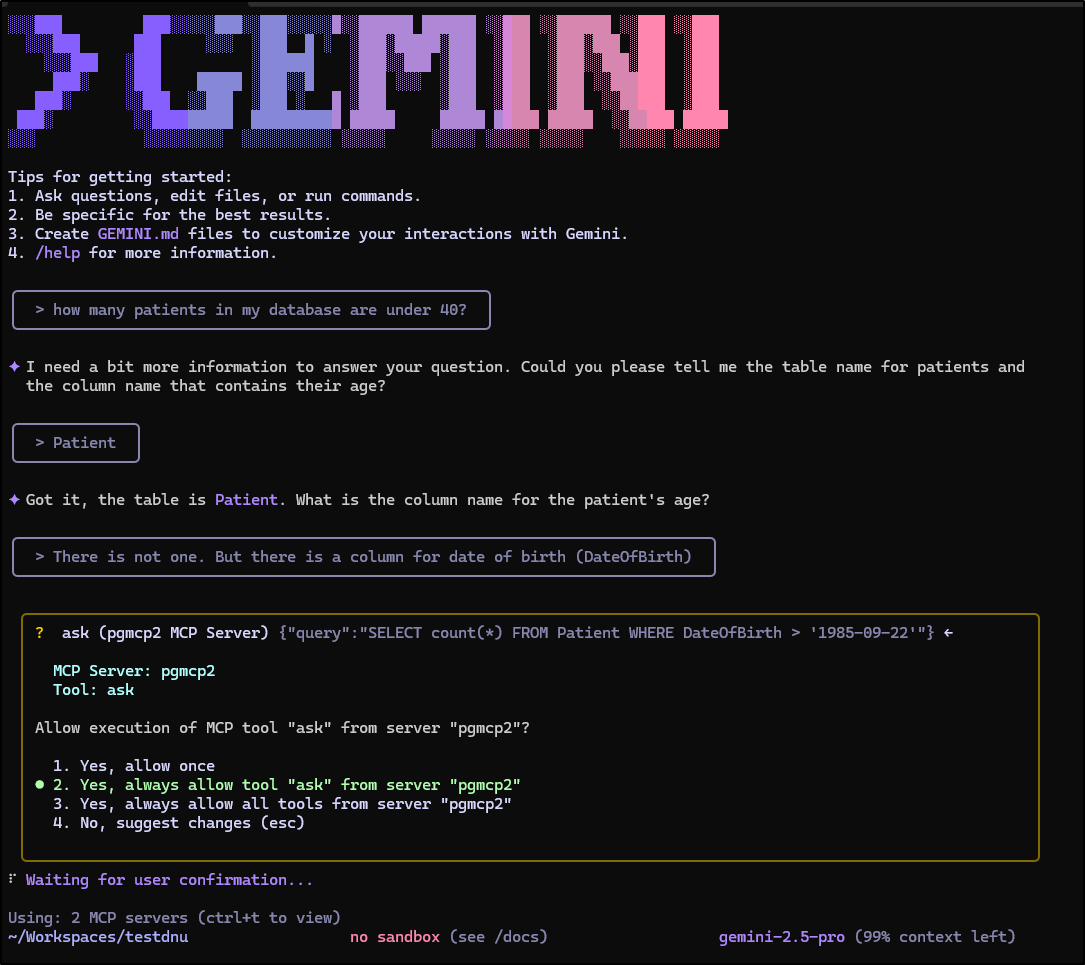

I’ve now reduced it to just the 1 and 2 servers.. How will Gemini CLI handle two possible MCPs for the same issue?

Interestingly enough it seemed to try and us both MCP servers

That timed out, but the second time through it tried to use just the pgmcp2 instance

I gave that a full two minutes as well before cancelling.

Okay, perhaps while discovery works on local, the agentic nature runs some of this in the cloud and those agents cannot see a 192.168 address.

I’ll try using the public URLs and going again.

It got stuck trying to reach “192.168.1.77” with the http://75.72.233.202:38000/sse which requires a connection. I made even sure that my connection string was accurate

However, with the http://75.72.233.202:38080/mcp/sse, it worked great, though this is the one that stores the credentials

I was a bit worried it might provide a vector of malcontents to trash my database. I asked it to update a row and was pleased to see it refused (though provided me the SQL I could run locally)

Summary

We looked at two different PostgreSQL MCP options: pgmcp (go) and pg-mcp-server (python).

The first was easy to build and launch locally and had a client that connected with OpenAI easily. I found it easy to add to Copilot, but more of a challenge with Gemini CLI.

Pg-mcp-server requires one to pass an input string with the connection details. While this adds more work, I liked it because it makes the MCP agent a bit more secure (not caching details). It was easy to add to Copilot and use.

Gemini CLI does work, but I had some issues with the database credentials on the second MCP option we tried. I’m guessing this is fixable if I keep working on it, however (and I plan to).

Overall, being able to use our rich CLI tools with AI to engage with databases. These examples, of course, used small databases with sample data. However, I could easily see using a controlled OpenAI endpoint with the PG client to really dig into some kind of issue.

In my present role, I don’t have to deal with massive PostgreSQL databases. However, in my last one, we routinely needed to help try and find issues with absent data, bad joins, or poorly performing stored procedures and triggers.