Published: Sep 4, 2025 by Isaac Johnson

There have been a couple of posts from Marius in my backlog to check into. One was on Containery, a nice containerized Docker monitoring and administration tool. I plan to show how to test in WSL (then clean WSL) as well as use on a proper Linux container host, wrapping, as I often do, with exposing using TLS via Kubernetes.

I also want to dig into Dockpeek which is a much smaller lightweight container monitoring tool. You will see why I like this, even though it does far less than Containery. As with the prior, I plan to fire it up locally, then on Linux then expose externally with an Azure DNS A record and TLS ingress in Kubernetes.

Let’s start with Containery…

Containery

The first one I want to check out was something Marius posted about in July, Containery.

We can start with the docker-compose provided in the Github README

services:

app:

image: ghcr.io/danylo829/containery:latest

container_name: containery

restart: "unless-stopped"

ports:

- "5000:5000"

volumes:

- containery_data:/containery_data

- containery_static:/containery/app/static/dist

- /var/run/docker.sock:/var/run/docker.sock:ro

volumes:

containery_data:

name: containery_data

containery_static:

name: containery_static

We can fire that up interactively

$ cat ./docker-compose.yml

services:

app:

image: ghcr.io/danylo829/containery:latest

container_name: containery

restart: "unless-stopped"

ports:

- "5000:5000"

volumes:

- containery_data:/containery_data

- containery_static:/containery/app/static/dist

- /var/run/docker.sock:/var/run/docker.sock:ro

volumes:

containery_data:

name: containery_data

containery_static:

name: containery_static

$ docker compose up

[+] Running 9/9

✔ app Pulled 7.8s

✔ 59e22667830b Already exists 0.0s

✔ 4c665aba06d1 Pull complete 1.3s

✔ e3586b415667 Pull complete 3.1s

✔ f5cc5422ebcb Pull complete 3.1s

✔ fbc740372be1 Pull complete 3.2s

✔ 734dee942992 Pull complete 3.2s

✔ 1fbaefecde4a Pull complete 6.3s

✔ 6ddaa8352378 Pull complete 6.5s

[+] Running 4/4

✔ Network containery_default Created 0.1s

✔ Volume "containery_data" Created 0.0s

✔ Volume "containery_static" Created 0.0s

✔ Container containery Created 0.3s

Attaching to containery

containery | Initializing Flask-Migrate at /containery_data/migrations...

containery | Creating directory /containery_data/migrations ... done

containery | Creating directory /containery_data/migrations/versions ... done

containery | Generating /containery_data/migrations/script.py.mako ... done

containery | Generating /containery_data/migrations/env.py ... done

containery | Generating /containery_data/migrations/alembic.ini ... done

containery | Generating /containery_data/migrations/README ... done

containery | Please edit configuration/connection/logging settings in /containery_data/migrations/alembic.ini before proceeding.

containery | Waiting for the database to be ready...

containery | INFO [alembic.runtime.migration] Context impl SQLiteImpl.

containery | INFO [alembic.runtime.migration] Will assume non-transactional DDL.

containery | Applying database migrations...

containery | INFO [alembic.runtime.migration] Context impl SQLiteImpl.

containery | INFO [alembic.runtime.migration] Will assume non-transactional DDL.

containery | INFO [alembic.autogenerate.compare] Detected added table 'stg_global_settings'

containery | INFO [alembic.autogenerate.compare] Detected added table 'usr_role'

containery | INFO [alembic.autogenerate.compare] Detected added table 'usr_user'

containery | INFO [alembic.autogenerate.compare] Detected added table 'usr_personal_settings'

containery | INFO [alembic.autogenerate.compare] Detected added table 'usr_role_permission'

containery | INFO [alembic.autogenerate.compare] Detected added table 'usr_user_role'

containery | Generating /containery_data/migrations/versions/8a47cfa7ab20_.py ... done

containery | INFO [alembic.runtime.migration] Context impl SQLiteImpl.

containery | INFO [alembic.runtime.migration] Will assume non-transactional DDL.

containery | INFO [alembic.runtime.migration] Running upgrade -> 8a47cfa7ab20, empty message

containery | Starting Gunicorn...

containery | [2025-08-29 10:59:12 +0000] [11] [INFO] Starting gunicorn 22.0.0

containery | [2025-08-29 10:59:12 +0000] [11] [INFO] Listening at: http://0.0.0.0:5000 (11)

containery | [2025-08-29 10:59:12 +0000] [11] [INFO] Using worker: eventlet

containery | [2025-08-29 10:59:12 +0000] [12] [INFO] Booting worker with pid: 12

containery | Copying lib static files...

containery | ✔ Registered module: index

containery | ✔ Registered module: auth

containery | ✔ Registered module: settings

containery | ✔ Registered module: user

containery | ✔ Registered module: main

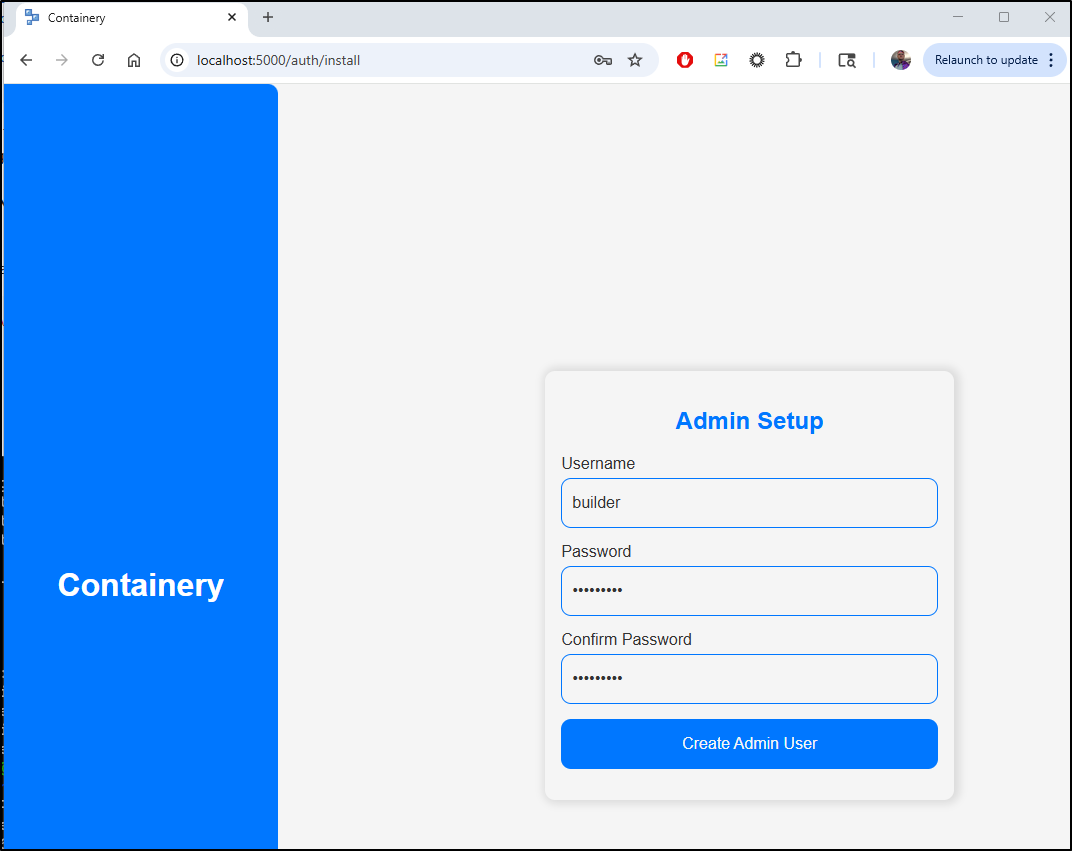

The first step on access is to create an admin user

Then login with the user

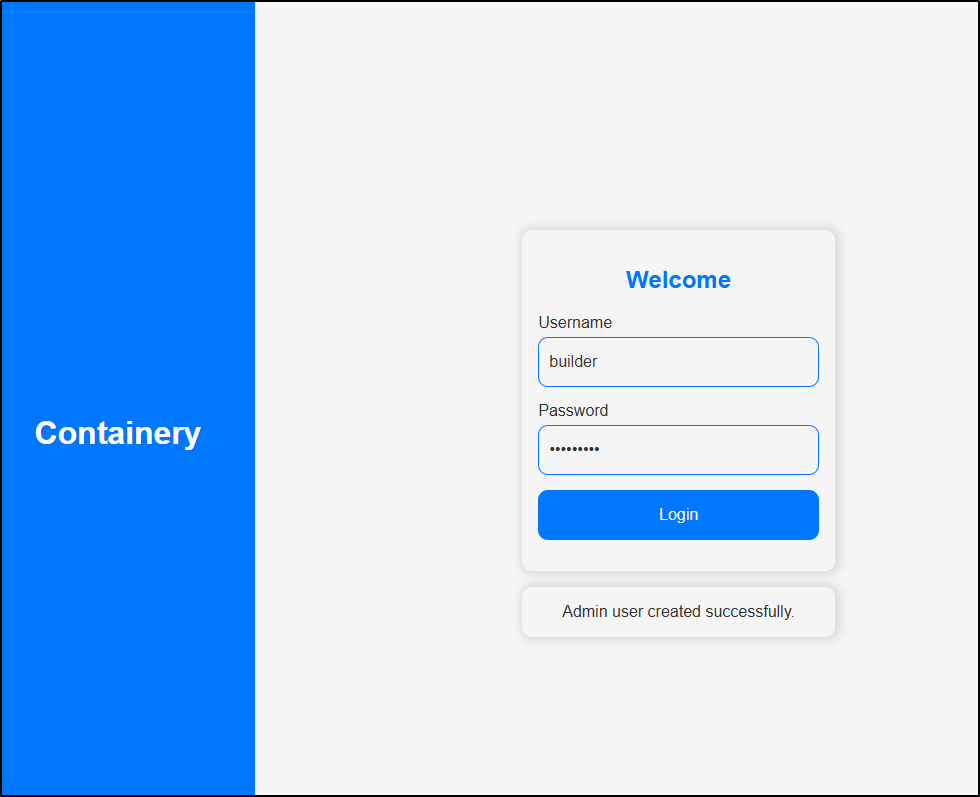

This provides a pretty clean interface showing CPU and RAM usage as well as some basic system info

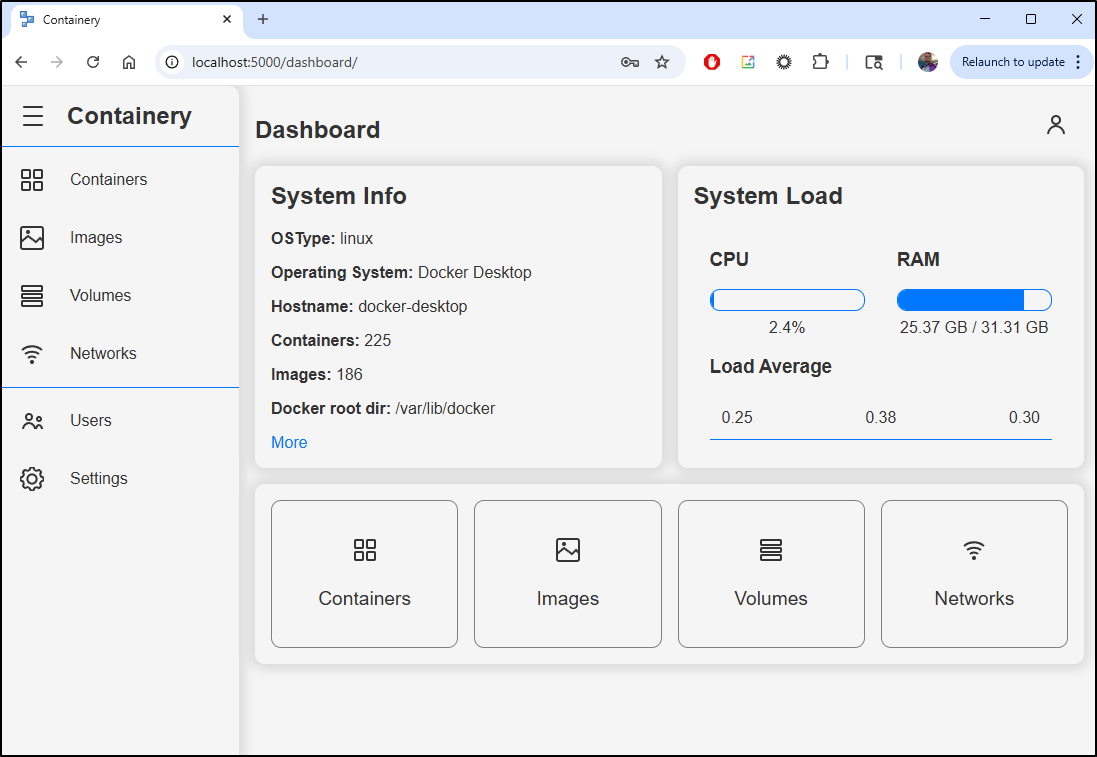

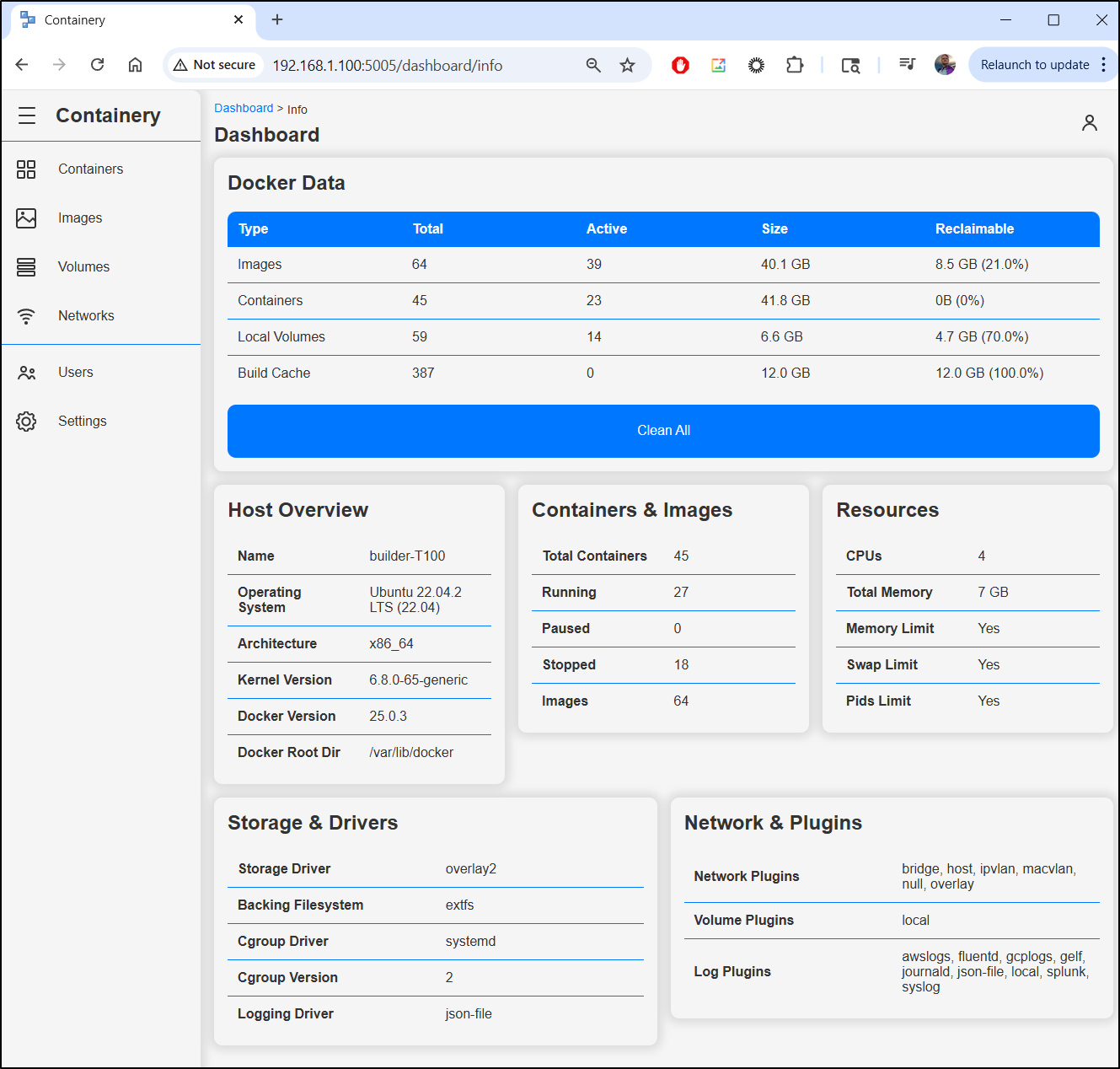

If I click “more” on the system info, we can see everything from network plugins to storage drivers, CPU details and kernel info.

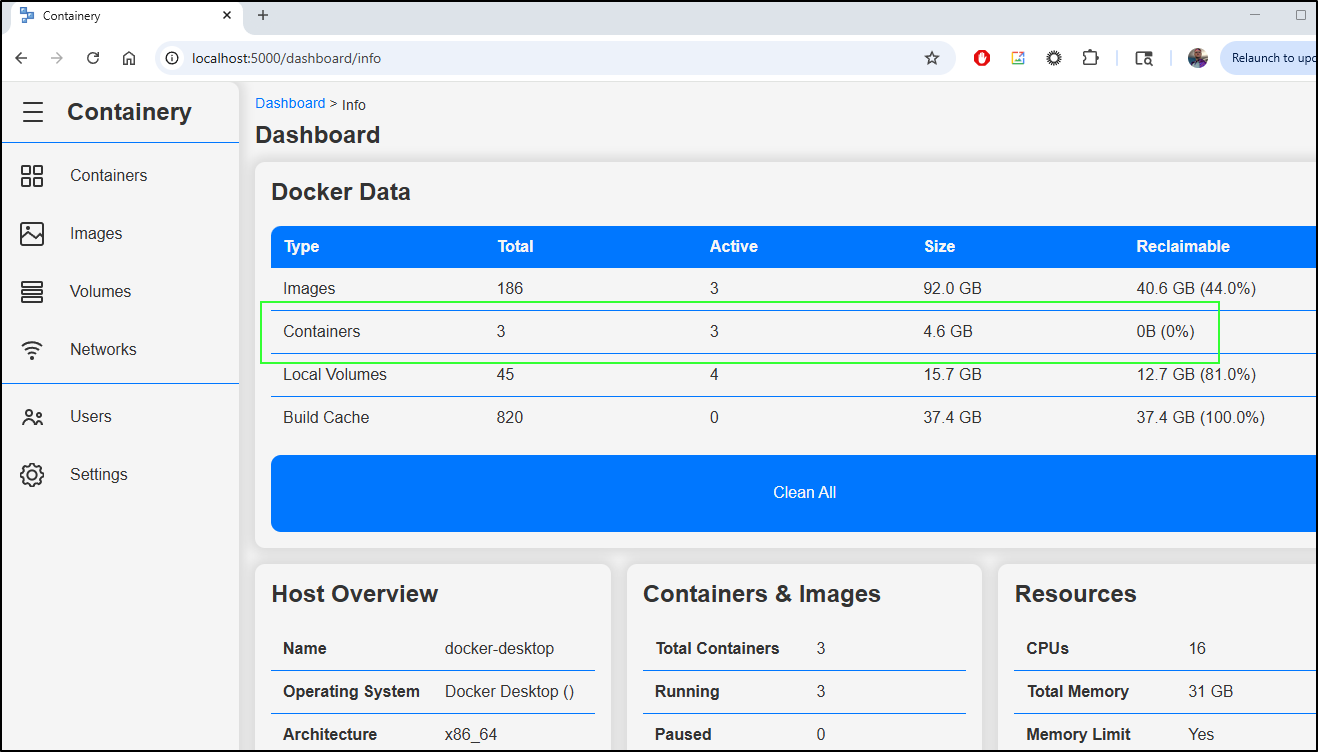

I like seeing the calculated sizes of images and containers since those tend to creep up on me.

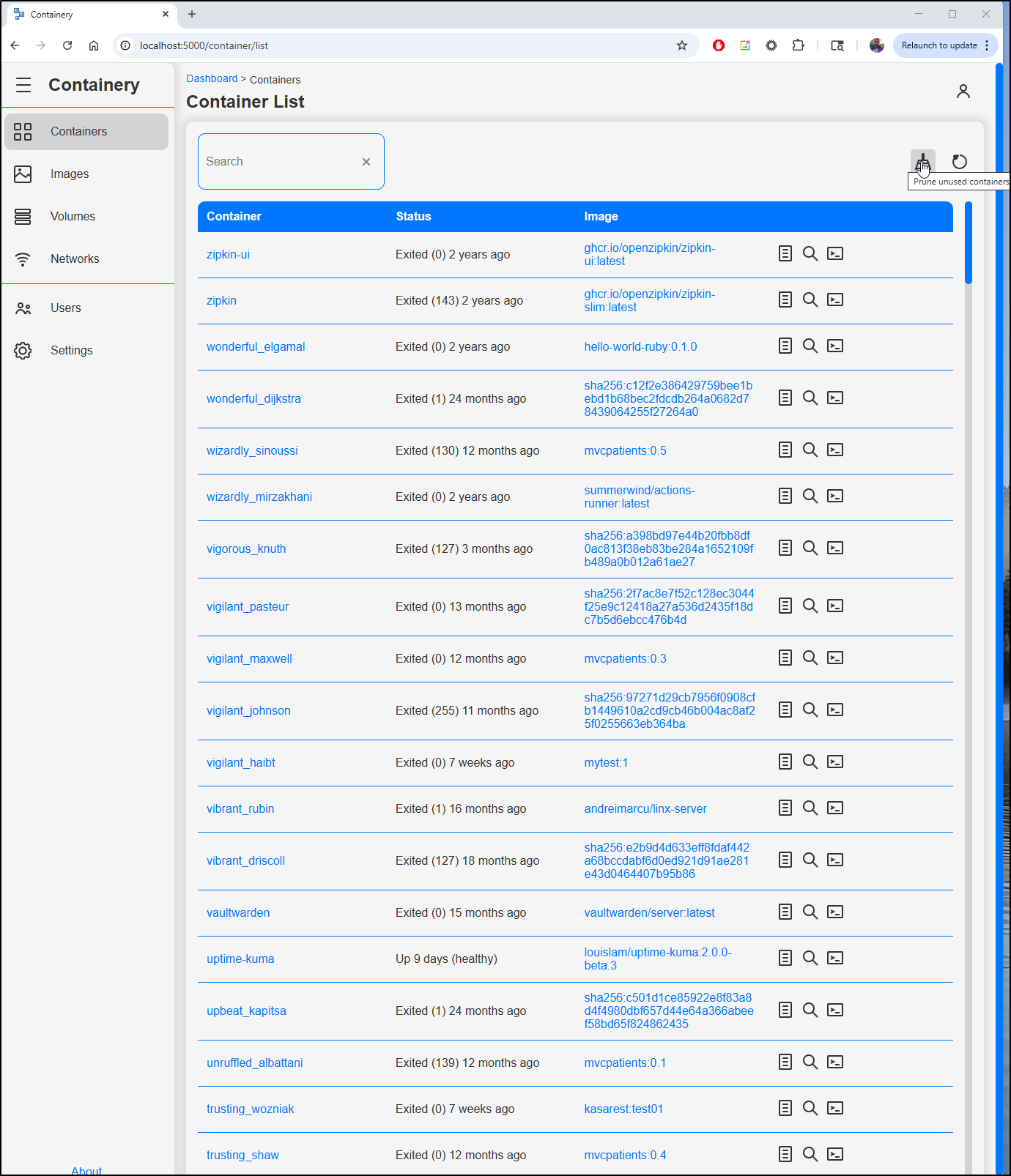

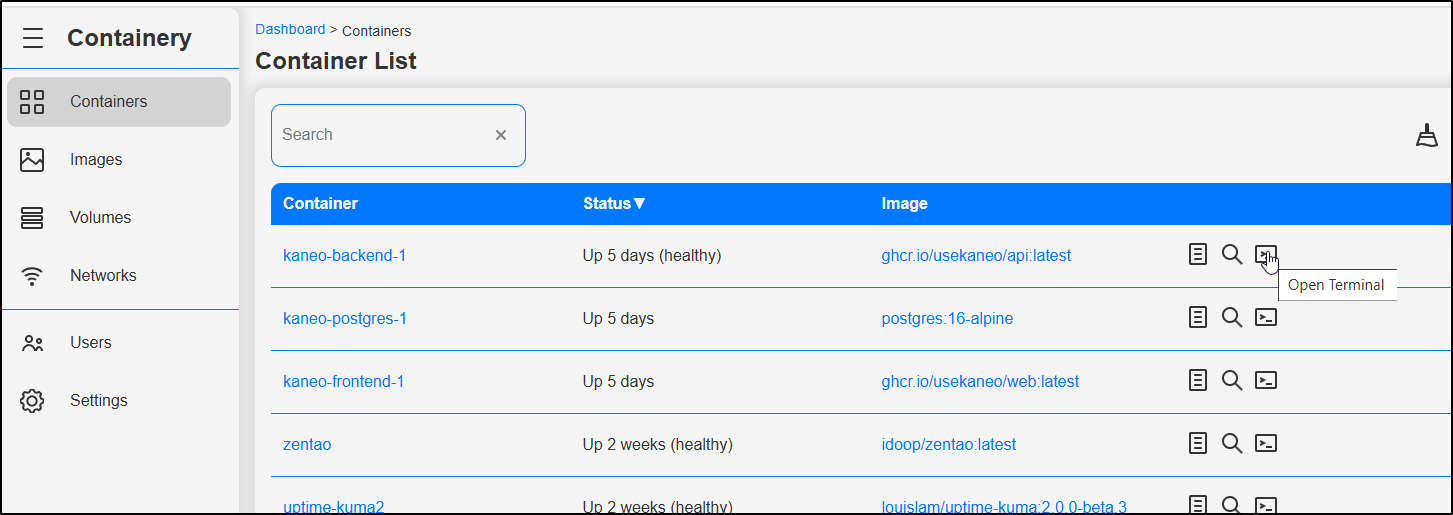

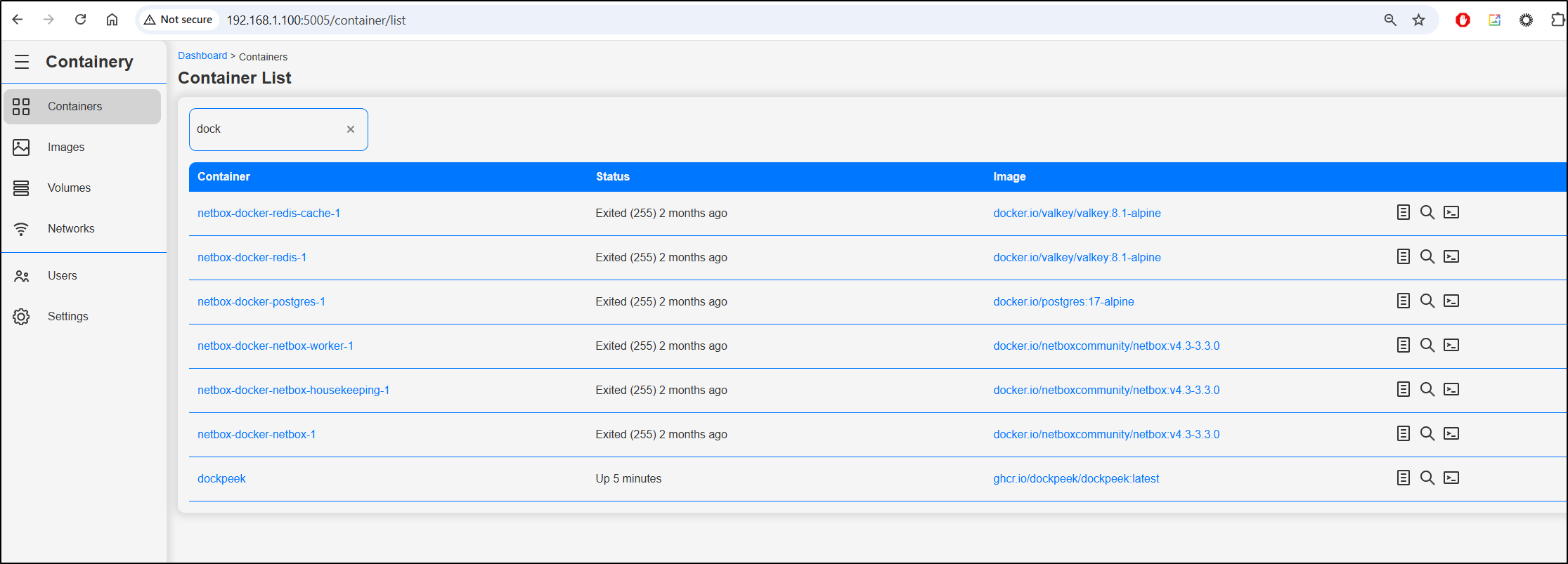

Heading to containers, I can see quite a few dead and unused. There is a scrub icon for cleaning the unused

I’m prompted to confirm I want to delete the stopped containers

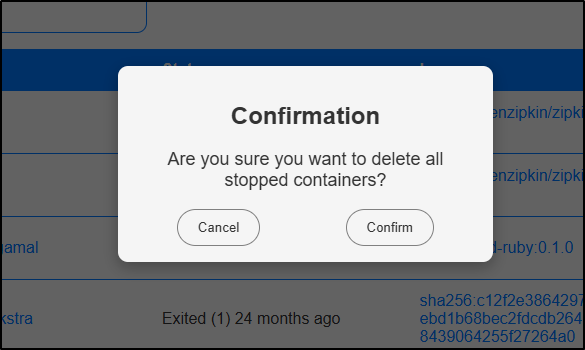

A spinning icon is telling me it’s cranking away

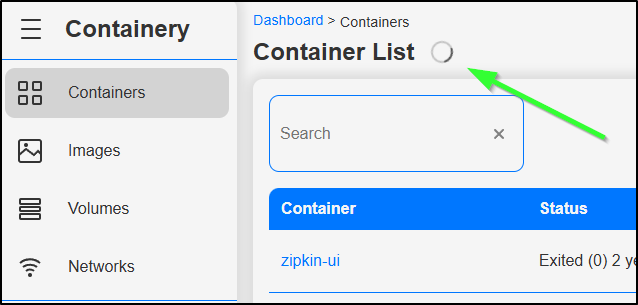

after a minute or so, the page refreshes to show a much trimmer list

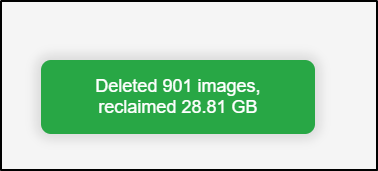

Wow.. I’m amazed how much space that cleared

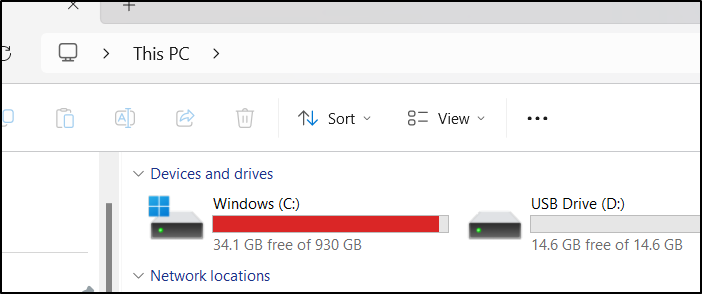

However, I question if that was actual disk space or just what Docker was said was available. I say this because my particular volume is always almost out of space and I hoped perhaps we cleared some.

But checking my C:\ didn’t show anything really improved that much

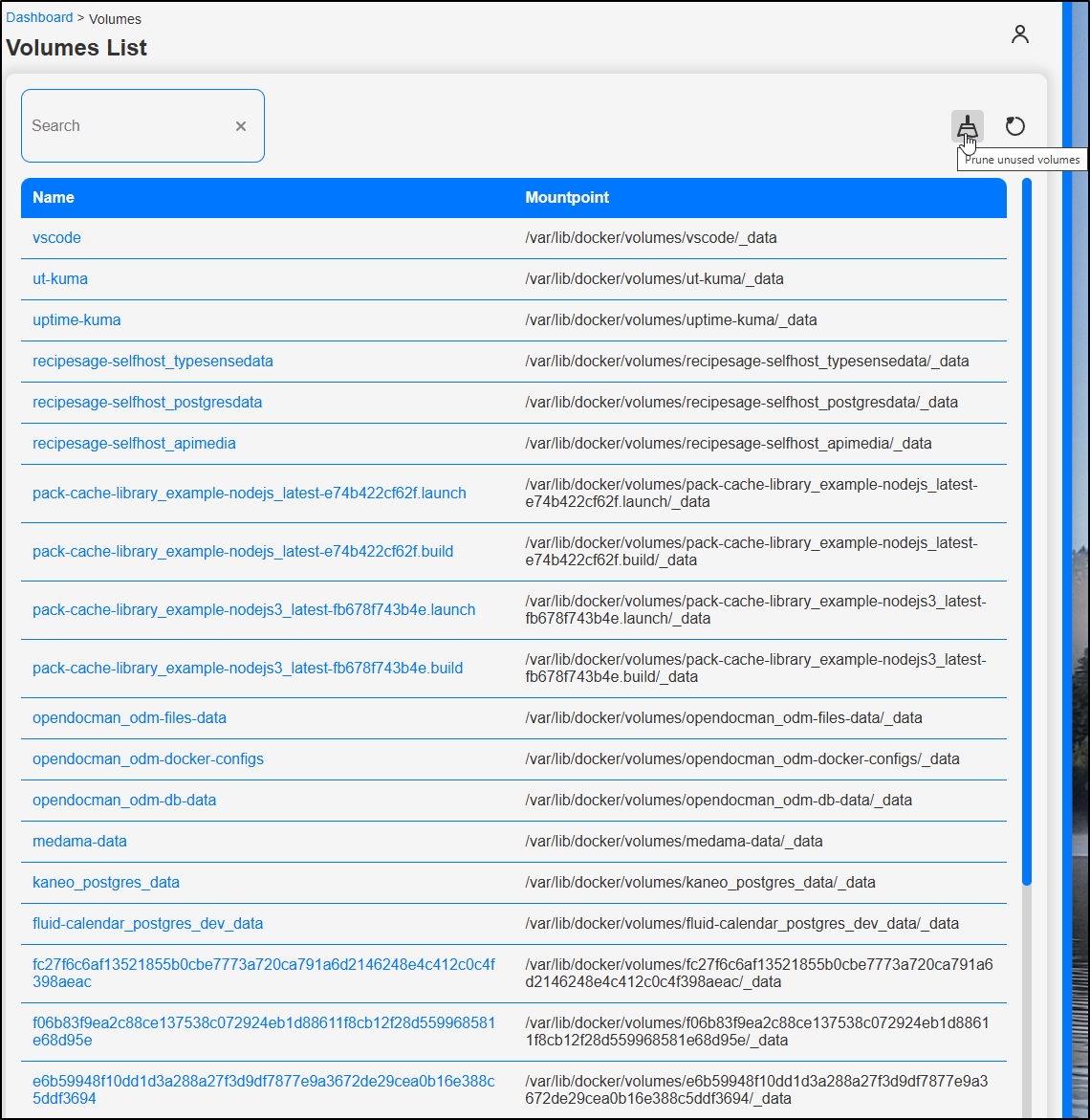

Let’s do similar with Volumes

This said, however, nothing to prune.

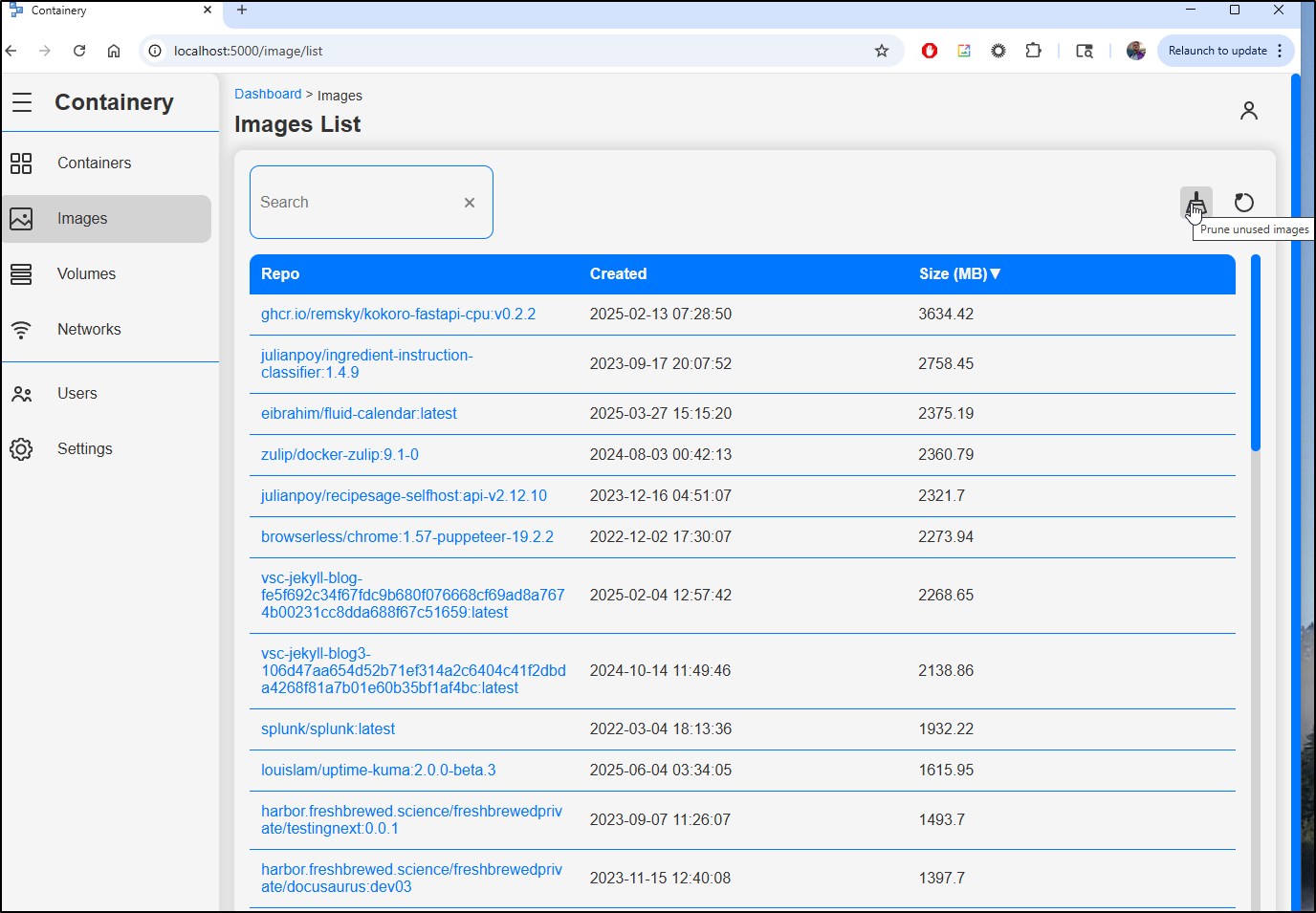

Lastly, I’ll clear old images

While it said it reclaimed space

I only saw my primary drive’s free space lower from 34.1Gb to 34.0Gb. Doing some research, this is a WSL issue and has nothing to do with containery

Cleanup

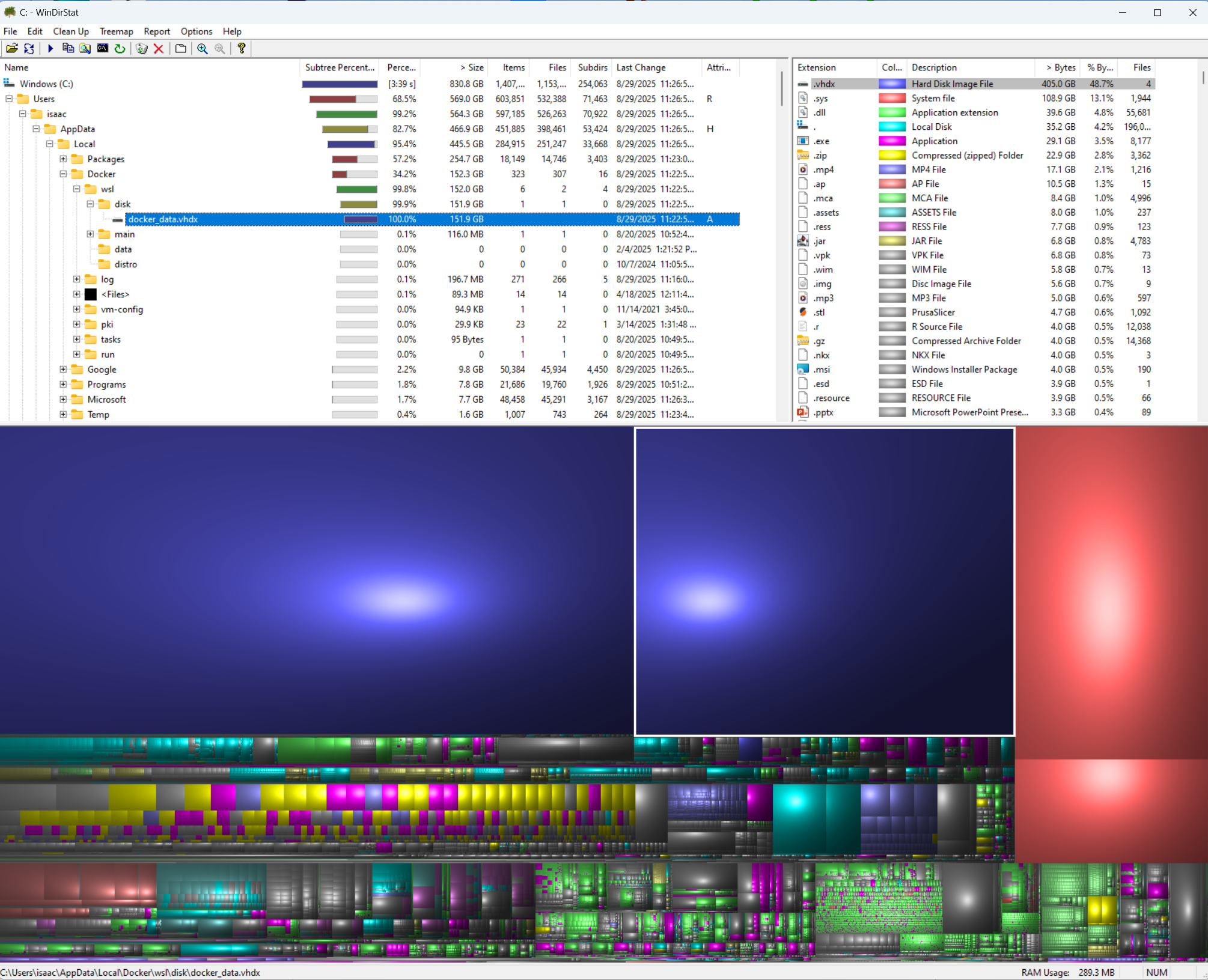

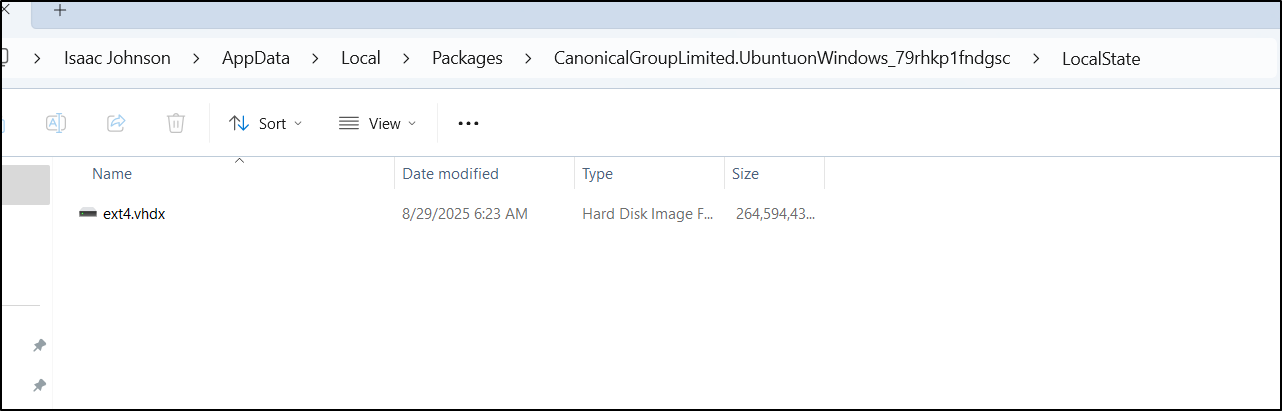

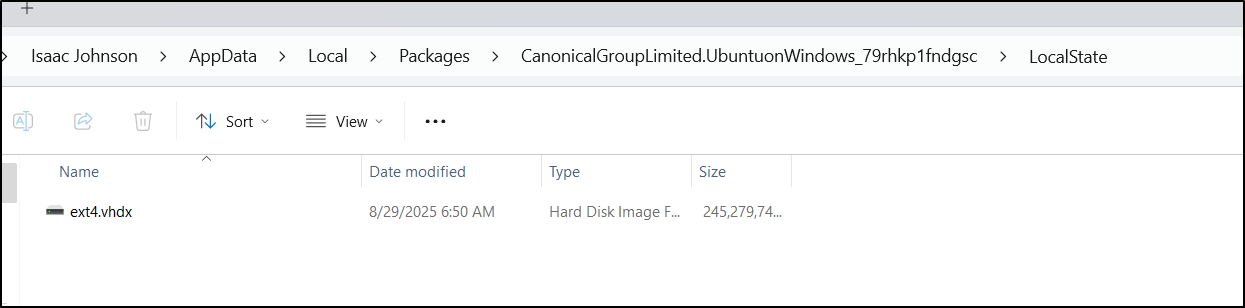

Let’s lookup where my big VHD files exist.

Obviously, because i blog in WSL, I did this in windows (and now am back to write what i did, so clearly I didn’t blow up my WSL).

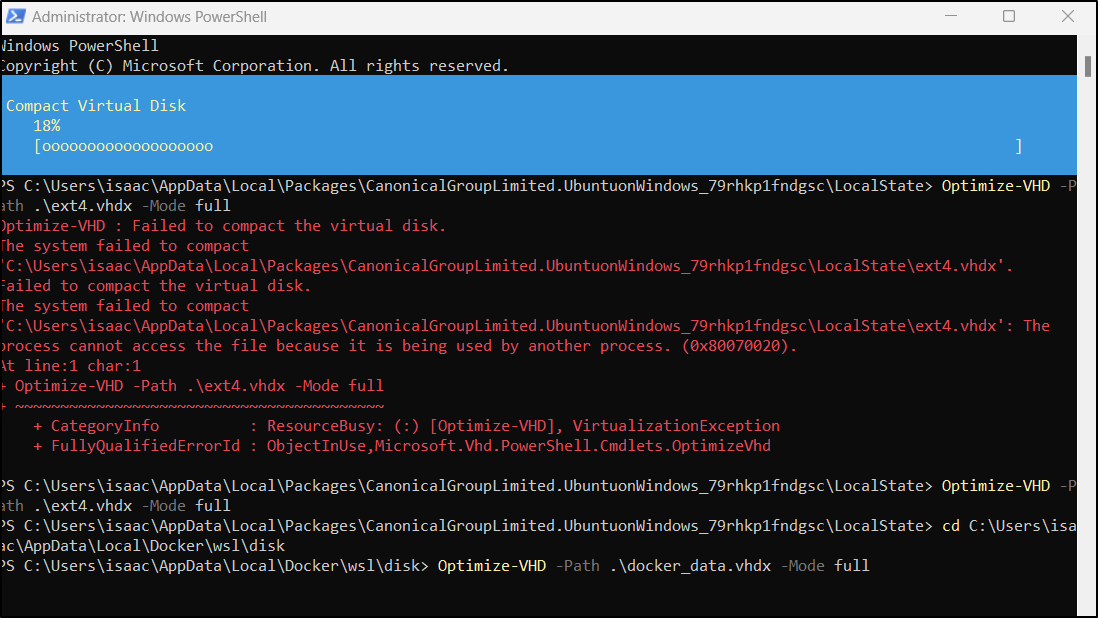

We can see the larger Linux drive is about 264.5Gb

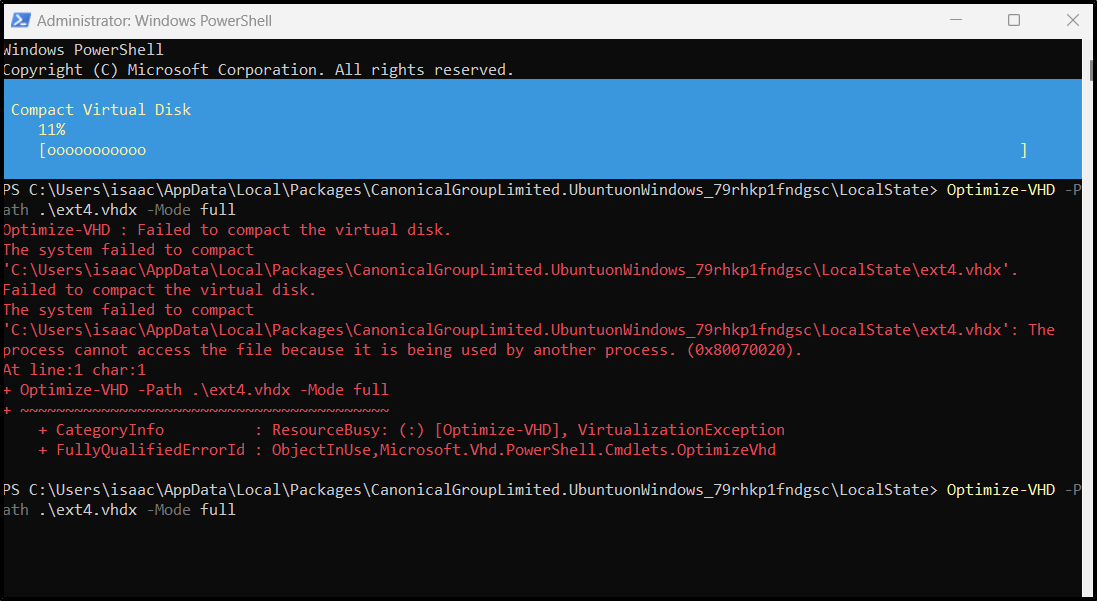

I had to stop WSL with wsl --shutdown as well as kill docker desktop to allow the command to work (also, use Administrator Powershell prompt)

That dropped me down to 245Gb used - at least some savings

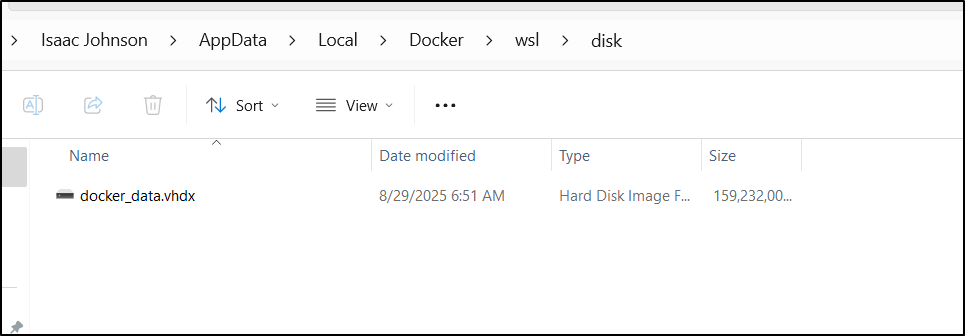

Docker desktop was 160 Gb

I ran the compress on that too

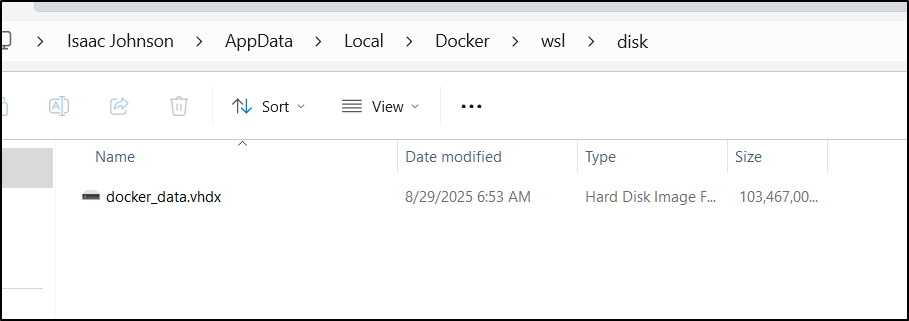

This was a much bigger savings, bringing the drive down to 103Gb

In total this was a massive savings I wish I had known about sooner.

Docker Host

Let’s fire this up on my primary Dockerhost, T-100

builder@builder-T100:~/containery$ cat ./docker-compose.yml

services:

app:

image: ghcr.io/danylo829/containery:latest

container_name: containery

restart: "unless-stopped"

ports:

- "5005:5000"

volumes:

- containery_data:/containery_data

- containery_static:/containery/app/static/dist

- /var/run/docker.sock:/var/run/docker.sock:ro

volumes:

containery_data:

name: containery_data

containery_static:

name: containery_static

builder@builder-T100:~/containery$ docker compose up

[+] Running 9/9

✔ app 8 layers [⣿⣿⣿⣿⣿⣿⣿⣿] 0B/0B Pulled 3.4s

✔ 59e22667830b Already exists 0.0s

✔ 4c665aba06d1 Pull complete 0.6s

✔ e3586b415667 Pull complete 1.2s

✔ f5cc5422ebcb Pull complete 1.2s

✔ fbc740372be1 Pull complete 1.2s

✔ 734dee942992 Pull complete 1.2s

✔ 1fbaefecde4a Pull complete 2.2s

✔ 6ddaa8352378 Pull complete 2.2s

[+] Building 0.0s (0/0)

[+] Running 4/4

✔ Network containery_default Created 0.2s

✔ Volume "containery_static" Created 0.0s

✔ Volume "containery_data" Created 0.0s

✔ Container containery Created 0.6s

Attaching to containery

containery | Initializing Flask-Migrate at /containery_data/migrations...

containery | Creating directory /containery_data/migrations ... done

containery | Creating directory /containery_data/migrations/versions ... done

containery | Generating /containery_data/migrations/README ... done

containery | Generating /containery_data/migrations/script.py.mako ... done

containery | Generating /containery_data/migrations/env.py ... done

containery | Generating /containery_data/migrations/alembic.ini ... done

containery | Please edit configuration/connection/logging settings in /containery_data/migrations/alembic.ini before proceeding.

containery | Waiting for the database to be ready...

containery | INFO [alembic.runtime.migration] Context impl SQLiteImpl.

containery | INFO [alembic.runtime.migration] Will assume non-transactional DDL.

containery | Applying database migrations...

containery | INFO [alembic.runtime.migration] Context impl SQLiteImpl.

containery | INFO [alembic.runtime.migration] Will assume non-transactional DDL.

containery | INFO [alembic.autogenerate.compare] Detected added table 'stg_global_settings'

containery | INFO [alembic.autogenerate.compare] Detected added table 'usr_role'

containery | INFO [alembic.autogenerate.compare] Detected added table 'usr_user'

containery | INFO [alembic.autogenerate.compare] Detected added table 'usr_personal_settings'

containery | INFO [alembic.autogenerate.compare] Detected added table 'usr_role_permission'

containery | INFO [alembic.autogenerate.compare] Detected added table 'usr_user_role'

containery | Generating /containery_data/migrations/versions/ed7ec7fac7db_.py ... done

containery | INFO [alembic.runtime.migration] Context impl SQLiteImpl.

containery | INFO [alembic.runtime.migration] Will assume non-transactional DDL.

containery | INFO [alembic.runtime.migration] Running upgrade -> ed7ec7fac7db, empty message

containery | Starting Gunicorn...

containery | [2025-08-29 11:50:24 +0000] [10] [INFO] Starting gunicorn 22.0.0

containery | [2025-08-29 11:50:24 +0000] [10] [INFO] Listening at: http://0.0.0.0:5000 (10)

containery | [2025-08-29 11:50:24 +0000] [10] [INFO] Using worker: eventlet

containery | [2025-08-29 11:50:24 +0000] [11] [INFO] Booting worker with pid: 11

containery | Copying lib static files...

containery | ✔ Registered module: main

containery | ✔ Registered module: settings

containery | ✔ Registered module: user

containery | ✔ Registered module: index

containery | ✔ Registered module: auth

I noticed not as much to reclaim

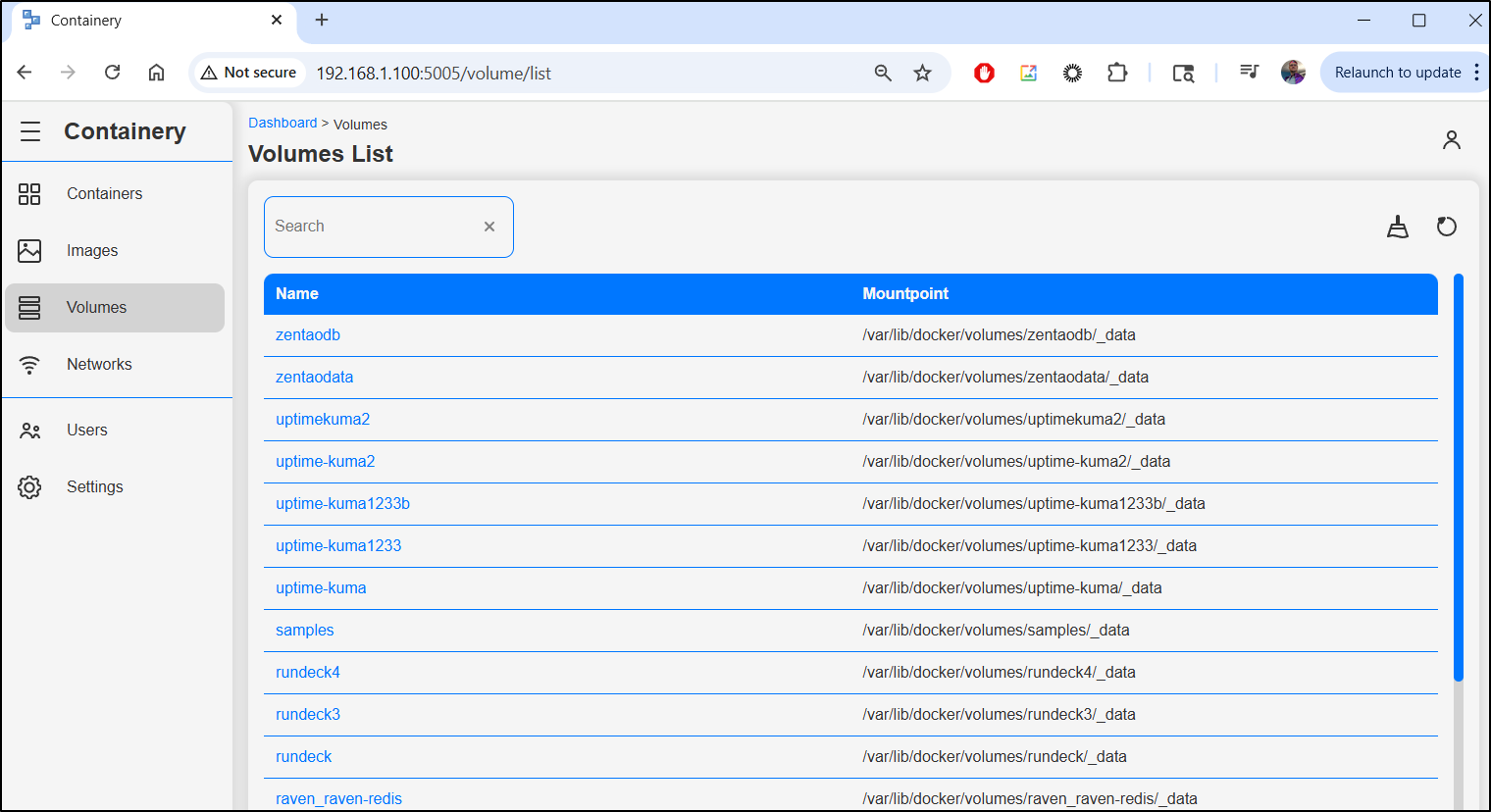

Volumes shows mount points, but not sizes

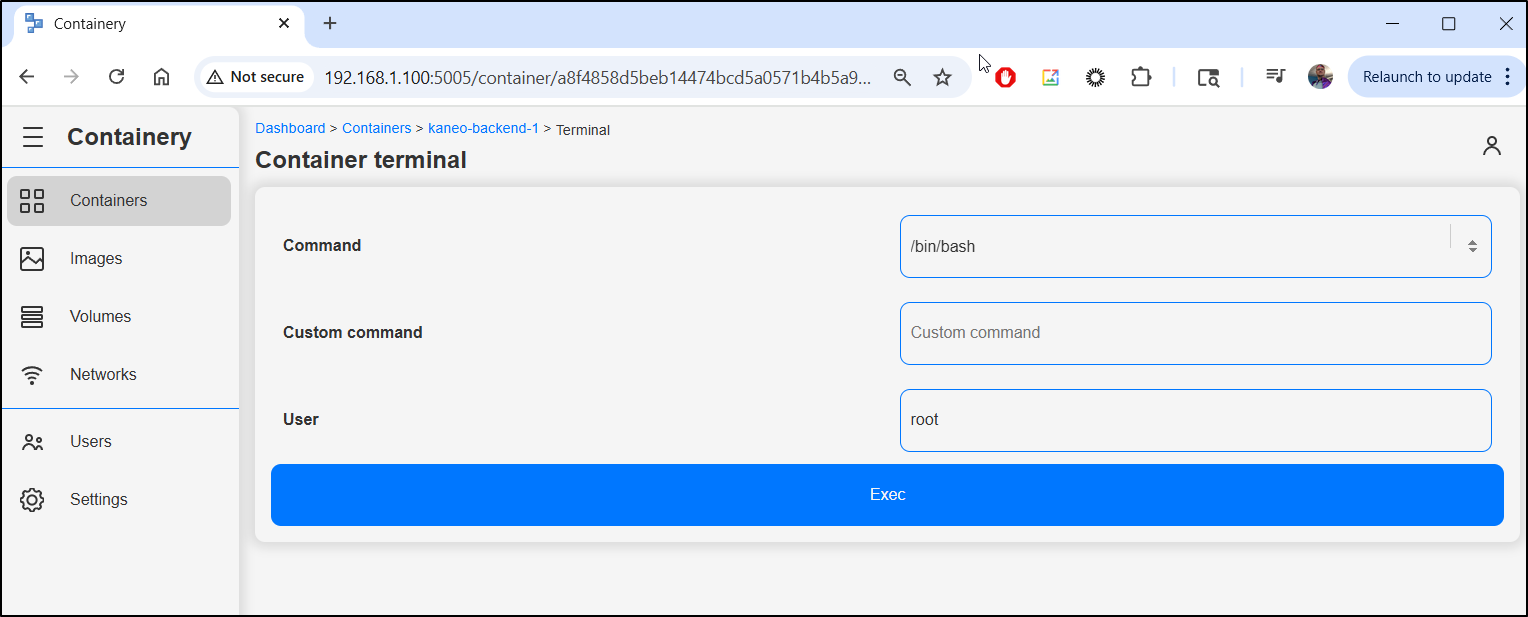

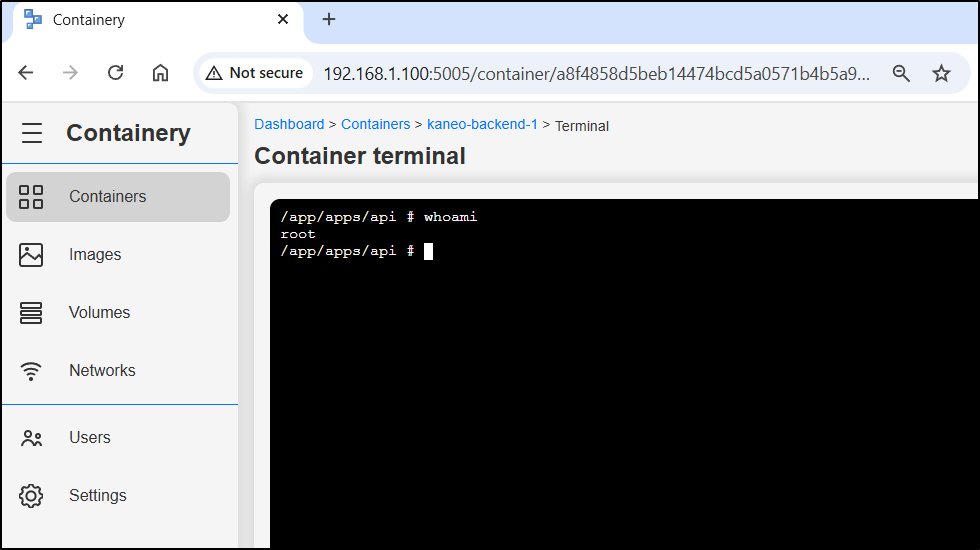

I can go to a running container and open a shell

I can fire off a /bin/bash or /bin/sh shell

Here I have a nice interactive prompt

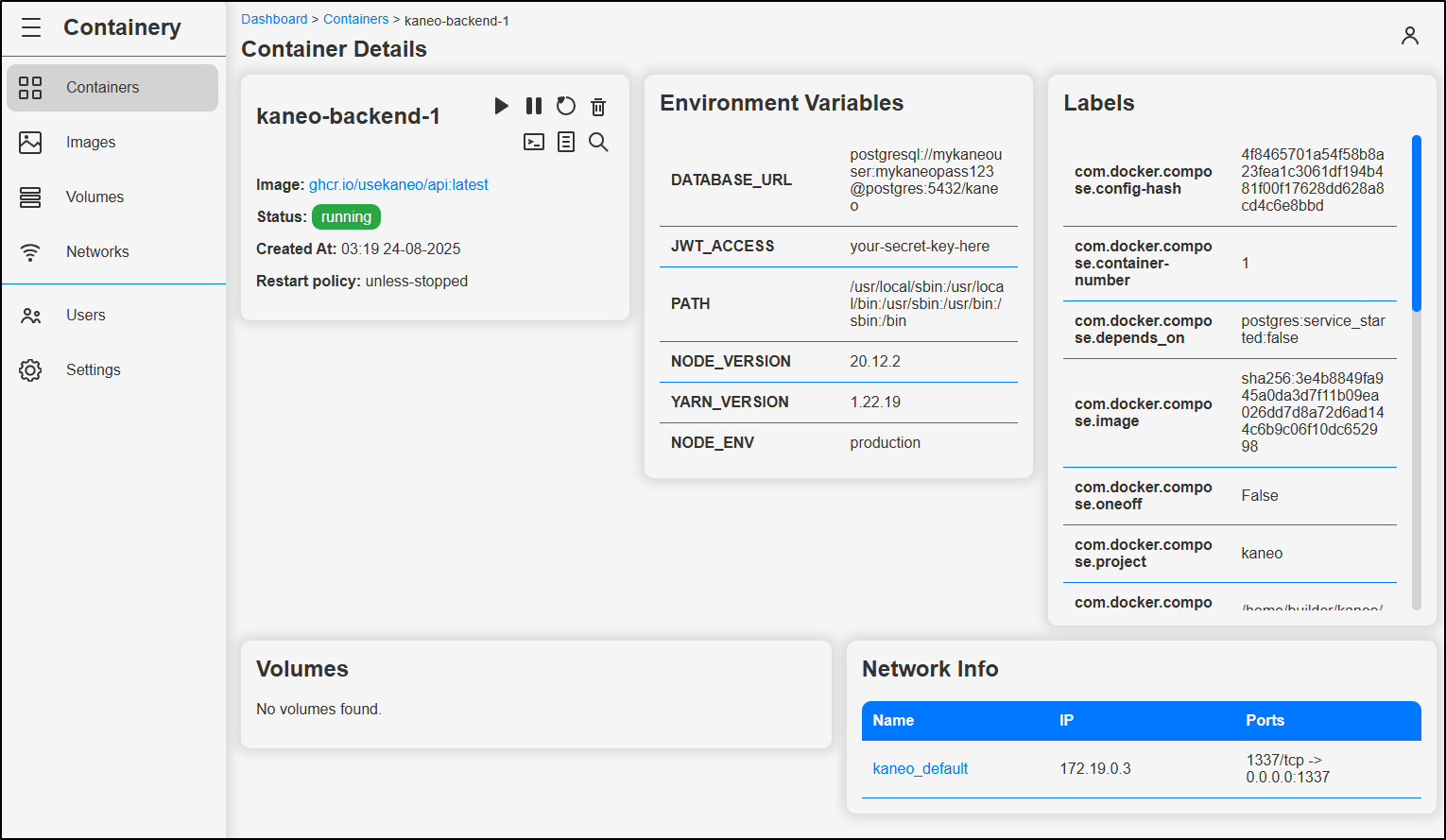

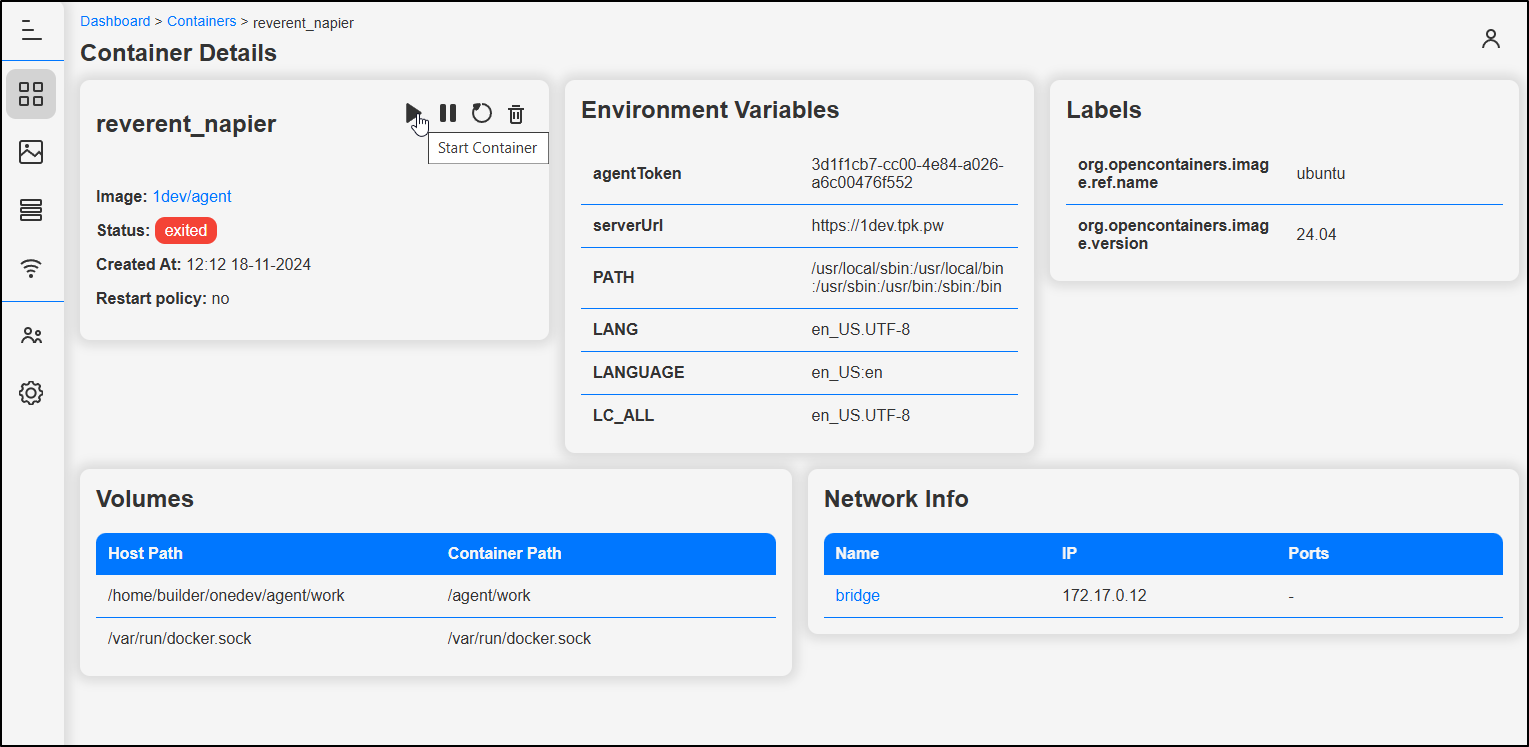

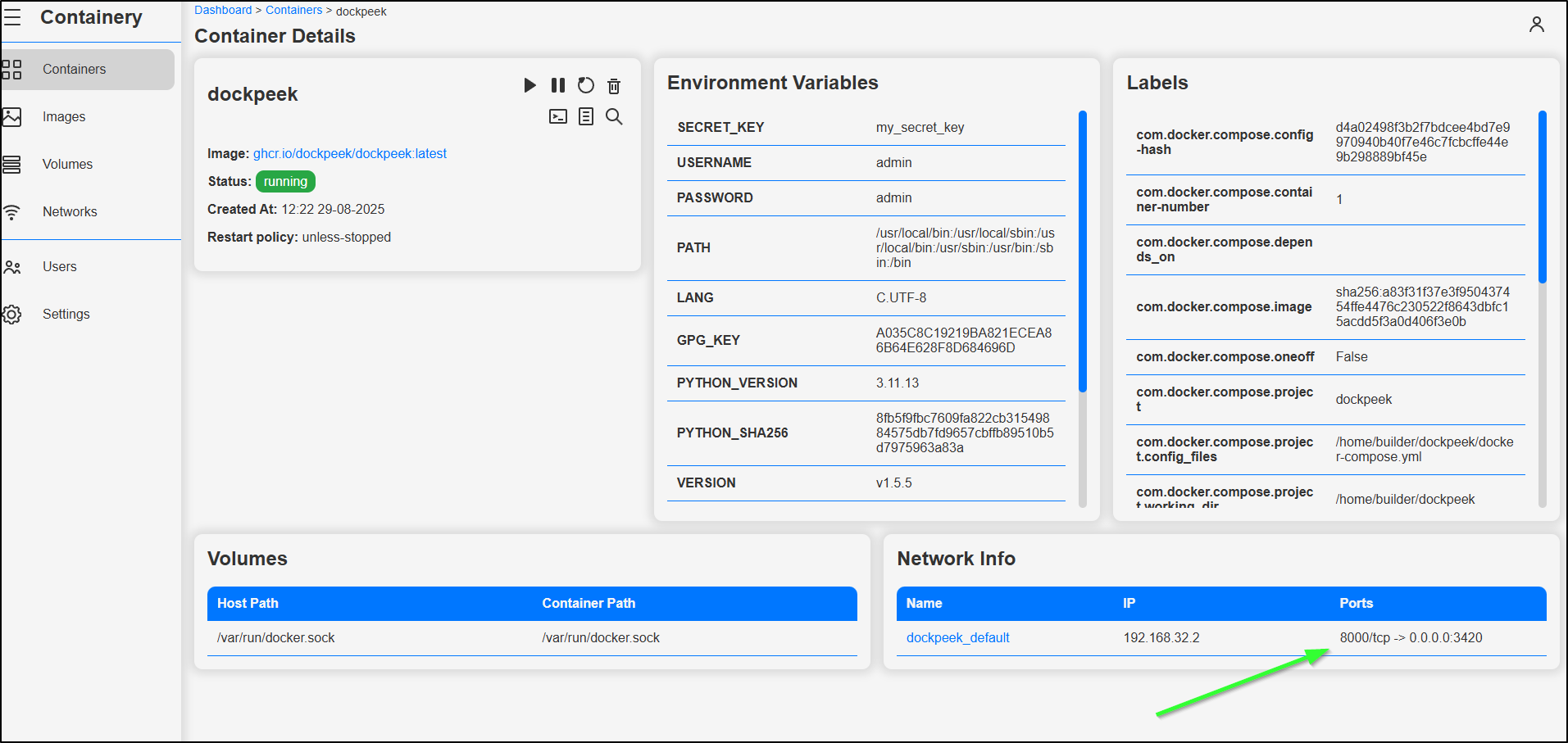

On running containers we can see details

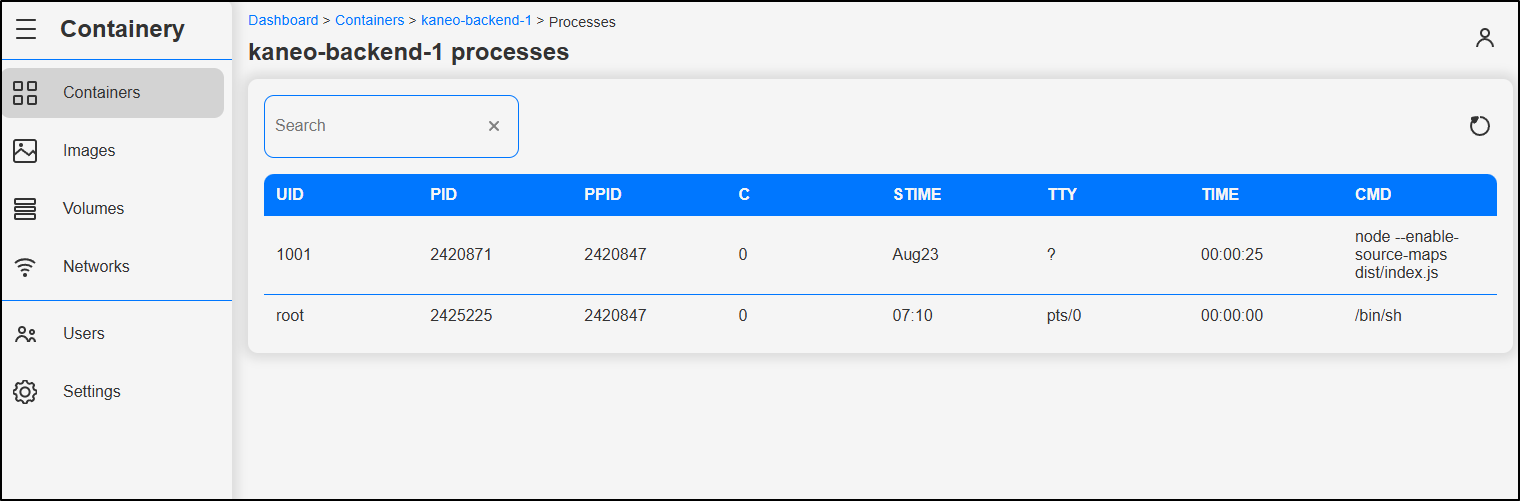

I can see processes

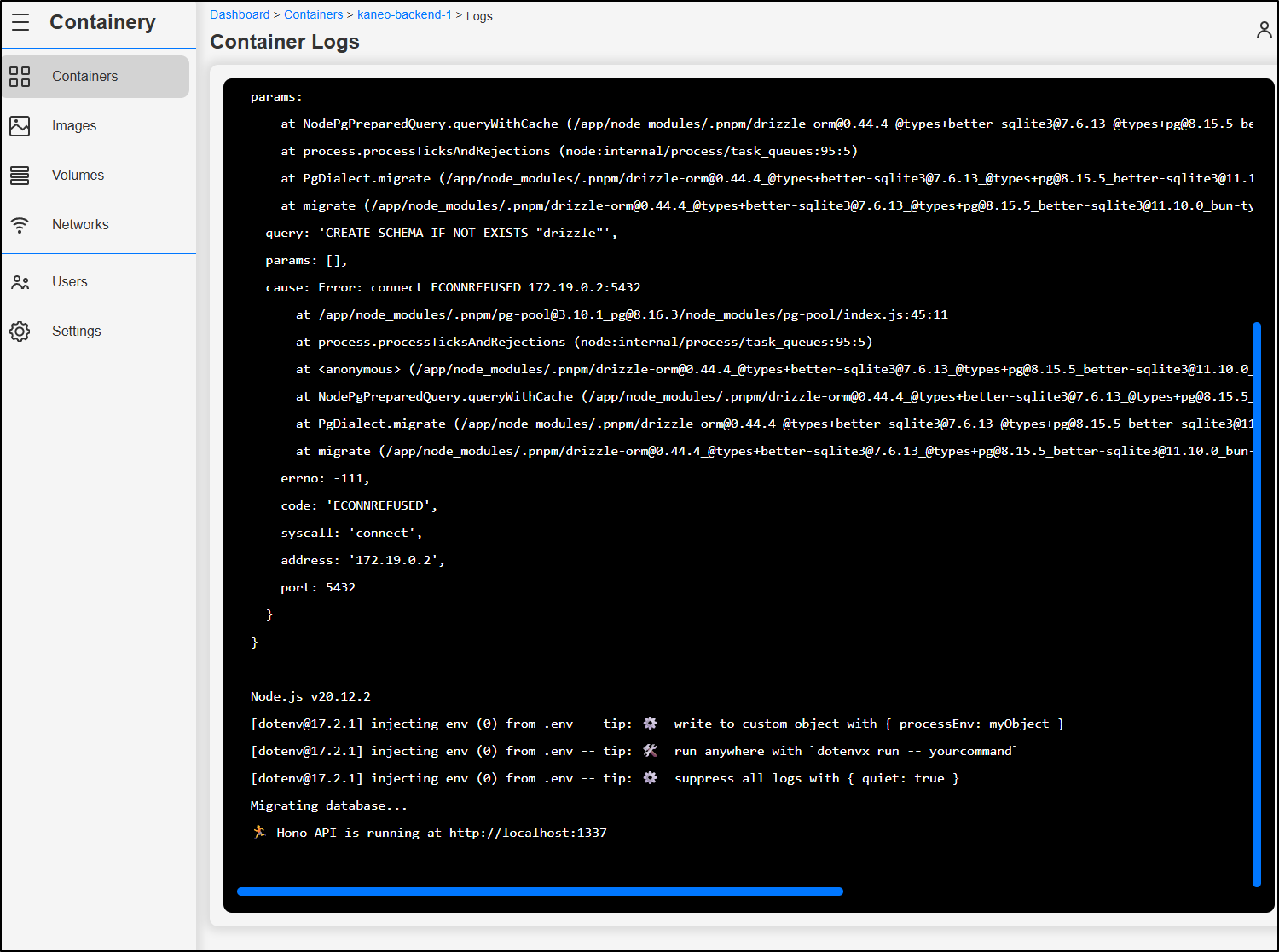

and view logs, which could be helpful in troubleshooting problems with containerized workloads

It’s worth noting we can go to a stopped container and start it if desired

Settings

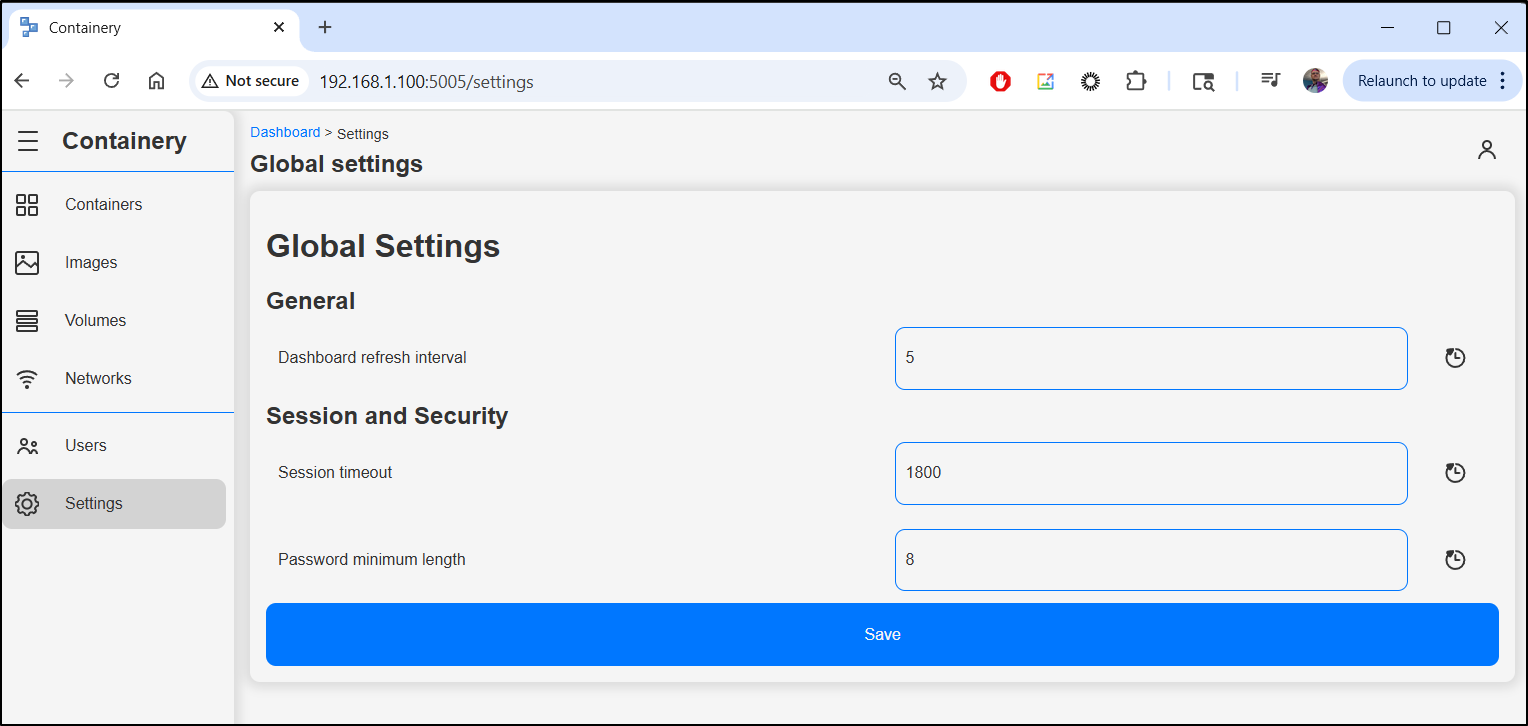

Our settings just has password lengths and refresh settings

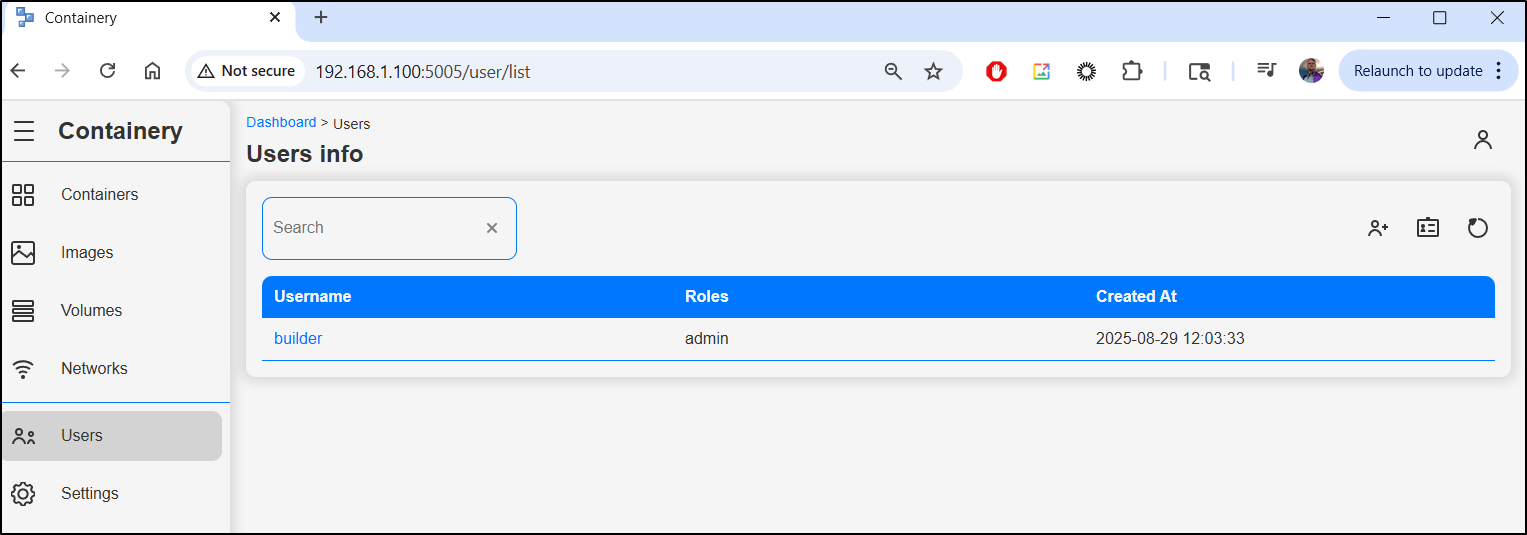

I can also add and remove users in the users section

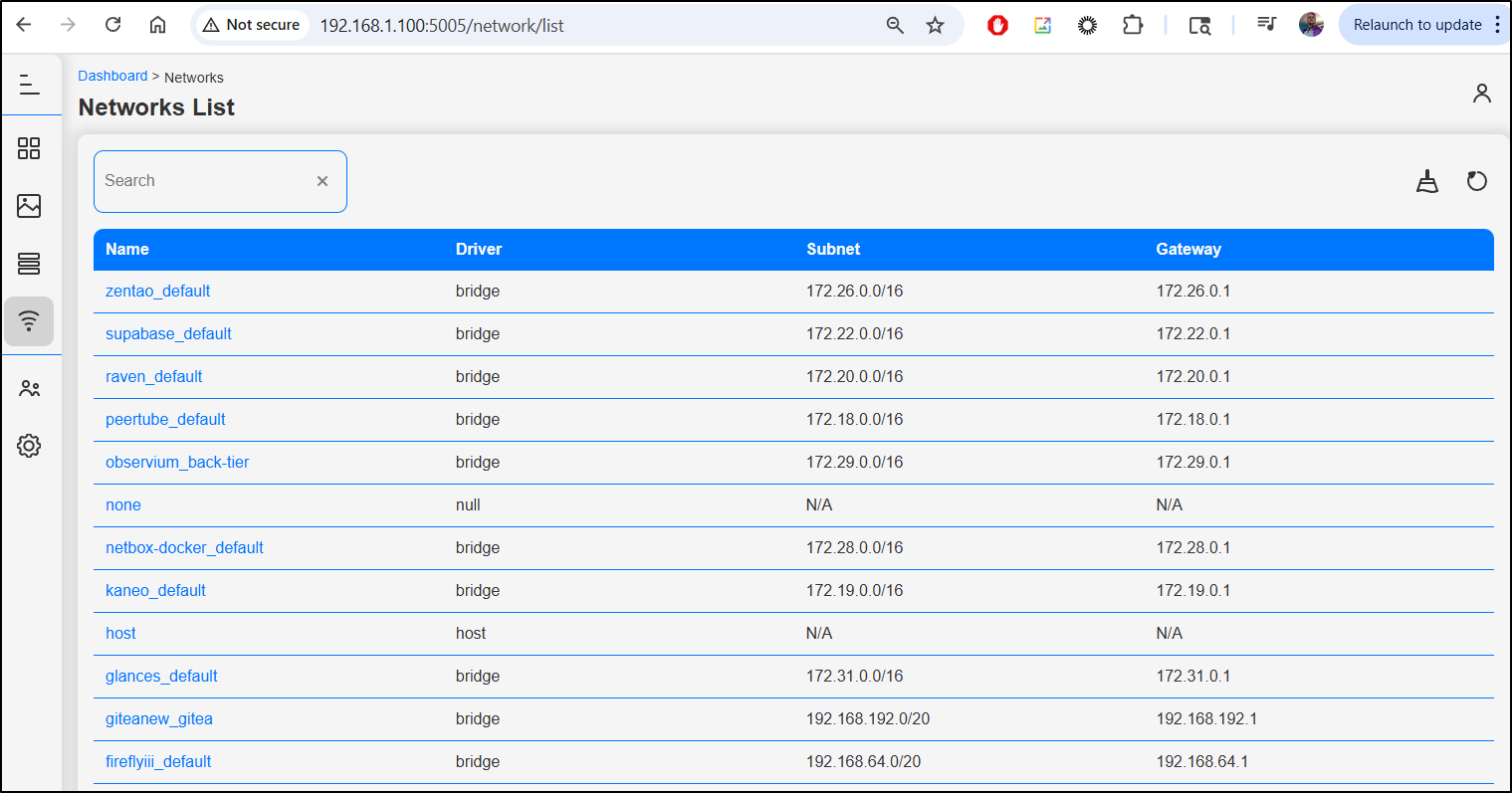

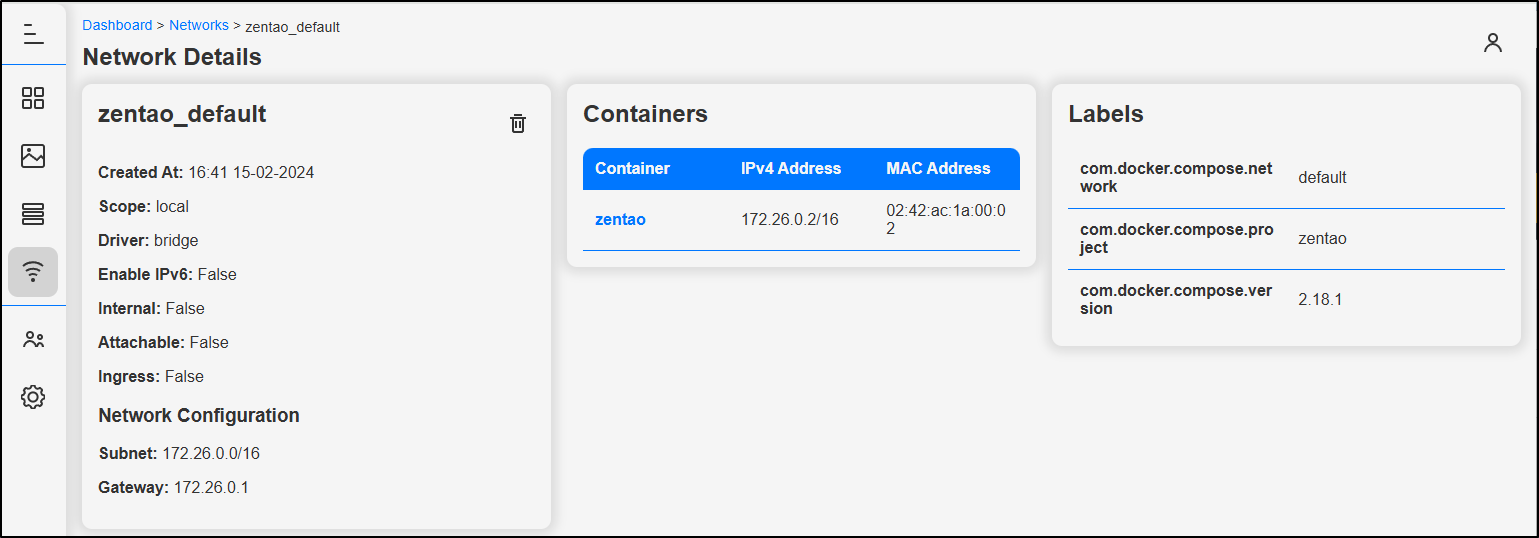

Networks show some details like driver, subnet and gateway

The details are interesting but I’m not certain for what I would use them

I stopped the interactive run and fired it up in daemon mode so we could view it later

builder@builder-T100:~/containery$ docker compose up -d &

[1] 2451975

[+] Building 0.0s (0/0)

[+] Running 1/1

✔ Container containery Started 0.5s

[1]+ Done docker compose up -d

Ingress

Say we wish to expose this externally. I could punch a hole through my firewall and access with HTTP but that is a bit insecure.

Let’s instead setup a TLS endpoint fronted by Kubernetes.

I’ll start with an A Record for containery.tpk.pw in Azure DNS

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n containery

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "b91b54c3-90a6-419a-a395-2c7f509f35f7",

"fqdn": "containery.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/containery",

"name": "containery",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

Then create a YAML manifest that will use it

apiVersion: v1

kind: Endpoints

metadata:

name: containery-external-ip

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: containery

port: 5005

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: containery-external-ip

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: containery

port: 80

protocol: TCP

targetPort: 5005

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

nginx.org/websocket-services: containery-external-ip

name: containery-ingress

spec:

rules:

- host: containery.tpk.pw

http:

paths:

- backend:

service:

name: containery-external-ip

port:

number: 80

path: /

pathType: Prefix

tls:

- hosts:

- containery.tpk.pw

secretName: containery-tls

Then apply

$ kubectl apply -f ./ingress.yaml

endpoints/containery-external-ip created

service/containery-external-ip created

Warning: annotation "kubernetes.io/ingress.class" is deprecated, please use 'spec.ingressClassName' instead

ingress.networking.k8s.io/containery-ingress created

When I see the cert is satisfied

$ kubectl get cert containery-tls

NAME READY SECRET AGE

containery-tls False containery-tls 33s

$ kubectl get cert containery-tls

NAME READY SECRET AGE

containery-tls True containery-tls 102s

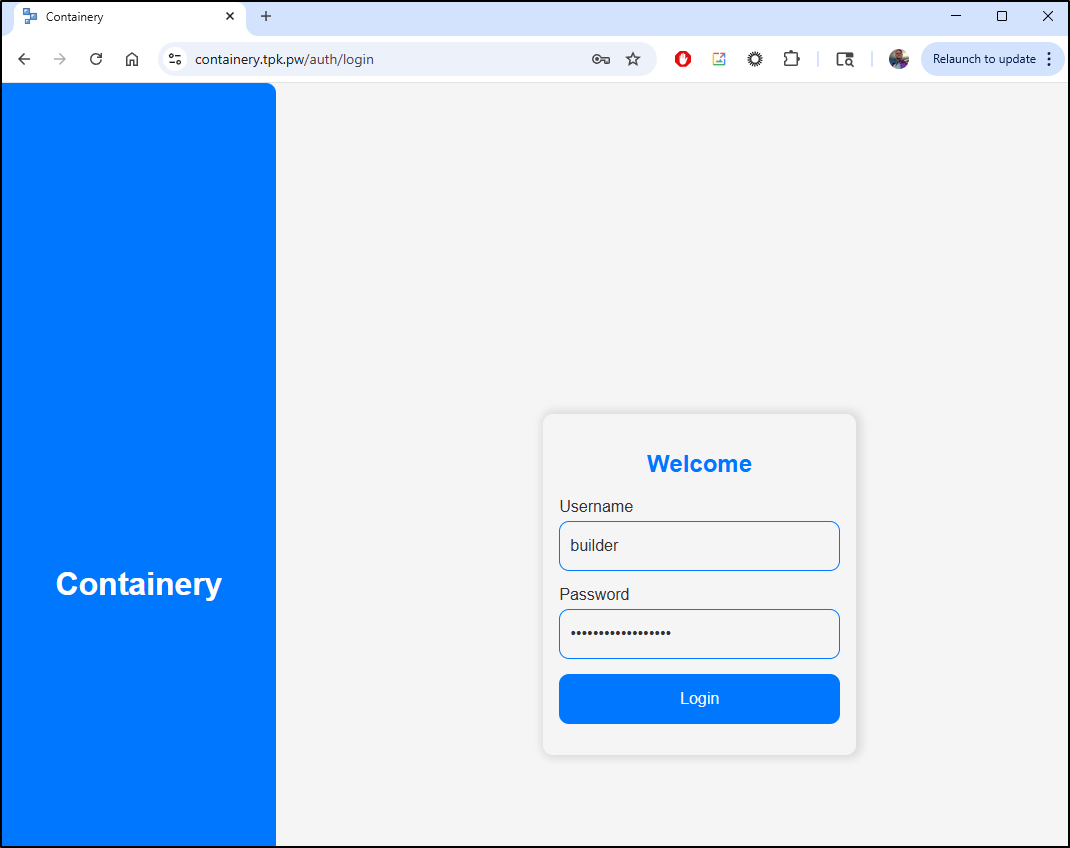

I can now login externally with https://containery.tpk.pw/

Dockpeek

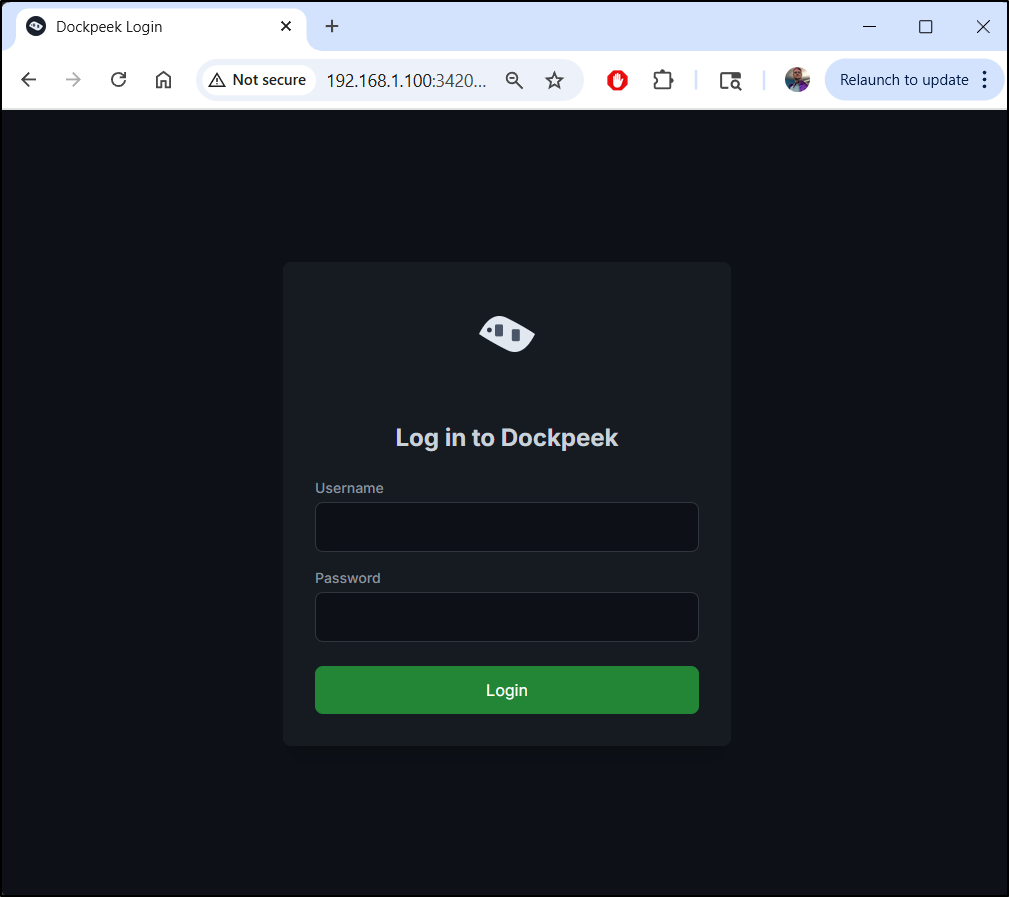

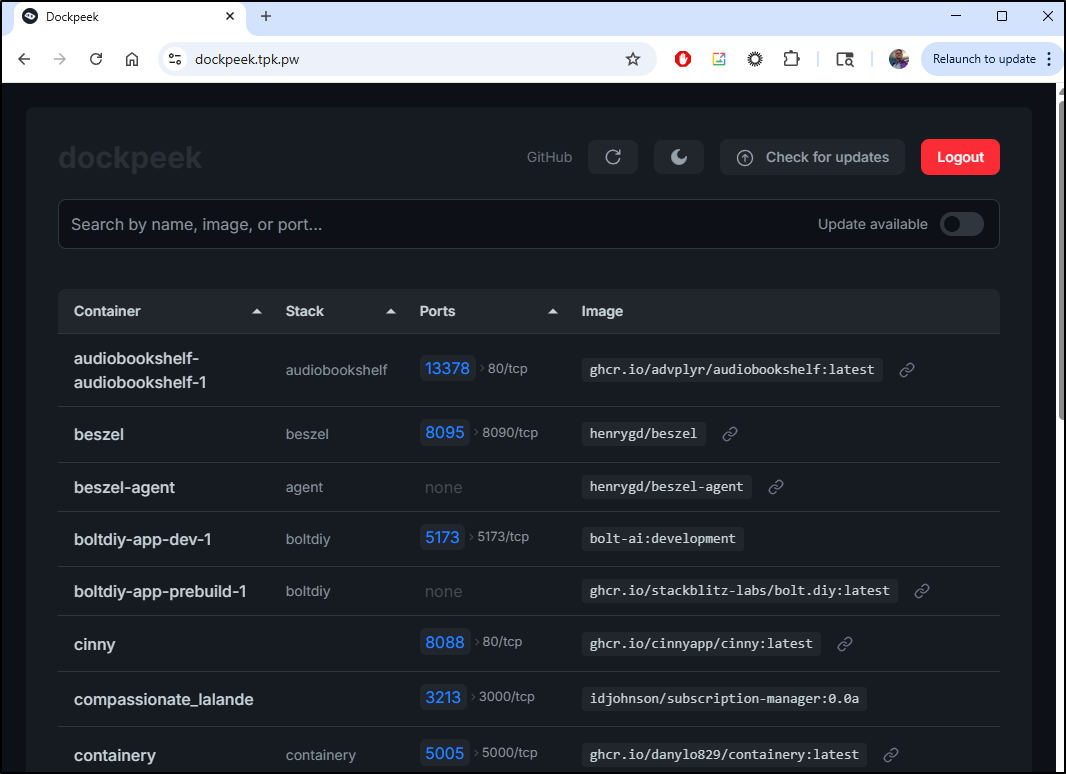

Another, more recent post by Marius was about Dockpeek

Let’s fire that up on the Dockerhost using docker compose

builder@builder-T100:~/dockpeek$ cat docker-compose.yml

services:

dockpeek:

image: ghcr.io/dockpeek/dockpeek:latest

container_name: dockpeek

environment:

- SECRET_KEY=my_secret_key # Set secret key

- USERNAME=admin # Change default username

- PASSWORD=admin # Change default password

ports:

- "3420:8000"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

restart: unless-stopped

Then an interactive launch

builder@builder-T100:~/dockpeek$ docker compose up

[+] Running 9/9

✔ dockpeek 8 layers [⣿⣿⣿⣿⣿⣿⣿⣿] 0B/0B Pulled 3.9s

✔ 396b1da7636e Pull complete 1.9s

✔ fcec5a125fd8 Pull complete 2.0s

✔ a27cb4be7017 Pull complete 2.5s

✔ 1961ca026b04 Pull complete 2.5s

✔ a45ad9b924e1 Pull complete 2.5s

✔ 47596506f01f Pull complete 2.5s

✔ 2c1db1f4510e Pull complete 2.8s

✔ 03bf88adcbaf Pull complete 2.8s

[+] Building 0.0s (0/0)

[+] Running 2/2

✔ Network dockpeek_default Created 0.1s

✔ Container dockpeek Created 0.6s

Attaching to dockpeek

dockpeek | * Serving Flask app 'app'

dockpeek | * Debug mode: off

We are presented with a login page

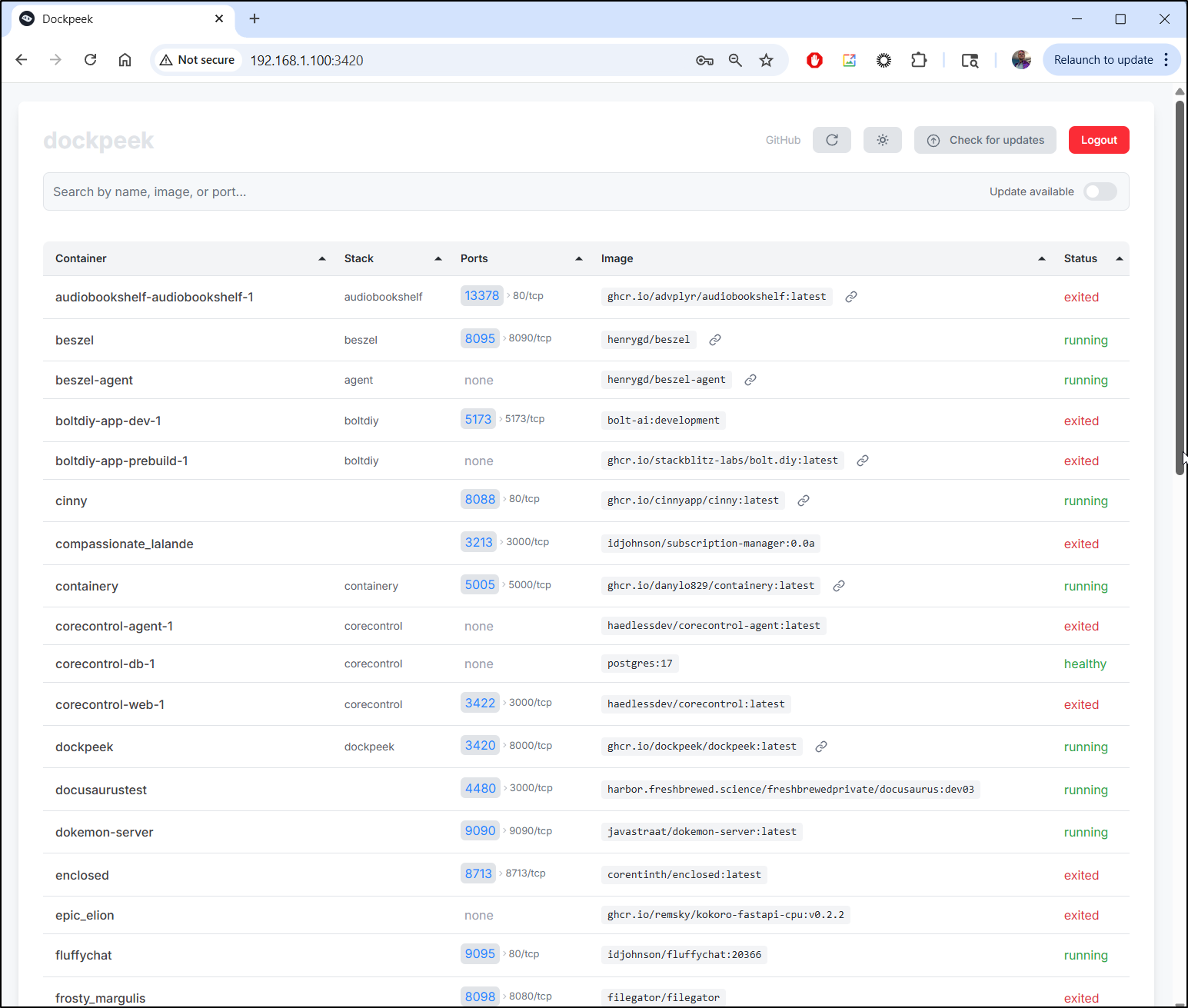

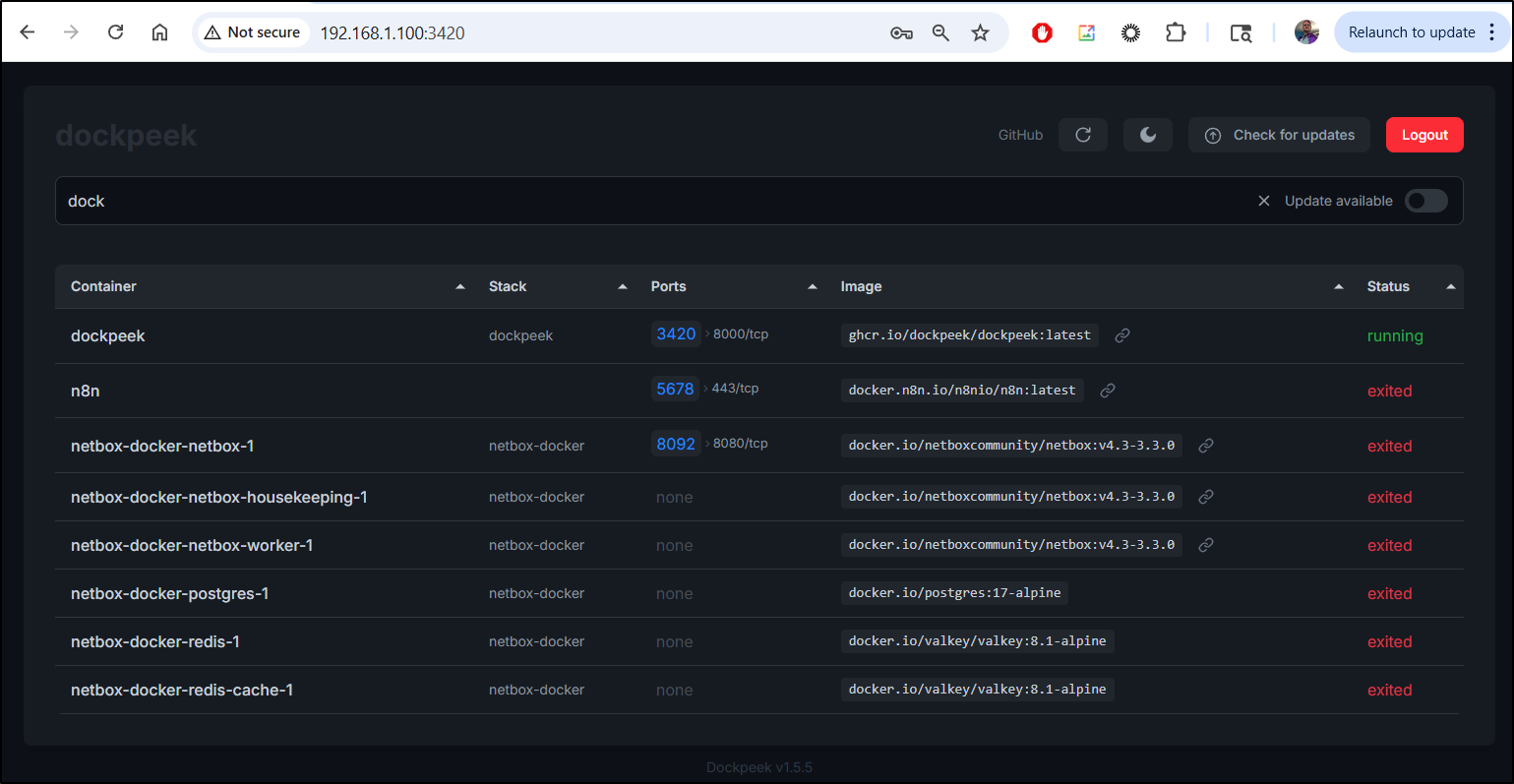

I’m presented with an excellent report page that just has the key details - the important one for me being port number and status

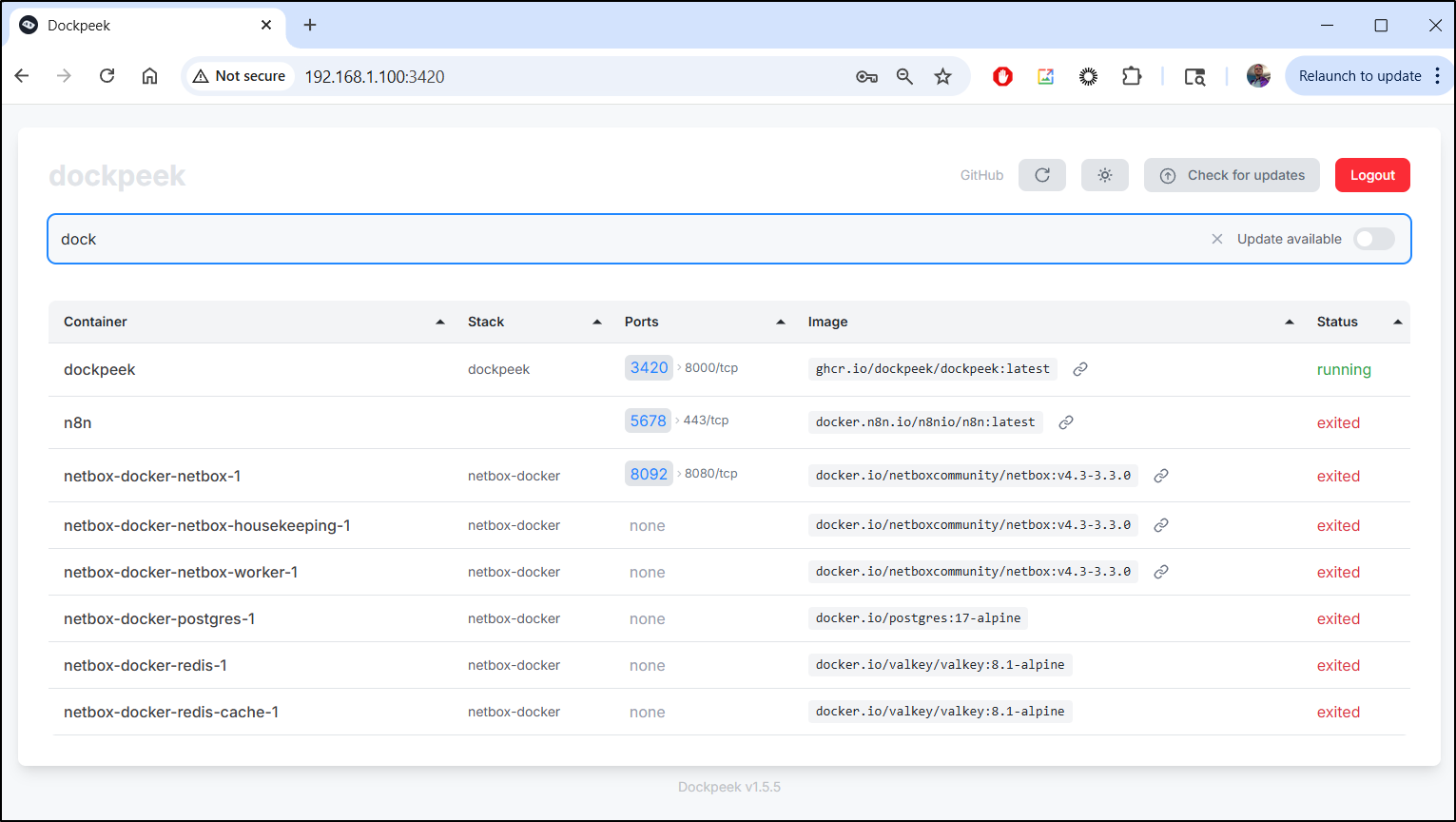

I can start to search and the list refines as a type in a very satisfying dynamic way

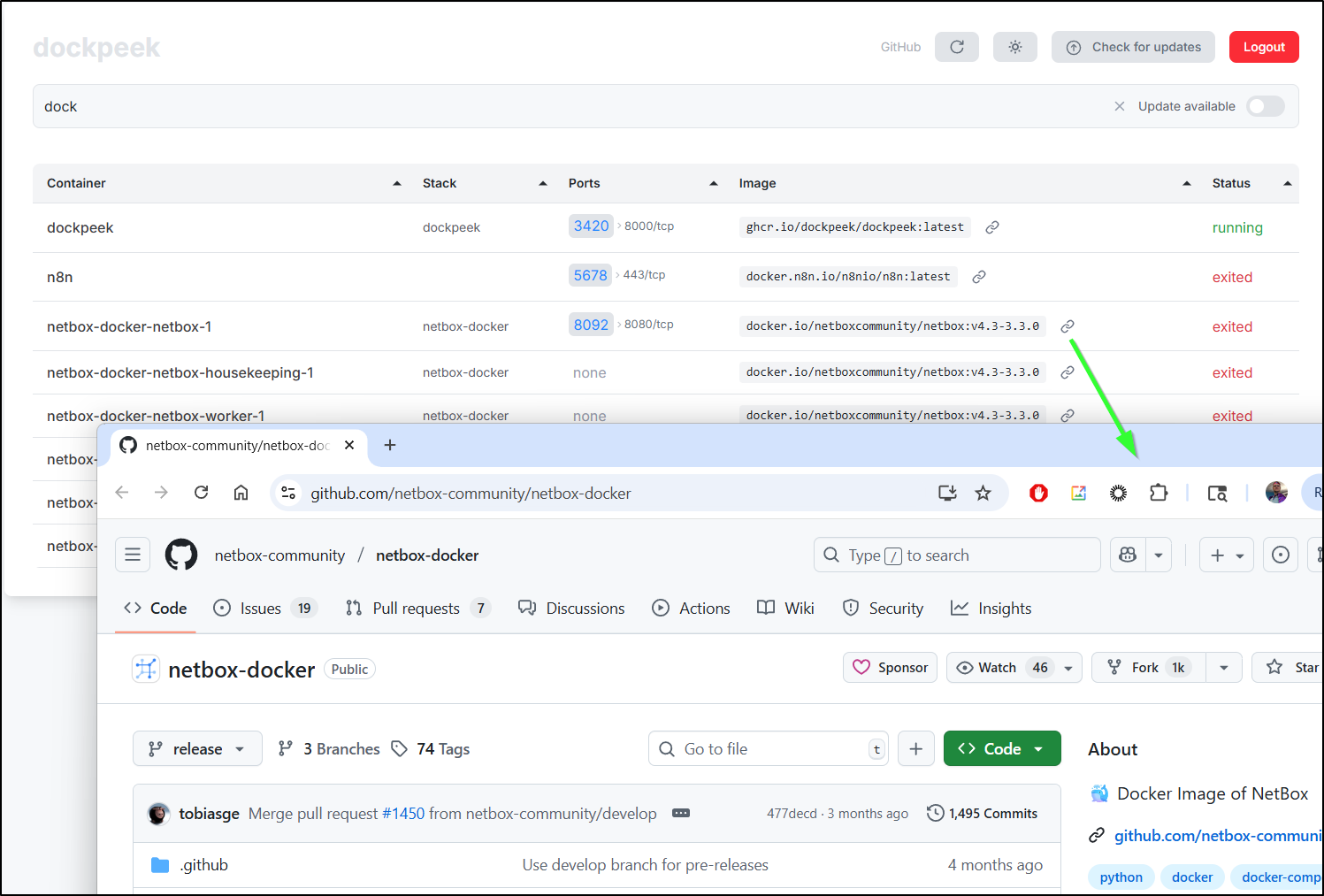

I thought the link on the image might just go to Dockerhub, but it more cleverly went to the Github project that built it

The list is not actionable. There is nothing more to do here but refresh or switch from light to dark mode

However, at least for me, this is really handy.

For instance, going back to containery, we can search for the same keyword but I don’t see ports listed in the results

The information is there if you go to details and know where to look

But a lot of the time, I’m testing out new tools and need to see what ports are in use or I’m debugging a K8s forwarding rule with Endpoints and need to figure out what port I used this time (since I never am consistent).

Ingress

In much the same way, we can create an A Record

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a a

dd-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n dockpeek

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "be29bea0-f6f0-453d-9c09-224126ad1931",

"fqdn": "dockpeek.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/dockpeek",

"name": "dockpeek",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

Then an ingress that can use it

$ cat ./dockpeek.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: dockpeek-external-ip

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: dockpeek

port: 3420

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: dockpeek-external-ip

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: dockpeek

port: 80

protocol: TCP

targetPort: 3420

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

nginx.org/websocket-services: dockpeek-external-ip

name: dockpeek-ingress

spec:

rules:

- host: dockpeek.tpk.pw

http:

paths:

- backend:

service:

name: dockpeek-external-ip

port:

number: 80

path: /

pathType: Prefix

tls:

- hosts:

- dockpeek.tpk.pw

secretName: dockpeek-tls

Then apply

$ kubectl apply -f ./dockpeek.yaml

endpoints/dockpeek-external-ip created

service/dockpeek-external-ip created

Warning: annotation "kubernetes.io/ingress.class" is deprecated, please use 'spec.ingressClassName' instead

ingress.networking.k8s.io/dockpeek-ingress created

I’ll also want to stop the existing container and change the user/password to something more secure

dockpeek | * Serving Flask app 'app'

dockpeek | * Debug mode: off

^CGracefully stopping... (press Ctrl+C again to force)

Aborting on container exit...

[+] Stopping 1/1

✔ Container dockpeek Stopped 10.2s

canceled

builder@builder-T100:~/dockpeek$ vi docker-compose.yml

builder@builder-T100:~/dockpeek$ docker compose up -d &

[1] 2703901

[+] Building 0.0s (0/0)

[+] Running 1/1

✔ Container dockpeek Started 0.4s

[1]+ Done docker compose up -d

I can now see the cert is satisfied so we can test with our new user account

$ kubectl get cert dockpeek-tls

NAME READY SECRET AGE

dockpeek-tls False dockpeek-tls 26s

$ kubectl get cert dockpeek-tls

NAME READY SECRET AGE

dockpeek-tls True dockpeek-tls 93s

Summary

Today we looked at Containery and Dockpeek, both excellent Docker image reporting tools.

I found Containery to be fantastic at cleaning up wasted space and getting details for pods. Being able to see logs, specific images, restart containers and even get a shell was fantastic.

While Dockpeek is far simpler, it serves a real purpose to me - allowing me to see the ports in use and container status. Most of the time, these are really all the details I need. I can get away with using a dumbterm to get into the Dockerhost and look at logs, start containers, etc. When I consider what I need when remotely debugging, this really is it.

A small downside with these tools, at least with my TLS forwarding implementation, is that I can just see one host at a time. Ideally I would want these on my various Dockerhosts so I could checkout servers at 121, 143 and 100.