Published: Aug 26, 2025 by Isaac Johnson

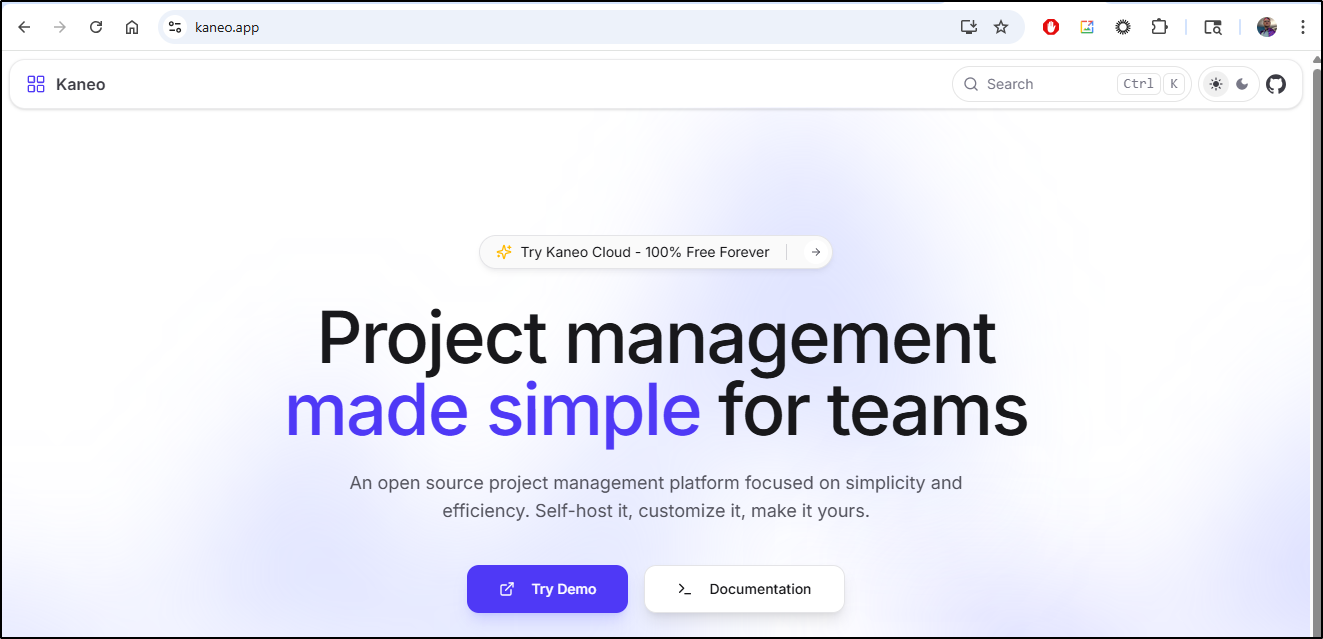

Today we’ll look at Kaneo, a pretty nice open-source PjM tool in docker and SaaS then look to host it externally.

We’ll also look at FossFlow which is a really slick Open-source Isometric diagram tool. Like before, we look at Docker and Kubernetes and hosting externally as well as some usage details.

Lastly, I’ll touch on a commercial product (but with a free guest instance) called IsoFlow which was the base from which FossFlow forked. We’ll look at the SaaS and the Community Edition running locally.

Kaneo

I’ve used Vikunja now for months, but had a note to take a look at another good Open-source PjM suite, Kaneo.

I saw it first mentioned back in April in this Marius post.

I’m going to start with the docker-compose locally

$ cat docker-compose.yml

services:

postgres:

image: postgres:16-alpine

environment:

POSTGRES_DB: kaneo

POSTGRES_USER: mykaneouser

POSTGRES_PASSWORD: mykaneopass123

volumes:

- postgres_data:/var/lib/postgresql/data

restart: unless-stopped

backend:

image: ghcr.io/usekaneo/api:latest

environment:

JWT_ACCESS: "your-secret-key-here"

DATABASE_URL: "postgresql://mykaneouser:mykaneopass123@postgres:5432/kaneo"

ports:

- 1337:1337

depends_on:

- postgres

restart: unless-stopped

frontend:

image: ghcr.io/usekaneo/web:latest

environment:

KANEO_API_URL: "http://localhost:1337"

ports:

- 5173:5173

depends_on:

- backend

restart: unless-stopped

volumes:

postgres_data:

I’ll fire it up interactively to start

builder@DESKTOP-QADGF36:~/Workspaces/kaneo$ docker compose up

[+] Running 27/27

✔ backend Pulled 18.6s

✔ 3bce96456554 Pull complete 6.5s

✔ 2bde47b9f7c3 Pull complete 6.6s

✔ db3e2f2b6054 Pull complete 6.7s

✔ f654cfc9cb19 Pull complete 6.7s

✔ 4f4fb700ef54 Pull complete 6.7s

✔ ceb3a1c625c0 Pull complete 6.8s

✔ 863993be634a Pull complete 6.8s

✔ 90b66fb610c9 Pull complete 6.8s

✔ a70b1328ca1e Pull complete 6.8s

✔ 178e58b90e0a Pull complete 16.8s

✔ c3e3b567e60b Pull complete 16.9s

✔ 765b33a5772f Pull complete 16.9s

✔ frontend Pulled 4.7s

✔ 4abcf2066143 Pull complete 0.9s

✔ fc21a1d387f5 Pull complete 1.8s

✔ e6ef242c1570 Pull complete 1.8s

✔ 13fcfbc94648 Pull complete 1.8s

✔ d4bca490e609 Pull complete 1.8s

✔ 5406ed7b06d9 Pull complete 1.9s

✔ 8a3742a9529d Pull complete 1.9s

✔ 0d0c16747d2c Pull complete 2.8s

✔ a35acf90e25d Pull complete 2.8s

✔ 567123fc343b Pull complete 2.9s

✔ f3721f3008a0 Pull complete 2.9s

✔ 608cacd076c7 Pull complete 3.0s

✔ 9fc72ec3b0bc Pull complete 3.0s

[+] Running 5/5

✔ Network kaneo_default Created 0.1s

✔ Volume "kaneo_postgres_data" Created 0.0s

✔ Container kaneo-postgres-1 Created 0.4s

✔ Container kaneo-backend-1 Created 0.1s

✔ Container kaneo-frontend-1 Created 0.1s

Attaching to backend-1, frontend-1, postgres-1

postgres-1 | The files belonging to this database system will be owned by user "postgres".

postgres-1 | This user must also own the server process.

postgres-1 |

postgres-1 | The database cluster will be initialized with locale "en_US.utf8".

postgres-1 | The default database encoding has accordingly been set to "UTF8".

postgres-1 | The default text search configuration will be set to "english".

postgres-1 |

postgres-1 | Data page checksums are disabled.

postgres-1 |

postgres-1 | fixing permissions on existing directory /var/lib/postgresql/data ... ok

postgres-1 | creating subdirectories ... ok

postgres-1 | selecting dynamic shared memory implementation ... posix

postgres-1 | selecting default max_connections ... 100

postgres-1 | selecting default shared_buffers ... 128MB

postgres-1 | selecting default time zone ... UTC

postgres-1 | creating configuration files ... ok

postgres-1 | running bootstrap script ... ok

postgres-1 | sh: locale: not found

postgres-1 | 2025-08-22 13:36:09.986 UTC [35] WARNING: no usable system locales were found

backend-1 | [dotenv@17.2.1] injecting env (0) from .env -- tip: 🔐 prevent building .env in docker: https://dotenvx.com/prebuild

frontend-1 | /docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

frontend-1 | /docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

frontend-1 | /docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

frontend-1 | 10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

backend-1 | [dotenv@17.2.1] injecting env (0) from .env -- tip: 🔐 prevent building .env in docker: https://dotenvx.com/prebuild

backend-1 | [dotenv@17.2.1] injecting env (0) from .env -- tip: 🛠️ run anywhere with `dotenvx run -- yourcommand`

backend-1 | Migrating database...

frontend-1 | 10-listen-on-ipv6-by-default.sh: info: /etc/nginx/conf.d/default.conf differs from the packaged version

frontend-1 | /docker-entrypoint.sh: Sourcing /docker-entrypoint.d/15-local-resolvers.envsh

frontend-1 | /docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

frontend-1 | /docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

frontend-1 | /docker-entrypoint.sh: Launching /docker-entrypoint.d/env.sh

frontend-1 | Starting environment variable replacement...

frontend-1 | Found KANEO_API_URL: http://localhost:1337

backend-1 | 🏃 Hono API is running at http://localhost:1337

backend-1 | /app/node_modules/.pnpm/drizzle-orm@0.44.4_@types+better-sqlite3@7.6.13_@types+pg@8.15.5_better-sqlite3@11.10.0_bun-types@1.2.8_pg@8.16.3/node_modules/src/pg-core/session.ts:73

backend-1 | throw new DrizzleQueryError(queryString, params, e as Error);

backend-1 | ^

backend-1 |

backend-1 |

backend-1 | DrizzleQueryError: Failed query: CREATE SCHEMA IF NOT EXISTS "drizzle"

backend-1 | params:

backend-1 | at NodePgPreparedQuery.queryWithCache (/app/node_modules/.pnpm/drizzle-orm@0.44.4_@types+better-sqlite3@7.6.13_@types+pg@8.15.5_better-sqlite3@11.10.0_bun-types@1.2.8_pg@8.16.3/node_modules/src/pg-core/session.ts:73:11)

backend-1 | at process.processTicksAndRejections (node:internal/process/task_queues:95:5)

backend-1 | at PgDialect.migrate (/app/node_modules/.pnpm/drizzle-orm@0.44.4_@types+better-sqlite3@7.6.13_@types+pg@8.15.5_better-sqlite3@11.10.0_bun-types@1.2.8_pg@8.16.3/node_modules/src/pg-core/dialect.ts:85:3)

backend-1 | at migrate (/app/node_modules/.pnpm/drizzle-orm@0.44.4_@types+better-sqlite3@7.6.13_@types+pg@8.15.5_better-sqlite3@11.10.0_bun-types@1.2.8_pg@8.16.3/node_modules/src/postgres-js/migrator.ts:10:2) {

backend-1 | query: 'CREATE SCHEMA IF NOT EXISTS "drizzle"',

backend-1 | params: [],

backend-1 | cause: Error: connect ECONNREFUSED 172.25.0.2:5432

backend-1 | at /app/node_modules/.pnpm/pg-pool@3.10.1_pg@8.16.3/node_modules/pg-pool/index.js:45:11

backend-1 | at process.processTicksAndRejections (node:internal/process/task_queues:95:5)

backend-1 | at <anonymous> (/app/node_modules/.pnpm/drizzle-orm@0.44.4_@types+better-sqlite3@7.6.13_@types+pg@8.15.5_better-sqlite3@11.10.0_bun-types@1.2.8_pg@8.16.3/node_modules/src/node-postgres/session.ts:149:14)

backend-1 | at NodePgPreparedQuery.queryWithCache (/app/node_modules/.pnpm/drizzle-orm@0.44.4_@types+better-sqlite3@7.6.13_@types+pg@8.15.5_better-sqlite3@11.10.0_bun-types@1.2.8_pg@8.16.3/node_modules/src/pg-core/session.ts:71:12)

backend-1 | at PgDialect.migrate (/app/node_modules/.pnpm/drizzle-orm@0.44.4_@types+better-sqlite3@7.6.13_@types+pg@8.15.5_better-sqlite3@11.10.0_bun-types@1.2.8_pg@8.16.3/node_modules/src/pg-core/dialect.ts:85:3)

backend-1 | at migrate (/app/node_modules/.pnpm/drizzle-orm@0.44.4_@types+better-sqlite3@7.6.13_@types+pg@8.15.5_better-sqlite3@11.10.0_bun-types@1.2.8_pg@8.16.3/node_modules/src/postgres-js/migrator.ts:10:2) {

backend-1 | errno: -111,

backend-1 | code: 'ECONNREFUSED',

backend-1 | syscall: 'connect',

backend-1 | address: '172.25.0.2',

backend-1 | port: 5432

backend-1 | }

backend-1 | }

backend-1 |

backend-1 | Node.js v20.12.2

frontend-1 | Replaced KANEO_API_URL with http://localhost:1337

frontend-1 | Environment variable replacement complete

frontend-1 | /docker-entrypoint.sh: Configuration complete; ready for start up

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: using the "epoll" event method

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: nginx/1.25.5

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: built by gcc 13.2.1 20231014 (Alpine 13.2.1_git20231014)

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: OS: Linux 6.6.87.2-microsoft-standard-WSL2

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker processes

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker process 41

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker process 42

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker process 43

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker process 44

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker process 45

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker process 46

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker process 47

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker process 48

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker process 49

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker process 50

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker process 51

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker process 52

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker process 53

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker process 54

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker process 55

frontend-1 | 2025/08/22 13:36:10 [notice] 1#1: start worker process 56

postgres-1 | performing post-bootstrap initialization ... ok

postgres-1 | initdb: warning: enabling "trust" authentication for local connections

postgres-1 | initdb: hint: You can change this by editing pg_hba.conf or using the option -A, or --auth-local and --auth-host, the next time you run initdb.

postgres-1 | syncing data to disk ... ok

postgres-1 |

postgres-1 |

postgres-1 | Success. You can now start the database server using:

postgres-1 |

postgres-1 | pg_ctl -D /var/lib/postgresql/data -l logfile start

postgres-1 |

postgres-1 | waiting for server to start....2025-08-22 13:36:11.095 UTC [41] LOG: starting PostgreSQL 16.8 on x86_64-pc-linux-musl, compiled by gcc (Alpine 14.2.0) 14.2.0, 64-bit

postgres-1 | 2025-08-22 13:36:11.097 UTC [41] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432"

postgres-1 | 2025-08-22 13:36:11.104 UTC [44] LOG: database system was shut down at 2025-08-22 13:36:10 UTC

postgres-1 | 2025-08-22 13:36:11.112 UTC [41] LOG: database system is ready to accept connections

postgres-1 | done

postgres-1 | server started

postgres-1 | CREATE DATABASE

postgres-1 |

postgres-1 |

postgres-1 | /usr/local/bin/docker-entrypoint.sh: ignoring /docker-entrypoint-initdb.d/*

postgres-1 |

postgres-1 | waiting for server to shut down...2025-08-22 13:36:11.297 UTC [41] LOG: received fast shutdown request

postgres-1 | .2025-08-22 13:36:11.300 UTC [41] LOG: aborting any active transactions

postgres-1 | 2025-08-22 13:36:11.304 UTC [41] LOG: background worker "logical replication launcher" (PID 47) exited with exit code 1

postgres-1 | 2025-08-22 13:36:11.304 UTC [42] LOG: shutting down

postgres-1 | 2025-08-22 13:36:11.306 UTC [42] LOG: checkpoint starting: shutdown immediate

backend-1 exited with code 0

postgres-1 | 2025-08-22 13:36:11.437 UTC [42] LOG: checkpoint complete: wrote 924 buffers (5.6%); 0 WAL file(s) added, 0 removed, 0 recycled; write=0.040 s, sync=0.085 s, total=0.133 s; sync files=301, longest=0.003 s, average=0.001 s; distance=4267 kB, estimate=4267 kB; lsn=0/191AB60, redo lsn=0/191AB60

postgres-1 | 2025-08-22 13:36:11.456 UTC [41] LOG: database system is shut down

postgres-1 | done

postgres-1 | server stopped

postgres-1 |

postgres-1 | PostgreSQL init process complete; ready for start up.

postgres-1 |

postgres-1 | 2025-08-22 13:36:11.530 UTC [1] LOG: starting PostgreSQL 16.8 on x86_64-pc-linux-musl, compiled by gcc (Alpine 14.2.0) 14.2.0, 64-bit

postgres-1 | 2025-08-22 13:36:11.530 UTC [1] LOG: listening on IPv4 address "0.0.0.0", port 5432

postgres-1 | 2025-08-22 13:36:11.530 UTC [1] LOG: listening on IPv6 address "::", port 5432

postgres-1 | 2025-08-22 13:36:11.534 UTC [1] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432"

postgres-1 | 2025-08-22 13:36:11.542 UTC [57] LOG: database system was shut down at 2025-08-22 13:36:11 UTC

postgres-1 | 2025-08-22 13:36:11.549 UTC [1] LOG: database system is ready to accept connections

backend-1 | [dotenv@17.2.1] injecting env (0) from .env -- tip: 🔐 encrypt with Dotenvx: https://dotenvx.com

backend-1 | [dotenv@17.2.1] injecting env (0) from .env -- tip: ⚙️ enable debug logging with { debug: true }

backend-1 | [dotenv@17.2.1] injecting env (0) from .env -- tip: 🔐 encrypt with Dotenvx: https://dotenvx.com

backend-1 | Migrating database...

backend-1 | 🏃 Hono API is running at http://localhost:1337

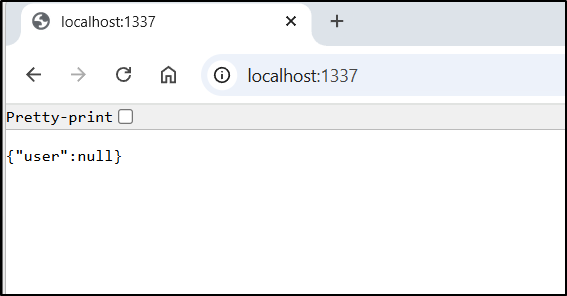

I can see the API backend is up

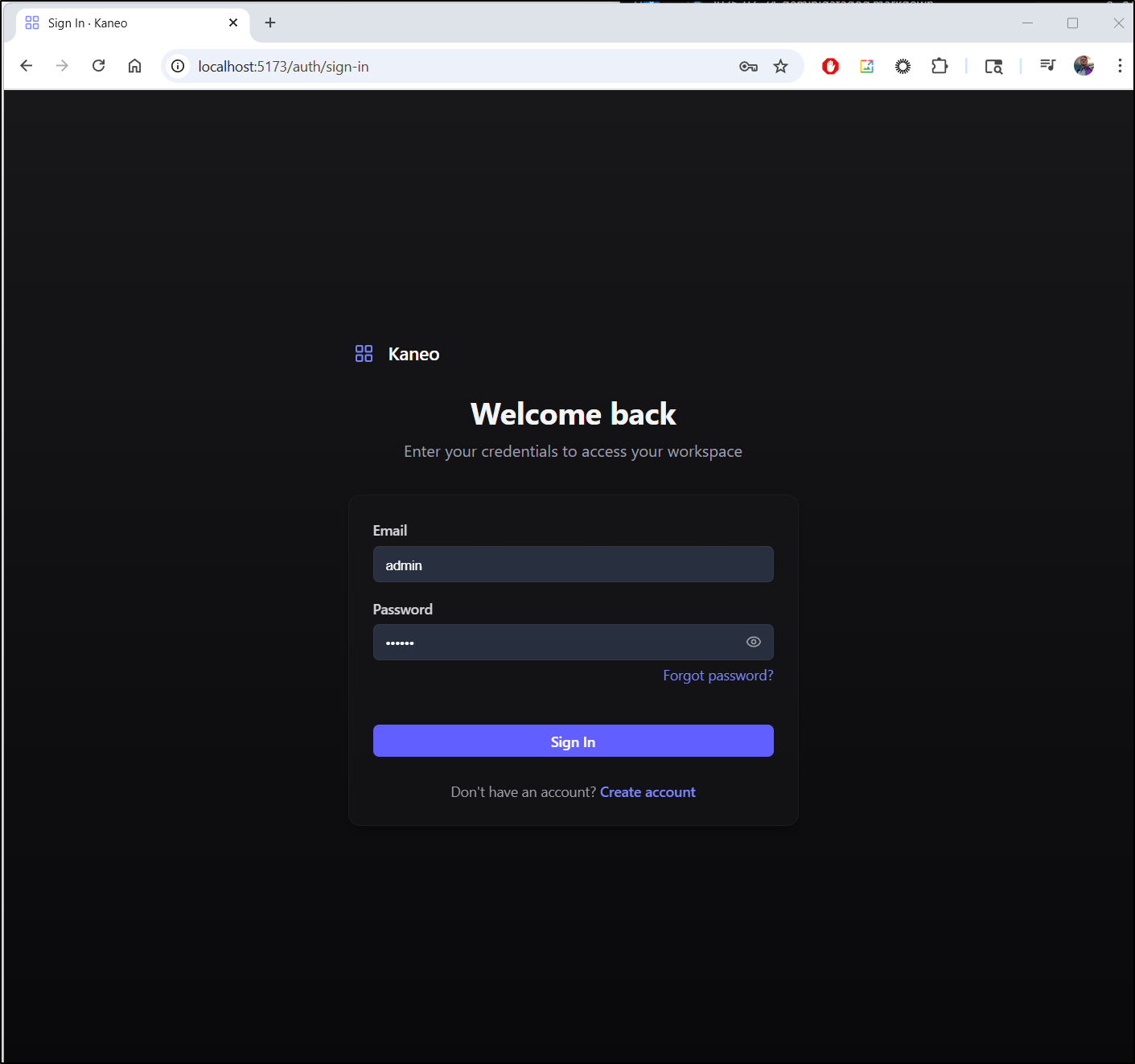

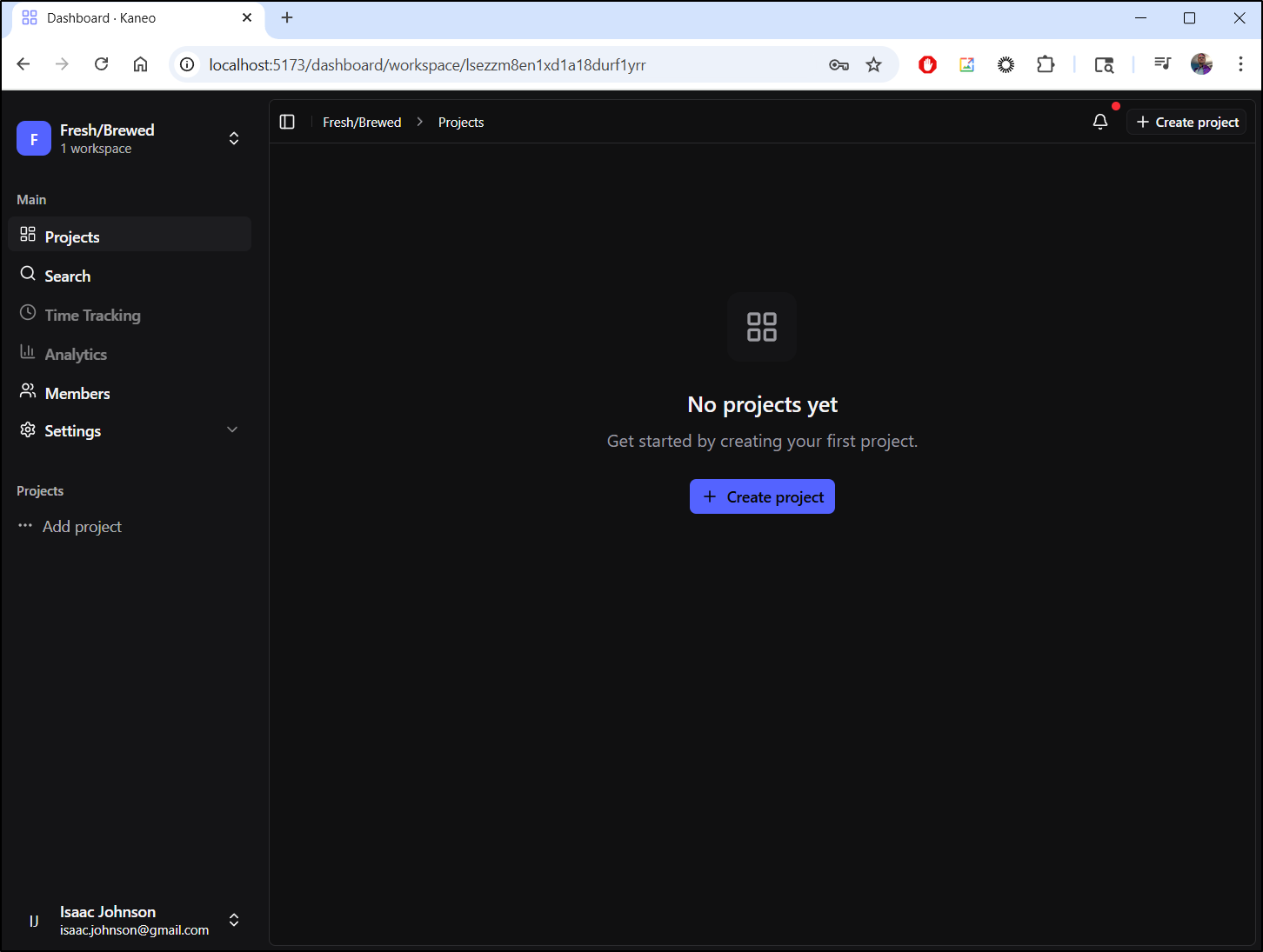

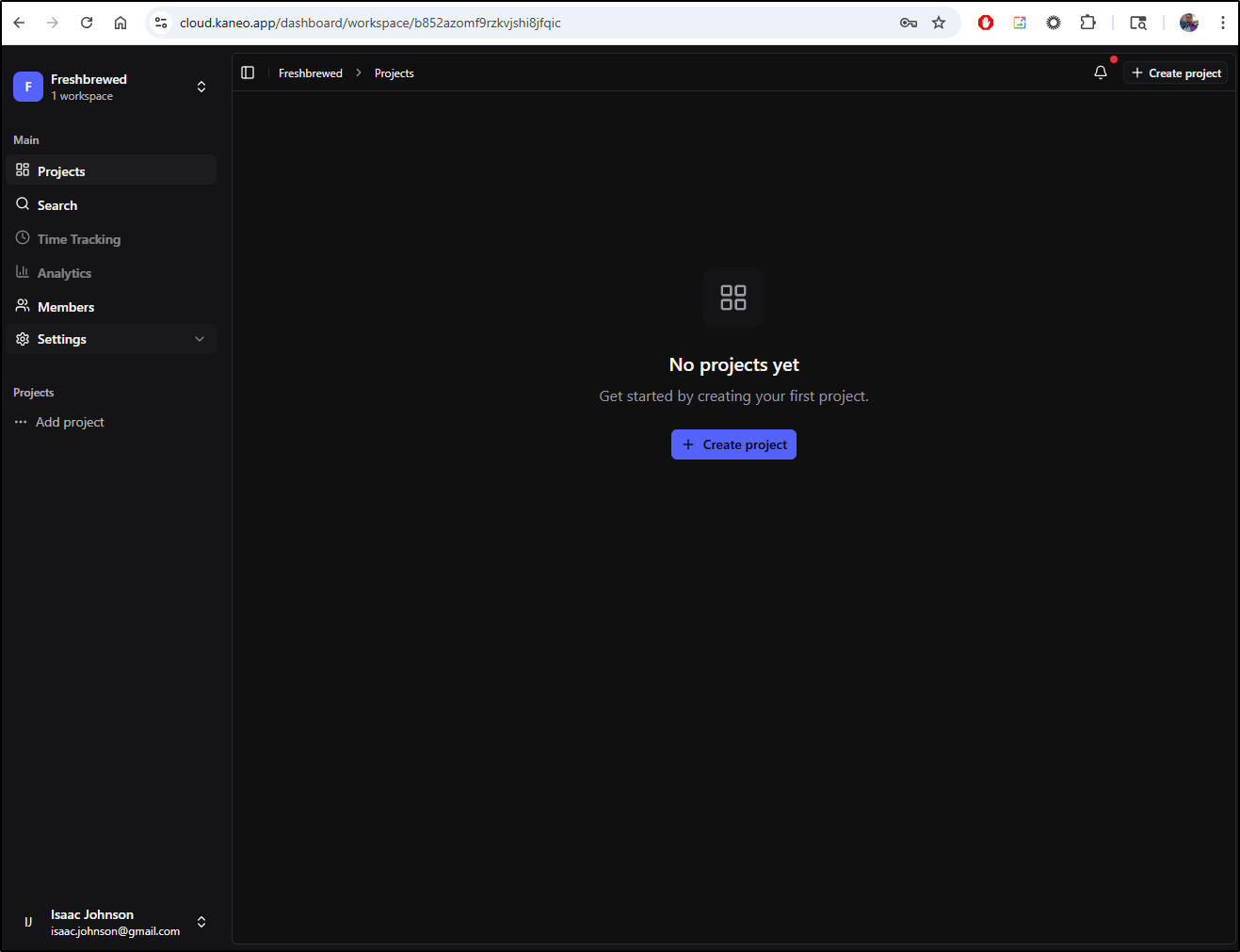

I can access the Web interface at port 5173

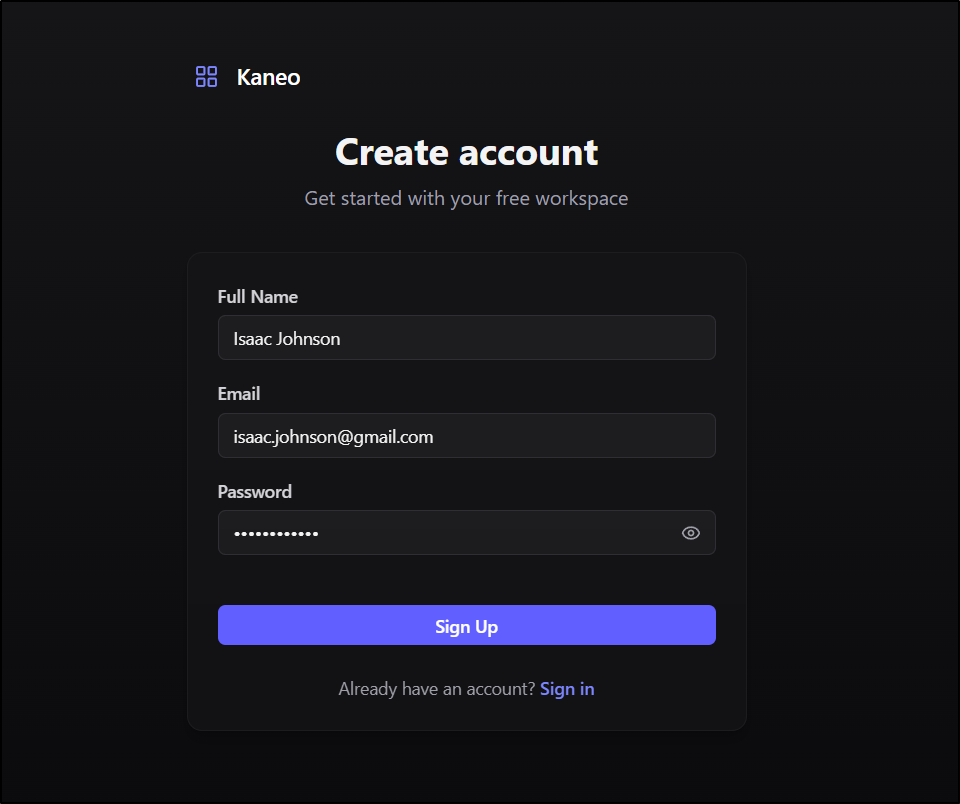

I’ll create an account

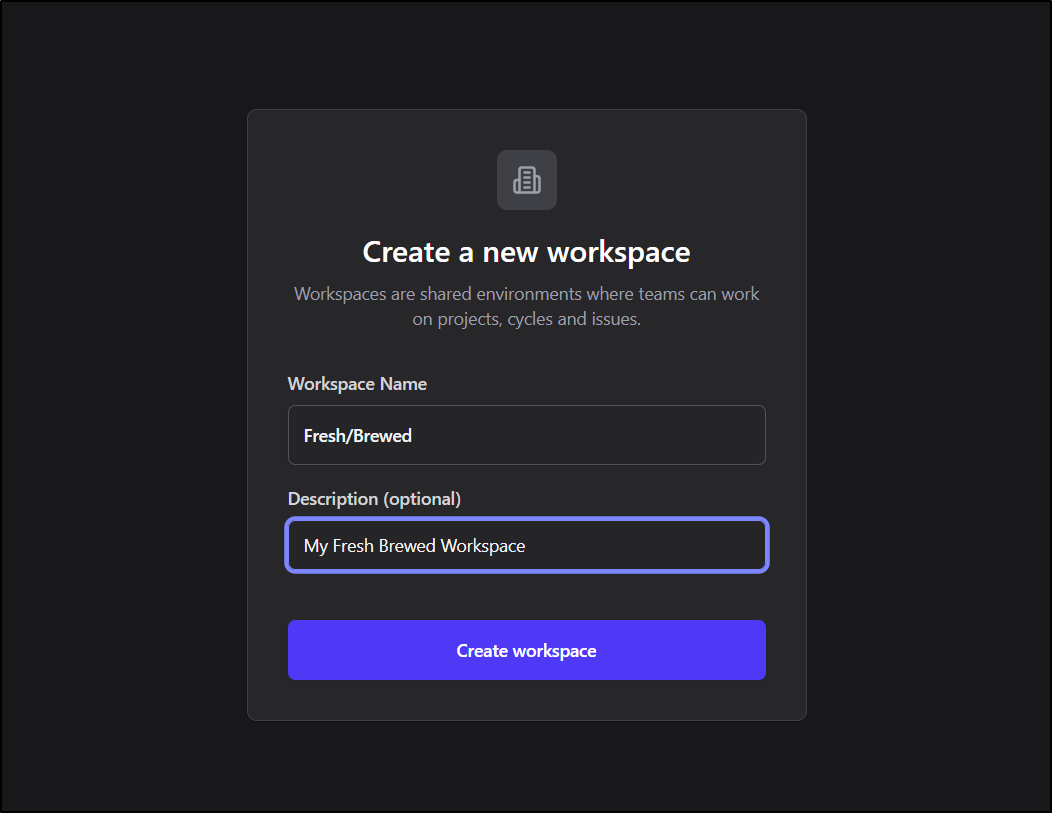

Then create a workspace

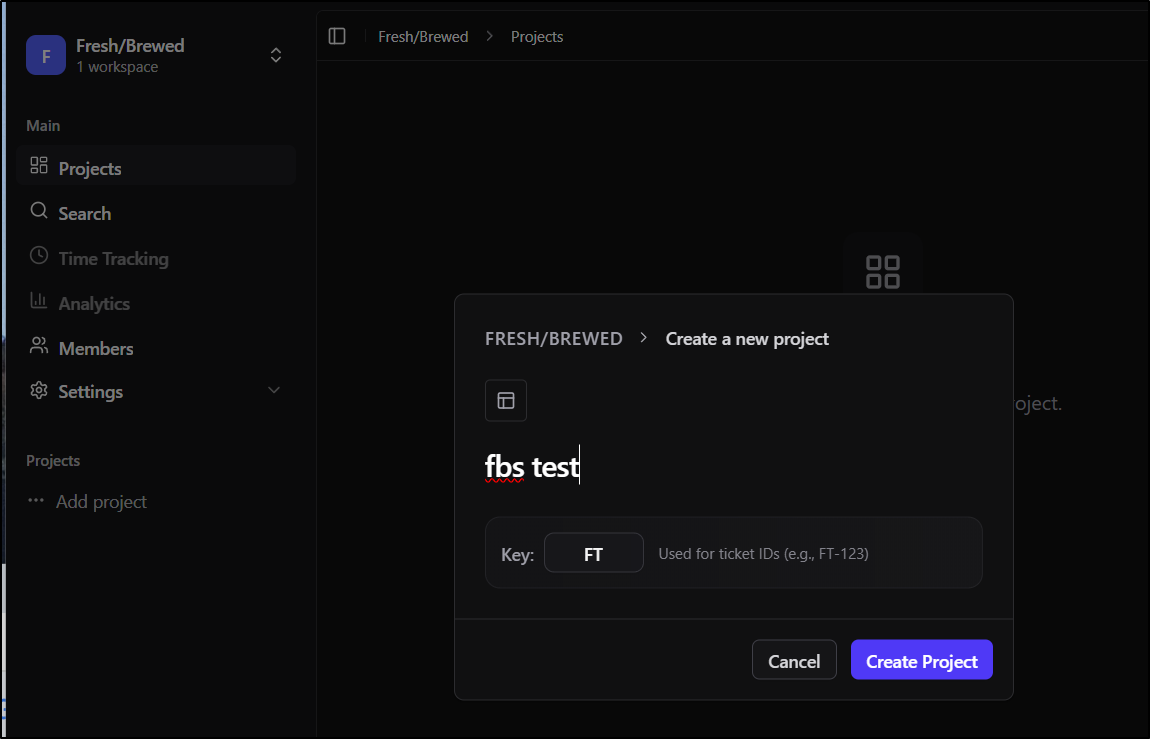

Now that I’m in my new workspace, I can create a project

It will suggest a key for tickets as we create the new project

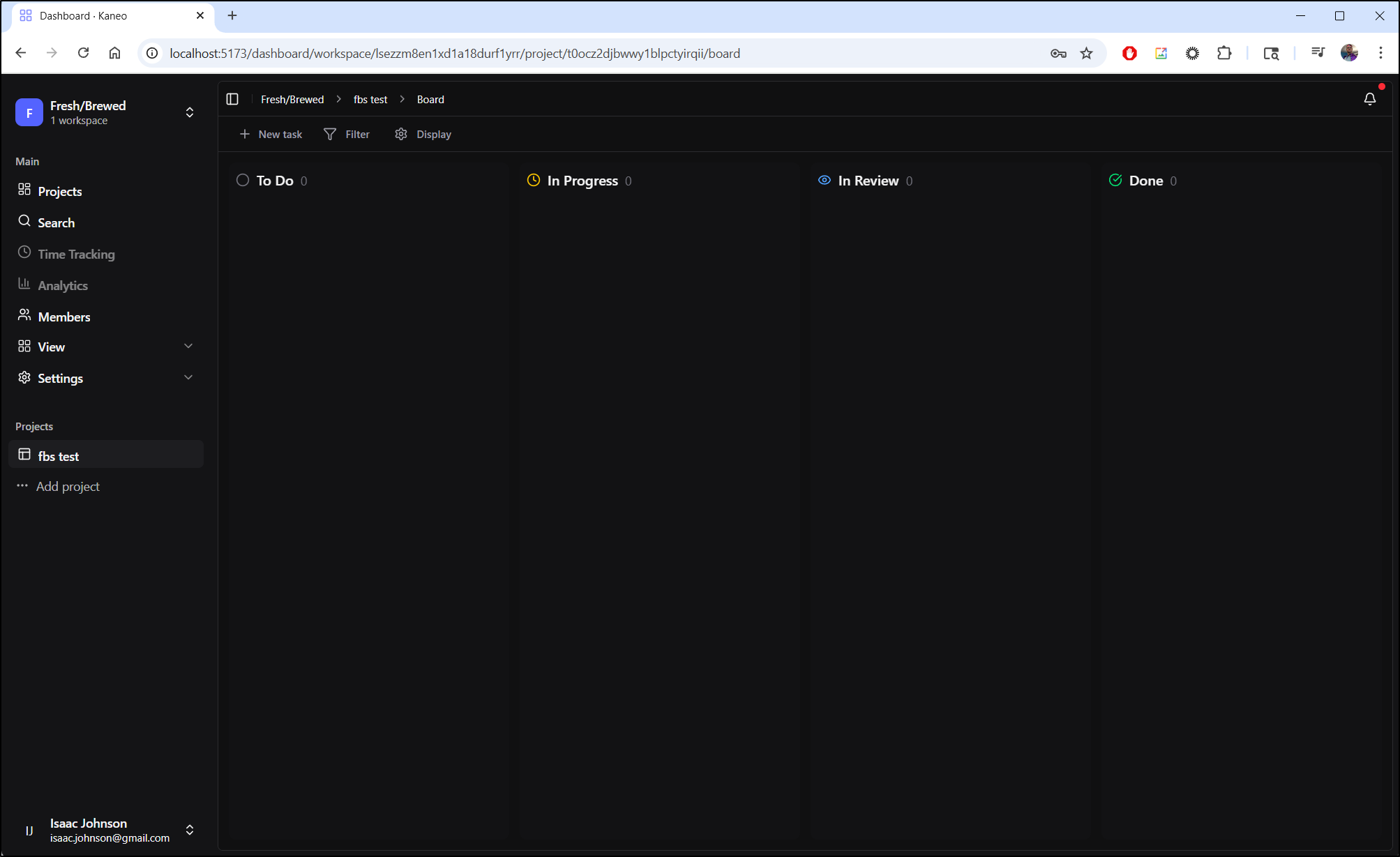

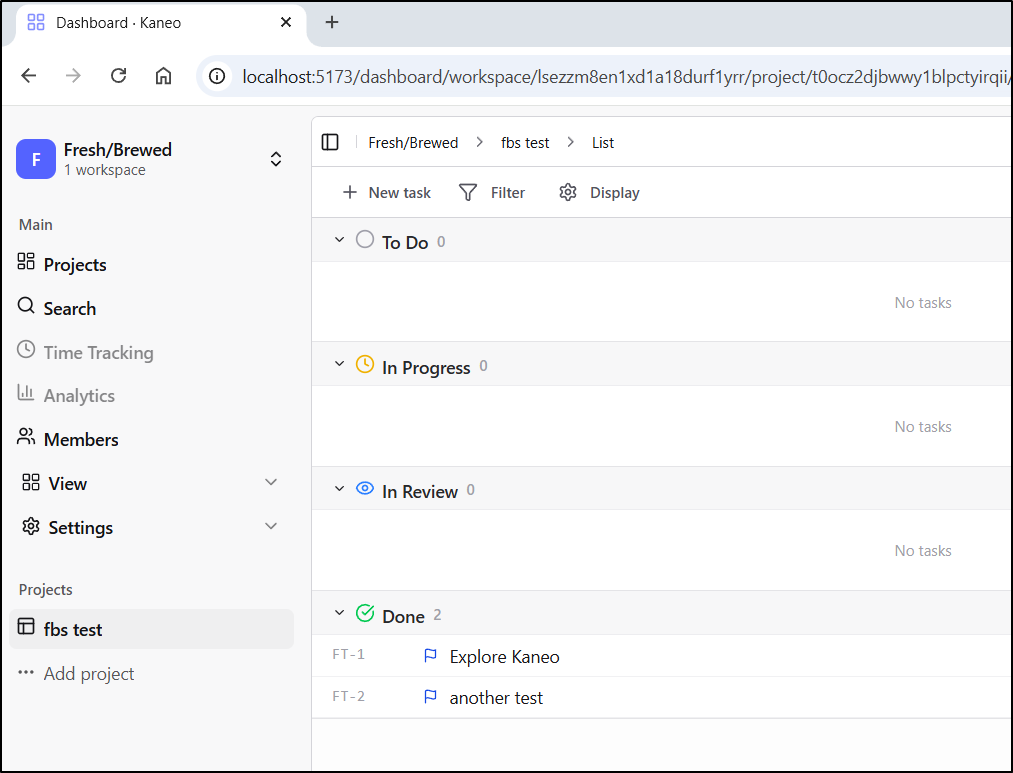

I’m now dropped into a nice Kanban board with To Do, In Progress, In Review and Done.

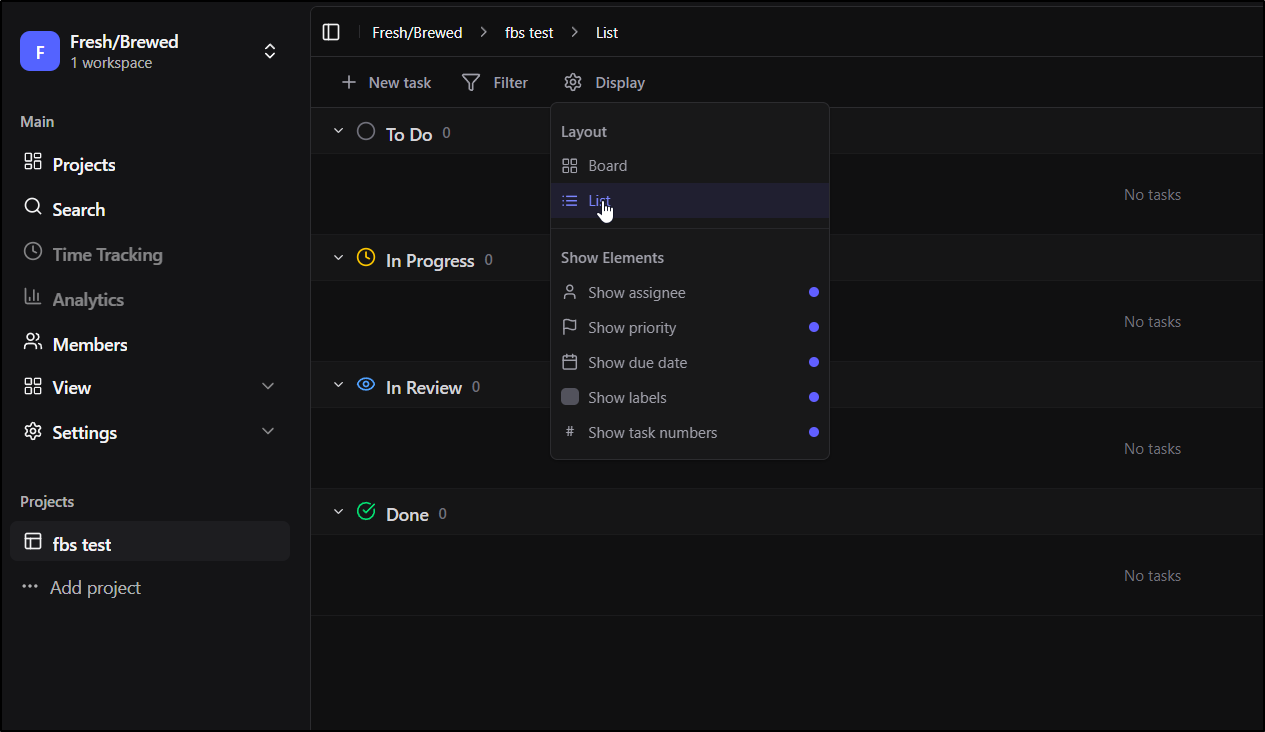

Though, it’s easy enough to convert that to a list

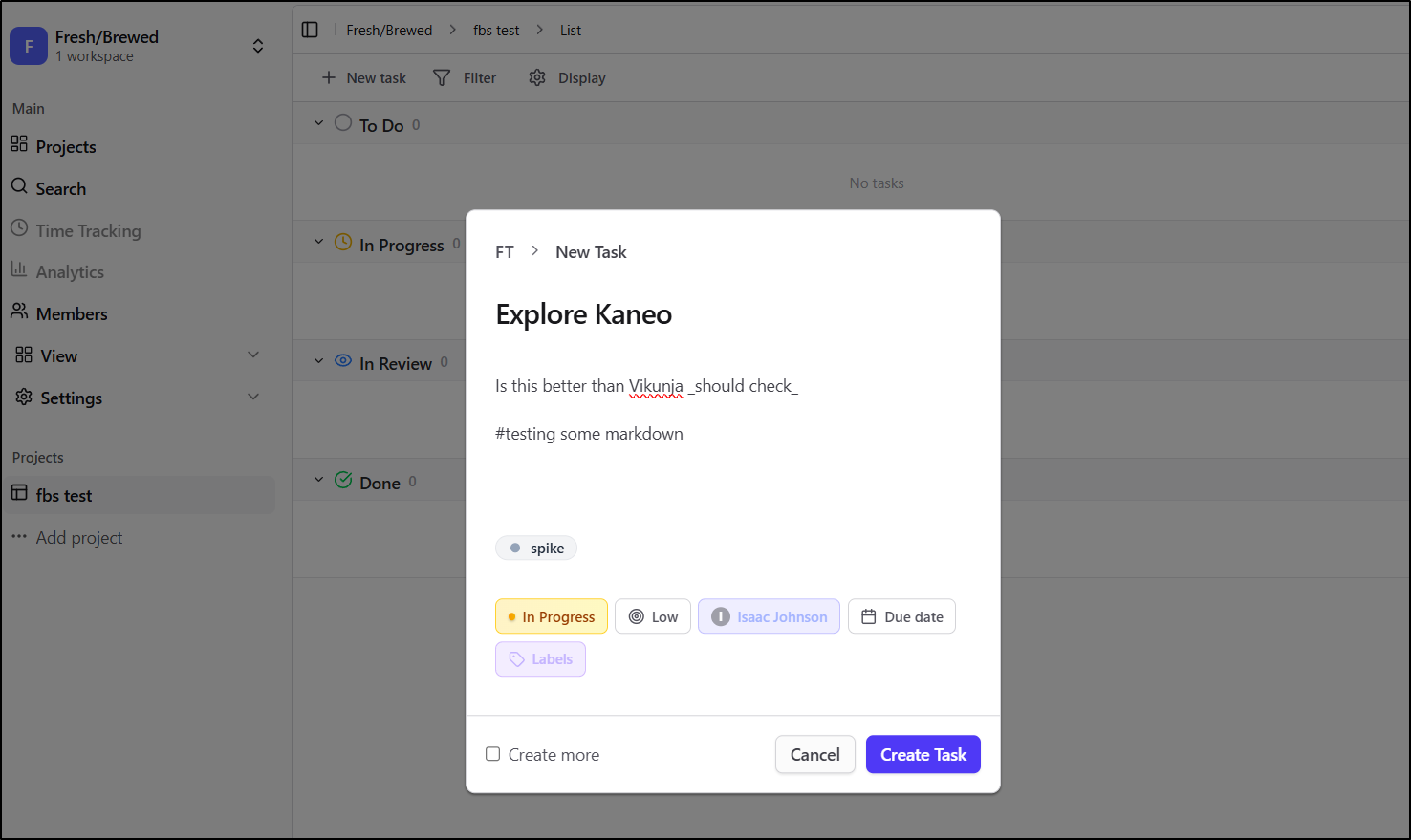

I’ll flip to light mode and compact interface, then create a new ticket

It does let me add arbitrary tags and set assignees, but at least in this create interface, doesn’t take in markdown

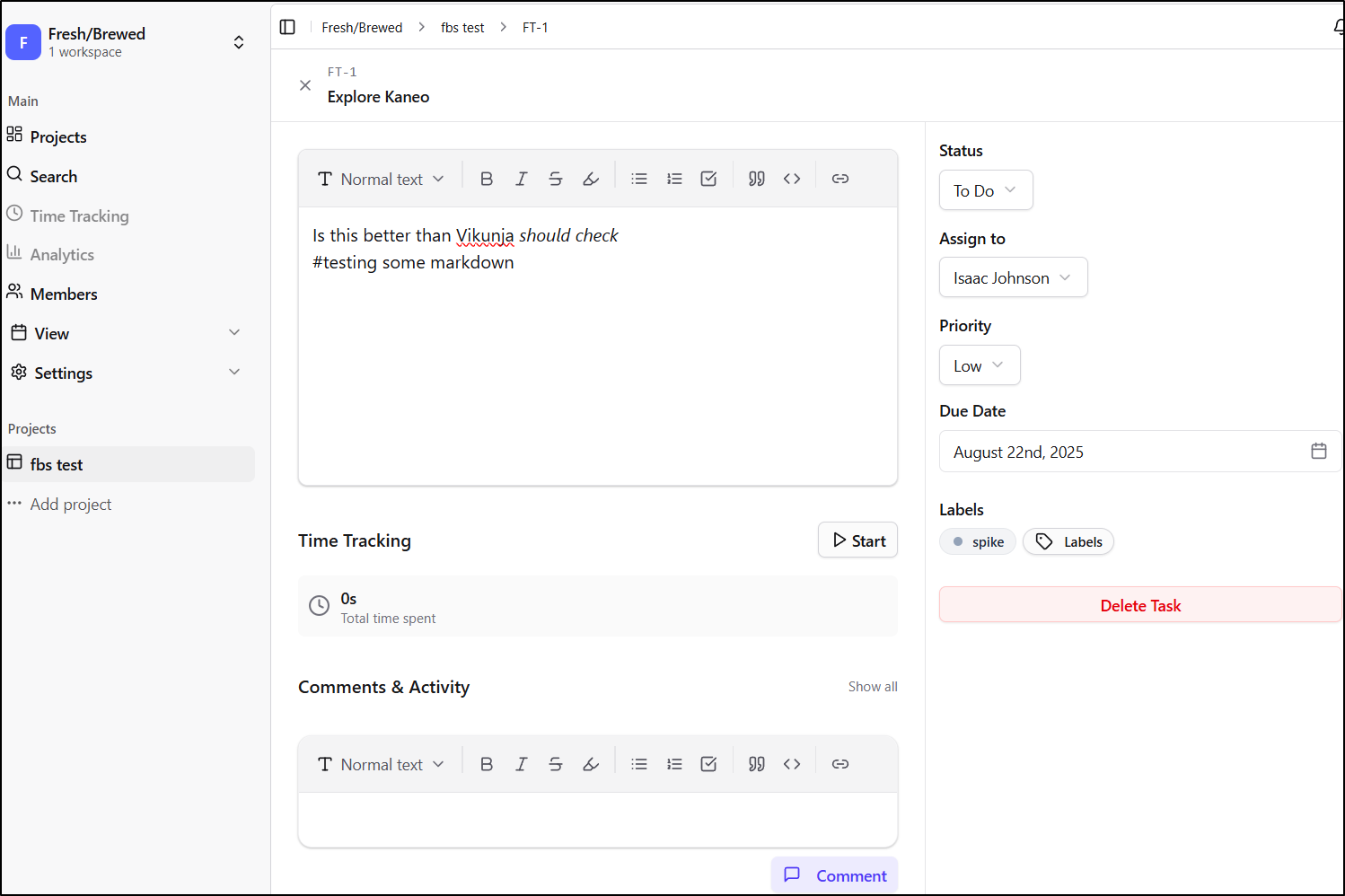

The created ticket does have some nice features like Time Tracking, but I’m not as big a fan of WYSIWYG editors

Actually, i tweaked it and it does look like it took the markdown (just needed a space after pound sign)

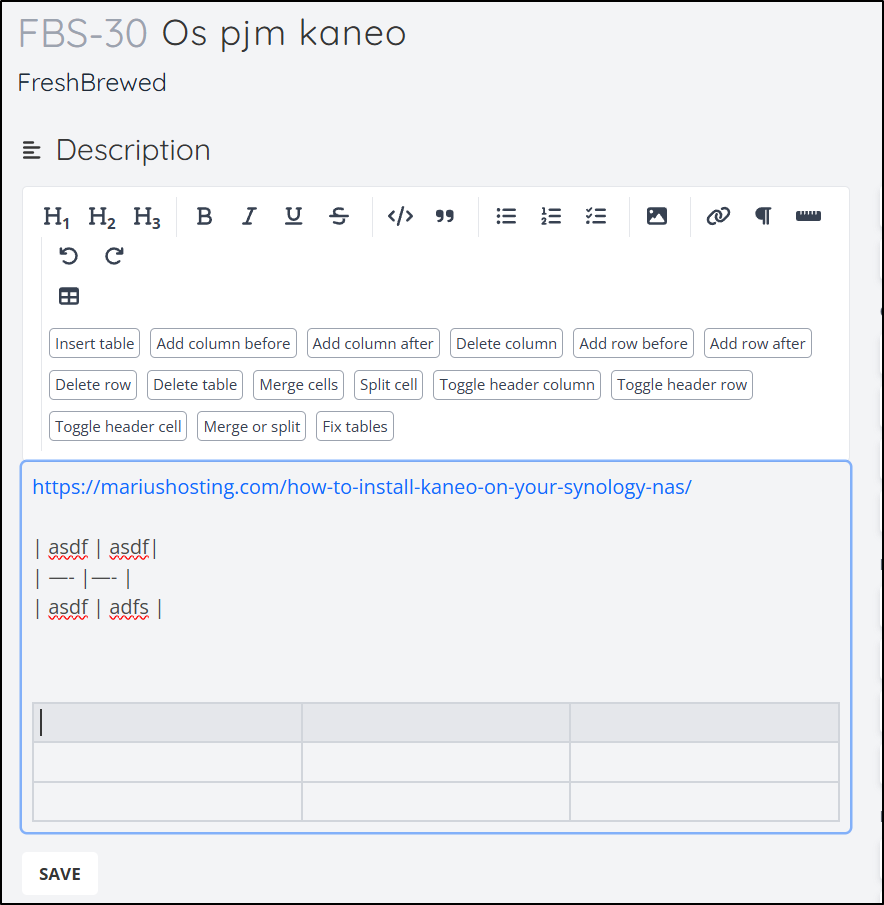

as we can see above, it seems to support some markdown but not all. I actually really use tables so that would stink to have no table support.

I was curious if Vikunja does tables as I shouldn’t judge so quick

as you can see with the actual Vikunja ticket for this post, I can add tables with the WYSIWYG editor, but not markdown.

Let’s test some time tracking

I’m going back and forth in my head if I like this - one one hand I like that the start/stop is independent. And in moving to done, whatever was logged thus far is captured. and it lets me add time if I, say, missed an item and want to capture time

However, I do like systems that just capture this for me so I can track. If I was billing clients, however, having a very manual start/pause/stop is better as I tend to multi-task (ie. having an active work item but not actioning it at this moment).

Another confusing thing to me is that I have logged time now, but the Time Tracking and Analytics sections are still grayed out

Setting up prod

Since I want to experiment with the API, I will set this up in the production cluster

I’ll want to sort out an A Record first

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n kaneo

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "03c992bd-4665-4e5f-a5f6-af846c084466",

"fqdn": "kaneo.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/kaneo",

"name": "kaneo",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

I’ll want to use the chart, but it’s in the GIT repo (not in a helm repo)

builder@LuiGi:~/Workspaces$ git clone https://github.com/usekaneo/kaneo.git

Cloning into 'kaneo'...

remote: Enumerating objects: 8181, done.

remote: Counting objects: 100% (216/216), done.

remote: Compressing objects: 100% (144/144), done.

remote: Total 8181 (delta 112), reused 105 (delta 68), pack-reused 7965 (from 2)

Receiving objects: 100% (8181/8181), 7.56 MiB | 6.64 MiB/s, done.

Resolving deltas: 100% (4399/4399), done.

builder@LuiGi:~/Workspaces$ cd kaneo/

builder@LuiGi:~/Workspaces/kaneo$

I’ll make a values file with my DNS name and cluster issuer

$ cat myvalues.yaml

# PostgreSQL configuration

postgresql:

auth:

password: "your-secure-db-password"

persistence:

enabled: true

size: 20Gi

storageClass: "local-path"

resources:

limits:

cpu: 500m

memory: 512Mi

requests:

cpu: 100m

memory: 128Mi

# API configuration

api:

env:

jwtAccess: "your-secure-jwt-token"

persistence:

enabled: true

size: 5Gi

storageClass: "local-path"

# For production, consider setting resource limits

resources:

limits:

cpu: 500m

memory: 512Mi

requests:

cpu: 100m

memory: 256Mi

# Web configuration

web:

env:

apiUrl: "https://kaneo.tpk.pw"

resources:

limits:

cpu: 300m

memory: 256Mi

requests:

cpu: 100m

memory: 128Mi

ingress:

enabled: true

className: "nginx"

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/rewrite-target: /$1

hosts:

- host: kaneo.tpk.pw

paths:

- path: /?(.*)

pathType: Prefix

service: web

port: 80

- path: /api/?(.*)

pathType: Prefix

service: api

port: 1337

tls:

- secretName: kaneo-tls

hosts:

- kaneo.tpk.pw

Then install

$ helm install -f ./myvalues.yaml kaneo ./charts/kaneo --namespace kaneo --create-namespace

NAME: kaneo

LAST DEPLOYED: Sat Aug 23 16:52:42 2025

NAMESPACE: kaneo

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Thank you for installing kaneo.

Your release is named kaneo.

To learn more about the release, try:

$ helm status kaneo

$ helm get all kaneo

You can access the application using these URLs:

https://kaneo.tpk.pw

Note: This chart uses path patterns with regex capture groups for nginx:

- /?(.*) -> for web frontend

- /api/?(.*) -> for API requests

Make sure your ingress controller supports the configured annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$1

NOTES:

1. Kaneo is configured with SQLite database for persistence.

A PersistentVolumeClaim is used to store the SQLite database file.

2. Important environment variables:

- API:

- JWT_ACCESS: Secret key for generating JWT tokens (currently set to: your-secure-jwt-token)

- DB_PATH: Path to the SQLite database file (set to: "/")

- Web:

- KANEO_API_URL: URL of the API service (set to: "https://kaneo.tpk.pw/api")

You can customize these values in your values.yaml file.

3. IMPORTANT: This chart uses a combined pod approach where both the API and Web containers

run in the same pod. This allows the web frontend to connect directly to the API via

localhost, simplifying the deployment and eliminating cross-origin issues.

When I see the cert satisfied

builder@LuiGi:~/Workspaces/kaneo$ kubectl get cert -n kaneo

NAME READY SECRET AGE

kaneo-tls False kaneo-tls 70s

builder@LuiGi:~/Workspaces/kaneo$ kubectl get cert -n kaneo

NAME READY SECRET AGE

kaneo-tls True kaneo-tls 107s

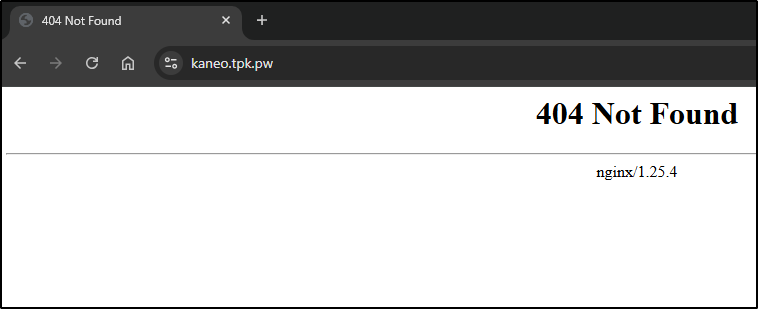

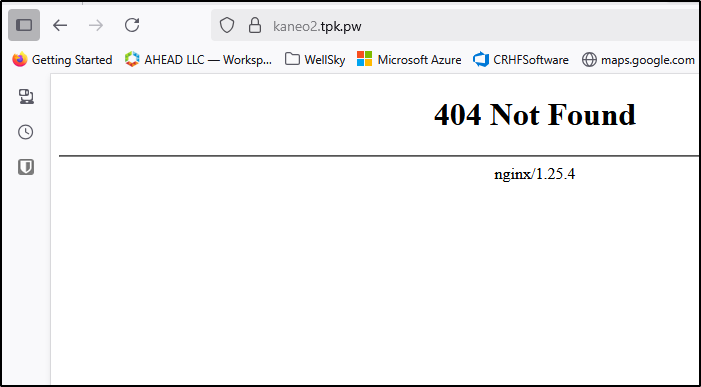

But I don’t see the app

The pods are up

$ kubectl get po -n kaneo -l app.kubernetes.io/instance=kaneo,app.kubernetes.io/name=kaneo

NAME READY STATUS RESTARTS AGE

kaneo-64bc789697-8kgz9 2/2 Running 2 (3m55s ago) 4m15s

kaneo-postgresql-767c55694f-6jccs 1/1 Running 0 4m15s

However nothing seems to work.. the pod crashes on DB migrations now

$ kubectl get po -n kaneo -l app.kubernetes.io/instance=kaneo,app.kubernetes.io/name=kan

eo,app.kubernetes.io/component=web

NAME READY STATUS RESTARTS AGE

kaneo-fd546974-zkrvw 1/2 Running 2 (12m ago) 12m

I can see the DB is up

$ kubectl get po -n kaneo

NAME READY STATUS RESTARTS AGE

kaneo-fd546974-zkrvw 1/2 Running 2 (13m ago) 13m

kaneo-postgresql-767c55694f-lvtfv 1/1 Running 0 13m

yet the failures point to migrations

$ kubectl logs -n kaneo --previous kaneo-fd546974-zkrvw

Defaulted container "api" out of: api, web

[dotenv@17.2.1] injecting env (0) from .env -- tip: ⚙️ load multiple .env files with { path: ['.env.local', '.env'] }

[dotenv@17.2.1] injecting env (0) from .env -- tip: ⚙️ load multiple .env files with { path: ['.env.local', '.env'] }

[dotenv@17.2.1] injecting env (0) from .env -- tip: ⚙️ suppress all logs with { quiet: true }

Migrating database...

🏃 Hono API is running at http://localhost:1337

/app/node_modules/.pnpm/drizzle-orm@0.44.4_@types+better-sqlite3@7.6.13_@types+pg@8.15.5_better-sqlite3@11.10.0_bun-types@1.2.8_pg@8.16.3/node_modules/src/pg-core/session.ts:73

throw new DrizzleQueryError(queryString, params, e as Error);

^

DrizzleQueryError: Failed query: CREATE SCHEMA IF NOT EXISTS "drizzle"

params:

at NodePgPreparedQuery.queryWithCache (/app/node_modules/.pnpm/drizzle-orm@0.44.4_@types+better-sqlite3@7.6.13_@types+pg@8.15.5_better-sqlite3@11.10.0_bun-types@1.2.8_pg@8.16.3/node_modules/src/pg-core/session.ts:73:11)

at process.processTicksAndRejections (node:internal/process/task_queues:95:5)

at PgDialect.migrate (/app/node_modules/.pnpm/drizzle-orm@0.44.4_@types+better-sqlite3@7.6.13_@types+pg@8.15.5_better-sqlite3@11.10.0_bun-types@1.2.8_pg@8.16.3/node_modules/src/pg-core/dialect.ts:85:3)

at migrate (/app/node_modules/.pnpm/drizzle-orm@0.44.4_@types+better-sqlite3@7.6.13_@types+pg@8.15.5_better-sqlite3@11.10.0_bun-types@1.2.8_pg@8.16.3/node_modules/src/postgres-js/migrator.ts:10:2) {

query: 'CREATE SCHEMA IF NOT EXISTS "drizzle"',

params: [],

cause: Error: connect ECONNREFUSED 10.43.32.114:5432

at /app/node_modules/.pnpm/pg-pool@3.10.1_pg@8.16.3/node_modules/pg-pool/index.js:45:11

at process.processTicksAndRejections (node:internal/process/task_queues:95:5)

at <anonymous> (/app/node_modules/.pnpm/drizzle-orm@0.44.4_@types+better-sqlite3@7.6.13_@types+pg@8.15.5_better-sqlite3@11.10.0_bun-types@1.2.8_pg@8.16.3/node_modules/src/node-postgres/session.ts:149:14)

at NodePgPreparedQuery.queryWithCache (/app/node_modules/.pnpm/drizzle-orm@0.44.4_@types+better-sqlite3@7.6.13_@types+pg@8.15.5_better-sqlite3@11.10.0_bun-types@1.2.8_pg@8.16.3/node_modules/src/pg-core/session.ts:71:12)

at PgDialect.migrate (/app/node_modules/.pnpm/drizzle-orm@0.44.4_@types+better-sqlite3@7.6.13_@types+pg@8.15.5_better-sqlite3@11.10.0_bun-types@1.2.8_pg@8.16.3/node_modules/src/pg-core/dialect.ts:85:3)

at migrate (/app/node_modules/.pnpm/drizzle-orm@0.44.4_@types+better-sqlite3@7.6.13_@types+pg@8.15.5_better-sqlite3@11.10.0_bun-types@1.2.8_pg@8.16.3/node_modules/src/postgres-js/migrator.ts:10:2) {

errno: -111,

code: 'ECONNREFUSED',

syscall: 'connect',

address: '10.43.32.114',

port: 5432

}

}

Node.js v20.12.2

Let’s try another way.

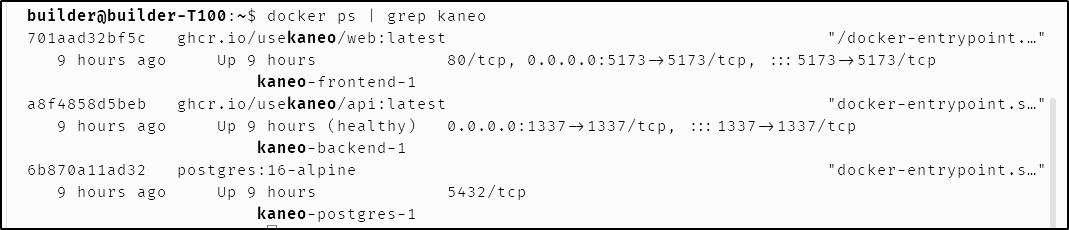

I’ll fire this up on my dockerhost

builder@builder-T100:~/kaneo$ docker compose up -d

[+] Running 39/39

✔ postgres 11 layers [⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿] 0B/0B Pulled 9.7s

✔ 9824c27679d3 Pull complete 3.9s

✔ 01ef787617d5 Pull complete 3.9s

✔ d444581c5dc1 Pull complete 4.1s

✔ 127625cab66d Pull complete 4.1s

✔ 7f8bf47818a2 Pull complete 4.3s

✔ 0951477387e1 Pull complete 8.4s

✔ 878e28e3ecd5 Pull complete 8.4s

✔ d079e32a74cc Pull complete 8.5s

✔ cb87d3c01966 Pull complete 8.5s

✔ 40af0ccd9733 Pull complete 8.5s

✔ 0b003ba20c51 Pull complete 8.5s

✔ frontend 12 layers [⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿] 0B/0B Pulled 4.4s

✔ fc21a1d387f5 Pull complete 2.2s

✔ e6ef242c1570 Pull complete 2.2s

✔ 13fcfbc94648 Pull complete 2.2s

✔ d4bca490e609 Pull complete 2.2s

✔ 5406ed7b06d9 Pull complete 2.2s

✔ 8a3742a9529d Pull complete 2.3s

✔ 0d0c16747d2c Pull complete 3.0s

✔ a35acf90e25d Pull complete 3.1s

✔ 567123fc343b Pull complete 3.1s

✔ f3721f3008a0 Pull complete 3.2s

✔ 608cacd076c7 Pull complete 3.2s

✔ 9fc72ec3b0bc Pull complete 3.2s

✔ backend 13 layers [⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿] 0B/0B Pulled 9.4s

✔ 4abcf2066143 Pull complete 0.7s

✔ 3bce96456554 Pull complete 2.9s

✔ 2bde47b9f7c3 Pull complete 3.0s

✔ db3e2f2b6054 Pull complete 3.0s

✔ f654cfc9cb19 Pull complete 3.1s

✔ 4f4fb700ef54 Pull complete 3.1s

✔ ceb3a1c625c0 Pull complete 3.1s

✔ 863993be634a Pull complete 3.2s

✔ 90b66fb610c9 Pull complete 3.2s

✔ a70b1328ca1e Pull complete 3.2s

✔ 178e58b90e0a Pull complete 8.1s

✔ c3e3b567e60b Pull complete 8.2s

✔ 765b33a5772f Pull complete 8.2s

[+] Building 0.0s (0/0)

[+] Running 5/5

✔ Network kaneo_default Created 0.1s

✔ Volume "kaneo_postgres_data" Created 0.0s

✔ Container kaneo-postgres-1 Started 1.4s

✔ Container kaneo-backend-1 Started 0.8s

✔ Container kaneo-frontend-1 Started 1.1s

I’m going to try two things, using the same ports across services and using a new DNS name

---

apiVersion: v1

kind: Endpoints

metadata:

name: kaneoweb-external-ip

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: kaneoweb

port: 5173

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: kaneoweb-external-ip

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: kaneoweb

port: 5173

protocol: TCP

targetPort: 5173

sessionAffinity: None

type: ClusterIP

---

apiVersion: v1

kind: Endpoints

metadata:

name: kaneoapi-external-ip

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: kaneoapi

port: 1337

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: kaneoapi-external-ip

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: kaneoapi

port: 1337

protocol: TCP

targetPort: 1337

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

name: kaneo-ingress

spec:

rules:

- host: kaneo2.tpk.pw

http:

paths:

- path: /?(.*)

pathType: Prefix

backend:

service:

name: kaneoweb-external-ip

port:

number: 5173

- path: /api/?(.*)

pathType: Prefix

backend:

service:

name: kaneoapi-external-ip

port:

number: 1337

tls:

- hosts:

- kaneo2.tpk.pw

secretName: kaneo2-tls

switch them up

builder@LuiGi:~/Workspaces/kaneo$ kubectl delete -f ./k8s-fwd.yaml

endpoints "kaneoweb-external-ip" deleted

service "kaneoweb-external-ip" deleted

endpoints "kaneoapi-external-ip" deleted

service "kaneoapi-external-ip" deleted

ingress.networking.k8s.io "kaneo-ingress" deleted

builder@LuiGi:~/Workspaces/kaneo$ kubectl apply -f ./k8s-fwd.yaml

endpoints/kaneoweb-external-ip created

service/kaneoweb-external-ip created

endpoints/kaneoapi-external-ip created

service/kaneoapi-external-ip created

Warning: annotation "kubernetes.io/ingress.class" is deprecated, please use 'spec.ingressClassName' instead

ingress.networking.k8s.io/kaneo-ingress created

I get a valid cert, but no go on getting to the Dockerhost

I double checked the pods were up

SaaS

One thing we can try is Kaneo Cloud

The account create flow is about the same

This works, and it’s reasonably fast

However, I do have concerns when there is a SaaS option with no premium add ons. I would expect at least a “pay for support” feature, otherwise I cannot see how this stays funded and up.

Based on a traceroute, it runs in a roadrunner (rr.com) network which would mean home-lab like my own.

Again, not poo poo’ing it - just those things are harder to depend upon.

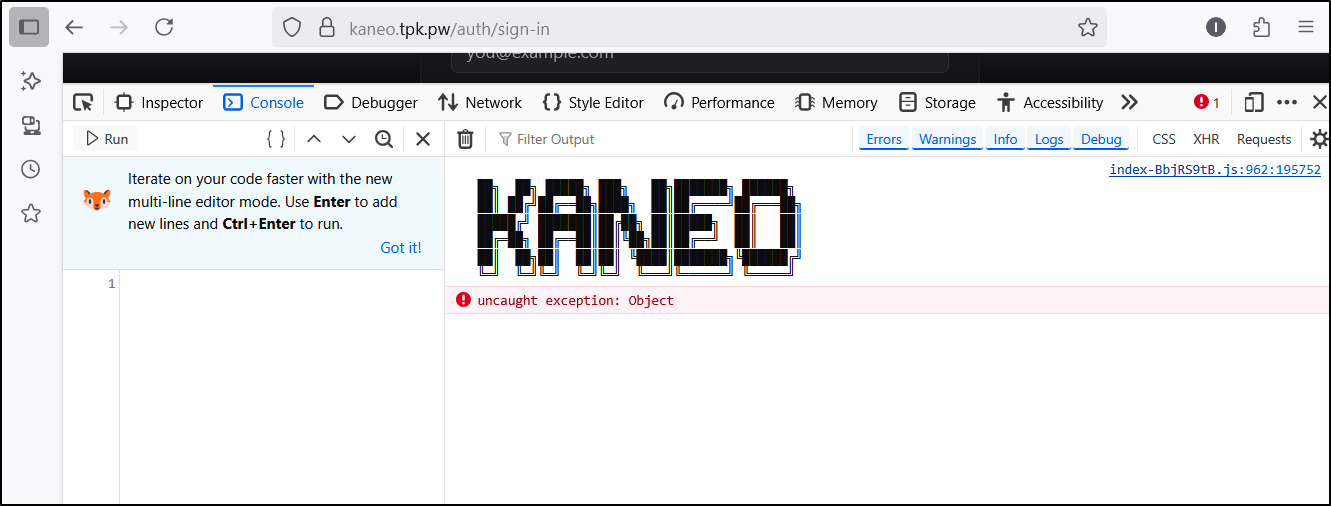

Try again

I tried again with a new manifest that came with YAML

$ cat k8s.yaml

apiVersion: v1

kind: Secret

metadata:

name: postgres-secret

type: Opaque

data:

POSTGRES_PASSWORD: bXlrYW5lb3Bhc3MxMjM=

---

apiVersion: v1

kind: Secret

metadata:

name: jwt-secret

type: Opaque

data:

JWT_ACCESS: eW91ci1zZWNyZXQta2V5LWhlcmU=

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: postgres-pvc

spec:

storageClassName: local-path

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgres-deployment

spec:

replicas: 1

selector:

matchLabels:

app: postgres

template:

metadata:

labels:

app: postgres

spec:

containers:

- name: postgres

image: postgres:16-alpine

env:

- name: POSTGRES_DB

value: "kaneo"

- name: POSTGRES_USER

value: "mykaneouser"

- name: POSTGRES_PASSWORD

valueFrom:

secretKeyRef:

name: postgres-secret

key: POSTGRES_PASSWORD

ports:

- containerPort: 5432

volumeMounts:

- name: postgres-data

mountPath: /var/lib/postgresql/data

volumes:

- name: postgres-data

persistentVolumeClaim:

claimName: postgres-pvc

---

apiVersion: v1

kind: Service

metadata:

name: postgres-service

spec:

selector:

app: postgres

ports:

- protocol: TCP

port: 5432

targetPort: 5432

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: backend-deployment

spec:

replicas: 1

selector:

matchLabels:

app: backend

template:

metadata:

labels:

app: backend

spec:

containers:

- name: backend

image: ghcr.io/usekaneo/api:latest

env:

- name: JWT_ACCESS

valueFrom:

secretKeyRef:

name: jwt-secret

key: JWT_ACCESS

- name: DATABASE_URL

value: "postgresql://mykaneouser:mykaneopass123@postgres-service:5432/kaneo"

ports:

- containerPort: 1337

---

apiVersion: v1

kind: Service

metadata:

name: backend-service

spec:

selector:

app: backend

ports:

- protocol: TCP

port: 1337

targetPort: 1337

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend-deployment

spec:

replicas: 1

selector:

matchLabels:

app: frontend

template:

metadata:

labels:

app: frontend

spec:

containers:

- name: frontend

image: ghcr.io/usekaneo/web:latest

env:

- name: KANEO_API_URL

value: "https://kaneo.tpk.pw/api"

ports:

- containerPort: 5173

---

apiVersion: v1

kind: Service

metadata:

name: frontend-service

spec:

selector:

app: frontend

ports:

- protocol: TCP

port: 80

targetPort: 5173

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: kaneo-ingress

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/tls-acme: "true"

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: kaneo.tpk.pw

http:

paths:

- path: /api/

pathType: Prefix

backend:

service:

name: backend-service

port:

number: 1337

- path: /

pathType: Prefix

backend:

service:

name: frontend-service

port:

number: 80

tls:

- hosts:

- kaneo.tpk.pw

secretName: kaneo-tls

I can create an account, but logging in gives an object error

Fossflow

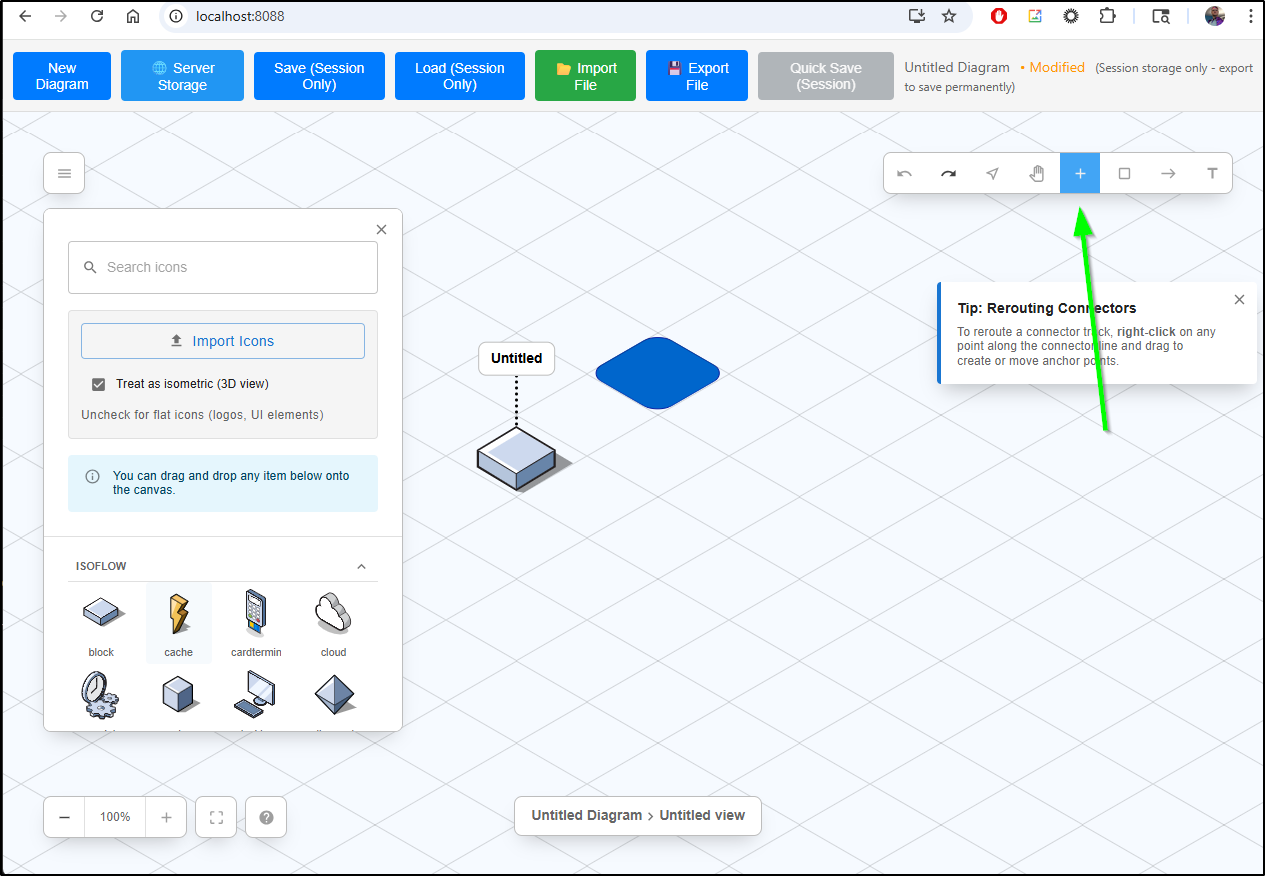

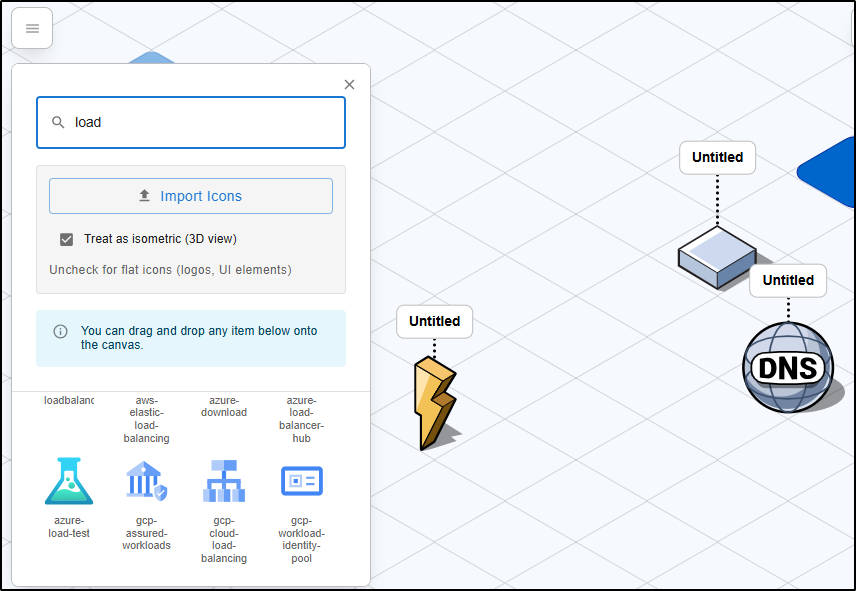

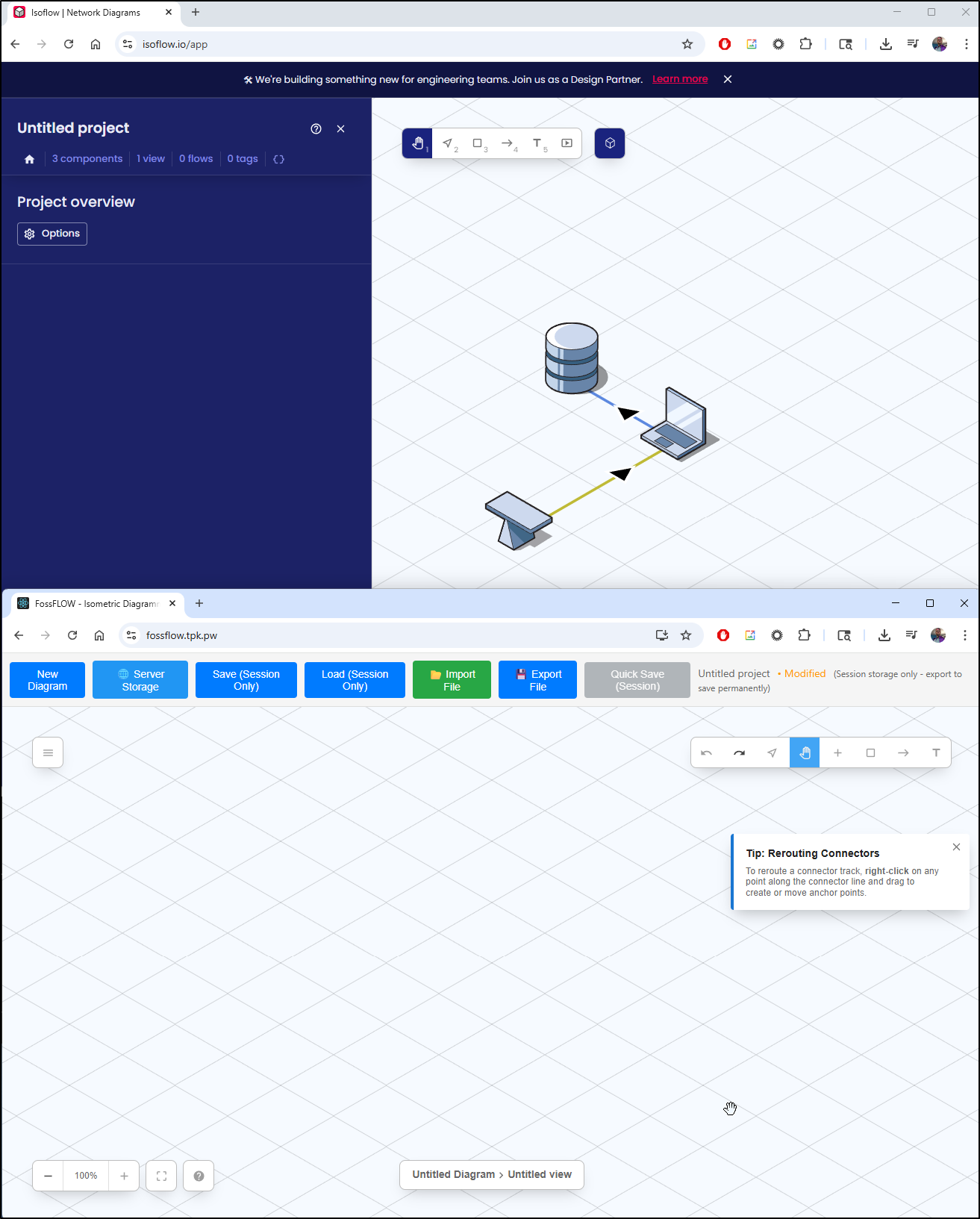

Marius recently posted about FossFlow which can create Isometric diagrams (something I’ve used cloudcraft.co for before - but its paid)

Let’s try locally with docker to start

builder@LuiGi:~/Workspaces$ git clone https://github.com/stan-smith/FossFLOW.git

Cloning into 'FossFLOW'...

remote: Enumerating objects: 5934, done.

remote: Counting objects: 100% (125/125), done.

remote: Compressing objects: 100% (59/59), done.

remote: Total 5934 (delta 84), reused 71 (delta 65), pack-reused 5809 (from 2)

Receiving objects: 100% (5934/5934), 2.47 MiB | 5.07 MiB/s, done.

Resolving deltas: 100% (3596/3596), done.

builder@LuiGi:~/Workspaces$ cd FossFLOW/

The only quick change I needed to make to the docker compose file was to set a local port other than 80

builder@LuiGi:~/Workspaces/FossFLOW$ git diff compose.yml

diff --git a/compose.yml b/compose.yml

index 0f7d4a0..fc4c9ed 100644

--- a/compose.yml

+++ b/compose.yml

@@ -3,11 +3,11 @@ services:

image: stnsmith/fossflow:latest

pull_policy: always

ports:

- - 80:80

+ - 8088:80

environment:

- NODE_ENV=production

- ENABLE_SERVER_STORAGE=${ENABLE_SERVER_STORAGE:-true}

- STORAGE_PATH=/data/diagrams

- ENABLE_GIT_BACKUP=${ENABLE_GIT_BACKUP:-false}

volumes:

- - ./diagrams:/data/diagrams

\ No newline at end of file

+ - ./diagrams:/data/diagrams

builder@LuiGi:~/Workspaces/FossFLOW$ docker compose up

[+] Running 1/1

✔ fossflow Pulled 0.7s

[+] Running 1/1

✔ Container fossflow-fossflow-1 Recreated 0.2s

Attaching to fossflow-1

fossflow-1 | Starting FossFLOW backend server...

fossflow-1 | npm warn config production Use `--omit=dev` instead.

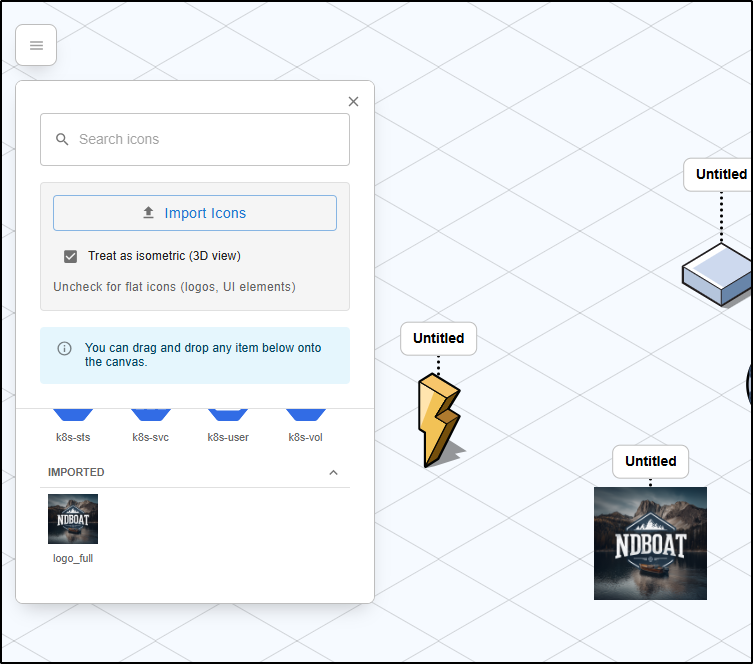

At the start you just see nodes and areas. but under the “+” are where you will find many common icons

The bundled icons include the major clouds like GCP

Imported icons do show up as just their 2D versions you import

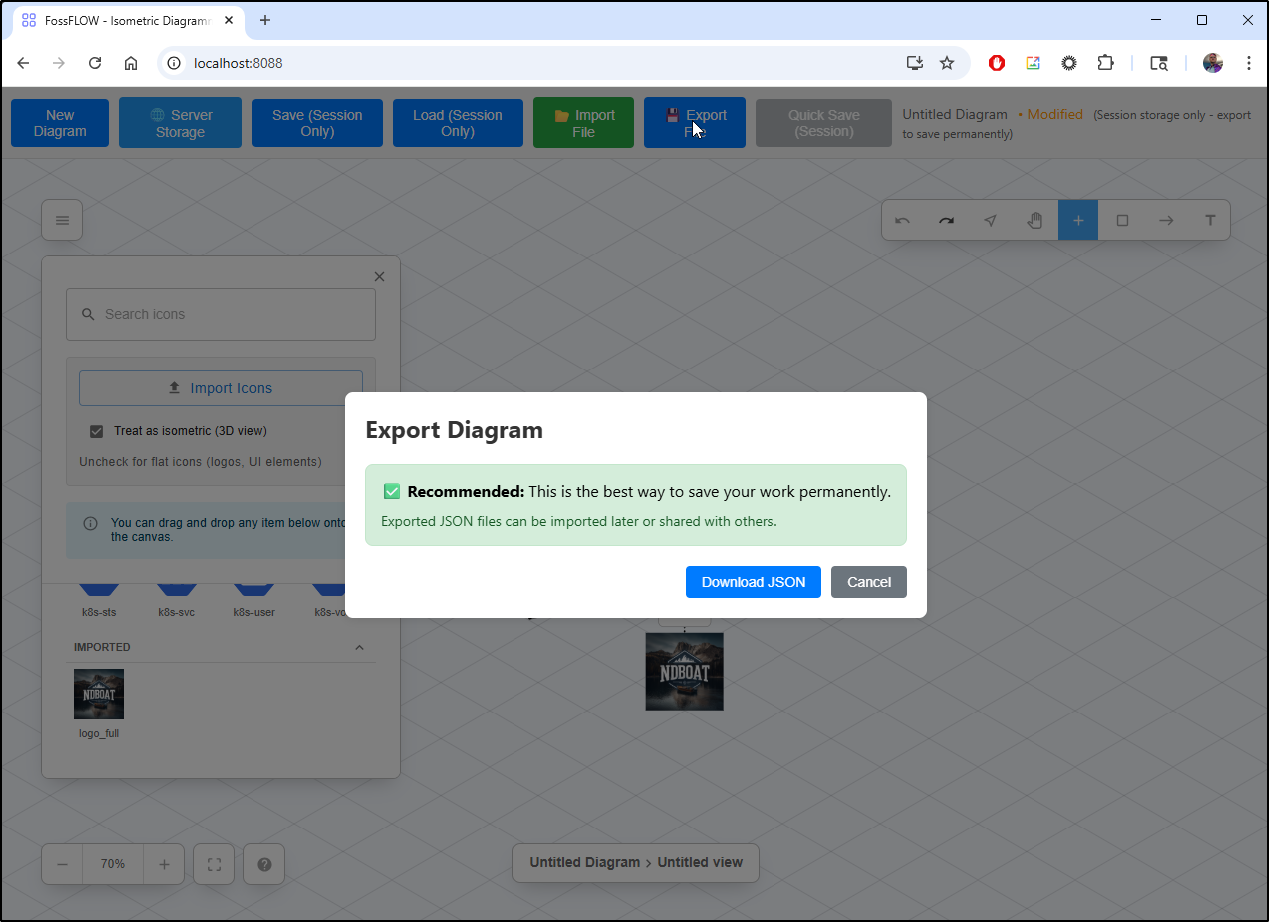

Export is just about allowing one to download their diagram in JSON format for the purpose of importing later.

Thus, the only way (presently) to capture the diagrams is either to use the app or screen capture images

Hosting it

Let’s try hosting a version.

Like before, I can create an A Record

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n fossflow

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "a0cb140b-64f5-4c28-835a-5f2b0128ab75",

"fqdn": "fossflow.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/fossflow",

"name": "fossflow",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

I’ll fire up a quick deployment as a kubernetes YAML manifest

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: fossflow-diagrams-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: local-path

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: fossflow

spec:

replicas: 1

selector:

matchLabels:

app: fossflow

template:

metadata:

labels:

app: fossflow

spec:

containers:

- name: fossflow

image: stnsmith/fossflow:latest

ports:

- containerPort: 80

volumeMounts:

- name: diagrams

mountPath: /data/diagrams

volumes:

- name: diagrams

persistentVolumeClaim:

claimName: fossflow-diagrams-pvc

---

apiVersion: v1

kind: Service

metadata:

name: fossflow

spec:

selector:

app: fossflow

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

And see it come up

$ kubectl apply -f ./manifest.yaml

persistentvolumeclaim/fossflow-diagrams-pvc created

deployment.apps/fossflow created

service/fossflow created

Then I just need an ingress that can use it

$ cat ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

nginx.org/websocket-services: fossflow

name: fossflow-ingress

spec:

rules:

- host: fossflow.tpk.pw

http:

paths:

- backend:

service:

name: fossflow

port:

number: 80

path: /

pathType: Prefix

tls:

- hosts:

- fossflow.tpk.pw

secretName: fossflow-tls

$ kubectl apply -f ./ingress.yaml

Warning: annotation "kubernetes.io/ingress.class" is deprecated, please use 'spec.ingressClassName' instead

ingress.networking.k8s.io/fossflow-ingress created

Once I see the cert satisfied

$ kubectl get cert fossflow-tls

NAME READY SECRET AGE

fossflow-tls False fossflow-tls 51s

$ kubectl get cert fossflow-tls

NAME READY SECRET AGE

fossflow-tls True fossflow-tls 2m23s

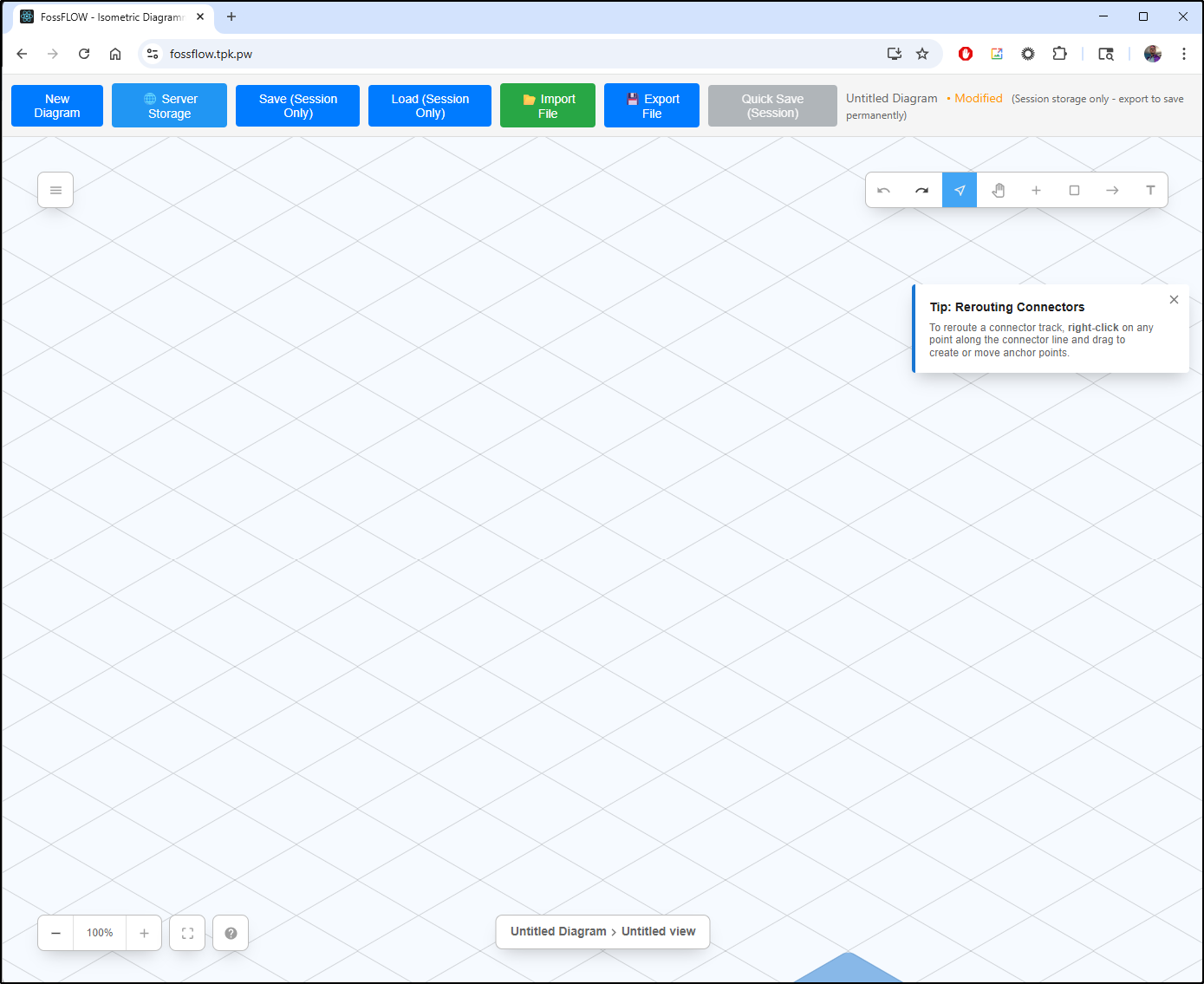

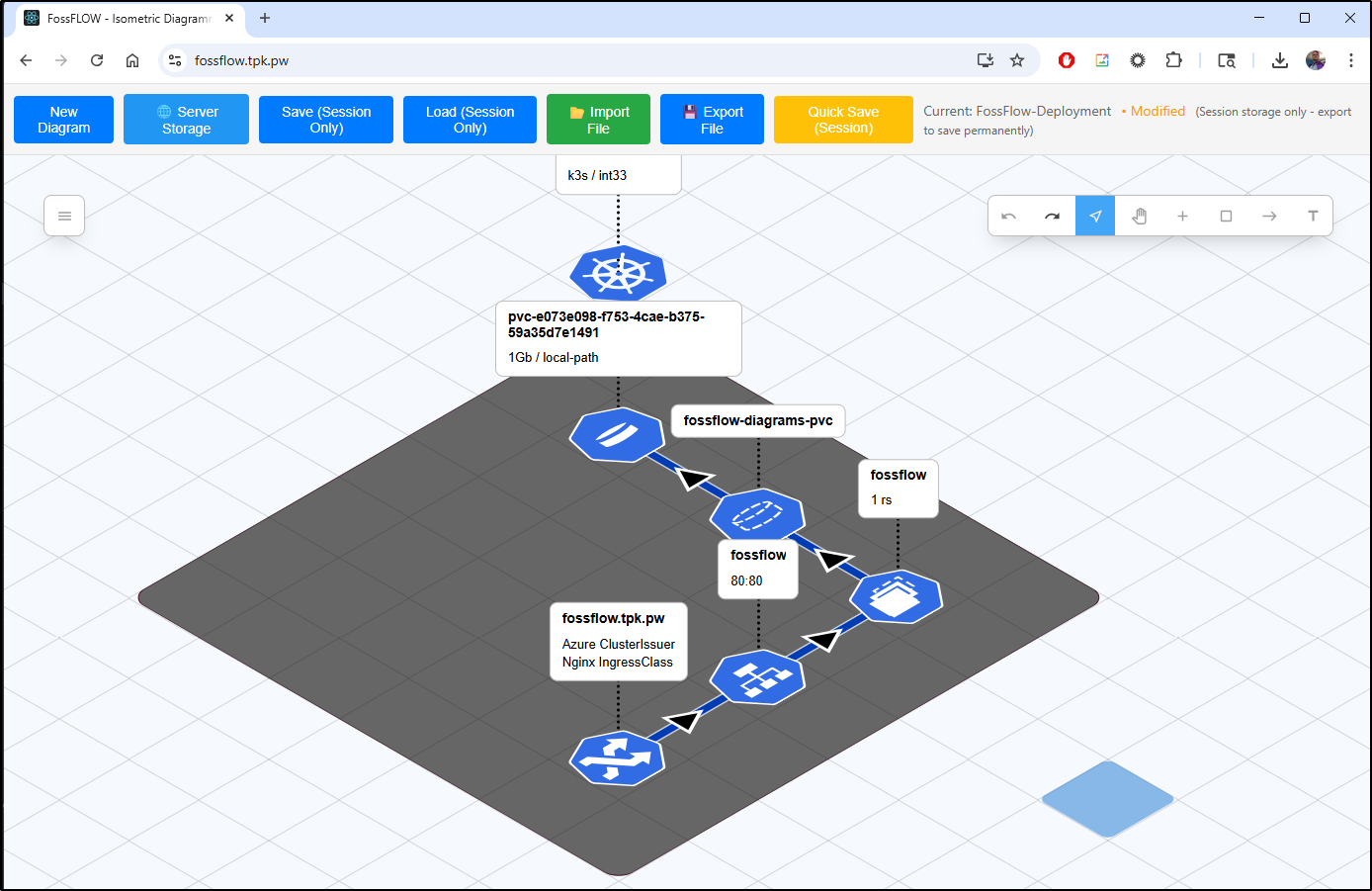

I can hop right in

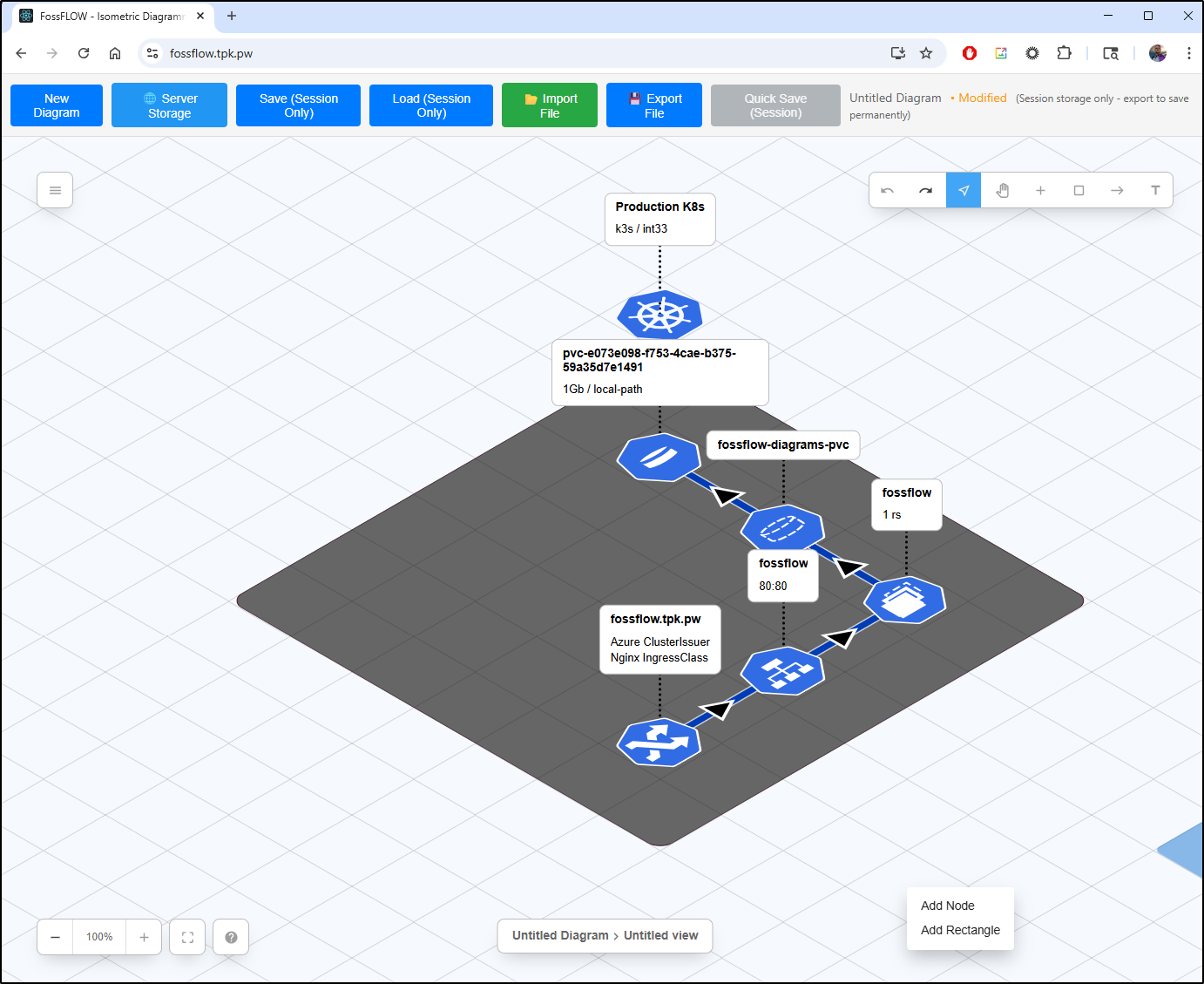

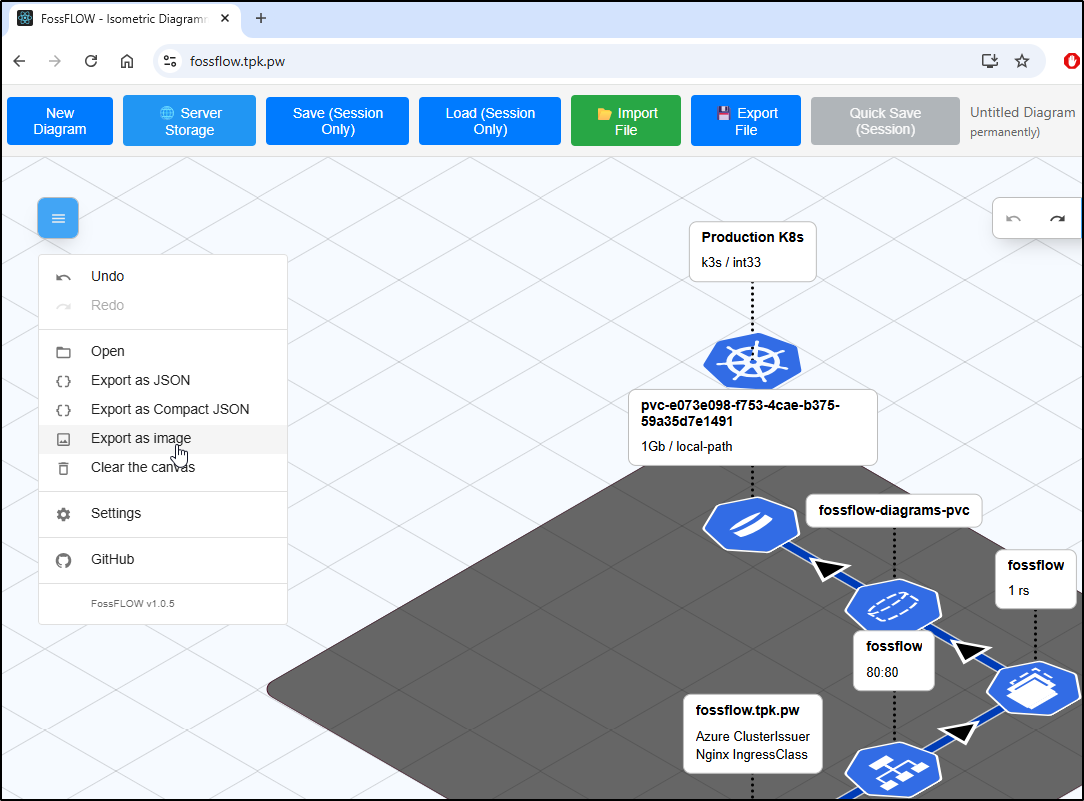

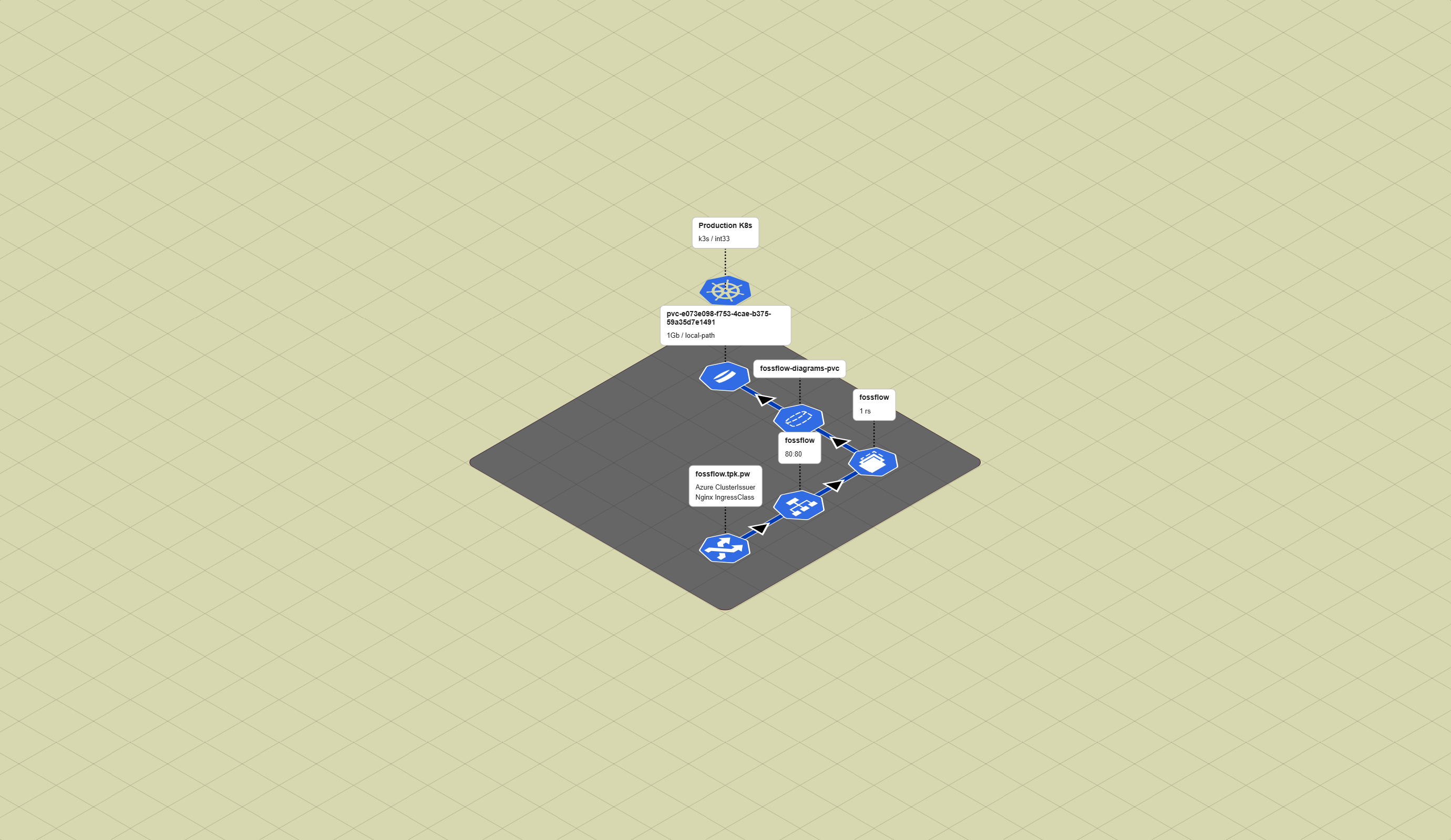

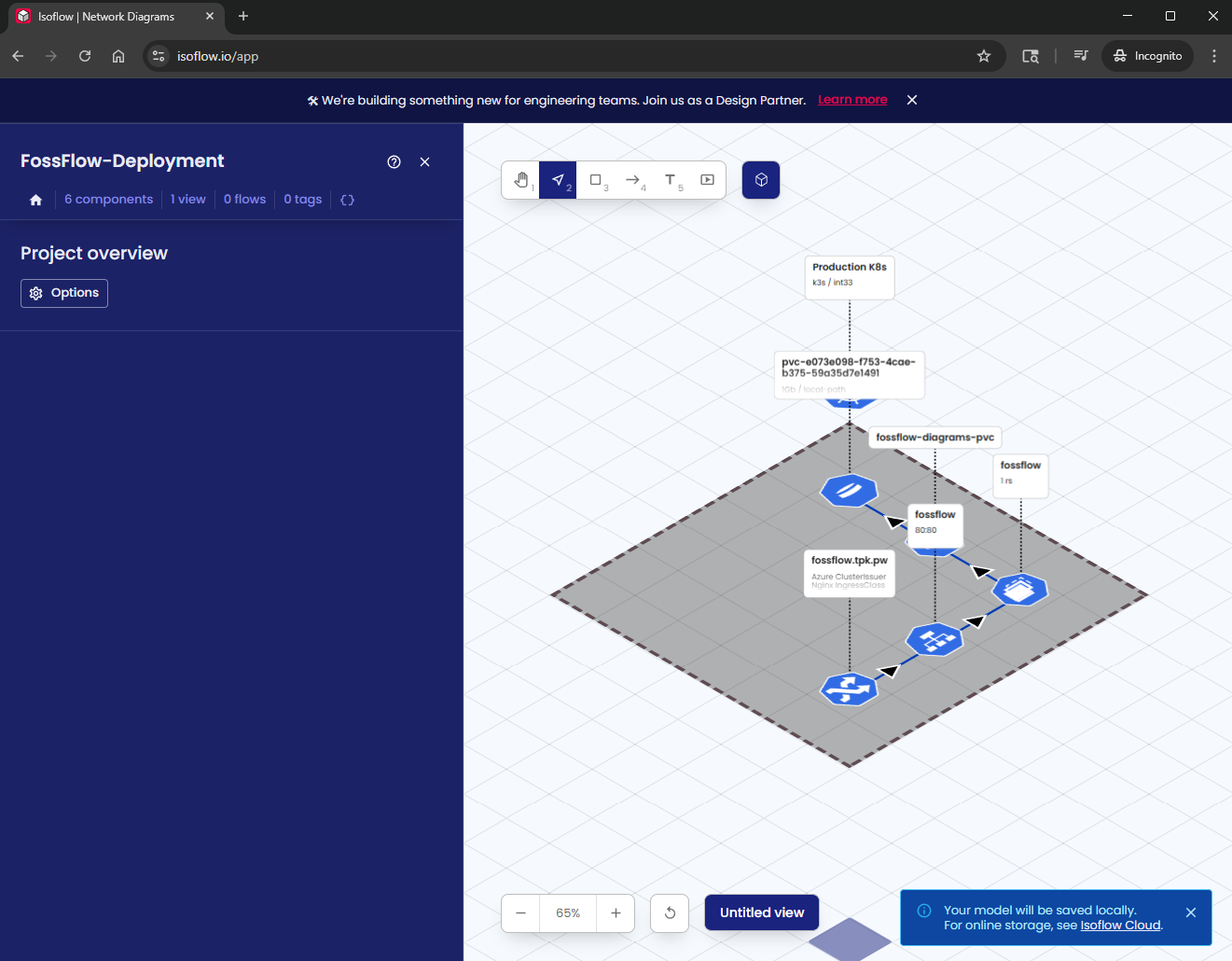

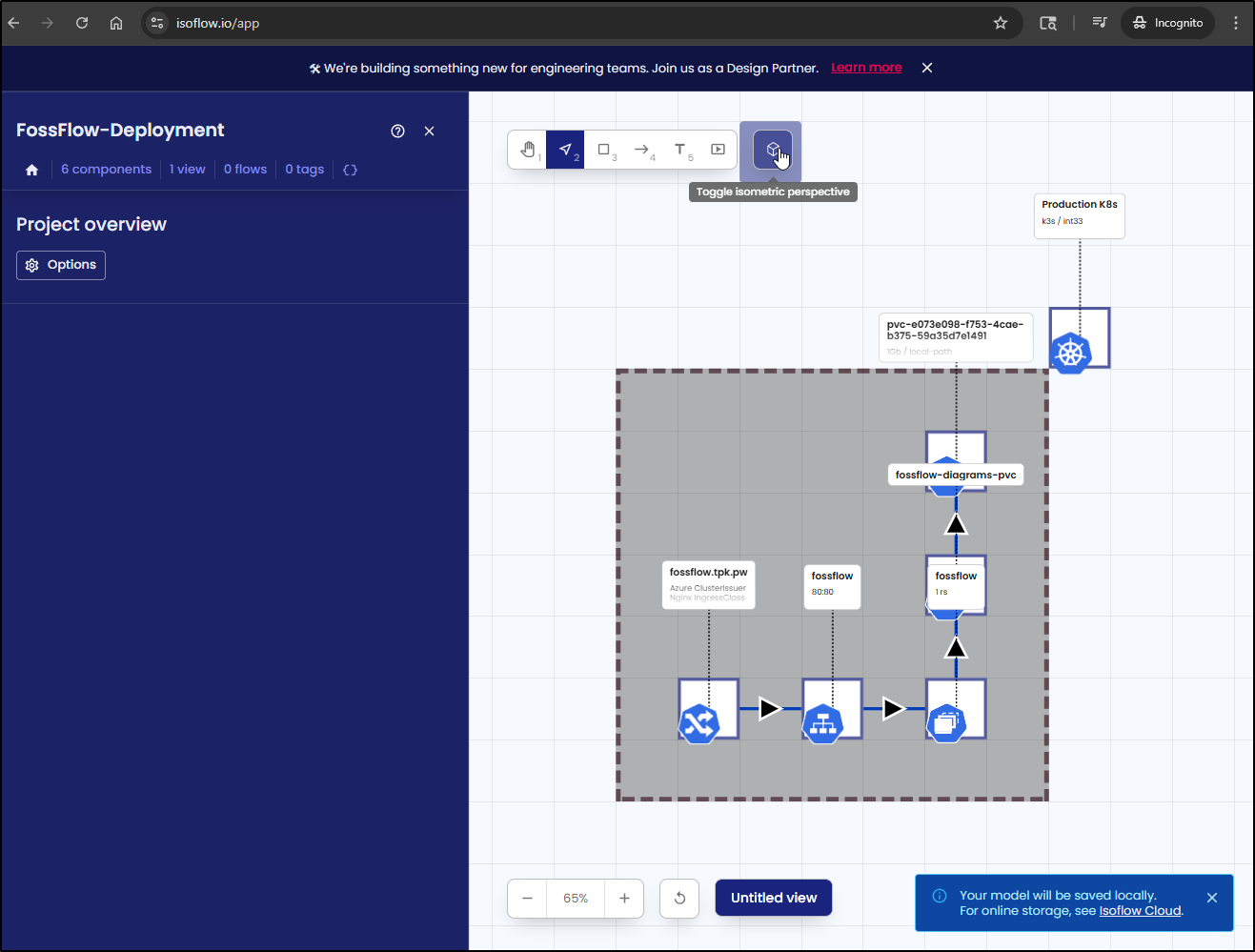

I can diagram up this deployment

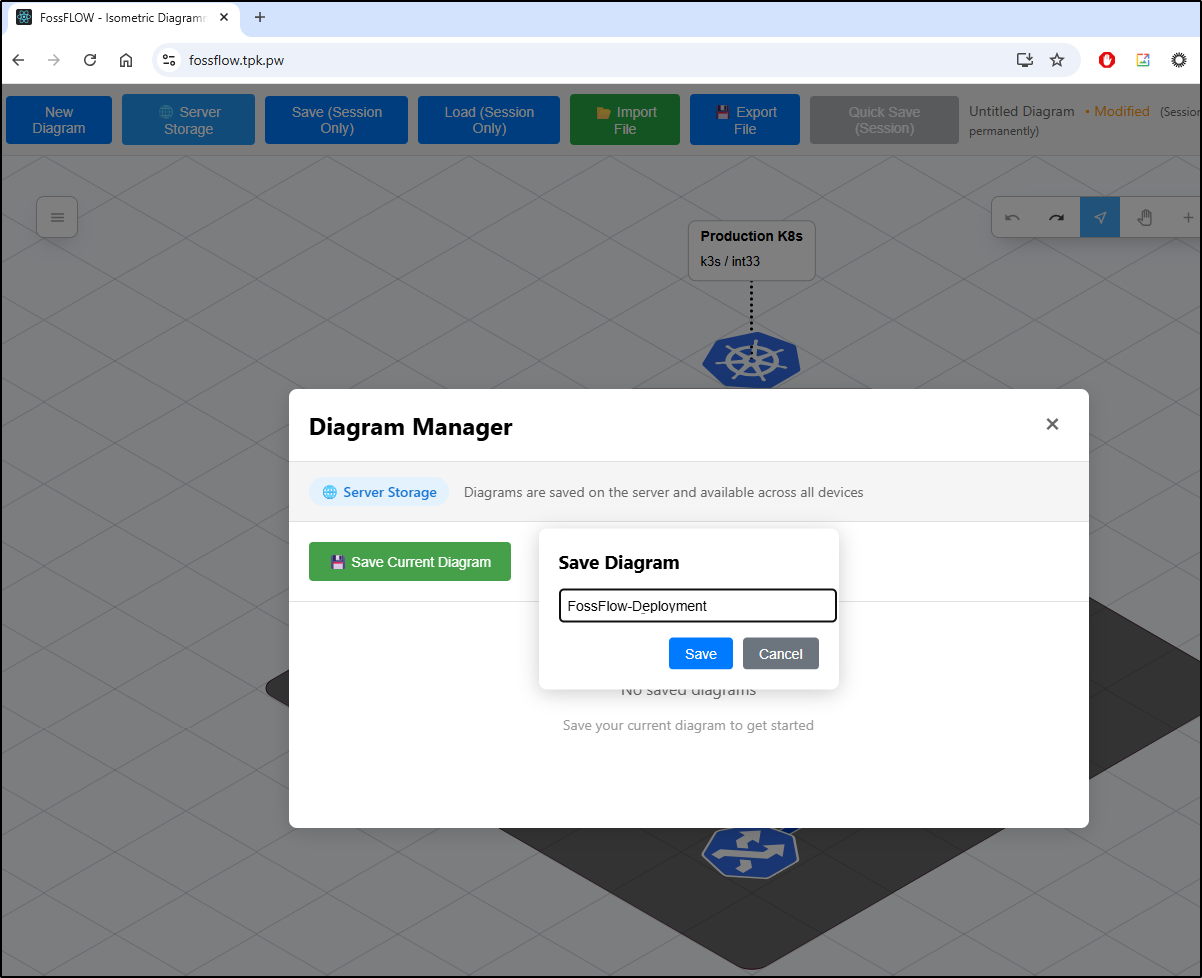

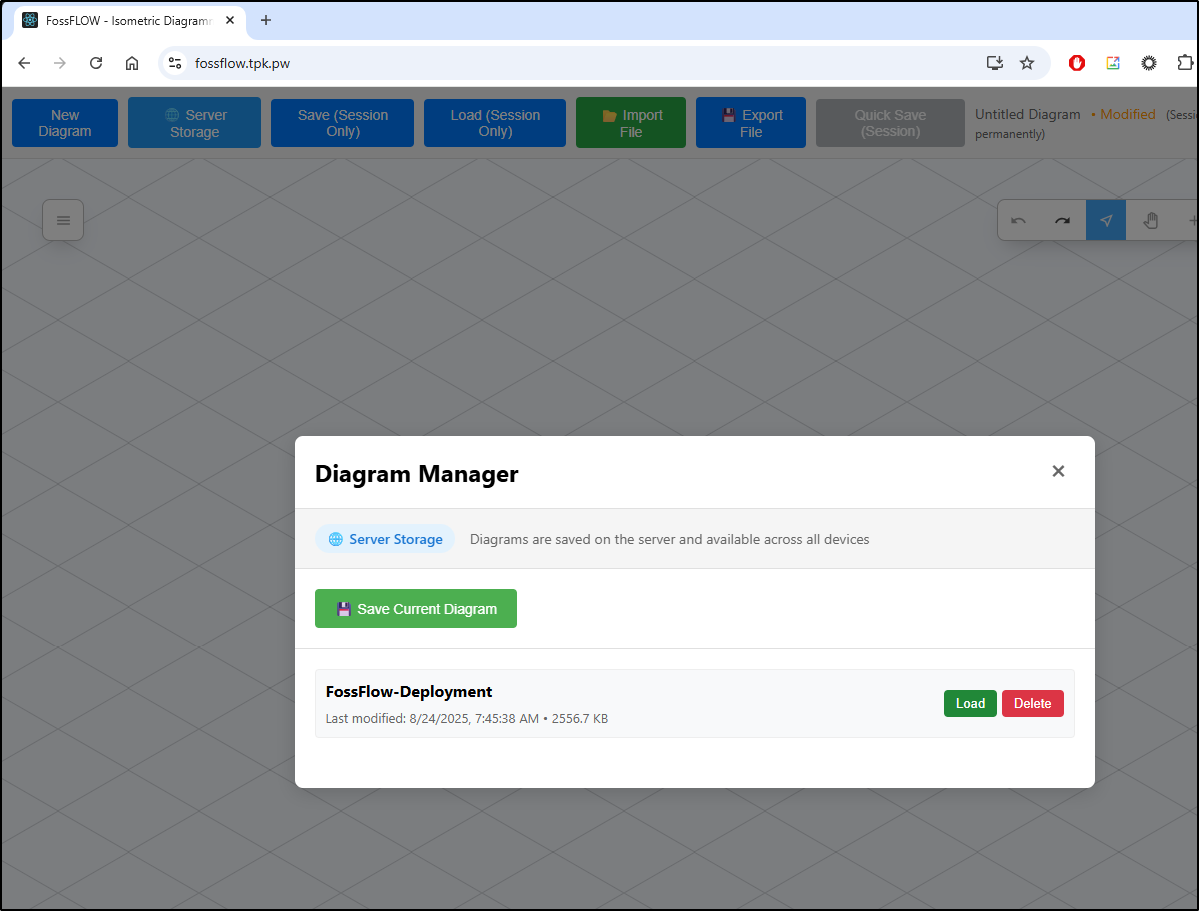

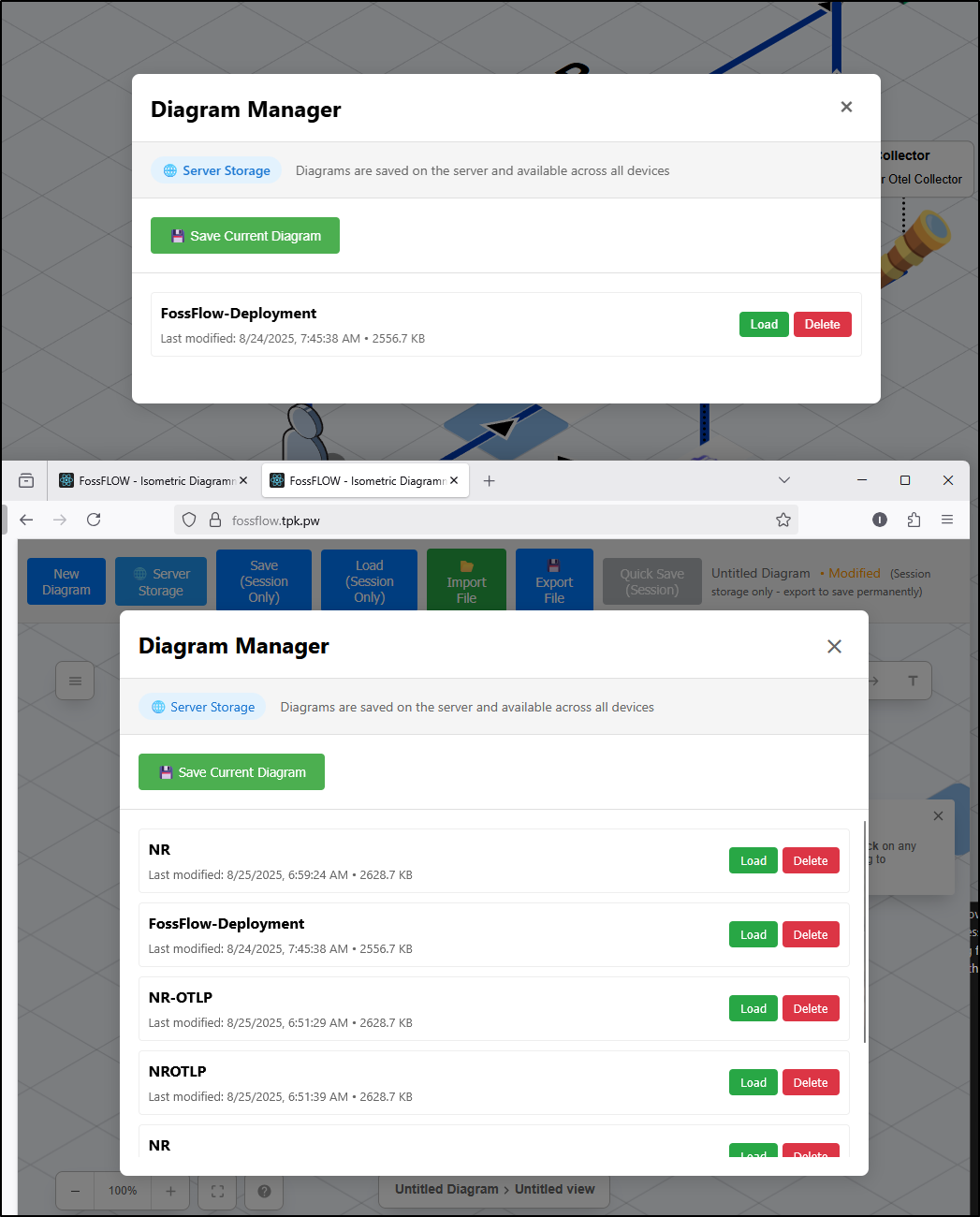

I can save it to server storage to come back to later

I can also download the JSON

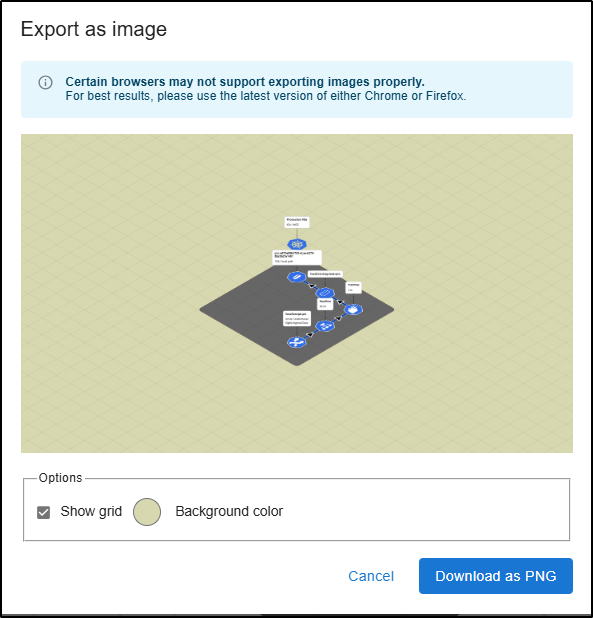

Let’s also try exporting an image

I can optionally set a background colour and/or show the grid

which you can see here:

I want to test that PVC. Let’s kill the existing pod

builder@LuiGi:~/Workspaces/FossFLOW$ kubectl get deployments | grep foss

fossflow 1/1 1 1 9m25s

builder@LuiGi:~/Workspaces/FossFLOW$ kubectl get po | grep foss

fossflow-78666fdbbb-4rfll 1/1 Running 0 17m

builder@LuiGi:~/Workspaces/FossFLOW$ kubectl delete po fossflow-78666fdbbb-4rfll

pod "fossflow-78666fdbbb-4rfll" deleted

builder@LuiGi:~/Workspaces/FossFLOW$ kubectl get po -l app=fossflow

NAME READY STATUS RESTARTS AGE

fossflow-78666fdbbb-vxvlf 1/1 Running 0 43s

Refreshing the page is empty, but I do see a saved diagram in server storage

which worked fine

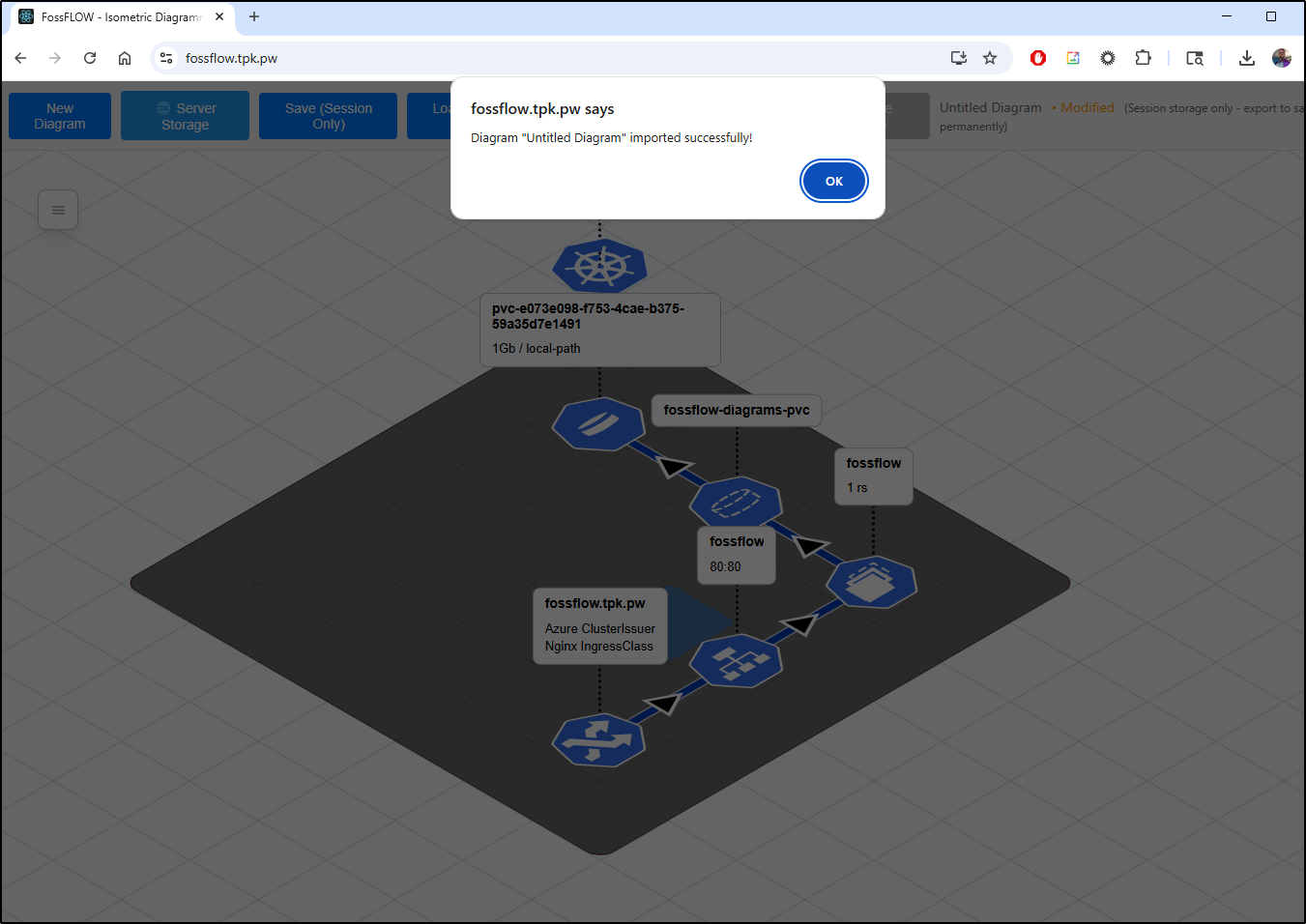

Importing also worked just fine

Imports from IsoFlow

The dialog suggested it could pull in IsoFlow diagrams.

However, when I tested, I found nothing showed up

Another nuance I found with FossFlow is when I did a “save to server”, I did not see evidence of a save in my session. I kept saving different ways and began to panick.

However, when I opened it up in another browser and went to load, I saw all my saves, so it’s just a minor UI bug

IsoFlow

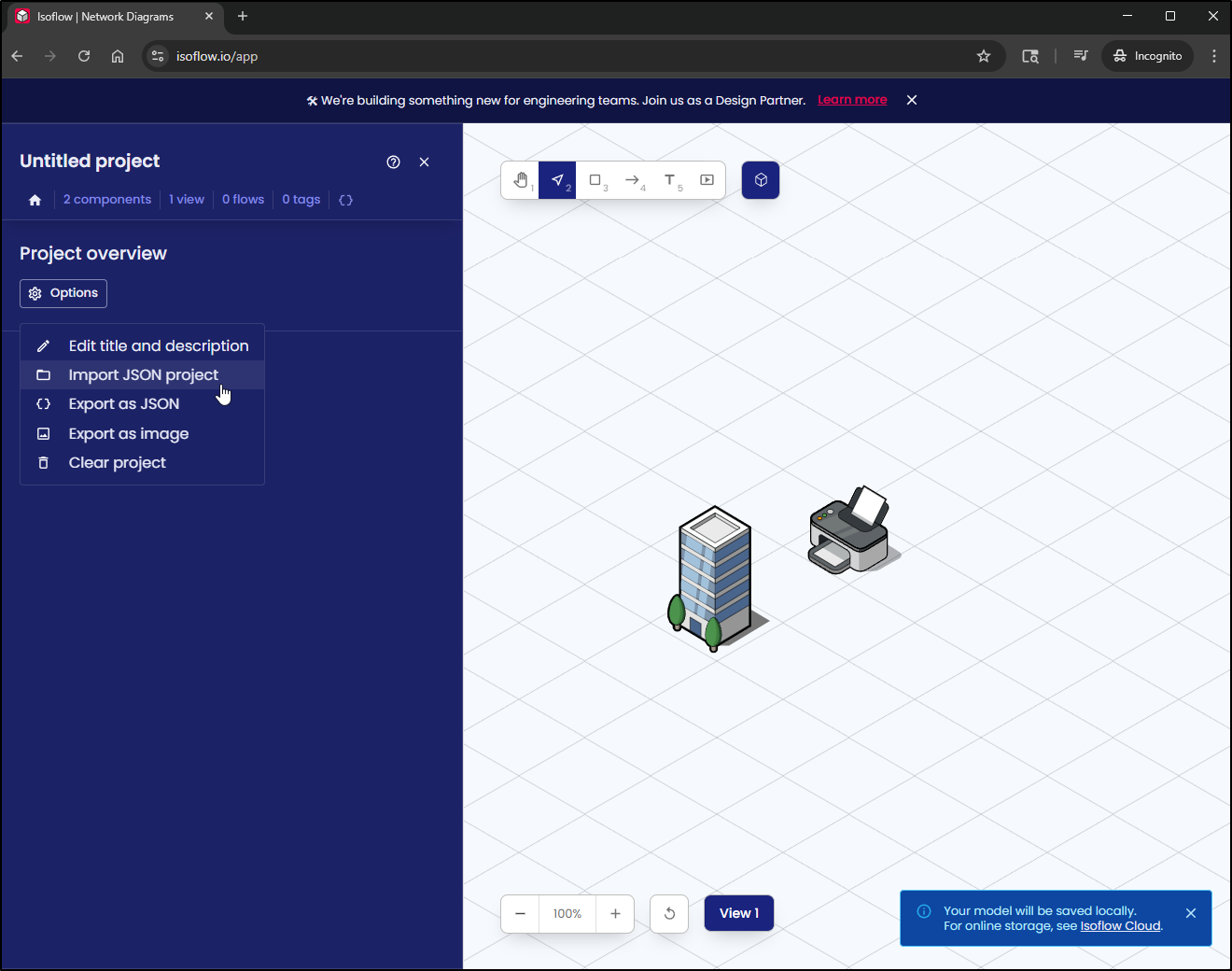

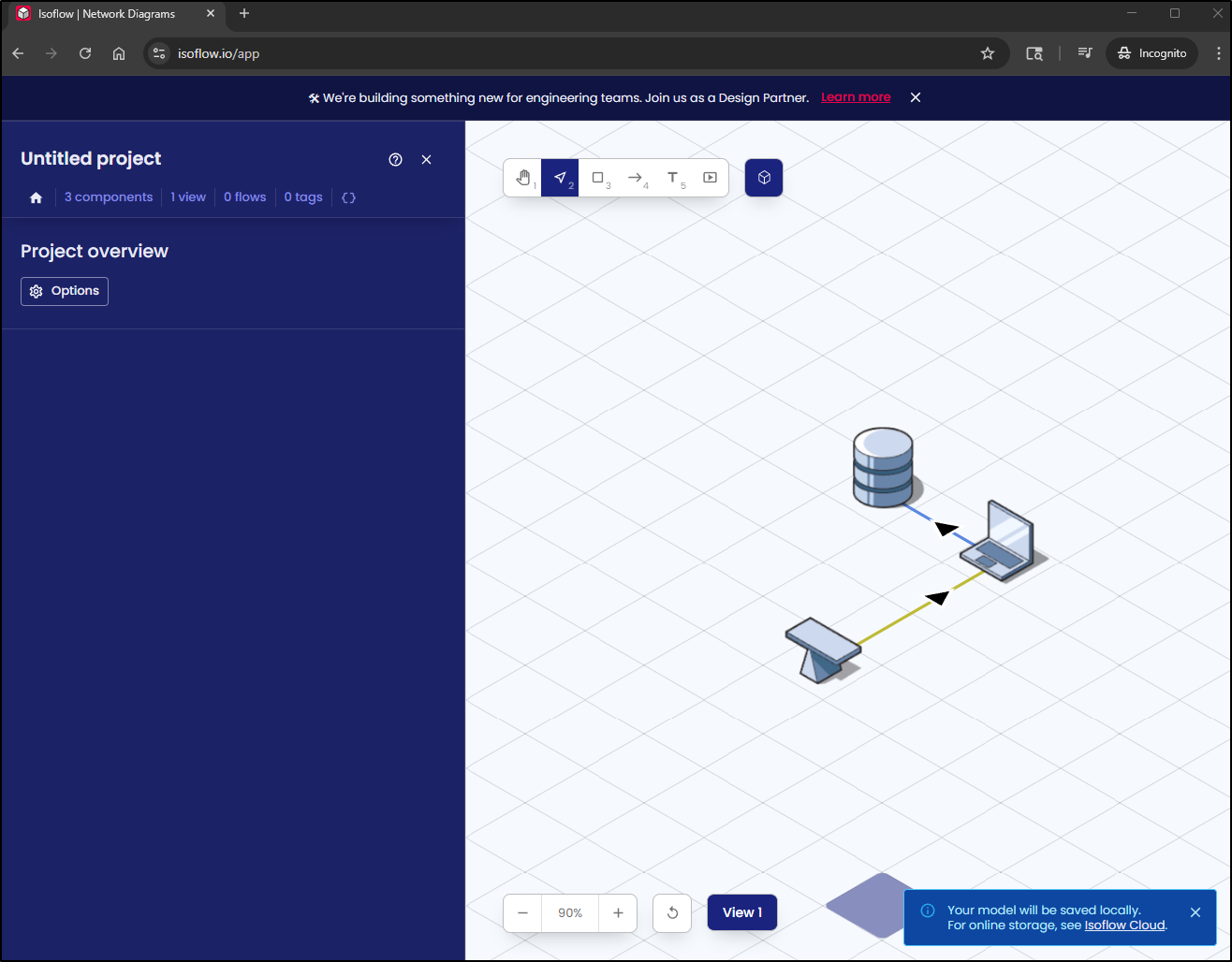

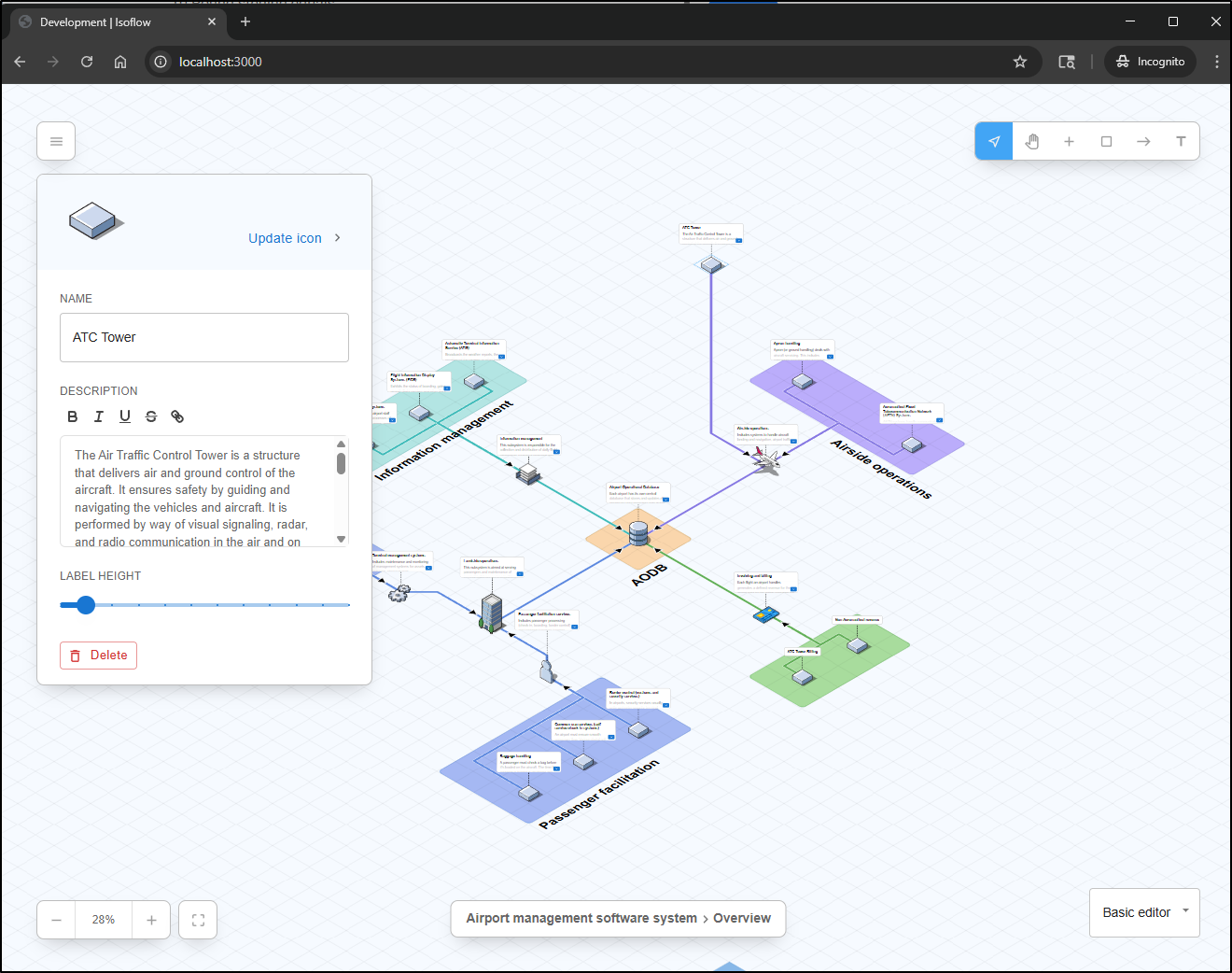

IsoFlow is interesting. You can use the free editor to make some diagrams

And as we saw, I can export the JSON. Testing the exports did show they came back in again

While we couldn’t pull in the Isoflow into Fossflow, surprisingly it did work in reverse - I could pull in a FossFlow into IsoFlow

Which lets me do things like go back to a 2d flat diagram

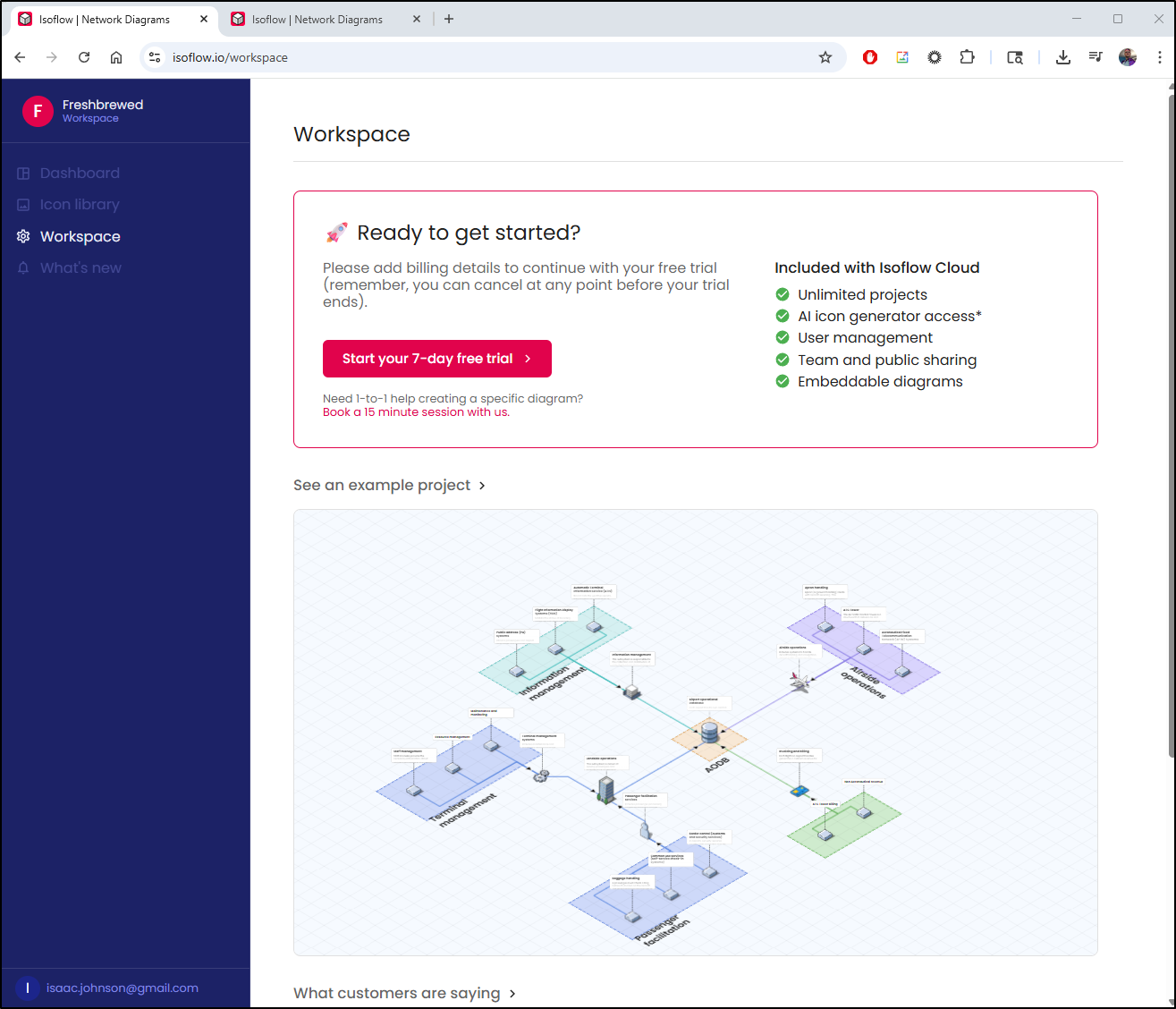

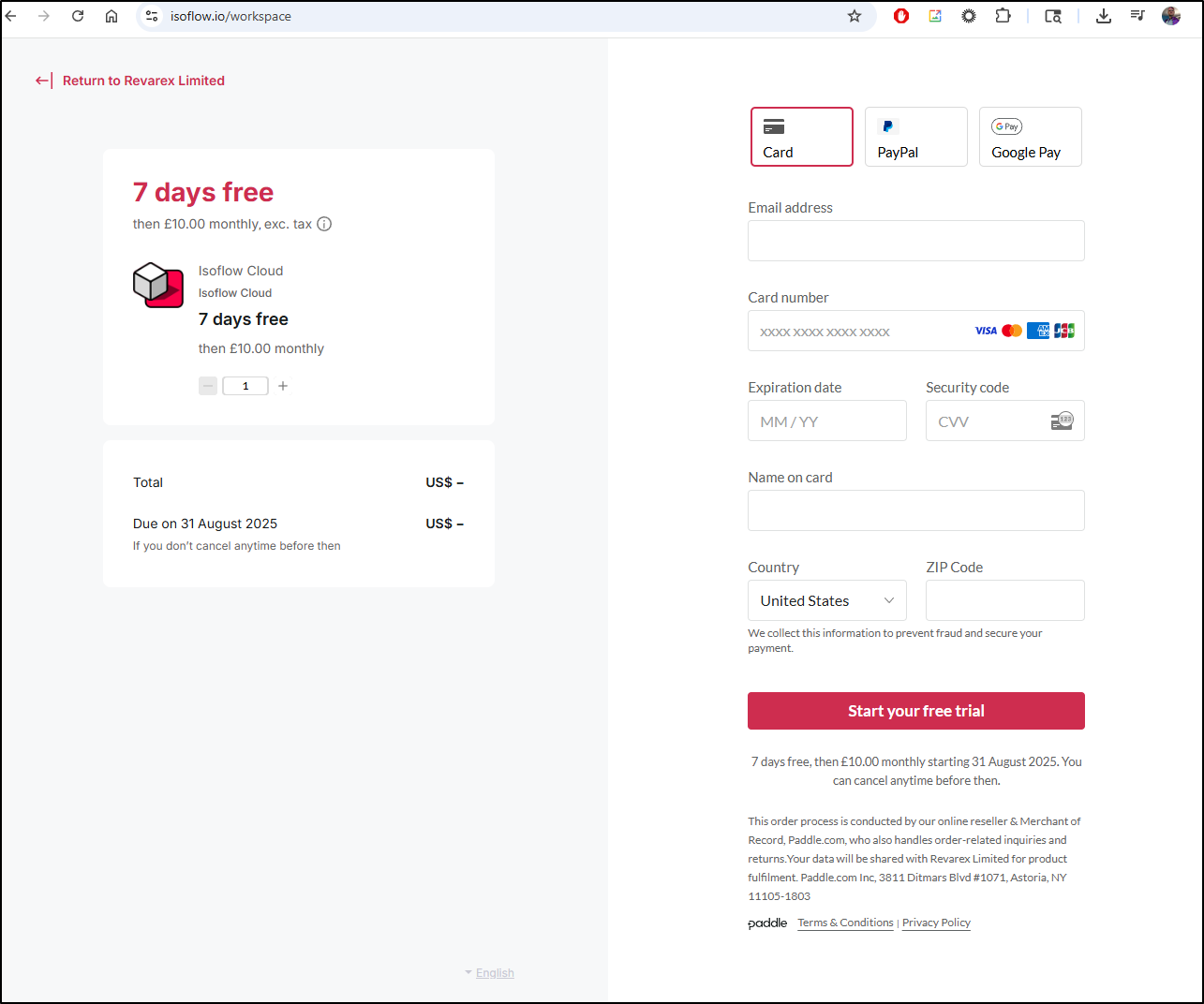

However, as soon as you want to make an account and try the free cloud product, you are locked into a “Start your 7-day free trial” button with now way out (other than logout).

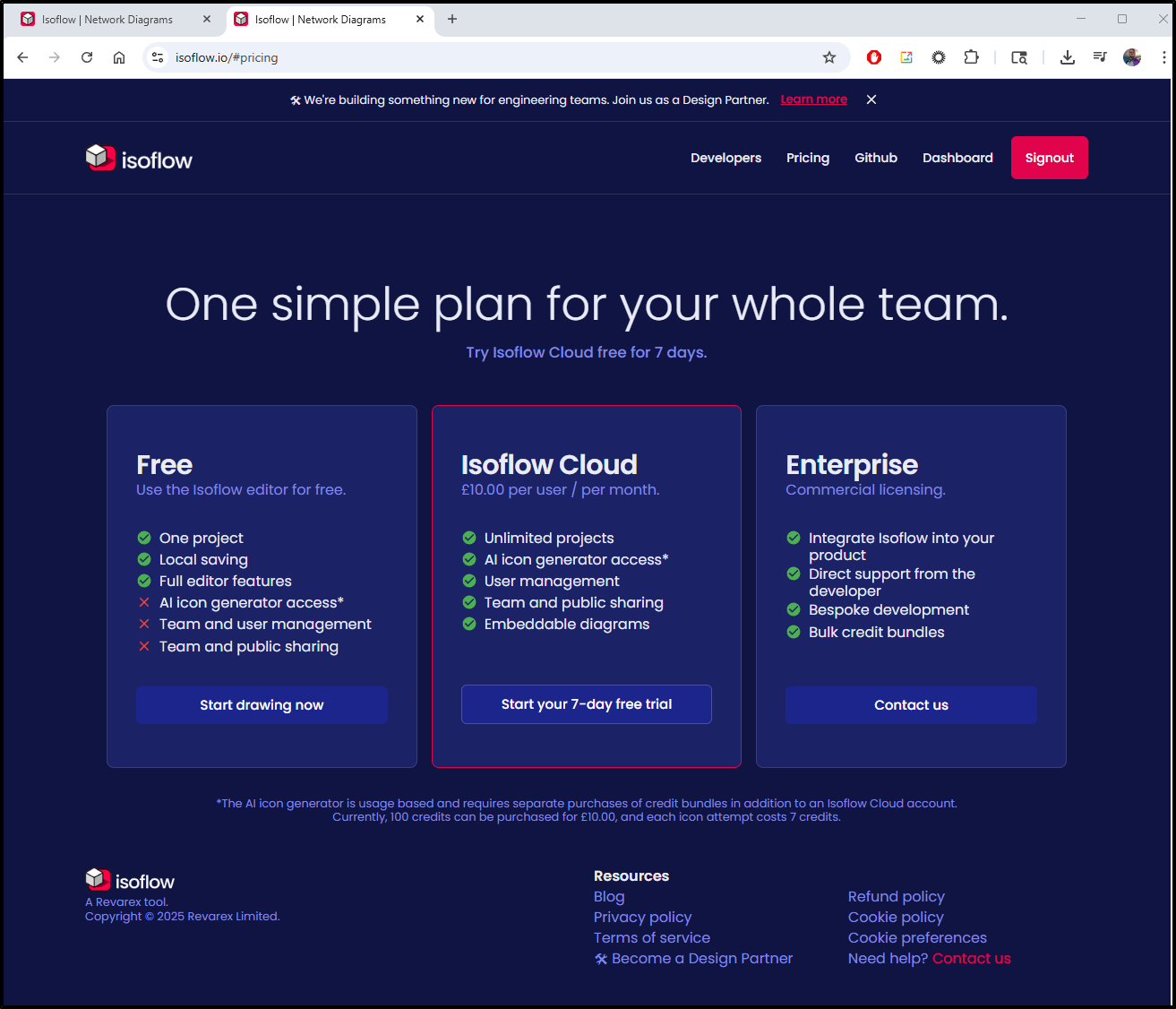

And while it is only USD$13.53/month with a free tier available

There is no way through without setting some payment details using a different billing place (?paddle).

Community edition

They have a community edition, which was the fork from which FossFlow came.

We can clone the repo and fire it up with npm

builder@DESKTOP-QADGF36:~/Workspaces$ git clone https://github.com/markmanx/isoflow.git

Cloning into 'isoflow'...

remote: Enumerating objects: 6632, done.

remote: Counting objects: 100% (978/978), done.

remote: Compressing objects: 100% (140/140), done.

remote: Total 6632 (delta 876), reused 838 (delta 838), pack-reused 5654 (from 2)

Receiving objects: 100% (6632/6632), 2.43 MiB | 8.38 MiB/s, done.

Resolving deltas: 100% (3506/3506), done.

builder@DESKTOP-QADGF36:~/Workspaces$ cd isoflow/

builder@DESKTOP-QADGF36:~/Workspaces/isoflow$ nvm use lts/iron

Now using node v20.19.4 (npm v10.8.2)

builder@DESKTOP-QADGF36:~/Workspaces/isoflow$ npm i

added 1209 packages, and audited 1210 packages in 42s

192 packages are looking for funding

run `npm fund` for details

29 vulnerabilities (6 low, 13 moderate, 8 high, 2 critical)

To address issues that do not require attention, run:

npm audit fix

To address all issues (including breaking changes), run:

npm audit fix --force

Run `npm audit` for details.

builder@DESKTOP-QADGF36:~/Workspaces/isoflow$ npm run start

> isoflow@1.1.1 start

> webpack serve --config ./webpack/dev.config.js

<i> [webpack-dev-server] Project is running at:

<i> [webpack-dev-server] Loopback: http://localhost:3000/

<i> [webpack-dev-server] On Your Network (IPv4): http://172.22.72.68:3000/

<i> [webpack-dev-server] Content not from webpack is served from '/home/builder/Workspaces/isoflow/webpack/build' directory

As suggested, it is a basic editor.

It looks like the FossFlow folks took the basics and just extended it.

Summary

Today we looked at Kaneo and how to run it locally in Docker. Clearly, it was a fail trying to host in k8s - either with helm or as a forwarding service. It seems pretty good, but I don’t think I’m ready to leave Vikunja for it.

We looked at two related Isometric diagram tools: FossFlow and Isoflow. They both have some nice features. I would like to give IsoFlow a real chance, but I don’t like having to set my billing details just to do a forced 7-day trial.

I do think the moving of items in IsoFlow is a lot smoother.