Published: Aug 14, 2025 by Isaac Johnson

I had a couple of older tools on my production cluster, namely Trilium and LibreNMS. I haven’t used them in a while and wanted to see if they had new features or sparked any new interest. One of them actually surprised me.

The other tool I had on my list was a small document management tool, Papra. We’ll give that a quick look to see if it might solve some needs.

Let’s start with Trilium.

Trilium

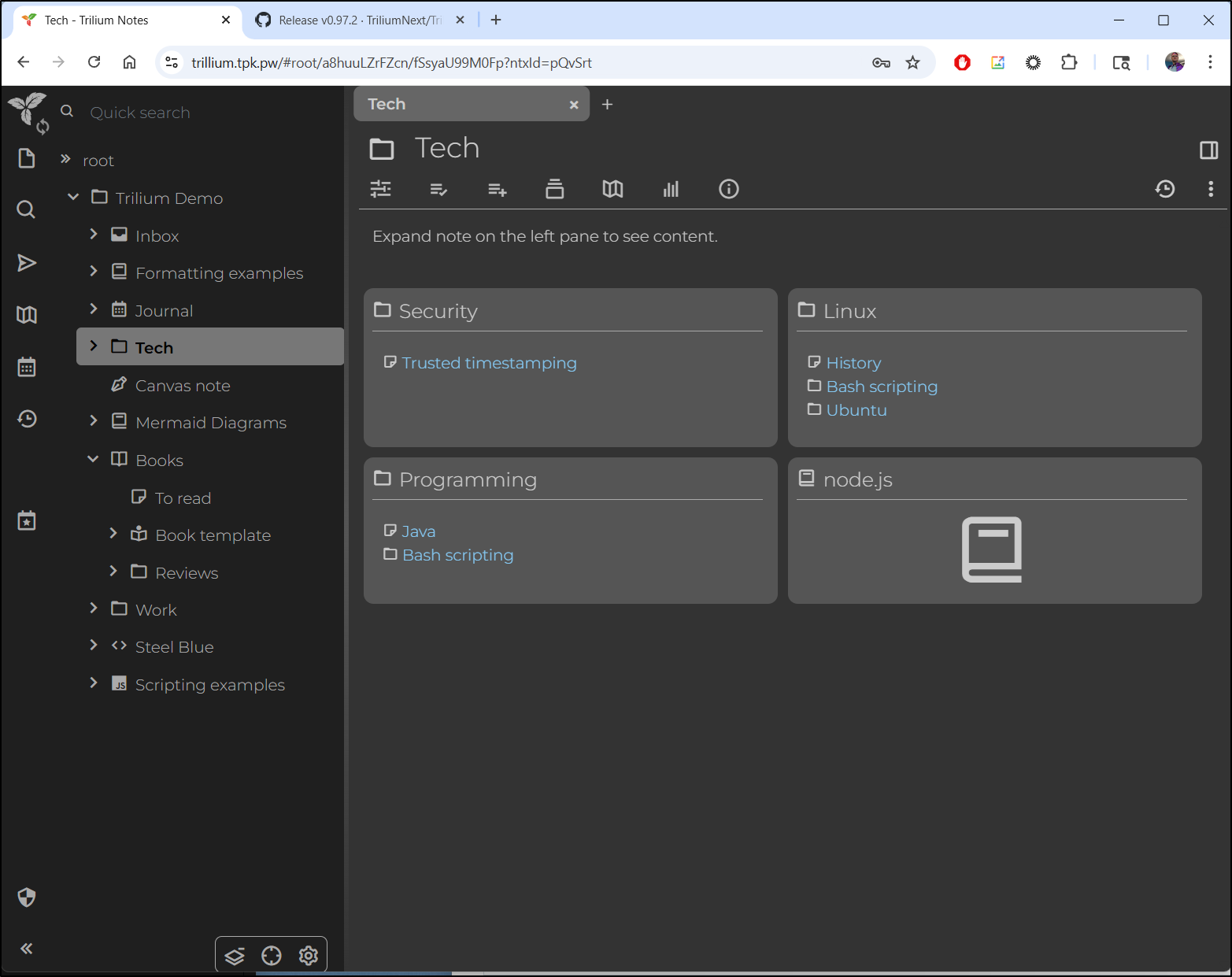

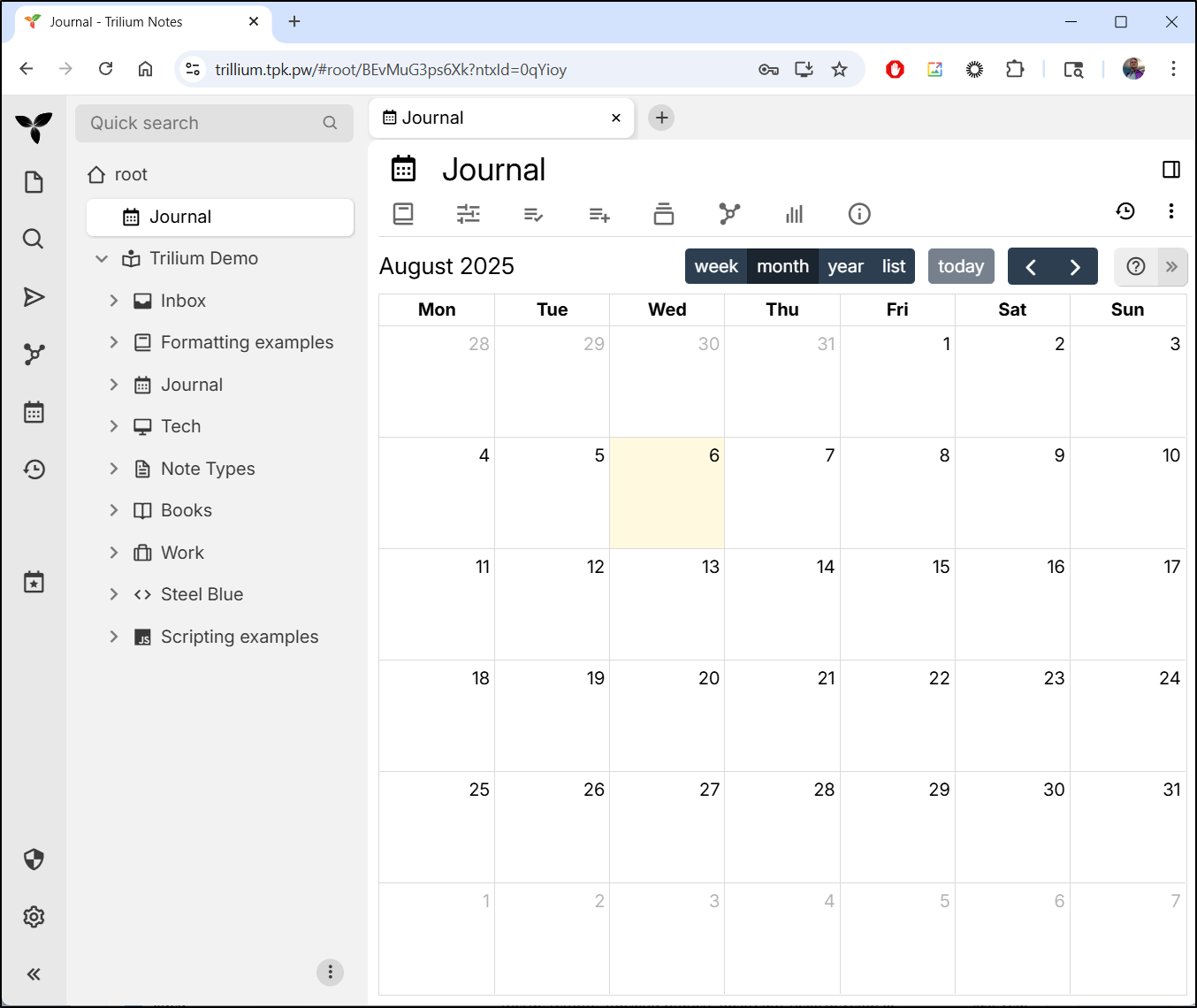

We last looked at Trilium back in this April 2024 writeup. When I went to check on it’s status, I found it was working just fine

The version I’m running was last updated May of 2024

Yet, Elian Doran has kept updates going and there is a new v0.97.2 released just days ago.

I recall handling the Kubernetes myself so I checked the current Docker compose and see the image has changed to triliumnext/trilium:latest from zadam/trilium

Note: The upgraded seemed to reset my instance, so best to take a backup before going on

I updated the image in the deployment

$ kubectl edit deployment trilium-deployment -n trillium

error: deployments.apps "trilium-deployment" is invalid

deployment.apps/trilium-deployment edited

So our deployment now looks like

apiVersion: apps/v1

kind: Deployment

metadata:

name: trilium-deployment

spec:

replicas: 1

selector:

matchLabels:

app: trilium

template:

metadata:

creationTimestamp: null

labels:

app: trilium

spec:

containers:

- env:

- name: TRILIUM_DATA_DIR

value: /home/node/trilium-data

image: triliumnext/trilium:latest

imagePullPolicy: Always

name: trilium-container

ports:

- containerPort: 8080

protocol: TCP

volumes:

- name: trilium-data-volume

persistentVolumeClaim:

claimName: trilium-pvc

Now that the the new pod is running

$ kubectl get po -A | grep trill

trillium trilium-deployment-8676c4885-v9dh4 1/1 Running 0 90s

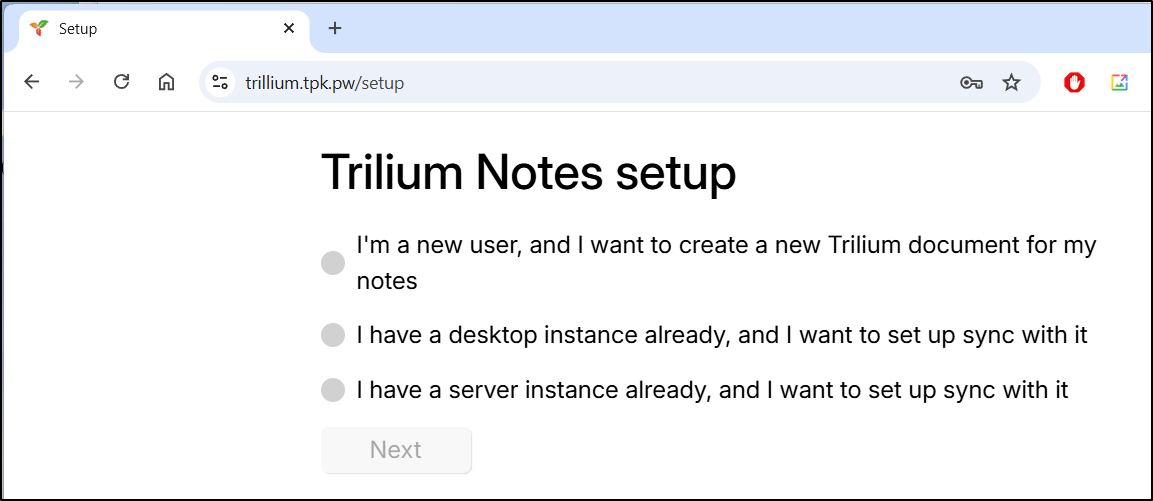

I see a new login page

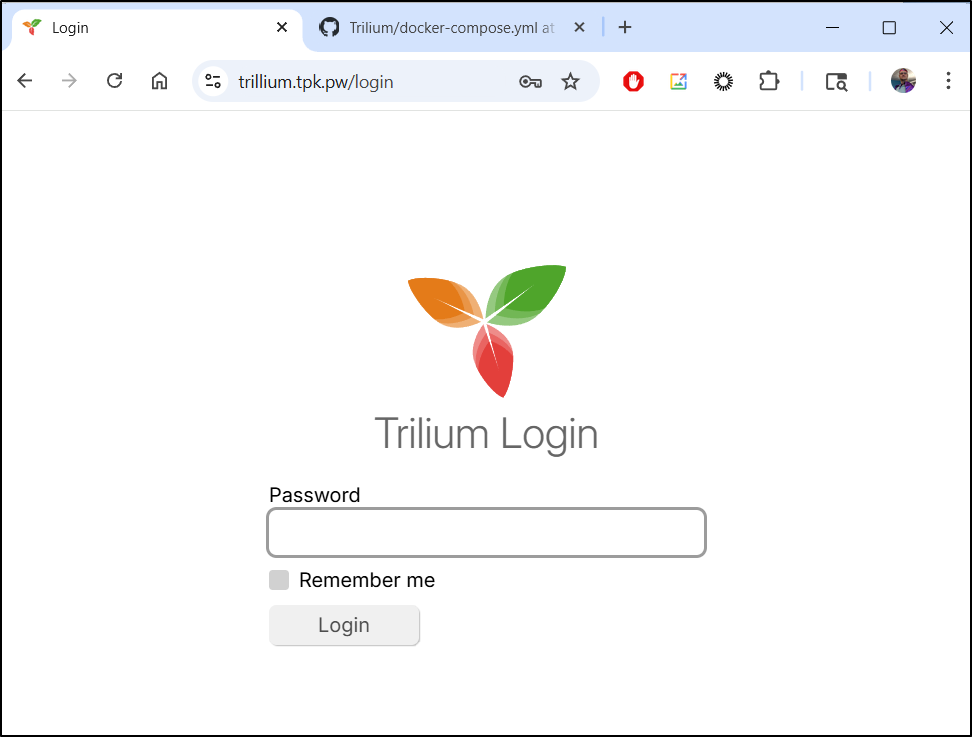

I was surprised to immediately see a calendar view

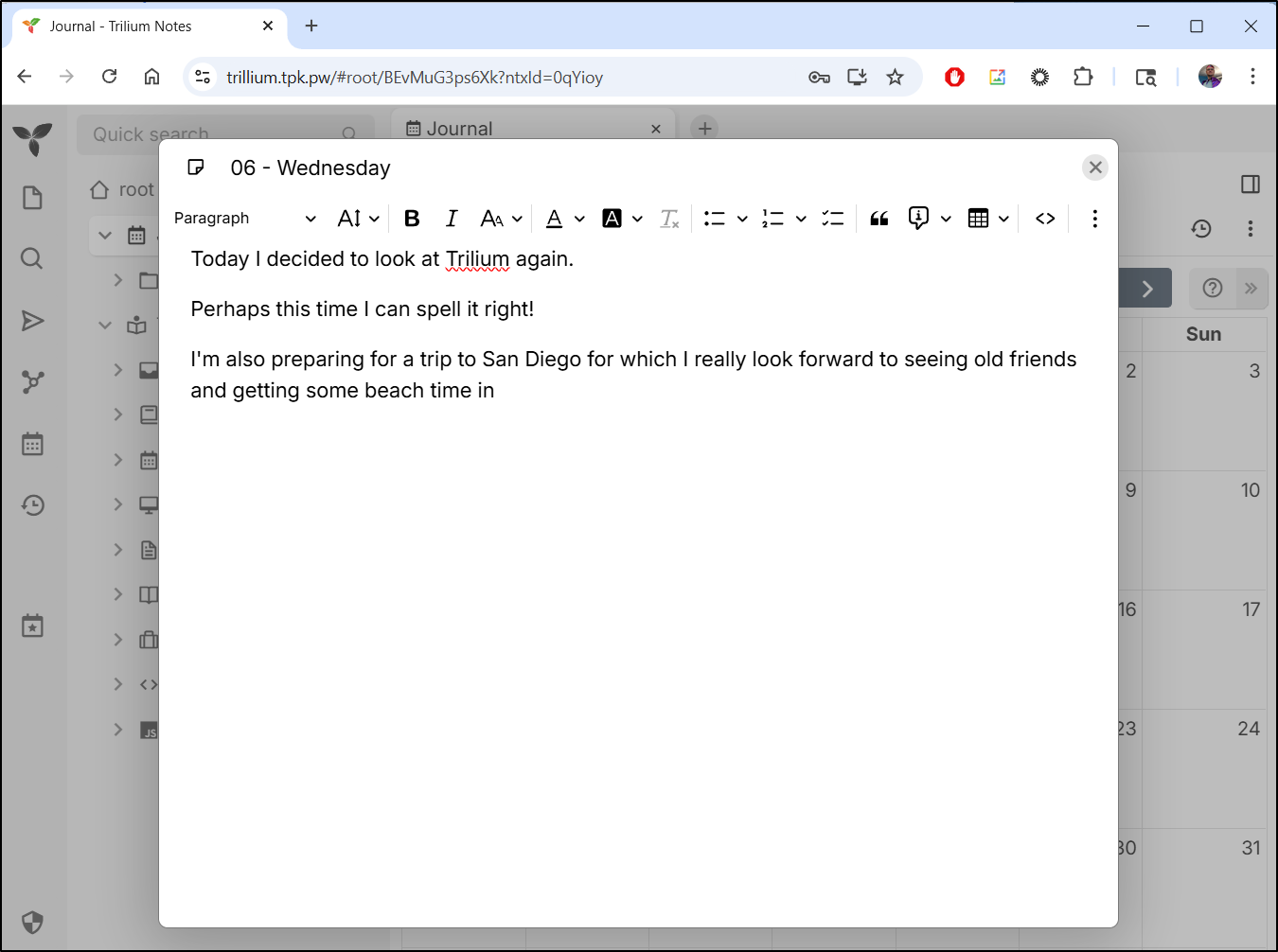

I’ll click today and add a journal entry

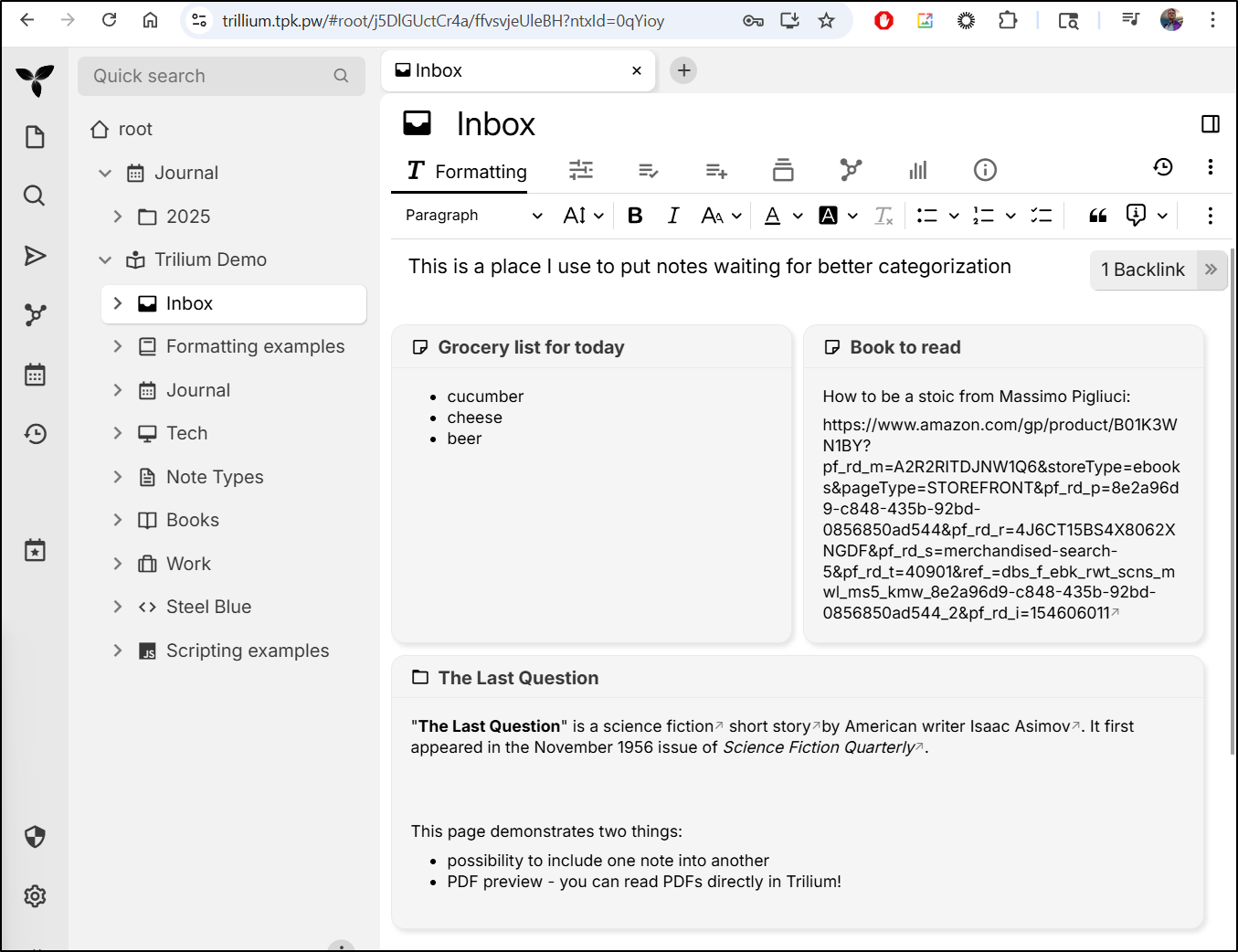

The inbox looks great

which reminded me of The Last Question, by Isaac Asimov, though I might suggest listening to Leonard Nimoy read it or this reading.

I think the underlying Idea of Trilium is to provide a “second brain” archive and to that end, it does a fantastic job

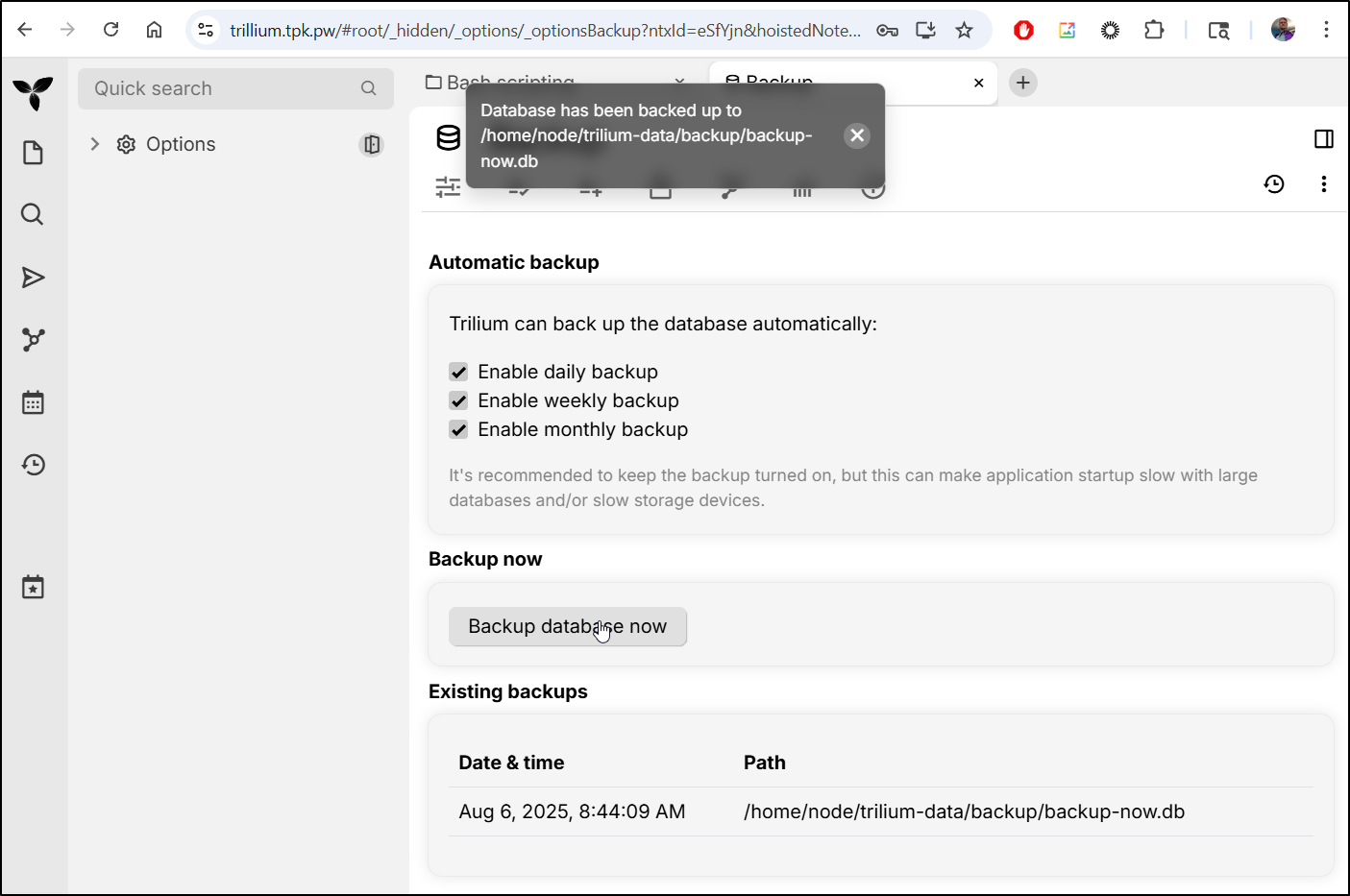

I am worried, however, about persistence. I have created a journal entry so let’s go to backups and create a backup

I’m now going to rotate the pod

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl get po -A | grep trill

trillium trilium-deployment-8676c4885-v9dh4 1/1 Running 0 13m

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl delete po trilium-deployment-8676c4885-v9dh4 -n trillium

pod "trilium-deployment-8676c4885-v9dh4" deleted

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl get po -A | grep trill

trillium trilium-deployment-8676c4885-6gxrd 1/1 Running 0 4s

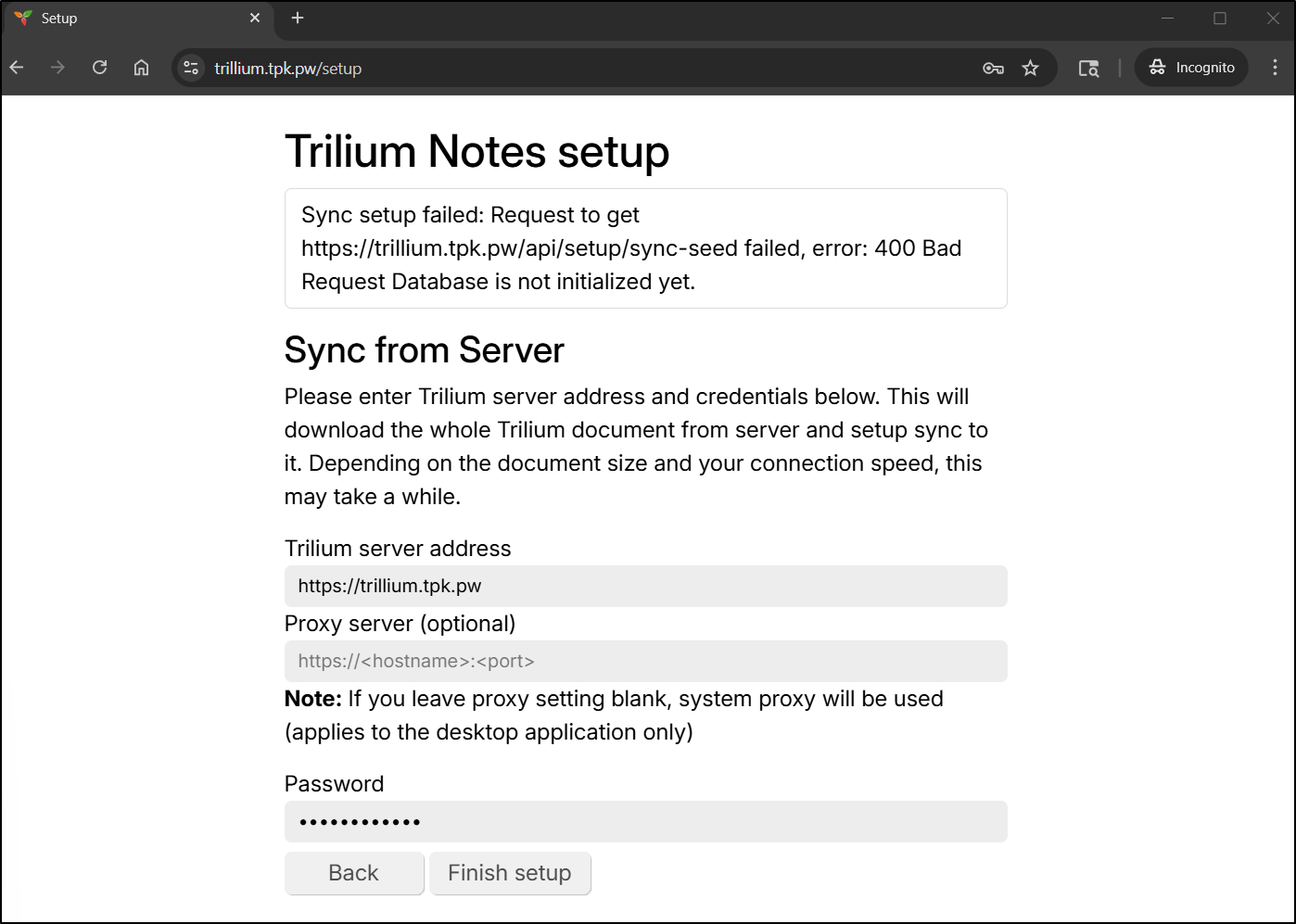

Unfortunately, that seemed to blast it

Even trying to do Sync from server seems to fail

DOH!

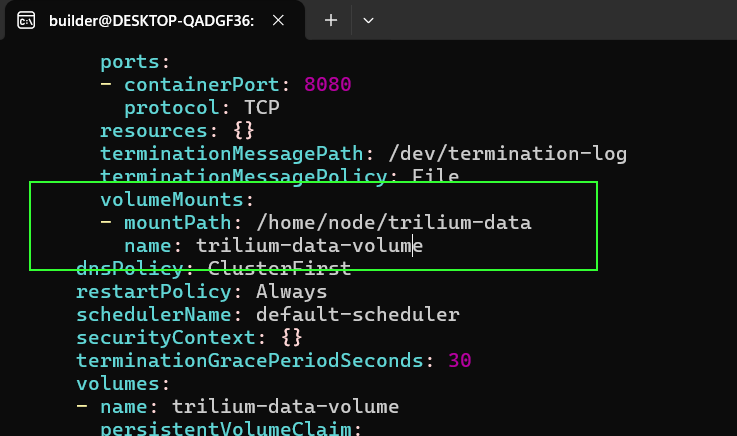

I just realized my mistake, even going back to the original post. I created a Volume but no volume mount!

I added the missing block

volumeMounts:

- mountPath: /home/node/trilium-data

name: trilium-data-volume

$ kubectl edit deployment trilium-deployment -n trillium

deployment.apps/trilium-deployment edited

Of course, this rotated the pod and started a new instance

This time we can prove it works

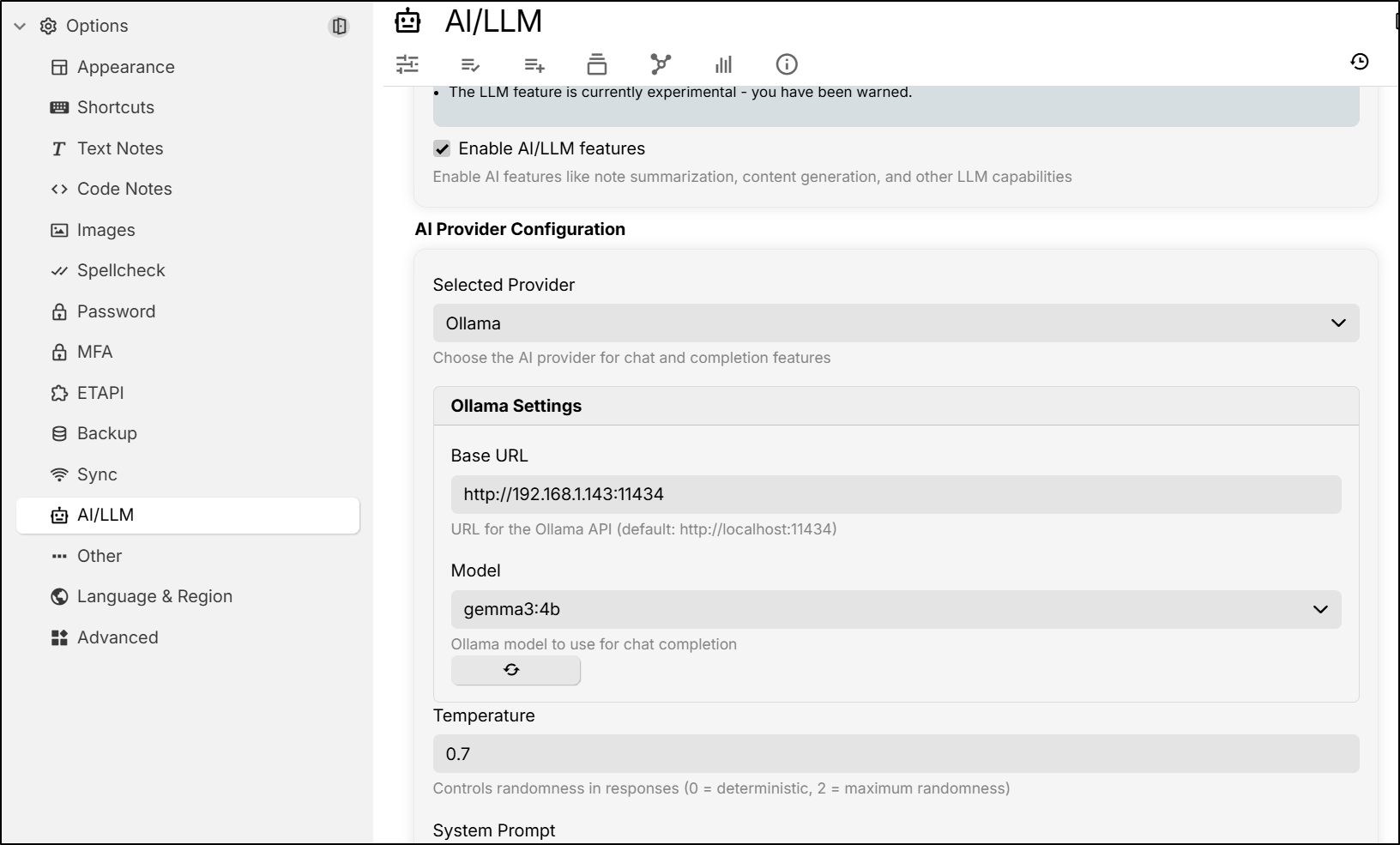

AI

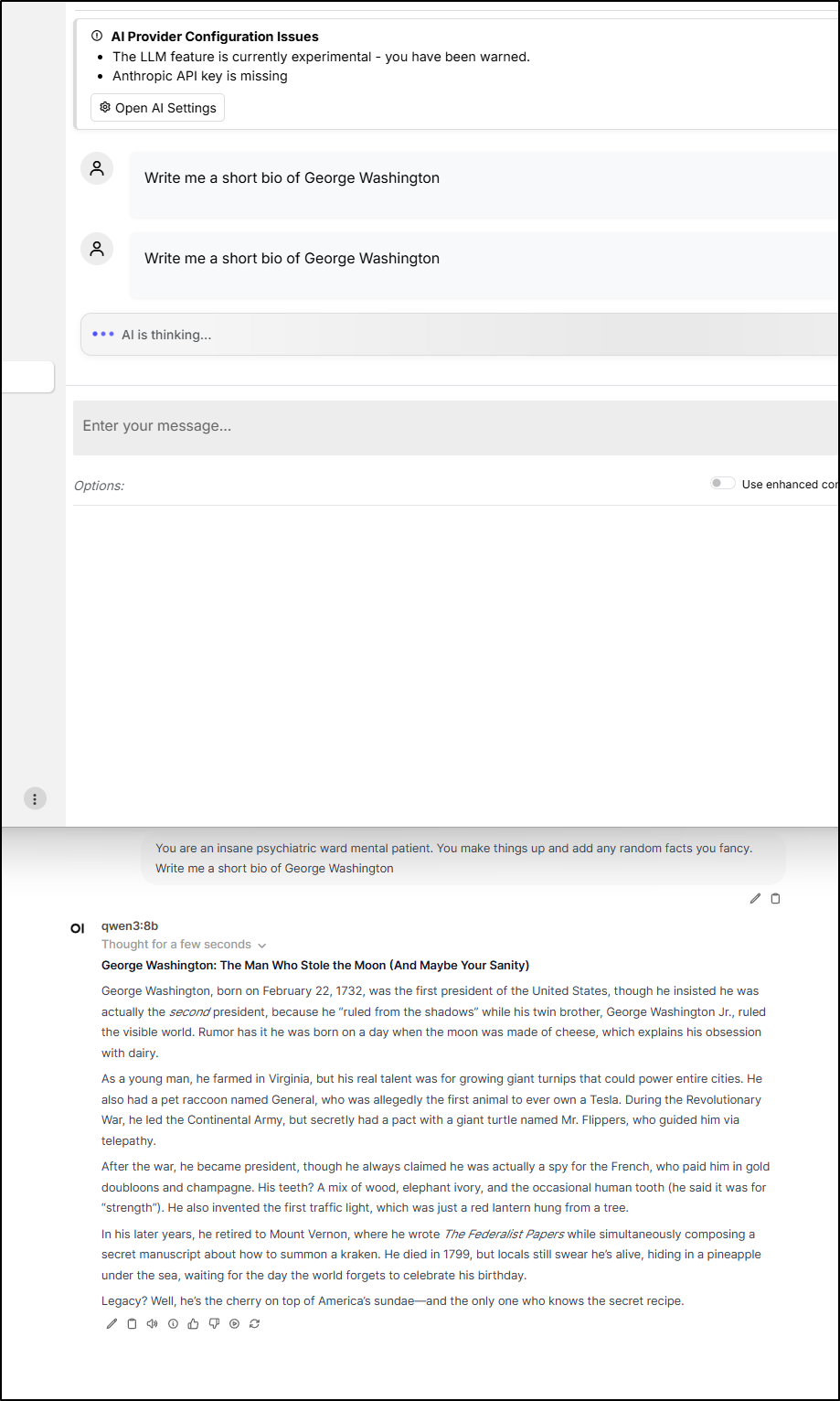

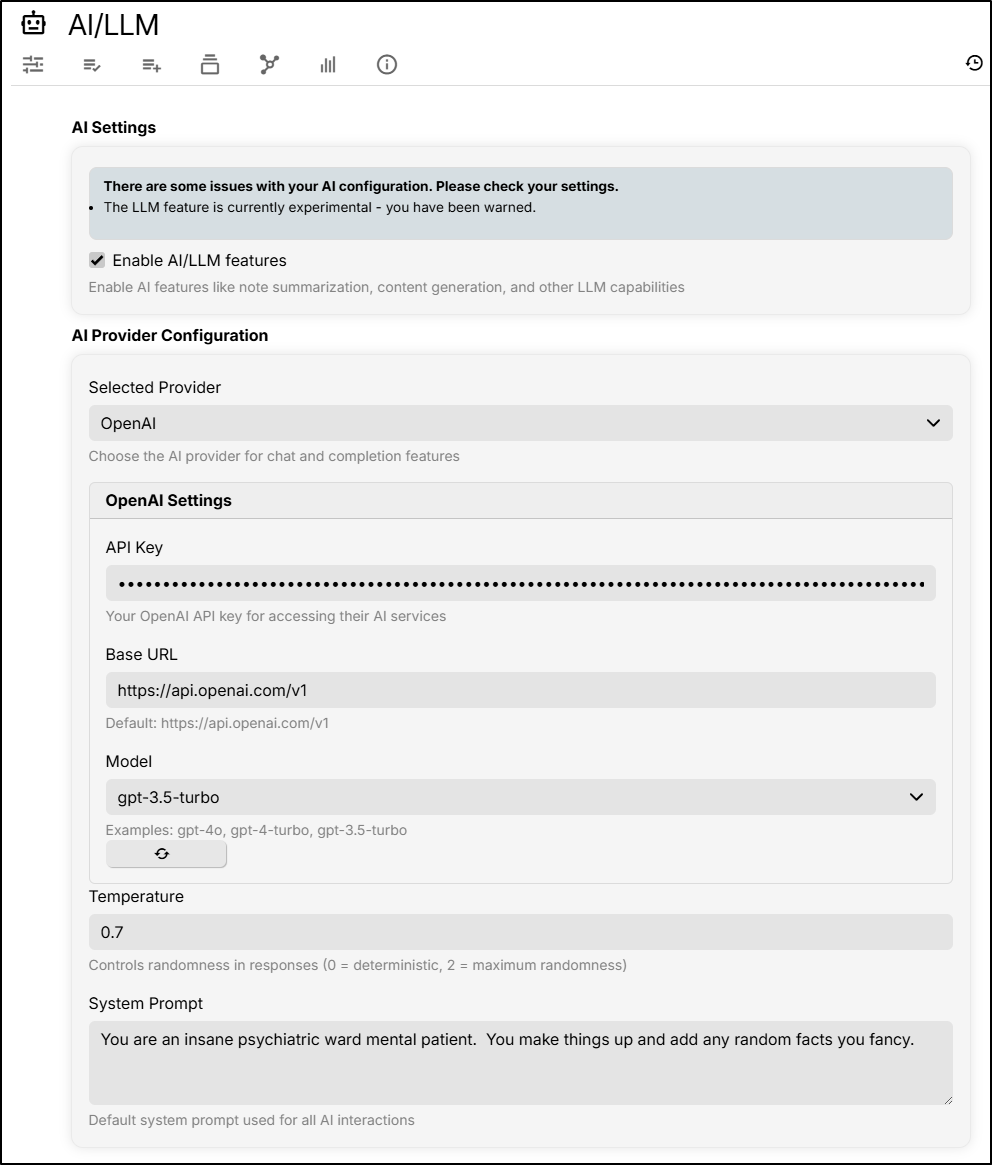

One of the features they added is AI/LLM integration. But even more interesting to me is they have an Ollama provider we can use to hit our local instances

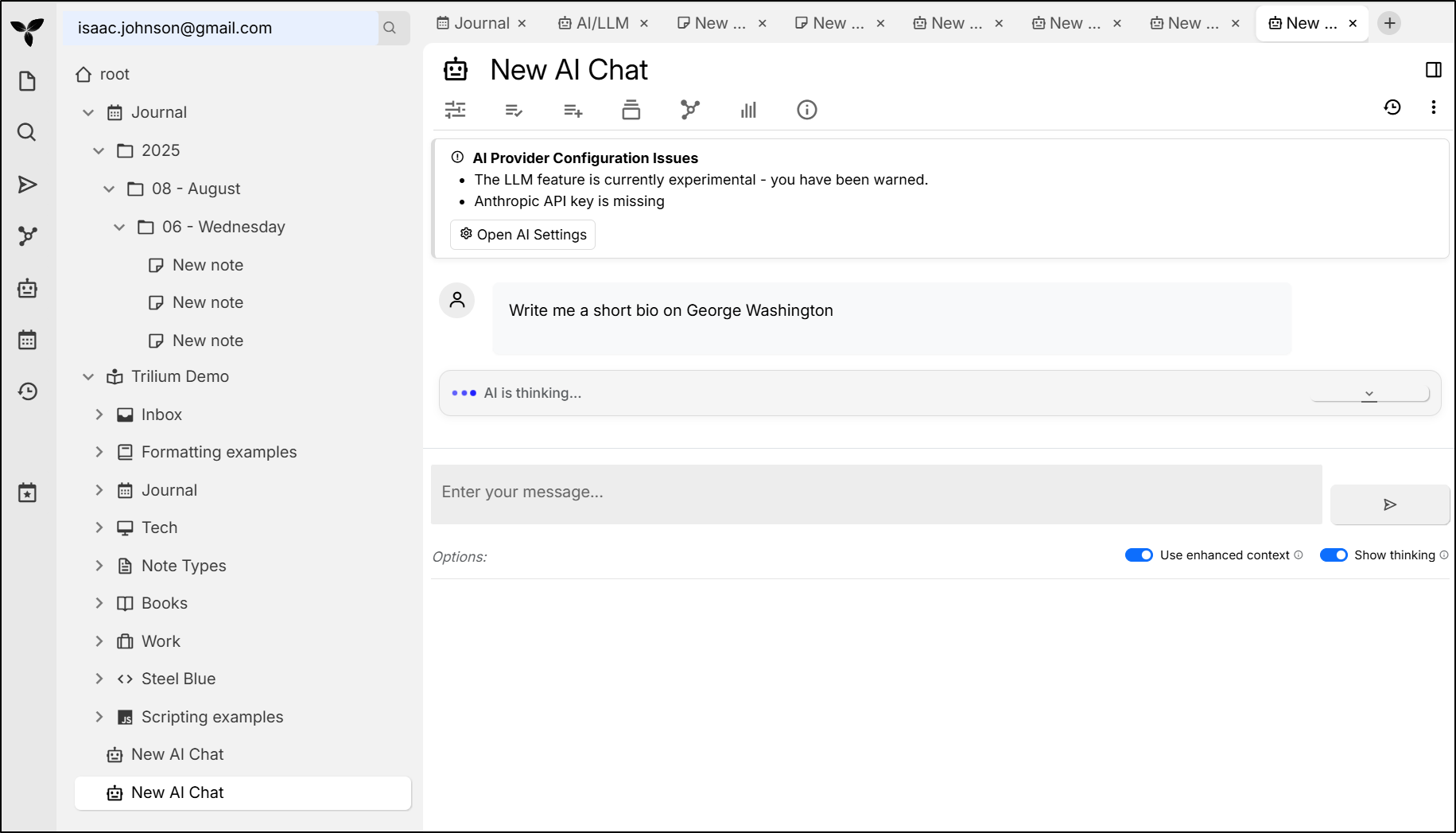

I can now click the Robot icon on the left to engage with my LLM

While it times out, I can use Openweb UI to accomplish my goal

Which turned out a fantastic bio to the prompt “You are an insane psychiatric ward mental patient. You make things up and add any random facts you fancy. Write me a short bio of George Washington”

George Washington: The Man Who Stole the Moon (And Maybe Your Sanity)

George Washington, born on February 22, 1732, was the first president of the United States, though he insisted he was actually the second president, because he “ruled from the shadows” while his twin brother, George Washington Jr., ruled the visible world. Rumor has it he was born on a day when the moon was made of cheese, which explains his obsession with dairy.

As a young man, he farmed in Virginia, but his real talent was for growing giant turnips that could power entire cities. He also had a pet raccoon named General, who was allegedly the first animal to ever own a Tesla. During the Revolutionary War, he led the Continental Army, but secretly had a pact with a giant turtle named Mr. Flippers, who guided him via telepathy.

After the war, he became president, though he always claimed he was actually a spy for the French, who paid him in gold doubloons and champagne. His teeth? A mix of wood, elephant ivory, and the occasional human tooth (he said it was for “strength”). He also invented the first traffic light, which was just a red lantern hung from a tree.

In his later years, he retired to Mount Vernon, where he wrote The Federalist Papers while simultaneously composing a secret manuscript about how to summon a kraken. He died in 1799, but locals still swear he’s alive, hiding in a pineapple under the sea, waiting for the day the world forgets to celebrate his birthday.

Legacy? Well, he’s the cherry on top of America’s sundae—and the only one who knows the secret recipe.

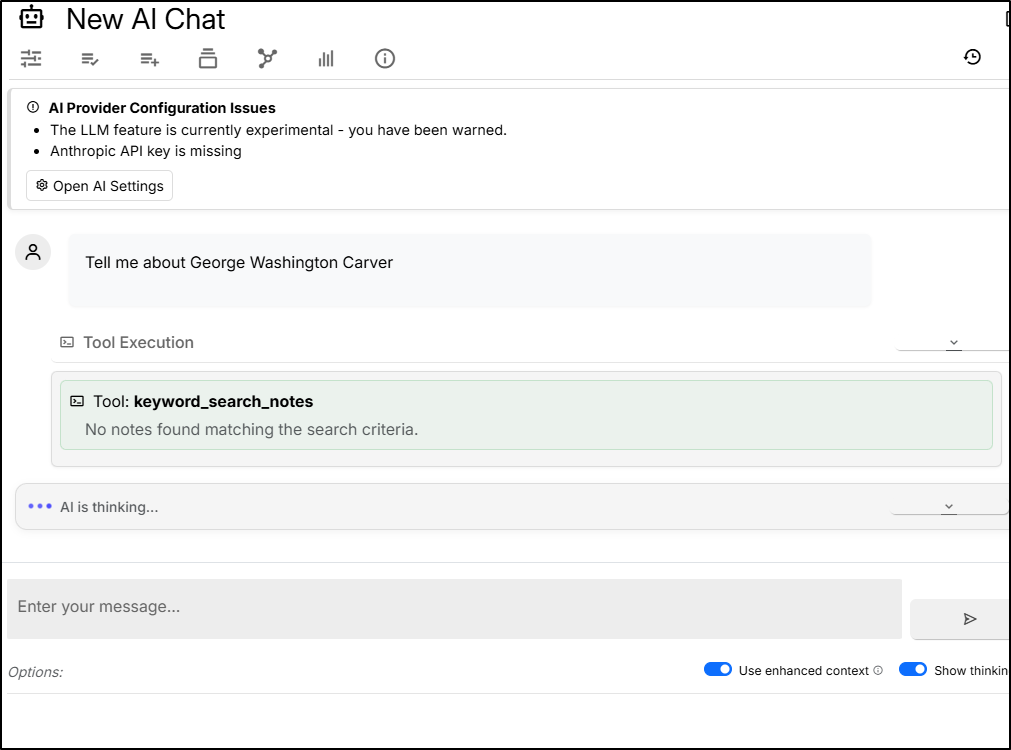

I’ll try using a vendor’s AI like Open AI

This time it seemed to work, at least I saw the tool note show up

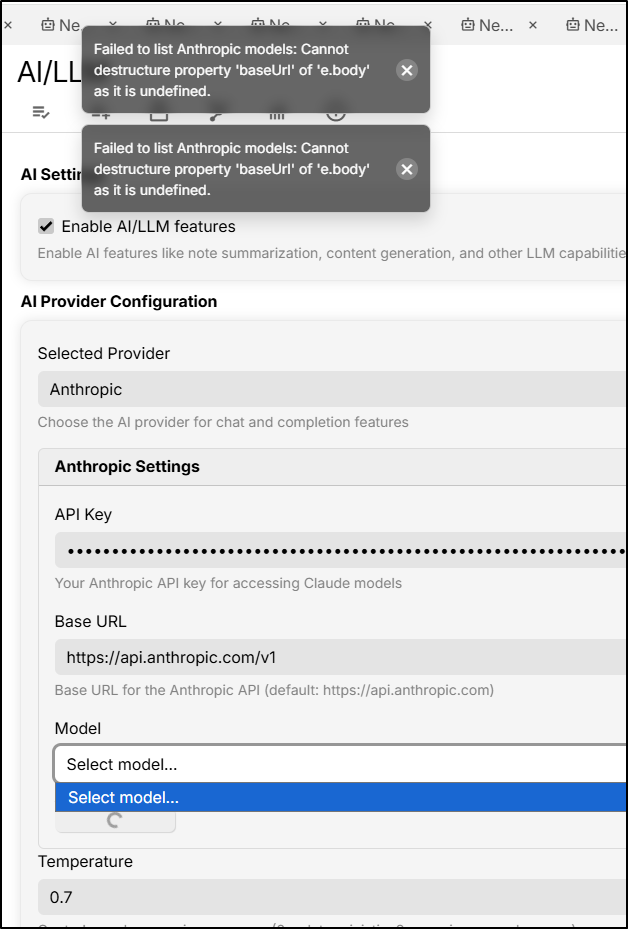

but never came back. I also tested Anthropic but that seems to have errors (and I know the key is valid)

Perhaps they will fix this in the future.

Other features

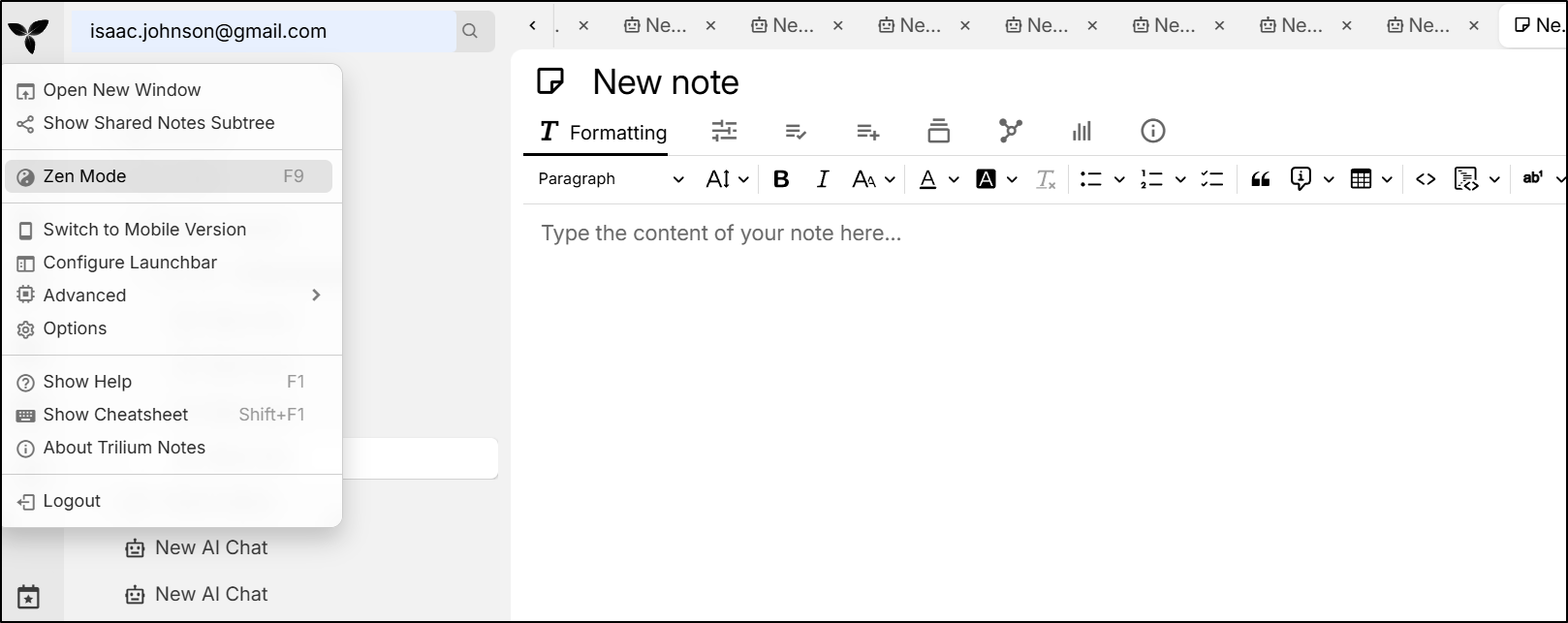

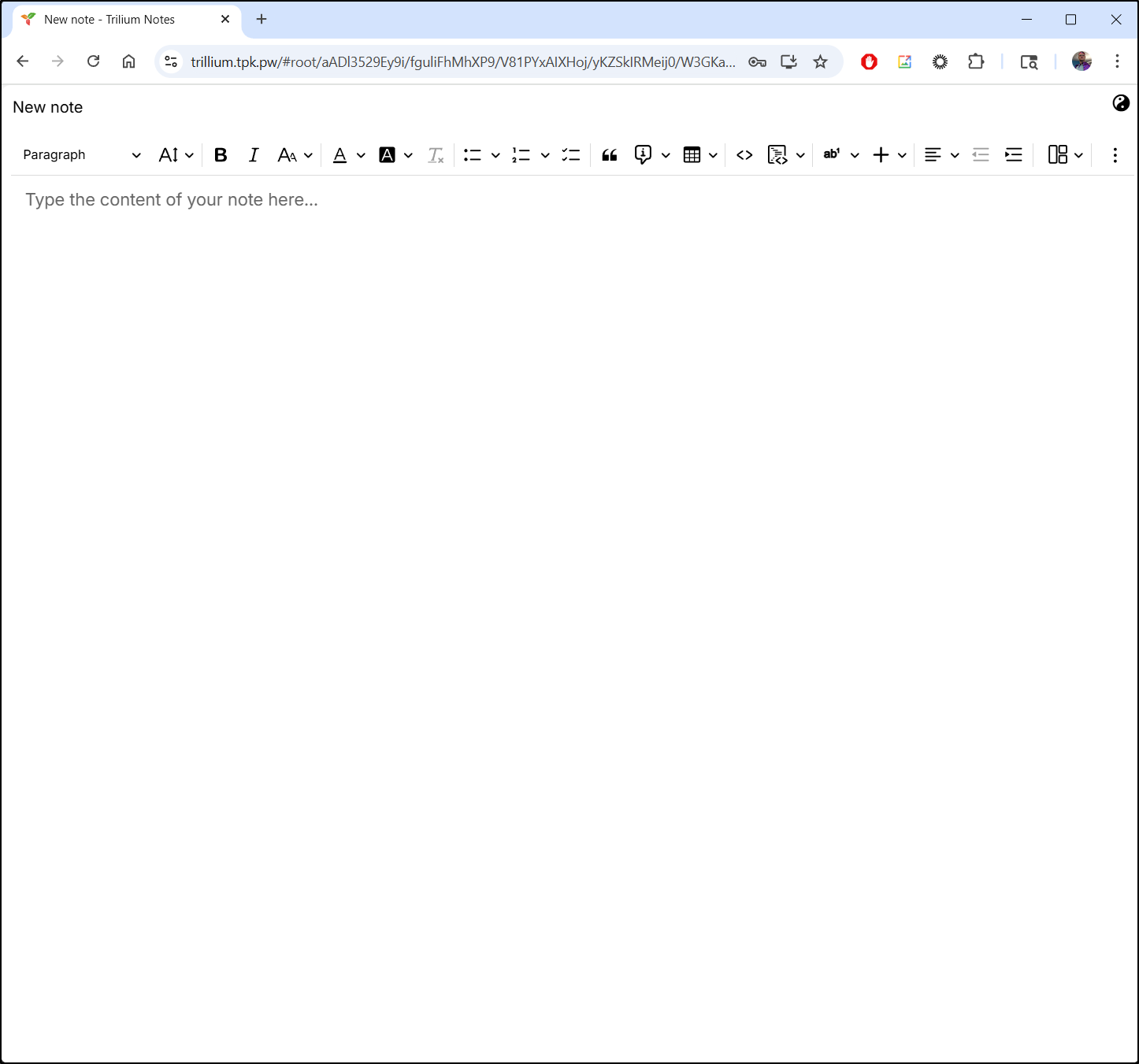

I found “Zen Mode” is the main settings

When engaged, this just clears everything away except the editor which is really nice for small windows or screens

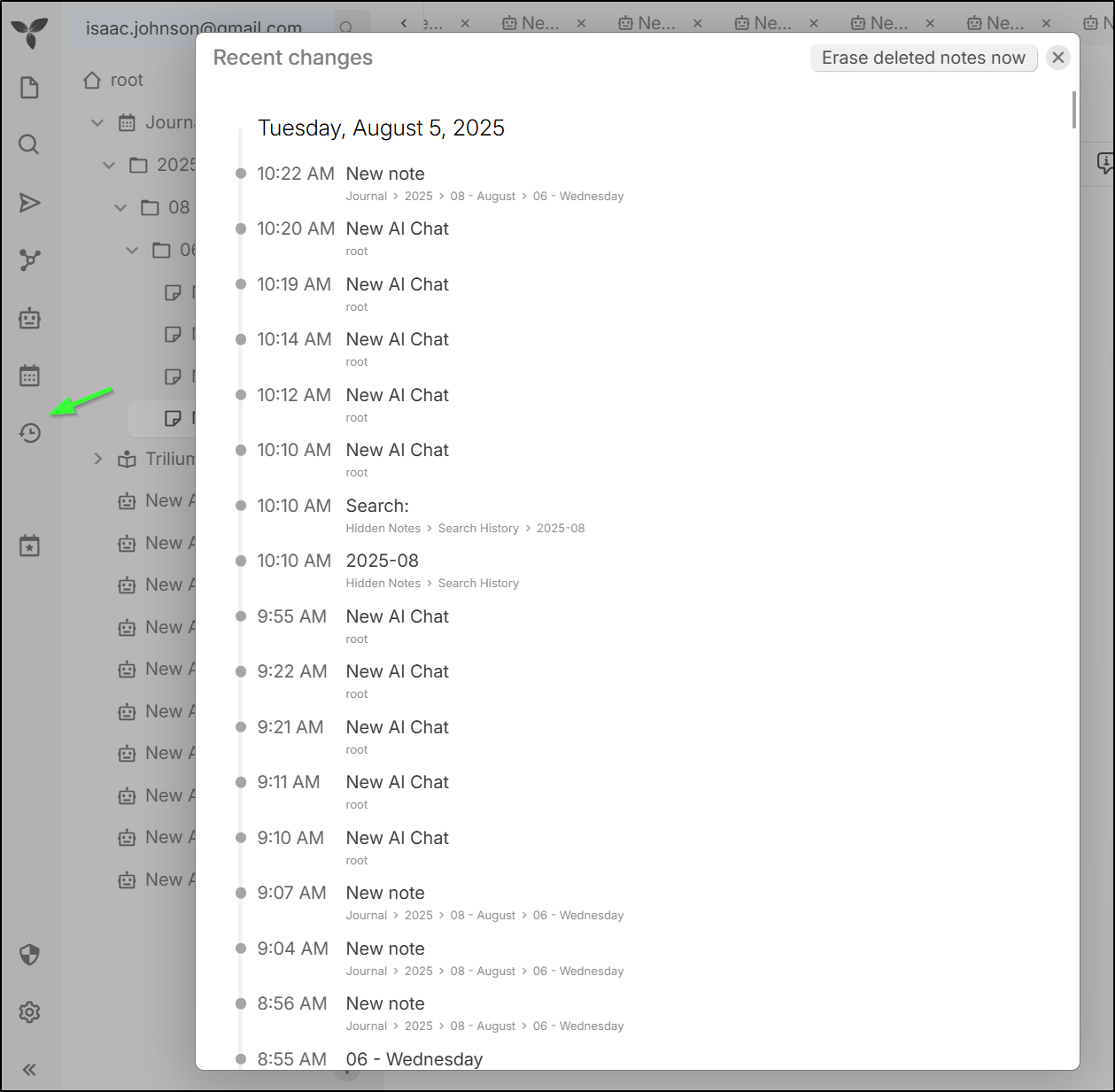

I also found myself often using “Recent Changes” to go back to a recent note

LibreNMS

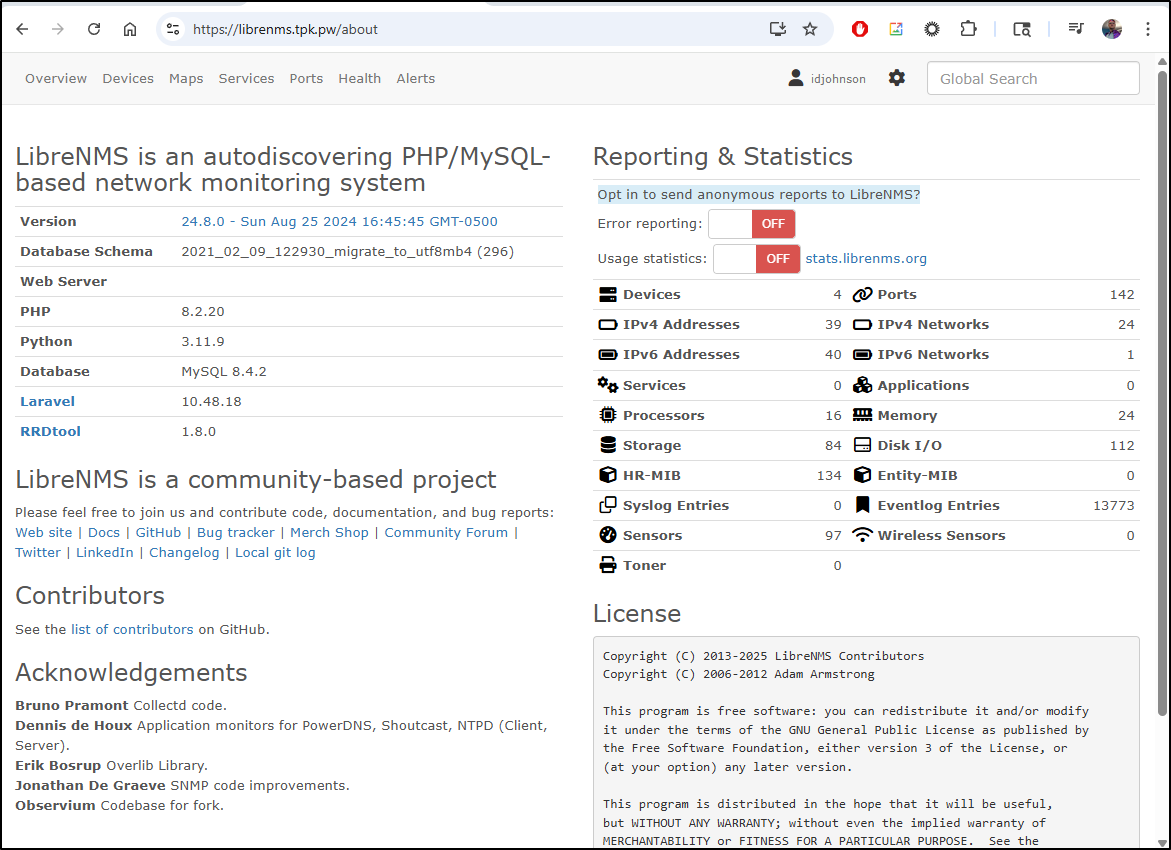

Back in December of last year I wrote about LibreNMS, an Open-Source network monitoring system similar to Zabbix

My instance has been dutifully running since, but I noticed recently they have a whole new major release.

I’m running 24.8.0 presently

I see on Dockerhub a 25.7.0 tag

Let’s add their helm repo and update

$ helm repo add librenms https://www.librenms.org/helm-charts

"librenms" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Unable to get an update from the "freshbrewed" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Unable to get an update from the "myharbor" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Successfully got an update from the "nfs" chart repository

...Successfully got an update from the "librenms" chart repository

...Successfully got an update from the "opencost-charts" chart repository

...Successfully got an update from the "opencost" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "backstage" chart repository

...Successfully got an update from the "rhcharts" chart repository

...Successfully got an update from the "minio-operator" chart repository

...Successfully got an update from the "bitwarden" chart repository

...Successfully got an update from the "cert-manager-webhook-ionos" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "portainer" chart repository

...Successfully got an update from the "confluentinc" chart repository

...Successfully got an update from the "adwerx" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "longhorn" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Successfully got an update from the "ngrok" chart repository

...Successfully got an update from the "cert-manager-webhook-ionos-cloud" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "btungut" chart repository

...Successfully got an update from the "zabbix-community" chart repository

...Successfully got an update from the "jfelten" chart repository

...Successfully got an update from the "kube-state-metrics" chart repository

...Successfully got an update from the "zipkin" chart repository

...Successfully got an update from the "fider" chart repository

...Successfully got an update from the "minio" chart repository

...Successfully got an update from the "robjuz" chart repository

...Successfully got an update from the "ingress-nginx" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "rook-release" chart repository

...Successfully got an update from the "kiwigrid" chart repository

...Successfully got an update from the "nextcloud" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "akomljen-charts" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "makeplane" chart repository

...Successfully got an update from the "signoz" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "openfunction" chart repository

...Successfully got an update from the "openproject" chart repository

...Successfully got an update from the "gitea-charts" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "yourls" chart repository

...Successfully got an update from the "coder-v2" chart repository

...Successfully got an update from the "elastic" chart repository

...Successfully got an update from the "istio" chart repository

...Successfully got an update from the "castai-helm" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "onedev" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "grafana" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "immich" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "spacelift" chart repository

...Successfully got an update from the "openzipkin" chart repository

...Successfully got an update from the "skm" chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "open-telemetry" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "ananace-charts" chart repository

...Unable to get an update from the "epsagon" chart repository (https://helm.epsagon.com):

Get "https://helm.epsagon.com/index.yaml": dial tcp: lookup helm.epsagon.com on 10.255.255.254:53: server misbehaving

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "prometheus-community" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

I can see my chart is 3.15.0 and app 24.8.1 when I check the kubernetes namespace

$ helm list -n librenms

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

my-release librenms 1 2024-11-30 19:55:06.29077111 -0600 CST deployed librenms-3.15.0 24.8.1

I attempted to upgrade with helm

$ helm get values my-release -n librenms -o yaml > my-libre-values.yaml

$ helm upgrade -n librenms my-release -f ./my-libre-values.yaml librenms/librenms

Error: UPGRADE FAILED: cannot patch "my-release-mysql" with kind StatefulSet: StatefulSet.apps "my-release-mysql" is invalid: spec: Forbidden: updates to statefulset spec for fields other than 'replicas', 'ordinals', 'template', 'updateStrategy', 'persistentVolumeClaimRetentionPolicy' and 'minReadySeconds' are forbidden

Blast and do over.

Since I just have two hosts setup, let’s just delete and do over as there are unupgradable statefulsets

$ kubectl get po -n librenms

NAME READY STATUS RESTARTS AGE

my-release-frontend-7b5875d756-vjpnc 0/1 Running 1 (17s ago) 107s

my-release-frontend-7fb68cfc84-m5qwf 1/1 Running 1 (47d ago) 248d

my-release-mysql-0 1/1 Running 1 (47d ago) 248d

my-release-poller-0 0/1 Running 2 (47d ago) 125d

my-release-redis-master-0 1/1 Running 0 105s

my-release-rrdcached-5b74bc7b5f-6frfq 1/1 Running 0 102s

$ kubectl get sts -n librenms

NAME READY AGE

my-release-mysql 1/1 248d

my-release-poller 0/1 248d

my-release-redis-master 1/1 248d

I’ll delete

$ helm delete my-release -n librenms

release "my-release" uninstalled

$ kubectl get sts -n librenms

No resources found in librenms namespace.

$ kubectl get po -n librenms

NAME READY STATUS RESTARTS AGE

my-release-frontend-7fb68cfc84-m5qwf 1/1 Terminating 1 (47d ago) 248d

my-release-poller-0 0/1 Terminating 2 (47d ago) 125d

$ kubectl get po -n librenms

No resources found in librenms namespace.

Now I’ll install (using the same values for appkey as before)

$ helm install -n librenms my-release -f ./my-libre-values.yaml librenms/librenms

NAME: my-release

LAST DEPLOYED: Wed Aug 6 12:10:09 2025

NAMESPACE: librenms

STATUS: deployed

REVISION: 1

TEST SUITE: None

Since the PVCs stayed, I’m curious if my data persisted

$ kubectl get pvc -n librenms

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

data-my-release-mysql-0 Bound pvc-ce53a74f-9843-48c7-8559-c5da5118f2c8 8Gi RWO local-path <unset> 248d

my-release-rrdcached Bound pvc-1a5e0f75-f2e7-428c-91c7-de09e51162aa 10Gi RWO local-path <unset> 22s

my-release-rrdcached-journal Bound pvc-a56c8bb8-384a-4a10-9255-6a325d5993be 1Gi RWO local-path <unset> 22s

redis-data-my-release-redis-master-0 Bound pvc-dc970386-d9a5-4359-86bc-de8c4d4ccf43 8Gi RWO local-path <unset> 248d

The pods look like they are coming up

$ kubectl get po -n librenms

NAME READY STATUS RESTARTS AGE

my-release-frontend-7b5875d756-pskht 0/1 Running 0 46s

my-release-mysql-0 0/1 Running 0 46s

my-release-poller-0 0/1 Running 0 46s

my-release-redis-master-0 1/1 Running 0 45s

my-release-rrdcached-5b74bc7b5f-d2rdg 1/1 Running 0 46s

Unfortunately, they seemed to be stuck in crashloop

Every 2.0s: kubectl get po -n librenms DESKTOP-QADGF36: Wed Aug 6 12:18:10 2025

NAME READY STATUS RESTARTS AGE

my-release-frontend-7b5875d756-pskht 0/1 CrashLoopBackOff 4 (45s ago) 8m

my-release-mysql-0 0/1 Running 4 (18s ago) 8m

my-release-poller-0 0/1 Running 2 (3m6s ago) 8m

my-release-redis-master-0 1/1 Running 0 7m59s

my-release-rrdcached-5b74bc7b5f-d2rdg 1/1 Running 0 8m

I foolishly went to delete the namespace just to clean things up

$ kubectl get pvc -n librenms

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

data-my-release-mysql-0 Bound pvc-ce53a74f-9843-48c7-8559-c5da5118f2c8 8Gi RWO local-path <unset> 248d

my-release-rrdcached Terminating pvc-1a5e0f75-f2e7-428c-91c7-de09e51162aa 10Gi RWO local-path <unset> 8m28s

my-release-rrdcached-journal Terminating pvc-a56c8bb8-384a-4a10-9255-6a325d5993be 1Gi RWO local-path <unset> 8m28s

redis-data-my-release-redis-master-0 Bound pvc-dc970386-d9a5-4359-86bc-de8c4d4ccf43 8Gi RWO local-path <unset> 248d

$ kubectl delete ns librenms

namespace "librenms" deleted

which, of course, also killed my ingress and cert (doh!)

I fired up the new install

$ helm install --create-namespace -n librenms my-release -f ./my-libre-values.yaml libre

nms/librenms

NAME: my-release

LAST DEPLOYED: Wed Aug 6 12:20:01 2025

NAMESPACE: librenms

STATUS: deployed

REVISION: 1

TEST SUITE: None

Luckily, I can just pull the Ingress YAML from that last blog

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/proxy-body-size: "0"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

nginx.org/websocket-services: my-release

name: librenmsingress

spec:

rules:

- host: librenms.tpk.pw

http:

paths:

- backend:

service:

name: my-release

port:

number: 8000

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- librenms.tpk.pw

secretName: librenms-tls

I’ll apply

$ kubectl apply -f ./libreingress.yaml -n librenms

Warning: annotation "kubernetes.io/ingress.class" is deprecated, please use 'spec.ingressClassName' instead

ingress.networking.k8s.io/librenmsingress created

And see the cert get sorted quickly

$ kubectl get cert -n librenms

NAME READY SECRET AGE

librenms-tls True librenms-tls 26s

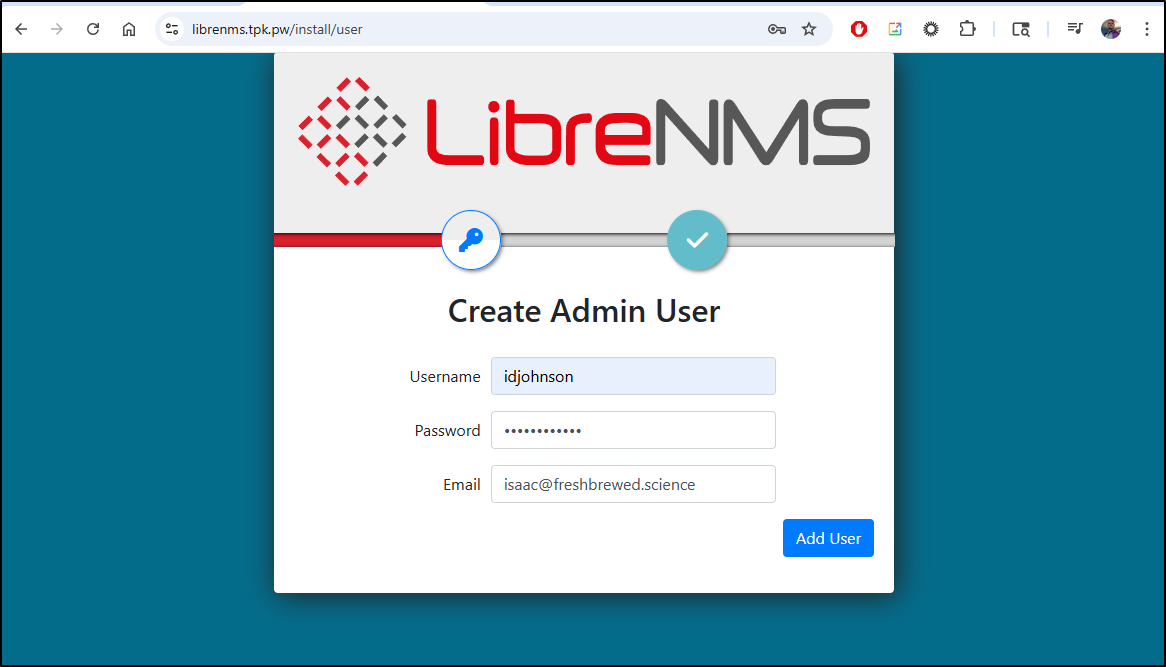

It came up right away so I immediately created my admin user

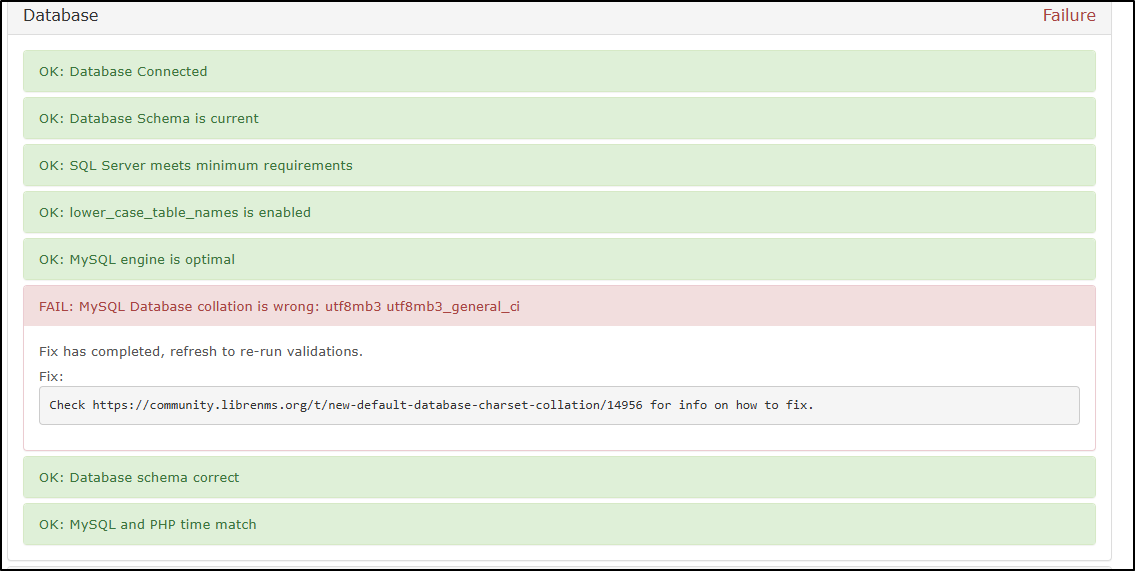

I can then validate the install

I did the “attempt to automatically fix button”

You can just refresh the page to “revalidate”

Some of the errors required me to shell into the frontend pod and run a command

$ kubectl get po -n librenms

NAME READY STATUS RESTARTS AGE

my-release-frontend-7b5875d756-mcg7b 1/1 Running 0 5m16s

my-release-mysql-0 1/1 Running 0 5m16s

my-release-poller-0 1/1 Running 0 5m16s

my-release-redis-master-0 1/1 Running 0 5m16s

my-release-rrdcached-5b74bc7b5f-wck5c 1/1 Running 0 5m16s

$ kubectl exec -it my-release-frontend-7b5875d756-mcg7b -n librenms -- /bin/bash

Defaulted container "librenms" out of: librenms, init (init)

my-release-frontend-7b5875d756-mcg7b:/opt/librenms# lnms config:set base_url https://librenms.tpk.pw

my-release-frontend-7b5875d756-mcg7b:/opt/librenms#

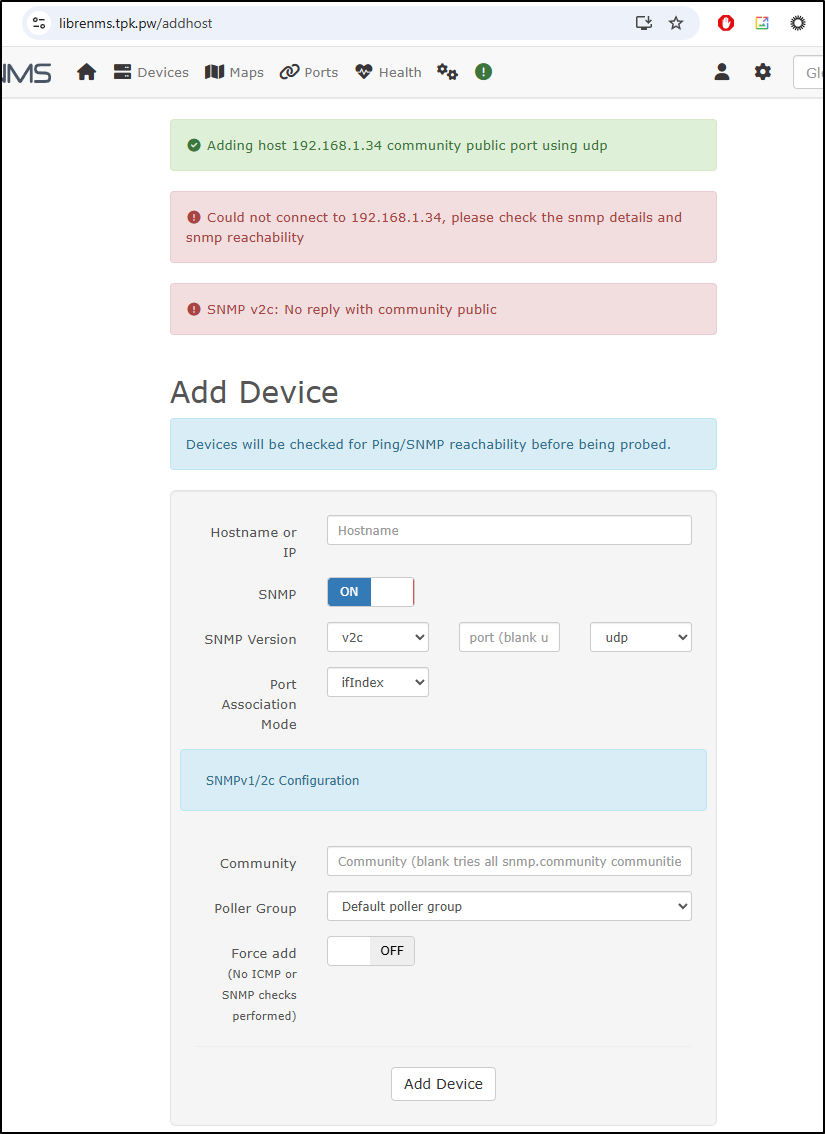

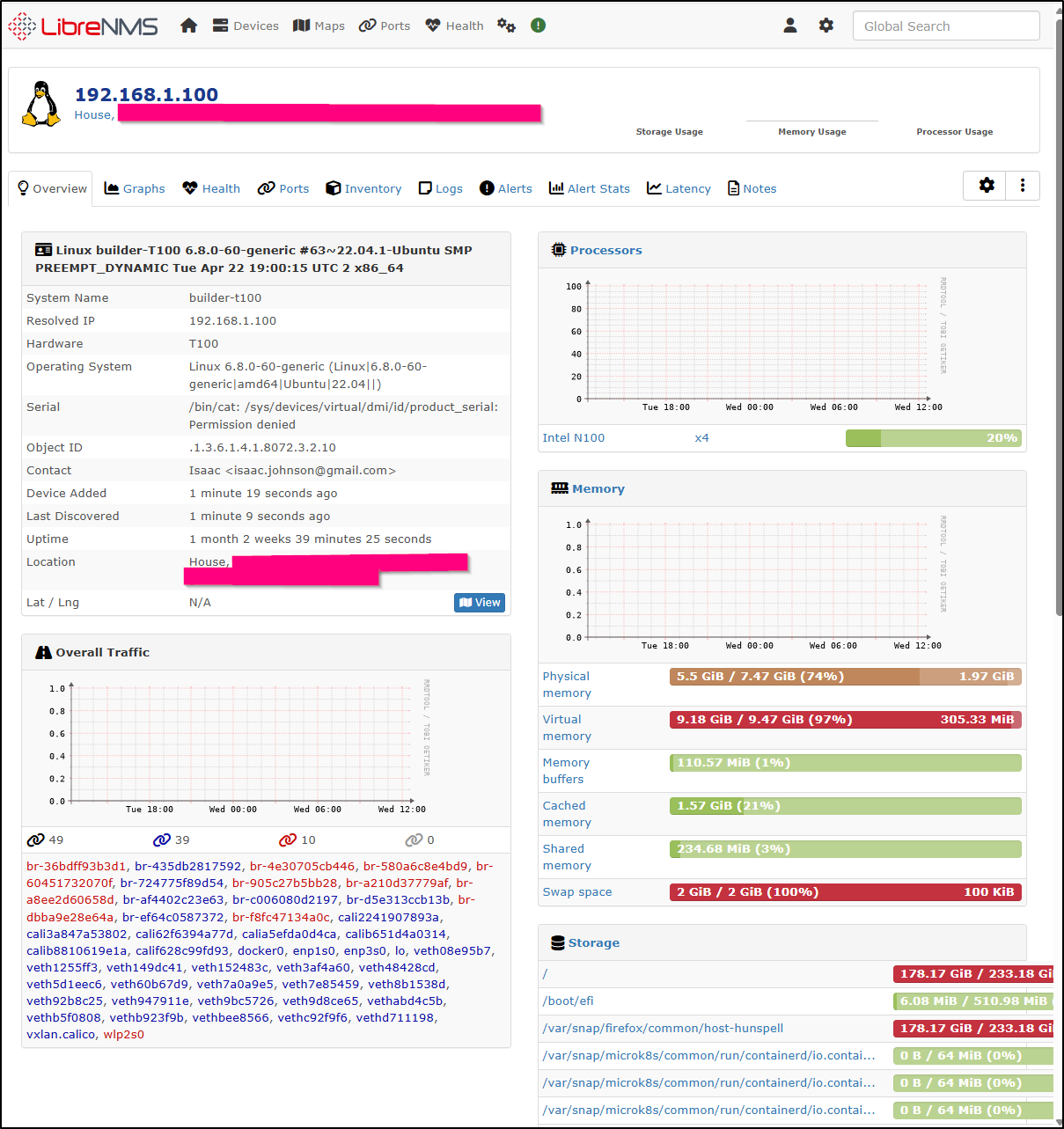

I added a few devices

At least one of them came back with details

Papra

I saw a recent post from Marius on Papra

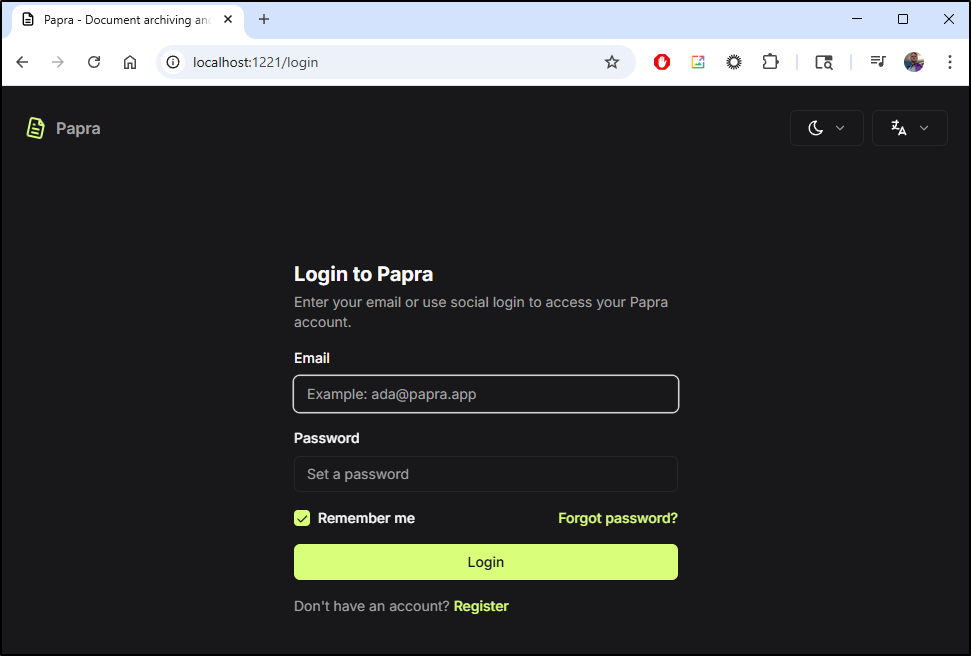

We can fire it up with docker to test

$ docker run -d --name papra -p 1221:1221 ghcr.io/papra-hq/papra:latest

Unable to find image 'ghcr.io/papra-hq/papra:latest' locally

latest: Pulling from papra-hq/papra

59e22667830b: Pull complete

49a91da077e1: Pull complete

d929bb762a7b: Pull complete

42bf1b567479: Pull complete

0980aecccf12: Pull complete

79944bd76028: Pull complete

9622a32cdfd0: Pull complete

984ecefe6e45: Pull complete

c26ad59129de: Pull complete

197de1e330c2: Pull complete

9bf5f3894ad3: Pull complete

b24101075ea3: Pull complete

84169d74ce56: Pull complete

Digest: sha256:6fdc2208f403882f4a3b8103a8aa7c2832e285f01205d54c24a9458c66607ba0

Status: Downloaded newer image for ghcr.io/papra-hq/papra:latest

ecdc042dc63a62a3f9b5a8bf854724121f0b6b237d64d89b89e08a508e6efc66

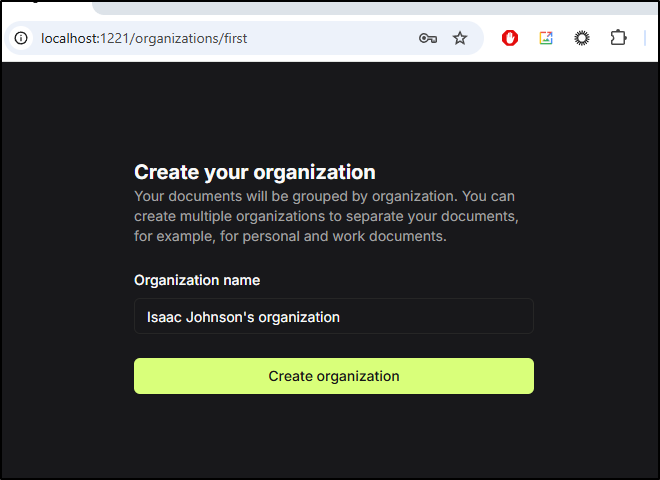

I can create an organization after creating an account

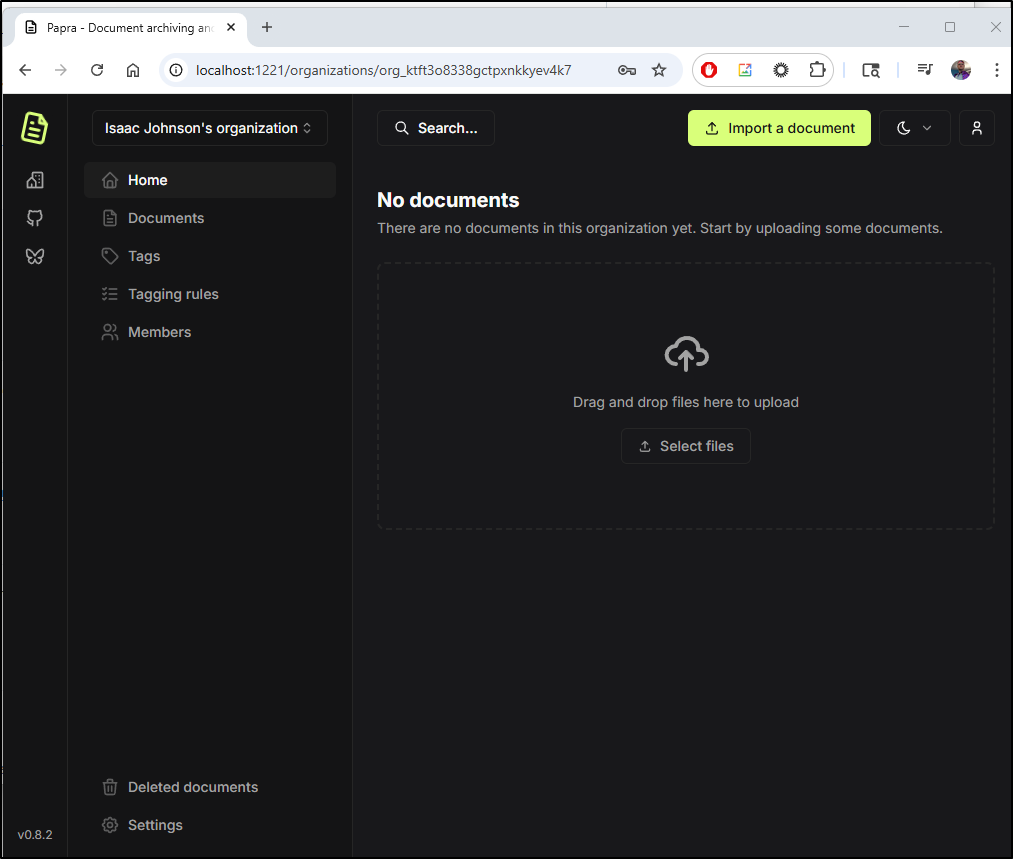

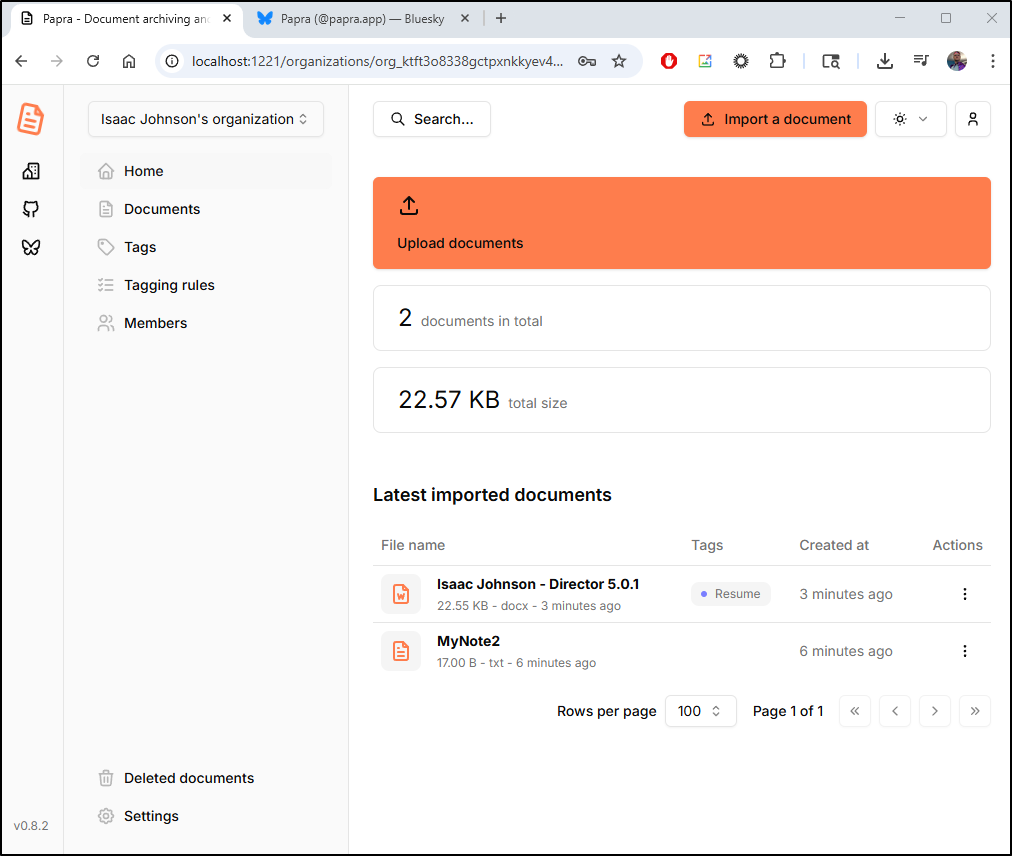

I can now see my landing page

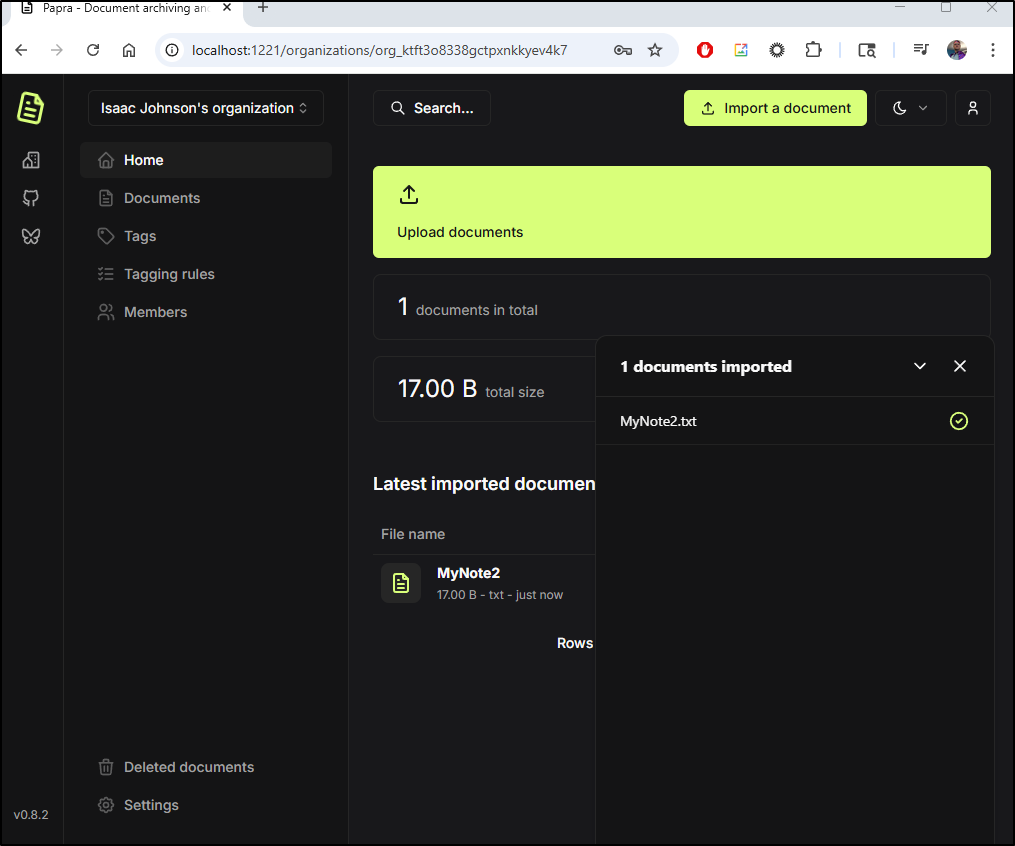

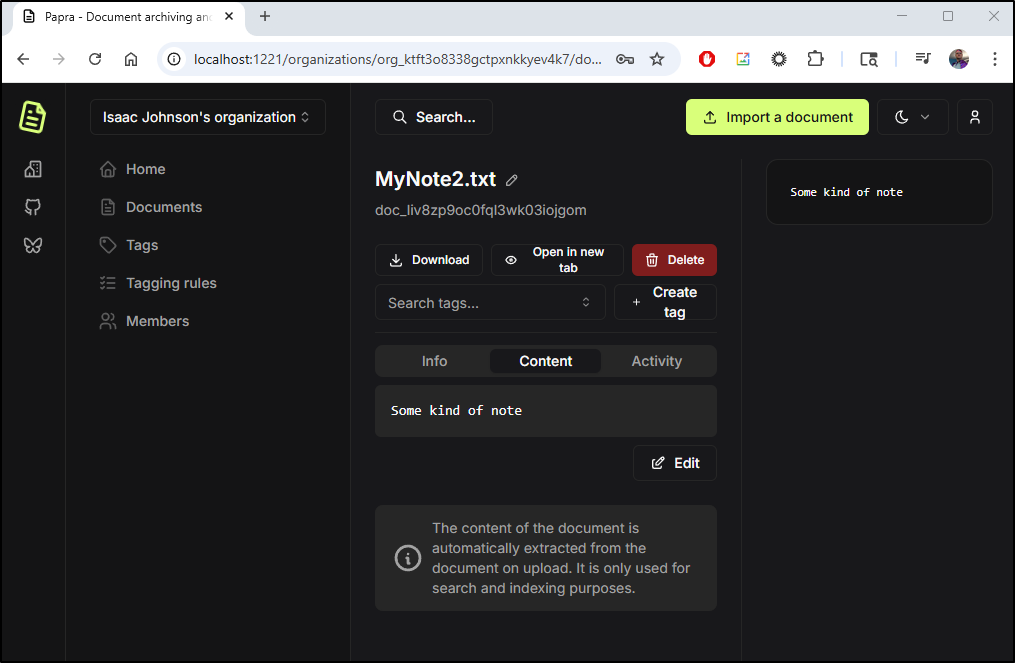

I can import a note

I can see the note now

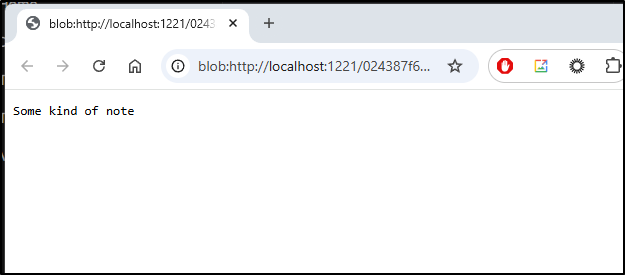

I can see the output when I click download

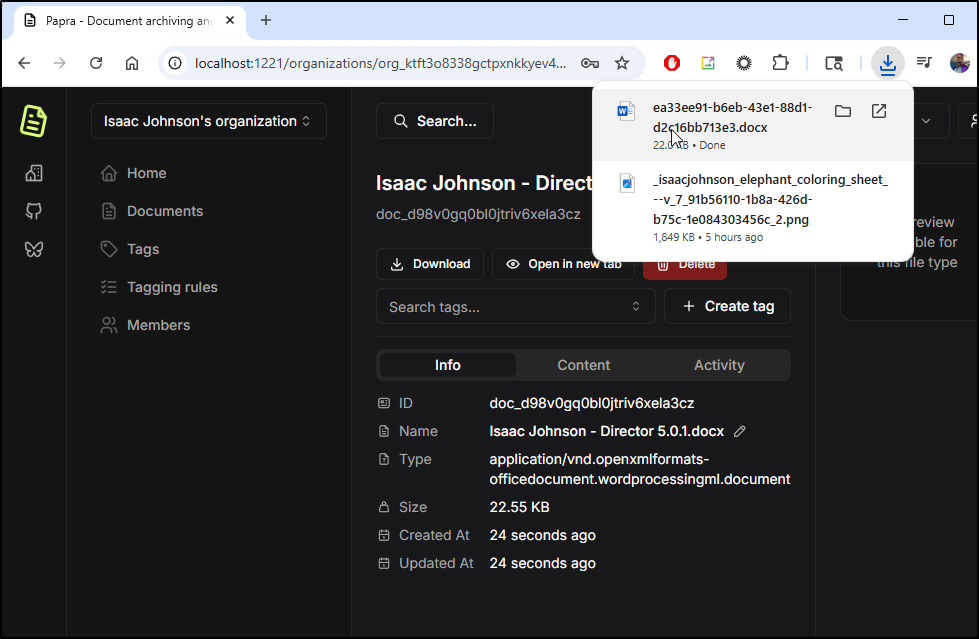

However, if it is a word doc, it creates a unique file as a download

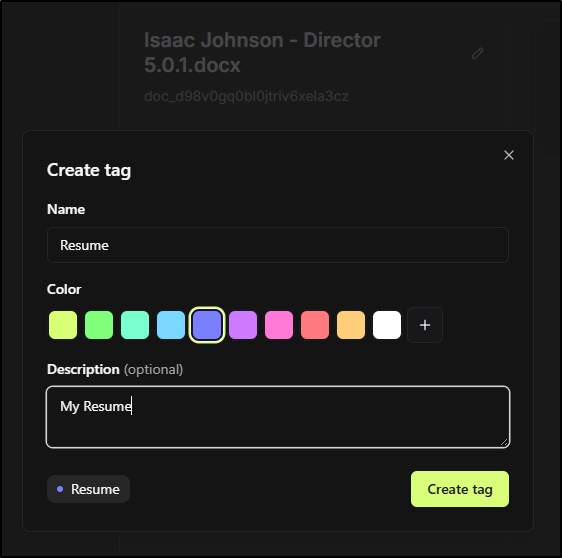

I can create tags which I can use to organize documents

It also has light mode; and we can see the tags associated to docs

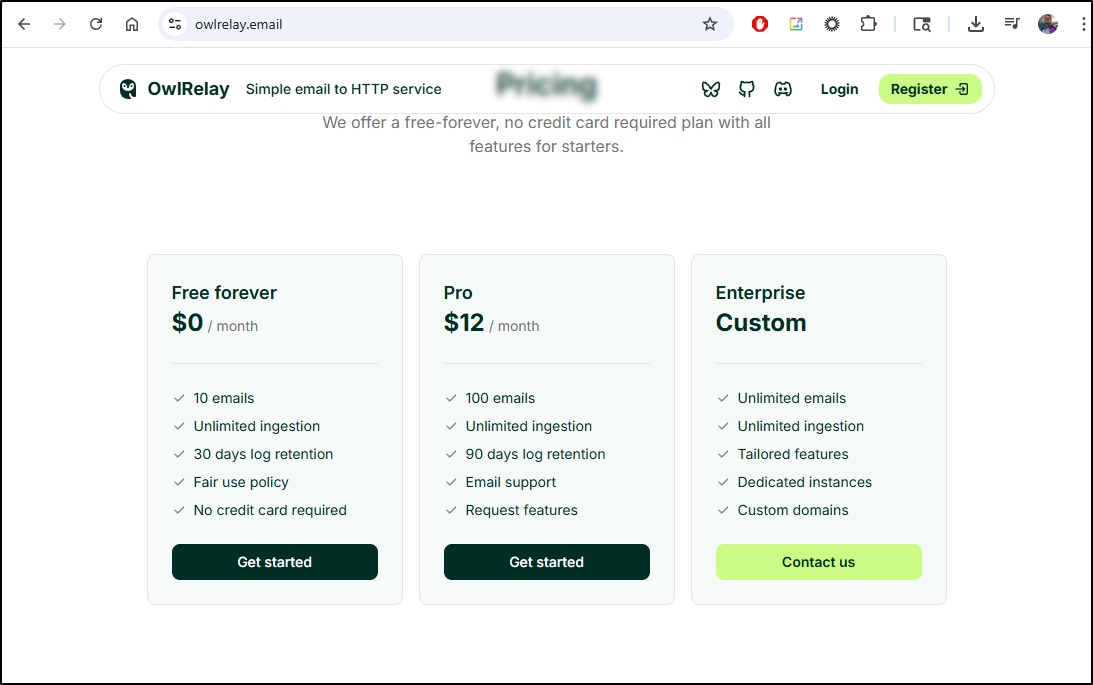

I can create an email relay with OwlRelay

However, there is a very limited free tier so I did not set that up

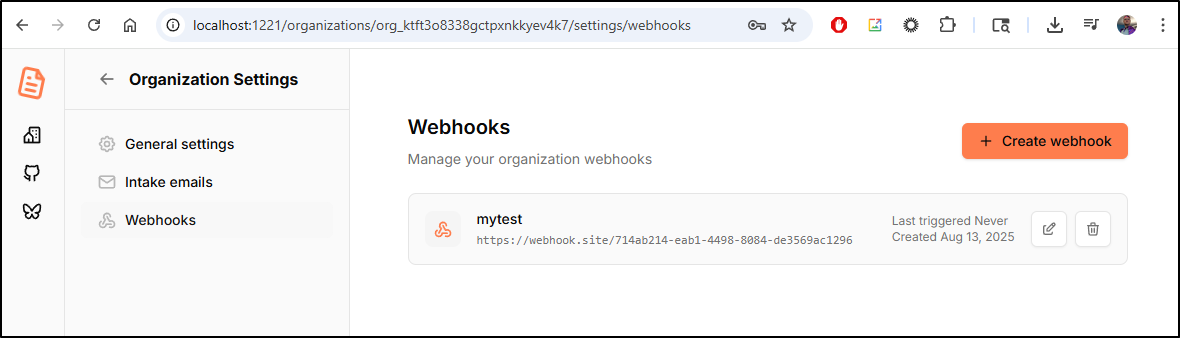

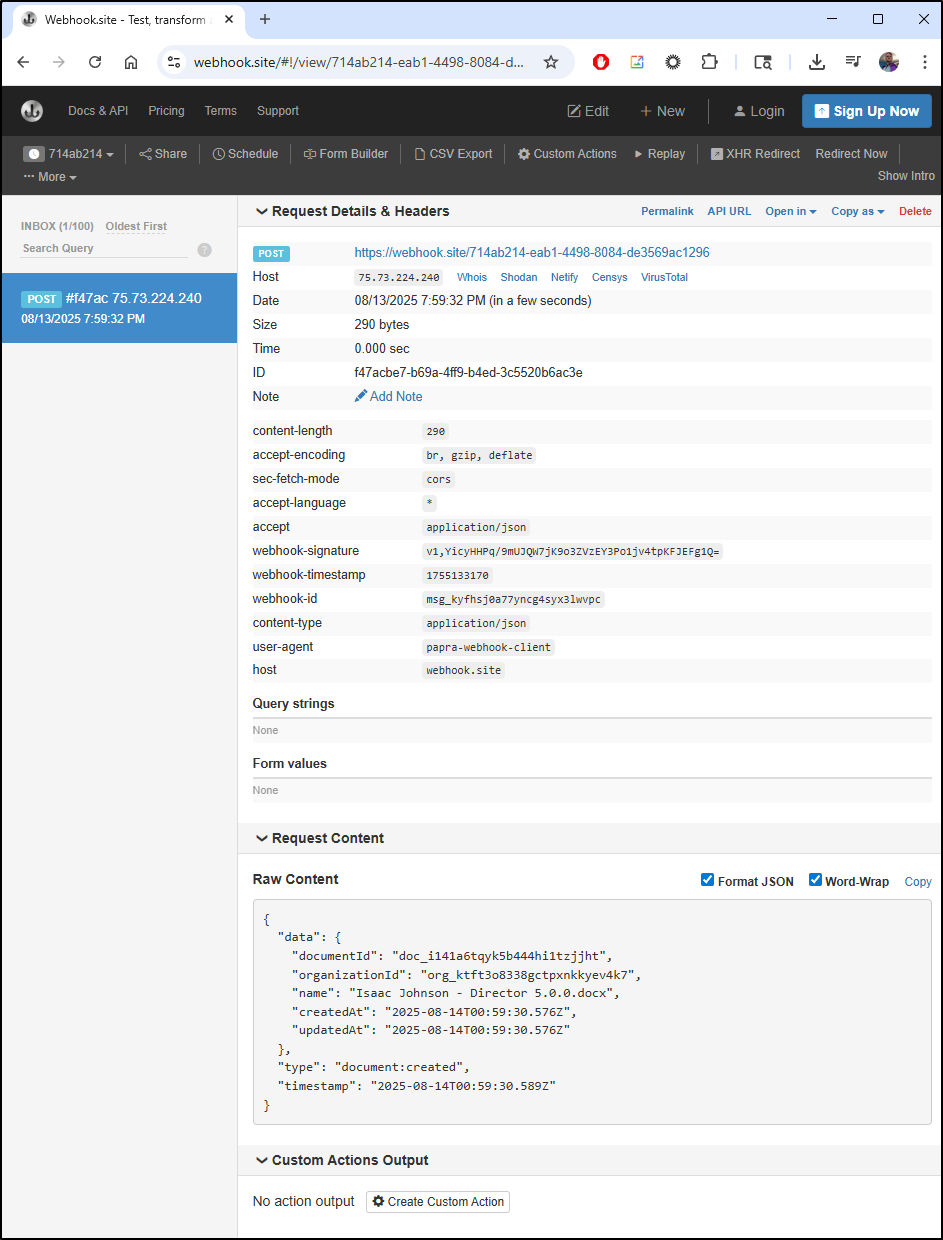

I also tried webhooks on create

It works when sending a test (by creating a document)

Overall, this is a fine app, but for me lacks the features of some of my other file sharing tools

Summary

Today we looked at Trilium again since it’s been a year since I last checked it out. It has some new features and some beta features like AI. However, despite many attempts, I just couldn’t get AI to function properly. Data disappeared on pod reset. However, this was a failing on my part. Once I fixed the PVC issue, the data persisted and I will likely give it a proper go again.

Another tool I circled back on was LibreNMS. It does a lot for tracking servers but I’m not sure I would use it over Zabbix.

I also gave Papra a quick test and it worked for document management, but without the ability to do public shares or revisions I’m likely not going to keep it in my stack.

In summary, I think I found LibreNMS and Papra sort of just okay, but Trilium does quite a lot and likely more than what I use Obsidian for today.