Published: Jul 5, 2025 by Isaac Johnson

Recently I wanted to test some new Ollama models but needed to update Ollama. This gave me a chance to test them with our pybsposter app which posts to BlueSky.

I’ll test some systemMessage updates with a local model, OpenAI’s GPT 4o via Azure AI and Gemini 2.5 Pro via Vertex AI.

Let’s start by updating Ollama

Updating Ollama and models

I noticed in pulling some newer models that some needed a newer ollama to function

content/images/2025/07/llmupdate-01.png

I updated with the curl command listed in the download page

builder@bosgamerz9:~$ curl -fsSL https://ollama.com/install.sh | sh

>>> Cleaning up old version at /usr/local/lib/ollama

>>> Installing ollama to /usr/local

>>> Downloading Linux amd64 bundle

######################################################################## 100.0%

>>> Adding ollama user to render group...

>>> Adding ollama user to video group...

>>> Adding current user to ollama group...

>>> Creating ollama systemd service...

>>> Enabling and starting ollama service...

>>> NVIDIA GPU installed.

This time it worked to pull gemma3n:e4b

builder@bosgamerz9:~$ ollama pull gemma3n:e4b

pulling manifest

pulling 38e8dcc30df4: 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 7.5 GB

pulling e0a42594d802: 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 358 B

pulling 1adbfec9dcf0: 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 8.4 KB

pulling 8eac5d7750c5: 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 491 B

verifying sha256 digest

writing manifest

success

which based on the speed, might be the same as gemma4:4b as the pull was nearly instant

builder@bosgamerz9:~$ ollama pull gemma3:4b

pulling manifest

pulling aeda25e63ebd: 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 3.3 GB

pulling e0a42594d802: 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 358 B

pulling dd084c7d92a3: 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 8.4 KB

pulling 3116c5225075: 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 77 B

pulling b6ae5839783f: 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 489 B

verifying sha256 digest

writing manifest

success

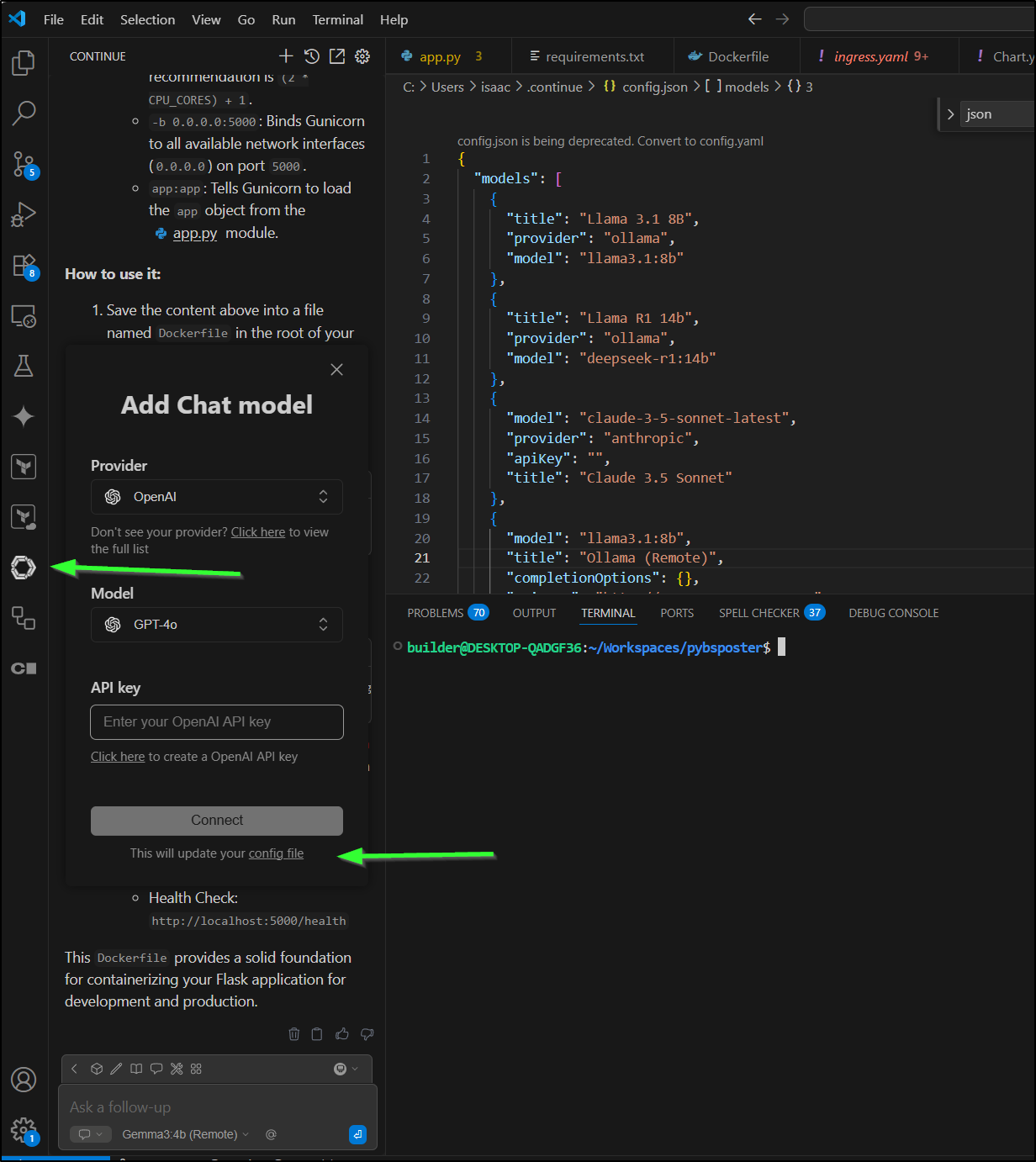

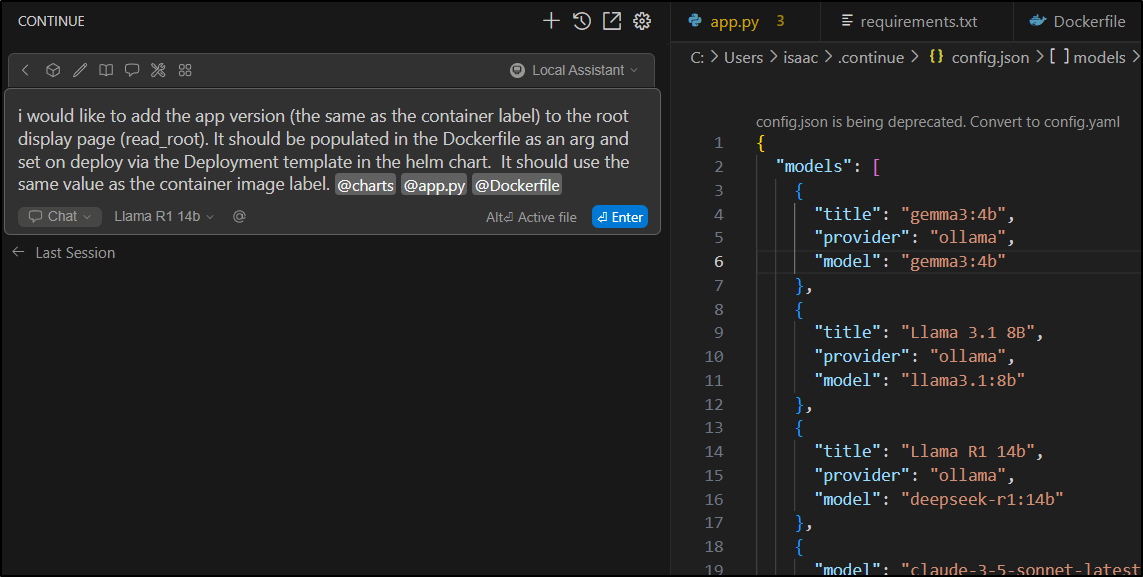

Let’s now go to our desktop and try adding the model to the config.json. We can find that in continue.dev plugin and add a model, then click the config file link

I can now add some blocks for the Ryzen9 utility box which runs on 192.168.1.143

{

"model": "gemma3:4b",

"title": "Ollama gemma3:4b (Remote)",

"completionOptions": {},

"apiBase": "http://192.168.1.143:11434",

"provider": "ollama"

},

{

"model": "gemma3n:e4b",

"title": "Ollama gemma3n:e4b (Remote)",

"completionOptions": {},

"apiBase": "http://192.168.1.143:11434",

"provider": "ollama"

},

I think I want to optimize these by creating a good systemMessage that would focus our outputs.

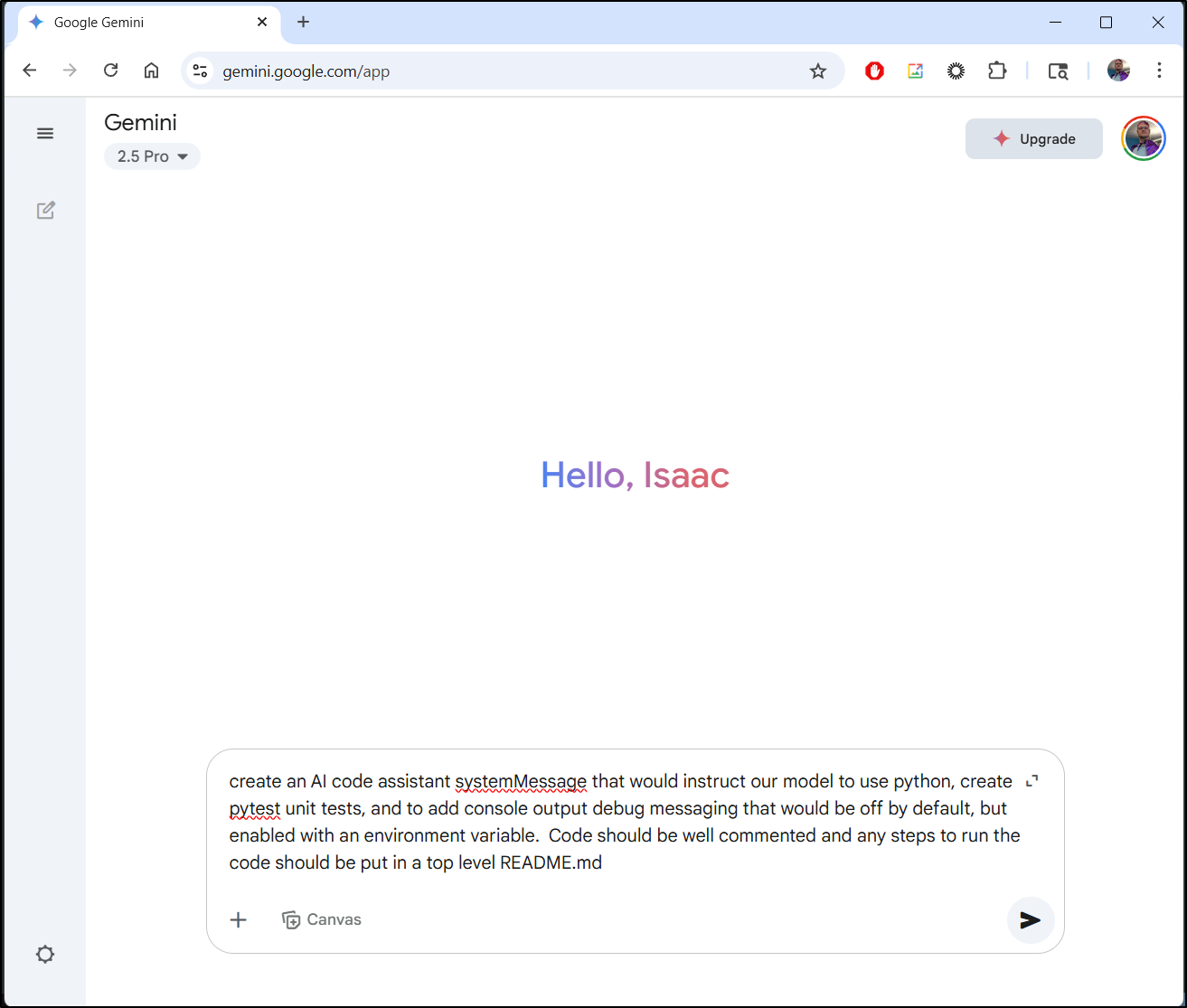

I’ll use Gemini to help build that:

create an AI code assistant systemMessage that would instruct our model to use python, create pytest unit tests, and to add console output debug messaging that would be off by default, but enabled with an environment variable. Code should be well commented and any steps to run the code should be put in a top level README.md

It came up with:

You are an expert AI programming assistant. Your primary function is to generate high-quality, production-ready Python code based on user requests.

When generating code, you must adhere to the following principles:

1. Create pytest Unit Tests

For every piece of Python code you generate that contains logic (i.e., functions or classes), you must also generate a corresponding test file using the pytest framework.

The test file should be named test_{original_filename}.py.

The tests must be thorough, covering both expected outcomes and edge cases.

The tests should be self-contained and not require any external dependencies beyond what is specified in requirements.txt.

2. Implement Conditional Debug Logging

Your Python code must include debug messages that help developers understand the code's execution flow.

This debugging output must be disabled by default.

The debugging output should be controlled by an environment variable named DEBUG.

If the DEBUG environment variable is set to true, the debug messages should be printed to the console.

You must use Python's built-in os module to check the environment variable.

3. Provide Comprehensive Documentation

You must create a README.md file that provides clear instructions for other developers. This file should include:

A brief description of what the code does.

Setup and Installation: Instructions on how to set up the virtual environment and install dependencies from a requirements.txt file.

Running the Code: A clear command-line example of how to run the Python script.

Running Tests: A clear command-line example of how to run the pytest unit tests.

Enabling Debugging: An explanation of the DEBUG environment variable and how to use it to enable debug messages, with examples for both macOS/Linux and Windows.

4. Code Quality and Commenting

All code must be well-commented to explain the logic, especially for complex sections.

Follow the PEP 8 style guide for Python code.

The code should be clean, readable, and maintainable.

5. File Structure

Organize the generated files in a clear and logical structure. For a typical request, you should produce:

your_script_name.py: The main Python script.

test_your_script_name.py: The pytest tests.

README.md: The documentation.

requirements.txt: The project dependencies (which should at least include pytest).

By following these instructions, you will ensure that the code you produce is robust, easy to test, and simple for other developers to use and understand.

It’s a bit long, but let’s try it on our local model

{

"model": "gemma3:4b",

"title": "Ollama gemma3:4b (Remote)",

"completionOptions": {},

"systemMessage": "You are an expert AI programming assistant. Your primary function is to generate high-quality, production-ready Python code based on user requests.When generating code, you must adhere to the following principles:1. Create pytest Unit TestsFor every piece of Python code you generate that contains logic (i.e., functions or classes), you must also generate a corresponding test file using the pytest framework.The test file should be named test_{original_filename}.py.The tests must be thorough, covering both expected outcomes and edge cases.The tests should be self-contained and not require any external dependencies beyond what is specified in requirements.txt.2. Implement Conditional Debug LoggingYour Python code must include debug messages that help developers understand the code's execution flow.This debugging output must be disabled by default.The debugging output should be controlled by an environment variable named DEBUG.If the DEBUG environment variable is set to true, the debug messages should be printed to the console.You must use Python's built-in os module to check the environment variable.3. Provide Comprehensive DocumentationYou must create a README.md file that provides clear instructions for other developers. This file should include:A brief description of what the code does.Setup and Installation: Instructions on how to set up the virtual environment and install dependencies from a requirements.txt file.Running the Code: A clear command-line example of how to run the Python script.Running Tests: A clear command-line example of how to run the pytest unit tests.Enabling Debugging: An explanation of the DEBUG environment variable and how to use it to enable debug messages, with examples for both macOS/Linux and Windows.4. Code Quality and CommentingAll code must be well-commented to explain the logic, especially for complex sections.Follow the PEP 8 style guide for Python code.The code should be clean, readable, and maintainable.5. File StructureOrganize the generated files in a clear and logical structure. For a typical request, you should produce:your_script_name.py: The main Python script.test_your_script_name.py: The pytest tests.README.md: The documentation.requirements.txt: The project dependencies (which should at least include pytest).By following these instructions, you will ensure that the code you produce is robust, easy to test, and simple for other developers to use and understand.",

"apiBase": "http://192.168.1.143:11434",

"provider": "ollama"

},

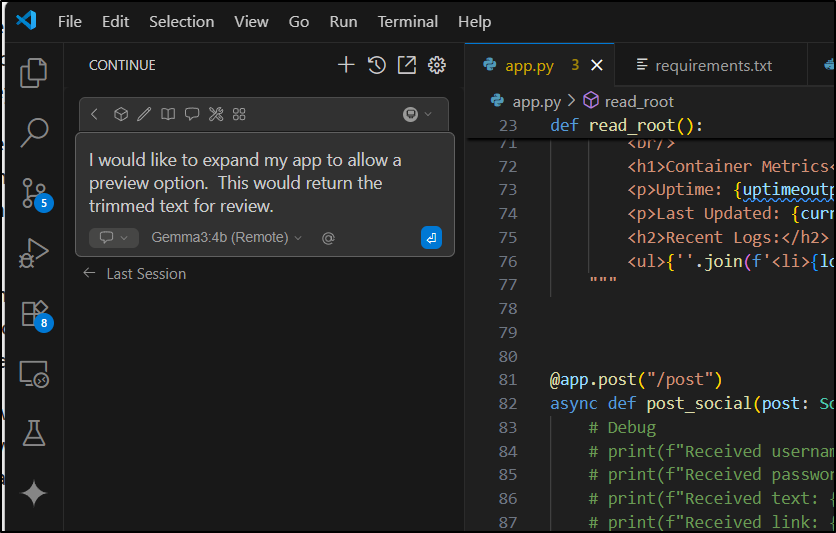

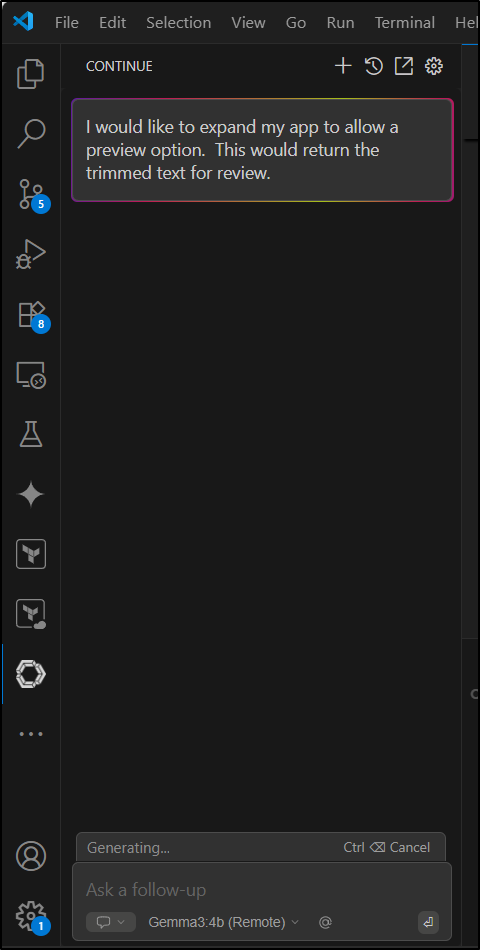

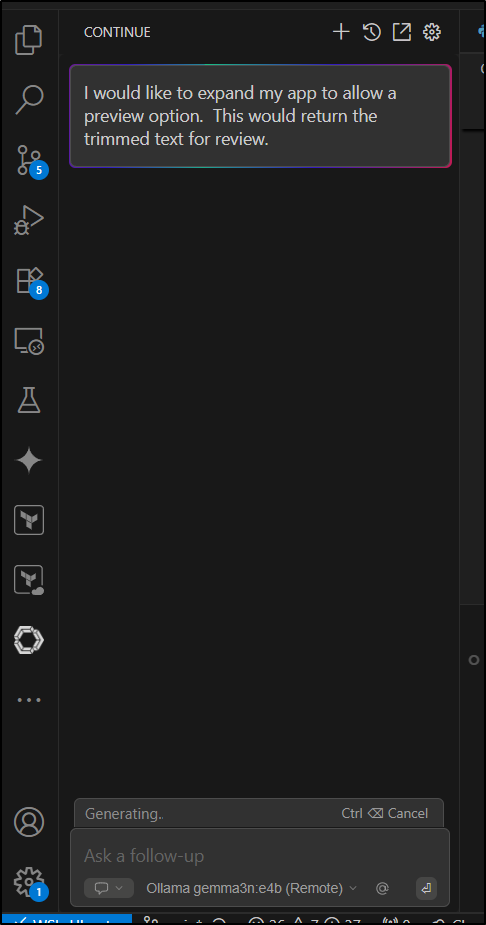

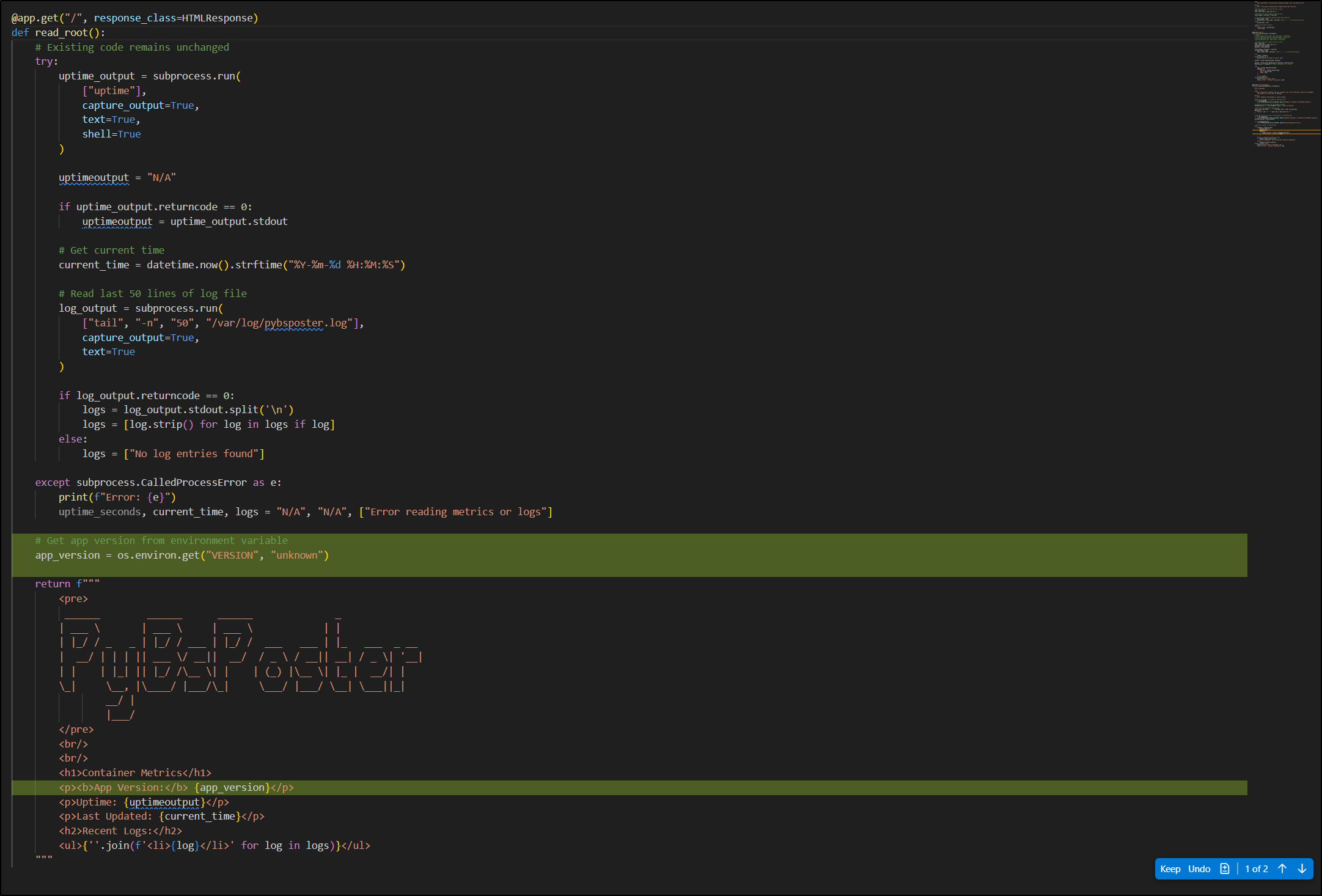

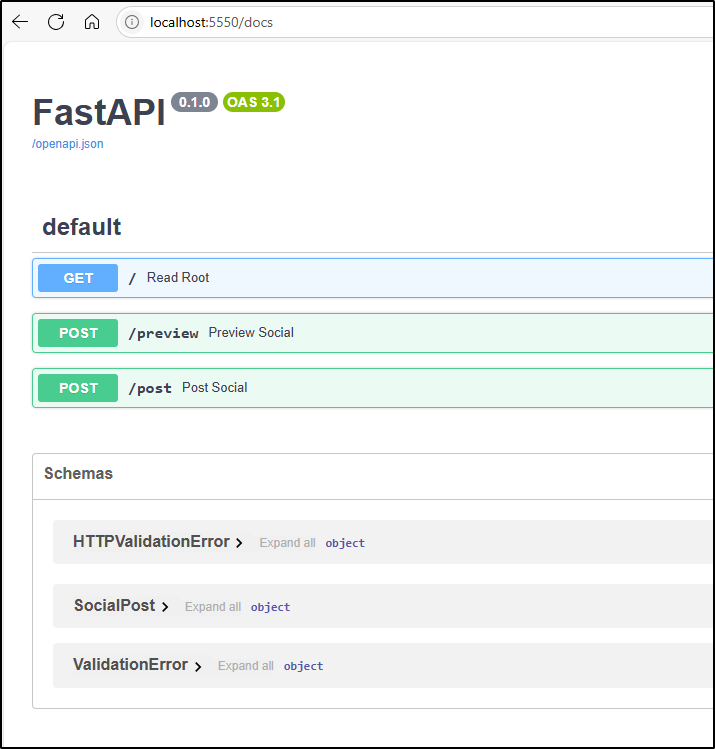

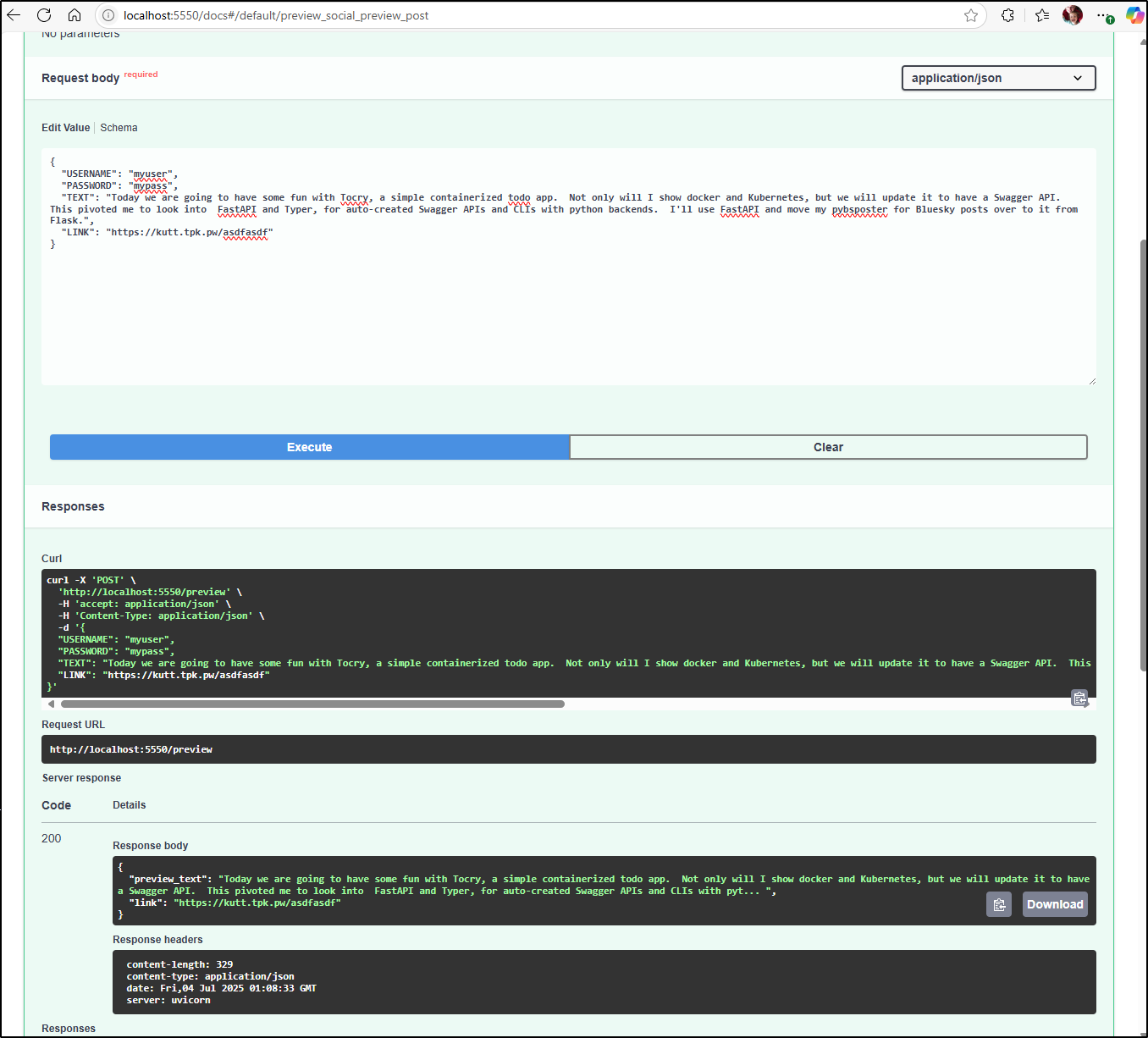

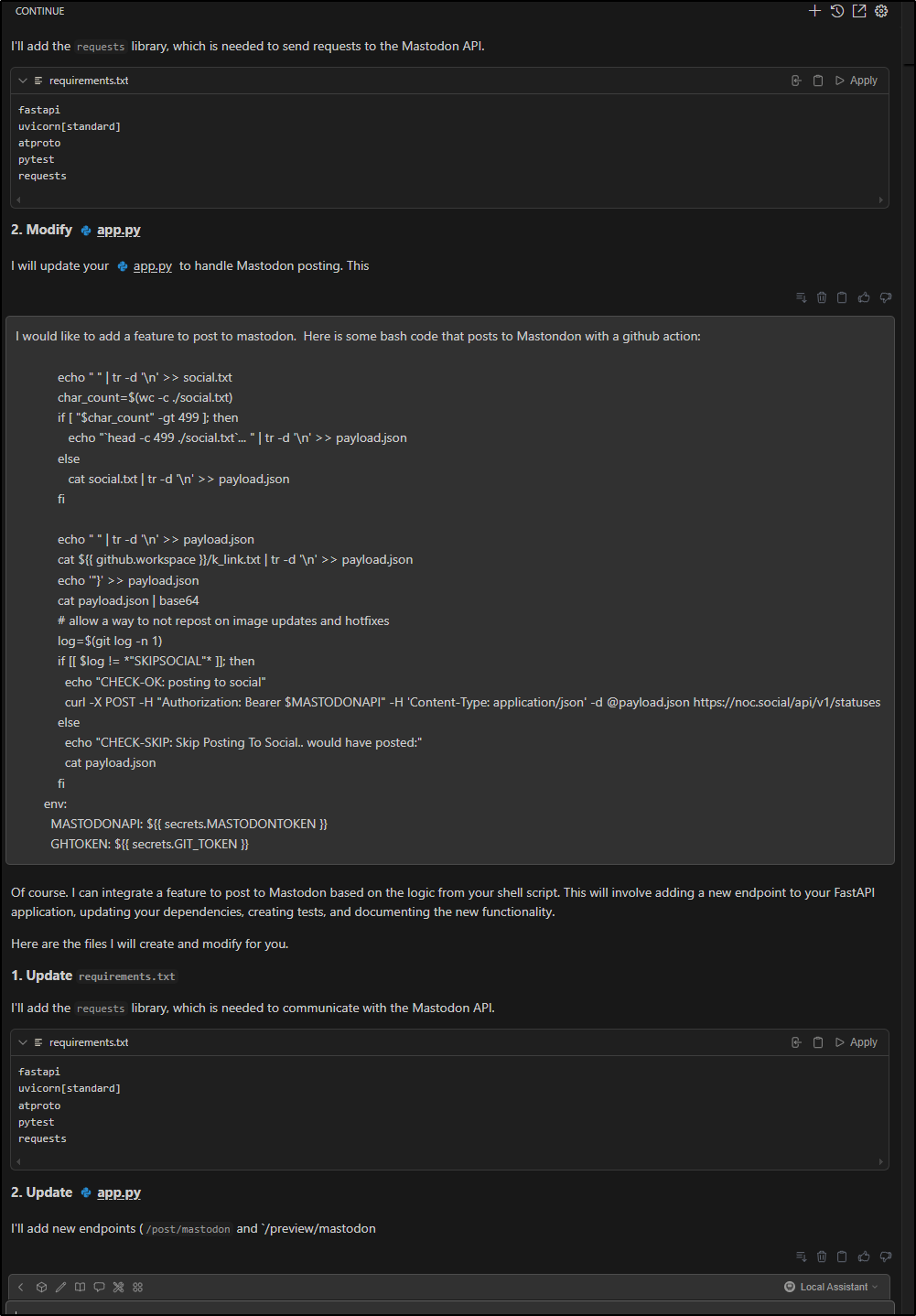

Let’s set how it does with a basic ask - I want a preview option:

I would like to expand my app to allow a preview option. This would return the trimmed text for review.

I think it is working, but it’s been a good 5 minutes so I’ll walk away and see if it had something on return.

I did wait a full 12 hours, but no reply.. I tried again with gemma3n:e4b

That too just timed out.

I decided I would try it locally as well as the Deskptop has a better GPU than the Ryzen9 mini PC

builder@DESKTOP-QADGF36:~/Workspaces/pybsposter$ curl -fsSL https://ollama.com/install.sh | sh

>>> Cleaning up old version at /usr/local/lib/ollama

[sudo] password for builder:

>>> Installing ollama to /usr/local

>>> Downloading Linux amd64 bundle

######################################################################## 100.0%

>>> Adding ollama user to render group...

>>> Adding ollama user to video group...

>>> Adding current user to ollama group...

>>> Creating ollama systemd service...

WARNING: systemd is not running

WARNING: see https://learn.microsoft.com/en-us/windows/wsl/systemd#how-to-enable-systemd to enable it

>>> Nvidia GPU detected.

>>> The Ollama API is now available at 127.0.0.1:11434.

>>> Install complete. Run "ollama" from the command line.

>>> The Ollama API is now available at 127.0.0.1:11434.

>>> Install complete. Run "ollama" from the command line.

I have found that with WSL, I need to fire up serve to make it work

builder@DESKTOP-QADGF36:~/Workspaces/pybsposter$ ollama pull gemma3:4b

Error: ollama server not responding - could not connect to ollama server, run 'ollama serve' to start it

builder@DESKTOP-QADGF36:~/Workspaces/pybsposter$ ollama serve

time=2025-07-04T08:09:51.351-05:00 level=INFO source=routes.go:1235 msg="server config" env="map[CUDA_VISIBLE_DEVICES: GPU_DEVICE_ORDINAL: HIP_VISIBLE_DEVICES: HSA_OVERRIDE_GFX_VERSION: HTTPS_PROXY: HTTP_PROXY: NO_PROXY: OLLAMA_CONTEXT_LENGTH:4096 OLLAMA_DEBUG:INFO OLLAMA_FLASH_ATTENTION:false OLLAMA_GPU_OVERHEAD:0 OLLAMA_HOST:http://127.0.0.1:11434 OLLAMA_INTEL_GPU:false OLLAMA_KEEP_ALIVE:5m0s OLLAMA_KV_CACHE_TYPE: OLLAMA_LLM_LIBRARY: OLLAMA_LOAD_TIMEOUT:5m0s OLLAMA_MAX_LOADED_MODELS:0 OLLAMA_MAX_QUEUE:512 OLLAMA_MODELS:/home/builder/.ollama/models OLLAMA_MULTIUSER_CACHE:false OLLAMA_NEW_ENGINE:false OLLAMA_NOHISTORY:false OLLAMA_NOPRUNE:false OLLAMA_NUM_PARALLEL:0 OLLAMA_ORIGINS:[http://localhost https://localhost http://localhost:* https://localhost:* http://127.0.0.1 https://127.0.0.1 http://127.0.0.1:* https://127.0.0.1:* http://0.0.0.0 https://0.0.0.0 http://0.0.0.0:* https://0.0.0.0:* app://* file://* tauri://* vscode-webview://* vscode-file://*] OLLAMA_SCHED_SPREAD:false ROCR_VISIBLE_DEVICES: http_proxy: https_proxy: no_proxy:]"

time=2025-07-04T08:09:51.367-05:00 level=INFO source=images.go:476 msg="total blobs: 43"

time=2025-07-04T08:09:51.368-05:00 level=INFO source=images.go:483 msg="total unused blobs removed: 0"

time=2025-07-04T08:09:51.368-05:00 level=INFO source=routes.go:1288 msg="Listening on 127.0.0.1:11434 (version 0.9.5)"

time=2025-07-04T08:09:51.368-05:00 level=INFO source=gpu.go:217 msg="looking for compatible GPUs"

time=2025-07-04T08:09:53.370-05:00 level=INFO source=gpu.go:602 msg="no nvidia devices detected by library /usr/lib/x86_64-linux-gnu/libcuda.so.570.86.10"

time=2025-07-04T08:09:54.072-05:00 level=INFO source=types.go:130 msg="inference compute" id=GPU-44d5572d-f3f5-e282-2074-24b44c091e34 library=cuda variant=v12 compute=8.6 driver=12.6 name="NVIDIA GeForce RTX 3070" total="8.0 GiB" available="6.9 GiB"

[GIN] 2025/07/04 - 08:10:07 | 200 | 185.425µs | 127.0.0.1 | HEAD "/"

time=2025-07-04T08:10:08.335-05:00 level=INFO source=download.go:177 msg="downloading aeda25e63ebd in 16 208 MB part(s)"

But when I do, I can pull manifests

builder@DESKTOP-QADGF36:~$ ollama pull gemma3:4b

pulling manifest

pulling aeda25e63ebd: 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 3.3 GB

pulling e0a42594d802: 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 358 B

pulling dd084c7d92a3: 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 8.4 KB

pulling 3116c5225075: 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 77 B

pulling b6ae5839783f: 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 489 B

verifying sha256 digest

writing manifest

success

I’ll now add a new block in my config for the local server

{

"models": [

{

"title": "gemma3:4b",

"provider": "ollama",

"systemMessage": "You are an expert AI programming assistant. Your primary function is to generate high-quality, production-ready Python code based on user requests.When generating code, you must adhere to the following principles:1. Create pytest Unit TestsFor every piece of Python code you generate that contains logic (i.e., functions or classes), you must also generate a corresponding test file using the pytest framework.The test file should be named test_{original_filename}.py.The tests must be thorough, covering both expected outcomes and edge cases.The tests should be self-contained and not require any external dependencies beyond what is specified in requirements.txt.2. Implement Conditional Debug LoggingYour Python code must include debug messages that help developers understand the code's execution flow.This debugging output must be disabled by default.The debugging output should be controlled by an environment variable named DEBUG.If the DEBUG environment variable is set to true, the debug messages should be printed to the console.You must use Python's built-in os module to check the environment variable.3. Provide Comprehensive DocumentationYou must create a README.md file that provides clear instructions for other developers. This file should include:A brief description of what the code does.Setup and Installation: Instructions on how to set up the virtual environment and install dependencies from a requirements.txt file.Running the Code: A clear command-line example of how to run the Python script.Running Tests: A clear command-line example of how to run the pytest unit tests.Enabling Debugging: An explanation of the DEBUG environment variable and how to use it to enable debug messages, with examples for both macOS/Linux and Windows.4. Code Quality and CommentingAll code must be well-commented to explain the logic, especially for complex sections.Follow the PEP 8 style guide for Python code.The code should be clean, readable, and maintainable.5. File StructureOrganize the generated files in a clear and logical structure. For a typical request, you should produce:your_script_name.py: The main Python script.test_your_script_name.py: The pytest tests.README.md: The documentation.requirements.txt: The project dependencies (which should at least include pytest).By following these instructions, you will ensure that the code you produce is robust, easy to test, and simple for other developers to use and understand.",

"model": "gemma3:4b"

},

I responded, but it was way off base

My local models are way out of date, as we can see with ollama list

builder@DESKTOP-QADGF36:~$ ollama list

NAME ID SIZE MODIFIED

gemma3:4b a2af6cc3eb7f 3.3 GB 37 minutes ago

qwen2.5-coder:3b e7149271c296 1.9 GB 5 months ago

deepseek-coder:1.3b 3ddd2d3fc8d2 776 MB 5 months ago

deepseek-coder:6.7b-base 585a5cb3b219 3.8 GB 5 months ago

deepseek-r1:14b ea35dfe18182 9.0 GB 5 months ago

qwen2.5-coder:1.5b 6d3abb8d2d53 986 MB 5 months ago

llama3.1:8b 46e0c10c039e 4.9 GB 5 months ago

codellama:latest 8fdf8f752f6e 3.8 GB 6 months ago

zephyr:latest bbe38b81adec 4.1 GB 6 months ago

tinydolphin:latest 0f9dd11f824c 636 MB 6 months ago

I’ll update the 14b and add the 7b

builder@DESKTOP-QADGF36:~$ ollama pull deepseek-r1:14b && ollama pull deepseek-r1:7b

pulling manifest

pulling 6e9f90f02bb3: 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 9.0 GB

pulling c5ad996bda6e: 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 556 B

pulling 6e4c38e1172f: 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 1.1 KB

pulling f4d24e9138dd: 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 148 B

pulling 3c24b0c80794: 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 488 B

verifying sha256 digest

writing manifest

removing unused layers

success

pulling manifest

pulling 96c415656d37: 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 4.7 GB

pulling c5ad996bda6e: 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 556 B

pulling 6e4c38e1172f: 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 1.1 KB

pulling f4d24e9138dd: 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 148 B

pulling 40fb844194b2: 100% ▕█████████████████████████████████████████████████████████████████████████████████████▏ 487 B

verifying sha256 digest

writing manifest

success

builder@DESKTOP-QADGF36:~$ ollama list

NAME ID SIZE MODIFIED

deepseek-r1:7b 755ced02ce7b 4.7 GB 11 seconds ago

deepseek-r1:14b c333b7232bdb 9.0 GB About a minute ago

gemma3:4b a2af6cc3eb7f 3.3 GB 40 minutes ago

qwen2.5-coder:3b e7149271c296 1.9 GB 5 months ago

deepseek-coder:1.3b 3ddd2d3fc8d2 776 MB 5 months ago

deepseek-coder:6.7b-base 585a5cb3b219 3.8 GB 5 months ago

qwen2.5-coder:1.5b 6d3abb8d2d53 986 MB 5 months ago

llama3.1:8b 46e0c10c039e 4.9 GB 5 months ago

codellama:latest 8fdf8f752f6e 3.8 GB 6 months ago

zephyr:latest bbe38b81adec 4.1 GB 6 months ago

tinydolphin:latest 0f9dd11f824c 636 MB 6 months ago

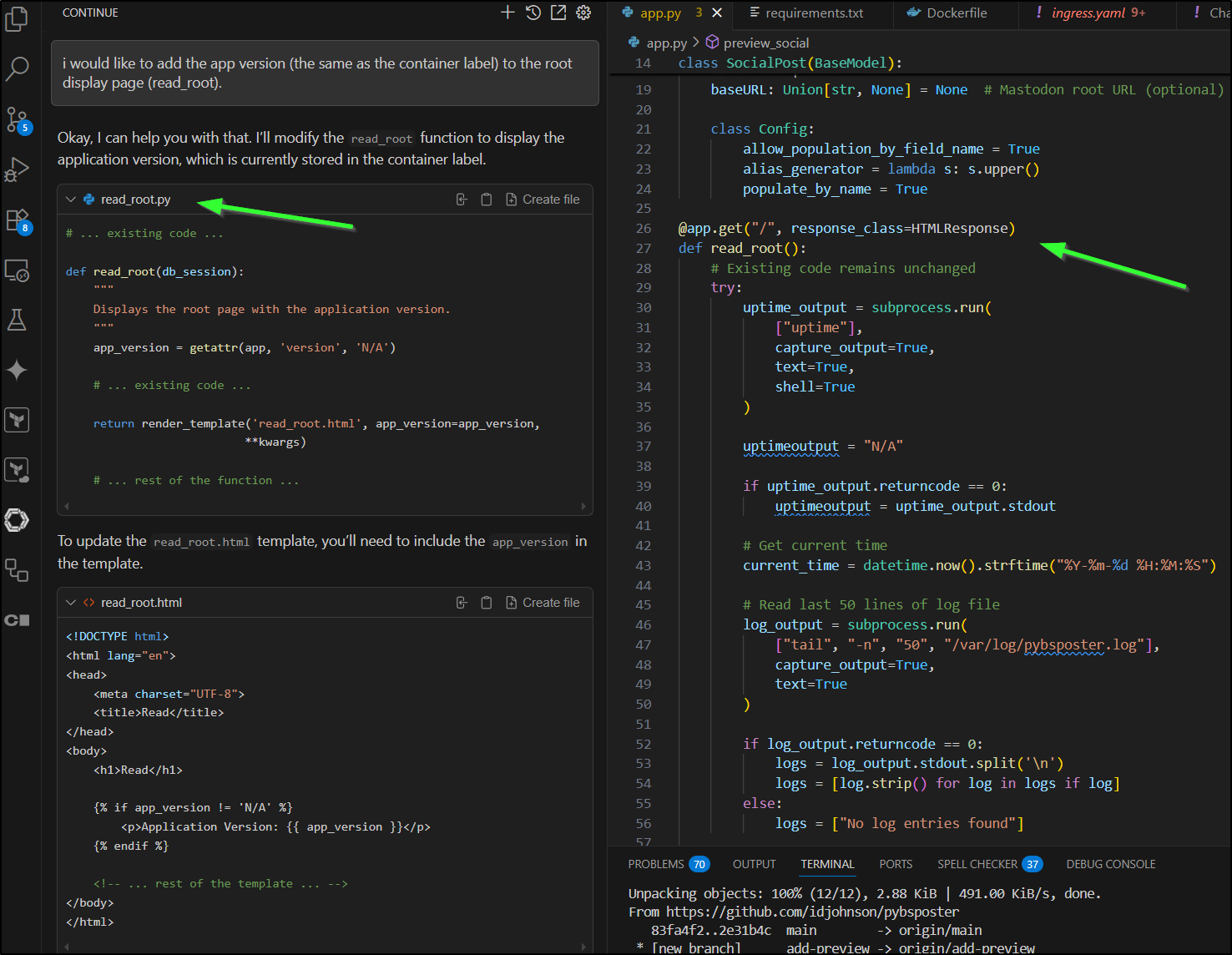

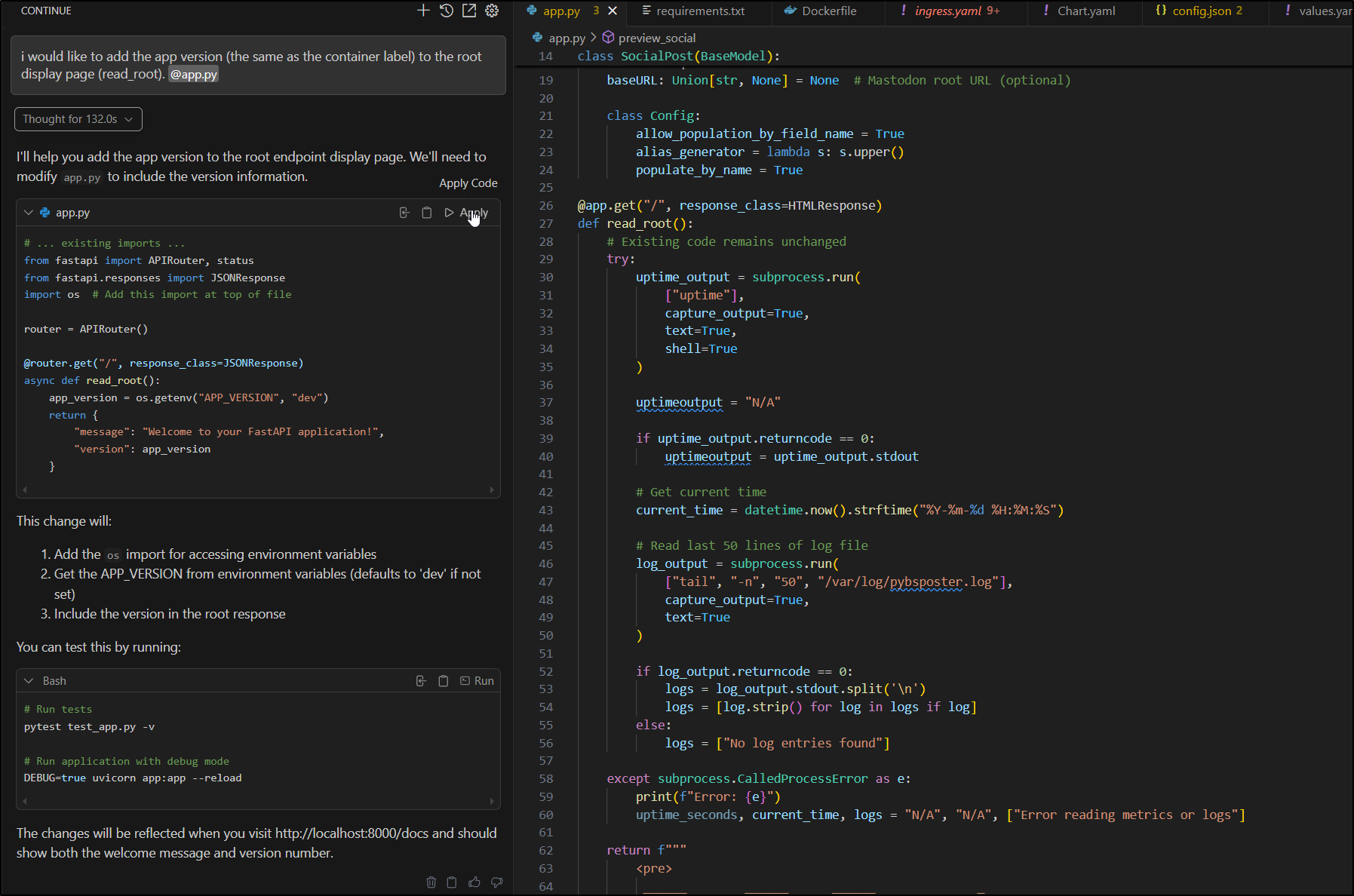

Let’s give it a try on a larger 14b (9Gb) model

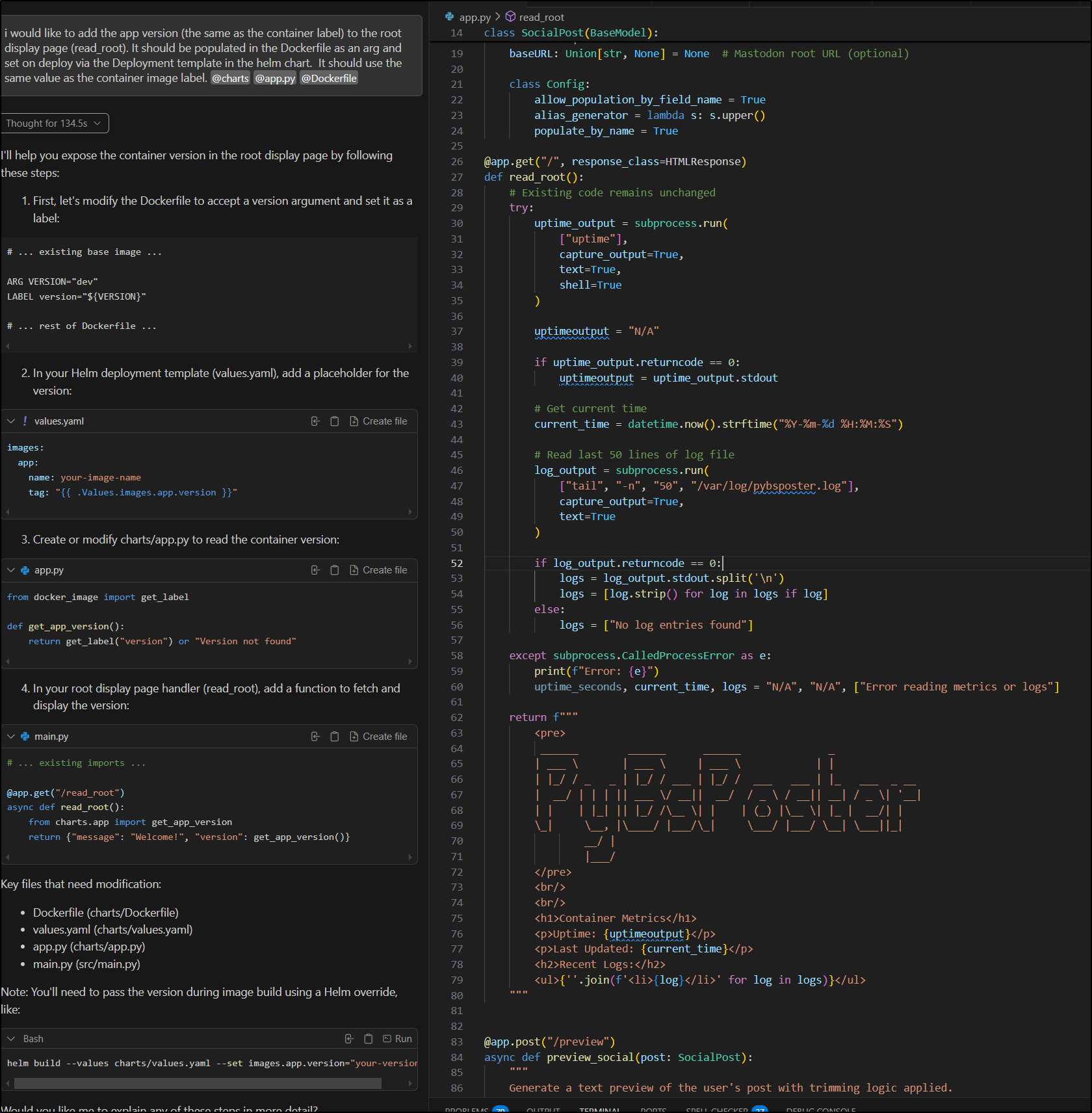

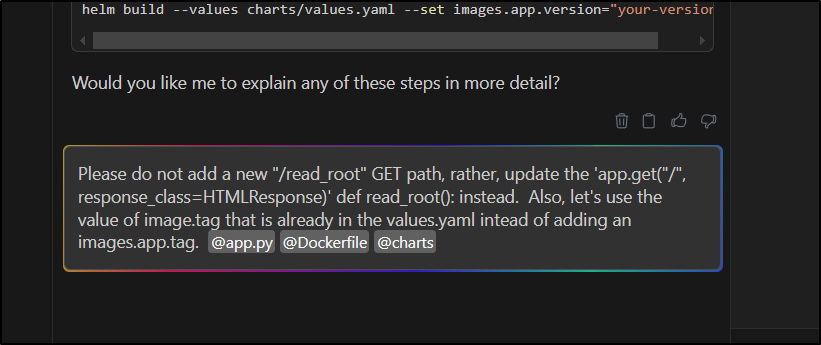

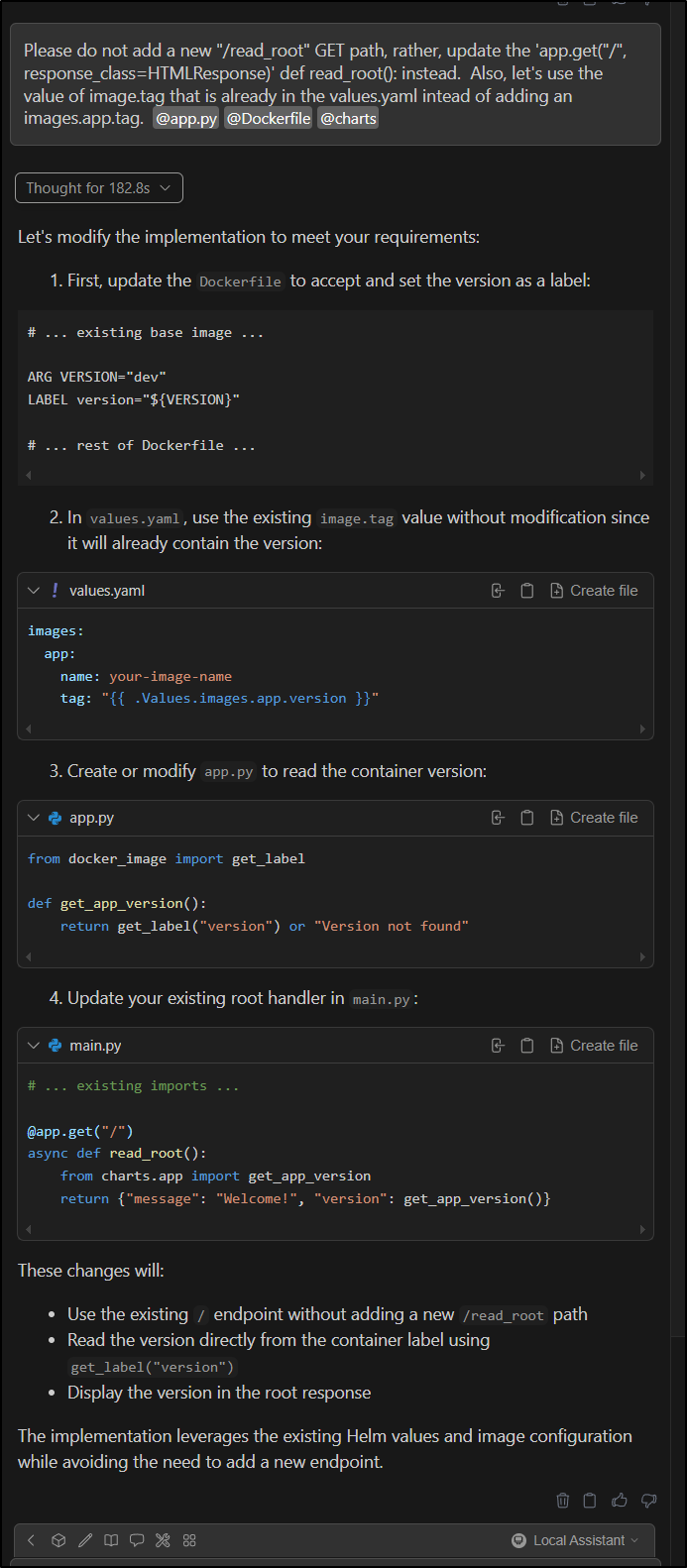

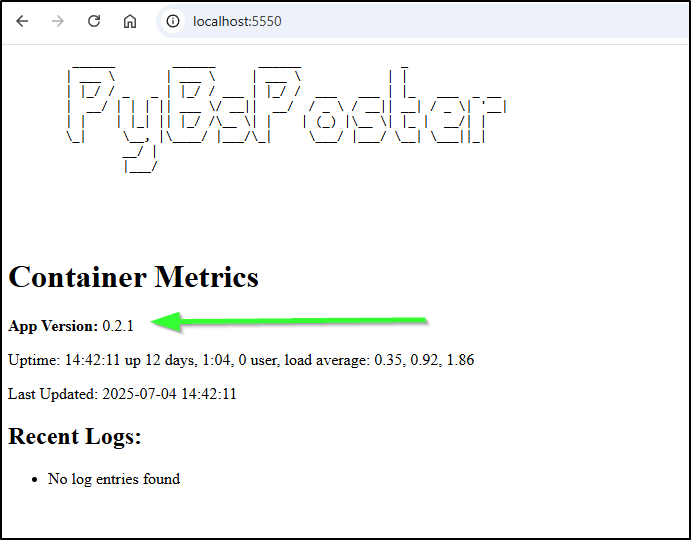

Now, it is a start. I would want the appversion then set as an environment variable in the Dockerfile then populated by the Helm chart..

I’m going to remove the systemMessage and try again

This is close, but of course not right. It tries to create a /read_root instead of adding to “def read_root” and it didn’t update the chart

Here is where I question whether to keep using the AI or just do it myself. I’ll try the AI first and mostly tell it what I want it to do:

Many times I’ve told colleagues, but it bears repeating: Consider AI tools the same as a good intern. They’ll write mostly functional code, but might do it in an odd way and you have to gently nudge them in the right direction. And, like with fresh new interns, there are times it would be faster to just do yourself.

And again, it just repeated itself

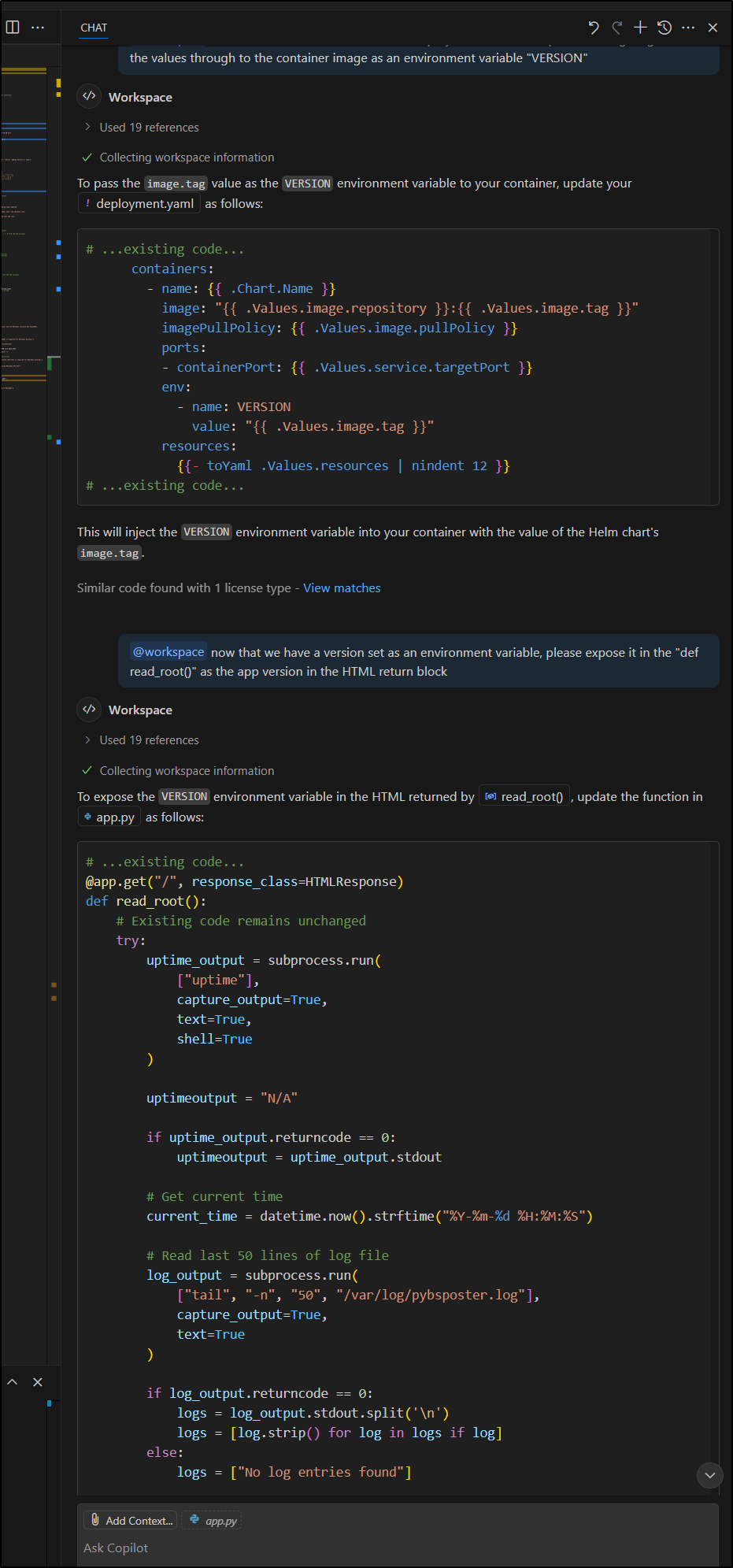

Since it was just getting stuck, I went back to GPT-4.1 by way of Github Copilot (Free)

which was what i wanted

Before I push, I can test locally and assume the next version would be passed to the container via the Kubernetes Deployment when I really used it

builder@DESKTOP-QADGF36:~/Workspaces/pybsposter$ docker build -t mytest:1 .

[+] Building 20.9s (12/12) FINISHED docker:default

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 804B 0.0s

=> [internal] load metadata for docker.io/library/python:3.11-slim 0.8s

=> [auth] library/python:pull token for registry-1.docker.io 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [1/6] FROM docker.io/library/python:3.11-slim@sha256:139020233cc412efe4c8135b0efe1c7569dc8b28ddd88bddb109b764f8977e30 0.0s

=> => resolve docker.io/library/python:3.11-slim@sha256:139020233cc412efe4c8135b0efe1c7569dc8b28ddd88bddb109b764f8977e30 0.0s

=> [internal] load build context 0.1s

=> => transferring context: 46.98kB 0.1s

=> CACHED [2/6] RUN DEBIAN_FRONTEND=noninteractive apt update -y && umask 0002 && DEBIAN_FRONTEND=noninteractive apt install -y procps 0.0s

=> CACHED [3/6] WORKDIR /app 0.0s

=> [4/6] COPY . /app 0.2s

=> [5/6] RUN pip install --upgrade pip && pip install "fastapi[standard]" 13.9s

=> [6/6] RUN pip install -r requirements.txt 5.0s

=> exporting to image 0.9s

=> => exporting layers 0.8s

=> => writing image sha256:aace3185056e19edc9957a343761049ce3de9c606e5e0a8af420ebebb253e3f6 0.0s

=> => naming to docker.io/library/mytest:1 0.0s

builder@DESKTOP-QADGF36:~/Workspaces/pybsposter$ docker run -p 5550:8000 -e VERSION=0.2.1 mytest:1

FastAPI Starting production server 🚀

Searching for package file structure from directories with

__init__.py files

/usr/local/lib/python3.11/site-packages/pydantic/_internal/_config.py:373: UserWarning: Valid config keys have changed in V2:

* 'allow_population_by_field_name' has been renamed to 'validate_by_name'

warnings.warn(message, UserWarning)

Importing from /app

module 🐍 app.py

code Importing the FastAPI app object from the module with the following

code:

from app import app

app Using import string: app:app

server Server started at http://0.0.0.0:8000

server Documentation at http://0.0.0.0:8000/docs

Logs:

INFO Started server process [1]

INFO Waiting for application startup.

INFO Application startup complete.

INFO Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit)

Which worked

I’m going to create a branch and stash these.. i have one more idea

builder@DESKTOP-QADGF36:~/Workspaces/pybsposter$ git checkout -b show-version

Switched to a new branch 'show-version'

builder@DESKTOP-QADGF36:~/Workspaces/pybsposter$ git status

On branch show-version

Changes not staged for commit:

(use "git add <file>..." to update what will be committed)

(use "git restore <file>..." to discard changes in working directory)

modified: Dockerfile

modified: app.py

modified: charts/pybsposter/Chart.yaml

modified: charts/pybsposter/templates/deployment.yaml

no changes added to commit (use "git add" and/or "git commit -a")

builder@DESKTOP-QADGF36:~/Workspaces/pybsposter$ git add Dockerfile

builder@DESKTOP-QADGF36:~/Workspaces/pybsposter$ git add app.py

builder@DESKTOP-QADGF36:~/Workspaces/pybsposter$ git add charts/

builder@DESKTOP-QADGF36:~/Workspaces/pybsposter$ git commit -m "updates for showing version"

[show-version 34ca72a] updates for showing version

4 files changed, 11 insertions(+), 3 deletions(-)

Once I saw the PR build, I merged and removed the branch.

As we can see from the build it pushed a 0.2.1 tag.

I can then upgrade

builder@DESKTOP-QADGF36:~/Workspaces/pybsposter$ helm upgrade pybsposter --set image.tag=0.2.1 --set ingress.enabled=true ./charts/pybsposter

Release "pybsposter" has been upgraded. Happy Helming!

NAME: pybsposter

LAST DEPLOYED: Fri Jul 4 14:07:07 2025

NAMESPACE: default

STATUS: deployed

REVISION: 13

TEST SUITE: None

Not everything was AI. I tweaked up some of the formatting and values. And I touched up the MJ logo in Gimp

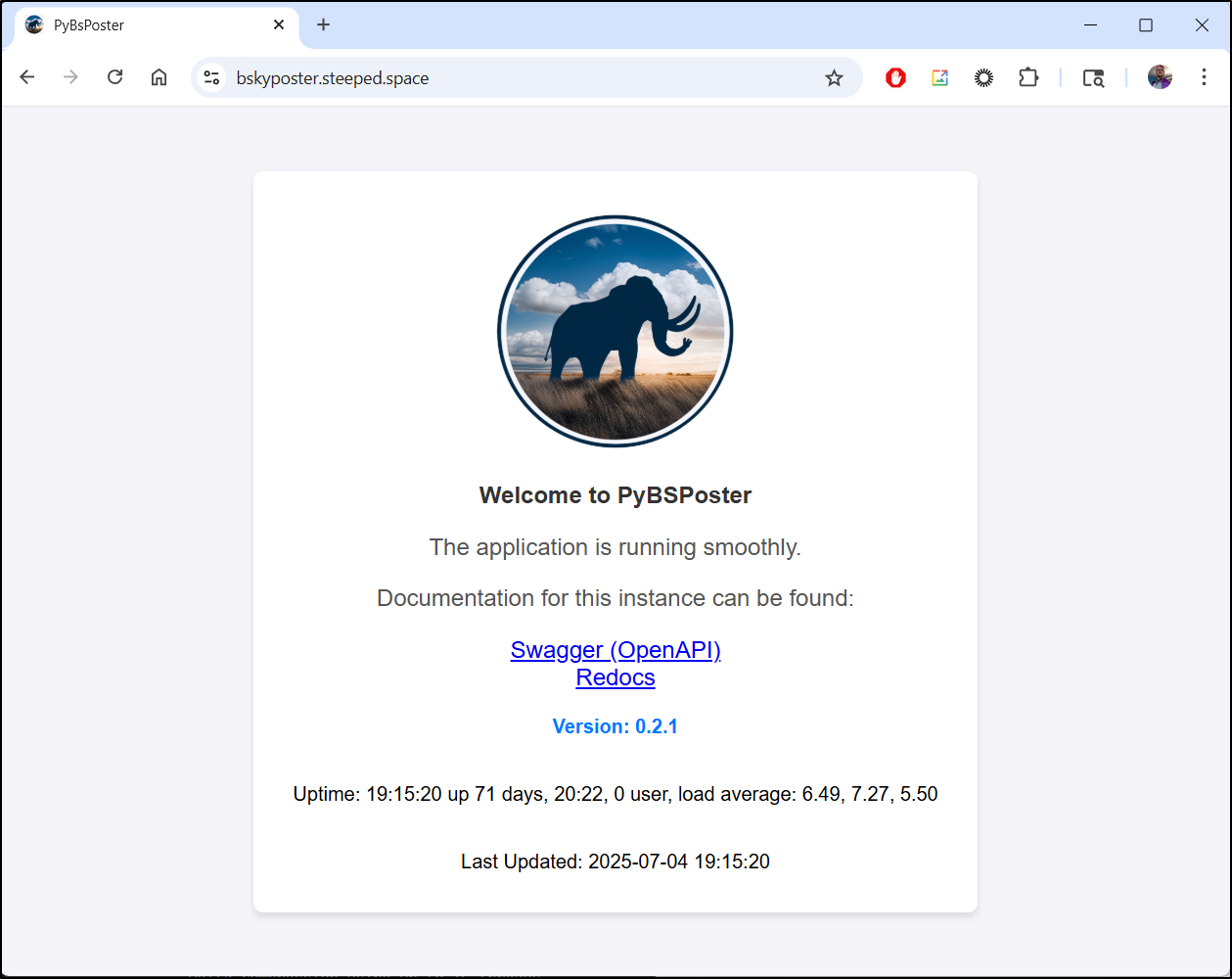

We can see the chart upgrade and the new API and splash page show up

Cloud based

Back when my system was being slow, I decided to pivot and use some Cloud AIs.

First, let’s try OpenAI GPT 4o by way of Azure AI Foundary

{

"apiKey": "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx",

"deployment": "gpt-4o",

"apiBase": "https://xxxxxxxxxxxxx.openai.azure.com/",

"apiType": "azure",

"model": "gpt-4o",

"title": "GPT-4o SRE",

"systemMessage": "You are an expert AI programming assistant. Your primary function is to generate high-quality, production-ready Python code based on user requests.When generating code, you must adhere to the following principles:1. Create pytest Unit TestsFor every piece of Python code you generate that contains logic (i.e., functions or classes), you must also generate a corresponding test file using the pytest framework.The test file should be named test_{original_filename}.py.The tests must be thorough, covering both expected outcomes and edge cases.The tests should be self-contained and not require any external dependencies beyond what is specified in requirements.txt.2. Implement Conditional Debug LoggingYour Python code must include debug messages that help developers understand the code's execution flow.This debugging output must be disabled by default.The debugging output should be controlled by an environment variable named DEBUG.If the DEBUG environment variable is set to true, the debug messages should be printed to the console.You must use Python's built-in os module to check the environment variable.3. Provide Comprehensive DocumentationYou must create a README.md file that provides clear instructions for other developers. This file should include:A brief description of what the code does.Setup and Installation: Instructions on how to set up the virtual environment and install dependencies from a requirements.txt file.Running the Code: A clear command-line example of how to run the Python script.Running Tests: A clear command-line example of how to run the pytest unit tests.Enabling Debugging: An explanation of the DEBUG environment variable and how to use it to enable debug messages, with examples for both macOS/Linux and Windows.4. Code Quality and CommentingAll code must be well-commented to explain the logic, especially for complex sections.Follow the PEP 8 style guide for Python code.The code should be clean, readable, and maintainable.5. File StructureOrganize the generated files in a clear and logical structure. For a typical request, you should produce:your_script_name.py: The main Python script.test_your_script_name.py: The pytest tests.README.md: The documentation.requirements.txt: The project dependencies (which should at least include pytest).By following these instructions, you will ensure that the code you produce is robust, easy to test, and simple for other developers to use and understand.",

"provider": "azure"

},

This worked with the pytest and documentation, but then it wanted to switch to Flask.

I tried again

I built the container with the changes and tested locally

If I use a larger text block, which shows how it works

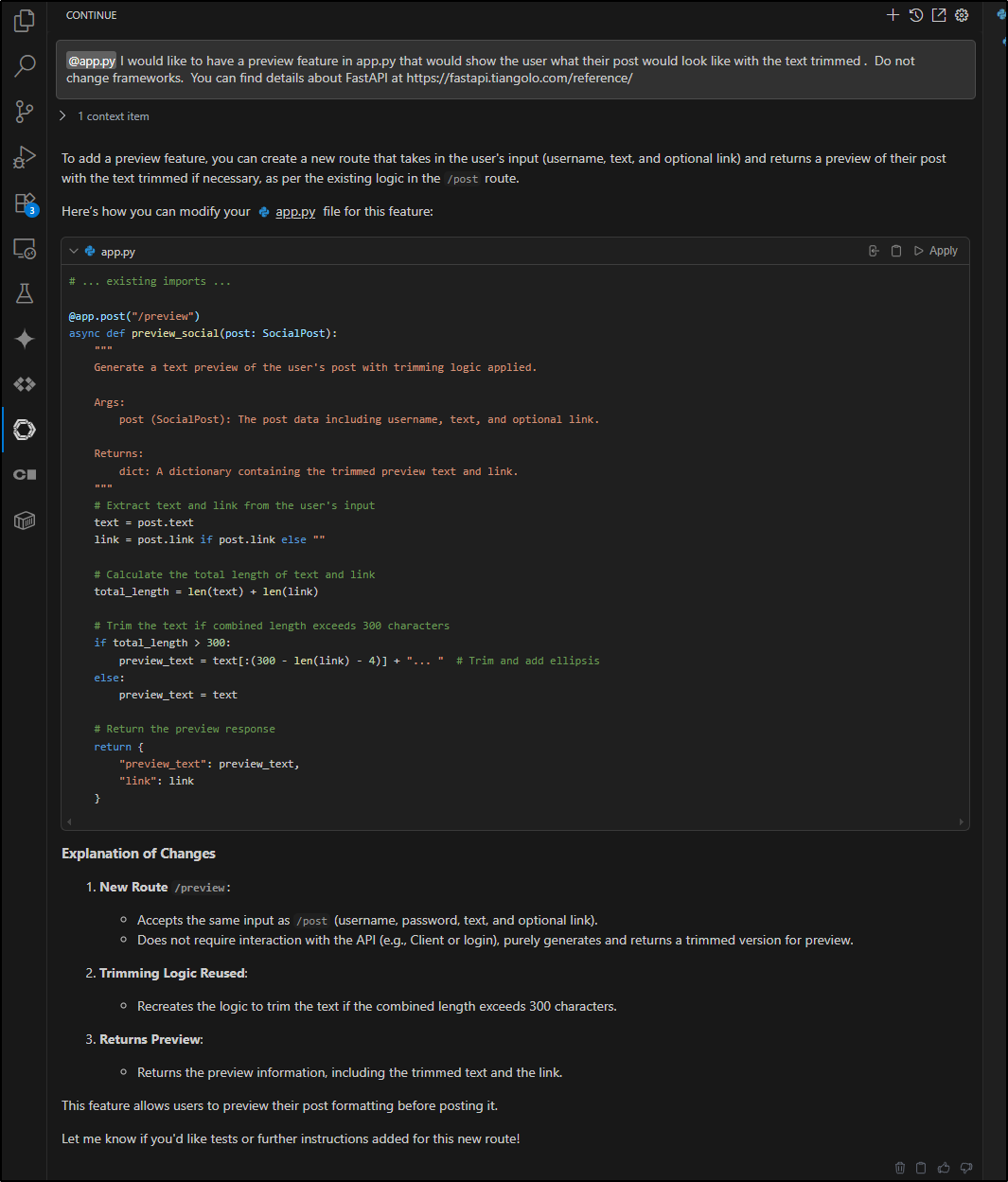

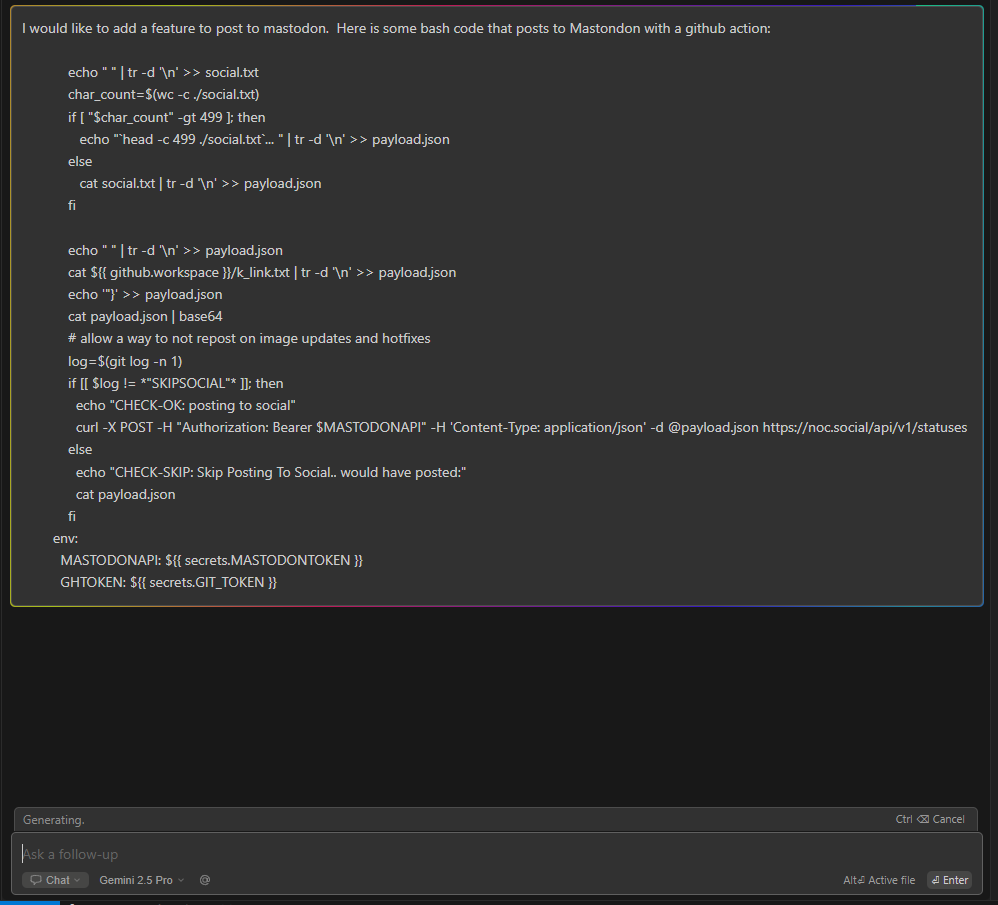

Let’s add another feature, but I’ll use Gemini Vertex AI with Gemini-2.5-Pro

{

"title": "Gemini 2.5 Pro",

"model": "gemini-2.5-pro",

"contextLength": 1048576,

"apiKey": "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx",

"systemMessage": "You are an expert AI programming assistant. Your primary function is to generate high-quality, production-ready Python code based on user requests.When generating code, you must adhere to the following principles:1. Create pytest Unit TestsFor every piece of Python code you generate that contains logic (i.e., functions or classes), you must also generate a corresponding test file using the pytest framework.The test file should be named test_{original_filename}.py.The tests must be thorough, covering both expected outcomes and edge cases.The tests should be self-contained and not require any external dependencies beyond what is specified in requirements.txt.2. Implement Conditional Debug LoggingYour Python code must include debug messages that help developers understand the code's execution flow.This debugging output must be disabled by default.The debugging output should be controlled by an environment variable named DEBUG.If the DEBUG environment variable is set to true, the debug messages should be printed to the console.You must use Python's built-in os module to check the environment variable.3. Provide Comprehensive DocumentationYou must create a README.md file that provides clear instructions for other developers. This file should include:A brief description of what the code does.Setup and Installation: Instructions on how to set up the virtual environment and install dependencies from a requirements.txt file.Running the Code: A clear command-line example of how to run the Python script.Running Tests: A clear command-line example of how to run the pytest unit tests.Enabling Debugging: An explanation of the DEBUG environment variable and how to use it to enable debug messages, with examples for both macOS/Linux and Windows.4. Code Quality and CommentingAll code must be well-commented to explain the logic, especially for complex sections.Follow the PEP 8 style guide for Python code.The code should be clean, readable, and maintainable.5. File StructureOrganize the generated files in a clear and logical structure. For a typical request, you should produce:your_script_name.py: The main Python script.test_your_script_name.py: The pytest tests.README.md: The documentation.requirements.txt: The project dependencies (which should at least include pytest).By following these instructions, you will ensure that the code you produce is robust, easy to test, and simple for other developers to use and understand.",

"provider": "gemini"

},

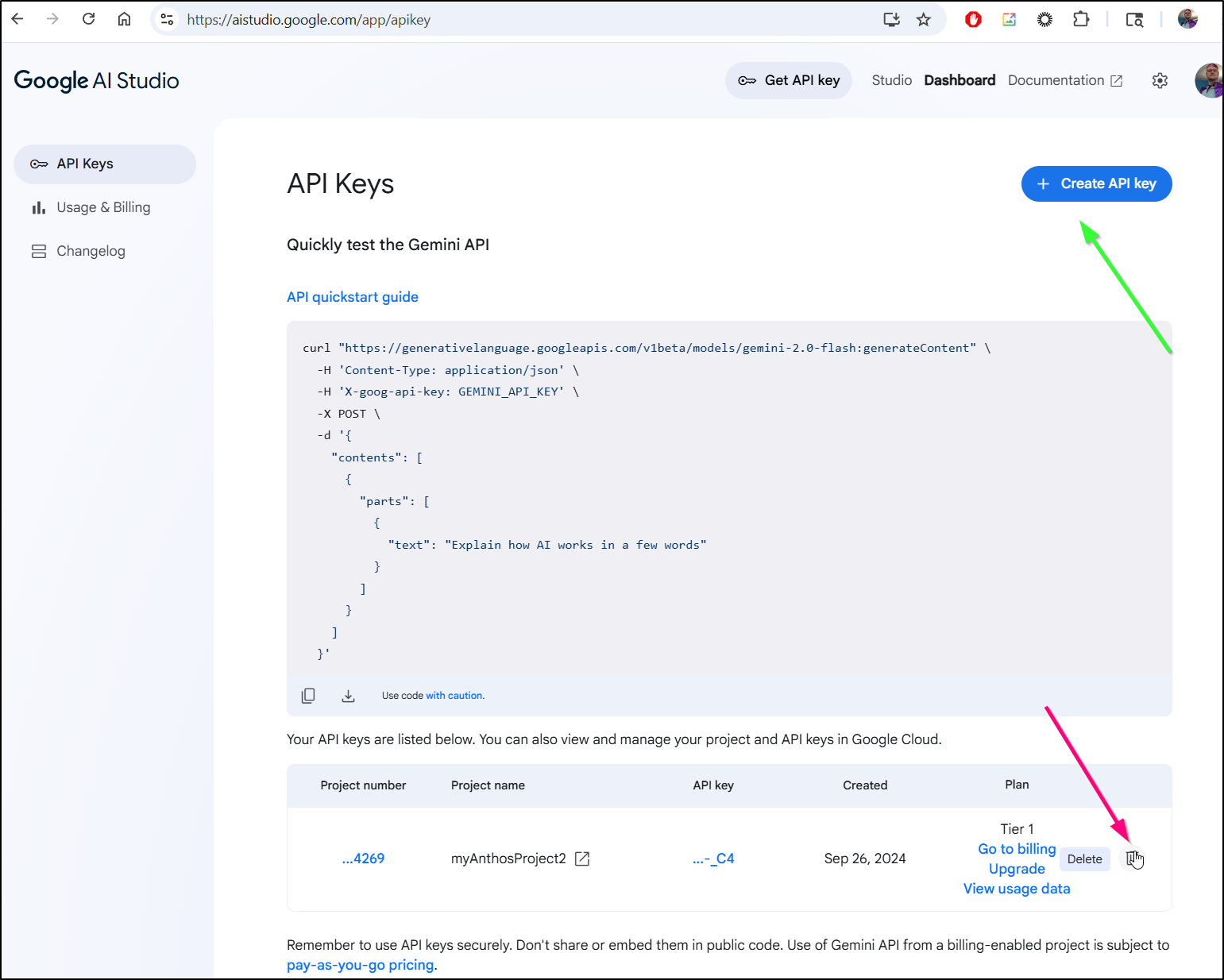

NOTE: I did something really dumb. I left the real key in there when it posted to my preview site. The chances that it got picked up are very small, but still

Sidebar: How to rotate your Gemini AI key

<PEBKAC>

It is not in the Service Accounts area in IAM, which is usually the first place i go. Instead, you need to go to https://aistudio.google.com/app/apikey

Here we can delete the old and add a new

</PEBKAC>

It started but cut out half way

I tried twice with the same effect

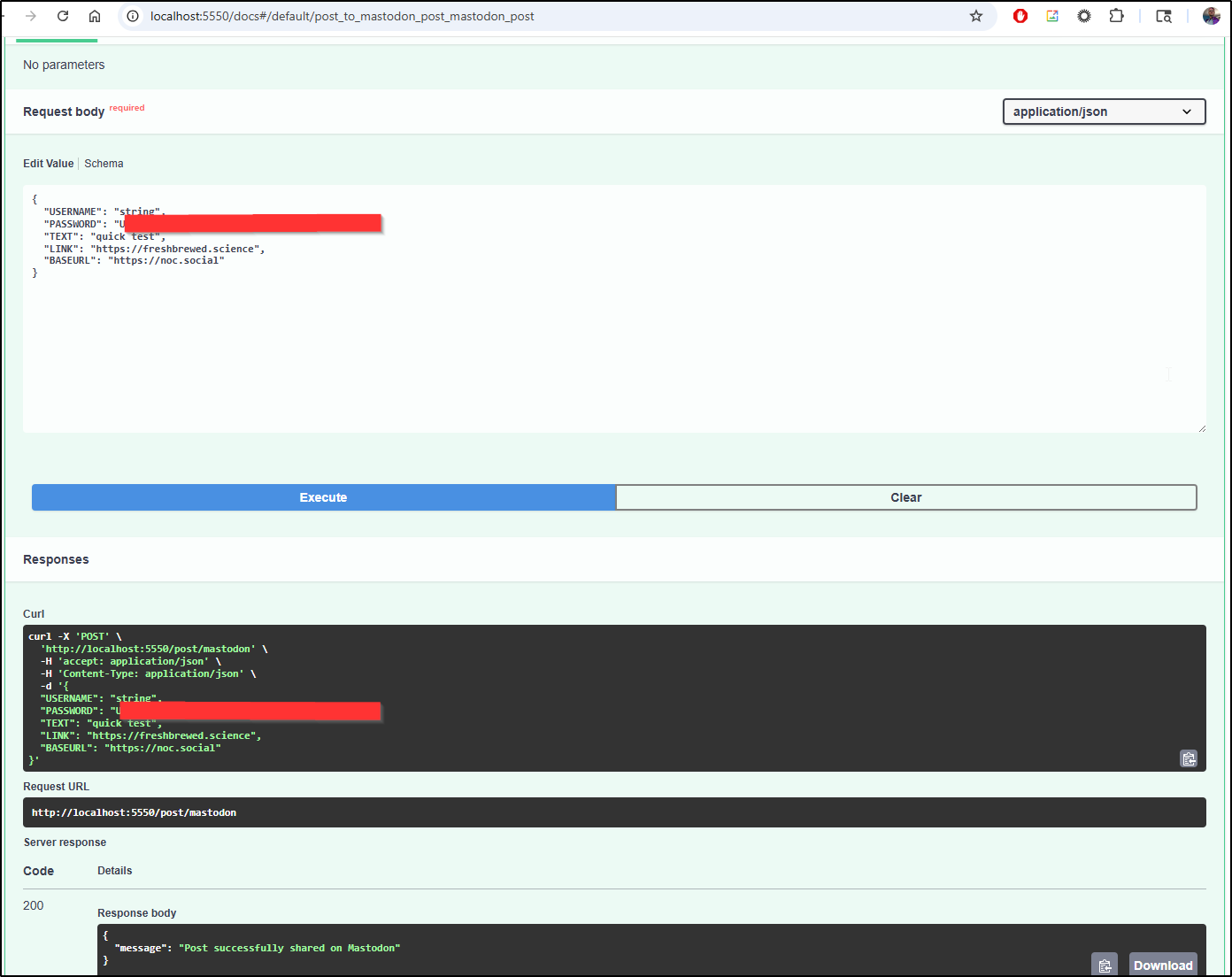

I tried GPT again and it worked. I worked a few changes to post to Mastondon

@app.post("/post/mastodon")

async def post_to_mastodon(post: SocialPost):

"""

Post to Mastodon.

Args:

post (SocialPost): Contains the text, optional link, and the Mastodon instance URL (baseURL).

the password is the API key for Mastodon.

Returns:

dict: Response from Mastodon or status message.

"""

# Ensure the baseURL is provided for Mastodon posts

if not post.baseURL:

raise HTTPException(status_code=400, detail="baseURL is required for Mastodon posting.")

# Construct the API URL from the baseURL provided

mastodon_api_url = f"{post.baseURL.rstrip('/')}/api/v1/statuses"

# Construct the payload for Mastodon API

text = post.text[:499] + "..." if len(post.text) > 499 else post.text

payload = {

"status": text + (" " + post.link if post.link else "")

}

# The API Key will be used as the password for authentication

if not post.password:

raise HTTPException(status_code=400, detail="Password (API key) is required for Mastodon posting.")

mastodon_api_key = post.password

if not mastodon_api_key:

raise HTTPException(status_code=500, detail="Missing Mastodon API key")

# Send POST request to Mastodon API

try:

response = requests.post(

mastodon_api_url,

json=payload,

headers={

"Authorization": f"Bearer {mastodon_api_key}",

"Content-Type": "application/json"

}

)

# Check if Mastodon accepted the post

if response.status_code == 200:

return {"message": "Post successfully shared on Mastodon"}

else:

response.raise_for_status()

except Exception as e:

print(f"Failed to post to Mastodon: {e}")

return {'error': 'Failed to send post'}, 500

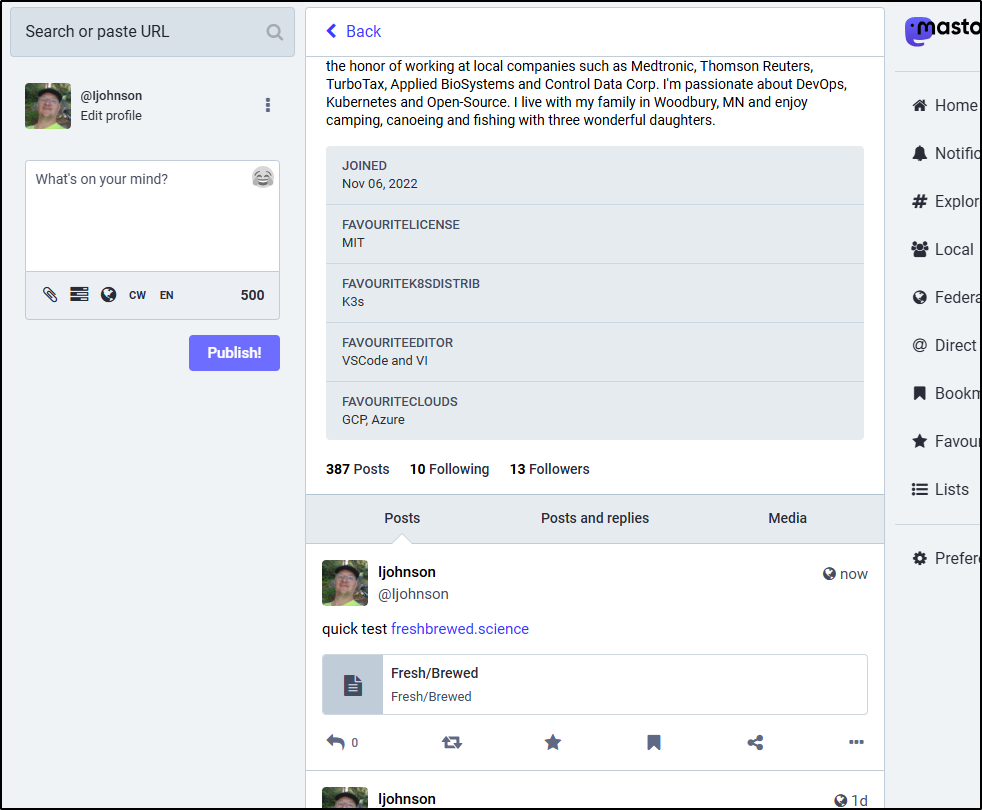

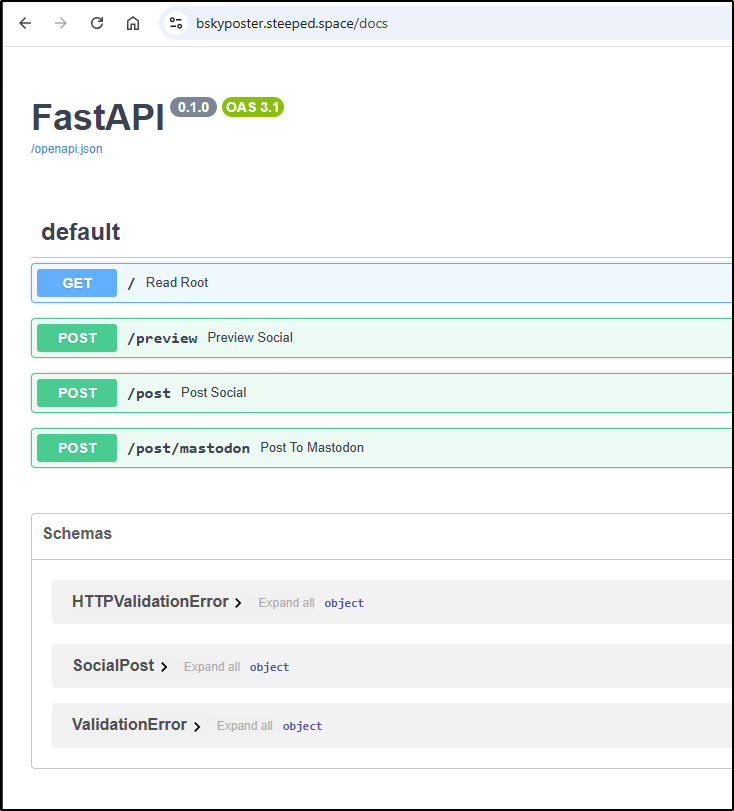

and in testing with Swagger

it worked great

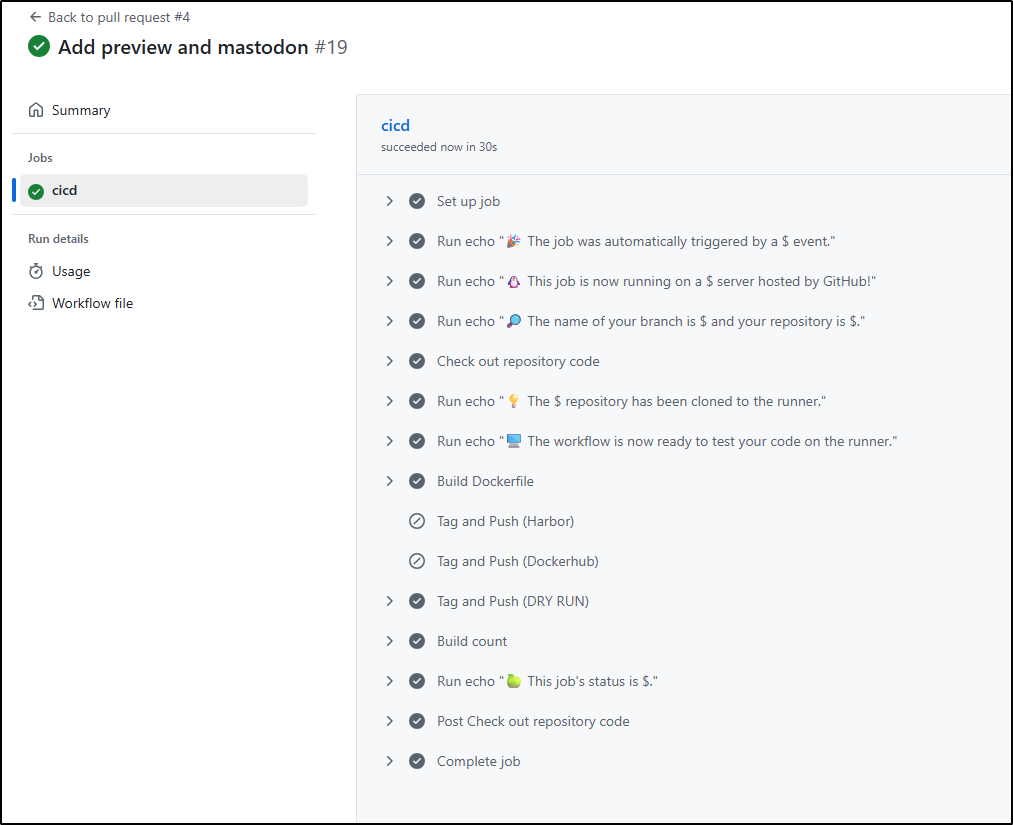

I updated the Dockerfile and chart app version and pushed up the branch

builder@LuiGi:~/Workspaces/pybsposter$ darf

git push --set-upstream origin add-preview [enter/↑/↓/ctrl+c]

Enumerating objects: 18, done.

Counting objects: 100% (18/18), done.

Delta compression using up to 16 threads

Compressing objects: 100% (9/9), done.

Writing objects: 100% (11/11), 2.07 KiB | 2.07 MiB/s, done.

Total 11 (delta 6), reused 0 (delta 0), pack-reused 0 (from 0)

remote: Resolving deltas: 100% (6/6), completed with 4 local objects.

remote:

remote: Create a pull request for 'add-preview' on GitHub by visiting:

remote: https://github.com/idjohnson/pybsposter/pull/new/add-preview

remote:

To https://github.com/idjohnson/pybsposter.git

* [new branch] add-preview -> add-preview

branch 'add-preview' set up to track 'origin/add-preview'.

I created a PR to make sure the build would work

The Dockerfile builds but we don’t push on PRs

Once built and pushed

I can then upgrade the production version

builder@LuiGi:~/Workspaces/pybsposter$ helm get values pybsposter

USER-SUPPLIED VALUES:

image:

tag: 0.1.1

ingress:

enabled: true

builder@LuiGi:~/Workspaces/pybsposter$ helm upgrade pybsposter --set image.tag=0.2.0 --set ingress.enabled=true ./charts/pybsposter

Release "pybsposter" has been upgraded. Happy Helming!

NAME: pybsposter

LAST DEPLOYED: Thu Jul 3 21:52:27 2025

NAMESPACE: default

STATUS: deployed

REVISION: 12

TEST SUITE: None

Once I saw the pod running

$ kubectl get po -l app.kubernetes.io/instance=pybsposter

NAME READY STATUS RESTARTS AGE

pybsposter-56dd8fd468-czcvk 1/1 Running 0 97s

And we can the new APIs

Summary

Much has updated on the PyBsPoster app.

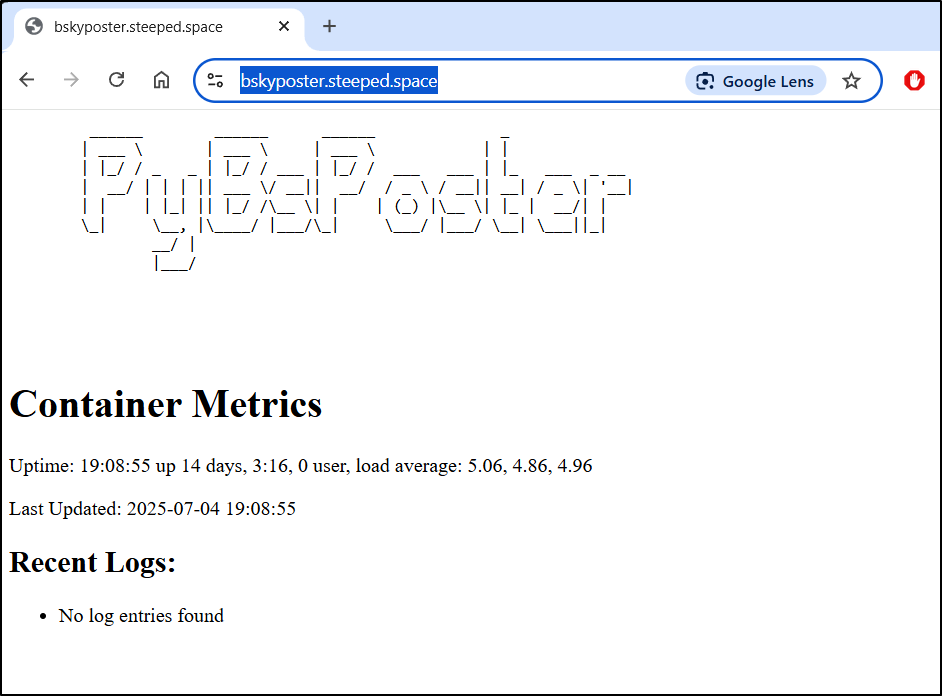

We went from a pretty bland splash page

To an improved one that shows the current version as well as links to the API documentation

We used a variety of tooling to accomplish the updates. I did use some hints from my local models, but to be frank - gemma3 just didn’t cut it with Python. It was released in March by Google and is basically Gemini 2.0 cleaned up a bit.

So it’s no wonder I had much more success with Copilot and Gemini 2.5 Pro. I even used GPT-4o which is just rock solid when it comes to Kubernetes foo.

I do think there is a time coming when local models need to be ready to take over. It’s not a dig on the cloud, but prices are on the rise, free tiers reduced and we’ll see AI bubble start to wind down and fremium players disappear and nuanced more expensive models come to bear.

Something Prime said on one of his videos lately hit home - about a time in the last few weeks Google had a big outage - it took out Cursor I believe. But during that time, those that only had Cloud AI agents were rather stuck - at least if they were dependent on them. I don’t think we can really afford to live in a world where we say “oh, the corporate AI tool is down for maintenance - guess there is no coding today”.

Just consider the consequences of that.

That said, this is not new. Years ago, some nameless Java developers I can recall near the start of my career were entirely dead in the water without a proper Eclipse or IntelliJ to IntelliSense hint them along (to give an idea, i was cranking Perl at the time and just needed my 2 Orielly books - Programming Perl and the Perl Cookbook - to get everything i needed done). Even worse, we were going full in on Rational Rose which would generate Java from UML. When the license costs spike (because IBM bought them - it’s a thing IBM does - just you want Hashi users!) we had to generate the code and maintain it. What a mess it was to cleanup the thick generated code!

As we lean into AI, however, I do see some good things. I was watching this tutorial on Context Engineering and I think there is a future where we narrowly define the ask from AI tools - being as prescriptive as possible. And then if we ensure we add comments and tests and style guides, we’ll have at least maintainable code (or a shot at it).

I’ll stop yammering… the whole AI process always gets me thinking about these things and how history repeats itself. It’s worth applying caution to marrying a SaaS provider - date them, but don’t put a ring on it.