Published: Jun 30, 2025 by Isaac Johnson

Recently my AWX instance, now many years old and the last of the Adwerx line was falling down. It was time to pivot to the Operator model, which itself is now undergoing a refactor.

I’ll cover AWX Setup and some testing before showing how we can automate all the steps using Kubernetes Jobs. I let Gemini Code Assist help build those out (though I’ve done it nearly a dozen times over the last few years so I was mostly interested in seeing how the AI might do it different than I).

AWX Operator install

We can follow the helm steps to install the operator in the default namespace

$ helm repo add awx-operator https://ansible-community.github.io/awx-operator-helm/

"awx-operator" has been added to your repositories

$ helm install my-awx-operator awx-operator/awx-operator

NAME: my-awx-operator

LAST DEPLOYED: Tue Jun 24 06:52:45 2025

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

AWX Operator installed with Helm Chart version 3.1.0

When we see the pods up, we can move on to the next steps

$ kubectl get po -l helm.sh/chart=awx-operator

NAME READY STATUS RESTARTS AGE

awx-operator-controller-manager-7fcf495945-lpn6t 2/2 Running 0 3m44s

Instance install

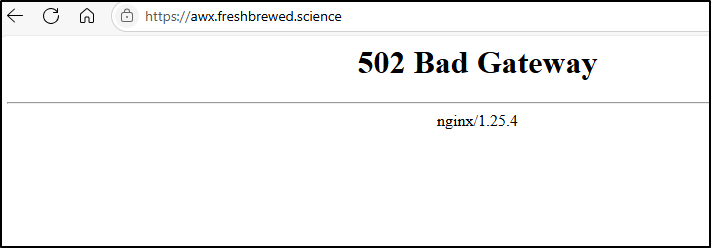

My former Adwerx instance is using awx.freshbrewed.science and perhaps I can resurrect it (port-forwarding works to a service, but the ingress falls down).

To avoid conflict, I’ll create a DNS entry in tpk.pw for it

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n awx

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "9fae4e6b-1930-4d6e-9906-532c18b94f1e",

"fqdn": "awx.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/awx",

"name": "awx",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

Kubernetes upgrades

My cluster was really not doing well. 502s in harbor, services not resolving. Sometimes the best way to “freshen up” the cluster is a full upgrade

At present, I’m running v1.26 which is pretty old (nearly 3y old now)

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-hp-elitebook-850-g2 Ready <none> 337d v1.26.14+k3s1

builder-hp-elitebook-745-g5 Ready control-plane,master 479d v1.26.14+k3s1

hp-hp-elitebook-850-g2 Ready <none> 476d v1.26.14+k3s1

builder-hp-elitebook-850-g1 Ready <none> 479d v1.26.14+k3s1

I’ll setup the Rancher automated upgrade system

$ kubectl apply -f https://github.com/rancher/system-upgrade-controller/releases/latest/download/system-upgrade-controller.yaml

namespace/system-upgrade created

serviceaccount/system-upgrade created

role.rbac.authorization.k8s.io/system-upgrade-controller created

clusterrole.rbac.authorization.k8s.io/system-upgrade-controller created

clusterrole.rbac.authorization.k8s.io/system-upgrade-controller-drainer created

rolebinding.rbac.authorization.k8s.io/system-upgrade created

clusterrolebinding.rbac.authorization.k8s.io/system-upgrade created

clusterrolebinding.rbac.authorization.k8s.io/system-upgrade-drainer created

configmap/default-controller-env created

deployment.apps/system-upgrade-controller created

$ kubectl apply -f https://github.com/rancher/system-upgrade-controller/releases/latest/download/crd.yaml

Warning: resource customresourcedefinitions/plans.upgrade.cattle.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/plans.upgrade.cattle.io configured

I’m not quite ready for the 30s.. so let’s use the latest v1.29 that K3s has, v1.29.15+k3s

Then perform the upgrade

$ cat k8s_upgrade_plan.yaml

# Server plan

apiVersion: upgrade.cattle.io/v1

kind: Plan

metadata:

name: server-plan

namespace: system-upgrade

spec:

concurrency: 1

cordon: true

nodeSelector:

matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: In

values:

- "true"

serviceAccountName: system-upgrade

upgrade:

image: rancher/k3s-upgrade

version: v1.29.15+k3s1

---

# Agent plan

apiVersion: upgrade.cattle.io/v1

kind: Plan

metadata:

name: agent-plan

namespace: system-upgrade

spec:

concurrency: 1

cordon: true

nodeSelector:

matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: DoesNotExist

prepare:

args:

- prepare

- server-plan

image: rancher/k3s-upgrade

serviceAccountName: system-upgrade

upgrade:

image: rancher/k3s-upgrade

version: v1.29.15+k3s1

$ kubectl apply -f ./k8s_upgrade_plan.yaml

plan.upgrade.cattle.io/server-plan created

plan.upgrade.cattle.io/agent-plan created

Almost immediately I can see it start the process

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-hp-elitebook-745-g5 NotReady,SchedulingDisabled control-plane,master 479d v1.29.15+k3s1

builder-hp-elitebook-850-g1 Ready <none> 479d v1.26.14+k3s1

builder-hp-elitebook-850-g2 Ready <none> 337d v1.26.14+k3s1

hp-hp-elitebook-850-g2 Ready <none> 476d v1.26.14+k3s1

Withing 10 minutes the upgrade was complete (I was expecting much longer)

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-hp-elitebook-745-g5 Ready control-plane,master 479d v1.29.15+k3s1

builder-hp-elitebook-850-g1 Ready <none> 479d v1.29.15+k3s1

builder-hp-elitebook-850-g2 Ready <none> 337d v1.29.15+k3s1

hp-hp-elitebook-850-g2 Ready <none> 476d v1.29.15+k3s1

Let’s talk about problems. Especially those we create for ourselves.

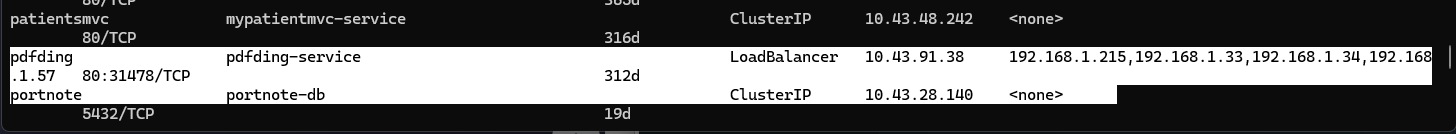

It’s summer, and we’ve had some powerblips at home. I recently added another UPS to my stack, but at some point, in reboots, one of the “demo” services beat Nginx to the Loadbalancer

What this means is when we have services, like PDFDing or YAL capturing port 80

$ kubectl get svc -A | grep Load

default zipkinlb LoadBalancer 10.43.102.173 192.168.1.215,192.168.1.33,192.168.1.34,192.168.1.57 9411:31557/TCP 315d

nginx-ingress nginx-ingress LoadBalancer 10.43.168.217 192.168.1.34 80:32747/TCP,443:32259/TCP 479d

yal yal-service LoadBalancer 10.43.176.22 192.168.1.33 80:32265/TCP 442d

Nginx cannot capture port 80 across the board. In fact, prior to the listing above, my Nginx LB process was stuck in Pending:

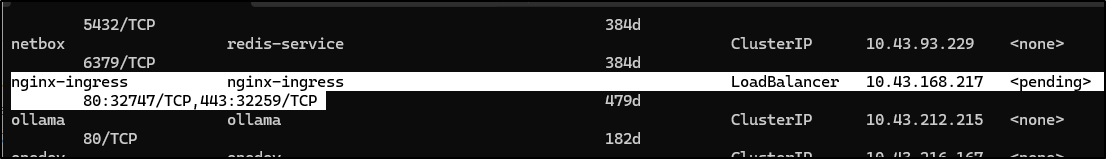

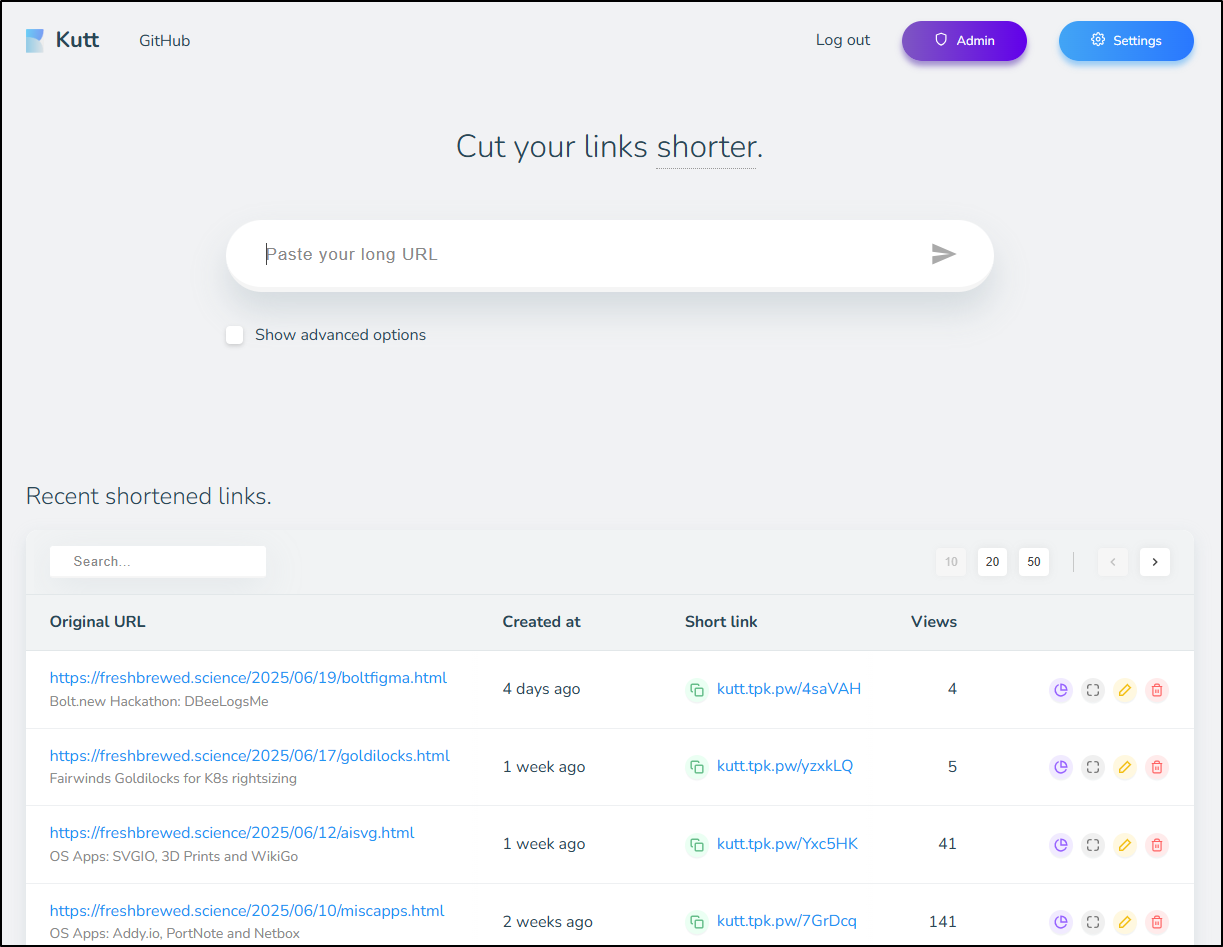

That can really mess me up as I use kutt to shorten and track links - if Kutt.tpk.pw is down, then my social links just timeout.

Moreover, if my cluster is just dumping and not working, I cannot even blog as my Github runners live here too.

With Nginx restored, not only does Harbor work again (so all my crashing pods that couldn’t pull private images come back online), but so too does my AWX which was in a 502 unreachable

And now is alive again

Back to install

I can now install with a simple manifest that will use the Operator

$ cat newawx.yaml

apiVersion: awx.ansible.com/v1beta1

kind: AWX

metadata:

name: awx

spec:

admin_user: admin

admin_password_secret: awx-admin-password

---

apiVersion: v1

kind: Secret

metadata:

name: awx-admin-password

stringData:

password: "notmyrealpassword"

type: Opaque

$ kubectl apply -f ./newawx.yaml

Now that my cluster is alive again, we can see our AWX service is ready for testing

$ kubectl get svc | grep -i awx

awx-operator-controller-manager-metrics-service ClusterIP 10.43.11.142 <none> 8443/TCP 58m

awx-postgres-15 ClusterIP None <none> 5432/TCP 47m

awx-service ClusterIP 10.43.230.47 <none> 80/TCP 46m

Let’s port-forward to the service

$ kubectl port-forward svc/awx-service 8088:80

Forwarding from 127.0.0.1:8088 -> 8052

Forwarding from [::1]:8088 -> 8052

Handling connection for 8088

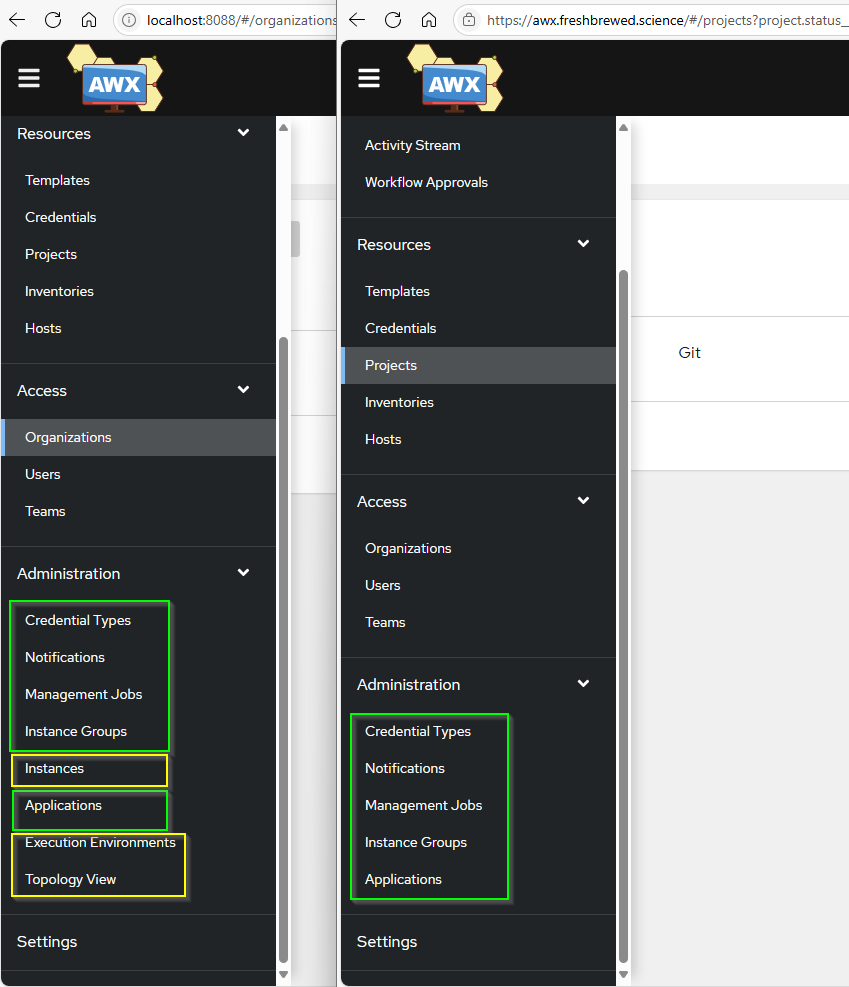

You’ll notice, if we compare the new (left) and the former Adwerx (right), that our new Operator-based system has Execution Environments as well as Instances and Topology view

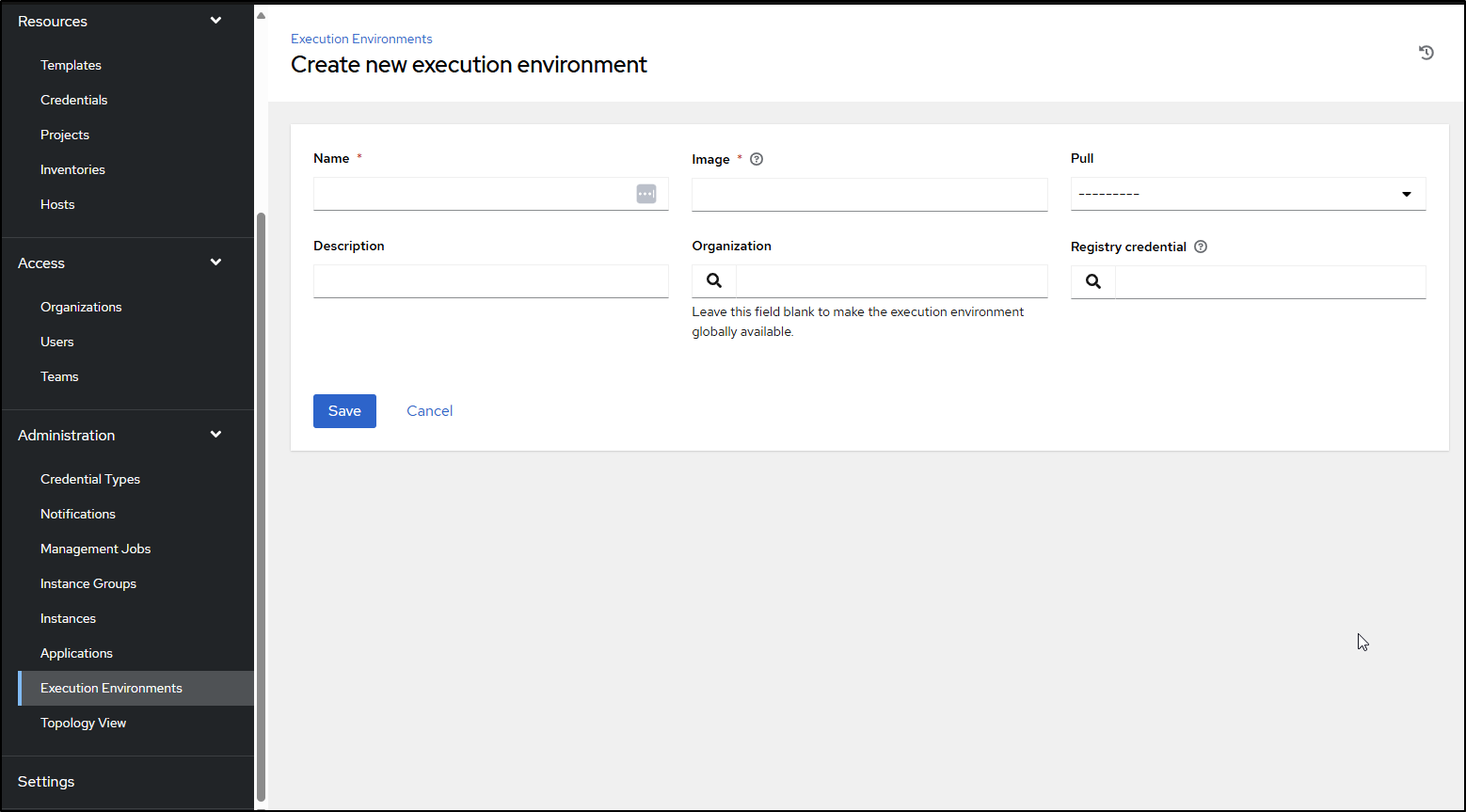

The execution environments are particularly handy as they allow one to pull a custom image as a base which would be needed to shoe-horn in ansible galaxy templates

I don’t really want to have to port-forward to the newer AWX, so let’s first double-check the service

$ kubectl get svc awx-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

awx-service ClusterIP 10.43.230.47 <none> 80/TCP 37h

Let’s make an A-Record for this new instance

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n awx

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "cd2ee354-2bd0-4f4d-aae4-526775124a67",

"fqdn": "awx.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/awx",

"name": "awx",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

Let’s apply

$ cat new-awx.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

name: awx-ingress

spec:

rules:

- host: awx.tpk.pw

http:

paths:

- backend:

service:

name: awx-service

port:

number: 80

path: /

pathType: Prefix

tls:

- hosts:

- awx.tpk.pw

secretName: awxtpk-tls

$ kubectl apply -f ./new-awx.ingress.yaml

Warning: annotation "kubernetes.io/ingress.class" is deprecated, please use 'spec.ingressClassName' instead

ingress.networking.k8s.io/awx-ingress created

Once the cert has be satisified

$ kubectl get cert | grep awx

awxtpk-tls True awxtpk-tls 98s

which worked

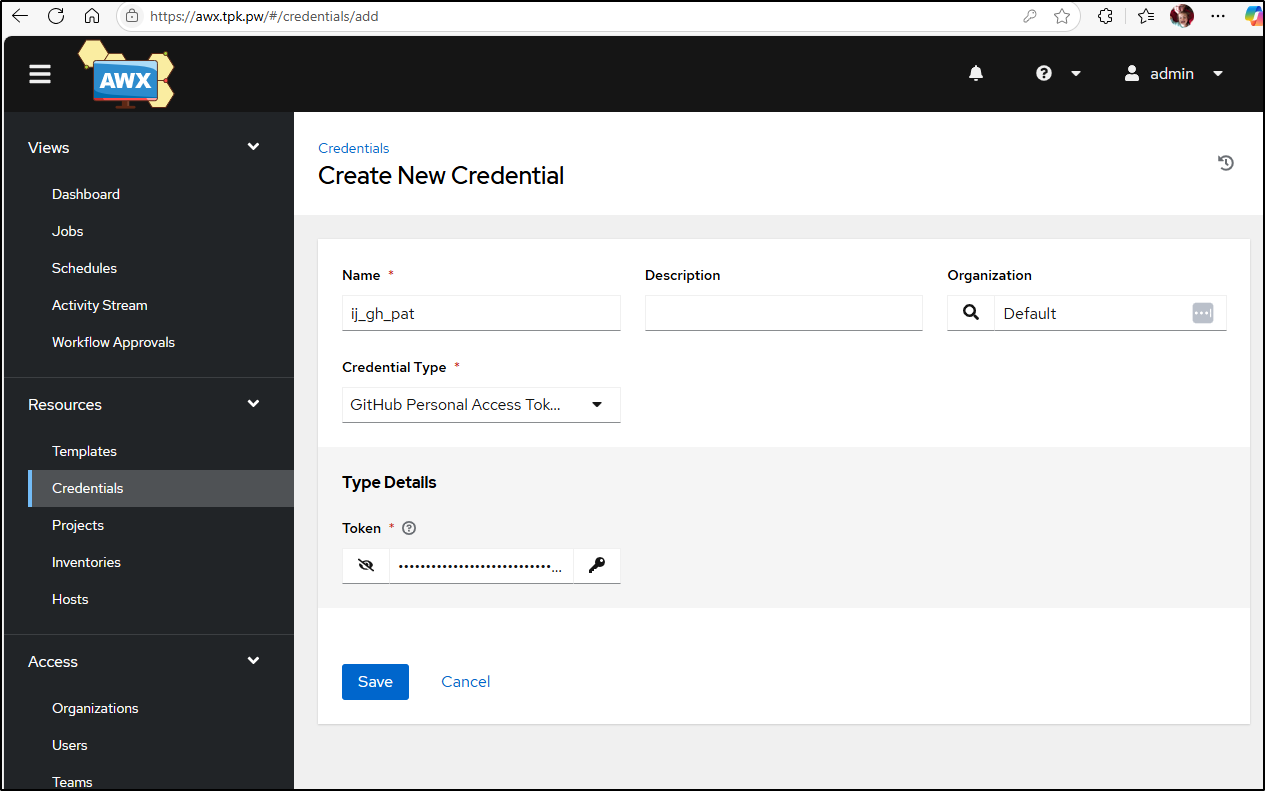

I’ll forgoe setting up a new organization. Thus, for our first step, let’s get a Github PAT set

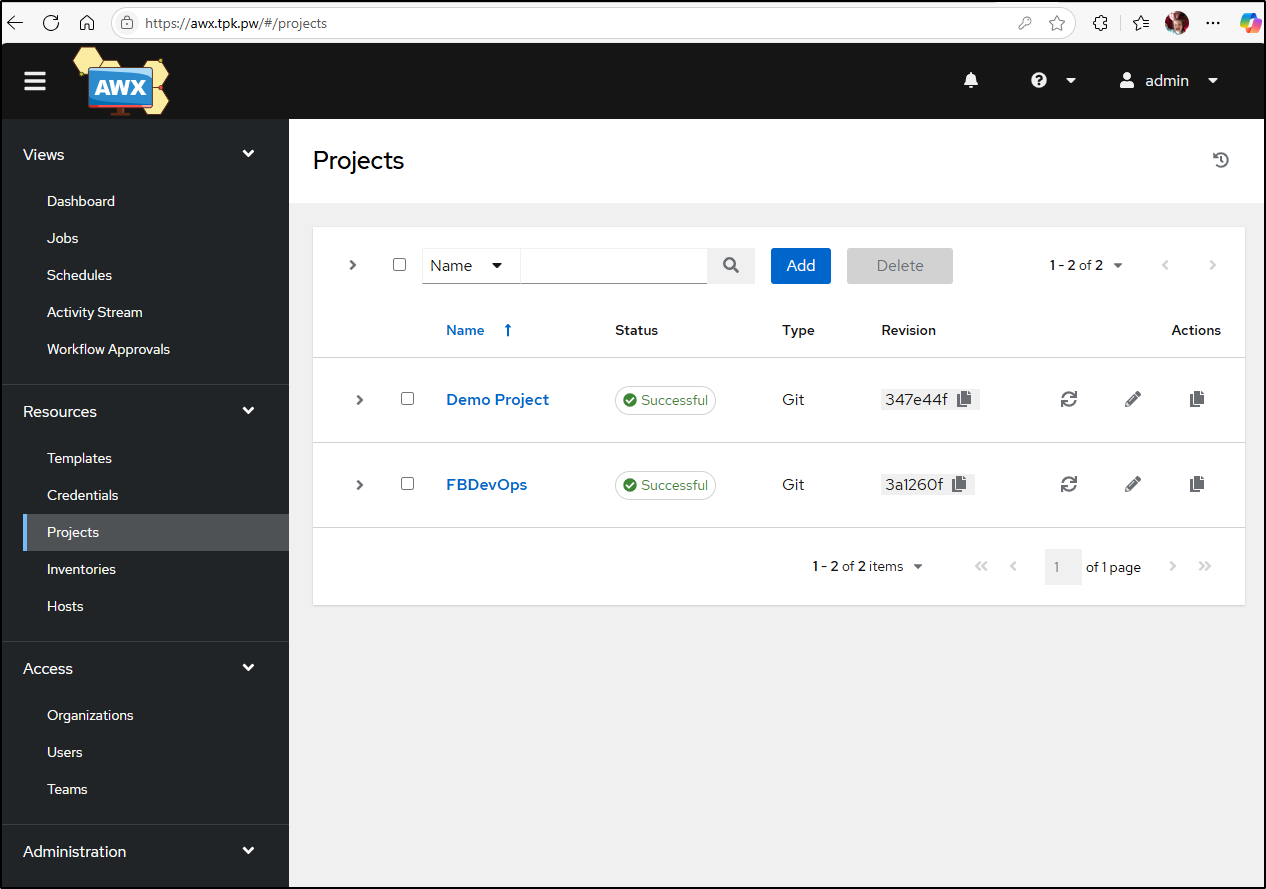

However, as my Github repo is public, I don’t really need it setup the project

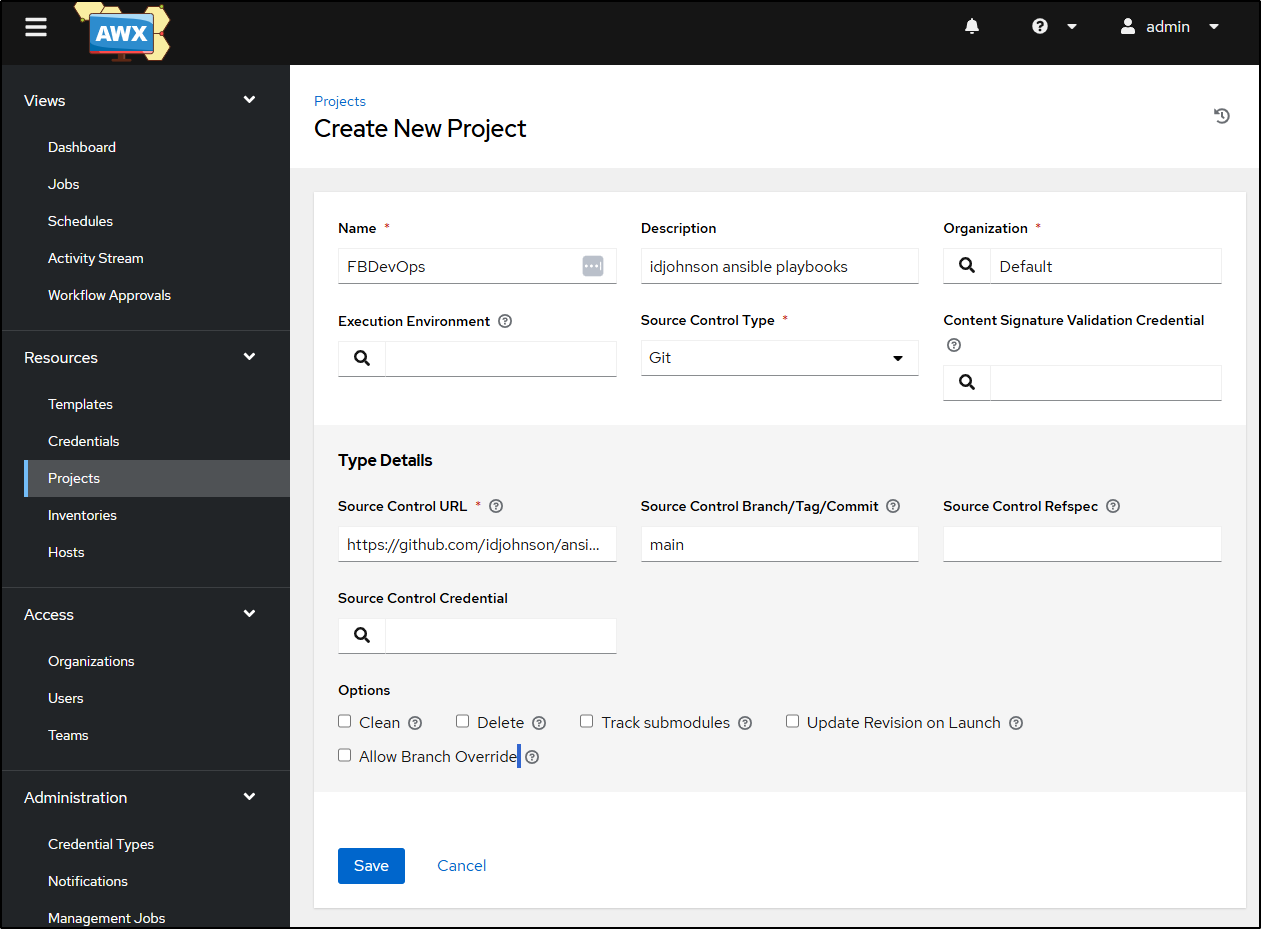

I’ll save it and see it start to sync

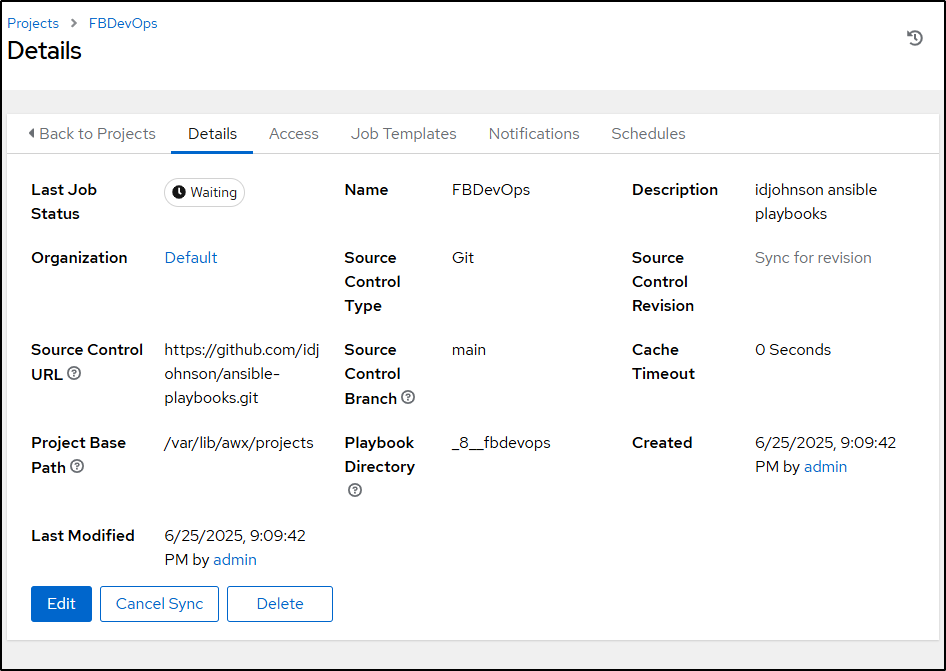

If I go back to my Projects page, we’ll see it’s already synced

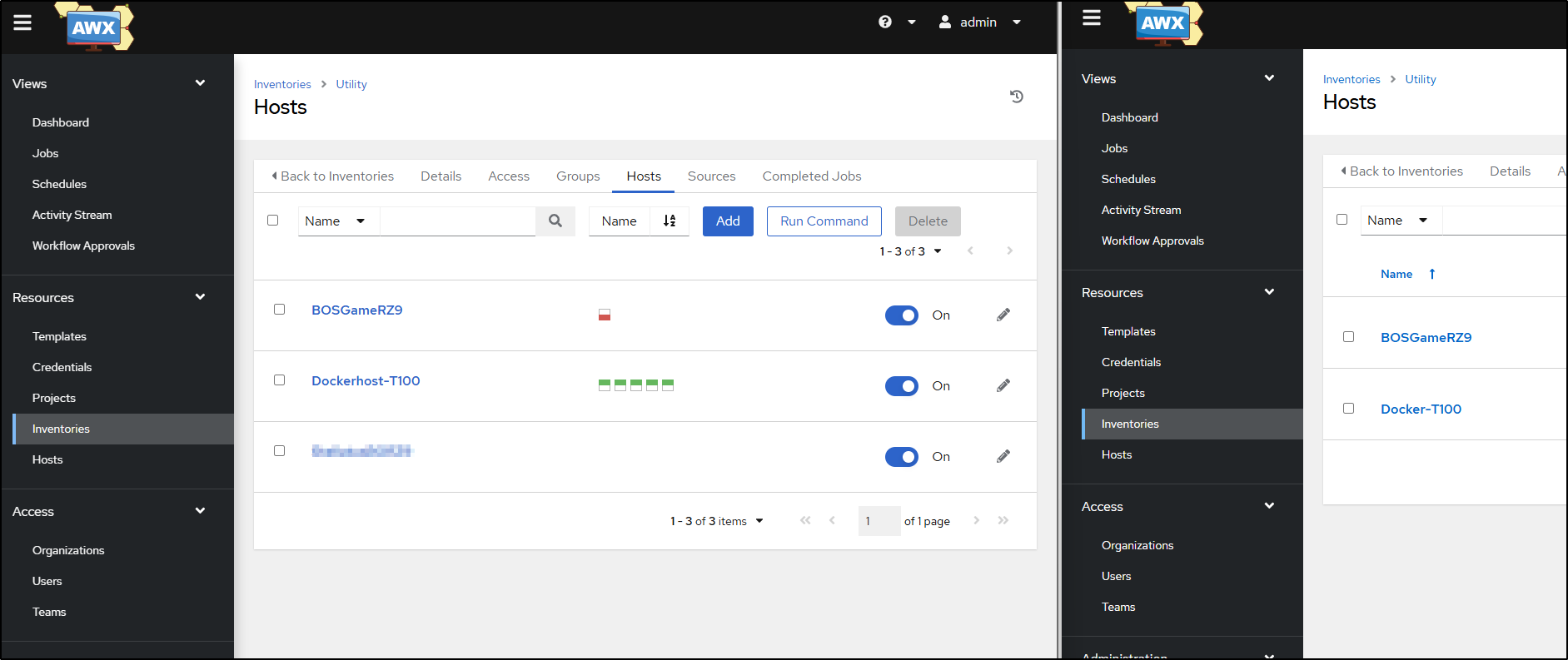

I’ll create some hosts we can use

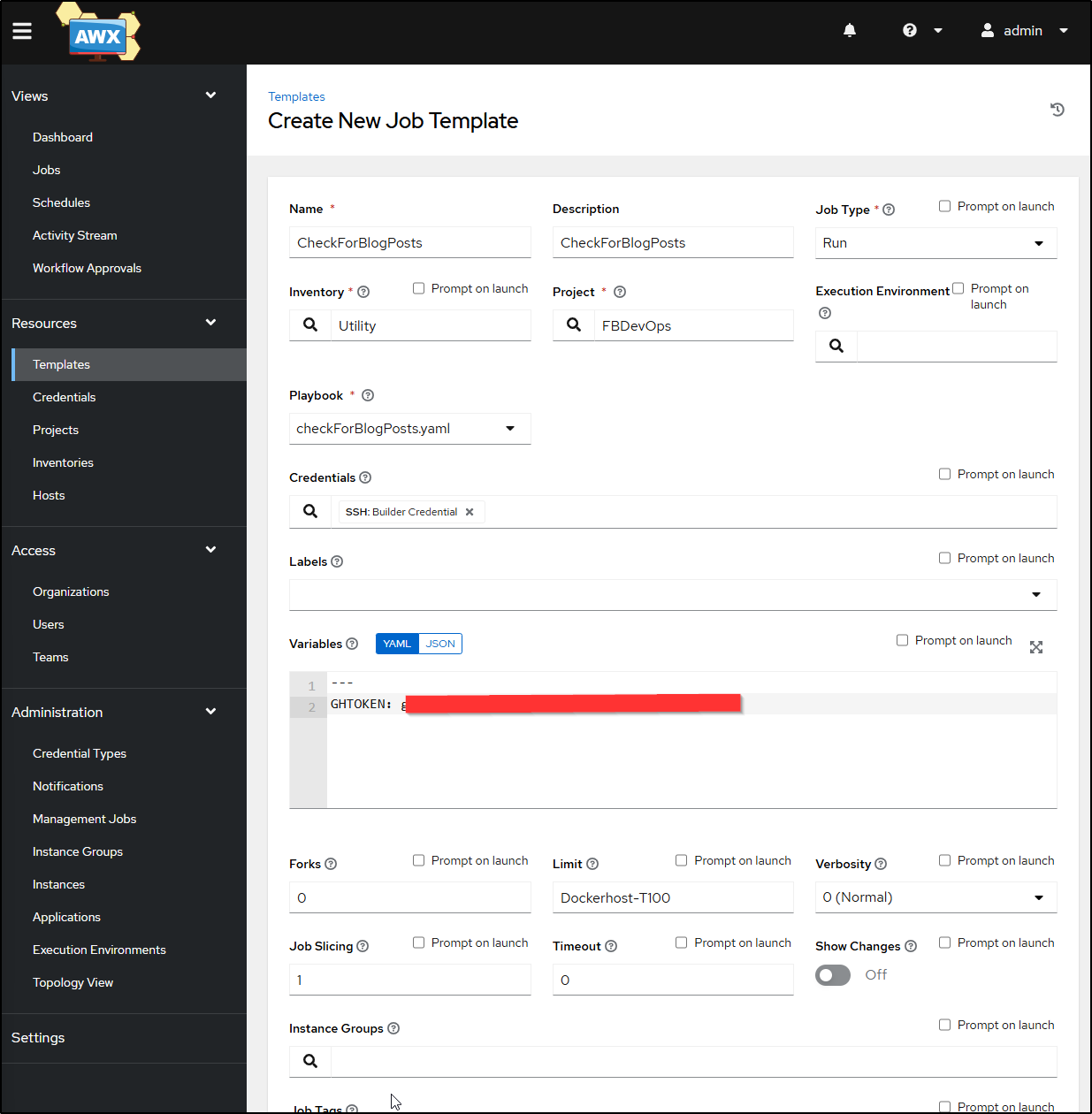

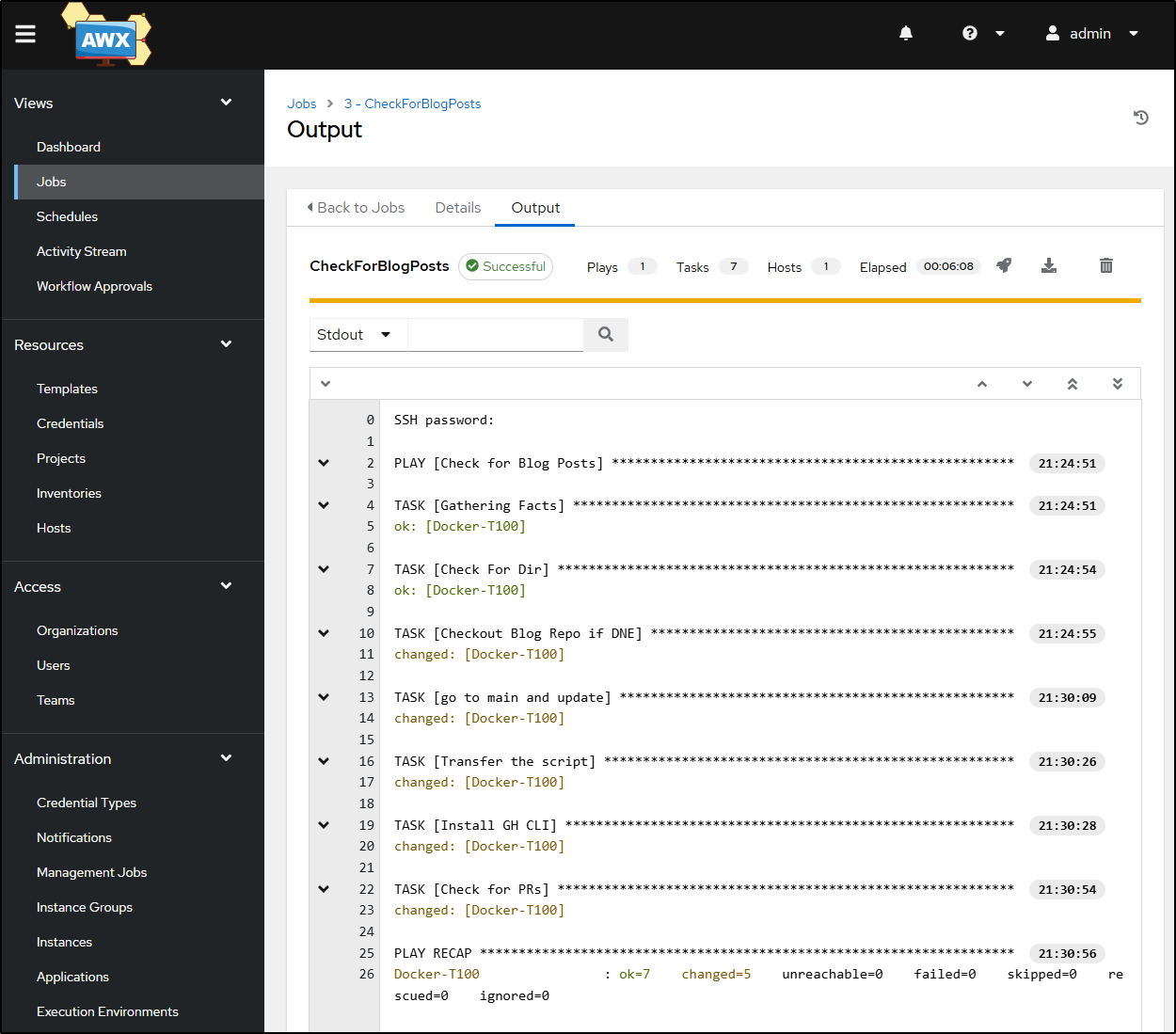

Let’s create the Blog Post checker template

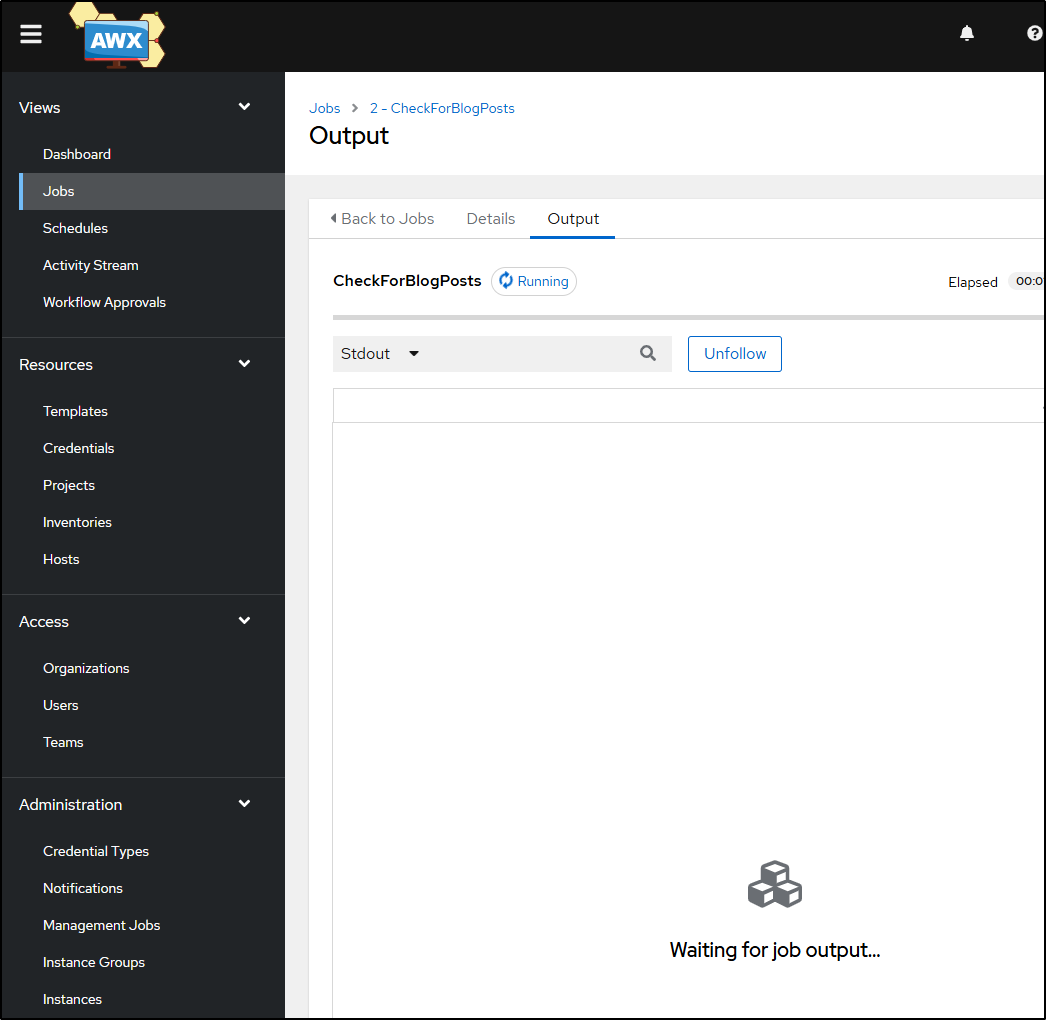

Now it’s running

This first time, it takes a bit longer to sync the repo down, but as we can see, the template completed successfully

Automation with K8s Jobs

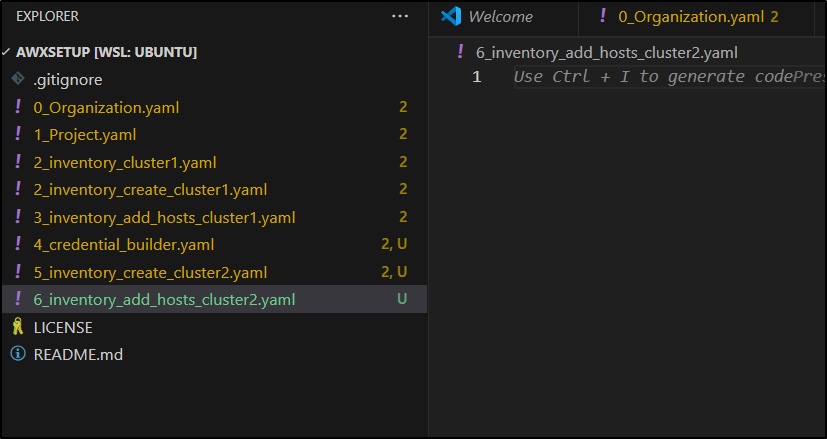

Something I’ve done a handful of times for employers (but never myself) has been to setup up a series of turnkey Kubernetes Jobs that when chained together setup AWX entirely.

I had used these in with some metadata startup scripts along with Terraform to build a one node AWX on-the-fly both on-prem and in the clouds.

note: I’ll put all these in a new Github Public repo awxSetup

First, we start with the organization.

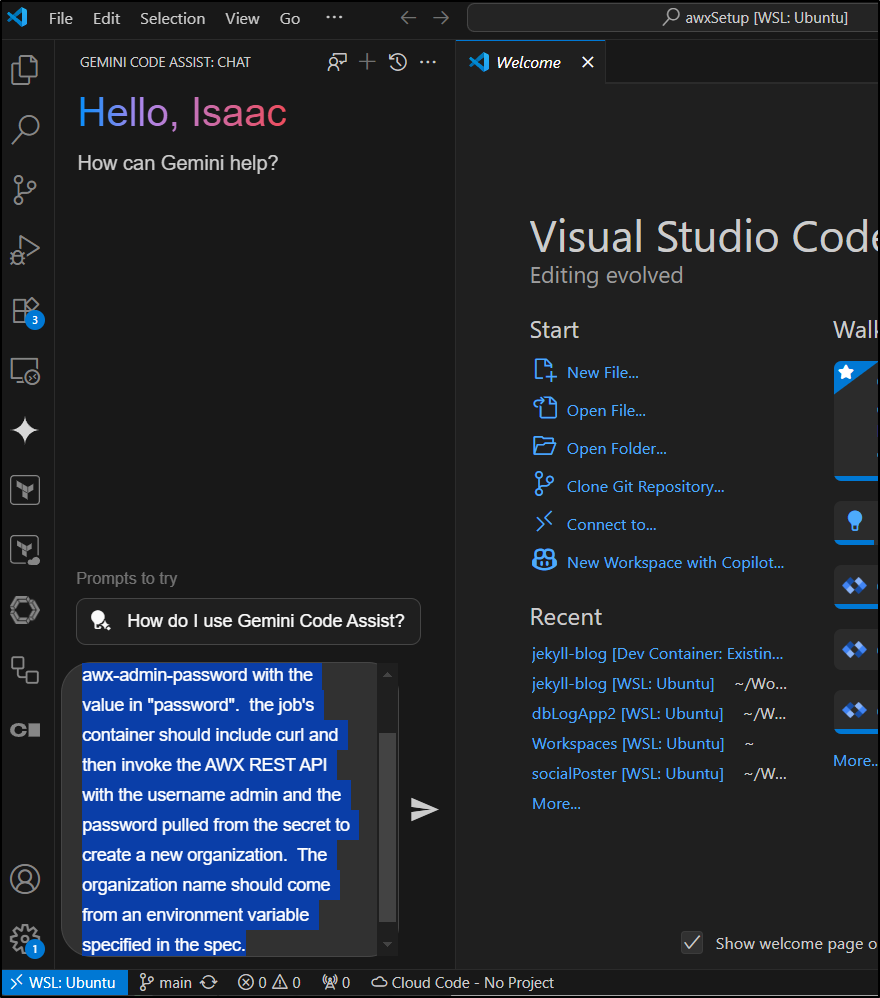

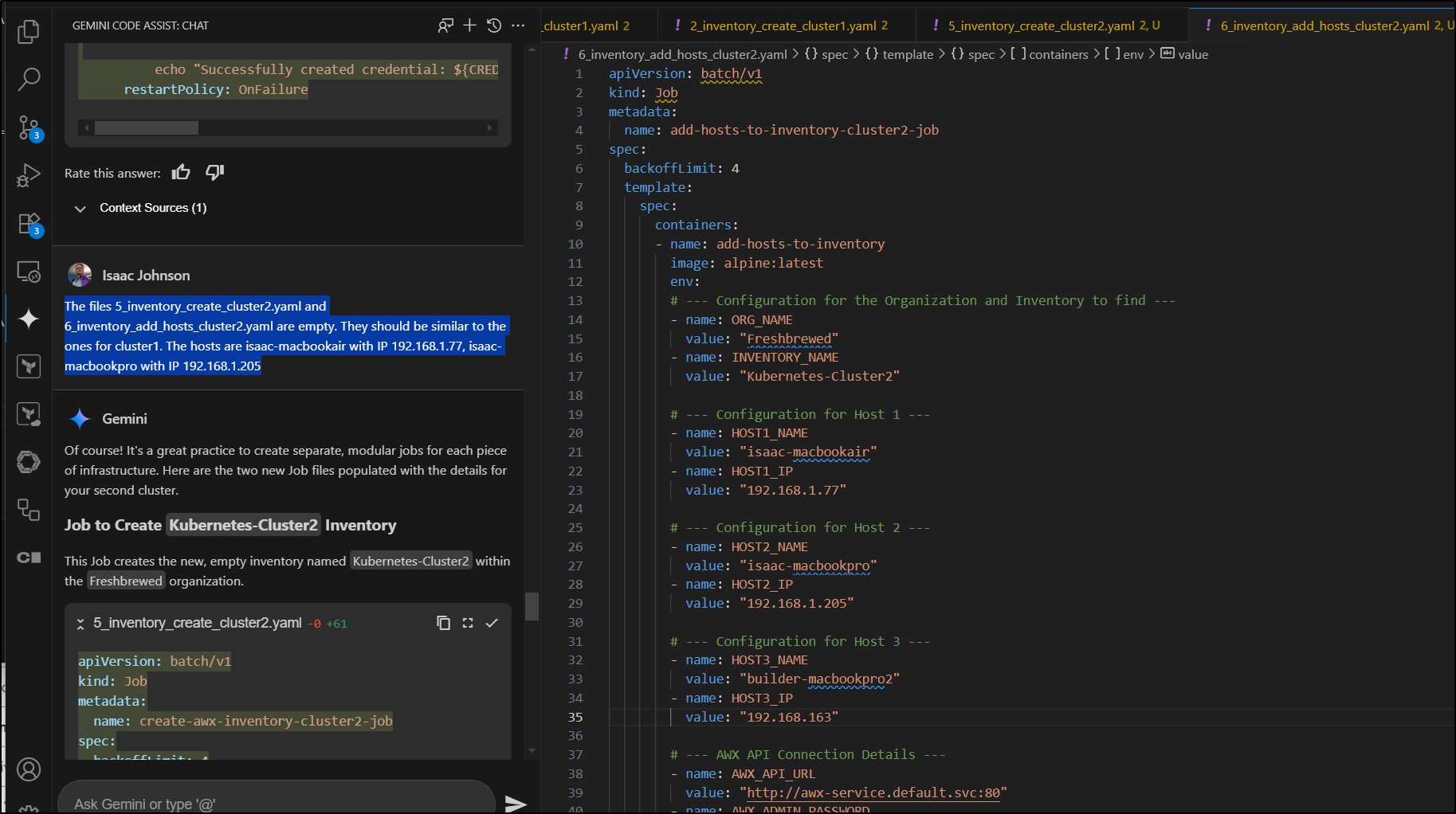

I’ll give Gemini Code Assist a shot to build this

create a Kubernetes Job YAML that pulls a secret from a secret awx-admin-password with the value in “password”. the job’s container should include curl and then invoke the AWX REST API with the username admin and the password pulled from the secret to create a new organization. The organization name should come from an environment variable specified in the spec.

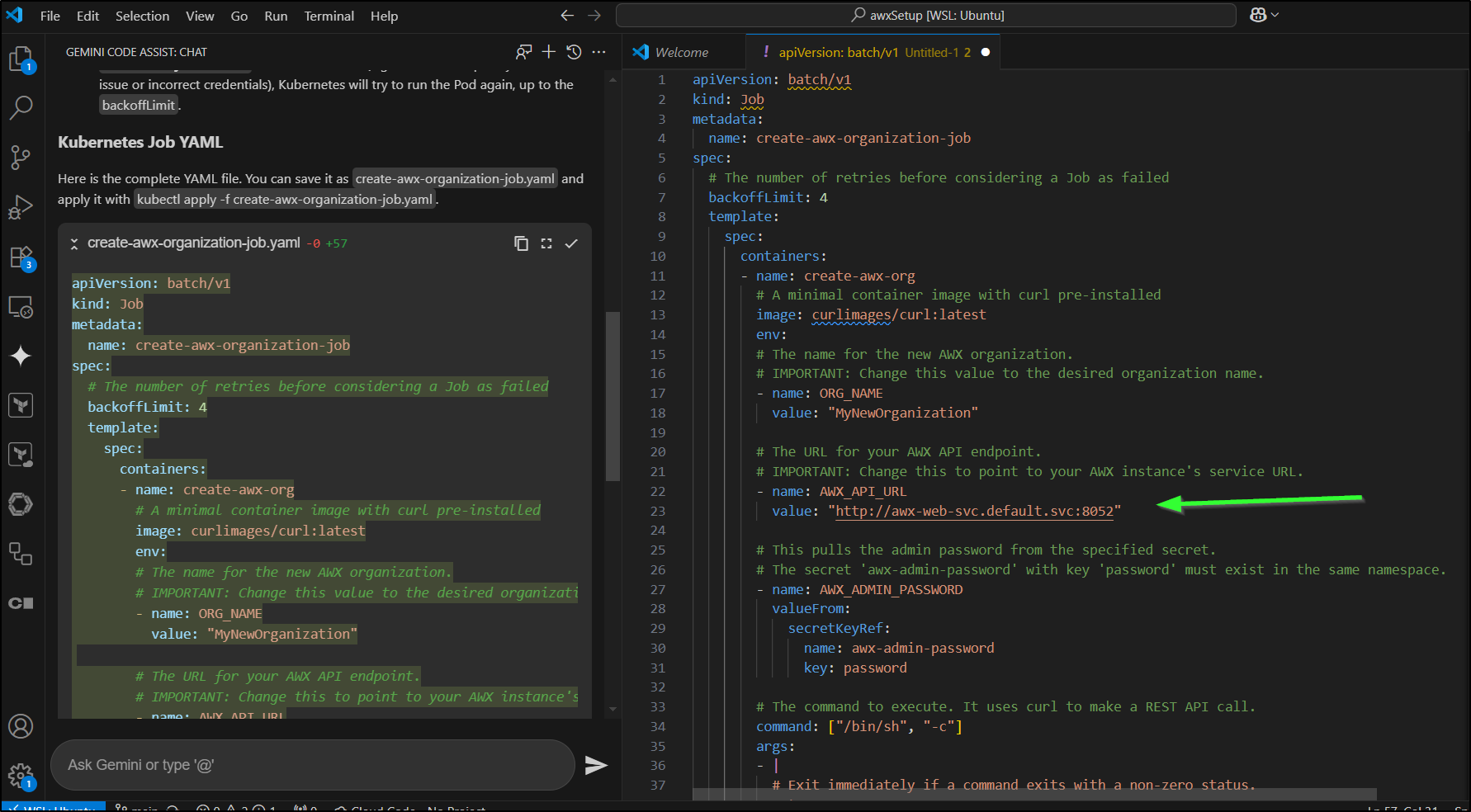

I was happy it caught a gap in that I need to know my ingress so it suggested a reasonable value for an internal K8s URL to AWX

I tweaked the service to match the current AWX service name and port as well as the Organization name

apiVersion: batch/v1

kind: Job

metadata:

name: create-awx-organization-job

spec:

# The number of retries before considering a Job as failed

backoffLimit: 4

template:

spec:

containers:

- name: create-awx-org

# A minimal container image with curl pre-installed

image: curlimages/curl:latest

env:

# The name for the new AWX organization.

# IMPORTANT: Change this value to the desired organization name.

- name: ORG_NAME

value: "Freshbrewed"

# The URL for your AWX API endpoint.

# IMPORTANT: Change this to point to your AWX instance's service URL.

- name: AWX_API_URL

value: "http://awx-service.default.svc:80"

# This pulls the admin password from the specified secret.

# The secret 'awx-admin-password' with key 'password' must exist in the same namespace.

- name: AWX_ADMIN_PASSWORD

valueFrom:

secretKeyRef:

name: awx-admin-password

key: password

# The command to execute. It uses curl to make a REST API call.

command: ["/bin/sh", "-c"]

args:

- |

# Exit immediately if a command exits with a non-zero status.

set -e

# Print each command to stdout before executing it.

set -x

echo "Attempting to create organization: ${ORG_NAME} in AWX at ${AWX_API_URL}"

# Use curl to send a POST request to the AWX API to create a new organization.

# -k: Allows insecure server connections (e.g., self-signed certs). Remove if not needed.

# -f: Fail with an error code on HTTP server errors (like 4xx or 5xx).

# -u: Basic authentication with the admin user and password from the secret.

# -H: Sets the Content-Type header to application/json.

# -d: The JSON data payload for creating the organization.

curl -k -f -X POST \

-u "admin:${AWX_ADMIN_PASSWORD}" \

-H "Content-Type: application/json" \

-d "{\"name\": \"${ORG_NAME}\", \"description\": \"Organization created by Kubernetes Job\"}" \

"${AWX_API_URL}/api/v2/organizations/"

echo "Successfully created organization: ${ORG_NAME}"

restartPolicy: OnFailure

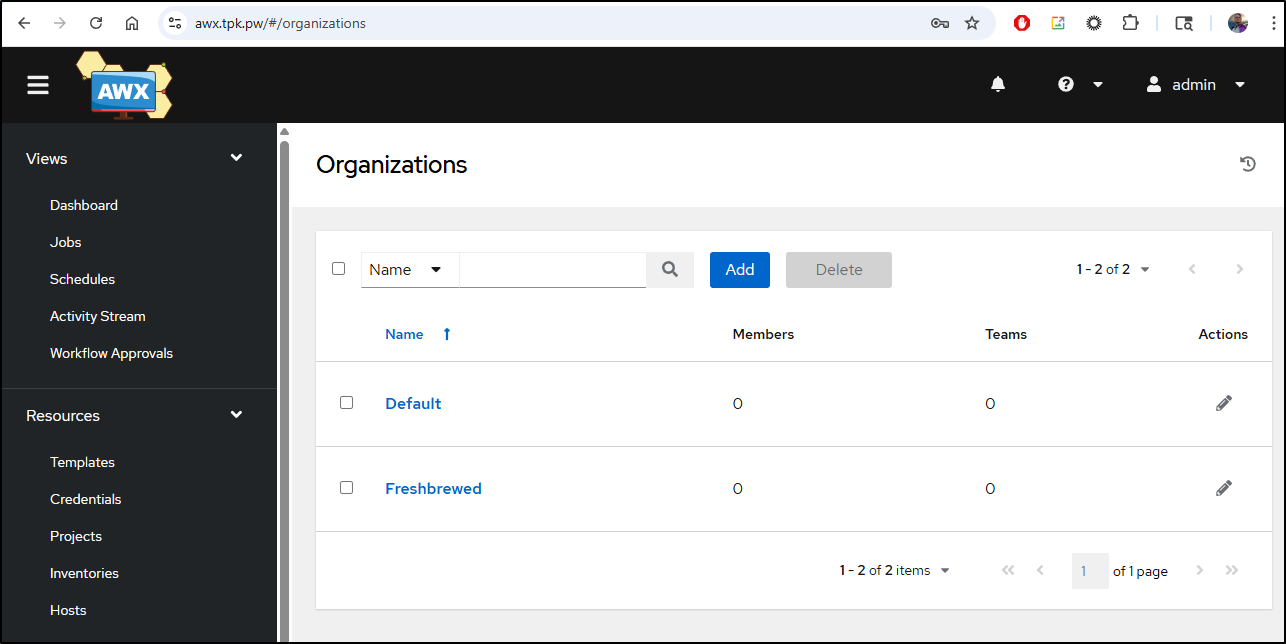

Next, I ran it to see if it would create the Org

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl apply -f ./0_Organization.yaml

job.batch/create-awx-organization-job created

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl get jobs create-awx-organization-job

NAME COMPLETIONS DURATION AGE

create-awx-organization-job 1/1 8s 18s

And indeed it did

I’ll give it a harder one for the project

I next need a Job that will fetch the Orgnization ID that matches our value we used in the first step. That is, find the Orgnization ID that matches the name value of “FreshBrewed”. I want to use that to create a Project named “ansible-playbooks” using that Organization ID, the Source Control URL of https://github.com/idjohnson/ansible-playbooks.git, the type of GIT, the branch of “main” and it should update revision on launch. This does not need credentials (as it is public)

I found it interesting it did a search on name and got the first (0th) result. I usually just ask for all organizations then match on name in a loop. I believe Gemini’s might be a better way to do it

apiVersion: batch/v1

kind: Job

metadata:

name: create-awx-project-job

spec:

backoffLimit: 4

template:

spec:

containers:

- name: create-awx-project

# Using a general-purpose image to install the tools we need (curl and jq)

image: alpine:latest

env:

# --- Configuration for the Organization to find ---

- name: ORG_NAME

value: "Freshbrewed"

# --- Configuration for the Project to create ---

- name: PROJECT_NAME

value: "ansible-playbooks"

- name: PROJECT_SCM_URL

value: "https://github.com/idjohnson/ansible-playbooks.git"

- name: PROJECT_SCM_BRANCH

value: "main"

# --- AWX API Connection Details ---

- name: AWX_API_URL

value: "http://awx-service.default.svc:80"

- name: AWX_ADMIN_PASSWORD

valueFrom:

secretKeyRef:

name: awx-admin-password

key: password

command: ["/bin/sh", "-c"]

args:

- |

# Exit on error, print commands

set -ex

echo "Installing dependencies..."

apk add --no-cache curl jq

# 1. FETCH ORGANIZATION ID

# Query the API for the organization by name and use jq to parse the JSON response,

# extracting the 'id' from the first result.

echo "Fetching ID for organization: ${ORG_NAME}"

ORG_ID=$(curl -k -f -s -u "admin:${AWX_ADMIN_PASSWORD}" "${AWX_API_URL}/api/v2/organizations/?name=${ORG_NAME}" | jq '.results[0].id')

# Verify that we got a valid ID.

if [ -z "$ORG_ID" ] || [ "$ORG_ID" == "null" ]; then

echo "Error: Could not find organization with name '${ORG_NAME}'"

exit 1

fi

echo "Found Organization ID: ${ORG_ID}"

# 2. CREATE PROJECT

# Prepare the JSON payload for the new project. Using a variable makes the curl command cleaner.

echo "Preparing to create project '${PROJECT_NAME}'..."

JSON_PAYLOAD=$(cat <<EOF

{

"name": "${PROJECT_NAME}",

"organization": ${ORG_ID},

"scm_type": "git",

"scm_url": "${PROJECT_SCM_URL}",

"scm_branch": "${PROJECT_SCM_BRANCH}",

"scm_update_on_launch": true

}

EOF

)

# Send the POST request to create the project.

curl -k -f -X POST \

-u "admin:${AWX_ADMIN_PASSWORD}" \

-H "Content-Type: application/json" \

-d "${JSON_PAYLOAD}" \

"${AWX_API_URL}/api/v2/projects/"

echo "Successfully created project: ${PROJECT_NAME}"

restartPolicy: OnFailure

As before, let’s launch it

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl apply -f ./1_Project.yaml

job.batch/create-awx-project-job created

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl get jobs create-awx-project-job

NAME COMPLETIONS DURATION AGE

create-awx-project-job 1/1 8s 10s

And I can already see from the revision it looks right.

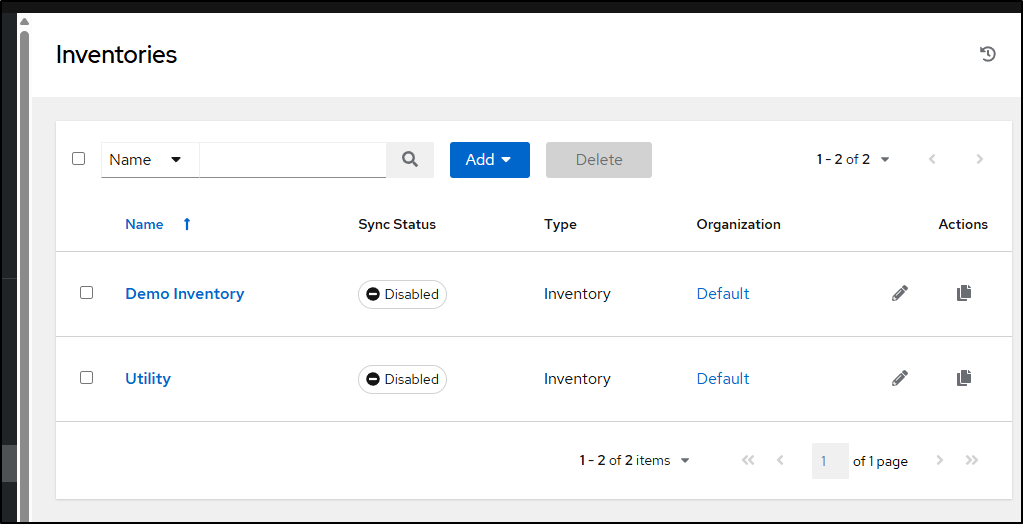

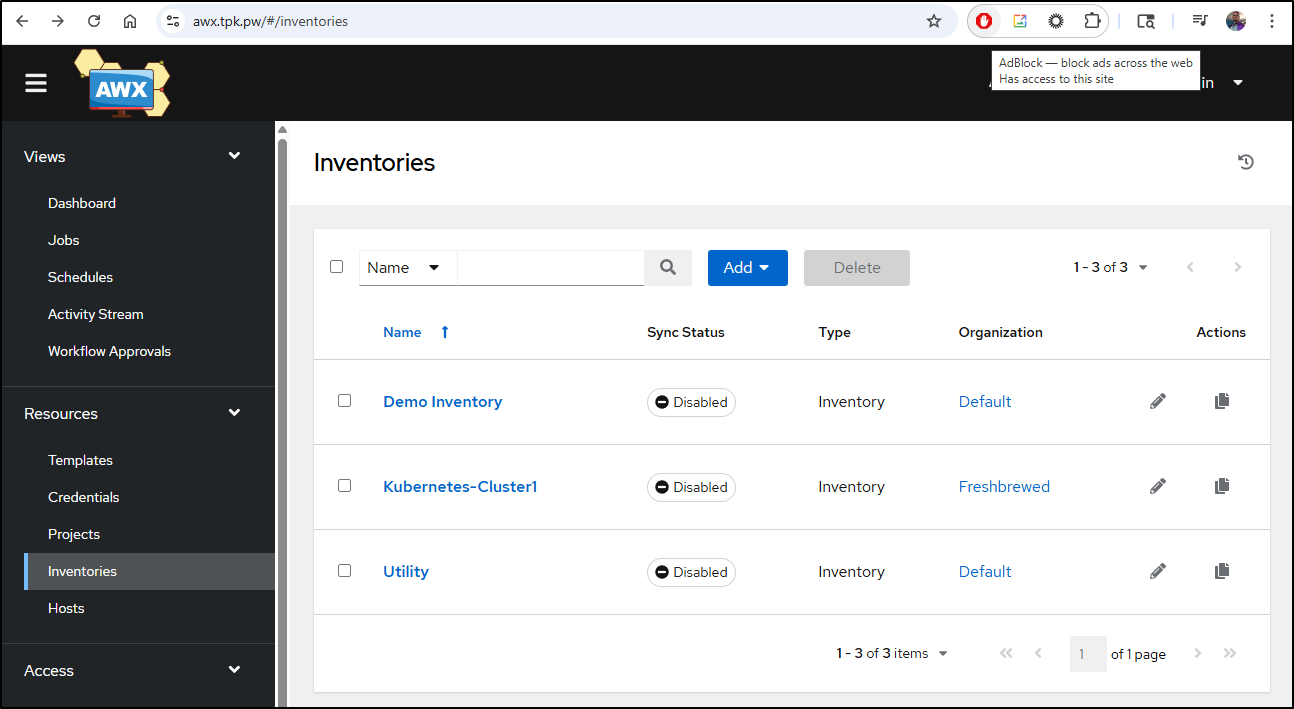

Earlier, I added a “Utility” Inventory to the default Organization

I now want to mimic my former AWX setup by having two clusters and a combined inventory

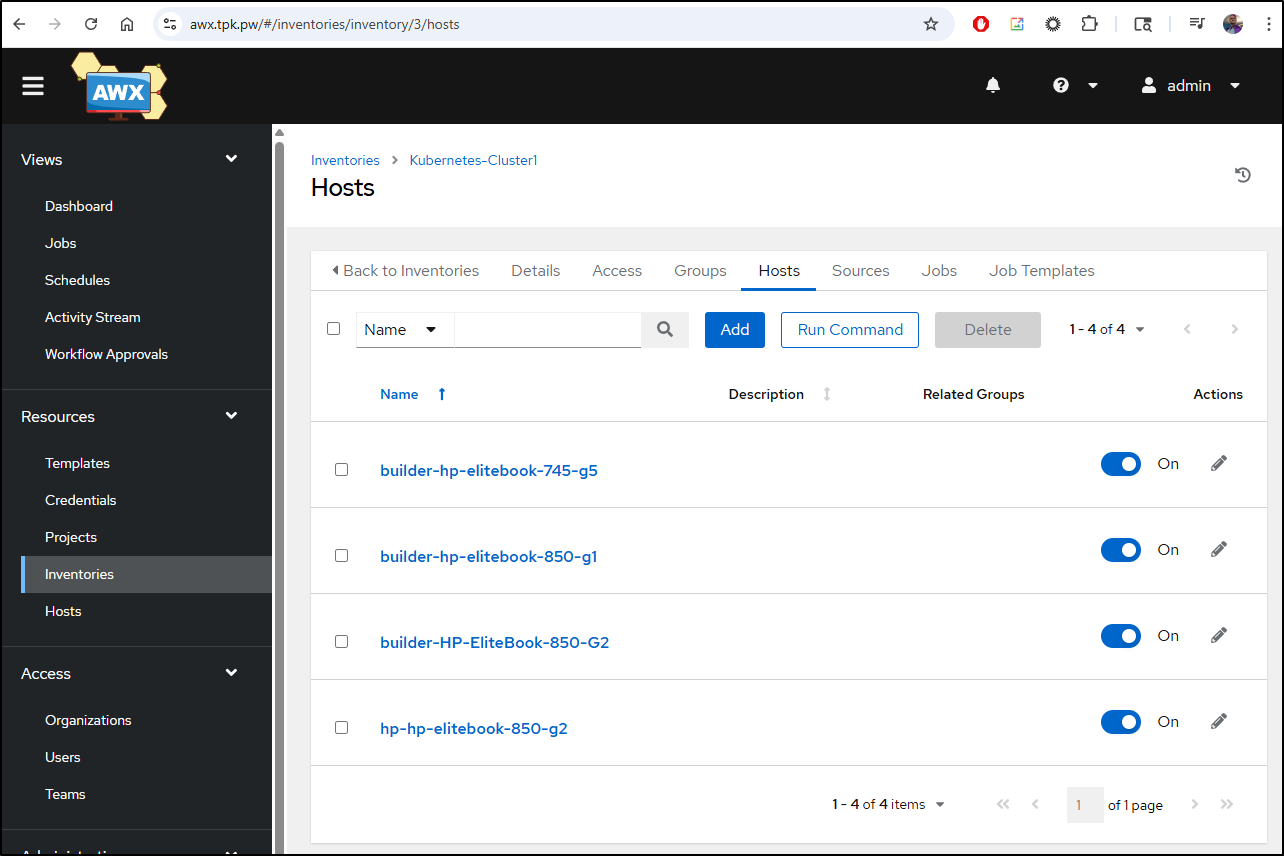

I now want to add a Kubernetes Job to add an AWX Inventory called “Kubernetes-Cluster1” which would include two hosts: “builder-hp-elitebook-745-g5” that uses variables to set the ansible_host to 192.168.1.33 and ansible_connection of ssh. “builder-hp-elitebook-850-g1” that uses variables to set the ansible_host to 192.168.1.36 and ansible_connection of ssh

While this worked to create a single Job

apiVersion: batch/v1

kind: Job

metadata:

name: create-awx-inventory-job

spec:

backoffLimit: 4

template:

spec:

containers:

- name: create-awx-inventory

image: alpine:latest

env:

# --- Configuration for the Organization to find ---

- name: ORG_NAME

value: "Freshbrewed"

# --- Configuration for the Inventory to create ---

- name: INVENTORY_NAME

value: "Kubernetes-Cluster1"

# --- Configuration for Host 1 ---

- name: HOST1_NAME

value: "builder-hp-elitebook-745-g5"

- name: HOST1_IP

value: "192.168.1.33"

# --- Configuration for Host 2 ---

- name: HOST2_NAME

value: "builder-hp-elitebook-850-g1"

- name: HOST2_IP

value: "192.168.1.36"

# --- AWX API Connection Details ---

- name: AWX_API_URL

value: "http://awx-service.default.svc:80"

- name: AWX_ADMIN_PASSWORD

valueFrom:

secretKeyRef:

name: awx-admin-password

key: password

command: ["/bin/sh", "-c"]

args:

- |

# Exit on error, print commands

set -ex

echo "Installing dependencies..."

apk add --no-cache curl jq

# 1. FETCH ORGANIZATION ID

echo "Fetching ID for organization: ${ORG_NAME}"

ORG_ID=$(curl -k -f -s -u "admin:${AWX_ADMIN_PASSWORD}" "${AWX_API_URL}/api/v2/organizations/?name=${ORG_NAME}" | jq '.results[0].id')

if [ -z "$ORG_ID" ] || [ "$ORG_ID" == "null" ]; then

echo "Error: Could not find organization with name '${ORG_NAME}'"

exit 1

fi

echo "Found Organization ID: ${ORG_ID}"

# 2. CREATE INVENTORY AND CAPTURE ITS ID

echo "Creating inventory: ${INVENTORY_NAME}"

INVENTORY_PAYLOAD="{\"name\": \"${INVENTORY_NAME}\", \"organization\": ${ORG_ID}}"

INVENTORY_ID=$(curl -k -f -s -X POST -u "admin:${AWX_ADMIN_PASSWORD}" -H "Content-Type: application/json" -d "${INVENTORY_PAYLOAD}" "${AWX_API_URL}/api/v2/inventories/" | jq '.id')

if [ -z "$INVENTORY_ID" ] || [ "$INVENTORY_ID" == "null" ]; then

echo "Error: Failed to create inventory '${INVENTORY_NAME}' or could not parse its ID."

exit 1

fi

echo "Successfully created inventory with ID: ${INVENTORY_ID}"

# 3. ADD HOST 1 TO THE NEW INVENTORY

echo "Adding host '${HOST1_NAME}' to inventory ID ${INVENTORY_ID}"

HOST1_PAYLOAD=$(cat <<EOF

{

"name": "${HOST1_NAME}",

"variables": "ansible_host: ${HOST1_IP}\nansible_connection: ssh"

}

EOF

)

curl -k -f -X POST -u "admin:${AWX_ADMIN_PASSWORD}" -H "Content-Type: application/json" -d "${HOST1_PAYLOAD}" "${AWX_API_URL}/api/v2/inventories/${INVENTORY_ID}/hosts/"

# 4. ADD HOST 2 TO THE NEW INVENTORY

echo "Adding host '${HOST2_NAME}' to inventory ID ${INVENTORY_ID}"

HOST2_PAYLOAD=$(cat <<EOF

{

"name": "${HOST2_NAME}",

"variables": "ansible_host: ${HOST2_IP}\nansible_connection: ssh"

}

EOF

)

curl -k -f -X POST -u "admin:${AWX_ADMIN_PASSWORD}" -H "Content-Type: application/json" -d "${HOST2_PAYLOAD}" "${AWX_API_URL}/api/v2/inventories/${INVENTORY_ID}/hosts/"

echo "Successfully created inventory and added hosts."

restartPolicy: OnFailure

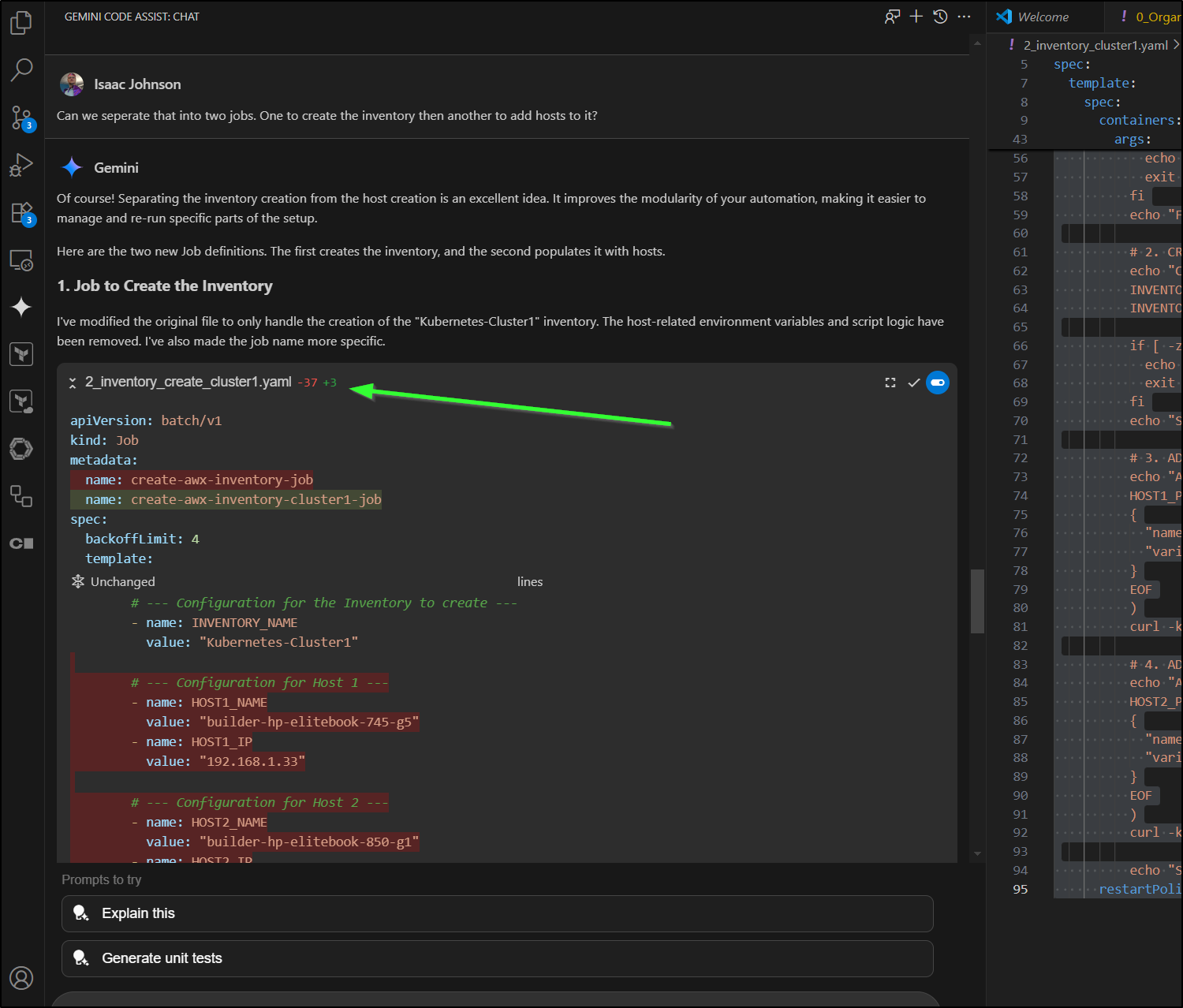

I realized it might be best to separate into two jobs

Can we separate that into two jobs. One to create the inventory then another to add hosts to it?

I noticed that it has been detecting my files which is how it figured out the proper service name.

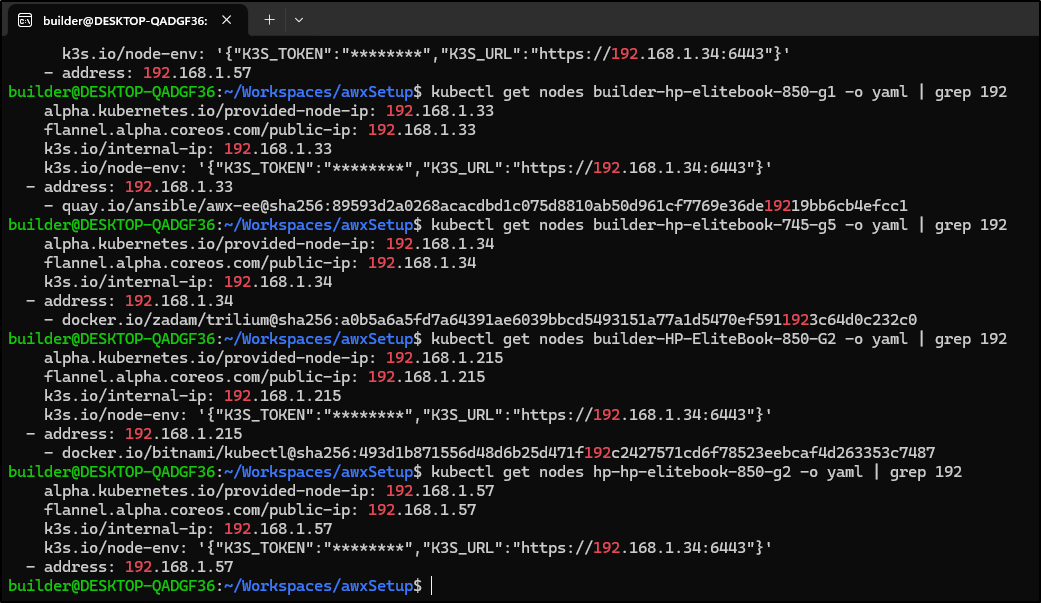

I did need to confirm (and correct) the IPs

This resulted in a Job for the Inventory

apiVersion: batch/v1

kind: Job

metadata:

name: create-awx-inventory-cluster1-job

spec:

backoffLimit: 4

template:

spec:

containers:

- name: create-awx-inventory

image: alpine:latest

env:

# --- Configuration for the Organization to find ---

- name: ORG_NAME

value: "Freshbrewed"

# --- Configuration for the Inventory to create ---

- name: INVENTORY_NAME

value: "Kubernetes-Cluster1"

# --- AWX API Connection Details ---

- name: AWX_API_URL

value: "http://awx-service.default.svc:80"

- name: AWX_ADMIN_PASSWORD

valueFrom:

secretKeyRef:

name: awx-admin-password

key: password

command: ["/bin/sh", "-c"]

args:

- |

# Exit on error, print commands

set -ex

echo "Installing dependencies..."

apk add --no-cache curl jq

# 1. FETCH ORGANIZATION ID

echo "Fetching ID for organization: ${ORG_NAME}"

ORG_ID=$(curl -k -f -s -u "admin:${AWX_ADMIN_PASSWORD}" "${AWX_API_URL}/api/v2/organizations/?name=${ORG_NAME}" | jq '.results[0].id')

if [ -z "$ORG_ID" ] || [ "$ORG_ID" == "null" ]; then

echo "Error: Could not find organization with name '${ORG_NAME}'"

exit 1

fi

echo "Found Organization ID: ${ORG_ID}"

# 2. CREATE INVENTORY AND CAPTURE ITS ID

echo "Creating inventory: ${INVENTORY_NAME}"

INVENTORY_PAYLOAD="{\"name\": \"${INVENTORY_NAME}\", \"organization\": ${ORG_ID}}"

INVENTORY_ID=$(curl -k -f -s -X POST -u "admin:${AWX_ADMIN_PASSWORD}" -H "Content-Type: application/json" -d "${INVENTORY_PAYLOAD}" "${AWX_API_URL}/api/v2/inventories/" | jq '.id')

if [ -z "$INVENTORY_ID" ] || [ "$INVENTORY_ID" == "null" ]; then

echo "Error: Failed to create inventory '${INVENTORY_NAME}' or could not parse its ID."

exit 1

fi

echo "Successfully created inventory with ID: ${INVENTORY_ID}"

echo "Successfully created inventory: ${INVENTORY_NAME}"

restartPolicy: OnFailure

Then hosts (I added two more on my own)

apiVersion: batch/v1

kind: Job

metadata:

name: add-hosts-to-inventory-cluster1-job

spec:

backoffLimit: 4

template:

spec:

containers:

- name: add-hosts-to-inventory

image: alpine:latest

env:

# --- Configuration for the Organization and Inventory to find ---

- name: ORG_NAME

value: "Freshbrewed"

- name: INVENTORY_NAME

value: "Kubernetes-Cluster1"

# --- Configuration for Host 1 ---

- name: HOST1_NAME

value: "builder-hp-elitebook-745-g5"

- name: HOST1_IP

value: "192.168.1.34"

# --- Configuration for Host 2 ---

- name: HOST2_NAME

value: "builder-hp-elitebook-850-g1"

- name: HOST2_IP

value: "192.168.1.33"

# --- Configuration for Host 3 ---

- name: HOST3_NAME

value: "builder-HP-EliteBook-850-G2"

- name: HOST3_IP

value: "192.168.1.215"

# --- Configuration for Host 4 ---

- name: HOST4_NAME

value: "hp-hp-elitebook-850-g2"

- name: HOST4_IP

value: "192.168.1.57"

# --- AWX API Connection Details ---

- name: AWX_API_URL

value: "http://awx-service.default.svc:80"

- name: AWX_ADMIN_PASSWORD

valueFrom:

secretKeyRef:

name: awx-admin-password

key: password

command: ["/bin/sh", "-c"]

args:

- |

# Exit on error, print commands

set -ex

echo "Installing dependencies..."

apk add --no-cache curl jq

# 1. FETCH INVENTORY ID

# We query by both inventory name and organization name to ensure we get the correct one.

echo "Fetching ID for inventory '${INVENTORY_NAME}' in organization '${ORG_NAME}'"

INVENTORY_ID=$(curl -k -f -s -u "admin:${AWX_ADMIN_PASSWORD}" "${AWX_API_URL}/api/v2/inventories/?name=${INVENTORY_NAME}&organization__name=${ORG_NAME}" | jq '.results[0].id')

if [ -z "$INVENTORY_ID" ] || [ "$INVENTORY_ID" == "null" ]; then

echo "Error: Could not find inventory named '${INVENTORY_NAME}' in organization '${ORG_NAME}'"

exit 1

fi

echo "Found Inventory ID: ${INVENTORY_ID}"

# 2. ADD HOST 1 TO THE INVENTORY

echo "Adding host '${HOST1_NAME}' to inventory ID ${INVENTORY_ID}"

HOST1_PAYLOAD=$(cat <<EOF

{

"name": "${HOST1_NAME}",

"variables": "ansible_host: ${HOST1_IP}\nansible_connection: ssh"

}

EOF

)

curl -k -f -X POST -u "admin:${AWX_ADMIN_PASSWORD}" -H "Content-Type: application/json" -d "${HOST1_PAYLOAD}" "${AWX_API_URL}/api/v2/inventories/${INVENTORY_ID}/hosts/"

# 3. ADD HOST 2 TO THE INVENTORY

echo "Adding host '${HOST2_NAME}' to inventory ID ${INVENTORY_ID}"

HOST2_PAYLOAD=$(cat <<EOF

{

"name": "${HOST2_NAME}",

"variables": "ansible_host: ${HOST2_IP}\nansible_connection: ssh"

}

EOF

)

curl -k -f -X POST -u "admin:${AWX_ADMIN_PASSWORD}" -H "Content-Type: application/json" -d "${HOST2_PAYLOAD}" "${AWX_API_URL}/api/v2/inventories/${INVENTORY_ID}/hosts/"

# 4. ADD HOST 3 TO THE INVENTORY

echo "Adding host '${HOST3_NAME}' to inventory ID ${INVENTORY_ID}"

HOST3_PAYLOAD=$(cat <<EOF

{

"name": "${HOST3_NAME}",

"variables": "ansible_host: ${HOST3_IP}\nansible_connection: ssh"

}

EOF

)

curl -k -f -X POST -u "admin:${AWX_ADMIN_PASSWORD}" -H "Content-Type: application/json" -d "${HOST3_PAYLOAD}" "${AWX_API_URL}/api/v2/inventories/${INVENTORY_ID}/hosts/"

# 5. ADD HOST 4 TO THE INVENTORY

echo "Adding host '${HOST4_NAME}' to inventory ID ${INVENTORY_ID}"

HOST4_PAYLOAD=$(cat <<EOF

{

"name": "${HOST4_NAME}",

"variables": "ansible_host: ${HOST4_IP}\nansible_connection: ssh"

}

EOF

)

curl -k -f -X POST -u "admin:${AWX_ADMIN_PASSWORD}" -H "Content-Type: application/json" -d "${HOST4_PAYLOAD}" "${AWX_API_URL}/api/v2/inventories/${INVENTORY_ID}/hosts/"

echo "Successfully added hosts to inventory '${INVENTORY_NAME}'."

restartPolicy: OnFailure

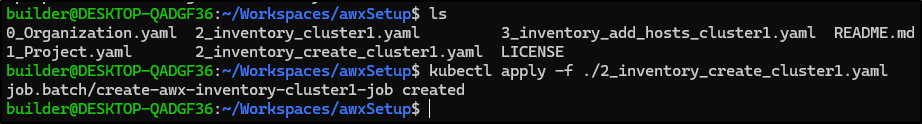

I first fired off the cluster inventory create

and verified it was created

Then I fired off the Job to add the hosts

$ kubectl apply -f ./3_inventory_add_hosts_cluster1.yaml

job.batch/add-hosts-to-inventory-cluster1-job created

I’ll add these files to GIT before we move on

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ git status

On branch main

Your branch is up to date with 'origin/main'.

Untracked files:

(use "git add <file>..." to include in what will be committed)

0_Organization.yaml

1_Project.yaml

2_inventory_cluster1.yaml

2_inventory_create_cluster1.yaml

3_inventory_add_hosts_cluster1.yaml

nothing added to commit but untracked files present (use "git add" to track)

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ git add -A

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ git commit -m updates

[main d8c49a1] updates

5 files changed, 412 insertions(+)

create mode 100644 0_Organization.yaml

create mode 100644 1_Project.yaml

create mode 100644 2_inventory_cluster1.yaml

create mode 100644 2_inventory_create_cluster1.yaml

create mode 100644 3_inventory_add_hosts_cluster1.yaml

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ git push

Enumerating objects: 8, done.

Counting objects: 100% (8/8), done.

Delta compression using up to 16 threads

Compressing objects: 100% (7/7), done.

Writing objects: 100% (7/7), 4.26 KiB | 4.26 MiB/s, done.

Total 7 (delta 2), reused 0 (delta 0)

remote: Resolving deltas: 100% (2/2), done.

To https://github.com/idjohnson/awxSetup.git

dd62b1e..d8c49a1 main -> main

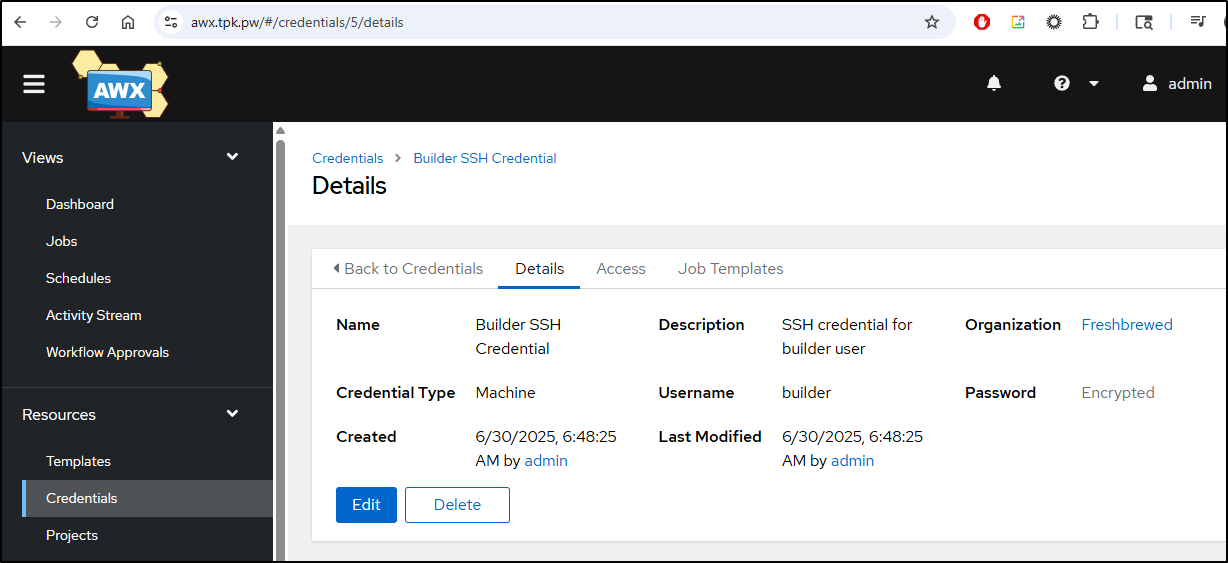

To use these hosts, I’ll want to pull in a builder credential.

I’ll ask Gemini Code Assist:

I want another Kubernetes Job to create a machine credential for the Freshbrewed Organization that has the username of builder and password that is pulled from a kubernetes secret. We should create the secret manually with kubectl.

It came back with:

kubectl create secret generic awx-builder-password \

--from-literal=password='YOUR_SUPER_SECRET_PASSWORD'

Followed by

apiVersion: batch/v1

kind: Job

metadata:

name: create-awx-builder-credential-job

spec:

backoffLimit: 4

template:

spec:

containers:

- name: create-awx-credential

image: alpine:latest

env:

# --- Configuration for the Organization to find ---

- name: ORG_NAME

value: "Freshbrewed"

# --- Configuration for the Credential to create ---

- name: CREDENTIAL_NAME

value: "Builder SSH Credential"

- name: CREDENTIAL_USERNAME

value: "builder"

# --- AWX API Connection Details ---

- name: AWX_API_URL

value: "http://awx-service.default.svc:80"

- name: AWX_ADMIN_PASSWORD

valueFrom:

secretKeyRef:

name: awx-admin-password

key: password

# --- Password for the new 'builder' credential ---

- name: BUILDER_PASSWORD

valueFrom:

secretKeyRef:

# This secret must be created manually beforehand

name: awx-builder-password

key: password

command: ["/bin/sh", "-c"]

args:

- |

# Exit on error, print commands

set -ex

echo "Installing dependencies..."

apk add --no-cache curl jq

# 1. FETCH ORGANIZATION ID

echo "Fetching ID for organization: ${ORG_NAME}"

ORG_ID=$(curl -k -f -s -u "admin:${AWX_ADMIN_PASSWORD}" "${AWX_API_URL}/api/v2/organizations/?name=${ORG_NAME}" | jq '.results[0].id')

if [ -z "$ORG_ID" ] || [ "$ORG_ID" == "null" ]; then

echo "Error: Could not find organization with name '${ORG_NAME}'"

exit 1

fi

echo "Found Organization ID: ${ORG_ID}"

# 2. FETCH MACHINE CREDENTIAL TYPE ID

echo "Fetching ID for 'Machine' credential type (kind=ssh)"

CREDENTIAL_TYPE_ID=$(curl -k -f -s -u "admin:${AWX_ADMIN_PASSWORD}" "${AWX_API_URL}/api/v2/credential_types/?kind=ssh" | jq '.results[0].id')

if [ -z "$CREDENTIAL_TYPE_ID" ] || [ "$CREDENTIAL_TYPE_ID" == "null" ]; then

echo "Error: Could not find credential type with kind=ssh"

exit 1

fi

echo "Found Machine Credential Type ID: ${CREDENTIAL_TYPE_ID}"

# 3. CREATE THE CREDENTIAL

echo "Creating credential '${CREDENTIAL_NAME}'..."

CREDENTIAL_PAYLOAD=$(jq -n \

--arg name "$CREDENTIAL_NAME" \

--arg org_id "$ORG_ID" \

--arg cred_type_id "$CREDENTIAL_TYPE_ID" \

--arg username "$CREDENTIAL_USERNAME" \

--arg password "$BUILDER_PASSWORD" \

'{name: $name, description: "SSH credential for builder user", organization: ($org_id|tonumber), credential_type: ($cred_type_id|tonumber), inputs: {username: $username, password: $password}}')

curl -k -f -X POST \

-u "admin:${AWX_ADMIN_PASSWORD}" \

-H "Content-Type: application/json" \

-d "${CREDENTIAL_PAYLOAD}" \

"${AWX_API_URL}/api/v2/credentials/"

echo "Successfully created credential: ${CREDENTIAL_NAME}"

restartPolicy: OnFailure

Let’s run those next

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl create secret generic awx-builder-password --from-literal=password='xxxxxxxx'

secret/awx-builder-password created

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl apply -f ./4_credential_builder.yaml

job.batch/create-awx-builder-credential-job created

And there it is

We can wrap by actually testing it.

I had one more test I wanted to throw at Gemini Code Assist. That was to populate empty files.

I created placeholders for cluster2

Again, Gemini delivered and I added the one extra host

However, this time things did not go as smooth

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl apply -f ./6_inventory_add_hosts_cluster2.yaml

job.batch/add-hosts-to-inventory-cluster2-job created

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl get jobs

NAME STATUS COMPLETIONS DURATION AGE

add-hosts-to-inventory-cluster2-job Running 0/1 59s 59s

create-awx-inventory-cluster2-job Running 0/1 69s 69s

seems it is having troubles with the admin password

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl get po | grep create

create-awx-inventory-cluster2-job-dbqtx 0/1 CreateContainerConfigError 0 96s

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl describe po create-awx-inventory-cluster2-job-dbqtx | tail -n15

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 109s default-scheduler Successfully assigned default/create-awx-inventory-cluster2-job-dbqtx to isaac-macbookair

Normal Pulled 108s kubelet Successfully pulled image "alpine:latest" in 1.447s (1.447s including waiting). Image size: 3807667 bytes.

Normal Pulled 107s kubelet Successfully pulled image "alpine:latest" in 457ms (457ms including waiting). Image size: 3807667 bytes.

Normal Pulled 91s kubelet Successfully pulled image "alpine:latest" in 503ms (503ms including waiting). Image size: 3807667 bytes.

Normal Pulled 79s kubelet Successfully pulled image "alpine:latest" in 558ms (558ms including waiting). Image size: 3807667 bytes.

Normal Pulled 67s kubelet Successfully pulled image "alpine:latest" in 542ms (542ms including waiting). Image size: 3807667 bytes.

Normal Pulled 53s kubelet Successfully pulled image "alpine:latest" in 483ms (483ms including waiting). Image size: 3807667 bytes.

Normal Pulled 41s kubelet Successfully pulled image "alpine:latest" in 484ms (484ms including waiting). Image size: 3807667 bytes.

Warning Failed 27s (x8 over 108s) kubelet Error: secret "awx-admin-password" not found

Normal Pulled 27s kubelet Successfully pulled image "alpine:latest" in 593ms (593ms including waiting). Image size: 3807667 bytes.

Normal Pulling 12s (x9 over 109s) kubelet Pulling image "alpine:latest"

Then I realized my mistake! I had switched cluster contexts to check node names.

Let’s cleanup, then go back to the production cluster to try again

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectx mac77

Switched to context "mac77".

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl get jobs

NAME STATUS COMPLETIONS DURATION AGE

add-hosts-to-inventory-cluster2-job Running 0/1 3m16s 3m16s

create-awx-inventory-cluster2-job Running 0/1 3m26s 3m26s

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl delete -f ./5_inventory_create_cluster2.yaml

job.batch "create-awx-inventory-cluster2-job" deleted

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl delete -f ./6_inventory_add_hosts_cluster2.yaml

job.batch "add-hosts-to-inventory-cluster2-job" deleted

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl get jobs

No resources found in default namespace.

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectx int33

Switched to context "int33".

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl apply -f ./5_inventory_create_cluster2.yaml

job.batch/create-awx-inventory-cluster2-job created

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl get jobs

NAME COMPLETIONS DURATION AGE

add-hosts-to-inventory-cluster1-job 1/1 12s 36m

awx-migration-24.6.1 1/1 5m 5d23h

create-awx-builder-credential-job 1/1 9s 29m

create-awx-inventory-cluster1-job 1/1 9s 37m

create-awx-inventory-cluster2-job 0/1 5s 5s

create-awx-organization-job 1/1 8s 78m

create-awx-project-job 1/1 8s 68m

kubectl-job 1/1 5s 367d

meet-db-migrate 0/1 120d 120d

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl apply -f ./6_inventory_add_hosts_cluster2.yaml

job.batch/add-hosts-to-inventory-cluster2-job created

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl get jobs | head -n3

NAME COMPLETIONS DURATION AGE

add-hosts-to-inventory-cluster1-job 1/1 12s 36m

add-hosts-to-inventory-cluster2-job 0/1 6s 6s

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl get jobs | head -n3

NAME COMPLETIONS DURATION AGE

add-hosts-to-inventory-cluster1-job 1/1 12s 36m

add-hosts-to-inventory-cluster2-job 1/1 8s 12s

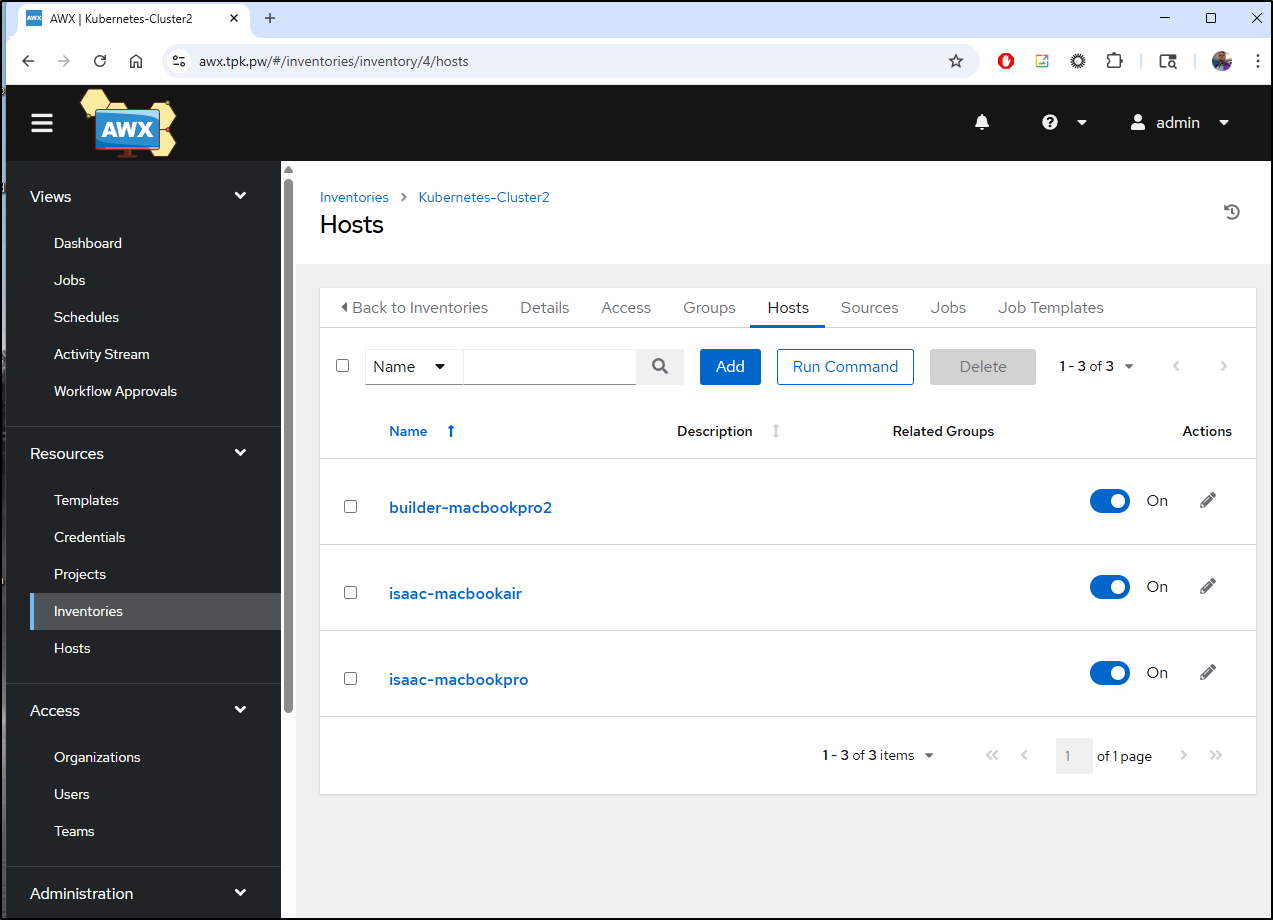

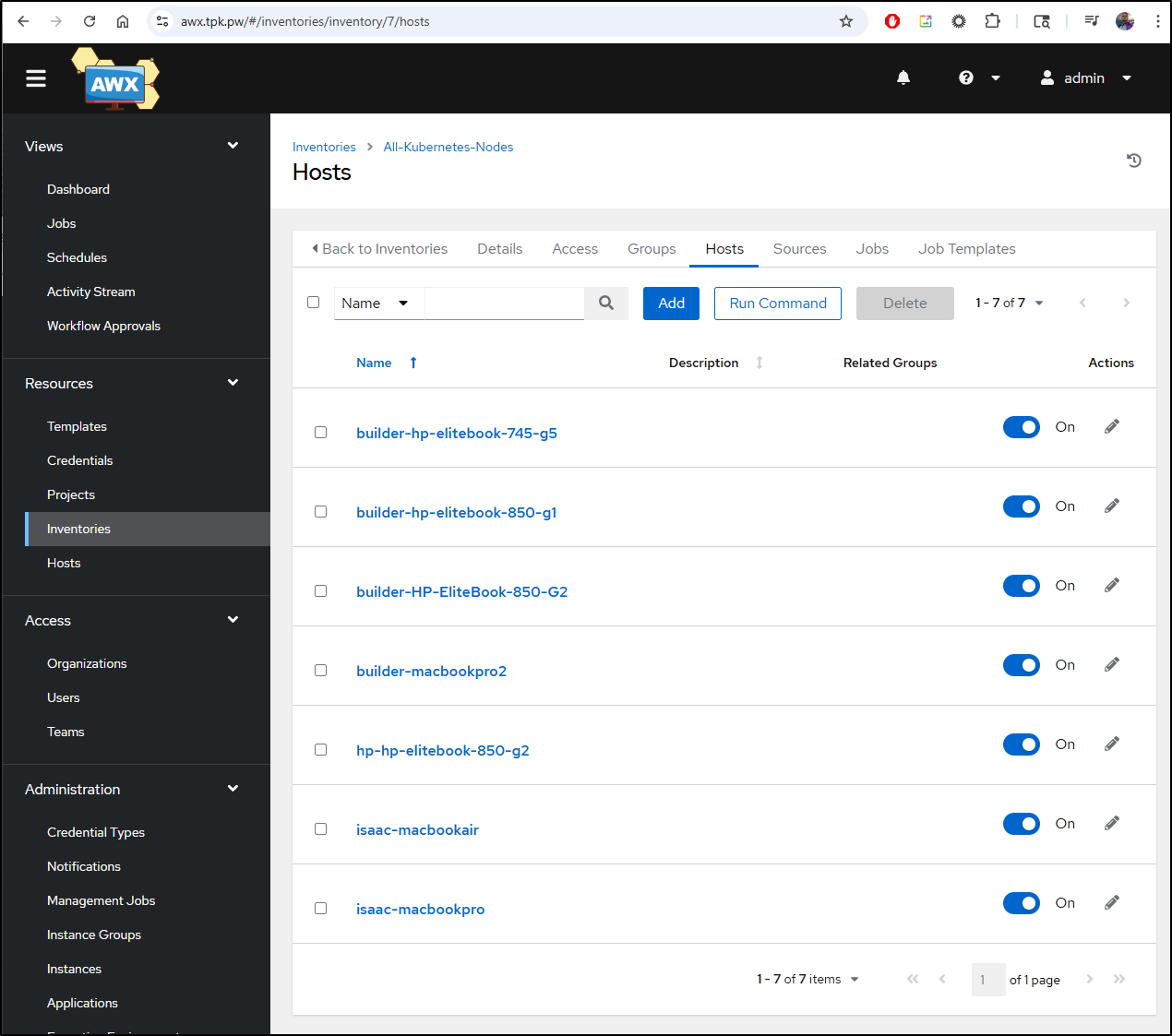

And here we can see they are entirely populated

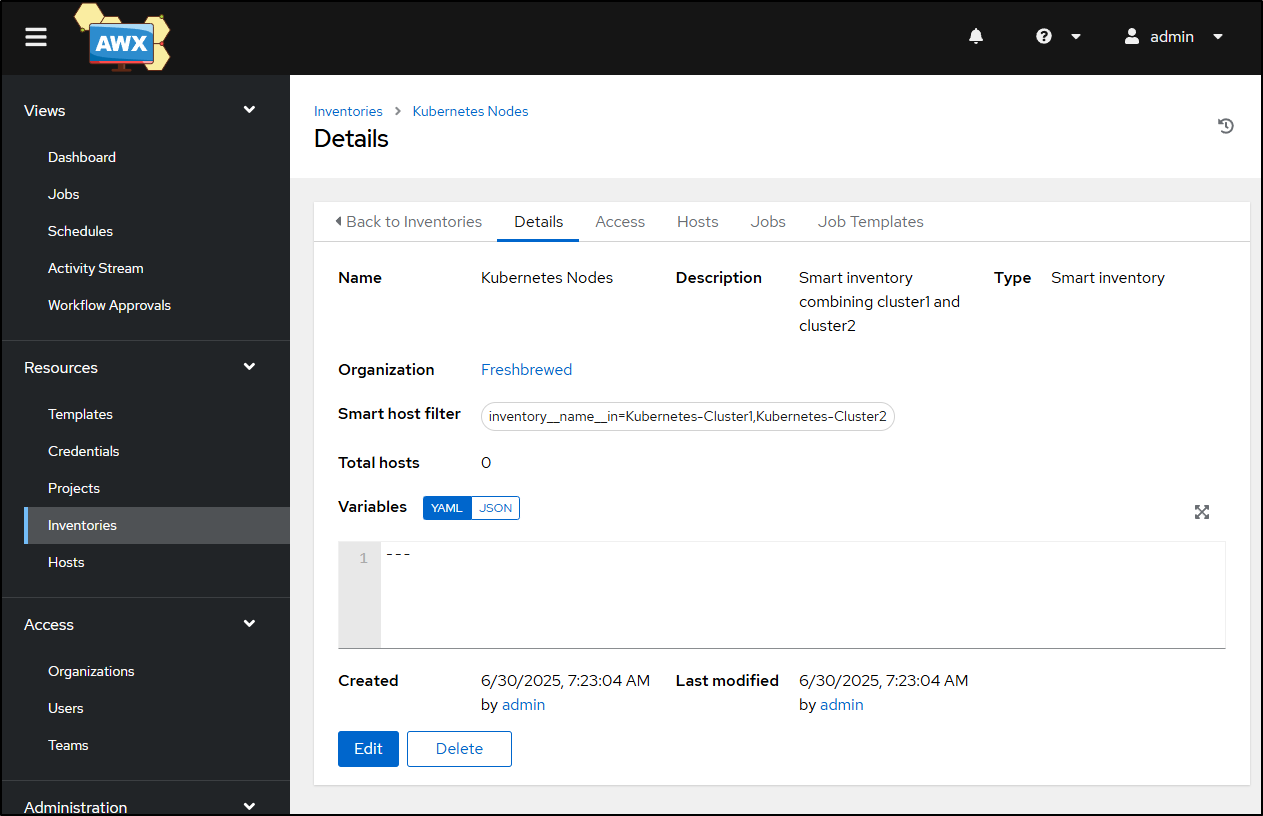

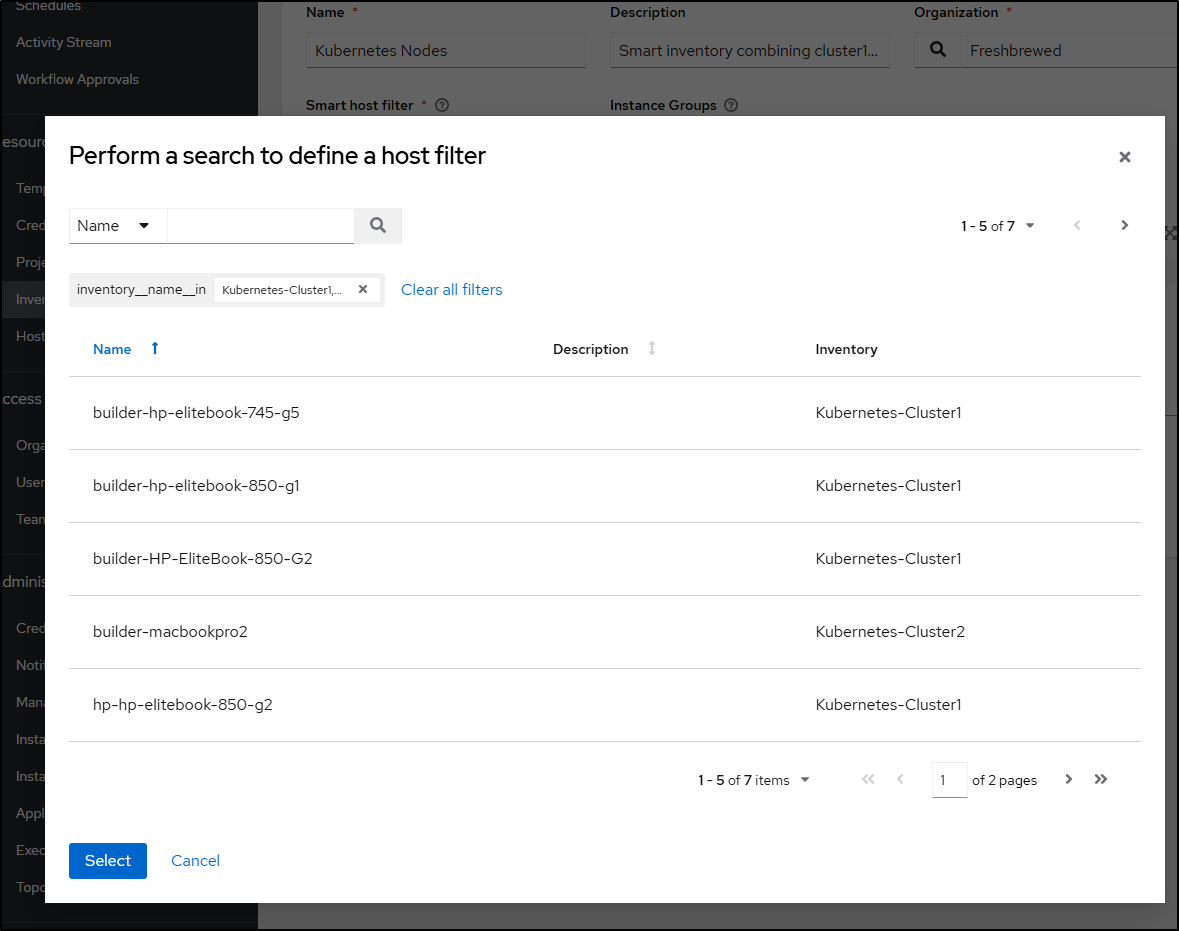

I’ll ask GCA

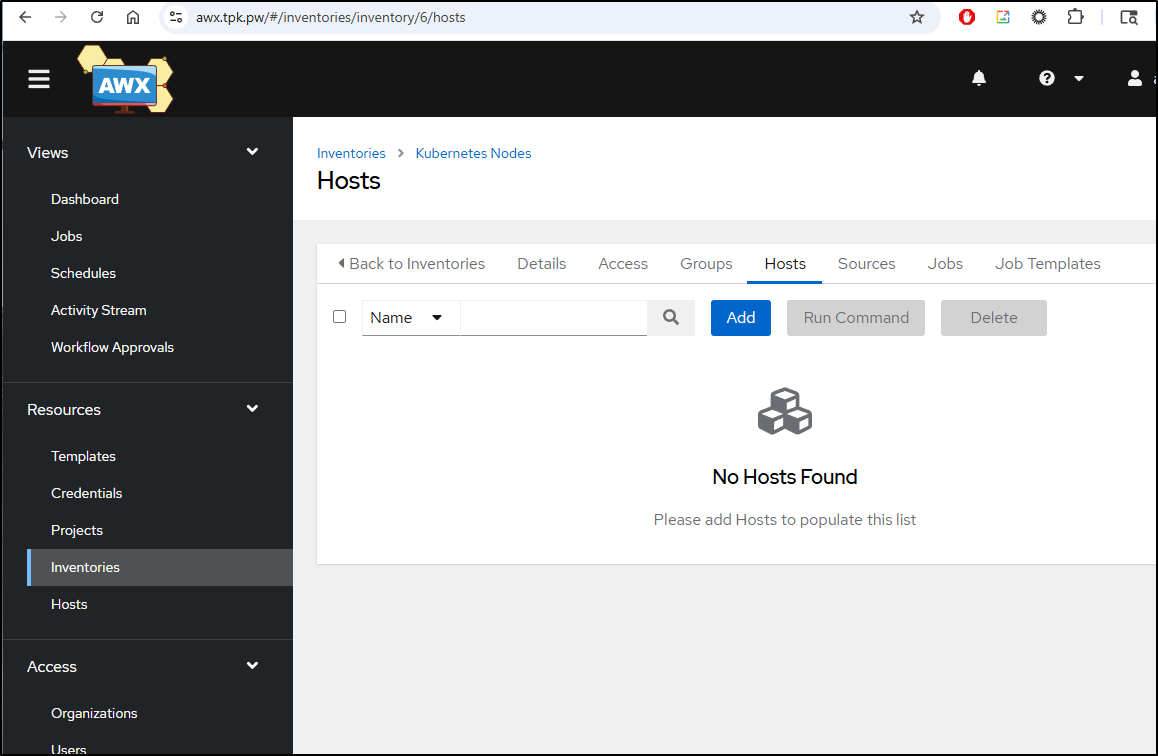

I now have two inventories, Kubernetes-Cluster1 and Kubernetes-Cluster2 as defined in files 2_inventory_create_cluster1.yaml 3_inventory_add_hosts_cluster1.yaml 5_inventory_create_cluster2.yaml and 6_inventory_add_hosts_cluster2.yaml. I want to create one more inventory called “Kubernetes Nodes” which includes all the hosts from cluster1 and cluster2

I had thought it would do as I would, which is just make a 3rd inventory that combines the lot of them, but instead it is suggesting a “Smart Inventory”

apiVersion: batch/v1

kind: Job

metadata:

name: create-awx-smart-inventory-nodes-job

spec:

backoffLimit: 4

template:

spec:

containers:

- name: create-awx-smart-inventory

image: alpine:latest

env:

# --- Configuration for the Organization to find ---

- name: ORG_NAME

value: "Freshbrewed"

# --- Configuration for the Smart Inventory to create ---

- name: SMART_INVENTORY_NAME

value: "Kubernetes Nodes"

- name: SOURCE_INVENTORY_1

value: "Kubernetes-Cluster1"

- name: SOURCE_INVENTORY_2

value: "Kubernetes-Cluster2"

# --- AWX API Connection Details ---

- name: AWX_API_URL

value: "http://awx-service.default.svc:80"

- name: AWX_ADMIN_PASSWORD

valueFrom:

secretKeyRef:

name: awx-admin-password

key: password

command: ["/bin/sh", "-c"]

args:

- |

# Exit on error, print commands

set -ex

echo "Installing dependencies..."

apk add --no-cache curl jq

# 1. FETCH ORGANIZATION ID

echo "Fetching ID for organization: ${ORG_NAME}"

ORG_ID=$(curl -k -f -s -u "admin:${AWX_ADMIN_PASSWORD}" "${AWX_API_URL}/api/v2/organizations/?name=${ORG_NAME}" | jq '.results[0].id')

if [ -z "$ORG_ID" ] || [ "$ORG_ID" == "null" ]; then

echo "Error: Could not find organization with name '${ORG_NAME}'"

exit 1

fi

echo "Found Organization ID: ${ORG_ID}"

# 2. CONSTRUCT THE HOST FILTER AND JSON PAYLOAD

# The 'inventory__name__in' filter finds all hosts that are in any of the listed inventories.

HOST_FILTER="inventory__name__in=${SOURCE_INVENTORY_1},${SOURCE_INVENTORY_2}"

echo "Using host filter: ${HOST_FILTER}"

SMART_INVENTORY_PAYLOAD=$(jq -n \

--arg name "$SMART_INVENTORY_NAME" \

--arg org_id "$ORG_ID" \

--arg host_filter "$HOST_FILTER" \

'{name: $name, description: "Smart inventory combining cluster1 and cluster2", organization: ($org_id|tonumber), kind: "smart", host_filter: $host_filter}')

# 3. CREATE THE SMART INVENTORY

echo "Creating smart inventory: ${SMART_INVENTORY_NAME}"

curl -k -f -X POST \

-u "admin:${AWX_ADMIN_PASSWORD}" \

-H "Content-Type: application/json" \

-d "${SMART_INVENTORY_PAYLOAD}" \

"${AWX_API_URL}/api/v2/inventories/"

echo "Successfully created smart inventory: ${SMART_INVENTORY_NAME}"

restartPolicy: OnFailure

I see it made an inventory

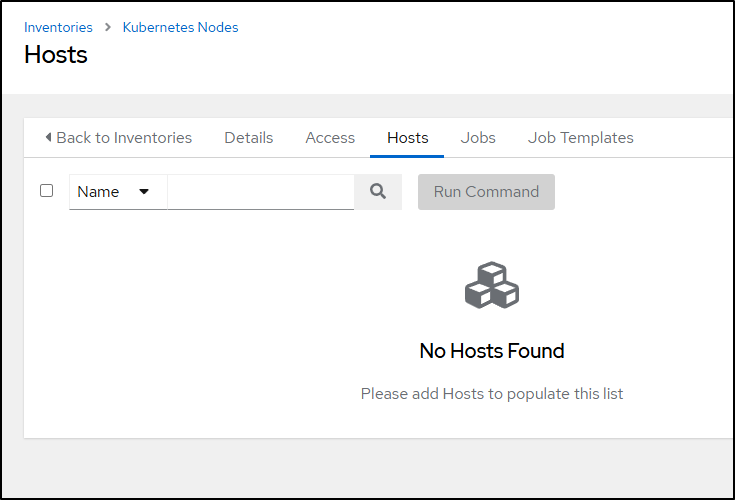

but it was empty

Though, on edit, and checking the filter, it looks right

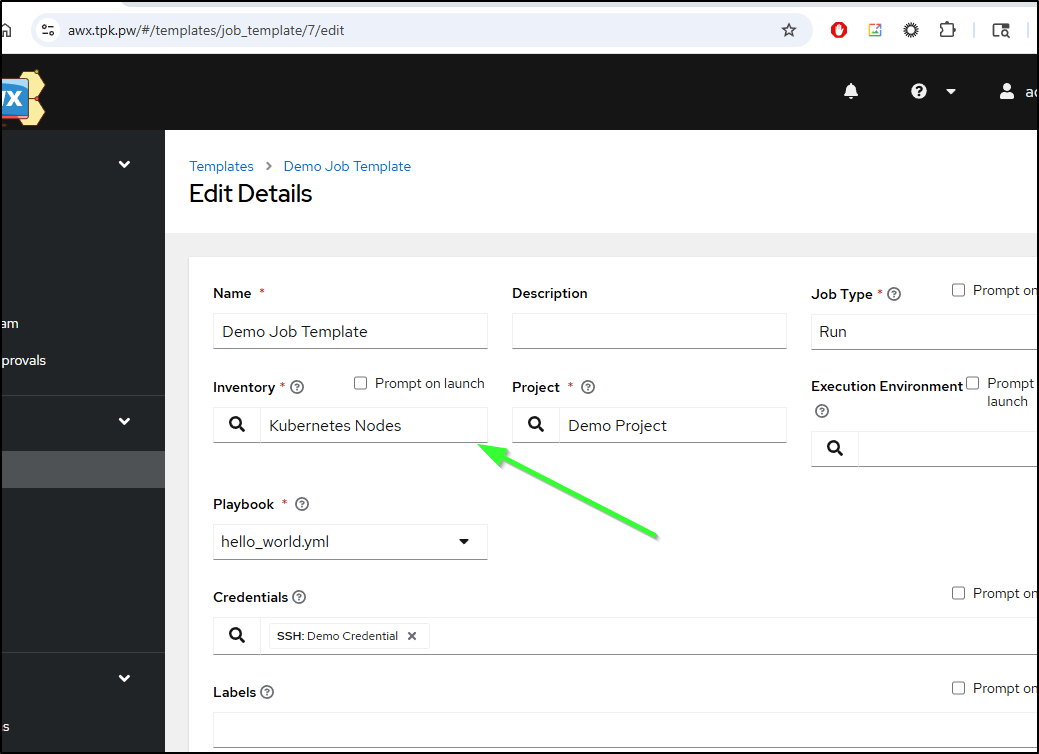

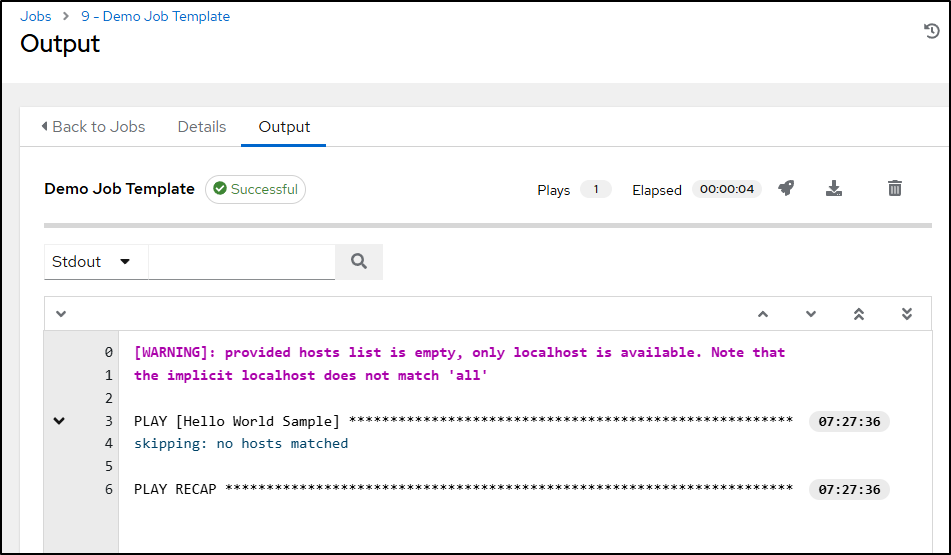

Let’s test with the demo job by editing the job template to pick our new “smart inventory”

That didn’t seem to work

I’ll pivot with GCA

actually I would like the job to fetch the currently defined hosts from Kubernetes-Cluster1 and Kubernetes-Cluster2 and then create a new Kubernetes Nodes inventory and then populate with each host it had found from Cluster1 and Cluster2.

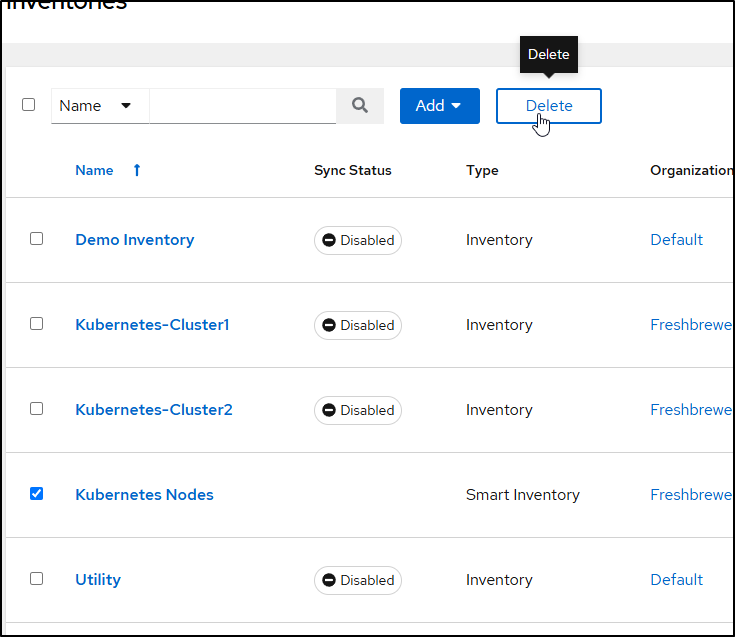

I’ll first cleanup by deleting the current job

$ kubectl delete job create-awx-smart-inventory-nodes-job

job.batch "create-awx-smart-inventory-nodes-job" deleted

and the smart inventory

Then using the suggested “static” option

apiVersion: batch/v1

kind: Job

metadata:

name: create-awx-static-inventory-nodes-job

spec:

backoffLimit: 4

template:

spec:

containers:

- name: create-awx-smart-inventory

image: alpine:latest

env:

# --- Configuration for the Organization to find ---

- name: ORG_NAME

value: "Freshbrewed"

# --- Configuration for the new static Inventory to create ---

- name: TARGET_INVENTORY_NAME

value: "Kubernetes Nodes"

- name: SOURCE_INVENTORY_1

value: "Kubernetes-Cluster1"

- name: SOURCE_INVENTORY_2

value: "Kubernetes-Cluster2"

# --- AWX API Connection Details ---

- name: AWX_API_URL

value: "http://awx-service.default.svc:80"

- name: AWX_ADMIN_PASSWORD

valueFrom:

secretKeyRef:

name: awx-admin-password

key: password

command: ["/bin/sh", "-c"]

args:

- |

# Exit on error, print commands

set -ex

echo "Installing dependencies..."

apk add --no-cache curl jq

# 1. FETCH ORGANIZATION ID

echo "Fetching ID for organization: ${ORG_NAME}"

ORG_ID=$(curl -k -f -s -u "admin:${AWX_ADMIN_PASSWORD}" "${AWX_API_URL}/api/v2/organizations/?name=${ORG_NAME}" | jq '.results[0].id')

if [ -z "$ORG_ID" ] || [ "$ORG_ID" == "null" ]; then

echo "Error: Could not find organization with name '${ORG_NAME}'"

exit 1

fi

echo "Found Organization ID: ${ORG_ID}"

# 2. CREATE THE NEW STANDARD INVENTORY

echo "Creating target inventory: ${TARGET_INVENTORY_NAME}"

TARGET_INVENTORY_PAYLOAD="{\"name\": \"${TARGET_INVENTORY_NAME}\", \"organization\": ${ORG_ID}, \"description\": \"Static inventory populated from other clusters\"}"

TARGET_INVENTORY_ID=$(curl -k -f -s -X POST -u "admin:${AWX_ADMIN_PASSWORD}" -H "Content-Type: application/json" -d "${TARGET_INVENTORY_PAYLOAD}" "${AWX_API_URL}/api/v2/inventories/" | jq '.id')

if [ -z "$TARGET_INVENTORY_ID" ] || [ "$TARGET_INVENTORY_ID" == "null" ]; then

echo "Error: Failed to create inventory '${TARGET_INVENTORY_NAME}' or could not parse its ID."

exit 1

fi

echo "Successfully created new inventory with ID: ${TARGET_INVENTORY_ID}"

# 3. FETCH ALL HOSTS FROM SOURCE INVENTORIES

echo "Fetching all hosts from ${SOURCE_INVENTORY_1} and ${SOURCE_INVENTORY_2}"

# Use a high page_size to avoid dealing with pagination in shell.

# The jq '.results' will give us a JSON array of host objects.

ALL_HOSTS_JSON=$(curl -k -f -s -u "admin:${AWX_ADMIN_PASSWORD}" "${AWX_API_URL}/api/v2/hosts/?inventory__name__in=${SOURCE_INVENTORY_1},${SOURCE_INVENTORY_2}&page_size=200" | jq '.results')

# 4. LOOP THROUGH HOSTS AND ADD THEM TO THE NEW INVENTORY

echo "Populating new inventory with found hosts..."

# Use jq to iterate over the array. The -c flag produces compact output, one JSON object per line.

echo "${ALL_HOSTS_JSON}" | jq -c '.[]' | while read host_object; do

# For each host object, construct the payload for the new host.

# We just need the name and variables.

ADD_HOST_PAYLOAD=$(echo "${host_object}" | jq '{name, variables}')

HOST_NAME=$(echo "${host_object}" | jq -r '.name')

echo "Adding host: ${HOST_NAME} to inventory ID ${TARGET_INVENTORY_ID}"

curl -k -f -X POST \

-u "admin:${AWX_ADMIN_PASSWORD}" \

-H "Content-Type: application/json" \

-d "${ADD_HOST_PAYLOAD}" \

"${AWX_API_URL}/api/v2/inventories/${TARGET_INVENTORY_ID}/hosts/"

done

HOST_COUNT=$(echo "${ALL_HOSTS_JSON}" | jq 'length')

echo "Successfully populated inventory '${TARGET_INVENTORY_NAME}' with ${HOST_COUNT} hosts."

restartPolicy: OnFailure

I’ll apply

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl apply -f ./7_inventory_create_static_nodes.yaml

job.batch/create-awx-static-inventory-nodes-job created

builder@DESKTOP-QADGF36:~/Workspaces/awxSetup$ kubectl get jobs | head -n3

NAME COMPLETIONS DURATION AGE

add-hosts-to-inventory-cluster1-job 1/1 12s 51m

add-hosts-to-inventory-cluster2-job 1/1 8s 14m

This created the inventory but no hosts

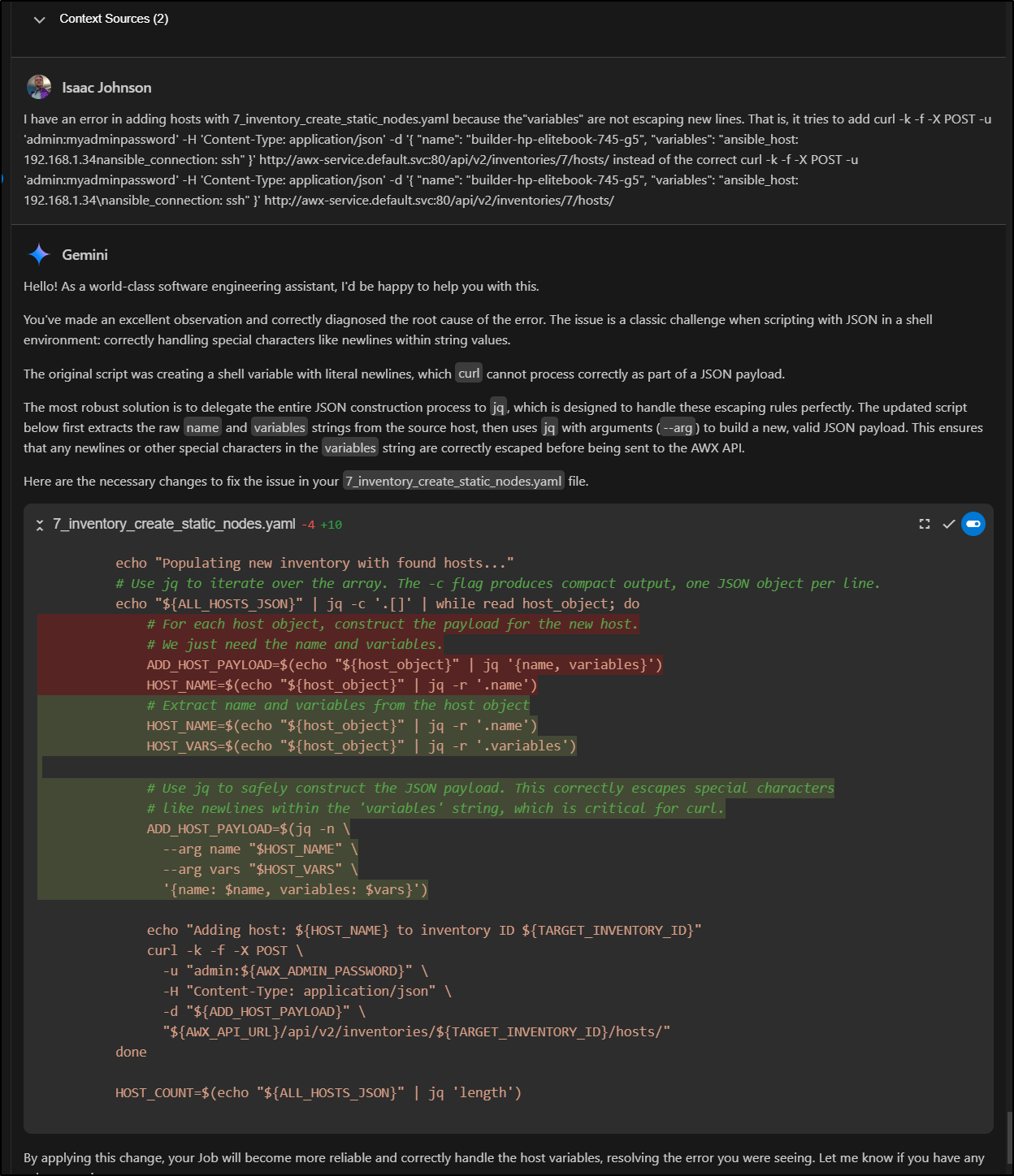

I found a couple of minor issues. I needed to make it idempotent (don’t error if inventory exists), then get the variables escaped (it was trying to add “variables”: “ansible_host: 192.168.1.34nansible_connection: ssh”, for instance)

I liked the creative solutioning of GCA to use JQ to solve the escaping

I actually fought this a bit (and even tried GPT-4.1). But this is sometimes where we have to step in and figure it ourselves. The fact was that jq was returning variables without escaped newlines.

I just solved it by knowing anything that was “nansible_” would rightly be “\nanible_” and fixed the code to find and fix it with sed

# 5. LOOP THROUGH SOURCE HOSTS AND ADD THEM TO THE NEW INVENTORY

echo "Populating new inventory with found hosts..."

# Use jq to iterate over the array. The -c flag produces compact output, one JSON object per line.

echo "${ALL_HOSTS_JSON}" | jq -c '.[]' | while read host_object; do

# Extract name and variables from the host object

HOST_NAME=$(echo "${host_object}" | jq -r '.name')

HOST_VARS=$(echo "${host_object}" | jq -r '.variables')

echo "Processing host: ${HOST_NAME} with variables: ${HOST_VARS}"

vars_json=$(echo "$HOST_VARS" | sed 's/nansible_/\\nansible_/g')

echo "Processing host: ${HOST_NAME} with variables: ${vars_json}"

echo "Adding host: ${HOST_NAME} to inventory ID ${TARGET_INVENTORY_ID}"

curl -k -f -X POST \

-u "admin:${AWX_ADMIN_PASSWORD}" \

-H "Content-Type: application/json" \

-d "{\"name\": \"$HOST_NAME\", \"variables\": \"$vars_json\"}" \

"${AWX_API_URL}/api/v2/inventories/${TARGET_INVENTORY_ID}/hosts/"

done

which worked

Summary

Today we worked through setting up a new AWX Instance, this time using the newer Operator model as my former AWX 17.1.0 based on a long abandoned Adwerx chart was dying.

I managed to fix my cluster and access the old one again, but then we used it as a basis to build out and test the new AWX instance (which is based on AWX 24.6.1)

Once the initial tests were sorted, we walked through creating a handful of Kubernetes Jobs to create the organization, project, inventories, Machine credential and hosts.

Lastly, we worked through making the inventory creation more robust and idempotent and fixing some issues with newlines and jq.

Hopefully you found something useful in that. I know it was fun for me to revisit doing this as I had done so many times before, but never for myself.