Published: Jun 17, 2025 by Isaac Johnson

Fairwinds was an OSN sponsor this year and had a few interesting Open-Source projects I wanted to which I wanted to circle back. They are a managed service offering provider for GKE (see more), but what I think is really cool is they Open-Source a lot of their key tooling.

I have a sticker pack in my OSN bag with their more recently Open-Source offerings, so let’s check them out. The firt we’ll explore is Goldilocks.

Goldilocks

Goldilocks is a tool to answer the question “Well, what should be the right size for my pods?”. The CTO of Fairwinds was saying on a call how customers kept asking about needing help to determine their pods correct size and that’s how they came up with Goldilocks - not to small, not too big, but just right.

This sounds really interesting to me as in my own experience, right-sizing pods seems to be as much art as science and invariably becomes a heated discussion whenever we start to hit scaling issues.

Part of the reason it becomes contentious, in my opinion, is all sorts of monitoring and “costing” tools (such as Ternary and Kubecost) will suggest we “can save money” by reducing pods size but there are often consequences and there can be QoS and performance issues that come with smaller pod sizes.

Installation Pre-reqs

Before I can get started, I need to add a few key pieces.

First, we need to install a metrics-server if not there already

$ kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

Warning: resource serviceaccounts/metrics-server is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

serviceaccount/metrics-server configured

Warning: resource clusterroles/system:aggregated-metrics-reader is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader configured

Warning: resource clusterroles/system:metrics-server is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

clusterrole.rbac.authorization.k8s.io/system:metrics-server configured

Warning: resource rolebindings/metrics-server-auth-reader is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader configured

Warning: resource clusterrolebindings/metrics-server:system:auth-delegator is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator configured

Warning: resource clusterrolebindings/system:metrics-server is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server configured

Warning: resource services/metrics-server is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

service/metrics-server configured

Warning: resource deployments/metrics-server is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

deployment.apps/metrics-server configured

Warning: resource apiservices/v1beta1.metrics.k8s.io is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io configured

However, we can also use helm to install it

builder@LuiGi:~$ helm repo add metrics-server https://kubernetes-sigs.github.io/metrics-server/

"metrics-server" has been added to your repositories

builder@LuiGi:~$ helm upgrade --install metrics-server metrics-server/metrics-server

Release "metrics-server" does not exist. Installing it now.

NAME: metrics-server

LAST DEPLOYED: Sun Jun 8 13:12:42 2025

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

***********************************************************************

* Metrics Server *

***********************************************************************

Chart version: 3.12.2

App version: 0.7.2

Image tag: registry.k8s.io/metrics-server/metrics-server:v0.7.2

***********************************************************************

Next, I’ll need a VPA (Vertical Pod Autoscaler) installed. Fairwind’s has a helm chart for that we can use:

builder@LuiGi:~$ helm repo add fairwinds-stable https://charts.fairwinds.com/stable

"fairwinds-stable" has been added to your repositories

builder@LuiGi:~$ helm install vpa fairwinds-stable/vpa --namespace vpa --create-namespace

NAME: vpa

LAST DEPLOYED: Sun Jun 8 13:15:03 2025

NAMESPACE: vpa

STATUS: deployed

REVISION: 1

NOTES:

Congratulations on installing the Vertical Pod Autoscaler!

Components Installed:

- recommender

- updater

- admission-controller

To verify functionality, you can try running 'helm -n vpa test vpa'

Note: not sure if was the weak wifi at a sporting event, but the helm install took a very long time with no output till the end

Goldilocks install

Let’s now move on to install Goldilocks with helm

$ helm repo add fairwinds-stable https://charts.fairwinds.com/stable

$ helm install goldilocks --create-namespace --namespace goldilocks fairwinds-stable/goldilocks

NAME: goldilocks

LAST DEPLOYED: Sun Jun 8 13:23:13 2025

NAMESPACE: goldilocks

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

kubectl -n goldilocks port-forward svc/goldilocks-dashboard 8080:80

echo "Visit http://127.0.0.1:8080 to use your application"

Checking that the pods are good

builder@LuiGi:~$ kubectl get po -n goldilocks

NAME READY STATUS RESTARTS AGE

goldilocks-controller-c96cbbd94-x8wd2 1/1 Running 0 8m59s

goldilocks-dashboard-5d486cdd4d-rgjjt 1/1 Running 0 8m59s

goldilocks-dashboard-5d486cdd4d-zxkgl 1/1 Running 0 8m54s

I can then port-forward to the service

builder@LuiGi:~$ kubectl get svc -n goldilocks

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

goldilocks-dashboard ClusterIP 10.43.253.69 <none> 80/TCP 9m44s

builder@LuiGi:~$ kubectl port-forward svc/goldilocks-dashboard -n goldilocks 8088:80

Forwarding from 127.0.0.1:8088 -> 8080

Forwarding from [::1]:8088 -> 8080

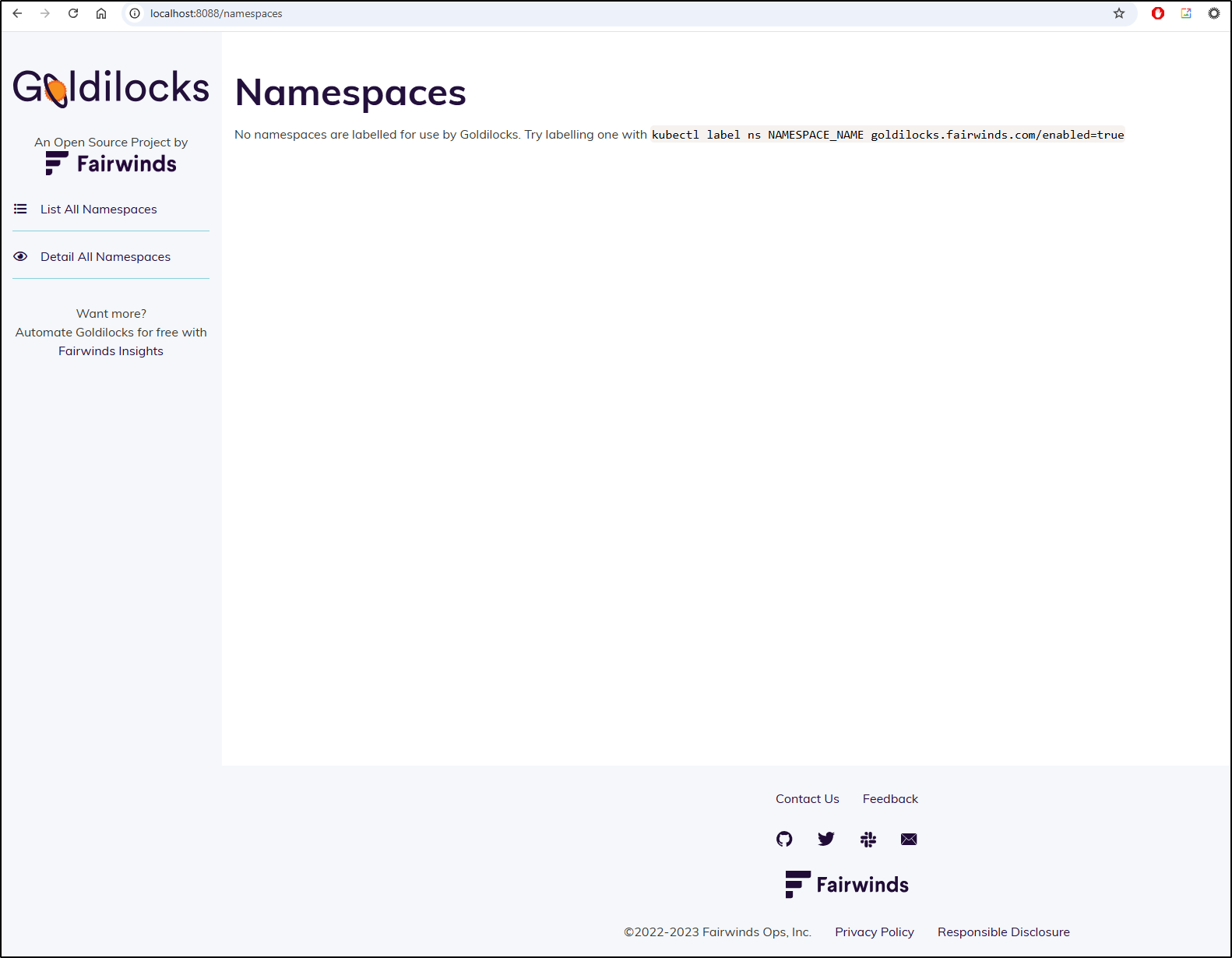

This brings up the dashboard

Clearly, we need to annotate a namespace to see some results with kubectl label ns NAMESPACE_NAME goldilocks.fairwinds.com/enabled=true

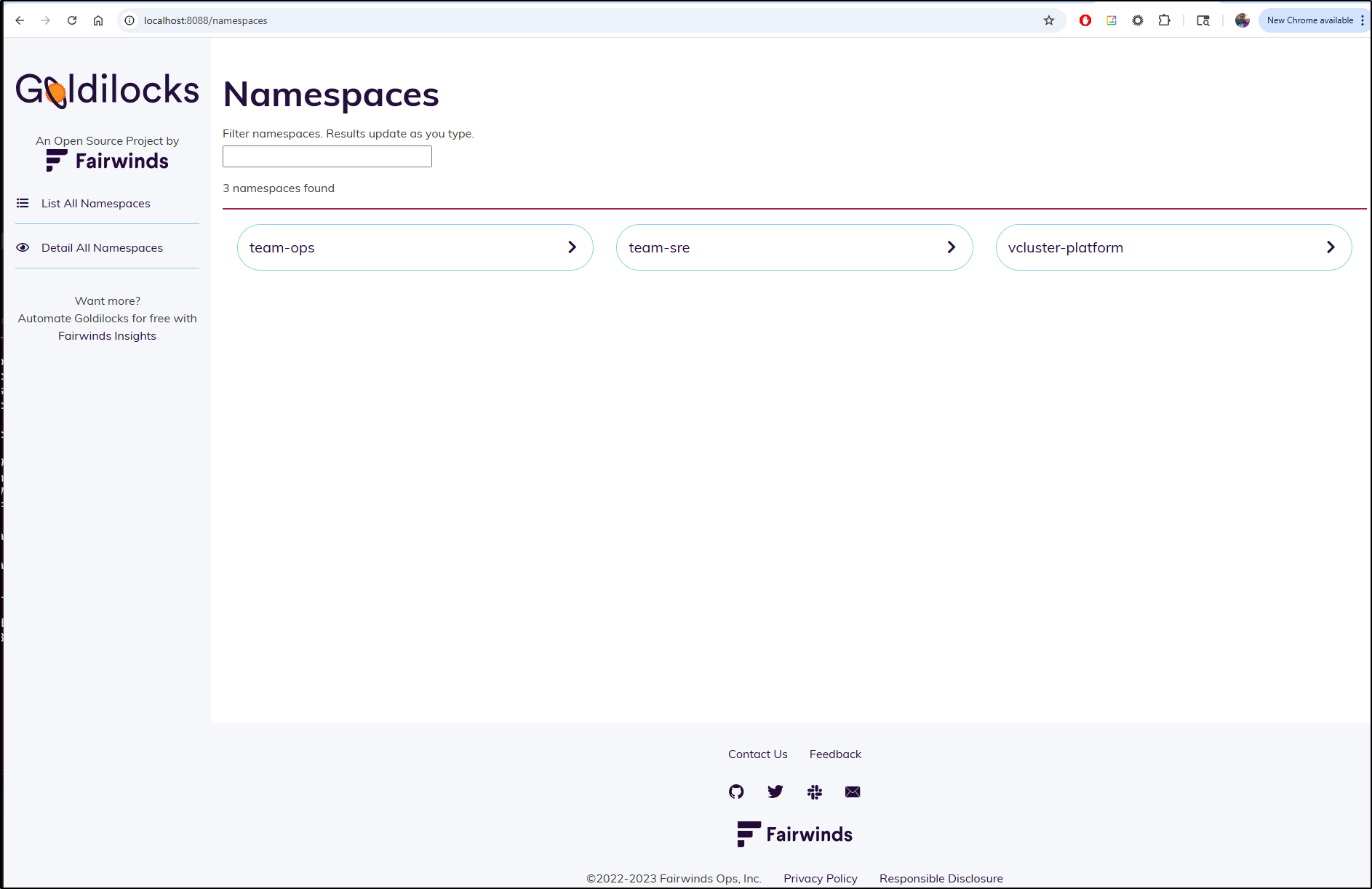

We can see that my test cluster has two vClusters as well as the vCluster system itself (from Loft)

builder@LuiGi:~$ kubectl get ns

NAME STATUS AGE

default Active 65d

goldilocks Active 12m

kube-node-lease Active 65d

kube-public Active 65d

kube-system Active 65d

p-default Active 36d

team-ops Active 36d

team-sre Active 37d

vcluster-platform Active 36d

vpa Active 20m

Let’s annotate those:

builder@LuiGi:~$ kubectl label ns team-ops goldilocks.fairwinds.com/enabled=true

namespace/team-ops labeled

builder@LuiGi:~$ kubectl label ns team-sre goldilocks.fairwinds.com/enabled=true

namespace/team-sre labeled

builder@LuiGi:~$ kubectl label ns vcluster-platform goldilocks.fairwinds.com/enabled=true

namespace/vcluster-platform labeled

I can now see those in the Goldilocks dashboard

Note: If you want default namespace, label it as well:

$ kubectl label ns default goldilocks.fairwinds.com/enabled=true

namespace/default labeled

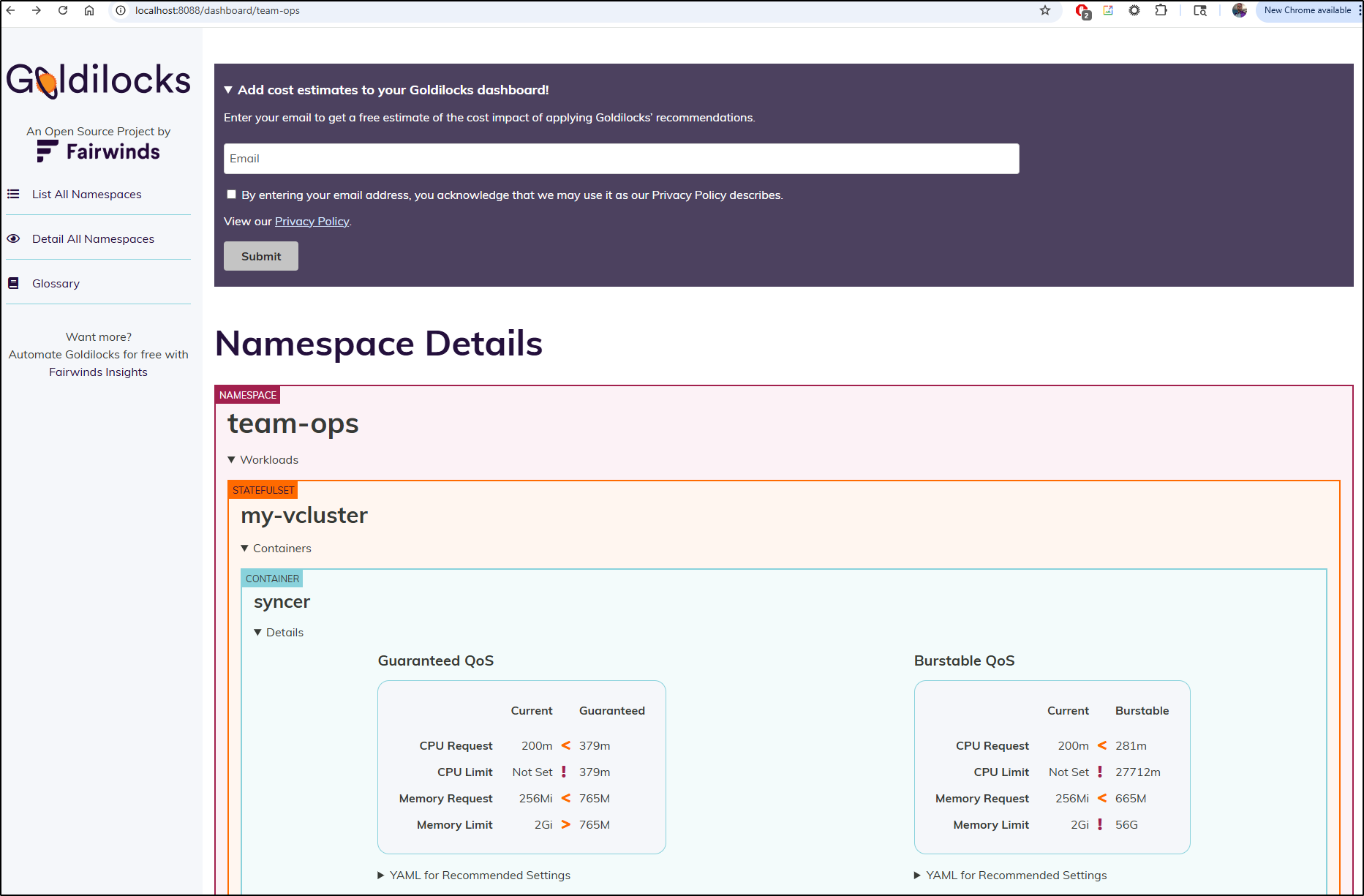

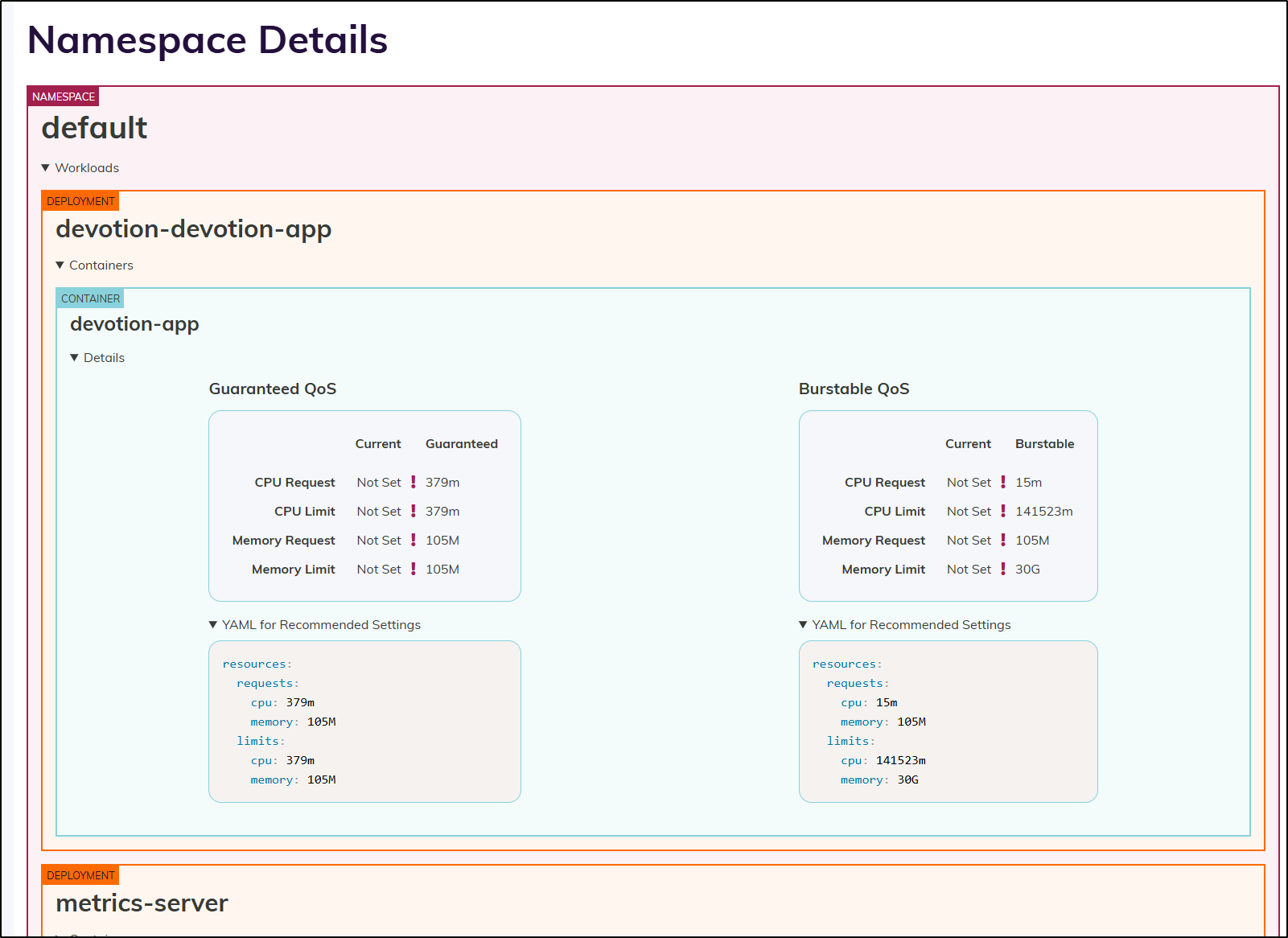

Almost immediately we can see some recommendations

I am not that trilled with a massive purple banner trying to get me to signup for Fairwinds sales. Seems a bit “needy” to me.

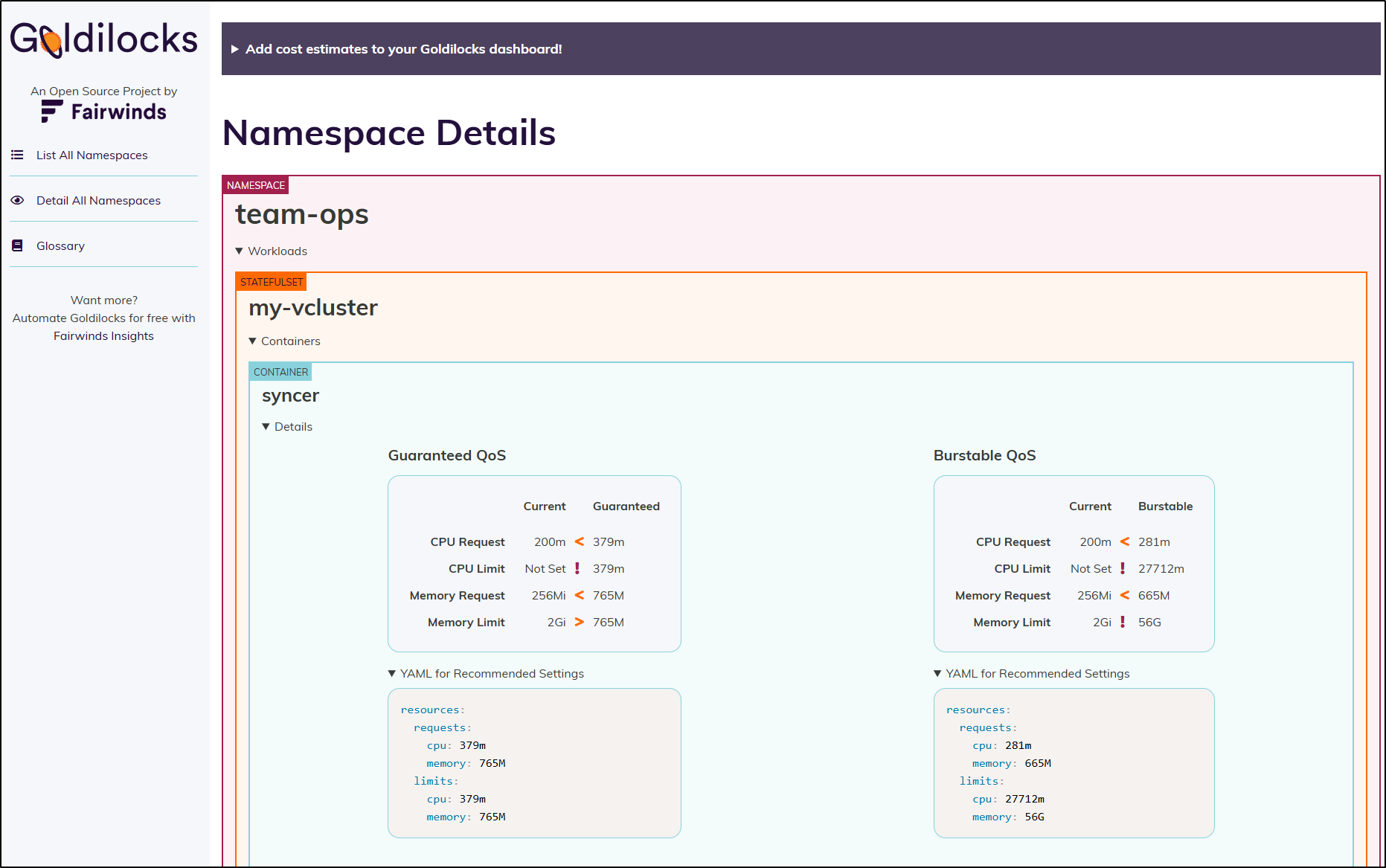

That said, I can minimize it as well as see the YAML block I would use in my charts or manifests

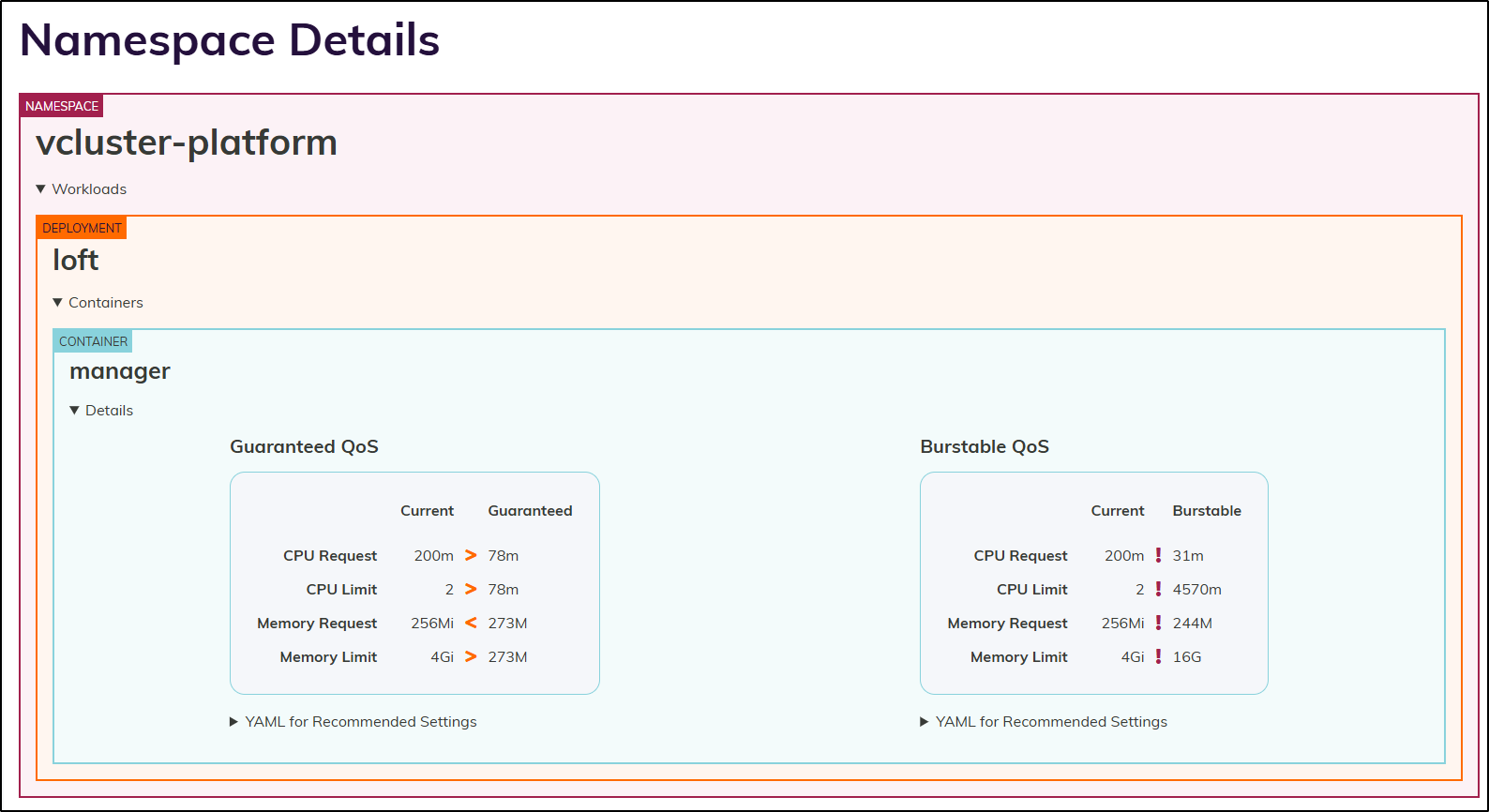

I thought it was particularly interesting that for the guaranteed Quality of Service (QoS), it detected I put too low of values for the pods, but the memory limit was out of whack and the CPU limit did not exist.

While it doesn’t happen often, a runaway process can start to hog CPU and without a limit, Kubernetes won’t automatically replace the node. That’s a good catch, imho.

Loft’s vCluster platform also had recommendations

I then installed my “meet” app locally

$ kubectl get po

NAME READY STATUS RESTARTS AGE

devotion-db-migrate-9r22l 0/1 Error 0 6m33s

devotion-db-migrate-ksmsn 0/1 Error 0 3m39s

devotion-db-migrate-mk52b 0/1 Error 0 10m

devotion-db-migrate-qrltk 0/1 Error 0 8m12s

devotion-db-migrate-wlvkq 0/1 Error 0 9m12s

devotion-devotion-app-6c4d7b5bc-2m4dh 1/1 Running 0 4m24s

metrics-server-85f985c745-nzh4p 1/1 Running 0 75m

Here we can see some guidance on the missing requests and limits

Since I exposed those as a helm block, I can change the resources: {} to

... snip ...

size: 1Gi

replicaCount: 1

resources:

requests:

cpu: 379m

memory: 105M

limits:

cpu: 379m

memory: 105M

secrets:

sendgrid:

... snip ...

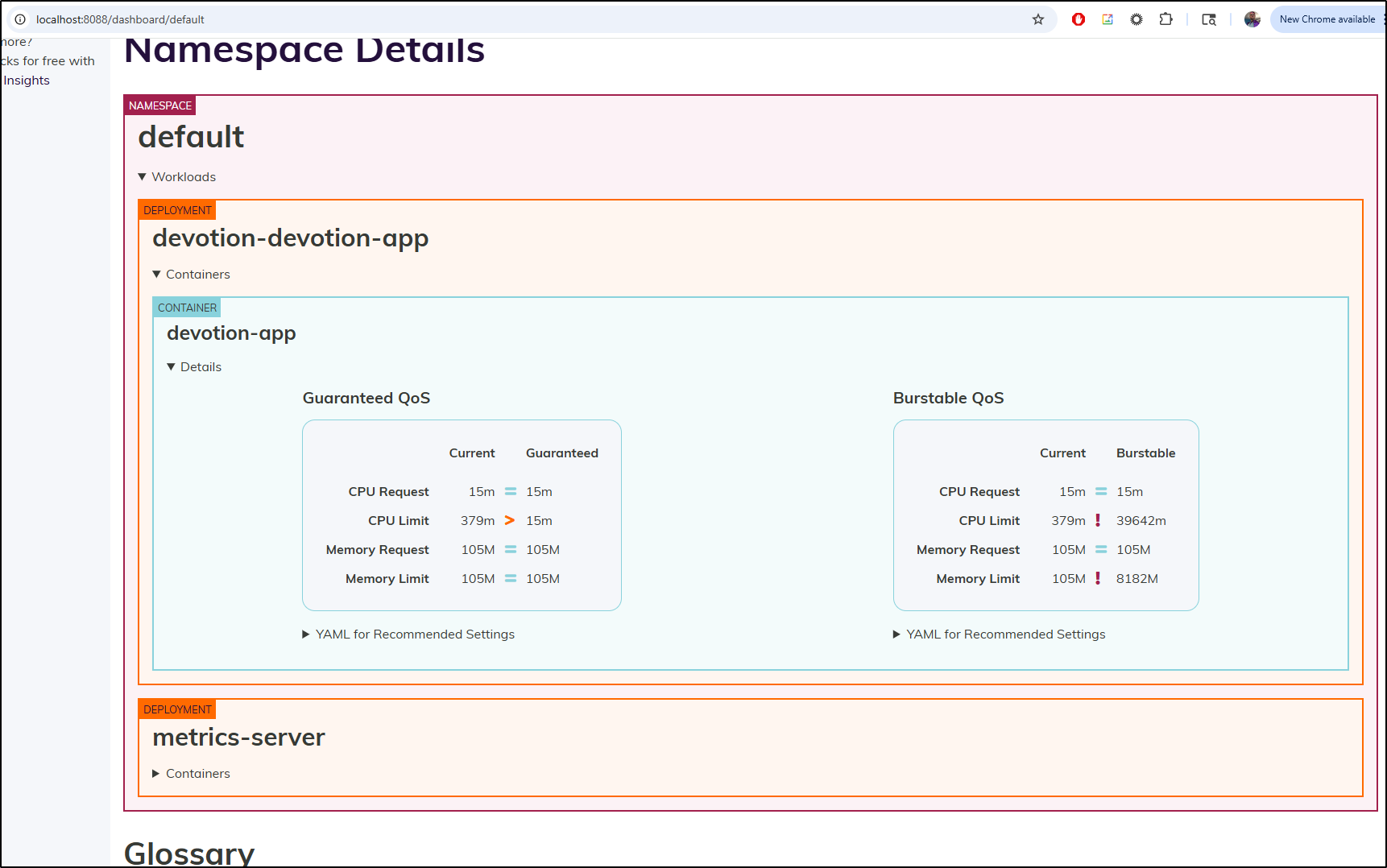

I upgraded via Helm to set them

builder@LuiGi:~/Workspaces/flaskAppBase$ vi values.yaml

builder@LuiGi:~/Workspaces/flaskAppBase$ helm upgrade --install devotion -f ./values.yaml ./helm/devotion

Release "devotion" has been upgraded. Happy Helming!

NAME: devotion

LAST DEPLOYED: Sun Jun 8 14:33:38 2025

NAMESPACE: default

STATUS: deployed

REVISION: 4

TEST SUITE: None

builder@LuiGi:~/Workspaces/flaskAppBase$ kubectl get po

NAME READY STATUS RESTARTS AGE

devotion-db-migrate-9r22l 0/1 Error 0 13m

devotion-db-migrate-ksmsn 0/1 Error 0 10m

devotion-db-migrate-mk52b 0/1 Error 0 16m

devotion-db-migrate-qrltk 0/1 Error 0 14m

devotion-db-migrate-s7ckq 0/1 Error 0 4m36s

devotion-db-migrate-wlvkq 0/1 Error 0 15m

devotion-devotion-app-6c4d7b5bc-2m4dh 0/1 Error 0 10m

devotion-devotion-app-9779dc69-bxzdm 1/1 Running 0 96s

metrics-server-85f985c745-nzh4p 1/1 Running 0 82m

While Golidlocks is happy with my memory settings, it now seems annoyed with my CPU settings. I don’t know why it would to limit to 15 millicores, but that would be a bit much. 379m, in CPU Request terms is about 1/3rd of a CPU.

I might try setting the request to 15m but not the limit.

Now, the question becomes is this based on the metrics gathered from Kubernetes or from some kind of pod inspection. I tend to think it’s the former which doesn’t help for a demo cluster with no load.

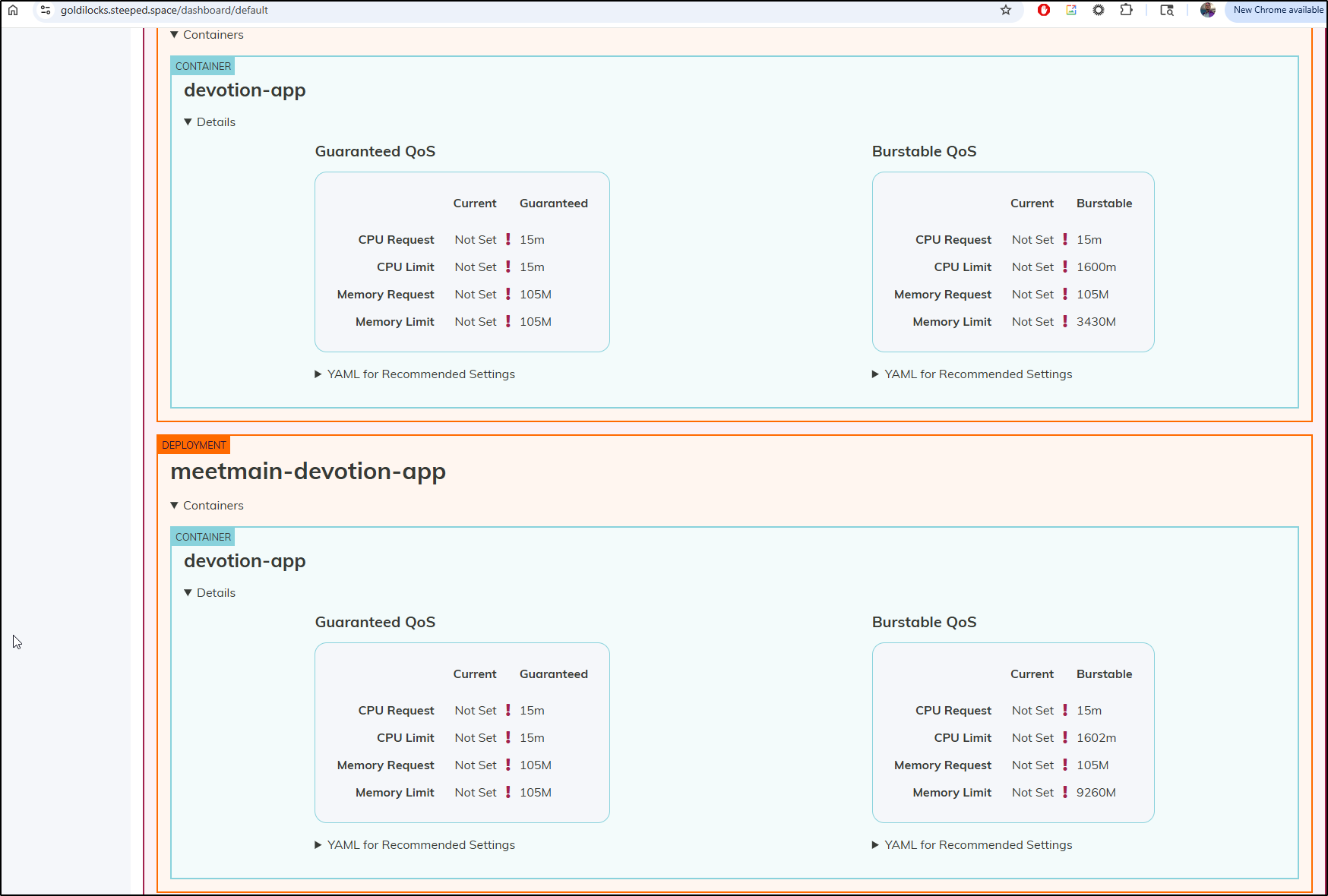

As nervous as this makes me, I think putting this into the production cluster is the only way to really see if it shines.

As it does not, by default, monitor all namespaces, just those we have annotated, I feel a bit safer.

Production

I can confirm my production server does have a metrics server already

$ kubectl get po -A | grep metrics

kube-system metrics-server-68cf49699b-274v6 1/1 Running 4 (66d ago) 463d

I’ll now install the Fairwinds VPA chart

$ helm install vpa fairwinds-stable/vpa --namespace vpa --create-namespace

NAME: vpa

LAST DEPLOYED: Sun Jun 8 14:45:19 2025

NAMESPACE: vpa

STATUS: deployed

REVISION: 1

NOTES:

Congratulations on installing the Vertical Pod Autoscaler!

Components Installed:

- recommender

- updater

- admission-controller

To verify functionality, you can try running 'helm -n vpa test vpa'

I’ll actually invoke that test this time to check the VPA was properly installed

$ helm -n vpa test vpa

NAME: vpa

LAST DEPLOYED: Sun Jun 8 14:45:19 2025

NAMESPACE: vpa

STATUS: deployed

REVISION: 1

TEST SUITE: vpa-test

Last Started: Sun Jun 8 14:46:47 2025

Last Completed: Sun Jun 8 14:46:47 2025

Phase: Succeeded

TEST SUITE: vpa-test

Last Started: Sun Jun 8 14:46:47 2025

Last Completed: Sun Jun 8 14:46:48 2025

Phase: Succeeded

TEST SUITE: vpa-test

Last Started: Sun Jun 8 14:46:48 2025

Last Completed: Sun Jun 8 14:46:48 2025

Phase: Succeeded

TEST SUITE: vpa-test

Last Started: Sun Jun 8 14:46:48 2025

Last Completed: Sun Jun 8 14:46:49 2025

Phase: Succeeded

TEST SUITE: vpa-test

Last Started: Sun Jun 8 14:46:49 2025

Last Completed: Sun Jun 8 14:46:49 2025

Phase: Succeeded

TEST SUITE: vpa-test-crds-available

Last Started: Sun Jun 8 14:46:49 2025

Last Completed: Sun Jun 8 14:47:08 2025

Phase: Succeeded

TEST SUITE: vpa-test-create-vpa

Last Started: Sun Jun 8 14:47:08 2025

Last Completed: Sun Jun 8 14:47:15 2025

Phase: Succeeded

TEST SUITE: vpa-test-metrics-api-available

Last Started: Sun Jun 8 14:47:22 2025

Last Completed: Sun Jun 8 14:47:26 2025

Phase: Succeeded

TEST SUITE: vpa-test-webhook-configuration

Last Started: Sun Jun 8 14:47:16 2025

Last Completed: Sun Jun 8 14:47:21 2025

Phase: Succeeded

NOTES:

Congratulations on installing the Vertical Pod Autoscaler!

Components Installed:

- recommender

- updater

- admission-controller

To verify functionality, you can try running 'helm -n vpa test vpa'

I can now add Goldilocks via Helm as I did on the test cluster

$ helm install goldilocks --create-namespace --namespace goldilocks fairwinds-stable/goldilocks

NAME: goldilocks

LAST DEPLOYED: Sun Jun 8 14:49:17 2025

NAMESPACE: goldilocks

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

kubectl -n goldilocks port-forward svc/goldilocks-dashboard 8080:80

echo "Visit http://127.0.0.1:8080 to use your application"

I’m also interested to track the Meet metrics so let’s annotate our default namespace

$ kubectl label ns default goldilocks.fairwinds.com/enabled=true

namespace/default labeled

I am going to expose this with ingress just to test, but do NOT plan to leave it live

$ gcloud dns --project=myanthosproject2 record-sets create goldilocks.steeped.space --zone="steepedspace" --type="A" --ttl="300" --rrdatas="75.73.224.240"

NAME TYPE TTL DATA

goldilocks.steeped.space. A 300 75.73.224.240

Then create and apply an ingress that can use it

builder@LuiGi:~/Workspaces$ cat ./goldilocks.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: gcpleprod2

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: goldilocks-dashboard

labels:

app.kubernetes.io/instance: goldilocksingress

name: goldilocksingress

spec:

rules:

- host: goldilocks.steeped.space

http:

paths:

- backend:

service:

name: goldilocks-dashboard

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- goldilocks.steeped.space

secretName: goldilocks-tls

builder@LuiGi:~/Workspaces$ kubectl apply -f ./goldilocks.ingress.yaml -n goldilocks

ingress.networking.k8s.io/goldilocksingress created

When I see the cert is satisified

builder@LuiGi:~/Workspaces$ kubectl get cert -n goldilocks

NAME READY SECRET AGE

goldilocks-tls False goldilocks-tls 23s

builder@LuiGi:~/Workspaces$ kubectl get cert -n goldilocks

NAME READY SECRET AGE

goldilocks-tls False goldilocks-tls 44s

builder@LuiGi:~/Workspaces$ kubectl get cert -n goldilocks

NAME READY SECRET AGE

goldilocks-tls False goldilocks-tls 58s

builder@LuiGi:~/Workspaces$ kubectl get cert -n goldilocks

NAME READY SECRET AGE

goldilocks-tls True goldilocks-tls 72s

I can now access it at https://goldilocks.steeped.space/

I’ll now leave this live for a few days to see if the settings change

Datadog

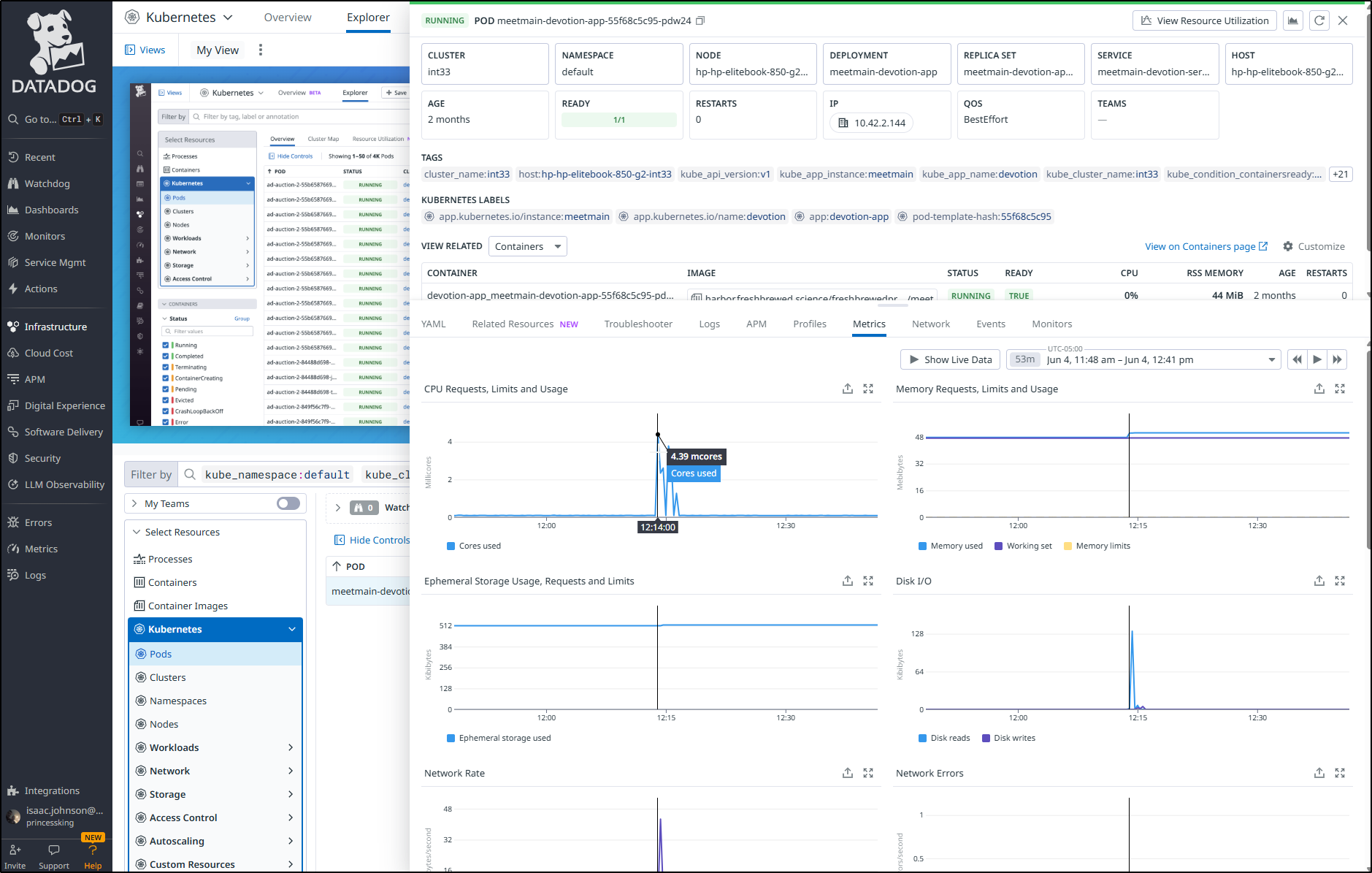

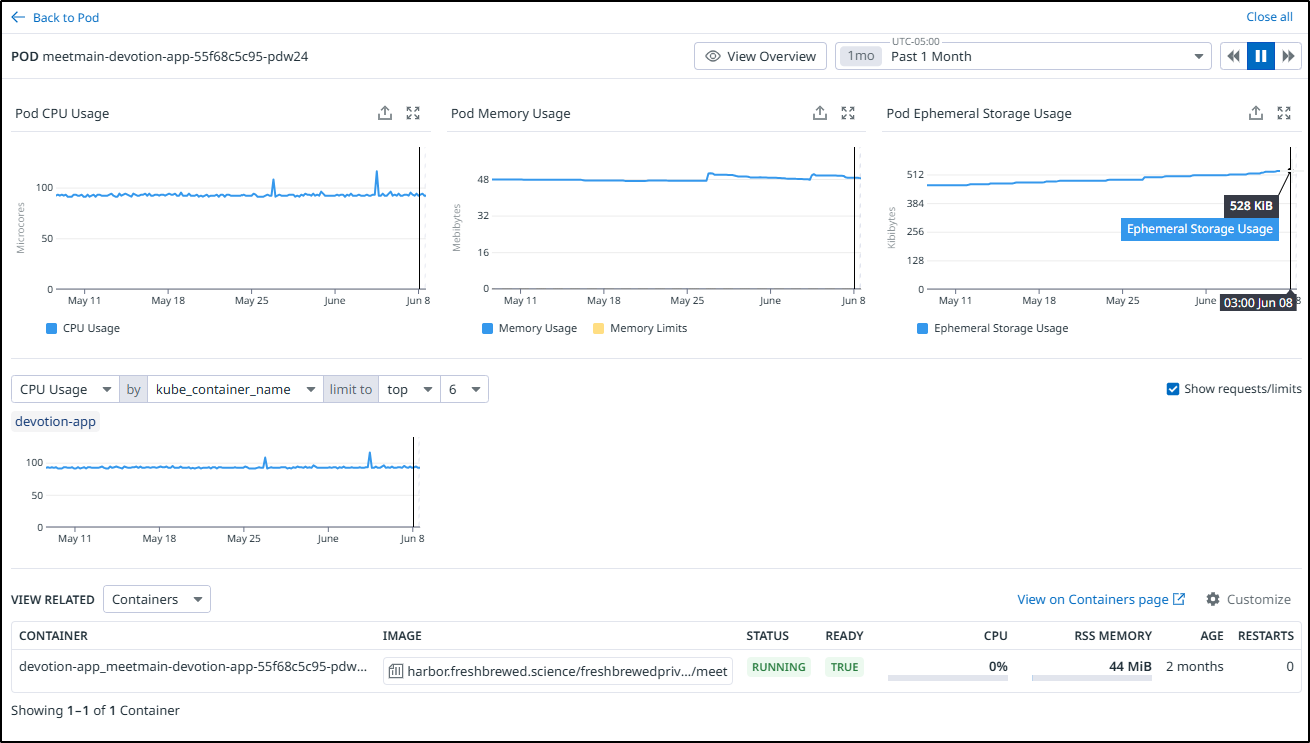

This is where having a monitoring suite like Datadog comes in real handy.

I can view my pods for the deployment over the last month and zoom in on a spike time

I can view “Resource Utilization” to see it’s basically just shy of 100 microcores and 44mb of memory most of the time, but storage (however small) does tend to creep up

Summary

I don’t do this often, but I think Goldilocks has earned a place on the lid

Appropriately near the OSN sticker

Disclosure

I had to search this up because I think I might have been the winner of their booth giveaway and indeed I was!

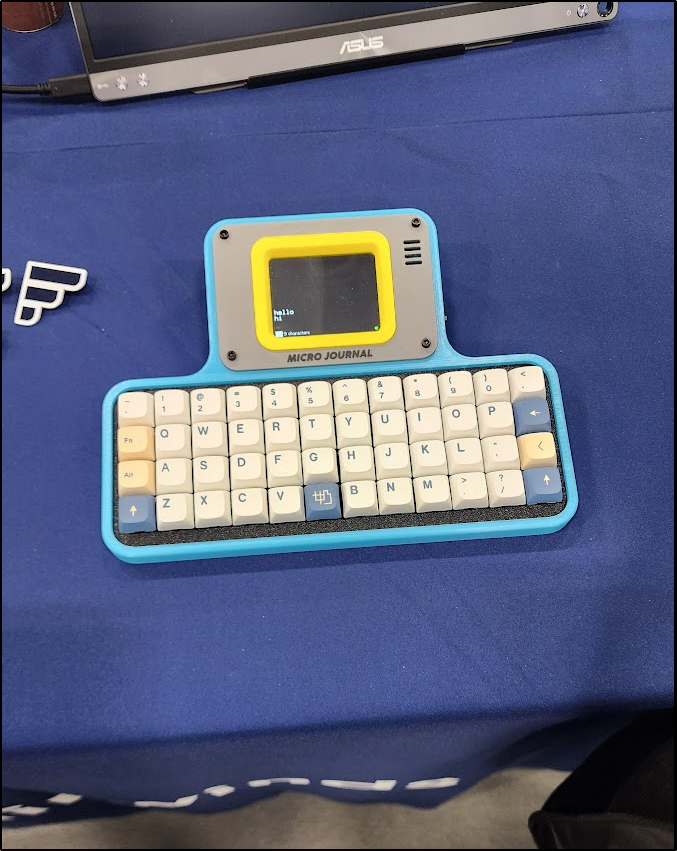

They had this glorious Micro Journel

Which I gave to my middle daughter who uses it often for writing

While this had no bearing on me deciding to dig in on their OS solutions, I felt it worth brining up.

Editors Note

The domain “steeped.space” has since been retired. All work that would have been there is now on “steeped.icu”. Thus any links you see above mentioning “goldilocks.steeped.space”, for instance, really would be valid now on “goldilocks.steeped.icu”.