Published: Jun 10, 2025 by Isaac Johnson

addy.io is basically an anonymous email forwarding service based on an open-source platform we can install locally. I’ll focus on the free tier and lowest end paid tier of their SaaS offering showing how we can configure our own domain for email forwarding.

The second half of this article focuses on ways to track ports and hosts in a homelab. Based on this Mariushosting post on PortNote, I’ll dig into host in docker and Kubernetes then compare to another NOC / Operations platform, NetBox.

Lastly, I’ll wrap with some automation tips for PortNote which make it stay always up to date.

Addy

I’m not sure how I even came across addy.io. It was scribbled in my notes with just the URL.

Addy is simple: anonymous email forwarding. It is Open Source and one can self-host. However, for this test, I’ll just work with their free SaaS offering.

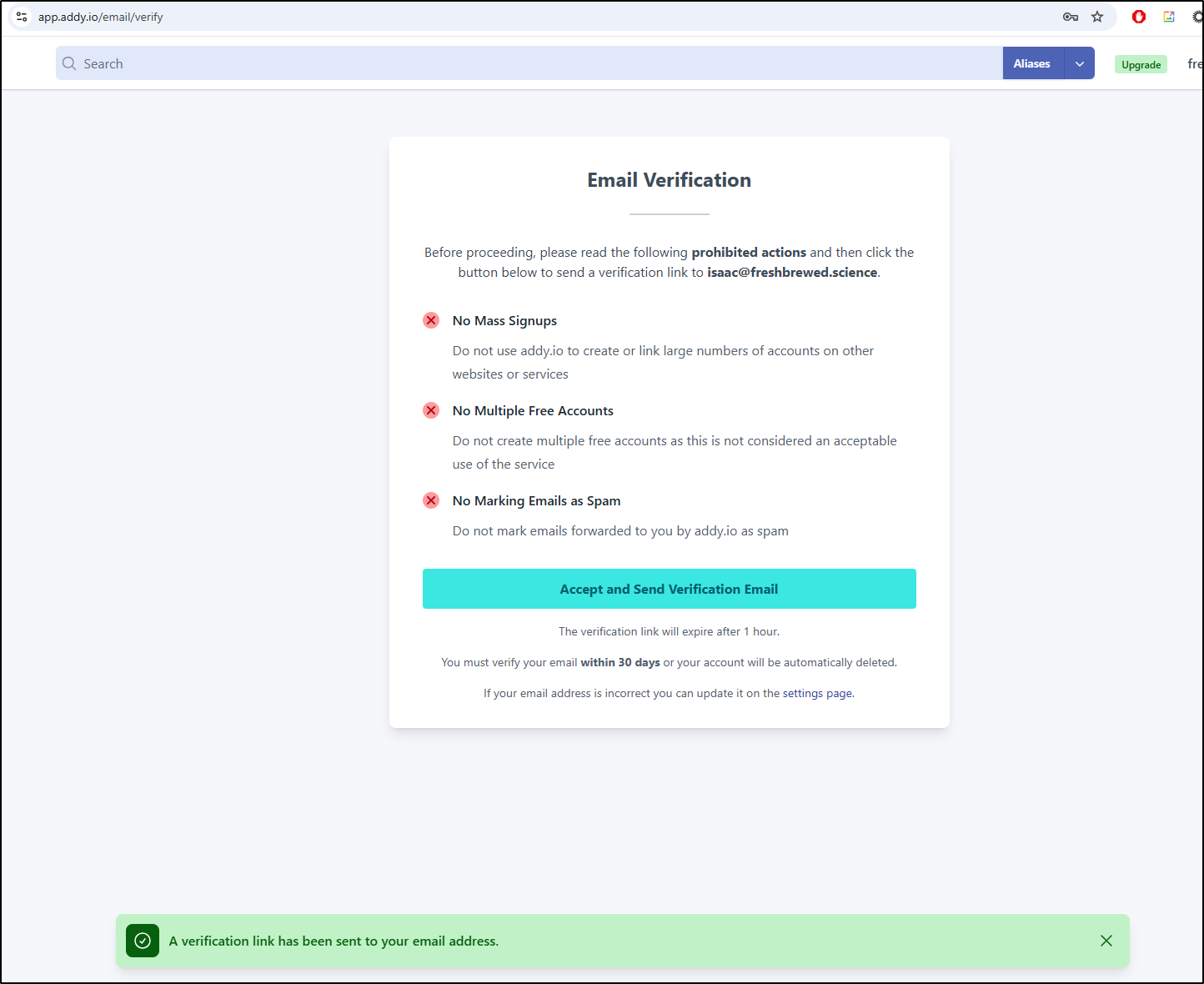

I’ll have to verify my destination email

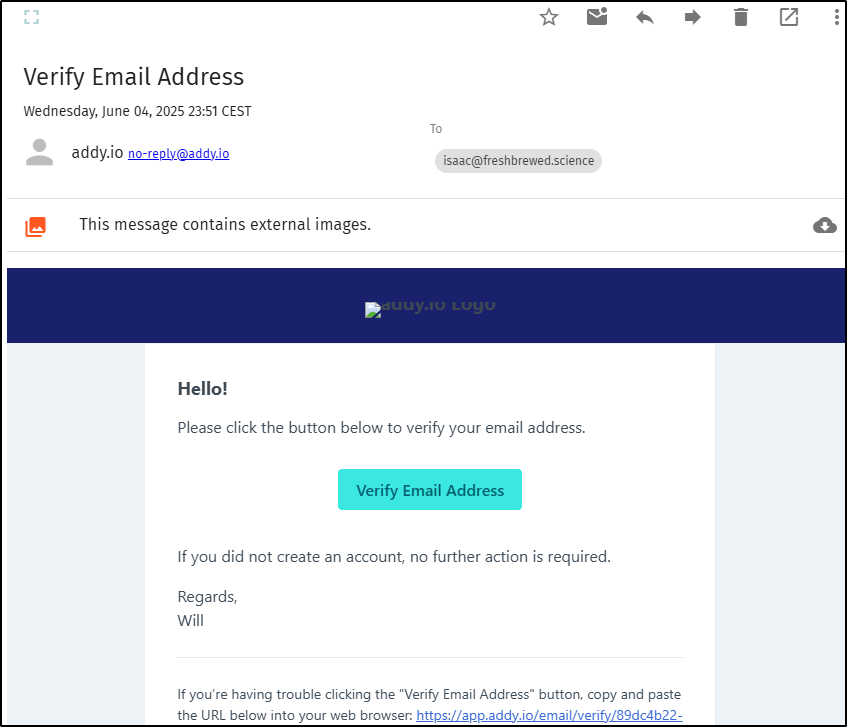

with the link they sent

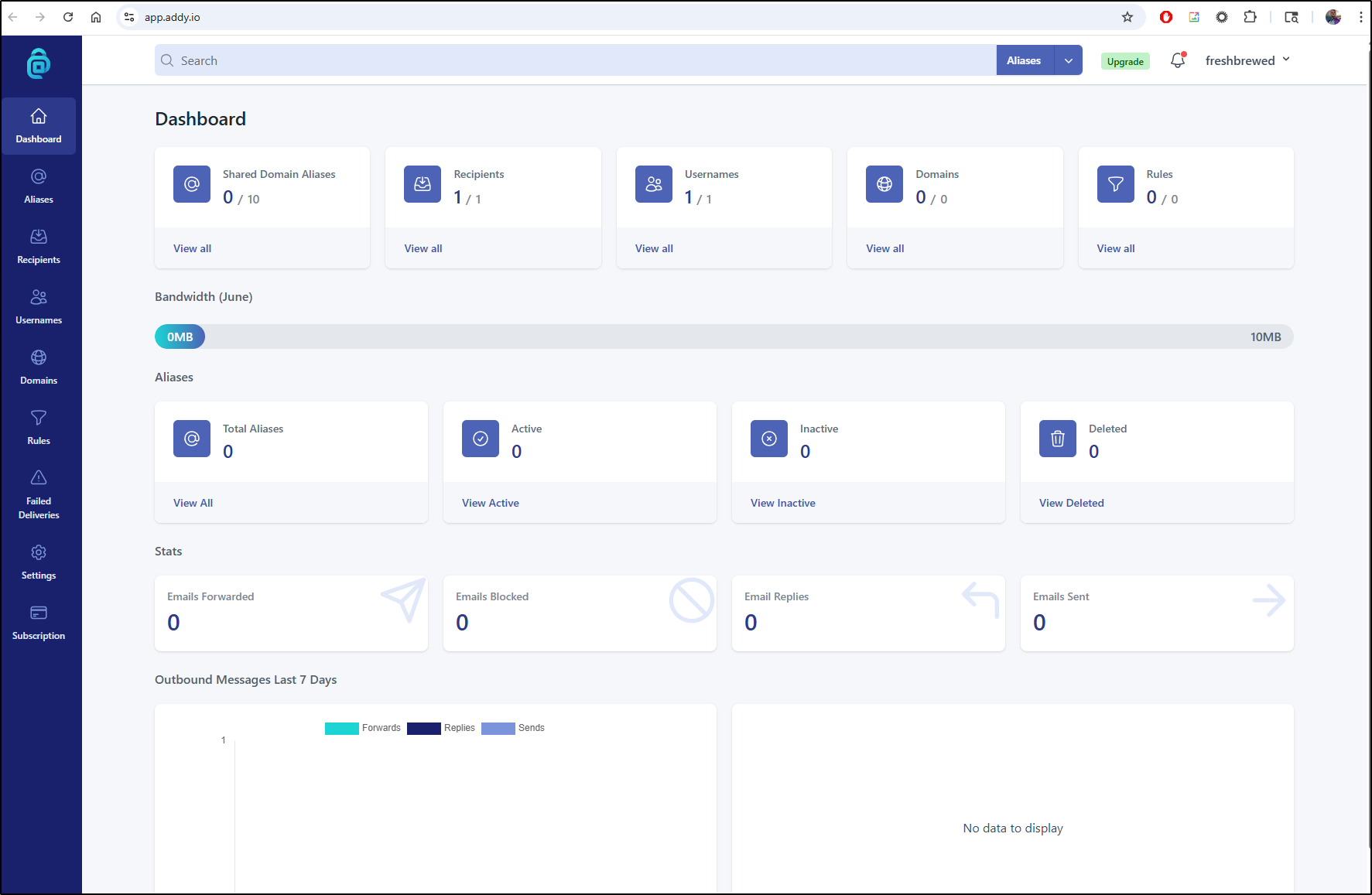

I now see the addy dashboard

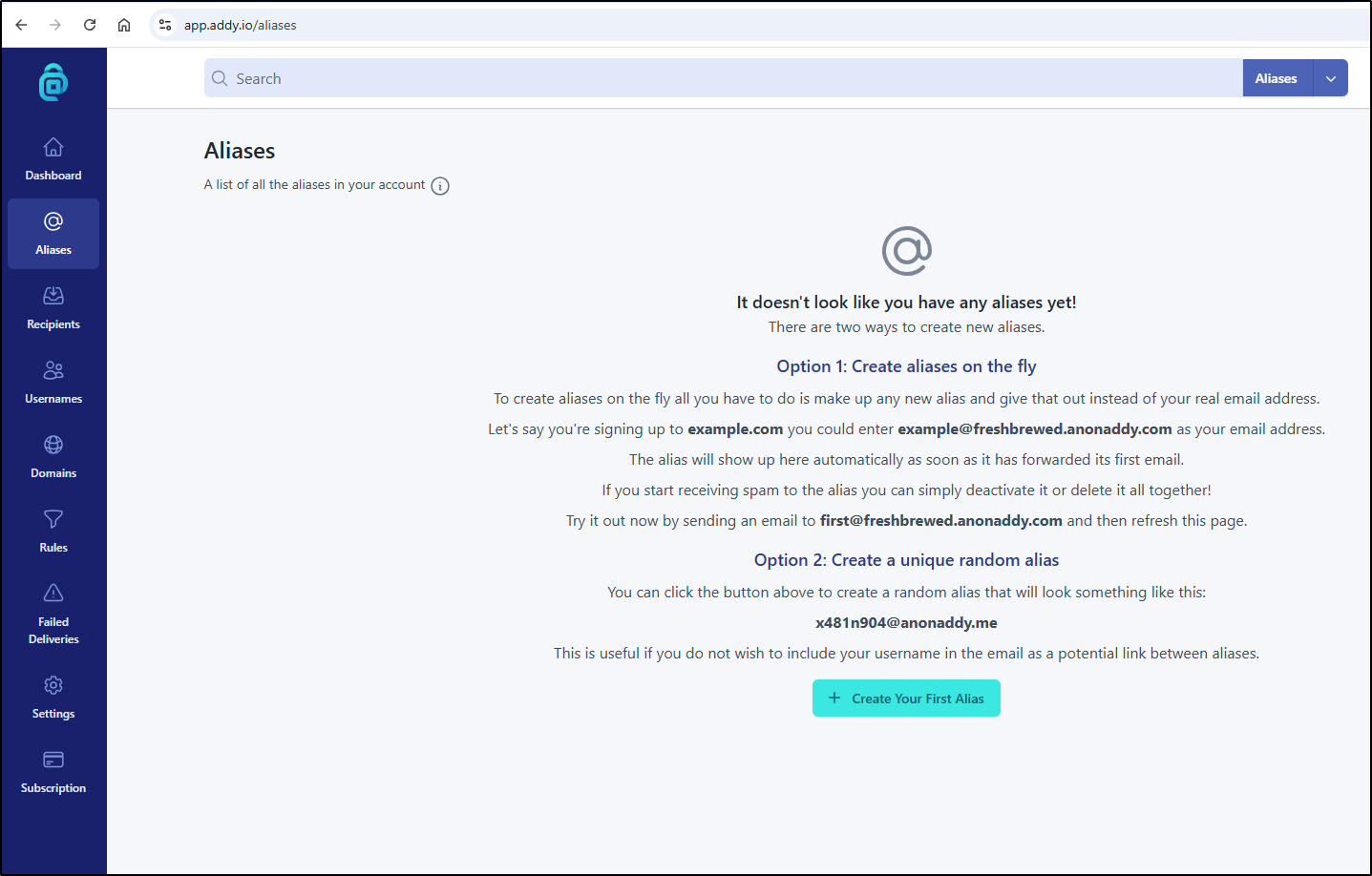

Aliases

We can create a top level one with a random string, or use any name under our own subdomain

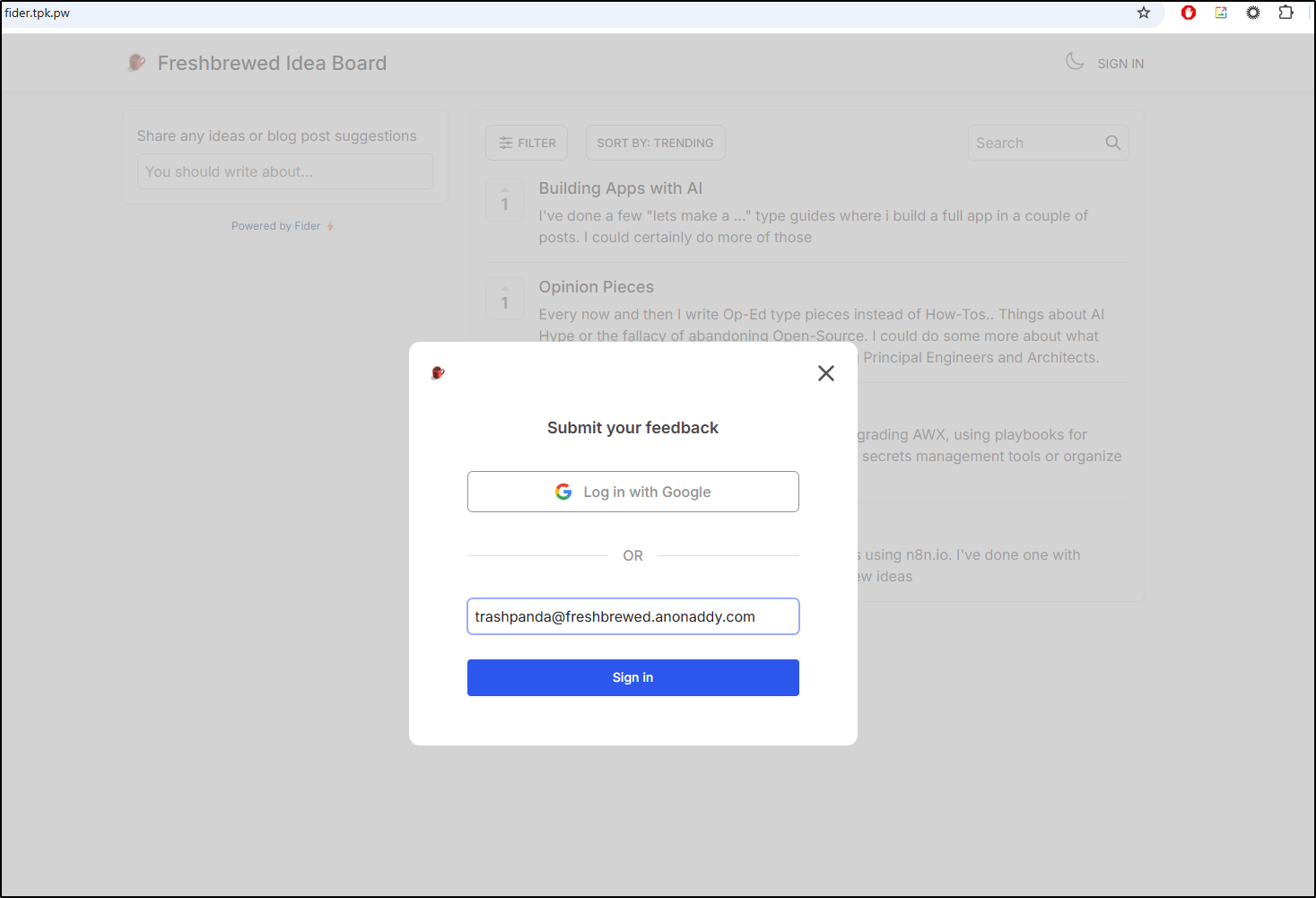

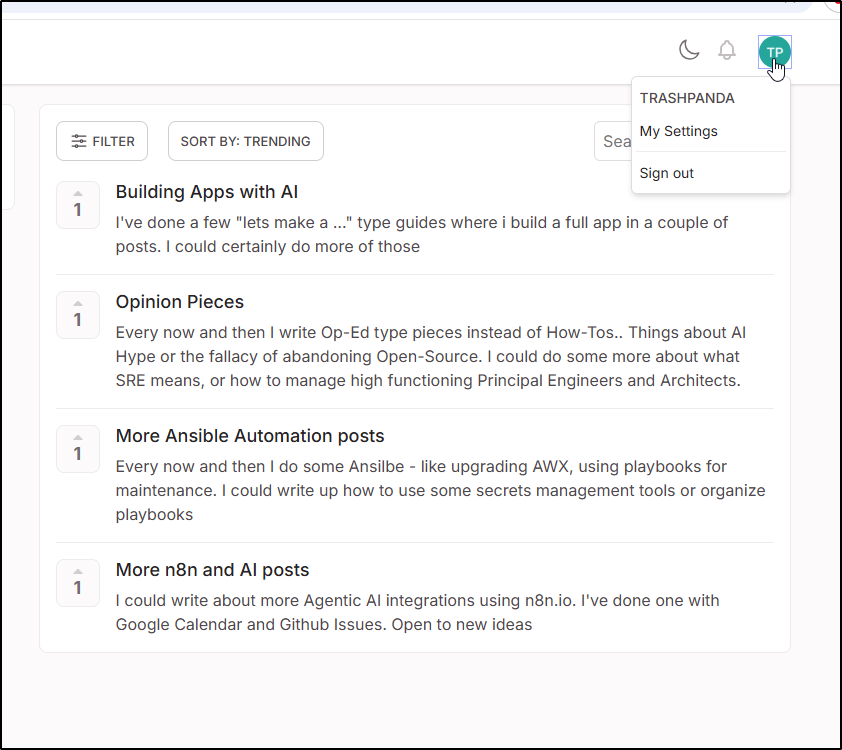

I’ll try using trashpanda@freshbrewed.anonaddy.com to start. Perhaps we wish to game the voting page

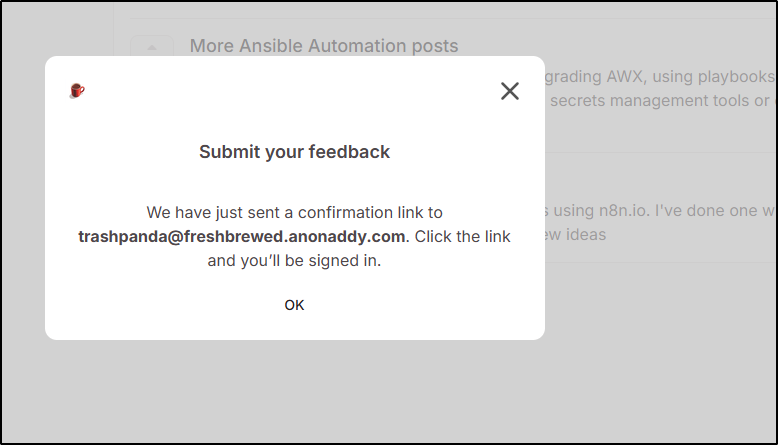

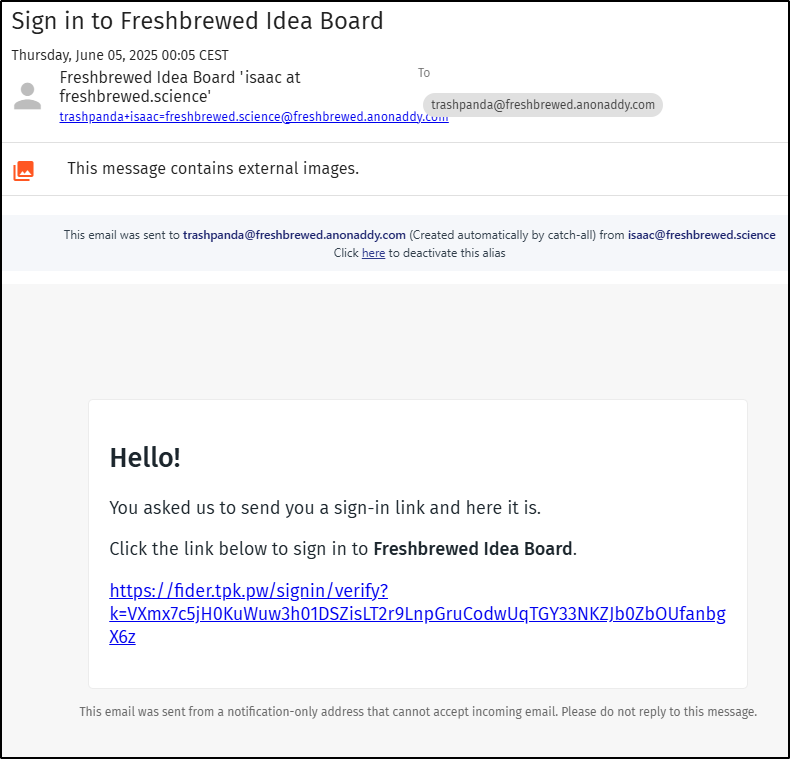

which sends a confirmation email

I can see it forwarded

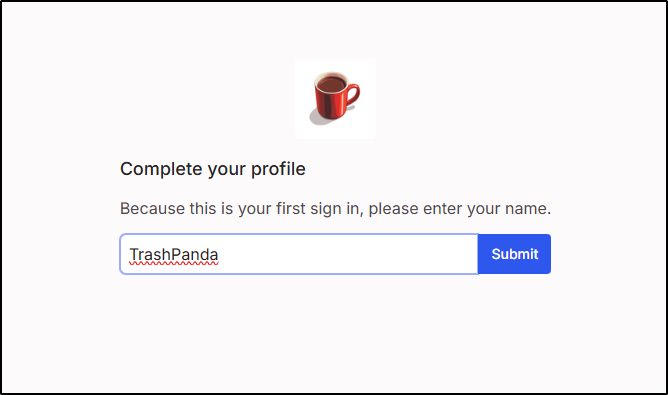

Which then let me create a user in Fider

And now I could vote if I wanted

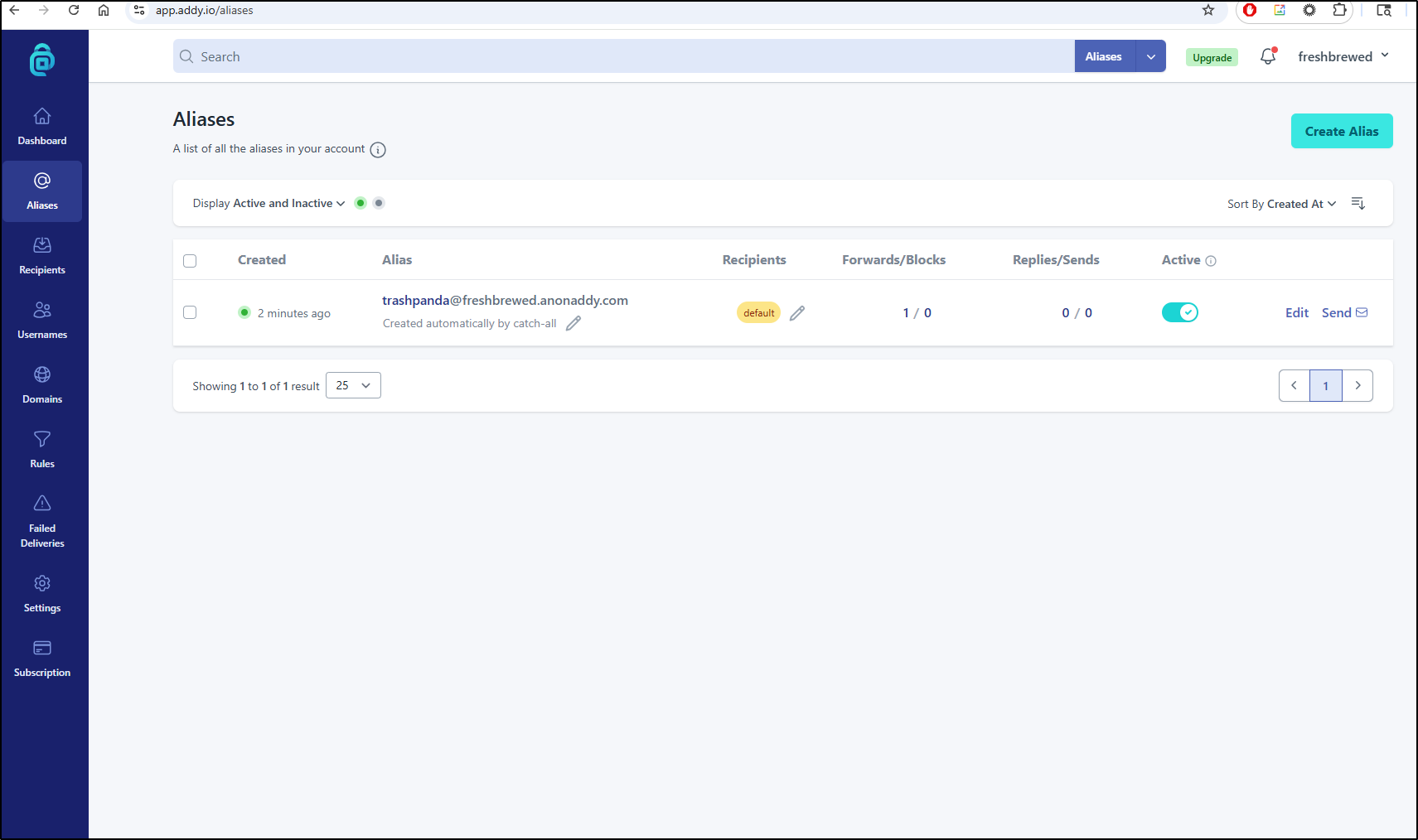

Back in Aliases we can see the new alias that was used

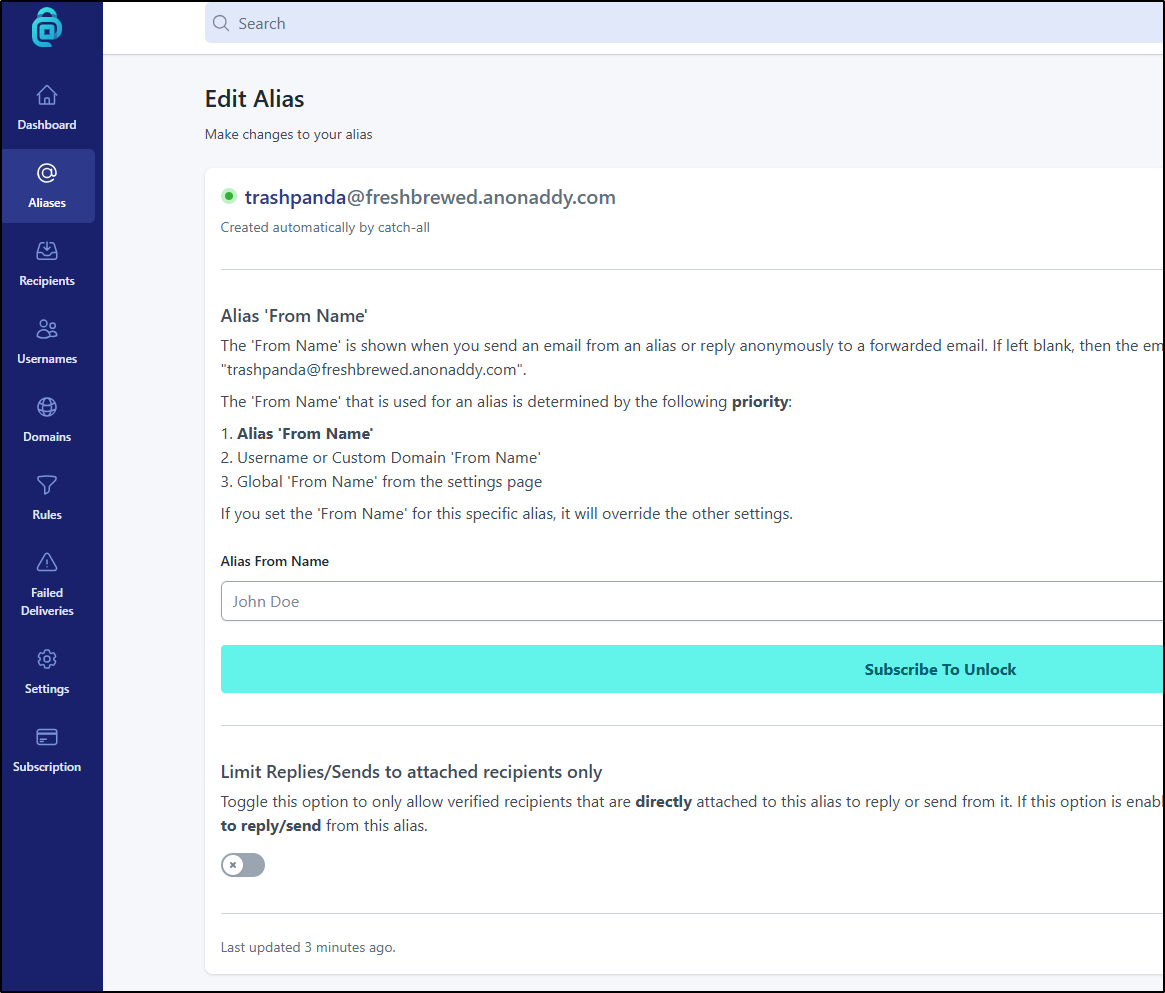

If we click “edit” on the alias we can see our first pay feature - custom Alias From Name

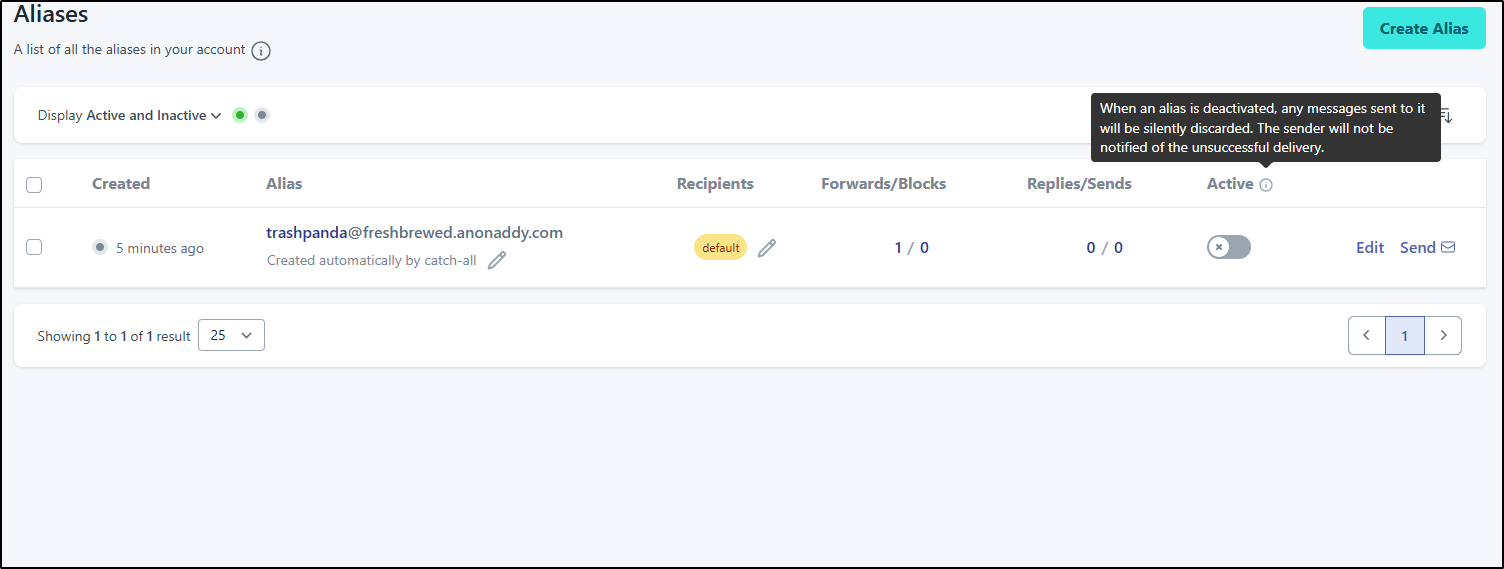

Now, if we want to stop using it, we need to deactivate it. By setting it to not active, it will throw out future spam messages

Custom Domains

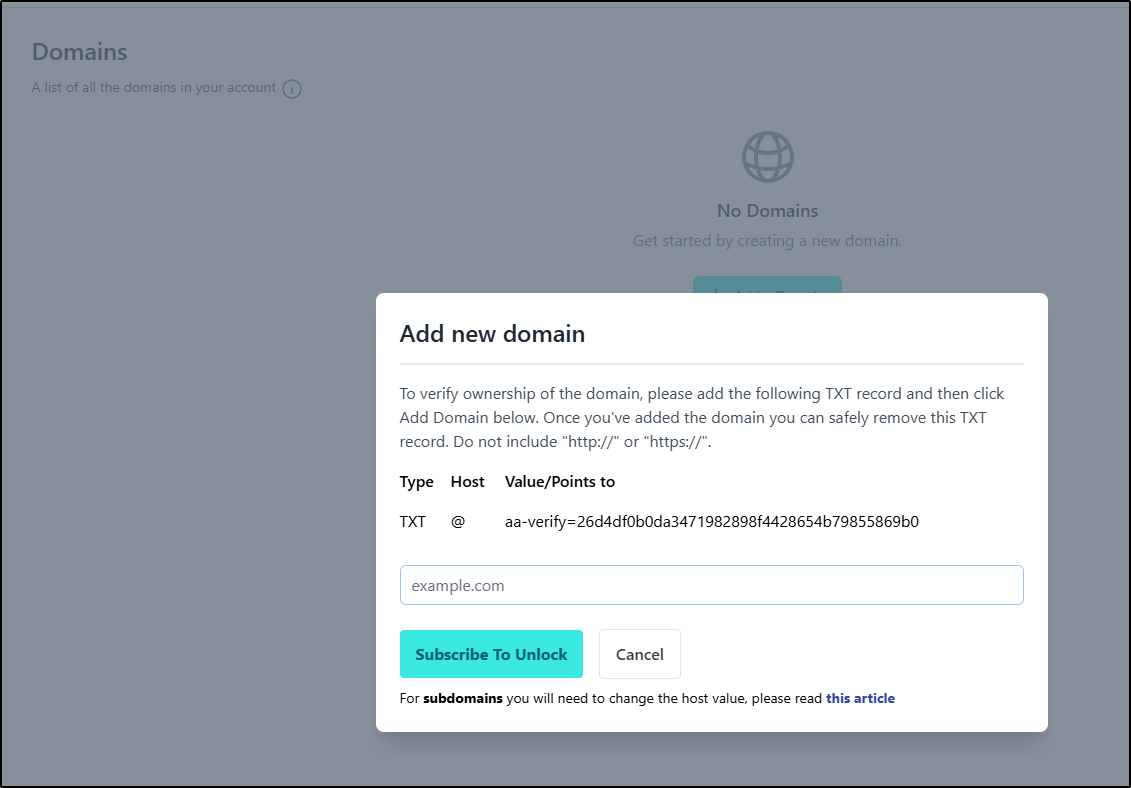

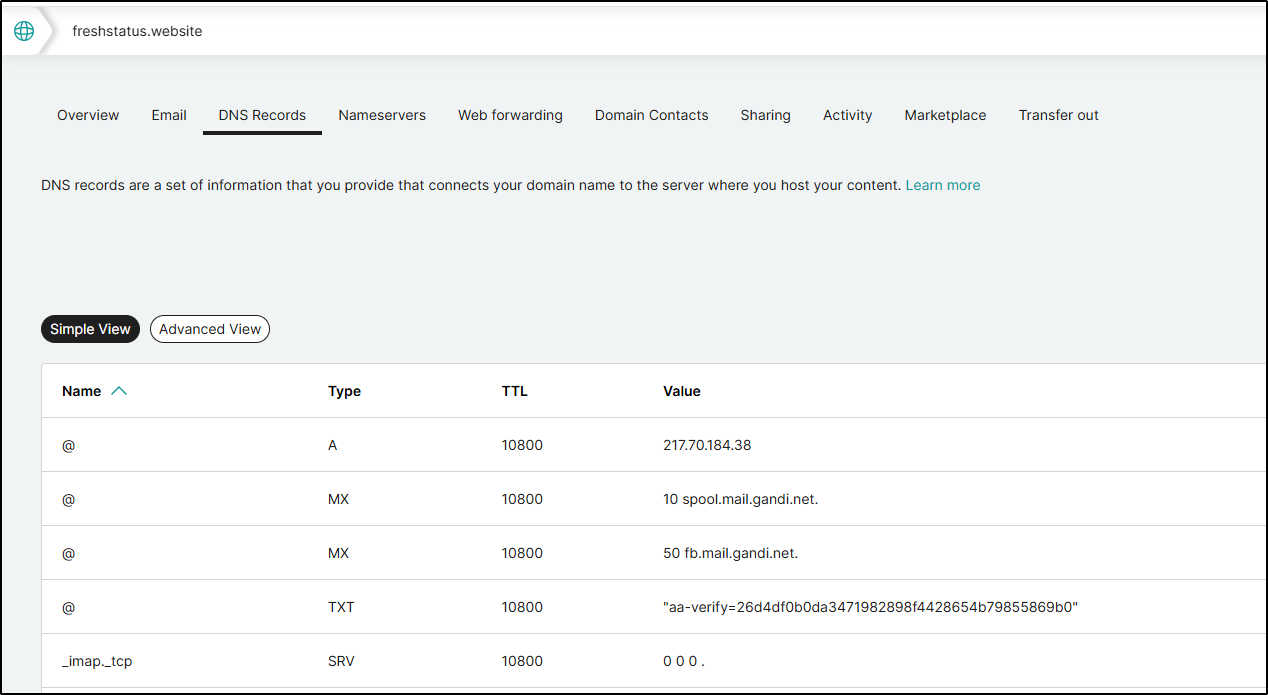

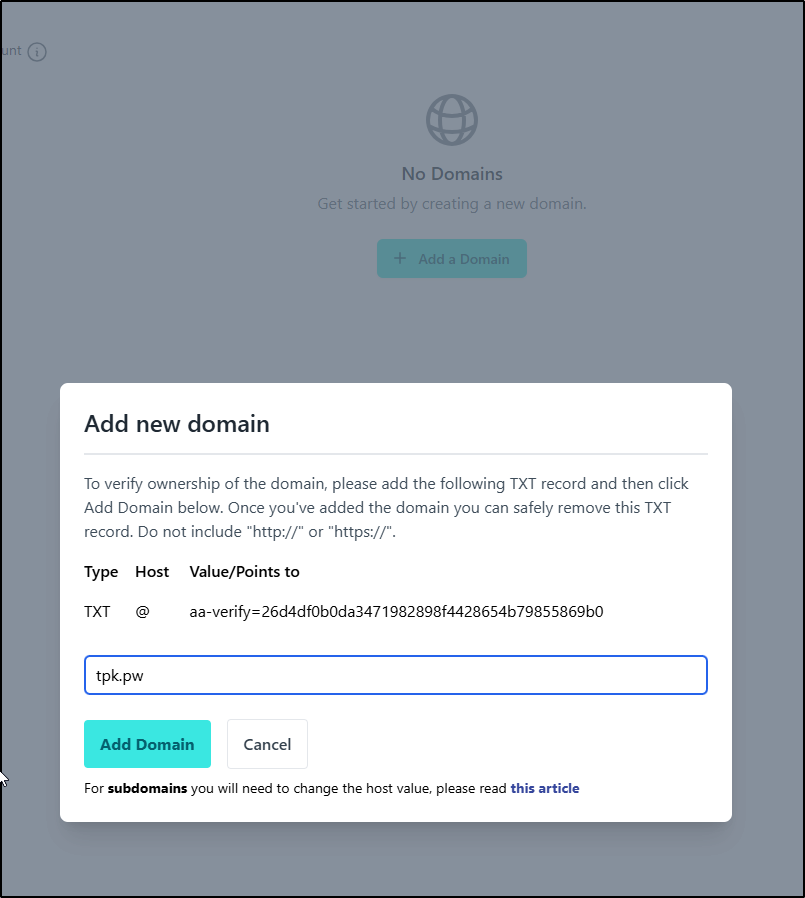

We could do custom domains by taking the aa-verify

and setting them in our DNS records

but as that is another paid feature, I won’t be able to test it

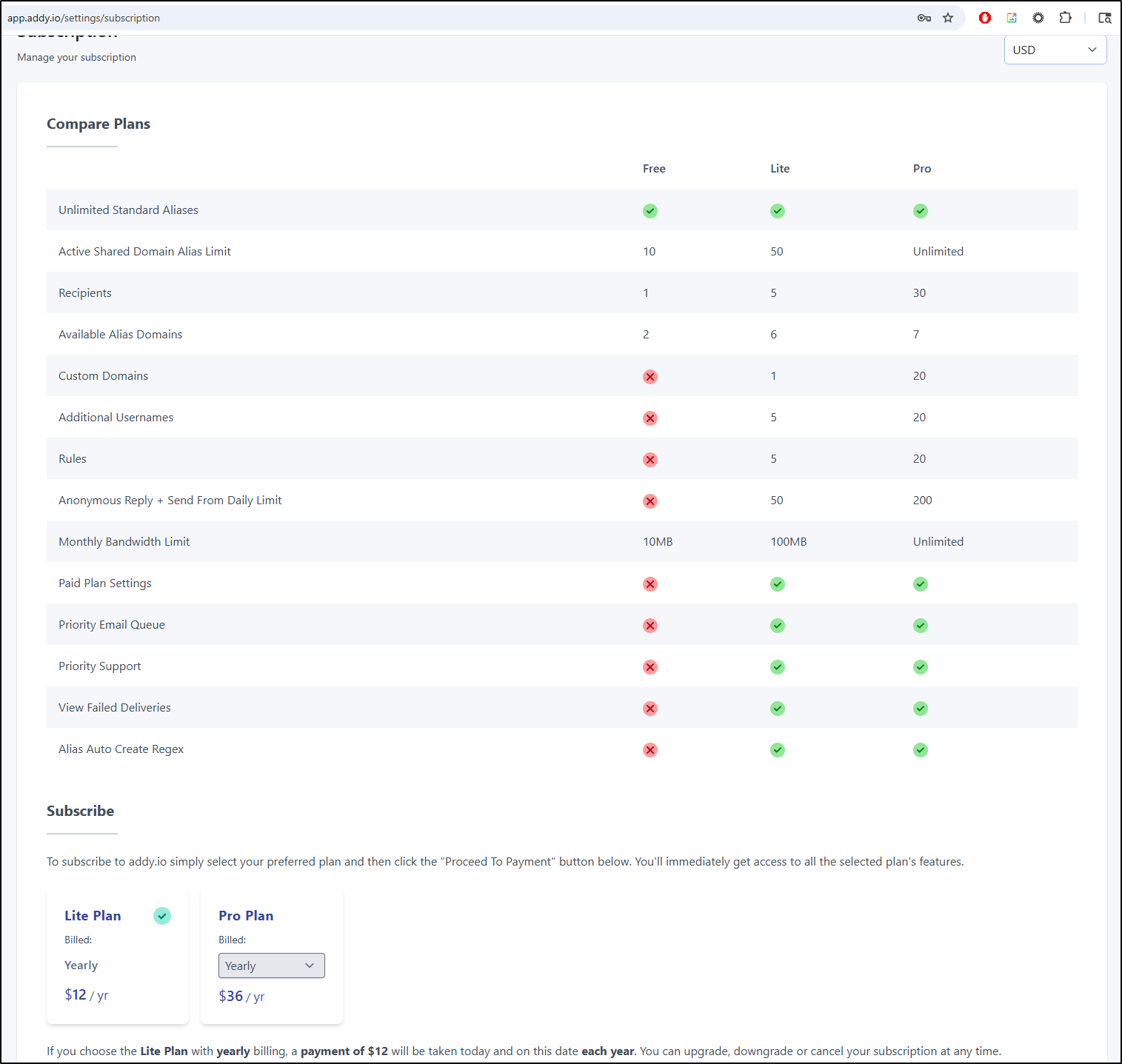

I’m seriously considering buying this because the lite plan, which would cover my needs, is just a mere US $12/y

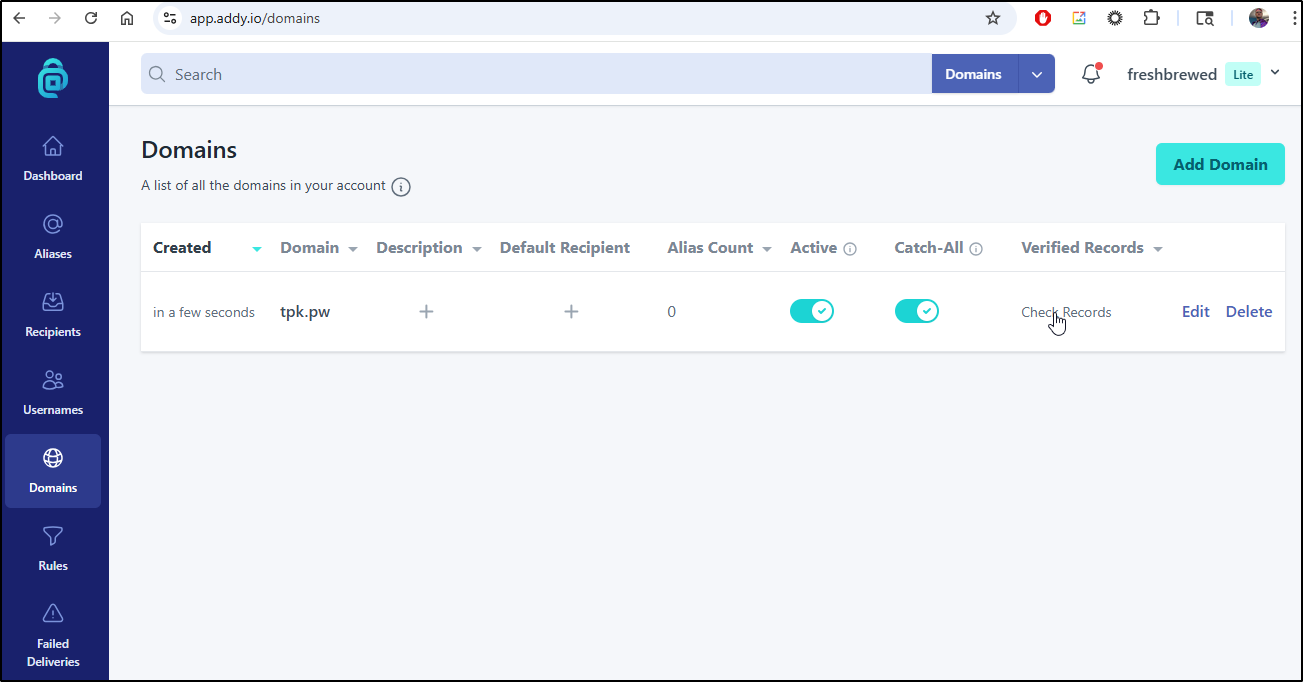

Dammit… I couldn’t resist. I got the Lite version

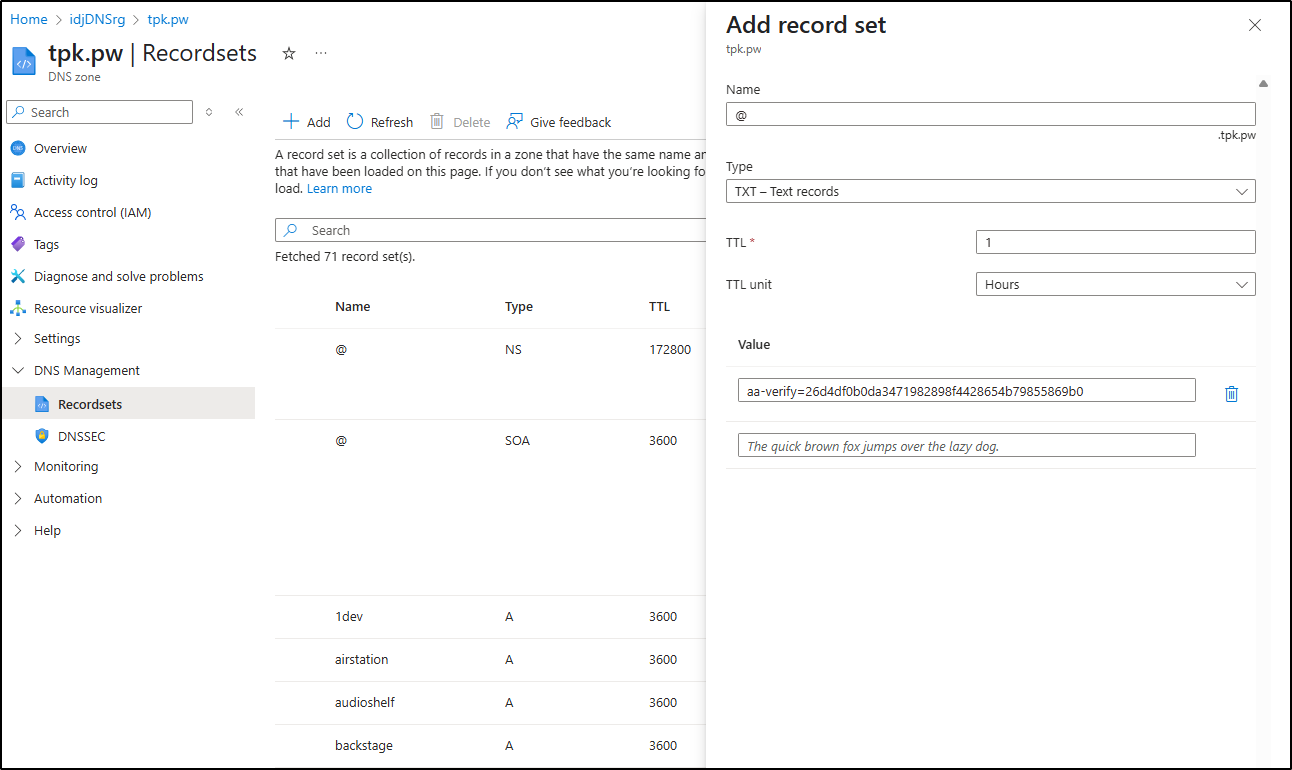

I’ll add the verify record to Azure DNS, in this case, for tpk.pw

Then we can set it back in addy.io

Since I already added the TXT record, I can ask Addy to verify now

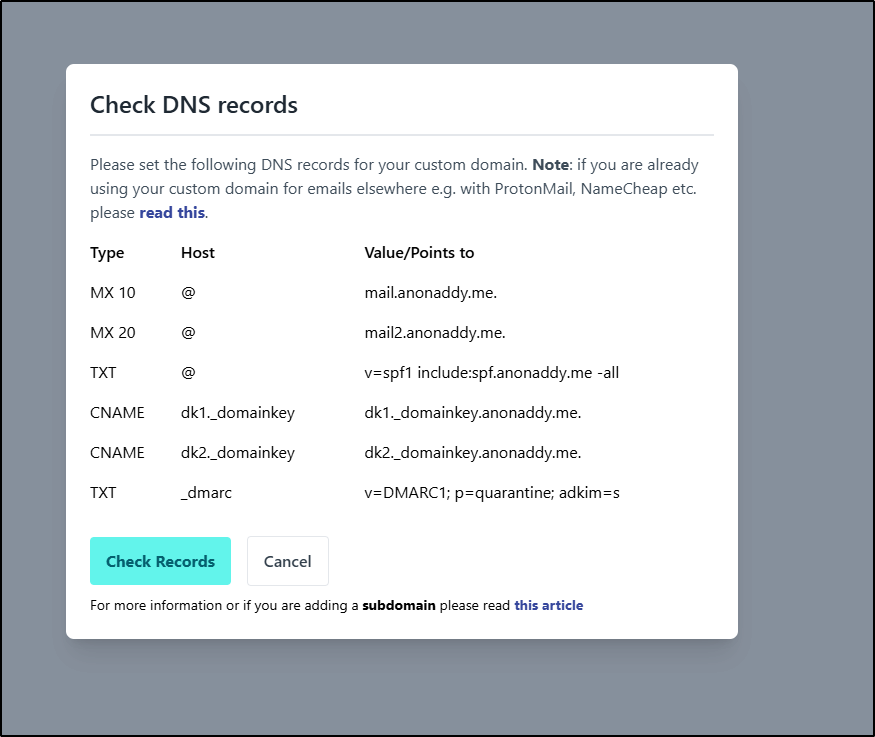

Actually, looks like I have a few more to set

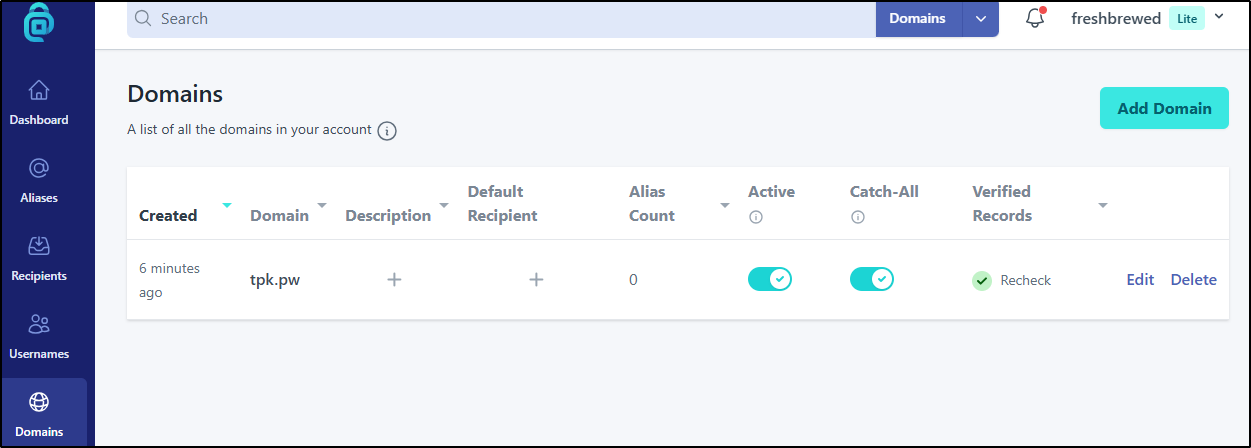

Once updated, we can see it show verified

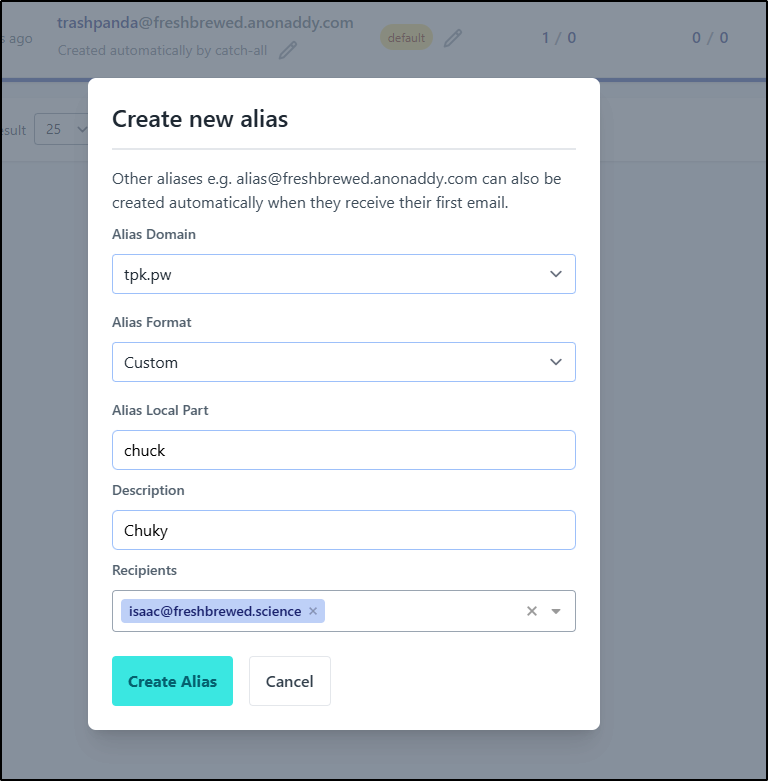

Now I can use my domain in an alias

And indeed, that worked!

Now, this will be handy for the types of services that have crappy regexp’s on their email forms and block “.science” as it’s too long (in their eyes).

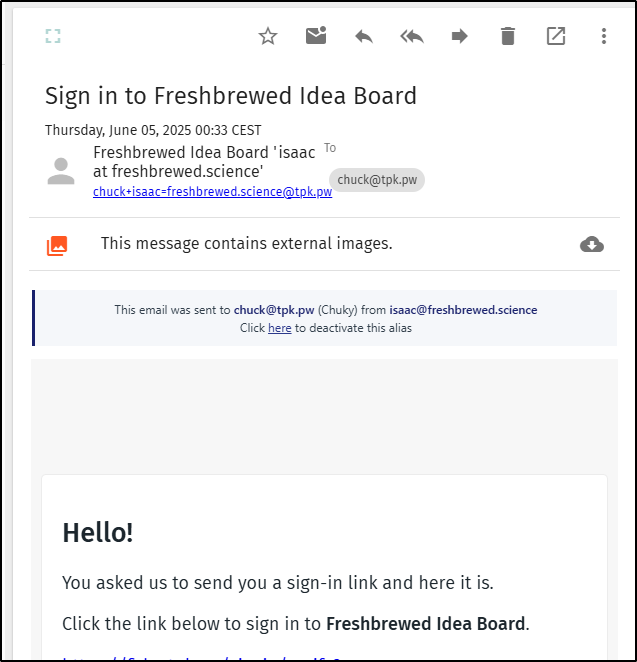

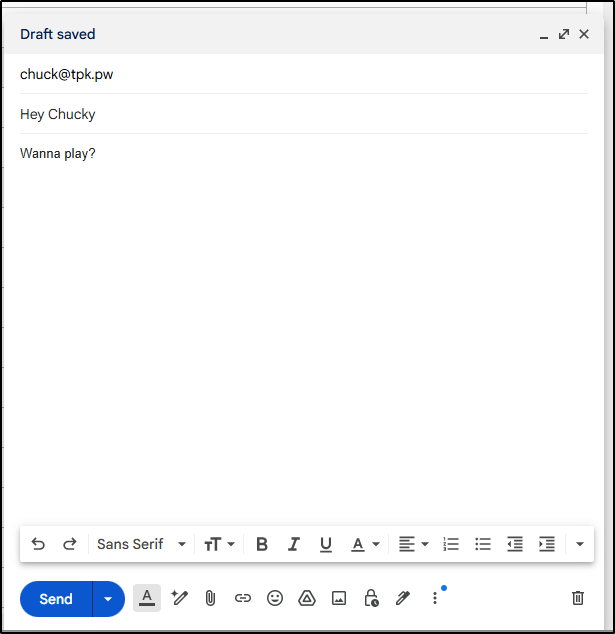

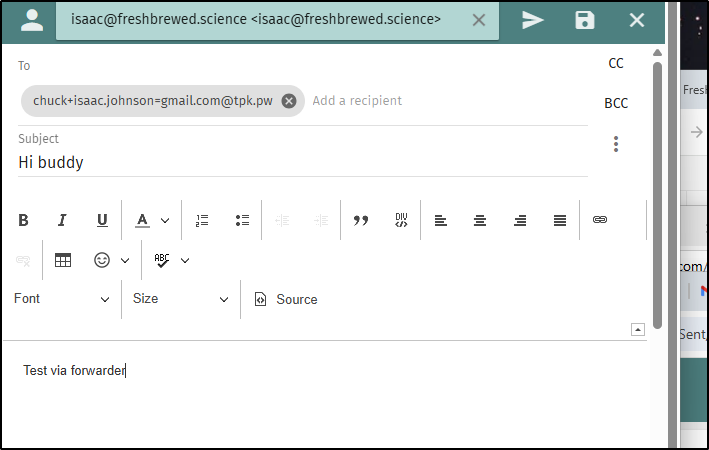

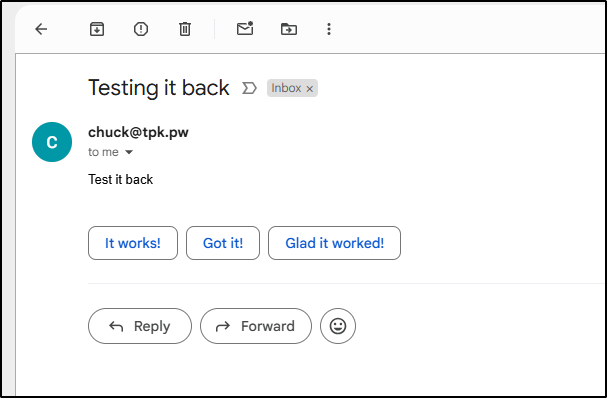

I want to test the flow from emails. I’ll try sending an email from my gmail

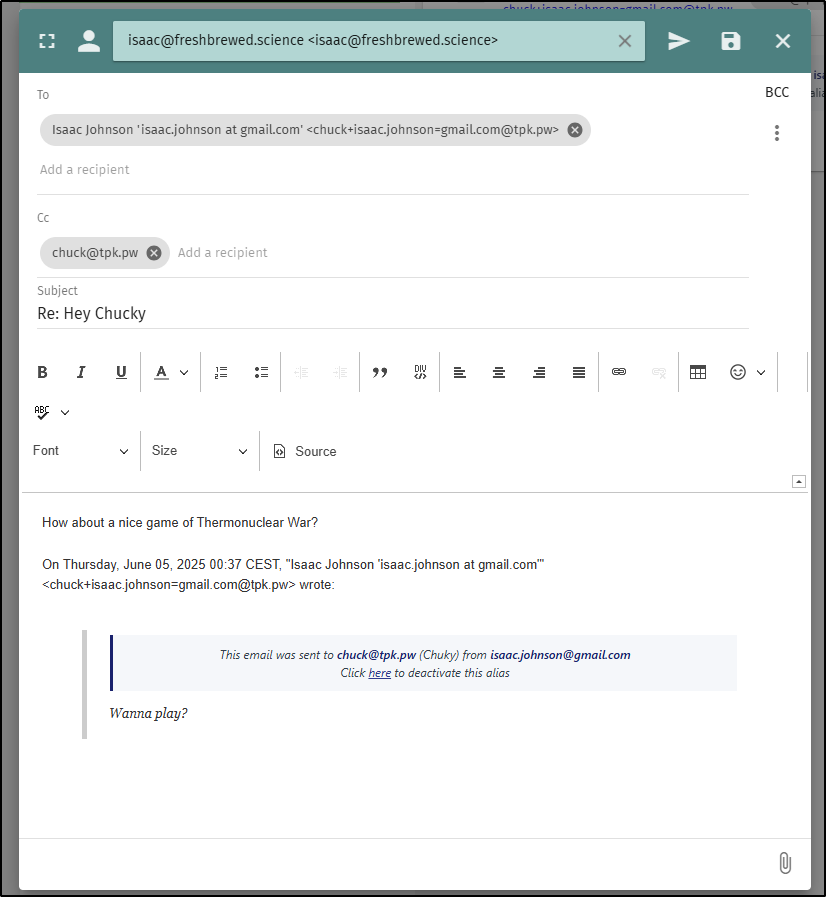

I then replied

However, I found the replies did not get back to my gmail.

I then tried using a forwarder email

I have some kind of DMARC issue still with freshbrewed.science blocking things, but I set the whole flow from GMail and managed to get it working

So I’ll have to come back and sort out whatever DMARC issue is hanging out fb.s emails

PortNote

A while back Marius posted about Portnote.

PortNote is a straightforward app to manage all of your servers and used ports in a simple interface.

I’ll start with the docker compose locally to give it a shot

services:

web:

image: haedlessdev/portnote:latest

ports:

- "3000:3000"

environment:

JWT_SECRET: RANDOM_SECRET # Replace with a secure random string

USER_SECRET: RANDOM_SECRET # Replace with a secure random string

LOGIN_USERNAME: username # Replace with a username

LOGIN_PASSWORD: mypassword # Replace with a custom password

DATABASE_URL: "postgresql://postgres:postgres@db:5432/postgres"

depends_on:

db:

condition: service_started

agent:

image: haedlessdev/portnote-agent:latest

environment:

DATABASE_URL: "postgresql://postgres:postgres@db:5432/postgres"

depends_on:

db:

condition: service_started

db:

image: postgres:17

restart: always

environment:

POSTGRES_USER: postgres

POSTGRES_PASSWORD: postgres

POSTGRES_DB: postgres

volumes:

- postgres_data:/var/lib/postgresql/data

volumes:

postgres_data:

which I can just launch from the Github repo

builder@LuiGi:~/Workspaces$ git clone https://github.com/crocofied/PortNote.git

Cloning into 'PortNote'...

remote: Enumerating objects: 569, done.

remote: Counting objects: 100% (39/39), done.

remote: Compressing objects: 100% (39/39), done.

remote: Total 569 (delta 0), reused 0 (delta 0), pack-reused 530 (from 1)

Receiving objects: 100% (569/569), 25.87 MiB | 8.53 MiB/s, done.

Resolving deltas: 100% (288/288), done.

Updating files: 100% (80/80), done.

builder@LuiGi:~/Workspaces$ cd PortNote

builder@LuiGi:~/Workspaces/PortNote$ vi compose.yml

builder@LuiGi:~/Workspaces/PortNote$ docker compose up

[+] Running 16/16

✔ agent Pulled 5.6s

✔ ceda16c5871d Pull complete 2.3s

✔ 4f4fb700ef54 Pull complete 49.3s

✔ e2faaa07380c Pull complete 3.1s

✔ web Pulled 52.0s

✔ f18232174bc9 Already exists 0.0s

✔ a54ab62fca2d Pull complete 10.0s

✔ 6bf3eed75316 Pull complete 10.2s

✔ efb51bda5e87 Pull complete 10.3s

✔ d98dd163e50f Pull complete 10.4s

✔ 119ebbf4413a Pull complete 46.5s

✔ d4420ff72fb1 Pull complete 48.1s

✔ 835c2aa23956 Pull complete 49.0s

✔ 72f8d4da615d Pull complete 49.1s

✔ 9141ee28cc14 Pull complete 49.2s

✔ c10de3cfc9cc Pull complete 49.6s

[+] Running 5/5

✔ Network portnote_default Created 0.3s

✔ Volume "portnote_postgres_data" Created 0.0s

✔ Container portnote-db-1 Created 0.4s

✔ Container portnote-agent-1 Created 0.2s

✔ Container portnote-web-1 Created 0.2s

Attaching to agent-1, db-1, web-1

db-1 | The files belonging to this database system will be owned by user "postgres".

db-1 | This user must also own the server process.

db-1 |

db-1 | The database cluster will be initialized with locale "en_US.utf8".

db-1 | The default database encoding has accordingly been set to "UTF8".

db-1 | The default text search configuration will be set to "english".

db-1 |

db-1 | Data page checksums are disabled.

db-1 |

db-1 | fixing permissions on existing directory /var/lib/postgresql/data ... ok

db-1 | creating subdirectories ... ok

db-1 | selecting dynamic shared memory implementation ... posix

db-1 | selecting default "max_connections" ... 100

db-1 | selecting default "shared_buffers" ... 128MB

agent-1 | Unable to connect to database: failed to connect to `host=db user=postgres database=postgres`: dial error (dial tcp 172.28.0.2:5432: connect: connection refused)

db-1 | selecting default time zone ... Etc/UTC

db-1 | creating configuration files ... ok

db-1 | running bootstrap script ... ok

agent-1 exited with code 1

db-1 | performing post-bootstrap initialization ... ok

db-1 | syncing data to disk ... ok

db-1 |

db-1 |

db-1 | Success. You can now start the database server using:

db-1 |

db-1 | pg_ctl -D /var/lib/postgresql/data -l logfile start

db-1 |

db-1 | initdb: warning: enabling "trust" authentication for local connections

db-1 | initdb: hint: You can change this by editing pg_hba.conf or using the option -A, or --auth-local and --auth-host, the next time you run initdb.

db-1 | waiting for server to start....2025-06-04 22:51:26.038 UTC [48] LOG: starting PostgreSQL 17.5 (Debian 17.5-1.pgdg120+1) on x86_64-pc-linux-gnu, compiled by gcc (Debian 12.2.0-14) 12.2.0, 64-bit

db-1 | 2025-06-04 22:51:26.045 UTC [48] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432"

db-1 | 2025-06-04 22:51:26.067 UTC [51] LOG: database system was shut down at 2025-06-04 22:51:25 UTC

db-1 | 2025-06-04 22:51:26.081 UTC [48] LOG: database system is ready to accept connections

db-1 | done

db-1 | server started

db-1 |

db-1 | /usr/local/bin/docker-entrypoint.sh: ignoring /docker-entrypoint-initdb.d/*

db-1 |

db-1 | waiting for server to shut down....2025-06-04 22:51:26.302 UTC [48] LOG: received fast shutdown request

db-1 | 2025-06-04 22:51:26.311 UTC [48] LOG: aborting any active transactions

db-1 | 2025-06-04 22:51:26.316 UTC [48] LOG: background worker "logical replication launcher" (PID 54) exited with exit code 1

db-1 | 2025-06-04 22:51:26.320 UTC [49] LOG: shutting down

db-1 | 2025-06-04 22:51:26.326 UTC [49] LOG: checkpoint starting: shutdown immediate

db-1 | 2025-06-04 22:51:26.361 UTC [49] LOG: checkpoint complete: wrote 3 buffers (0.0%); 0 WAL file(s) added, 0 removed, 0 recycled; write=0.011 s, sync=0.006 s, total=0.041 s; sync files=2, longest=0.003 s, average=0.003 s; distance=0 kB, estimate=0 kB; lsn=0/14E4FA0, redo lsn=0/14E4FA0

db-1 | 2025-06-04 22:51:26.366 UTC [48] LOG: database system is shut down

db-1 | done

db-1 | server stopped

db-1 |

db-1 | PostgreSQL init process complete; ready for start up.

db-1 |

db-1 | 2025-06-04 22:51:26.458 UTC [1] LOG: starting PostgreSQL 17.5 (Debian 17.5-1.pgdg120+1) on x86_64-pc-linux-gnu, compiled by gcc (Debian 12.2.0-14) 12.2.0, 64-bit

db-1 | 2025-06-04 22:51:26.459 UTC [1] LOG: listening on IPv4 address "0.0.0.0", port 5432

db-1 | 2025-06-04 22:51:26.459 UTC [1] LOG: listening on IPv6 address "::", port 5432

db-1 | 2025-06-04 22:51:26.469 UTC [1] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432"

db-1 | 2025-06-04 22:51:26.482 UTC [62] LOG: database system was shut down at 2025-06-04 22:51:26 UTC

db-1 | 2025-06-04 22:51:26.495 UTC [1] LOG: database system is ready to accept connections

web-1 | Prisma schema loaded from prisma/schema.prisma

web-1 | Datasource "db": PostgreSQL database "postgres", schema "public" at "db:5432"

db-1 | 2025-06-04 22:51:26.905 UTC [66] LOG: could not receive data from client: Connection reset by peer

web-1 |

web-1 | 3 migrations found in prisma/migrations

web-1 |

web-1 | Applying migration `20250510105645_server_and_port_model`

web-1 | Applying migration `20250510130844_add_server_id_to_port_model`

web-1 | Applying migration `20250511104442_scan_model`

web-1 |

web-1 | The following migration(s) have been applied:

web-1 |

web-1 | migrations/

web-1 | └─ 20250510105645_server_and_port_model/

web-1 | └─ migration.sql

web-1 | └─ 20250510130844_add_server_id_to_port_model/

web-1 | └─ migration.sql

web-1 | └─ 20250511104442_scan_model/

web-1 | └─ migration.sql

web-1 |

web-1 | All migrations have been successfully applied.

web-1 | npm notice

web-1 | npm notice New major version of npm available! 10.8.2 -> 11.4.1

web-1 | npm notice Changelog: https://github.com/npm/cli/releases/tag/v11.4.1

web-1 | npm notice To update run: npm install -g npm@11.4.1

web-1 | npm notice

web-1 |

web-1 | > portnote@1.2.0 start

web-1 | > next start

web-1 |

web-1 | ▲ Next.js 15.3.2

web-1 | - Local: http://localhost:3000

web-1 | - Network: http://172.28.0.4:3000

web-1 |

web-1 | ✓ Starting...

web-1 | ✓ Ready in 698ms

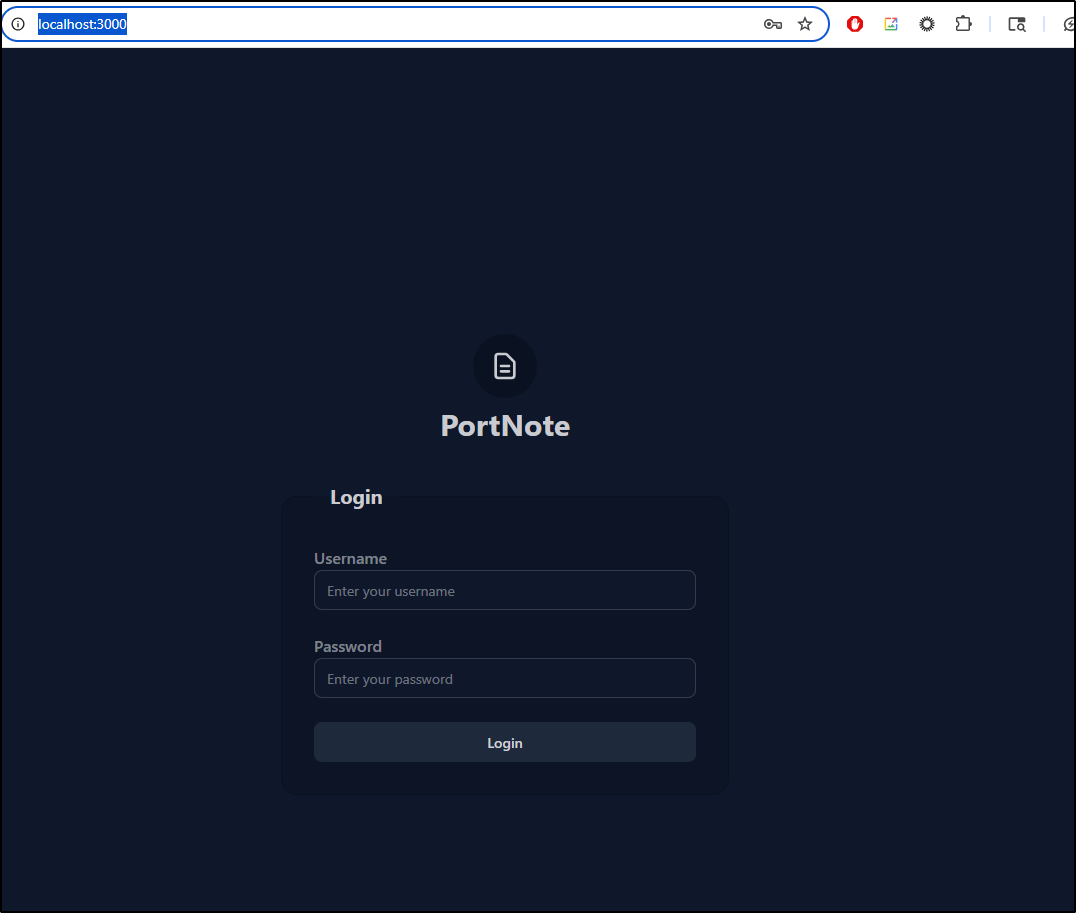

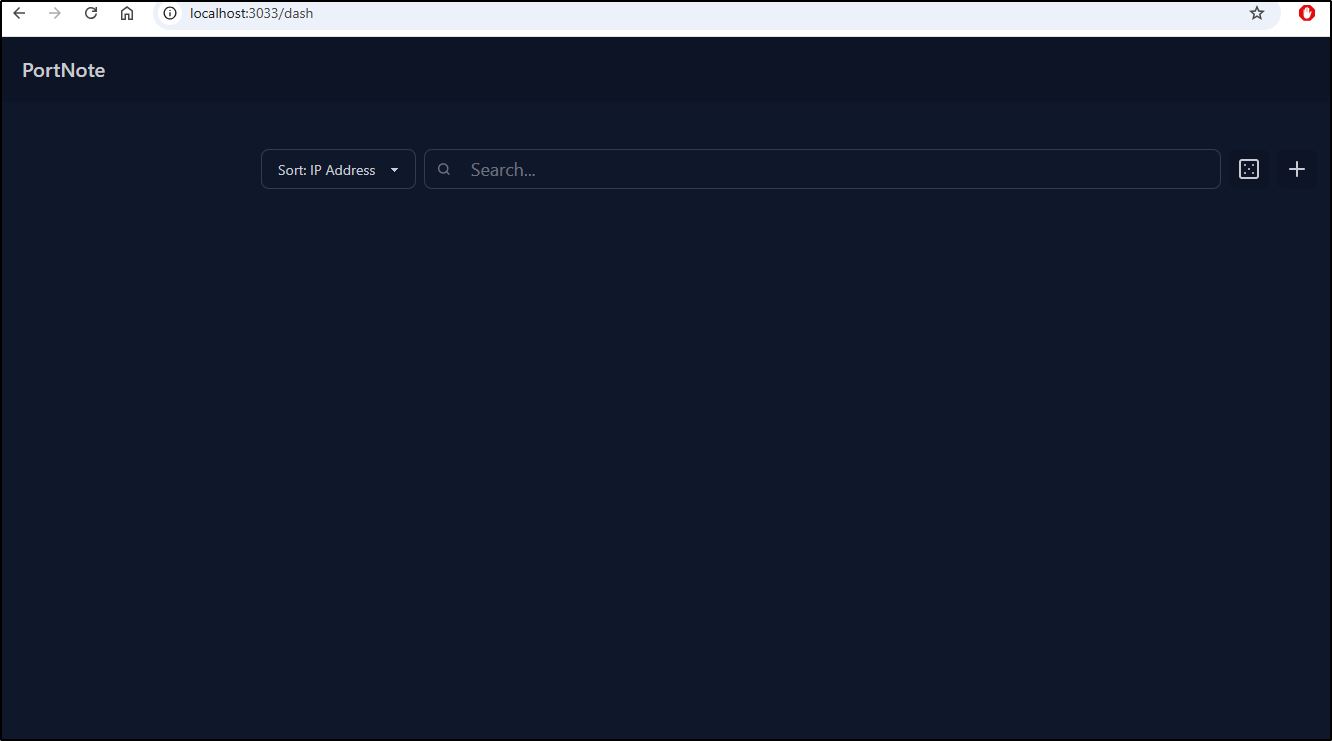

I can hit port 3000 and use the default username and mypassword login

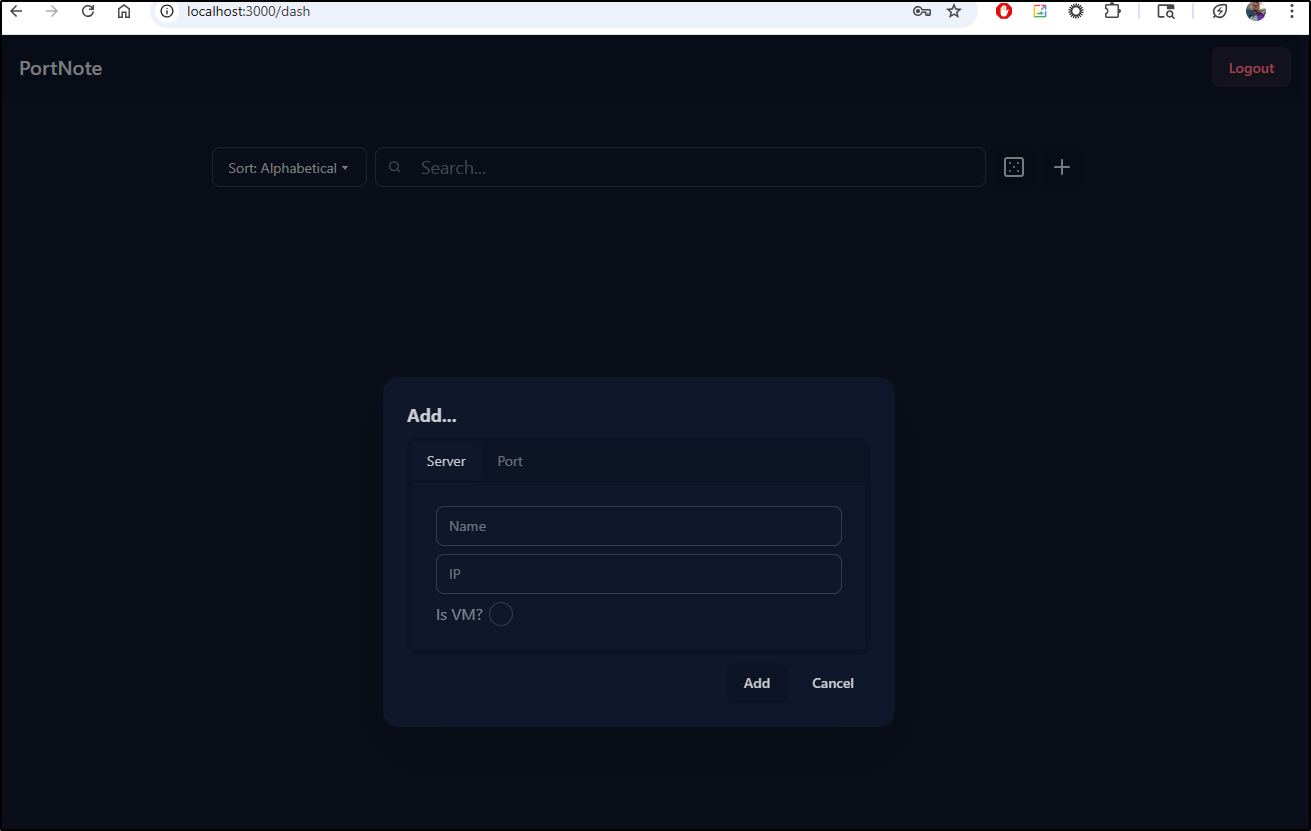

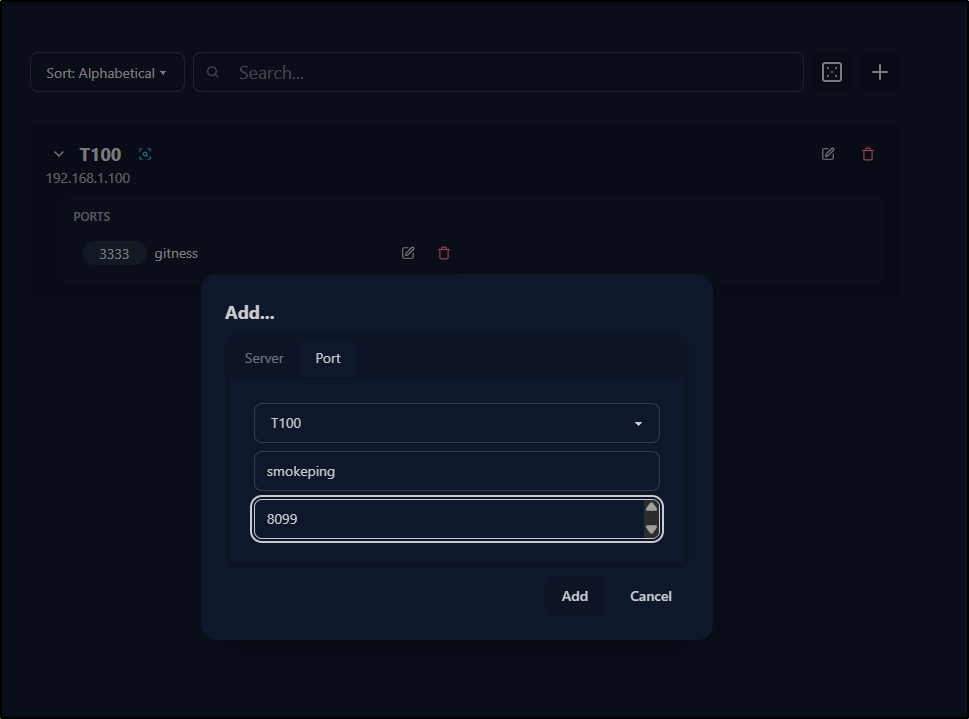

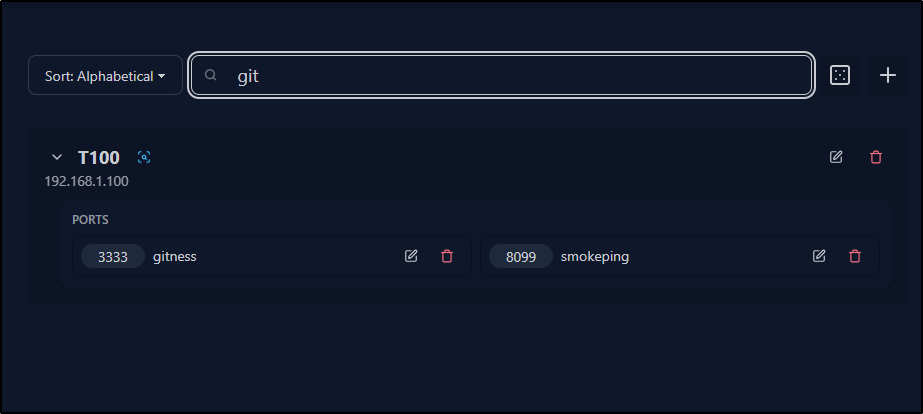

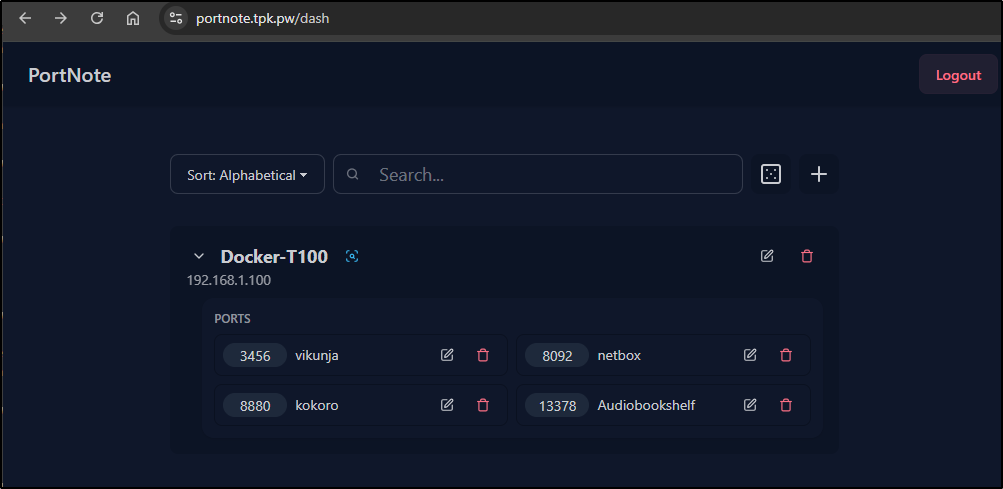

Nothing is there, so we can add a new server and port

Once I add a host, I can then start adding ports to existing servers

If I search for any part of the name of a port, it will show just hosts that match

Kubernetes

Next, I’ll try just turning it all into a Kubernetes YAML manifest with a service

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: postgres-data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: portnote-db

spec:

replicas: 1

selector:

matchLabels:

app: portnote-db

template:

metadata:

labels:

app: portnote-db

spec:

containers:

- name: postgres

image: postgres:17

env:

- name: POSTGRES_USER

value: "postgres"

- name: POSTGRES_PASSWORD

value: "postgres"

- name: POSTGRES_DB

value: "postgres"

ports:

- containerPort: 5432

volumeMounts:

- name: postgres-data

mountPath: /var/lib/postgresql/data

volumes:

- name: postgres-data

persistentVolumeClaim:

claimName: postgres-data

---

apiVersion: v1

kind: Service

metadata:

name: portnote-db

spec:

ports:

- port: 5432

targetPort: 5432

selector:

app: portnote-db

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: portnote-web

spec:

replicas: 1

selector:

matchLabels:

app: portnote-web

template:

metadata:

labels:

app: portnote-web

spec:

containers:

- name: web

image: haedlessdev/portnote:latest

env:

- name: JWT_SECRET

value: "RANDOM_SECRET" # Replace with a secure random string

- name: USER_SECRET

value: "RANDOM_SECRET" # Replace with a secure random string

- name: LOGIN_USERNAME

value: "username" # Replace with a username

- name: LOGIN_PASSWORD

value: "mypassword" # Replace with a custom password

- name: DATABASE_URL

value: "postgresql://postgres:postgres@portnote-db:5432/postgres"

ports:

- containerPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: portnote-web

spec:

type: ClusterIP

ports:

- port: 3000

targetPort: 3000

selector:

app: portnote-web

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: portnote-agent

spec:

replicas: 1

selector:

matchLabels:

app: portnote-agent

template:

metadata:

labels:

app: portnote-agent

spec:

containers:

- name: agent

image: haedlessdev/portnote-agent:latest

env:

- name: DATABASE_URL

value: "postgresql://postgres:postgres@portnote-db:5432/postgres"

Then applying it

builder@LuiGi:~/Workspaces/PortNote$ kubectl create ns portnote

namespace/portnote created

builder@LuiGi:~/Workspaces/PortNote$ kubectl apply -f ./deployment.yaml -n portnote

persistentvolumeclaim/postgres-data created

deployment.apps/portnote-db created

service/portnote-db created

deployment.apps/portnote-web created

service/portnote-web created

deployment.apps/portnote-agent created

Seems to have some issues at the start

$ kubectl get po -n portnote

NAME READY STATUS RESTARTS AGE

portnote-web-7c6d7fb679-x74v4 0/1 ContainerCreating 0 48s

portnote-db-56b464f8f4-zrmn4 1/1 Running 0 48s

portnote-agent-59f88f79bf-n4kml 0/1 CrashLoopBackOff 2 (21s ago) 48s

but soon seemed happy without my intervention

$ kubectl get po -n portnote

NAME READY STATUS RESTARTS AGE

portnote-db-56b464f8f4-zrmn4 1/1 Running 0 72s

portnote-web-7c6d7fb679-x74v4 1/1 Running 0 72s

portnote-agent-59f88f79bf-n4kml 1/1 Running 3 (45s ago) 72s

Which worked great

$ kubectl port-forward svc/portnote-web -n portnote 3033:3000

Forwarding from 127.0.0.1:3033 -> 3000

Forwarding from [::1]:3033 -> 3000

Handling connection for 3033

Handling connection for 3033

Handling connection for 3033

Now, I wont expose some app with the default usename even if it just for port mapping.

I changed it and reapplied the manifest

builder@LuiGi:~/Workspaces/PortNote$ vi deployment.yaml

builder@LuiGi:~/Workspaces/PortNote$ kubectl apply -f ./deployment.yaml -n portnote

persistentvolumeclaim/postgres-data unchanged

deployment.apps/portnote-db unchanged

service/portnote-db unchanged

deployment.apps/portnote-web configured

service/portnote-web unchanged

deployment.apps/portnote-agent unchanged

which worked.

I’ll now set an A Record

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n portnote

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "d55ddfea-71f1-4d33-b45c-ee08a6ba6de2",

"fqdn": "portnote.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/portnote",

"name": "portnote",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

Now to make an ingress and use it

builder@LuiGi:~/Workspaces/PortNote$ cat ./ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: portnote-web

name: portnoteingress

spec:

rules:

- host: portnote.tpk.pw

http:

paths:

- backend:

service:

name: portnote-web

port:

number: 3000

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- portnote.tpk.pw

secretName: portnote-tls

builder@LuiGi:~/Workspaces/PortNote$ kubectl apply -f ./ingress.yaml -n portnote

ingress.networking.k8s.io/portnoteingress created

When the cert is satisified

$ kubectl get cert -n portnote

NAME READY SECRET AGE

portnote-tls True portnote-tls 2m9s

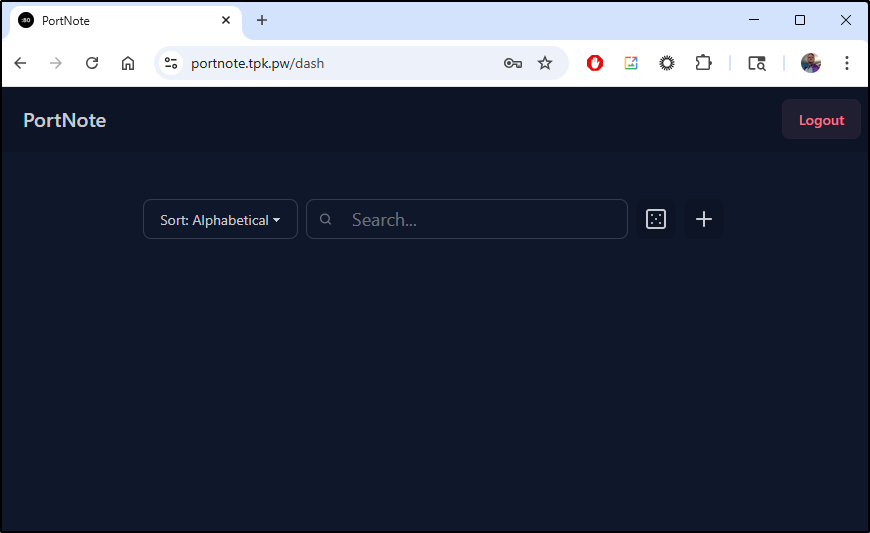

I could now add hosts if I wanted

That said, I’m not sure it covers quite enough for me. I would like a bit more details. I can think of tools like Zabbix being a good fit for that.

Netbox

When searching for other options, I found Netbox labs. The install docs seemed geared for direct linux host installation.

However, I did find [this community Docker container[(https://github.com/netbox-community/netbox-docker)

Let’s pull down the repo and start with docker compose

builder@LuiGi:~/Workspaces$ git clone https://github.com/netbox-community/netbox-docker.git

Cloning into 'netbox-docker'...

remote: Enumerating objects: 5089, done.

remote: Counting objects: 100% (19/19), done.

remote: Compressing objects: 100% (13/13), done.

remote: Total 5089 (delta 11), reused 6 (delta 6), pack-reused 5070 (from 3)

Receiving objects: 100% (5089/5089), 1.28 MiB | 3.80 MiB/s, done.

Resolving deltas: 100% (2918/2918), done.

builder@LuiGi:~/Workspaces$ cd netbox-docker/

builder@LuiGi:~/Workspaces/netbox-docker$ tee docker-compose.override.yml <<EOF

services:

netbox:

ports:

- 8000:8080

EOF

services:

netbox:

ports:

- 8000:8080

builder@LuiGi:~/Workspaces/netbox-docker$ docker compose pull

[+] Pulling 40/40

✔ netbox-worker Skipped - Image is already being pulled by netbox 0.0s

✔ netbox-housekeeping Skipped - Image is already being pulled by netbox 0.0s

✔ netbox Pulled 50.4s

✔ redis-cache Skipped - Image is already being pulled by redis 0.0s

✔ redis Pulled 7.9s

✔ postgres Pulled 27.0s

builder@LuiGi:~/Workspaces/netbox-docker$ docker compose up

[+] Running 13/13

✔ Network netbox-docker_default Created 0.1s

✔ Volume "netbox-docker_netbox-media-files" Created 0.0s

✔ Volume "netbox-docker_netbox-reports-files" Created 0.0s

✔ Volume "netbox-docker_netbox-scripts-files" Created 0.0s

✔ Volume "netbox-docker_netbox-postgres-data" Created 0.0s

✔ Volume "netbox-docker_netbox-redis-data" Created 0.0s

✔ Volume "netbox-docker_netbox-redis-cache-data" Created 0.0s

✔ Container netbox-docker-redis-1 Created 0.3s

✔ Container netbox-docker-redis-cache-1 Created 0.3s

✔ Container netbox-docker-postgres-1 Created 0.3s

✔ Container netbox-docker-netbox-1 Created 0.2s

✔ Container netbox-docker-netbox-housekeeping-1 Created 0.1s

✔ Container netbox-docker-netbox-worker-1 Created 0.1s

Attaching to netbox-1, netbox-housekeeping-1, netbox-worker-1, postgres-1, redis-1, redis-cache-1

... snip ...

seems to crash

builder@LuiGi:~/Workspaces/netbox-docker$ docker compose up

[+] Running 4/4

✔ Container netbox-docker-redis-1 Running 0.0s

✔ Container netbox-docker-redis-cache-1 Running 0.0s

✔ Container netbox-docker-postgres-1 Running 0.0s

✔ Container netbox-docker-netbox-1 Running 0.0s

Attaching to netbox-1, netbox-housekeeping-1, netbox-worker-1, postgres-1, redis-1, redis-cache-1

Gracefully stopping... (press Ctrl+C again to force)

dependency failed to start: container netbox-docker-netbox-1 is unhealthy

It took a few tries, but then it came up

I then used the manage.py to create a user

builder@LuiGi:~/Workspaces/netbox-docker$ docker exec -it netbox-docker-netbox-1 /bin/bash

unit@46d90e38bd03:/opt/netbox/netbox$ ./manage.py createsuperuser

🧬 loaded config '/etc/netbox/config/configuration.py'

🧬 loaded config '/etc/netbox/config/extra.py'

🧬 loaded config '/etc/netbox/config/logging.py'

🧬 loaded config '/etc/netbox/config/plugins.py'

Username: admin

Email address: admin@tpk.pw

Password:

Password (again):

This password is too short. It must contain at least 12 characters.

Bypass password validation and create user anyway? [y/N]: N

Password:

Password (again):

Superuser created successfully.

unit@46d90e38bd03:/opt/netbox/netbox$

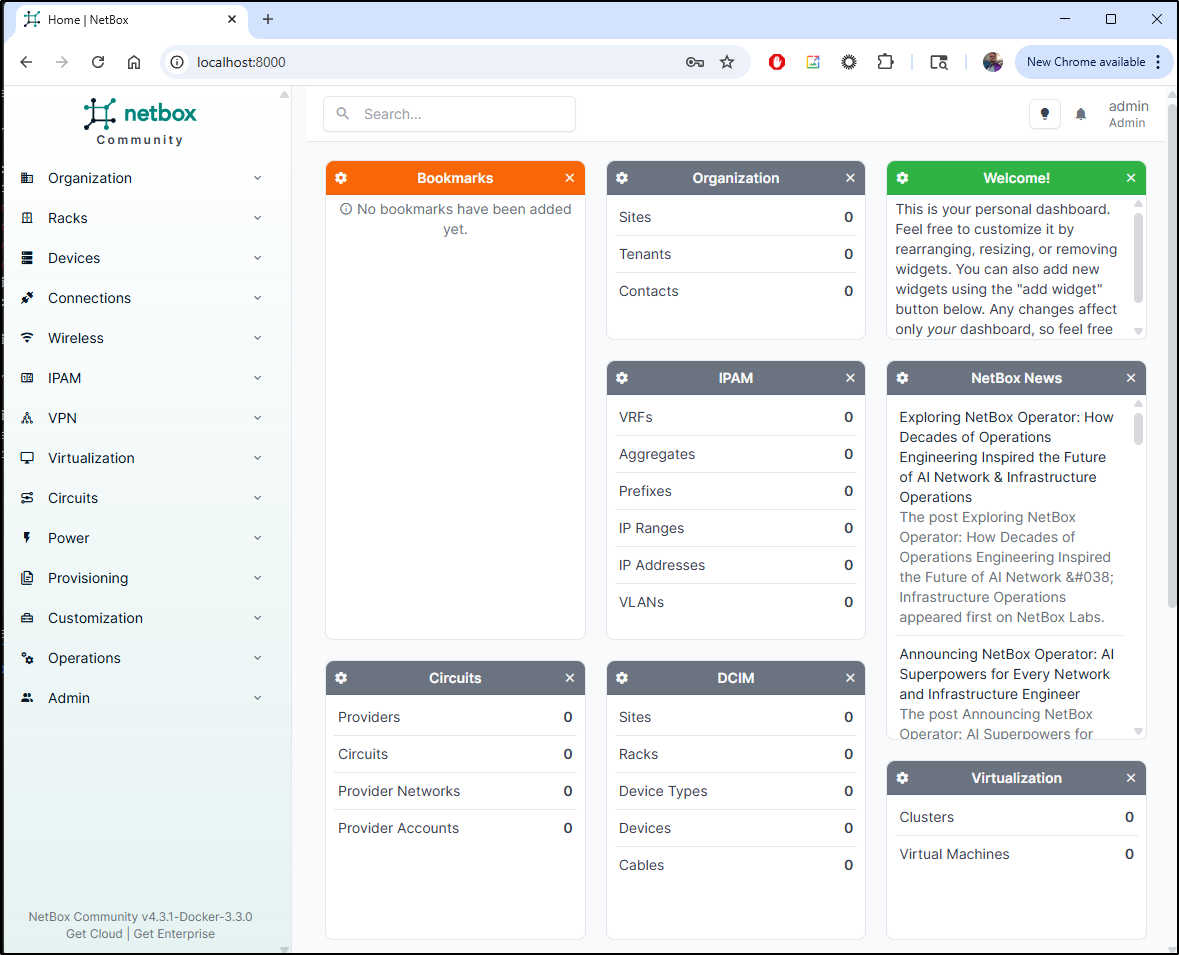

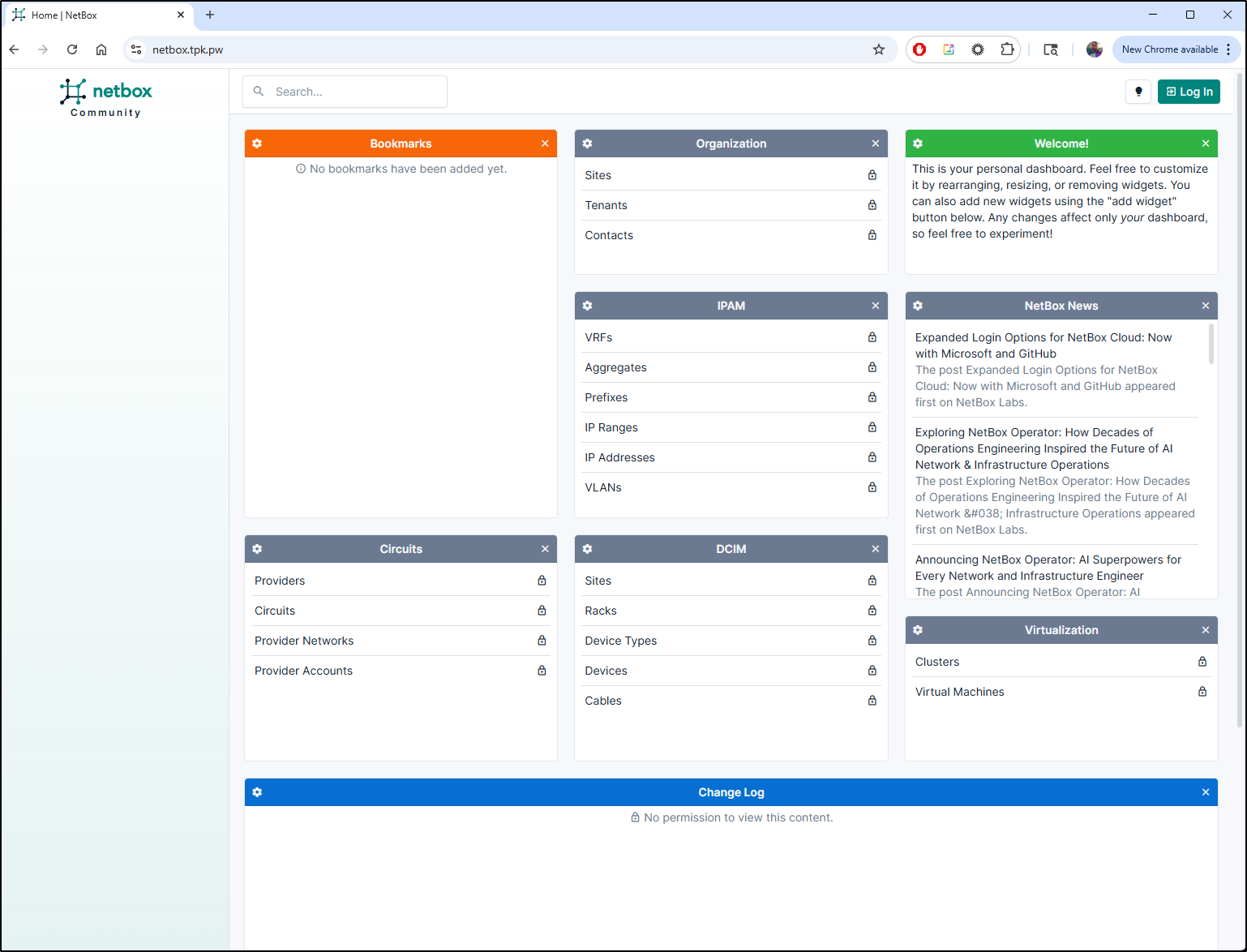

Now I can login

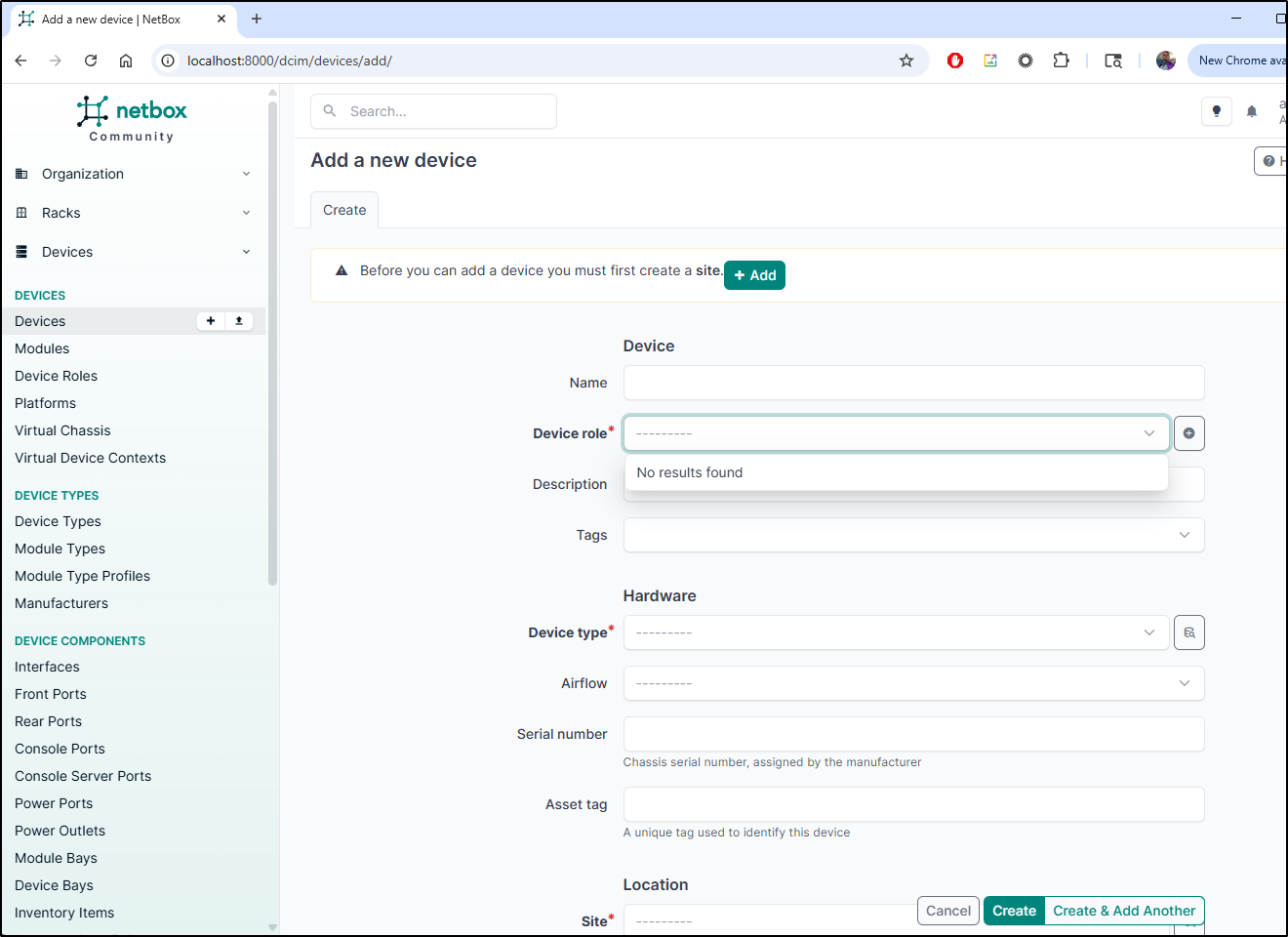

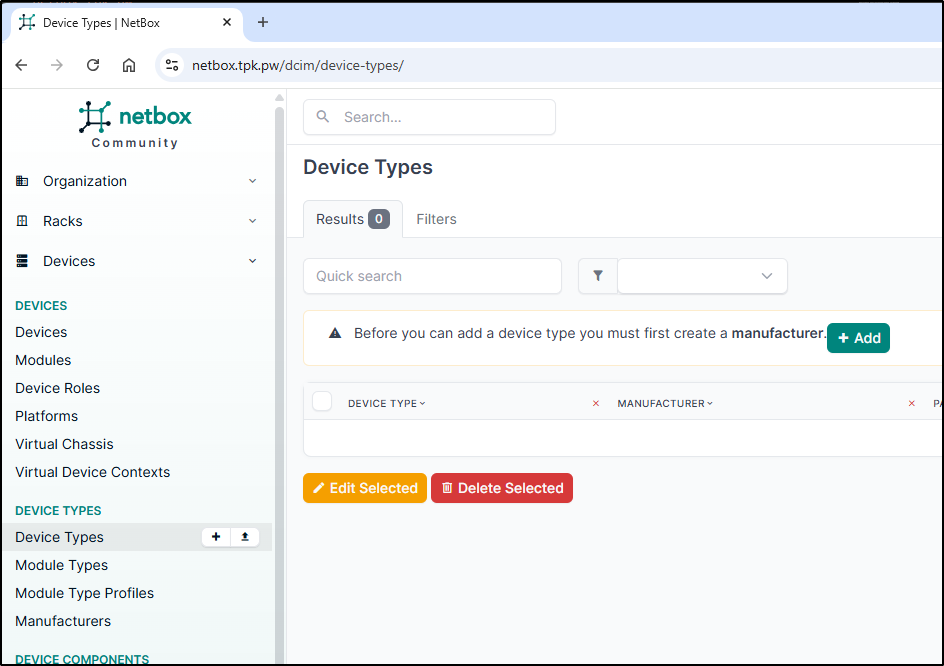

But before I create devices I need to add types and roles

exposing externally

I opted to use port 8092

builder@builder-T100:~/netbox-docker$ cat docker-compose.override.yml

services:

netbox:

ports:

- 8092:8080

Let’s fire this up on a shared docker host.

builder@builder-T100:~/netbox-docker$ docker compose up -d

[+] Building 0.0s (0/0)

[+] Running 6/6

✔ Container netbox-docker-redis-cache-1 Running 0.0s

✔ Container netbox-docker-redis-1 Running 0.0s

✔ Container netbox-docker-postgres-1 Running 0.0s

✔ Container netbox-docker-netbox-1 Healthy 0.5s

✔ Container netbox-docker-netbox-housekeeping-1 Started 0.9s

✔ Container netbox-docker-netbox-worker-1 Started

Note: i had to run that twice but on second attempt all the containers started without issue.

Then I just need to create an admin user

builder@builder-T100:~$ docker exec -it netbox-docker-netbox-1 /bin/bash

unit@0ba6f24ff908:/opt/netbox/netbox$ ./manage.py createsuperuser

🧬 loaded config '/etc/netbox/config/configuration.py'

🧬 loaded config '/etc/netbox/config/extra.py'

🧬 loaded config '/etc/netbox/config/logging.py'

🧬 loaded config '/etc/netbox/config/plugins.py'

Username: builder

Email address: isaac@freshbrewed.science

Password:

Password (again):

Superuser created successfully.

We can then create an A record which we’ll use for an ingress

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n netbox

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "384b493e-2b7f-43b3-8d8e-c22d722d3c2f",

"fqdn": "netbox.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/netbox",

"name": "netbox",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

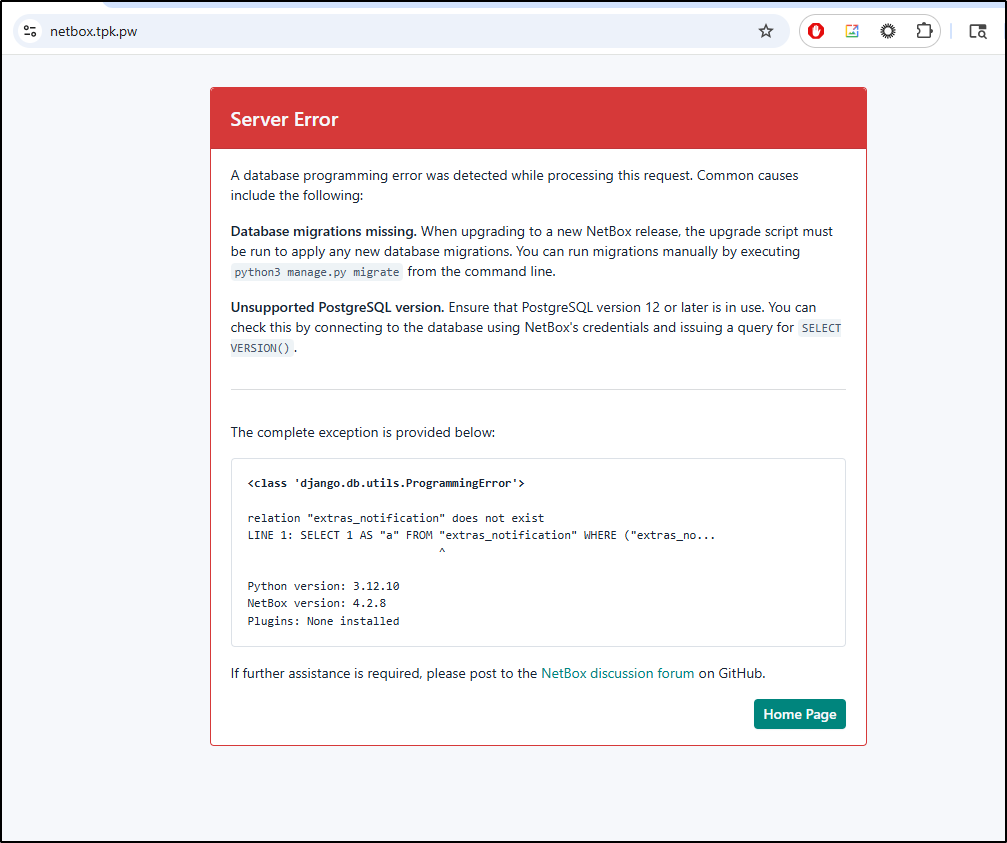

I should note, I initially attempted to convert it all to Kubernetes YAML manifests

builder@LuiGi:~/Workspaces/netbox-docker$ kubectl get deployment -n netbox

NAME READY UP-TO-DATE AVAILABLE AGE

redis-deployment 1/1 1 1 367d

postgres-deployment 1/1 1 1 367d

netbox-deployment 1/1 1 1 367d

builder@LuiGi:~/Workspaces/netbox-docker$ kubectl get svc -n netbox

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

postgres-service ClusterIP 10.43.222.7 <none> 5432/TCP 367d

redis-service ClusterIP 10.43.93.229 <none> 6379/TCP 367d

netbox-service ClusterIP 10.43.202.87 <none> 8000/TCP 367d

builder@LuiGi:~/Workspaces/netbox-docker$ kubectl get pvc -n netbox

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

postgres-pvc Bound pvc-7dfd3be7-5087-4329-94d9-f25e3b2b4366 1Gi RWO local-path 367d

netbox-pvc Bound pvc-271e6e59-ad7a-4be2-b60b-06a6b704ecca 1Gi RWO local-path 367d

builder@LuiGi:~/Workspaces/netbox-docker$ kubectl get ingress -n netbox

NAME CLASS HOSTS ADDRESS PORTS AGE

netbox-ingress <none> netbox.tpk.pw 80, 443 367d

But I saw database errors:

Next, we apply an Endpoint, Service and Ingress object in Kubernetes that will use that A record

$ cat ingress.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: netbox-external-ip

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: netboxint

port: 8092

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: netbox-external-ip

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: netbox

port: 80

protocol: TCP

targetPort: 8092

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: netbox-external-ip

generation: 1

name: netboxingress

spec:

rules:

- host: netbox.tpk.pw

http:

paths:

- backend:

service:

name: netbox-external-ip

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- netbox.tpk.pw

secretName: netbox-tls

$ kubectl apply -f ./ingress.yaml

endpoints/netbox-external-ip created

service/netbox-external-ip created

ingress.networking.k8s.io/netboxingress created

When the cert comes up

kubectl get cert netbox-tls

NAME READY SECRET AGE

netbox-tls True netbox-tls 18s

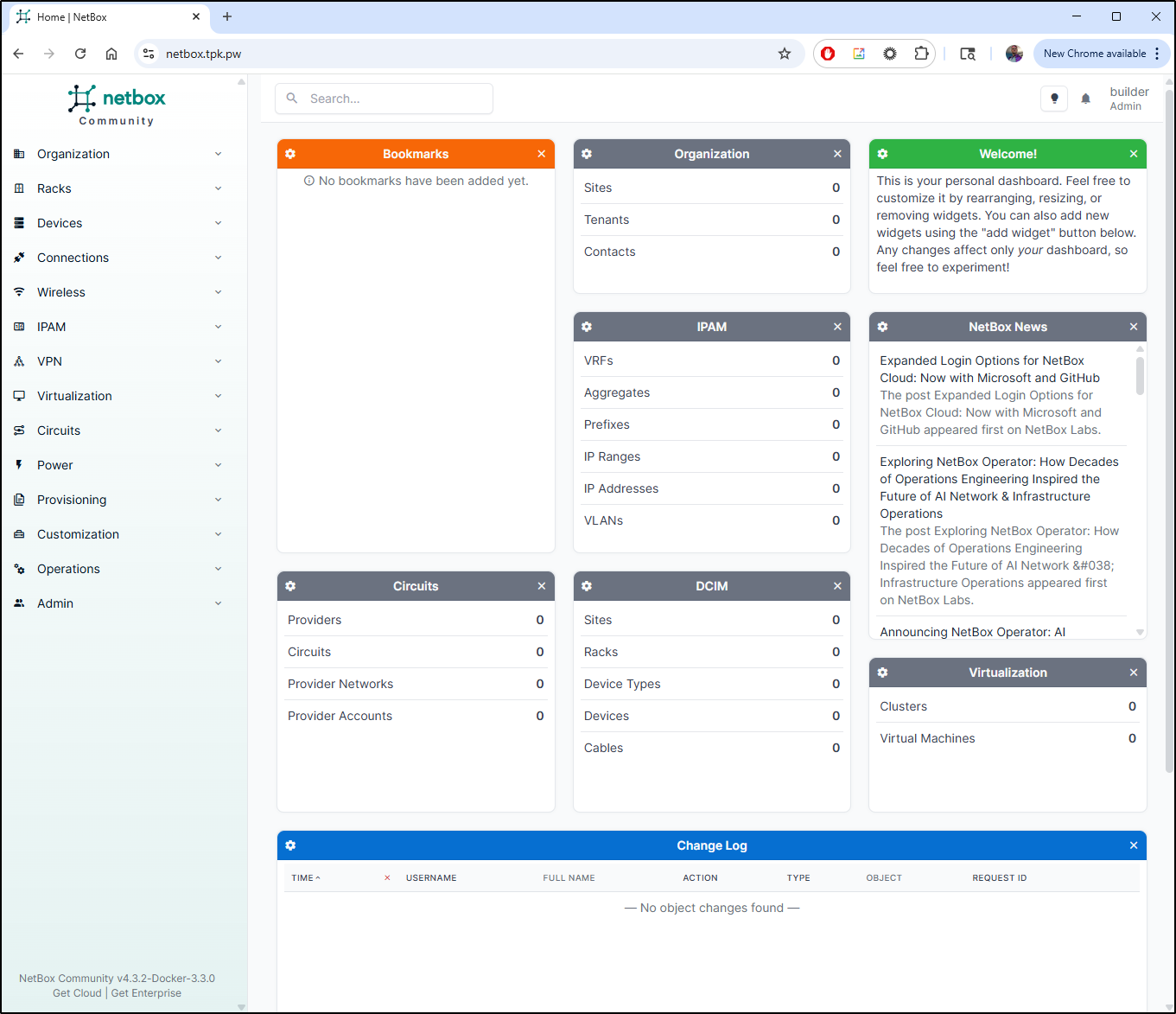

I can now see the dashboard with login

And can login without issue

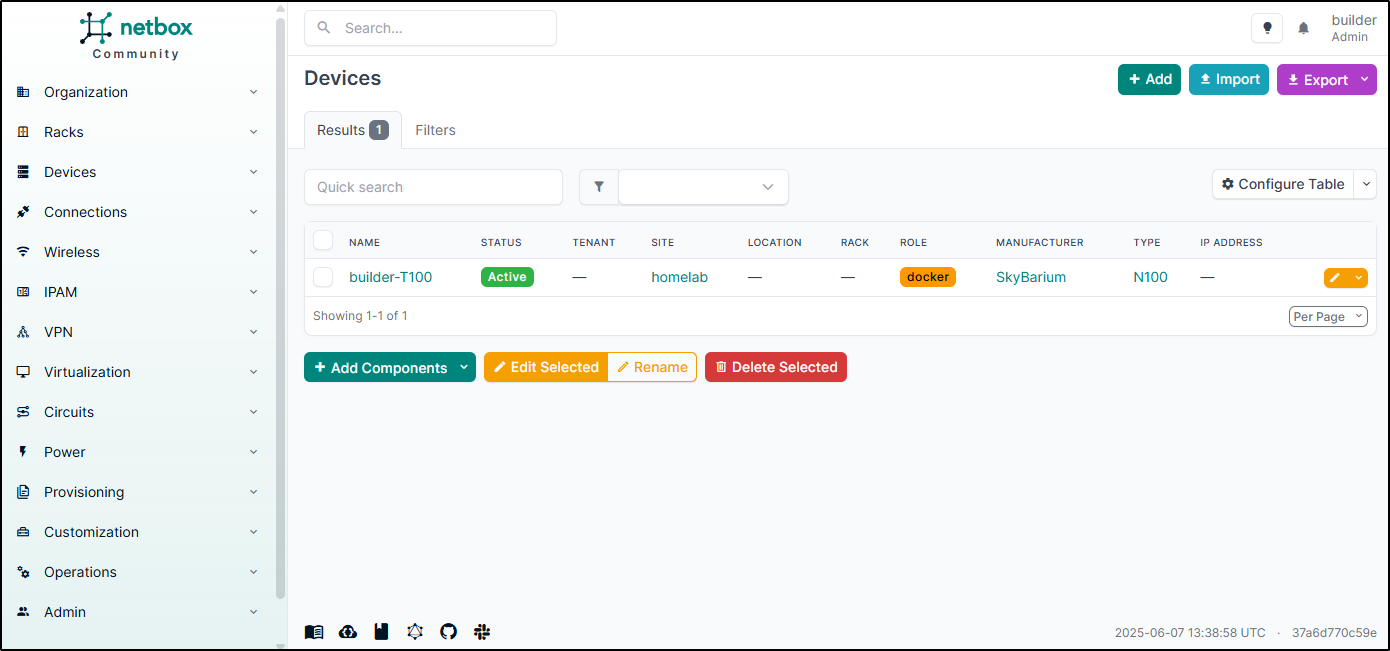

There is a lot to get through to add a machine. We need, at the least, a site (which wants a location) then a chassis and manufacturer so as to add a type.

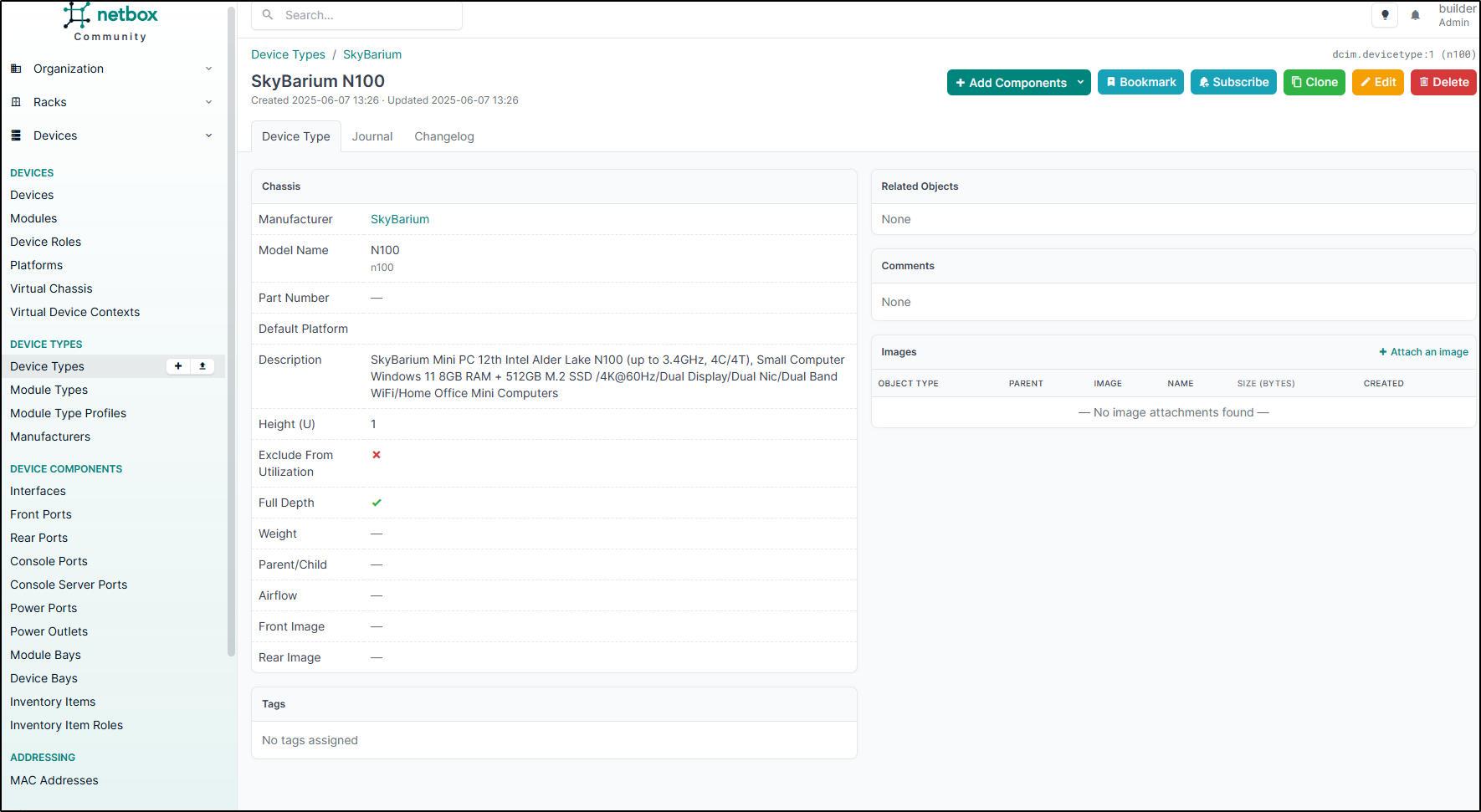

I added a Device Type for my Docker host

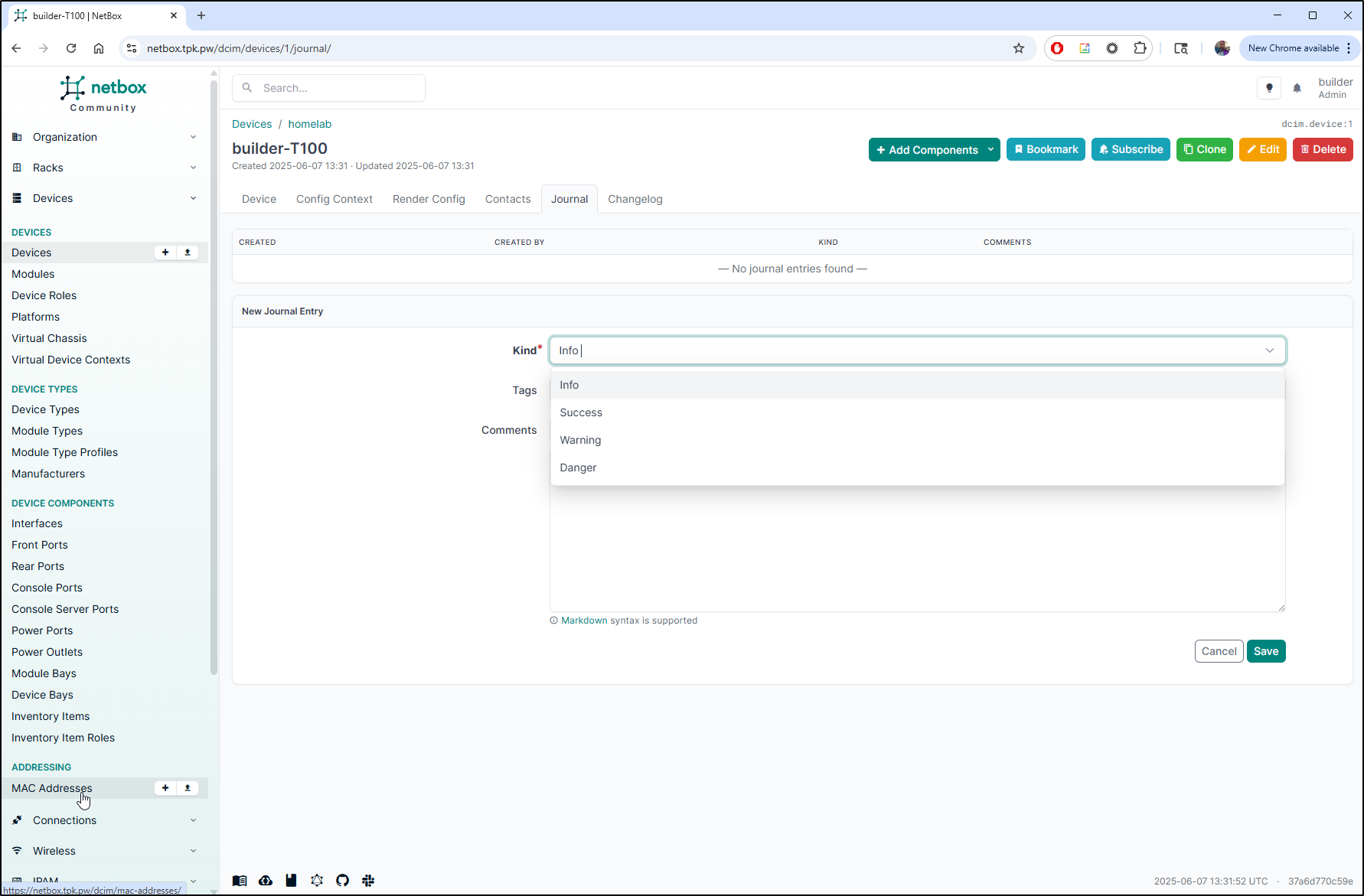

Once we finally add a host, we can do things like add Journal entries for maintenance

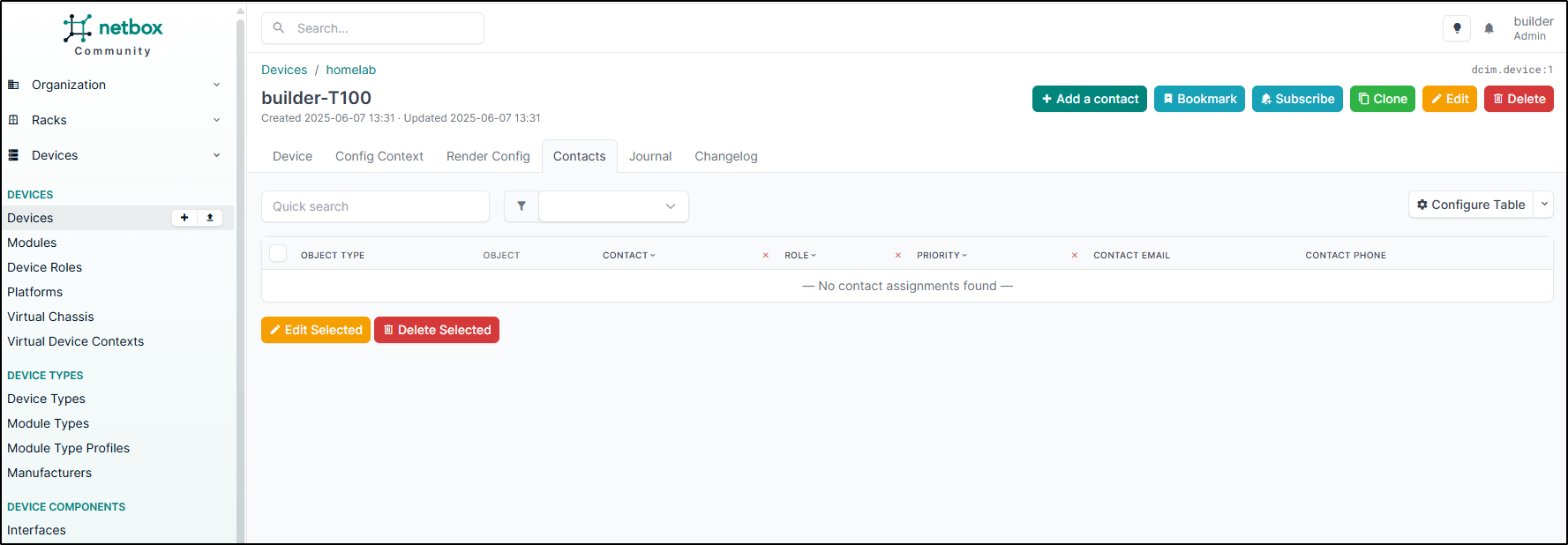

or add contacts, which could be useful for notifying affected teams or projects when we have to update the host

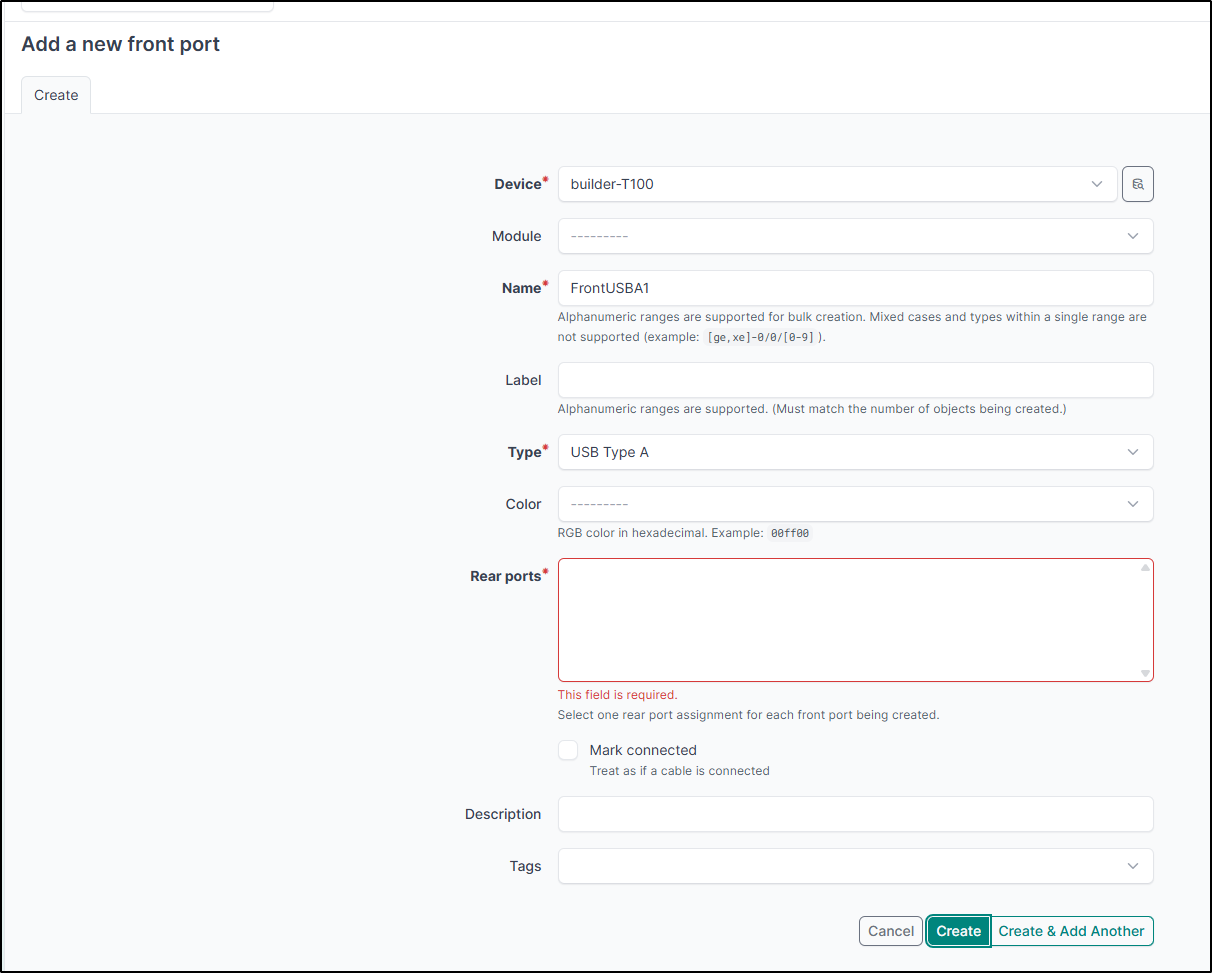

The Ports seem more about Network adapters. Even though I could add a USB-A type port, it requires a connected rear port

At most I might use it to track my hosts:

Summary

Today we looked at addy.io which makes it easy to create anonymous emails for signups and other purposes. I don’t often sign-up for SaaS services on the first shot, but I found their lowest tier to offer quite a lot of features and I wanted custom domain support. It worked pretty well and highlighted some outstanding issues I have on my own mail server settings.

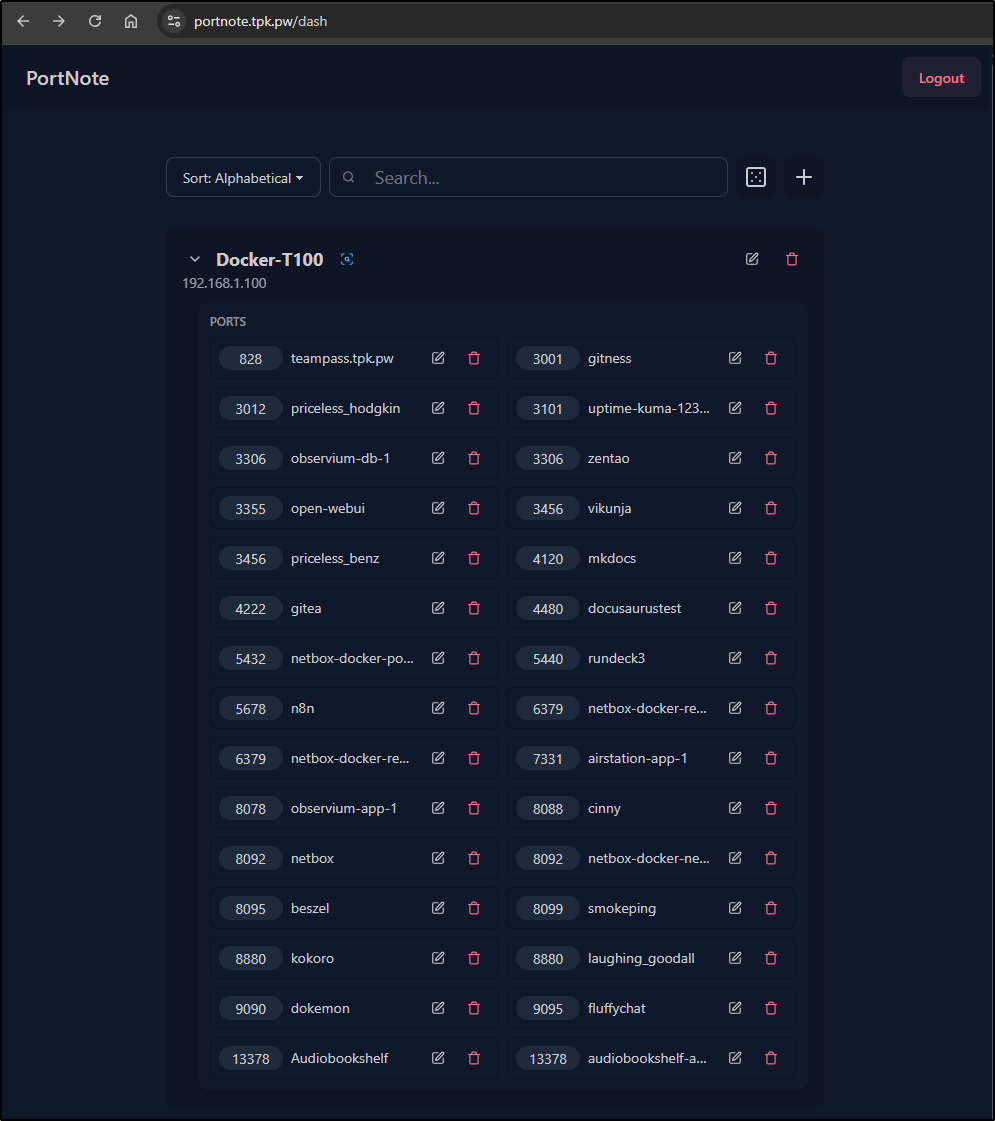

I then tested out PortNote which does a great job of just organizing hosts and ports. It’s pretty basic, but I did find (by watching the console) we could populate it with a bunch of curl statements, so I could automate if I wanted.

e.g.

$ curl -X POST -H "Authorization:Basic bm90bXl1c2VyOjg2NDdmZGp0" --header "Content-Type: application/json" https://portnote.tpk.pw/api/add --data '{ "portNote": "vikunja", "portPort": 34

56, "portServer": 1, "type": 1}'

{"id":4,"serverId":1,"note":"vikunja","port":3456}

I could even automate this with a quick bash script:

#!/bin/bash

docker ps --format '{{.Names}} {{.Ports}}' | awk '

{

# Find the part before "->" (host mapping)

split($2, ports, "->");

# Extract the host port (may include IP:PORT)

split(ports[1], hostport, ":");

# Get the last part (should be PORT/proto)

portproto = hostport[length(hostport)];

# Remove protocol (e.g. /tcp)

split(portproto, portonly, "/");

port = portonly[1];

printf("{\"portServer\": 1, \"type\": 1, \"portNote\":\"%s\",\"portPort\":%s}\n", $1, port);

}' > portfile

while read -r line; do

curl -X POST -H "Authorization:Basic bm90bXl1c2VyOjg2NDdmZGp0" --header "Content-Type: application/json" -d "$line" https://portnote.tpk.pw/api/add || true

done < portfile

Which worked to populate the containers on this host:

I also popped that into my crontab so it would auto-update in the future

builder@builder-T100:~$ crontab -l | tail -n 1

0 4 * * * /home/builder/portnote-load.sh

Lastly, I tried out Netbox which has an Open-Source community edition. However, it’s a bit too detailed for my needs and seems more geared at managing a NOC.