Published: May 22, 2025 by Isaac Johnson

Today we’ll take a look at Marimo a Python notebook suite which is fantastic way to run edit python notebooks. I know of Jupyter but Marimo was new to me, so let’s give it a try and see what it can do.

We will also explore Google Gemini 2.5 Pro several ways including images with Imagen and Video with VEO2. Lastly, we’ll look at code generation both using the AI Studio console as well as integrated in VS Code via the continue.dev plugin.

Marimo

Install with pip

builder@DESKTOP-QADGF36:~/Workspaces/marimo$ pip install marimo

Collecting marimo

Downloading marimo-0.8.22-py3-none-any.whl (11.8 MB)

|████████████████████████████████| 11.8 MB 2.3 MB/s

Requirement already satisfied: docutils>=0.16.0 in /usr/lib/python3/dist-packages (from marimo) (0.16)

Requirement already satisfied: psutil>=5.0 in /home/builder/.local/lib/python3.8/site-packages (from marimo) (5.9.8)

Collecting ruff

Downloading ruff-0.11.11-py3-none-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (11.5 MB)

|████████████████████████████████| 11.5 MB 79.8 MB/s

Collecting tomlkit>=0.12.0

Downloading tomlkit-0.13.2-py3-none-any.whl (37 kB)

Collecting packaging

Downloading packaging-25.0-py3-none-any.whl (66 kB)

|████████████████████████████████| 66 kB 10.1 MB/s

Collecting pyyaml>=6.0

Downloading PyYAML-6.0.2-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (746 kB)

|████████████████████████████████| 746 kB 45.5 MB/s

Collecting uvicorn>=0.22.0

Downloading uvicorn-0.33.0-py3-none-any.whl (62 kB)

|████████████████████████████████| 62 kB 2.0 MB/s

Collecting jedi>=0.18.0

Downloading jedi-0.19.2-py2.py3-none-any.whl (1.6 MB)

|████████████████████████████████| 1.6 MB 58.4 MB/s

Collecting itsdangerous>=2.0.0

Downloading itsdangerous-2.2.0-py3-none-any.whl (16 kB)

Collecting importlib-resources>=5.10.2; python_version < "3.9"

Downloading importlib_resources-6.4.5-py3-none-any.whl (36 kB)

Collecting click<9,>=8.0

Downloading click-8.1.8-py3-none-any.whl (98 kB)

|████████████████████████████████| 98 kB 14.7 MB/s

Collecting starlette!=0.36.0,>=0.26.1

Downloading starlette-0.44.0-py3-none-any.whl (73 kB)

|████████████████████████████████| 73 kB 4.2 MB/s

Requirement already satisfied: typing-extensions>=4.4.0; python_version < "3.11" in /home/builder/.local/lib/python3.8/site-packages (from marimo) (4.10.0)

Collecting websockets<13.0.0,>=10.0.0

Downloading websockets-12.0-cp38-cp38-manylinux_2_5_x86_64.manylinux1_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl (130 kB)

|████████████████████████████████| 130 kB 53.2 MB/s

Collecting pymdown-extensions<11,>=9.0

Downloading pymdown_extensions-10.15-py3-none-any.whl (265 kB)

|████████████████████████████████| 265 kB 43.3 MB/s

Collecting pygments<3,>=2.13

Downloading pygments-2.19.1-py3-none-any.whl (1.2 MB)

|████████████████████████████████| 1.2 MB 53.2 MB/s

Requirement already satisfied: markdown<4,>=3.4 in /home/builder/.local/lib/python3.8/site-packages (from marimo) (3.4.1)

Collecting h11>=0.8

Downloading h11-0.16.0-py3-none-any.whl (37 kB)

Collecting parso<0.9.0,>=0.8.4

Downloading parso-0.8.4-py2.py3-none-any.whl (103 kB)

|████████████████████████████████| 103 kB 53.1 MB/s

Collecting zipp>=3.1.0; python_version < "3.10"

Downloading zipp-3.20.2-py3-none-any.whl (9.2 kB)

Collecting anyio<5,>=3.4.0

Downloading anyio-4.5.2-py3-none-any.whl (89 kB)

|████████████████████████████████| 89 kB 17.5 MB/s

Requirement already satisfied: importlib-metadata>=4.4; python_version < "3.10" in /home/builder/.local/lib/python3.8/site-packages (from markdown<4,>=3.4->marimo) (7.0.0)

Requirement already satisfied: idna>=2.8 in /usr/lib/python3/dist-packages (from anyio<5,>=3.4.0->starlette!=0.36.0,>=0.26.1->marimo) (2.8)

Collecting sniffio>=1.1

Downloading sniffio-1.3.1-py3-none-any.whl (10 kB)

Collecting exceptiongroup>=1.0.2; python_version < "3.11"

Downloading exceptiongroup-1.3.0-py3-none-any.whl (16 kB)

ERROR: pymdown-extensions 10.15 has requirement markdown>=3.6, but you'll have markdown 3.4.1 which is incompatible.

Installing collected packages: ruff, tomlkit, packaging, pyyaml, click, h11, uvicorn, parso, jedi, itsdangerous, zipp, importlib-resources, sniffio, exceptiongroup, anyio, starlette, websockets, pymdown-extensions, pygments, marimo

Successfully installed anyio-4.5.2 click-8.1.8 exceptiongroup-1.3.0 h11-0.16.0 importlib-resources-6.4.5 itsdangerous-2.2.0 jedi-0.19.2 marimo-0.8.22 packaging-25.0 parso-0.8.4 pygments-2.19.1 pymdown-extensions-10.15 pyyaml-6.0.2 ruff-0.11.11 sniffio-1.3.1 starlette-0.44.0 tomlkit-0.13.2 uvicorn-0.33.0 websockets-12.0 zipp-3.20.2

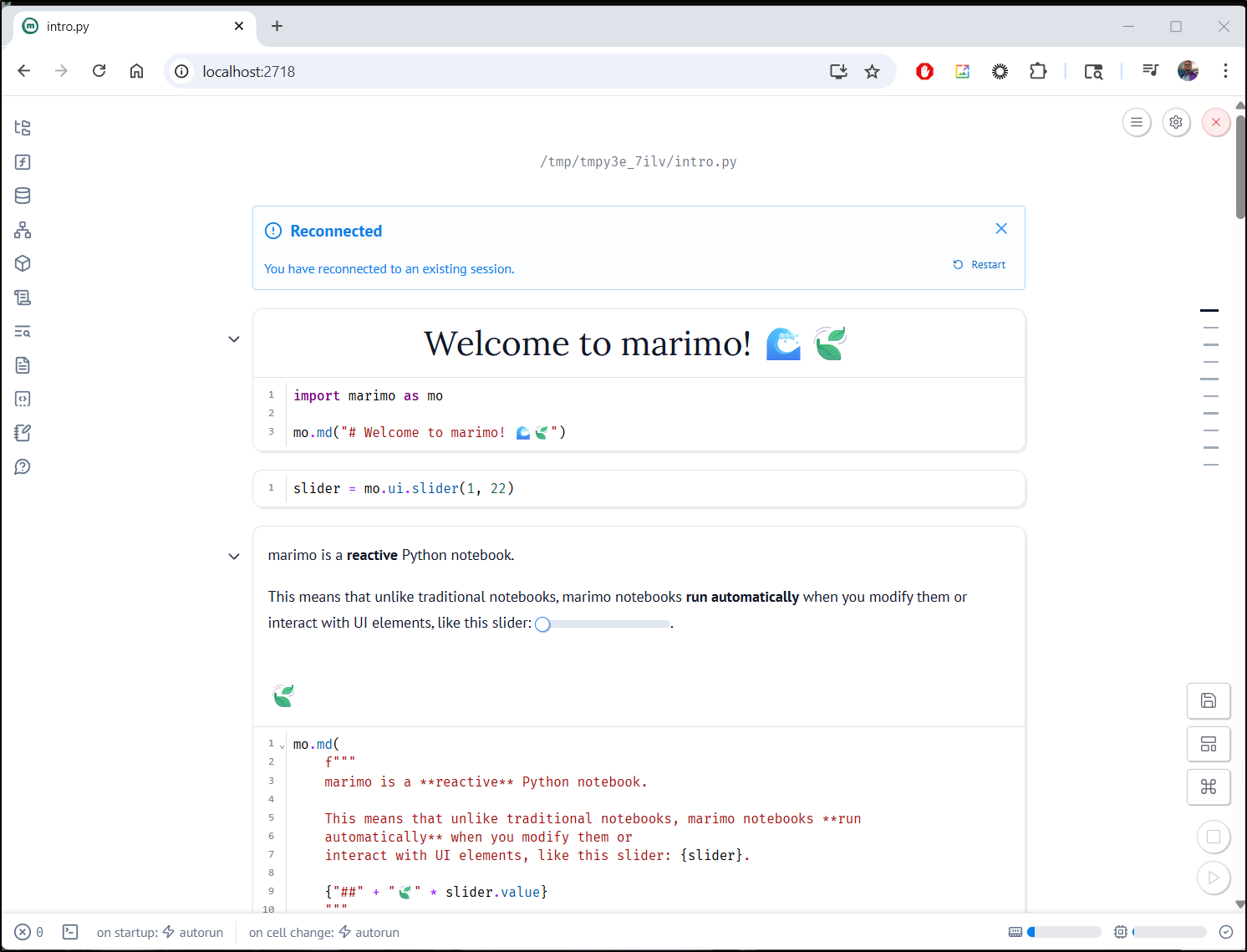

I can now use marimo tutorial intro to launch the tutorial

While that did launch in Firefox in WSL, because it really is exposing a port, we can use a browser in Windows:

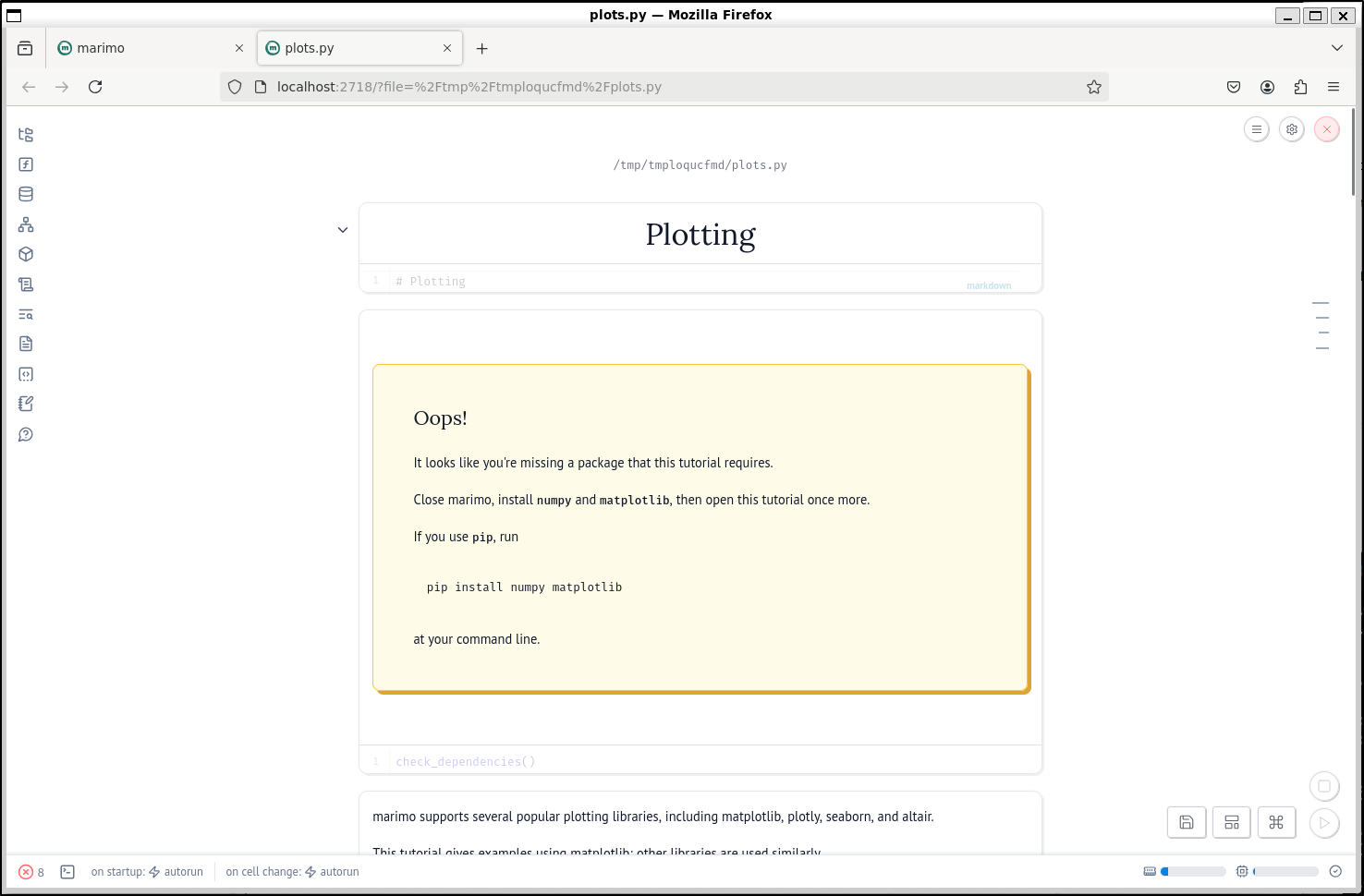

I next did a marimo edit and tried a plotting tutorial, but found it was missing a library. Thankfully, it told me what is missing (pip install numpy matplotlib)

I installed the missing library

builder@DESKTOP-QADGF36:~/Workspaces/marimo$ pip install numpy matplotlib

Collecting numpy

Downloading numpy-1.24.4-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (17.3 MB)

|████████████████████████████████| 17.3 MB 2.4 MB/s

Collecting matplotlib

Downloading matplotlib-3.7.5-cp38-cp38-manylinux_2_12_x86_64.manylinux2010_x86_64.whl (9.2 MB)

|████████████████████████████████| 9.2 MB 58.5 MB/s

Requirement already satisfied: importlib-resources>=3.2.0; python_version < "3.10" in /home/builder/.local/lib/python3.8/site-packages (from matplotlib) (6.4.5)

Requirement already satisfied: packaging>=20.0 in /home/builder/.local/lib/python3.8/site-packages (from matplotlib) (25.0)

Requirement already satisfied: pillow>=6.2.0 in /usr/lib/python3/dist-packages (from matplotlib) (7.0.0)

Collecting fonttools>=4.22.0

Downloading fonttools-4.57.0-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (4.7 MB)

|████████████████████████████████| 4.7 MB 63.1 MB/s

Collecting kiwisolver>=1.0.1

Downloading kiwisolver-1.4.7-cp38-cp38-manylinux_2_5_x86_64.manylinux1_x86_64.whl (1.2 MB)

|████████████████████████████████| 1.2 MB 64.1 MB/s

Collecting contourpy>=1.0.1

Downloading contourpy-1.1.1-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (301 kB)

|████████████████████████████████| 301 kB 60.8 MB/s

Collecting pyparsing>=2.3.1

Downloading pyparsing-3.1.4-py3-none-any.whl (104 kB)

|████████████████████████████████| 104 kB 77.5 MB/s

Requirement already satisfied: python-dateutil>=2.7 in /usr/lib/python3/dist-packages (from matplotlib) (2.7.3)

Collecting cycler>=0.10

Downloading cycler-0.12.1-py3-none-any.whl (8.3 kB)

Requirement already satisfied: zipp>=3.1.0; python_version < "3.10" in /home/builder/.local/lib/python3.8/site-packages (from importlib-resources>=3.2.0; python_version < "3.10"->matplotlib) (3.20.2)

Installing collected packages: numpy, fonttools, kiwisolver, contourpy, pyparsing, cycler, matplotlib

Successfully installed contourpy-1.1.1 cycler-0.12.1 fonttools-4.57.0 kiwisolver-1.4.7 matplotlib-3.7.5 numpy-1.24.4 pyparsing-3.1.4

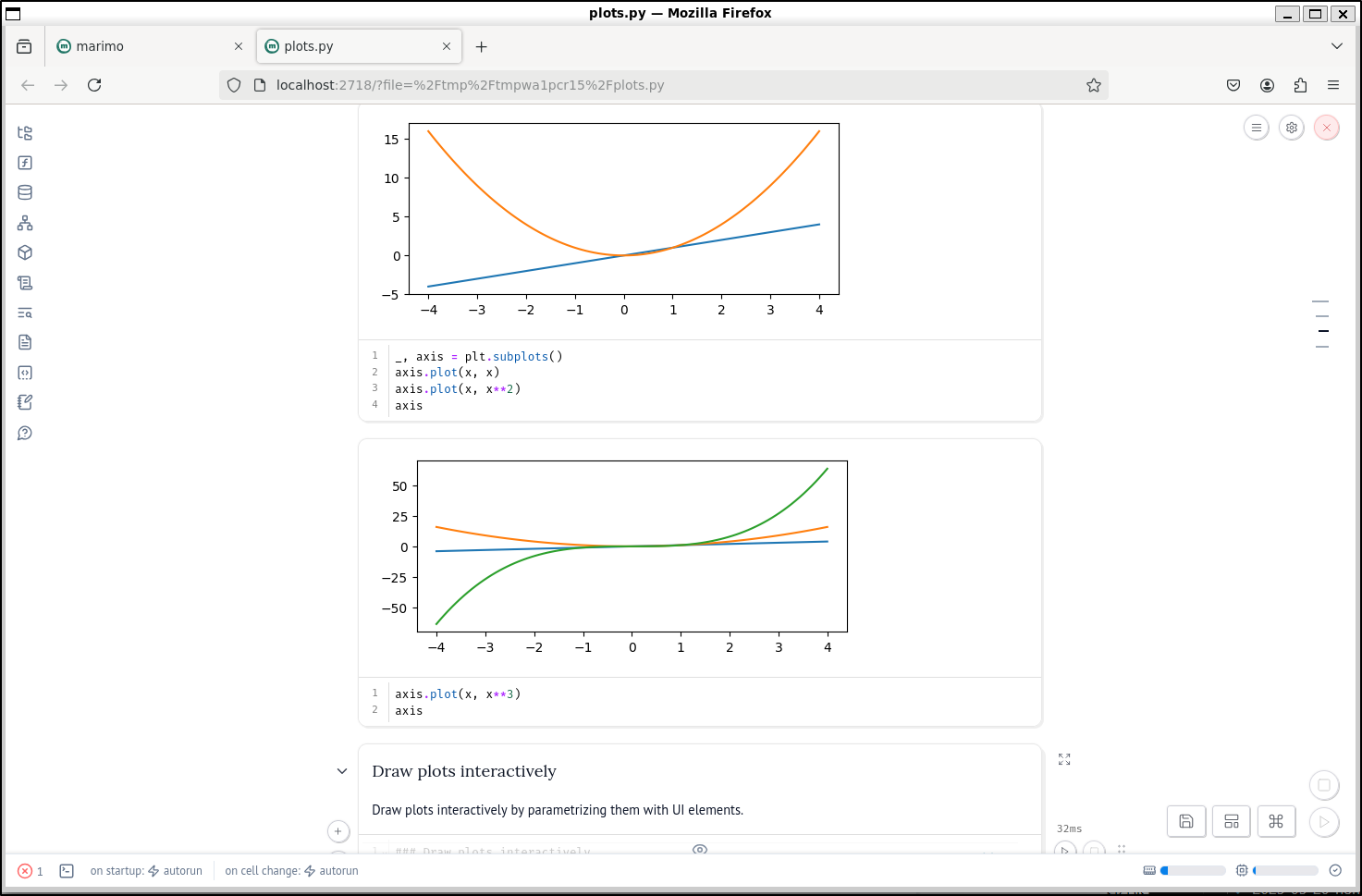

and tried again

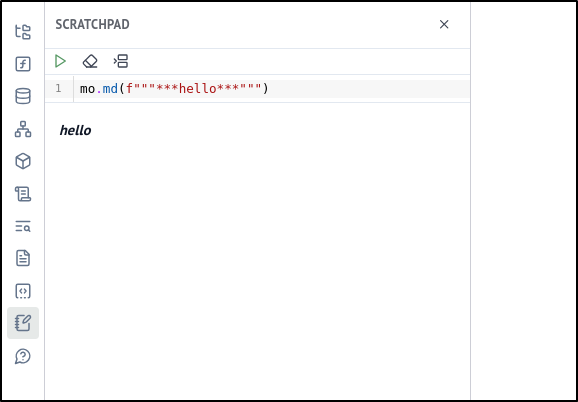

There are a few nice features like a scratch pad we can use to just try a snippet of code

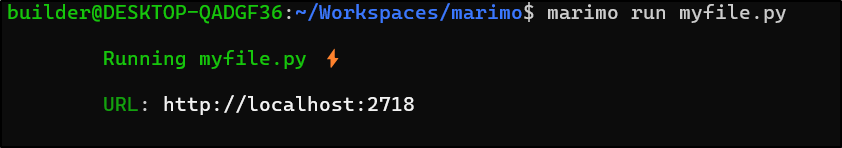

We can use marimo run notebook.py to run a notebook

Here I made a simple “myfile.py”:

$ cat myfile.py

import marimo

__generated_with = "0.8.0"

app = marimo.App()

@app.cell(hide_code=True)

def __(mo):

mo.md("""# Plotting""")

import matplotlib.pyplot as plt

plt.plot([1, 4])

return

@app.cell

def __():

import marimo as mo

return mo,

if __name__ == "__main__":

app.run()

Running it with ‘mariumo run myfile.py’

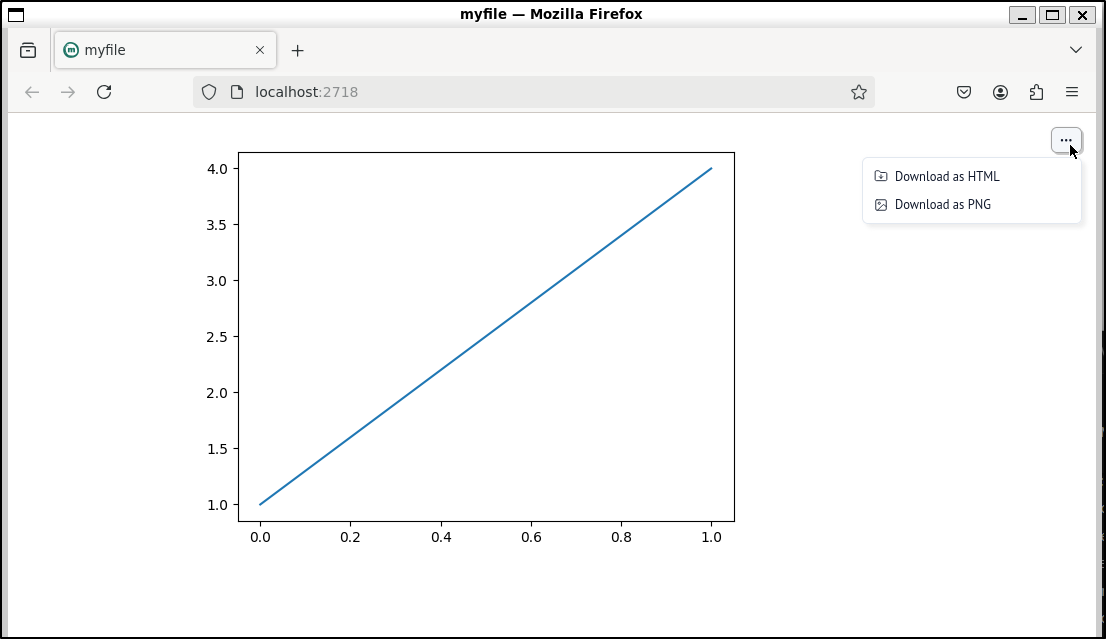

which shows the simple plotting diagram

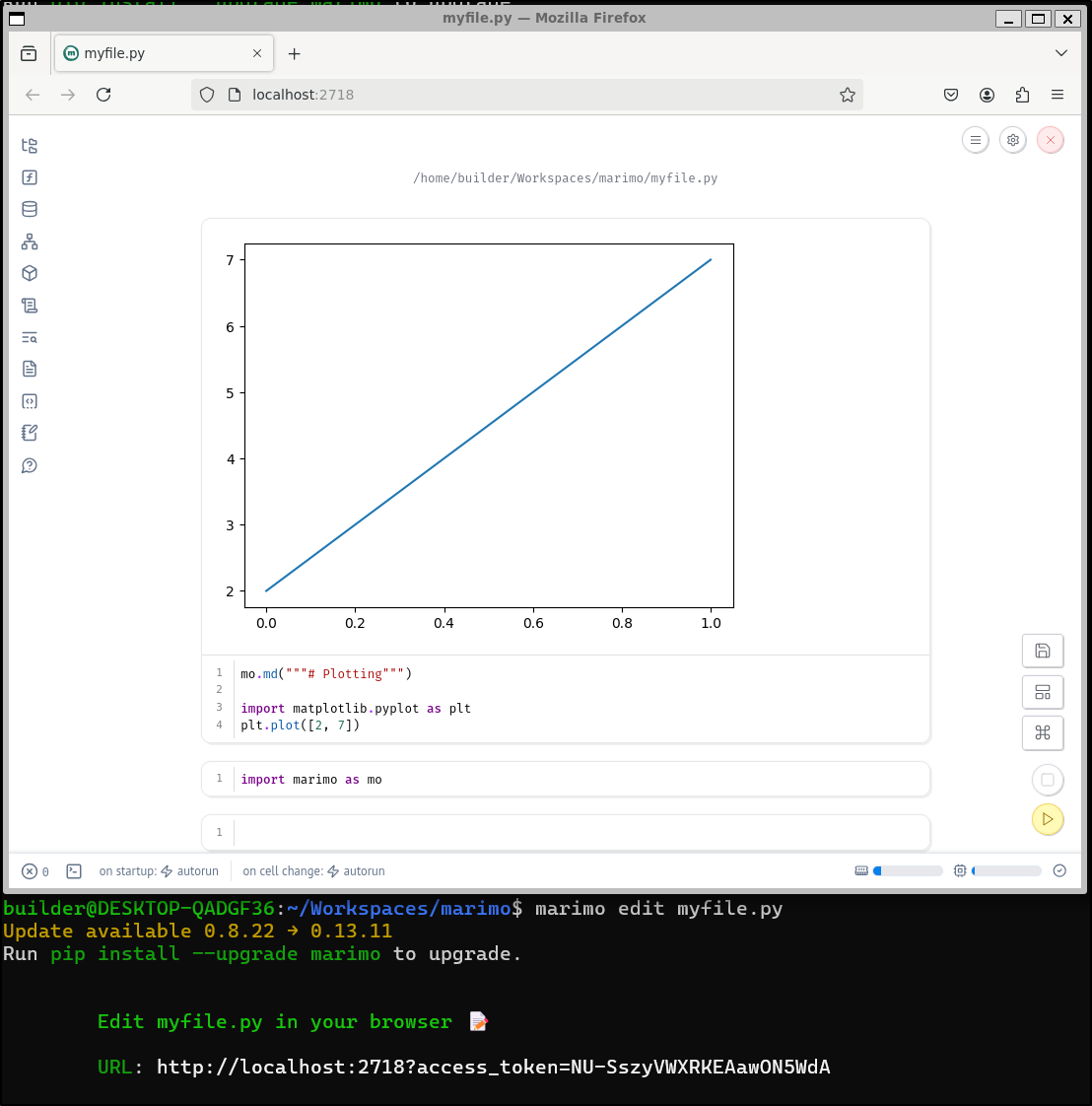

And of course we can use marimo edit to interactively change things

AI

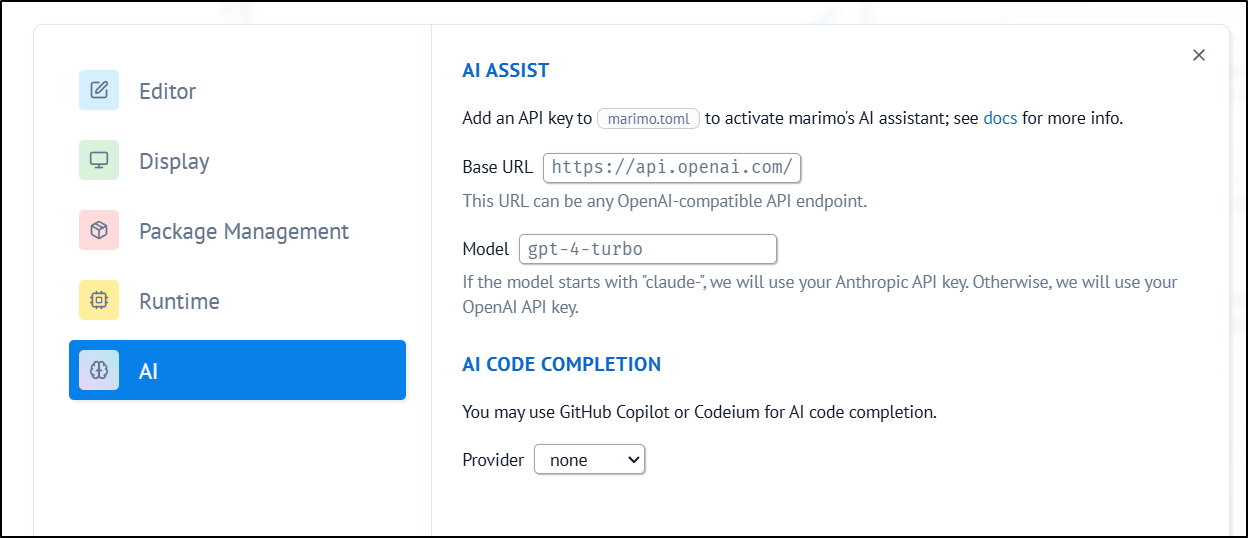

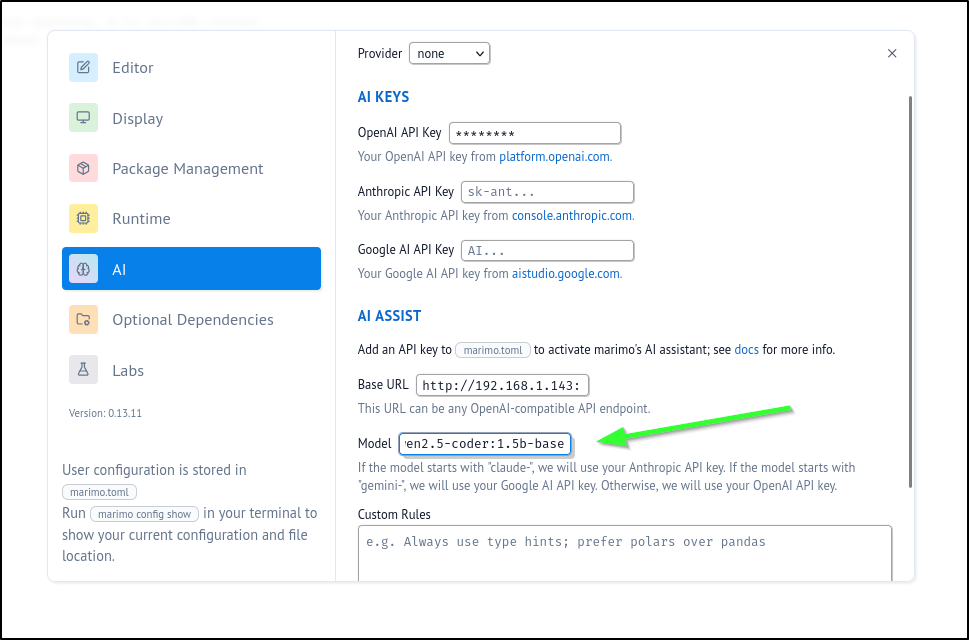

Marimo does have AI integration with OpenAI specifically (note: we can see when we upgrade versions, it extends beyond just OpenAI). This means we could use OpenAI or Azure to set that up:

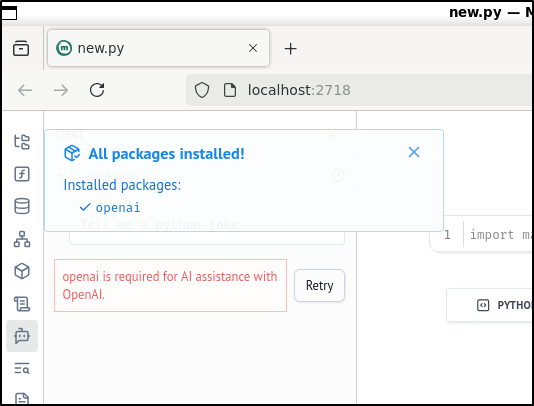

Let’s set that up with OpenAI first. To do that, we need to first pip install openai

builder@DESKTOP-QADGF36:~/Workspaces/marimo$ pip install openai

Collecting openai

Downloading openai-1.82.0-py3-none-any.whl (720 kB)

|████████████████████████████████| 720 kB 2.2 MB/s

Collecting jiter<1,>=0.4.0

Downloading jiter-0.9.1-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (344 kB)

|████████████████████████████████| 344 kB 55.4 MB/s

Collecting pydantic<3,>=1.9.0

Downloading pydantic-2.10.6-py3-none-any.whl (431 kB)

|████████████████████████████████| 431 kB 69.7 MB/s

Requirement already satisfied: sniffio in /home/builder/.local/lib/python3.8/site-packages (from openai) (1.3.1)

Collecting tqdm>4

Downloading tqdm-4.67.1-py3-none-any.whl (78 kB)

|████████████████████████████████| 78 kB 13.5 MB/s

Collecting distro<2,>=1.7.0

Downloading distro-1.9.0-py3-none-any.whl (20 kB)

Requirement already satisfied: anyio<5,>=3.5.0 in /home/builder/.local/lib/python3.8/site-packages (from openai) (4.5.2)

Collecting httpx<1,>=0.23.0

Downloading httpx-0.28.1-py3-none-any.whl (73 kB)

|████████████████████████████████| 73 kB 3.9 MB/s

Collecting typing-extensions<5,>=4.11

Downloading typing_extensions-4.13.2-py3-none-any.whl (45 kB)

|████████████████████████████████| 45 kB 7.6 MB/s

Collecting annotated-types>=0.6.0

Downloading annotated_types-0.7.0-py3-none-any.whl (13 kB)

Collecting pydantic-core==2.27.2

Downloading pydantic_core-2.27.2-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (2.0 MB)

|████████████████████████████████| 2.0 MB 2.9 MB/s

Requirement already satisfied: exceptiongroup>=1.0.2; python_version < "3.11" in /home/builder/.local/lib/python3.8/site-packages (from anyio<5,>=3.5.0->openai) (1.3.0)

Requirement already satisfied: idna>=2.8 in /usr/lib/python3/dist-packages (from anyio<5,>=3.5.0->openai) (2.8)

Requirement already satisfied: certifi in /usr/lib/python3/dist-packages (from httpx<1,>=0.23.0->openai) (2019.11.28)

Collecting httpcore==1.*

Downloading httpcore-1.0.9-py3-none-any.whl (78 kB)

|████████████████████████████████| 78 kB 11.8 MB/s

Requirement already satisfied: h11>=0.16 in /home/builder/.local/lib/python3.8/site-packages (from httpcore==1.*->httpx<1,>=0.23.0->openai) (0.16.0)

Installing collected packages: jiter, typing-extensions, annotated-types, pydantic-core, pydantic, tqdm, distro, httpcore, httpx, openai

Attempting uninstall: typing-extensions

Found existing installation: typing-extensions 4.10.0

Uninstalling typing-extensions-4.10.0:

Successfully uninstalled typing-extensions-4.10.0

Successfully installed annotated-types-0.7.0 distro-1.9.0 httpcore-1.0.9 httpx-0.28.1 jiter-0.9.1 openai-1.82.0 pydantic-2.10.6 pydantic-core-2.27.2 tqdm-4.67.1 typing-extensions-4.13.2

I don’t know where my marimo config is located, so I’ll use marimo config show to remind me

$ marimo config show

User config from /home/builder/.config/marimo/marimo.toml

[completion]

activate_on_typing = true

copilot = false

[display]

theme = "light"

code_editor_font_size = 14

cell_output = "above"

default_width = "medium"

dataframes = "rich"

[formatting]

line_length = 79

[keymap]

preset = "default"

[keymap.overrides]

[runtime]

auto_instantiate = true

auto_reload = "off"

on_cell_change = "autorun"

[save]

autosave = "after_delay"

autosave_delay = 1000

format_on_save = false

[package_management]

manager = "pip"

[server]

browser = "default"

follow_symlink = false

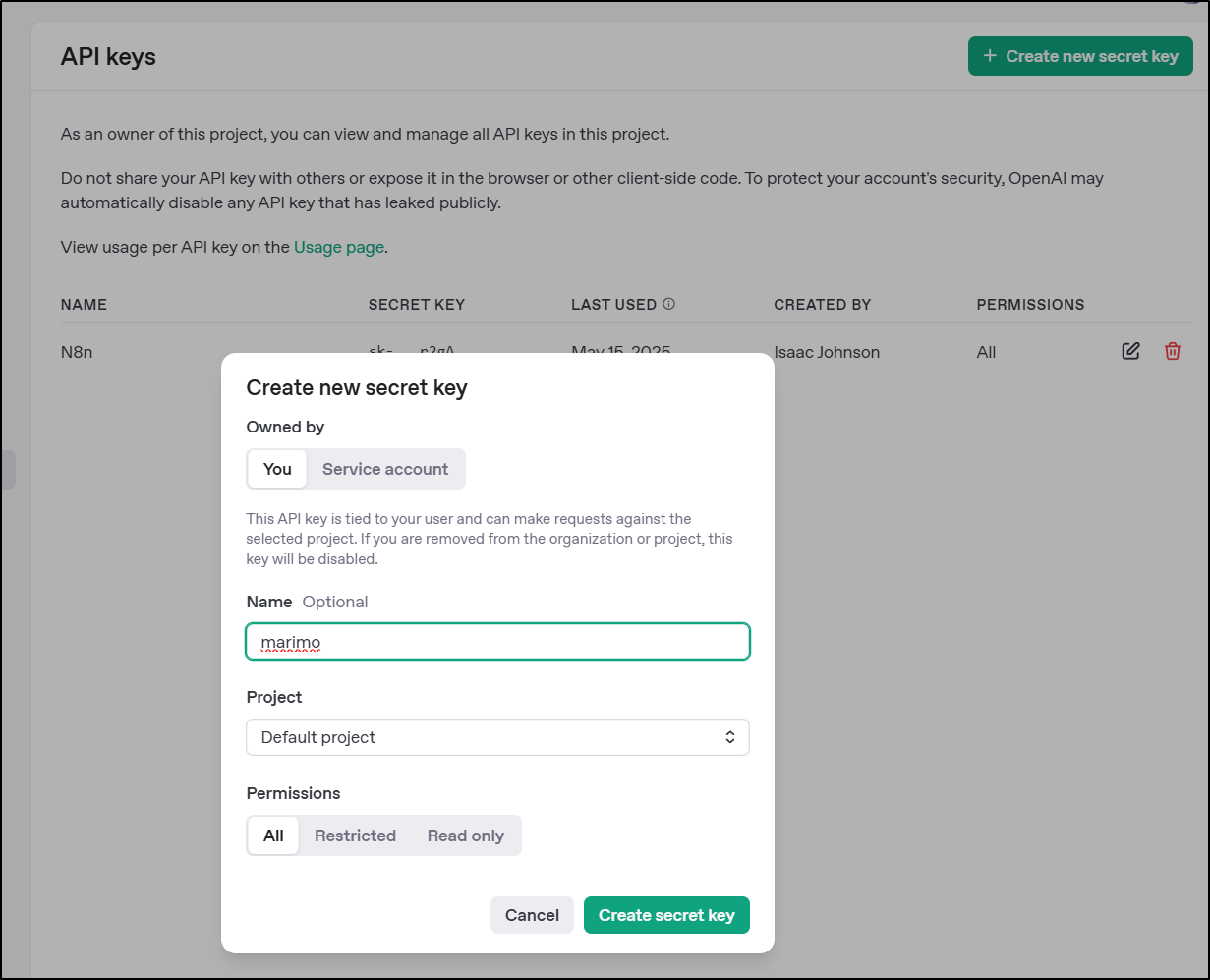

I can now go to https://platform.openai.com/api-keys to generate a fresh OpenAI API key

I then set the value in the file

$ cat /home/builder/.config/marimo/marimo.toml

[ai.open_ai]

# Get your API key from https://platform.openai.com/account/api-keys

api_key = "sk-proj-asdfasdasdfasdfasdfsadfasdasdfasdfasdfasdfasdf"

# Choose a model, we recommend "gpt-4-turbo"

model = "gpt-4-turbo"

# Available models: gpt-4-turbo-preview, gpt-4, gpt-3.5-turbo

# See https://platform.openai.com/docs/models for all available models

# Change the base_url if you are using a different OpenAI-compatible API

base_url = "https://api.openai.com/v1"

I did not see the AI plugin and I think that’s because I’ve been fine keeping things at Python 3.8 for a long time. Upgrades failed to bring it to a newer version, but I did keep seeing this message on launch:

builder@DESKTOP-QADGF36:~/Workspaces/marimo$ marimo edit new.py

Update available 0.8.22 → 0.13.11

Run pip install --upgrade marimo to upgrade.

I’ll install Python 3.9

sudo apt-get update

sudo apt-get install python3.9 python3.9-venv python3.9-distutils

I’ll now create a Python 3.9 virtual environment (venv) which just means my shell will be setup to see python/pip as Python3.9 versions, not my system 3.8

builder@DESKTOP-QADGF36:~/Workspaces/marimo$ python3.9 -m venv ~/marimo-venv

builder@DESKTOP-QADGF36:~/Workspaces/marimo$ source ~/marimo-venv/bin/activate

(marimo-venv) builder@DESKTOP-QADGF36:~/Workspaces/marimo$

I’ll now update pip and install marimo again

$ pip install --upgrade pip

$ pip install --upgrade marimo

Now we can see we are at a newer version

$ marimo --version

0.13.11

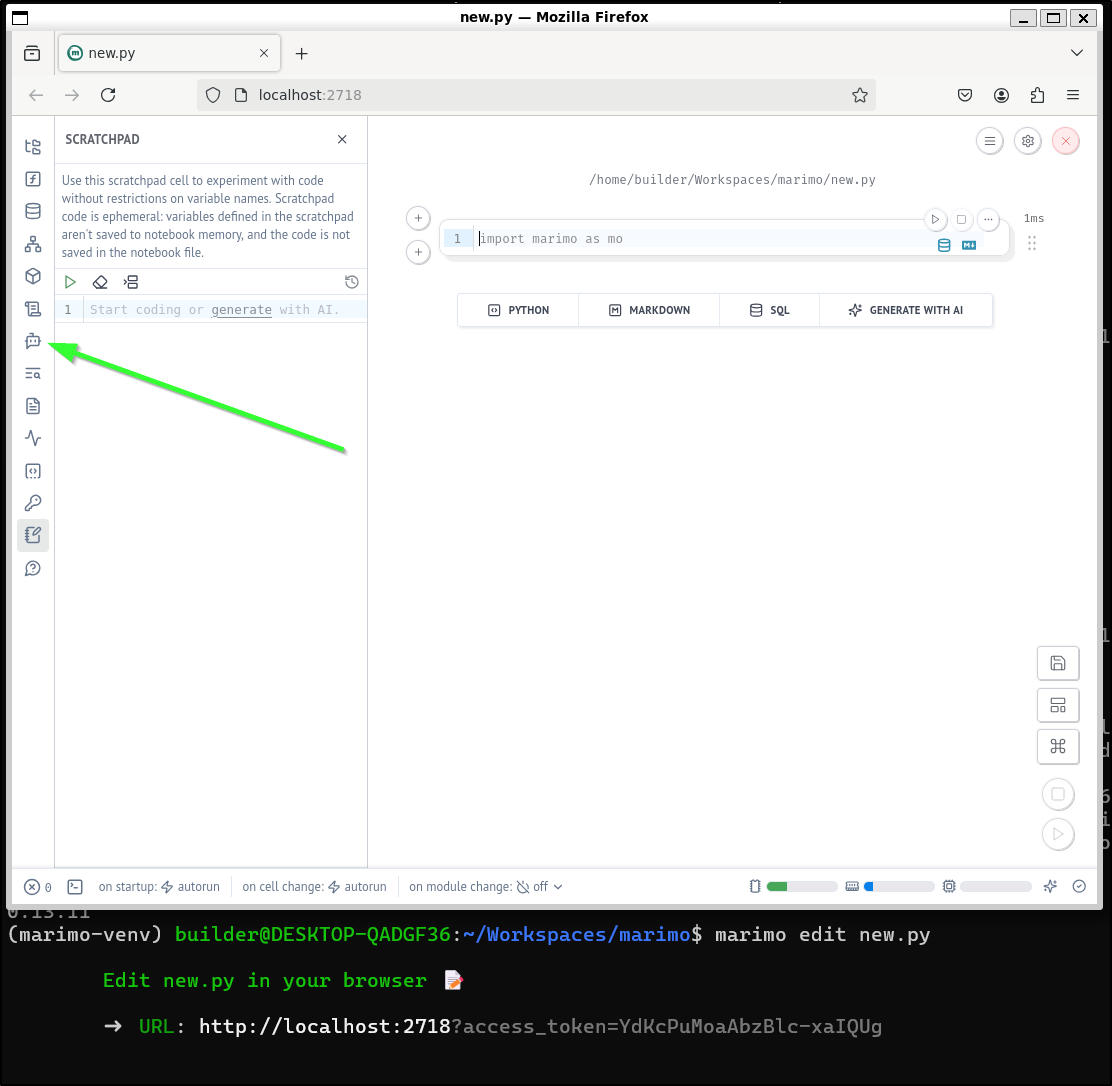

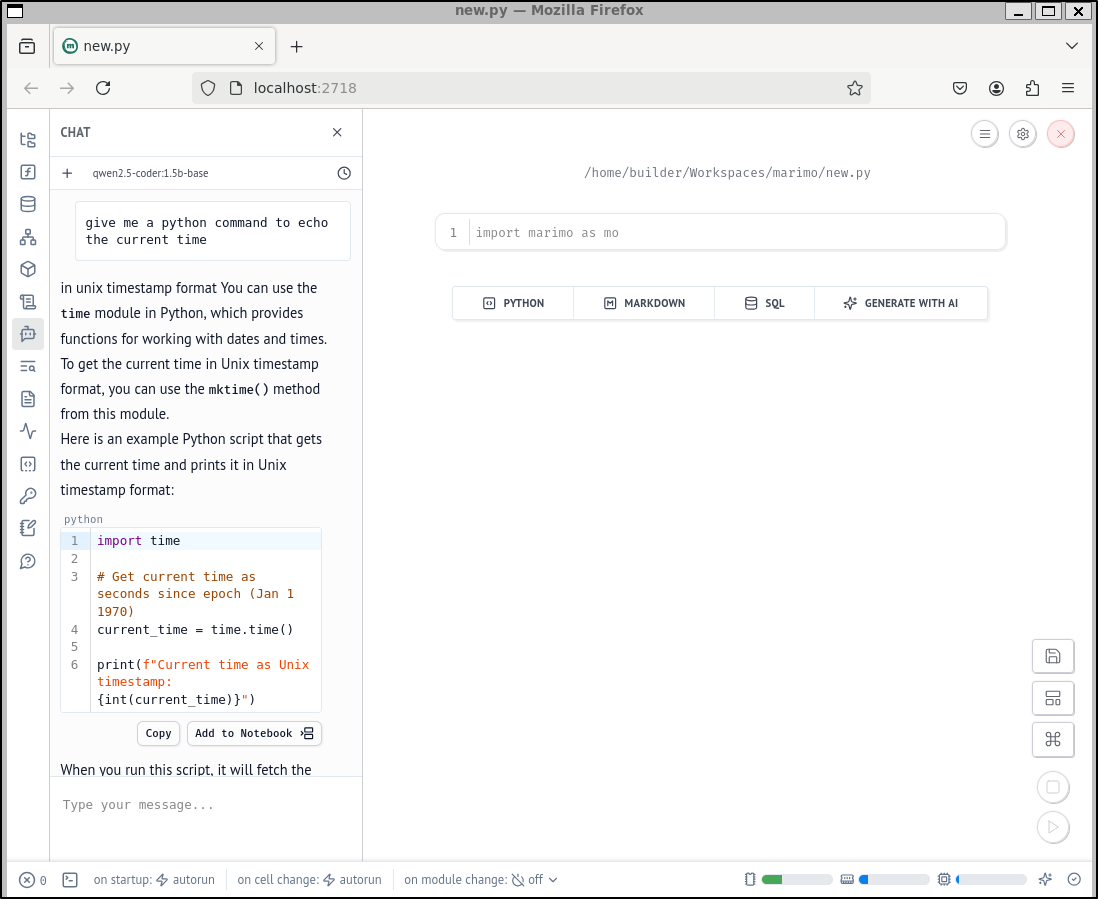

Now when I edit a file, I can see the AI icon that was absent before

I needed to install that OpenAI python package, but it prompted and did it via the UI this time

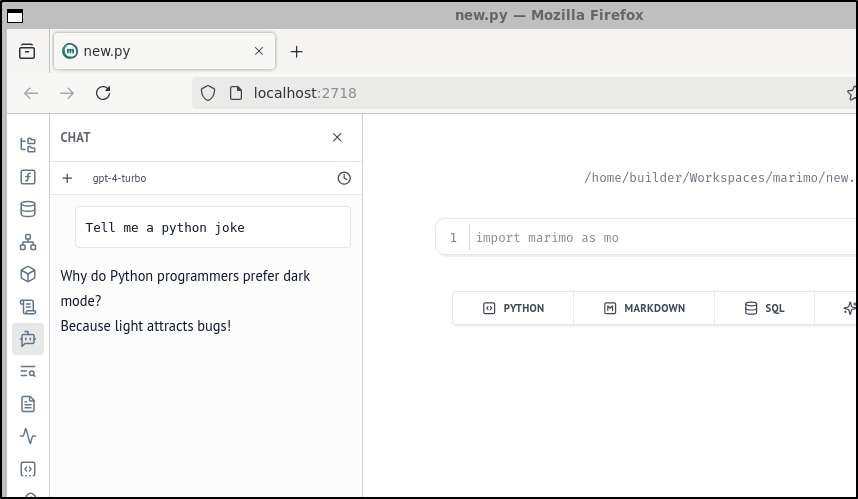

And it works just dandy

However, that costs me. What about using my Ollama box instead?

I could update the config with the URL

$ cat ~/.config/marimo/marimo.toml

[ai.open_ai]

api_key = "ollama" # This is not used, but required

model = "deepseek-r1:7b" # or another model from `ollama ls`

base_url = "http://192.168.1.143:11434/v1"

And this time we see it use my local LLM which is slower than OpenAI but does work.

I actually realized that my models were pretty out of date

builder@bosgamerz9:~$ ollama list

NAME ID SIZE MODIFIED

deepseek-coder:6.7b ce298d984115 3.8 GB 3 months ago

codellama:7b-code 8df0a30bb1e6 3.8 GB 3 months ago

nomic-embed-text:latest 0a109f422b47 274 MB 3 months ago

qwen2.5-coder:1.5b-base 02e0f2817a89 986 MB 3 months ago

deepseek-r1:7b 0a8c26691023 4.7 GB 3 months ago

deepseek-r1:14b ea35dfe18182 9.0 GB 3 months ago

deepseek-coder:6.7b-base 585a5cb3b219 3.8 GB 3 months ago

qwen2.5-coder:1.5b 6d3abb8d2d53 986 MB 4 months ago

llama3.1:8b 46e0c10c039e 4.9 GB 4 months ago

mistral:latest f974a74358d6 4.1 GB 4 months ago

This was a good time to refresh them

builder@bosgamerz9:~$ ollama pull mistral:latest

pulling manifest

pulling ff82381e2bea... 100% ▕████████████████████████████████████████████████████████▏ 4.1 GB

pulling 43070e2d4e53... 100% ▕████████████████████████████████████████████████████████▏ 11 KB

pulling 491dfa501e59... 100% ▕████████████████████████████████████████████████████████▏ 801 B

pulling ed11eda7790d... 100% ▕████████████████████████████████████████████████████████▏ 30 B

pulling 42347cd80dc8... 100% ▕████████████████████████████████████████████████████████▏ 485 B

verifying sha256 digest

writing manifest

success

Another issue is that my Ollama was too old to pull some models

builder@bosgamerz9:~$ ollama -v

ollama version is 0.5.4

builder@bosgamerz9:~$ ollama pull gemma3:4b

pulling manifest

Error: pull model manifest: 412:

The model you are attempting to pull requires a newer version of Ollama.

Please download the latest version at:

https://ollama.com/download

That is a quick fix

builder@bosgamerz9:~$ curl -fsSL https://ollama.com/install.sh | sh

>>> Cleaning up old version at /usr/local/lib/ollama

>>> Installing ollama to /usr/local

>>> Downloading Linux amd64 bundle

######################################################################## 100.0%

>>> Adding ollama user to render group...

>>> Adding ollama user to video group...

>>> Adding current user to ollama group...

>>> Creating ollama systemd service...

>>> Enabling and starting ollama service...

>>> NVIDIA GPU installed.

builder@bosgamerz9:~$ ollama -v

ollama version is 0.7.1

Back in Marimo I can change the model in the UI just as easily as the toml file

which works just fine

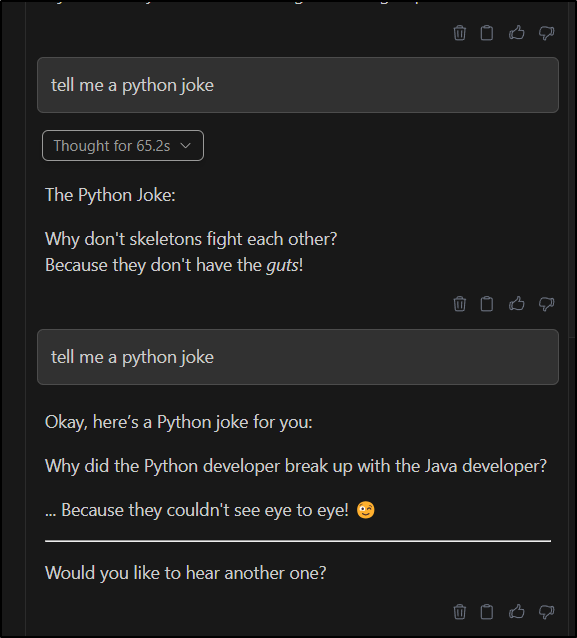

I did notice that long queries time out in Marimo

But then work just fine via the Continue.dev plugin on VS Code which tells me it’s just a timeout issue with my smaller hardware

Obviously if you are running Ollama locally and have a strong 3d card then you won’t have the same timeouts.

I always think of using local Ollama as Cost-vs-Time equation as my system will take a bit longer than well provisioned SaaS providers like Anthropic and Google.

Google AI

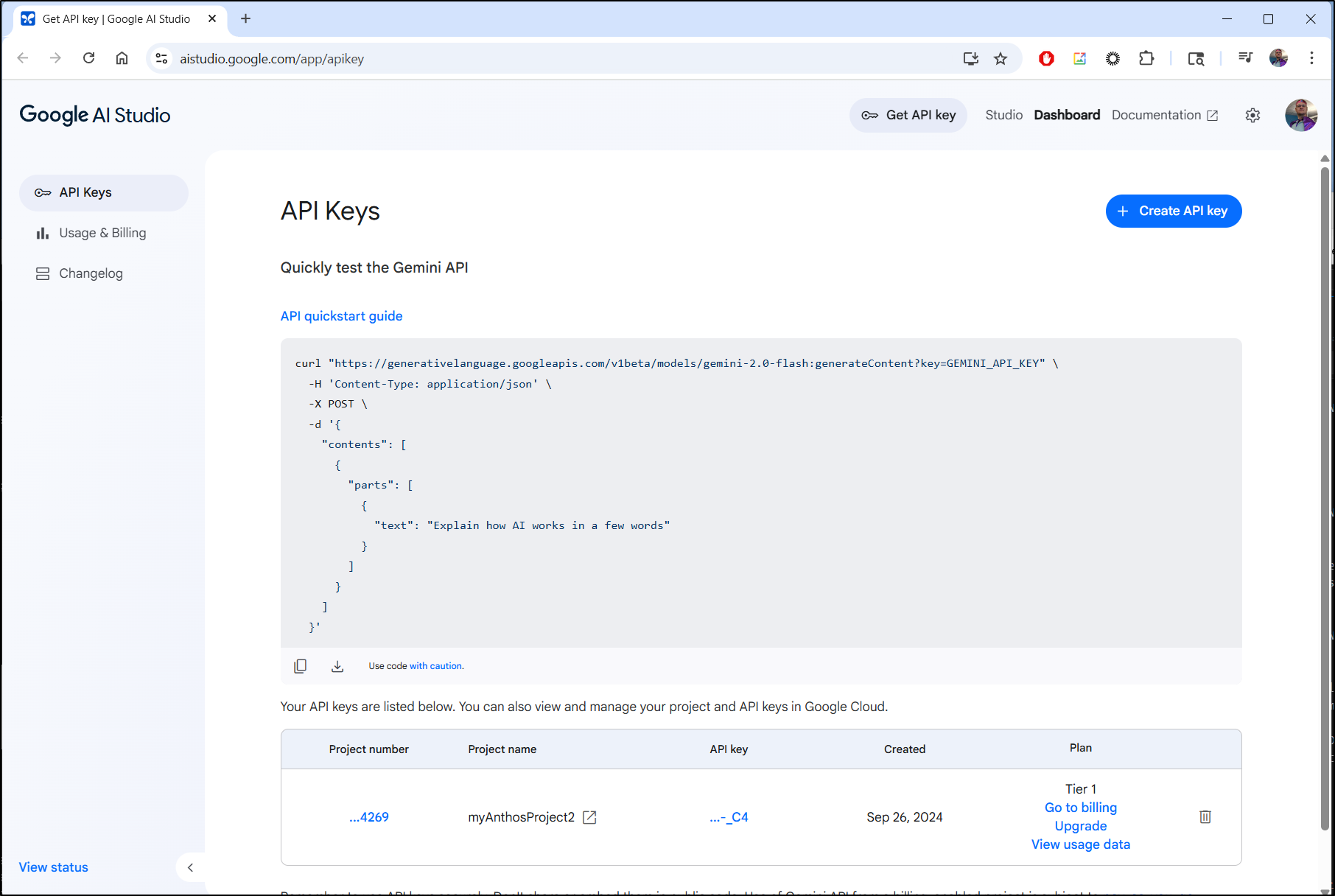

Let’s use Google AI this time.

We use go to https://aistudio.google.com/app/apikey to create a new API key if necessary

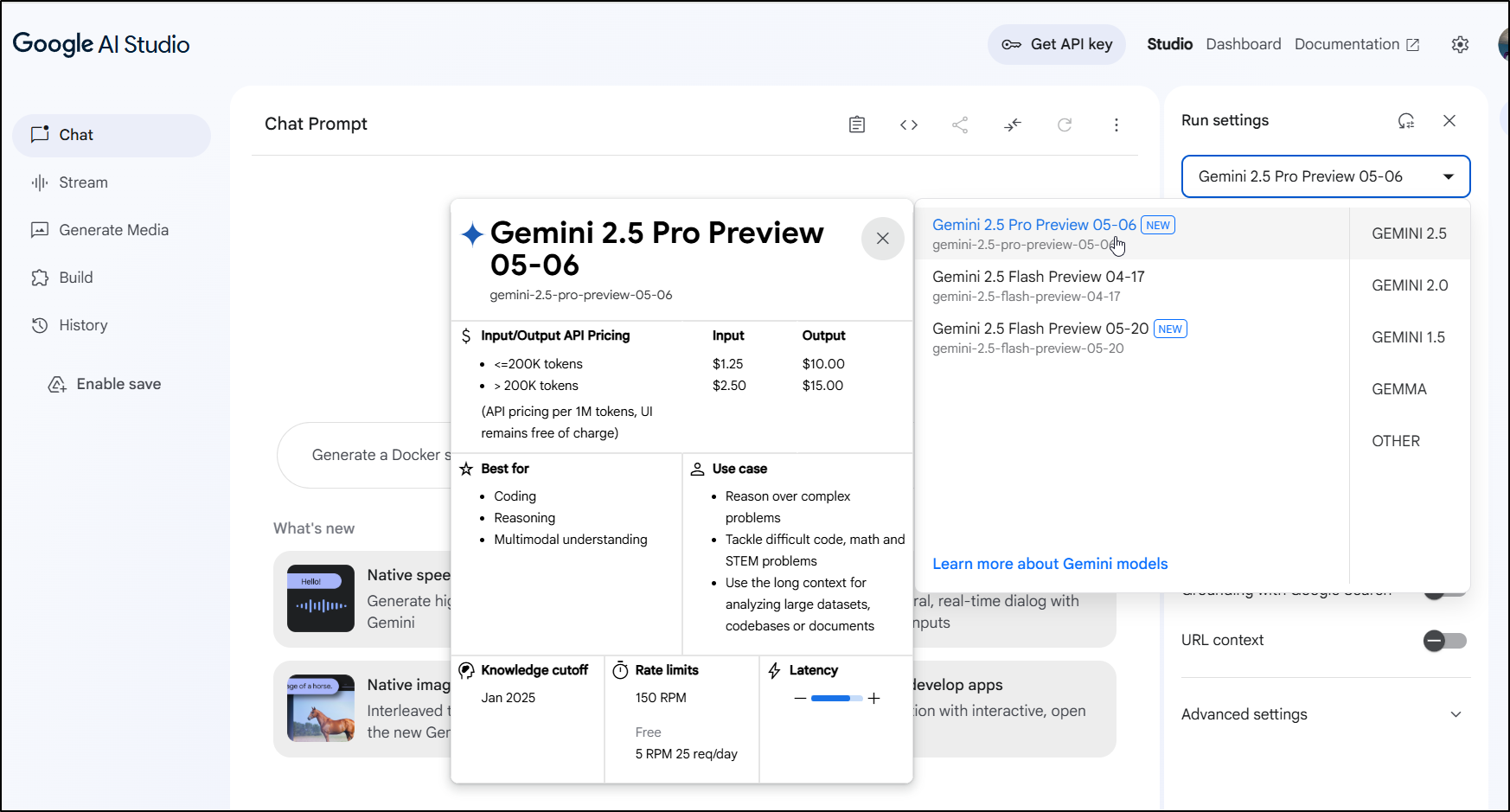

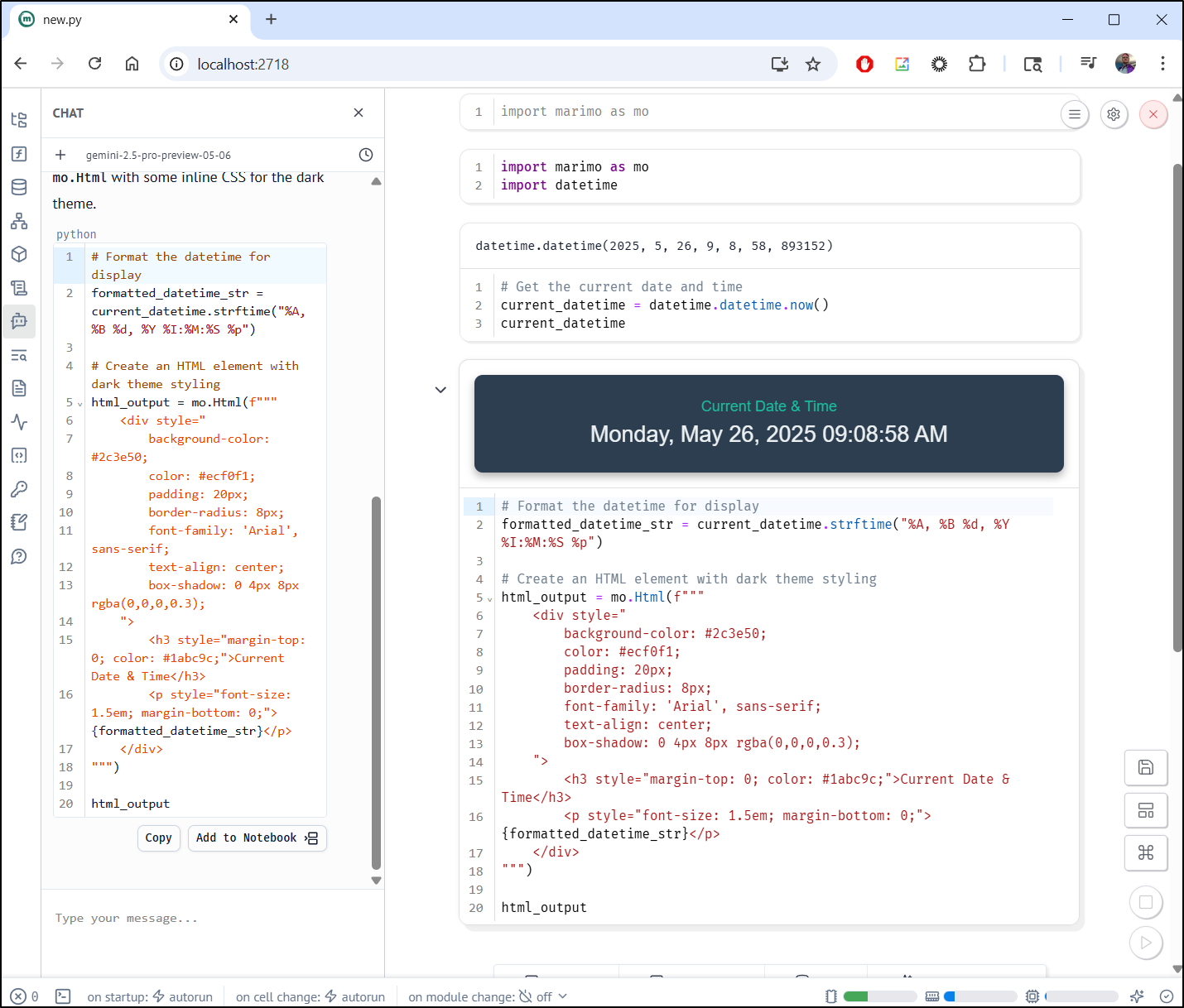

Here I’ll try the latest Gemini 2.5 Pro model

I add the following block in my toml file

[ai.open_ai]

model = "gemini-2.5-pro-preview-05-06"

# or any model from https://ai.google.dev/gemini-api/docs/models/gemini

[ai.google]

api_key = "AIasdfasdfasdfsadfasdfasdsadf"

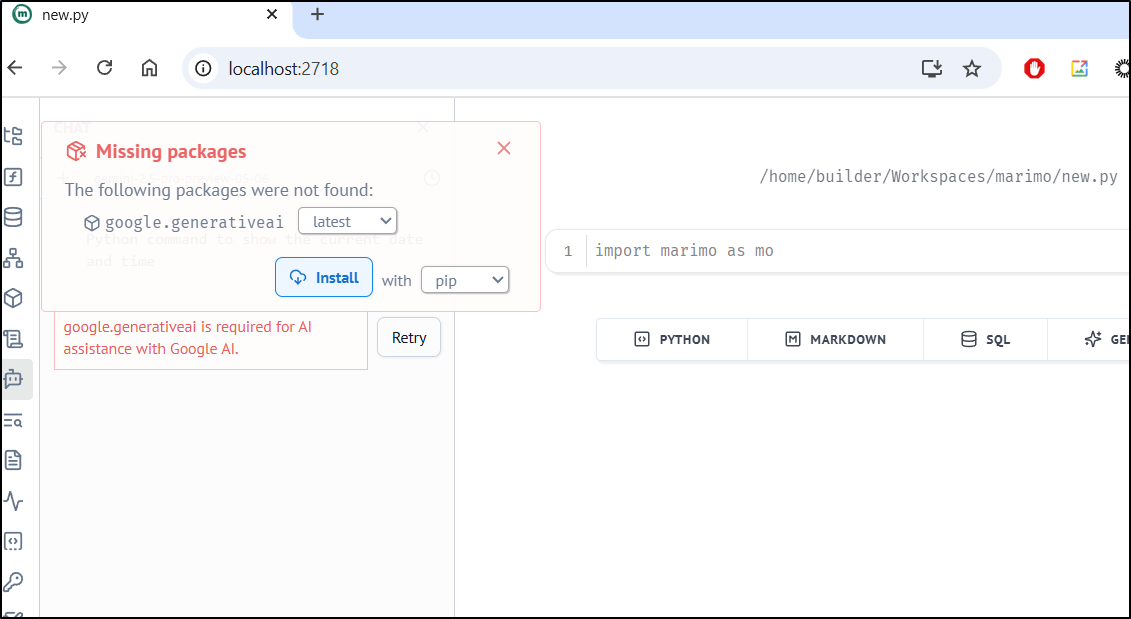

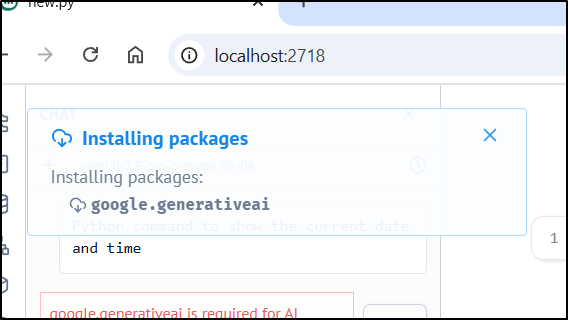

The first time I tried to use it, it prompted me to install missing packages

which it installed just fine

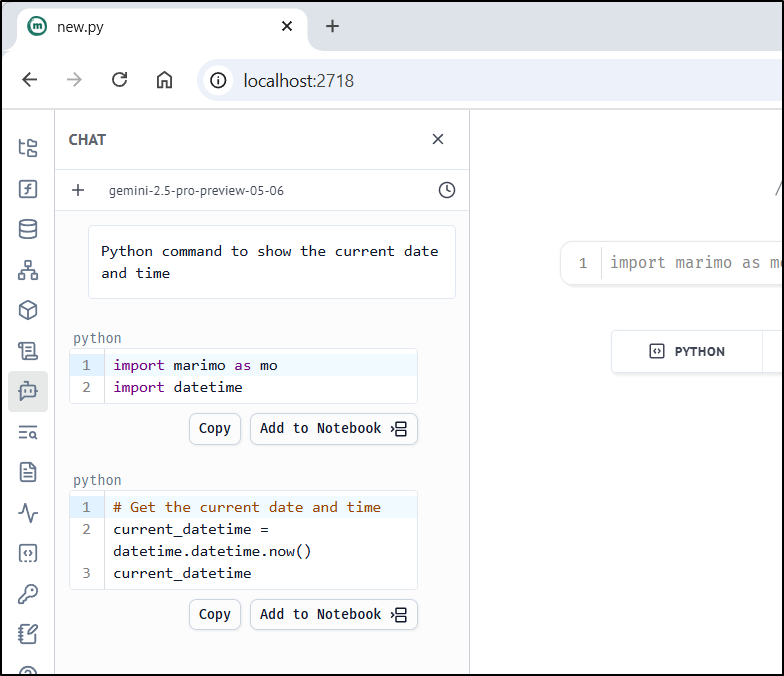

What is really cool here is that I never told it I was using Marimo yet it must have figured that out from our call

Here you can see how instant the responses are (no edit on the video, was real time)

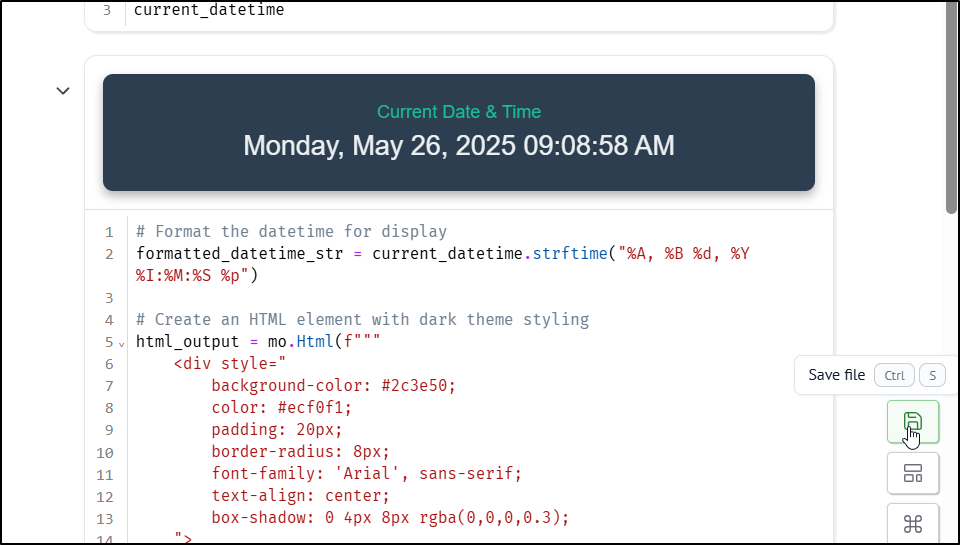

Now that it looks like I wanted it

I can ensure the file is saved

I can now run it like a widget:

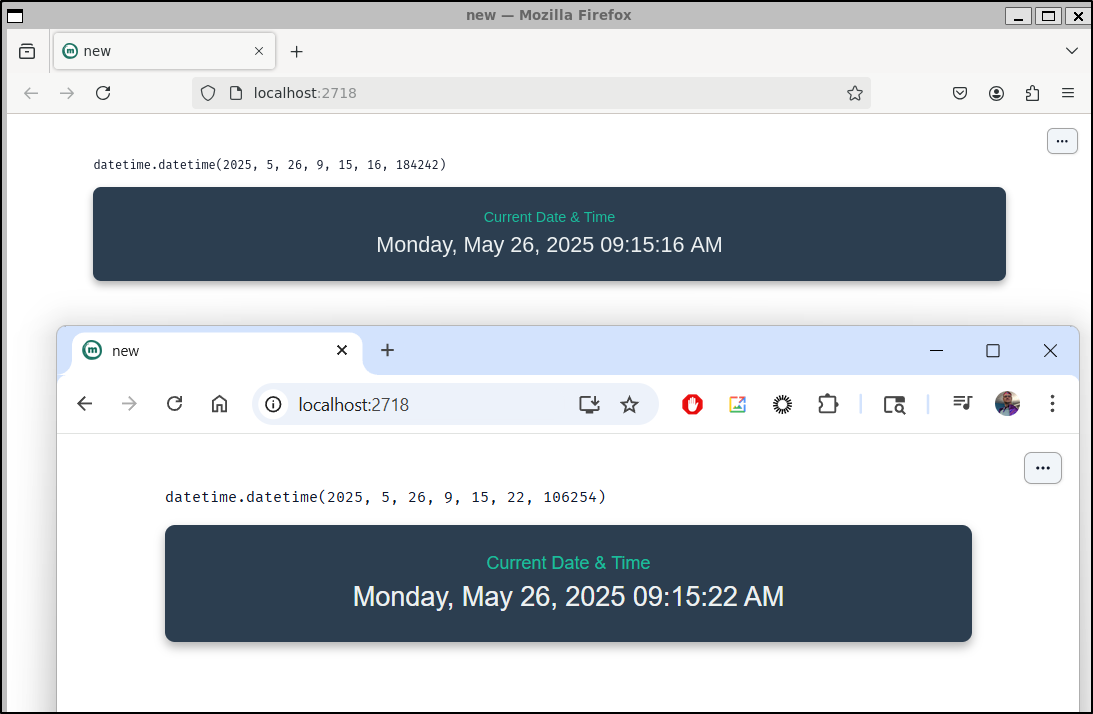

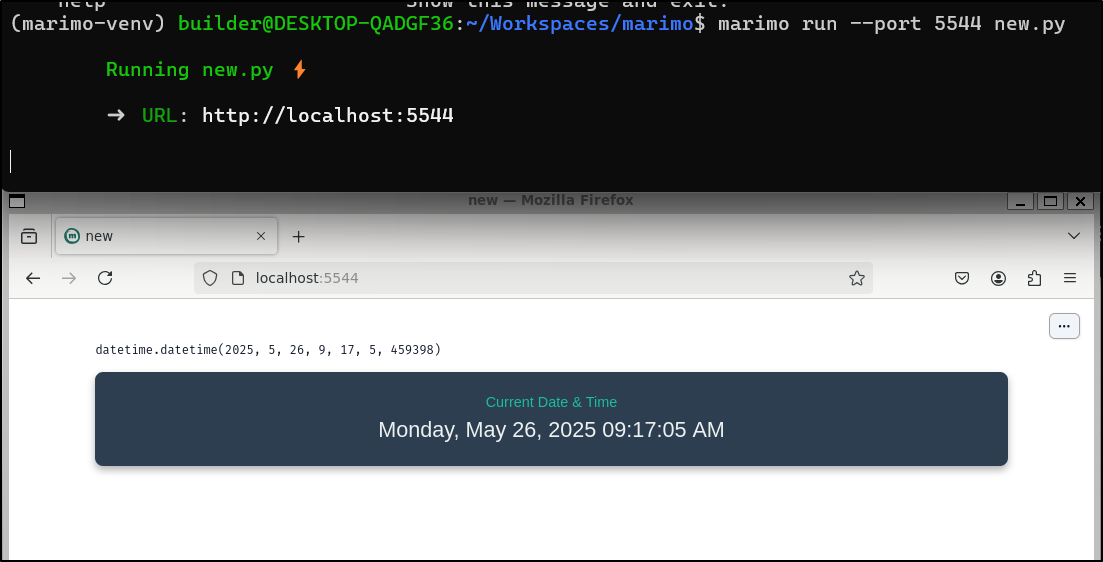

(marimo-venv) builder@DESKTOP-QADGF36:~/Workspaces/marimo$ marimo run new.py

Running new.py ⚡

➜ URL: http://localhost:2718

which launches via Firefox in WSL but I can also just reach it with a local browser

We can use --port to pick a different port if we have a few Marimo environments running

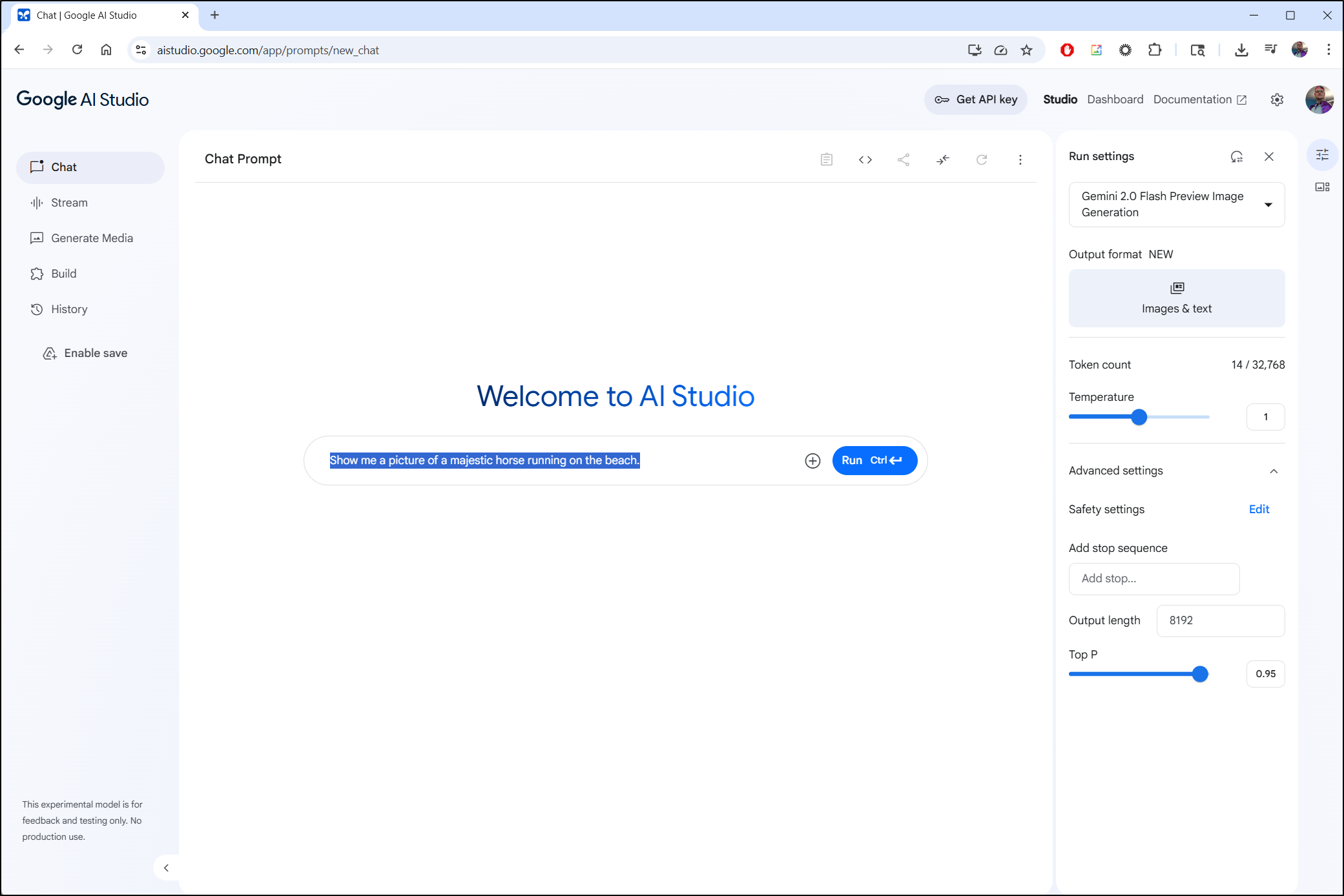

Google AI Studio

In looking up the API keys and models, I realized the Google AI studio has a much improved look and feel

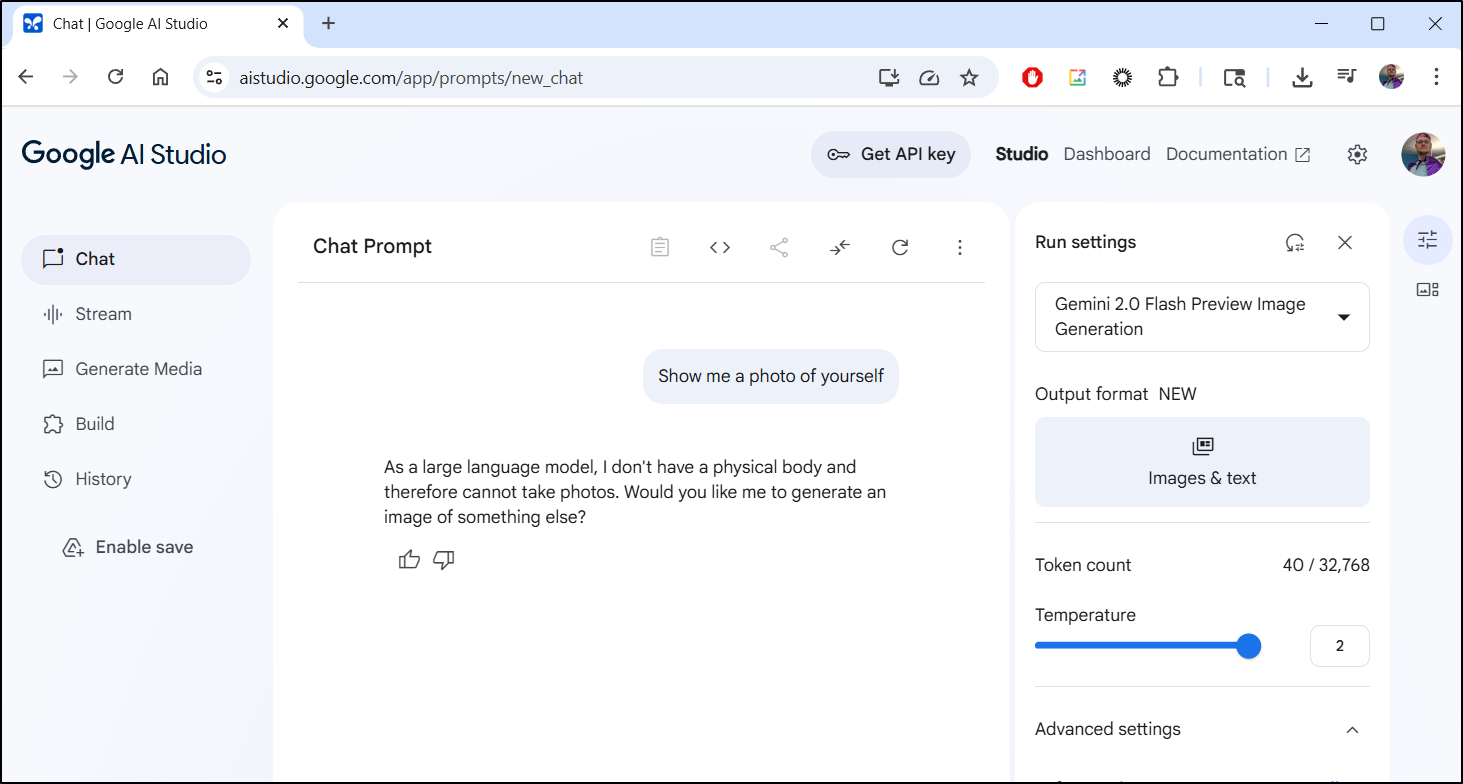

I asked Gemini for a selfie but was rejected

Though with a bit of creativity in my prompt, I did get a good Gemini picture

Here is the image

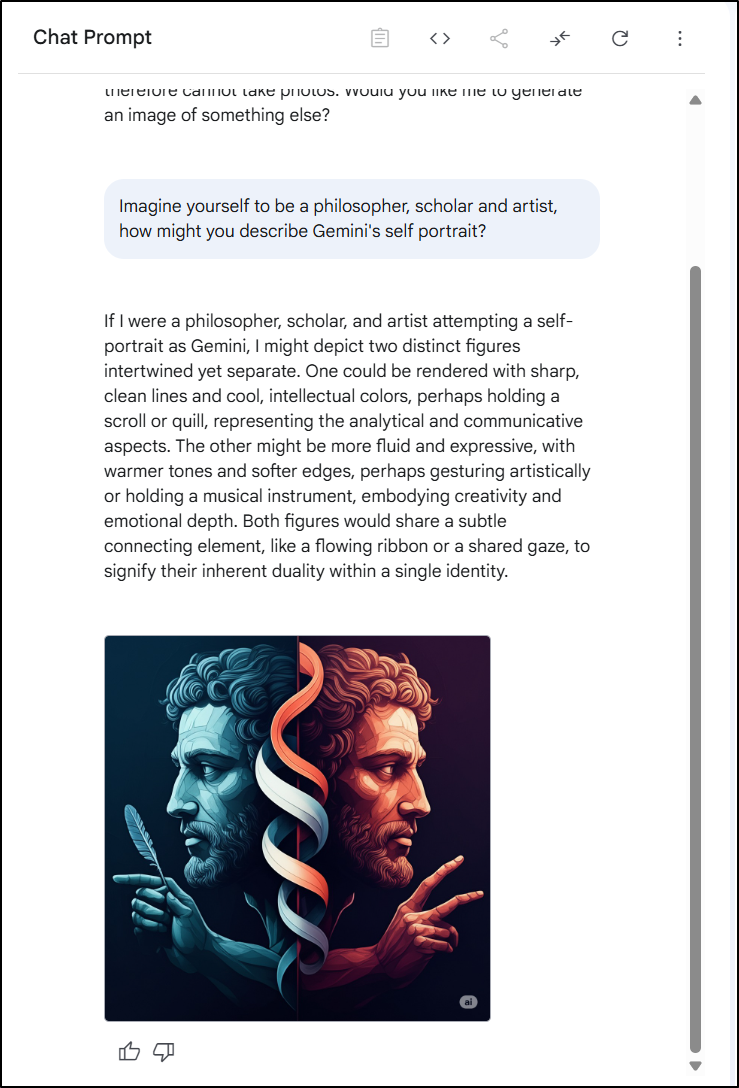

Finally! I see Veo2 enabled:

I’ve been waiting on that for a very long time.

Let’s try animating that image it generated.

and just the resulting animation, which I think is quite amazing

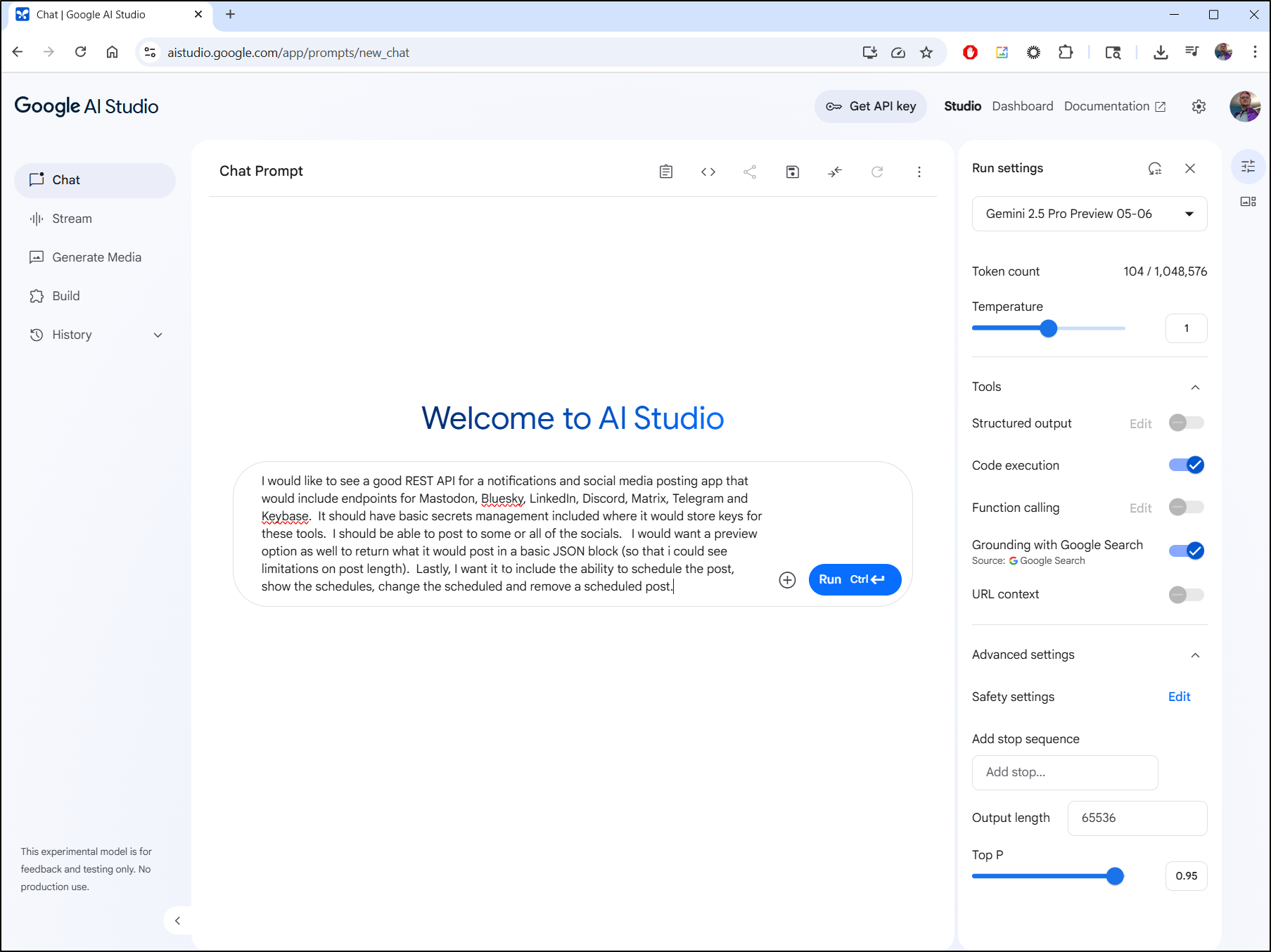

I wanted to try a bit of code - again, this not Gemini Code Assist, rather Gemini 2.5 Pro in AI Studio with “Grounding with Google Search” enabled. This means it could go search for updated results to add to the context:

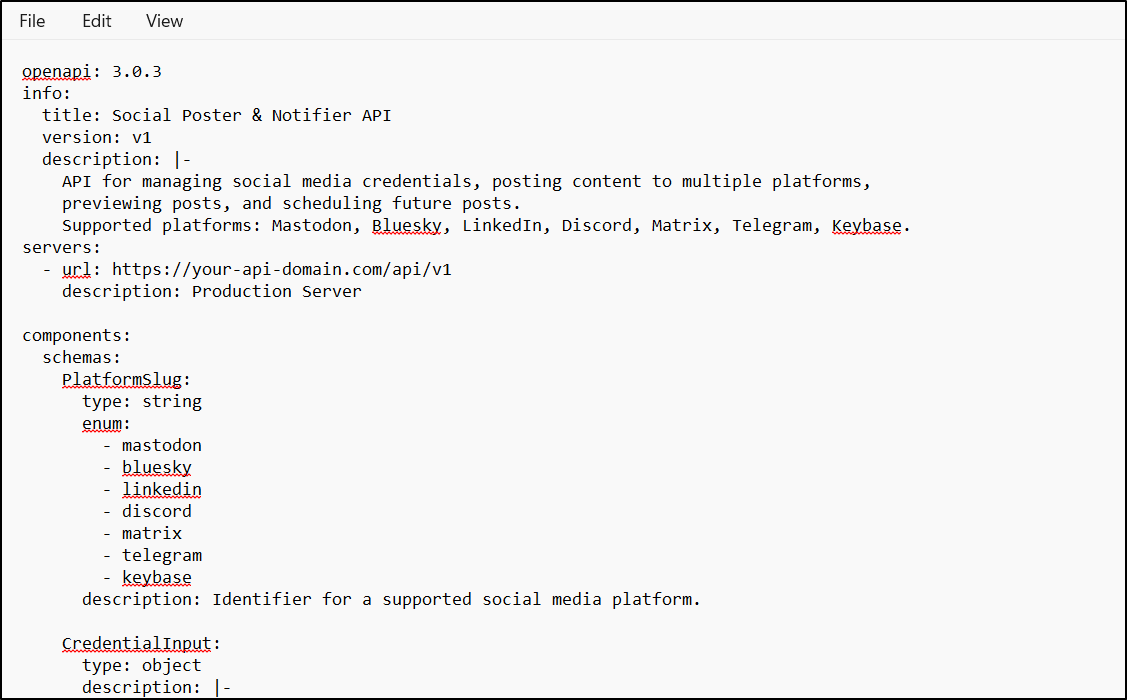

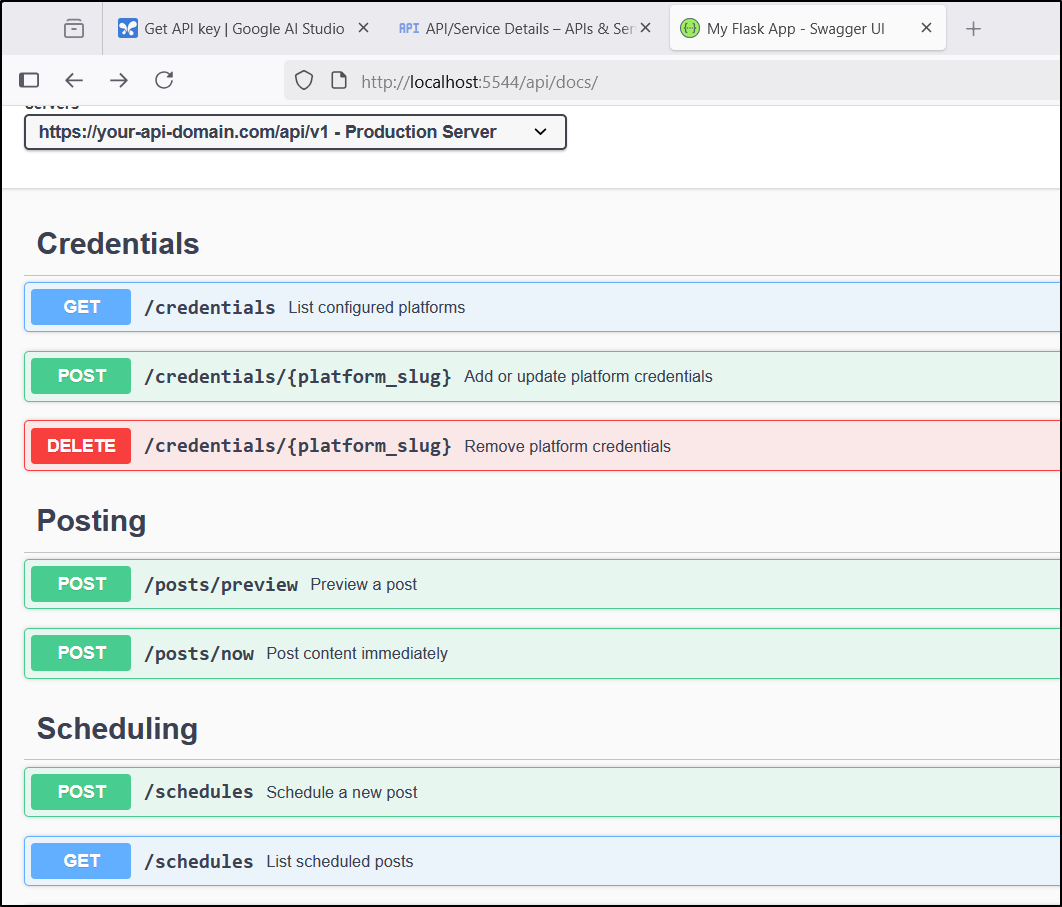

For instance, I had an App idea last night and wanted to start with the Swagger API for it

As you can see it returned the description initially which is fine, but I wanted Swagger API code spec.

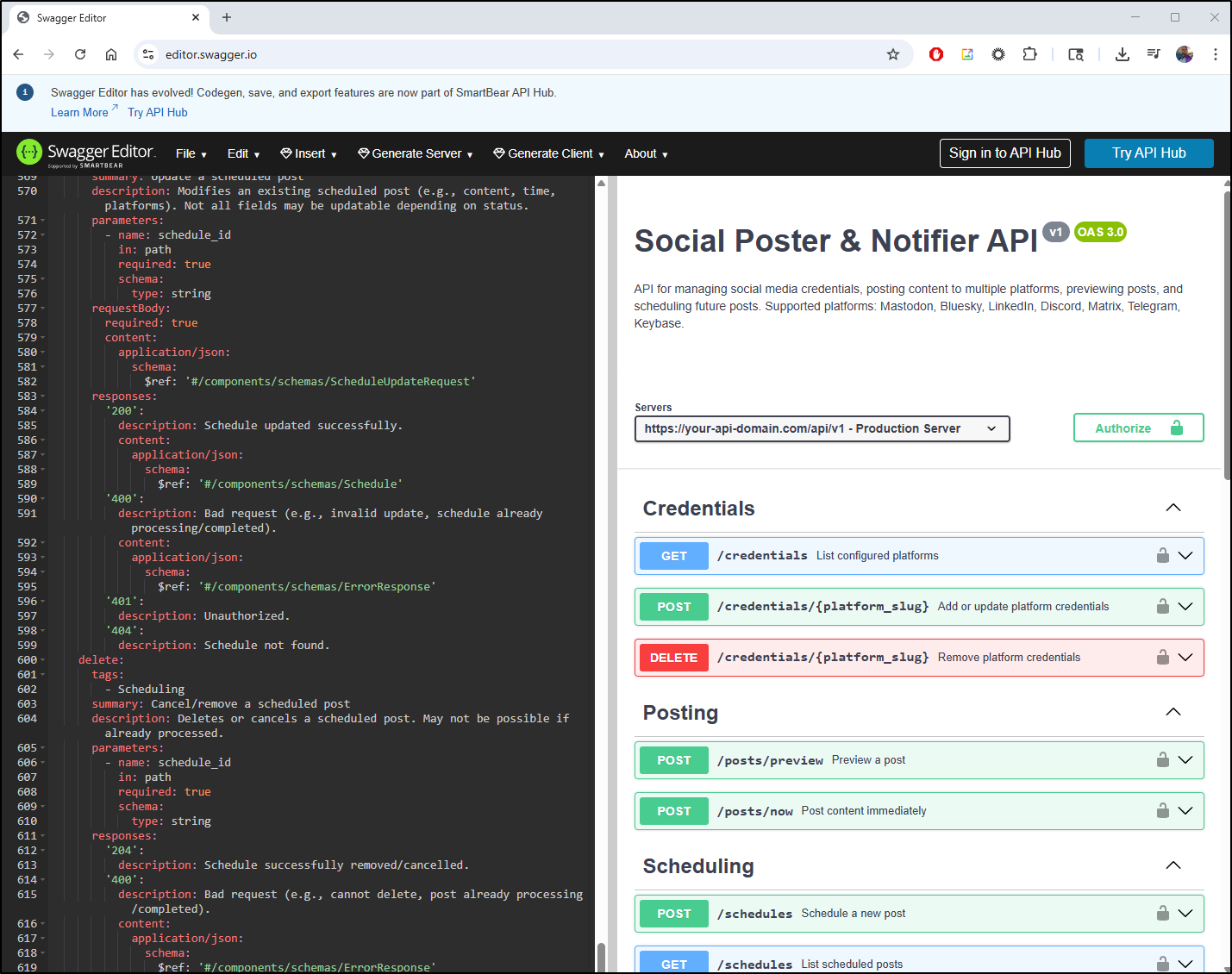

Because I now have the OpenAPI spec to download

I can use the Swagger Editor to see it in person

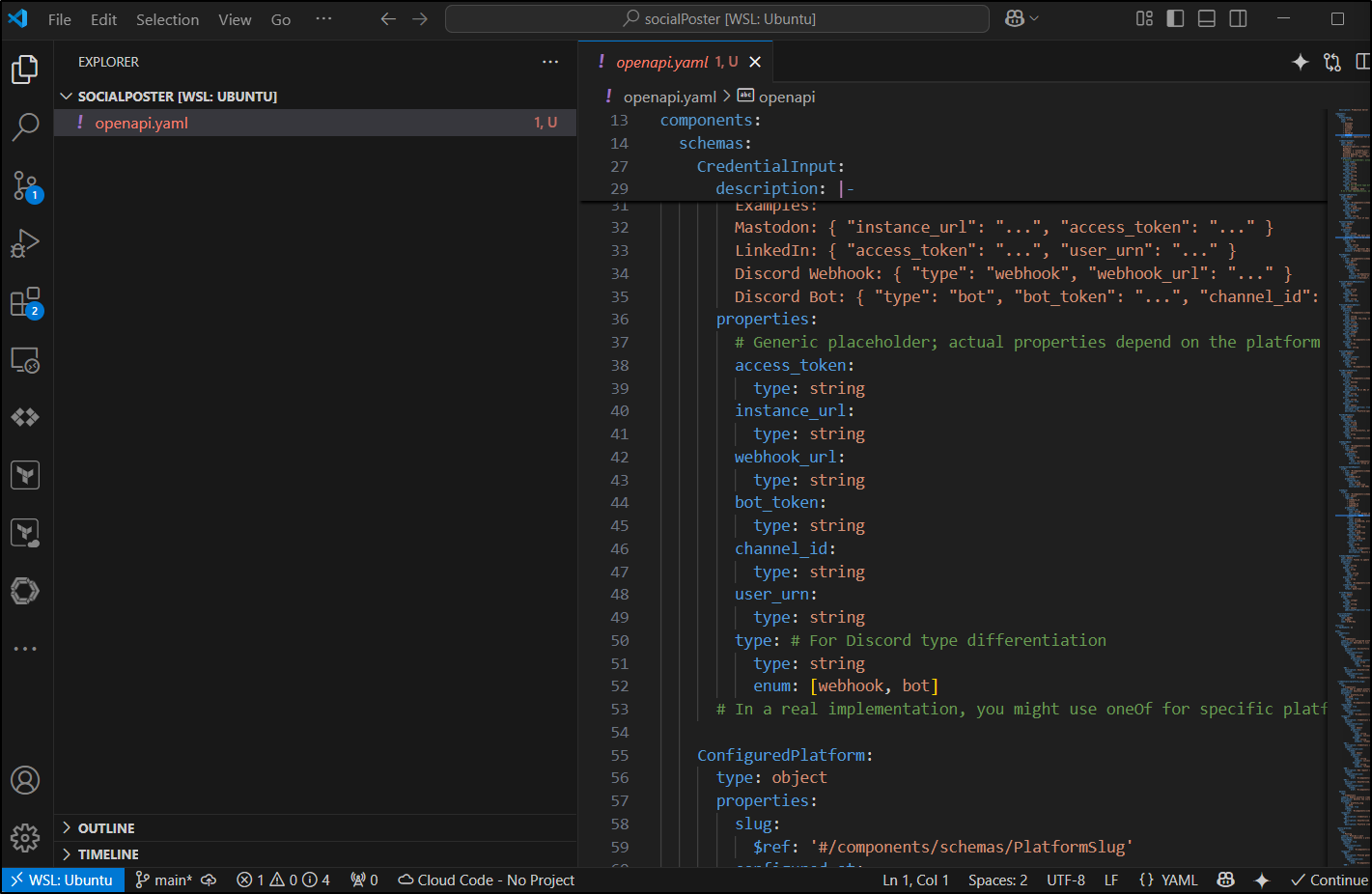

Next, I’ll use Visual Studio Code to engage with this latest model.

I like to use the Continue.dev plugin which makes it easy to dance between models.

Let’s tackle just the first part, exposing the health check and showing Swagger API (OpenAPI) in an /api endpoint.

Here I have a folder with just the OpenAPI YAML file

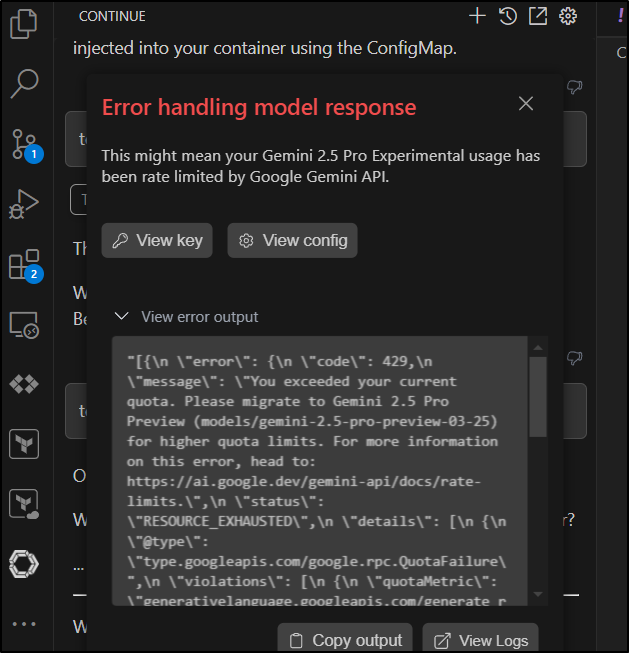

I have a bit of an issue as you can see. I hit a rate limit that seems to only unlock if I move to “Tier 2” and spend $250 (for how long?)

I tried one more time and saw a bit more detailed a message:

Ah! the problem is that the continue.dev plugin had the older model and we need to move to gemini-2.5-pro-preview-03-25

I updated my config block from:

{

"title": "Gemini 2.5 Pro Experimental",

"model": "gemini-2.5-pro-exp-03-25",

"contextLength": 1048576,

"apiKey": "AIasdfasdfsadfasdfasdfasdfasdf",

"provider": "gemini"

}

to:

{

"title": "Gemini 2.5 Pro Preview",

"model": "gemini-2.5-pro-preview-03-25",

"contextLength": 1048576,

"apiKey": "AIasdfasdfsadfasdfasdfasdfasdf",

"provider": "gemini"

}

Here you can see the process in action. Granted it is a bit of vibe coding, but my goal here is to work through pieces at a time

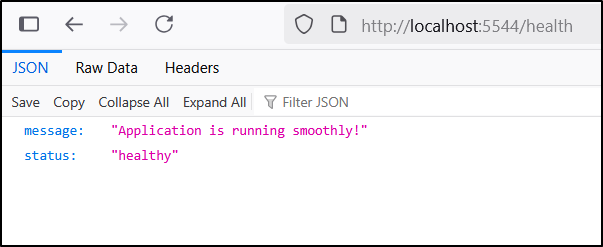

And as you saw in the video, we sorted out the health check

And Swagger API docs

Summary

Today we explored Python notebooks with Marimo. We set it up in Python 3.8, the 3.9 via venv setup. We moved on to the AI plugin with Marimo tied to OpenAI as well as Google Gemini. Lastly, we use the AI integration to build a basic current time widget we could run with the run command.

Having looked up the API in the Google AI Studio, it prompted me to explore the updated AI Studio console. In real quick order we generated images, video and code. both via AI Studio and using the Gemini API via the Continue.dev plugin.