Published: May 20, 2025 by Isaac Johnson

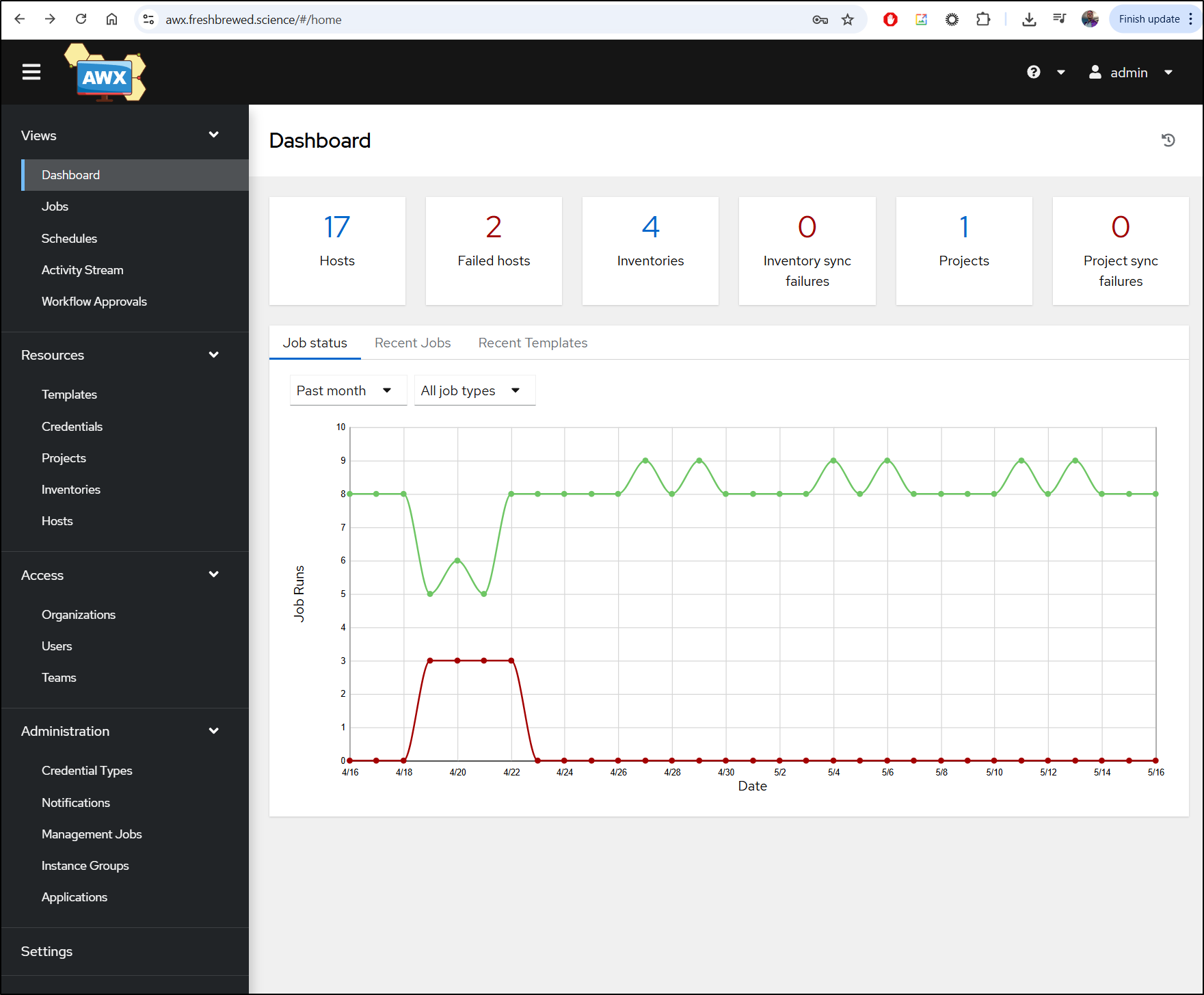

In this last article (for a bit) on n8n exclusively, let’s look at a few more features like Scheduled Tasks and how we might use this for work presently in tools like Ansible Tower (AWX). On that same line, we’ll look at how we can tie observability suites like Datadog and New Relic to n8n via Webhooks for server maintenance.

Next, I’ll the cover some Day 2 operational issues such as container upgrades (easy), API usage and some of my challenges around credentials. I’ll wrap with notes on costs and what I would really want changed to buy in altogether on n8n.

Let’s dig in!

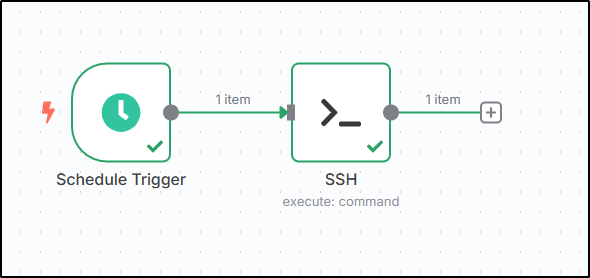

Scheduled work

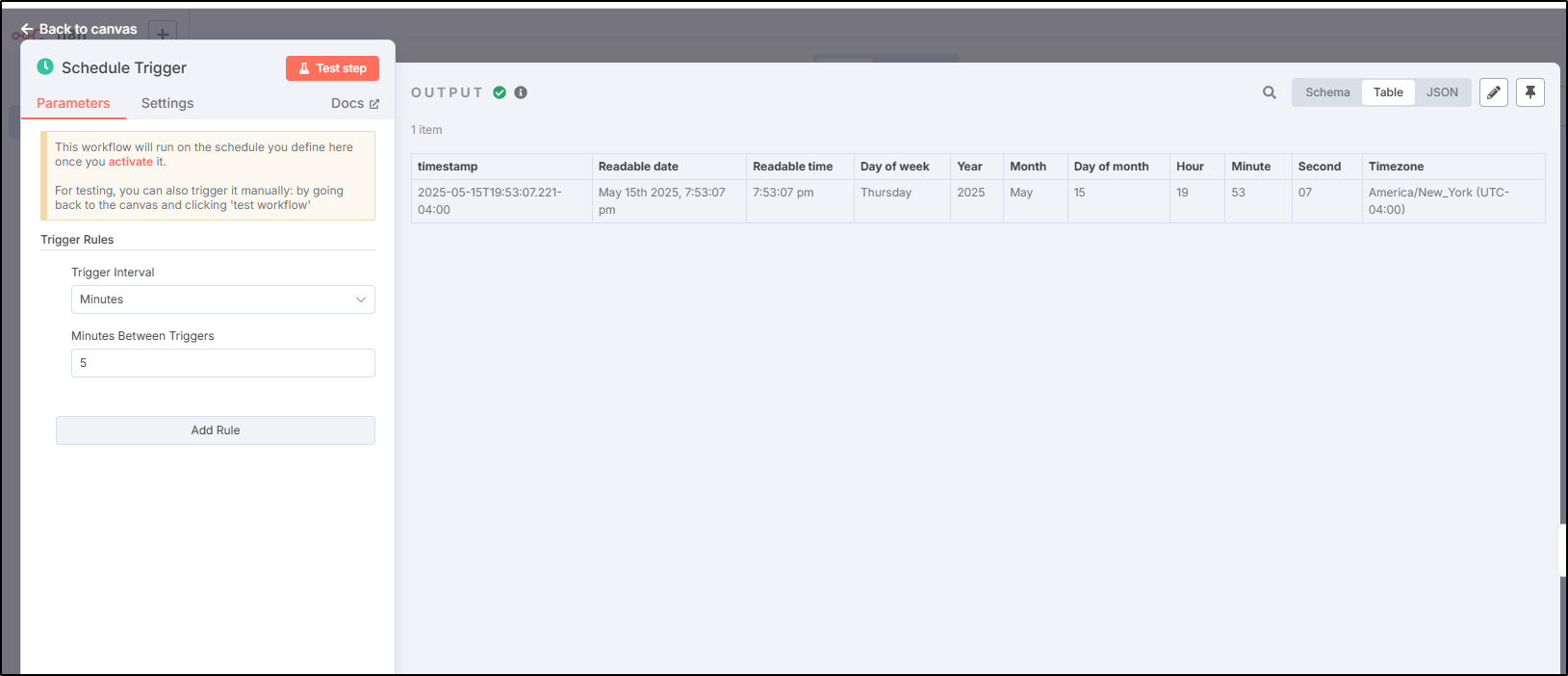

Let’s create a scheduled trigger

When run I can see the time details

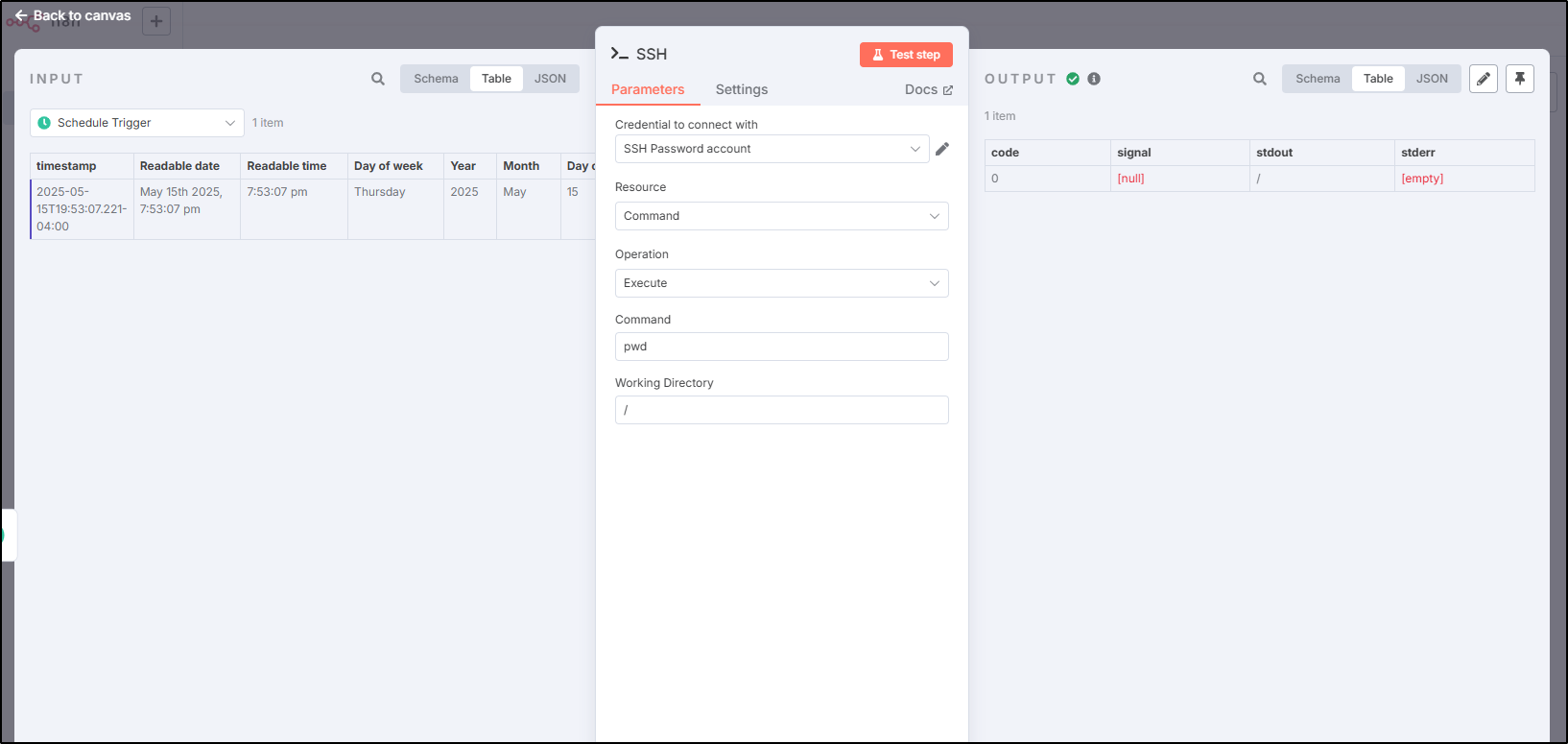

I can add a credential to a host and then use it in my workflow

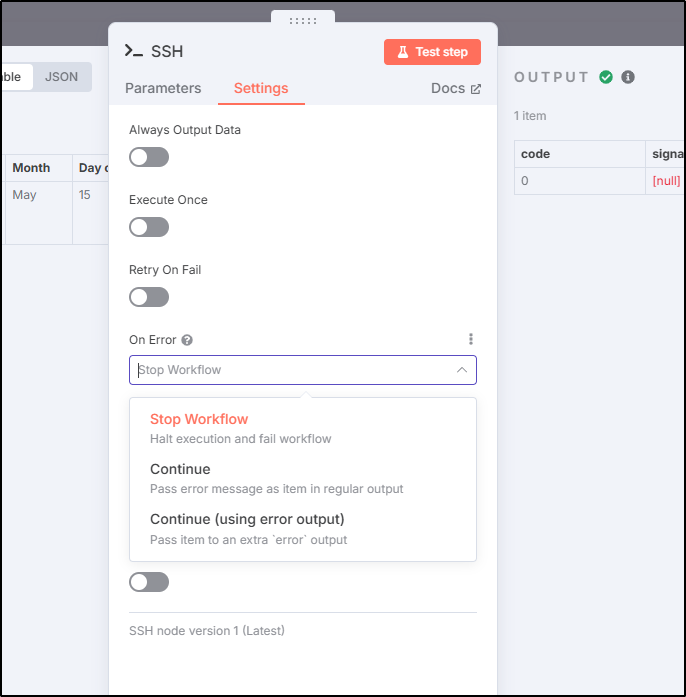

We have some options like whether to continue on error

The temptation I’m having is whether to move some of my scheduled work presently done in AWX over

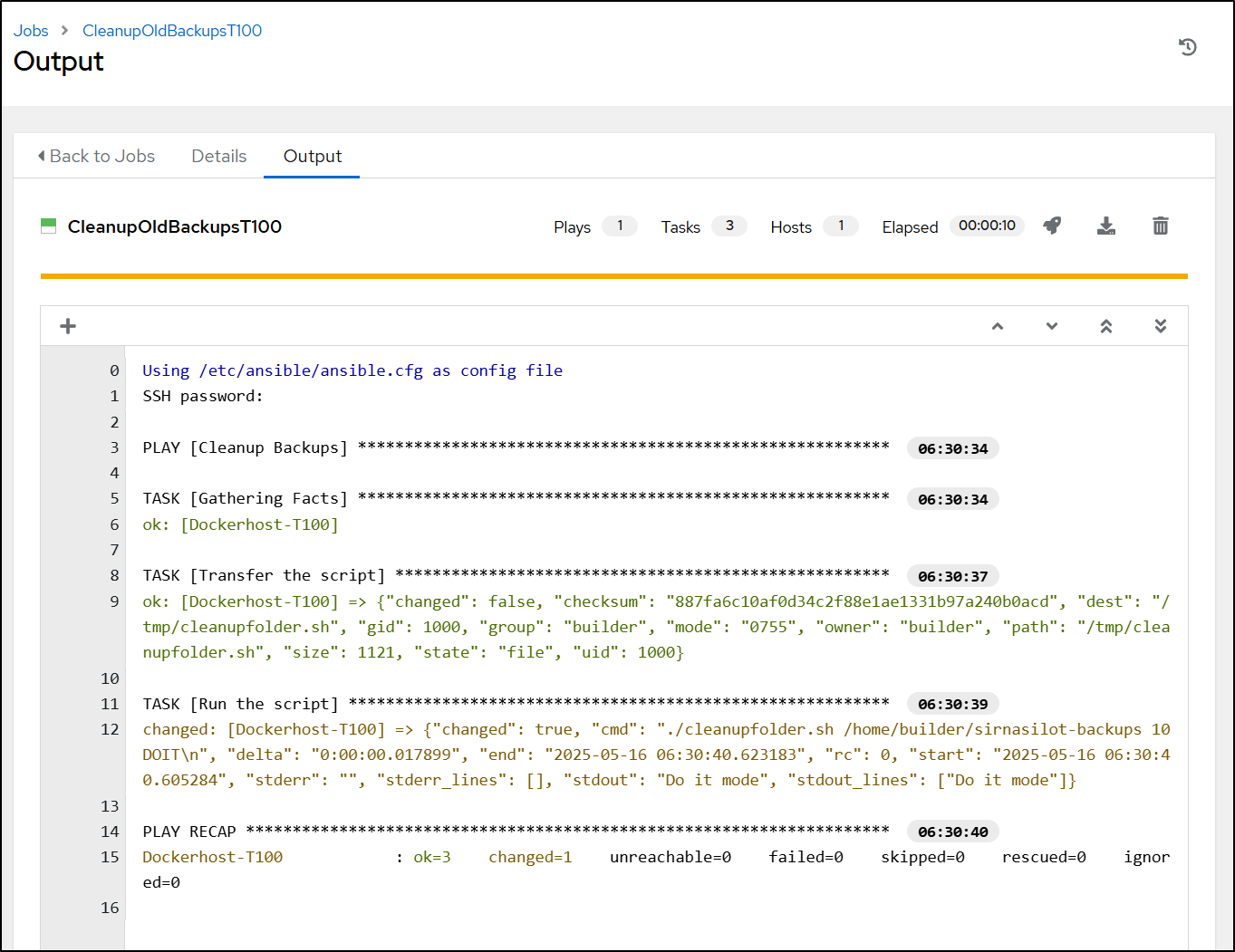

For instance, I have this simply cleanup playbook:

- name: Cleanup Backups

hosts: all

tasks:

- name: Transfer the script

copy: src=cleanupfolder.sh dest=/tmp mode=0755

# use ACTION=dryrun or ACTION=DOIT

- name: Run the script

ansible.builtin.shell: |

./cleanupfolder.sh

args:

chdir: /tmp

That just runs a basic bash script

#!/bin/bash

# Check if required arguments are provided

if [ $# -ne 3 ]; then

echo "Usage: $0 <backup_directory> <number_of_files_to_keep> <action: DRYRUN or DOIT>"

echo "Example: $0 /path/to/backups 10 DRYRUN"

exit 1

fi

BACKUP_DIR="$1"

FILES_TO_KEEP="$2"

ACTION="$3"

# Check if backup directory exists

if [ ! -d "$BACKUP_DIR" ]; then

echo "Error: Directory $BACKUP_DIR does not exist"

exit 1

fi

# Check if FILES_TO_KEEP is a positive number

if ! [[ "$FILES_TO_KEEP" =~ ^[0-9]+$ ]] || [ "$FILES_TO_KEEP" -lt 1 ]; then

echo "Error: Number of files to keep must be a positive integer"

exit 1

fi

if [ "$ACTION" != "DRYRUN" ] && [ "$ACTION" != "DOIT" ]; then

echo "Error: Action must be either DRYRUN or DOIT"

exit 1

fi

if [ "$ACTION" == "DRYRUN" ]; then

echo "Dry run mode"

find "$BACKUP_DIR" -type f -printf '%T@ %p\n' | sort -n | head -n -"$FILES_TO_KEEP" | cut -d' ' -f2- | xargs echo rm -f

fi

if [ "$ACTION" == "DOIT" ]; then

echo "Do it mode"

find "$BACKUP_DIR" -type f -printf '%T@ %p\n' | sort -n | head -n -"$FILES_TO_KEEP" | cut -d' ' -f2- | xargs rm -f

fi

and runs every night

in n8n, this is super easy to create:

Which in JSON form is this:

{

"name": "My workflow 3",

"nodes": [

{

"parameters": {

"rule": {

"interval": [

{}

]

}

},

"type": "n8n-nodes-base.scheduleTrigger",

"typeVersion": 1.2,

"position": [

0,

0

],

"id": "4e3be65a-949d-4533-9016-2fbf31bfd038",

"name": "Schedule Trigger"

},

{

"parameters": {

"command": "find \"/home/builder/sirnasilot-backups\" -type f -printf '%T@ %p\\n' | sort -n | head -n -\"10\" | cut -d' ' -f2- | xargs rm -f",

"cwd": "/tmp"

},

"type": "n8n-nodes-base.ssh",

"typeVersion": 1,

"position": [

220,

0

],

"id": "1264e7d4-7310-46a1-9aaf-fc2c502e45e2",

"name": "SSH",

"alwaysOutputData": true,

"credentials": {

"sshPassword": {

"id": "cfpXXMrPJqSfkMDQ",

"name": "sample ssh"

}

}

}

],

"pinData": {},

"connections": {

"Schedule Trigger": {

"main": [

[

{

"node": "SSH",

"type": "main",

"index": 0

}

]

]

}

},

"active": false,

"settings": {

"executionOrder": "v1"

},

"versionId": "5f275501-809e-4002-a5ff-599de084cf35",

"meta": {

"templateCredsSetupCompleted": true,

"instanceId": "30decc2c2e2f0d1ef5cae3101d975562c56caa5eaccf613e399c5ae0a4395615"

},

"id": "BAXRiNxqJf2idsFi",

"tags": []

}

Webhook Flows

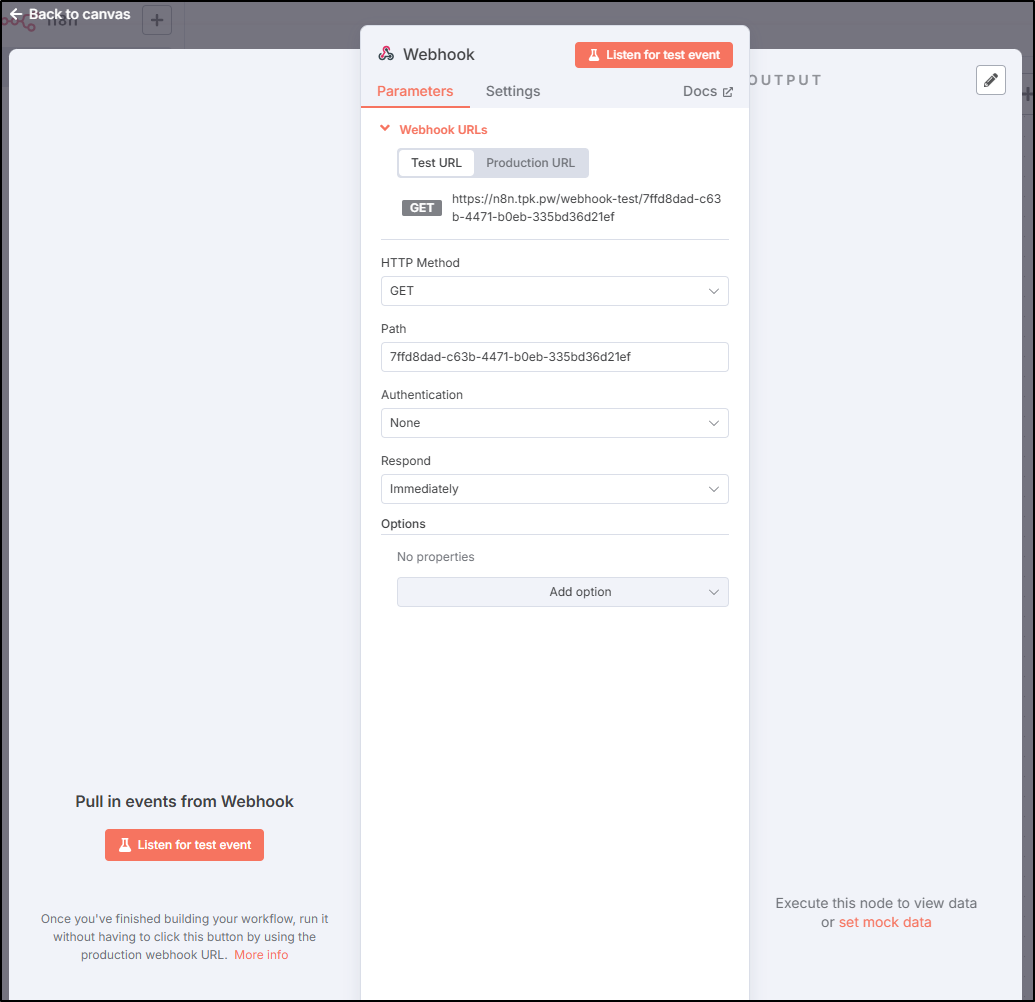

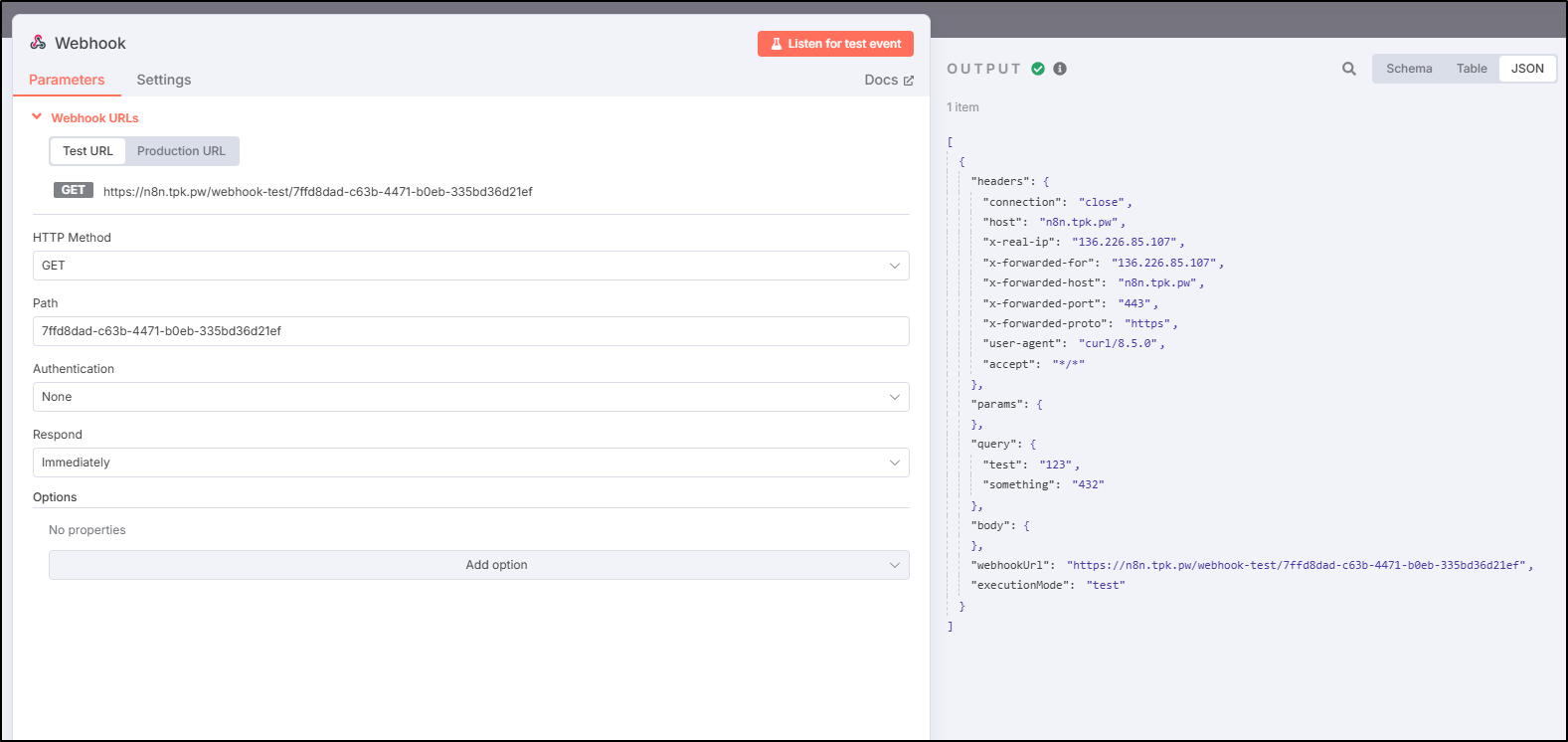

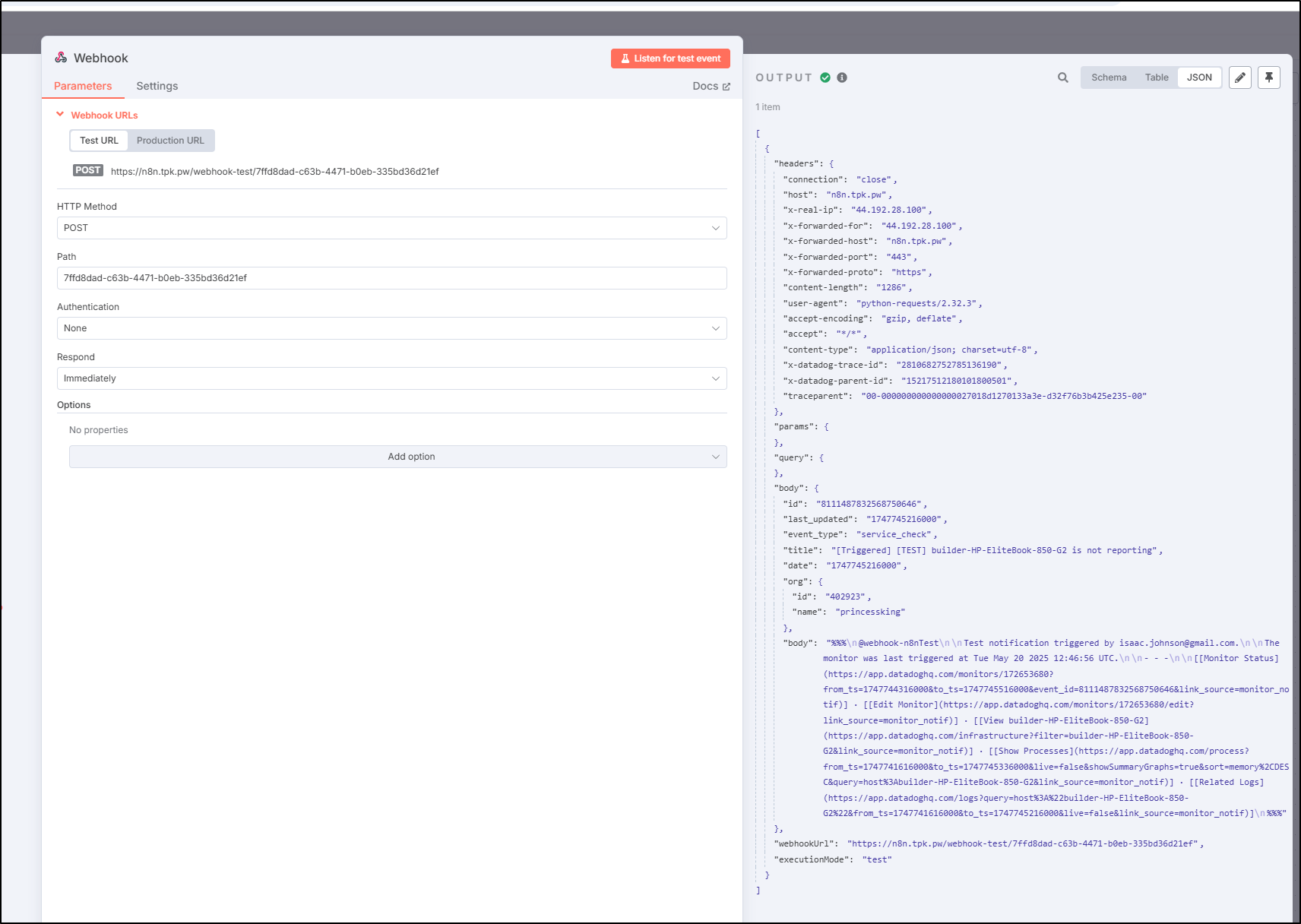

Let’s start by creating a webhook node

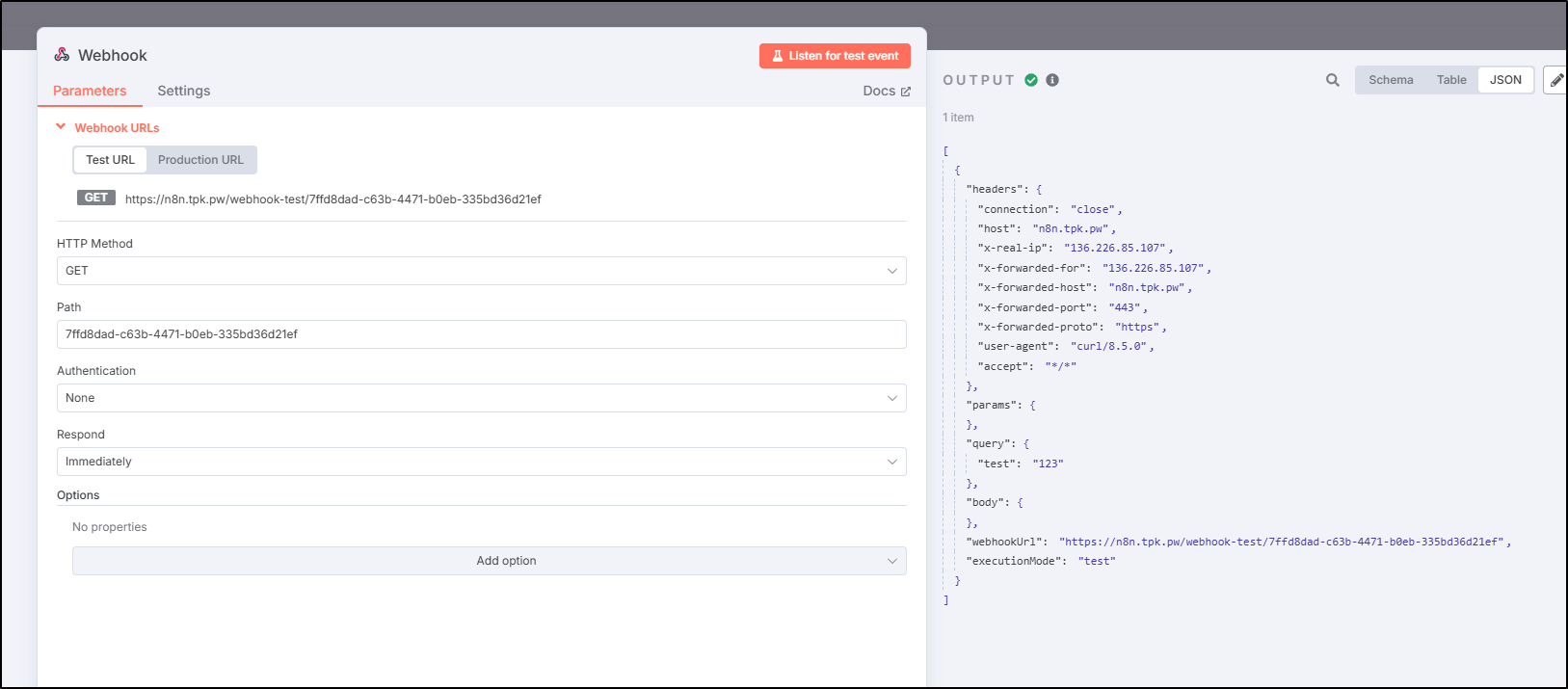

I can click test to listen for an invokation, then use curl to send some content

builder@LuiGi:~/Workspaces/jekyll-blog$ curl -X GET https://n8n.tpk.pw/webhook-test/7ffd8dad-c63b-4471-b0eb-335bd36d21ef?test=123&something=432

[1] 14668

builder@LuiGi:~/Workspaces/jekyll-blog$ {"message":"Workflow was started"}

I see it picked up the first query param but not the second

I then realized i forgot to wrap my URL with single quotes and tried again

$ curl -X GET 'https://n8n.tpk.pw/webhook-test/7ffd8dad-c63b-4471-b0eb-335bd36d21ef?test=123&something=432'

{"message":"Workflow was started"}

and this time I see both

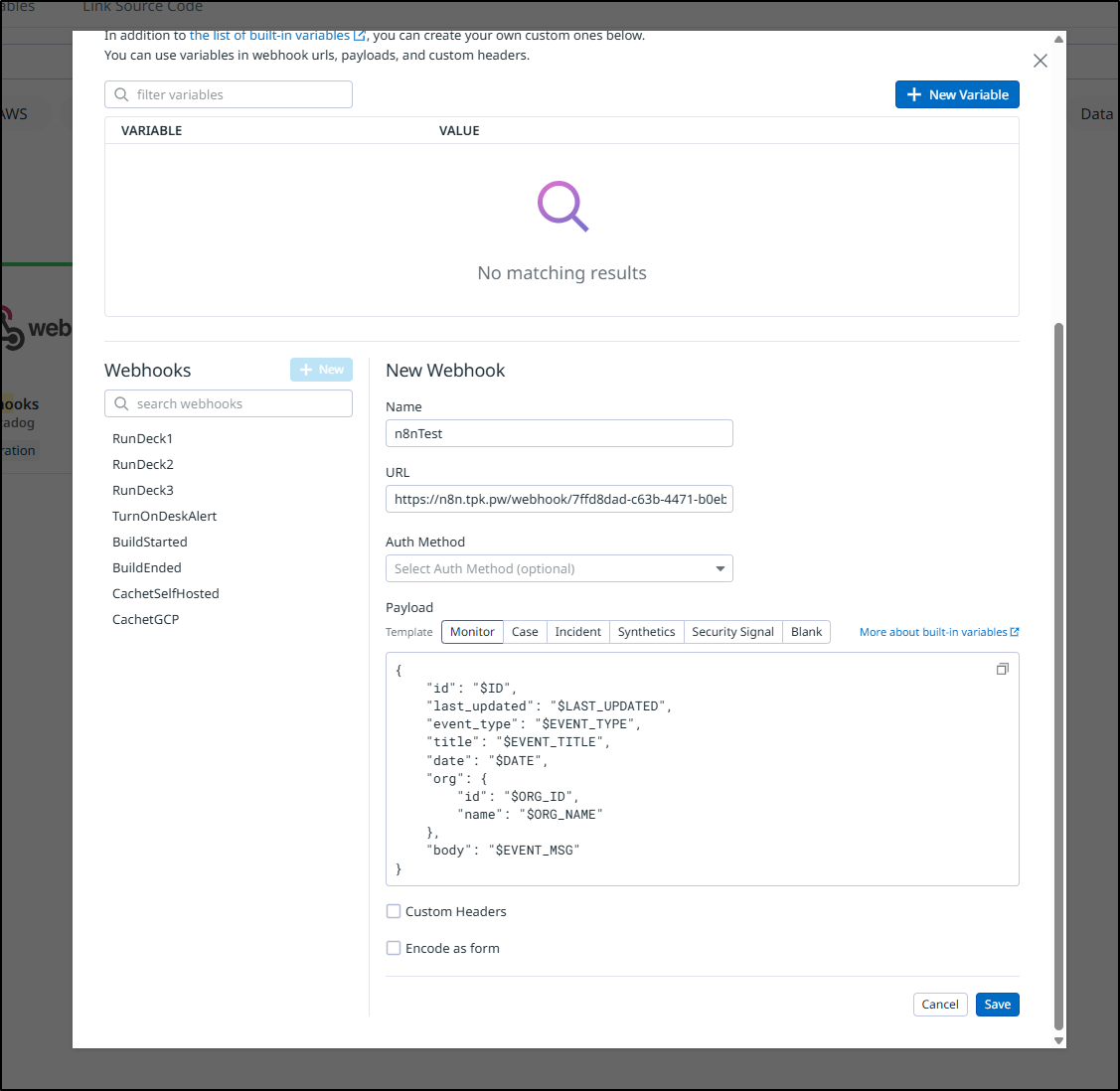

In Datadog, I can go to Integrations and Webhooks to add a new Webhook, this time with the Production URL

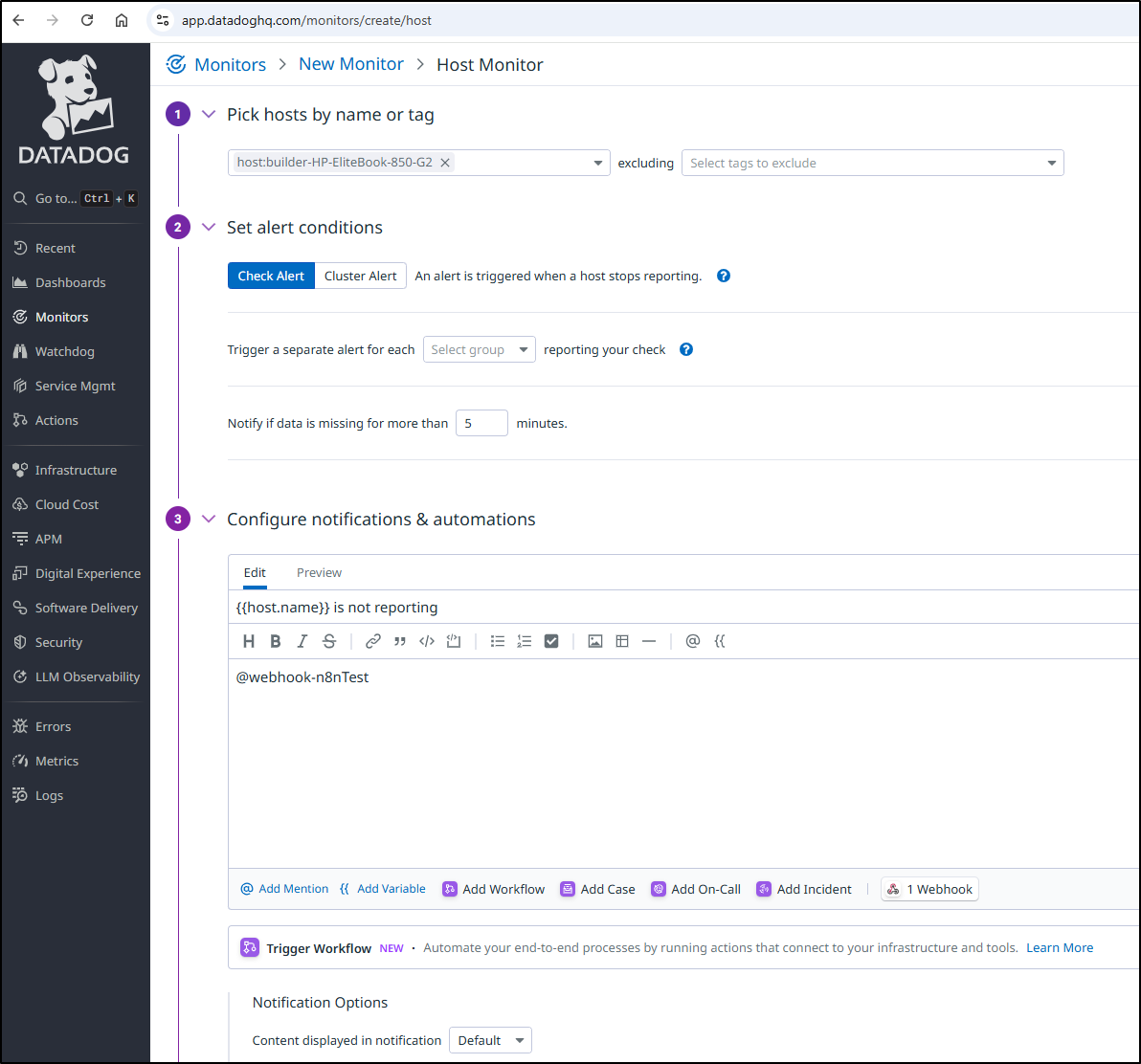

For the purpose of demonstration, let’s just check on one host. Say the HP 850-G2 stops reporting for 5 minutes

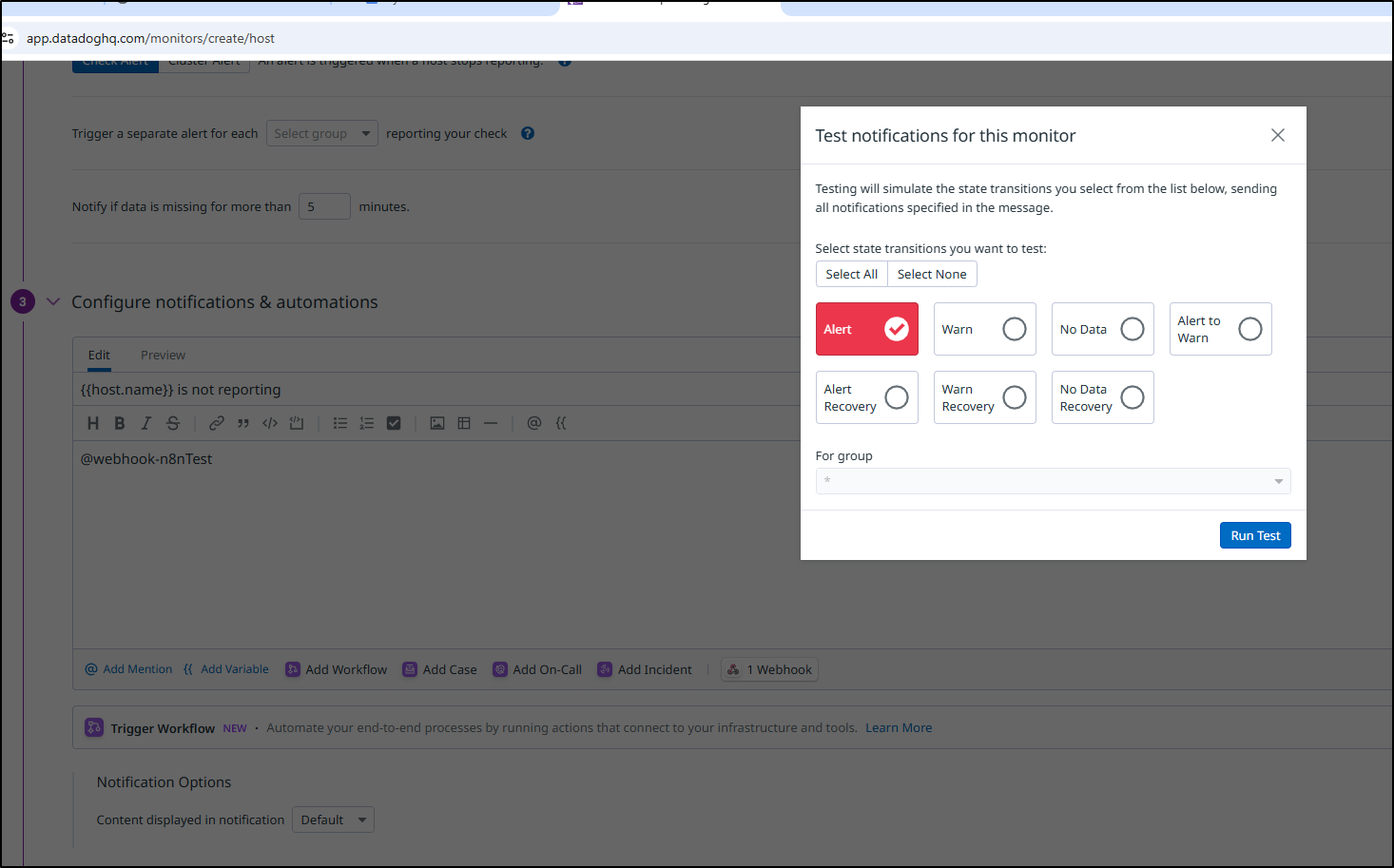

I can then run a test

For the purpose of testing the hook, I did have to use the “-test” URL, but we can see the payload as delivered by Datadog

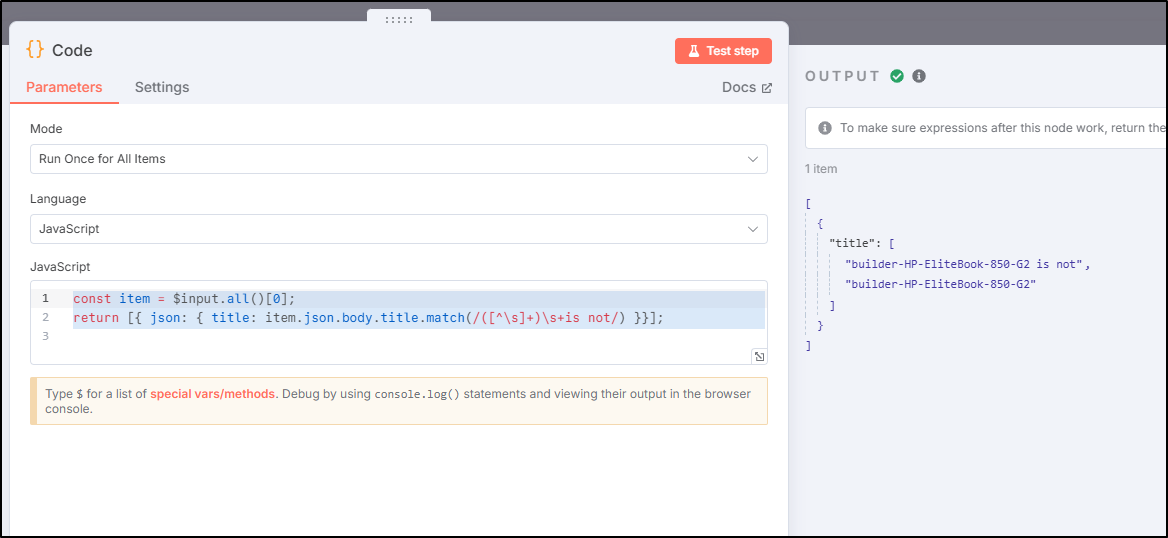

I don’t have the javascript perfect, but something like

const item = $input.all()[0];

return [{ json: { title: item.json.body.title.match(/([^\s]+)\s+is not/) }}];

finds the title and server name

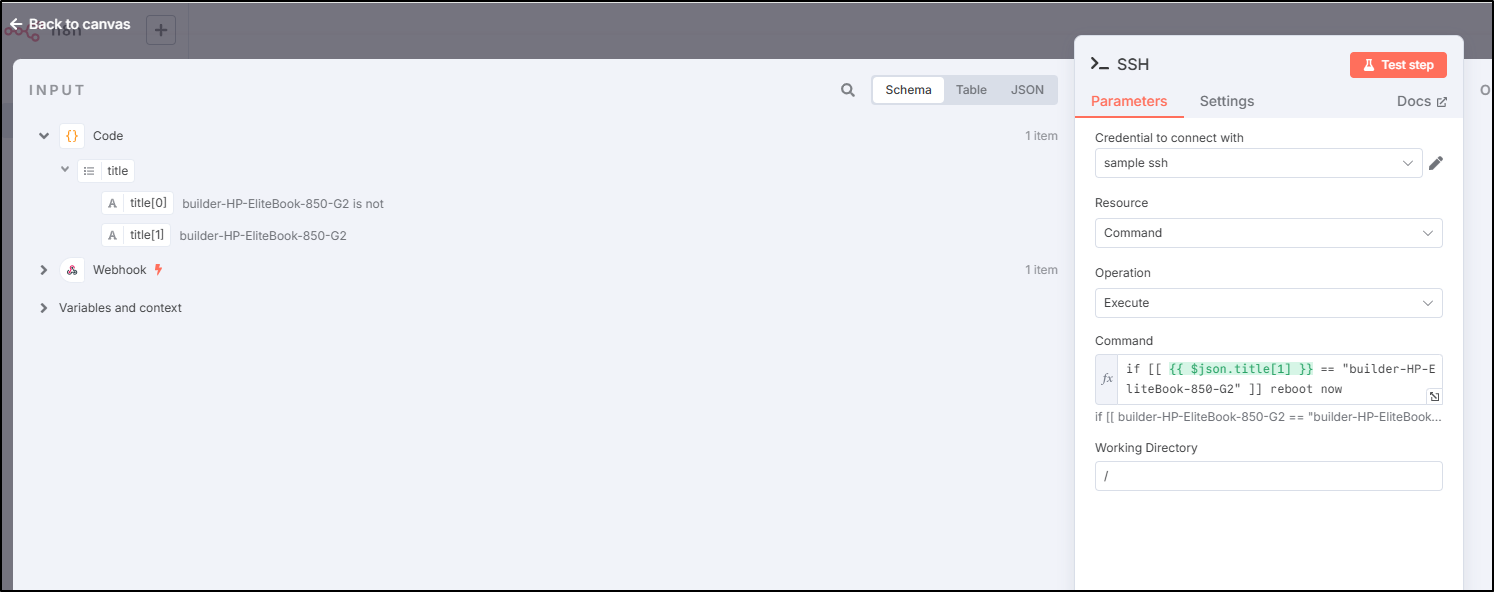

I could then check that the name matches in the SSH step and if it does, reboot the server (obviously, if it can connect)

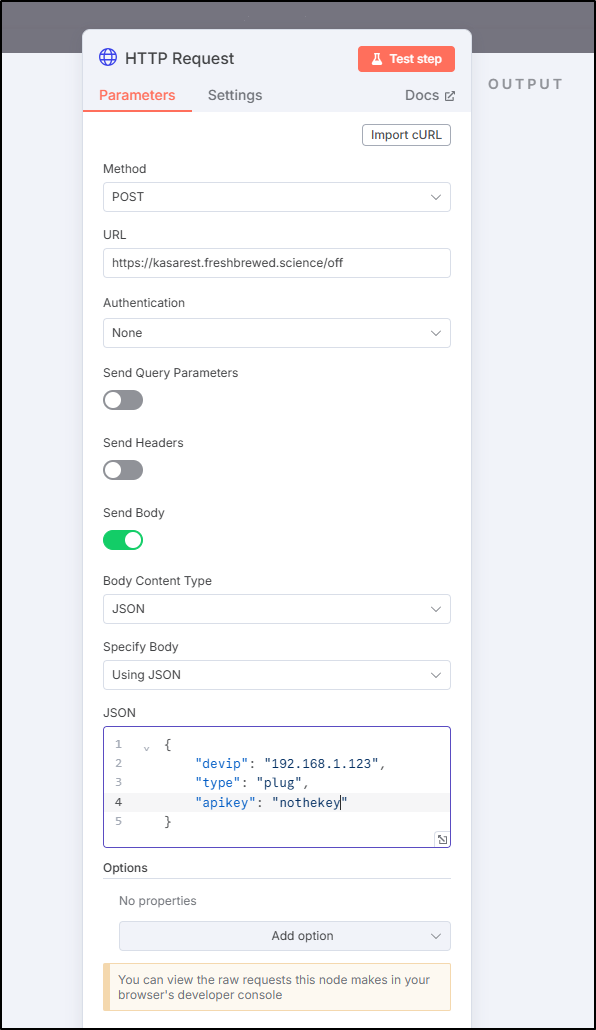

If the server is hooked to a smart plug, I could also use an HTTP request to turn it off

then the same thing for “/on” after for a quick power cycle.

While I’m not quite ready to automate the power cycling off hosts, it does show how we could pair up an Observability suite with n8n to automate lab maintenance for auto-healing servers.

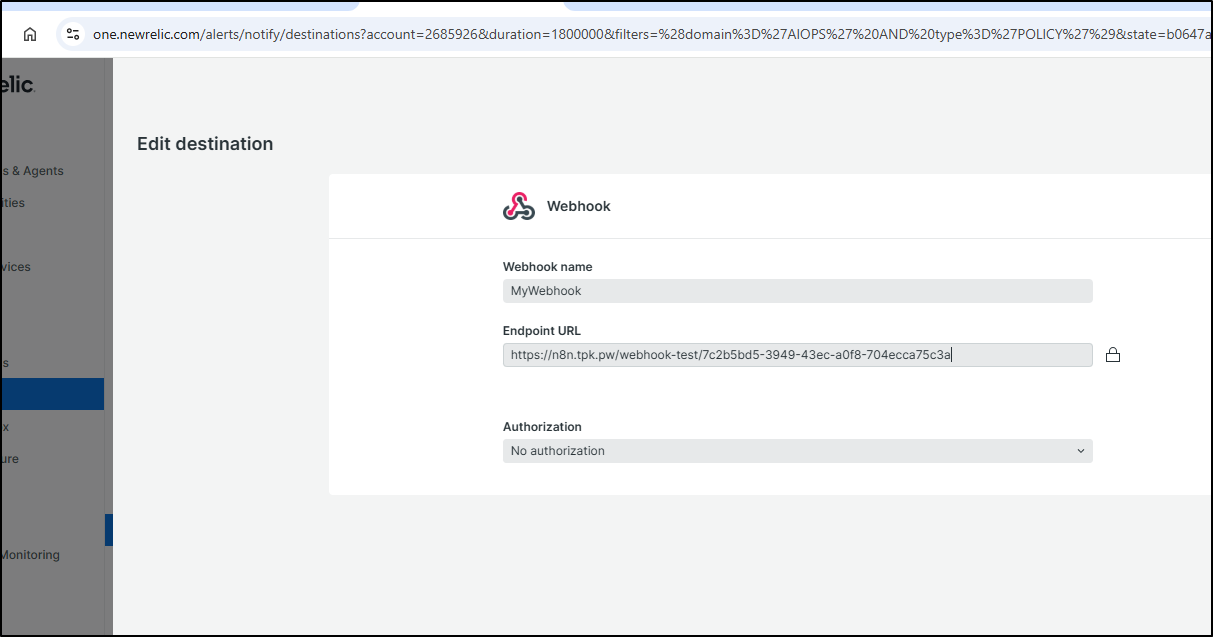

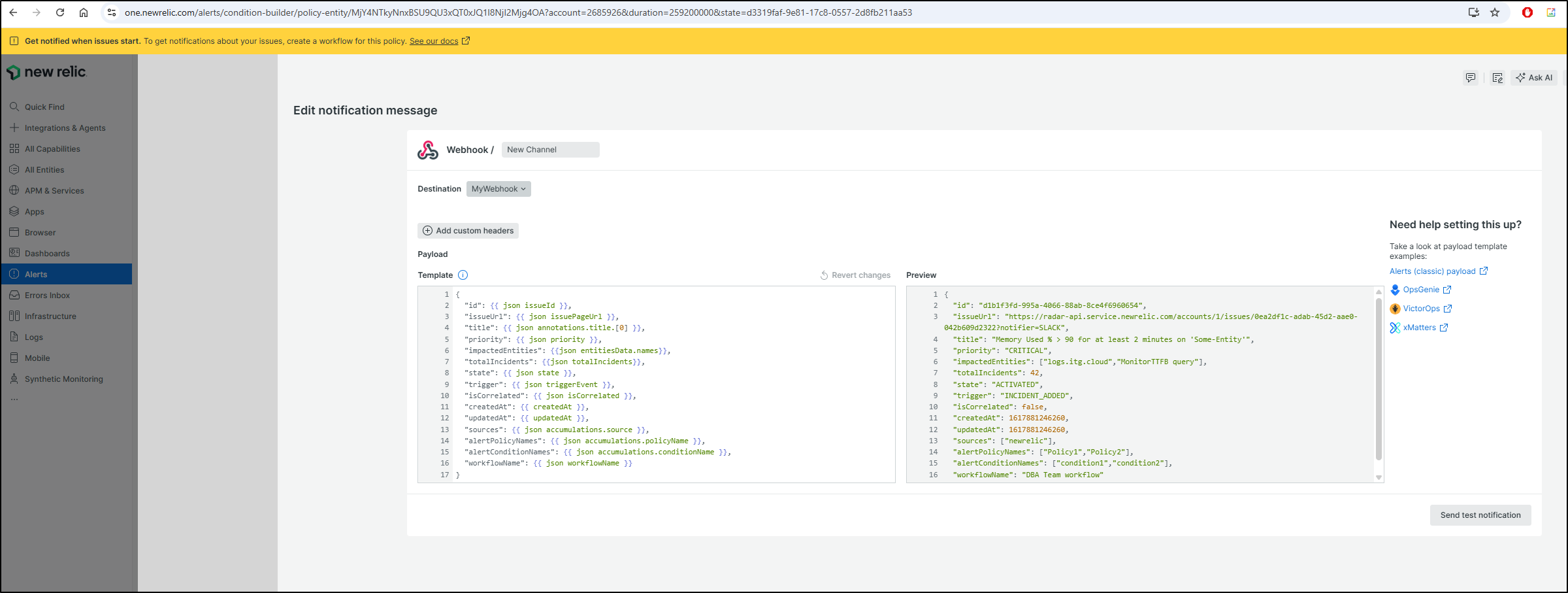

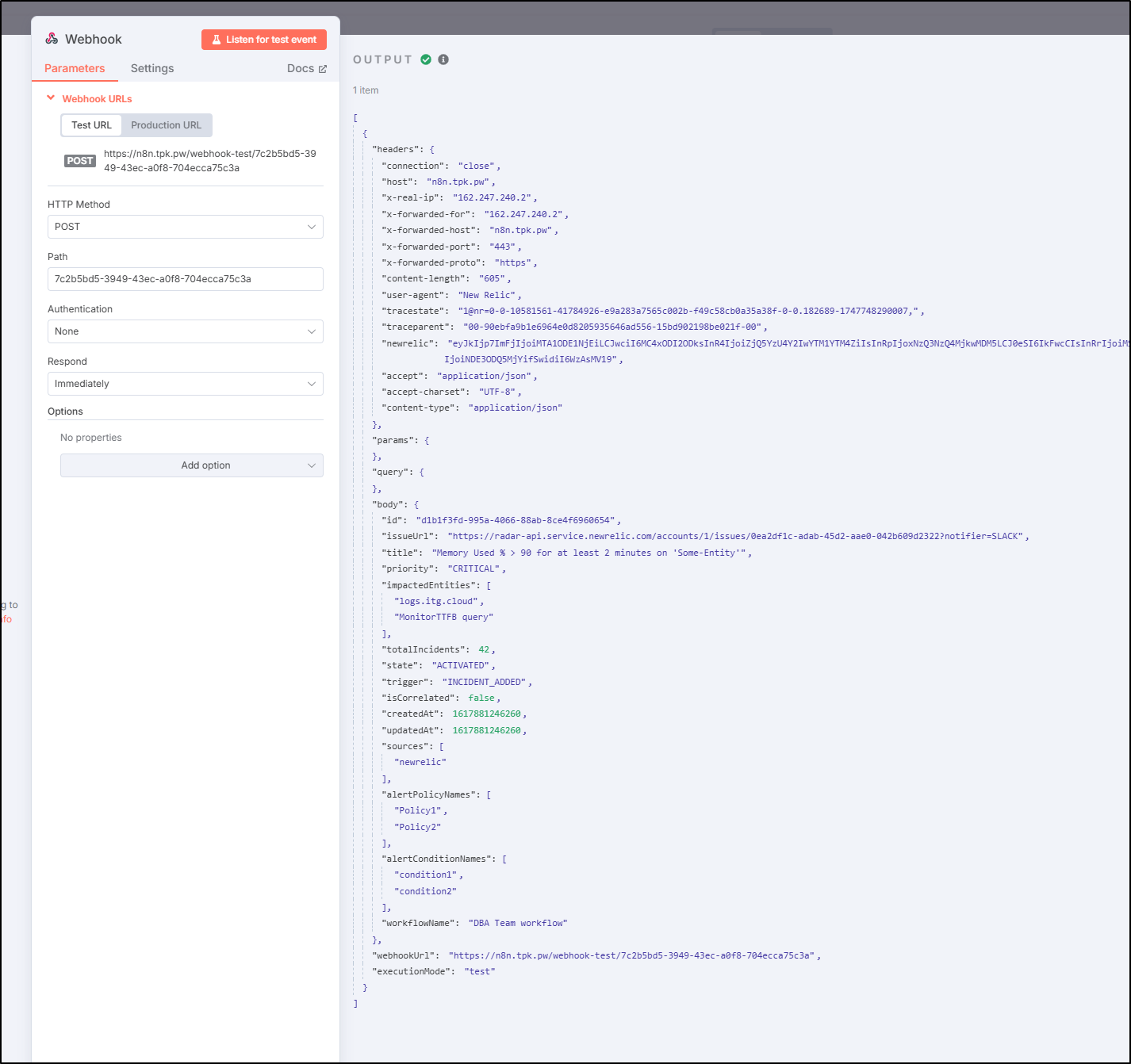

And I showed Datadog, but we can do the same with NewRelic as well:

I can tie this to an alert policy based on host metrics

And we can see that “Some-Entity” would be passed in the body.title similar to Datadog

I appreciate there is an “executionMode” of “test” we could match and skip actually rebooting making this an easier flow to test.

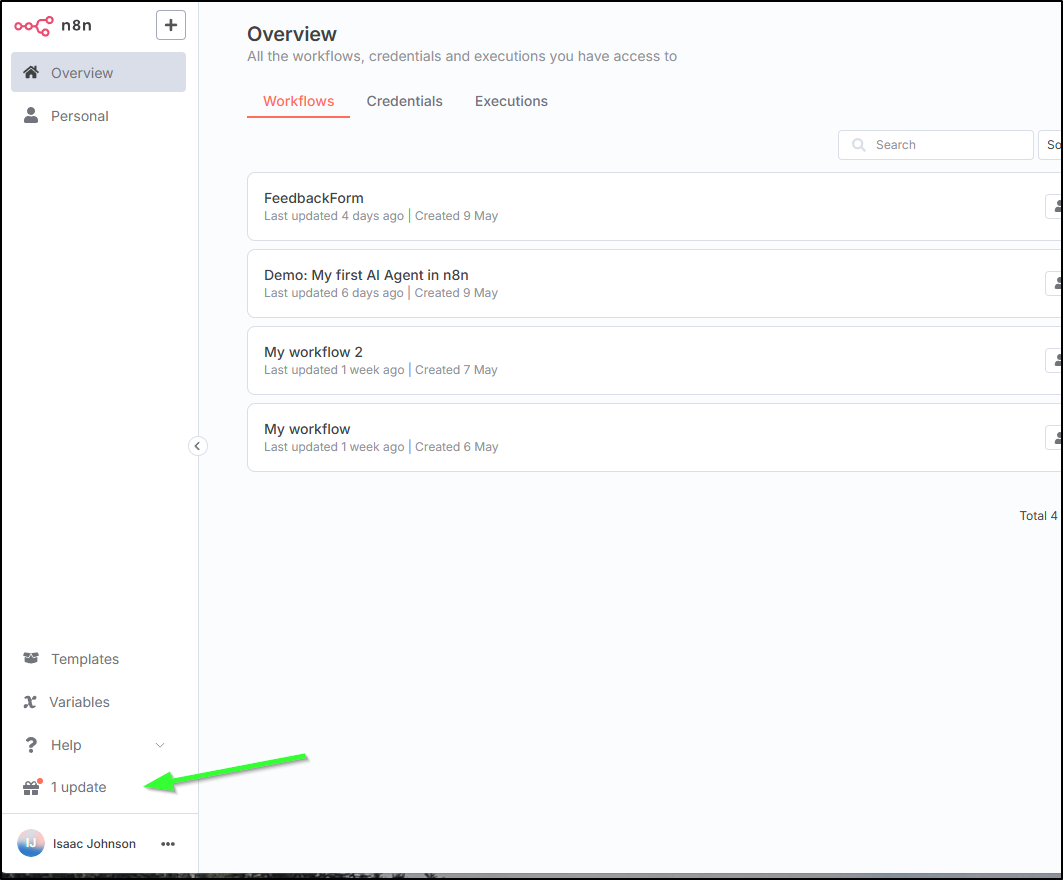

Upgrades

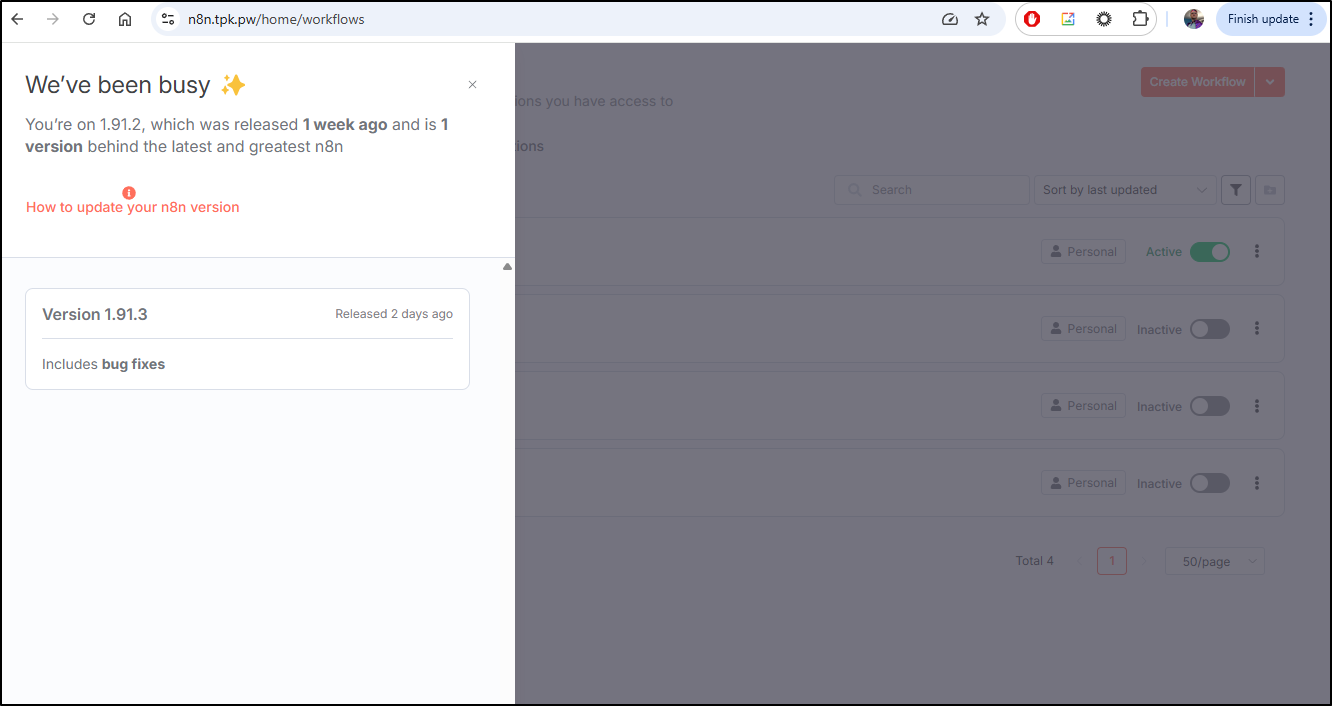

We launched n8n via Docker before and as one would expect, there are updates.

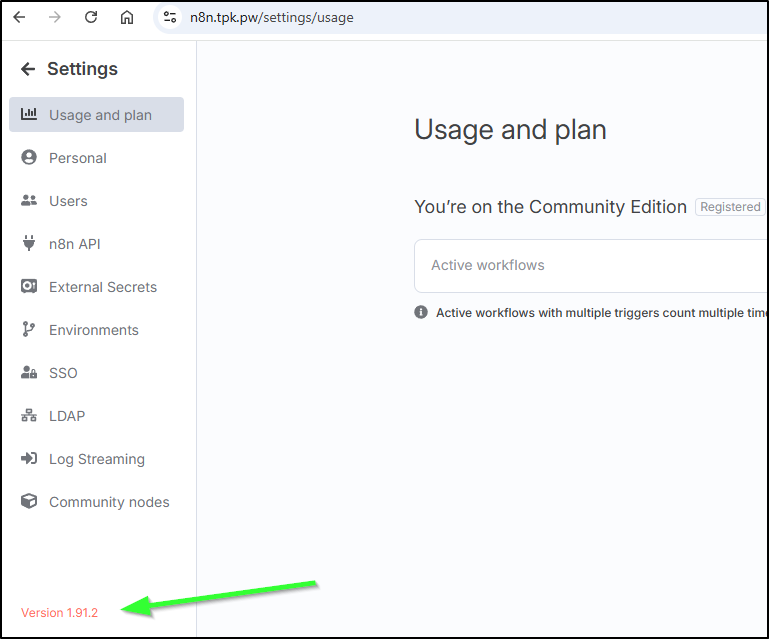

And I can see 1.91.3 was released two days back

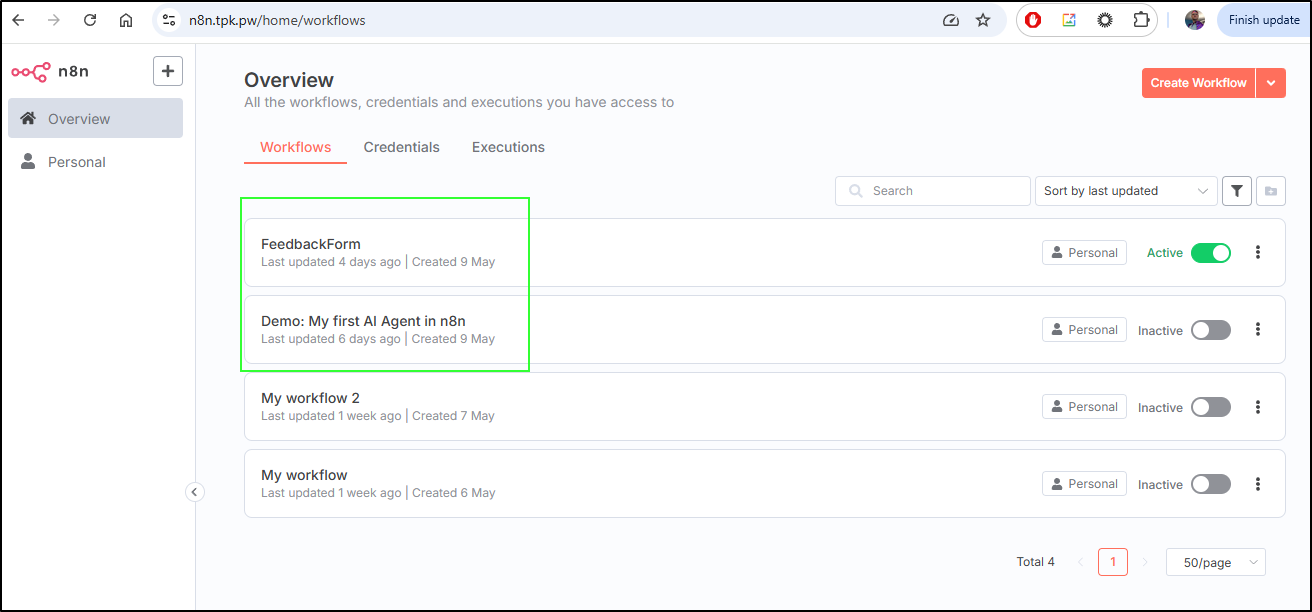

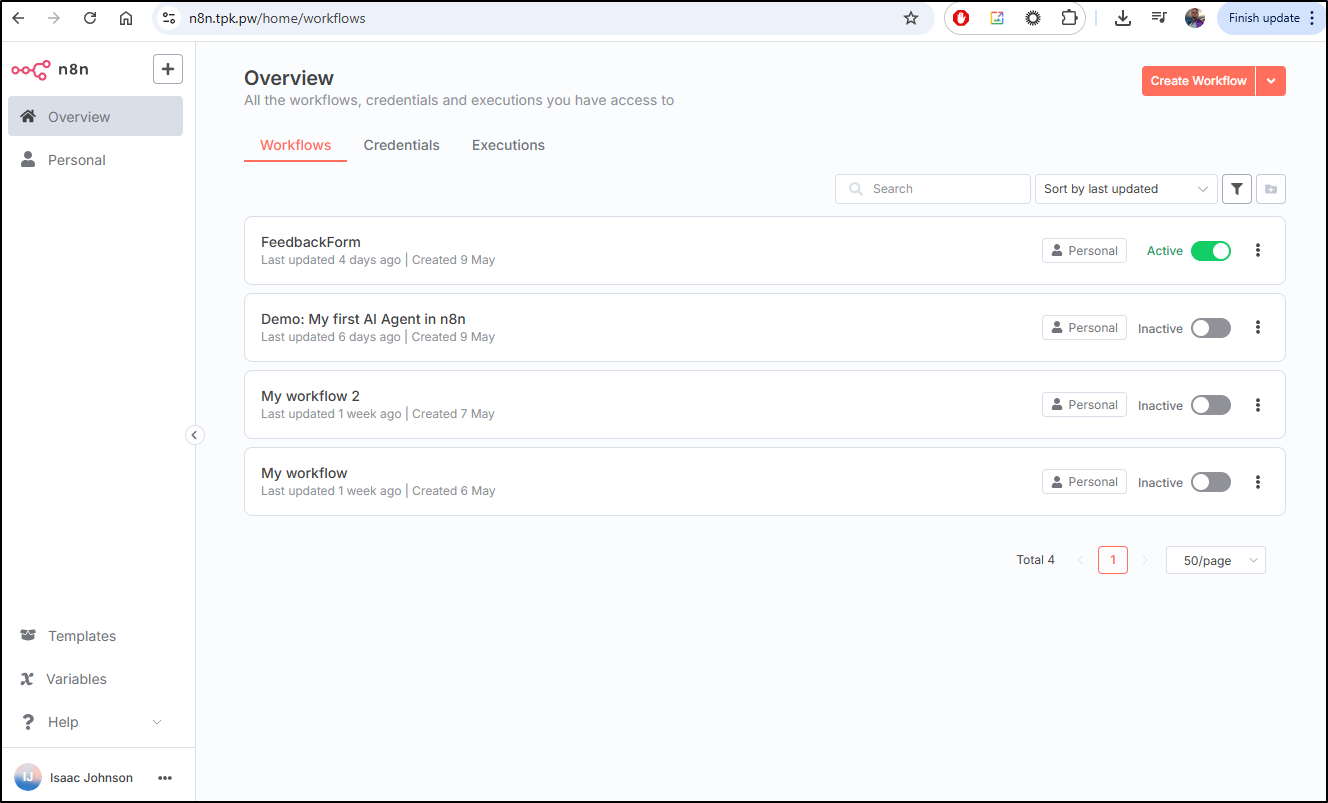

Because we are not using an HA Database, rather a local one, it would be smart to backup any existing workflows before we role out.

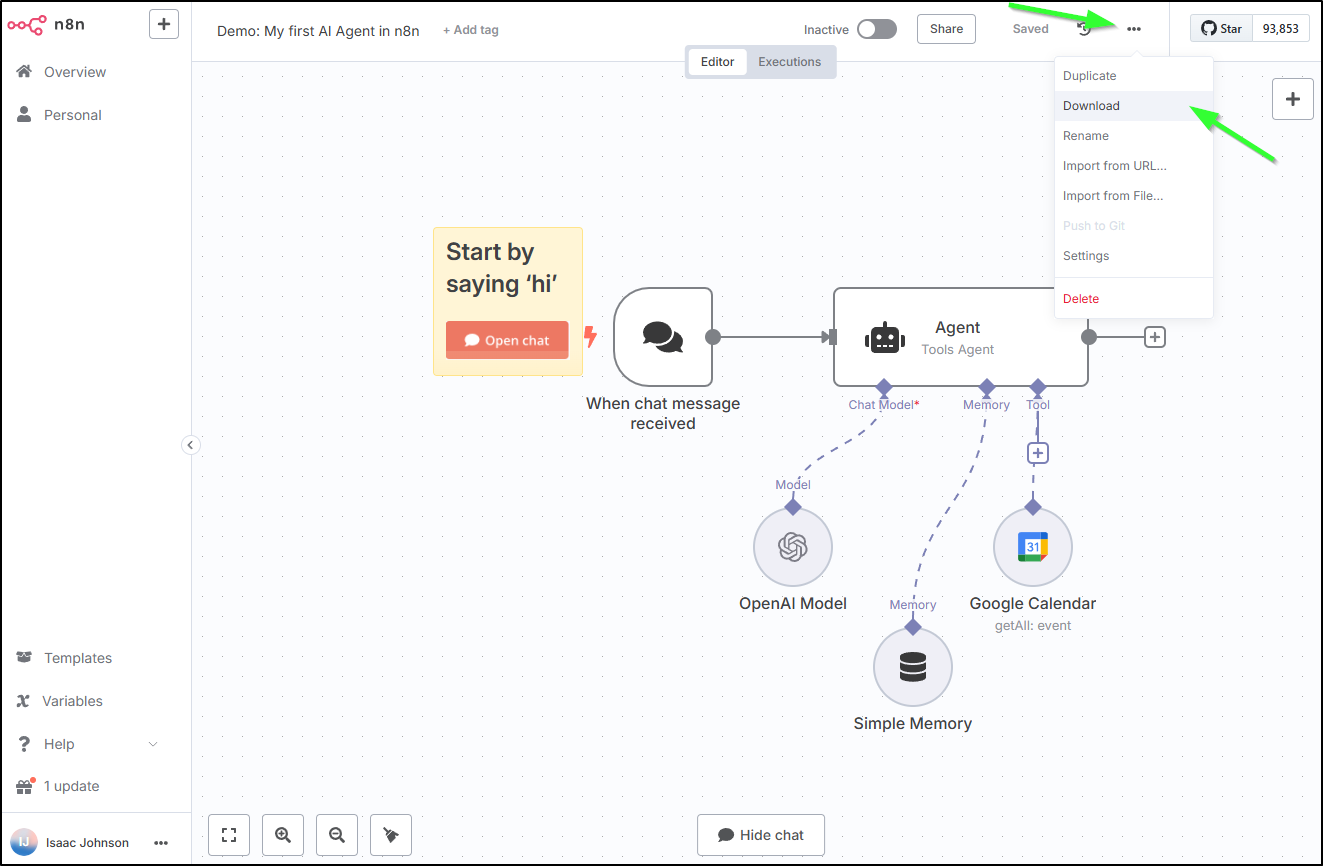

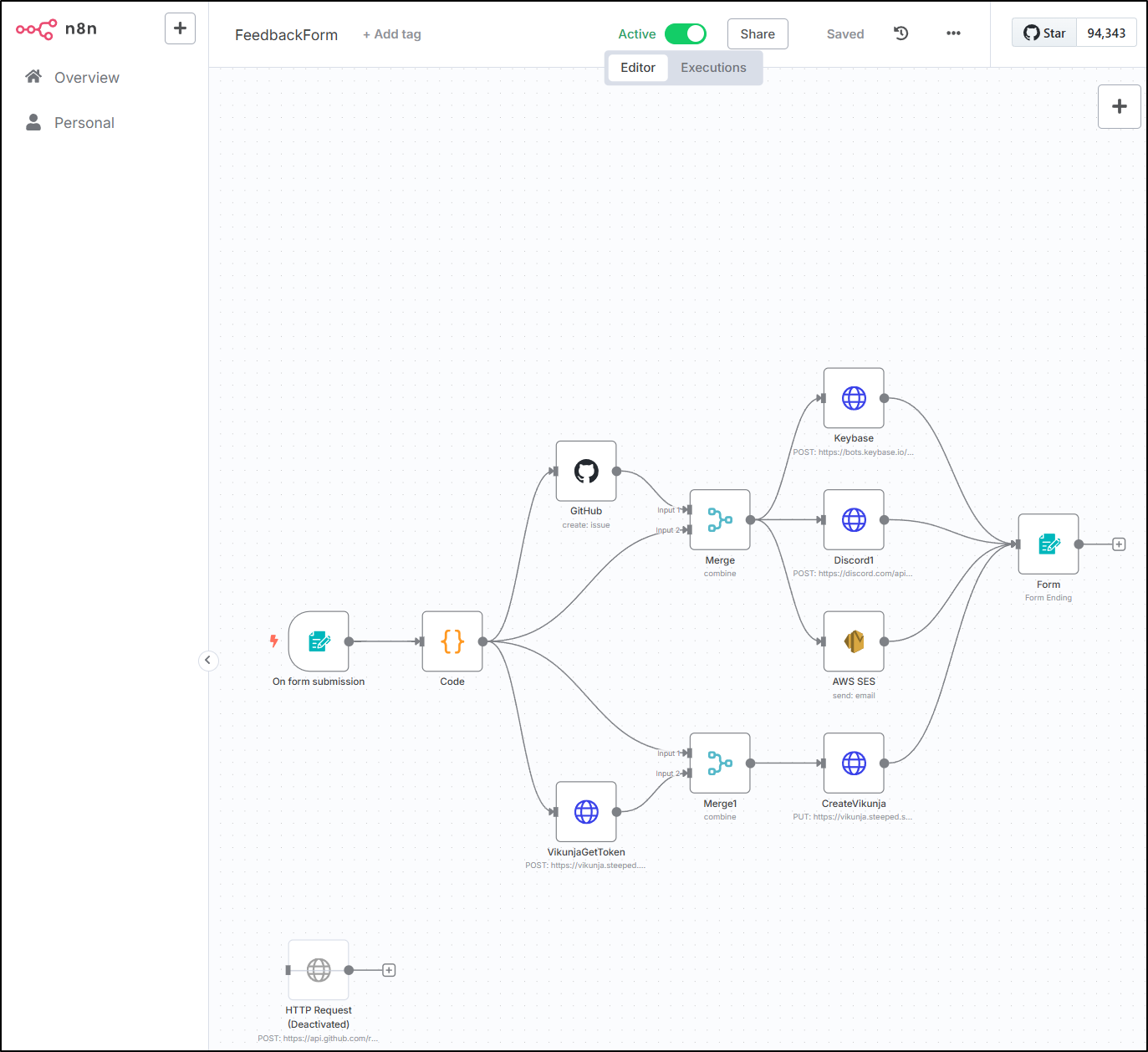

For me, I want to save the top two - my Agentic AI demo and the active Feedback form

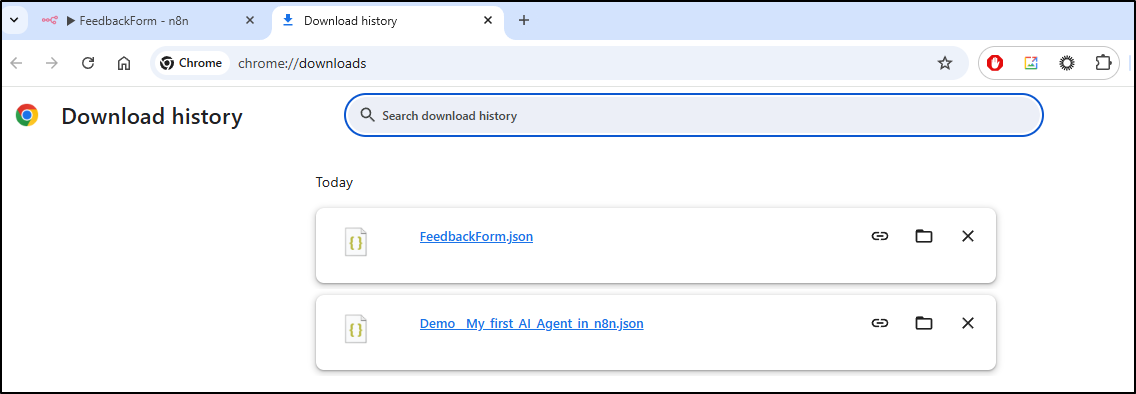

I’ll open both and download the workflow

Now when I upgrade, I at least have confidence that I have a point-in-time backup of the flows

Looking at my history, i can see that I did NOT pin to a particular version

docker run -d --name n8n -p 5678:443 -e N8N_SECURE_COOKIE=false \

-e N8N_HOST=n8n.tpk.pw -e N8N_PROTOCOL=https -e N8N_PORT=443 \

-v /home/builder/n8n:/home/node/.n8n docker.n8n.io/n8nio/n8n

I can see I’m just one point release behind by just going to settings/usage

I can see from Github Releases there are no breaking changes.

While I can’t pull an index from their docker host, I can use Docker hub to check tags. It’s also mentioned in their Docker docs that we can use latest, next and specific tags:

# Pull latest (stable) version

docker pull docker.n8n.io/n8nio/n8n

# Pull specific version

docker pull docker.n8n.io/n8nio/n8n:1.81.0

# Pull next (unstable) version

docker pull docker.n8n.io/n8nio/n8n:next

Let’s use an explicit tag to avoid ambiguity.

builder@builder-T100:~$ docker ps | head -n2

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d01742c69b86 docker.n8n.io/n8nio/n8n "tini -- /docker-ent…" 6 days ago Up 6 days 5678/tcp, 0.0.0.0:5678->443/tcp, :::5678->443/tcp n8n

builder@builder-T100:~$ docker stop n8n

n8n

builder@builder-T100:~$ docker pull docker.n8n.io/n8nio/n8n

Using default tag: latest

latest: Pulling from n8nio/n8n

f18232174bc9: Already exists

8cc209e5911c: Already exists

d7a069a788e0: Already exists

42ec265e2954: Already exists

c8212b925ea8: Already exists

4f4fb700ef54: Already exists

8407fa236366: Pull complete

a85740143146: Pull complete

3d840b6f91fa: Pull complete

90ea31d66485: Pull complete

a9ad41dbf2d3: Pull complete

Digest: sha256:f8fd85104e5a0cccb0d745536eeed64739c28239e6bfc2d0479380f31c394506

Status: Downloaded newer image for docker.n8n.io/n8nio/n8n:latest

docker.n8n.io/n8nio/n8n:latest

builder@builder-T100:~$ docker rm n8n

n8n

builder@builder-T100:~$ docker run -d --name n8n -p 5678:443 -e N8N_SECURE_COOKIE=false -e N8N_HOST=n8n.tpk.pw -e N8N_PROTOCOL=https -e N8N_PORT=443 -v /home/builder/n8n:/home/node/.n8n docker.n8n.io/n8nio/n8n:latest

140fec5b53b8f9038b36a22a3181bca7d9cfdc147ced53aff2d5c1ff4f86962b

Thankfully it came back without issue

Challenges

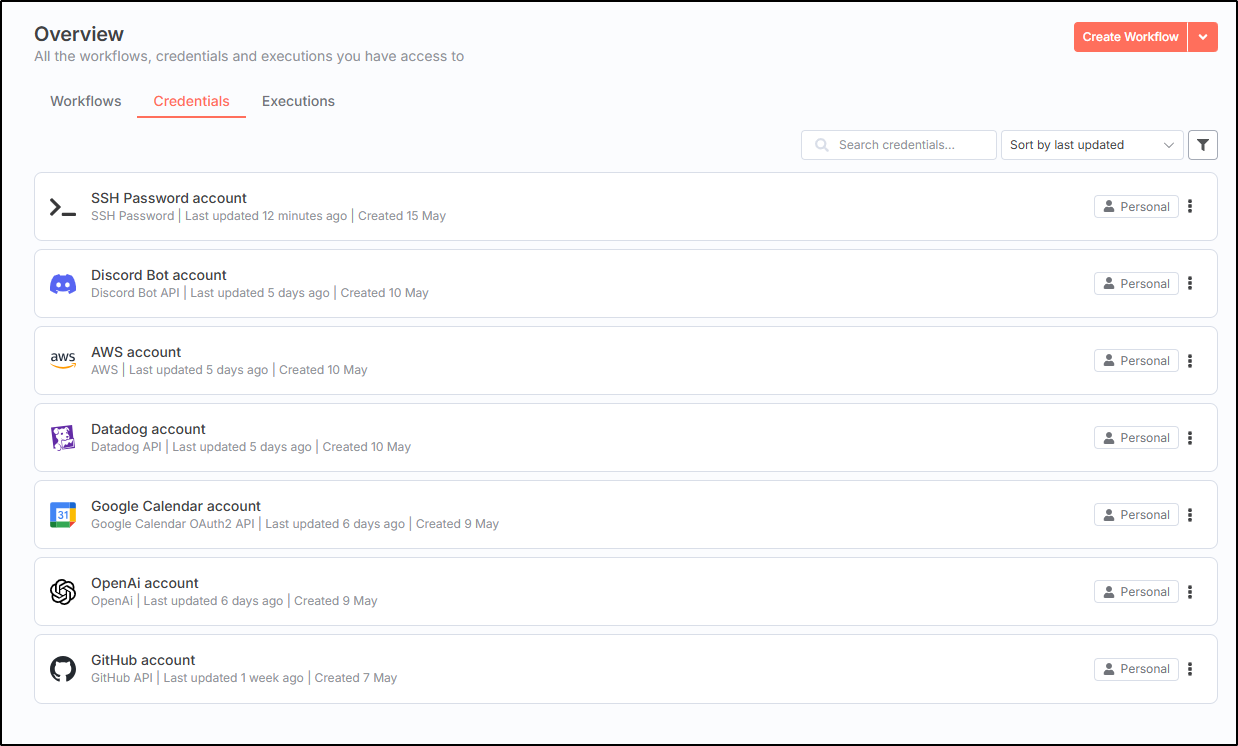

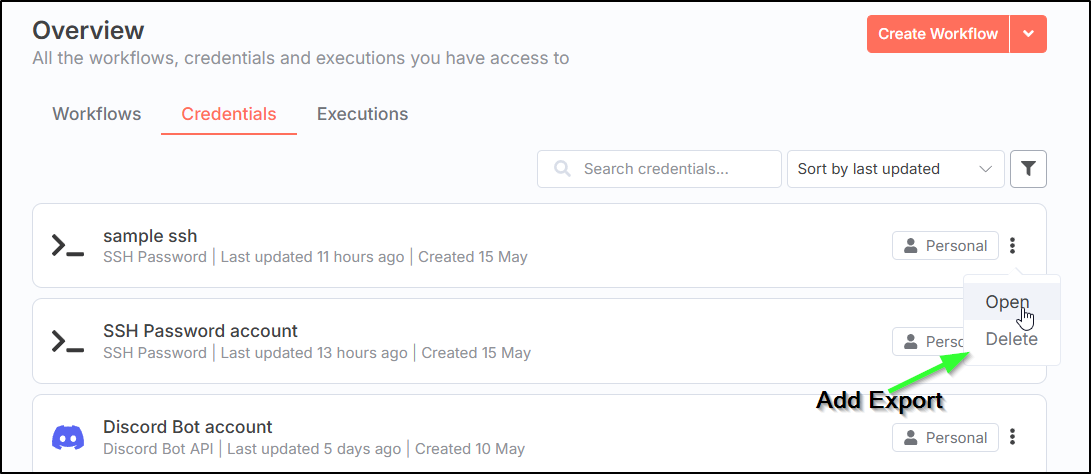

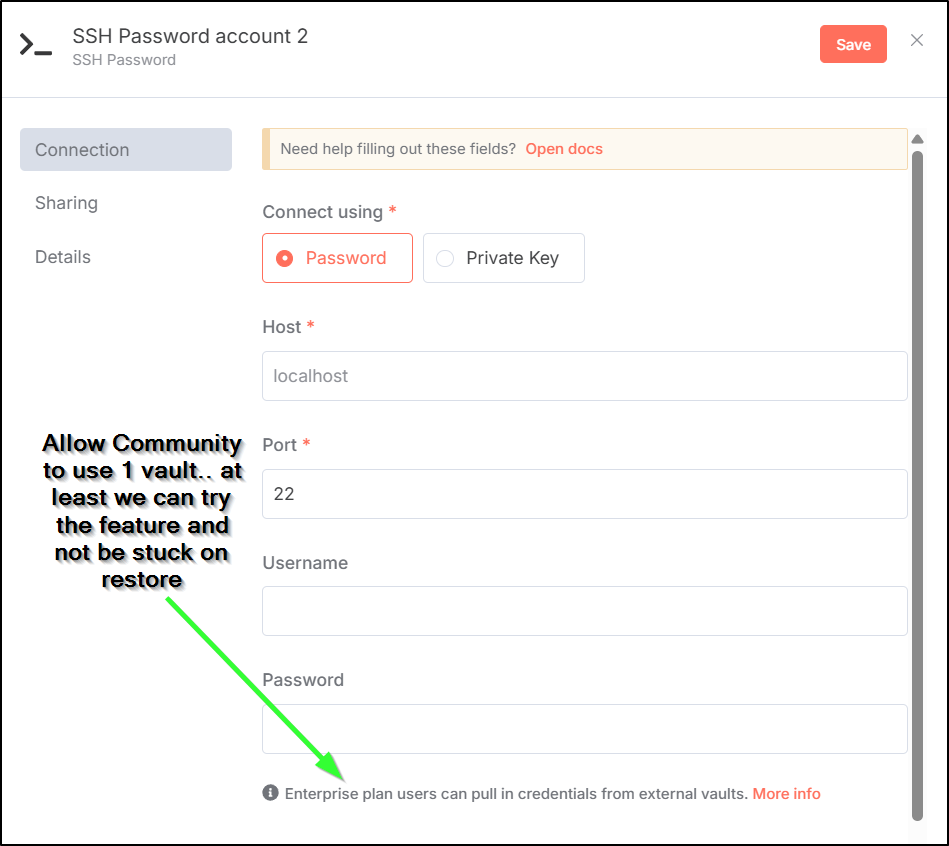

When we add credentials, they are shown in the Credentials page

I can see some details but not the passwords

However, I could tgz up the mounted volume and store on my NAS mount

builder@builder-T100:~/n8n$ ls

binaryData config crash.journal database.sqlite git n8nEventLog-1.log n8nEventLog-2.log n8nEventLog-3.log n8nEventLog.log ssh

I can then make a point in time backup

builder@builder-T100:~$ sudo tar -czvf "/mnt/linuxbackups/n8n_$(date +%Y-%m-%d_%H-%M-%S).tar.gz" /home/builder/n8n/

tar: Removing leading `/' from member names

/home/builder/n8n/

/home/builder/n8n/binaryData/

/home/builder/n8n/crash.journal

/home/builder/n8n/n8nEventLog-2.log

/home/builder/n8n/n8nEventLog-3.log

/home/builder/n8n/n8nEventLog.log

/home/builder/n8n/git/

/home/builder/n8n/config

/home/builder/n8n/database.sqlite

/home/builder/n8n/ssh/

/home/builder/n8n/n8nEventLog-1.log

It is pretty small in space

v$ sudo ls -lh /mnt/linuxbackups/

total 1.9G

-rw-r--r-- 1 1024 users 1.9G Nov 30 10:32 myghrunner-1.1.16.tar.gz

-rwxrwxrwx 1 1024 users 121K May 15 20:11 n8n_2025-05-15_20-11-55.tar.gz

-rwxrwxrwx 1 1024 users 15 Sep 6 2024 testfile.txt

API

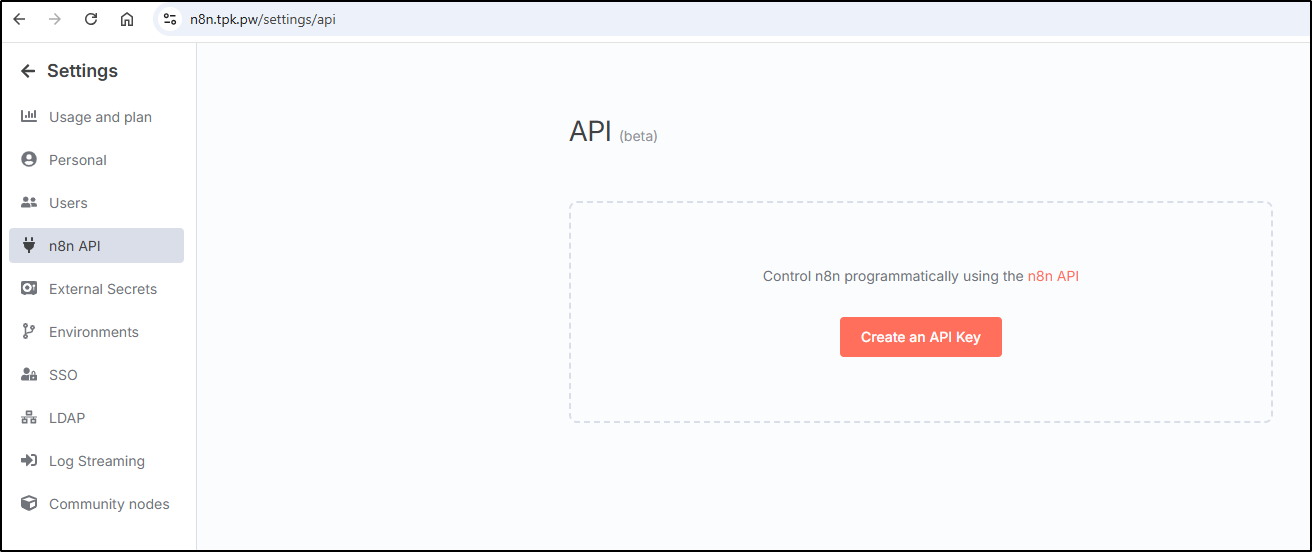

To use the API key, you can start by going to “n8n API” under settings and create a key

The API key, by default, has all scopes. But they locked changing that behind a paywall (boo!)

We can use the API key in a curl to get users

$ curl -X GET -H "X-N8N-API-KEY: eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiI1MjQ5YzEzYi00NDY5LTRiN2EtOTc0OS03MDM2MmUwYzQ0ODQiLCJpc3MiOiJuOG4iLCJhdWQiOiJwdWJsaWMtYXBpIiwiaWF0IjoxNzQ3MzU4NDc0LCJleHAiOjE3NDk4NzM2MDB9.h09fj3eefx0M89TaZtW5jDNwVRLMAYZ5DF0yP2UTaJs" https://n8n.tpk.pw/api/v1/users/

{"data":[{"id":"5249c13b-4469-4b7a-9749-70362e0c4484","email":"isaac.johnson@gmail.com","firstName":"Isaac","lastName":"Johnson","createdAt":"2025-05-07T00:02:54.673Z","updatedAt":"2025-05-10T12:36:55.505Z","isPending":false}],"nextCursor":null}

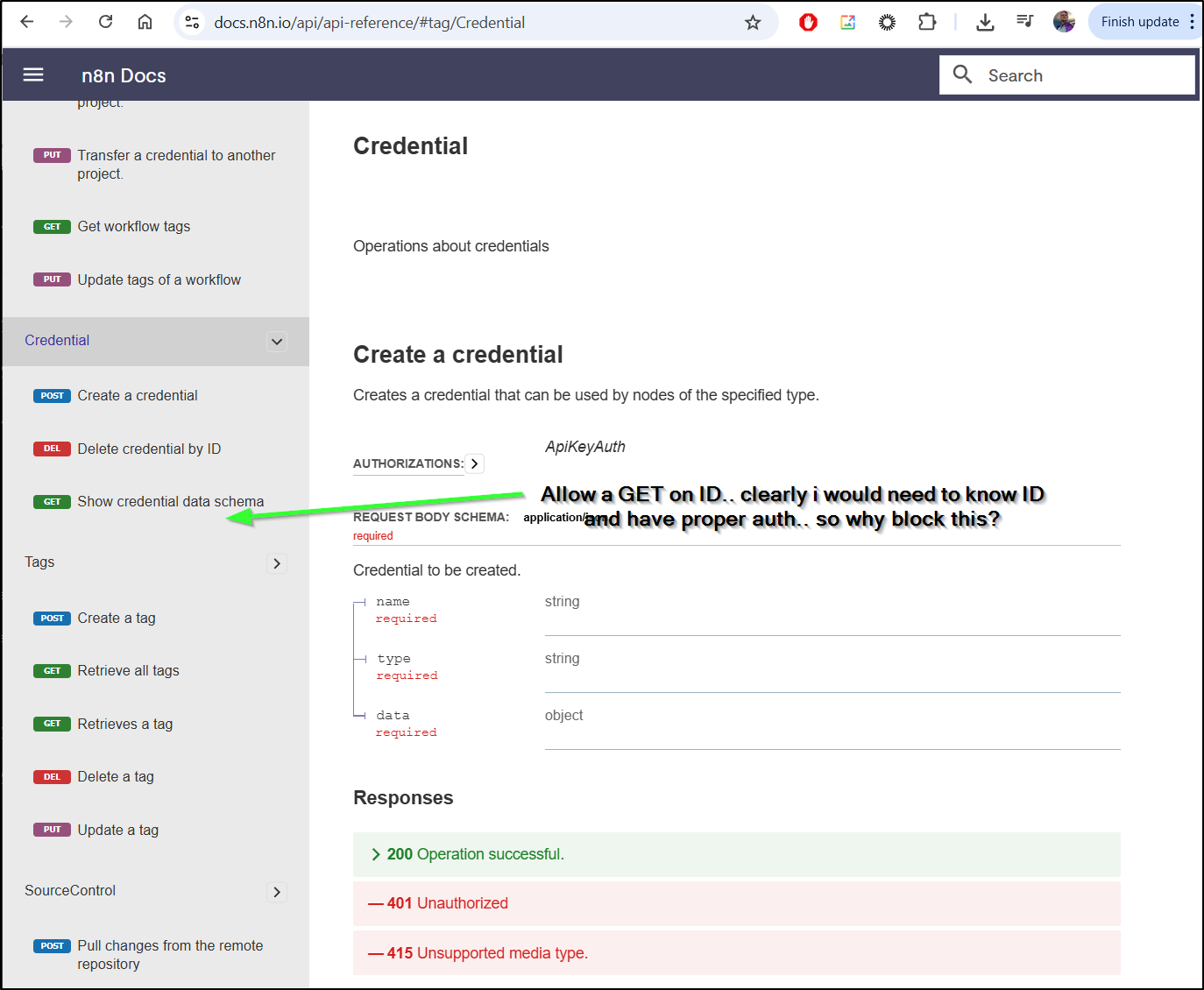

Going back to the API reference, I was hoping I might get my credentials out by the REST API, but alas they don’t allow that

$ curl -X GET -H "X-N8N-API-KEY: eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiI1MjQ5YzEzYi00NDY5LTRiN2EtOTc0OS03MDM2MmUwYzQ0ODQiLCJpc3MiOiJuOG4iLCJhdWQiOiJwdWJsaWMtYXBpIiwiaWF0IjoxNzQ3MzU4NDc0LCJleHAiOjE3NDk4NzM2MDB9.h09fj3eefx0M89TaZtW5jDNwVRLMAYZ5DF0yP2UTaJs" https://n8n.tpk.pw/api/v1/credentials/

{"message":"GET method not allowed"}

$ curl -X GET -H "X-N8N-API-KEY: eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiI1MjQ5YzEzYi00NDY5LTRiN2EtOTc0OS03MDM2MmUwYzQ0ODQiLCJpc3MiOiJuOG4iLCJhdWQiOiJwdWJsaWMtYXBpIiwiaWF0IjoxNzQ3MzU4NDc0LCJleHAiOjE3NDk4NzM2MDB9.h09fj3eefx0M89TaZtW5jDNwVRLMAYZ5DF0yP2UTaJs" https://n8n.tpk.pw/api/v1/credentials/bwI56CCWPWYvBWmA

{"message":"GET method not allowed"}

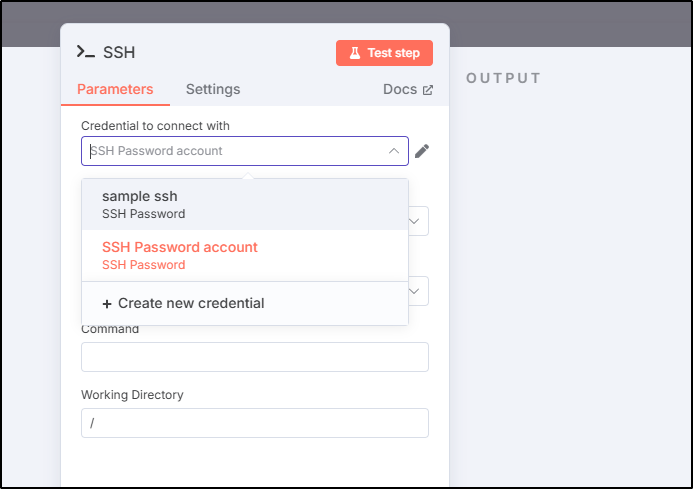

We can get schema, but you have to guess what the credential type name is as i see nowhere it is defined

$ curl -X GET -H "X-N8N-API-KEY: eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiI1MjQ5YzEzYi00NDY5LTRiN2EtOTc0OS03MDM2MmUwYzQ0ODQiLCJpc3MiOiJuOG4iLCJhdWQiOiJwdWJsaWMtYXBpIiwiaWF0IjoxNzQ3MzU4NDc0LCJleHAiOjE3NDk4NzM2MDB9.h09fj3eefx0M89TaZtW5jDNwVRLMAYZ5DF0yP2UTaJs" https://n8n.tpk.pw/api/v1/credentials/schema/sshPassword

{"additionalProperties":false,"type":"object","properties":{"host":{"type":"string"},"port":{"type":"number"},"username":{"type":"string"},"password":{"type":"string"}},"required":["host","port"]}

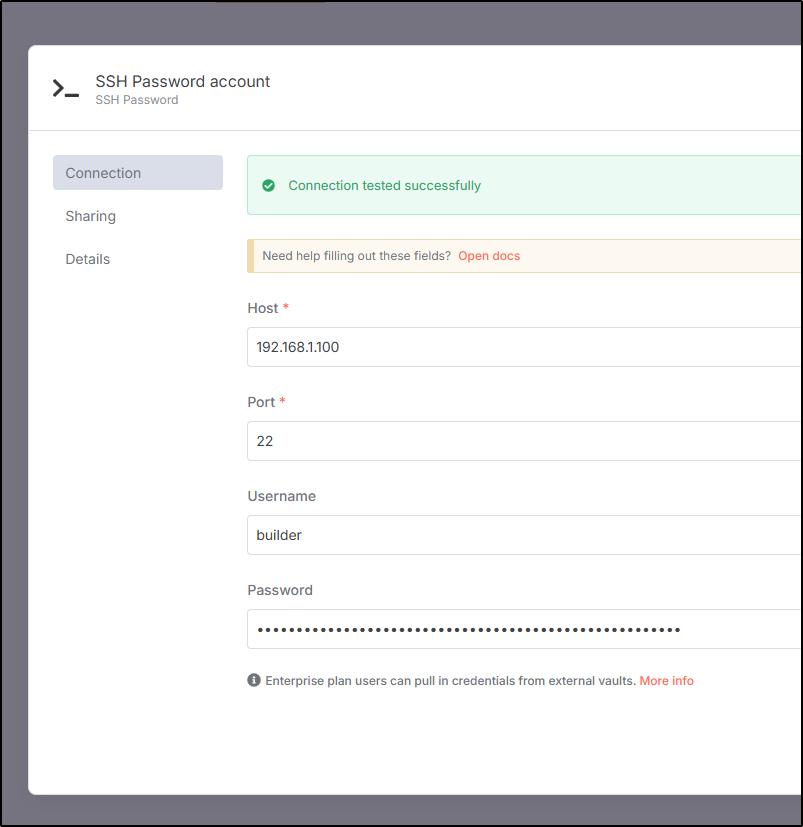

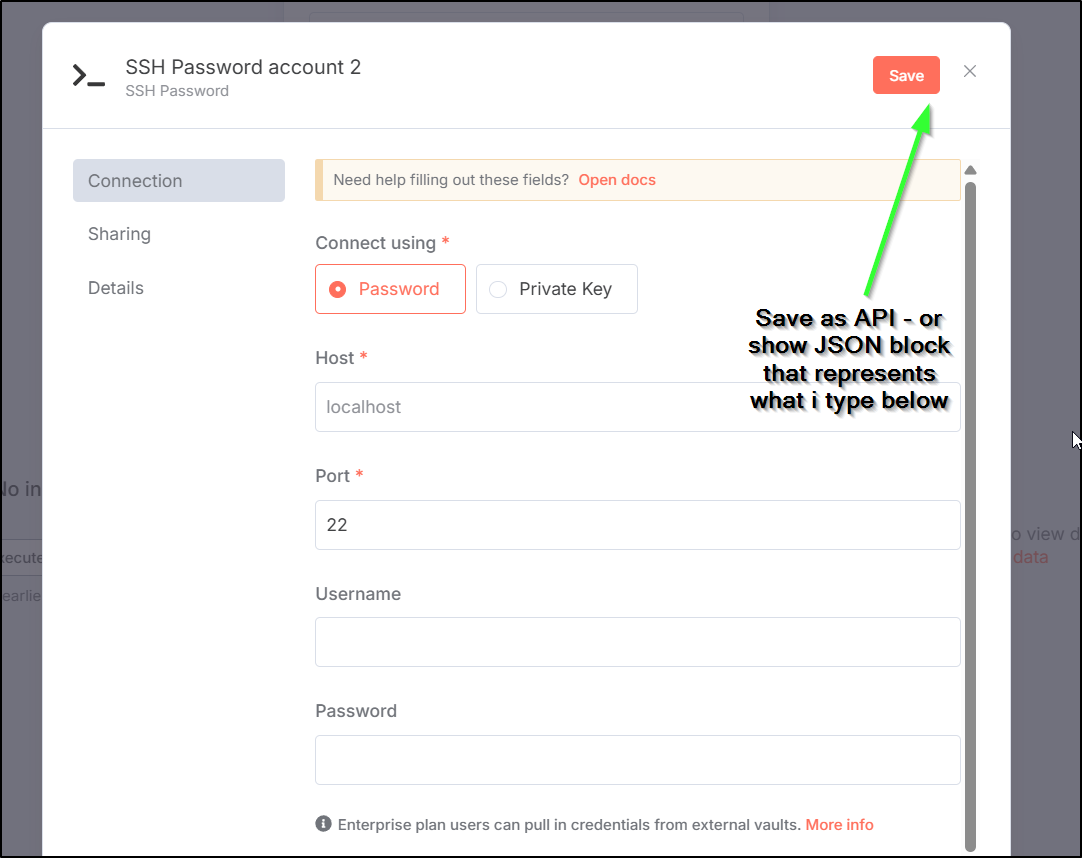

Let’s use the REST API to create an SSH credential.

I’ll first get the JSON set aside

$ curl -X GET -H "X-N8N-API-KEY: eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiI1MjQ5YzEzYi00NDY5LTRiN2EtOTc0OS03MDM2MmUwYzQ0ODQiLCJpc3MiOiJuOG4iLCJhdWQiOiJwdWJsaWMtYXBpIiwiaWF0IjoxNzQ3MzU4NDc0LCJleHAiOjE3NDk4NzM2MDB9.h09fj3eefx0M89TaZtW5jDNwVRLMAYZ5DF0yP2UTaJs" https://n8n.tpk.pw/api/v1/credentials/schema/sshPassword | jq > myssh.json

I’ll use the hints to build the payload

$ cat ./myssh.json

{

"name": "sample ssh",

"type": "sshPassword",

"data": {

"host": "192.168.1.100",

"port": 22,

"username": "builder",

"password": "myuserpassword1234"

}

}

I can now POST to the endpoint to use

$ curl -X POST -H "Content-Type: application/json" -H "X-N8N-API-KEY: eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiI1MjQ5YzEzYi00NDY5LTRiN2EtOTc0OS03MDM2MmUwYzQ0ODQiLCJpc3MiOiJuOG4iLCJhdWQiOiJwdWJsaWMtYXBpIiwiaWF0IjoxNzQ3MzU4NDc0LCJleHAiOjE3NDk4NzM2MDB9.h09fj3eefx0M89TaZtW5jDNwVRLMAYZ5DF0yP2UTaJs" https://n8n.tpk.pw/api/v1/credentials/ -d @myssh.json

{"name":"sample ssh","type":"sshPassword","id":"cfpXXMrPJqSfkMDQ","createdAt":"2025-05-16T01:38:35.462Z","updatedAt":"2025-05-16T01:38:35.462Z","isManaged":false}

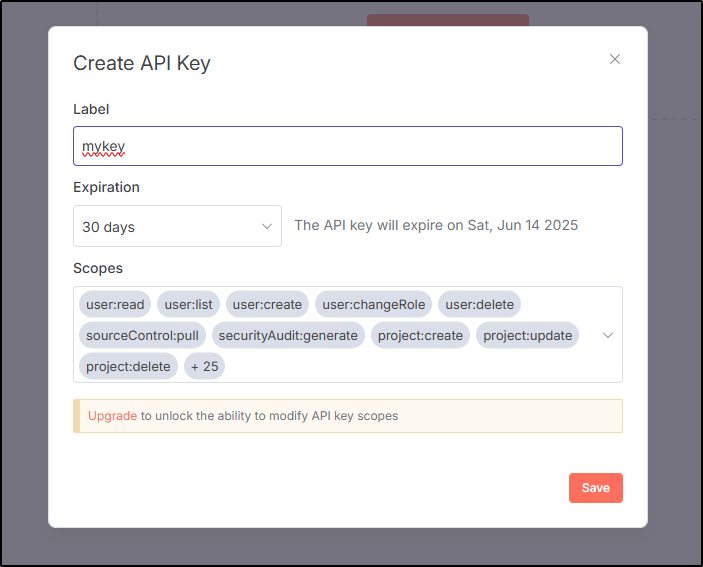

I can now use the new credential in an SSH step

I found the endpoints for all the Schemas I used:

https://n8n.tpk.pw/api/v1/credentials/schema/discordBotApi

https://n8n.tpk.pw/api/v1/credentials/schema/aws

https://n8n.tpk.pw/api/v1/credentials/schema/datadogApi

https://n8n.tpk.pw/api/v1/credentials/schema/googleCalendarOAuth2Api

https://n8n.tpk.pw/api/v1/credentials/schema/githubApi

https://n8n.tpk.pw/api/v1/credentials/schema/openAiApi

I had some troubles figuring out the OpenAi schema name, but later I found this Github page that listed them all.

The idea here is that we may want to create a BASH script that would set all our keys so that a proper backup of our host would be the keys via REST and then restoring workflows that are JSON

the way that would work is that we would create a credential, e.g.

$ curl -X POST -H "Content-Type: application/json" -H "X-N8N-API-KEY: eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiI1MjQ5YzEzYi00NDY5LTRiN2EtOTc0OS03MDM2MmUwYzQ0ODQiLCJpc3MiOiJuOG4iLCJhdWQiOiJwdWJsaWMtYXBpIiwiaWF0IjoxNzQ3MzU4NDc0LCJleHAiOjE3NDk4NzM2MDB9.h09fj3eefx0M89TaZtW5jDNwVRLMAYZ5DF0yP2UTaJs" https://n8n.tpk.pw/api/v1/credentials/ -d @mgh.json

{"name":"mygh","type":"githubApi","id":"cfpXXMrPJqSfkMDQ","createdAt":"2025-05-16T01:38:35.462Z","updatedAt":"2025-05-16T01:38:35.462Z","isManaged":false}

I could then use that new ID in the backup JSON when restoring

The Continued Struggle

I am really torn. I like n8n - clearly - i wrote three back to back blog posts. It’s really an amazing suite and it can do so many things.

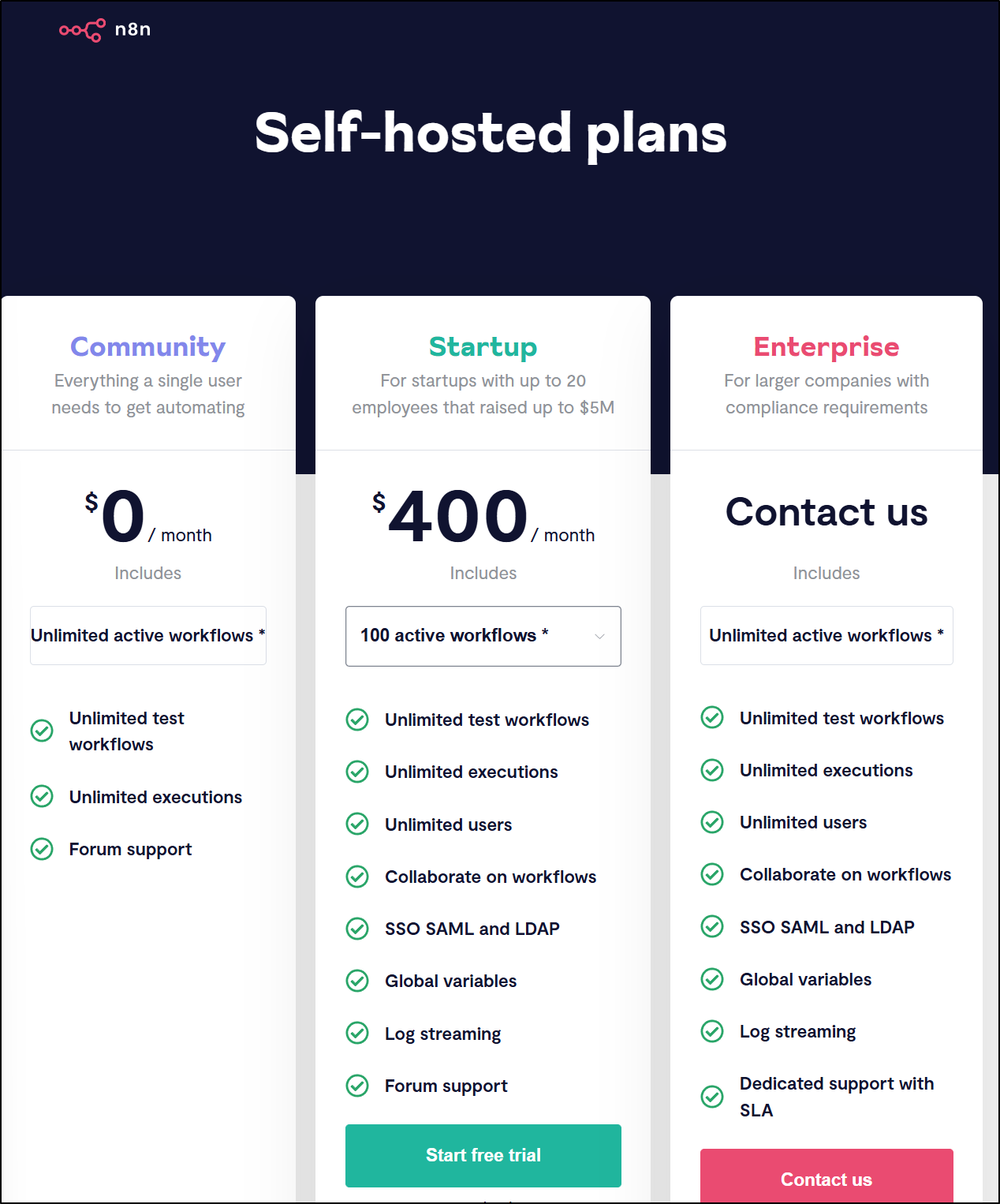

However, I feel there is a problem with their pricing:

There is $0 then $400/mo ($4800/year) for the next step up….

There is this big gap for what I might call the “enthusiast” or “solo” person. I want global variables, i want to share out to a community so i can blog and help ultimately sell their product.

I had this exact same issue with Portainer and did a setup post and usage follow-up then threw it out when the demo license wrapped simply because too much was behind an expensive paywall.

Then the question is “what would I pay”? I think I might fork over $10/mo or $100 for an annual license. I do think there needs to be a period set on the license (not a one time purchase) just because maintaining that many plugins has to be expensive.

Or, if they consider what people like me do a valuable addition to marketing, then offer a one time purchase for $20 or just expand what is in the Community edition to handle day 2 and I will keep using it (and talking about it).

My Ask

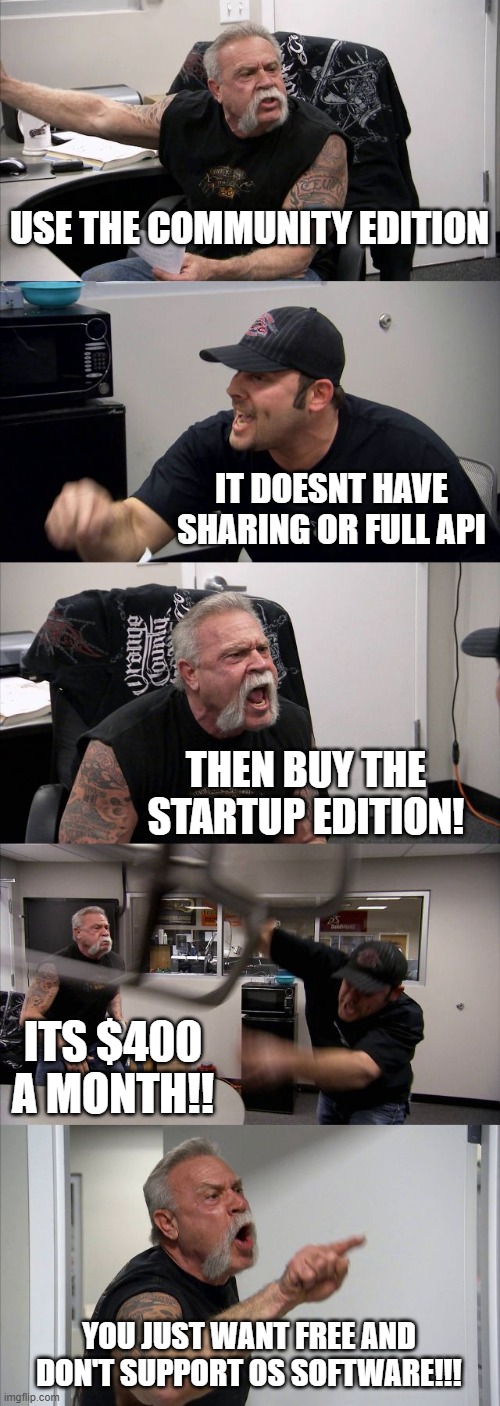

As it stands, it’s just barely over the line for me to stick with as I can back things up with API + JSON. I do find it incredibly annoying that I have to back-peddle the Credential API by hand. So here would be my top feature request:

Either:

- Add an export

- When creating, allow an export or show the CLI commands

- Allow at least 1 vault usage for community

- Allow GET on credential via API

Thankfully, when I created some tokens for my work I stored them in AKV so I could find and fetch them in a DR situation. But what if I hadn’t? I would need to document how to find, rotate and save as new when restoring a JSON flow.. that just seems really cumbersome for something as complicated as my Form flow.

Counting the deactivated node, that would be 6 credentials to find and fix.

Summary

Today we looked at n8n scheduledTask flows as well as some ideas on automating server maintenance using NewRelic and Datadog flows to an n8n webhook. I has a bit of trouble working out the javascript on the Code node, but I think there is a good enough start for someone to work out the rest.

We then tackled upgrades (of the Docker based setup) as well as some challenges this might create regarding backups. That lead us to investigate what we can and cannot do with the API and basically there are some real gaps on credentials. I formulated an “Ask” that would really get me to buy in altogether on n8n.

Until there is a way to backup credentials, I would advise using your own secrets management solution for tracking what you used for Credentials in the case of DR. Obviously there is a perfectly suitable SaaS solution from n8n but I don’t think they have a great pricing model (goes from free to too spendy for a sole proprietor). I’m hopeful they address these concerns in the future.

There is also some pretty good n8n tutorials on YouTube. If you want to get more into the Agentic AI, check out this AI Foundations tutorial.