Published: Apr 4, 2025 by Isaac Johnson

Something I am now just realizing having gone back to the office is that when things go down at home I cannot just get up and bounce a box.

Most things I can manage remotely, but I heavily use AWX in Kubernetes to manage things. I use codeserver and a linuxserver container, also in Kubernetes as a remote management plan to shell into systems.

Lastly, I have often two clusters so if one goes bad, I still have a fallback. Lastly, most of my work is spread between a production Kubernetes, a Dockerhost and lastly a couple of Synology NASes.

Primary Cluster Outage

When my primary cluster is out and my secondary is out of commission, I’m left in a rather stuck position.

I tried to access remotely, but found my external endpoint was DOA

$ kubectl get nodes

E0402 07:03:26.662052 225181 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://75.73.224.240:25568/api?timeout=32s\": dial tcp 75.73.224.240:25568: i/o timeout"

E0402 07:03:56.663832 225181 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://75.73.224.240:25568/api?timeout=32s\": dial tcp 75.73.224.240:25568: i/o timeout"

E0402 07:04:26.671262 225181 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://75.73.224.240:25568/api?timeout=32s\": dial tcp 75.73.224.240:25568: i/o timeout"

E0402 07:04:56.673565 225181 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://75.73.224.240:25568/api?timeout=32s\": dial tcp 75.73.224.240:25568: i/o timeout"

E0402 07:05:26.677447 225181 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://75.73.224.240:25568/api?timeout=32s\": dial tcp 75.73.224.240:25568: i/o timeout"

Unable to connect to the server: dial tcp 75.73.224.240:25568: i/o timeout

My first thought was “did my external IP change”? My ISP bumps me from time to time, usually every couple years (which is manageable).

I usually use the AWX instance to do a dig (or I’m home and can just use whatismyip.com). Obviously, I cannot do that now when I’m at work far away.

But it dawned on me I had an AzDO agent running on-prem still.

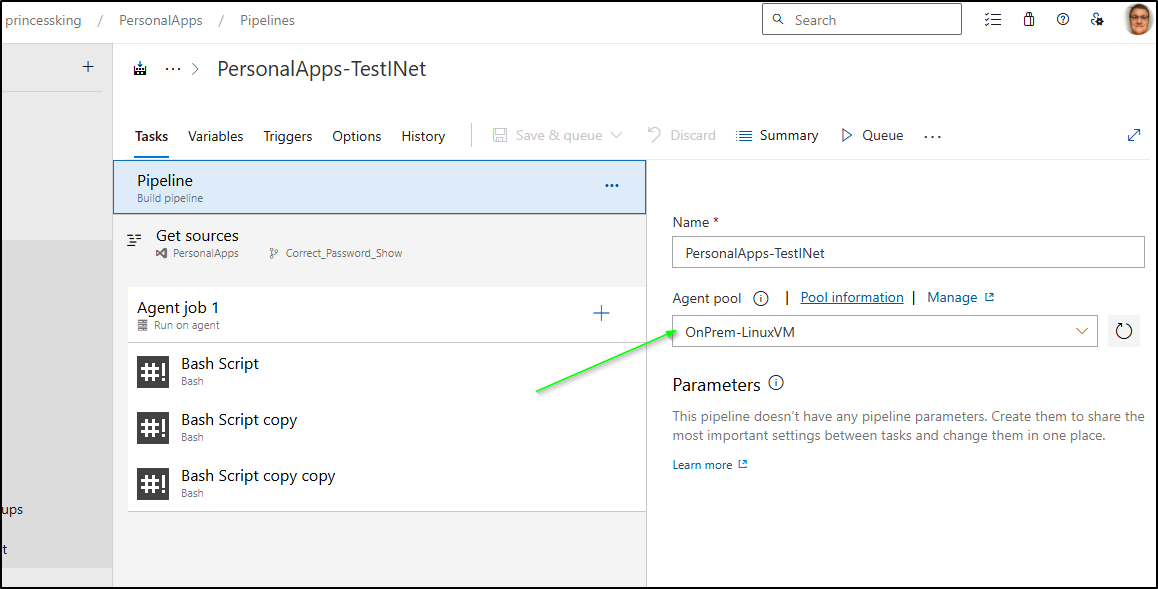

I whipped up a quick classic UI job to use the agent

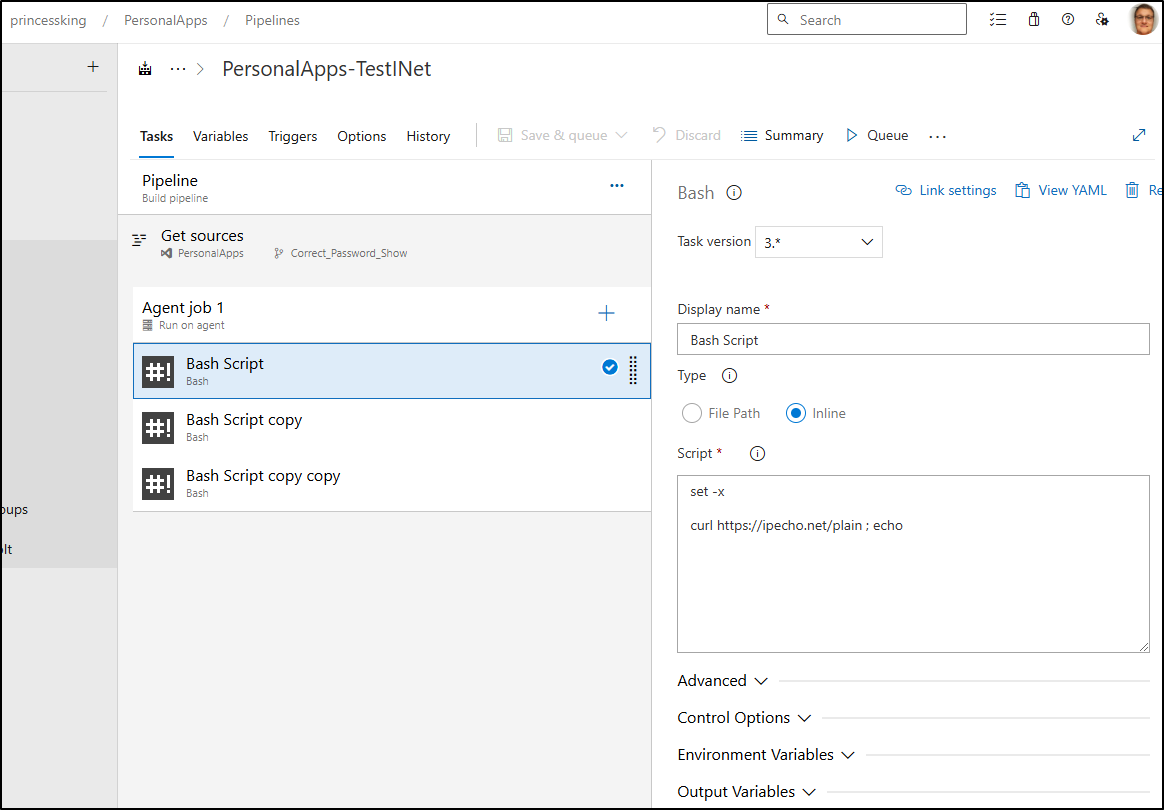

and look up IPs

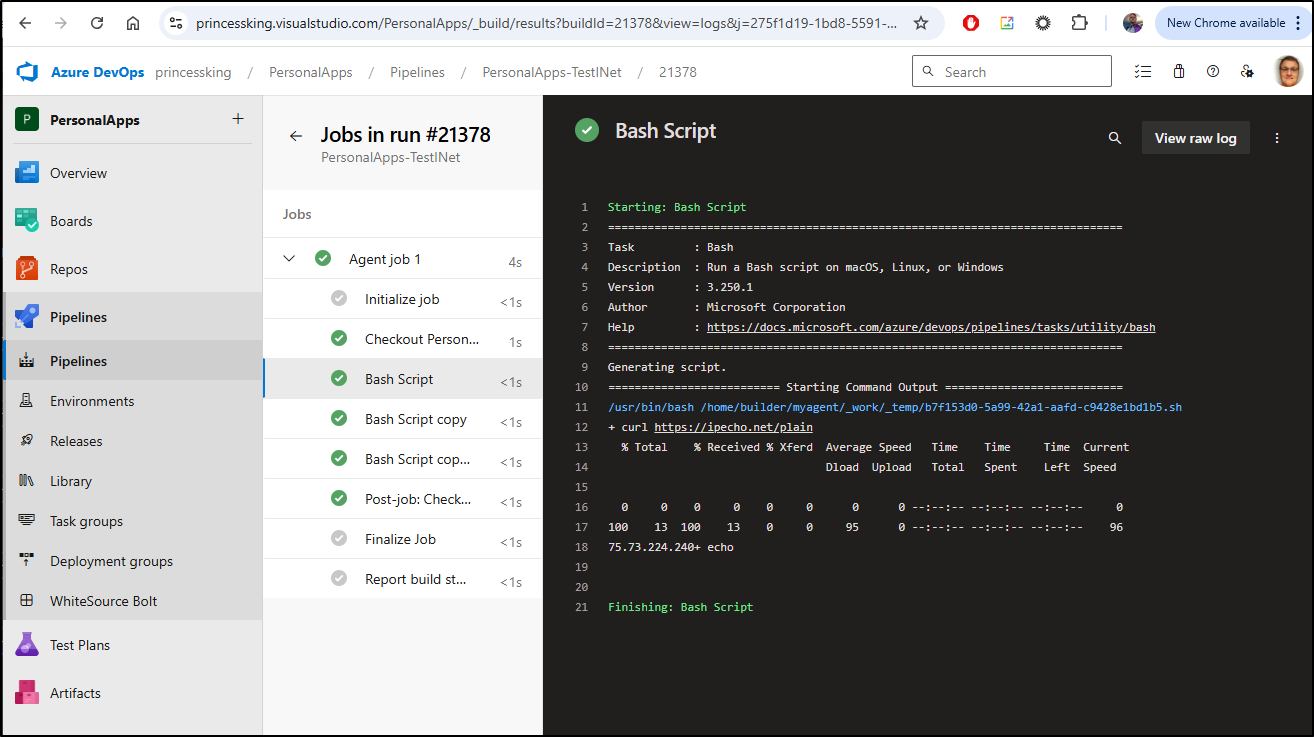

Then ran it to verify, indeed, my IP is correct

Now that I’m home

It had a hard crash, kernel panic - nothing I can imagine i could do here.

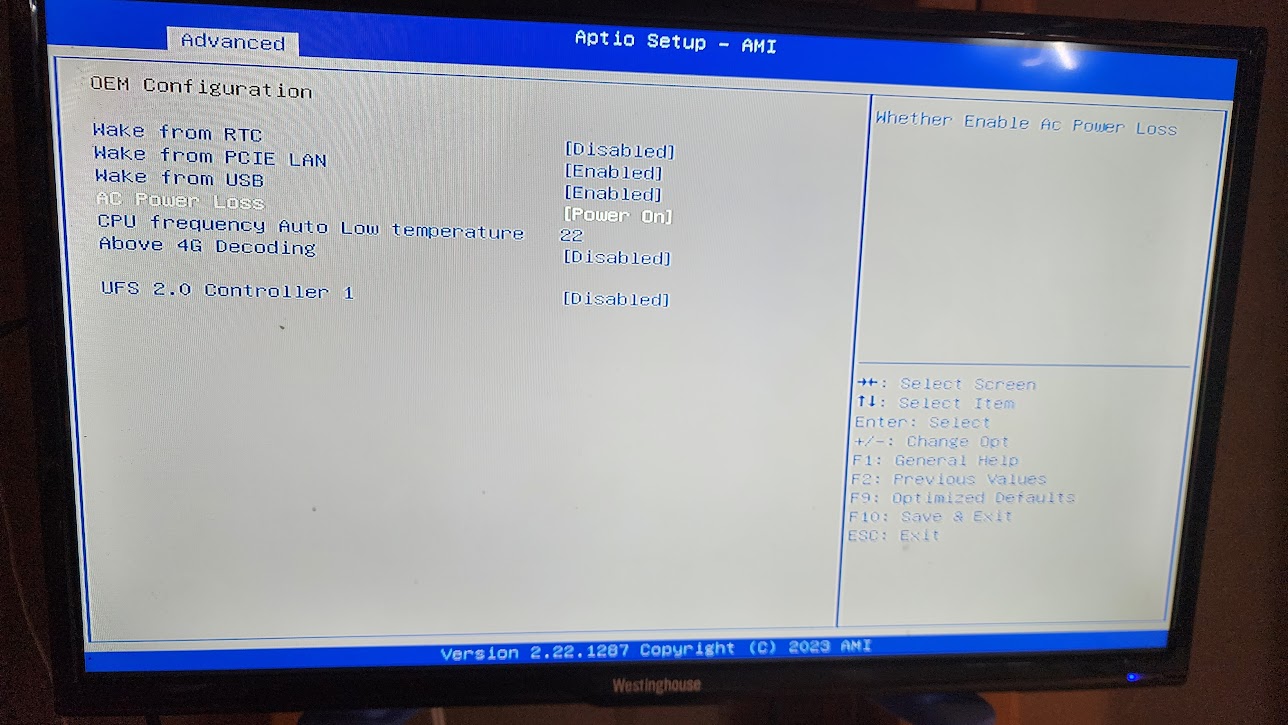

When I bounced it, I did set the BIOS to way on AC on. I’ll want to test that later.

Fully powered up, the IP changed on me, which is never fun.

So even if I update my kubeconfig, it’s clear the replica nodes cannot connect to the control-plane/master

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

hp-hp-elitebook-850-g2 NotReady <none> 393d v1.26.14+k3s1

builder-hp-elitebook-850-g1 NotReady <none> 397d v1.26.14+k3s1

builder-hp-elitebook-850-g2 NotReady <none> 254d v1.26.14+k3s1

builder-hp-elitebook-745-g5 Ready control-plane,master 397d v1.26.14+k3s1

I’m pretty sure I just need to get the nodes and update the K3S_URL

builder@builder-HP-EliteBook-850-G2:~$ sudo cat /etc/systemd/system/k3s-agent.service.env

K3S_TOKEN='K10ef48ebf2c2adb1da135c5fd0ad3fa4966ac35da5d6d941de7ecaa52d48993605::server:ed854070980c4560bbba49f8217363d4'

K3S_URL='https://192.168.1.33:6443'

builder@builder-HP-EliteBook-850-G2:~$ sudo vi /etc/systemd/system/k3s-agent.service.env

builder@builder-HP-EliteBook-850-G2:~$ sudo cat /etc/systemd/system/k3s-agent.service.env

K3S_TOKEN='K10ef48ebf2c2adb1da135c5fd0ad3fa4966ac35da5d6d941de7ecaa52d48993605::server:ed854070980c4560bbba49f8217363d4'

K3S_URL='https://192.168.1.34:6443'

Then restart the service

builder@builder-HP-EliteBook-850-G2:~$ sudo systemctl stop k3s-agent.service

builder@builder-HP-EliteBook-850-G2:~$ sudo systemctl start k3s-agent.service

I can now see that is syncing

builder@DESKTOP-QADGF36:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-hp-elitebook-745-g5 Ready control-plane,master 397d v1.26.14+k3s1

builder-hp-elitebook-850-g2 Ready <none> 254d v1.26.14+k3s1

builder-hp-elitebook-850-g1 NotReady <none> 397d v1.26.14+k3s1

hp-hp-elitebook-850-g2 NotReady <none> 393d v1.26.14+k3s1

Once I updated the other two, the cluster was alive again

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-hp-elitebook-850-g1 Ready <none> 397d v1.26.14+k3s1

builder-hp-elitebook-850-g2 Ready <none> 254d v1.26.14+k3s1

hp-hp-elitebook-850-g2 Ready <none> 393d v1.26.14+k3s1

builder-hp-elitebook-745-g5 Ready control-plane,master 397d v1.26.14+k3s1

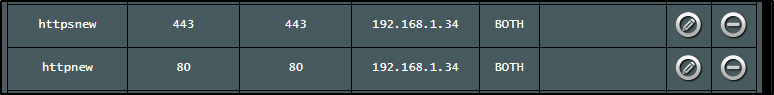

I also needed to fix the routing now too

Lastly, a quick check shows my ingress is working

Via NAS

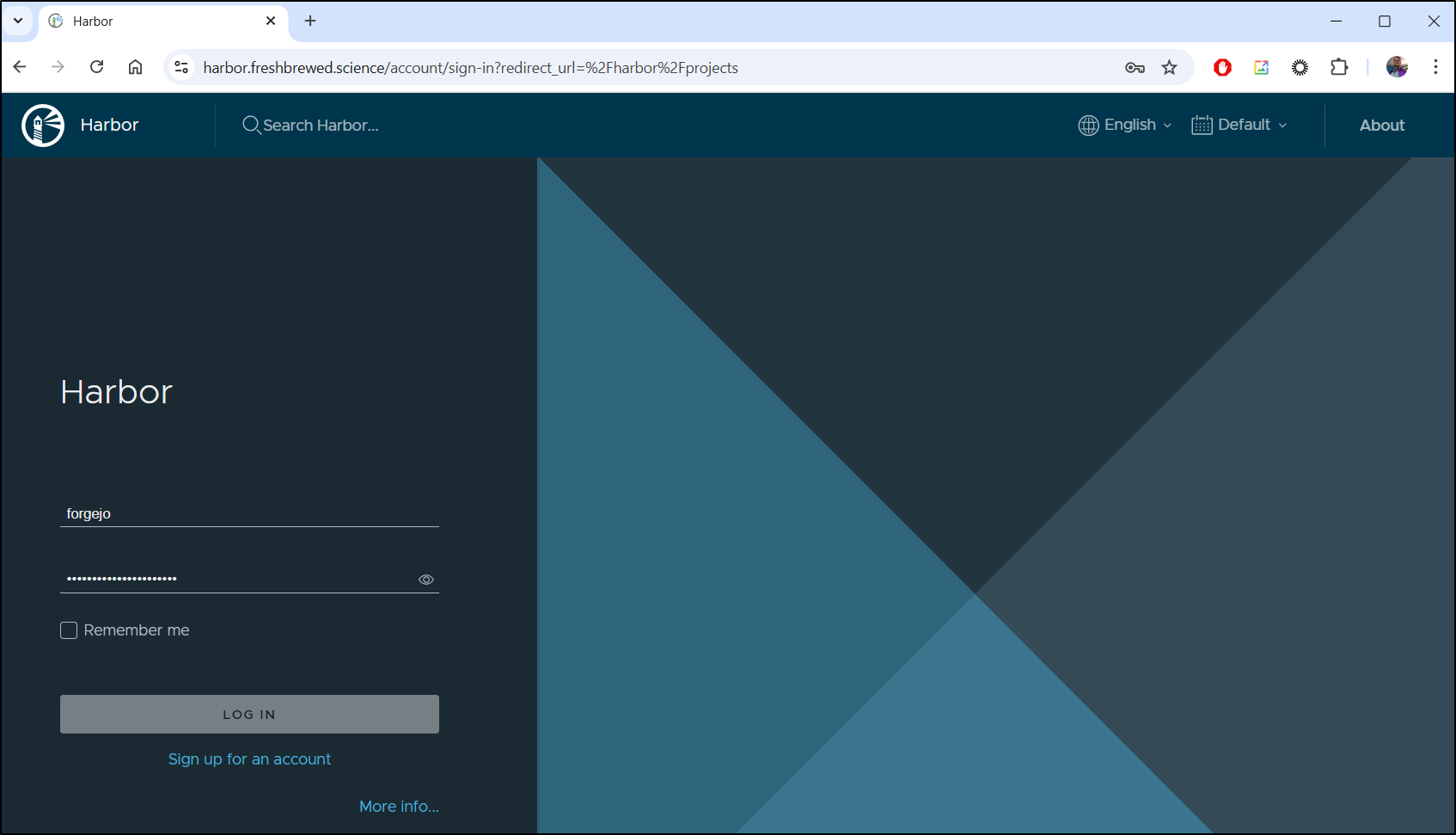

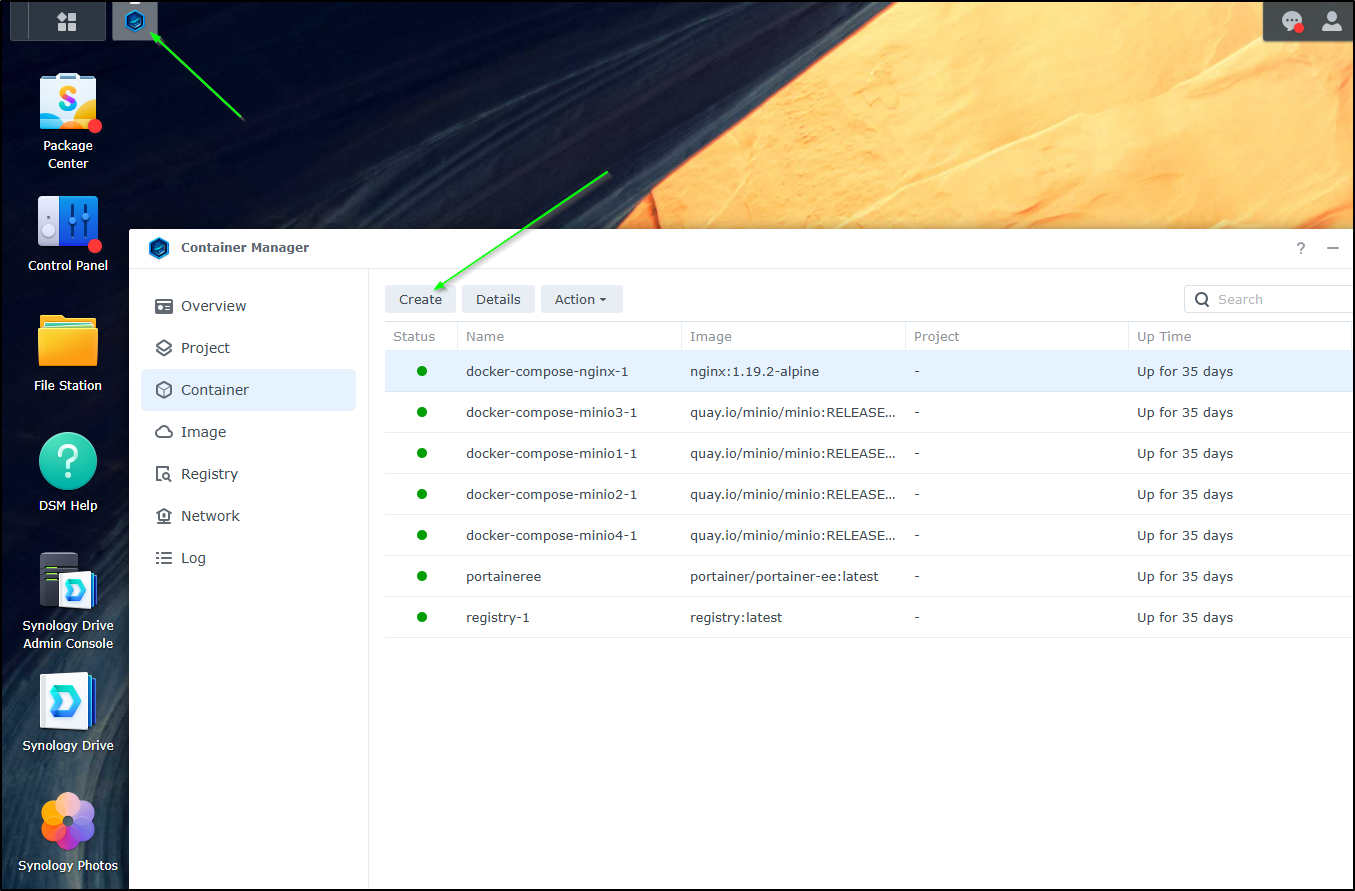

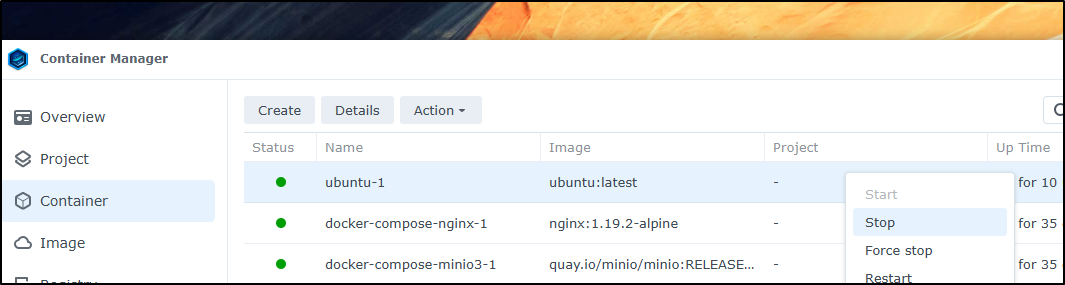

I logged into both of my NAS’s.

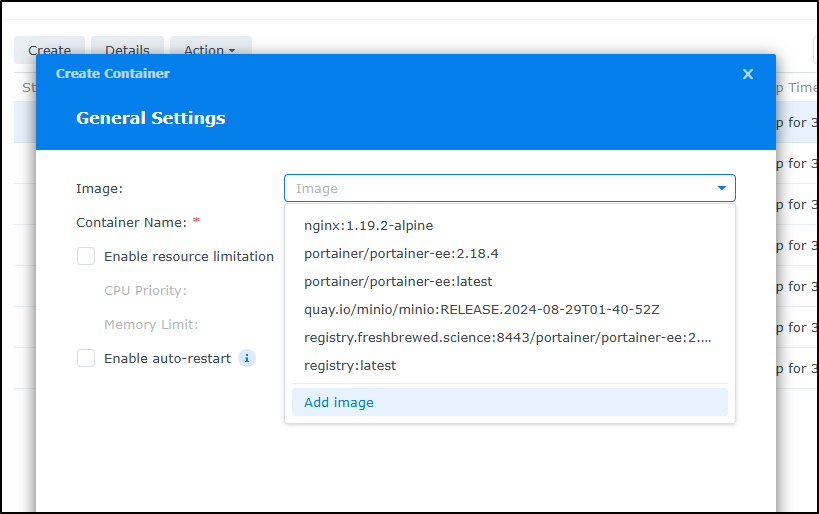

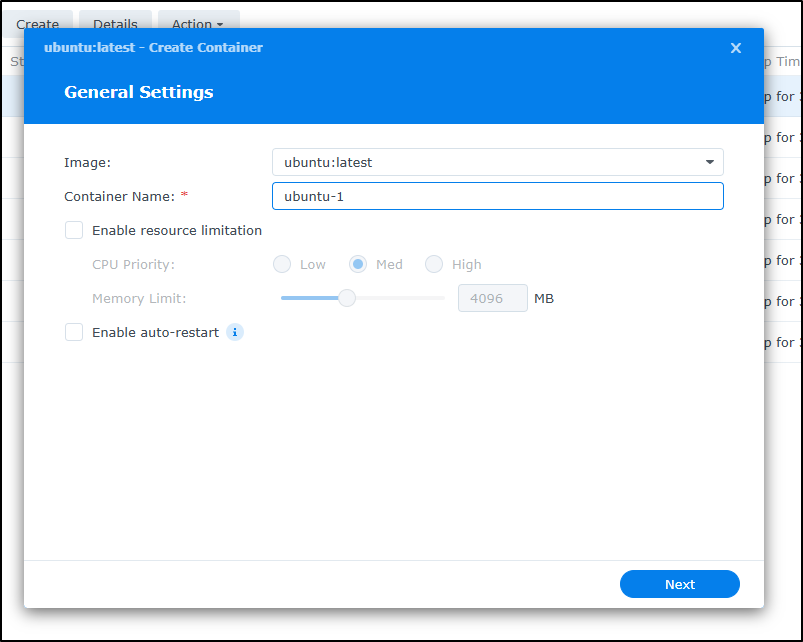

I realized the newer one runs a Container Manager. I fired it up and chose to “Create” a new container

I’ll need to add an image

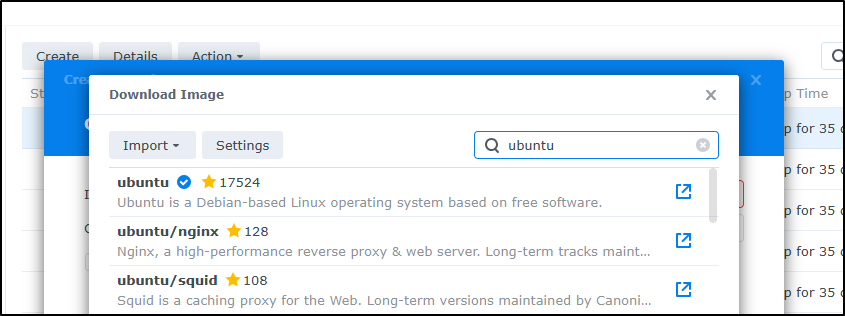

I could go with busybox or ubuntu. I went with the latter

I chose the “latest” tag and continued. After it downloaded, I clicked Next

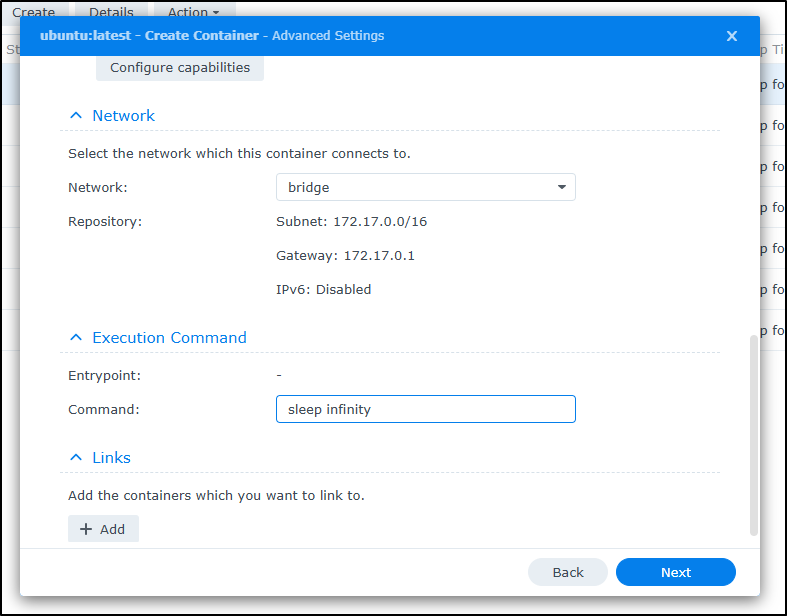

I’ll tell it to sleep infinitely (otherwise the container would just launch and close)

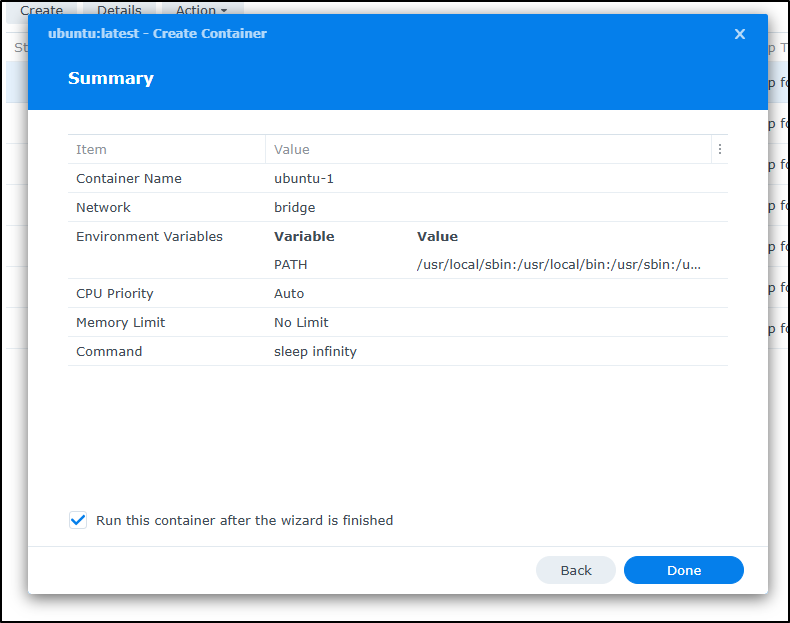

Press Done to create and launch

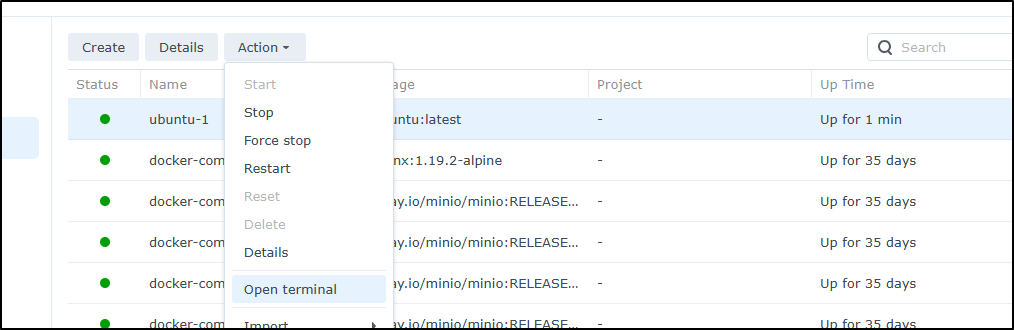

I can now select the container, click Actions and choose to open a terminal

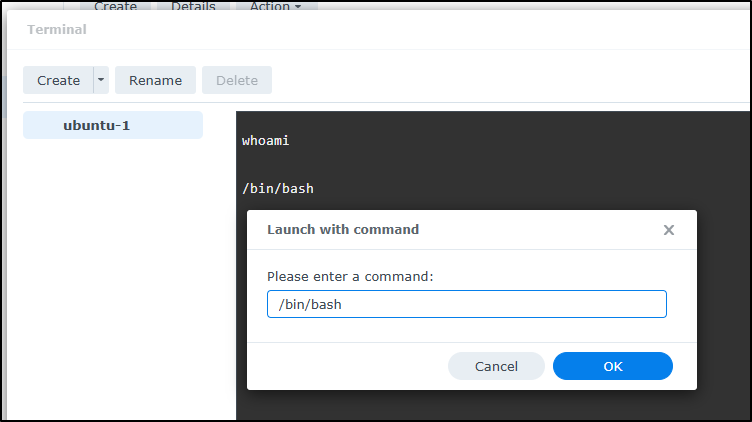

I realized that i needed to use the “Create” drop-down to launch with command and enter bash

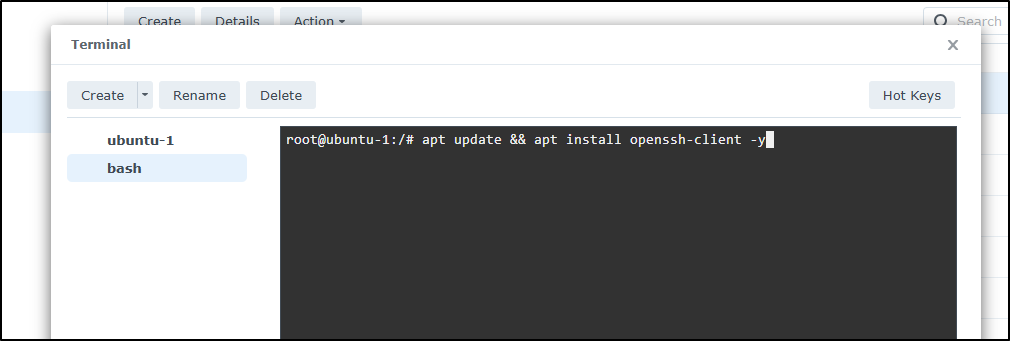

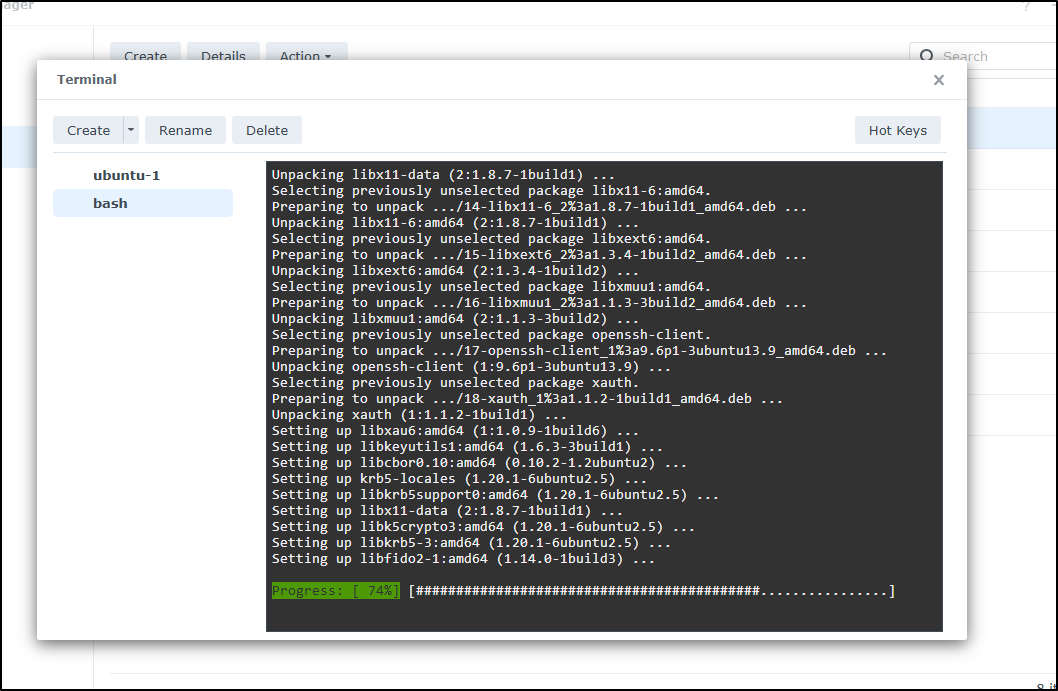

The Ubuntu container will be pretty basic. I’ll want to now use apt update && apt install openssh-client -y to get an SSH client on here so i can jump to other boxes

This took a fat minute, but did load

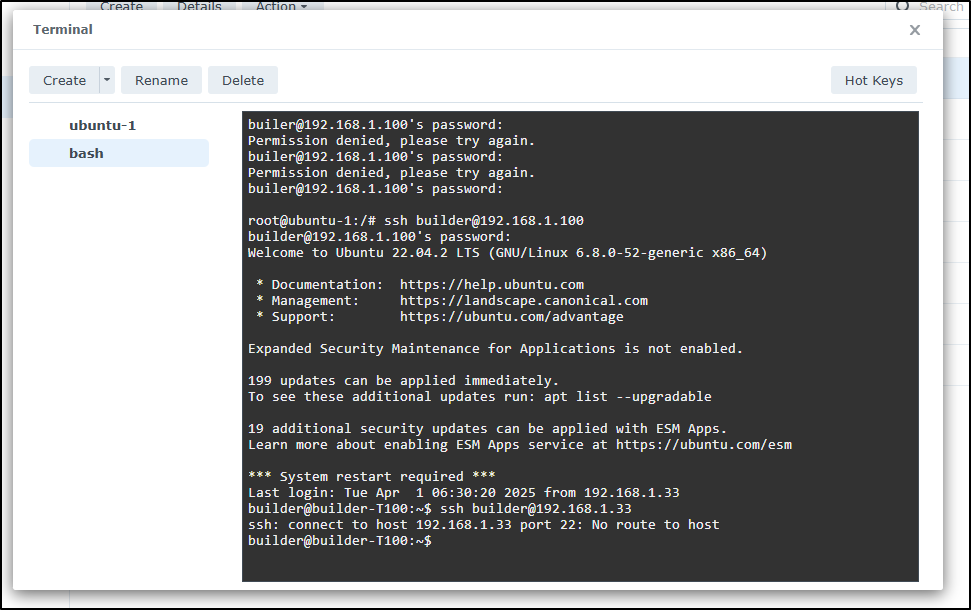

My goal here is to see if the host that serves as my K8s master is just acting up and will allow me to SSH in and reboot.

If it requires a hard-reset, I’ll be a bit more stuck.

Indeed, I found the host 192.168.1.33 was either off the network, changed IPs or is hung. I made sure it wasn’t just the NAS connectivity by going to the 192.168.1.100 Dockerhost first

I can now close the terminal window and stop the container so it’s not wasting CPU or Memory resources

A Bad Second Cluster

I’m still mourning the loss of my ‘Anna-Macbook Air’ which died mid-March. Just totally dead, nothing. I have been running that sucker hot for years which relly pushed the boundaries for what a Macbook Air can do.

I lack the funds presently to replace it, but I do have its brother “Isaac Macbook Air” which had some issues and was pulled from my stack for a while. I meant to replace the cluster but didn’t get to it. Now is the time.

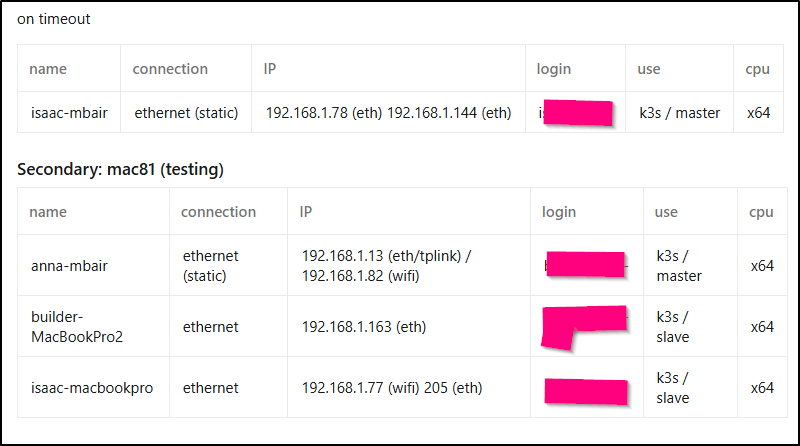

I think, due to lack of resources, I’m going to move the other macbook air (isaac-mbair) into being the new master for the secondary cluster

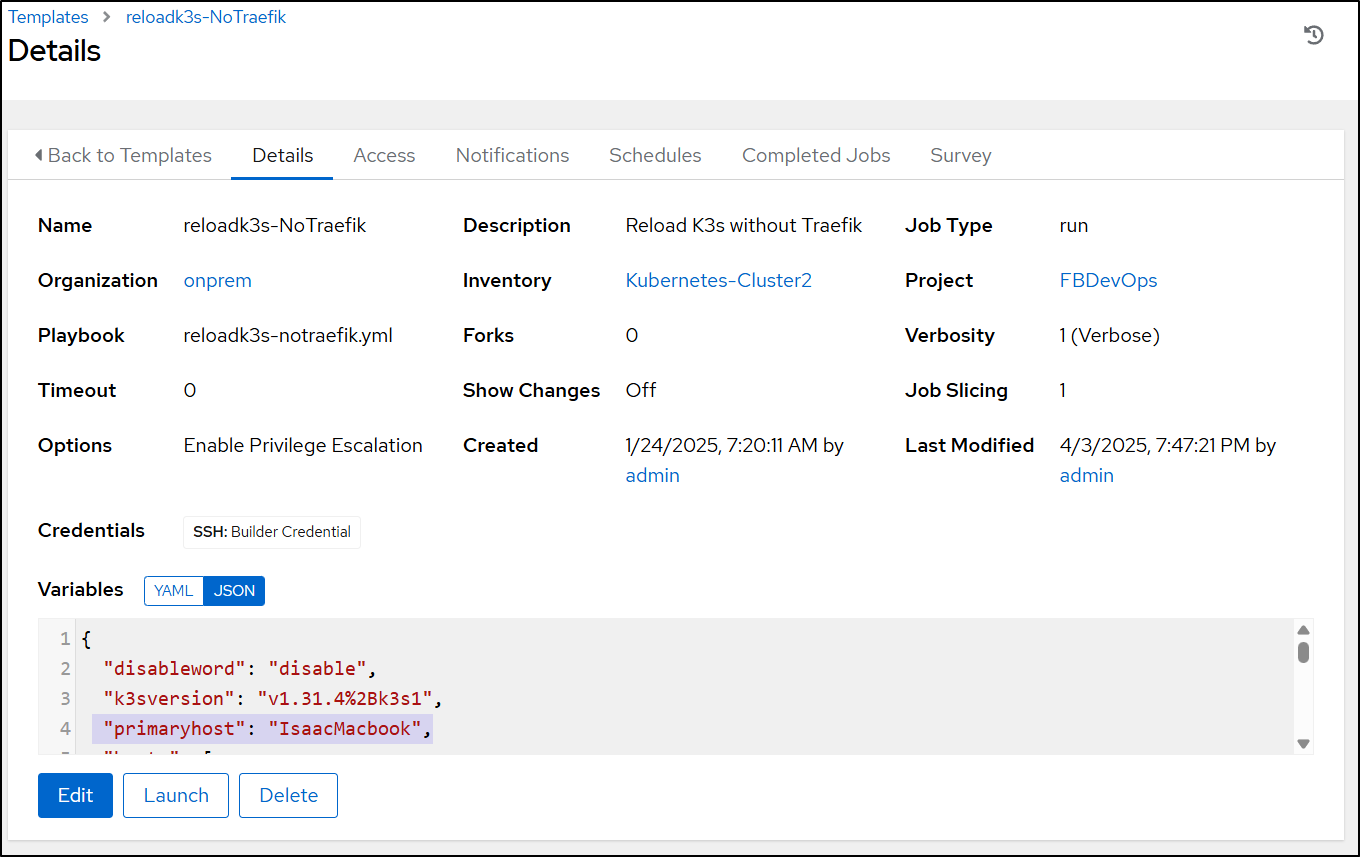

One minor issue I’ll have is I hardcoded the now defunct “AnnaMacbook” into the Playbook.

- name: Update Primary

hosts: AnnaMacbook

tasks:

I’ll need to parameterize that to using “primaryhost”

It took some updating, including adding az back to the old Macbook Air

But after a few loads (and swapping batteries between the old Macbook Pros), I got the system online

builder@DESKTOP-QADGF36:~/.kube$ kubectx mac77

Switched to context "mac77".

builder@DESKTOP-QADGF36:~/.kube$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-macbookpro2 Ready <none> 19m v1.31.4+k3s1

isaac-macbookair Ready control-plane,master 20m v1.31.4+k3s1

isaac-macbookpro Ready <none> 19m v1.31.4+k3s1

I will also say the old Macbook Air was fried. I dismantled it and saved the battery and hard drive.

My teenage daughter who saw me dismantled the laptop thought the aftermarket brand name of the battery was pretty hilarious:

Remote Reboots

I have an idea for using some Kasa plugs I have yet to use (provided I can find them).

If I set the BIOS to boot on power, then I should be able to power-cycle remotely.

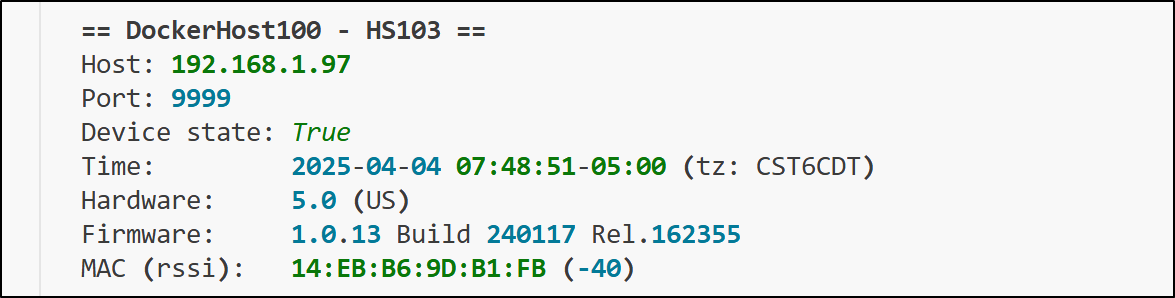

I added some Kasa plugs I had in a box from a while back (some HS103s)

I set them up on the Primary Kubernetes host and my “Dockerhost” aka the T100

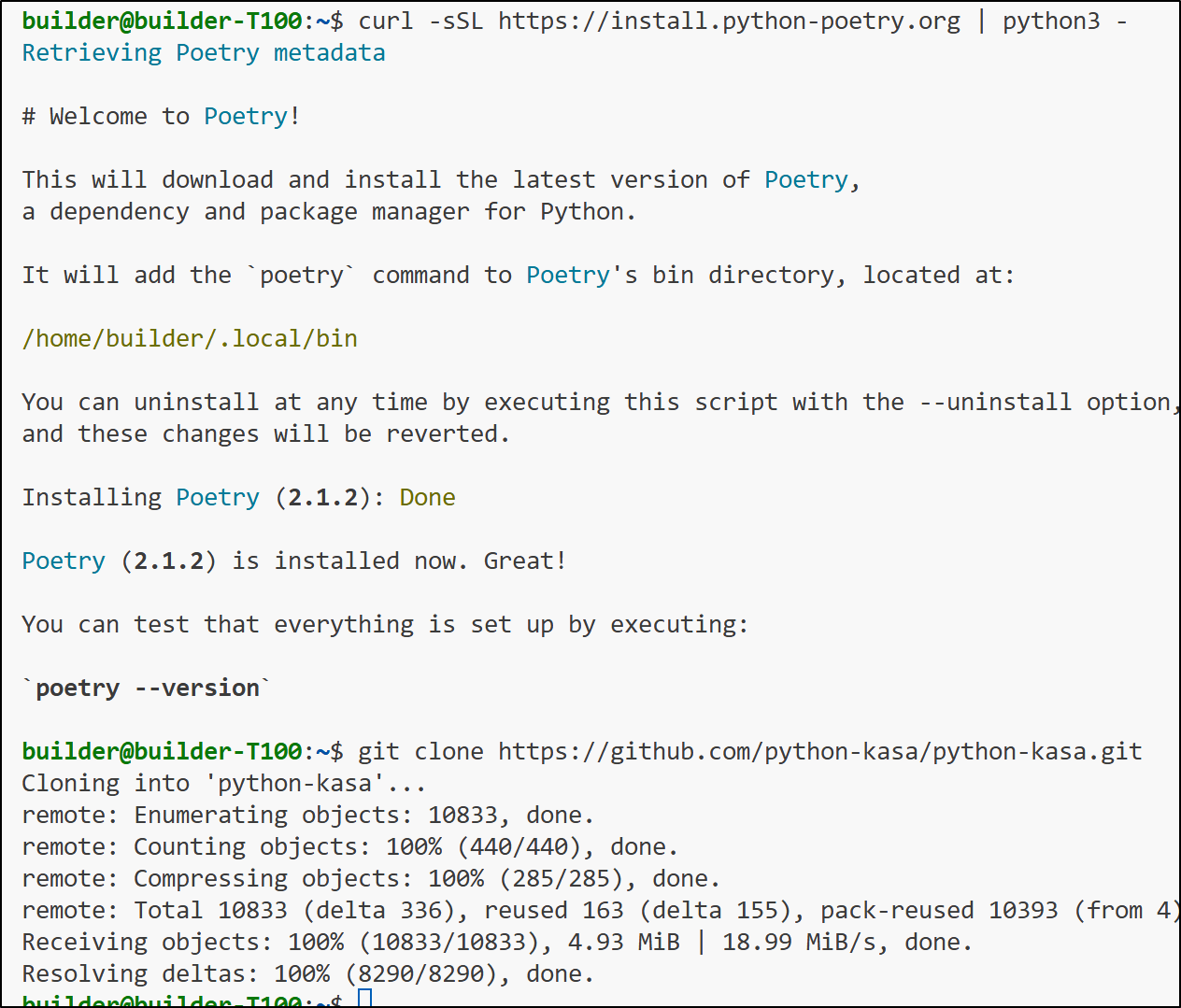

While remote, I then used a remote shell to get back to the T100 to add the Kasa local library so I could determine which IPs matched these plugs

$ curl -sSL https://install.python-poetry.org | python3 -

$ git clone https://github.com/python-kasa/python-kasa.git

My first issue was the local Python was too old

builder@builder-T100:~$ cd python-kasa/

builder@builder-T100:~/python-kasa$ poetry install

The currently activated Python version 3.10.12 is not supported by the project (>=3.11,<4.0).

Trying to find and use a compatible version.

Poetry was unable to find a compatible version. If you have one, you can explicitly use it via the "env use" command.

builder@builder-T100:~/python-kasa$

I fought a bit, but ultimately used these steps to upgrade Python on one of my hosts.

I fought poetry for a while before pivoting to just using uv as the README says (install from here)

git clone https://github.com/python-kasa/python-kasa.git

cd python-kasa/

uv sync --all-extras

uv run kasa

And this worked great

Now that I ran the local client, I have the IPv4’s for these:

- the Elitebook is 192.168.1.48

- the DockerT100 is 192.168.1.97

This means (borrowing from my Github workflow used to trigger the ‘build light’), I could power cycle them with

curl -s -o /dev/null "https://kasarest.freshbrewed.science/on?devip=192.168.1.48&apikey=$"

curl -s -o /dev/null "https://kasarest.freshbrewed.science/on?devip=192.168.1.97&apikey=$"

Summary

I covered fixing the primary cluster (it was hung in a way short of a power-cycle, not much I could do). I showed then how to handle a Rancher K3s IP change on master (without a full respin). I showed how to use a Synology NAS as a backdoor when nothing else works (using containers).

We looked at rebuilding the secondary cluster now that one of my Macbook Air’s is dead (RIP). I covered updating the Ansible Playbooks to remove some hardcoded host names and lastly we revisited using Kasa plugs (HS103s) and the Python Kasa library to create a poor-mans LightsOut setup.