Published: Mar 21, 2025 by Isaac Johnson

Today we’ll check out Kokoro, a very nice web UI wrapped around a text-to-speech engine. We’ll see it in Docker and then try to get it running in Kubernetes with an exposed TLS ingress. I’ll also check out its performance on different classes of machine.

I will take a moment to look at NZBGet, a USENET News Group downloader. Then we’ll move on to exploring Kutt, an excellent modern URL shortener. We will look at Docker, Kubernetes and the REST API before showing how to integrate it into a Github Actions workflow. I actually delayed this article for a while just because I wanted to get some real-world usage out of it so we could compare with YOURLS.

Kokoro

I saw this Marius post a few weeks ago about “Kokoro” which is a nice Text-to-speech app you can run in a container.

If we go to the Github home for it we can see how to invoke with Docker:

# CPU

docker run -p 8880:8880 ghcr.io/remsky/kokoro-fastapi-cpu:v0.2.2

# GPU

docker run --gpus all -p 8880:8880 ghcr.io/remsky/kokoro-fastapi-gpu:v0.2.2

I’ll try the GPU first as I’m on my bigger machine with an NVidia card

$ docker run --gpus all -p 8880:8880 ghcr.io/remsky/kokoro-fastapi-gpu:v0.2.2

Unable to find image 'ghcr.io/remsky/kokoro-fastapi-gpu:v0.2.2' locally

v0.2.2: Pulling from remsky/kokoro-fastapi-gpu

de44b265507a: Pull complete

7c19210cd82d: Pull complete

5f5407c3a203: Pull complete

0f8429c62360: Pull complete

4b650590013c: Pull complete

14fc1a5fdf5b: Downloading [=====================> ] 881.7MB/2.07GB

66a4307f086b: Download complete

ba1786052c2e: Download complete

b98cda71b10f: Download complete

5e64b8041de6: Downloading [============================================> ] 658.6MB/732.9MB

d1da8f0791a1: Download complete

bf83beda4da9: Download complete

d27544a87475: Download complete

4f4fb700ef54: Download complete

36b7d6a9ac01: Download complete

6921f6108f74: Downloading [===============> ] 1.104GB/3.591GB

333fa843603f: Waiting

6eabf45d2dc4: Waiting

2b73a021bed5: Waiting

74d90daacc5b: Waiting

40a1c66fd673: Waiting

As you can see, this container is huge. When it did finally land, it didnt like my CUDA version

$ docker run --gpus all -p 8880:8880 ghcr.io/remsky/kokoro-fastapi-gpu:v0.2.2

Unable to find image 'ghcr.io/remsky/kokoro-fastapi-gpu:v0.2.2' locally

v0.2.2: Pulling from remsky/kokoro-fastapi-gpu

de44b265507a: Pull complete

7c19210cd82d: Pull complete

5f5407c3a203: Pull complete

0f8429c62360: Pull complete

4b650590013c: Pull complete

14fc1a5fdf5b: Pull complete

66a4307f086b: Pull complete

ba1786052c2e: Pull complete

b98cda71b10f: Pull complete

5e64b8041de6: Pull complete

d1da8f0791a1: Pull complete

bf83beda4da9: Pull complete

d27544a87475: Pull complete

4f4fb700ef54: Pull complete

36b7d6a9ac01: Pull complete

6921f6108f74: Pull complete

333fa843603f: Pull complete

6eabf45d2dc4: Pull complete

2b73a021bed5: Pull complete

74d90daacc5b: Pull complete

40a1c66fd673: Pull complete

Digest: sha256:4dc5455046339ecbea75f2ddb90721ad8dbeb0ab14c8c828e4b962dac2b80135

Status: Downloaded newer image for ghcr.io/remsky/kokoro-fastapi-gpu:v0.2.2

docker: Error response from daemon: failed to create task for container: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: error during container init: error running hook #0: error running hook: exit status 1, stdout: , stderr: Auto-detected mode as 'legacy'

nvidia-container-cli: requirement error: unsatisfied condition: cuda>=12.8, please update your driver to a newer version, or use an earlier cuda container: unknown.

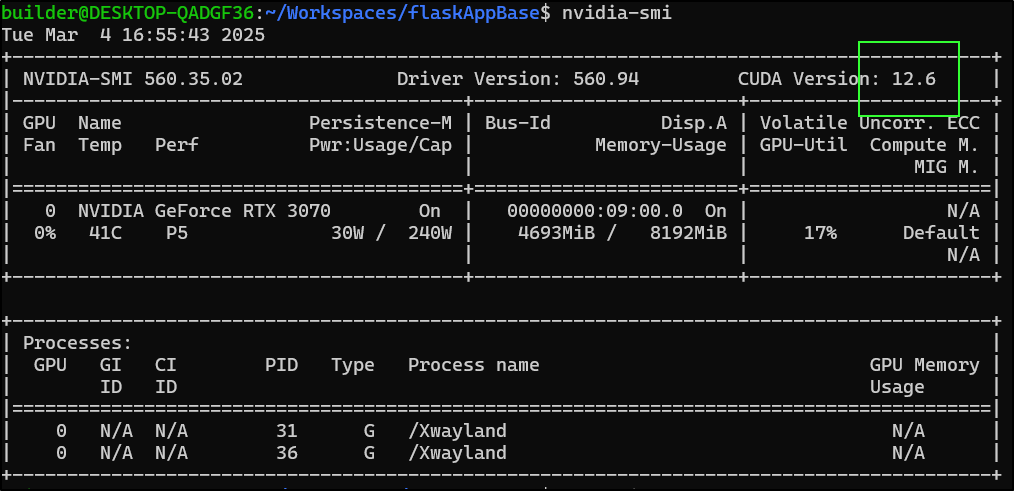

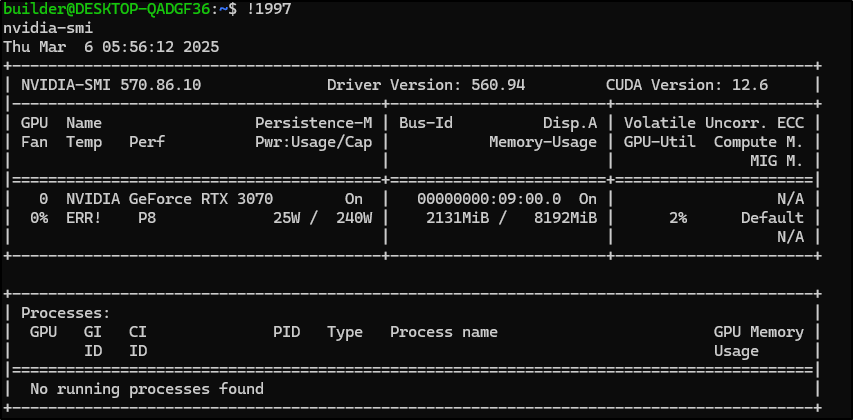

seem’s it wants the latest 12.8 but I have 12.6 installed

I’ll follow the steps here

$ wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/cuda-ubuntu2004.pin

$ sudo mv cuda-ubuntu2004.pin /etc/apt/preferences.d/cuda-repository-pin-600

$ wget https://developer.download.nvidia.com/compute/cuda/12.8.0/local_installers/cuda-repo-ubuntu2004-12-8-local_12.8.0-570.86.10-1_amd64.deb

$ sudo dpkg -i cuda-repo-ubuntu2004-12-8-local_12.8.0-570.86.10-1_amd64.deb

$ sudo cp /var/cuda-repo-ubuntu2004-12-8-local/cuda-*-keyring.gpg /usr/share/keyrings/

$ sudo apt-get update

$ sudo apt-get -y install cuda-toolkit-12-8

That alone did not satisfy the cuda requirement, but then I installed nvidia-open

$ sudo apt-get install -y nvidia-open

Still no go.. I may have to reboot

builder@DESKTOP-QADGF36:~/Workspaces/flaskAppBase$ docker run --gpus all -p 8880:8880 ghcr.io/remsky/kokoro-fastapi-gpu:v0.2.2

docker: Error response from daemon: failed to create task for container: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: error during container init: error running hook #0: error running hook: exit status 1, stdout: , stderr: Auto-detected mode as 'legacy'

nvidia-container-cli: requirement error: unsatisfied condition: cuda>=12.8, please update your driver to a newer version, or use an earlier cuda container: unknown.

builder@DESKTOP-QADGF36:~/Workspaces/flaskAppBase$ nvidia-smi

Tue Mar 4 17:08:29 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 560.35.02 Driver Version: 560.94 CUDA Version: 12.6 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce RTX 3070 On | 00000000:09:00.0 On | N/A |

| 0% 41C P5 30W / 240W | 4614MiB / 8192MiB | 56% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 31 G /Xwayland N/A |

| 0 N/A N/A 36 G /Xwayland N/A |

+-----------------------------------------------------------------------------------------+

Even after a reboot it didn’t help.

I’ll just use the CPU version instead

$ docker run -p 8880:8880 ghcr.io/remsky/kokoro-fastapi-cpu:v0.2.2

Unable to find image 'ghcr.io/remsky/kokoro-fastapi-cpu:v0.2.2' locally

v0.2.2: Pulling from remsky/kokoro-fastapi-cpu

c29f5b76f736: Pull complete

74e68b11a1c1: Pull complete

a477a912afa7: Pull complete

8c67a072a8ad: Pull complete

406e12cf3cd9: Pull complete

31312498c845: Pull complete

87ccd60e8dd6: Pull complete

4f4fb700ef54: Pull complete

15cd46d12611: Pull complete

d94653bc0bd7: Pull complete

43d164395a1c: Pull complete

98755b5b49e7: Pull complete

614c4f55d6a2: Pull complete

041a0f34698b: Pull complete

fac405487e7b: Pull complete

Digest: sha256:76549cce3c5cc5ed4089619a9cffc3d39a041476ff99c5138cd18b6da832c4d7

Status: Downloaded newer image for ghcr.io/remsky/kokoro-fastapi-cpu:v0.2.2

2025-03-06 12:03:43.929 | INFO | __main__:download_model:60 - Model files already exist and are valid

Building kokoro-fastapi @ file:///app

Built kokoro-fastapi @ file:///app

Uninstalled 1 package in 1ms

Installed 1 package in 0.98ms

INFO: Started server process [31]

INFO: Waiting for application startup.

12:03:57 PM | INFO | main:57 | Loading TTS model and voice packs...

12:03:57 PM | INFO | model_manager:38 | Initializing Kokoro V1 on cpu

12:03:57 PM | DEBUG | paths:101 | Searching for model in path: /app/api/src/models

12:03:57 PM | INFO | kokoro_v1:45 | Loading Kokoro model on cpu

12:03:57 PM | INFO | kokoro_v1:46 | Config path: /app/api/src/models/v1_0/config.json

12:03:57 PM | INFO | kokoro_v1:47 | Model path: /app/api/src/models/v1_0/kokoro-v1_0.pth

/app/.venv/lib/python3.10/site-packages/torch/nn/modules/rnn.py:123: UserWarning: dropout option adds dropout after all but last recurrent layer, so non-zero dropout expects num_layers greater than 1, but got dropout=0.2 and num_layers=1

warnings.warn(

/app/.venv/lib/python3.10/site-packages/torch/nn/utils/weight_norm.py:143: FutureWarning: `torch.nn.utils.weight_norm` is deprecated in favor of `torch.nn.utils.parametrizations.weight_norm`.

WeightNorm.apply(module, name, dim)

12:03:58 PM | DEBUG | paths:153 | Scanning for voices in path: /app/api/src/voices/v1_0

12:03:58 PM | DEBUG | paths:131 | Searching for voice in path: /app/api/src/voices/v1_0

12:03:58 PM | DEBUG | model_manager:77 | Using default voice 'af_heart' for warmup

12:03:58 PM | INFO | kokoro_v1:73 | Creating new pipeline for language code: a

12:03:59 PM | DEBUG | kokoro_v1:244 | Generating audio for text with lang_code 'a': 'Warmup text for initialization.'

12:04:00 PM | DEBUG | kokoro_v1:251 | Got audio chunk with shape: torch.Size([57600])

12:04:00 PM | INFO | model_manager:84 | Warmup completed in 2740ms

12:04:00 PM | INFO | main:101 |

░░░░░░░░░░░░░░░░░░░░░░░░

╔═╗┌─┐┌─┐┌┬┐

╠╣ ├─┤└─┐ │

╚ ┴ ┴└─┘ ┴

╦╔═┌─┐┬┌─┌─┐

╠╩╗│ │├┴┐│ │

╩ ╩└─┘┴ ┴└─┘

░░░░░░░░░░░░░░░░░░░░░░░░

Model warmed up on cpu: kokoro_v1CUDA: False

67 voice packs loaded

Beta Web Player: http://0.0.0.0:8880/web/

or http://localhost:8880/web/

░░░░░░░░░░░░░░░░░░░░░░░░

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:8880 (Press CTRL+C to quit)

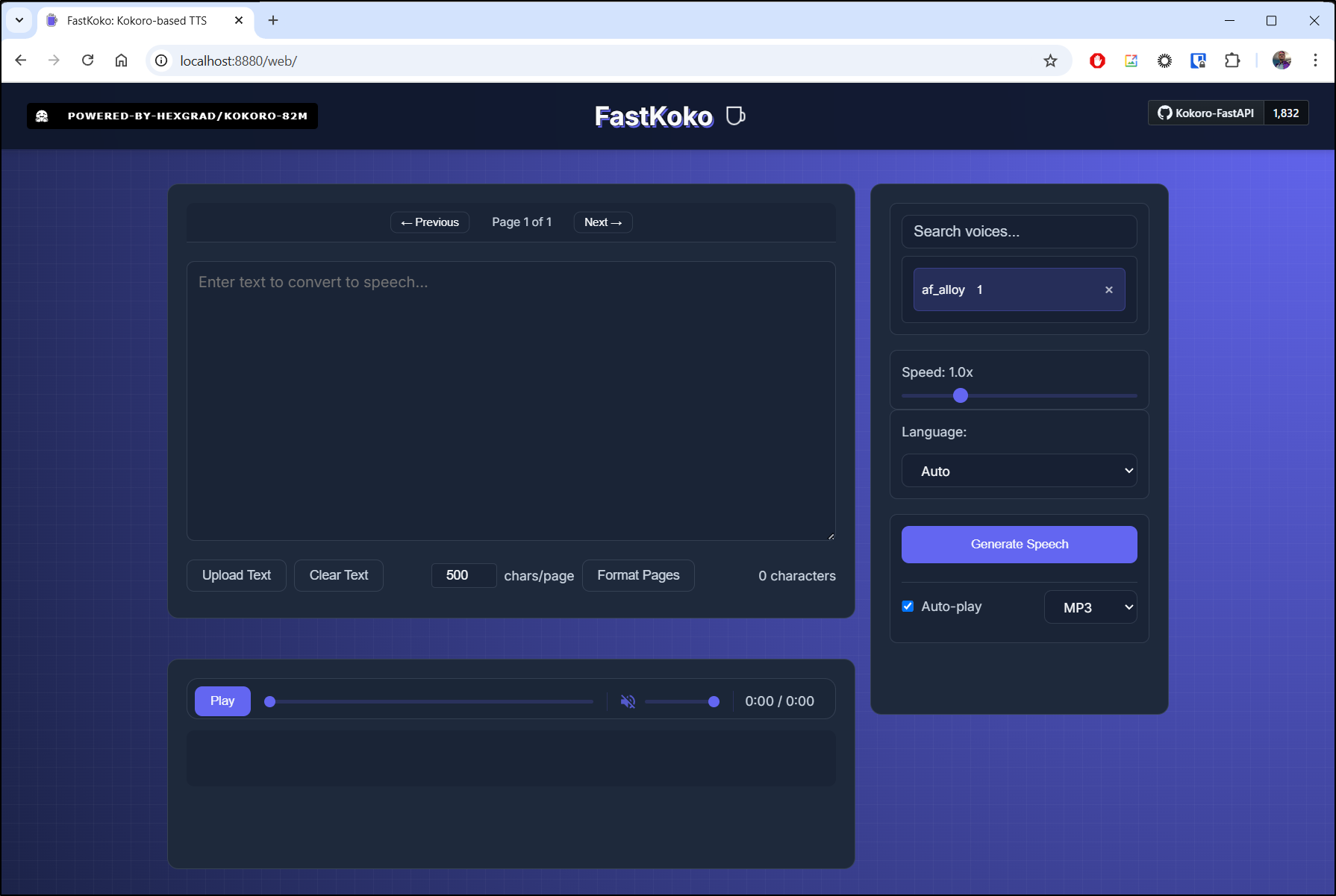

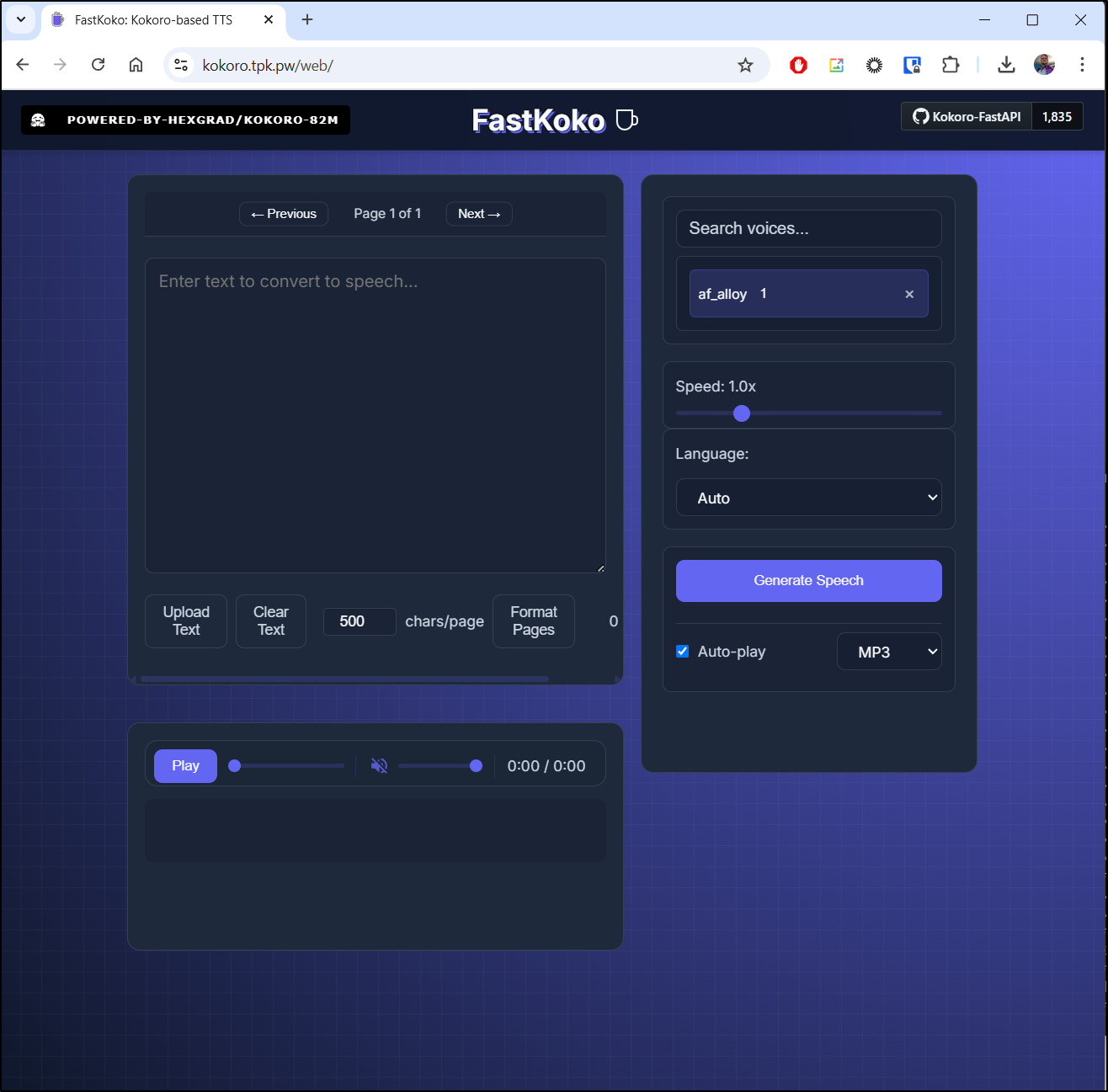

And up it came

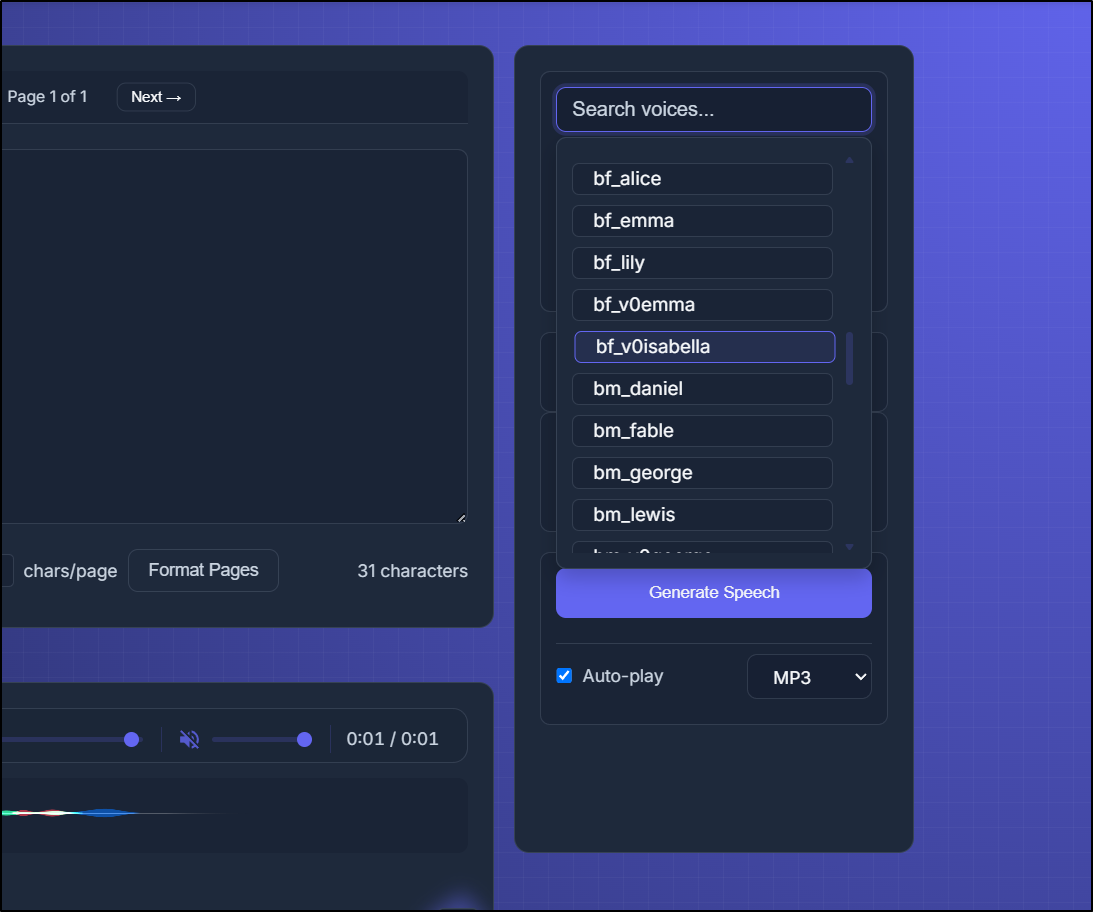

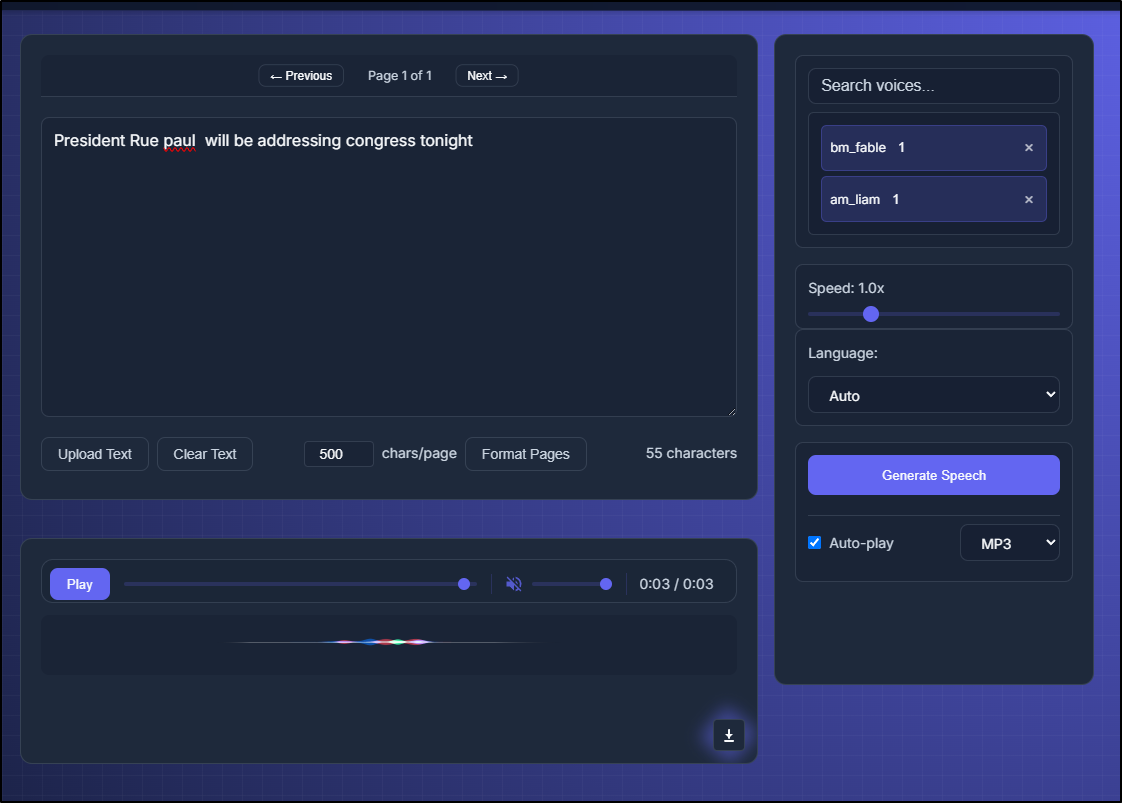

There are a lot of voices from which to pick

Here you can see some examples (a longer one at the end). This has audio, of course:

On the Mini PC with Ryzen 9s

builder@bosgamerz9:~$ wget https://developer.download.nvidia.com/compute/cuda/12.8.1/local_installers/cuda_12.8.1_570.124.06_linux.run

--2025-03-06 18:00:21-- https://developer.download.nvidia.com/compute/cuda/12.8.1/local_installers/cuda_12.8.1_570.124.06_linux.run

Resolving developer.download.nvidia.com (developer.download.nvidia.com)... 23.193.200.156, 23.193.200.134

Connecting to developer.download.nvidia.com (developer.download.nvidia.com)|23.193.200.156|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 5382238770 (5.0G) [application/octet-stream]

Saving to: ‘cuda_12.8.1_570.124.06_linux.run’

cuda_12.8.1_570.124.06_linux.run 100%[=================================================================>] 5.01G 16.5MB/s in 6m 17s

2025-03-06 18:06:39 (13.6 MB/s) - ‘cuda_12.8.1_570.124.06_linux.run’ saved [5382238770/5382238770]

builder@bosgamerz9:~$ sudo sh cuda_12.8.1_570.124.06_linux.run

===========

= Summary =

===========

Driver: Installed

Toolkit: Installed in /usr/local/cuda-12.8/

Please make sure that

- PATH includes /usr/local/cuda-12.8/bin

- LD_LIBRARY_PATH includes /usr/local/cuda-12.8/lib64, or, add /usr/local/cuda-12.8/lib64 to /etc/ld.so.conf and run ldconfig as root

To uninstall the CUDA Toolkit, run cuda-uninstaller in /usr/local/cuda-12.8/bin

To uninstall the NVIDIA Driver, run nvidia-uninstall

Logfile is /var/log/cuda-installer.log

I tried but it didn’t work either

builder@bosgamerz9:~$ sudo docker run --gpus all -p 8880:8880 ghcr.io/remsky/kokoro-fastapi-gpu:v0.2.2

Unable to find image 'ghcr.io/remsky/kokoro-fastapi-gpu:v0.2.2' locally

v0.2.2: Pulling from remsky/kokoro-fastapi-gpu

de44b265507a: Pull complete

7c19210cd82d: Pull complete

5f5407c3a203: Pull complete

0f8429c62360: Pull complete

4b650590013c: Pull complete

14fc1a5fdf5b: Pull complete

66a4307f086b: Pull complete

ba1786052c2e: Pull complete

b98cda71b10f: Pull complete

5e64b8041de6: Pull complete

d1da8f0791a1: Pull complete

bf83beda4da9: Pull complete

d27544a87475: Pull complete

4f4fb700ef54: Pull complete

36b7d6a9ac01: Pull complete

6921f6108f74: Pull complete

333fa843603f: Pull complete

6eabf45d2dc4: Pull complete

2b73a021bed5: Pull complete

74d90daacc5b: Pull complete

40a1c66fd673: Pull complete

Digest: sha256:4dc5455046339ecbea75f2ddb90721ad8dbeb0ab14c8c828e4b962dac2b80135

Status: Downloaded newer image for ghcr.io/remsky/kokoro-fastapi-gpu:v0.2.2

docker: Error response from daemon: could not select device driver "" with capabilities: [[gpu]].

builder@bosgamerz9:~$ sudo docker run --gpus all -p 8880:8880 ghcr.io/remsky/kokoro-fastapi-gpu:v0.2.2

docker: Error response from daemon: could not select device driver "" with capabilities: [[gpu]].

Here are similar examples using the CPU variant on it

On an i3

Here is a similar result on my smaller “Dockerhost” which just runs weaker N100 CPUs

As you can see, it works, but takes a lot longer to produce output.

For instance, comparing the byline for Mickey 17

Written and directed by Academy Award-winning writer/director Bong Joon Ho (PARASITE), Robert Pattinson (THE BATMAN, TENET) stars as an unlikely hero in the extraordinary circumstance of working for an employer who demands the ultimate commitment to the job… to die, for a living

It took 18s to create the speech on the smaller CPUs to 7s on the Ryzen 9s. The total speech time was about 17s.

Exposing with TLS

Let’s say I want to expose this, just for fun.

I first make an A Record in something like Azure DNS

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n kokoro

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "8a5b800b-419d-4991-bc56-2a07aeec0bb1",

"fqdn": "kokoro.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/kokoro",

"name": "kokoro",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

I can then create the Ingress, Service and Endpoint to forward traffic to the dockerhost:

$ cat ../kokoro.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: kokoro-external-ip

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: kokoroint

port: 8880

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: kokoro-external-ip

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: kokoro

port: 80

protocol: TCP

targetPort: 8880

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: kokoro-external-ip

generation: 1

labels:

app.kubernetes.io/instance: kokoroingress

name: kokoroingress

spec:

rules:

- host: kokoro.tpk.pw

http:

paths:

- backend:

service:

name: kokoro-external-ip

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- kokoro.tpk.pw

secretName: kokoro-tls

$ kubectl apply -f ../kokoro.yaml

endpoints/kokoro-external-ip created

service/kokoro-external-ip created

ingress.networking.k8s.io/kokoroingress created

Lastly, just ensure I’m running Kokoro in daemon mode on Docker

builder@builder-T100:~$ docker run -d -p 8880:8880 ghcr.io/remsky/kokoro-fastapi-cpu:v0.2.2

af3172e5ed7186474ef12019e6ea9e450d6e8df4c9e53893db07f022b02f36be

We now have a functional (albeit not the fastest) public service Kokoro:

Fever Dreams (literally)

I had an idea inspired from a YouTube video.

It follows a wild dream I had when sick recently. In it we had Randy Rainbow as press secretary, for which I’ll use Midjourney to get it close.

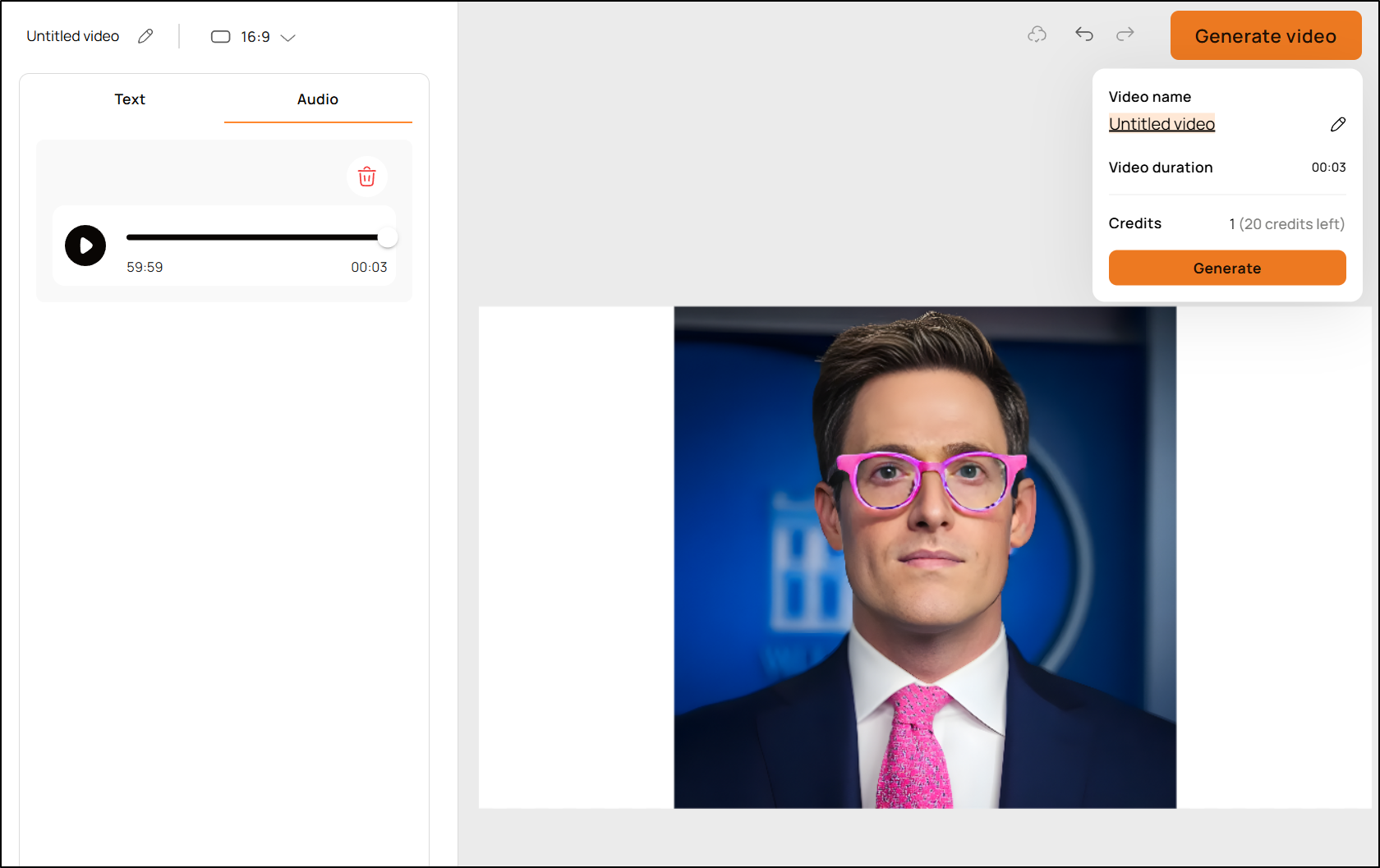

I use this simple AI Face Swap tool to refine things..

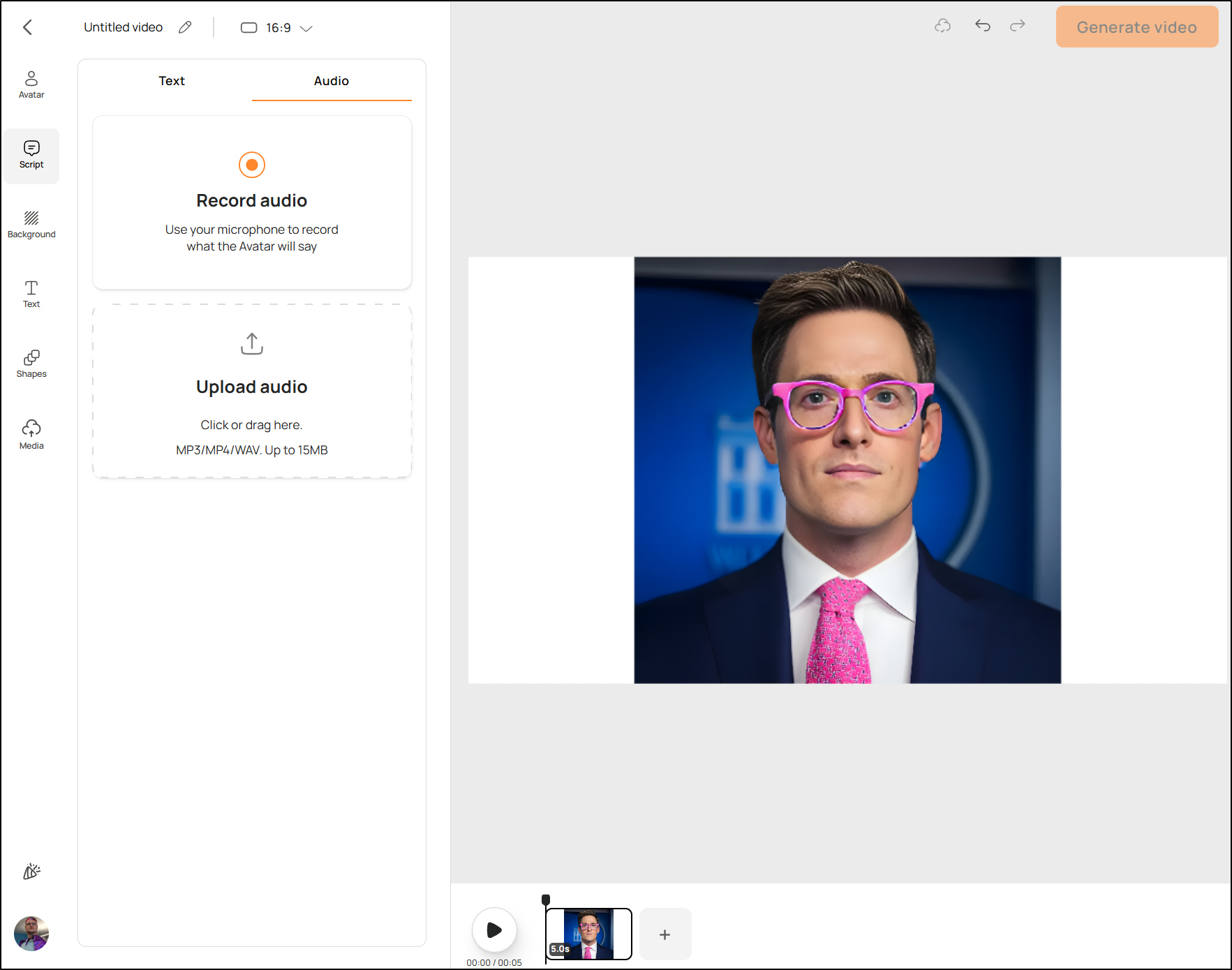

Now I’ll pop over to D-ID to create an avatar

From here I can upload audio

Brining us back to Kokoro. I found bm_fable and am_liam combined was closest to Randy Rainbows voice, but I’m sure there are some further refinements that could be made

Now I can upload and generate

And as an aside, if you don’t wish to pay for Midjourney, there are other models such as “stable-cascade” on huggingface you can use

I also had a version of the dream where Tim Waltz was president and RuPaul was VP, but Tim had Ru do the presentations to congress just because she’s a badass.

Midjourney, which I pay for, has so many nanny filters it is sometimes hard to get the image you want

but again, tell me President Rue wouldn’t smack a … who gets out of line. I’d vote for her.

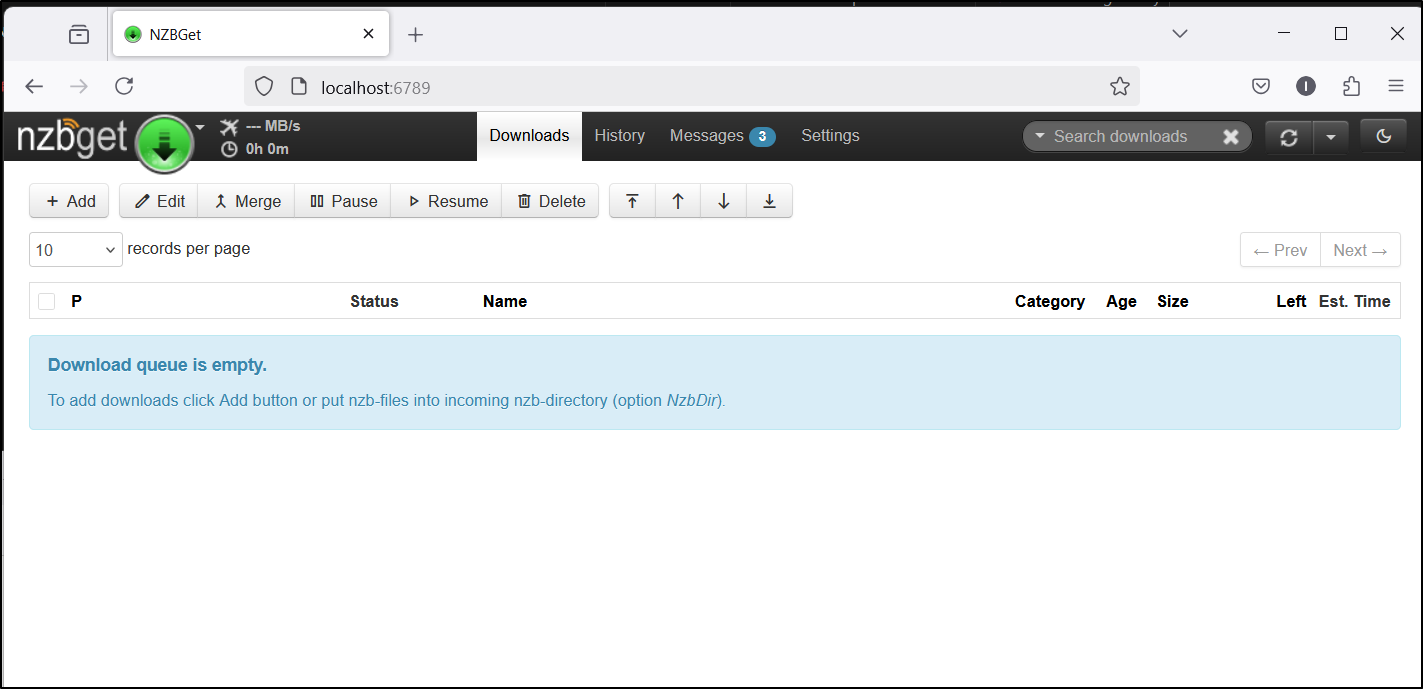

NZBGet

I had a note to try out Linuxserver/NZBGet which is a news group downloader.

From Docker hub we can find this News Group downloader that runs well in docker:

docker run -d \

--name=nzbget \

-e PUID=1000 \

-e PGID=1000 \

-e TZ=Etc/UTC \

-e NZBGET_USER=nzbget `#optional` \

-e NZBGET_PASS=tegbzn6789 `#optional` \

-p 6789:6789 \

-v /path/to/nzbget/data:/config \

-v /path/to/downloads:/downloads `#optional` \

--restart unless-stopped \

lscr.io/linuxserver/nzbget:latest

I’ll pivot that to a Kubernetes Manifest YAML

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nzbget-config

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nzbget-downloads

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 40Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nzbget

spec:

replicas: 1

selector:

matchLabels:

app: nzbget

template:

metadata:

labels:

app: nzbget

spec:

containers:

- name: nzbget

image: lscr.io/linuxserver/nzbget:latest

env:

- name: PUID

value: "1000"

- name: PGID

value: "1000"

- name: TZ

value: "Etc/UTC"

- name: NZBGET_USER

value: "nzbget"

- name: NZBGET_PASS

value: "tegbzn6789"

ports:

- containerPort: 6789

volumeMounts:

- name: nzbget-config

mountPath: /config

- name: nzbget-downloads

mountPath: /downloads

volumes:

- name: nzbget-config

persistentVolumeClaim:

claimName: nzbget-config

- name: nzbget-downloads

persistentVolumeClaim:

claimName: nzbget-downloads

---

apiVersion: v1

kind: Service

metadata:

name: nzbget

spec:

selector:

app: nzbget

ports:

- port: 6789

targetPort: 6789

type: ClusterIP

And apply it

$ kubectl apply -f ./nzbget.yaml

persistentvolumeclaim/nzbget-config created

persistentvolumeclaim/nzbget-downloads created

deployment.apps/nzbget created

service/nzbget created

I’ll then port-forward to the service port

$ kubectl port-forward svc/nzbget 6789:6789

Forwarding from 127.0.0.1:6789 -> 6789

Forwarding from [::1]:6789 -> 6789

I’m now in, but there was no login required, so I’m not sure what the username and password was for.

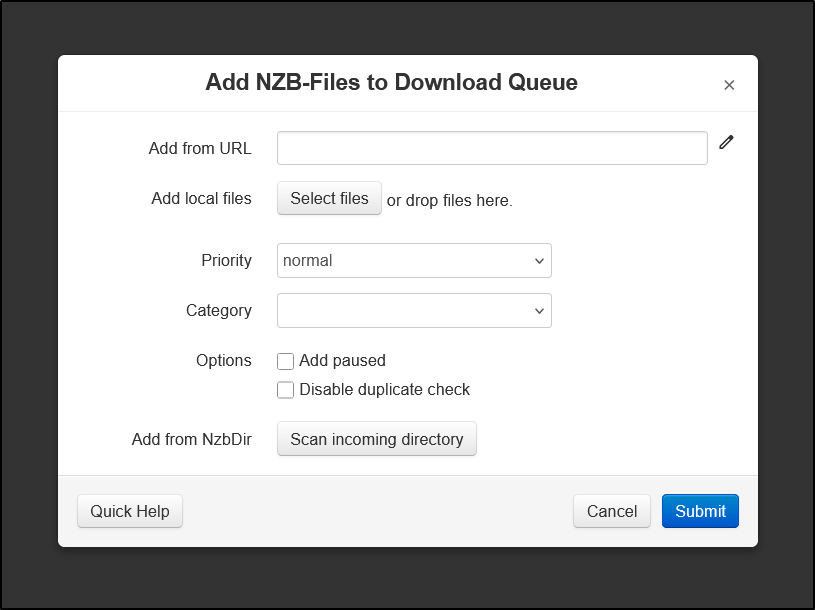

I can add from URL by using “+ Add”

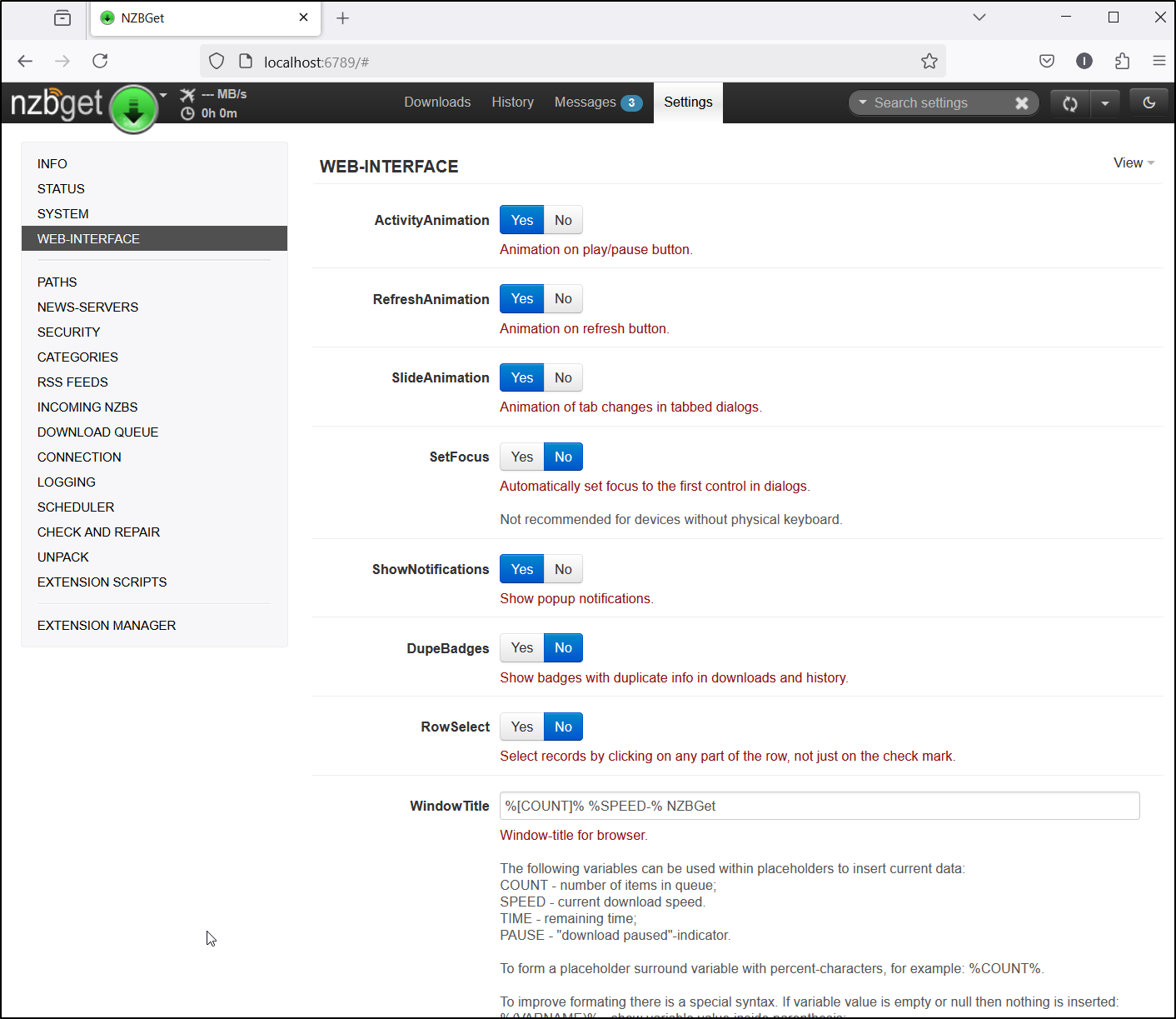

And change settings

The problem I had is the more I searched, the more I realized Usenet is no longer free. Even articles about free access just link to paid trials.

Unfortunately, I don’t trust any of these enough to do it so I’ll just have to stop here.

Also, without proper auth login, there is no way I would expose this service publicly.

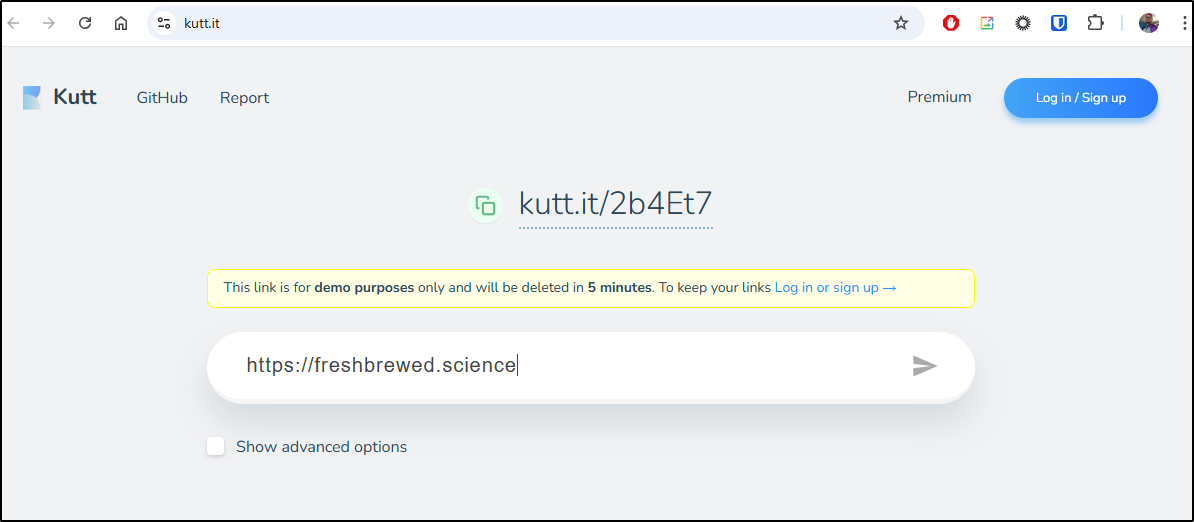

Kutt

We looked at some URL shorteners before and I still use Yourls today.

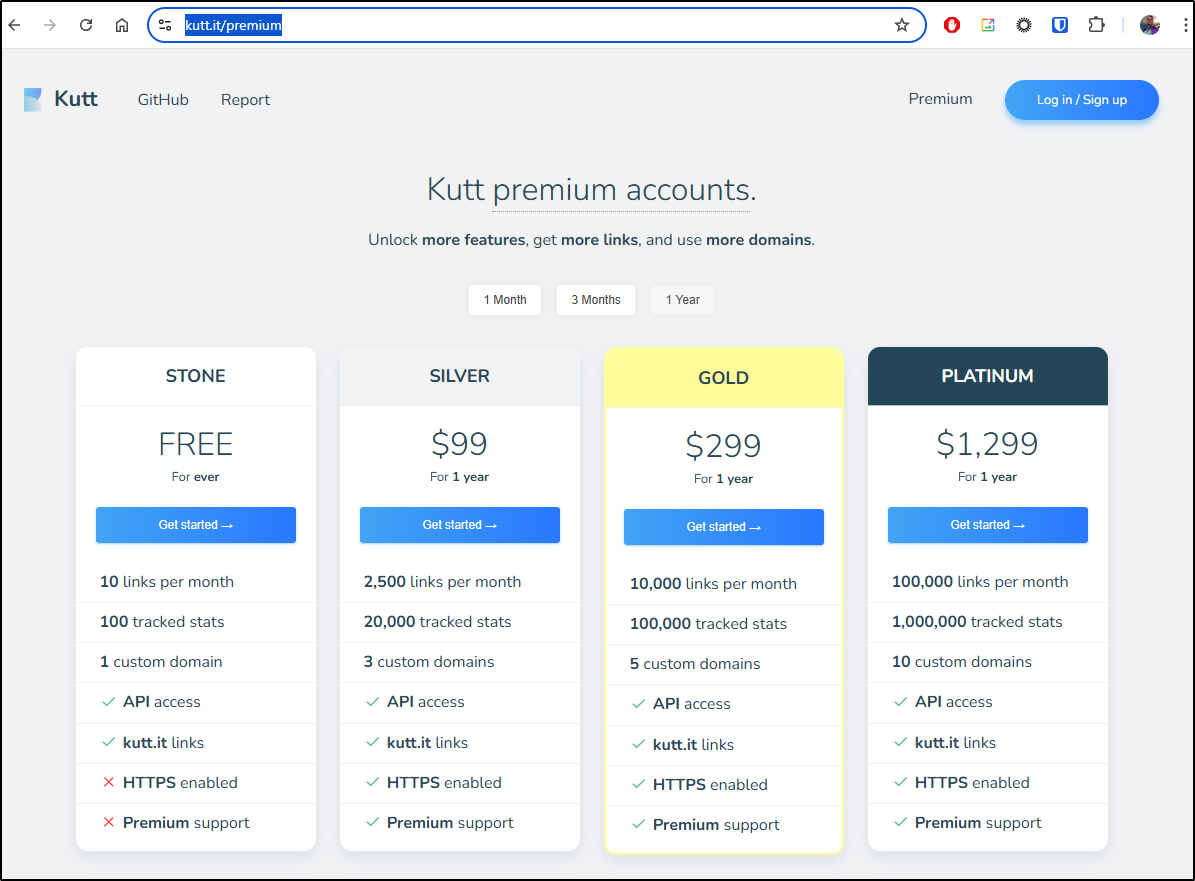

Kutt will let you shorten URLs for free with their hosted option.

They have a free tier but even at the “silver” which is US$99 a year, I think it’s pretty good value for what they offer.

We can go to Kutt’s Github page to find some Docker compose files.

They have MariaDB, PostgreSQL and SQLite and Redis. Let’s try SQlite+Redis

builder@LuiGi:~/Workspaces$ git clone https://github.com/thedevs-network/kutt.git

Cloning into 'kutt'...

remote: Enumerating objects: 5976, done.

remote: Counting objects: 100% (250/250), done.

remote: Compressing objects: 100% (84/84), done.

remote: Total 5976 (delta 209), reused 166 (delta 166), pack-reused 5726 (from 2)

Receiving objects: 100% (5976/5976), 5.09 MiB | 1.44 MiB/s, done.

Resolving deltas: 100% (3833/3833), done.

builder@LuiGi:~/Workspaces$ cd kutt/

builder@LuiGi:~/Workspaces/kutt$ cat docker-compose.sqlite-redis.yml

services:

server:

build:

context: .

volumes:

- db_data_sqlite:/var/lib/kutt

- custom:/kutt/custom

environment:

DB_FILENAME: "/var/lib/kutt/data.sqlite"

REDIS_ENABLED: true

REDIS_HOST: redis

REDIS_PORT: 6379

ports:

- 3000:3000

depends_on:

redis:

condition: service_started

redis:

image: redis:alpine

restart: always

expose:

- 6379

volumes:

db_data_sqlite:

custom:

builder@LuiGi:~/Workspaces/kutt$ docker compose -f docker-compose.sqlite-redis.yml up

[+] Running 9/9

✔ redis Pulled 6.8s

✔ f18232174bc9 Already exists 0.0s

✔ 16cd0457255f Pull complete 0.6s

✔ fa05f5234346 Pull complete 0.8s

✔ 4d03bd351ab9 Pull complete 0.9s

✔ 4738865c5f32 Pull complete 3.8s

✔ 927ba88b2847 Pull complete 3.8s

✔ 4f4fb700ef54 Pull complete 3.9s

✔ 4c7f0522bc7d Pull complete 4.0s

[+] Building 31.7s (12/12) FINISHED docker:default

=> [server internal] load build definition from Dockerfile 0.1s

=> => transferring dockerfile: 660B 0.0s

=> [server internal] load metadata for docker.io/library/node:22-alpine 1.7s

=> [server auth] library/node:pull token for registry-1.docker.io 0.0s

=> [server internal] load .dockerignore 0.0s

=> => transferring context: 58B 0.0s

=> [server stage-0 1/5] FROM docker.io/library/node:22-alpine@sha256:9bef0ef1e268f60627da9ba7d7605e8831d5b56ad07487d24d1aa386336d1944 10.3s

=> => resolve docker.io/library/node:22-alpine@sha256:9bef0ef1e268f60627da9ba7d7605e8831d5b56ad07487d24d1aa386336d1944 0.1s

=> => sha256:cb2bde55f71f84688f9eb7197e0f69aa7c4457499bdf39f34989ab16455c3369 50.34MB / 50.34MB 7.8s

=> => sha256:9d0e0719fbe047cc0770ba9ed1cb150a4ee9bc8a55480eeb8a84a736c8037dbc 1.26MB / 1.26MB 0.4s

=> => sha256:6f063dbd7a5db7835273c913fc420b1082dcda3b5972d75d7478b619da284053 446B / 446B 0.5s

=> => sha256:9bef0ef1e268f60627da9ba7d7605e8831d5b56ad07487d24d1aa386336d1944 6.41kB / 6.41kB 0.0s

=> => sha256:01393fe5a51489b63da0ab51aa8e0a7ff9990132917cf20cfc3d46f5e36c0e48 1.72kB / 1.72kB 0.0s

=> => sha256:33544e83793ca080b49f5a30fb7dbe8a678765973de6ea301572a0ef53e76333 6.18kB / 6.18kB 0.0s

=> => extracting sha256:cb2bde55f71f84688f9eb7197e0f69aa7c4457499bdf39f34989ab16455c3369 1.9s

=> => extracting sha256:9d0e0719fbe047cc0770ba9ed1cb150a4ee9bc8a55480eeb8a84a736c8037dbc 0.1s

=> => extracting sha256:6f063dbd7a5db7835273c913fc420b1082dcda3b5972d75d7478b619da284053 0.0s

=> [server internal] load build context 0.2s

=> => transferring context: 1.17MB 0.1s

=> [server stage-0 2/5] WORKDIR /kutt 0.1s

=> [server stage-0 3/5] RUN --mount=type=bind,source=package.json,target=package.json --mount=type=bind,source=package-lock.json,target=package-lock.json --mount=type=cache,target=/root/.npm npm ci --omit=dev 15.9s

=> [server stage-0 4/5] RUN mkdir -p /var/lib/kutt 0.7s

=> [server stage-0 5/5] COPY . . 0.2s

=> [server] exporting to image 2.4s

=> => exporting layers 2.3s

=> => writing image sha256:ddfbb61a51be693c7b6c2cc85f7b0050e6b792c6d9a1d44a26c93418f6c62f0a 0.0s

=> => naming to docker.io/library/kutt-server 0.0s

=> [server] resolving provenance for metadata file 0.0s

[+] Running 6/6

✔ server Built 0.0s

✔ Network kutt_default Created 0.3s

✔ Volume "kutt_db_data_sqlite" Created 0.0s

✔ Volume "kutt_custom" Created 0.0s

✔ Container kutt-redis-1 Created 0.2s

✔ Container kutt-server-1 Created 0.1s

Attaching to redis-1, server-1

redis-1 | 1:C 08 Mar 2025 13:37:55.188 * oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

redis-1 | 1:C 08 Mar 2025 13:37:55.188 * Redis version=7.4.2, bits=64, commit=00000000, modified=0, pid=1, just started

redis-1 | 1:C 08 Mar 2025 13:37:55.188 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

redis-1 | 1:M 08 Mar 2025 13:37:55.189 * monotonic clock: POSIX clock_gettime

redis-1 | 1:M 08 Mar 2025 13:37:55.192 * Running mode=standalone, port=6379.

redis-1 | 1:M 08 Mar 2025 13:37:55.193 * Server initialized

redis-1 | 1:M 08 Mar 2025 13:37:55.194 * Ready to accept connections tcp

server-1 |

server-1 | > kutt@3.2.2 migrate

server-1 | > knex migrate:latest

server-1 |

server-1 | Batch 1 run: 10 migrations

server-1 | npm notice

server-1 | npm notice New major version of npm available! 10.9.2 -> 11.2.0

server-1 | npm notice Changelog: https://github.com/npm/cli/releases/tag/v11.2.0

server-1 | npm notice To update run: npm install -g npm@11.2.0

server-1 | npm notice

server-1 |

server-1 | > kutt@3.2.2 start

server-1 | > node server/server.js --production

server-1 |

server-1 | ================================

server-1 | Missing environment variables:

server-1 | JWT_SECRET: undefined

server-1 | ================================

server-1 |

server-1 | Exiting with error code 1

server-1 exited with code 1

Looks like we need to specify a JWT secret

builder@LuiGi:~/Workspaces/kutt$ !v

vi docker-compose.sqlite-redis.yml

builder@LuiGi:~/Workspaces/kutt$ cat ./docker-compose.sqlite-redis.yml

services:

server:

build:

context: .

volumes:

- db_data_sqlite:/var/lib/kutt

- custom:/kutt/custom

environment:

JWT_SECRET: "ThisIsMyJWTSecretAndIThinkItsPrettyLong"

DB_FILENAME: "/var/lib/kutt/data.sqlite"

REDIS_ENABLED: true

REDIS_HOST: redis

REDIS_PORT: 6379

ports:

- 3000:3000

depends_on:

redis:

condition: service_started

redis:

image: redis:alpine

restart: always

expose:

- 6379

volumes:

db_data_sqlite:

custom:

builder@LuiGi:~/Workspaces/kutt$ docker compose -f docker-compose.sqlite-redis.yml up

[+] Running 2/2

✔ Container kutt-redis-1 Created 0.0s

✔ Container kutt-server-1 Recreated 0.1s

Attaching to redis-1, server-1

redis-1 | 1:C 08 Mar 2025 13:40:51.756 * oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

redis-1 | 1:C 08 Mar 2025 13:40:51.756 * Redis version=7.4.2, bits=64, commit=00000000, modified=0, pid=1, just started

redis-1 | 1:C 08 Mar 2025 13:40:51.756 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

redis-1 | 1:M 08 Mar 2025 13:40:51.757 * monotonic clock: POSIX clock_gettime

redis-1 | 1:M 08 Mar 2025 13:40:51.758 * Running mode=standalone, port=6379.

redis-1 | 1:M 08 Mar 2025 13:40:51.760 * Server initialized

redis-1 | 1:M 08 Mar 2025 13:40:51.761 * Loading RDB produced by version 7.4.2

redis-1 | 1:M 08 Mar 2025 13:40:51.761 * RDB age 148 seconds

redis-1 | 1:M 08 Mar 2025 13:40:51.761 * RDB memory usage when created 0.90 Mb

redis-1 | 1:M 08 Mar 2025 13:40:51.761 * Done loading RDB, keys loaded: 0, keys expired: 0.

redis-1 | 1:M 08 Mar 2025 13:40:51.761 * DB loaded from disk: 0.000 seconds

redis-1 | 1:M 08 Mar 2025 13:40:51.761 * Ready to accept connections tcp

server-1 |

server-1 | > kutt@3.2.2 migrate

server-1 | > knex migrate:latest

server-1 |

server-1 | Already up to date

server-1 |

server-1 | > kutt@3.2.2 start

server-1 | > node server/server.js --production

server-1 |

server-1 | > Ready on http://localhost:3000

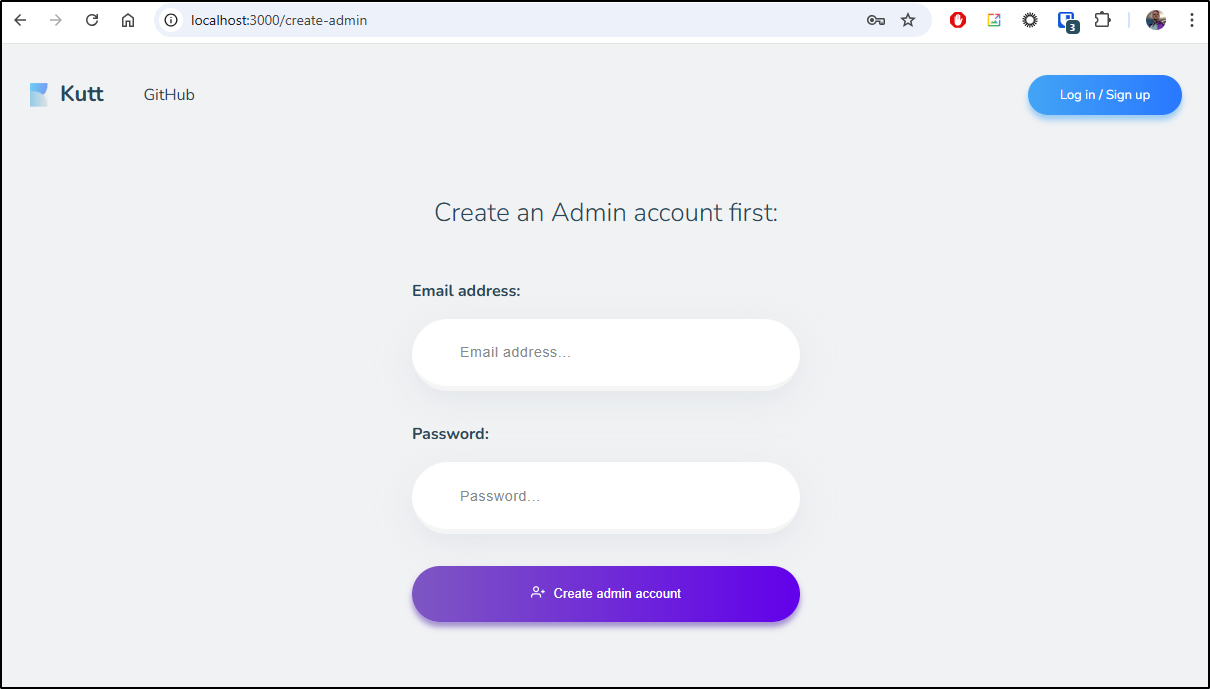

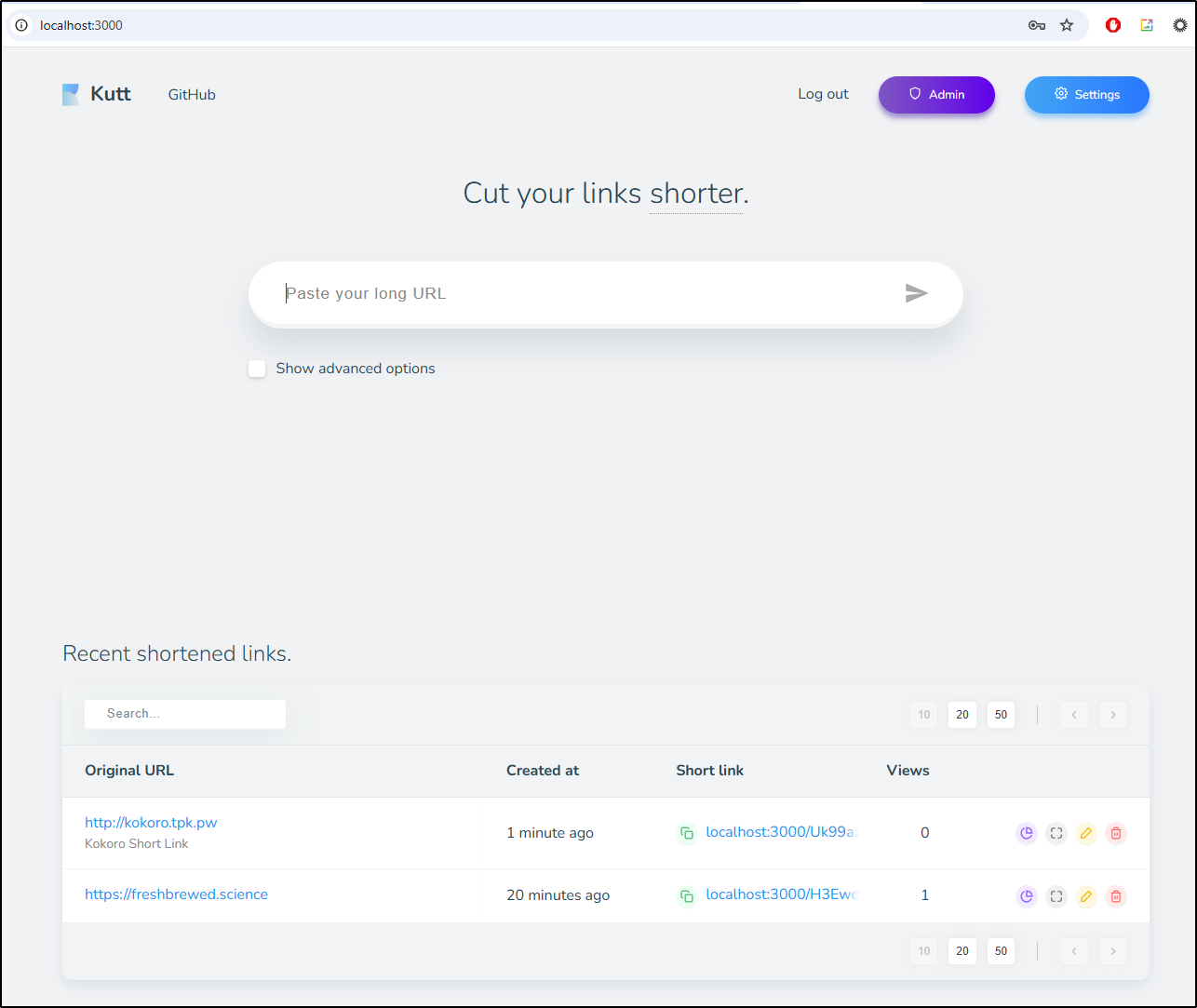

First, I need to create an Admin account

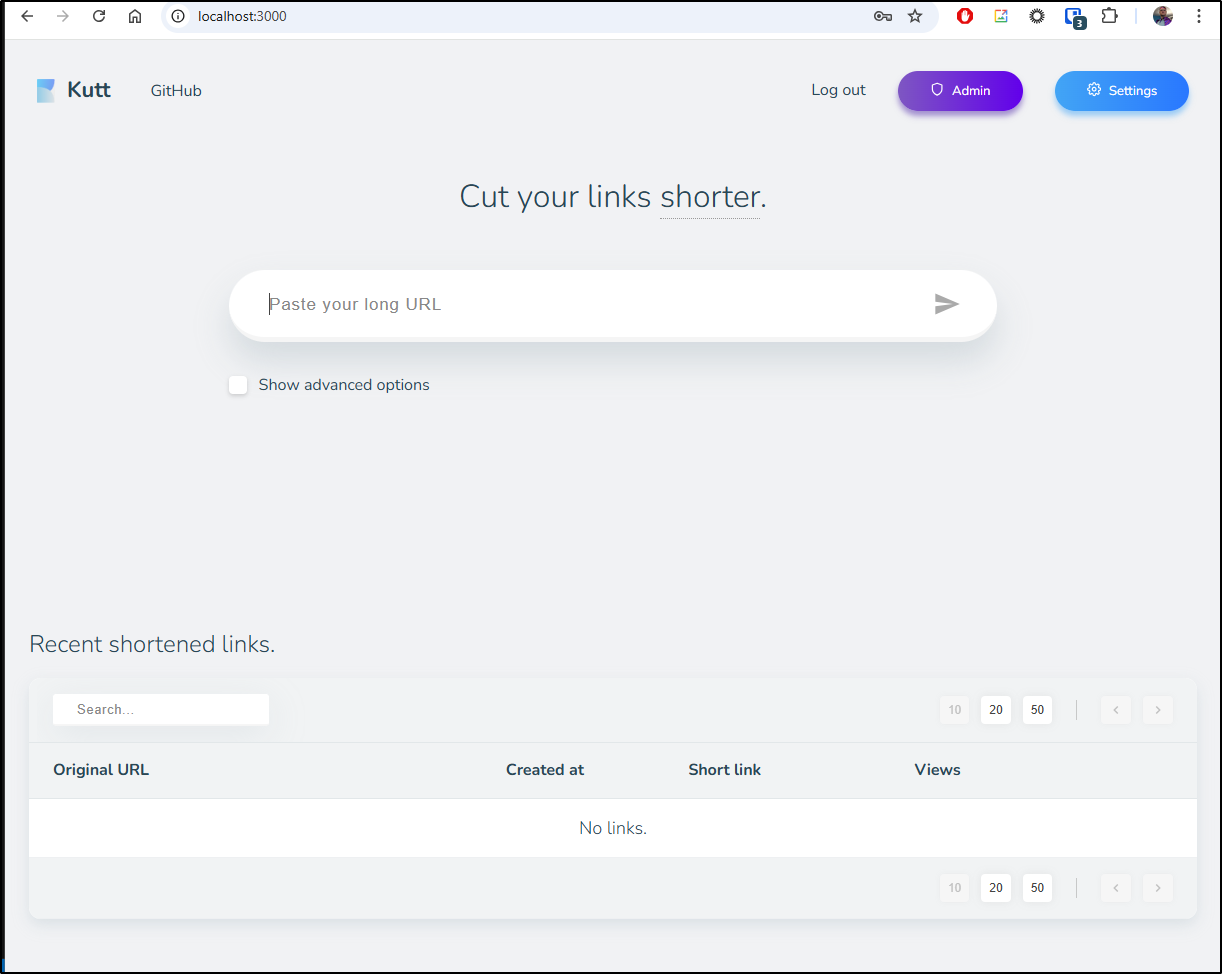

I can then login to try it

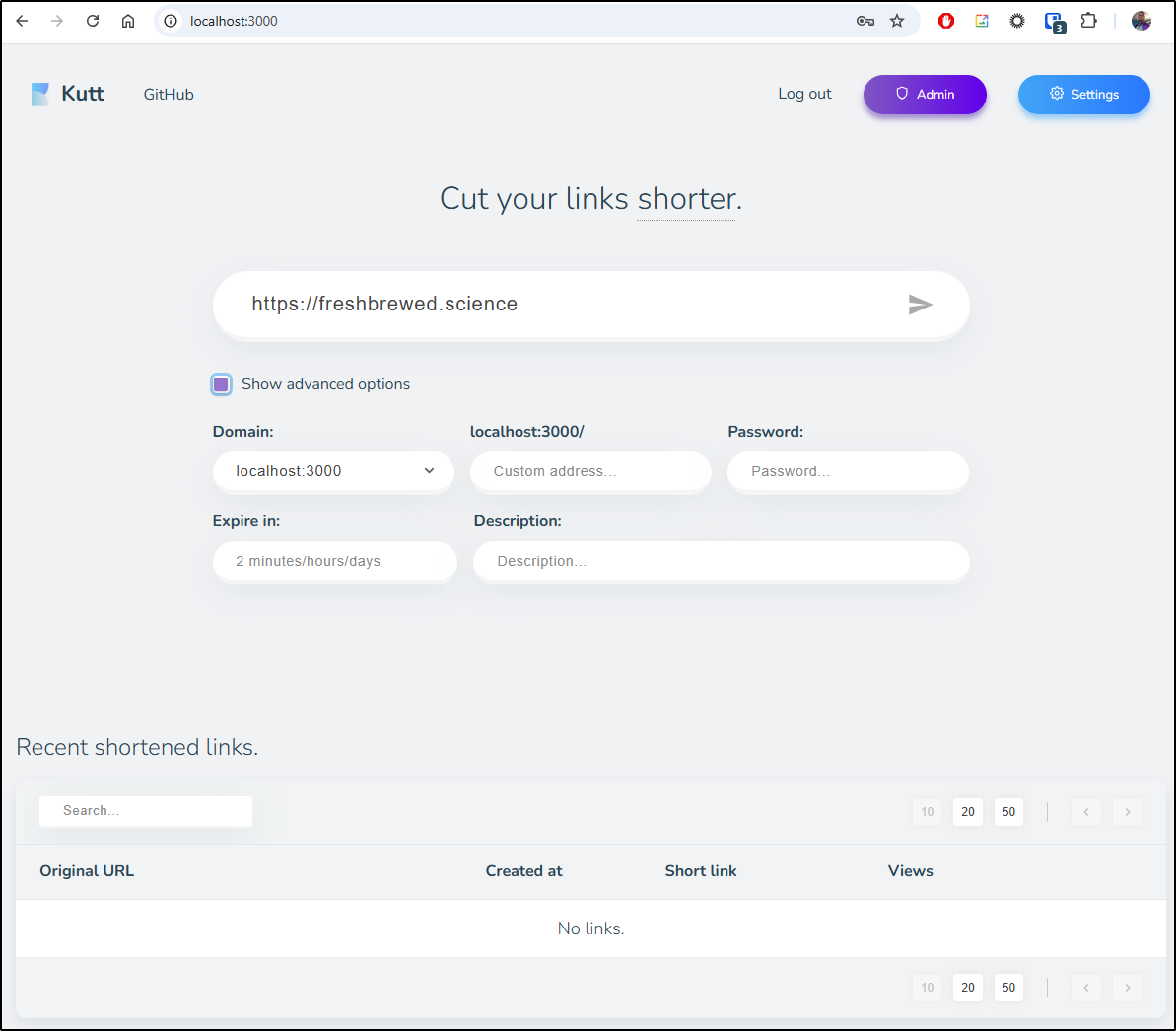

In creating a link, I can specify some advanced options

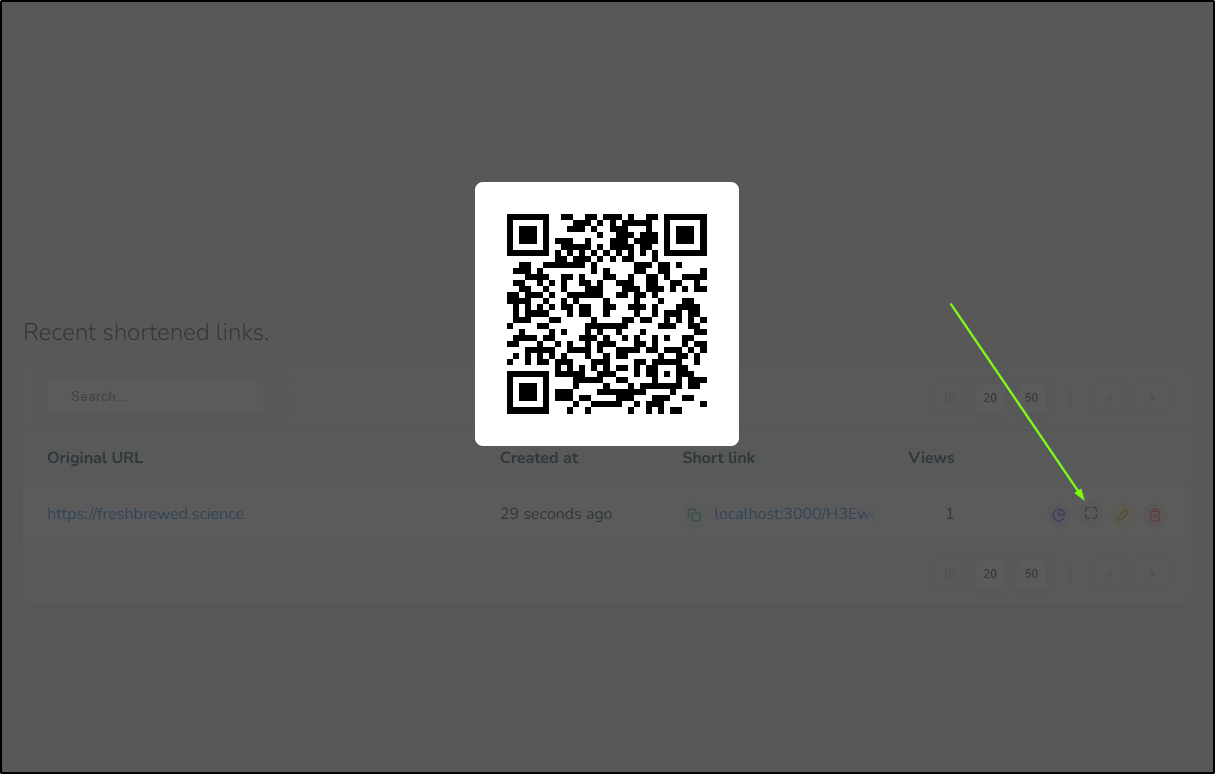

There are some nice features in this tool like creating a QR code on the fly

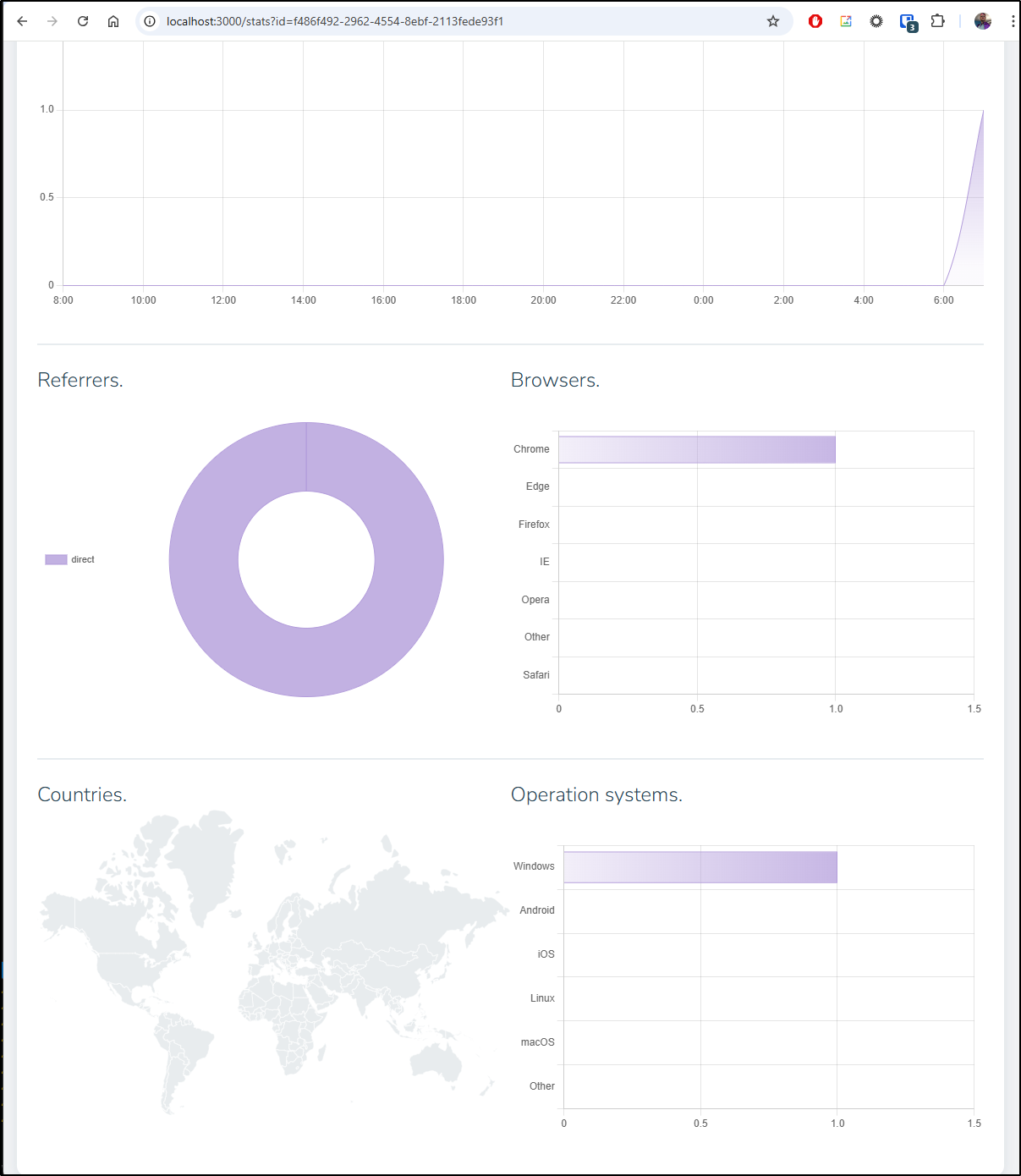

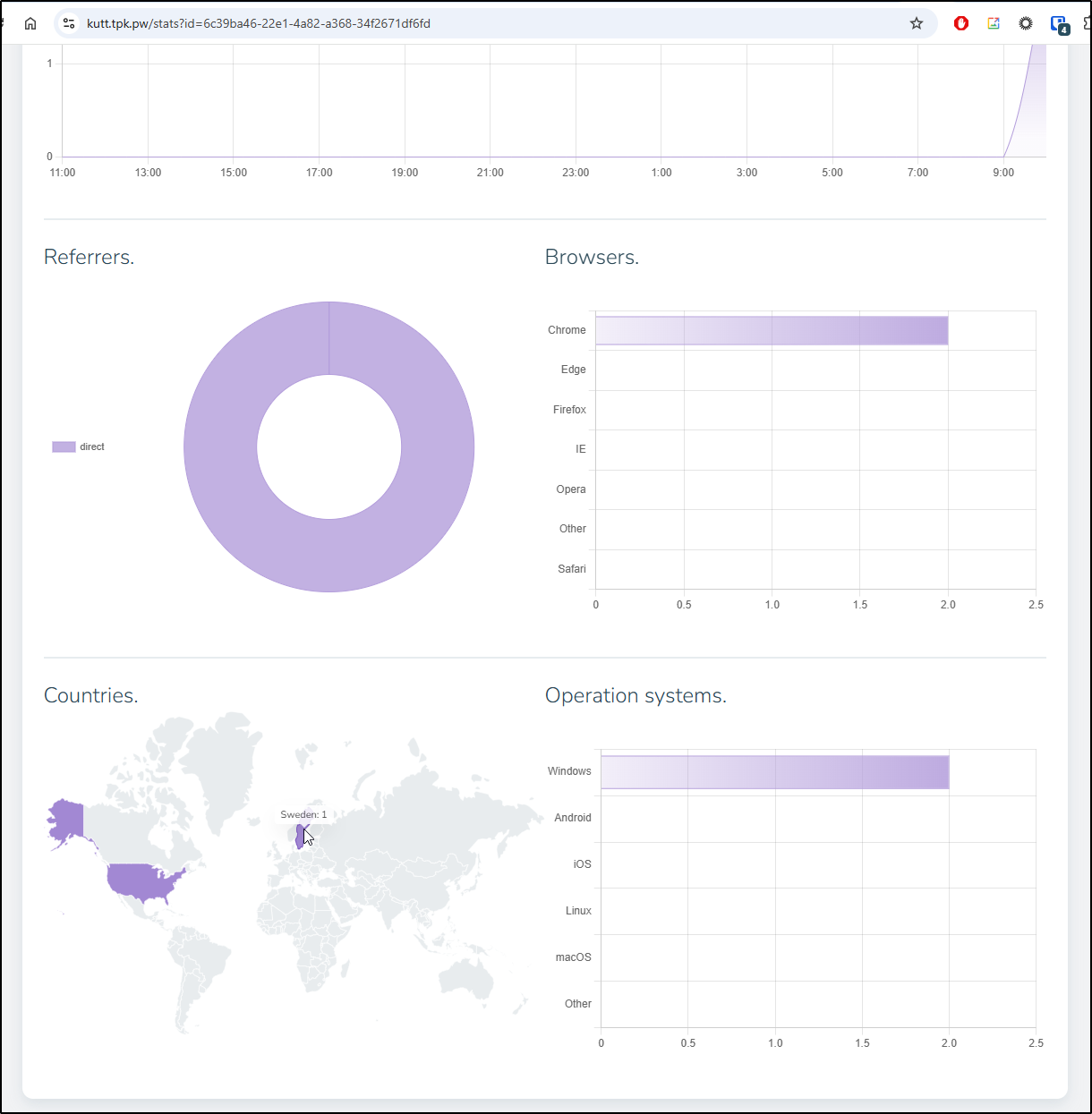

and some nice stats (they draw in a really smooth dynamic way as the page loads)

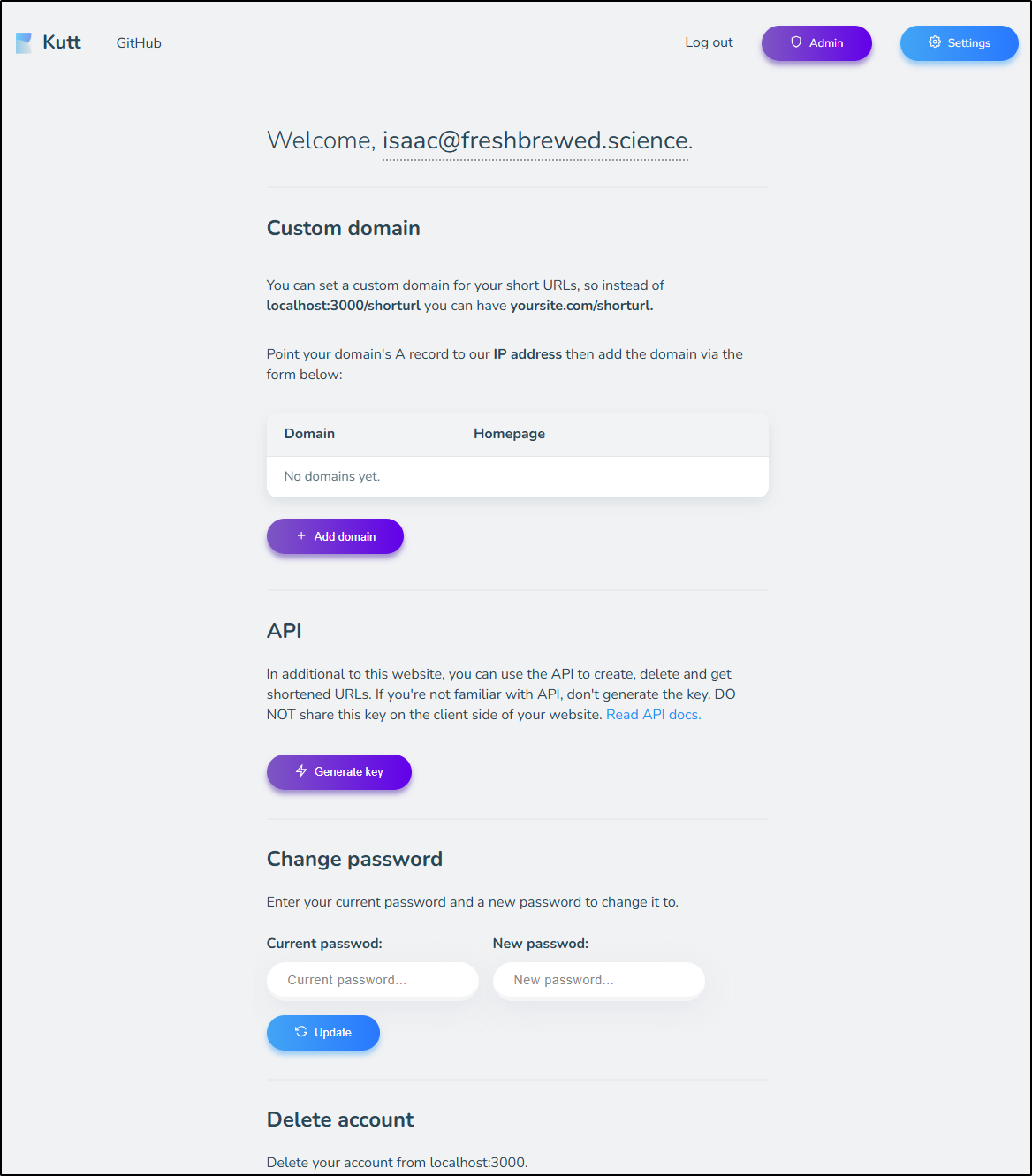

From the Admin side, we can get an API key to use with their API as well as change passwords or add an A Record (saying this was hosted on a box or VM)

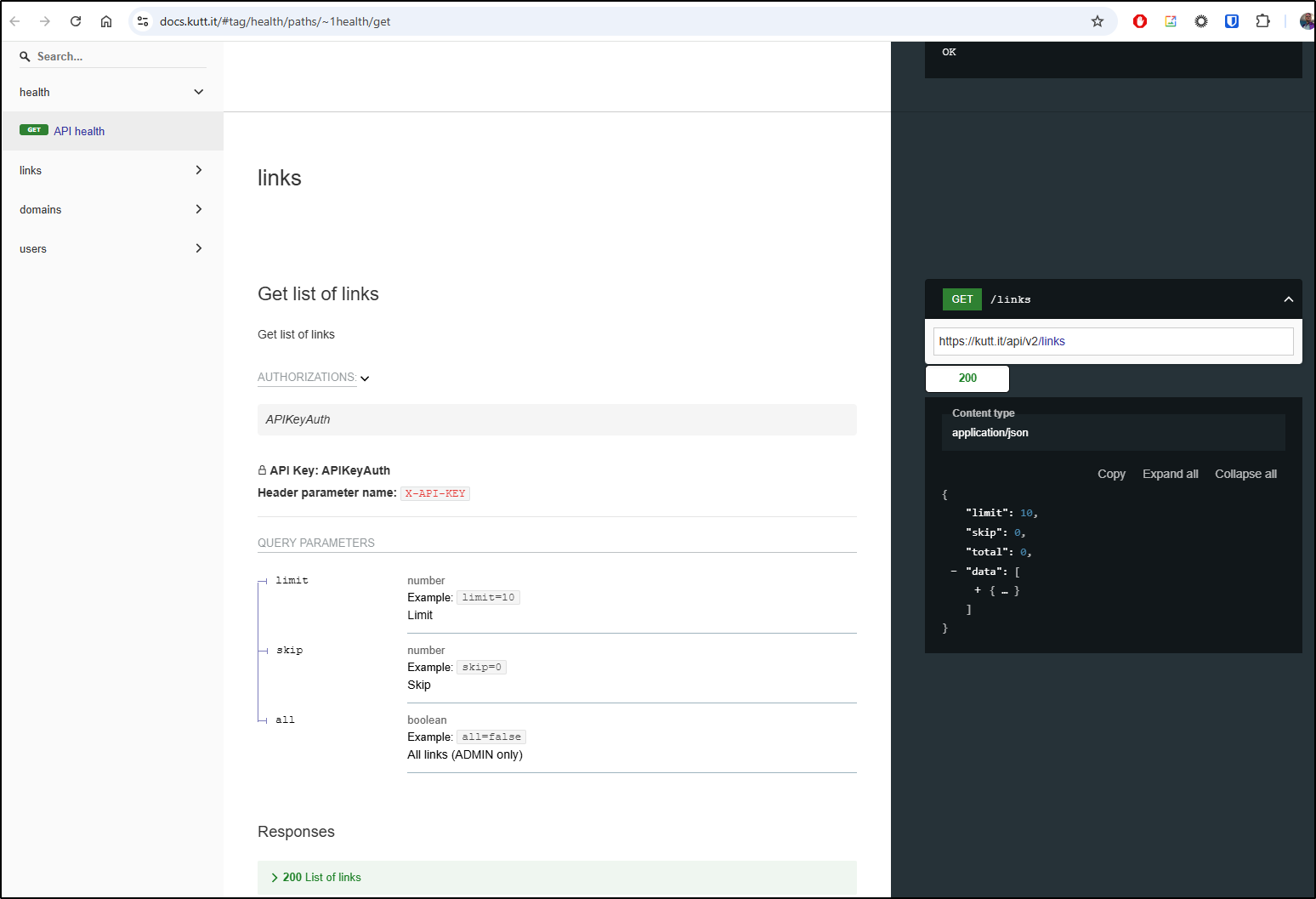

API

Let’s check the API docs to see how hard this might be to use the API

Based on what i see here:

I should be able to use

curl -X GET -H "X-API-KEY: vYvhc2utYBKvvlWMv-TNpoBVb1aadiyBSiLs14G_" http://localhost:3000/api/v2/links

I tested both forms and it works great

$ curl -X GET -H "X-API-KEY: vYvhc2utYBKvvlWMv-TNpoBVb1aadiyBSiLs14G_" http://localhost:3000/api/v2/links

{"total":1,"limit":10,"skip":0,"data":[{"id":"f486f492-2962-4554-8ebf-2113fede93f1","address":"H3EwqN","banned":false,"created_at":"2025-03-08T13:48:15.000Z","updated_at":"2025-03-08T13:48:15.000Z","password":false,"description":null,"expire_in":null,"target":"https://freshbrewed.science","visit_count":1,"domain":null,"link":"https://localhost:3000/H3EwqN"}]}

$ curl --request GET --header "Content-Type: application/json" --header "X-API-KEY: vYvhc2utYBKvvlWMv-TNpoBVb1aadiyBSiLs14G_" http://localhost:3000/api/v2/links

{"total":1,"limit":10,"skip":0,"data":[{"id":"f486f492-2962-4554-8ebf-2113fede93f1","address":"H3EwqN","banned":false,"created_at":"2025-03-08T13:48:15.000Z","updated_at":"2025-03-08T13:48:15.000Z","password":false,"description":null,"expire_in":null,"target":"https://freshbrewed.science","visit_count":1,"domain":null,"link":"https://localhost:3000/H3EwqN"}]}

I can try and create a link as well

$ curl -X POST -H "Content-Type: application/json" -H "X-API-KEY: vYvhc2utYBKvvlWMv-TNpoBVb1aadiyBSiLs14G_" --data '{"target": "http://kokoro.tpk.pw","description": "Kokoro Short Link","reuse": false}' http://localhost:3000/api/v2/links | tee o.f

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 398 100 314 100 84 1984 531 --:--:-- --:--:-- --:--:-- 2518

{"id":"d0dcec9f-56ae-475d-a1a8-6cf00e7a5d7f","address":"Uk99az","description":"Kokoro Short Link","banned":false,"password":false,"expire_in":null,"target":"http://kokoro.tpk.pw","visit_count":0,"created_at":"2025-03-08T14:07:05.000Z","updated_at":"2025-03-08T14:07:05.000Z","link":"https://localhost:3000/Uk99az"}

And I can then fetch the created link programmatically

$ cat o.f | jq .link

"https://localhost:3000/Uk99az"

as well as see it on the admin page

Helm this sucka

Okay, I’m diggin this enough to take it to the next step

Let’s create a simple Kubernetes Manifest for the SQLite deployment. However, because I’m a bit of an open-source fan, I’ll swap out Redis with Valkey

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: db-data-sqlite

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: custom

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kutt-redis

spec:

replicas: 1

selector:

matchLabels:

app: kutt-redis

template:

metadata:

labels:

app: kutt-redis

spec:

containers:

- name: kutt-redis

image: valkey:latest

ports:

- containerPort: 6379

env:

- name: REDIS_PASSWORD

value: "your_redis_password" # Replace with your actual password

---

apiVersion: v1

kind: Service

metadata:

name: kutt-redis

spec:

selector:

app: kutt-redis

ports:

- port: 6379

targetPort: 6379

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kutt-server

spec:

replicas: 1

selector:

matchLabels:

app: kutt-server

template:

metadata:

labels:

app: kutt-server

spec:

containers:

- name: kutt-server

image: kutt-server:latest

env:

- name: JWT_SECRET

value: "ThisIsMyJWTSecretAndIThinkItsPrettyLong"

- name: DB_FILENAME

value: "/var/lib/kutt/data.sqlite"

- name: REDIS_ENABLED

value: "true"

- name: REDIS_HOST

value: "valkey"

- name: REDIS_PORT

value: "6379"

- name: DEFAULT_DOMAIN

value: "kutt.tpk.pw"

- name: TRUST_PROXY

value: "true"

ports:

- containerPort: 3000

volumeMounts:

- name: db-data-sqlite

mountPath: /var/lib/kutt

- name: custom

mountPath: /kutt/custom

volumes:

- name: db-data-sqlite

persistentVolumeClaim:

claimName: db_data_sqlite

- name: custom

persistentVolumeClaim:

claimName: custom

---

apiVersion: v1

kind: Service

metadata:

name: kutt-service

spec:

type: ClusterIP

ports:

- port: 3000

targetPort: 3000

selector:

app: kutt-server

Note: I added two settings so we can front this with Kubernetes and a proper DNS entry:

- name: DEFAULT_DOMAIN

value: "kutt.tpk.pw"

- name: TRUST_PROXY

value: "true"

To keep things clean, I’ll launch this into a namespace

builder@LuiGi:~/Workspaces/kutt$ kubectl create ns kutt

namespace/kutt created

builder@LuiGi:~/Workspaces/kutt$ kubectl apply -f ./k8s.manifest.yaml -n kutt

persistentvolumeclaim/db-data-sqlite created

persistentvolumeclaim/custom created

deployment.apps/kutt-redis created

service/kutt-redis created

deployment.apps/kutt-server created

service/kutt-service created

Though that didn’t seem to launc the pods

$ kubectl get po -n kutt

NAME READY STATUS RESTARTS AGE

kutt-server-7567bb4668-rvbn5 0/1 Pending 0 83s

kutt-redis-6d54744b4b-plt8w 0/1 ImagePullBackOff 0 84s

Interesting, seems the valkey image isn’t just “latest”

$ kubectl describe po kutt-redis-6d54744b4b-plt8w -n kutt | tail -n 10

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m27s default-scheduler Successfully assigned kutt/kutt-redis-6d54744b4b-plt8w to hp-hp-elitebook-850-g2

Normal Pulling 55s (x4 over 2m27s) kubelet Pulling image "valkey:latest"

Warning Failed 55s (x4 over 2m26s) kubelet Failed to pull image "valkey:latest": rpc error: code = Unknown desc = failed to pull and unpack image "docker.io/library/valkey:latest": failed to resolve reference "docker.io/library/valkey:latest": pull access denied, repository does not exist or may require authorization: server message: insufficient_scope: authorization failed

Warning Failed 55s (x4 over 2m26s) kubelet Error: ErrImagePull

Warning Failed 41s (x6 over 2m25s) kubelet Error: ImagePullBackOff

Normal BackOff 26s (x7 over 2m25s) kubelet Back-off pulling image "valkey:latest"

Checking Dockerhub, looks like I should use the image repository and tag:

image: valkey/valkey:alpine

That solved Redis/Valkey at least

$ kubectl get po -n kutt

NAME READY STATUS RESTARTS AGE

kutt-server-7567bb4668-rvbn5 0/1 Pending 0 9m20s

kutt-redis-cf5cfbd87-llnsm 1/1 Running 0 68s

The issue with Kutt Server is that it’s missing it’s PersistentVolumeClaim

builder@LuiGi:~/Workspaces/kutt$ kubectl describe po kutt-server-7567bb4668-rvbn5 -n kutt | tail -n2

Warning FailedScheduling 10m default-scheduler 0/4 nodes are available: persistentvolumeclaim "db_data_sqlite" not found. preemption: 0/4 nodes are available: 4 Preemption is not helpful for scheduling..

Warning FailedScheduling 22s (x2 over 5m22s) default-scheduler 0/4 nodes are available: persistentvolumeclaim "db_data_sqlite" not found. preemption: 0/4 nodes are available: 4 Preemption is not helpful for scheduling..

I don’t think we can use underscores here. I’ll switch that up to dashes.

After a few debugging rounds, I found a funny hidden character got into my YAML. Once i fixed it, i could apply the YAML again

$ cat ./k8s.manifest.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: dbdatasqlite

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: custom

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kutt-redis

spec:

replicas: 1

selector:

matchLabels:

app: kutt-redis

template:

metadata:

labels:

app: kutt-redis

spec:

containers:

- name: kutt-redis

image: valkey/valkey:alpine

ports:

- containerPort: 6379

env:

- name: REDIS_PASSWORD

value: "your_redis_password" # Replace with your actual password

---

apiVersion: v1

kind: Service

metadata:

name: kutt-redis

spec:

selector:

app: kutt-redis

ports:

- port: 6379

targetPort: 6379

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kutt-server

spec:

replicas: 1

selector:

matchLabels:

app: kutt-server

template:

metadata:

labels:

app: kutt-server

spec:

containers:

- name: kutt-server

image: kutt-server:latest

env:

- name: JWT_SECRET

value: "ThisIsMyJWTSecretAndIThinkItsPrettyLong"

- name: DB_FILENAME

value: "/var/lib/kutt/data.sqlite"

- name: REDIS_ENABLED

value: "true"

- name: REDIS_HOST

value: "valkey"

- name: REDIS_PORT

value: "6379"

- name: DEFAULT_DOMAIN

value: "kutt.tpk.pw"

- name: TRUST_PROXY

value: "true"

ports:

- containerPort: 3000

volumeMounts:

- name: db-data-sqlite

mountPath: /var/lib/kutt

- name: custom

mountPath: /kutt/custom

volumes:

- name: db-data-sqlite

persistentVolumeClaim:

claimName: dbdatasqlite

- name: custom

persistentVolumeClaim:

claimName: custom

---

apiVersion: v1

kind: Service

metadata:

name: kutt-service

spec:

type: ClusterIP

ports:

- port: 3000

targetPort: 3000

selector:

app: kutt-server

applying

$ kubectl apply -f ./k8s.manifest.yaml -n kutt

persistentvolumeclaim/dbdatasqlite unchanged

persistentvolumeclaim/custom unchanged

deployment.apps/kutt-redis unchanged

service/kutt-redis unchanged

deployment.apps/kutt-server configured

service/kutt-service unchanged

Seems an issue with the Kutt server image

$ kubectl describe po kutt-server-6bdcfcd9d-jmxhh -n kutt | tail -n 10

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 91s default-scheduler Successfully assigned kutt/kutt-server-6bdcfcd9d-jmxhh to hp-hp-elitebook-850-g2

Normal BackOff 17s (x4 over 89s) kubelet Back-off pulling image "kutt-server:latest"

Warning Failed 17s (x4 over 89s) kubelet Error: ImagePullBackOff

Normal Pulling 3s (x4 over 90s) kubelet Pulling image "kutt-server:latest"

Warning Failed 3s (x4 over 90s) kubelet Failed to pull image "kutt-server:latest": rpc error: code = Unknown desc = failed to pull and unpack image "docker.io/library/kutt-server:latest": failed to resolve reference "docker.io/library/kutt-server:latest": pull access denied, repository does not exist or may require authorization: server message: insufficient_scope: authorization failed

Warning Failed 3s (x4 over 90s) kubelet Error: ErrImagePull

Again, a quick check of Dockerhub shows that I should e using “kutt/kutt:latest”

containers:

- name: kutt-server

image: kutt/kutt:latest

Let’s fix and try again

builder@LuiGi:~/Workspaces/kutt$ !v

vi k8s.manifest.yaml

builder@LuiGi:~/Workspaces/kutt$ cat ./k8s.manifest.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: dbdatasqlite

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: custom

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kutt-redis

spec:

replicas: 1

selector:

matchLabels:

app: kutt-redis

template:

metadata:

labels:

app: kutt-redis

spec:

containers:

- name: kutt-redis

image: valkey/valkey:alpine

ports:

- containerPort: 6379

env:

- name: REDIS_PASSWORD

value: "your_redis_password" # Replace with your actual password

---

apiVersion: v1

kind: Service

metadata:

name: kutt-redis

spec:

selector:

app: kutt-redis

ports:

- port: 6379

targetPort: 6379

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kutt-server

spec:

replicas: 1

selector:

matchLabels:

app: kutt-server

template:

metadata:

labels:

app: kutt-server

spec:

containers:

- name: kutt-server

image: kutt/kutt:latest

env:

- name: JWT_SECRET

value: "ThisIsMyJWTSecretAndIThinkItsPrettyLong"

- name: DB_FILENAME

value: "/var/lib/kutt/data.sqlite"

- name: REDIS_ENABLED

value: "true"

- name: REDIS_HOST

value: "valkey"

- name: REDIS_PORT

value: "6379"

- name: DEFAULT_DOMAIN

value: "kutt.tpk.pw"

- name: TRUST_PROXY

value: "true"

ports:

- containerPort: 3000

volumeMounts:

- name: db-data-sqlite

mountPath: /var/lib/kutt

- name: custom

mountPath: /kutt/custom

volumes:

- name: db-data-sqlite

persistentVolumeClaim:

claimName: dbdatasqlite

- name: custom

persistentVolumeClaim:

claimName: custom

---

apiVersion: v1

kind: Service

metadata:

name: kutt-service

spec:

type: ClusterIP

ports:

- port: 3000

targetPort: 3000

selector:

app: kutt-server

builder@LuiGi:~/Workspaces/kutt$ kubectl apply -f ./k8s.manifest.yaml -n kutt

persistentvolumeclaim/dbdatasqlite unchanged

persistentvolumeclaim/custom unchanged

deployment.apps/kutt-redis unchanged

service/kutt-redis unchanged

deployment.apps/kutt-server configured

service/kutt-service unchanged

Finally! We are (likely) running

builder@LuiGi:~/Workspaces/kutt$ kubectl get po -n kutt

NAME READY STATUS RESTARTS AGE

kutt-redis-cf5cfbd87-llnsm 1/1 Running 0 13m

kutt-server-6bdcfcd9d-jmxhh 0/1 ImagePullBackOff 0 4m30s

kutt-server-84dcf54f45-wcbnc 0/1 ContainerCreating 0 20s

builder@LuiGi:~/Workspaces/kutt$ kubectl get po -n kutt

NAME READY STATUS RESTARTS AGE

kutt-redis-cf5cfbd87-llnsm 1/1 Running 0 13m

kutt-server-84dcf54f45-wcbnc 1/1 Running 0 34s

Let’s create an A Record so we can create the Ingress

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n kutt

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "f986dd86-7bbb-46af-802e-5fbcb322a369",

"fqdn": "kutt.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/kutt",

"name": "kutt",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

I’ll now create the ingress

$ cat ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: kutt-service

name: kuttingress

spec:

rules:

- host: kutt.tpk.pw

http:

paths:

- backend:

service:

name: kutt-service

port:

number: 3000

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- kutt.tpk.pw

secretName: kutt-tls

$ kubectl apply -f ./ingress.yaml -n kutt

ingress.networking.k8s.io/kuttingress created

When the cert is satisified

$ kubectl get cert -n kutt

NAME READY SECRET AGE

kutt-tls True kutt-tls 86s

I can now login and test.

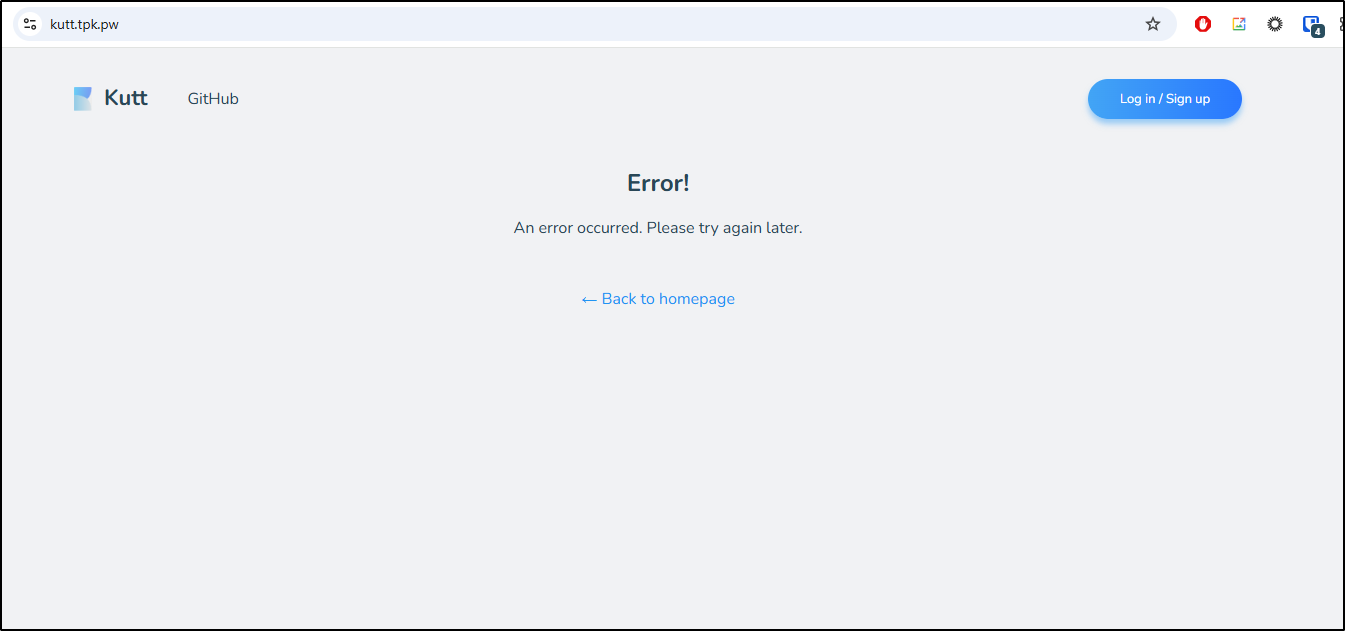

Uh oh, getting an error:

Looking at the logs, it would appear we cannot find “valkey”

$ kubectl logs kutt-server-84dcf54f45-wcbnc -n kutt

> kutt@3.2.2 migrate

> knex migrate:latest

Already up to date

npm notice

npm notice New major version of npm available! 10.9.2 -> 11.2.0

npm notice Changelog: https://github.com/npm/cli/releases/tag/v11.2.0

npm notice To update run: npm install -g npm@11.2.0

npm notice

> kutt@3.2.2 start

> node server/server.js --production

> Ready on http://localhost:3000

[ioredis] Unhandled error event: Error: getaddrinfo ENOTFOUND valkey

at GetAddrInfoReqWrap.onlookup [as oncomplete] (node:dns:109:26)

[ioredis] Unhandled error event: Error: getaddrinfo ENOTFOUND valkey

at GetAddrInfoReqWrap.onlookup [as oncomplete] (node:dns:109:26)

[ioredis] Unhandled error event: Error: getaddrinfo ENOTFOUND valkey

at GetAddrInfoReqWrap.onlookup [as oncomplete] (node:dns:109:26)

[ioredis] Unhandled error event: Error: getaddrinfo ENOTFOUND valkey

at GetAddrInfoReqWrap.onlookup [as oncomplete] (node:dns:109:26)

Checking the service, it would seem we left it as “redis”

$ kubectl get svc -n kutt

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kutt-redis ClusterIP 10.43.217.83 <none> 6379/TCP 31m

kutt-service ClusterIP 10.43.164.129 <none> 3000/TCP 31m

Easy enough to just rename the service and leave the rest

$ cat k8s.manifest.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: dbdatasqlite

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: custom

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kutt-redis

spec:

replicas: 1

selector:

matchLabels:

app: kutt-redis

template:

metadata:

labels:

app: kutt-redis

spec:

containers:

- name: kutt-redis

image: valkey/valkey:alpine

ports:

- containerPort: 6379

env:

- name: REDIS_PASSWORD

value: "your_redis_password" # Replace with your actual password

---

apiVersion: v1

kind: Service

metadata:

name: valkey

spec:

selector:

app: kutt-redis

ports:

- port: 6379

targetPort: 6379

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kutt-server

spec:

replicas: 1

selector:

matchLabels:

app: kutt-server

template:

metadata:

labels:

app: kutt-server

spec:

containers:

- name: kutt-server

image: kutt/kutt:latest

env:

- name: JWT_SECRET

value: "ThisIsMyJWTSecretAndIThinkItsPrettyLong"

- name: DB_FILENAME

value: "/var/lib/kutt/data.sqlite"

- name: REDIS_ENABLED

value: "true"

- name: REDIS_HOST

value: "valkey"

- name: REDIS_PORT

value: "6379"

- name: DEFAULT_DOMAIN

value: "kutt.tpk.pw"

- name: TRUST_PROXY

value: "true"

ports:

- containerPort: 3000

volumeMounts:

- name: db-data-sqlite

mountPath: /var/lib/kutt

- name: custom

mountPath: /kutt/custom

volumes:

- name: db-data-sqlite

persistentVolumeClaim:

claimName: dbdatasqlite

- name: custom

persistentVolumeClaim:

claimName: custom

---

apiVersion: v1

kind: Service

metadata:

name: kutt-service

spec:

type: ClusterIP

ports:

- port: 3000

targetPort: 3000

selector:

app: kutt-server

applying

$ kubectl apply -f ./k8s.manifest.yaml -n kutt

persistentvolumeclaim/dbdatasqlite unchanged

persistentvolumeclaim/custom unchanged

deployment.apps/kutt-redis unchanged

service/valkey created

deployment.apps/kutt-server unchanged

service/kutt-service unchanged

Seems its timing out connecting to via JEDIS now

$ kubectl logs kutt-server-84dcf54f45-qlsn4 -n kutt

> kutt@3.2.2 migrate

> knex migrate:latest

Already up to date

npm notice

npm notice New major version of npm available! 10.9.2 -> 11.2.0

npm notice Changelog: https://github.com/npm/cli/releases/tag/v11.2.0

npm notice To update run: npm install -g npm@11.2.0

npm notice

> kutt@3.2.2 start

> node server/server.js --production

> Ready on http://localhost:3000

[ioredis] Unhandled error event: Error: connect ETIMEDOUT

at Socket.<anonymous> (/kutt/node_modules/ioredis/built/Redis.js:170:41)

at Object.onceWrapper (node:events:638:28)

at Socket.emit (node:events:524:28)

at Socket._onTimeout (node:net:609:8)

at listOnTimeout (node:internal/timers:594:17)

at process.processTimers (node:internal/timers:529:7)

[ioredis] Unhandled error event: Error: connect ETIMEDOUT

at Socket.<anonymous> (/kutt/node_modules/ioredis/built/Redis.js:170:41)

at Object.onceWrapper (node:events:638:28)

at Socket.emit (node:events:524:28)

at Socket._onTimeout (node:net:609:8)

at listOnTimeout (node:internal/timers:594:17)

at process.processTimers (node:internal/timers:529:7)

After a lot of debugging here on the side, there is something not quite right with the latest Valkey and this JEDIS library.

I could make it work by connecting to the PodIP, but not the service. I tried a few different images as well as exposing the deployment directly with kubectl expose

$ kubectl expose deployment kutt-vakey -n kutt --port=6379 --target-port=6379 --protocol=TCP

I could test with an Ubuntu pod and had no issues.

I popped over to some older Redis instances lying about my cluster to see if it was a networking (ClusterIP), DNS (KubeDNS) or version issue.

It would seem to be version related as that redis pod is 276d old.

Testing

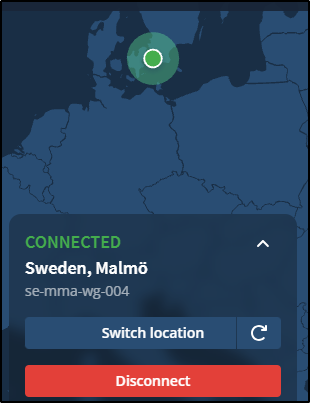

My first test was a just a quick link and I verified it redirected and I could see the origin of US.

I then turned on my VPN and sent my traffic to Sweden

Tested and it was showing properly

I only use the shorteners outside of LinkedIn as LinkedIn replaces URLs anyways.

So you can see them in use on Mastodon posts and Bluesky.

I get a lot of traffic by way of Google and LinkedIn, which isn’t covered in Kutt, but here you can see that which came by way of Mastodon and Bluesky:

That Claud post actually was split on Kutt and Yourls.

The same link but by way of YOURLS:

Summary

We looked at Kokoro, a very nice web UI wrapped around a text-to-speech engine. Even on lower end hardware, it did a fine just generating rather high-quality speech. I’ve left the instance up at kokoro.tpk.pw.

I briefly touched on NZBGet, but realized I had no active way to get USENet groups and didn’t really want to test it further.

Lastly, we checked out Kutt. I actually delayed this article for a while just because I wanted to get some real world usage out of it. We fired it up in Docker, then Kubernetes. We updated our Github Actions to use the REST API to create URLs and then cut over from YOURLS. YOURLS works well; there is nothing wrong with it, however Kutt is a bit more modern and dynamic and I like the QR code feature built-in.