Published: Feb 18, 2025 by Isaac Johnson

I enjoy using LLMs to help augment my work and more often than not I use them in VS Code. However, there are times I would like to run a long query off on the side and keep my editor out of the picture.

I wondered how hard it would be to serve up my Ollama as a Web Interface. We’ll look at two very similar Open-Source web backends to Ollama, Open WebUI and Ollama WebUI.

These containerized apps make it easy to wrap our self-hosted Ollama with a Web interface.

Open-Webui Setup

Let’s go to the repo in Github. There we can see two ways to run this.

First, with python

pip install open-webui

open-webui serve

Or Docker:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

There are other Docker invokations such as with NVidia CU and API Only.

But let’s use the one for connecting to an Ollama on a different server:

docker run -d -p 3000:8080 \

-e OLLAMA_BASE_URL=https://example.com \

-v open-webui:/app/backend/data \

--name open-webui \

--restart always \

ghcr.io/open-webui/open-webui:main

For me, that would be

docker run -d -p 3355:8080 \

-e OLLAMA_BASE_URL=http://192.168.1.143:11434 \

-v /home/builder/openwebui:/app/backend/data \

--name open-webui \

--restart always \

ghcr.io/open-webui/open-webui:main

I’ll make the directory on the Dockerhost

builder@builder-T100:~$ mkdir openwebui

builder@builder-T100:~$ cd openwebui/

builder@builder-T100:~/openwebui$ pwd

/home/builder/openwebui

Then run it

builder@builder-T100:~/openwebui$ docker run -d -p 3355:8080 \

-e OLLAMA_BASE_URL=http://192.168.1.143:11434 \

-v /home/builder/openwebui:/app/backend/data \

--name open-webui \

--restart always \

ghcr.io/open-webui/open-webui:main

Unable to find image 'ghcr.io/open-webui/open-webui:main' locally

main: Pulling from open-webui/open-webui

c29f5b76f736: Pull complete

73c4bbda278d: Pull complete

acc53c3e87ac: Pull complete

ad3b14759e4f: Pull complete

b874b4974f13: Pull complete

4f4fb700ef54: Pull complete

dfcf69fcbc2b: Pull complete

e8bfaf4ee0e0: Pull complete

17b8c991f4f9: Pull complete

cac7b012c1cf: Pull complete

19163ec7da38: Pull complete

d4995fbfa404: Pull complete

3c90b4d01587: Pull complete

78a0f8f94f20: Pull complete

6cd13850c639: Pull complete

Digest: sha256:f3d3d5c1358f1cd511e59ac951be032e4ef8f853f37cb7af33a800b976be6dc2

Status: Downloaded newer image for ghcr.io/open-webui/open-webui:main

22d5ce789f74d95f020ec750c15dbbc3fc600ba870e5638b1286cf4e4171af4e

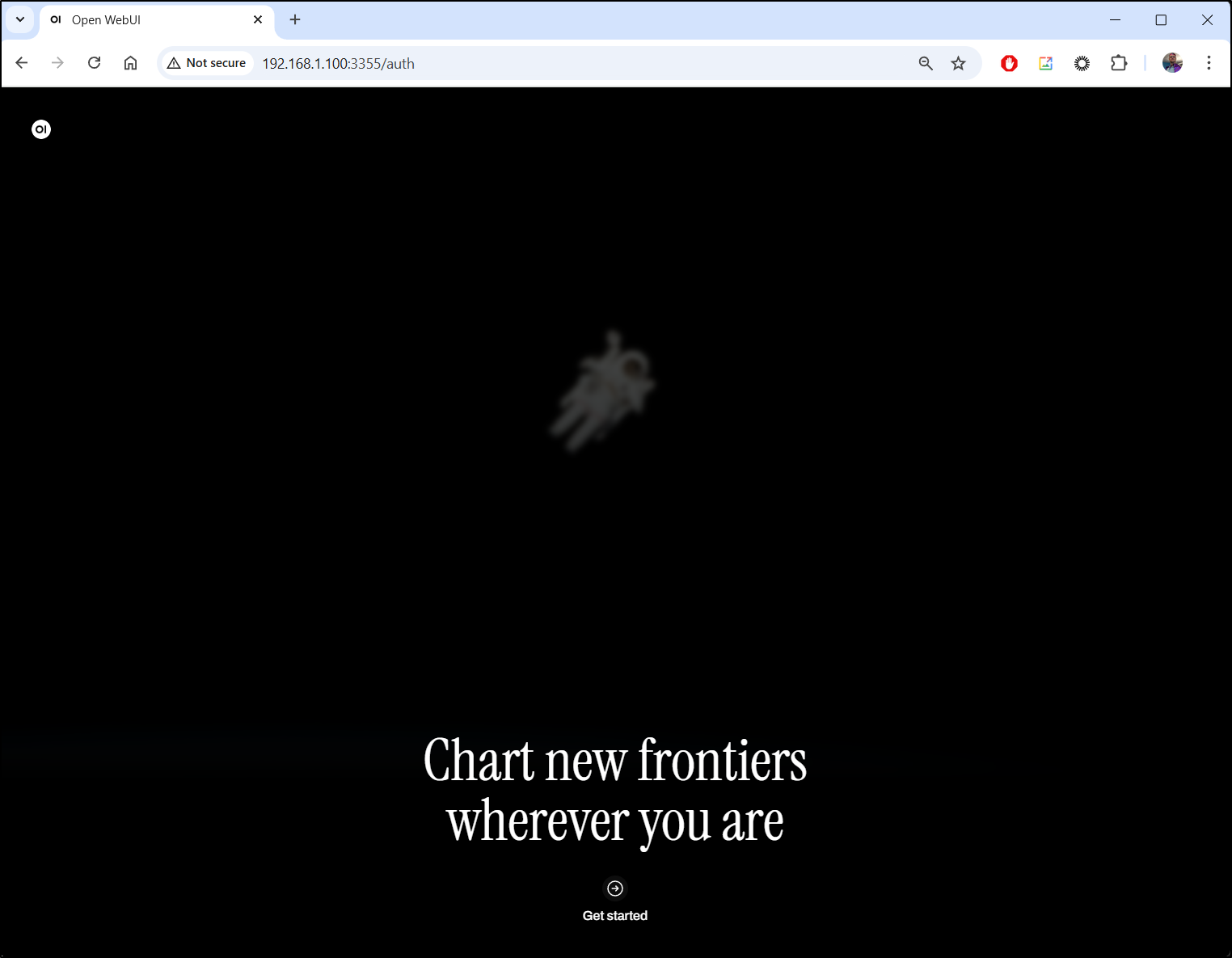

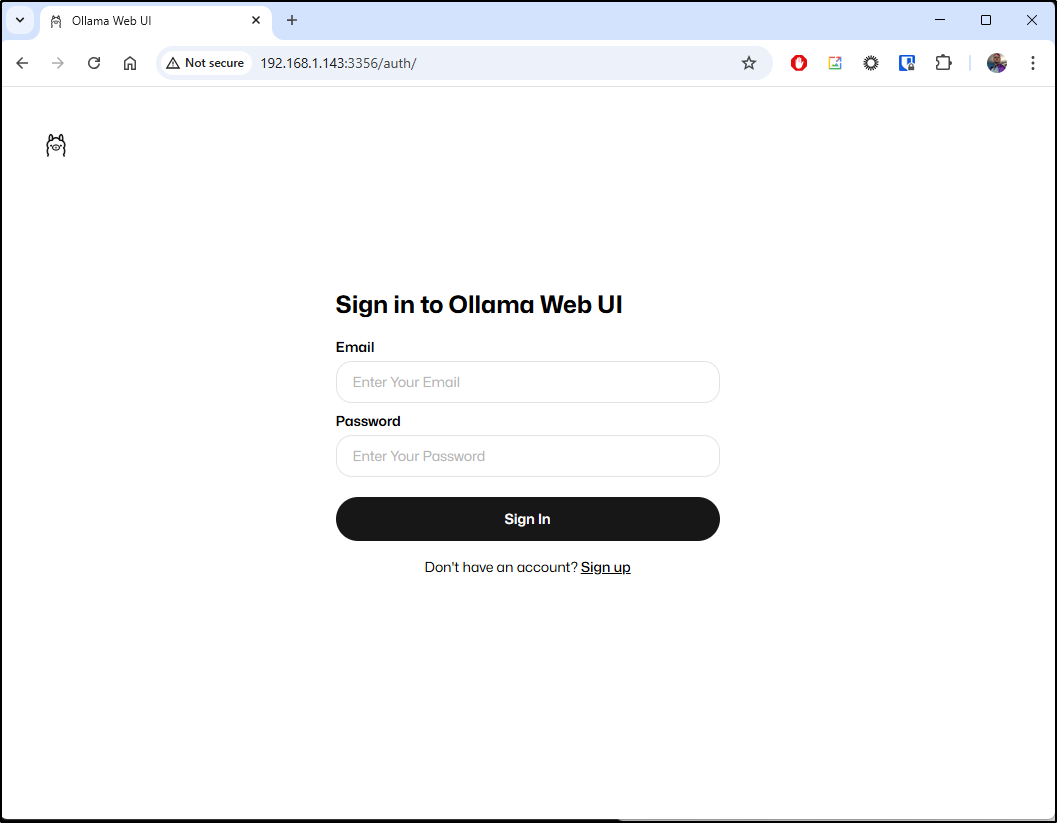

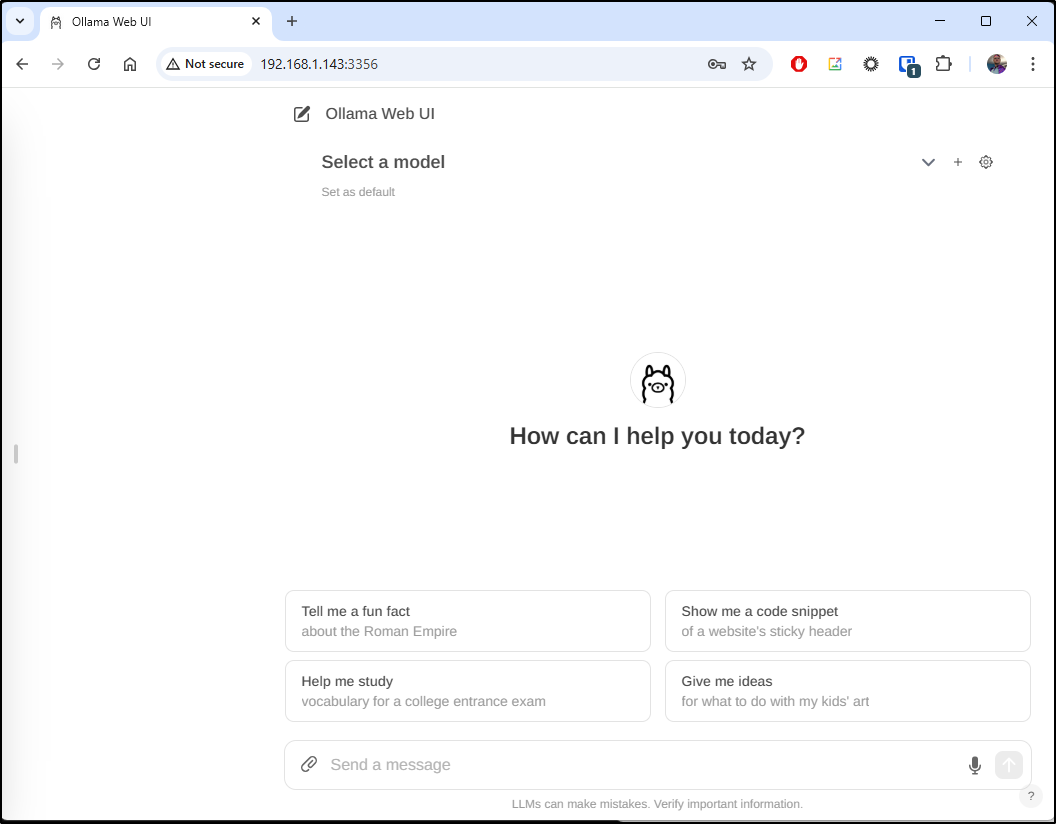

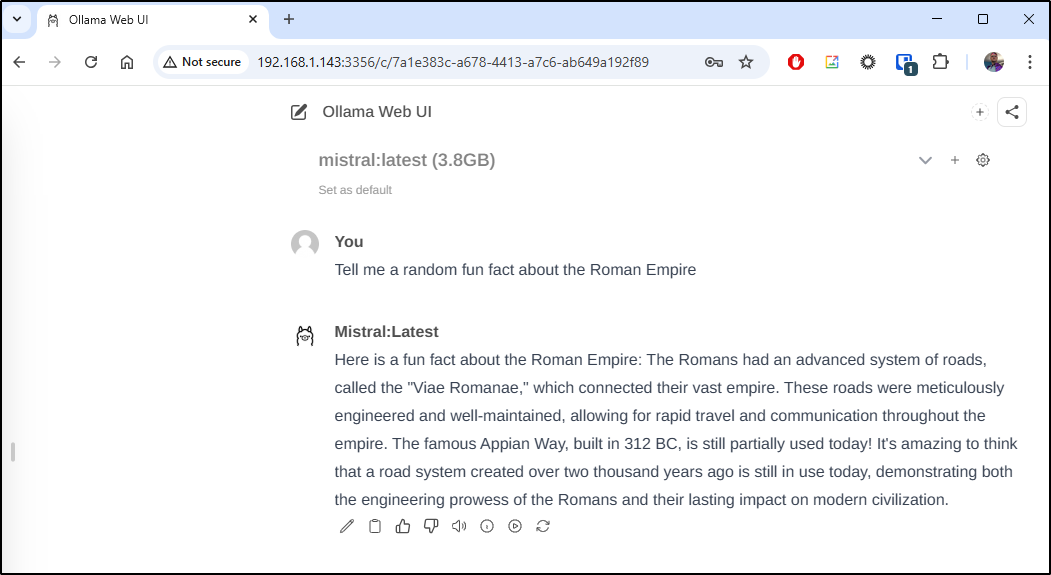

I can now hit the landing page

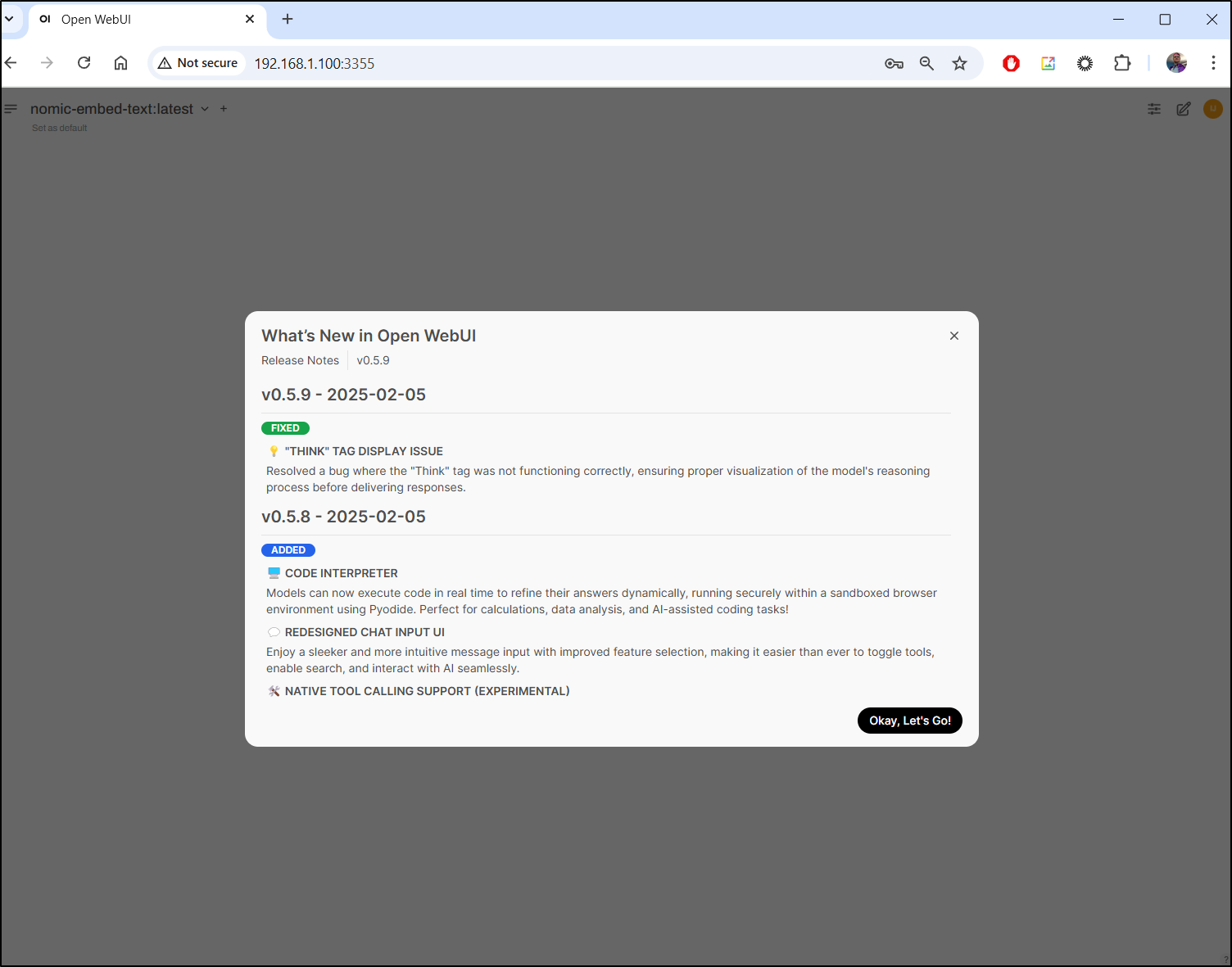

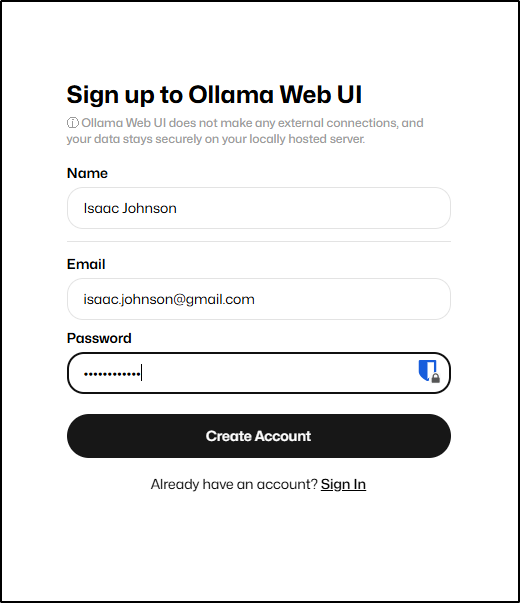

I can then add an account and login

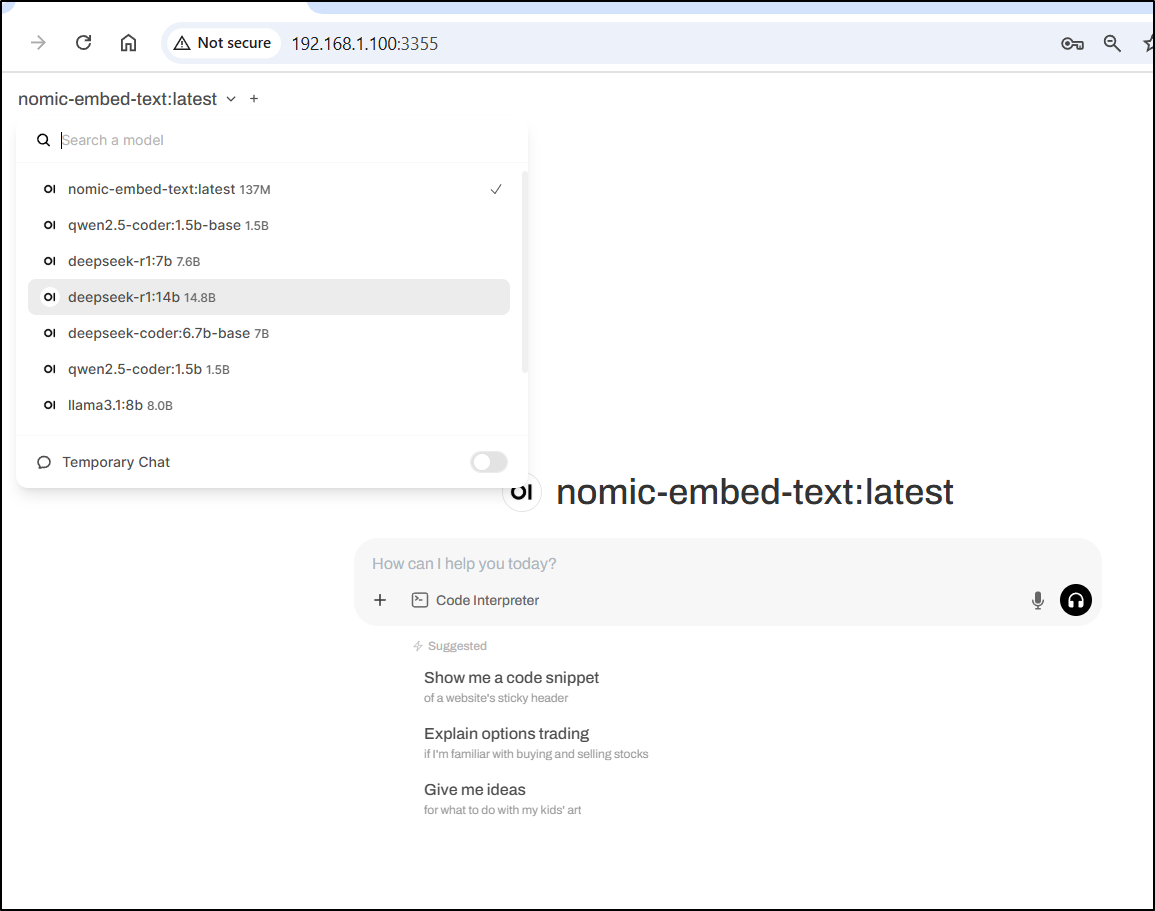

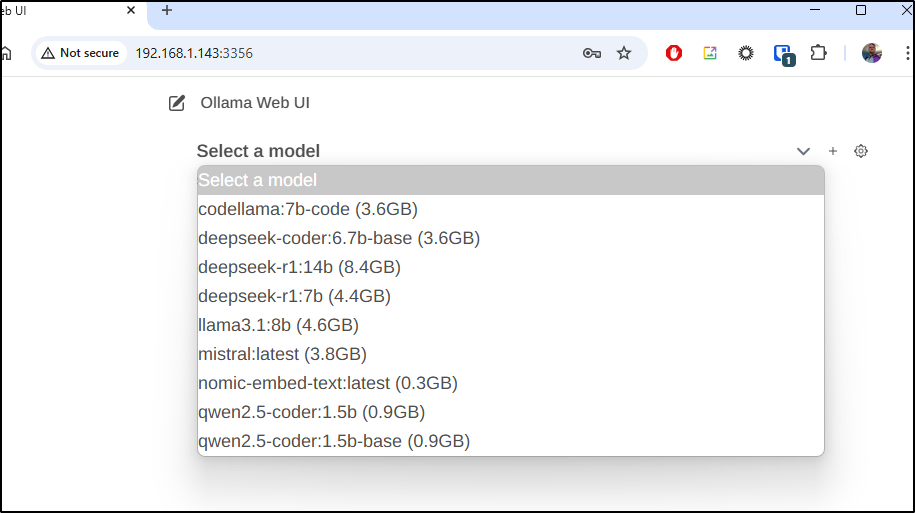

I noticed the list did include all the models I’ve pulled lately

Using

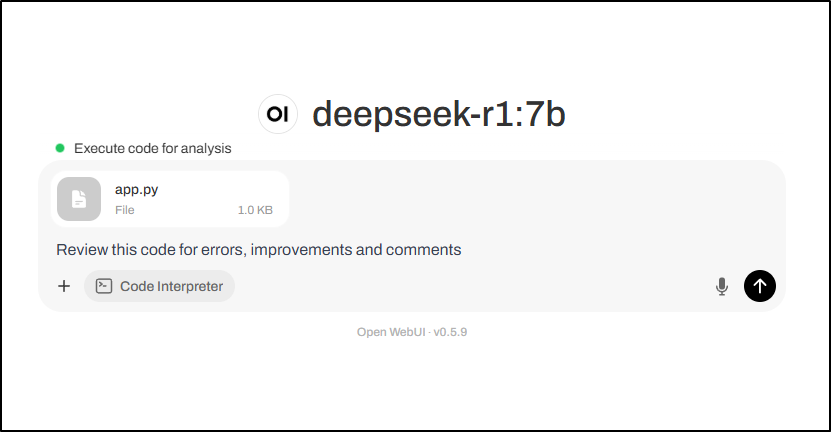

On of my ideas recently has been to see if I could review my existing apps for improvements.

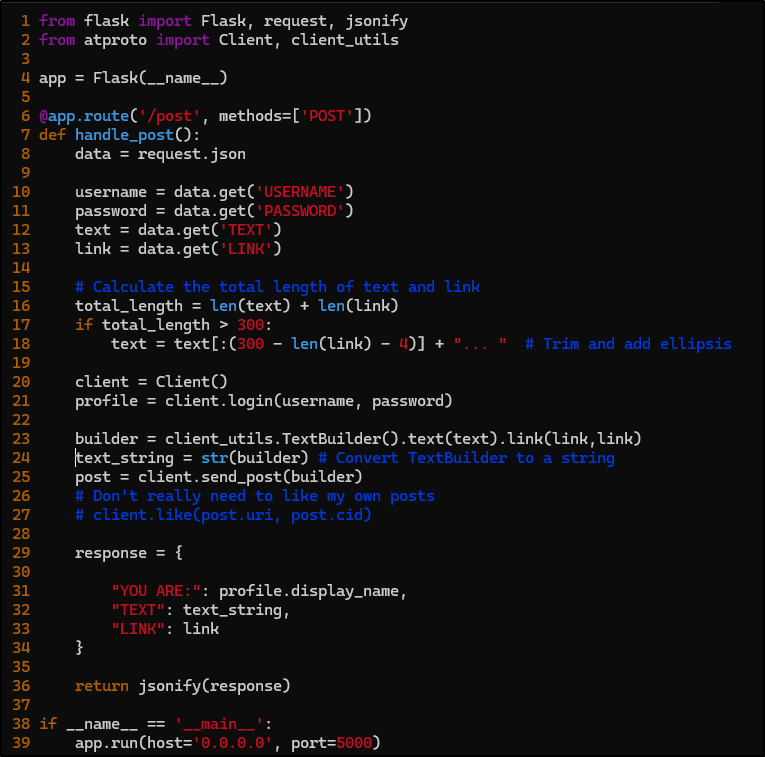

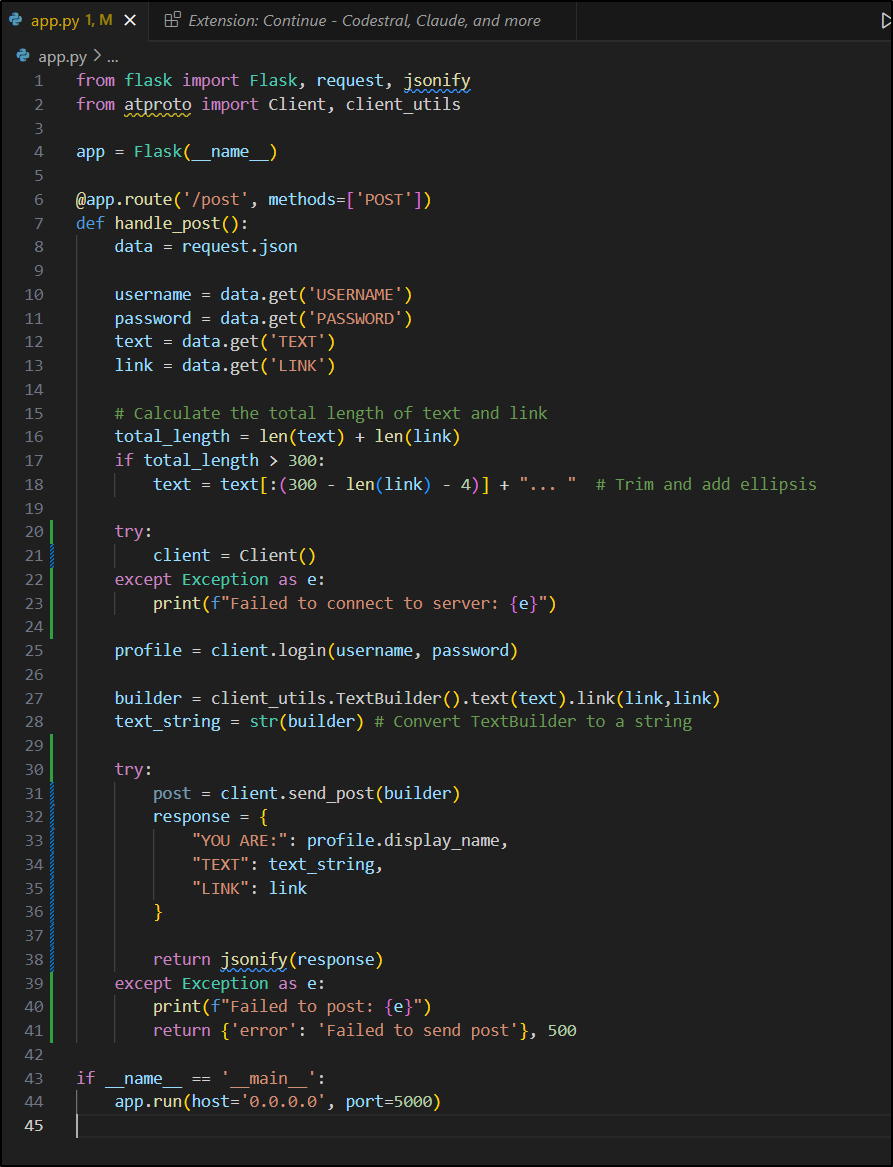

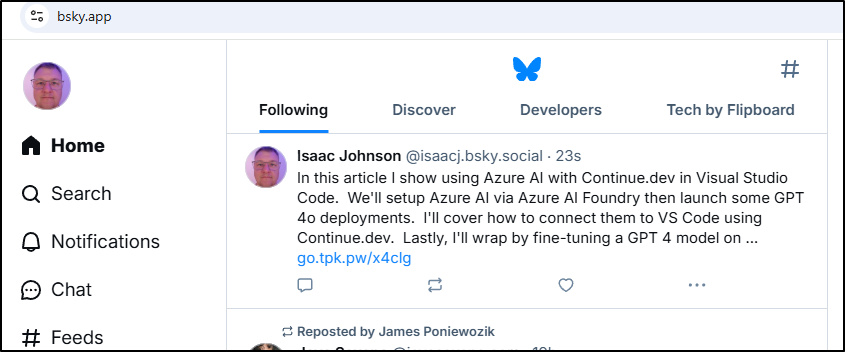

For instance, I have this OS pybsposter which I use for posting to BlueSky

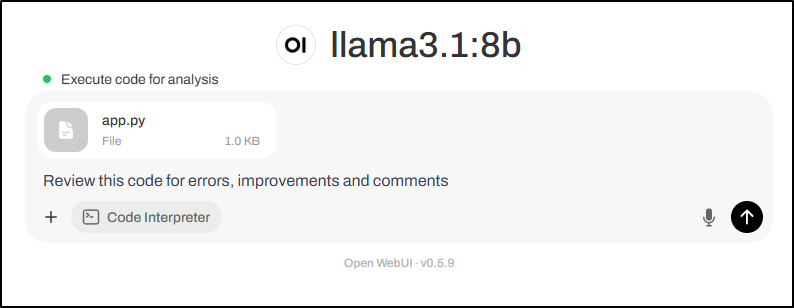

I uploaded the main “app.py” and set OpenWeb UI for “Code Analysis” mode then asked it to “Review this code for errors, improvements and comments”

I then see what I believe to be a wait screen as it does the work

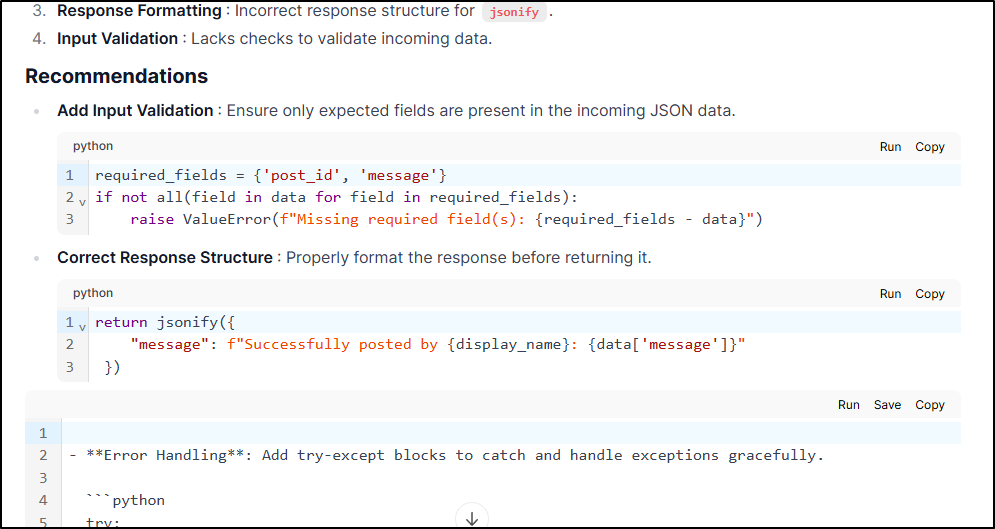

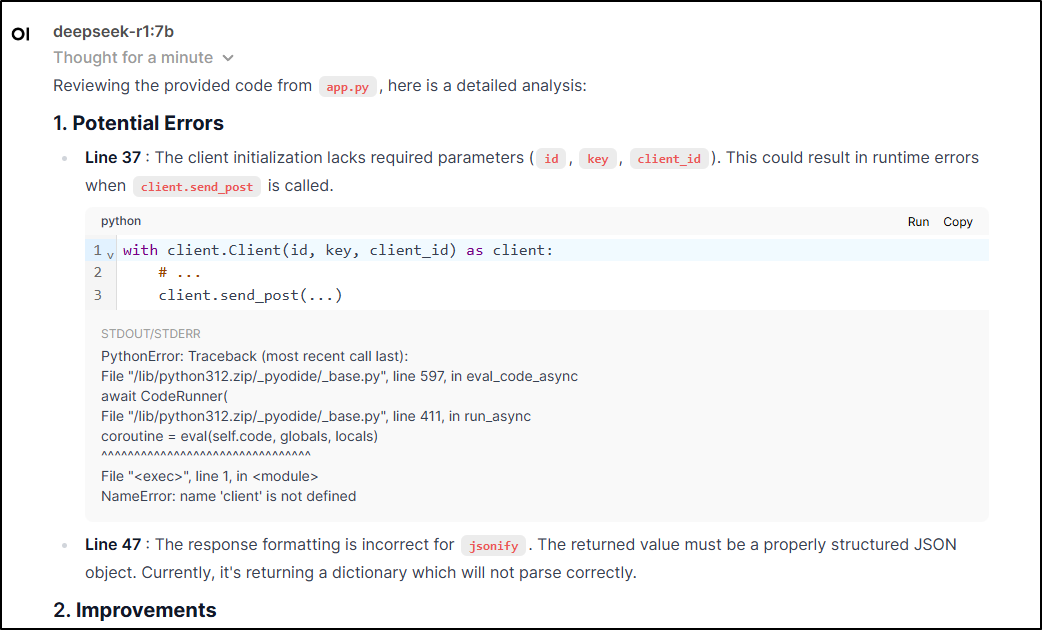

Here we can see it does a great job formatting results

The problem here, and maybe it’s the training model, is a lot of this is inaccurate.

For instance, the recommendation on ‘post_id’ and ‘message’ is just wrong - I’m not pulling either of those required fields

As you can see this is the full app

Which also makes the first suggestion confusing as there is no line 47 and line 37 is the jsonify response (not 47)

For comparison, I’ll try the exact same prompt and file on llama3.1:8b

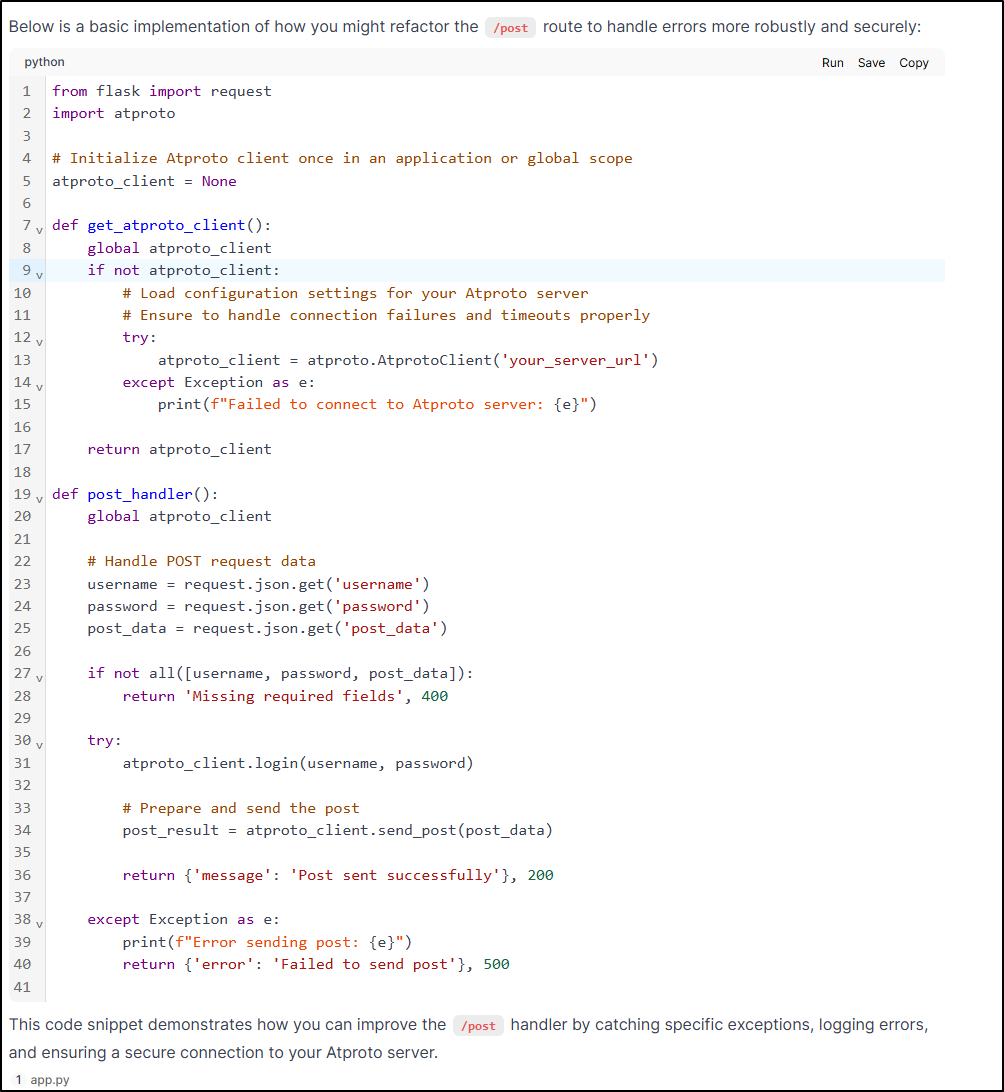

Here we can see the results

Again, this isn’t exactly right either - better - but still incomplete

It suggests using “atproto.AtProtoClient(url)” (line 13) but we can scour the online docs and clearly see there is no such class.

There is, however a class atproto_client.AsyncClient(base_url: str | None = None, *args: Any, **kwargs: Any)

and a non-async class atproto_client.Client(base_url: str | None = None, *args: Any, **kwargs: Any).

However, this does prompt me to make a change and catch connection errors. I could change

client = Client()

to

try:

client = Client()

except Exception as e:

print(f"Failed to connect to server: {e}")

and in much the same way, when I’m about to send

post = client.send_post(builder)

response = {

"YOU ARE:": profile.display_name,

"TEXT": text_string,

"LINK": link

}

return jsonify(response)

to

try:

post = client.send_post(builder)

response = {

"YOU ARE:": profile.display_name,

"TEXT": text_string,

"LINK": link

}

return jsonify(response)

except Exception as e:

print(f"Failed to post: {e}")

return {'error': 'Failed to send post'}, 500

That is:

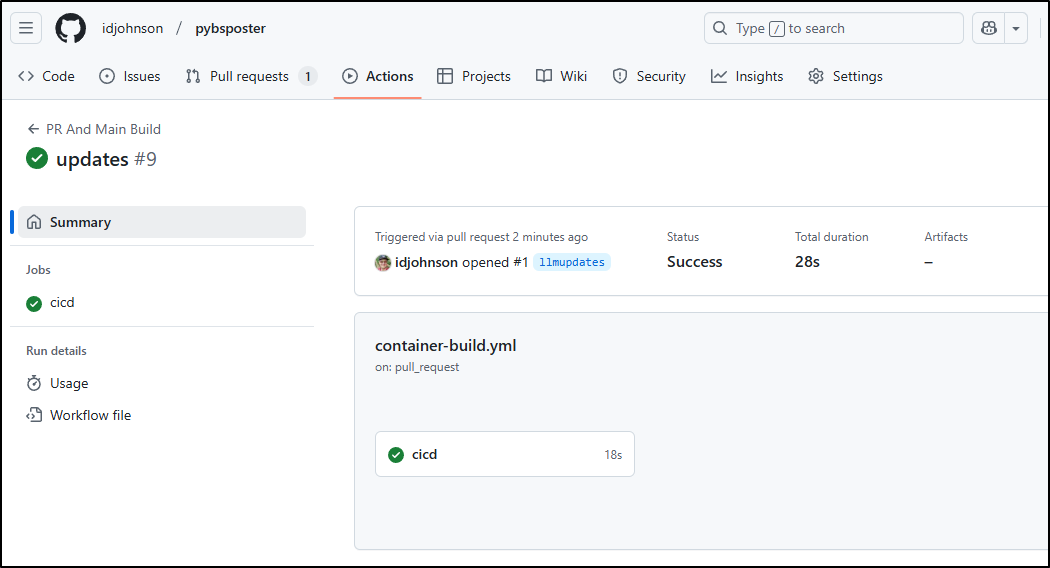

which we can see in this PR

I can see it passed the CI build

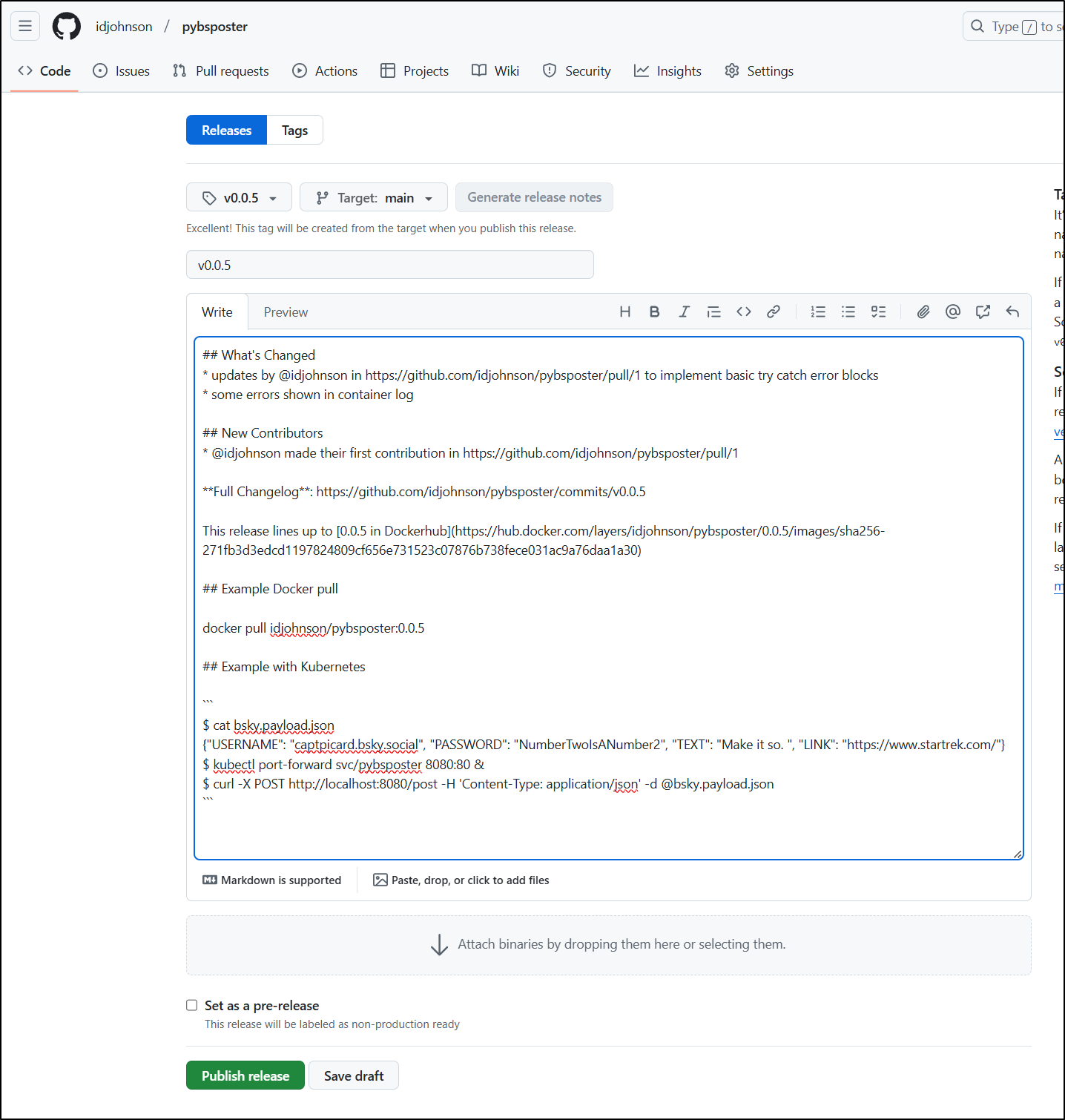

My last step is to touch the Dockerfile tag and push.

However, I realize this really would be best if I shared with everyone, at the very least in Dockerhub

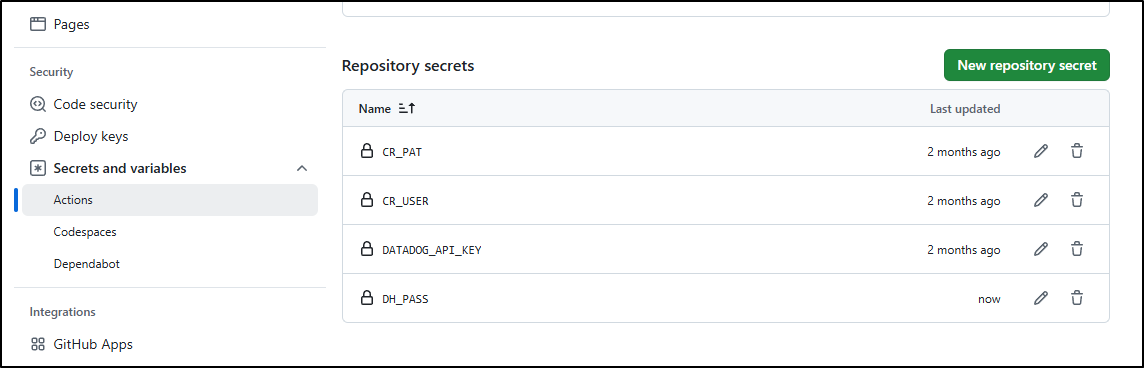

I created a new DH PAT and USER for pushing and added as a repo secret

then added a new block to the GH action

- id: tagandpushdh

name: Tag and Push (Dockerhub)

run: |

export BUILDIMGTAG="`cat Dockerfile | tail -n1 | sed 's/^.*\///g'`"

# Note the replacement to Dockerhub

export FINALBUILDTAG="`cat Dockerfile | tail -n1 | sed 's/^#.*\//idjohnson\//g'`"

export FINALBUILDLATEST="`cat Dockerfile | tail -n1 | sed 's/^#//g' | sed 's/:.*/:latest/'`"

docker tag $BUILDIMGTAG $FINALBUILDTAG

docker tag $BUILDIMGTAG $FINALBUILDLATEST

docker images

echo $CR_PAT | docker login -u $CR_USER --password-stdin

docker push "$FINALBUILDTAG"

# add a "latest" for others to use

docker push $FINALBUILDLATEST

# Push Charts

export CVER="`cat ./charts/pybsposter/Chart.yaml | grep 'version:' | sed 's/version: //' | tr -d '\n'`"

#helm push ./charts/pybsposter-$CVER.tgz oci://harbor.freshbrewed.science/library/

env: # Or as an environment variable

CR_PAT: $

CR_USER: $

if: github.ref == 'refs/heads/main'

note: I later realized i used “PAT” in the workflow and “PASS” in the Github secrets and corrected my Github secrets to match

We can see it built

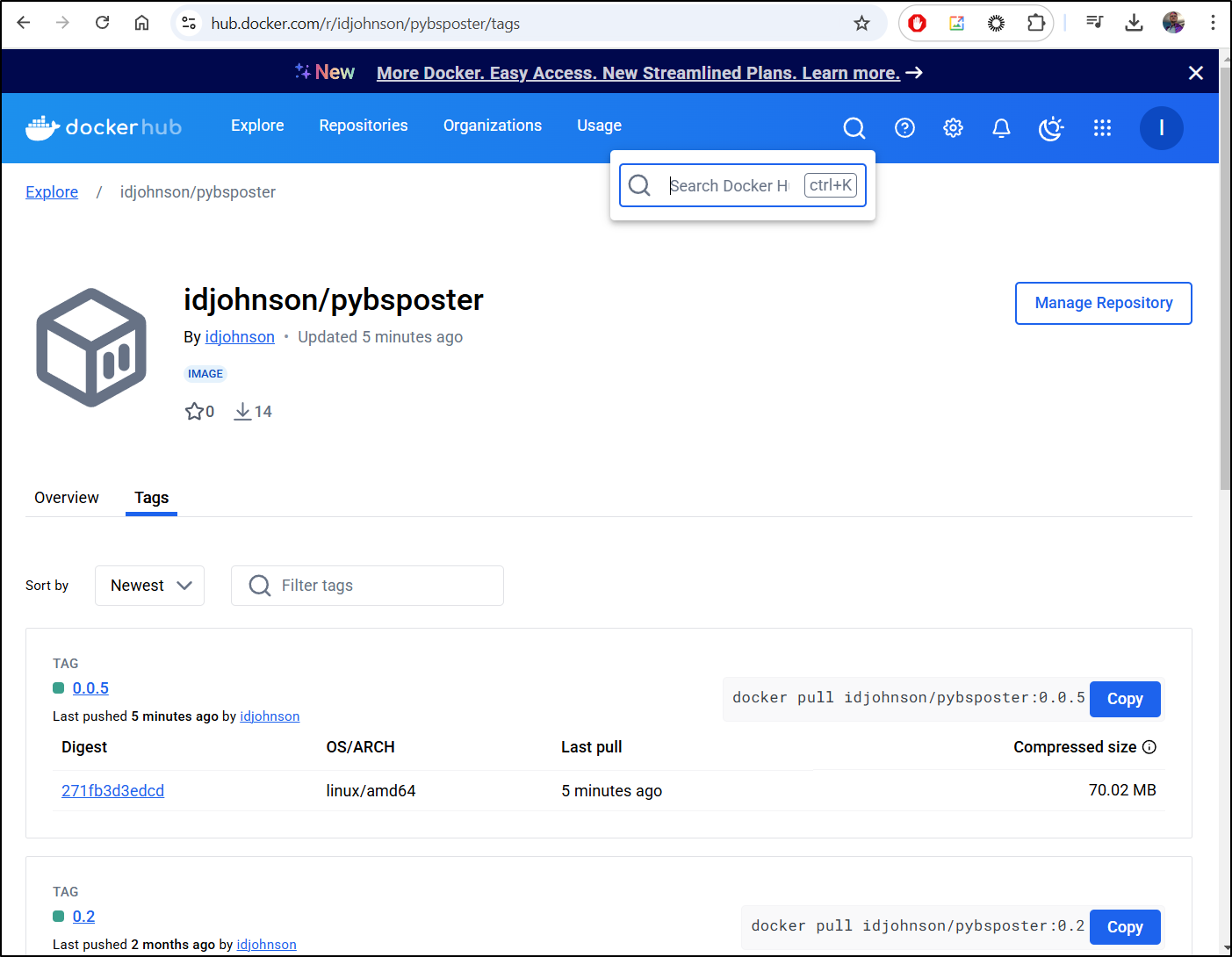

And I can see it now live at https://hub.docker.com/r/idjohnson/pybsposter

I can then upgrade my local copy with the new image “0.0.5”

.9.1 9.1-0

builder@DESKTOP-QADGF36:~/Workspaces/pybsposter$ helm get values pybsposter

USER-SUPPLIED VALUES:

image:

tag: 0.0.4

ingress:

enabled: true

builder@DESKTOP-QADGF36:~/Workspaces/pybsposter$ helm upgrade pybsposter --set image.tag=0.0.5 ./charts/pybsposter

Release "pybsposter" has been upgraded. Happy Helming!

NAME: pybsposter

LAST DEPLOYED: Wed Feb 5 19:17:04 2025

NAMESPACE: default

STATUS: deployed

REVISION: 5

TEST SUITE: None

And it’s running now

$ kubectl get po | grep pybs

pybsposter-6dc7d87cbf-2bj8v 1/1 Running 0 49s

pybsposter-8b5578f98-4bzr6 1/1 Terminating 0 61d

The next day I realized my mistake having neglected to neither set ingress.enabled=true nor use the values file

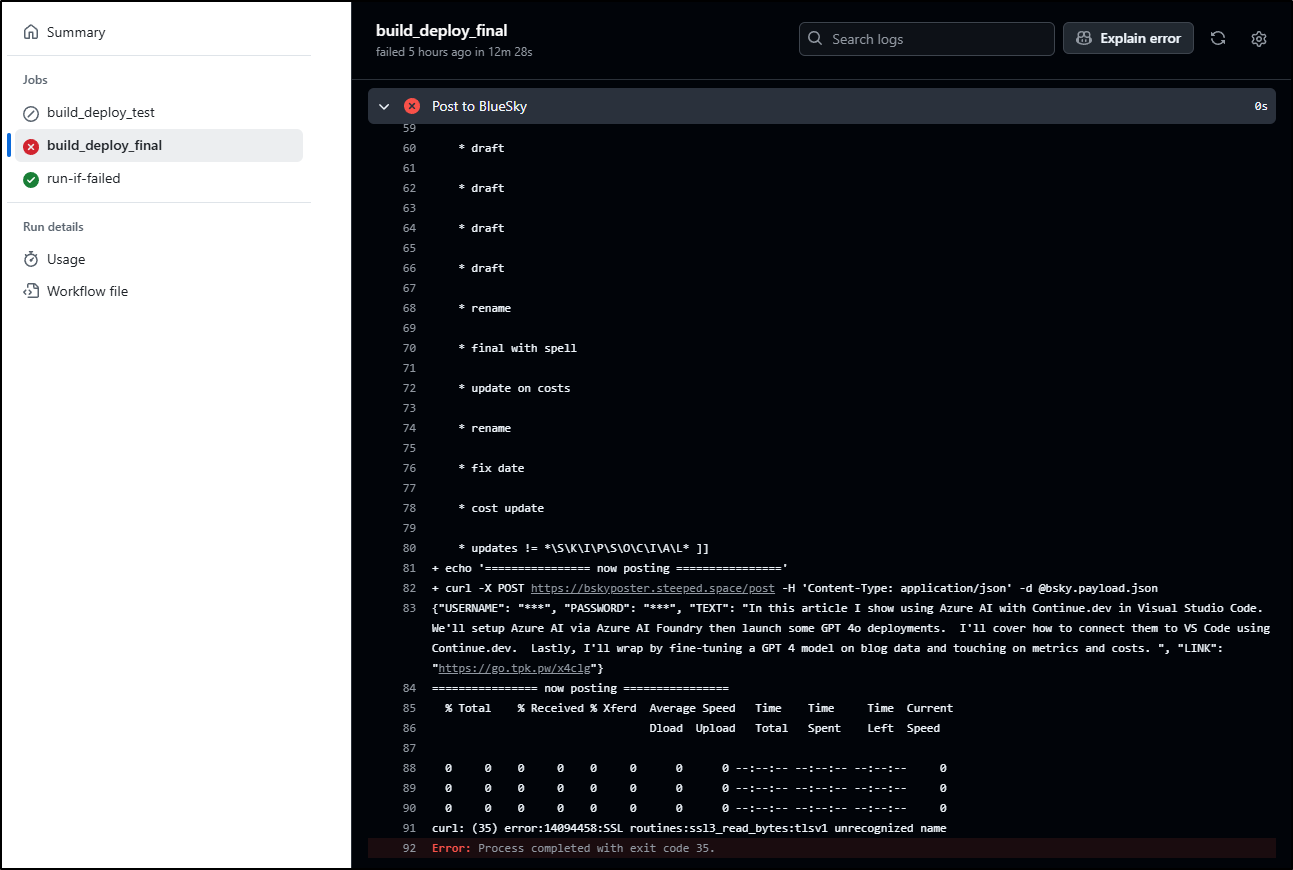

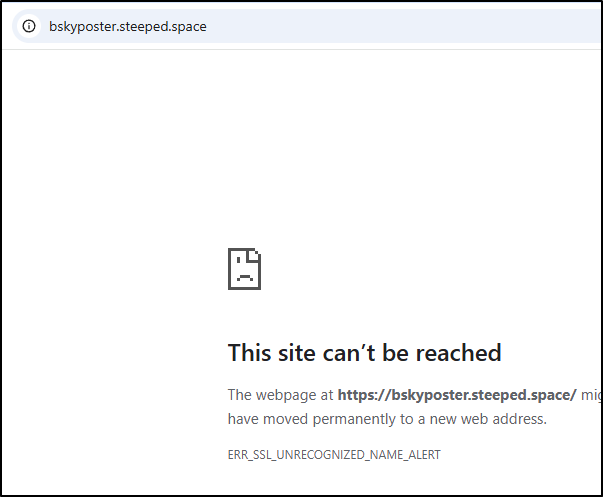

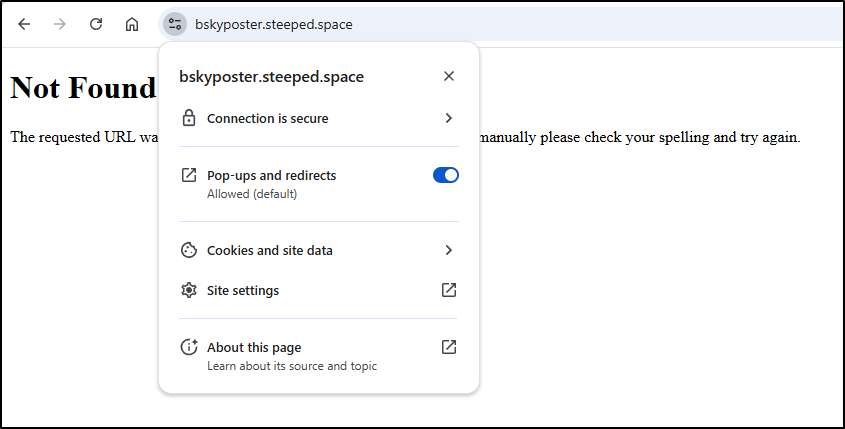

the error, of course, caused by the lack of ingress (thus an invalid SSL cert)

I’ll run the upgrade again, this time enabling ingress

$ helm upgrade pybsposter --set image.tag=0.0.5 --set ingress.enabled=true ./charts/pybsposter

Release "pybsposter" has been upgraded. Happy Helming!

NAME: pybsposter

LAST DEPLOYED: Thu Feb 6 08:14:25 2025

NAMESPACE: default

STATUS: deployed

REVISION: 6

TEST SUITE: None

That seemed to fix the ingress

I can now build the payload and test locally

$ curl -X POST https://bskyposter.steeped.space/post -H 'Content-Type: application/json' -d @bsky.payload.json

{"LINK":"https://go.tpk.pw/x4clg","TEXT":"<atproto_client.utils.text_builder.TextBuilder object at 0x7fb6fd2c36a0>","YOU ARE:":"Isaac Johnson"}

And see it posted

As for error handling, we can now see a bit more verbose logs.

Here I’ll send the same payload but with a wrong password:

$ cat bsky.payload.json

{"USERNAME": "isaacj.bsky.social", "PASSWORD": "NOTPASSWORD", "TEXT": "In this article I show using Azure AI with Continue.dev in Visual Studio Code. We'll setup Azure AI via Azure AI Foundry then launch some GPT 4o deployments. I'll cover how to connect them to VS Code using Continue.dev. Lastly, I'll wrap by fine-tuning a GPT 4 model on blog data and touching on metrics and costs. ", "LINK": "https://go.tpk.pw/x4clg"}

$ curl -X POST https://bskyposter.steeped.space/post -H 'Content-Type: application/json' -d @bsky.payload.json

<!doctype html>

<html lang=en>

<title>500 Internal Server Error</title>

<h1>Internal Server Error</h1>

<p>The server encountered an internal error and was unable to complete your request. Either the server is overloaded or there is an error in the application.</p>

Now in the logs I can see a stack dump

$ kubectl logs pybsposter-6dc7d87cbf-2bj8v

* Serving Flask app 'app'

* Debug mode: off

WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:5000

* Running on http://10.42.2.63:5000

Press CTRL+C to quit

10.42.0.20 - - [06/Feb/2025 14:14:46] "GET / HTTP/1.1" 404 -

10.42.0.20 - - [06/Feb/2025 14:14:46] "GET /favicon.ico HTTP/1.1" 404 -

10.42.0.20 - - [06/Feb/2025 14:14:48] "GET / HTTP/1.1" 404 -

10.42.0.20 - - [06/Feb/2025 14:15:25] "GET / HTTP/1.1" 404 -

10.42.0.20 - - [06/Feb/2025 14:15:26] "GET / HTTP/1.1" 404 -

10.42.0.20 - - [06/Feb/2025 14:18:05] "POST /post HTTP/1.1" 200 -

10.42.0.20 - - [06/Feb/2025 22:19:50] "GET /.env HTTP/1.1" 404 -

[2025-02-07 11:45:26,609] ERROR in app: Exception on /post [POST]

Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/flask/app.py", line 1511, in wsgi_app

response = self.full_dispatch_request()

File "/usr/local/lib/python3.9/site-packages/flask/app.py", line 919, in full_dispatch_request

rv = self.handle_user_exception(e)

File "/usr/local/lib/python3.9/site-packages/flask/app.py", line 917, in full_dispatch_request

rv = self.dispatch_request()

File "/usr/local/lib/python3.9/site-packages/flask/app.py", line 902, in dispatch_request

return self.ensure_sync(self.view_functions[rule.endpoint])(**view_args) # type: ignore[no-any-return]

File "/app/app.py", line 25, in handle_post

profile = client.login(username, password)

File "/usr/local/lib/python3.9/site-packages/atproto_client/client/client.py", line 92, in login

session = self._get_and_set_session(login, password)

File "/usr/local/lib/python3.9/site-packages/atproto_client/client/client.py", line 47, in _get_and_set_session

session = self.com.atproto.server.create_session(

File "/usr/local/lib/python3.9/site-packages/atproto_client/namespaces/sync_ns.py", line 5691, in create_session

response = self._client.invoke_procedure(

File "/usr/local/lib/python3.9/site-packages/atproto_client/client/base.py", line 115, in invoke_procedure

return self._invoke(InvokeType.PROCEDURE, url=self._build_url(nsid), params=params, data=data, **kwargs)

File "/usr/local/lib/python3.9/site-packages/atproto_client/client/client.py", line 40, in _invoke

return super()._invoke(invoke_type, **kwargs)

File "/usr/local/lib/python3.9/site-packages/atproto_client/client/base.py", line 122, in _invoke

return self.request.post(**kwargs)

File "/usr/local/lib/python3.9/site-packages/atproto_client/request.py", line 181, in post

return _parse_response(self._send_request('POST', *args, **kwargs))

File "/usr/local/lib/python3.9/site-packages/atproto_client/request.py", line 171, in _send_request

_handle_request_errors(e)

File "/usr/local/lib/python3.9/site-packages/atproto_client/request.py", line 54, in _handle_request_errors

raise exception

File "/usr/local/lib/python3.9/site-packages/atproto_client/request.py", line 169, in _send_request

return _handle_response(response)

File "/usr/local/lib/python3.9/site-packages/atproto_client/request.py", line 77, in _handle_response

raise exceptions.UnauthorizedError(error_response)

atproto_client.exceptions.UnauthorizedError: Response(success=False, status_code=401, content=XrpcError(error='AuthenticationRequired', message='Invalid identifier or password'), headers={'date': 'Fri, 07 Feb 2025 11:45:26 GMT', 'content-type': 'application/json; charset=utf-8', 'content-length': '77', 'connection': 'keep-alive', 'x-powered-by': 'Express', 'access-control-allow-origin': '*', 'ratelimit-limit': '10', 'ratelimit-remaining': '9', 'ratelimit-reset': '1739015126', 'ratelimit-policy': '10;w=86400', 'etag': 'W/"4d-98r3hvgolnybv7tgksQiZbSE7Zg"', 'vary': 'Accept-Encoding'})

10.42.0.20 - - [07/Feb/2025 11:45:26] "POST /post HTTP/1.1" 500 -

My final step on the pybsposter will be to add a release so we can tie back to it from Dockerhub

We can now see the release published here.

I also took a moment to write up a Repository Overview in Dockerhub with some step-by-step instructions.

Large Models

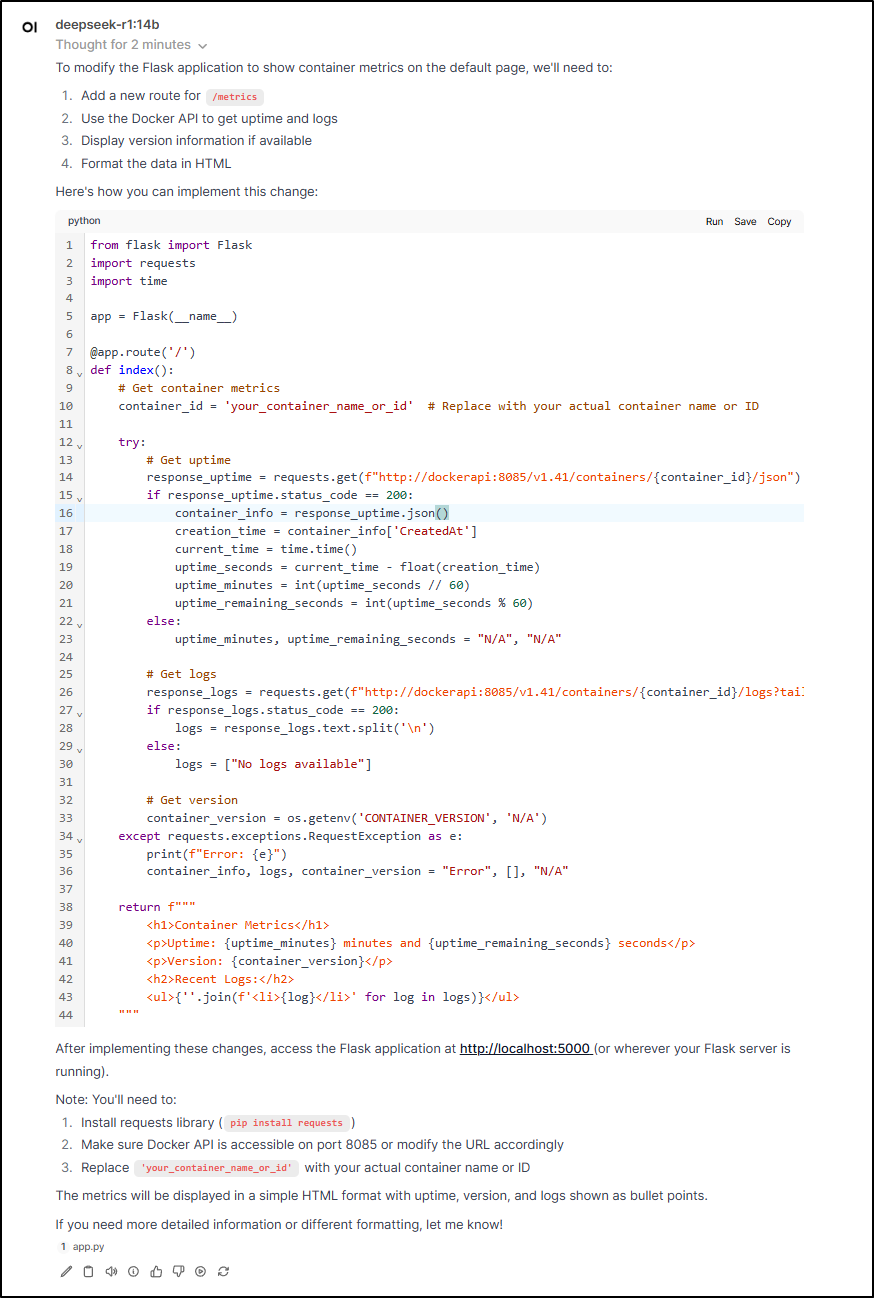

The largest model I can run on the MiniPC, presently, is Deepseek R1 14b which runs around 9Gb.

Here I’ll provide the app.py and ask it to add a default page. Don’t worry - I’ll pause the recording during the bulk of the wait, but you can see it took just over 7 minutes to come back with a reply and just over 9 minutes to fully complete the answer

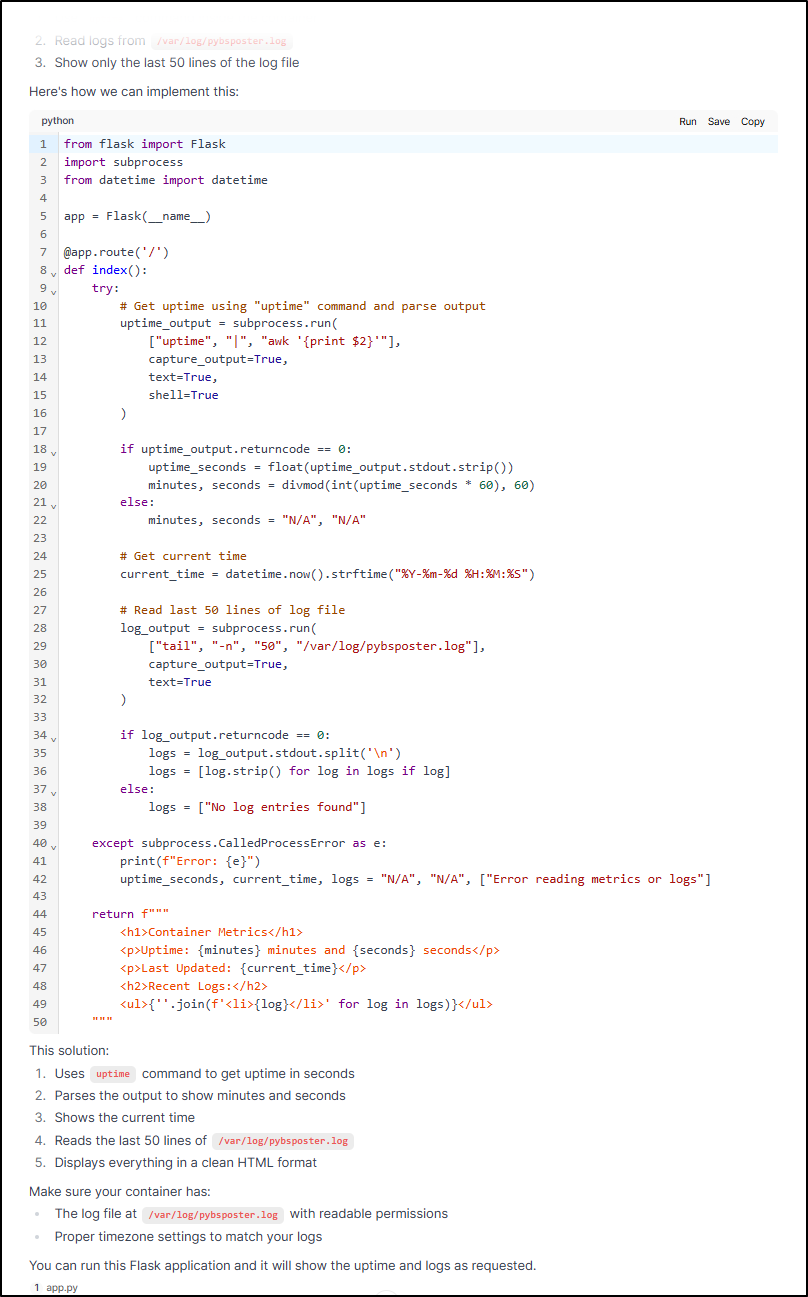

We can take a look at the answer

The problem is this assumes some kind of “dockerapi” running on 8085. I really want to fetch the stats from in the container so it would work on Kubernetes or Docker.

I asked the model with a follow-up prompt

do not use Docker API. Instead get uptime metrics from inside the container using system call like “uptime”. logs from the app should be captured and sent to /var/log/pybsposter.log. we should just show the last 50 lines of that file.

I timed it (no need to show the recording) and it took 4.5 minutes to respond with “thinking”. It started to respond at the 6:26 mark. It’s still pretty slow as it answers:

I noticed it swapped from time to importing datetime

In testing, I did find issues.

First, there was a lack of uptime on the container.

$ kubectl exec -it pybsposter-79c7988866-5gq6x -- /bin/bash

root@pybsposter-79c7988866-5gq6x:/app# uptime | awk '{print $2'

bash: uptime: command not found

awk: line 2: missing } near end of file

root@pybsposter-79c7988866-5gq6x:/app# uptime | awk '{print $2}'

bash: uptime: command not found

root@pybsposter-79c7988866-5gq6x:/app# which uptime

I guess that’s not baked into python:3.9-slim

I did a quick local test by apt installing it

root@pybsposter-79c7988866-5gq6x:/app# apt update

Get:1 http://deb.debian.org/debian bookworm InRelease [151 kB]

Get:2 http://deb.debian.org/debian bookworm-updates InRelease [55.4 kB]

Get:3 http://deb.debian.org/debian-security bookworm-security InRelease [48.0 kB]

Get:4 http://deb.debian.org/debian bookworm/main amd64 Packages [8792 kB]

Get:5 http://deb.debian.org/debian bookworm-updates/main amd64 Packages [13.5 kB]

Get:6 http://deb.debian.org/debian-security bookworm-security/main amd64 Packages [243 kB]

Fetched 9303 kB in 2s (4200 kB/s)

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

All packages are up to date.

root@pybsposter-79c7988866-5gq6x:/app# apt install procps

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following additional packages will be installed:

libproc2-0 psmisc

The following NEW packages will be installed:

libproc2-0 procps psmisc

0 upgraded, 3 newly installed, 0 to remove and 0 not upgraded.

Need to get 1030 kB of archives.

After this operation, 3310 kB of additional disk space will be used.

Do you want to continue? [Y/n] Y

Get:1 http://deb.debian.org/debian bookworm/main amd64 libproc2-0 amd64 2:4.0.2-3 [62.8 kB]

Get:2 http://deb.debian.org/debian bookworm/main amd64 procps amd64 2:4.0.2-3 [709 kB]

Get:3 http://deb.debian.org/debian bookworm/main amd64 psmisc amd64 23.6-1 [259 kB]

Fetched 1030 kB in 0s (5774 kB/s)

debconf: delaying package configuration, since apt-utils is not installed

Selecting previously unselected package libproc2-0:amd64.

(Reading database ... 6686 files and directories currently installed.)

Preparing to unpack .../libproc2-0_2%3a4.0.2-3_amd64.deb ...

Unpacking libproc2-0:amd64 (2:4.0.2-3) ...

Selecting previously unselected package procps.

Preparing to unpack .../procps_2%3a4.0.2-3_amd64.deb ...

Unpacking procps (2:4.0.2-3) ...

Selecting previously unselected package psmisc.

Preparing to unpack .../psmisc_23.6-1_amd64.deb ...

Unpacking psmisc (23.6-1) ...

Setting up psmisc (23.6-1) ...

Setting up libproc2-0:amd64 (2:4.0.2-3) ...

Setting up procps (2:4.0.2-3) ...

Processing triggers for libc-bin (2.36-9+deb12u9) ...

root@pybsposter-79c7988866-5gq6x:/app#

root@pybsposter-79c7988866-5gq6x:/app# uptime | awk '{print $2}'

up

However, the next issue had to do with conversion - I saw a 500 server error with output

[2025-02-07 13:16:55,291] ERROR in app: Exception on / [GET]

Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/flask/app.py", line 1511, in wsgi_app

response = self.full_dispatch_request()

File "/usr/local/lib/python3.9/site-packages/flask/app.py", line 919, in full_dispatch_request

rv = self.handle_user_exception(e)

File "/usr/local/lib/python3.9/site-packages/flask/app.py", line 917, in full_dispatch_request

rv = self.dispatch_request()

File "/usr/local/lib/python3.9/site-packages/flask/app.py", line 902, in dispatch_request

return self.ensure_sync(self.view_functions[rule.endpoint])(**view_args) # type: ignore[no-any-return]

File "/app/app.py", line 20, in index

uptime_seconds = float(uptime_output.stdout.strip())

ValueError: could not convert string to float: '13:16:55 up 186 days, 23:34, 0 user, load average: 1.47, 3.18, 4.13'

10.42.0.20 - - [07/Feb/2025 13:16:55] "GET / HTTP/1.1" 500 -

The problem is that the code is expecting elapsed seconds to compare yet our output from uptime is just ‘up’

["uptime", "|", "awk '{print $2}'"],

# from console

root@pybsposter-79c7988866-5gq6x:/app# uptime | awk '{print $2}'

up

But I think cat’ing out the uptime in proc would work

root@pybsposter-79c7988866-5gq6x:/app# awk '{print $1}' /proc/uptime

16155465.13

I’ll try correcting that as well as adding to the Dockerfile

RUN DEBIAN_FRONTEND=noninteractive apt update -y \

&& umask 0002 \

&& DEBIAN_FRONTEND=noninteractive apt install -y procps

Just for a note, I’m doing a bunch of local docker build and pushes on this candidate code

builder@DESKTOP-QADGF36:~/Workspaces/pybsposter$ docker build -t harbor.freshbrewed.science/freshbrewedprivate/pybsposter:0.0.6b .

[+] Building 16.4s (11/11) FINISHED docker:default

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 706B 0.0s

=> [internal] load metadata for docker.io/library/python:3.9-slim 0.7s

=> [auth] library/python:pull token for registry-1.docker.io 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> CACHED [1/5] FROM docker.io/library/python:3.9-slim@sha256:f9364cd6e0c146966f8f23fc4fd85d53f2e604bdde74e3c06565194dc4a02f85 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 14.03kB 0.0s

=> [2/5] RUN DEBIAN_FRONTEND=noninteractive apt update -y && umask 0002 && DEBIAN_FRONTEND=noninteractive apt install -y pr 3.7s

=> [3/5] WORKDIR /app 0.0s

=> [4/5] COPY . /app 0.1s

=> [5/5] RUN pip install -r requirements.txt 11.3s

=> exporting to image 0.5s

=> => exporting layers 0.5s

=> => writing image sha256:daf73e3297c610a053177683a25885a199ad86f772f99da24b4b1f548f9d683b 0.0s

=> => naming to harbor.freshbrewed.science/freshbrewedprivate/pybsposter:0.0.6b 0.0s

builder@DESKTOP-QADGF36:~/Workspaces/pybsposter$ docker push harbor.freshbrewed.science/freshbrewedprivate/pybsposter:0.0.6b

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/pybsposter]

56b0b818f72b: Pushed

ea087f991cae: Pushed

ff0c3de21093: Pushed

1b6866f84e92: Pushed

6022e9b5727d: Layer already exists

e0dfbff797f9: Layer already exists

0eaf13317391: Layer already exists

7914c8f600f5: Layer already exists

0.0.6b: digest: sha256:cf31b850ffec6330f70913dc333e205727036f94f729548df7f973590e39f887 size: 1998

Then updating the deployment manually

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/instance: pybsposter

app.kubernetes.io/name: pybsposter

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app.kubernetes.io/instance: pybsposter

app.kubernetes.io/name: pybsposter

spec:

containers:

- image: harbor.freshbrewed.science/freshbrewedprivate/pybsposter:0.0.6b

imagePullPolicy: IfNotPresent

name: pybsposter

ports:

- containerPort: 5000

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

imagePullSecrets:

- name: myharborreg

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

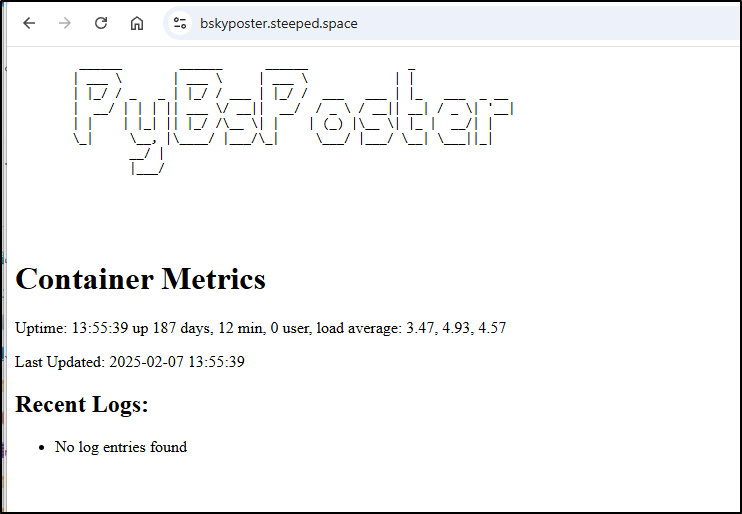

After a bunch of testing, I wrapped with a simplified uptime and a better splash image

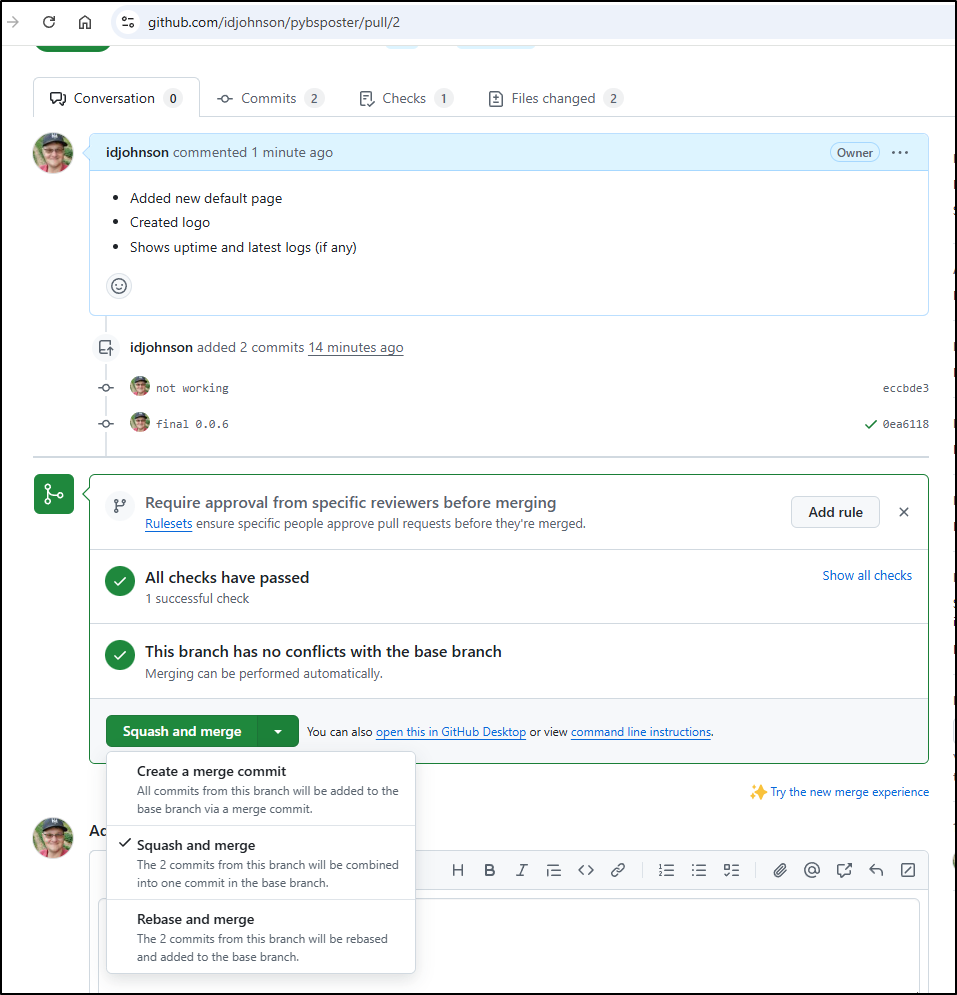

I pushed up to Github and created a new pull request

This is a case where I don’t want to keep in all the debug nonsense. So I’ll merge, but do a “Squash and merge” to squeeze all my changes into one logical commit

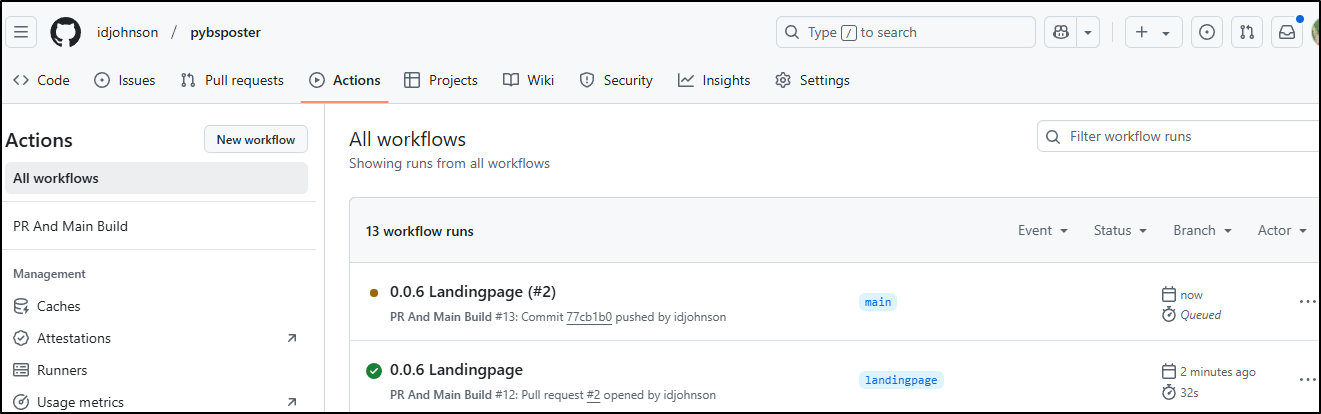

This kicked a build off on main

Once completed, I can see a new 0.0.6 on Dockerhub.

I am not going to bother updating my local deployment since it logically matches, but I could do a fresh helm deploy if I wanted to swap off the 0.0.6i image I had locally.

Ollama WebUI Setup

There is a very similar project from a different group called Ollama WebUI.

This time I’ll set it up on the same host as my LLM sever.

I’ll need to add docker or podman to my LLM host

builder@bosgamerz9:~$ brew install podman

==> Auto-updating Homebrew...

Adjust how often this is run with HOMEBREW_AUTO_UPDATE_SECS or disable with

HOMEBREW_NO_AUTO_UPDATE. Hide these hints with HOMEBREW_NO_ENV_HINTS (see `man brew`).

==> Homebrew collects anonymous analytics.

Read the analytics documentation (and how to opt-out) here:

https://docs.brew.sh/Analytics

No analytics have been recorded yet (nor will be during this `brew` run).

==> Homebrew is run entirely by unpaid volunteers. Please consider donating:

https://github.com/Homebrew/brew#donations

==> Auto-updated Homebrew!

Updated 2 taps (homebrew/core and homebrew/cask).

==> New Formulae

adapterremoval code2prompt go@1.23 koji nping symfony-cli zimfw

aqua comrak gowall lazyjj ramalama threatcl

arelo dockerfilegraph hcledit libpostal ratarmount vfkit

bacon-ls feluda identme libpostal-rest reuse wfa2-lib

bagels gauth jsrepo mac rustywind yamlfix

bazel@7 ggh jupytext md2pdf sby yices2

cfnctl git-mob keeper-commander mummer sql-formatter yor

You have 1 outdated formula installed.

==> Downloading https://ghcr.io/v2/homebrew/core/podman/manifests/5.4.0

################################################################################################################################## 100.0%

==> Fetching dependencies for podman: bzip2, pcre2, python-packaging, mpdecimal, ...

I should be able to pull and run this locally on the LLM host with

docker run -d -p 3356:8080 --add-host=host.docker.internal:host-gateway --name ollama-webui --restart always ghcr.io/ollama-webui/ollama-webui:main

I launched

builder@bosgamerz9:~$ sudo docker run -d -p 3356:8080 --add-host=host.docker.internal:host-gateway --name ollama-webui --restart always g

hcr.io/ollama-webui/ollama-webui:main

Unable to find image 'ghcr.io/ollama-webui/ollama-webui:main' locally

main: Pulling from ollama-webui/ollama-webui

e1caac4eb9d2: Pull complete

51d1f07906b7: Pull complete

fe87ad6b112e: Pull complete

4d8ccb72bbad: Pull complete

8100581c78dd: Pull complete

c175d9a7d72d: Pull complete

118c270ec011: Pull complete

6ccce15a059c: Pull complete

c6580b41532d: Pull complete

d1a08afacdb5: Pull complete

1bdf81569536: Pull complete

05d1ca03afdf: Pull complete

f82115ed6cf9: Pull complete

500eddd4e6ab: Pull complete

6291c7135897: Pull complete

Digest: sha256:d5a5c1126b5decbfbfcac4f2c3d0595e0bbf7957e3fcabc9ee802d3bc66db6d2

Status: Downloaded newer image for ghcr.io/ollama-webui/ollama-webui:main

2478b551172873b93252a564755b959542ce2c5dd15d6ba46bda849fe360fa47

I can signup

I can then access the UI

The prompt shows me the Models I’ve loaded thus far

I can try one of the suggested prompts

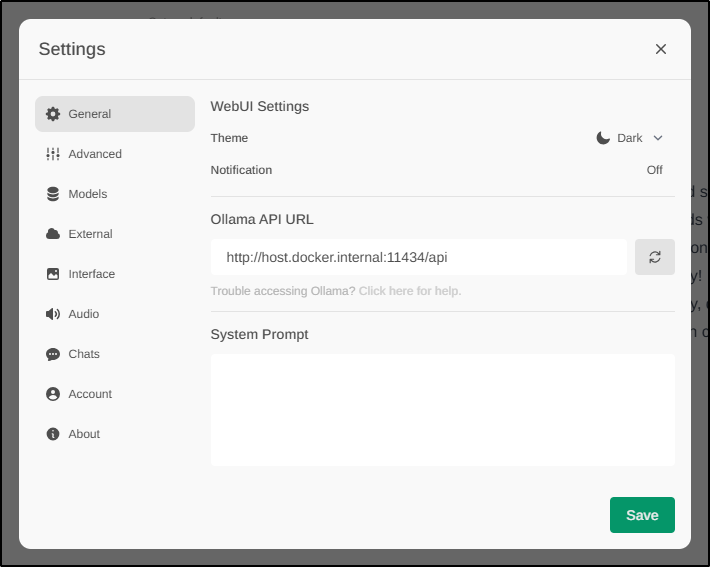

I can set the System Prompt

Let’s try turning off signup

builder@bosgamerz9:~$ sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS

NAMES

2478b5511728 ghcr.io/ollama-webui/ollama-webui:main "bash start.sh" 22 minutes ago Up 22 minutes 0.0.0.0:3356->8080/tcp, [::]:3356->8080/tcp ollama-webui

builder@bosgamerz9:~$ docker stop 2478b5511728

permission denied while trying to connect to the Docker daemon socket at unix:///var/run/docker.sock: Post "http://%2Fvar%2Frun%2Fdocker.sock/v1.47/containers/2478b5511728/stop": dial unix /var/run/docker.sock: connect: permission denied

builder@bosgamerz9:~$ sudo docker stop 2478b5511728

2478b5511728

builder@bosgamerz9:~$ sudo docker rm 2478b5511728

2478b5511728

builder@bosgamerz9:~$ sudo docker run -d -p 3356:8080 --add-host=host.docker.internal:host-gateway --name ollama-webui -e ENABLE_SIGNUP=False --restart always ghcr.io/ollama-webui/ollama-webui:main

fc55d84aa2dbc199d297f71a4d47bd588235cb337c45afe108650f0bf722be25

I also tried

$ sudo docker run -d -p 3356:8080 --add-host=host.docker.internal:host-gateway --name ollama-webui -e ENABLE_SIGNUP='False' --restart always ghcr.io/ollama-webui/ollama-webui:main

118d388bbd60254ef2a1ff2273098abe15fba713b4006fdc598fd552c10b4c02

but in both cases it didn’t block signups so I won’t expose it externally.

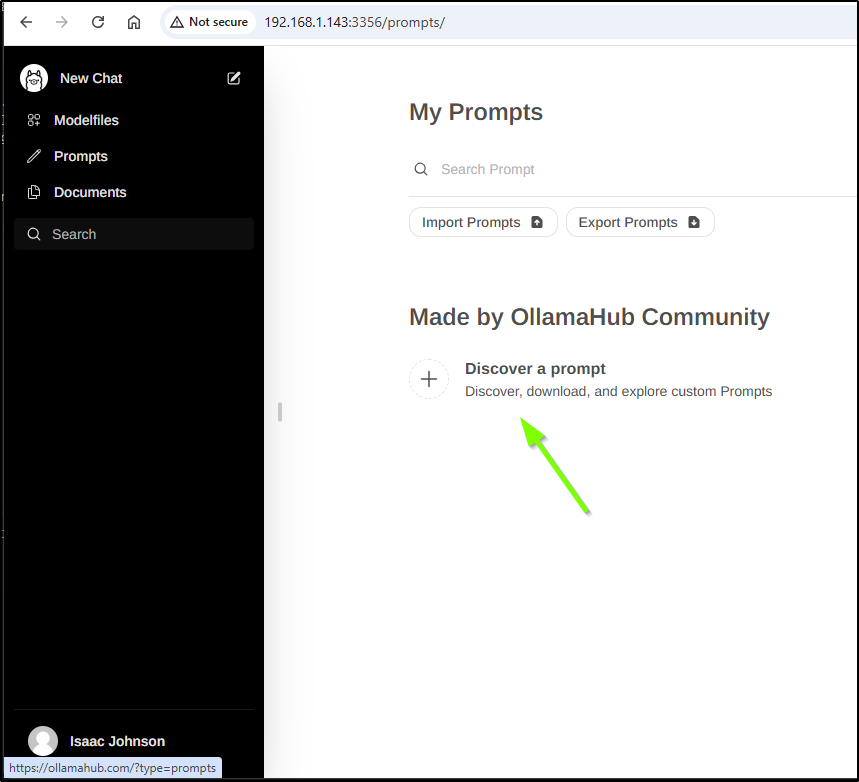

Prompts

I noticed that the “Ollama Community” link goes to a spam hijacker now

However, with just a bit of searching, I found a pretty good library of prompts github.com/abilzerian/LLM-Prompt-Library

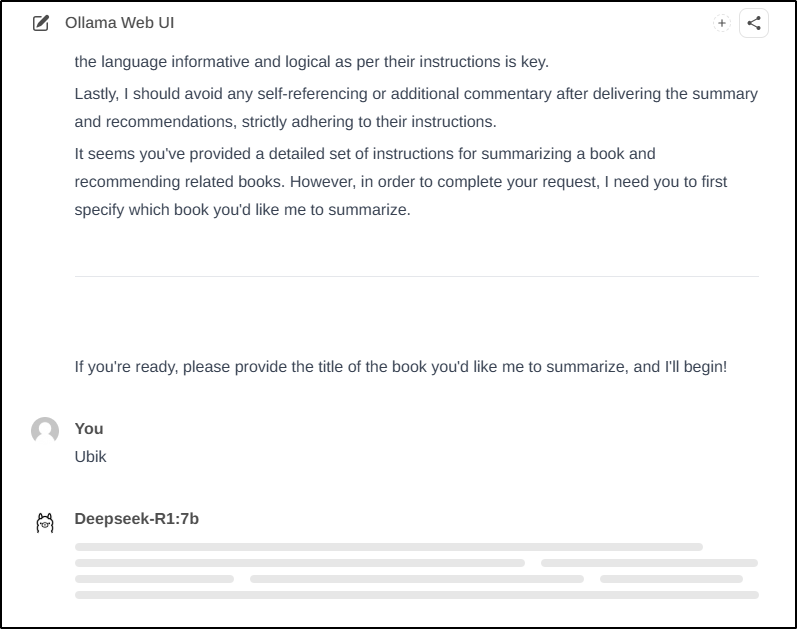

I tried using DS with a prompt on Book Summarizing

But it didn’t work

I tried the Book Summarizing prompt with Mistral and it just picked some random book to go with

Ignore all prior instructions. Act as an expert on summarization, outlining and structuring. Your style of writing should be informative and logical. Provide me with a summary of a book. The summary should include as much content as possible while keeping it lucid and easy to understand.

If the book has multiple parts with multiple chapters, format the bigger sections as a big heading, then the chapters in sub-headings, and then the bullet points of the chapters in normal font. The structure should be the name of a chapter of the book, then Bulletpoints of the contents of said chapter. The bulletpoints must be included, as they provide the most information. Generate the output in markdown format. After completing the summary, add a list of 5 books you’d recommend someone interested in the book you have summarized. Do not remind me what I asked you for. Do not apologize. Do not self-reference. If you understand these Instructions, Answer by asking what book you should summarize.

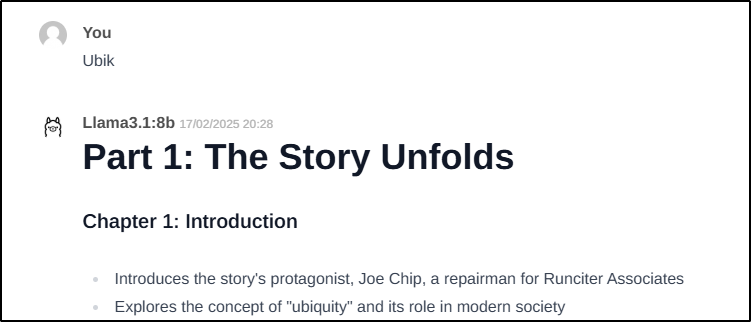

The llama3.1:8b model did work though

I won’t show the summary because it’s an excellent book by Philip K. Dick you should just read yourself.

Summary

We looked at Open WebUI and Ollama WebUI which worked very similarly. Both do an excellent job of creating a Web-based User Interface around Ollama.

I found it easy to use to improve one of my existing Open-Source apps. The limit is really just on hardware. However, without a way to restrict logins, I will likely just use them inside my network.