Published: Feb 4, 2025 by Isaac Johnson

Today we have some clean ups to do. It started when I was trying to debug an issue with my Ingress controller and noticed that it was getting slammed with Gitea REST calls. Let’s dig into why that was happening and how we can fix it.

Also, I want to respin a K3s cluster that has pretty much gone to pot. I’ll work through resetting it and this time using Istio instead of Traefik or Nginx.

Lastly, I noticed (as a result of cleaning up the test cluster), my Uptime Kuma was out of date and needed some updates.

Gitea

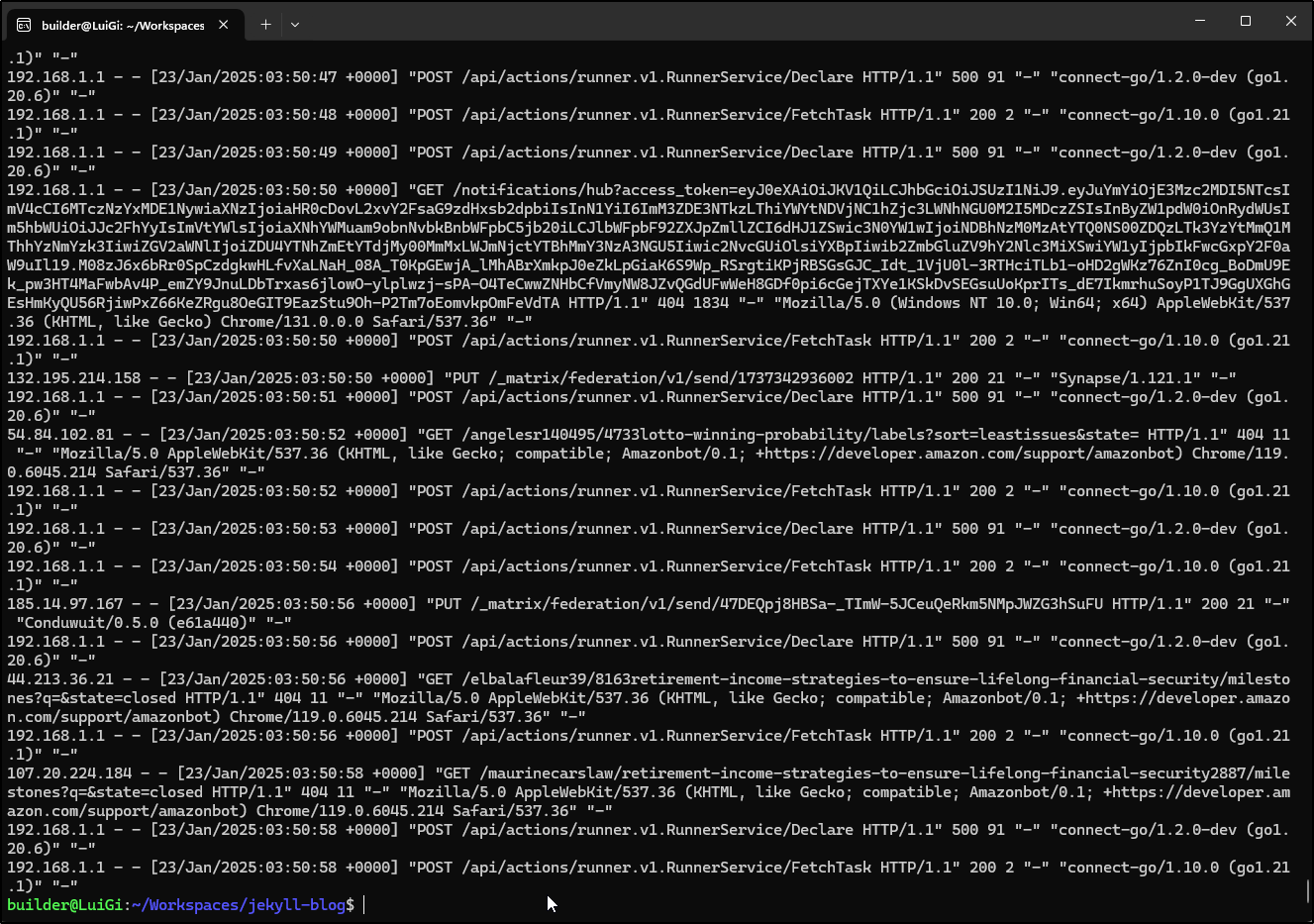

I recently was debugging my ingress controlled and noticed the shear amount of volume coming through to my Gitea instance.

just a snipped from now

57.141.5.14 - - [23/Jan/2025:01:31:39 +0000] "GET /lonnabolden647?tab=stars&sort=recentupdate&q=&language= HTTP/1.1" 200 18664 "-" "meta-externalagent/1.1 (+https://developers.facebook.com/docs/sharing/webmasters/crawler)" "-"

149.56.70.72 - - [23/Jan/2025:01:31:39 +0000] "PUT /_matrix/federation/v1/send/1737595899200-5908 HTTP/1.1" 200 21 "-" "Dendrite/0.14.1+40bef6a" "-"

147.53.120.155 - - [23/Jan/2025:01:31:39 +0000] "GET /user/settings HTTP/1.1" 200 27890 "https://gitea.freshbrewed.science:443/billiesinnett2/6732powerball/wiki/How-to-Decode-Winning-Powerball-Numbers%3A-Strategies%2C-Stats%2C-and-Insights" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Vivaldi/5.3.2679.68" "-"

192.168.1.1 - - [23/Jan/2025:01:31:39 +0000] "POST /api/actions/runner.v1.RunnerService/Declare HTTP/1.1" 500 91 "-" "connect-go/1.2.0-dev (go1.20.6)" "-"

85.208.96.207 - - [23/Jan/2025:01:31:40 +0000] "GET /dorthysturdiva/situs-slot-rajatoto885017/wiki/?action=_pages HTTP/1.1" 200 18593 "-" "Mozilla/5.0 (compatible; SemrushBot/7~bl; +http://www.semrush.com/bot.html)" "-"

192.168.1.1 - - [23/Jan/2025:01:31:40 +0000] "POST /api/actions/runner.v1.RunnerService/FetchTask HTTP/1.1" 200 2 "-" "connect-go/1.10.0 (go1.21.1)" "-"

147.53.120.155 - - [23/Jan/2025:01:31:40 +0000] "POST /user/settings HTTP/1.1" 303 0 "https://gitea.freshbrewed.science:443/user/settings" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Vivaldi/5.3.2679.68" "-"

98.84.60.17 - - [23/Jan/2025:01:31:41 +0000] "GET /layneenriquez8/cindi1988/issues?assignee=-1&labels=0&milestone=-1&poster=0&project=-1&q=&sort=nearduedate&state=open&type=all HTTP/1.1" 200 33096 "-" "Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; Amazonbot/0.1; +https://developer.amazon.com/support/amazonbot) Chrome/119.0.6045.214 Safari/537.36" "-"

147.53.120.155 - - [23/Jan/2025:01:31:41 +0000] "GET /user/settings HTTP/1.1" 200 28037 "https://gitea.freshbrewed.science:443/user/settings" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Vivaldi/5.3.2679.68" "-"

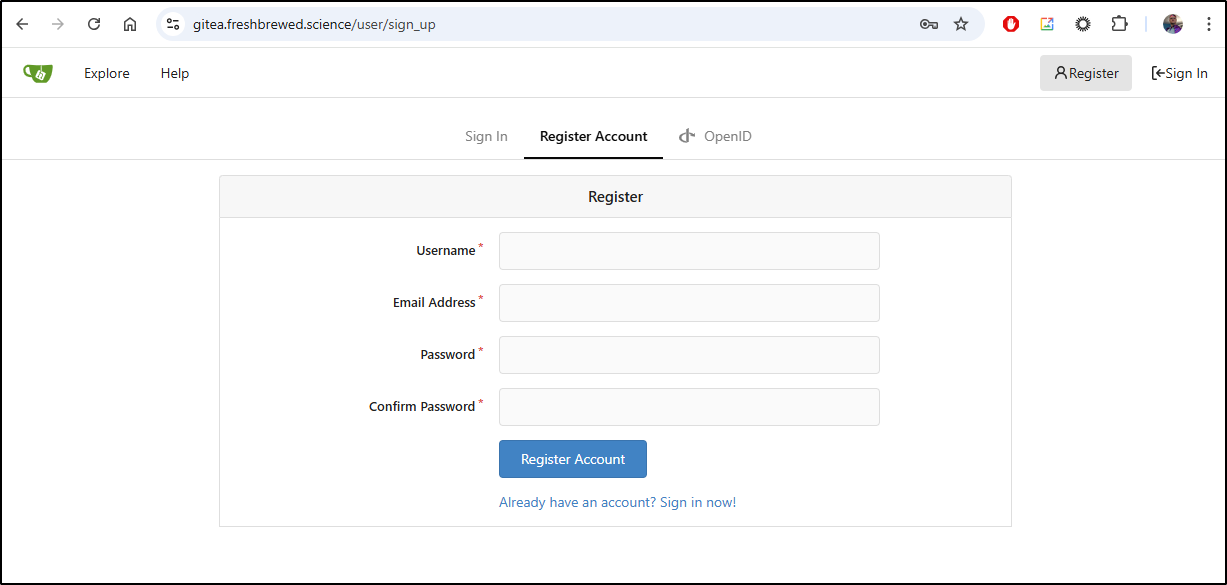

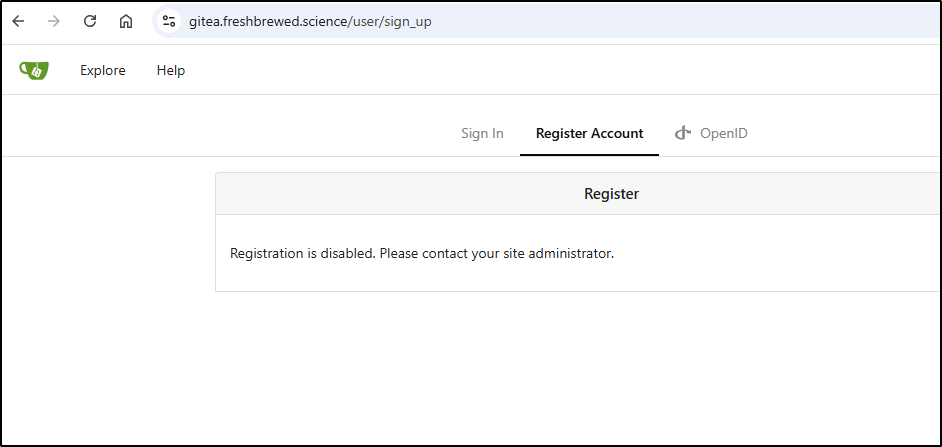

The first thing I noticed when I went to my Gitea was indeed the front door was wide open - that is, anyone could register an account

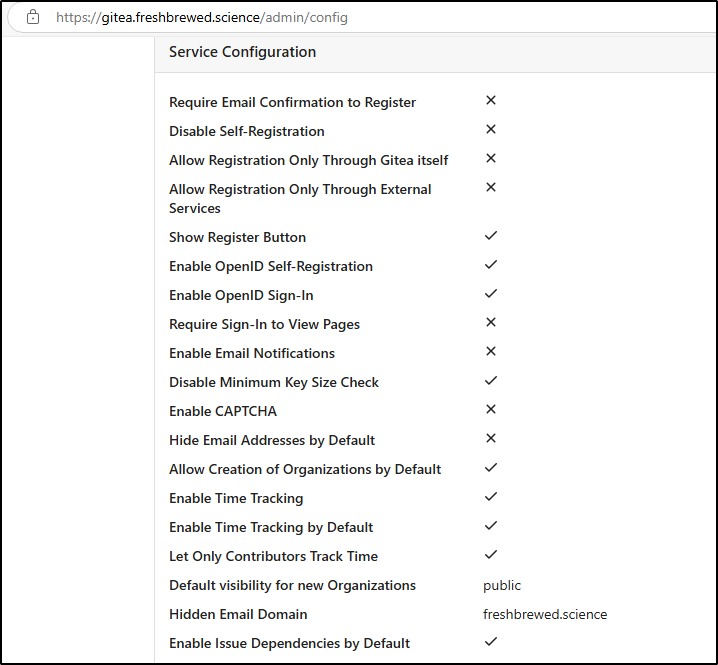

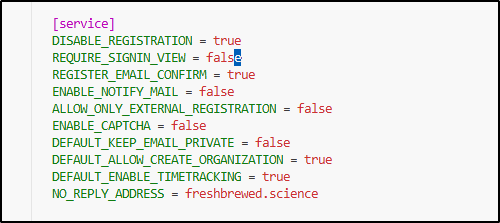

When I looked at the config, I saw I must have left defaults to allow signup without any kind of checks

The service was created 10 months ago and this container has been running for 2 months

builder@builder-T100:~$ sudo docker ps -a | grep 4220

b0121334df7f gitea/gitea:1.21.7 "/usr/bin/entrypoint…" 10 months ago Up 2 months 0.0.0.0:4222->22/tcp, :::4222->22/tcp, 0.0.0.0:4220->3000/tcp, :::4220->3000/tcp gitea

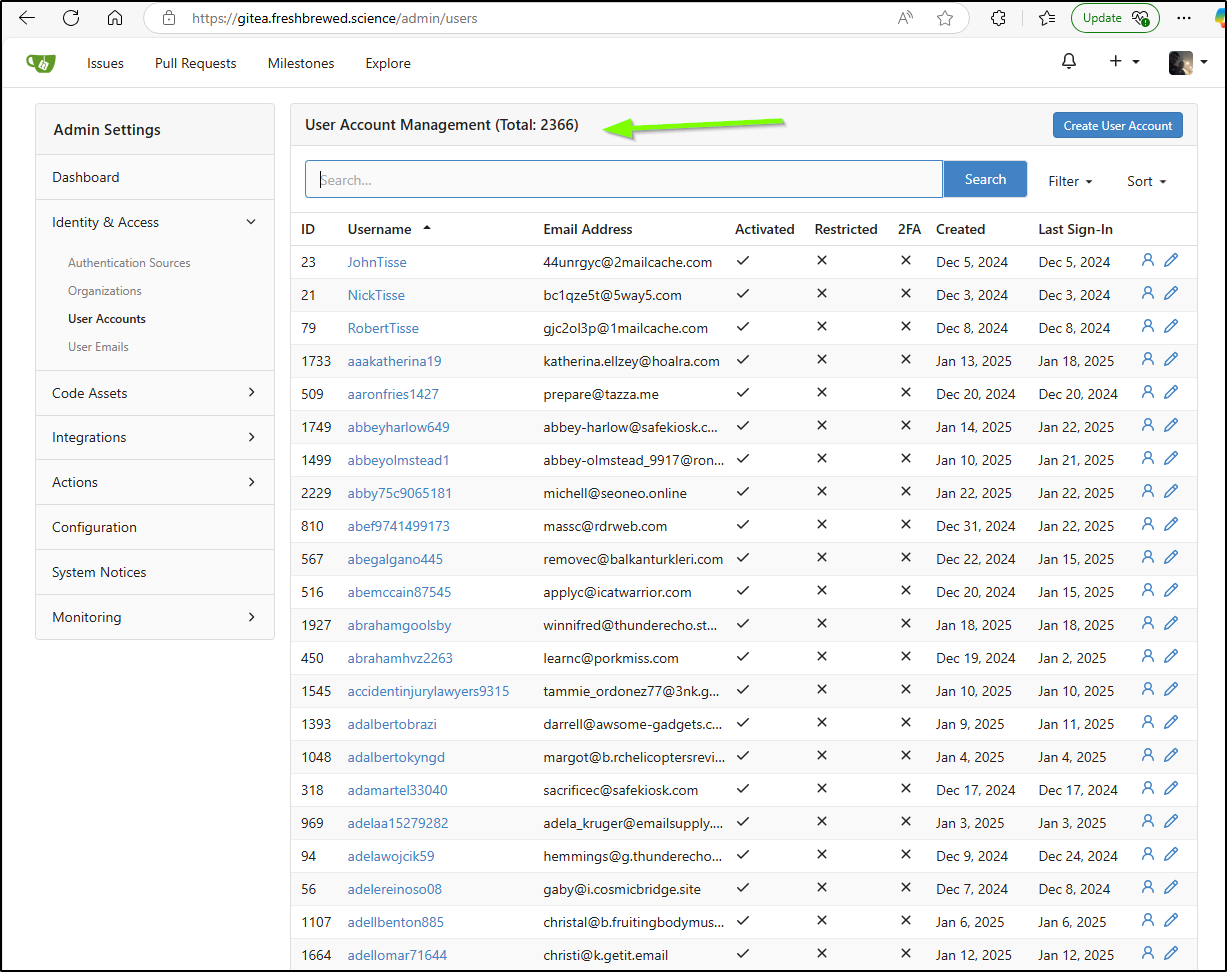

I mean, how many users could it be handling?

Sweat crap on a stick - 2366!?!?

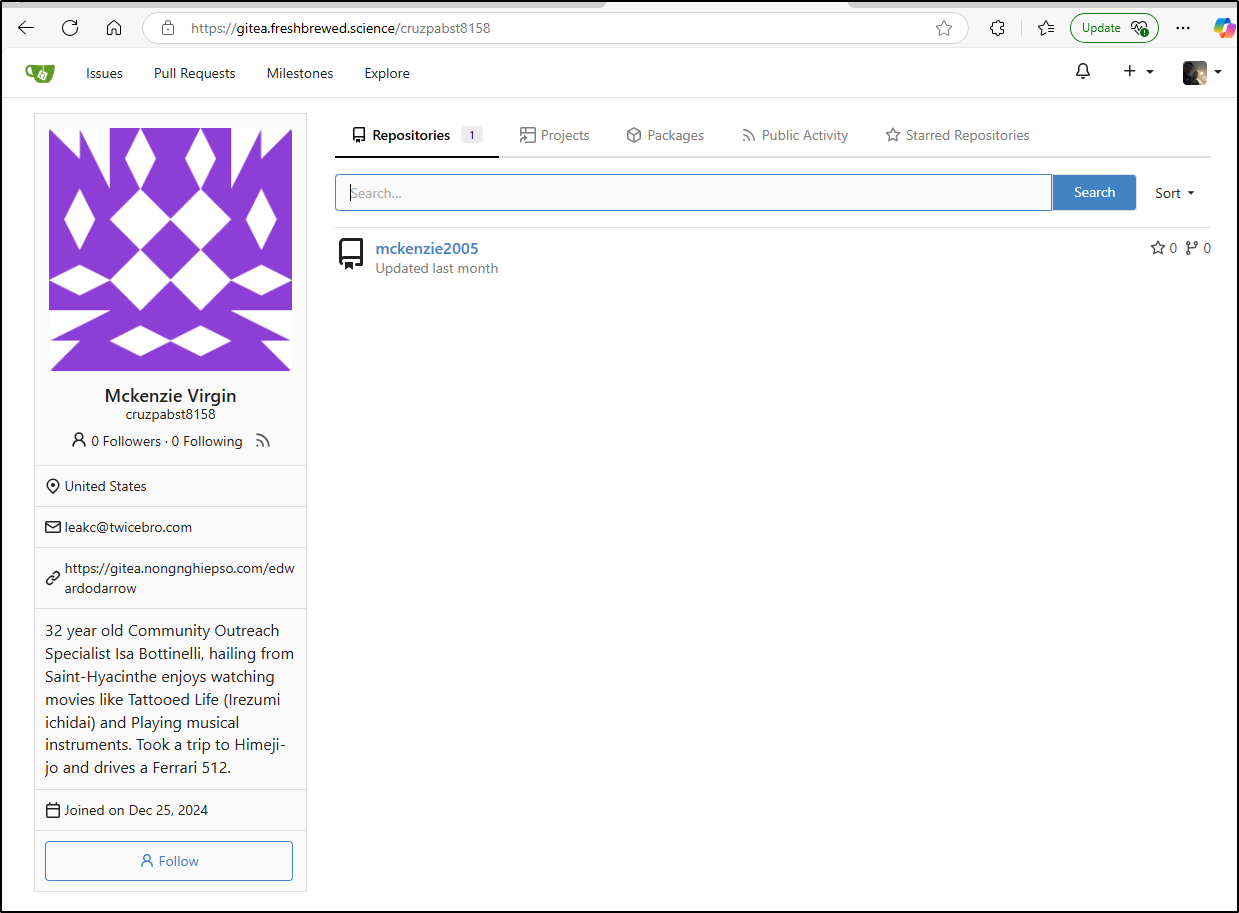

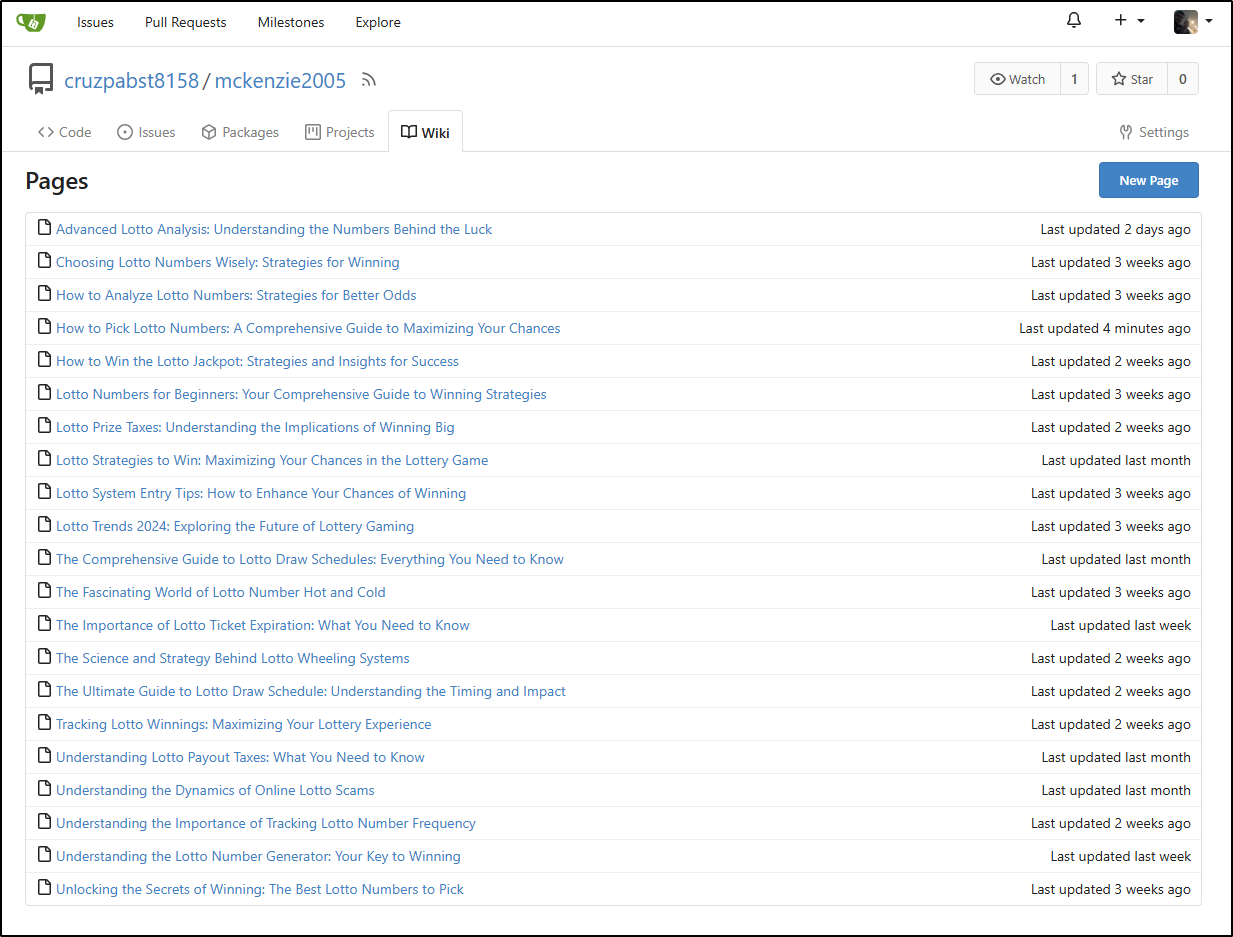

One can see fake profiles

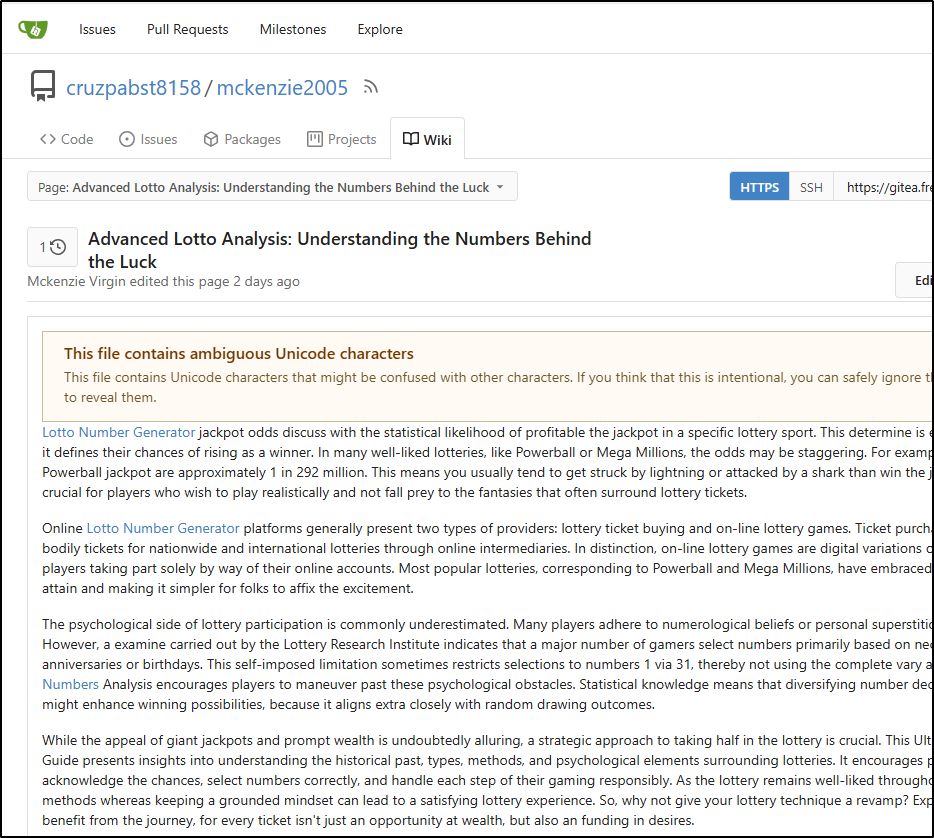

In almost all cases, people are just using the Wiki to save Spam articles

These seem to be AI generated deep fake articles clearly used by spammers

I’m going to open the app ini

/GiteaNew/gitea/gitea/conf$ vi app.ini

And disable signup

I did a sudo docker-compose down and sudo docker-compose up & to rotate it. Then saw that it was now disabling signups

Removing users

The next bit was interesting - there is no easy way to bulk delete users. One article suggested using database commands.

Since i need to do some surgery on the SQLite3 DB, I’ll stop Gitea for now with a docker compose down

sqlite> DELETE from email_address where email not in (‘isaac.johnson@gmail.com’,’isaac@freshbrewed.science’); sqlite> select COUNT(*) from email_address where email not in (‘isaac.johnson@gmail.com’,’isaac@freshbrewed.science’); 0

I’ll want to then remove from the users table too

sqlite> select id, name, email from user LIMIT 10;

1|builderadmin|isaac.johnson@gmail.com

2|ForgejoBackups|

3|kathimcintosh4|kathi.mcintosh@emailinbox.store

4|milorudolph60|milo.rudolph_3513@regularemail.shop

5|debramoorhouse|debra.moorhouse2606@regularemail.shop

6|tiffanyrountre|info@emailsgateway.store

7|renaldobooker0|renaldo_booker2580@emailinbox.store

8|lawrencecolquh|lawrencecolquhoun.6854@emailclient.online

9|marissathaxton|marissa-thaxton@quirkyemails.fun

10|wilma985214546|wilma-laver9101@quirkyemails.fun

sqlite> DELETE FROM user WHERE id not in (1,2);

Now the Repos get cleaned up

sqlite> SELECT owner_id, owner_name, name FROM repository LIMIT 10;

2|ForgejoBackups|BlogManagement

3|kathimcintosh4|bet9ja-promo-code-yohaig

4|milorudolph60|bet9ja-promo-code-yohaig

6|tiffanyrountre|bet9ja-promotion-code-yohaig

5|debramoorhouse|bet9ja-promo-code-yohaig

7|renaldobooker0|mc-oluomo-elected-as-nurtw-president---blueprint-newspapers-limited

12|stephanyx90660|stephany2007

14|philipusing232|philip2002

16|blythekeys8365|7468525

18|fayeyuc2075636|budal1983

sqlite> DELETE FROM repository WHERE owner_id not in (1, 2);

sqlite> SELECT owner_id, owner_name, name FROM repository LIMIT 10;

2|ForgejoBackups|BlogManagement

So now I’ll fire it back up

builder@builder-T100:~/GiteaNew$ sudo docker-compose up -d

2025-01-22 21:48:32,698 DEBUG [ddtrace.appsec._remoteconfiguration] [_remoteconfiguration.py:60] [dd.service=bin dd.env= dd.version= dd.trace_id=0 dd.span_id=0] - [3879176][P: 3879175] Register ASM Remote Config Callback

2025-01-22 21:48:32,699 DEBUG [ddtrace._trace.tracer] [tracer.py:868] [dd.service=bin dd.env= dd.version= dd.trace_id=0 dd.span_id=0] - finishing span name='requests.request' id=8124676839364187293 trace_id=137667180970989971669389181615678715143 parent_id=None service='requests' resource='GET /version' type='http' start=1737604112.5592725 end=1737604112.697234 duration=0.137961441 error=0 tags={'_dd.base_service': 'bin', '_dd.injection.mode': 'host', '_dd.p.dm': '-0', '_dd.p.tid': '6791bc1000000000', 'component': 'requests', 'http.method': 'GET', 'http.status_code': '200', 'http.url': 'http+docker://localhost/version', 'http.useragent': 'docker-compose/1.29.2 docker-py/5.0.3 Linux/6.8.0-48-generic', 'language': 'python', 'out.host': 'localhost', 'runtime-id': '147187b2e15d4207a48e6e74509fae4e', 'span.kind': 'client'} metrics={'_dd.measured': 1, '_dd.top_level': 1, '_dd.tracer_kr': 1.0, '_sampling_priority_v1': 1, 'process_id': 3879176} links='' events='' (enabled:True)

2025-01-22 21:48:32,713 DEBUG [ddtrace._trace.tracer] [tracer.py:868] [dd.service=bin dd.env= dd.version= dd.trace_id=0 dd.span_id=0] - finishing span name='requests.request' id=14111092360656294140 trace_id=137667180970989971664043727157691609037 parent_id=None service='requests' resource='GET /v1.44/networks/giteanew_gitea' type='http' start=1737604112.7011092 end=1737604112.7127628 duration=0.011653751 error=0 tags={'_dd.base_service': 'bin', '_dd.injection.mode': 'host', '_dd.p.dm': '-0', '_dd.p.tid': '6791bc1000000000', 'component': 'requests', 'http.method': 'GET', 'http.status_code': '404', 'http.url': 'http+docker://localhost/v1.44/networks/giteanew_gitea', 'http.useragent': 'docker-compose/1.29.2 docker-py/5.0.3 Linux/6.8.0-48-generic', 'language': 'python', 'out.host': 'localhost', 'runtime-id': '147187b2e15d4207a48e6e74509fae4e', 'span.kind': 'client'} metrics={'_dd.measured': 1, '_dd.top_level': 1, '_dd.tracer_kr': 1.0, '_sampling_priority_v1': 1, 'process_id': 3879176} links='' events='' (enabled:True)

.. snip ...

Now it looks clean

I can see in my logs some Gitea Runners keep trying to connect and notifications

Test Cluster

While my test cluster is up, it just has slowed to a crawl and isn’t scheduling workloads well anymore.

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

anna-macbookair Ready control-plane,master 278d v1.27.6+k3s1

isaac-macbookpro Ready <none> 278d v1.27.6+k3s1

builder-macbookpro2 Ready <none> 278d v1.27.6+k3s1

Since it’s been a fat minute since I did this last, let’s check our Nodes and their current IPs

$ kubectl get nodes -o json | jq -r '

> [

> "Name", "Internal IP"

> ],

[] | [

.meta> (.items[] | [

> .metadata.name,

> (.status.addresses[] | select(.type == "InternalIP") | .address)

> ]) | @tsv

> ' | column -t

Name Internal IP

builder-macbookpro2 192.168.1.163

anna-macbookair 192.168.1.13

isaac-macbookpro 192.168.1.205

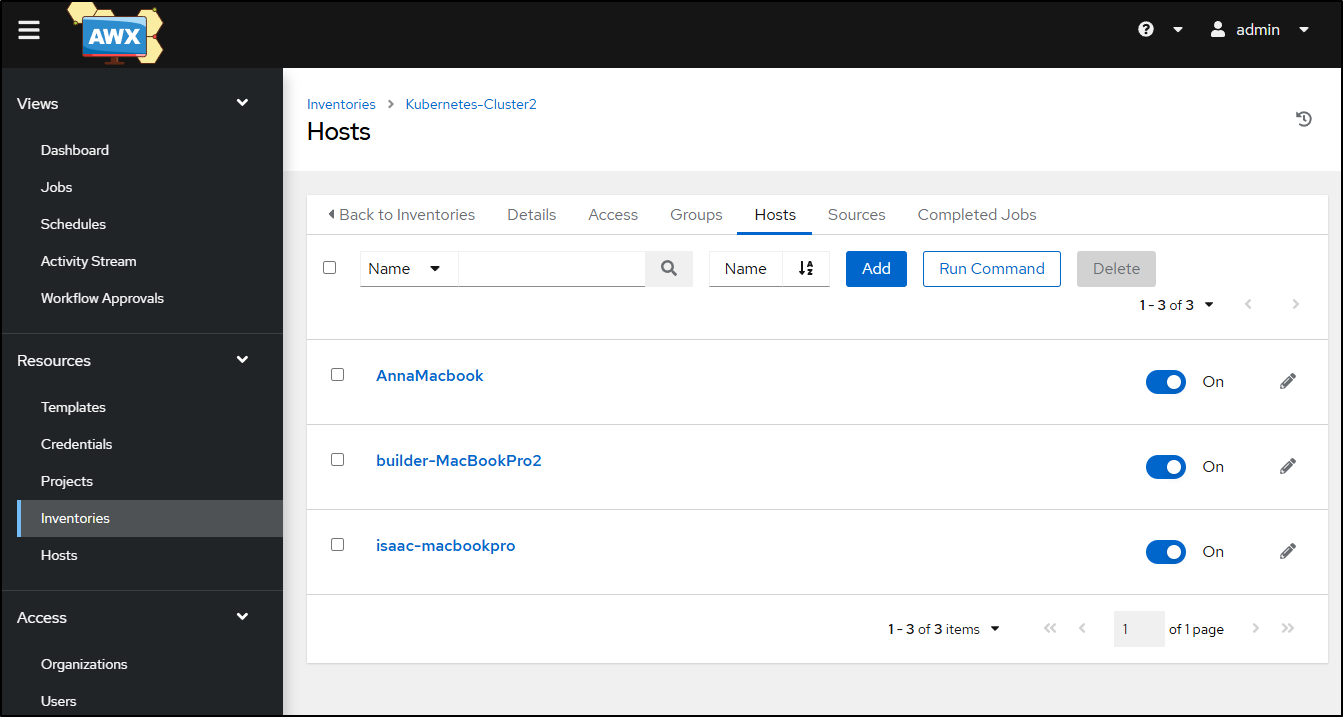

This matches my “Kubernetes-Cluster2”

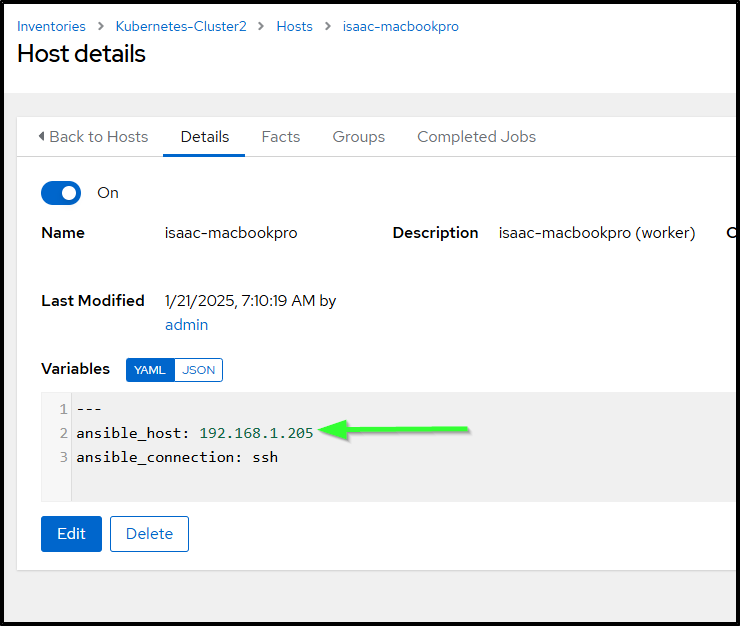

I then check each host that its IP still lines up

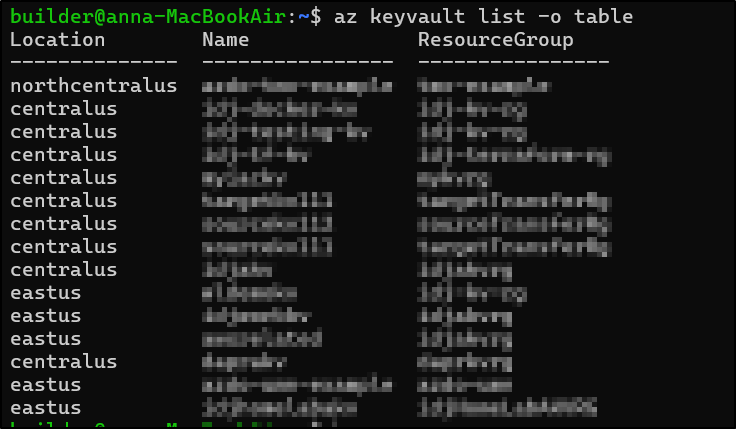

Now before I even begin, I know that during the process I will stash a key into AKV for later use and almost always by this time the Azure CLI is out of date and/or has an expired session.

The az upgrade step seemed to fail or maybe it is the latest for this OS

builder@anna-MacBookAir:~$ az upgrade

This command is in preview and under development. Reference and support levels: https://aka.ms/CLI_refstatus

Your current Azure CLI version is 2.53.0. Latest version available is 2.68.0.

Please check the release notes first: https://docs.microsoft.com/cli/azure/release-notes-azure-cli

Do you want to continue? (Y/n): Y

Hit:1 https://download.docker.com/linux/ubuntu jammy InRelease

Hit:2 http://security.ubuntu.com/ubuntu jammy-security InRelease

Hit:3 http://us.archive.ubuntu.com/ubuntu jammy InRelease

Hit:4 http://us.archive.ubuntu.com/ubuntu jammy-updates InRelease

Hit:5 http://repo.mongodb.org/apt/debian buster/mongodb-org/6.0 InRelease

Hit:6 http://us.archive.ubuntu.com/ubuntu jammy-backports InRelease

Hit:7 https://packages.cloud.google.com/apt gcsfuse-jammy InRelease

Hit:8 https://baltocdn.com/helm/stable/debian all InRelease

Hit:9 https://packages.cloud.google.com/apt cloud-sdk InRelease

Hit:10 https://ppa.launchpadcontent.net/ansible/ansible/ubuntu jammy InRelease

Reading package lists... Done

W: http://repo.mongodb.org/apt/debian/dists/buster/mongodb-org/6.0/InRelease: Key is stored in legacy trusted.gpg keyring (/etc/apt/trusted.gpg), see the DEPRECATION section in apt-key(8) for details.

N: Skipping acquire of configured file 'main/binary-i386/Packages' as repository 'http://repo.mongodb.org/apt/debian buster/mongodb-org/6.0 InRelease' doesn't support architecture 'i386'

W: https://packages.cloud.google.com/apt/dists/gcsfuse-jammy/InRelease: Key is stored in legacy trusted.gpg keyring (/etc/apt/trusted.gpg), see the DEPRECATION section in apt-key(8) for details.

N: Skipping acquire of configured file 'main/binary-i386/Packages' as repository 'https://packages.cloud.google.com/apt gcsfuse-jammy InRelease' doesn't support architecture 'i386'

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

azure-cli is already the newest version (2.53.0-1~focal).

The following packages were automatically installed and are no longer required:

app-install-data-partner bsdmainutils crda g++-9 gir1.2-clutter-1.0 gir1.2-clutter-gst-3.0 gir1.2-cogl-1.0

gir1.2-coglpango-1.0 gir1.2-gnomebluetooth-1.0 gir1.2-gtkclutter-1.0 gir1.2-handy-0.0 gnome-getting-started-docs

gnome-screenshot hddtemp ibverbs-providers ippusbxd libamtk-5-0 libamtk-5-common libapr1 libaprutil1

libaprutil1-dbd-sqlite3 libaprutil1-ldap libasn1-8-heimdal libboost-date-time1.71.0 libboost-filesystem1.71.0

libboost-iostreams1.71.0 libboost-locale1.71.0 libboost-thread1.71.0 libbrlapi0.7 libcamel-1.2-62 libcbor0.6

libcdio18 libcmis-0.5-5v5 libcolord-gtk1 libdns-export1109 libedataserver-1.2-24 libedataserverui-1.2-2

libextutils-pkgconfig-perl libfprint-2-tod1 libfreerdp-server2-2 libfwupdplugin1 libgnome-bg-4-1 libgsound0

libgssapi3-heimdal libgssdp-1.2-0 libgtksourceview-3.0-1 libgtksourceview-3.0-common libgupnp-1.2-0 libgupnp-1.2-1

libgupnp-av-1.0-3 libgupnp-dlna-2.0-4 libhandy-0.0-0 libhcrypto4-heimdal libheimbase1-heimdal libheimntlm0-heimdal

libhogweed5 libhx509-5-heimdal libibverbs1 libicu66 libidn11 libilmbase24 libisl22 libjson-c4 libjuh-java

libjurt-java libkrb5-26-heimdal libldap-2.4-2 liblibreoffice-java libllvm10 libllvm11 libllvm12 libmodbus5

libmozjs-68-0 libmpdec2 libneon27-gnutls libnettle7 libntfs-3g883 libntfs-3g89 libopenexr24 liborcus-0.15-0

libperl5.30 libphonenumber7 libpoppler97 libprotobuf17 libpython3.8 libpython3.8-dev libpython3.8-minimal

libpython3.8-stdlib libqpdf26 libraw19 librdmacm1 libreadline5 libreoffice-style-tango libridl-java

libroken18-heimdal librygel-core-2.6-2 librygel-db-2.6-2 librygel-renderer-2.6-2 librygel-server-2.6-2 libsane

libsnmp35 libstdc++-9-dev libtepl-4-0 libtracker-control-2.0-0 libtracker-miner-2.0-0 libtracker-sparql-2.0-0

libunoloader-java liburcu6 liburl-dispatcher1 libvncserver1 libvpx6 libwebp6 libwind0-heimdal libwmf0.2-7 libxmlb1

ltrace lz4 mongodb-database-tools mongodb-mongosh ncal perl-modules-5.30 popularity-contest python3-entrypoints

python3-requests-unixsocket python3-simplejson python3.8 python3.8-dev python3.8-minimal rygel shim syslinux

syslinux-common syslinux-legacy ure-java vino xul-ext-ubufox

Use 'sudo apt autoremove' to remove them.

0 upgraded, 0 newly installed, 0 to remove and 46 not upgraded.

CLI upgrade failed or aborted.

I’ll try the command I know I need, namely the Az Command Line Interface (CLI) with Azure Key Vault.

First, I’ll show the version then login with a device code

builder@anna-MacBookAir:~$ az version

{

"azure-cli": "2.53.0",

"azure-cli-core": "2.53.0",

"azure-cli-telemetry": "1.1.0",

"extensions": {}

}

builder@anna-MacBookAir:~$ az login --use-device-code

To sign in, use a web browser to open the page https://microsoft.com/devicelogin and enter the code A4SC2NKX6 to authenticate.

The following tenants don't contain accessible subscriptions. Use 'az login --allow-no-subscriptions' to have tenant level access.

15d19784-ad58-4a57-a66f-ad1c0f826a45 'WellSky'

2f5b31ae-cc9f-445d-bec6-fe0546b2a741 'mytestdomain'

5b42c447-cf12-4b56-8de9-fc7688e36ec6 'IDJCompleteNonsense'

92a2e5a9-a8ab-4d8c-91eb-8af9712c16d5 'Princess King'

[

{

"cloudName": "AzureCloud",

"homeTenantId": "28c575f6-asdf-asdf-asdf-7e6d1ba0eb4a",

"id": "d955c0ba-asdf-asdf-asdf-8fed74cbb22d",

"isDefault": true,

"managedByTenants": [],

"name": "Pay-As-You-Go",

"state": "Enabled",

"tenantId": "28c575f6-asdf-asdf-asdf-7e6d1ba0eb4a",

"user": {

"name": "isaac.johnson@gmail.com",

"type": "user"

}

}

]

Then a list seems to work

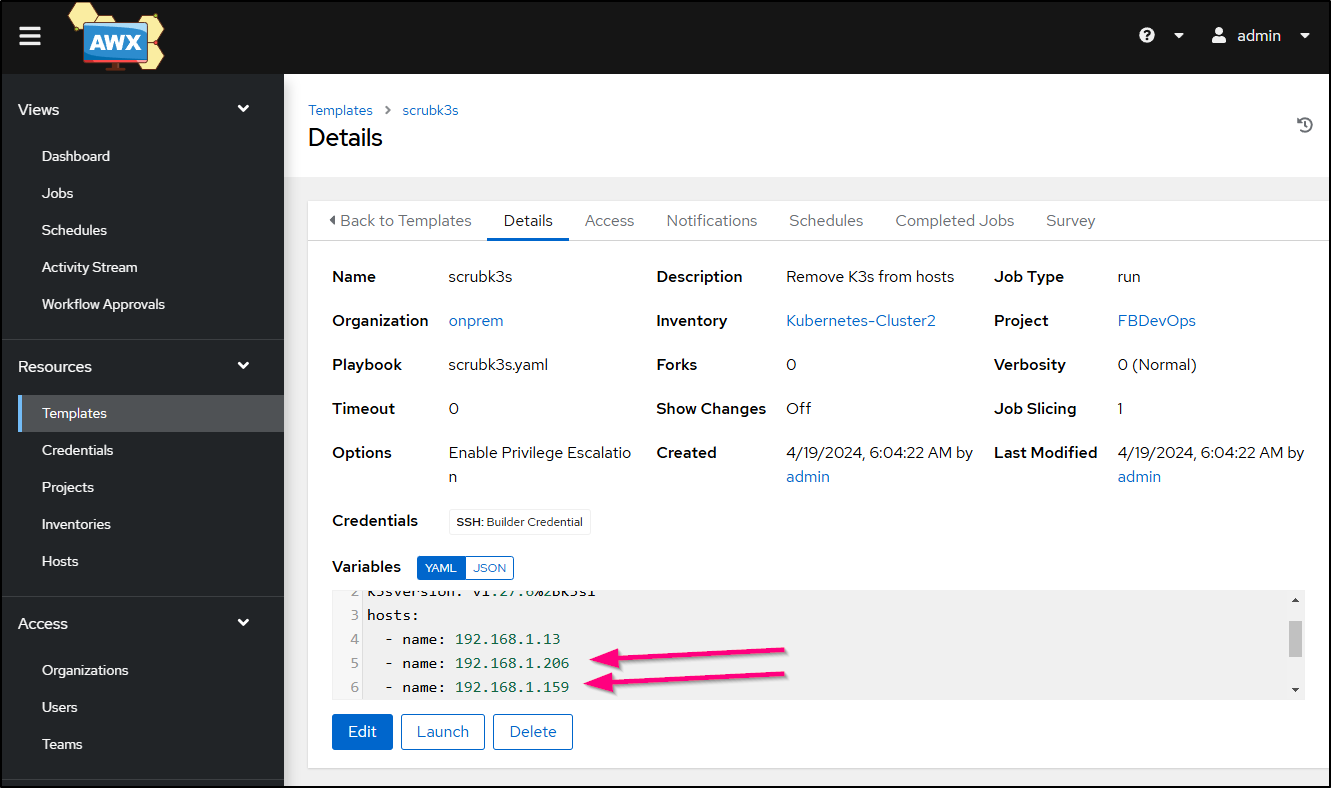

I should now be able to run a “scrub” command, however it’s worth noting I had to fix some IPs there too

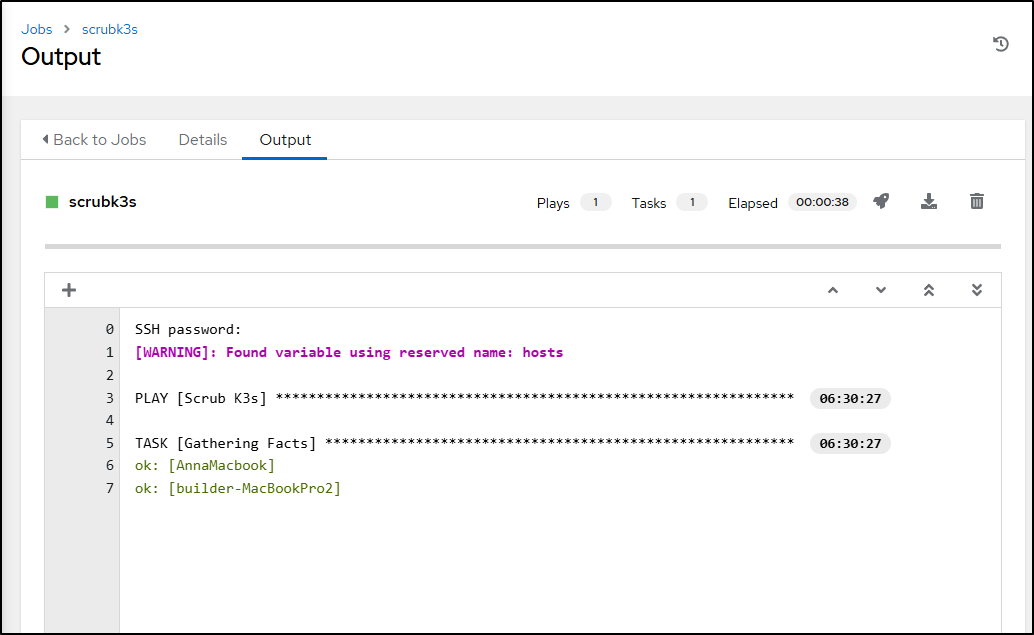

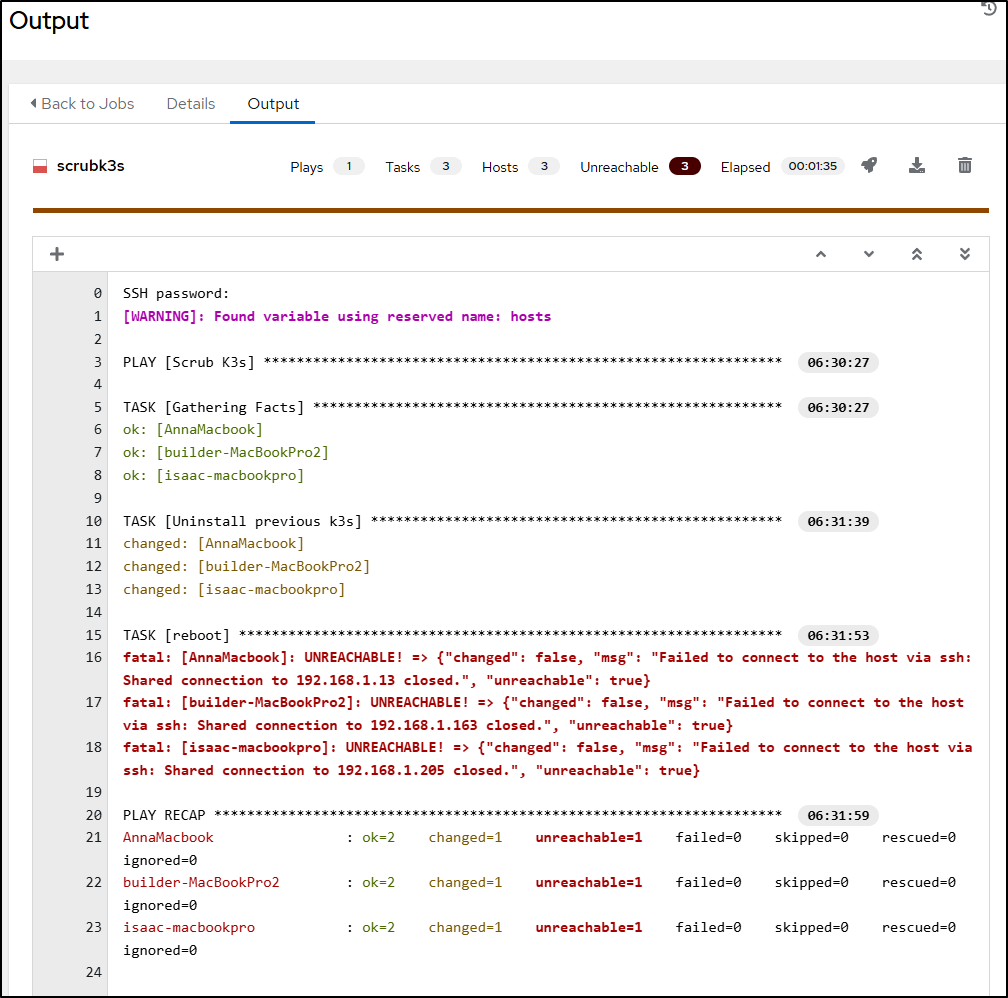

This Playbook takes a bit as it should remove K3s and restart hosts along the way (which are all old and physical).

It will look like a fail, but that is just because of my reboot step

I can check a host and see it’s been bounced and k3s is gone

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ ssh builder@192.168.1.13

Welcome to Ubuntu 22.04.5 LTS (GNU/Linux 6.8.0-51-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/pro

Expanded Security Maintenance for Applications is not enabled.

33 updates can be applied immediately.

To see these additional updates run: apt list --upgradable

14 additional security updates can be applied with ESM Apps.

Learn more about enabling ESM Apps service at https://ubuntu.com/esm

New release '24.04.1 LTS' available.

Run 'do-release-upgrade' to upgrade to it.

Last login: Fri Jan 24 06:31:54 2025 from 192.168.1.33

builder@anna-MacBookAir:~$ uptime

06:35:52 up 3 min, 1 user, load average: 0.18, 0.20, 0.09

builder@anna-MacBookAir:~$ kubectl get nodes

Command 'kubectl' not found, but can be installed with:

sudo snap install kubectl

builder@anna-MacBookAir:~$ sudo ps -ef | grep k3s

builder 2184 2133 0 06:36 pts/0 00:00:00 grep --color=auto k3s

For whatever reason, one host seemed to fail at the job of cleanup

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ ssh builder@192.168.1.205

builder@192.168.1.205's password:

Welcome to Ubuntu 20.04.6 LTS (GNU/Linux 5.15.0-130-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/pro

Expanded Security Maintenance for Applications is not enabled.

14 updates can be applied immediately.

To see these additional updates run: apt list --upgradable

19 additional security updates can be applied with ESM Apps.

Learn more about enabling ESM Apps service at https://ubuntu.com/esm

New release '22.04.5 LTS' available.

Run 'do-release-upgrade' to upgrade to it.

Your Hardware Enablement Stack (HWE) is supported until April 2025.

Last login: Fri Jan 24 06:31:54 2025 from 192.168.1.33

builder@isaac-MacBookPro:~$ sudo ps -ef | grep k3s

root 1048 1 2 06:37 ? 00:00:01 /usr/local/bin/k3s agent

builder 1728 1549 0 06:38 pts/0 00:00:00 grep k3s

So I re-ran it by hand

builder@isaac-MacBookPro:~$ sudo /usr/local/bin/k3s-agent-uninstall.sh

+ id -u

+ [ 0 -eq 0 ]

+ /usr/local/bin/k3s-killall.sh

+ [ -s /etc/systemd/system/k3s-agent.service ]

+ basename /etc/systemd/system/k3s-agent.service

+ systemctl stop k3s-agent.service

+ [ -x /etc/init.d/k3s* ]

+ killtree

+ kill -9

... snip ...

+ rm -rf /etc/rancher/k3s

+ rm -rf /run/k3s

+ rm -rf /run/flannel

+ type rpm-ostree

+ type zypper

+ remove_uninstall

+ rm -f /usr/local/bin/k3s-agent-uninstall.sh

the cleanup took a long time, but when done, I verified the script was gone and bounced it myself

builder@isaac-MacBookPro:~$ sudo /usr/local/bin/k3s-agent-uninstall.sh

sudo: /usr/local/bin/k3s-agent-uninstall.sh: command not found

builder@isaac-MacBookPro:~$ sudo reboot now

Connection to 192.168.1.205 closed by remote host.

Connection to 192.168.1.205 closed.

After it came back up, I could look to reload everything.

My last run was k3s 1.27.6

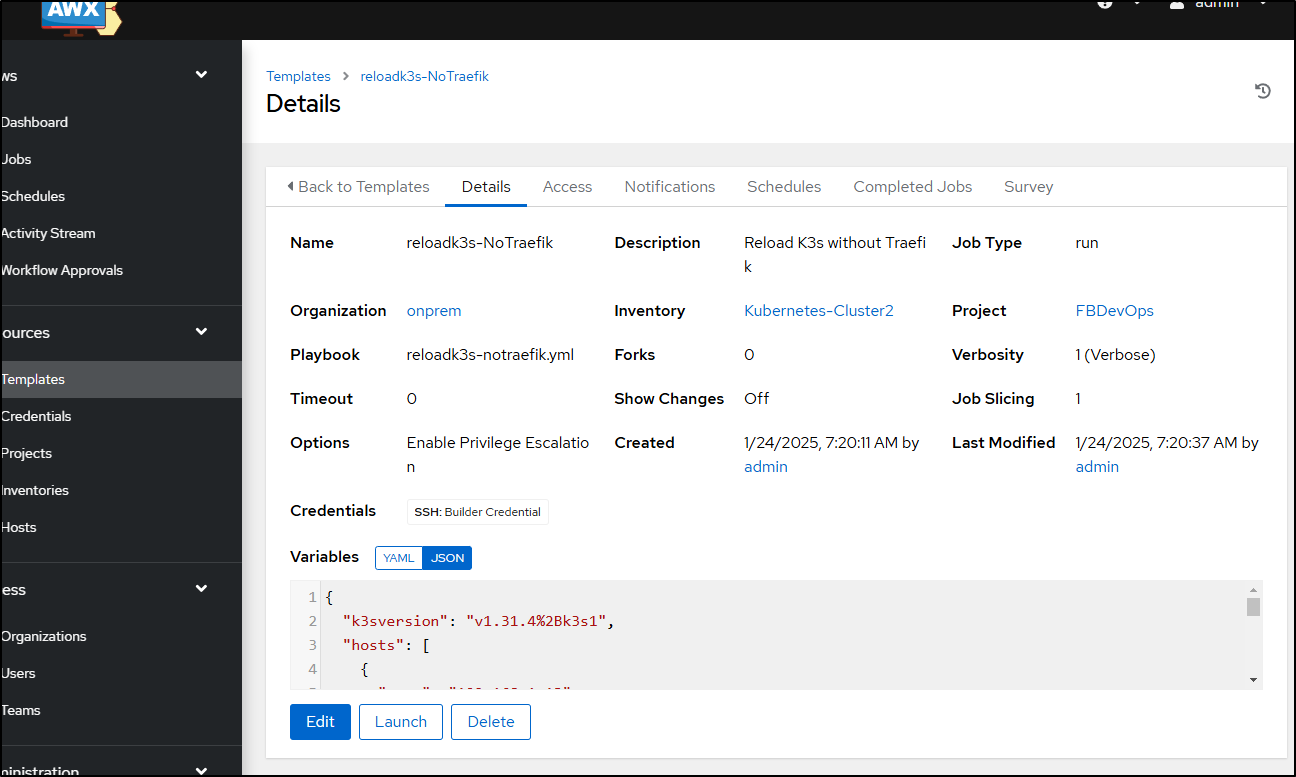

Since 1.32 is brand new, I’ll go with the latest of the 1.31 lineage

I’m guessing they still have the %2B in the name so it would be v1.31.4%2Bk3s1.

I’ve been using Istio by way of Anthos Service Mesh a lot more lately. I think I’m going to go without Traefik to start to see if I can get Istio on here.

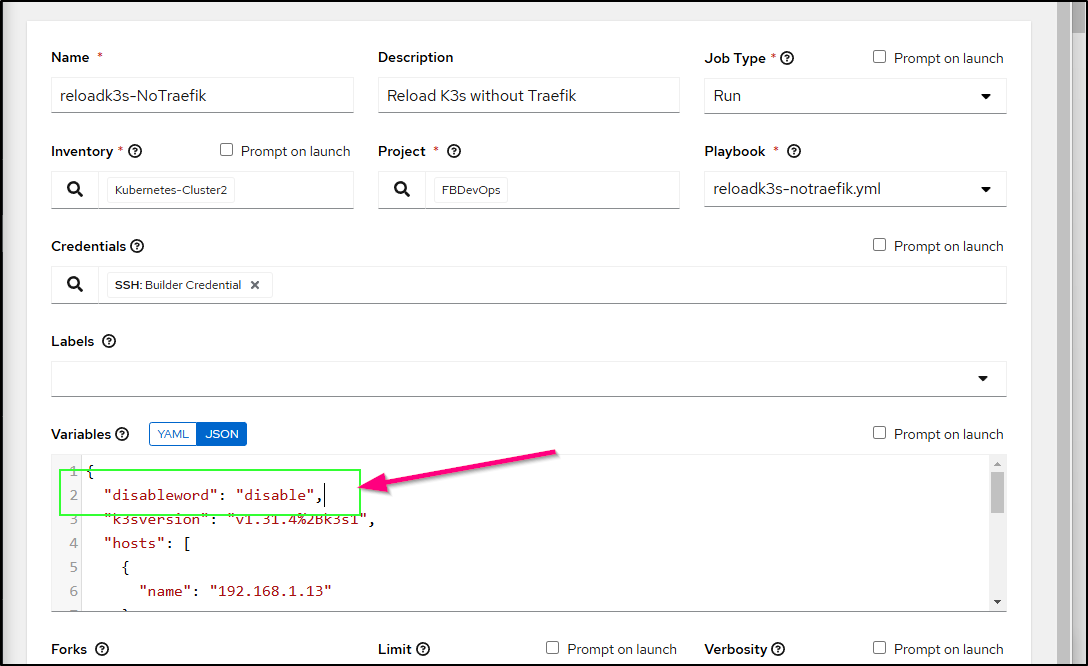

I updated the hosts and version and clicked Launch

I saw an error that when checking status seemed to relate to the ‘no deploy’ flag

● k3s.service - Lightweight Kubernetes

Loaded: loaded (/etc/systemd/system/k3s.service; enabled; vendor preset: enabled)

Active: activating (auto-restart) (Result: exit-code) since Fri 2025-01-24 07:27:38 CST; 2s ago

Docs: https://k3s.io

Process: 5790 ExecStartPre=/bin/sh -xc ! /usr/bin/systemctl is-enabled --quiet nm-cloud-setup.service 2>/dev/null (code=exited, status=0/SUCCESS)

Process: 5792 ExecStartPre=/sbin/modprobe br_netfilter (code=exited, status=0/SUCCESS)

Process: 5793 ExecStartPre=/sbin/modprobe overlay (code=exited, status=0/SUCCESS)

Process: 5794 ExecStart=/usr/local/bin/k3s server --no-deploy traefik --tls-san 75.73.224.240 (code=exited, status=1/FAILURE)

Main PID: 5794 (code=exited, status=1/FAILURE)

CPU: 20ms

Jan 24 07:27:38 anna-MacBookAir systemd[1]: Failed to start Lightweight Kubernetes.

Jan 24 07:27:38 anna-MacBookAir k3s[5794]: --protect-kernel-defaults (agent/node) Kernel tuning behavior. If set, error if kernel tunables are different than kub>

Jan 24 07:27:38 anna-MacBookAir k3s[5794]: --secrets-encryption Enable secret encryption at rest

Jan 24 07:27:38 anna-MacBookAir k3s[5794]: --enable-pprof (experimental) Enable pprof endpoint on supervisor port

Jan 24 07:27:38 anna-MacBookAir k3s[5794]: --rootless (experimental) Run rootless

Jan 24 07:27:38 anna-MacBookAir k3s[5794]: --prefer-bundled-bin (experimental) Prefer bundled userspace binaries over host binaries

Jan 24 07:27:38 anna-MacBookAir k3s[5794]: --selinux (agent/node) Enable SELinux in containerd [$K3S_SELINUX]

Jan 24 07:27:38 anna-MacBookAir k3s[5794]: --lb-server-port value (agent/node) Local port for supervisor client load-balancer. If the supervisor and apiserver>

Jan 24 07:27:38 anna-MacBookAir k3s[5794]:

Jan 24 07:27:38 anna-MacBookAir k3s[5794]: time="2025-01-24T07:27:38-06:00" level=fatal msg="flag provided but not defined: -no-deploy"

Reading through some comments on a GH Issues page, it seems that the --no-deploy flag is deprecated and should be replaced with --disable. This change was made in version 1.25 of k3s.

This worked

builder@anna-MacBookAir:~$ systemctl status k3s.service

● k3s.service - Lightweight Kubernetes

Loaded: loaded (/etc/systemd/system/k3s.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2025-01-24 07:33:03 CST; 37s ago

Docs: https://k3s.io

Process: 6890 ExecStartPre=/bin/sh -xc ! /usr/bin/systemctl is-enabled --quiet nm-cloud-setup.service 2>/dev/null (code=exited, status=0/SUCCESS)

Process: 6892 ExecStartPre=/sbin/modprobe br_netfilter (code=exited, status=0/SUCCESS)

Process: 6893 ExecStartPre=/sbin/modprobe overlay (code=exited, status=0/SUCCESS)

Main PID: 6894 (k3s-server)

Tasks: 62

Memory: 888.5M

CPU: 36.191s

CGroup: /system.slice/k3s.service

├─6894 "/usr/local/bin/k3s server" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ""

├─6916 "containerd " "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" >

├─7481 /var/lib/rancher/k3s/data/c3b3bbf39f70985bb27180c065eb5928b00f3fad75e5c614e8736017d239b9e0/bin/containerd-shim-runc-v2 -namespace k8s.io -id 77e7b404e9ebb05d51c3>

├─7491 /var/lib/rancher/k3s/data/c3b3bbf39f70985bb27180c065eb5928b00f3fad75e5c614e8736017d239b9e0/bin/containerd-shim-runc-v2 -namespace k8s.io -id aefd5c1e4f70cebec07c>

└─7518 /var/lib/rancher/k3s/data/c3b3bbf39f70985bb27180c065eb5928b00f3fad75e5c614e8736017d239b9e0/bin/containerd-shim-runc-v2 -namespace k8s.io -id 1470e4f0a5ee5a6aef41>

Jan 24 07:33:19 anna-MacBookAir k3s[6894]: I0124 07:33:19.313053 6894 replica_set.go:679] "Finished syncing" kind="ReplicaSet" key="kube-system/metrics-server-5985cbc9d7" duratio>

Jan 24 07:33:19 anna-MacBookAir k3s[6894]: I0124 07:33:19.315433 6894 pod_startup_latency_tracker.go:104] "Observed pod startup duration" pod="kube-system/metrics-server-5985cbc9>

Jan 24 07:33:20 anna-MacBookAir k3s[6894]: I0124 07:33:20.337845 6894 pod_startup_latency_tracker.go:104] "Observed pod startup duration" pod="kube-system/coredns-ccb96694c-c8zhk>

Jan 24 07:33:20 anna-MacBookAir k3s[6894]: I0124 07:33:20.339312 6894 replica_set.go:679] "Finished syncing" kind="ReplicaSet" key="kube-system/coredns-ccb96694c" duration="368.6>

Jan 24 07:33:20 anna-MacBookAir k3s[6894]: I0124 07:33:20.370442 6894 replica_set.go:679] "Finished syncing" kind="ReplicaSet" key="kube-system/coredns-ccb96694c" duration="12.14>

Jan 24 07:33:20 anna-MacBookAir k3s[6894]: I0124 07:33:20.371272 6894 replica_set.go:679] "Finished syncing" kind="ReplicaSet" key="kube-system/coredns-ccb96694c" duration="50.20>

Jan 24 07:33:35 anna-MacBookAir k3s[6894]: I0124 07:33:35.115954 6894 range_allocator.go:247] "Successfully synced" key="anna-macbookair"

Jan 24 07:33:36 anna-MacBookAir k3s[6894]: I0124 07:33:36.860659 6894 replica_set.go:679] "Finished syncing" kind="ReplicaSet" key="kube-system/metrics-server-5985cbc9d7" duratio>

Jan 24 07:33:36 anna-MacBookAir k3s[6894]: I0124 07:33:36.862608 6894 replica_set.go:679] "Finished syncing" kind="ReplicaSet" key="kube-system/metrics-server-5985cbc9d7" duratio>

Jan 24 07:33:36 anna-MacBookAir k3s[6894]: I0124 07:33:36.897609 6894 handler.go:286] Adding GroupVersion metrics.k8s.io v1beta1 to ResourceManager

If we look at my playbook you can see I accounted for this but just forgot to add to my variables for this newer release.

I’ll just add back in the “disableword”

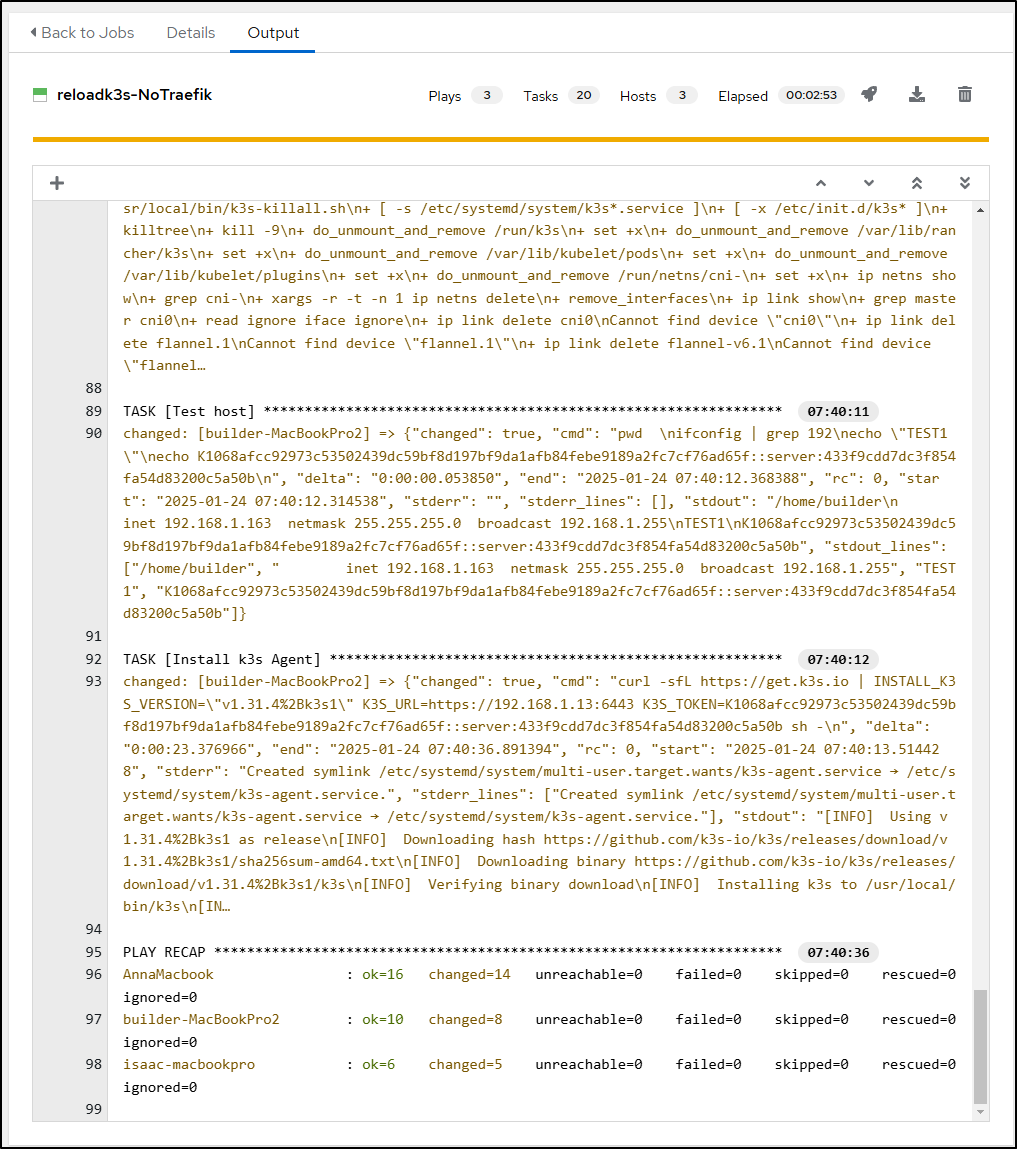

This time the playbook completed without issue

I can now see two nodes are up

builder@DESKTOP-QADGF36:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

anna-macbookair Ready control-plane,master 12m v1.31.4+k3s1

builder-macbookpro2 Ready <none> 10m v1.31.4+k3s1

I’m not sure why the older host didn’t join. I’ll try doing the command directly on the box

builder@DESKTOP-QADGF36:~$ ssh builder@192.168.1.205

builder@192.168.1.205's password:

Welcome to Ubuntu 20.04.6 LTS (GNU/Linux 5.15.0-130-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/pro

Expanded Security Maintenance for Applications is not enabled.

13 updates can be applied immediately.

To see these additional updates run: apt list --upgradable

19 additional security updates can be applied with ESM Apps.

Learn more about enabling ESM Apps service at https://ubuntu.com/esm

New release '22.04.5 LTS' available.

Run 'do-release-upgrade' to upgrade to it.

Your Hardware Enablement Stack (HWE) is supported until April 2025.

Last login: Fri Jan 24 07:38:13 2025 from 192.168.1.33

builder@isaac-MacBookPro:~$ sudo ps -ef | grep k3s

builder 4563 4557 0 07:52 pts/0 00:00:00 grep k3s

builder@isaac-MacBookPro:~$ curl -sfL https://get.k3s.io | INSTALL_K3S_VERSION="v1.31.4%2Bk3s1" K3S_URL=https://192.168.1.13:6443 K3S_TOKEN=asdfsadfasdfasdfasdfasdfasdfasdfsadfasdf::server:asdfasdfasdfsadfasdfasdf sh -

[INFO] Using v1.31.4%2Bk3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.31.4%2Bk3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.31.4%2Bk3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Skipping /usr/local/bin/ctr symlink to k3s, command exists in PATH at /usr/bin/ctr

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

[INFO] systemd: Starting k3s-agent

That seemed to work dandy

... snip ...

Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

[INFO] systemd: Starting k3s-agent

builder@isaac-MacBookPro:~$ exit

logout

Connection to 192.168.1.205 closed.

builder@DESKTOP-QADGF36:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

anna-macbookair Ready control-plane,master 15m v1.31.4+k3s1

builder-macbookpro2 Ready <none> 12m v1.31.4+k3s1

isaac-macbookpro Ready <none> 20s v1.31.4+k3s1

Adding Istio

My guess is my Playbook is a bit dated.

The version is presently pinned to cert-manager 1.11

helm install cert-manager jetstack/cert-manager --namespace cert-manager --create-namespace --version v1.11.0 --set installCRDs=true

I can search the Jetstack repo and see the latest is now 1.16.3

$ helm search repo jetstack

NAME CHART VERSION APP VERSION DESCRIPTION

jetstack/cert-manager v1.16.3 v1.16.3 A Helm chart for cert-manager

jetstack/cert-manager-approver-policy v0.18.0 v0.18.0 approver-policy is a CertificateRequest approve...

jetstack/cert-manager-csi-driver v0.10.2 v0.10.2 cert-manager csi-driver enables issuing secretl...

jetstack/cert-manager-csi-driver-spiffe v0.8.2 v0.8.2 csi-driver-spiffe is a Kubernetes CSI plugin wh...

jetstack/cert-manager-google-cas-issuer v0.9.0 v0.9.0 A Helm chart for jetstack/google-cas-issuer

jetstack/cert-manager-istio-csr v0.14.0 v0.14.0 istio-csr enables the use of cert-manager for i...

jetstack/cert-manager-trust v0.2.1 v0.2.0 DEPRECATED: The old name for trust-manager. Use...

jetstack/finops-dashboards v0.0.5 0.0.5 A Helm chart for Kubernetes

jetstack/finops-policies v0.0.6 v0.0.6 A Helm chart for Kubernetes

jetstack/finops-stack v0.0.5 0.0.3 A FinOps Stack for Kubernetes

jetstack/trust-manager v0.15.0 v0.15.0 trust-manager is the easiest way to manage TLS ...

jetstack/version-checker v0.8.5 v0.8.5 A Helm chart for version-checker

I think for ease, I’ll just do this by hand.

I’ll add the Istio Base

builder@DESKTOP-QADGF36:~$ helm repo add istio https://istio-release.storage.googleapis.com/charts

"istio" has been added to your repositories

builder@DESKTOP-QADGF36:~$ helm install istio-base istio/base -n istio-system --create-namespace

NAME: istio-base

LAST DEPLOYED: Fri Jan 24 08:07:51 2025

NAMESPACE: istio-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Istio base successfully installed!

To learn more about the release, try:

$ helm status istio-base -n istio-system

$ helm get all istio-base -n istio-system

Then Istio Discovery

builder@DESKTOP-QADGF36:~$ helm install istiod istio/istiod -n istio-system --wait

NAME: istiod

LAST DEPLOYED: Fri Jan 24 08:08:17 2025

NAMESPACE: istio-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

"istiod" successfully installed!

To learn more about the release, try:

$ helm status istiod -n istio-system

$ helm get all istiod -n istio-system

Next steps:

* Deploy a Gateway: https://istio.io/latest/docs/setup/additional-setup/gateway/

* Try out our tasks to get started on common configurations:

* https://istio.io/latest/docs/tasks/traffic-management

* https://istio.io/latest/docs/tasks/security/

* https://istio.io/latest/docs/tasks/policy-enforcement/

* Review the list of actively supported releases, CVE publications and our hardening guide:

* https://istio.io/latest/docs/releases/supported-releases/

* https://istio.io/latest/news/security/

* https://istio.io/latest/docs/ops/best-practices/security/

For further documentation see https://istio.io website

Lastly we can add the Istio Ingress Gateway

builder@DESKTOP-QADGF36:~$ helm install istio-ingress istio/gateway -n istio-ingress --wait

NAME: istio-ingress

LAST DEPLOYED: Fri Jan 24 08:09:35 2025

NAMESPACE: istio-ingress

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

"istio-ingress" successfully installed!

To learn more about the release, try:

$ helm status istio-ingress -n istio-ingress

$ helm get all istio-ingress -n istio-ingress

Next steps:

* Deploy an HTTP Gateway: https://istio.io/latest/docs/tasks/traffic-management/ingress/ingress-control/

* Deploy an HTTPS Gateway: https://istio.io/latest/docs/tasks/traffic-management/ingress/secure-ingress/

Now that Istio is there, I’ll add in the Cert Manager. I’m going to try not specifying a version to see if that just grabs the latest. I’m pretty sure it will.

builder@DESKTOP-QADGF36:~$ helm install cert-manager jetstack/cert-manager --namespace cert-manager --create-namespace --set installCRDs=true

NAME: cert-manager

LAST DEPLOYED: Fri Jan 24 08:10:49 2025

NAMESPACE: cert-manager

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

⚠️ WARNING: `installCRDs` is deprecated, use `crds.enabled` instead.

cert-manager v1.16.3 has been deployed successfully!

In order to begin issuing certificates, you will need to set up a ClusterIssuer

or Issuer resource (for example, by creating a 'letsencrypt-staging' issuer).

More information on the different types of issuers and how to configure them

can be found in our documentation:

https://cert-manager.io/docs/configuration/

For information on how to configure cert-manager to automatically provision

Certificates for Ingress resources, take a look at the `ingress-shim`

documentation:

https://cert-manager.io/docs/usage/ingress/

From that note, I should update our playbook to use --set crds.enabled=true instead of --set installCRDs=true for the future.

I still need to test the cluster, but it does appear to be in a generally healthy way

$ kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

cert-manager cert-manager-56d4c7dfb7-ldr8g 1/1 Running 0 2m9s

cert-manager cert-manager-cainjector-6dc54dcd78-t28vb 1/1 Running 0 2m9s

cert-manager cert-manager-webhook-5d74598b49-9mv8b 1/1 Running 0 2m9s

istio-ingress istio-ingress-6994c5d49d-tqlkd 1/1 Running 0 3m24s

istio-system istiod-5f6b94dc6d-9xxz5 1/1 Running 0 4m41s

kube-system coredns-ccb96694c-pb2h6 1/1 Running 0 34m

kube-system local-path-provisioner-5cf85fd84d-rflfp 1/1 Running 0 34m

kube-system metrics-server-5985cbc9d7-x4mq9 1/1 Running 0 34m

kube-system svclb-istio-ingress-c42643a3-7trqh 3/3 Running 0 3m24s

kube-system svclb-istio-ingress-c42643a3-g6zvz 3/3 Running 0 3m24s

kube-system svclb-istio-ingress-c42643a3-jhpqg 3/3 Running 0 3m24s

Uptime Cleanup

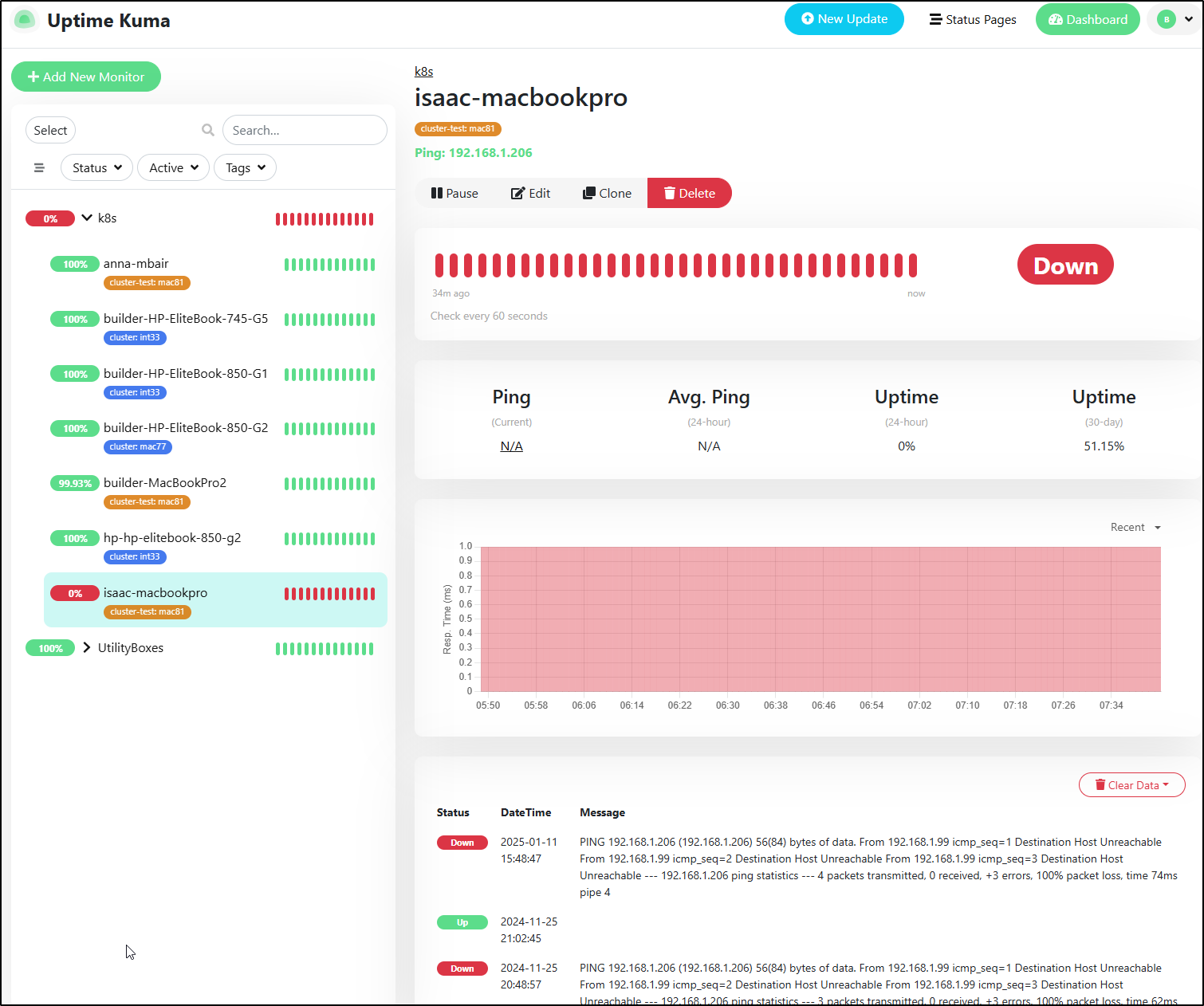

We noticed above that a couple of our hosts changed IPs.

This prompted me to check my Uptime Kuma instance to see if things were off.

I need to pause just a moment.

<old man rant> Folks, we need to talk about how bad search is broken. I wanted to find the URL for Uptime just to link above. However, searching Bing, it’s nowhere on the front page. Look at this junk:

Google results were a bit better (I’m a Google fan, but I switched to Bing just because I couldn’t take all the AI nonsense at the top anymore). But seriously, Bing had the Github home (and Github is part of Microsoft!) on the second page.

We can do better. </old man rant>

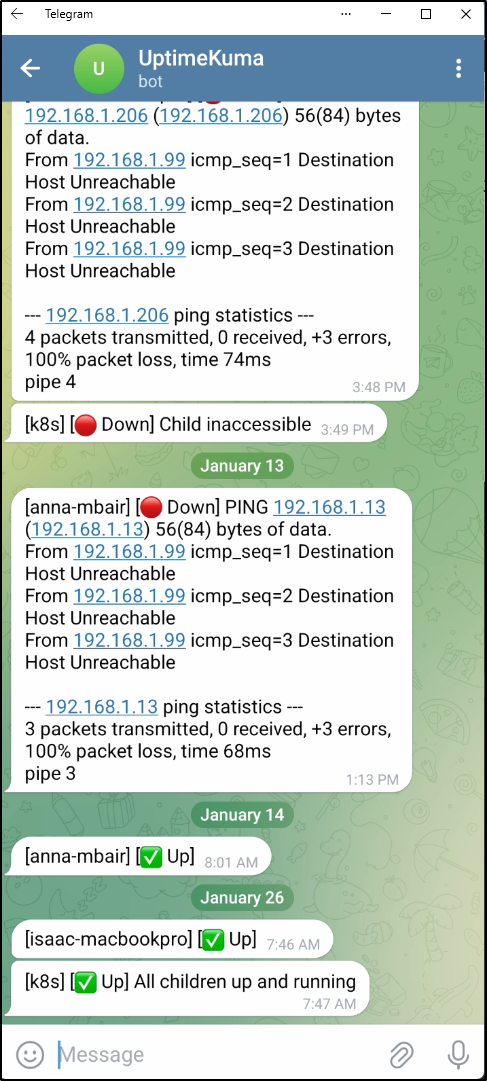

Okay, back to Uptime. So I see one host is wacked out and has been for a couple weeks.

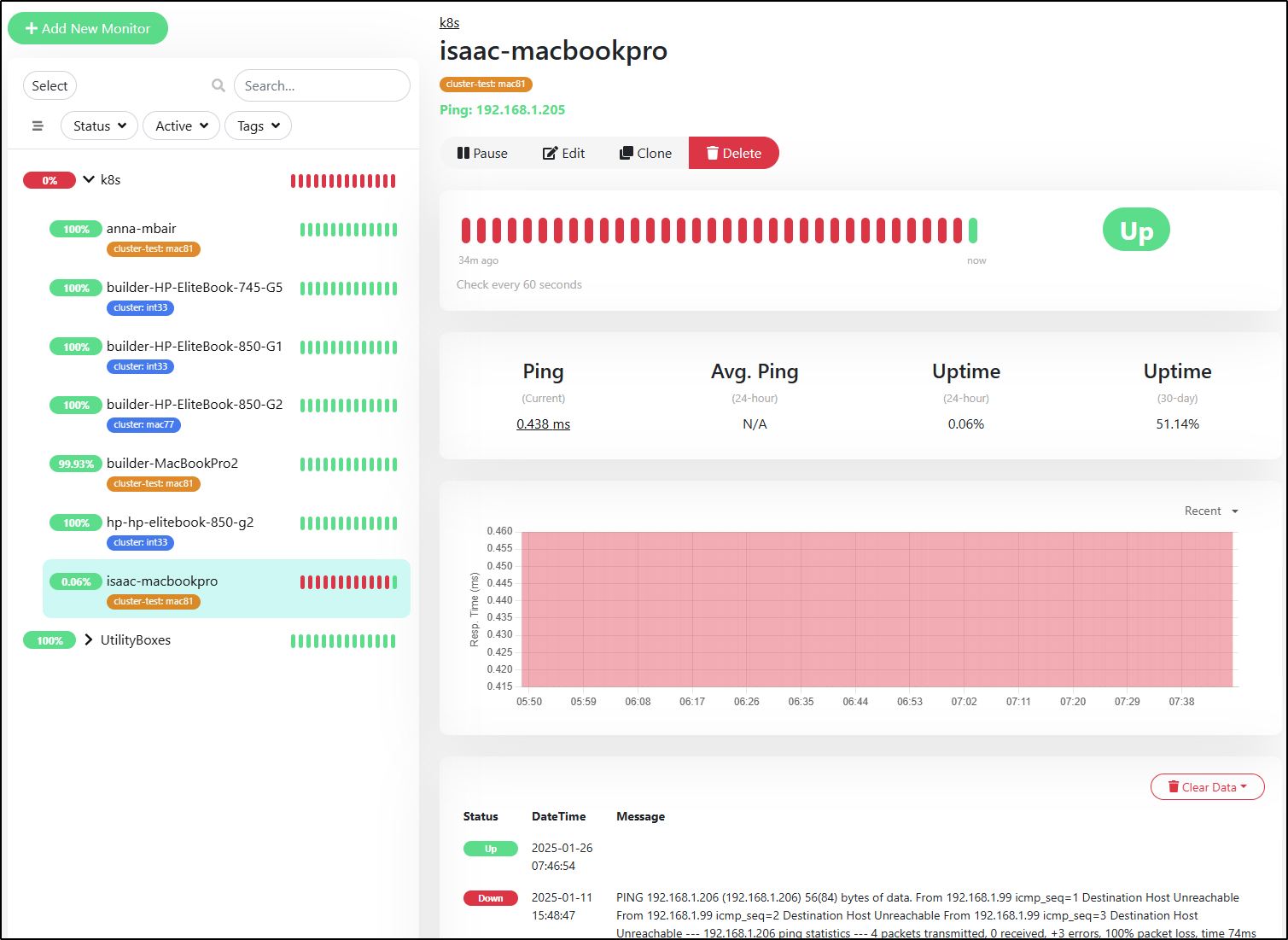

The two things to call out are that the hostname was off (206) and just needs to change to 205. the other issue, well, not issue but rather consequence, is that this is a my “test” cluster and I didn’t want pages from this so you can see I sent alerts to Telegram but not Pagerduty nor Matrix

I saved (and added Matrix) and we can see it’s already showing healthy

I realized I wasn’t being good about watching Telegram as there were unread messages from December

But you can see as of this writing (Jan 26) that it has popped back online

Side Note: if you are curious how that screenshot came about, I use Phone Link which is a free utility in Windows 11 to connect to an Android phone (and it says it works with iOS but i have none of those). Makes it really easy to deal with text messages and apps remotely. It’s a lot like Samsung Dex

Conclusion

We started by determining my Ingress Controller was getting slammed with Gitea requests and in my case, Gitea has just been used as a backup/sync system. I discovered it had been taken over by AI spammers and I worked to surgically clean it out (I could have just nuked and started over).

I then went to cleanup my aging K3s test cluster and also upgrade it to a newer Kubernetes version. I had a bit of a hiccup with my Ansible Playbook for respinning K3s, but I was able to get everything back up and running.

I also noticed some issues with my Uptime Kuma instance that I was able to fix by correcting IPs.

I’m still a bit annoyed about search, but that’s a rant for another day.

Thanks for reading! I hope you found this useful.