Published: Nov 12, 2024 by Isaac Johnson

First off, credit to MariusHosting for all three suggestions. I saw a post recently he had on Yourls and looked and found he’s covered at least three different Open-Source URL shorteners over the last year.

Today, we’ll of course look at YOURLS, though more with a focus on Kubernetes (and usage of their charts) but also shlink and Reduced.to.

I hope to compare and contrast and wrap by selecting one to carry forward using my tpk.pw domain.

YOURLS

$ helm repo add yourls https://charts.yourls.org/

"yourls" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "jfelten" chart repository

...Successfully got an update from the "kube-state-metrics" chart repository

...Successfully got an update from the "adwerx" chart repository

...Successfully got an update from the "opencost" chart repository

...Successfully got an update from the "backstage" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "minio-operator" chart repository

...Unable to get an update from the "myharbor" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Unable to get an update from the "freshbrewed" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Successfully got an update from the "makeplane" chart repository

...Successfully got an update from the "zipkin" chart repository

...Successfully got an update from the "akomljen-charts" chart repository

...Successfully got an update from the "minio" chart repository

...Successfully got an update from the "opencost-charts" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "skm" chart repository

...Successfully got an update from the "btungut" chart repository

...Successfully got an update from the "zabbix-community" chart repository

...Successfully got an update from the "ngrok" chart repository

...Successfully got an update from the "immich" chart repository

...Successfully got an update from the "spacelift" chart repository

...Successfully got an update from the "rhcharts" chart repository

...Successfully got an update from the "openfunction" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Successfully got an update from the "longhorn" chart repository

...Successfully got an update from the "rook-release" chart repository

...Successfully got an update from the "openproject" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "nfs" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "bitwarden" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Unable to get an update from the "epsagon" chart repository (https://helm.epsagon.com):

Get "https://helm.epsagon.com/index.yaml": dial tcp: lookup helm.epsagon.com on 10.255.255.254:53: server misbehaving

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "ingress-nginx" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "elastic" chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "signoz" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "gitea-charts" chart repository

...Successfully got an update from the "castai-helm" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "yourls" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "open-telemetry" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "kiwigrid" chart repository

...Successfully got an update from the "ananace-charts" chart repository

...Successfully got an update from the "openzipkin" chart repository

...Successfully got an update from the "grafana" chart repository

...Successfully got an update from the "portainer" chart repository

...Successfully got an update from the "confluentinc" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "bitnami" chart repository

...Successfully got an update from the "prometheus-community" chart repository

Update Complete. ⎈Happy Helming!⎈

Now we can install it

$ helm install my-yourls yourls/yourls

NAME: my-yourls

LAST DEPLOYED: Sun Nov 10 20:56:19 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: yourls

CHART VERSION: 6.1.14

APP VERSION: 1.9.2

** Please be patient while the chart is being deployed **

Your YOURLS site can be accessed through the following DNS name from within your cluster:

my-yourls.default.svc.cluster.local (port 80)

To access your YOURLS site from outside the cluster follow the steps below:

1. Get the YOURLS URL by running these commands:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

Watch the status with: 'kubectl get svc --namespace default -w my-yourls'

export SERVICE_IP=$(kubectl get svc --namespace default my-yourls --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")

echo "YOURLS URL: http://$SERVICE_IP/"

echo "YOURLS Admin URL: http://$SERVICE_IP/admin"

2. Open a browser and access YOURLS using the obtained URL.

3. Login with the following credentials below to see your app:

echo Username: $(kubectl get secret --namespace default my-yourls -o jsonpath="{.data.username}" | base64 --decode)

echo Password: $(kubectl get secret --namespace default my-yourls -o jsonpath="{.data.password}" | base64 --decode)

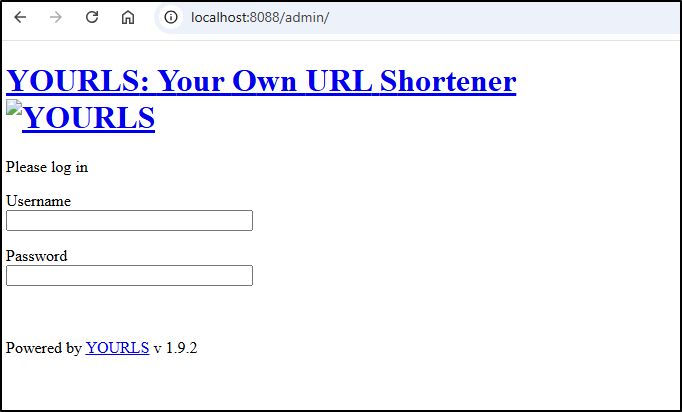

I can port-forward to the service

$ kubectl port-forward svc/my-yourls 8088:80

Forwarding from 127.0.0.1:8088 -> 80

Forwarding from [::1]:8088 -> 80

Handling connection for 8088

Handling connection for 8088

Handling connection for 8088

Handling connection for 8088

It’s got a lot of redirects with the service forwarding to localhost, so let’s create a DNS for it

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n yourls

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "60e800f1-b38b-46ab-89ff-0d2d82e034fa",

"fqdn": "yourls.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/yourls",

"name": "yourls",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

I then reinstalled

$ helm delete my-yourls

release "my-yourls" uninstalled

$ helm install my-yourls --set yourls.domain=yourls.tpk.pw yourls/yourls

NAME: my-yourls

LAST DEPLOYED: Sun Nov 10 21:21:47 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: yourls

CHART VERSION: 6.1.14

APP VERSION: 1.9.2

** Please be patient while the chart is being deployed **

Your YOURLS site can be accessed through the following DNS name from within your cluster:

my-yourls.default.svc.cluster.local (port 80)

To access your YOURLS site from outside the cluster follow the steps below:

1. Get the YOURLS URL by running these commands:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

Watch the status with: 'kubectl get svc --namespace default -w my-yourls'

export SERVICE_IP=$(kubectl get svc --namespace default my-yourls --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")

echo "YOURLS URL: http://$SERVICE_IP/"

echo "YOURLS Admin URL: http://$SERVICE_IP/admin"

2. Open a browser and access YOURLS using the obtained URL.

3. Login with the following credentials below to see your app:

echo Username: $(kubectl get secret --namespace default my-yourls -o jsonpath="{.data.username}" | base64 --decode)

echo Password: $(kubectl get secret --namespace default my-yourls -o jsonpath="{.data.password}" | base64 --decode)

Next, we add an ingress

$ cat yourls.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: my-yourls

name: yourlsingress

namespace: default

spec:

rules:

- host: yourls.tpk.pw

http:

paths:

- backend:

service:

name: my-yourls

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- yourls.tpk.pw

secretName: myyourls-tls

$ kubectl apply -f ./yourls.ingress.yaml

ingress.networking.k8s.io/enclosedingress configured

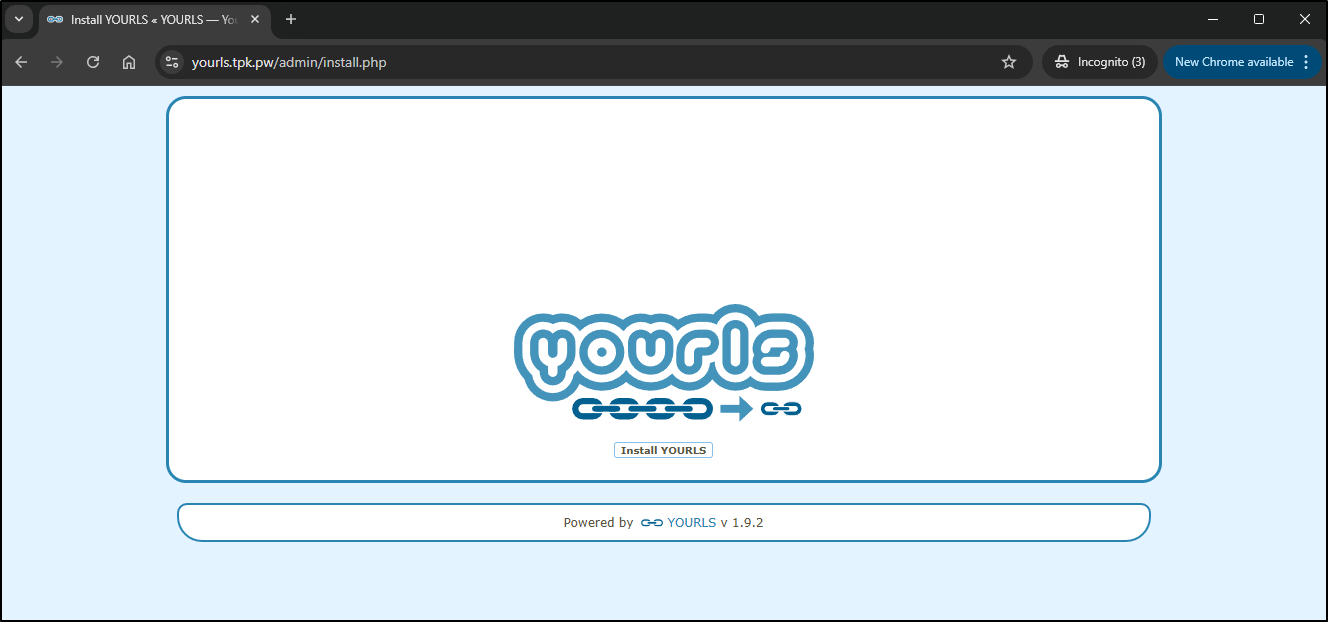

Once I saw it was created

$ kubectl get cert myyourls-tls

NAME READY SECRET AGE

myyourls-tls True myyourls-tls 86s

This time it worked

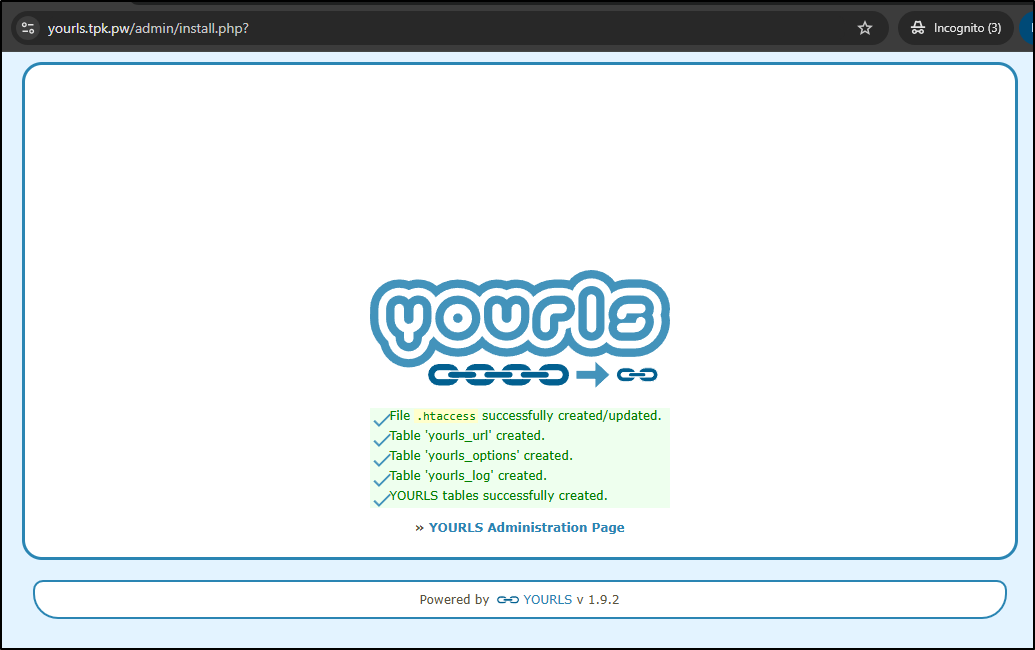

We can now see it is installed

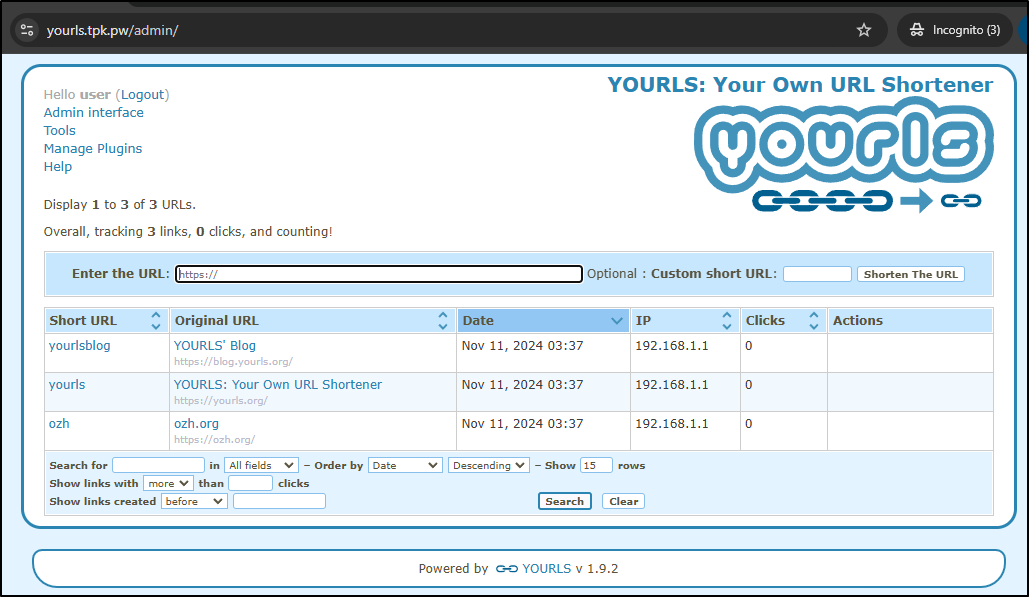

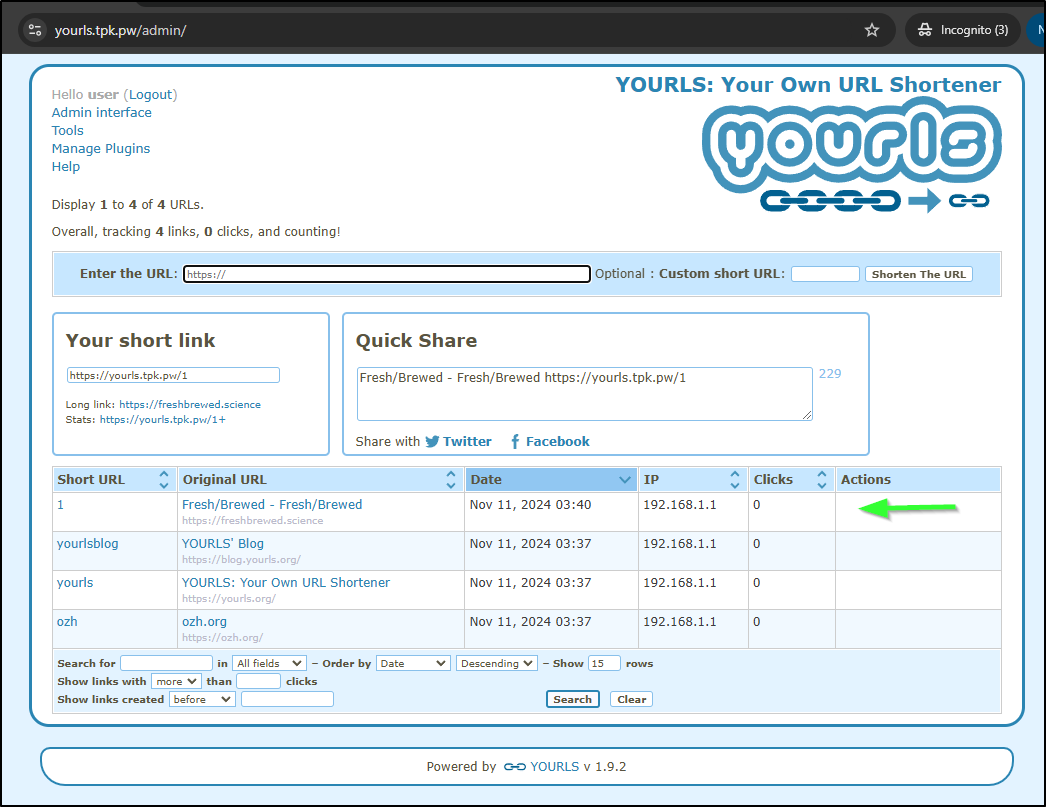

Once logged in I can see some links

I can then create a new link

I can now use https://yourls.tpk.pw/1.

I can also create customized short urls:

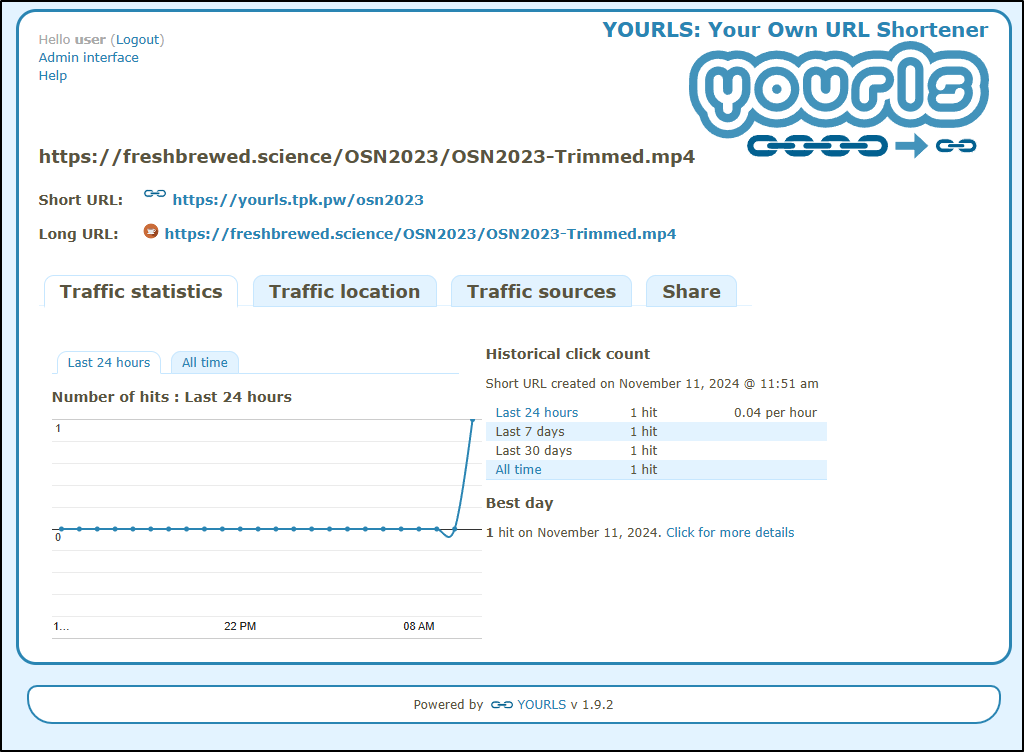

We can see stats on our links

Theoretically we can get some data like country and sources, but both were blank for me

Perhaps that works only with older browsers.

SHLink

Next up is shlink.

According to the documentation, we can run with just a docker invokation:

docker run \

--name my_shlink \

-p 8080:8080 \

-e DEFAULT_DOMAIN=s.test \

-e IS_HTTPS_ENABLED=true \

-e GEOLITE_LICENSE_KEY=kjh23ljkbndskj345 \

shlinkio/shlink:stable

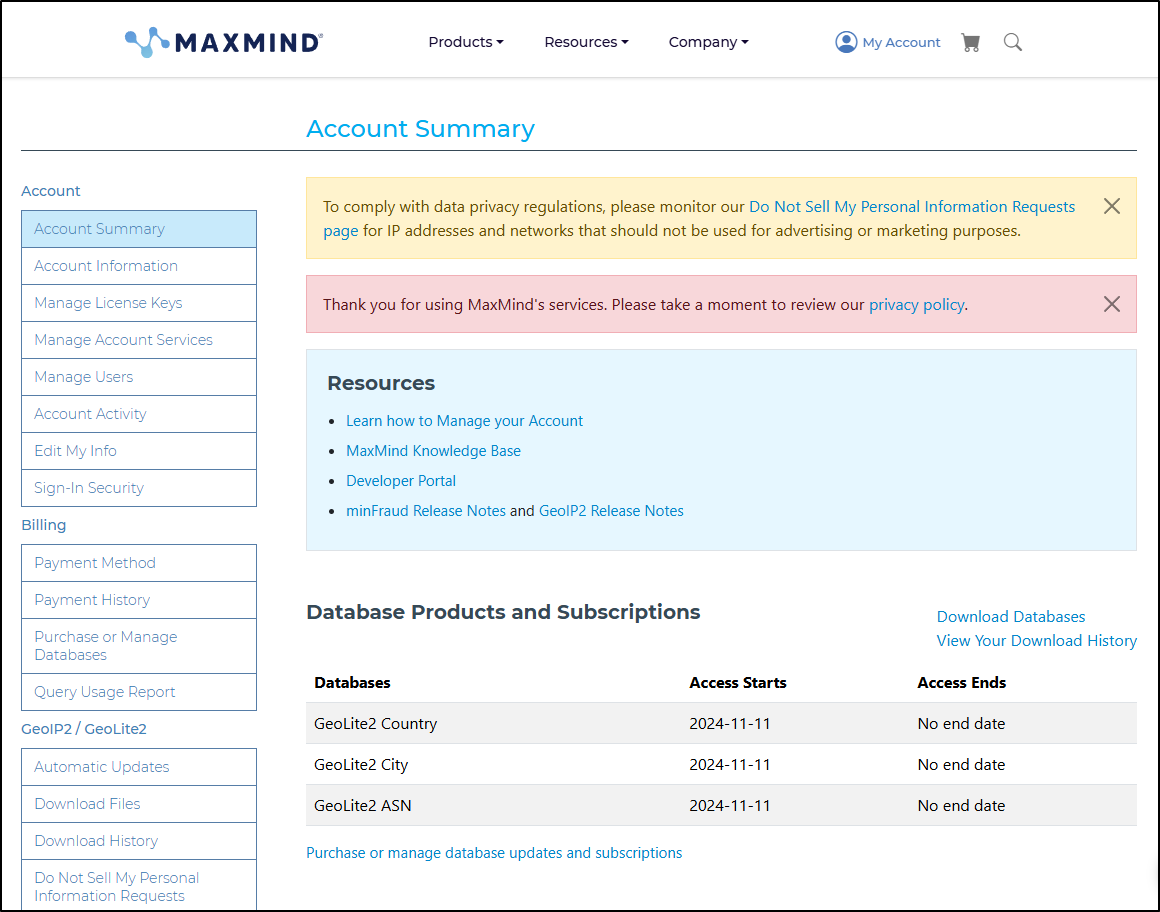

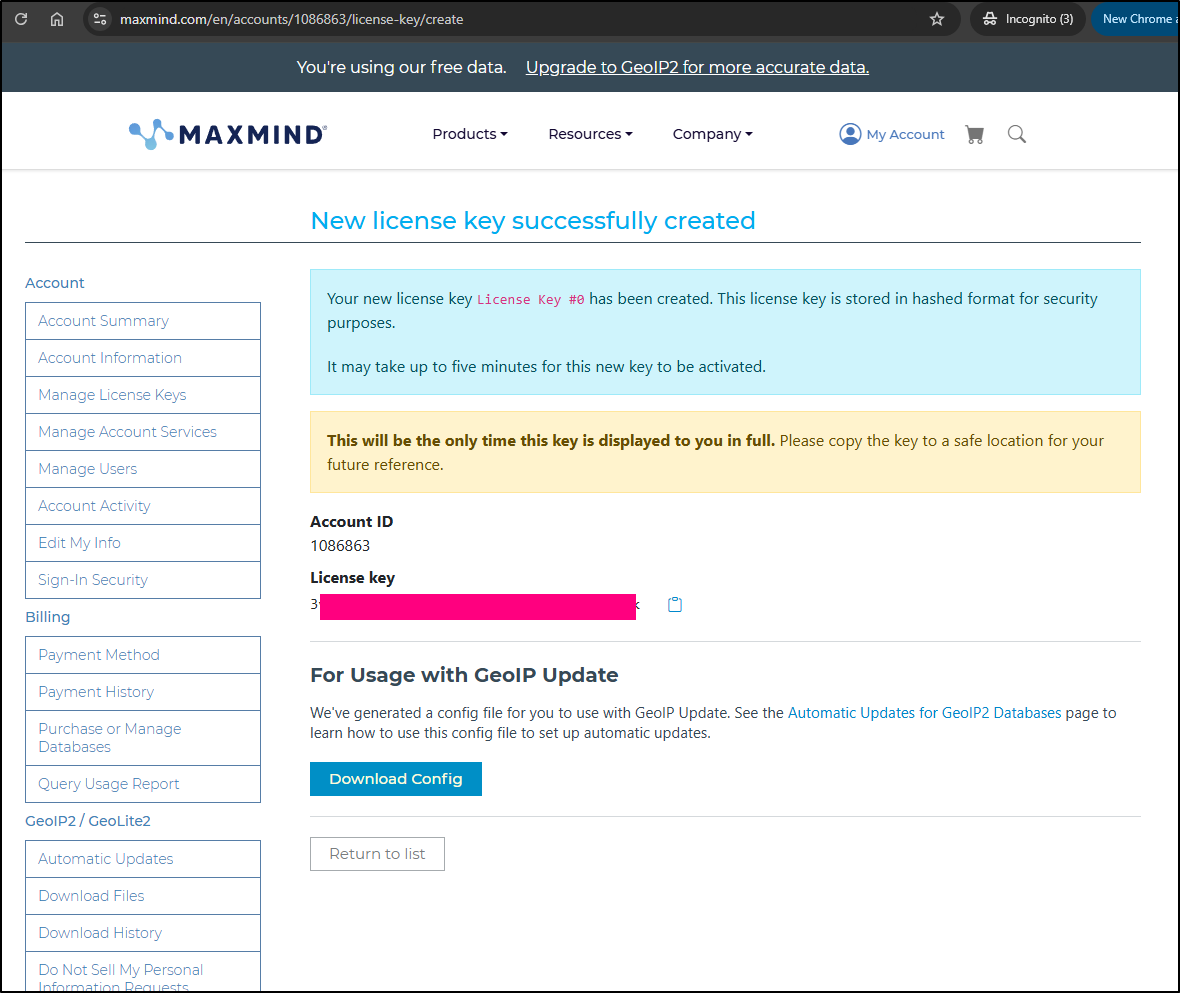

To test this, I’ll need a GeoLite2 account. We can signup here

I’ll now generate a key

Instead of docker, let’s switch this to a Kubernetes Manifest

apiVersion: v1

kind: Secret

metadata:

name: geolite-license-key-secret

type: Opaque

data:

GEOLITE_LICENSE_KEY: a2poMjNsamtibmRza2ozNDU= # Base64 encoded value of key

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-shlink-deployment

spec:

replicas: 1

selector:

matchLabels:

app: my-shlink

template:

metadata:

labels:

app: my-shlink

spec:

containers:

- name: my-shlink

image: shlinkio/shlink:stable

ports:

- containerPort: 8080

env:

- name: DEFAULT_DOMAIN

value: s.test

- name: IS_HTTPS_ENABLED

value: "true"

- name: GEOLITE_LICENSE_KEY

valueFrom:

secretKeyRef:

name: geolite-license-key-secret

key: GEOLITE_LICENSE_KEY

---

apiVersion: v1

kind: Service

metadata:

name: my-shlink-service

spec:

selector:

app: my-shlink

ports:

- protocol: TCP

port: 80

targetPort: 8080

I’m going to setup an ingress up front for this.

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n shlink

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "d05827f1-a045-4ac4-bb26-e3fa74891942",

"fqdn": "shlink.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/shlink",

"name": "shlink",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

Then apply an ingress.yaml

$ cat ./shlink.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: my-shlink-service

name: shlinkingress

namespace: default

spec:

rules:

- host: shlink.tpk.pw

http:

paths:

- backend:

service:

name: my-shlink-service

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- shlink.tpk.pw

secretName: myshlink-tls

$ kubectl apply -f ./shlink.ingress.yaml

ingress.networking.k8s.io/shlinkingress created

I can now apply my YAML (with my values of course)

$ kubectl apply -f ./shlink.yaml

secret/geolite-license-key-secret created

deployment.apps/my-shlink-deployment created

service/my-shlink-service created

Shlink doesn’t use a Web interface to manage links

Most of our commands with SHLink will come from interaction with the container. We can see the full list by exec’ing ‘shlink’ on it

$ kubectl exec -it `kubectl get pods -l app=my-shlink -o jsonpath='{.items[*].metadata.name}'` -- shlink

Shlink 4.2.5

Usage:

command [options] [arguments]

Options:

-h, --help Display help for the given command. When no command is given display help for the list command

-q, --quiet Do not output any message

-V, --version Display this application version

--ansi|--no-ansi Force (or disable --no-ansi) ANSI output

-n, --no-interaction Do not ask any interactive question

-v|vv|vvv, --verbose Increase the verbosity of messages: 1 for normal output, 2 for more verbose output and 3 for debug

Available commands:

completion Dump the shell completion script

help Display help for a command

list List commands

api-key

api-key:disable Disables an API key.

api-key:generate Generate a new valid API key.

api-key:list Lists all the available API keys.

domain

domain:list List all domains that have been ever used for some short URL

domain:redirects Set specific "not found" redirects for individual domains.

domain:visits Returns the list of visits for provided domain.

integration

integration:matomo:send-visits [MATOMO INTEGRATION DISABLED] Send existing visits to the configured matomo instance

short-url

short-url:create Generates a short URL for provided long URL and returns it

short-url:delete Deletes a short URL

short-url:delete-expired Deletes all short URLs that are considered expired, because they have a validUntil date in the past

short-url:edit Edit an existing short URL

short-url:import Allows to import short URLs from third party sources

short-url:list List all short URLs

short-url:manage-rules Set redirect rules for a short URL

short-url:parse Returns the long URL behind a short code

short-url:visits Returns the detailed visits information for provided short code

short-url:visits-delete Deletes visits from a short URL

tag

tag:delete Deletes one or more tags.

tag:list Lists existing tags.

tag:rename Renames one existing tag.

tag:visits Returns the list of visits for provided tag.

visit

visit:download-db Checks if the GeoLite2 db file is too old or it does not exist, and tries to download an up-to-date copy if so.

visit:locate Resolves visits origin locations. It implicitly downloads/updates the GeoLite2 db file if needed.

visit:non-orphan Returns the list of non-orphan visits.

visit:orphan Returns the list of orphan visits.

visit:orphan-delete Deletes all orphan visits

So to replicate what we did with YOURLS, we’ll fire up the interactive short-url:create

$ kubectl exec -it `kubectl get pods -l app=my-shlink -o jsonpath='{.items[*].metadata.name}'` -- shlink short-url:create

Which URL do you want to shorten?:

> https://freshbrewed.science/OSN2023/OSN2023-Trimmed.mp4

Processed long URL: https://freshbrewed.science/OSN2023/OSN2023-Trimmed.mp4

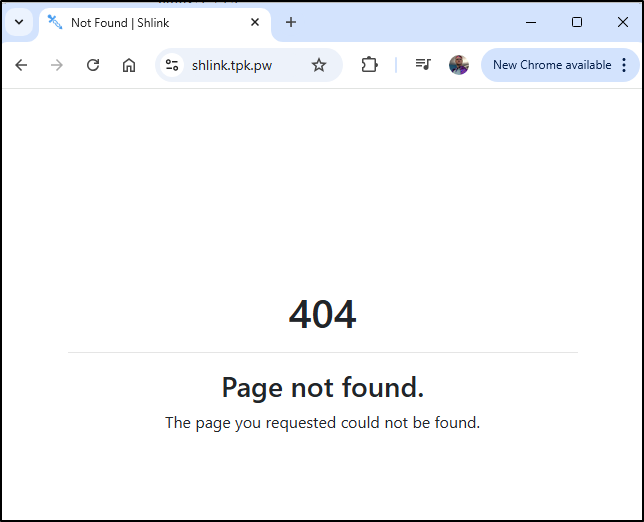

Generated short URL: https://shlink.tpk.pw/QVLf6

This works just as YOURLS did redirecting to the recording.

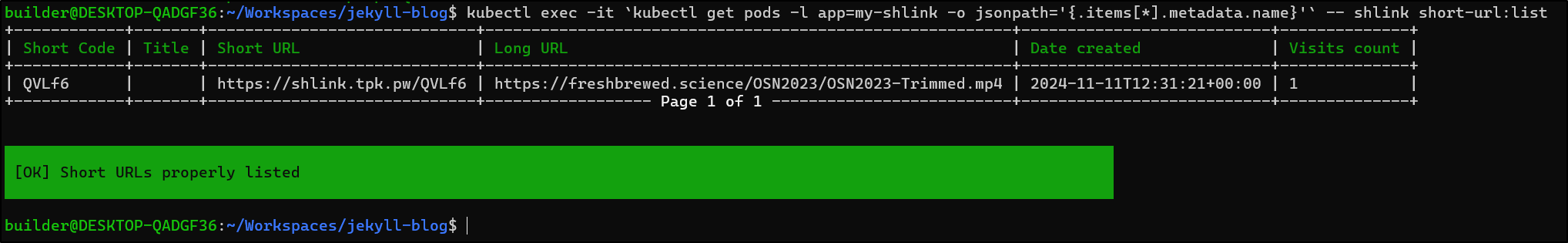

I can use “list” to get a list of my existing links

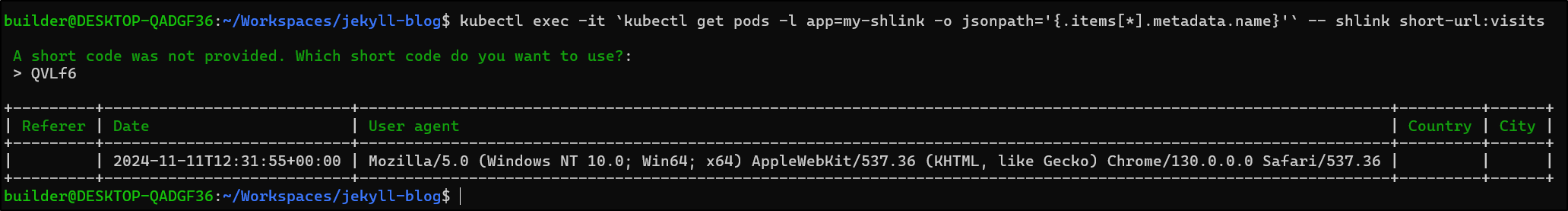

I can use that short-code to get visits

But as before, I guess my location is hidden as I don’t see it in the visits log

$ kubectl exec -it `kubectl get pods -l app=my-shlink -o jsonpath='{.items[*].metadata.name}'` -- shlink short-url:visits

A short code was not provided. Which short code do you want to use?:

> QVLf6

+---------+---------------------------+-----------------------------------------------------------------------------------------------------------------+---------+------+

| Referer | Date | User agent | Country | City |

+---------+---------------------------+-----------------------------------------------------------------------------------------------------------------+---------+------+

| | 2024-11-11T12:31:55+00:00 | Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/130.0.0.0 Safari/537.36 | | |

+---------+---------------------------+-----------------------------------------------------------------------------------------------------------------+---------+------+

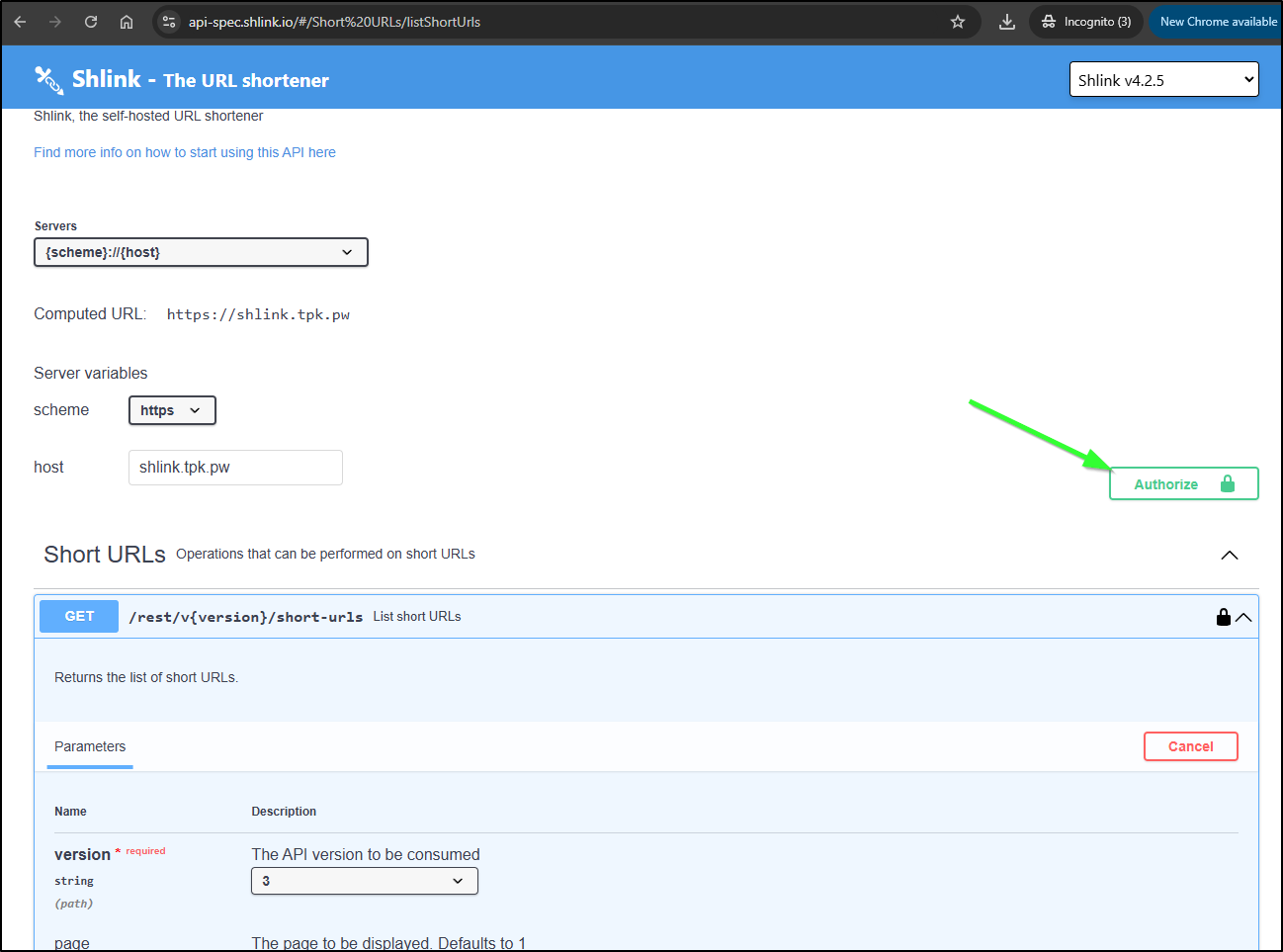

There is a rich REST API with Swagger docs here

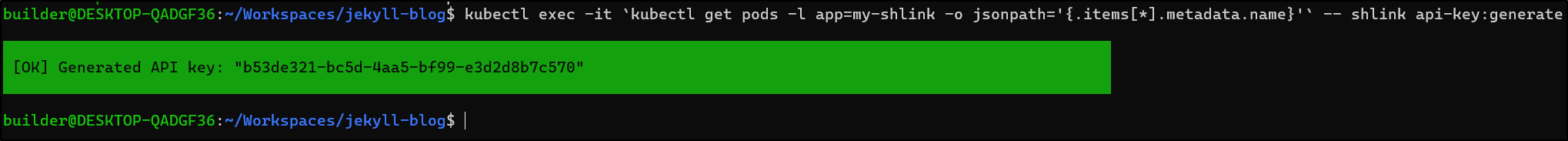

To use those, we need an API key (which we generate with the container)

I used that in the Authorize box of the REST Docs

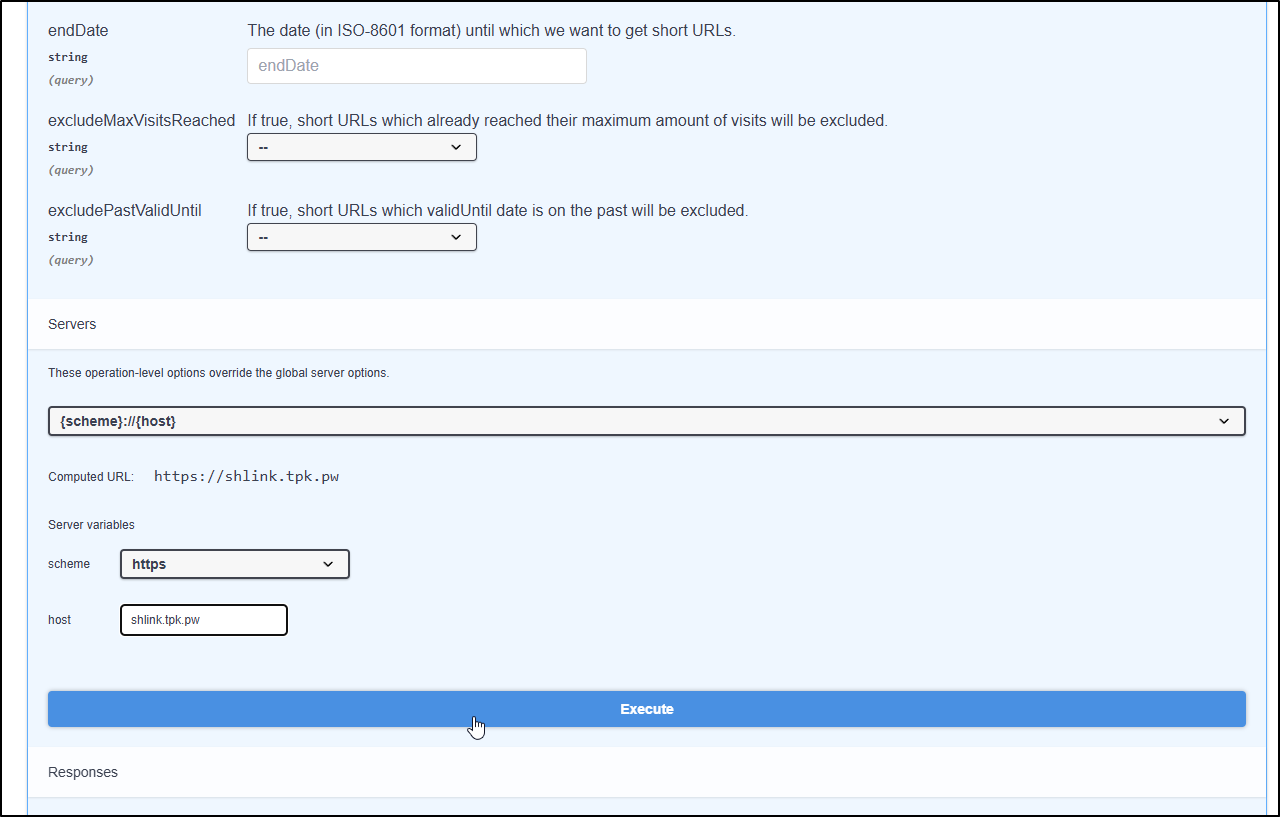

I can then click “execute” to invoke it

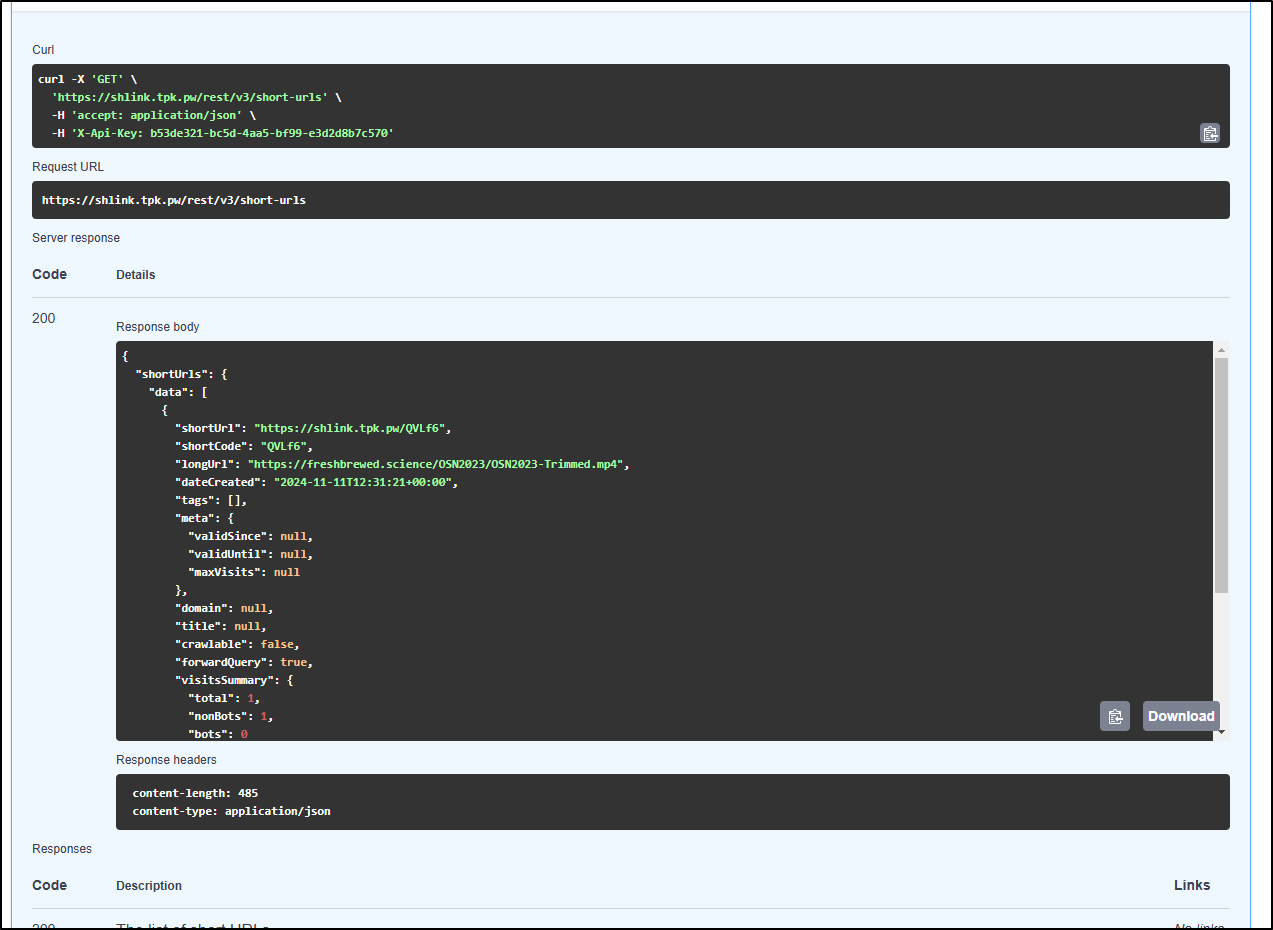

Which then shows results and the query behind it

This I can, of course, test locally

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ curl -X 'GET' 'https://shlink.tpk.pw/rest/v3/short-urls' -H 'accept: application/json' -H 'X-Api-Key: b53de321-bc5d-4aa5-bf99-e3d2d8b7c570' | jq

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 485 100 485 0 0 3849 0 --:--:-- --:--:-- --:--:-- 3849

{

"shortUrls": {

"data": [

{

"shortUrl": "https://shlink.tpk.pw/QVLf6",

"shortCode": "QVLf6",

"longUrl": "https://freshbrewed.science/OSN2023/OSN2023-Trimmed.mp4",

"dateCreated": "2024-11-11T12:31:21+00:00",

"tags": [],

"meta": {

"validSince": null,

"validUntil": null,

"maxVisits": null

},

"domain": null,

"title": null,

"crawlable": false,

"forwardQuery": true,

"visitsSummary": {

"total": 1,

"nonBots": 1,

"bots": 0

}

}

],

"pagination": {

"currentPage": 1,

"pagesCount": 1,

"itemsPerPage": 10,

"itemsInCurrentPage": 1,

"totalItems": 1

}

}

}

I can use the same REST API to POST a link as well

curl -X 'POST' \

'https://shlink.tpk.pw/rest/v3/short-urls' \

-H 'accept: application/json' \

-H 'X-Api-Key: b53de321-bc5d-4aa5-bf99-e3d2d8b7c570' \

-H 'Content-Type: application/json' \

-d '{

"longUrl": "https://freshbrewed.science/OSN2023/OSN2023-Trimmed.pptx",

"tags": [

"OSN"

],

"title": "OSN2024PPTX",

"crawlable": true,

"forwardQuery": true,

"findIfExists": true,

"shortCodeLength": 4

}'

Which now directs https://shlink.tpk.pw/SHqU to the PPTX

Since I’ve exposed the API key in this writeup, we’ll want to expire it now as well

$ kubectl exec -it `kubectl get pods -l app=my-shlink -o jsonpath='{.items[*].metadata.name}'` -- shlink api-key:list

+--------------------------------------+------+------------+-----------------+-------+

| Key | Name | Is enabled | Expiration date | Roles |

+--------------------------------------+------+------------+-----------------+-------+

| b53de321-bc5d-4aa5-bf99-e3d2d8b7c570 | - | +++ | - | Admin |

+--------------------------------------+------+------------+-----------------+-------+

$ kubectl exec -it `kubectl get pods -l app=my-shlink -o jsonpath='{.items[*].metadata.name}'` -- shlink api-key:disable b53de321-bc5d-4aa5-bf99-e3d2d8b7c570

[OK] API key "b53de321-bc5d-4aa5-bf99-e3d2d8b7c570" properly disabled

Let’s do one together:

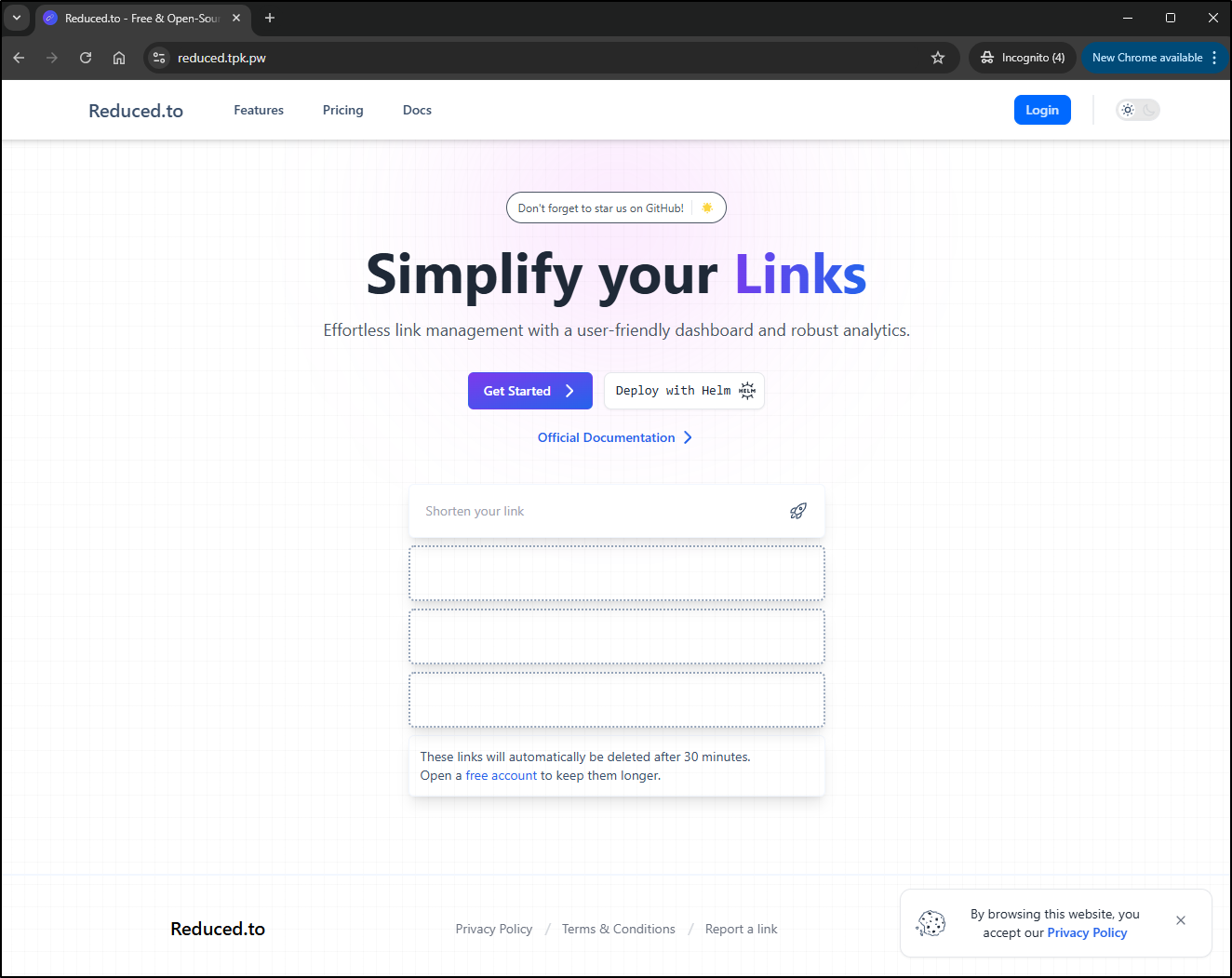

Reduced.to

While they have guides to run direct with npx as well as docker, I want to dig right in to their Helm Charts.

I created the namespace

$ kubectl create ns reduced-to

namespace/reduced-to created

but it does not want to take the Ingress as it stands

$ helm install my-reduced --set tls.issuer=azuredns-tpkpw --set tls.secret_name=reduced-tls --set shared_configmap.DOMAIN=reduced.tpk.pw ./chart/

Error: INSTALLATION FAILED: 1 error occurred:

* Ingress.networking.k8s.io "reduced-ingress" is invalid: annotations.kubernetes.io/ingress.class: Invalid value: "nginx": can not be set when the class field is also set

Let me try removing the redundant classname

builder@DESKTOP-QADGF36:~/Workspaces/reduced.to/docker/k8s$ git diff

diff --git a/docker/k8s/chart/templates/common/reduced-ingress.yaml b/docker/k8s/chart/templates/common/reduced-ingress.yaml

index 47ed14d..a6ceb9b 100644

--- a/docker/k8s/chart/templates/common/reduced-ingress.yaml

+++ b/docker/k8s/chart/templates/common/reduced-ingress.yaml

@@ -9,7 +9,6 @@ metadata:

labels:

app: reduced

spec:

- ingressClassName: nginx

tls:

- hosts:

- {{ .Values.shared_configmap.DOMAIN }}

I then tried again

$ helm delete my-reduced

release "my-reduced" uninstalled

$ helm install my-reduced --set tls.issuer=azuredns-tpkpw --set tls.secret_name=reduced-tls --set shared_configmap.DOMAIN=reduced.tpk.pw ./chart/

NAME: my-reduced

LAST DEPLOYED: Mon Nov 11 20:29:42 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

This looks okay

$ kubectl get pods -n reduced-to

NAME READY STATUS RESTARTS AGE

postgres-statefulset-0 1/1 Running 0 38s

frontend-deployment-79b95ffd65-snnzz 1/1 Running 0 38s

tracker-deployment-589c9b5d9b-6m5hv 1/1 Running 0 37s

redis-deployment-5c59c4ddd-f429h 1/1 Running 0 37s

backend-deployment-6d8cd4fc47-qzttq 1/1 Running 0 37s

I can see the Ingress was created

$ kubectl get ingress -n reduced-to

NAME CLASS HOSTS ADDRESS PORTS AGE

reduced-ingress <none> reduced.tpk.pw 80, 443 57s

Then the cert was sorted soon after

$ kubectl get cert -n reduced-to

NAME READY SECRET AGE

reduced-tls True reduced-tls 80s

I then tried to login

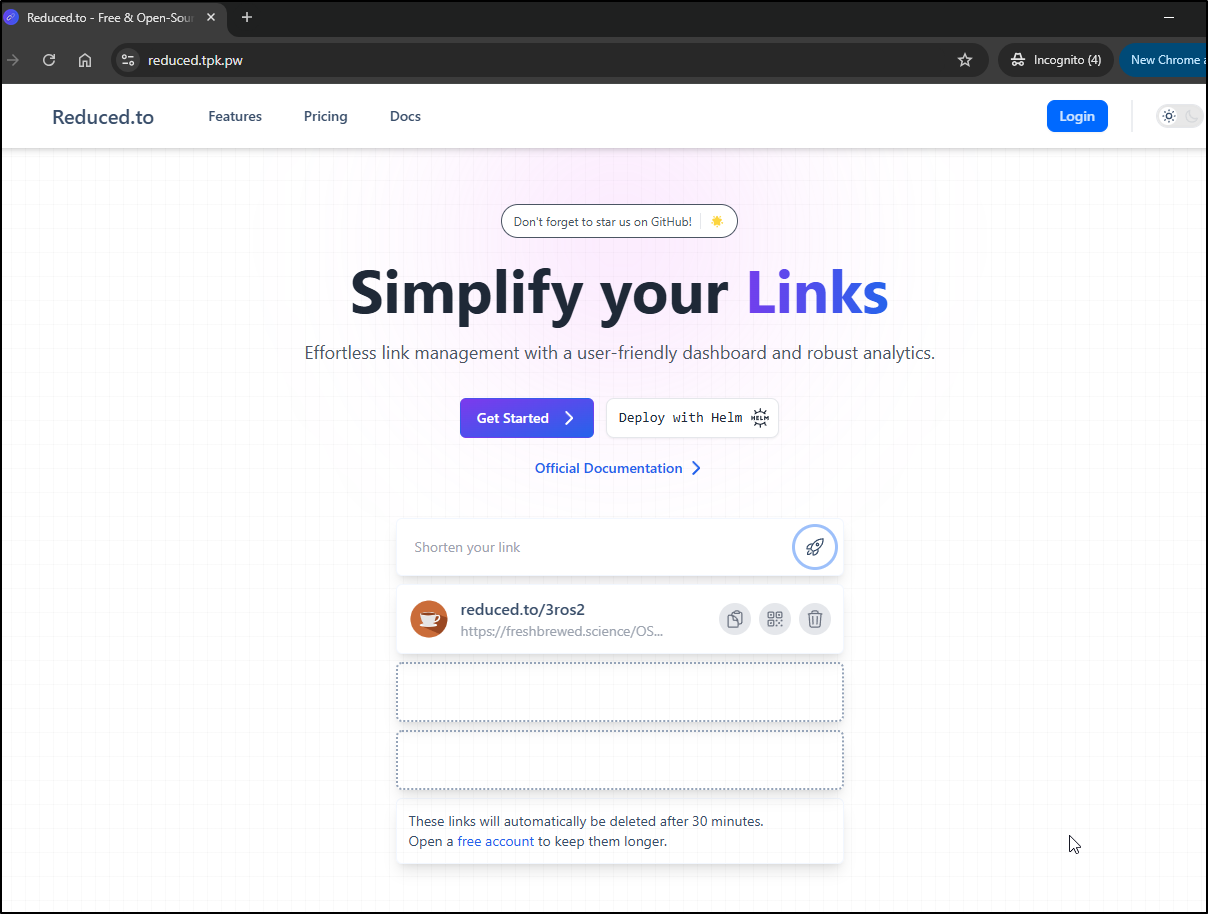

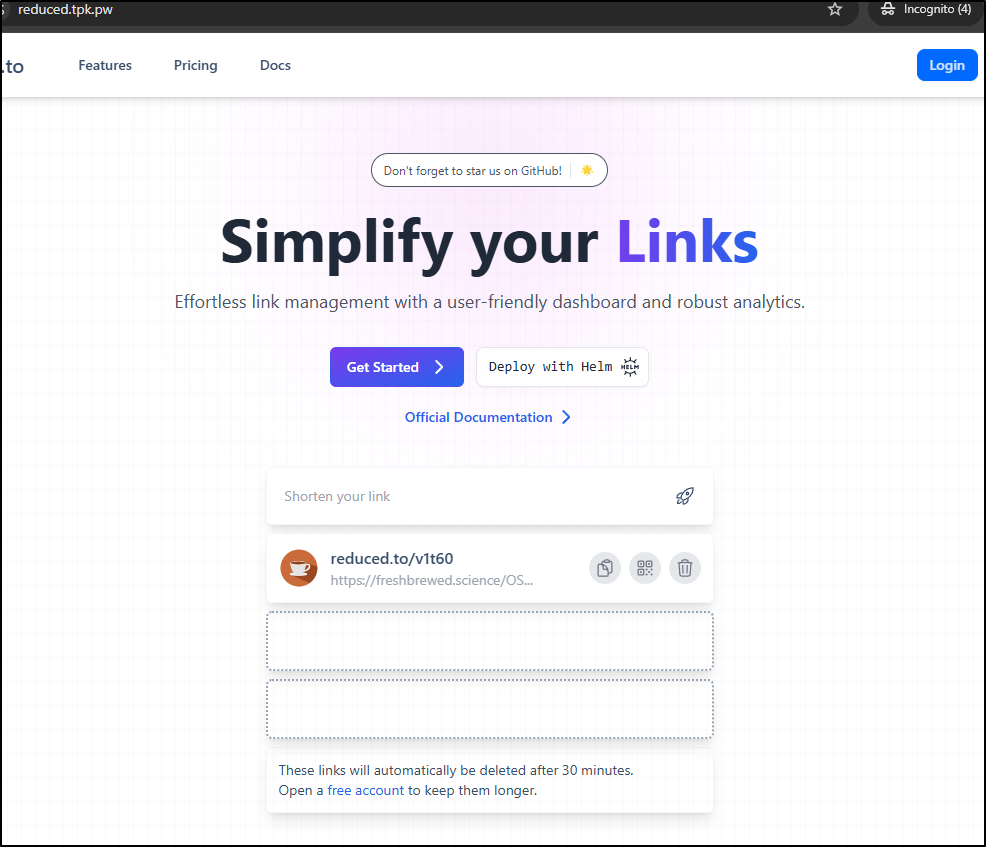

I first tried the “Shorten your link” field which does not require login

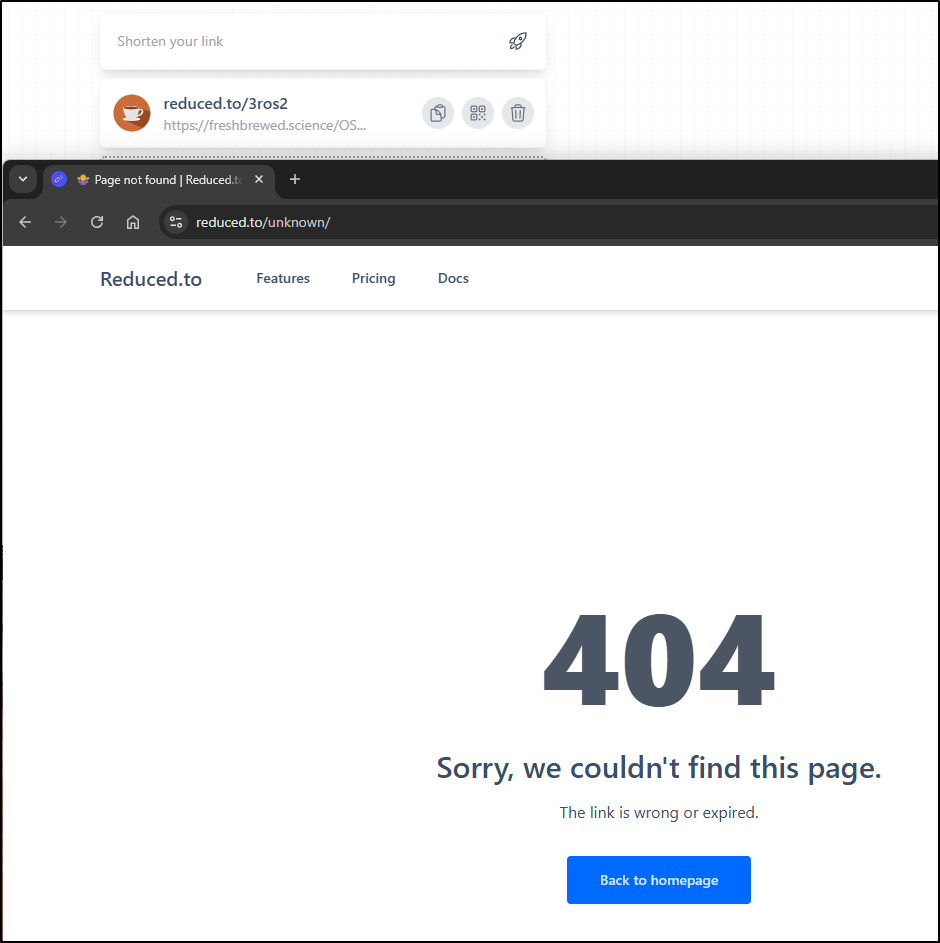

Clearly it did not create the link on the real reduced.to

However, using my domain https://reduced.tpk.pw/3ros2, it worked.

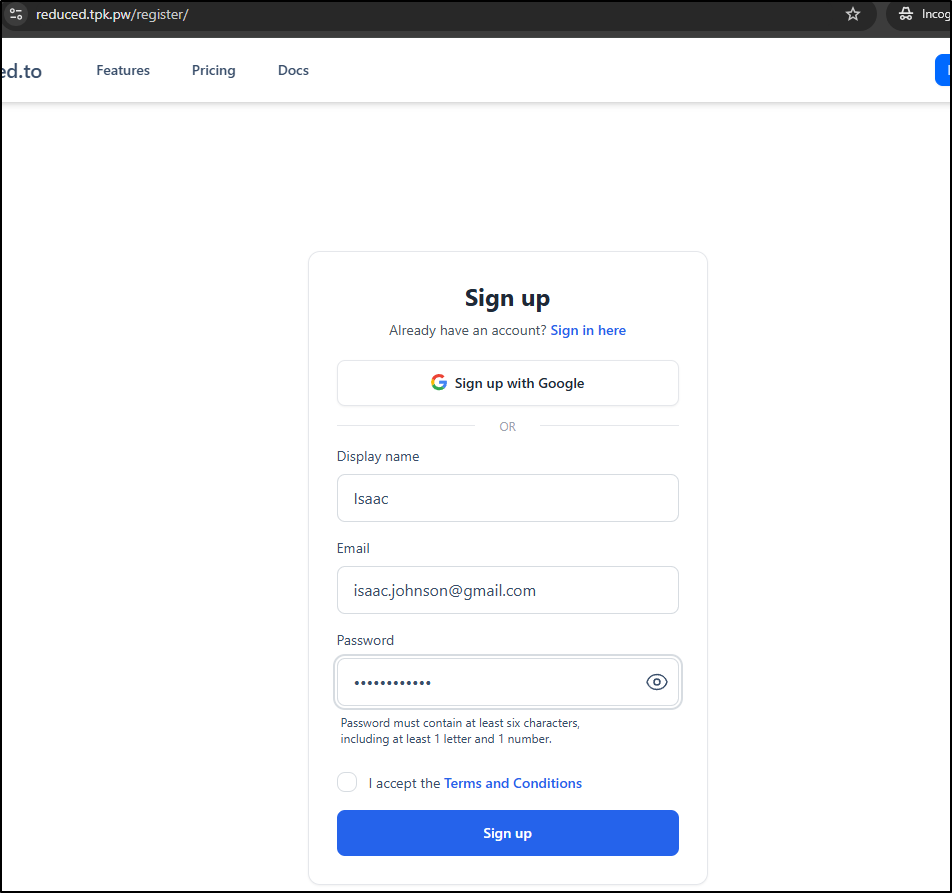

I can also create a new account

I signed in, but it doesn’t seem to do anything.

I think I need to try updating CLIENTSIDE_API_DOMAIN

I’ll now reinstall with that set

$ helm install my-reduced --set tls.issuer=azuredns-tpkpw --set tls.secret_name=reduced-tls --set shared_configmap.DOMAIN=reduced.tpk.pw --set shared_configmap.CLIENTSIDE_API_DOMAIN='https://reduced.tpk.pw' ./chart/

NAME: my-reduced

LAST DEPLOYED: Mon Nov 11 20:40:45 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

However, it is still showing the wrong URL on create

And logins fail.

Let’s try more values

$ helm install my-reduced --set tls.issuer=azuredns-tpkpw --set tls.secret_name=reduced-tls --set shared_configmap.DOMAIN=reduced.tpk.pw --set shared_configmap.CLIENTSIDE_API_DOMAIN='https://reduced.tpk.pw' --set shared_configmap.API_DOMAIN=reduced.tpk.pw --set shared_configmap.BACKEND_APP_PORT=8080 --set shared_configmap.FRONTEND_APP_PORT=8088 --set shared_configmap.TRACKER_APP_PORT=8099 ./chart/

NAME: my-reduced

LAST DEPLOYED: Mon Nov 11 20:48:59 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

This was even worse failing to create links at all.

Using a transform

The MariusHosting article showed the manifest he used with Portainer.

I fed that into co-pilot, then with some minor tweaks (AI is never perfect), I got a decent Kubernetes YAML

$ cat manifest_marius.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: redis-pvc

spec:

storageClassName: local-path

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: db-pvc

spec:

storageClassName: local-path

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis

spec:

replicas: 1

selector:

matchLabels:

app: redis

template:

metadata:

labels:

app: redis

spec:

containers:

- name: redis

image: redis

command: ["/bin/sh", "-c", "redis-server --requirepass redispass"]

ports:

- containerPort: 6379

resources:

limits:

memory: "256Mi"

cpu: "768m"

requests:

memory: "50Mi"

volumeMounts:

- mountPath: /data

name: redis-data

volumes:

- name: redis-data

persistentVolumeClaim:

claimName: redis-pvc

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgres

spec:

replicas: 1

selector:

matchLabels:

app: postgres

template:

metadata:

labels:

app: postgres

spec:

containers:

- name: postgres

image: postgres

env:

- name: POSTGRES_DB

value: reduced

- name: POSTGRES_USER

value: reduceduser

- name: POSTGRES_PASSWORD

value: reducedpass

ports:

- containerPort: 5432

resources:

limits:

memory: "512Mi"

cpu: "768m"

volumeMounts:

- mountPath: /var/lib/postgresql/data

name: db-data

volumes:

- name: db-data

persistentVolumeClaim:

claimName: db-pvc

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: backend

spec:

replicas: 1

selector:

matchLabels:

app: backend

template:

metadata:

labels:

app: backend

spec:

containers:

- name: backend

image: ghcr.io/origranot/reduced.to/backend:master

env:

- name: APP_PORT

value: "3000"

- name: RATE_LIMIT_TTL

value: "60"

- name: RATE_LIMIT_COUNT

value: "10"

- name: FRONT_DOMAIN

value: "http://reduced-front:5000"

- name: DATABASE_URL

value: "postgresql://reduceduser:reducedpass@postgres-service:5432/reduced?schema=public"

- name: REDIS_ENABLE

value: "true"

- name: REDIS_HOST

value: reduced-redis

- name: REDIS_PORT

value: "6379"

- name: REDIS_PASSWORD

value: redispass

- name: REDIS_TTL

value: "604800" # 1 week

- name: JWT_ACCESS_SECRET

value: FreshbrewedScienceIsGreat2024123

- name: JWT_REFRESH_SECRET

value: FreshbrewedScienceIsFantastic024

ports:

- containerPort: 3000

resources:

limits:

memory: "1Gi"

cpu: "768m"

readinessProbe:

exec:

command:

- stat

- /etc/passwd

securityContext:

capabilities:

drop: ["all"]

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend

spec:

replicas: 1

selector:

matchLabels:

app: frontend

template:

metadata:

labels:

app: frontend

spec:

containers:

- name: frontend

image: ghcr.io/origranot/reduced.to/frontend:master

env:

- name: API_DOMAIN

value: "http://reduced-back:3000"

- name: DOMAIN

value: "localhost"

ports:

- containerPort: 5000

securityContext:

runAsUser: 1026

runAsGroup: 100

capabilities:

drop: ["all"]

---

apiVersion: v1

kind: Service

metadata:

name: redis-service

spec:

selector:

app: redis

ports:

- protocol: TCP

port: 6379

targetPort: 6379

---

apiVersion: v1

kind: Service

metadata:

name: postgres-service

spec:

selector:

app: postgres

ports:

- protocol: TCP

port: 5432

targetPort: 5432

---

apiVersion: v1

kind: Service

metadata:

name: backend-service

spec:

selector:

app: backend

ports:

- protocol: TCP

port: 3000

targetPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: frontend-service

spec:

selector:

app: frontend

ports:

- protocol: TCP

port: 8302

targetPort: 5000

I then applied it

$ kubectl apply -f ./manifest_marius.yaml -n reduced-to

persistentvolumeclaim/redis-pvc created

persistentvolumeclaim/db-pvc created

deployment.apps/redis created

deployment.apps/postgres created

deployment.apps/backend created

deployment.apps/frontend created

service/redis-service created

service/postgres-service created

service/backend-service created

service/frontend-service created

I saw some initial errors

$ kubectl get pods -n reduced-to

NAME READY STATUS RESTARTS AGE

frontend-75c87bb7b9-2n72w 1/1 Running 0 6m43s

redis-77656478cb-9td54 1/1 Running 0 6m43s

backend-69d47fd8d6-sppp8 0/1 CrashLoopBackOff 6 (22s ago) 6m43s

postgres-dd4644bfb-5dsf6 1/1 Running 0 12s

Which I think is just a race condition on the backend (and i had a mistake in the first YAML on the postgres instance which I corrected)

$ kubectl logs backend-69d47fd8d6-sppp8 -n reduced-to

> nx run prisma:migrate-deploy

(node:25) [DEP0060] DeprecationWarning: The `util._extend` API is deprecated. Please use Object.assign() instead.

(Use `node --trace-deprecation ...` to show where the warning was created)

Prisma schema loaded from libs/prisma/src/schema.prisma

Datasource "db": PostgreSQL database "reduced", schema "public" at "postgres-service:5432"

Error: P1001: Can't reach database server at `postgres-service`:`5432`

Please make sure your database server is running at `postgres-service`:`5432`.

Warning: run-commands command "npx prisma migrate deploy --schema=libs/prisma/src/schema.prisma" exited with non-zero status code

> NX Running target migrate-deploy for project prisma failed

Failed tasks:

- prisma:migrate-deploy

Hint: run the command with --verbose for more details.

After rotating the pods I still saw errors

builder@DESKTOP-QADGF36:~/Workspaces/reduced.to$ kubectl get pods -n reduced-to

NAME READY STATUS RESTARTS AGE

frontend-75c87bb7b9-2n72w 1/1 Running 0 7m58s

redis-77656478cb-9td54 1/1 Running 0 7m58s

backend-69d47fd8d6-sppp8 0/1 CrashLoopBackOff 6 (97s ago) 7m58s

postgres-dd4644bfb-5dsf6 1/1 Running 0 87s

builder@DESKTOP-QADGF36:~/Workspaces/reduced.to$ kubectl delete pod backend-69d47fd8d6-sppp8 -n reduced-to

pod "backend-69d47fd8d6-sppp8" deleted

builder@DESKTOP-QADGF36:~/Workspaces/reduced.to$ kubectl get pods -n reduced-to

NAME READY STATUS RESTARTS AGE

frontend-75c87bb7b9-2n72w 1/1 Running 0 9m53s

redis-77656478cb-9td54 1/1 Running 0 9m53s

postgres-dd4644bfb-5dsf6 1/1 Running 0 3m22s

backend-69d47fd8d6-l8sh8 0/1 CrashLoopBackOff 3 (10s ago) 105s

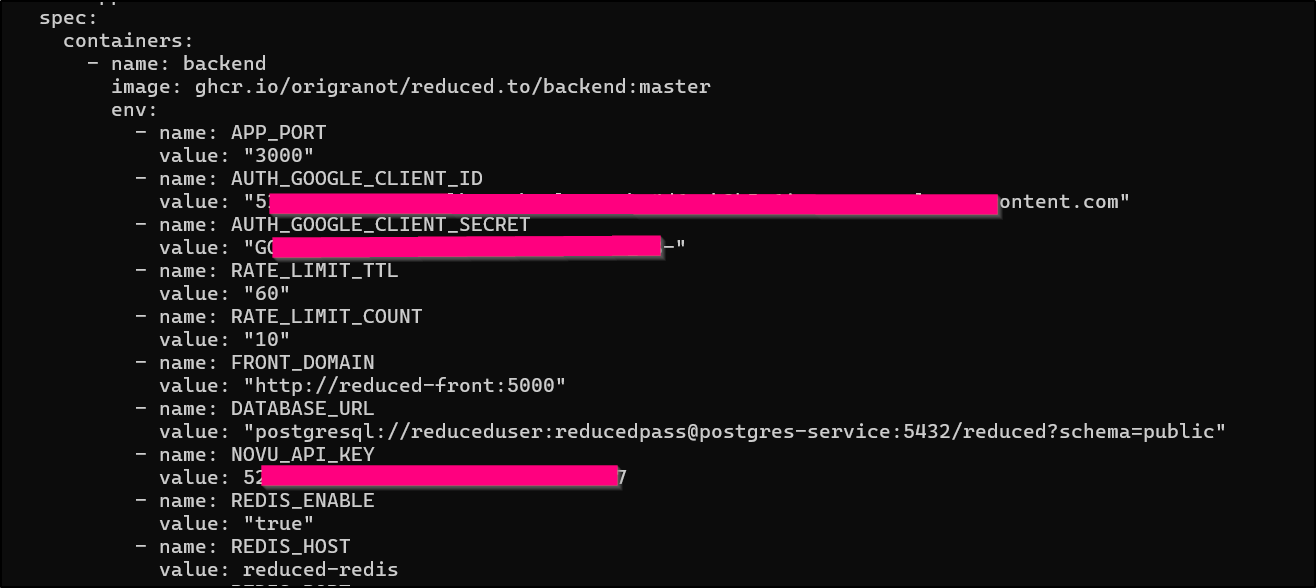

This time it had to do with OAuth2 and, assumably, a missing ClientID

$ kubectl logs backend-69d47fd8d6-l8sh8 -n reduced-to

> nx run prisma:migrate-deploy

(node:25) [DEP0060] DeprecationWarning: The `util._extend` API is deprecated. Please use Object.assign() instead.

(Use `node --trace-deprecation ...` to show where the warning was created)

Prisma schema loaded from libs/prisma/src/schema.prisma

Datasource "db": PostgreSQL database "reduced", schema "public" at "postgres-service:5432"

15 migrations found in prisma/migrations

No pending migrations to apply.

> NX Successfully ran target migrate-deploy for project prisma

(node:1) [DEP0040] DeprecationWarning: The `punycode` module is deprecated. Please use a userland alternative instead.

(Use `node --trace-deprecation ...` to show where the warning was created)

[Nest] 1 - 11/12/2024, 12:36:48 PM LOG [NestFactory] Starting Nest application...

[Nest] 1 - 11/12/2024, 12:36:49 PM LOG [InstanceLoader] PrismaModule dependencies initialized +84ms

[Nest] 1 - 11/12/2024, 12:36:49 PM LOG [InstanceLoader] PassportModule dependencies initialized +0ms

[Nest] 1 - 11/12/2024, 12:36:49 PM LOG [InstanceLoader] ConfigHostModule dependencies initialized +2ms

[Nest] 1 - 11/12/2024, 12:36:49 PM LOG [InstanceLoader] DiscoveryModule dependencies initialized +1ms

[Nest] 1 - 11/12/2024, 12:36:49 PM LOG [InstanceLoader] UsageModule dependencies initialized +0ms

[Nest] 1 - 11/12/2024, 12:36:49 PM LOG [InstanceLoader] ConfigModule dependencies initialized +1ms

[Nest] 1 - 11/12/2024, 12:36:49 PM LOG [InstanceLoader] AppConfigModule dependencies initialized +0ms

[Nest] 1 - 11/12/2024, 12:36:49 PM LOG [InstanceLoader] ScheduleModule dependencies initialized +2ms

[Nest] 1 - 11/12/2024, 12:36:49 PM LOG [InstanceLoader] TasksModule dependencies initialized +73ms

[Nest] 1 - 11/12/2024, 12:36:49 PM ERROR [ExceptionHandler] OAuth2Strategy requires a clientID option

TypeError: OAuth2Strategy requires a clientID option

at GoogleStrategy.OAuth2Strategy (/app/node_modules/passport-oauth2/lib/strategy.js:87:34)

at new Strategy (/app/node_modules/passport-google-oauth20/lib/strategy.js:52:18)

at new MixinStrategy (/app/node_modules/@nestjs/passport/dist/passport/passport.strategy.js:32:13)

at new GoogleStrategy (/app/backend/main.js:2310:9)

at Injector.instantiateClass (/app/node_modules/@nestjs/core/injector/injector.js:365:19)

at callback (/app/node_modules/@nestjs/core/injector/injector.js:65:45)

at process.processTicksAndRejections (node:internal/process/task_queues:105:5)

at async Injector.resolveConstructorParams (/app/node_modules/@nestjs/core/injector/injector.js:144:24)

at async Injector.loadInstance (/app/node_modules/@nestjs/core/injector/injector.js:70:13)

at async Injector.loadProvider (/app/node_modules/@nestjs/core/injector/injector.js:97:9)

I think it really wants the Google API keys setup.

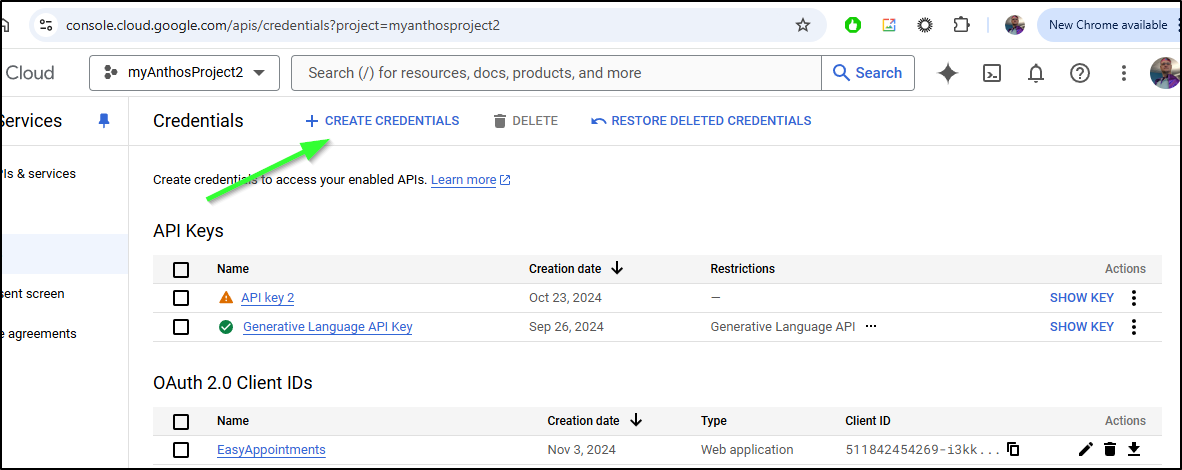

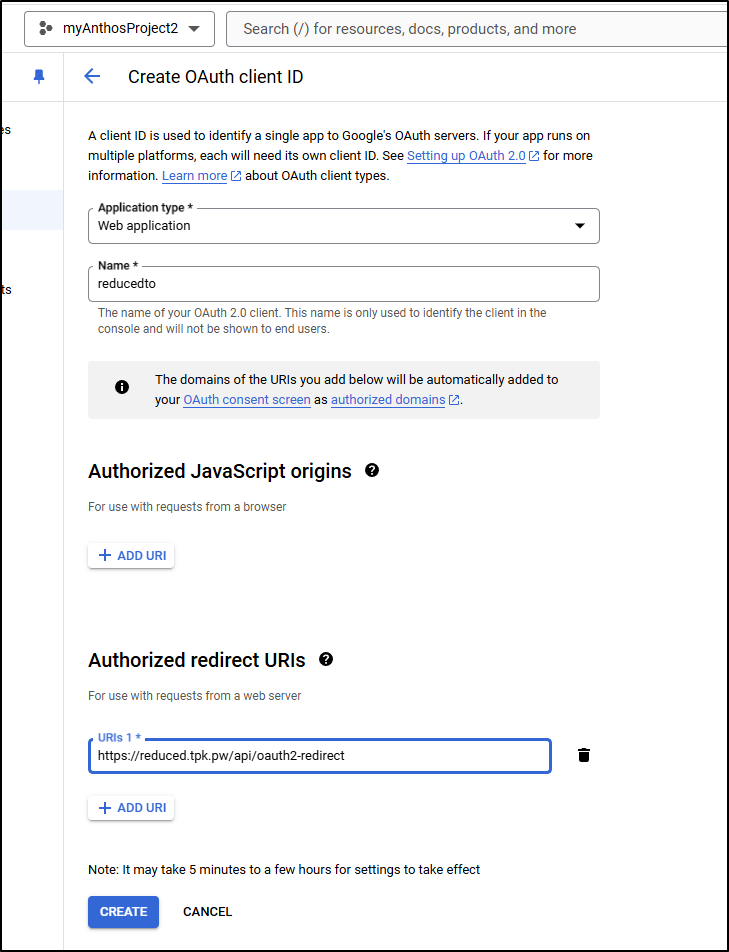

We can go create some new API credentials in GCP

There is no documentation on OAuth credentials setup but based on the fact they use passport-google-auth20 i’ll assume a standard OAuth2 callback url

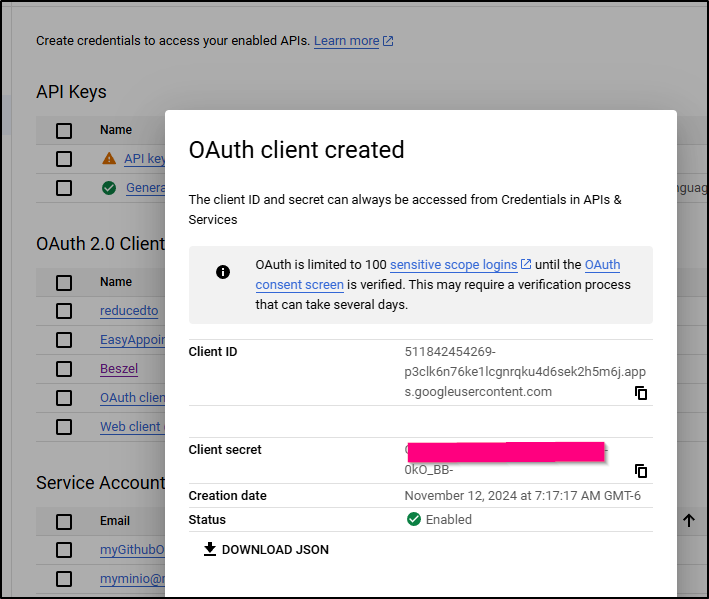

I now have a Client to try

I’ll now try adding those to the backend manifest

and applying

$ kubectl apply -f ./manifest_marius.yaml -n reduced-to

persistentvolumeclaim/redis-pvc unchanged

persistentvolumeclaim/db-pvc unchanged

deployment.apps/redis unchanged

deployment.apps/postgres unchanged

deployment.apps/backend configured

deployment.apps/frontend unchanged

service/redis-service unchanged

service/postgres-service unchanged

service/backend-service unchanged

service/frontend-service unchanged

This seems to be running

$ kubectl get pods -n reduced-to

NAME READY STATUS RESTARTS AGE

frontend-75c87bb7b9-2n72w 1/1 Running 0 53m

redis-77656478cb-9td54 1/1 Running 0 53m

postgres-dd4644bfb-5dsf6 1/1 Running 0 47m

backend-7d6785cbbc-vbwl4 1/1 Running 1 (8s ago) 17s

Since I set my service to 8302, I’ll need to whip up a quick new ingress file

$ kubectl get svc -n reduced-to

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

redis-service ClusterIP 10.43.156.13 <none> 6379/TCP 54m

postgres-service ClusterIP 10.43.255.240 <none> 5432/TCP 54m

backend-service ClusterIP 10.43.116.25 <none> 3000/TCP 54m

frontend-service ClusterIP 10.43.196.220 <none> 8302/TCP 54m

$ cat ./ingress.reduced.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: frontend-service

name: reducedingress

spec:

rules:

- host: reduced.tpk.pw

http:

paths:

- backend:

service:

name: frontend-service

port:

number: 8302

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- reduced.tpk.pw

secretName: myreduced-tls

I can then apply

$ kubectl apply -f ./ingress.reduced.yaml -n reduced-to

ingress.networking.k8s.io/reducedingress created

Which fetched a cert rather quick

$ kubectl get cert -n reduced-to

NAME READY SECRET AGE

myreduced-tls True myreduced-tls 15s

But as you can see with the testing, it too does not work

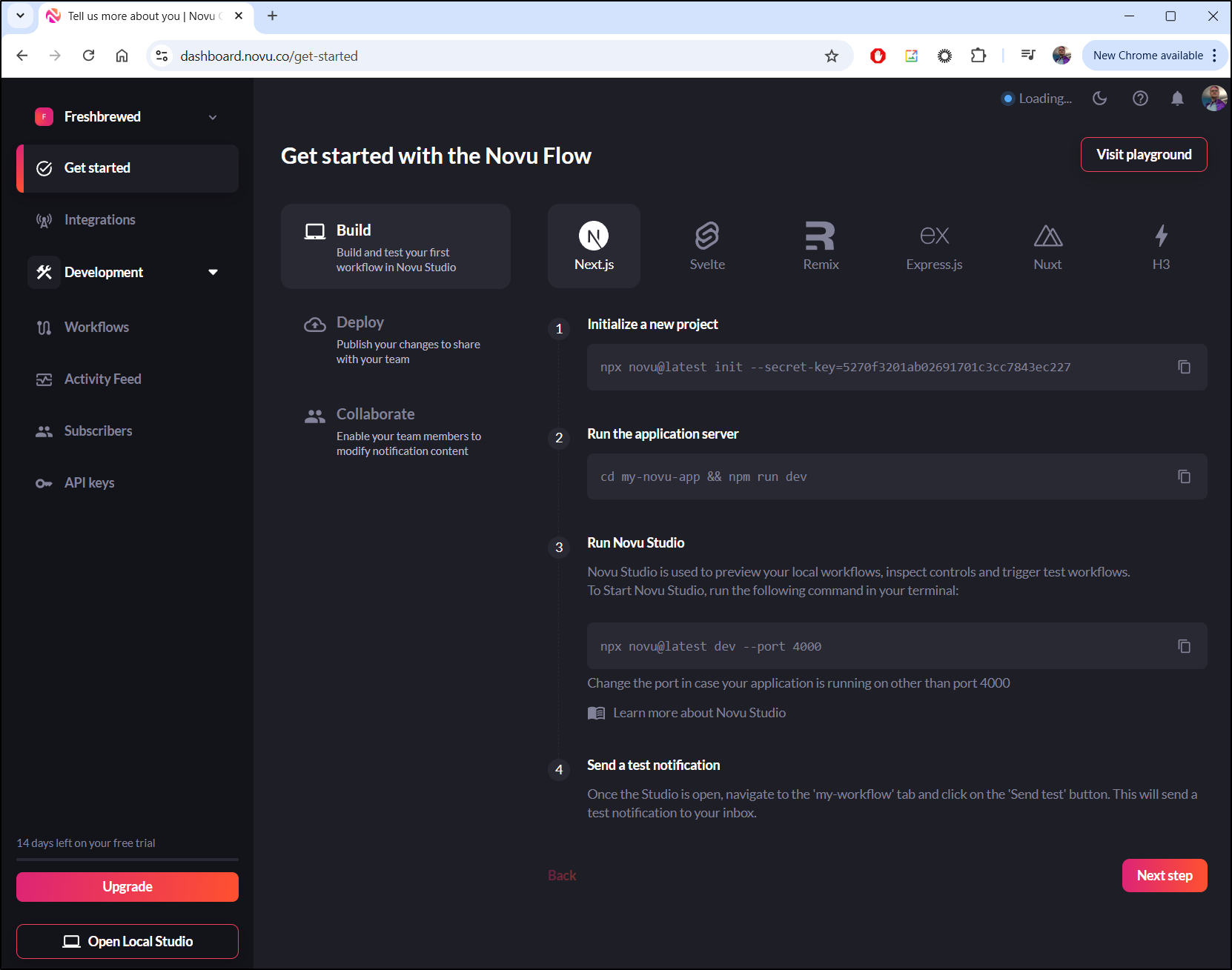

Docker

I’ll pivot to Docker. Since I don’t care to fart around with fixing accounts in the Database, I’ll signup for a novu.co account

Which I can get from the API Keys on the left of that nav.

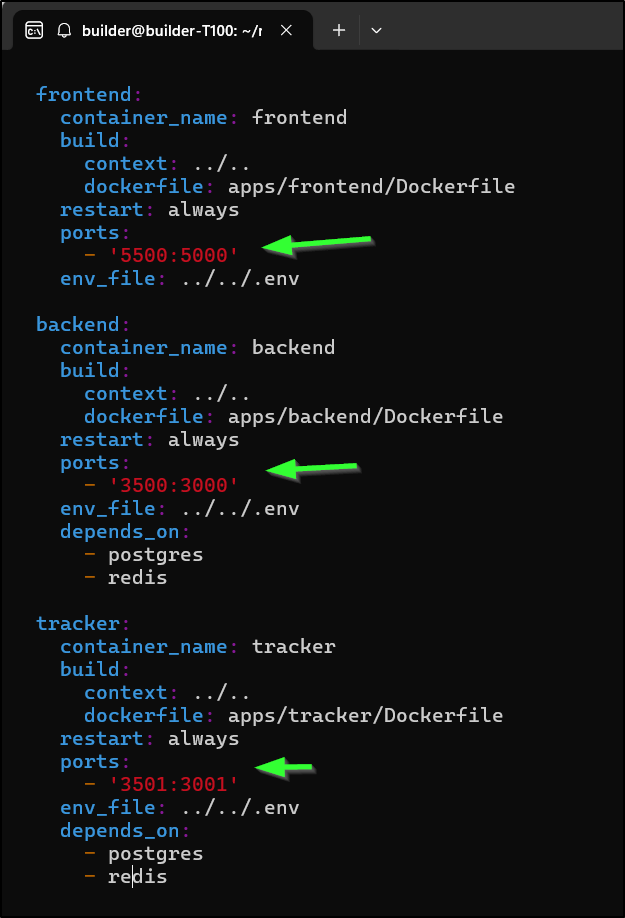

I also need to change the docker-compose to use ports that aren’t in use (adding a “5”)

and I used the .example.env to create an .env file

$ cat ../../.env

# General

BACKEND_APP_PORT=3500

FRONTEND_APP_PORT=5500

TRACKER_APP_PORT=3501

NODE_ENV=development

...

I can now test with a docker compose up

$ docker compose up -d

[+] Running 23/23

✔ redis 7 layers [⣿⣿⣿⣿⣿⣿⣿] 0B/0B Pulled 5.2s

✔ 89511e3ccef2 Pull complete 2.4s

✔ 4ca428e0bb5e Pull complete 2.7s

✔ 41cc262fb5bb Pull complete 3.0s

✔ 228fc9e0b0ff Pull complete 3.6s

✔ 23d1d45ab415 Pull complete 3.7s

✔ 4f4fb700ef54 Pull complete 3.9s

✔ 6adf9ee29d6f Pull complete 4.0s

✔ postgres 14 layers [⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿] 0B/0B Pulled 8.8s

✔ a480a496ba95 Pull complete 2.0s

✔ f5ece9c40e2b Pull complete 2.1s

✔ 241e5725184f Pull complete 2.7s

✔ 6832ae83547e Pull complete 3.0s

✔ 4db87ef10d0d Pull complete 3.5s

✔ 979fa3114f7b Pull complete 3.8s

✔ f2bc6009bf64 Pull complete 3.9s

✔ c9097748b1df Pull complete 4.1s

✔ 9d5c934890a8 Pull complete 7.1s

✔ d14a7815879e Pull complete 7.3s

✔ 442a42d0b75a Pull complete 7.4s

✔ 82020414c082 Pull complete 7.5s

✔ b6ce4c941ce7 Pull complete 7.6s

✔ 42e63a35cca7 Pull complete 7.7s

[+] Building 82.5s (22/40)

[+] Building 93.8s (22/40)

=> => sha256:6e96017b105b9b791db5b1e1a924abdd3d066861fa8c5c29e4b83dbbea2c8b2d 1.39MB / 1.39MB 0.8s

=> => sha256:5d9c86674f9fdc9f31743b0ceb6769531a0a5d4d9d0b8fb1406f03f92d72c4b4 446B / 446B 1.1s

=> => extracting sha256:f037f8beafdb35ef5c9a7254780c75e81cf3b2505ab9f8be06edc4a6eca4dbec 1.5s

=> => extracting sha256:6e96017b105b9b791db5b1e1a924abdd3d066861fa8c5c29e4b83dbbea2c8b2d 0.1s

=> => extracting sha256:5d9c86674f9fdc9f31743b0ceb6769531a0a5d4d9d0b8fb1406f03f92d72c4b4 0.0s

=> [frontend dependencies 1/5] FROM docker.io/library/node:20.17.0-alpine3.20@sha256:2d07db07a2df6830718ae2a47db6fedce6745f5bcd174c398f2acdda90a11c03 2.9s

=> => resolve docker.io/library/node:20.17.0-alpine3.20@sha256:2d07db07a2df6830718ae2a47db6fedce6745f5bcd174c398f2acdda90a11c03 0.0s

=> => sha256:2d07db07a2df6830718ae2a47db6fedce6745f5bcd174c398f2acdda90a11c03 7.67kB / 7.67kB 0.0s

=> => sha256:558a6416c5e0da2cb0f19bbc766f0a76825a7e76484bc211af0bb1cedbb7ca1e 1.72kB / 1.72kB 0.0s

=> => sha256:45a59611ca84e7fa7e39413f7a657afd43e2b71b8d6cb3cb722d97ffc842decb 6.36kB / 6.36kB 0.0s

=> => sha256:c36270121b0cfc4d7ddaf49f4711e7bcad4cf5e364694d5ff4bd5b65b01ed571 42.31MB / 42.31MB 1.0s

=> => sha256:842cd80f9366843dd6175d28abb6233501045711892a1a5a700676a0ed2d6ec4 1.39MB / 1.39MB 0.3s

=> => sha256:737da86e5e86be8d490b910c63f7a45bc0a97913a42ff71d396c801c8bf06a58 446B / 446B 0.3s

=> => extracting sha256:c36270121b0cfc4d7ddaf49f4711e7bcad4cf5e364694d5ff4bd5b65b01ed571 1.2s

=> => extracting sha256:842cd80f9366843dd6175d28abb6233501045711892a1a5a700676a0ed2d6ec4 0.2s

=> => extracting sha256:737da86e5e86be8d490b910c63f7a45bc0a97913a42ff71d396c801c8bf06a58 0.0s

=> [frontend internal] load build context 1.0s

=> => transferring context: 26.92MB 0.7s

=> [backend internal] load build context 1.0s

=> => transferring context: 26.92MB 0.8s

=> [frontend dependencies 2/5] WORKDIR /app 0.4s

=> [frontend dependencies 3/5] RUN apk add --no-cache python3 make g++ 6.9s

=> [frontend build 3/5] COPY . . 0.7s

=> [backend dependencies 2/5] WORKDIR /app 0.2s

=> [tracker build 3/5] COPY . . 1.3s

=> [tracker dependencies 3/5] RUN apk add --no-cache python3 make g++ 5.2s

=> [tracker dependencies 4/5] COPY package*.json ./ 0.8s

=> [tracker dependencies 5/5] RUN npm ci 82.8s

=> [frontend dependencies 4/5] COPY package*.json ./ 0.2s

=> [frontend dependencies 5/5] RUN npm ci 82.4s

=> => # npm warn deprecated trim@0.0.1: Use String.prototype.trim() instead

=> => # npm warn deprecated stable@0.1.8: Modern JS already guarantees Array#sort() is a stable sort, so this library is deprecated. See the compatibility table on MDN: https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/Arr

=> => # ay/sort#browser_compatibility

=> => # npm warn deprecated @babel/plugin-proposal-object-rest-spread@7.12.1: This proposal has been merged to the ECMAScript standard and thus this plugin is no longer maintained. Please use @babel/plugin-transform-object-rest-spread instead.

=> => # npm warn deprecated @babel/plugin-proposal-class-properties@7.18.6: This proposal has been merged to the ECMAScript standard and thus this plugin is no longer maintained. Please use @babel/plugin-transform-class-properties instead.

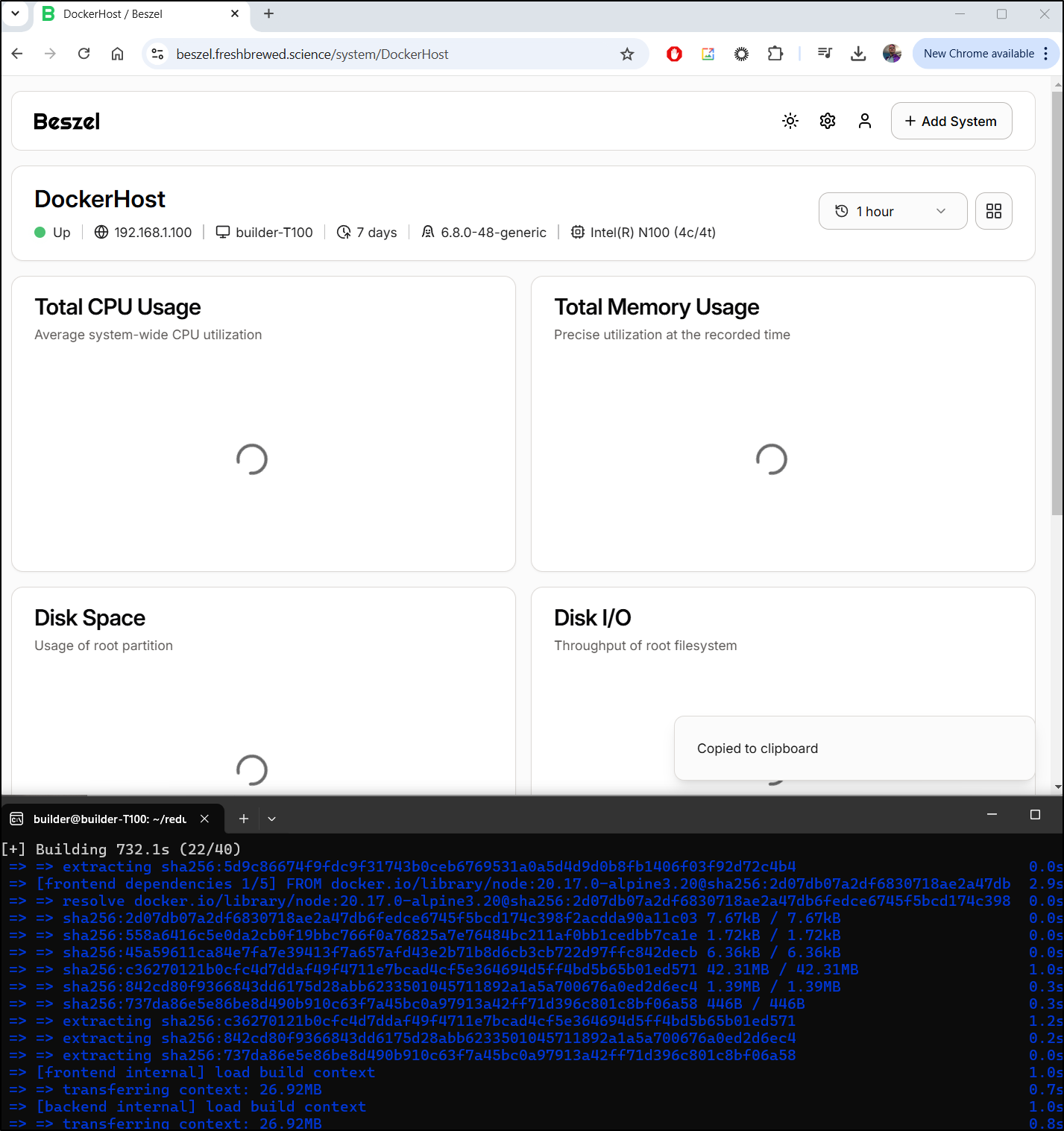

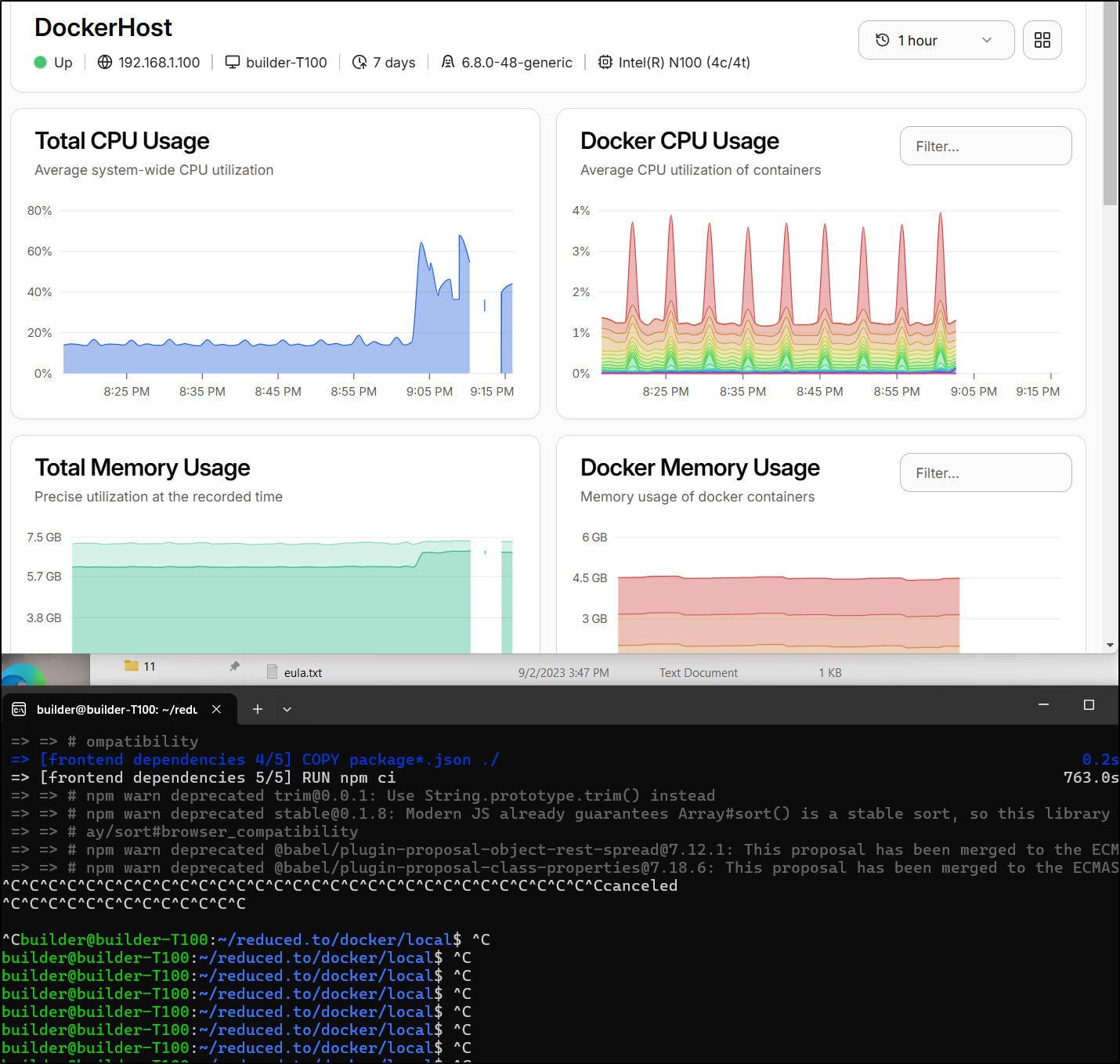

I waited a while and saw the host spike to max memory usage

I had to kill it as it was knocking everything out

It even required a restart - not good.

I tried the same on a different bigger host (relative - it’s an old retired Macbook Air)

isaac@isaac-MacBookAir:~/Documents/reduced.to/docker/local$ sudo docker compose up -d

WARN[0000] /home/isaac/Documents/reduced.to/docker/local/docker-compose.yml: the attribute `version` is obsolete, it will be ignored, please remove it to avoid potential confusion

[+] Running 23/23

✔ postgres Pulled 10.7s

✔ 2d429b9e73a6 Pull complete 3.3s

✔ 8cd18d1b1f3c Pull complete 3.3s

✔ efe013fcce98 Pull complete 3.6s

✔ e5f0c600cf82 Pull complete 3.8s

✔ f1c903b2aeee Pull complete 4.5s

✔ 49faa60cc715 Pull complete 4.6s

✔ 744e496a897f Pull complete 4.7s

✔ 83c689f809e5 Pull complete 4.7s

✔ 5dc6568b6a72 Pull complete 9.3s

✔ 5e679d2c32d2 Pull complete 9.3s

✔ 4905c4cdeae1 Pull complete 9.4s

✔ 15266ecdce80 Pull complete 9.4s

✔ 37c10b51b83c Pull complete 9.5s

✔ 3c636afee197 Pull complete 9.5s

✔ redis Pulled 5.5s

✔ 92ef1eccbb9f Pull complete 3.3s

✔ 5e00ad97561c Pull complete 3.4s

✔ 8f865c3d417c Pull complete 3.5s

✔ 74c736b00471 Pull complete 4.1s

✔ 928f5dbb5007 Pull complete 4.2s

✔ 4f4fb700ef54 Pull complete 4.2s

✔ 6fd0c1bf3b91 Pull complete 4.3s

[+] Building 604.2s (45/45) FINISHED docker:default

=> [tracker internal] load build definition from Dockerfile 0.3s

=> => transferring dockerfile: 618B 0.1s

=> [backend internal] load build definition from Dockerfile 0.3s

=> => transferring dockerfile: 728B 0.0s

=> [frontend internal] load build definition from Dockerfile 0.3s

=> => transferring dockerfile: 863B 0.0s

=> WARN: FromAsCasing: 'as' and 'FROM' keywords' casing do not match (line 1) 0.3s

=> WARN: FromAsCasing: 'as' and 'FROM' keywords' casing do not match (line 11) 0.3s

=> WARN: FromAsCasing: 'as' and 'FROM' keywords' casing do not match (line 31) 0.3s

=> [backend internal] load metadata for docker.io/library/node:lts-alpine 1.0s

=> [frontend internal] load metadata for docker.io/library/node:20.17.0-alpine3.20 1.0s

=> [frontend internal] load .dockerignore 0.1s

=> => transferring context: 2B 0.0s

=> [tracker internal] load .dockerignore 0.2s

=> => transferring context: 2B 0.0s

=> [backend internal] load .dockerignore 0.2s

=> => transferring context: 2B 0.0s

=> [frontend internal] load build context 1.5s

=> => transferring context: 26.92MB 1.2s

=> [frontend dependencies 1/5] FROM docker.io/library/node:20.17.0-alpine3.20@sha256:2d07db07a2df6830718ae2a47db6fedce6745f5bcd174c398f2acdda90a11c03 5.3s

=> => resolve docker.io/library/node:20.17.0-alpine3.20@sha256:2d07db07a2df6830718ae2a47db6fedce6745f5bcd174c398f2acdda90a11c03 0.1s

=> => sha256:c36270121b0cfc4d7ddaf49f4711e7bcad4cf5e364694d5ff4bd5b65b01ed571 42.31MB / 42.31MB 1.4s

=> => sha256:2d07db07a2df6830718ae2a47db6fedce6745f5bcd174c398f2acdda90a11c03 7.67kB / 7.67kB 0.0s

=> => sha256:558a6416c5e0da2cb0f19bbc766f0a76825a7e76484bc211af0bb1cedbb7ca1e 1.72kB / 1.72kB 0.0s

=> => sha256:45a59611ca84e7fa7e39413f7a657afd43e2b71b8d6cb3cb722d97ffc842decb 6.36kB / 6.36kB 0.0s

=> => sha256:842cd80f9366843dd6175d28abb6233501045711892a1a5a700676a0ed2d6ec4 1.39MB / 1.39MB 0.4s

=> => sha256:737da86e5e86be8d490b910c63f7a45bc0a97913a42ff71d396c801c8bf06a58 446B / 446B 0.2s

=> => extracting sha256:c36270121b0cfc4d7ddaf49f4711e7bcad4cf5e364694d5ff4bd5b65b01ed571 3.1s

=> => extracting sha256:842cd80f9366843dd6175d28abb6233501045711892a1a5a700676a0ed2d6ec4 0.1s

=> => extracting sha256:737da86e5e86be8d490b910c63f7a45bc0a97913a42ff71d396c801c8bf06a58 0.0s

=> [backend dependencies 1/5] FROM docker.io/library/node:lts-alpine@sha256:dc8ba2f61dd86c44e43eb25a7812ad03c5b1b224a19fc6f77e1eb9e5669f0b82 7.3s

=> => resolve docker.io/library/node:lts-alpine@sha256:dc8ba2f61dd86c44e43eb25a7812ad03c5b1b224a19fc6f77e1eb9e5669f0b82 0.2s

=> => sha256:dc8ba2f61dd86c44e43eb25a7812ad03c5b1b224a19fc6f77e1eb9e5669f0b82 6.41kB / 6.41kB 0.0s

=> => sha256:acfe48528d337d95603fbeaf249da67a07c4082e22f2c6ec85cf0fdffcfd63ff 1.72kB / 1.72kB 0.0s

=> => sha256:ee9ae20d6258e37bb705df1b02049a173c872534a0790fd9d13ab3b04523aa8f 6.20kB / 6.20kB 0.0s

=> => sha256:da9db072f522755cbeb85be2b3f84059b70571b229512f1571d9217b77e1087f 3.62MB / 3.62MB 0.5s

=> => sha256:2c683692384c7436fa88d4cd7481be6b45a69a4cf25896cc0e3eaa79a795c0f4 49.24MB / 49.24MB 1.8s

=> => extracting sha256:da9db072f522755cbeb85be2b3f84059b70571b229512f1571d9217b77e1087f 0.6s

=> => sha256:e7428cb4ffb31db5a9006b61751d36556f1a429fd33a9d3c0063c77ee50ac0fc 1.39MB / 1.39MB 0.8s

=> => sha256:d9f81725f57f61d3b3491b2eb12ea1949ae8c74d9e28bbd45ffe250990890b2e 444B / 444B 1.0s

=> => extracting sha256:2c683692384c7436fa88d4cd7481be6b45a69a4cf25896cc0e3eaa79a795c0f4 3.1s

=> => extracting sha256:e7428cb4ffb31db5a9006b61751d36556f1a429fd33a9d3c0063c77ee50ac0fc 0.3s

=> => extracting sha256:d9f81725f57f61d3b3491b2eb12ea1949ae8c74d9e28bbd45ffe250990890b2e 0.0s

=> [tracker internal] load build context 1.6s

=> => transferring context: 26.92MB 1.4s

=> [backend internal] load build context 1.8s

=> => transferring context: 26.92MB 1.7s

=> [frontend dependencies 2/5] WORKDIR /app 1.2s

=> [frontend build 3/5] COPY . . 0.9s

=> [frontend dependencies 3/5] RUN apk add --no-cache python3 make g++ 7.6s

=> [backend dependencies 2/5] WORKDIR /app 0.1s

=> [tracker build 3/5] COPY . . 0.6s

=> [backend dependencies 3/5] RUN apk add --no-cache python3 make g++ 5.6s

=> [backend dependencies 4/5] COPY package*.json ./ 0.2s

=> [tracker dependencies 5/5] RUN npm ci 211.6s

=> [frontend dependencies 4/5] COPY package*.json ./ 0.2s

=> [frontend dependencies 5/5] RUN npm ci 106.8s

=> [frontend build 4/5] COPY --from=dependencies /app/node_modules ./node_modules 22.6s

=> [frontend build 5/5] RUN npx nx build frontend --prod --skip-nx-cache && npm prune --production 380.7s

=> [tracker build 4/5] COPY --from=dependencies /app/node_modules ./node_modules 30.4s

=> CACHED [backend build 3/5] COPY . . 0.0s

=> CACHED [backend dependencies 4/5] COPY package*.json ./ 0.0s

=> CACHED [backend dependencies 5/5] RUN npm ci 0.0s

=> CACHED [backend build 4/5] COPY --from=dependencies /app/node_modules ./node_modules 0.0s

=> [backend build 5/5] RUN npx nx build backend --prod --skip-nx-cache 106.6s

=> [tracker build 5/5] RUN npx nx build tracker --prod --skip-nx-cache && npm prune --production 232.2s

=> [backend production 3/5] COPY --from=build /app/dist/apps/backend ./backend 0.2s

=> [backend production 4/5] COPY --from=build /app/node_modules ./node_modules 26.6s

=> [backend production 5/5] COPY --from=build /app/libs/prisma ./libs/prisma 0.3s

=> [backend] exporting to image 43.4s

=> => exporting layers 43.3s

=> => writing image sha256:9d0e12b2376662a0252c9deada8000c8c95b710e8fef77b347d4c70d3b8cba7c 0.0s

=> => naming to docker.io/library/local-backend 0.0s

=> [backend] resolving provenance for metadata file 0.1s

=> [tracker production 3/4] COPY --from=build /app/dist/apps/tracker ./tracker 0.2s

=> [tracker production 4/4] COPY --from=build /app/node_modules ./node_modules 12.0s

=> [tracker] exporting to image 15.7s

=> => exporting layers 15.6s

=> => writing image sha256:8aa611481206ca4f68322d3e7ec6ddbe44eacfb29656a57ddda19ac1fd958fa7 0.0s

=> => naming to docker.io/library/local-tracker 0.0s

=> [tracker] resolving provenance for metadata file 0.0s

=> [frontend production 3/4] COPY --from=build /app/dist/apps/frontend ./frontend 0.1s

=> [frontend production 4/4] COPY --from=build /app/node_modules ./node_modules 7.8s

=> [frontend] exporting to image 15.7s

=> => exporting layers 15.7s

=> => writing image sha256:31900c850102478e2614ef085459ce43217a1ecc1f058f0e2a68f21be2d87eea 0.0s

=> => naming to docker.io/library/local-frontend 0.0s

=> [frontend] resolving provenance for metadata file 0.0s

[+] Running 5/6

✔ Network local_default Created 0.2s

✔ Container frontend Started 1.2s

✔ Container redis Started 1.2s

⠋ Container postgres Starting 1.2s

✔ Container tracker Created 0.1s

✔ Container backend Created 0.1s

Error response from daemon: driver failed programming external connectivity on endpoint postgres (cda9c941c06e9418a16a24661f366ff501ab8a45cef17f324f60a96516155ed3): failed to bind port 0.0.0.0:5432/tcp: Error starting userland proxy: listen tcp4 0.0.0.0:5432: bind: address already in use

isaac@isaac-MacBookAir:~/Documents/reduced.to/docker/local$

That errored, as you see above, after a good 15+ minutes because I already use 5432 for the Immich DB on that host.

I changed the DB port to 5532 in both the .env and docker-compose forwarding port and tried again.

$ sudo docker compose up -d

WARN[0000] /home/isaac/Documents/reduced.to/docker/local/docker-compose.yml: the attribute `version` is obsolete, it will be ignored, please remove it to avoid potential confusion

[+] Running 5/5

✔ Container backend Started 12.2s

✔ Container frontend Started 11.4s

✔ Container tracker Started 12.2s

✔ Container postgres Started 11.5s

✔ Container redis Started

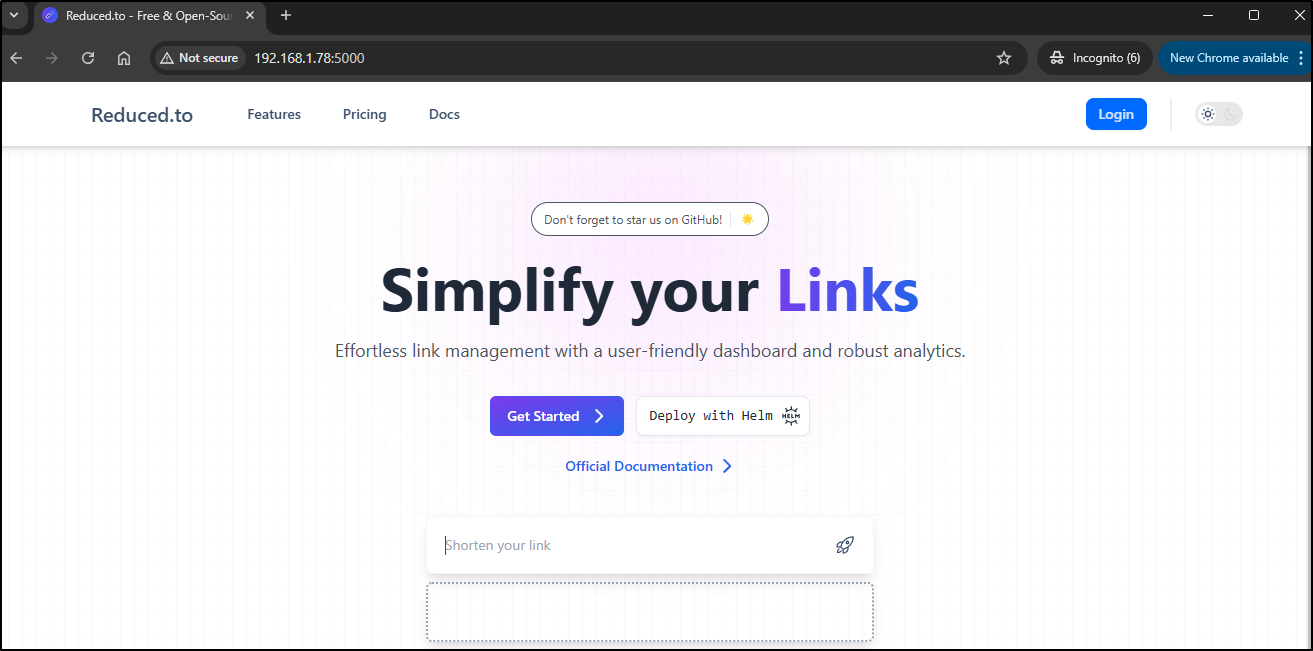

Which loads on the host IP to the frontend port

But adding links does nothing.

Moreover, the login doesnt work nor does account create:

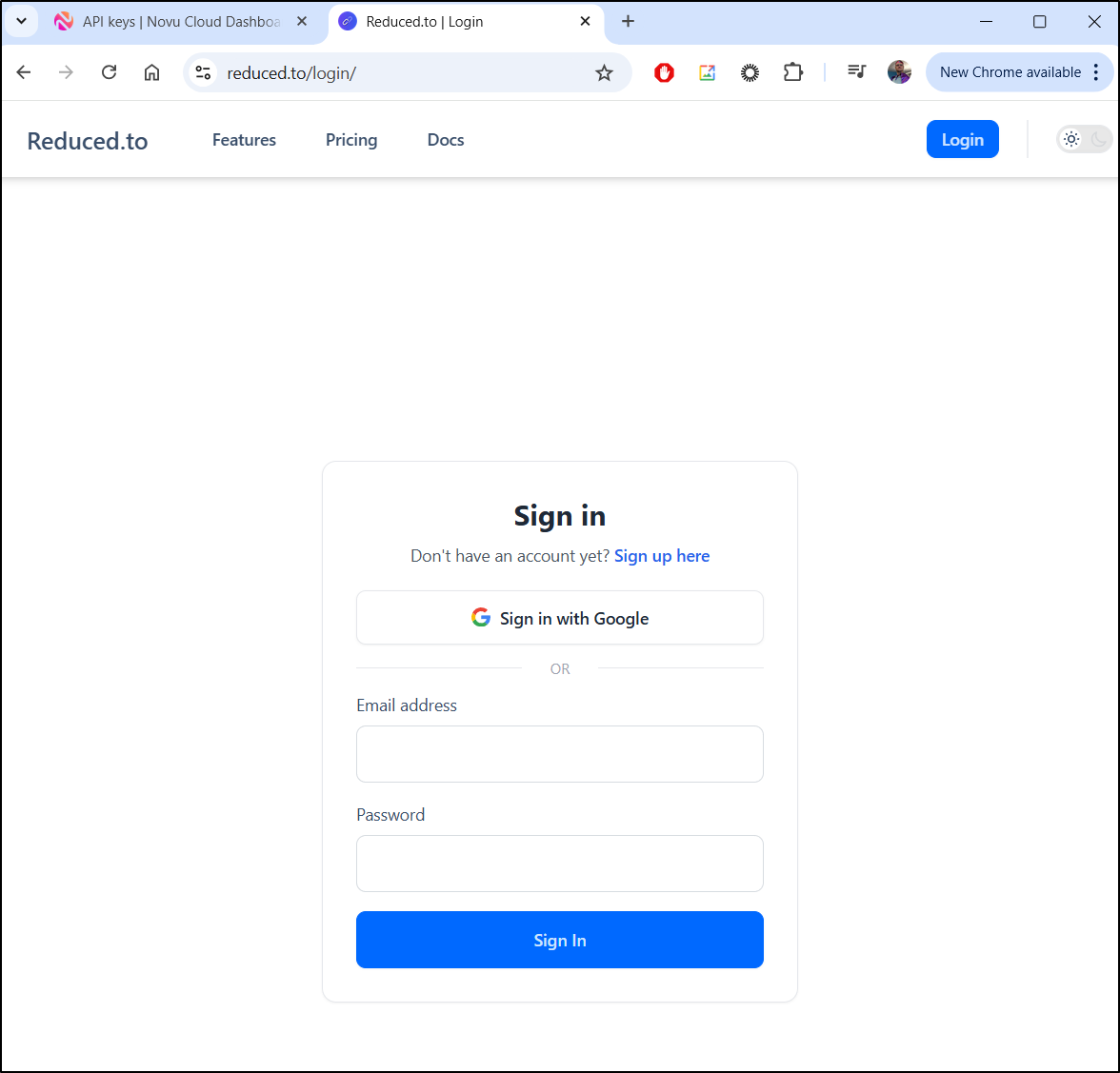

SaaS

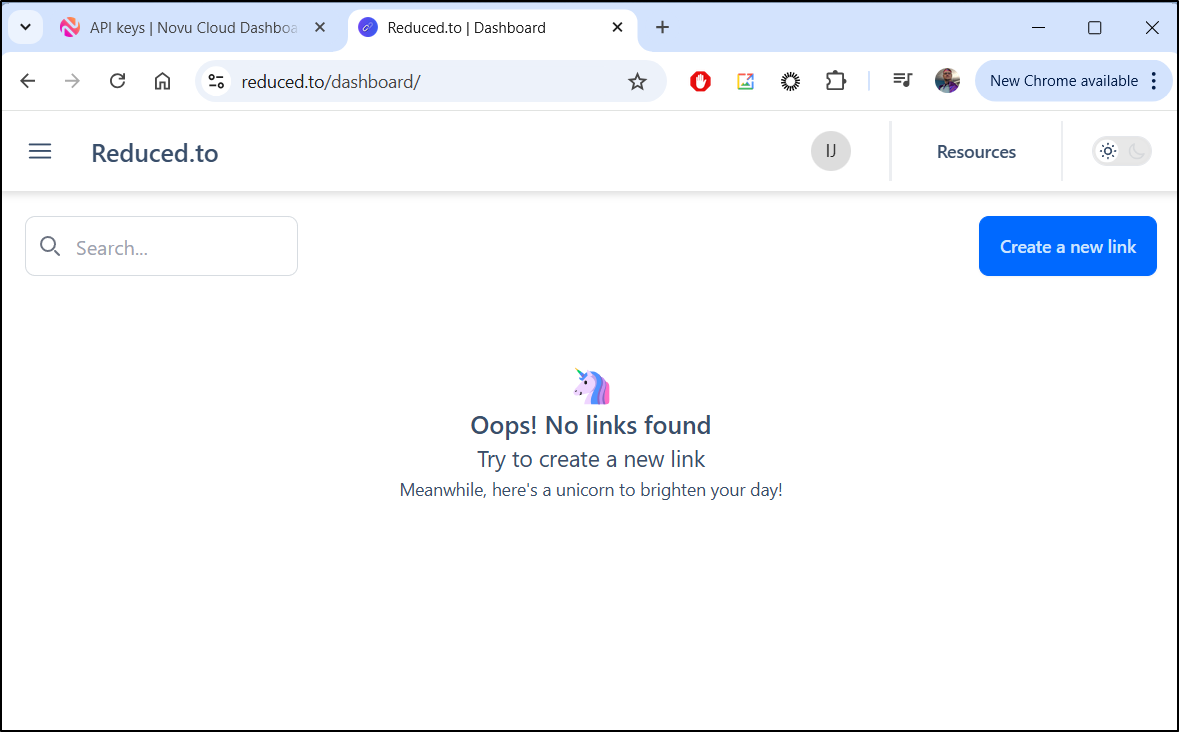

Now in fairness, reduced.to also has a hosted SaaS offering at, as you guessed reduced.to.

We can signup

I’ll then signup with Google

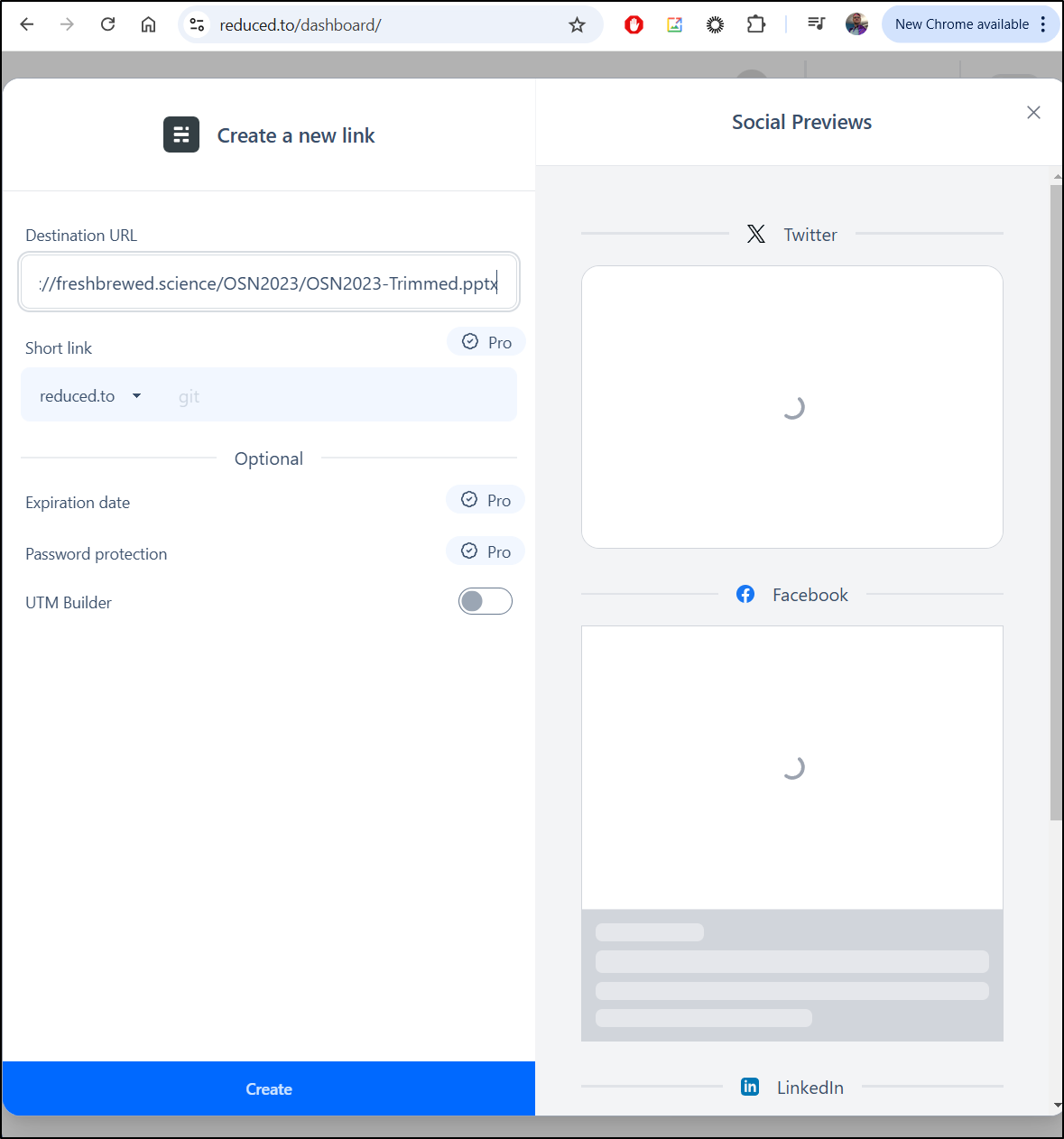

I can then create a new link

I can then create a link

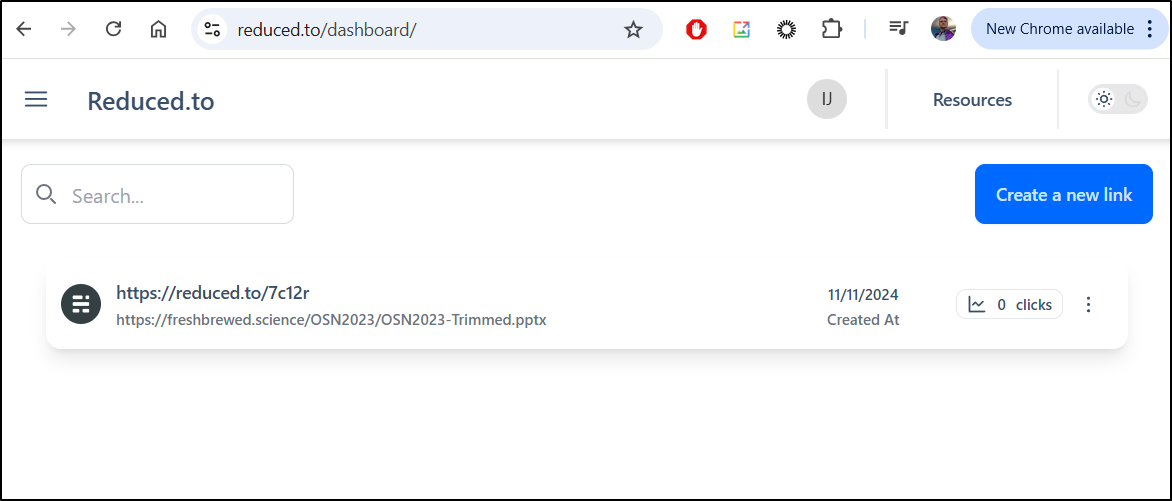

And see the URL

which does work at https://reduced.to/7c12r.

Summary

We looked at three tools with the hope of creating a nice URL shortener that, possibly, would have some kind of basic tracking. First was YOURLS which worked quite well. It has a Web interface that makes it easy to add and remove links. It’s clearly older based on the setup with backend .htaccess files and PHP, but there is nothing wrong with old. It’s backed is MariaDB which means I can easily migrate that to using the MariaDB on my NAS or doing regular MySQL backups.

The second we looked at was shlink. I used the built in SQLite database so it’s a self-contained container, but if we check the docs, it would be easy enough to use MySQL/MariaDB, PostgreSQL or MSSQL. This means I could use either of my NAS databases (one runs MariaDB and the other PostgreSQL). Like YOURLS, SHlink is also PHP driven but unlike YOURS does not have a web interface. All our interactions are done either with the CLI on the container or using the well-documented REST interface. Because it’s command-line driven, we don’t get nice usage graphs of URLs, but it’s also handy to not have an exposed admin web interface that could be attacked.

Lastly, I tried - I really did - to get Reduced.to to work. I tried their charts, my manifests, Docker compose on multiple hosts. Really, nothing works. I would guess, and it’s just a guess, that back when MariusHosting reviewed it in 2023, the open-source container wasn’t as deeply tied to the commercial offering. I could just be a big dummy and missing things, but I think they made it Open-Source just enough for bug-fixes but not enough to make it easy to launch on ones own. I did show the SaaS offering, but that has rather strict limitations on the free tier - namely 5 links a month and only tracking the first 250 visitors to a link. Also, let’s be fair. a reduced.to shortened link is not much better than me just dropping a CNAME using tpk.pw. Honestly, I highly doubt I’ll use reduced.to just because of that alone.

Addendum

I will plan on picking either shlink or YOURLS as a go forward with a proper backed up database. I’ll likely document the whole process as a small blog entry later. I’m slightly leaning towards YOURLS since I’m a sucker for a web interface with graphs. Really, either could do the job.

Also, I mentioned I was inspired by MariusHosting which somehow is just a featured player on my phones daily feed. Here are some links with a focus on running things on NASes: