Published: Aug 20, 2024 by Isaac Johnson

Today we will check out a couple of interesting Open-Source projects. The first of which is PdfDing. PDFDing is a lightweight Python based app for viewing a library of self-hosted PDFs. I discovered it by way of MariusHosting and it’s not on Github, rather Codeberg.

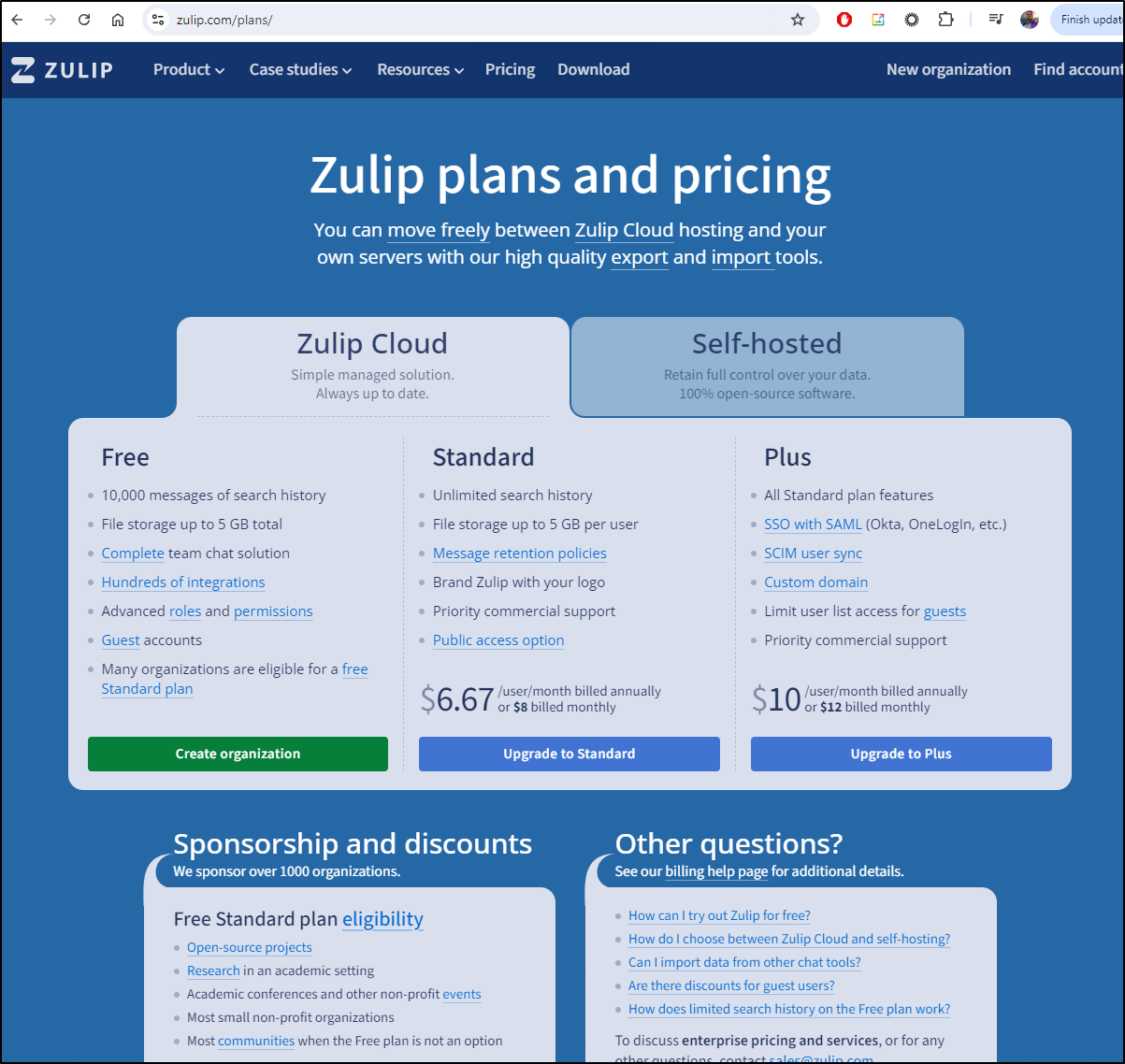

Zulip is another Slack-like Open-Source chat app that people are starting to migrate too following Element’s decision to change some of the license terms of Matrix Server.

PdfDing

I had bookmarked this MariusHosting post about setting up PdfDing.

That block is largely focused on using Docker on a NAS.

At the simplist, we can use a single container image with an embedded sqlite instance:

$ docker run --name pdfding \

-p 8000:8000 \

-v sqlite_data:/home/nonroot/pdfding/db -v media:/home/nonroot/pdfding/media \

-e HOST_NAME=127.0.0.1 -e SECRET_KEY=some_secret -e CSRF_COOKIE_SECURE=FALSE -e SESSION_COOKIE_SECURE=FALSE \

-d \

mrmn/pdfding:latest

I can easily turn that into a Manifest YAML for Kubernetes

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: sqlite-data-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: local-path

resources:

requests:

storage: 2Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: media-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: local-path

resources:

requests:

storage: 2Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: pdfding

spec:

replicas: 1

selector:

matchLabels:

app: pdfding

template:

metadata:

labels:

app: pdfding

spec:

containers:

- name: pdfding

image: mrmn/pdfding:latest

ports:

- containerPort: 8000

env:

- name: HOST_NAME

value: "127.0.0.1"

- name: SECRET_KEY

value: "some_secret"

- name: CSRF_COOKIE_SECURE

value: "FALSE"

- name: SESSION_COOKIE_SECURE

value: "FALSE"

volumeMounts:

- mountPath: /home/nonroot/pdfding/db

name: sqlite-data

- mountPath: /home/nonroot/pdfding/media

name: media

volumes:

- name: sqlite-data

persistentVolumeClaim:

claimName: sqlite-data-pvc

- name: media

persistentVolumeClaim:

claimName: media-pvc

---

apiVersion: v1

kind: Service

metadata:

name: pdfding-service

spec:

selector:

app: pdfding

ports:

- protocol: TCP

port: 80

targetPort: 8000

type: LoadBalancer

I can then apply it in a new namespace

builder@DESKTOP-QADGF36:~/Workspaces/pdfding$ kubectl create ns pdfding

namespace/pdfding created

builder@DESKTOP-QADGF36:~/Workspaces/pdfding$ kubectl apply -f ./manifest.yaml -n pdfding

persistentvolumeclaim/sqlite-data-pvc created

persistentvolumeclaim/media-pvc created

deployment.apps/pdfding created

service/pdfding-service created

I can port-forward to the service

builder@DESKTOP-QADGF36:~/Workspaces/pdfding$ kubectl port-forward svc/pdfding-service -n pdfding 8888:80

Forwarding from 127.0.0.1:8888 -> 8000

Forwarding from [::1]:8888 -> 8000

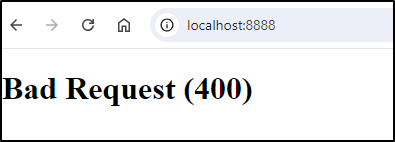

However, I got an error

Seems the hostname is rather dependent so localhost didn’t work but 127.0.0.1 did

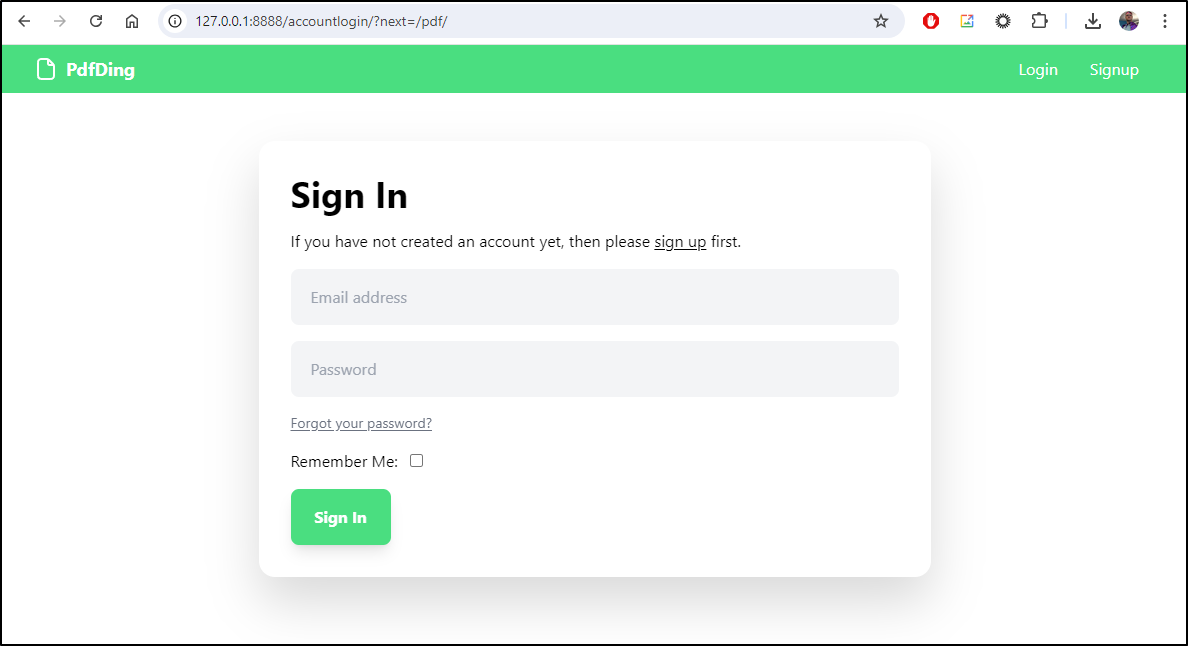

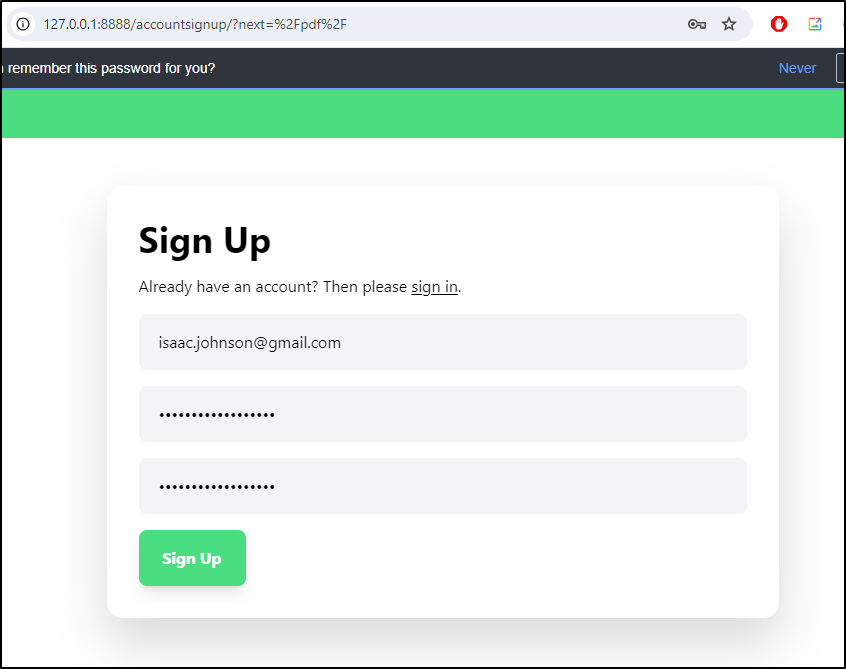

Since I have no accounts, I first need to signup for one

I’m then greeted with a landing page

Ingress

I’ll first make an A Record in CloudDNS

$ gcloud dns --project=myanthosproject2 record-sets create pdfding.steeped.space --zone="steepedspace" --type="A" --ttl="300" --rrdatas="75.73.224.240"

NAME TYPE TTL DATA

pdfding.steeped.space. A 300 75.73.224.240

Then make the Ingress YAML

builder@DESKTOP-QADGF36:~/Workspaces/pdfding$ cat pdfding.ingress.yaml

apiVersion: v1

kind: List

metadata:

resourceVersion: ""

items:

- apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: gcpleprod2

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

nginx.org/websocket-services: pdfding-service

name: pdfdingingress

namespace: pdfding

spec:

rules:

- host: pdfding.steeped.space

http:

paths:

- backend:

service:

name: pdfding-service

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- pdfding.steeped.space

secretName: pdfding-tls

builder@DESKTOP-QADGF36:~/Workspaces/pdfding$ kubectl apply -f ./pdfding.ingress.yaml

ingress.networking.k8s.io/pdfdingingress created

I get the same 400 error

I tried omitting “HOST_NAME” and setting it to “0.0.0.0”. While those failed, setting hostname to the DNS_NAME

env:

- name: HOST_NAME

value: "pdfding.steeped.space"

- name: SECRET_KEY

value: "mygreatsecret54321"

- name: CSRF_COOKIE_SECURE

value: "FALSE"

- name: SESSION_COOKIE_SECURE

value: "FALSE"

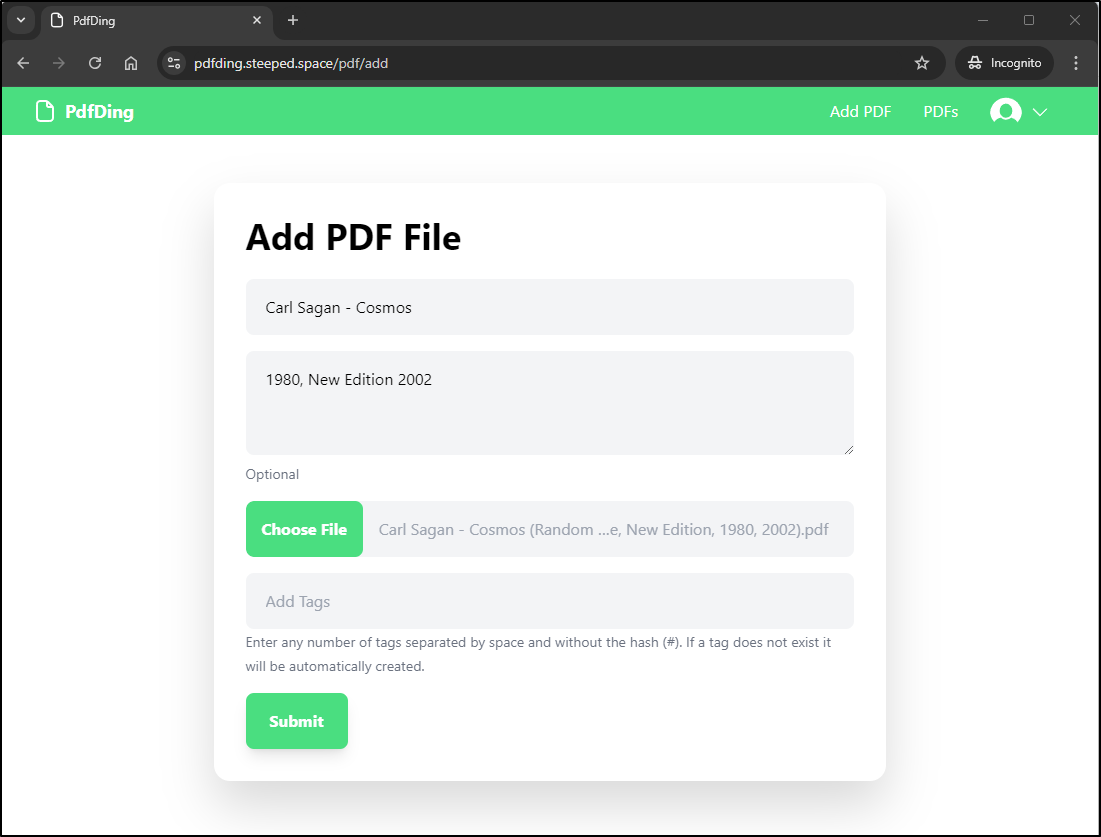

I then I want to add a PDF

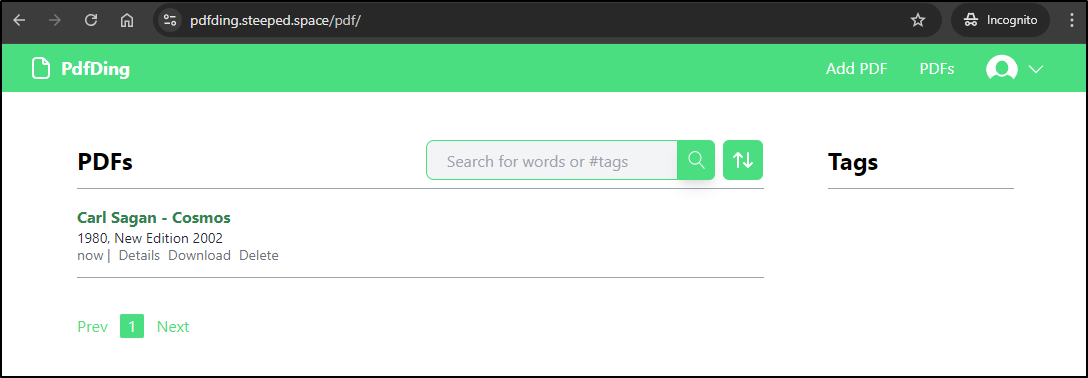

I can now see it on the list

I can then read it in the browser

I logged in with my phone and found it remembered my place in the book

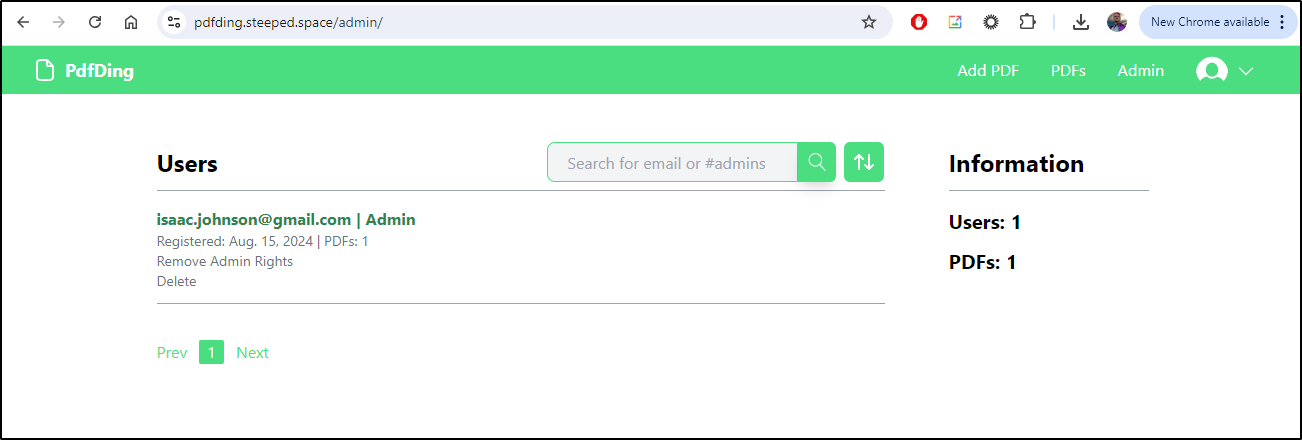

Make admin user

We have to hop into the pod to make ourselves the admin

builder@LuiGi:~/Workspaces/jekyll-blog$ kubectl get pods -n pdfding

NAME READY STATUS RESTARTS AGE

pdfding-b99897cbb-tpkpx 1/1 Running 0 10h

builder@LuiGi:~/Workspaces/jekyll-blog$ kubectl exec -it pdfding-b99897cbb-tpkpx -n pdfding -- /bin/bash

nonroot@pdfding-b99897cbb-tpkpx:~$ pdfding/manage.py make_admin -e isaac.johnson@gmail.com

nonroot@pdfding-b99897cbb-tpkpx:~$

I can now see an admin menu where I can edit users

Users

The problem I have is I don’t really want to allow the world to signup, but there is no other option besides implementing OIDC - at least in the settings as they stand.

I’m going to try basic auth

builder@DESKTOP-QADGF36:~/Workspaces/pdfding$ htpasswd -c auth builder

New password:

Re-type new password:

Adding password for user builder

builder@DESKTOP-QADGF36:~/Workspaces/pdfding$ kubectl create secret generic basic-auth -n pdfding --from-file=auth

secret/basic-auth created

I’ll then add to our ingress

builder@DESKTOP-QADGF36:~/Workspaces/pdfding$ kubectl get ingress -n pdfding pdfdingingress -o yaml > pdfding.ingress.yaml.set

builder@DESKTOP-QADGF36:~/Workspaces/pdfding$ kubectl get ingress -n pdfding pdfdingingress -o yaml > pdfding.ingress.yaml.new

builder@DESKTOP-QADGF36:~/Workspaces/pdfding$ vi pdfding.ingress.yaml.new

builder@DESKTOP-QADGF36:~/Workspaces/pdfding$ diff pdfding.ingress.yaml.new pdfding.ingress.yaml.set

8,13d7

< # type of authentication

< nginx.ingress.kubernetes.io/auth-type: basic

< # name of the secret that contains the user/password definitions

< nginx.ingress.kubernetes.io/auth-secret: basic-auth

< # message to display with an appropriate context why the authentication is required

< nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required'

builder@DESKTOP-QADGF36:~/Workspaces/pdfding$ kubectl apply -f ./pdfding.ingress.yaml.new -n pdfding

ingress.networking.k8s.io/pdfdingingress configured

The older format seemed to prompt for password

nginx.org/basic-auth-secret: basic-auth

But then dump me to forbidden after authing

I guess I’ll just have to wait on a release. I didn’t detail it here, but I tried to update the image with disable signup option but that didn’t work. I’m still a bit stumped as when I look at the code in https://codeberg.org/mrmn/PdfDing/src/branch/master, i cannot find any of the UI strings so I have no idea from where it actually loads those pages.

In the meantime, we can keep an eye on the changelog for updates.

Zulip

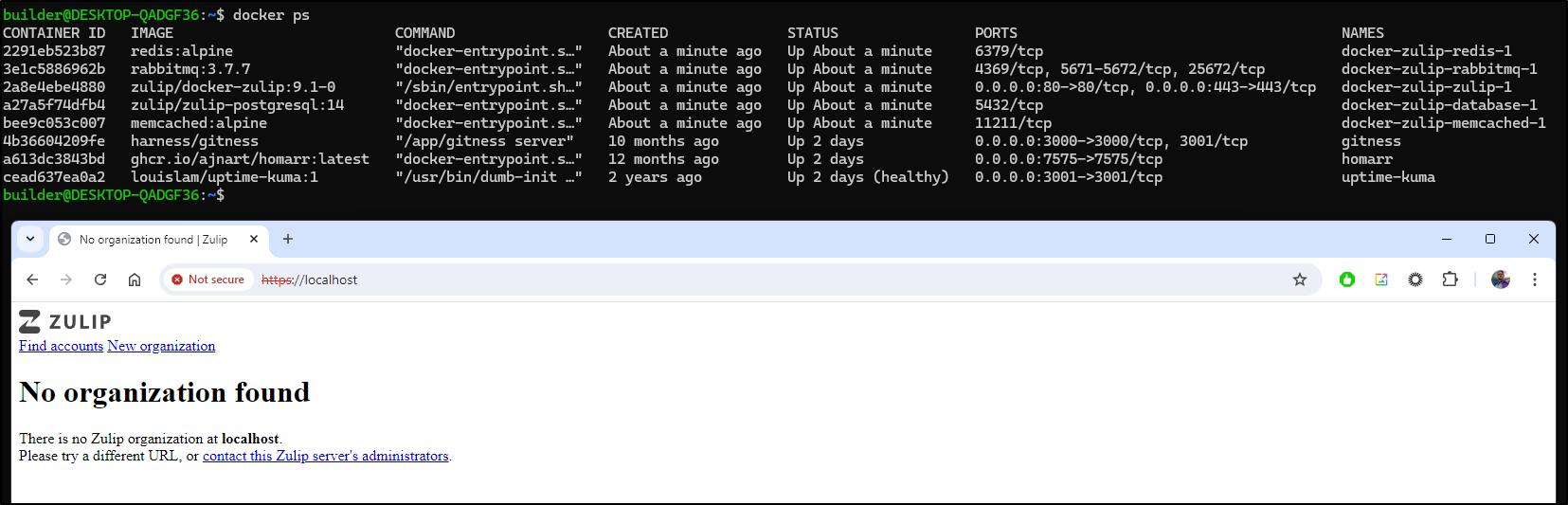

I recently saw an article on TheNewStack about Zulip which is an OS Chat app that, according to TheNewStack, Rust developers prefer over Slack. That brought me to Zulip.org/com which brought me to Docker install instructions in their Github Repo.

I’m going to try the quickest version first to see if I like it. This means just cloning and doing a Docker Compose up

builder@DESKTOP-QADGF36:~/Workspaces$ git clone https://github.com/zulip/docker-zulip.git

Cloning into 'docker-zulip'...

remote: Enumerating objects: 4055, done.

remote: Counting objects: 100% (499/499), done.

remote: Compressing objects: 100% (190/190), done.

remote: Total 4055 (delta 317), reused 417 (delta 281), pack-reused 3556 (from 1)

Receiving objects: 100% (4055/4055), 1.08 MiB | 5.80 MiB/s, done.

Resolving deltas: 100% (2355/2355), done.

builder@DESKTOP-QADGF36:~/Workspaces$ cd docker-zulip/

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ ls

CODE_OF_CONDUCT.md LICENSE UPGRADING.md custom_zulip_files entrypoint.sh upgrade-postgresql

Dockerfile README.md certbot-deploy-hook docker-compose.yml kubernetes

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ docker compose up

[+] Running 22/37

⠏ database Pulling 17.0s

⠋ 661ff4d9561e Waiting 0.1s

⠋ 6d47f5cef872 Waiting 0.1s

⠋ 0dba180d34e2 Waiting 0.1s

⠋ 730b24dd5556 Waiting 0.1s

⠋ 52c5bf0a9aef Waiting 0.1s

⠋ 3318a3e8afae Pulling fs layer 0.1s

⠋ 420c19ad94e3 Waiting

... snip. ...

The first time it gave me a crash and dump, but the second time I tried a ‘compose up’ it worked

I guess it’s working, albeit empty

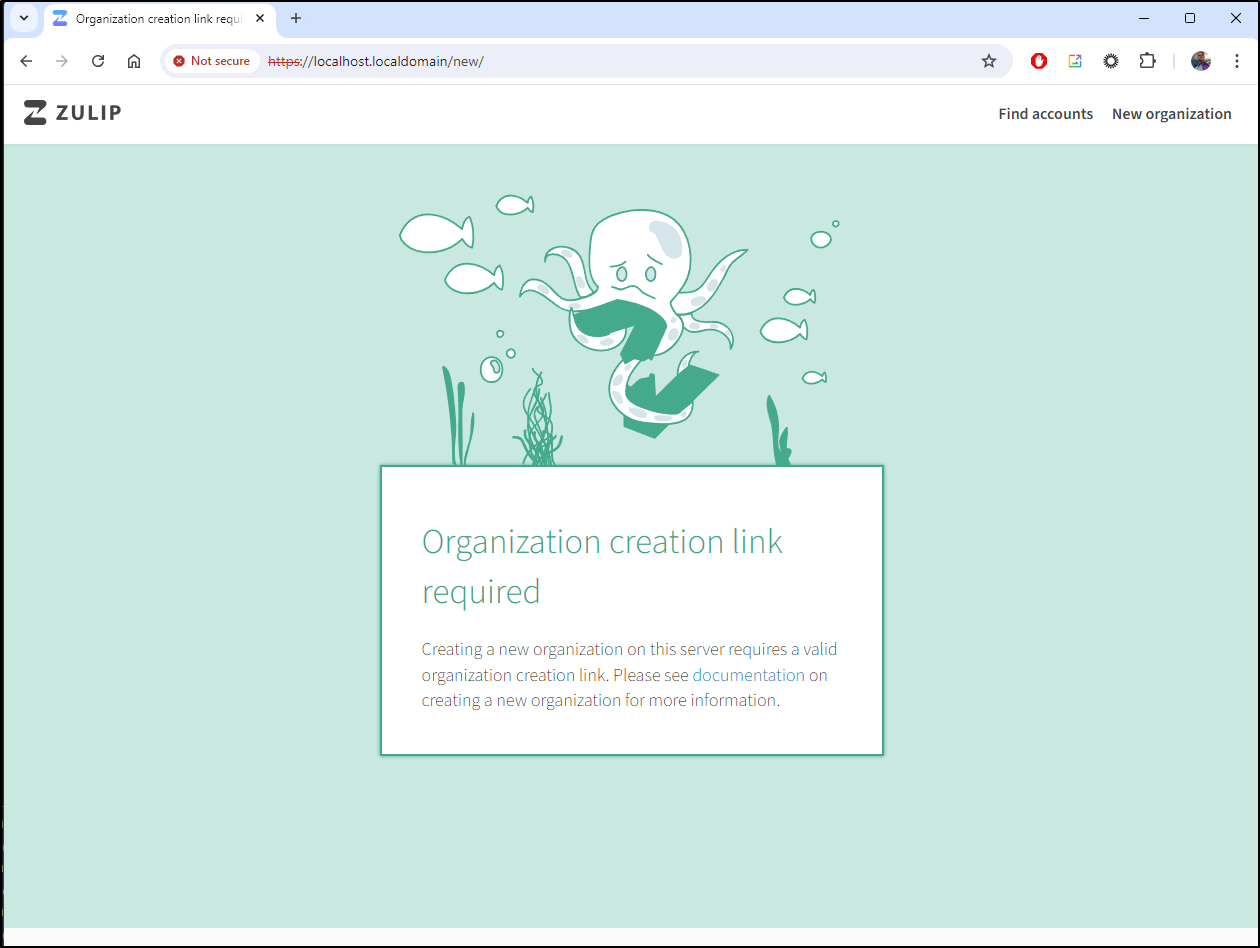

I tried to create an organization but got an error page (a bit prettier this time though)

The docs say i need to run manage.py generate_realm_creation_link on the server so I’ll try that using a docker exec into the ‘server’ container

It wasn’t found at root

builder@DESKTOP-QADGF36:~$ docker exec -it docker-zulip-zulip-1 /bin/bash

root@2a8e4ebe4880:/# manage.py generate_realm_creation_link

bash: manage.py: command not found

Though I found one in a release directory

root@2a8e4ebe4880:/# find . -type f -name manage.py -print

./home/zulip/deployments/2024-08-03-00-42-06/manage.py

It’s not liking running as root

root@2a8e4ebe4880:/home/zulip/deployments/2024-08-03-00-42-06# manage.py generate_realm_creation_link

bash: manage.py: command not found

root@2a8e4ebe4880:/home/zulip/deployments/2024-08-03-00-42-06# ./manage.py generate_realm_creation_link

manage.py should not be run as root. Use `su zulip` to switch to the 'zulip'

user before rerunning this, or use

su zulip -c '/home/zulip/deployments/2024-08-03-00-42-06/manage.py ...'

to switch users and run this as a single command.

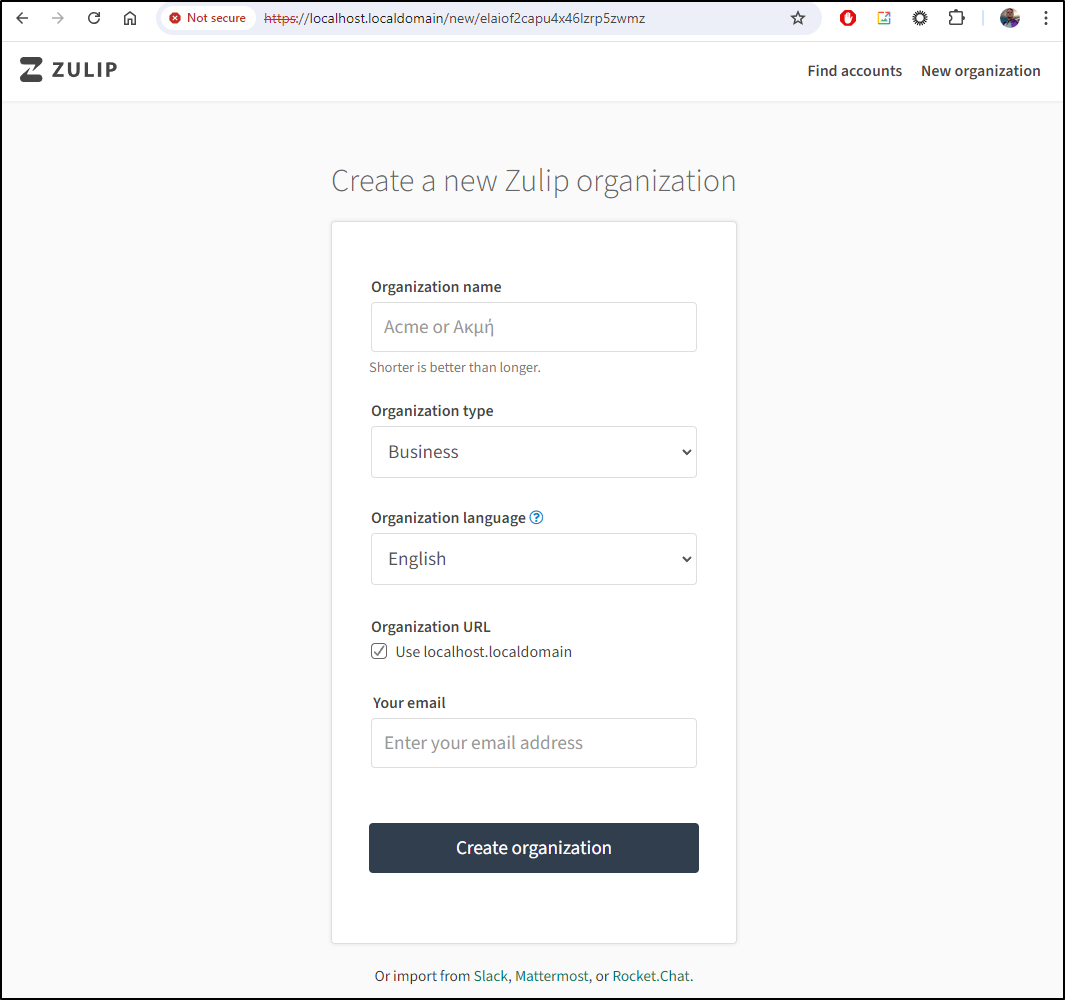

But as zulip it works

root@2a8e4ebe4880:/home/zulip/deployments/2024-08-03-00-42-06# su zulip -c '/home/zulip/deployments/2024-08-03-00-42-06/manage.py generate_realm_creation_link'

Please visit the following secure single-use link to register your

new Zulip organization:

https://localhost.localdomain/new/elaiof2capu4x46lzrp5zwmz

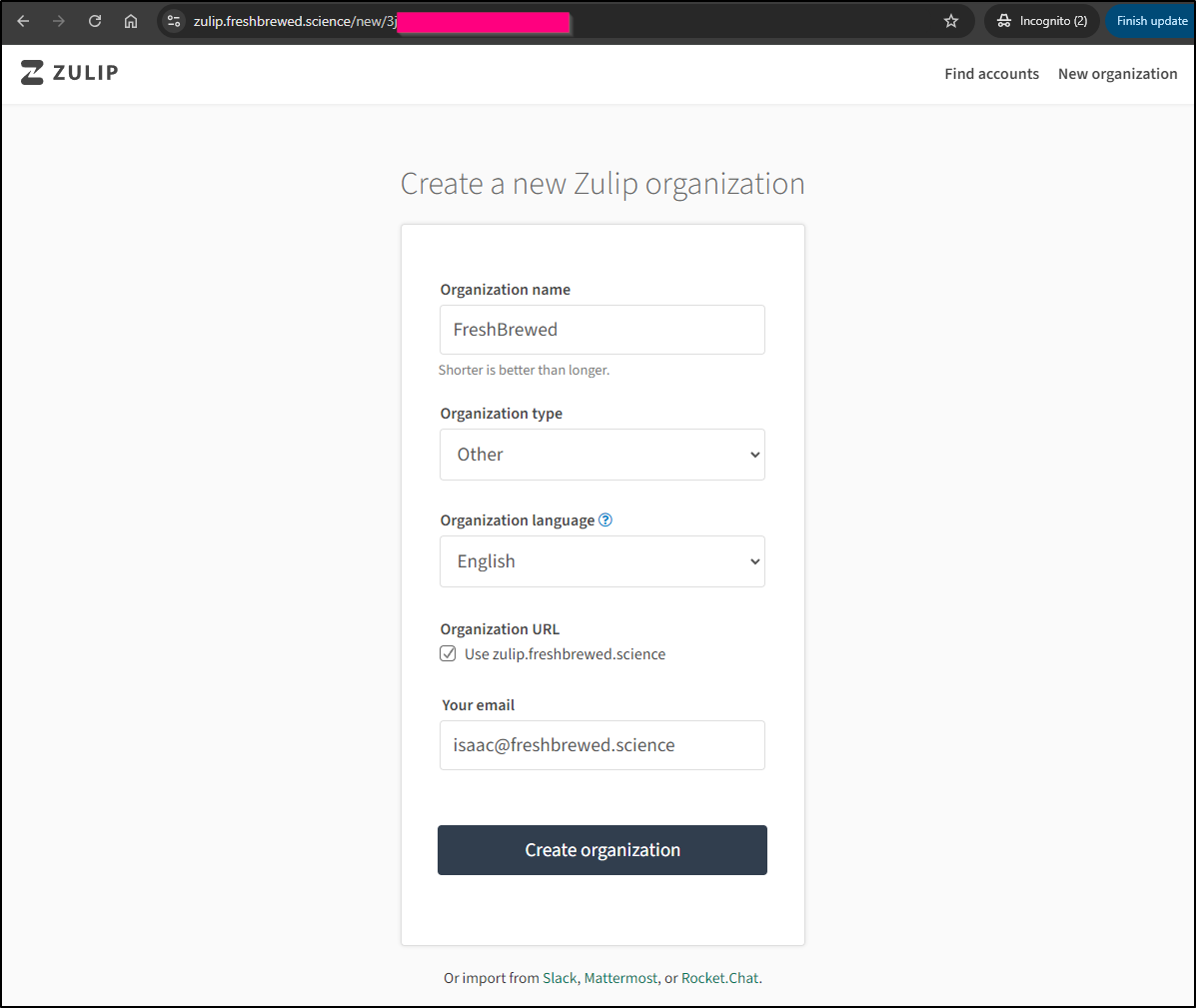

I can then use that link to create the org. I guess this is a nice bit of safety on something that could be publicly exposed

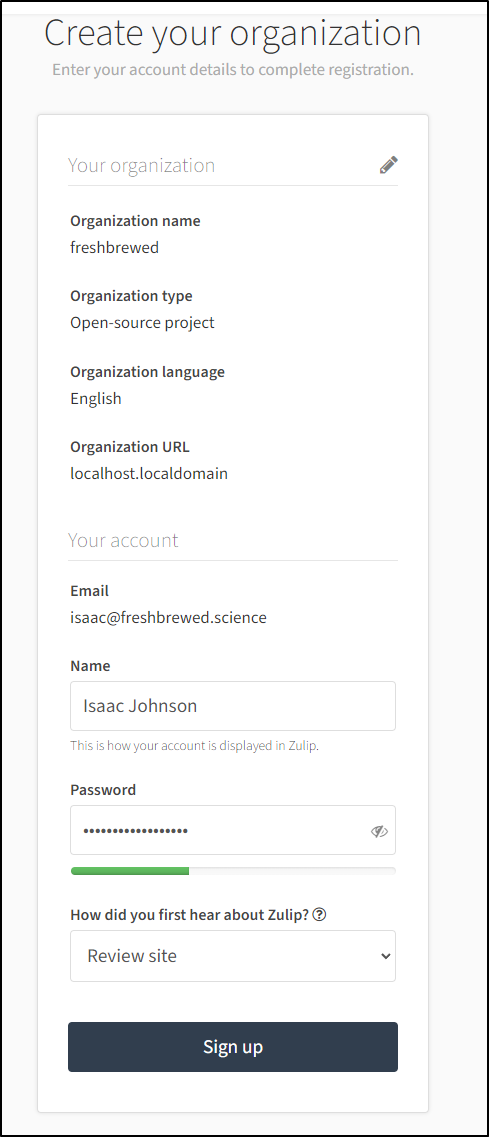

The next page lets me create the admin user

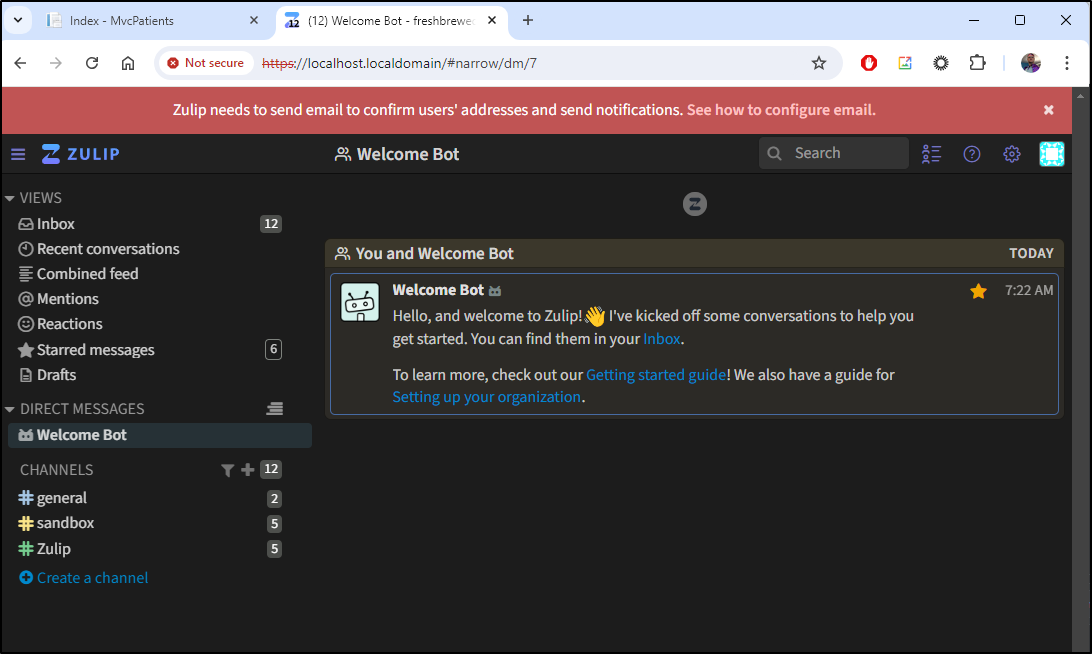

I see a warning about email server settings, but the rest is coming through just fine

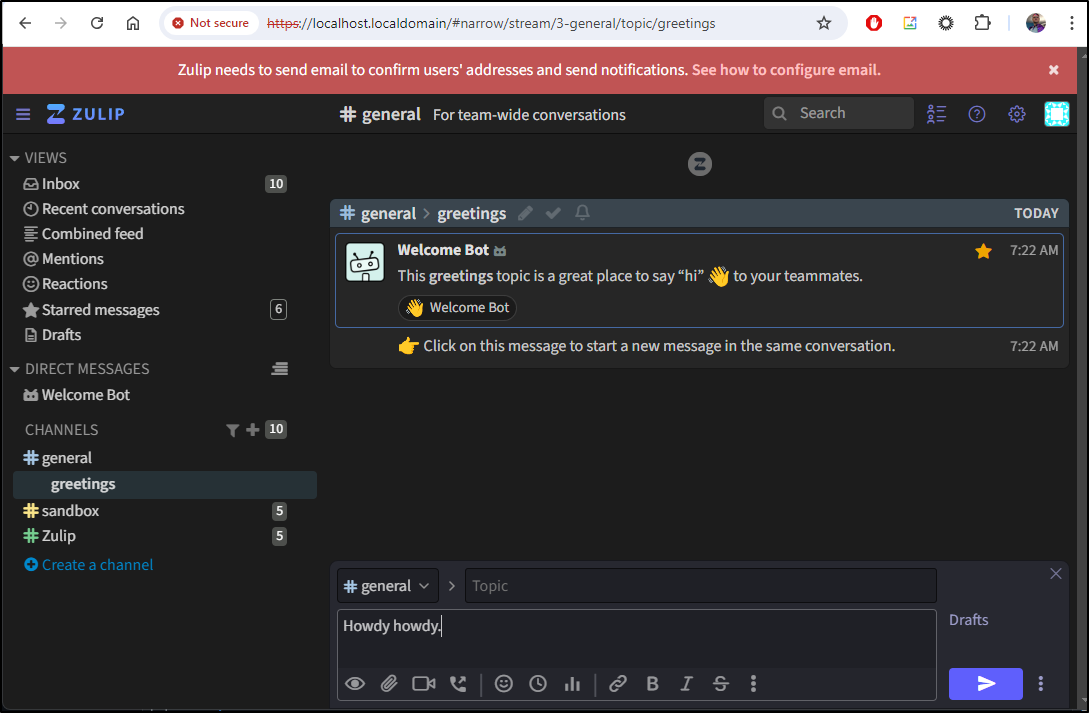

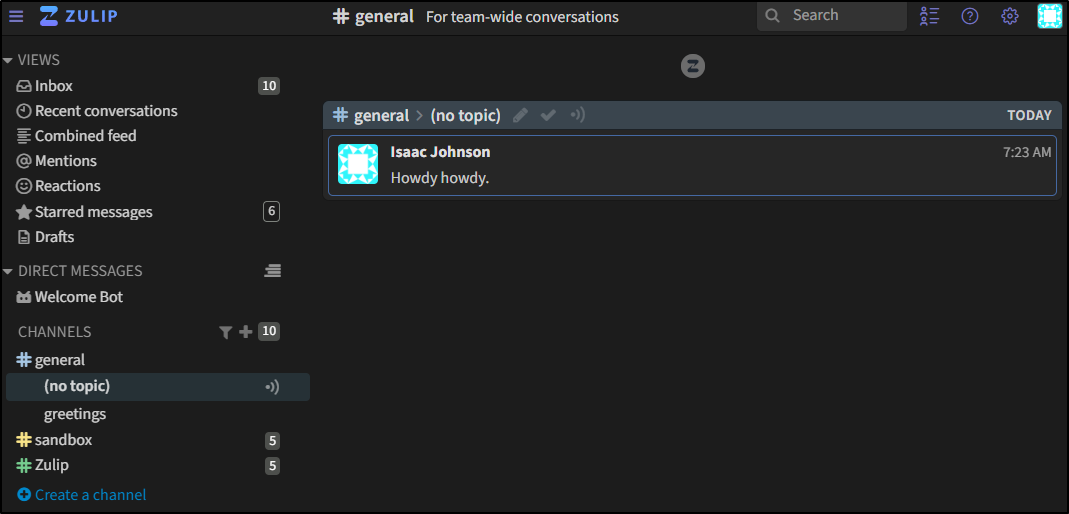

I can create a new message

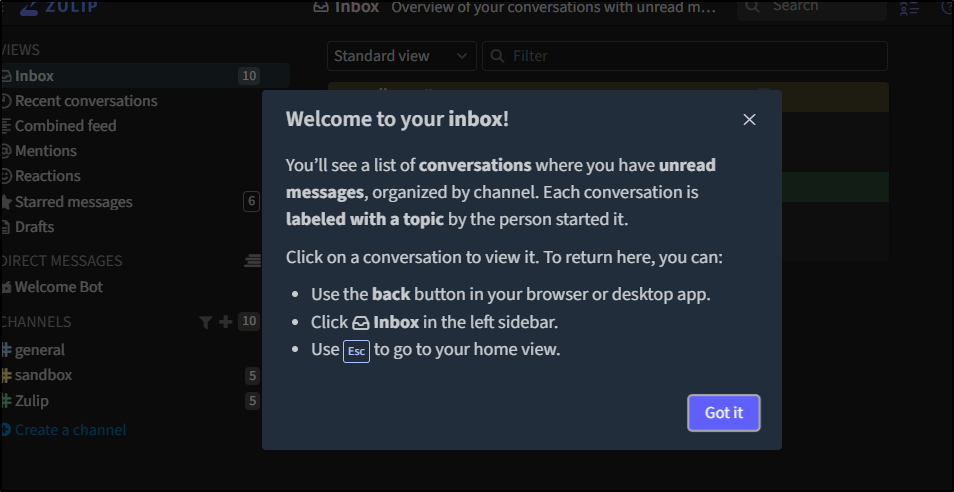

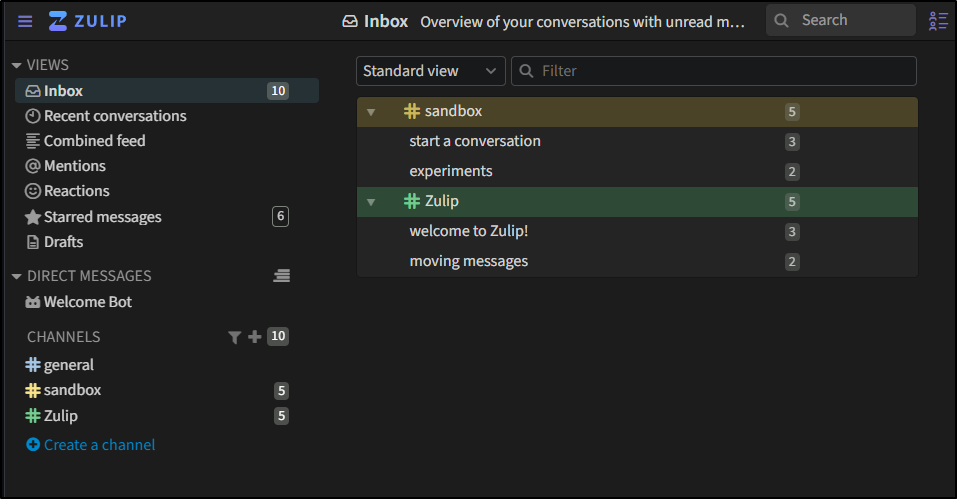

Clicking on Inbox lets me know what it’s about

I can then see messages waiting for me

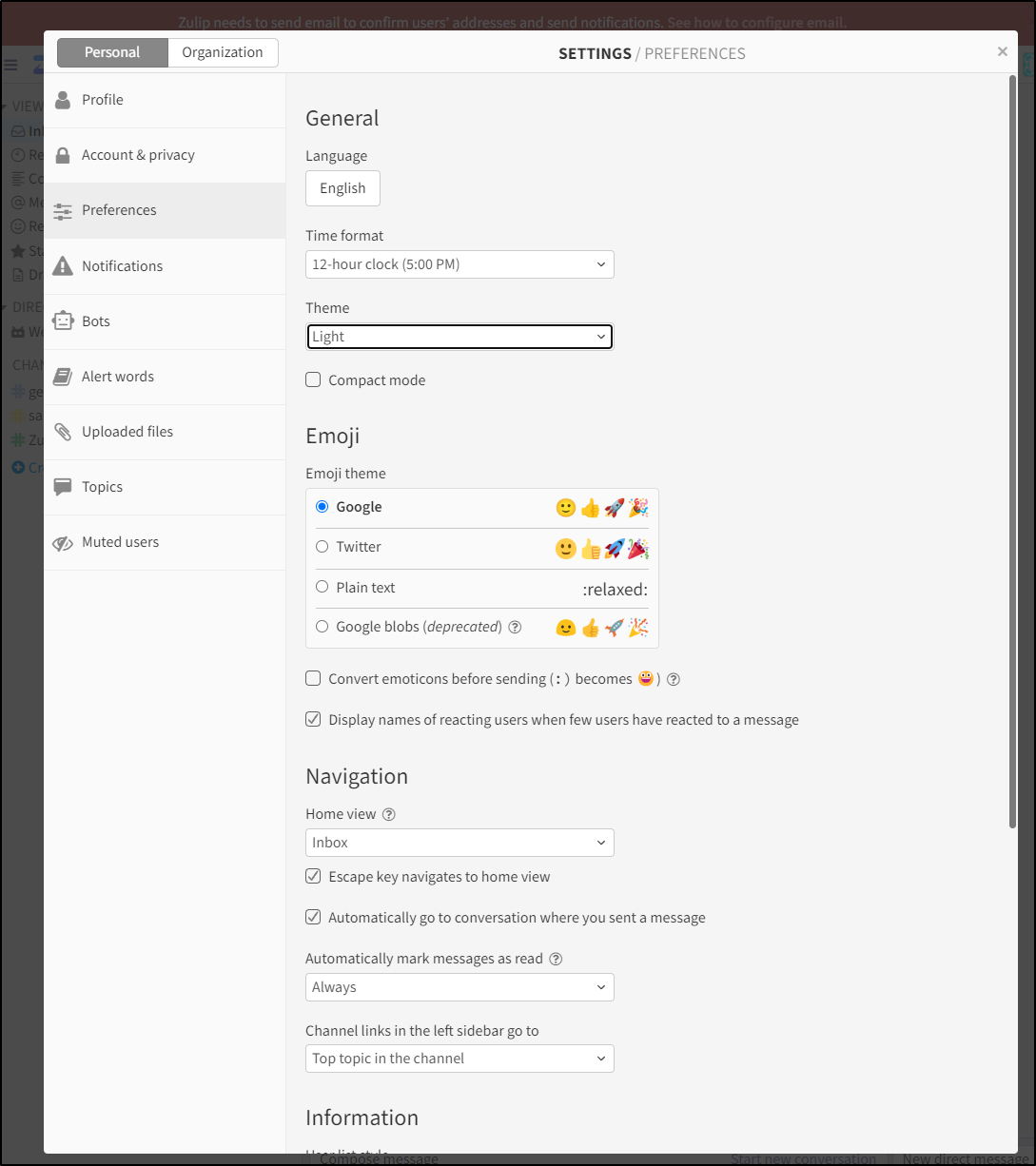

There are so many settings I feel I could take a long time touring each one. The first thing I wanted to do, however, was switch to a light theme.

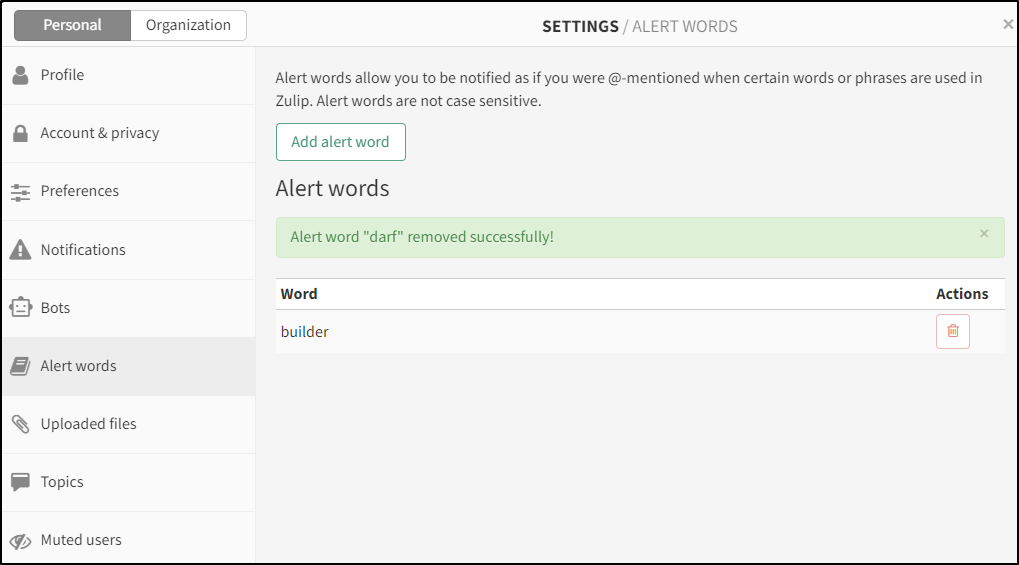

One feature that looks promising is “alert words”

The idea here is if someone said a word, say “builder”, it would act like they targeted the message to me (akin to an @user mention).

So far this is looking pretty slick. At least enough for me to move off of a WSL self-contained instance.

Helm

First, I need to create a Route53 entry for it so we can use the AWS resolver

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ cat r53-zulip.json

{

"Comment": "CREATE zulip fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "zulip.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "75.73.224.240"

}

]

}

}

]

}

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-zulip.json

{

"ChangeInfo": {

"Id": "/change/C04336661FHWSBVXCRVH7",

"Status": "PENDING",

"SubmittedAt": "2024-08-18T21:31:16.868Z",

"Comment": "CREATE zulip fb.s A record "

}

}

Next, I’ll want to set up the values for Helm. These are mostly for Ingress and Email

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ cat values.yaml

ingress:

# -- Enable this to use an Ingress to reach the Zulip service.

enabled: true

# -- Can be used to add custom Ingress annotations.

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

hosts:

# -- Host for the Ingress. Should be the same as

# `zulip.environment.SETTING_EXTERNAL_HOST`.

- host: zulip.freshbrewed.science

# -- Serves Zulip root of the chosen host domain.

paths:

- path: /

# -- Set a specific secret to read the TLS certificate from. If you use

# cert-manager, it will save the TLS secret here. If you do not, you need to

# manually create a secret with your TLS certificate.

tls:

- secretName: zulip-tls

hosts:

- zulip.freshbrewed.science

zulip:

# Environment variables based on https://github.com/zulip/docker-zulip/blob/master/docker-compose.yml#L63

environment:

# -- Disables HTTPS if set to "true".

# HTTPS and certificates are managed by the Kubernetes cluster, so

# by default it's disabled inside the container

DISABLE_HTTPS: true

# -- Set SSL certificate generation to self-signed because Kubernetes

# manages the client-facing SSL certs.

SSL_CERTIFICATE_GENERATION: self-signed

# -- Domain Zulip is hosted on.

SETTING_EXTERNAL_HOST: zulip.freshbrewed.science

# -- SMTP email password.

SECRETS_email_password: "SG.xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

SETTING_ZULIP_ADMINISTRATOR: "isaac@freshbrewed.science"

SETTING_EMAIL_HOST: "smtp.sendgrid.net" # e.g. smtp.example.com

SETTING_EMAIL_HOST_USER: "apikey" # isaac@freshbrewed.science"

SETTING_EMAIL_PORT: "587"

SETTING_EMAIL_USE_SSL: "False"

SETTING_EMAIL_USE_TLS: "True"

ZULIP_AUTH_BACKENDS: "EmailAuthBackend"

# -- If `persistence.existingClaim` is not set, a PVC is generated with these

# specifications.

persistence:

enabled: true

accessMode: ReadWriteOnce

size: 10Gi

# existingClaim: "" # Use an already existing PVC

Next, I’ll head to the chart folder and update dependencies. I had to move to the oldest supported versions that could be found. For instance, I checked the versions for Redis here then updated the Chart.yaml.

apiVersion: v2

description: Zulip is an open source threaded team chat that helps teams stay productive and focused.

name: zulip

type: application

icon: https://raw.githubusercontent.com/zulip/zulip/main/static/images/logo/zulip-icon-square.svg

# This is the chart version. This version number should be incremented each time you make changes

# to the chart and its templates, including the app version.

# Versions are expected to follow Semantic Versioning (https://semver.org/)

version: 0.9.1

# This is the version number of the application being deployed. This version number should be

# incremented each time you make changes to the application. Versions are not expected to

# follow Semantic Versioning. They should reflect the version the application is using.

# It is recommended to use it with quotes.

appVersion: "9.1-0"

dependencies:

- name: memcached

repository: https://charts.bitnami.com/bitnami

tags:

- memcached

version: 6.1.11

- name: rabbitmq

repository: https://charts.bitnami.com/bitnami

tags:

- rabbitmq

version: 10.1.19

- name: redis

repository: https://charts.bitnami.com/bitnami

tags:

- redis

version: 16.11.3

- name: postgresql

repository: https://charts.bitnami.com/bitnami

tags:

- postgresql

# Note: values.yaml overwrites posgresql image to zulip/zulip-postgresql:14

version: 11.9.13

sources:

- https://github.com/zulip/zulip

- https://github.com/zulip/docker-zulip

- https://hub.docker.com/r/zulip/docker-zulip

I also updated the Chart.lock file, before running update and build

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip/kubernetes/chart$ helm dependency update ./zulip

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "confluentinc" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "nfs" chart repository

...Successfully got an update from the "btungut" chart repository

...Successfully got an update from the "openfunction" chart repository

...Successfully got an update from the "kube-state-metrics" chart repository

...Successfully got an update from the "portainer" chart repository

...Successfully got an update from the "zabbix-community" chart repository

...Unable to get an update from the "freshbrewed" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Unable to get an update from the "myharbor" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "adwerx" chart repository

...Successfully got an update from the "backstage" chart repository

...Successfully got an update from the "jfelten" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "ingress-nginx" chart repository

...Successfully got an update from the "spacelift" chart repository

...Successfully got an update from the "akomljen-charts" chart repository

...Successfully got an update from the "opencost-charts" chart repository

...Successfully got an update from the "opencost" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Successfully got an update from the "kiwigrid" chart repository

...Successfully got an update from the "bitwarden" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "openzipkin" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "zipkin" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "rhcharts" chart repository

...Successfully got an update from the "gitea-charts" chart repository

...Unable to get an update from the "epsagon" chart repository (https://helm.epsagon.com):

Get "https://helm.epsagon.com/index.yaml": dial tcp: lookup helm.epsagon.com on 10.255.255.254:53: server misbehaving

...Successfully got an update from the "longhorn" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "openproject" chart repository

...Successfully got an update from the "skm" chart repository

...Successfully got an update from the "elastic" chart repository

...Successfully got an update from the "castai-helm" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "signoz" chart repository

...Successfully got an update from the "ngrok" chart repository

...Successfully got an update from the "rook-release" chart repository

...Successfully got an update from the "makeplane" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "open-telemetry" chart repository

...Successfully got an update from the "grafana" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "ananace-charts" chart repository

...Successfully got an update from the "bitnami" chart repository

...Successfully got an update from the "prometheus-community" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 4 charts

Downloading memcached from repo https://charts.bitnami.com/bitnami

Downloading rabbitmq from repo https://charts.bitnami.com/bitnami

Downloading redis from repo https://charts.bitnami.com/bitnami

Downloading postgresql from repo https://charts.bitnami.com/bitnami

Deleting outdated charts

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip/kubernetes/chart$ helm dependency build ./zulip

Hang tight while we grab the latest from your chart repositories...

...Unable to get an update from the "myharbor" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Unable to get an update from the "freshbrewed" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Successfully got an update from the "zipkin" chart repository

...Successfully got an update from the "makeplane" chart repository

...Successfully got an update from the "spacelift" chart repository

...Successfully got an update from the "akomljen-charts" chart repository

...Successfully got an update from the "kube-state-metrics" chart repository

...Successfully got an update from the "nfs" chart repository

...Successfully got an update from the "portainer" chart repository

...Successfully got an update from the "zabbix-community" chart repository

...Successfully got an update from the "ngrok" chart repository

...Successfully got an update from the "btungut" chart repository

...Successfully got an update from the "openfunction" chart repository

...Successfully got an update from the "opencost-charts" chart repository

...Successfully got an update from the "opencost" chart repository

...Successfully got an update from the "bitwarden" chart repository

...Successfully got an update from the "backstage" chart repository

...Successfully got an update from the "jfelten" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "openproject" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Successfully got an update from the "confluentinc" chart repository

...Successfully got an update from the "ingress-nginx" chart repository

...Successfully got an update from the "rhcharts" chart repository

...Successfully got an update from the "skm" chart repository

...Successfully got an update from the "adwerx" chart repository

...Successfully got an update from the "rook-release" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "longhorn" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "castai-helm" chart repository

...Successfully got an update from the "harbor" chart repository

...Unable to get an update from the "epsagon" chart repository (https://helm.epsagon.com):

Get "https://helm.epsagon.com/index.yaml": dial tcp: lookup helm.epsagon.com on 10.255.255.254:53: server misbehaving

...Successfully got an update from the "signoz" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "elastic" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "open-telemetry" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "ananace-charts" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "openzipkin" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "prometheus-community" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "gitea-charts" chart repository

...Successfully got an update from the "kiwigrid" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "grafana" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 4 charts

Downloading memcached from repo https://charts.bitnami.com/bitnami

Downloading rabbitmq from repo https://charts.bitnami.com/bitnami

Downloading redis from repo https://charts.bitnami.com/bitnami

Downloading postgresql from repo https://charts.bitnami.com/bitnami

Deleting outdated charts

I also noted they had a typo in the chart helpers which I needed to fix

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ git diff kubernetes/chart/zulip/templates/_helpers.tpl

diff --git a/kubernetes/chart/zulip/templates/_helpers.tpl b/kubernetes/chart/zulip/templates/_helpers.tpl

index 7a5aa20..88b69be 100644

--- a/kubernetes/chart/zulip/templates/_helpers.tpl

+++ b/kubernetes/chart/zulip/templates/_helpers.tpl

@@ -74,7 +74,7 @@ include all env variables for Zulip pods

- name: SETTING_MEMCACHED_LOCATION

value: "{{ template "common.names.fullname" .Subcharts.memcached }}:11211"

- name: SETTING_RABBITMQ_HOST

- value: "{{ template "rabbitmq.fullname" .Subcharts.rabbitmq }}"

+ value: "{{ template "common.names.fullname" .Subcharts.rabbitmq }}"

- name: SETTING_REDIS_HOST

value: "{{ template "common.names.fullname" .Subcharts.redis }}-headless"

- name: SECRETS_rabbitmq_password

I can then install

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ helm install zulip -n zulip --create-namespace -f ./values.yaml ./kubernetes/chart/zulip/

NAME: zulip

LAST DEPLOYED: Sun Aug 18 16:57:43 2024

NAMESPACE: zulip

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. To create a realm so you can sign in:

export POD_NAME=$(kubectl get pods --namespace zulip -l "app.kubernetes.io/name=zulip" -o jsonpath="{.items[0].metadata.name}")

kubectl -n zulip exec -it "$POD_NAME" -c zulip -- sudo -u zulip /home/zulip/deployments/current/manage.py generate_realm_creation_link

2. Zulip will be available on:

https://

So far it looks ok

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ kubectl get pods -n zulip

NAME READY STATUS RESTARTS AGE

zulip-memcached-74f9bfc676-4v6rv 1/1 Running 0 72s

zulip-redis-master-0 1/1 Running 0 72s

zulip-postgresql-0 1/1 Running 0 72s

zulip-rabbitmq-0 1/1 Running 0 72s

zulip-0 0/1 Running 0 72s

Though it soon crashed, so I looked up the logs

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ kubectl logs zulip-0 -n zulip

=== Begin Initial Configuration Phase ===

Preparing and linking the uploads folder ...

Prepared and linked the uploads directory.

Executing puppet configuration ...

Disabling https in nginx.

Notice: Compiled catalog for zulip-0.zulip.zulip.svc.cluster.local in environment production in 4.04 seconds

Notice: /Stage[main]/Zulip::Profile::Base/File[/etc/zulip/settings.py]/ensure: created

Notice: /Stage[main]/Zulip::Profile::Base/File[/etc/zulip/zulip-secrets.conf]/ensure: created

Notice: /Stage[main]/Zulip::App_frontend_base/File[/etc/nginx/zulip-include/s3-cache]/content: content changed '{md5}de61e21371d050ad1d89cd720f820b29' to '{md5}172d762a10c17101902d1d98c24e11ae'

Notice: /Stage[main]/Zulip::Profile::App_frontend/File[/etc/nginx/sites-available/zulip-enterprise]/content: content changed '{md5}1defc38fb8209121cd3a565185de3c80' to '{md5}636302ffdcd12fe6e57a590c932bbdaa'

Notice: /Stage[main]/Zulip::Nginx/Service[nginx]: Triggered 'refresh' from 2 events

Notice: Applied catalog in 0.48 seconds

Executing nginx configuration ...

Nginx configuration succeeded.

Certificates configuration succeeded.

Setting database configuration ...

Setting key "REMOTE_POSTGRES_HOST", type "string" in file "/etc/zulip/settings.py".

Setting key "REMOTE_POSTGRES_PORT", type "string" in file "/etc/zulip/settings.py".

Setting key "REMOTE_POSTGRES_SSLMODE", type "string" in file "/etc/zulip/settings.py".

Database configuration succeeded.

Setting Zulip secrets ...

Generating Zulip secrets ...

generate_secrets: No new secrets to generate.

Secrets generation succeeded.

Empty secret for key "secret_key".

Zulip secrets configuration succeeded.

Activating authentication backends ...

Setting key "AUTHENTICATION_BACKENDS", type "array" in file "/etc/zulip/settings.py".

Adding authentication backend "EmailAuthBackend".

Authentication backend activation succeeded.

Executing Zulip configuration ...

Setting key "EMAIL_HOST", type "string" in file "/etc/zulip/settings.py".

Setting key "EMAIL_HOST_USER", type "string" in file "/etc/zulip/settings.py".

Setting key "EMAIL_PORT", type "integer" in file "/etc/zulip/settings.py".

Setting key "EMAIL_USE_SSL", type "bool" in file "/etc/zulip/settings.py".

Setting key "EMAIL_USE_TLS", type "bool" in file "/etc/zulip/settings.py".

Setting key "EXTERNAL_HOST", type "string" in file "/etc/zulip/settings.py".

Setting key "MEMCACHED_LOCATION", type "string" in file "/etc/zulip/settings.py".

Setting key "RABBITMQ_HOST", type "string" in file "/etc/zulip/settings.py".

Setting key "RABBITMQ_USER", type "string" in file "/etc/zulip/settings.py".

Setting key "RATE_LIMITING", type "bool" in file "/etc/zulip/settings.py".

Setting key "REDIS_HOST", type "string" in file "/etc/zulip/settings.py".

Setting key "REDIS_PORT", type "integer" in file "/etc/zulip/settings.py".

Setting key "ZULIP_ADMINISTRATOR", type "string" in file "/etc/zulip/settings.py".

Traceback (most recent call last):

File "/home/zulip/deployments/current/manage.py", line 150, in <module>

execute_from_command_line(sys.argv)

File "/home/zulip/deployments/current/manage.py", line 115, in execute_from_command_line

utility.execute()

File "/srv/zulip-venv-cache/58c4cec1a70fbcdbd716f3aa98fe57c891b4ee84/zulip-py3-venv/lib/python3.10/site-packages/django/core/management/__init__.py", line 436, in execute

self.fetch_command(subcommand).run_from_argv(self.argv)

File "/srv/zulip-venv-cache/58c4cec1a70fbcdbd716f3aa98fe57c891b4ee84/zulip-py3-venv/lib/python3.10/site-packages/django/core/management/base.py", line 413, in run_from_argv

self.execute(*args, **cmd_options)

File "/home/zulip/deployments/2024-08-03-00-42-06/zerver/lib/management.py", line 97, in execute

super().execute(*args, **options)

File "/srv/zulip-venv-cache/58c4cec1a70fbcdbd716f3aa98fe57c891b4ee84/zulip-py3-venv/lib/python3.10/site-packages/django/core/management/base.py", line 459, in execute

output = self.handle(*args, **options)

File "/home/zulip/deployments/2024-08-03-00-42-06/zerver/management/commands/checkconfig.py", line 13, in handle

check_config()

File "/home/zulip/deployments/2024-08-03-00-42-06/zerver/lib/management.py", line 36, in check_config

if getattr(settings, setting_name) != default:

File "/srv/zulip-venv-cache/58c4cec1a70fbcdbd716f3aa98fe57c891b4ee84/zulip-py3-venv/lib/python3.10/site-packages/django/conf/__init__.py", line 98, in __getattr__

raise ImproperlyConfigured("The SECRET_KEY setting must not be empty.")

django.core.exceptions.ImproperlyConfigured: The SECRET_KEY setting must not be empty.

Error in the Zulip configuration. Exiting.

Seems that is a miss in the sample values and docs. I tried initially just adding “SECRET_KEY” but that didnt work. My next try was setting SECRETS_secret_key, e.g.

SECRETS_secret_key: 'it5bs))q6toz-1gwf(+j+f9@rd8%_-0nx)p-2!egr*y1o51=45XXCV'

That looked much better

2024-08-18 22:10:44,983 INFO success: zulip_events_user_presence entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ kubectl logs zulip-0 -n zulip | tail -n10

2024-08-18 22:10:44,383 INFO success: zulip_events_embed_links entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2024-08-18 22:10:44,383 INFO success: zulip_events_embedded_bots entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2024-08-18 22:10:44,383 INFO success: zulip_events_email_senders entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2024-08-18 22:10:44,383 INFO success: zulip_events_missedmessage_emails entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2024-08-18 22:10:44,404 INFO success: zulip_events_missedmessage_mobile_notifications entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2024-08-18 22:10:44,494 INFO success: zulip_events_outgoing_webhooks entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2024-08-18 22:10:44,603 INFO success: zulip_events_thumbnail entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2024-08-18 22:10:44,603 INFO success: zulip_events_user_activity entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2024-08-18 22:10:44,604 INFO success: zulip_events_user_activity_interval entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2024-08-18 22:10:44,983 INFO success: zulip_events_user_presence entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

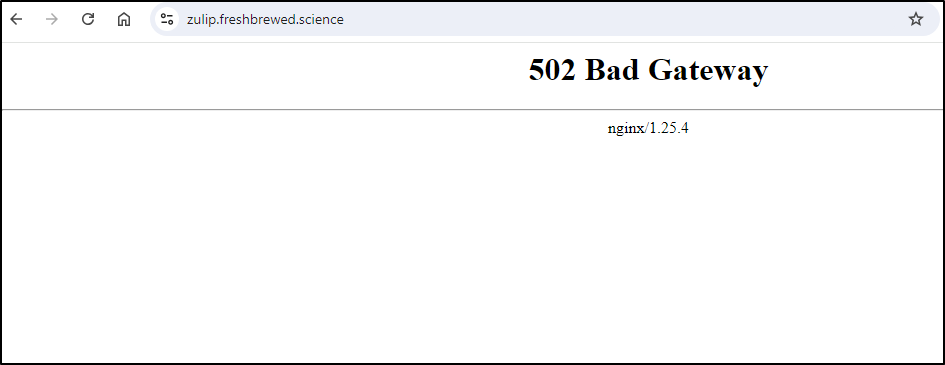

I have an ingress defined, but get a 502

I can see the Ingress points to a valid service that in turn finds the main pod, but we are still hanging out waiting for a ready health check

$ kubectl get pods -l app.kubernetes.io/instance=zulip,app.kubernetes.io/name=zulip -n zulip

NAME READY STATUS RESTARTS AGE

zulip-0 0/1 Running 0 2m25s

This is actually caused by a bit of a chicken and egg. we cannot respond witha health web result without a live container but the container’s readiness probe is checking the actual URL

livenessProbe:

failureThreshold: 3

httpGet:

httpHeaders:

- name: Host

value: zulip.freshbrewed.science

path: /

port: http

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

name: zulip

ports:

- containerPort: 80

name: http

protocol: TCP

resources: {}

securityContext: {}

startupProbe:

failureThreshold: 30

httpGet:

httpHeaders:

- name: Host

value: zulip.freshbrewed.science

path: /

port: http

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

I let it run a while just to see

$ kubectl get pods -l app.kubernetes.io/instance=zulip,app.kubernetes.io/name=zulip -n zulip

NAME READY STATUS RESTARTS AGE

zulip-0 0/1 Running 21 (116s ago) 110m

I tried to edit it (kubectl edit statefulset) to use httpget on 80

livenessProbe:

failureThreshold: 3

httpGet:

path: /

port: http

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

name: zulip

ports:

- containerPort: 80

name: http

protocol: TCP

resources: {}

securityContext: {}

startupProbe:

failureThreshold: 30

httpGet:

path: /

port: http

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

but no go

$ kubectl get pods -n zulip

NAME READY STATUS RESTARTS AGE

zulip-memcached-74f9bfc676-4v6rv 1/1 Running 0 12h

zulip-redis-master-0 1/1 Running 0 12h

zulip-postgresql-0 1/1 Running 0 12h

zulip-rabbitmq-0 1/1 Running 0 12h

zulip-0 0/1 Running 121 (3m19s ago) 10h

One more try, this time define port as 80 and use the /health endpoint

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 80

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

name: zulip

ports:

- containerPort: 80

name: http

protocol: TCP

resources: {}

securityContext: {}

startupProbe:

failureThreshold: 30

httpGet:

path: /health

port: 80

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

Still seemingly stuck

$ kubectl get pods -n zulip

NAME READY STATUS RESTARTS AGE

zulip-memcached-74f9bfc676-4v6rv 1/1 Running 0 12h

zulip-redis-master-0 1/1 Running 0 12h

zulip-postgresql-0 1/1 Running 0 12h

zulip-rabbitmq-0 1/1 Running 0 12h

zulip-0 0/1 Running 0 2m25s

Warning Unhealthy 2m35s kubelet Startup probe failed: Get "http://10.42.3.88:80/health": dial tcp 10.42.3.88:80: connect: connection refused

Warning Unhealthy 5s (x15 over 2m25s) kubelet Startup probe failed: HTTP probe failed with statuscode: 403

Seems it’s that pesky refusing to listen except for the hostname. I’ll pivot to a basic TCP check

livenessProbe:

failureThreshold: 3

tcpSocket:

port: 80

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

name: zulip

ports:

- containerPort: 80

name: http

protocol: TCP

resources: {}

securityContext: {}

startupProbe:

failureThreshold: 30

tcpSocket:

port: 80

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

Then rotate

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ kubectl edit statefulset zulip -n zulip

statefulset.apps/zulip edited

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ kubectl delete pod zulip-0 -n zulip

pod "zulip-0" deleted

Much better

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ kubectl get pods -n zulip

NAME READY STATUS RESTARTS AGE

zulip-memcached-74f9bfc676-4v6rv 1/1 Running 0 12h

zulip-redis-master-0 1/1 Running 0 12h

zulip-postgresql-0 1/1 Running 0 12h

zulip-rabbitmq-0 1/1 Running 0 12h

zulip-0 1/1 Running 0 56s

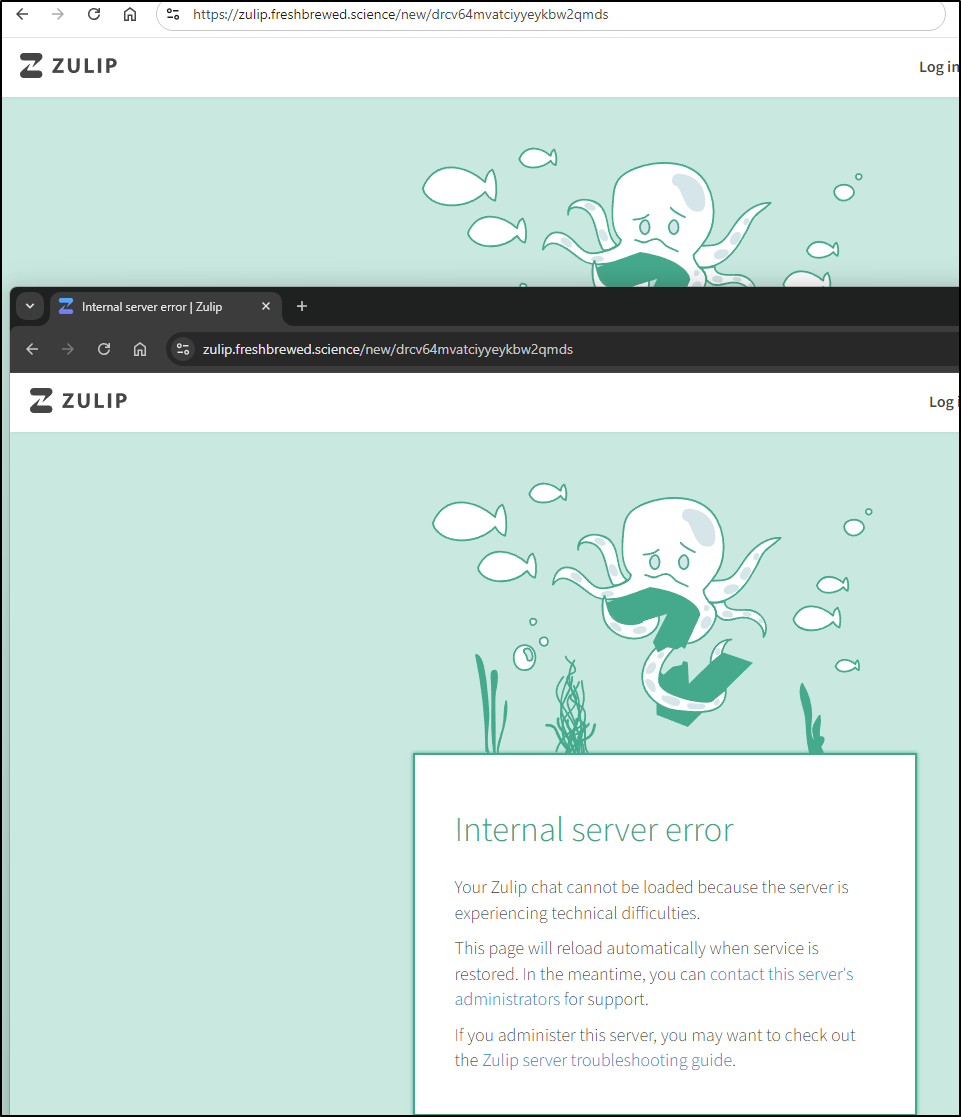

It’s an error page, but I’ll take it

Like with the container, I can hop in the pod to get that realm link

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ kubectl exec -it zulip-0 -n zulip -- /bin/bash

root@zulip-0:/# pwd

/

root@zulip-0:/# su zulip -c '/home/zulip/deployments/current/manage.py generate_realm_creation_link'

Please visit the following secure single-use link to register your

new Zulip organization:

https://zulip.freshbrewed.science/new/drcv64mvatciyyeykbw2qmds

root@zulip-0:/# exit

exit

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$

However, that did not work

The errors really seem to be about a detected reverse proxy

root@zulip-0:/var/log/zulip# tail -n 30 errors.log

2024-08-19 10:45:46.608 ERR [django.request] Internal Server Error: /new/drcv64mvatciyyeykbw2qmds

Traceback (most recent call last):

File "/srv/zulip-venv-cache/58c4cec1a70fbcdbd716f3aa98fe57c891b4ee84/zulip-py3-venv/lib/python3.10/site-packages/django/core/handlers/exception.py", line 55, in inner

response = get_response(request)

File "/srv/zulip-venv-cache/58c4cec1a70fbcdbd716f3aa98fe57c891b4ee84/zulip-py3-venv/lib/python3.10/site-packages/django/core/handlers/base.py", line 185, in _get_response

response = middleware_method(

File "/home/zulip/deployments/2024-08-03-00-42-06/zerver/middleware.py", line 670, in process_view

raise ProxyMisconfigurationError(proxy_state_header)

zerver.middleware.ProxyMisconfigurationError: Reverse proxy misconfiguration: No proxies configured in Zulip, but proxy headers detected from proxy at 10.42.0.20; see https://zulip.readthedocs.io/en/latest/production/reverse-proxies.html

2024-08-19 10:45:47.380 WARN [zerver.lib.push_notifications] Mobile push notifications are not configured.

See https://zulip.readthedocs.io/en/latest/production/mobile-push-notifications.html

2024-08-19 10:45:48.899 ERR [django.request] Internal Server Error: /new/drcv64mvatciyyeykbw2qmds

Traceback (most recent call last):

File "/srv/zulip-venv-cache/58c4cec1a70fbcdbd716f3aa98fe57c891b4ee84/zulip-py3-venv/lib/python3.10/site-packages/django/core/handlers/exception.py", line 55, in inner

response = get_response(request)

File "/srv/zulip-venv-cache/58c4cec1a70fbcdbd716f3aa98fe57c891b4ee84/zulip-py3-venv/lib/python3.10/site-packages/django/core/handlers/base.py", line 185, in _get_response

response = middleware_method(

File "/home/zulip/deployments/2024-08-03-00-42-06/zerver/middleware.py", line 670, in process_view

raise ProxyMisconfigurationError(proxy_state_header)

zerver.middleware.ProxyMisconfigurationError: Reverse proxy misconfiguration: No proxies configured in Zulip, but proxy headers detected from proxy at 10.42.0.20; see https://zulip.readthedocs.io/en/latest/production/reverse-proxies.html

2024-08-19 10:45:54.580 ERR [django.request] Internal Server Error: /new/drcv64mvatciyyeykbw2qmds

Traceback (most recent call last):

File "/srv/zulip-venv-cache/58c4cec1a70fbcdbd716f3aa98fe57c891b4ee84/zulip-py3-venv/lib/python3.10/site-packages/django/core/handlers/exception.py", line 55, in inner

response = get_response(request)

File "/srv/zulip-venv-cache/58c4cec1a70fbcdbd716f3aa98fe57c891b4ee84/zulip-py3-venv/lib/python3.10/site-packages/django/core/handlers/base.py", line 185, in _get_response

response = middleware_method(

File "/home/zulip/deployments/2024-08-03-00-42-06/zerver/middleware.py", line 670, in process_view

raise ProxyMisconfigurationError(proxy_state_header)

zerver.middleware.ProxyMisconfigurationError: Reverse proxy misconfiguration: No proxies configured in Zulip, but proxy headers detected from proxy at 10.42.0.20; see https://zulip.readthedocs.io/en/latest/production/reverse-proxies.html

Since that is my detected Loadbalancer IP, I’ll pack that into the statefulset as a new environment variable

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ kubectl get statefulset zulip -n zulip -o yaml | grep -C 2 LOAD

- name: ZULIP_AUTH_BACKENDS

value: EmailAuthBackend

- name: LOADBALANCER_IPS

value: 10.42.0.0/20

image: zulip/docker-zulip:9.1-0

That seemed to work, and I was able to get an Organization URL the same as before

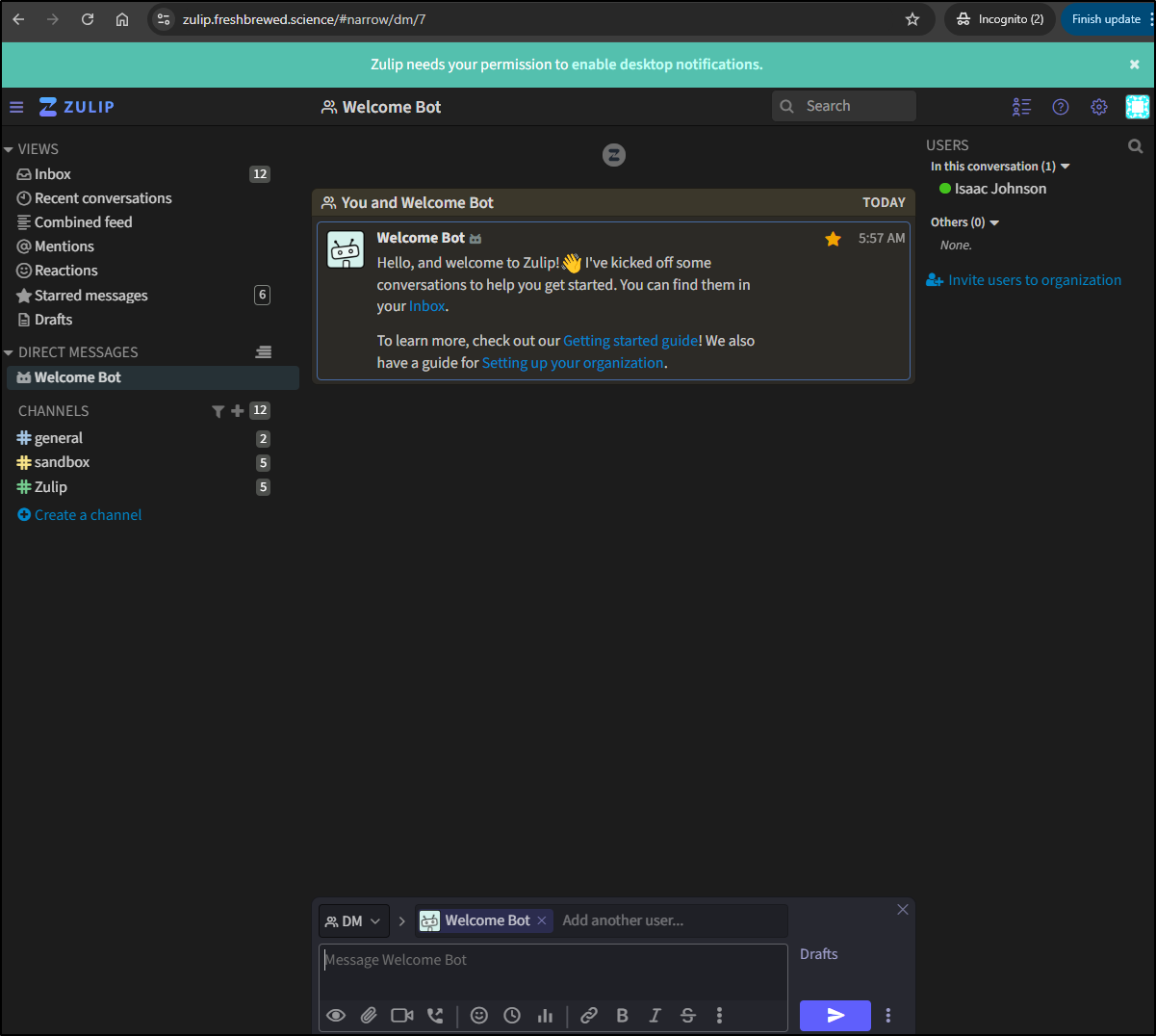

I now have an instance!

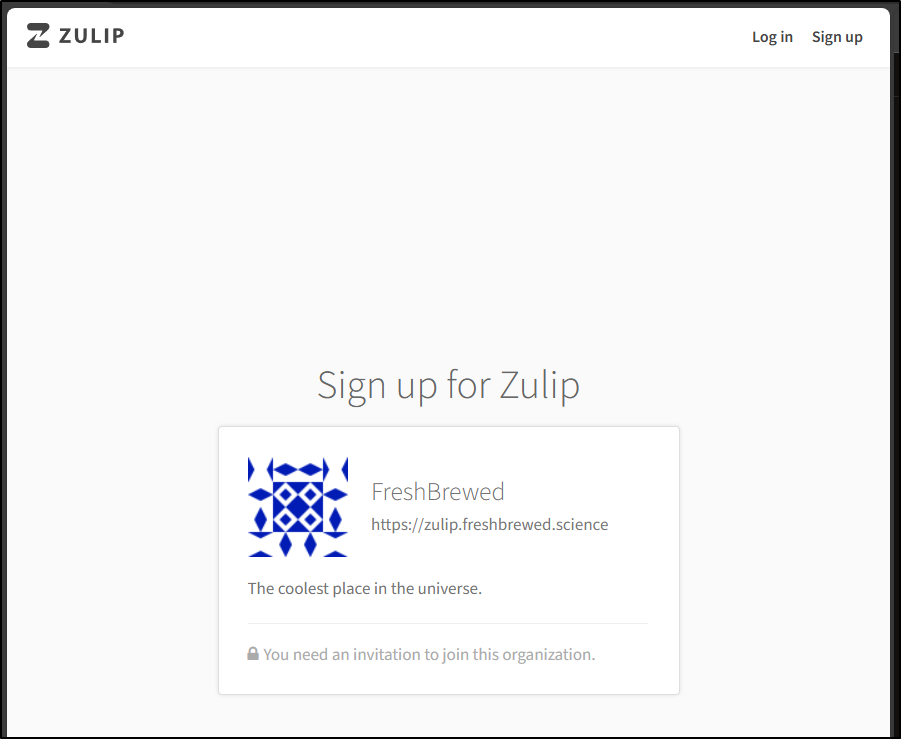

Using Edge, I found it is set to require invites

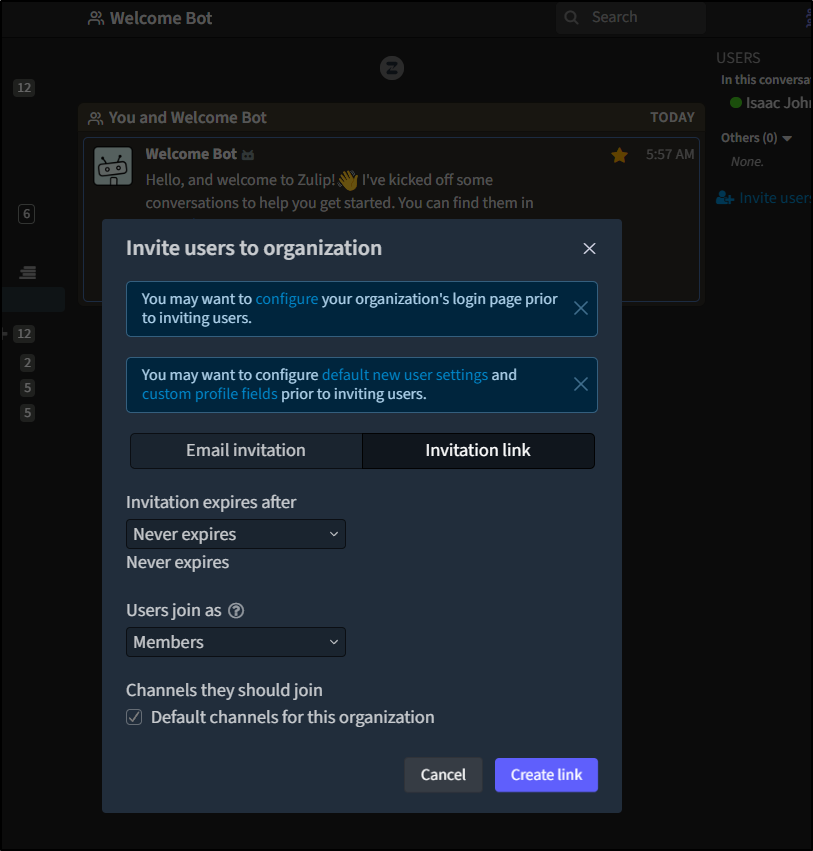

That said, I can create a non-expiring link.

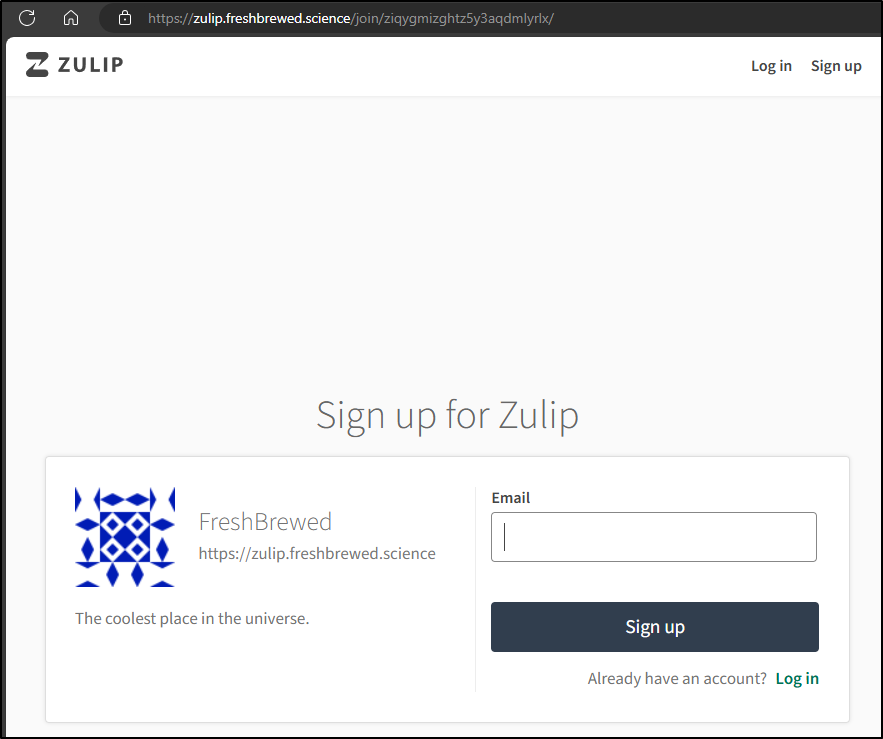

Which allows signups

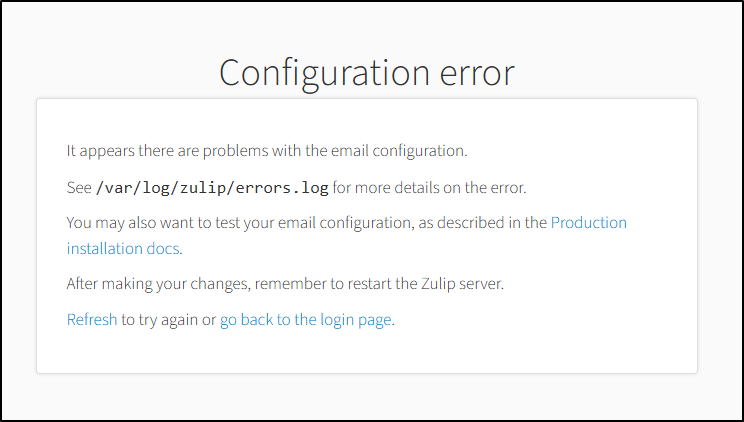

Though I was met with an error when I tried it

I checked the errors

2024-08-19 11:01:42.249 WARN [zerver.management.commands.deliver_scheduled_emails] <ScheduledEmail: 1 [<UserProfile: isaac@freshbrewed.science <Realm: 2>>] 2024-08-19 10:57:13.802492+00:00> not delivered

2024-08-19 11:01:42.651 ERR [zulip.send_email] Error sending account_registered email to ['Isaac Johnson <isaac@freshbrewed.science>']: Connection unexpectedly closed

Traceback (most recent call last):

File "/home/zulip/deployments/2024-08-03-00-42-06/zerver/lib/send_email.py", line 295, in send_email

if connection.send_messages([mail]) == 0:

File "/srv/zulip-venv-cache/58c4cec1a70fbcdbd716f3aa98fe57c891b4ee84/zulip-py3-venv/lib/python3.10/site-packages/django/core/mail/backends/smtp.py", line 128, in send_messages

new_conn_created = self.open()

File "/srv/zulip-venv-cache/58c4cec1a70fbcdbd716f3aa98fe57c891b4ee84/zulip-py3-venv/lib/python3.10/site-packages/django/core/mail/backends/smtp.py", line 95, in open

self.connection.login(self.username, self.password)

File "/usr/lib/python3.10/smtplib.py", line 739, in login

(code, resp) = self.auth(

File "/usr/lib/python3.10/smtplib.py", line 642, in auth

(code, resp) = self.docmd("AUTH", mechanism + " " + response)

File "/usr/lib/python3.10/smtplib.py", line 432, in docmd

return self.getreply()

File "/usr/lib/python3.10/smtplib.py", line 405, in getreply

raise SMTPServerDisconnected("Connection unexpectedly closed")

smtplib.SMTPServerDisconnected: Connection unexpectedly closed

Stack (most recent call last):

File "/home/zulip/deployments/current/manage.py", line 150, in <module>

execute_from_command_line(sys.argv)

File "/home/zulip/deployments/current/manage.py", line 115, in execute_from_command_line

utility.execute()

File "/srv/zulip-venv-cache/58c4cec1a70fbcdbd716f3aa98fe57c891b4ee84/zulip-py3-venv/lib/python3.10/site-packages/django/core/management/__init__.py", line 436, in execute

self.fetch_command(subcommand).run_from_argv(self.argv)

File "/srv/zulip-venv-cache/58c4cec1a70fbcdbd716f3aa98fe57c891b4ee84/zulip-py3-venv/lib/python3.10/site-packages/django/core/management/base.py", line 413, in run_from_argv

self.execute(*args, **cmd_options)

File "/home/zulip/deployments/2024-08-03-00-42-06/zerver/lib/management.py", line 97, in execute

super().execute(*args, **options)

File "/srv/zulip-venv-cache/58c4cec1a70fbcdbd716f3aa98fe57c891b4ee84/zulip-py3-venv/lib/python3.10/site-packages/django/core/management/base.py", line 459, in execute

output = self.handle(*args, **options)

File "/home/zulip/deployments/2024-08-03-00-42-06/zerver/management/commands/deliver_scheduled_emails.py", line 50, in handle

deliver_scheduled_emails(job)

File "/home/zulip/deployments/2024-08-03-00-42-06/zerver/lib/send_email.py", line 506, in deliver_scheduled_emails

send_email(**data)

File "/home/zulip/deployments/2024-08-03-00-42-06/zerver/lib/send_email.py", line 309, in send_email

logger.exception("Error sending %s email to %s: %s", template, mail.to, e, stack_info=True)

2024-08-19 11:01:42.652 WARN [zerver.management.commands.deliver_scheduled_emails] <ScheduledEmail: 1 [<UserProfile: isaac@freshbrewed.science <Realm: 2>>] 2024-08-19 10:57:13.802492+00:00> not delivered

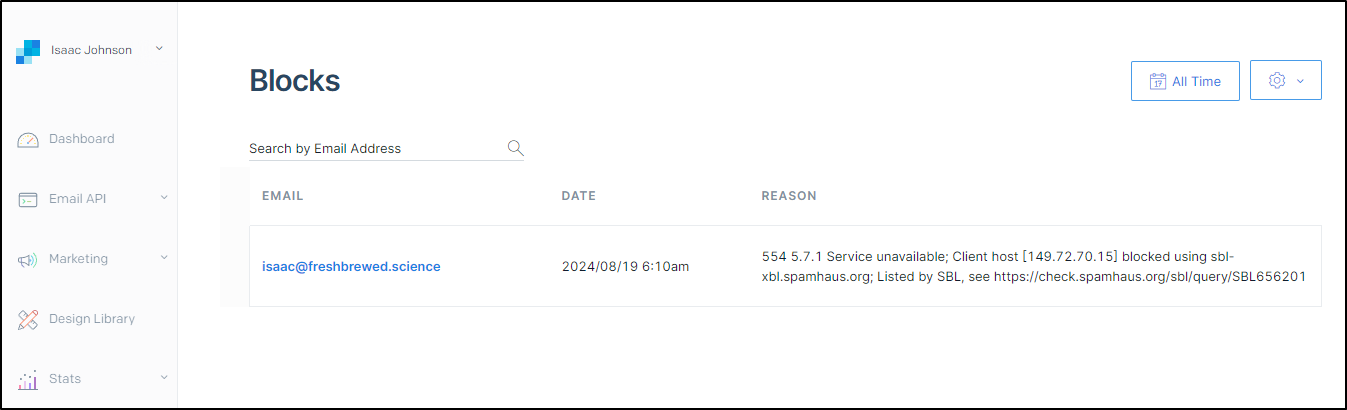

I’m actually a little stumped here. I set a new SG password and in looking at blocks, seems it blocked ?itself?

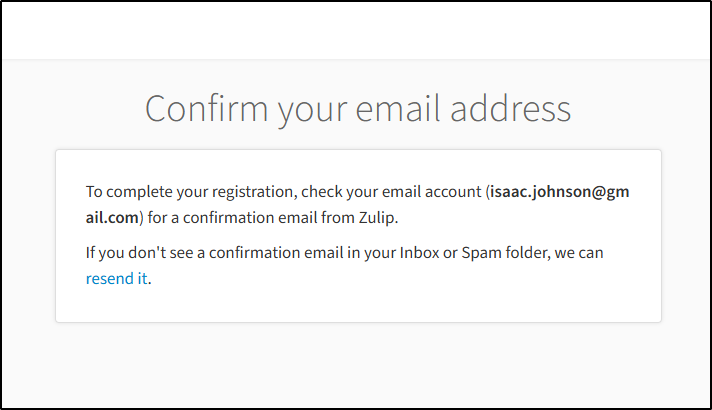

I switched to Gmail then used a Gmail Application Password (as my main is blocked by 2FA). This finally worked

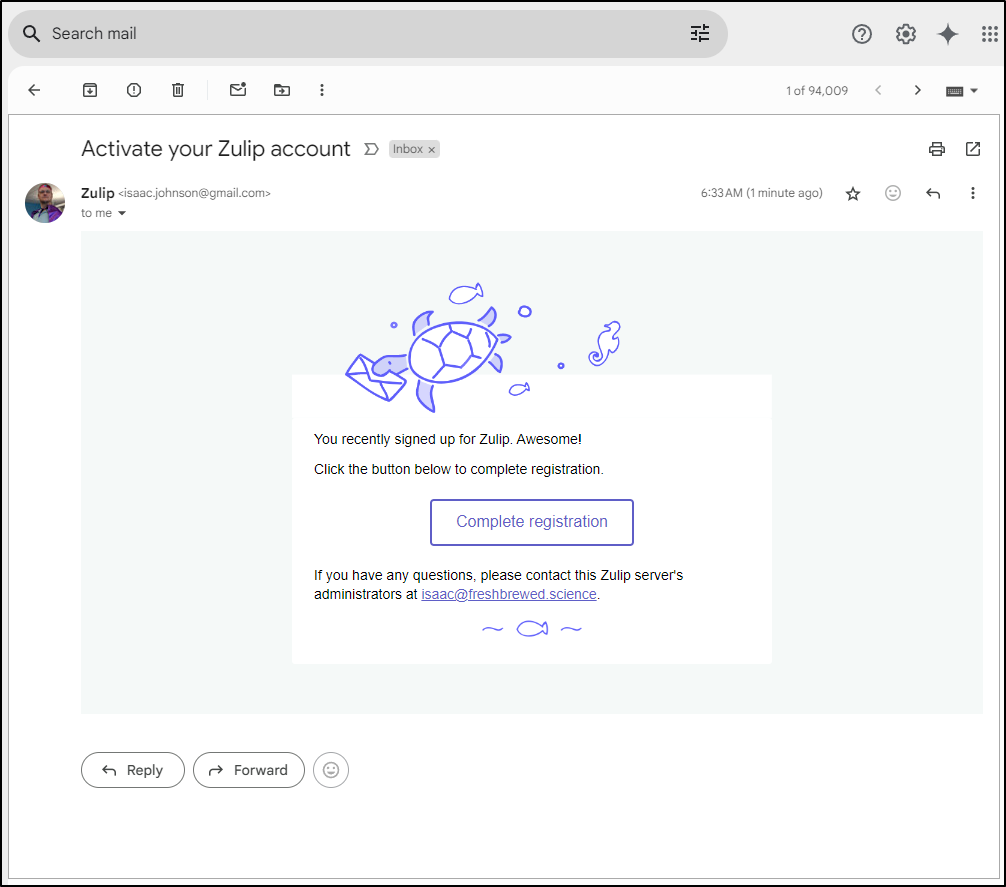

Which generated the welcome email: <!–

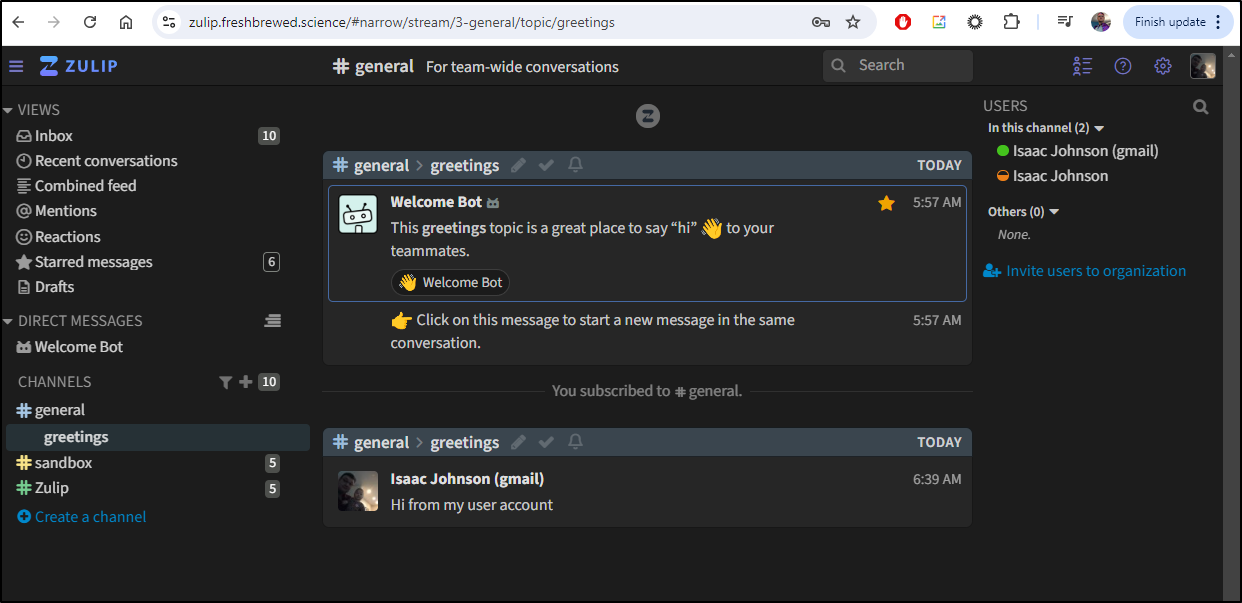

–>

A quick test shows it works

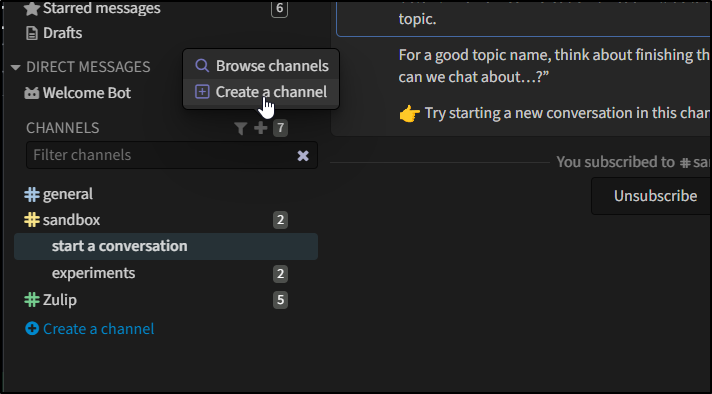

I’ll next create a channel

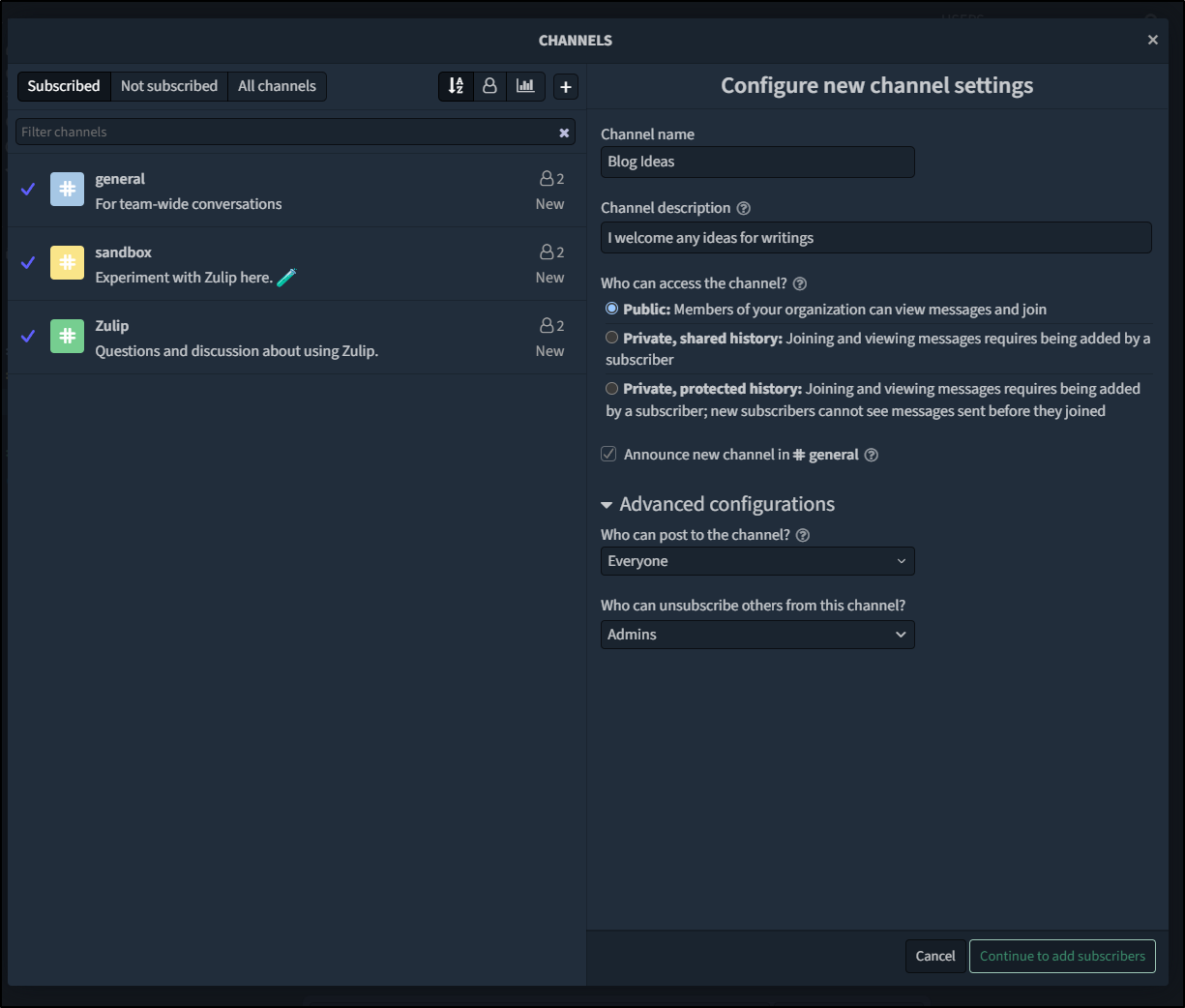

I can add a “Blog Ideas” channel

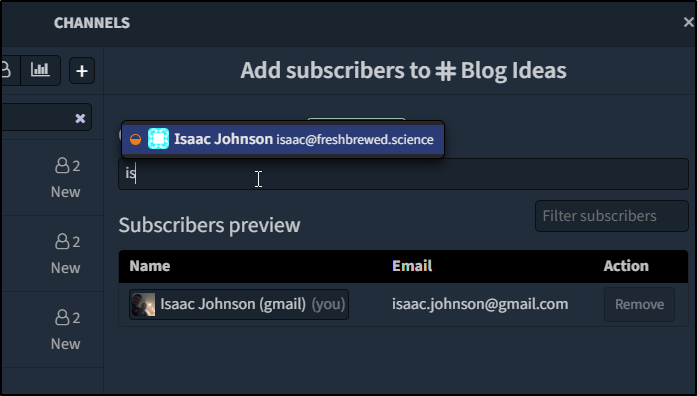

I can also pull in other users (like my admin user)

Before I go all in on this, I’ll give a week or so to see if I still like it. If I do, I’ll come back and write more.

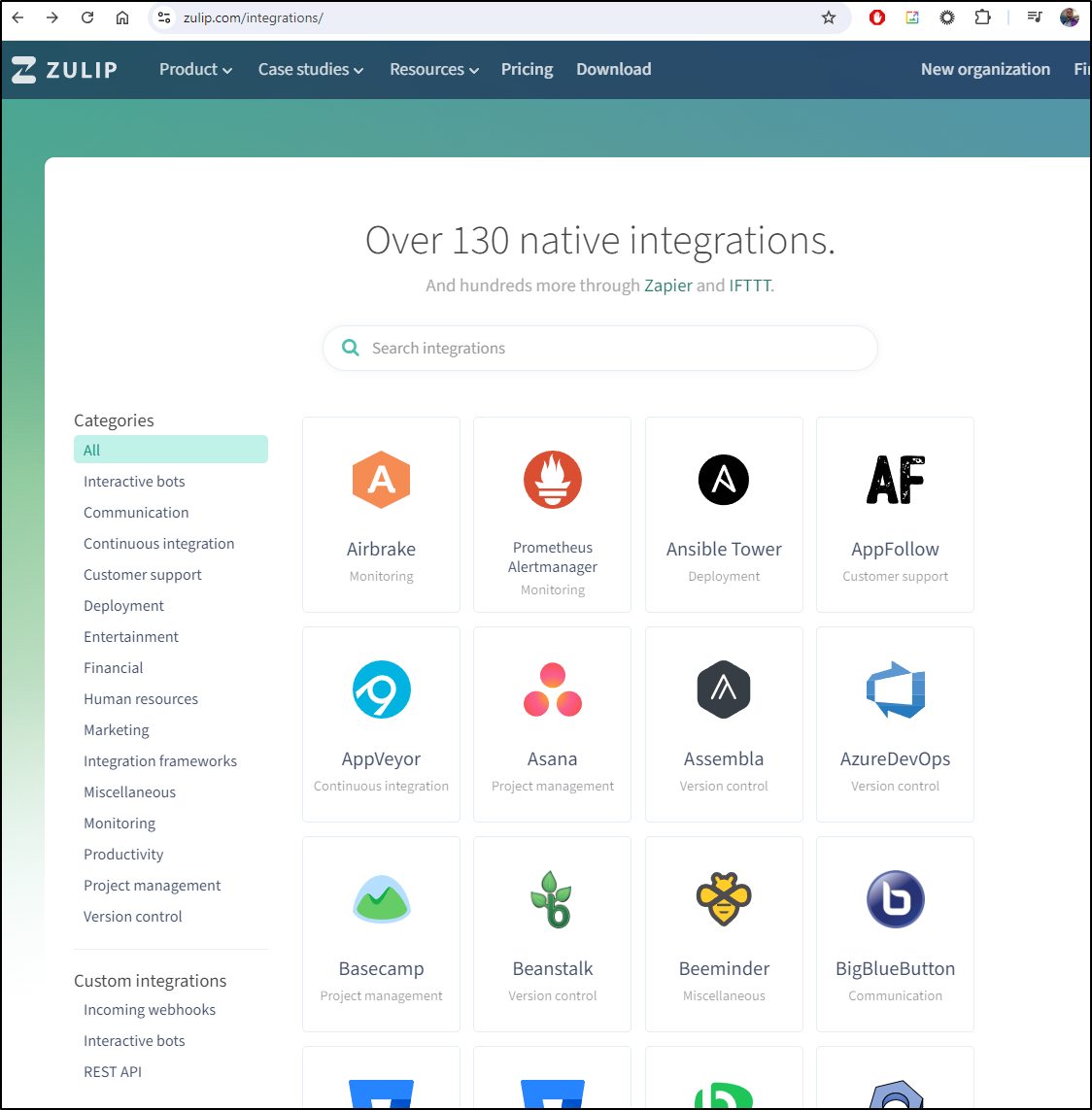

I should point out that they have many more integrations I have yet to explore:

They also have a very functional free tier you can use on their cloud here

Just to wrap with what I had to do with chart. I updated a lot of settings for more current versions of dependencies and fixed the _helpers.tpl which had an error in the rabbitmq name:

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ git diff kubernetes/chart/

diff --git a/kubernetes/chart/zulip/Chart.lock b/kubernetes/chart/zulip/Chart.lock

index a1b22d2..00070f7 100644

--- a/kubernetes/chart/zulip/Chart.lock

+++ b/kubernetes/chart/zulip/Chart.lock

@@ -1,15 +1,15 @@

dependencies:

- name: memcached

repository: https://charts.bitnami.com/bitnami

- version: 6.0.16

+ version: 6.1.11

- name: rabbitmq

repository: https://charts.bitnami.com/bitnami

- version: 8.32.0

+ version: 10.1.19

- name: redis

repository: https://charts.bitnami.com/bitnami

- version: 16.8.7

+ version: 16.11.3

- name: postgresql

repository: https://charts.bitnami.com/bitnami

- version: 11.1.22

-digest: sha256:376a93b6d6df79610d9ba283727a95560378644bb006f4ffc2c19571453a5cad

-generated: "2022-04-21T11:44:41.427111348+02:00"

+ version: 11.9.13

+digest: sha256:7ebaf1f9f0e11f190cbd87d7ac1403ef56829ebf1279e5a91cbcca62e3ac6ed7

+generated: "2024-08-18T16:44:35.278201191-05:00"

diff --git a/kubernetes/chart/zulip/Chart.yaml b/kubernetes/chart/zulip/Chart.yaml

index 7d94e25..9d0e4b8 100644

--- a/kubernetes/chart/zulip/Chart.yaml

+++ b/kubernetes/chart/zulip/Chart.yaml

@@ -18,23 +18,23 @@ dependencies:

repository: https://charts.bitnami.com/bitnami

tags:

- memcached

- version: 6.0.16

+ version: 6.1.11

- name: rabbitmq

repository: https://charts.bitnami.com/bitnami

tags:

- rabbitmq

- version: 8.32.0

+ version: 10.1.19

- name: redis

repository: https://charts.bitnami.com/bitnami

tags:

- redis

- version: 16.8.7

+ version: 16.11.3

- name: postgresql

repository: https://charts.bitnami.com/bitnami

tags:

- postgresql

# Note: values.yaml overwrites posgresql image to zulip/zulip-postgresql:14

- version: 11.1.22

+ version: 11.9.13

sources:

- https://github.com/zulip/zulip

diff --git a/kubernetes/chart/zulip/templates/_helpers.tpl b/kubernetes/chart/zulip/templates/_helpers.tpl

index 7a5aa20..88b69be 100644

--- a/kubernetes/chart/zulip/templates/_helpers.tpl

+++ b/kubernetes/chart/zulip/templates/_helpers.tpl

@@ -74,7 +74,7 @@ include all env variables for Zulip pods

- name: SETTING_MEMCACHED_LOCATION

value: "{{ template "common.names.fullname" .Subcharts.memcached }}:11211"

- name: SETTING_RABBITMQ_HOST

- value: "{{ template "rabbitmq.fullname" .Subcharts.rabbitmq }}"

+ value: "{{ template "common.names.fullname" .Subcharts.rabbitmq }}"

- name: SETTING_REDIS_HOST

value: "{{ template "common.names.fullname" .Subcharts.redis }}-headless"

- name: SECRETS_rabbitmq_password

diff --git a/kubernetes/chart/zulip/values.yaml b/kubernetes/chart/zulip/values.yaml

index 95c082f..0bc43d5 100644

--- a/kubernetes/chart/zulip/values.yaml

+++ b/kubernetes/chart/zulip/values.yaml

@@ -192,6 +192,7 @@ postgresql:

# -- Rabbitmq settings, see [Requirements](#Requirements).

rabbitmq:

+ fullname: rabbitmq

auth:

username: zulip

# Set this to true if you need the rabbitmq to be persistent

I then needed to tweak the email settings and liveness/startup probe on the STS

builder@DESKTOP-QADGF36:~/Workspaces/docker-zulip$ kubectl get sts -n zulip zulip -o yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

annotations:

meta.helm.sh/release-name: zulip

meta.helm.sh/release-namespace: zulip

creationTimestamp: "2024-08-18T21:57:46Z"

generation: 10

labels:

app.kubernetes.io/instance: zulip

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: zulip

app.kubernetes.io/version: 9.1-0

helm.sh/chart: zulip-0.9.1

name: zulip

namespace: zulip

resourceVersion: "26714911"

uid: c38af2de-5de3-499a-8cc6-61b4e68042b0

spec:

podManagementPolicy: OrderedReady

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/instance: zulip

app.kubernetes.io/name: zulip

serviceName: zulip

template:

metadata:

creationTimestamp: null

labels:

app.kubernetes.io/instance: zulip

app.kubernetes.io/name: zulip

spec:

containers:

- env:

- name: DB_HOST

value: zulip-postgresql

- name: DB_HOST_PORT

value: "5432"

- name: DB_USER

value: postgres

- name: SETTING_MEMCACHED_LOCATION

value: zulip-memcached:11211

- name: SETTING_RABBITMQ_HOST

value: zulip-rabbitmq

- name: SETTING_REDIS_HOST

value: zulip-redis-headless

- name: SECRETS_rabbitmq_password

value: asdfasdfasdfasfdasdfas

- name: SECRETS_postgres_password

value: asdfasdfasdfasfdasdfas

- name: SECRETS_memcached_password

value: asdfasdfasdfasfdasdfas

- name: SECRETS_redis_password

value: asdfasdfasdfasfdasdfas

- name: SECRETS_secret_key

value: ixxxxxxxxxxxxxxxxxxxxxxV

- name: DISABLE_HTTPS

value: "true"

- name: SECRETS_email_password

value: xxxxxxxxxxxxxxxxxxxxxx

- name: SETTING_EMAIL_HOST

value: smtp.gmail.com

- name: SETTING_EMAIL_HOST_USER

value: isaac.johnson@gmail.com

- name: SETTING_EMAIL_PORT

value: "587"

- name: SETTING_EMAIL_USE_SSL

value: "False"

- name: SETTING_EMAIL_USE_TLS

value: "True"

- name: SETTING_EXTERNAL_HOST

value: zulip.freshbrewed.science

- name: SETTING_NOREPLY_EMAIL_ADDRESS

value: isaac.johnson@gmail.com

- name: SETTING_ZULIP_ADMINISTRATOR

value: isaac@freshbrewed.science

- name: SSL_CERTIFICATE_GENERATION

value: self-signed

- name: ZULIP_AUTH_BACKENDS

value: EmailAuthBackend

- name: LOADBALANCER_IPS

value: 10.42.0.0/20

image: zulip/docker-zulip:9.1-0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

tcpSocket:

port: 80

timeoutSeconds: 5

name: zulip

ports:

- containerPort: 80

name: http

protocol: TCP

resources: {}

securityContext: {}

startupProbe:

failureThreshold: 30

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

tcpSocket:

port: 80

timeoutSeconds: 5

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /data

name: zulip-persistent-storage

- mountPath: /data/post-setup.d

name: zulip-post-setup-scripts

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: zulip

serviceAccountName: zulip

terminationGracePeriodSeconds: 30

volumes:

- name: zulip-persistent-storage

persistentVolumeClaim:

claimName: zulip-data

- configMap:

defaultMode: 488

name: zulip-post-setup-scripts

name: zulip-post-setup-scripts

updateStrategy:

rollingUpdate:

partition: 0

type: RollingUpdate

status:

availableReplicas: 1

collisionCount: 0

currentReplicas: 1

currentRevision: zulip-67f6659d97

observedGeneration: 10

readyReplicas: 1

replicas: 1

updateRevision: zulip-67f6659d97

updatedReplicas: 1

Summary

We checked out two Open-Source apps - one for viewing PDFs and the other for chats and social communications. While I like PDFDing, it’s lack of auth (other than OIDC) is a bit limiting. I worry about letting unlimited people dump PDFs in the cluster - i see it as being a possible spam haven.

The Zulip server is interesting. It’s Helm chart was so old, I question how well it’s really being maintained. I’m going to give it a shot for a bit and see. I actually like the fact it doesn’t have VOIP. I’m rather tired of endless Teams and Zoom calls. I have a phone, I’m quite fine using it if I really want to talk with mouthwords. That said, they have integrations including Jitsi and zoom so you can add VOIP if you desire.